Premature infant retinal image classification method and device based on attention mechanism

A technology of retina of premature infants and a classification method, which is applied in the field of retinal image classification of premature infants based on attention mechanism, can solve the problems of unbalanced distribution of disease characteristics and background of ROP images, inaccurate classification of ROP images, etc., and achieves improved recognition. and classification efficiency, improving classification performance, and high reliability.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0040] A method for classifying retinal images of premature infants based on an attention mechanism, comprising steps:

[0041] Step 1, preprocessing the 2D retinal fundus image to be identified to obtain the preprocessed 2D retinal fundus image;

[0042] Preprocessing includes: normalization of downsampling and mean subtraction;

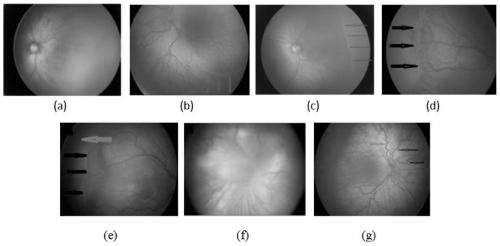

[0043] Such as figure 1 As shown, the schematic diagram of the two-dimensional retinal fundus image classified in the present invention: (a) normal image, (b) 1 stage, (c) 2 stage, (d) 3 stage, (e) 4 stage, (f) Stage 5, (g) Additional disease.

[0044] In order to prevent GPU memory overflow, the original 640×480 two-dimensional retinal fundus image is down-sampled to 320×240 by bilinear interpolation; in order to improve the contrast of the image, the image is standardized by subtracting the mean value, that is, using the original image The pixel value minus its average pixel value.

[0045] Step 2. Input the 2D retinal fundus image preprocesse...

Embodiment 2

[0059] An attention-based retinal image classification device for premature infants, including:

[0060] A preprocessing module, configured to preprocess the two-dimensional retinal fundus image to be identified, to obtain the preprocessed two-dimensional retinal fundus image;

[0061] The identification module is used to input the preprocessed two-dimensional retinal fundus image into the pre-trained deep attention network model, and the classification result of the output image identifies the ROP image of retinopathy of prematurity;

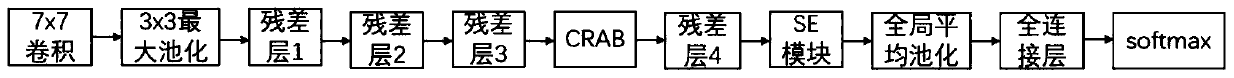

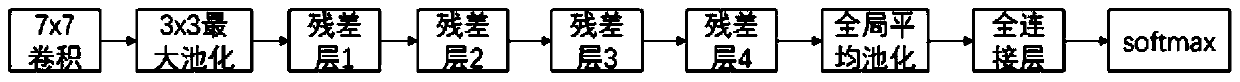

[0062] The deep attention network model is to add a complementary residual attention module and a channel attention SE module respectively after the third residual layer and the fourth residual layer of the original ResNet18 network.

[0063]Further, the complementary residual attention module includes: channel attention SE module, maximum pooling layer, average pooling layer, two-dimensional convolutional layer, sigmoid layer; SE module is use...

Embodiment 3

[0070] The inventive method is compared with prior art, and experimental result is as follows:

[0071] In order to quantitatively evaluate the performance of the present invention, 1443 (850 normal images and 593 ROP images) two-dimensional retinal fundus images from 100 subjects were subjected to the preprocessing, and four commonly used classification evaluation indicators were used to test , including Accuracy, Precision, Recall, and F1-score. The definitions of Accuracy, Precision, Recall, and F1-score are as follows:

[0072]

[0073]

[0074]

[0075]

[0076] Among them, TP, FP, TN, and FN represent true positive, false positive, true negative, and false negative, respectively, and P and R represent precision (Precision) and recall (Recall), respectively.

[0077] The present invention evaluates and compares the original ResNet18 network, the method of Zhang et al., and the deep attention network model described in the present invention in the test data se...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com