Human body action recognition method based on a TP-STG framework

A human action recognition, TP-STG technology, applied in the direction of character and pattern recognition, instruments, computer components, etc., can solve the problems of poor human action recognition effect and large error, to prevent early divergence and instability, and reduce prediction loss effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0074] The technical solutions of the present invention will be further described below in conjunction with the drawings and embodiments.

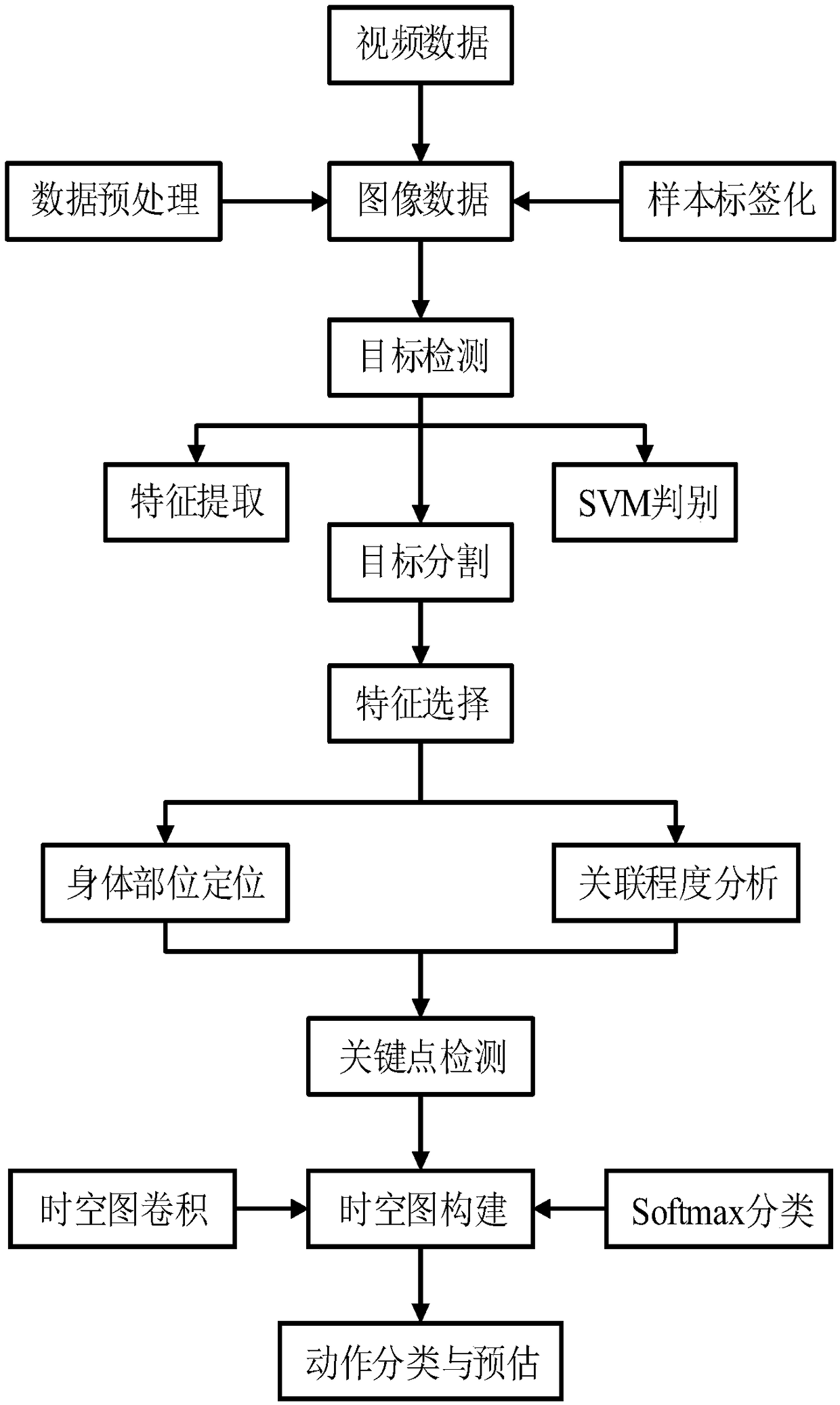

[0075] A human action recognition method based on the TP-STG framework, such as figure 1 Shown is the structural flow diagram of the human action recognition method based on the TP-STG framework of the present invention, and the method includes:

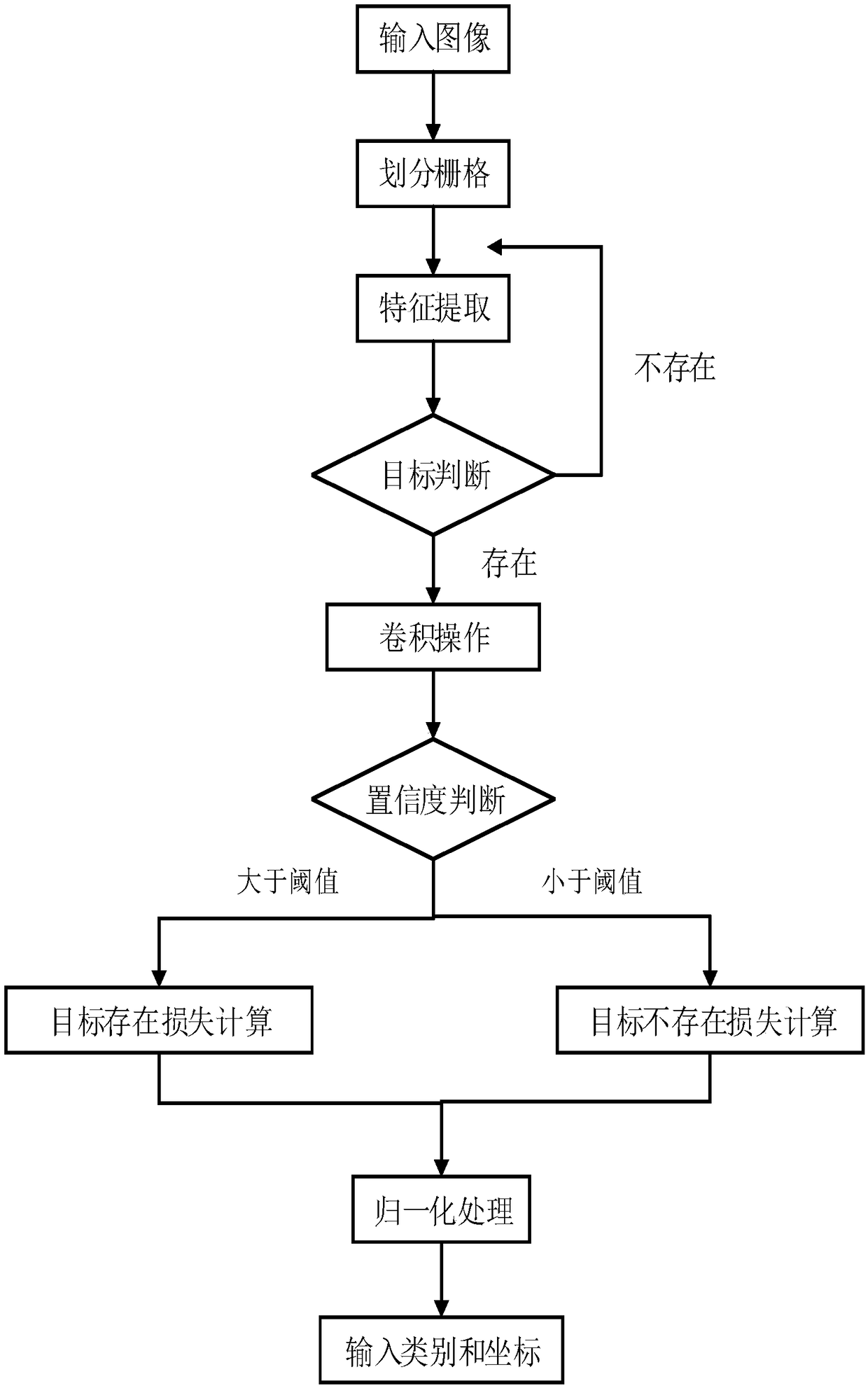

[0076] (S100) Using video information as input, performing feature extraction, adding prior knowledge to the SVM classifier, and proposing posterior discriminant criteria to remove non-human objects;

[0077] (S200) Segment the human target through the target positioning and detection algorithm, and output it in the form of target frame and coordinate information, and use feature selection to provide information for human body key point detection;

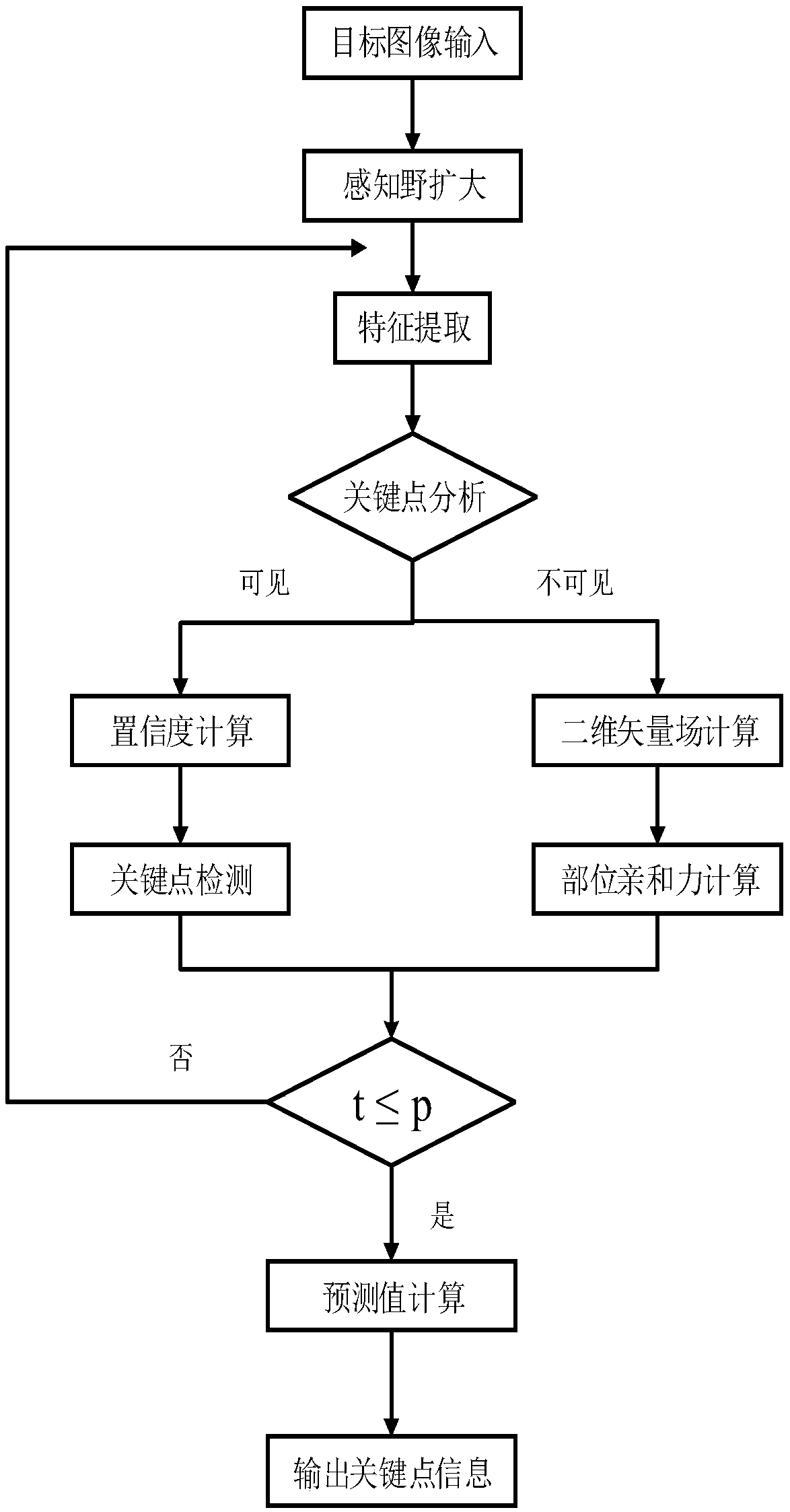

[0078] (S300) Using the improved posture recognition algorithm to perform body part location and correlation degree analysis to detect all key point...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com