Action detection model based on convolutional neural network

A convolutional neural network and motion detection technology, applied in the field of computer vision research, can solve problems such as low time efficiency and time-consuming motion positioning

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

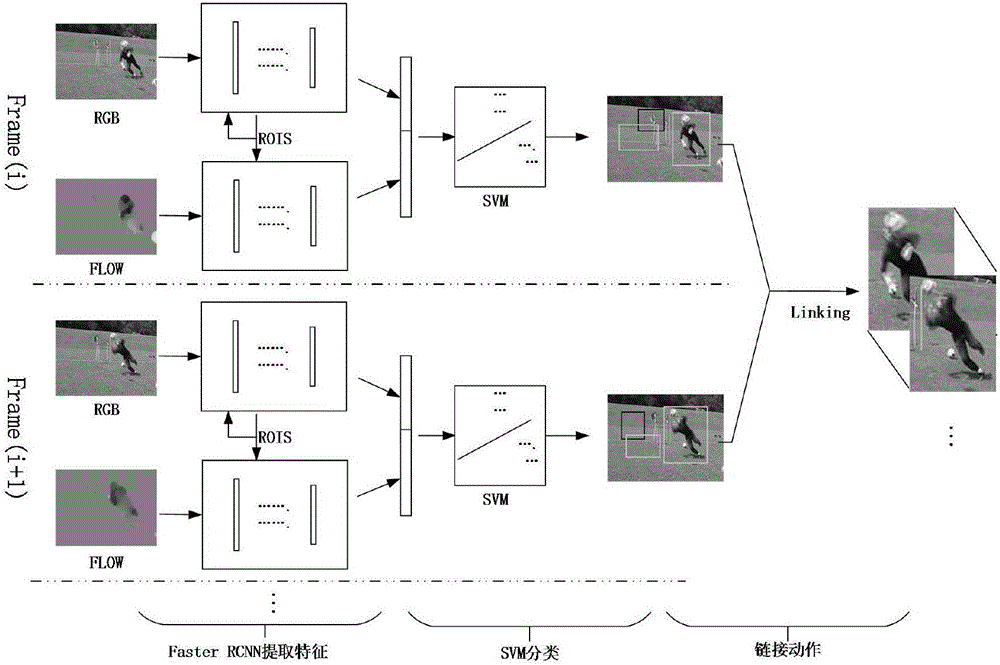

[0019] The realization and verification of the idea of the action detection model in the present invention uses GPU (K80) as the computing platform, adopts CUDA as the GPU parallel computing framework, and selects Caffe as the CNN framework. The specific implementation steps are as follows:

[0020] Step 1: Preprocessing of video data

[0021] The video data required by this method needs to be split and saved in the form of "one frame, one picture", and the size of each frame of pictures must be consistent. There are currently many open video datasets to choose from, choose one or more according to the specific task. Secondly, it is necessary to calculate the optical flow for each frame in the data set, obtain the optical flow map corresponding to each frame of the picture, organize and save the optical flow map data set.

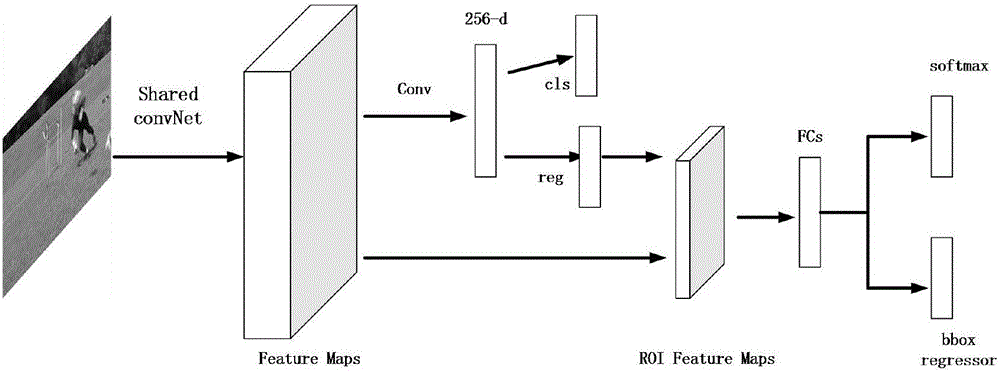

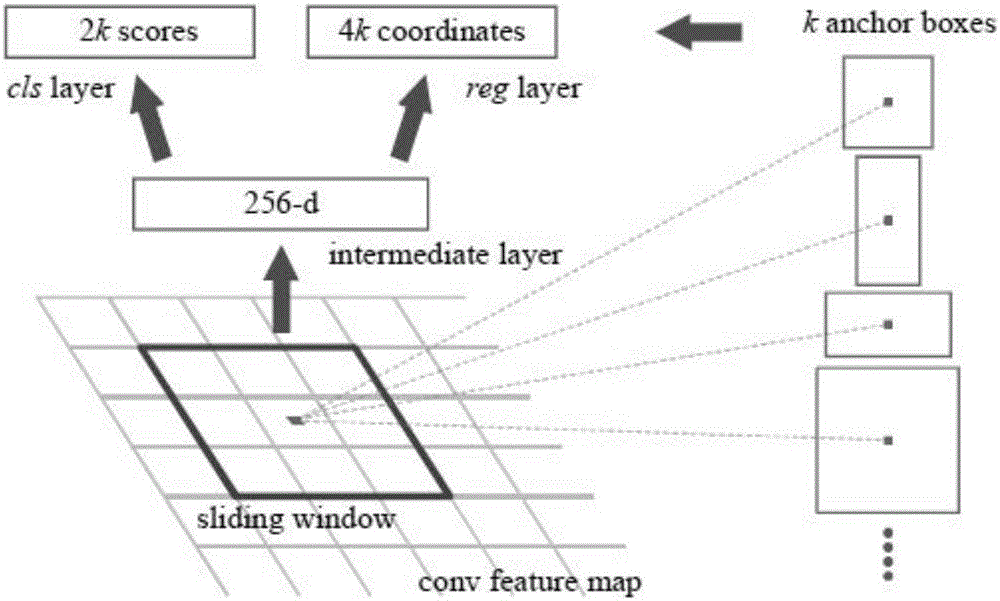

[0022] Step 2: Training of Faster RCNN

[0023]The two-channel Faster RCNN network is trained with the frame image data set and the optical flow image...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com