Method for segmenting and indexing scenes by combining captions and video image information

A video image and scene segmentation technology, applied in the field of video indexing and search, can solve the problems of time-consuming and laborious work, unsatisfactory recall rate and precision rate, unobjective labeling results, etc., achieve high accuracy and avoid artificial The effect of labeling

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

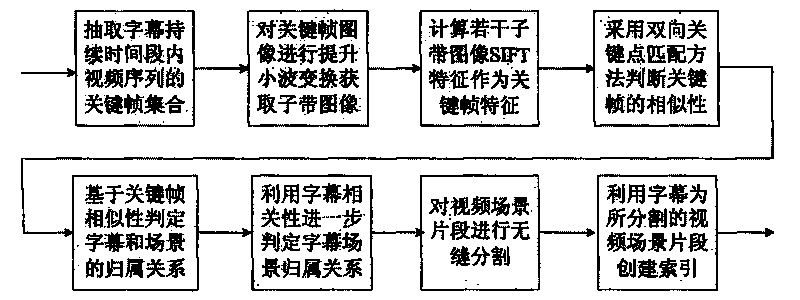

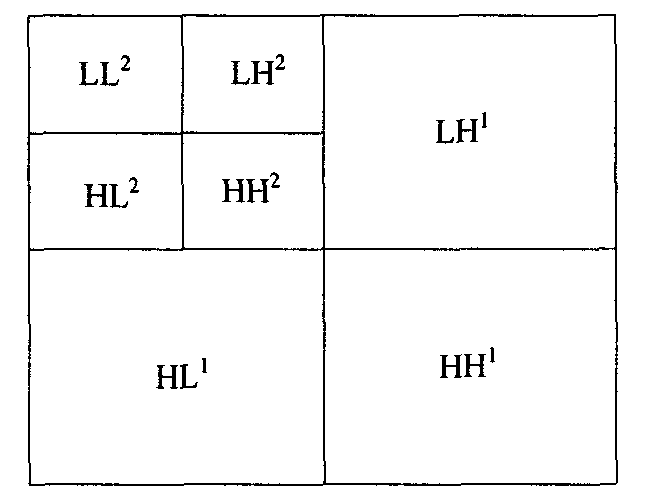

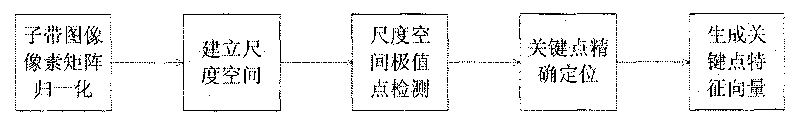

[0045] When extracting and indexing scene segments of movie-like videos, the present invention uses both information of movie video images and subtitles to achieve a higher-precision video scene segment extraction effect, and can automatically match and match the extracted scene video segments. The keywords contained in the subtitles are used as its index, so as to avoid manual labeling. Subtitles are generally the dialogue of characters in a movie, and it has three attributes, namely, its appearance moment, disappearance moment and subtitle text in the movie. At present, for high-definition DVD movies, the subtitles are generally released together with the video files in the form of plug-in files, which are easy to obtain; for embedded subtitles (subtitle text superimposed on the video image), the subtitles can be extracted through video OCR technology. Each subtitle includes the appearance and disappearance time of the subtitle in the video, and the present invention extract...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com