Dynamic gesture recognition method and system based on deep neural network

A deep neural network, dynamic gesture technology, applied in the field of computer vision and pattern recognition, can solve the problem of low recognition rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment 1

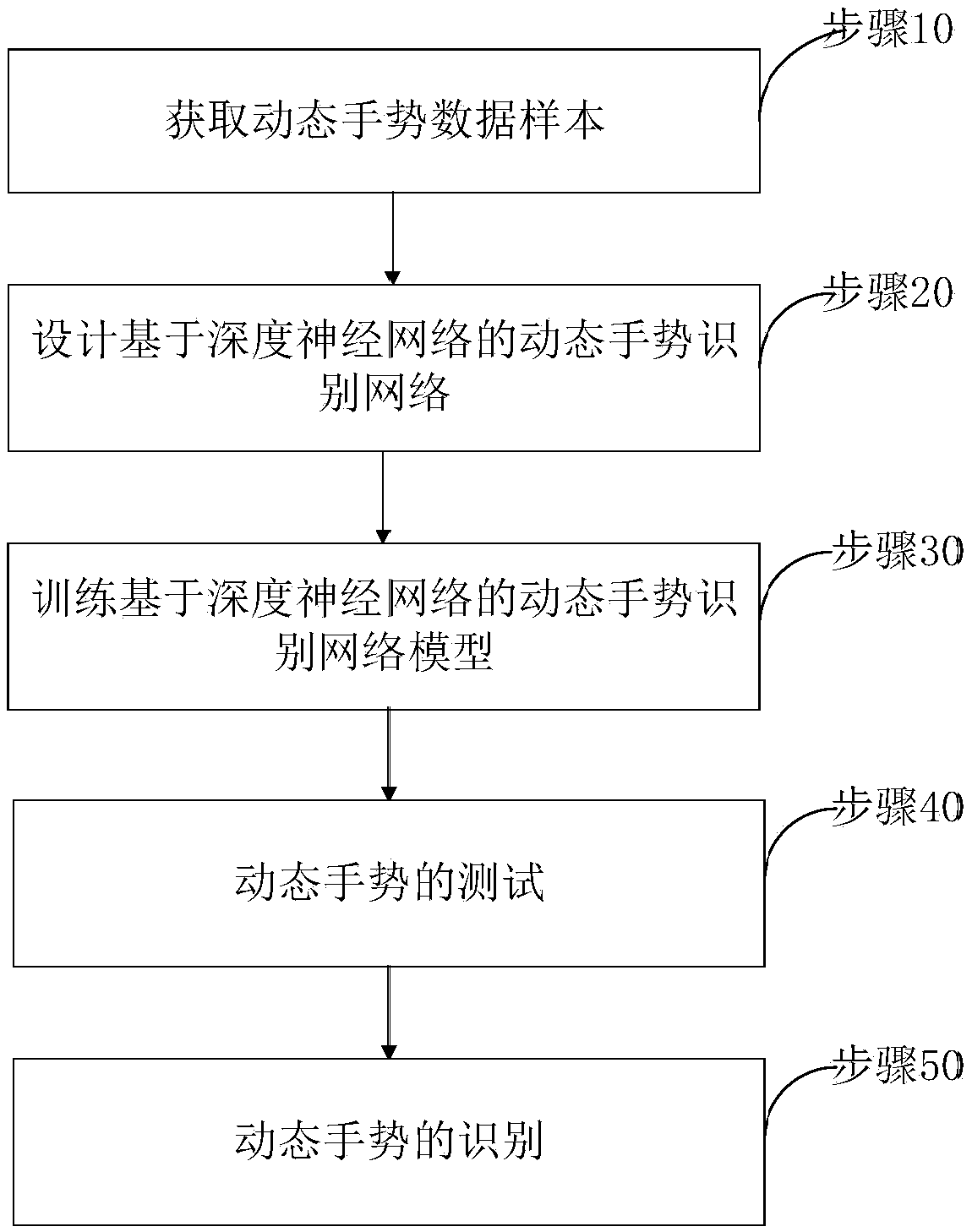

[0074] Such as figure 1 As shown, the dynamic gesture recognition method based on deep neural network provided by the present invention comprises the following steps:

[0075] Step 10: Acquire dynamic gesture data samples, this step includes:

[0076] Step 11: Use the 3D depth camera to collect C kinds of dynamic gesture video clips with different meanings, and collect at least 50 different video clips for each gesture.

[0077] Sampling n frames of RGB images and corresponding depth information images at equal intervals for each dynamic gesture video segment to form a sample x i ={x i1 ,x i2 ,...,x ik ,...,x in}, where x ik for sample x i The k-th frame data in is a four-channel data in RGB-D format with a size of 640×320×4, and C is a positive integer;

[0078] Step 12: Label all the collected video clips with gesture information, and each video corresponds to a unique gesture label, which is used as a training sample data set.

[0079] Among them, sample x i Form ...

specific Embodiment 2

[0091] For the training of the neural network model, the number of samples is of great significance to the training results. In order to reduce the workload of sample collection, the present invention proposes methods such as random translation, flipping, noise addition, and deformation of each video in the training sample data set. Carry out expansion, and form the final training sample data set with the expanded training samples and the original training samples to form a training sample library.

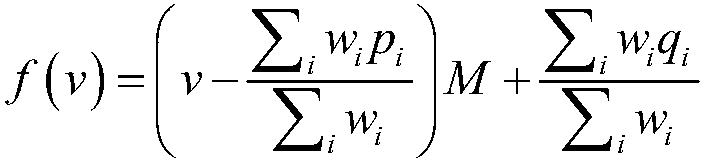

[0092] For each sample x in the training sample data set i The method of performing translation operation is as follows:

[0093] will sample x i The coordinates (x, y) of any pixel point on each channel in each frame of RGB-D data are translated along the x-axis by t x units, translate t along the y-axis y units, get (x',y'), where x'=x+t x , y'=y+t y , t x with t y They are random integers between [-0.1×width, 0.1×width] and [-0.1×height, 0.1×height], and the width is x ...

specific Embodiment 3

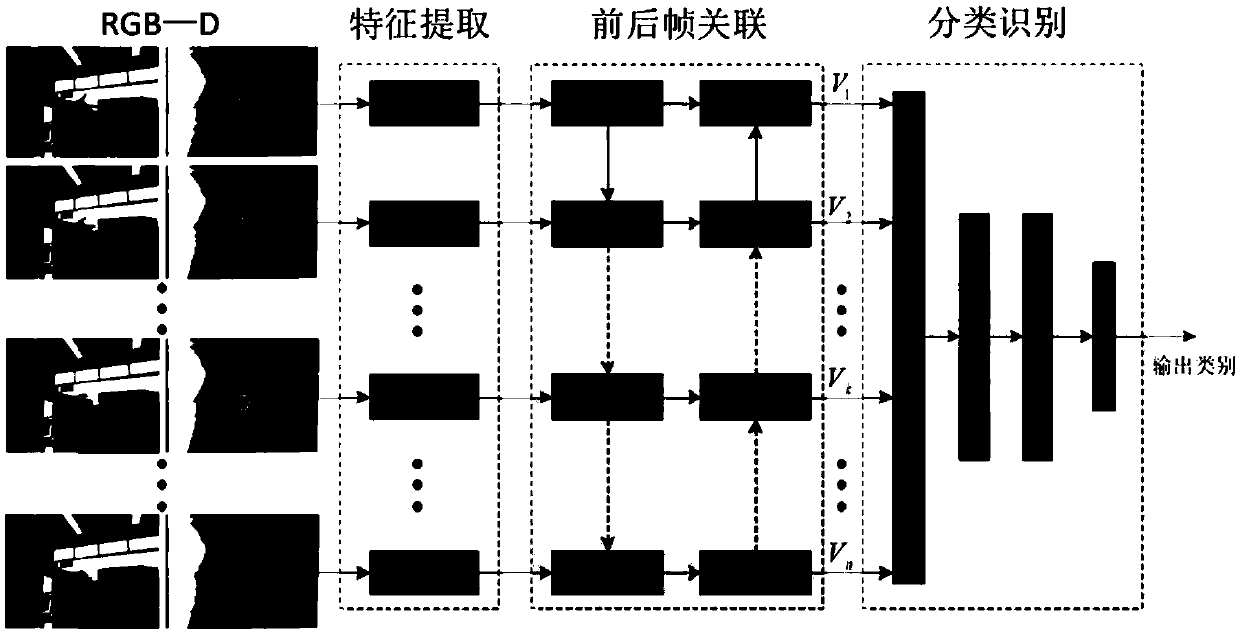

[0104] This specific embodiment 3 is a further refinement of the dynamic gesture recognition network model based on the deep neural network designed in the specific embodiment, and the specific steps include:

[0105] Step 21: The method of designing the feature extraction network is as follows:

[0106] Using a 4-layer convolutional neural network for a video input sample x of gesture meaning i The four-channel data in RGB-D format of n frames (n is a positive integer) with a size of 640×320×4 is used for feature extraction, and the convolution kernels of the first to fourth convolutional layers are set to 32, 64, 128, 256.

[0107] Then, in each convolutional layer, the convolution kernel window size is set to 3×3, and the window sliding step is set to 2; the maximum pooling window is set to 2×2, and the window sliding step is set to 2; the final output n features of size 2×1×256.

[0108] The n 2×1×256 features of the final output are pulled into a column vector to form ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com