Positioning method and system based on visual inertial navigation information fusion

A vision and depth vision technology, applied in the field of sensor fusion, can solve problems such as difficult operation, low algorithm robustness, and inability to handle loopbacks, etc., and achieve the effects of easy use, convenient assembly and disassembly, and cost reduction

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0065] In order to better explain the present invention and facilitate understanding, the present invention will be described in detail below through specific embodiments in conjunction with the accompanying drawings.

[0066] In the following description, various aspects of the present invention will be described. However, those skilled in the art can implement the present invention by using only some or all of the structures or processes of the present invention. For clarity of explanation, specific numbers, arrangements and sequences are set forth, but it will be apparent that the invention may be practiced without these specific details. In other instances, well-known features have not been described in detail in order not to obscure the invention.

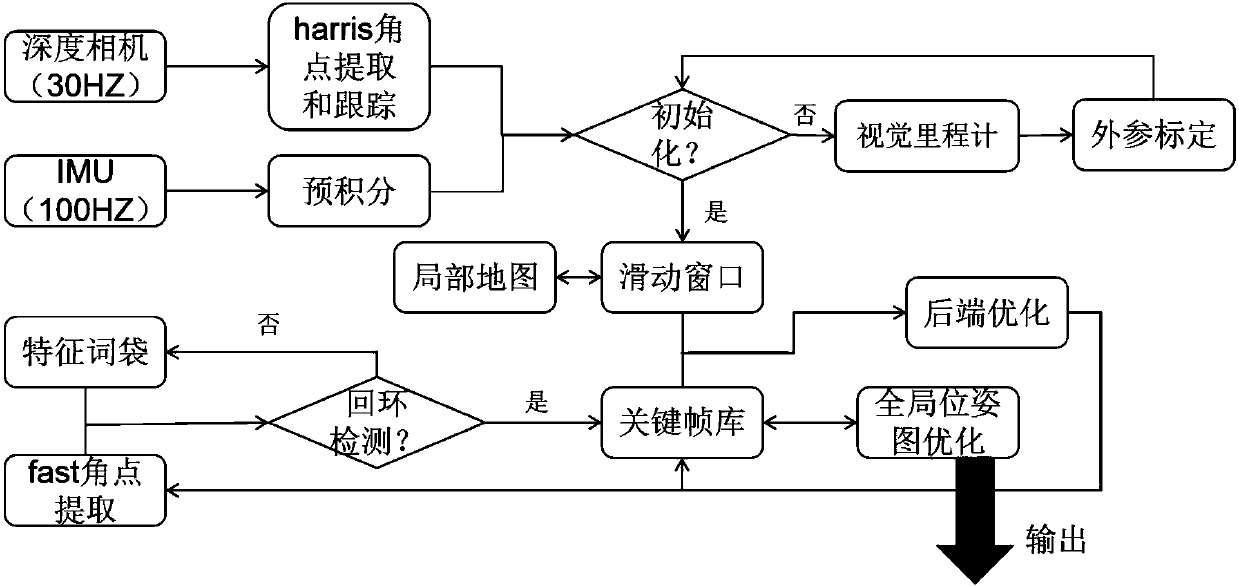

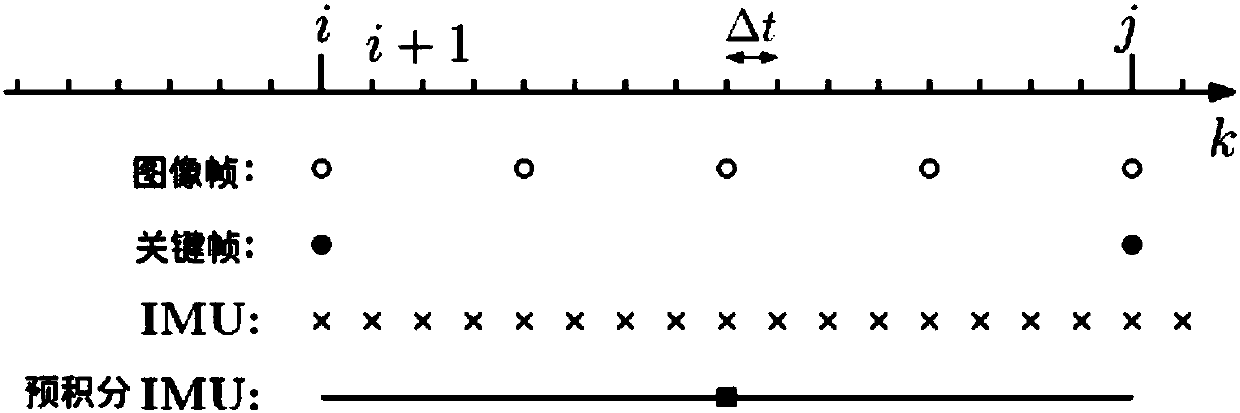

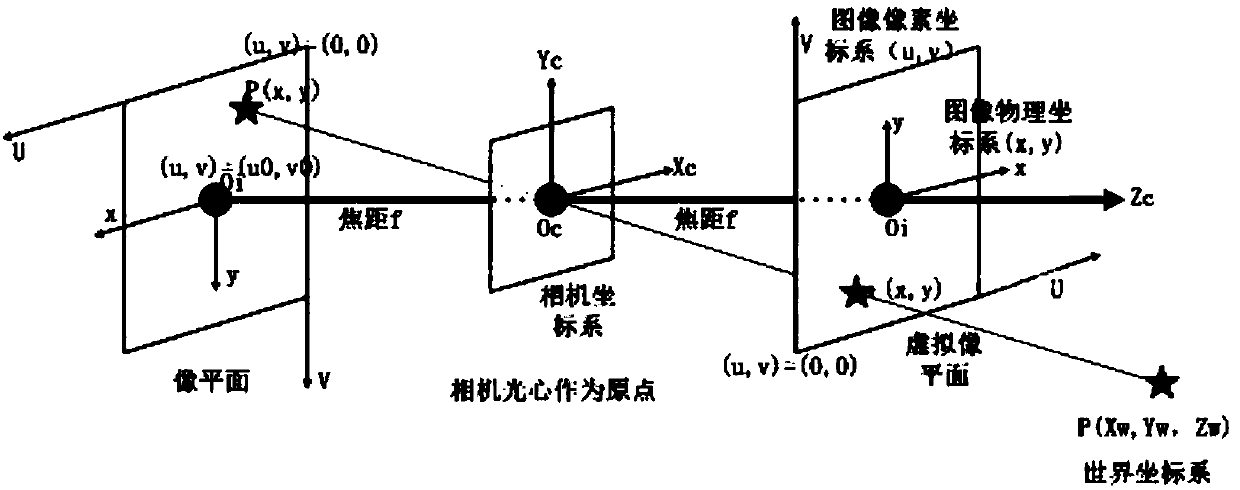

[0067] At present, the method based on nonlinear optimization calculates the measurement residual error of inertial navigation and the reprojection error of visual sensor separately, and obtains the optimal estimation of the s...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com