Target object three-dimensional color point cloud generation method based on KINECT

A target object, color point cloud technology, applied in the field of virtual reality, can solve the problem that the point cloud data cannot completely express the target object, etc., achieve the effect of low memory space requirements, accurate registration results, and improved search speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

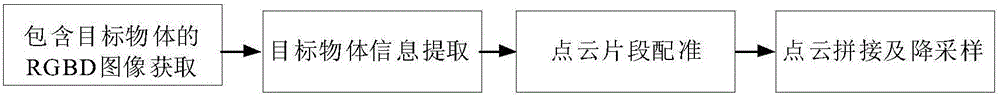

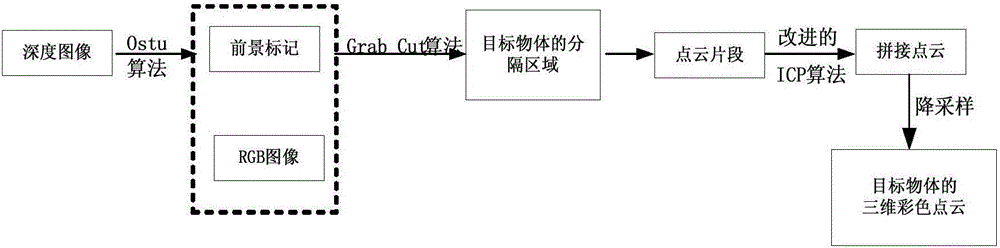

[0033] The present invention carries out according to following 4 steps when carrying out three-dimensional color point cloud generation to target object (such as figure 1 , figure 2 shown):

[0034] Step 1: Obtain an RGBD image containing the target object.

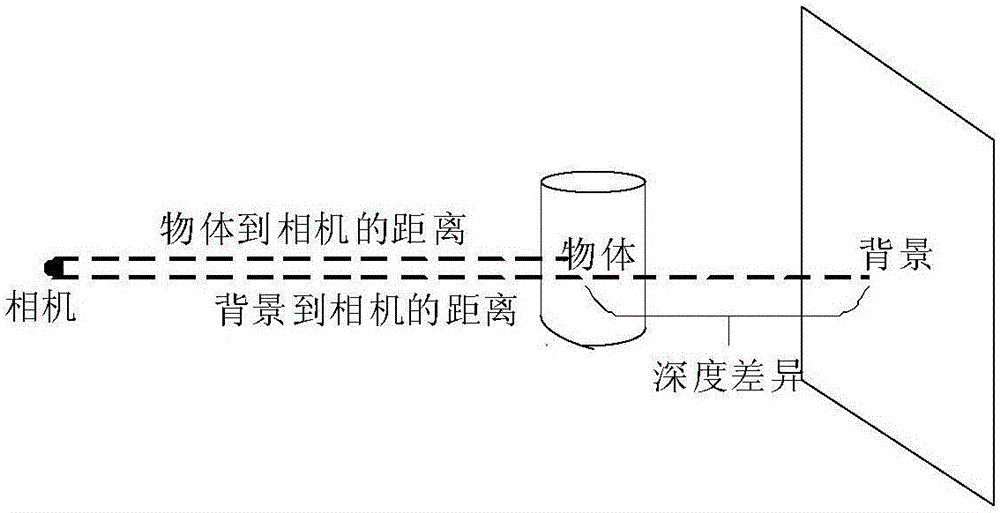

[0035] Kinect is used to take a shot, and the obtained RGBD image (RGB image and depth image, collectively referred to as RGBD image) only contains the local area information of the target object visible from the camera perspective. In order to obtain the complete color information and depth information of the target object, it is necessary to obtain from Shoot the target object from different angles. Use Kinect to shoot around the target object for a week, and obtain multiple RGBD images to contain the complete information of the target object. At the same time, during the shooting process, record the positional relationship (rotation and translation) of two adjacent images as a point cloud registration The initial ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com