Patents

Literature

1057 results about "Gradient direction" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

The direction of the gradient is simply the arctangent of the y-gradient divided by the x-gradient. tan−1(sobely/sobelx). Each pixel of the resulting image contains a value for the angle of the gradient away from horizontal in units of radians, covering a range of −π/2 to π/2.

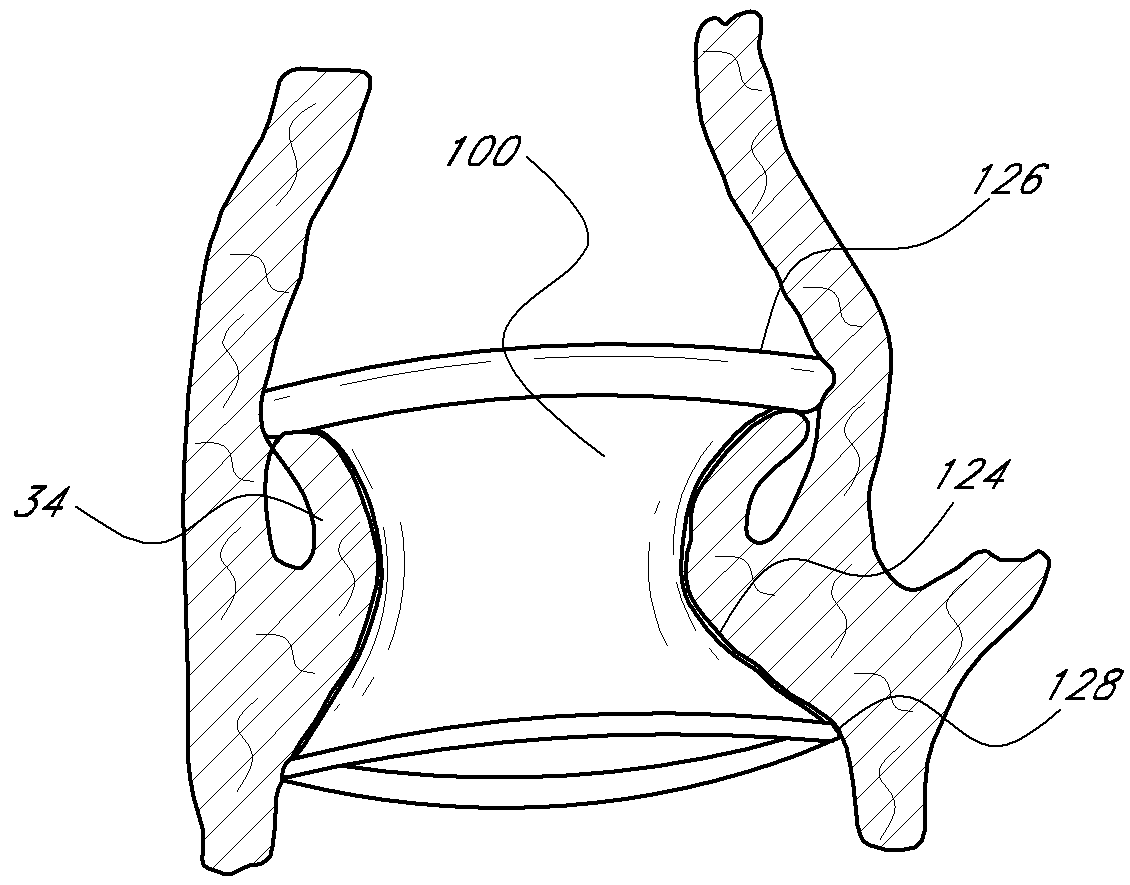

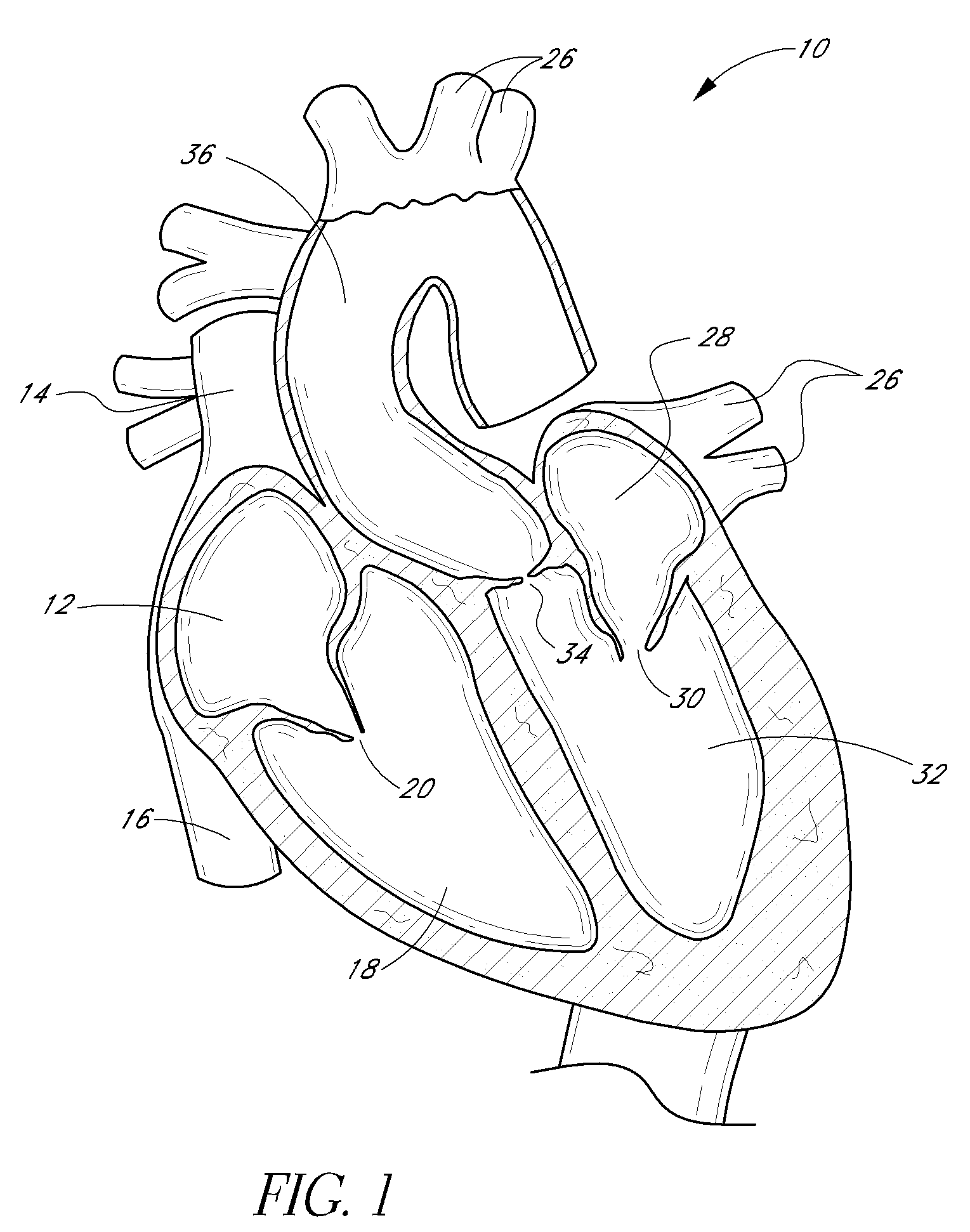

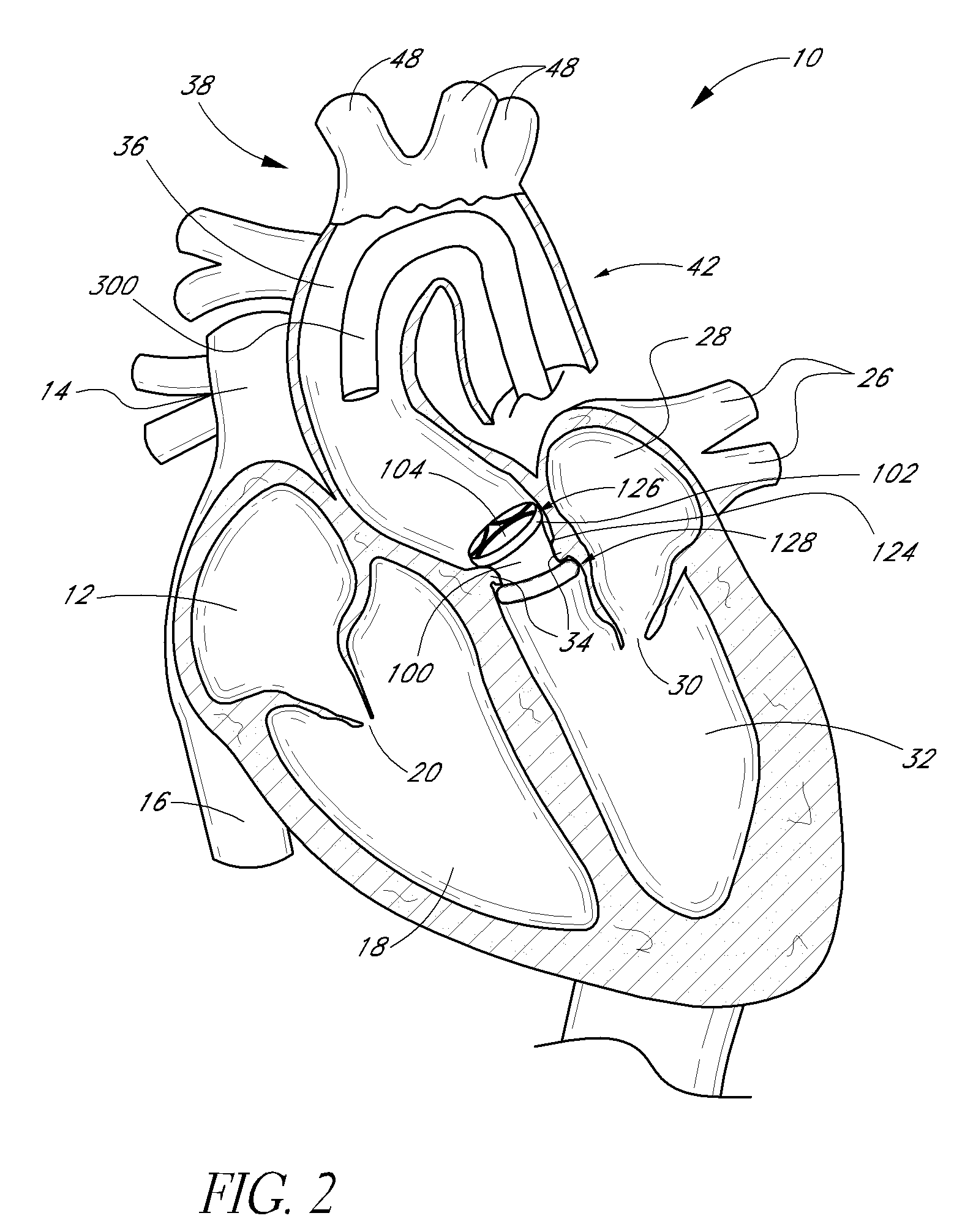

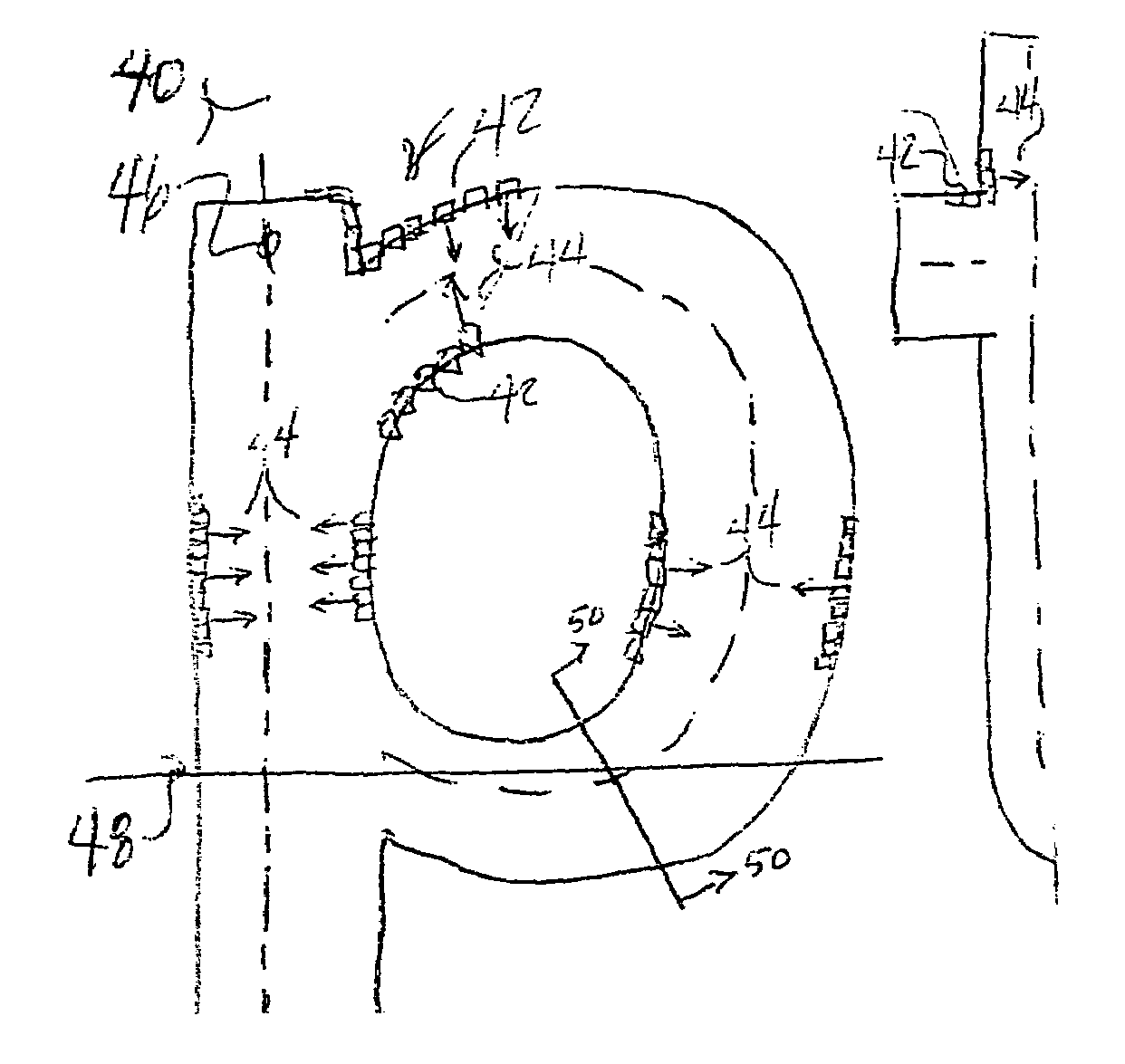

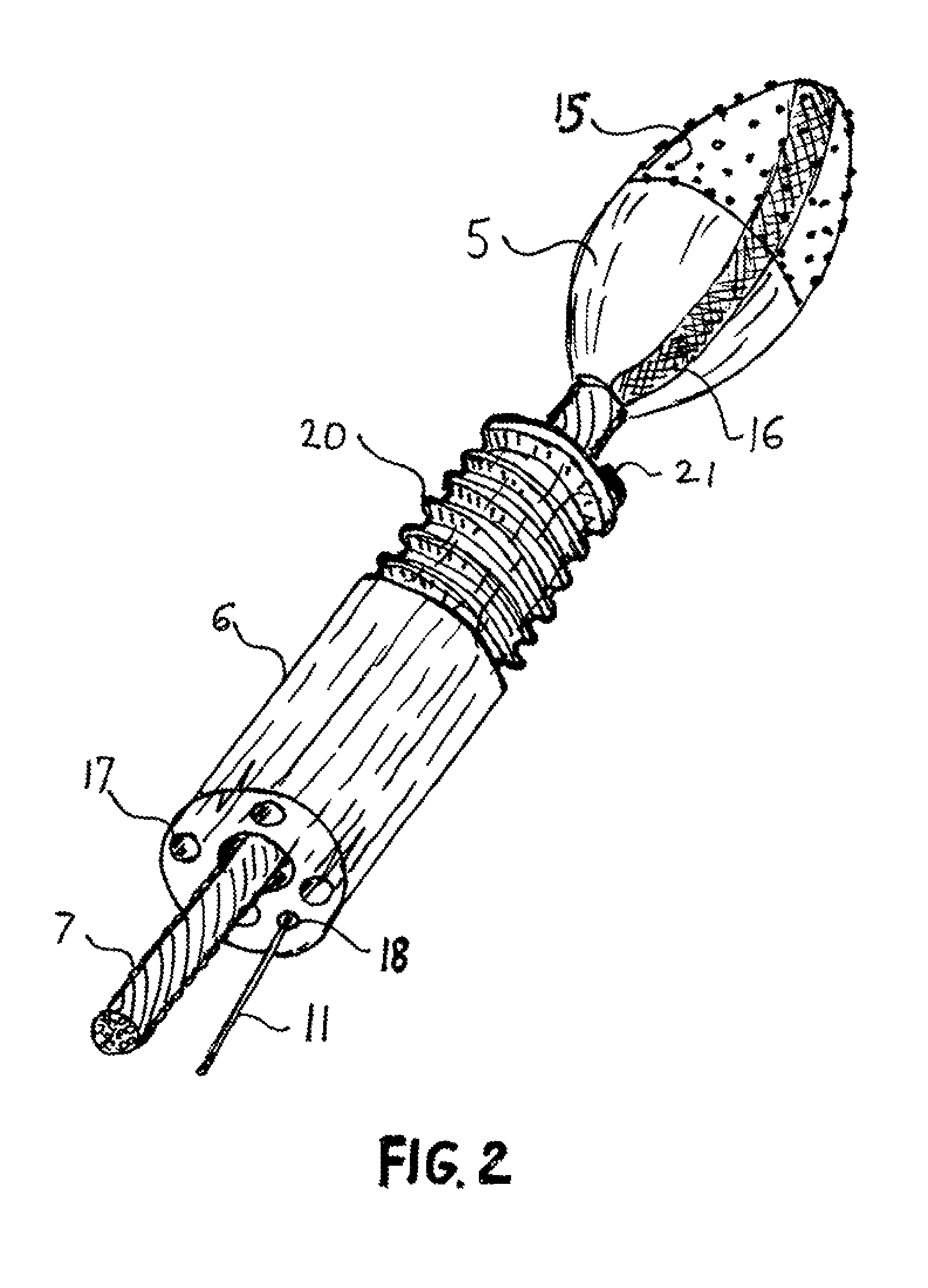

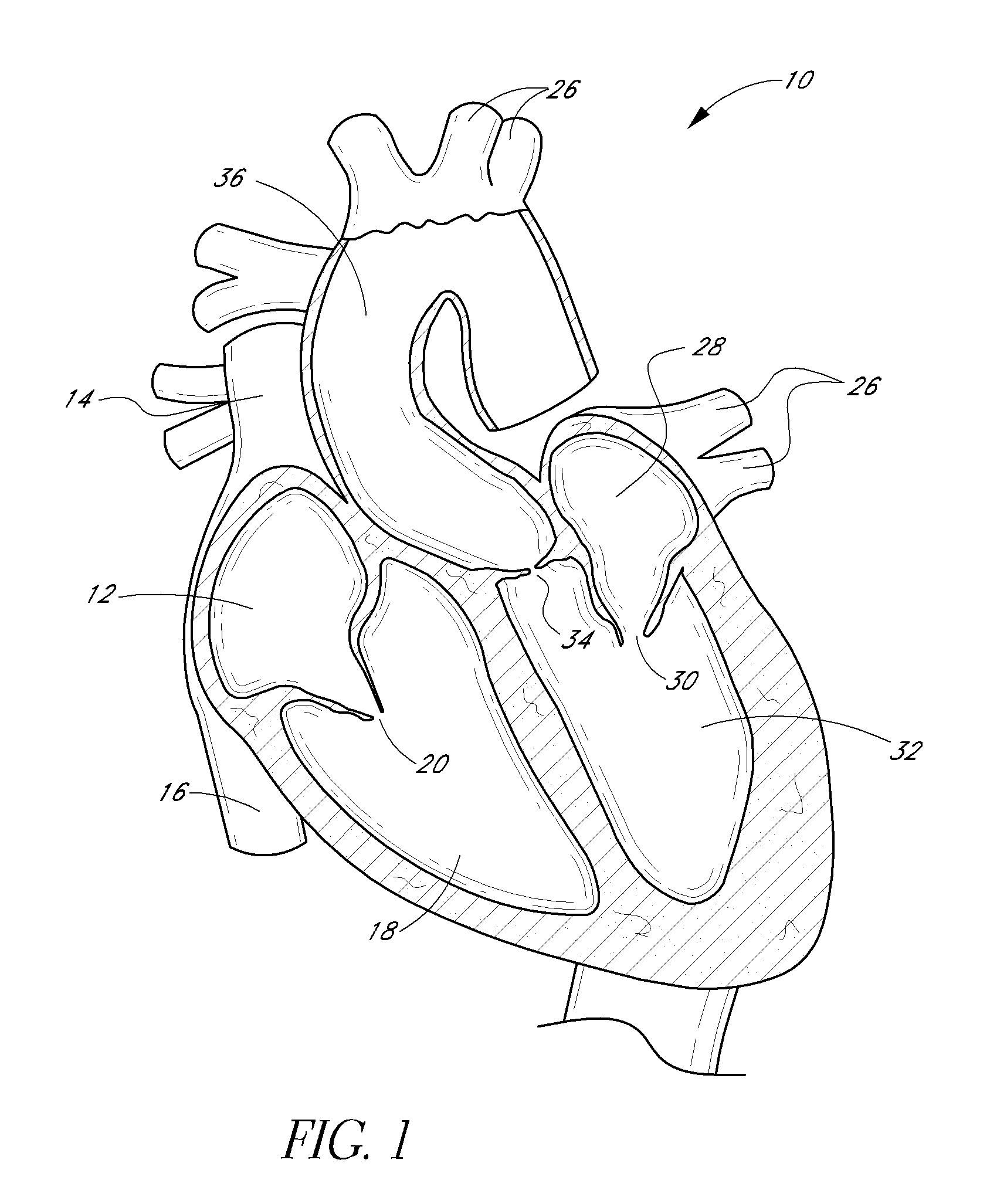

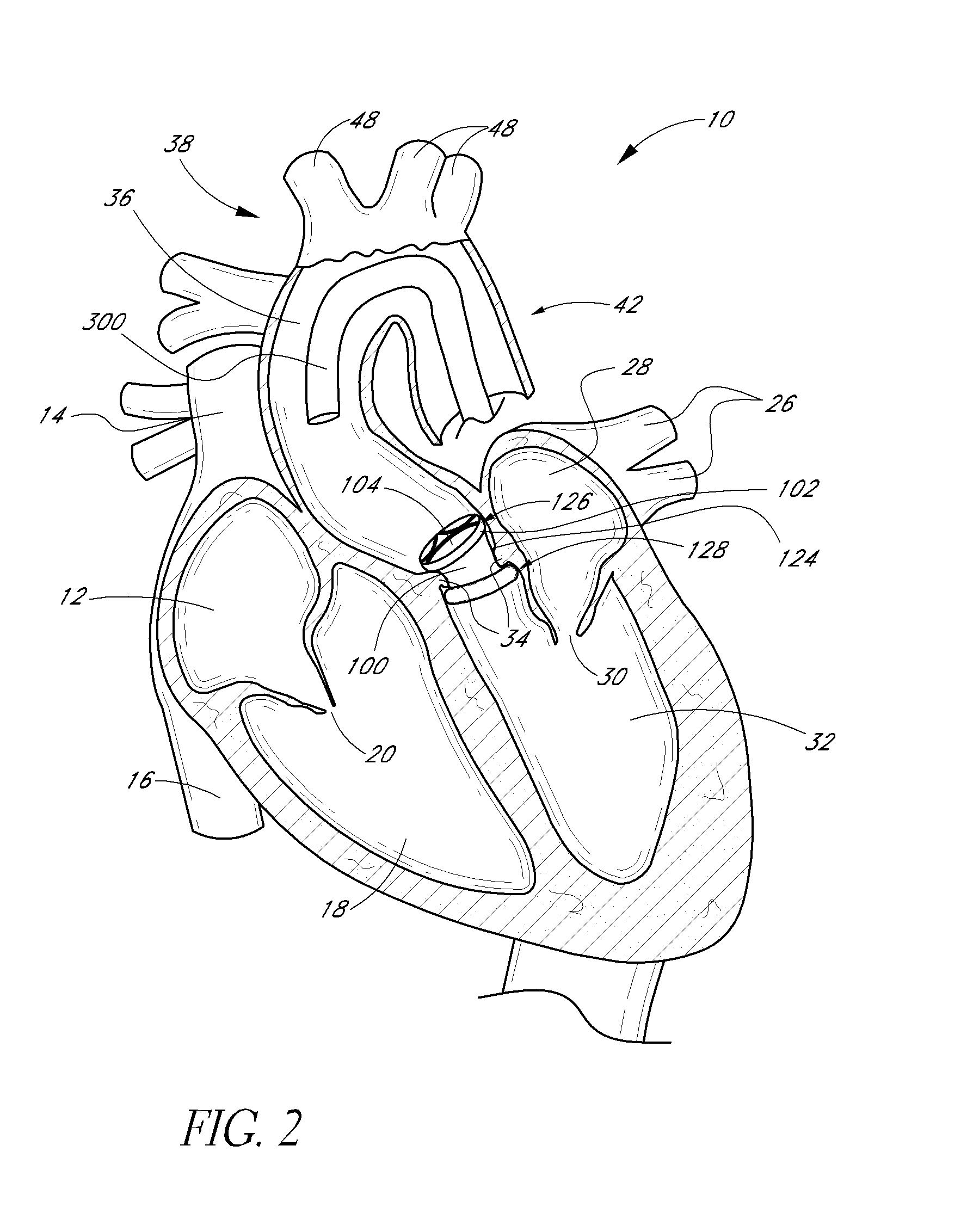

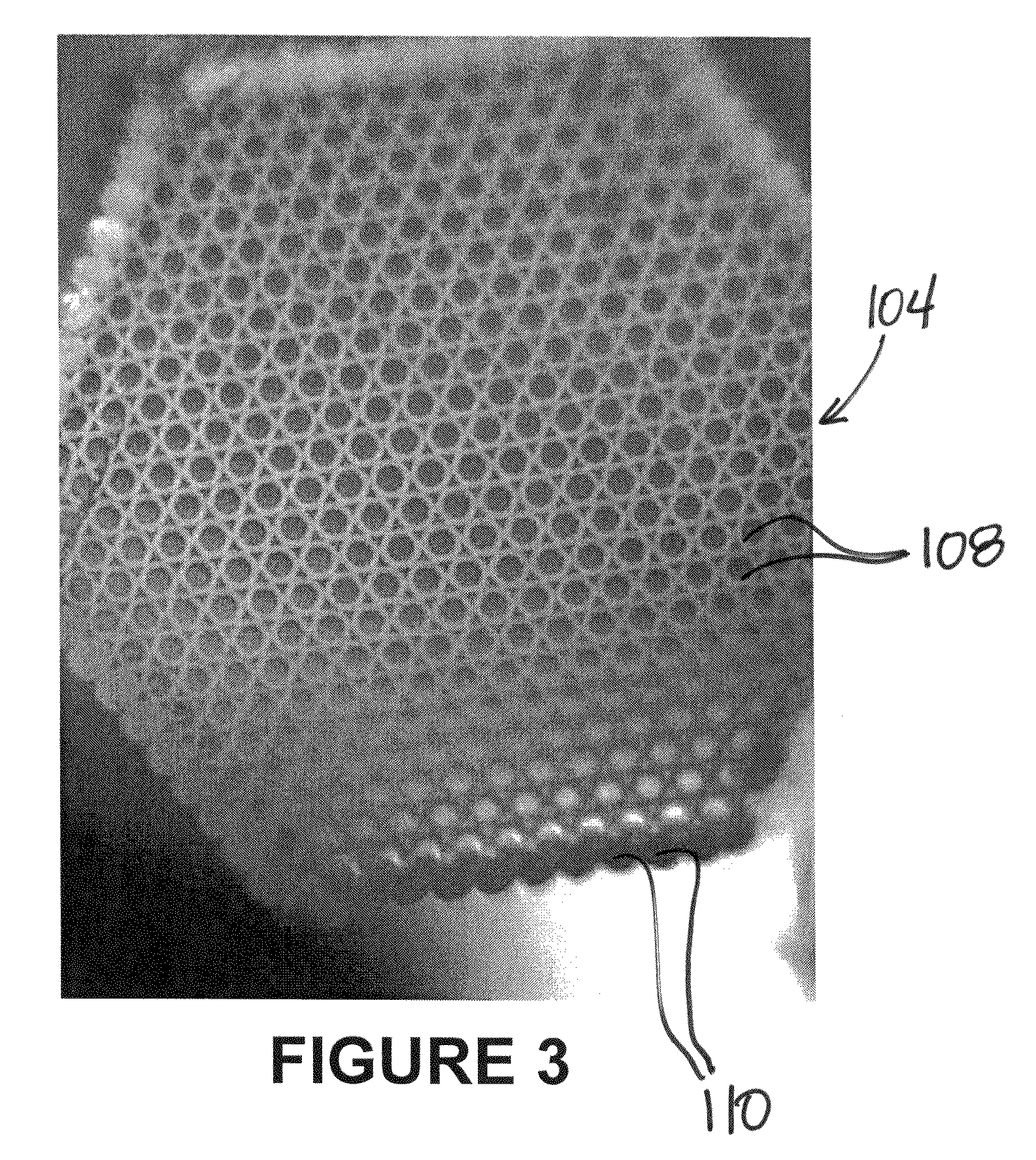

Translumenally implantable heart valve with formed in place support

A cardiovascular prosthetic valve, the valve comprising an inflatable cuff comprising at least one inflatable channel that forms, at least in part, an inflatable structure, and a valve coupled to the inflatable cuff, the valve configured to permit flow in a first axial direction and to inhibit flow in a second axial direction opposite to the first axial direction, the valve comprising a plurality of tissue supports that extend generally in the axial direction and that are flexible and / or movable throughout a range in a radial direction.

Owner:DIRECT FLOW MEDICAL INC

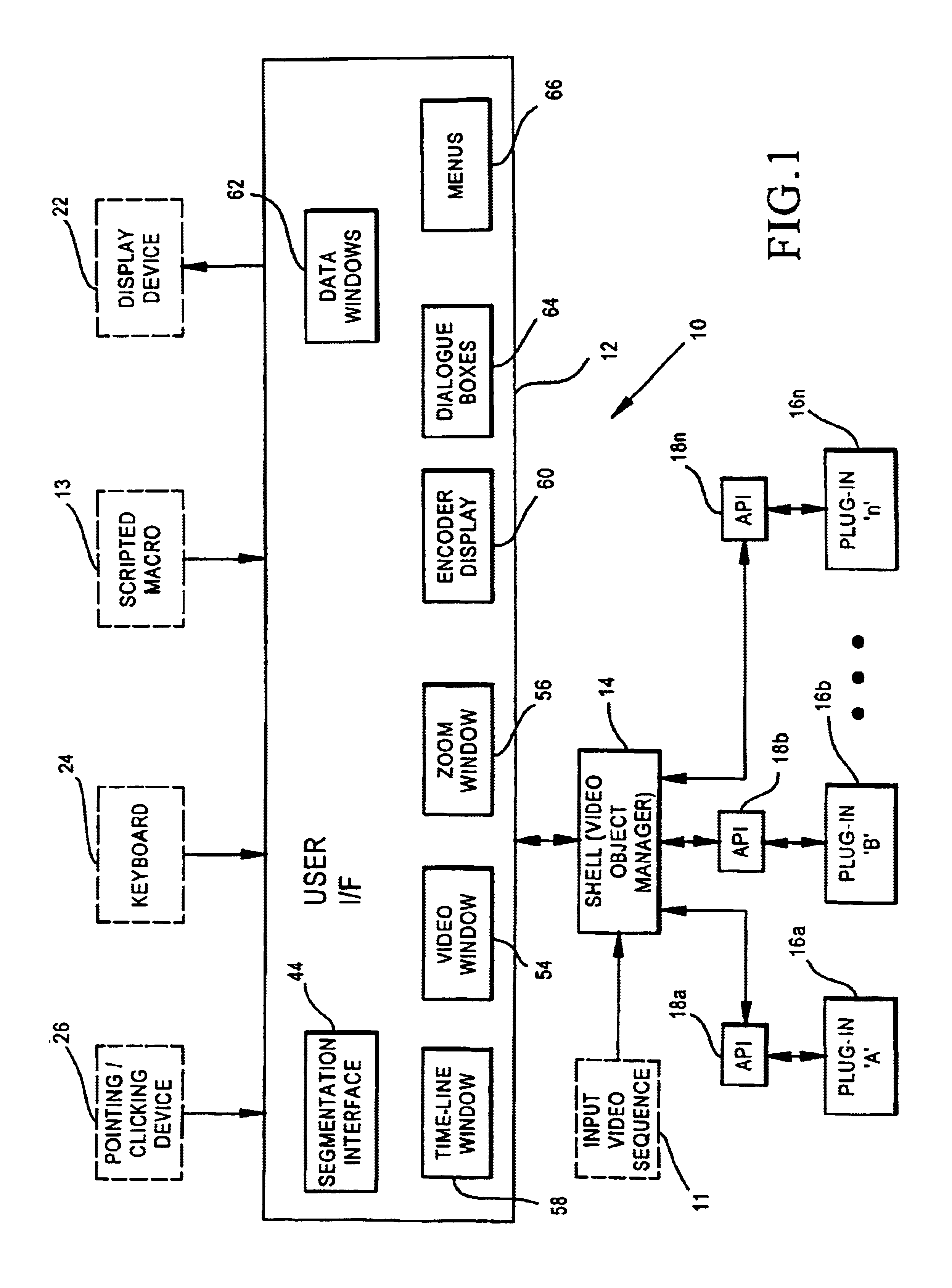

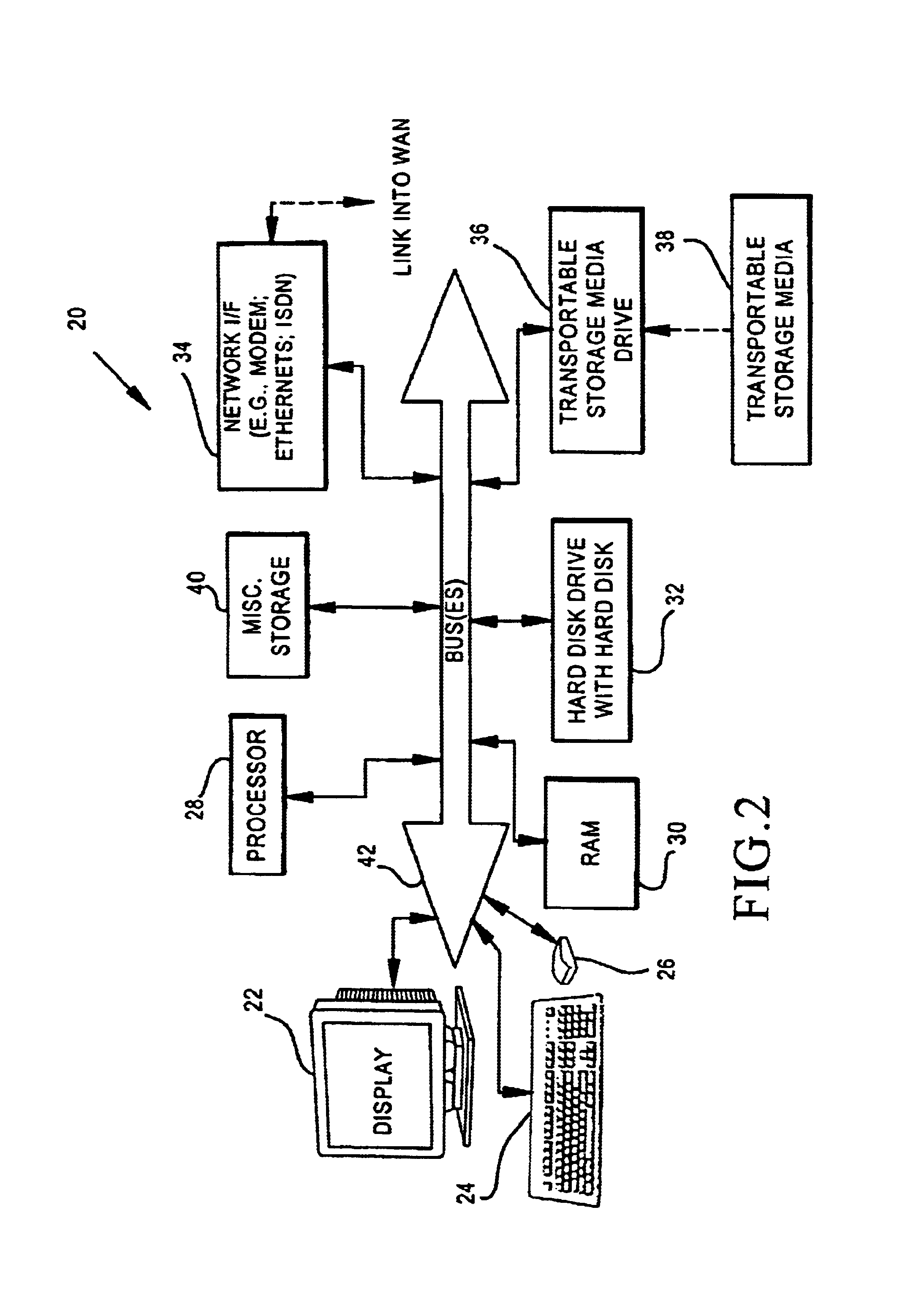

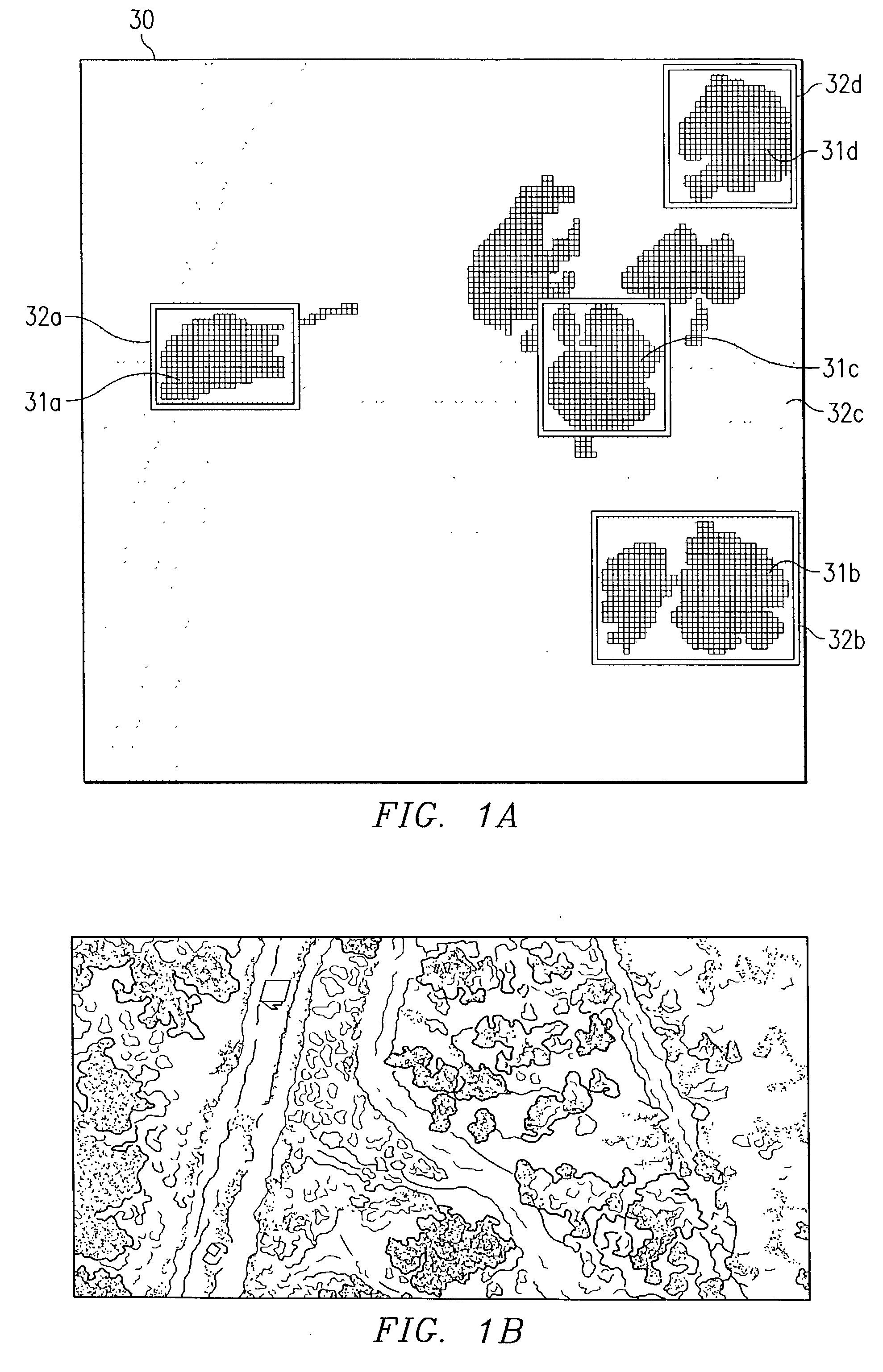

Methods and systems for text detection in mixed-context documents using local geometric signatures

InactiveUS7043080B1Quality andSpeed andCharacter and pattern recognitionVisual presentationGraphicsImaging processing

Embodiments of the present invention relate to methods and systems for detection and delineation of text characters in images which may contain combinations of text and graphical content. Embodiments of the present invention employ intensity contrast edge detection methods and intensity gradient direction determination methods in conjunction with analyses of intensity curve geometry to determine the presence of text and verify text edge identification. These methods may be used to identify text in mixed-content images, to determine text character edges and to achieve other image processing purposes.

Owner:SHARP KK

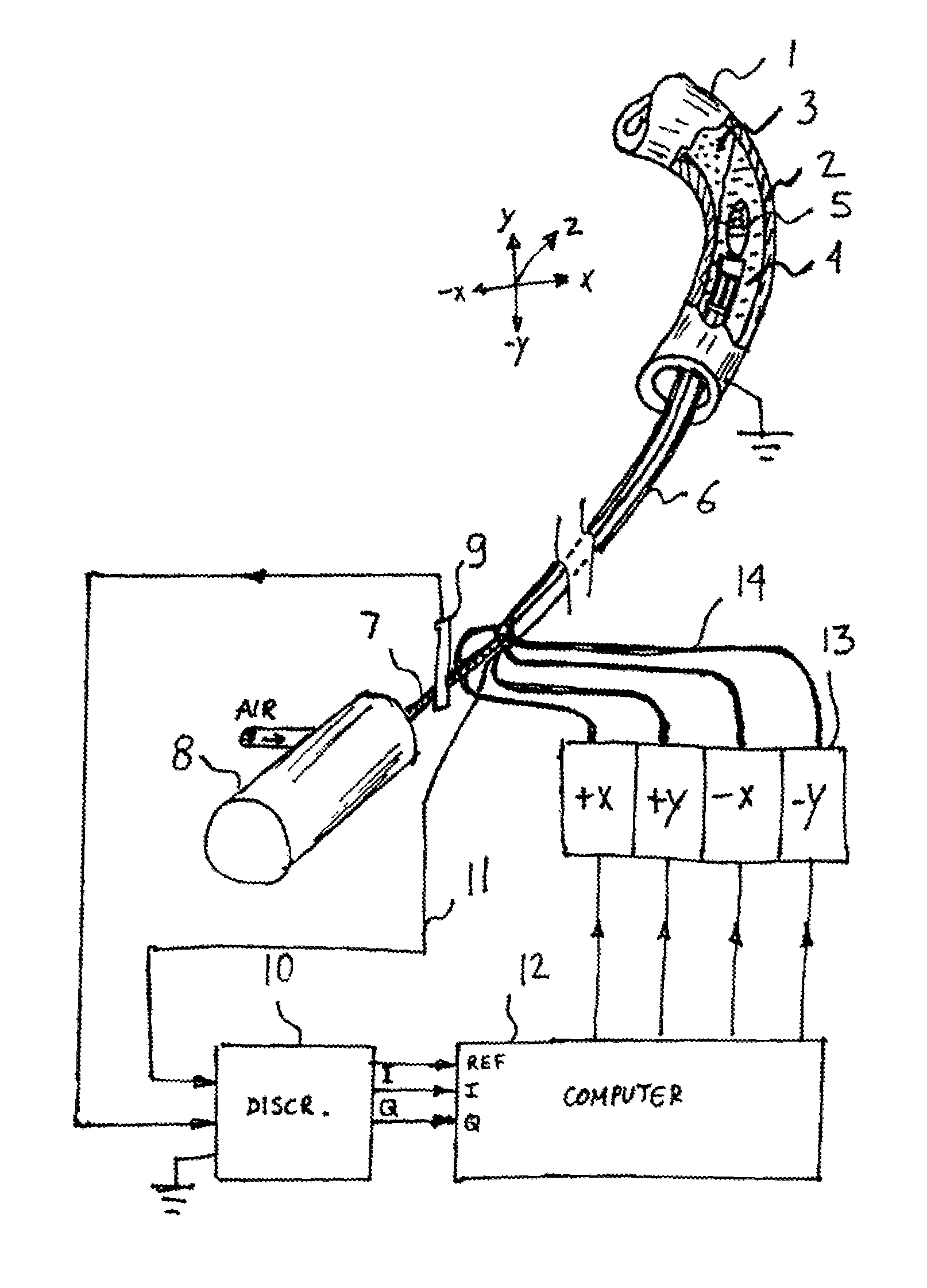

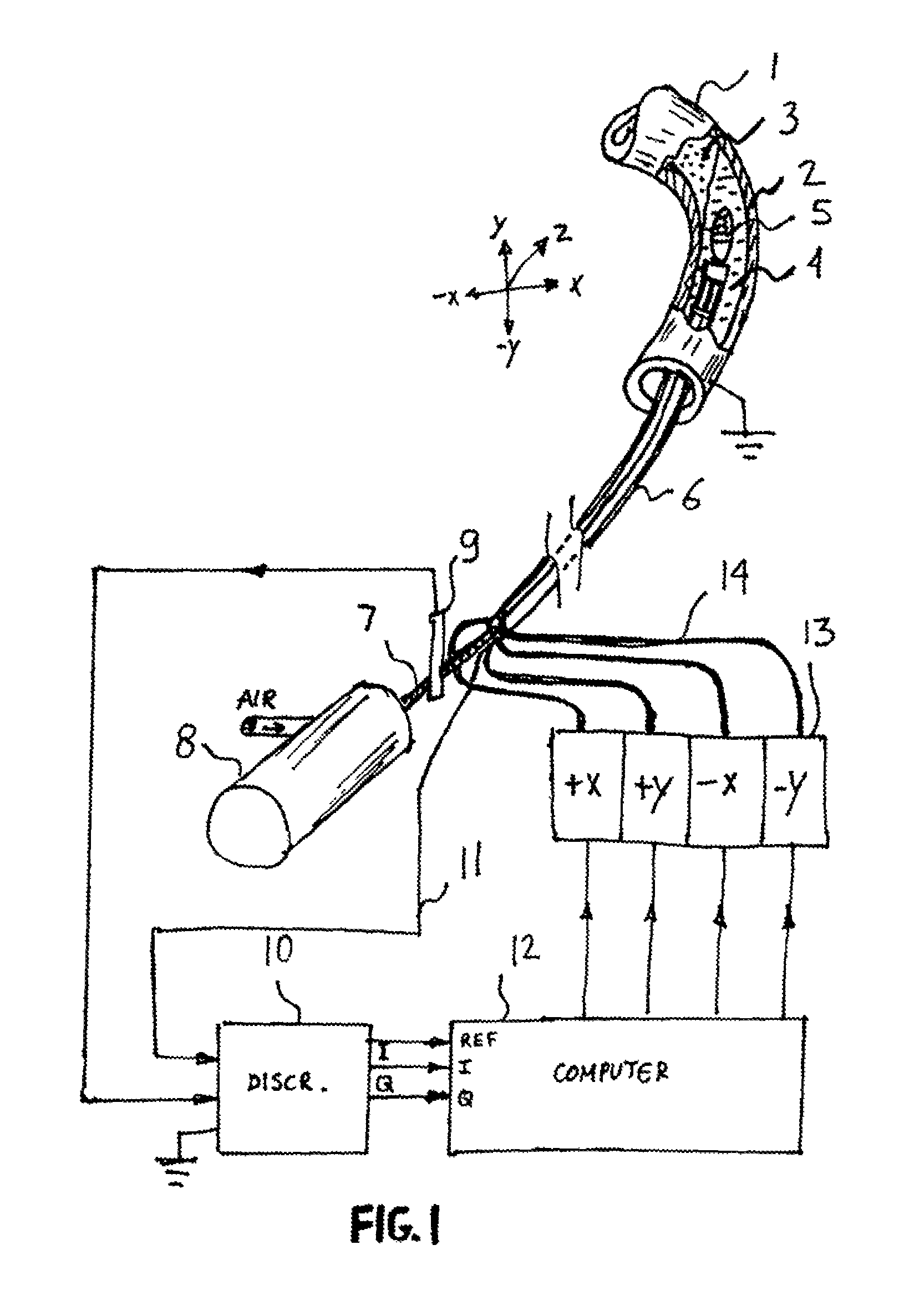

Automatic atherectomy system

An automatic atherectomy system uses a rotary burr at the tip of a catheter as a sensing device, in order to measure both electrical conductivity and permittivity of surrounding tissue at multiple frequencies. From these parameters it is determined which tissue lies in different directions around the tip. A servo system steers the catheter tip in the direction of the tissue to be removed. In non-atherectomy applications the rotary burr can be replaced with any desired tool and the system can be used to automatically steer the catheter to the desired position. The steering may be done hydraulically, by pressurizing miniature bellows located near the catheter tip.

Owner:KARDIUM

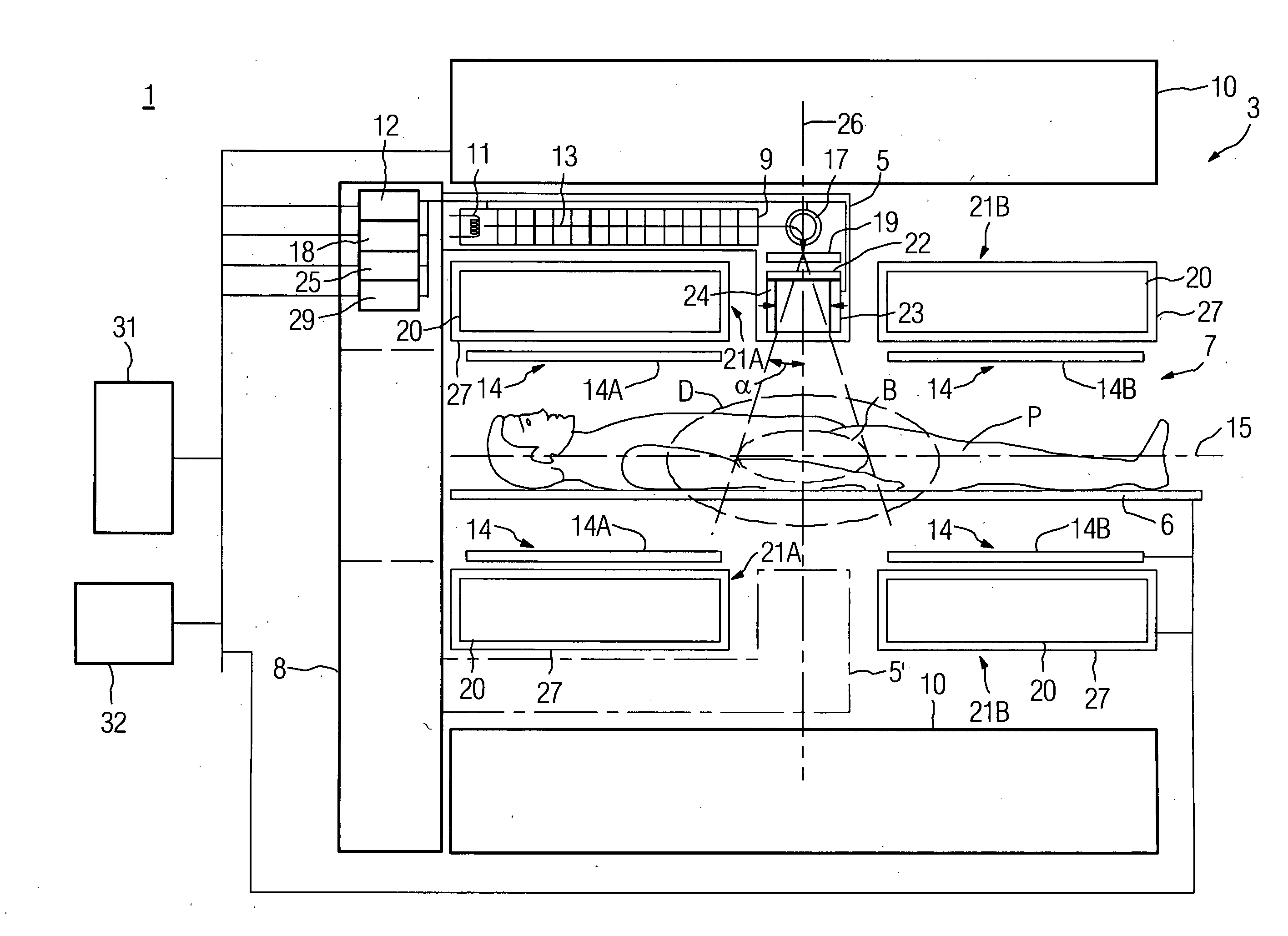

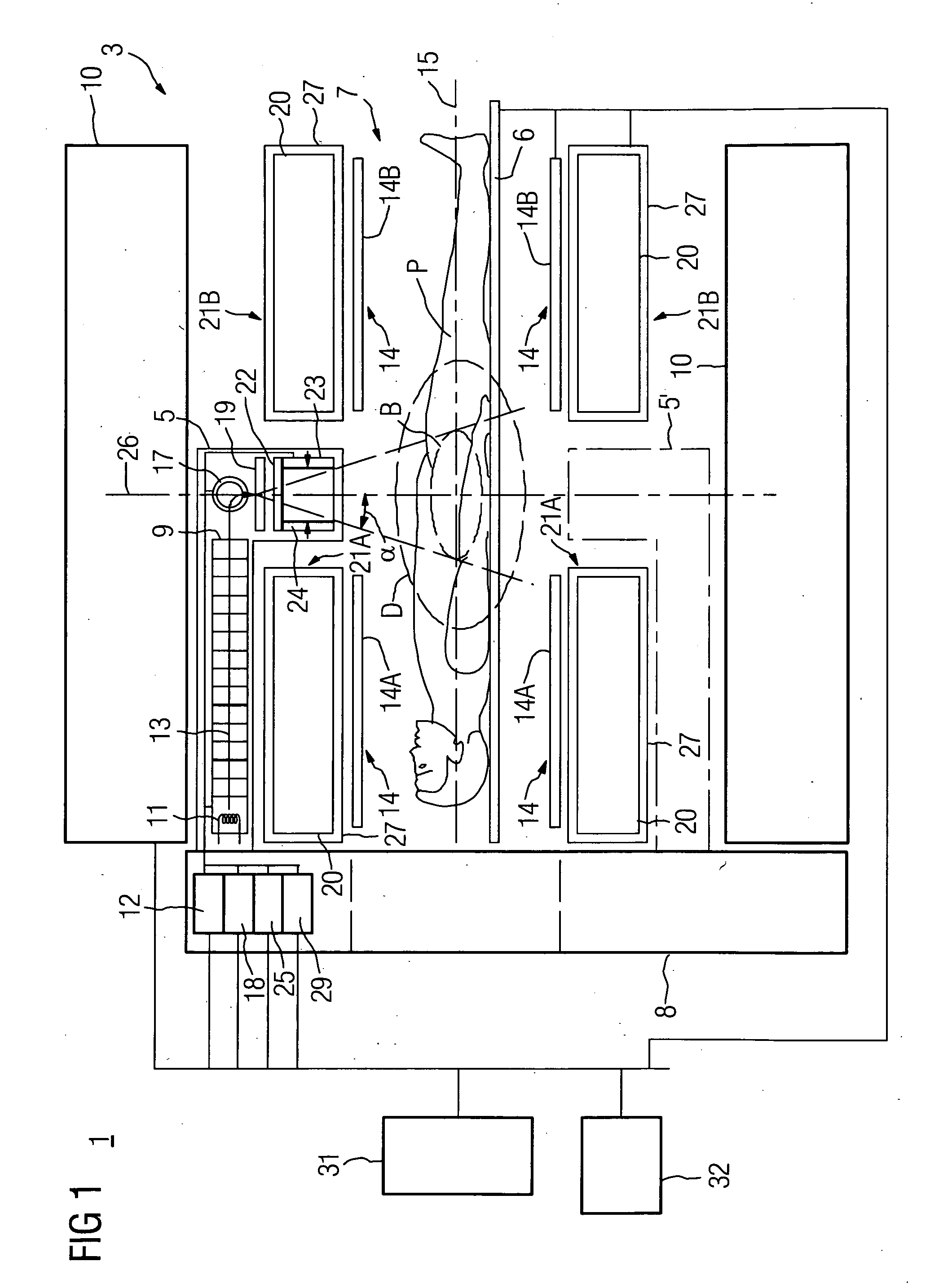

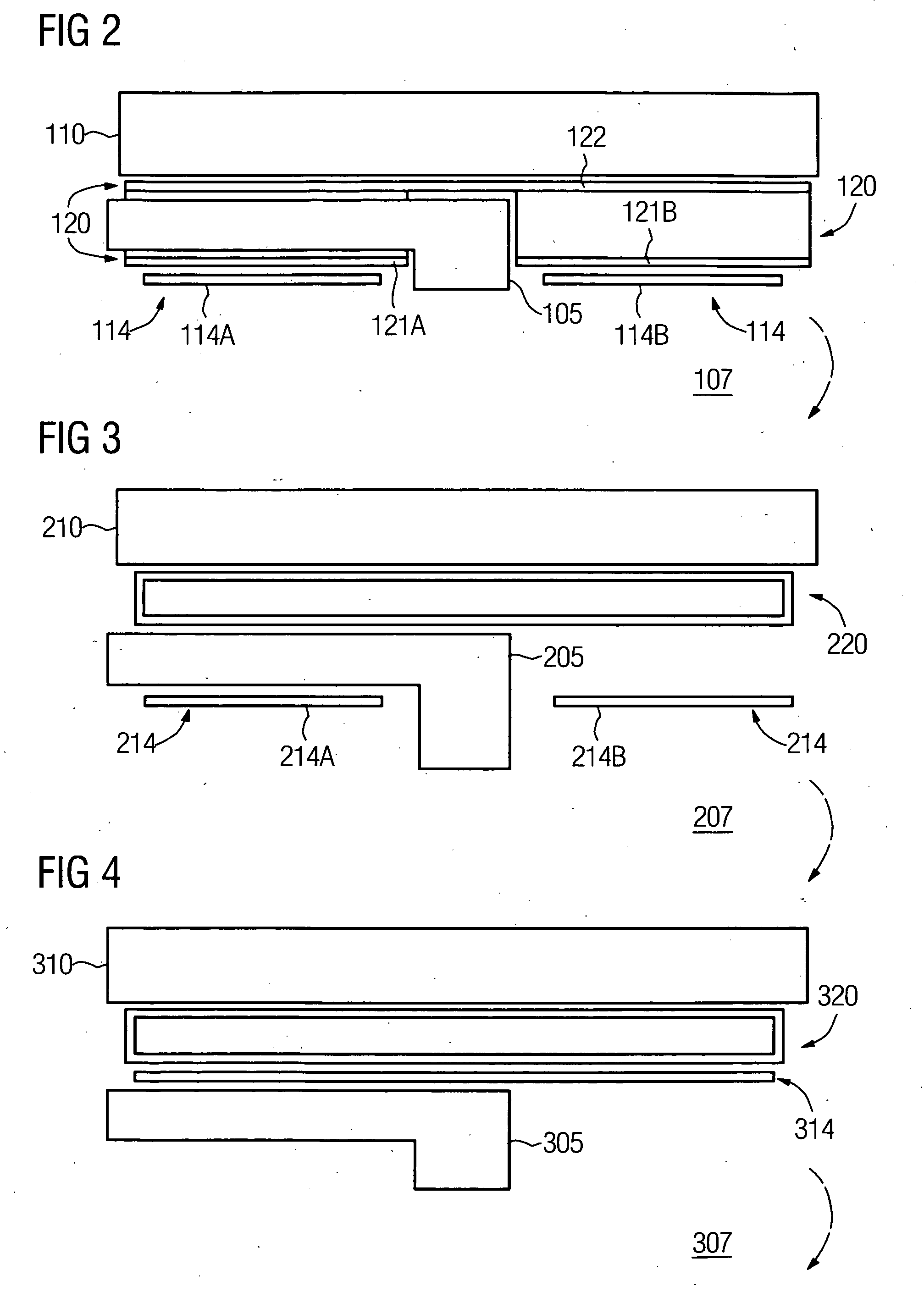

Combined radiation therapy and magnetic resonance unit

ActiveUS20080208036A1Avoid huge expensesHigh quality imagingMagnetic measurementsDiagnostic recording/measuringResonanceRadiation therapy

The invention relates to a combined radiation therapy and magnetic resonance unit. For this purpose, in accordance with the invention a combined radiation therapy and magnetic resonance unit is provided comprising a magnetic resonance diagnosis part with an interior, which is limited in radial direction by a main magnet, and a radiation therapy part for the irradiation of an irradiation area within the interior, wherein at least parts of the radiation therapy part, which comprise a beam deflection arrangement, are arranged within the interior.

Owner:SIEMENS HEALTHCARE GMBH

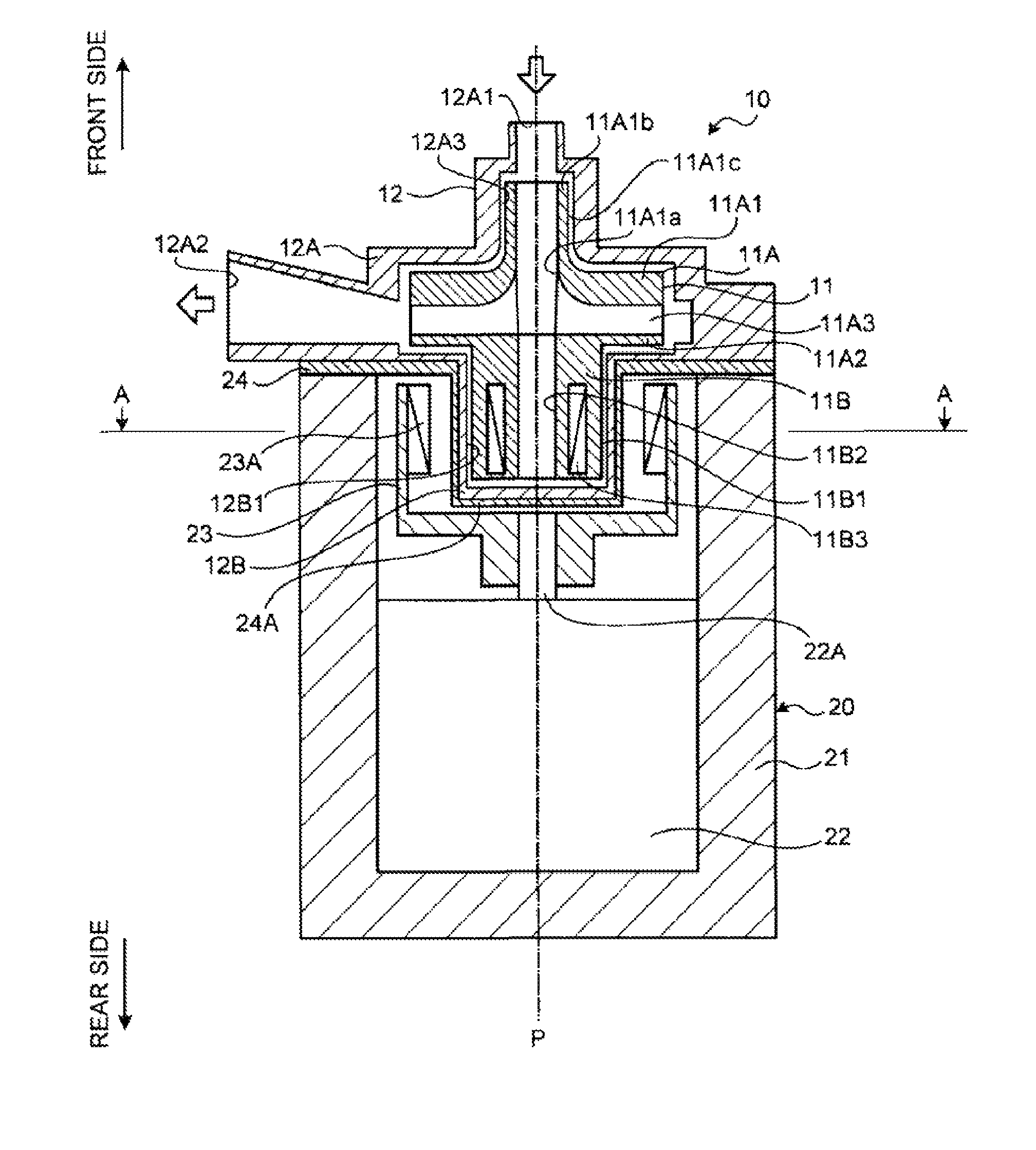

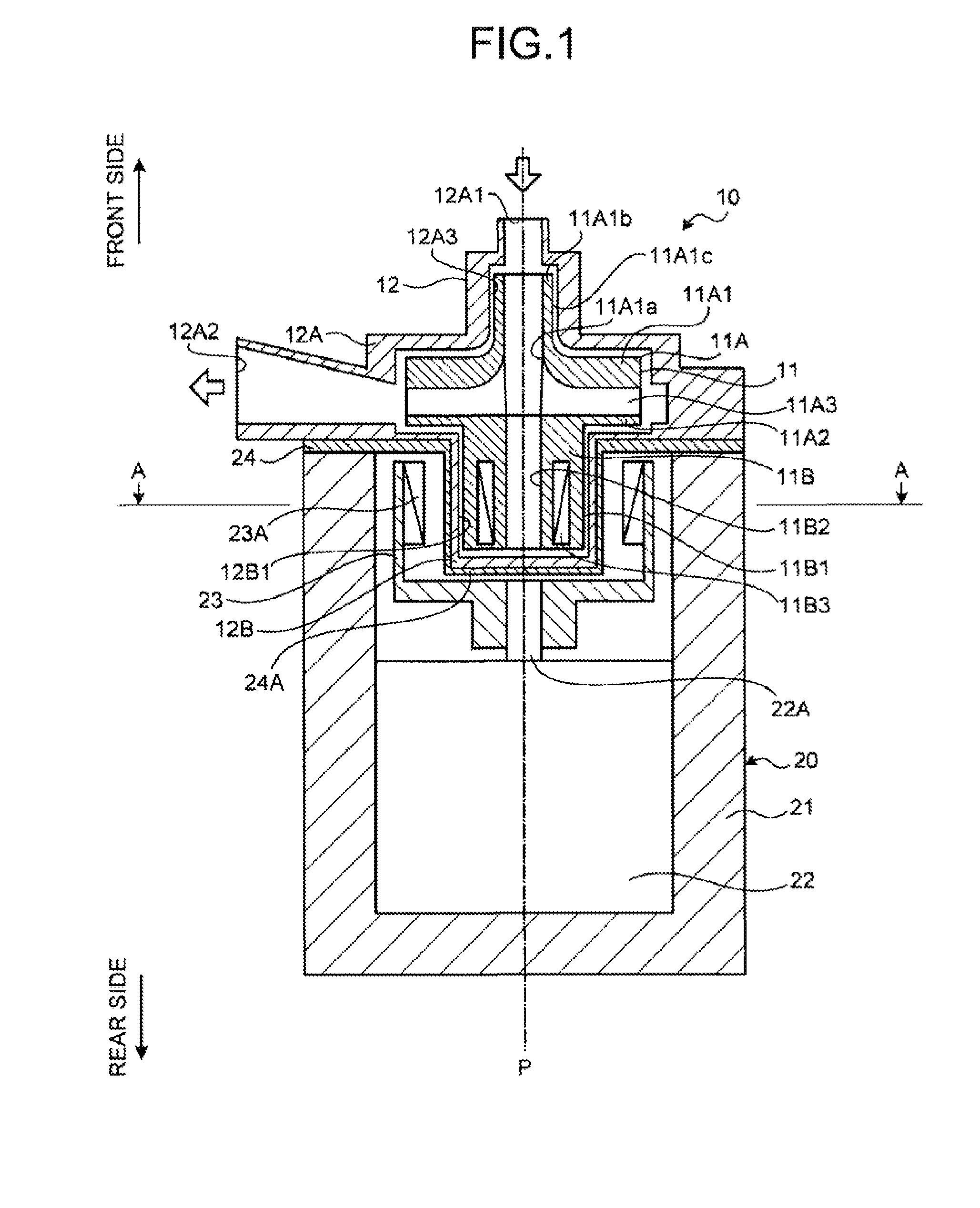

Blood pump and pump unit

ActiveUS8114008B2Reduce manufacturing costImprove reliabilityPump componentsBlood pumpsImpellerCoupling

Provided are a magnetic coupling as an axial bearing including a driven magnet (11B3) that is a permanent magnet provided to a rotating body (11) inside a casing (12) and a drive magnet (23A) that is a permanent magnet placed face to face with the driven magnet in a radial direction of the rotating body outside the casing to be magnetically coupled with the driven magnet, a driving motor (22) that rotatably drives the drive magnet about an axis (P) of the rotating body, a radial bearing that is a dynamic bearing having annular bearing surfaces (12B1, 11B1) centering on the axis on an inner wall of the casing and the rotating body, each of the annular bearing surfaces being arranged with a gap between the drive magnet and the driven magnet in the radial direction of the rotating body, and a closed impeller (11A) including a front shroud (11A1) arranged on a front side in the axis direction in the rotating body, a rear shroud (11A2) arranged on a rear side in the axis direction of the front shroud, and a vane (11A3) arranged between the front shroud and the rear shroud.

Owner:NIPRO CORP

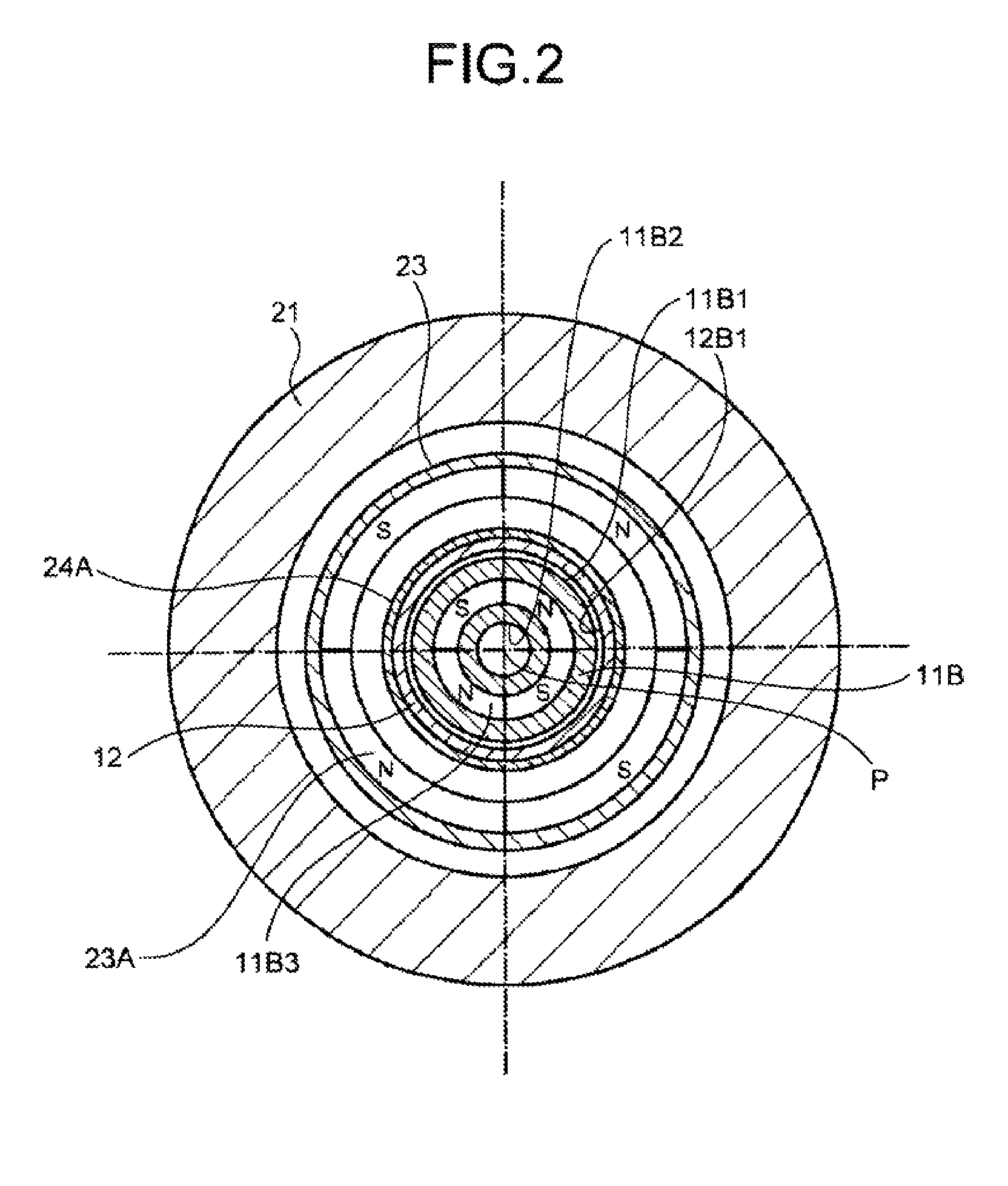

Translumenally implantable heart valve with formed in place support

A cardiovascular prosthetic valve, the valve comprising an inflatable cuff comprising at least one inflatable channel that forms, at least in part, an inflatable structure, and a valve coupled to the inflatable cuff, the valve configured to permit flow in a first axial direction and to inhibit flow in a second axial direction opposite to the first axial direction, the valve comprising a plurality of tissue supports that extend generally in the axial direction and that are flexible and / or movable throughout a range in a radial direction.

Owner:SPEYSIDE MEDICAL LLC

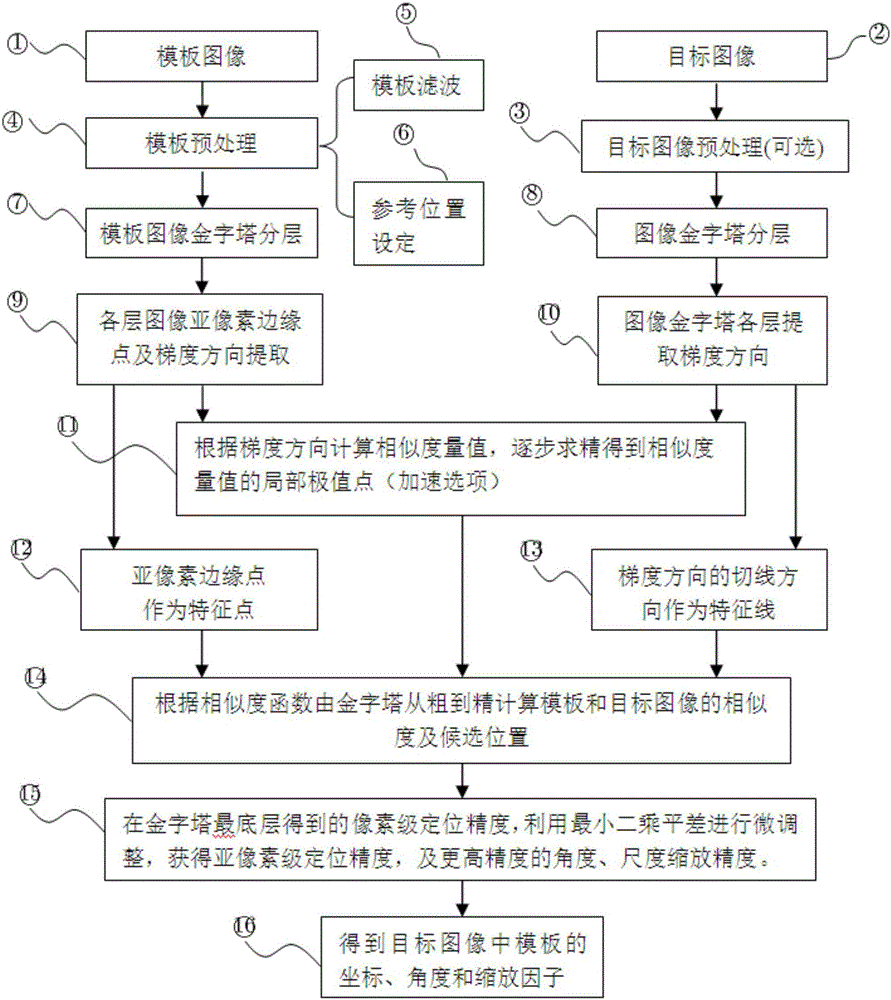

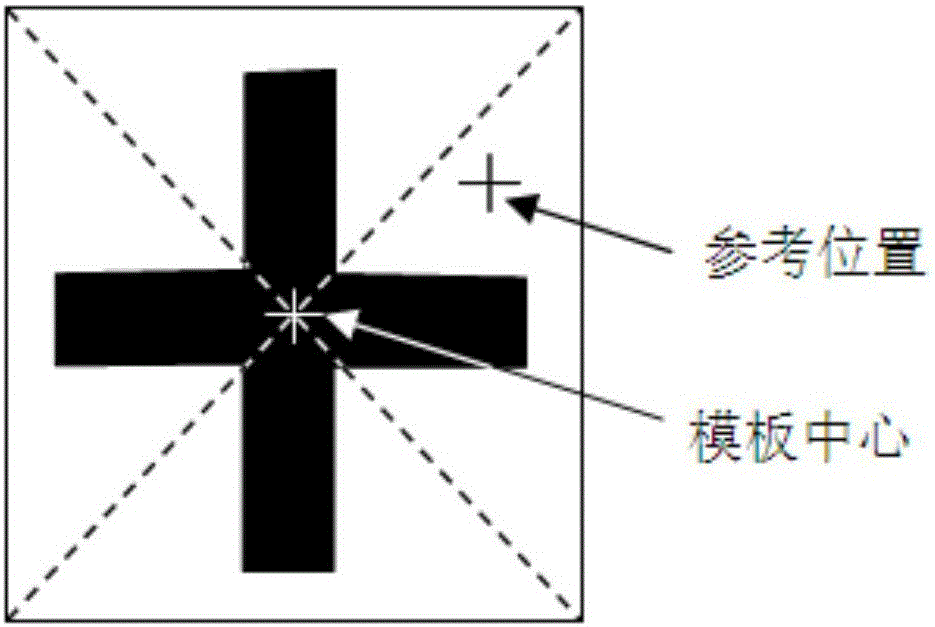

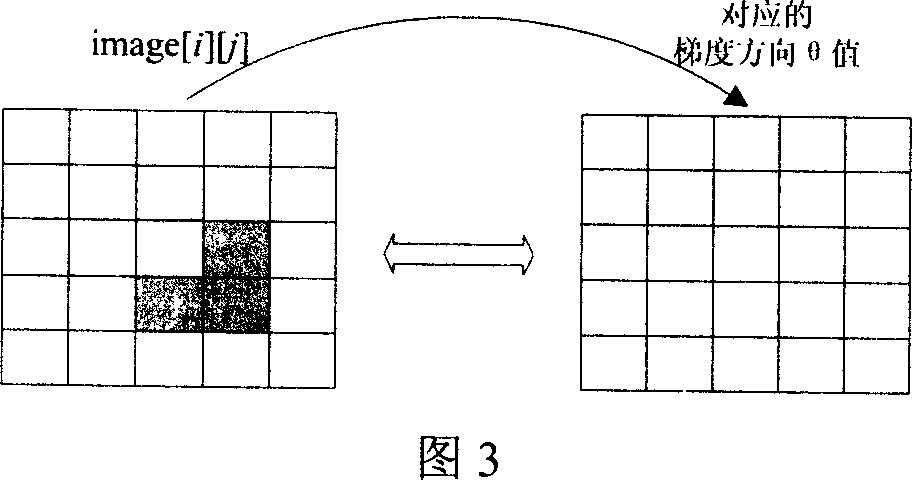

Fast high-precision geometric template matching method enabling rotation and scaling functions

ActiveCN105930858APrecise positioningGuaranteed stabilityCharacter and pattern recognitionTemplate matchingMachine vision

The present invention provides a fast high-precision geometric template matching method enabling rotation and scaling functions. According to the method, based on image edge information, with the sub-pixel edge points of a template image adopted as feature points, the tangent directions of the gradient directions of a target image adopted as feature lines, and based on the local extremum points of a similarity value, the similarity and the candidate positions of the template image and the target image are calculated from fine to rough through adopting a Pyramid algorithm and according to a similarity function; and pixel-level positioning accuracy is obtained at the bottommost layer of a Pyramid, the least squares method is adopted to carry out fin adjustment, so that sub-pixel positioning accuracy, higher-precision angle and size scaling accuracy can be achieved. The method can realize fast, stable and high-precision positioning and identification of target images which are moved, rotated, scaled and is partially shielded, and where the brightness of illumination changes, illumination is uneven, and cluttered background exists. The method can be applied to situations which require machine vision to carry out target positioning and identification.

Owner:吴晓军

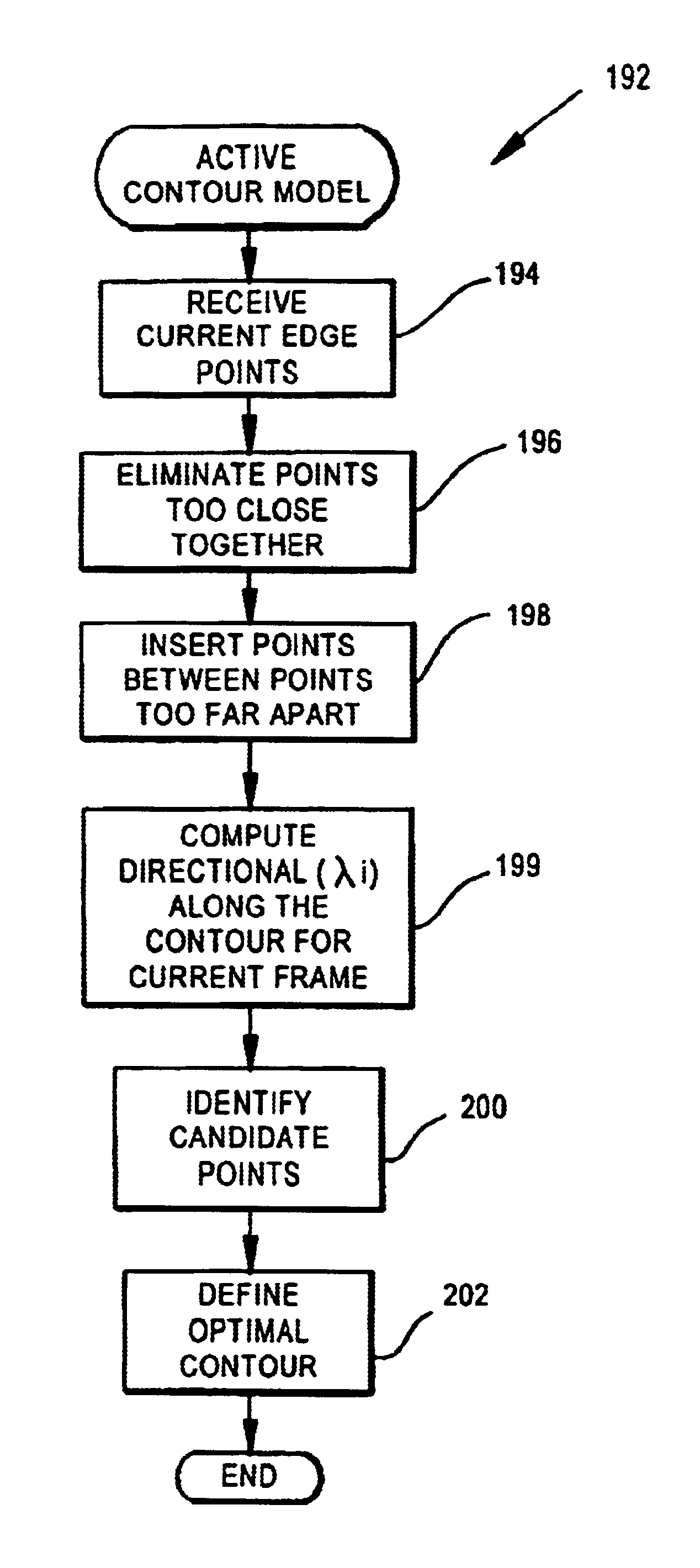

Video object segmentation using active contour model with directional information

InactiveUS6912310B1Improve segmentationAccurate segmentationImage analysisCharacter and pattern recognitionGradient strengthEnergy minimization

Object segmentation and tracking are improved by including directional information to guide the placement of an active contour (i.e., the elastic curve or ‘snake’) in estimating the object boundary. In estimating an object boundary the active contour deforms from an initial shape to adjust to image features using an energy minimizing function. The function is guided by external constraint forces and image forces to achieve a minimal total energy of the active contour. Both gradient strength and gradient direction of the image are analyzed in minimizing contour energy for an active contour model.

Owner:UNIV OF WASHINGTON

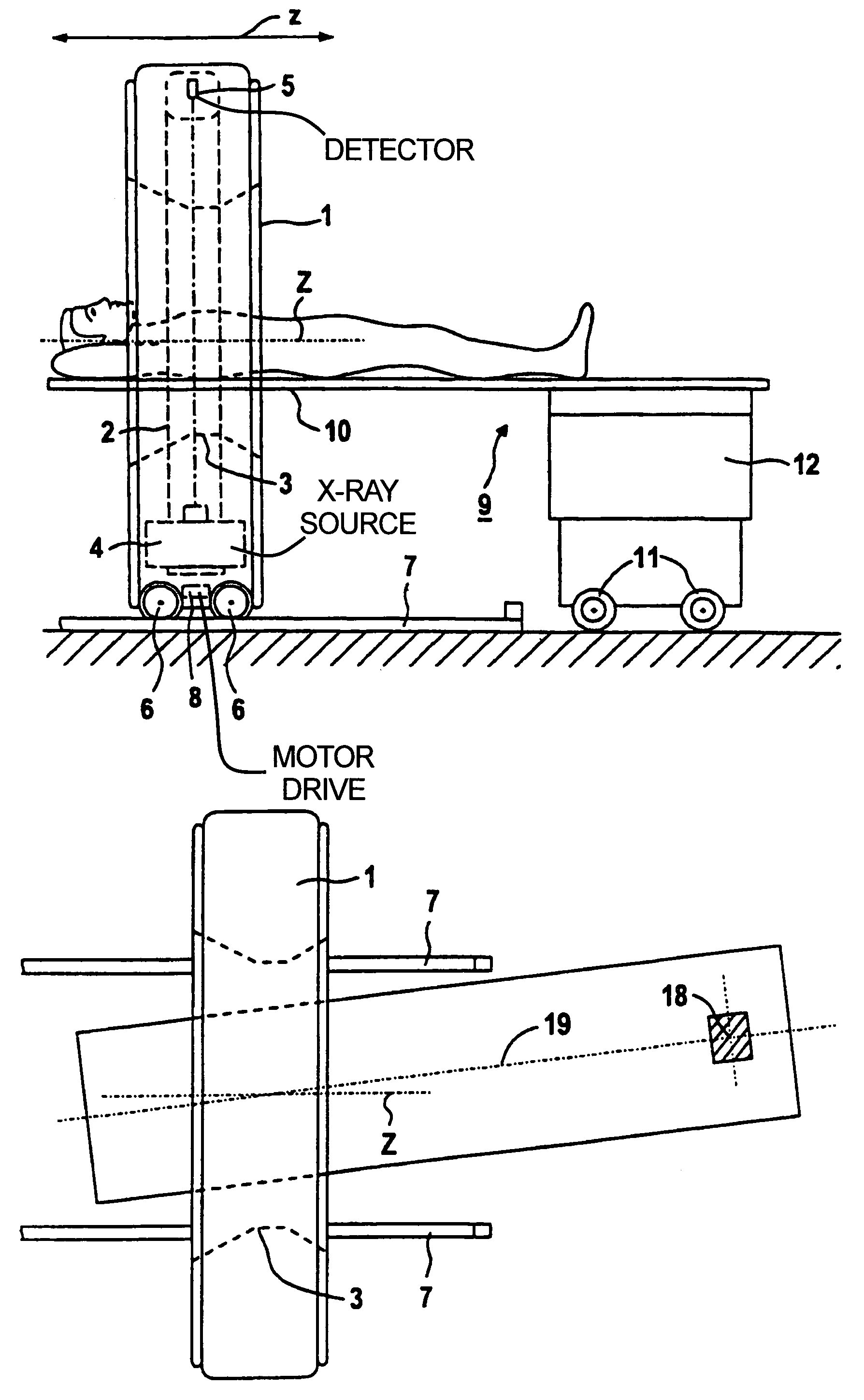

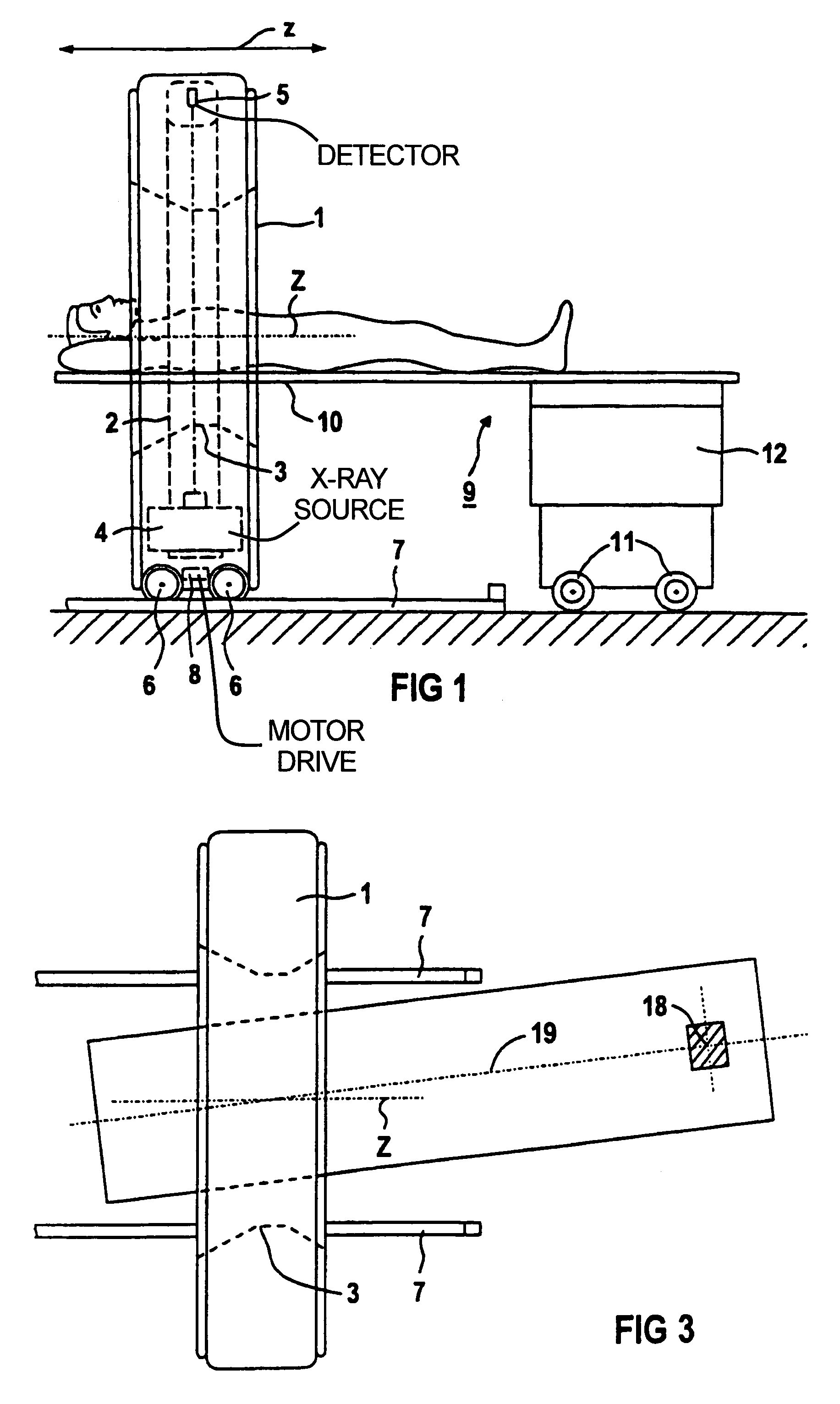

Computed tomography apparatus

InactiveUS6959068B1Technically simpleSimple preparation processMaterial analysis using wave/particle radiationRadiation/particle handlingComputed tomographyX-ray

A computed tomography apparatus has a gantry with a measuring opening, with an x-ray source movable around the measuring opening for irradiating an examination area from different directions, a detector for registering corresponding sets of projection data, and at least one support table having a support plate. The gantry can be moved into a use position independently of the support table and the support table is fashioned such that the support plate extends through the measuring opening when the gantry assumes a use position.

Owner:SIEMENS HEALTHCARE GMBH

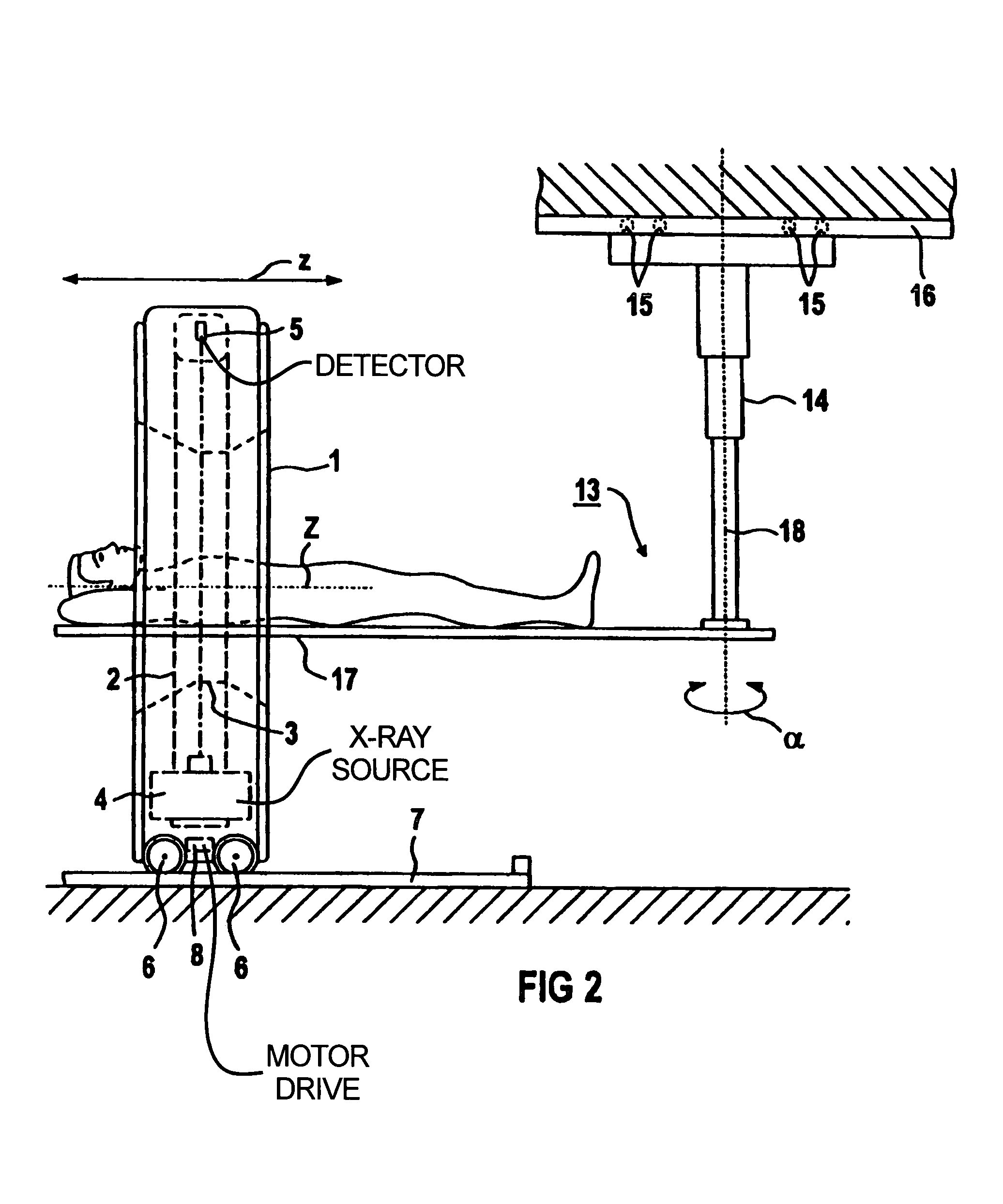

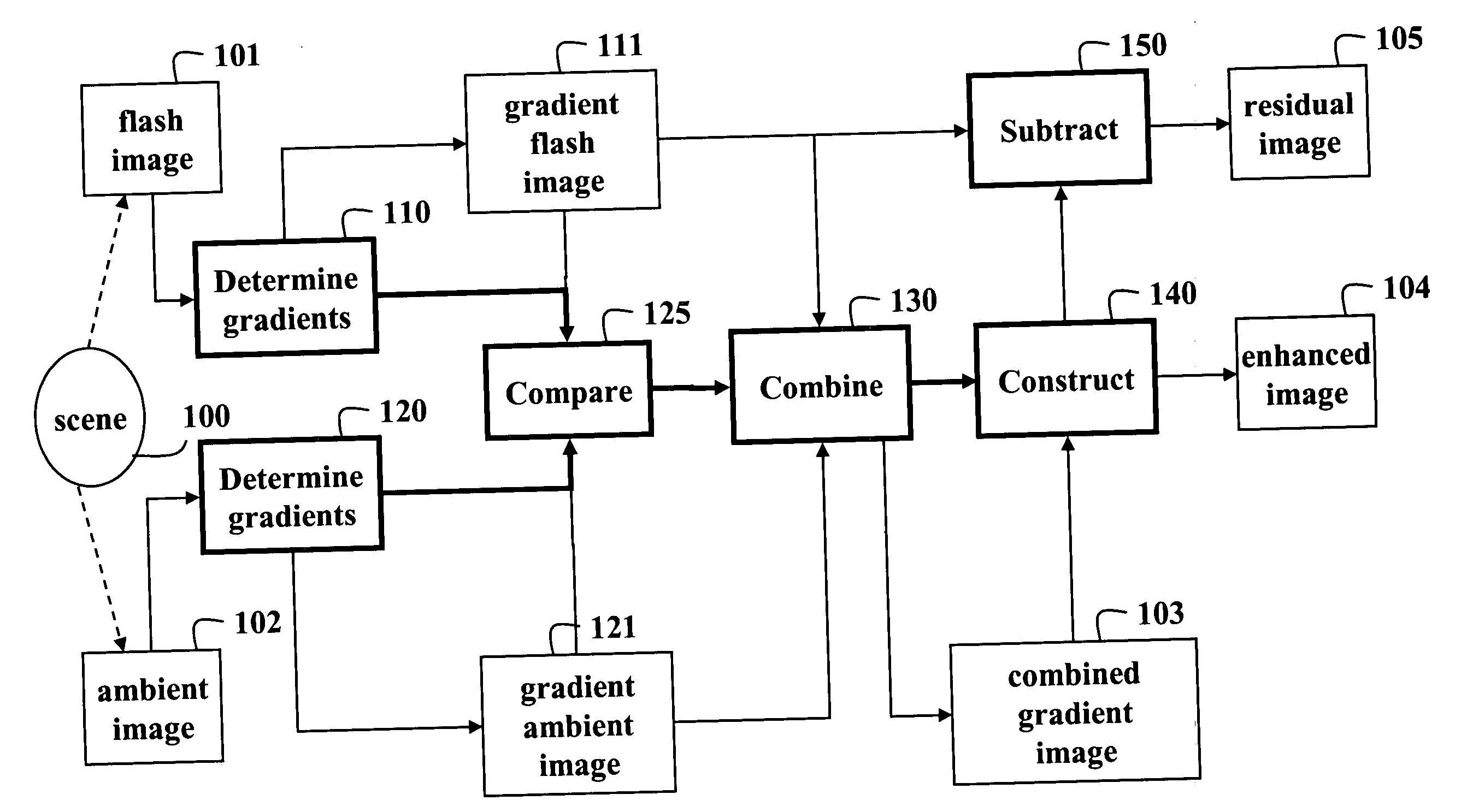

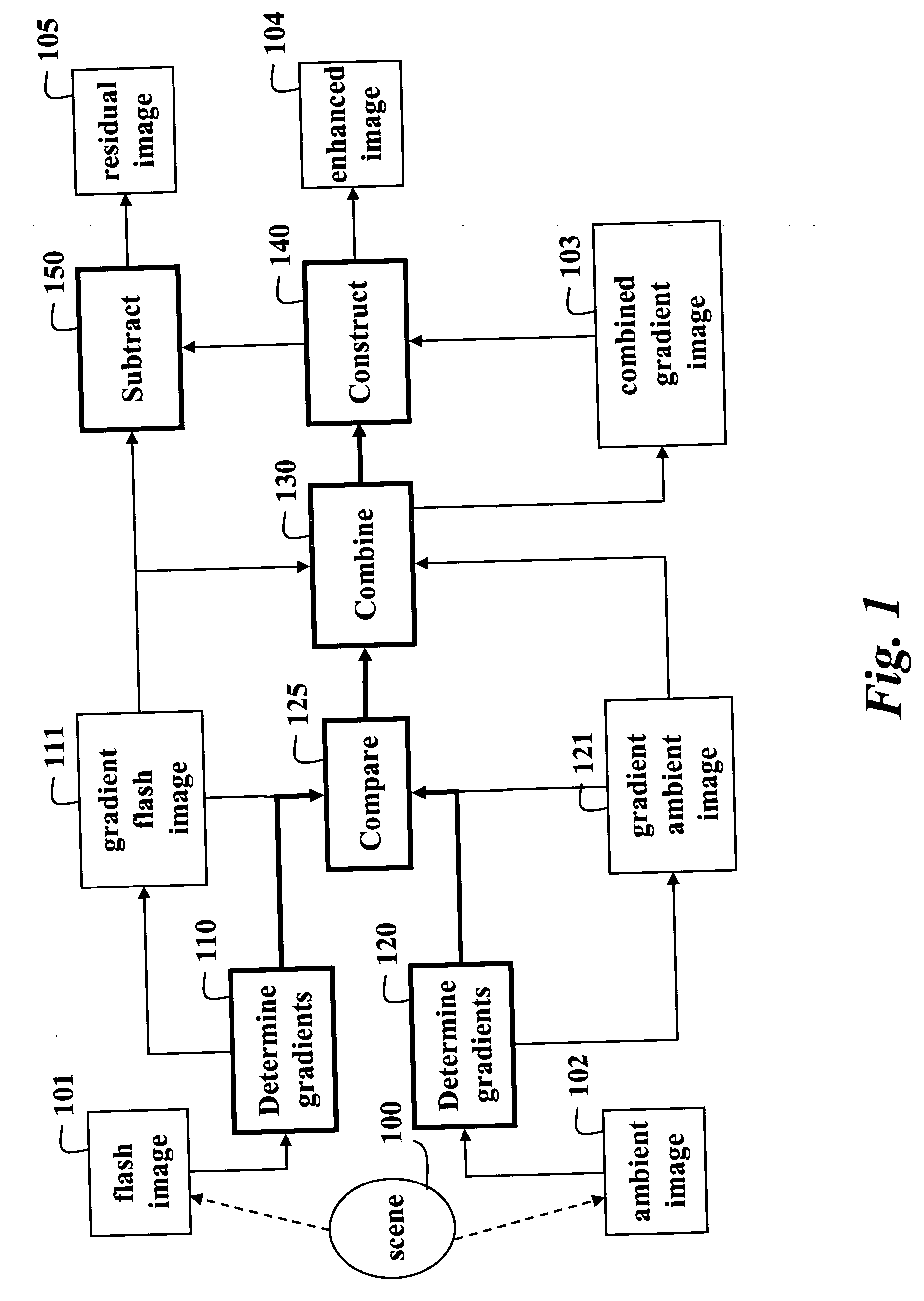

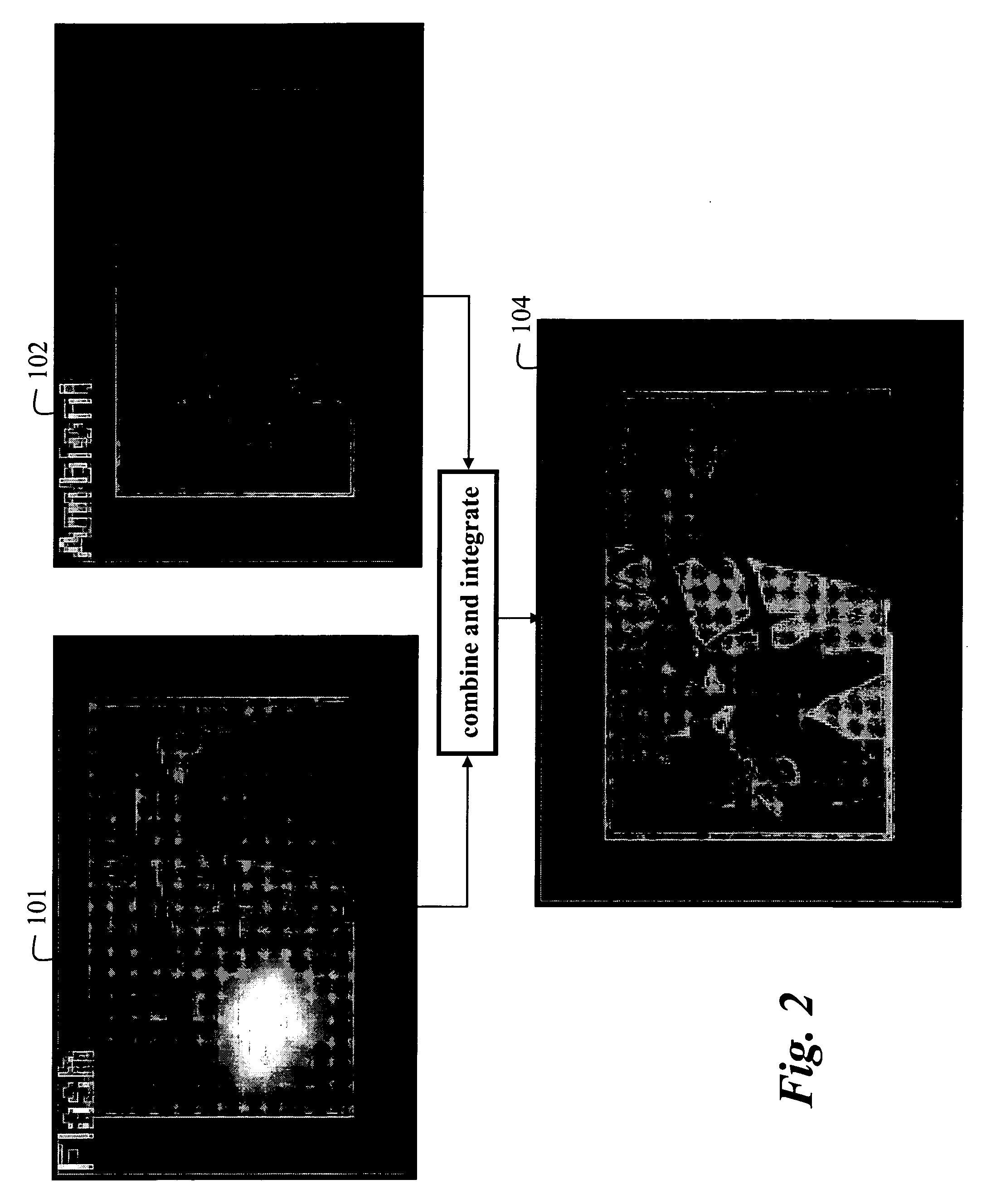

Method and apparatus for enhancing flash and ambient images

InactiveUS20070024742A1Minimize the numberQuality improvementTelevision system detailsColor signal processing circuitsGradient direction

A method and system generate an enhanced output image. A first image is acquired of a scene illuminated by a first illumination condition. A second image is acquired of the scene illuminated by a second illumination condition. First and second gradient images are determined from the first and second images. Orientations of gradients in the first and second gradient images are compared to produce a combined gradient image, and an enhanced output image is constructed from the combined gradient image.

Owner:MITSUBISHI ELECTRIC RES LAB INC

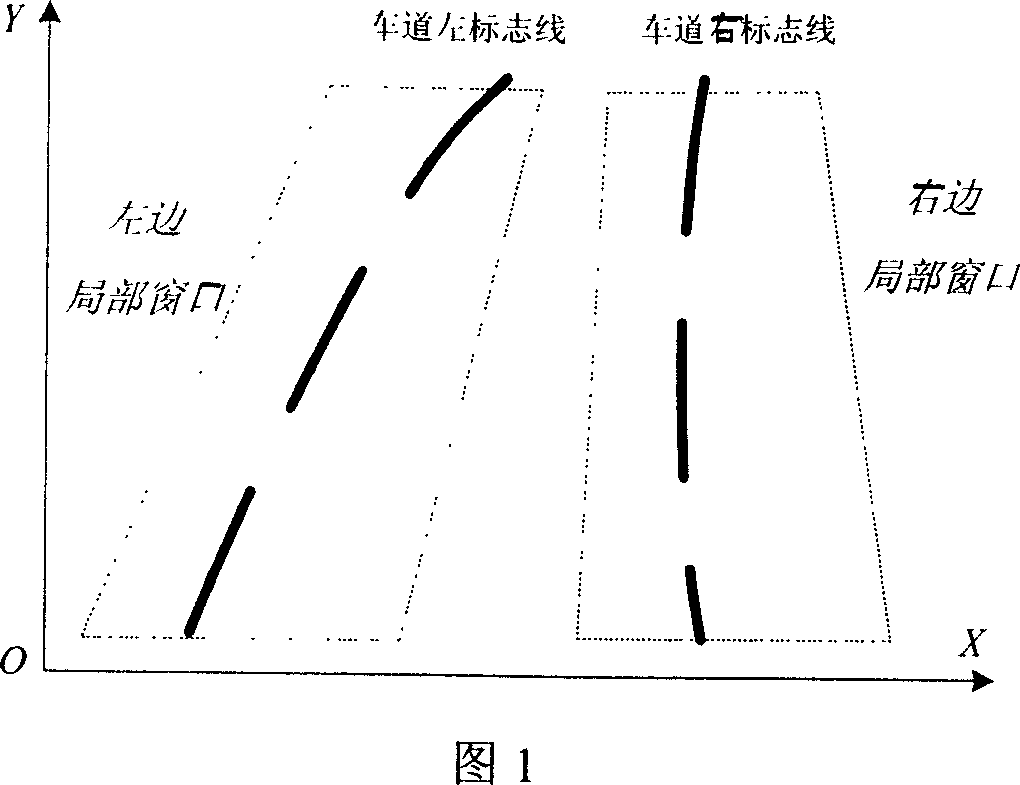

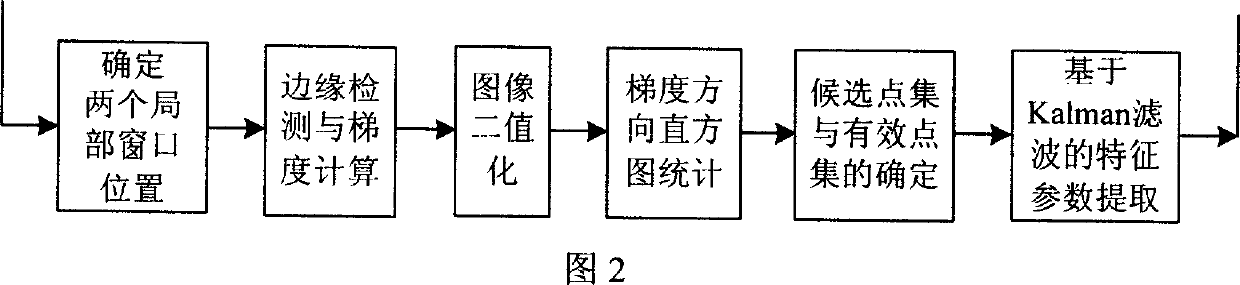

Vehicle lane Robust identifying method for lane deviation warning

InactiveCN1945596AImprove active safetyReduce and avoid deviation traffic accidentsImage analysisCharacter and pattern recognitionPoint setGradient direction

A lane robust identification method for warning departure is that first conducts conditional edge detection on the left and right local windows, and constructs the corresponding gradient direction map. Then, according to the histogram processing method, the gradient direction range of lane edge points on every local window. Aiming at the continuity features of direction and space of lane edge points, the filtering algorithm of the candidate and effect point collection is put forward and every window is filtered. Finally, according to the established effective point collection corresponding to each window, the rapid and accurate extraction of lane symbol is realized though the Kalman filter method based on scalar processing.

Owner:SOUTHEAST UNIV

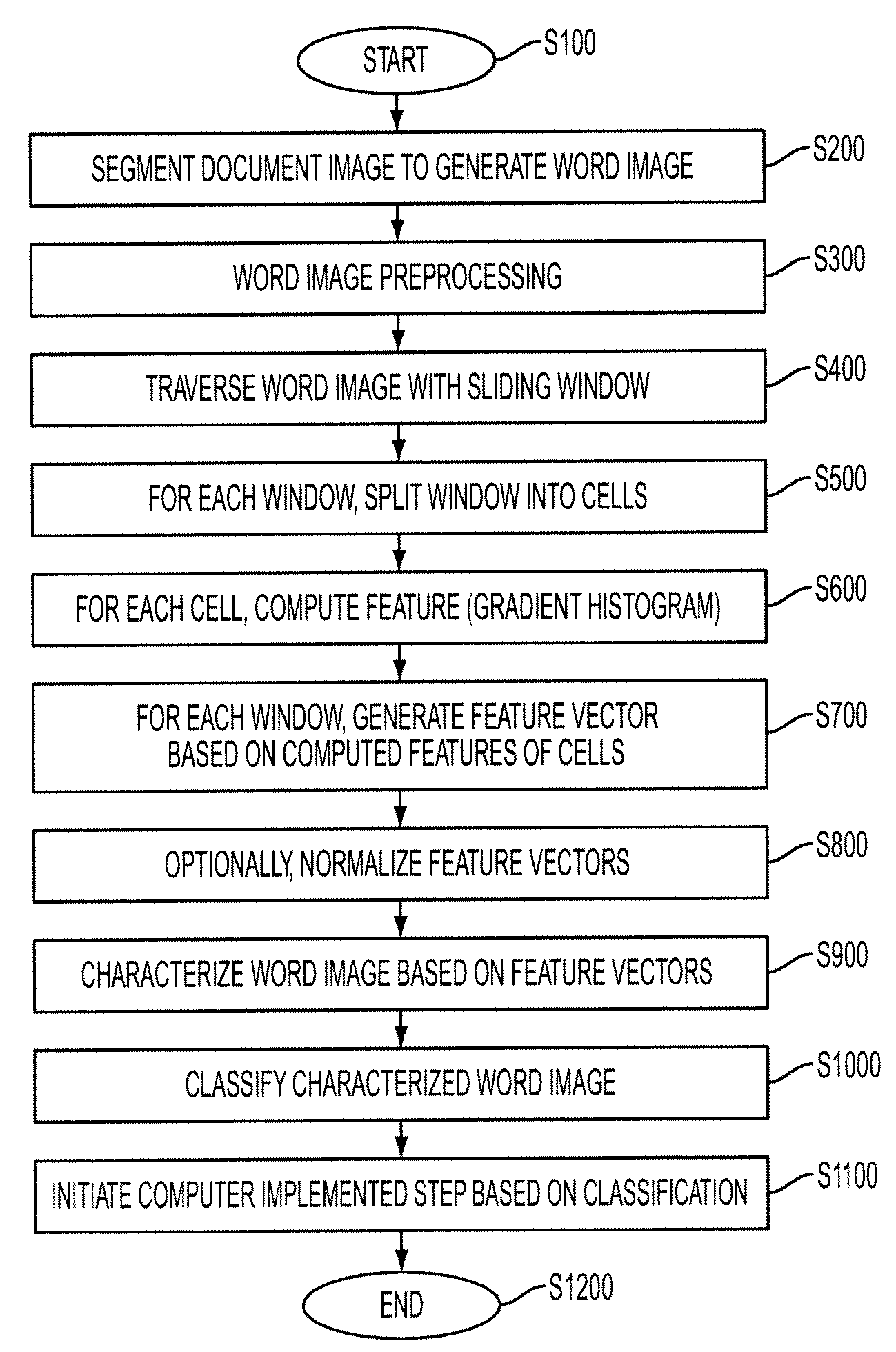

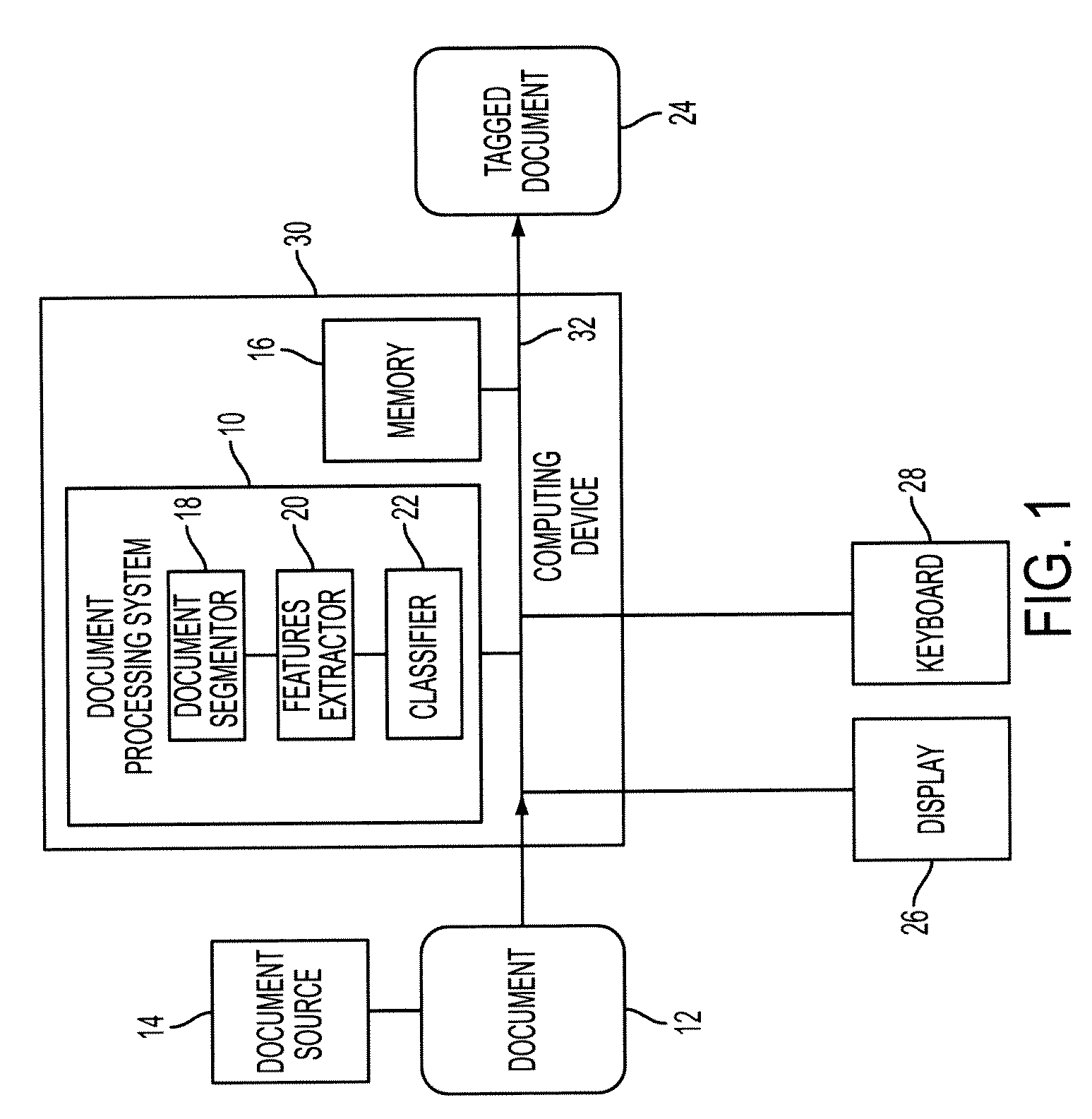

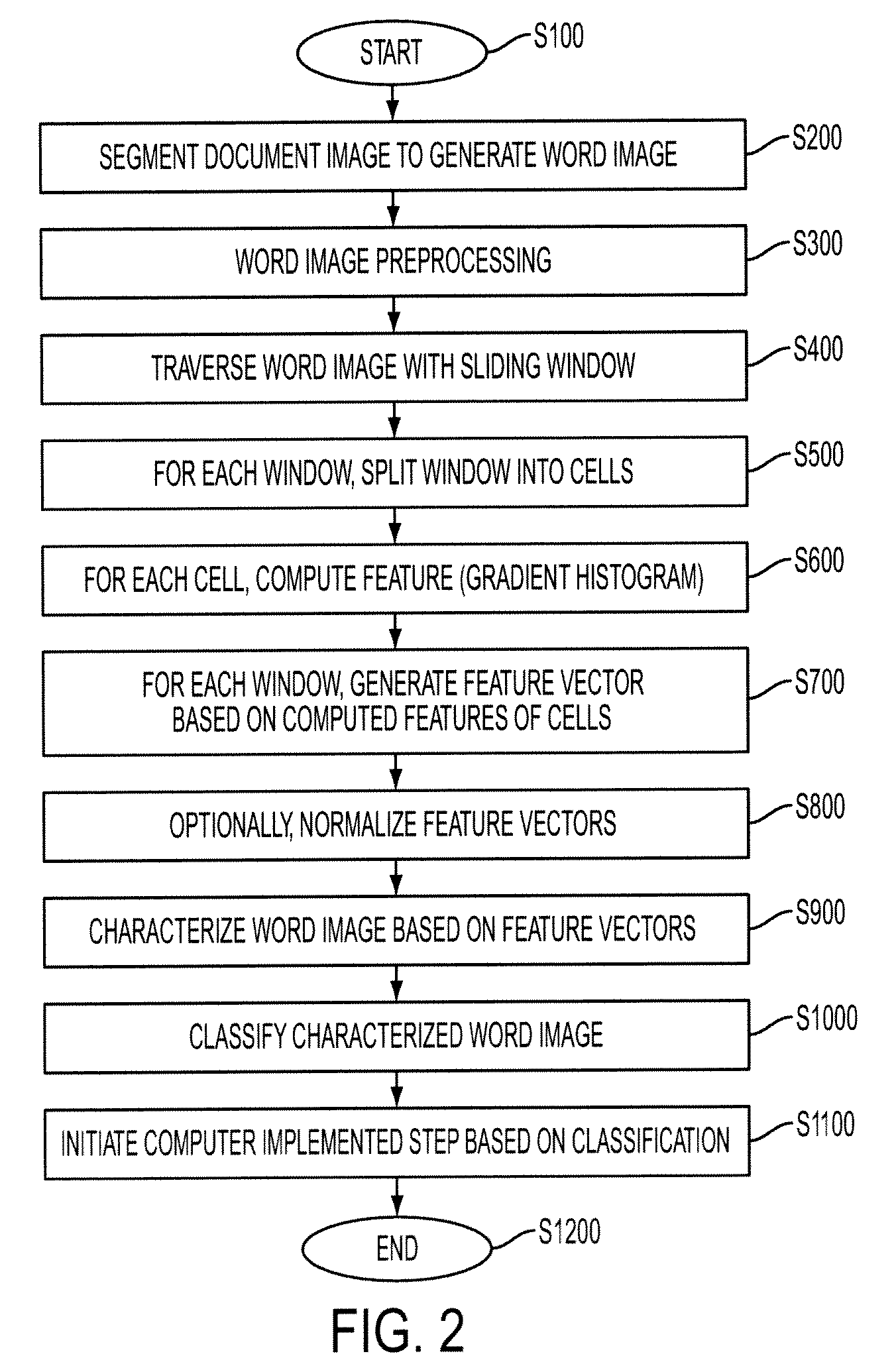

System and method for characterizing handwritten or typed words in a document

A method of characterizing a word image includes traversing the word image stepwise with a window to provide a plurality of window images. For each of the plurality of window images, the method includes splitting the window image to provide a plurality of cells. A feature, such as a gradient direction histogram, is extracted from each of the plurality of cells. The word image can then be characterized based on the features extracted from the plurality of window images.

Owner:MAJANDRO LLC

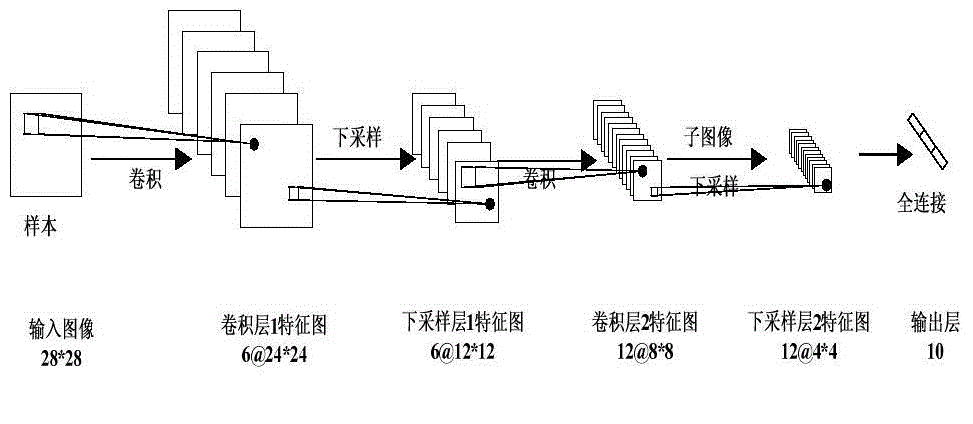

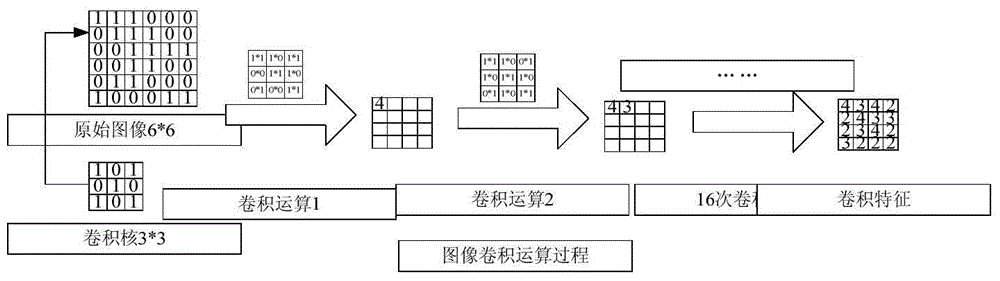

Face recognition method of deep convolutional neural network

InactiveCN104866810AImprove classification abilityThe actual output value is smallCharacter and pattern recognitionTime complexityEuclidean vector

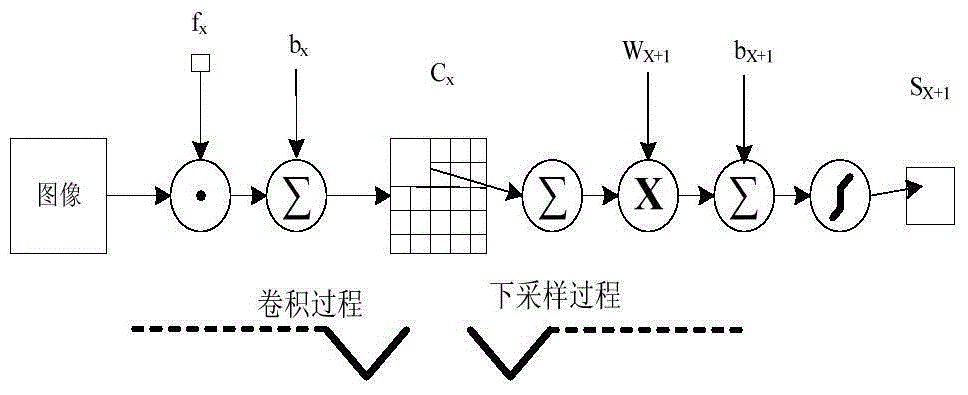

The invention discloses a face recognition method of a deep convolutional neural network, which reduces the time complexity, and enables a weight in the network to still have a high classification capacity under the condition of reducing the number of training samples. The face recognition method comprises a training stage and a classification stage. The training stage comprises the steps of (1) randomly generating a weight wj between an input unit and a hidden unit and an offset bj of the hidden unit, wherein j equals to 1,...,L and represents the number of the weight and the offset, and the total number is L; (2) inputting a training image Y and a label thereof, by using a forward conduction formula hw, b(x)=f(W<T>x), wherein hw, b(x) is an output value, x is input, and an output value hw, b(x<(i)>) of each layer is calculated; (3) calculating the offset of the last layer according to a label value and an output value of the last layer; (4) calculating the offset of each layer according to the offset of the last layer, and acquiring the gradient direction; and (5) updating the weight. The classification stage comprises the steps of (a) keeping all parameters in the network to be unchanged, and recording a category vector outputted by the network of each training sample; (b) calculating a residual error delta, wherein delta=||hw, b(x<(i)>)-y<(i)>||<2>; and (c) classifying a tested image according to the minimum residual error.

Owner:BEIJING UNIV OF TECH

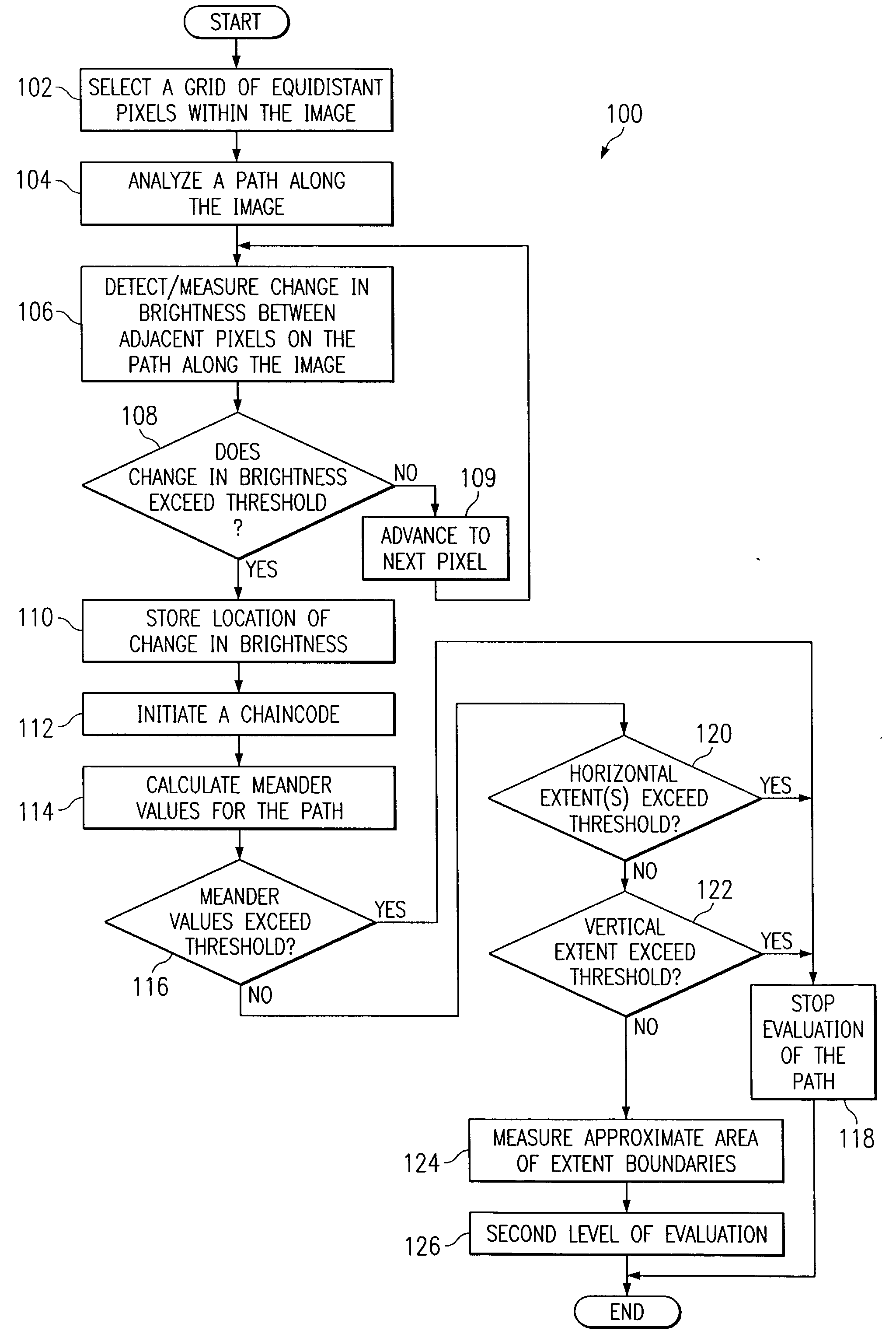

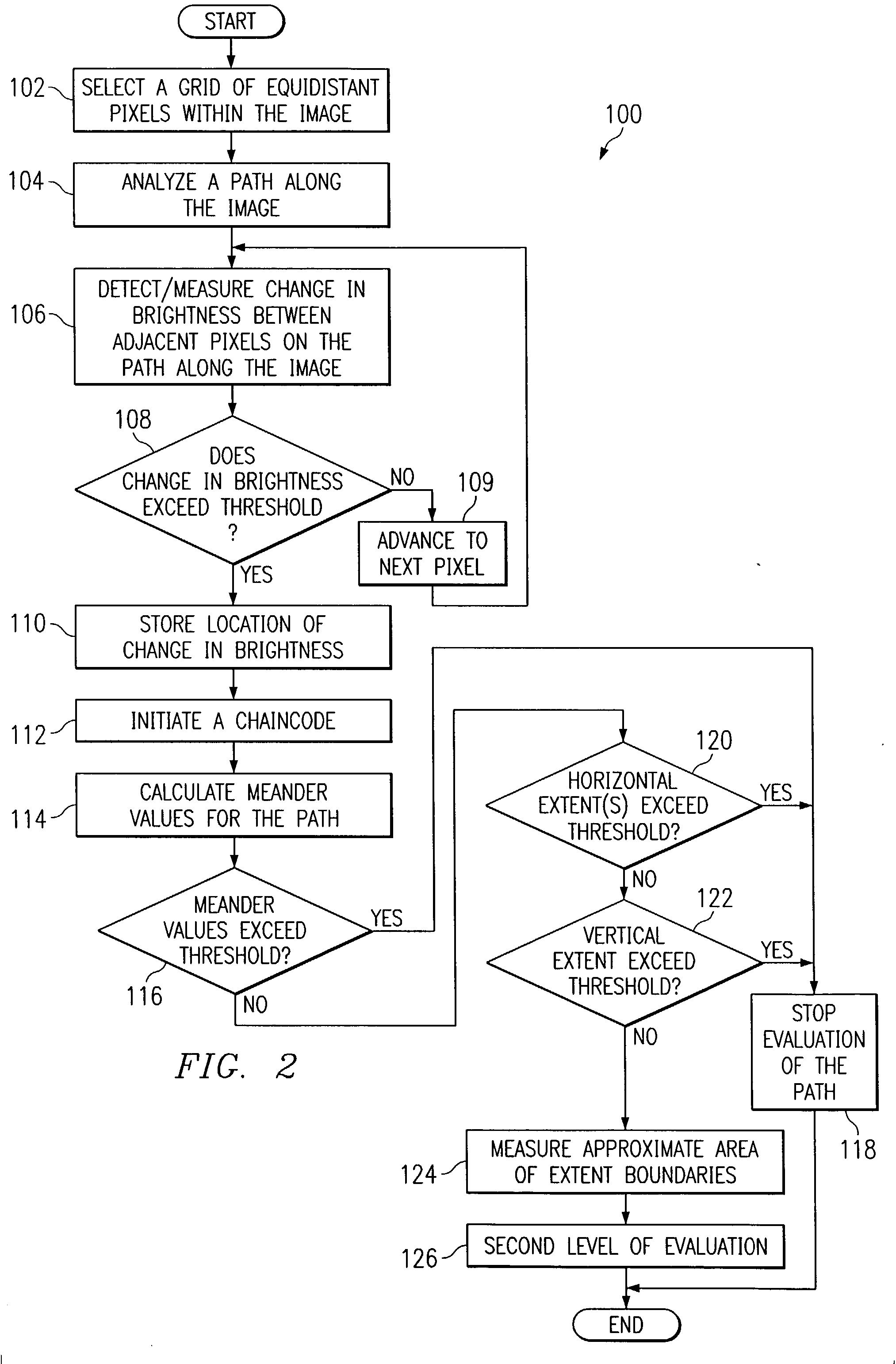

System and method for analyzing a contour of an image by applying a sobel operator thereto

ActiveUS20050002570A1Accurate analysisImage enhancementImage analysisAngular degreesCharacteristic space

The present invention includes a method for analyzing an image wherein elements defining a path within a two-dimensional image are received from a prescreener. A Sobel operator may be applied to the region around each of the elements of the chain to obtain a corresponding array of gradient directions. An angle correction may be applied to any of the gradient directions that goes beyond the highest value (in radian measure; the Pi −Pi transition), to obtain an array of gradient directions free of any artificial jumps in value. The gradient direction array (Sobel chaincode) can have its bandwidth taken to determine a single number of straightness so as to identify extremely straight edges, (manmade objects) from less straight edges (natural objects). A similar process can be used to analyze contours for straight sections, which are also parallel. These two and other filters applied to the gradient array can be part of a feature suite, for feature space analysis.

Owner:NORTHROP GRUMMAN SYST CORP

Improved image edge detection method

InactiveCN107067382AClear outlineImprove continuityImage enhancementImage analysisPerpendicular directionDouble threshold

The invention discloses an improved image edge detection method. The improved image edge detection method comprise steps of S1, performing smoothing processing on an image and using an improved media filter to suppress noise, S2, obtaining a difference between a horizontal direction and a perpendicular direction through first-order-partial derivatives of directions of x,y,45 degrees and 135 degrees and thus obtaining a gradient amplitude and a gradient direction, S3, performing non-maximum value inhibition on the gradient amplitude, S4, using a gradient histogram to solve a high threshold and a low threshold and then using a double-threshold algorithm to perform edge detection on the image, and S5, performing sharpening processing and connecting the edges to obtain a final edge image. The improved image edge detection method use weighted media filtering to replace gauss filtering, uses the partial derivatives of four directions, uses the gradient histogram to determine the high threshold and the low threshold, reduces detection errors, improves detection accuracy, and makes the counter of the edge image more clear and continuity better.

Owner:南宁市正祥科技有限公司

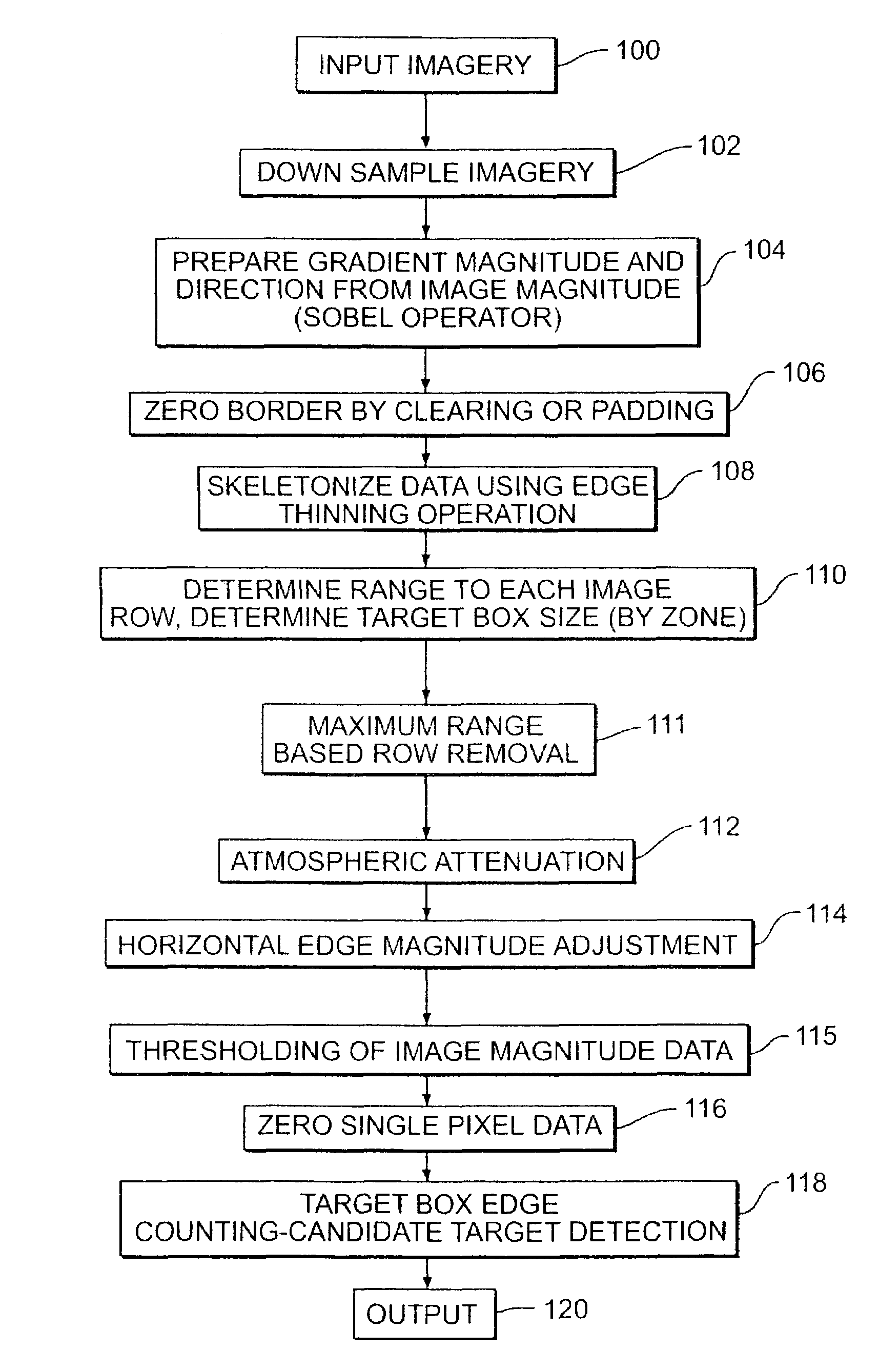

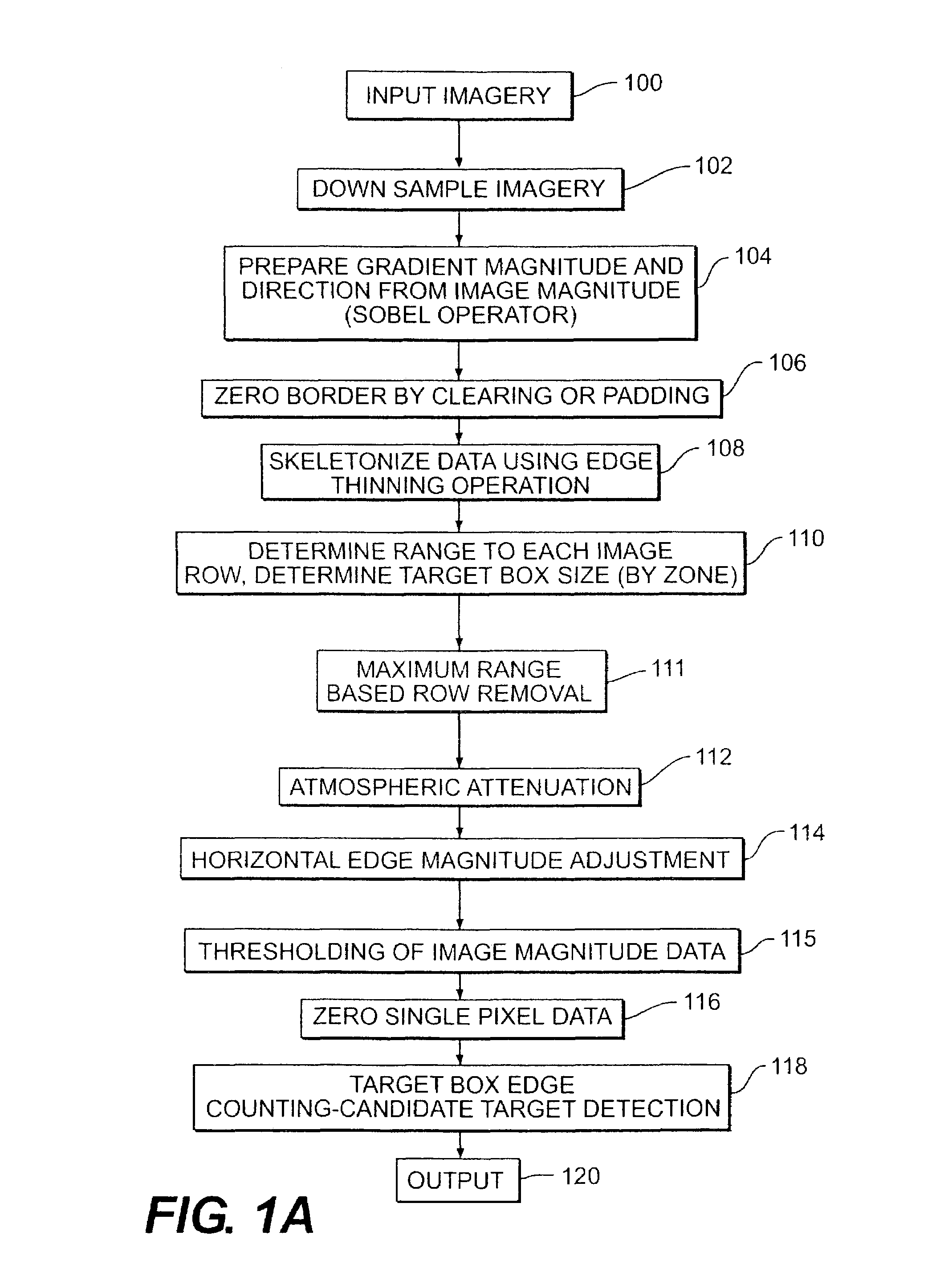

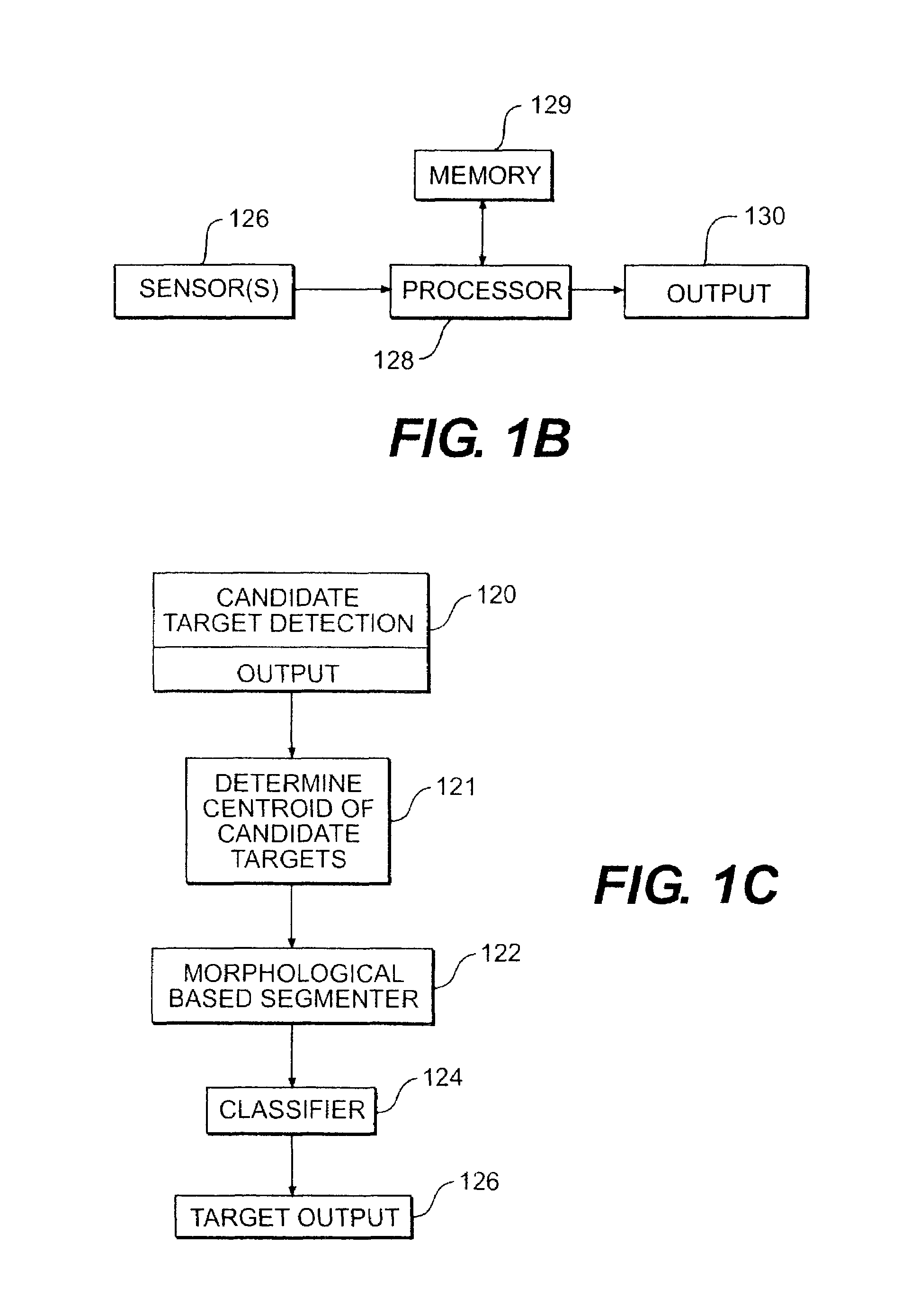

Target detection method and system

InactiveUS7430303B2Simple technologyImprove efficiencyCharacter and pattern recognitionColor television detailsUltrasound attenuationAlgorithm

A method and system detects candidate targets or objects from a viewed scene by simplifying the data, converting the data to gradient magnitude and direction data which is thresholded to simplify the data. Horizontal edges within the data are softened to reduce their masking of adjacent non-horizontal features. One or more target boxes are stepped across the image data and the number of directions of gradient direction data within the box is used to determine the presence of a target. Atmospheric attenuation is compensated. The thresholding used in one embodiment compares the gradient magnitude data to a localized threshold calculated from the local variance of the image gradient magnitude data. Imagery subsets are containing the candidate targets may then be used to detect and identify features and apply a classifier function to screen candidate detections and determine a likely target.

Owner:LOCKHEED MARTIN CORP

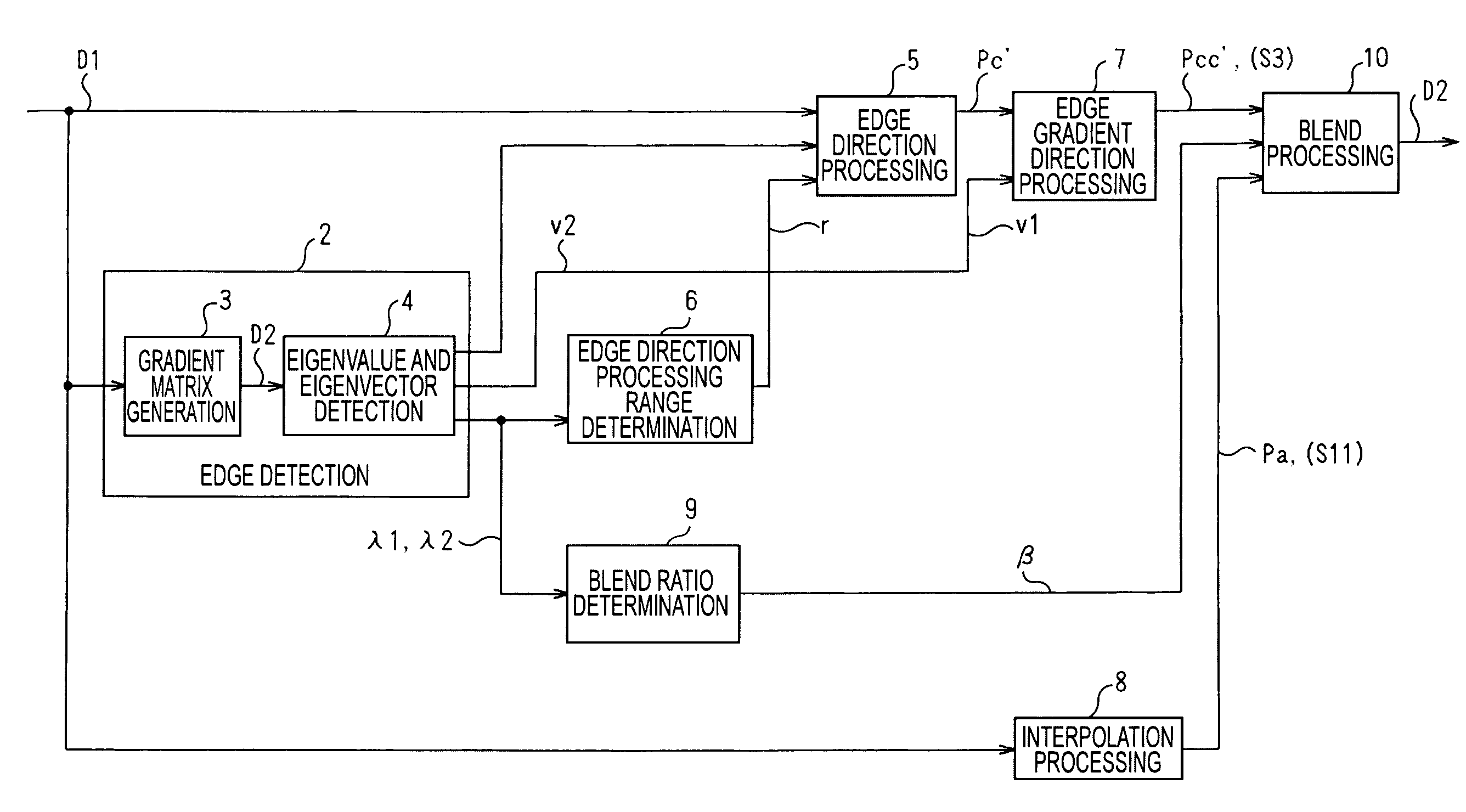

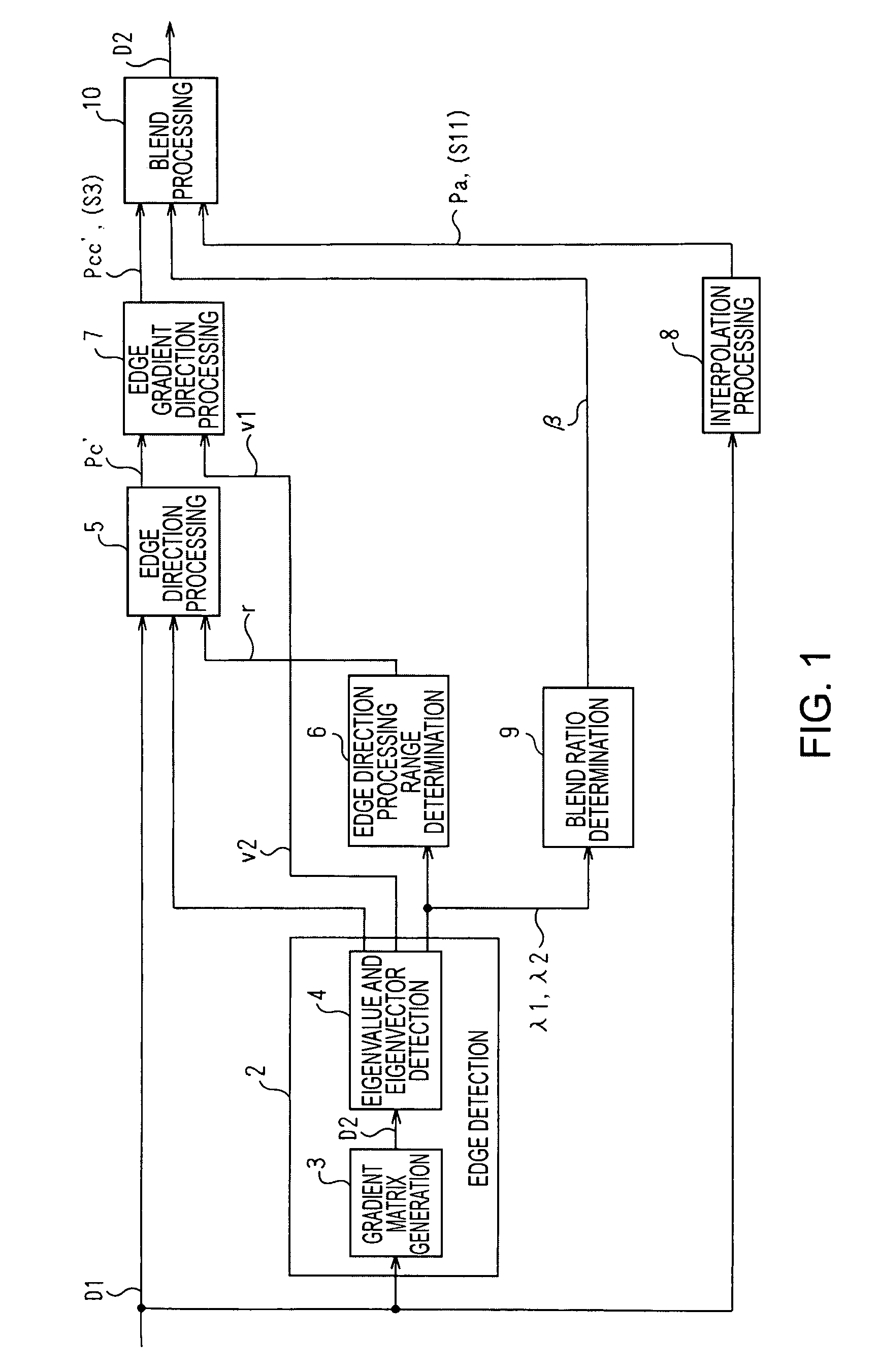

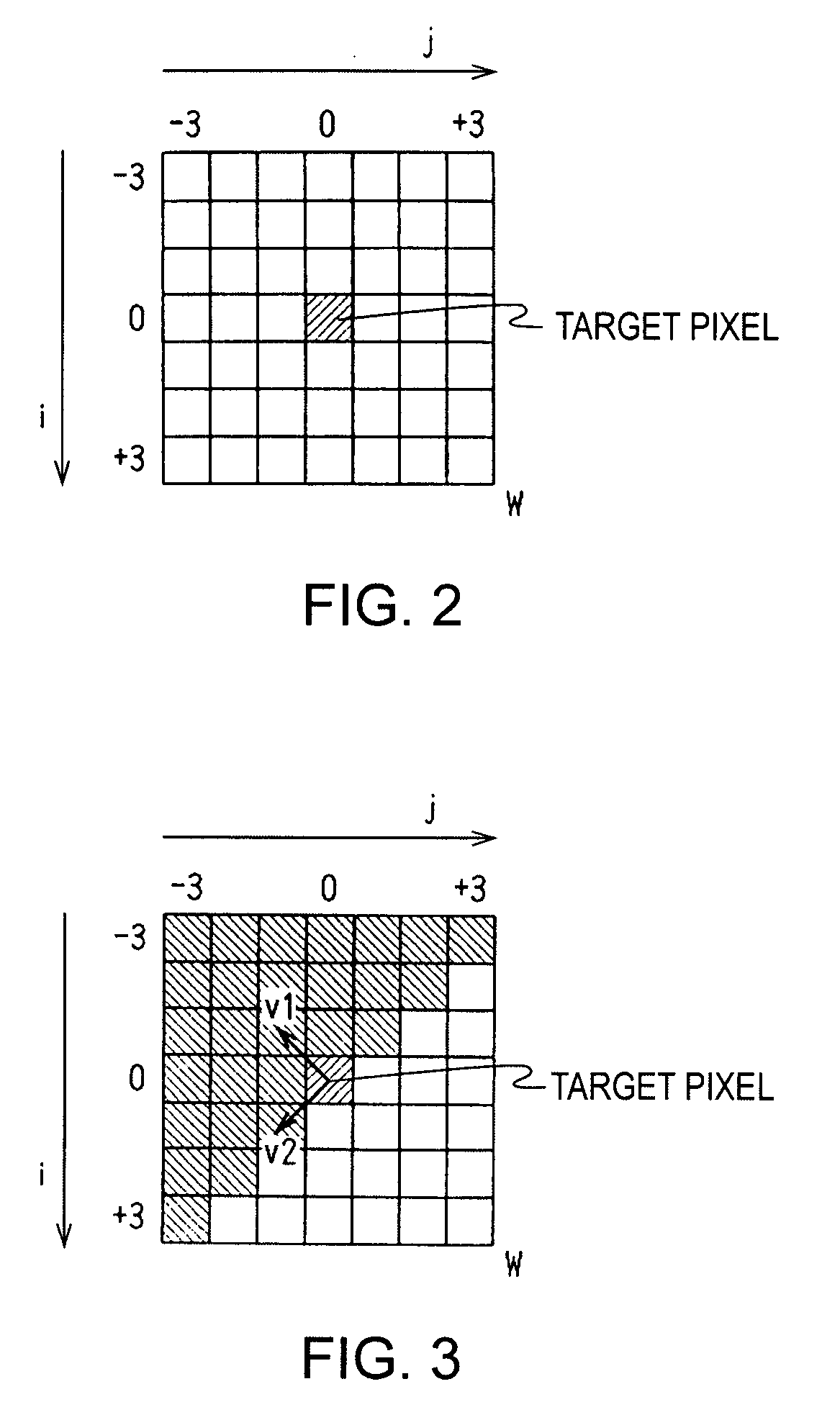

Image Processing Apparatus, Image Processing Method, Program of Image Processing Method, and Recording Medium in Which Program of Image Processing Method Has Been Recorded

InactiveUS20080123998A1Effectively avoiding lossAvoid it happening againImage enhancementImage analysisImaging processingComputer science

The present invention is applied, for example, to resolution conversion. An edge gradient direction v1 with the largest gradient of pixel values and an edge direction v2 orthogonal to the edge gradient direction v1 are detected. Edge enhancement and smoothing processing are performed in the edge gradient direction v1 and in the edge direction v2, respectively, to generate output image data D2.

Owner:SONY CORP

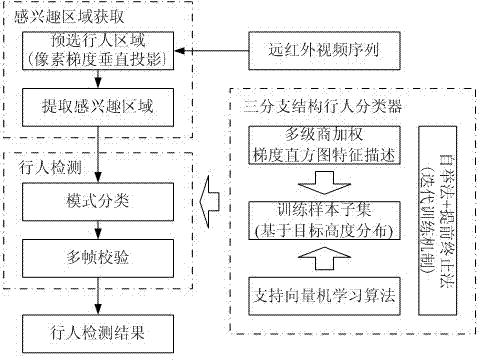

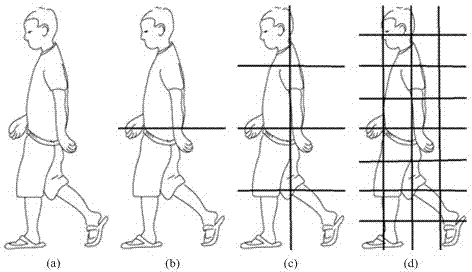

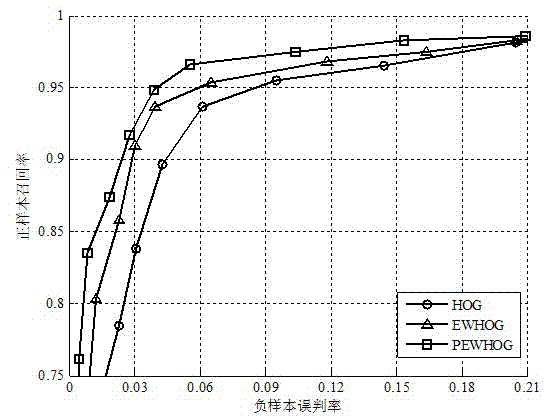

Real-time robust far infrared vehicle-mounted pedestrian detection method

InactiveCN103198332AExact searchDetailed descriptionCharacter and pattern recognitionVertical projectionFar infrared

The invention discloses a real-time robust far infrared vehicle-mounted pedestrian detection method. The method comprises the steps of catching a potential pedestrian pre-selection area in an input image through a pixel gradient vertical projection, searching an interest area in the pedestrian pre-selection area through a local threshold method and morphological post-processing techniques, extracting a multi-stage entropy weighing gradient direction histogram for feature description of the interest area, inputting the histogram to a support vector machine pedestrian classifier for online judgment of the interest area, achieving pedestrian detection through multi-frame verification and screening of judgment results of the pedestrian classifier, dividing training sample space according to sample height distribution, building a classification frame of a three-branch structure, and collecting difficult samples and a training pedestrian classifier in an iteration mode with combination of a bootstrap method and an advanced termination method. According to the real-time robust far infrared vehicle-mounted pedestrian detection method, not only is accuracy of pedestrian detection improved, but also a false alarm rate is reduced, input image processing speed and generalization capacity of the classifier are improved, and provided is an effective night vehicle-mounted pedestrian-assisted early warning method.

Owner:SOUTH CHINA UNIV OF TECH

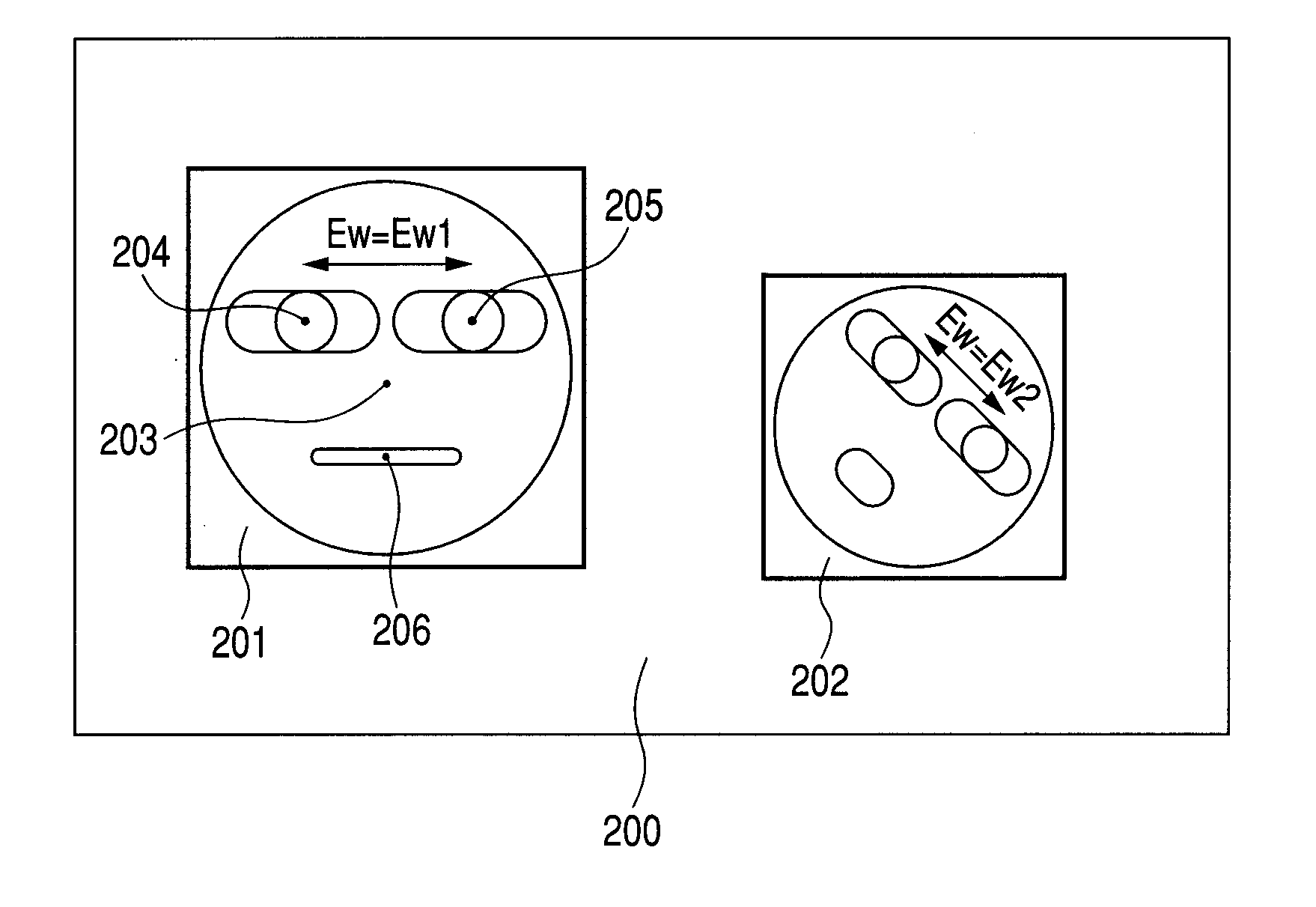

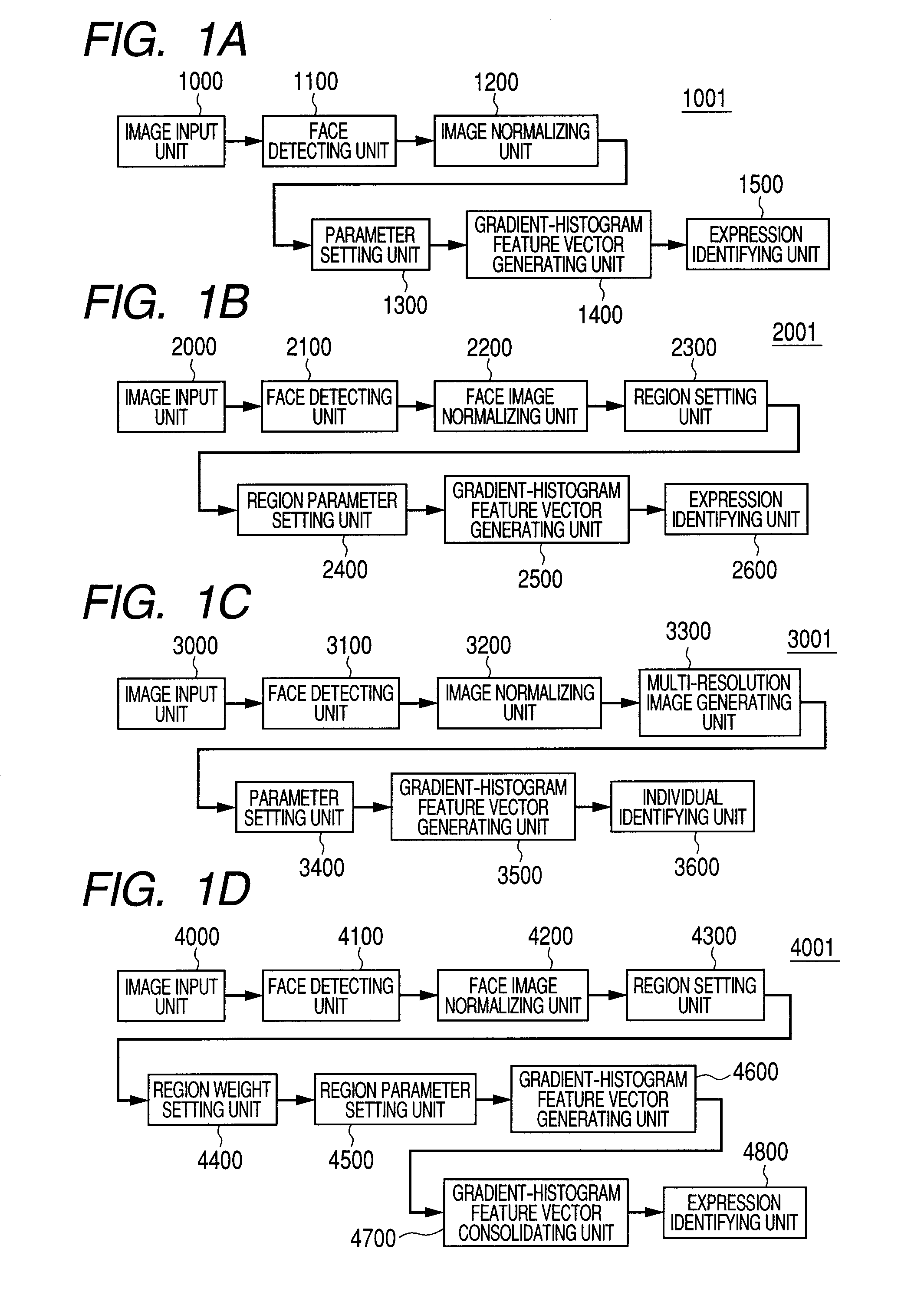

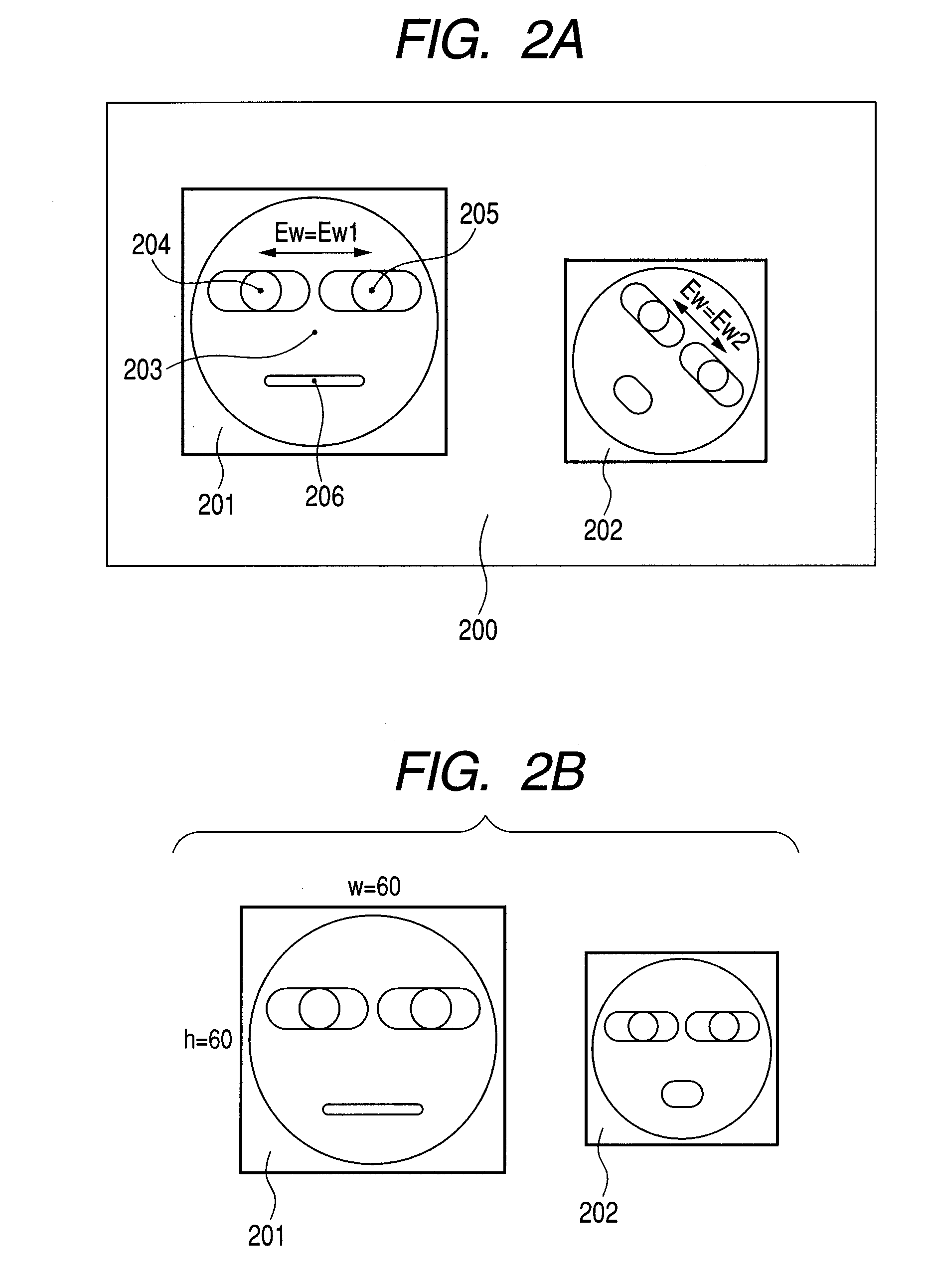

Image recognition apparatus for identifying facial expression or individual, and method for the same

InactiveUS20100296706A1Improve accuracyHigh-precision identificationCharacter and pattern recognitionFeature vectorFacial expression

A face detecting unit detects a person's face from input image data, and a parameter setting unit sets parameters for generating a gradient histogram indicating the gradient direction and gradient magnitude of a pixel value based on the detected face. Further, a generating unit sets a region (a cell) from which to generate a gradient histogram in the region of the detected face, and generates a gradient histogram for each such region to generate feature vectors. An expression identifying unit identifies an expression exhibited by the detected face based on the feature vectors. Thereby, the facial expression of a person included in an image is identified with high precision.

Owner:CANON KK

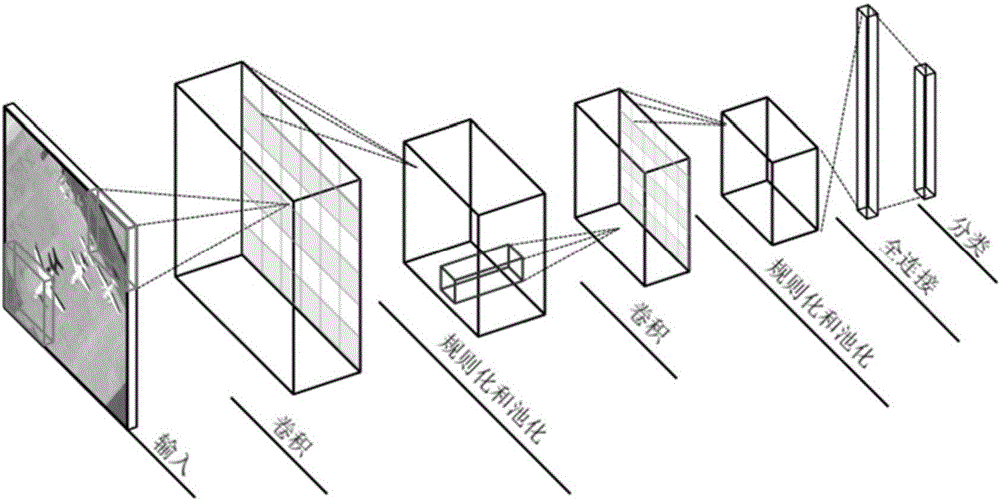

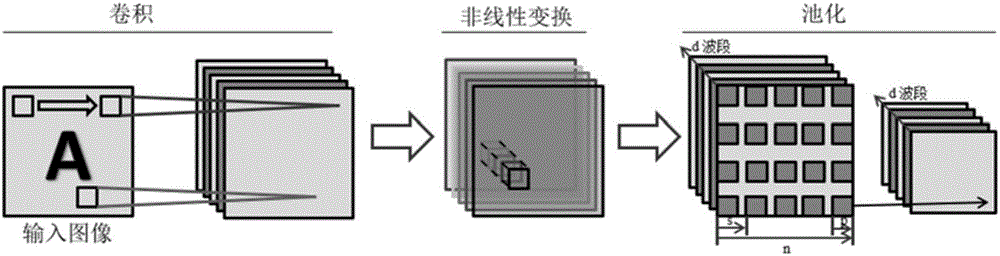

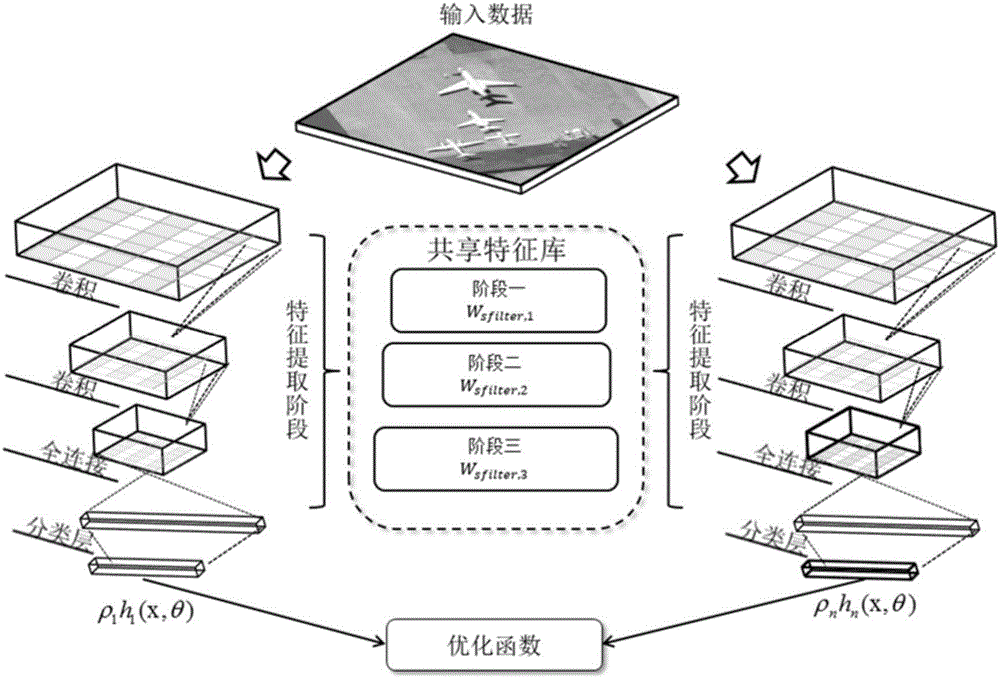

Random convolutional neural network-based high-resolution image scene classification method

InactiveCN106250931AImprove generalization abilityImprove training efficiencyCharacter and pattern recognitionNerve networkAlgorithm

The invention discloses a random convolutional neural network-based high-resolution image scene classification method. The method comprises the steps of performing data mean removal, and obtaining a to-be-classified image set and a training image set; randomly initializing a parameter library of model sharing; calculating negative gradient directions of the to-be-classified image set and the training image set; training a basic convolutional neural network model, and training a weight of the basic convolutional neural network model; predicting an updating function, and obtaining an addition model; and when an iteration reaches a maximum training frequency, identifying the to-be-classified image set by utilizing the addition model. According to the method, features are hierarchically learned by using a deep convolutional network, and model aggregation learning is carried out by utilizing a gradient upgrading method, so that the problem that a single model easily falls into a local optimal solution is solved and the network generalization capability is improved; and in a model training process, a random parameter sharing mechanism is added, so that the model training efficiency is improved, the features can be hierarchically learned with reasonable time cost, and the learned features have better robustness in scene identification.

Owner:WUHAN UNIV

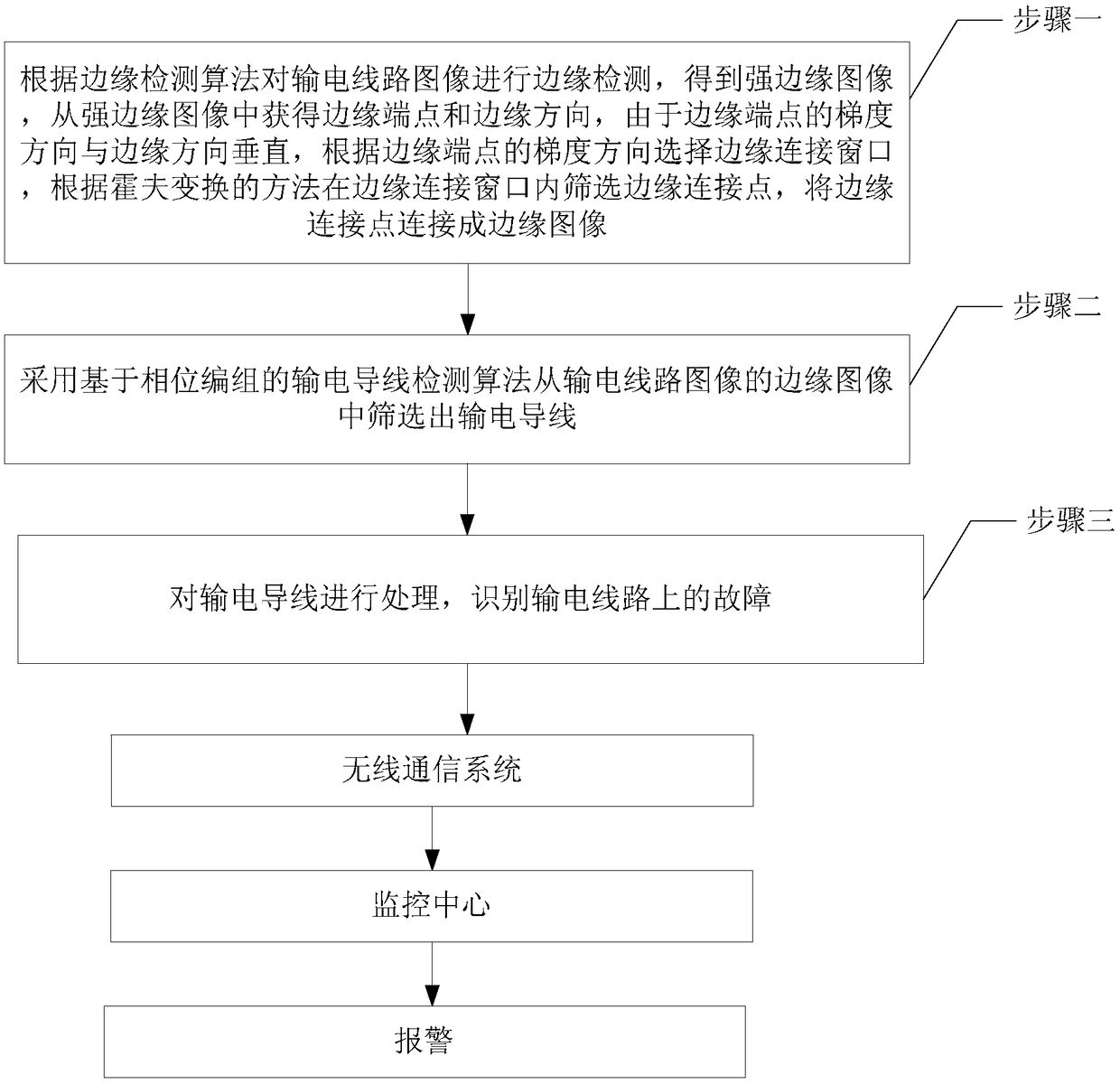

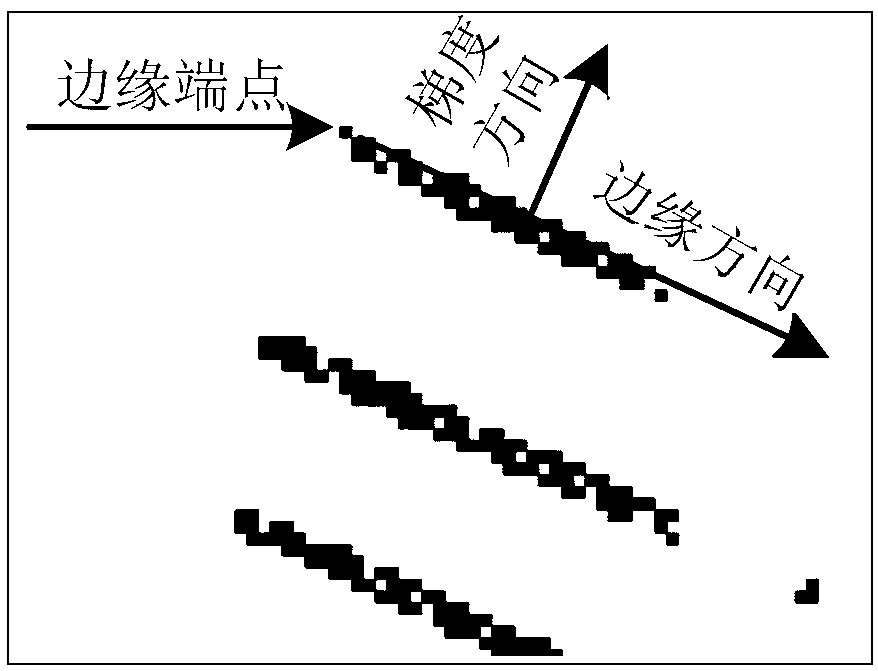

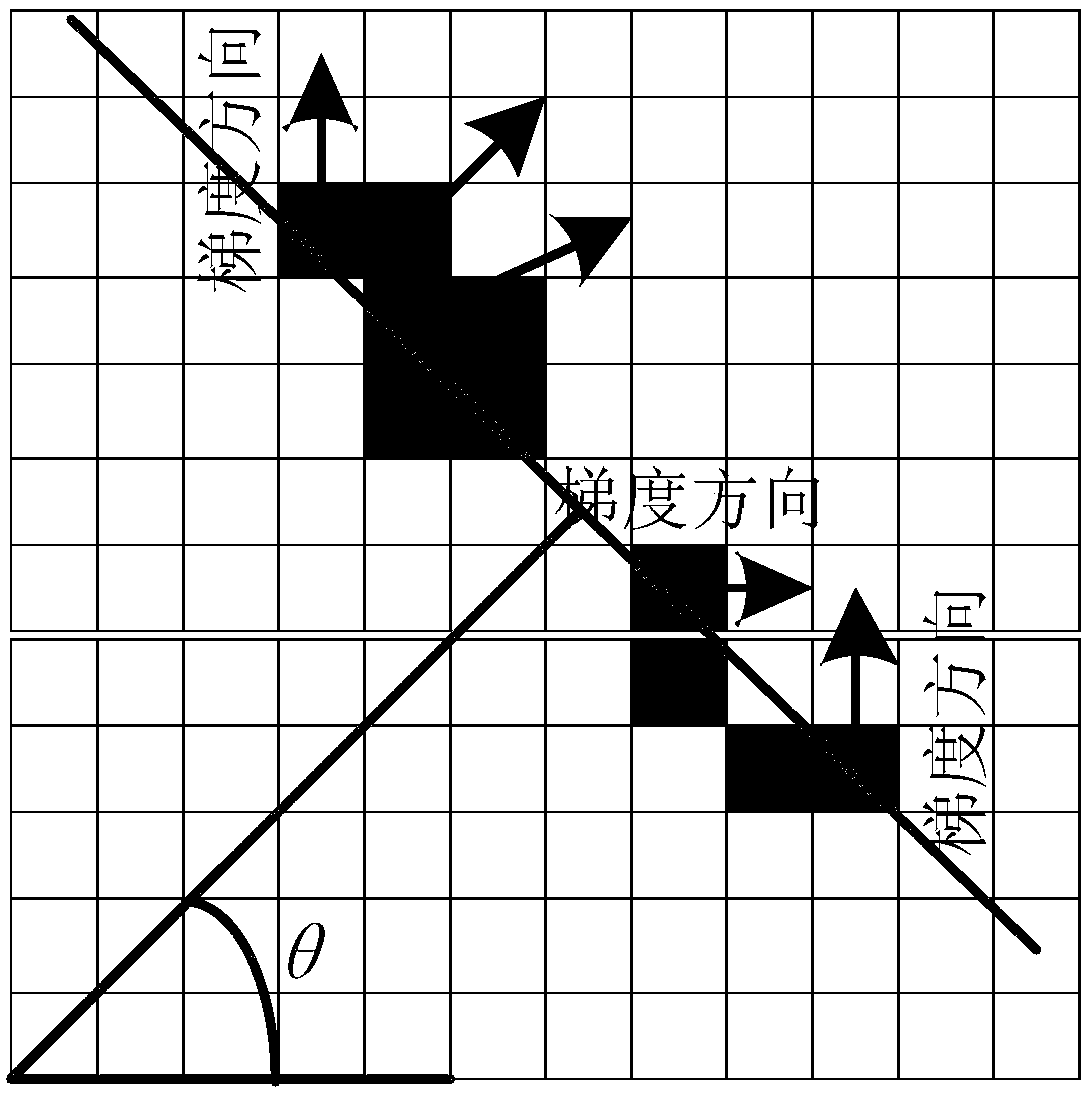

Fault identification method of high voltage transmission line based on computer vision

ActiveCN109215020APrecise screeningQuick filterImage enhancementImage analysisHough transformMonitoring system

The invention relates to a high-voltage transmission line fault identification method based on computer vision, which relates to the technical field of high-voltage transmission line running state monitoring. The invention aims at solving the problem of high false alarm rate of the existing high-voltage transmission line on-line monitoring system. 11) carrying out edge detection on the transmission line image according to the edge detection algorithm, a strong edge image is obtained, and edge endpoints and edge directions are obtained from the strong edge image. Since the gradient direction ofthe edge endpoints is perpendicular to the edge direction, an edge connection window is selected according to the gradient direction of the edge endpoints, and edge connection points are selected inthe edge connection window according to a Hough transform method, and the edge connection points are connected into an edge image. Step 2, screening the transmission lines from the edge images of thetransmission line images by adopting a transmission line detection algorithm based on phase grouping; Step 3, the transmission conductor is processed to identify the fault on the transmission line. Itis used to identify transmission line faults.

Owner:国网黑龙江省电力有限公司佳木斯供电公司 +2

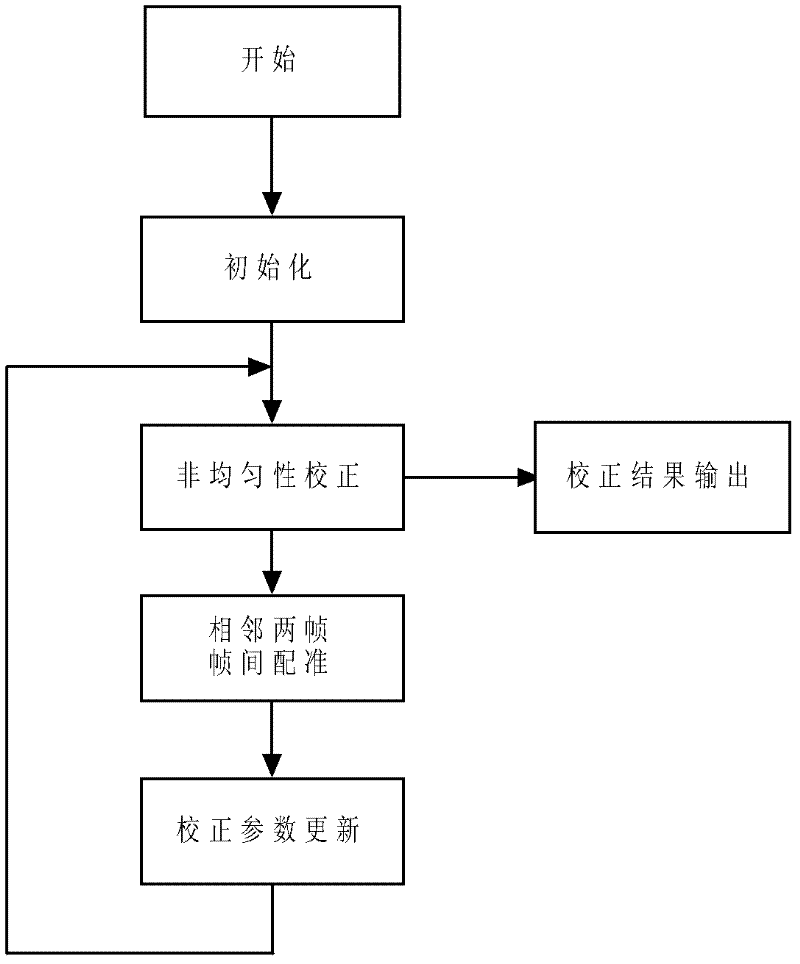

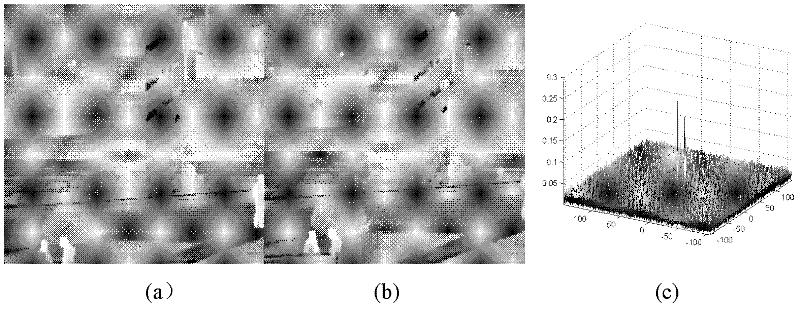

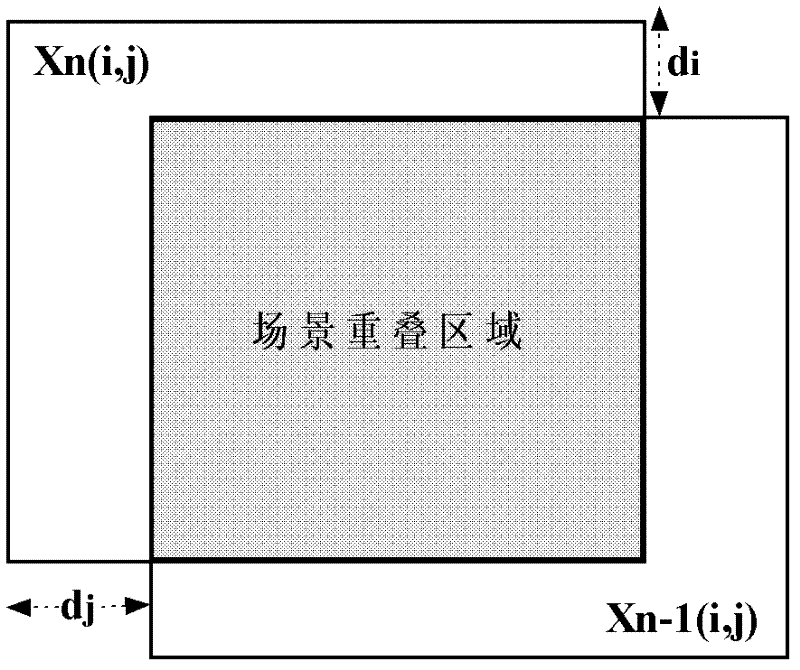

Rapidly converged scene-based non-uniformity correction method

InactiveCN102538973APrevent erroneous updatesBug update avoidanceRadiation pyrometryPhase correlationSteep descent

The invention discloses a rapidly converged scene-based non-uniformity correction method, wherein the aim of non-uniformity correction is achieved by minimizing interframe registration error of two adjacent images. The method mainly comprises the following steps of: initializing gain and offset correction parameters and acquiring an uncorrected original image; acquiring a new uncorrected original image, and carrying out non-uniformity correction on the new uncorrected original image and the previous uncorrected original image by utilizing the current non-uniformity correction parameters; obtaining relative displacement, scene correlation coefficient and interframe registration error of two corrected images by utilizing an original point masking phase correlation method; and updating correction parameters along the negative gradient direction by adopting a steepest descent method. The method disclosed by the invention has the advantages of high correction accuracy, fast convergence speed, no ghost effect and low calculated amount and storage content and is especially applicable to being integrated into an infrared focal plane imaging system, and the effect of improving imaging quality, environmental suitability and time stability of an infrared focal plane array is achieved.

Owner:NANJING UNIV OF SCI & TECH

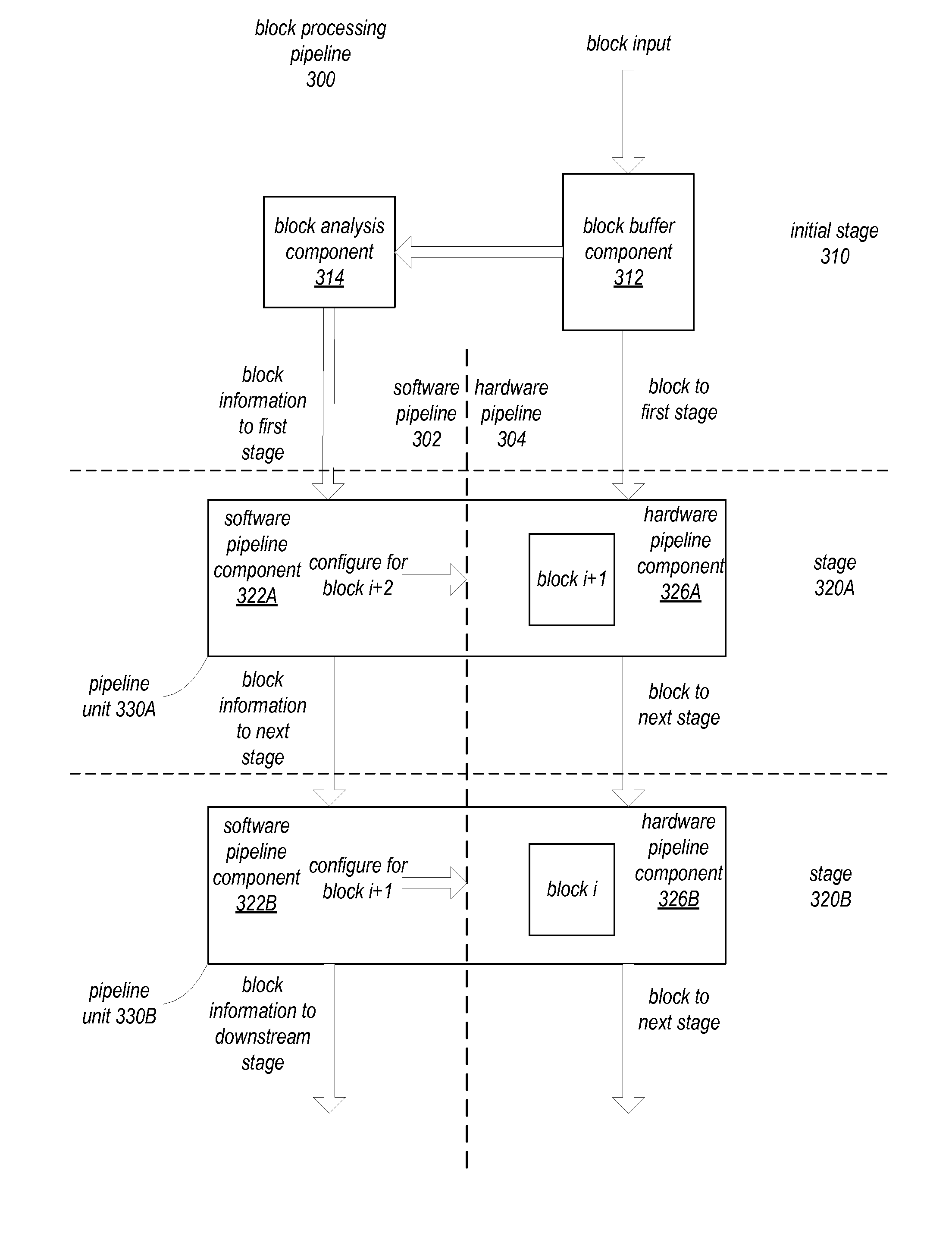

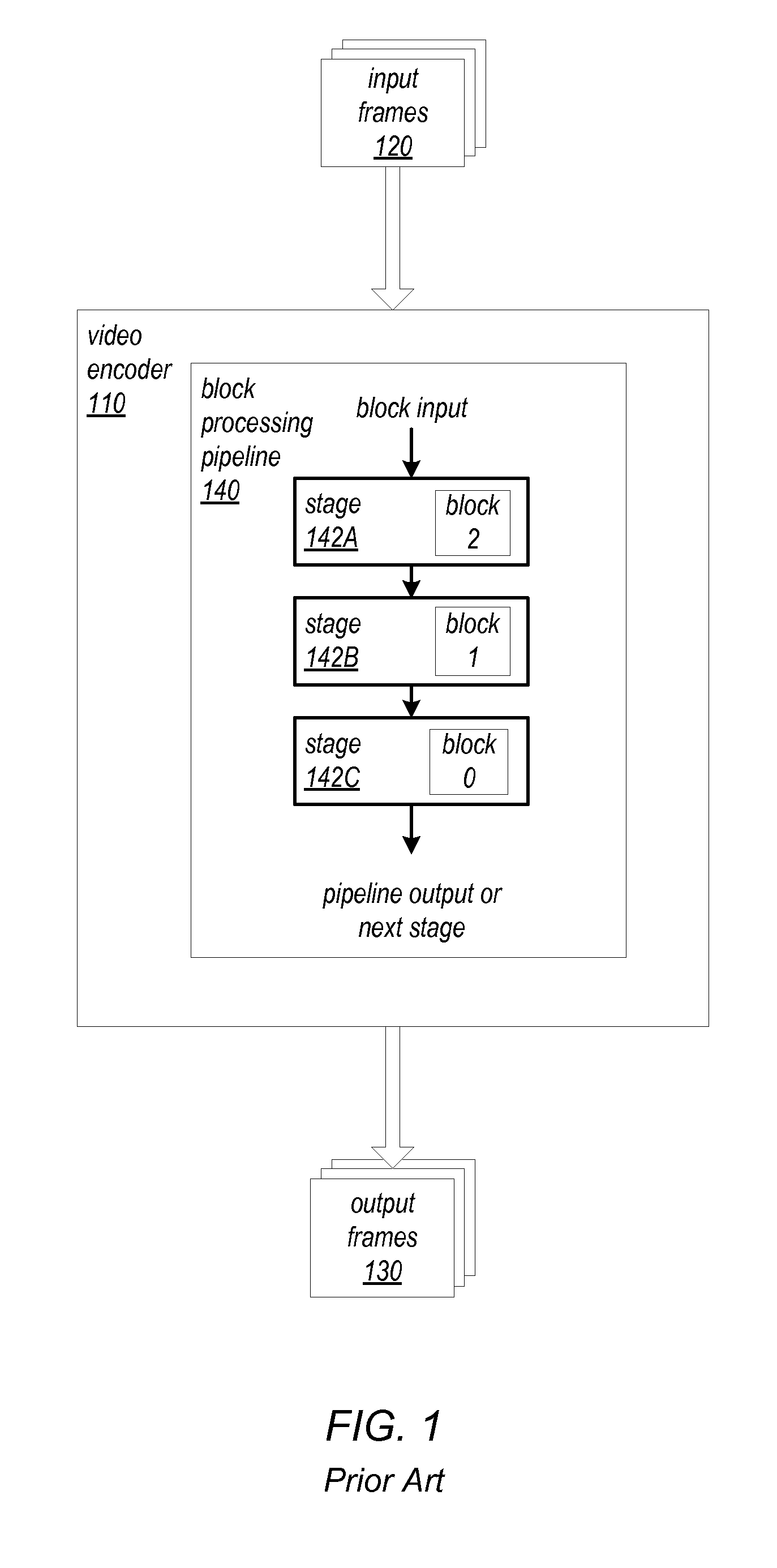

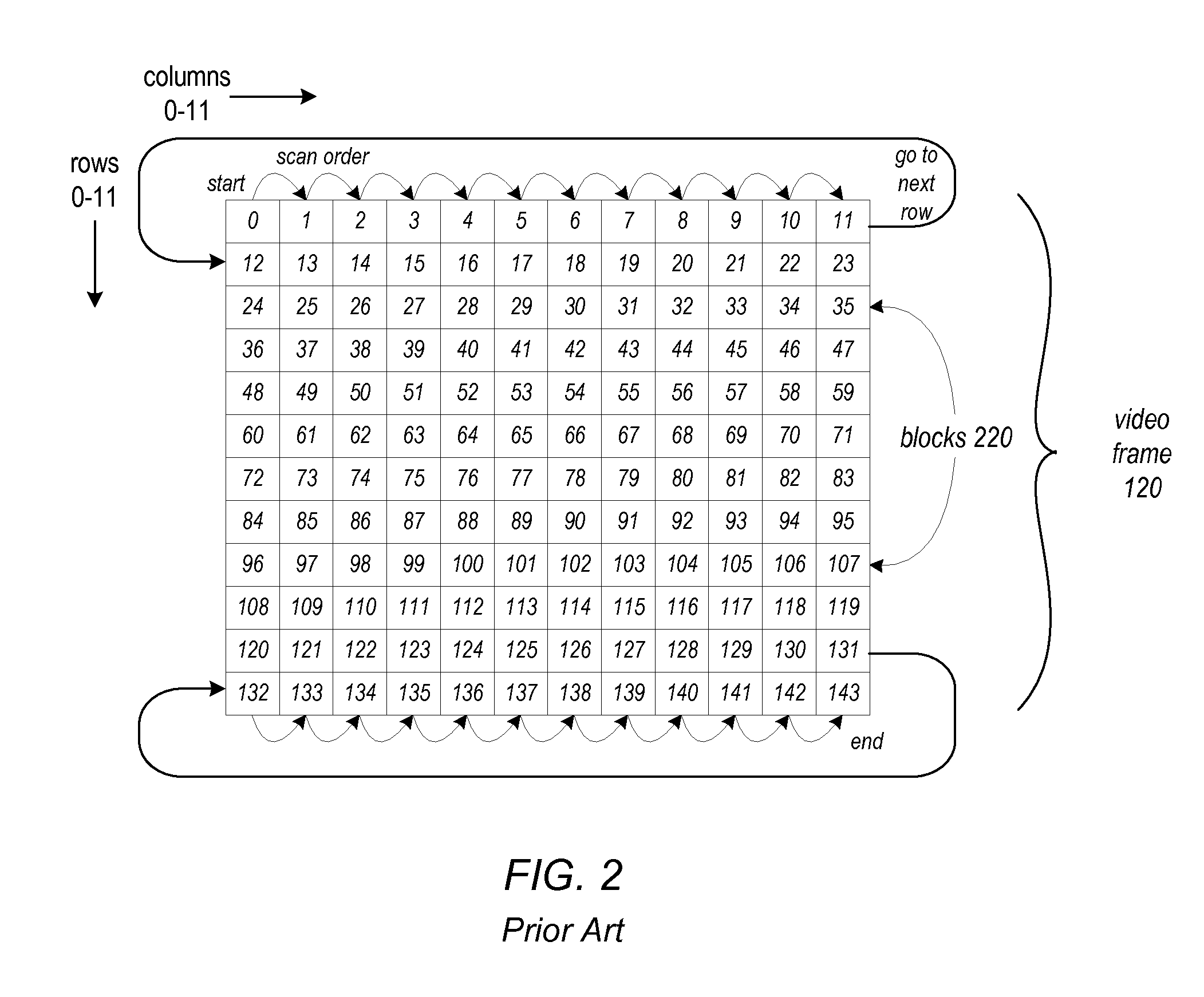

Encoding blocks in video frames containing text using histograms of gradients

ActiveUS20160014421A1Improve performanceQuality improvementCharacter and pattern recognitionProcessor architectures/configurationVideo encodingMotion vector

A block input component of a video encoding pipeline may, for a block of pixels in a video frame, compute gradients in multiple directions, and may accumulate counts of the computed gradients in one or more histograms. The block input component may analyze the histogram(s) to compute block-level statistics and determine whether a dominant gradient direction exists in the block, indicating the likelihood that it represents an image containing text. If text is likely, various encoding parameter values may be selected to improve the quality of encoding for the block (e.g., by lowering a quantization parameter value). The computed statistics or selected encoding parameter values may be passed to other stages of the pipeline, and used to bias or control selection of a prediction mode, an encoding mode, or a motion vector. Frame-level or slice-level parameter values may be generated from gradient histograms of multiple blocks.

Owner:APPLE INC

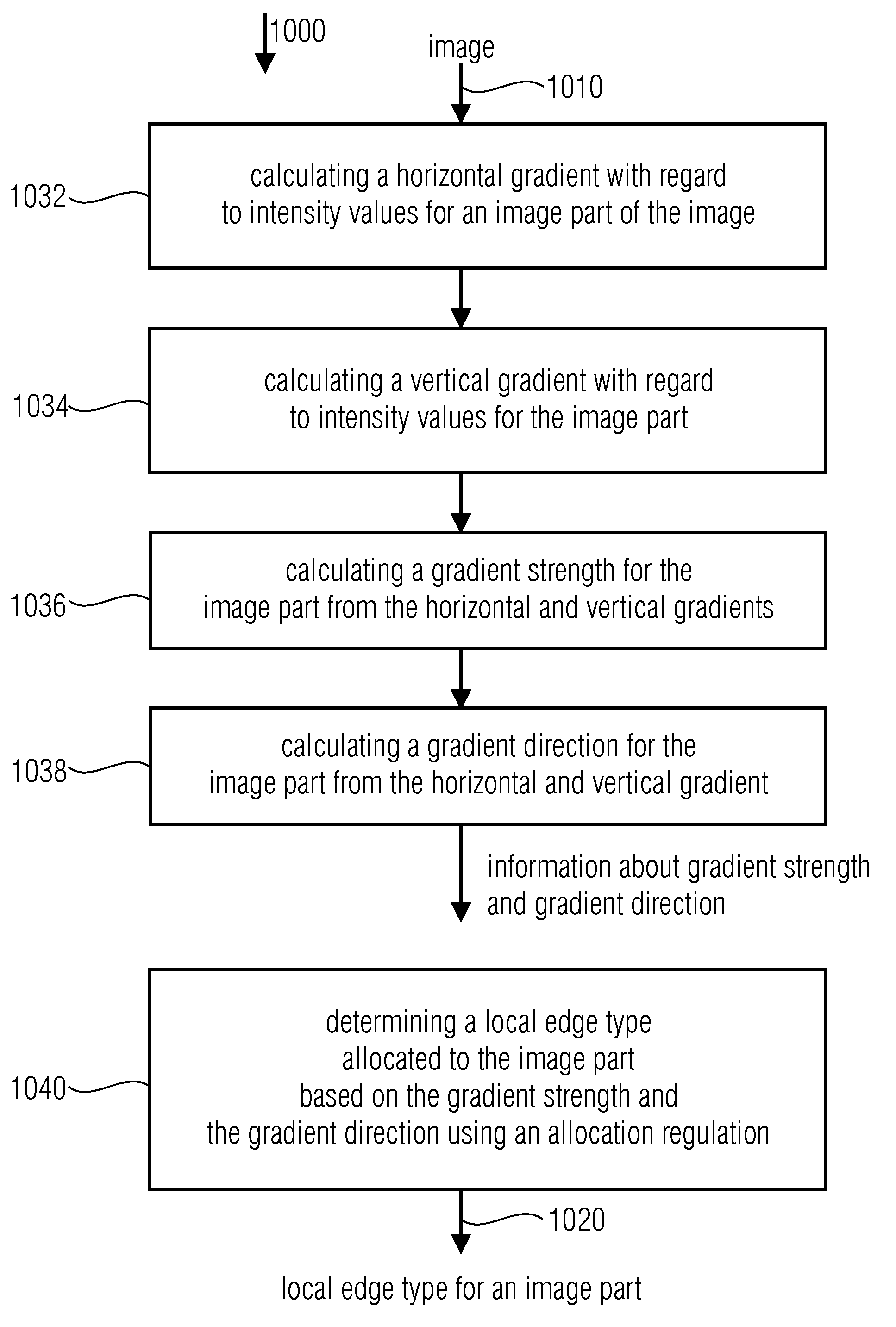

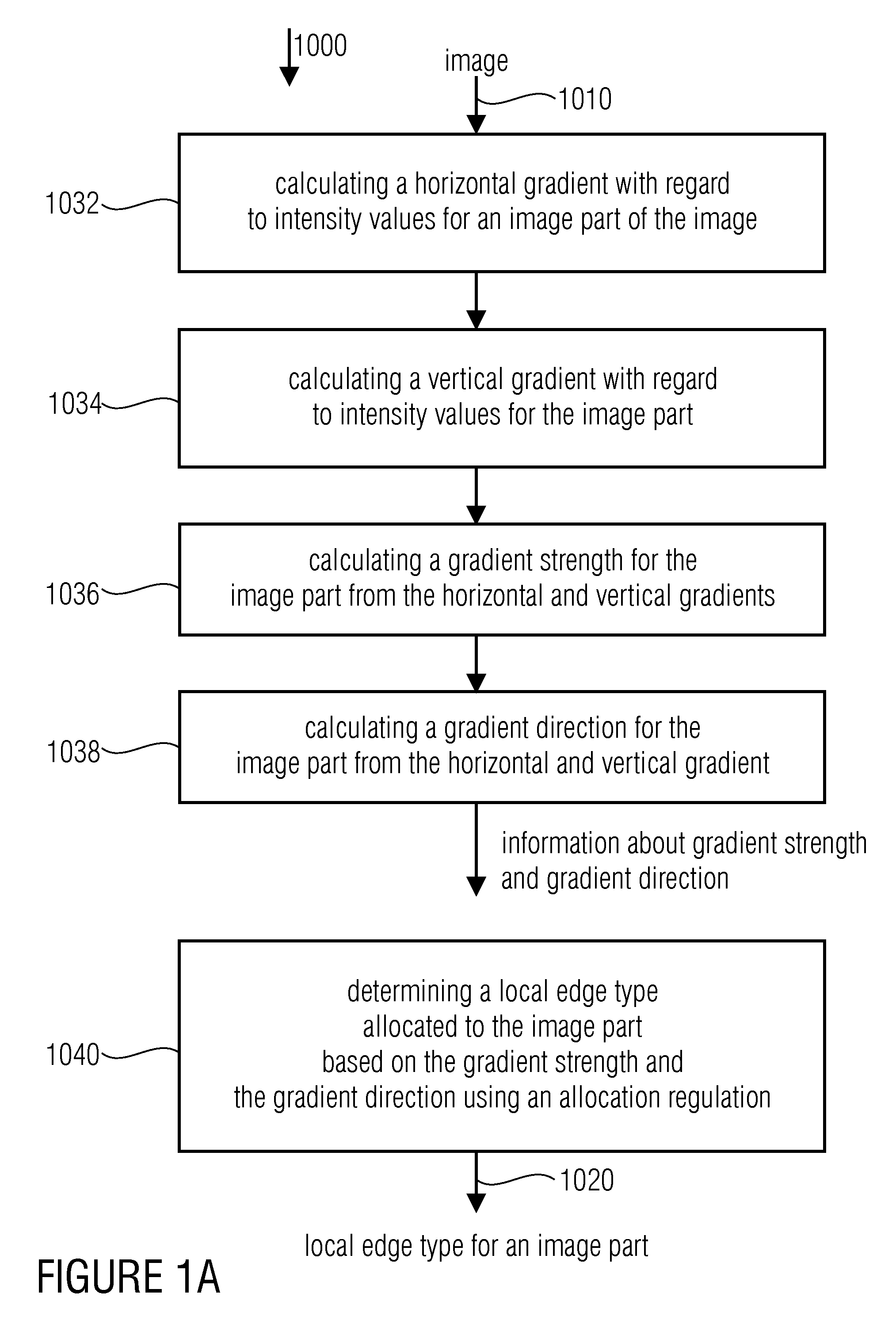

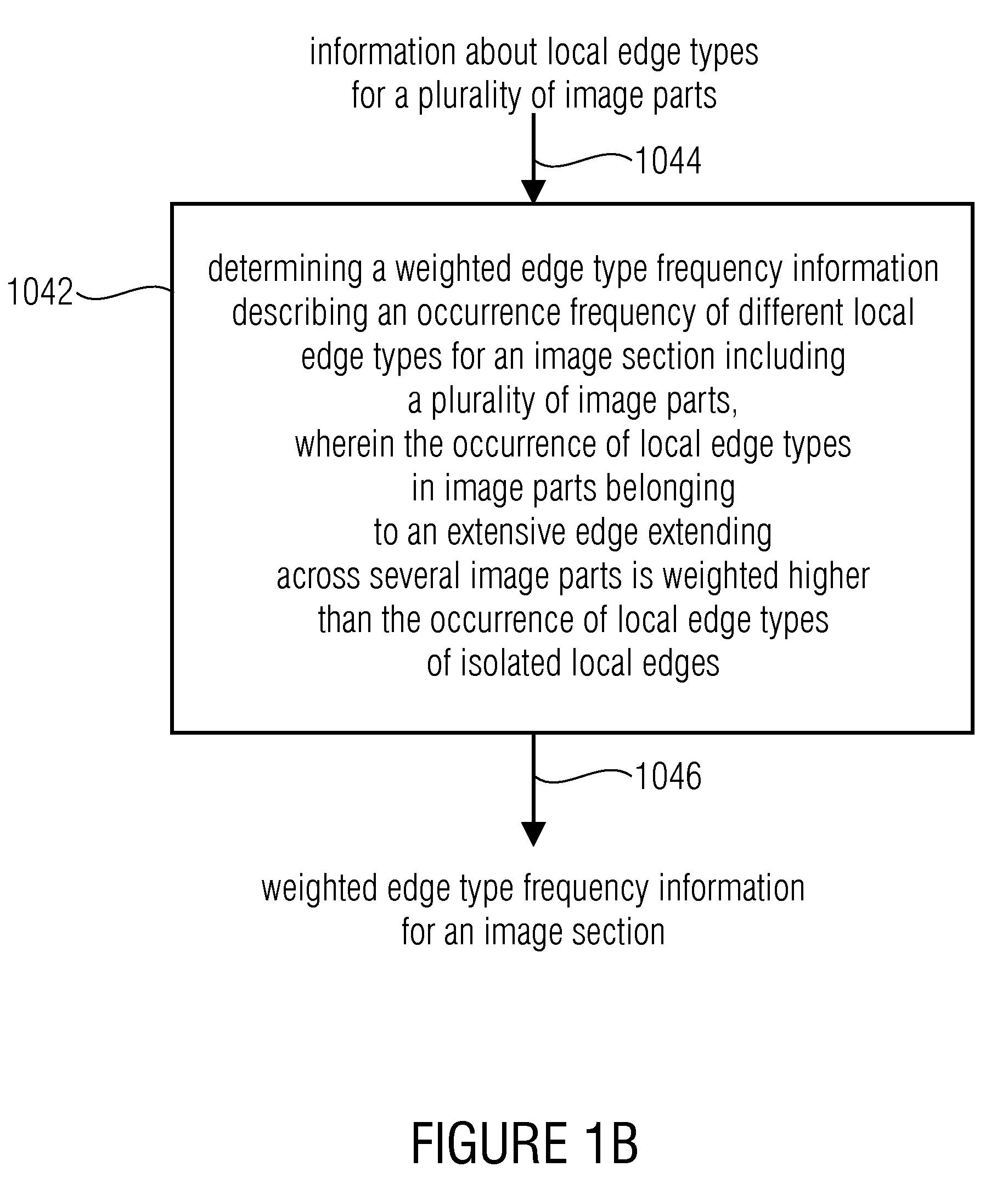

Device and method for determining an edge histogram, device and method for storing an image in an image database, device and method for finding two similar images and computer program

InactiveUS20100111416A1Reduce distortion problemsReliable edge histogramImage analysisDigital data information retrievalGradient strengthEdge type

A device for determining an edge histogram of an image based on information about a gradient strength and a gradient direction of a local gradient in an image content of a partial image of the image includes an allocator which is implemented to allocate the information about the gradient strength and the gradient direction based on an allocation regulation to an edge image in order to obtain edge type information. The allocation regulation is selected such that with a predetermined gradient direction at least three different allocated edge types exist mirroring the different gradient strengths. The device further includes an edge histogram determiner which is implemented to determine the edge histogram based on the edge type information so that in the edge type histogram at least three edge types having different allocated gradient strengths may be differentiated.

Owner:FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG EV

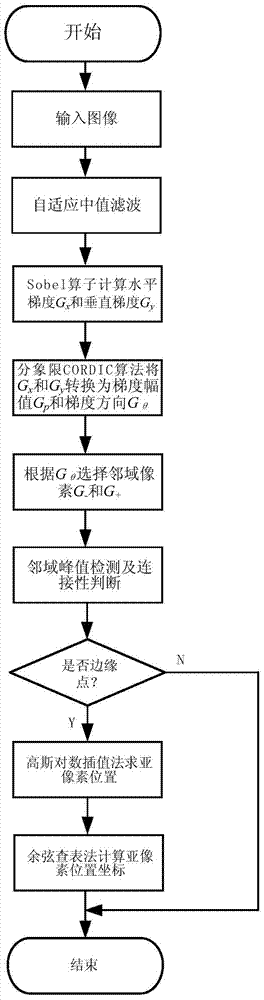

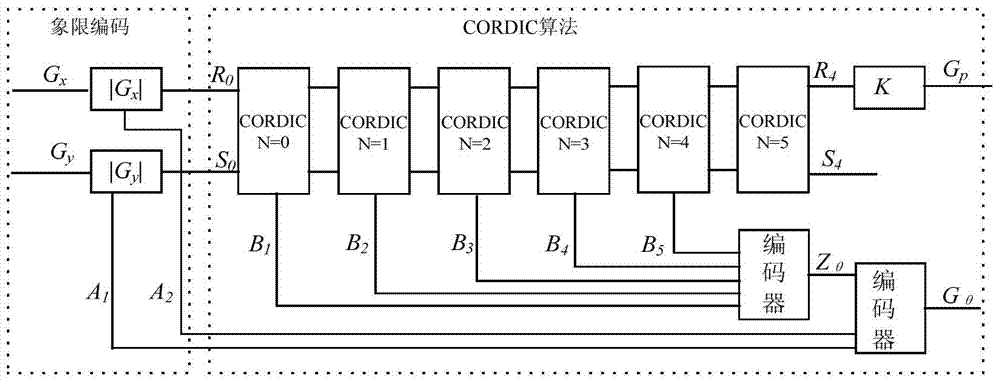

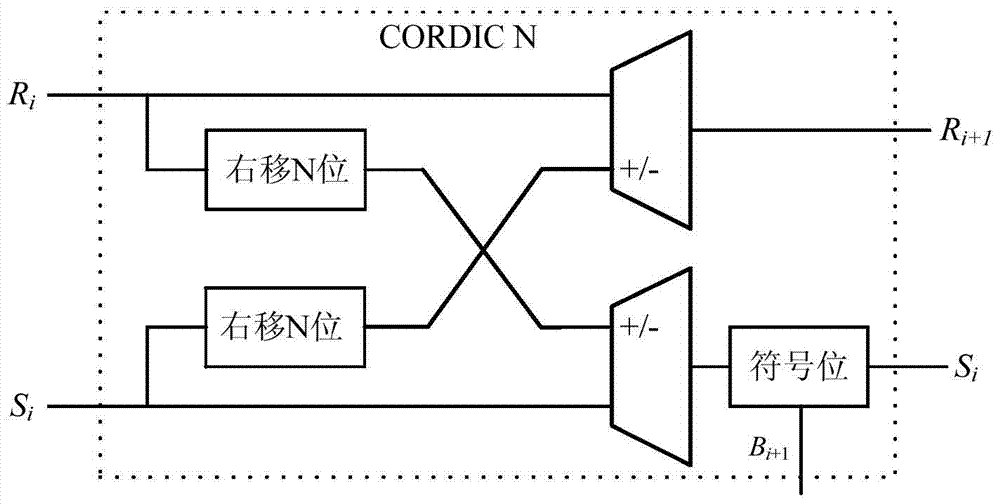

Rapid sub pixel edge detection and locating method based on machine vision

ActiveCN104268857AThe detection process is fastFast positioningImage enhancementImage analysisMachine visionRectangular coordinates

The invention discloses a rapid sub pixel edge detection and locating method based on machine vision. The method includes the following steps that firstly, a detection image is acquired; secondly, denoising pretreatment is conducted on the image; thirdly, the gradient Gx of each pixel point in the horizontal direction and the gradient Gy of each pixel point in the vertical direction are calculated; fourthly, the gradient magnitude G0 and the gradient direction Gtheta of each pixel points under polar coordinates are calculated; fifthly, neighborhood pixel points of each pixel point are determined; sixthly, pixel-level edge points are determined; seventhly, the distance between a sub pixel edge point of each pixel-level edge point in the eight-gradient direction and the pixel-level edge point is calculated; eighthly, the distance d between each sub pixel edge point in the actual gradient direction Gtheta and the corresponding pixel-level edge point is calculated; ninthly, a cosine lookup table method is adopted for calculating rectangular coordinates of each sub pixel edge point in the actual gradient direction Gtheta, so that the image edge points are detected and sub-pixel-level localization is conducted. The whole method is high in calculation accuracy and speed.

Owner:湖南湘江时代机器人研究院有限公司

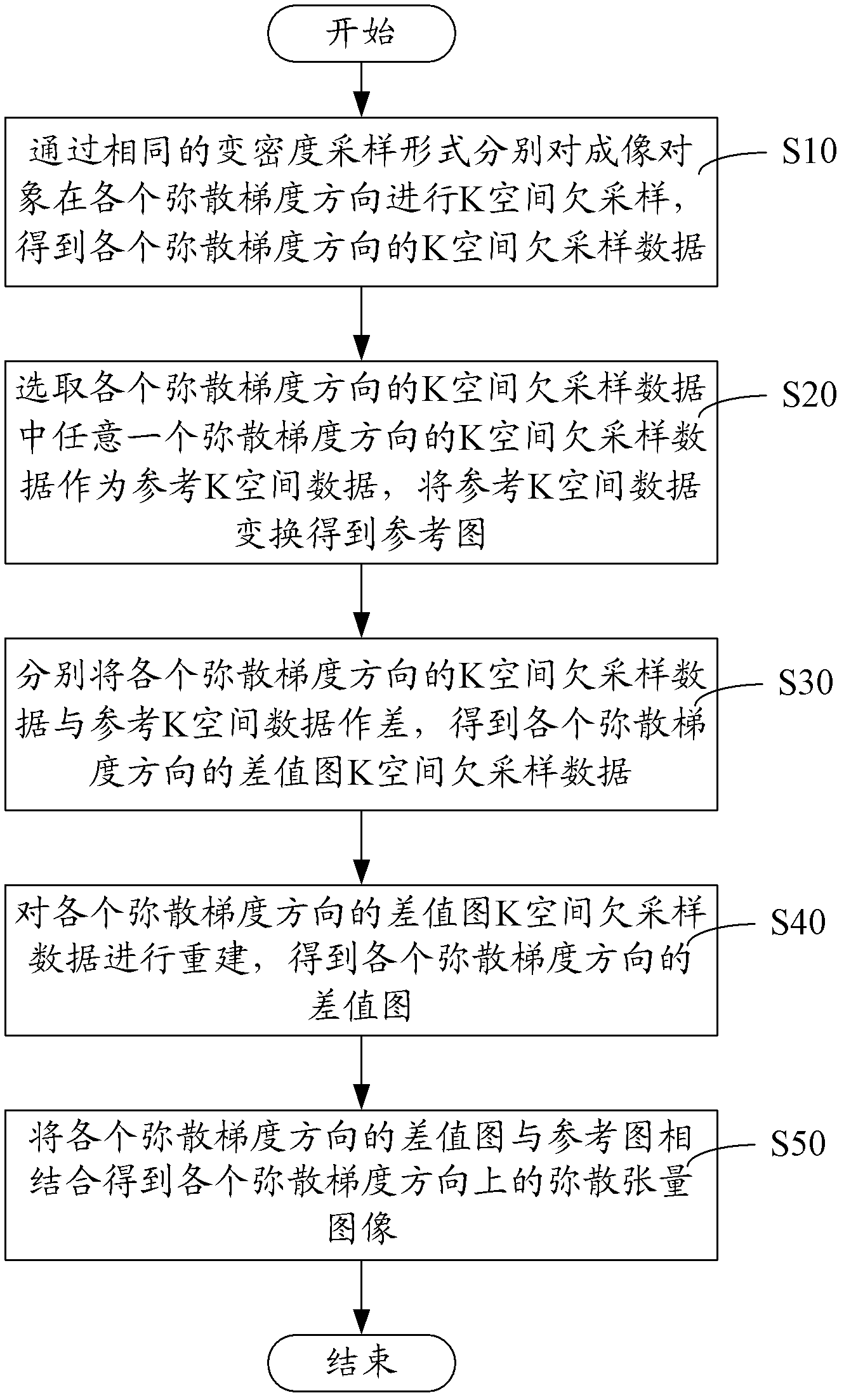

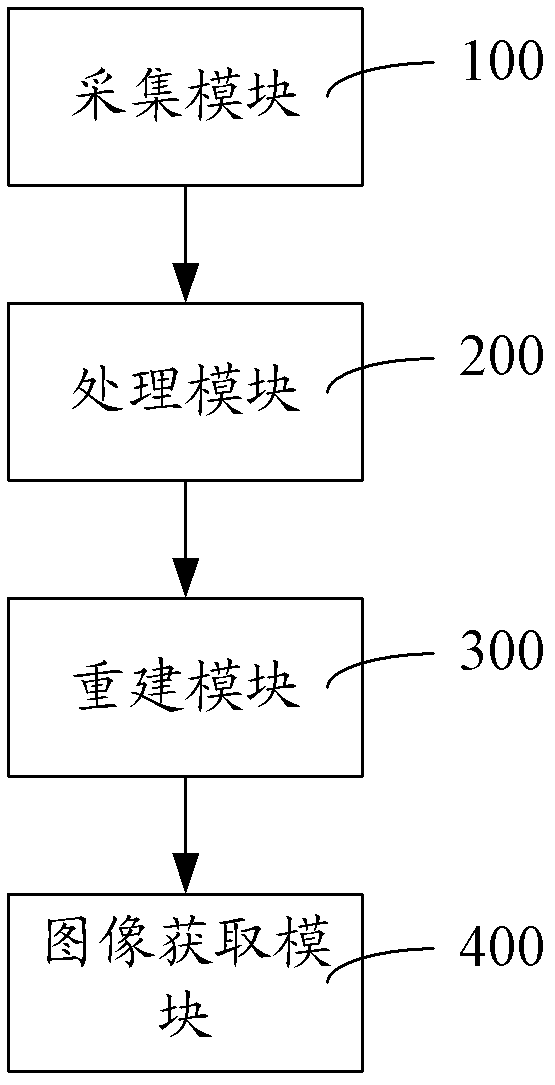

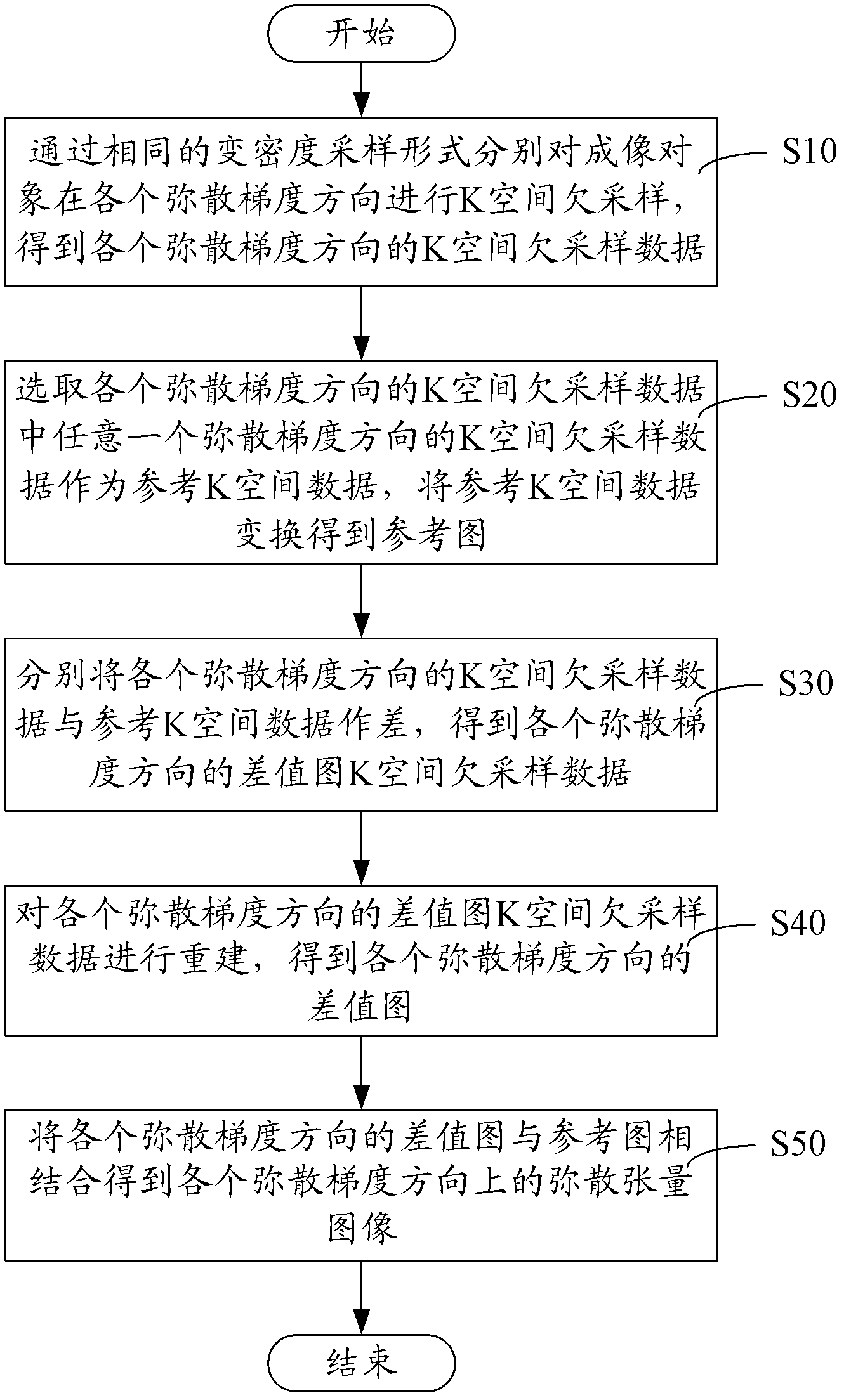

Diffusion-tensor imaging method and system

ActiveCN102309328AReduce acquisition timeDiffusion Tensor Imaging FastDiagnostic recording/measuringSensorsDiffusionReference image

The invention provides a diffusion-tensor imaging method, which comprises the following steps of: respectively performing K space under sampling on an imaged target in each diffusion gradient direction in the same variable density sampling form to acquire K space under sampling data of each diffusion gradient direction; selecting the K space under sampling data of any diffusion gradient direction in the K space under sampling data of each diffusion gradient direction as reference K space data, and converting the reference K space data to acquire a reference image; making a difference between the K space under sampling data of each diffusion gradient direction and the reference K space data to acquire differential chart K space under sampling data of each diffusion gradient direction; rebuilding the differential chart K space under sampling data of each diffusion gradient direction to acquire a differential chart of each diffusion gradient direction; and combining the differential chart of each diffusion gradient direction and the reference image to acquire a diffusion-tensor image in each diffusion gradient direction. The invention also provides a diffusion-tensor imaging system at the same time.

Owner:SHANGHAI UNITED IMAGING HEALTHCARE

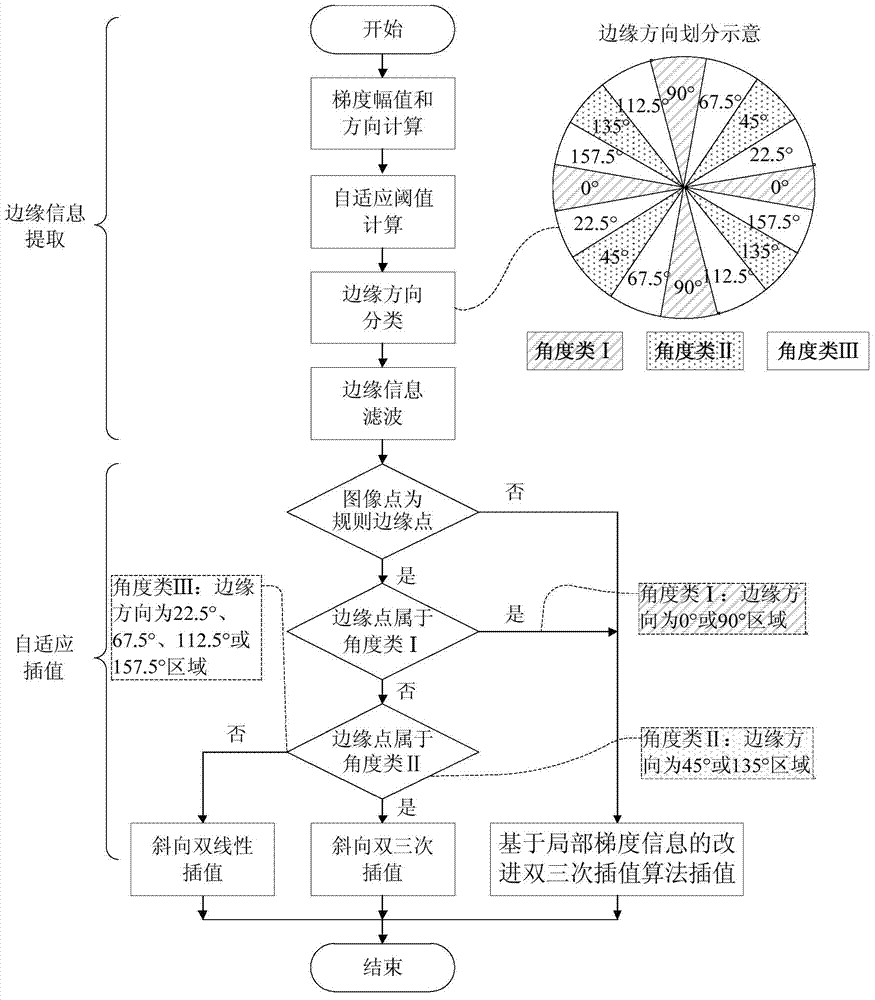

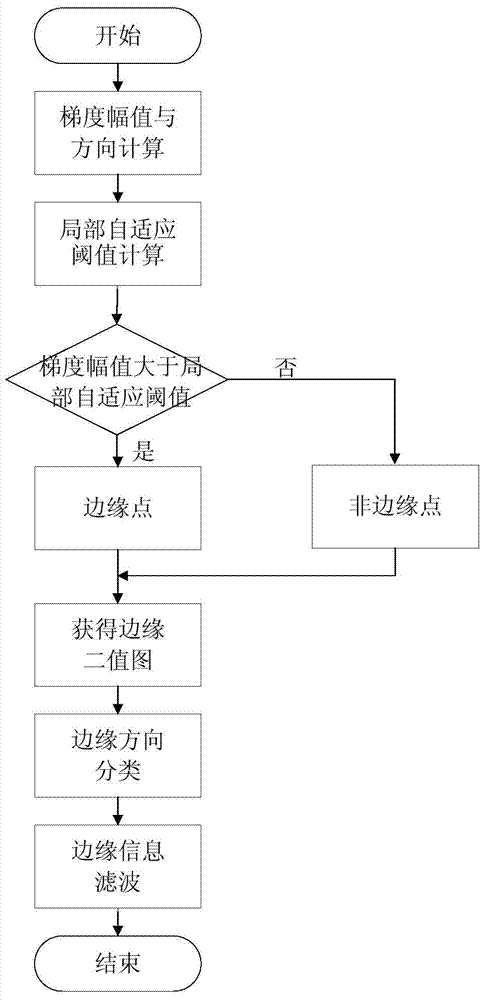

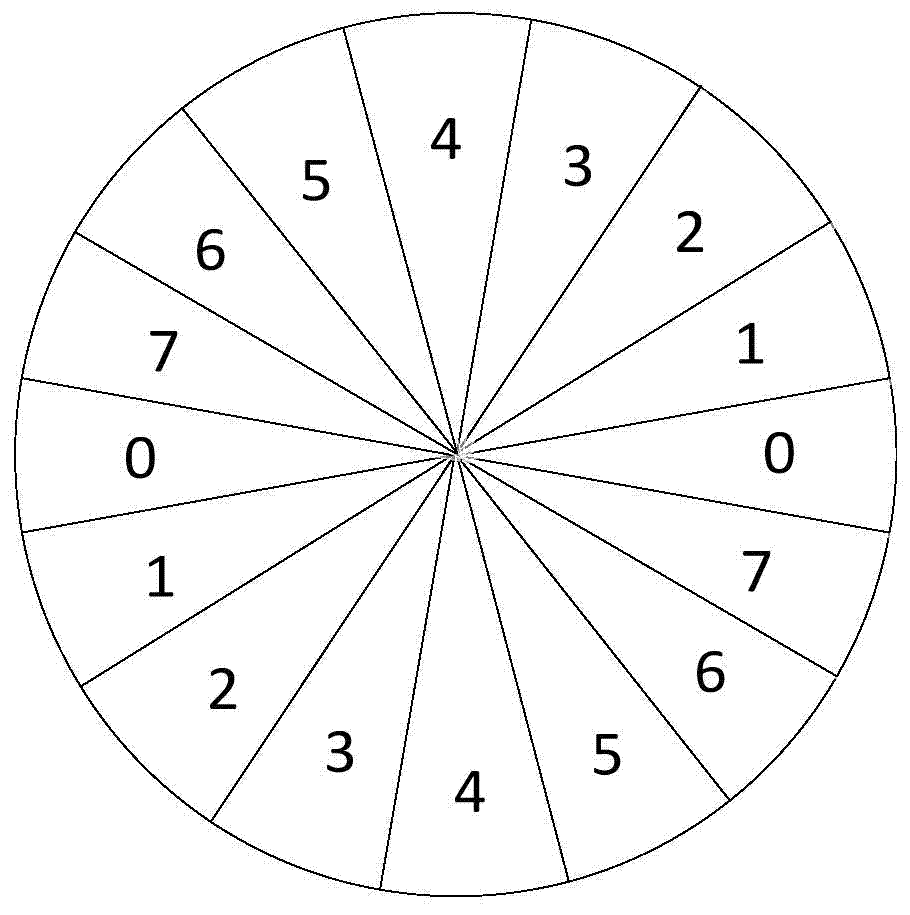

Margin-oriented self-adaptive image interpolation method and VLSI implementation device thereof

ActiveCN103500435AGuaranteed accuracyProtectImage enhancementImage analysisVlsi implementationsSynchronous control

The invention discloses a margin-oriented self-adaptive image interpolation method and a VLSI implementation device thereof. The method comprises the steps that the gradient magnitude and the gradient direction of a source image pixel are computed, and marginal information is obtained by comparing the gradient magnitude and a local self-adaptive threshold value, wherein the marginal direction is perpendicular to the gradient direction; the marginal direction is classified, filtering is conducted through the marginal information, and an image is divided into a regular marginal area and a non-marginal area; the regular marginal area interpolation is conducted in the marginal direction, and an improved bicubic interpolation method, a slant bicubic interpolation method and a slant bilinear interpolation method based on local gradient information are adopted to conduct image interpolation according to the classification of the marginal information; image interpolation is conducted on the non-marginal area through the improved bicubic interpolation method based on the local gradient information. The VLSI implementation device comprises a marginal information extraction module, a self-adaptive interpolation module, an input line field synchronous control module and an after-scaling line field synchronous control module. The margin-oriented self-adaptive image interpolation method and the VLSI implementation device of the margin-oriented self-adaptive image interpolation method can effectively improve the effect of image interpolation with high-magnification scaling, and is beneficial to integrated circuit framework achieving.

Owner:XI AN JIAOTONG UNIV

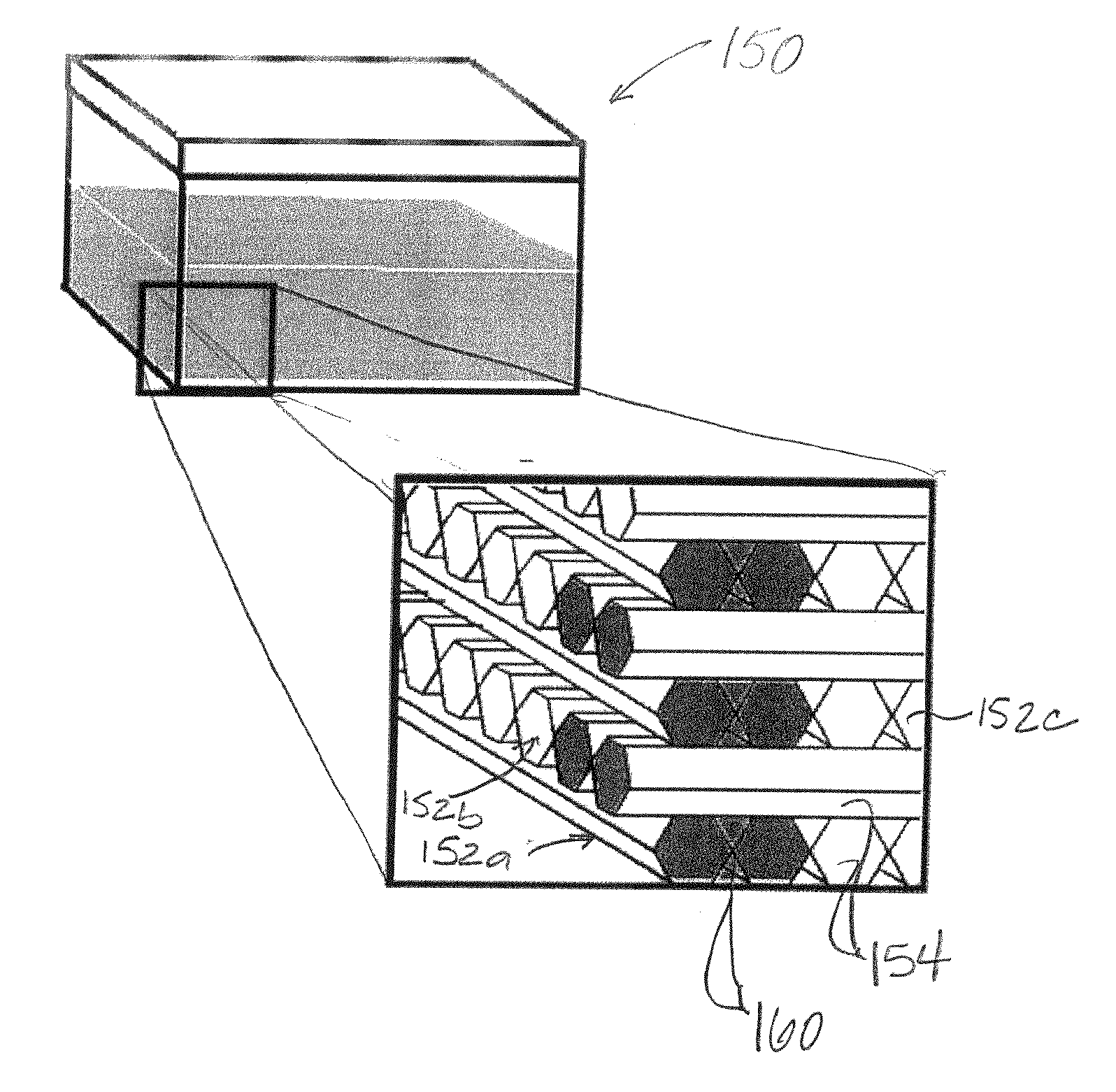

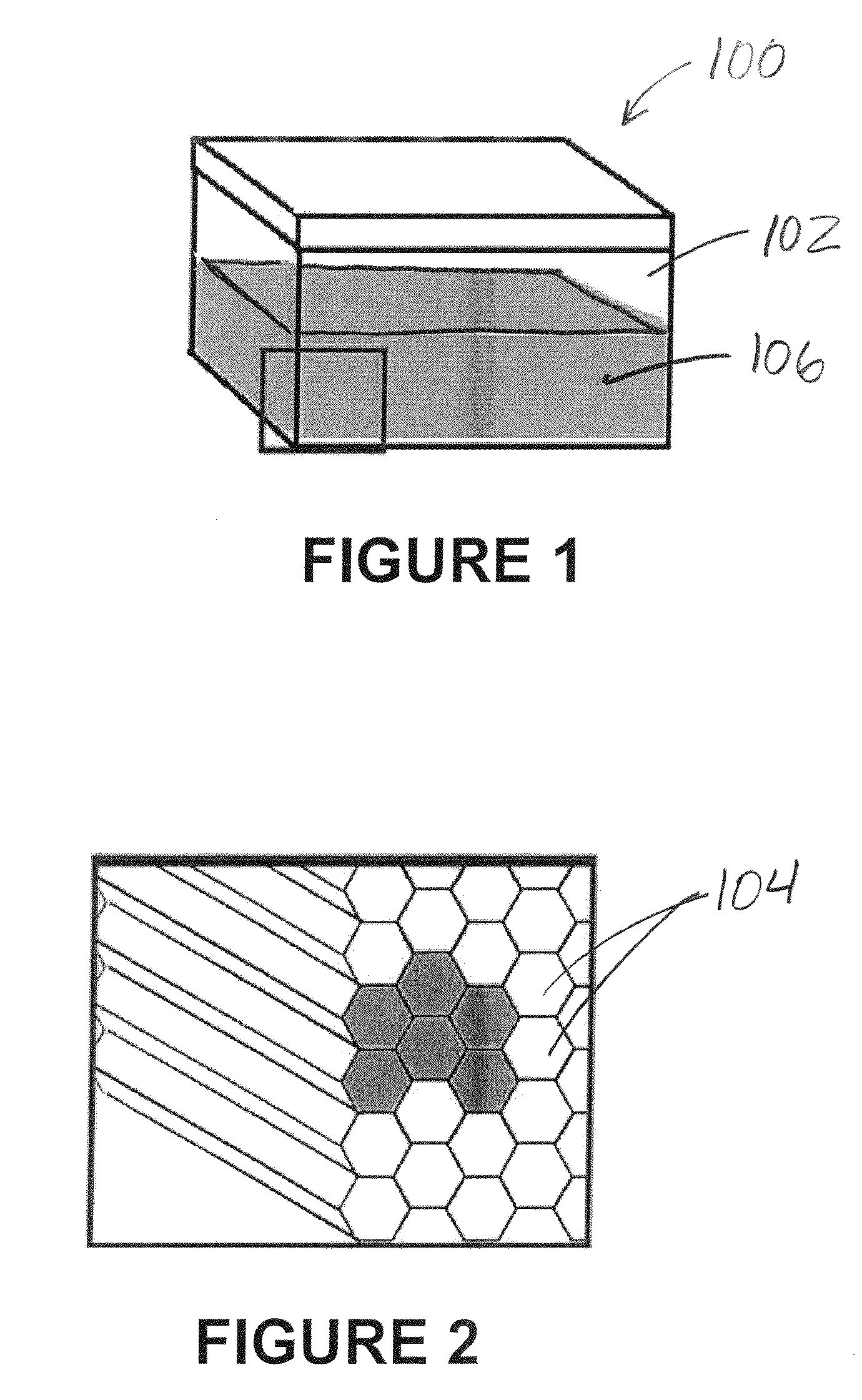

Test object for use with diffusion MRI and system and method of synthesizing complex diffusive geometries using novel gradient directions

InactiveUS20090058417A1Diagnostic recording/measuringMeasurements using NMR imaging systemsDiffusionTest object

A test object for use with diffusion MRI and a system and methods of synthesizing complex diffusive geometries. The test object, which includes anisotropic structures, can be used to monitor DTI measures by providing a baseline measurement. Using measurements of the phantom, data characteristic of more complicated diffusive behavior can be “synthesized”, or composed of actual measurements re-arranged into a desired spatial distribution function describing diffusion. Unlike a typical DTI scan, the ADC measurements of the present invention are treated in a “reconstruction” phase as if the gradients were applied in different directions. Given a set of reconstruction directions, a judicious choice of acquisition directions for each reconstruction direction allows for the synthesis of any distribution.

Owner:AUGUSTA UNIV RES INST INC

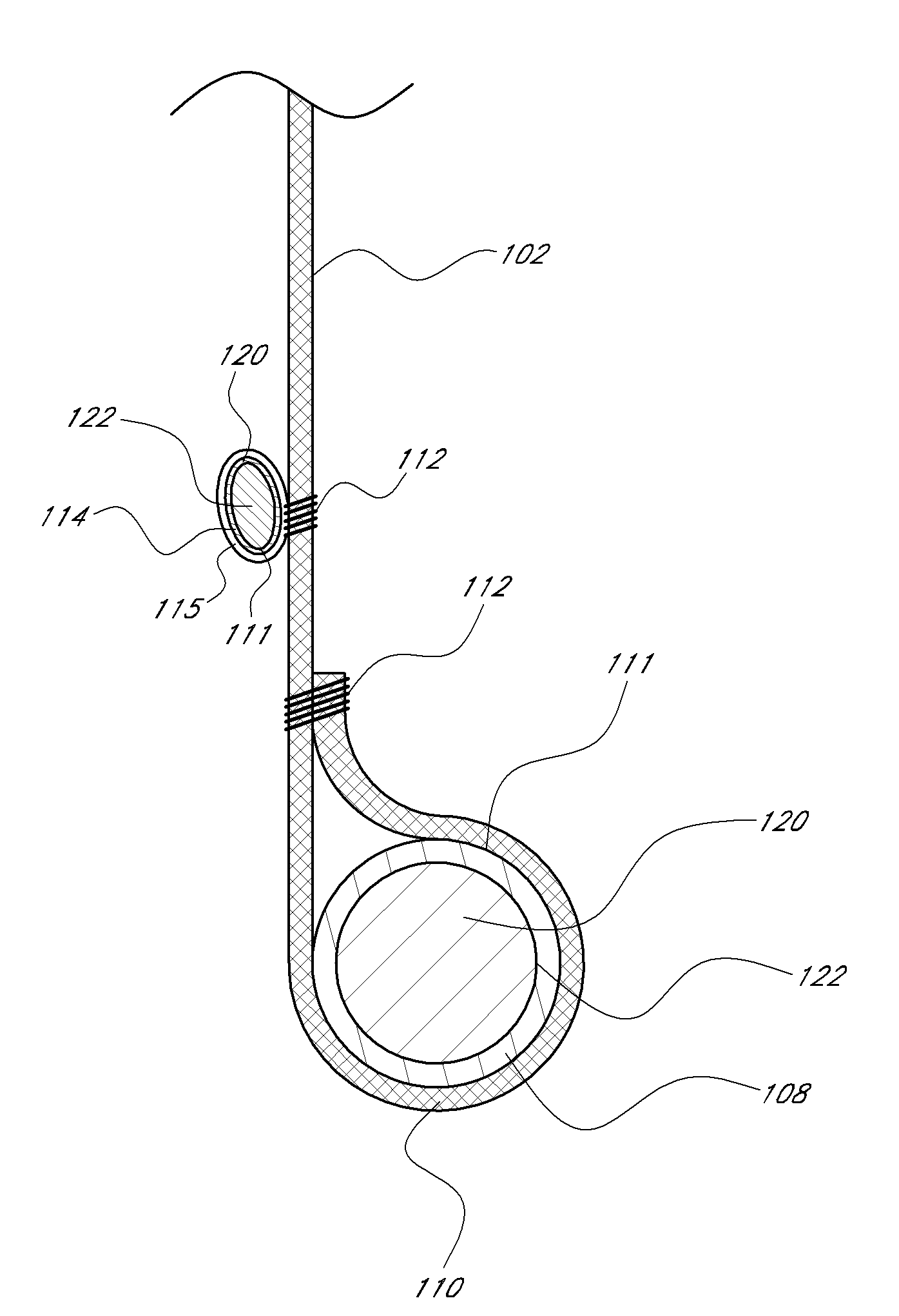

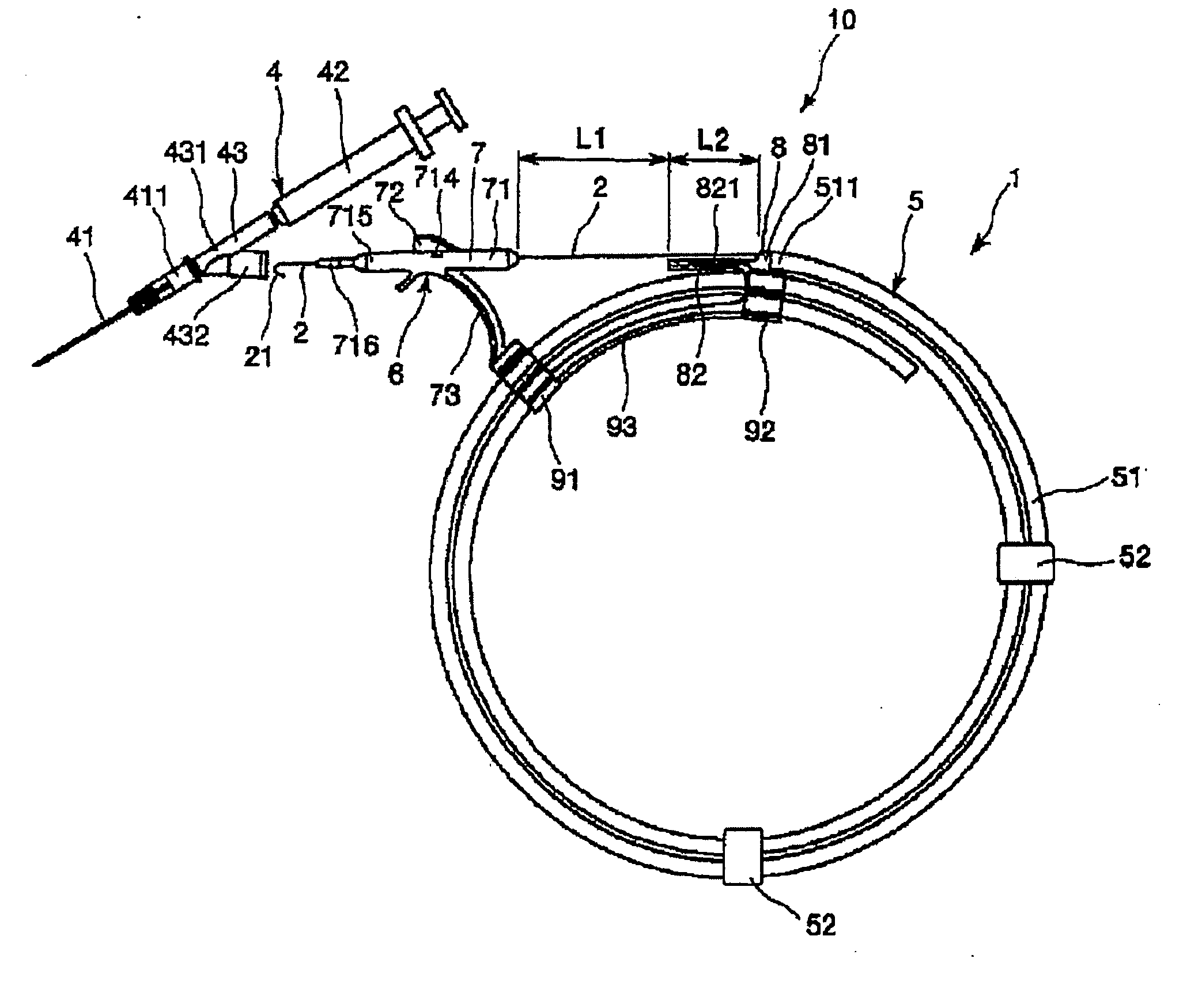

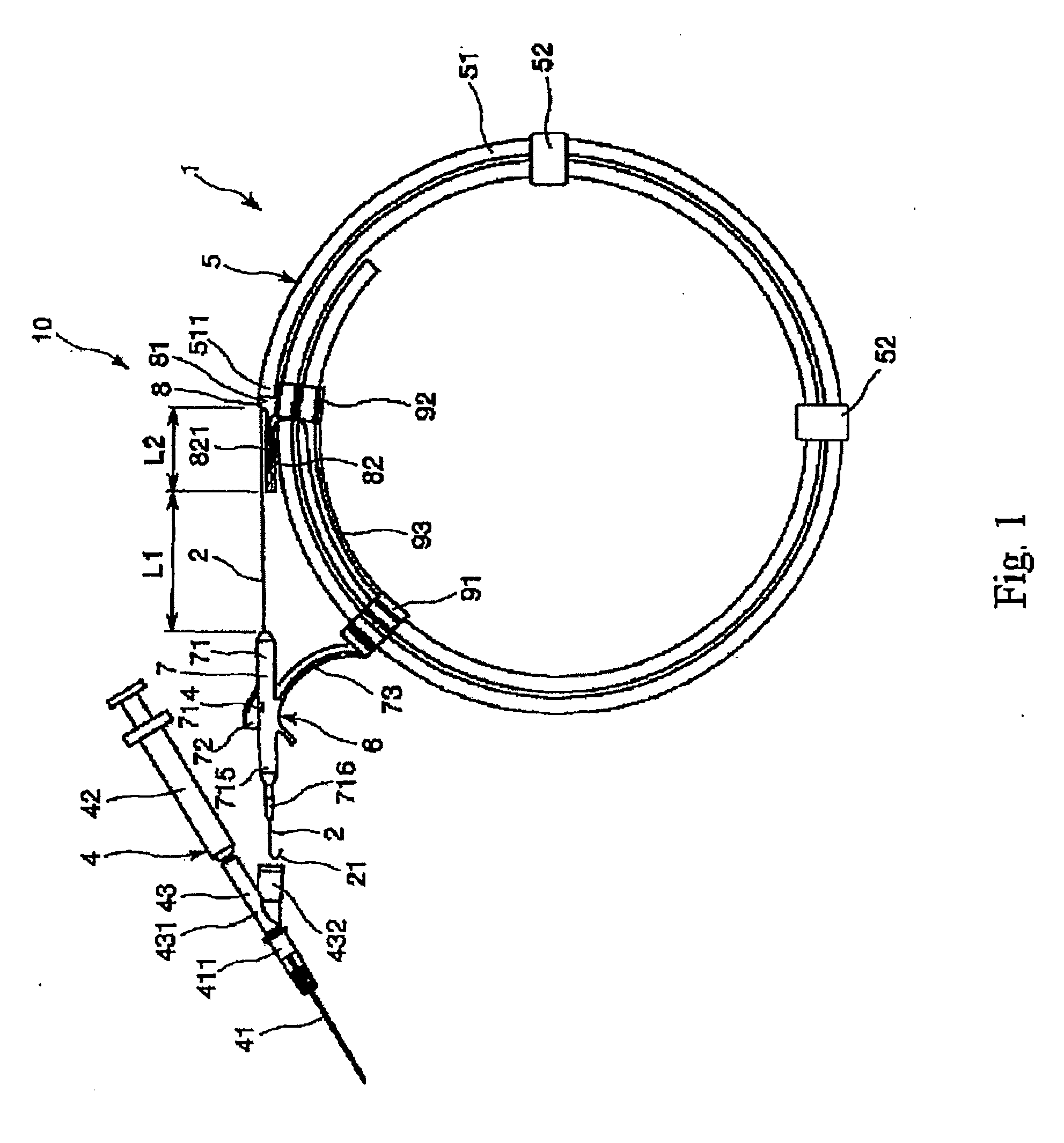

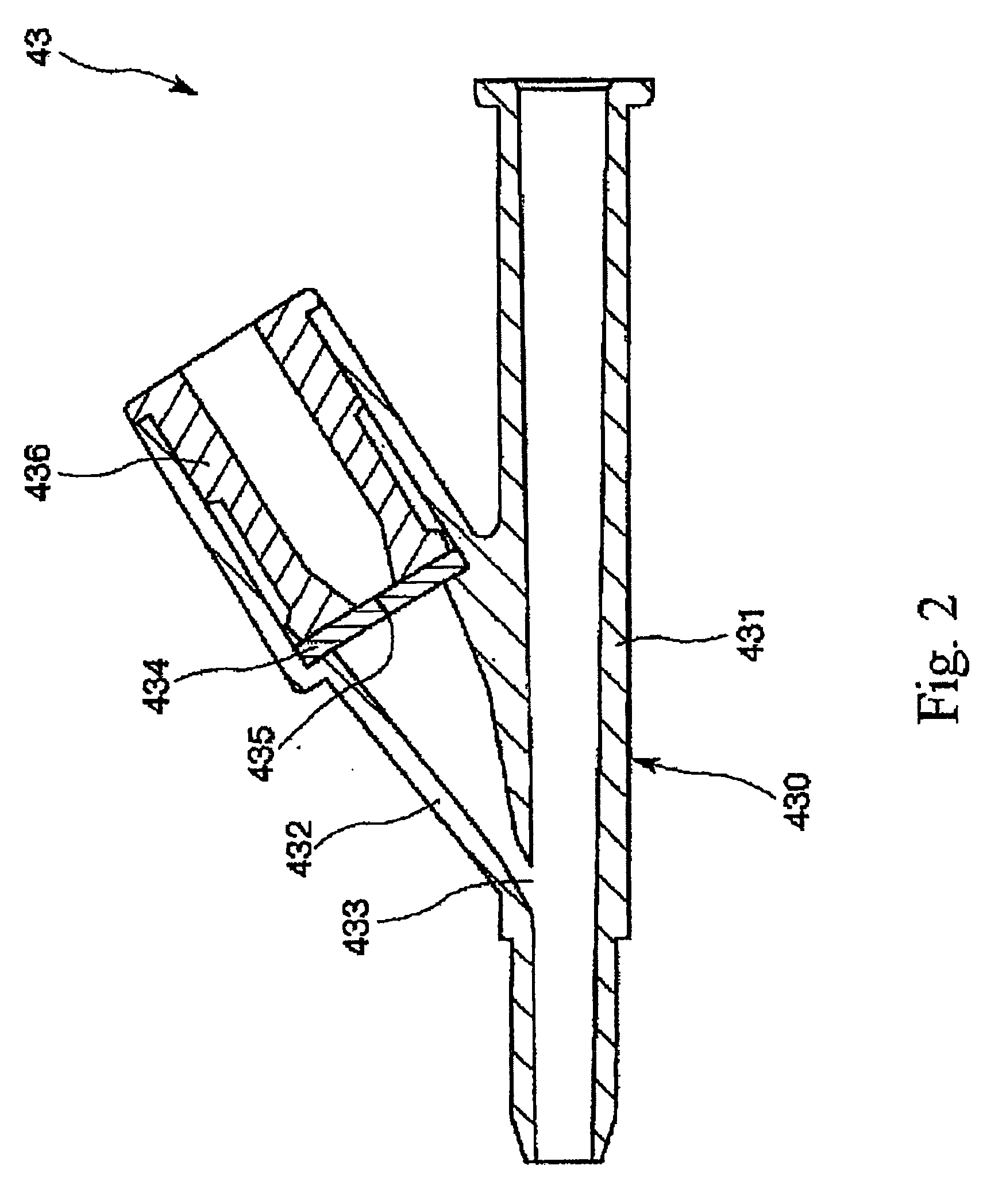

Guide wire assembly

InactiveUS20070185413A1Accurate perceptionMaximizes widthGuide wiresDiagnostic recording/measuringGradient directionEngineering

A guide wire assembly (1) includes a guide wire (2) having a main body section (22), a front-end angular section (21) and a base-end angular section (23). The guide wire assembly (1) further includes a storage section for storing the guide wire (2) inside a hollow section of a substantially annularly wound pipe body (51). The base-end angular section (23) is arranged so that it is dynamically stabilized in elasticity, in a state in which a bent direction thereof is aligned with a direction of curvature of the pipe body (51). When the base-end angular section (23) passes through the pipe body as the guide wire (2) is delivered from the storage section (5), a state is maintained in which the bent direction of the base-end angular section (23) is aligned with the direction of curvature of the pipe body (51), and the direction of the front-end angular section (21) is maintained at a predetermined direction with respect to the storage section (5).

Owner:TERUMO KK

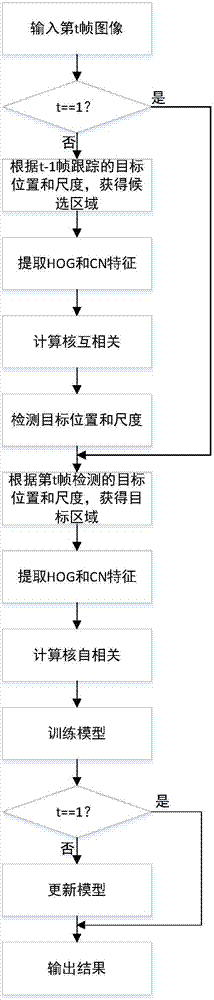

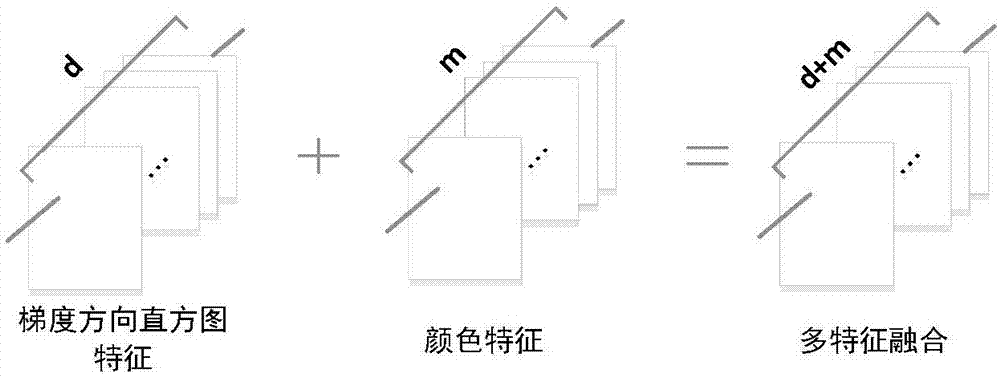

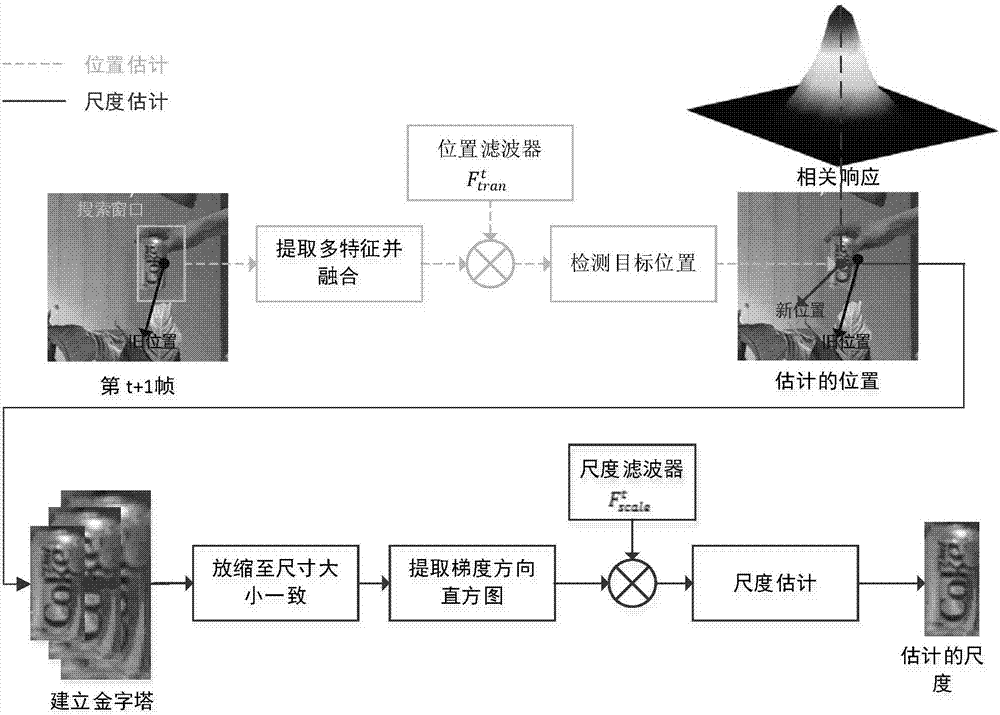

Target tracking method based on multi-characteristic adaptive fusion and kernelized correlation filtering technology

InactiveCN107316316AImprove discrimination abilityImprove stabilityImage enhancementImage analysisComputation complexityCorrelation filter

The invention provides a target tracking method based on multi-characteristic adaptive fusion and kernelized correlation filtering technology. The method comprises steps of according to target position and the dimension of the previous frame tracking, acquiring a candidate region of target motion; extracting histogram characteristics and color characteristics in the gradient direction of the candidate region, fusing the two kinds of characteristics, carrying out Fourier transform so as to obtain a characteristic spectrum and then calculating kernelized correlation; determining the position and the dimension of the target at the current frame, and acquiring a target region; extracting histogram characteristics and color characteristics in the gradient direction of the target region, fusing the two kinds of characteristics, carrying out Fourier transform so as to obtain a characteristic spectrum and then calculating kernelized self-correlation; designing the adaptive target correlation and training a position filter model and a dimension filter model; and using a linear interpolation method to update the characteristic spectrums and the related filters. According to the invention, the discrimination capability of the models is improved; robustness of the target tracking of the target in a complex scene and the appearance change is improved; calculation complexity is reduced; and tracking timeliness is improved.

Owner:NANJING UNIV OF SCI & TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com