Face recognition method of deep convolutional neural network

A neural network and face recognition technology, applied in the face recognition field of deep convolutional neural network, can solve the problems of reduced test sample recognition rate, harsh time complexity, and high time complexity, and is conducive to classification and recognition, The effect of reducing time complexity and strong classification ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

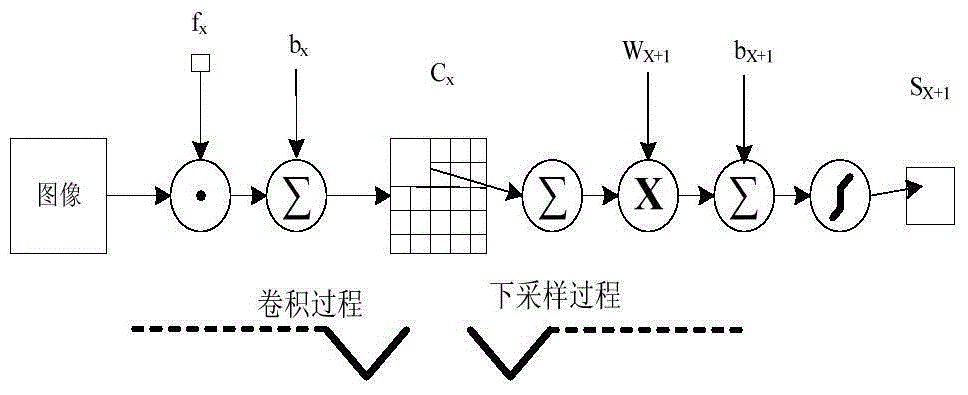

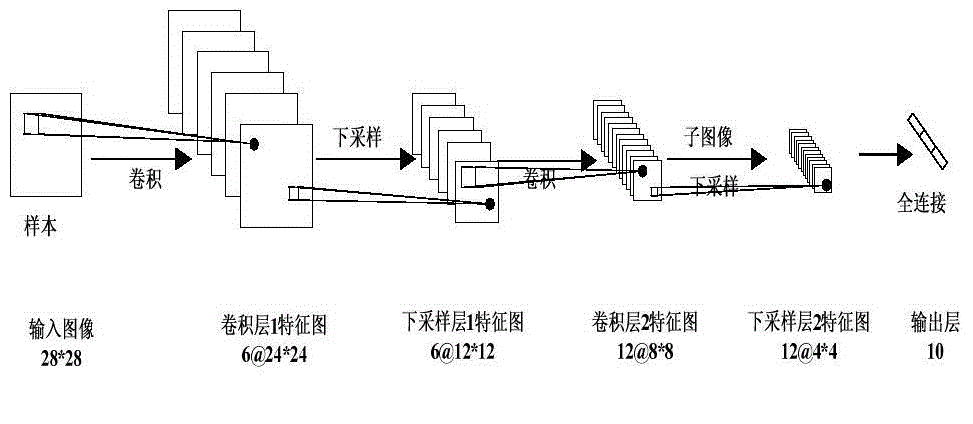

[0026] The face recognition method of this deep convolutional neural network includes a training phase and a classification phase. The training phase includes the following steps:

[0027] (1) Randomly generate the weight w between the input unit and the hidden unit j And hidden unit bias b j , J=1,...,L, j is the number of weights and offsets, there are L in total;

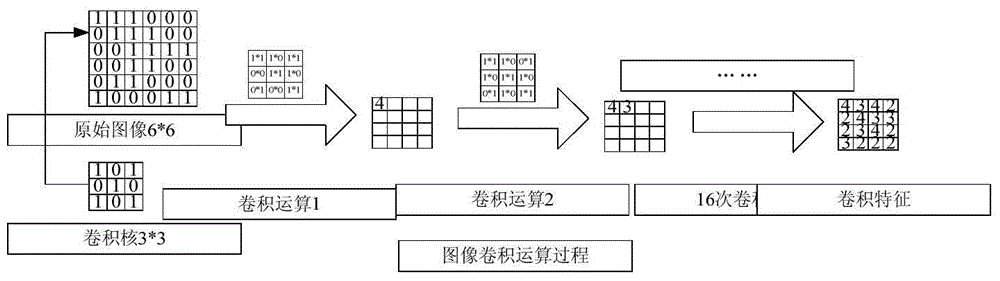

[0028] (2) Input the training image Y and its label, and use the forward conduction formula h W,b (x)=f(W T x), where h W,b (x) is the output value, x is the input, calculate the output value of each layer h W,b (x (i) );

[0029] (3) Calculate the deviation of the last layer according to the label value and the output value of the last layer of step (2) by formula (4)

[0030] δ i ( nl ) = ∂ J 1 ∂ Z i ( nl ) = ∂ ∂ Z i ( nl ) 1 2 | | h W , b ( x ( i ) ) - y ( i ) | | 2 - - - ( 4...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com