Patents

Literature

268 results about "Energy minimization" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In the field of computational chemistry, energy minimization (also called energy optimization, geometry minimization, or geometry optimization) is the process of finding an arrangement in space of a collection of atoms where, according to some computational model of chemical bonding, the net inter-atomic force on each atom is acceptably close to zero and the position on the potential energy surface (PES) is a stationary point (described later). The collection of atoms might be a single molecule, an ion, a condensed phase, a transition state or even a collection of any of these. The computational model of chemical bonding might, for example, be quantum mechanics.

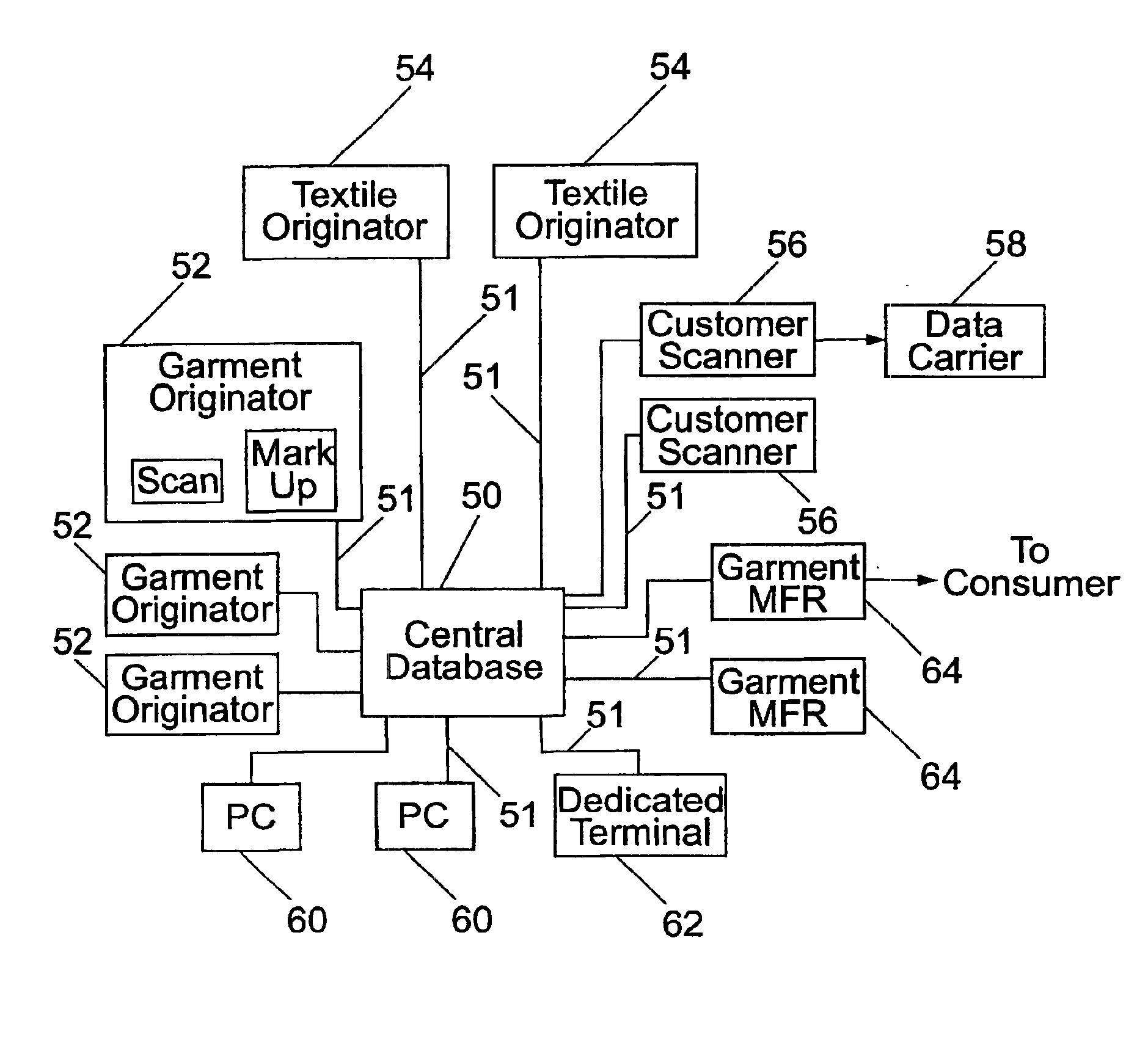

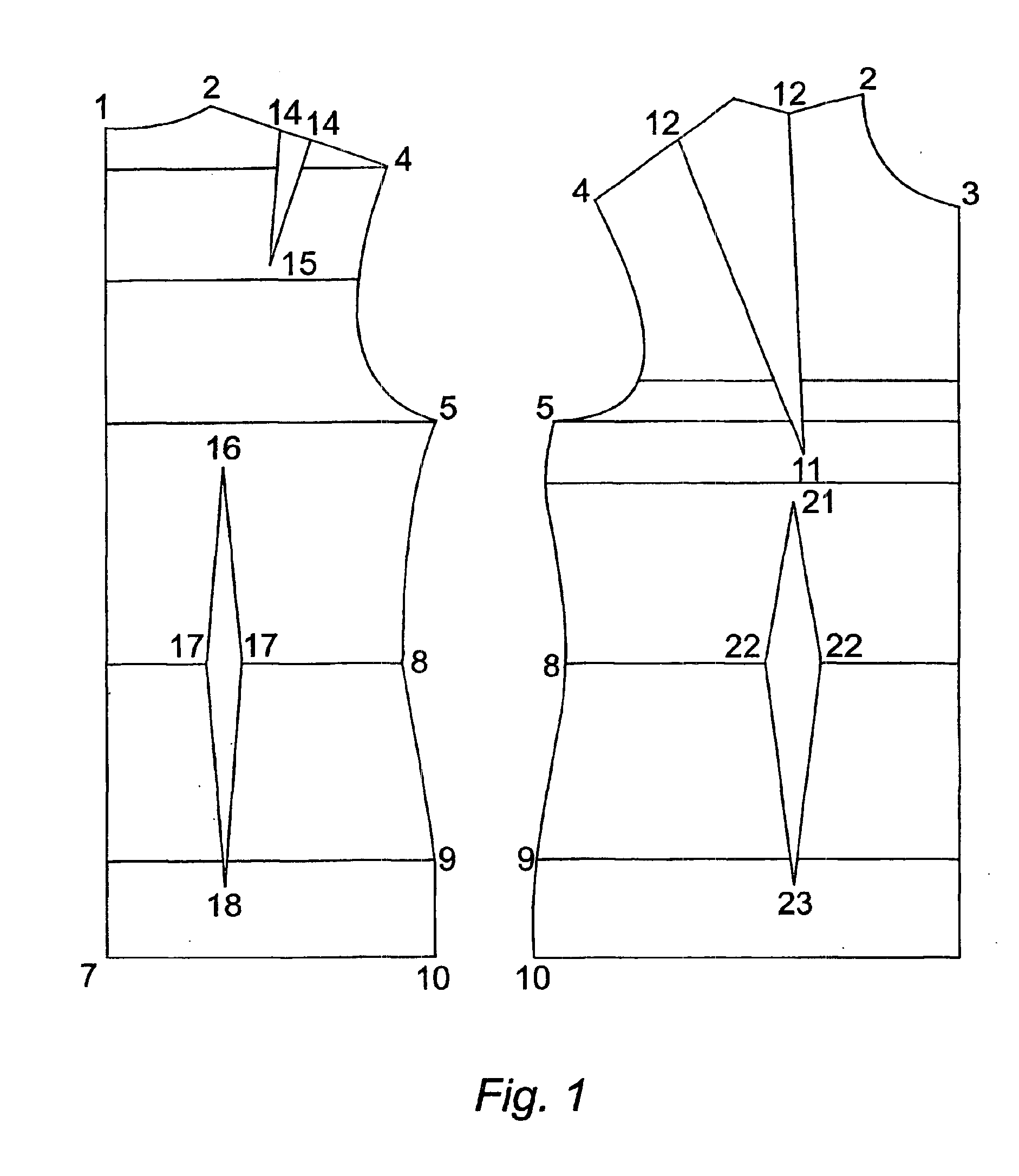

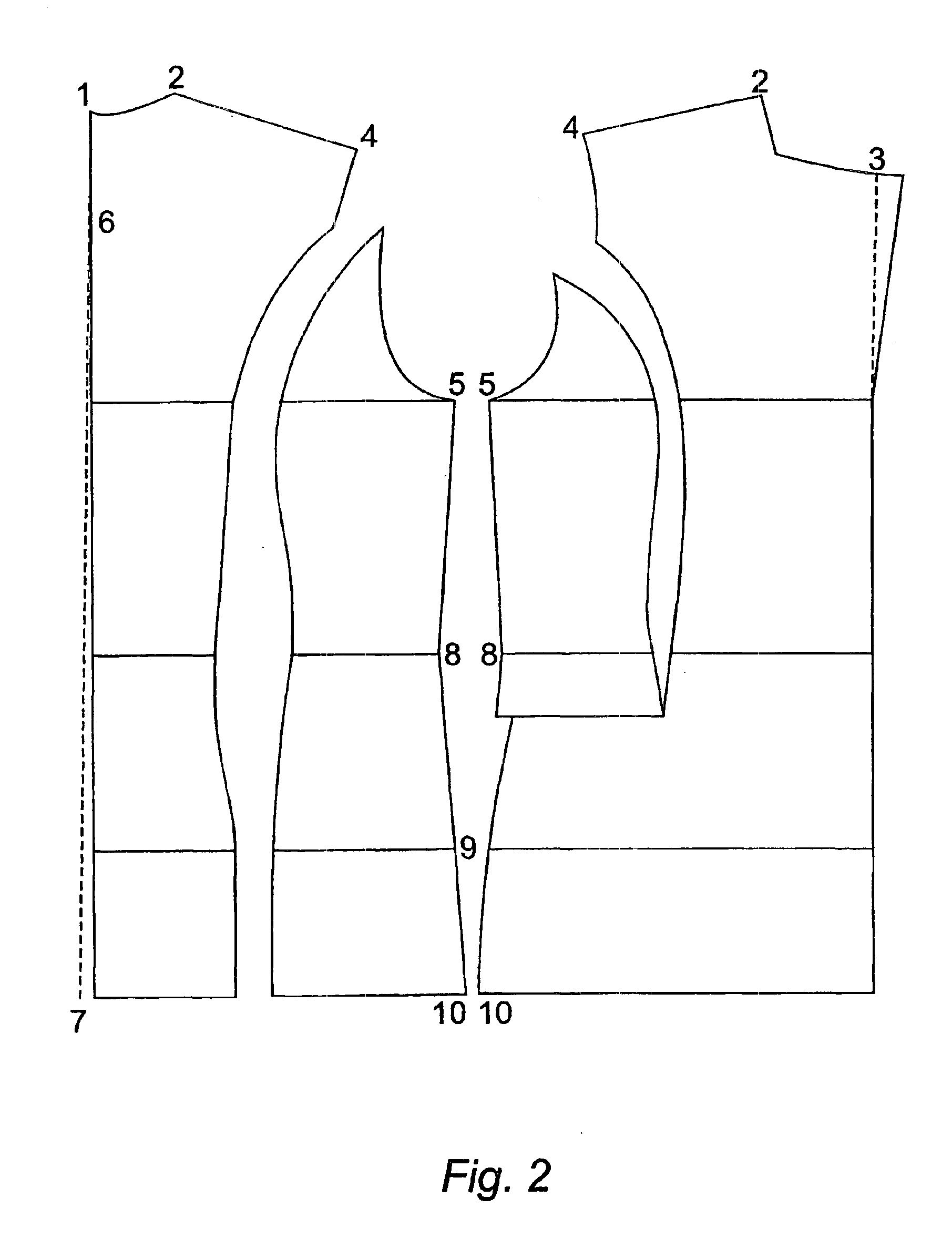

Production and visualization of garments

InactiveUS6907310B2Special data processing applicationsClothes making applicancesBody shapeEnergy minimization

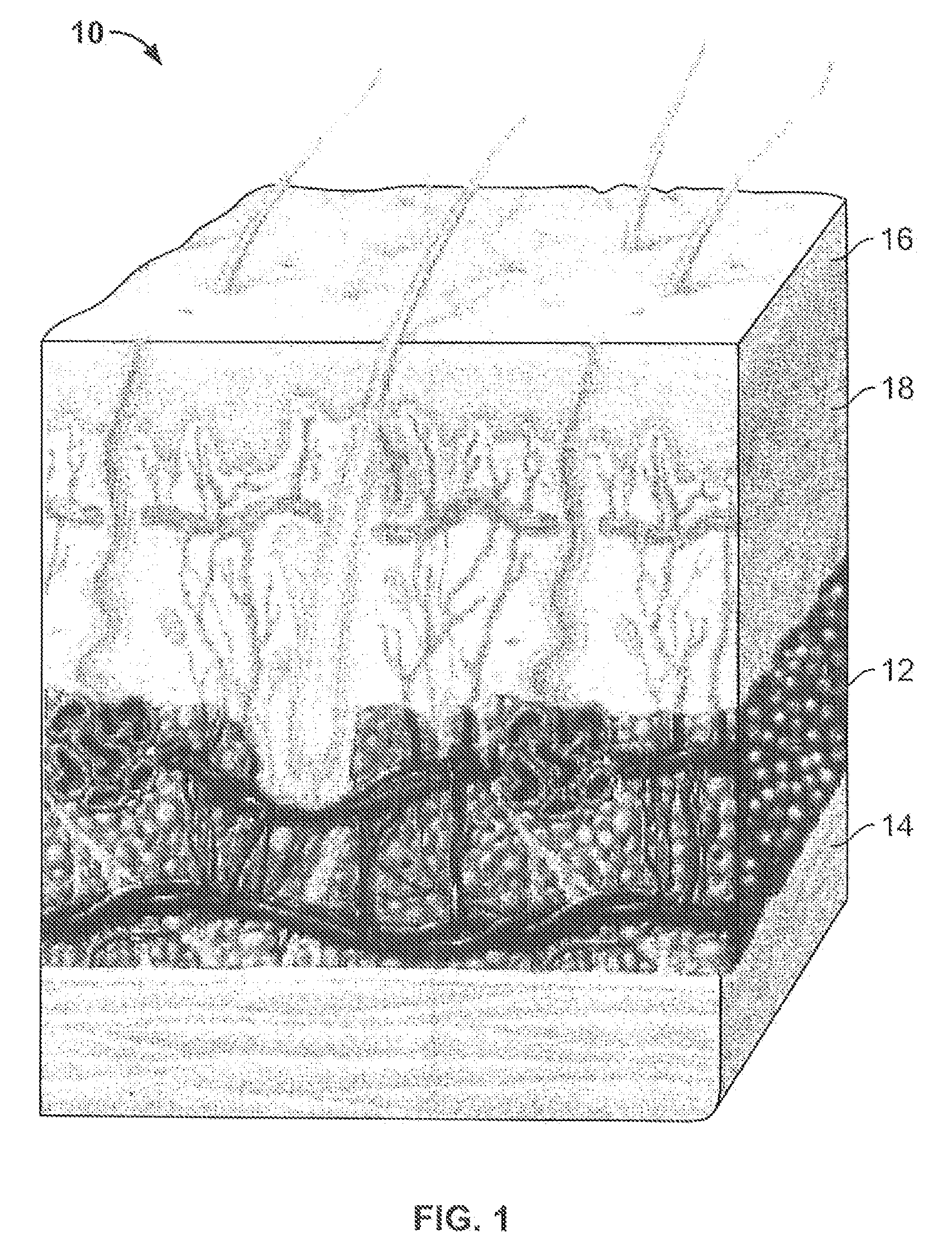

Methods and systems for modelling and modifying garments, providing a basis for integrated “virtual tailoring” systems and processes. The garment models provided relate specific design points of the garment to specific body regions in terms of explicit 3D relationship rules, enabling garments to be modified holistically, by a constrained 3D warp process, to fit different body shapes / sizes, either in order to generate a range of graded sizes or made-to-measure garments, for the purposes of visualization and / or garment production. The methods described further facilitate the generation of 2D pattern pieces by flattening 3D representations of modified garments using a constrained internal energy minimization process, in a manner that ensures that the resulting pattern pieces can be assembled in substantially the same way as those of the base garment. The methods enable the visualisation and / or production of bespoke or graded garments, and garment design modifications, within an integrated virtual tailoring environment.

Owner:VIRTUAL MIRRORS

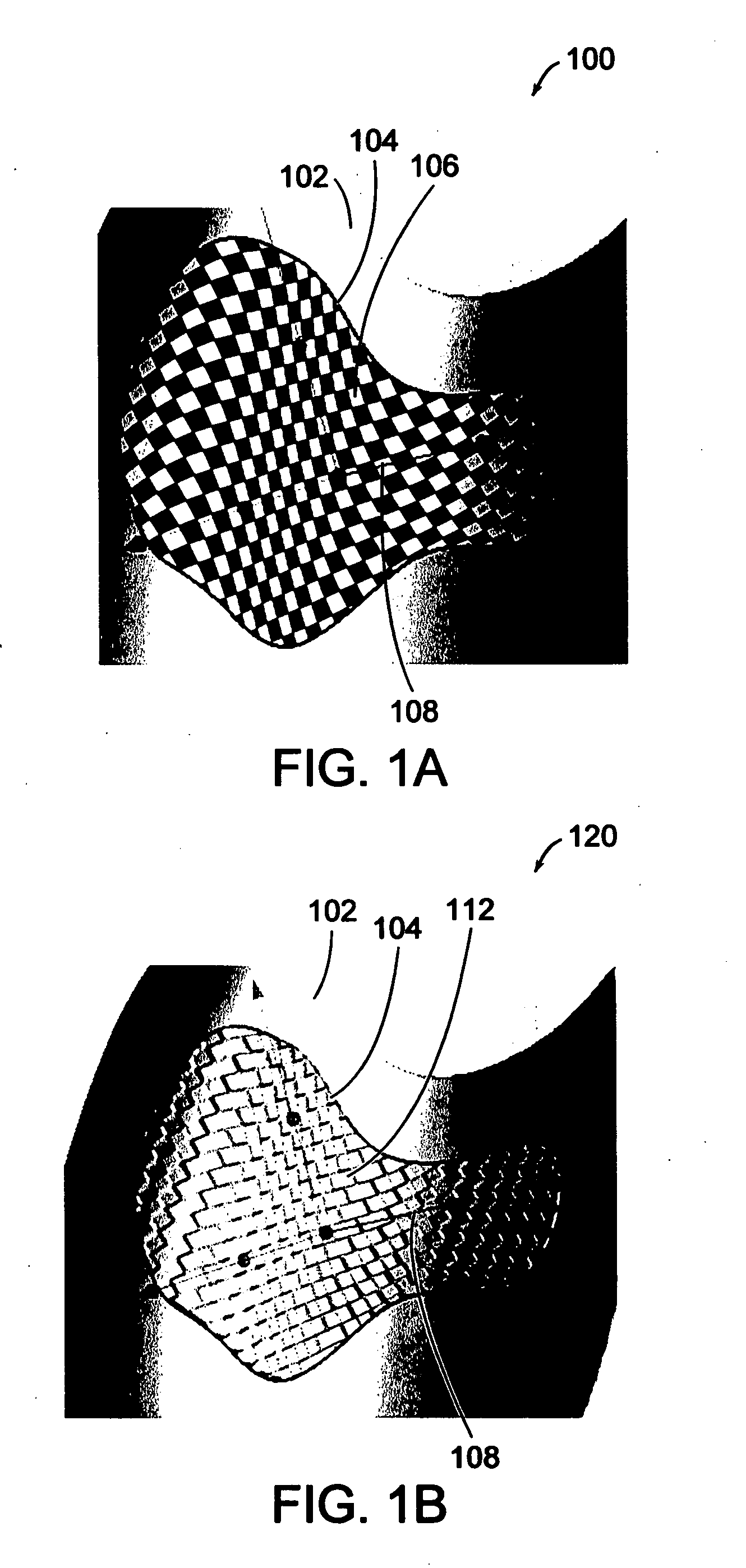

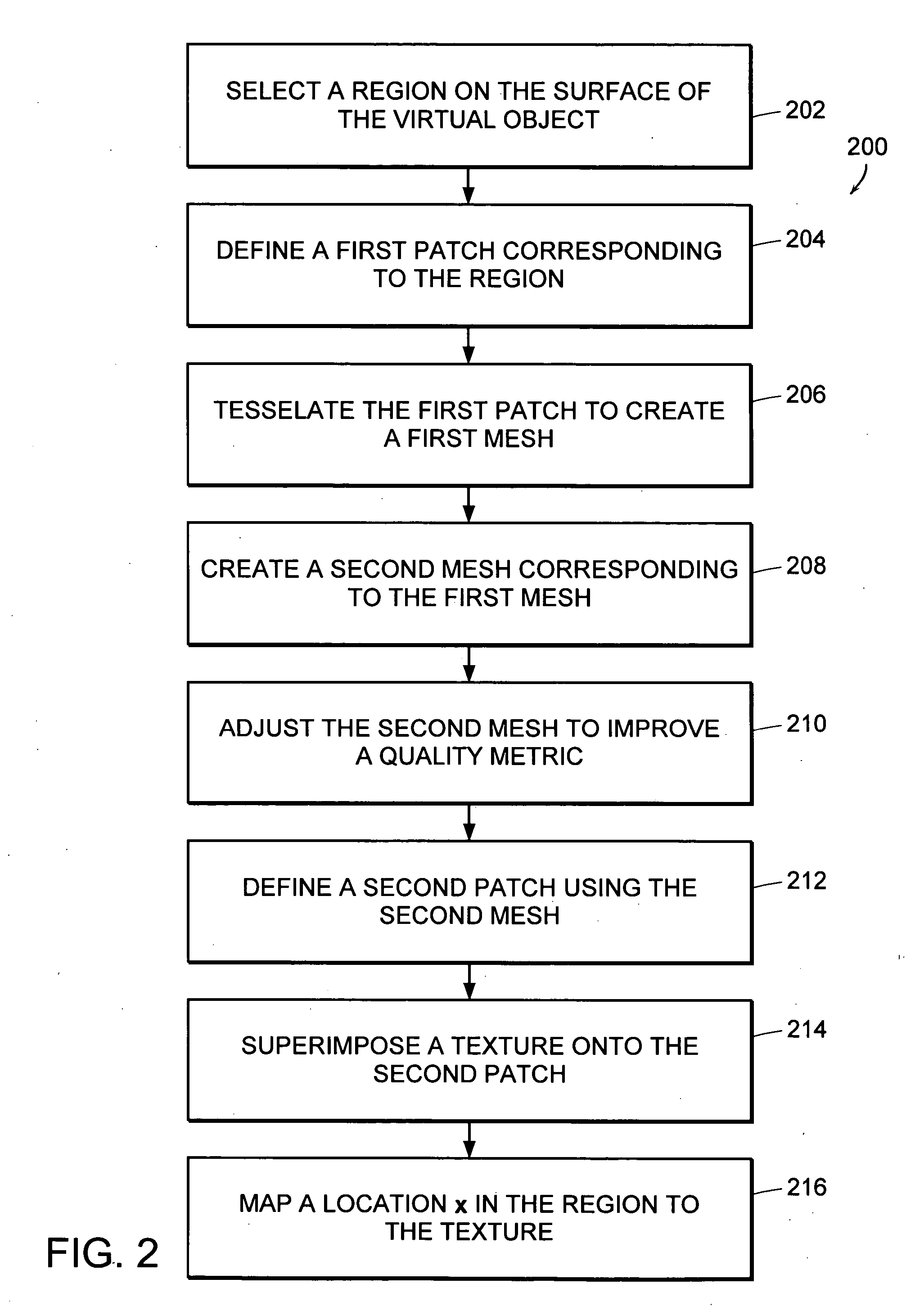

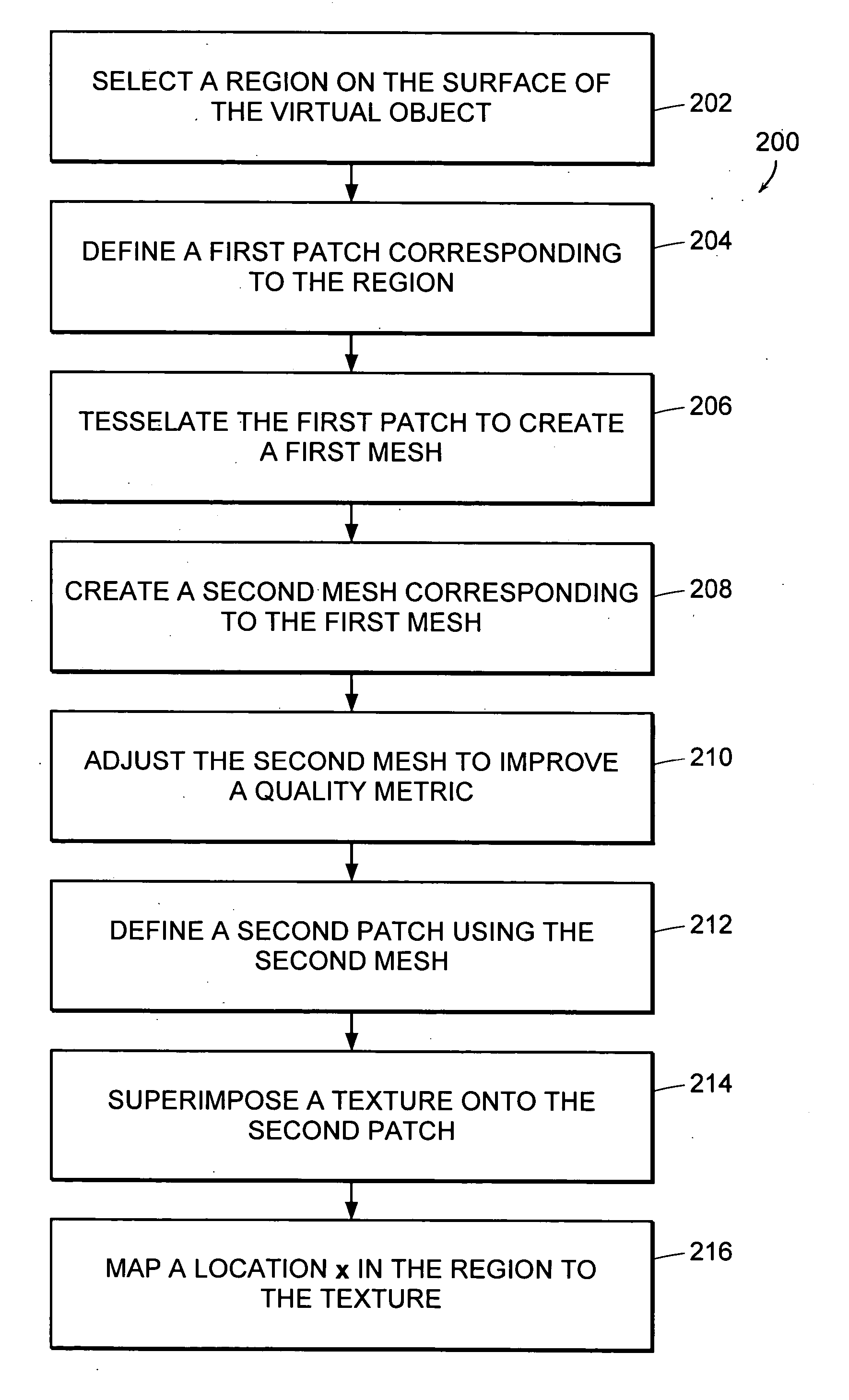

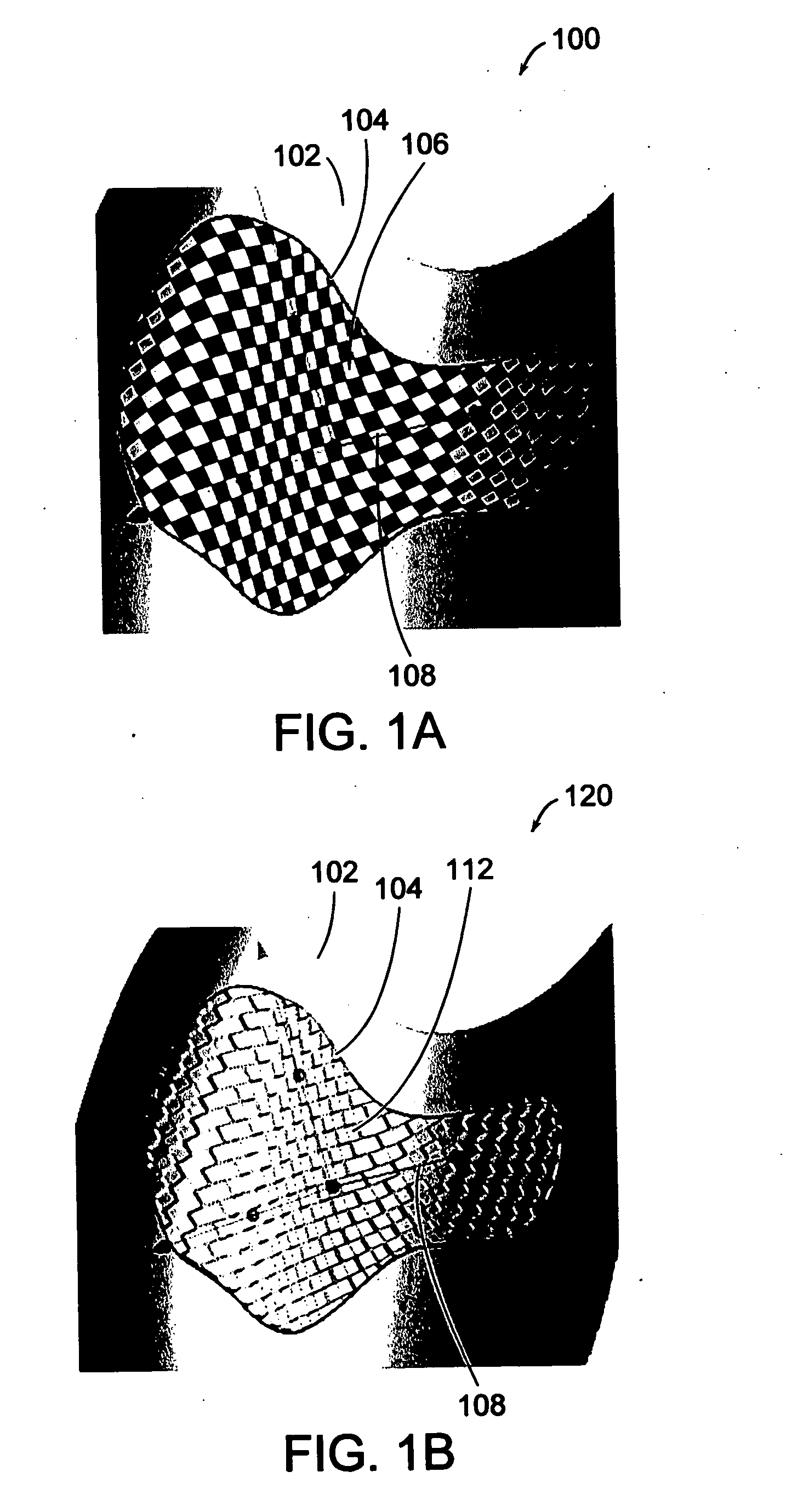

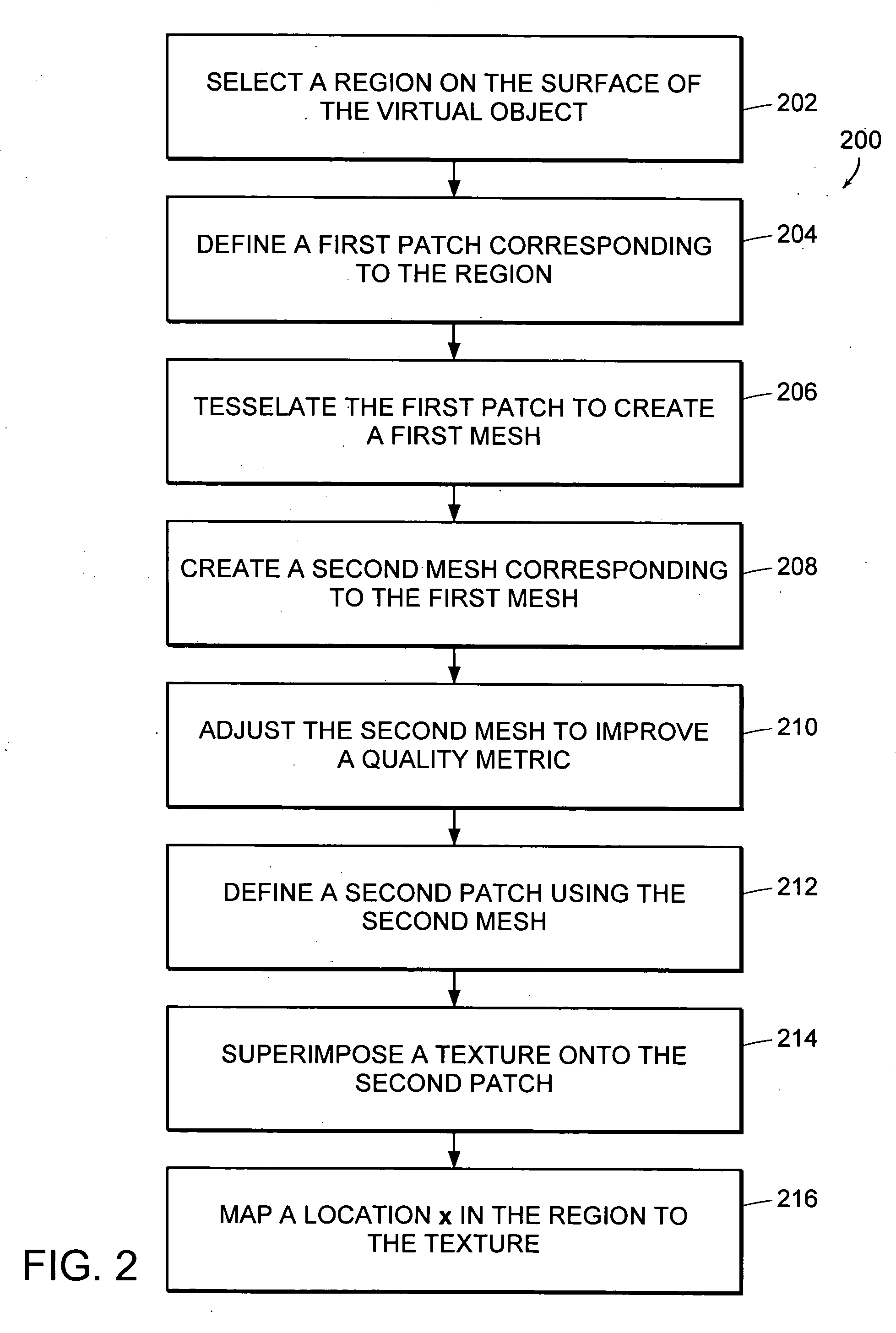

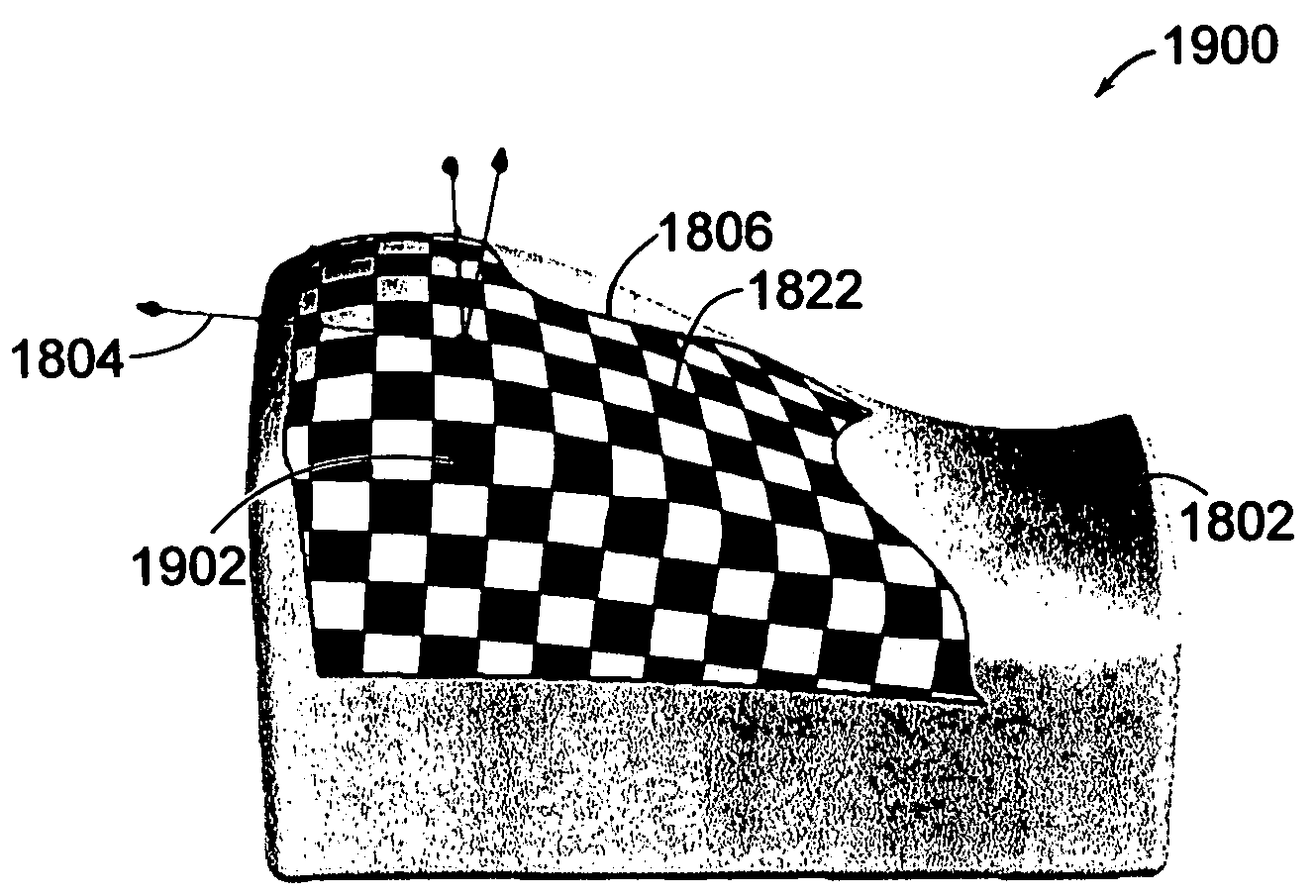

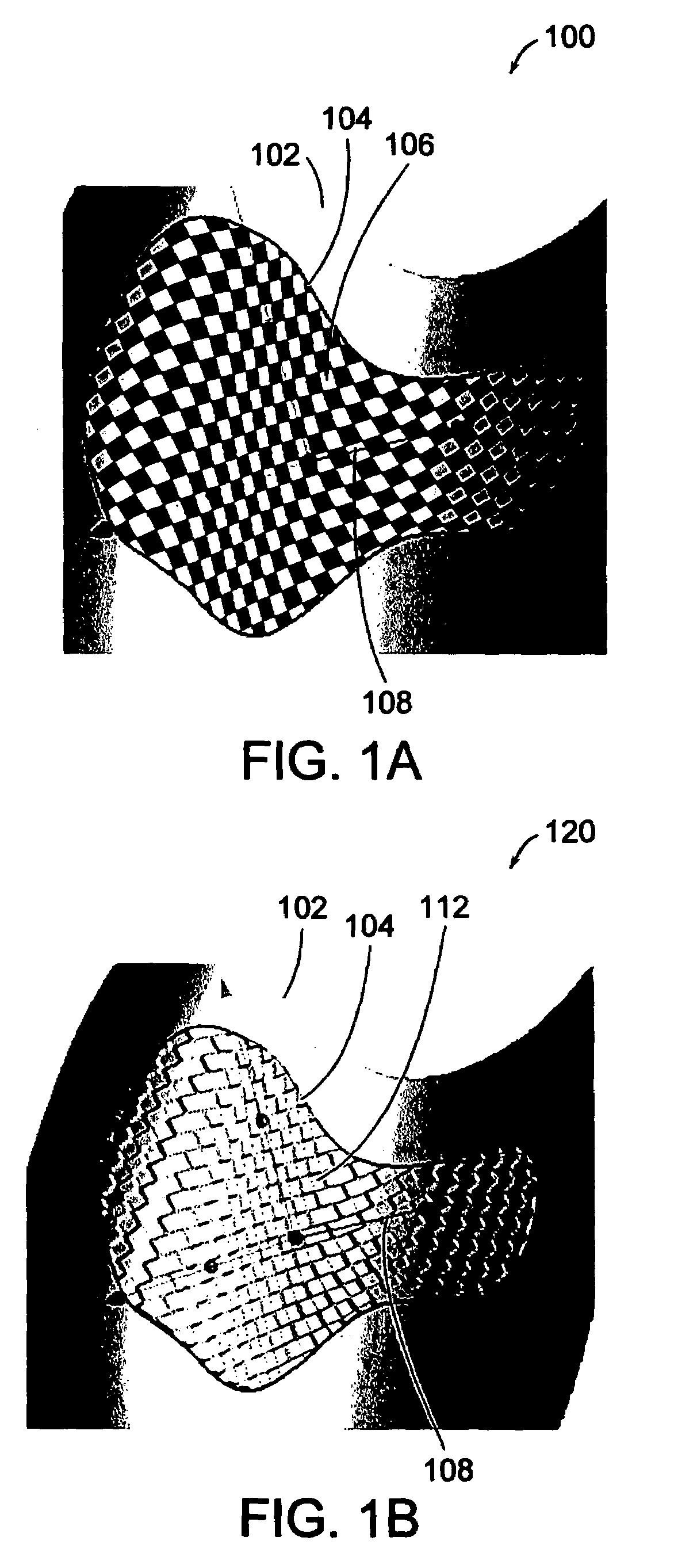

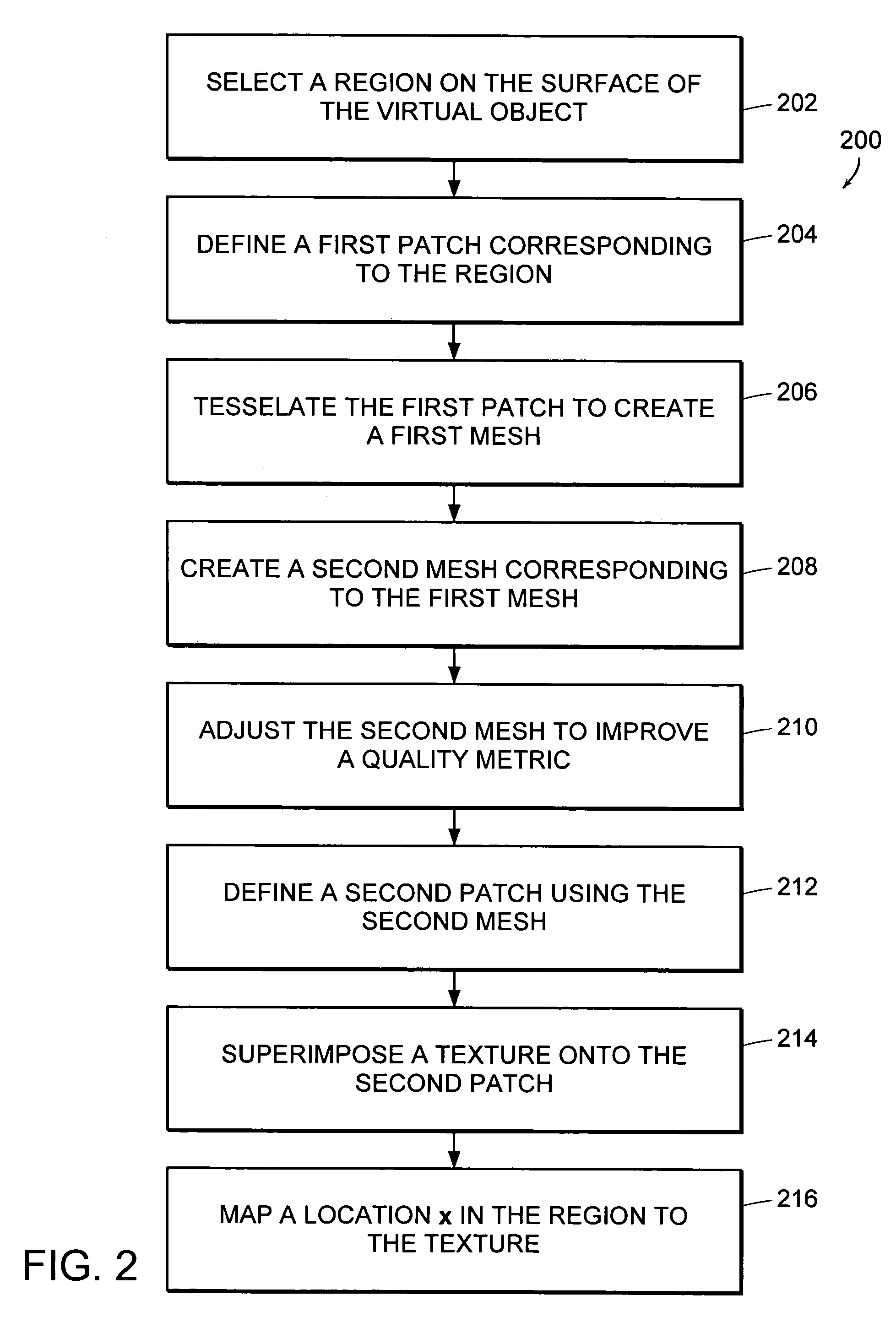

Apparatus and methods for wrapping texture onto the surface of a virtual object

ActiveUS20050128211A1Simple methodEasy to findTexturing/coloringCathode-ray tube indicatorsGraphicsEnergy minimization

The invention provides techniques for wrapping a two-dimensional texture conformally onto a surface of a three dimensional virtual object within an arbitrarily-shaped, user-defined region. The techniques provide minimum distortion and allow interactive manipulation of the mapped texture. The techniques feature an energy minimization scheme in which distances between points on the surface of the three-dimensional virtual object serve as set lengths for springs connecting points of a planar mesh. The planar mesh is adjusted to minimize spring energy, and then used to define a patch upon which a two-dimensional texture is superimposed. Points on the surface of the virtual object are then mapped to corresponding points of the texture. The invention also features a haptic / graphical user interface element that allows a user to interactively and intuitively adjust texture mapped within the arbitrary, user-defined region.

Owner:3D SYST INC

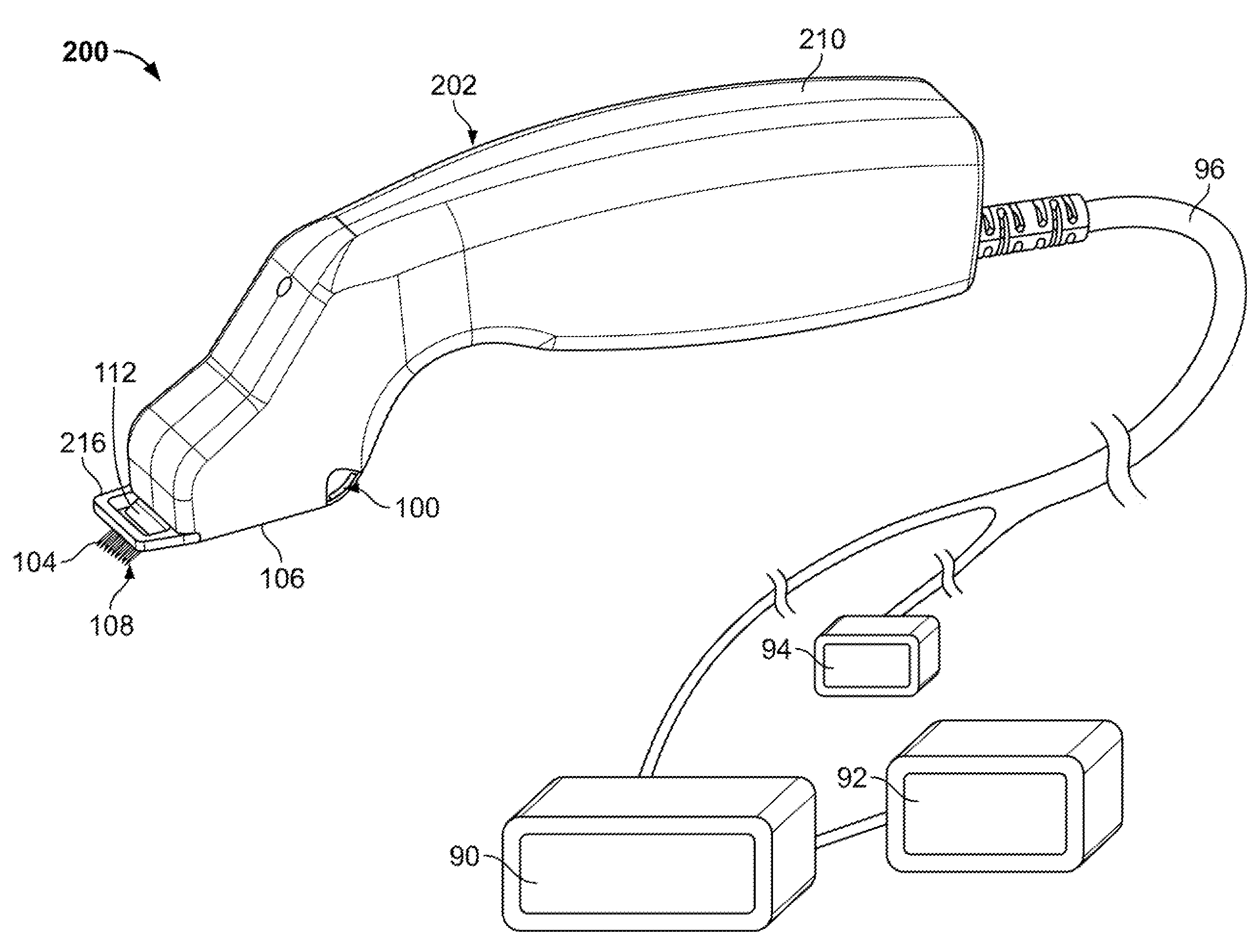

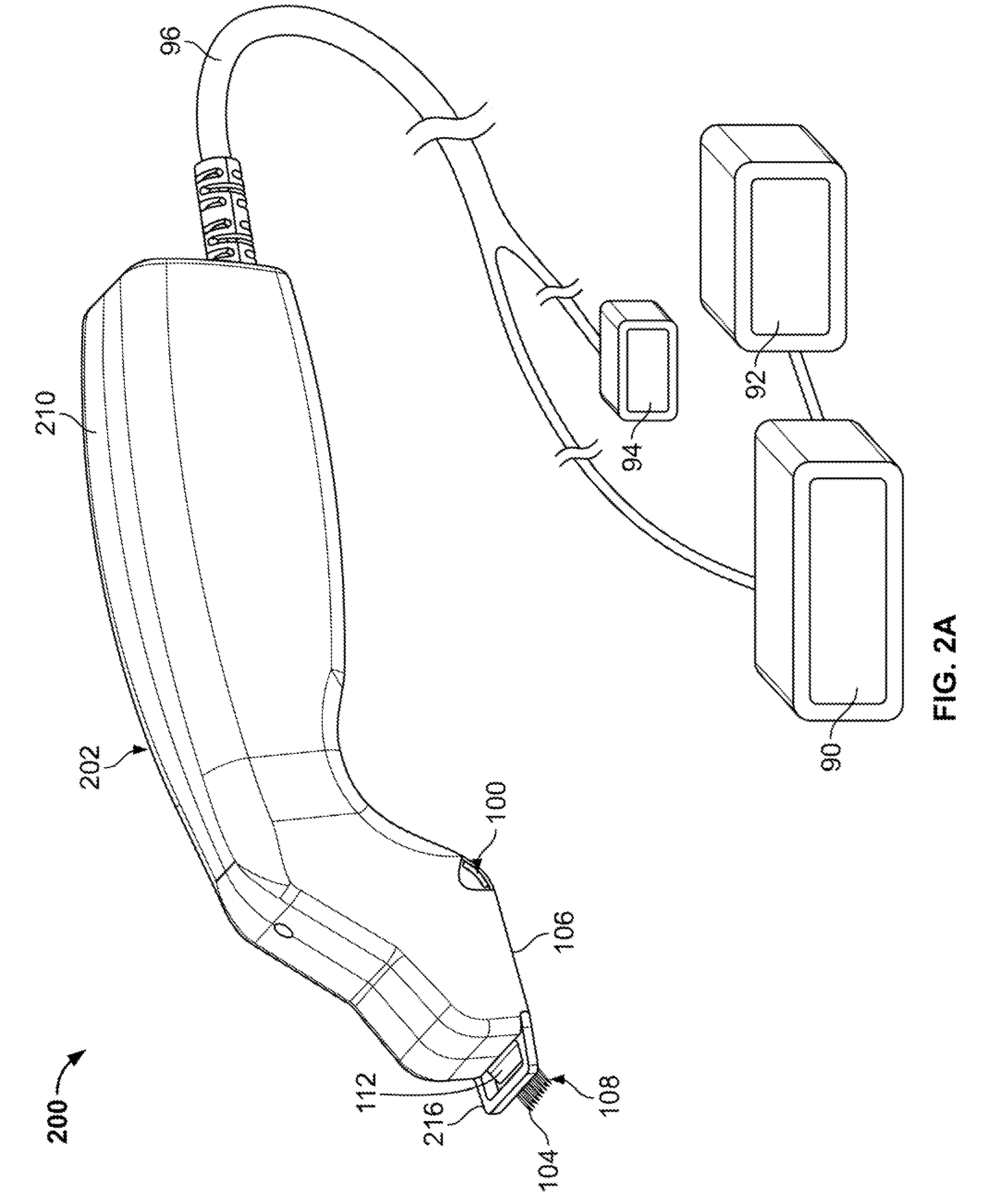

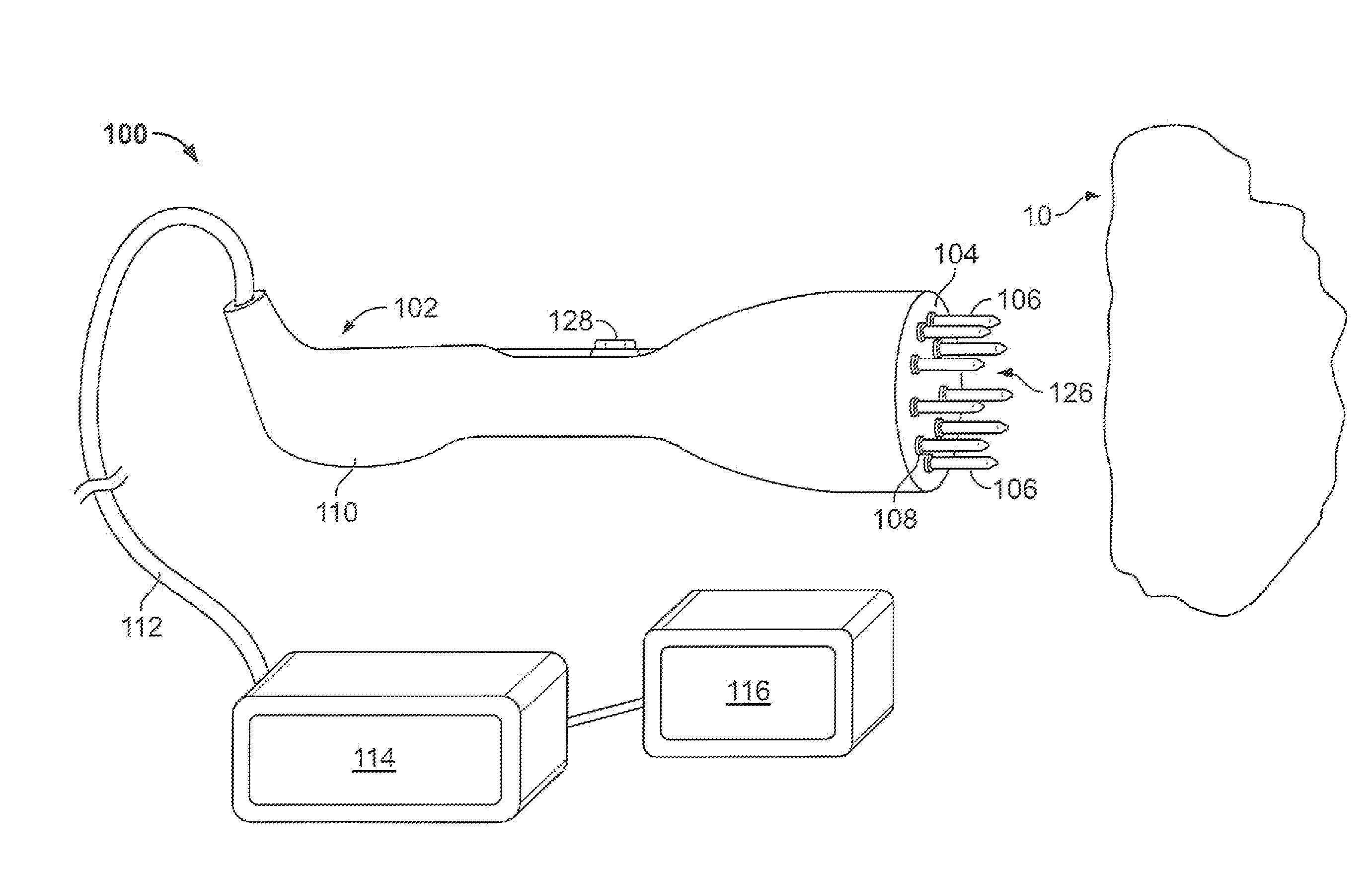

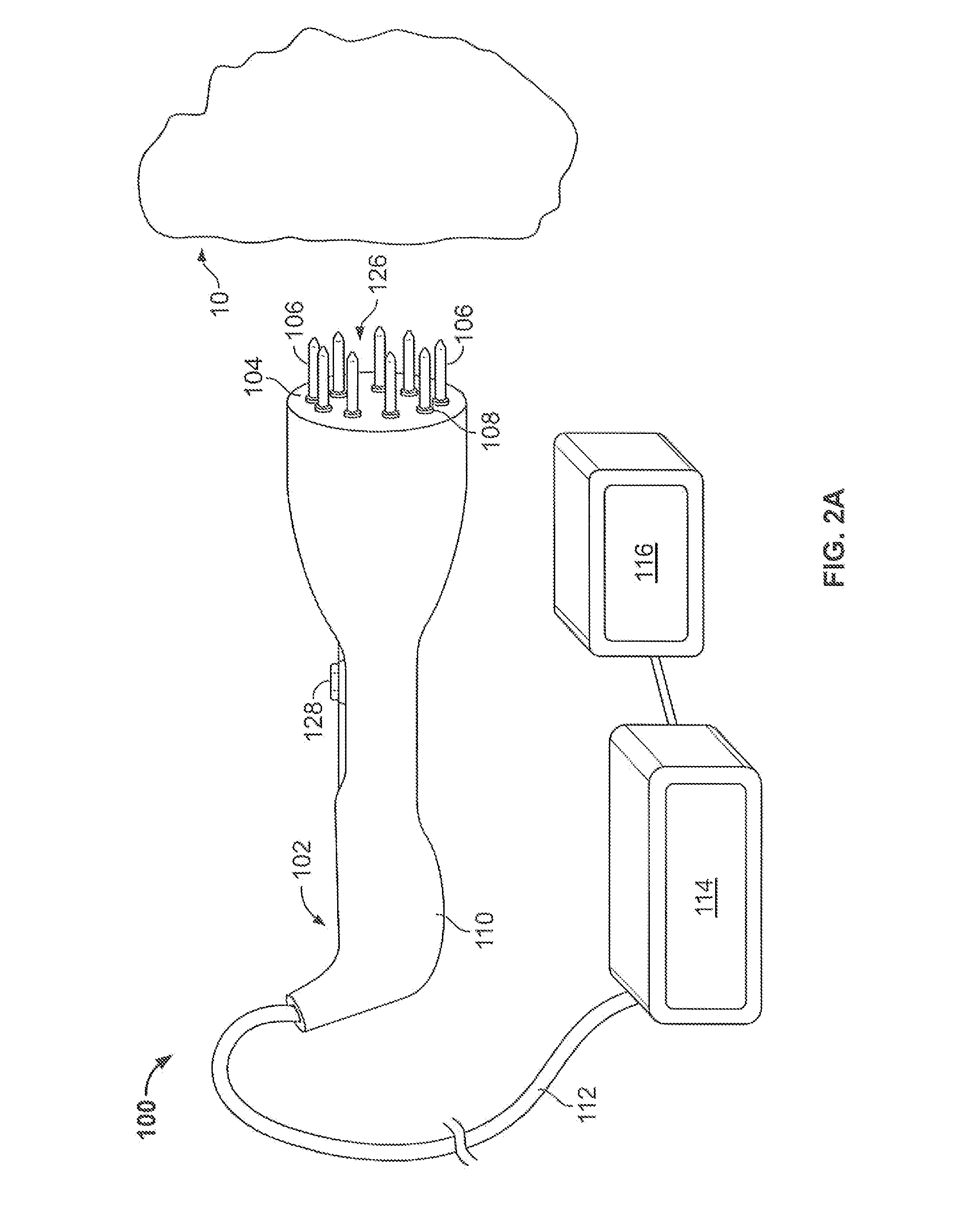

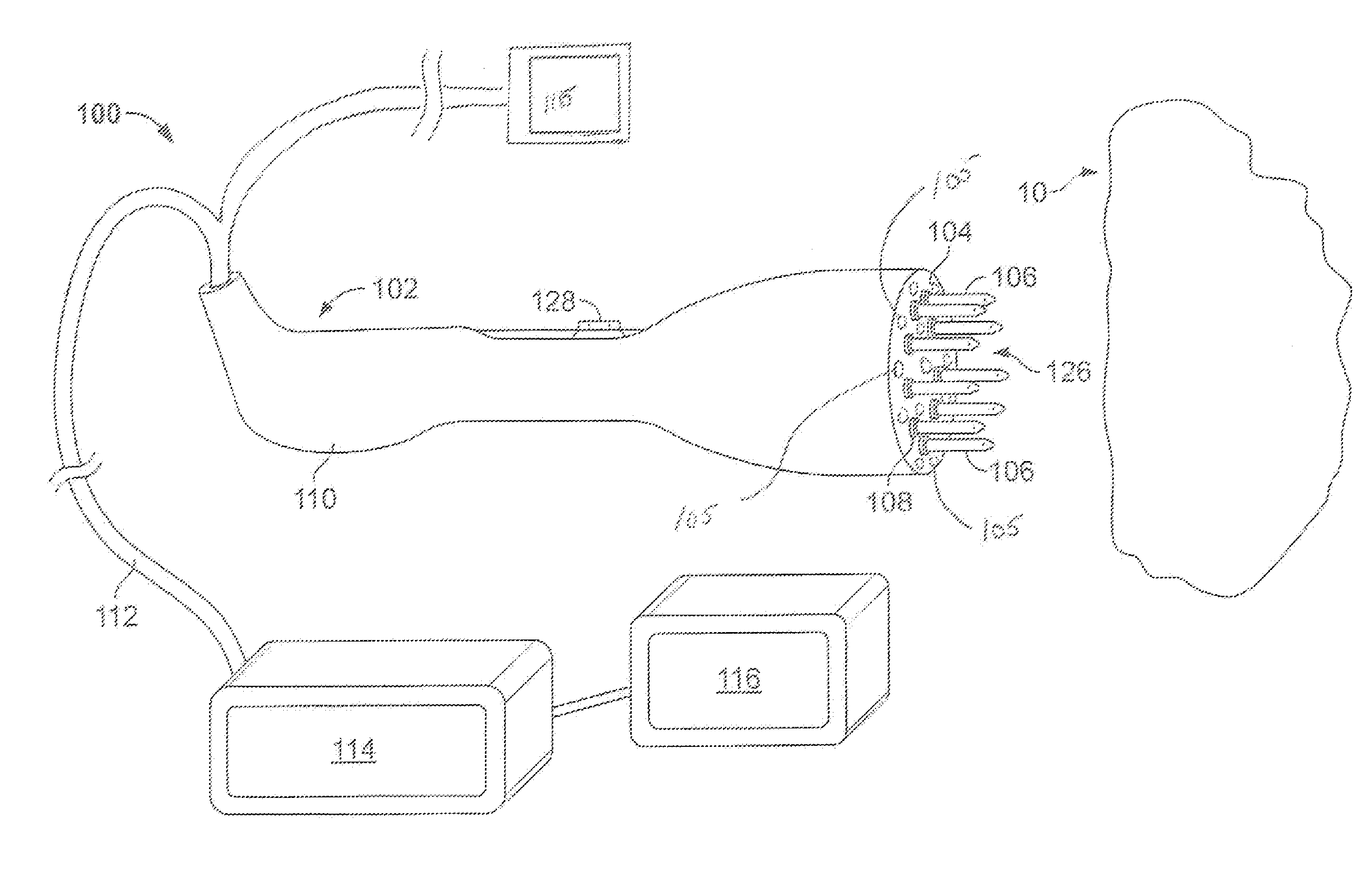

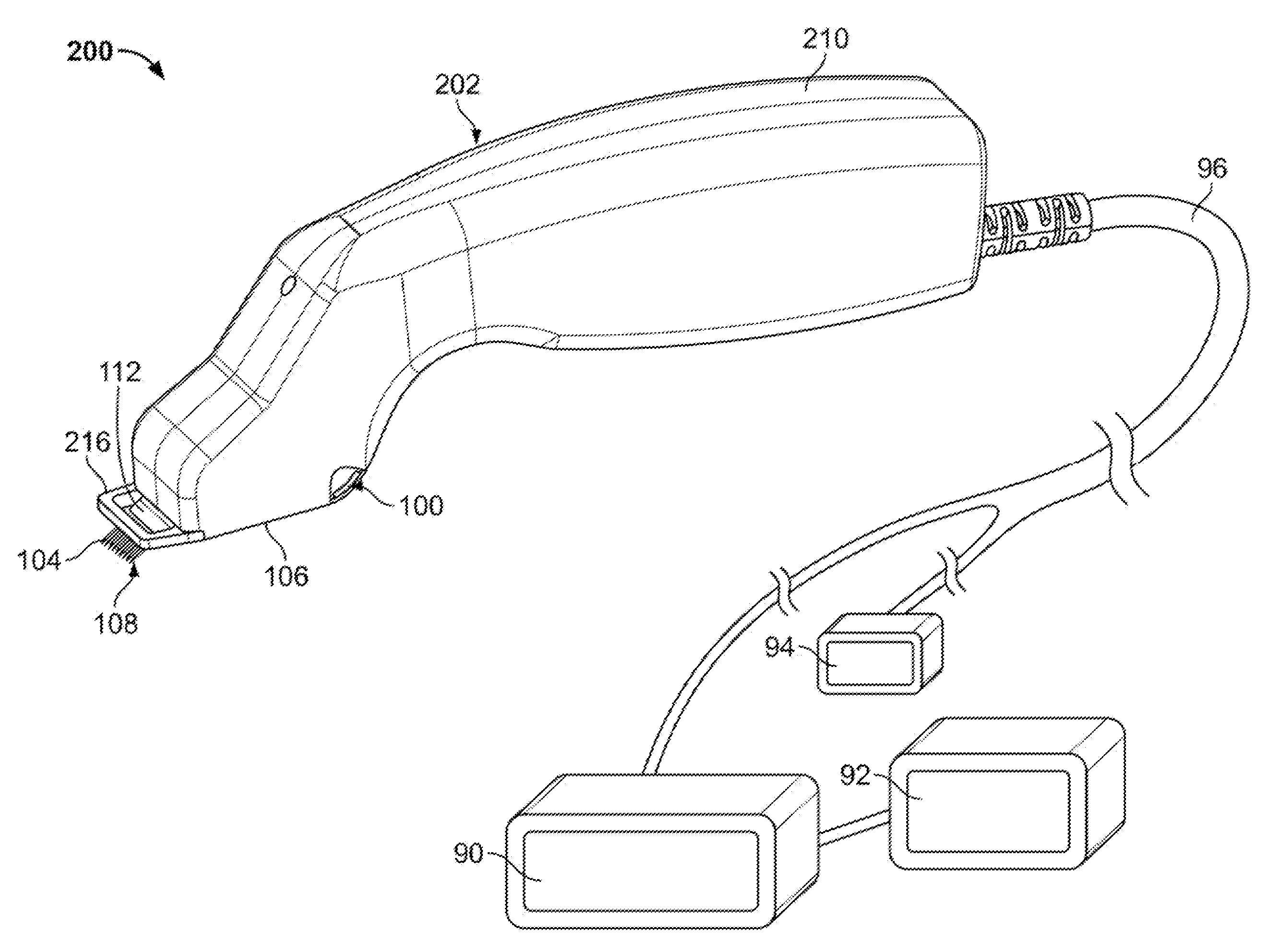

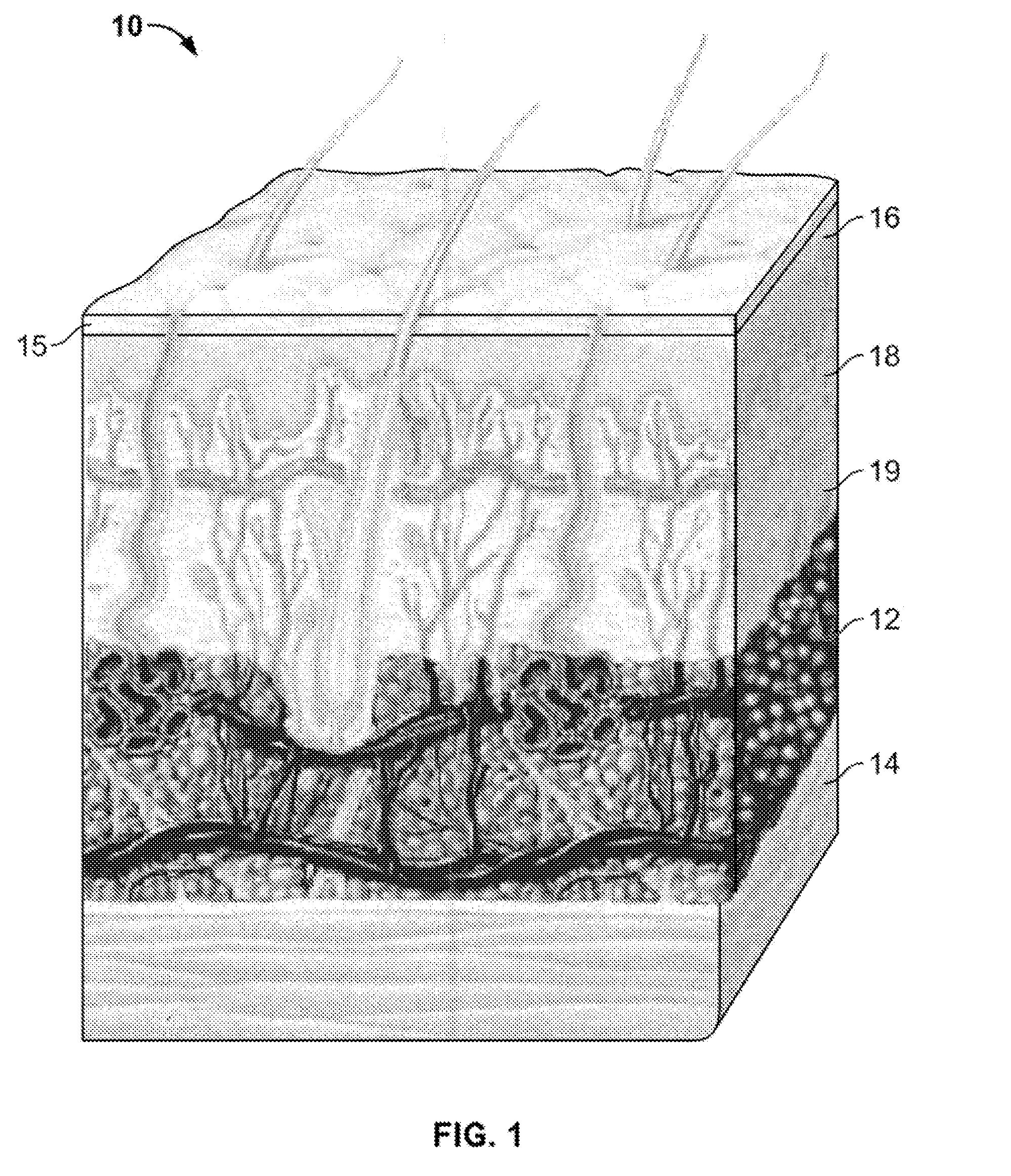

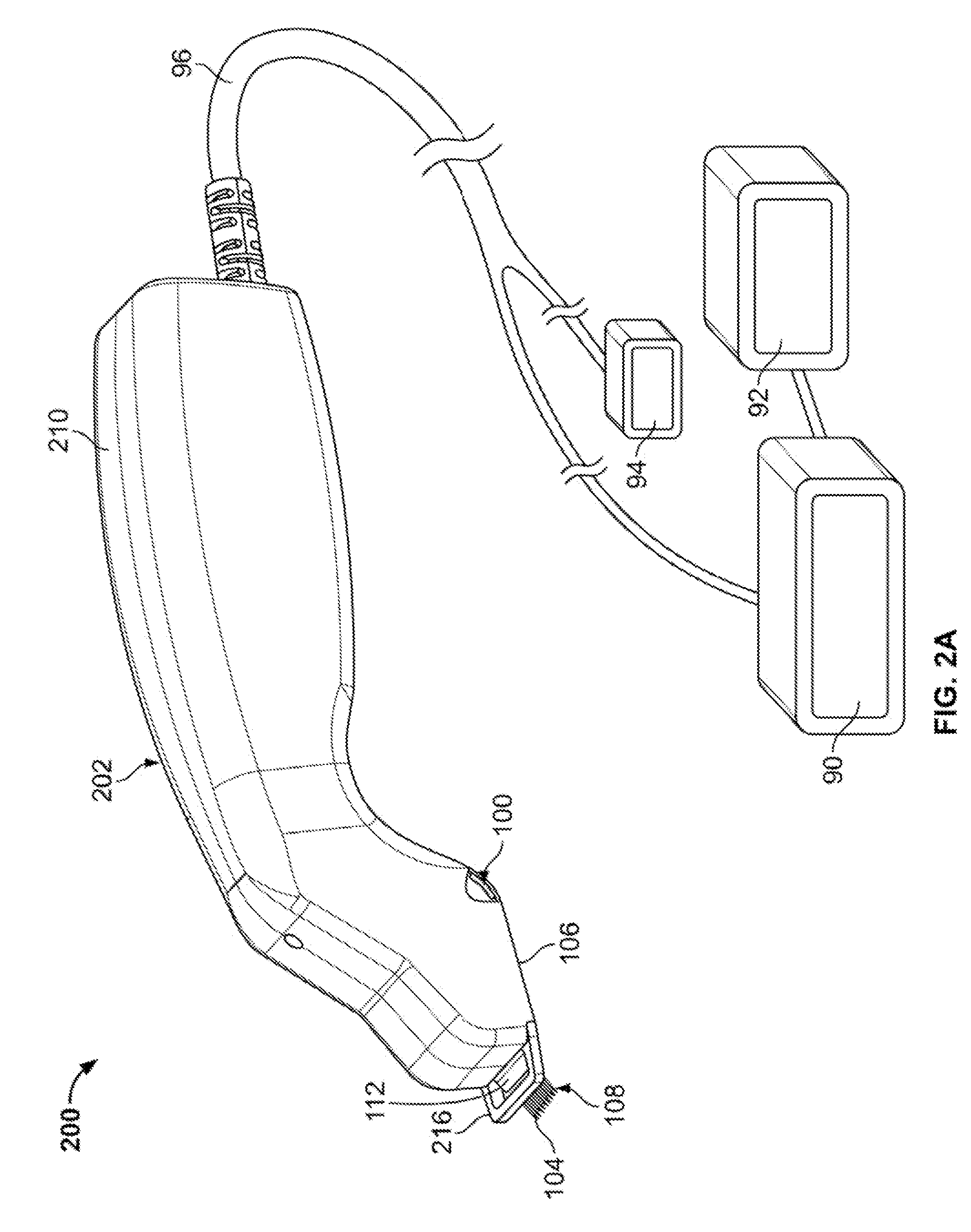

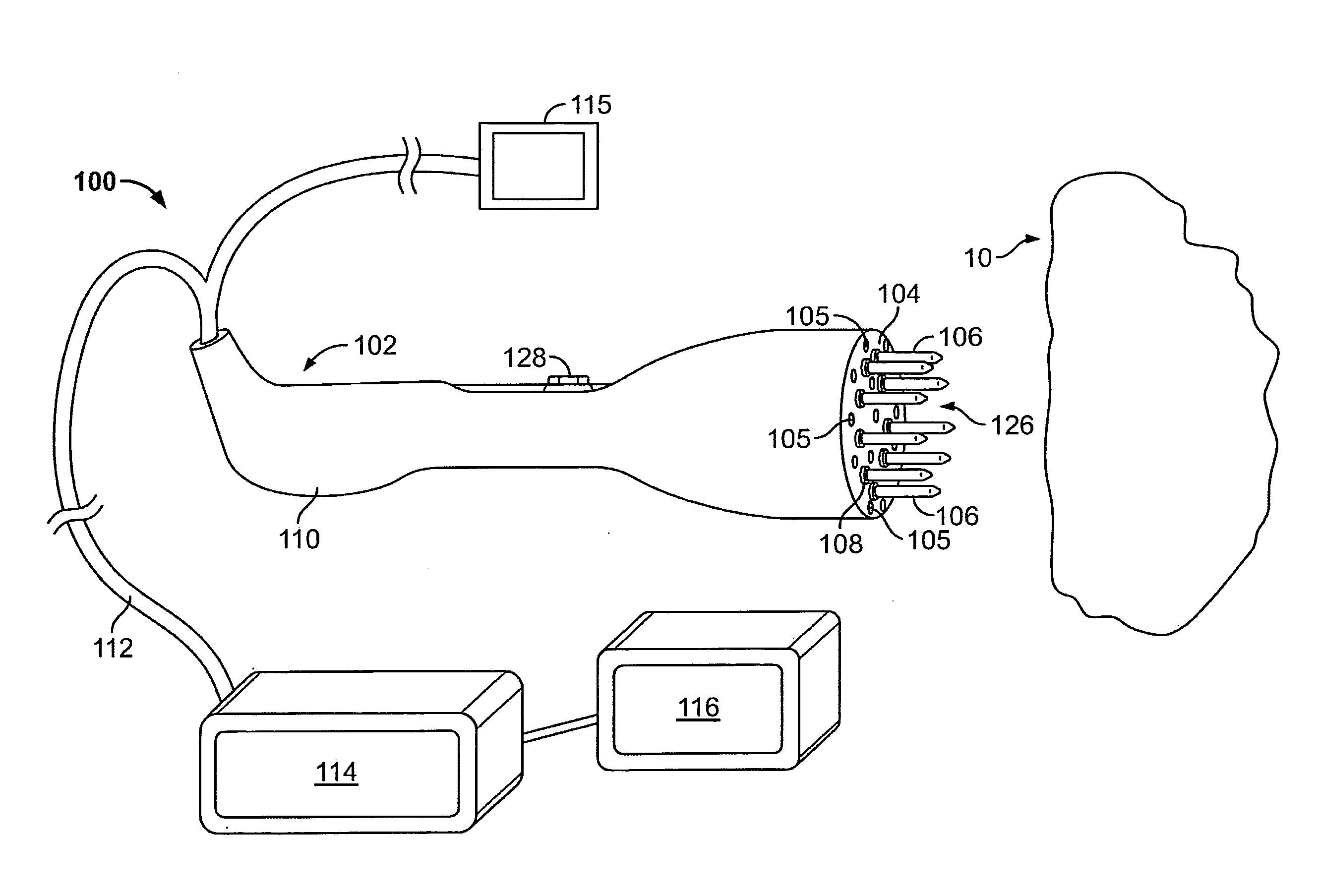

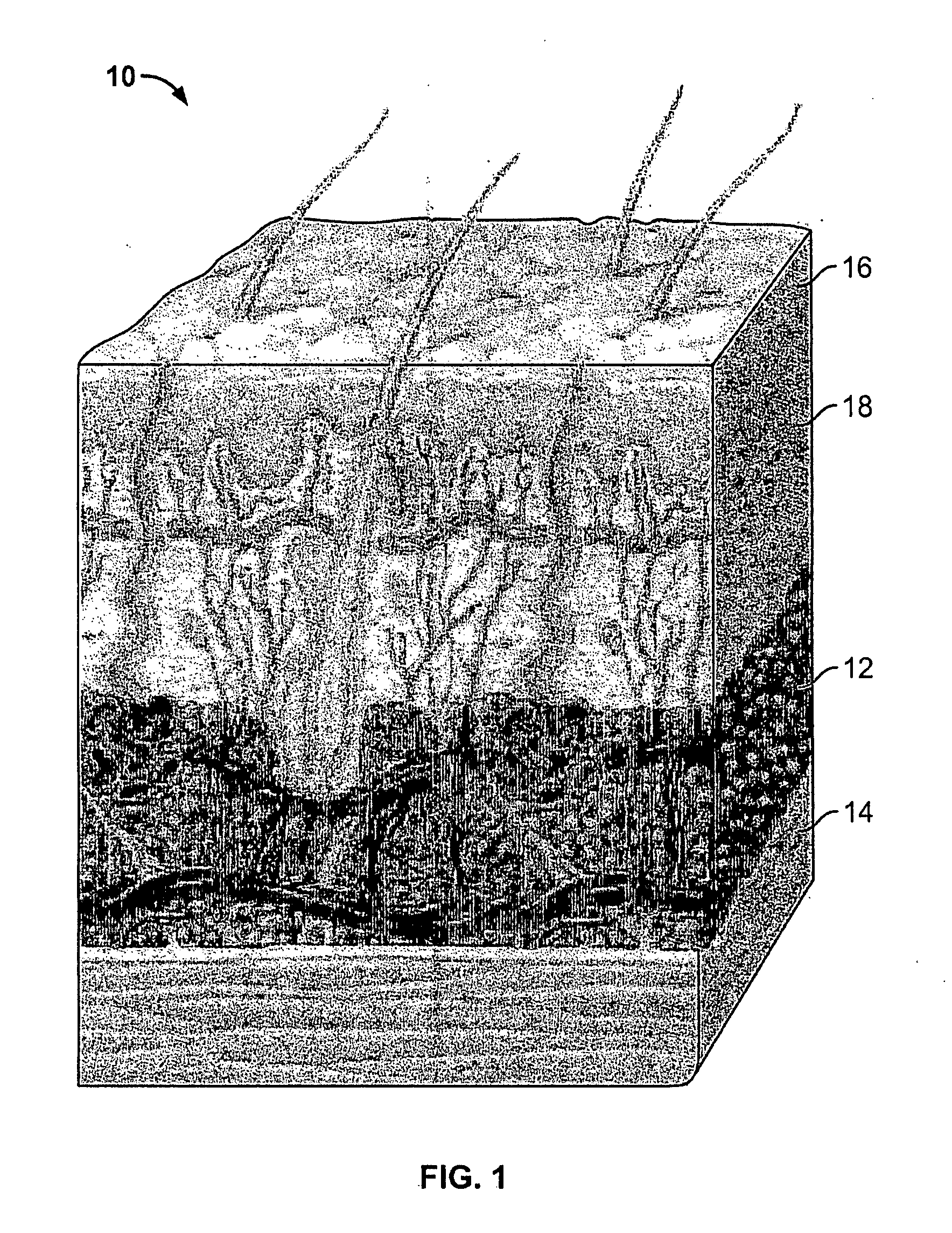

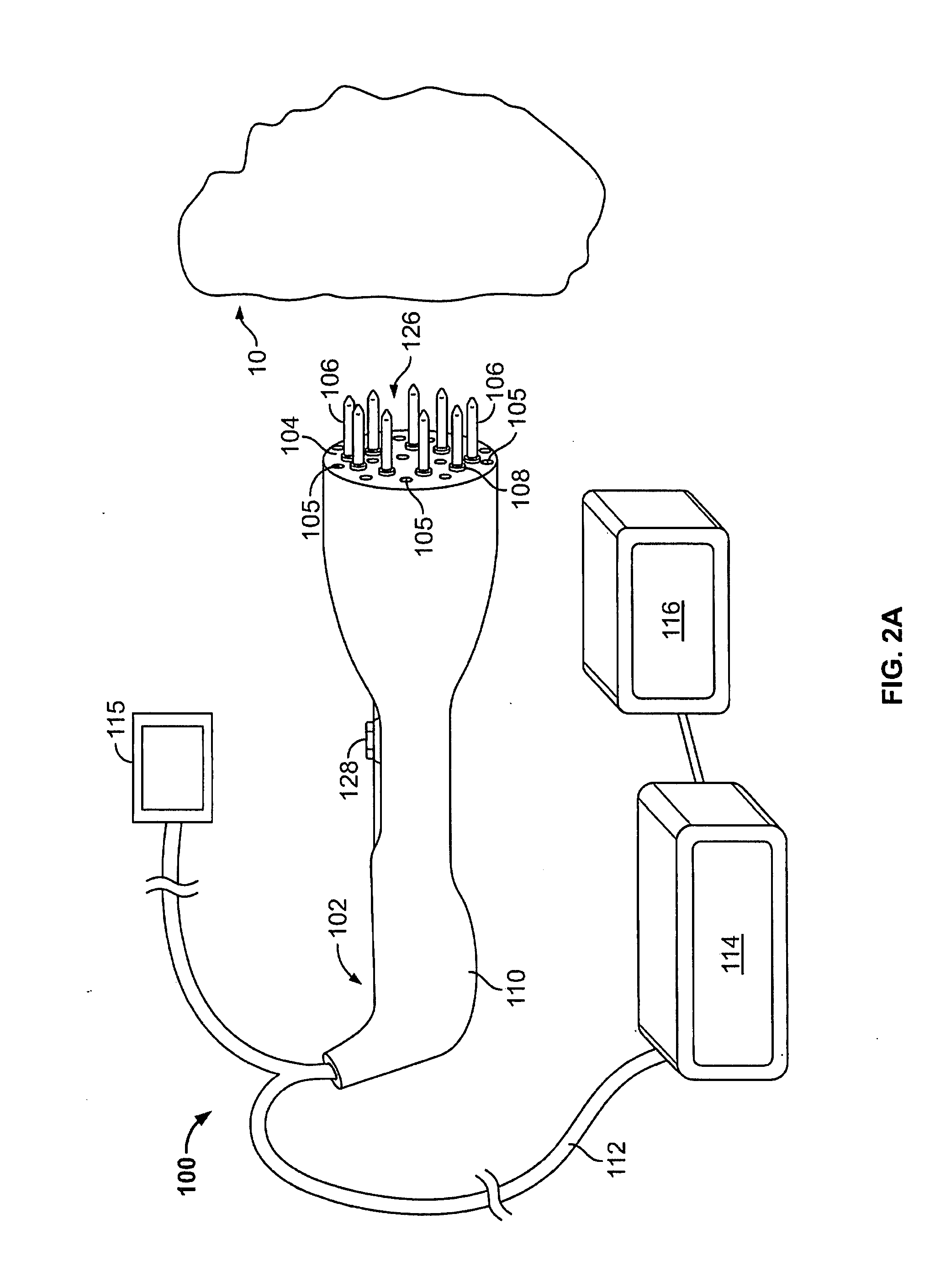

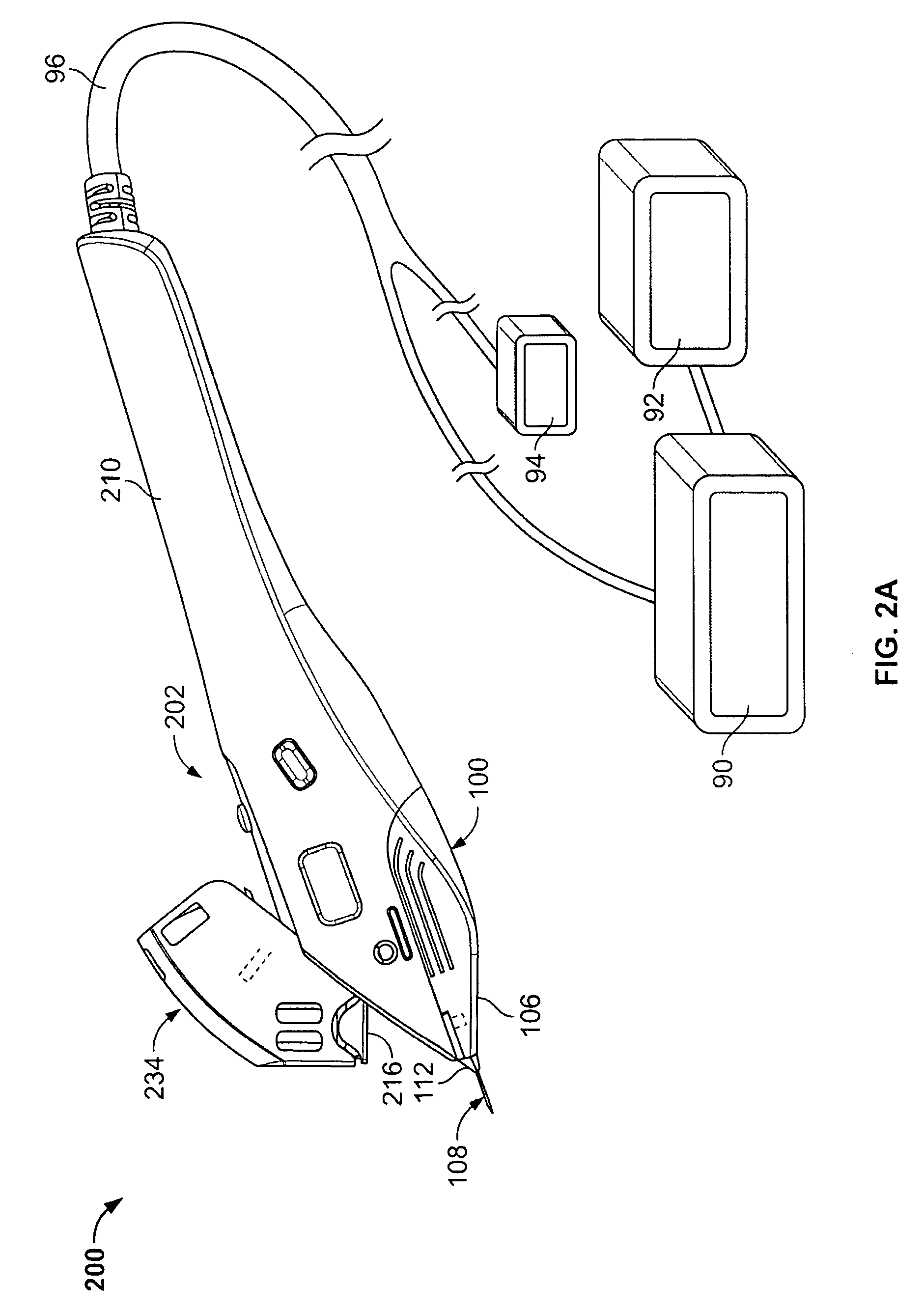

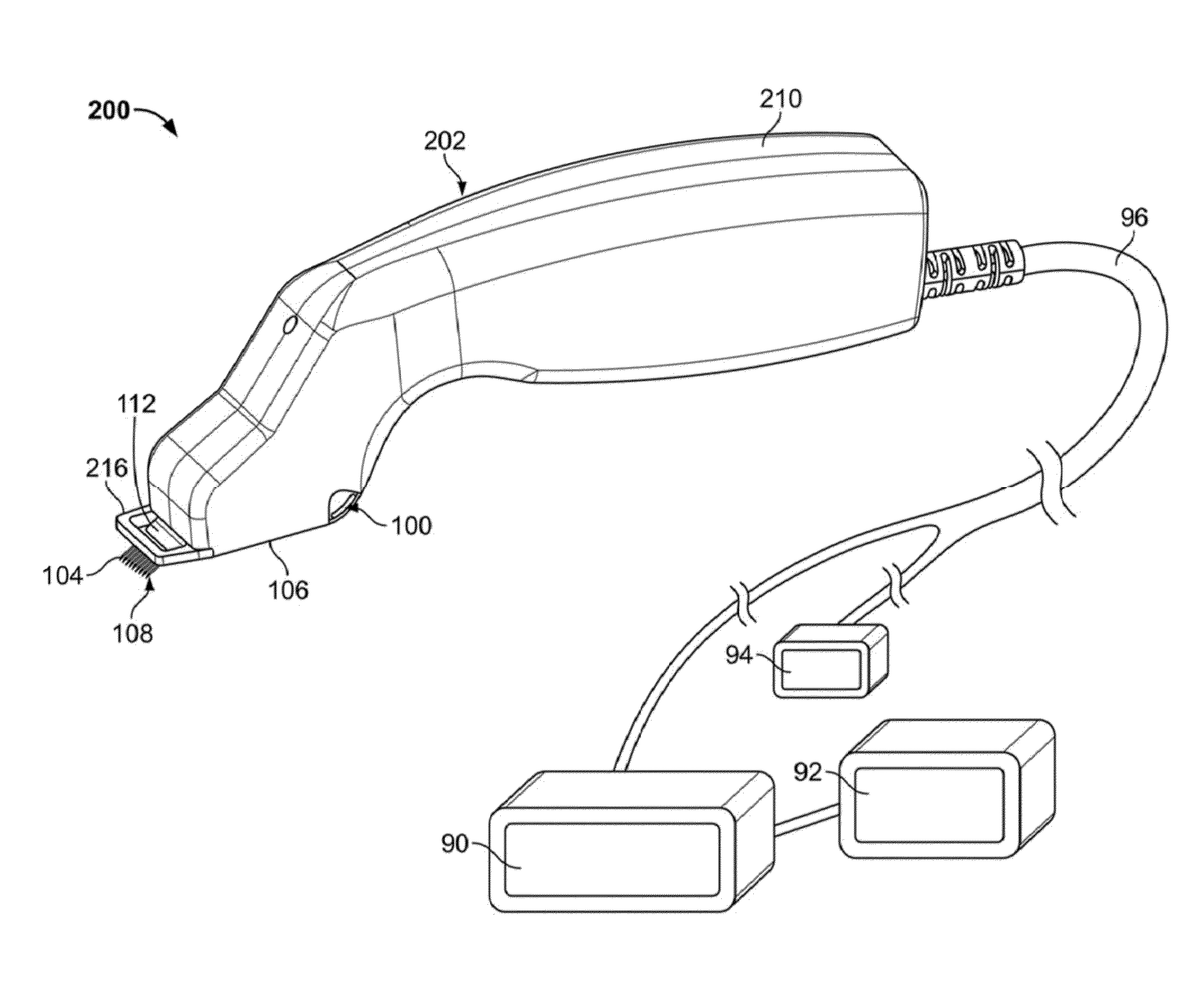

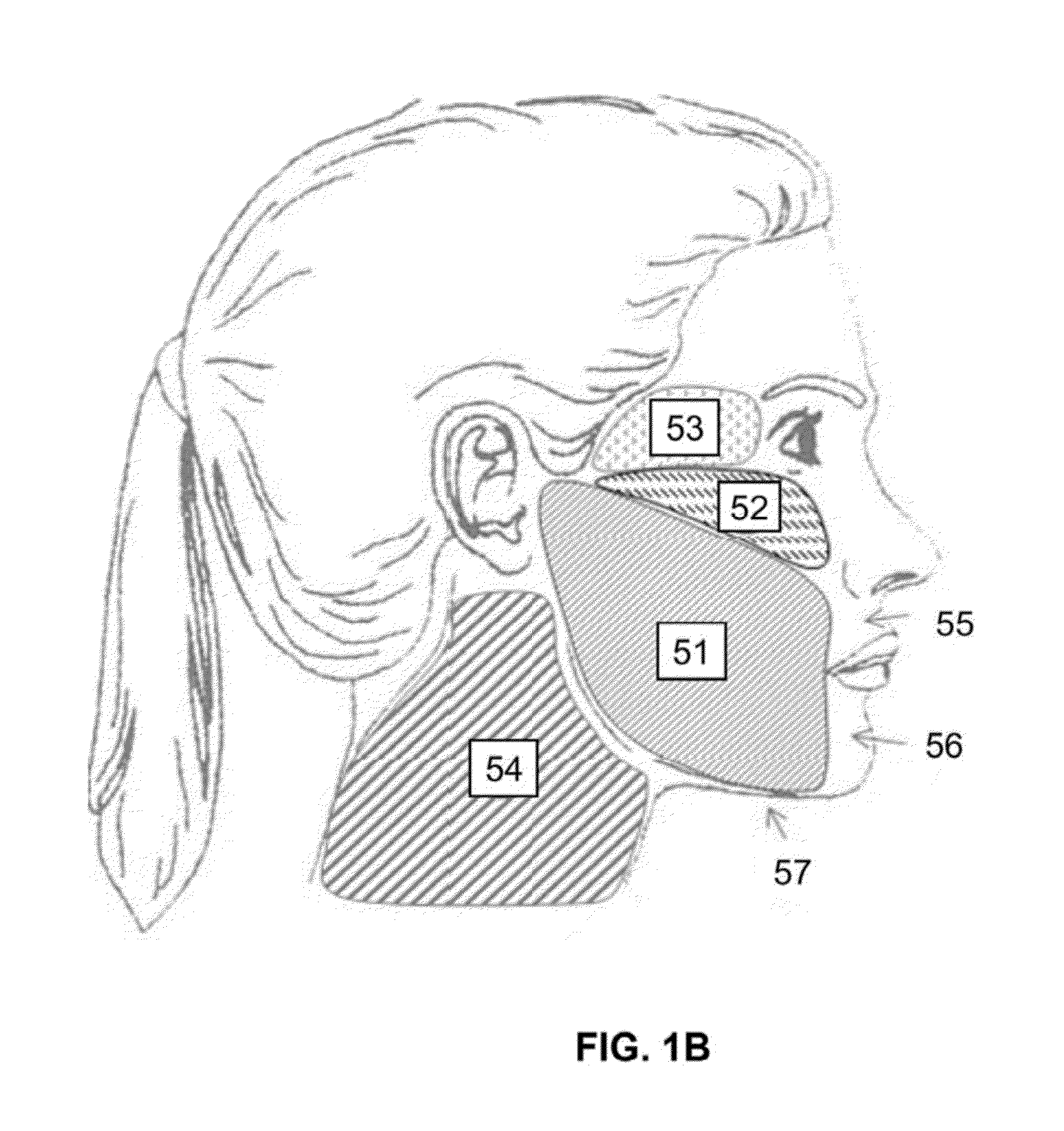

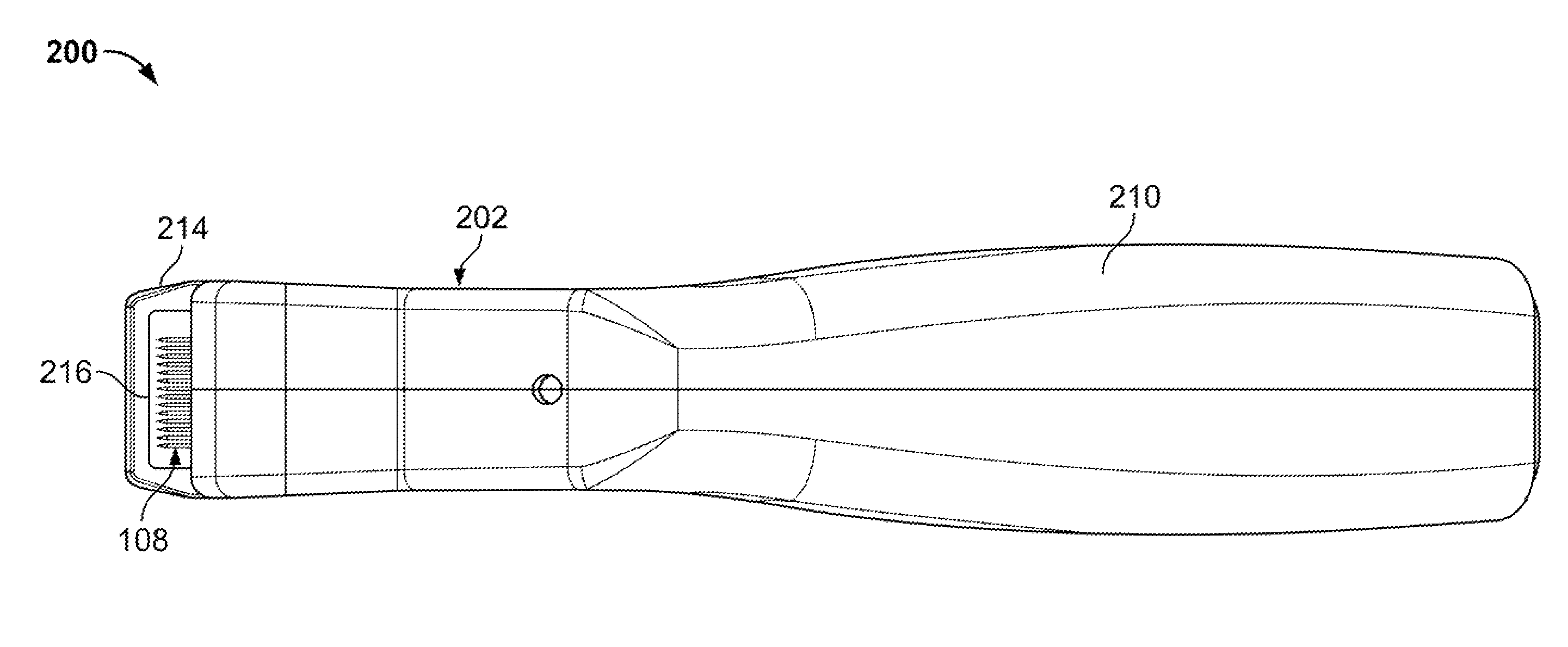

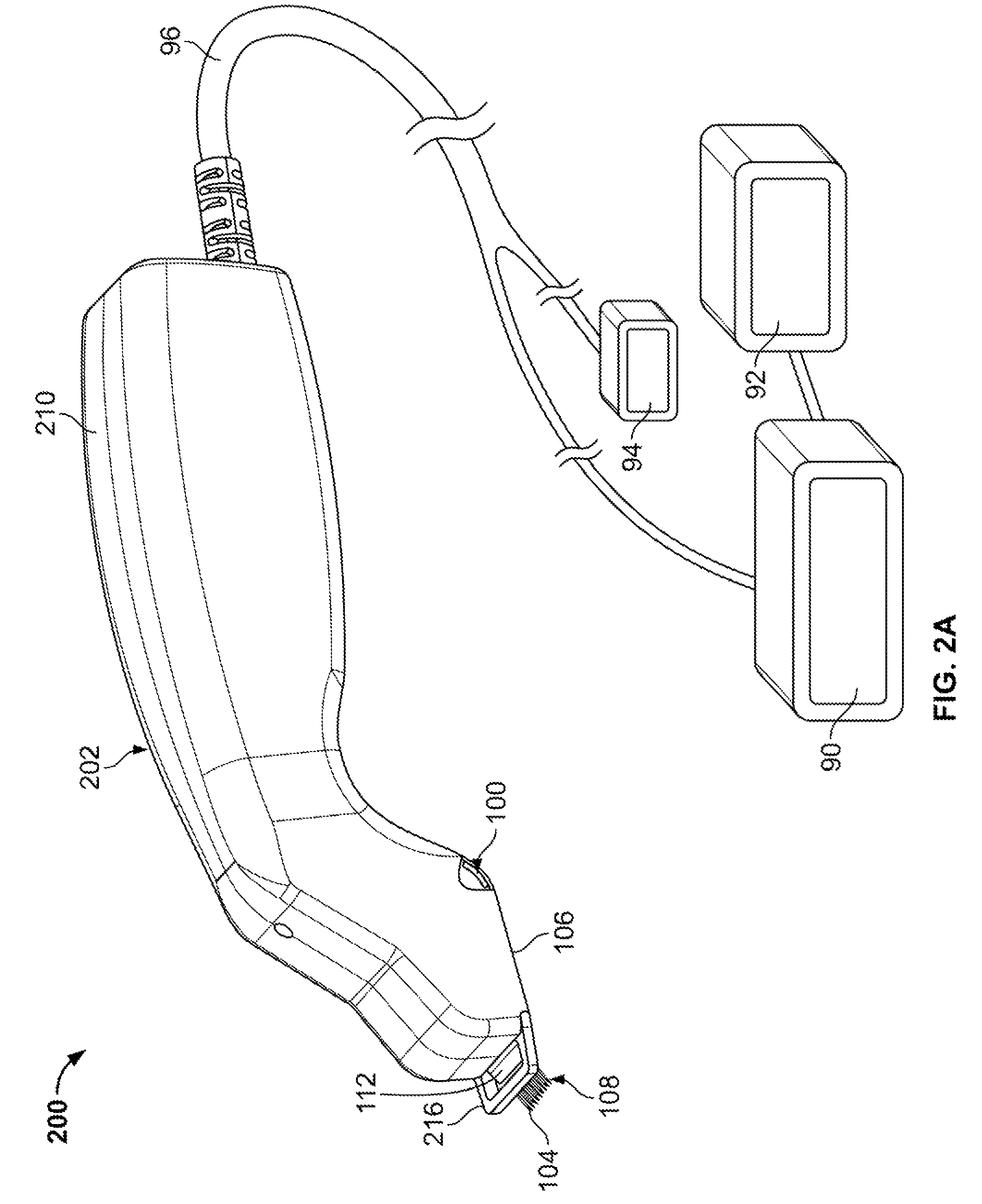

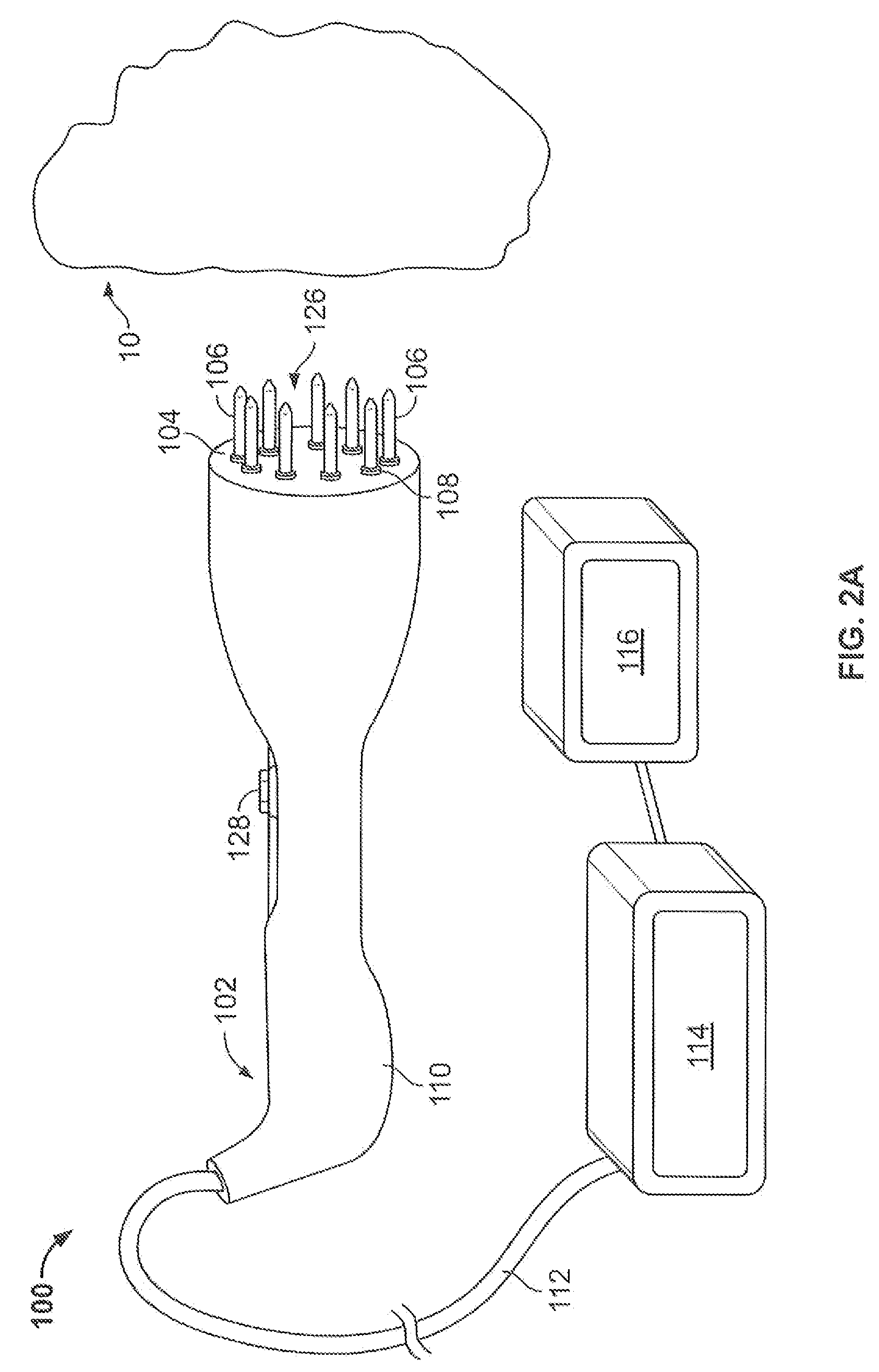

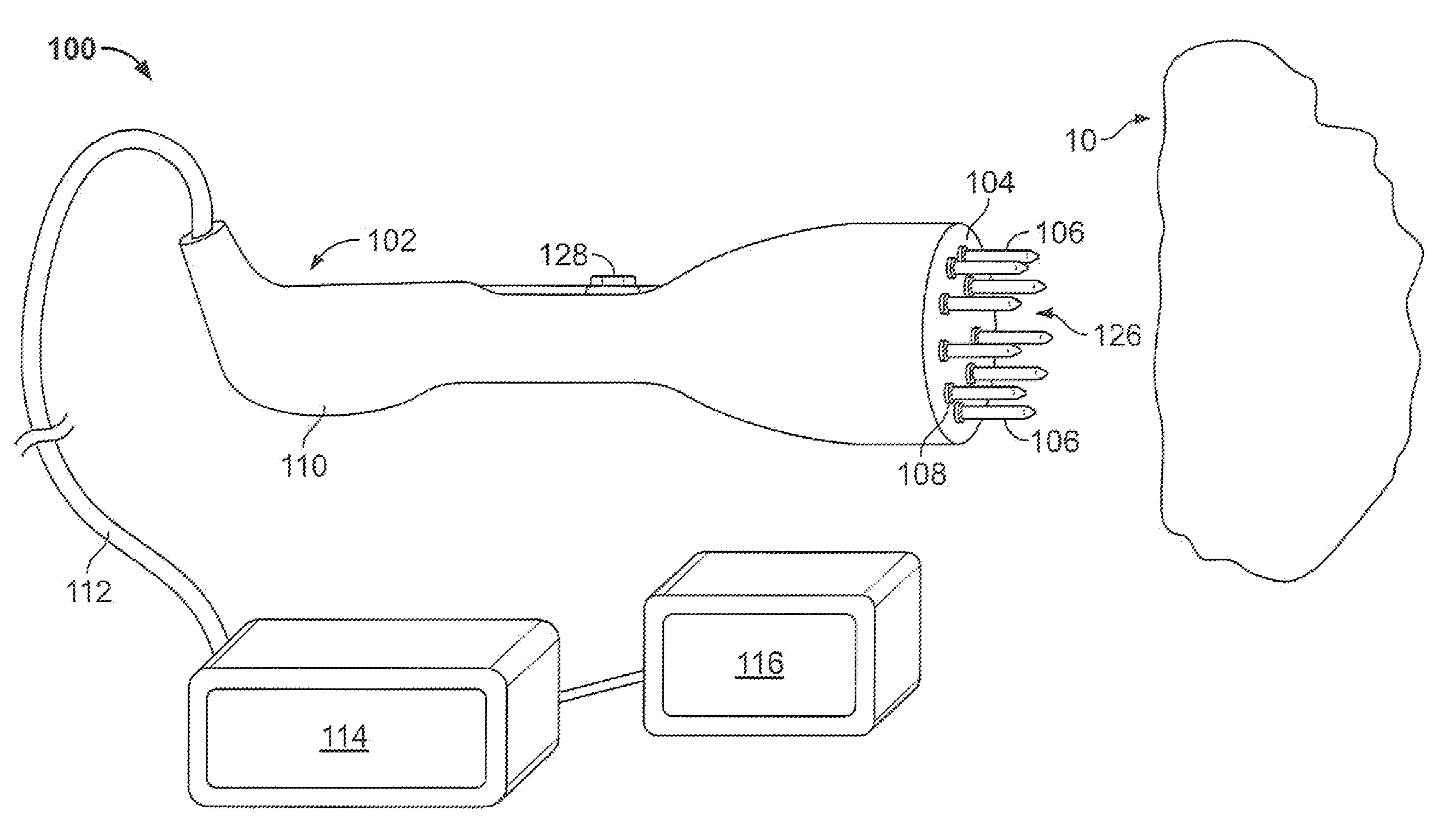

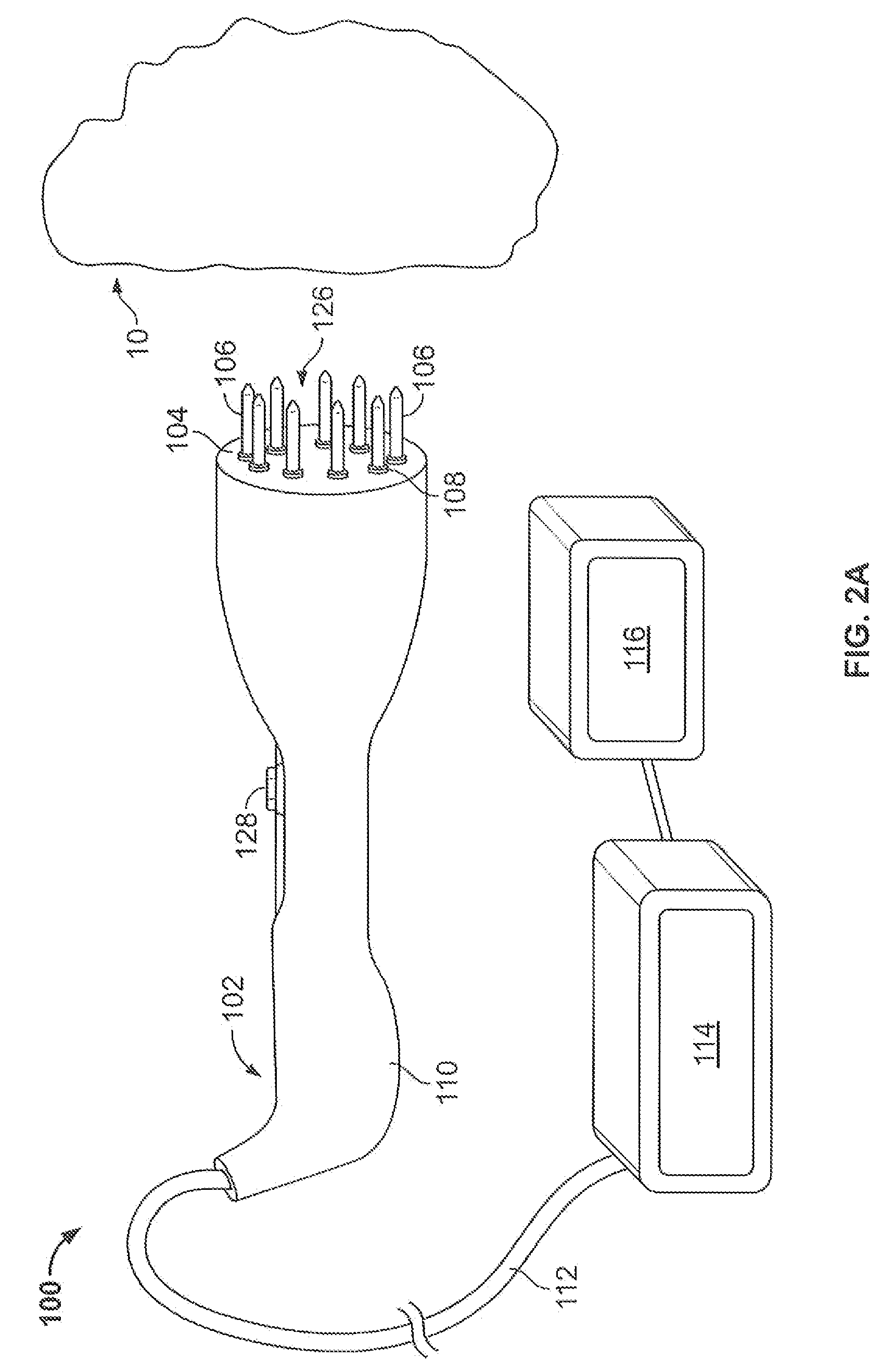

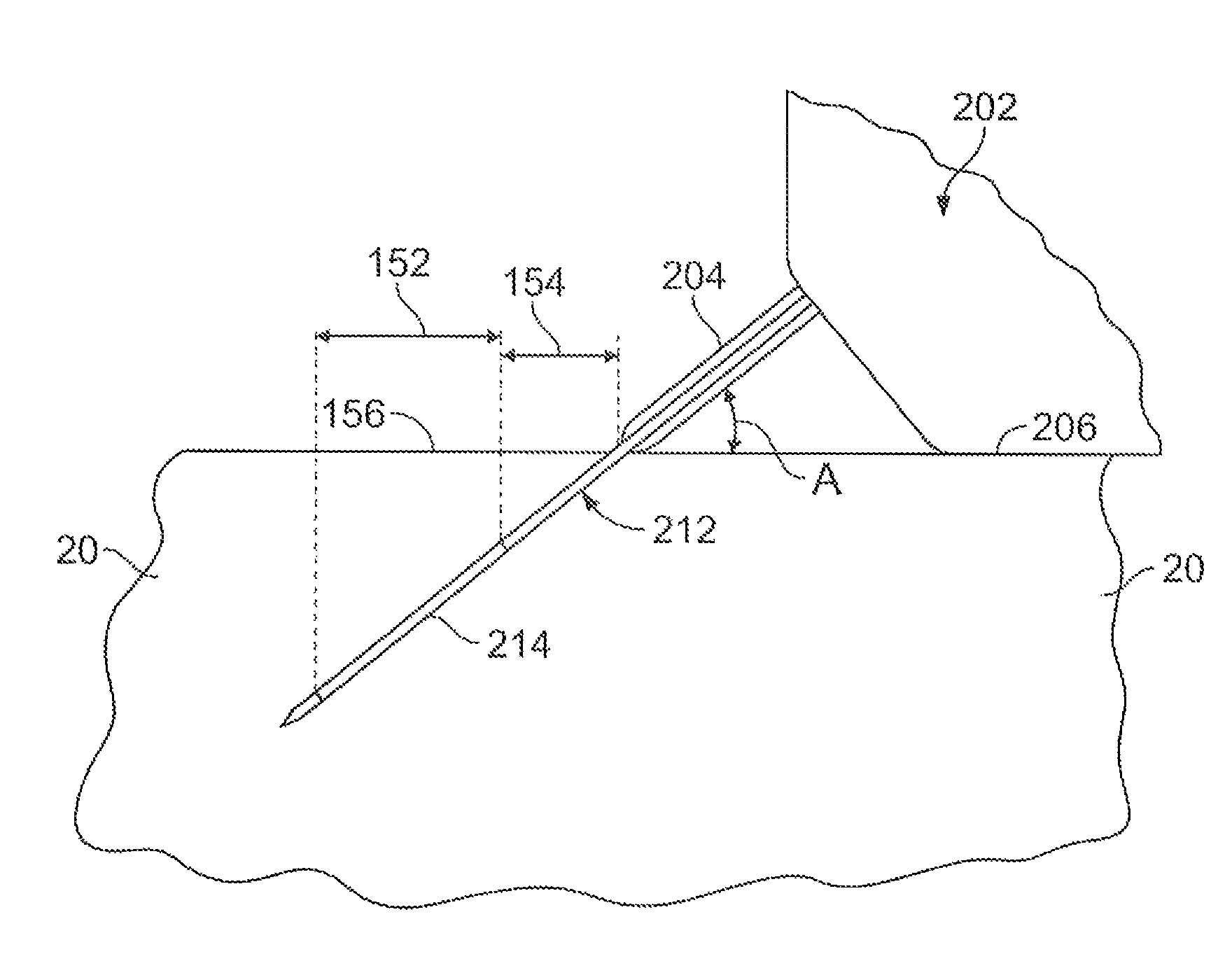

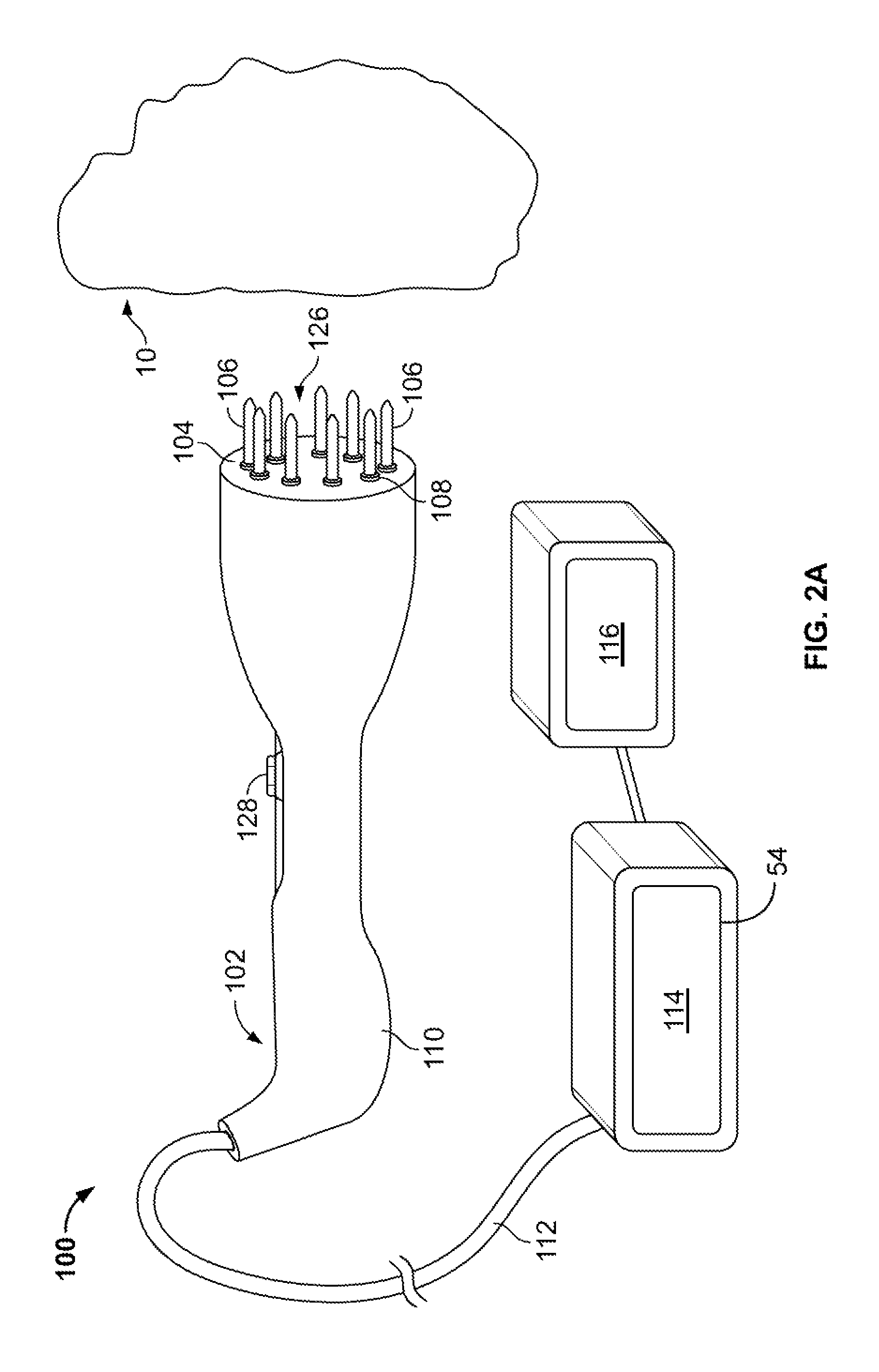

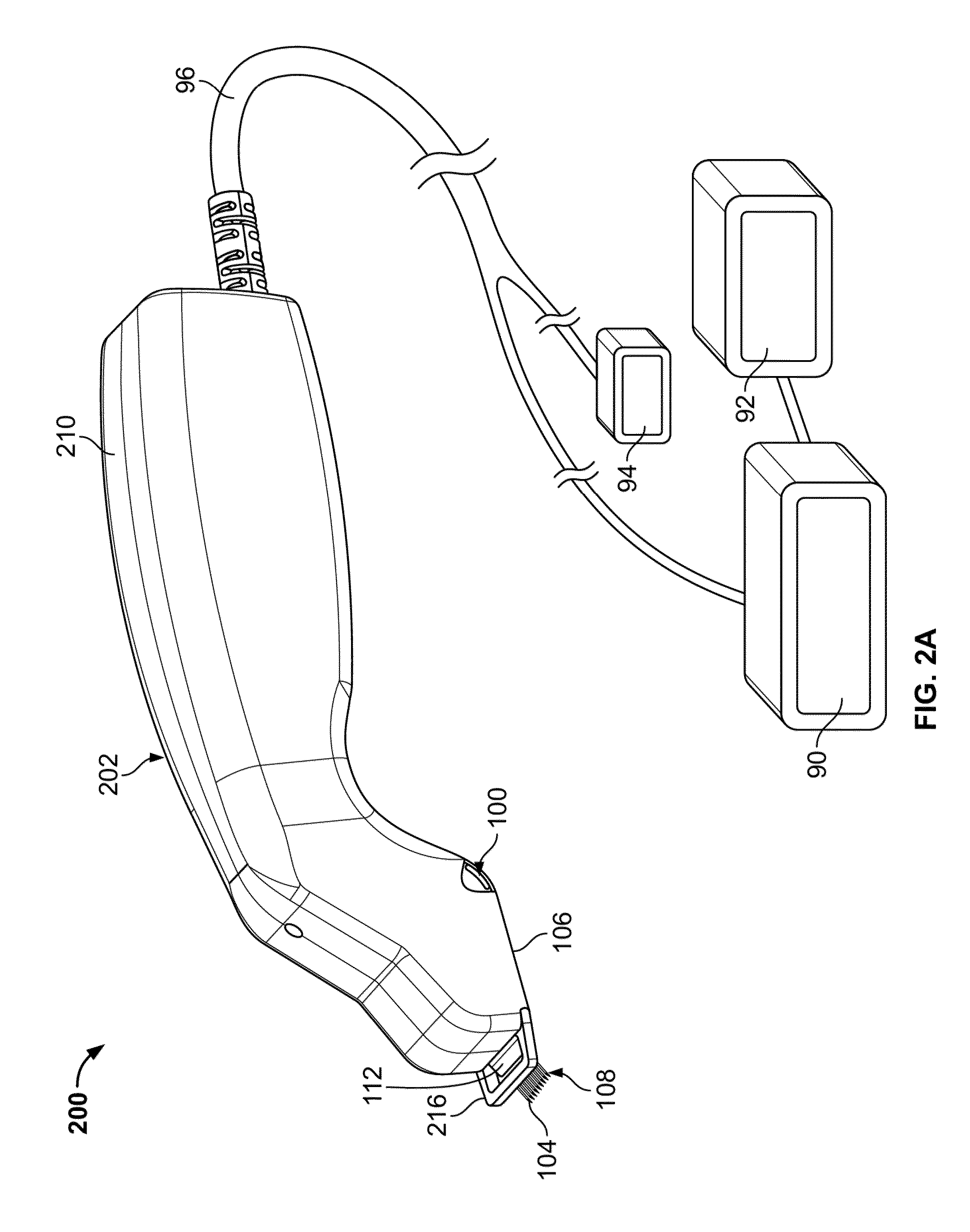

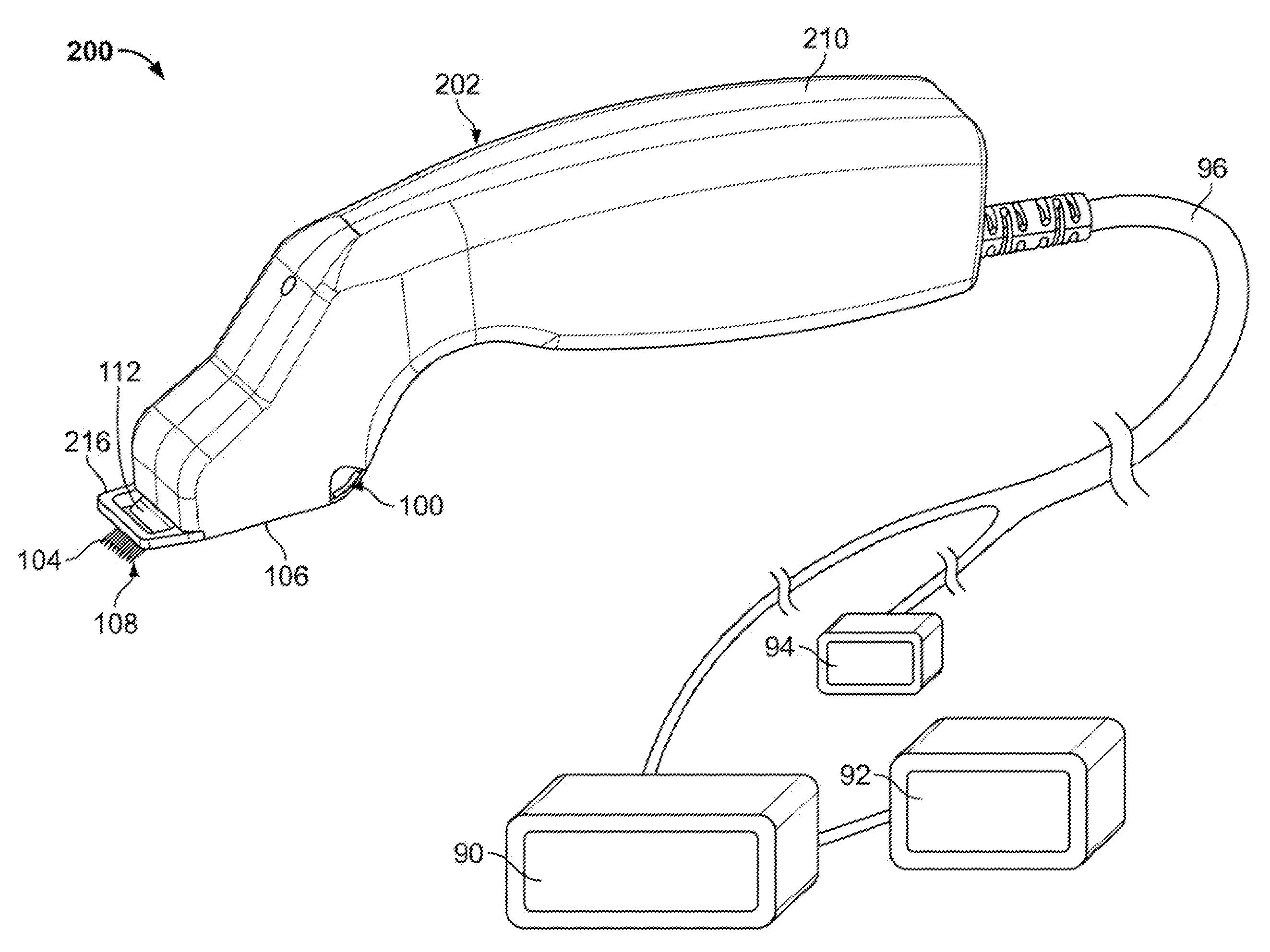

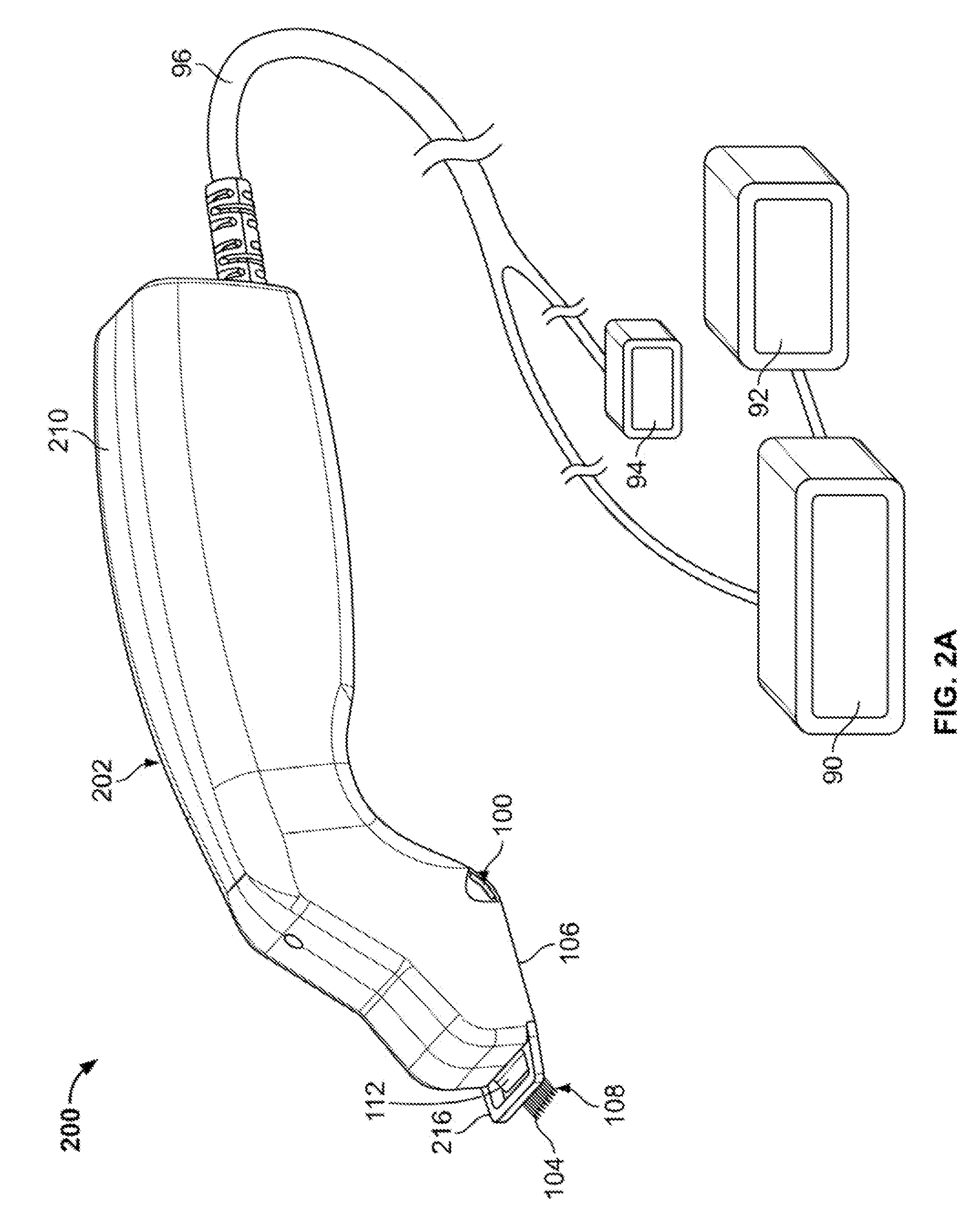

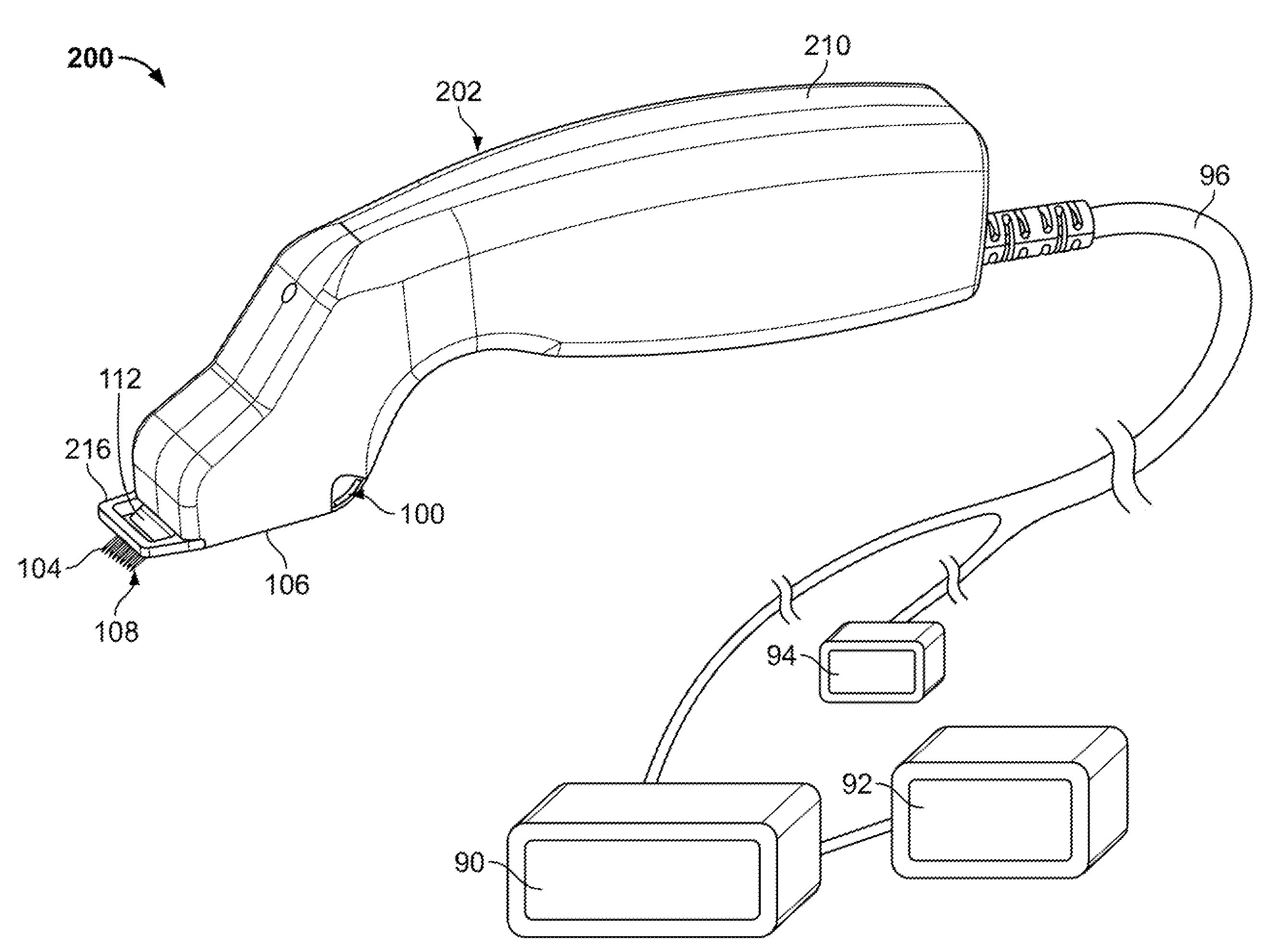

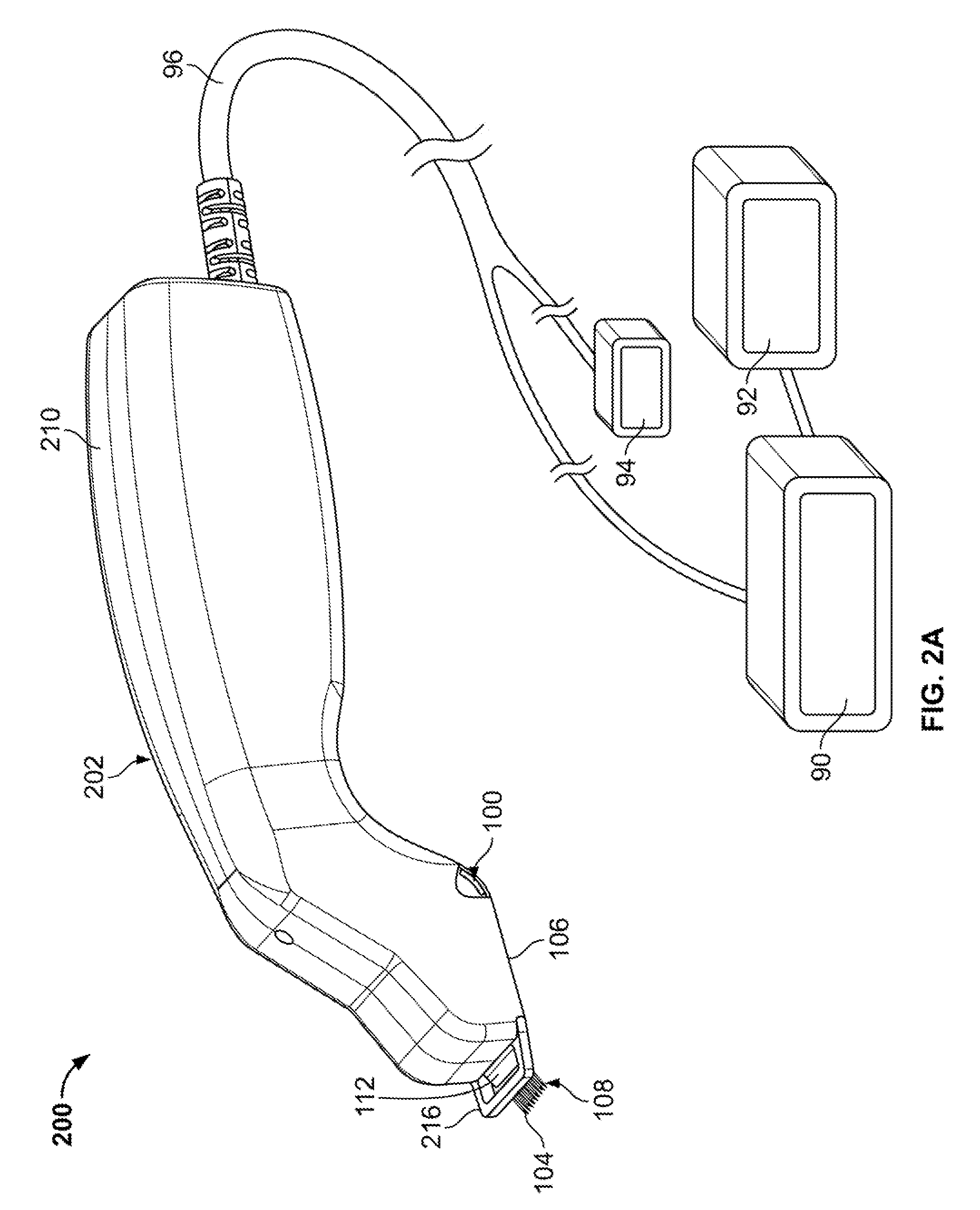

Devices and methods for percutaneous energy delivery

ActiveUS20100010480A1Avoid energyGood lookingUltrasound therapySurgical needlesEnergy minimizationEngineering

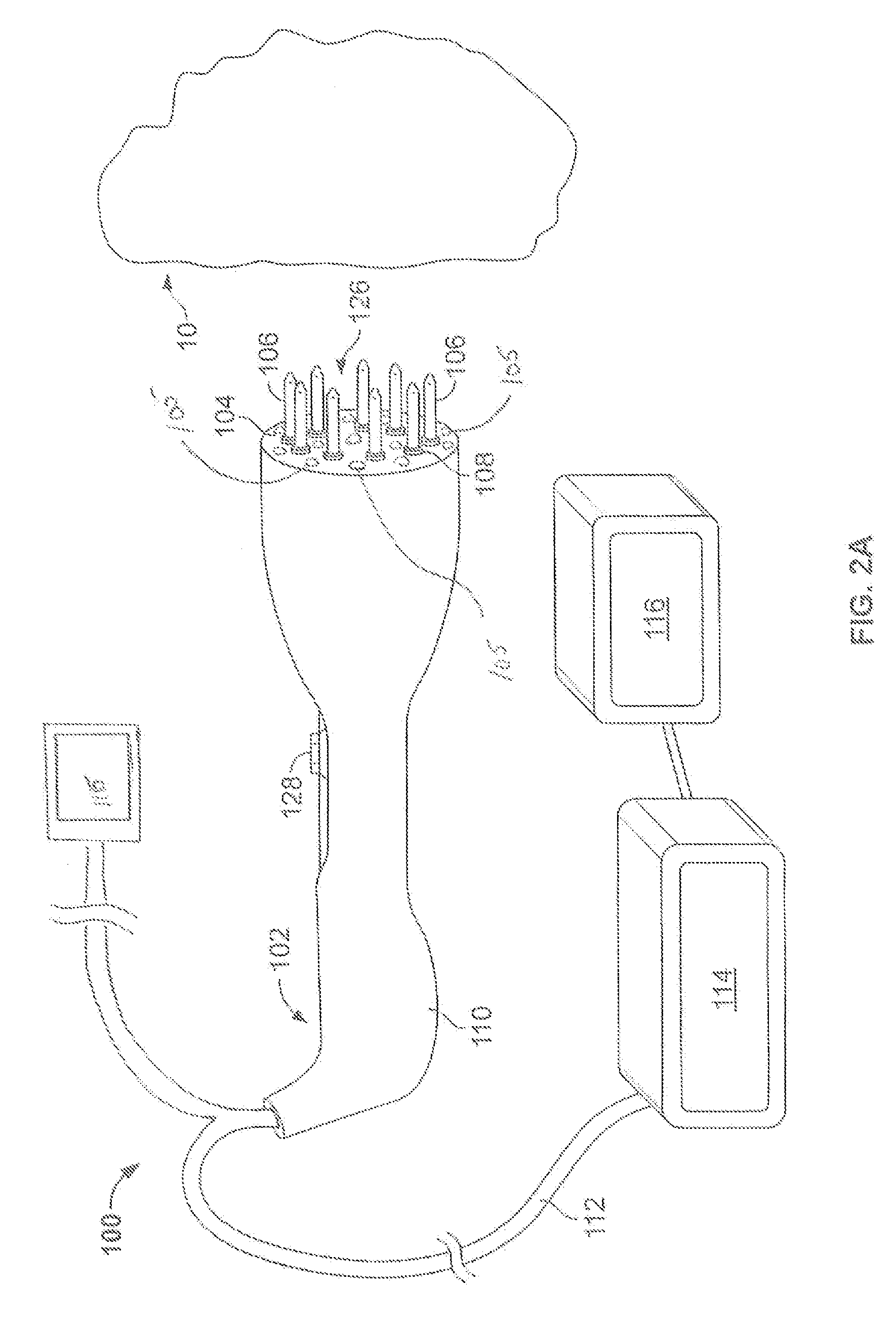

The invention provides a system and method for percutaneous energy delivery in an effective, manner using one or more probes. Additional variations of the system include array of probes configured to minimize the energy required to produce the desired effect.

Owner:SYNERON MEDICAL LTD

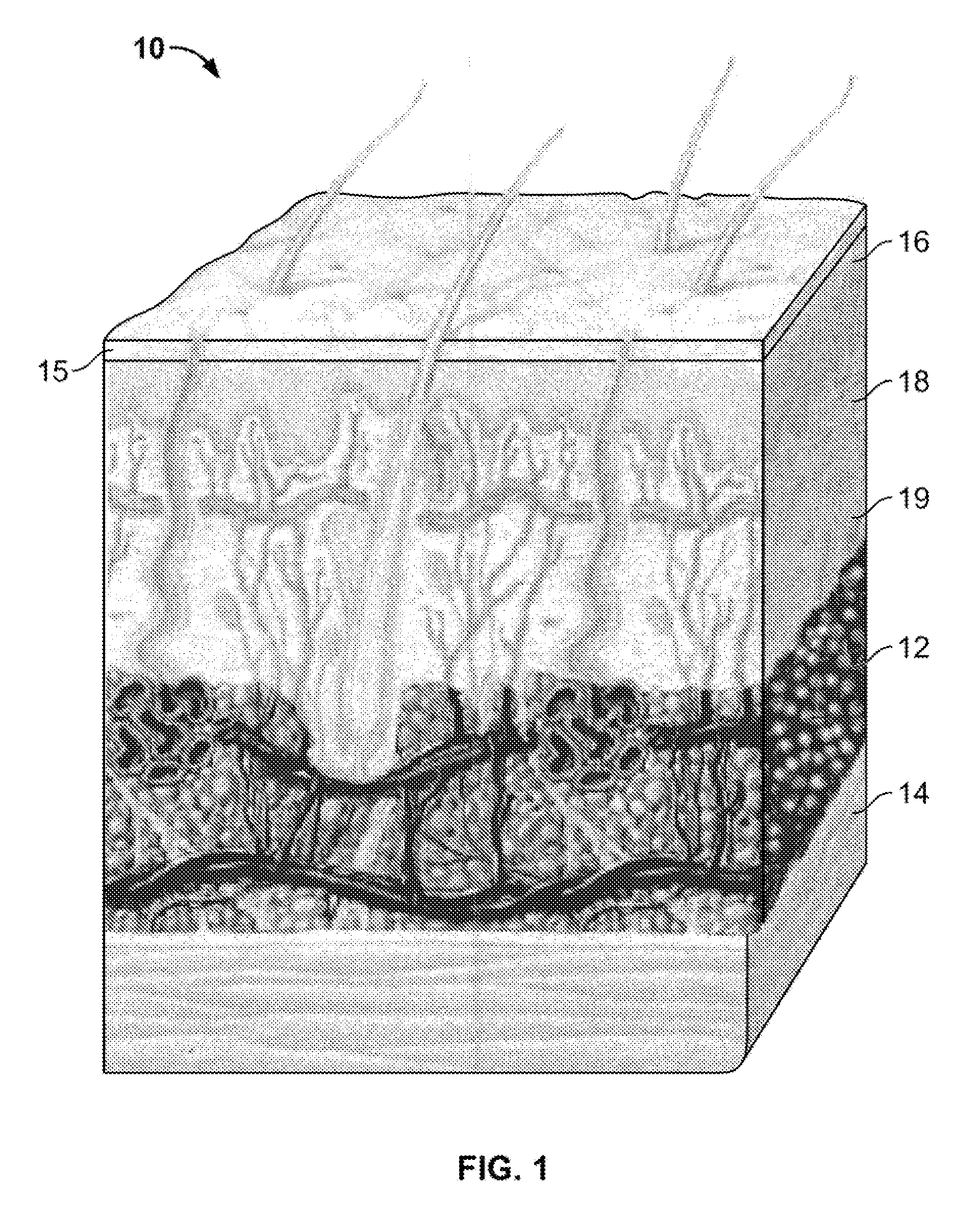

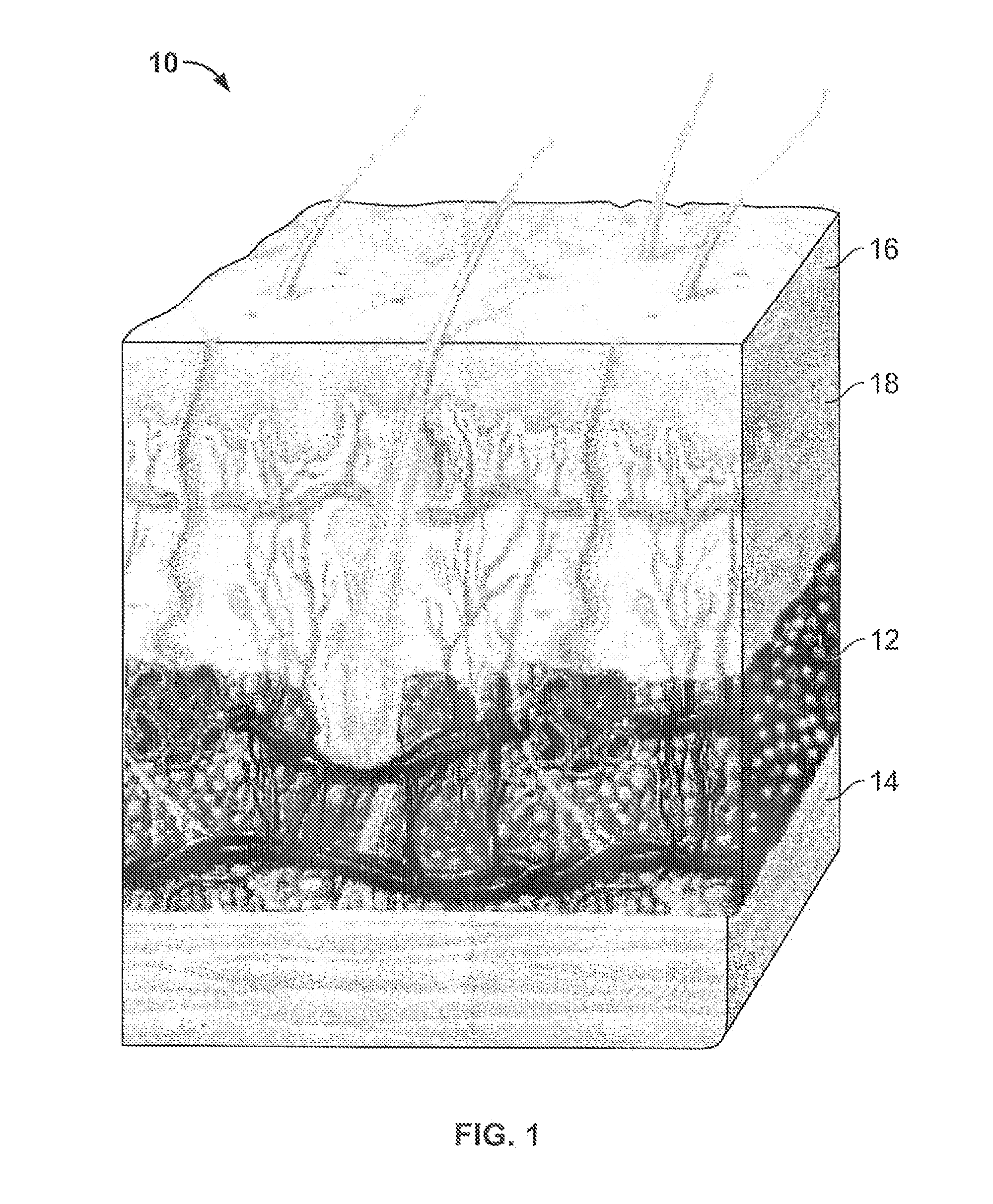

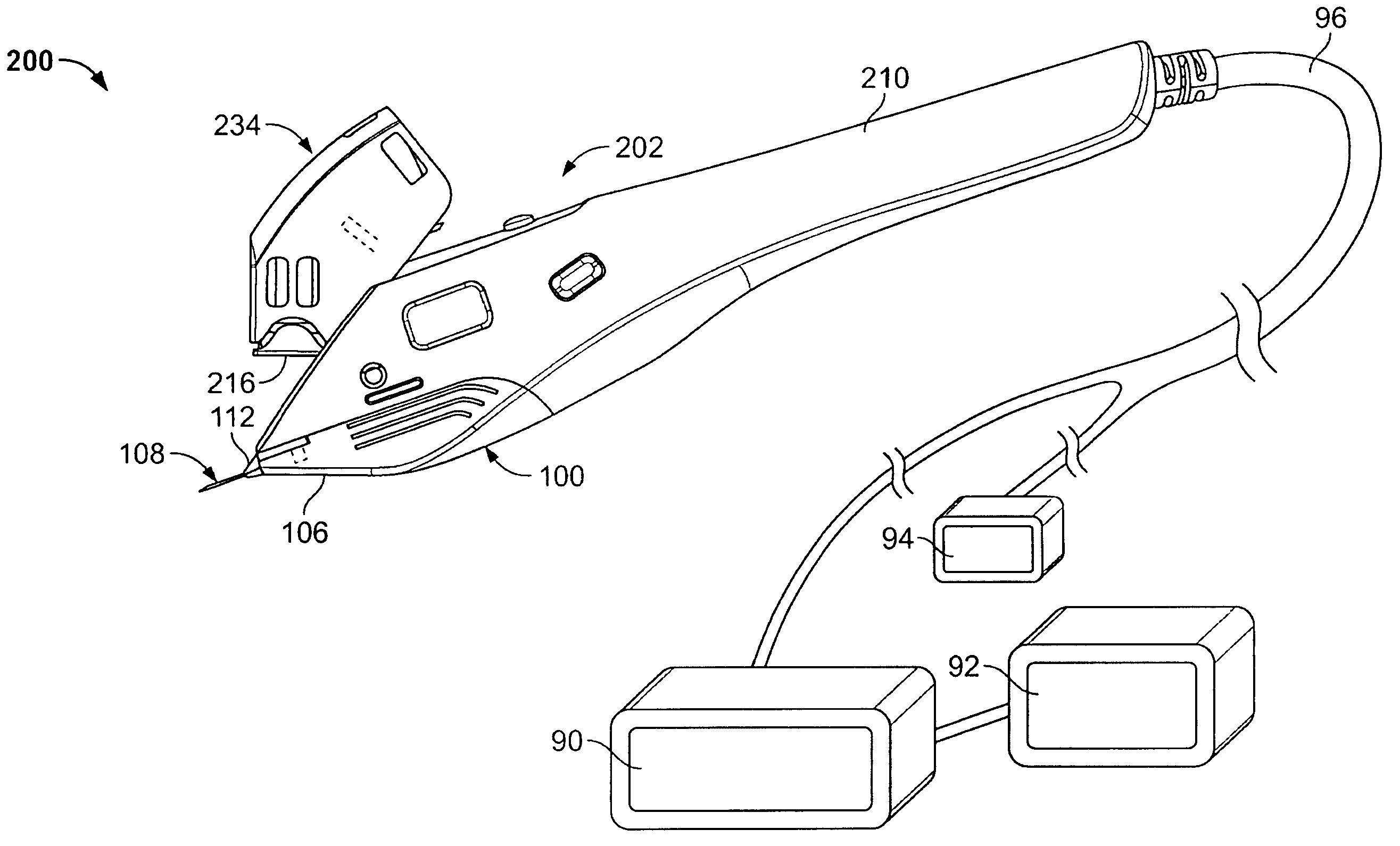

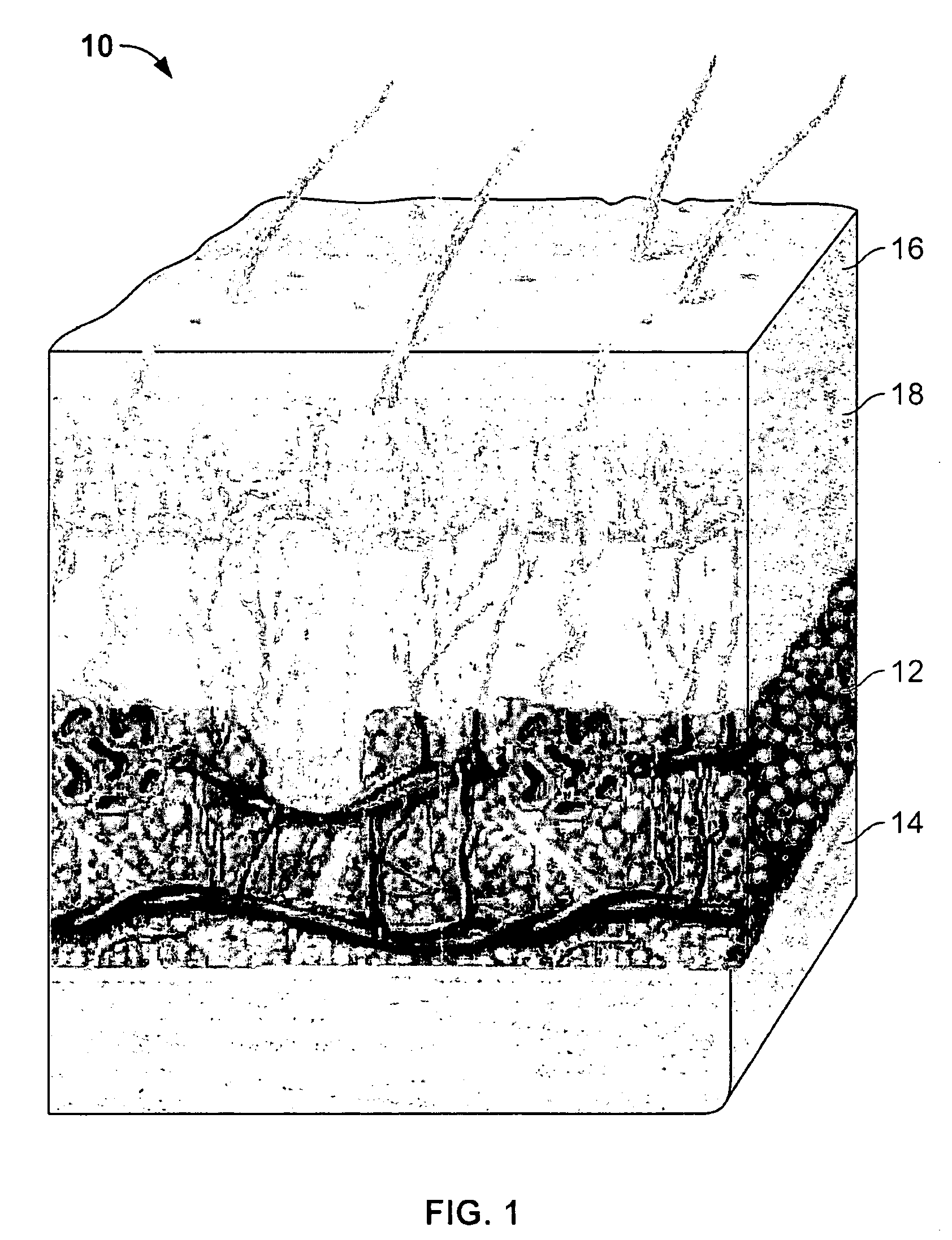

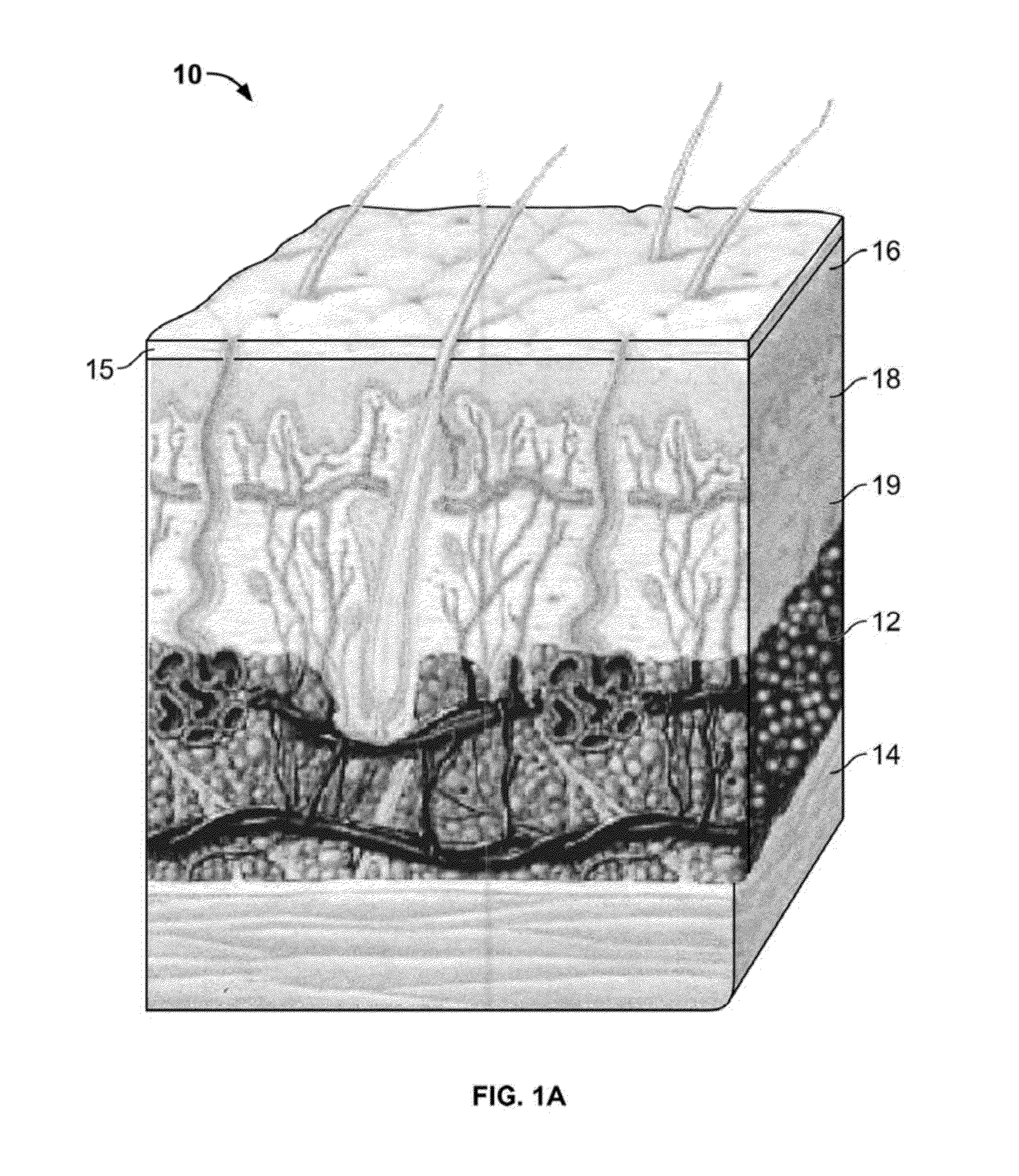

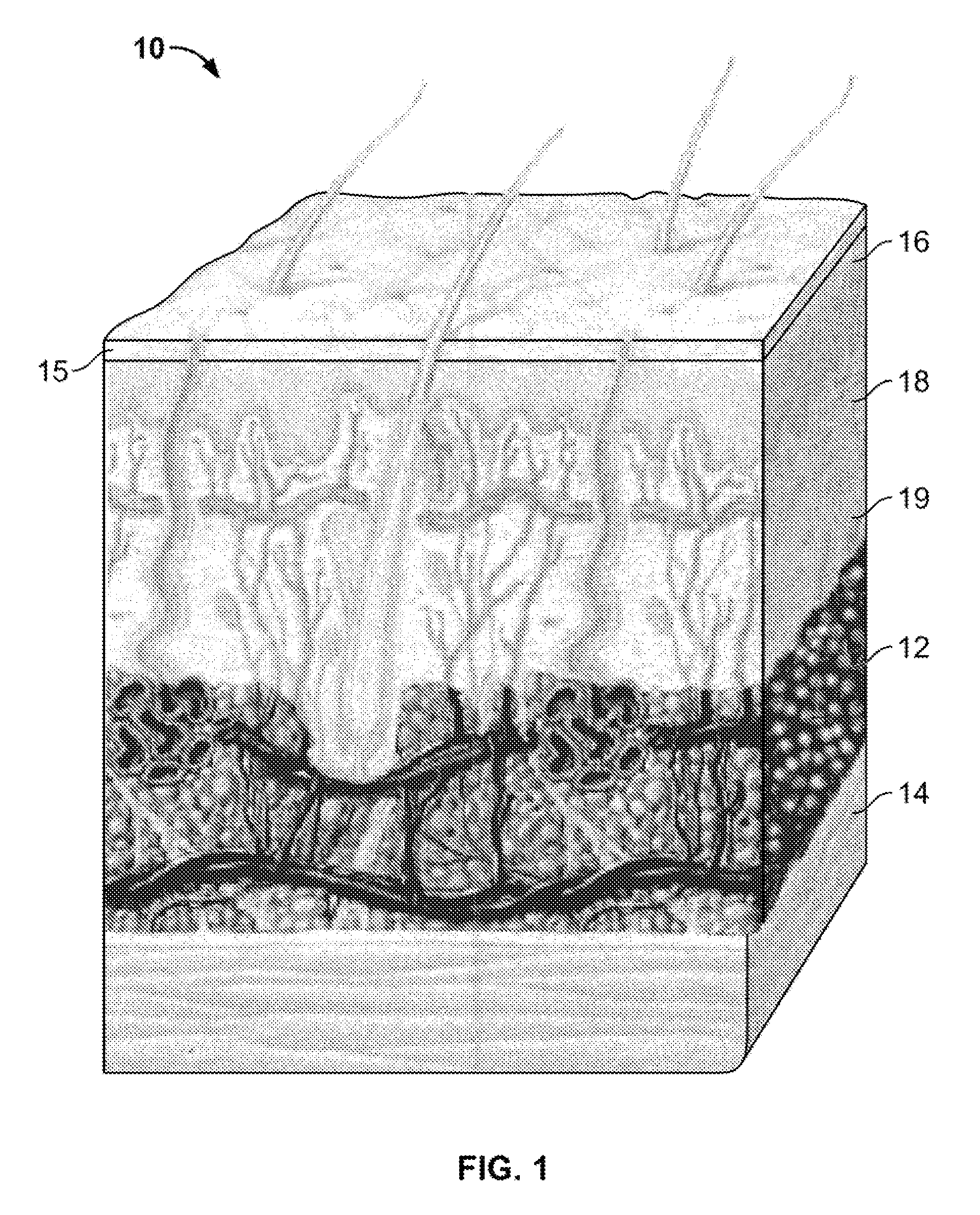

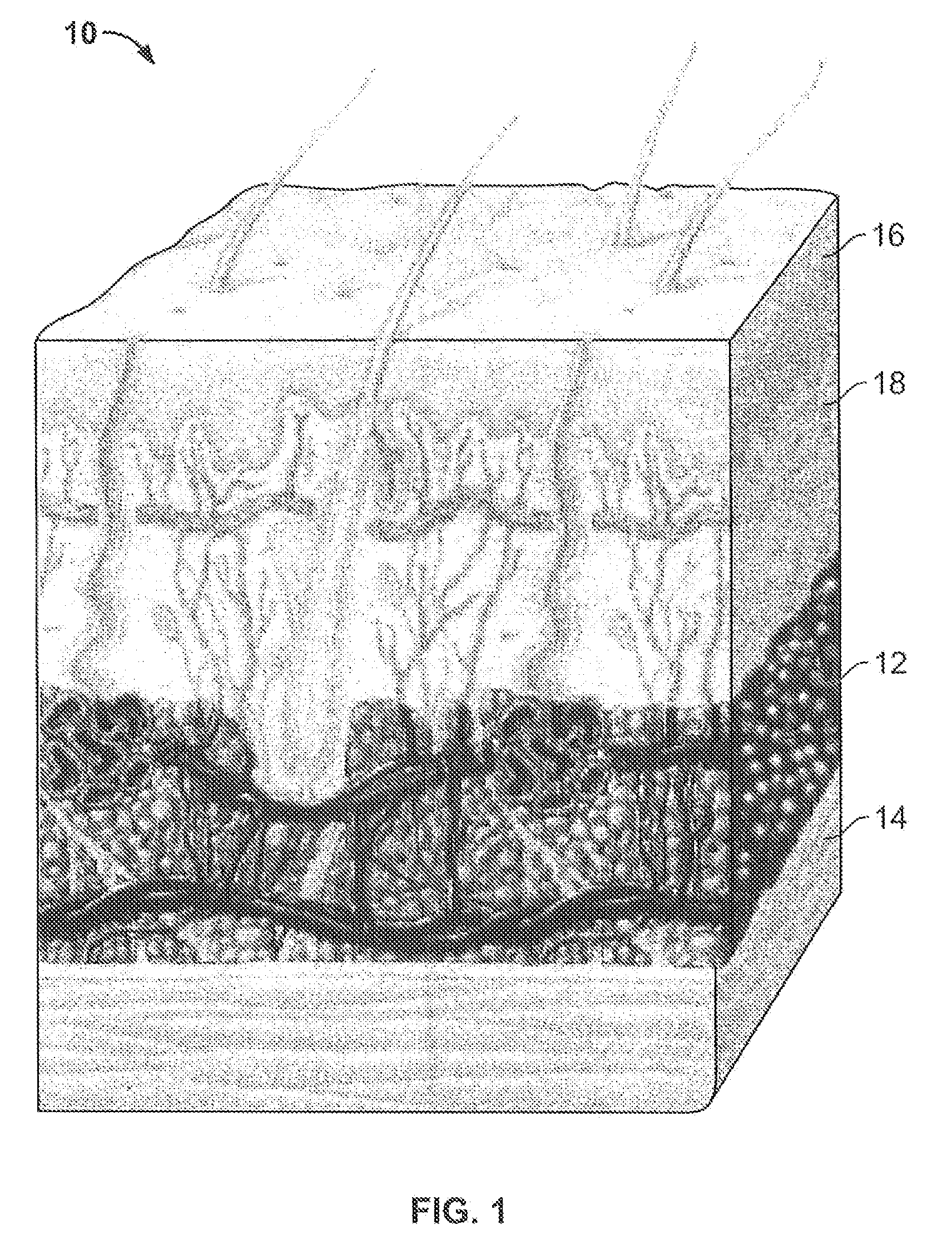

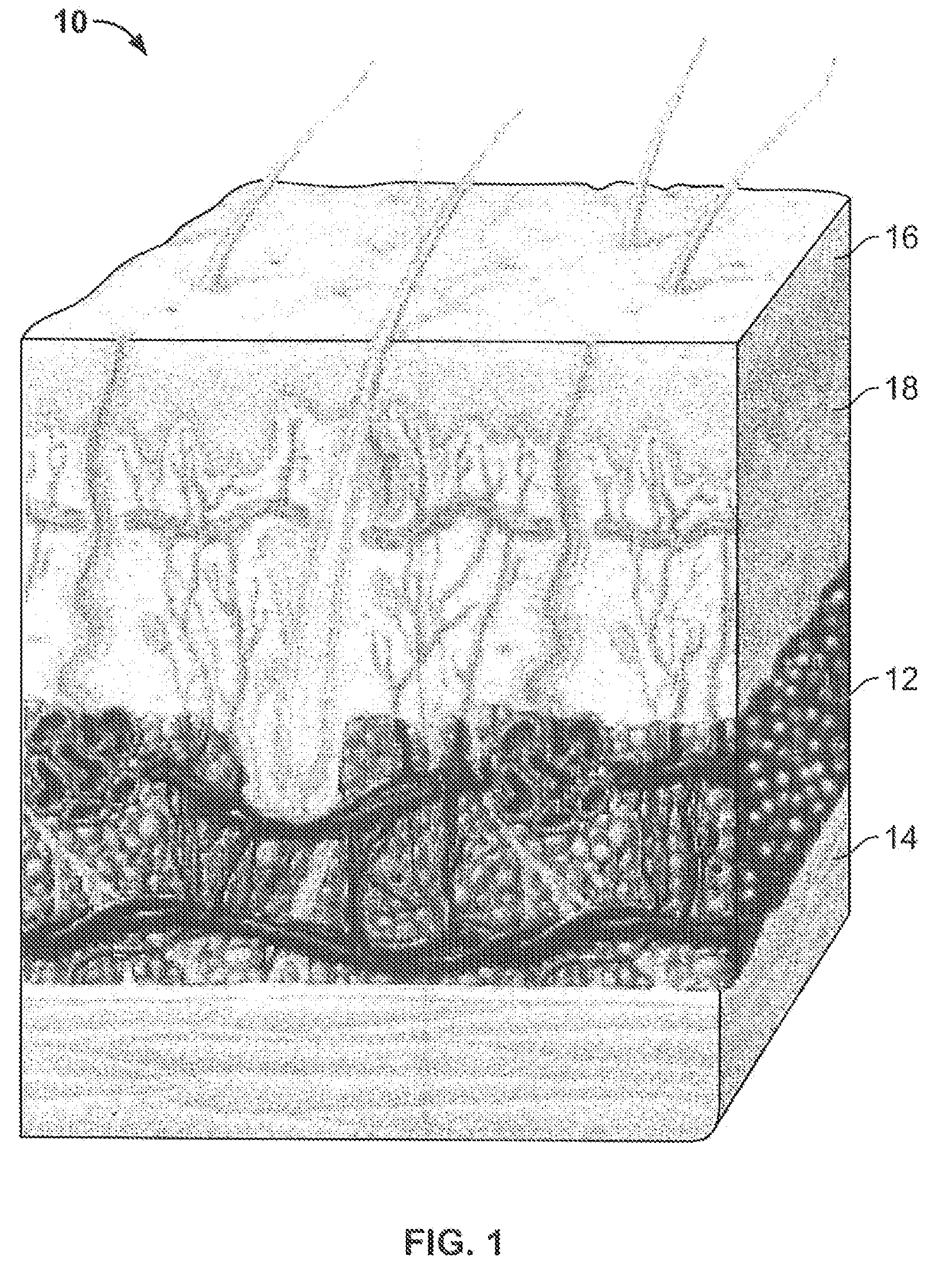

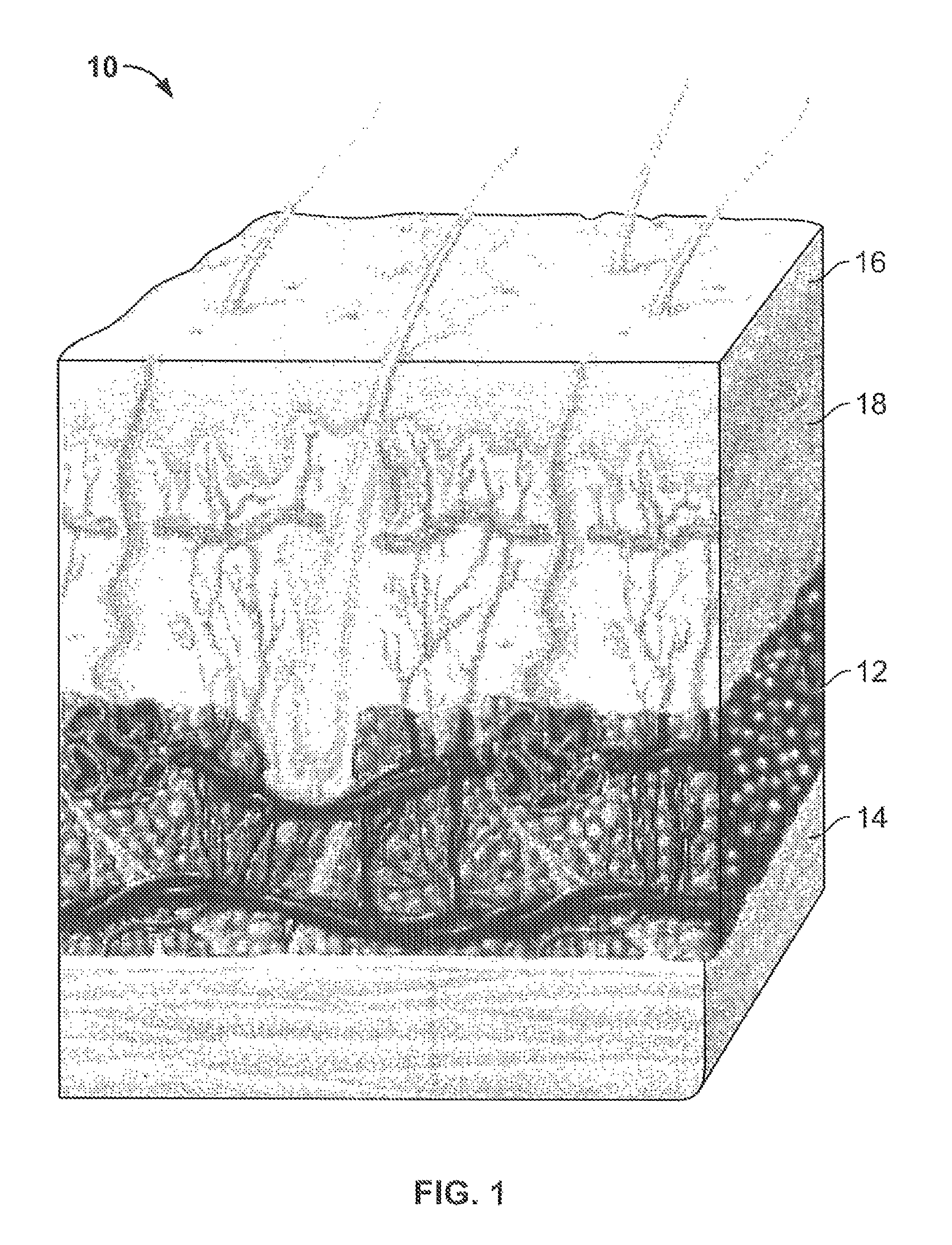

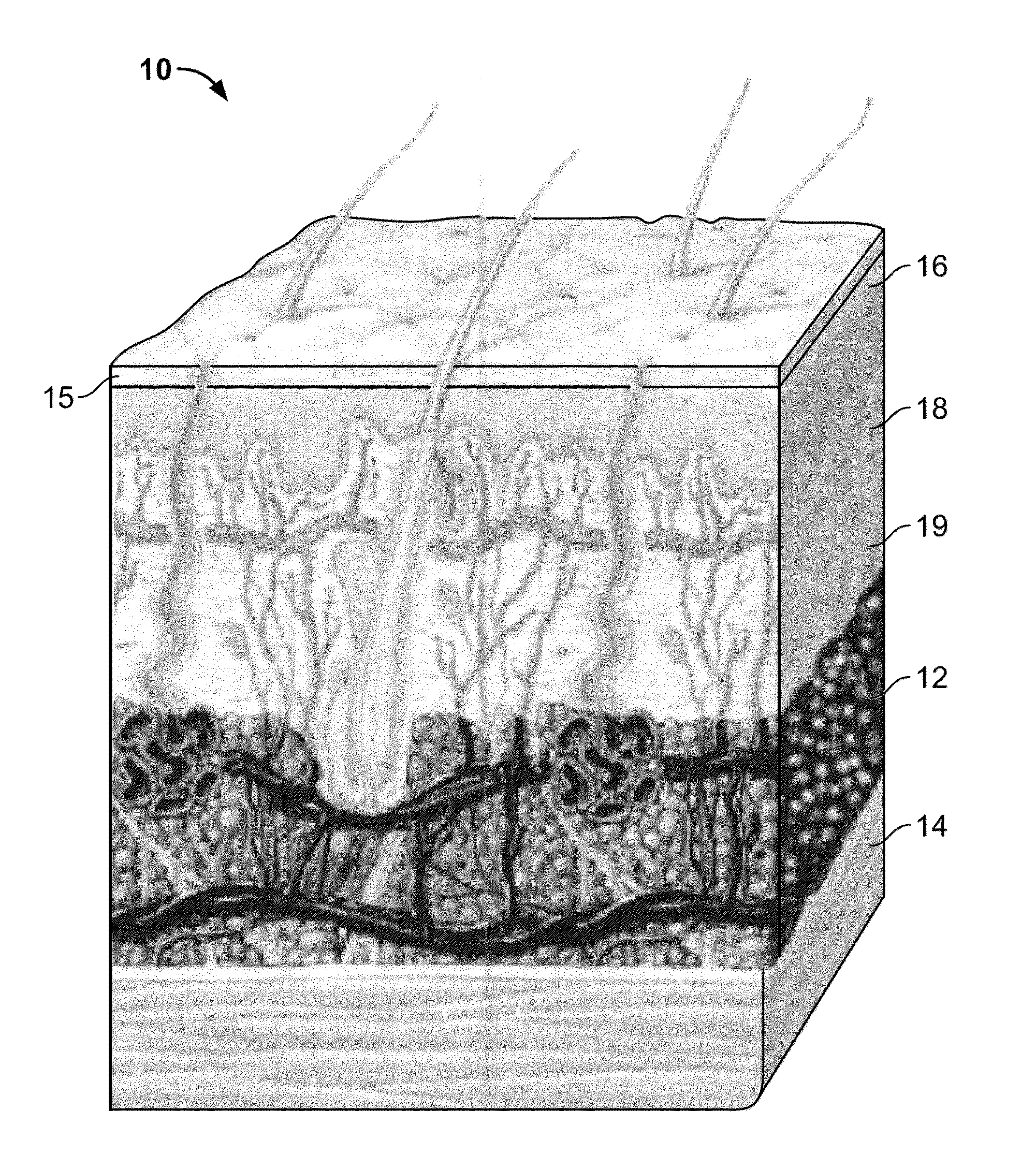

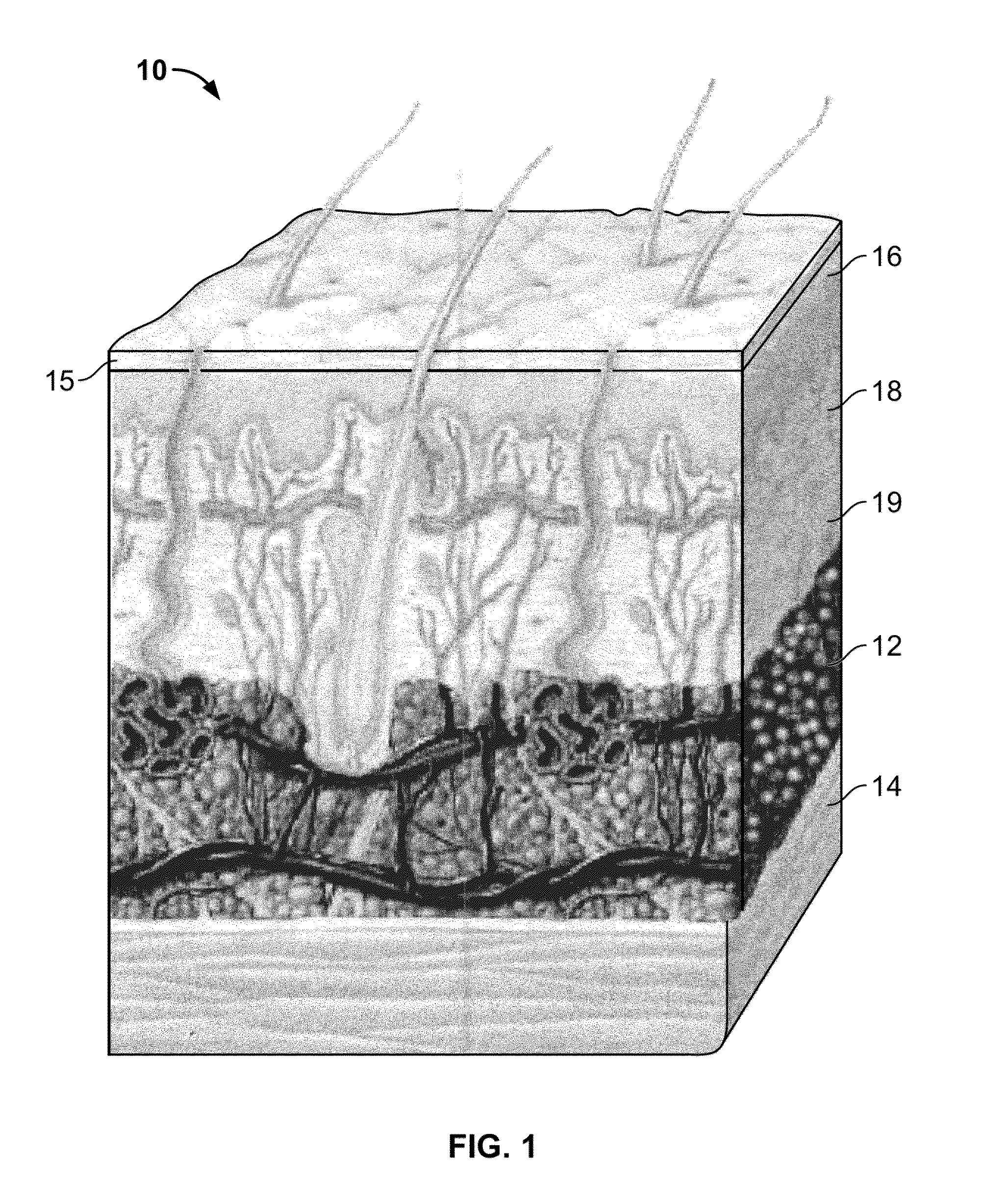

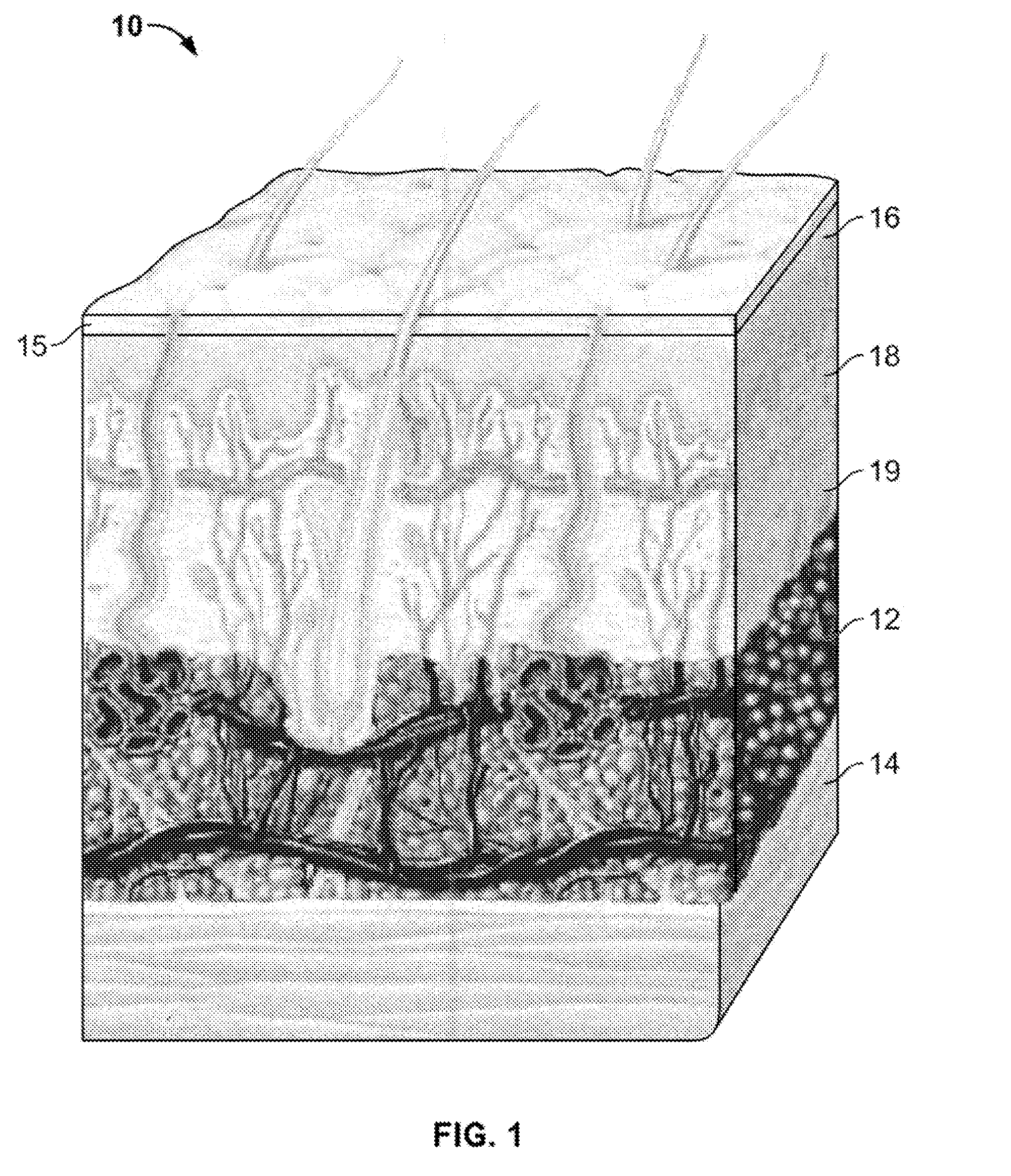

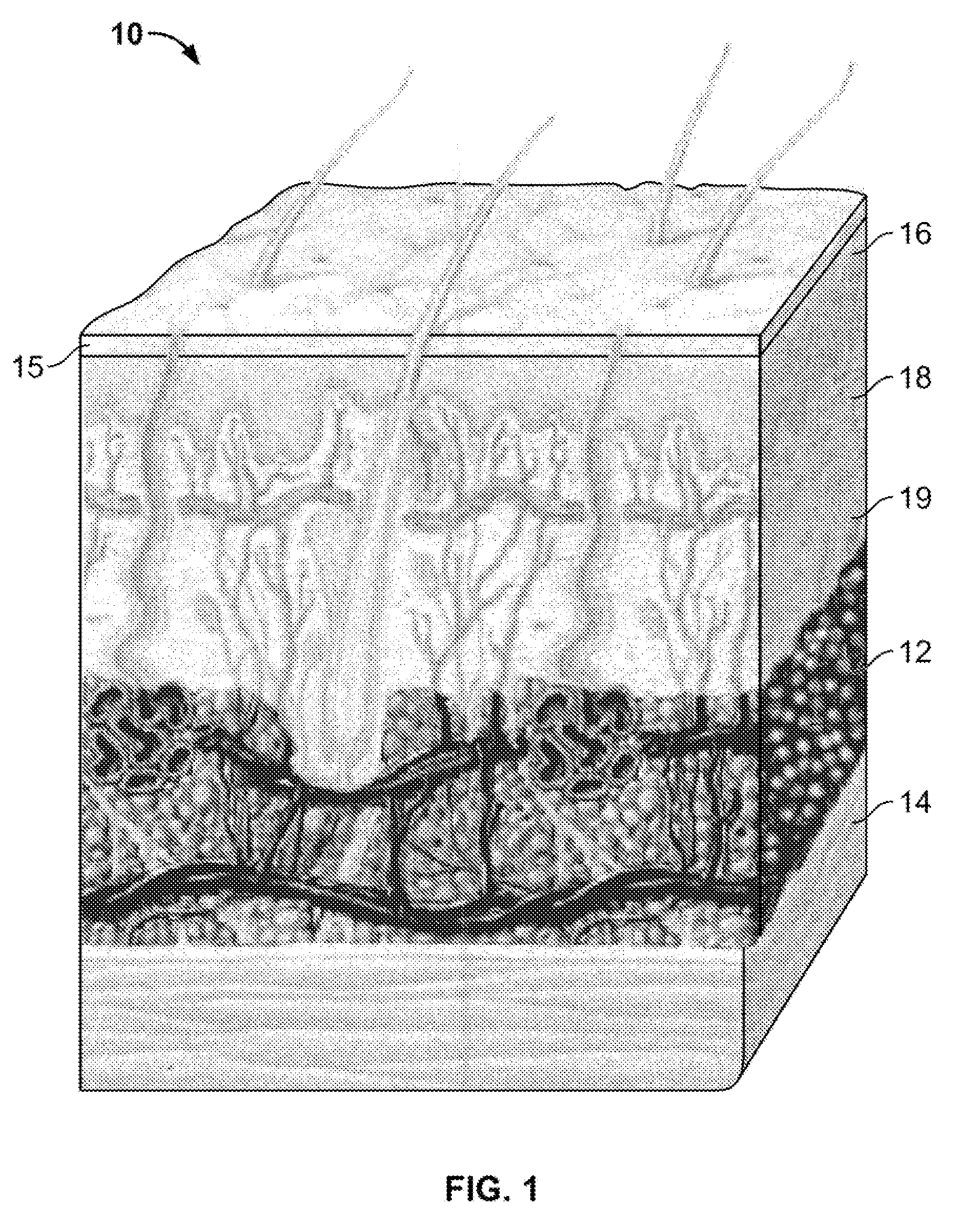

Methods and devices for treating tissue

ActiveUS20080091182A1Avoid energyEasy to insertElectrotherapySurgical needlesEnergy minimizationNon invasive

The invention provides a system and method for achieving the cosmetically beneficial effects of shrinking collagen tissue in the dermis or other areas of tissue in an effective, non-invasive manner using an array of electrodes. Systems described herein allow for improved treatment of tissue. Additional variations of the system include array of electrodes configured to minimize the energy required to produce the desired effect.

Owner:SYNERON MEDICAL LTD

Methods and devices for treating tissue

InactiveUS20080312647A1Easy to insertMinimize formationSurgical needlesSurgical instruments for heatingEnergy minimizationNon invasive

The invention provides a system, and method for achieving the cosmetically beneficial effects of shrinking collagen tissue in the dermis or other areas of tissue in an effective, non-invasive manner using an array of electrodes. Systems described herein allow for improved, treatment of tissue. Additional variations of the system include array of electrodes configured to minimize the energy required to produce the desired effect.

Owner:SYNERON MEDICAL LTD

Video object segmentation using active contour model with directional information

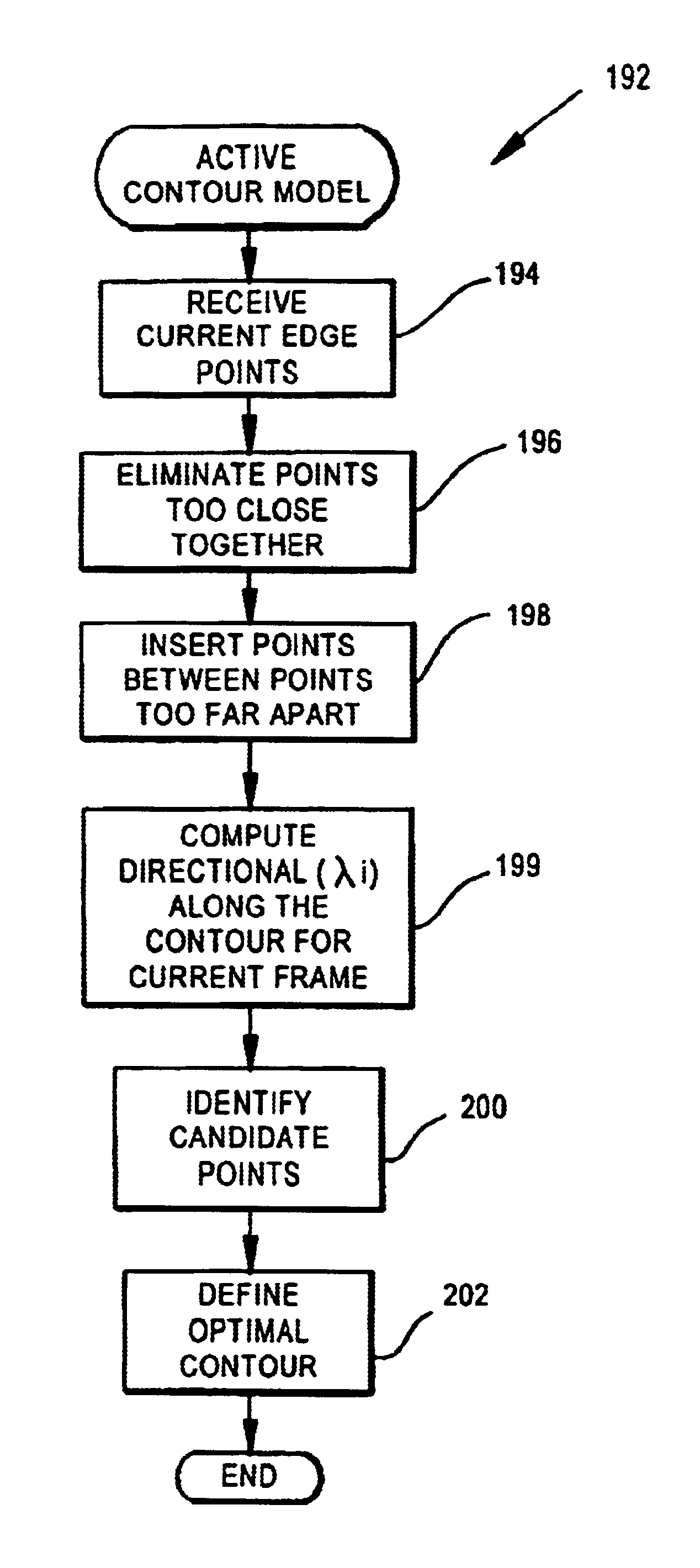

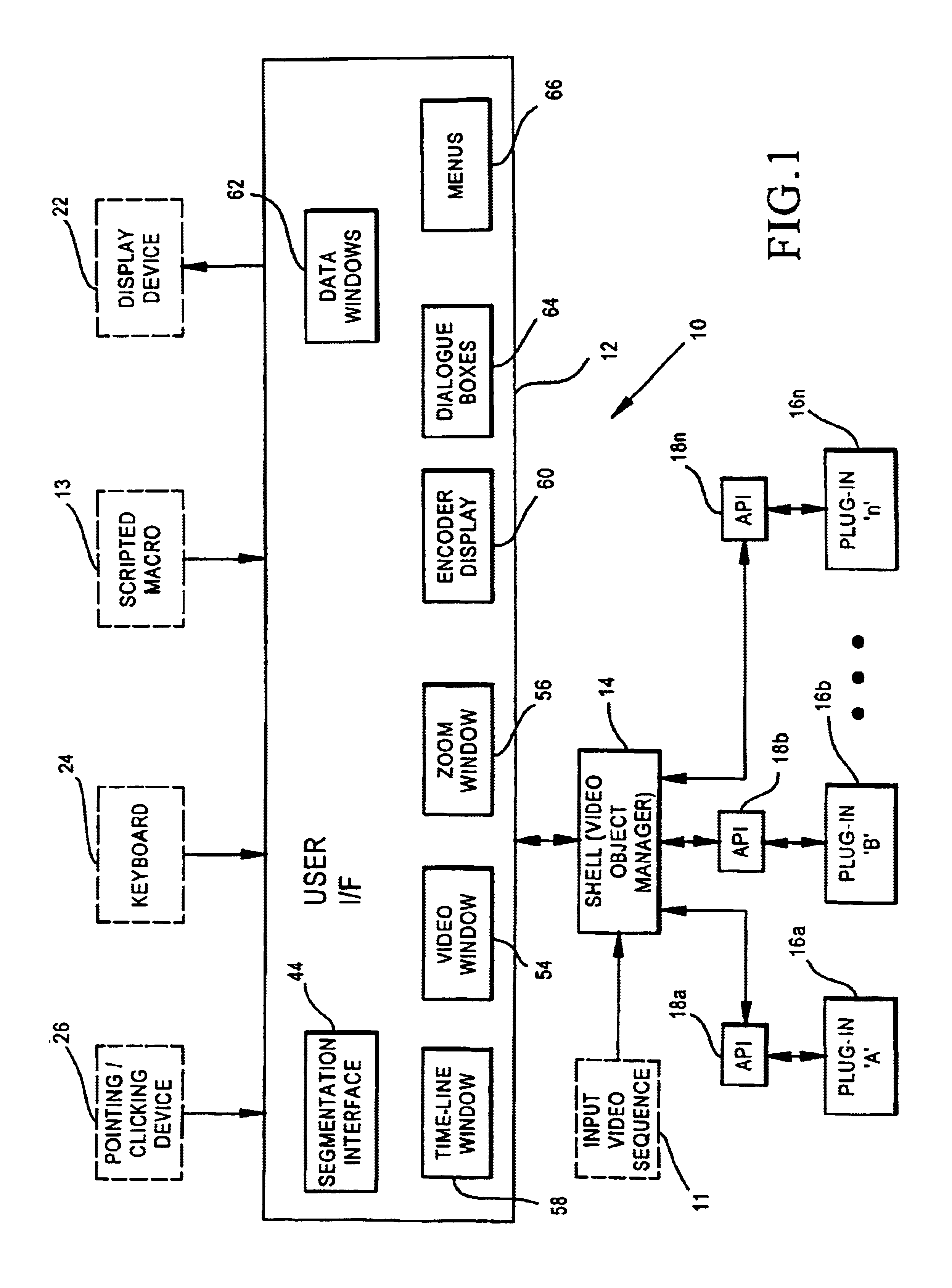

InactiveUS6912310B1Improve segmentationAccurate segmentationImage analysisCharacter and pattern recognitionGradient strengthEnergy minimization

Object segmentation and tracking are improved by including directional information to guide the placement of an active contour (i.e., the elastic curve or ‘snake’) in estimating the object boundary. In estimating an object boundary the active contour deforms from an initial shape to adjust to image features using an energy minimizing function. The function is guided by external constraint forces and image forces to achieve a minimal total energy of the active contour. Both gradient strength and gradient direction of the image are analyzed in minimizing contour energy for an active contour model.

Owner:UNIV OF WASHINGTON

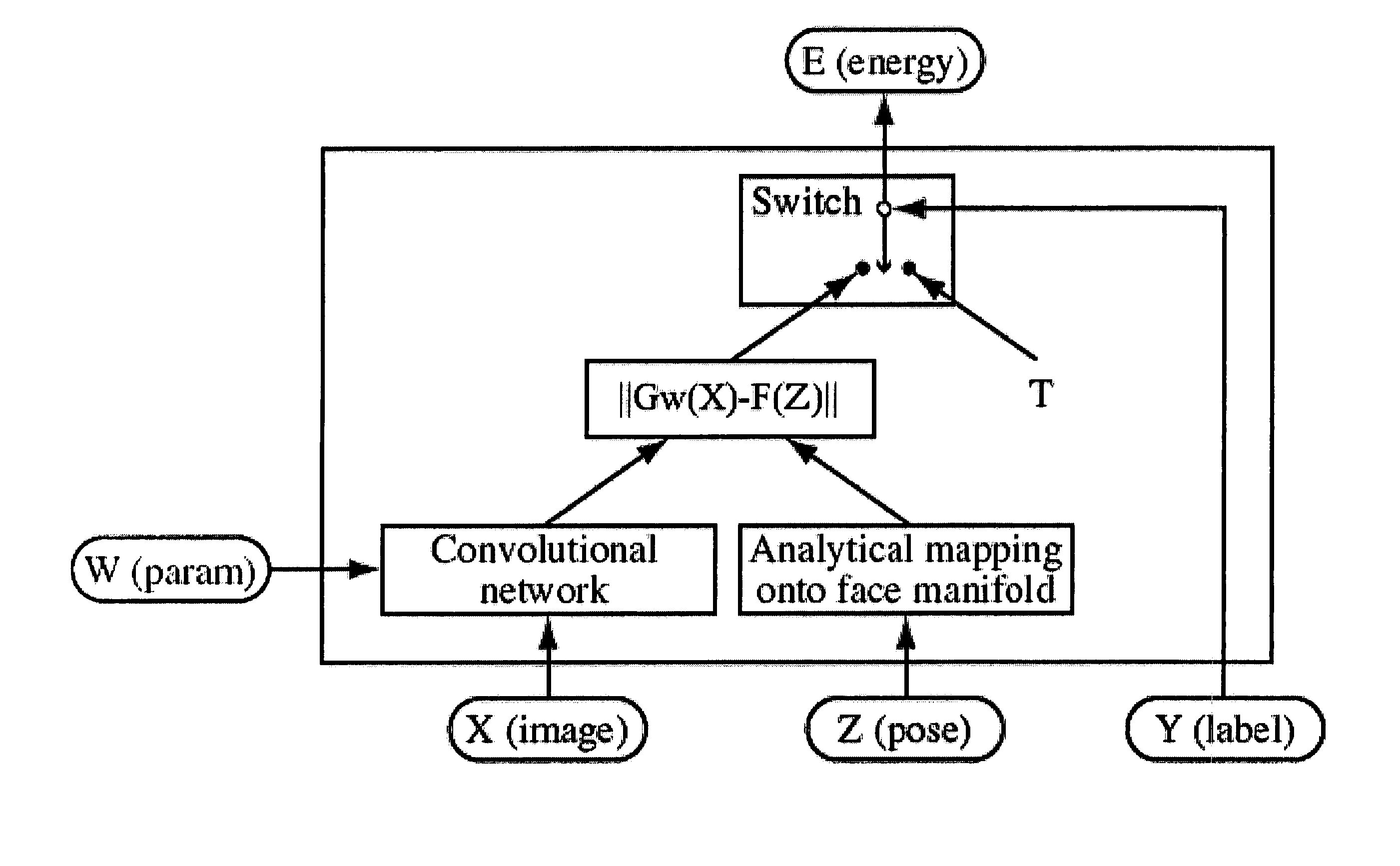

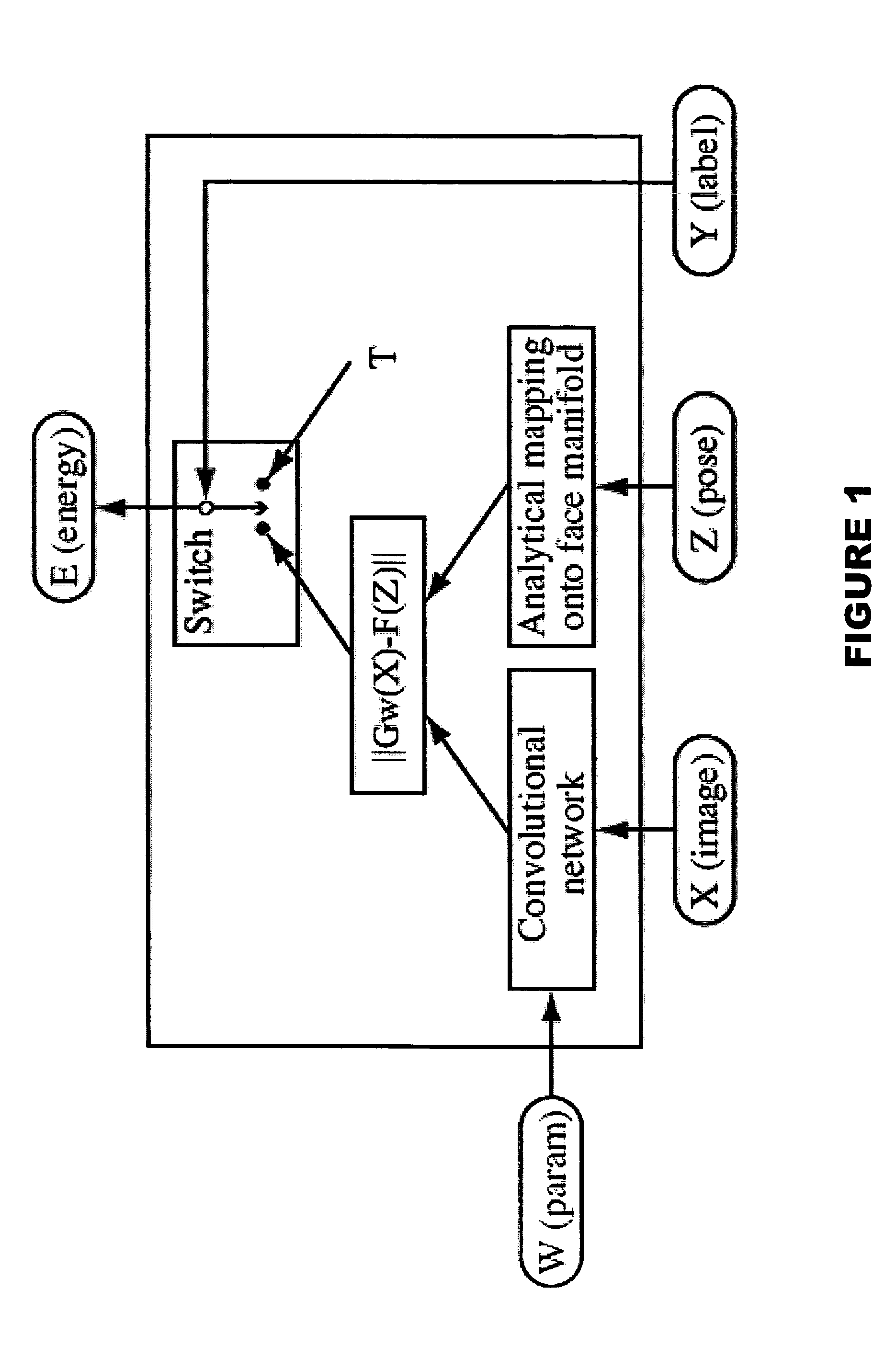

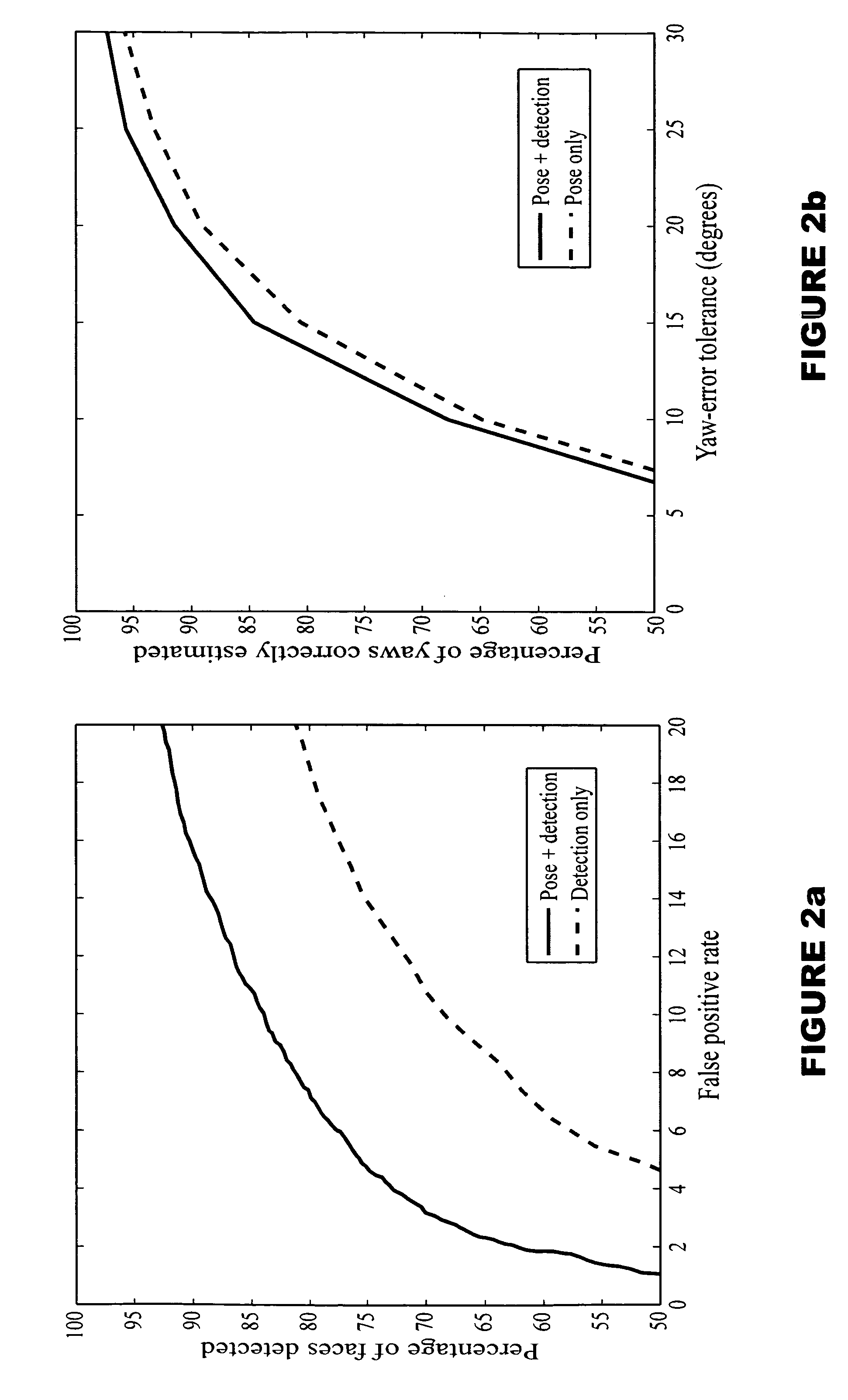

Synergistic face detection and pose estimation with energy-based models

ActiveUS20060034495A1Improve reliabilityThree-dimensional object recognitionFace detectionEnergy minimization

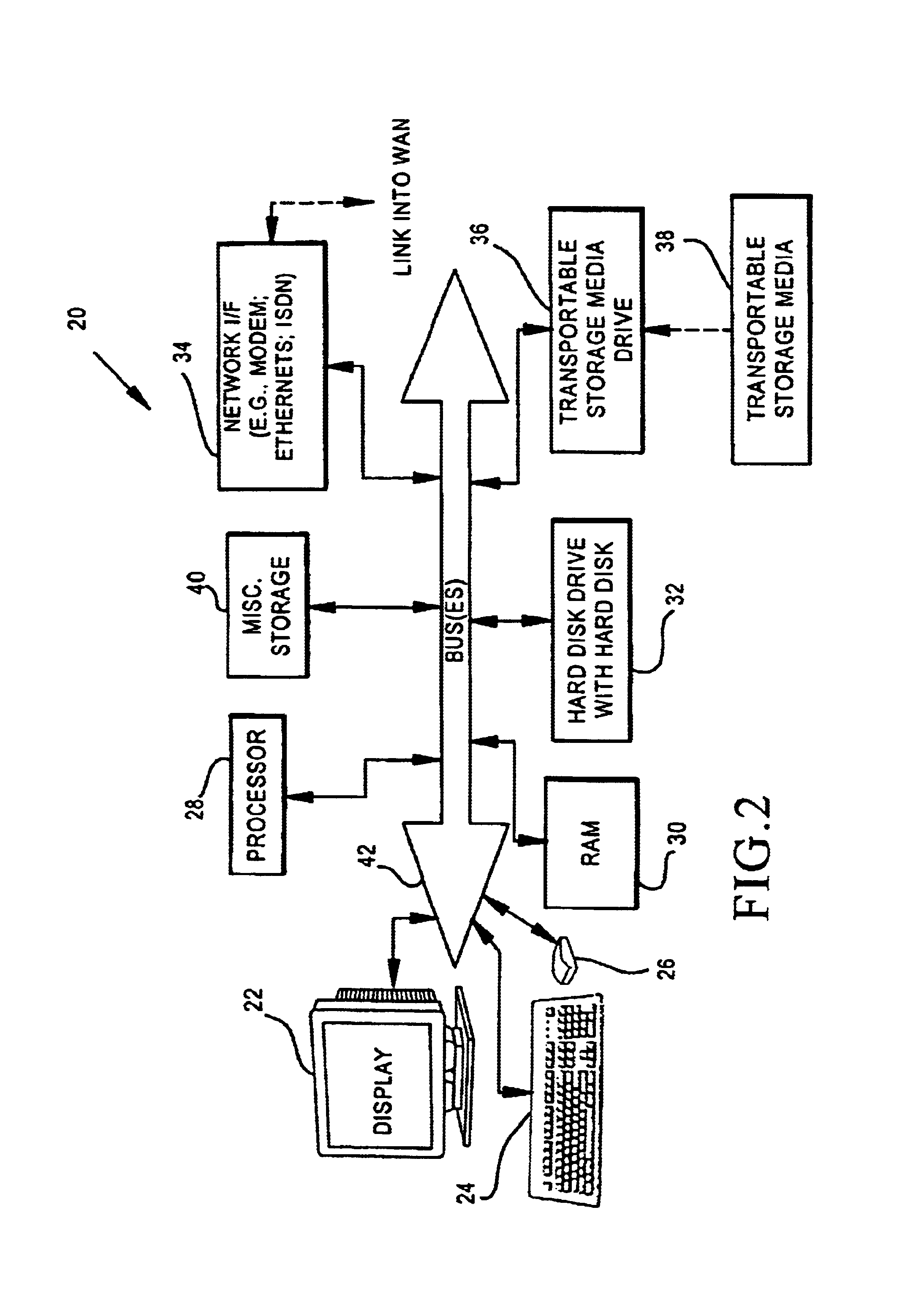

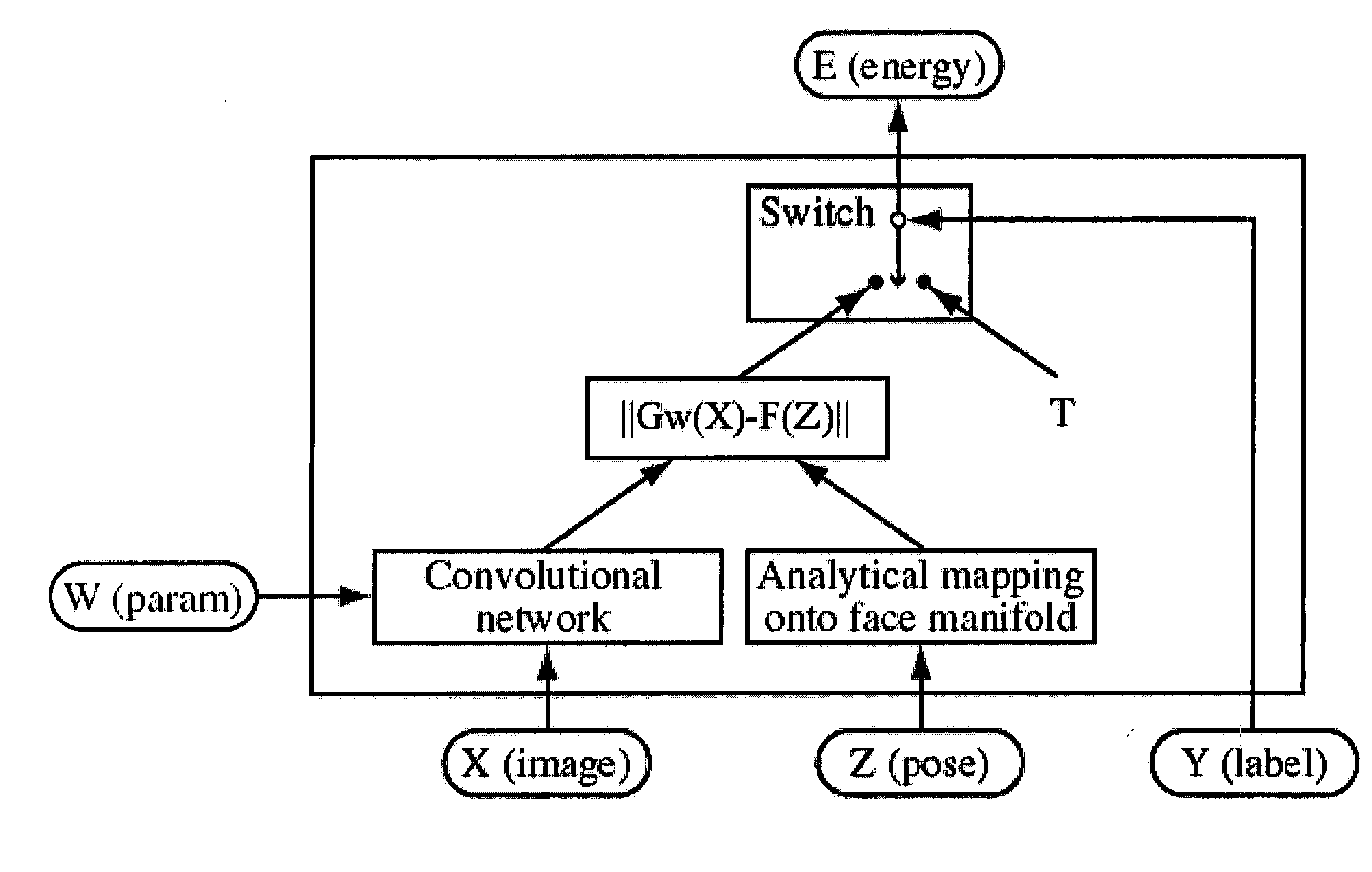

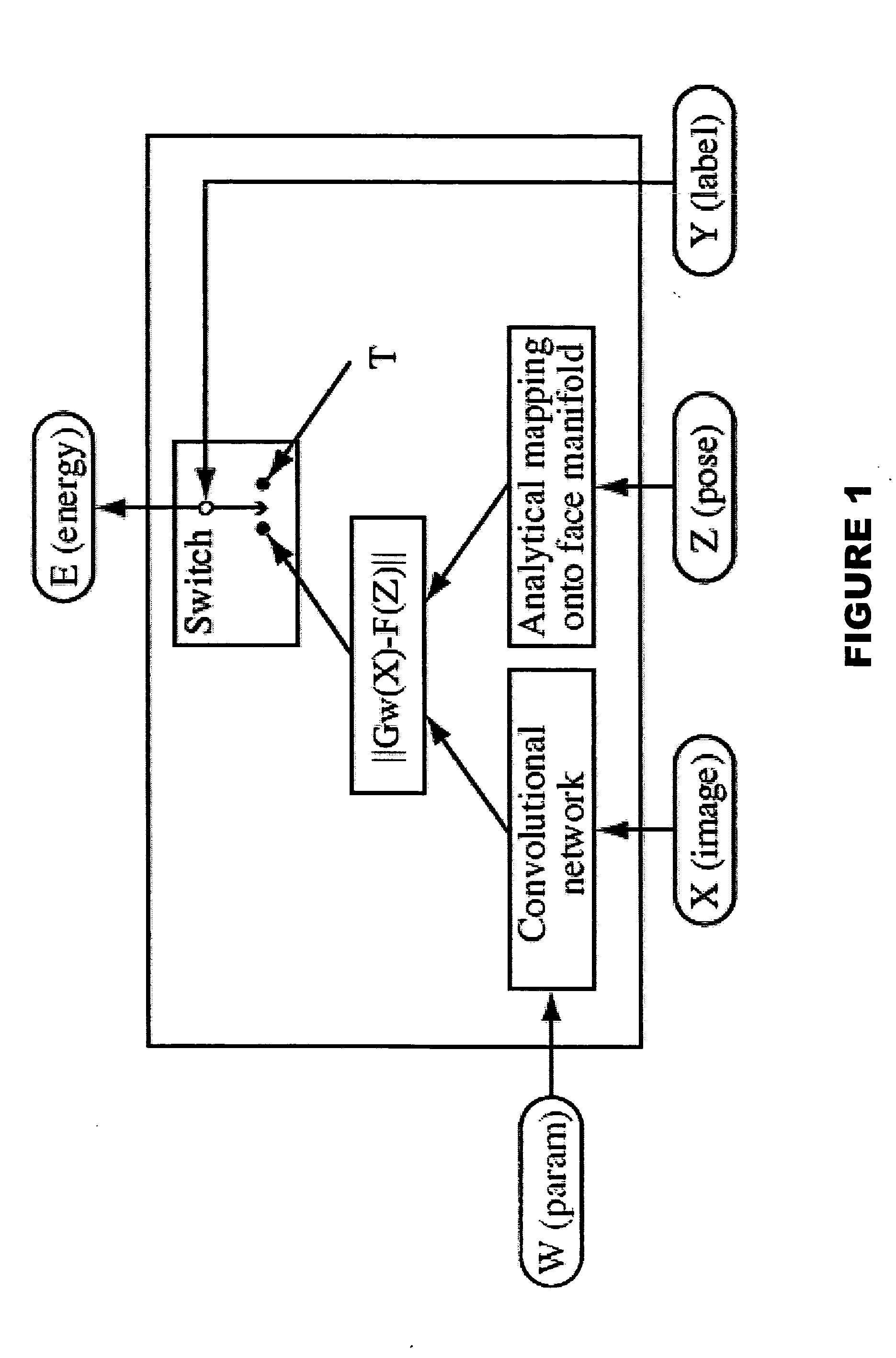

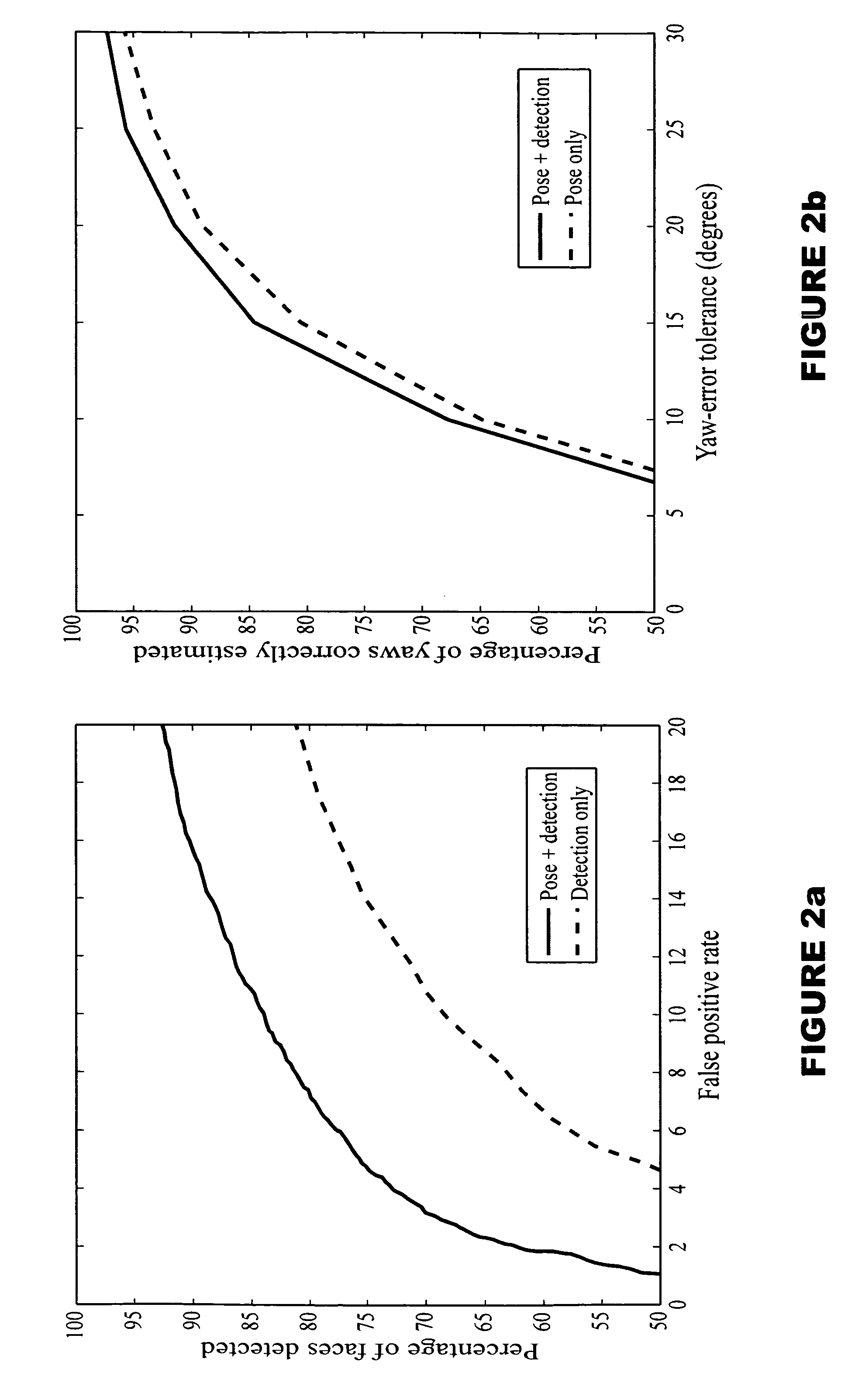

A method for human face detection that detects faces independently of their particular poses and simultaneously estimates those poses. Our method exhibits an immunity to variations in skin color, eyeglasses, facial hair, lighting, scale and facial expressions, and others. In operation, we train a convolutional neural network to map face images to points on a face manifold, and non-face images to points far away from that manifold, wherein that manifold is parameterized by facial pose. Conceptually, we view a pose parameter as a latent variable, which may be inferred through an energy-minimization process. To train systems based upon our inventive method, we derive a new type of discriminative loss function that is tailored to such detection tasks. Our method enables a multi-view detector that can detect faces in a variety of poses, for example, looking left or right (yaw axis), up or down (pitch axis), or tilting left or right (roll axis). Systems employing our method are highly-reliable, run at near real time (5 frames per second on conventional hardware), and is robust against variations in yaw (±90°), roll (±45°), and pitch (±60°).

Owner:NEC CORP

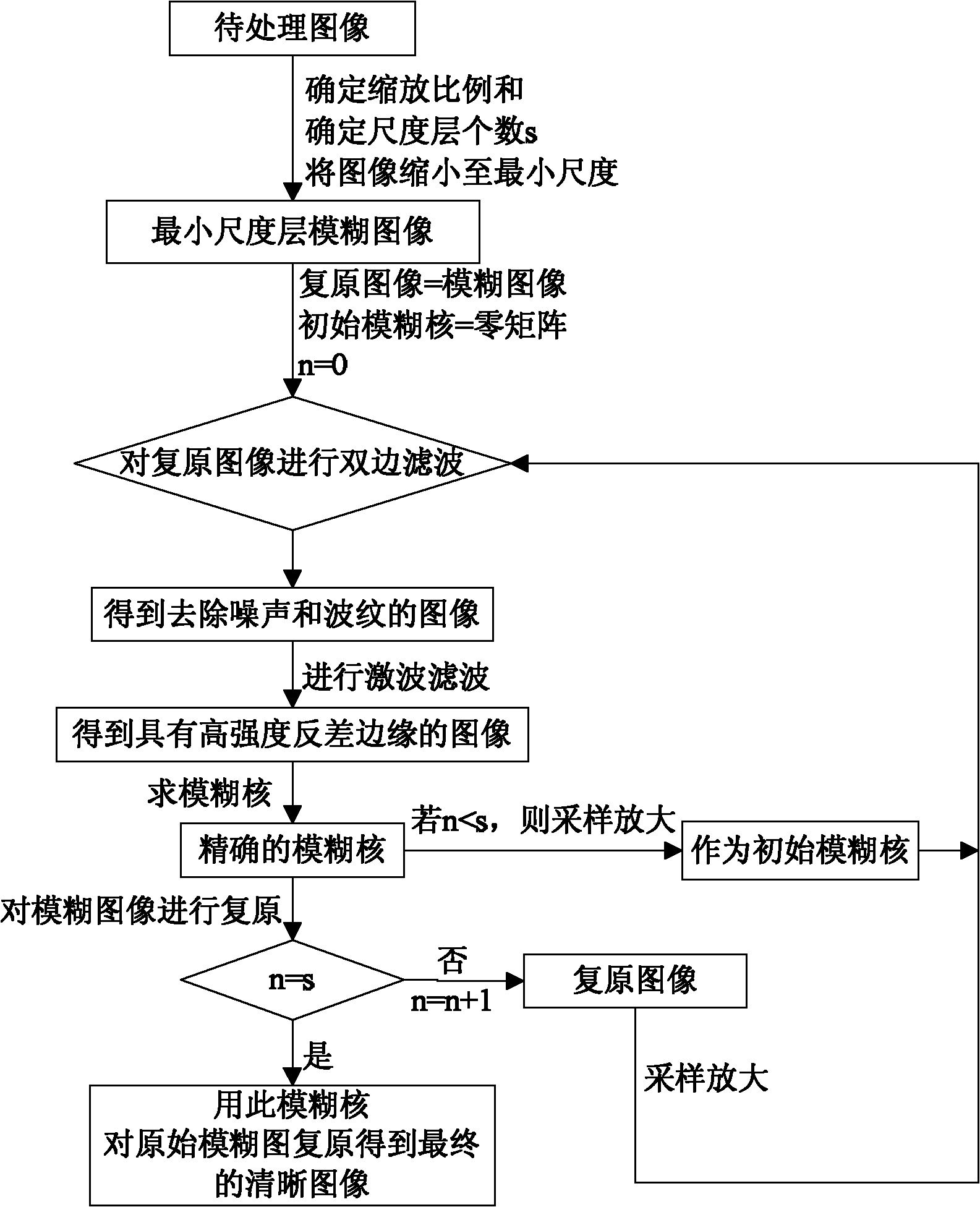

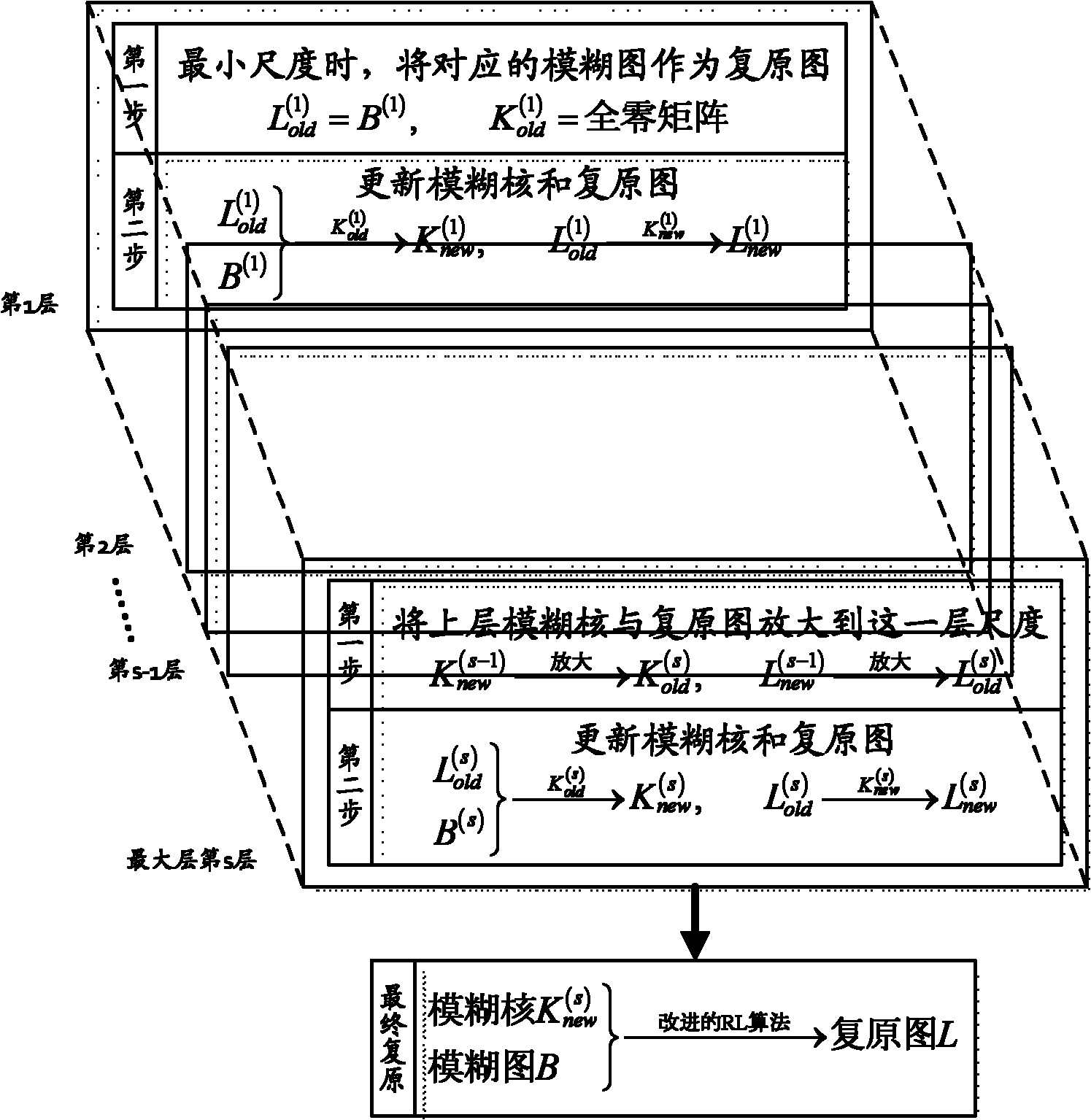

Edge information-based multi-scale blurred image blind restoration method

InactiveCN101930601AEffective convergenceReduce computational complexityImage enhancementShock wavePattern recognition

The invention discloses an edge information-based multi-scale blurred image blind restoration method, which comprises the following steps of: circularly and gradually restoring an image from a small scale layer to a large scale layer, setting self-adaptive parameters at different scales, and processing each scale layer, namely bilaterally filtering the restored image to obtain an image of which the noise and ripple are removed; performing shock wave filtering to obtain an image with high-strength contrast edges; solving the edges, and combining a fuzzy core initial value and a fuzzy graph to obtain an accurate fuzzy core; restoring a fuzzy image at the current scale to obtain a clear restored image by using the solved fuzzy core; sampling and amplifying in the current scale layer to obtain the restored image and a fuzzy core initial value of an adjacent large scale layer, and performing cycle operation on the adjacent large scale layer. The edge information-based multi-scale blurred image blind restoration method can effectively converge various images in different fuzzy degrees, and compared with a general blind restoration method which directly solves the energy minimization, the blurred image blind restoration method has the advantages of low computational complexity and high noise suppression capacity.

Owner:ZHEJIANG UNIV

Devices and methods for percutaneous energy delivery

InactiveUS20100217253A1Avoid energyGood lookingSurgical needlesSurgical instruments for heatingEnergy minimizationBiomedical engineering

The invention provides a system and method for percutaneous energy delivery in an effective, manner using one or more probes. Additional variations of the system include array of probes configured to minimize the energy required to produce the desired effect.

Owner:SYNERON MEDICAL LTD

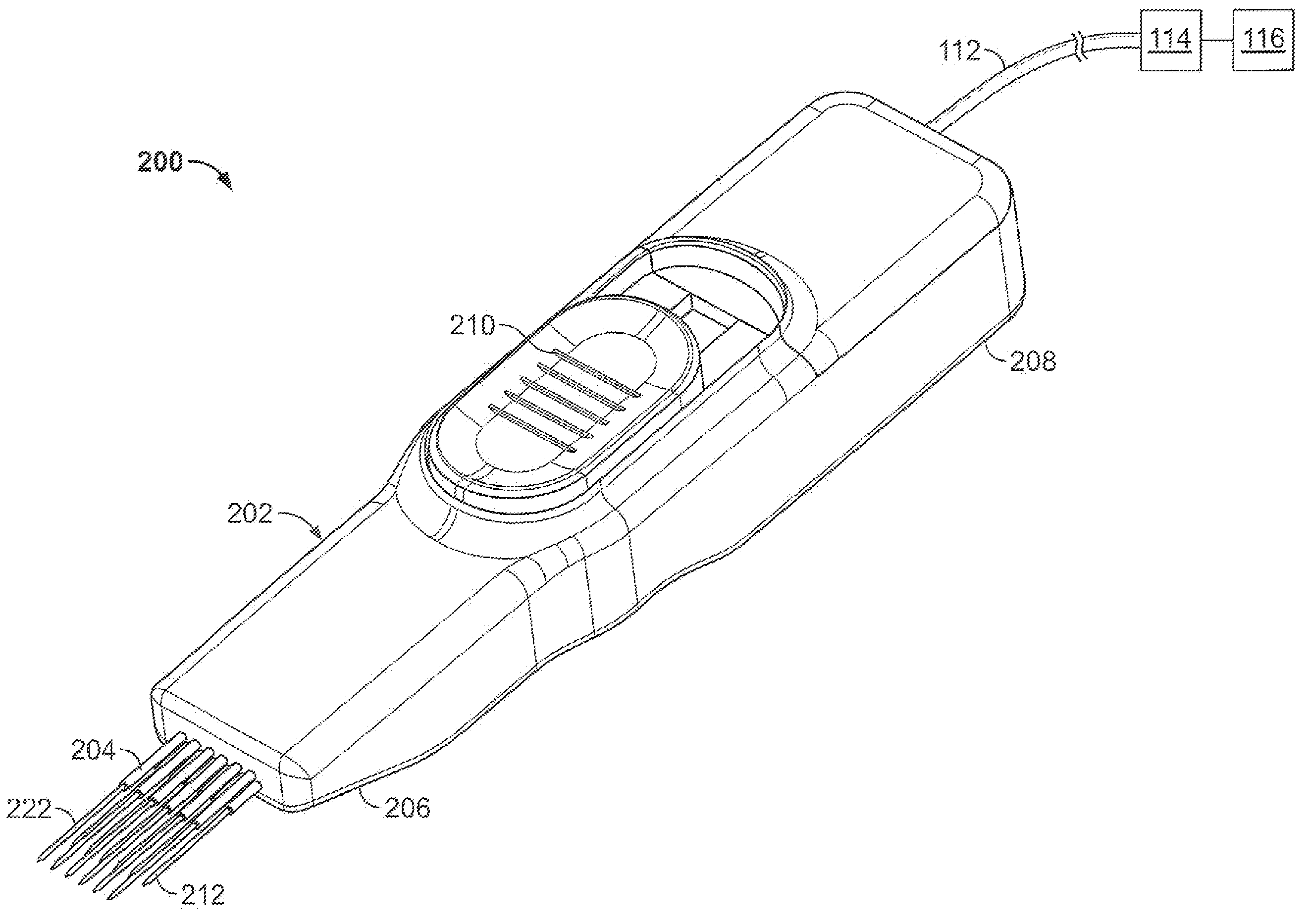

Methods and devices for treating tissue

InactiveUS20090036958A1Avoid energyEasy to insertSurgical needlesSurgical instrument detailsEnergy minimizationNon invasive

The invention provides a system and method for achieving the cosmetically beneficial effects of shrinking collagen tissue in the dermis or other areas of tissue in an effective, non-invasive manner using an array of electrodes. Systems described herein allow for improved treatment of tissue. Additional variations of the system include array of electrodes configured to minimize the energy required to produce the desired effect.

Owner:SYNERON MEDICAL LTD

Cartridge electrode device

InactiveUS20090112205A1Avoid energyReduce unnecessary damageSurgical instruments for heatingEnergy minimizationEngineering

The invention provides a system and method for achieving the cosmetically beneficial effects of shrinking collagen tissue in the dermis or other areas of tissue in an effective, non-invasive manner using an array of electrodes. Systems described herein allow for improved treatment of tissue. Additional variations of the system include array of electrodes configured to minimize the energy required to produce the desired effect.

Owner:SYNERON MEDICAL LTD

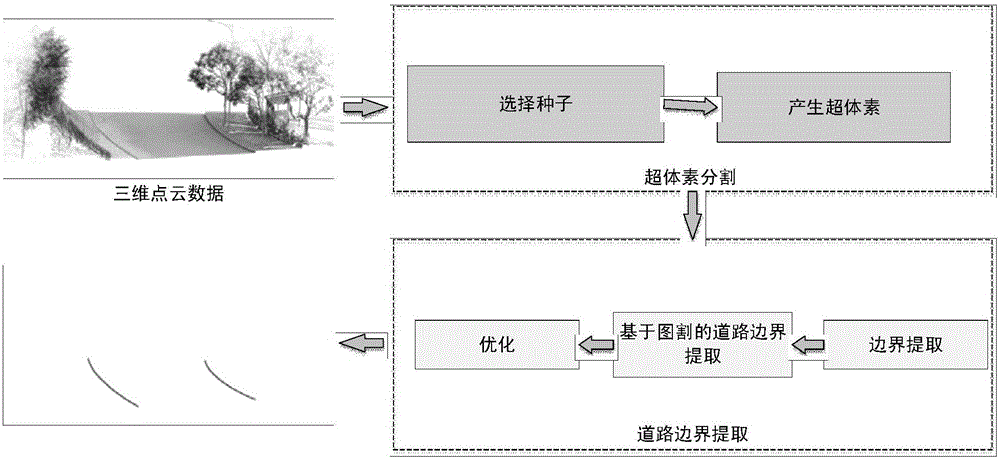

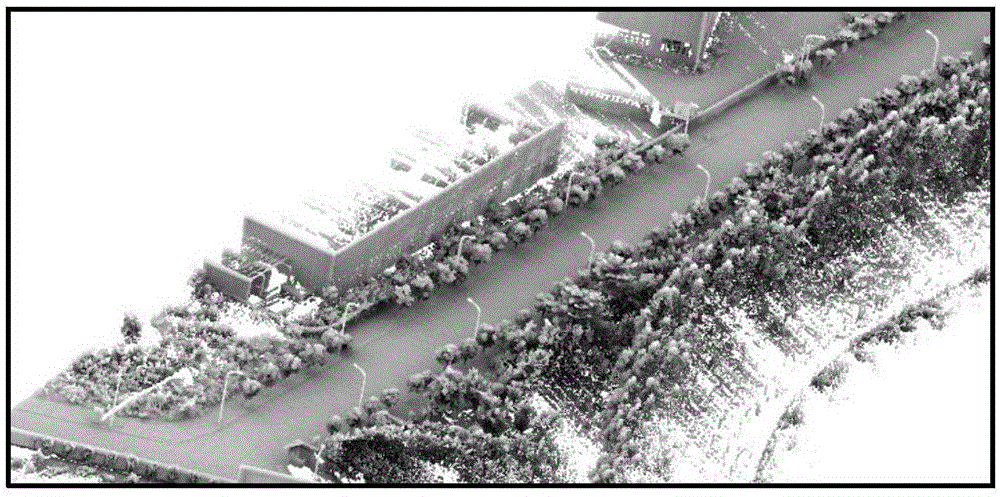

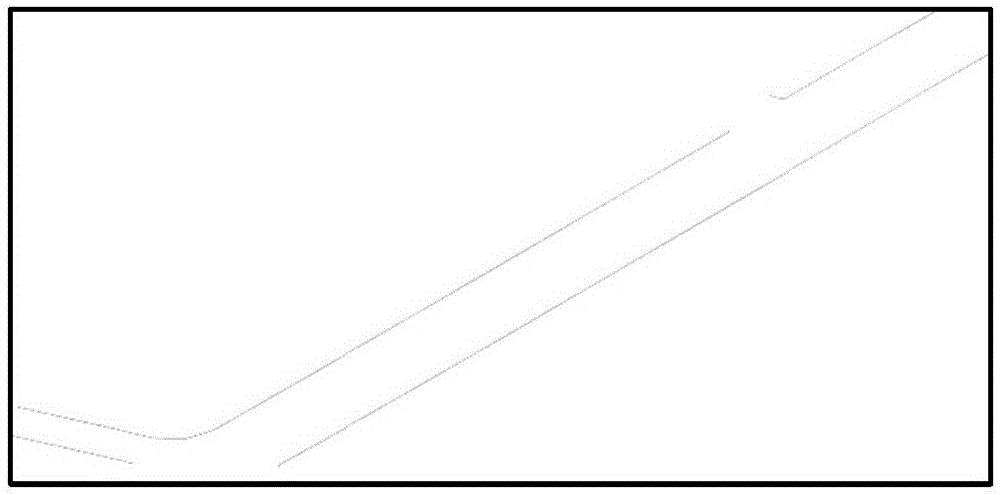

Three-dimensional point cloud road boundary automatic extraction method

ActiveCN106780524AReduce human subjective interventionThe result is stable and robustImage enhancementImage analysisCluster algorithmVoxel

The invention relates to the field of point cloud processing, and specifically discloses a three-dimensional point cloud road boundary automatic extraction method. The method comprises the steps of S1, screening seed points for the whole acquired three-dimensional point cloud data set P so as to perform super voxel classification; S2, extracting boundary points between adjacent non-coplanar super voxels by using an alpha-shape algorithm; S3, extracting road boundary points by using a graph cut based energy minimization algorithm; S4, removing outliers based on an Euclidean distance clustering algorithm; and S5, fitting the extracted road boundary points into a smooth curve. The method can directly operate on the large-scale three-dimensional point cloud, can be applied to different scenes, is high in calculation speed and good in algorithm robustness, and can extract road boundaries quickly.

Owner:XIAMEN UNIV

Devices and methods for percutaneous energy delivery

ActiveUS8540705B2Avoid energyGood lookingSurgical needlesControlling energy of instrumentEnergy minimizationBiomedical engineering

The invention provides a system and method for percutaneous energy delivery in an effective, manner using one or more probes. Additional variations of the system include array of probes configured to minimize the energy required to produce the desired effect.

Owner:SYNERON MEDICAL LTD

Synergistic face detection and pose estimation with energy-based models

ActiveUS7236615B2Improve reliabilityPictoral communicationThree-dimensional object recognitionFace detectionEnergy minimization

A method for human face detection that detects faces independently of their particular poses and simultaneously estimates those poses. Our method exhibits an immunity to variations in skin color, eyeglasses, facial hair, lighting, scale and facial expressions, and others. In operation, we train a convolutional neural network to map face images to points on a face manifold, and non-face images to points far away from that manifold, wherein that manifold is parameterized by facial pose. Conceptually, we view a pose parameter as a latent variable, which may be inferred through an energy-minimization process. To train systems based upon our inventive method, we derive a new type of discriminative loss function that is tailored to such detection tasks. Our method enables a multi-view detector that can detect faces in a variety of poses, for example, looking left or right (yaw axis), up or down (pitch axis), or tilting left or right (roll axis). Systems employing our method are highly-reliable, run at near real time (5 frames per second on conventional hardware), and is robust against variations in yaw (±90°), roll(±45°), and pitch(±60°).

Owner:NEC CORP

Haptic graphical user interface for adjusting mapped texture

ActiveUS20050128210A1Easy to findEasy selectionInput/output for user-computer interactionCathode-ray tube indicatorsGraphicsEnergy minimization

The invention provides techniques for wrapping a two-dimensional texture conformally onto a surface of a three dimensional virtual object within an arbitrarily-shaped, user-defined region. The techniques provide minimum distortion and allow interactive manipulation of the mapped texture. The techniques feature an energy minimization scheme in which distances between points on the surface of the three-dimensional virtual object serve as set lengths for springs connecting points of a planar mesh. The planar mesh is adjusted to minimize spring energy, and then used to define a patch upon which a two-dimensional texture is superimposed. Points on the surface of the virtual object are then mapped to corresponding points of the texture. The invention also features a haptic / graphical user interface element that allows a user to interactively and intuitively adjust texture mapped within the arbitrary, user-defined region.

Owner:3D SYST INC

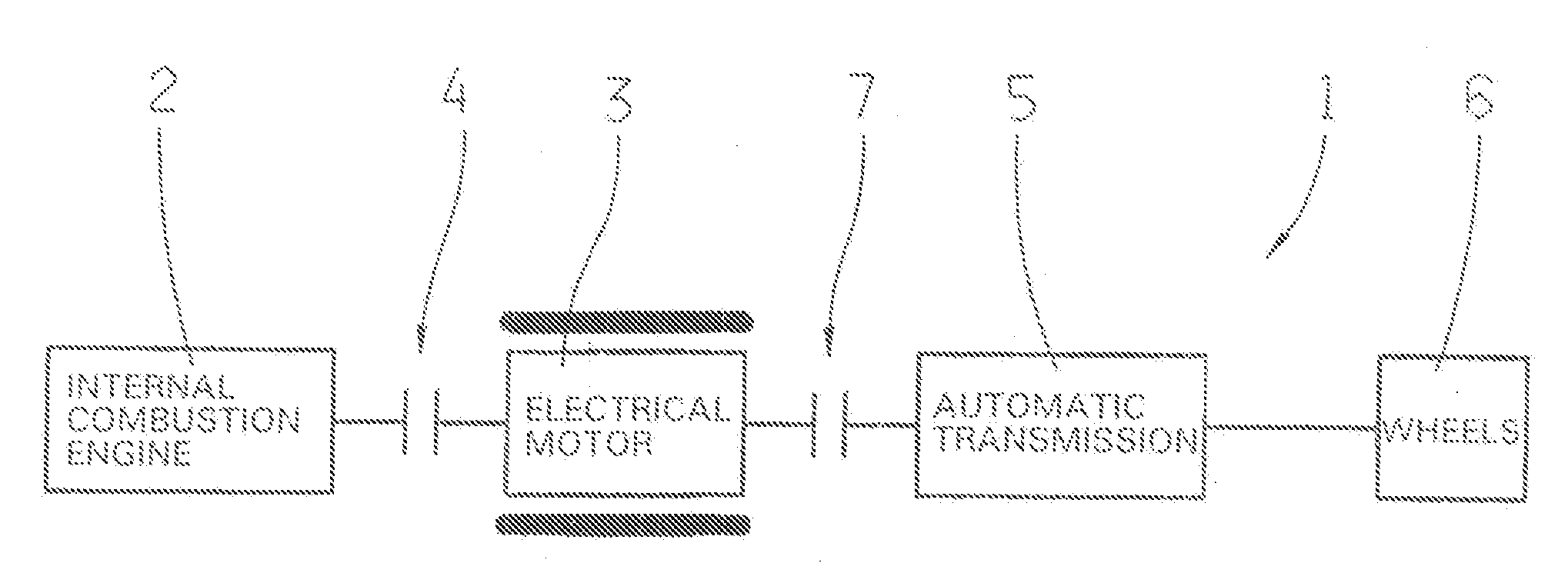

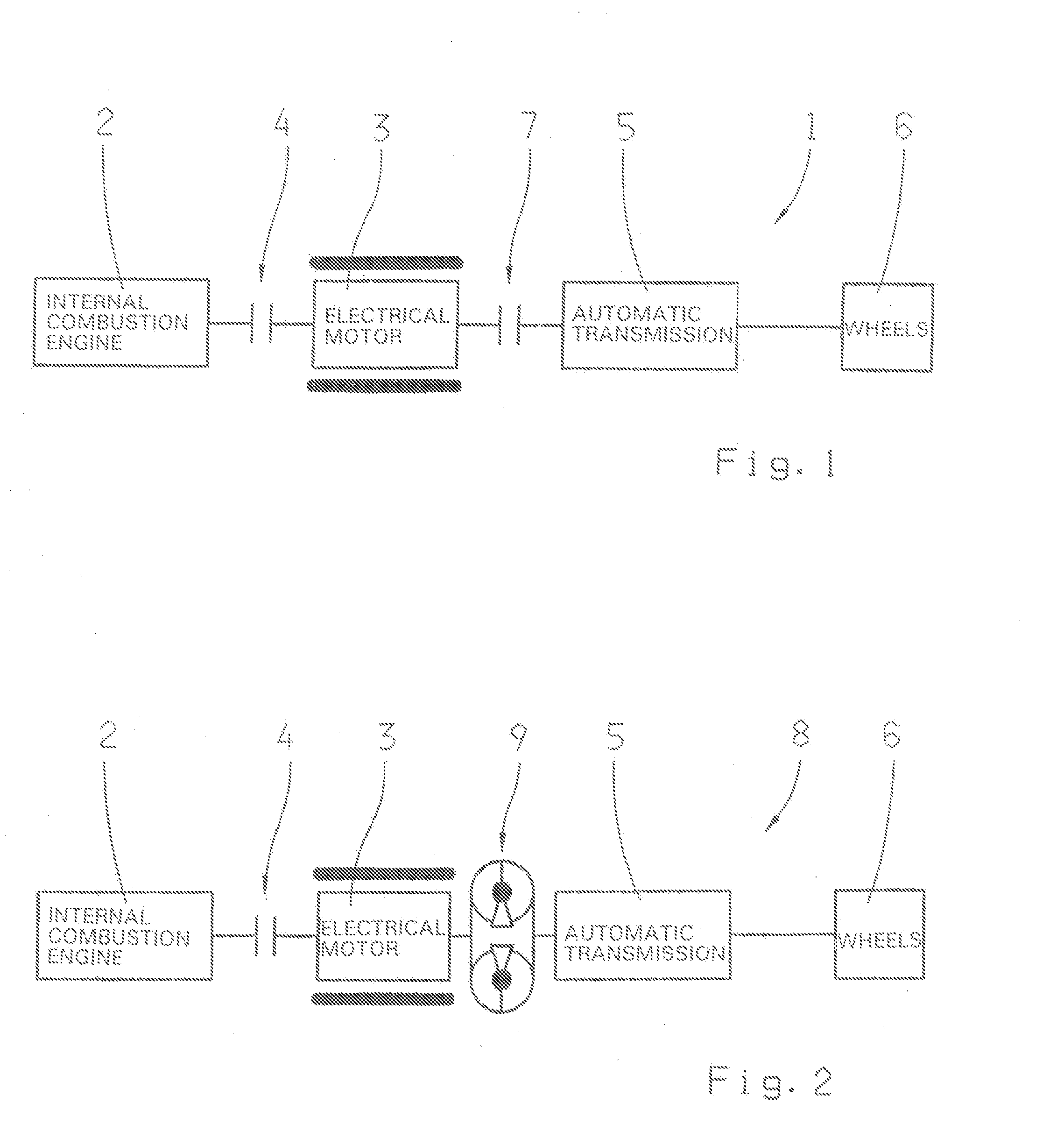

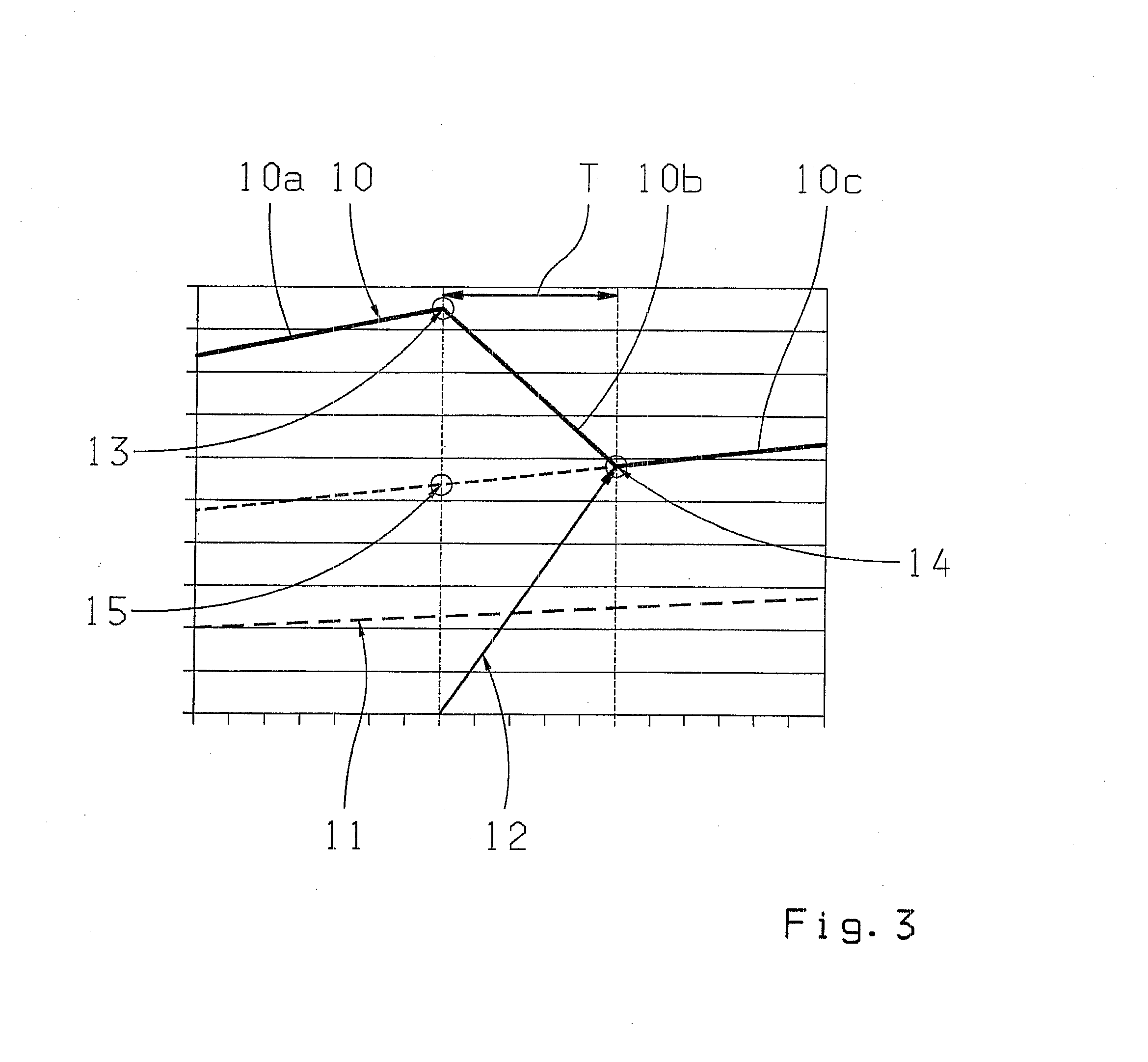

Method for operating a drive train

InactiveUS20080064560A1Minimizing overall energy requiredMinimizing energyAuxillary drivesGas pressure propulsion mountingMobile vehicleEnergy minimization

A method of operating a drive train of a motor vehicle with a hybrid drive having an internal combustion engine, an electric motor, an automatic transmission. The drive train also having a clutch between the internal combustion engine and the electric motor, and a clutch or a torque converter between the electric motor and the automatic transmission. This arrangement enabling the internal combustion engine to be started by engaging the clutch arranged between the internal combustion engine end the electric motor, when the drive train is powered exclusively by the electric motor. The braking power, generated during an upshift in response to a drop in the rotational speed of the drive train components, is used to start the internal combustion engine, such that the energy required from the electric motor for starting the combustion engine is minimized.

Owner:ZF FRIEDRICHSHAFEN AG

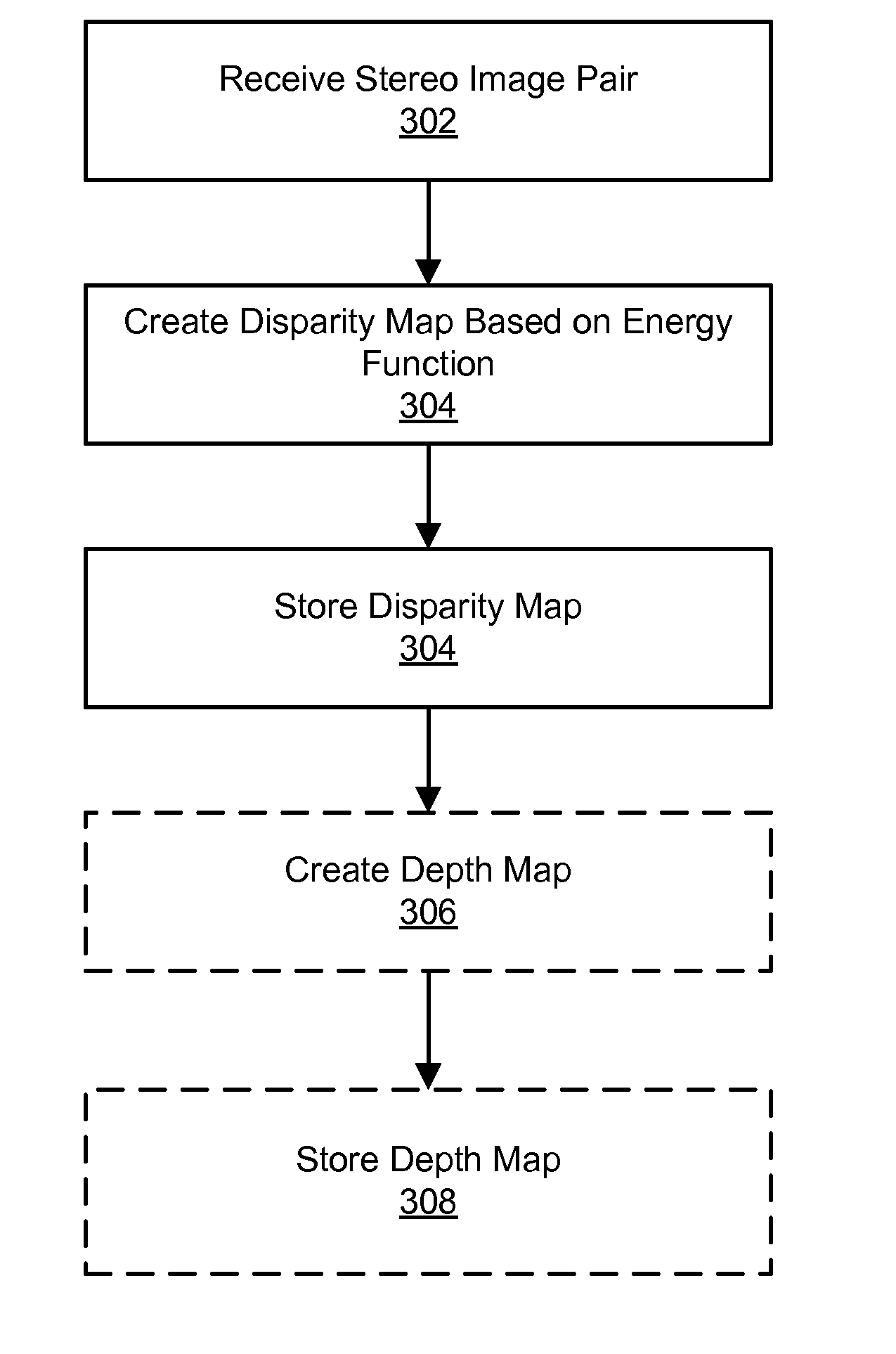

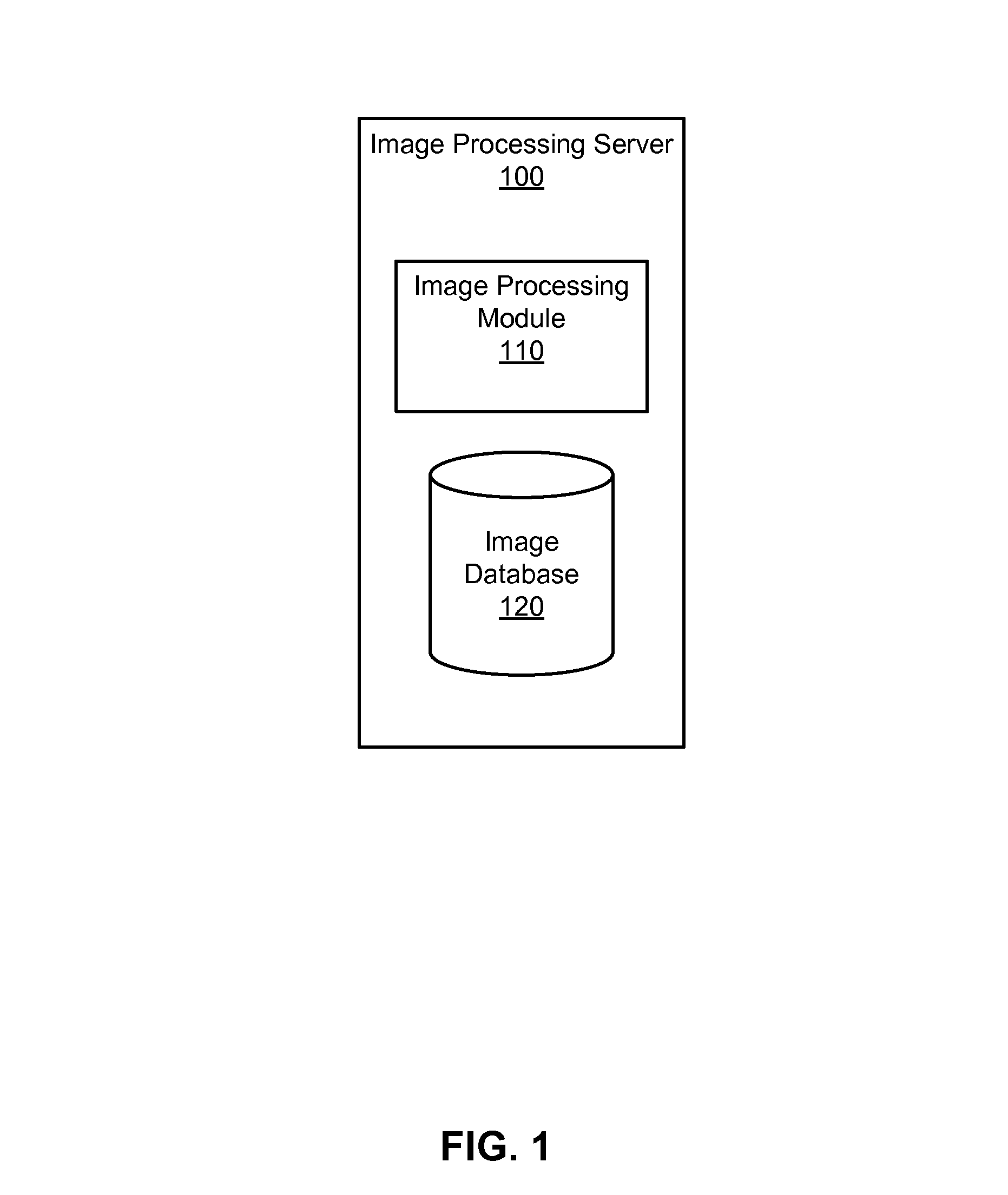

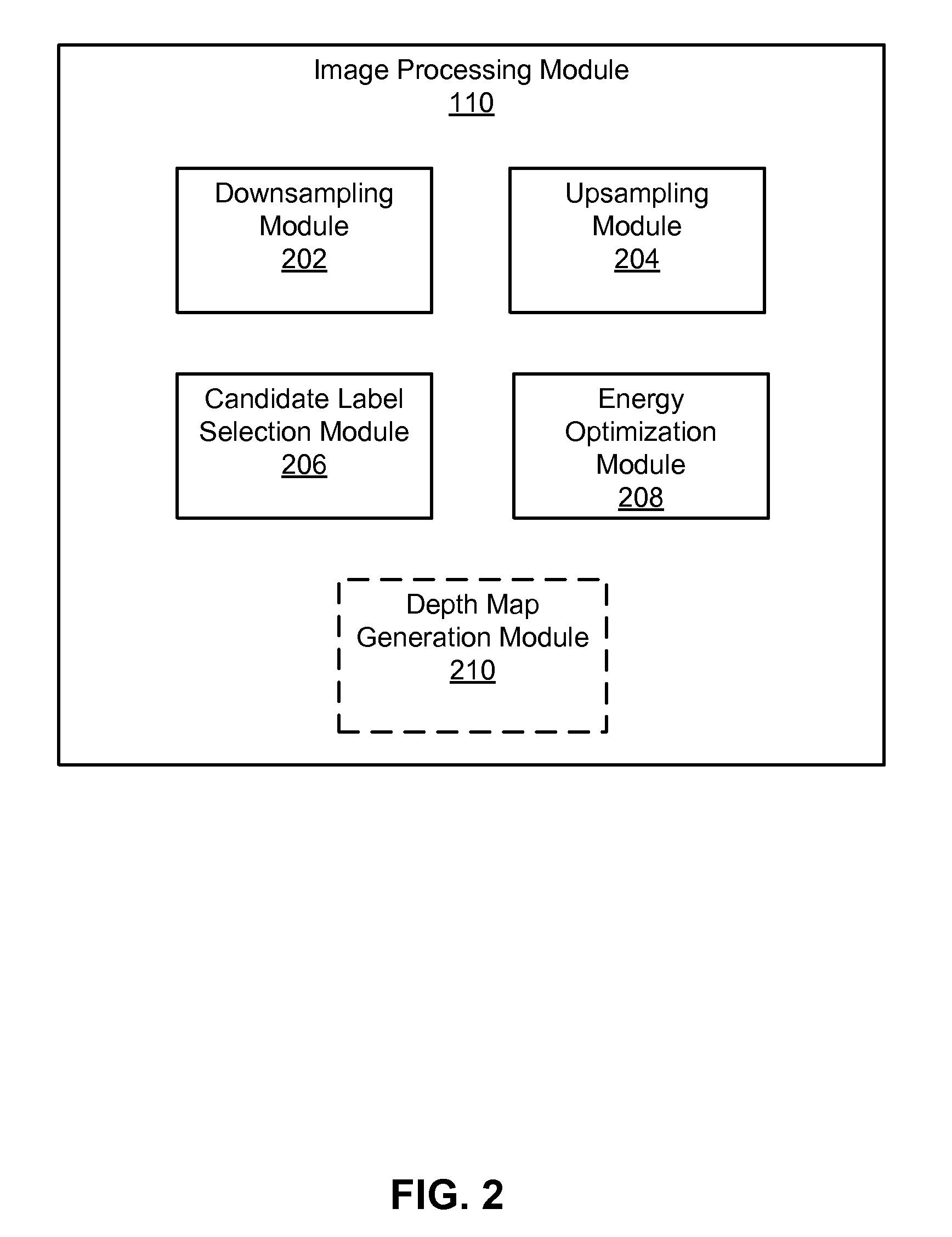

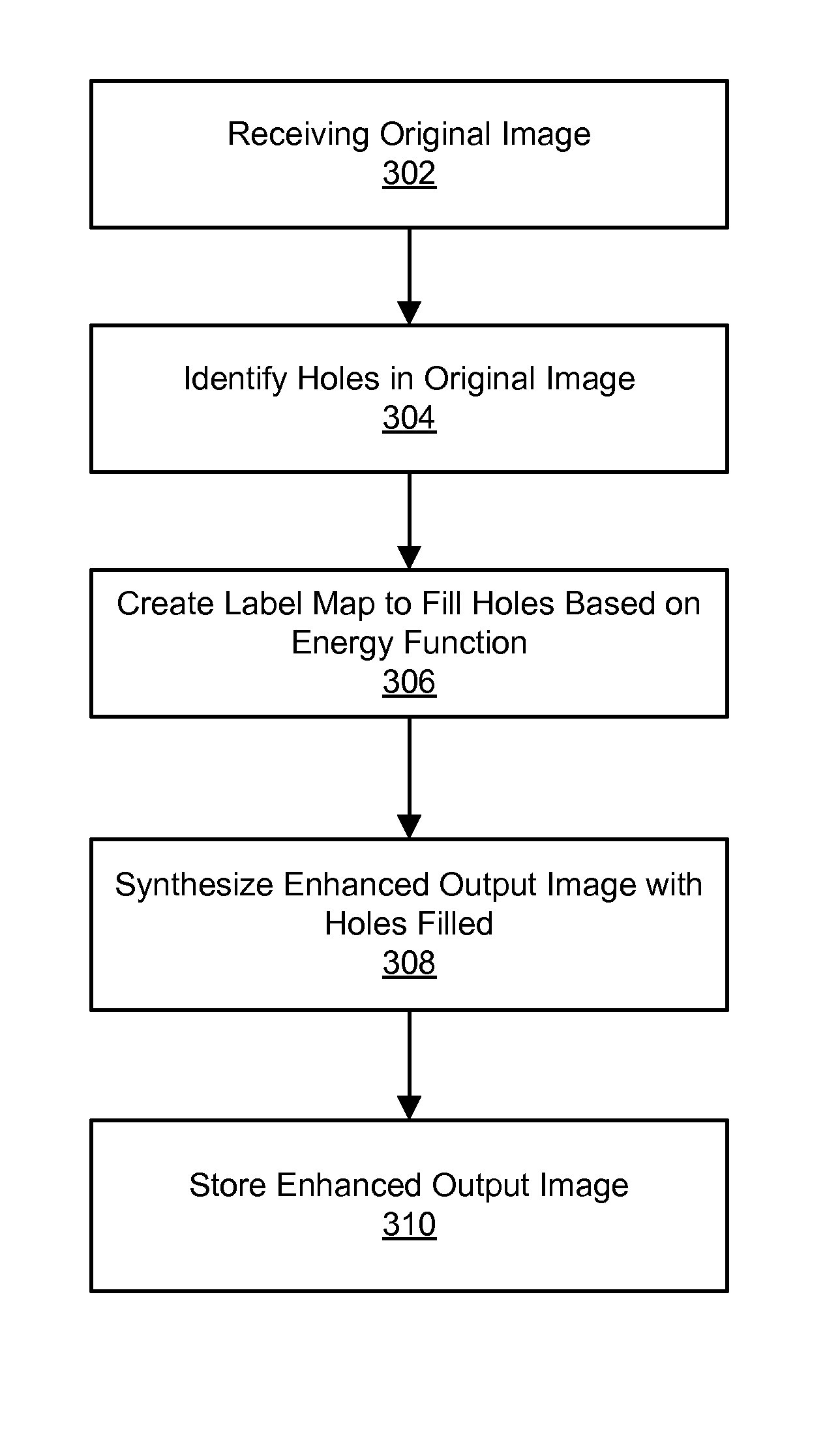

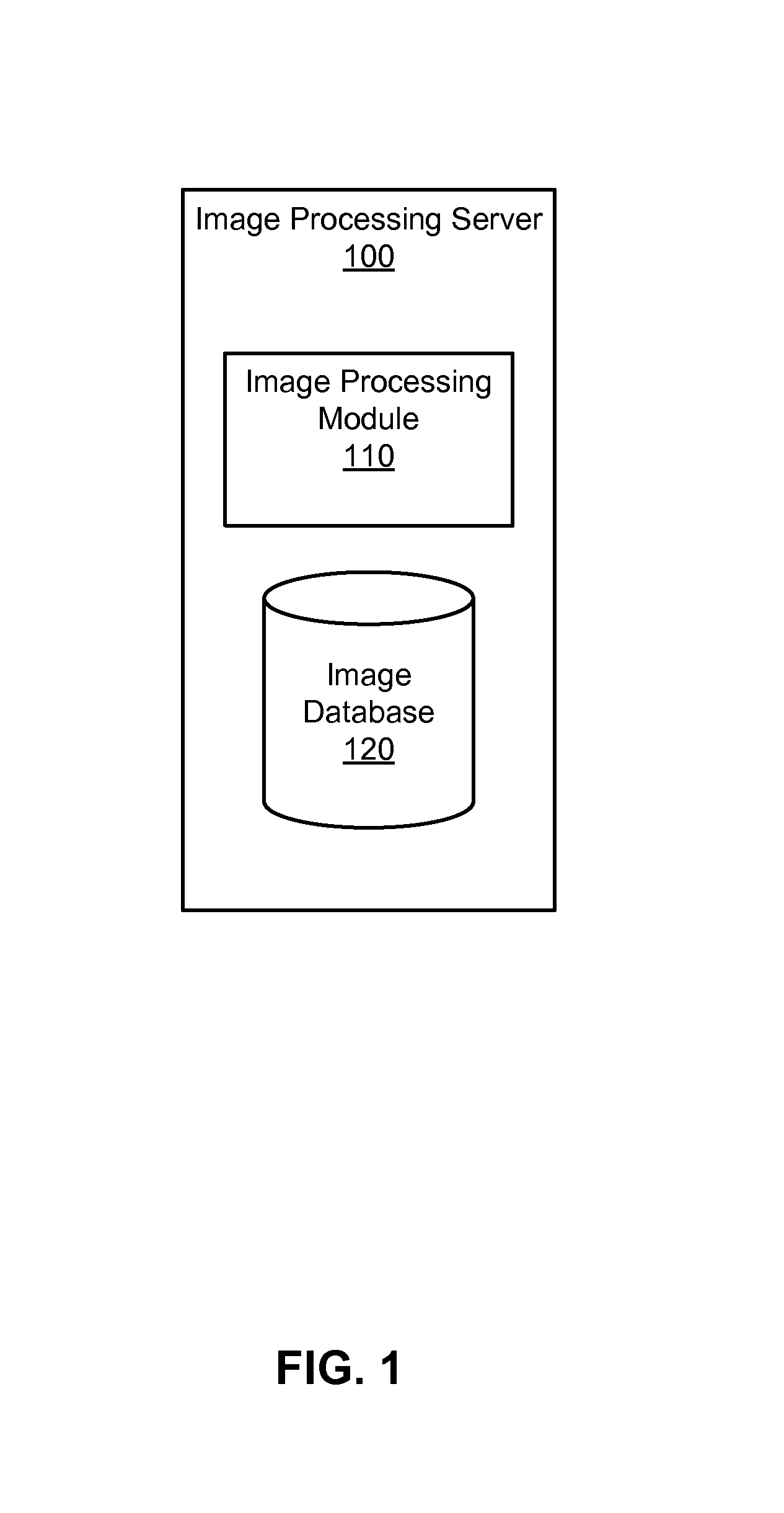

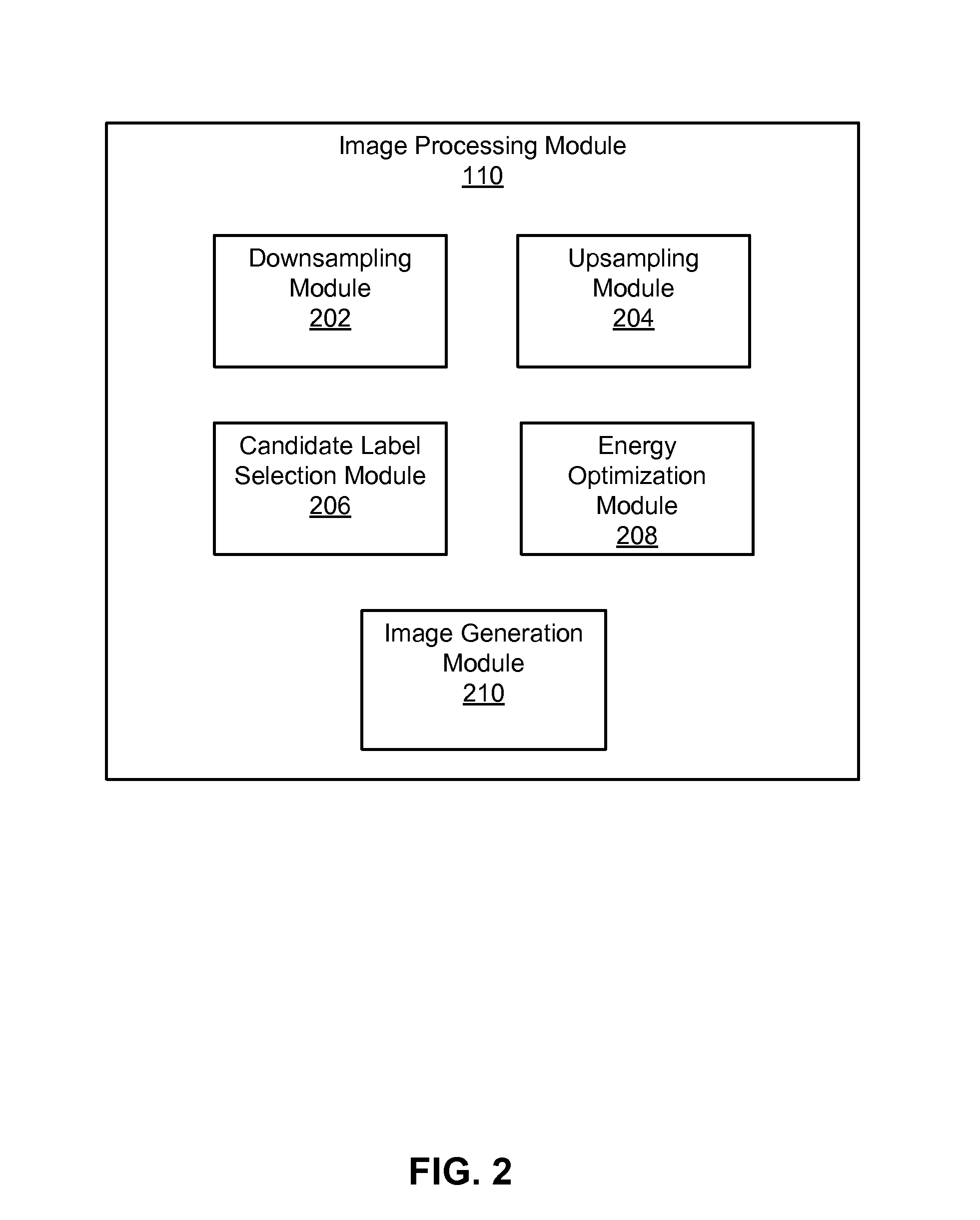

Fast randomized multi-scale energy minimization for inferring depth from stereo image pairs

An image processing module infers depth from a stereo image pair according to a multi-scale energy minimization process. A stereo image pair is progressively downsampled to generate a pyramid of downsampled image pairs of varying resolution. Starting with the coarsest downsampled image pair, a disparity map is generated that reflects displacement between corresponding pixels in the stereo image pair. The disparity map is then progressively upsampled. At each upsampling stage, the disparity labels are refined according to an energy function. The disparity labels provide depth information related to surfaces depicted in the stereo image pair.

Owner:GOOGLE LLC

Devices and methods for percutaneous energy delivery

ActiveUS8882753B2Avoid energyGood lookingUltrasound therapyDiagnosticsEnergy minimizationBiomedical engineering

The invention provides a system and method for percutaneous energy delivery in an effective, manner using one or more probes. Additional variations of the system include array of probes configured to minimize the energy required to produce the desired effect.

Owner:SYNERON MEDICAL LTD

Methods and devices for treating tissue

InactiveUS20080281389A1Avoid energyEasy to insertInternal electrodesSurgical instruments for heatingEnergy minimizationNon invasive

The invention provides a system and method for achieving the cosmetically beneficial effects of shrinking collagen tissue in the dermis or other areas of tissue in an effective, non-invasive manner using an array of electrodes. Systems described herein allow for improved treatment of tissue. Additional variations of the system include array of electrodes configured to minimize the energy required to produce the desired effect.

Owner:SYNERON MEDICAL LTD

Methods and devices for treating tissue

ActiveUS20080091183A1Avoid energyEasy to insertSurgical needlesInternal electrodesEnergy minimizationNon invasive

The invention provides a system and method for achieving the cosmetically beneficial effects of shrinking collagen tissue in the dermis or other areas of tissue in an effective, non-invasive manner using an array of electrodes. Systems described herein allow for improved treatment of tissue. Additional variations of the system include array of electrodes configured to minimize the energy required to produce the desired effect.

Owner:SYNERON MEDICAL LTD

Fast randomized multi-scale energy minimization for image processing

ActiveUS8670630B1Minimize energy functionEnhance the imageImage enhancementDigitally marking record carriersEnergy minimizationComputer vision

An image processing module performs efficient image enhancement according to a multi-scale energy minimization process. One or more input images are progressively downsampled to generate a pyramid of downsampled images of varying resolution. Starting with the coarsest downsampled image, a label map is generated that maps output pixel positions to pixel positions in the downsampled input images. The label map is then progressively upsampled. At each upsampling stage, the labels are refined according to an energy function configured to produce the desired enhancements. Using the multi-scale energy minimization, the image processing module enhances image via hole-filling and / or super-resolution.

Owner:GOOGLE LLC

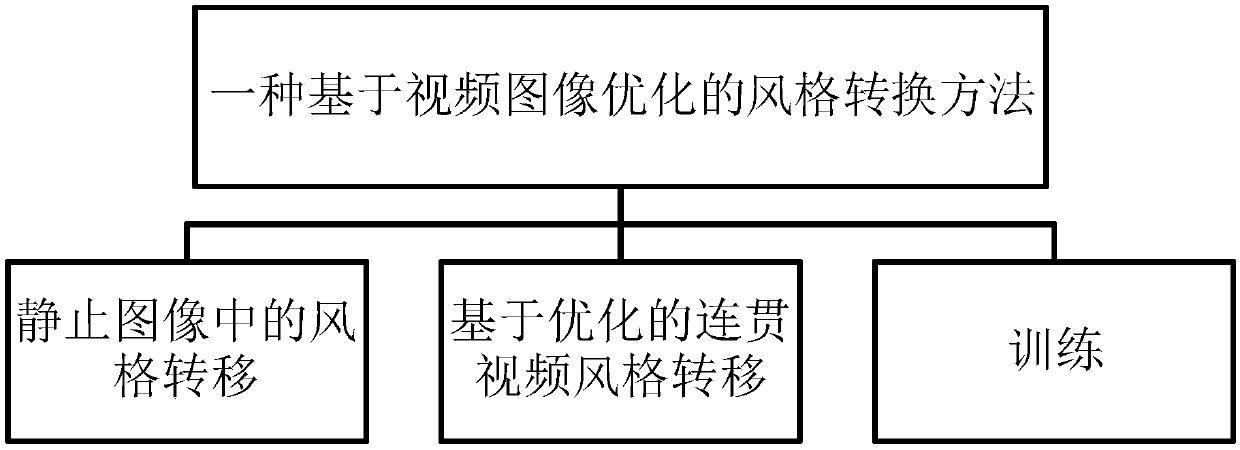

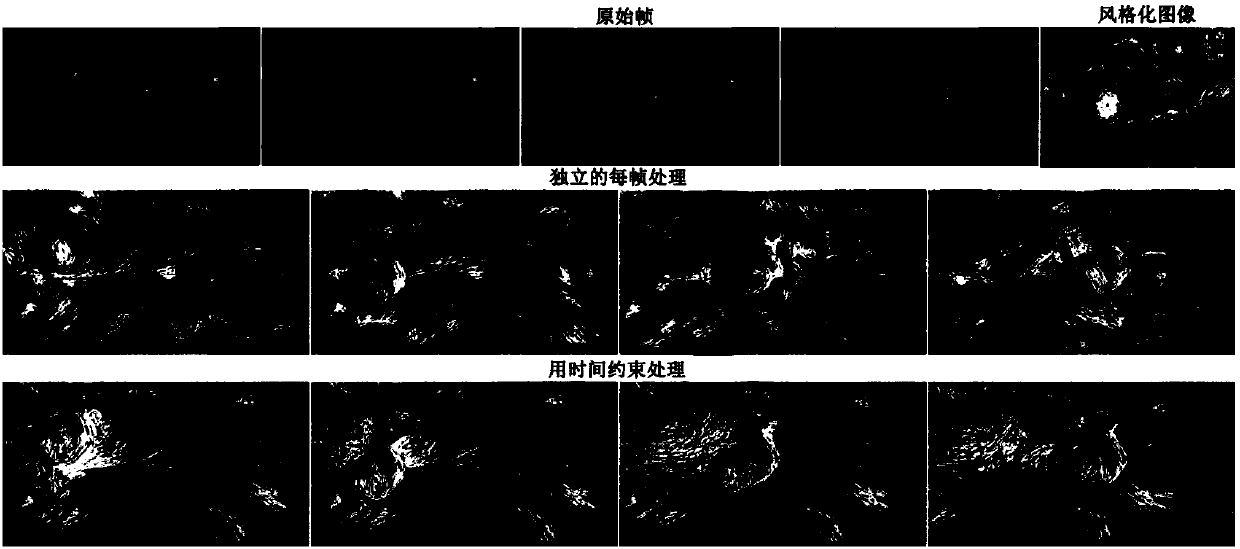

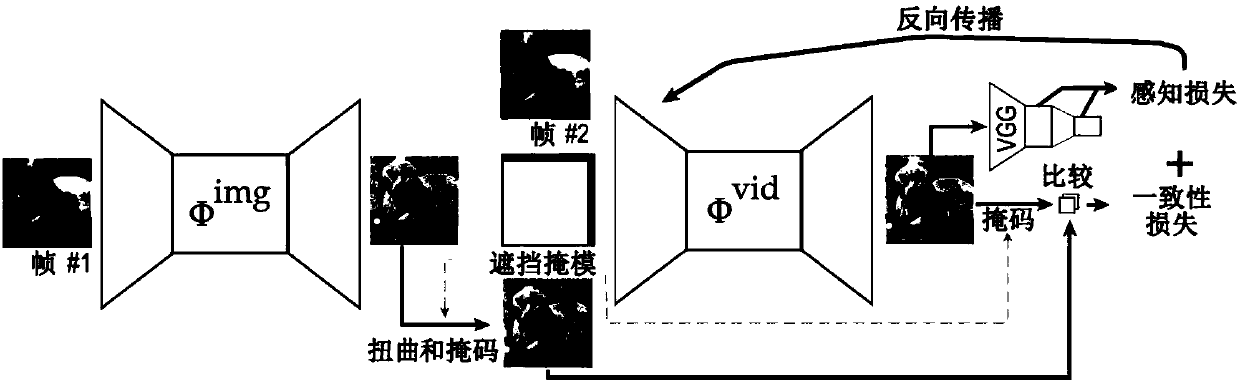

Video image optimization-based style transformation method

The invention provides a video image optimization-based style transformation method. The method mainly comprises style transformation in a still image, optimization-based coherent video style transfer and training. According to the process of the method, random Gaussian noises are adopted to perform initialization, a content loss function and a style loss function are defined, and therefore, the energy minimization problem of the video style transfer method can be solved, long-term consistency and image quality during the movement of a camera can be improved; and a network with parameters is adopted as input frames, previously generated frames are distorted and masked by blocking masks, output is generated, and the function is adopted to obtain a video recursively. With the method of the invention adopted, the loss functions of the short-term consistency and long-term consistency of the stylized video and the multi-channel mode of the stylized video can be realized, namely, and a stable result is generated under fast motion and intensive blocking conditions; operating time is greatly reduced; and the quality of images can be improved.

Owner:SHENZHEN WEITESHI TECH

Methods and devices for treating tissue

ActiveUS8133216B2Avoid energyEasy to insertSurgical needlesSurgical instruments for heatingEnergy minimizationMedicine

The invention provides a system and method for achieving the cosmetically beneficial effects of shrinking collagen tissue in the dermis or other areas of tissue in an effective, non-invasive manner using an array of electrodes. Systems described herein allow for improved treatment of tissue. Additional variations of the system include array of electrodes configured to minimize the energy required to produce the desired effect.

Owner:SYNERON MEDICAL LTD

Methods for applying energy to tissue using a graphical interface

ActiveUS8608737B2Avoid energyGood lookingUltrasound therapyDiagnosticsEnergy minimizationGraphical user interface

The invention provides a system and method for percutaneous energy delivery in an effective, manner using one or more probes. Additional variations of the system include array of probes configured to minimize the energy required to produce the desired effect.

Owner:SYNERON MEDICAL LTD

Devices and methods for percutaneous energy delivery

InactiveUS20120143178A9Avoid energyGood lookingSurgical needlesSurgical instruments for heatingEnergy minimizationEngineering

The invention provides a system and method for percutaneous energy delivery in an effective, manner using one or more probes. Additional variations of the system include array of probes configured to minimize the energy required to produce the desired effect.

Owner:SYNERON MEDICAL LTD

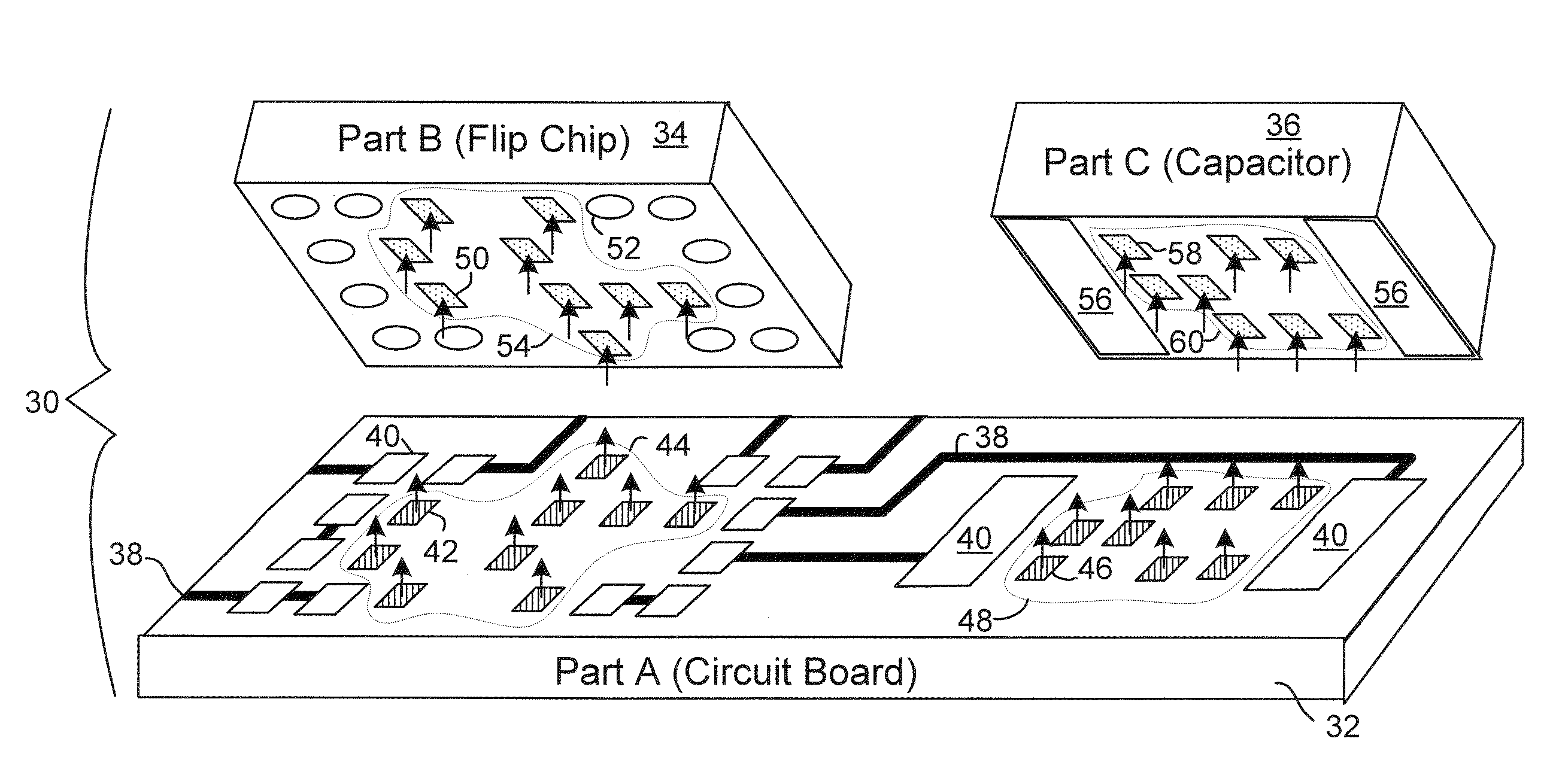

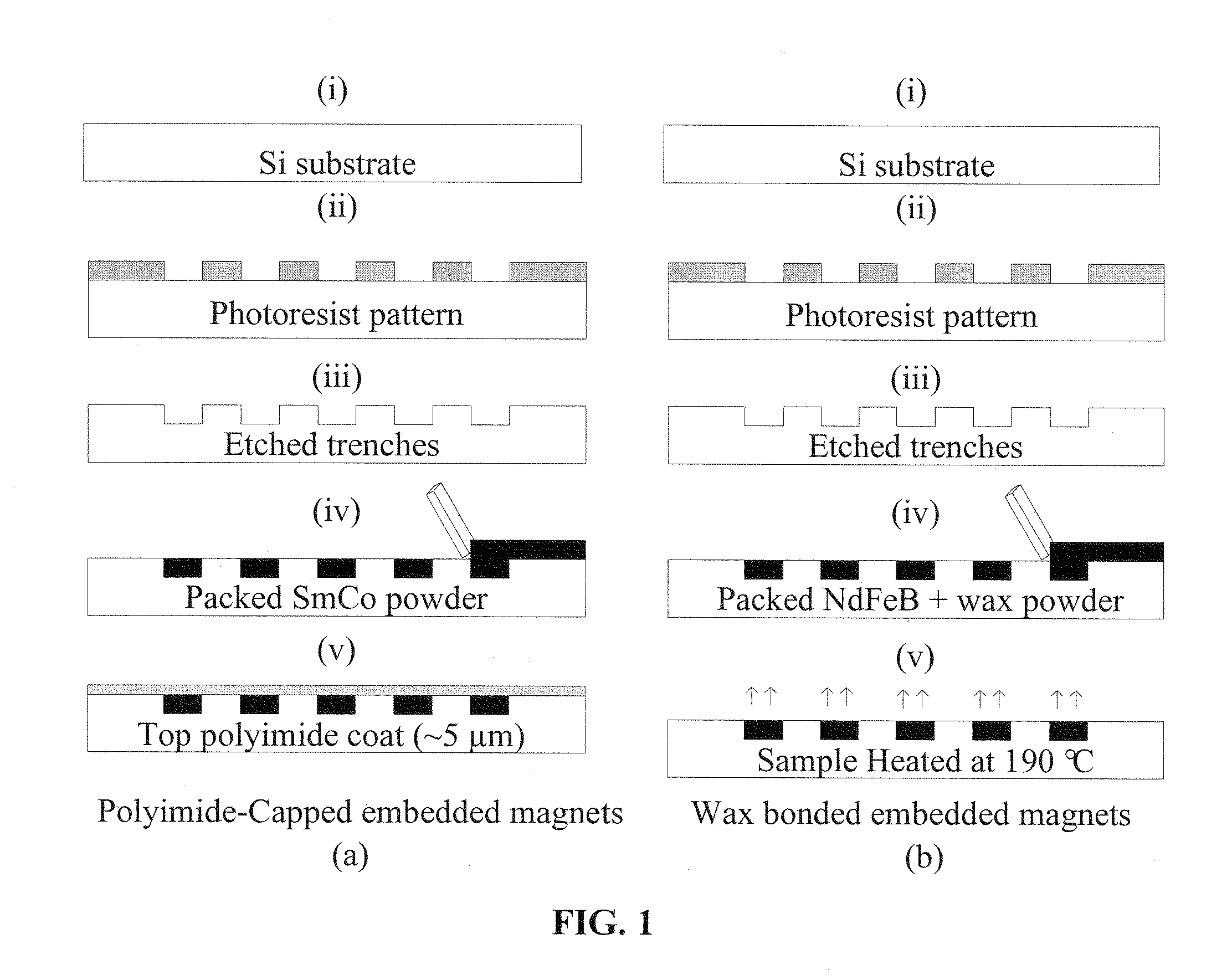

Enhanced magnetic self-assembly using integrated micromagnets

InactiveUS20110179640A1Minimal energyLine/current collector detailsFinal product manufactureMagnetic tension forceEnergy minimization

Embodiments of the invention relate to a method and system for magnetic self-assembly (MSA) of one or more parts to another part. Assembly occurs when the parts having magnet patterns bond to one another. Such bonding can result in energy minima The magnetic forces and torques—controlled by the size, shape, material, and magnetization direction of the magnetic patterns cause the components to rotate and align. Specific embodiments of MSA can offer self-assembly features such as angular orientation, where assembly is restricted to one physical orientation; inter-part bonding allowing assembly of free-floating components to one another; assembly of free-floating components to a substrate; and bonding specificity, where assembly is restricted to one type of component when multiple components may be present.

Owner:UNIV OF FLORIDA RES FOUNDATION INC

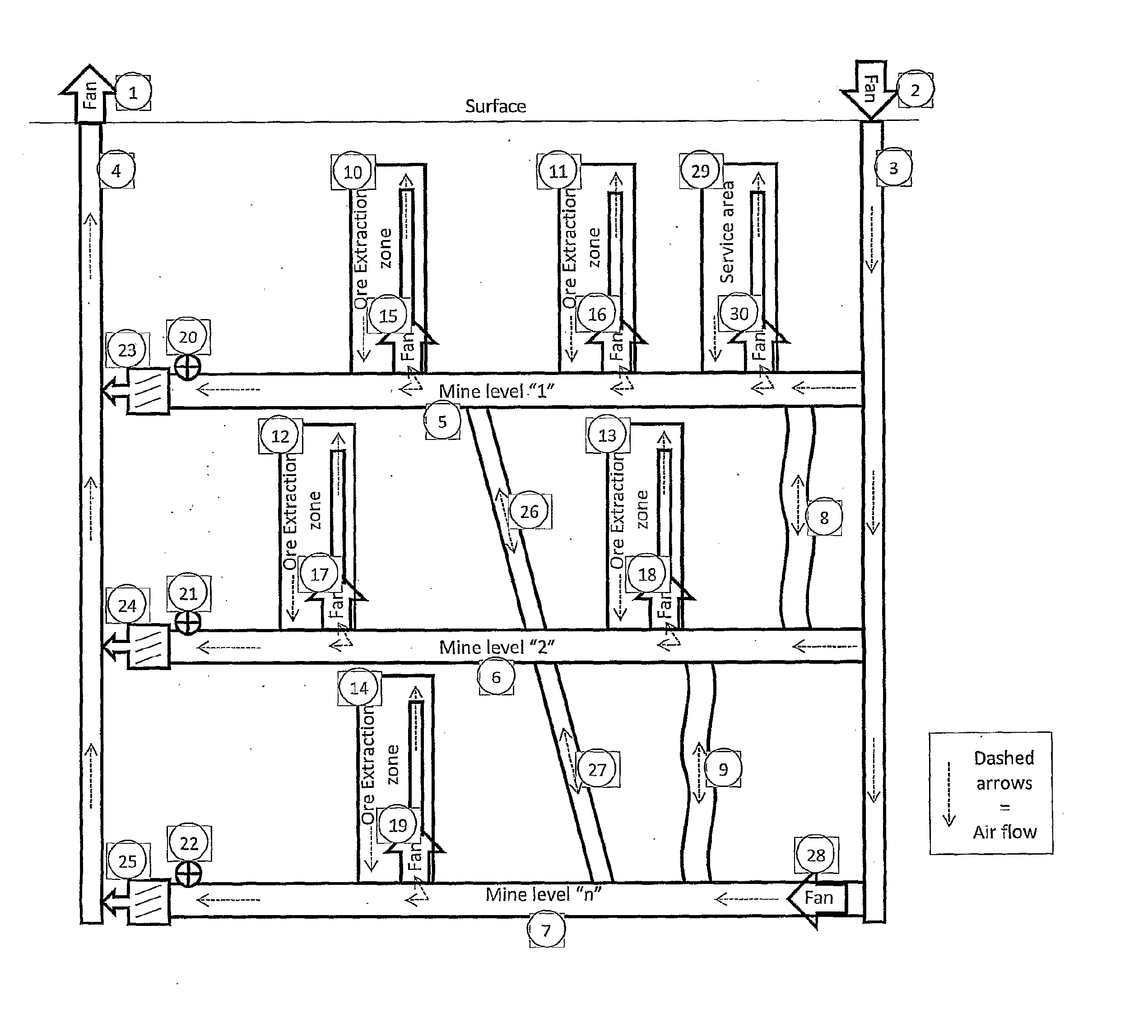

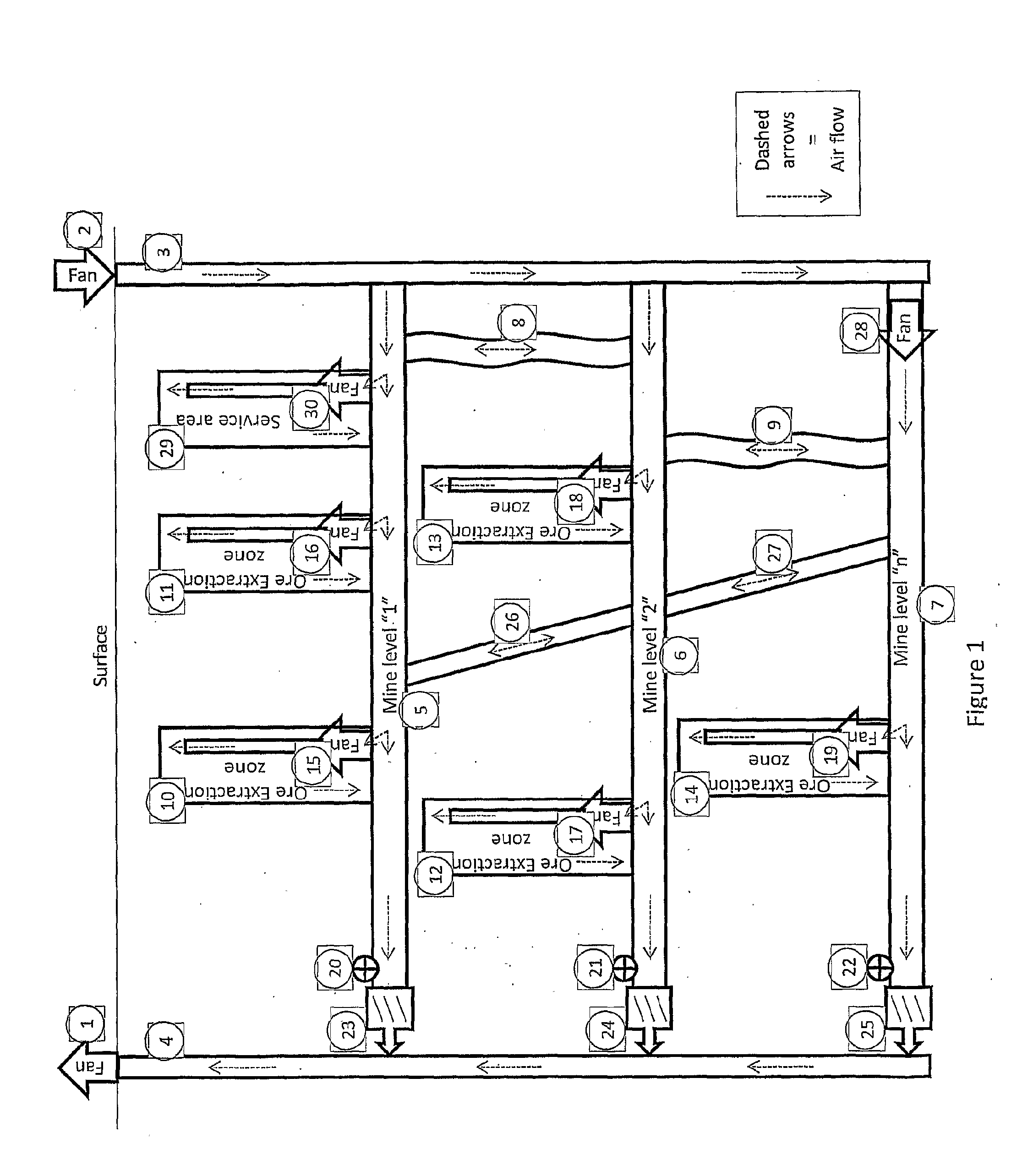

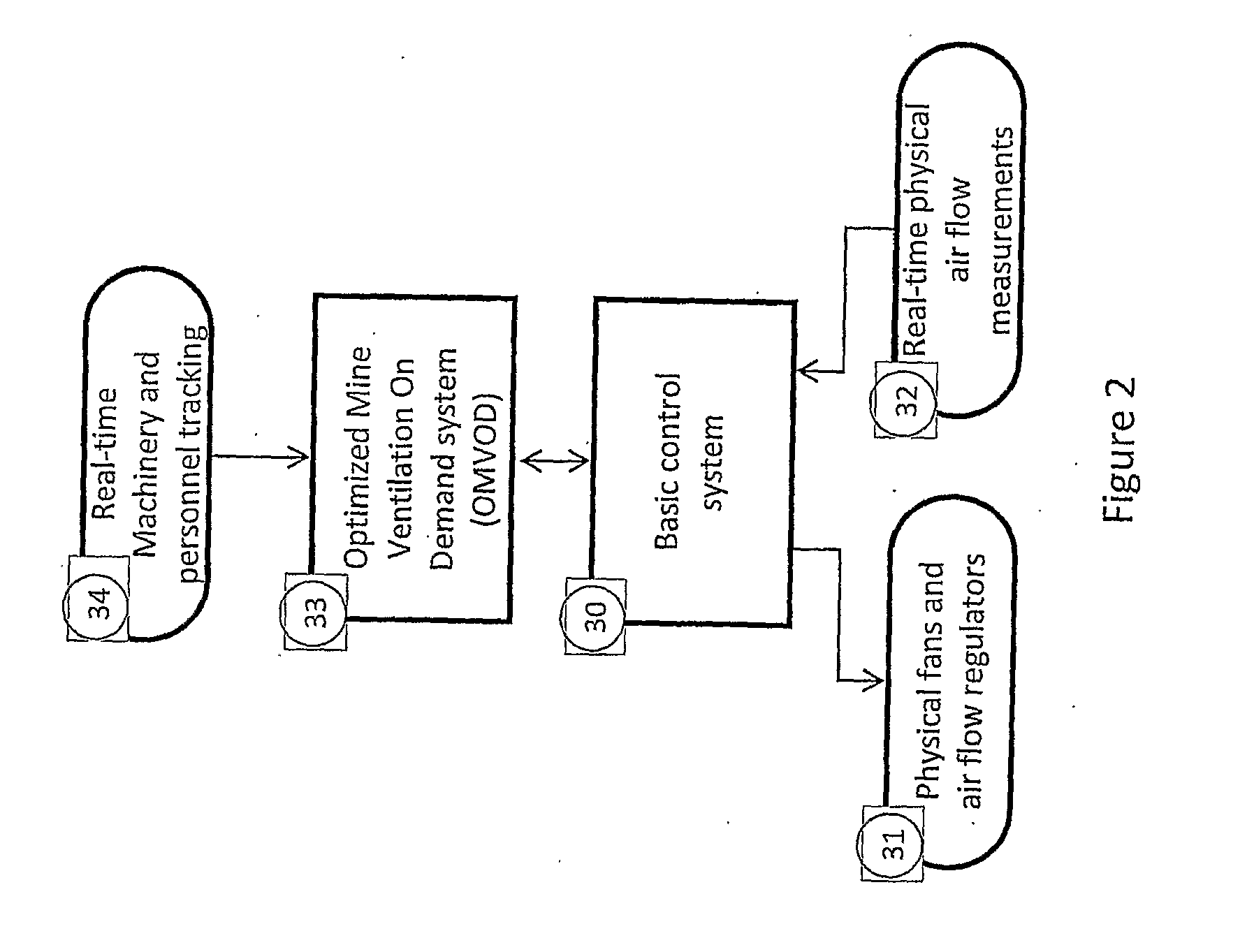

Optimized Mine Ventilation System

ActiveUS20100105308A1Reduce operating costsProgramme controlEnergy efficient ICTHuman–machine interfaceEngineering

The optimized mine ventilation system of this invention supplements mine ventilation basic control systems composed of PLCs (Programmable Logic Controllers with human machine interfaces from vendors such as Allen-Bradley™, Modicon™ and others) or DCSs (Distributed Control System from vendors such as ABB™ and others) with supervisory control establishing a dynamic ventilation demand as a function of real-time tracking of machinery and / or personnel location and where this demand is optimally distributed in the work zones via the mine ventilation network and where the energy required to ventilate is minimized while totally satisfying the demand for each work zones. The optimized mine ventilation system operates on the basis of a predictive dynamic simulation model of the mine ventilation network along with emulated control equipment such as fans and air flow regulators. The model always reaches an air mass flow balance where the pressure and density is preferably compensated for depth and accounts for the natural ventilation pressure flows due to temperature differences. Model setpoints are checked for safety bounds and sent to real physical control equipment via the basic control system.

Owner:HOWDEN CANADA INC

Devices and methods for percutaneous energy delivery

ActiveUS20100010486A1Avoid energyGood lookingUltrasound therapySurgical needlesEnergy minimizationBiomedical engineering

The invention provides a system and method for percutaneous energy delivery in an effective, manner using one or more probes. Additional variations of the system include array of probes configured to minimize the energy required to produce the desired effect.

Owner:SYNERON MEDICAL LTD

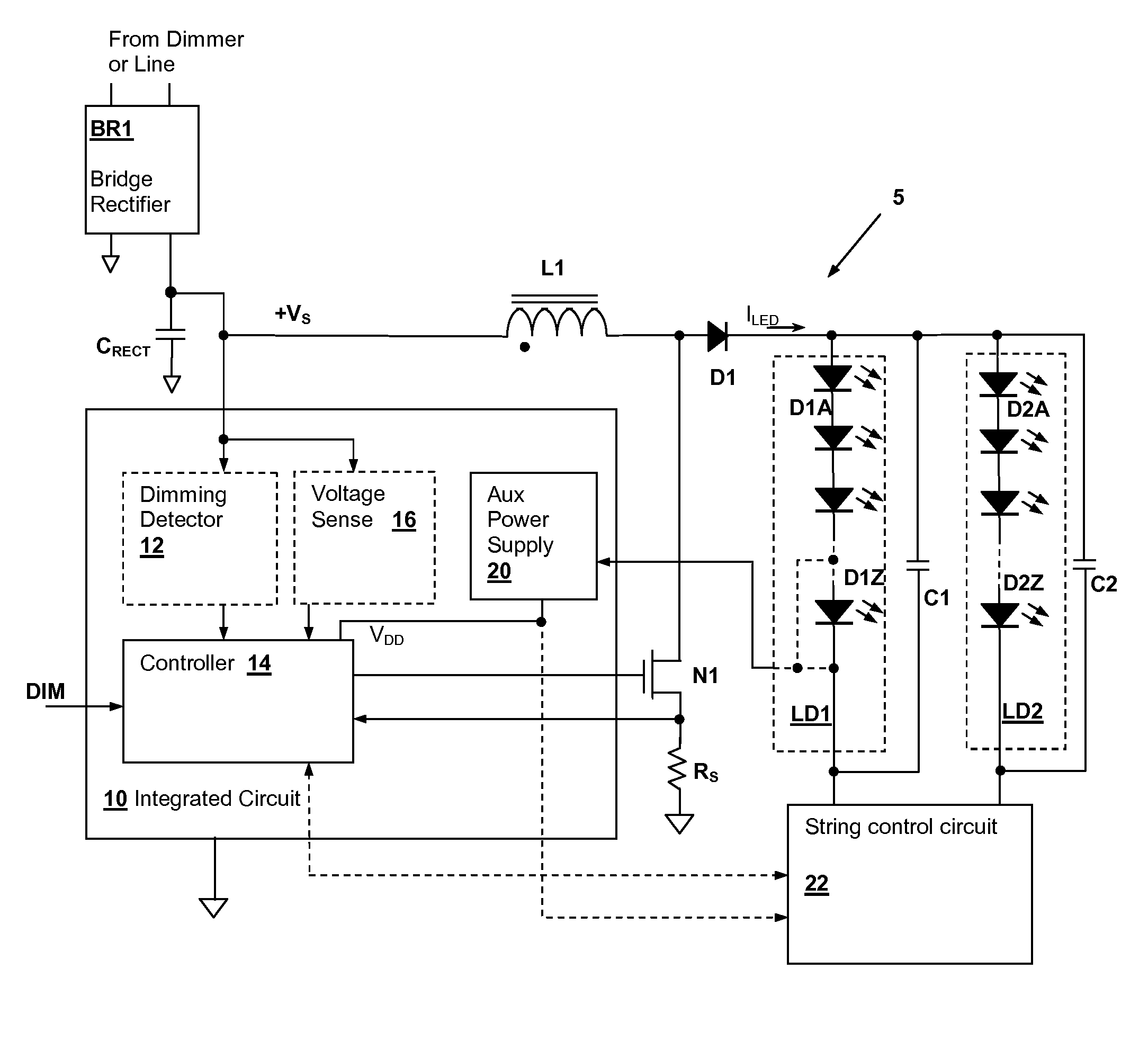

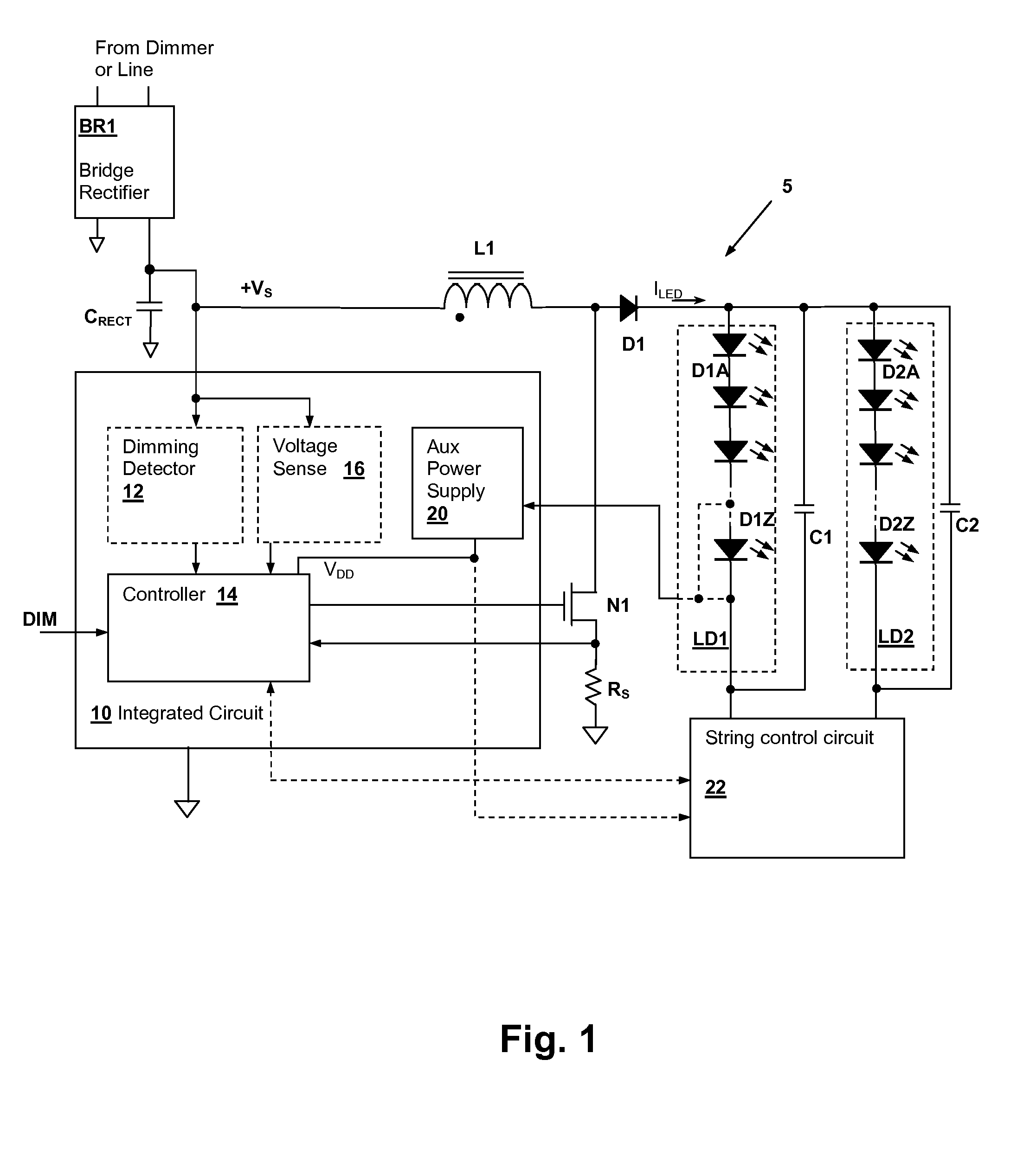

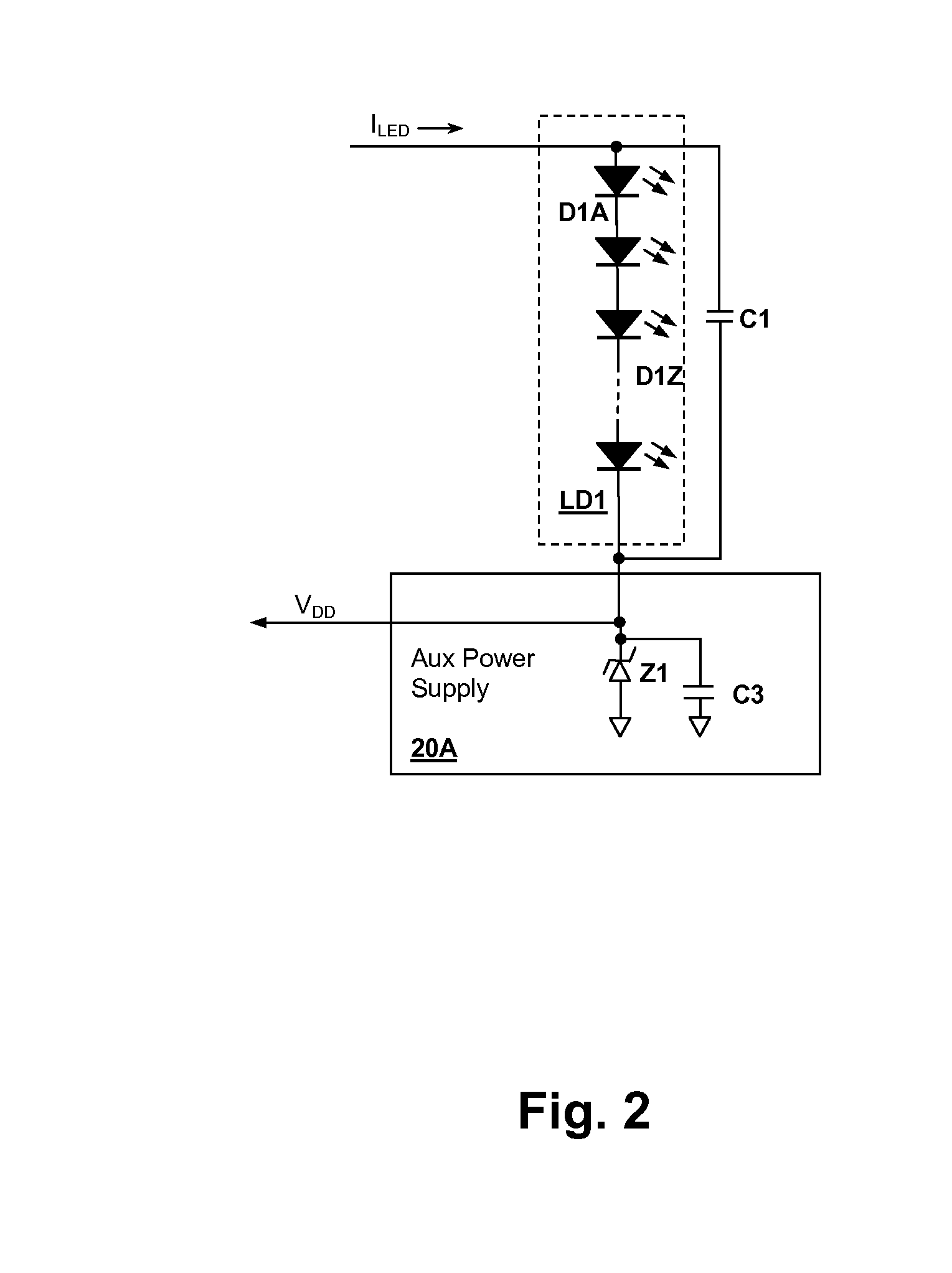

LED (light-emitting diode) string derived controller power supply

ActiveUS20150271882A1Improve efficiencyReduce complexityElectrical apparatusElectroluminescent light sourcesLinear regulatorLight equipment

An LED lighting device includes an auxiliary power supply that supplies power to a control circuit of the LED lighting device that receives an input from a terminal of a light-emitting diode (LED) string of the lighting device that has a substantially lower voltage than the line voltage to which the lighting device is connected. The terminal may be within the LED string, or may be an end of the string. A linear regulator may be operated from the voltage drop across a number of the LEDs in the string so that the energy wasted by the auxiliary power supply is minimized. In other designs, the auxiliary power supply may be intermittently connected in series with the LED string only when needed. The intermittent connection can be used to forward bias a portion of the LED string when the voltage supplied to the LED string is low, increasing overall brightness.

Owner:SIGNIFY HLDG BV

Haptic graphical user interface for adjusting mapped texture

InactiveUS7626589B2Easy to findEasy selectionInput/output for user-computer interactionData processing applicationsGraphicsTouch Perception

The invention provides techniques for wrapping a two-dimensional texture conformally onto a surface of a three dimensional virtual object within an arbitrarily-shaped, user-defined region. The techniques provide minimum distortion and allow interactive manipulation of the mapped texture. The techniques feature an energy minimization scheme in which distances between points on the surface of the three-dimensional virtual object serve as set lengths for springs connecting points of a planar mesh. The planar mesh is adjusted to minimize spring energy, and then used to define a patch upon which a two-dimensional texture is superimposed. Points on the surface of the virtual object are then mapped to corresponding points of the texture. The invention also features a haptic / graphical user interface element that allows a user to interactively and intuitively adjust texture mapped within the arbitrary, user-defined region.

Owner:3D SYST INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com