Neural network optimization method based on floating number operation inline function library

An inline function and neural network technology, applied in the field of pattern recognition, can solve problems such as large amount of nonlinear calculation, hindered execution efficiency of embedded processors, large amount of calculation of floating point numbers, etc., and achieve the effect of improving recognition efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0035] The present invention will be described in detail below with reference to the drawings and examples.

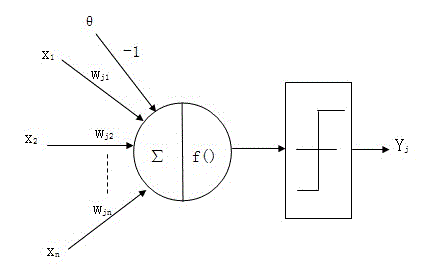

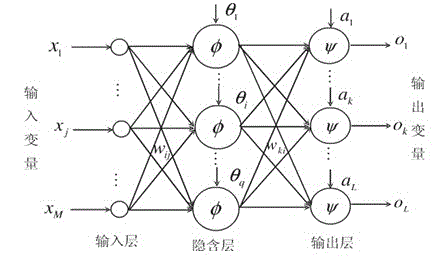

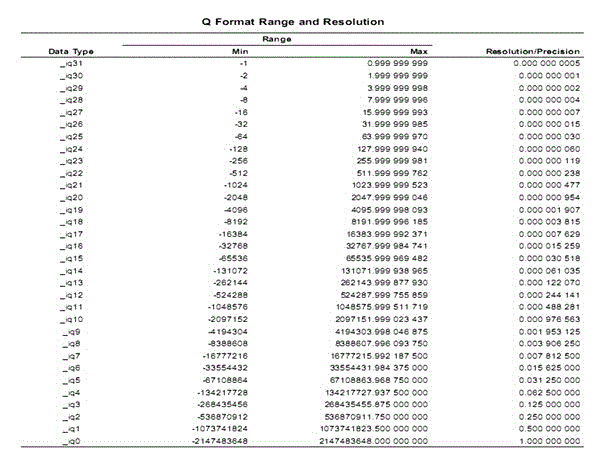

[0036] The neural network optimization method based on the floating-point number operation inline function library of the present invention, wherein the model of the neural unit is: Y=1 / (1+exp(-∑w i ×x i )), i ranges from 1 to n, and n is the number of neural units; the above floating-point arithmetic inline function library is built in the dual-core chip, that is, the function library IQmath Library, which includes: format conversion function, after calibration Mutual conversion between floating-point numbers and integers; arithmetic functions, to realize multiplication and division of calibrated floating-point numbers; trigonometric functions, to realize sine, cosine, tangent operations of calibrated floating-point numbers; mathematical functions, to realize calibrated floating-point numbers The root, exponent, logarithm and multiple power operations of floating-poi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com