Patents

Literature

140results about How to "Automatic extraction" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

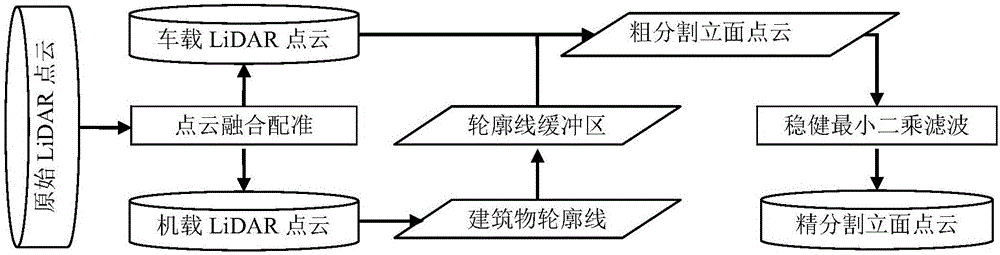

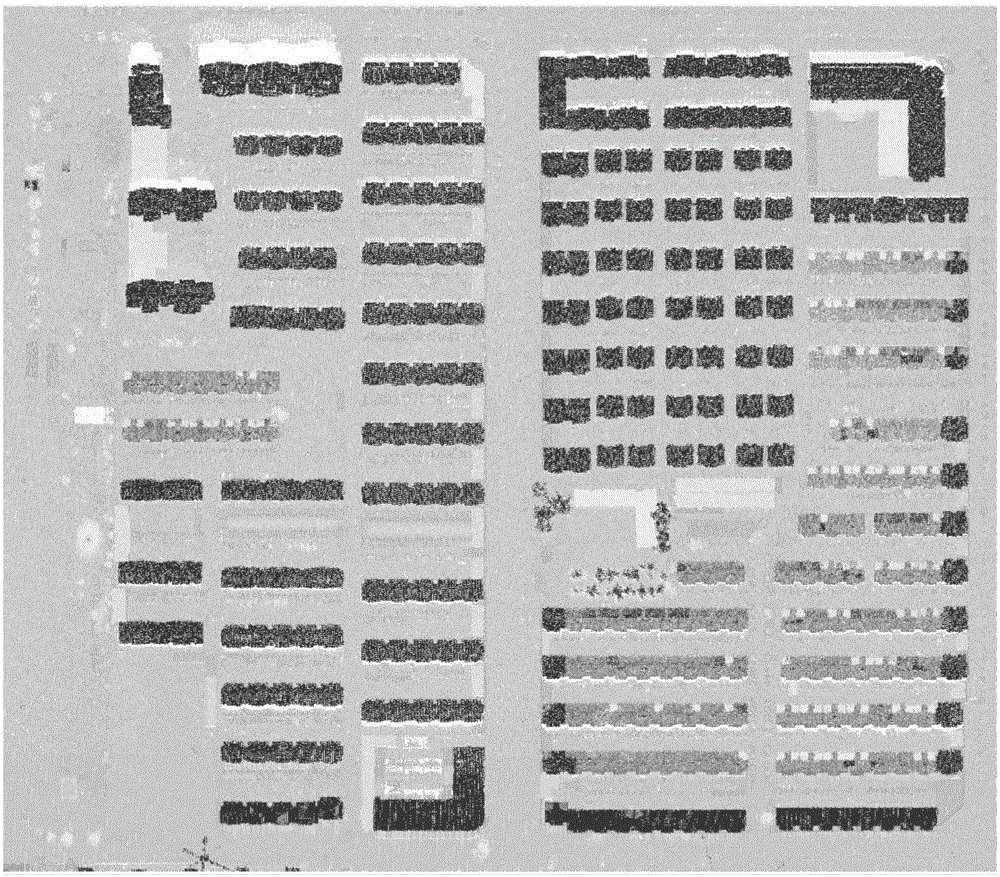

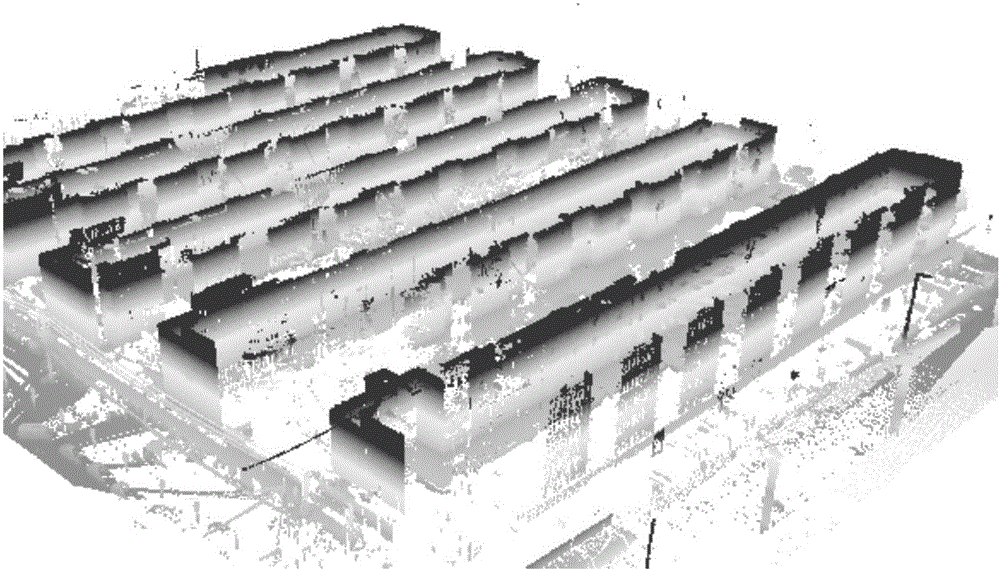

Automatic segmentation method for point cloud of facade of large scene city building

ActiveCN105844629AHigh precisionAccurate segmentationImage enhancementImage analysisAutomatic segmentationPoint cloud

The invention discloses an automatic segmentation method for a point cloud of a facade of a large scene city building. The method comprises steps of (1) performing fusion registration on airborne LiDAR point cloud data and on-vehicle LiDAR point cloud data, (2) extracting airborn LiDAR building point cloud data from the airborne LiDAR point cloud data which goes through registration in the step (1), (3) performing segmentation on the point cloud data of a single building based on the airborne LiDAR point cloud data extracted from the step (2), (4) performing contour tracking on the single building segmented by the step (3), (5) performing simplification and normalization processing on a contour line extracted from the step (4), (6) performing rough segmentation on the point cloud of the facade of the building based on the contour line which goes through the simplification and normalization processing in the step (5), and (7) performing fine segmentation on the building facade point cloud which is roughly segmented in the step (6). The automatic segmentation method of the invention can fast and accurately separate the building facade point cloud from the on-vehicle LiDAR point cloud.

Owner:HENAN POLYTECHNIC UNIV

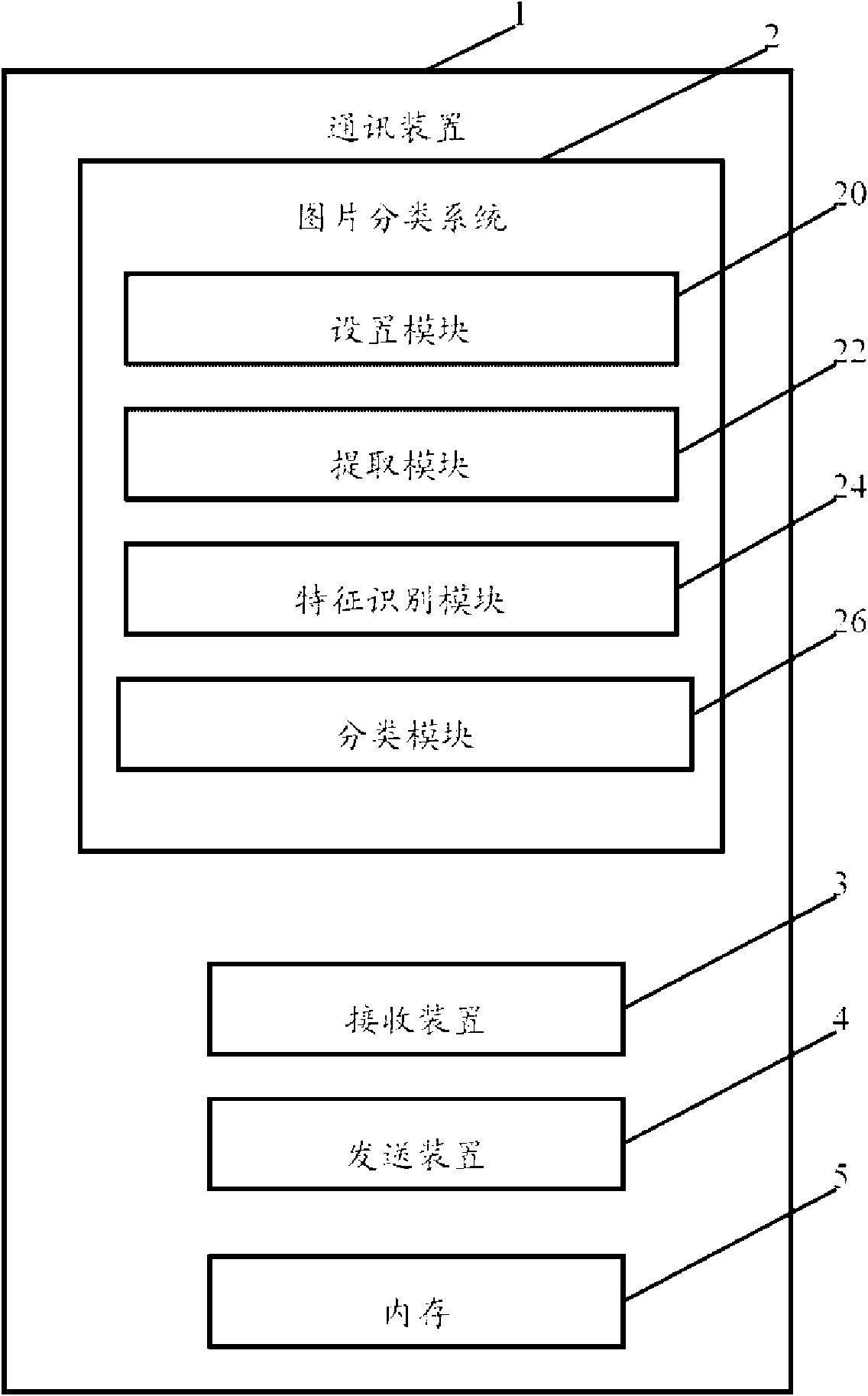

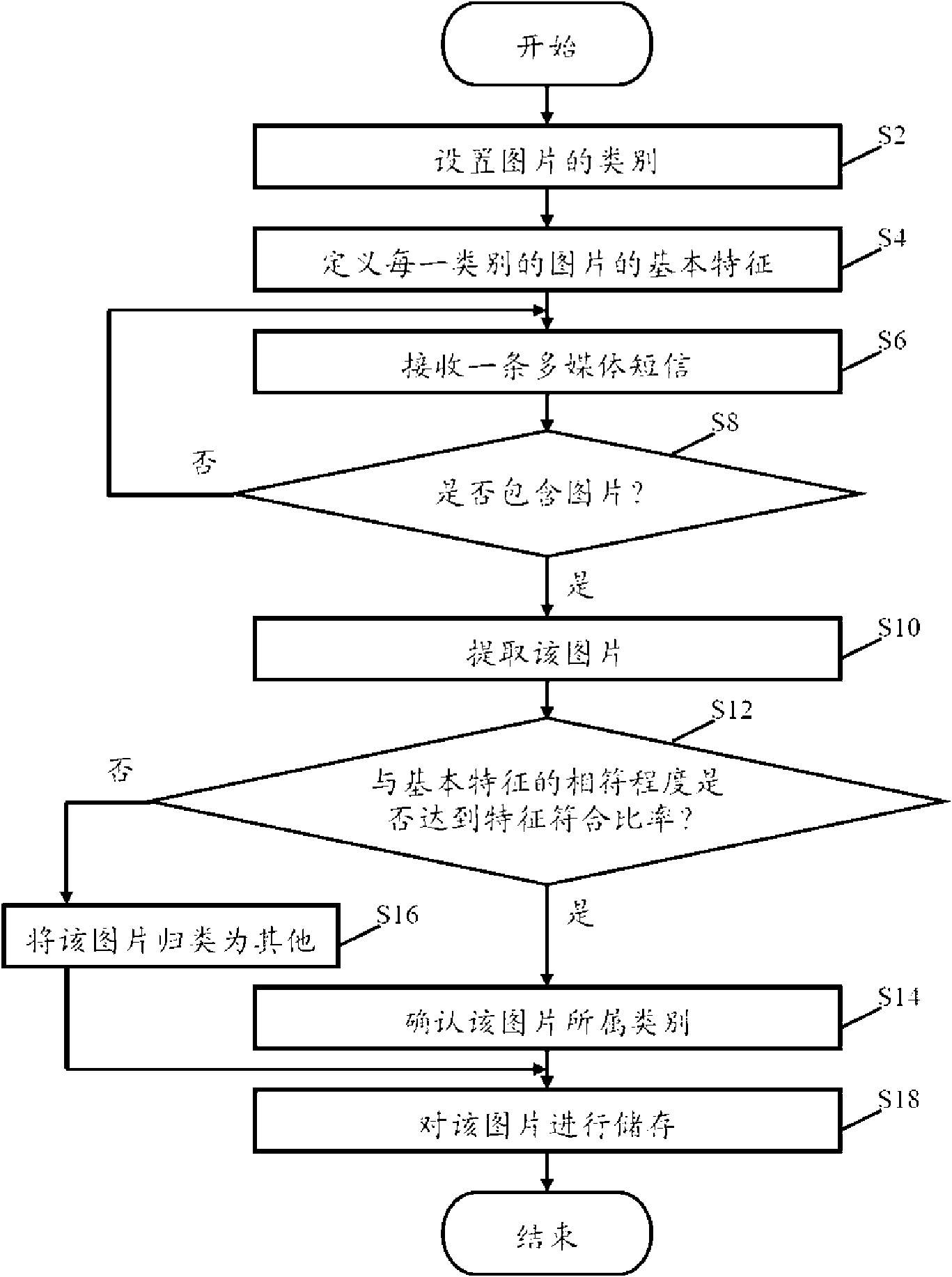

Picture classification system and method

InactiveCN101635763AAutomatic extractionCharacter and pattern recognitionSubstation equipmentSynchronized Multimedia Integration LanguageClassification methods

The invention discloses a picture classification system applied in communication devices, comprising a setting module, an extracting module, a characteristic identification module and a classifying module, wherein, the setting module is used for setting the class of a picture and the storing position of different classes of pictures and is used for defining the basic characteristics of the picture and setting characteristic correspondence ratio; the extracting module utilizes synchronize multimedia integration language to decode the received MMS and extracts pictures contained in the MMS; the characteristic identification module is used for indentifying the characteristic of the picture; an indentified characteristic is compared with the set basic characteristic, if the correspondence degree of indentified characteristic with one of the basic characteristics reaches a preset characteristic correspondence ratio, the class to which the basic characteristic belongs is confirmed to be the class to which the extracted picture belongs; the classifying module is used for storing the picture to the storing position corresponding to the class of picture according to the confirmed class. The invention also provides a picture classification method. The invention can automatically classify and store pictures received by the communication device.

Owner:SHENZHEN FUTAIHONG PRECISION IND CO LTD +1

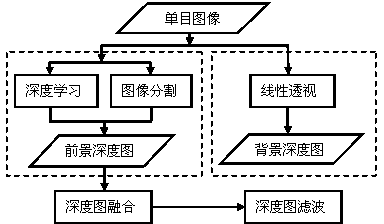

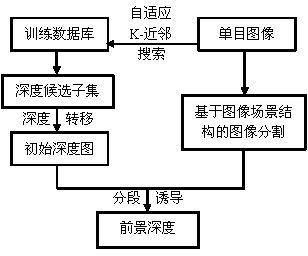

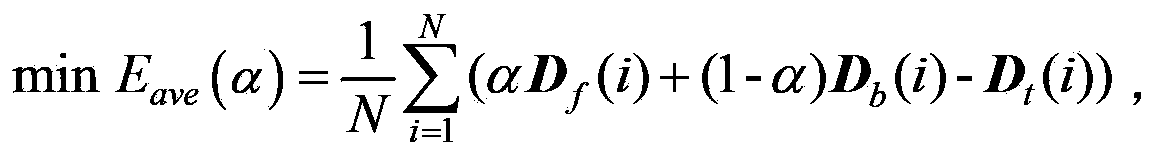

Extraction method of monocular image depth map based on foreground and background fusion

ActiveCN103413347AAutomatic extractionAccurate classificationImage enhancementImage analysisComputation complexityVisual perception

The invention discloses an extraction method of a monocular image depth map based on foreground and background fusion, and belongs to the three-dimensional image reconstruction field of the computer vision. The method of the invention comprises the following steps: step A, a non-parametric machine learning method is used to extract a foreground depth map from an original monocular image; step B, a linear perspective method is used to estimate a background depth map with an integral distribution trend in the original monocular image; step C, the foreground depth map and the background depth map of the original monocular image perform global integration, so as to get a final depth map of the original monocular image. Compared with the prior art, the extraction method of the monocular image depth map based on the foreground and background fusion does not need to compute the camera parameter, is low in computational complexity, and is simple and practicable.

Owner:NANJING UNIV OF POSTS & TELECOMM

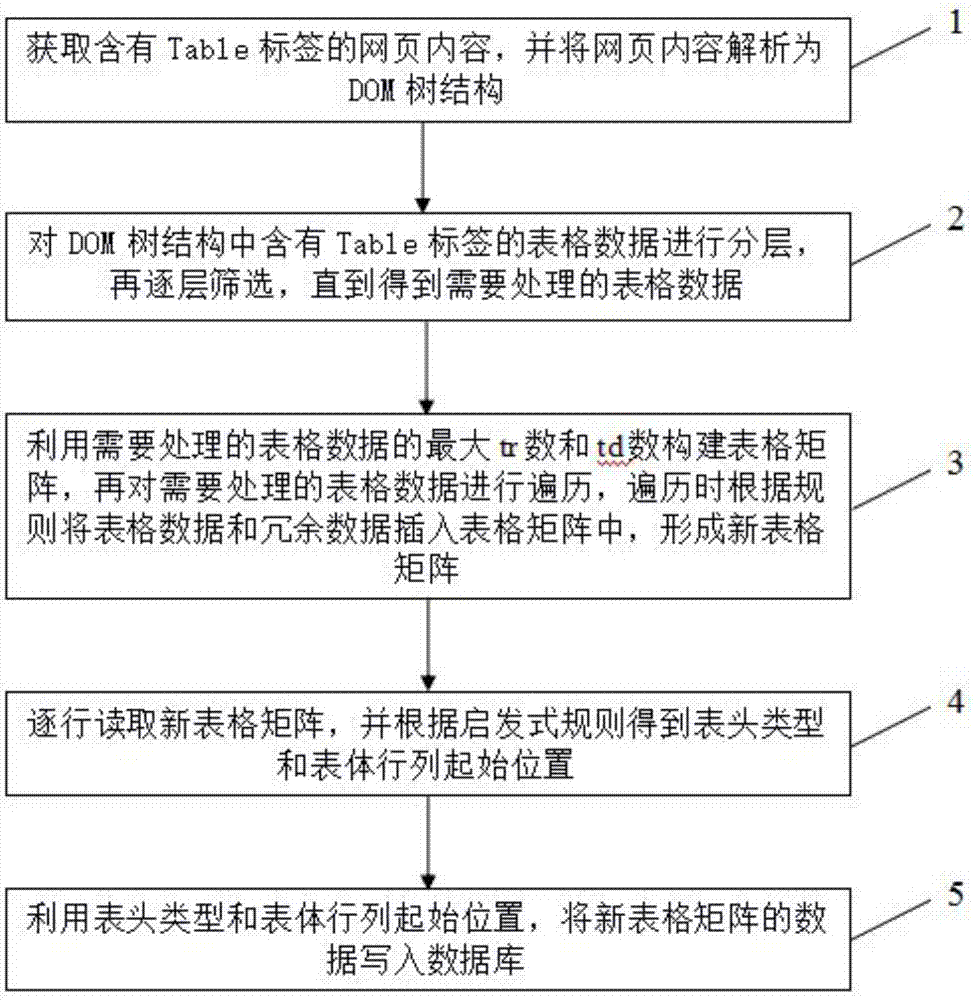

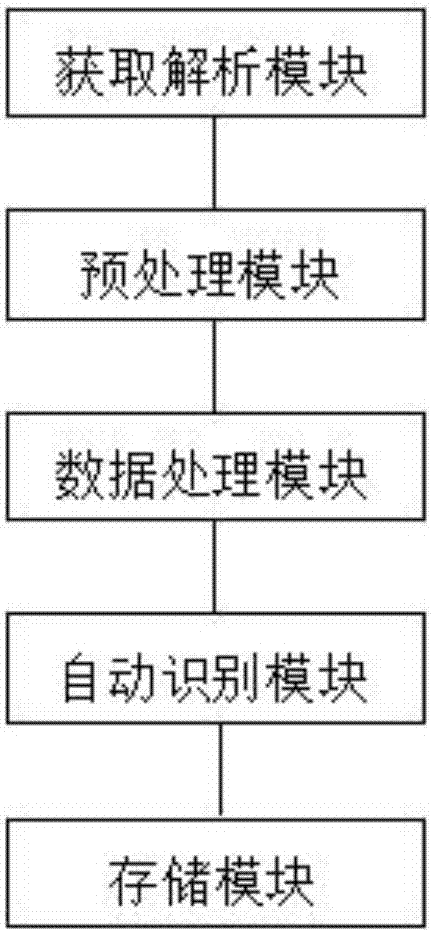

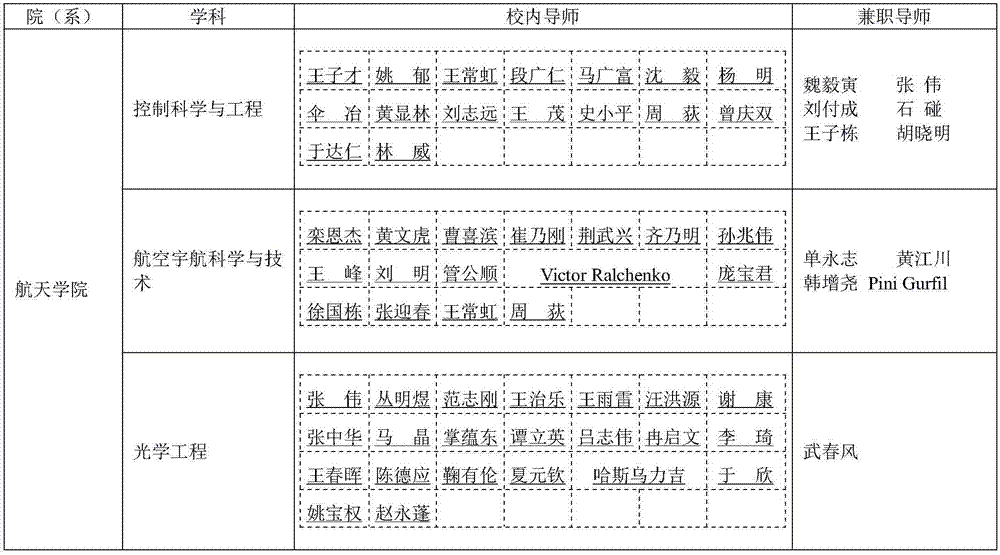

Automatic webpage table data extraction method and device

InactiveCN107992625ASolve extraction problemsAutomatic extractionText processingSemi-structured data mapping/conversionData miningWeb page

The invention discloses an automatic webpage table data extraction method and device. The method includes: acquiring Table-label-containing webpage content, and analyzing the webpage content into a DOM tree structure; subjecting Table-label-containing table data in the DOM tree structure to layering, and screening layer by layer until processing-requiring table data are obtained; adopting a maximum tr number and td number of the processing-requiring table data to create a table matrix, subjecting the processing-requiring table data to traversal, and inserting the table data and redundant datainto the table matrix to form a new table matrix; reading the new table matrix line by line to obtain a header type and a body starting position; writing data of the new table matrix into a database by adoption of the header type and the body starting position. By the automatic webpage table data extraction method, problems of multiple table label nesting and merge cell containing complicated webtable data extraction are solved, the table data can be extracted quickly and accurately, and high practical value is achieved.

Owner:湖南星汉数智科技有限公司

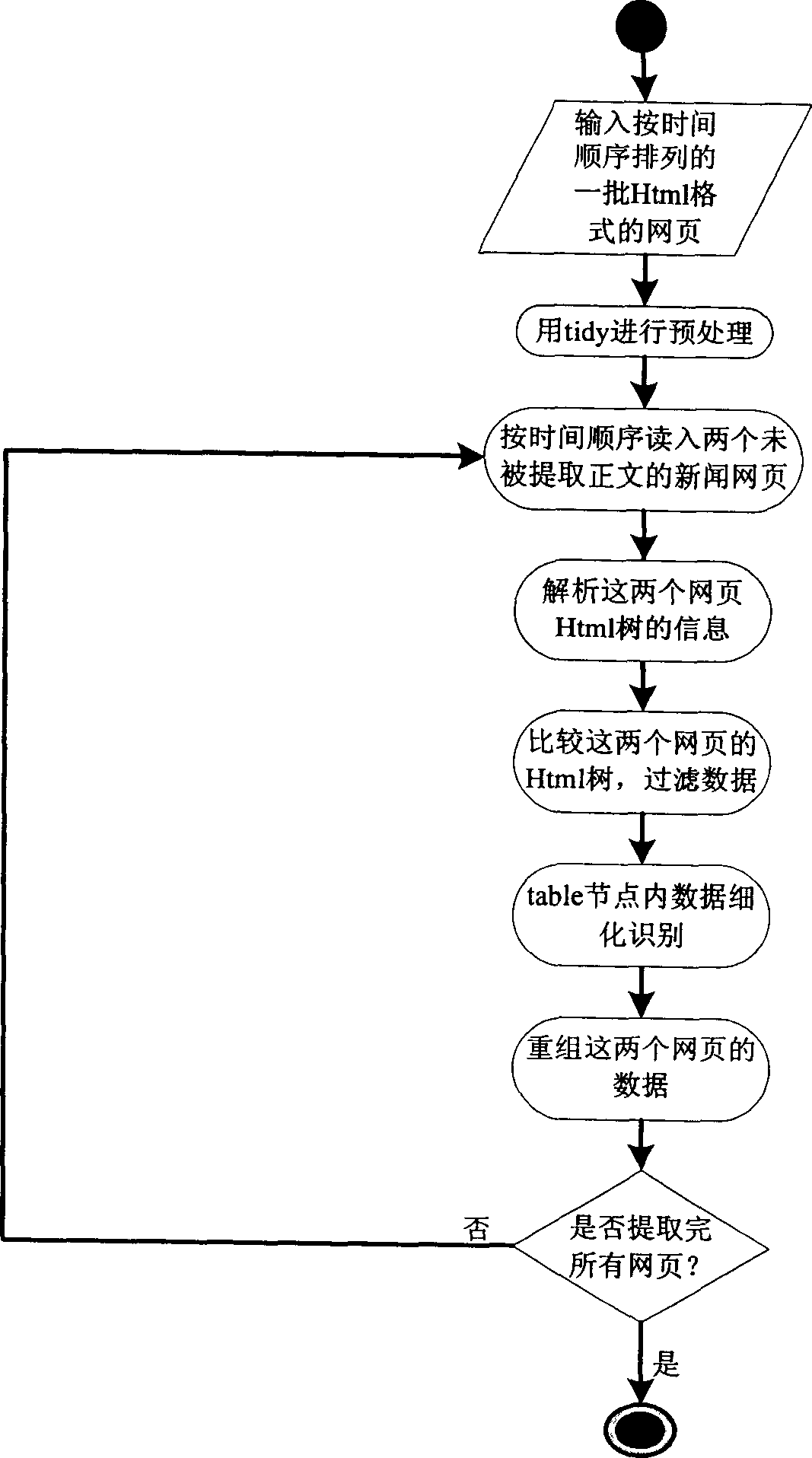

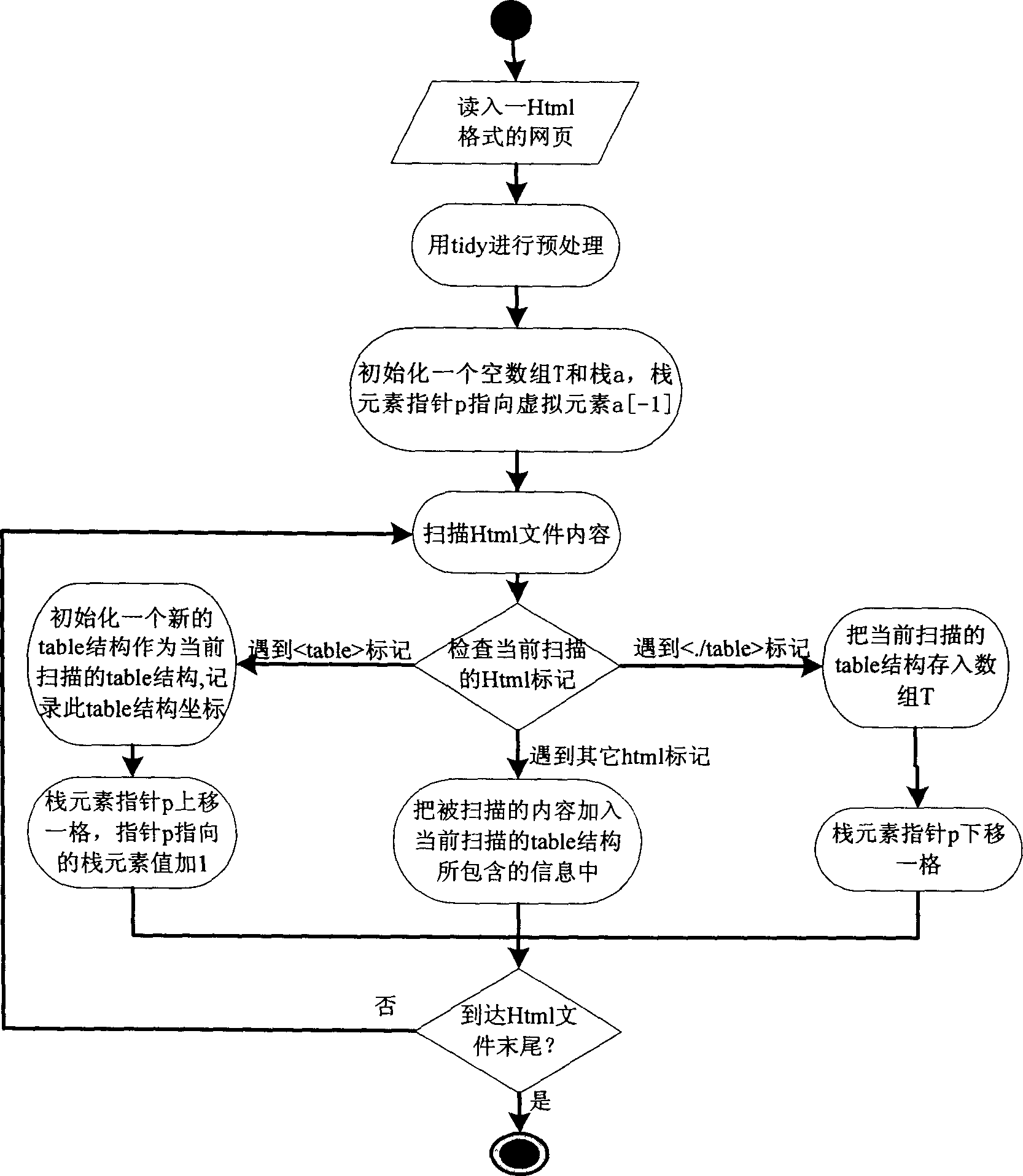

Method for acquiring news web page text information

ActiveCN1786965AReduce manual interventionImprove efficiency and accuracySpecial data processing applicationsInformation retrievalData science

The invention relates to a method for extracting the text information in a new webpage, belonging to the field of webpage information analyzing and processing technique. The existing technique ordinarily adopts a packer to extract the interesting data from the web pages and the obtaining of information mode recognition knowledge of the packer is a time-taking and laborious, higher intelligence-demanding operation. The invention uses a stack data structure to convert the hiberarchy information of webpage data into vectors, constructs and analyzes Html tree, then making compression on the data of various layers of the Html tree, making data filtration, thinning, recognition and recombination to extract the needed data information. The invention is applied to extracting the template-generated news information in the news web pages from a fixed website.

Owner:新方正控股发展有限责任公司 +2

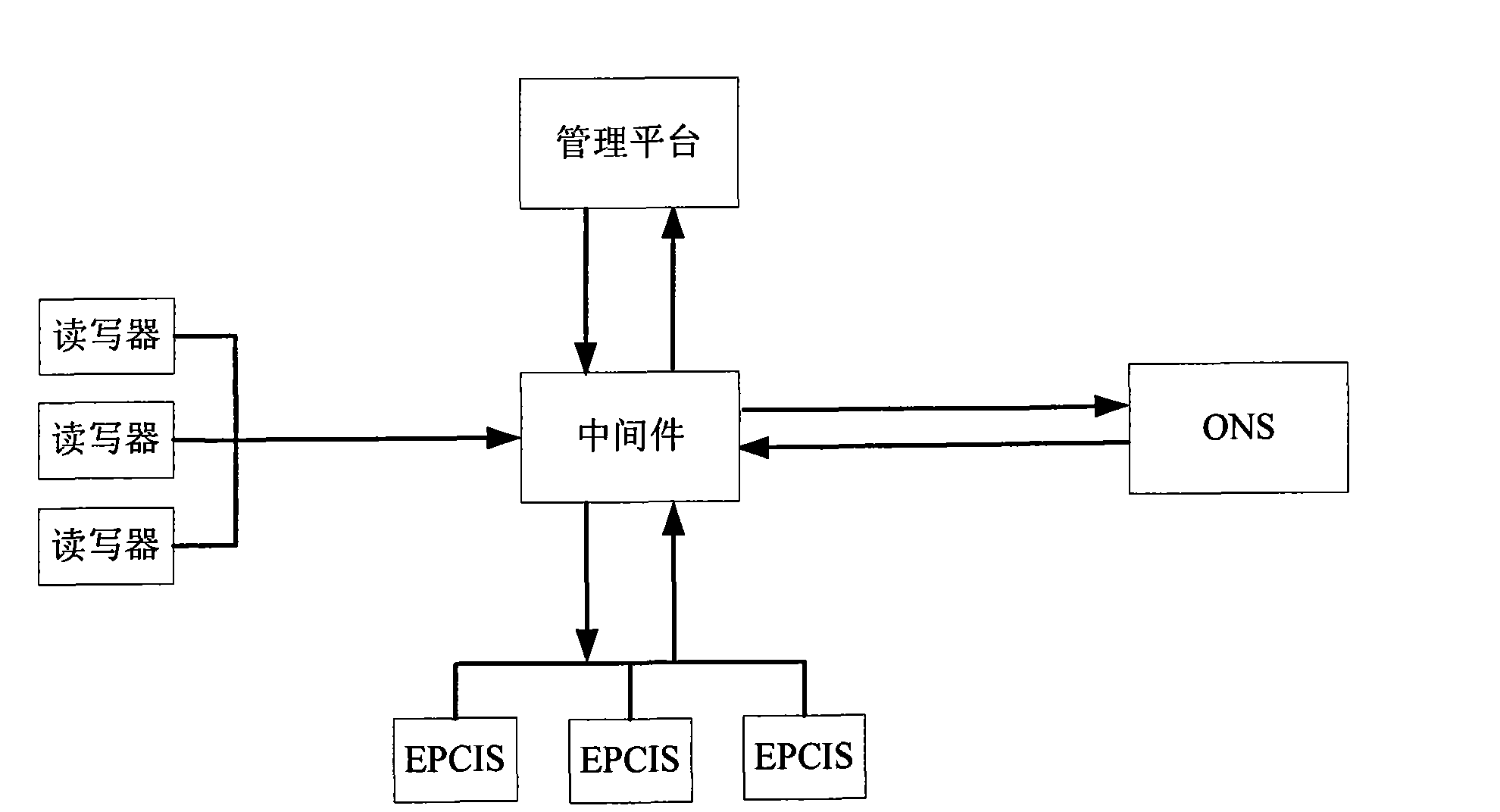

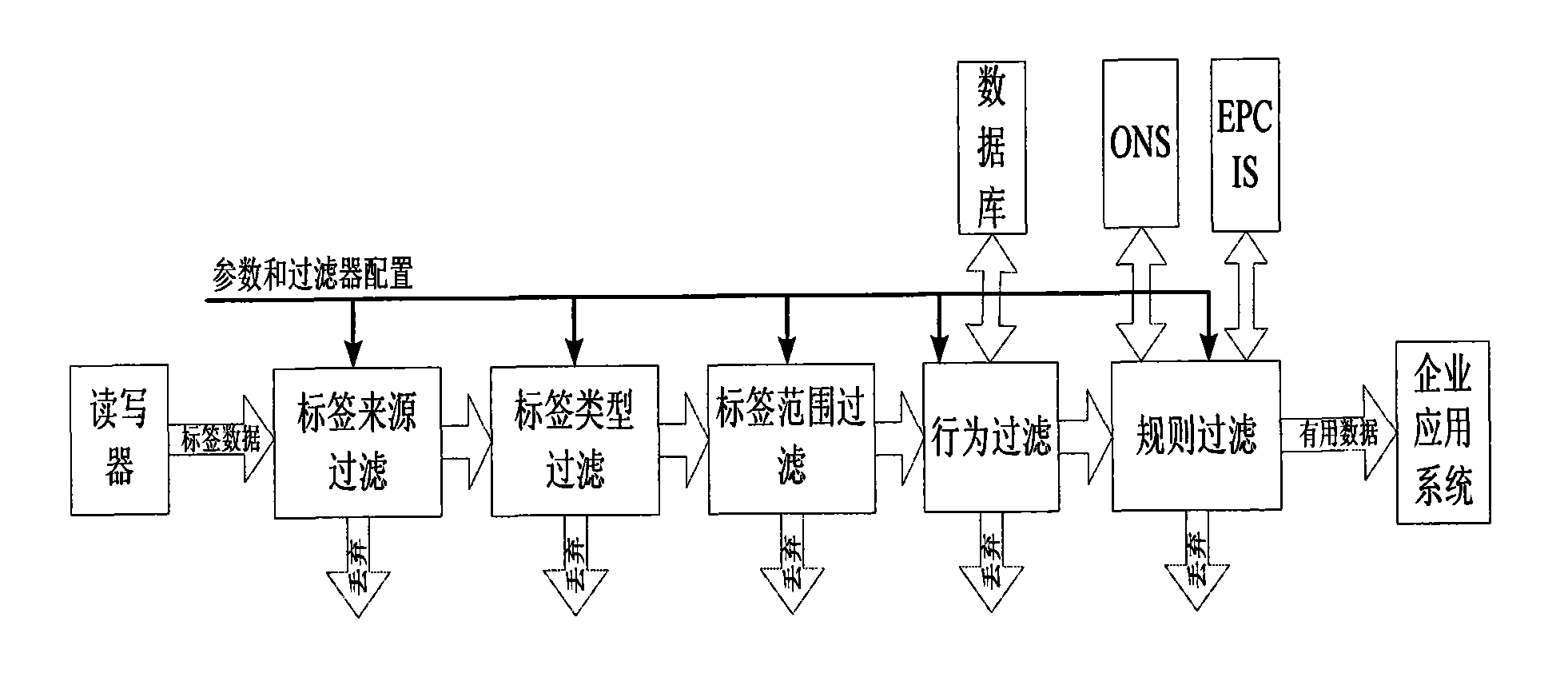

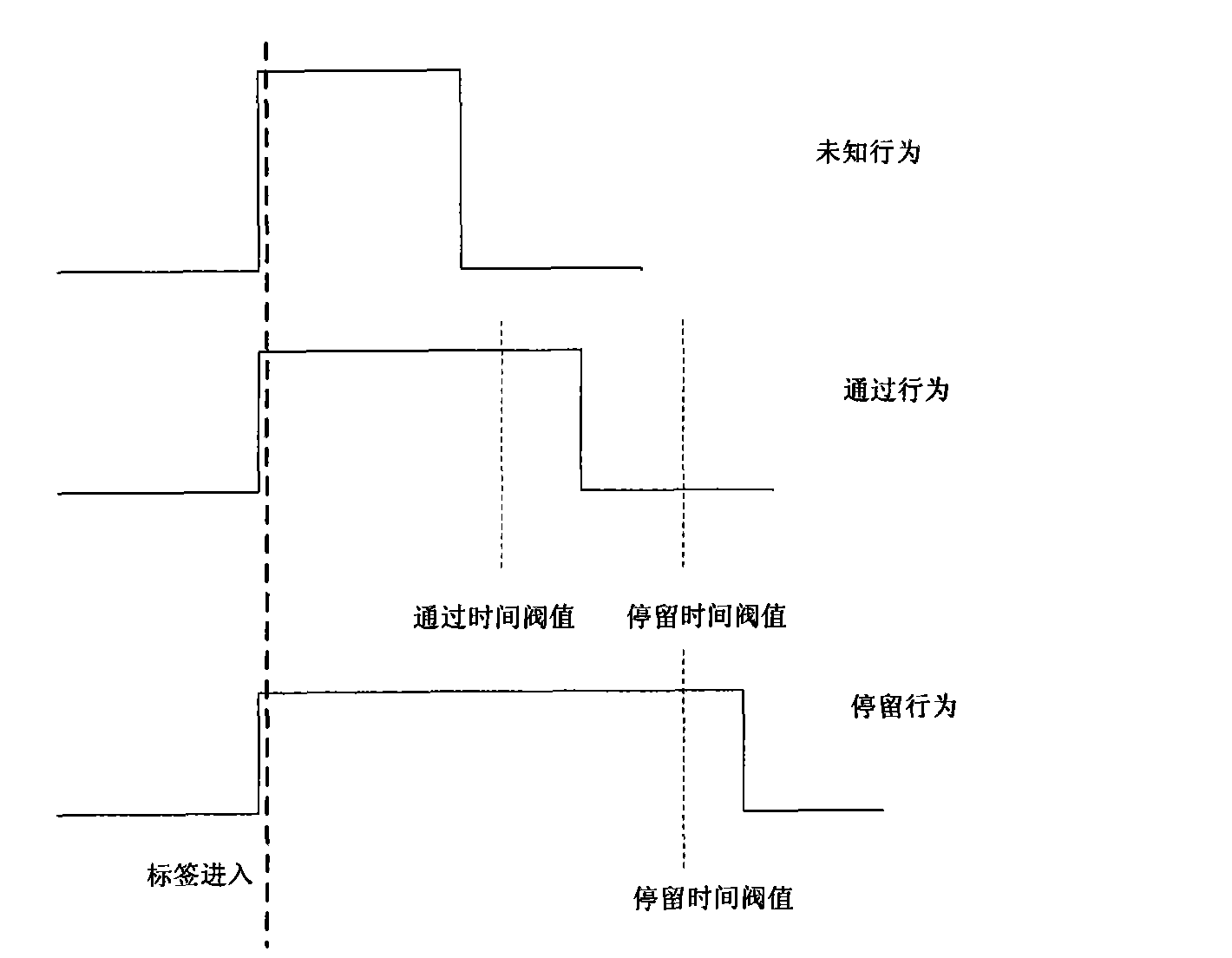

Electronic tag data filtering method used for radio frequency identification middleware

InactiveCN101515334AData load reductionImprove data processing capabilities and overall throughputCo-operative working arrangementsData miningRadio-frequency identification

The invention provides an electronic tag data filtering method used for a radio frequency identification middleware. The middleware sequentially filters according to the source, type, coding range, behavior type and entity attribute of an electronic tag. The method comprehensively considers the attribute, behavior information and EPC network information of the tag, greatly reduces the redundant data and realizes the efficient screening of data.

Owner:HUAZHONG UNIV OF SCI & TECH

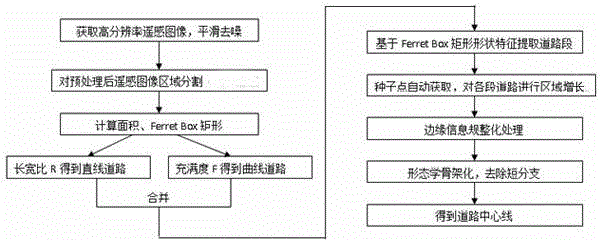

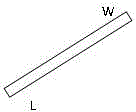

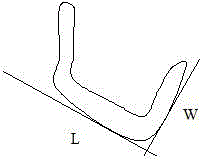

Road extracting method based on shape characteristics of roads of remote sensing images

InactiveCN104657978AAutomatic extractionOvercome the disadvantage of only extracting straight road segmentsImage enhancementImage analysisMinimum bounding rectangleGray level

The invention discloses a road extracting method based on shape characteristics of roads of remote sensing images. The road extracting method comprises the following steps: firstly, segmenting partial regions of preprocessed remote sensing images by utilizing the characteristics of consistency of partial gray levels of road surfaces and the large difference of the partial gray levels between a target region and a background; then, combining segmented results with area and the shape characteristics of a Ferret Box minimum external connection rectangle so as to obtain linear and curved road sections, performing regional seed growth on confirmed roads, and connecting large parts of road sections so as to realize the extraction of road information. Compared with the prior art, the road extracting method disclosed by the invention has the effects that not only the linear and curved road sections can be obtained, but also seed points can be automatically extracted, and road nets can be automatically obtained; the extraction of roads cannot be influenced by the rotation of images, the defect that most methods only can extract linear road sections is overcome, and the road extracting method is more intelligent.

Owner:FUZHOU UNIV

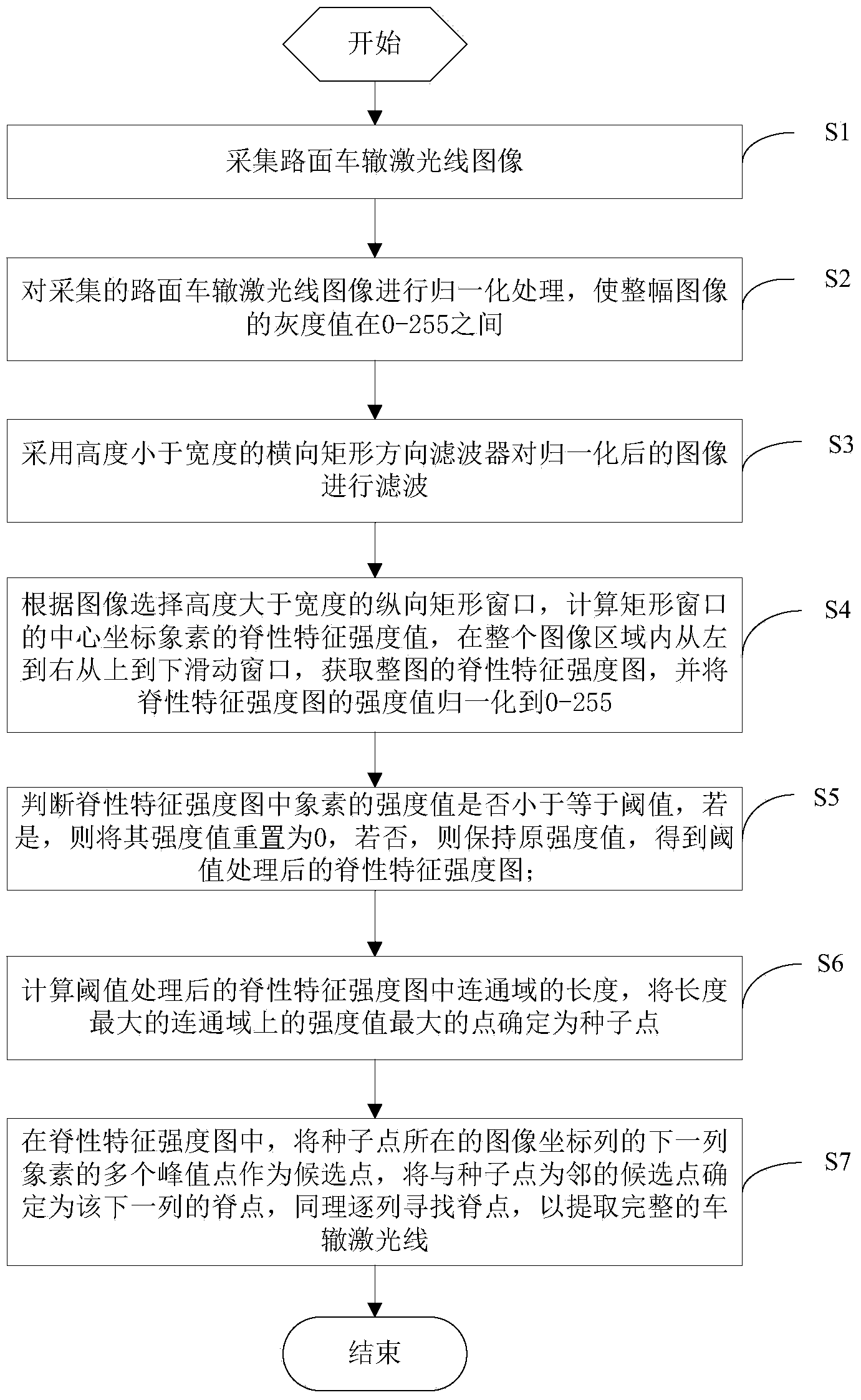

Road surface line laser rut detection and identification method and processing system

InactiveCN103886594AImprove robustnessAutomatic extractionImage analysisFeature extractionCharacteristic strength

The invention discloses a road surface line laser rut detection and identification method and a processing system. The detection and identification method includes the steps that road surface rut laser line images are collected; characteristic extraction is carried out on the images to obtain a ridge characteristic strength graph; in the characteristic strength graph, numerical values of each row of pixel grey levels in the images present a waveform curve, one or more waveform peak points exist in each curve, the waveform peak points are candidate points of points on a laser line rut and called ridge points, and waveform ridge points of each row of the pixel grey levels are continuously looked for one by one according to peak values to determine a rut laser line. According to the method, the rut laser line can be automatically and precisely extracted in real time, the processing system has high robustness and provides precise and reliable data for calculation of subsequent road surface rut models and rut depths, and therefore measurement accuracy of the road surface rut depths is improved.

Owner:WUHAN INSTITUTE OF TECHNOLOGY

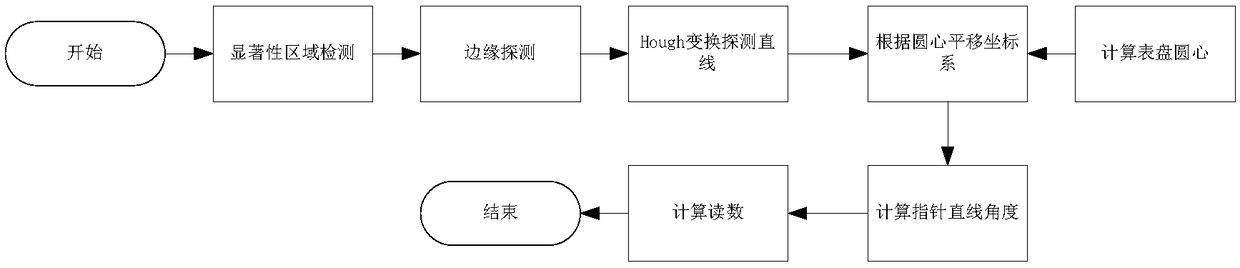

An application of machine vision understanding in electric power remote monitoring

PendingCN109190473AAutomatic extractionRealize automatic collectionCharacter and pattern recognitionHough transformMachine vision

The invention relates to an application of machine vision understanding in electric power remote monitoring, belonging to the field of data identification. The application includes performing significant region detection on the image; carrying out edge detection; searching for the smallest square contour to get the dial positioning result; using Gamma correction combined with homomorphic filter toenhance the de-noised image; using the Hough transform to find the straight line; applying the Hough transform to the pointer image after the saliency region is detected; searching the maximum Houghvalue i in the transform space by setting a threshold value, and then inversely transforming the Hough value to the color space of the original image to obtain the straight line equation of the pointer edge; transforming the coordinate of the straight line into the coordinate system with the coordinate of the center point of the dial as the origin; using the slope of the straight line where the pointer is located to convert the angle of inclination of the pointer, and calculating the corresponding reading of the meter according to the corresponding relationship between the angle and the meterreading. The application can be applied to remote data acquisition, automatic analysis and operation management of substation.

Owner:SHANGHAI MUNICIPAL ELECTRIC POWER CO +1

Automatic generation method and system for HTTP (Hyper Text Transport Protocol) network feature code

InactiveCN103746982AAutomatic extractionImprove production efficiencyTransmissionProgramming languageTraffic capacity

The invention discloses an automatic generation method for an HTTP (Hyper Text Transport Protocol) network feature code. The method comprises the following steps: a pack feature code generation step, a URI (Uniform Resource Identifier) feature code generation step and an HTTP network feature code total set generation step, wherein the pack feature code generation step comprises the following steps: extracting feature statistics and pack content aiming at a plurality of network samples in a manner of asking and answering interchangeably; generating a coarseness cluster set by secondary clustering so as to generate a fineness cluster set on the basis of the coarseness cluster set by the secondary clustering; generating an asking and answering pack feature code set of the network samples by the fineness cluster set; the URI feature code generation step comprises the following steps: dividing flow of the network samples into single one type of flow and carrying out supplementary extraction of a URI path and parameter feature codes to generate a URI feature code set; finally, combining the asking and answering pack feature code set and the URI feature code set to generate a feature code total set Tall.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

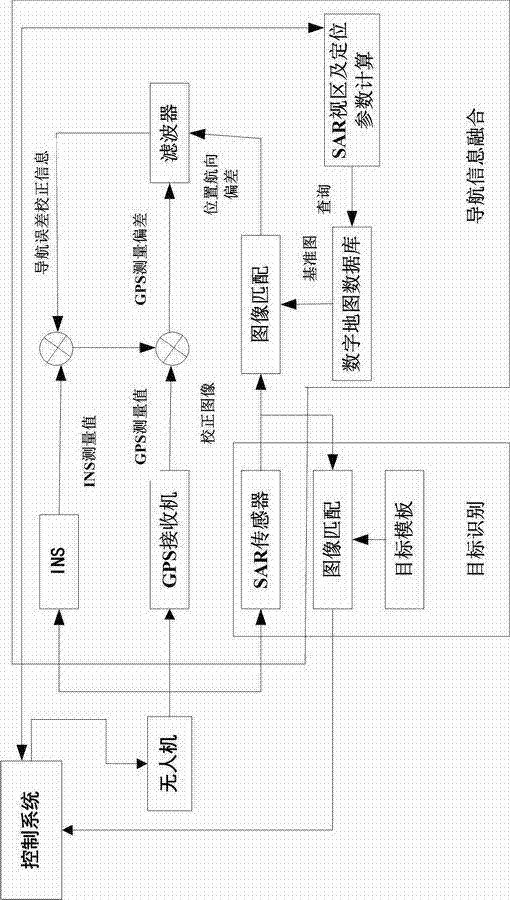

Combined navigation method based on INS (inertial navigation system)/GPS (global position system)/SAR (synthetic aperture radar)

InactiveCN104777499AIncrease redundancyImprove fault toleranceNavigation by speed/acceleration measurementsSatellite radio beaconingSynthetic aperture sonarInverse synthetic aperture radar

A combined navigation method based on an INS (inertial navigation system) / GPS (global position system) / SAR (synthetic aperture radar) comprises steps as follows: an INS receiver, a GPS receiver and an SAR sensor are mounted in appropriate positions of an unmanned aerial vehicle in parallel; image matching is performed, and position navigation deviation of the INS is obtained and taken as an observed quantity to be input into a filter, and the observed quantity and other observed quantities are jointly filtered; GPS measuring deviation is obtained and taken as an observed quantity to be input into the filter, and the observed quantity and other observed quantities are jointly filtered; the filter integrates the measuring deviation from the GPS and the position navigation deviation, obtained through image matching, from the INS, and a navigation error estimated value is calculated. Topographic features are automatically and reliably extracted from an SAR image, a map image is formed in a digital map, and the SAR topographic features are automatically predicted; topographic deviation is accurately estimated and integrated; capture and matching are automatically initialized; the fault tolerance capability, the autonomy and the reliability of navigation are high.

Owner:HENAN POLYTECHNIC UNIV

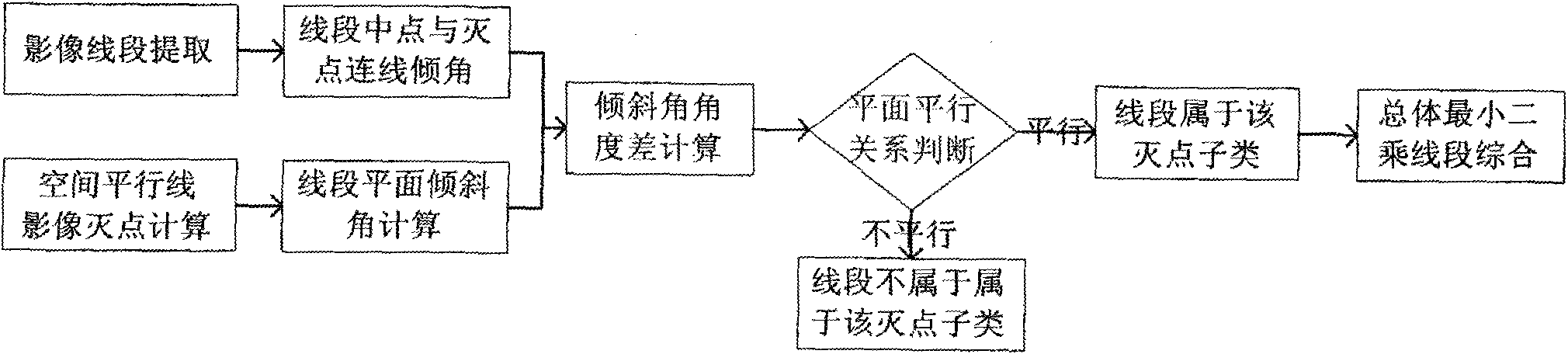

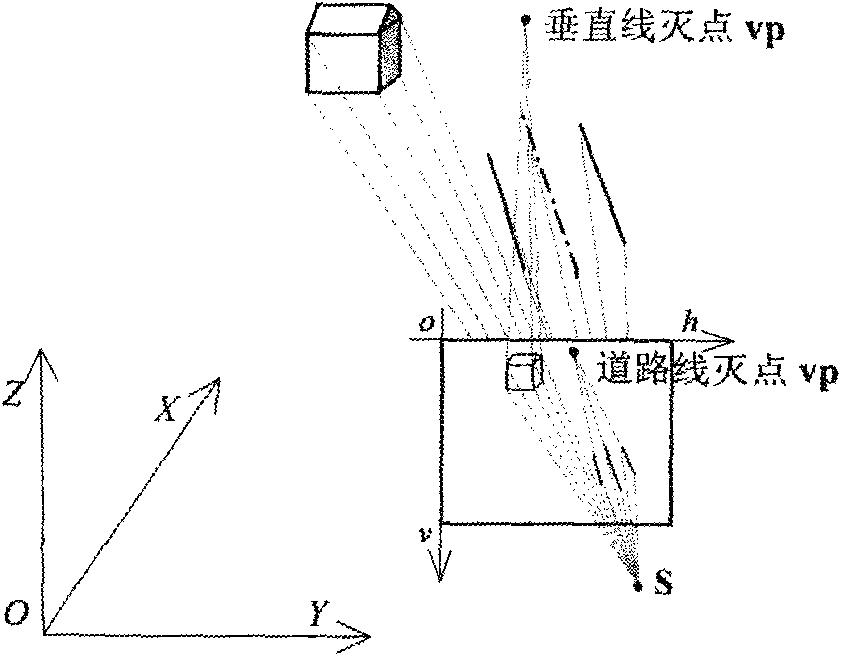

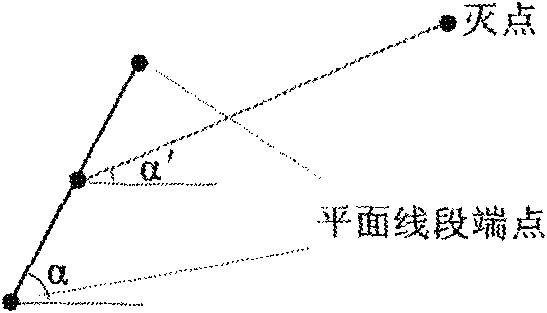

Vanishing point based method for automatically extracting and classifying ground movement measurement image line segments

InactiveCN101915570AAutomatic extractionAccurate extractionPicture interpretationHough transformTotal least squares

The invention relates to a vanishing point based method for automatically extracting and classifying ground movement measurement image line segments. The method comprises the following steps of: firstly, analyzing the attribute of ground object space parallel characteristic lines of a target and calculating vanishing points of an image of the target on the basis of image sequences acquired by ground movement measurement; secondly, extracting characteristic line segments based on an image edge according to the Hough transform of a polar coordinate parameter area extremum and the continuous change of image greyscale; and then, classifying the extracted plane line segments by using vanishing points; and finally integrating the classified line segments according to a total least square method and conditions of the plane adjacency and overlapping degree of the line segments so as to extract image characteristic line segments which correspond to multiple ground object space parallel lines. The invention can improve the indoor processing capability of a movement measurement system and promote the research on automatic recognition, mapping, three-dimensional reconstruction and the like of multiple ground objects (such as buildings, roads and the like).

Owner:TONGJI UNIV

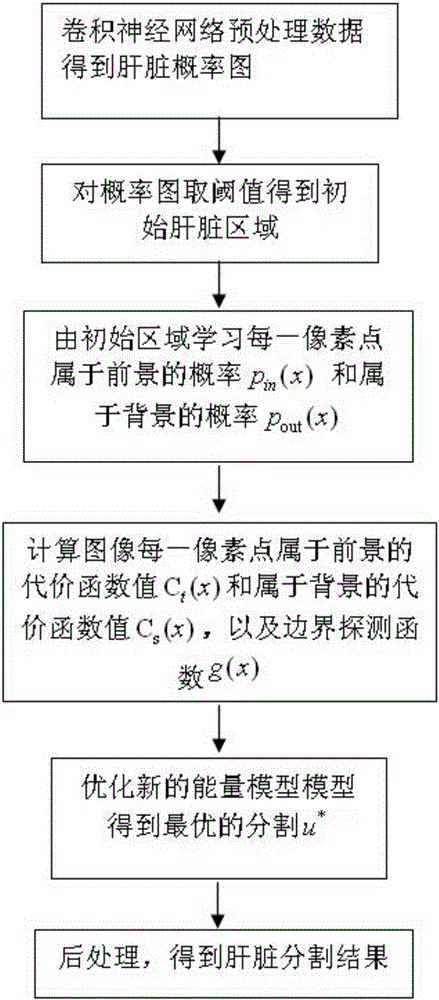

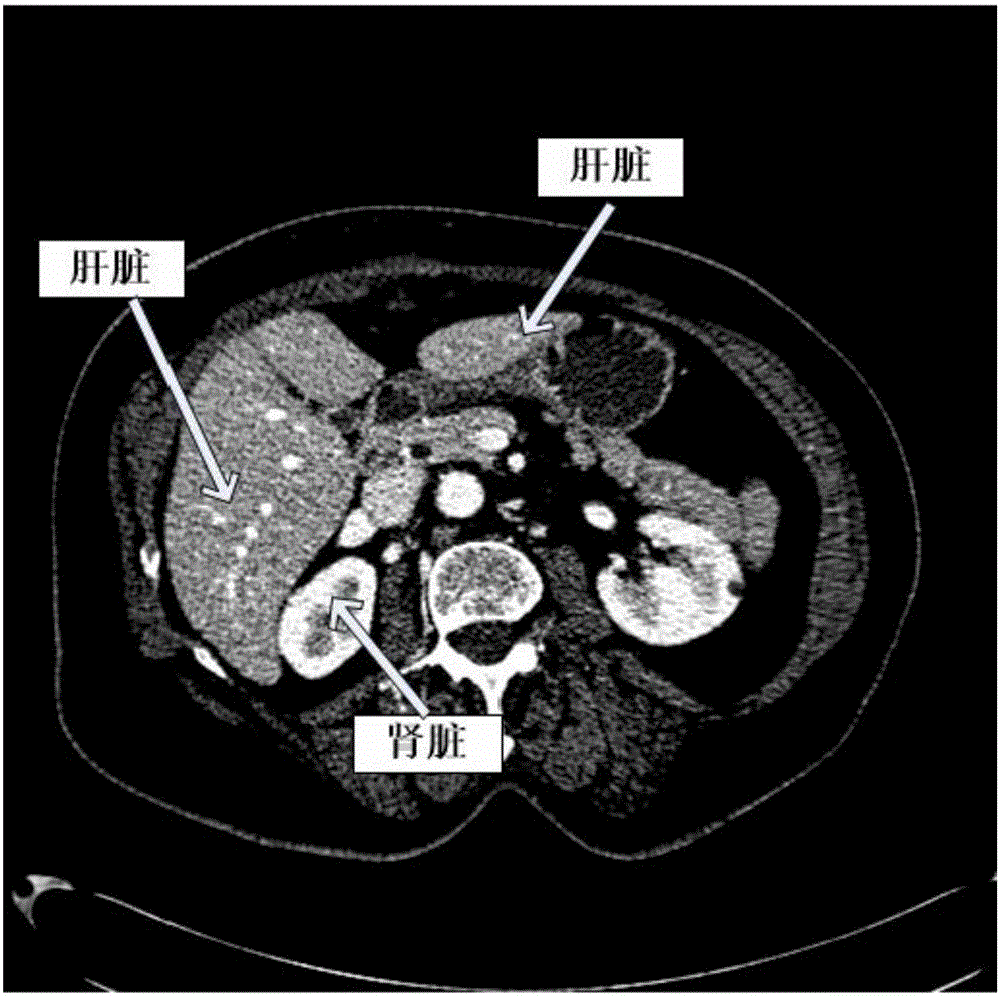

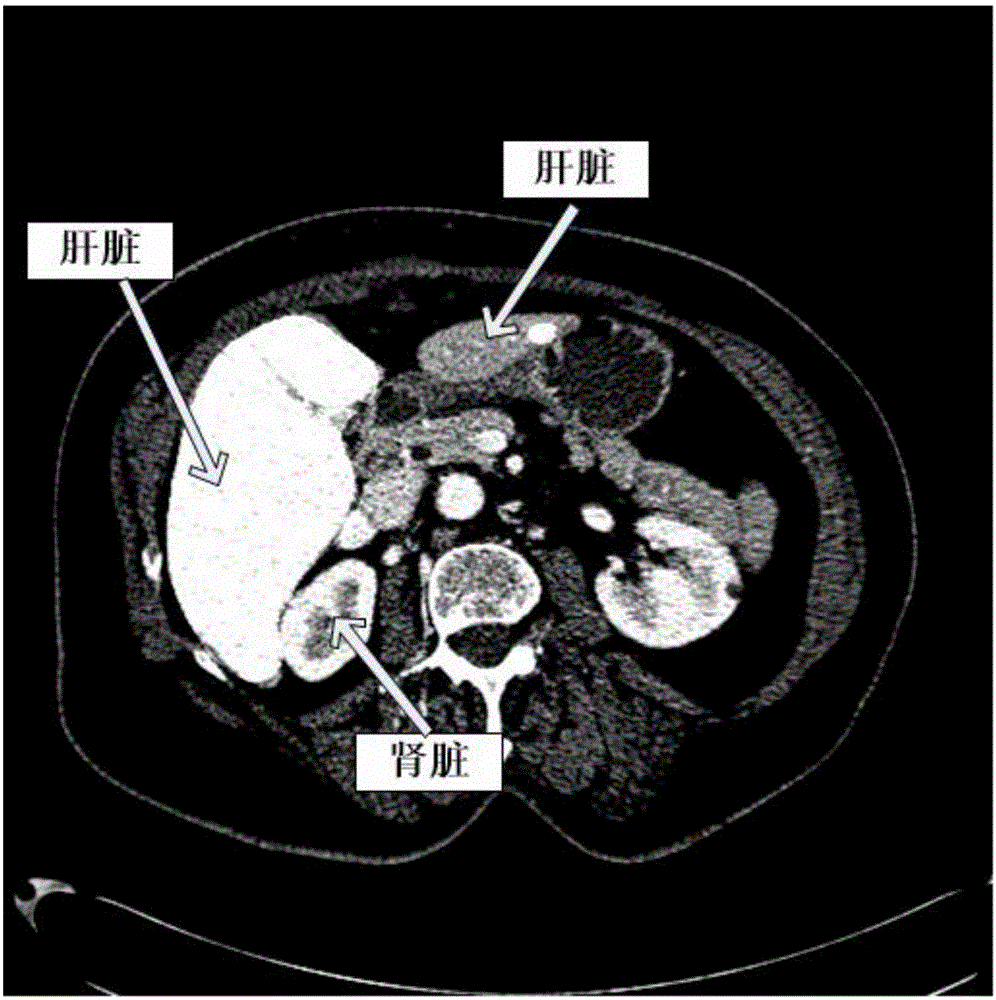

Fully-automatic three-dimensional liver segmentation method based on local apriori information and convex optimization

ActiveCN106056596AAutomatic extractionThe segmentation result is accurateImage enhancementImage analysisFully automaticAlgorithm

The invention relates to medical image processing, and aims to provide a fully-automatic three-dimensional liver segmentation method based on local apriori information and convex optimization. The fully-automatic three-dimensional liver segmentation method based on the local apriori information and the convex optimization comprises the following steps: processing abdominal liver CTA volume data by utilizing a trained three-dimensional convolutional neural network, and then obtaining an aprior probability graph of a liver; obtaining an initial region of the liver from the aprior probability graph of the liver; determining probabilities of various pixel points, belonging to a foreground liver and a background, in an image; optimizing a new energy model by utilizing a convex optimization technology, and segmenting the liver; and performing post-processing, and then obtaining a contour of the liver. The method obtains a segment result. The method can overcome problems of under segmentation and inaccurate boundaries, which exist in an original liver segmentation by utilizing a convolutional neural network, well, and then can obtain an accurate segmentation result.

Owner:ZHEJIANG DE IMAGE SOLUTIONS CO LTD

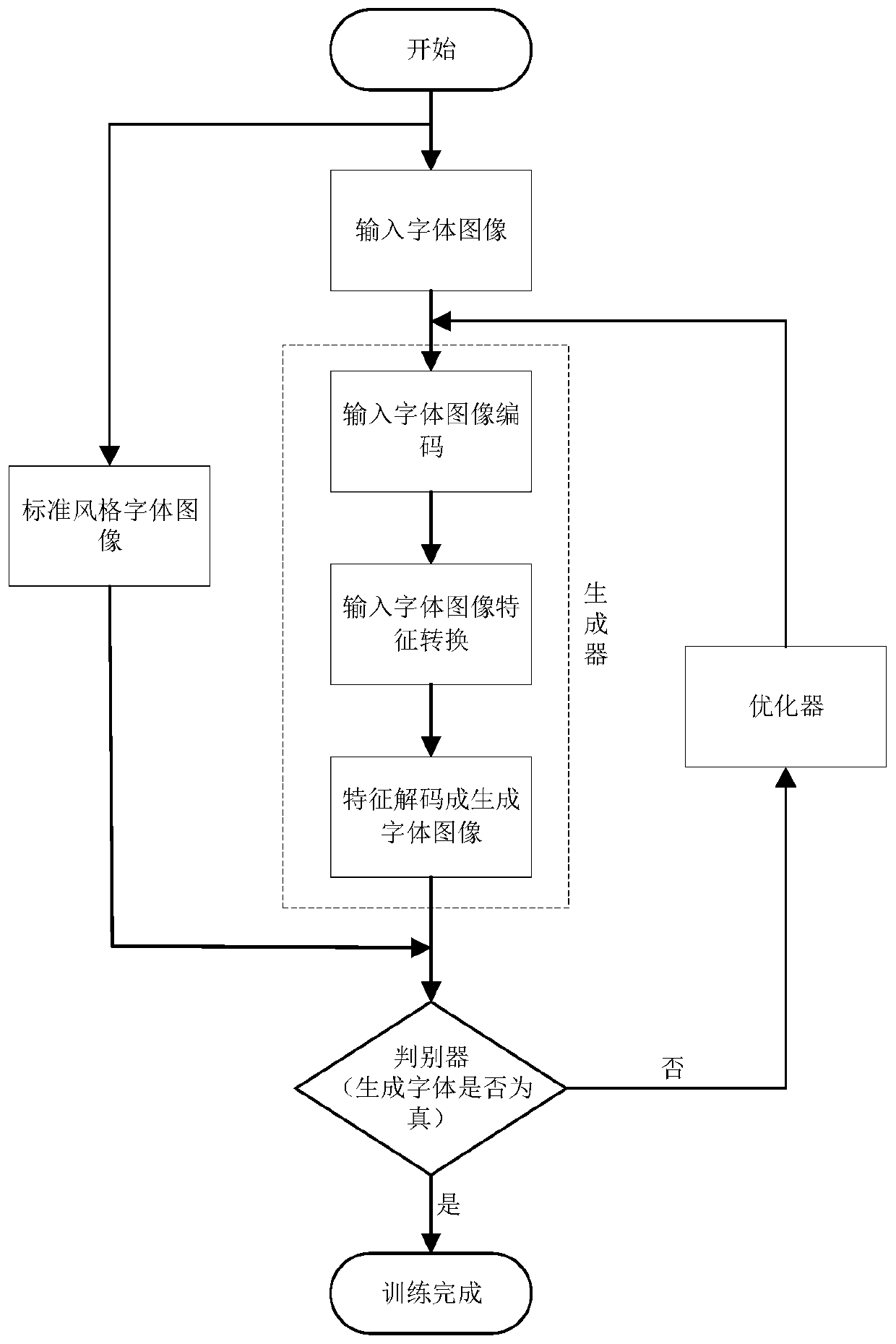

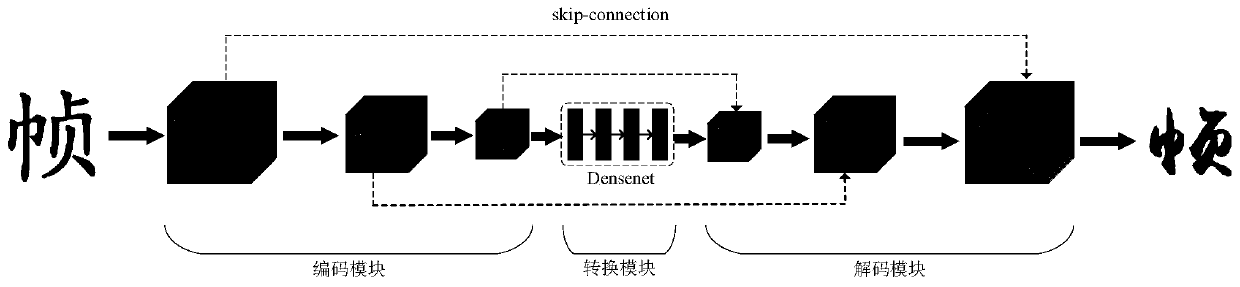

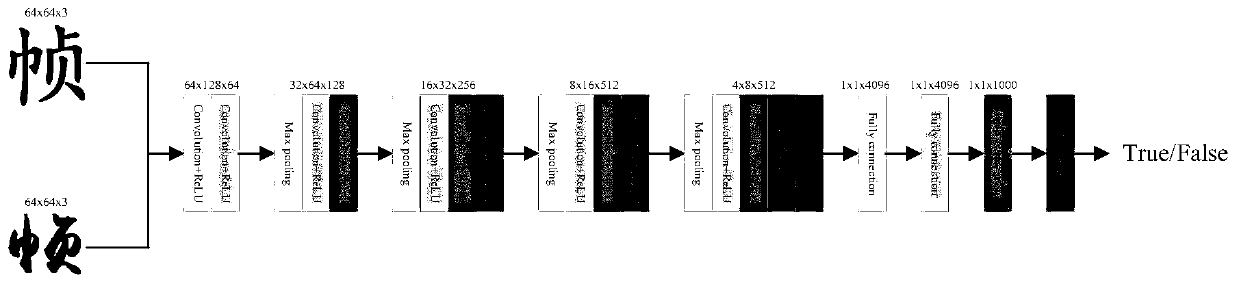

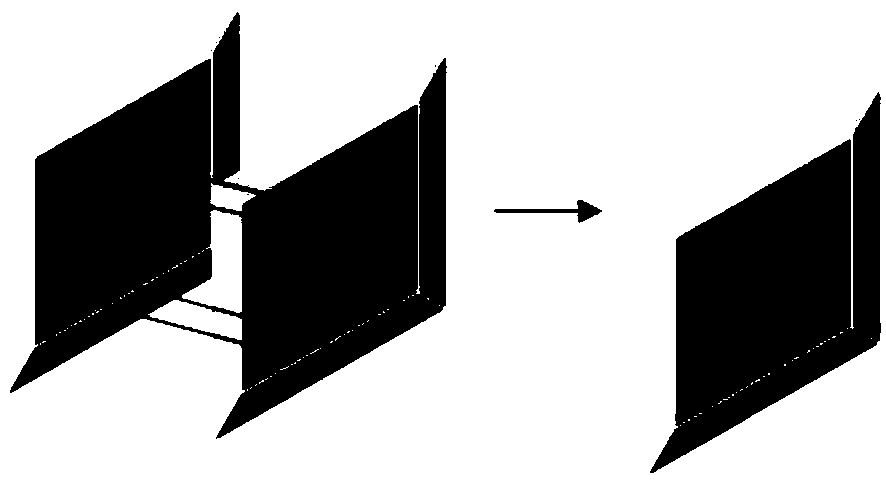

Calligraphy word stock automatic restoration method and system based on style migration

PendingCN110570481AAutomatic extractionImprove the situation where there is a large deformationTexturing/coloringNeural architecturesCode moduleDiscriminator

The invention provides a calligraphy font library automatic restoration method and system based on style migration. The calligraphy font library automatic restoration method comprises the steps that input fonts and standard style fonts are set; the input font image is input into a coding module, and potential feature information is obtained by the coding module; the conversion module converts thefeature information into feature information of standard style fonts; the decoding module performs processing to obtain a generated font image; the input font image and the generated font image are input into a discriminator, and the probability that the generated font image is a real standard style font is output; similarly, the input font image and the standard style font image are input into adiscriminator to obtain the probability that the standard style font image is a real standard style font; and finally loss functions of the generator and the discriminator are obtained according to the two probabilities. The optimizer adjusts the generator and the discriminator according to the loss functions until the loss functions of the generator and the discriminator converge to obtain a trained generator; a complete font library of standard style fonts can be obtained by adopting the trained generator.

Owner:CHINA UNIV OF GEOSCIENCES (WUHAN)

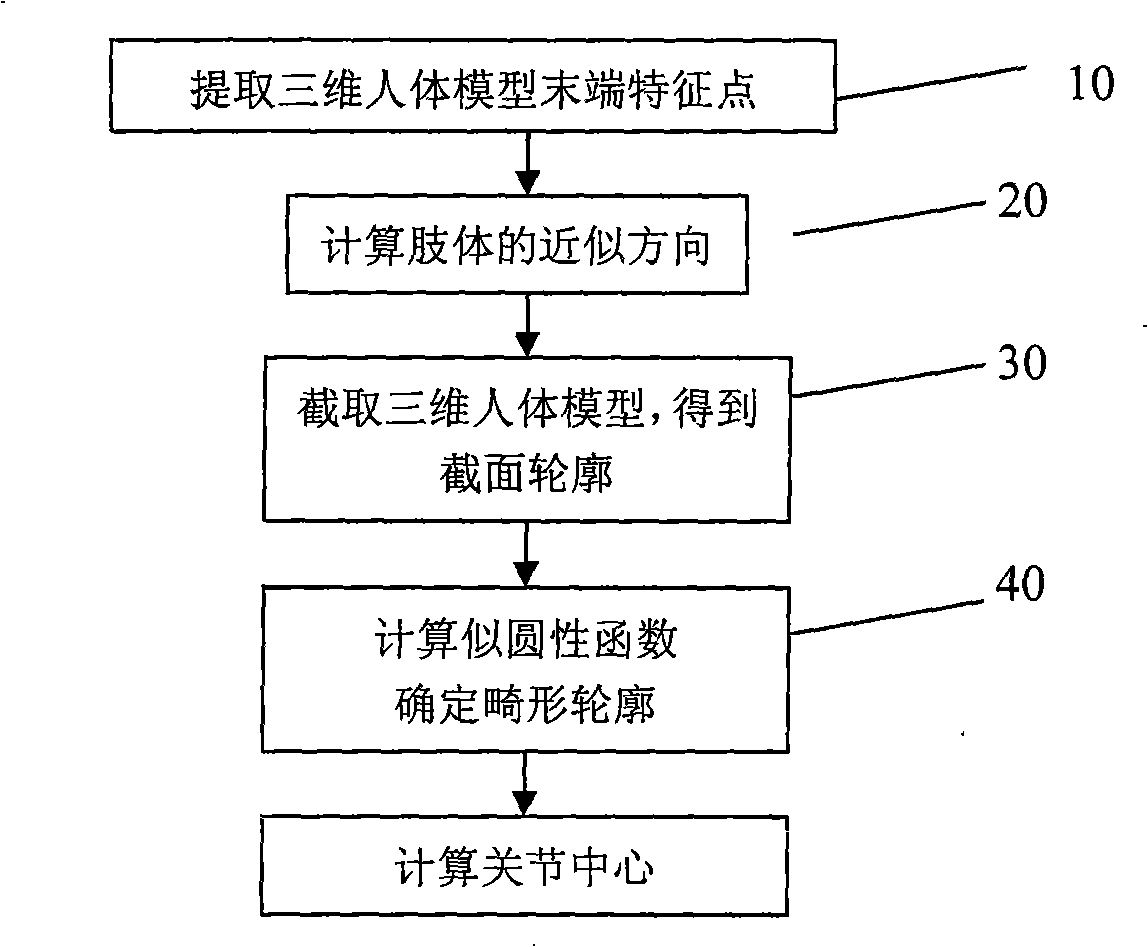

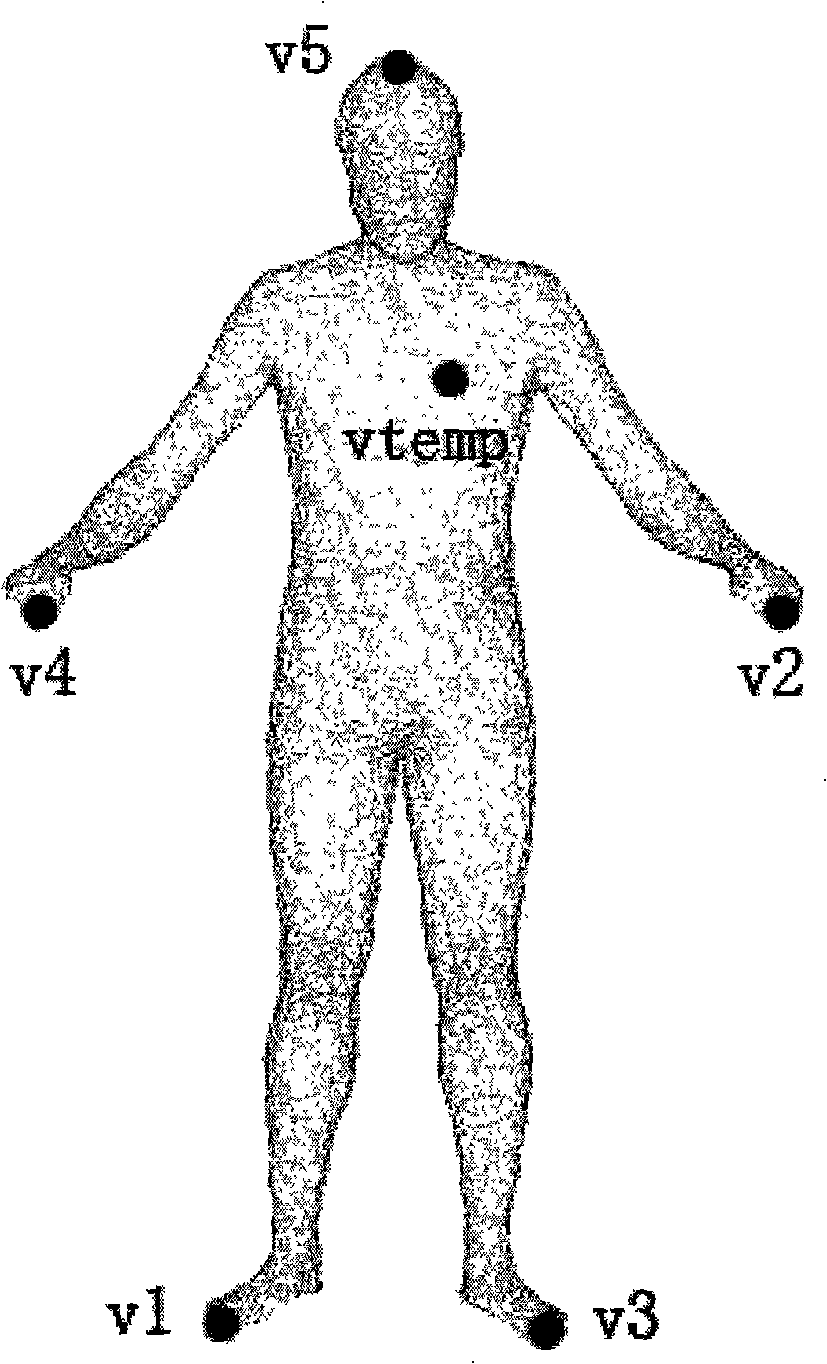

Three-dimensional mannequin joint center extraction method

InactiveCN101271589AAutomatic extractionNo human intervention requiredImage analysis3D modellingGravity centerSacroiliac joint

The invention discloses a joint center extracting method of a three-dimensional human model , comprising the following steps: the surface grid information of the three-dimensional human model is imported, by which a characteristic point of a tail-end of the three-dimensional human model is extracted; on the basis of the characteristic point of the tail-end, the limbs of the three-dimensional human model are divided; and an approximate direction of each limb in the three-dimensional human model is computed; a group of parallel planes perpendicular to the approximate direction of the limb is used for intercepting the three-dimensional human model to get a sectional profile group; a circularity function of the sectional profile group is computed to get a group of circularity function sequences; a local minimum of the circularity function sequence is computed, by which a deformed profile in the profile group is determined; a profile center of gravity of the deformed profile is computed and taken as the joint center of a relevant position of the three-dimensional human model. The joint center extracting method provided by the invention can be used in the three-dimensional human model with an arbitrary posture, of which the extraction precision of the joint center is comparatively high.

Owner:北京宗元科技有限公司

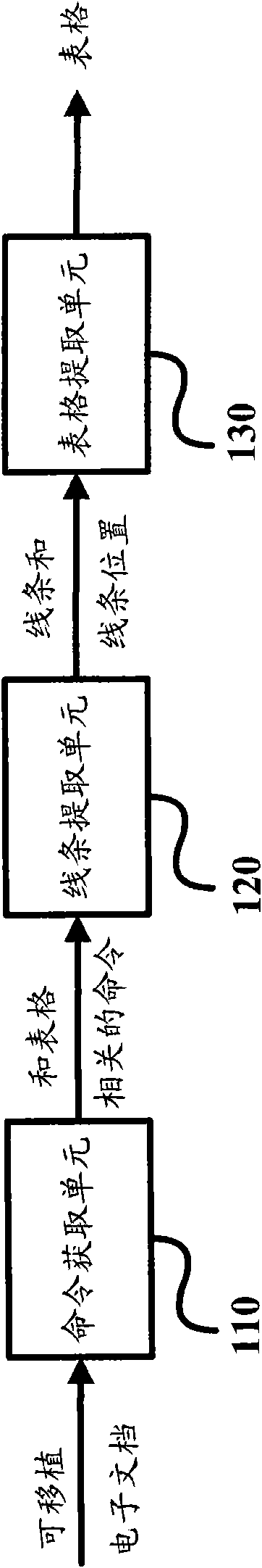

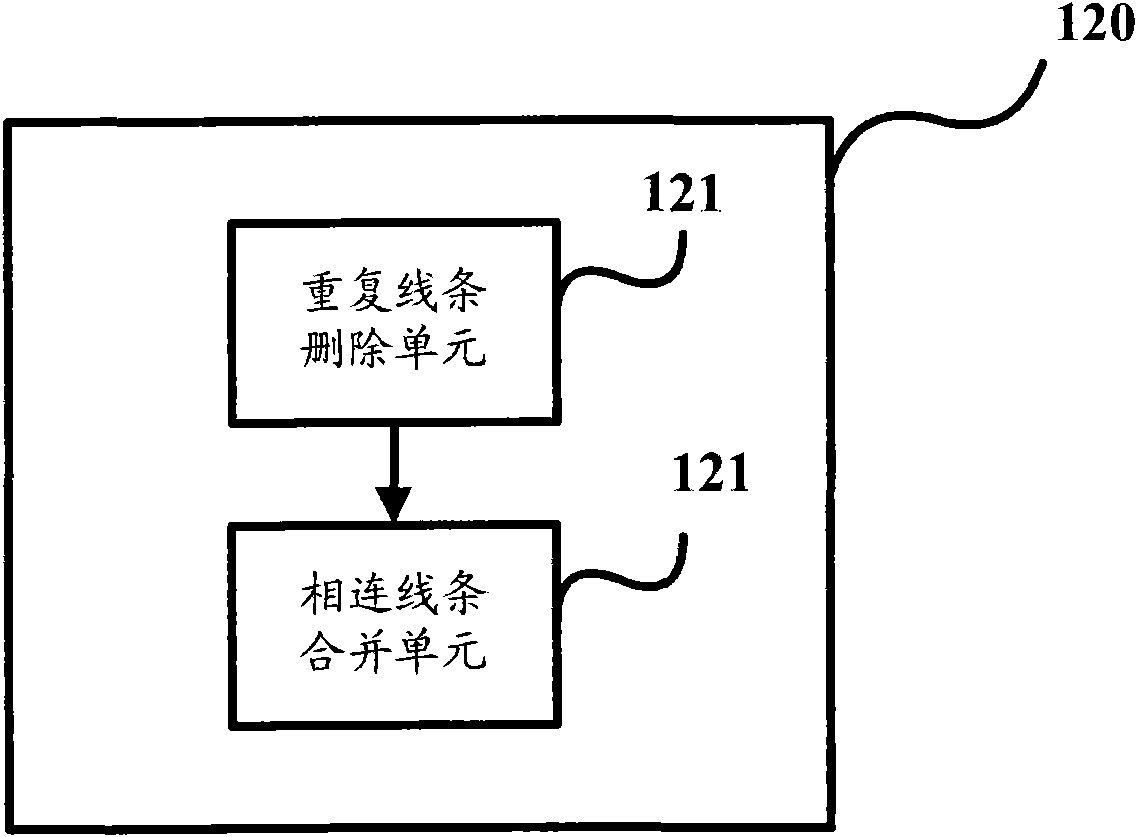

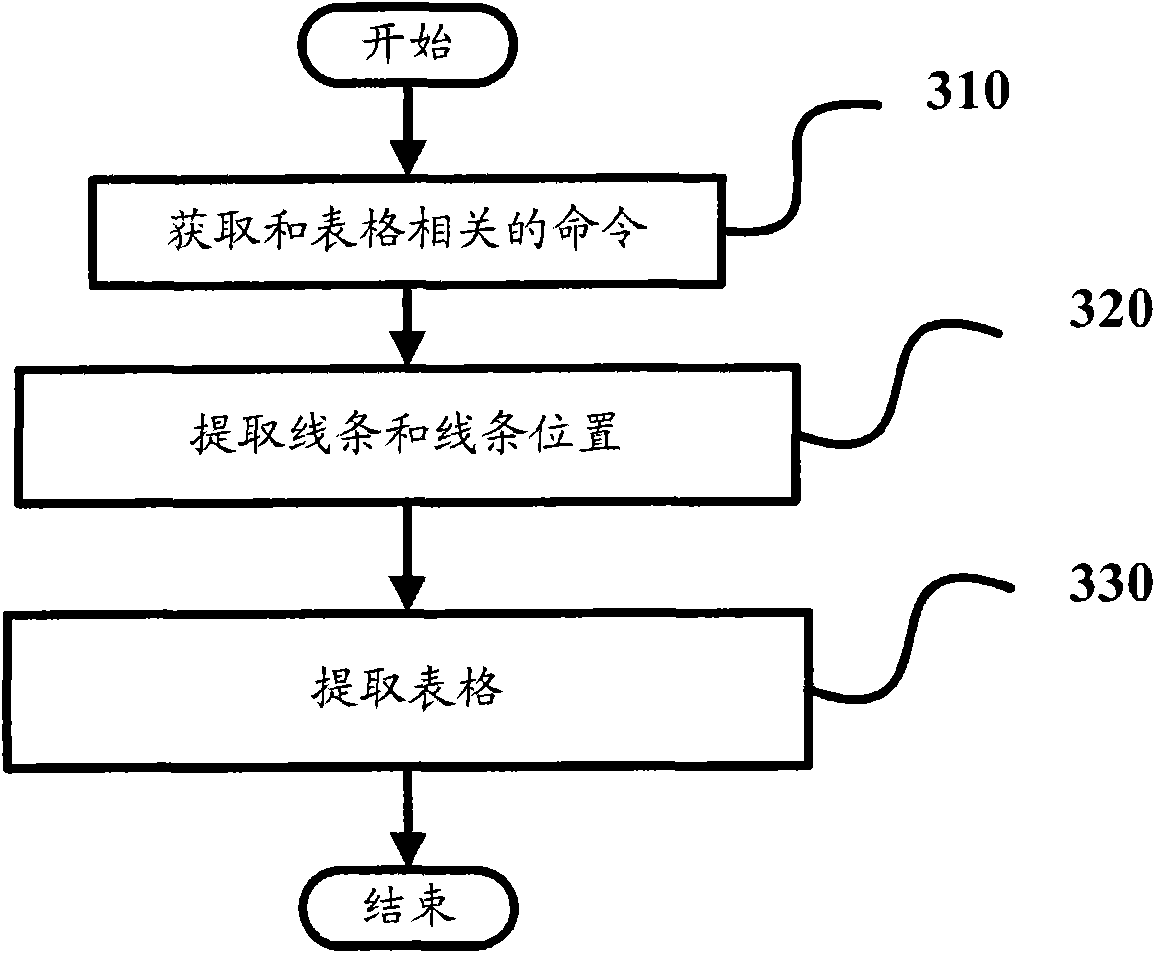

Method and device for extracting form from portable electronic document

InactiveCN101833546AAutomatic extractionSpecial data processing applicationsElectronic documentComputer hardware

The invention provides a device and a method for extracting a form from a portable electronic document. The device for extracting the form comprises a command acquiring unit, a line extracting unit and a form extracting unit, wherein the command acquiring unit is used for analyzing the content of the portable electronic document so as to acquire commands related to the form; the line extracting unit is used for extracting lines and the positions of the lines by processing the commands; and the form extracting unit is used for analyzing the position relation of the lines to extract the form. The device and the method can be used for automatically extracting the form from the portable electronic document.

Owner:RICOH KK

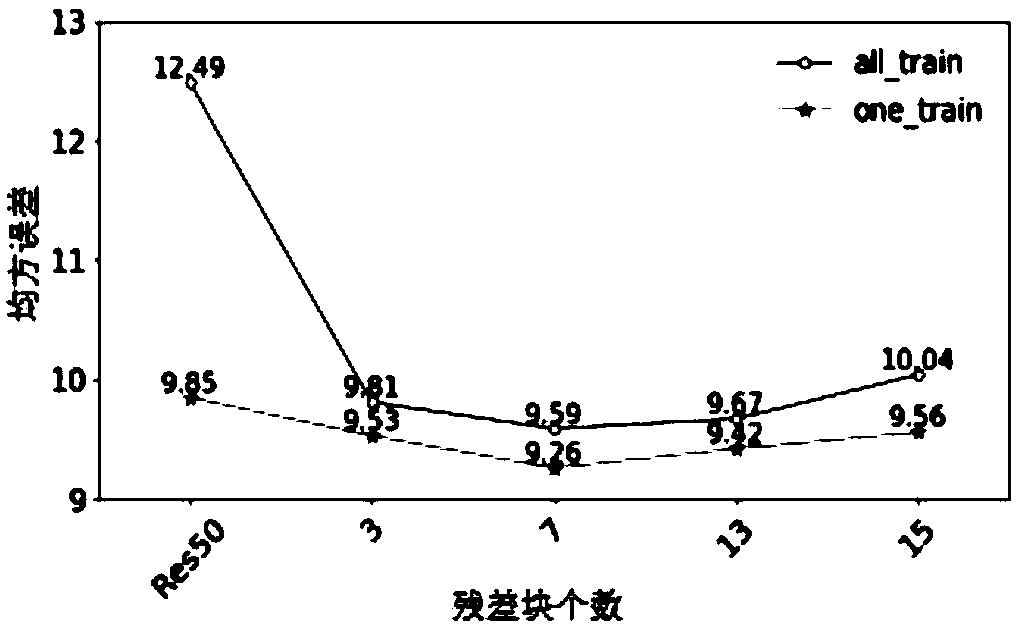

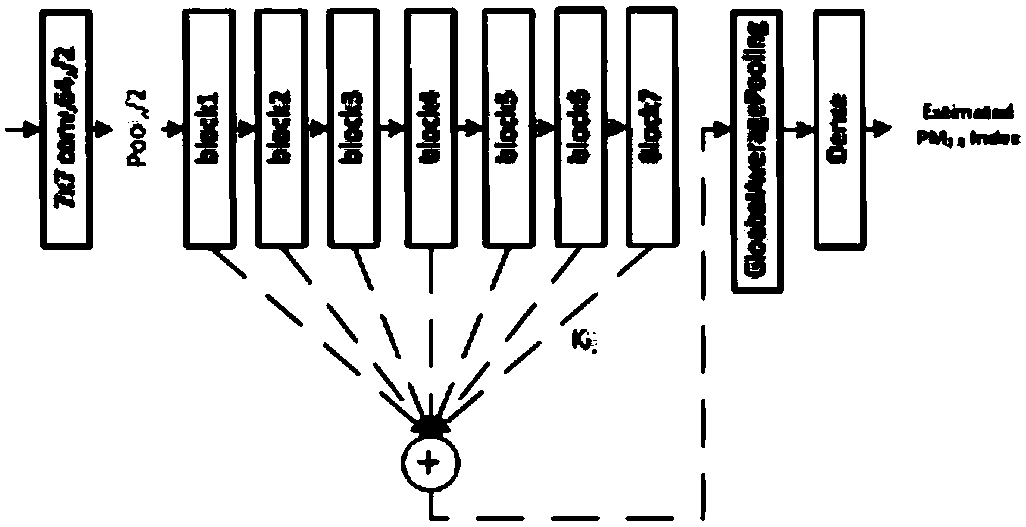

A method for estimating air particulate pollution degree based on shallow convolution neural network

ActiveCN109523013AAutomatic extractionAvoid featuresCharacter and pattern recognitionNeural architecturesFeature extractionParticulate pollution

The invention discloses a method for estimating air particulate pollution degree based on shallow convolution neural network. The basic steps of the method include: 1. Constructing a shallow convolution neural network (PMIE) model with a layer enhancement function; 2. Combining the output of PMIE model with four kinds of weather eigenvalues to construct regression model; 3. Trainning PMIE model and regression model; 4. The PM2.5 index of the test set image is estimated by using the trained PMIE model and regression model. The invention provides a shallow convolution neural network model with layer enhancement function, Combining the output results with four kinds of weather features to estimate the degree of air particulate pollution in the image, the problem caused by feature extraction and feature optimization is effectively avoided, and the specific PM2.5 index value is obtained, which improves the training convergence speed and algorithm robustness, and has better performance.

Owner:NORTHWEST UNIV(CN)

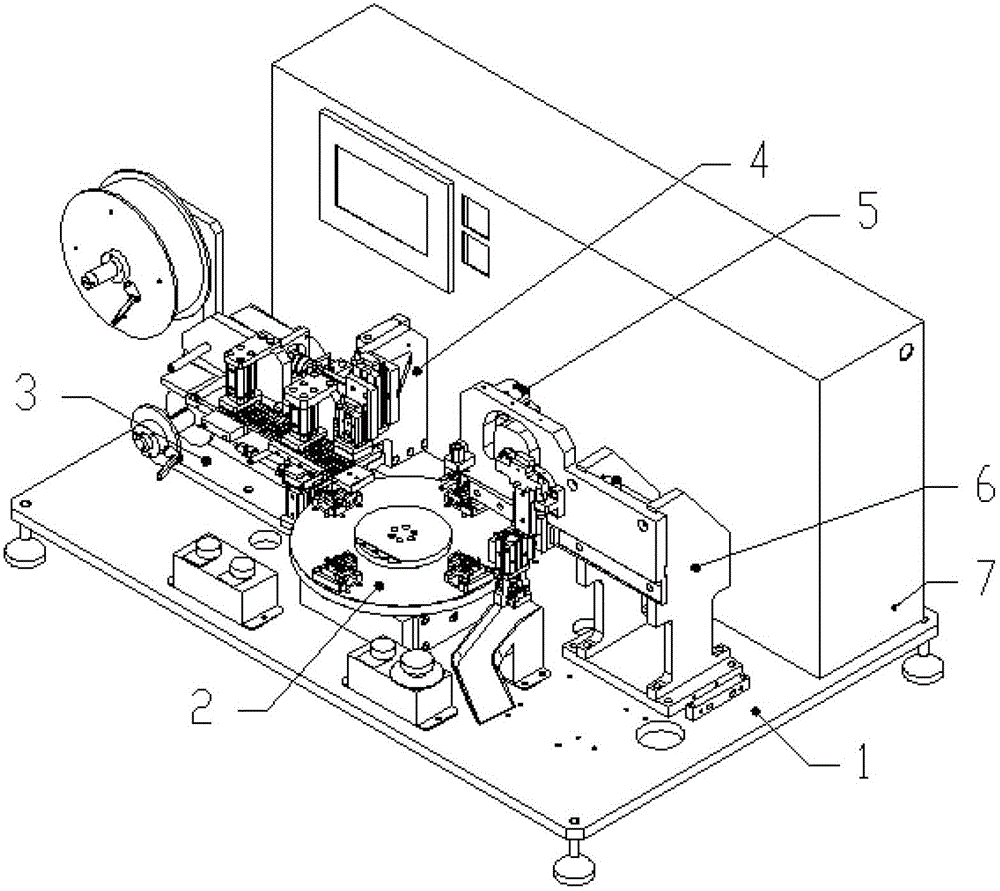

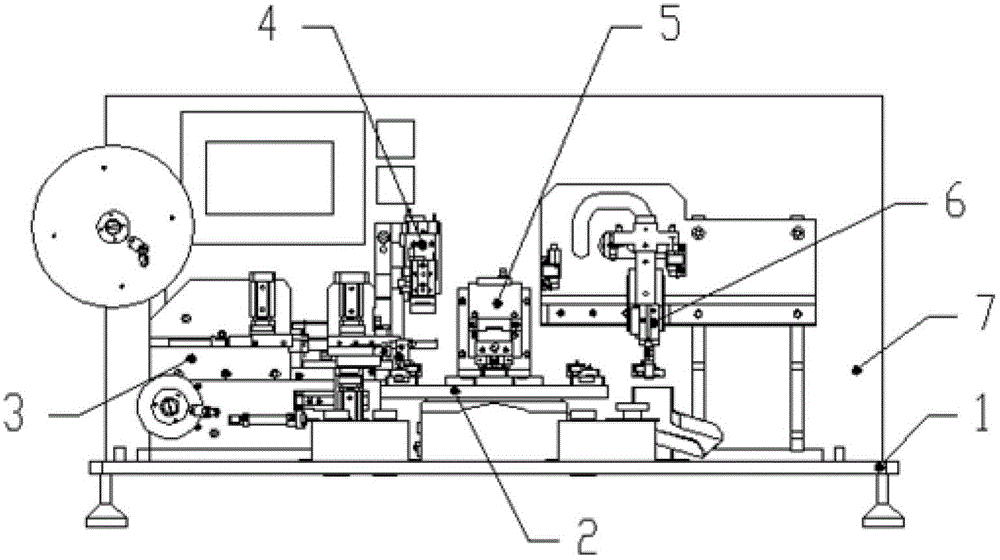

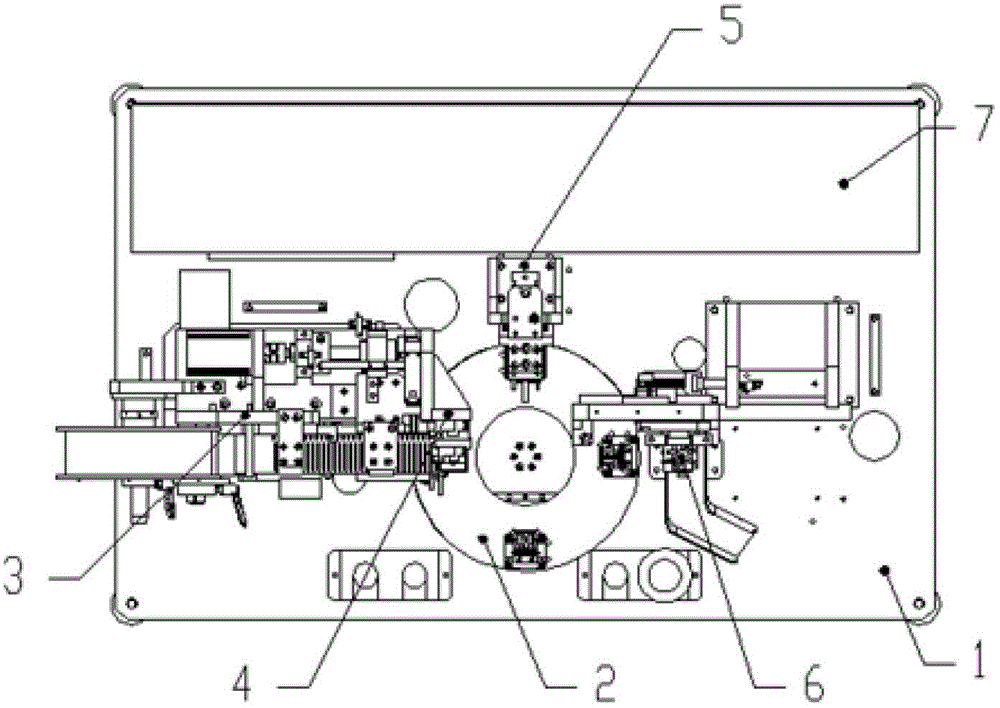

Adhesive tape sticking device

The invention discloses an adhesive tape sticking device, which comprises a rack, a carrier turntable instrument, an adhesive tape feeder, an adhesive tape sticking instrument, a component discharge instrument and a controller. Specifically, the adhesive tape feeder is used for adhesive tape feeding, the adhesive tape sticking instrument is disposed at the adhesive tape discharge end of the adhesive tape feeder, and a suction nozzle on the adhesive tape sticking instrument can suck an adhesive tape. The carrier turntable instrument is located on the rack, and can convey the carrier thereon to the place right below the suction nozzle so as to stick the adhesive tape by the suction nozzle. The component discharge instrument can send the carrier of an adhesive tape sticked product into a discharge flow channel for discharge. And the controller controls the work of each component. The adhesive tape sticking device provided by the invention realizes automatic peeling and sticking of adhesive tapes and automatic receiving of sticked products, achieves full automatic work, and has no need for manual operation. The adhesive tape sticking speed is fast, and the sticked adhesive tapes are accurate in positions and are firm. Manpower and space are saved, the production efficiency is improved, and production cost is reduced.

Owner:KUNSHAN FULIRUI ELECTRONICS TECH

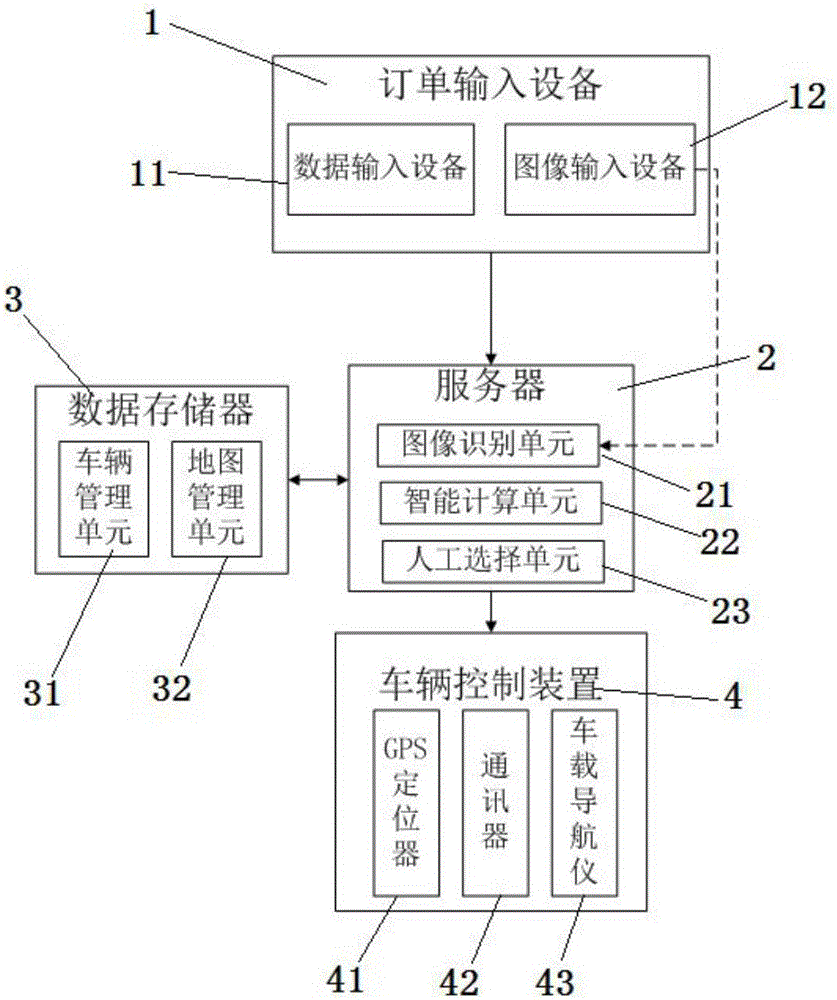

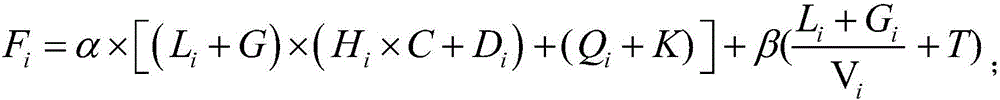

Logistic transportation dispatching system

PendingCN106651051ARealize unified management and schedulingAvoid blindnessForecastingLogisticsLogistics managementData memory

The invention discloses a logistic transportation dispatching system. The logistic transportation dispatching system comprises order input equipment, a server, a data storage and a vehicle control device; the order input equipment is used for inputting information of cargoes needing to be transported, and the information comprises the cargo types, the cargo quantity, the delivery place and the destination; the server is connected with the order input equipment and used for calculating the transportation cost and obtaining an optimal transportation scheme; the data storage is connected with the server and used for storing logistic transportation information; and the vehicle control device is installed on a vehicle and used for positioning the vehicle and transmitting a transportation instruction. According to the logistic transportation dispatching system, the control device is installed on the vehicle and used for positioning the vehicle and transmitting the transportation instruction to the vehicle through the server, and therefore unified managing and dispatching of the vehicle are achieved.

Owner:宁波贤晟信息技术有限公司

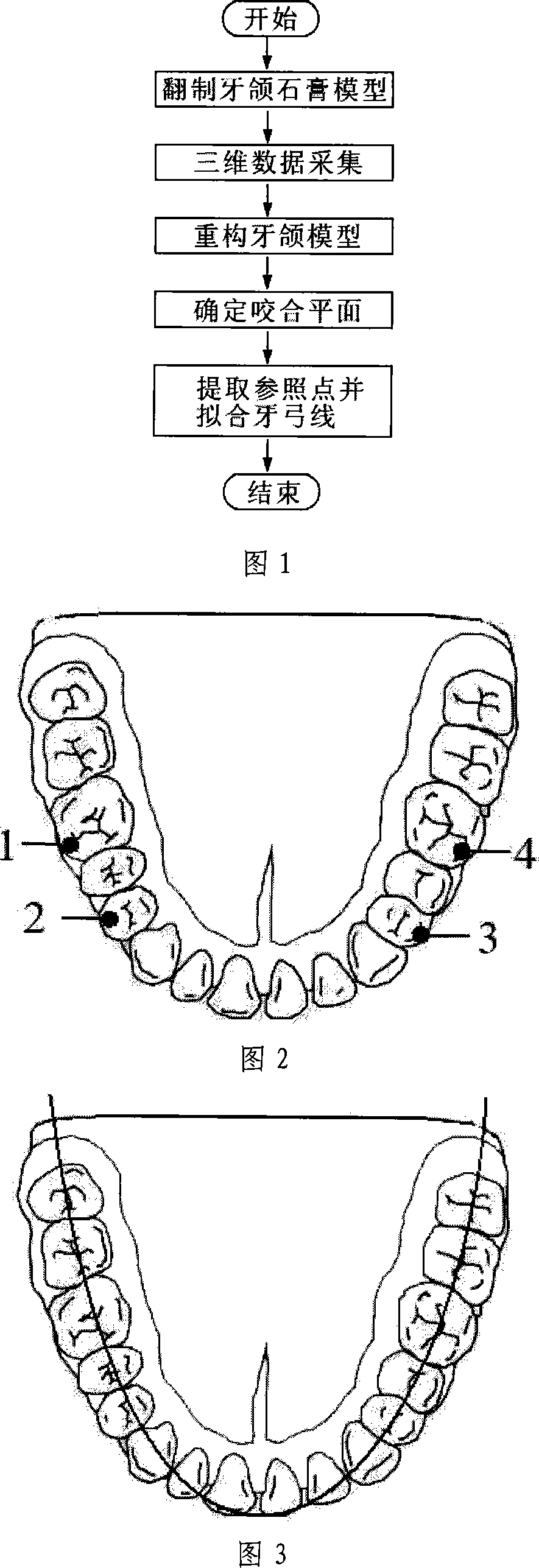

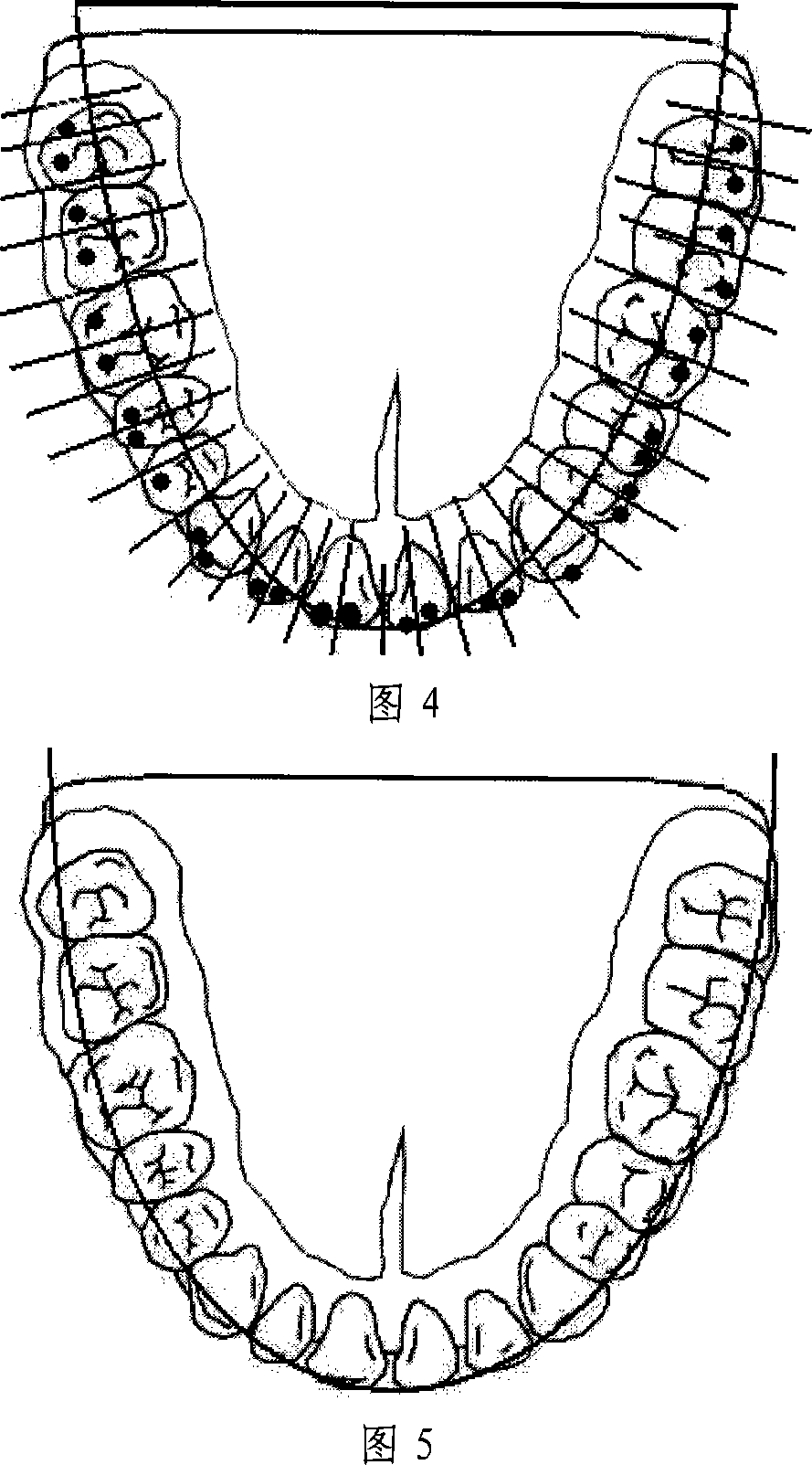

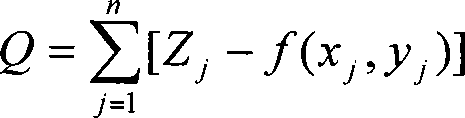

Method for fast and accurately detecting dental arch line on three-dimension grid dental cast

InactiveCN101103940AQuick extractionAutomatic extractionDentistrySpecial data processing applicationsComputer Aided DesignOcclusal plane

Disclosed is a quick and accurate detecting dental arch on a three-dimensional mesh dental model, belonging to the field of computer aided f. The method includes five steps, simple human-computer interactive confirmation of an occlusal plane, sifting initial reference point, fitting an initial dental arch, extracting the final reference point and fitting the final dental arch. The method of the invention only needs simple human-computer interactive operation which helps achieve high degree of automation and avoid the general complex computation; linear computation which is mainly used in the invention can detect dental arch on the three-dimensional dental model quickly, steadily and precisely, valued in application in the field of computer aided orthodontic aesthetics.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

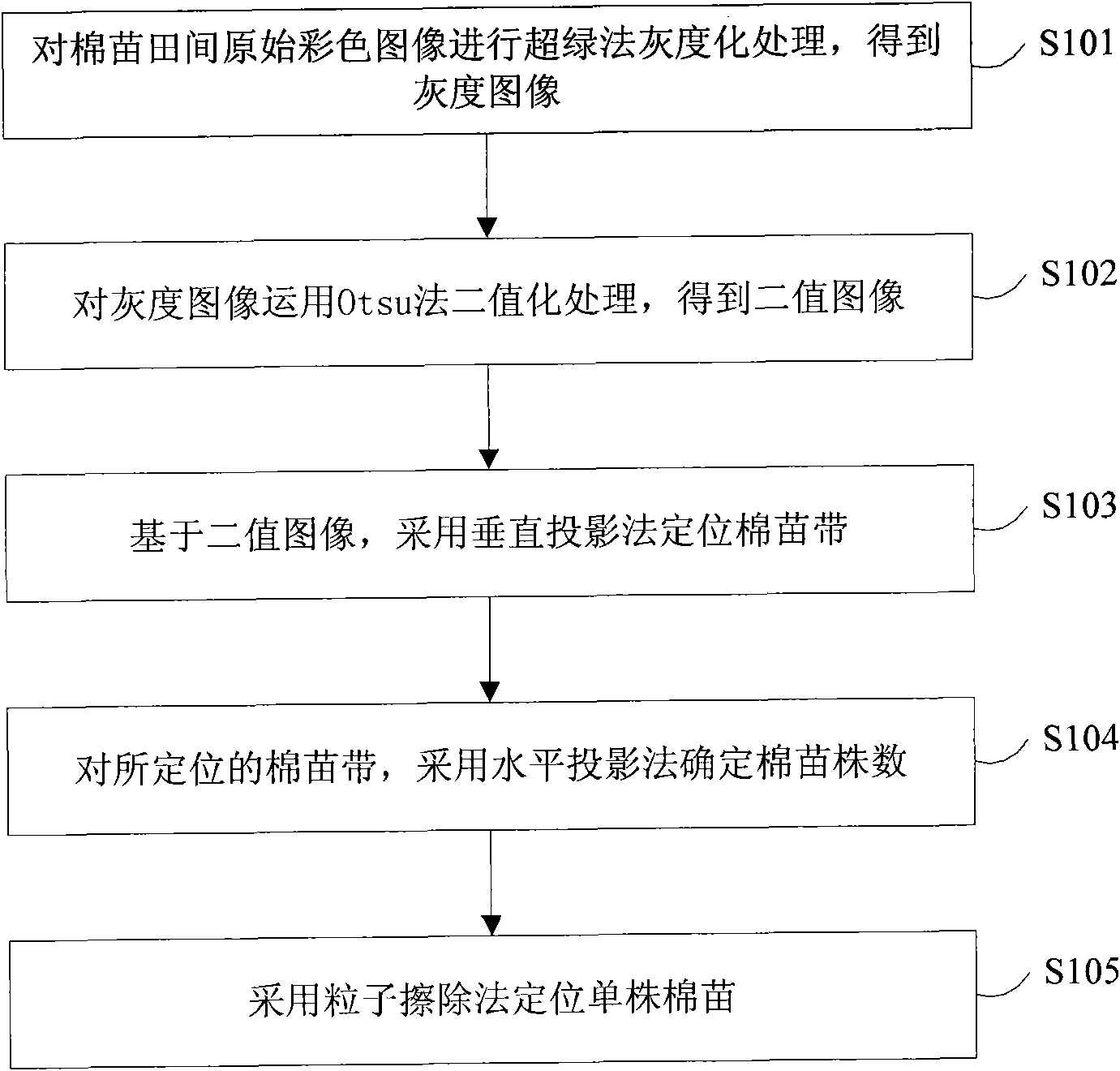

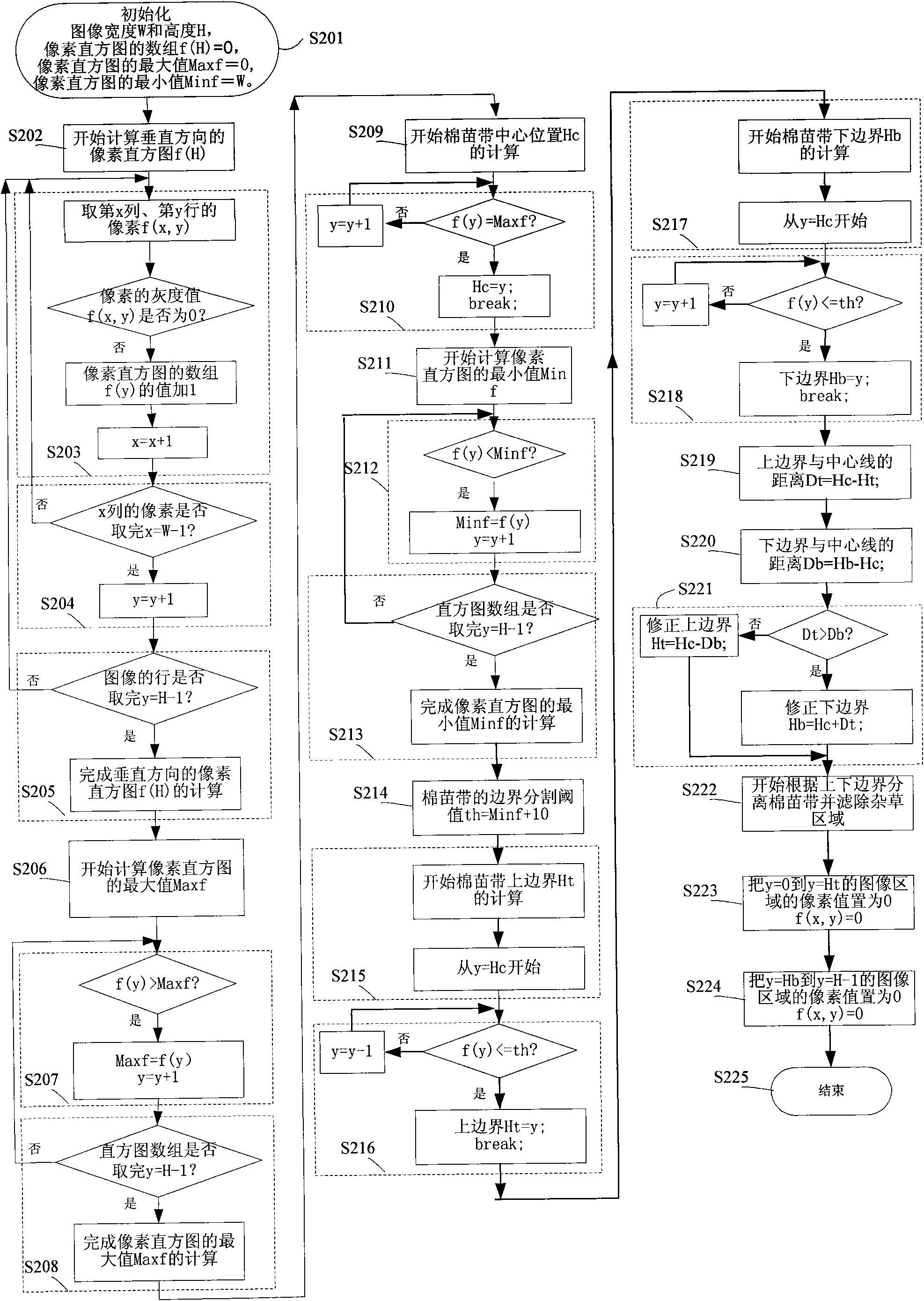

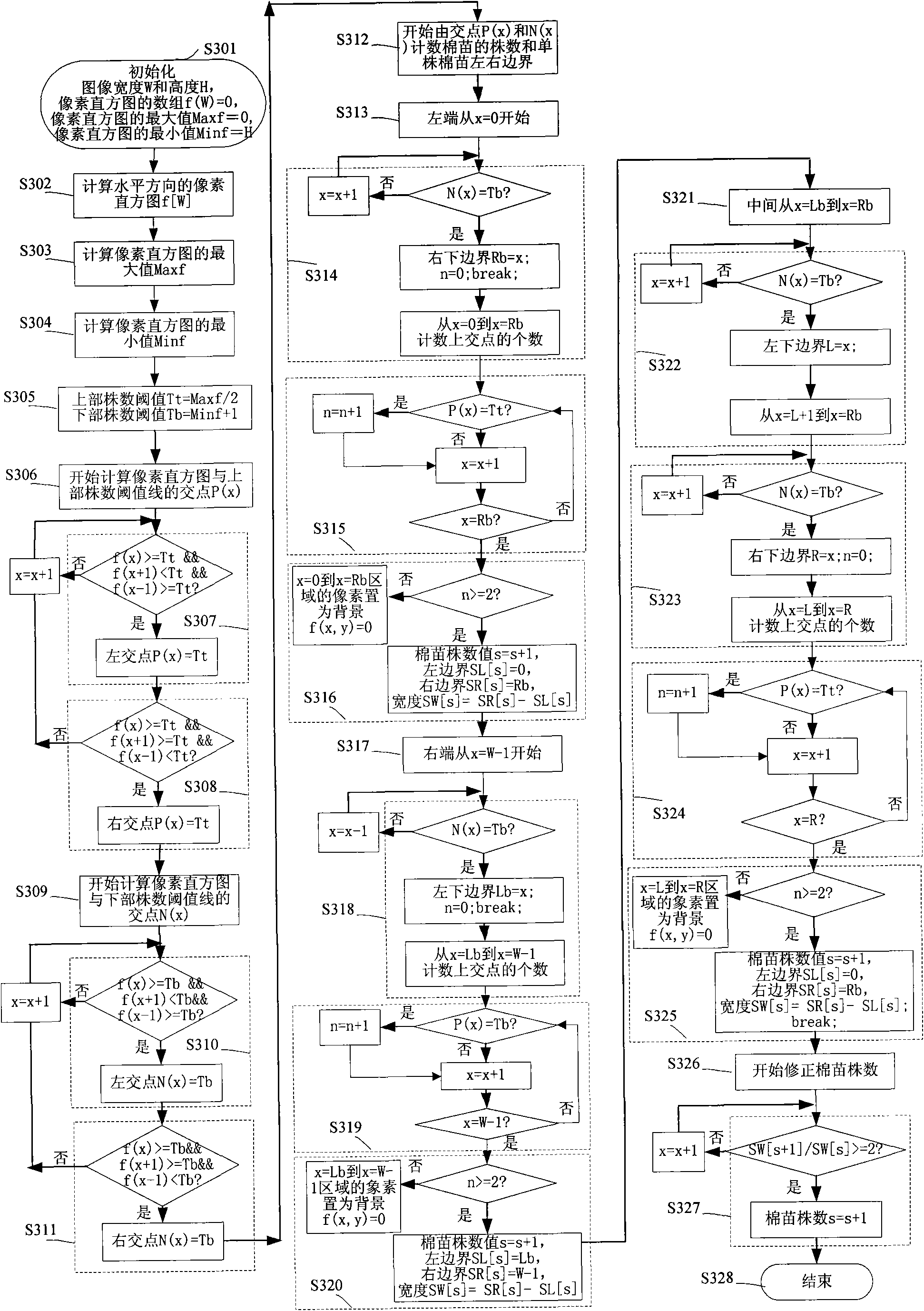

Method and device for automatically extracting cotton seeds

InactiveCN102339378AImprove recognition rateQuick extractionCharacter and pattern recognitionHorticultureAgricultural scienceSoil color

The invention provides a method and device for automatically extracting cotton seeds. The method comprises the following steps of: step one, using green plants and soil color characteristics to carry out background segmentation on colorful images in a cotton seed field; step two, using position characteristics of cotton seed line spacing to carry out cotton seed belt location on the green plants obtained by the background segmentation; step three, using position characteristics of cotton seed row spacing to carry out single-plant cotton seed location and plant counting on the located cotton seed belts; and step four, using shape characteristics of the cotton seeds and weeds to extract the single-plant cotton seeds to obtain a cotton seed image in the field in accordance with the plant number of the cotton seeds. According to the method and device, the quick and automatic extraction of the cotton seeds with high recognition rate can be realized.

Owner:CHINESE ACAD OF AGRI MECHANIZATION SCI

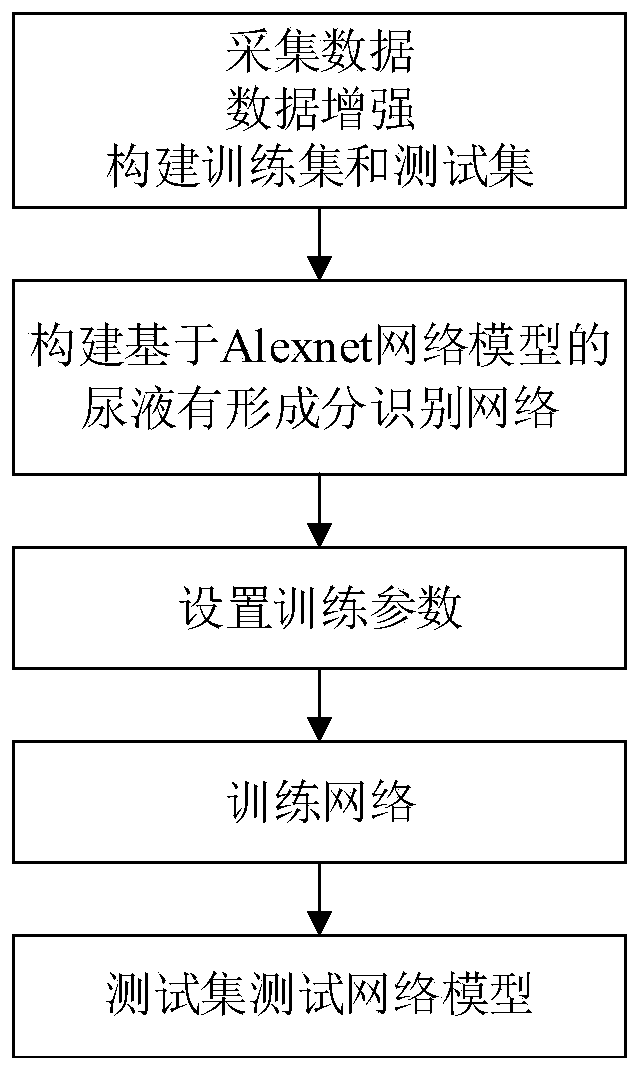

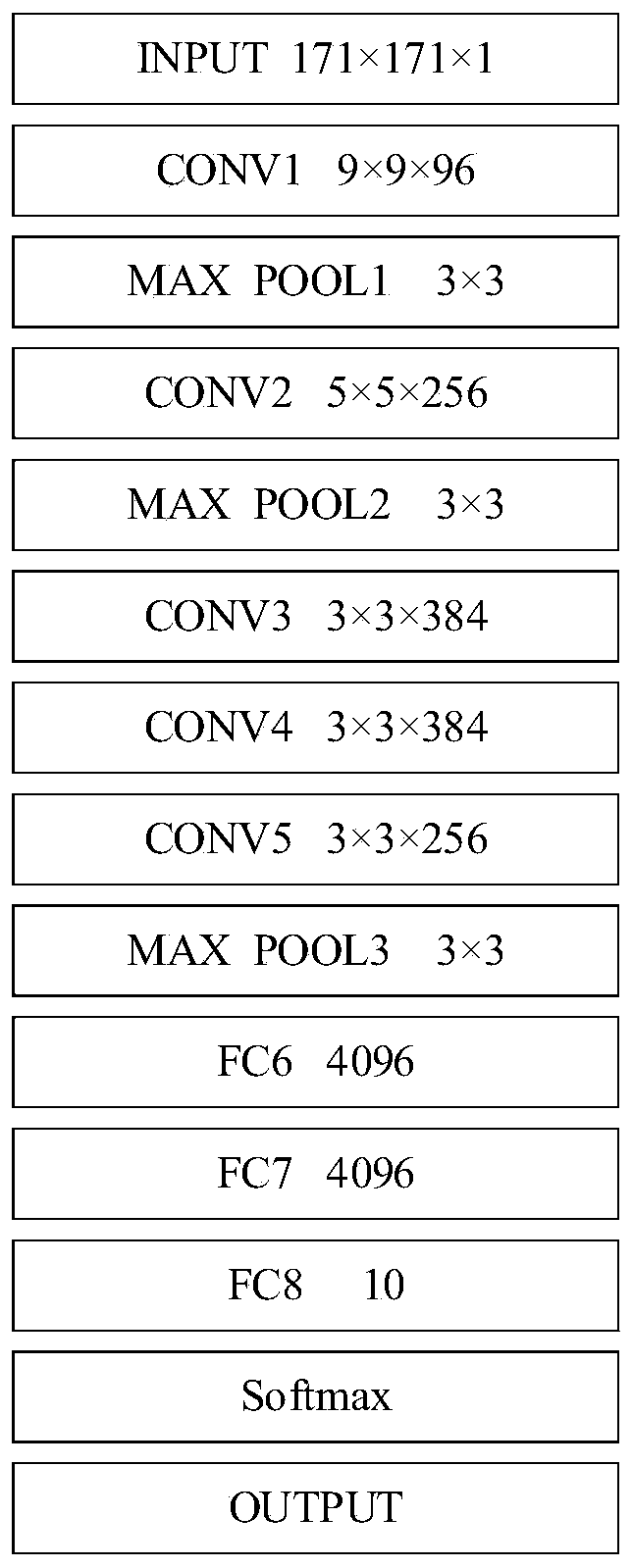

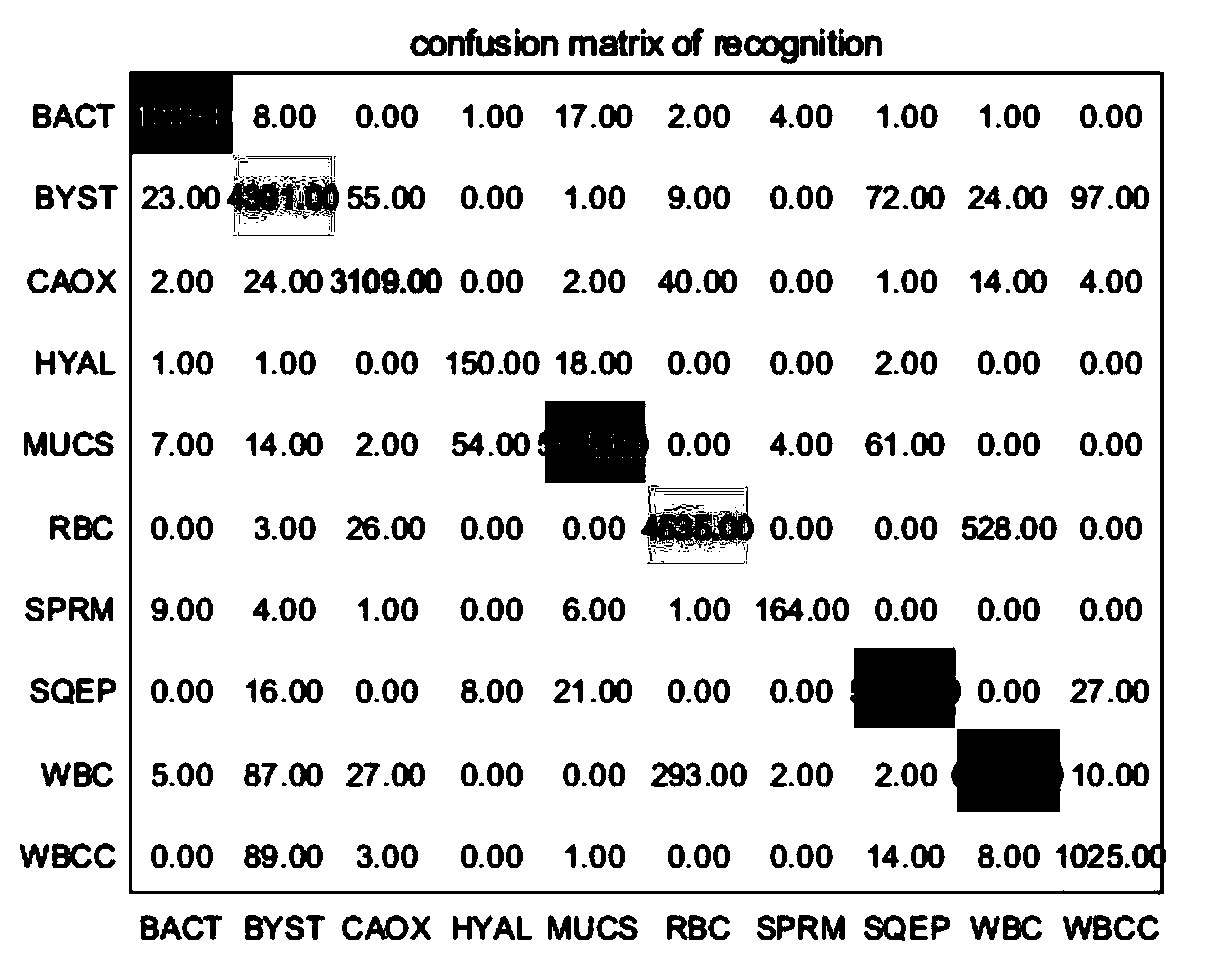

Urine visible component recognition method based on improved Alexnet model

InactiveCN110473166AAutomatic featureAutomatic identificationImage enhancementImage analysisData setImaging processing

The invention relates to the field of medical image processing, in particular to a urine visible component recognition method based on an improved Alexnet model. The method comprises the following steps: 1, acquiring and expanding an image data set, and constructing a urinary sediment image training set and a test set; 2, constructing a urine visible component recognition network model based on anAlexnet network model; 3, setting training parameters of the urine visible component recognition network model; 4, training a urine visible component recognition network model based on an Alexnet network model; 5, testing a urine visible component recognition network model based on the Alexnet network model. The method is improved on the basis of an Alexnet network model and reduces the number ofnetwork training parameters. The method can automatically extract image features, has the characteristics of high recognition rate, short recognition time and strong generalization ability, and has important application prospects for assisting medical diagnosis and reducing the burden of doctors.

Owner:HARBIN ENG UNIV

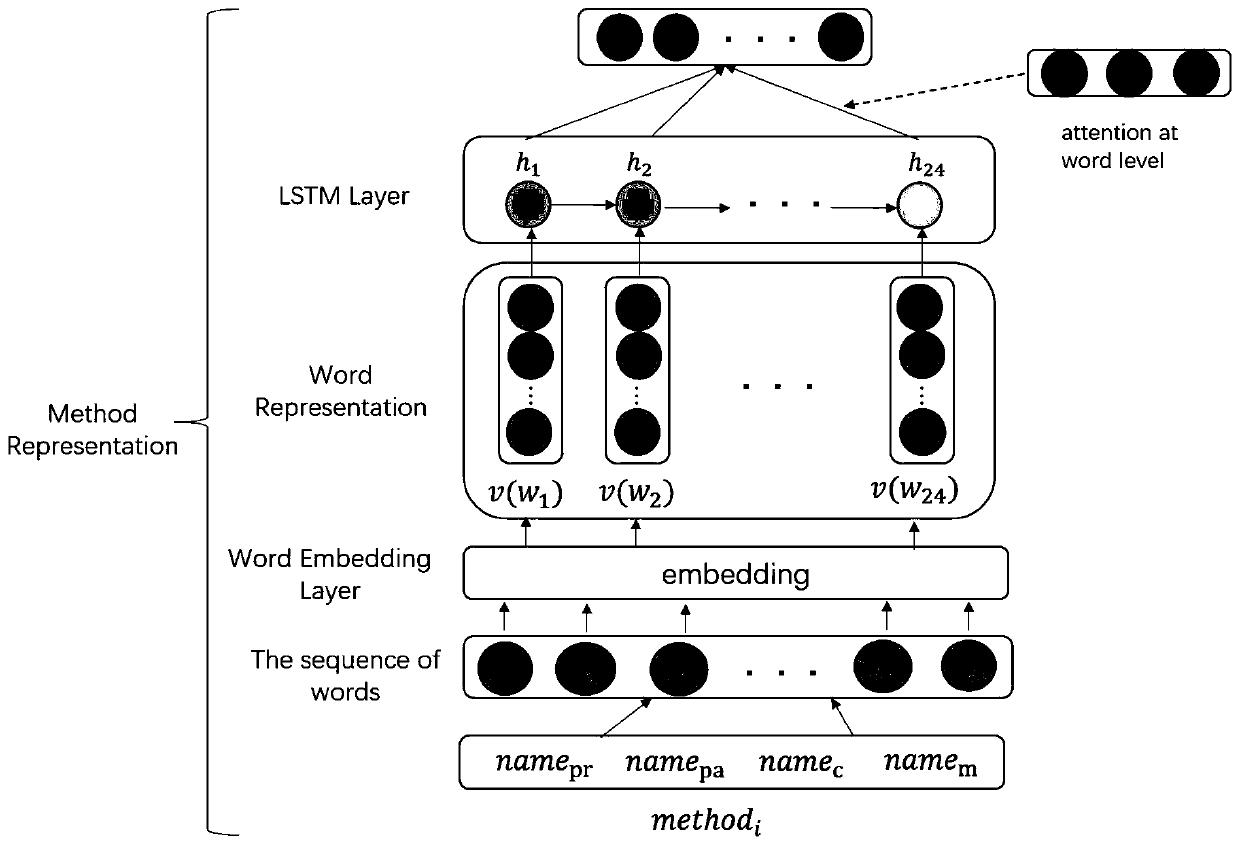

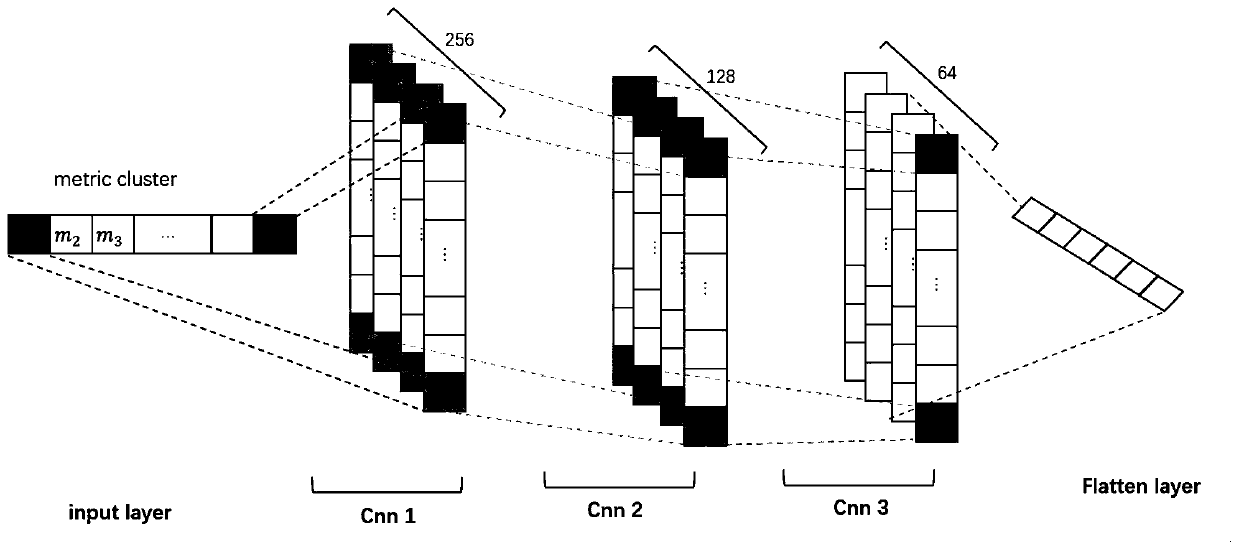

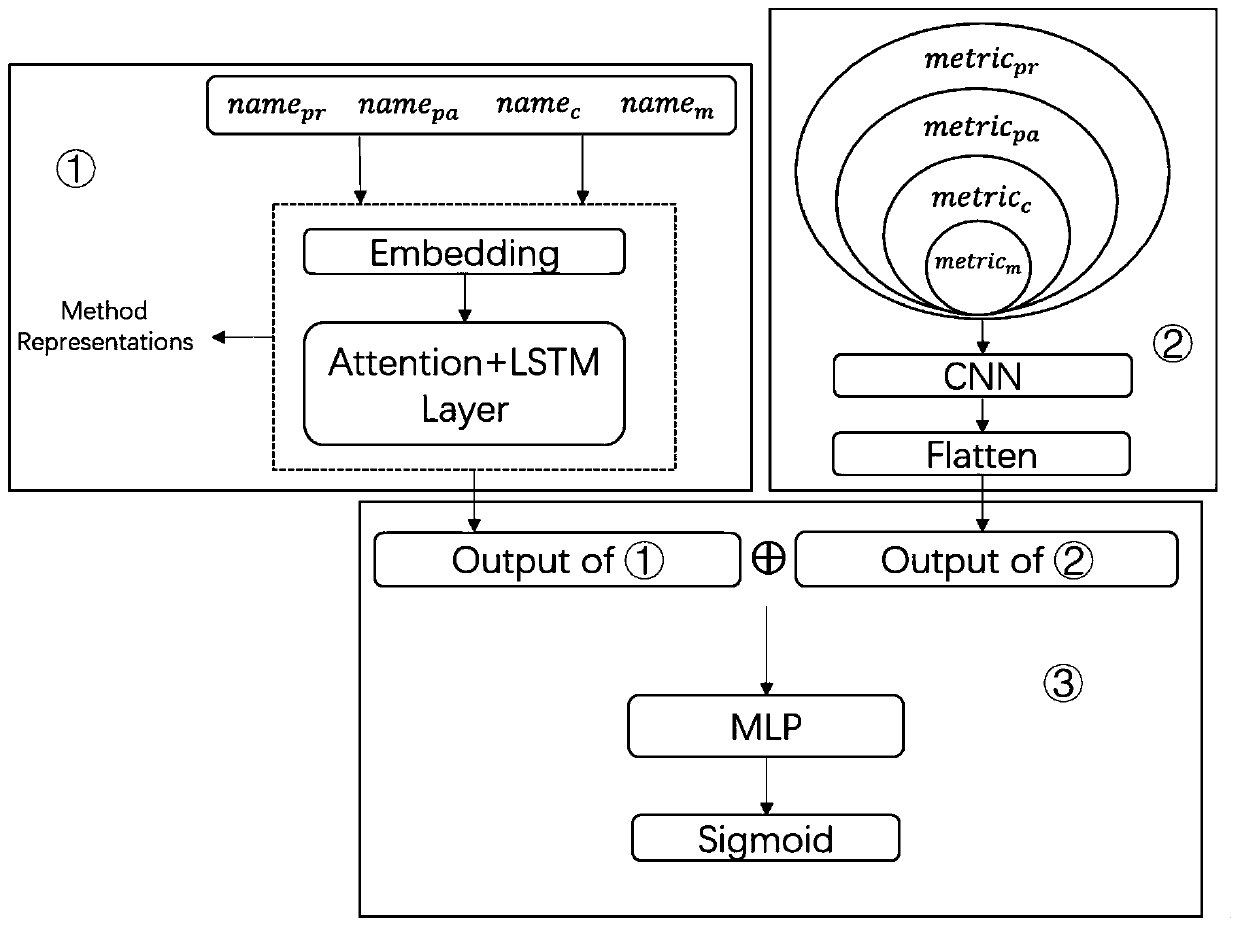

Code function taste detection method based on deep semantics

ActiveCN110413319AAutomatic extractionSteps to eliminate manual feature extractionCharacter and pattern recognitionSoftware metricsFeature extractionModel testing

The invention relates to a code function taste detection method based on deep semantics, and belongs to the technical field of automatic software reconstruction. The method comprises the following steps: extracting semantic features and digital features in text information and structured information, including model training and model testing. The model training comprises code function representation A, structured feature extraction A and code taste classification A, wherein code function representation B, structured feature extraction B and code taste classification B are included. The code function representation A and the code function representation B are code function representations based on an attention mechanism and an LSTM neural network, wherein the structured feature extractionA and the structured feature extraction B are structured feature extraction based on a convolutional neural network. The code taste classification A and the code taste classification B are function-level code taste detection methods based on deep learning provided by code taste classification of a multi-layer perceptron. Under the condition of short detection time, it can be guaranteed that the detection result has high recall rate and accuracy.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

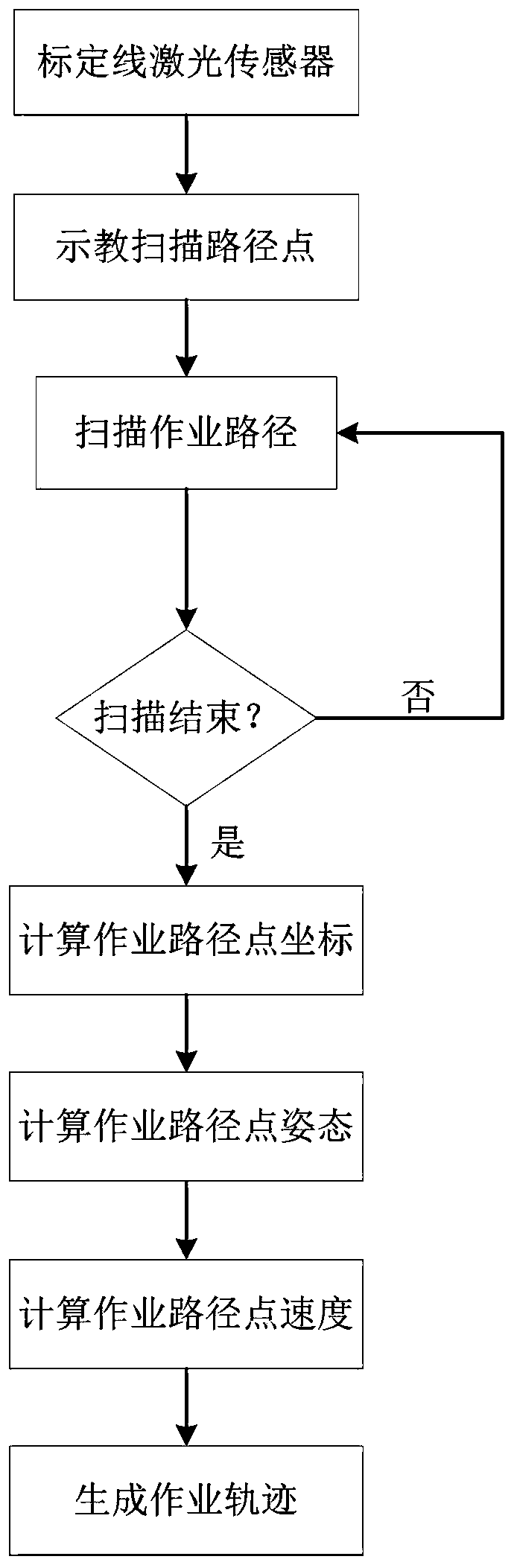

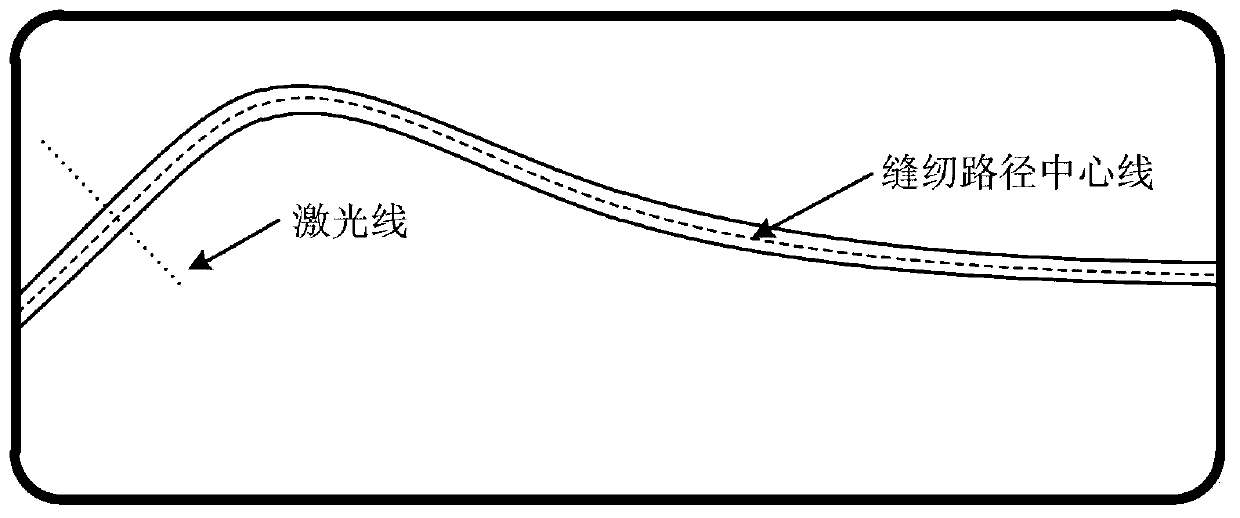

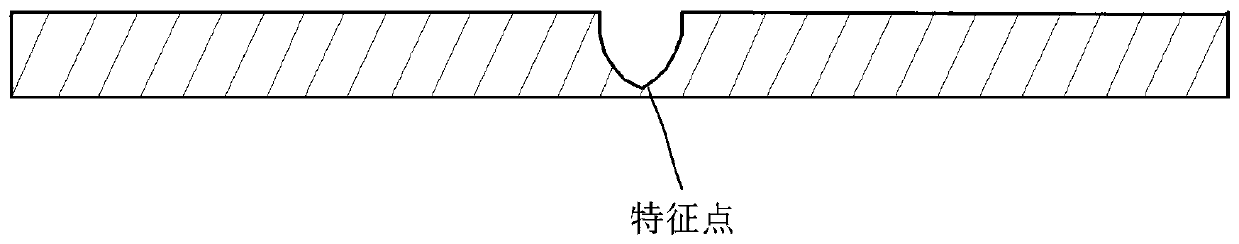

Robot operation trajectory acquisition method based on line laser sensor

ActiveCN109732589AImprove work efficiencyIncrease the level of automationProgramme-controlled manipulatorLaser sensorLaser line

The invention relates to a robot operation trajectory acquisition method based on a line laser sensor. The method comprises the following steps that an to-be-operated path of a robot is scanned by using the line laser sensor, contour data of a laser line are extracted according to a certain sampling frequency, finally an operation trajectory of the robot is automatically generated according to thecontour sampling information on the whole path and position information of the robot, and the operation trajectory comprises information such as the operation position, the operation posture and theoperation speed of the robot. According to the method, the operation efficiency of the robot on a complex path can be improved, and the automation level of the robot during working can be improved.

Owner:716TH RES INST OF CHINA SHIPBUILDING INDAL CORP +2

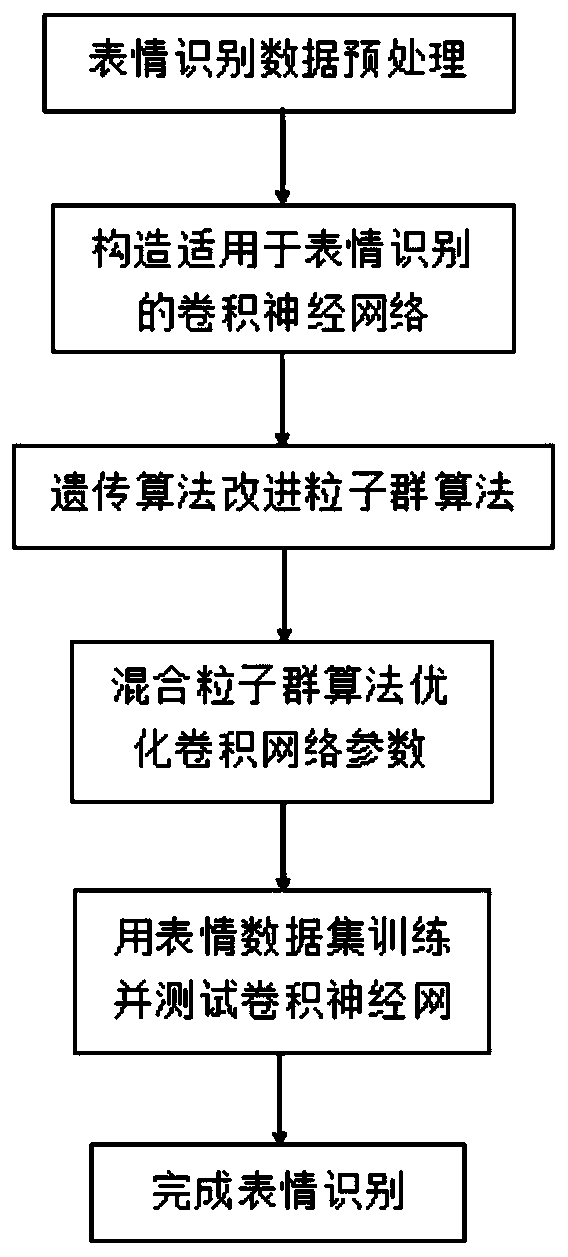

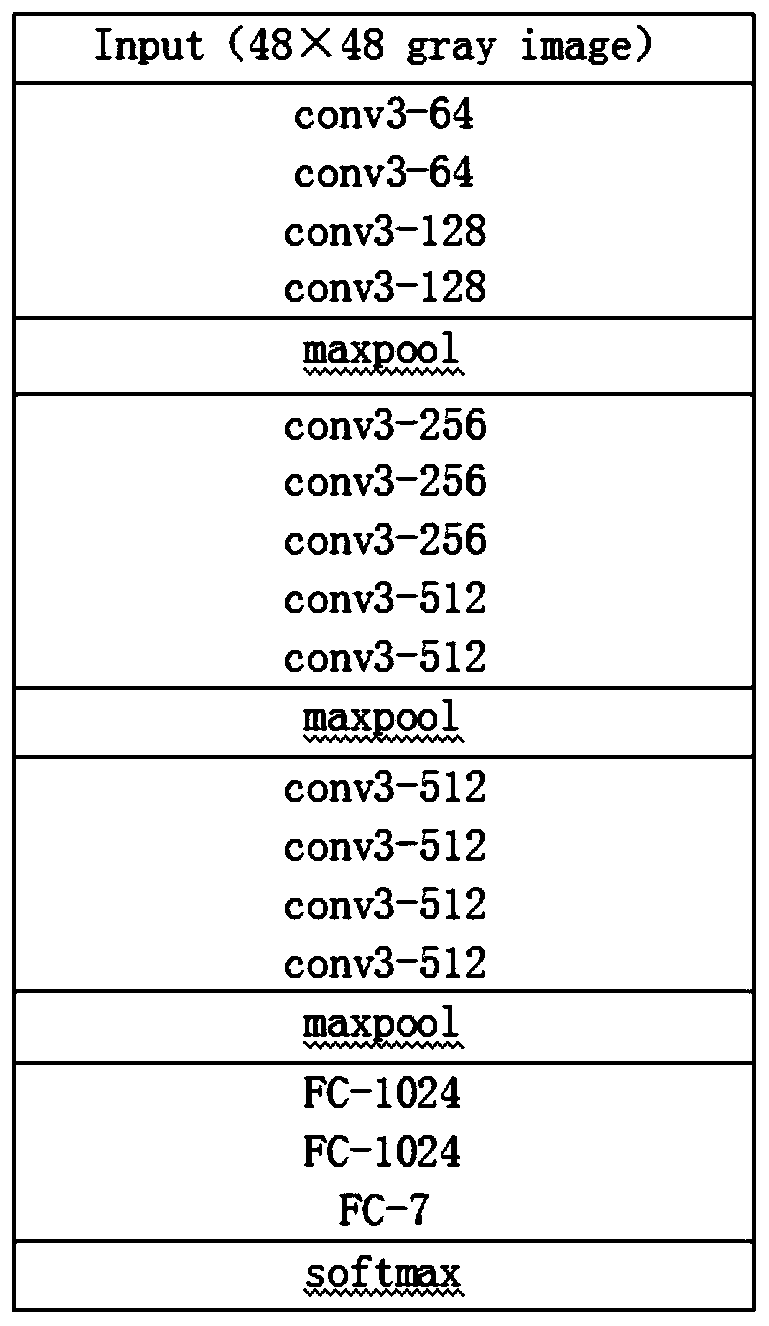

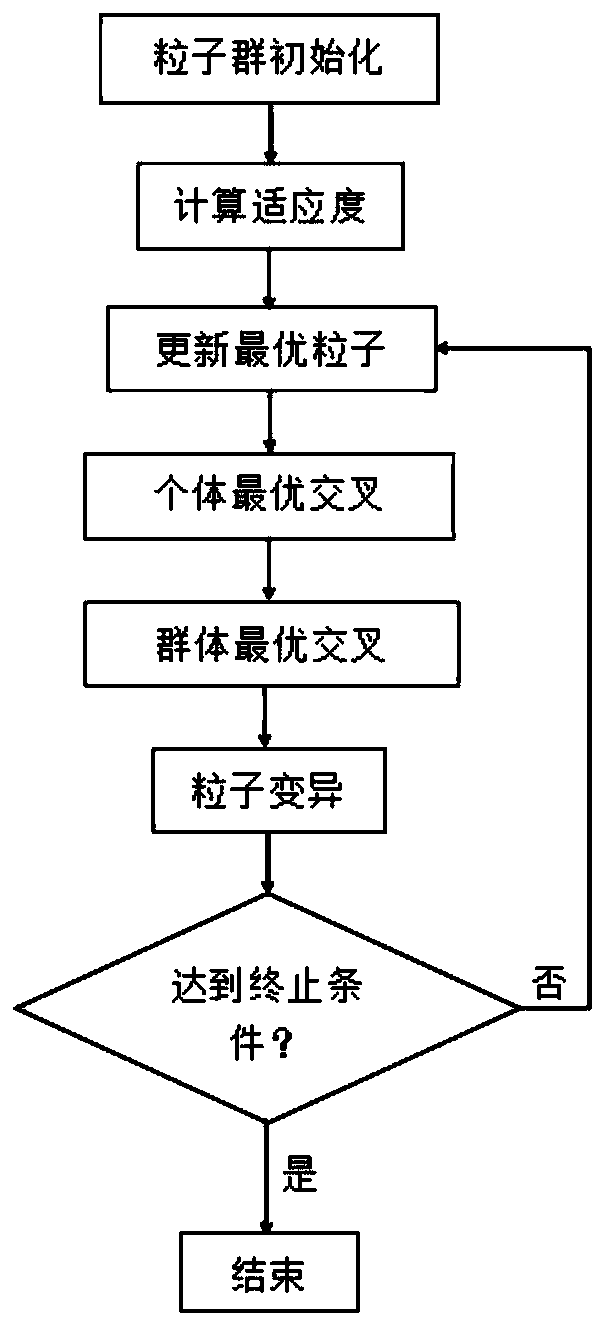

Expression recognition method for optimizing convolutional neural network based on improved particle swarm optimization algorithm

PendingCN110598552AAutomatic extractionReduce computational complexityArtificial lifeNeural architecturesLocal optimumNetwork Convergence

The invention relates to an expression recognition method for optimizing a convolutional neural network based on an improved particle swarm optimization algorithm. The expression recognition method constructs a convolutional neural network suitable for expression recognition, combines the hybrid particle swarm optimization algorithm with a crossover mutation algorithm and a particle swarm optimization algorithm in a genetic algorithm, optimizes the constructed convolutional neural network by using the hybrid particle swarm optimization algorithm, and solves the problems of gradient disappearance and falling into a local optimal solution in the training process of the convolutional neural network, so as to enable the network convergence speed to be increased and higher in the accuracy. Theexpression recognition method comprises the following steps: (1) performing preprocessing of gray normalization and scale normalization on an expression data set; (2) constructing a convolutional neural network suitable for expression recognition; (3) improving a particle swarm optimization algorithm by using a crossover mutation algorithm in the genetic algorithm, (4) optimizing parameters of theconvolutional neural network by using the improved particle swarm optimization algorithm, and (5) training and testing the optimized convolutional neural network by taking a preprocessed expression data set.

Owner:JILIN UNIV

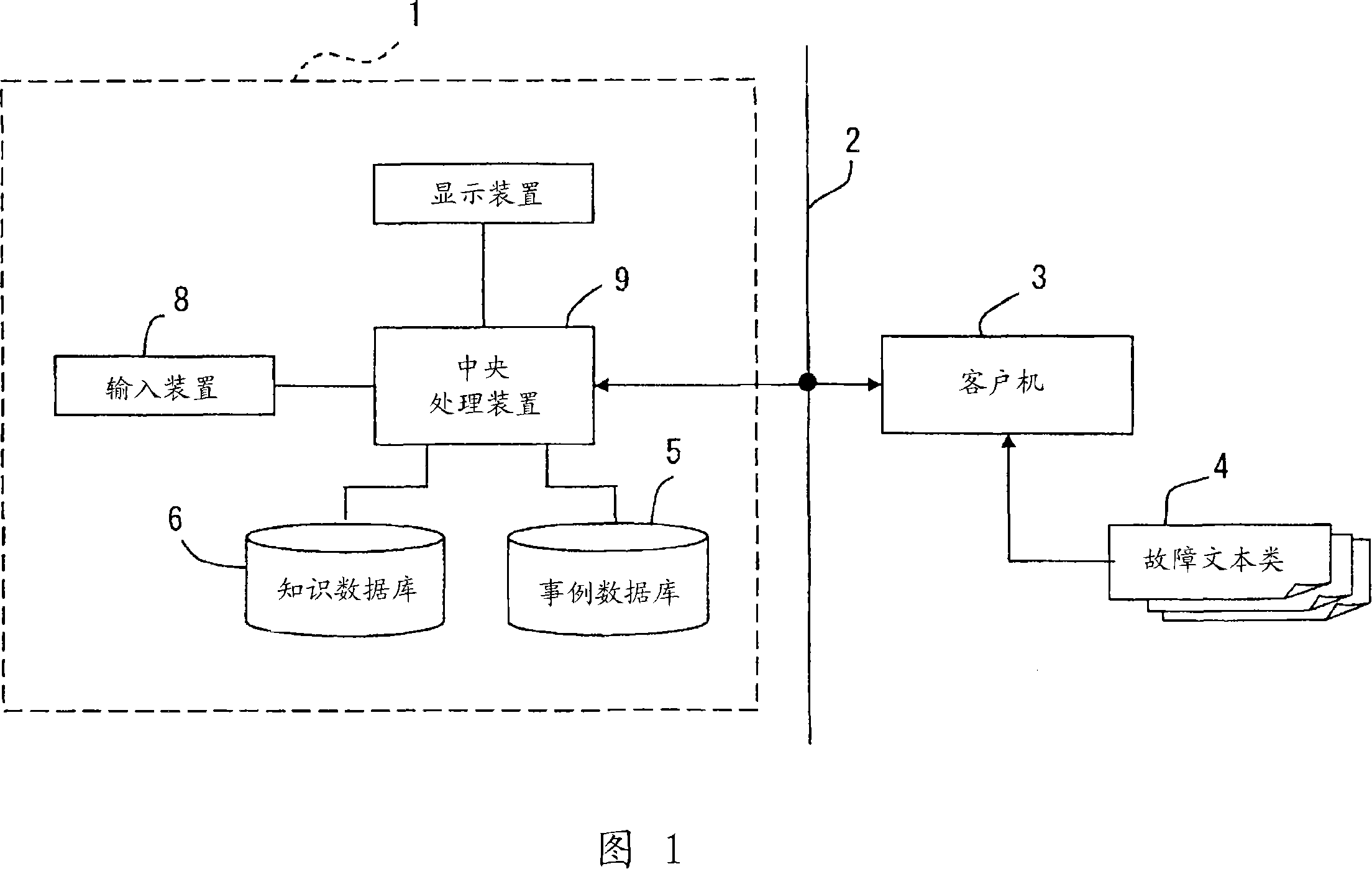

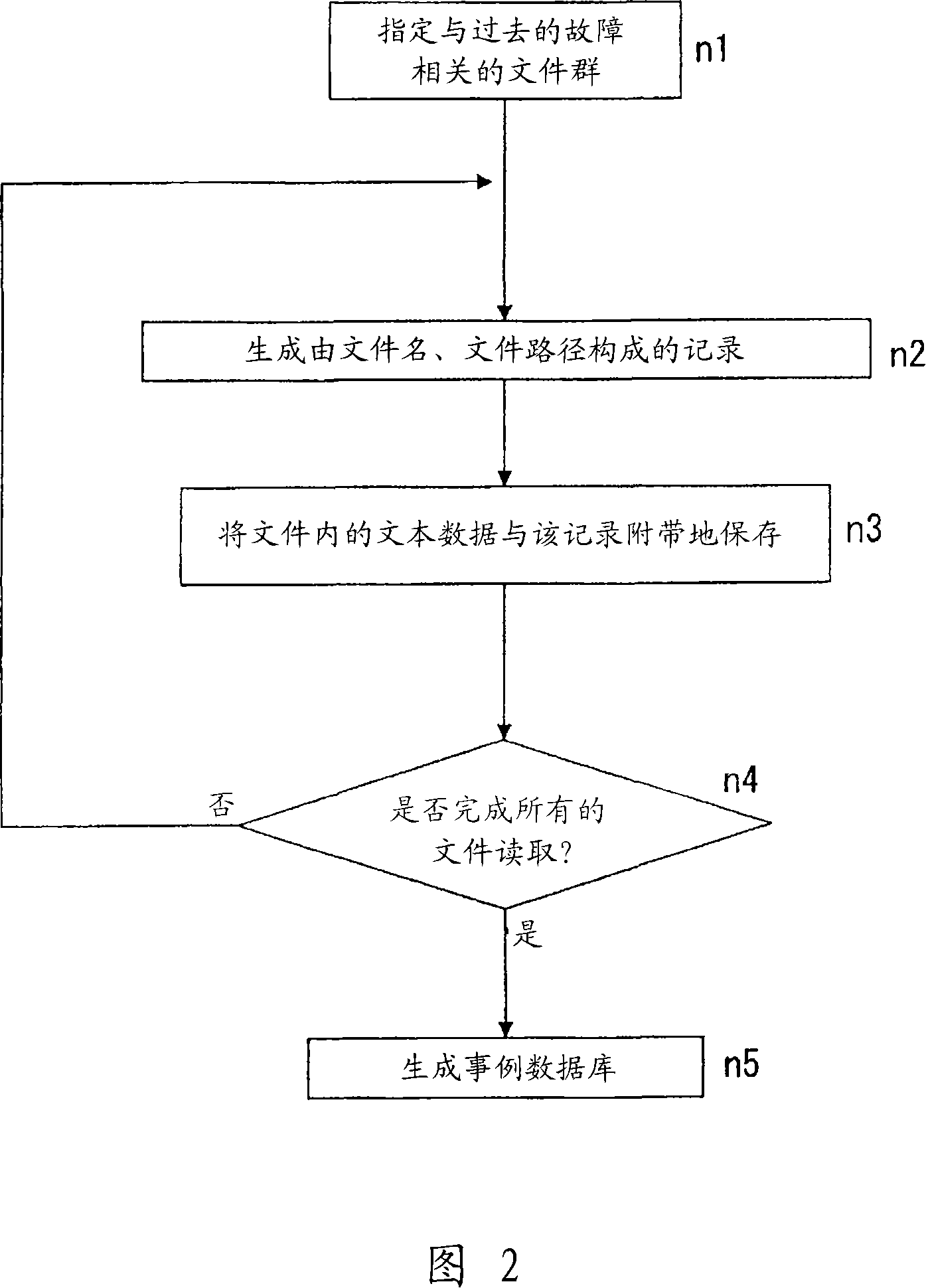

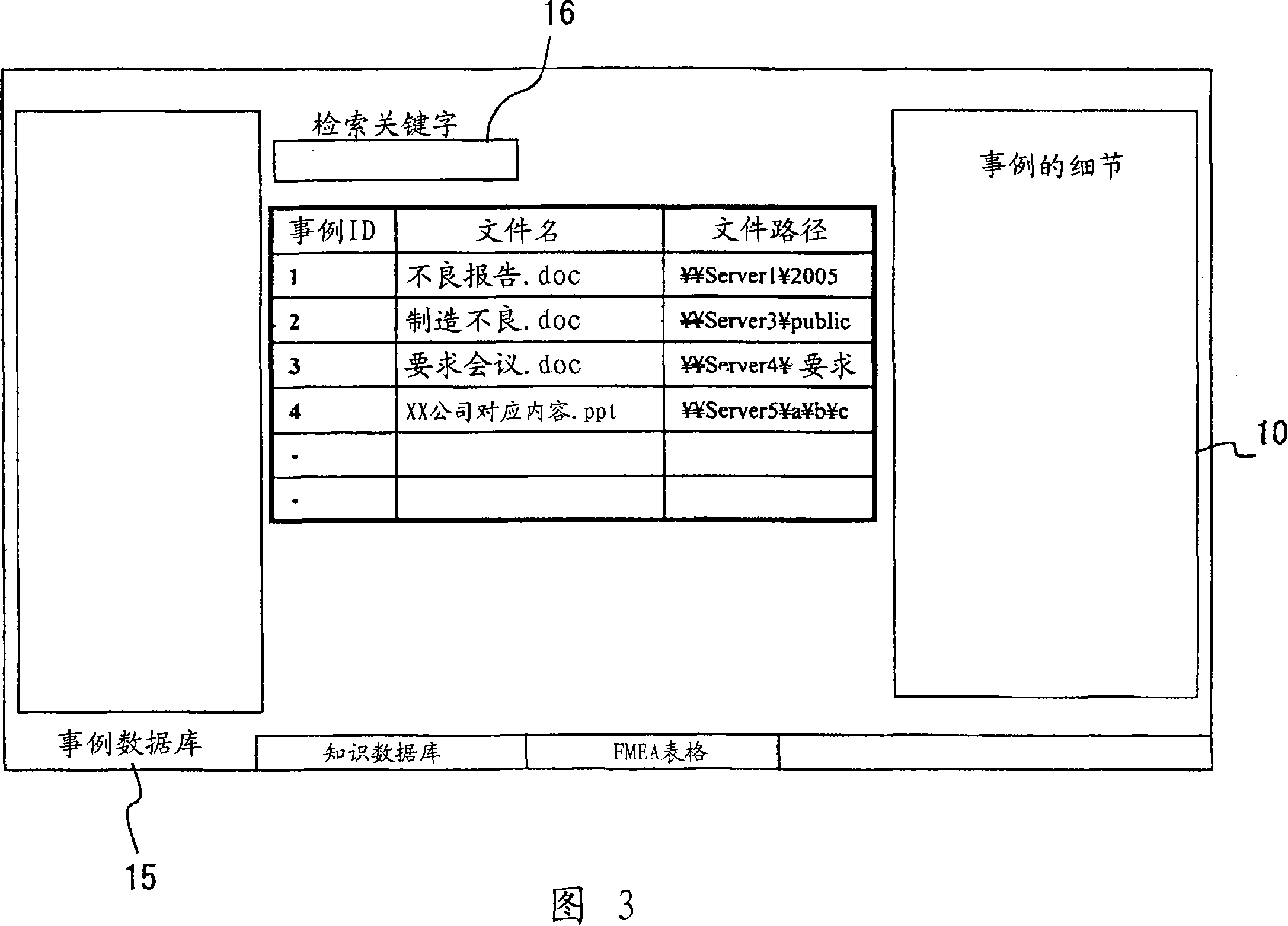

Database generation and use aid apparatus

InactiveCN101154236AAutomatic extractionData processing applicationsDigital data information retrievalData miningKnowledge level

Owner:ORMON CORP

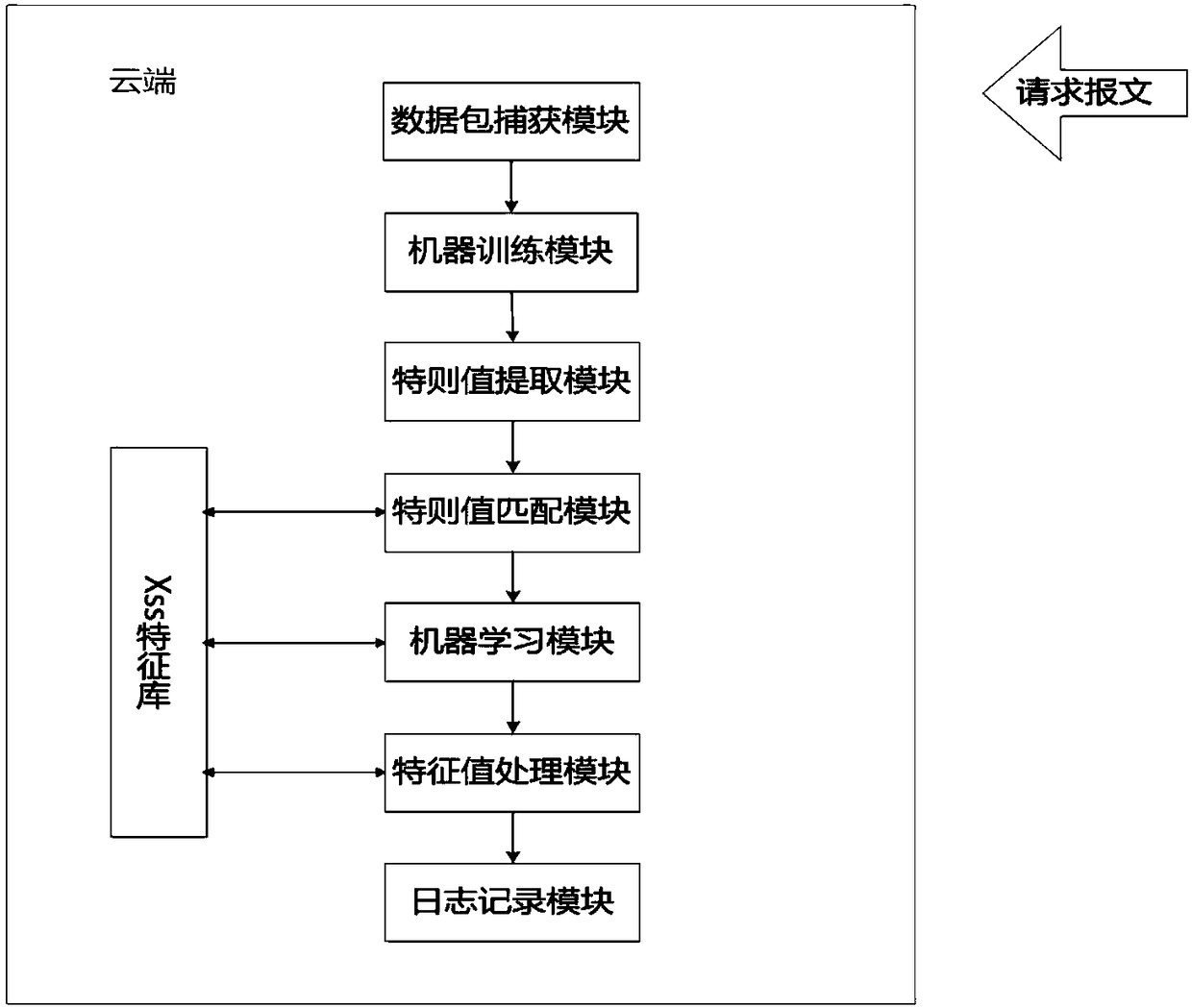

Machine learning-based xss defense system and realization method thereof

InactiveCN108491717AEfficiently identify and predictImprove work efficiencyPlatform integrity maintainanceTransmissionSocial engineering (security)Learning based

The invention discloses a machine learning-based xss defense system and a realization method thereof. The system comprises a data packet capturing module, a machine training module, a feature value extraction module, a feature value matching module, a machine learning module, a feature value processing module and a log record module which are sequentially connected. In the machine learning-based xss defense system of the invention, monitoring of xss attacks is carried out on a defended target, machine learning is carried out on strange xss attack code feature values, latest xss feature valuescan be quickly and automatically collected in cloud-side xss attack defense, manual collection of social engineering information is eliminated, and high effectiveness and rapidity of xss defense are realized.

Owner:SICHUAN CHANGHONG ELECTRIC CO LTD

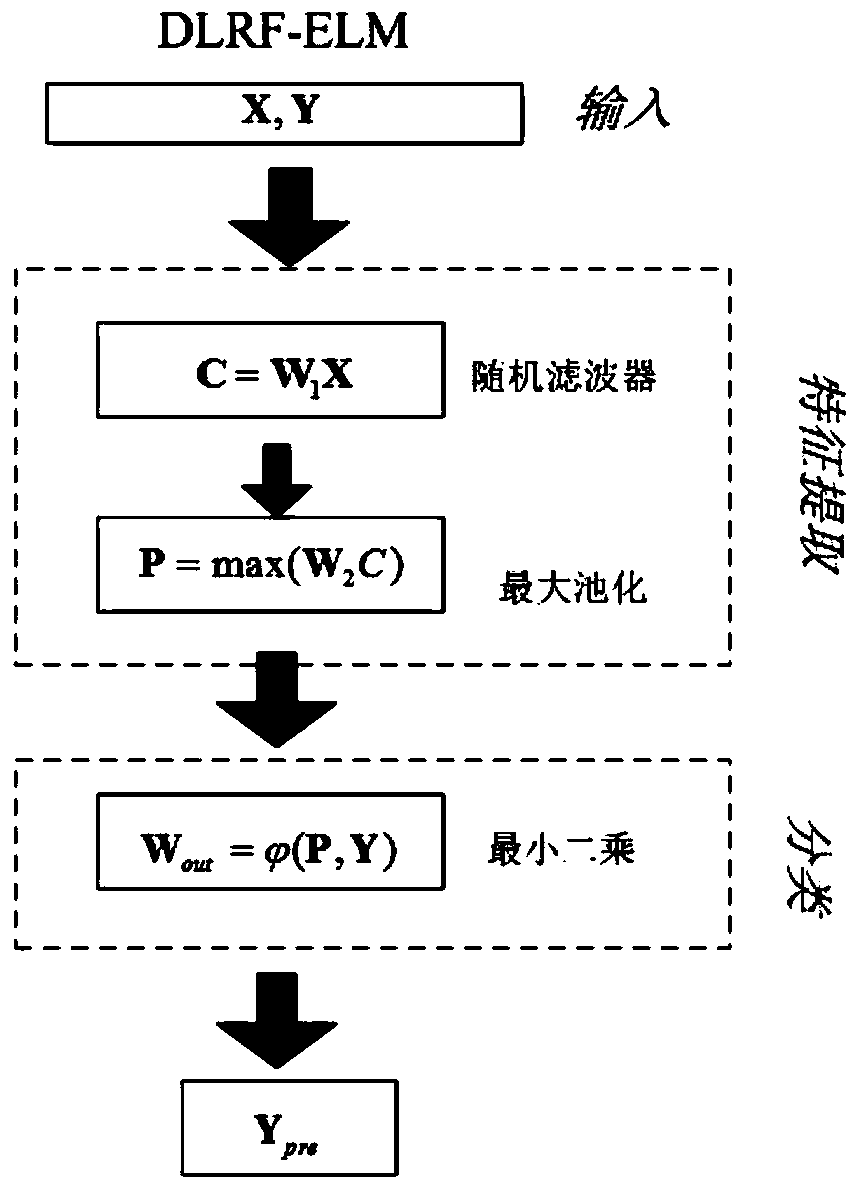

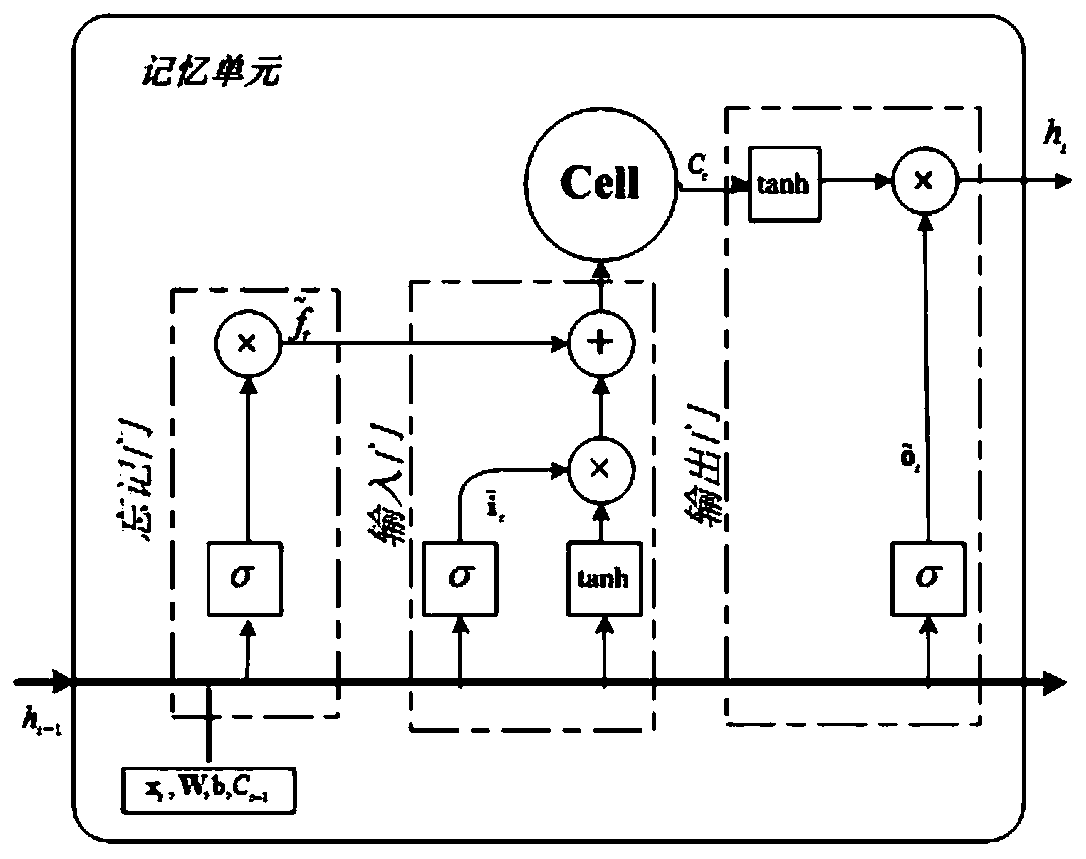

Electrocardiosignal classifying method and system based on LRF-ELM and BLSTM

ActiveCN110558975AAutomatic extractionQuick extractionDiagnostic recording/measuringSensorsTime informationEcg signal

The invention provides an electrocardiosignal classifying method and system based on LRF-ELM and BLSTM. The electrocardiosignal classifying method includes the steps: acquiring electrocardiosignal data, preprocessing the electrocardiosignal data to obtain a dataset, and using the electrocardiosignal data in the dataset as input data of a neural network; using a LRF-ELM network as a feature extractor, learning spatial information in the electrocardiosignal data, and through three stacked random convolution and pooling processes, extracting feature data of different dimensions in the electrocardiosignal data; and after fusion, using the extracted feature data as input of a sequence learning stage, adopting a deep BLSTM network to carry out sequence learning, and finally outputting electrocardiosignal classifying results. According to the electrocardiosignal classifying method and system based on the LRF-ELM and the BLSTM, time information and spatial information of electrocardiosignals are taken into account at the same time, and therefore, not only can electrocardiosignal features be extracted efficiently and rapidly, but also good classification and identification properties are ensured.

Owner:山东山科智心科技有限公司

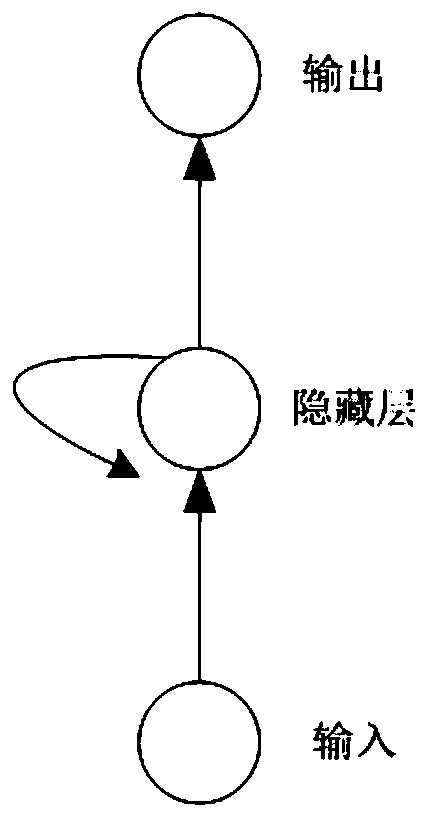

Inflammable gas concentration monitoring and early warning method

The invention relates to a method for monitoring and early warning of flammable gas concentration, which mainly solves the problem of poor safety in the prior art. The present invention stores the flammable gas concentration data in the database by adopting a flammable gas concentration monitoring and early warning method, and processes the data as a time series to obtain the flammable gas concentration time series data, and then outputs it through a neural network, The concentration boundary value is set in advance, and when the boundary value is reached, the technical solution of automatic alarm by the system solves the above problems well, and can be used in flammable gas concentration monitoring and early warning.

Owner:CHINA PETROLEUM & CHEM CORP +1

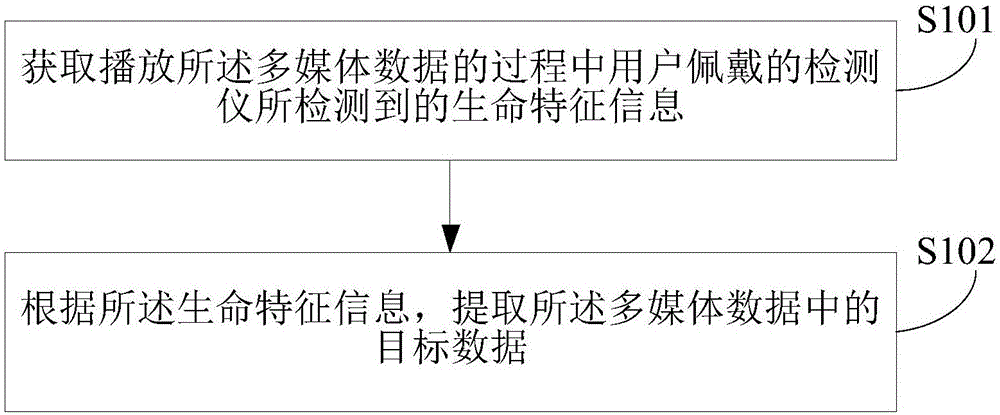

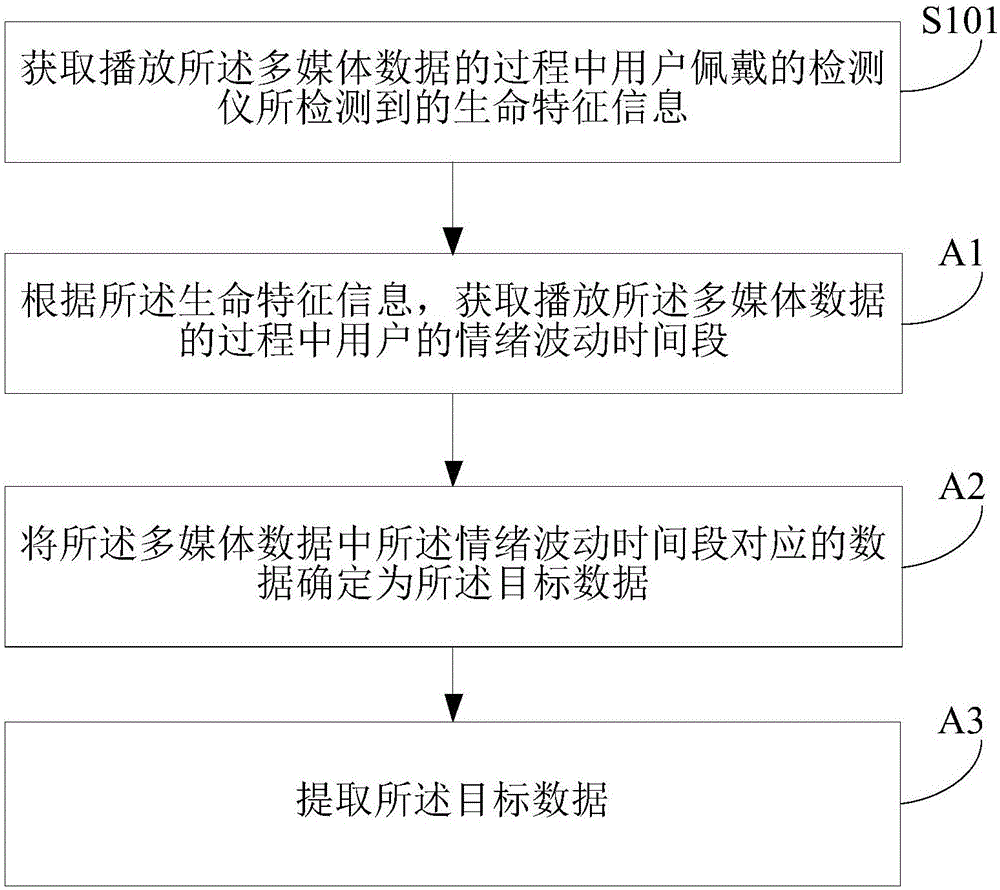

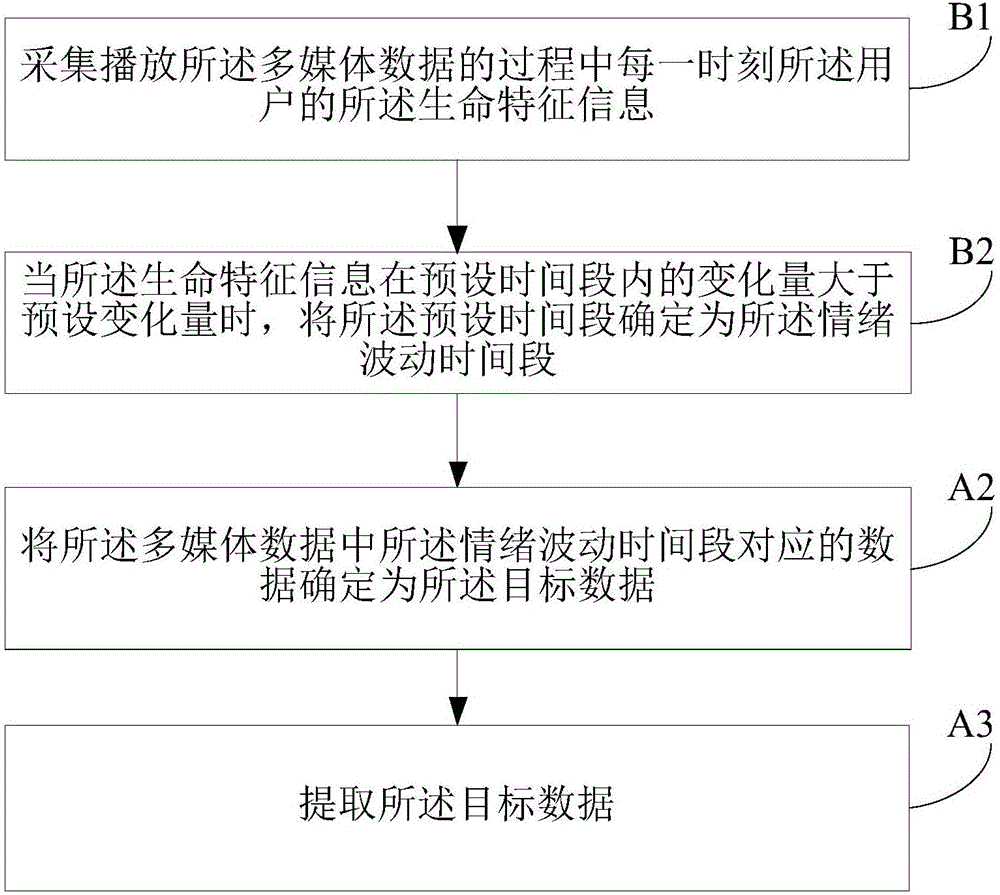

Processing method and apparatus of multimedia data

The invention relates to a processing method and apparatus of multimedia data. The method comprises the following steps: obtaining life characteristic information detected by a detector worn by a user in the process when multimedia data is played; and according to the life characteristic information, extracting target data in the multimedia data. By use of the technical scheme, according to the life characteristic information, the target data in the multimedia data, i.e., wonderful fragments in the multimedia data, can be automatically extracted, the conventional mode of extracting the wonderful fragments by reliance on recall of the user is avoided, memory burden of the user is reduced, and at the same time, since the life characteristic information can more accurately reflect the emotion of the user, it can be ensured that the extracted target data is more accurate and is truly the wonderful fragments leaving a quite deep impression on the user.

Owner:BEIJING XIAOMI MOBILE SOFTWARE CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com