Method for automatically tagging animation scenes for matching through comprehensively utilizing overall color feature and local invariant features

A technology of local invariant features and color features, applied in special data processing applications, editing/combining graphics or text, image data processing, etc., can solve problems such as lack of fast image matching methods

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

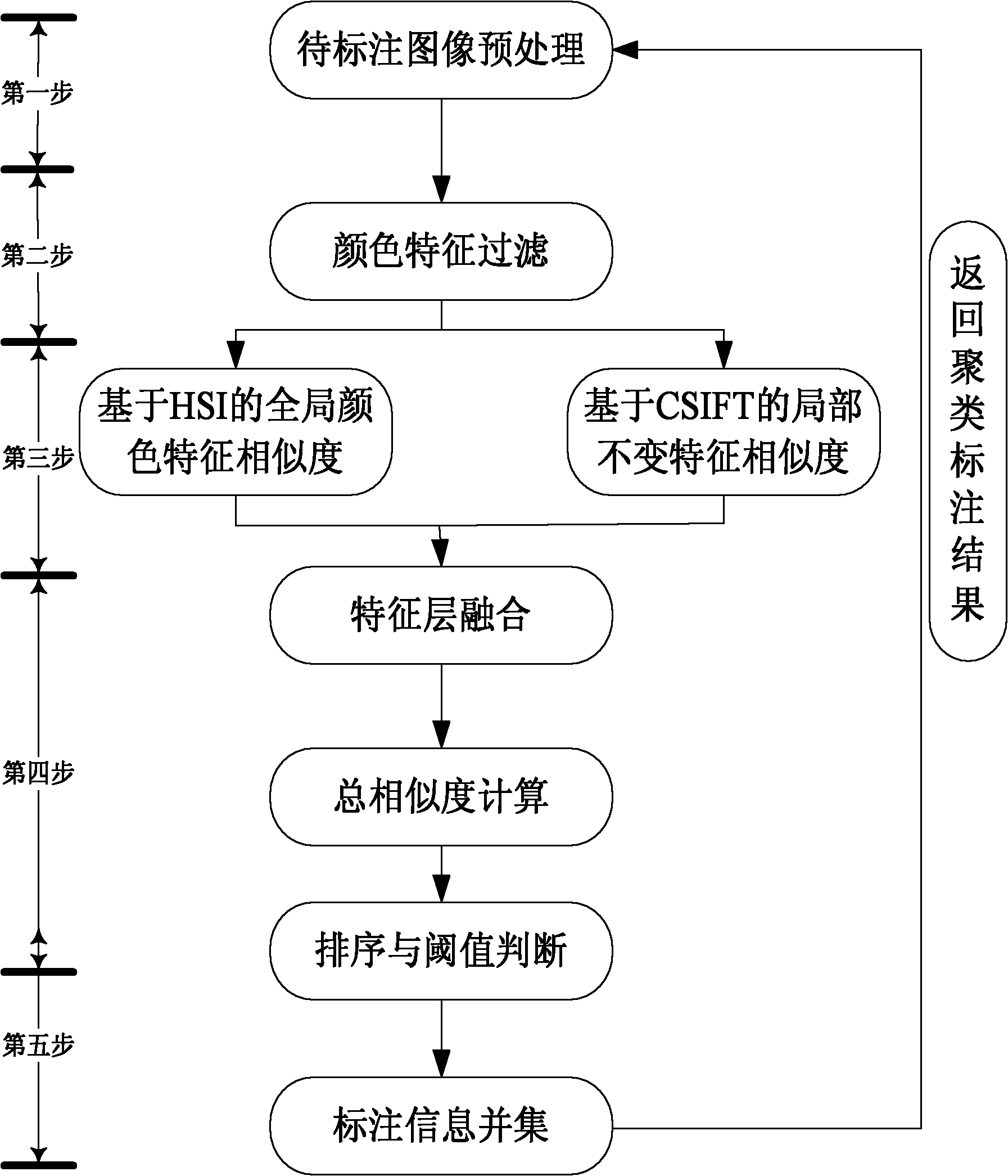

[0057] figure 1 It is a flow chart of the animation scene image labeling method based on color and local invariant features of the present invention, and the specific steps include:

[0058] The first step is to preprocess the animated image to be marked, so as to highlight the grayscale details of the image and unify the size of all images;

[0059] The second step is to calculate the global color similarity between the target image and the image in the animation scene material library, and perform color feature filtering to obtain the previous similar images;

[0060] The third step is to calculate the image and target image based on The global color similarity of and CSIFT-based local feature similarity;

[0061] The fourth step, this The global color similarity and local invariant feature similarity of the two images are fused to obtain the final total similarity, and the total similarity is sorted. If the total similarity is greater than the threshold is consi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com