Video flow based people face expression fantasy method

A technology of facial expressions and video streams, applied in image data processing, instruments, calculations, etc., can solve problems that are difficult to meet practical applications and a large number of human interactions, and achieve high reliability and strong expressive effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

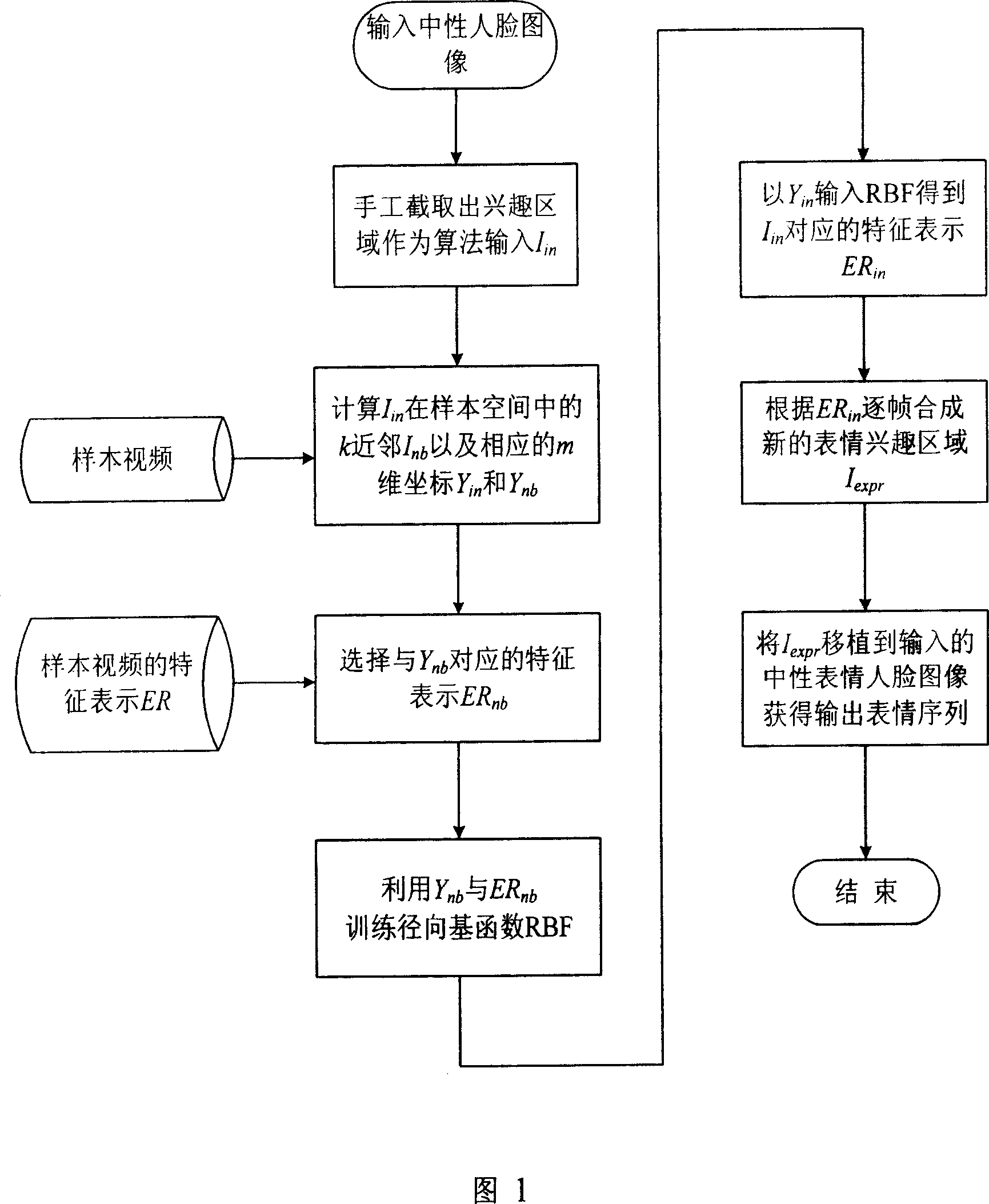

Method used

Image

Examples

Embodiment 1

[0044] Surprised Emoticon Sequence Fantasy Example:

[0045] 1: The input image is 1920×1080 pixels, manually determine the position of the pupils of the two eyes on the image, the horizontal distance between the pupils of the two eyes is 190 pixels, and the width of the pupils of the two eyes is shifted to the left and right by 105 pixels, and to the left and right by 100 pixels. Eye sub-region of 400×200 pixels; manually determine the positions of the two corners of the mouth on the image, the horizontal distance between the two corners of the mouth is 140 pixels, and move the width of 80 pixels from the corners of the mouth to the left and right, and the width of 150 and 50 pixels to the top and bottom, respectively. A mouth sub-region of 300×200 pixels is obtained, and the eye sub-region and the mouth sub-region constitute the facial expression interest sub-region of the input image.

[0046] 2: Take the sub-region around the eyes and the sub-region around the mouth as I r...

Embodiment 2

[0055] Happy Emoticon Sequence Fantasy Example:

[0056]1: The input image is 1920×1080 pixels, manually determine the position of the pupils of the two eyes on the image, the horizontal distance between the pupils of the two eyes is 188 pixels, and the width of the pupils of the two eyes is shifted to the left and right by 106 pixels, and to the left and right by 100 pixels. Eye sub-region of 400×200 pixels; manually determine the positions of the two mouth corners on the image, the horizontal distance between the two mouth corners is 144 pixels, and move 78 pixel widths from the two mouth corners to the left and right, and 150 and 50 pixel widths to the top and bottom, respectively. A mouth sub-region of 300×200 pixels is obtained, and the eye sub-region and the mouth sub-region constitute the facial expression interest sub-region of the input image.

[0057] 2: Take the sub-region around the eyes and the sub-region around the mouth as I respectively in , select the first f...

Embodiment 3

[0066] Angry Emoticon Sequence Fantasy Example:

[0067] 1: The input image is 1920×1080 pixels, manually determine the position of the pupils of the two eyes on the image, the horizontal distance between the pupils of the two eyes is 186 pixels, and the width of the pupils of the two eyes is shifted to the left and right by 107 pixels, and to the left and right by 100 pixels. Eye sub-region of 400×200 pixels; manually determine the positions of the two corners of the mouth on the image, the horizontal distance between the two corners of the mouth is 138 pixels, and move the width of 81 pixels from the corners of the mouth to the left and right, and the width of 150 and 50 pixels to the top and bottom, respectively. A mouth sub-region of 300×200 pixels is obtained, and the eye sub-region and the mouth sub-region constitute the facial expression interest sub-region of the input image.

[0068] 2: Take the sub-region around the eyes and the sub-region around the mouth as I respe...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com