Hierarchical Methods and Apparatus for Extracting User Intent from Spoken Utterances

a user intent and hierarchical technology, applied in the field of hierarchical extraction of user intent from spoken utterances, can solve the problems of human operator required, people do not talk or think in terms of specific machine-based, forget precise predetermined commands,

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

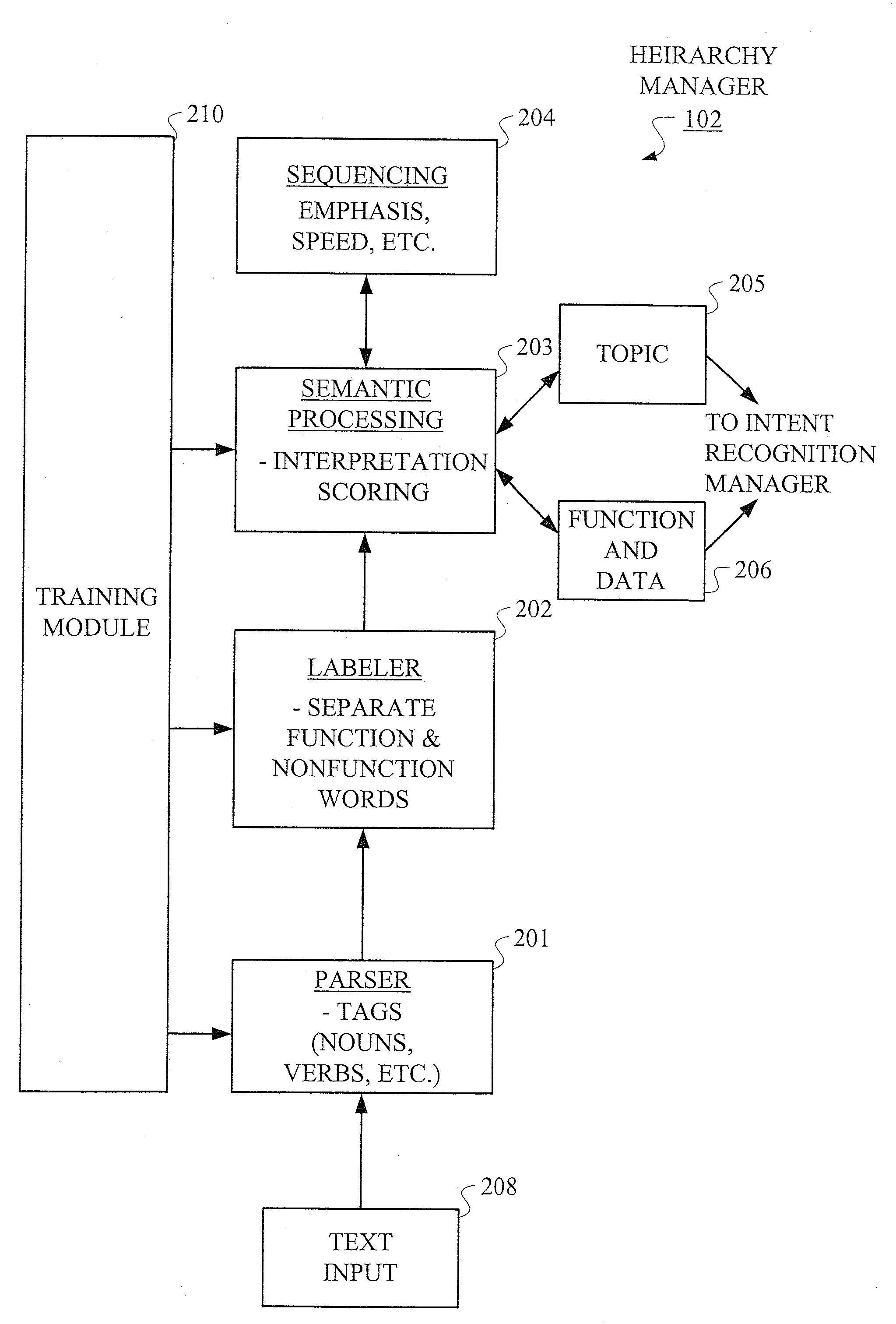

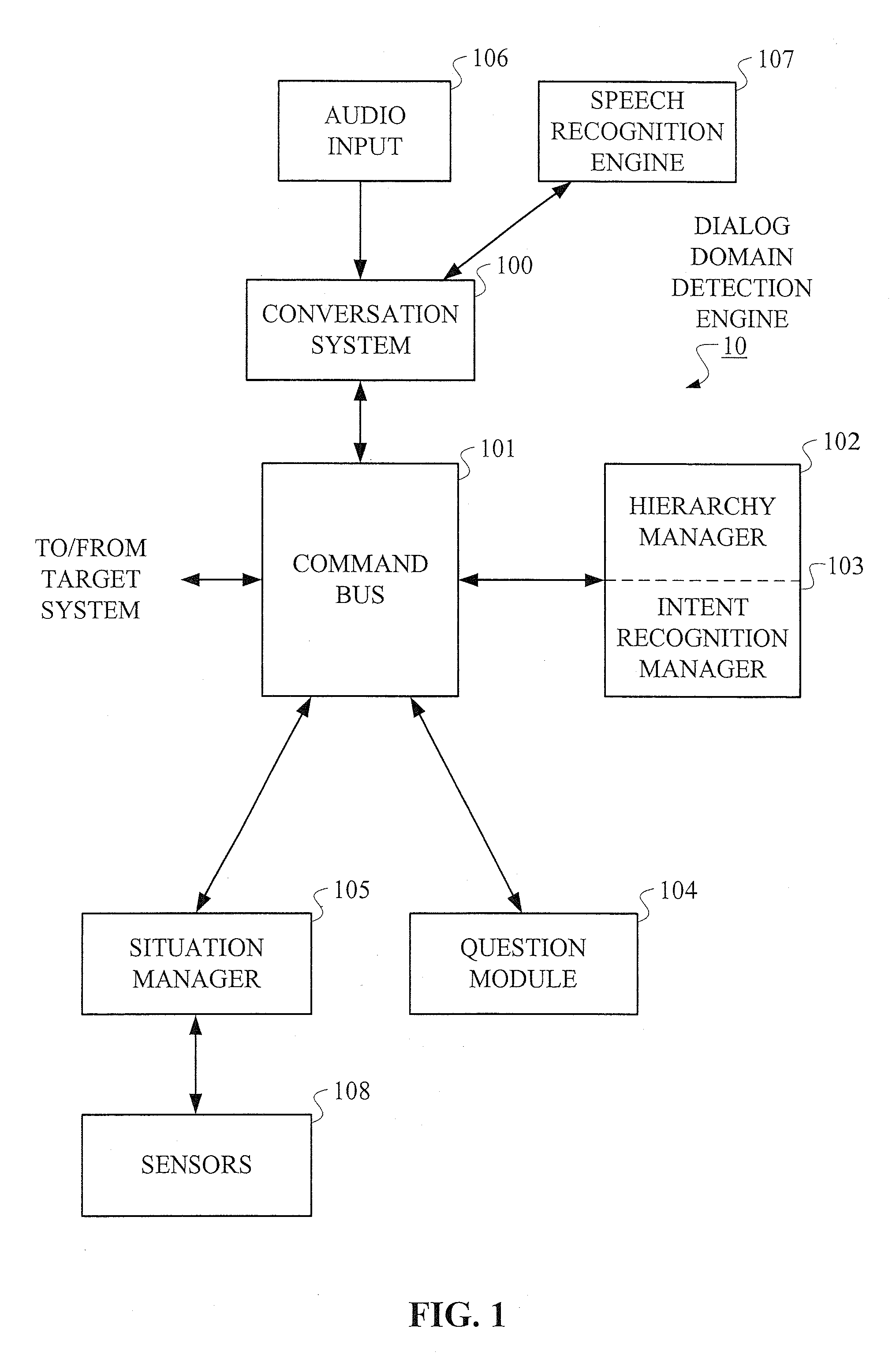

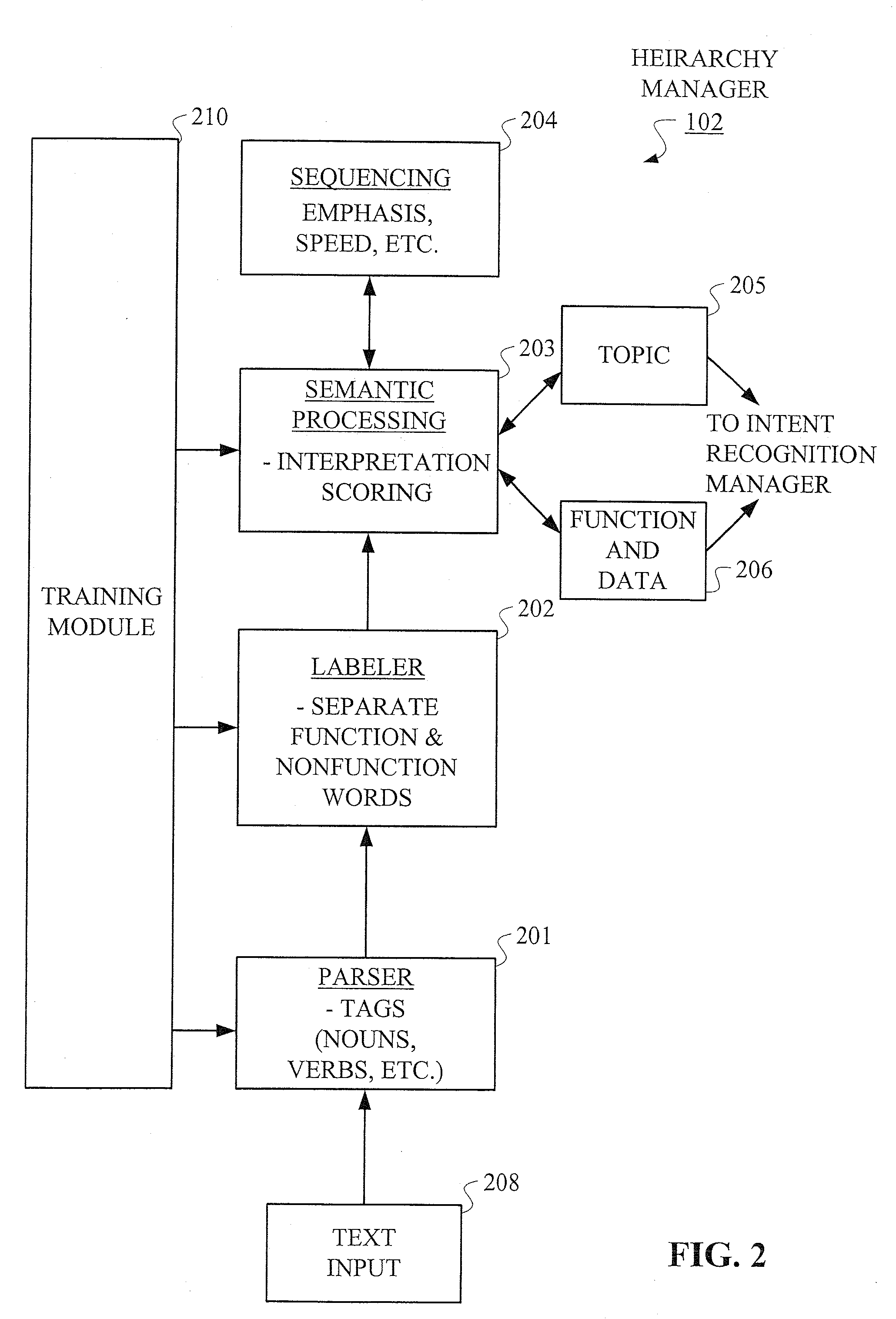

Image

Examples

Embodiment Construction

[0021]While the present invention may be illustratively described below in the context of a vehicle-based voice system, it is to be understood that principles of the invention are not limited to any particular computing system environment or any particular speech recognition application. Rather, principles of the invention are more generally applicable to any computing system environment and any speech recognition application in which it would be desirable to permit the user to provide free form or conversational speech input.

[0022]Principles of the invention address the problem of extracting user intent from free form-type spoken utterances. For example, returning to the vehicle-based climate control example described above, principles of the invention permit a driver to interact with a voice system in the vehicle by giving free form voice instructions that are different than the precise (machine-based grammar) voice commands understood by the climate control system. Thus, in this ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com