Patents

Literature

316 results about "Speech applications" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

The Speech Application Programming Interface or SAPI is an API developed by Microsoft to allow the use of speech recognition and speech synthesis within Windows applications.

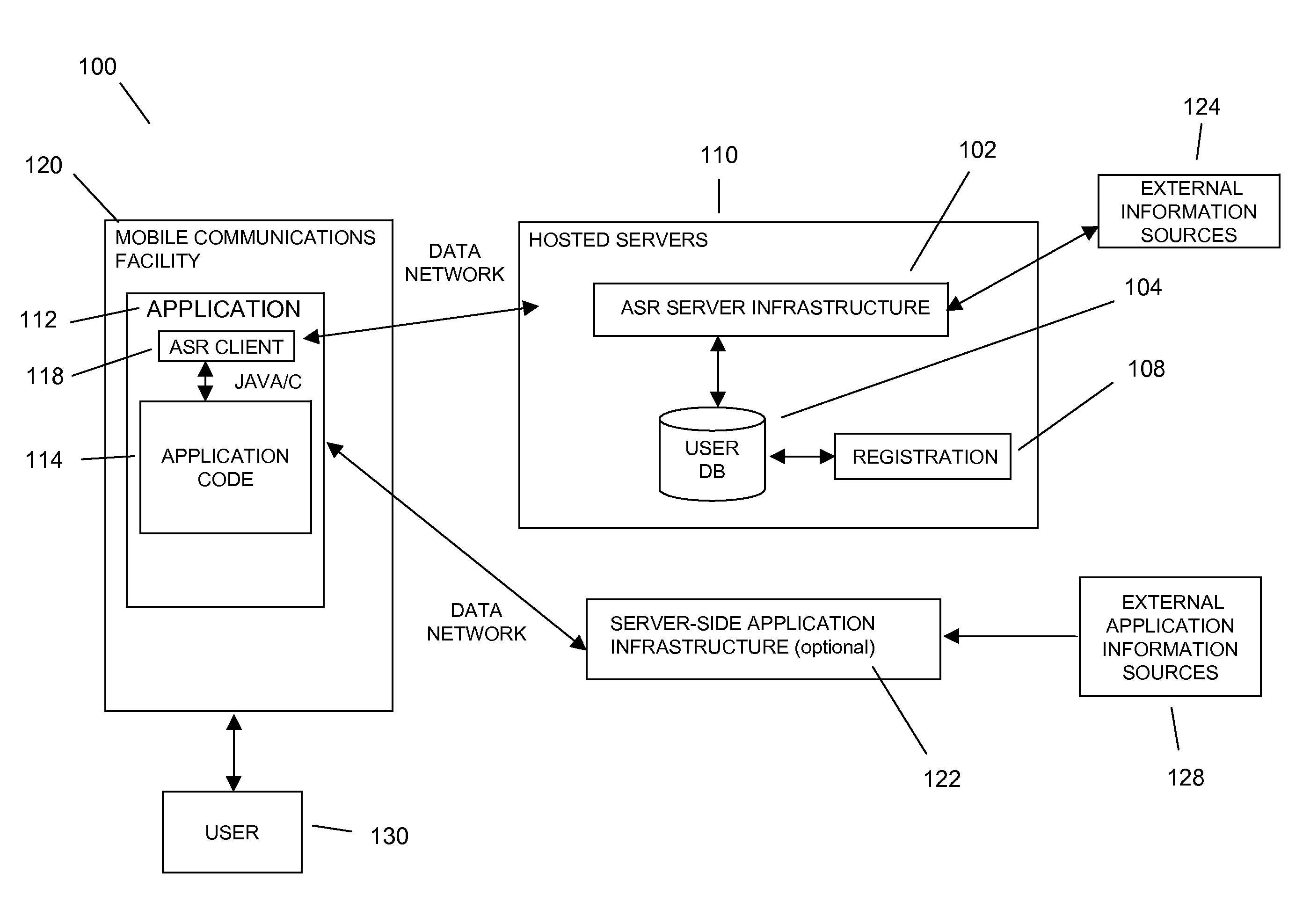

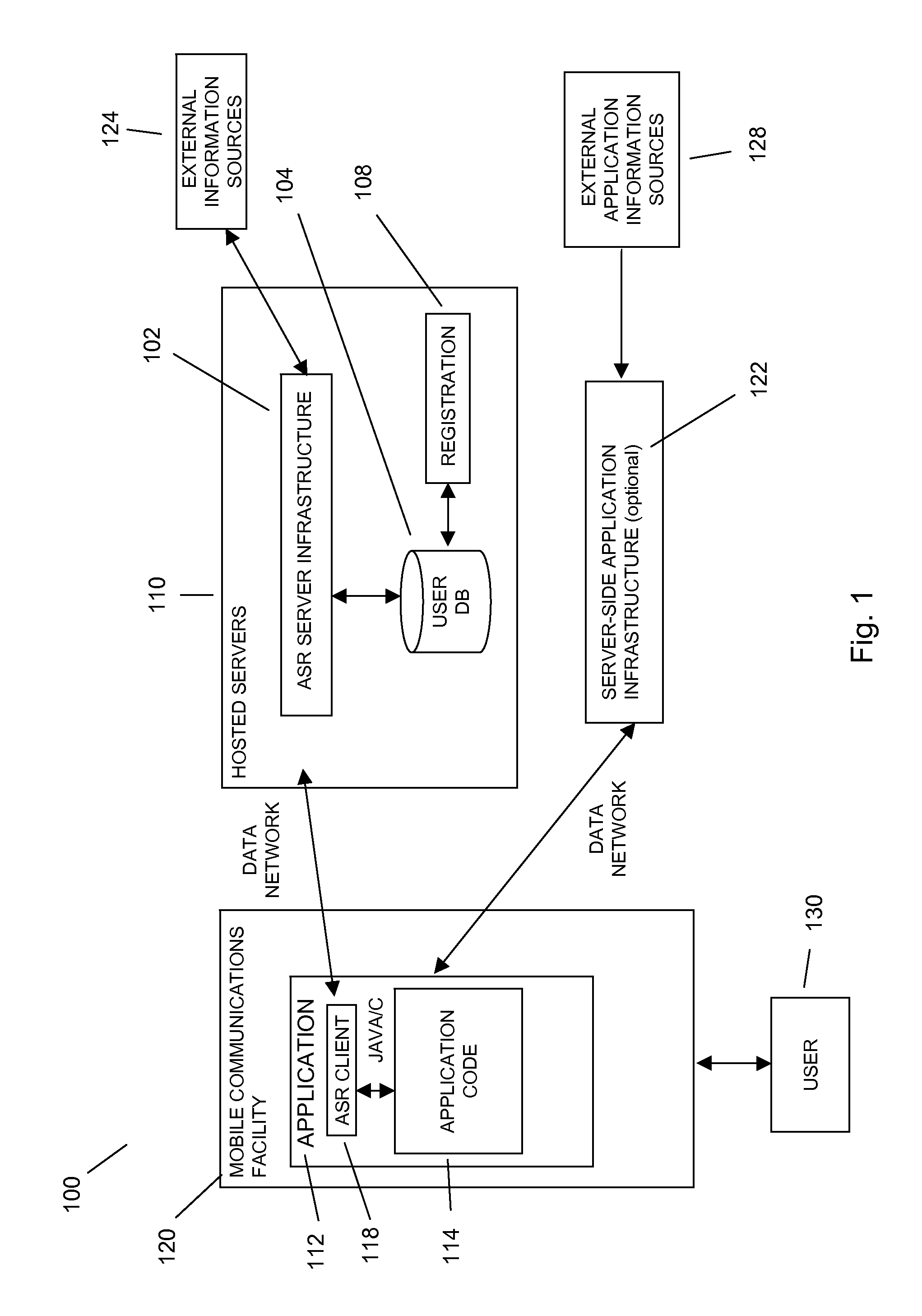

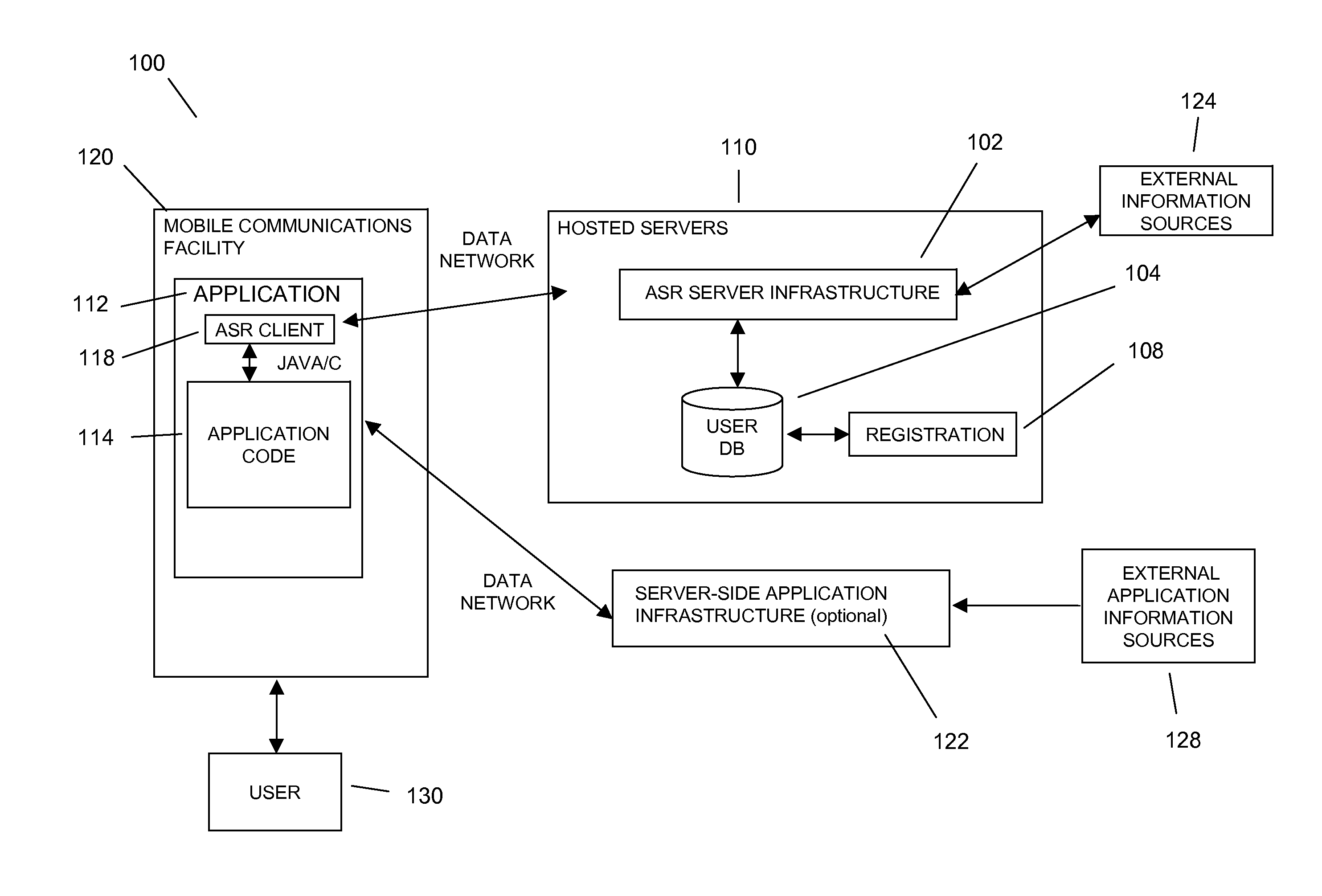

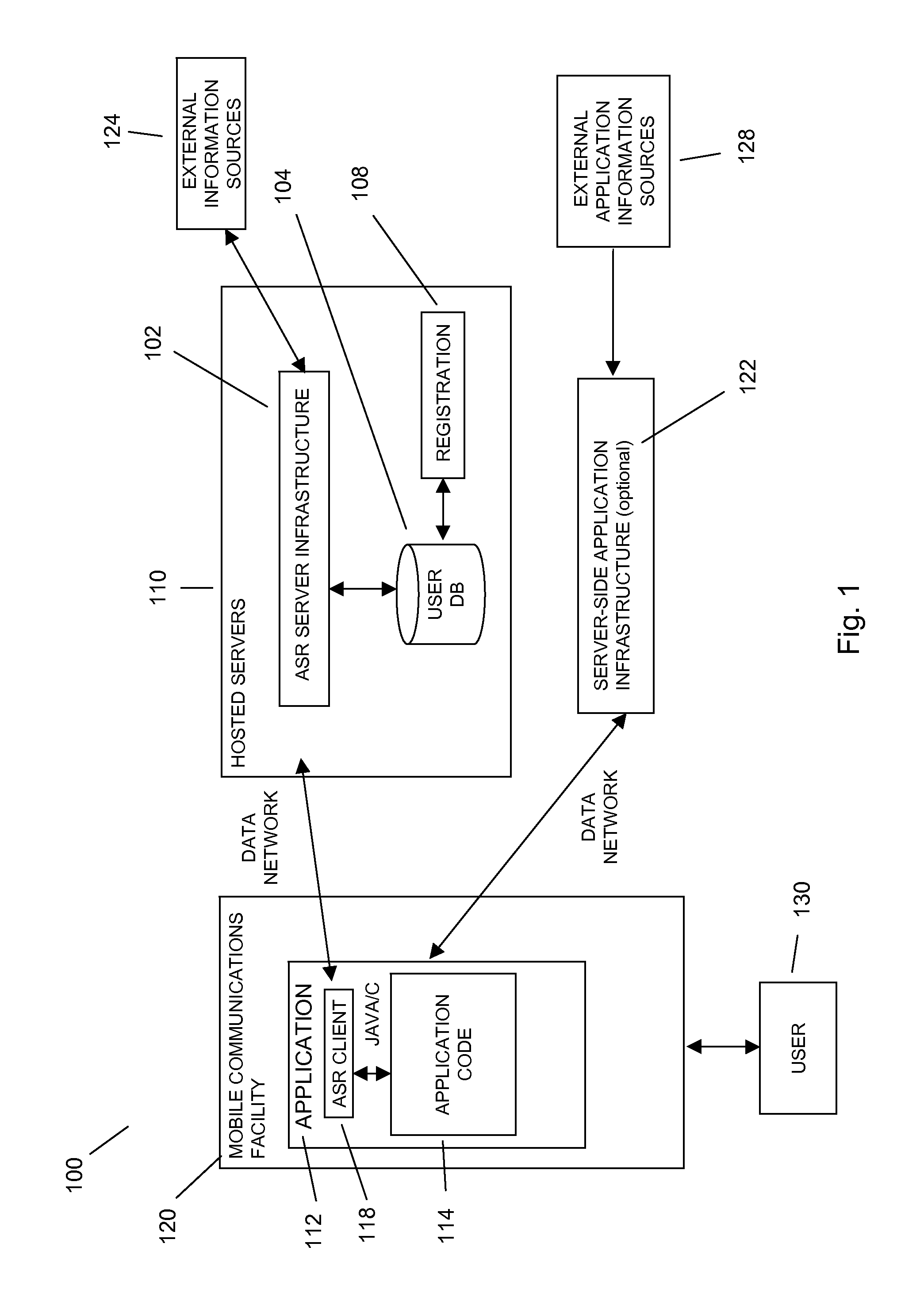

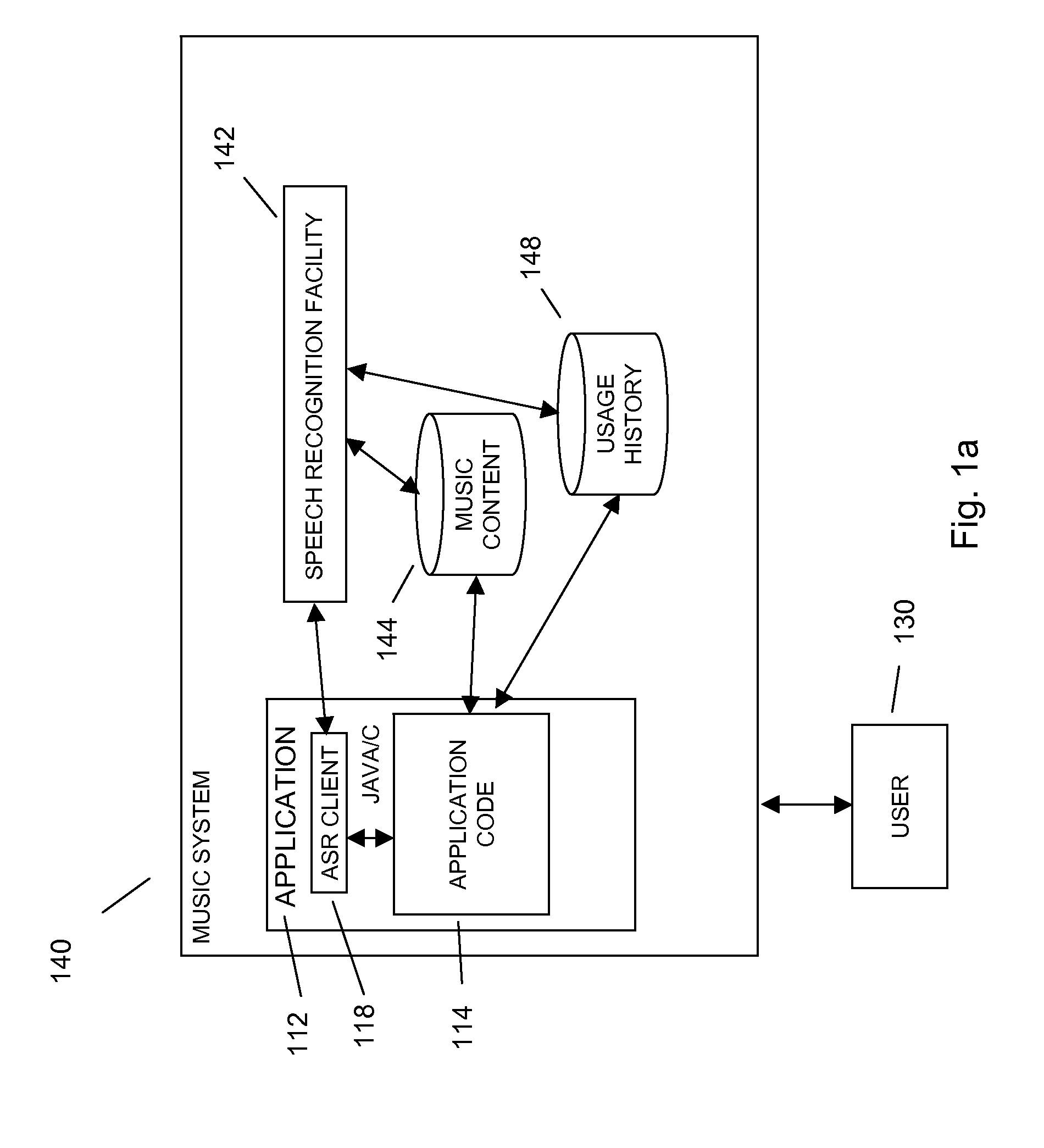

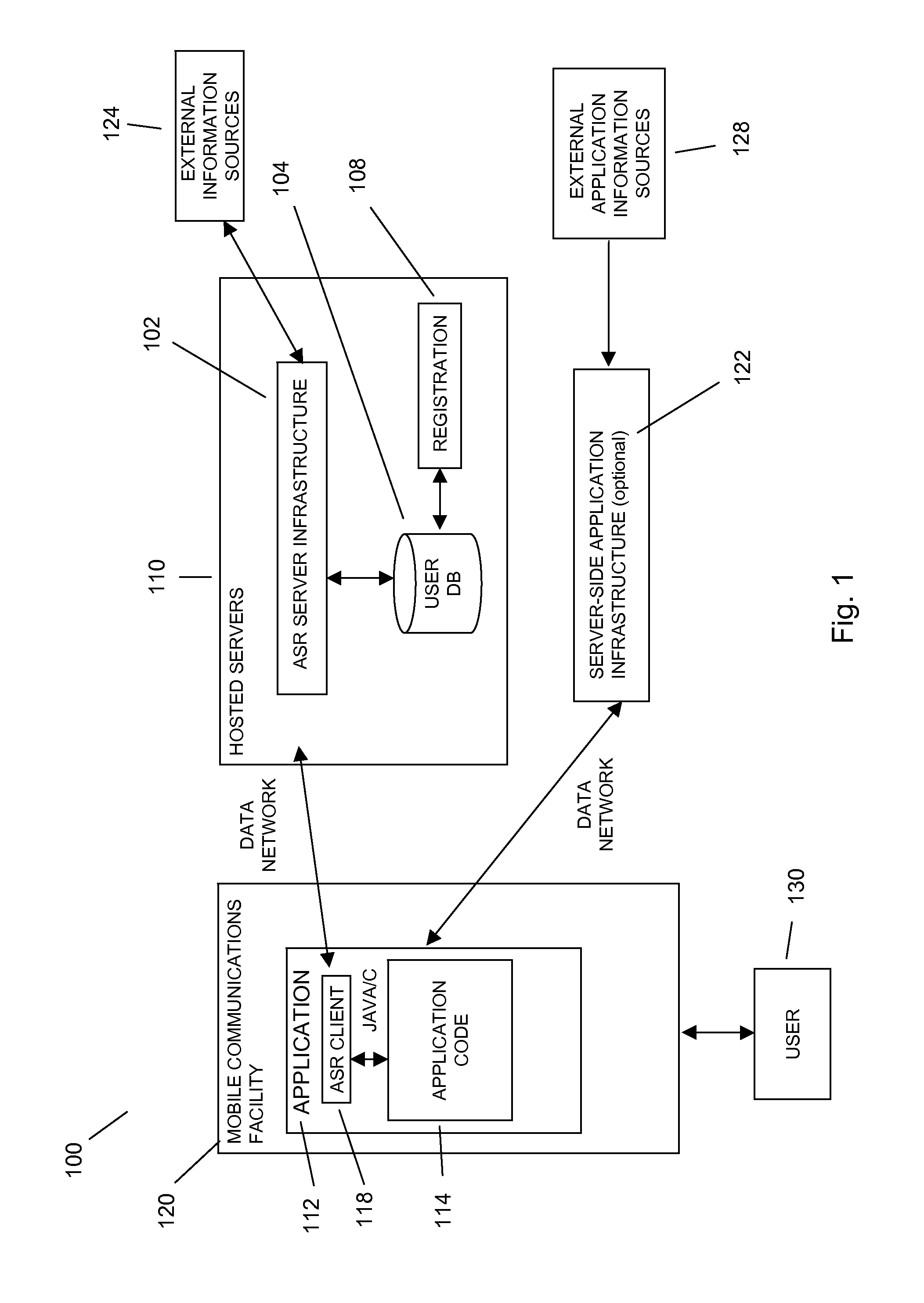

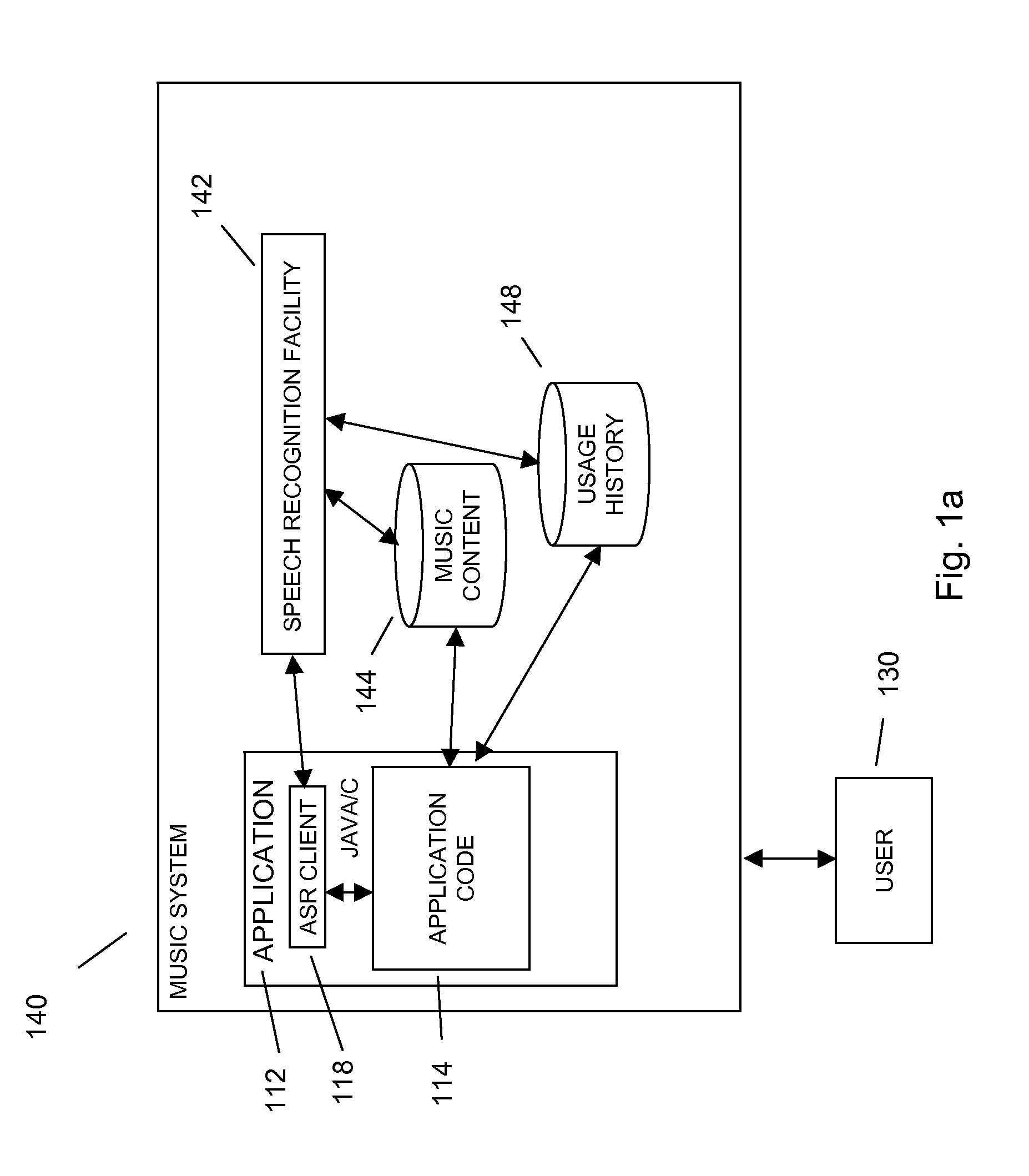

Command and control utilizing ancillary information in a mobile voice-to-speech application

In embodiments of the present invention improved capabilities are described for controlling a mobile communication facility utilizing ancillary information comprising accepting speech presented by a user using a resident capture facility on the mobile communication facility while the user engages an interface that enables a command mode for the mobile communications facility; processing the speech using a resident speech recognition facility to recognize command elements and content elements; transmitting at least a portion of the speech through a wireless communication facility to a remote speech recognition facility; transmitting information from the mobile communication facility to the remote speech recognition facility, wherein the information includes information about a command recognizable by the resident speech recognition facility and at least one of language, location, display type, model, identifier, network provider, and phone number associated with the mobile communication facility; generating speech-to-text results utilizing the remote speech recognition facility based at least in part on the speech and on the information related to the mobile communication facility; and transmitting the text results for use on the mobile communications facility.

Owner:VLINGO CORP

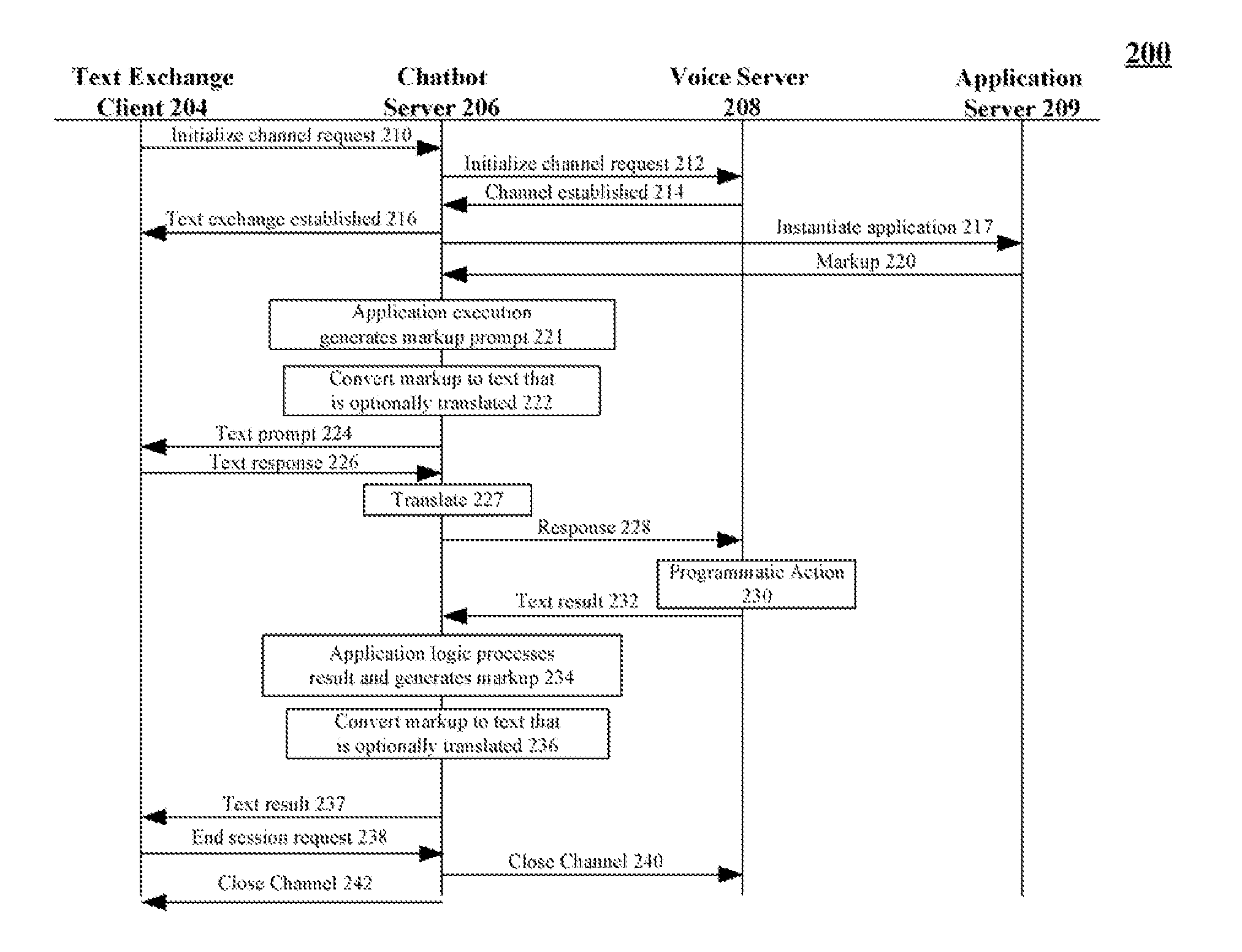

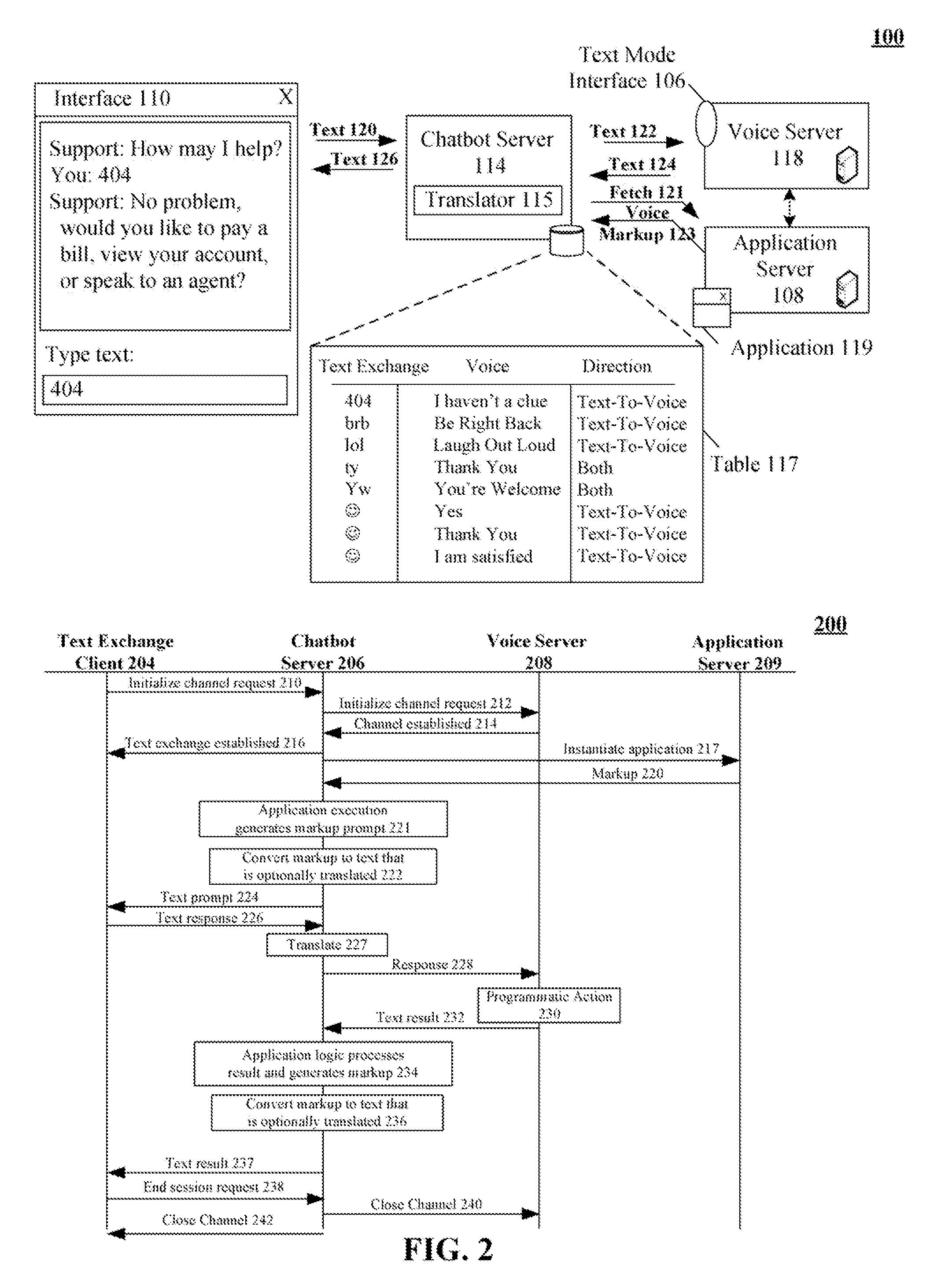

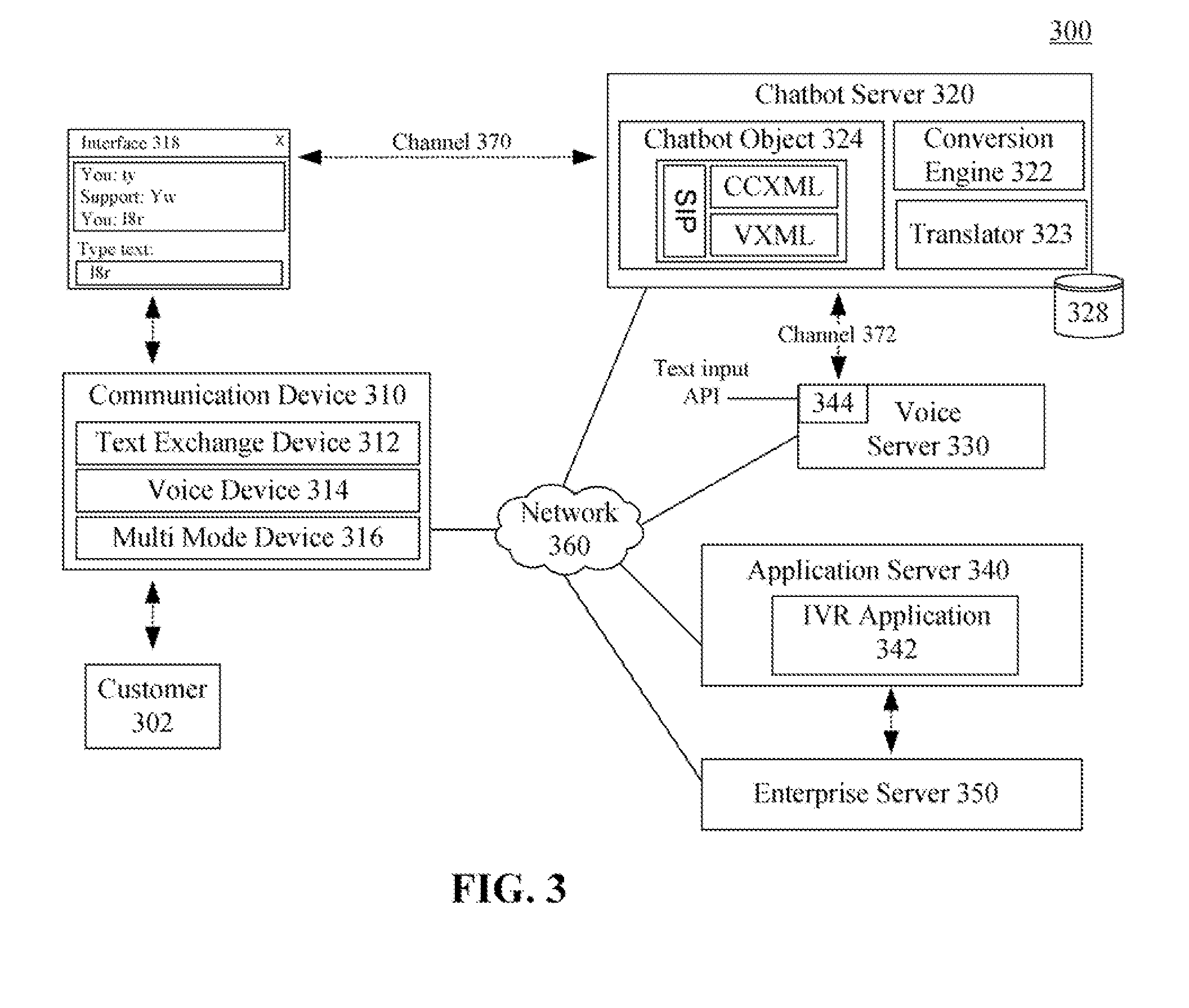

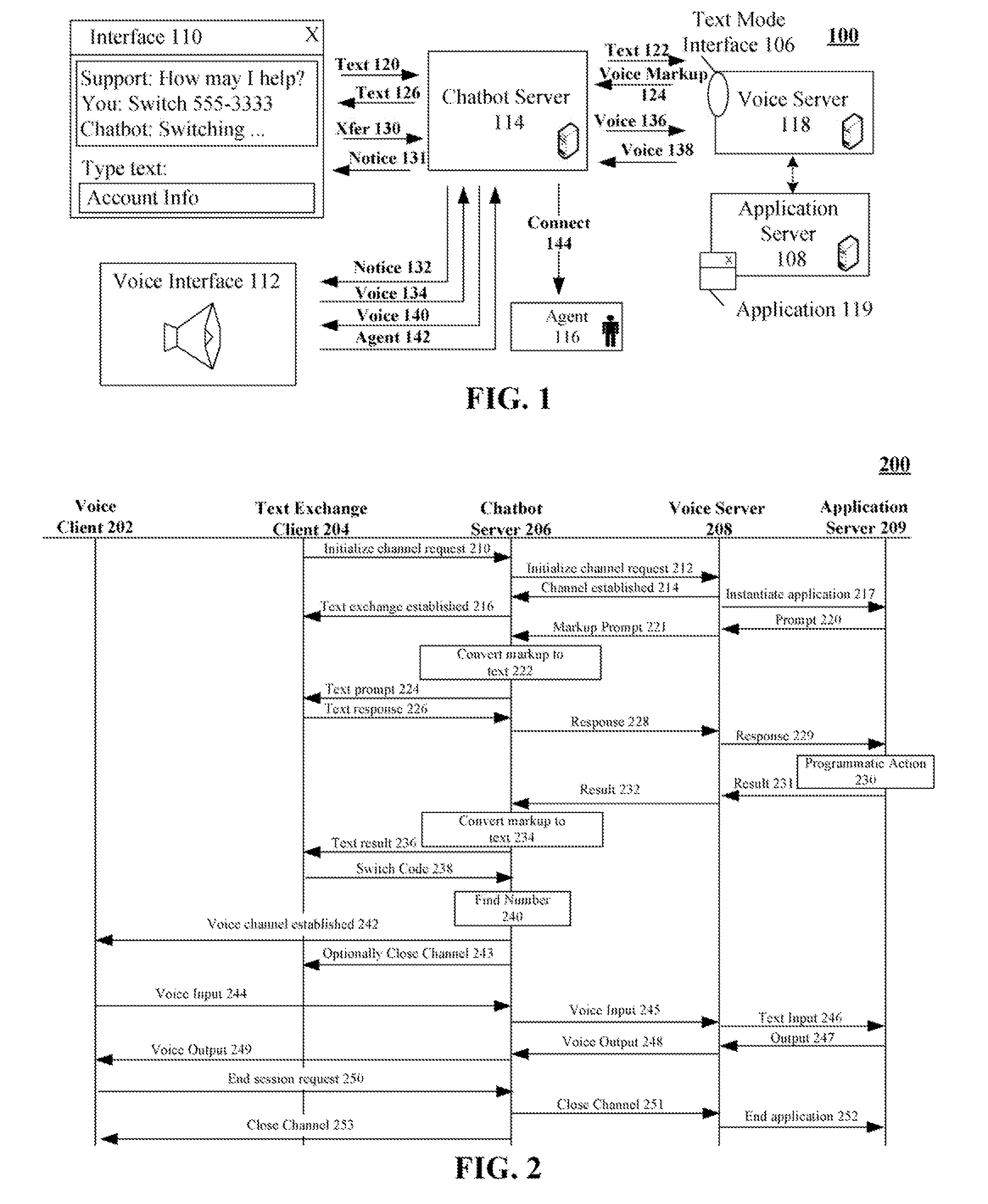

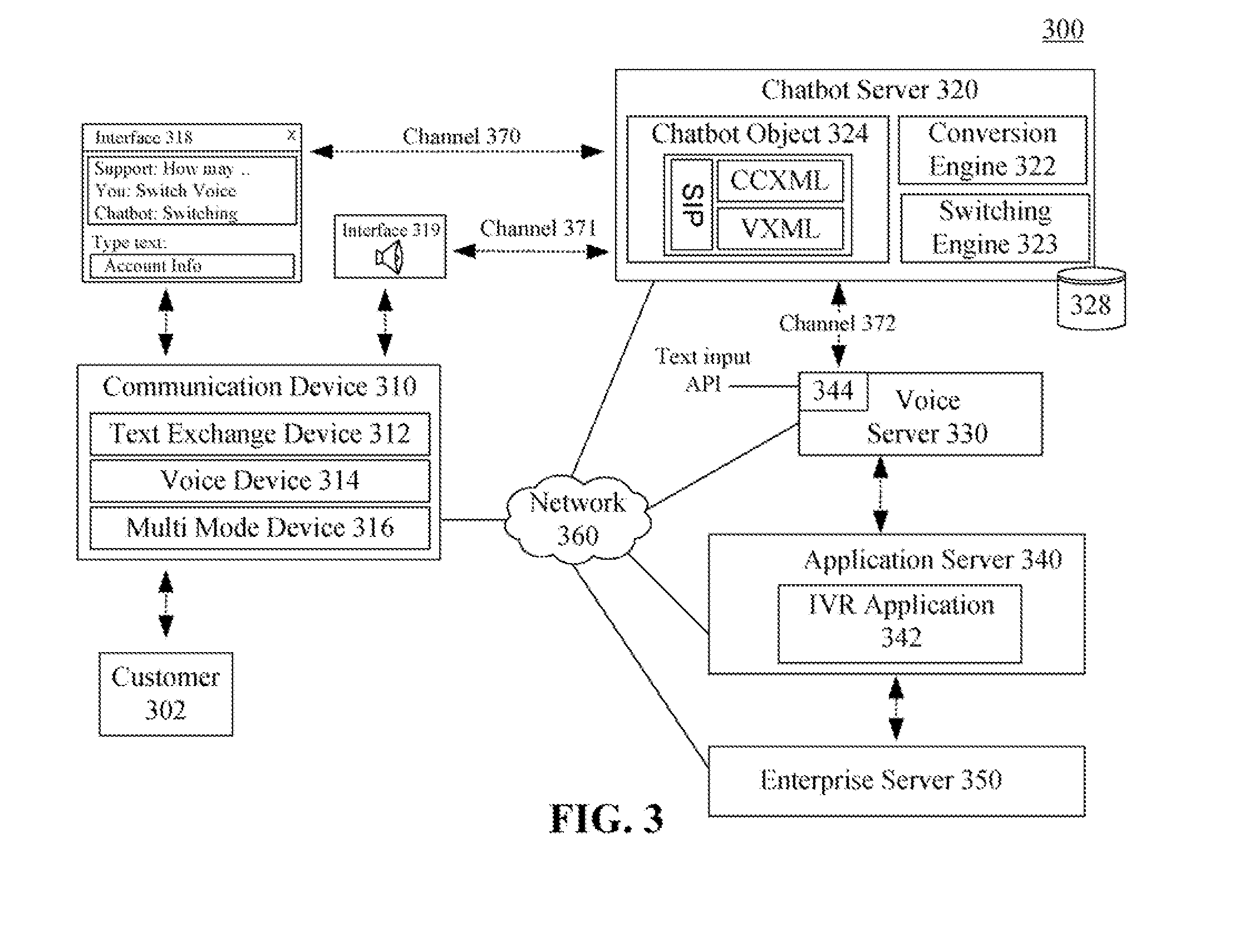

Dialect translator for a speech application environment extended for interactive text exchanges

The present solution includes a real-time automated communication method. In the method, a real-time communication session can be established between a text exchange client and a speech application. A translation table can be identified that includes multiple entries, each entry including a text exchange item and a corresponding conversational translation item. A text exchange message can be received that was entered into a text exchange client. Content in the text exchange message that matches a text exchange item in the translation table can be substituted with a corresponding conversational item. The translated text exchange message can be sent as input to a voice server. Output from the voice server can be used by the speech application, which performs an automatic programmatic action based upon the output.

Owner:NUANCE COMM INC

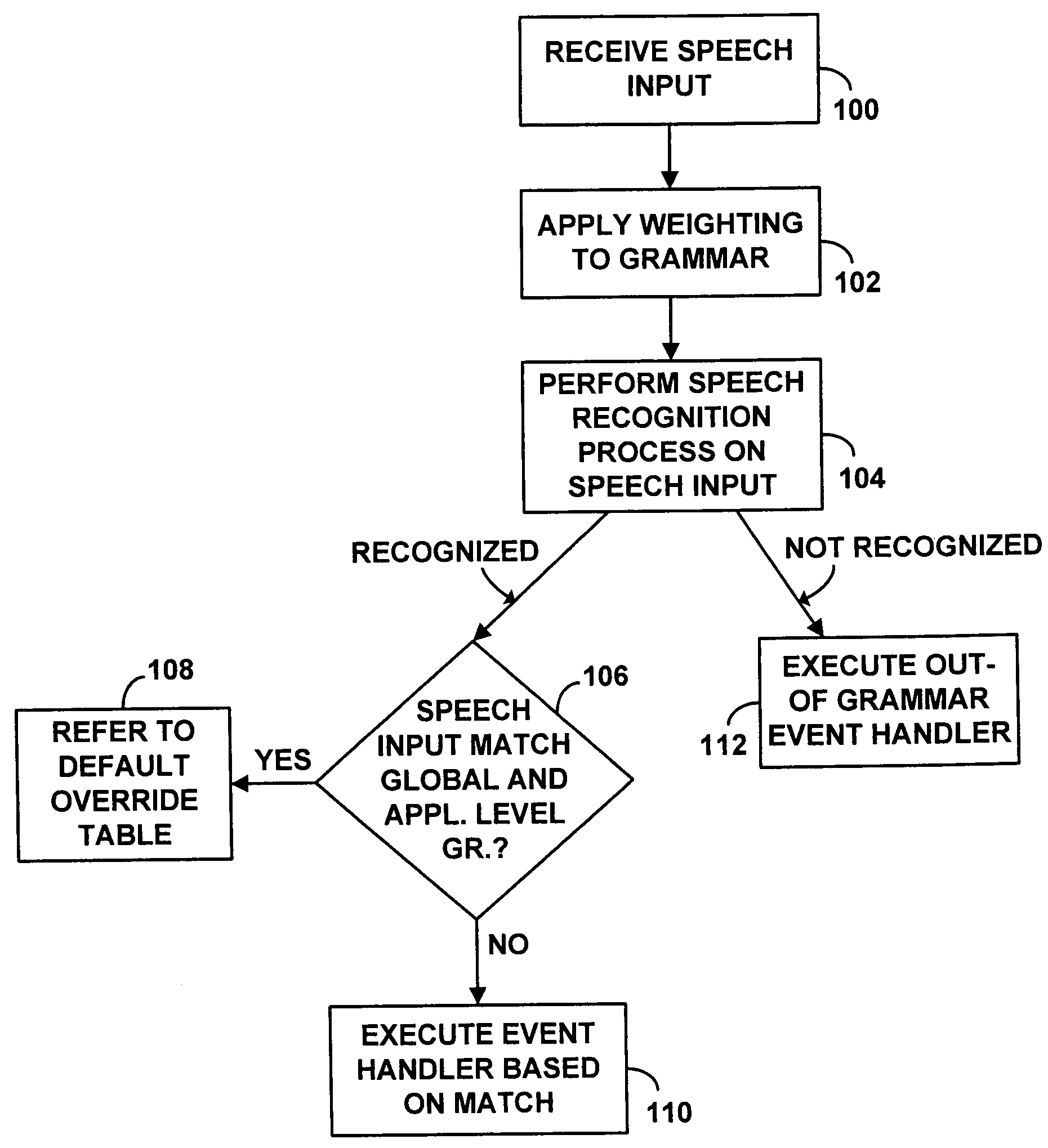

Voice browser with weighting of browser-level grammar to enhance usability

A computer system in the form of a voice command platform includes a voice browser and voice-based applications. The voice browser has global-level grammar elements and the voice applications have application-level grammar and grammar elements. A programming feature is provided by which developers of the voice applications can programmably weigh or weight global-level grammar elements relative to the application-level grammar or grammar elements. As a consequence of the weighting, a speech recognition engine for the voice browser is more likely to accurately recognize voice input from a user. The weighting can be applied on the application as a whole, or at any given state in the application. Also, the weighting can be made to the global level grammar elements as a group, or on an individual basis.

Owner:SPRINT SPECTRUM LLC

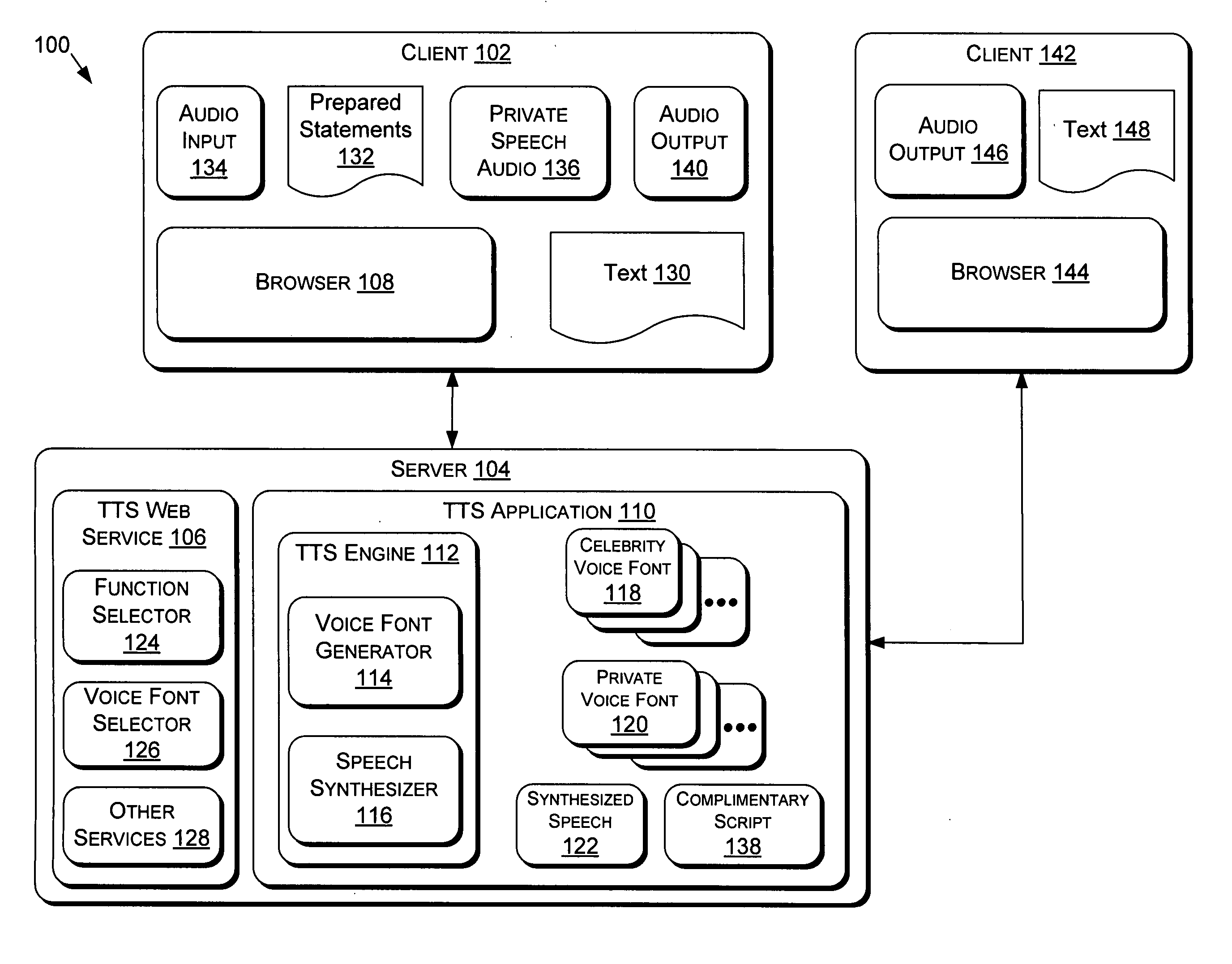

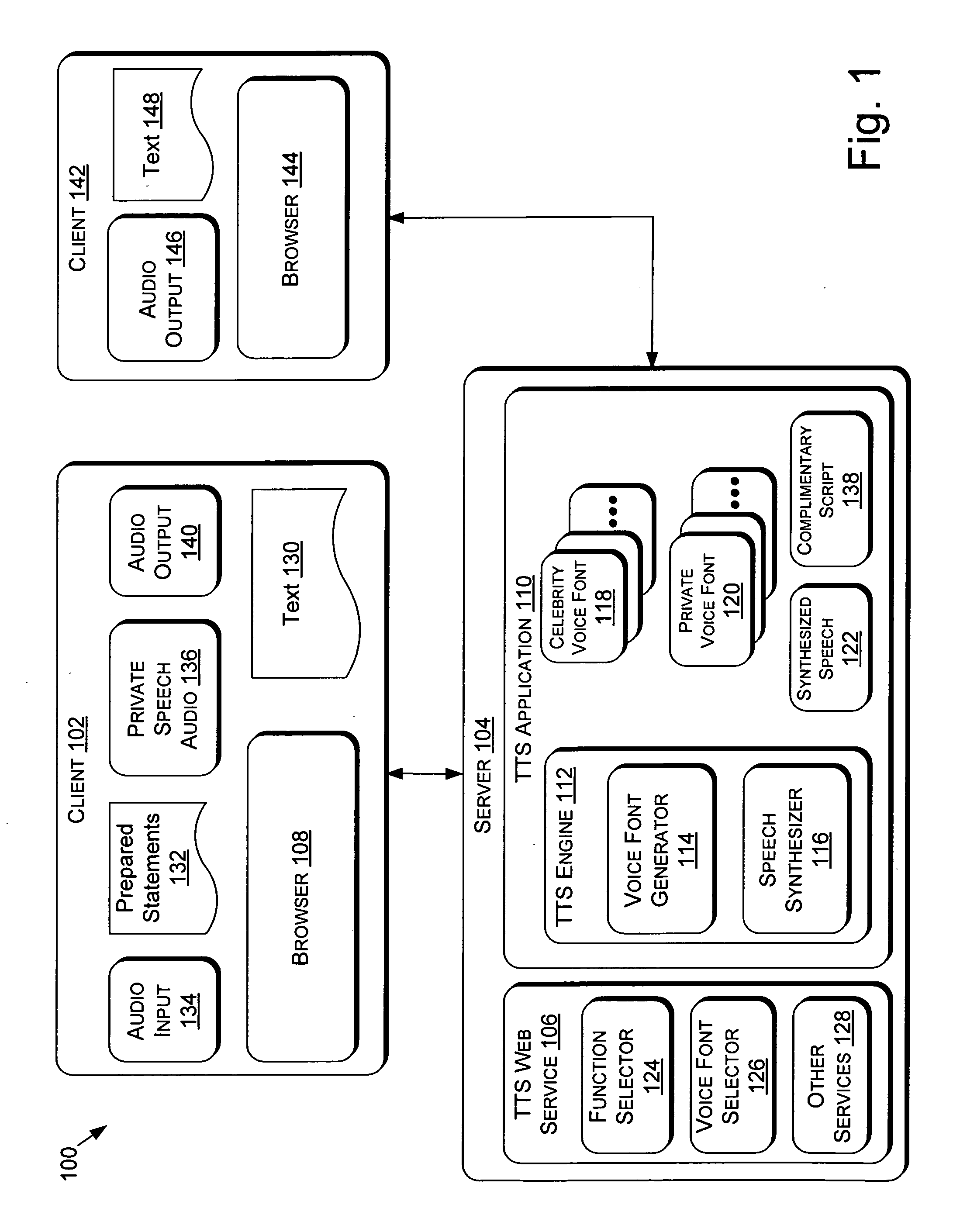

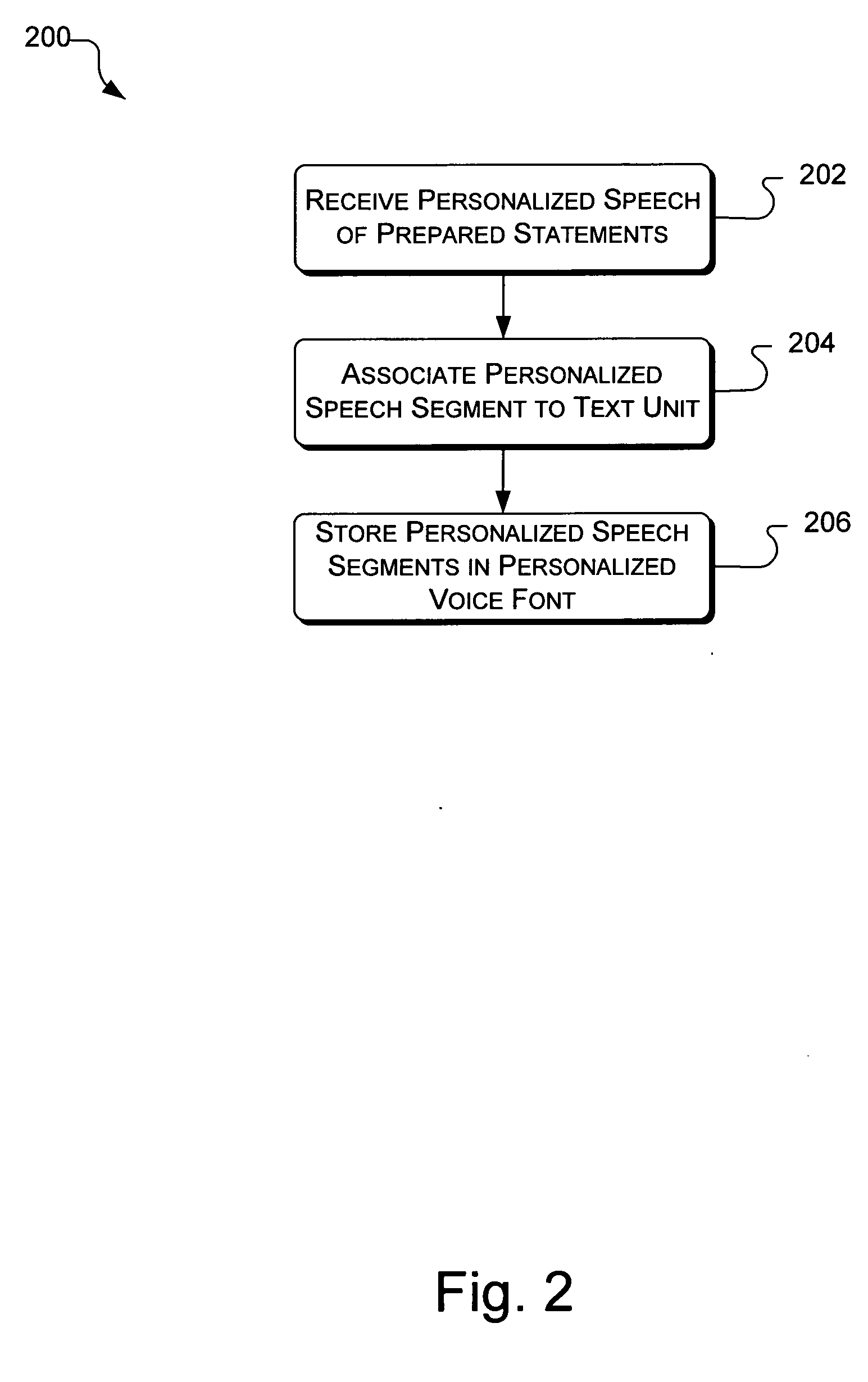

Providing personalized voice front for text-to-speech applications

A method for synthesizing speech from text includes receiving one or more waveforms characteristic of a voice of a person selected by a user, generating a personalized voice font based on the one or more waveforms, and delivering the personalized voice font to the user's computer, whereby speech can be synthesized from text, the speech being in the voice of the selected person, the speech being synthesized using the personalized voice font. A system includes a text-to-speech (TTS) application operable to generate a voice font based on speech waveforms transmitted from a client computer remotely accessing the TTS application.

Owner:MICROSOFT TECH LICENSING LLC

Handling of speech recognition in a declarative markup language

InactiveUS6941268B2Reliably and simply determiningEasy to solveAutomatic exchangesSpeech recognitionSpeech applicationsApplication software

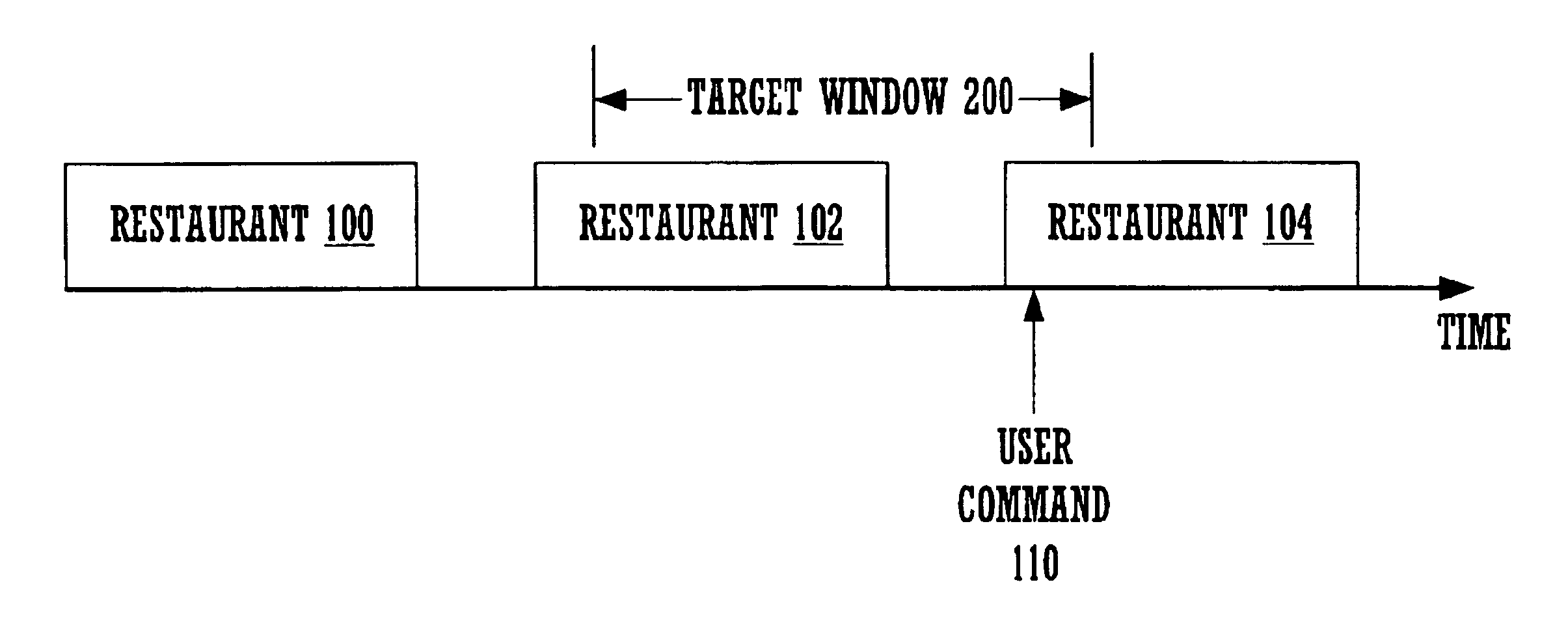

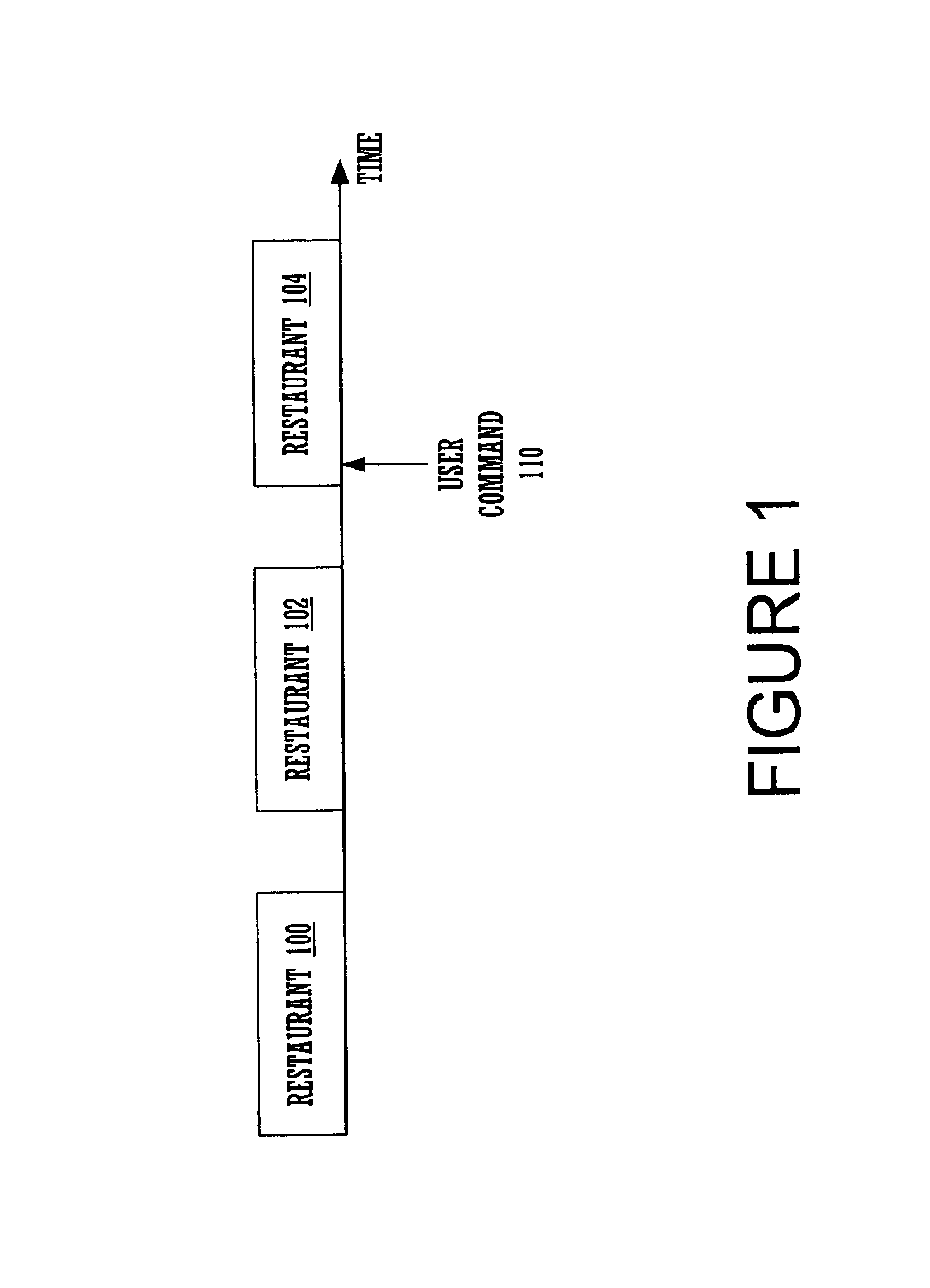

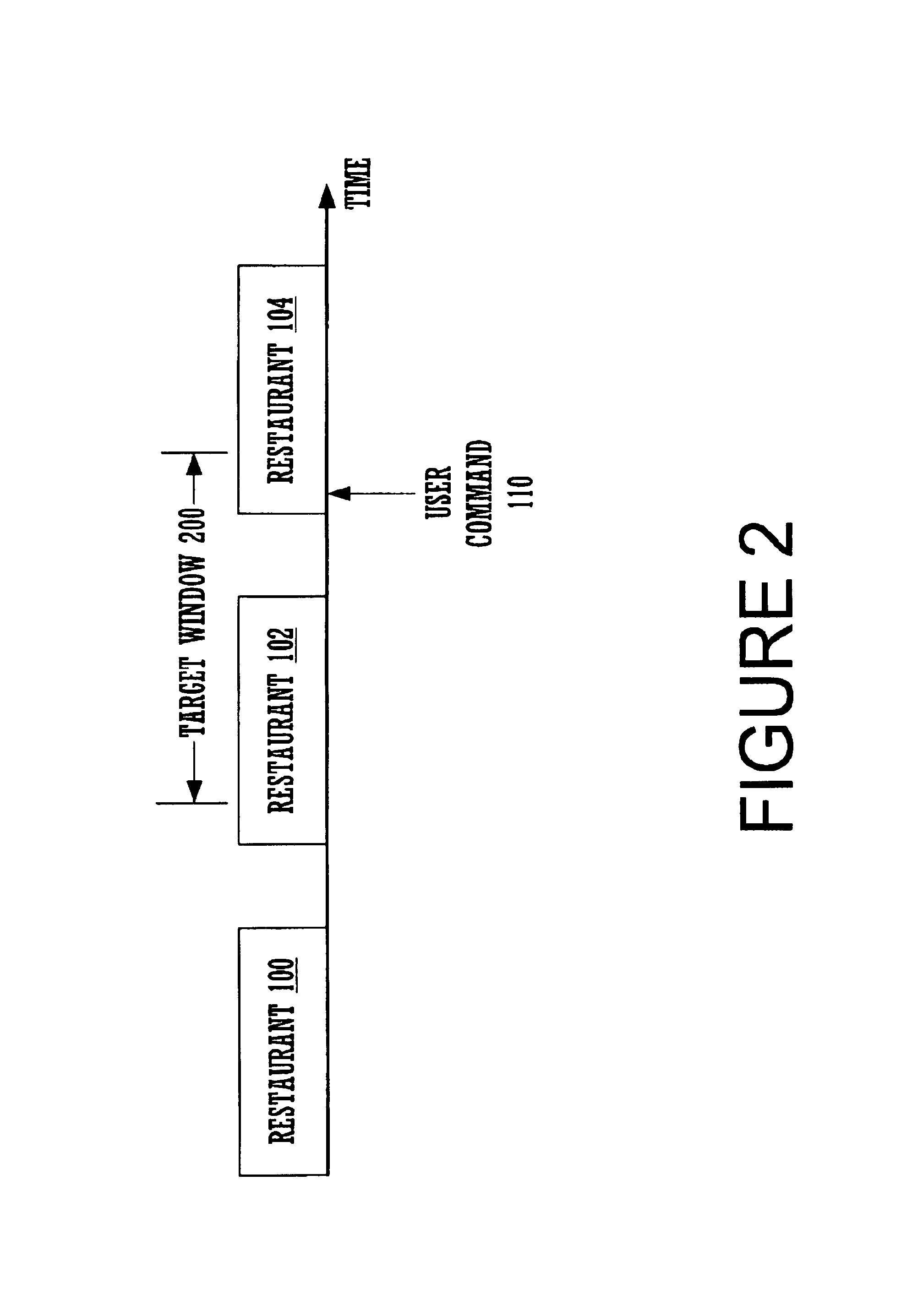

Declarative markup languages for speech applications such as VoiceXML are becoming more prevalent programming modalities for describing speech applications. Present declarative markup languages for speech applications model the running speech application as a state machine with the program specifying the transitions amongst the states. These languages can be extended to support a marker-semantic to more easily solve several problems that are otherwise not easily solved. In one embodiment, a partially overlapping target window is implemented using a mark semantic. Other uses include measurement of user listening time, detection and avoidance of errors, and better resumption of playback after a false barge in.

Owner:MICROSOFT TECH LICENSING LLC

System and method for determining utterance context in a multi-context speech application

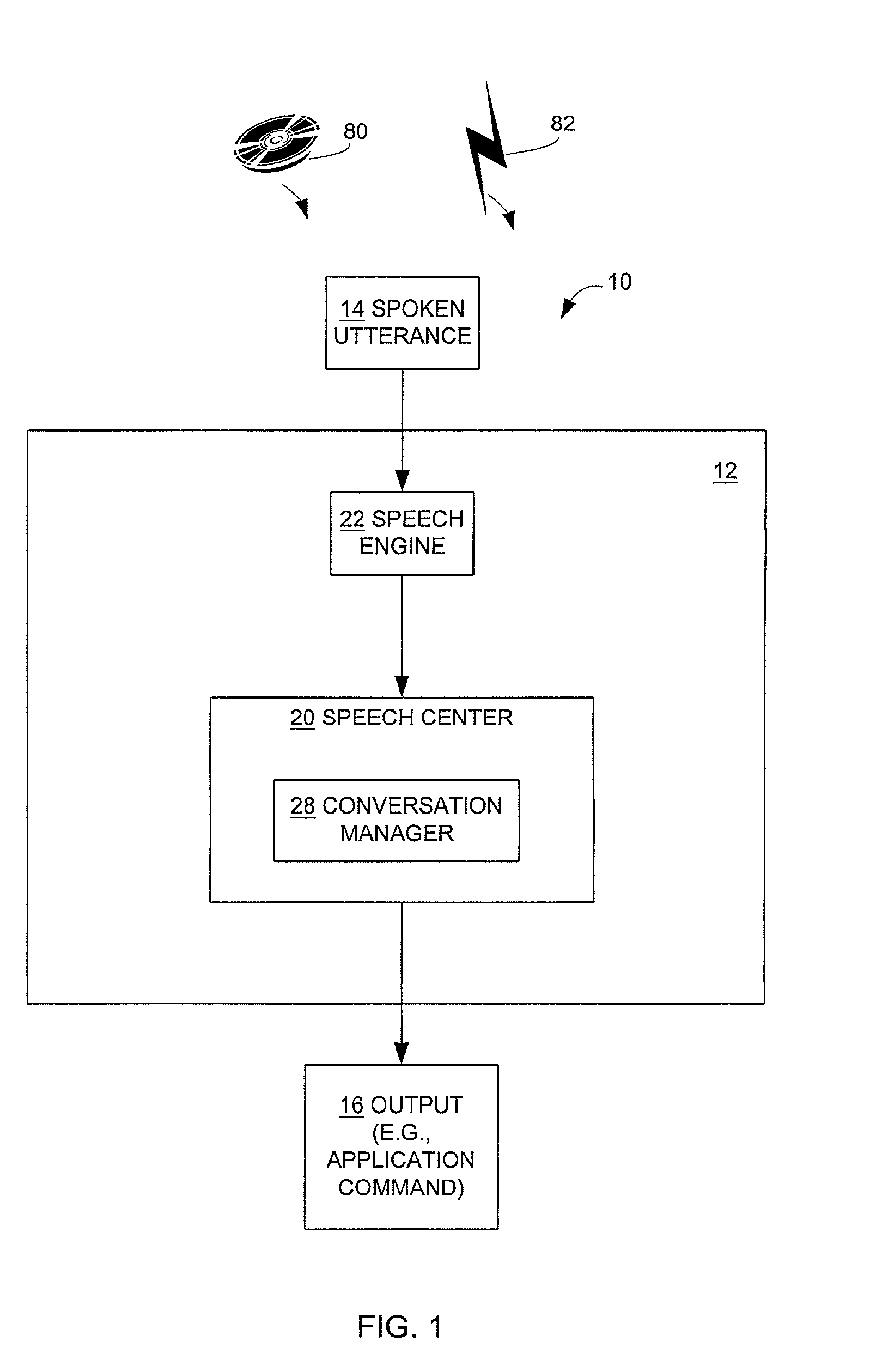

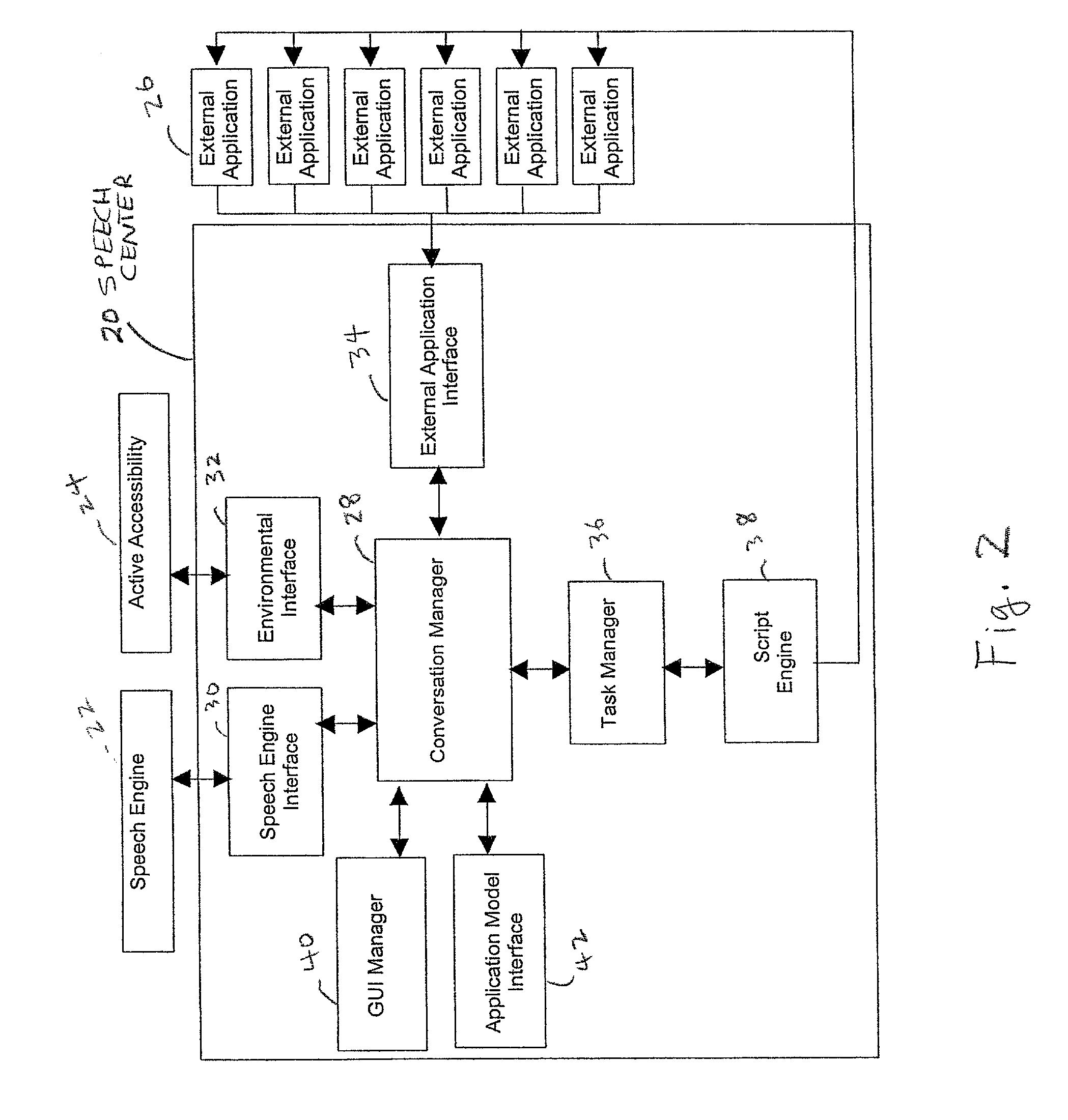

InactiveUS7085723B2Avoids time and expense and footprintSpeech recognitionSession managementComputerized system

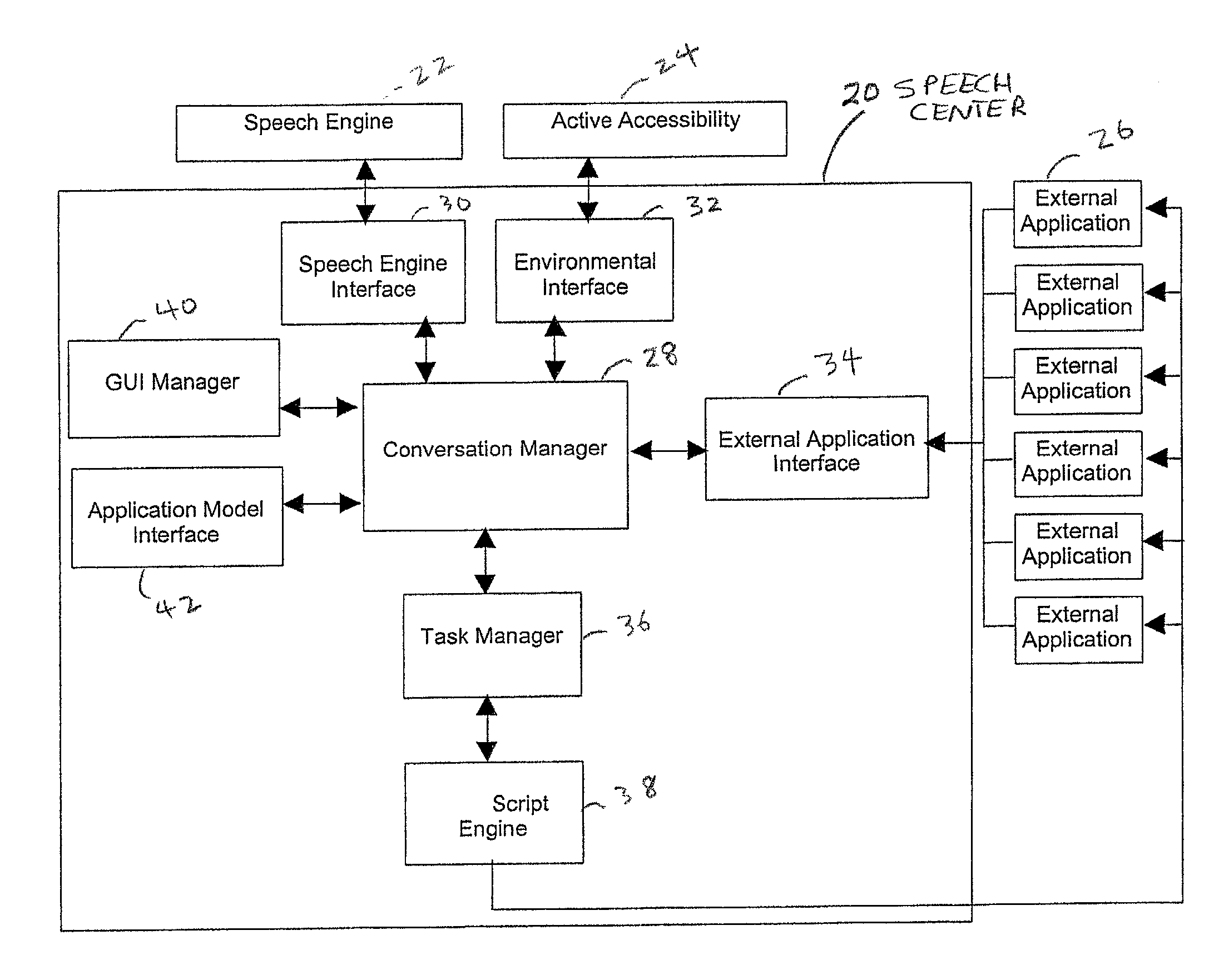

A speech center coordinates speech services for a number of speech-enabled applications performing on a computer system. The speech center includes a conversation manager that manages conversations between a user and the speech-enabled applications. The conversation manager includes a context manager that maintains a context list of speech-enabled applications that the user has accessed. If the user speaks an utterance, the context manager determines which speech-enabled application should receive a translation of the utterance from the context list, such as by determining the most recently accessed application.

Owner:NUANCE COMM INC

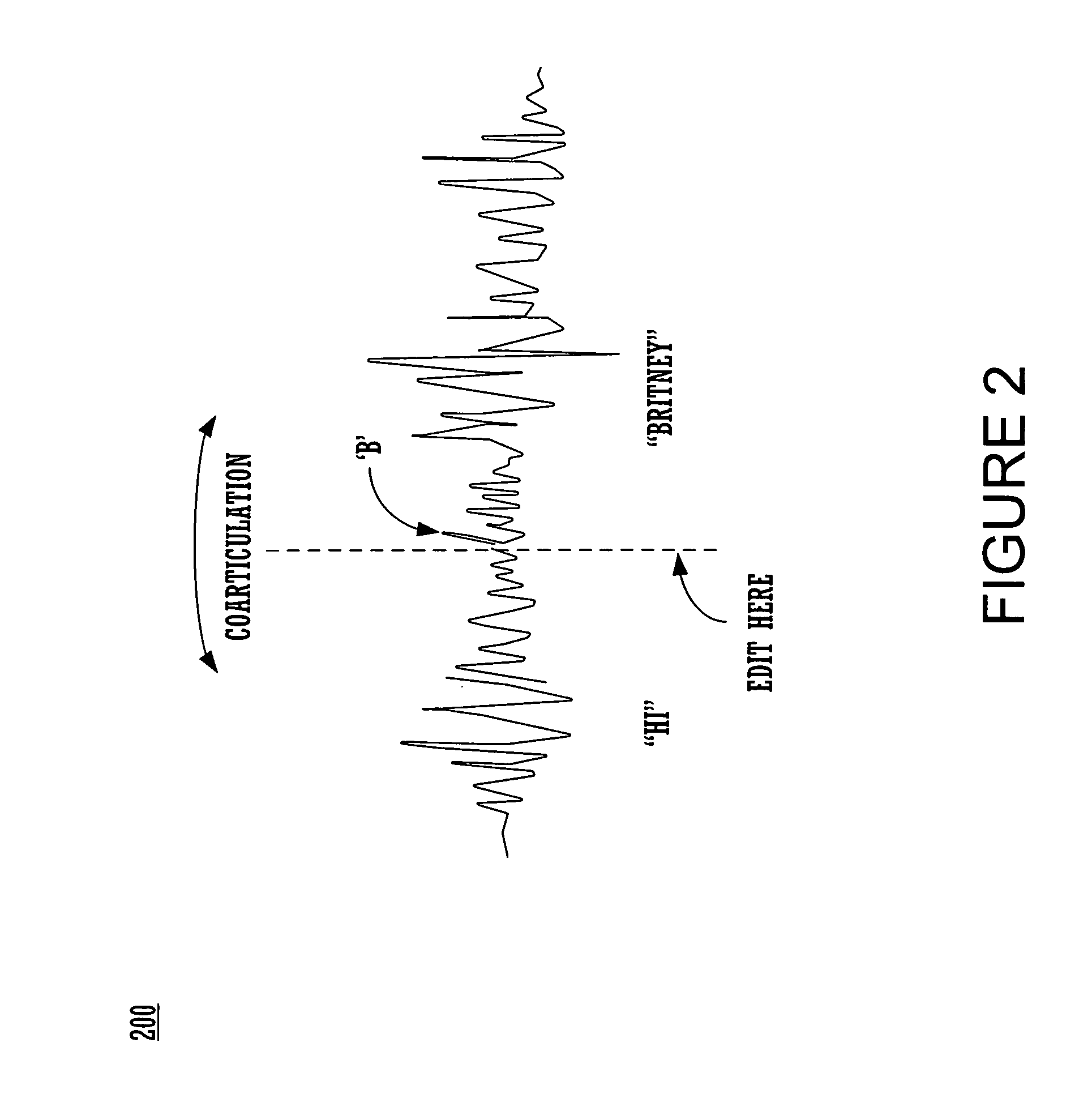

Coarticulated concatenated speech

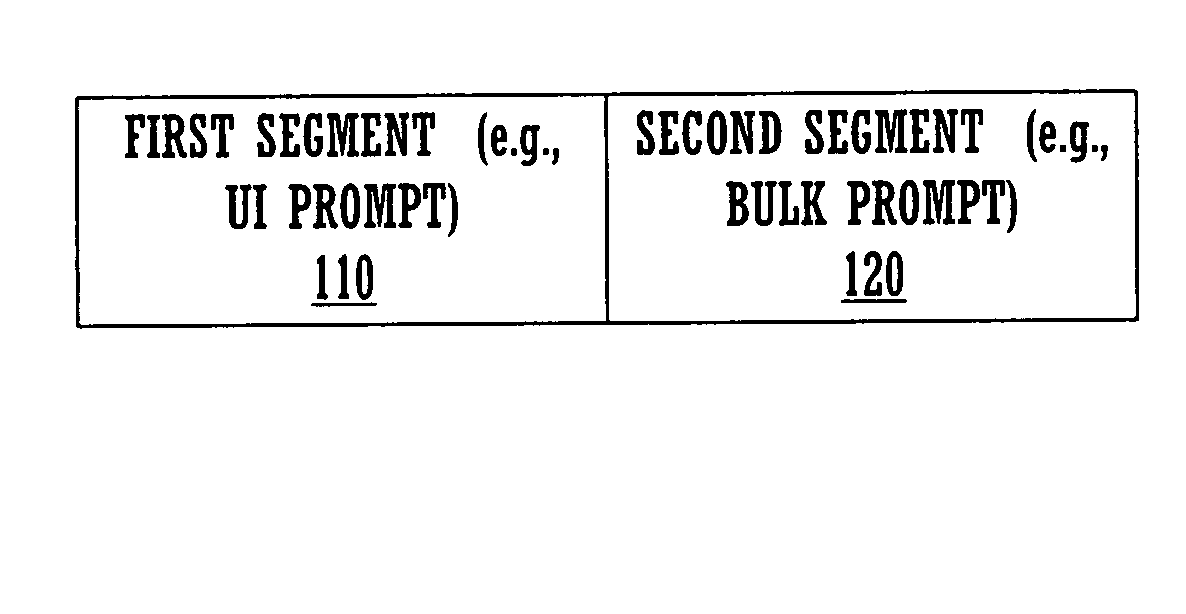

Described are methods and systems for reducing the audible gap in concatenated recorded speech, resulting in more natural sounding speech in voice applications. The sound of concatenated, recorded speech is improved by also coarticulating the recorded speech. The resulting message is smooth, natural sounding and lifelike. Existing libraries of regularly recorded bulk prompts can be used by coarticulating the user interface prompt occurring just before the bulk prompt. Applications include phone-based applications as well as non-phone-based applications.

Owner:MICROSOFT TECH LICENSING LLC

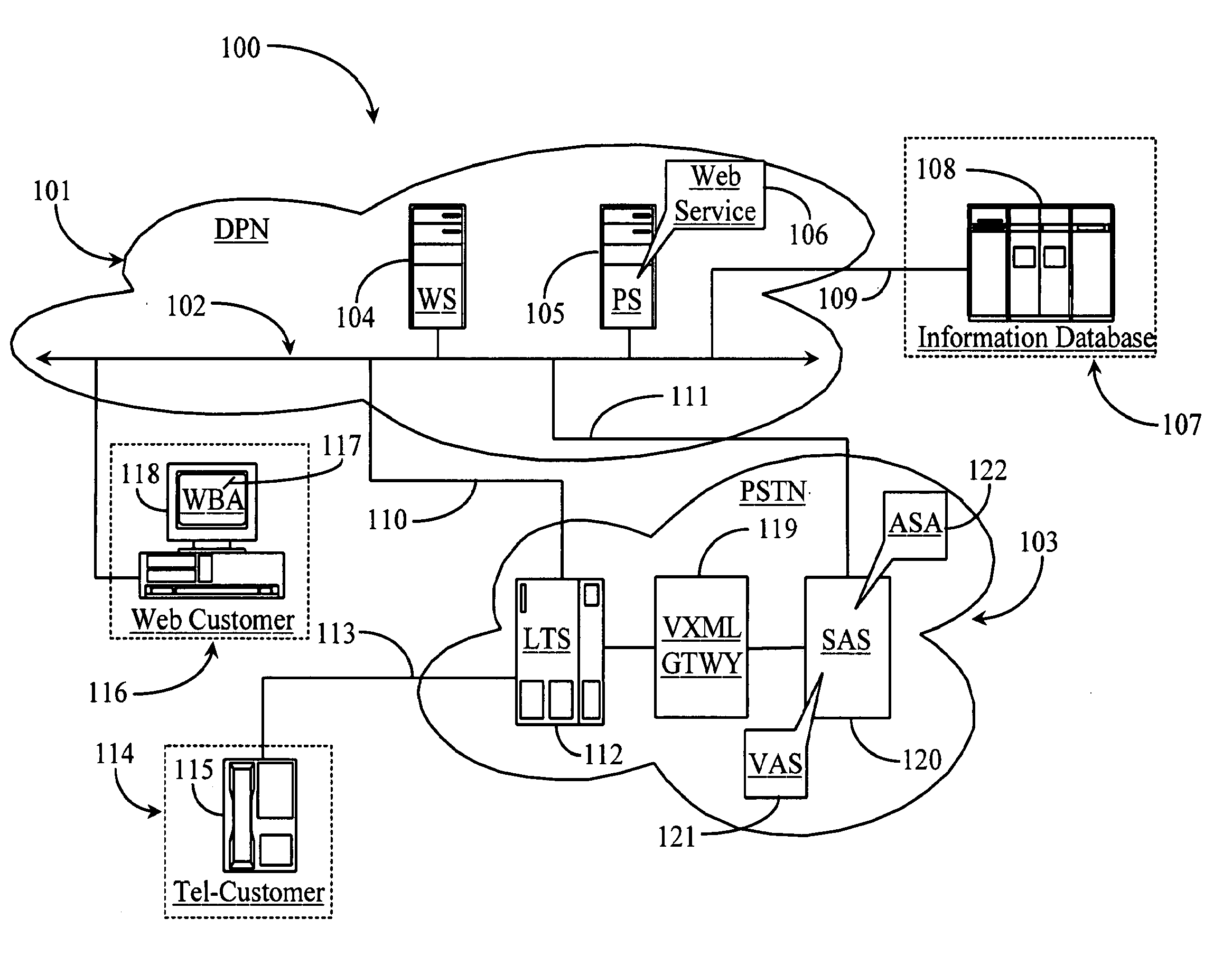

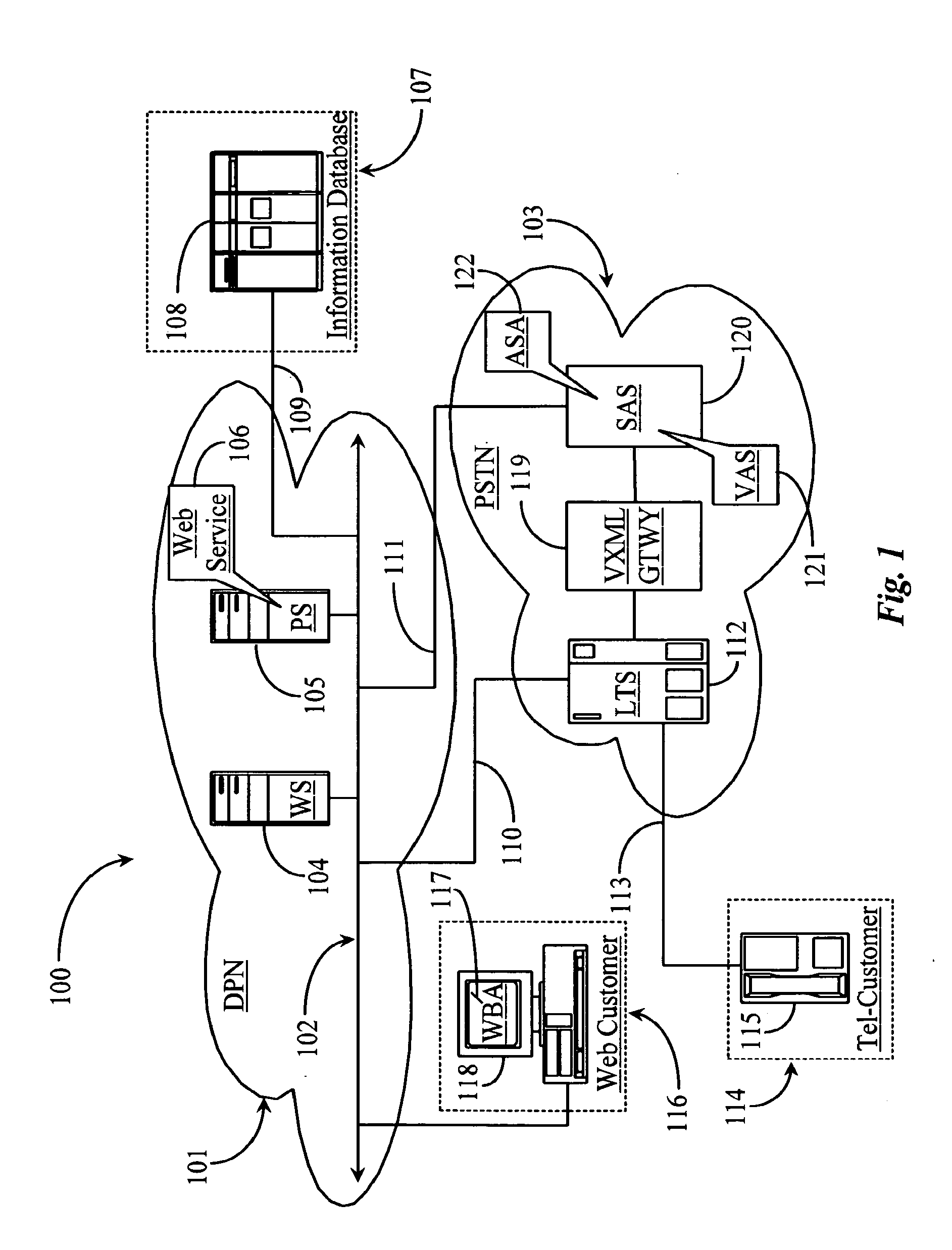

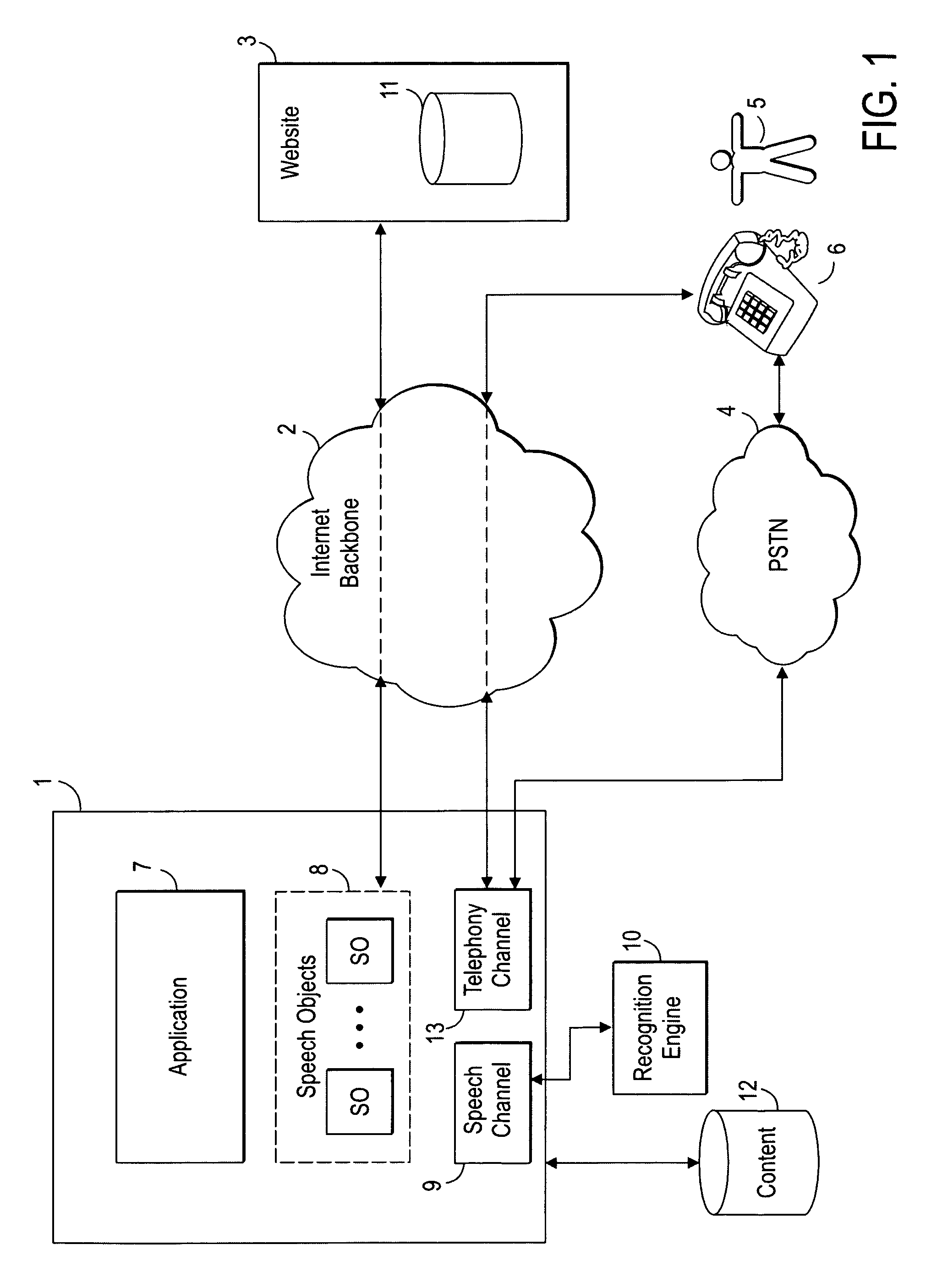

System and methods for dynamic integration of a voice application with one or more Web services

A system is provided for leveraging a Web service to provide access to information for telephone users. The system includes a first network service node for hosting the Web service, an information database accessible from the service node, a voice terminal connected to the first service node, and a service adaptor for integrating a voice application executable from the voice terminal to the Web service. In a preferred aspect, the service adaptor subscribes to data published by the Web service and creates code and functional modules based on that data and uses the created components to facilitate creation of a voice application or to update an existing voice application to provide access to and leverage of the Web service to telephone callers.

Owner:APPTERA

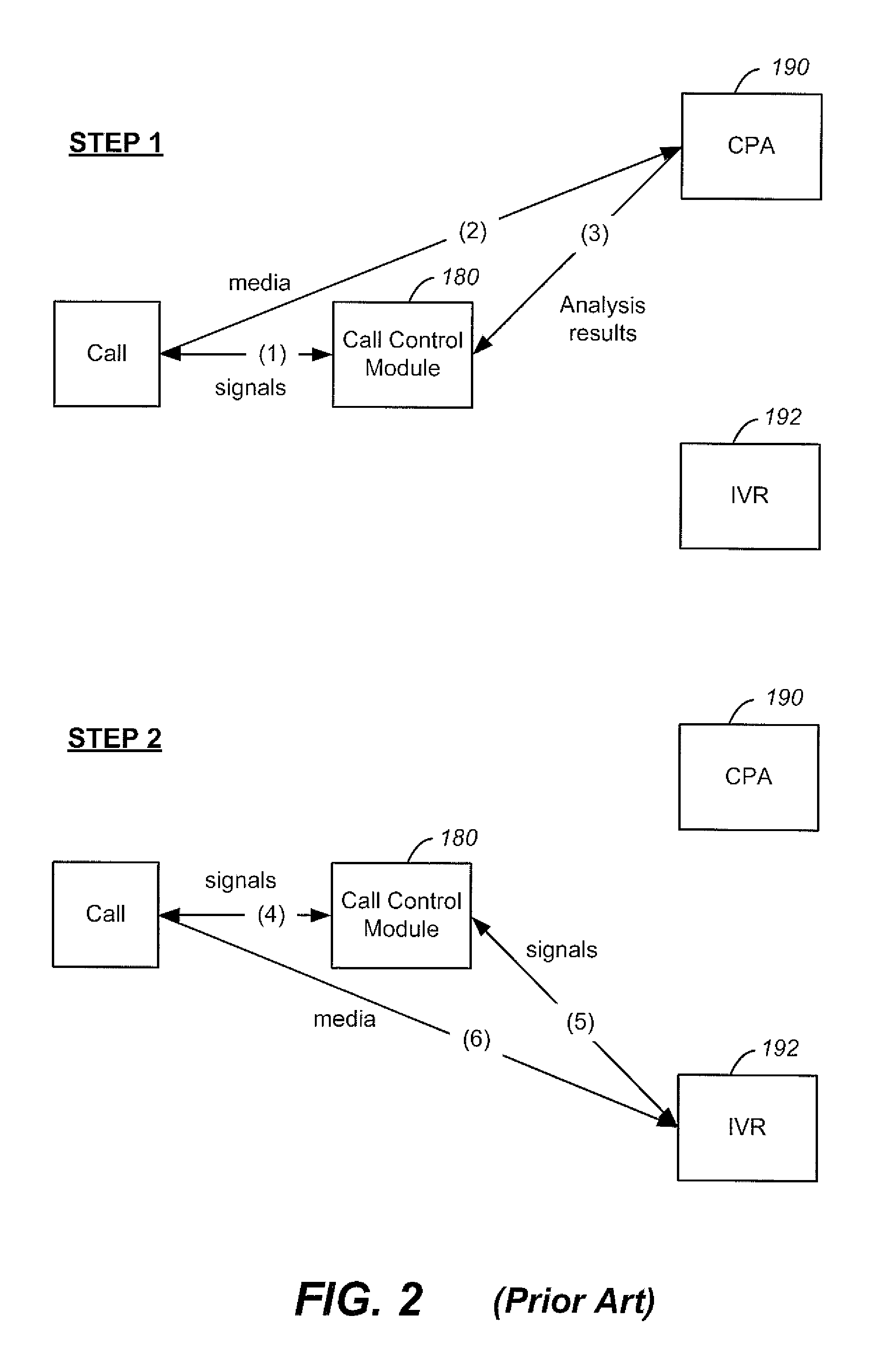

System and Method for Dynamic Call-Progress Analysis and Call Processing

ActiveUS20090052641A1Minimum delayAutomatic call-answering/message-recording/conversation-recordingAutomatic exchangesSpeech applicationsSpeech sound

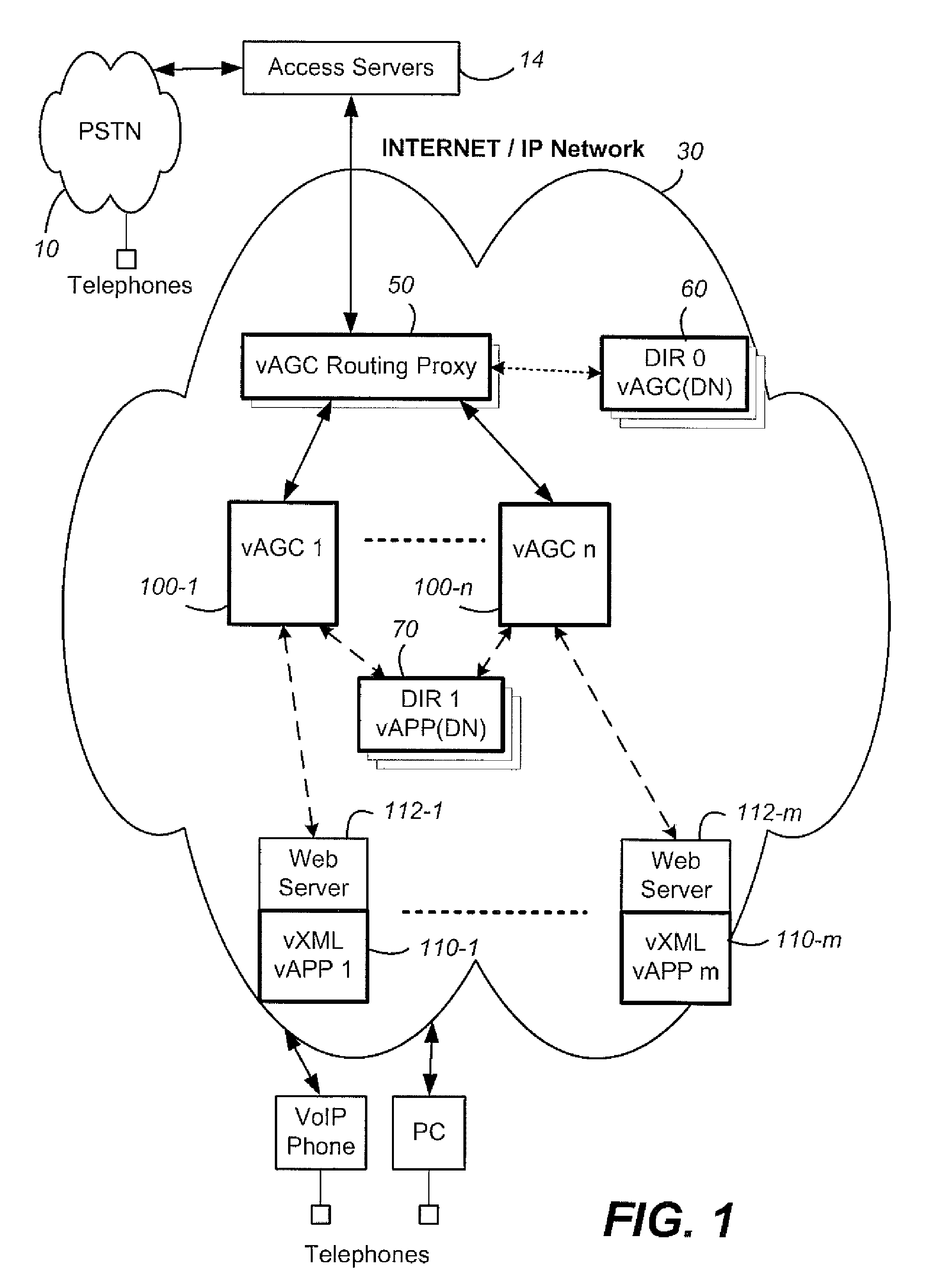

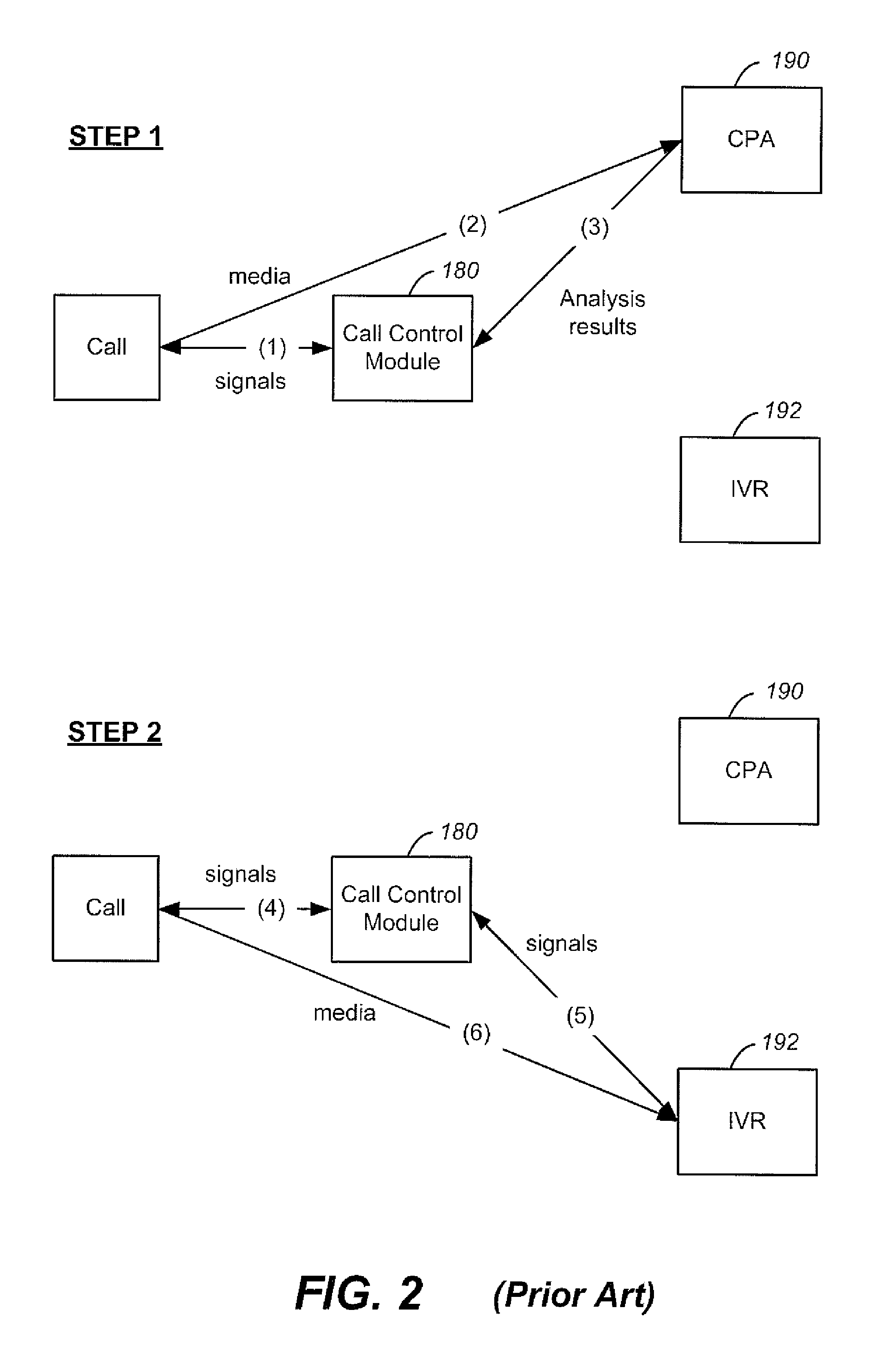

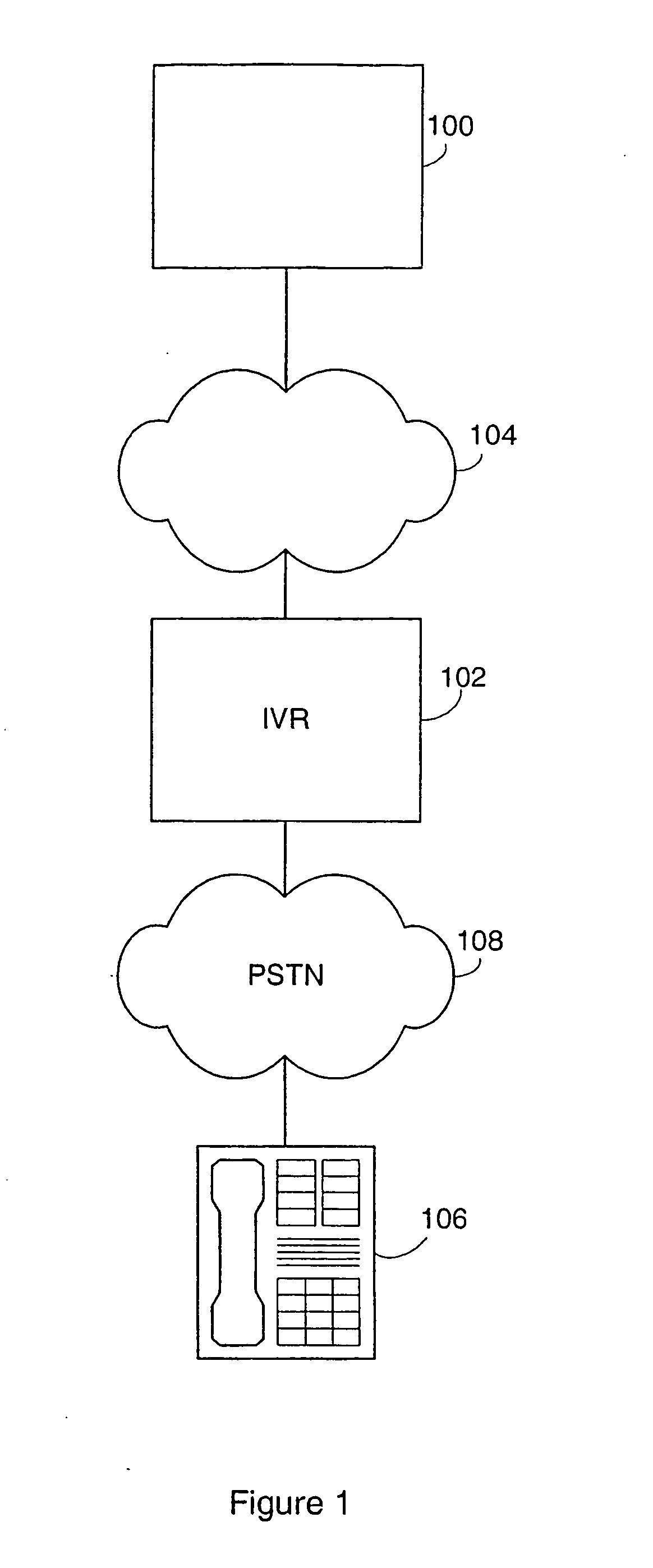

A telephony application such as an interactive voice response (“IVR”) needs to identify quickly the nature of the call (e.g., whether it is a person or machine answering a call) in order to initiate an appropriate voice application. Conventionally, the call stream is sent to a call-progress analyzer (“CPA”) for analysis. Once a result is reached, the call stream is redirected to a call processing unit running the IVR according to the analyzed result. The present scheme feeds the call stream simultaneous to both the CPA and the IVR. The CPA is allowed to continue analyzing and outputting a series of analysis results until a predetermined result appears. In the meantime, the IVR can dynamically adapt itself to the latest analysis results and interact with the call with a minimum of delay.

Owner:ALVARIA INC

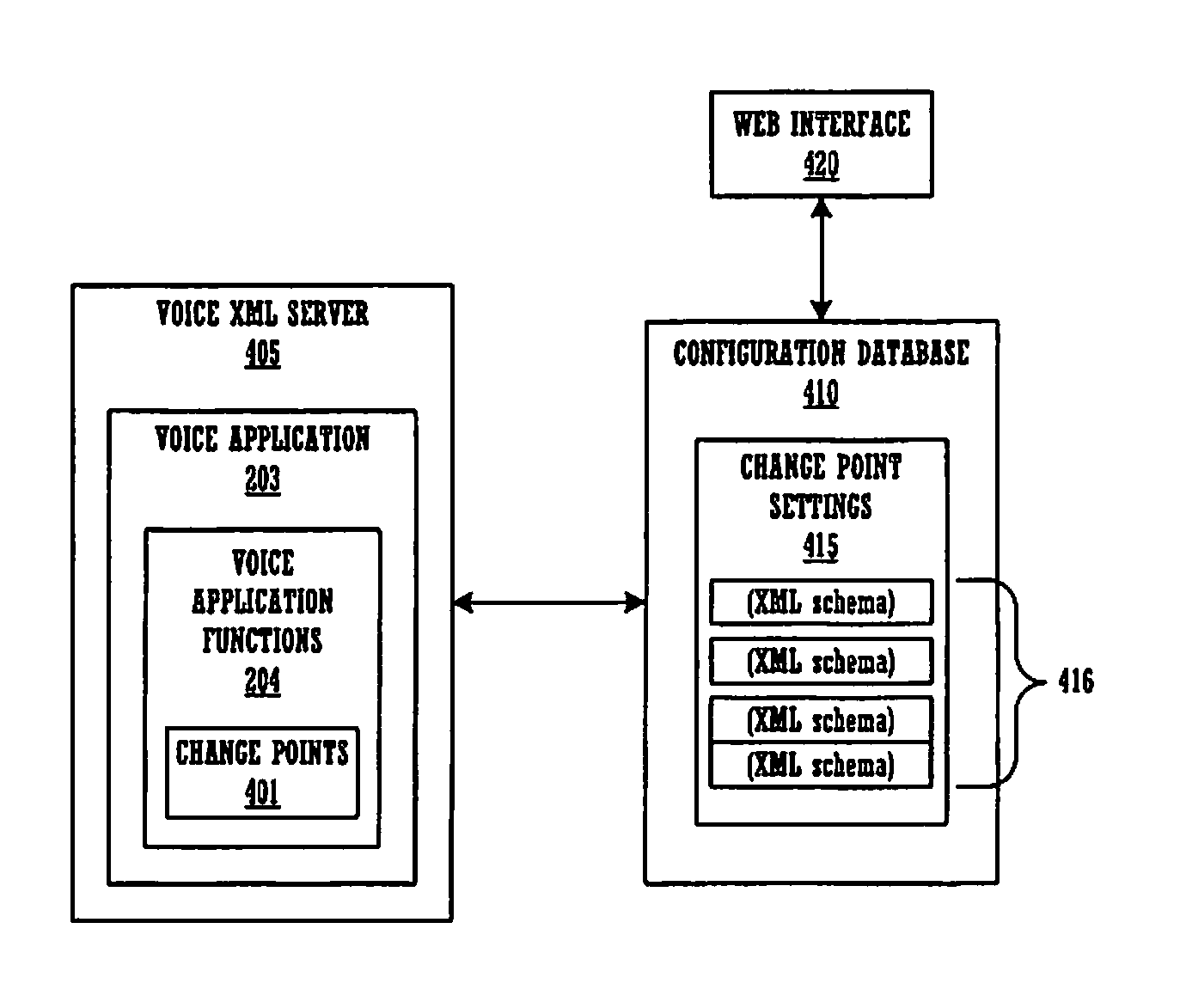

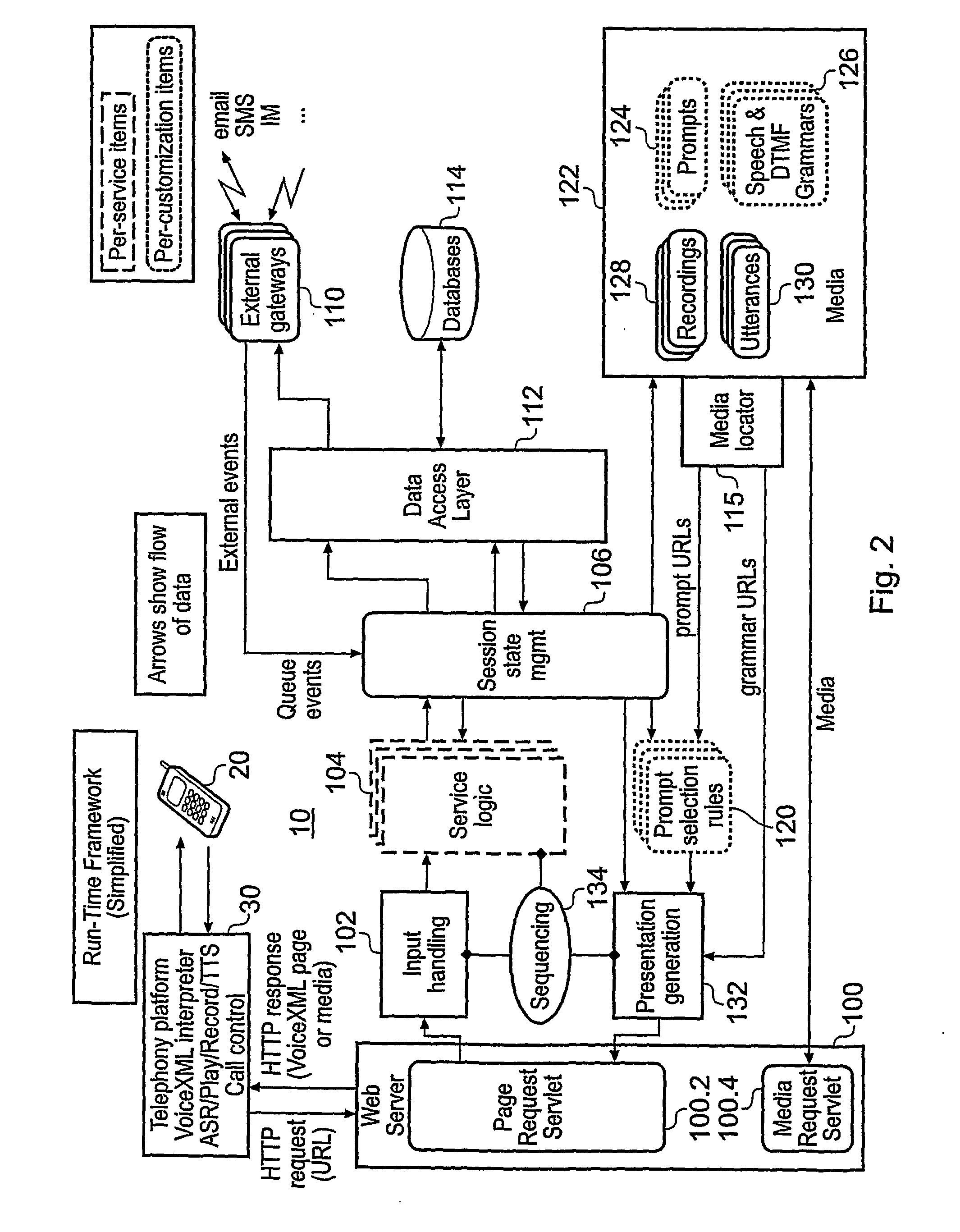

Method and system for design for run-time control of voice XML applications

ActiveUS7672295B1Efficiently and rapidly adaptEasy and rapid adaptationAutomatic call-answering/message-recording/conversation-recordingManual exchangesComputer hardwareSpeech applications

A method and system for implementing run-time control over voice application behavior. The method includes identifying a plurality of control point locations within a given voice application and a plurality of corresponding required responses to the control points. A design-for-control methodology is used to instrument the control points of voice application. A control agent is configured with web-based controls to manage the control points. The control points are used to alter the voice application's behavior during run-time.

Owner:MICROSOFT TECH LICENSING LLC

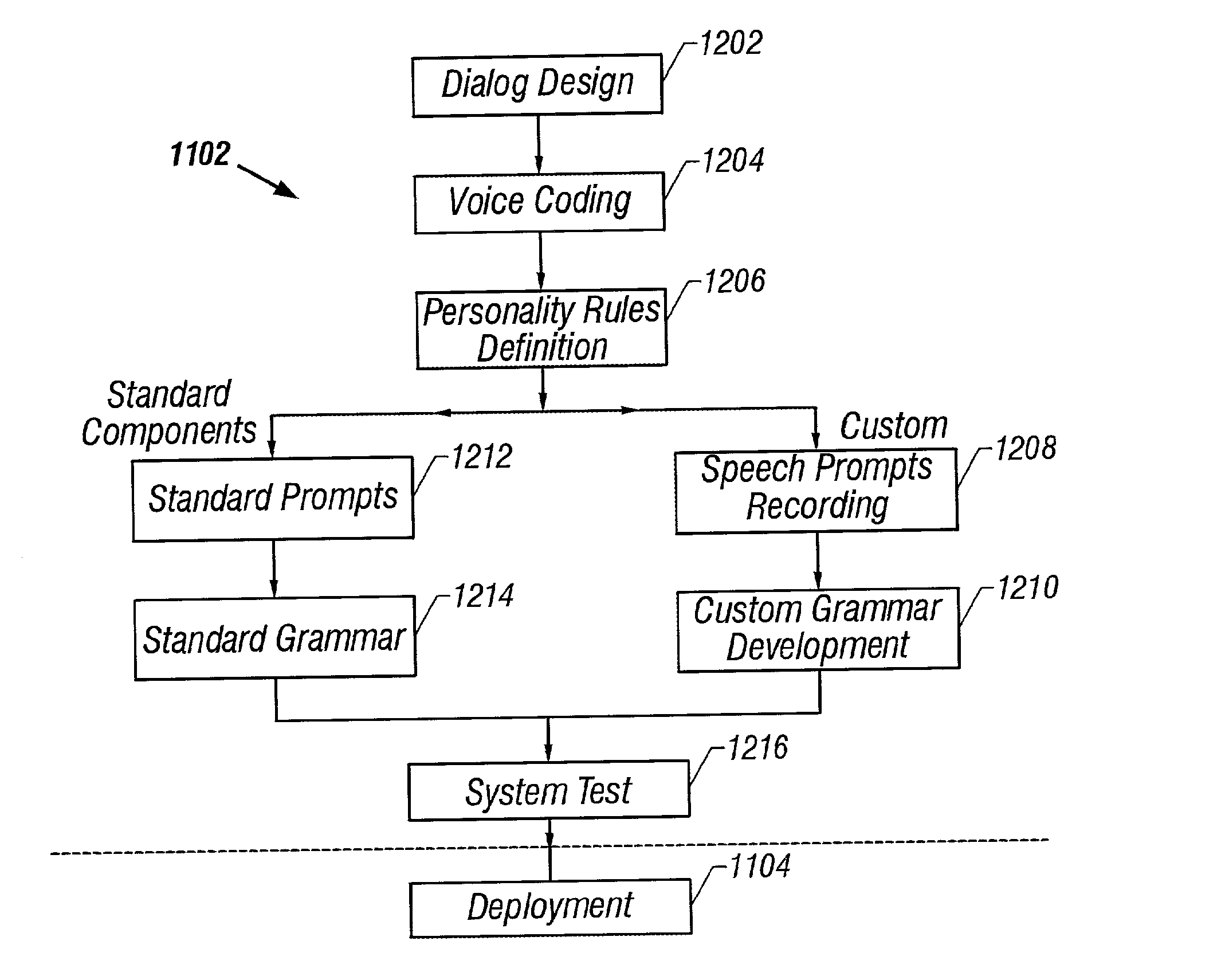

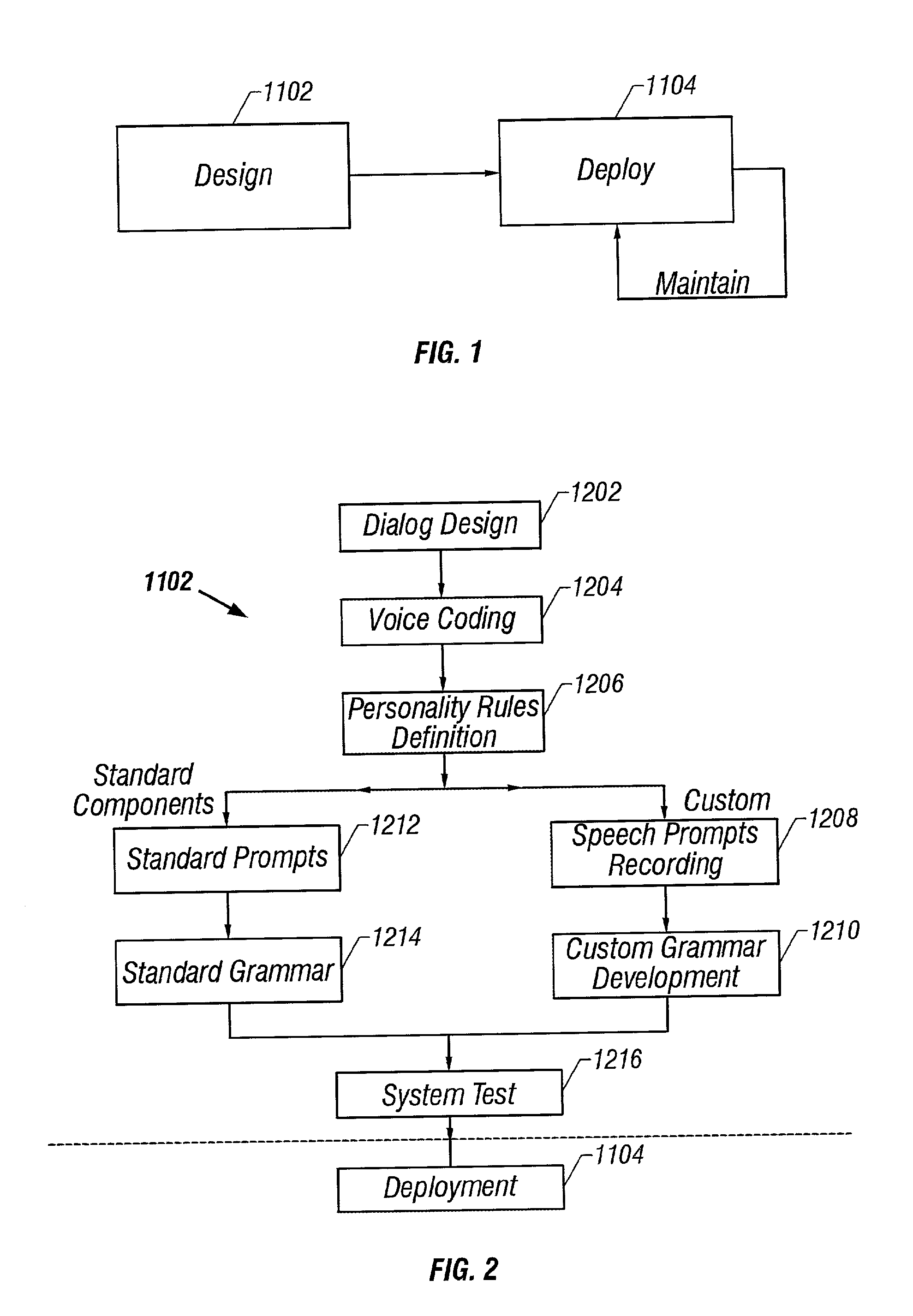

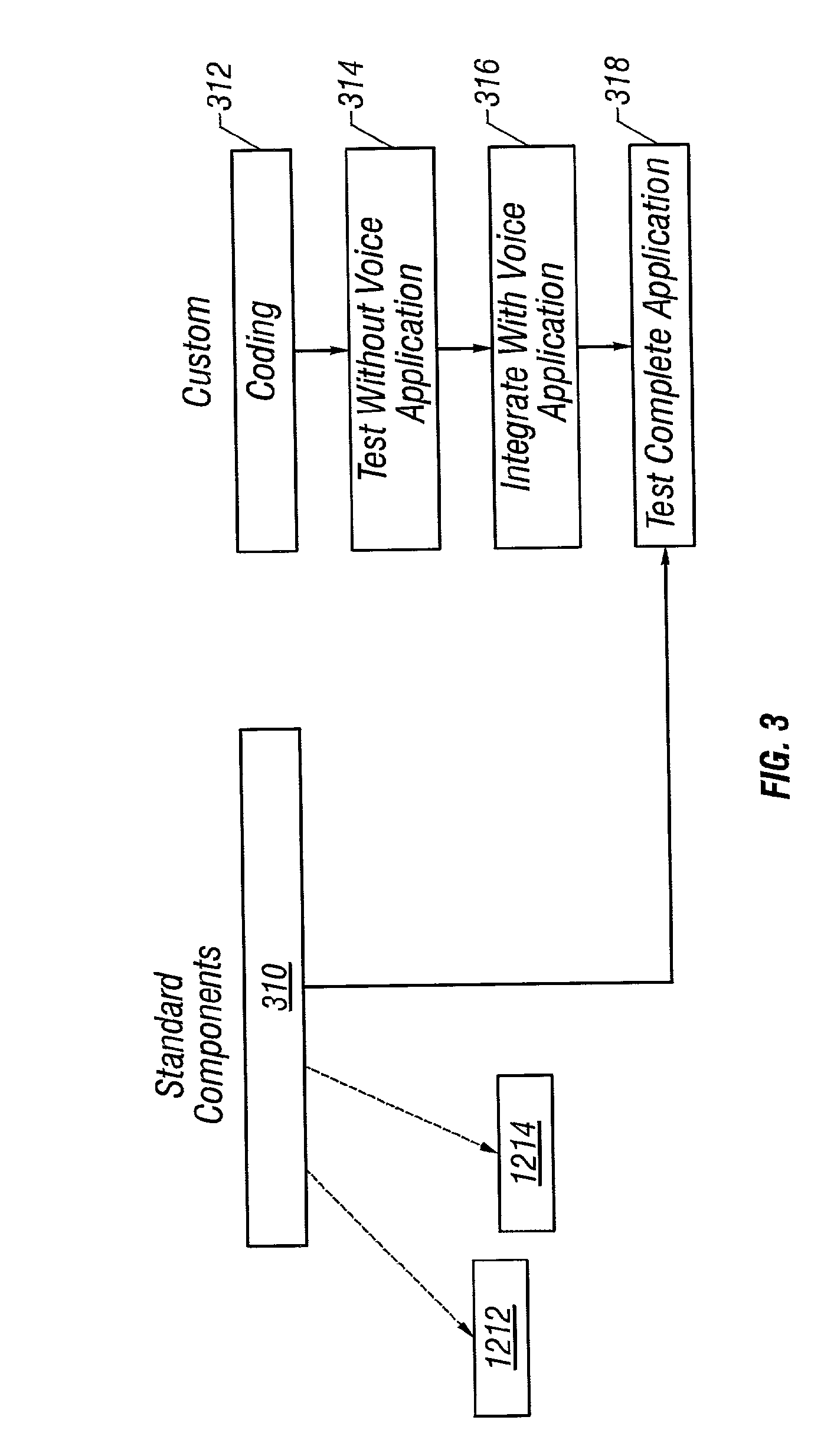

Voice application development methodology

A method of utilizing one or more generic software components to develop a specific voice application. The generic software components are configured to enable development of a specific voice application. The generic software components include a generic dialog asset that is stored in a repository. The method further comprises the step of deploying the specific voice application in a deployment environment, wherein the deployment environment includes the repository.

Owner:BEN FRANKLIN PATENT HLDG L L C

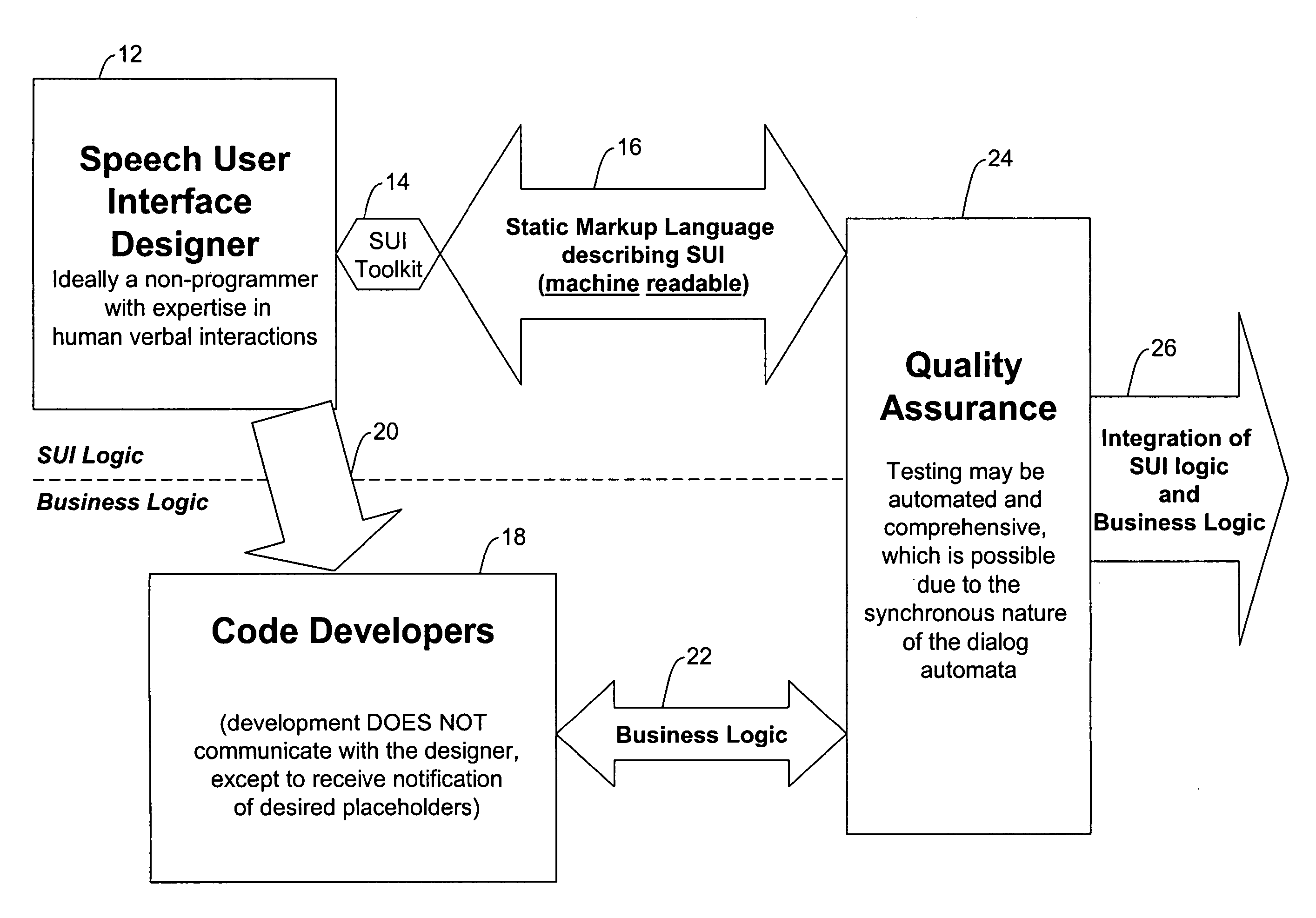

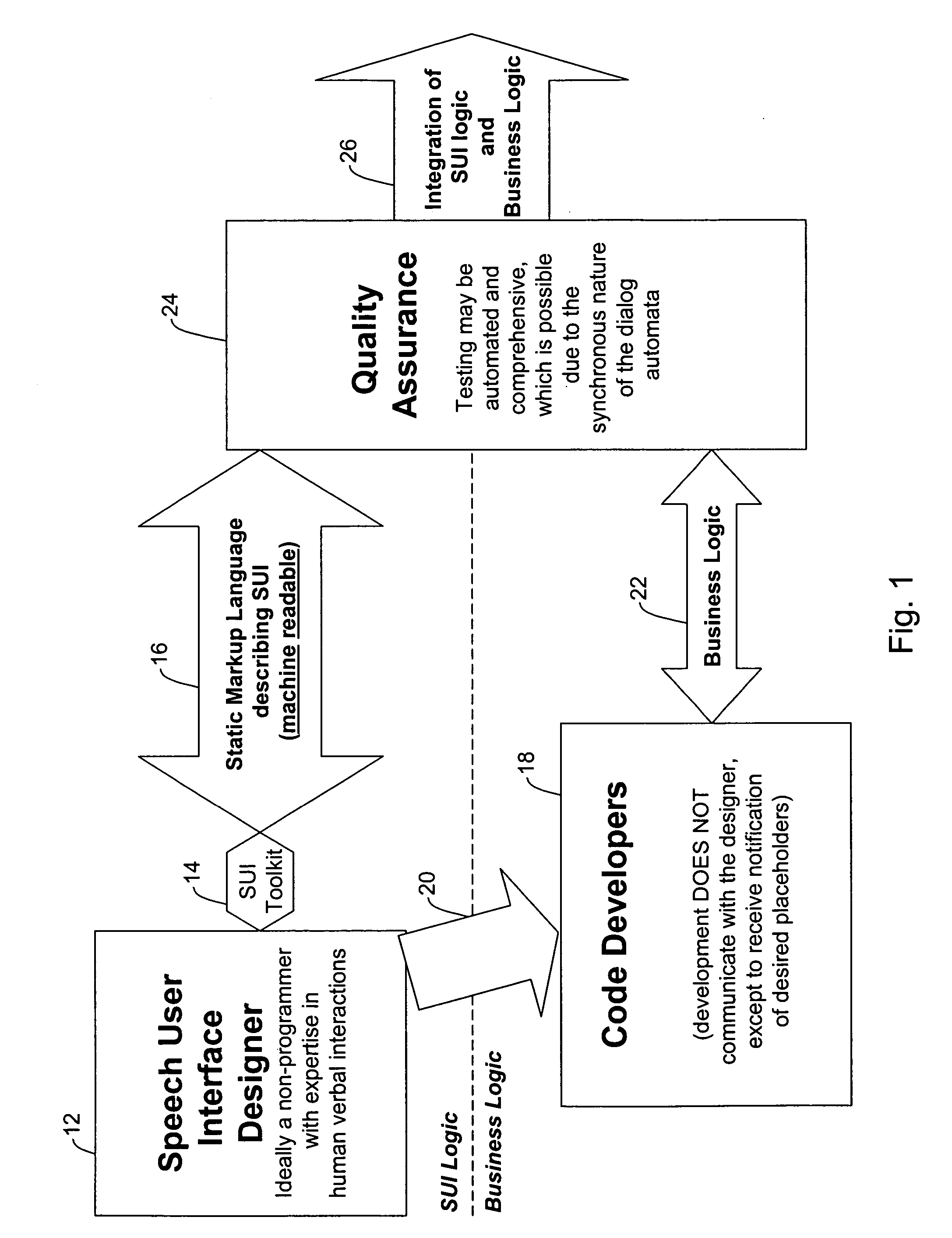

Methods and systems for developing and testing speech applications

InactiveUS20060230410A1Simple and intuitive designEliminate requirementsMultiprogramming arrangementsAutomatic exchangesSpeech applicationsSpeech sound

A system and method for facilitating the efficient development, testing and implementation of speech applications is disclosed which separates the speech user interface from the business logic. A speech user interface description is created devoid of business logic in the form of a machine readable markup language directly executable by the runtime environment based on business requirements. At least one business logic component is created separately for the speech user interface, the at least one business logic component being accessible by the runtime environment.

Owner:PARUS HLDG

System and method for dynamic call-progress analysis and call processing

ActiveUS8243889B2Minimum delayAutomatic call-answering/message-recording/conversation-recordingAutomatic exchangesSpeech applicationsSpeech sound

A telephony application such as an interactive voice response (“IVR”) needs to identify quickly the nature of the call (e.g., whether it is a person or machine answering a call) in order to initiate an appropriate voice application. Conventionally, the call stream is sent to a call-progress analyzer (“CPA”) for analysis. Once a result is reached, the call stream is redirected to a call processing unit running the IVR according to the analyzed result. The present scheme feeds the call stream simultaneous to both the CPA and the IVR. The CPA is allowed to continue analyzing and outputting a series of analysis results until a predetermined result appears. In the meantime, the IVR can dynamically adapt itself to the latest analysis results and interact with the call with a minimum of delay.

Owner:ALVARIA INC

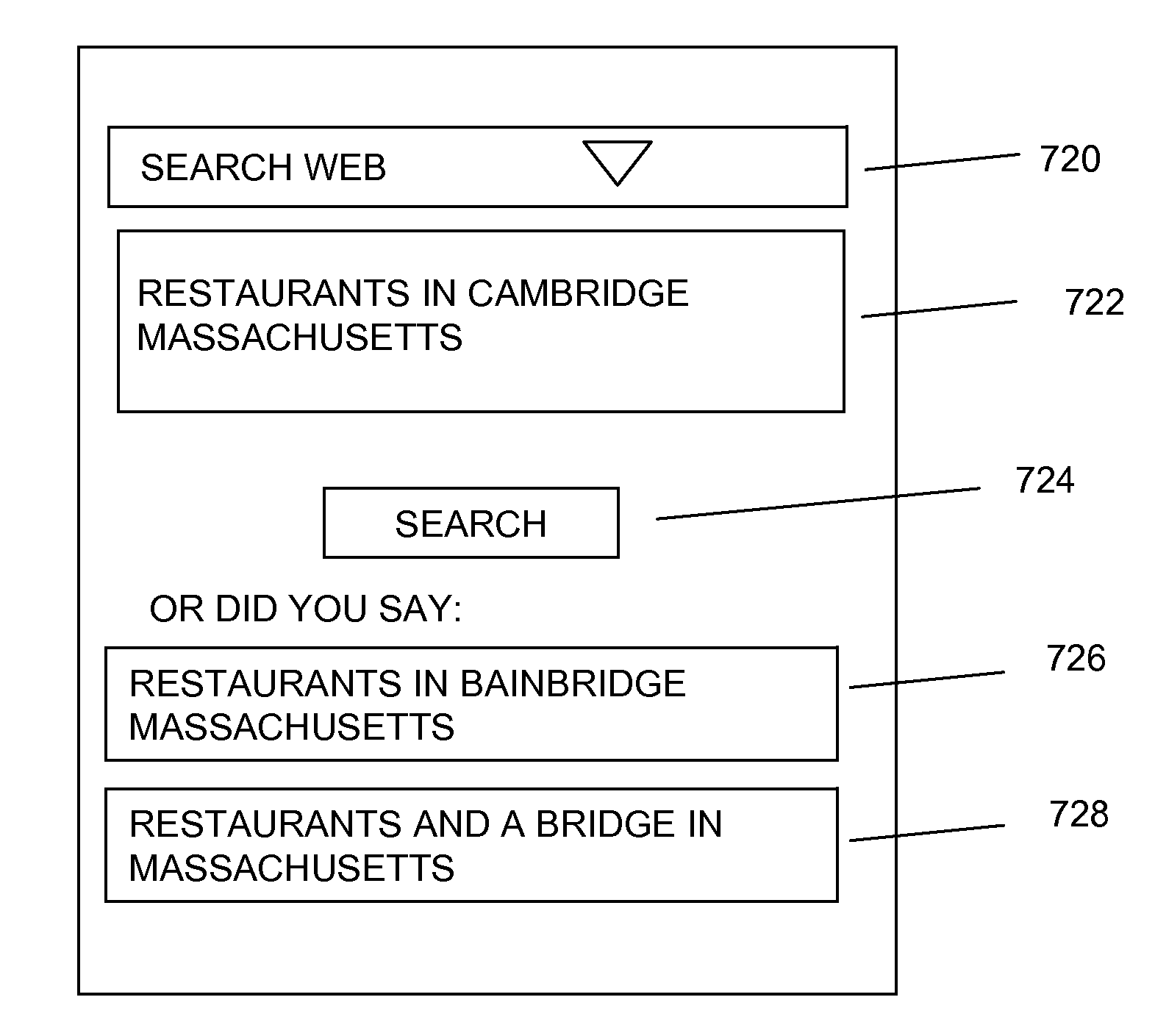

Method and system for presenting dynamic commercial content to clients interacting with a voice extensible markup language system

InactiveUS20050246174A1Speech analysisSpecial service for subscribersInteraction interfaceSpoken dialog

A system for selecting a voice dialog, which may be an advertisement or information message, from a pool of voice dialogs and for causing the selected voice dialog to be utilized by a voice application for presentation to a caller during an automated voice interactive session includes a voice-enabled interaction interface hosting the voice application; and, a sever monitoring the voice-enabled interaction interface for selecting the voice dialog and for serving at least identification and location of the dialog to be presented to the caller via the voice application.

Owner:APPTERA

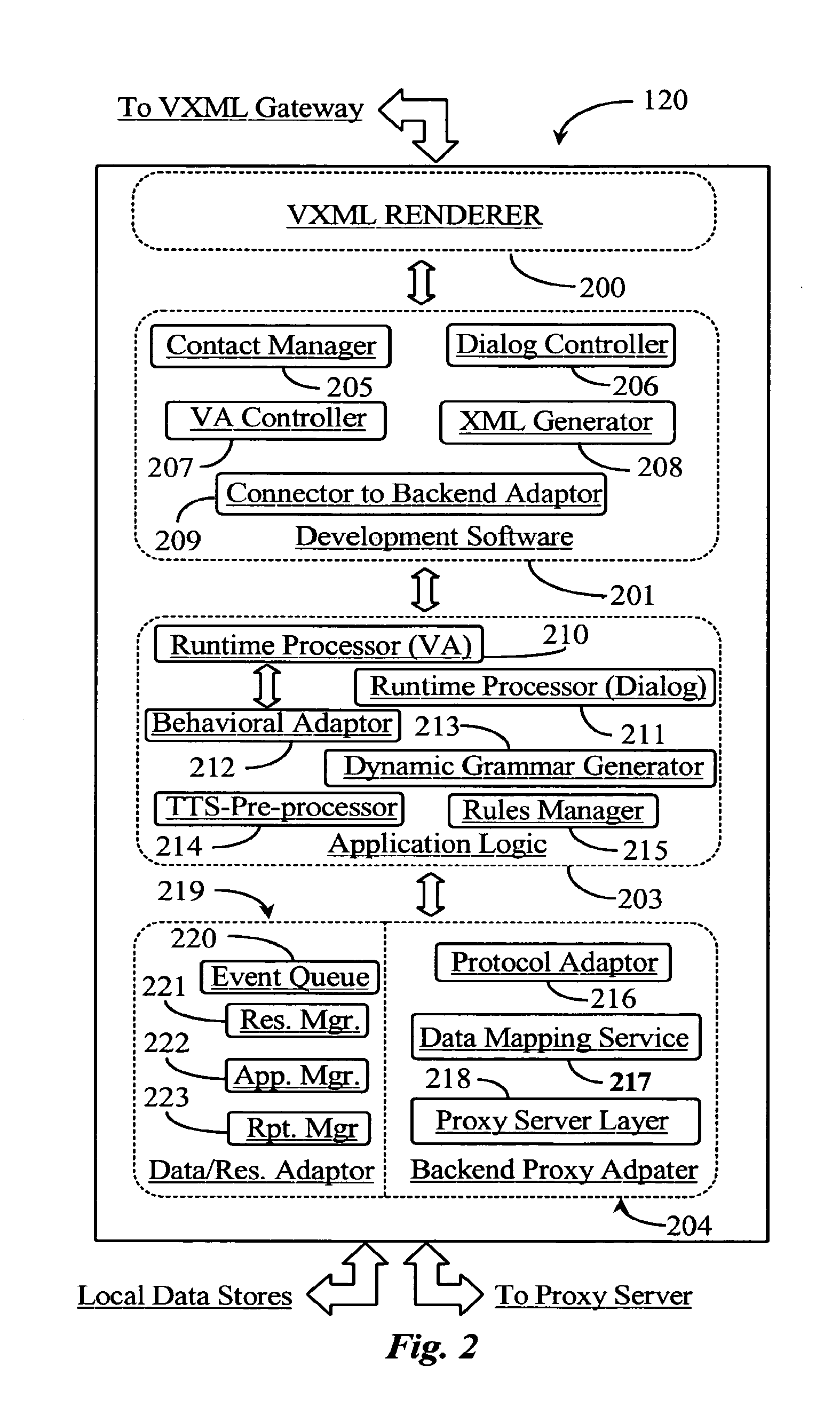

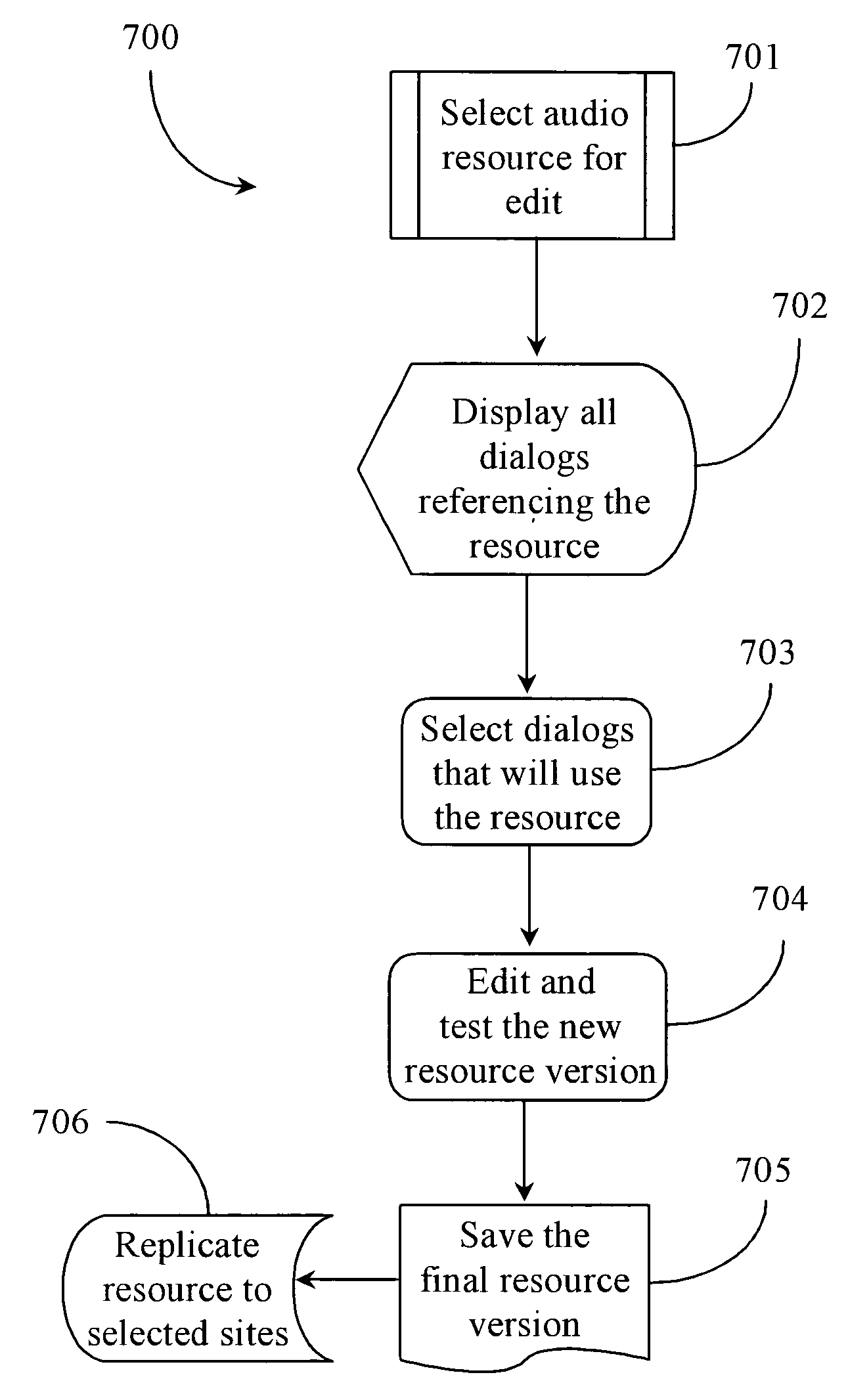

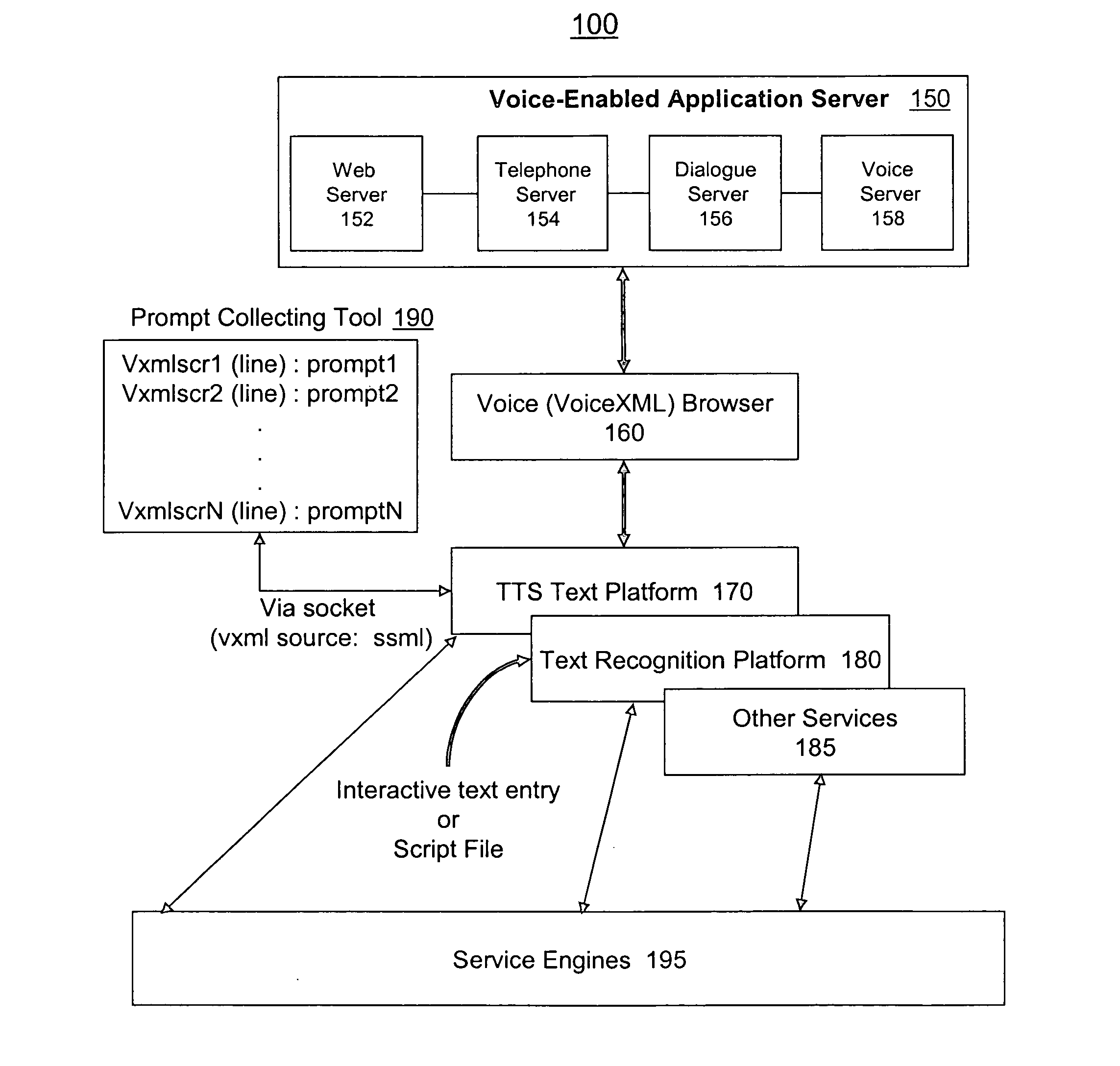

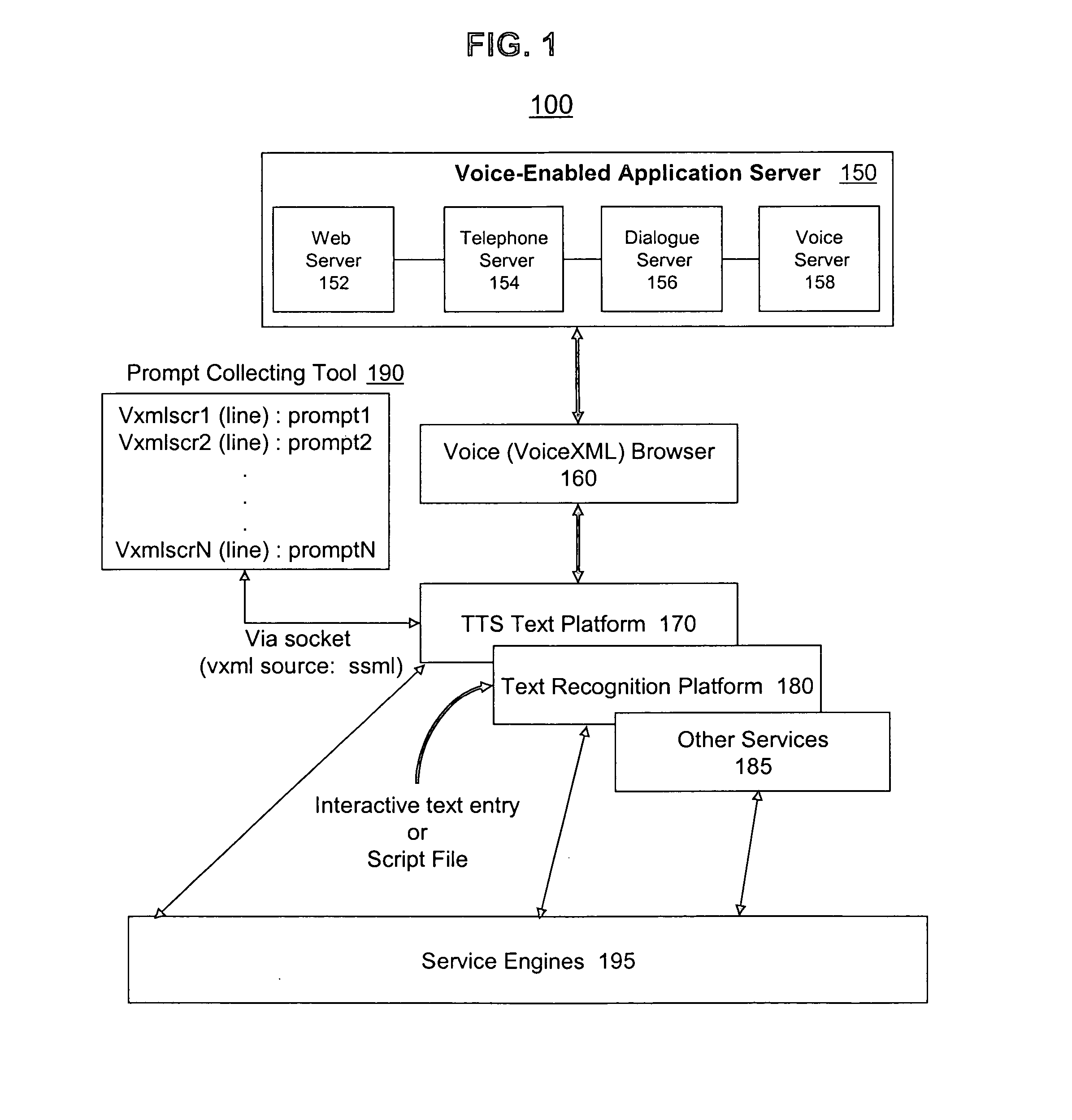

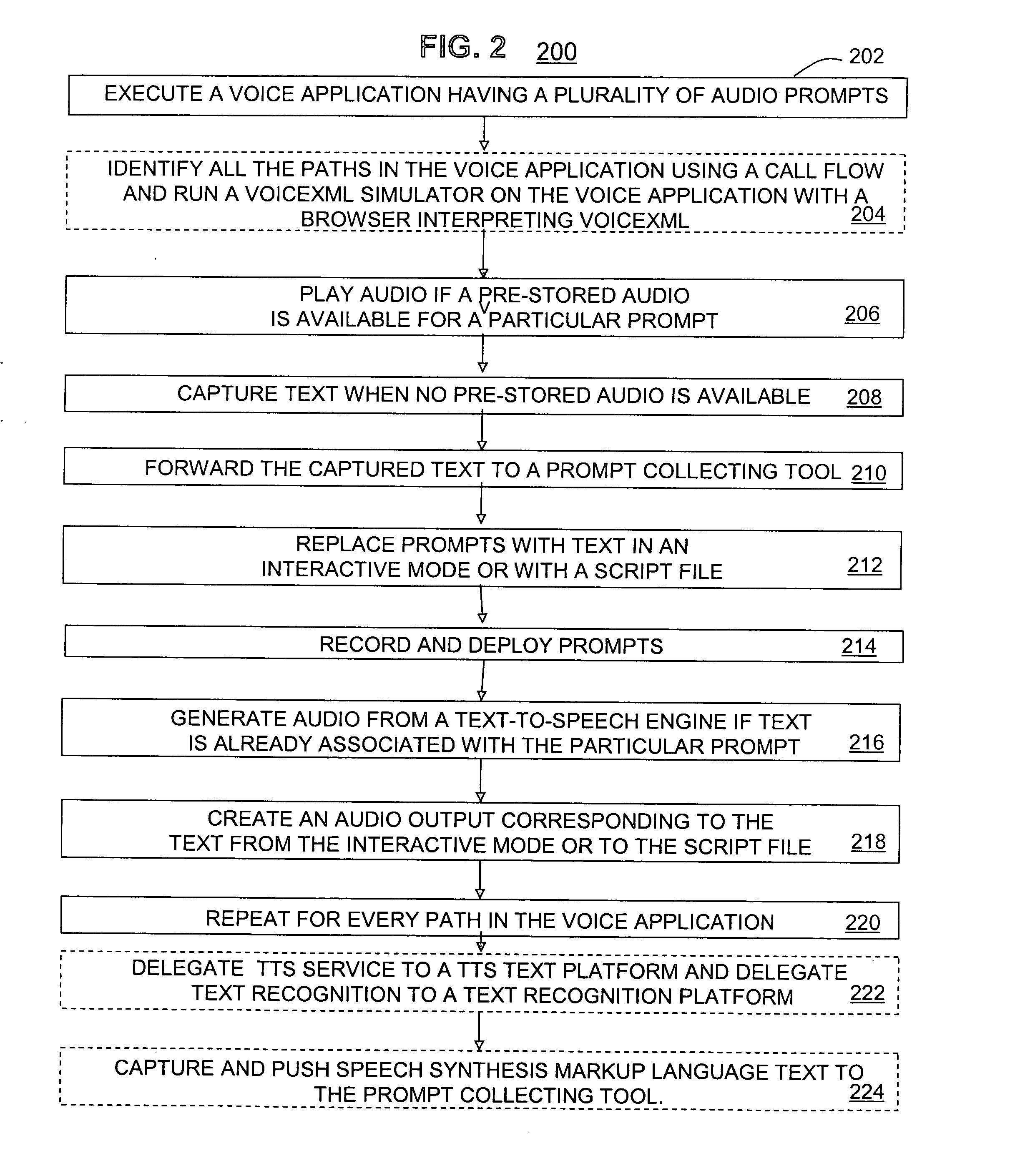

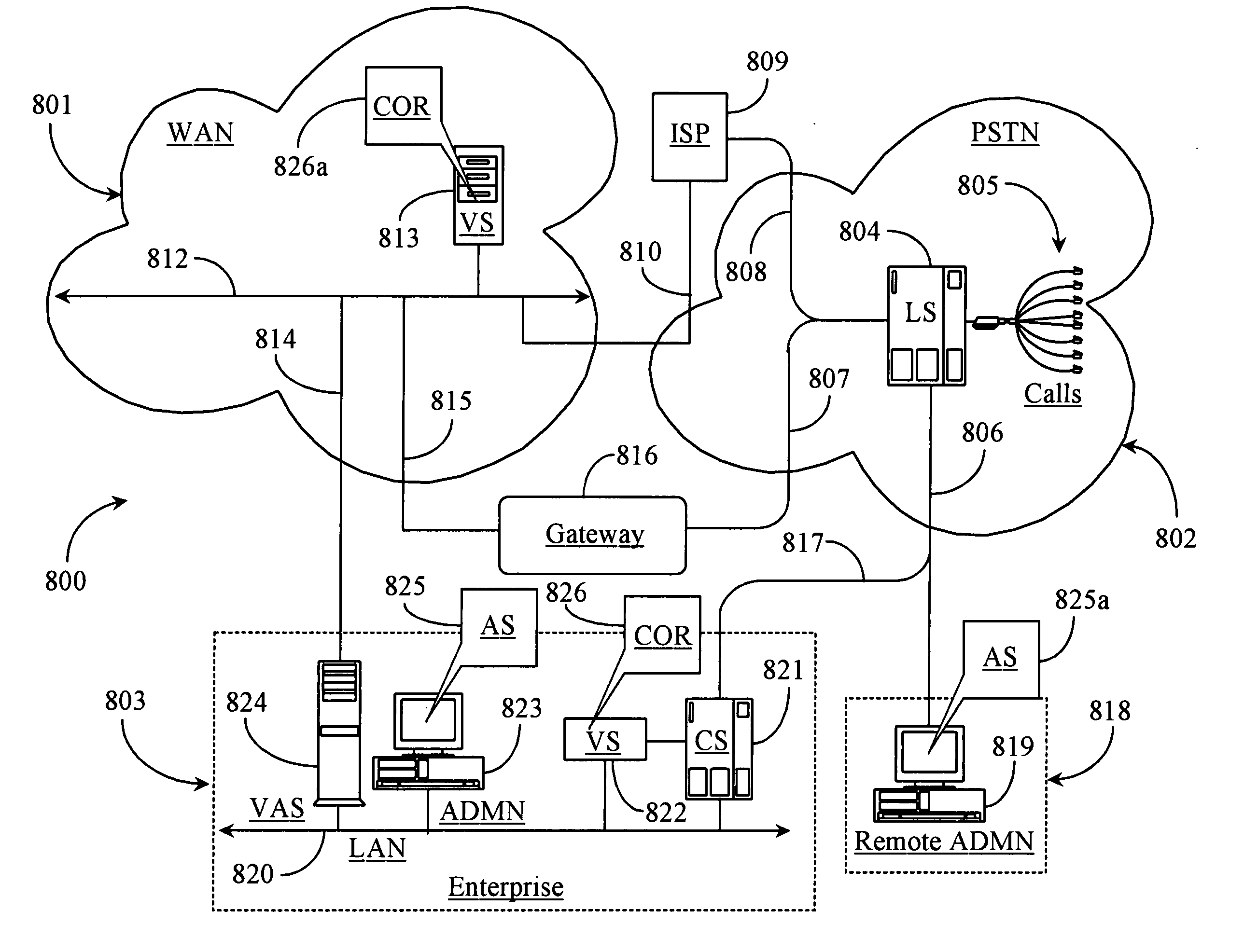

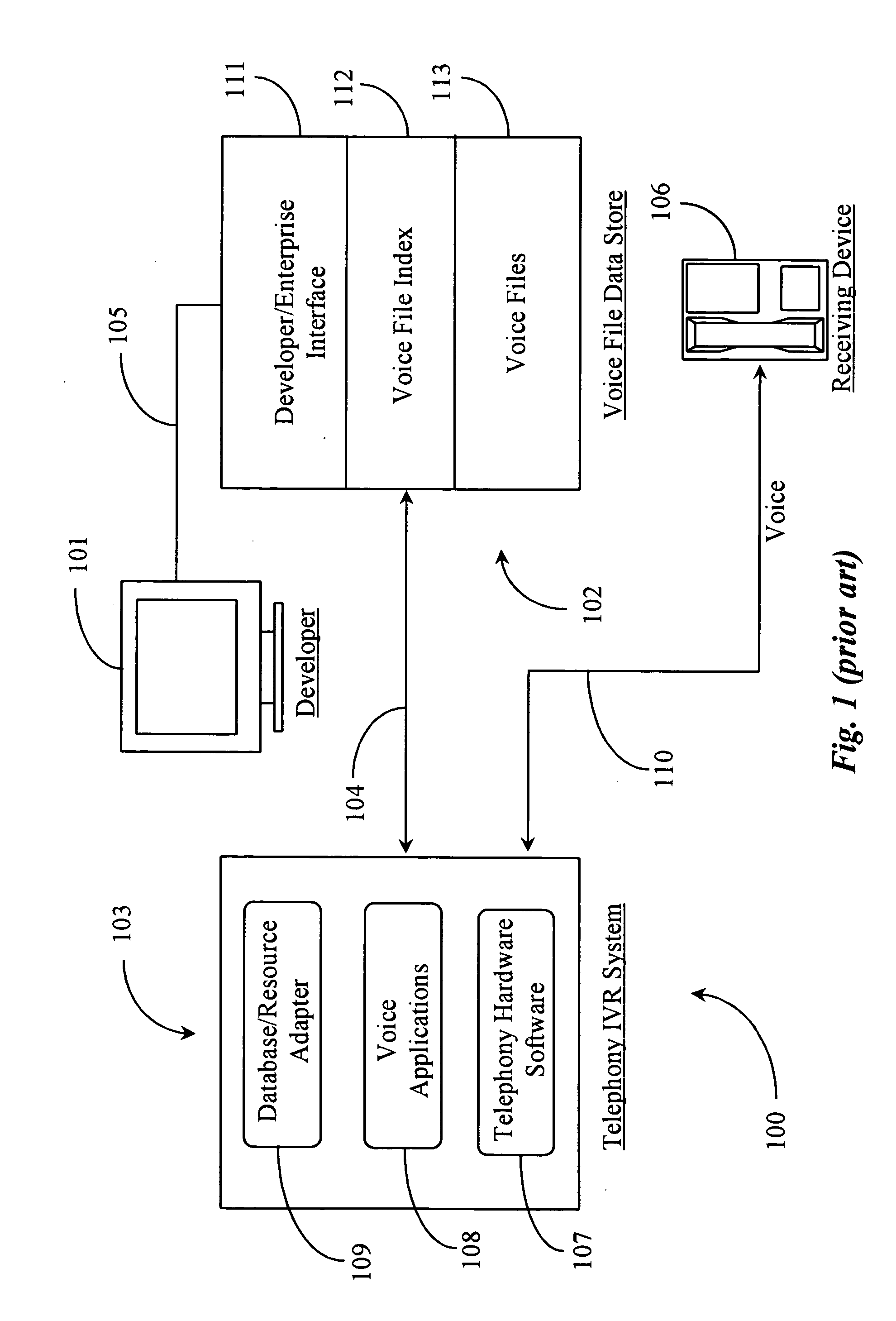

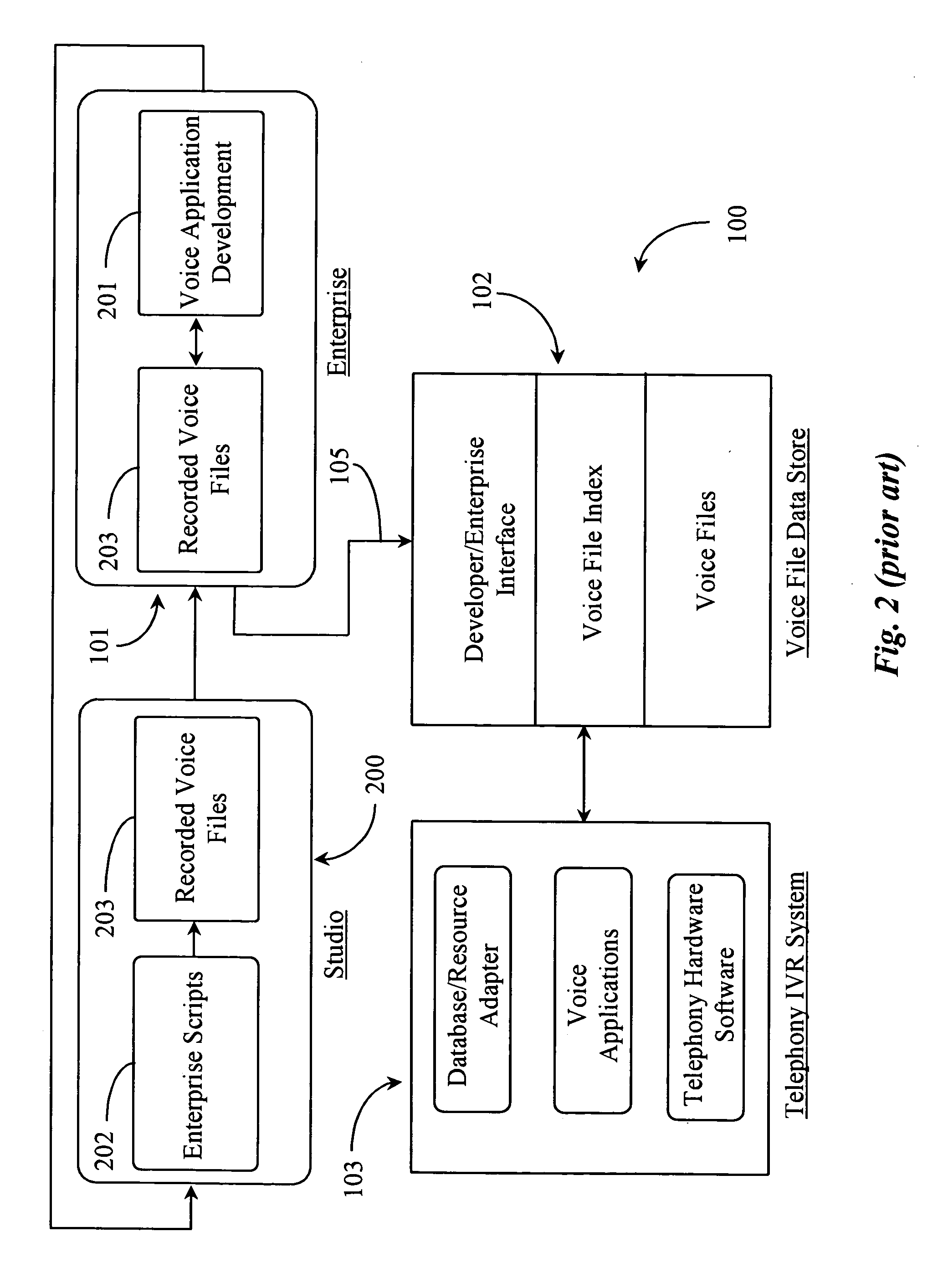

Method and system for collecting audio prompts in a dynamically generated voice application

ActiveUS20070043568A1Precise positioningAutomatic exchangesSpeech synthesisSimulator couplingApplication server

Owner:CERENCE OPERATING CO

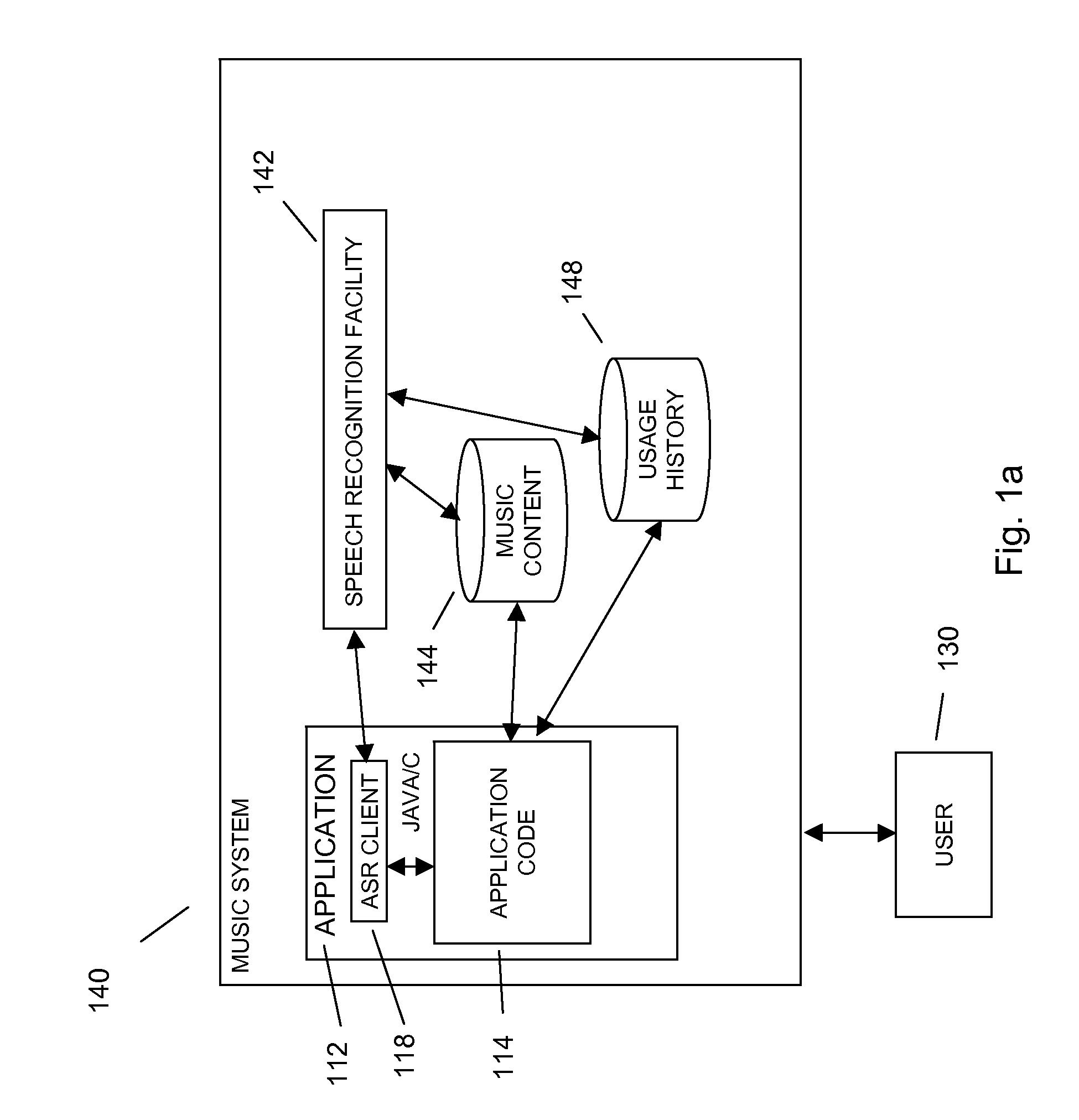

Hybrid command and control between resident and remote speech recognition facilities in a mobile voice-to-speech application

In embodiments of the present invention improved capabilities are described for hybrid command and control between resident and remote speech recognition facilities in controlling a mobile communication facility comprising accepting speech presented by a user using a resident capture facility on the mobile communication facility while the user engages an interface that enables a command mode for the mobile communications facility; processing the speech using a resident speech recognition facility to recognize command elements and content elements; transmitting at least a portion of the speech through a wireless communication facility to a remote speech recognition facility; transmitting information from the mobile communication facility to the remote speech recognition facility, wherein the information includes information about a command recognizable by the resident speech recognition facility; generating speech-to-text results utilizing a hybrid of the resident speech recognition facility and the remote speech recognition facility based at least in part on the speech and on the information related to the mobile communication facility; and transmitting the text results for use on the mobile communications facility.

Owner:VLINGO CORP

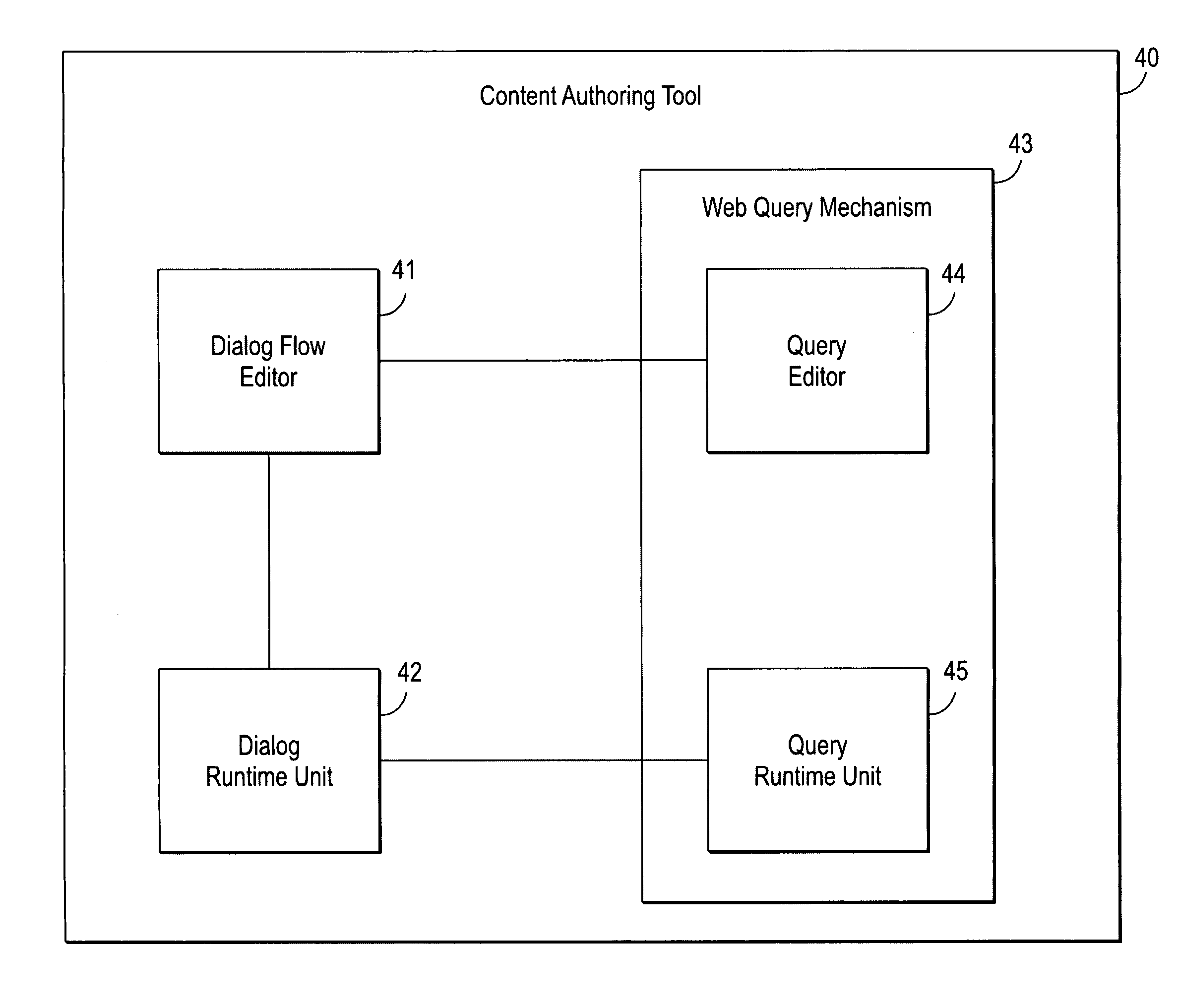

Tool for graphically defining dialog flows and for establishing operational links between speech applications and hypermedia content in an interactive voice response environment

A computer-implemented graphical design tool allows a developer to graphically author a dialog flow for use in a voice response system and to graphically create an operational link between a hypermedia page and a speech object. The hypermedia page may be a Web site, and the speech object may define a spoken dialog interaction between a person and a machine. Using a drag-and-drop interface, the developer can graphically define a dialog as a sequence of speech objects. The developer can also create a link between a property of any speech object and any field of a Web page, to voice-enable the Web page, or to enable a speech application to access Web site data.

Owner:NUANCE COMM INC

Command and control utilizing content information in a mobile voice-to-speech application

In embodiments of the present invention improved capabilities are described for controlling a mobile communication facility utilizing content information comprising accepting speech presented by a user using a resident capture facility on the mobile communication facility while the user engages an interface that enables a command mode for the mobile communications facility; processing the speech using a resident speech recognition facility to recognize command elements and content elements; transmitting at least a portion of the speech through a wireless communication facility to a remote speech recognition facility; transmitting information from the mobile communication facility to the remote speech recognition facility, wherein the information includes information about a command recognized by the resident speech recognition facility and information about the content recognized by the resident speech recognition facility; generating speech-to-text results utilizing the remote speech recognition facility based at least in part on the speech and on the information related to the mobile communication facility; and transmitting the text results for use on the mobile communications facility.

Owner:VLINGO CORP

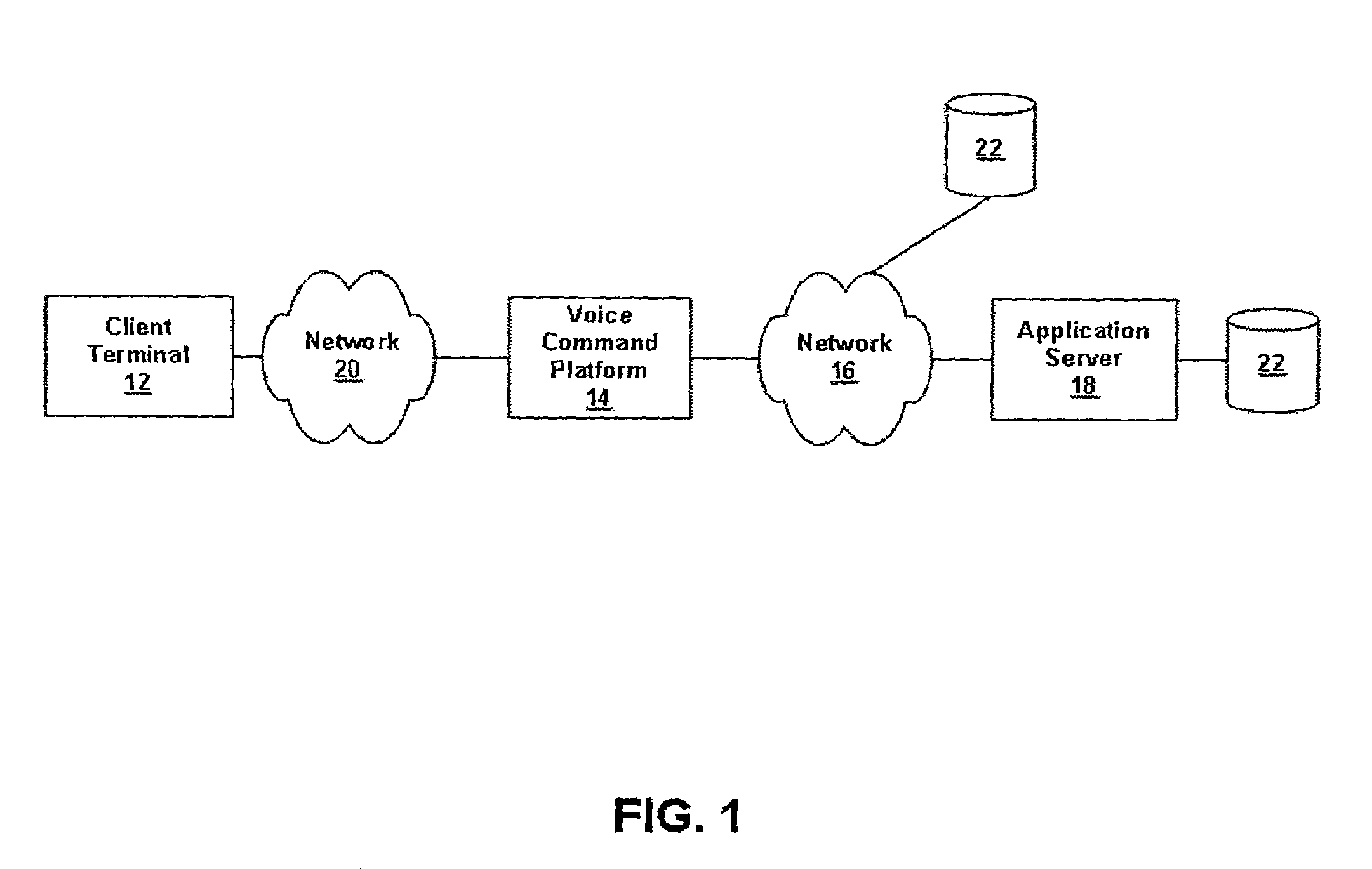

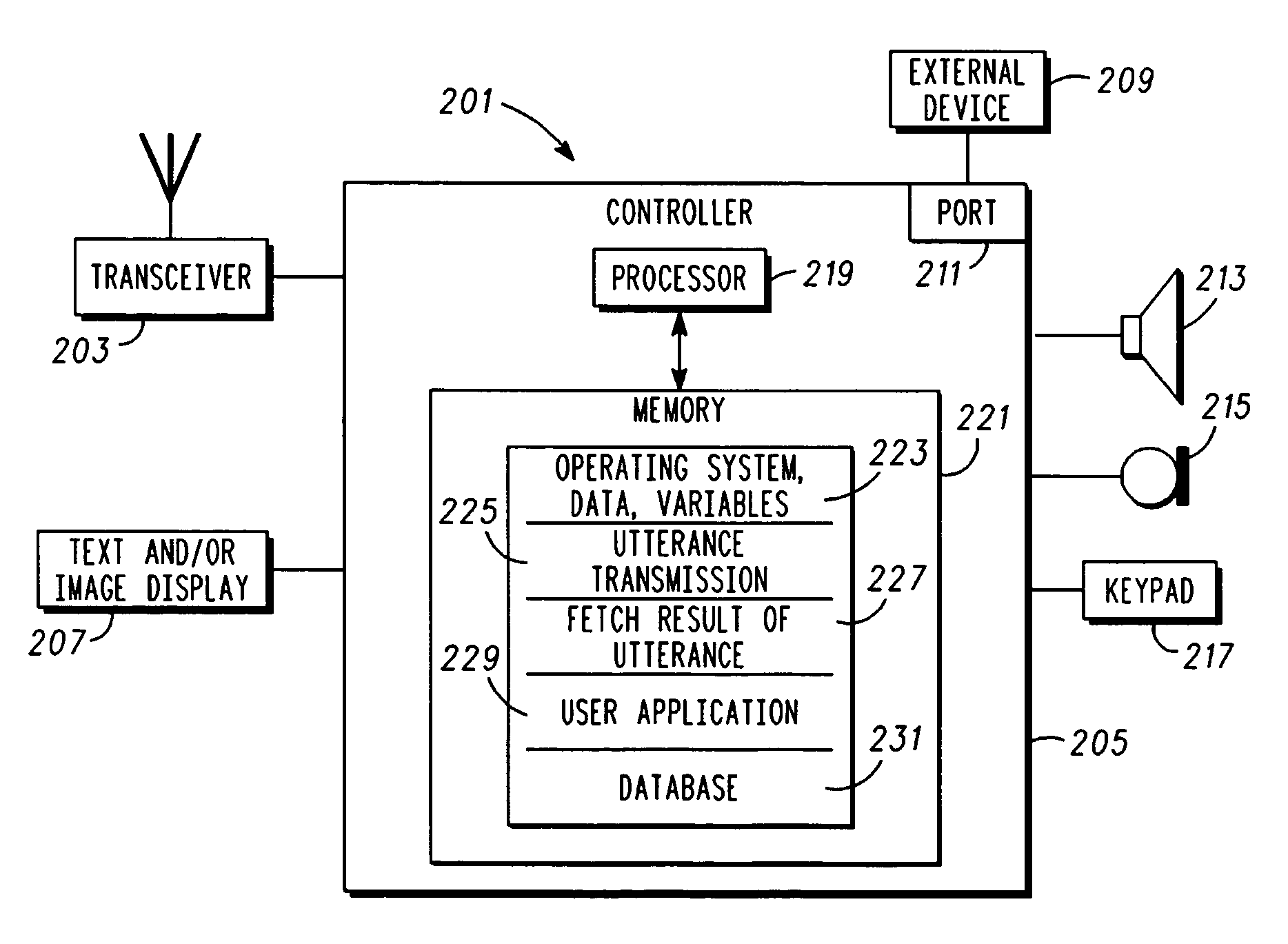

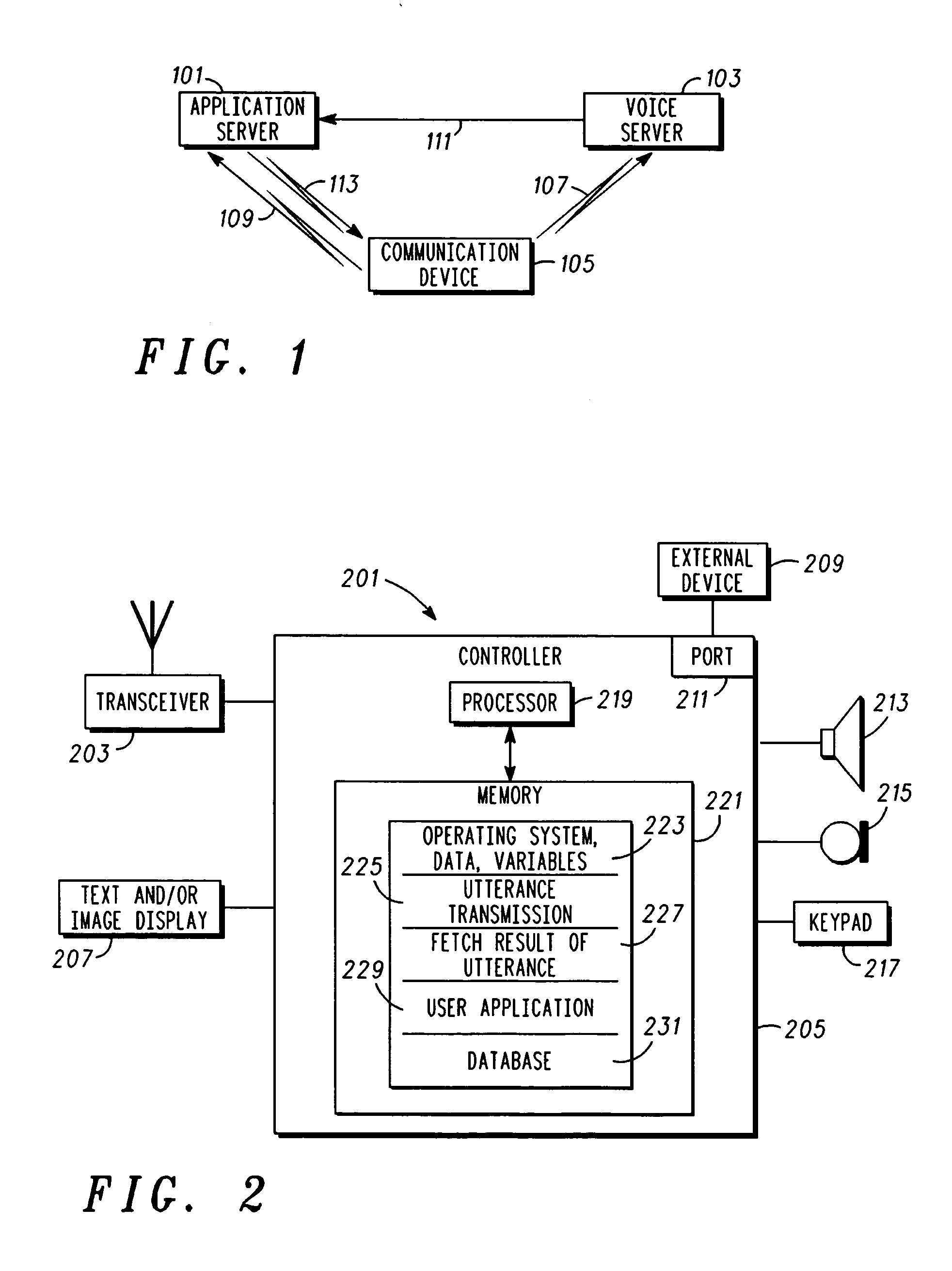

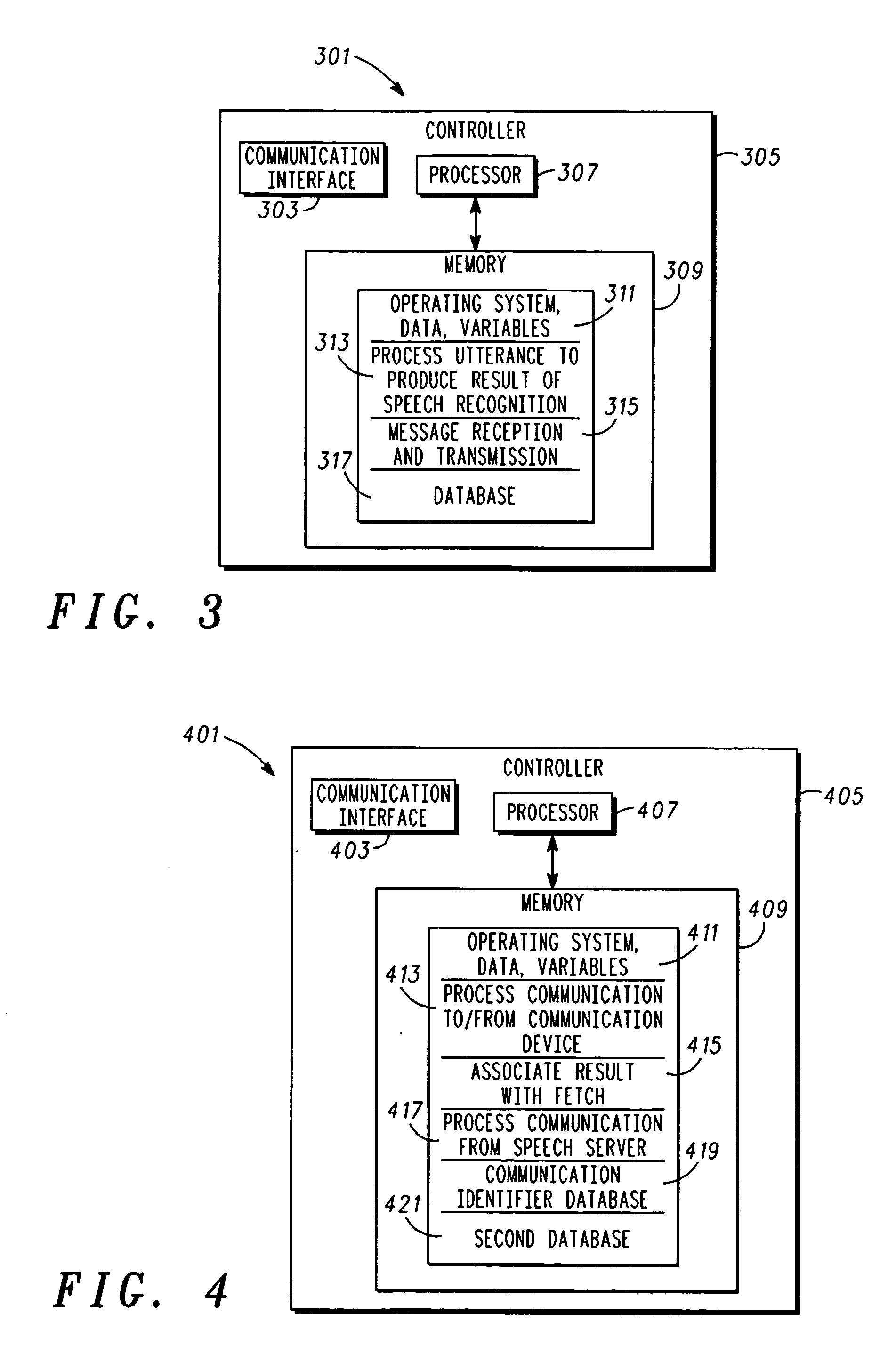

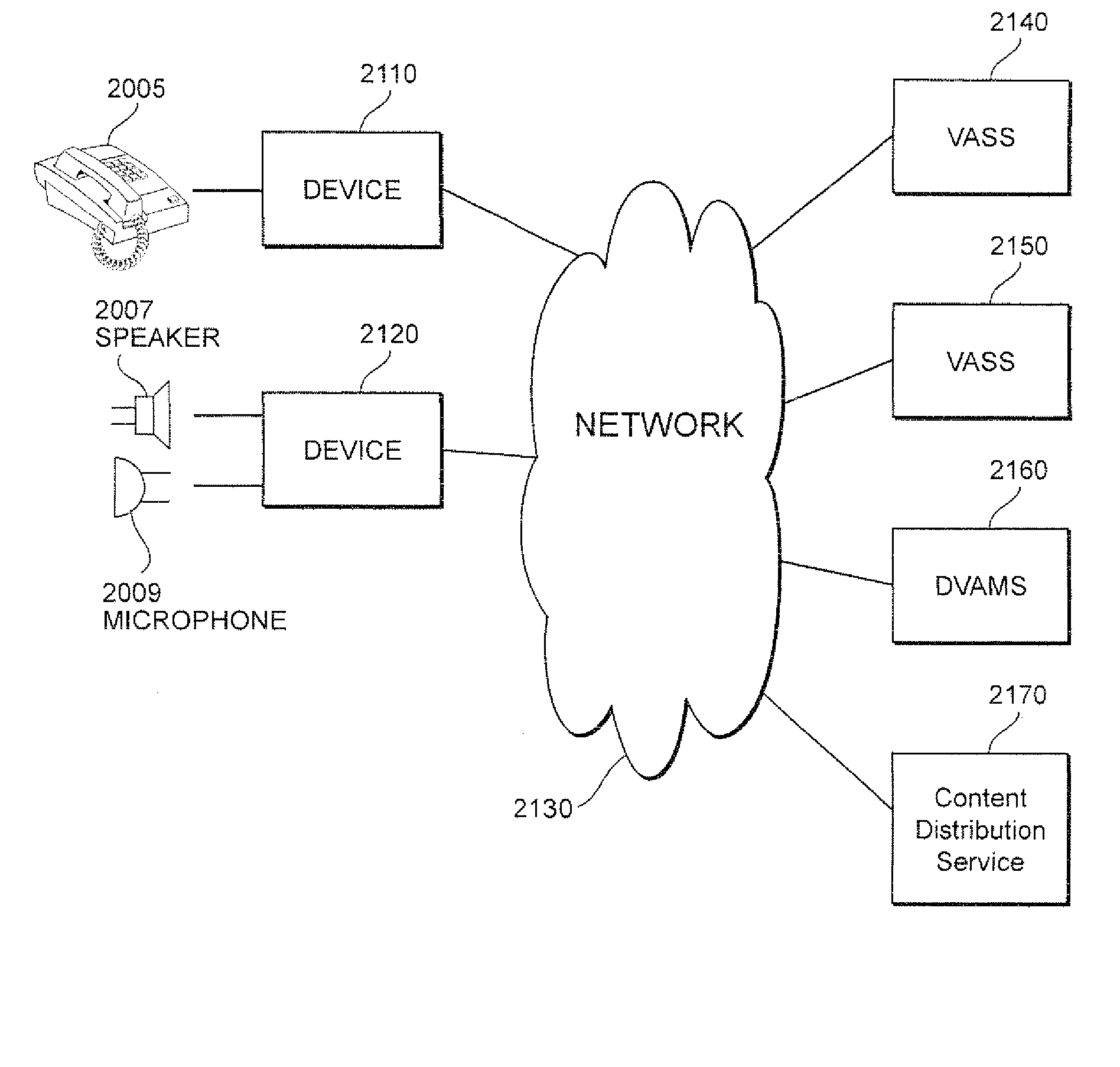

Method and apparatus for distributed speech applications

A communication unit (105) includes a communication interface, for transmitting and receiving communications when operably connected to a first communication network; and a processor cooperatively operable with the communication interface. Responsive to receipt of an utterance, the communication unit (105) can perform a fetch (109) over the communication interface and can transmit a first message (107) having the utterance over the communication interface. The communication unit (105) can receive a second message (113) having a result (111) of a recognition of the utterance from the communication interface in response to the fetch (109).

Owner:GOOGLE TECH HLDG LLC

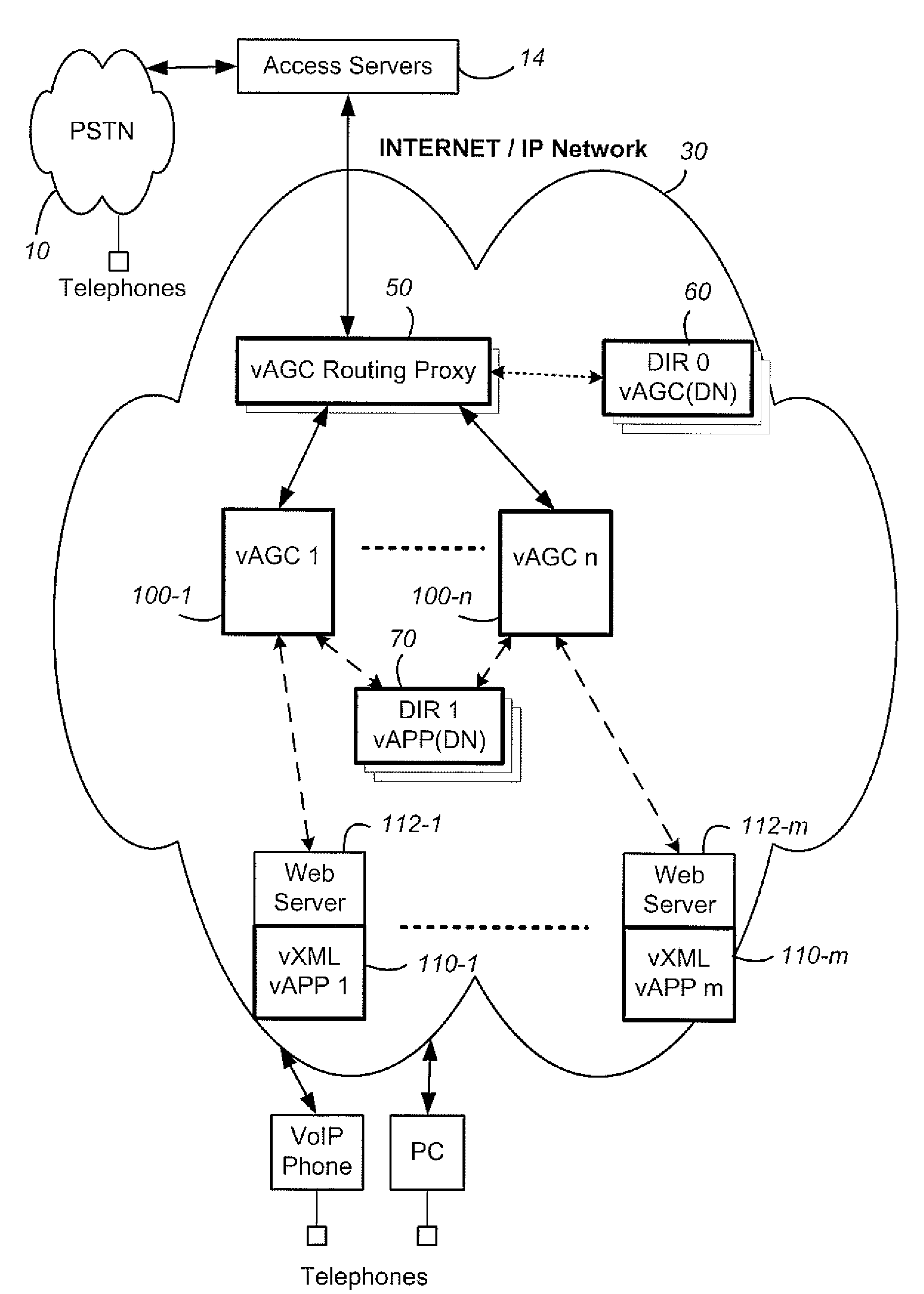

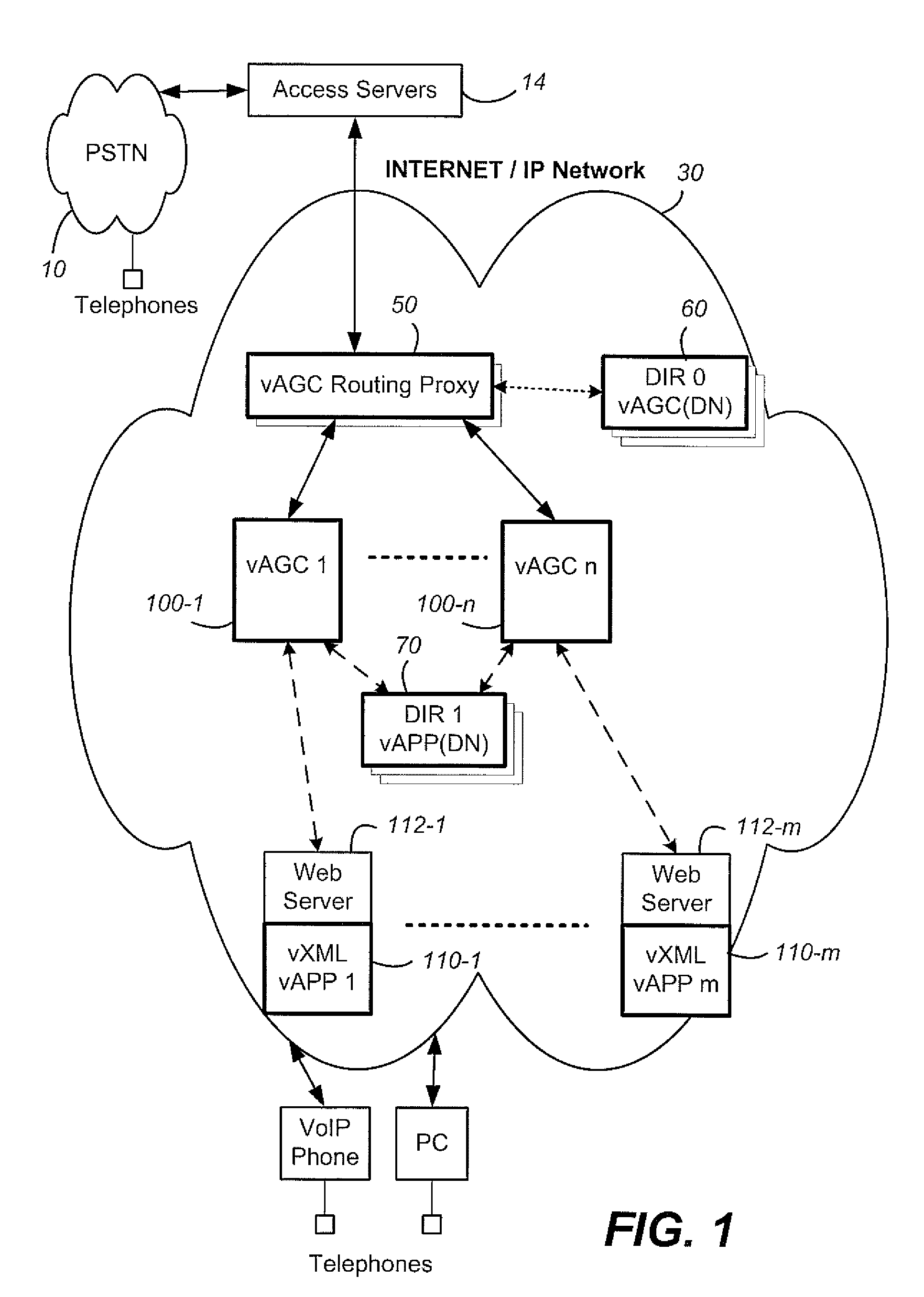

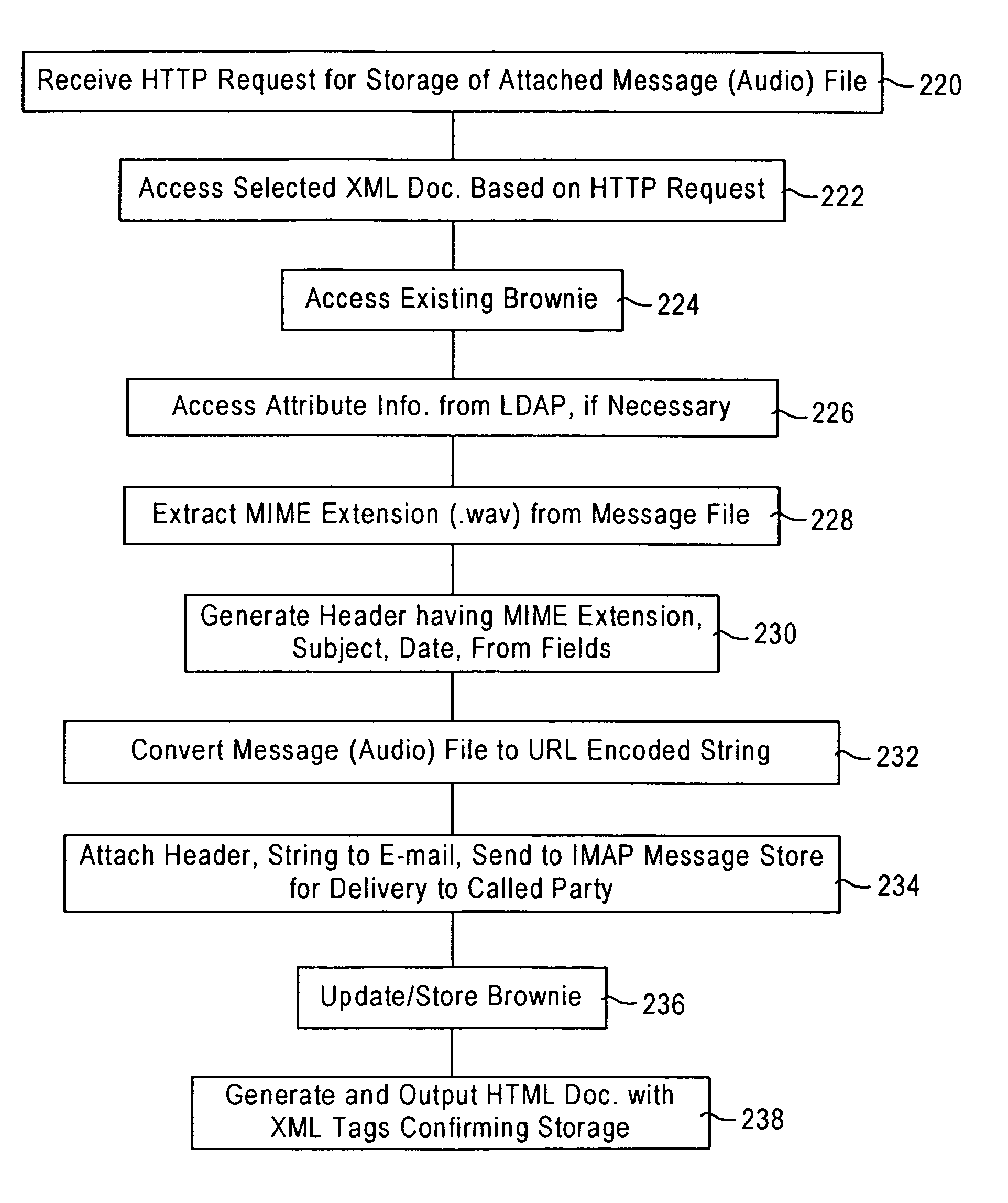

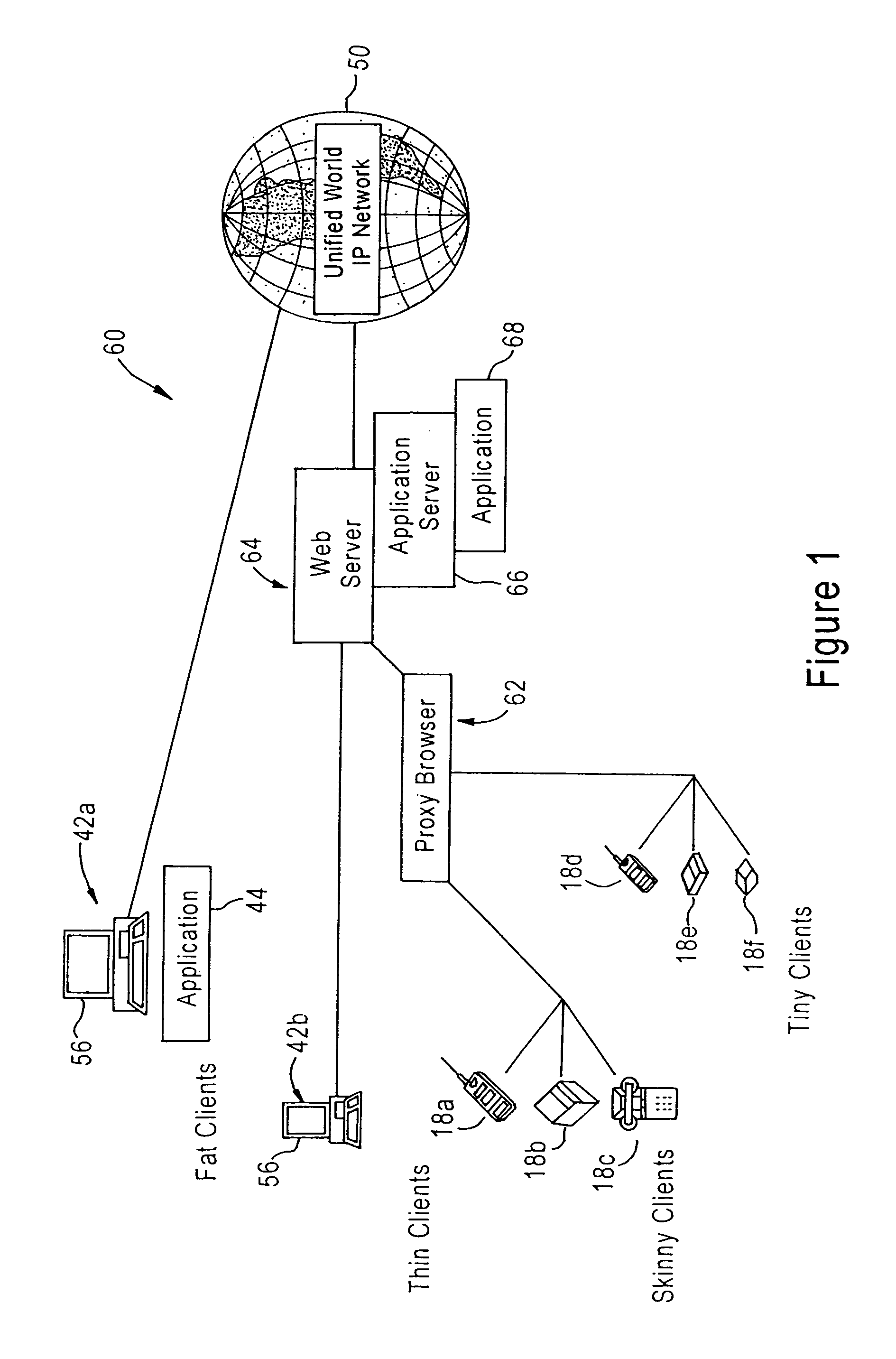

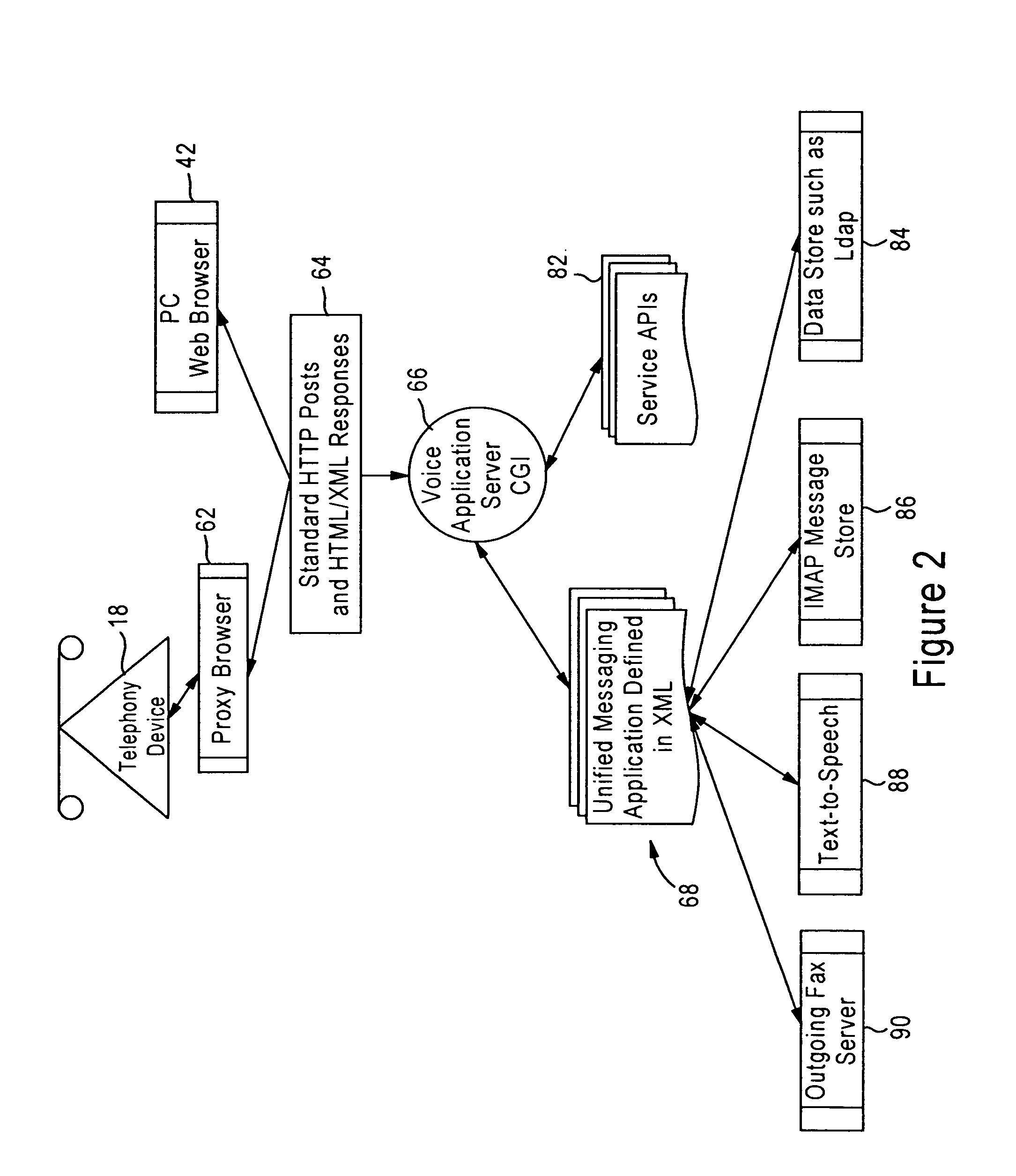

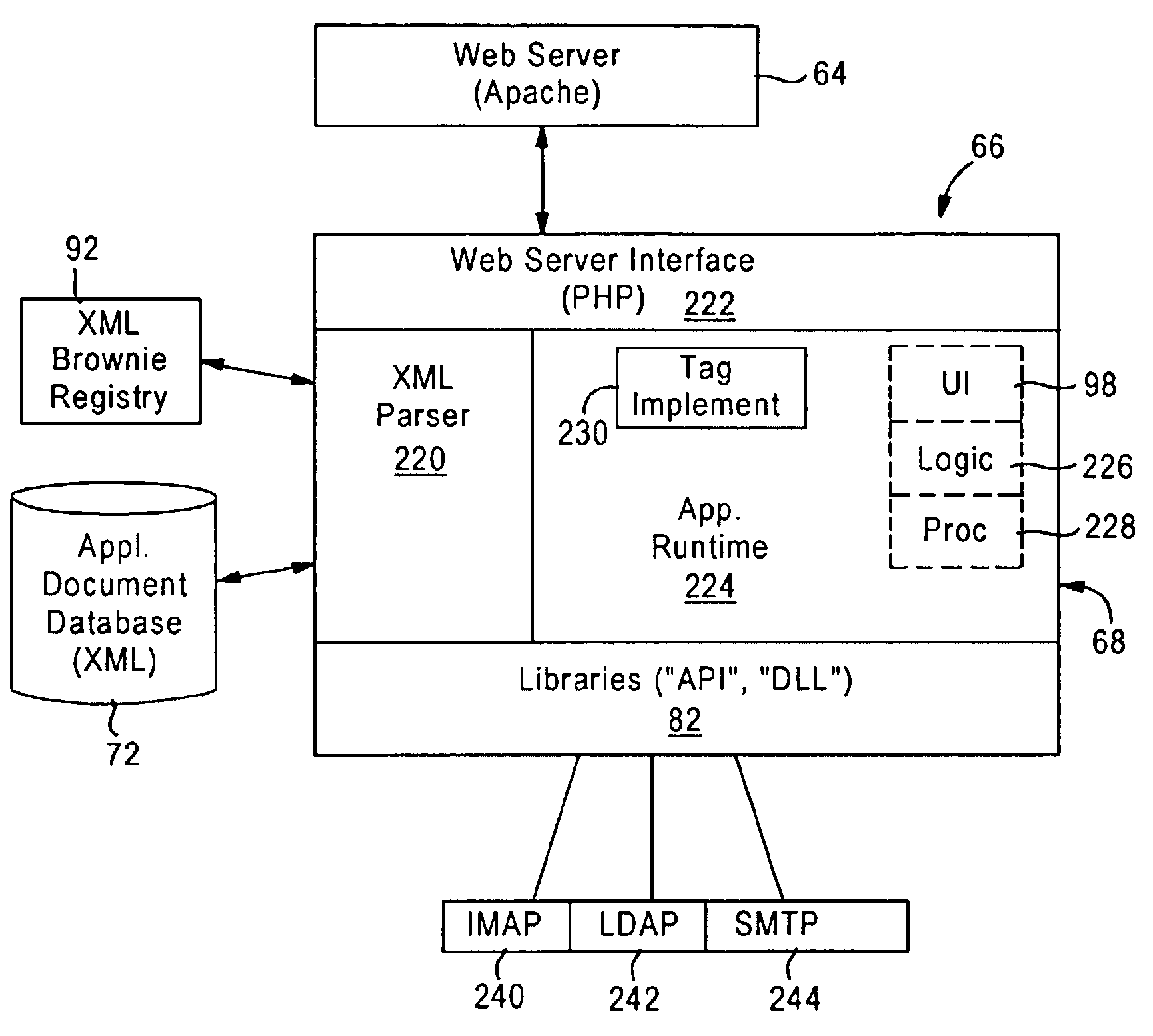

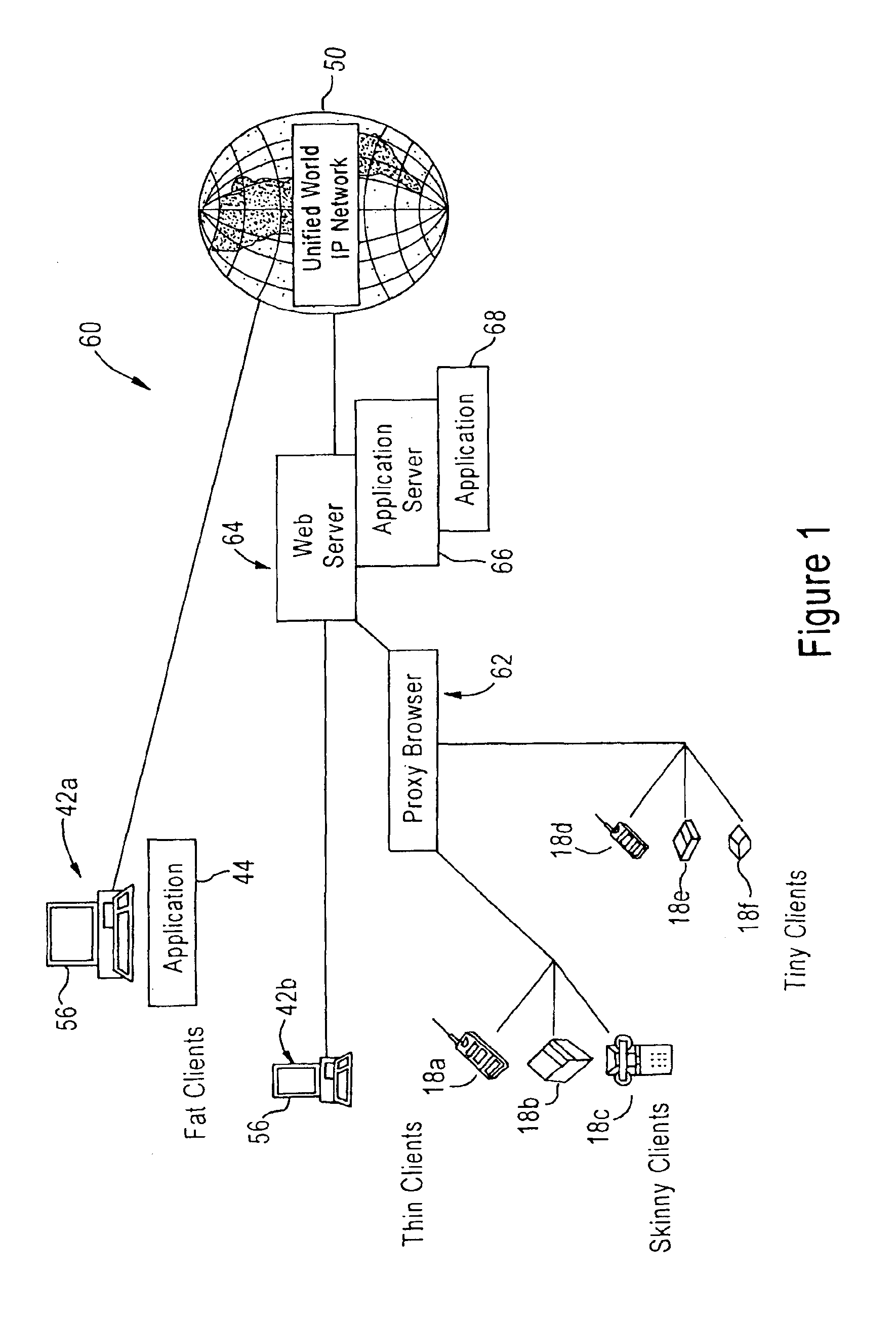

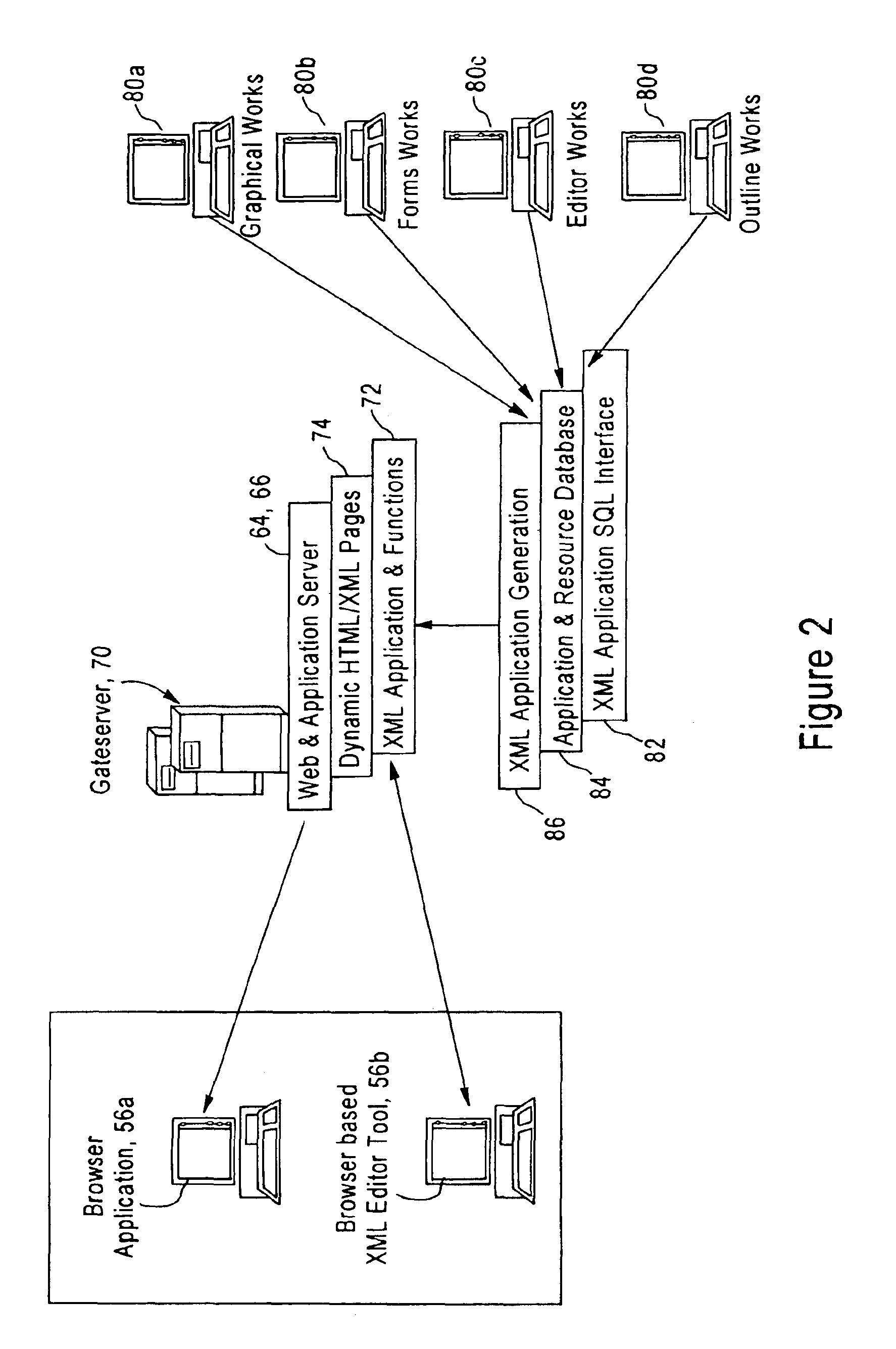

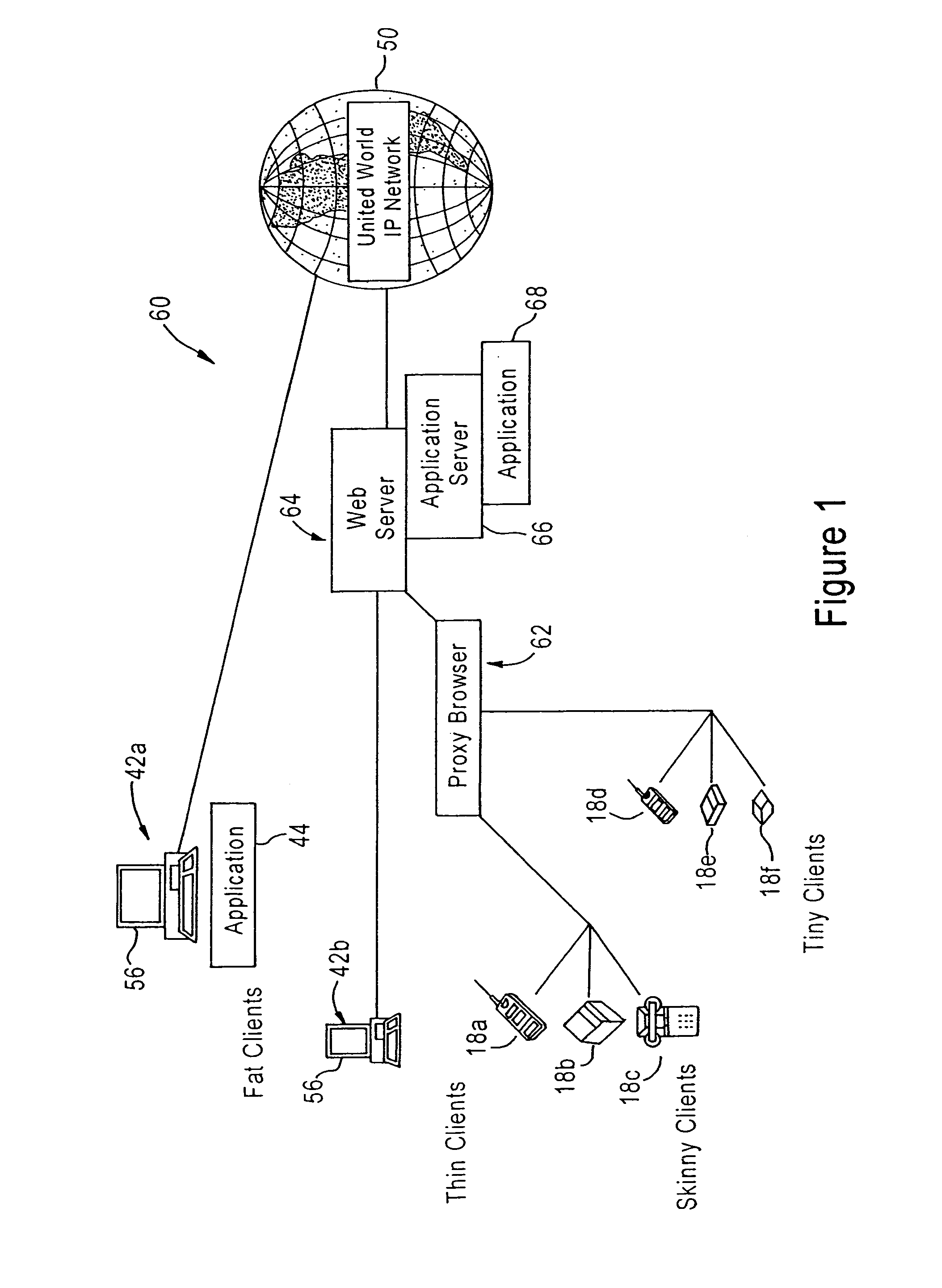

Unified messaging system using web based application server for management of messages using standardized servers

InactiveUS6990514B1Special service for subscribersMultiple digital computer combinationsPersonalizationSpeech applications

A unified web-based voice messaging system uses an application server, configured for executing a voice application defined by XML documents, that accesses subscriber attributes from a standardized information database server (such as LDAP), and messages from a standardized messaging server (such as IMAP), regardless of message format. The application server, upon receiving a request from a browser serving a user, accesses the standardized database server to obtain attribute information for responding to the voice application operation request. The application server generates an HTML document having media content and control tags for personalized execution of the voice application operation based on the attribute information obtained from the standardized database server. The application server also is configured for storing messages for a called party in the standardized messaging server by storing within the message format information that specifies the corresponding message format. Hence, the application server can respond to a request for a stored message from a subscriber by accessing the stored message from the standardized messaging server, and generating an HTML document having media content and control tags for presenting the subscriber with the stored message in a prescribed format based on the message format and the capabilities of the access device used by the subscriber.

Owner:CISCO TECH INC

System and method for verifying the identity of a user by voiceprint analysis

ActiveUS20100158207A1Devices with voice recognitionAutomatic call-answering/message-recording/conversation-recordingComputer networkSpeech applications

A distributed voice application execution environment system conducts a voiceprint analysis when a user initially begins to interact with the system. If the system is able to identify the user through a voiceprint analysis, the system immediately begins to interact with the user utilizing voice applications which have been customized for that user.

Owner:XTONE INC

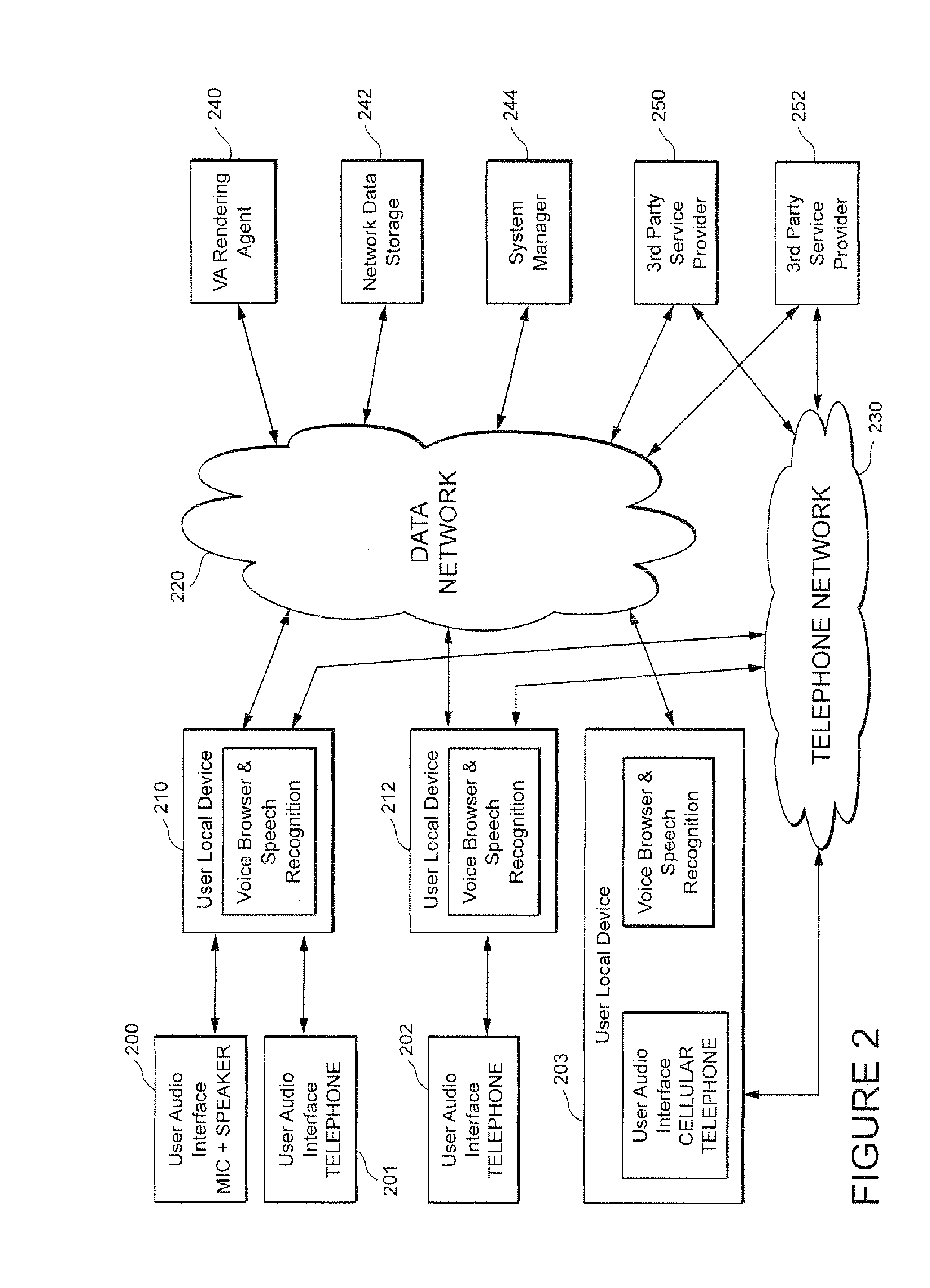

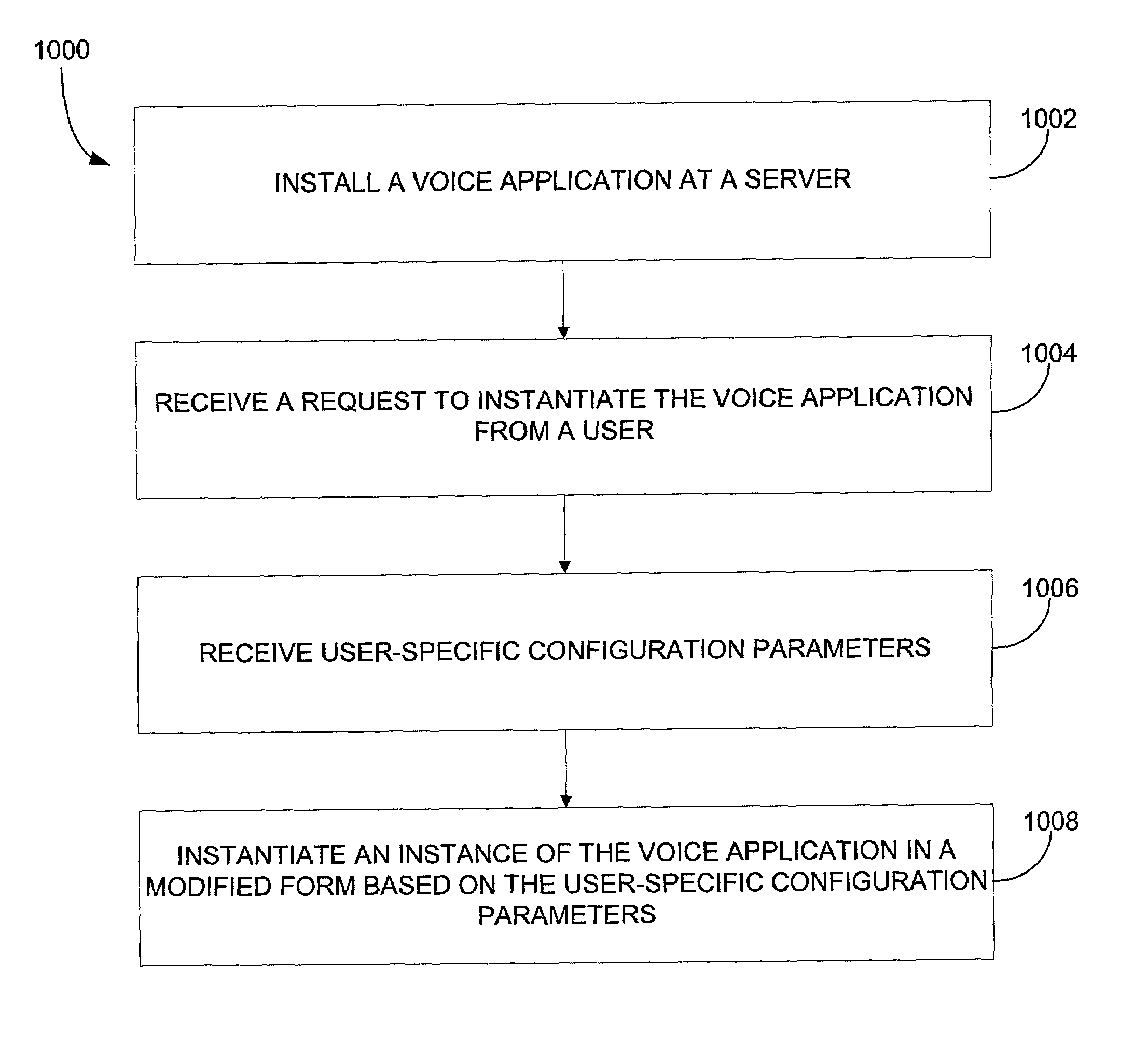

Voice applications and voice-based interface

InactiveUS7334050B2Network traffic/resource managementSpeech analysisComputer networkApplication software

A system, method and computer program product are provided for initiating a tailored voice application according to an embodiment. First, a voice application is installed at a server. A request to instantiate the voice application is received from a user. User-specific configuration parameters are also received. An instance of the voice application is instantiated in a modified form based on the user-specific configuration parameters. A system, method and computer program product provide a voice-based interface according to one embodiment. A voice application is provided for verbally outputting content to a user. An instance of the voice application is instantiated. Content is selected for output. The content is output verbally using the voice application. The instance of the voice application pauses the output and resumes the output. A method for providing a voice habitat is also provided according to one embodiment. An interface to a habitat is provided. A user is allowed to aggregate content in the habitat utilizing the interface. A designation of content for audible output is received from the user. Some or all of the designated content is output. The user is also allowed to aggregate applications in the habitat utilizing the interface. Spoken commands are received from the user and are interpreted using a voice application. Commands are issued to one or more of the applications in the habitat via the voice application.

Owner:NVIDIA INT

Application server providing personalized voice enabled web application services using extensible markup language documents

InactiveUS6901431B1Interconnection arrangementsAutomatic call-answering/message-recording/conversation-recordingPersonalizationSpeech applications

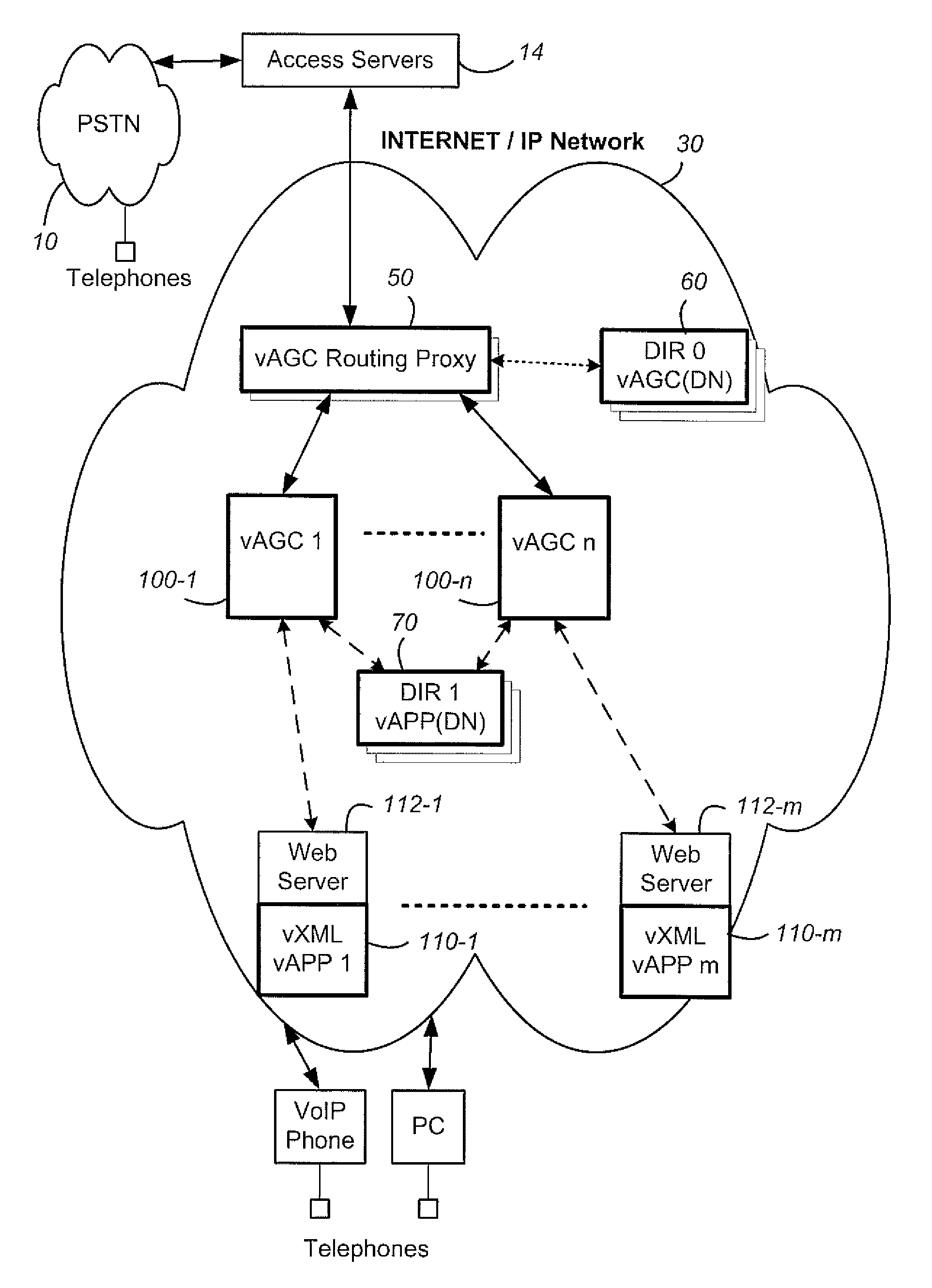

A unified web-based voice messaging system provides voice application control between a web browser and an application server via an hypertext transport protocol (HTTP) connection on an Internet Protocol (IP) network. The application server, configured for executing a voice application defined by XML documents, selects an XML document for execution of a corresponding voice application operation based on a determined presence of a user-specific XML document that specifies the corresponding voice application operation. The application server, upon receiving a voice application operation request from a browser serving a user, determines whether a personalized, user specific XML document exists for the user and for the corresponding voice application operation. If the application server determines the presence of the personalized XML document for a user-specific execution of the corresponding voice application operation, the application server dynamically generates a personalized HTML page having media content and control tags for personalized execution of the voice application operation; however if the application server determines an absence of the personalized XML document for the user-specific execution of the corresponding voice application operation, the application server dynamically generates a generic HTML page for generic execution of the voice application operation. Hence, a user can personalize any number of voice application operations, enabling a web-based voice application to be completely customized or merely partially customized.

Owner:CISCO TECH INC

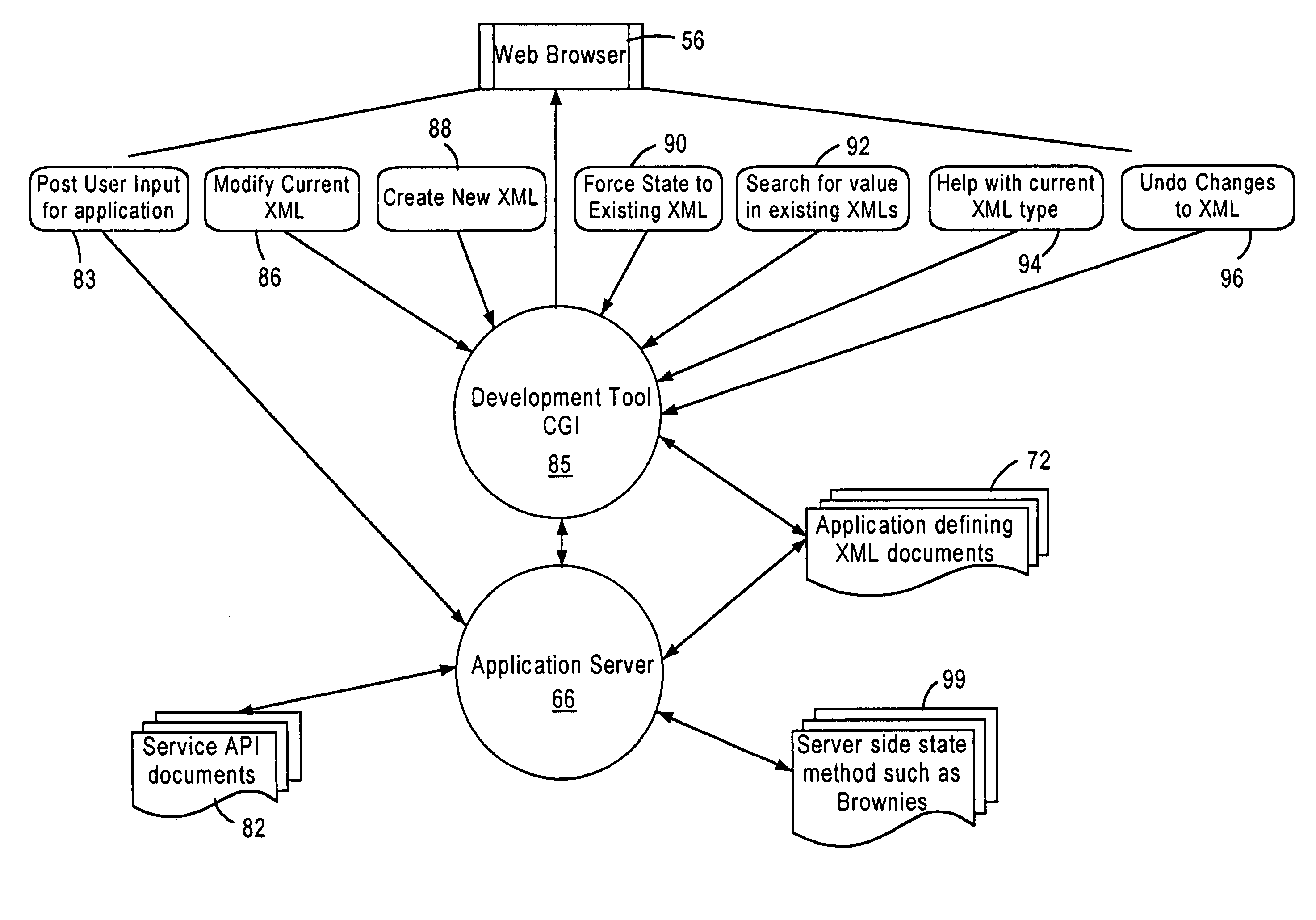

Browser-based arrangement for developing voice enabled web applications using extensible markup language documents

InactiveUS6954896B1Promote generationOperate application operatingDigital computer detailsComputer security arrangementsWeb browserApplication server

A unified web-based voice messaging system provides voice application control between a web browser and an application server via an hypertext transport protocol (HTTP) connection on an Internet Protocol (IP) network. The application server executes the voice-enabled web application by runtime execution of a first set of extensible markup language (XML) documents that define the voice-enabled web application to be executed. The application server generates an HTML form specifying selected application parameters from an XML document executable by the voice application. The HTML form is supplied to a browser, enabling a user of the browser to input or modify application parameters for the corresponding XML document into the form. The application server inserts the received input application parameters into the XML document, and stores the document.

Owner:CISCO TECH INC

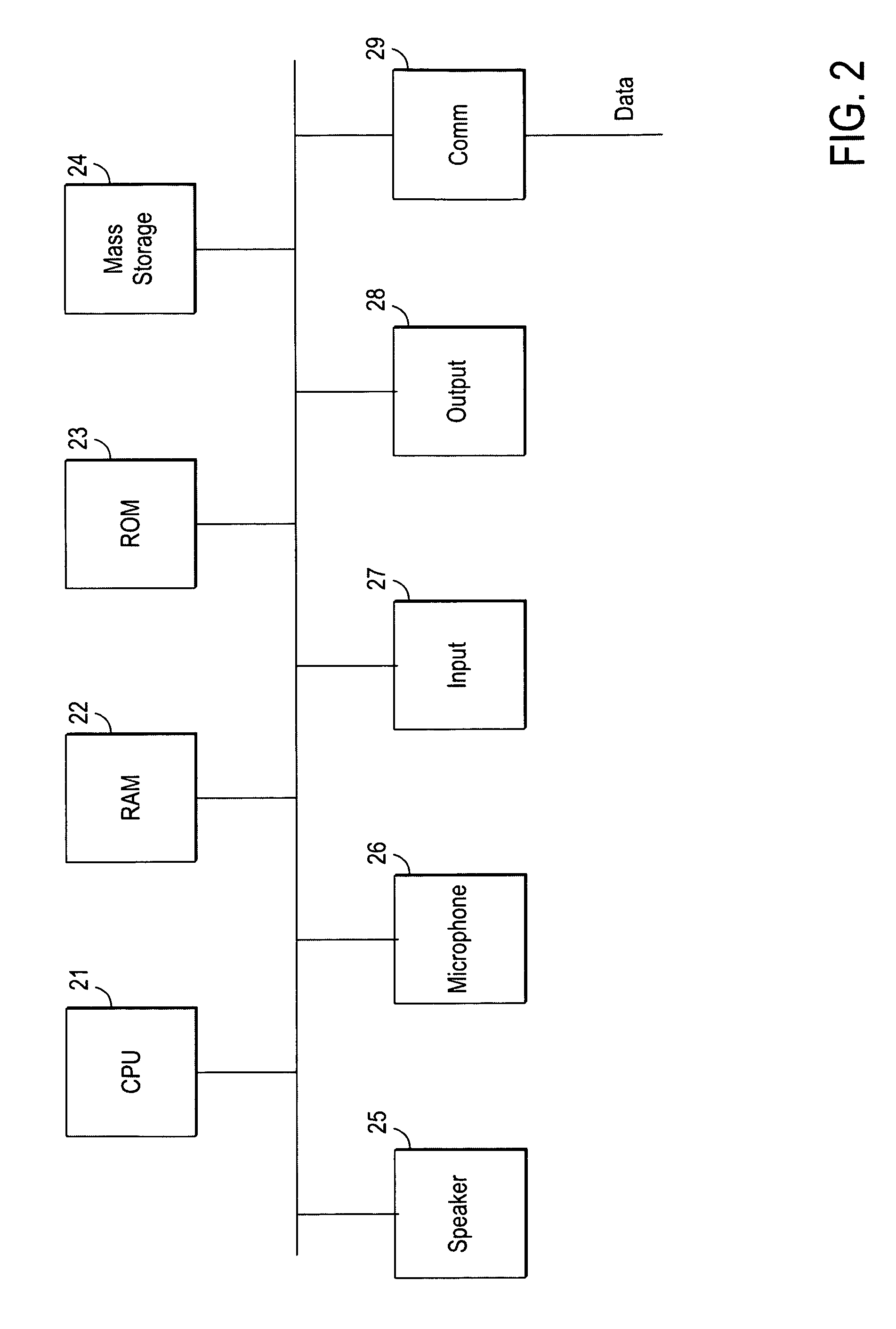

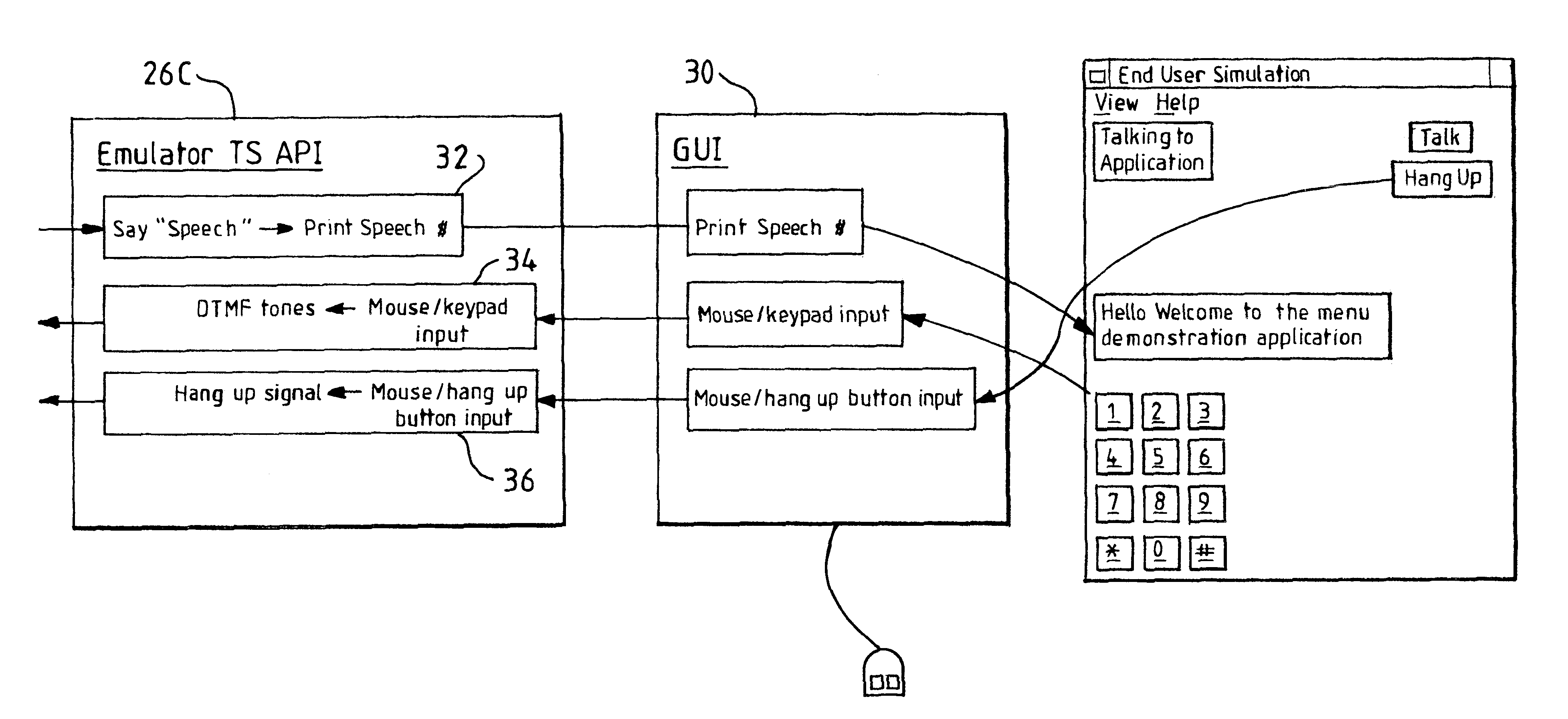

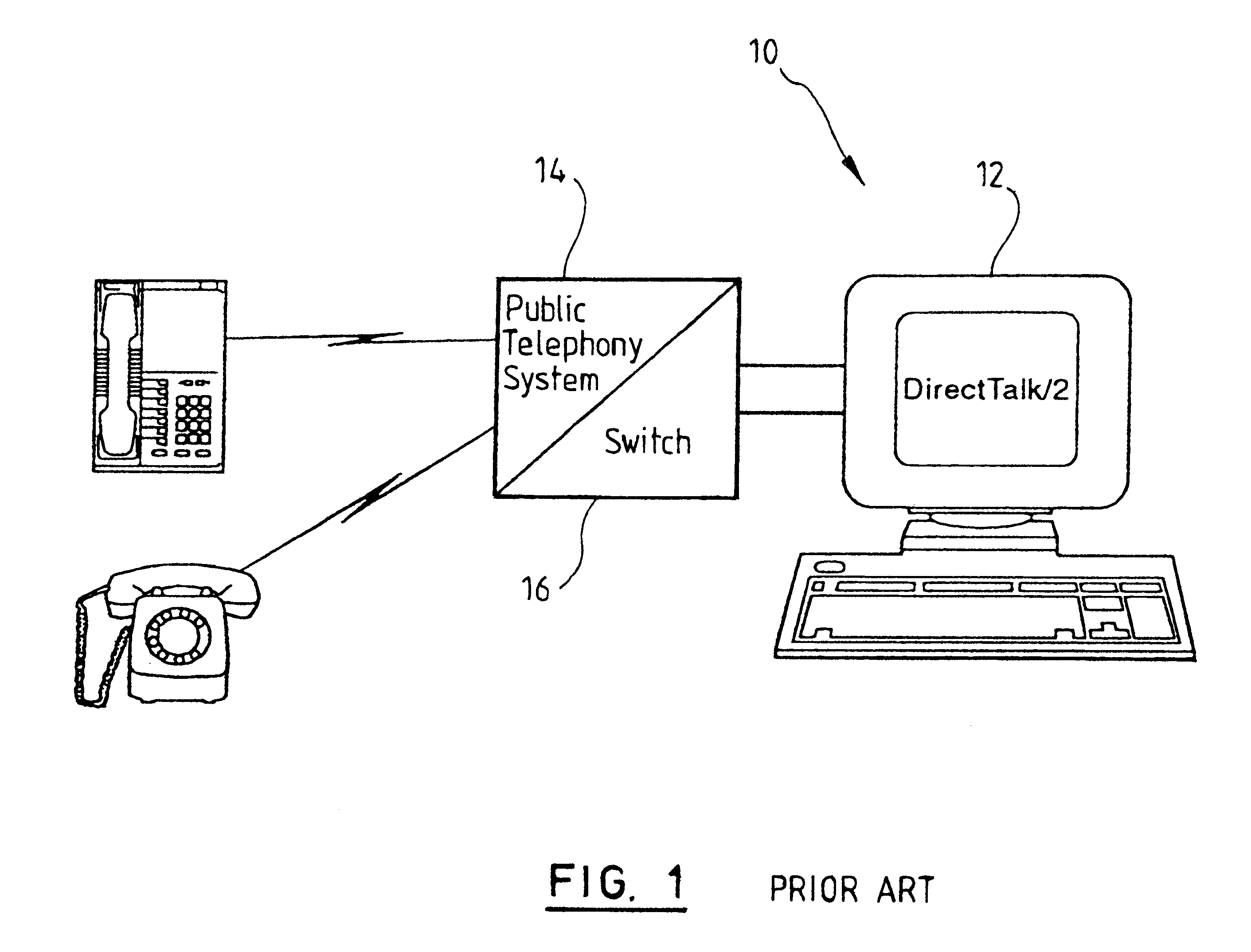

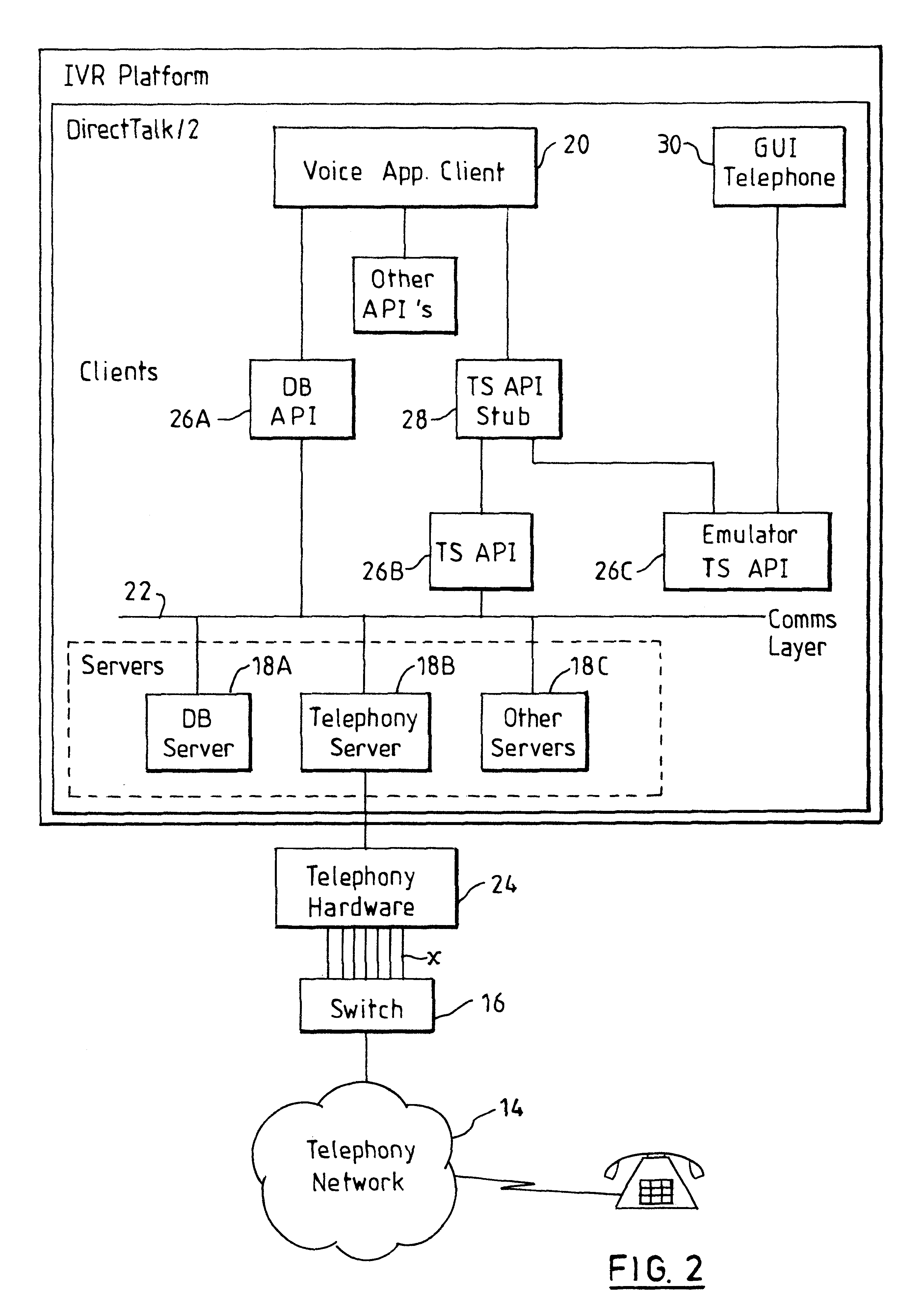

Simulation of telephone handset

InactiveUS6400807B1Error detection/correctionSpecial service for subscribersComputer hardwareGraphics

A system for developing and testing a telephony application on a interactive voice system without using associated telephony hardware. The telephony application normally sends and receives hardware signals to and from the system telephony hardware for communication with a telephone. In this invention a telephony emulator intercepts the hardware signals from the voice application and sends back simulated hardware signals to the voice application. A graphical user interface provides the user output in response to the telephony emulator means and accepts and passes on user input to the emulator means.

Owner:TWITTER INC

Method for creating and deploying system changes in a voice application system

ActiveUS20050135338A1Special service for subscribersAutomatic call-answering/message-recording/conversation-recordingSpeech applicationsSoftware

A system for configuring and implementing changes to a voice application system has a first software component and host node for configuring one or more changes; a second software component and host node for receiving and implementing the configured change or changes; and a data network connecting the host nodes. In a preferred embodiment, a pre-configured change-order resulting from the first software component and host node is deployed after pre-configuration, deployment and execution thereof requiring only one action.

Owner:HTC CORP

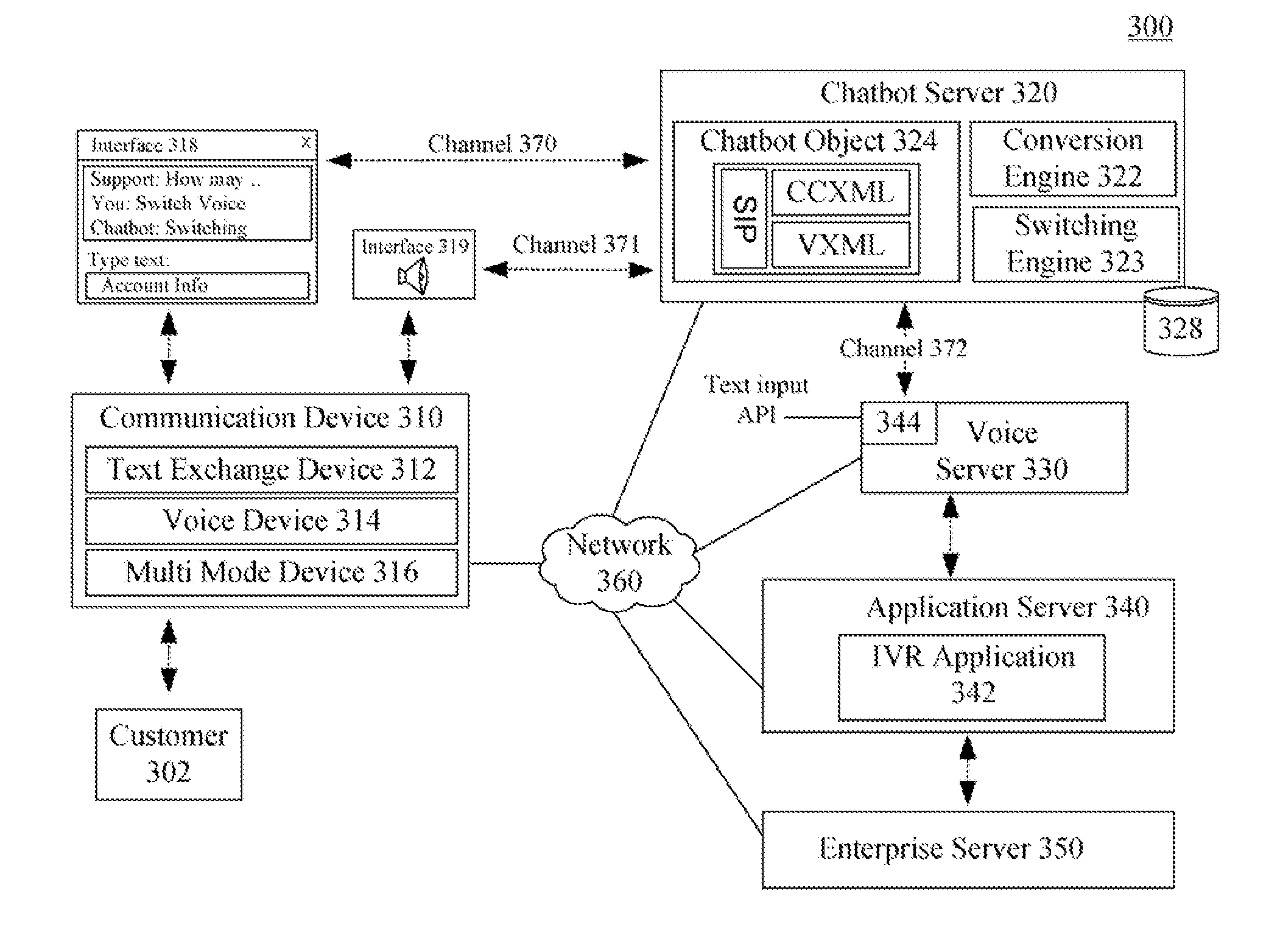

Switching between modalities in a speech application environment extended for interactive text exchanges

ActiveUS20080147406A1Multiple digital computer combinationsSpeech recognitionVoiceXMLSpeech applications

The present solution includes a method for dynamically switching modalities in a dialogue session involving a voice server. In the method, a dialogue session can be established between a user and a speech application. During the dialogue session, the user can interact using an original modality, which is either a speech modality, a text exchange modality, or a multi mode modality that includes a text exchange modality. The speech application can interact using a speech modality. A modality switch trigger can be detected that changes the original modality to a different modality. The modality transition to the second modality can be transparent to the speech application. The speech application can be a standard VoiceXML based speech application that lacks an inherent text exchange capability.

Owner:NUANCE COMM INC

Applications Server and Method

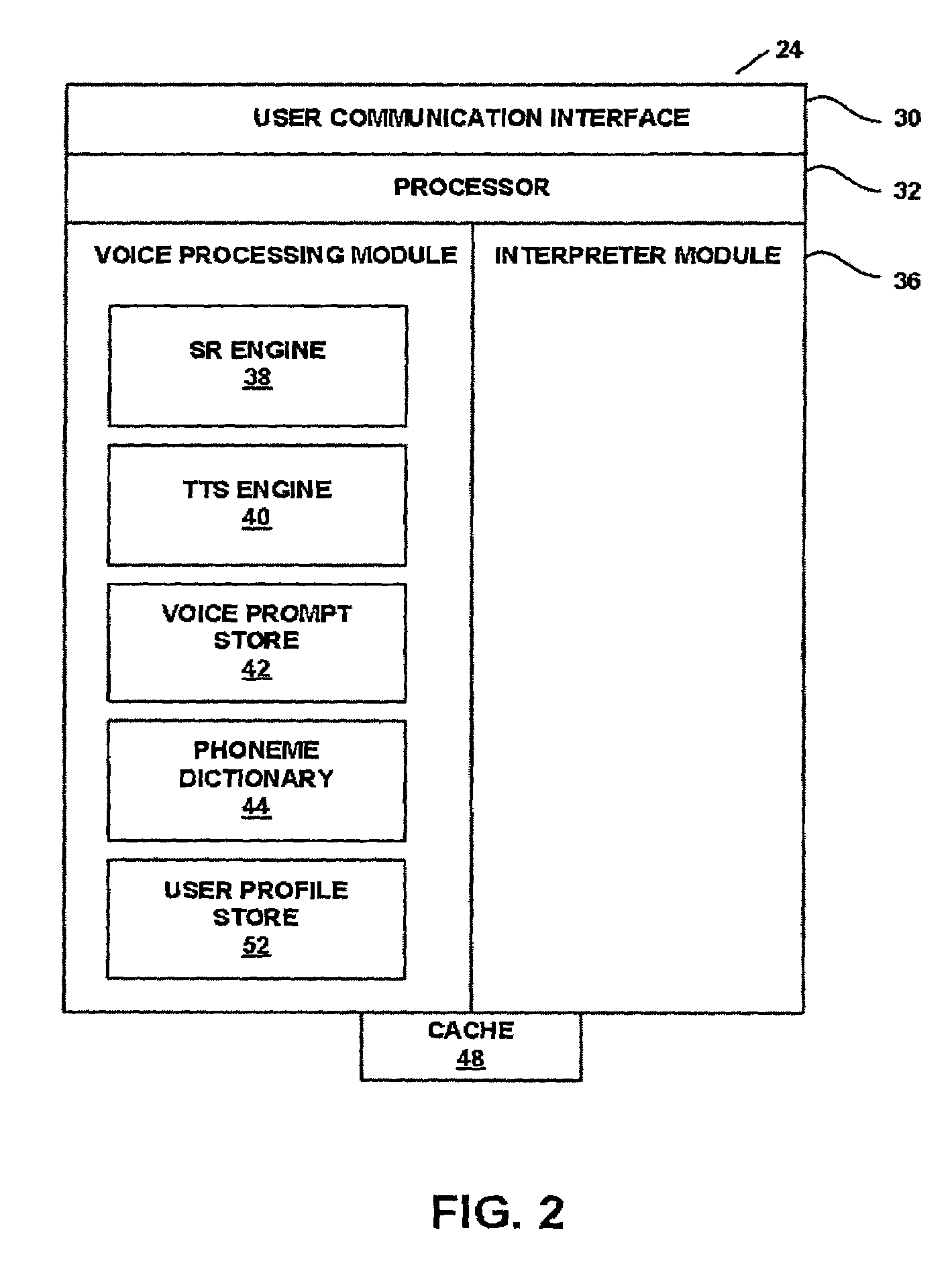

A speech applications server is arranged to provide a user driven service in accordance with an application program in response to user commands for selecting service options. The user is prompted by audio prompts to issue the user commands. The application program comprises a state machine operable to determine a state of the application program from one of a predetermined set of states defining a logical procedure through the user selected service options, transitions between states being determined in accordance with logical conditions to be satisfied in order to change between one state of the set and another state of the set. The logical conditions include whether a user has provided one of a set of possible commands. A prompt selection engine is operable to generate the audio prompts for prompting the commands from the user in accordance with predetermined rules. The prompt selected by the prompt selection engine is determined at run-time. Since the state machine and the prompt selection engine are separate entities and the prompts to be selected are determined at run-time, it is possible to effect a change to the prompt selection engine without influencing the operation of the state machine, enabling different customisations to be provided for the same user driven services, in particular this allows multilingual support, with the possibility of providing rules to adapt the prompt structure allowing for grammatical differences between to languages to be taken into account thus providing higher quality multiple language support.

Owner:ORANGE SA (FR)

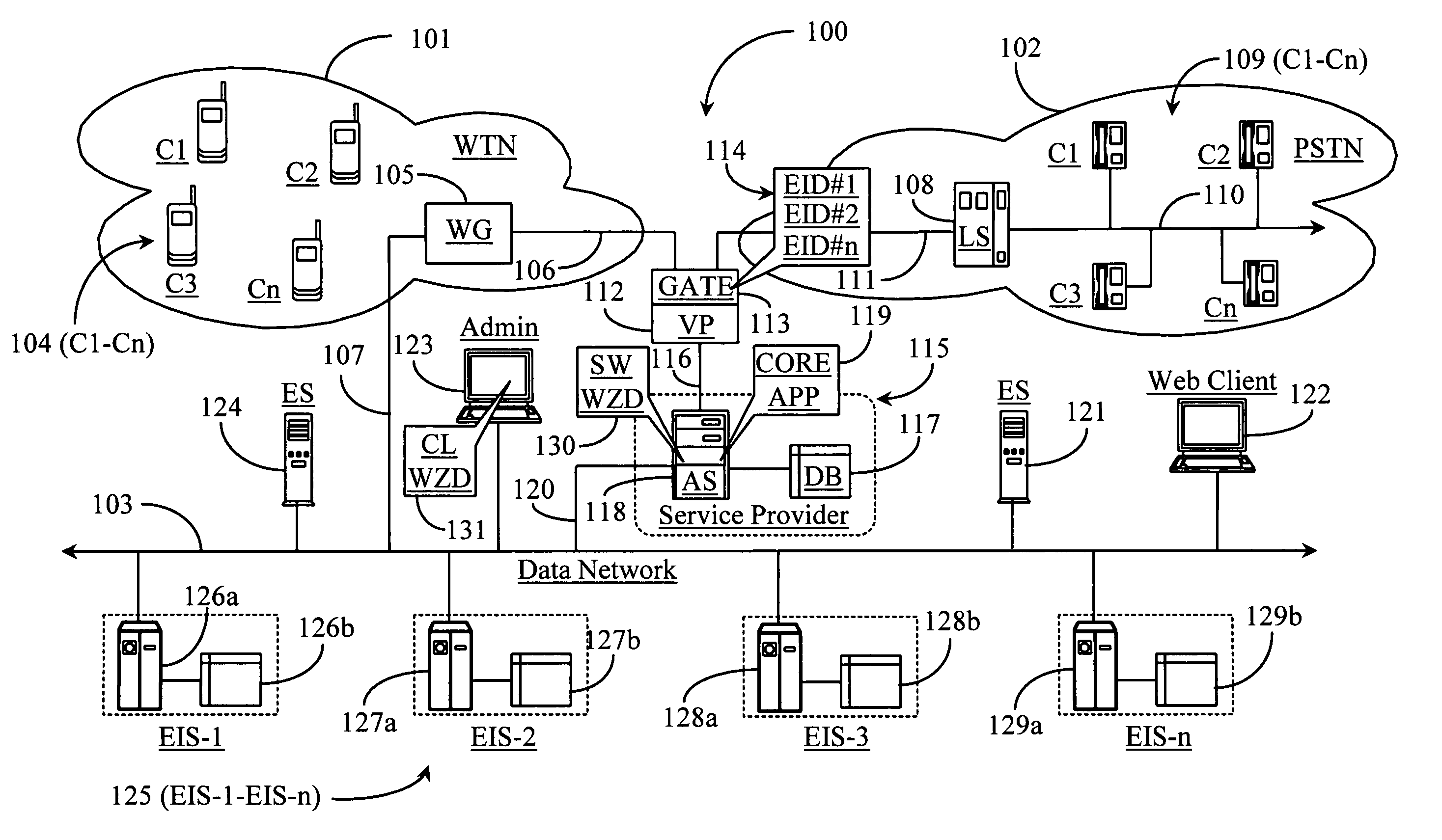

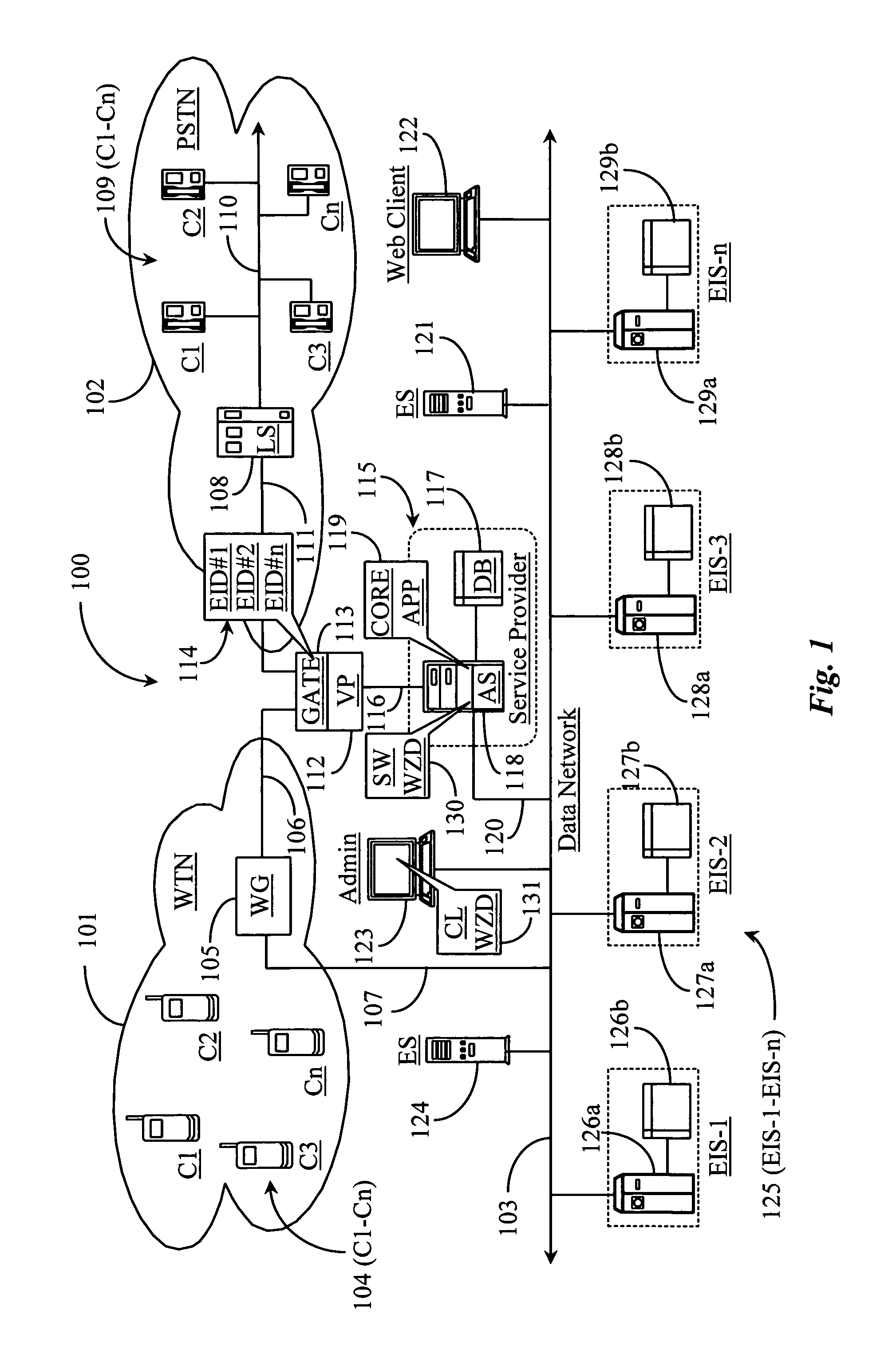

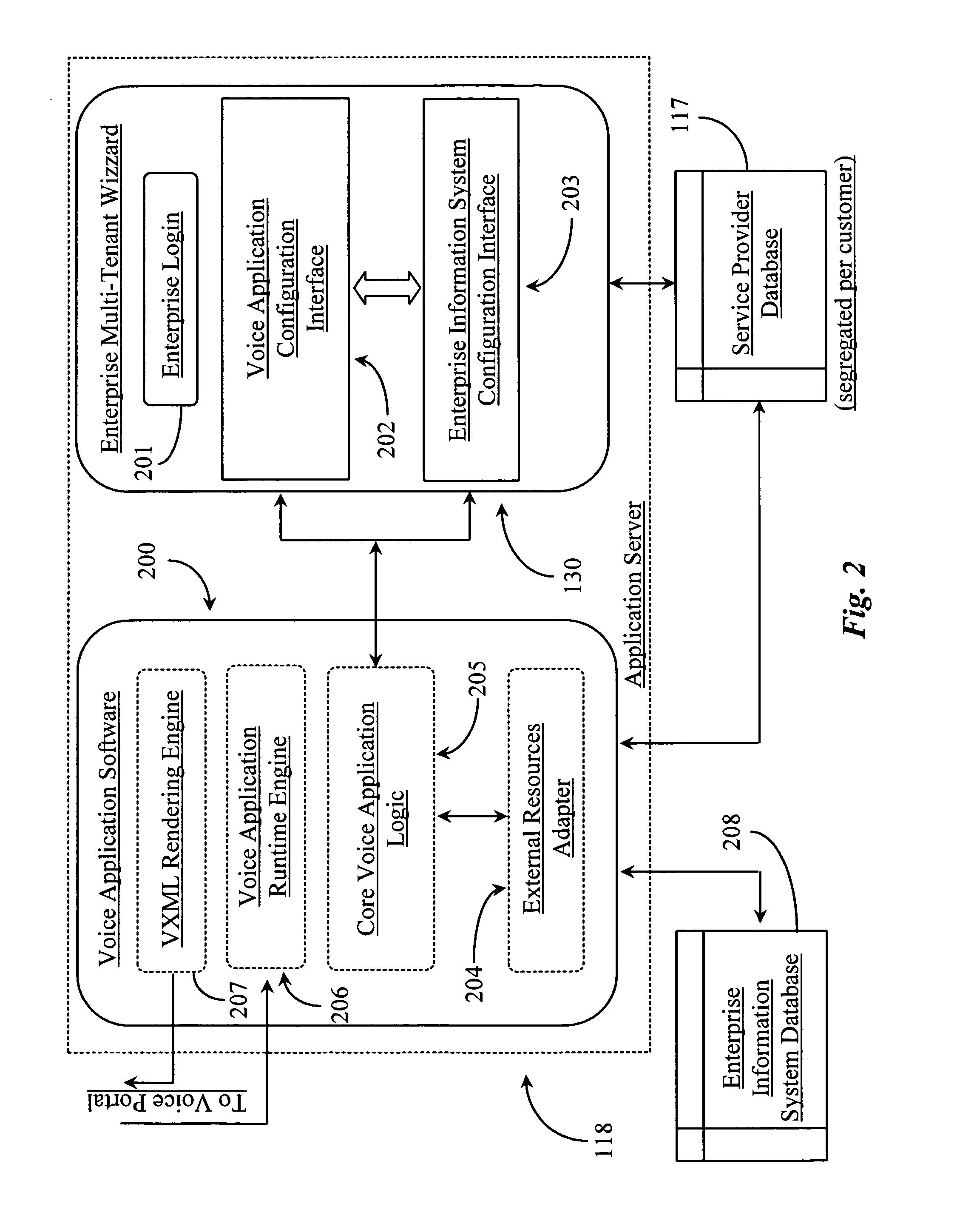

Multi-tenant self-service VXML portal

InactiveUS20050163136A1Data switching by path configurationAutomatic exchangesApplication serverTelephone network

A multi-tenant voice extensible markup language (VXML) voice system includes a voice portal connected to at least one telephony network; a voice application server integrated with the voice portal; and a multi-tenant configuration application integrated with the voice application server, the configuration application accessible to the tenants from a data packet network.

Owner:APPTERA

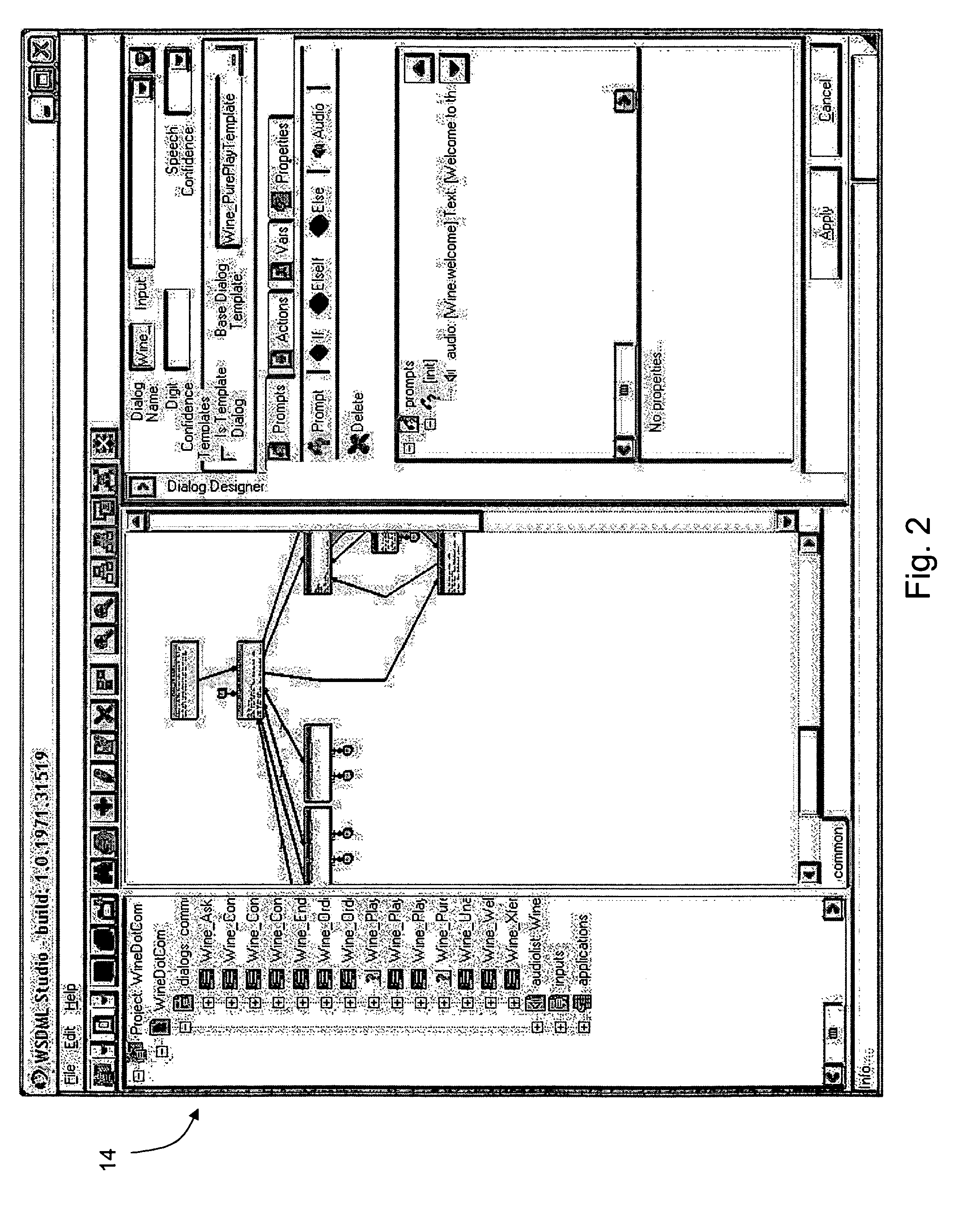

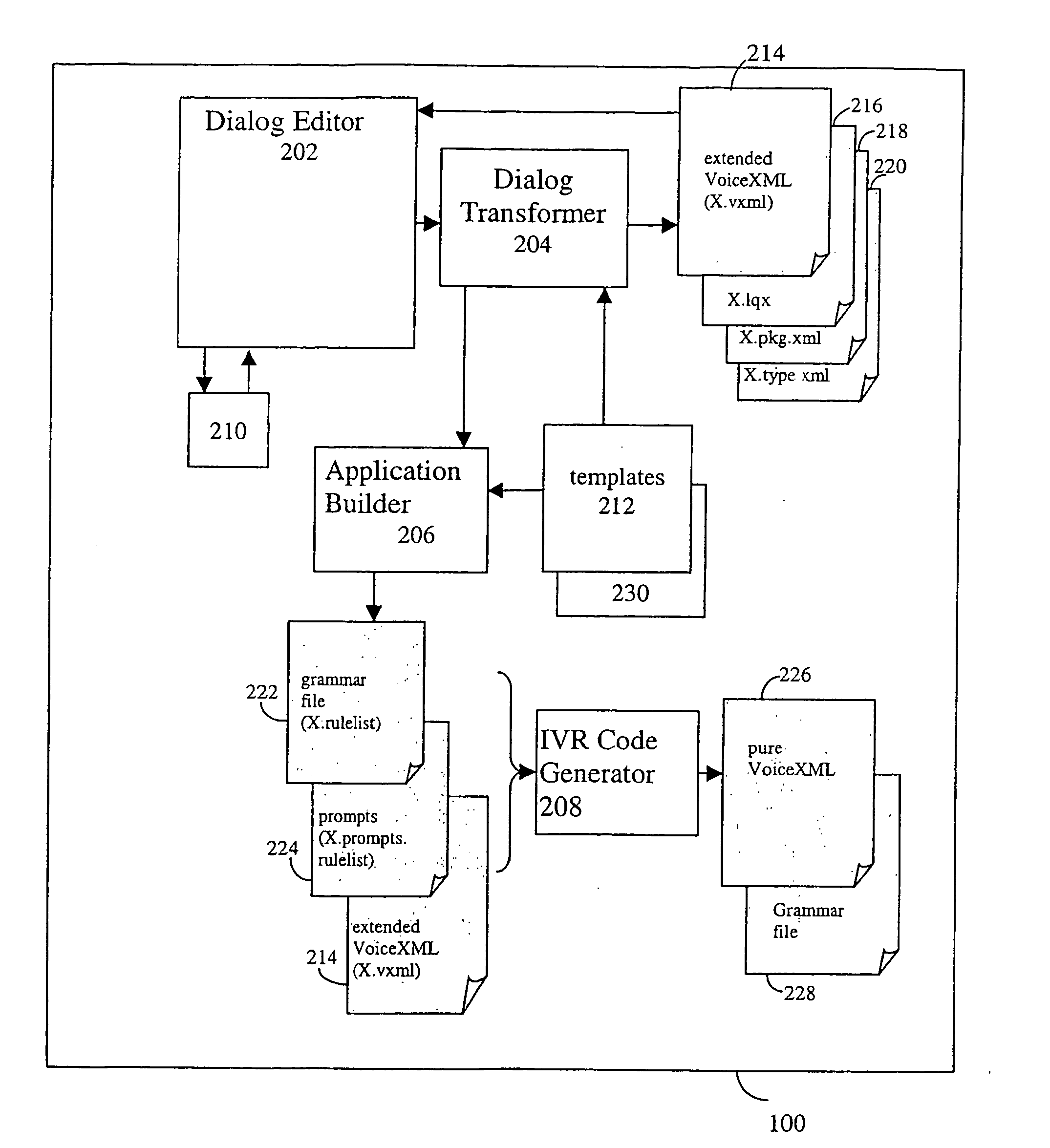

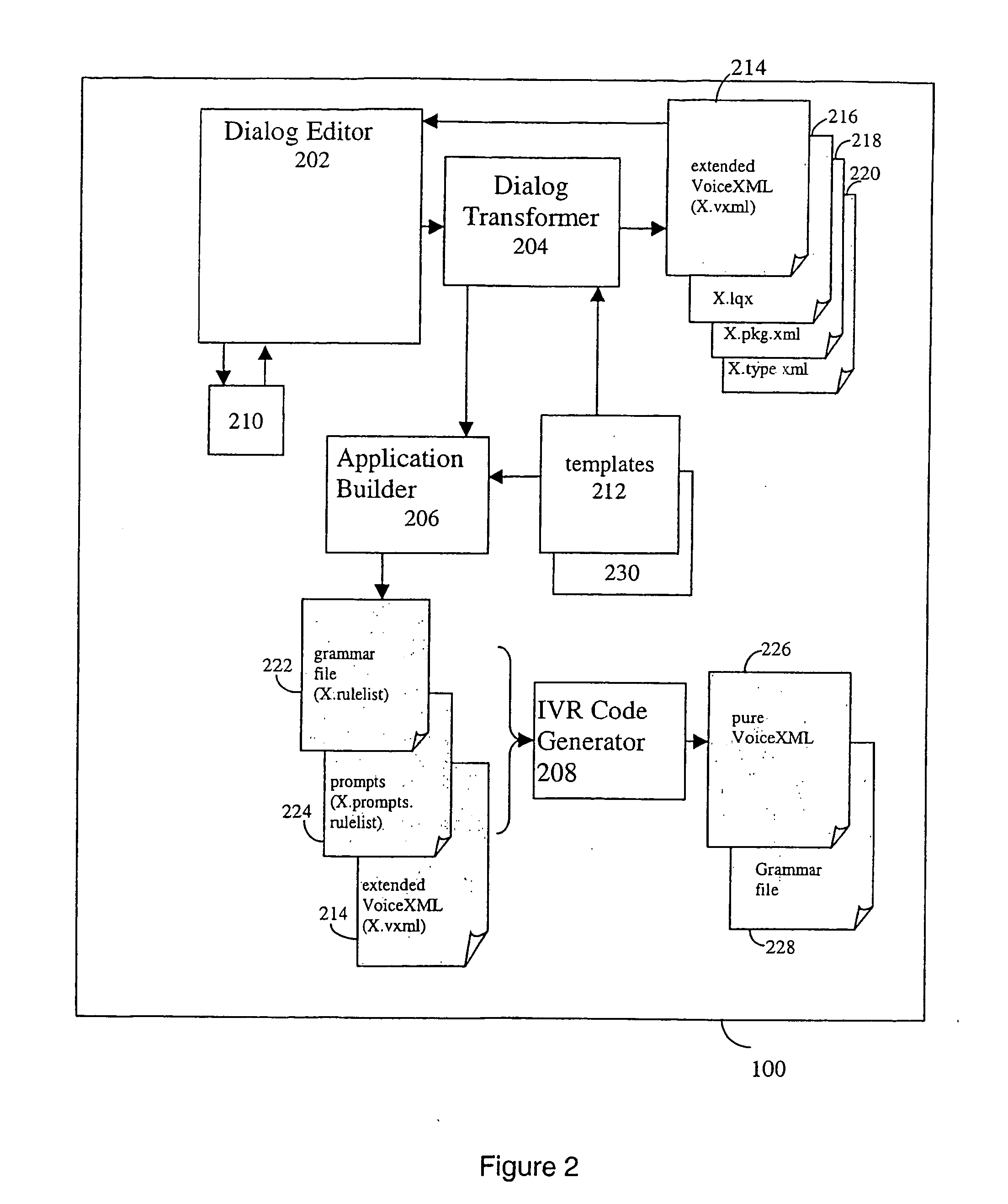

System and process for developing a voice application

InactiveUS20060025997A1Natural language data processingMultiple digital computer combinationsLanguage moduleComputer module

A system for use in developing a voice application, including a dialog element selector for defining execution paths of the application by selecting dialog elements and adding the dialog elements to a tree structure, each path through the tree structure representing one of the execution paths, a dialog element generator for generating the dialog elements on the basis of predetermined templates and properties of the dialog elements, the properties templates received from a user of the system, each of said dialog elements corresponding to at least one voice language template, and a code generator for generating at least one voice language module for the application on the basis of said at least one voice language template and said properties. The voice language templates include VoiceXML elements, and the dialog elements can be regenerated from the voice language module. The voice language module can be used to provide the voice application for an IVR.

Owner:TELSTRA CORPORATION LIMITD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com