Mass video semantic annotation method based on Spark

A semantic annotation and video technology, applied in video data retrieval, image data processing, instruments, etc., can solve the problem that computing resources cannot support large-scale operations, cannot apply massive video annotation, etc., and achieve the effect of improving scalability.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

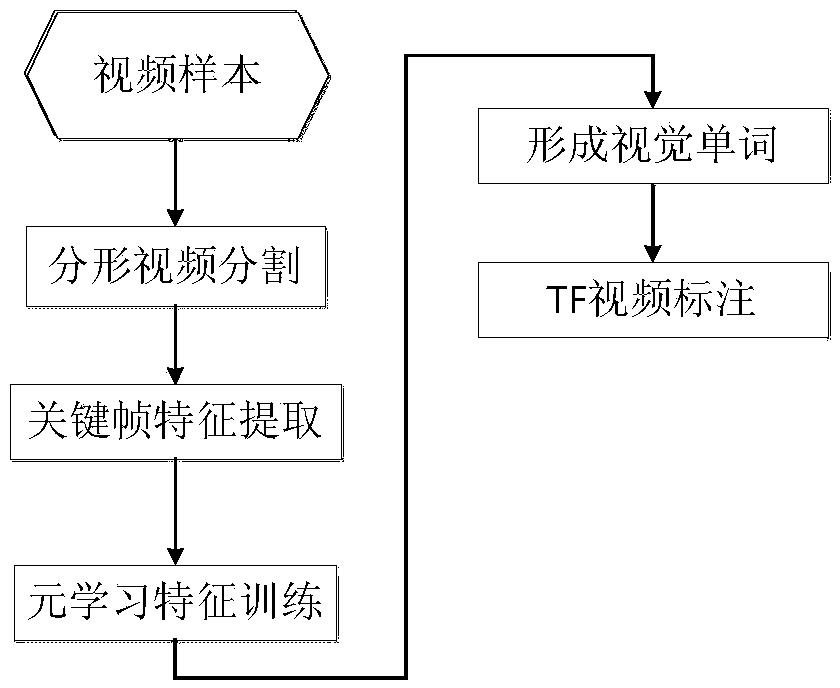

[0035] The present invention is based on the massive video semantic labeling method of Spark, and the steps are as follows:

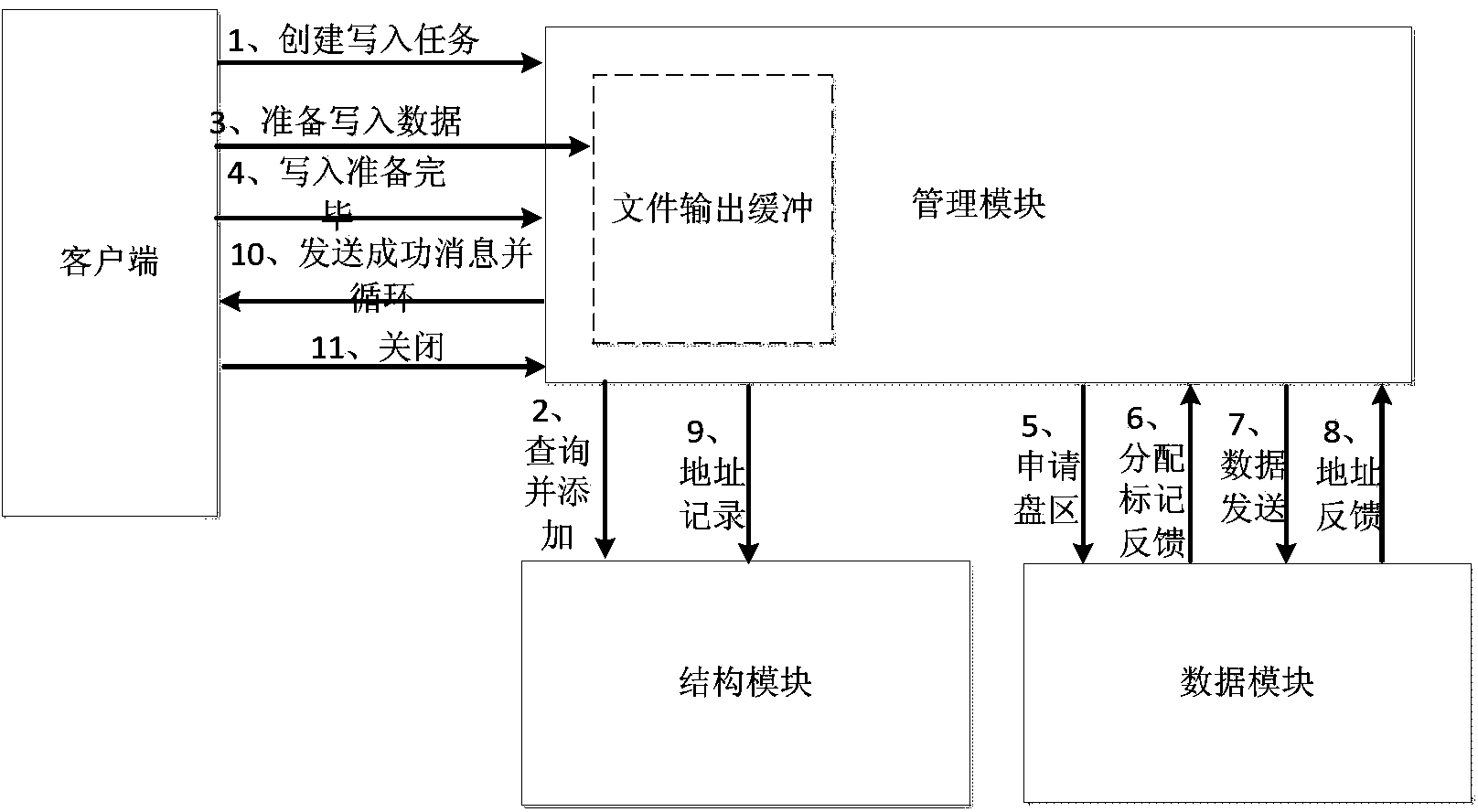

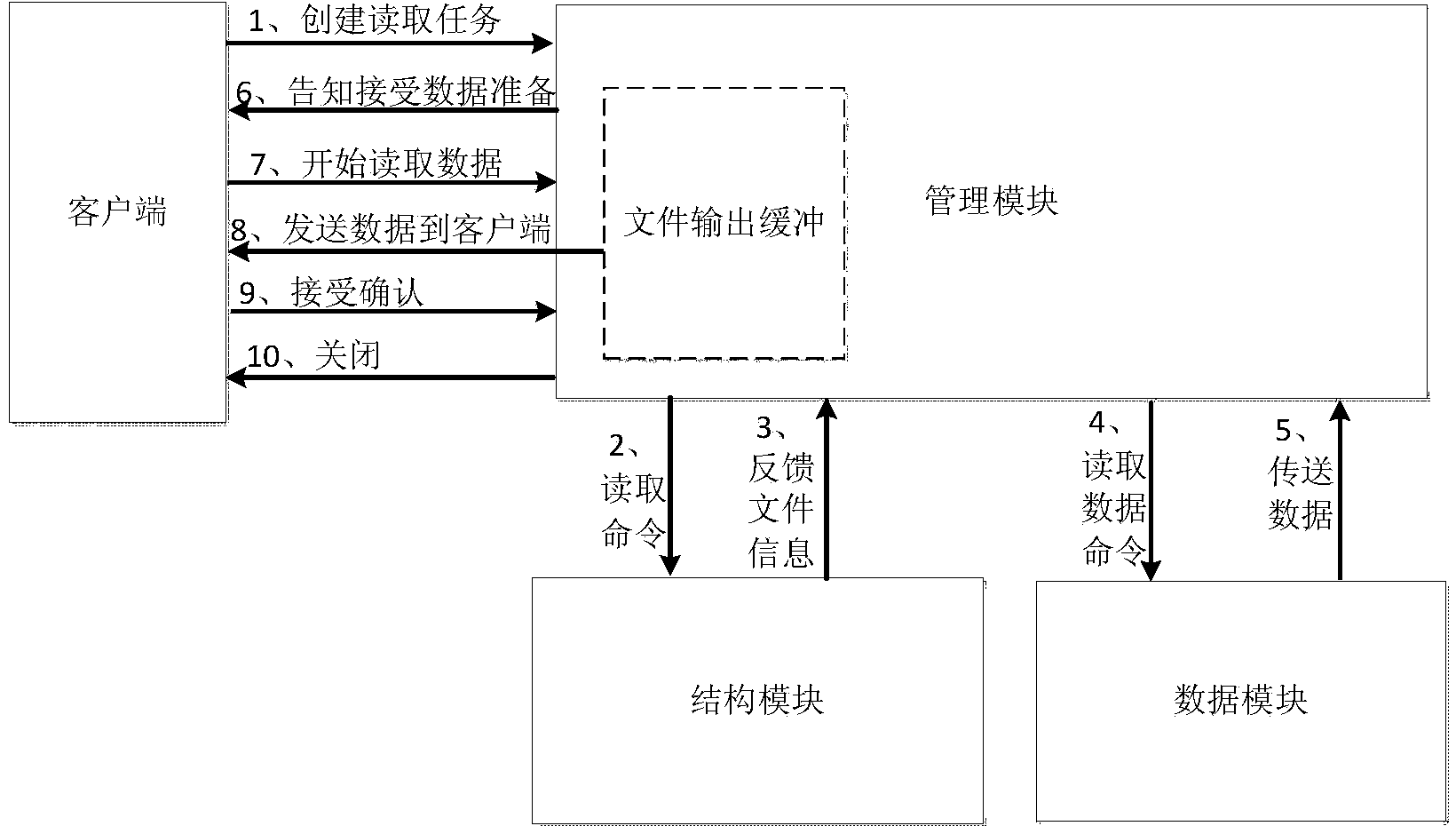

[0036] The first step is to establish a Hadoop / Spark massive video big data platform. The platform is composed of three parts: management module, structure module and data module, which are independent of each other to realize flexible storage of massive data. Independent maintenance and upgrade, flexible handling of system redundancy and backup. like figure 2 As shown, the management module provides a set of access interfaces to the operating system (client), mainly including: creating, opening, closing, revoking, reading, writing, rights management, etc. for files and directories. The operating system (client) obtains various services of the data storage system through these access interfaces. The structure module creates corresponding data tables in the database for data files of different structures, and the table describes file attribute informa...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com