Patents

Literature

103 results about "Video annotation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Retrieving video annotation metadata using a p2p network

InactiveUS20110246471A1Minimize barrierMinimal costDigital data information retrievalDigital data processing detailsVideo annotationVideo equipment

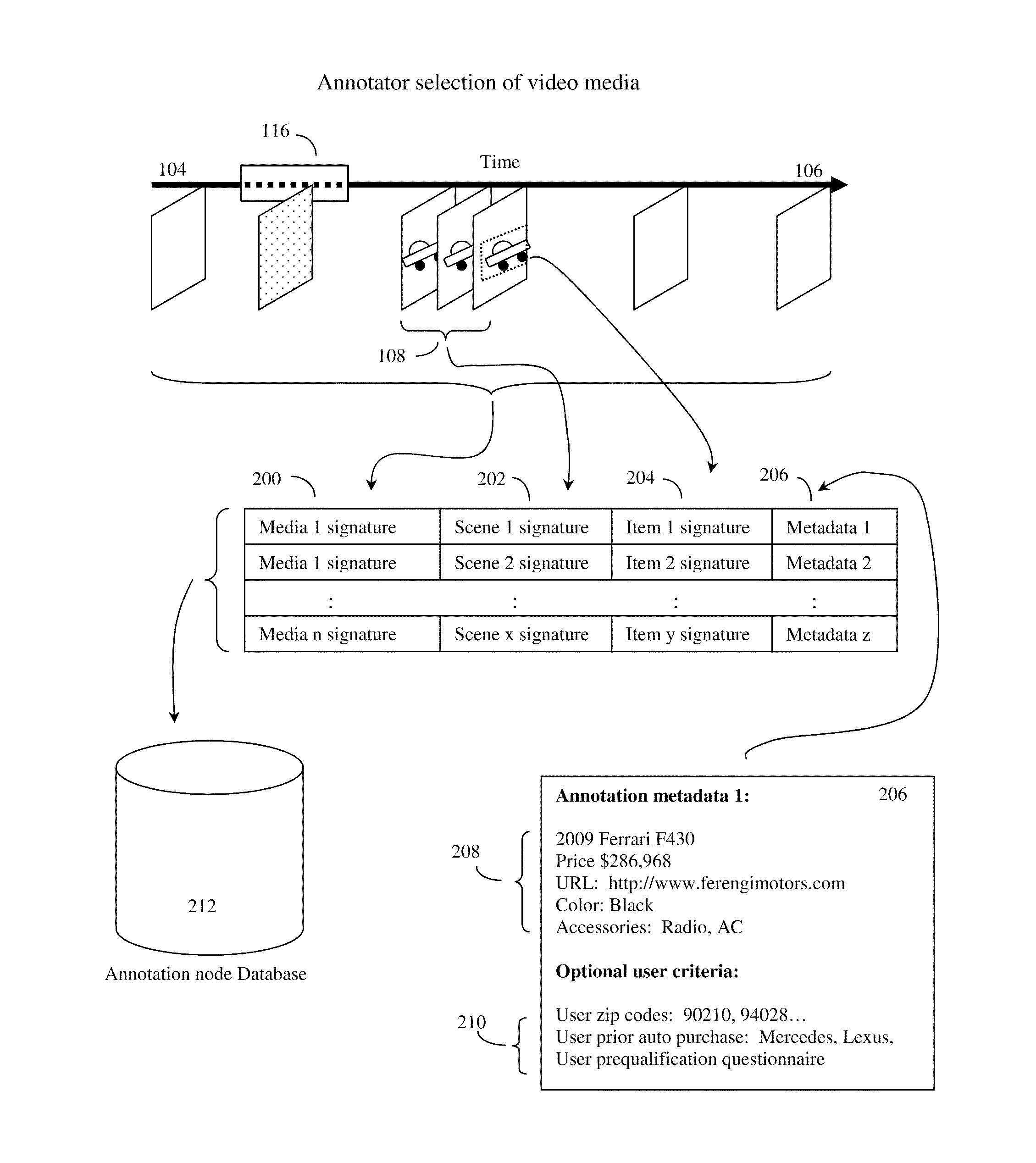

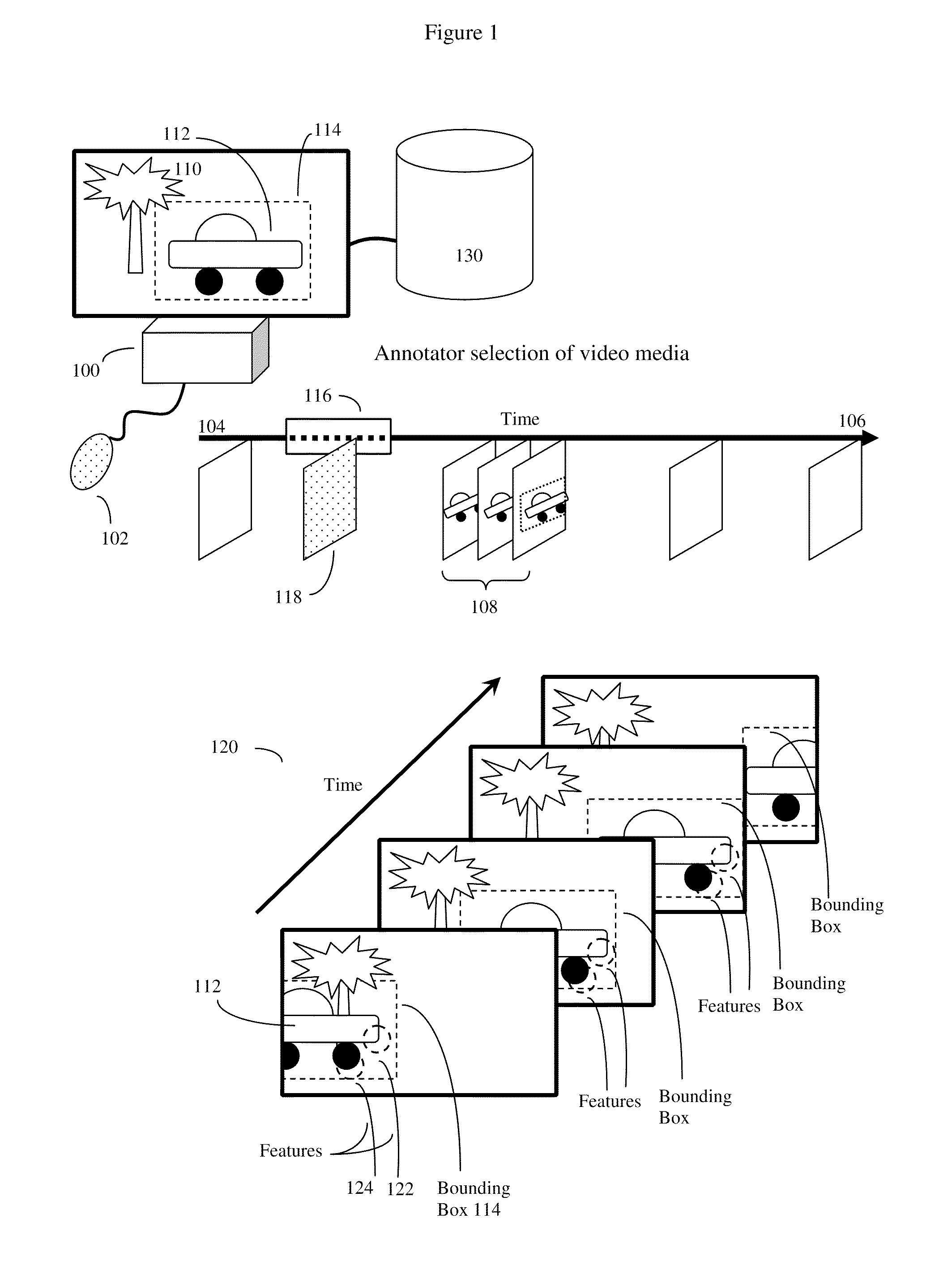

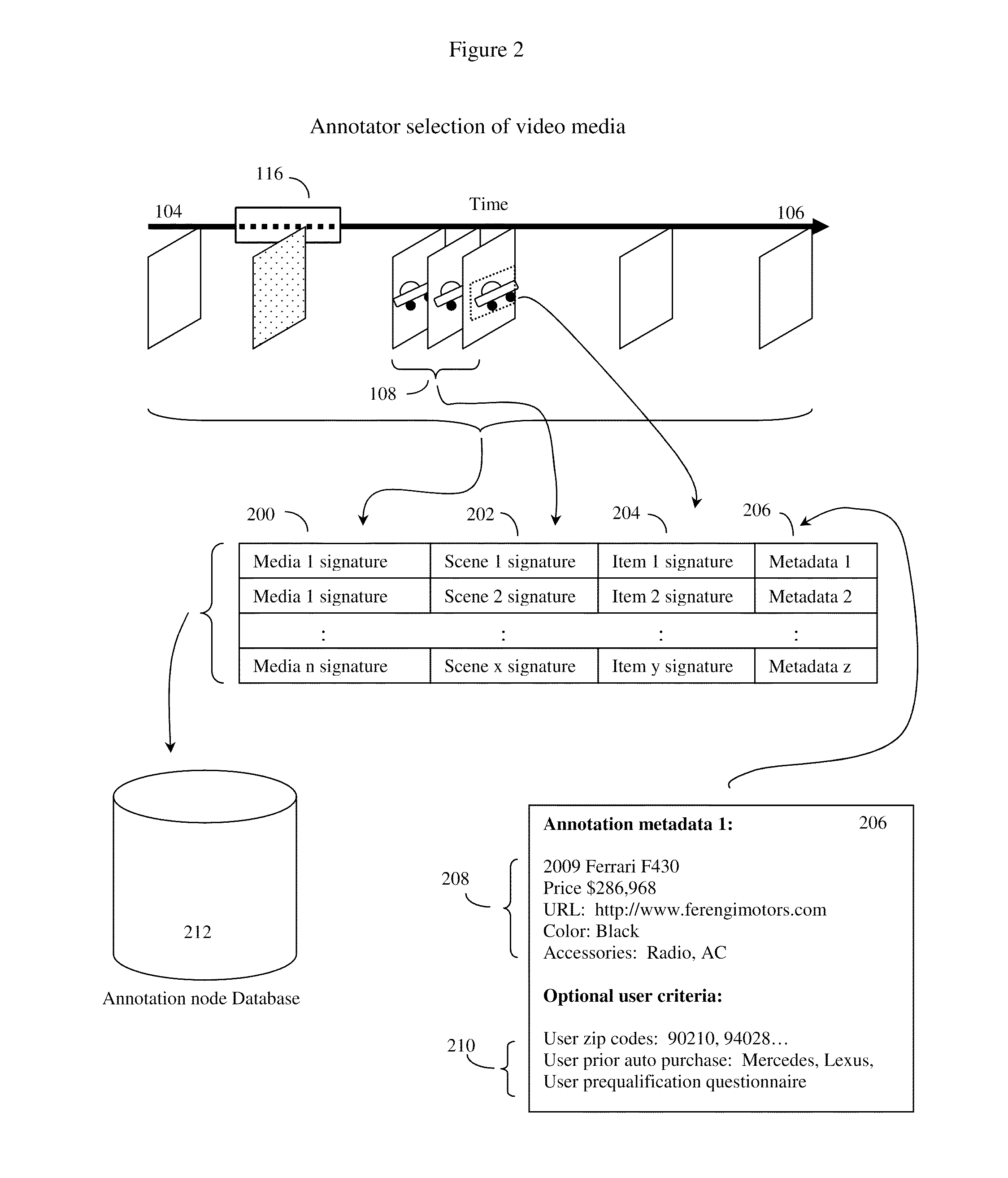

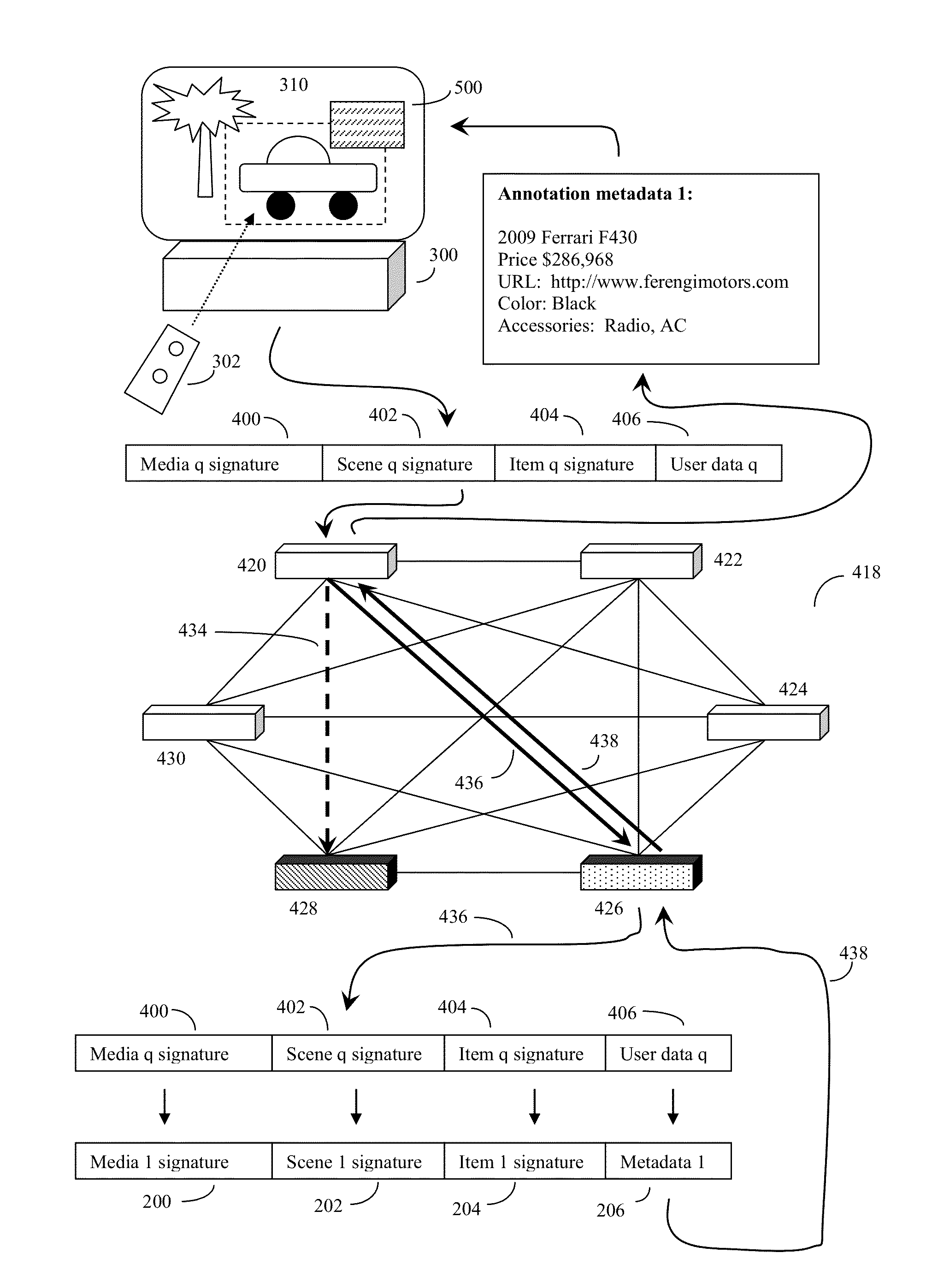

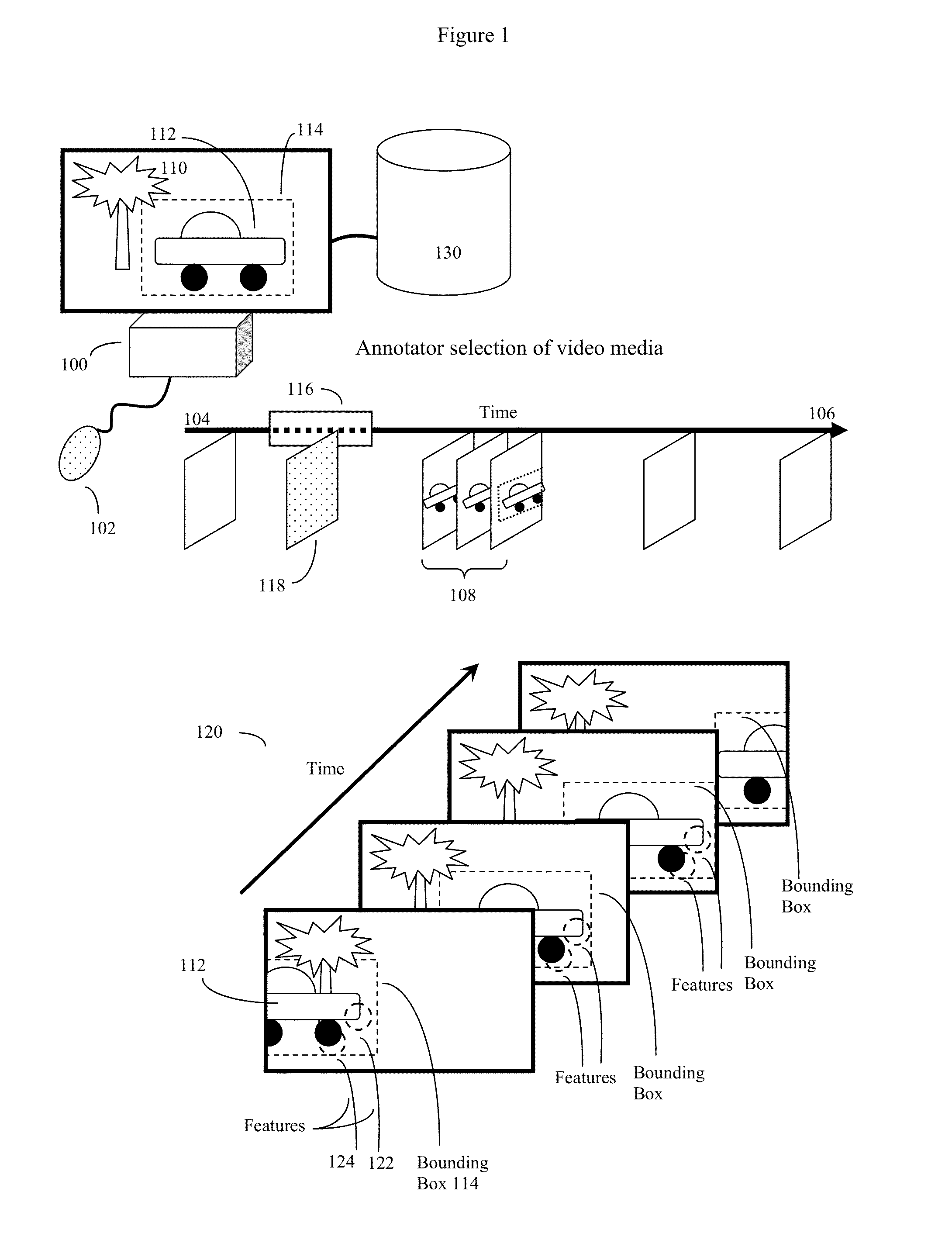

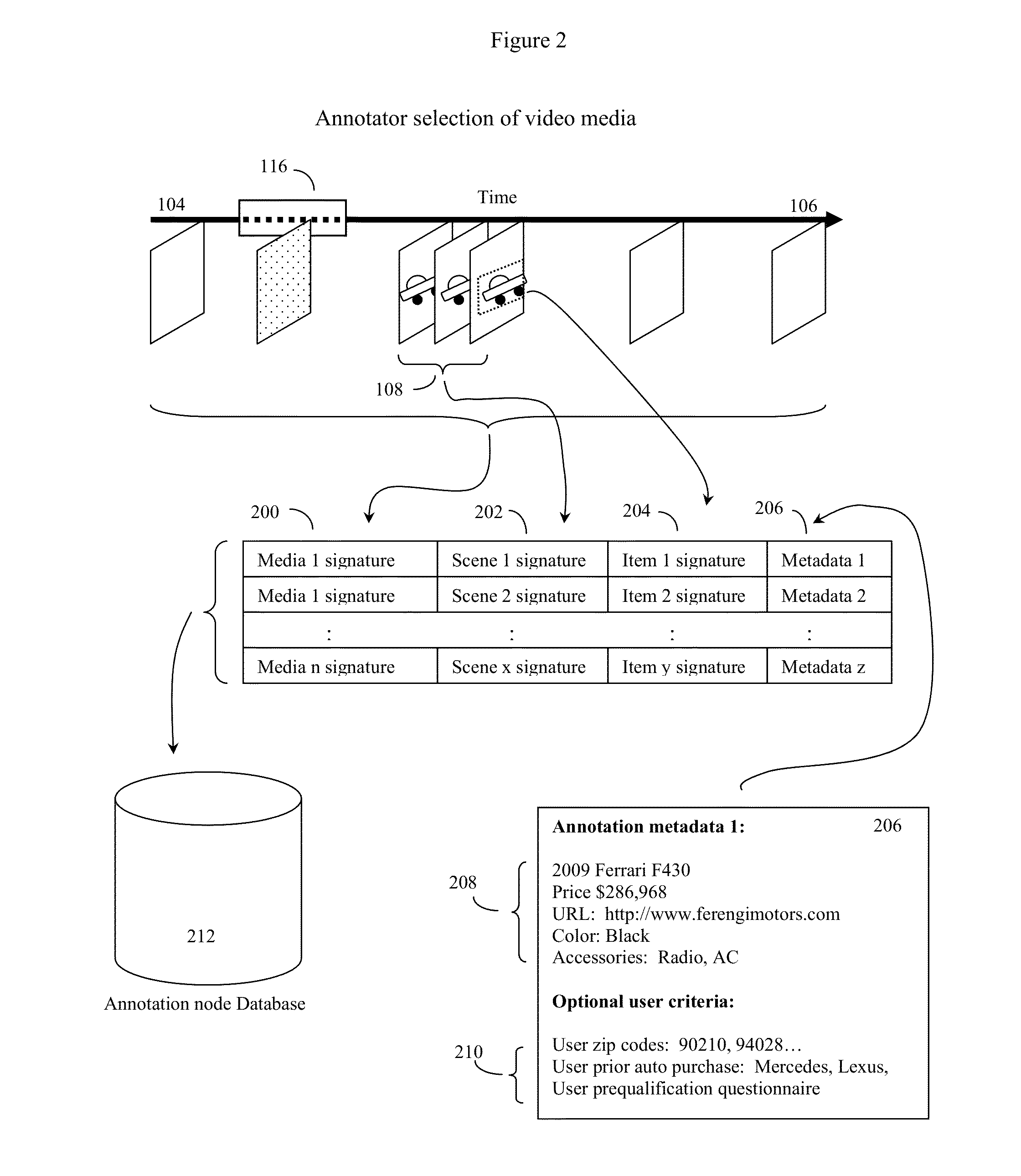

A method of annotating video programs (media) with metadata, and making the metadata available for download on a P2P network. Program annotators will analyze a video media and construct annotator index descriptors or signatures descriptive of the video media as a whole, annotator scenes of interest, and annotator items of interest. This will serve as an index to annotator metadata associated with specific scenes and items of interest. Viewers of these video medias on processor equipped, network capable, video devices will select scenes and items of interest as well, and the video devices will construct user indexes also descriptive of the video media, scenes and areas of interest. This user index will be sent over the P2P network to annotation nodes, and will be used as a search tool to find the appropriate index linked metadata. This will be sent back to the user video device over the P2P network.

Owner:RAKIB SELIM SHLOMO

Retrieving video annotation metadata using a p2p network and copyright free indexes

InactiveUS20150046537A1Minimize barrierMinimal costDigital data information retrievalUsing detectable carrier informationThird partyComputer graphics (images)

Video programs (media) are analyzed, often using computerized image feature analysis methods. Annotator index descriptors or signatures that are indexes to specific video scenes and items of interest are determined, and these in turn serve as an index to annotator metadata (often third party metadata) associated with these video scenes. The annotator index descriptors and signatures, typically chosen to be free from copyright restrictions, are in turn linked to annotator metadata, and then made available for download on a P2P network. Media viewers can then use processor equipped video devices to select video scenes and areas of interest, determine the corresponding user index, and send this user index over the P2P network to search for index linked annotator metadata. This metadata is then sent back to the user video device over the P2P network. Thus video programs can be enriched with additional content without transmitting any copyrighted video data.

Owner:NOVAFORA

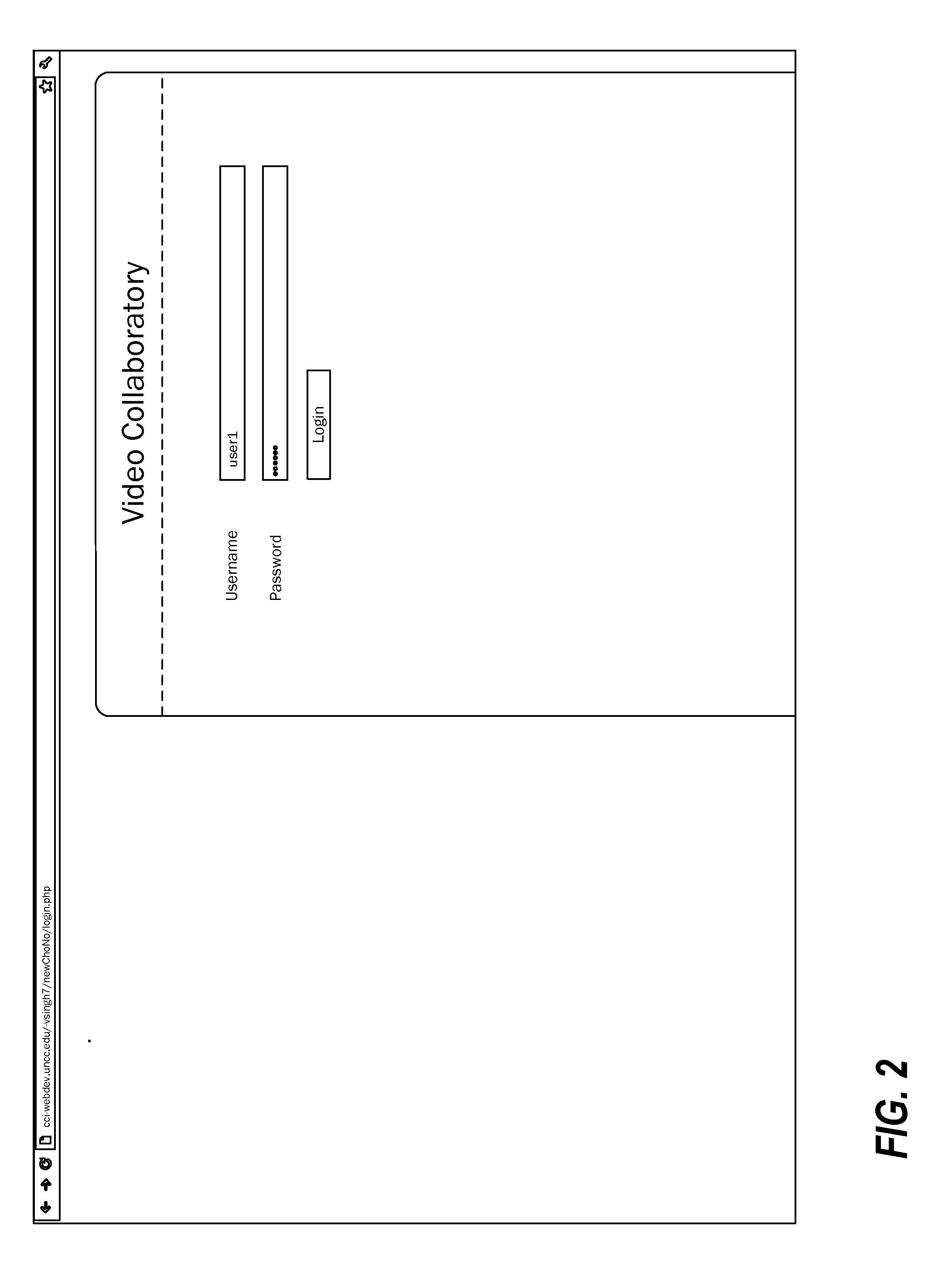

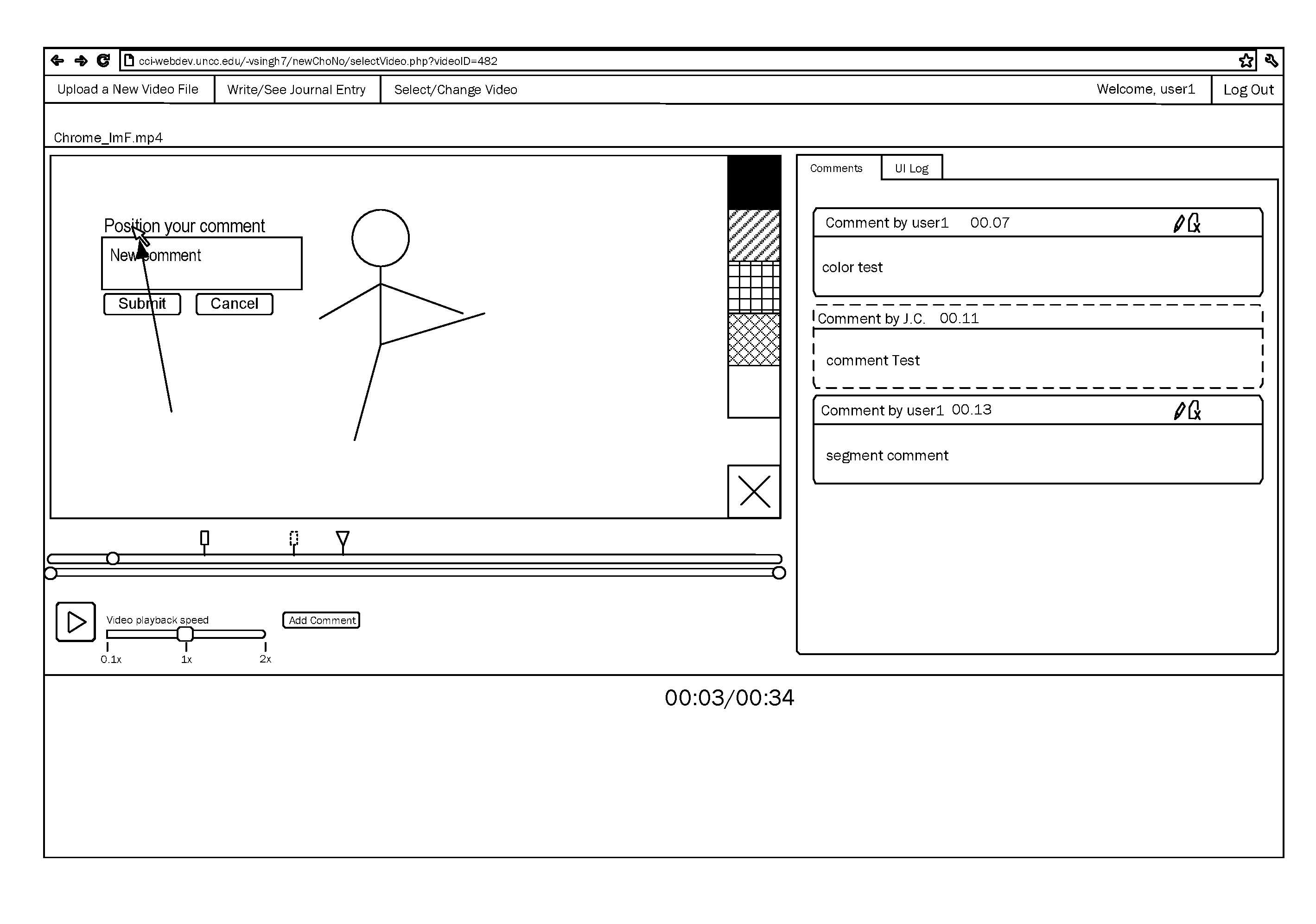

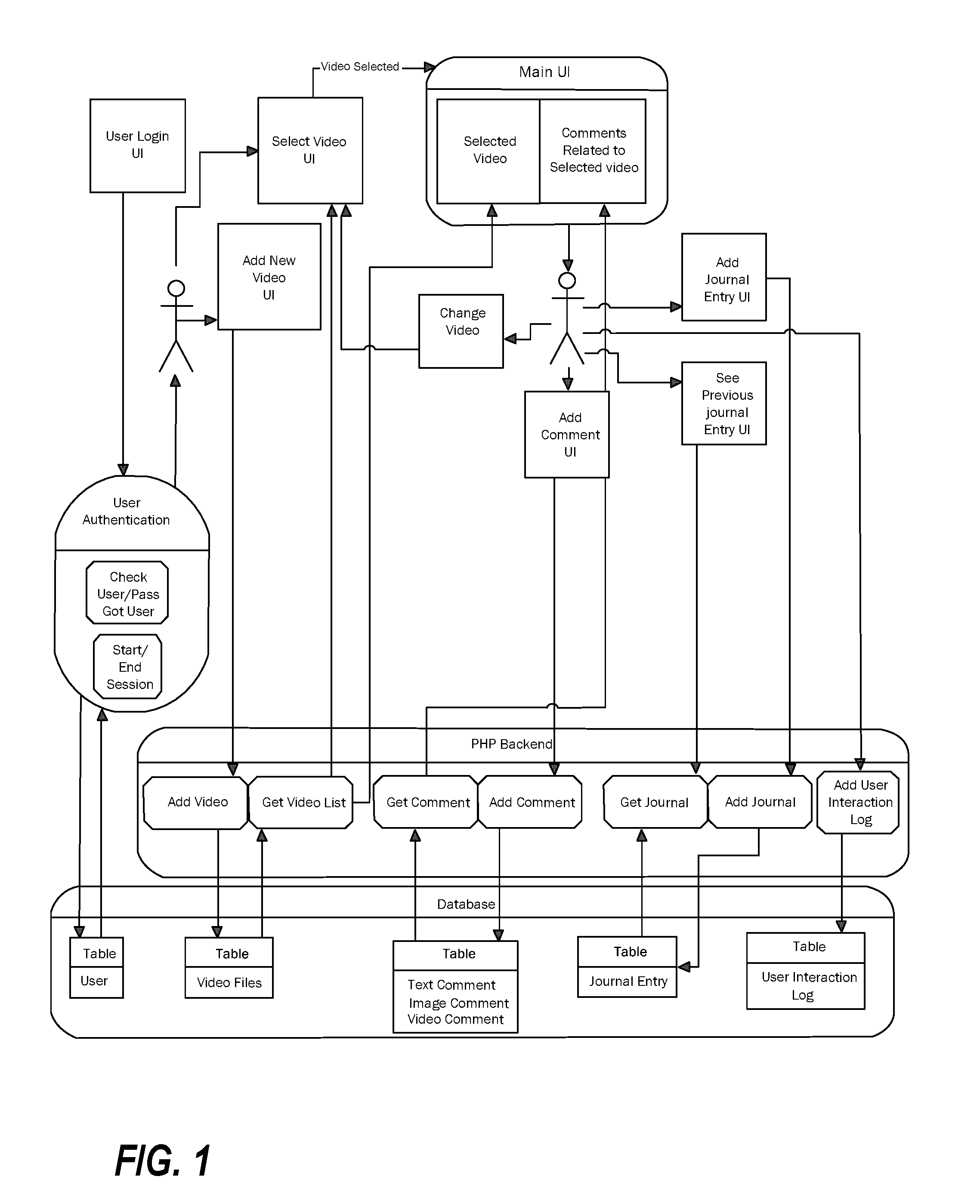

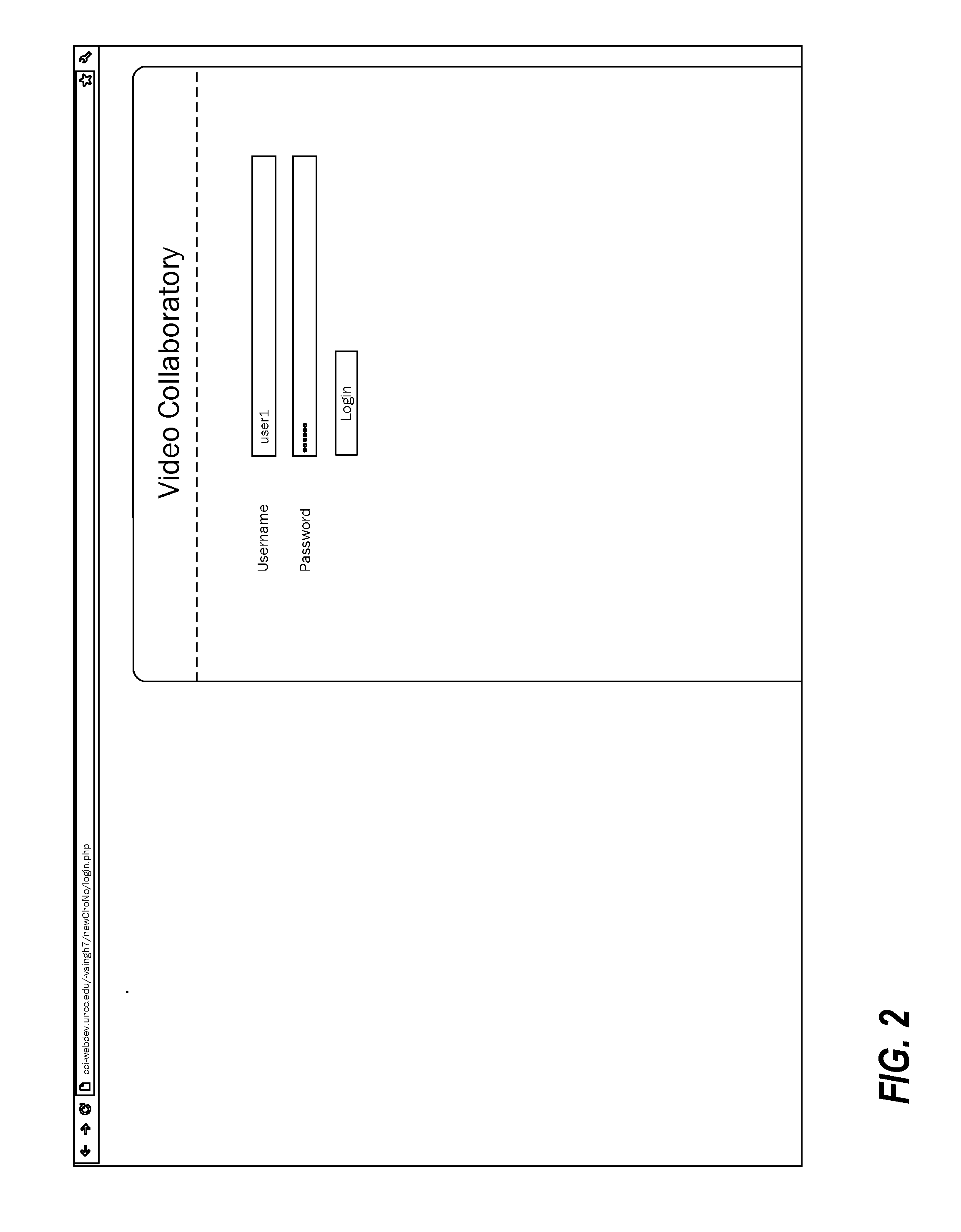

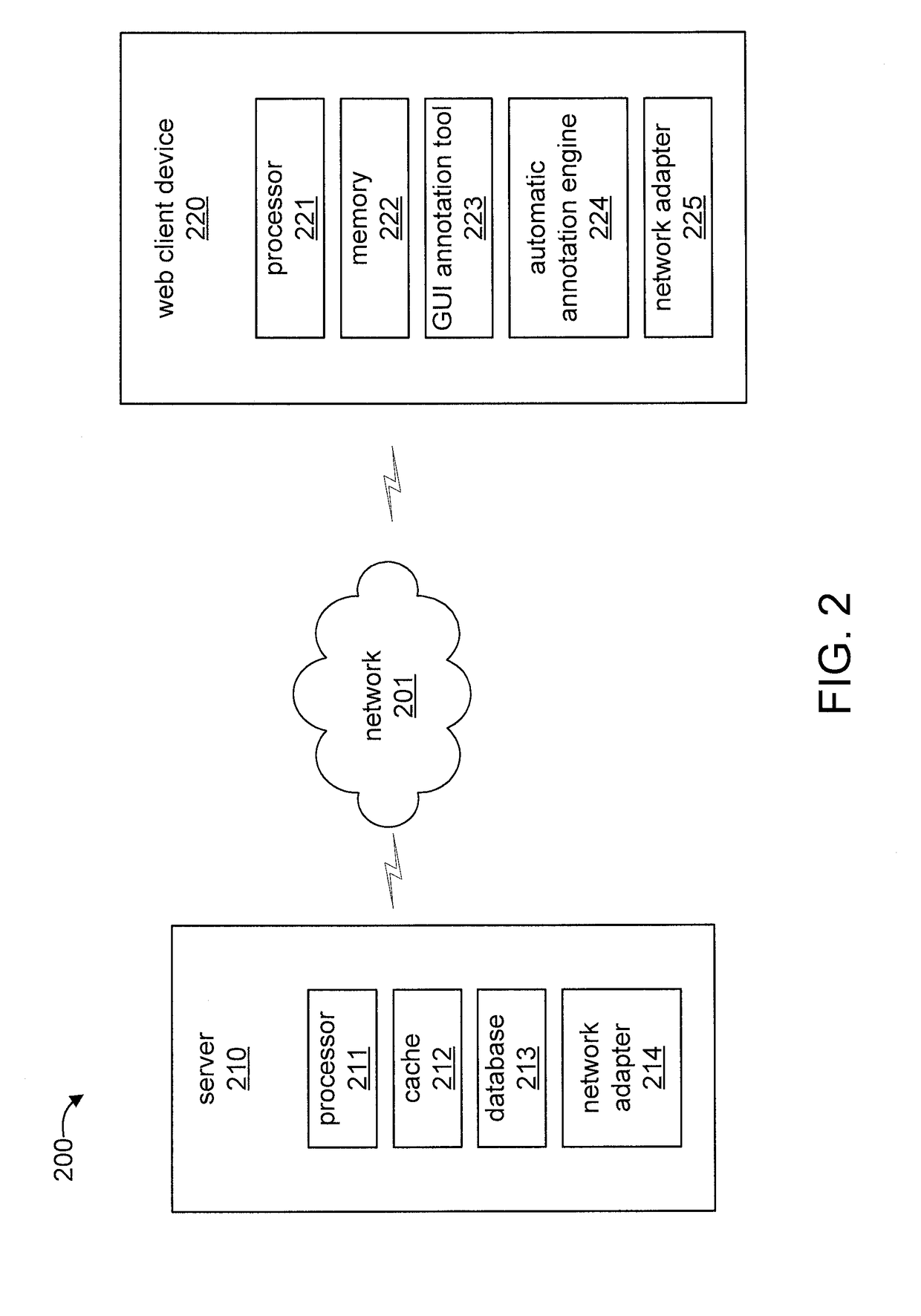

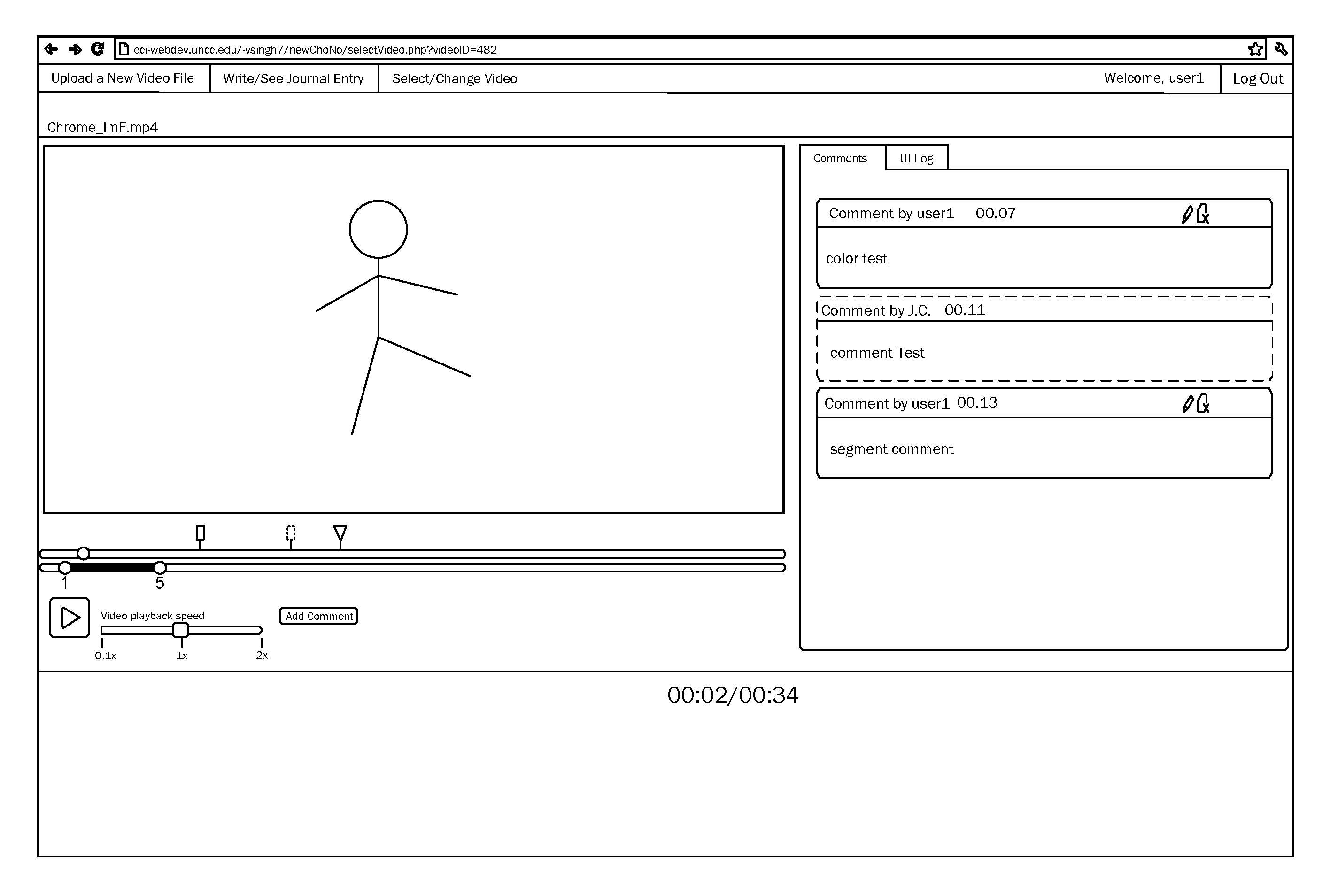

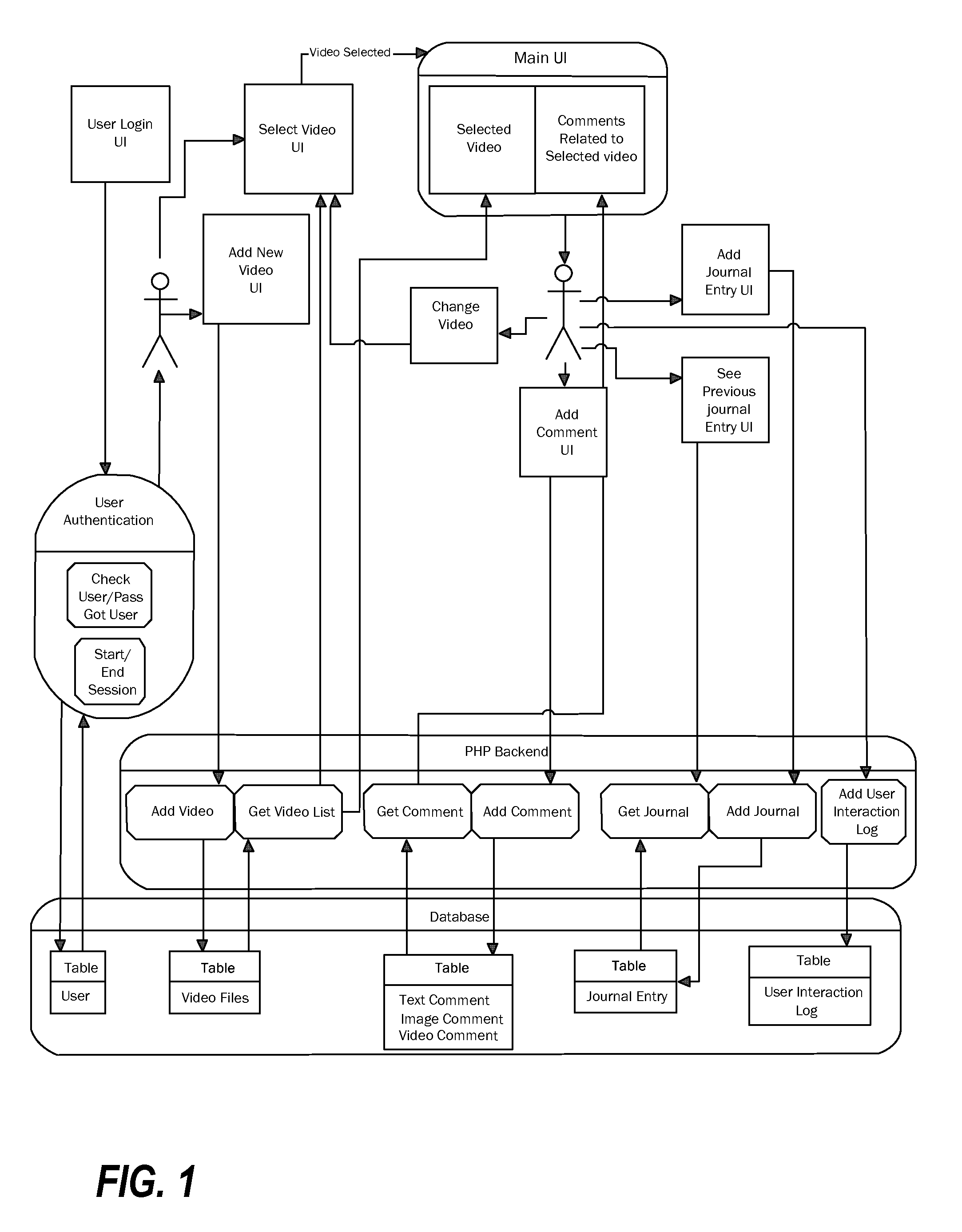

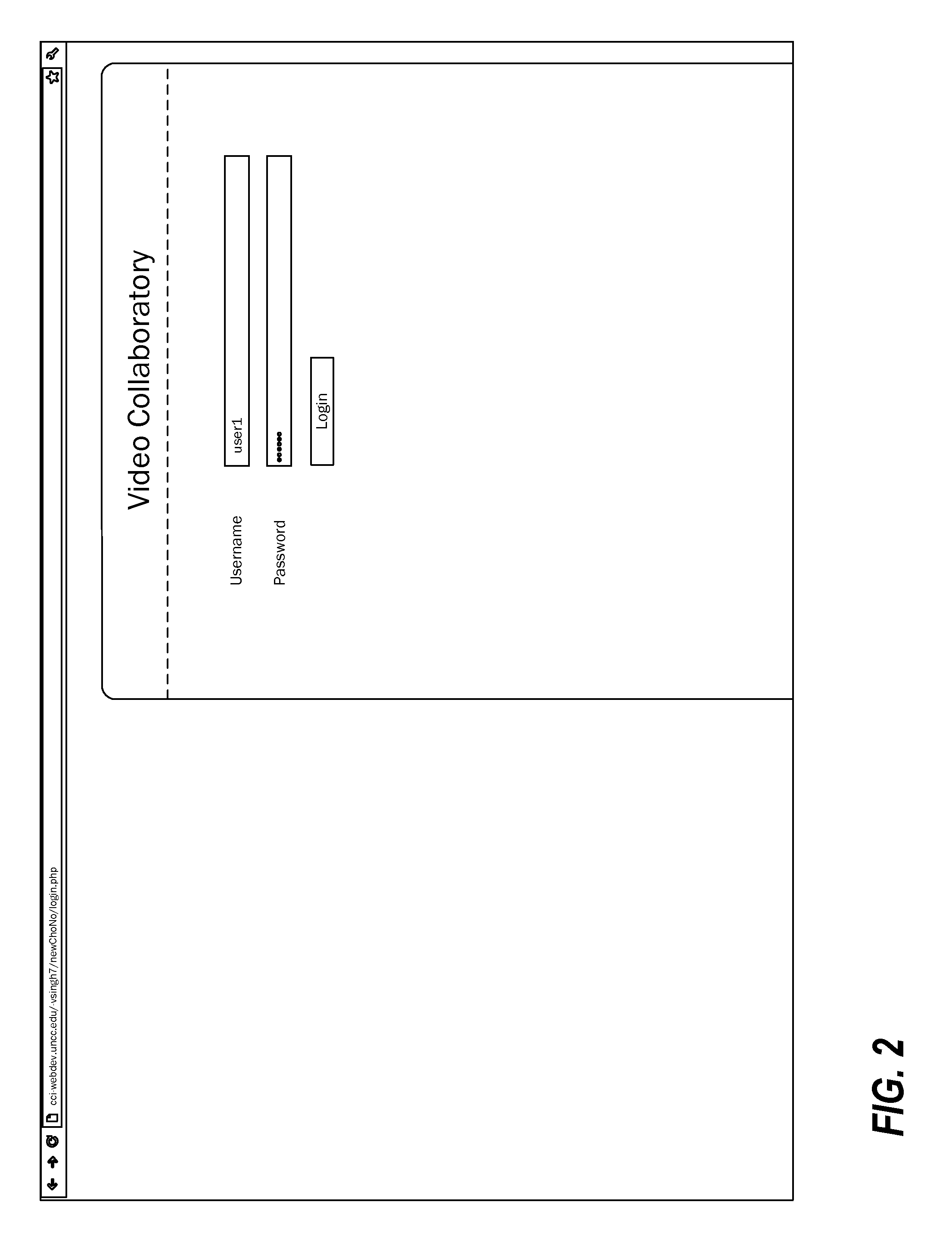

Multi-modal collaborative web-based video annotation system

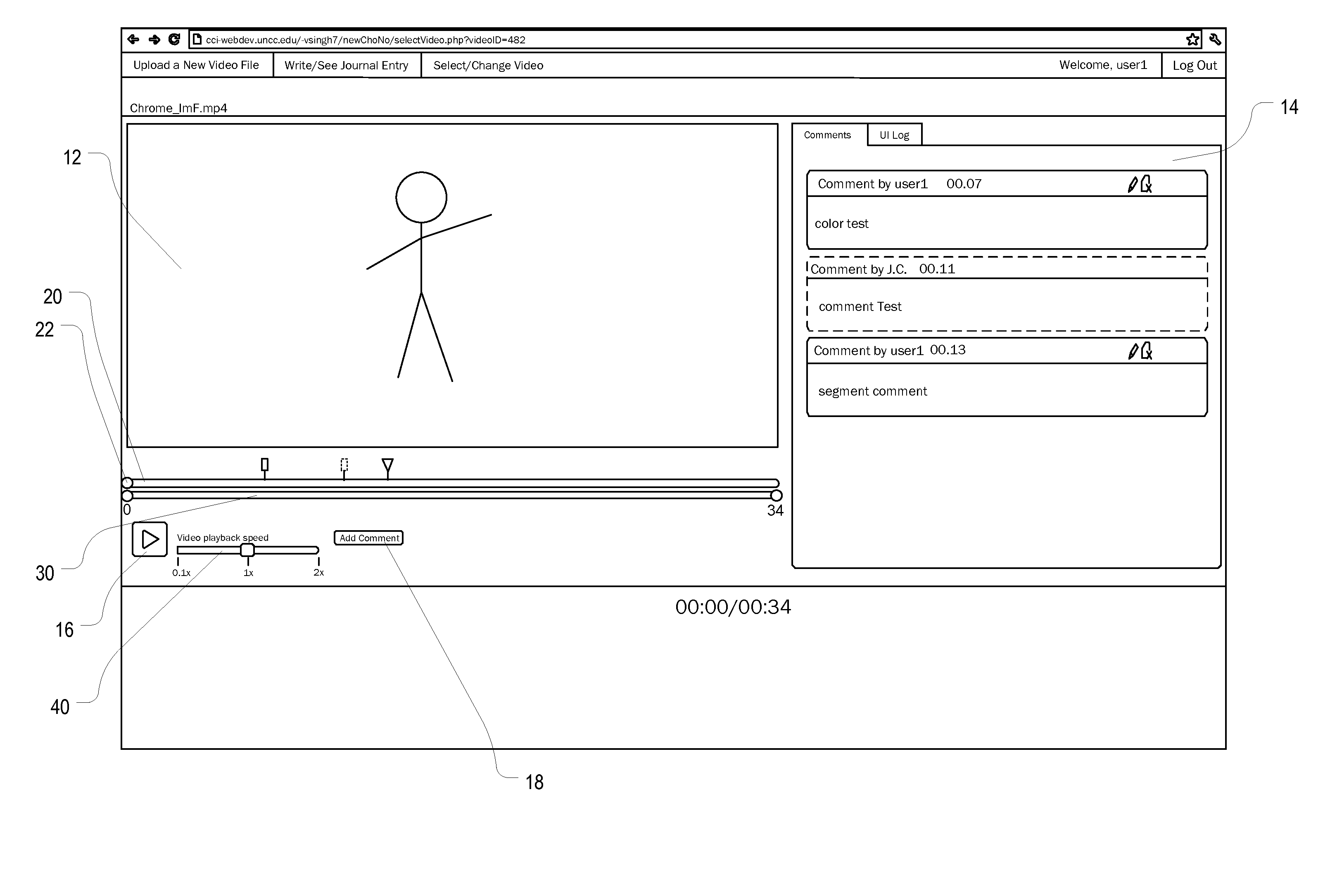

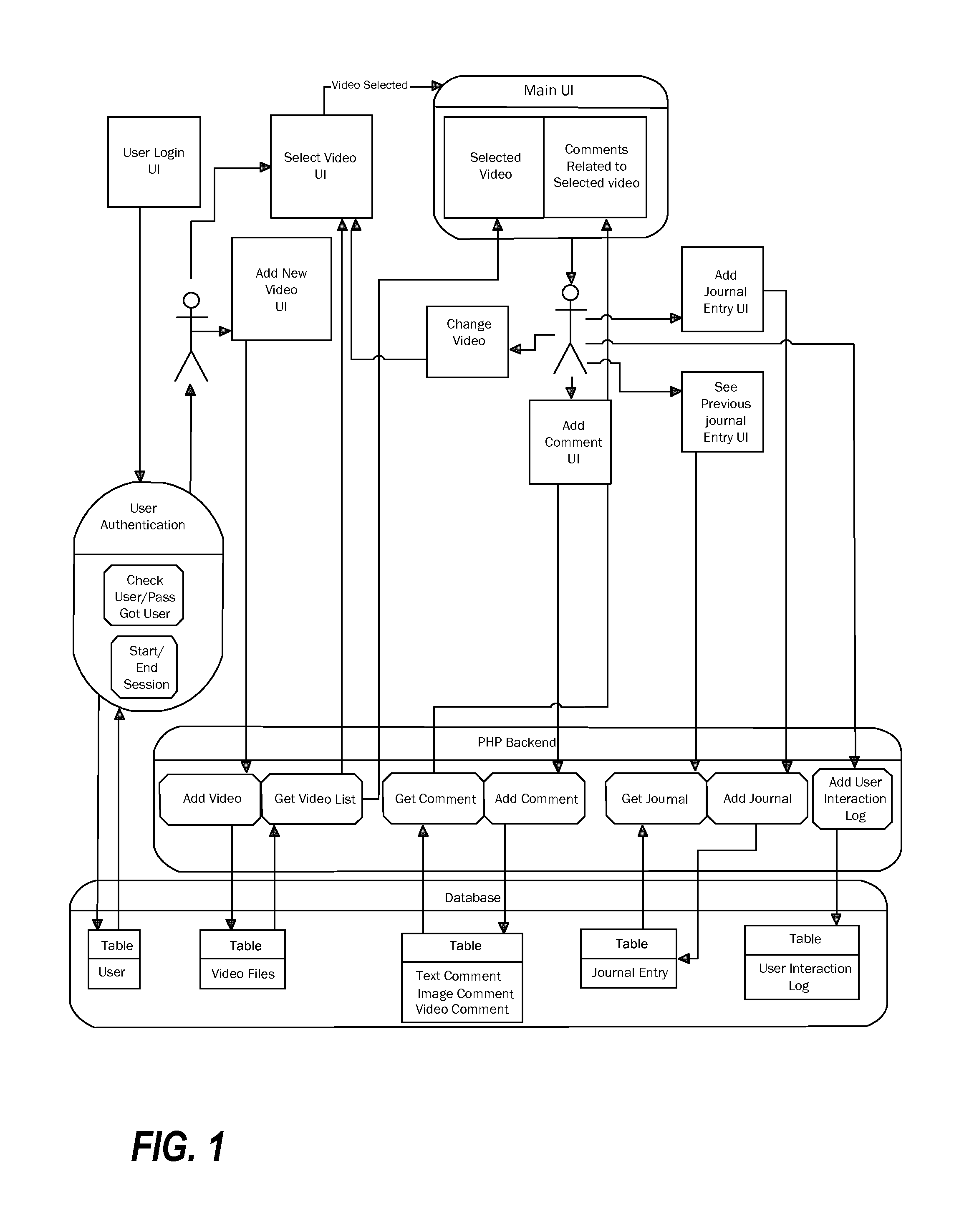

ActiveUS20130145269A1Record information storageCarrier indicating arrangementsColour codingVideo annotation

A video annotation interface includes a video pane configured to display a video, a video timeline bar including a video play-head indicating a current point of the video which is being played, a segment timeline bar including initial and final handles configured to define a segment of the video for playing, and a plurality of color-coded comment markers displayed in connection with the video timeline bar. Each of the comment markers is associated with a frame or segment of the video and corresponds to one or more annotations for that frame or segment made by one of a plurality of users. Each of the users can make annotations and view annotations made by other users. The annotations can include annotations corresponding to a plurality of modalities, including text, drawing, video, and audio modalities.

Owner:THE UNIV OF NORTH CAROLINA AT CHAPEL HILL

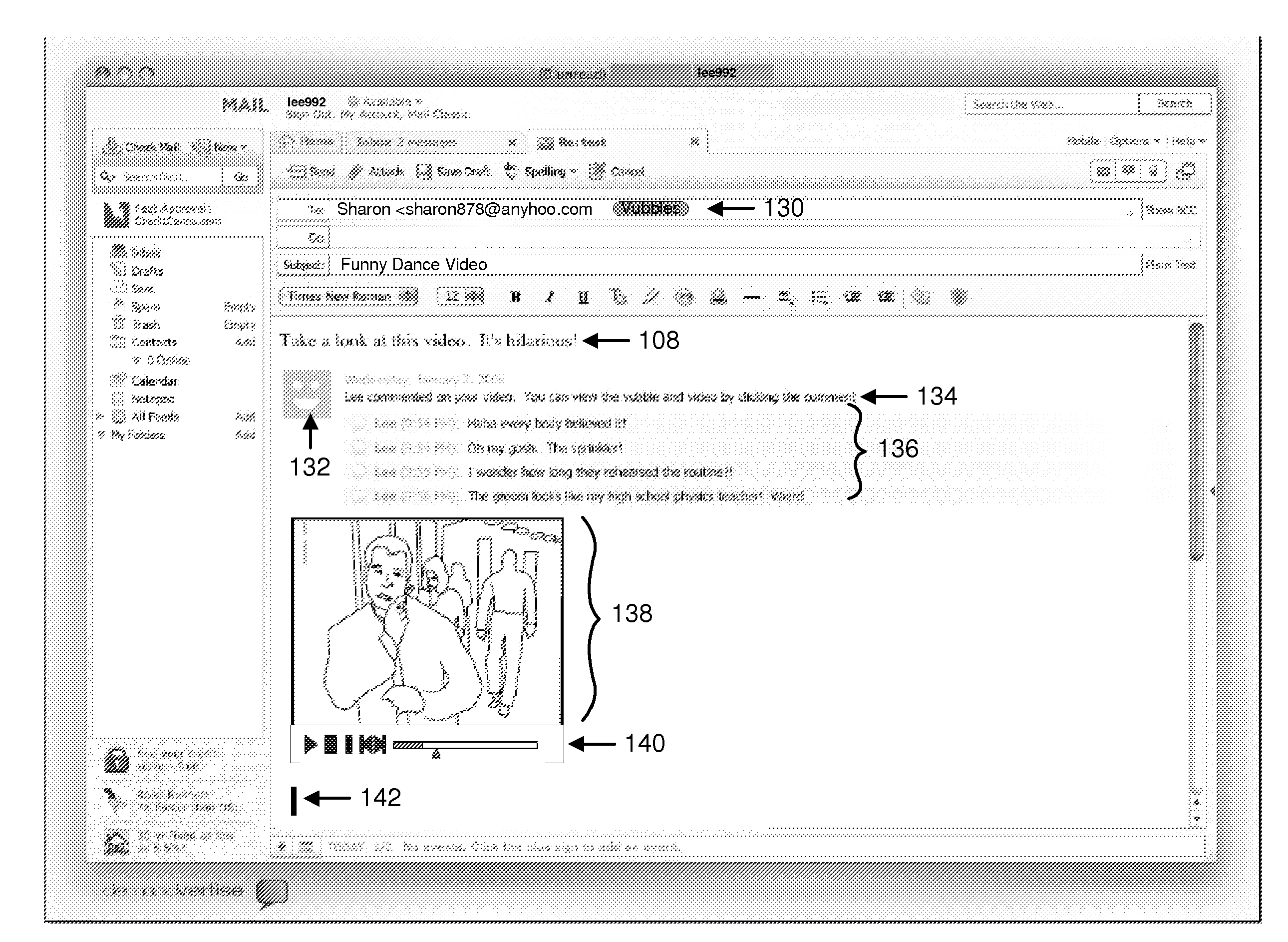

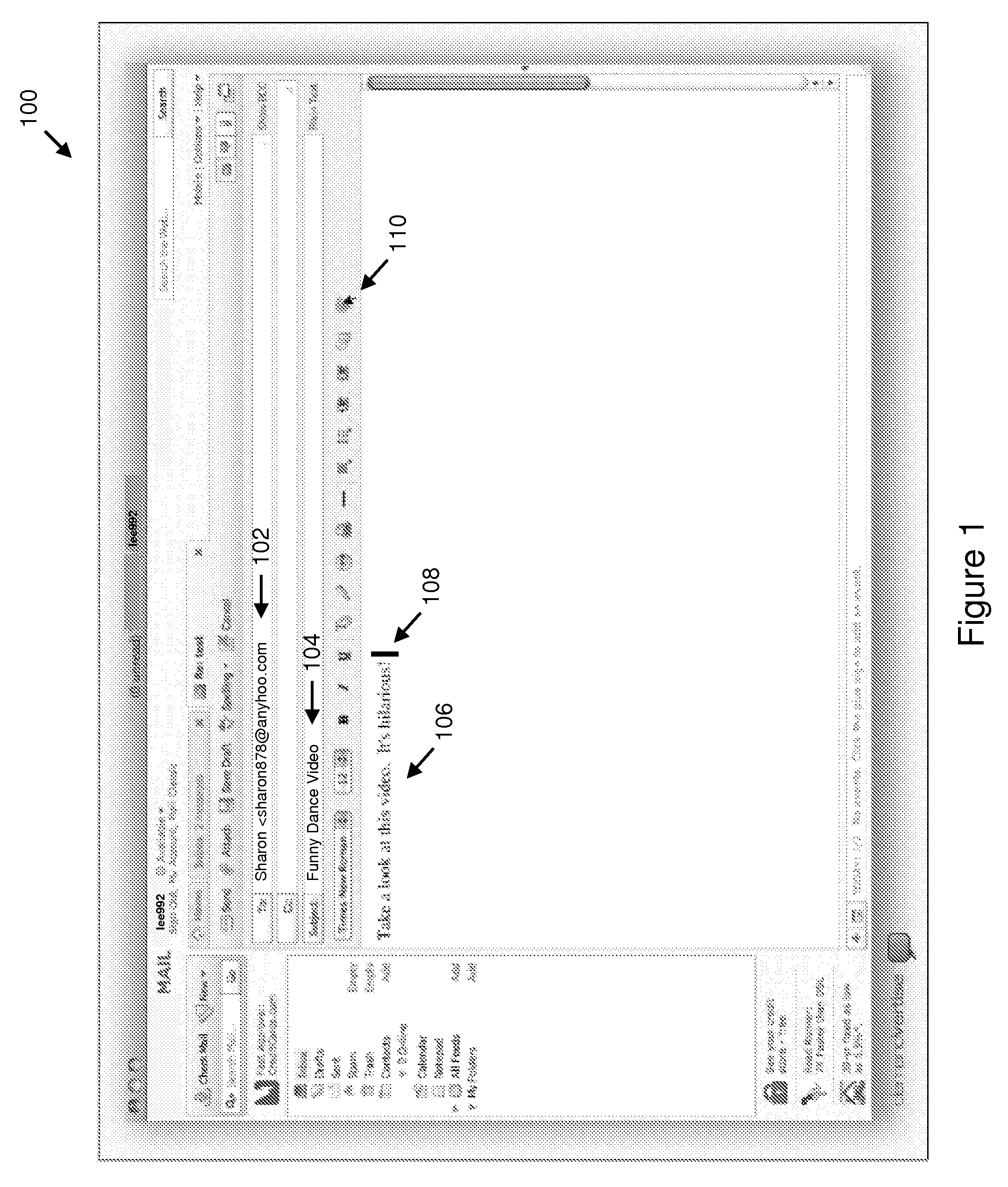

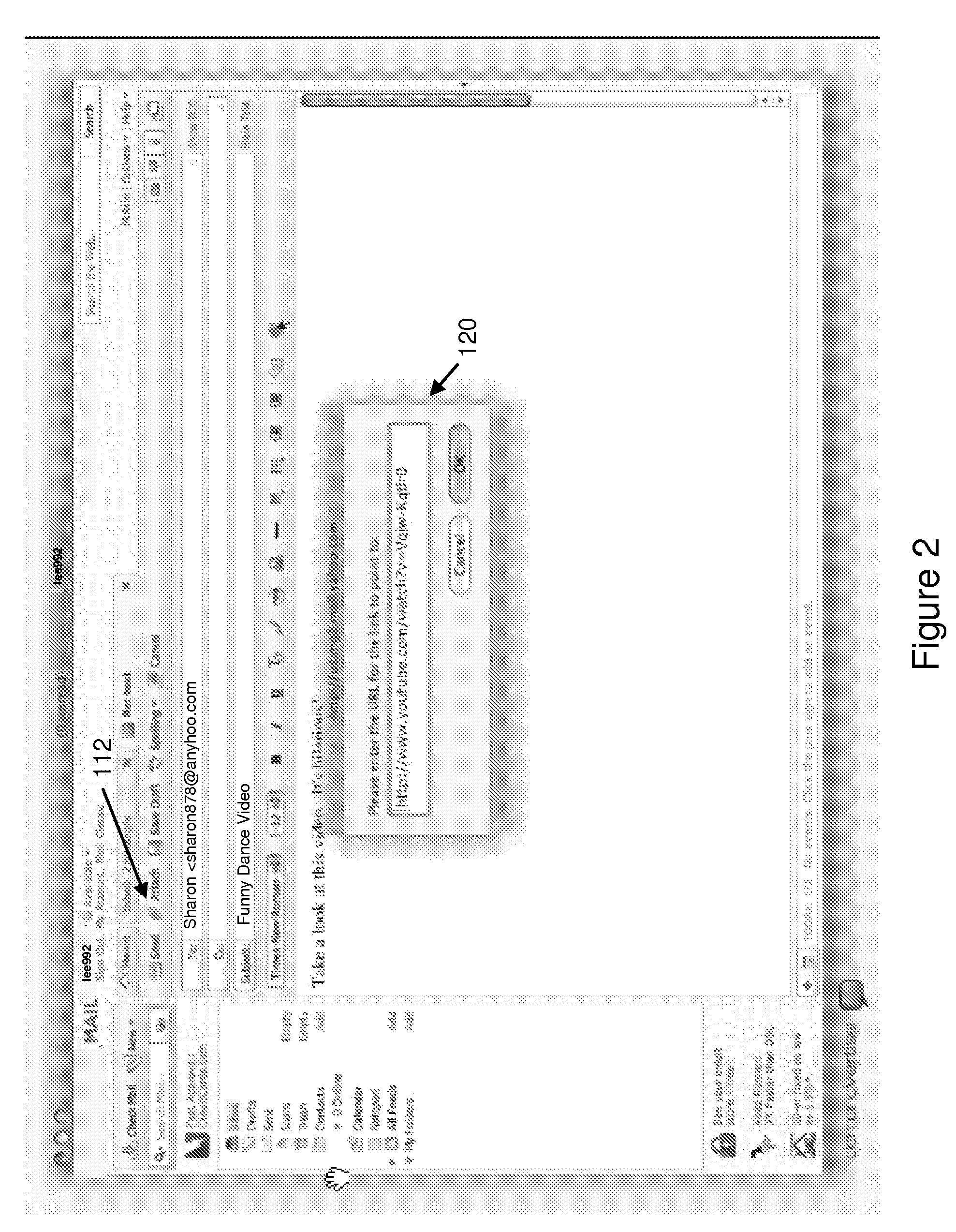

Video linking to electronic text messaging

InactiveUS20090210778A1Selective content distributionData switching networksVideo annotationApplication software

Certain embodiments of the invention include methods and apparatuses for linking video information to electronic messages such as electronic mail (email), online chat, web logs (“blogs”), bulletin boards, web page text, Simple Mobile Services (SMS), Multimedia Messaging Service (MMS) and other electronic message formats. One embodiment provides for embedding links from a video annotation session at a cursor position in an email application. A method for transferring from email to chat and vice versa is disclosed whereby association with video content is maintained.

Owner:FALL FRONT WIRELESS NY

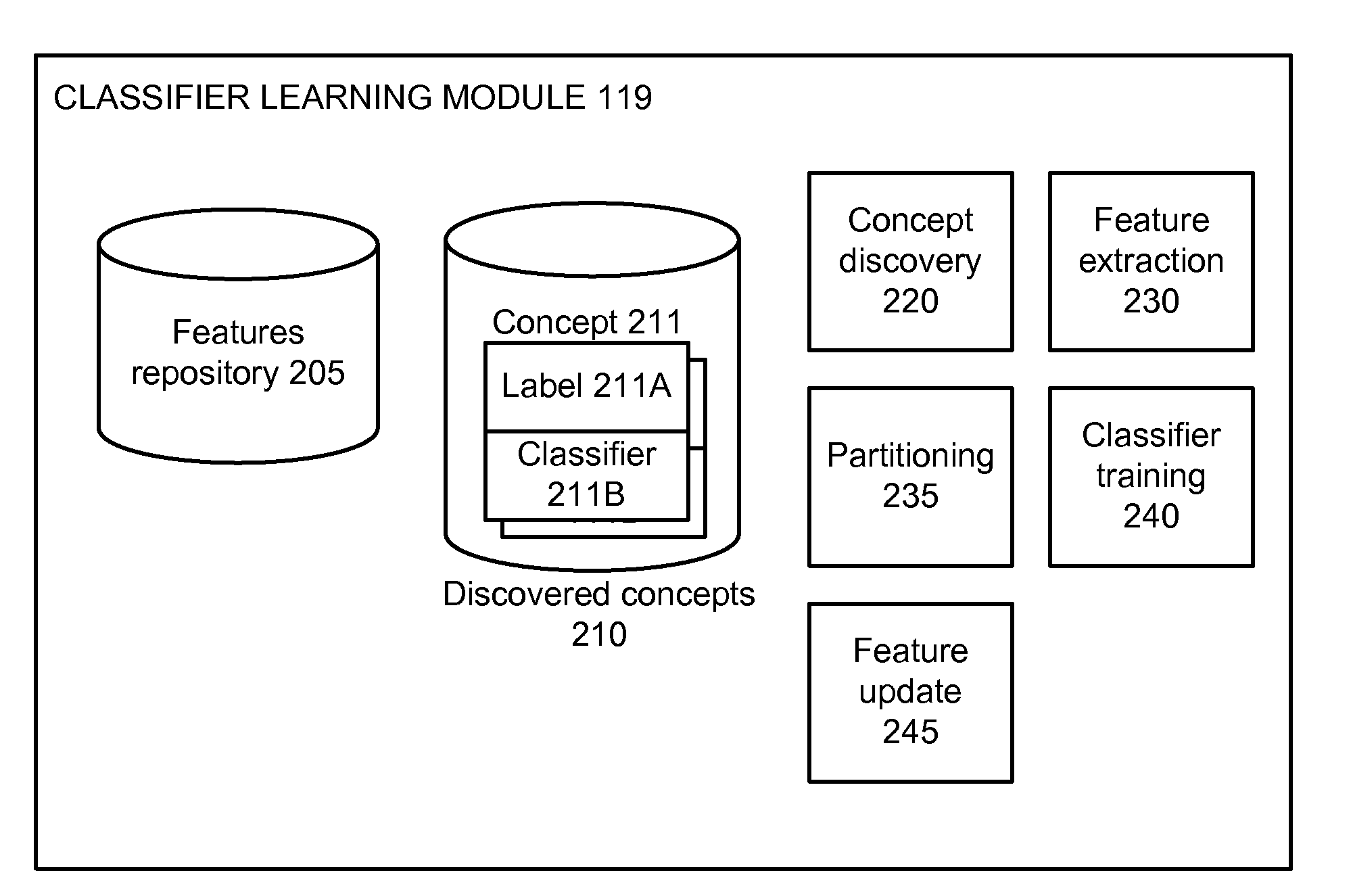

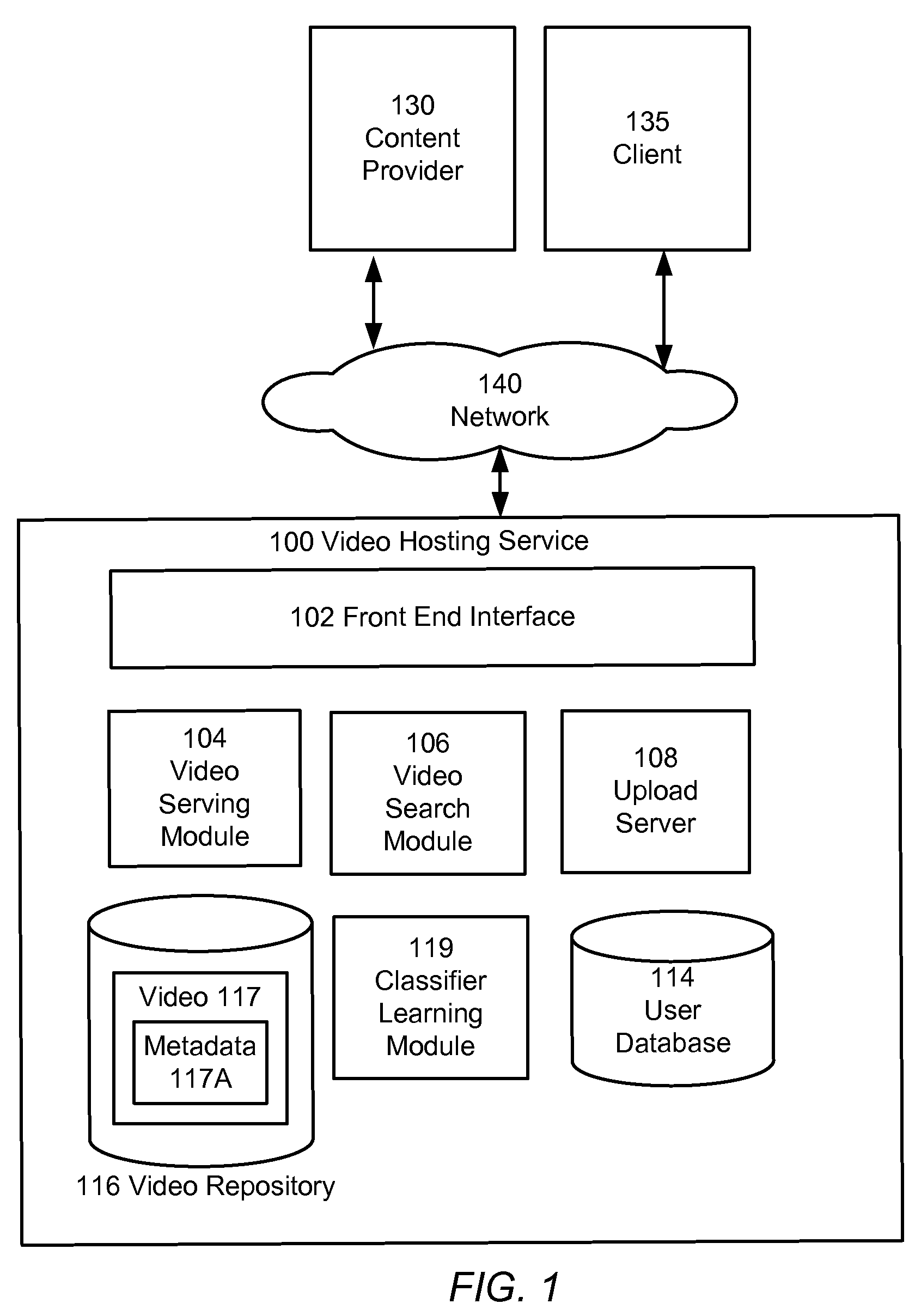

Learning concepts for video annotation

A concept learning module trains video classifiers associated with a stored set of concepts derived from textual metadata of a plurality of videos, the training based on features extracted from training videos. Each of the video classifiers can then be applied to a given video to obtain a score indicating whether or not the video is representative of the concept associated with the classifier. The learning process does not require any concepts to be known a priori, nor does it require a training set of videos having training labels manually applied by human experts. Rather, in one embodiment the learning is based solely upon the content of the videos themselves and on whatever metadata was provided along with the video, e.g., on possibly sparse and / or inaccurate textual metadata specified by a user of a video hosting service who submitted the video.

Owner:GOOGLE LLC

Multi-modal collaborative web-based video annotation system

ActiveUS20160247535A1Digital data information retrievalElectronic editing digitised analogue information signalsComputer graphics (images)Colour coding

A video annotation interface includes a video pane configured to display a video, a video timeline bar including a video play-head indicating a current point of the video which is being played, a segment timeline bar including initial and final handles configured to define a segment of the video for playing, and a plurality of color-coded comment markers displayed in connection with the video timeline bar. Each of the comment markers is associated with a frame or segment of the video and corresponds to one or more annotations for that frame or segment made by one of a plurality of users. Each of the users can make annotations and view annotations made by other users. The annotations can include annotations corresponding to a plurality of modalities, including text, drawing, video, and audio modalities.

Owner:THE UNIV OF NORTH CAROLINA AT CHAPEL HILL

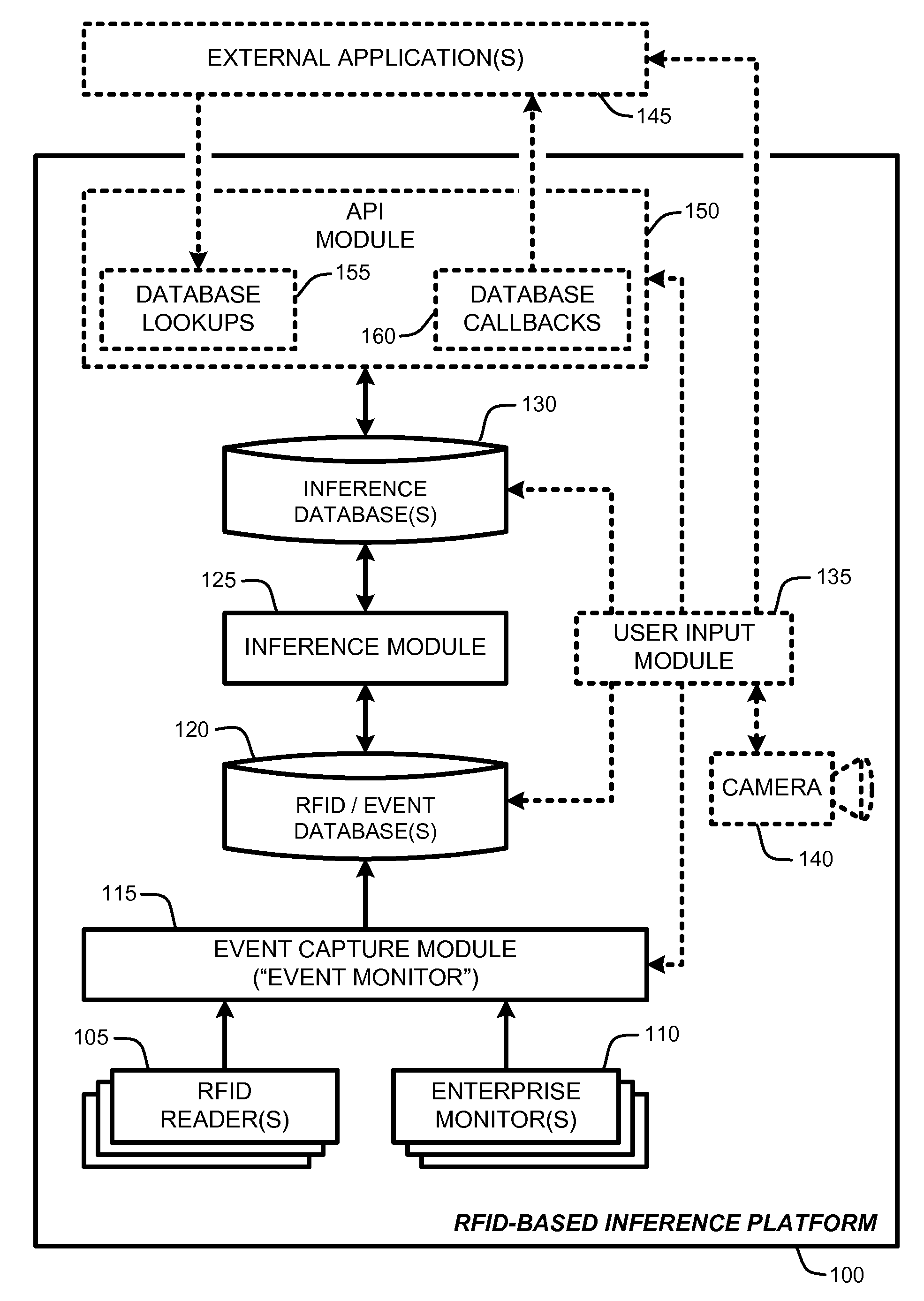

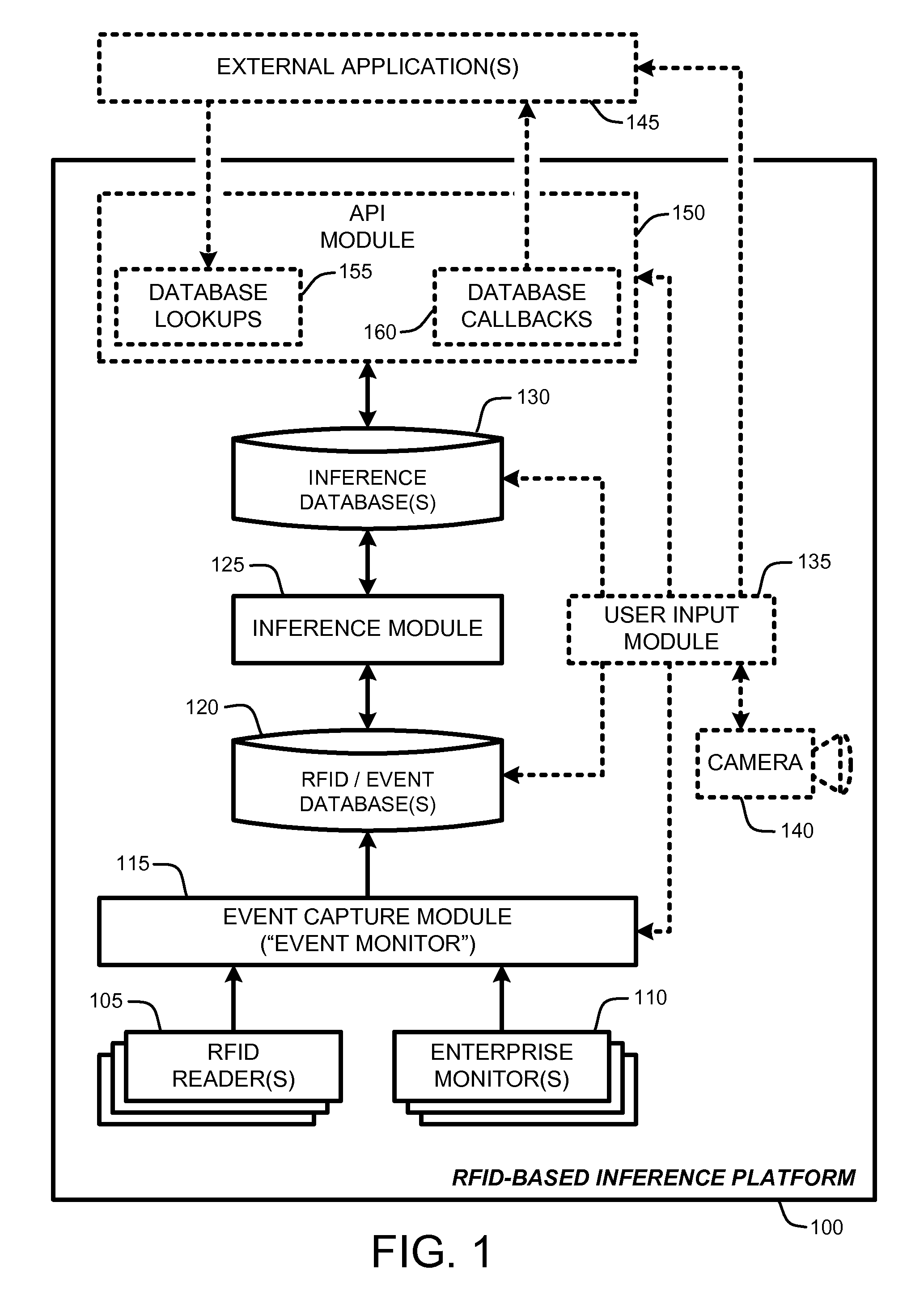

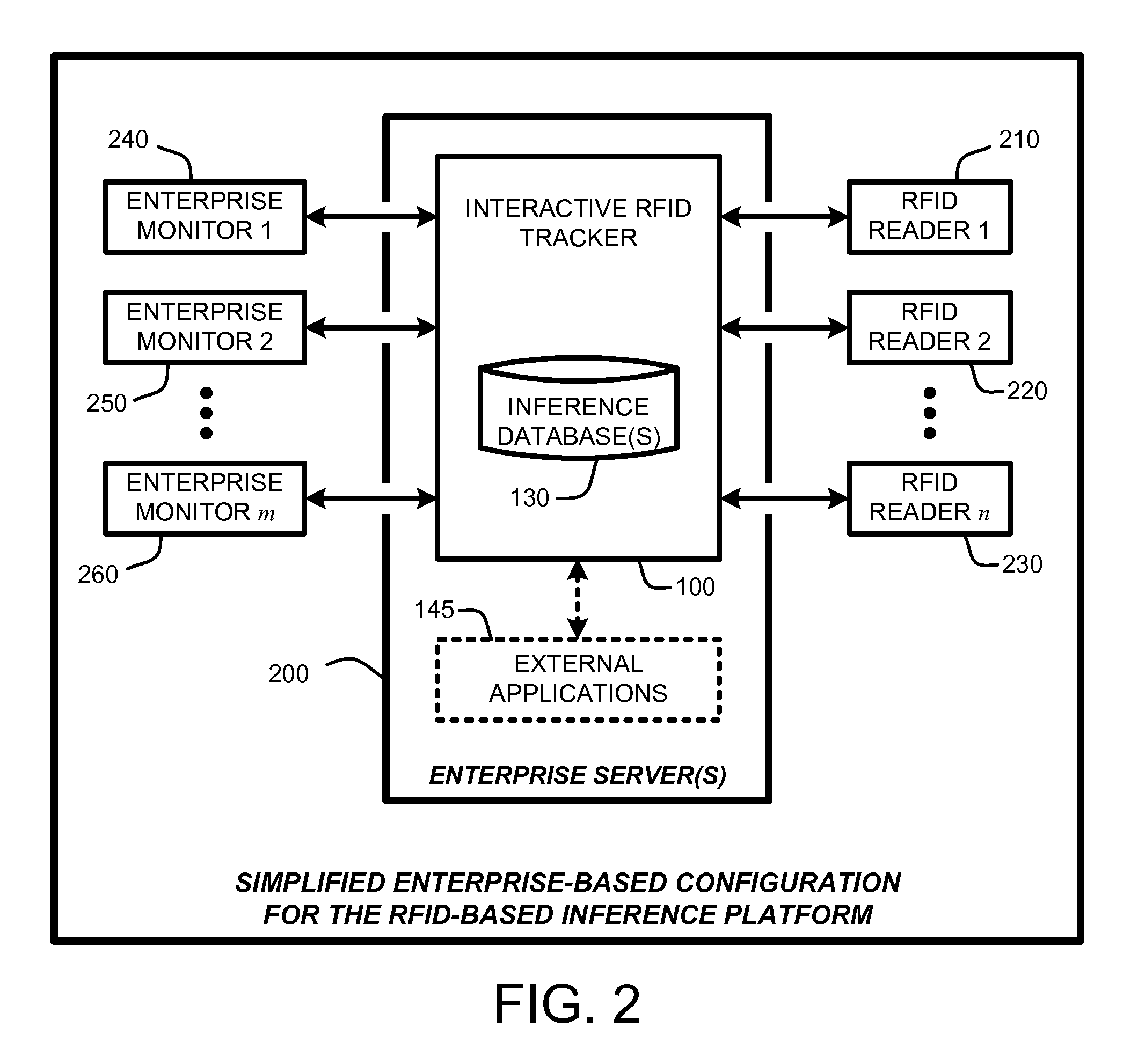

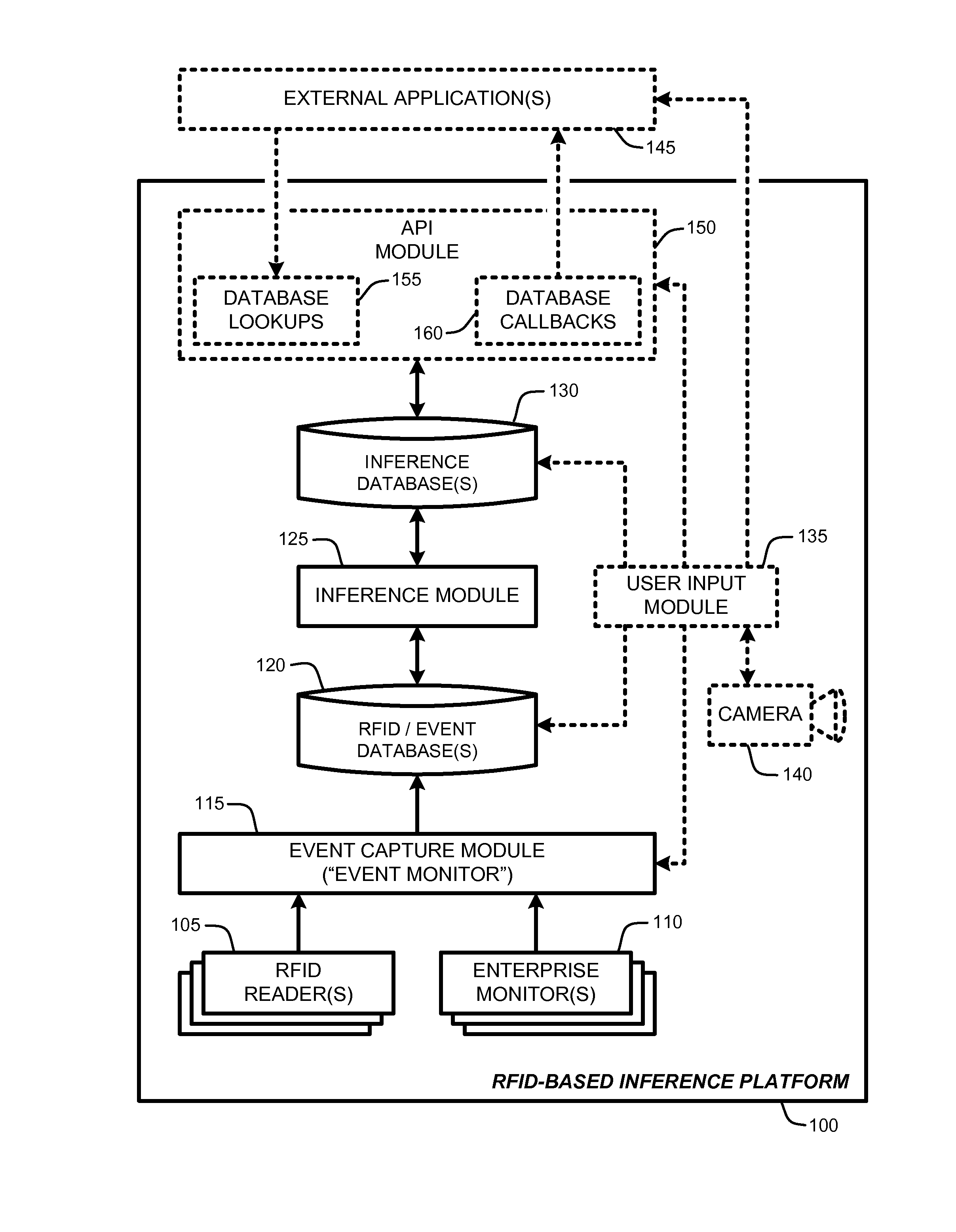

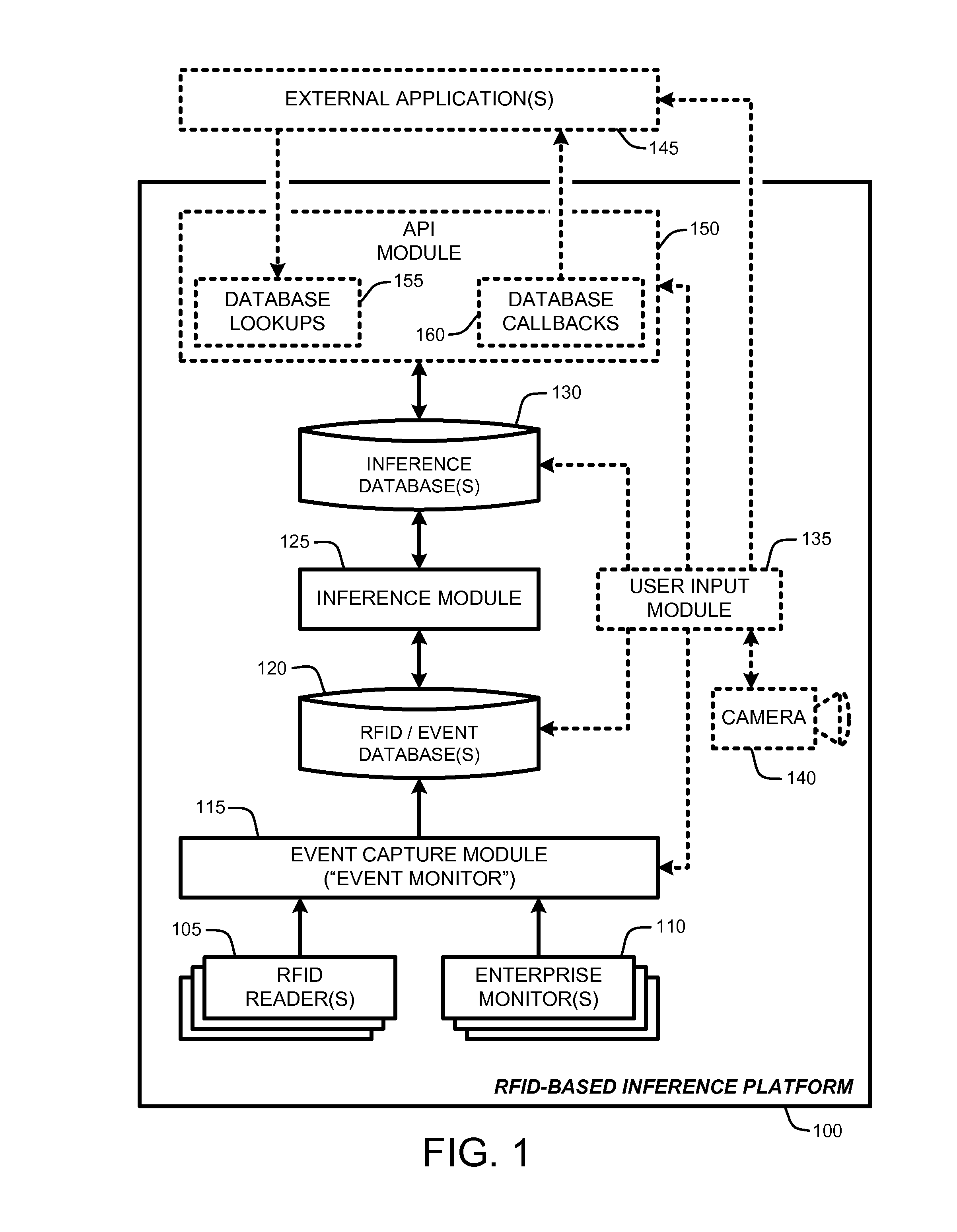

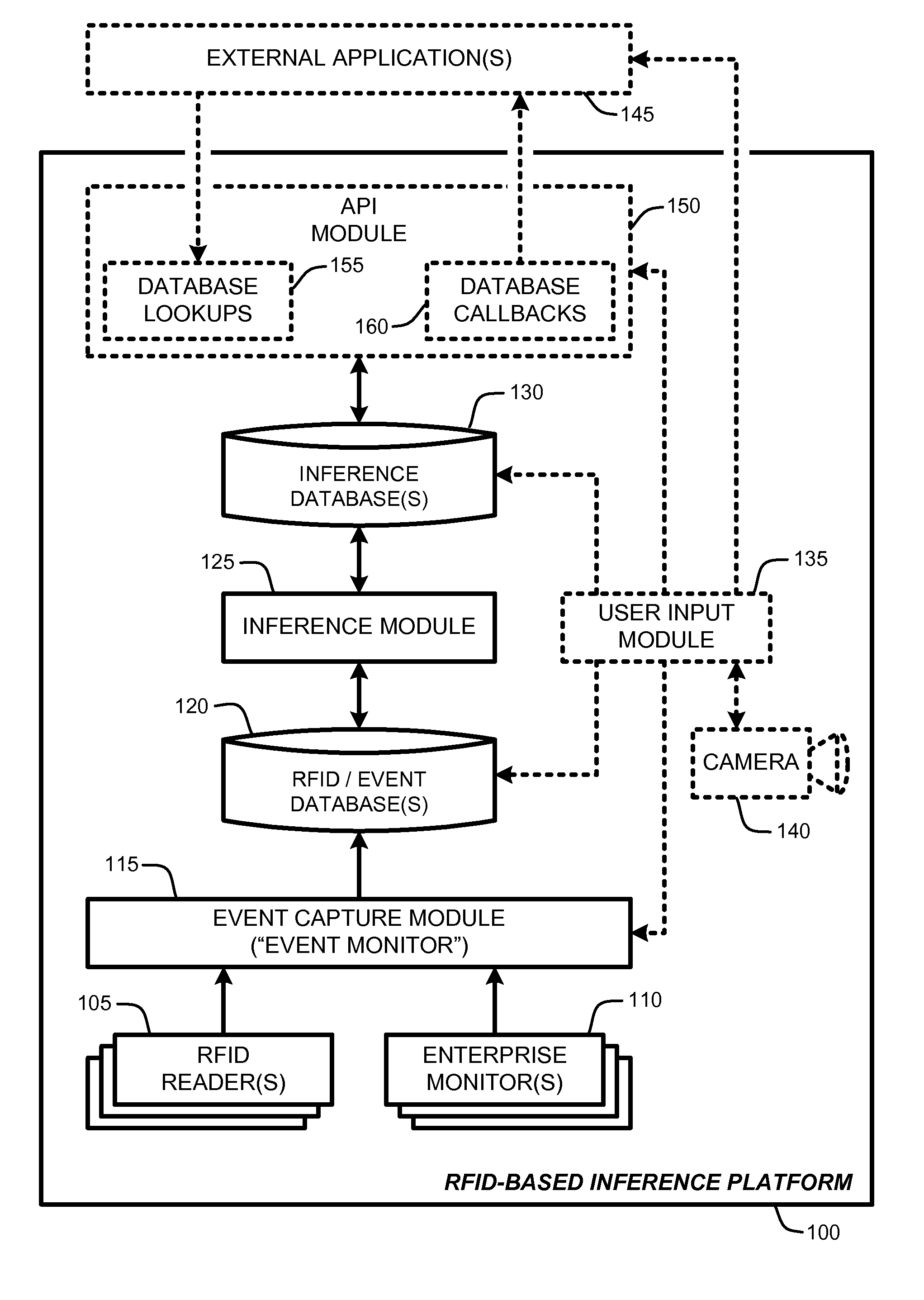

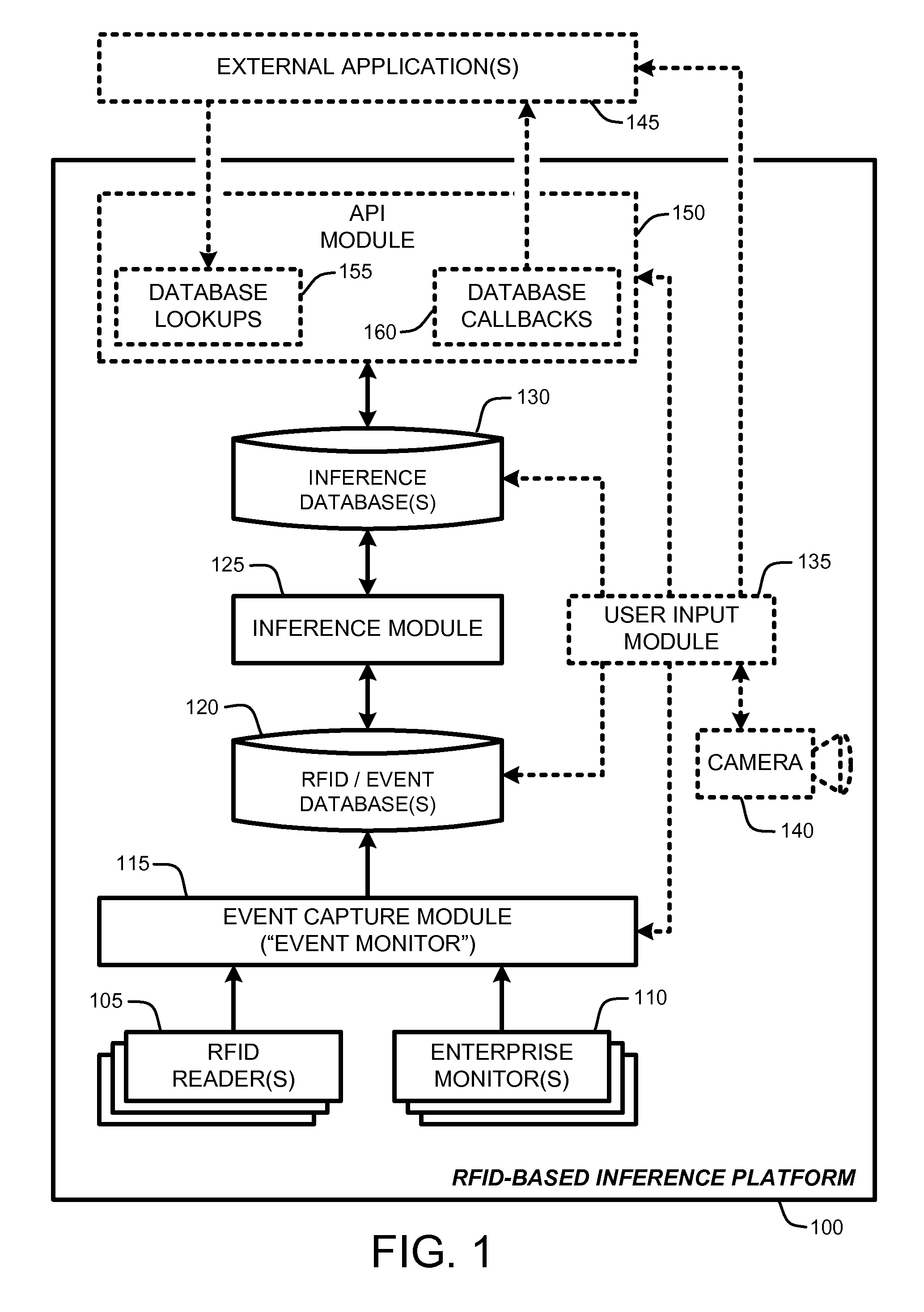

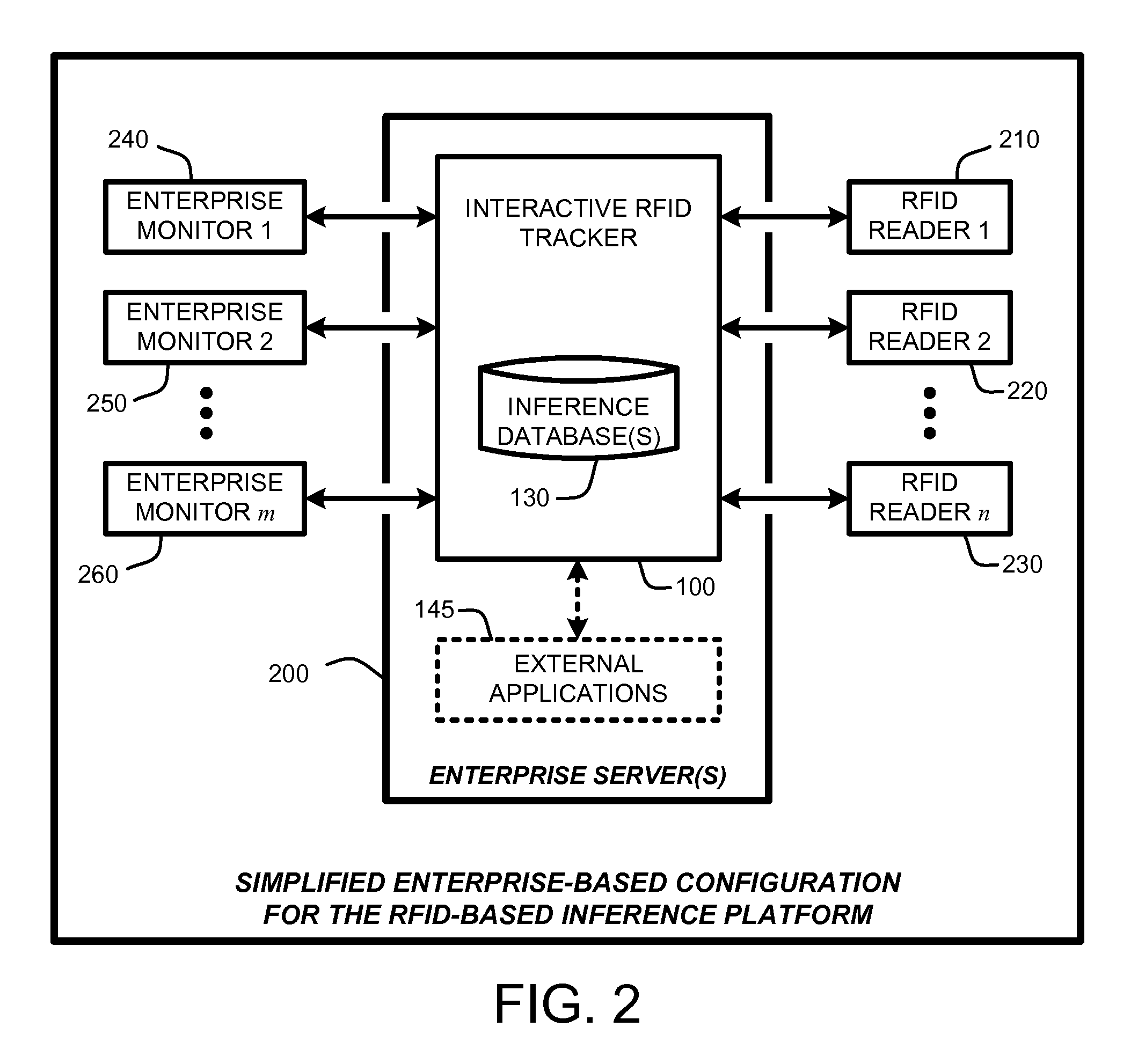

Rfid-based enterprise intelligence

ActiveUS20090315678A1Low costWireless architecture usageDigital computer detailsWorkspaceVideo annotation

An “RFID-Based Inference Platform” provides various techniques for using RFID tags in combination with other enterprise sensors to track users and objects, infer their interactions, and provide these inferences for enabling further applications. Specifically, observations are collected from combinations of RFID tag reads and other enterprise sensors including electronic calendars, user presence identifiers, cardkey access logs, computer logins, etc. Given sufficient observations, the RFID-Based Inference Platform automatically differentiates between tags associated with or affixed to people and tags affixed to objects. The RFID-Based Inference Platform then infers additional information including identities of people, ownership of specific objects, the nature of different “zones” in a workspace (e.g., private office versus conference room). These inferences are then used to enable various applications including object tracking, automated object ownership determinations, automated object cataloging, automated misplaced object alerts, video annotations, automated conference room scheduling, semi-automated object image catalogs, object interaction query systems, etc.

Owner:MICROSOFT TECH LICENSING LLC

Rfid-based enterprise intelligence

ActiveUS20110227704A1Low costElectric signal transmission systemsDigital data processing detailsWorkspaceCataloging

An “RFID-Based Inference Platform” provides various techniques for using RFID tags in combination with other enterprise sensors to track users and objects, infer their interactions, and provide these inferences for enabling further applications. Specifically, observations are collected from combinations of RFID tag reads and other enterprise sensors including electronic calendars, user presence identifiers, cardkey access logs, computer logins, etc. Given sufficient observations, the RFID-Based Inference Platform automatically differentiates between tags associated with or affixed to people and tags affixed to objects. The RFID-Based Inference Platform then infers additional information including identities of people, ownership of specific objects, the nature of different “zones” in a workspace (e.g., private office versus conference room). These inferences are then used to enable various applications including object tracking, automated object ownership determinations, automated object cataloging, automated misplaced object alerts, video annotations, automated conference room scheduling, semi-automated object image catalogs, object interaction query systems, etc.

Owner:MICROSOFT TECH LICENSING LLC

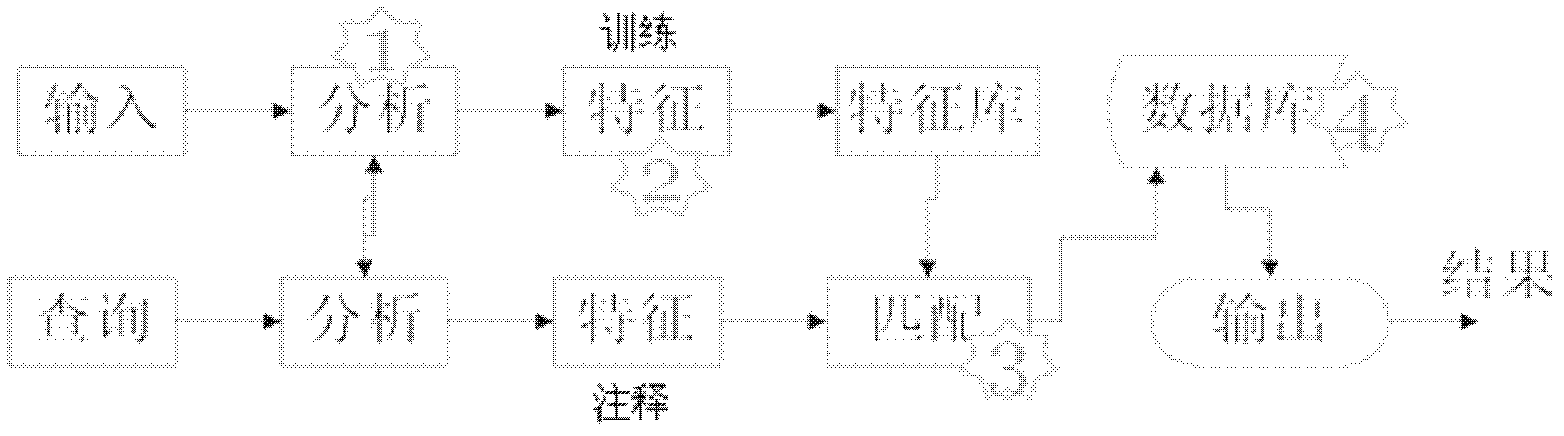

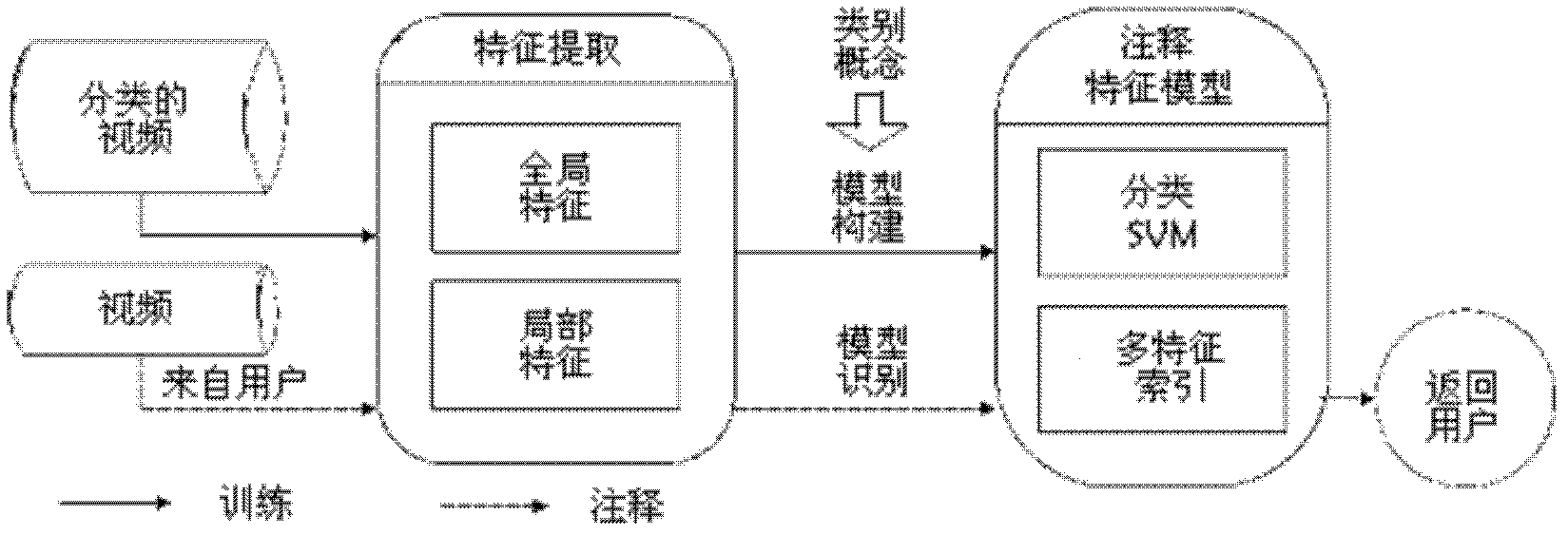

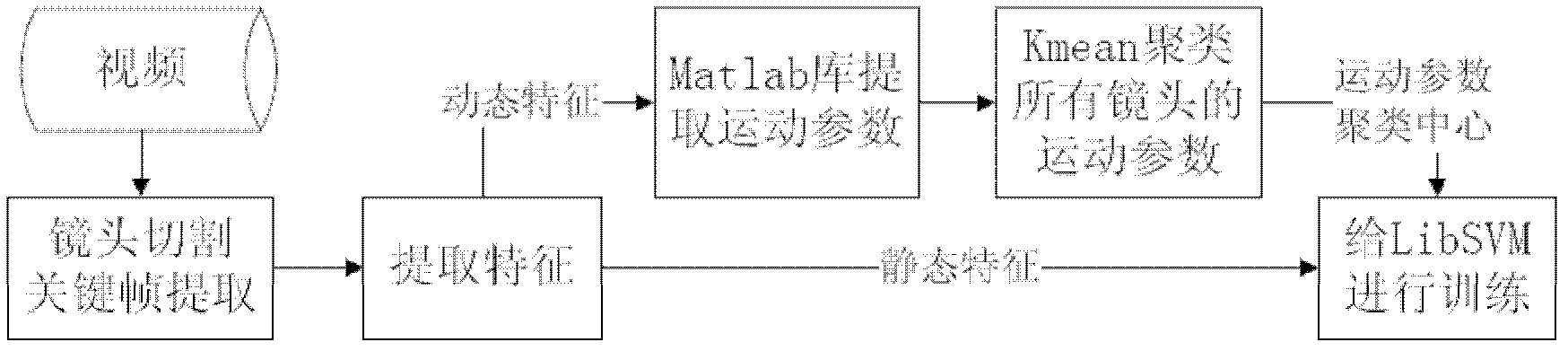

Automatic video annotation method based on automatic classification and keyword marking

InactiveCN102508923AImprove labeling abilityNarrow the matchSpecial data processing applicationsSupport vector machineVideo annotation

The invention discloses an automatic video annotation method based on automatic classification and keyword marking. The automatic video annotation method comprises the following steps of: S1, carrying out preprocessing on a video classification feature; S2, extracting the global feature and the local feature of a video, wherein the global feature is used for training an SVM (Support Vector Machine) model to enable the SVM model to identify different types; and the local feature is used for establishing a multi-feature index model with multiple features which correspond to keywords; and S3, for un-annotated videos from a user, extracting the global feature and the local feature and then enabling the SVM model to identify specific types of the videos by adopting the global feature; and retrieving relevant keywords in the multi-feature index model for annotating by using the local feature; and S4, ordering an annotation result according to a certain weight and then returning the annotation result to the user. According to the automatic video annotation method, the marking performance of the video is improved.

Owner:PEKING UNIV

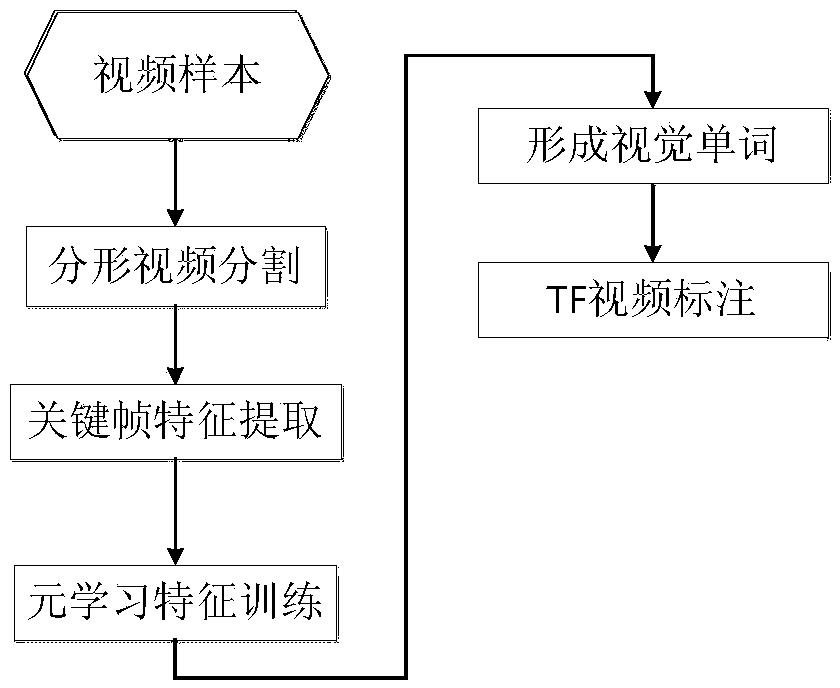

Mass video semantic annotation method based on Spark

ActiveCN104239501AAvoid constraintsRealize analysisImage analysisCharacter and pattern recognitionFeature extractionVideo annotation

The invention provides a mass video semantic annotation method based on Spark. The method is mainly based on elastic distributed storage of mass video under a Hadoop big data cluster environment and adopts a Spark computation mode to conduct video annotation. The method mainly comprises the following contents: a video segmentation method based on a fractal theory and realization thereof on Spark; a video feature extraction method based on Spark and a visual word forming method based on a meta-learning strategy; a video annotation generation method based on Spark. Compared with the traditional single machine computation, parallel computation or distributed computation, the mass video semantic annotation method based on Spark can improve the computation speed by more than a hundred times and has the advantages of complete annotation content information, low error rate and the like.

Owner:THE 28TH RES INST OF CHINA ELECTRONICS TECH GROUP CORP

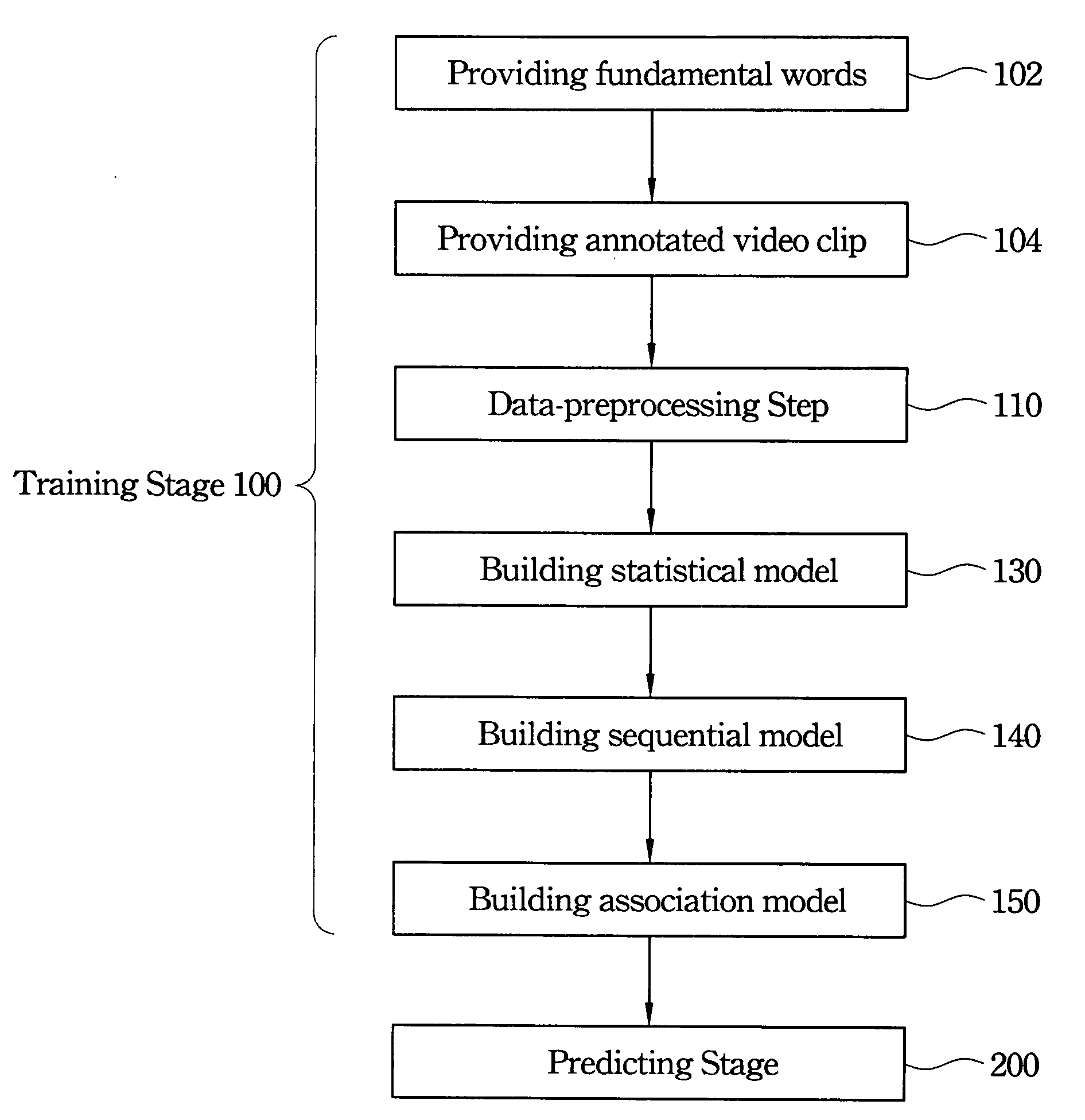

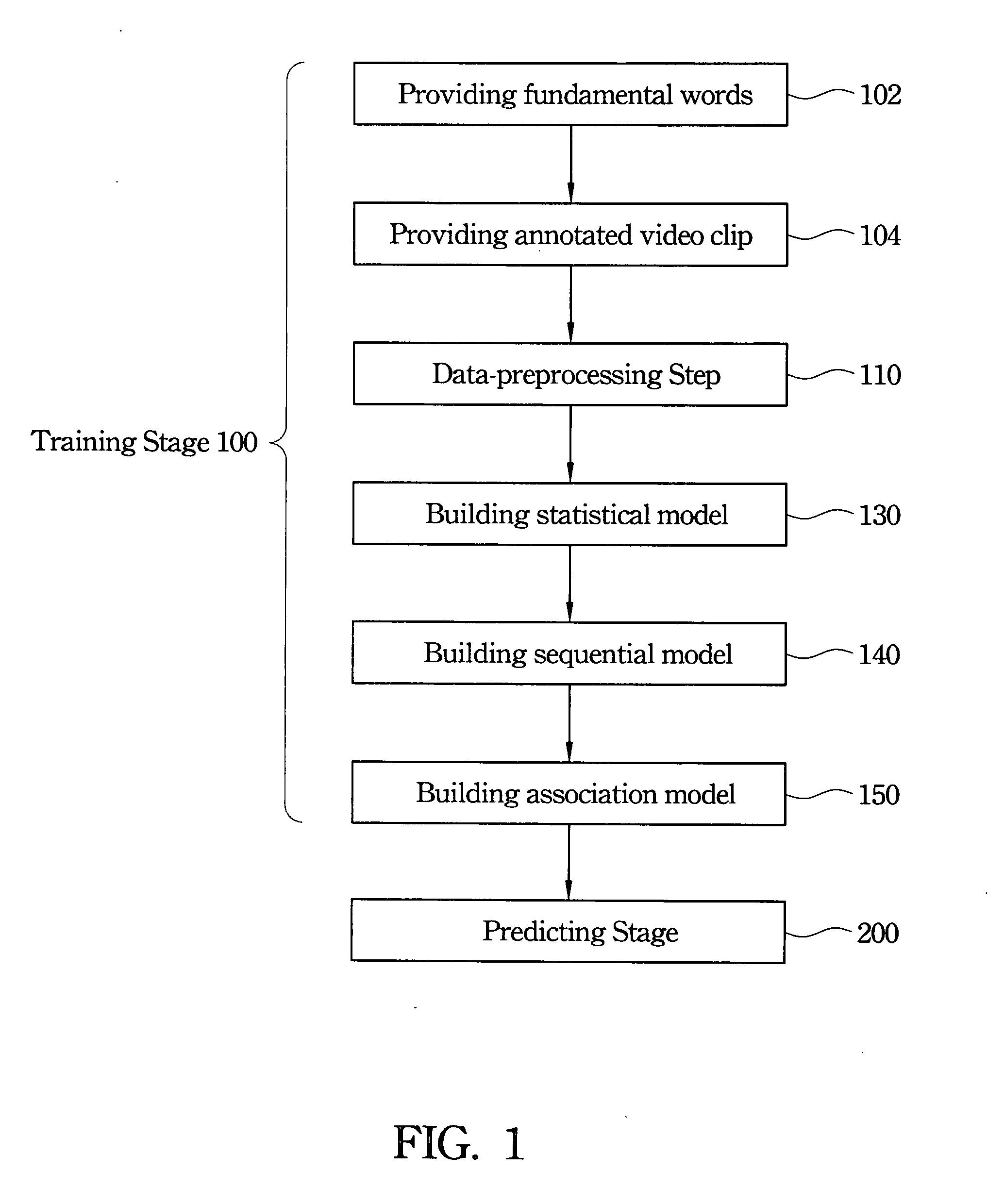

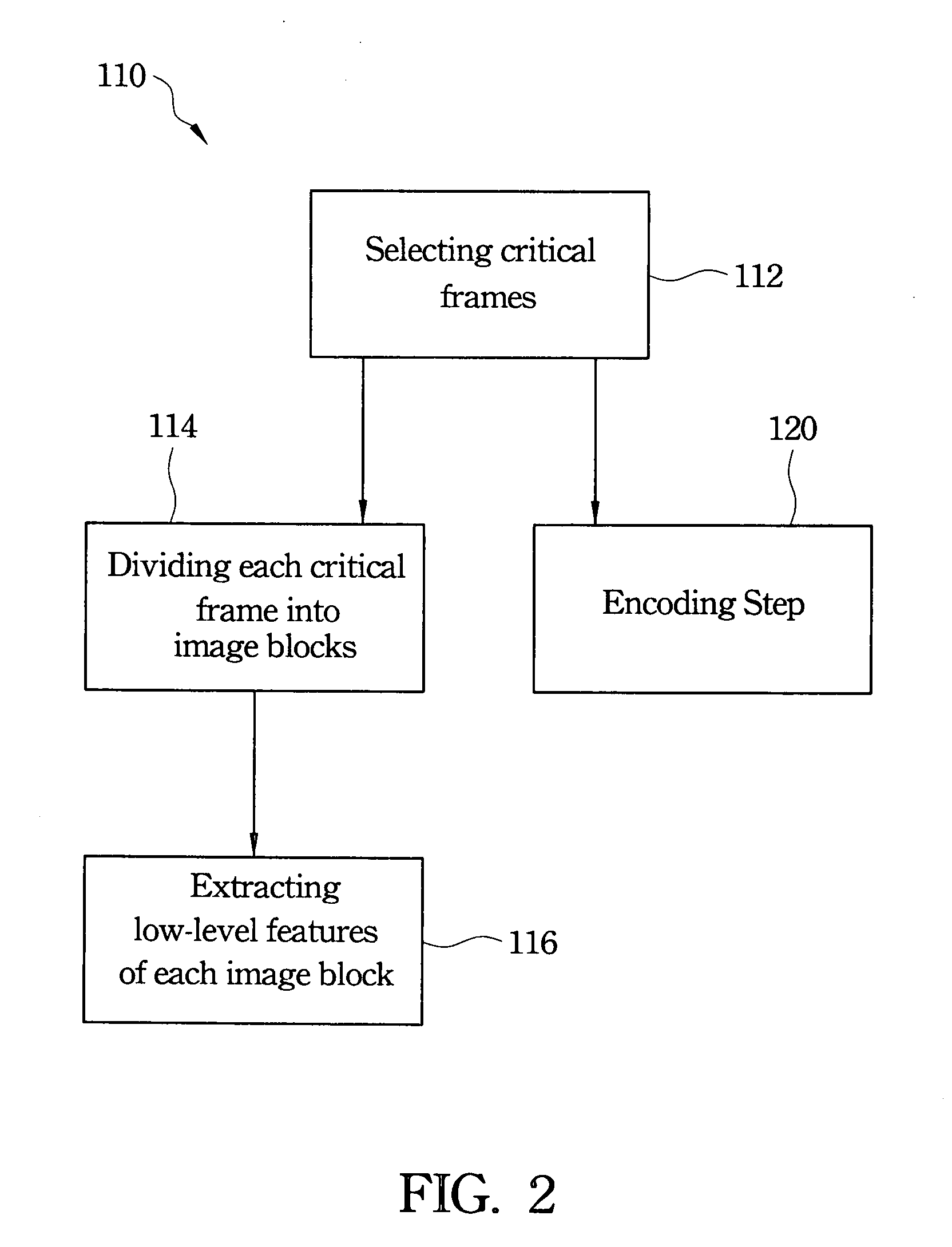

Video annotation method by integrating visual features and frequent patterns

InactiveUS20080059872A1Improve forecast accuracyOvercomes shortcomingDigital data information retrievalCharacter and pattern recognitionPattern recognitionVideo annotation

A video annotation method by integrating visual features and frequent patterns is disclosed. This method is featured in integrating a statistical model based on visual features with a sequential model and an association model constructed by data mining skills for automatically annotating unknown videos. This method takes both of visual features and semantic patterns into consideration simultaneously through the combination of three different models so as to enhance the accuracy of annotation.

Owner:NAT CHENG KUNG UNIV

RFID-based enterprise intelligence

Owner:MICROSOFT TECH LICENSING LLC

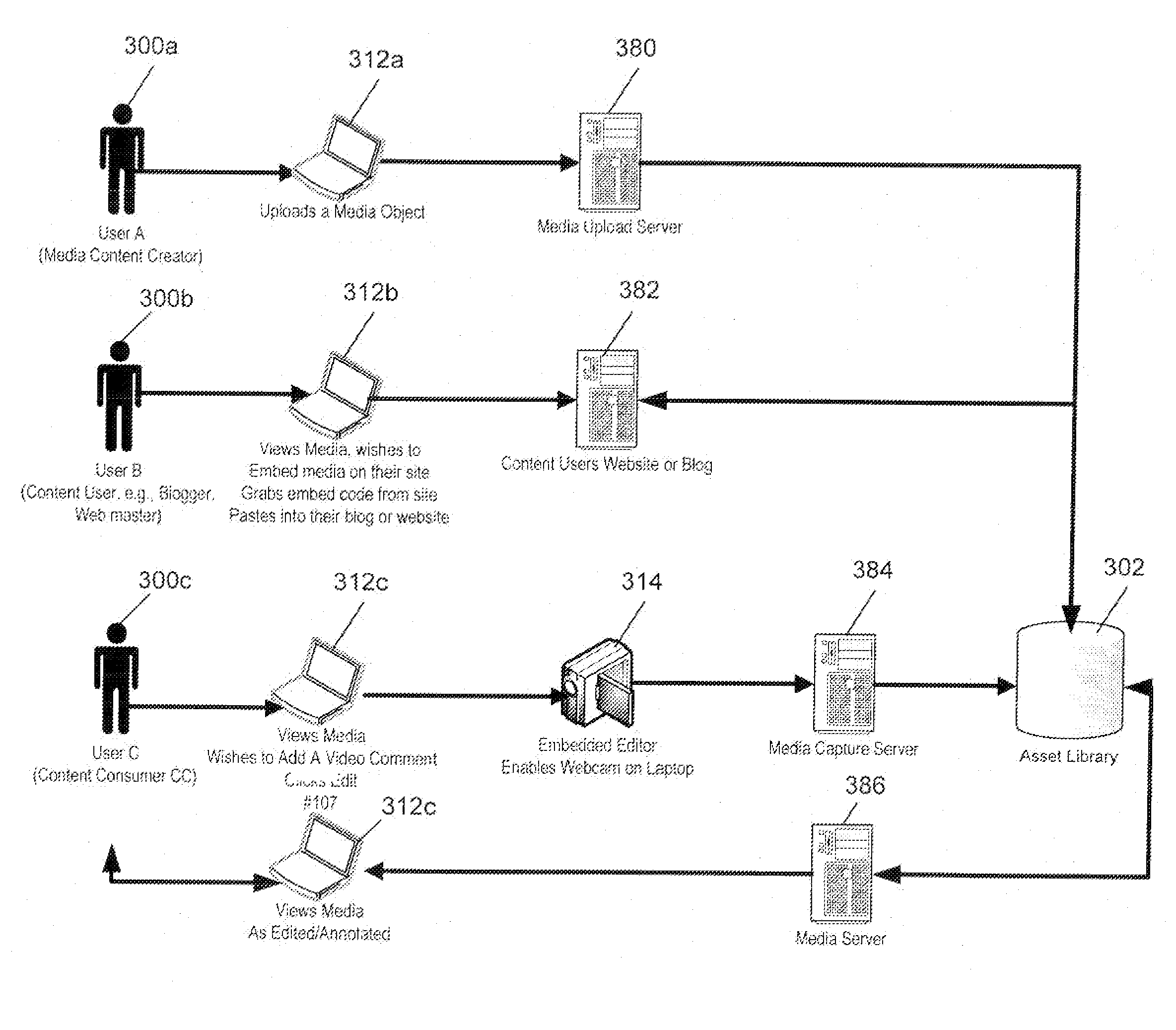

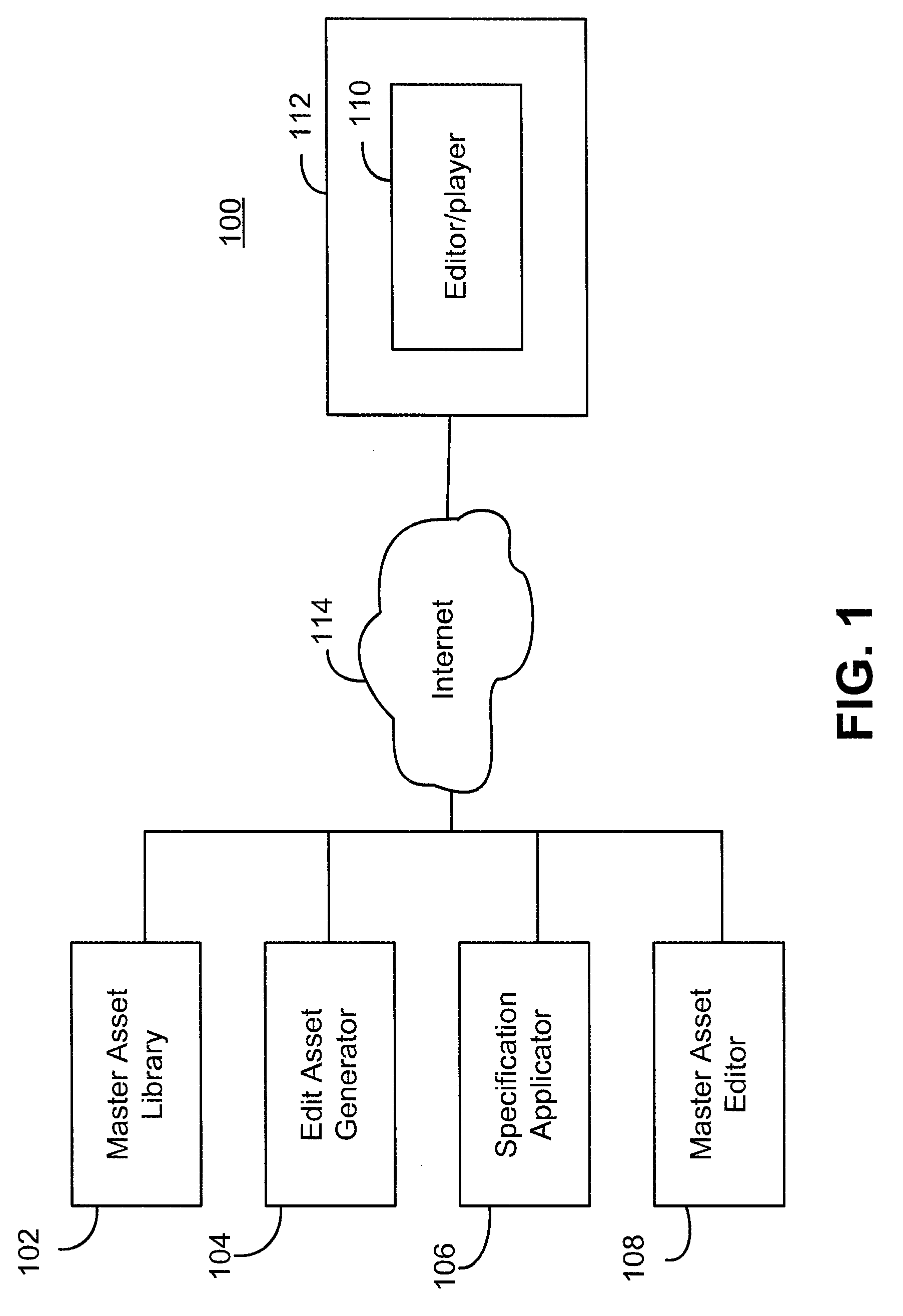

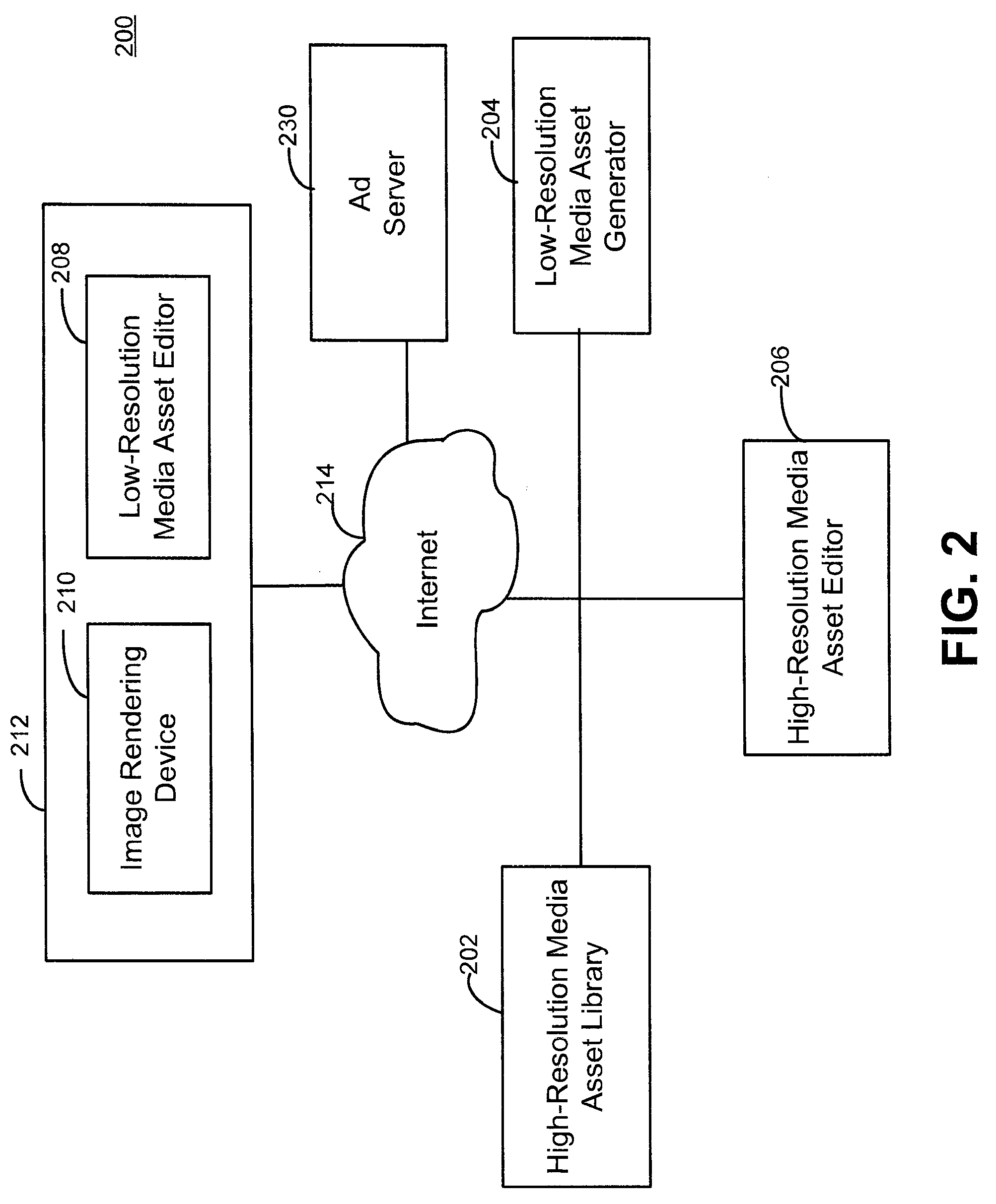

In-place upload and editing application for editing media assets

InactiveUS20090064005A1Electronic editing digitised analogue information signalsRecord information storageUser inputVideo annotation

System and methods for editing media assets are provided. In one example, apparatus for editing media assets includes logic (e.g., software) for causing the display of a media asset editor embedded within a web page, the editor operable to display a media asset and cause an edit of the media asset in response to user input. In one example, the media asset editor comprises a widget embedded within the web page; for example, a flash based widget. The editor may be further operable to upload media assets to remote storage, e.g., local or remote media assets or user-generated media assets. An edit to the media asset may include an edit instruction associated with the displayed media asset. In one example, the edit to the media asset may include an annotation of the displayed media asset, e.g., a text, audio, or video annotations of the displayed media asset.

Owner:OATH INC

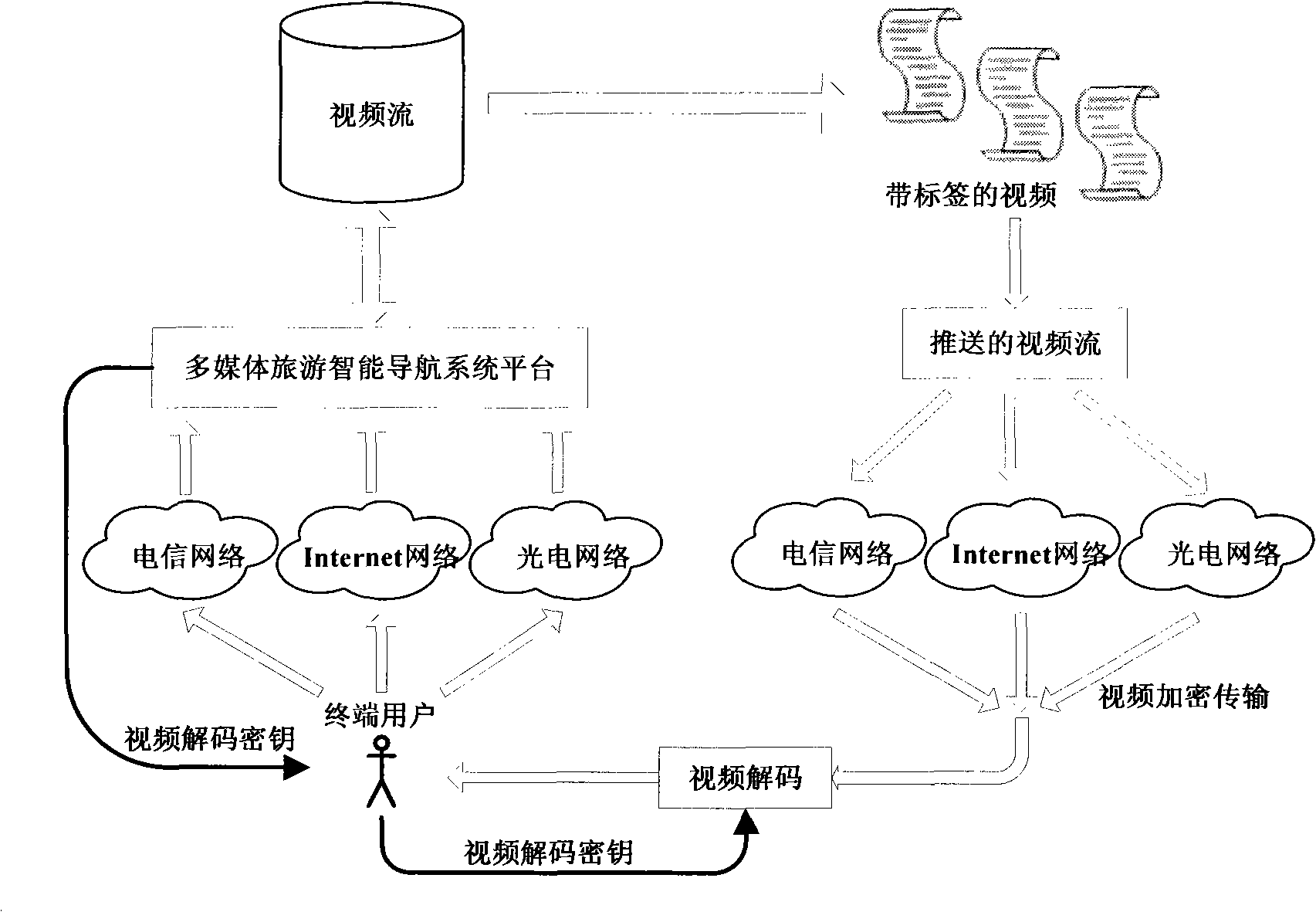

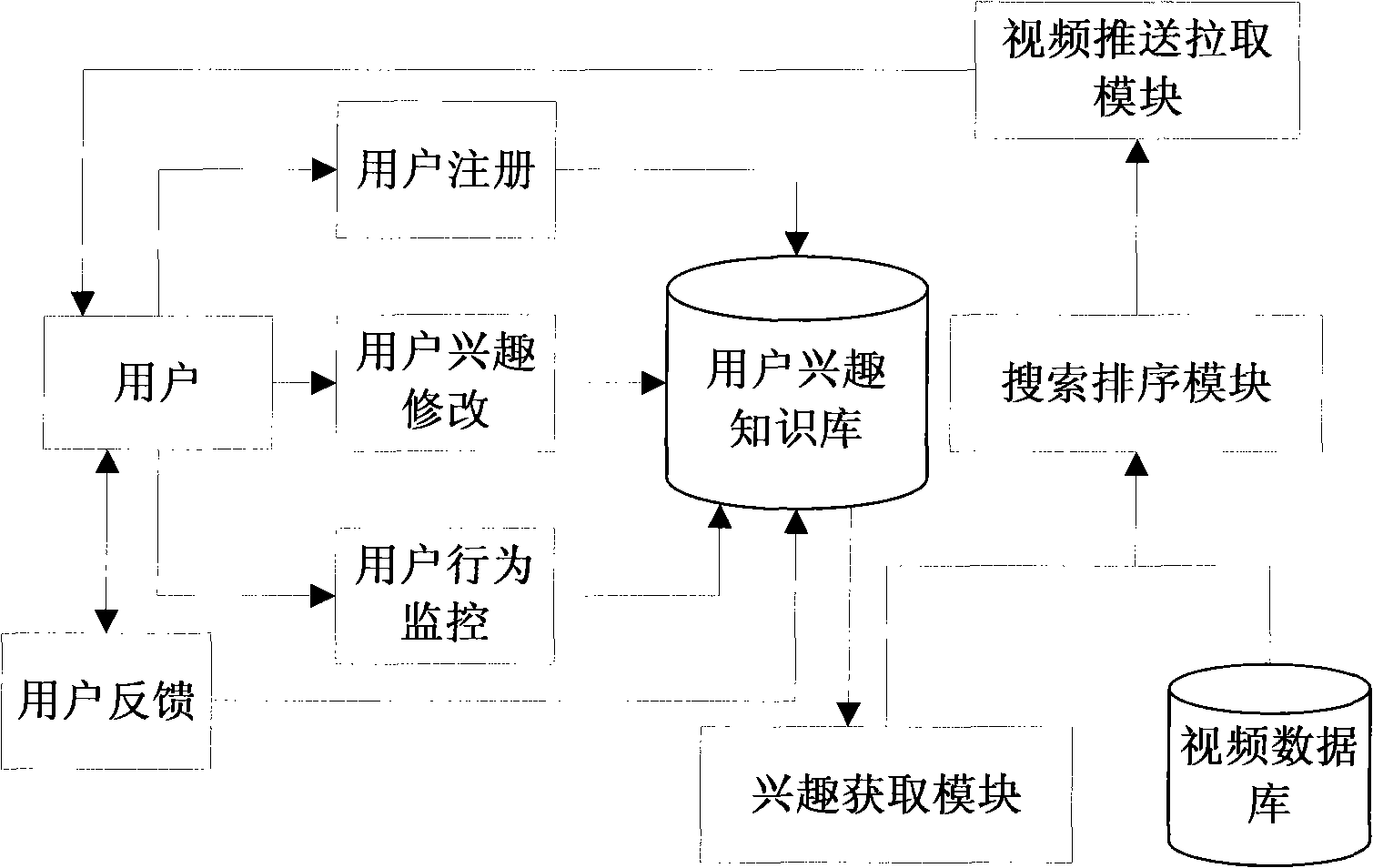

On-line landscape video active information service system of scenic spots in tourist attraction based on bandwidth network

InactiveCN101834837AWell definedTransmissionSpecial data processing applicationsTelecommunications networkFeature extraction

The invention provides an on-line landscape video active information service system of scenic spots in a tourist attraction based on a bandwidth network. The system of the invention collects video resources from Internet, telecommunication network and broadcasting / video network, and can provide video intelligent message services based on the bandwidth network for various users by classifying and excavating video information. The system performs characteristic extraction, video annotation and the like on video information need to be retrieved, uses the intelligent matching technology, and retrieves in a labeled video source database according to the user requirements, transmits a video stream according to the types of user terminals and transmits the processed video stream. The method provided by the invention has high service efficiency for the users, good instantaneity and high application value.

Owner:BEIJING UNIV OF POSTS & TELECOMM

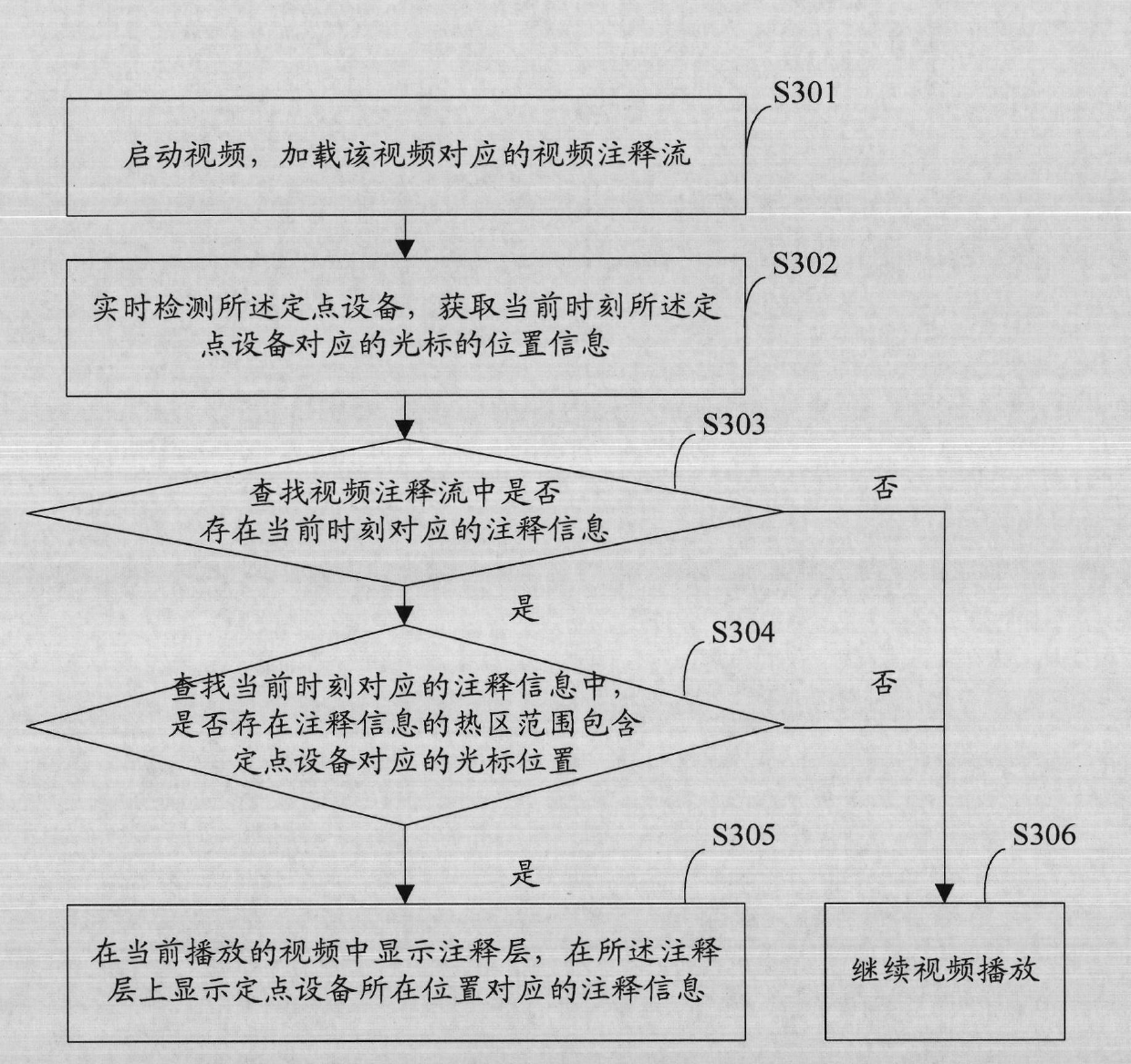

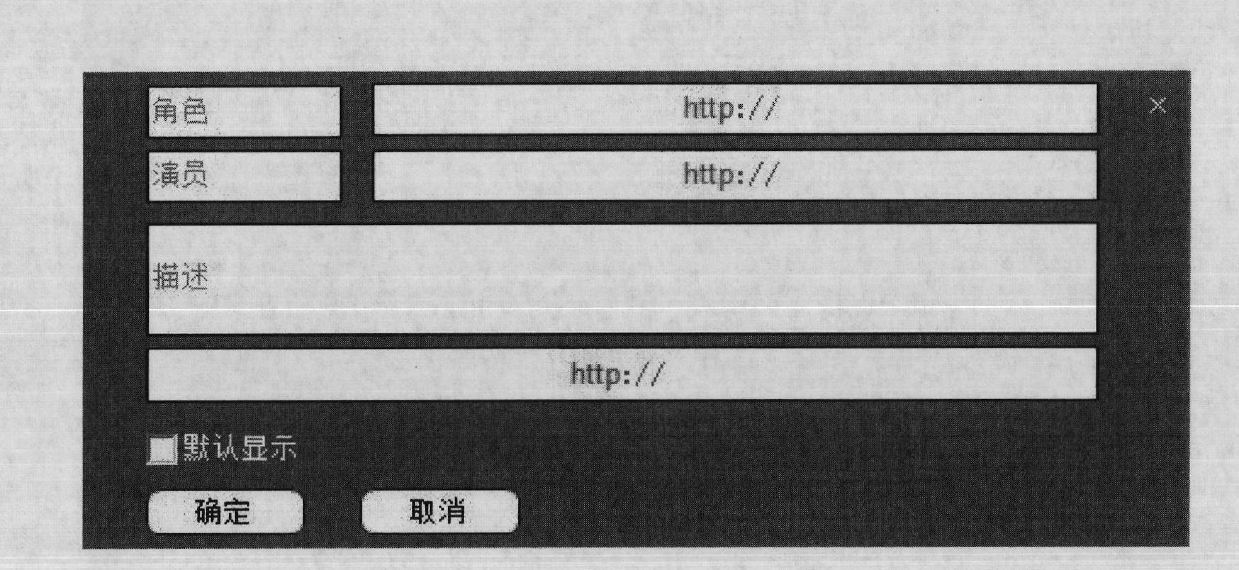

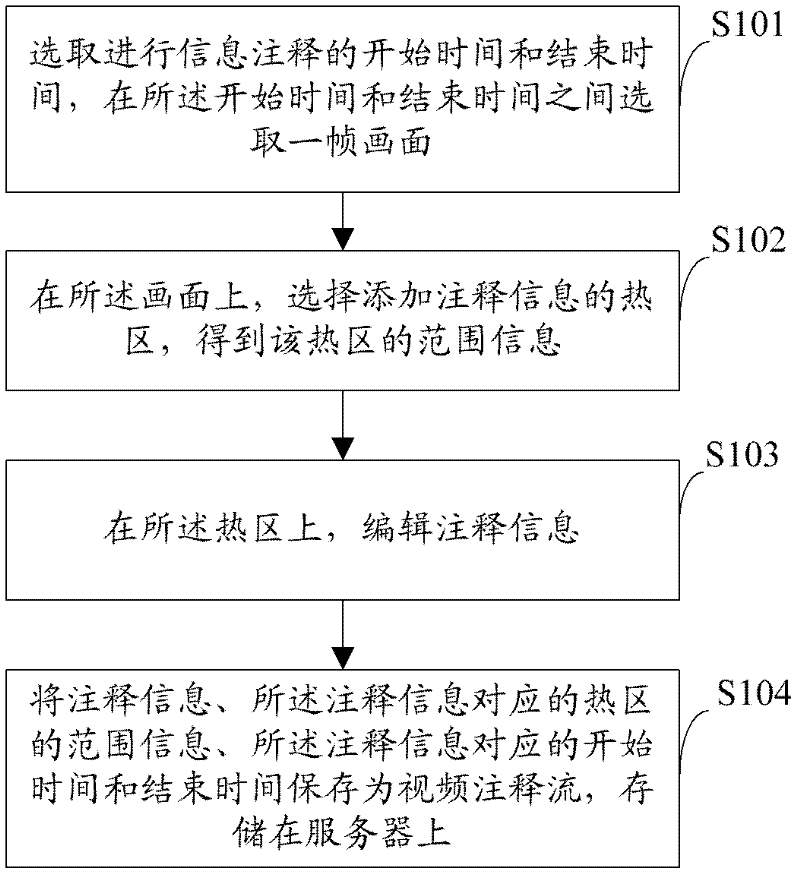

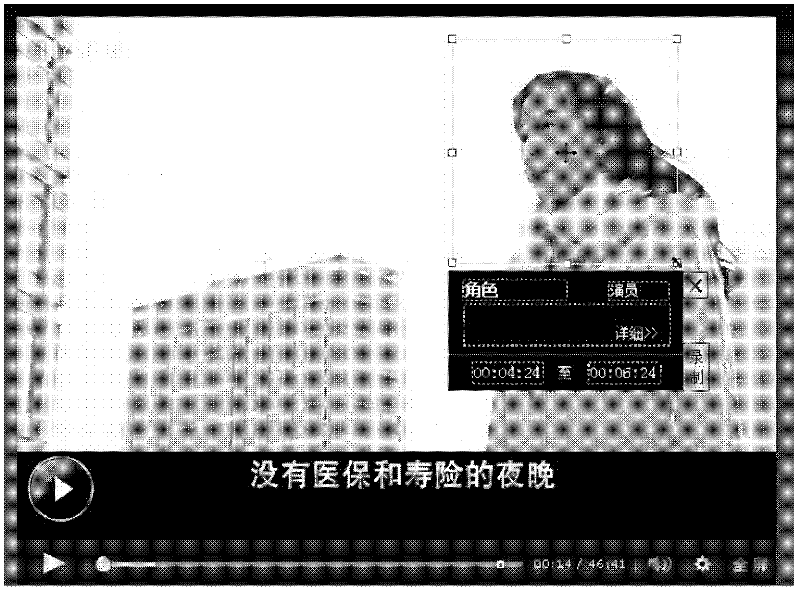

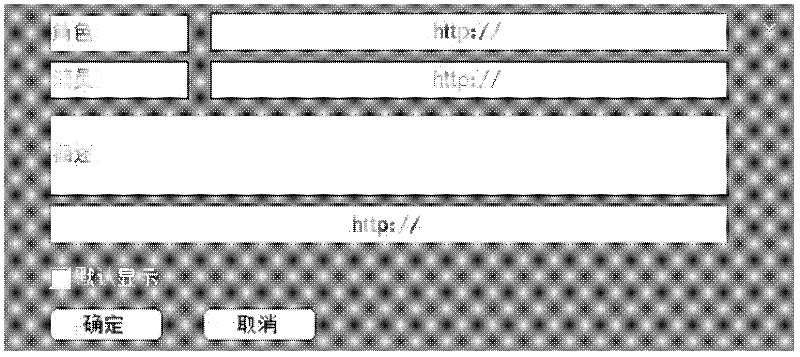

Method and device for adding video information and method and device for displaying video information

ActiveCN101950578AImprove experienceRealize dynamic displayInput/output for user-computer interactionElectronic editing digitised analogue information signalsStart timeComputer graphics (images)

The invention discloses a method and a device for adding video information. The method for adding the video information comprises the following steps of: selecting starting time and finishing time for adding annotation information and selecting a frame between the starting time and the finishing time; selecting a hot area, into which the annotation information is to be added on the frame to acquire range information of the hot area; editing the annotation information in the hot area; and saving the annotation information, the range information of the hot area corresponding to the annotation information, and the starting time and the finishing time corresponding to the annotation information as video annotation stream and storing the video annotation stream in a server. The invention also provides a method and a device for displaying the video information. Through the methods and the devices in the embodiment of the invention, the video information can be dynamically displayed and user experience can be enhanced.

Owner:BEIJING QIYI CENTURY SCI & TECH CO LTD

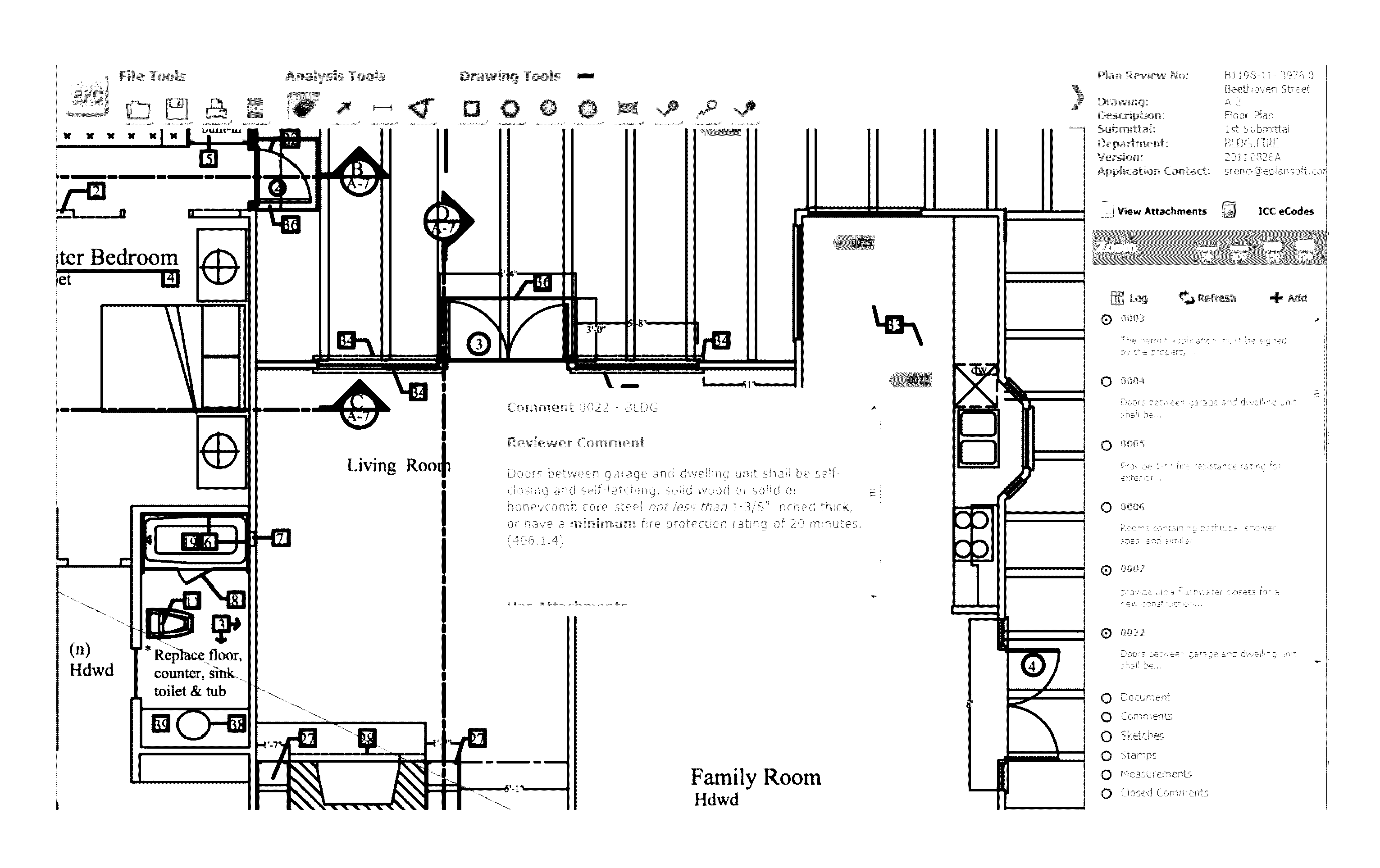

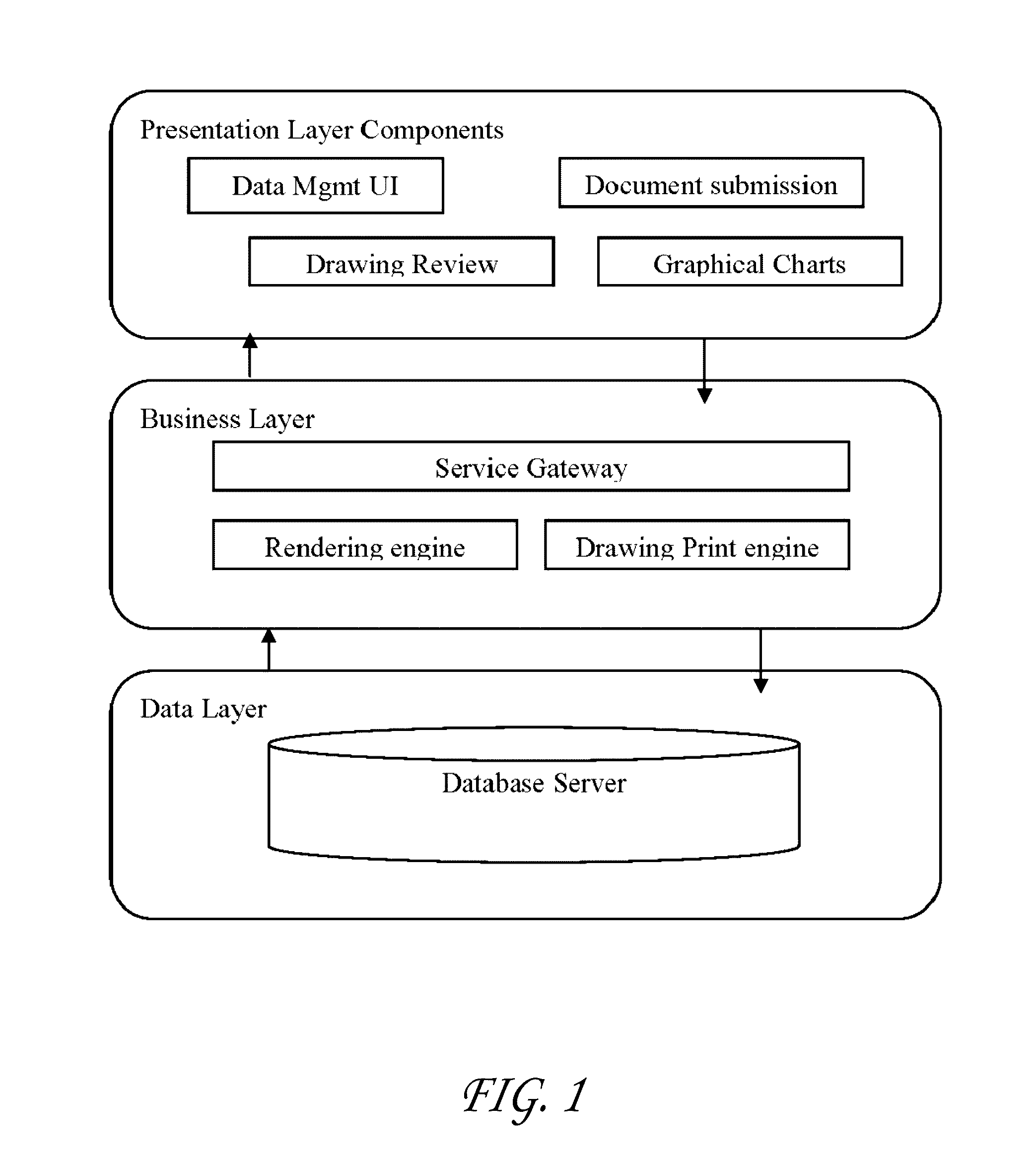

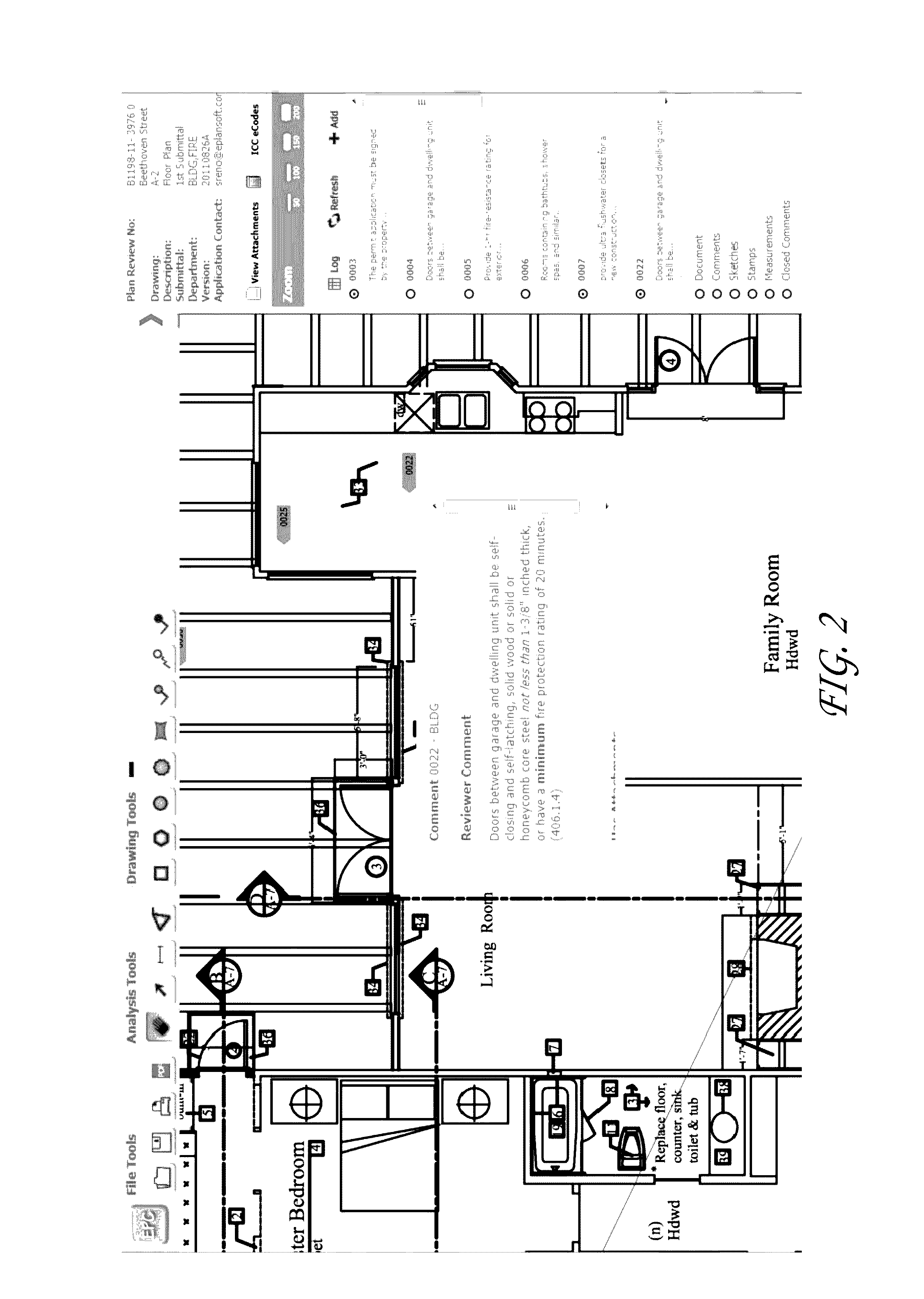

Systems and methods for management and processing of electronic documents using video annotations

ActiveUS20170052680A1Fast presentationGeometric CADMetadata video data retrievalDrag and dropComputer graphics (images)

Systems and methods for managing and processing building plan documents including plan sheets. A toolbox of building project-related annotation tools comprising geometrical shapes and corresponding metadata indicating a criticality of a building project related defect is provided. An interface enables a selection of one or more video frames from a video file. A video frame editing area enables a user to drag and drop a shape over a portion of a frame displayed in the editing area to highlight a building defect. An interface enables the user to define a relationship between a video file and a building-related task. A user annotation, comprising a geometrical shape and corresponding metadata indicating a criticality of a building project related defect, of a video frame is received. An association of the video file with a first building project-related task is generated. A search interface enables the user to search for annotated video files associated with building projects.

Owner:E PLAN

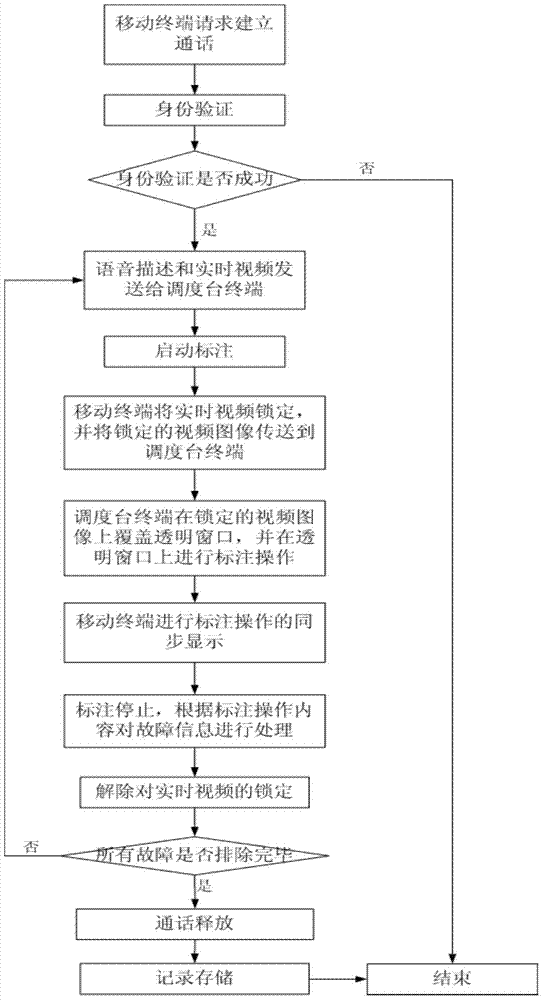

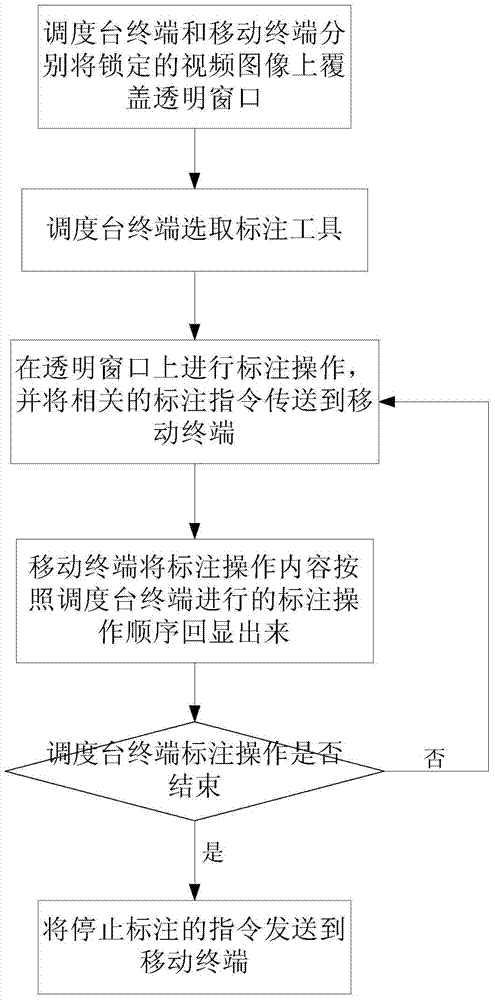

Method for performing long-distance guidance by use of video annotation

ActiveCN104506621AIntuitive labeling operationLabeling is easyClosed circuit television systemsTransmissionVoice communicationVideo annotation

The invention discloses a method for performing long-distance guidance by use of video annotation. The method comprises the following steps: S1, communicating with a dispatching desk by virtue of a mobile terminal and transmitting relevant failure information and onsite videos to the dispatching desk by virtue of voice description; S2, starting annotation on a video interface by the dispatching desk, automatically locking a real-time video by the mobile terminal and transmitting the real-time video to the dispatching desk; S3, covering a locked video image with a transparent window respectively by virtue of the dispatching desk and the mobile terminal, performing annotation operation on the transparent window and transmitting an annotation instruction to the mobile terminal by virtue of the dispatching desk, and displaying synchronously by virtue of the mobile terminal; S4, processing the failure information according to the annotation operation content and voice communication by virtue of the mobile terminal and unlocking the real-time video image by virtue of the mobile terminal; S5, repeating the steps S1 to S4 until all the failures are eliminated. By use of the method disclosed by the invention, the maintenance and inspection personnel of the mobile terminal seem to accept the personal guidance of dispatching desk technical support personnel, so that the usage experience is effectively improved.

Owner:BEIJING JIAXUN FEIHONG ELECTRIC CO LTD

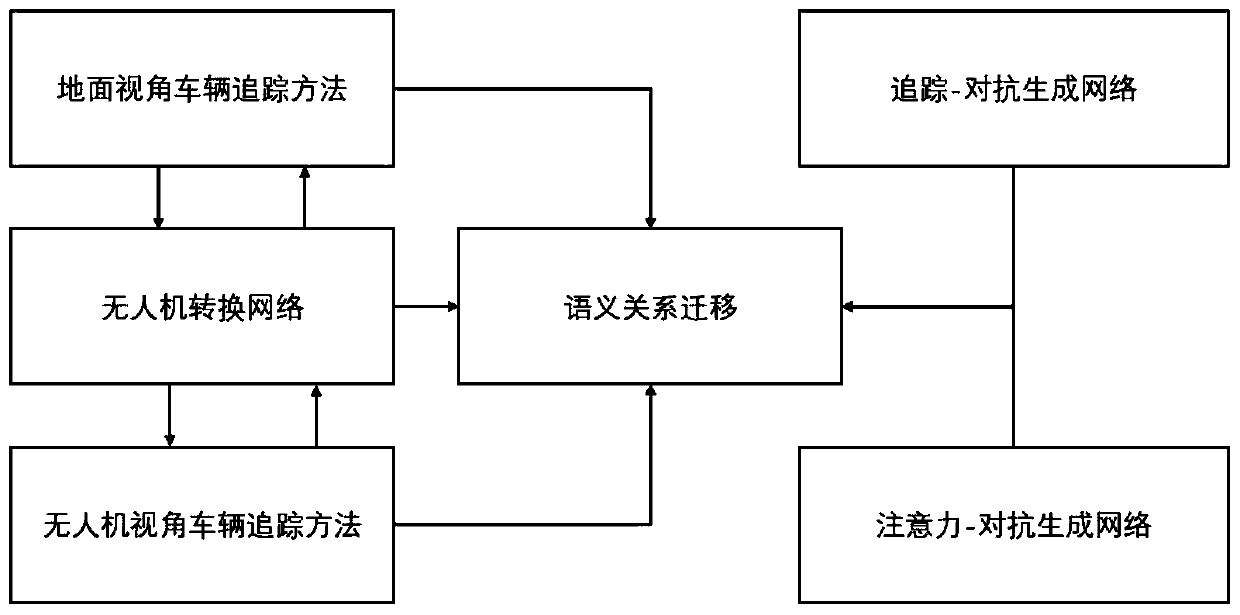

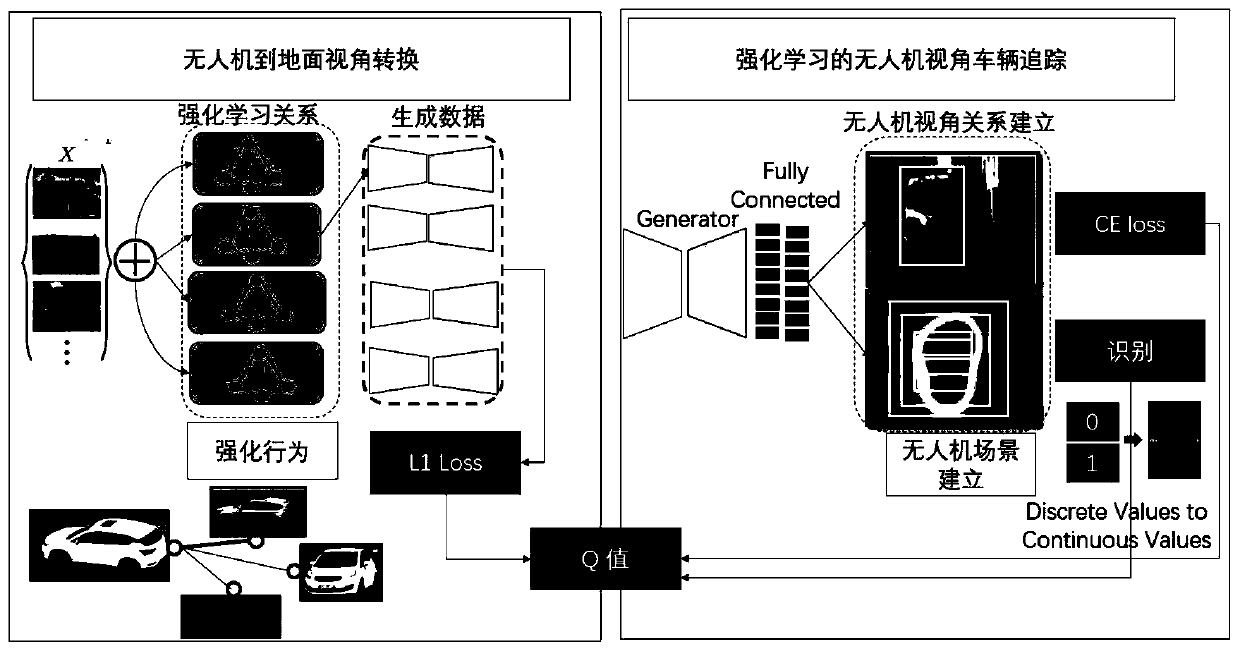

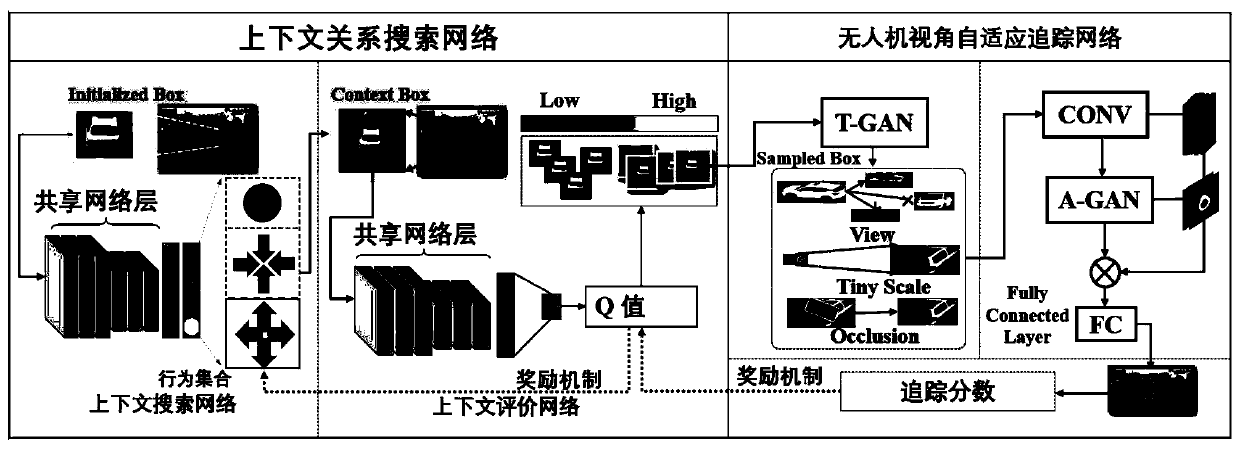

Unmanned aerial vehicle visual angle vehicle identification tracking method based on reinforcement learning

ActiveCN110874578AAccurate and efficient tracking of resultsFree laborInternal combustion piston enginesCharacter and pattern recognitionPattern recognitionVideo annotation

The invention discloses an unmanned aerial vehicle visual angle vehicle identification tracking method based on reinforcement learning. Based on unmanned aerial vehicle visual angle scene understanding, monitoring and tracking, efficient and self-adaptive panoramic video management is established, and through a transfer learning target tracking method of reinforcement learning, the unmanned aerialvehicle can perform self-adaptive fast moving vehicle tracking under the non-supervision condition. The cross-view and cross-azimuth space-ground cooperative tracking system is realized through ground camera data, cooperative processing and re-identification information and algorithms, so that traffic analysis does not pay attention to repeated video annotation work any more, the labor force of manual monitoring is liberated, and automatic analysis and monitoring application can be quickly, efficiently and accurately carried out according to an initialization target vehicle provided by software in advance.

Owner:QINGDAO RES INST OF BEIHANG UNIV +1

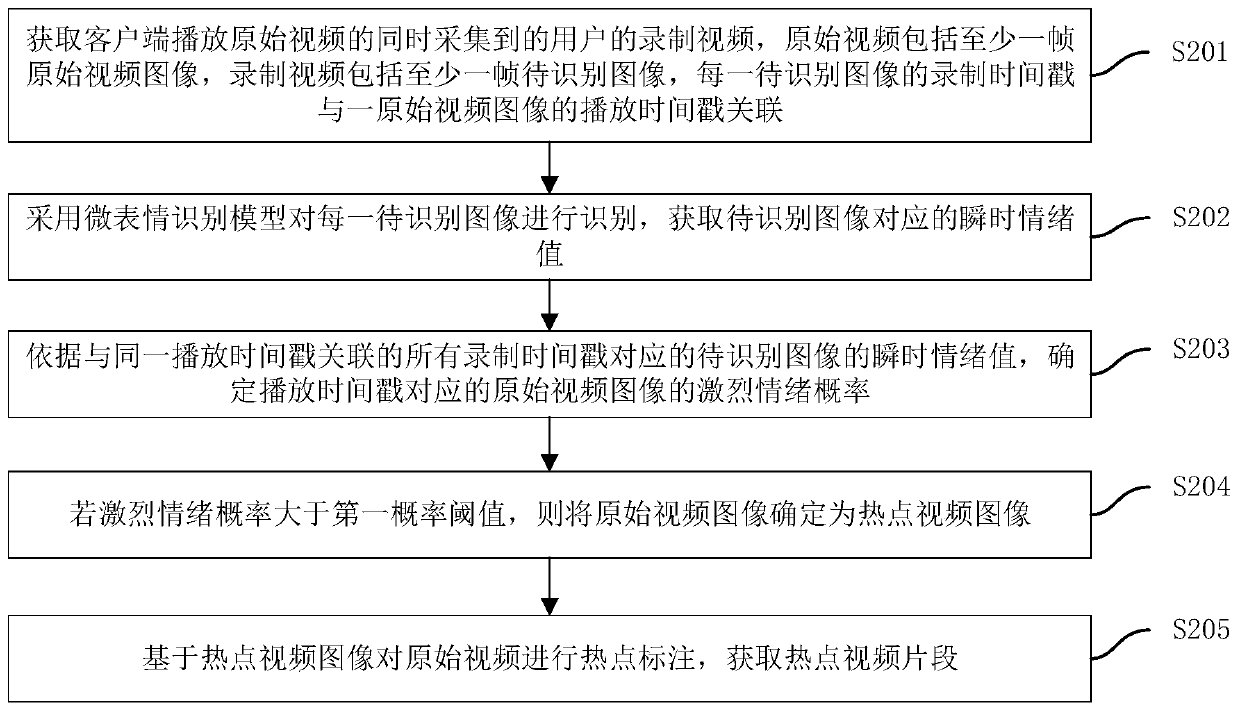

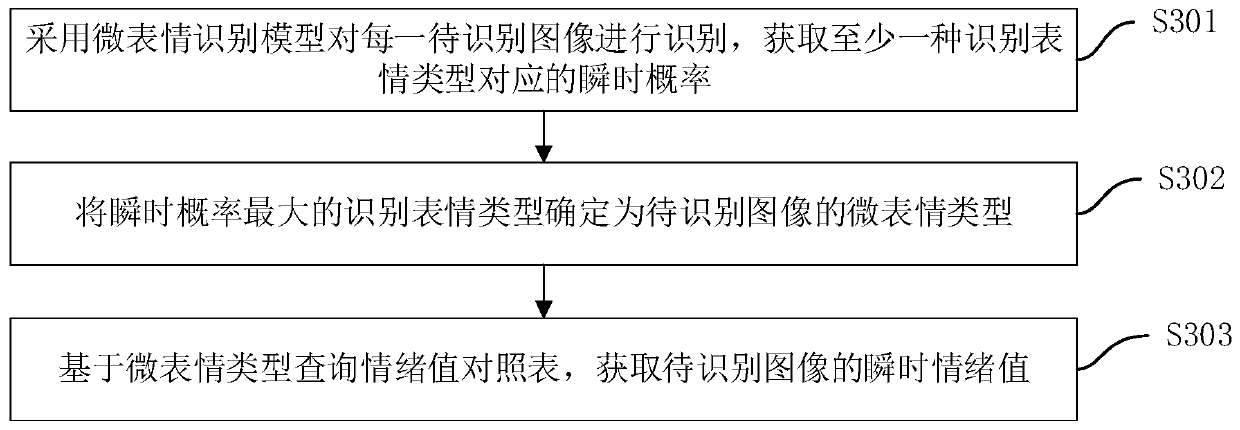

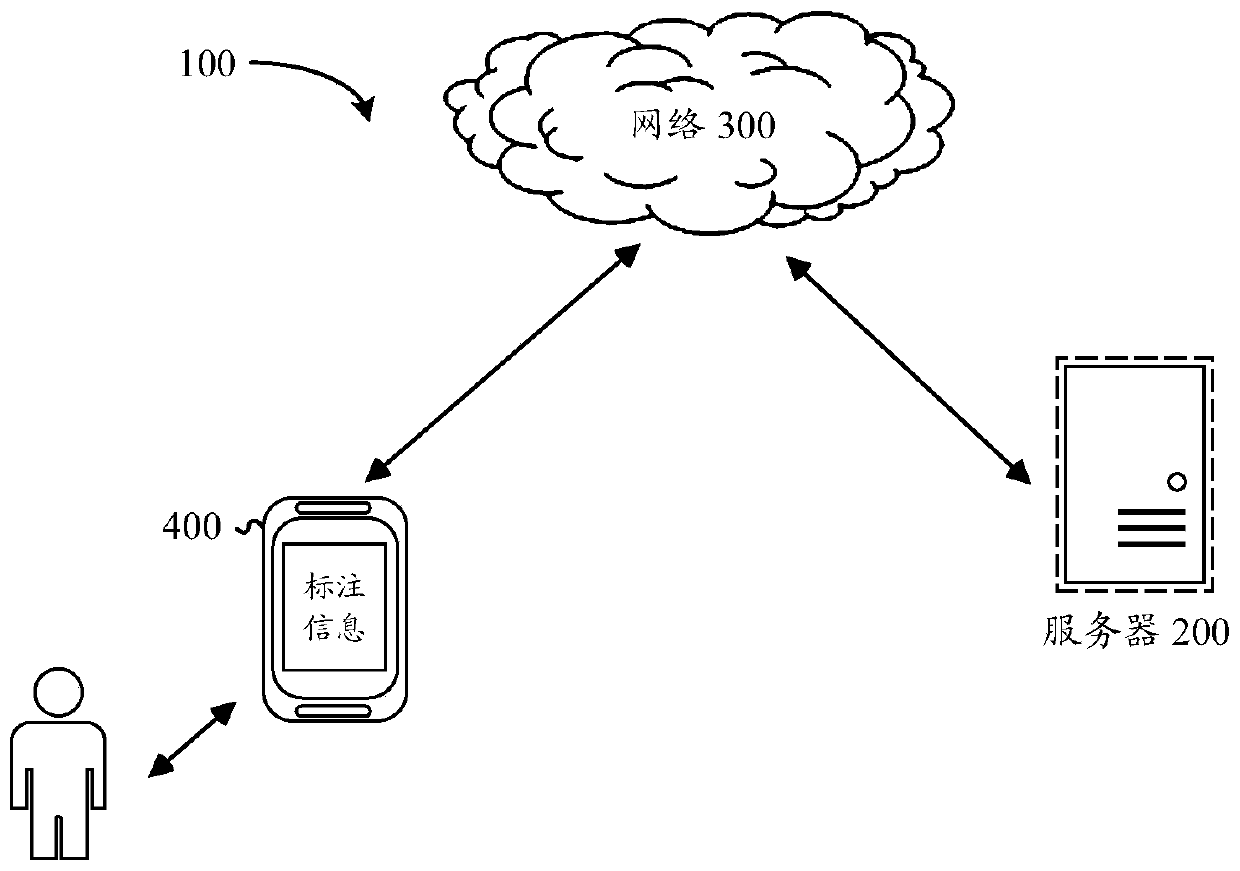

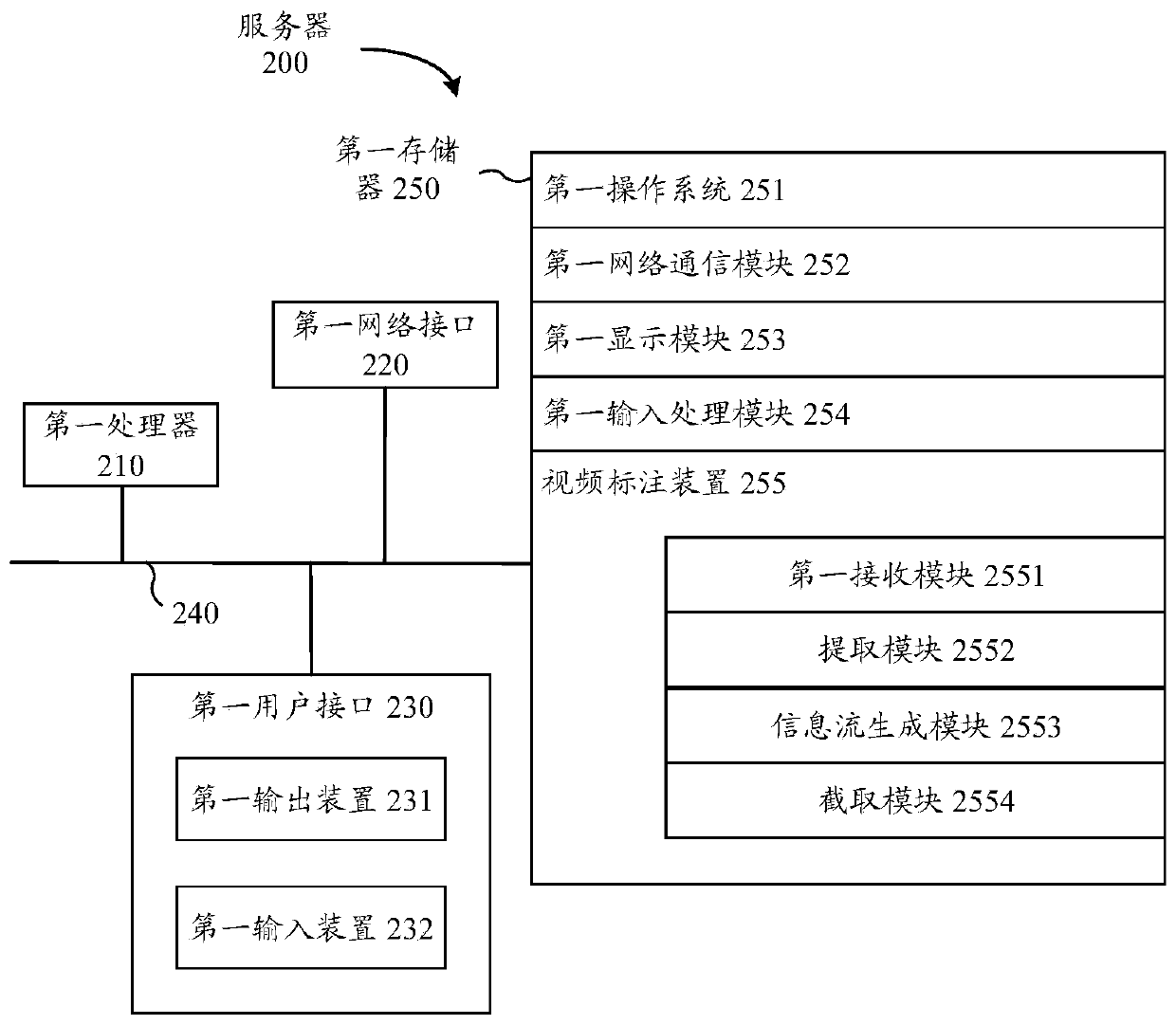

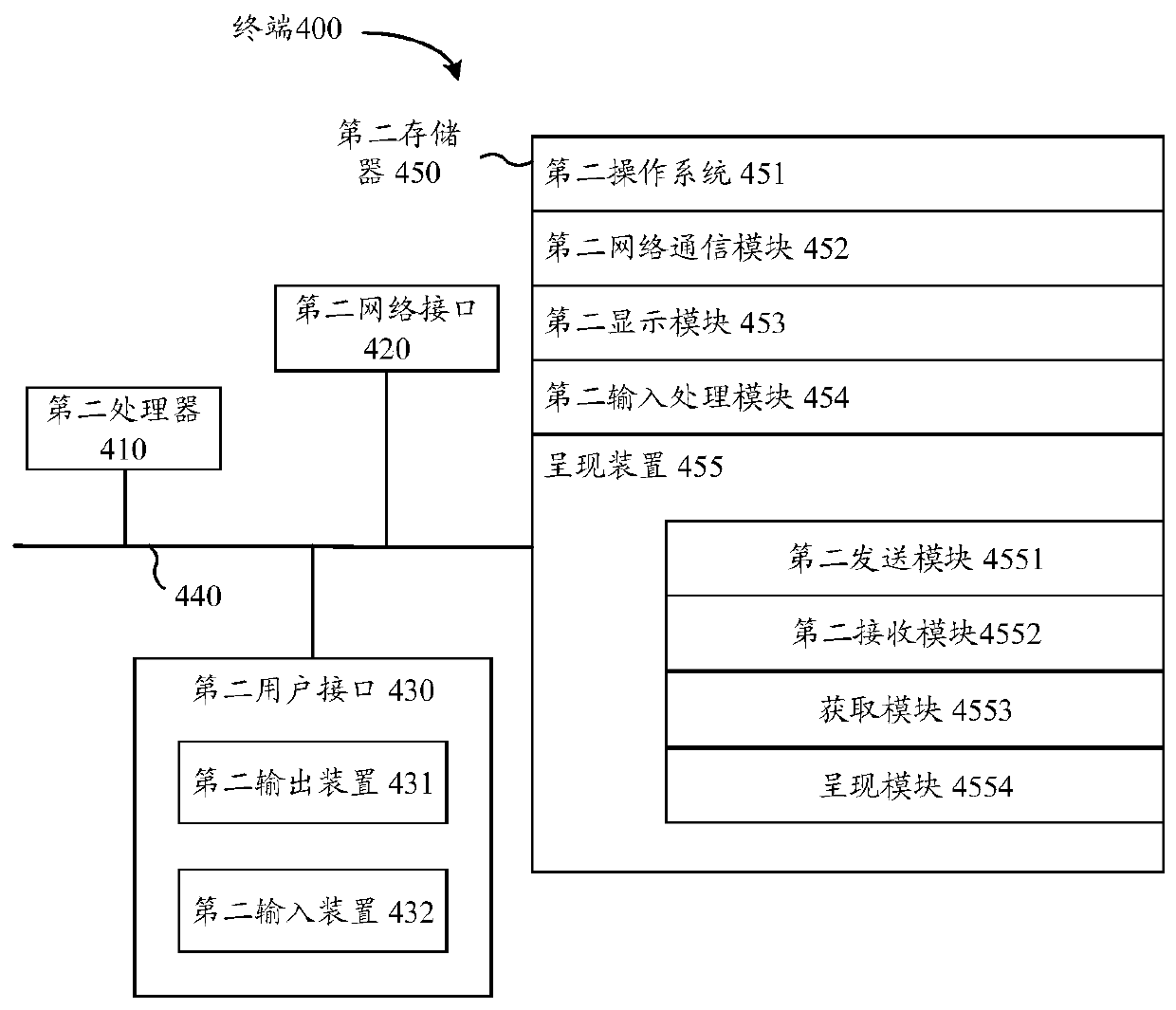

Hot video annotation processing method and device, computer equipment and storage medium

ActiveCN109819325AEnsure objectivityGuaranteed accuracyTelevision system detailsCharacter and pattern recognitionVideo annotationVideo image

The invention discloses a hot video annotation processing method and device, computer equipment and a storage medium. The method comprises the steps that a user recorded video collected while a clientplays an original video is acquired, the original video comprises at least one frame of original video image, the recorded video comprises at least one frame of to-be-identified image, each to-be-identified image is identified by adopting a micro expression identification model, and an instantaneous emotion value corresponding to the to-be-identified image is acquired. And according to the instantaneous emotion value, an intense emotion probability of the original video image corresponding to the playing time stamp is determined, if the intense emotion probability is greater than a first probability threshold, the original video image is determined as a hot video image, and hot spot marking is performed on the original video based on the hot video image to obtain a hot video clip. According to the method, the hotspot video clip can be automatically labeled, and the efficiency of labeling the hotspot video clip is improved.

Owner:PING AN TECH (SHENZHEN) CO LTD

Video annotation method and device, and storage medium

ActiveCN110996138AImprove labeling efficiencySelective content distributionComputer graphics (images)Algorithm

The invention provides a video annotation method and device and a storage medium. The method comprises the steps of receiving a video extraction instruction sent by a terminal, and obtaining a to-be-extracted video according to the video extraction instruction; extracting one or more pieces of event information from the video frame of the to-be-extracted video; wherein the event information represents basic elements forming plot contents of the to-be-extracted video; forming at least one event information flow by utilizing one or more pieces of event information; based on the at least one event information flow, intercepting at least one first segment meeting a plot triggering condition from the to-be-extracted video, and obtaining a plot labeling label of the at least one first segment; wherein the plot labeling label is used for labeling the at least one first segment. According to the invention, the video annotation efficiency can be improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

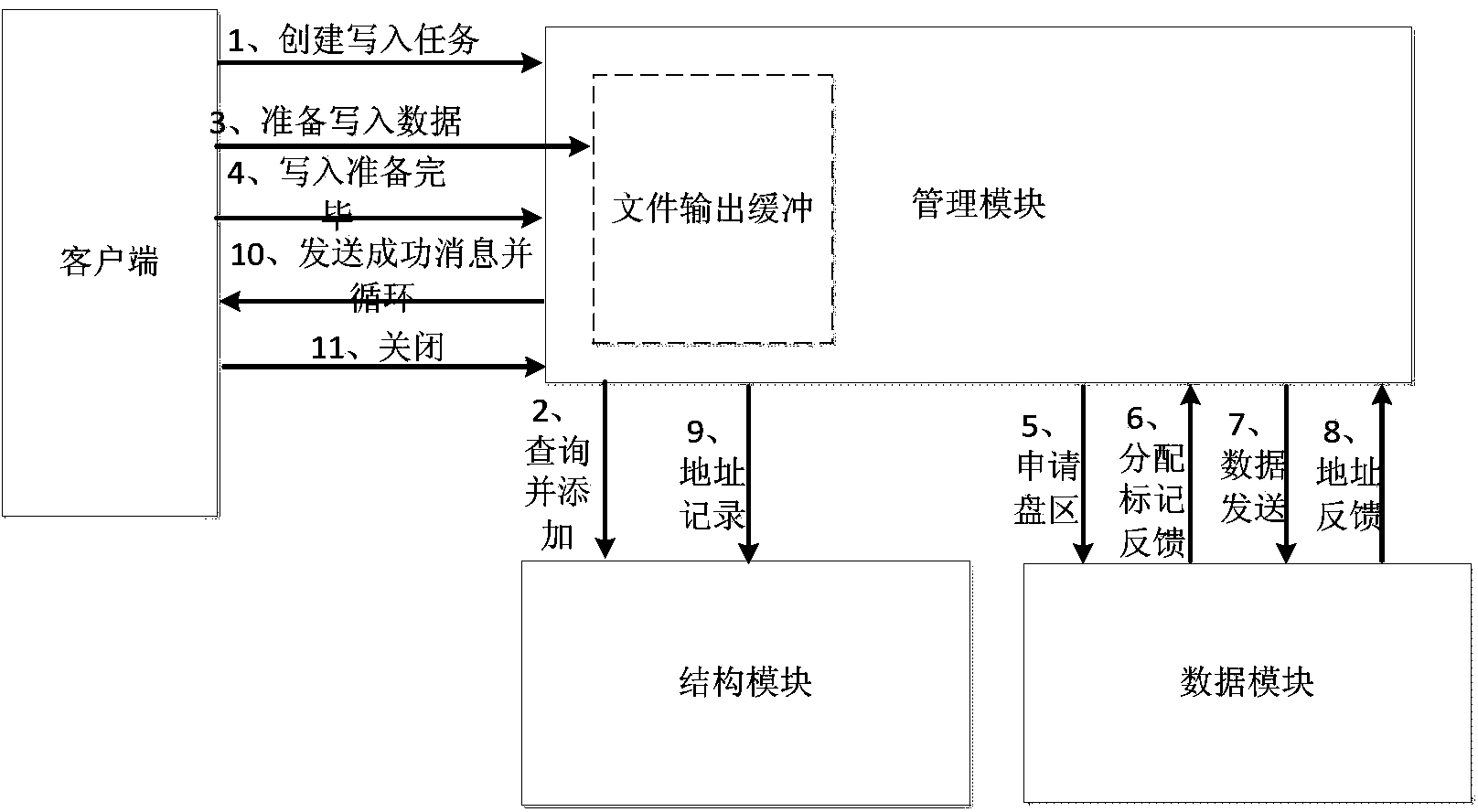

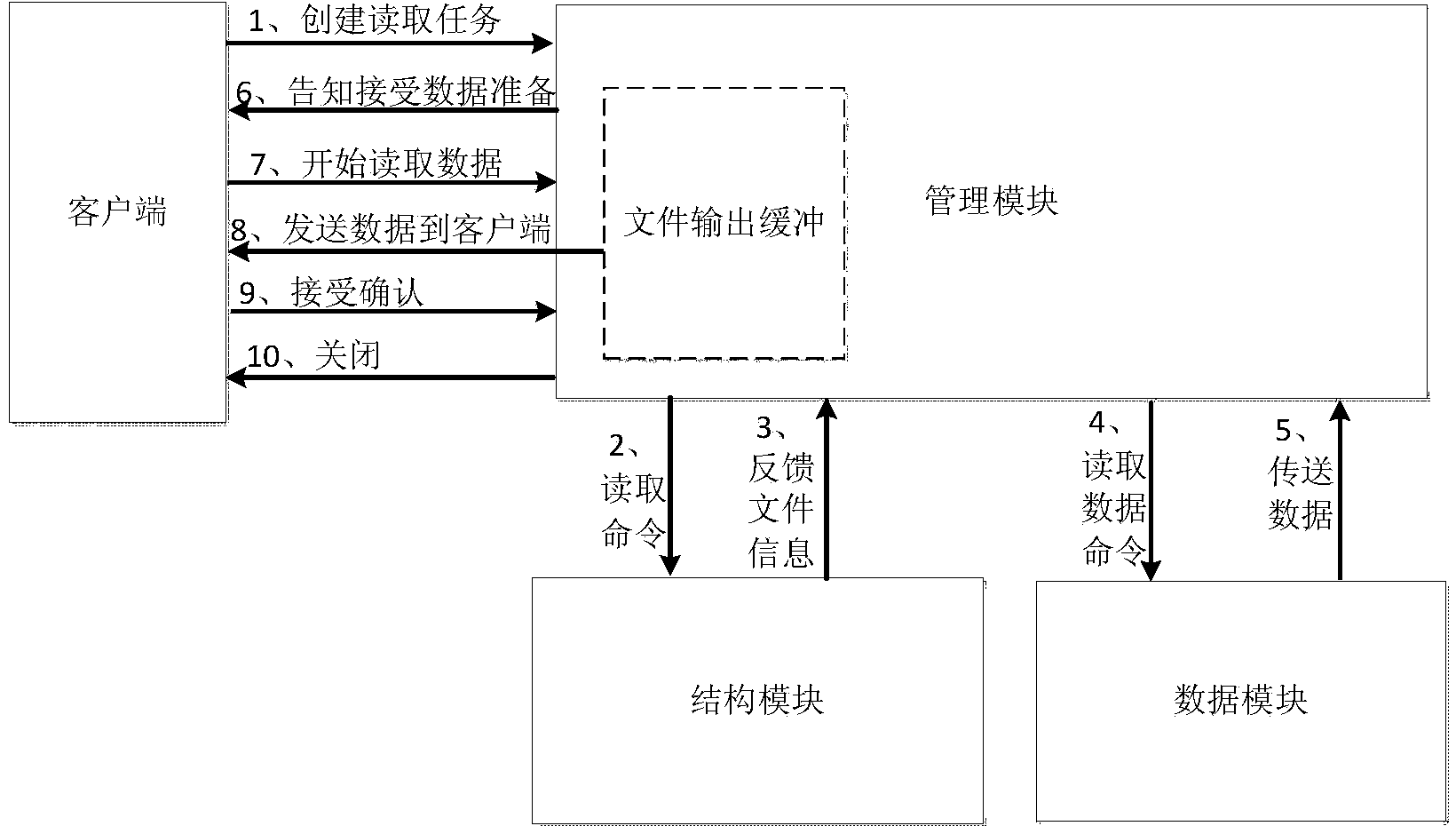

Video annotation processing method and video annotation processing server

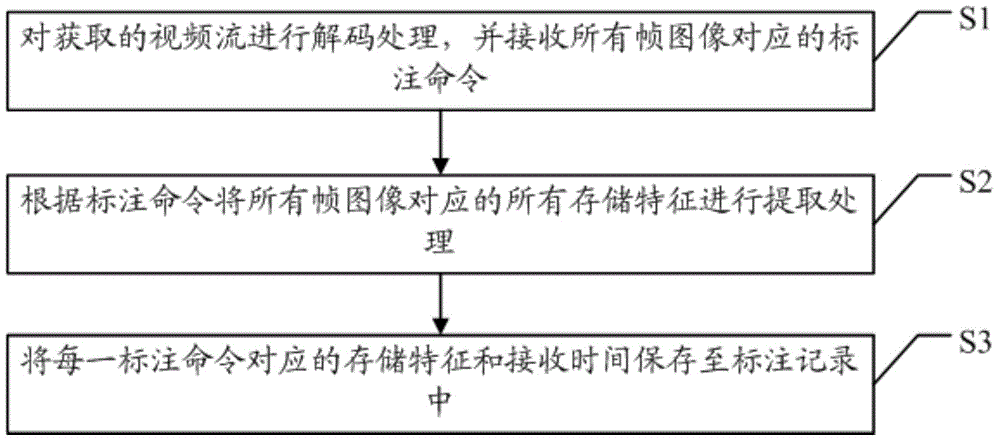

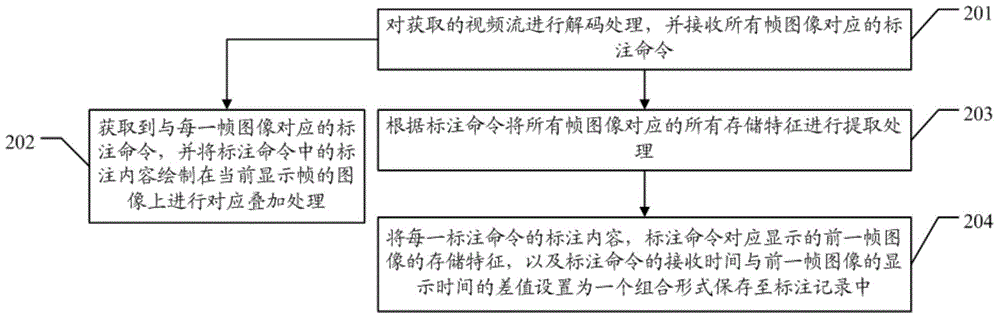

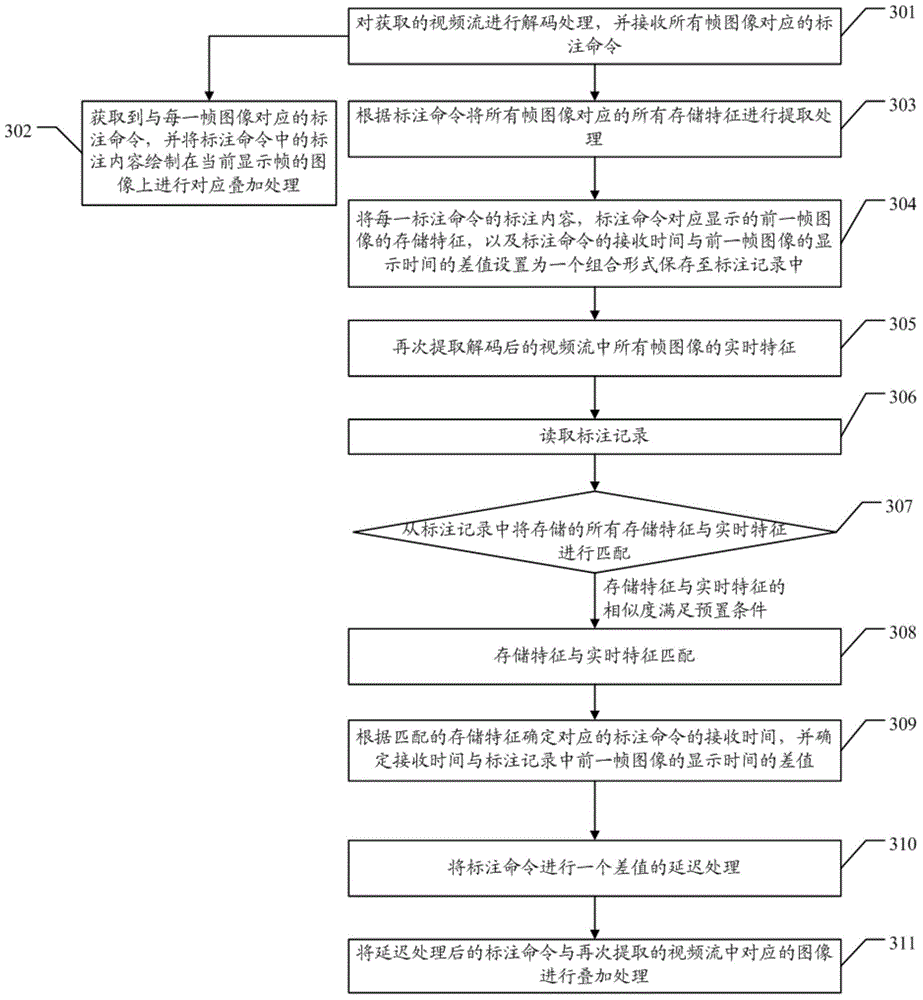

ActiveCN104883515AResolved technical issue where callouts could not be displayed as desiredTelevision system detailsColor television detailsVideo annotationComputer vision

An embodiment of the invention discloses a video annotation processing method and a video annotation processing server, and solves the technical problem that due to the design of storing an image of which annotation information and video are overlapped, annotations cannot be displayed according to needs. The video annotation processing method provided by the embodiment of the invention comprises a step S1 of decoding an obtained video stream and receiving annotation commands corresponding to all frame images; a step S2 of extracting all storage characteristics corresponding to all the frame images according to the annotation commands; and a step S3 of storing storage characteristics and reception time corresponding to each annotation command to an annotation record.

Owner:GUANGDONG VTRON TECH CO LTD

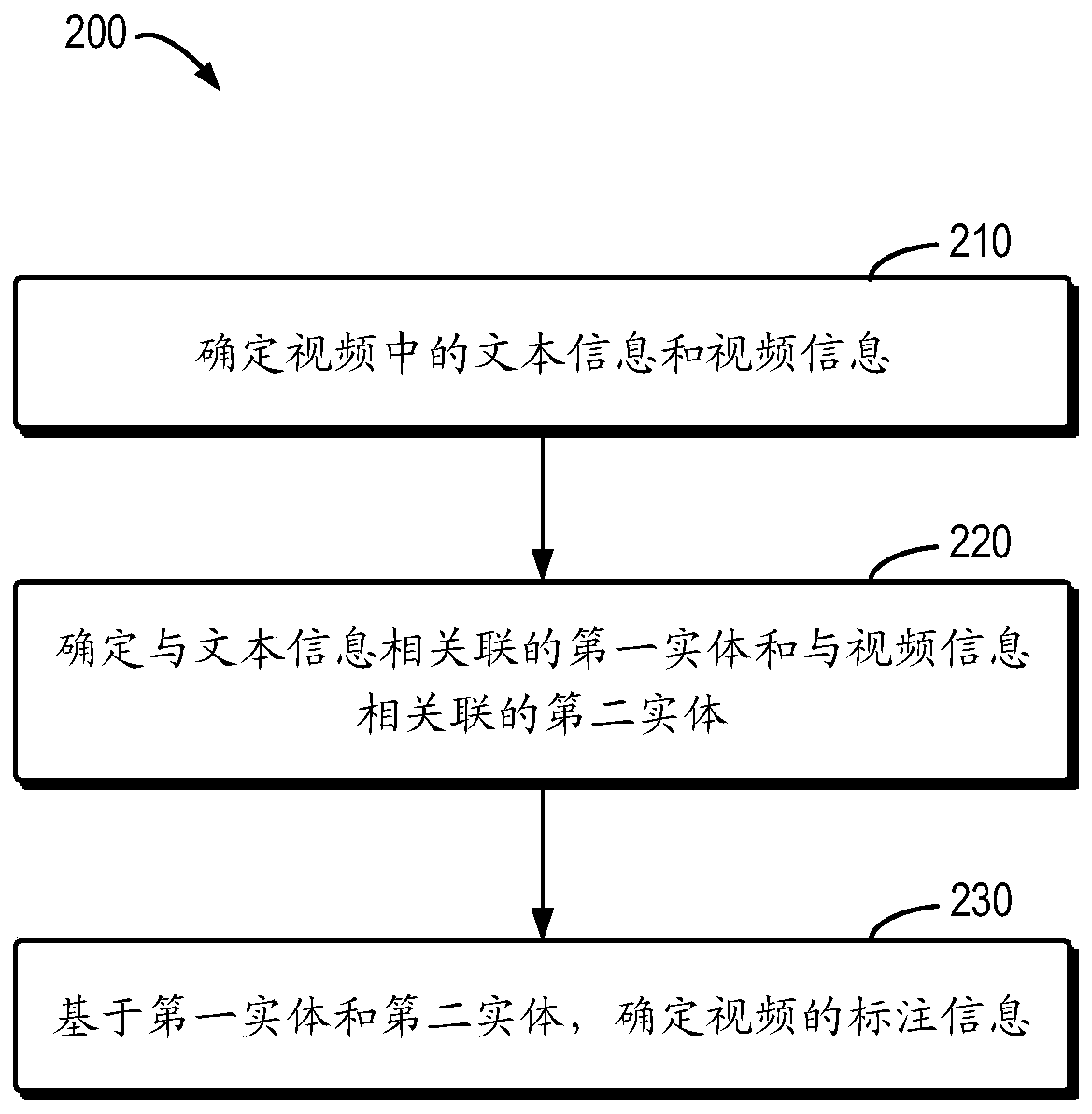

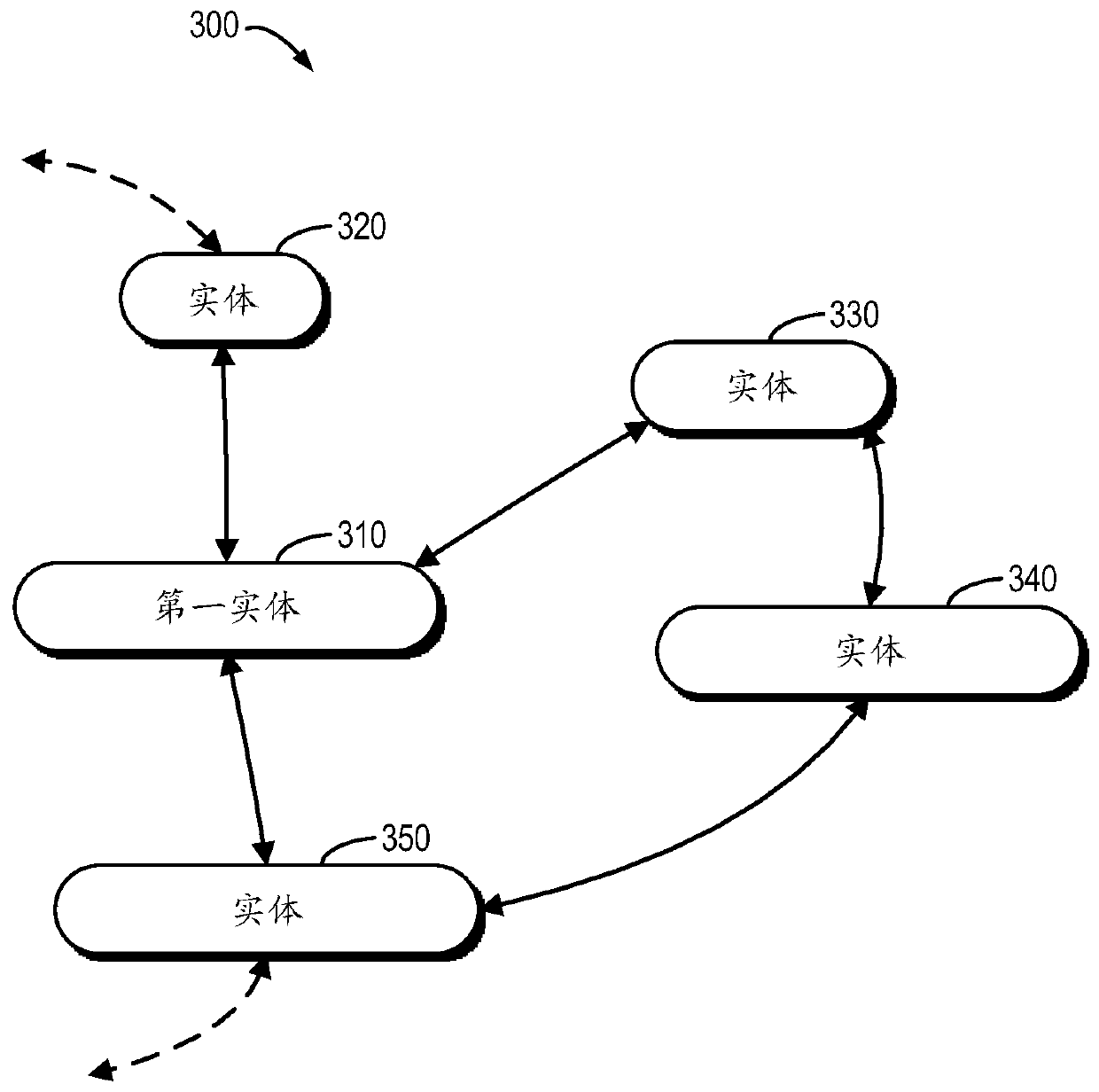

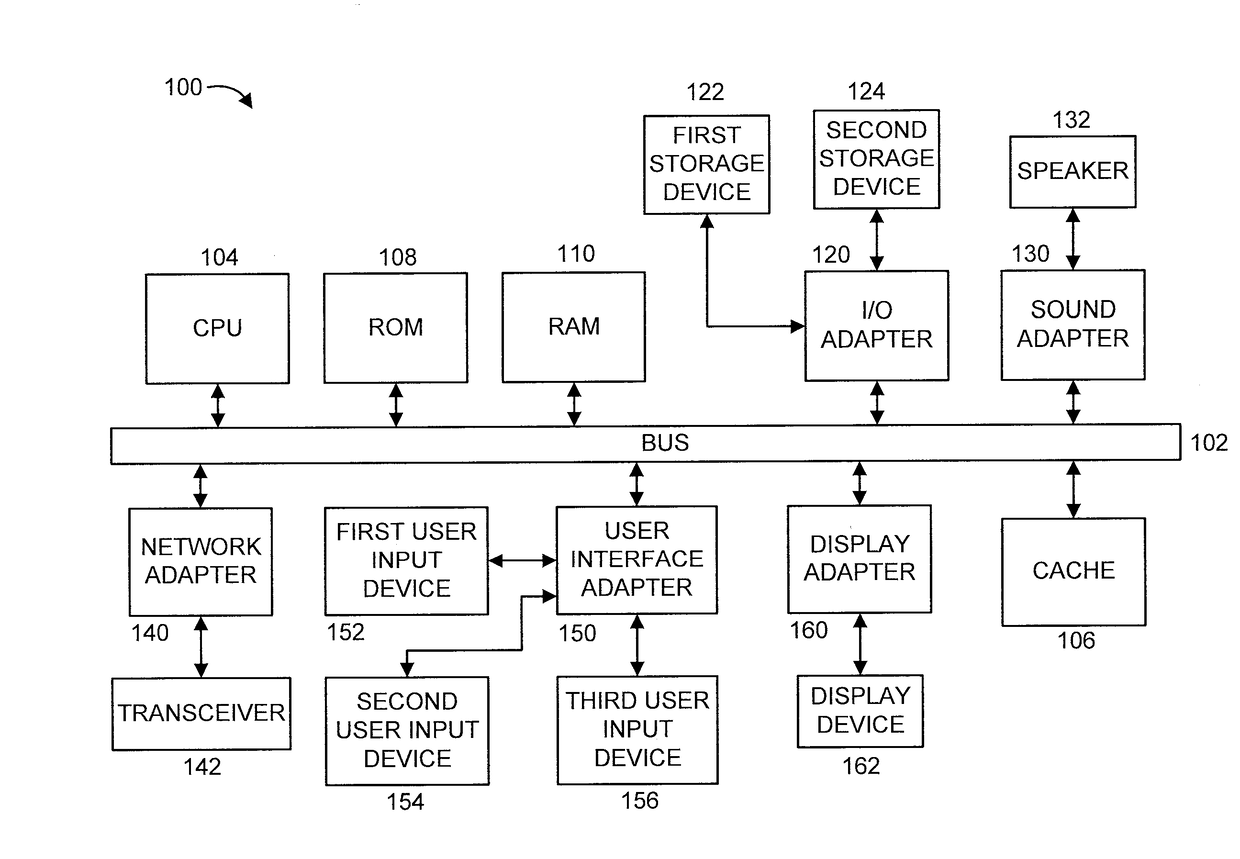

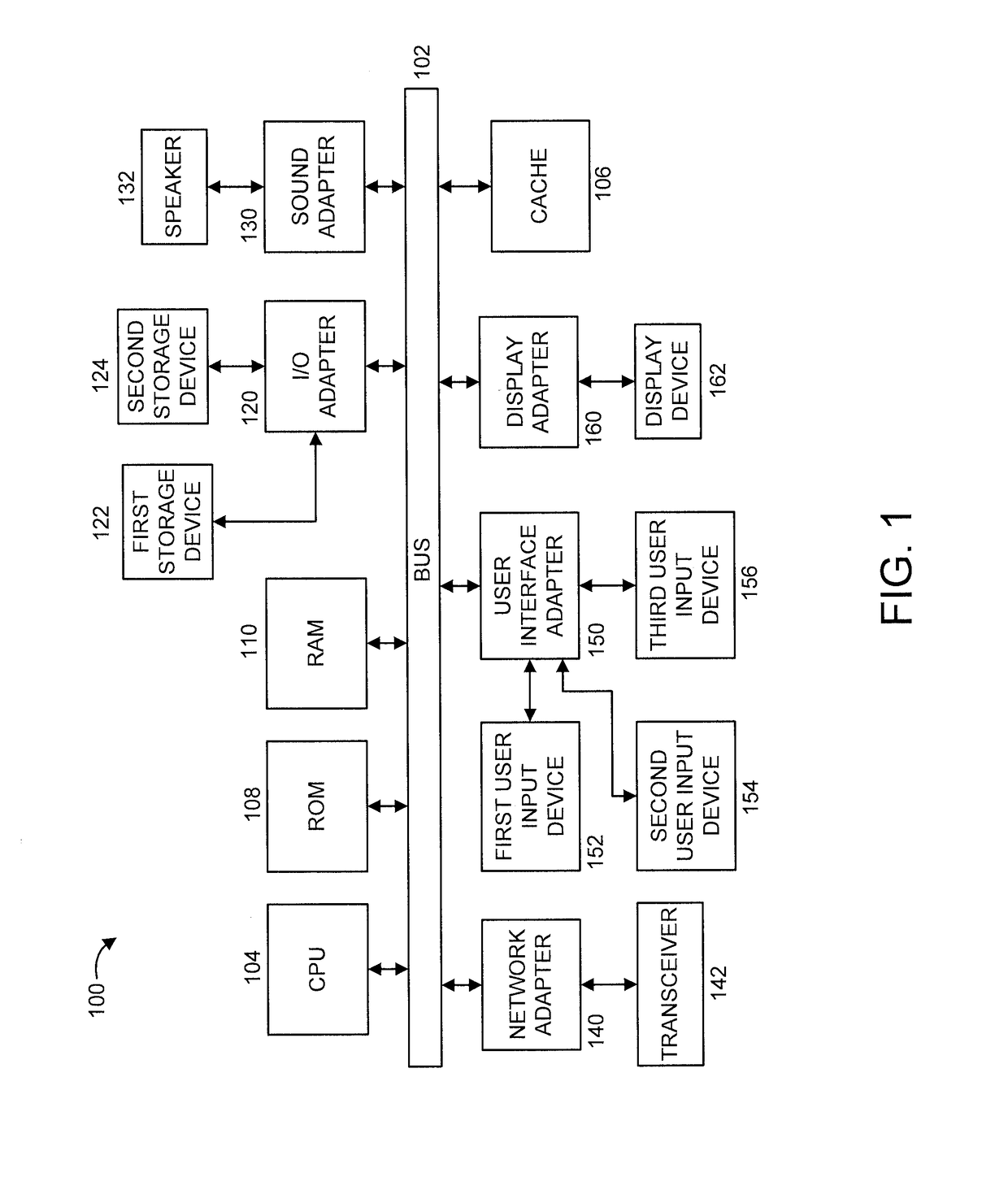

Method and device for determining video annotation information, equipment and computer storage medium

The embodiment of the invention relates to a method and device for determining annotation information of a video, equipment and a computer readable storage medium. The method may include determining text information and video information in the video. The method may also include determining a first entity associated with the text information and a second entity associated with the video information. Further, the method may further include determining annotation information of the video based on the first entity and the second entity. According to the technical scheme, multi-mode video annotation can be achieved, and the applicability of the video annotation scheme is remarkably improved. Moreover, the entity information associated with the text information and the entity information associated with the video information can be used for mutual verification, so that the situation that the video annotation has errors is avoided.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

Efficient video annotation with optical flow based estimation and suggestion

A computer-implemented method is provided for video annotation of a video sequence that includes a plurality of frames. The method includes storing, by a computer, information representative of a bounding box around a given target object in the video sequence in both a first frame and a last frame of the video sequence that include the target object. The method further includes generating, by the computer based on the information, estimated box annotations of the given target object in suggested frames from among the plurality of frames. The suggested frames are determined based on an annotation uncertainty measure calculated using a set of already provided annotations for at least some of the plurality of frames together with optical flow information for the video sequence. The method also includes displaying, by the computer, various ones of the estimated box annotations of the given target object in the suggested frames.

Owner:NEC CORP

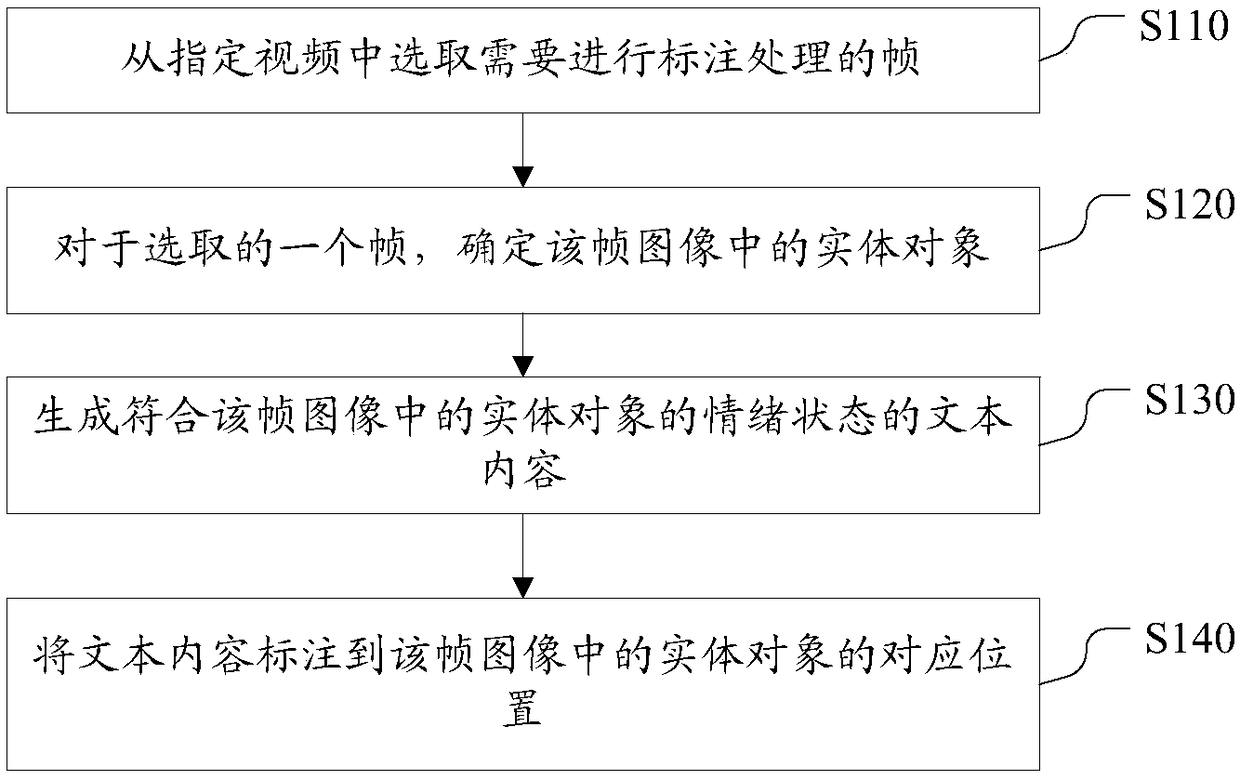

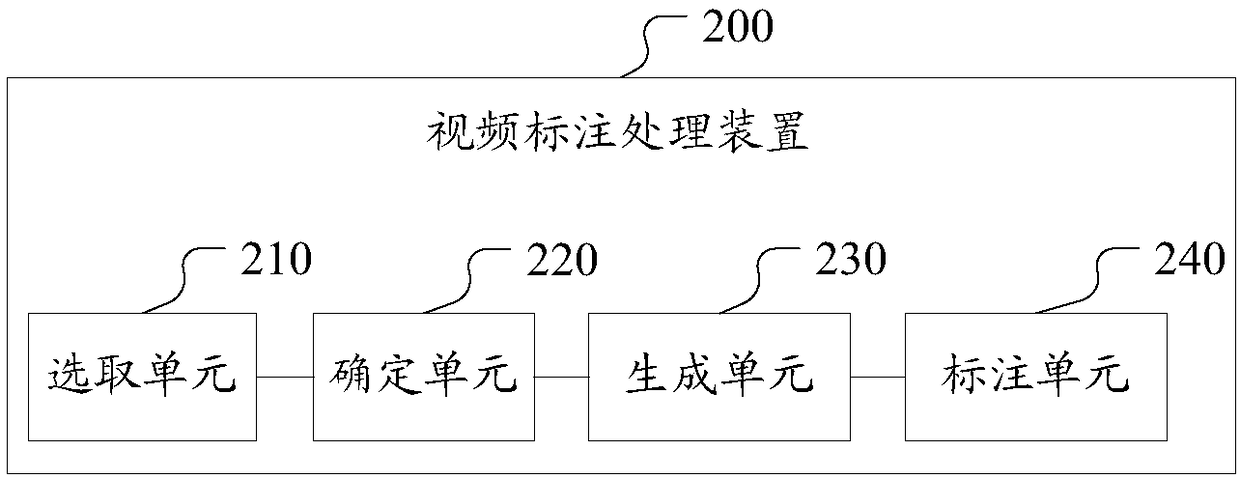

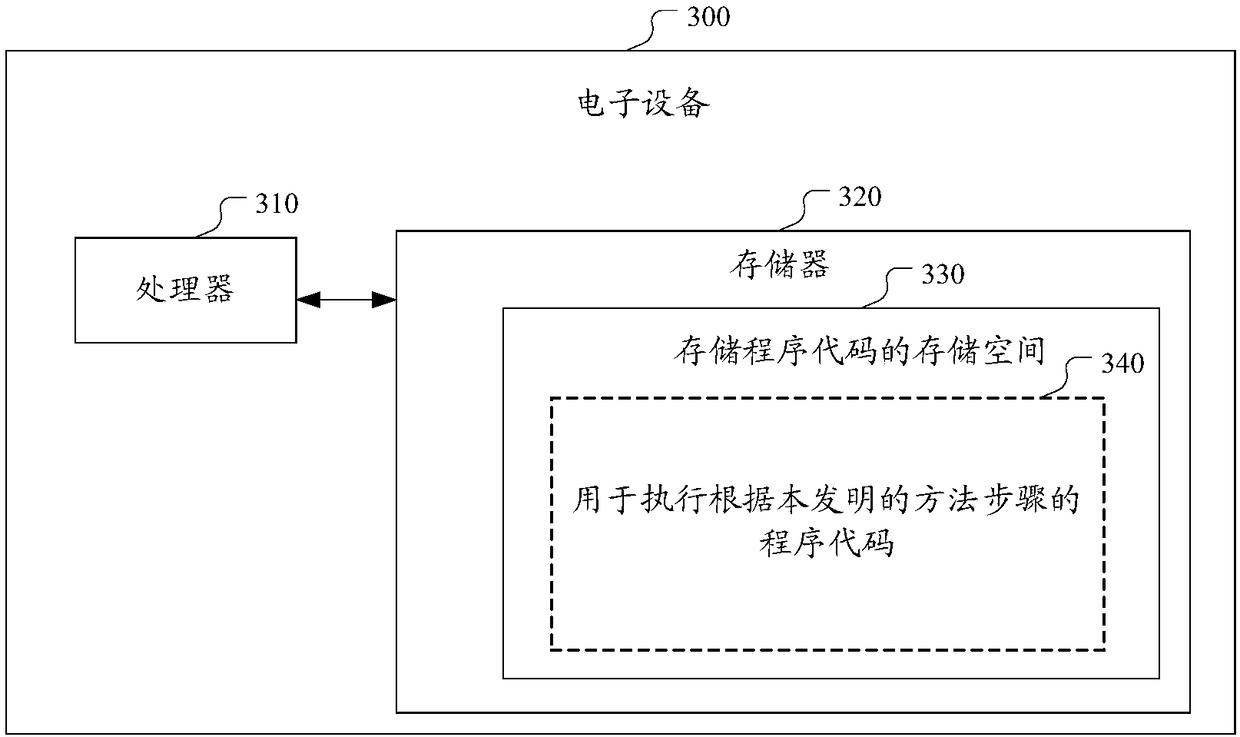

Video annotation processing method and apparatus

ActiveCN108377418AImprove display effectAdd funSelective content distributionComputer graphics (images)Video annotation

The invention discloses a video annotation processing method and apparatus, an electronic device and a computer readable storage medium. The method comprises the following steps: selecting a frame subjected to annotation processing from a specified video; determining a physical object in the frame image for the selected frame; generating a text content that conforms to an emotional state of the physical object in the frame image; and annotating the text content in a corresponding location of the physical object in the frame image. By adoption of the technical scheme, the text content corresponding to the emotion of the object is annotated in the video image, so that the effect of video display is richer, the interest of the video is increased, no user annotation is needed, the user demandsare met, and the user experience is improved.

Owner:BEIJING QIHOO TECH CO LTD

Multi-modal collaborative web-based video annotation system

ActiveUS9354763B2Digital data information retrievalRecord information storageVideo annotationCurrent point

A video annotation interface includes a video pane configured to display a video, a video timeline bar including a video play-head indicating a current point of the video which is being played, a segment timeline bar including initial and final handles configured to define a segment of the video for playing, and a plurality of color-coded comment markers displayed in connection with the video timeline bar. Each of the comment markers is associated with a frame or segment of the video and corresponds to one or more annotations for that frame or segment made by one of a plurality of users. Each of the users can make annotations and view annotations made by other users. The annotations can include annotations corresponding to a plurality of modalities, including text, drawing, video, and audio modalities.

Owner:THE UNIV OF NORTH CAROLINA AT CHAPEL HILL

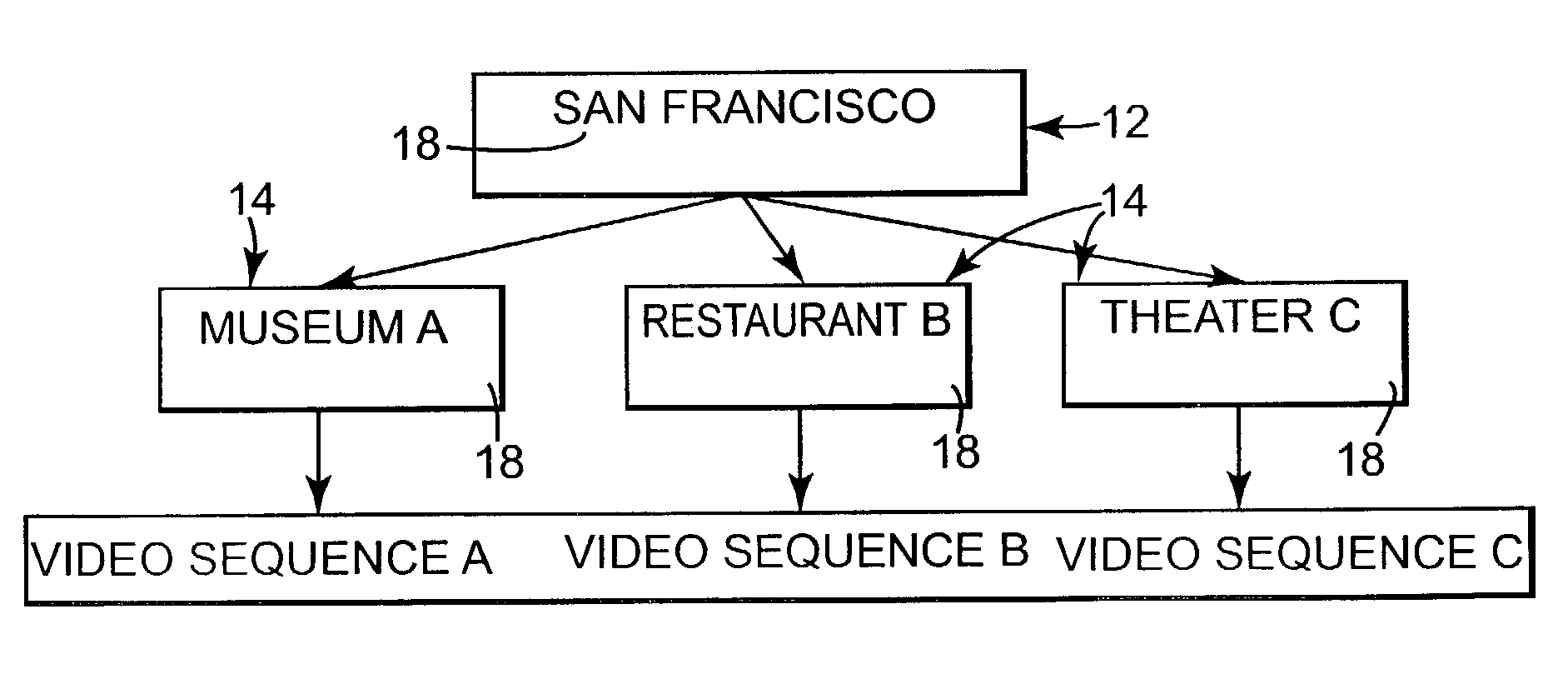

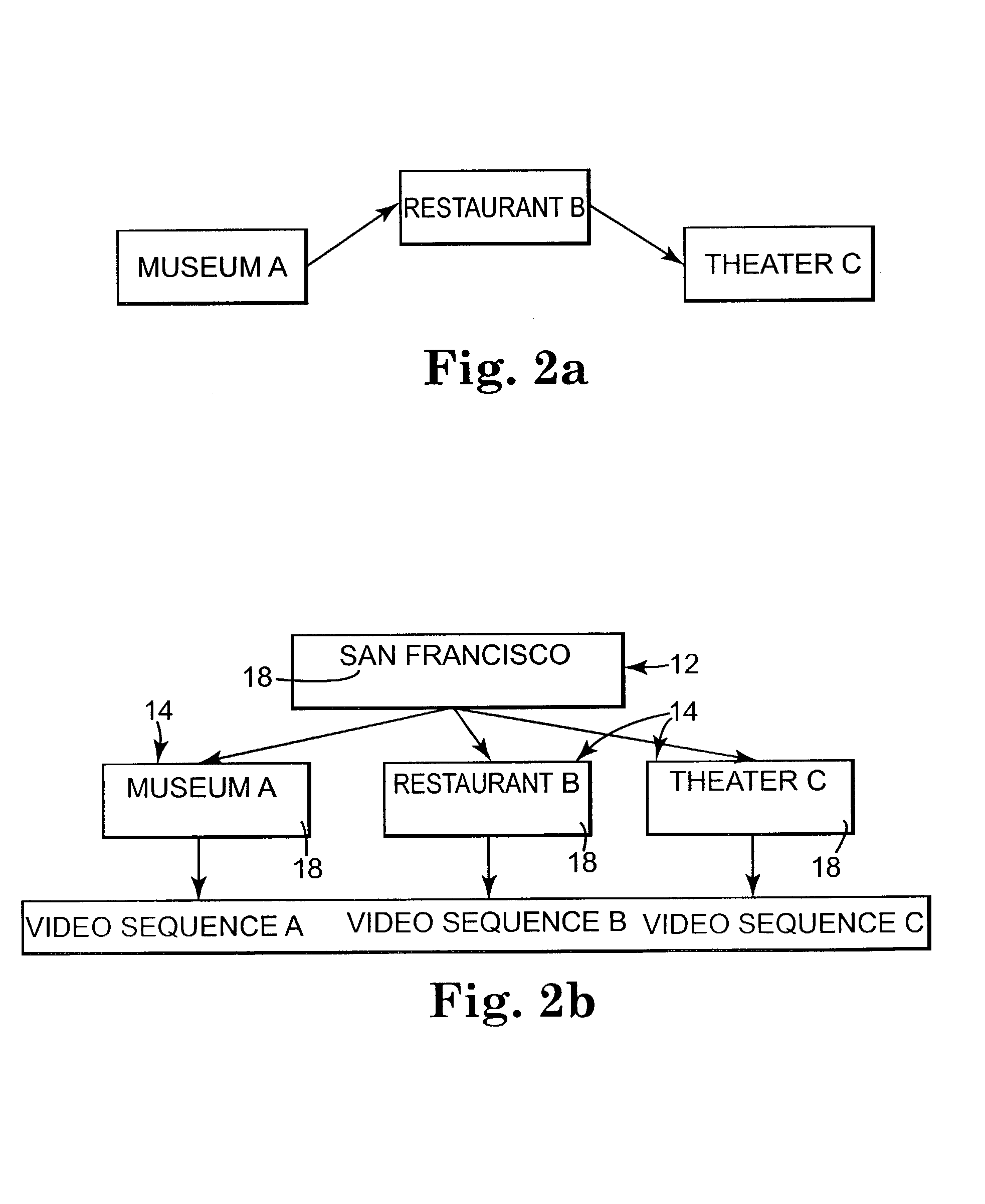

System and method for creation of video annotations

InactiveUS7334186B2Easy to browseTelevision system detailsElectronic editing digitised analogue information signalsData streamVideo annotation

A system and method for annotating a video data stream integrates geographic location data into the video data stream. A site name is mapped from the geographic location data, and the site name is automatically assigned as a text label to the associated portion of the video data stream.

Owner:HEWLETT PACKARD DEV CO LP

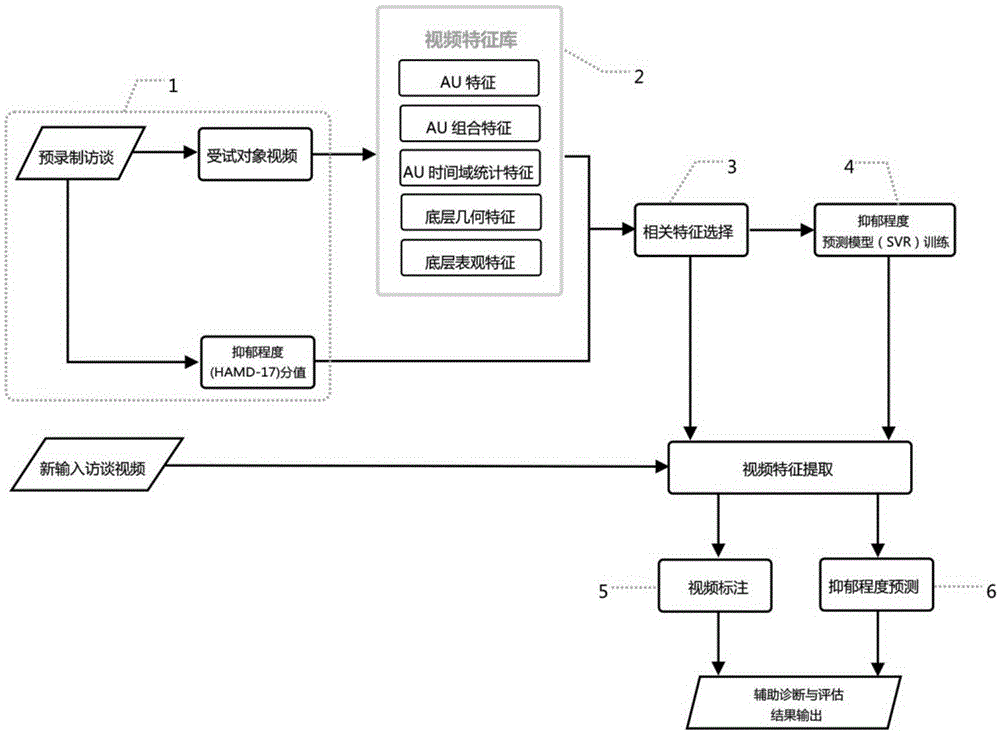

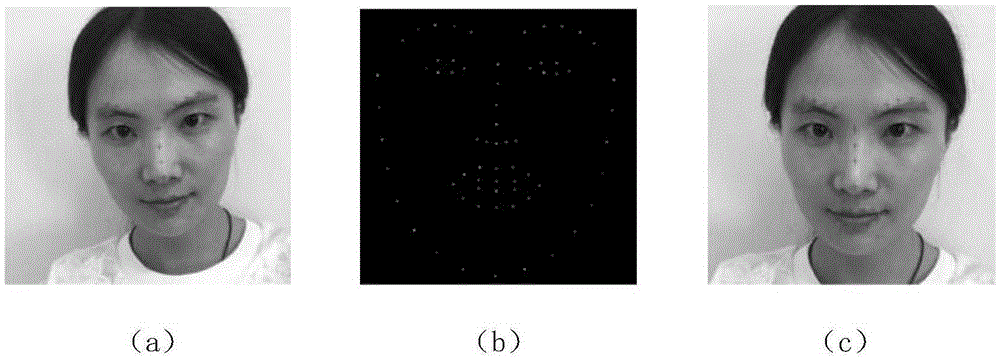

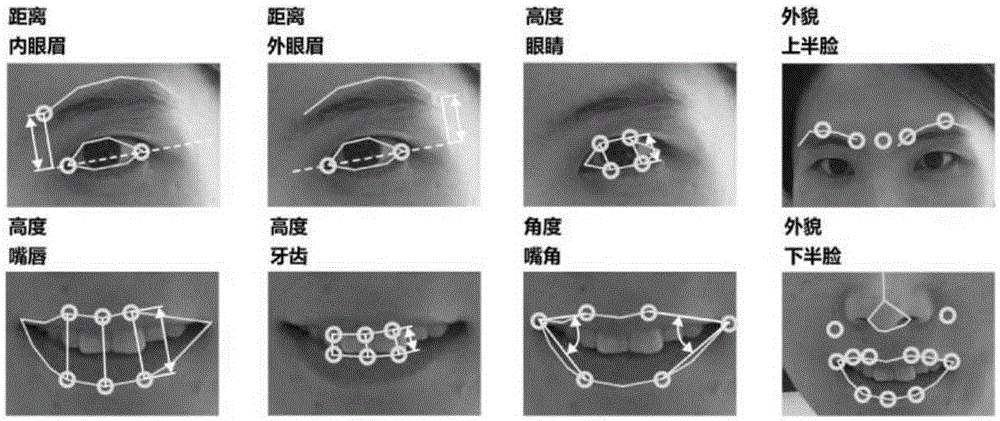

Facial expression analysis-based depression degree automatic evaluation system

ActiveCN105279380ANo intrusionEvaluation is objective and effectiveCharacter and pattern recognitionSpecial data processing applicationsFeature extractionVideo annotation

The invention discloses a facial expression analysis-based depression degree automatic evaluation system. The facial expression analysis-based depression degree automatic evaluation system includes a data acquisition module, a preprocessing and feature extraction module, a related feature extraction module, a prediction model training module, a new video annotation module and a new video prediction module. The facial expression analysis-based depression degree automatic evaluation system of the invention has the advantages of full-process automation, no invasion and no requirements for long-time cooperation of a tested object, and can work for a long time. With the facial expression analysis-based depression degree automatic evaluation system provided by the invention adopted, objective evaluation standards are provided, and objective and effective evaluation can be realized actually without relying on subjective experiences required. The facial expression analysis-based depression degree automatic evaluation system is not limited to isolated analysis on individual objects.

Owner:SOUTHEAST UNIV

Video annotation method and system

ActiveCN104391960AShow clear orderCharacter and pattern recognitionSelective content distributionVideo playerVideo annotation

An embodiment of the invention provides a video annotation method and system. The method includes the steps: a server provides an annotation interface; when a video player terminal plays a video, annotation information is generated according to a video image and submitted to the server through the annotation interface; the server receives the annotation information and extracts a corresponding video segment according to the annotation information; the server judges, in the video segment, whether or not a video annotation having an overlap ratio reaching an overlap threshold with the annotation information exists, combines the annotation information into the video annotation if yes, and generates the video annotation according to the annotation information if not.

Owner:BEIJING QIYI CENTURY SCI & TECH CO LTD

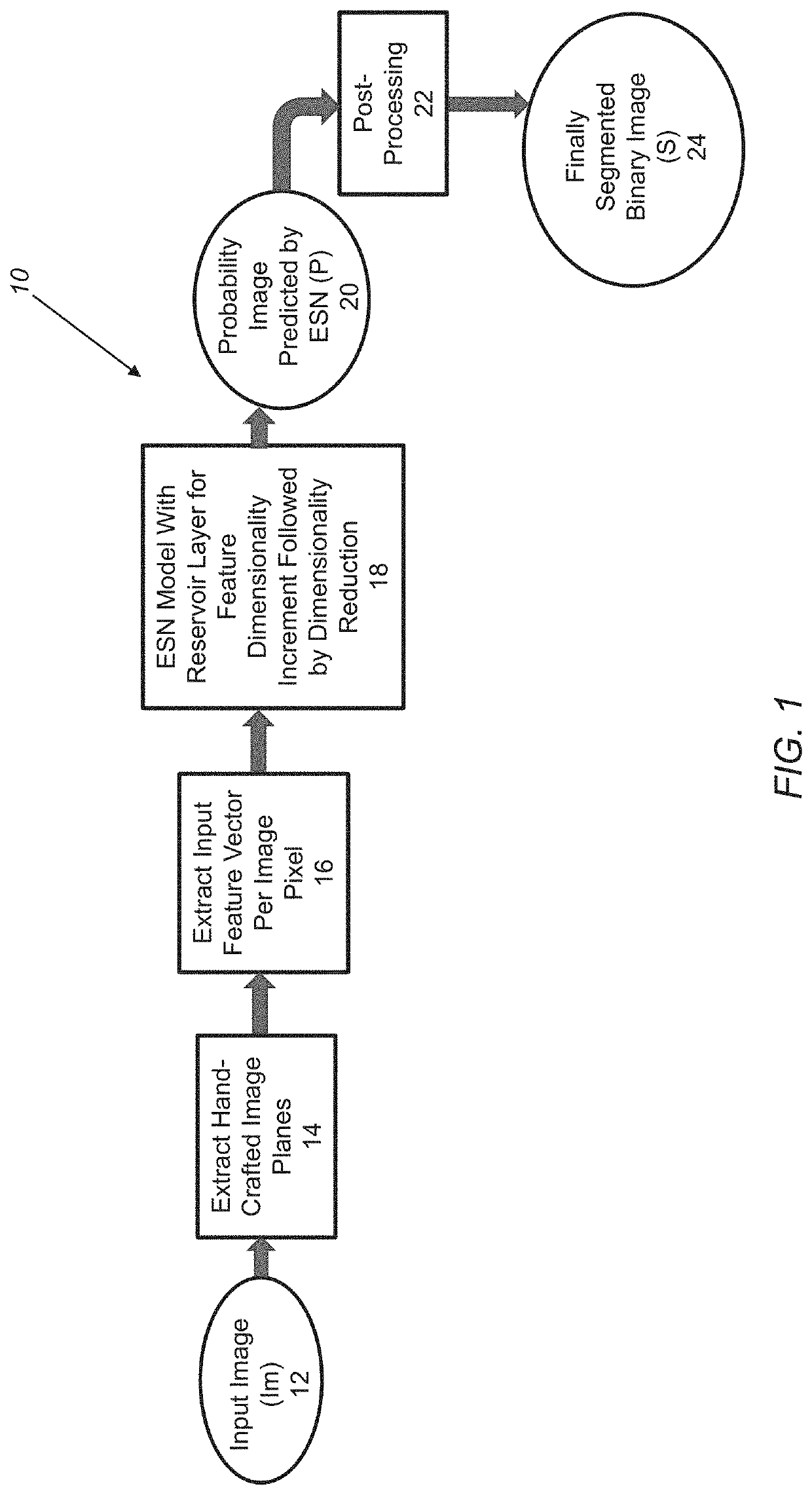

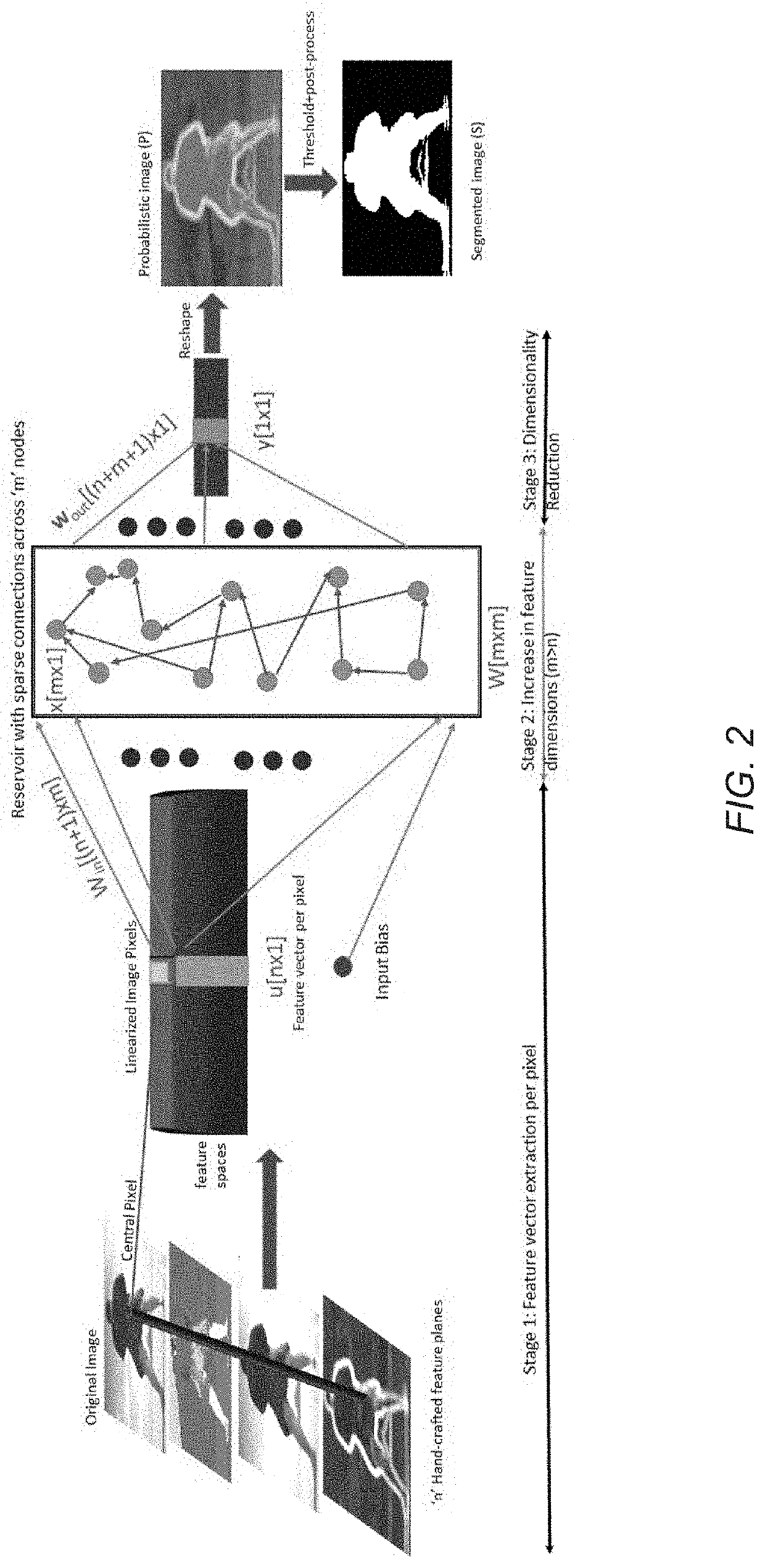

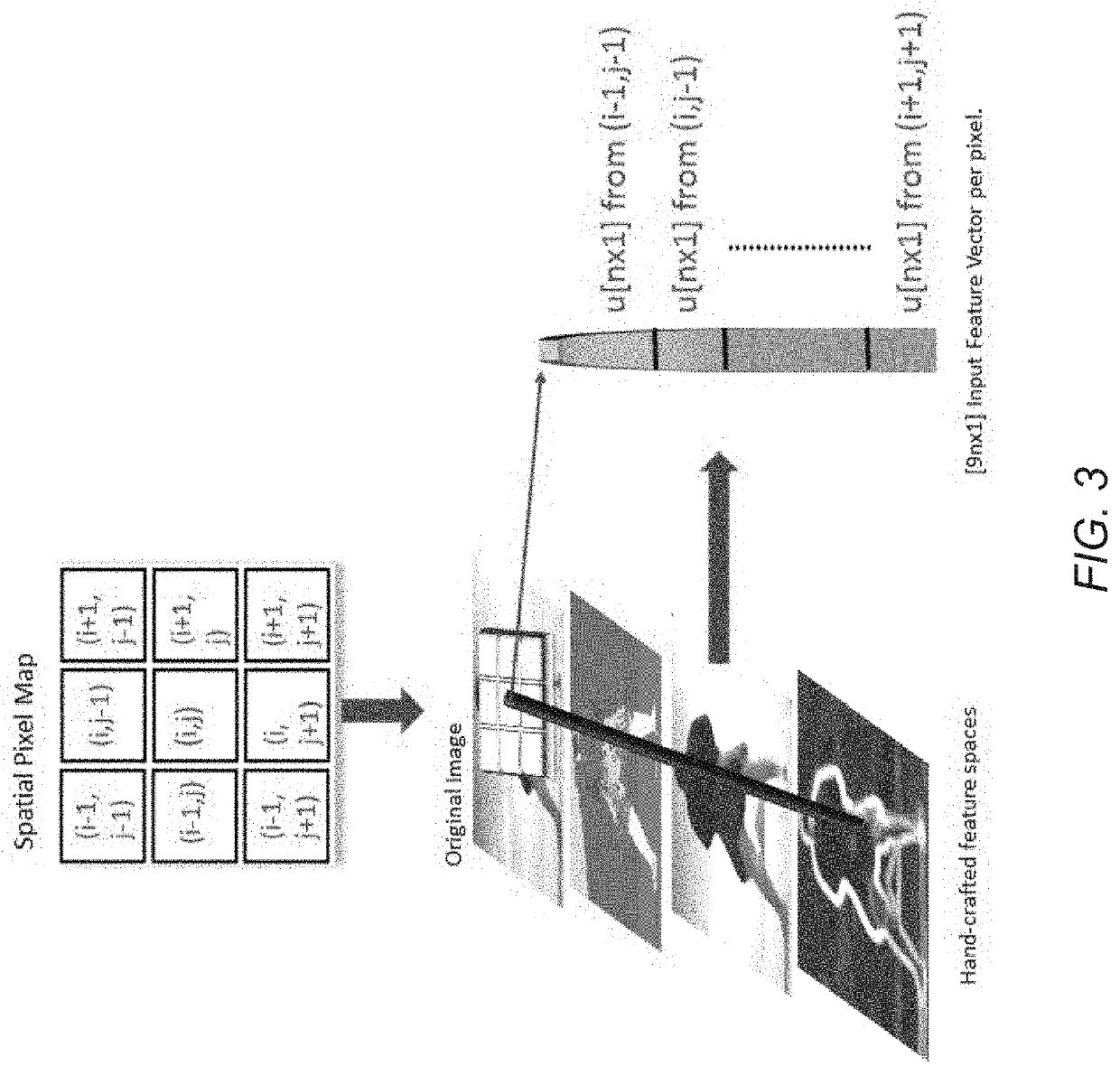

Methods and systems for providing fast semantic proposals for image and video annotation

ActiveUS20200082540A1Shorten the timeFast and accurate pre-proposalsImage enhancementAutonomous decision making processFeature vectorFeature Dimension

Methods and systems for providing fast semantic proposals for image and video annotation including: extracting image planes from an input image; linearizing each of the image planes to generate a one-dimensional array to extract an input feature vector per image pixel for the image planes; abstracting features for a region of interest using a modified echo state network model, wherein a reservoir increases feature dimensions per pixel location to multiple dimensions followed by feature reduction to one dimension per pixel location, wherein the echo state network model includes both spatial and temporal state factors for reservoir nodes associated with each pixel vector, and wherein the echo state network model outputs a probability image; post-processing the probability image to form a segmented binary image mask; and applying the segmented binary image mask to the input image to segment the region of interest and form a semantic proposal image.

Owner:VOLVO CAR CORP

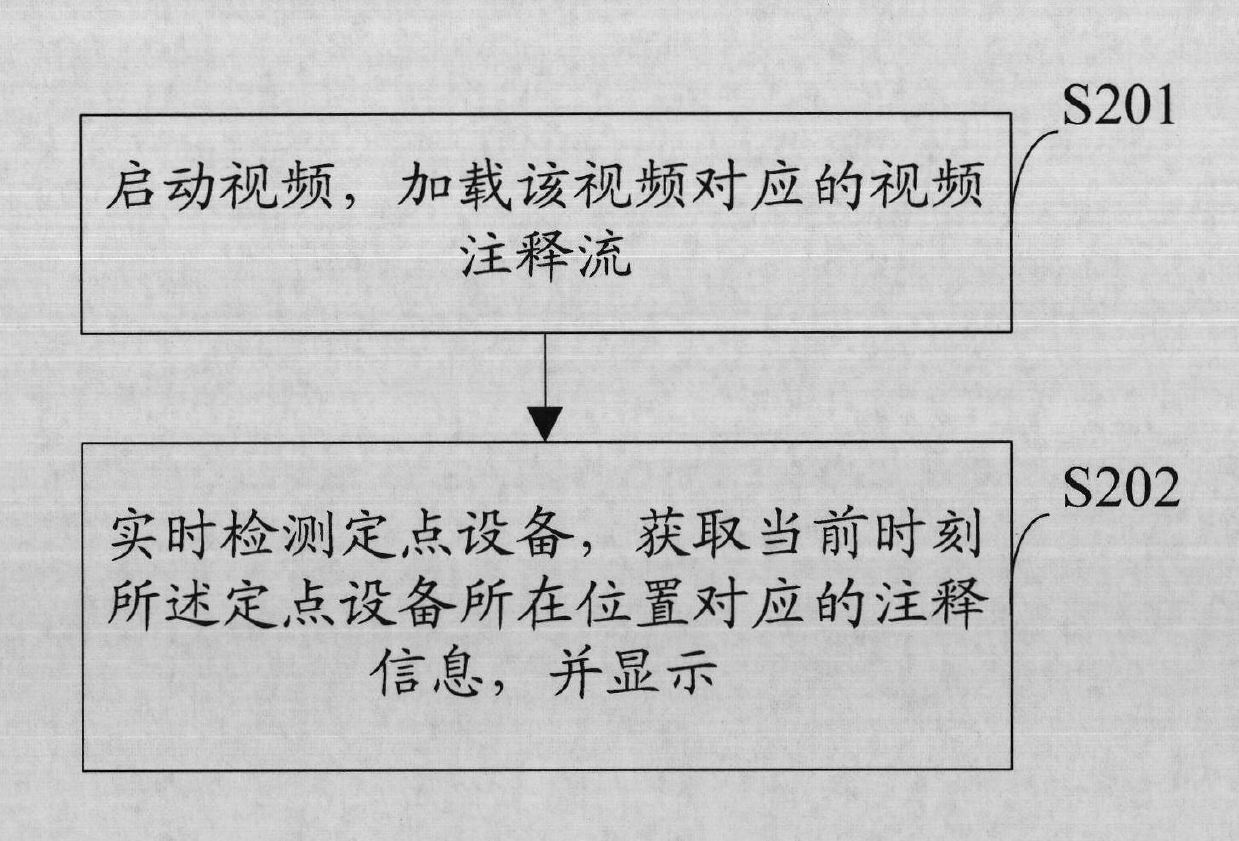

Display method and device for video information

ActiveCN102572601AImprove experienceRealize dynamic displaySelective content distributionComputer graphics (images)Video annotation

The invention discloses a display method for video information. The method comprises the following steps of: starting a video, and loading a video annotation stream corresponding to the video; and detecting a pointing device in real time, and acquiring and displaying annotation information corresponding to the position of the pointing device at a current moment. The invention also provides a display device for the video information. By the embodiment of the invention, the video information can be dynamically displayed, and user experiences can be enhanced.

Owner:BEIJING QIYI CENTURY SCI & TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com