Patents

Literature

105 results about "Video prediction" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

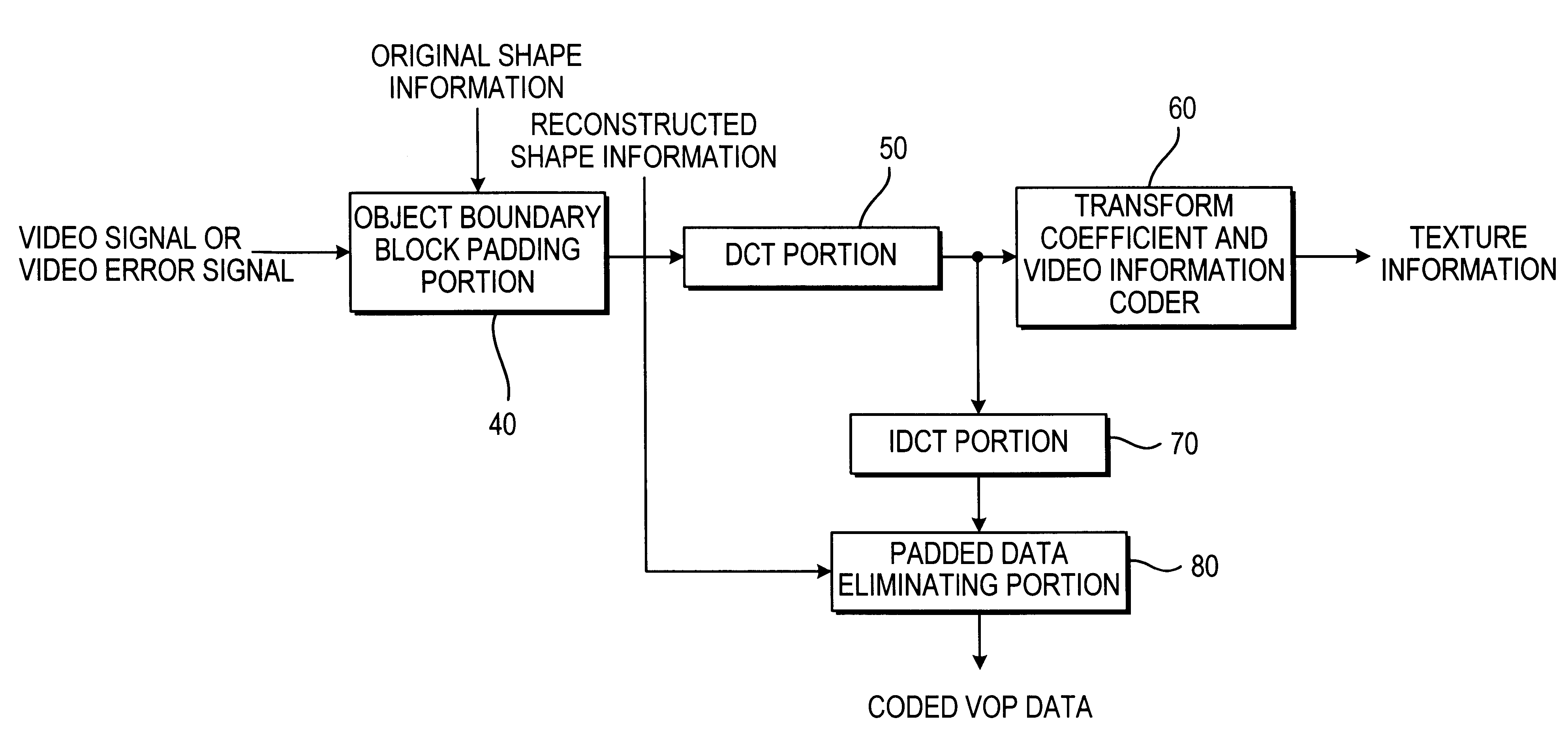

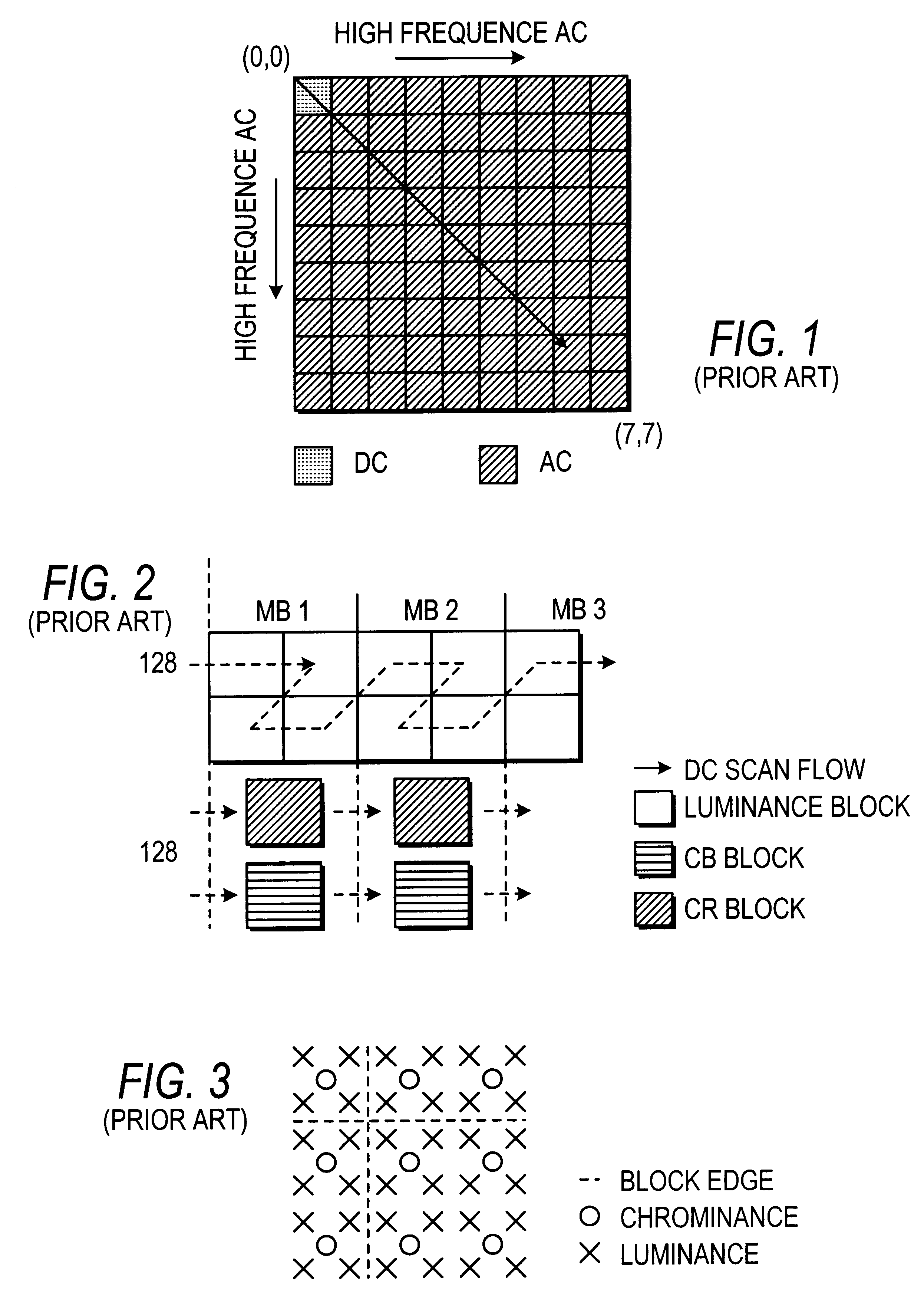

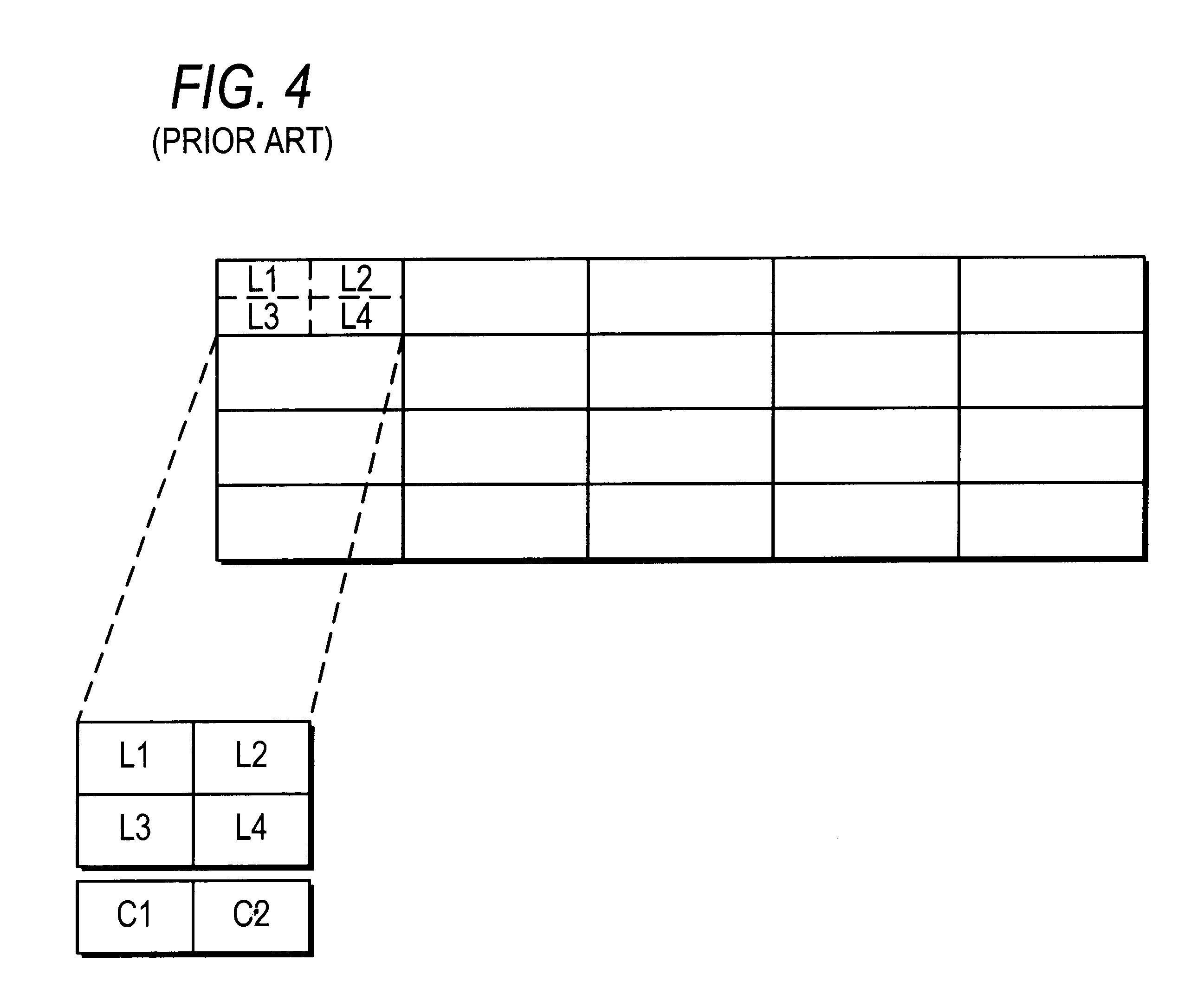

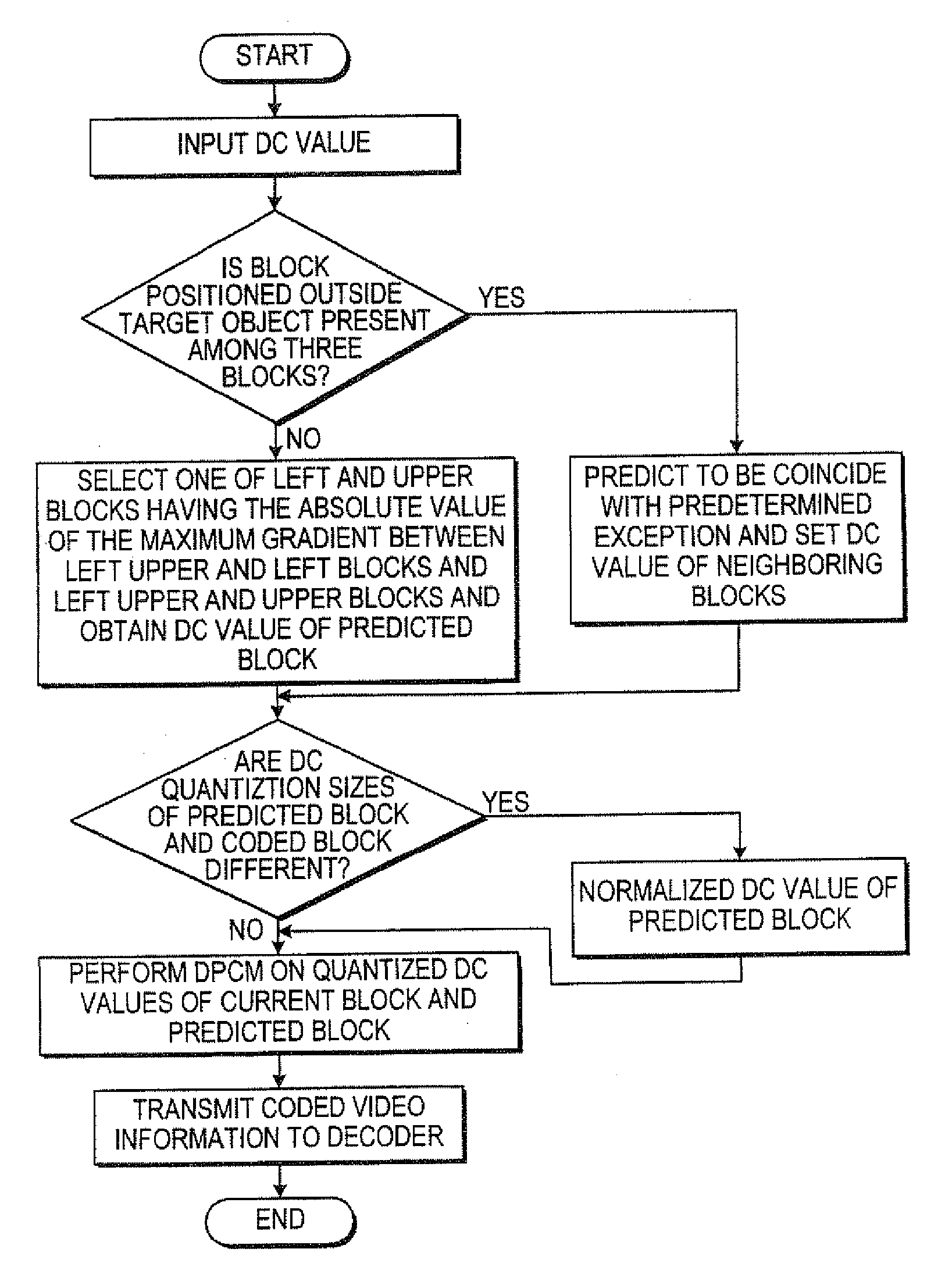

Video predictive coding apparatus and method

InactiveUS6215905B1Pulse modulation television signal transmissionPicture reproducers using cathode ray tubesComputer architectureVideo encoding

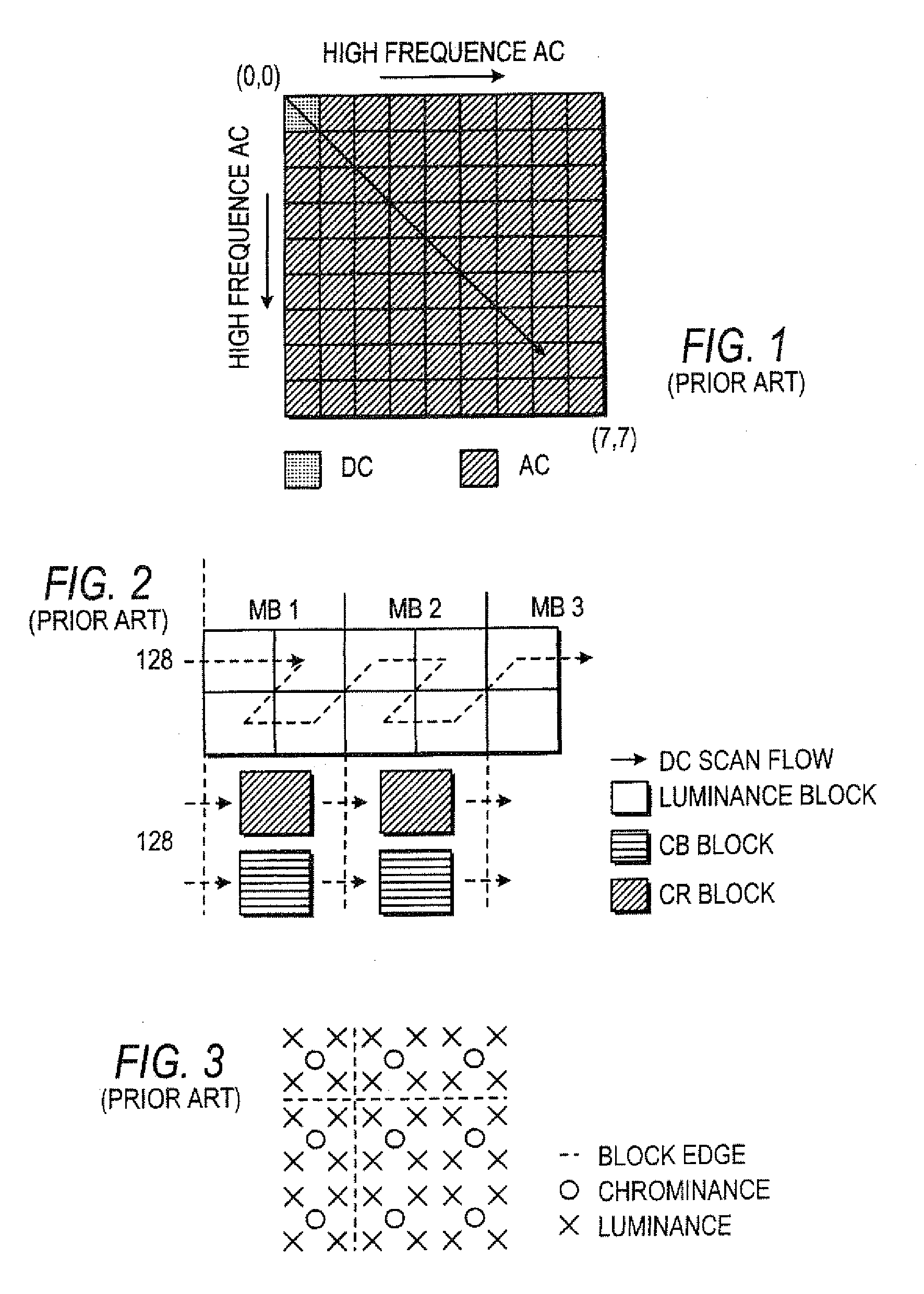

A predictive coding in a video coding system, which can enhance the coding efficiency by predictively coding DC coefficients of a block to be coded using DC gradients of a plurality of previously coded neighboring blocks. The predictive coefficient is selected according to the difference between the quantized DC gradients (coefficients) of a plurality of neighboring blocks of the block to be coded, and the DC coefficient of the block to be coded is predictively coded by the selected predictive coefficient, thereby enhancing the coding efficiency.

Owner:PANTECH CO LTD

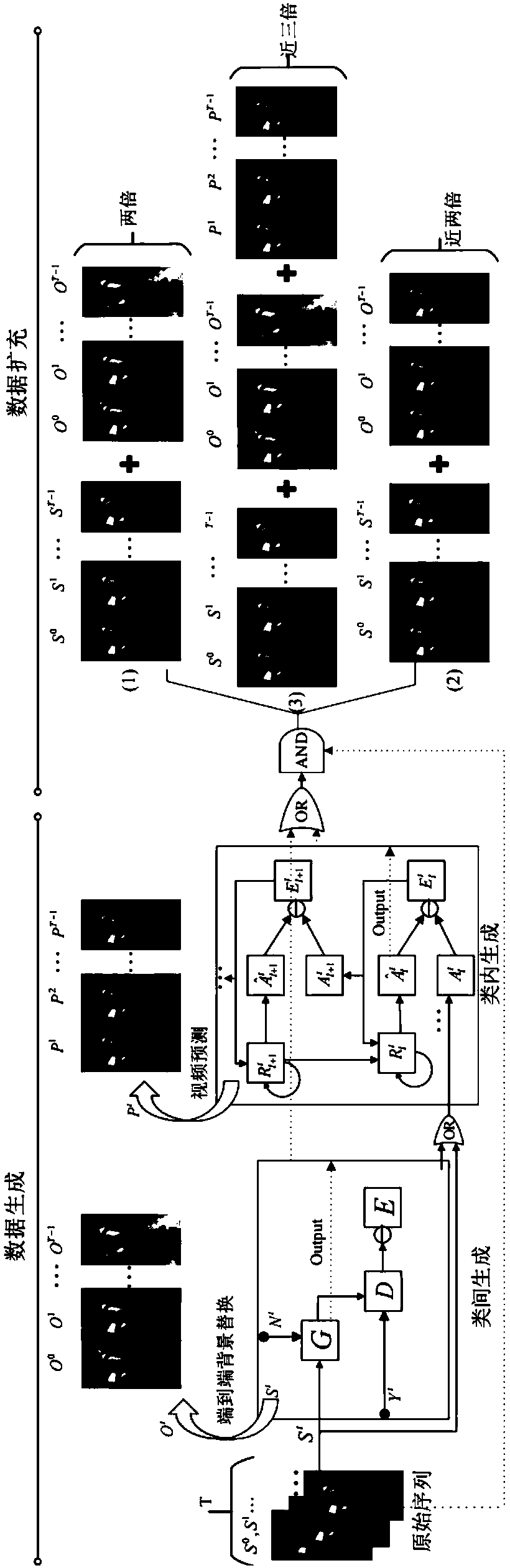

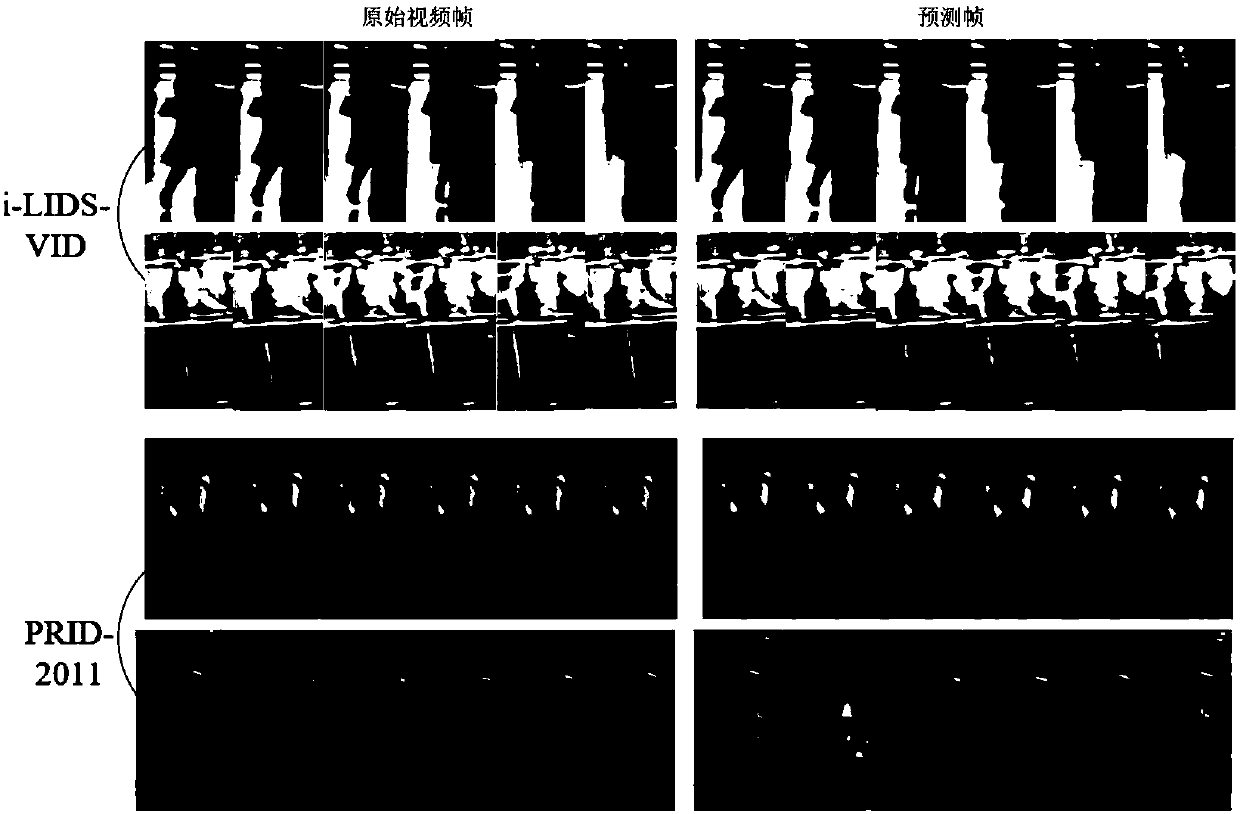

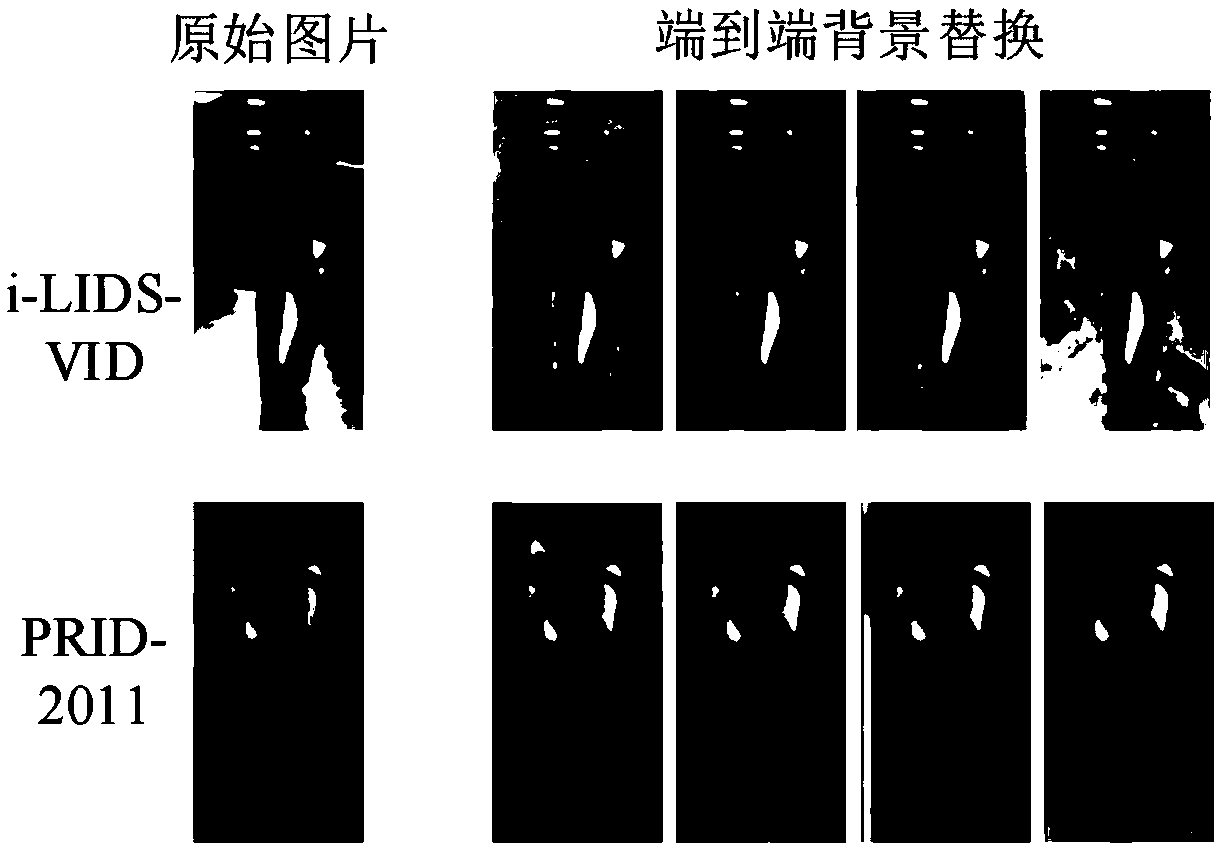

Method for generating and expanding pedestrian re-identification data based on generative network

ActiveCN107679465AWide applicabilityIncrease the lengthCharacter and pattern recognitionData expansionData set

The invention provides a method for generating and expanding the pedestrian re-identification data based on a generative network. The method includes the steps of generating a new pedestrian video frame sample by utilizing a video prediction network; generating the end-to-end pedestrian background transformation data by means of a deep generative adversarial network; expanding the breadth and therichness of the pedestrian data set by using different data generation methods; and sending the expanded data set to the feature extraction network, extracting the features and evaluating the performance through the Euclidean distance. According to the method, intra-class and inter-class data expansion of pedestrians is taken into consideration as well, more abundant samples can be generated by combining different generative networks, the expanded data set has good diversity and robustness, the problem of performance loss caused by insufficient number of samples and background interference canbe well solved, and the method has general applicability, and the expanded data set can achieve better performance and efficiency in the next step of pedestrian recognition.

Owner:SHANGHAI JIAO TONG UNIV

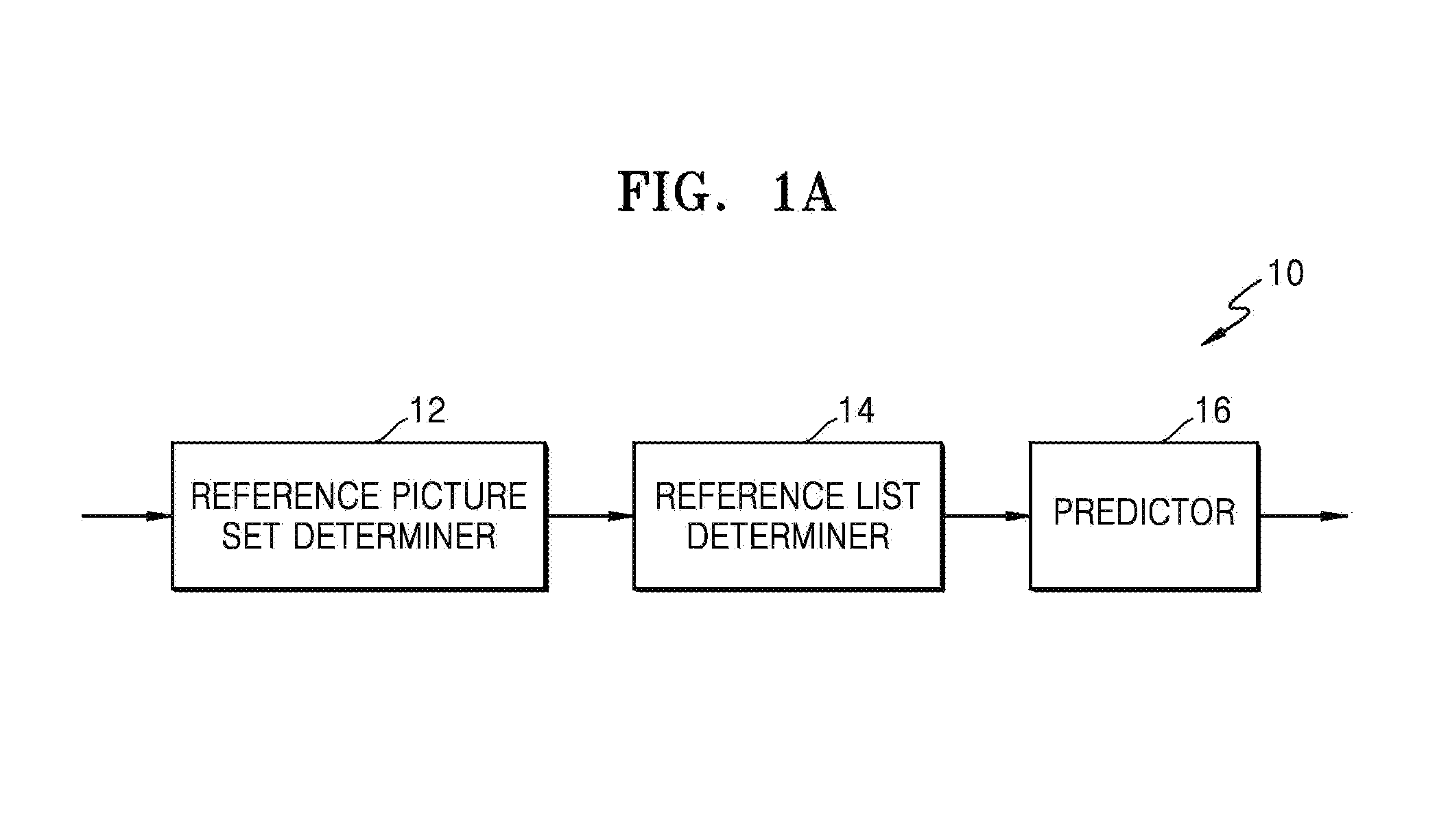

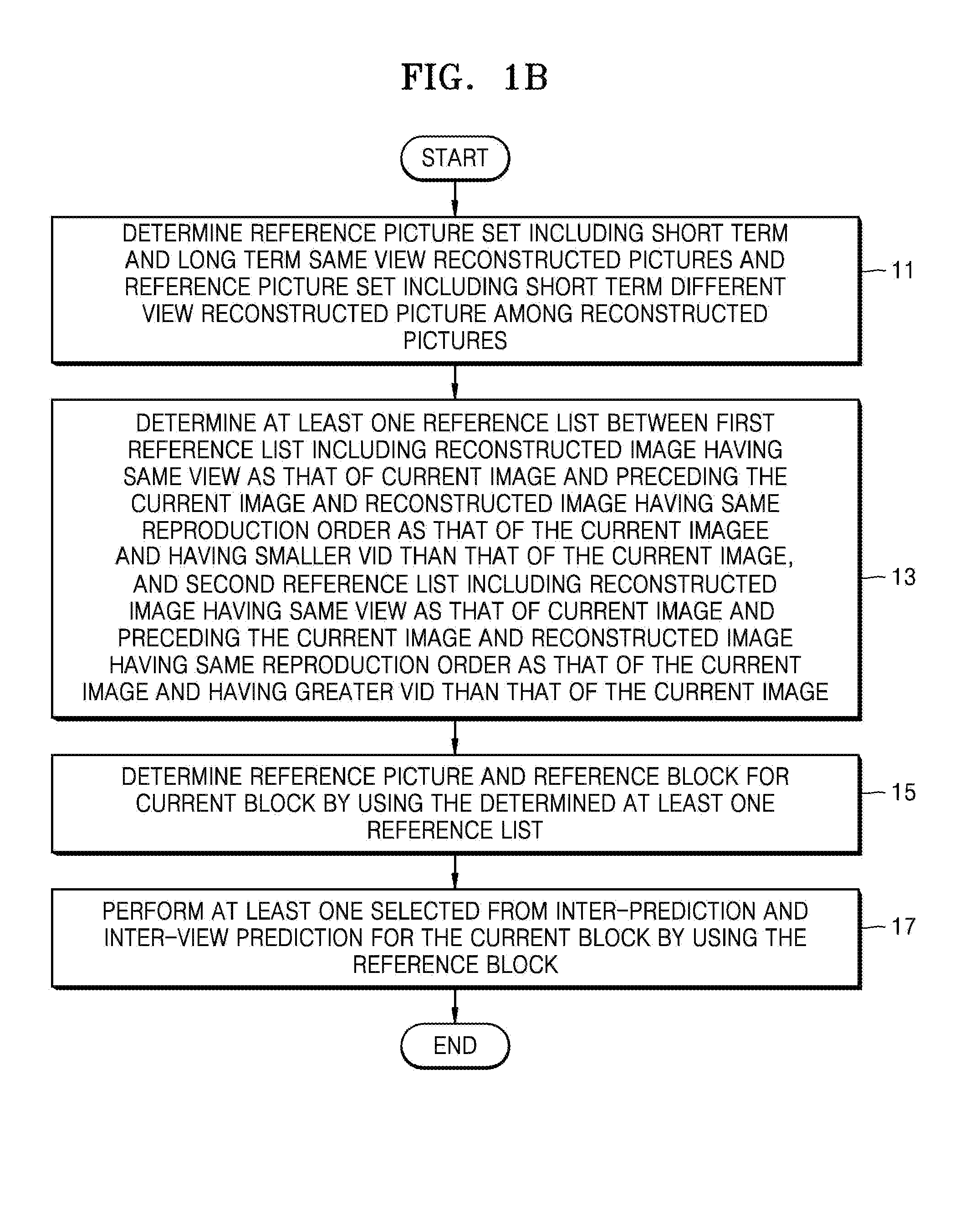

Multiview video encoding method using reference picture set for multiview video prediction and device therefor, and multiview video decoding method using reference picture set for multiview video prediction and device therefor

InactiveUS20150124877A1Color television with pulse code modulationColor television with bandwidth reductionVideo encodingComputer graphics (images)

Methods of encoding and decoding multi-view video by performing inter-prediction and inter-view prediction on each of pictures of multi-view video according to views are provided. A prediction encoding method of encoding a multi-view video includes determining a reference picture set; determining at least one reference list between a first reference list and a second reference list; determining one reference picture and reference block for a current block of the current picture using the determined one reference list; and performing inter-prediction or inter-view prediction for the current block using the reference block.

Owner:SAMSUNG ELECTRONICS CO LTD

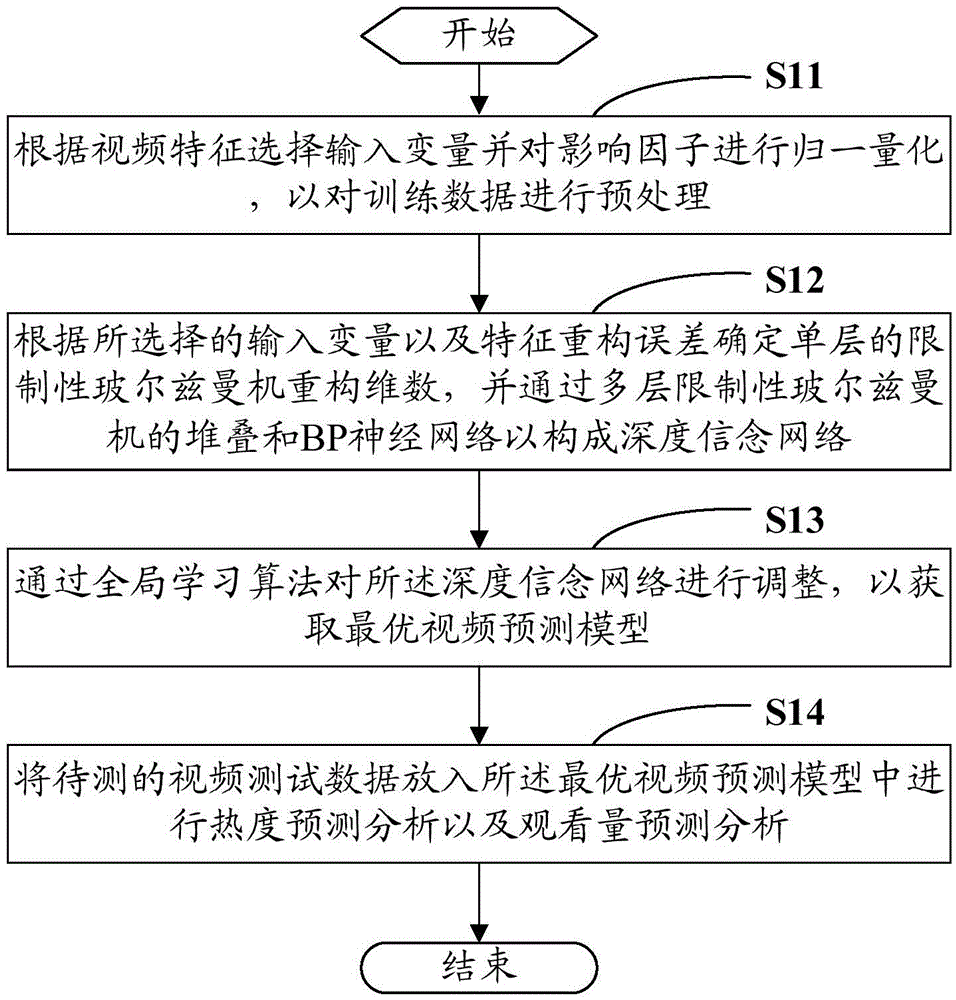

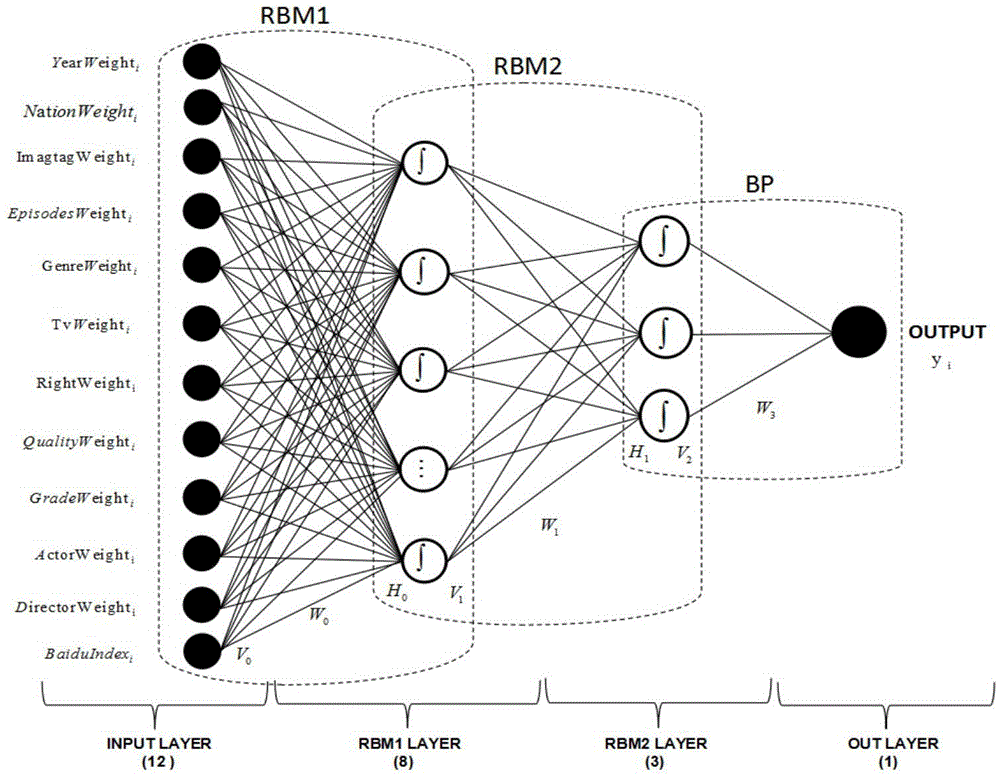

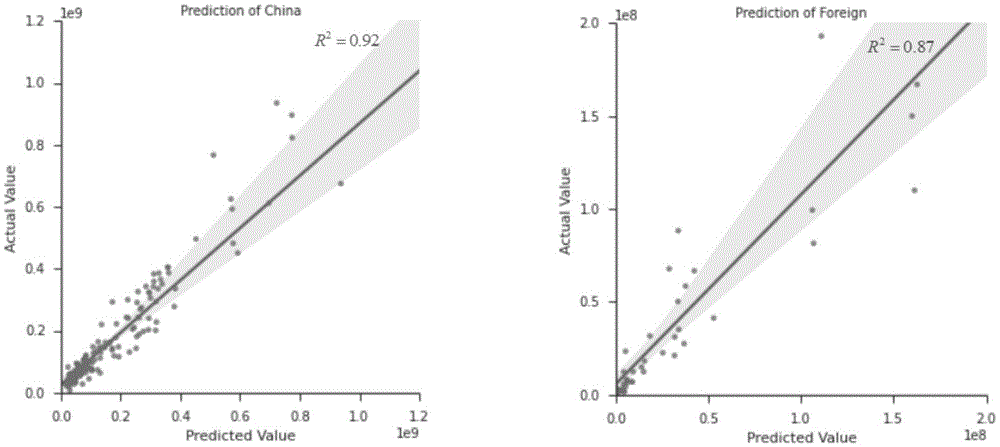

Video heat prediction method based on deep belief networks and system thereof

ActiveCN105635762AImprove accuracyImprove reliabilitySelective content distributionSpecial data processing applicationsRestricted Boltzmann machineRestrict boltzmann machine

The invention provides a video heat prediction method based on deep belief networks. The method comprises following steps: selecting input variables according to video features; normalizing and quantifying influence factors, thus preprocessing training data; determining a single-layer restricted Boltzmann machine reconstitution dimension according to the selected input variable and feature reconstruction errors; forming the deep belief networks by multi-layer stacked restricted Boltzmann machines and a BP (Back Propagation) neural network; adjusting the deep belief networks through a global learning algorithm, thus obtaining an optimum video prediction model; placing to-be-tested video test data in the optimum video prediction model for heat prediction analysis and watching quantity prediction analysis. The invention also provides a video heat prediction system based on the deep belief networks. According to the method and the system of the invention, an online video prediction model based on the deep belief networks is provided; the deep neural network is applied in the online video prediction field; and the prediction accuracy and reliability can be improved.

Owner:SHENZHEN UNIV

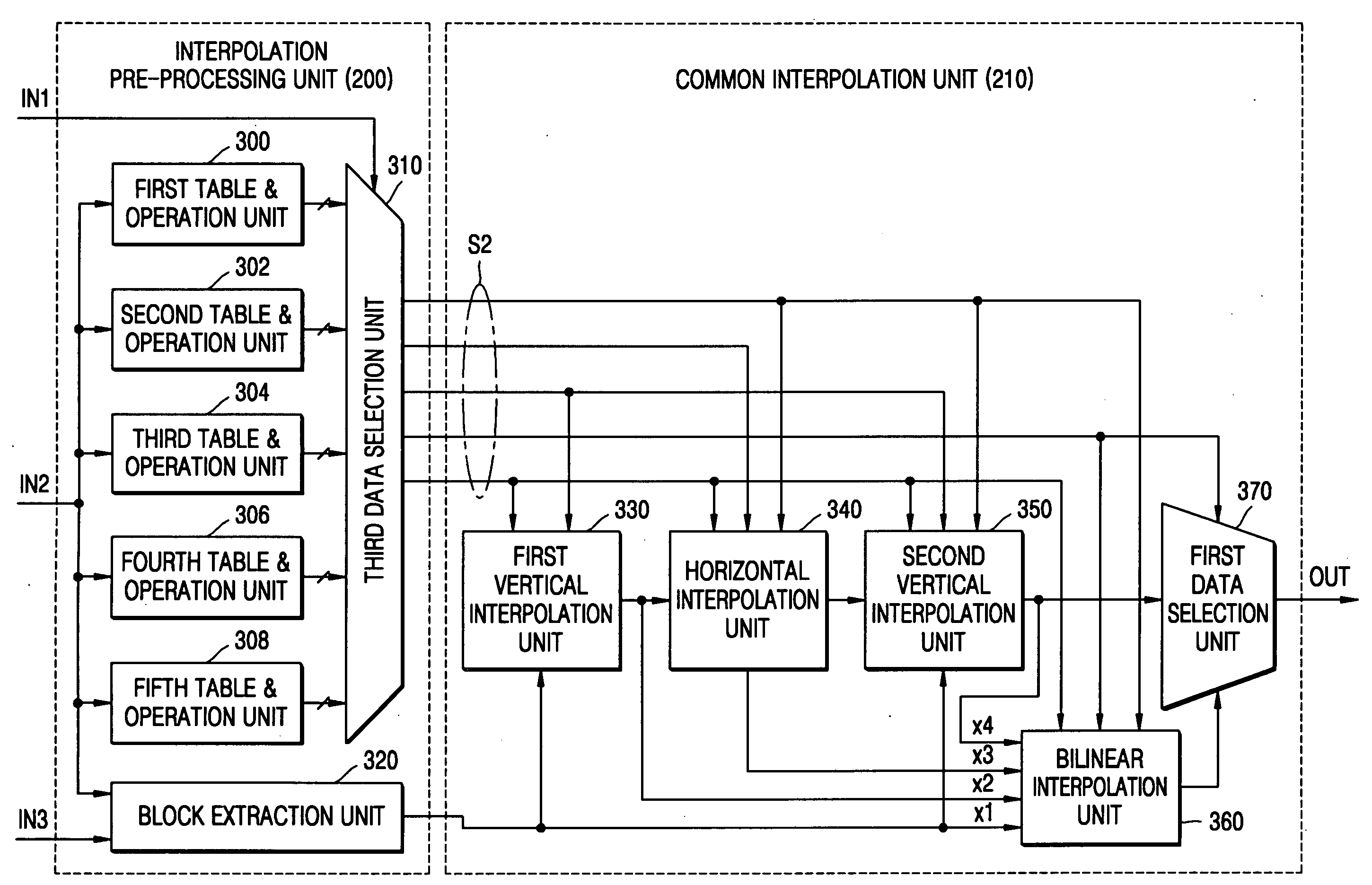

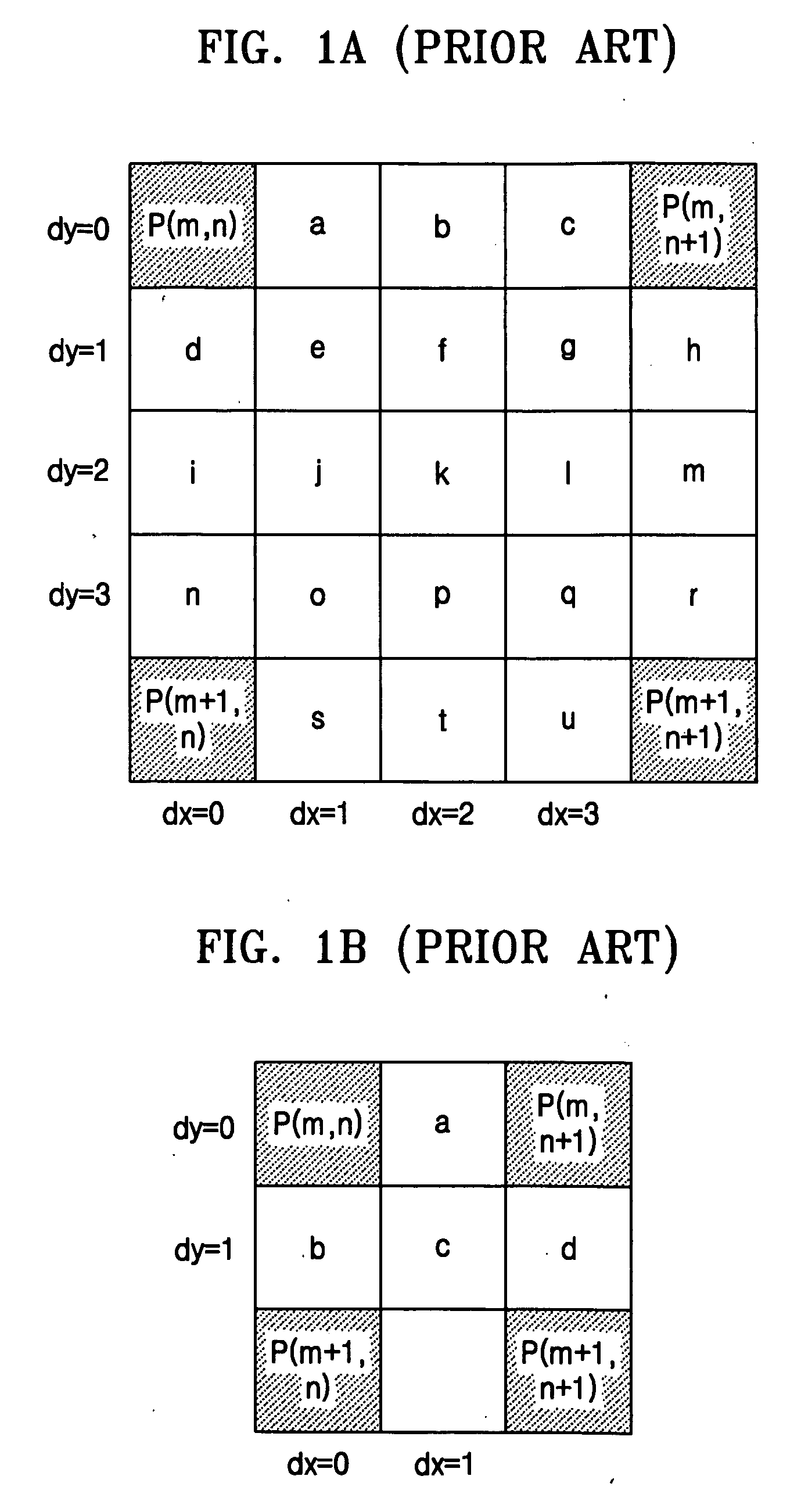

Video prediction apparatus and method for multi-format codec and video encoding/decoding apparatus and method using the video prediction apparatus and method

InactiveUS20070047651A1Requires costRequires timeColor television with pulse code modulationColor television with bandwidth reductionDirac (video compression format)Motion vector

Provided are a video prediction apparatus and method for a multi-format codec and a video encoding / decoding apparatus and method using the video prediction apparatus and method. The video prediction apparatus that generates a prediction block based on a motion vector and a reference frame according to a plurality of video compression formats includes an interpolation pre-processing unit and a common interpolation unit. The interpolation pre-processing unit receives video compression format information of a current block to be predicted, and extracts a block of a predetermined size to be used for interpolation from the reference frame and generates interpolation information using the motion vector. The common interpolation unit interpolates a pixel value of the extracted block or a previously interpolated pixel value in an interpolation direction according to the interpolation information to generate the prediction block.

Owner:SAMSUNG ELECTRONICS CO LTD

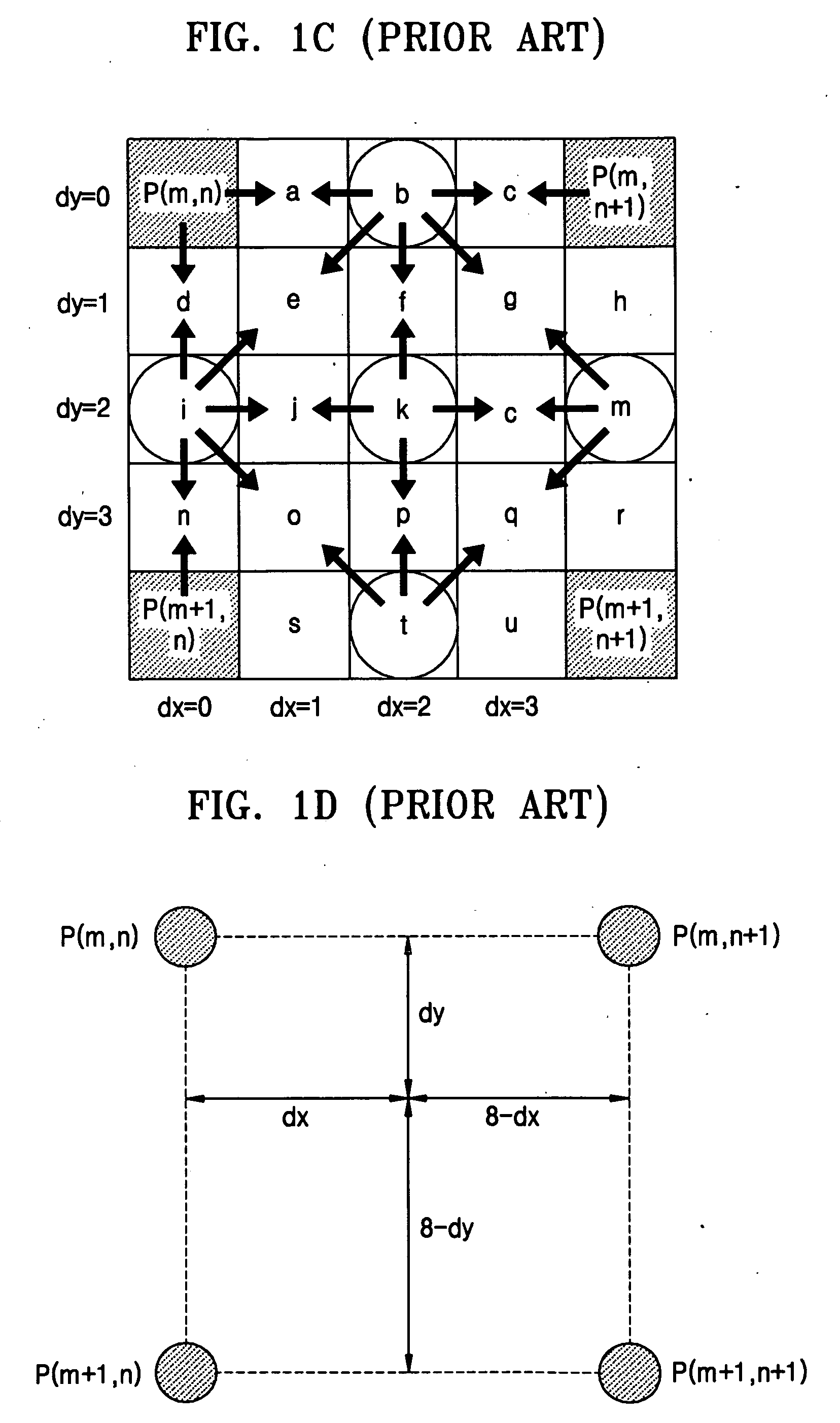

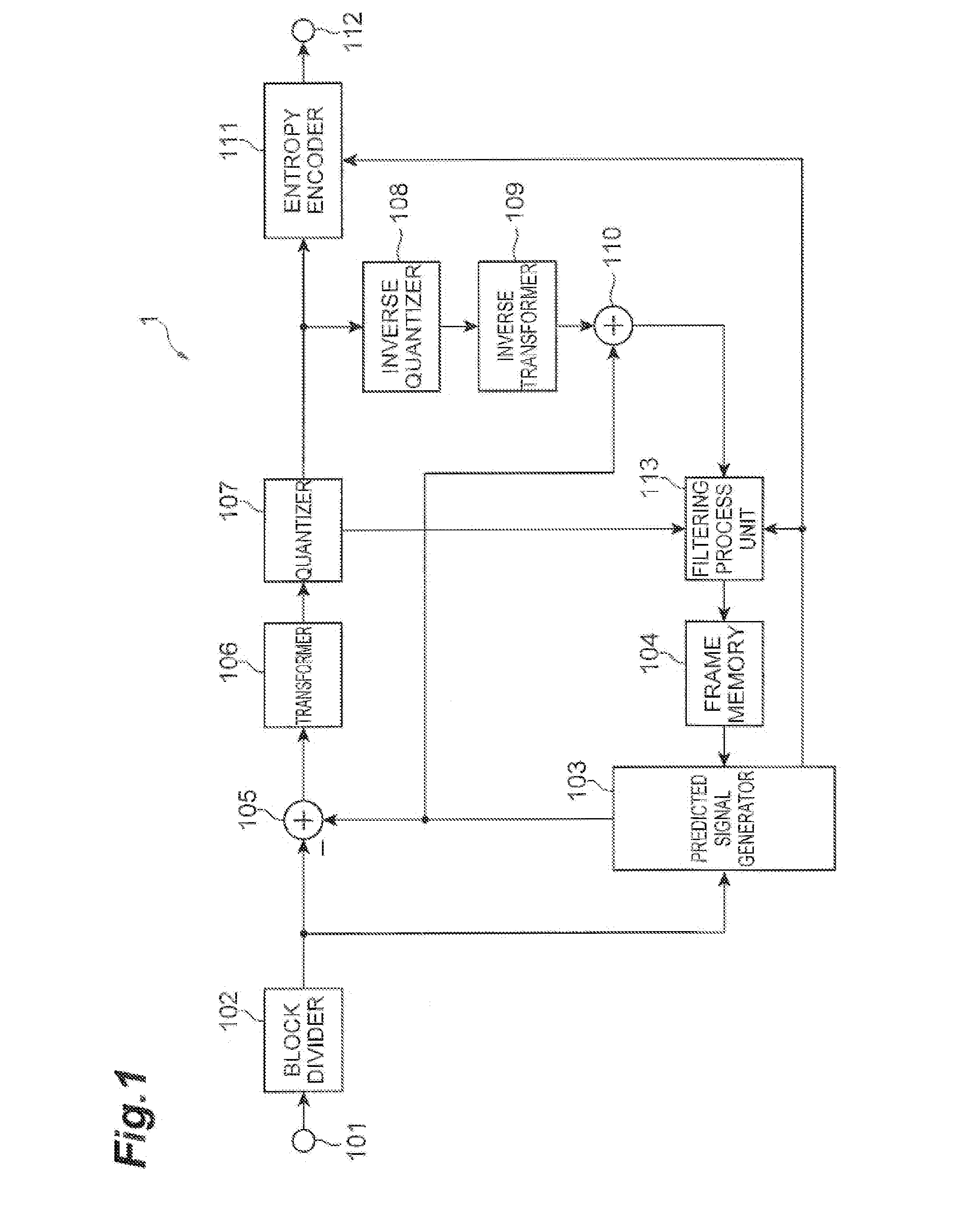

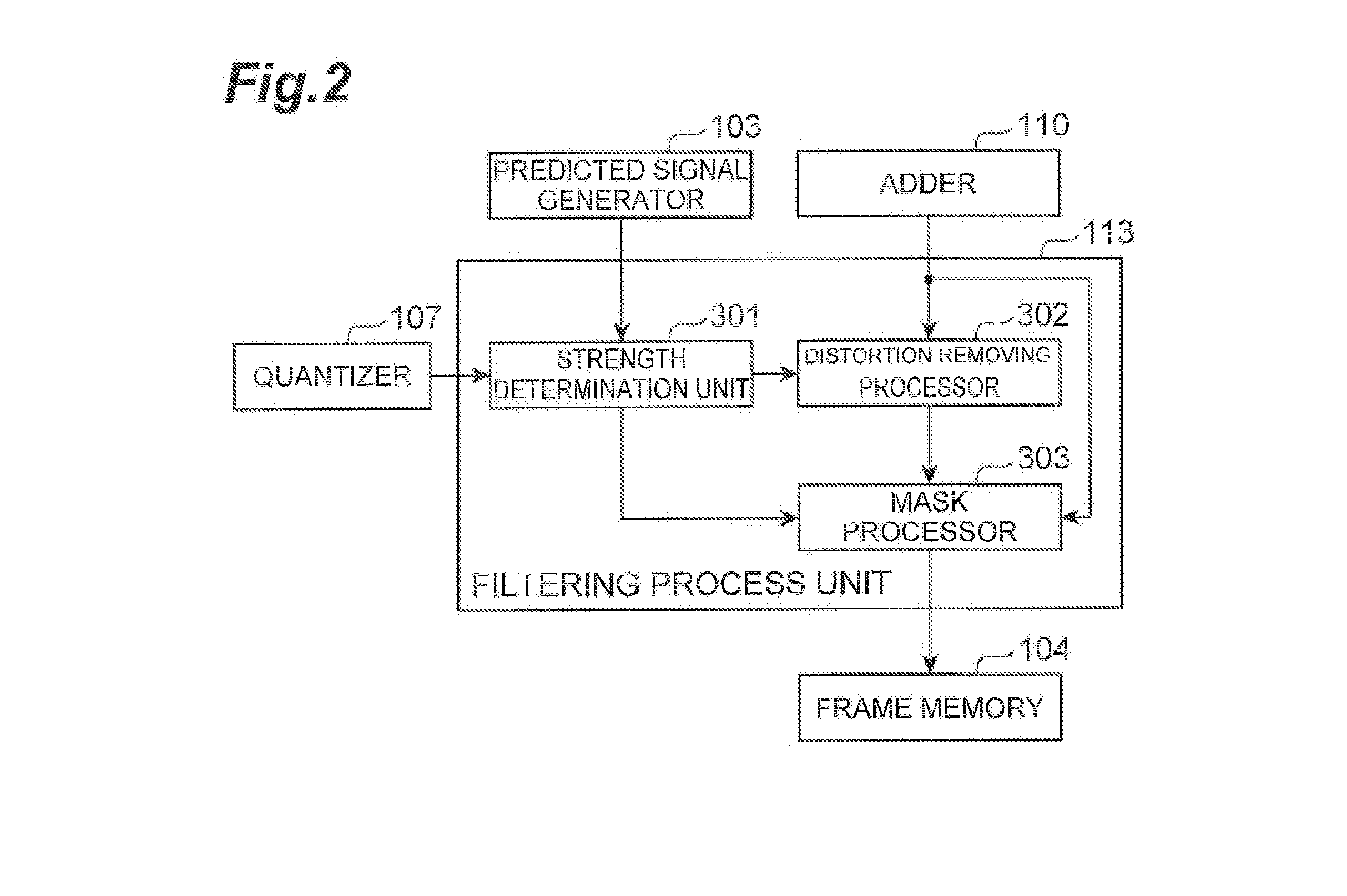

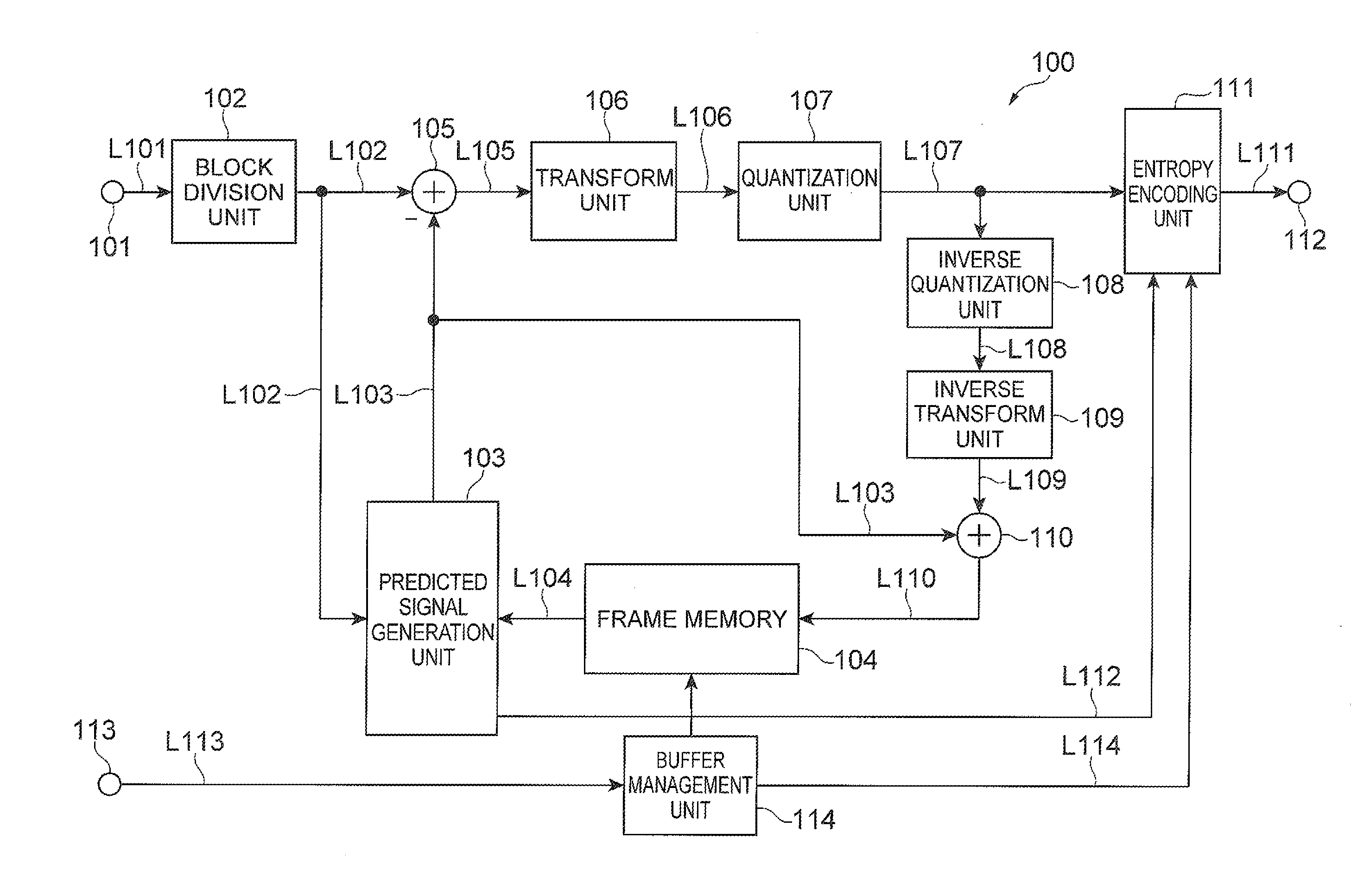

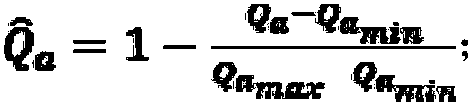

Moving image prediction encoder, moving image prediction decoder, moving image prediction encoding method, and moving image prediction decoding method

InactiveUS20130028322A1Improve efficiencyHigh reproduction qualityColor television with pulse code modulationColor television with bandwidth reductionRestoration deviceReference image

An object is to improve the quality of a reproduced picture and improve the efficiency of predicting a picture using the reproduced picture as a reference picture. For this object, a video prediction encoder 1 comprises an input terminal 101 which receives a plurality of pictures in a video sequence; an encoder which encodes an input picture by intra-frame prediction or inter-frame prediction to generate compressed data and encodes parameters for luminance compensation prediction between blocks in the picture: a restoration device which decodes the compressed data to restore a reproduced picture: a filtering processor 113 which determines a filtering strength and a target region to be filtered, using the parameters for luminance compensation prediction between the blocks and performs filtering on the reproduced picture, according to the filtering strength and the target region to be filtered; and a frame memory 104 which stores the filtered reproduced picture, as a reference picture.

Owner:NTT DOCOMO INC

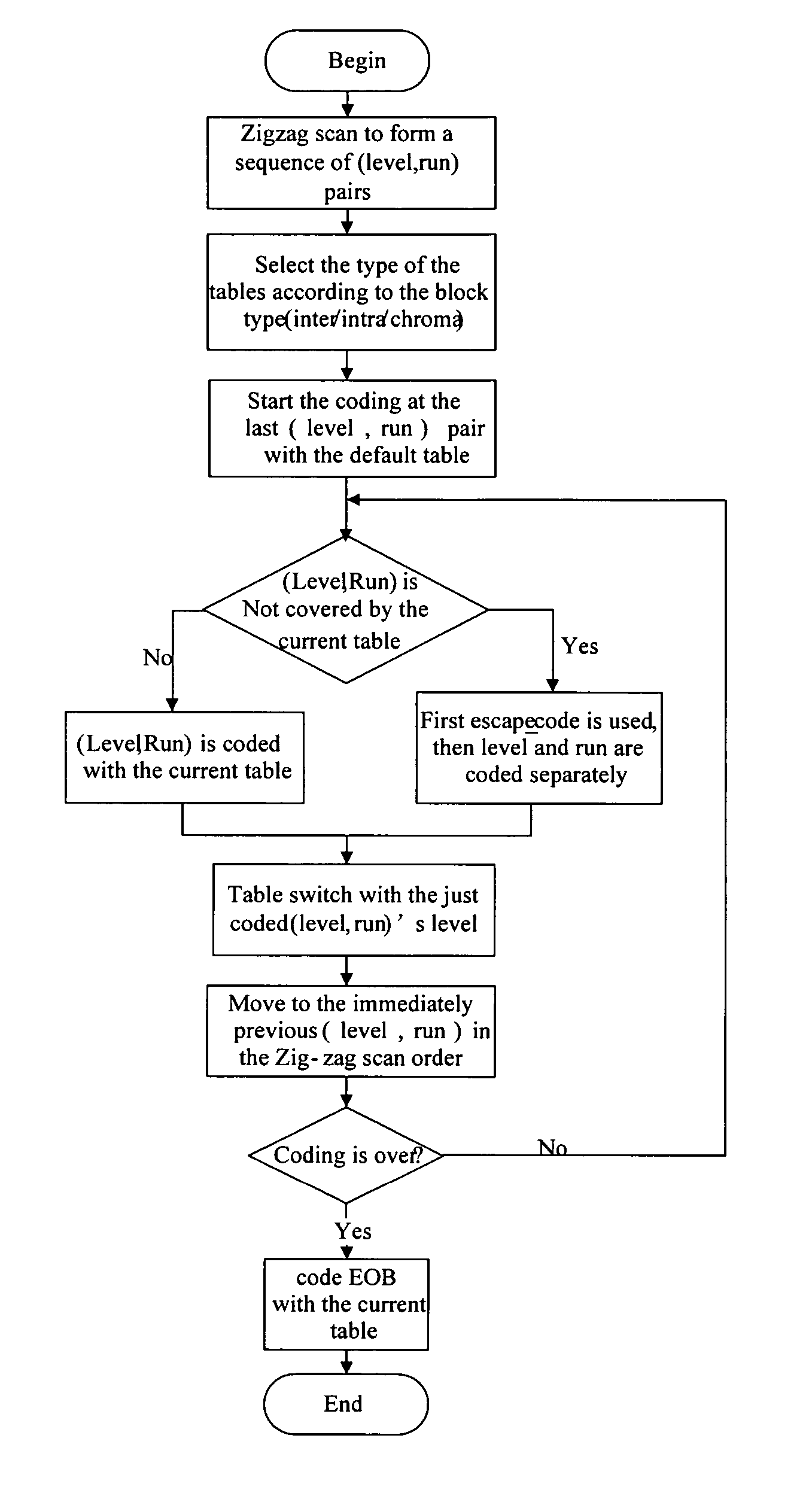

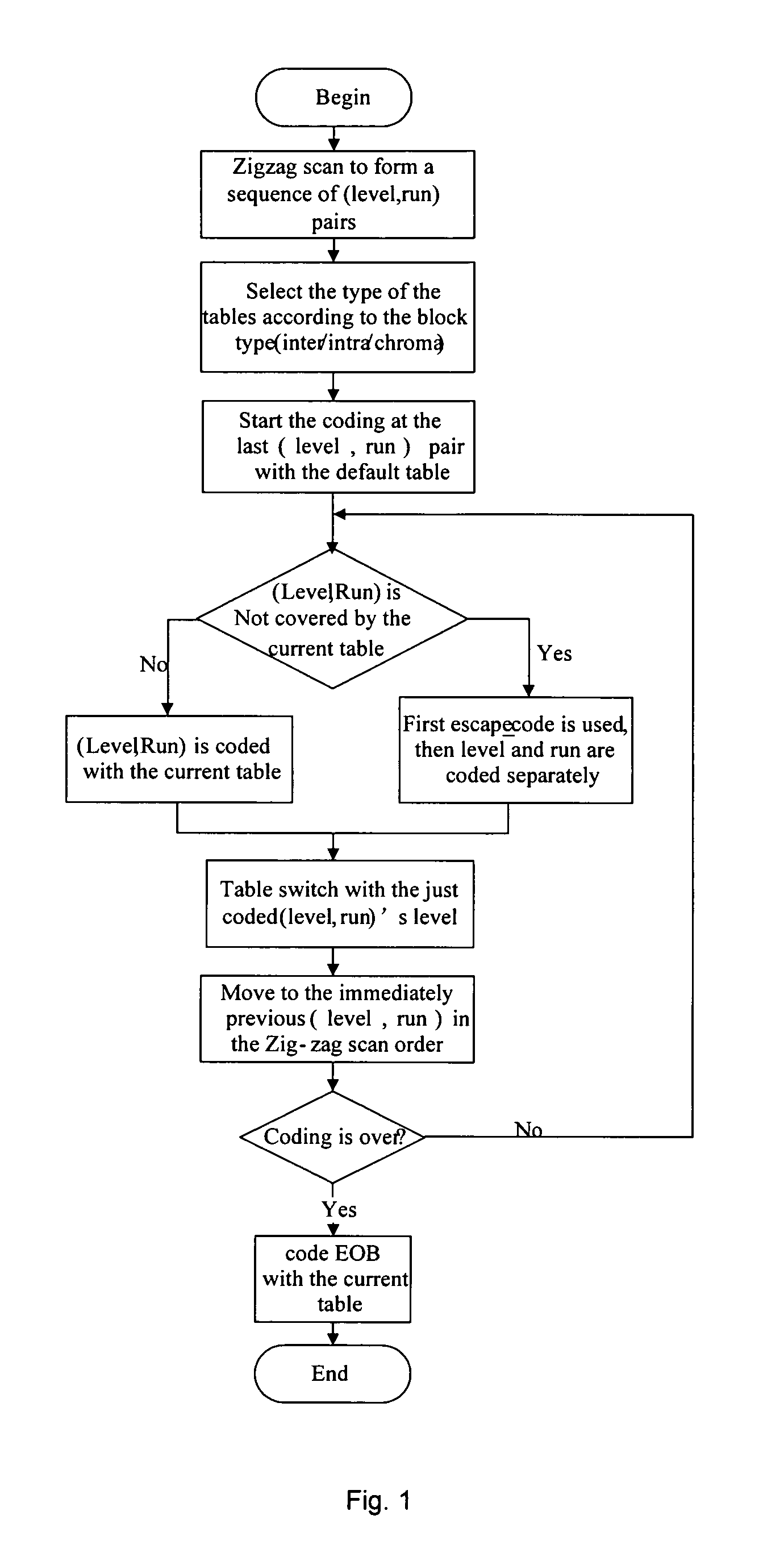

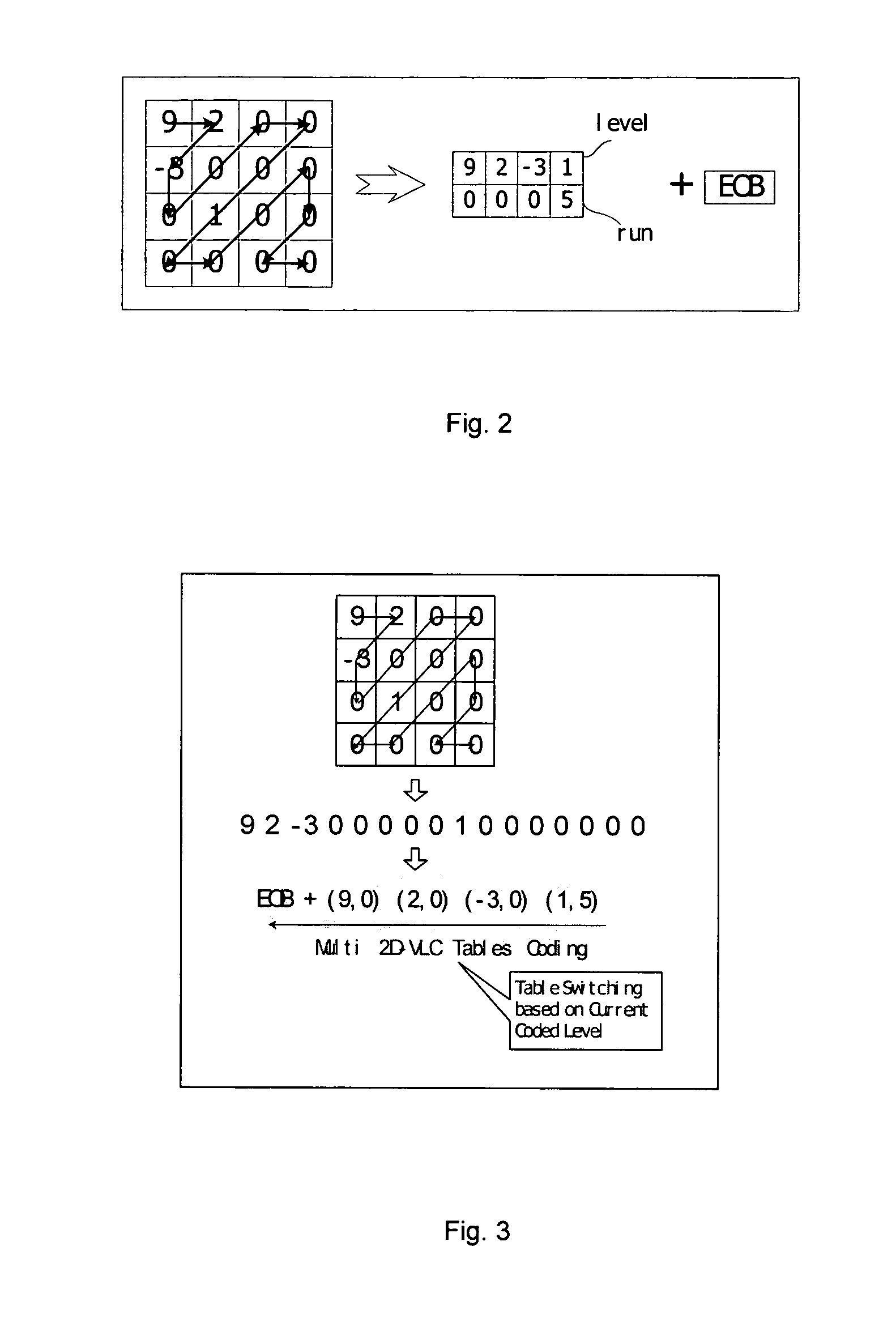

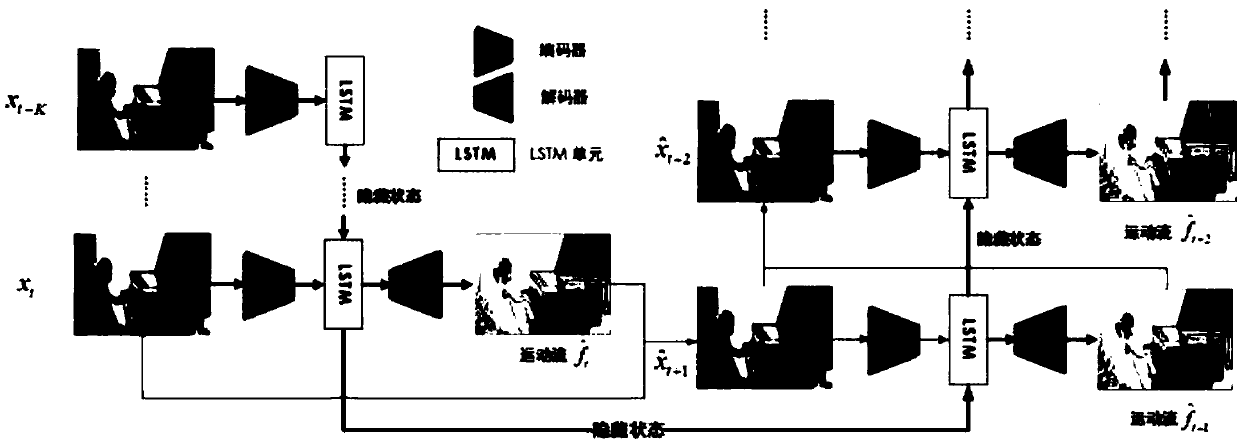

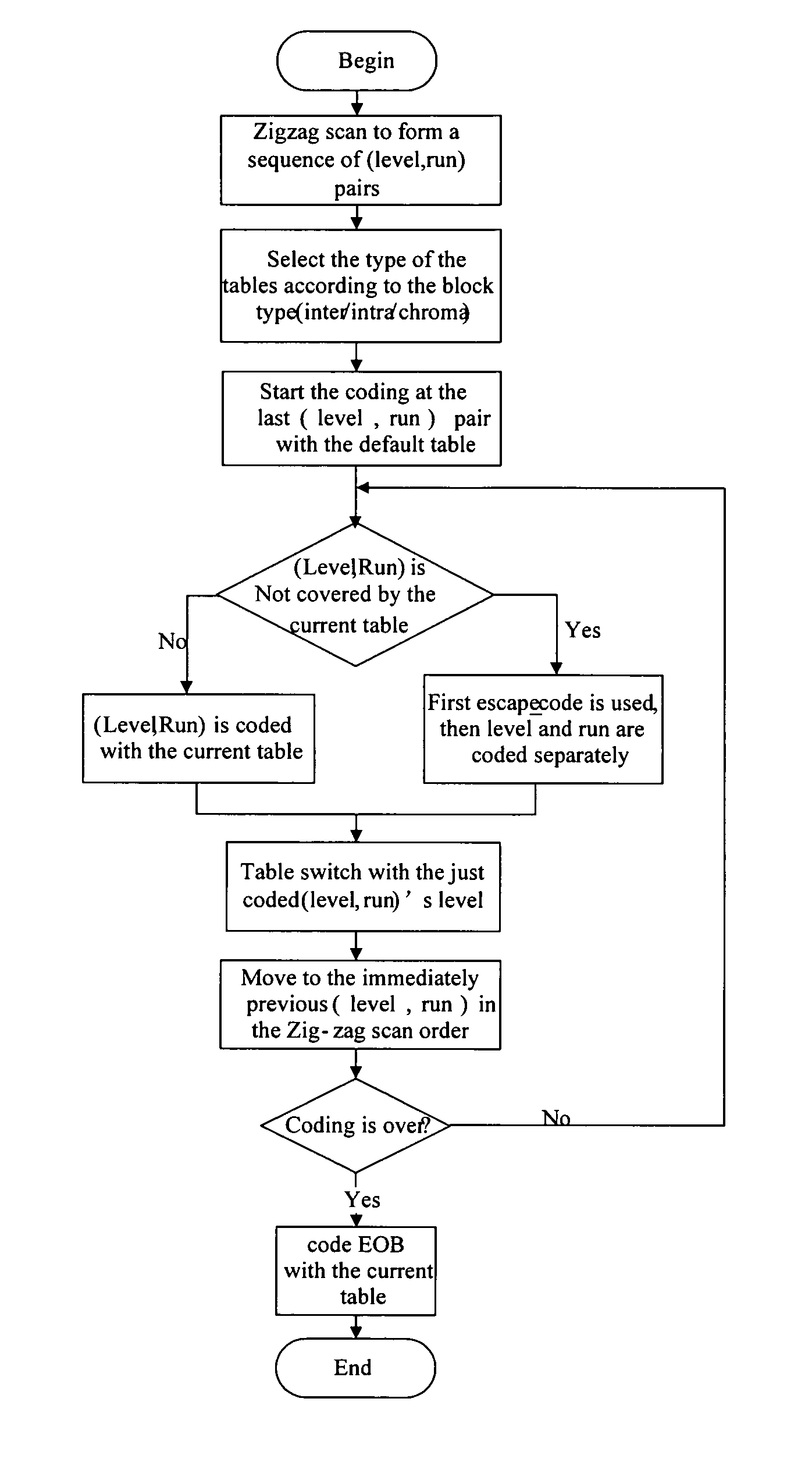

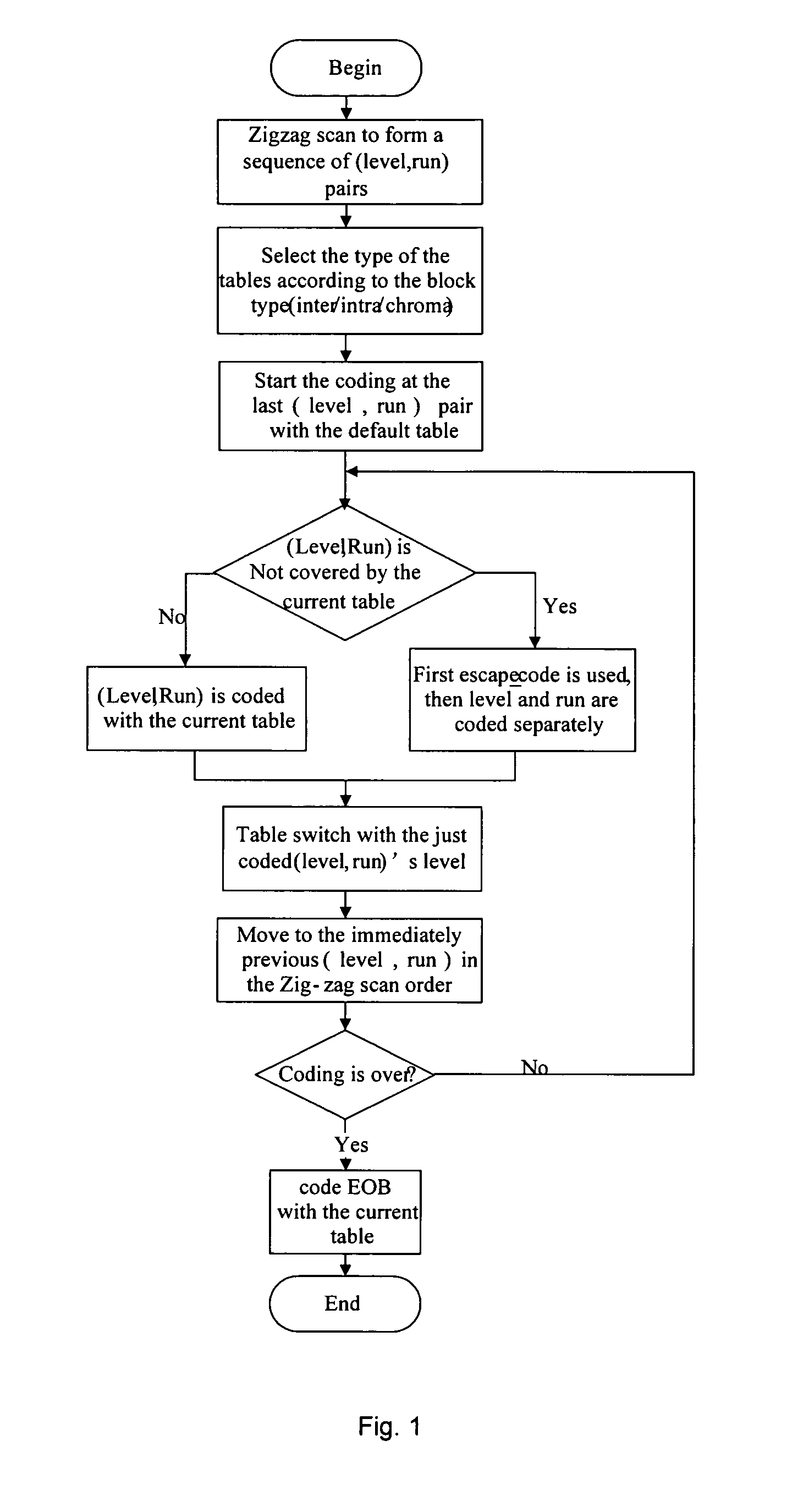

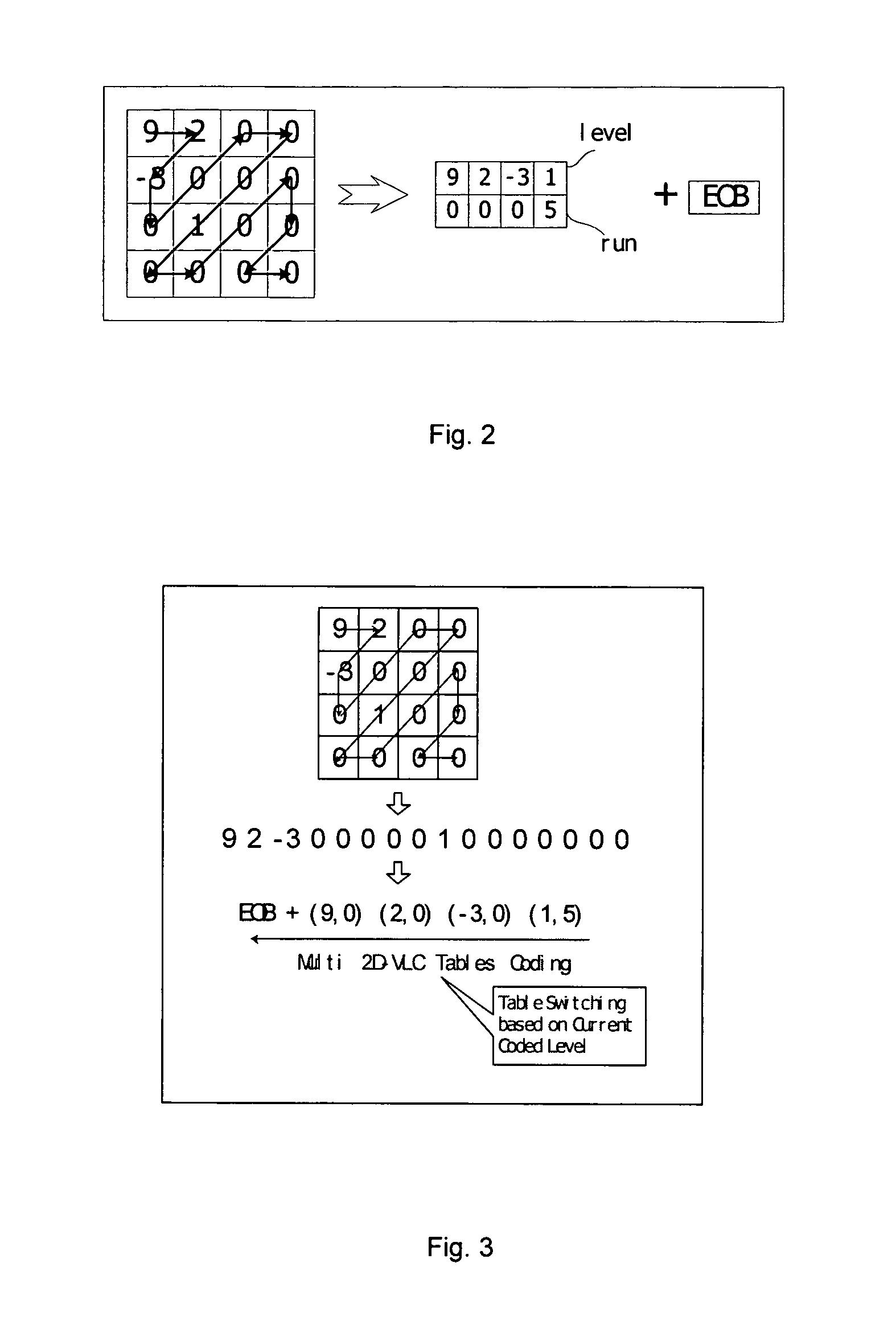

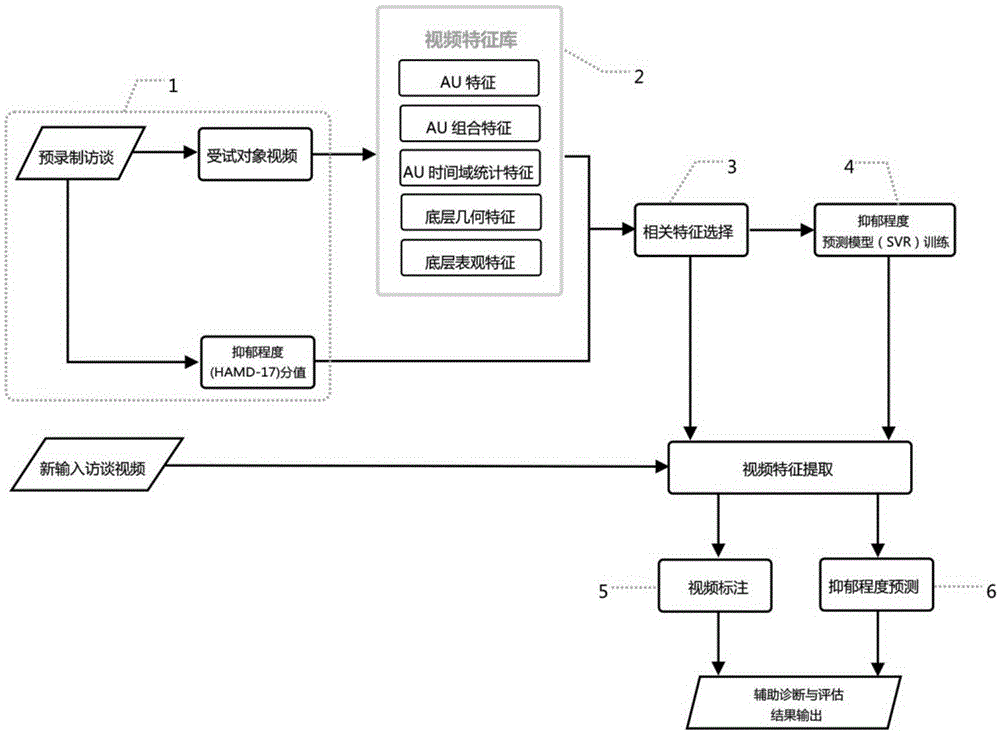

Entropy Coding Method For Coding Video Prediction Residual Coefficients

ActiveUS20070200737A1No impact to computational implementation complexityImprove coding efficiencyPicture reproducers using cathode ray tubesCode conversionCoding blockComputer architecture

The present invention provides an entropy coding method for coding video prediction residual coefficients, comprising the steps of: firstly, zig-zag scanning coefficients of blocks to be coded to form a sequence of (level, run) pairs; secondly, selecting a type of code table for coding a current image block to be coded according to a type of macro block; then switching and coding each (level, run) pair in the obtained sequence of (level, run) pairs with multiple tables, with the reverse zig-zag scanning order for the coding order of the pairs; at last, coding a flag of End of Block EOB with the current code table. The present invention of an entropy coding method for coding video prediction residual coefficients fully considers the context information and the rules of symbol's conditional probability distribution by designing different tables for different block types and different regions of level. The coding efficiency is improved and no impact to computational implementation complexity is involved.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

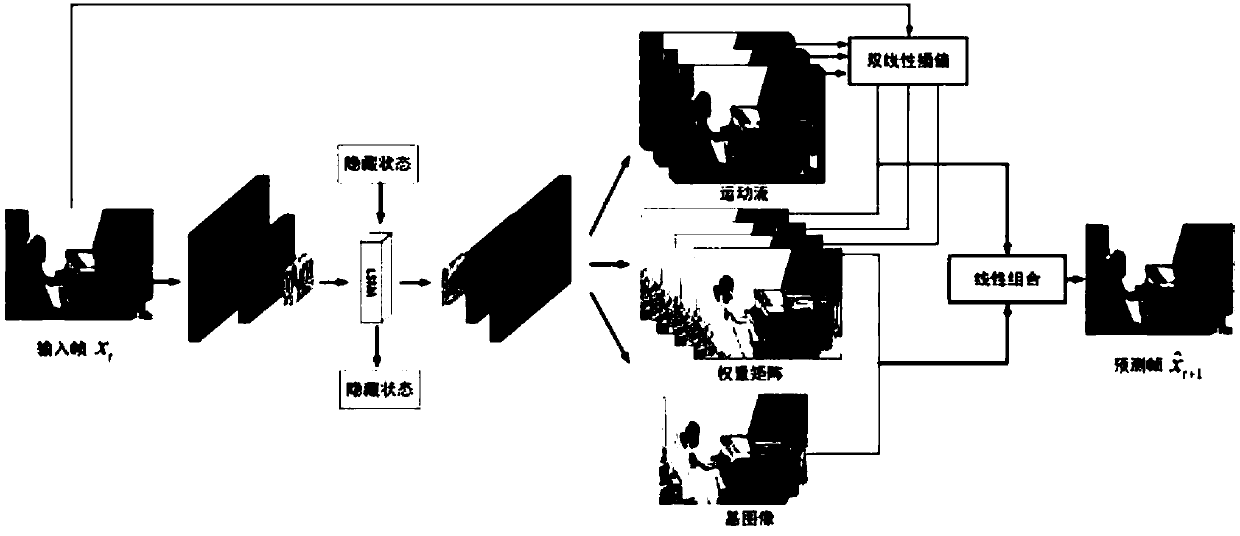

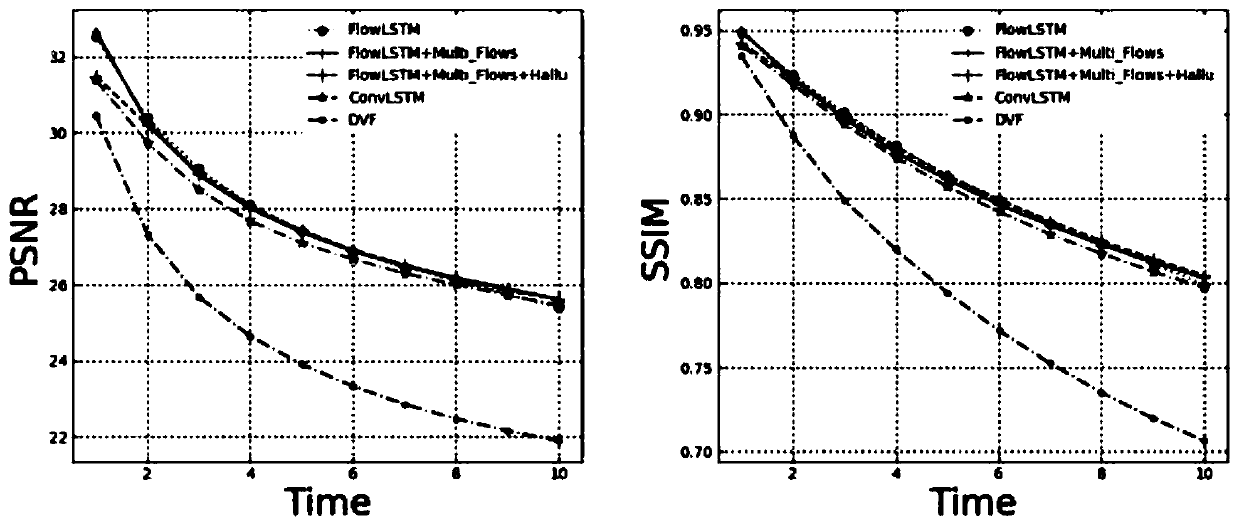

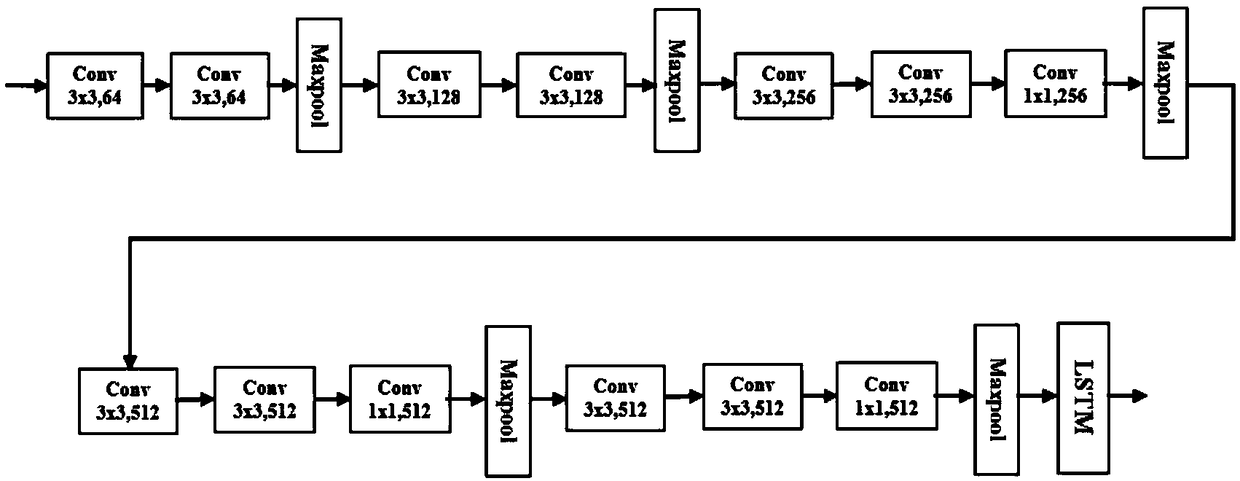

Depth convolution network model of multi-motion streams for video prediction

ActiveCN109064507AReduce blurIncrease frame rateTelevision system detailsImage enhancementTime informationModel method

The invention discloses a multi-motion stream depth convolution network model method for video prediction, which comprises the following steps: constructing a new convolution automatic coding networkframework fusing long-term and short-term memory network modules; the motion stream is proposed as the motion conversion from input frame to output frame. The method of generating multiple motion streams at the same time to learn more exquisite motion information is adopted to improve the prediction effect effectively. The base image is proposed as a pixel-level supplement to the motion flow method, which improves the robustness of the model and the overall effect of the prediction. A bilinear interpolation method is used to interact with multiple motion streams on the input frame to obtain multiple motion prediction maps, and then the motion prediction maps are linearly combined with the base image according to the weight matrix to obtain the final prediction results. By adopting the technical proposal of the invention, the time information in the video sequence can be more fully extracted and transmitted, thereby realizing longer-term, clearer and more accurate video prediction.

Owner:PEKING UNIV SHENZHEN GRADUATE SCHOOL

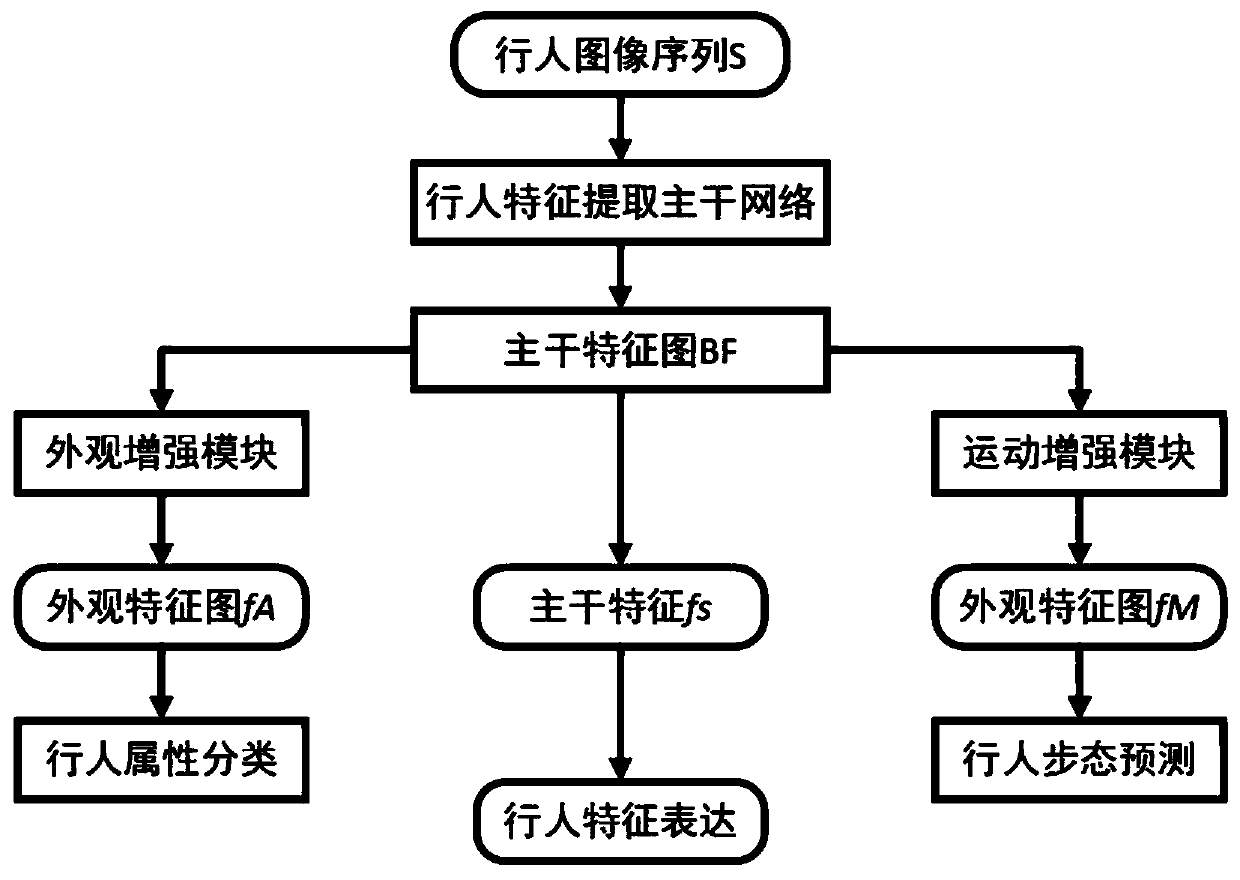

Pedestrian re-identification method based on video appearance and motion information synchronous enhancement

ActiveCN111259786AImprove re-identification performanceImprove the extraction effectBiometric pattern recognitionNeural architecturesFeature extractionVideo prediction

The invention discloses a pedestrian re-identification method based on video appearance and motion information synchronous enhancement. During training, pedestrian appearance and motion information ina backbone network are respectively enhanced through an appearance enhancement module AEM and a motion enhancement module MEM. The appearance enhancement module AEM uses an attribute recognition model obtained by training an existing large-scale pedestrian attribute data set to provide an attribute pseudo label for the large-scale pedestrian video data set, and enhances appearance and semantic information through attribute learning; the motion enhancement module MEM predicts pedestrian gait information by using a video prediction model, enhances gait information features with identity discrimination ability in a pedestrian feature extraction backbone network, and improves pedestrian re-identification performance. In practical application, only the pedestrian feature extraction backbone network needs to be reserved, and higher pedestrian re-identification performance can be obtained without increasing the network complexity and the model size. And the enhanced backbone network featuresobtain higher accuracy in a video-based pedestrian re-identification task.

Owner:ZHEJIANG UNIV

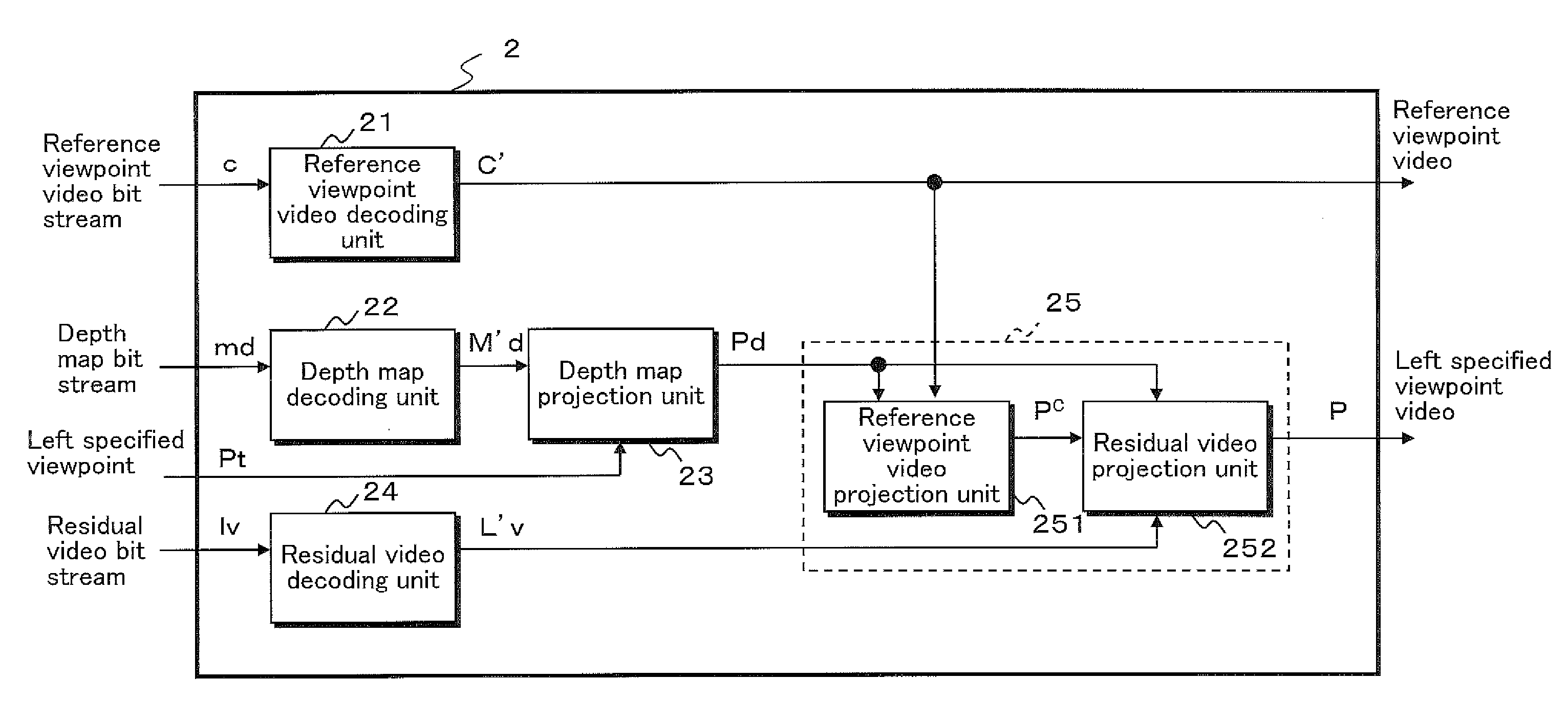

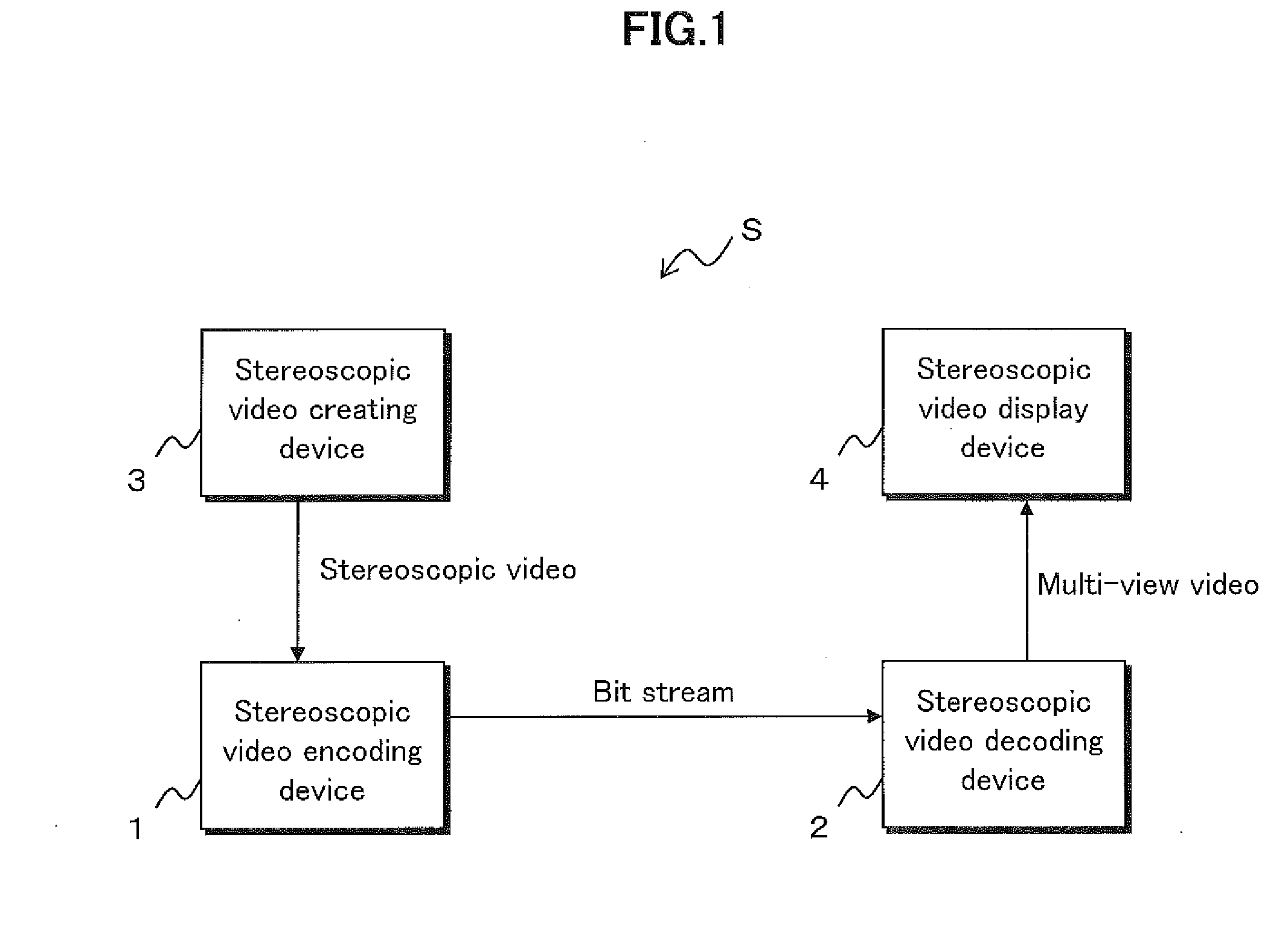

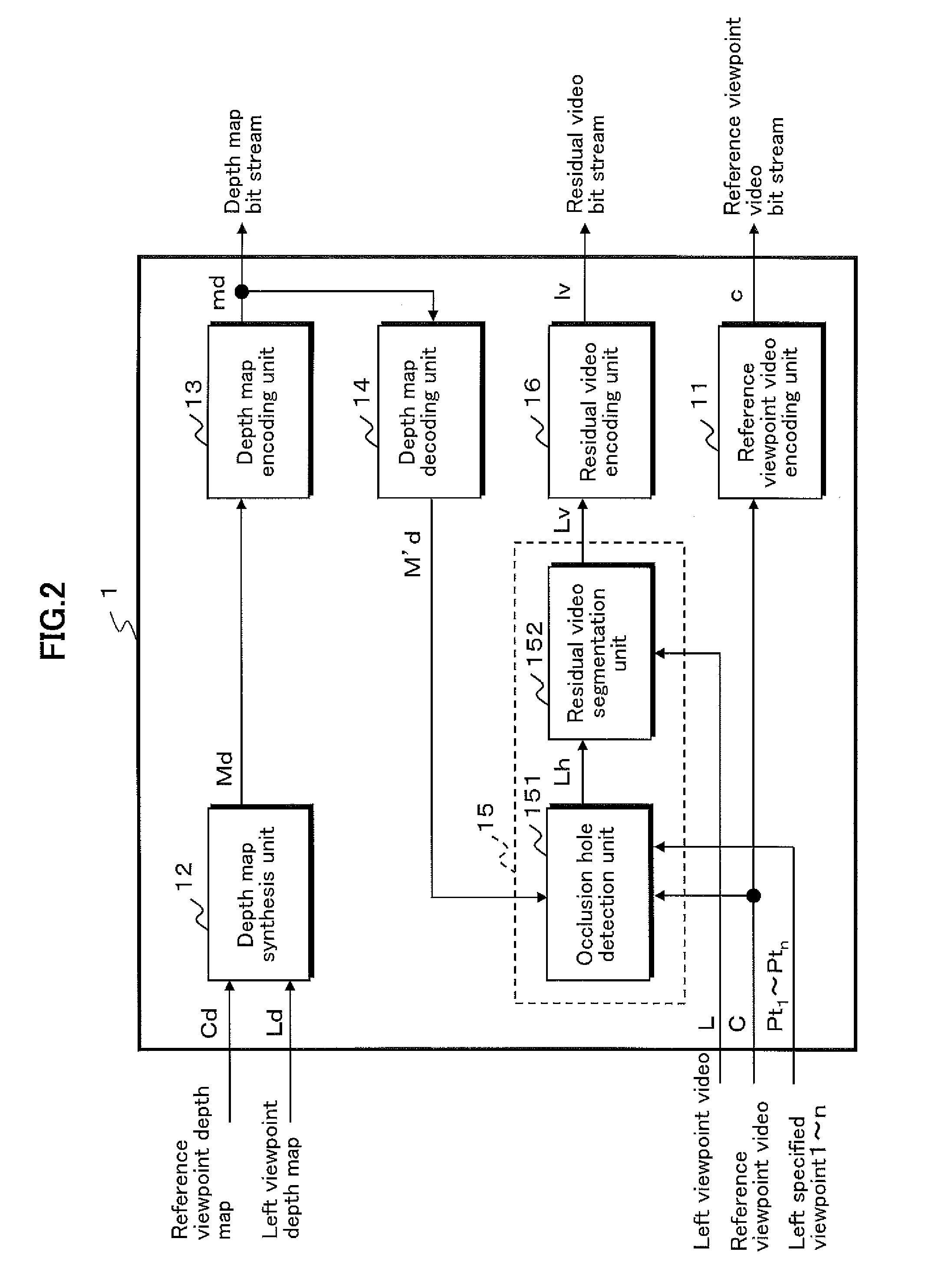

Stereo scopic video coding device, steroscopic video decoding device, stereoscopic video coding method, stereoscopic video decoding method, stereoscopic video coding program, and stereoscopic video decoding program

InactiveUS20140376635A1Improve efficiencyLess overlookingColor television with pulse code modulationColor television with bandwidth reductionStereoscopic videoViewpoints

A stereoscopic video coding device inputs therein a reference viewpoint video and a left viewpoint video, as well as a reference viewpoint depth map and a left viewpoint depth map which are maps showing information on depth values of the respective viewpoint videos. A depth map synthesis unit of the stereoscopic video coding device creates a left synthesized depth map at an intermediate viewpoint from the two depth maps. A projected video prediction unit of the stereoscopic video coding device extracts, from the left viewpoint video, a pixel in a pixel area to constitute an occlusion hole when the reference viewpoint video is projected to another viewpoint and creates a left residual video. The stereoscopic video coding device encodes and transmits each of the reference viewpoint video, the left synthesized depth map, and the left residual video.

Owner:NAT INST OF INFORMATION & COMM TECH

Method and device for video predictive encoding

ActiveUS8654845B2Improve compression efficiencyReduce overheadColor television with pulse code modulationPulse modulation television signal transmissionComputer hardwareVideo prediction

Owner:TENCENT TECH (SHENZHEN) CO LTD +1

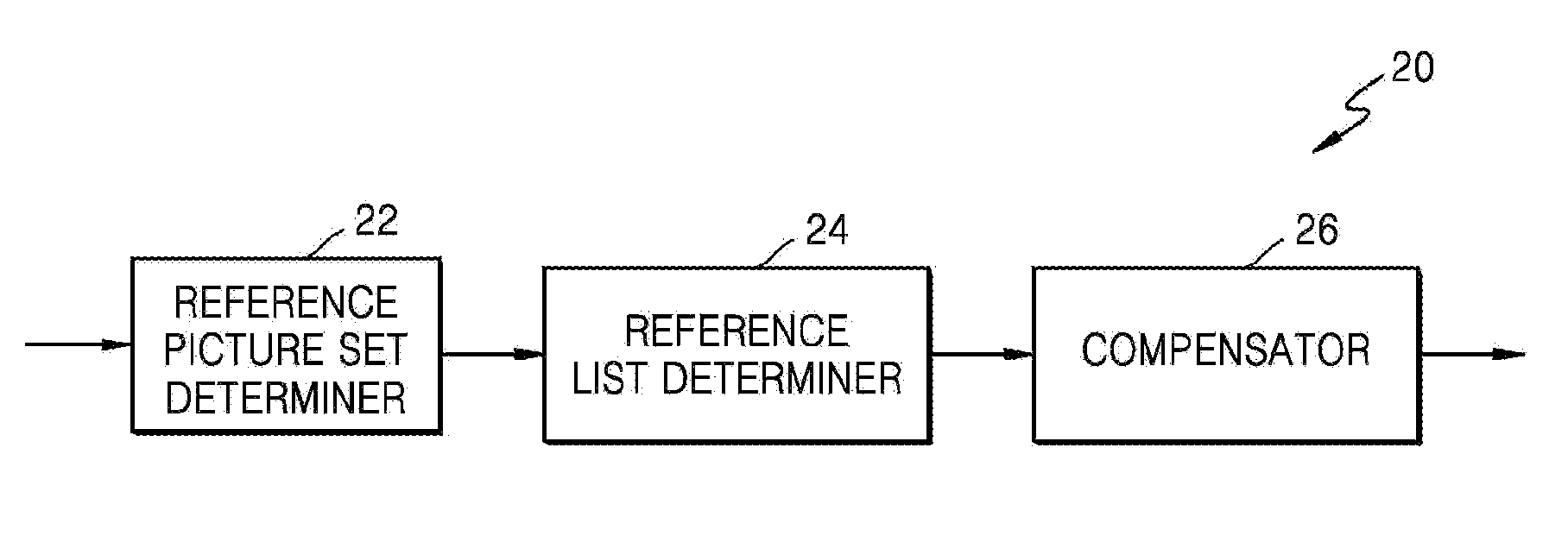

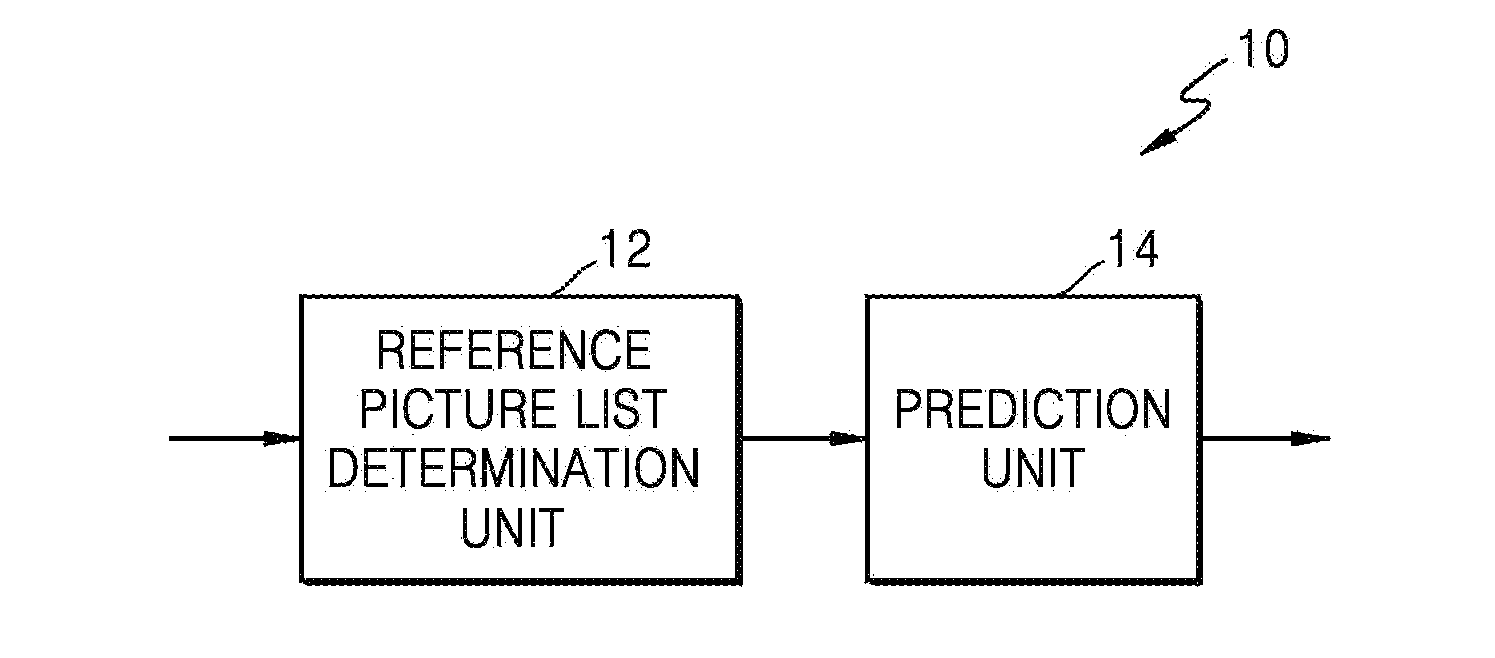

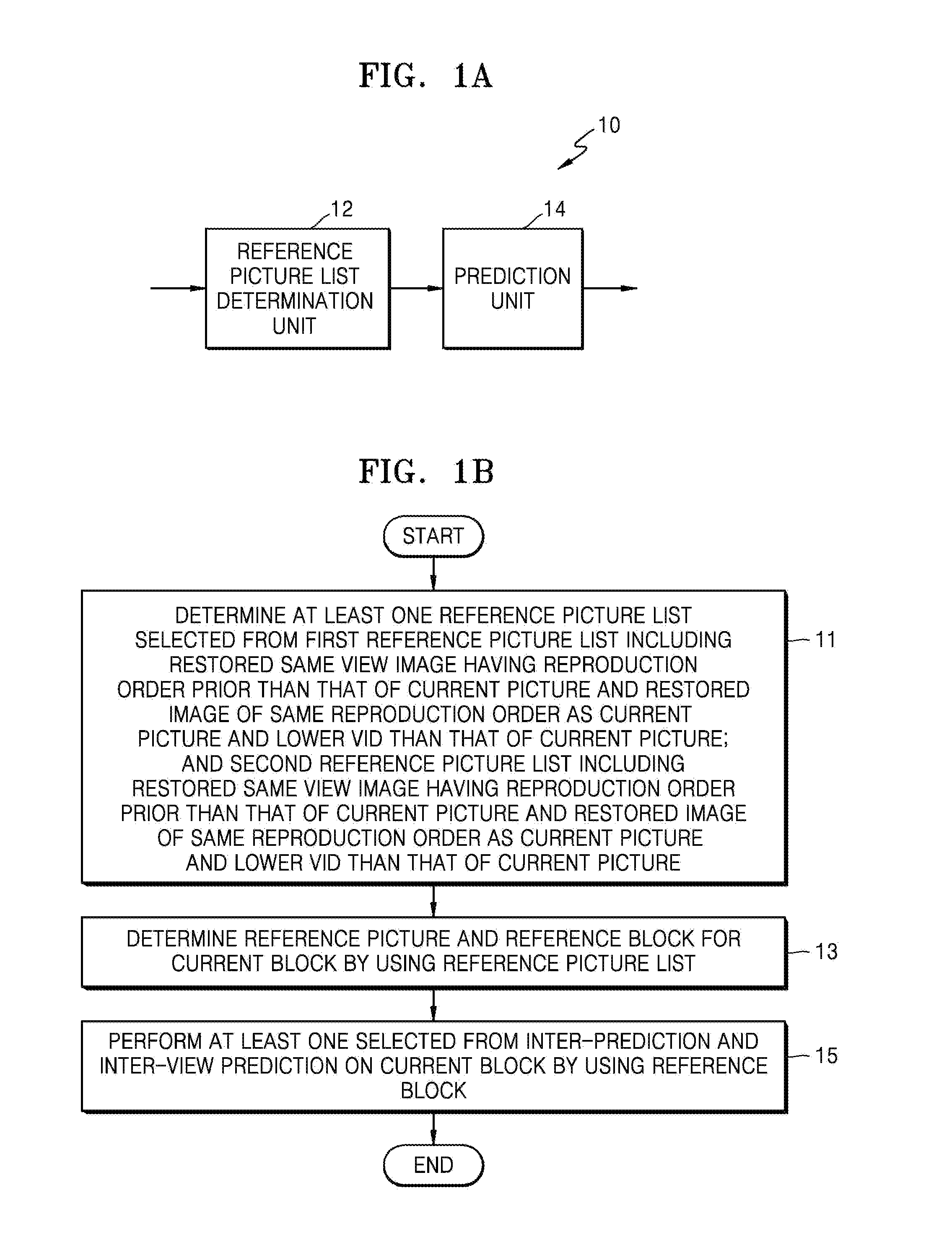

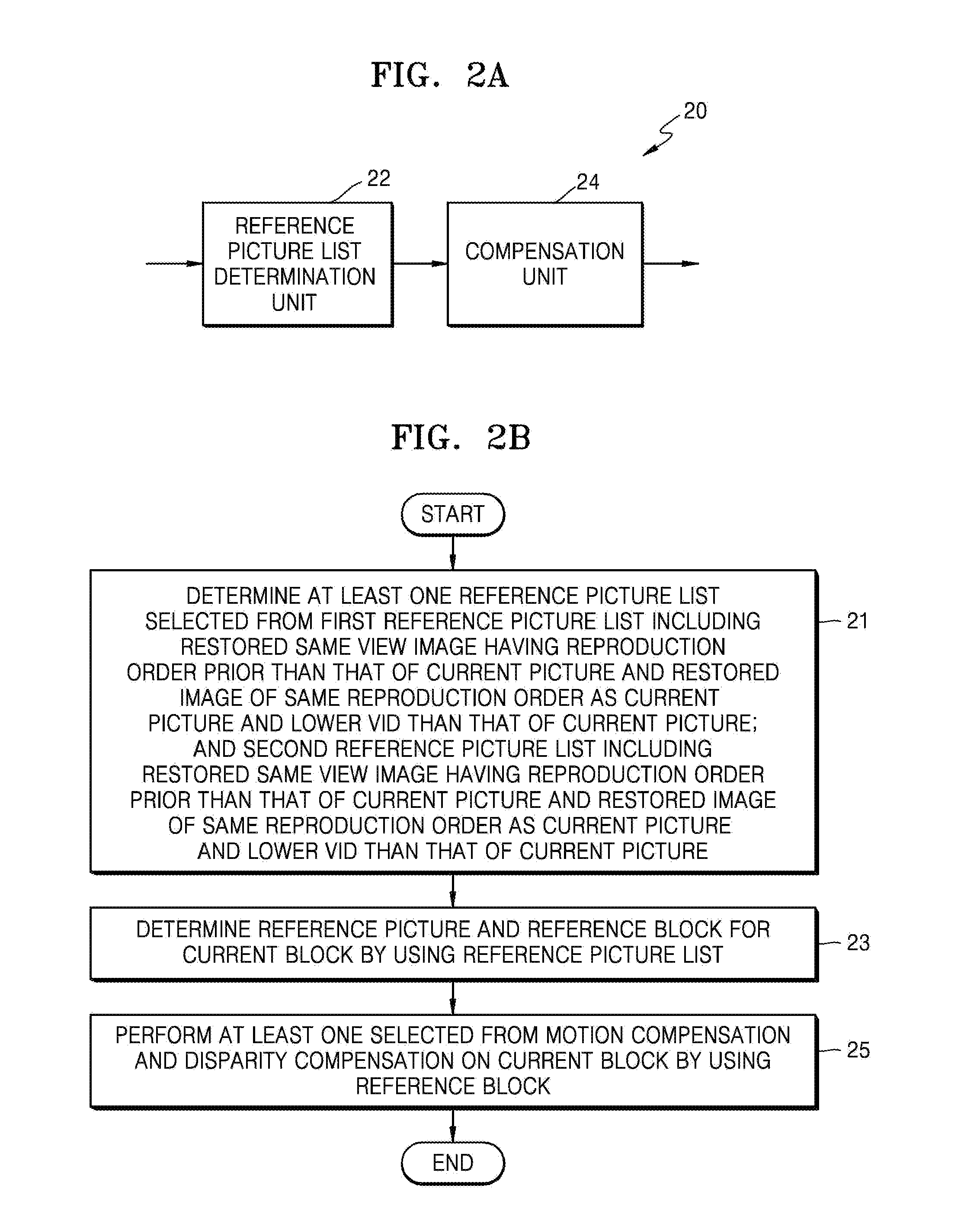

Method for encoding multiview video using reference list for multiview video prediction and device therefor, and method for decoding multiview video using reference list for multiview video prediction and device therefor

InactiveUS20150117526A1Color television with pulse code modulationColor television with bandwidth reductionViewpointsComputer graphics (images)

Provided are methods of encoding and decoding a multiview video by performing inter-prediction and inter-view prediction on each view image of the multiview video.A method of prediction-encoding the multiview video includes determining at least one reference picture list from among first and second reference picture lists which respectively include restored images having a viewpoint of a current picture for inter-prediction of the current picture and restored images for inter-view prediction; determining at least one reference picture and reference block with respect to a current block of the current picture by using the determined at least one reference picture list; and performing at least one selected from inter-prediction and inter-view prediction on the current block by using the reference block.

Owner:SAMSUNG ELECTRONICS CO LTD

Entropy coding method for coding video prediction residual coefficients

ActiveUS7974343B2Improve coding efficiencyReduce complexityPicture reproducers using cathode ray tubesCode conversionComputer architectureCode table

The present invention provides an entropy coding method for coding video prediction residual coefficients, comprising the steps of: firstly, zig-zag scanning coefficients of blocks to be coded to form a sequence of (level, run) pairs; secondly, selecting a type of code table for coding a current image block to be coded according to a type of macro block; then switching and coding each (level, run) pair in the obtained sequence of (level, run) pairs with multiple tables, with the reverse zig-zag scanning order for the coding order of the pairs; at last, coding a flag of End of Block EOB with the current code table. The present invention of an entropy coding method for coding video prediction residual coefficients fully considers the context information and the rules of symbol's conditional probability distribution by designing different tables for different block types and different regions of level. The coding efficiency is improved and no impact to computational implementation complexity is involved.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

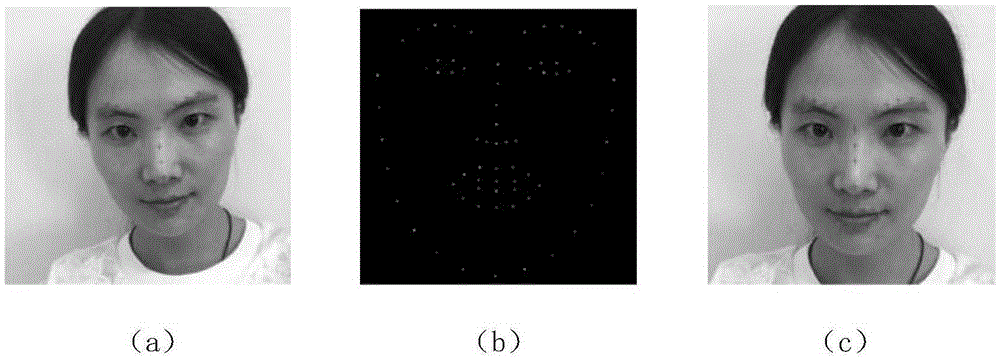

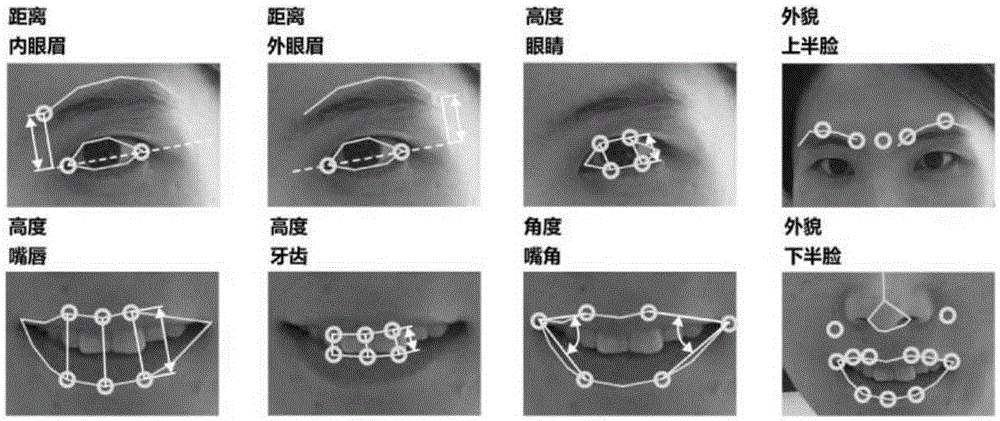

Facial expression analysis-based depression degree automatic evaluation system

ActiveCN105279380ANo intrusionEvaluation is objective and effectiveCharacter and pattern recognitionSpecial data processing applicationsFeature extractionVideo annotation

The invention discloses a facial expression analysis-based depression degree automatic evaluation system. The facial expression analysis-based depression degree automatic evaluation system includes a data acquisition module, a preprocessing and feature extraction module, a related feature extraction module, a prediction model training module, a new video annotation module and a new video prediction module. The facial expression analysis-based depression degree automatic evaluation system of the invention has the advantages of full-process automation, no invasion and no requirements for long-time cooperation of a tested object, and can work for a long time. With the facial expression analysis-based depression degree automatic evaluation system provided by the invention adopted, objective evaluation standards are provided, and objective and effective evaluation can be realized actually without relying on subjective experiences required. The facial expression analysis-based depression degree automatic evaluation system is not limited to isolated analysis on individual objects.

Owner:SOUTHEAST UNIV

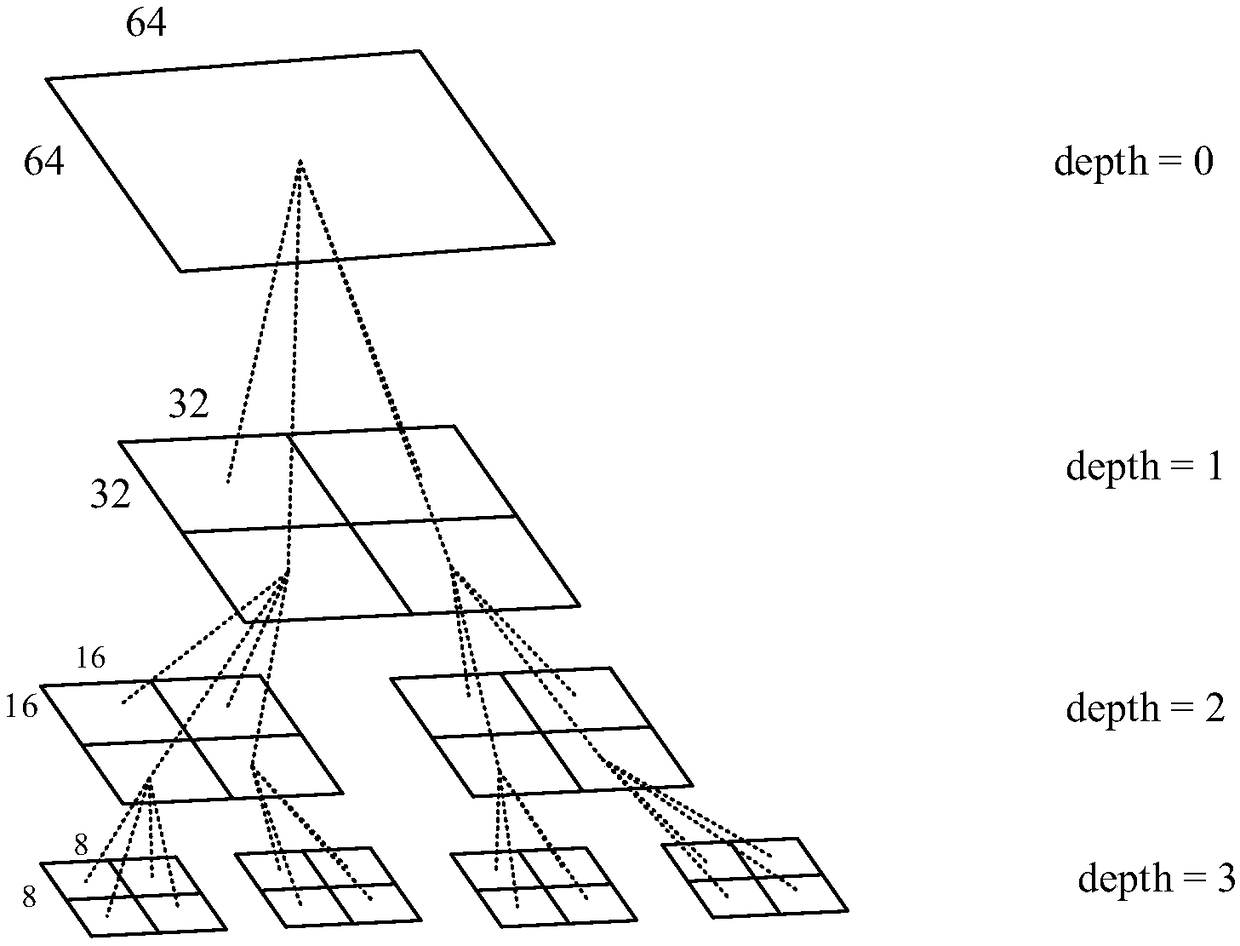

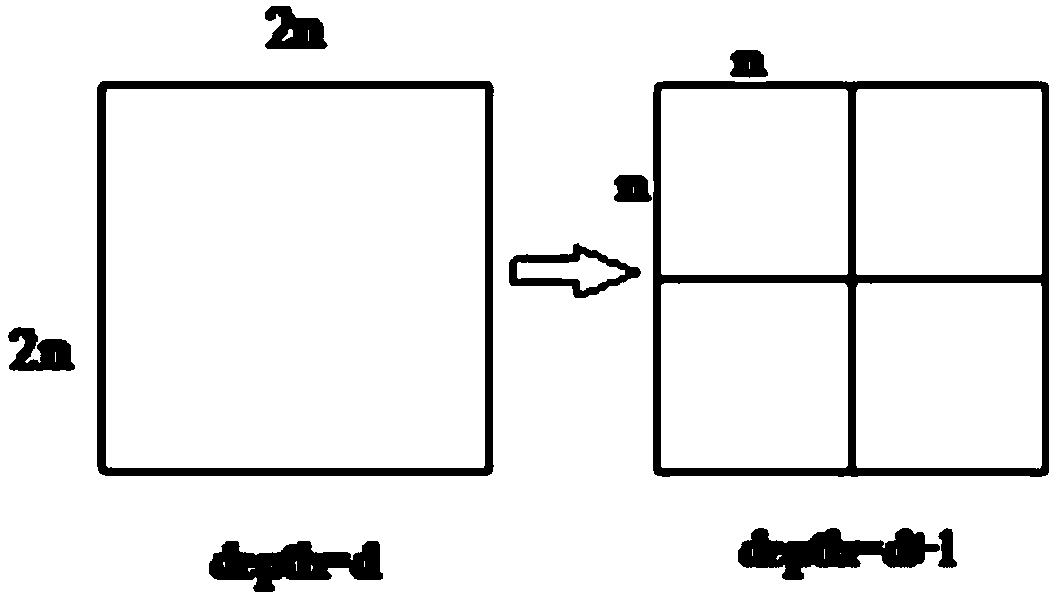

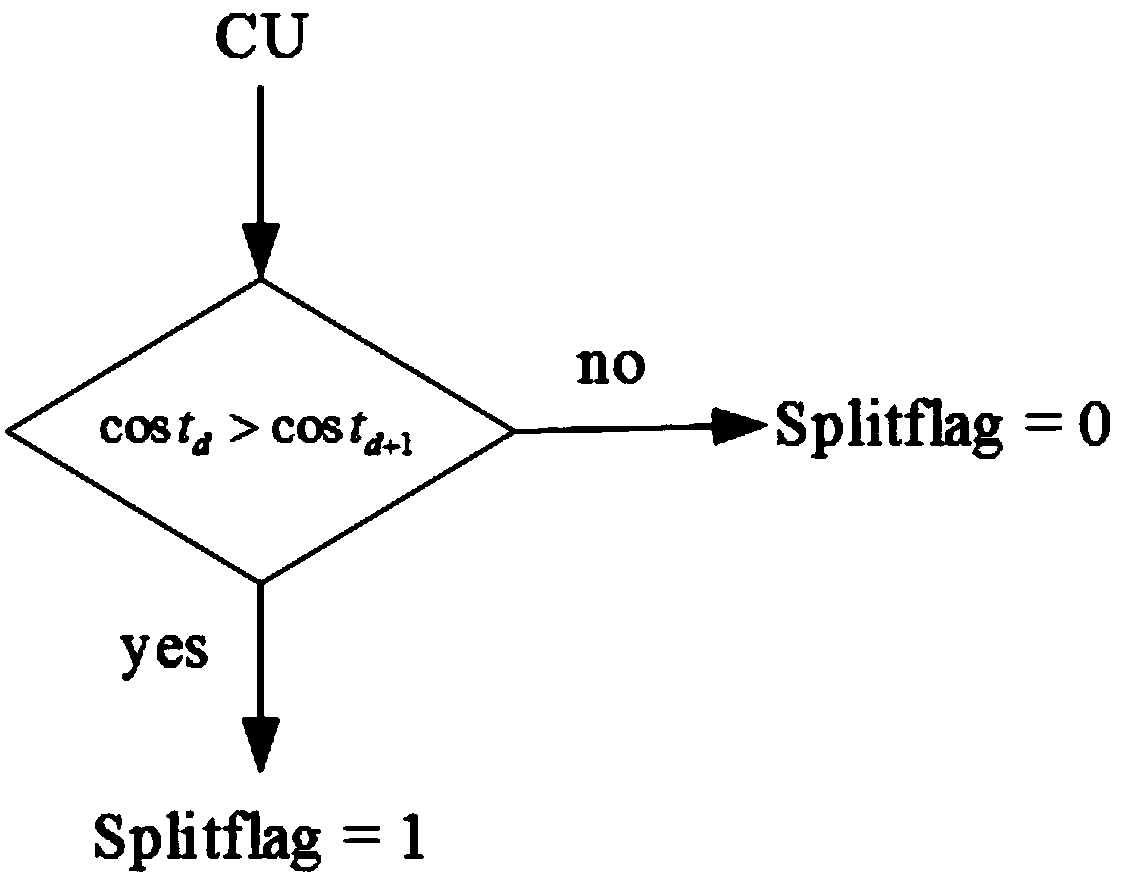

Video predictive encoding method based on neural networks

ActiveCN108924558AImprove coding efficiencyReduce coding complexityCharacter and pattern recognitionDigital video signal modificationUnit sizeCoding tree unit

The invention discloses a video predictive encoding method based on neural networks, and relates to the technical field of the video compressed encoding. The method comprises the following steps: S1,inputting an encoding tree unit with a size of 64*64, and performing rough judgment on the encoding tree unit through a Bayes classifier to judge whether to adopt a SKIP mode, if yes, it is determinedthat partitioning is not performed on the current encoding tree unit, and an encoding unit size decision of the encoding tree unit is directly obtained, otherwise executing the S2; and S2, performingencoding unit blocking decision on the depth of the encoding tree unit in parallel through three neural networks to obtain a blocking result of the encoding unit; S3, obtaining an encoding unit sizedecision through the encoding unit blocking result obtained in the step S2; and S4, performing predictive encoding according to the encoding unit size decision obtained in the step S1 or S3 so as to obtain an encoding result. In the premise of ensuring the encoding performance, the encoding complexity can be greatly reduced, and the encoding efficiency is improved.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

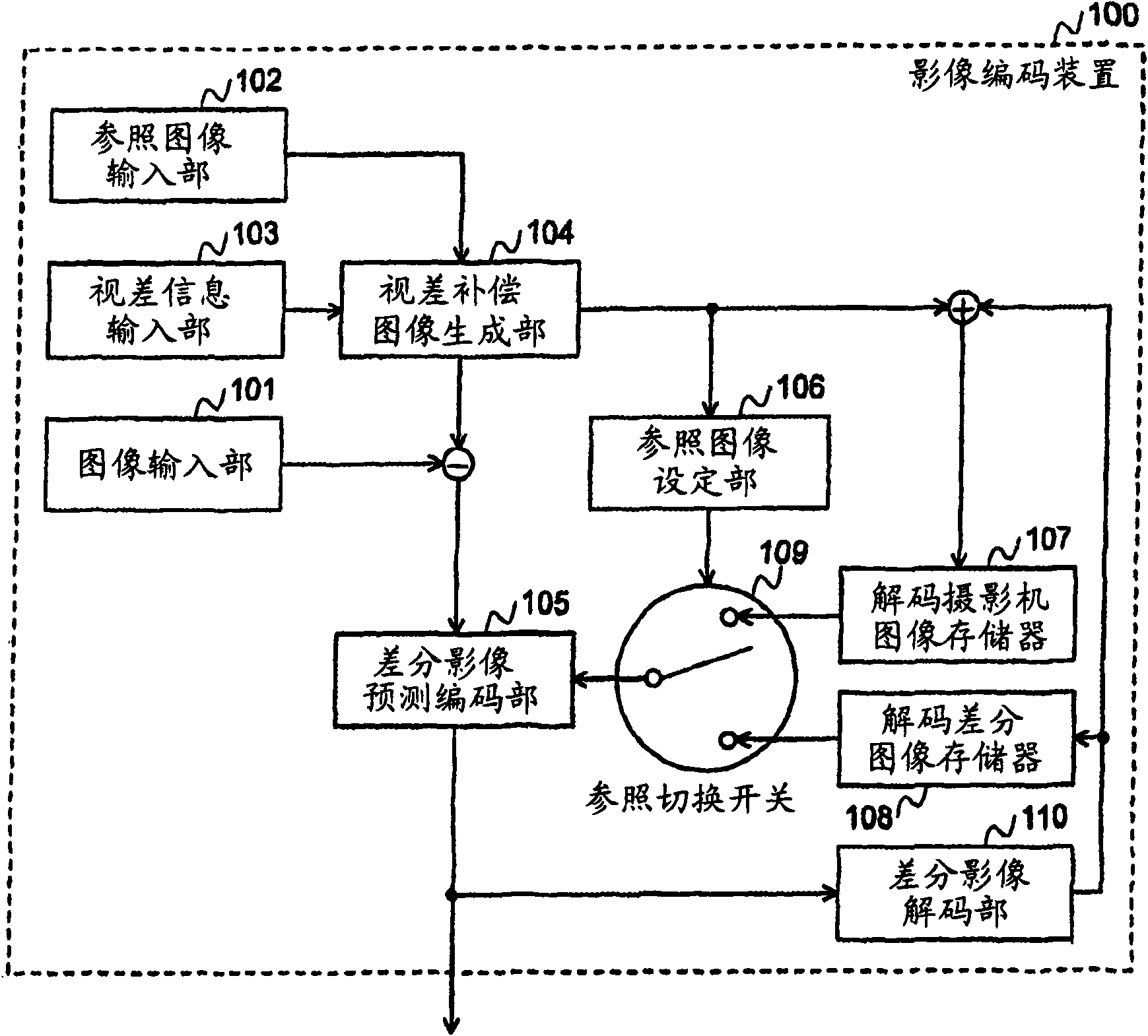

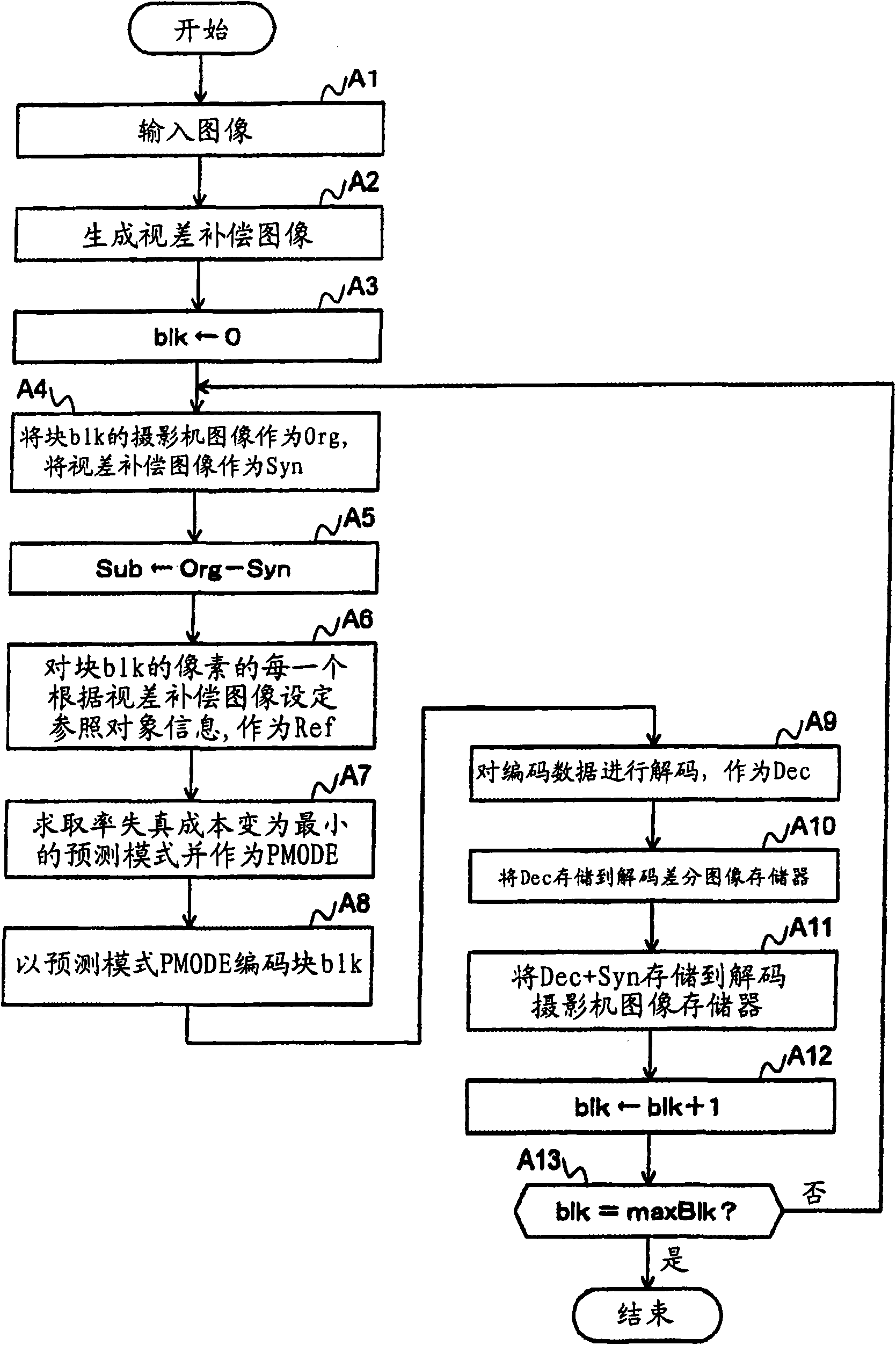

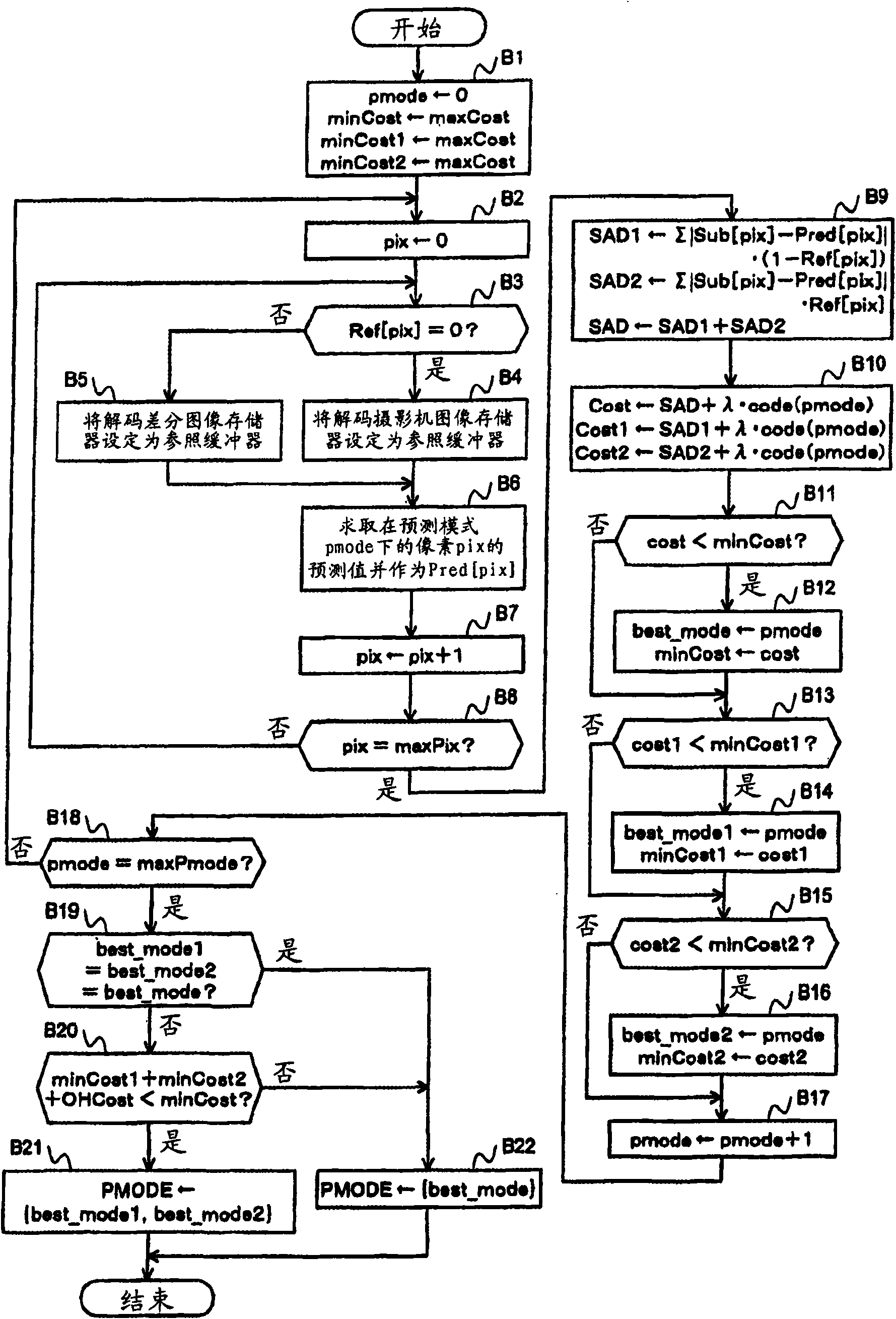

Video encoding method, decoding method, device thereof, program thereof, and storage medium containing the program

ActiveCN101563930AReduce residualPulse modulation television signal transmissionDigital video signal modificationCamera imageParallax

A video encoding method performs video prediction between cameras by using parallax information between a reference camera image which has been already encoded and a camera image to be encoded and corresponding to the reference camera image, so as to create a parallax-compensated image and encode a differential image between the camera image to be encoded and the parallax-compensated image. For each predetermined division unit of the differential image, depending on whether the parallax-compensated image at the same position is present or absent, i.e., the pixel value of the parallax-compensated image is valid or not, a decoded differential image group obtained by decoding a differential image between the already encoded camera image and the parallax-compensated image or a decoded camera image group obtained by decoding the already encoded camera image is set as a reference object.

Owner:NIPPON TELEGRAPH & TELEPHONE CORP

Video predictive coding apparatus and method

InactiveUS20070086663A1Improve coding efficiencyPulse modulation television signal transmissionImage codingComputer architectureVideo prediction

A predictive coding in a video coding system, which can enhance the coding efficiency by predictively coding DC coefficients of a block to be coded using DC gradients of a plurality of previously coded neighboring blocks. The predictive coefficient is selected according to the difference between the quantized DC gradients (coefficients) of a plurality of neighboring blocks of the block to be coded, and the DC coefficient of the block to be coded is predictively coded by the selected predictive coefficient, thereby enhancing the coding efficiency.

Owner:PANTECH & CURITEL COMM INC

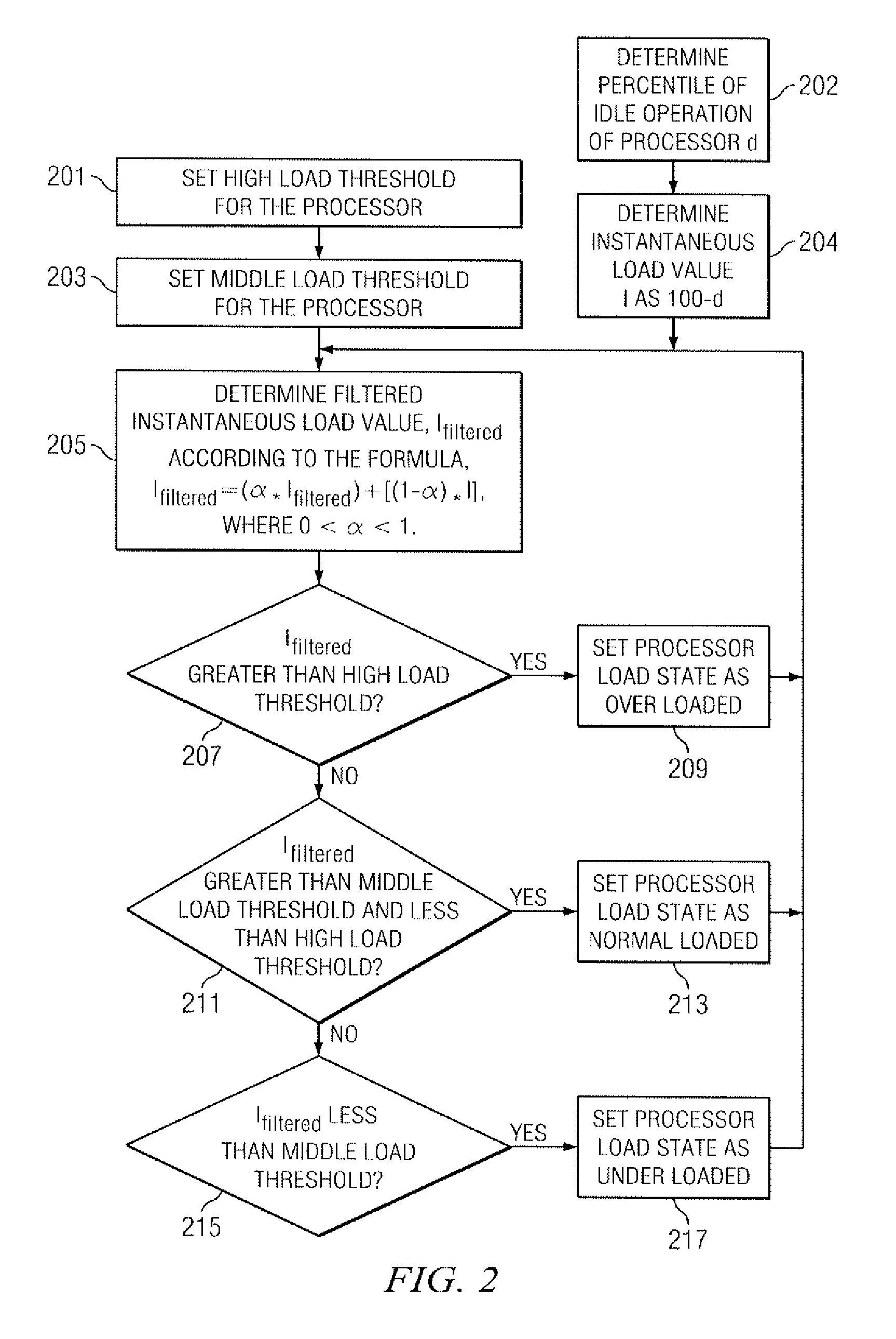

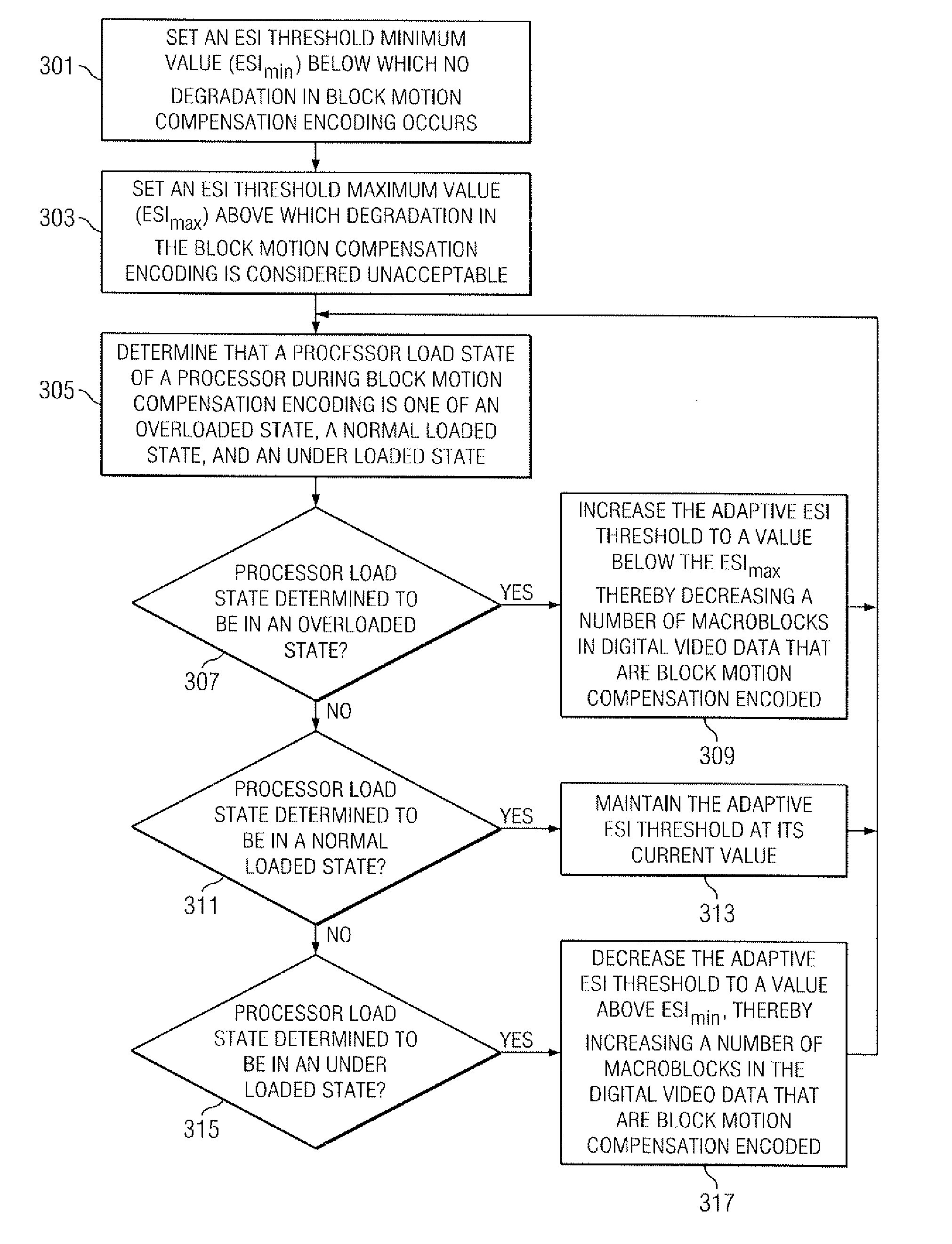

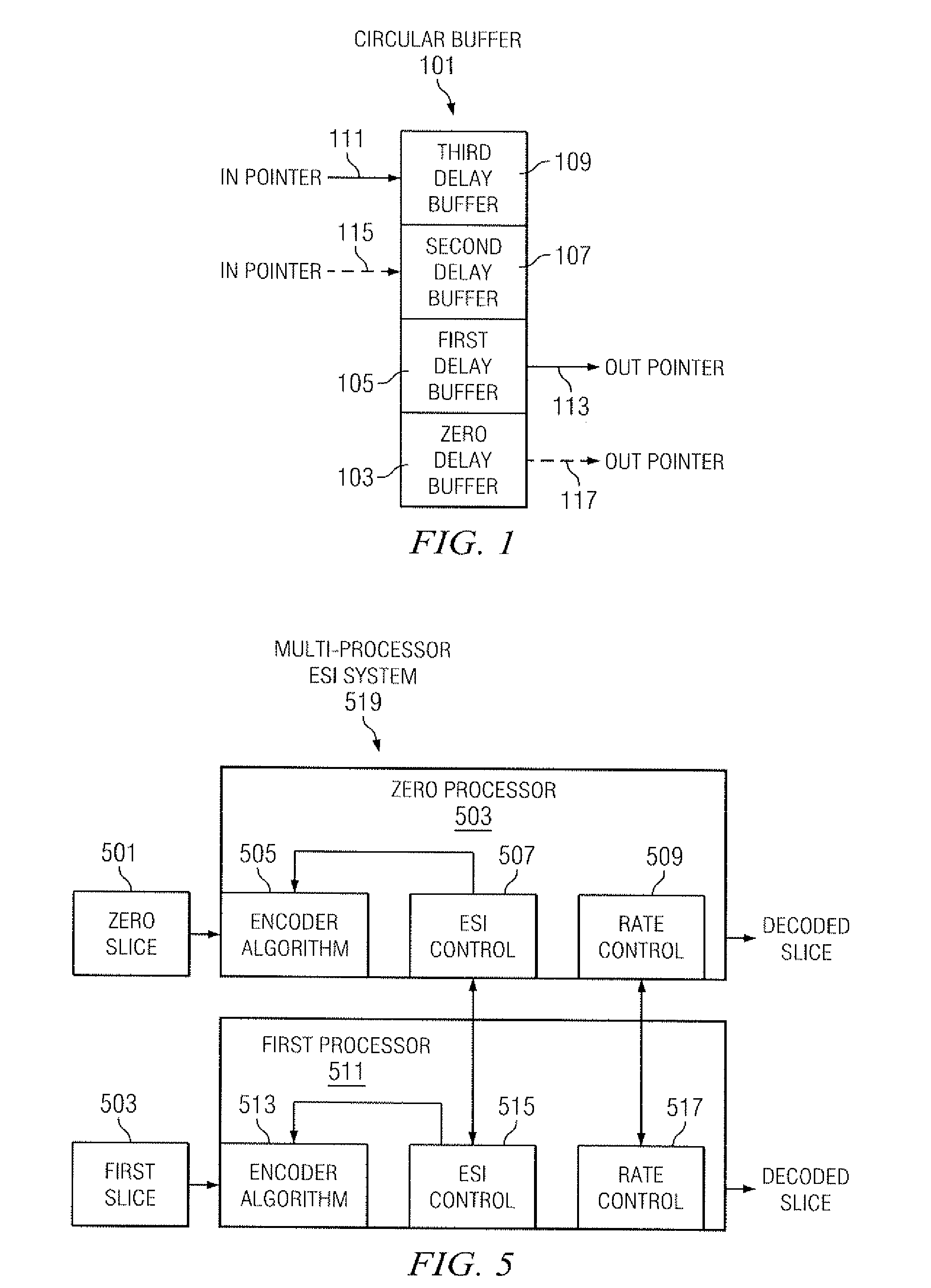

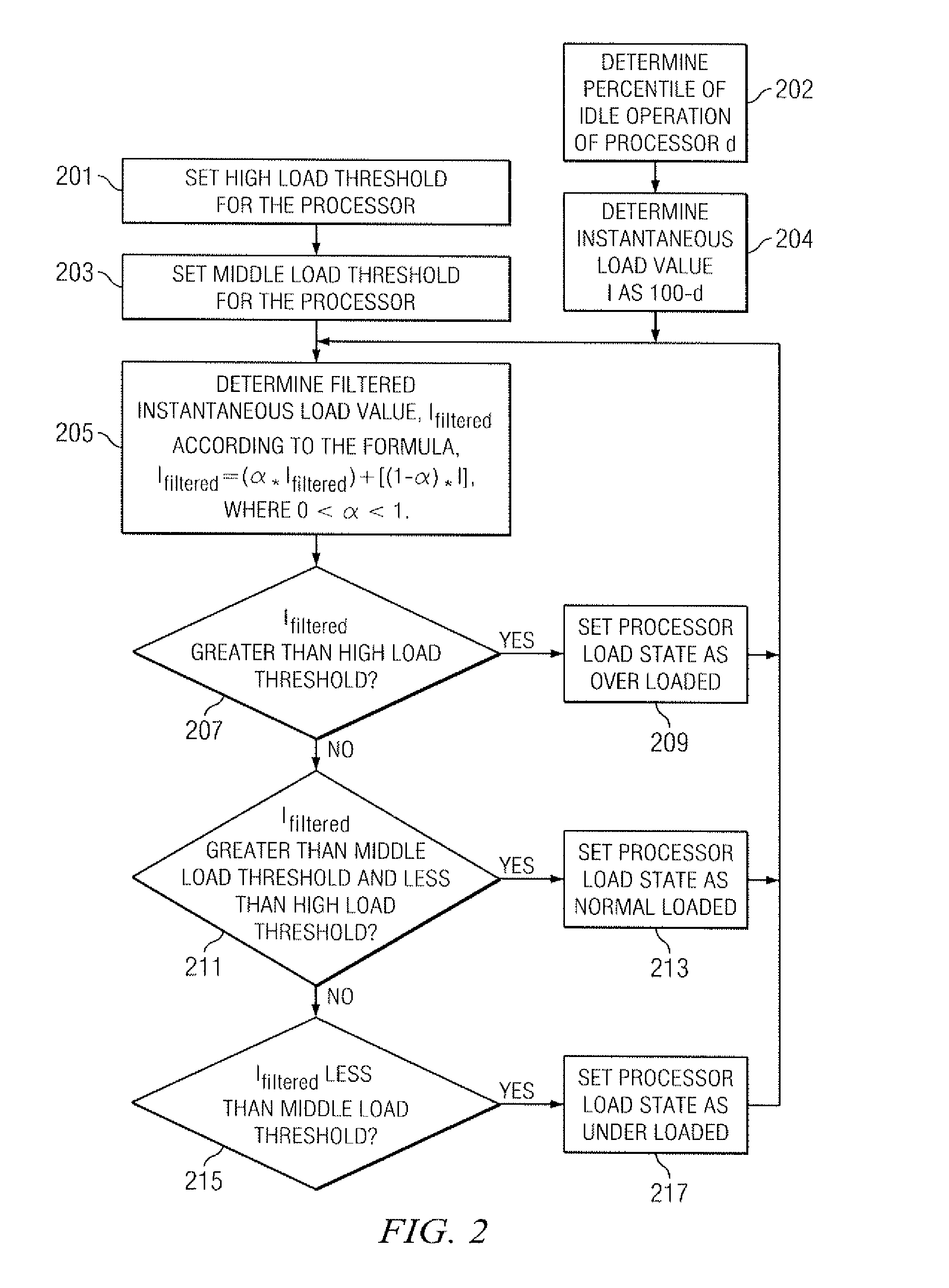

Adaptive real-time video prediction mode method and computer-readable medium and processor for storage and execution thereof

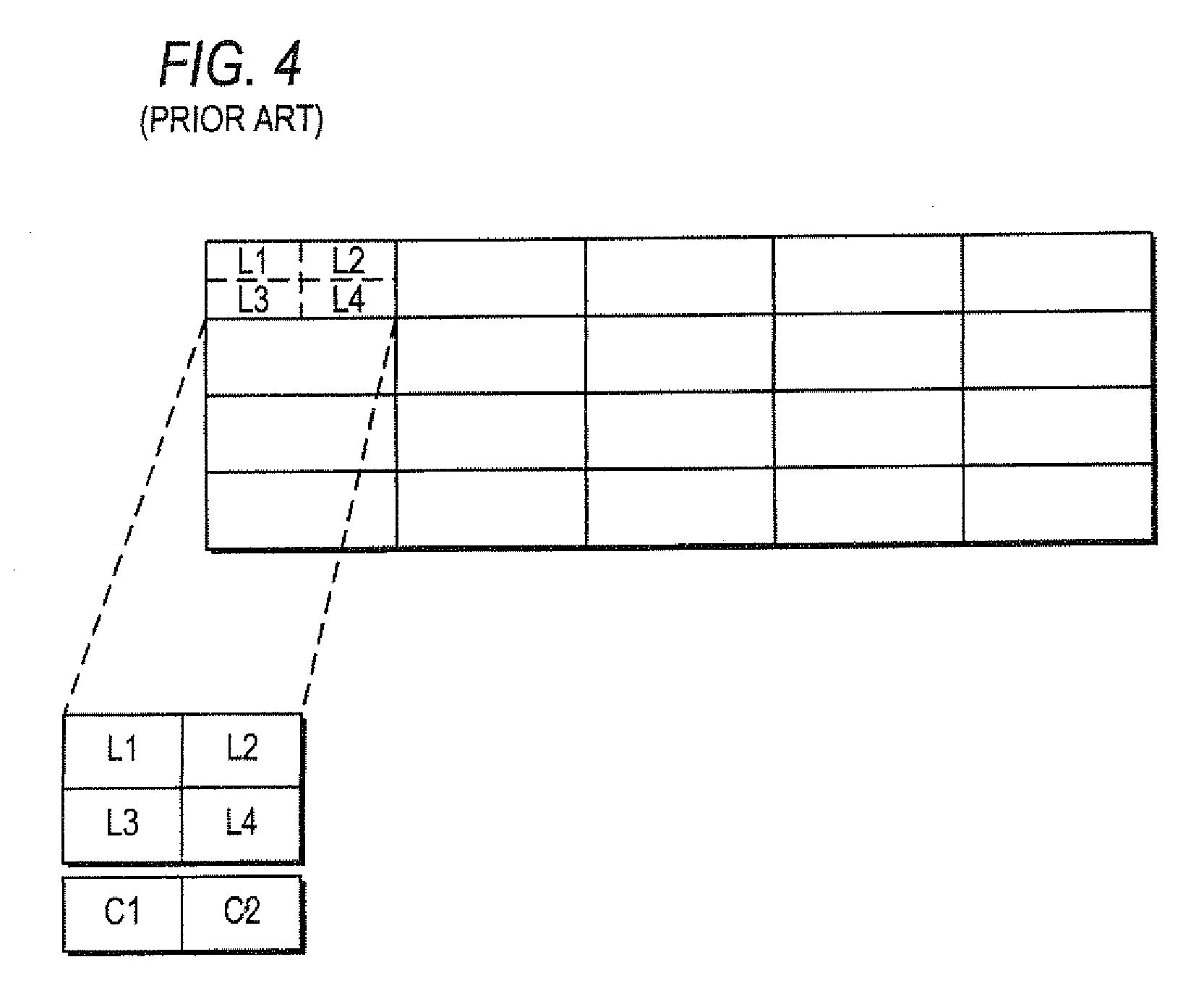

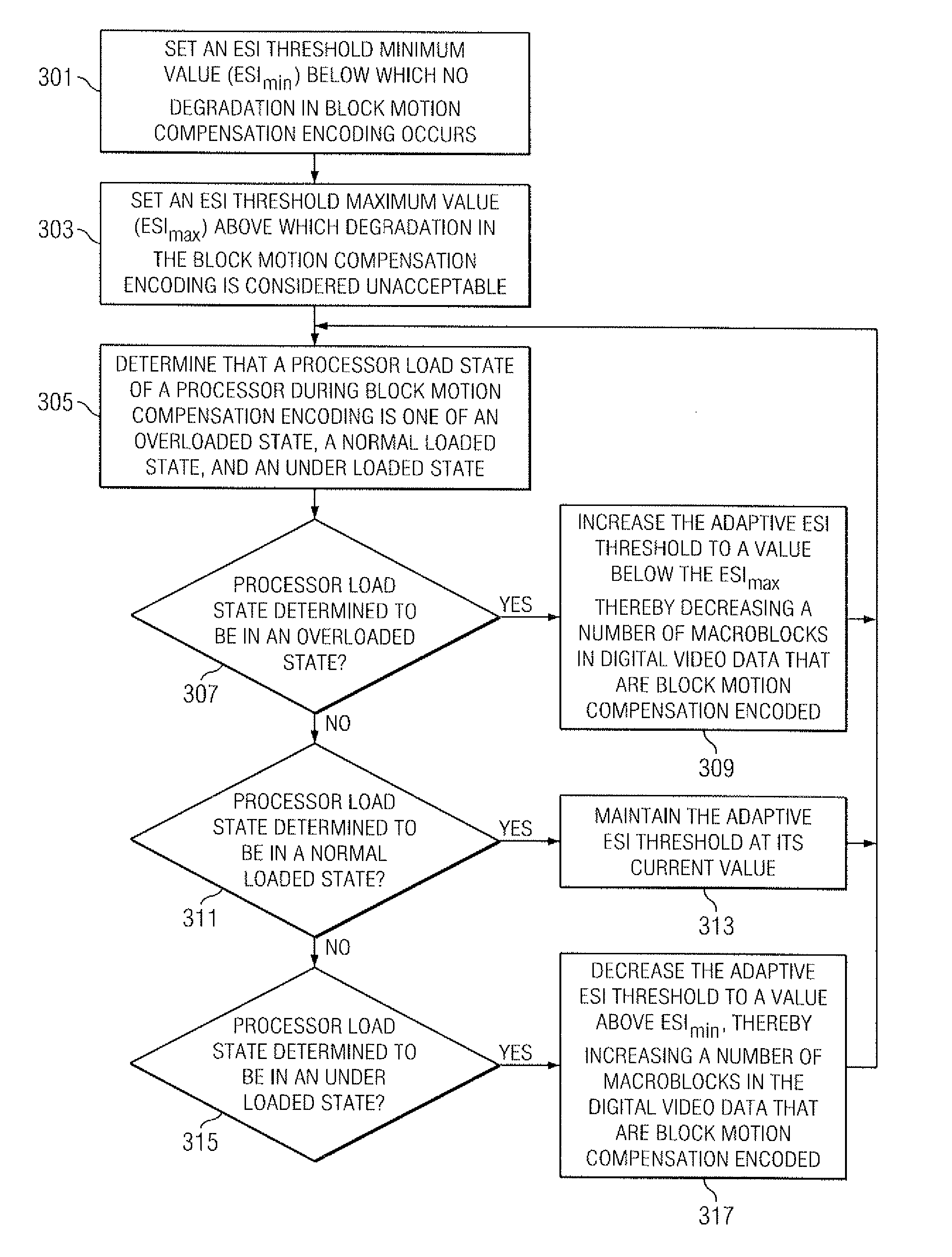

ActiveUS8379728B2Reduce in quantityColor television with pulse code modulationColor television with bandwidth reductionDigital videoNormal load

A method of determining an adaptive early skip indication (ESI) threshold during block motion compensation encoding of digital video data is disclosed. The method includes setting an ESI threshold minimum value below which no degradation in the block motion compensation encoding occurs; setting an ESI threshold maximum value above which degradation in the block motion compensation encoding is considered unacceptable; determining that a processor load state of the processor during block motion compensation encoding is one of an overloaded state, a normal loaded state, and an under loaded state; when the processor load state is determined to be in an overloaded state, increasing the adaptive ESI threshold to a value below the ESI threshold maximum value thereby decreasing a number of macroblocks in the digital video data that are block motion compensation encoded; when the processor load state is determined to be in a normal loaded state, maintaining the adaptive ESI threshold at its current value; and when the processor load state is determined to be in an under loaded state, decreasing the adaptive ESI threshold to a value above the ESI threshold minimum value thereby increasing a number of macroblocks in the digital video data that are block motion compensation encoded. A block motion compensation encoding devices is also disclosed that implements the method above. A computer readable medium is also disclosed that stores instructions, which when executed by a processing unit, performs the method above.

Owner:TEXAS INSTR INC

Adaptive real-time video prediction mode method and computer-readable medium and processor for storage and execution thereof

ActiveUS20100266045A1Reduce in quantityColor television with pulse code modulationColor television with bandwidth reductionDigital videoNormal load

A method of determining an adaptive early skip indication (ESI) threshold during block motion compensation encoding of digital video data is disclosed. The method includes setting an ESI threshold minimum value below which no degradation in the block motion compensation encoding occurs; setting an ESI threshold maximum value above which degradation in the block motion compensation encoding is considered unacceptable; determining that a processor load state of the processor during block motion compensation encoding is one of an overloaded state, a normal loaded state, and an under loaded state; when the processor load state is determined to be in an overloaded state, increasing the adaptive ESI threshold to a value below the ESI threshold maximum value thereby decreasing a number of macroblocks in the digital video data that are block motion compensation encoded; when the processor load state is determined to be in a normal loaded state, maintaining the adaptive ESI threshold at its current value; and when the processor load state is determined to be in an under loaded state, decreasing the adaptive ESI threshold to a value above the ESI threshold minimum value thereby increasing a number of macroblocks in the digital video data that are block motion compensation encoded. A block motion compensation encoding devices is also disclosed that implements the method above. A computer readable medium is also disclosed that stores instructions, which when executed by a processing unit, performs the method above.

Owner:TEXAS INSTR INC

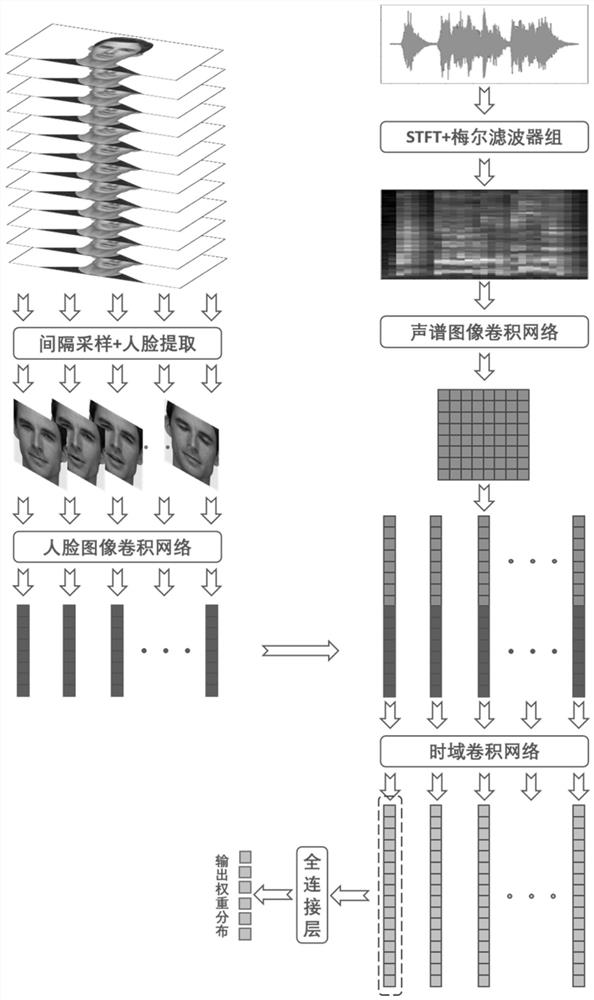

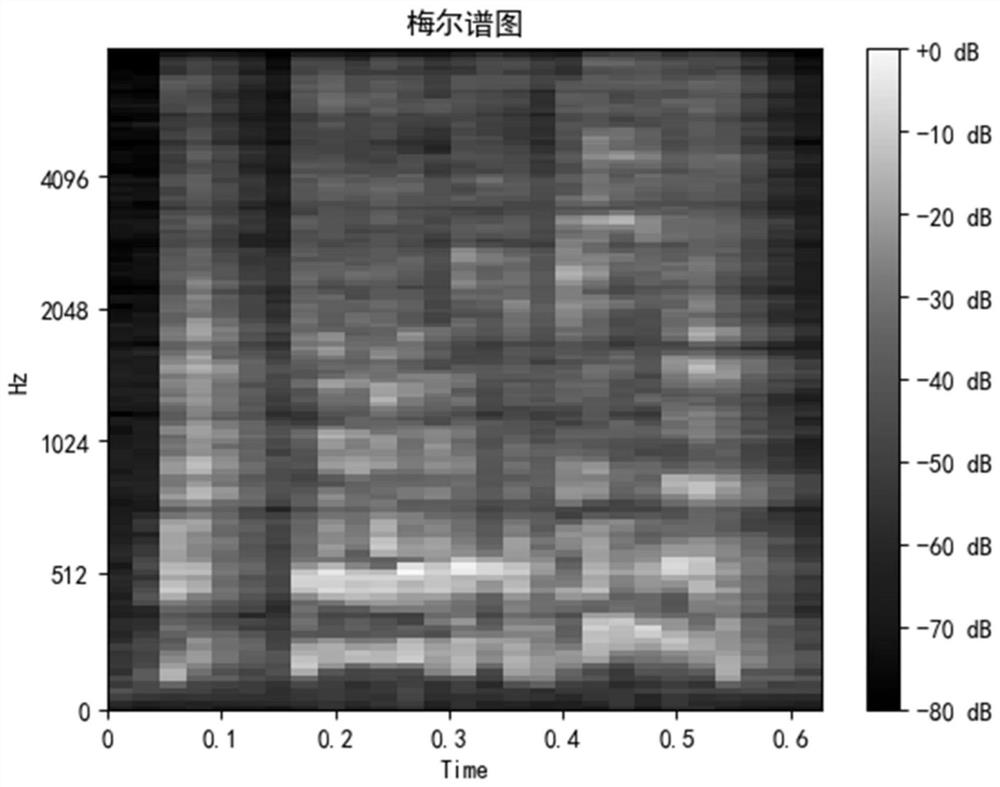

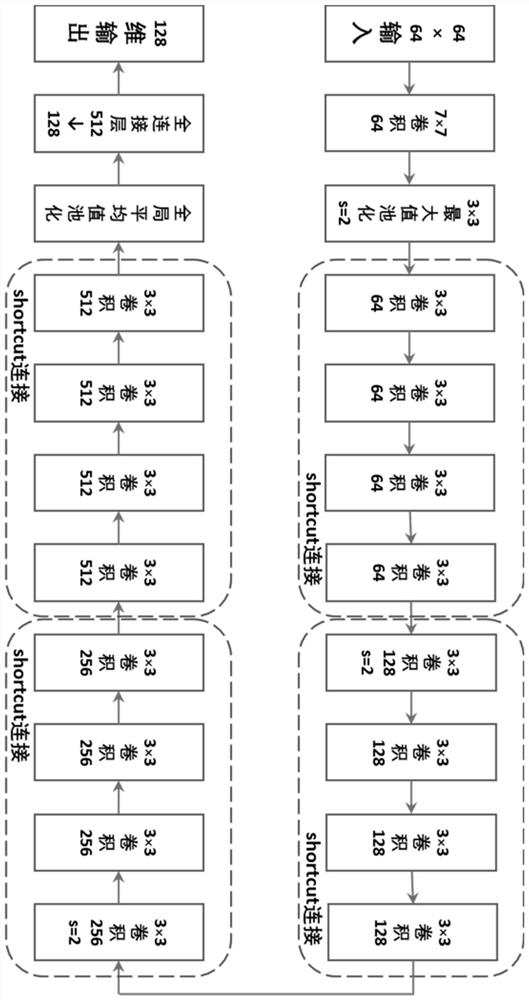

Multi-modal emotion recognition method based on time domain convolutional network

ActiveCN112784730AImprove recognition accuracySpeech analysisCharacter and pattern recognitionFace detectionPrediction probability

The invention discloses a multi-modal emotion recognition method based on a time domain convolutional network, and the method comprises the steps: carrying out the interval sampling of video modal data in an audio and video sample, carrying out the face detection and key point positioning, and obtaining a gray face image sequence; performing short-time Fourier transform and obtaining a Mel spectrogram through a Mel filter bank; enabling the gray-scale face image sequence and the Mel spectrogram to pass through a face image convolutional network and a spectrogram image convolutional network respectively, and performing feature fusion; inputting the fusion feature sequence into a time domain convolutional network to obtain an advanced feature vector; the advanced feature vectors are subjected to a full connection layer and Softmax regression, the prediction probability of each emotion category is obtained, cross entropy loss is calculated between the prediction probability and actual probability distribution, the whole network is trained through back propagation, and a trained neural network model is obtained. The emotion can be predicted through audios and videos, the training time is short, and the recognition accuracy is high.

Owner:SOUTHEAST UNIV

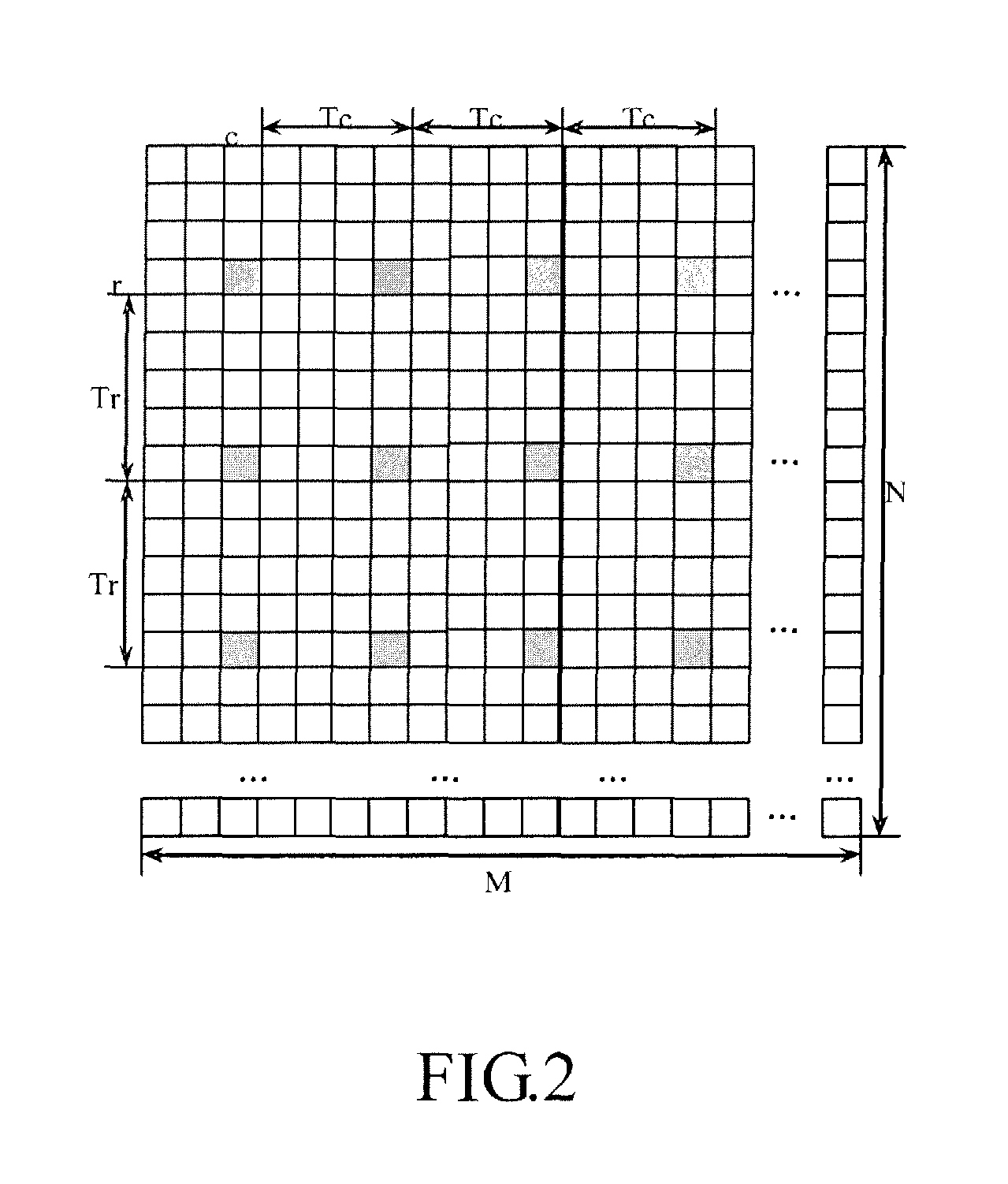

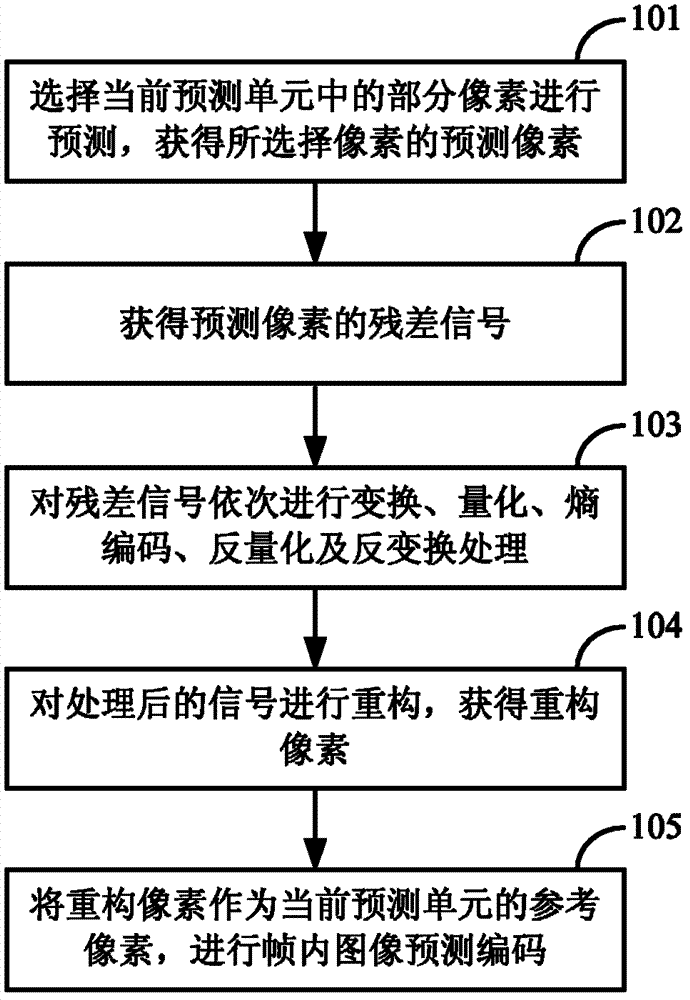

Intra frame image prediction coding and decoding methods, video coder and video decoder

InactiveCN103248885AImprove accuracyImprove codec efficiencyTelevision systemsDigital video signal modificationIntra-frameImage prediction

The invention discloses intra frame image prediction coding and decoding methods, a video coder and a video decoder, wherein the intra frame image prediction coding method comprises the following steps: selecting part of pixels in a current prediction unit for predicting so as to obtain the predicted pixels of the selected pixels; acquiring the residual signals of the predicted pixels; sequentially performing transformation, quantization, entropy coding, inverse quantization and inverse transformation to the residual signals; reconstructing the processed signals to obtain the reconstructed pixels; and taking the reconstructed pixels as reference pixels of the current prediction unit and performing intra frame image prediction coding. Through the adoption of the intra frame image prediction coding and decoding methods, the video coder and the video decoder, the accuracy of the prediction for an intra frame image is improved, so that the efficiency of the coding and decoding for the intra frame image is improved; and since the intra frame image prediction coding and decoding methods, the video coder and the video decoder do not break the structure of a convention video prediction coding and decoding unit, the intra frame image prediction coding and decoding methods, the video coder and the video decoder are in favor of hardware implementation. The intra frame image prediction coding and decoding methods, the video coder and the video decoder can be applied in the prediction mode of a conventional intra frame image and improves the accuracy of the prediction for the conventional intra frame image, and can also be applied in the prediction of a boundary image prediction unit when the intra frame image is coded.

Owner:LG ELECTRONICS (CHINA) R&D CENT CO LTD

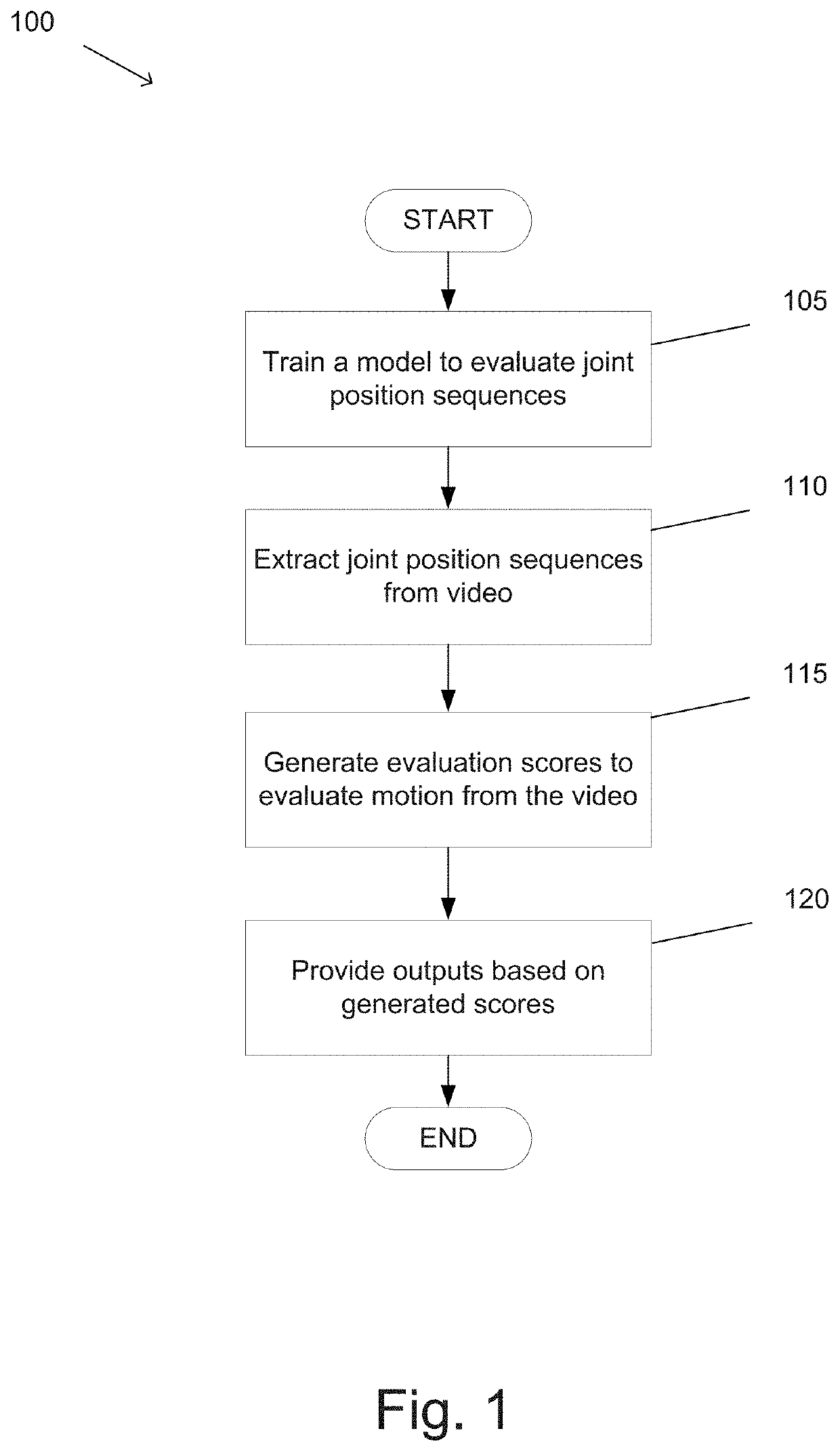

System and Method for Automatic Evaluation of Gait Using Single or Multi-Camera Recordings

Systems and methods in accordance with many embodiments of the invention include a motion evaluation system that trains a model to evaluate motion (such as, but not limited to, gait) through images (or video) captured by a single image capture device. In certain embodiments, motion evaluation includes predicting clinically relevant variables from videos of patients walking from keypoint trajectories extracted from the captured images.

Owner:THE BOARD OF TRUSTEES OF THE LELAND STANFORD JUNIOR UNIV +1

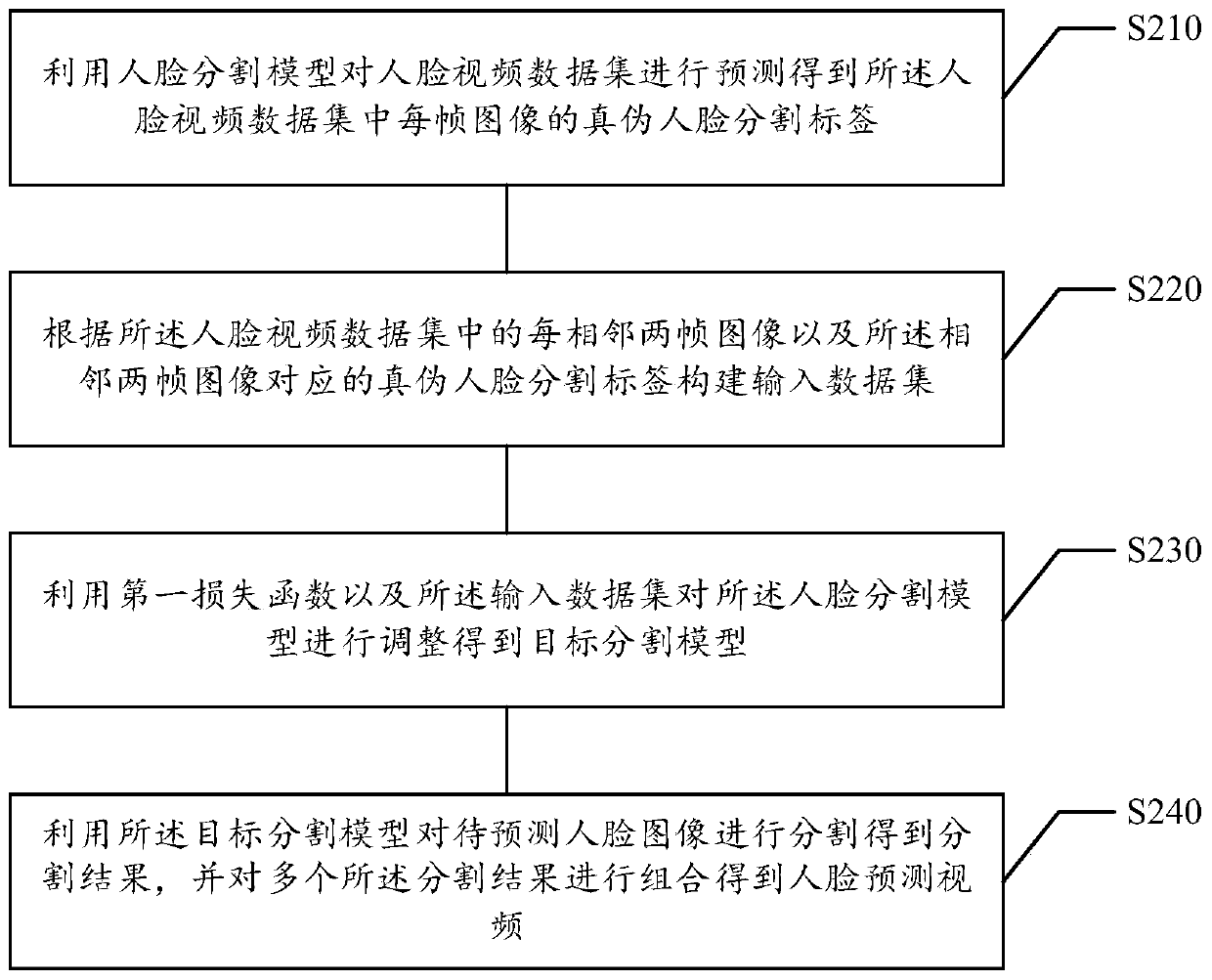

Video prediction method and device based on face segmentation, medium and electronic equipment

ActiveCN110427899AImprove stabilityFast adjustmentImage analysisInternal combustion piston enginesPattern recognitionData set

The embodiment of the invention relates to a video prediction method and a device based on face segmentation, a medium and electronic equipment, and relates to the technical field of image processing,and the method comprises the steps: carrying out prediction of a face video data set through a face segmentation model, and obtaining the true and false face segmentation label of each frame of imagein the face video data set; constructing an input data set according to every two adjacent frames of images in the face video data set and the true and false face segmentation tags corresponding to the two adjacent frames of images; adjusting the face segmentation model by using a first loss function and the input data set to obtain a target segmentation model; and segmenting a to-be-predicted face image by using the target segmentation model to obtain segmentation results, and combining the multiple segmentation results to obtain a face prediction video. According to the video prediction method, the stability of the face prediction video is improved.

Owner:NETEASE (HANGZHOU) NETWORK CO LTD

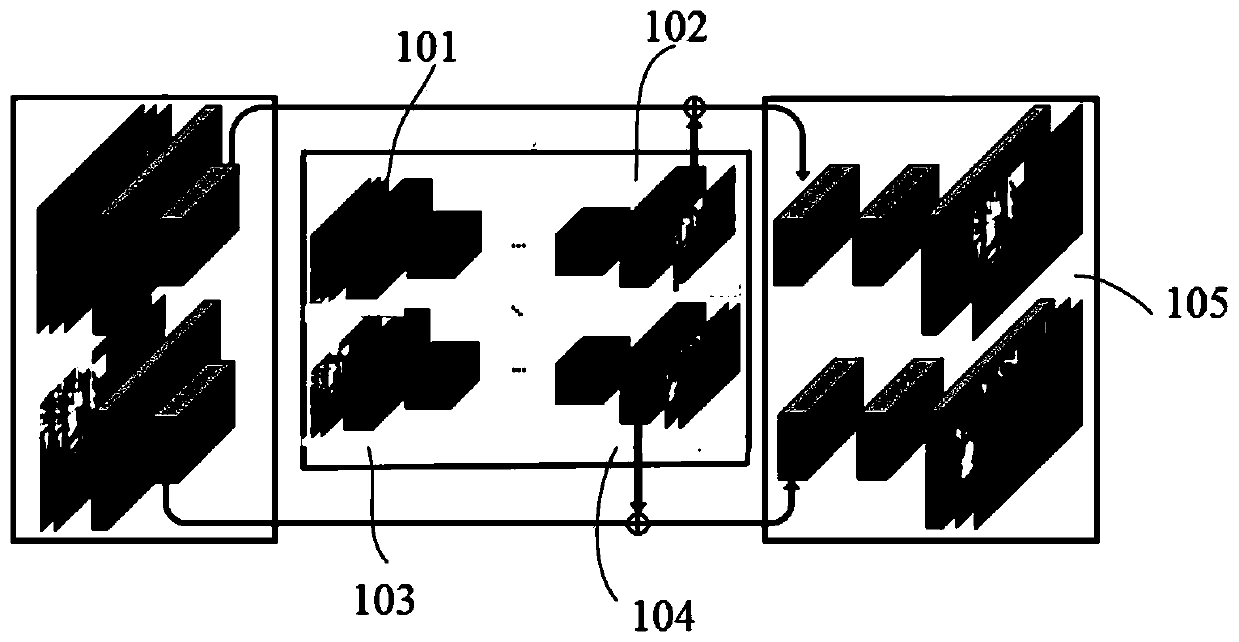

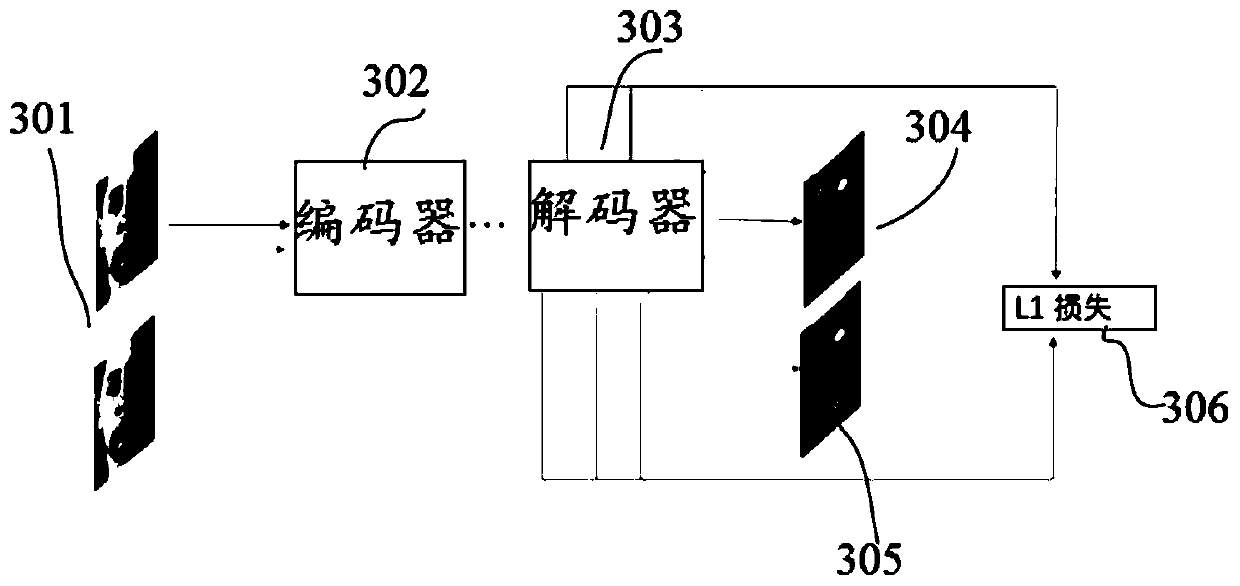

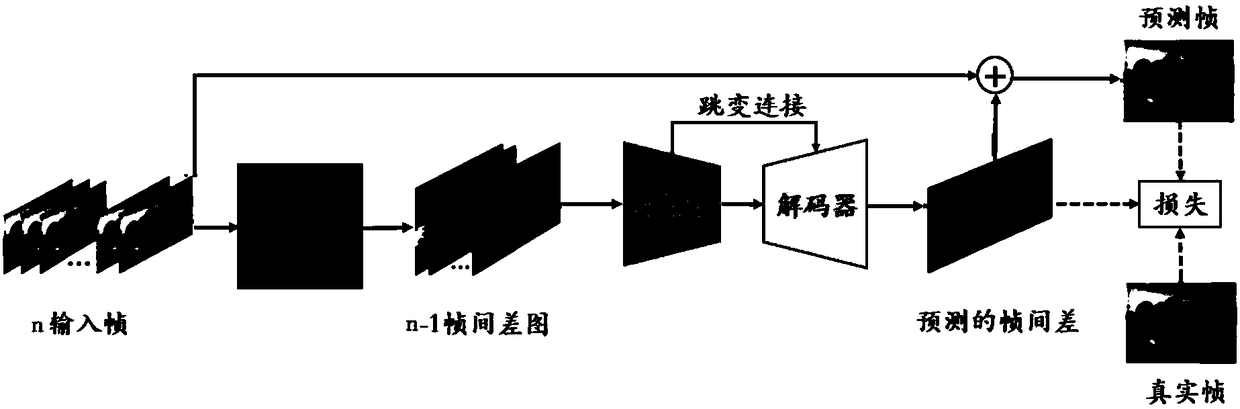

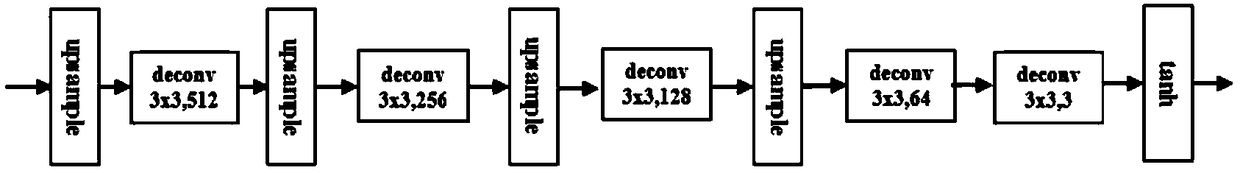

A method of generating a neural network model for video prediction

ActiveCN109168003AEasy extractionConvenience to followDigital video signal modificationAlgorithmNetwork model

The present invention provides a method of training a generator model G for video prediction so that a better, long-time video prediction effect can be obtained with less computational effort using the model. The generator model G comprises an encoder and a decoder adopting a neural network model structure, A hopping connection is adopt between that encoder and the decode, DELTA.X for generating apredicted inter-frame difference, The result of summing the predicted inter-frame difference DeltaX with the training sample is the method described in the predicted frame X<^>, includes selecting successive video frames as training samples and extracting the inter-frame difference of the training samples; 2) take that inter-frame difference as an input of an encoder in a generator model G, a formula is shown in the description wherein DELTAXi-1 is a value related to the ith inter-frame difference, Xi is the ith frame in the training sample, Xi<^> is that ith prediction frame, Xi and Xi<^> are associated with the neural network weights of the encoder and the decoder.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

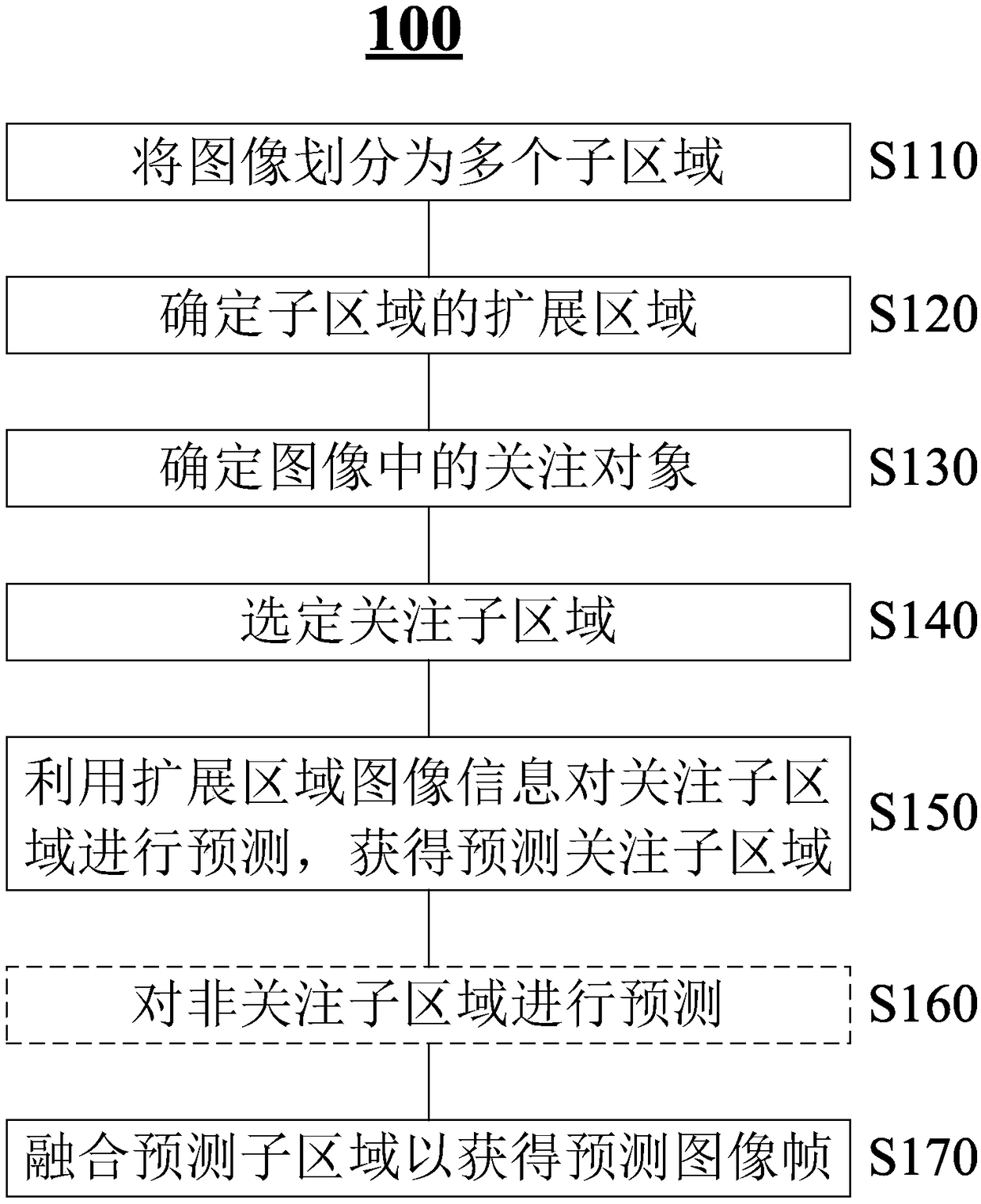

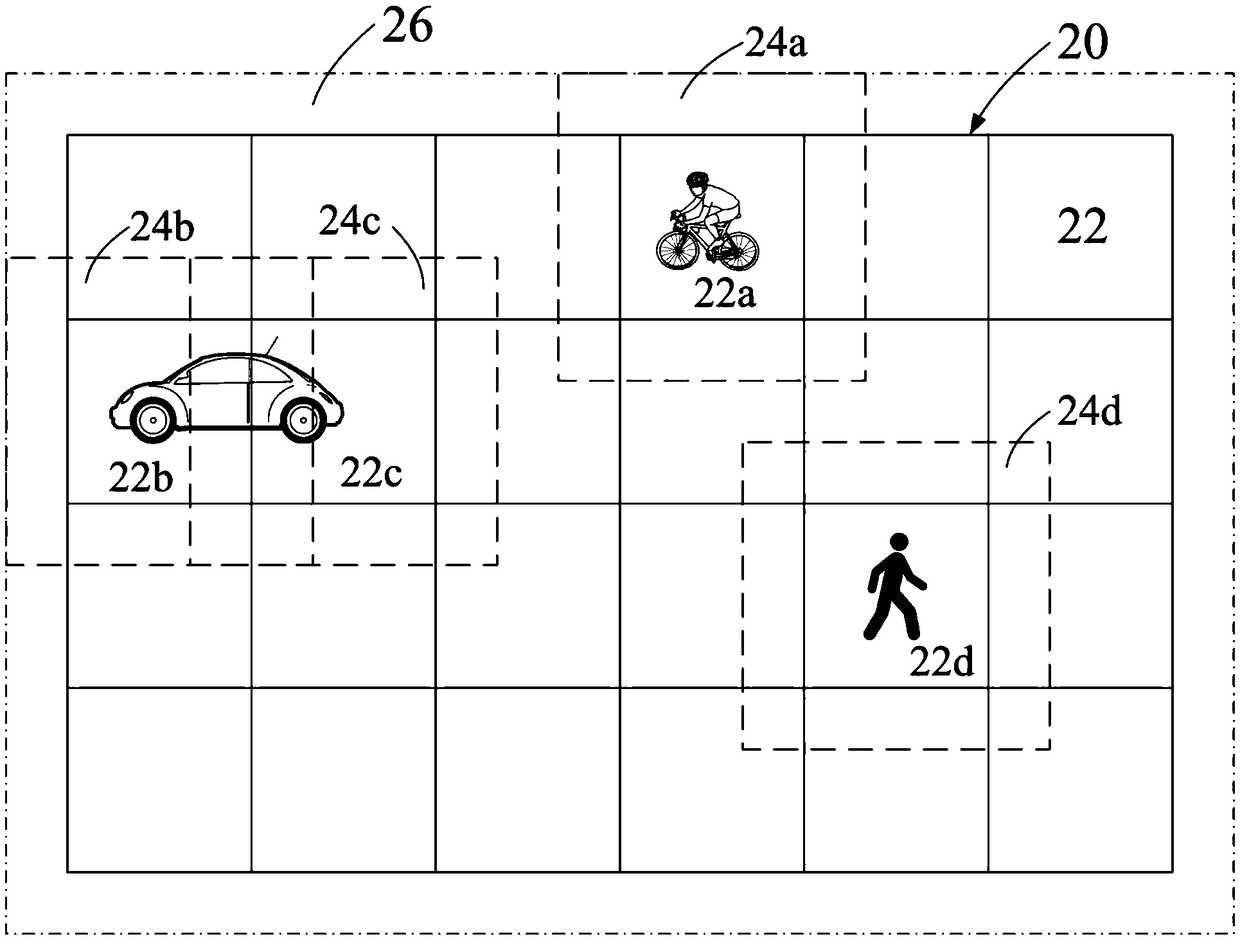

Image prediction method and device based on region dynamic screening and electronic device

ActiveCN109063603AImprove efficiencyHigh speedTelevision system detailsCharacter and pattern recognitionImage predictionVideo prediction

The present application relates to an image prediction method and apparatus based on region dynamic screening and an electronic device. According to an embodiment, an image prediction method may include dividing a current frame image into a plurality of sub-regions according to a predetermined standard; Determining an extended region corresponding to each sub-region, the extended region includingthe sub-region and being larger than the sub-region; Performing image recognition to determine an object of interest in the current frame image; Determining the sub-region in the extended region as asub-region of interest based on the extended region including at least a portion of the object of interest; Predicting the sub-region of interest using image information within the extended region toobtain a predicted sub-region of interest; And replacing a corresponding sub-region of interest in the current frame image with the predicted sub-region of interest to obtain a predicted frame image.By using the prediction method, the efficiency and speed of video prediction can be improved, and the prediction accuracy is not limited by the video size.

Owner:SHENZHEN HORIZON ROBOTICS TECH CO LTD

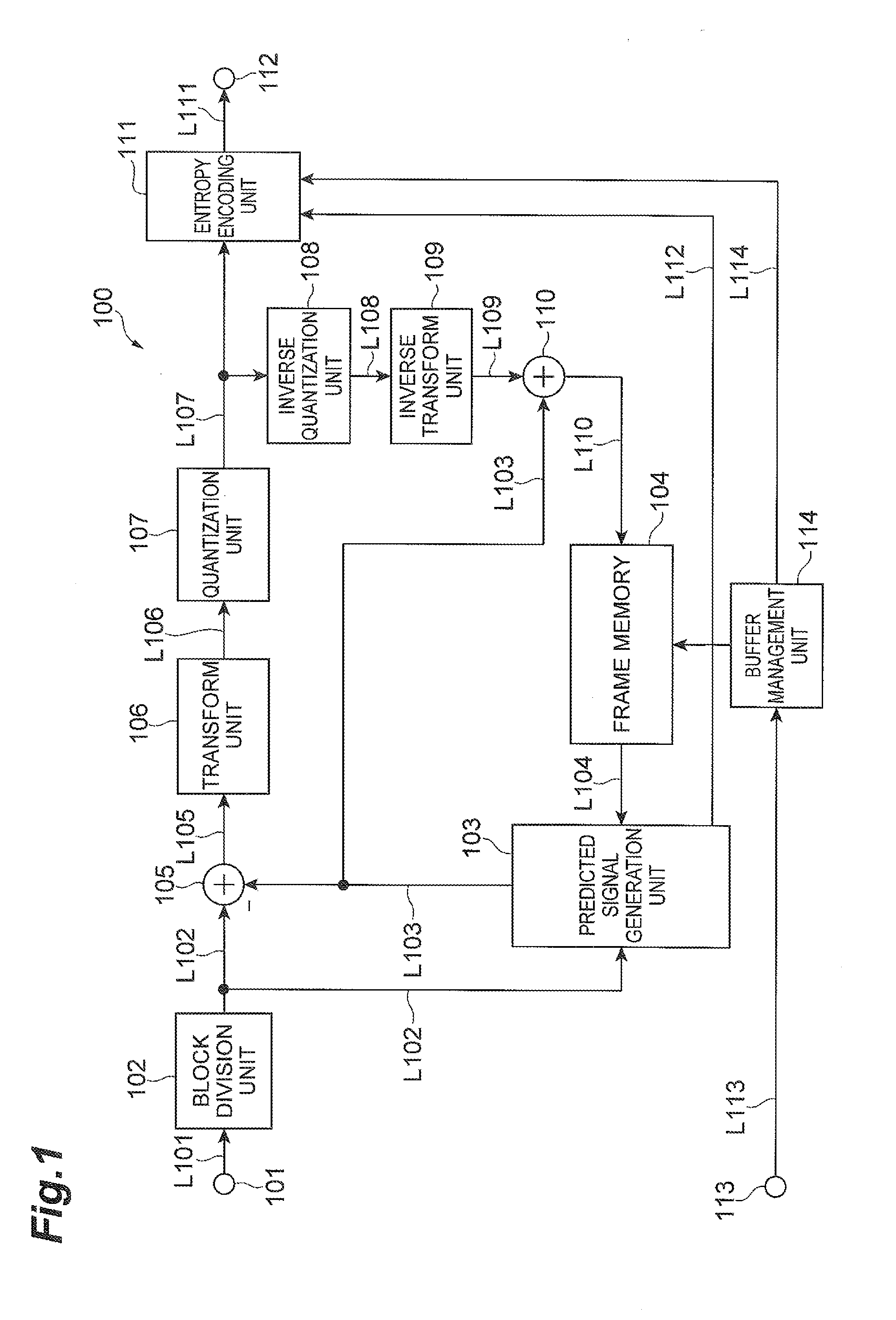

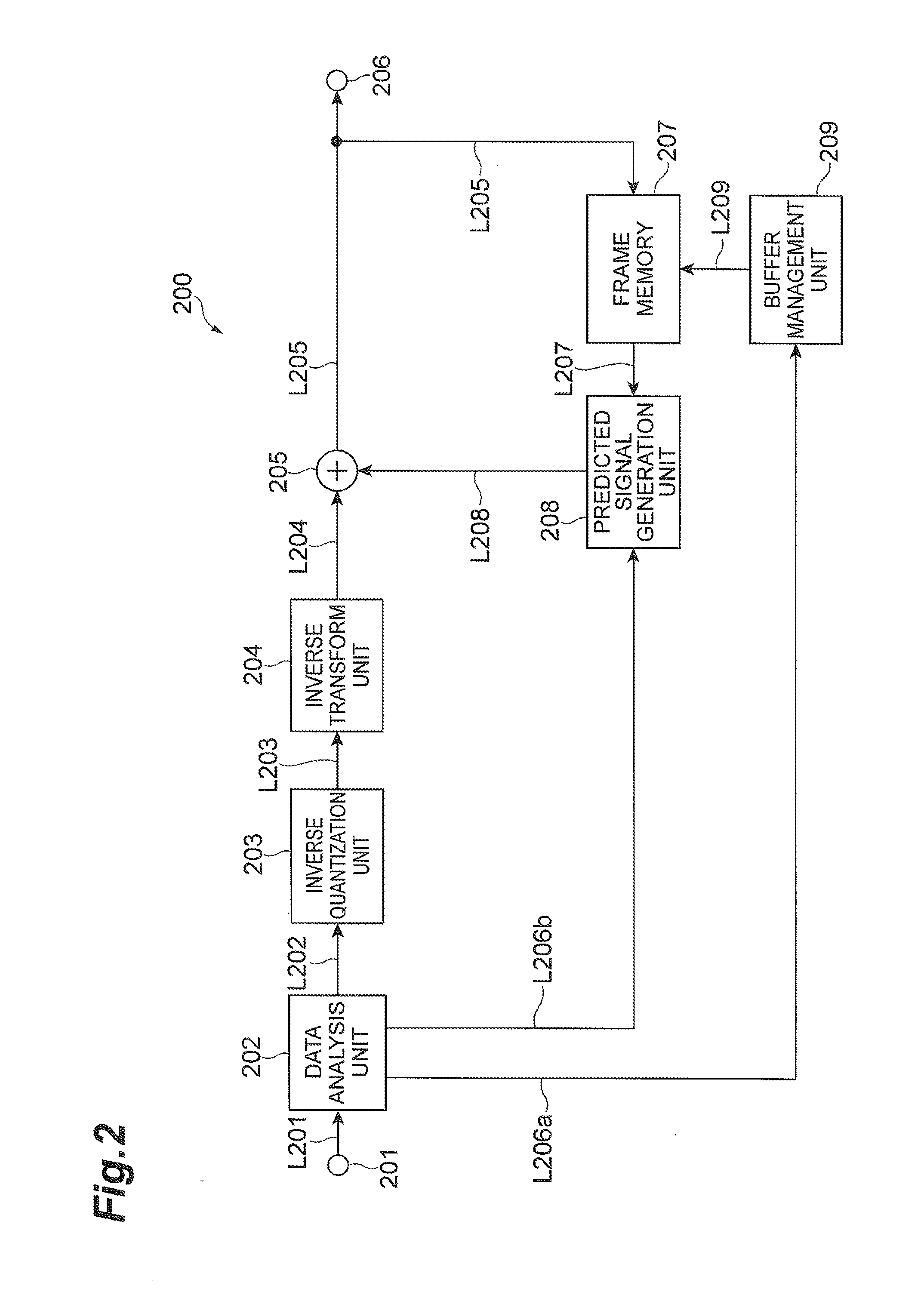

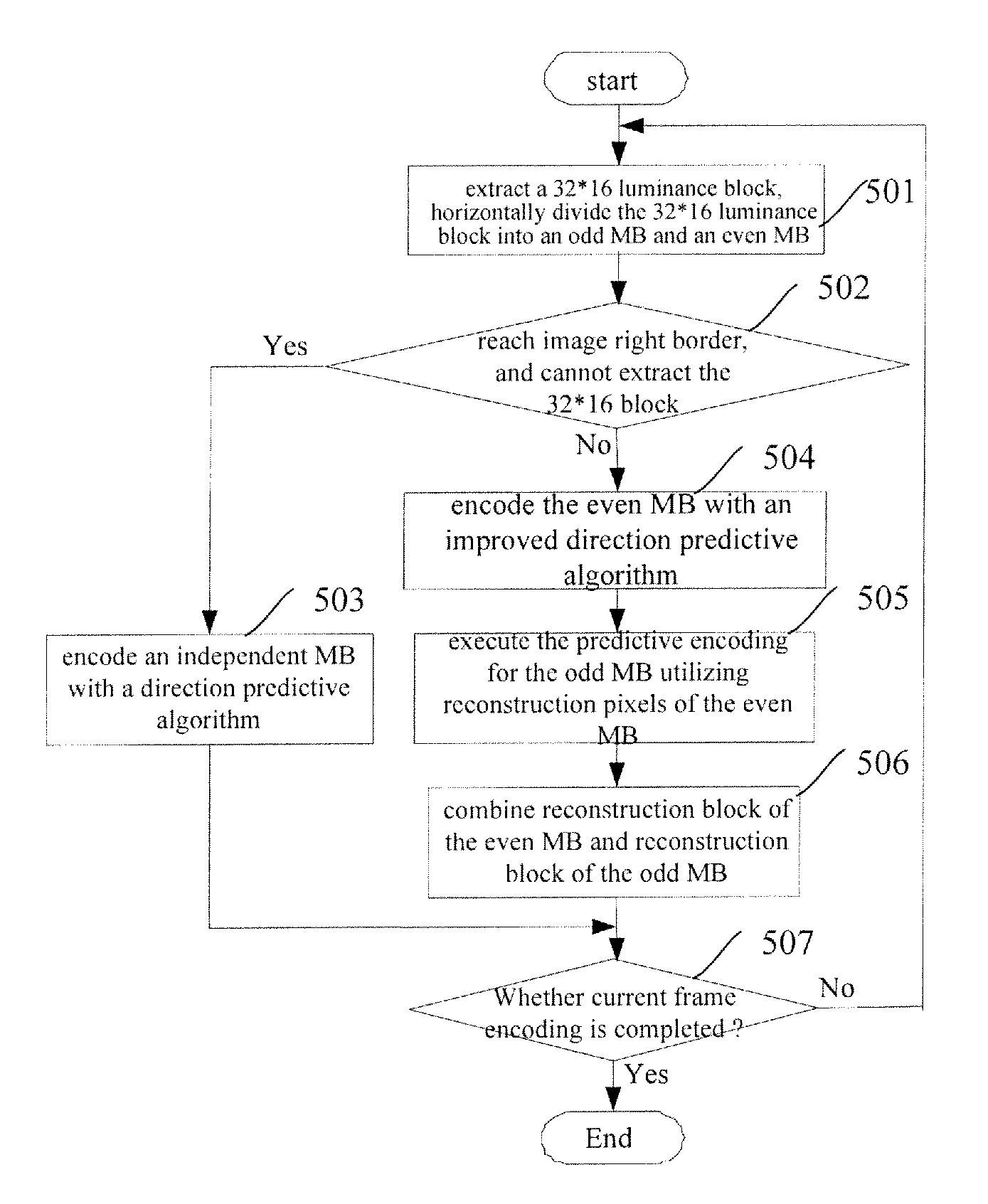

Motion video predict coding method, motion video predict coding device, motion video predict coding program, motion video predict decoding method, motion predict decoding device, and motion video predict decoding program

ActiveUS20140226716A1Encoding efficiency can be improvedImprove efficiencyColor television with pulse code modulationColor television with bandwidth reductionAlgorithmVideo sequence

A video predictive coding system includes a video predictive encoding device having: an input circuit to receive pictures constituting a video sequence; an encoding circuit which conducts predictive coding of a target picture using, as reference pictures, pictures encoded and reconstructed in the past, to generate compressed picture data; a reconstruction circuit to decode the compressed picture data to reconstruct a reproduced picture; picture storage to store the reproduced picture as a reference picture for encoding of a subsequent picture; and a buffer management circuit which controls the picture storage, (prior to predictive encoding of the target picture), on the basis of buffer description information BD[k] related to reference pictures used in predictive encoding of the target picture, encodes the buffer description information BD[k] with reference to buffer description information BD[m] for a picture different from the target picture, and adds encoded data thereof to the compressed picture data.

Owner:NTT DOCOMO INC

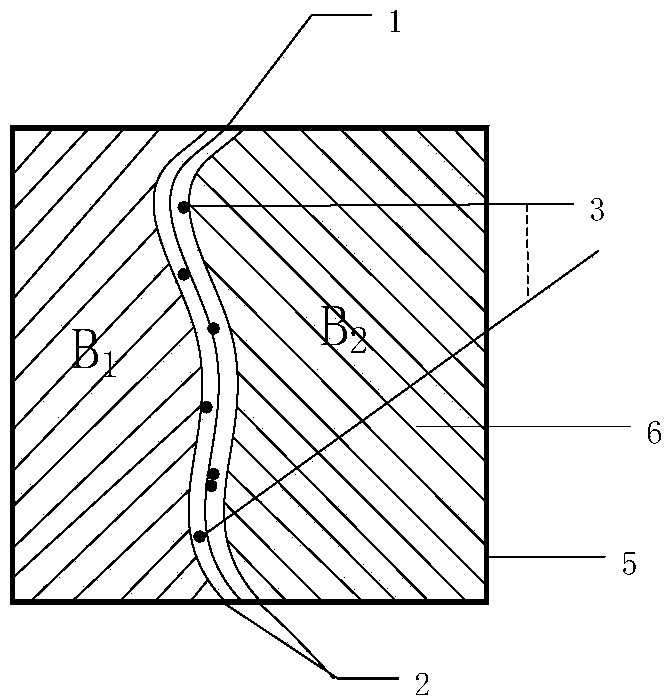

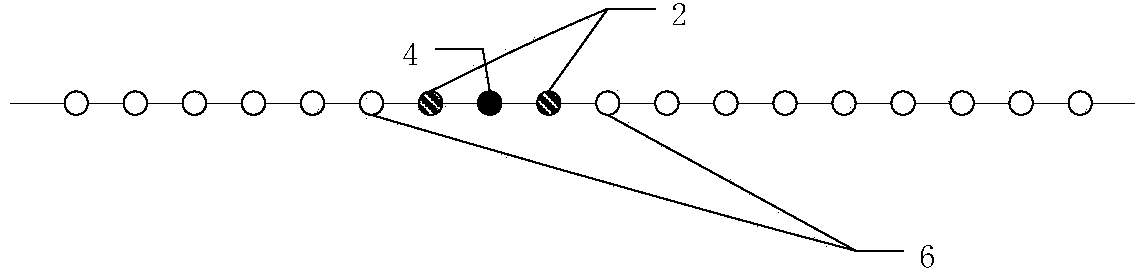

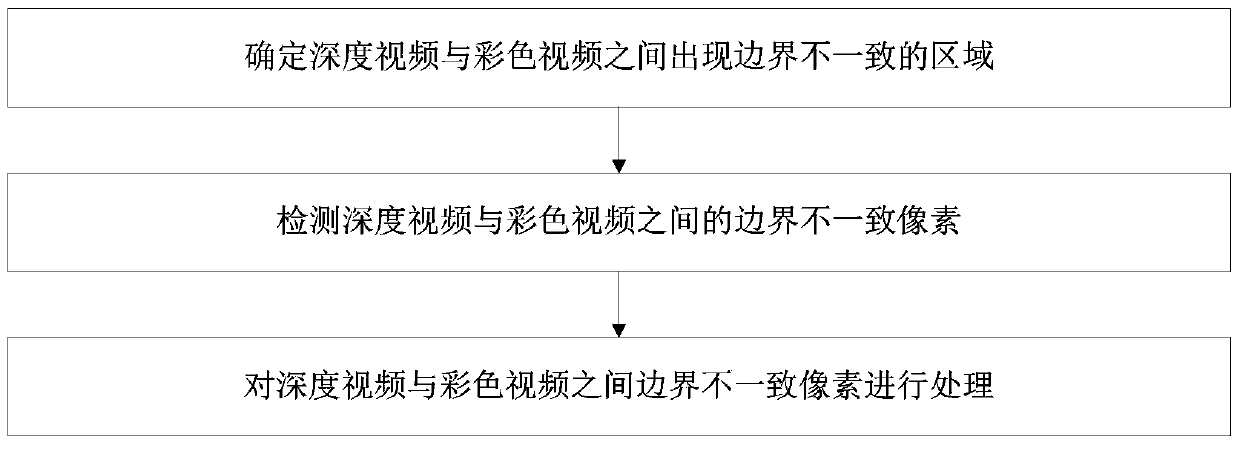

Detection and processing method for boundary inconsistency of depth and color videos

ActiveCN103747248AImprove rate-distortion performanceImprove composite qualityTelevision systemsDigital video signal modificationViewpointsRate distortion

The invention belongs to the field of 3D (three dimensional) video coding, and relates to a color video boundary-based detection and processing method for the boundary inconsistency of the depth video in the depth video coding assisted by the color video. The method comprises the concrete steps of determining the area with the boundary inconsistency between the depth video and the color video, detecting the inconsistent boundary pixels between the depth video and the color video, and processing the inconsistent boundary pixels before entropy coding. The detection and processing method can perform detection and processing on the boundary inconsistency between the color video and the depth video in the depth video prediction process based on the structural similarity between the color video and the depth video, so the rate-distortion performance of coding and the virtual viewpoint synthesis quality at a final decoding end are improved and the coding cost can be effectively reduced.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

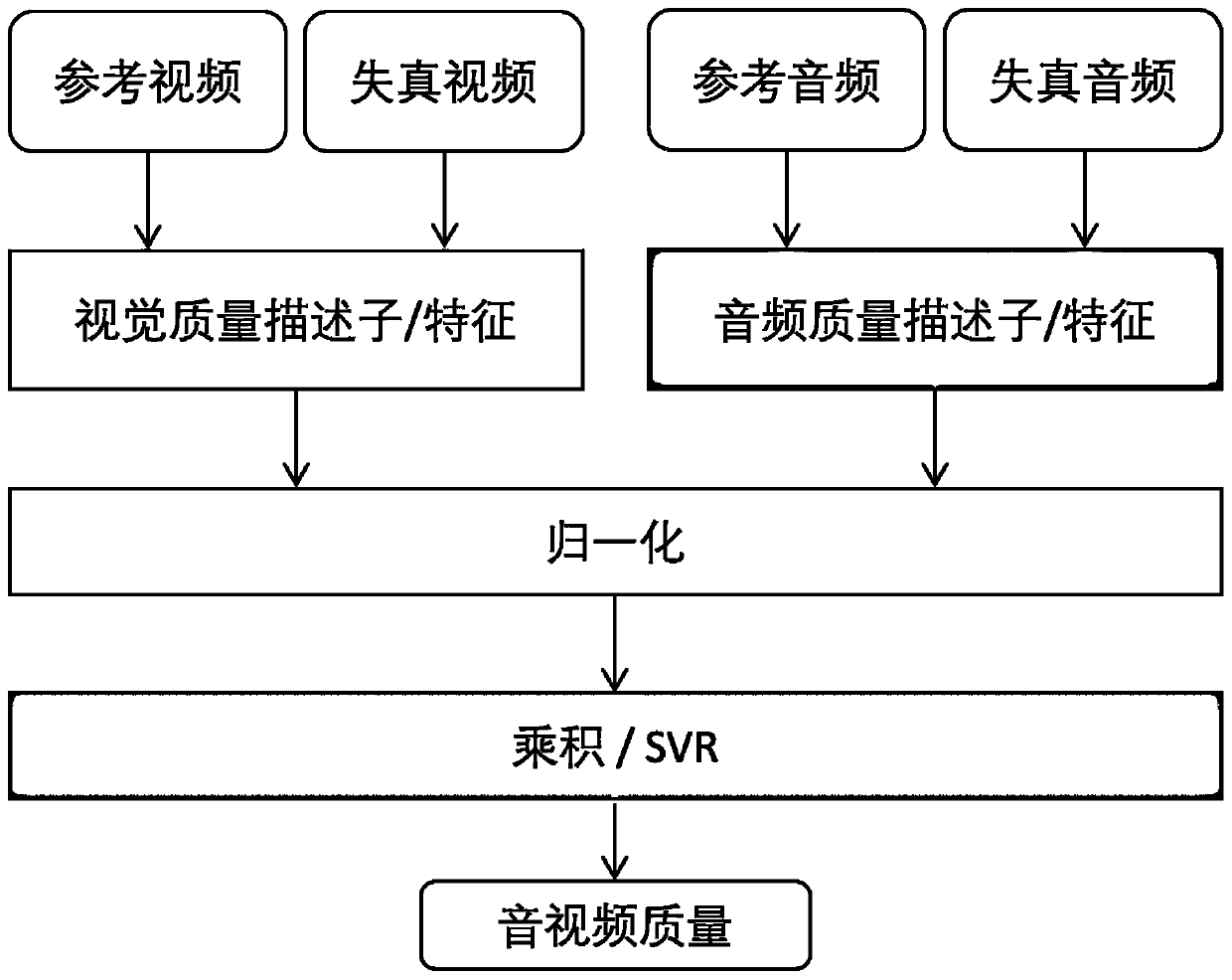

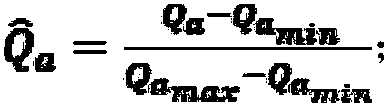

Video and audio joint quality evaluation method and device

ActiveCN111479105AEffective evaluationTelevision systemsSelective content distributionVideo qualityVideo prediction

The invention provides a video and audio joint quality evaluation method. The method comprises the following steps: predicting video quality by using a video quality evaluation model to obtain a videoprediction quality score Qv and a video prediction quality feature fv; predicting the audio quality by using an audio quality evaluation model to obtain an audio prediction quality score Qa and an audio prediction quality feature fa; normalizing the video prediction quality score Qv and the audio prediction quality score Qa respectively to obtain a normalized video prediction quality score and anaudio prediction quality score, fusing the normalized video prediction quality score and the audio prediction quality score, or fusing the video prediction quality feature fv and the audio predictionquality feature fa to obtain a predicted video and audio joint quality score Qav or feature fav. Meanwhile, the invention provides a video and audio joint quality evaluation device. By utilizing themethod and the device provided by the invention, the overall experience quality of the audio and the video can be effectively evaluated.

Owner:SHANGHAI JIAO TONG UNIV

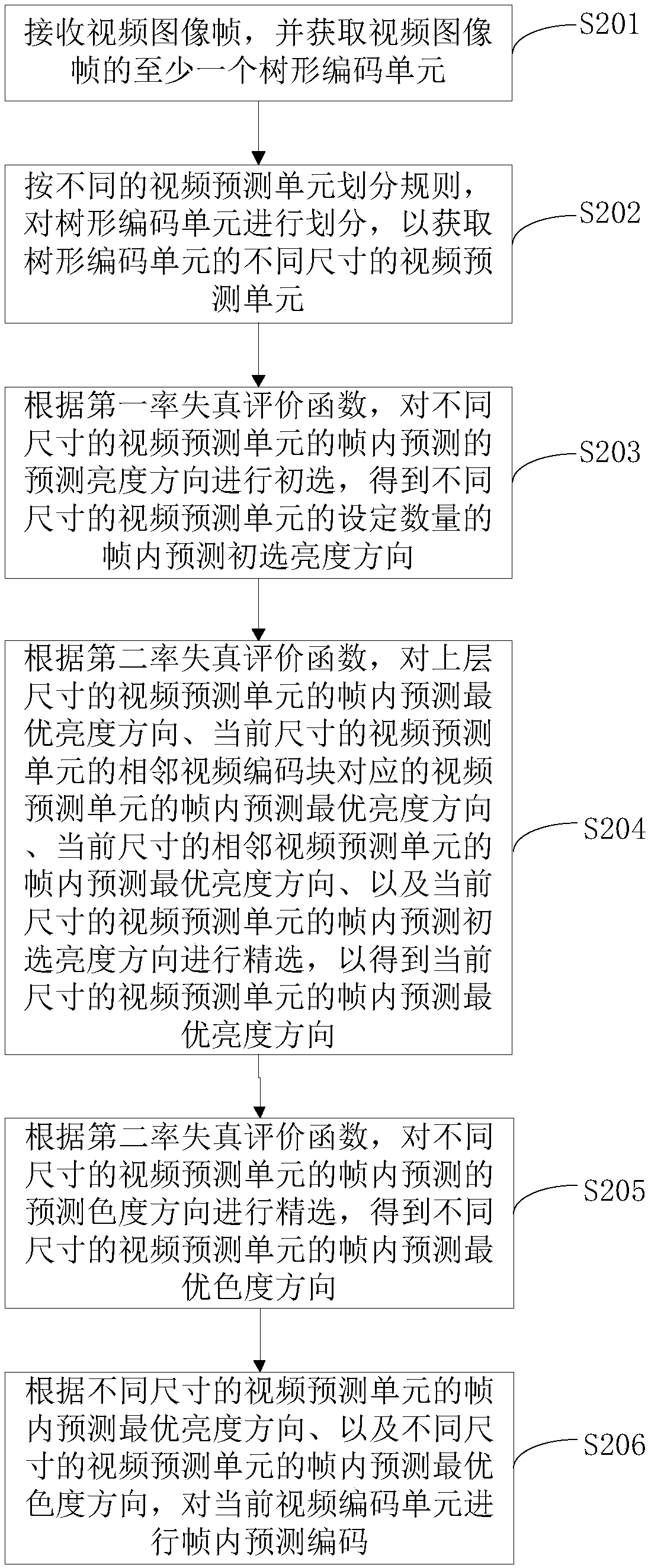

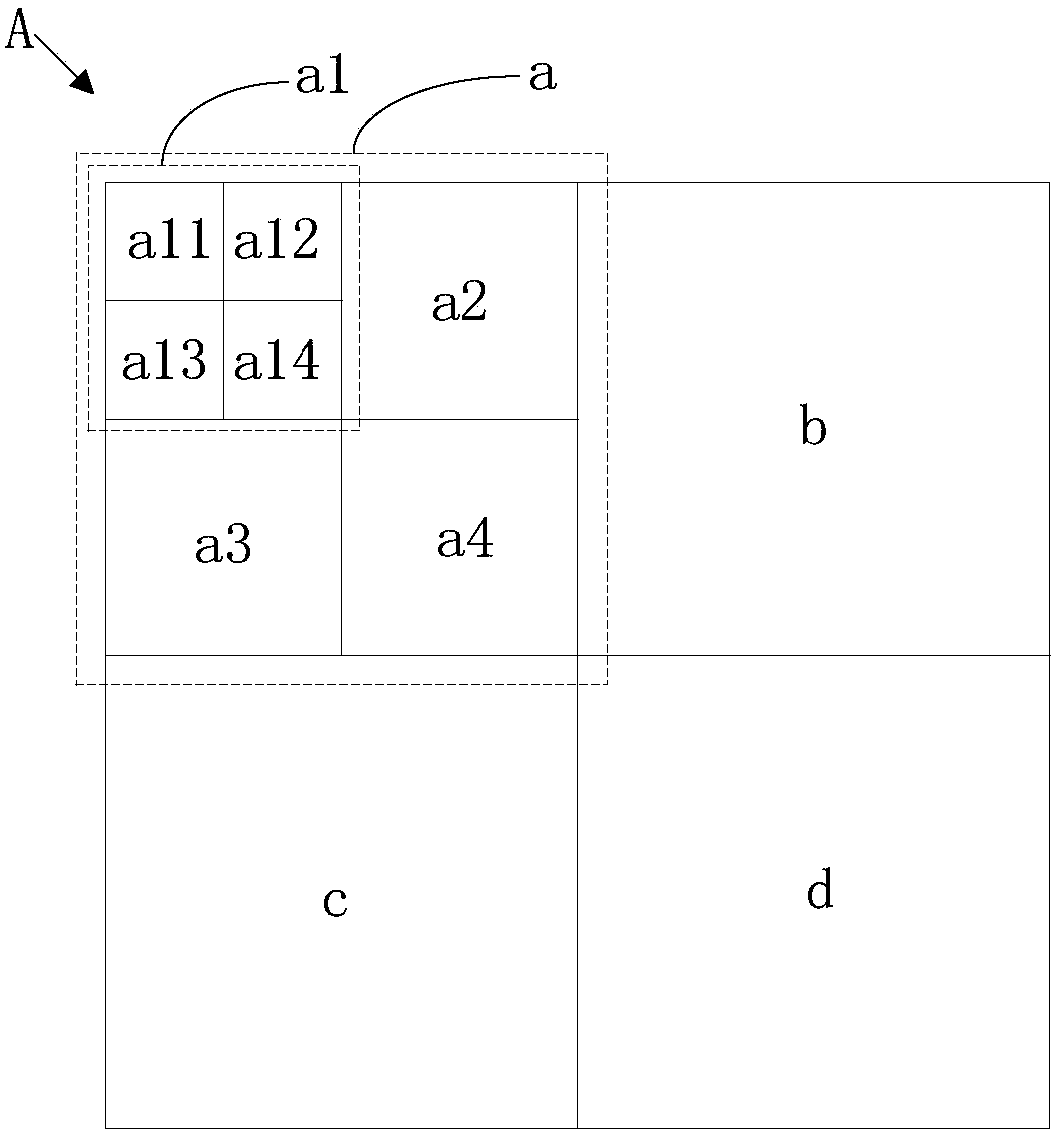

Video coding method, video coding device, electronic equipment and storage medium

ActiveCN110213576AImprove encoding compressionImprove encoding speedDigital video signal modificationTree codeTree shaped

The invention provides a video coding method, which comprises the following steps: receiving a video image frame, and obtaining at least one tree coding unit of the video image frame; dividing the tree-shaped coding units according to different video prediction unit division rules so as to obtain video prediction units with different sizes of the tree-shaped coding units; performing primary selection on the predicted brightness directions of intra-frame prediction of the video prediction units of different sizes to obtain the set number of intra-frame prediction primary selection brightness directions of the video prediction units of different sizes; setting an intra-frame prediction optimal brightness direction of a video prediction unit of the current size; selecting predicted chrominance directions of intra-frame prediction of video prediction units of different sizes to obtain an intra-frame prediction optimal chrominance direction of the video prediction units of different sizes;and performing intra-frame prediction coding on the current video image frame according to the intra-frame prediction optimal brightness direction and the intra-frame prediction optimal chromaticity direction.

Owner:TENCENT TECH (SHENZHEN) CO LTD

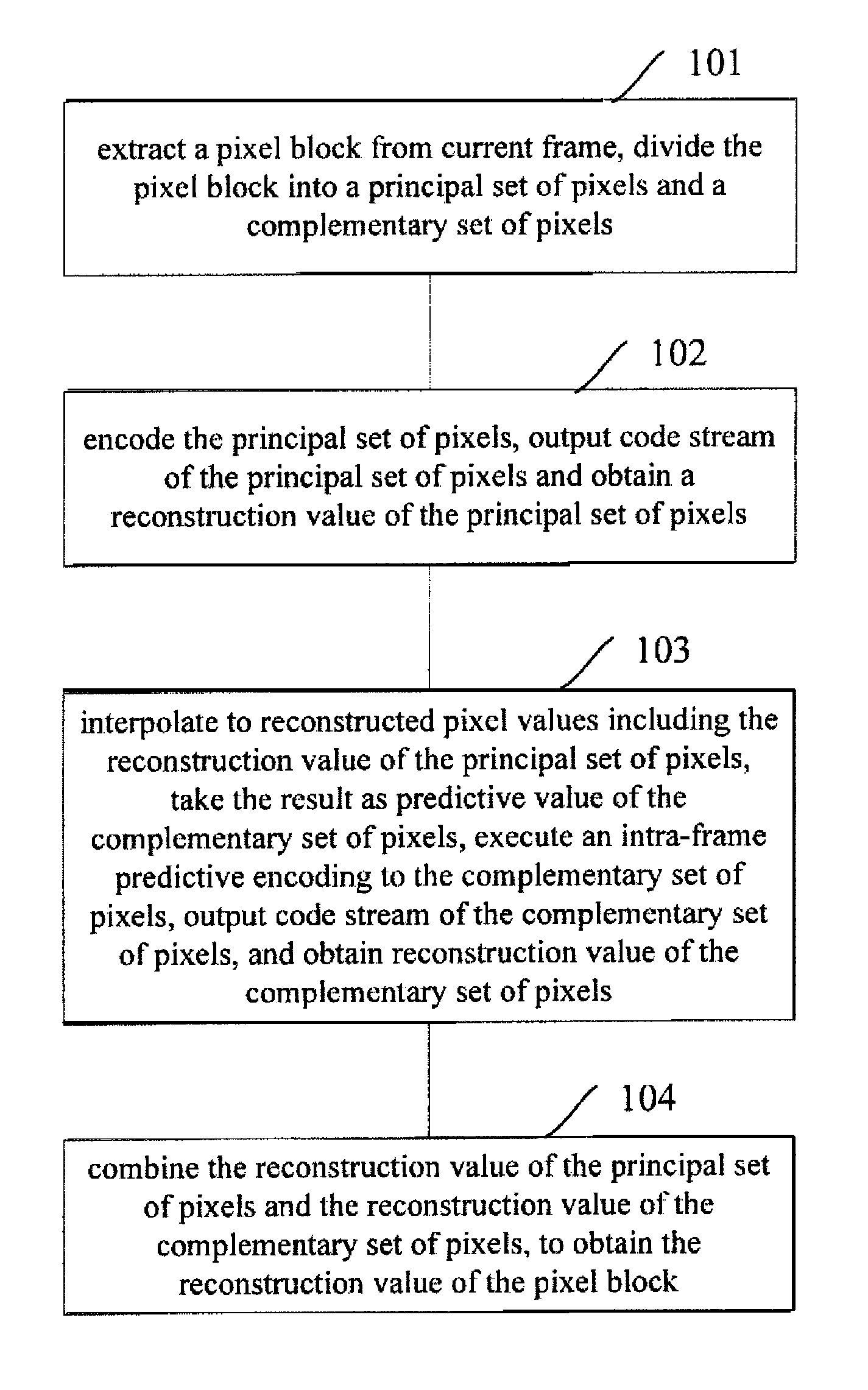

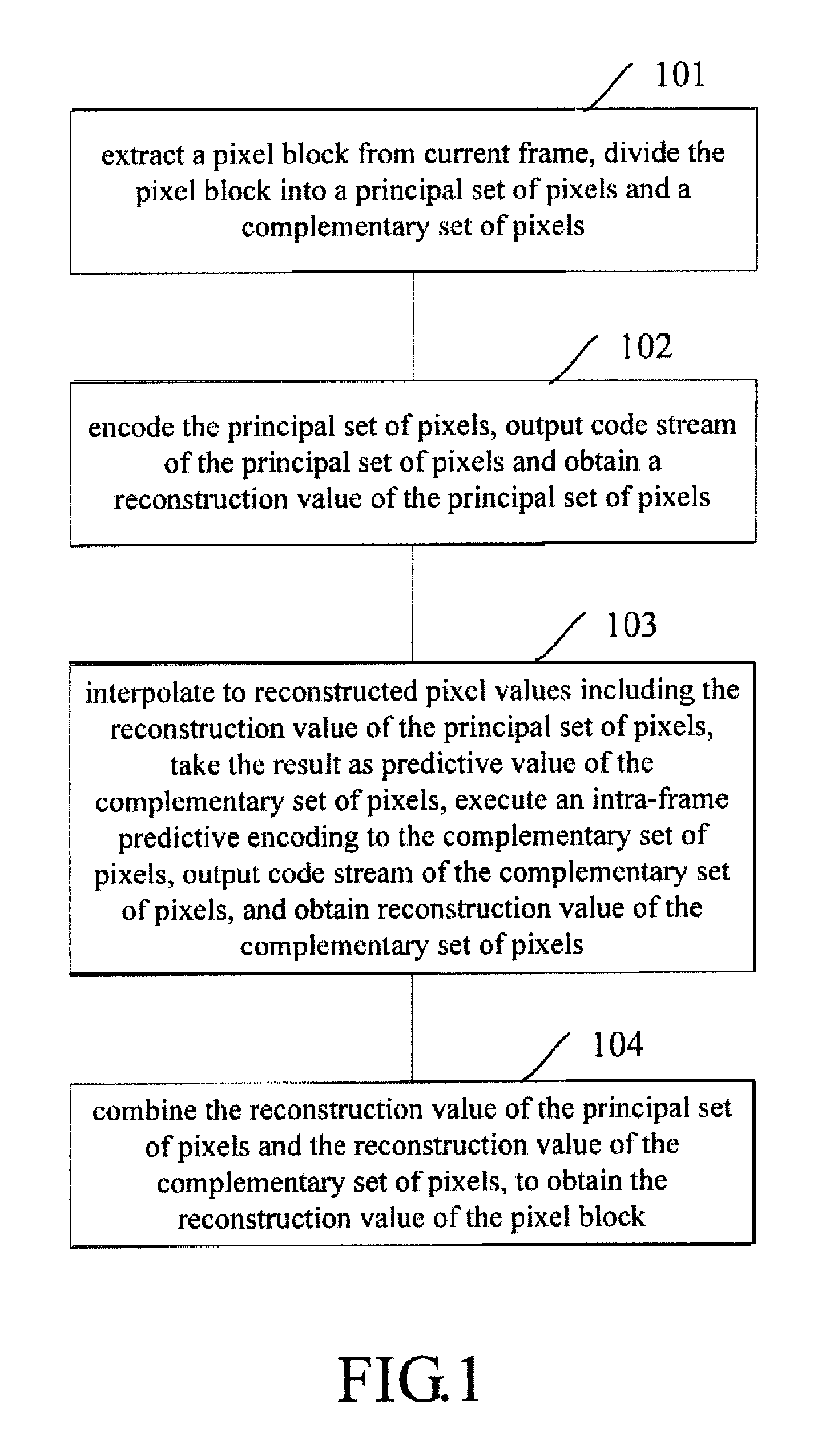

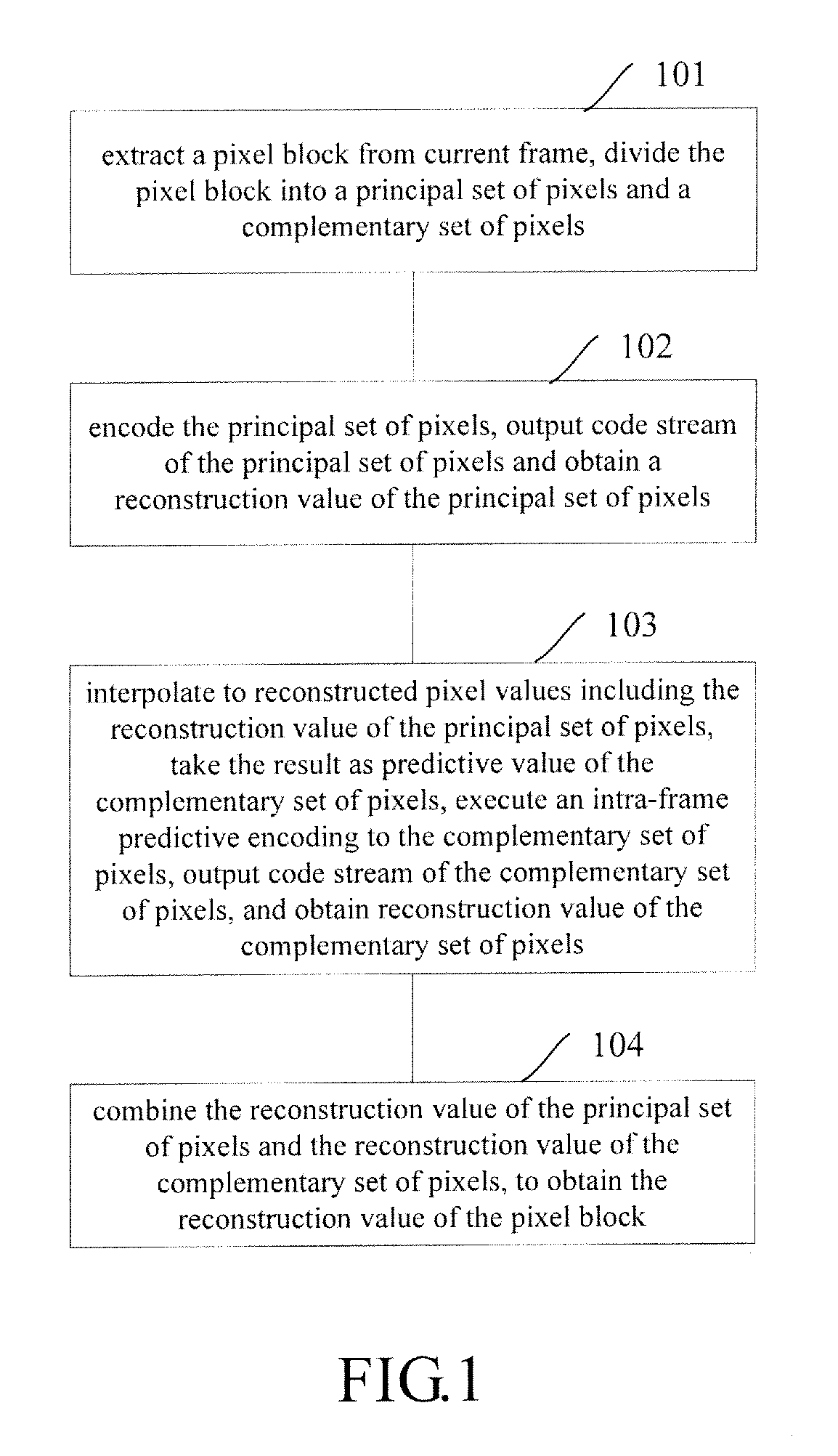

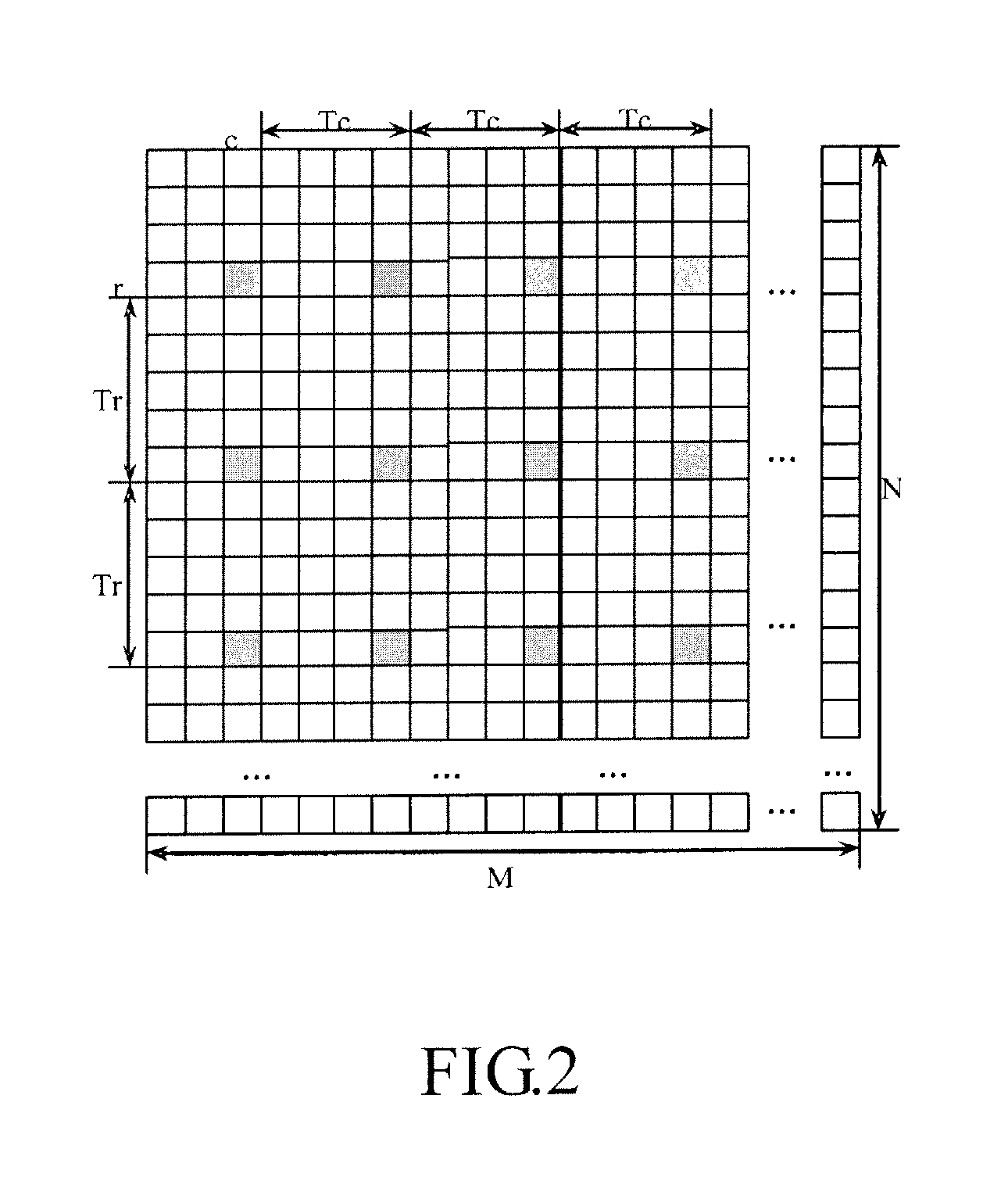

Method and device for video predictive encoding

ActiveUS20130051468A1Improve forecast accuracyNot be predicted in advanceColor television with pulse code modulationColor television with bandwidth reductionPattern recognitionVideo encoding

An embodiment of the invention provides a method for video predictive encoding. Firstly, extract a pixel block from current frame, divide the pixel block into a principal set of pixels and a complementary set of pixels; encode the principal set of pixels, output code stream of the principal set of pixels, obtain a reconstruction value of the principal set of pixels; interpolate to reconstructed pixel values, which include the reconstruction value of the principal set of pixels, take a result as predictive value of the complementary set of pixels, execute an intra-frame predictive encoding for the complementary set of pixels, output code stream of the complementary set of pixels, obtain a reconstruction value of the complementary set of pixels; and finally combine the reconstruction value of the principal set of pixels and the reconstruction value of the complementary set of pixels, and obtain a reconstruction value of the pixel block. Another embodiment of the invention also provides a device for video predictive encoding. By adopting implementation modes of the invention, compression efficiency of video encoding may be improved, implementation complexity may be lower, memory occupation may be saved, and cache hit rate may be improved. The implementation modes of the invention are suitable for highly parallel calculation, and may execute rapid encoding compression for a high-definition video source.

Owner:TENCENT TECH (SHENZHEN) CO LTD +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com