Detection and processing method for boundary inconsistency of depth and color videos

A color video and depth video technology, applied in the field of 3D video coding, can solve the problems of reducing coding efficiency and large prediction residual error, and achieve the effect of reducing coding cost and improving rate-distortion performance.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach

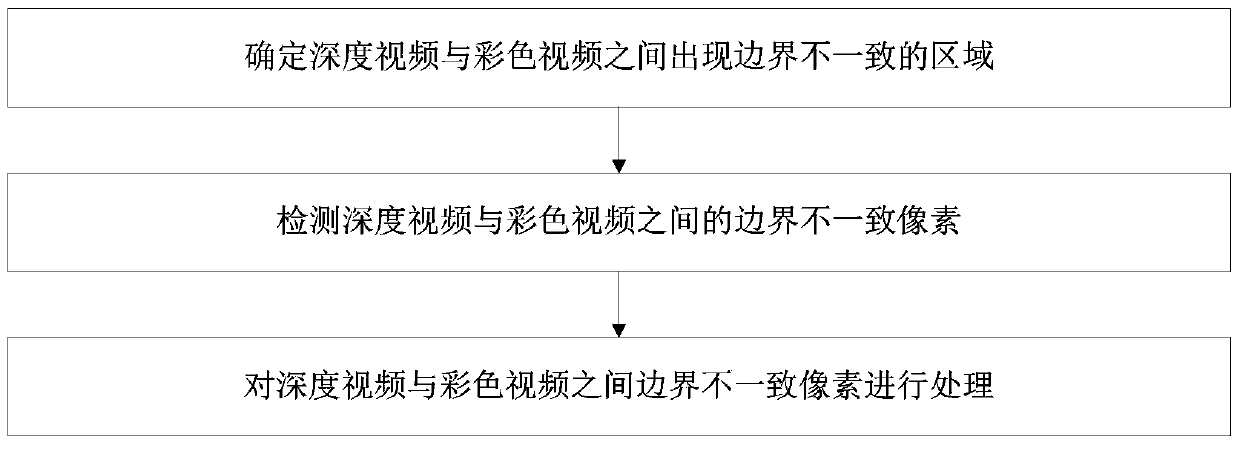

[0031] like image 3 Shown:

[0032] S1. Identify areas where boundary inconsistencies occur between the depth video and the color video:

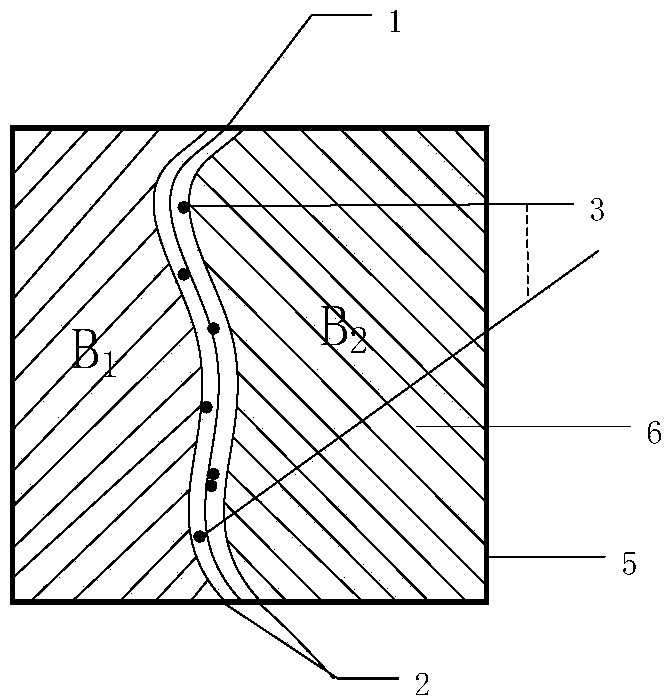

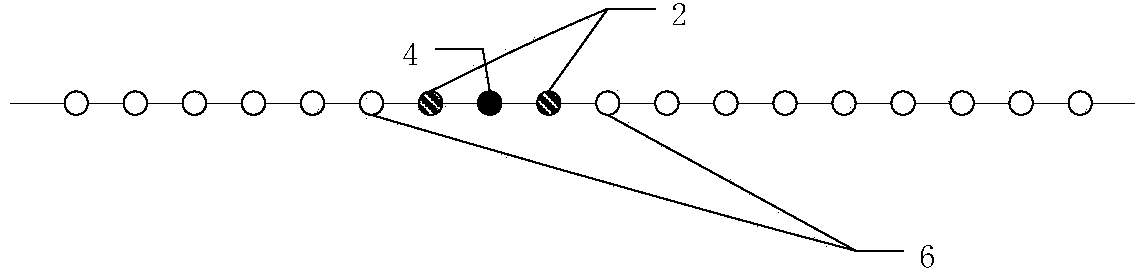

[0033] Use boundary detection operators such as Canny boundary detection operator (multi-level edge detection operator) to detect the boundary of the depth map, and the boundary is denoted as E d . Because the boundary inconsistency between the depth video and the color video mainly appears in the neighborhood of 2 to 3 pixels from the object boundary in the depth video, errors of more than 3 pixels are no longer considered as boundary inconsistency. Therefore, the area with H pixels centered on the object boundary detected in the depth map is the area where the boundary between the depth video and the color video is inconsistent, where 2≤H≤3. Therefore, using a 3×3 rectangle pair E d Perform expansion to obtain E d The extended area of 3 pixels in the center, which is the area where the boundary between the depth video and the colo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com