Patents

Literature

188 results about "View synthesis" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Currently a study branch of Computer Science Research, aims to create new views of a specific subject starting from a number of pictures taken from given point of views. Vision Research and Artificial Intelligence fields are involved in the definition of suitable approaches to the problem.

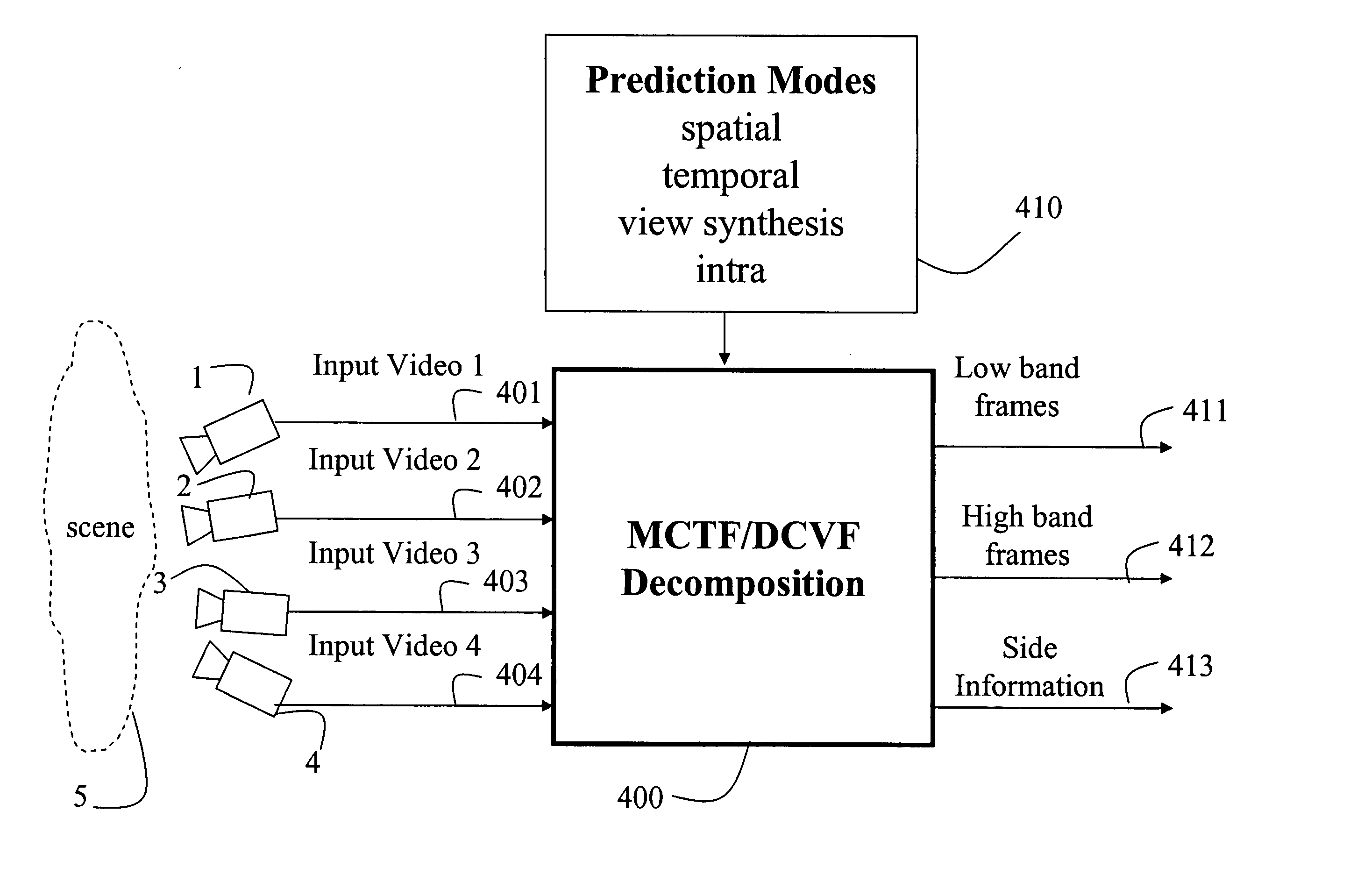

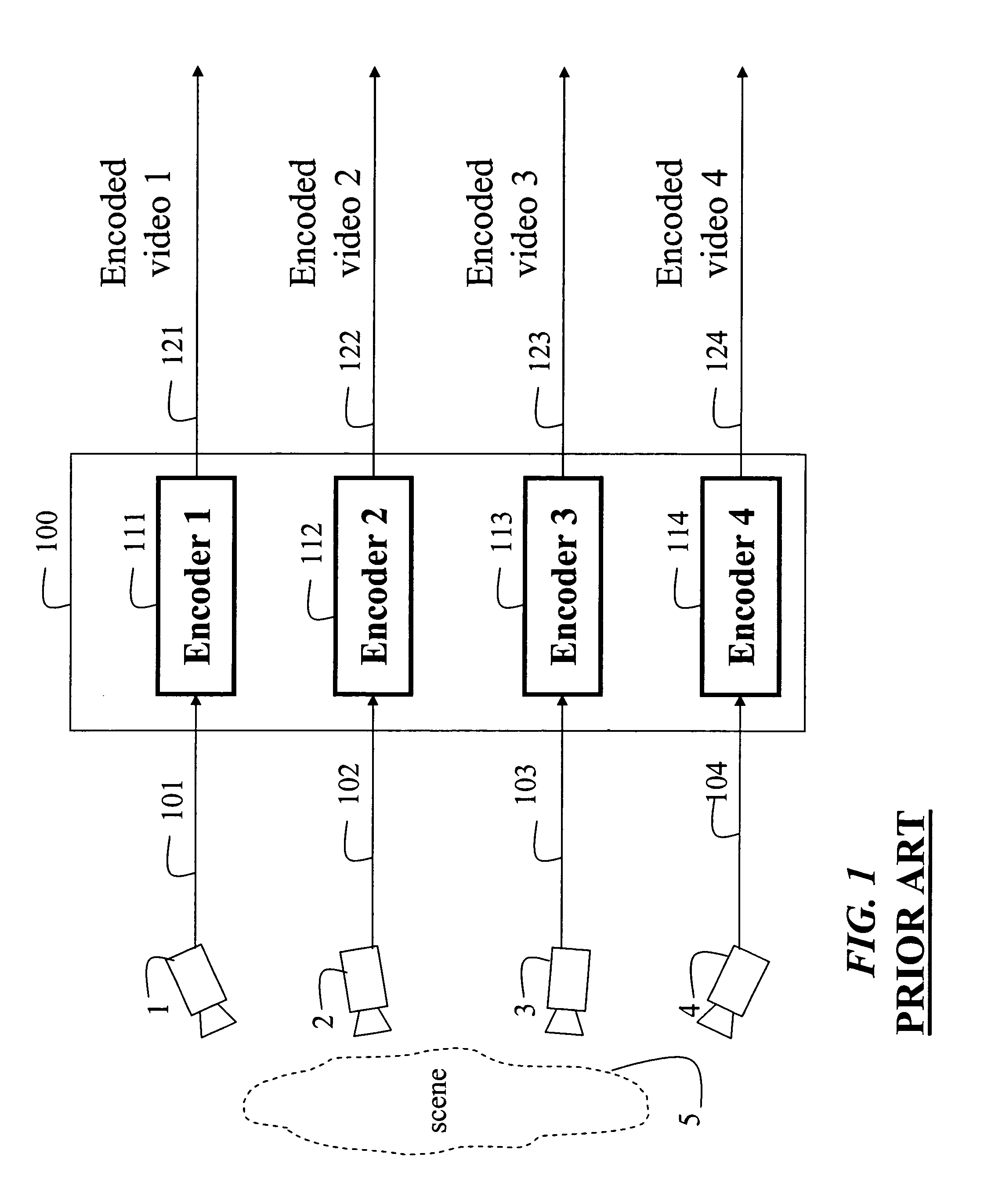

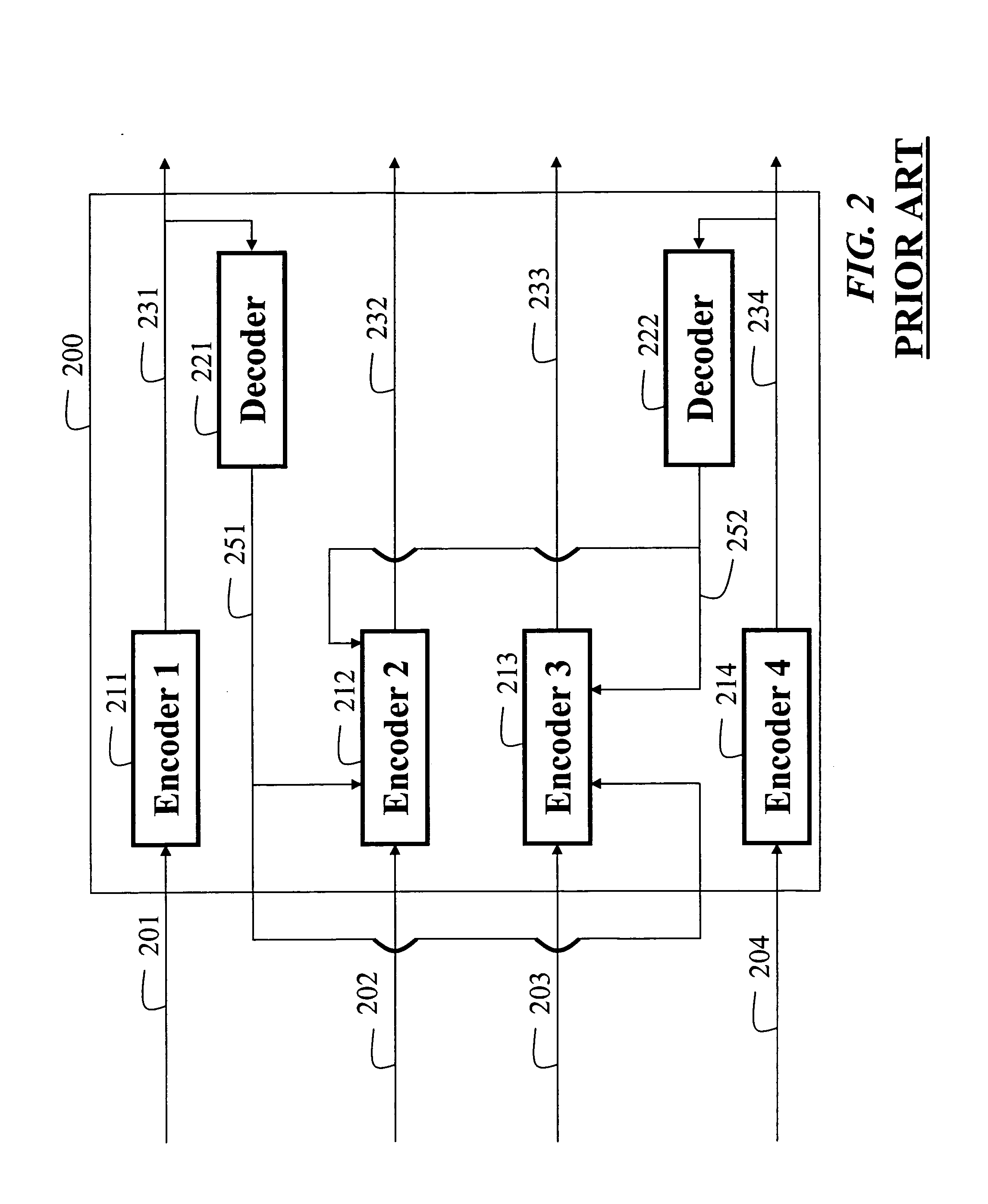

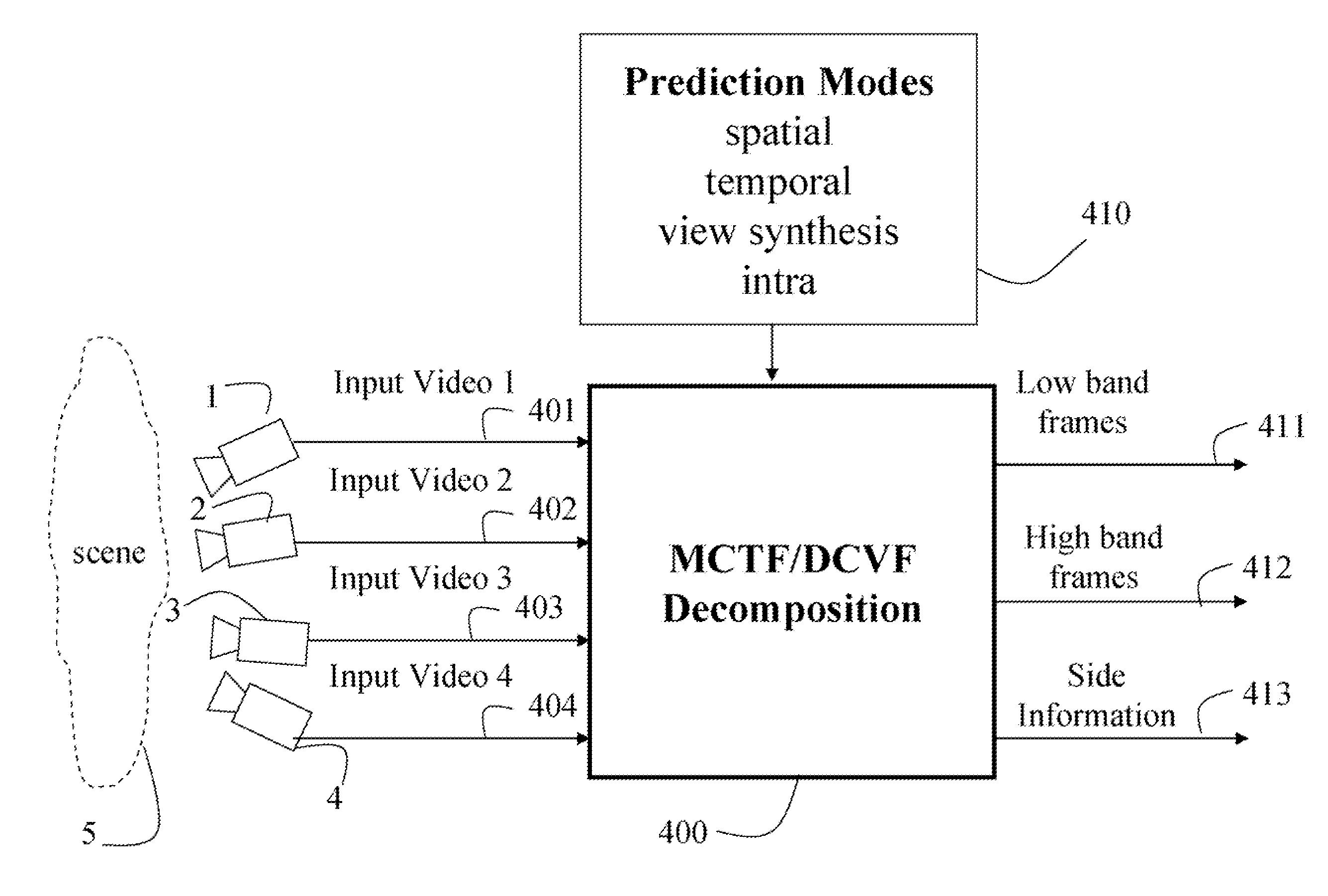

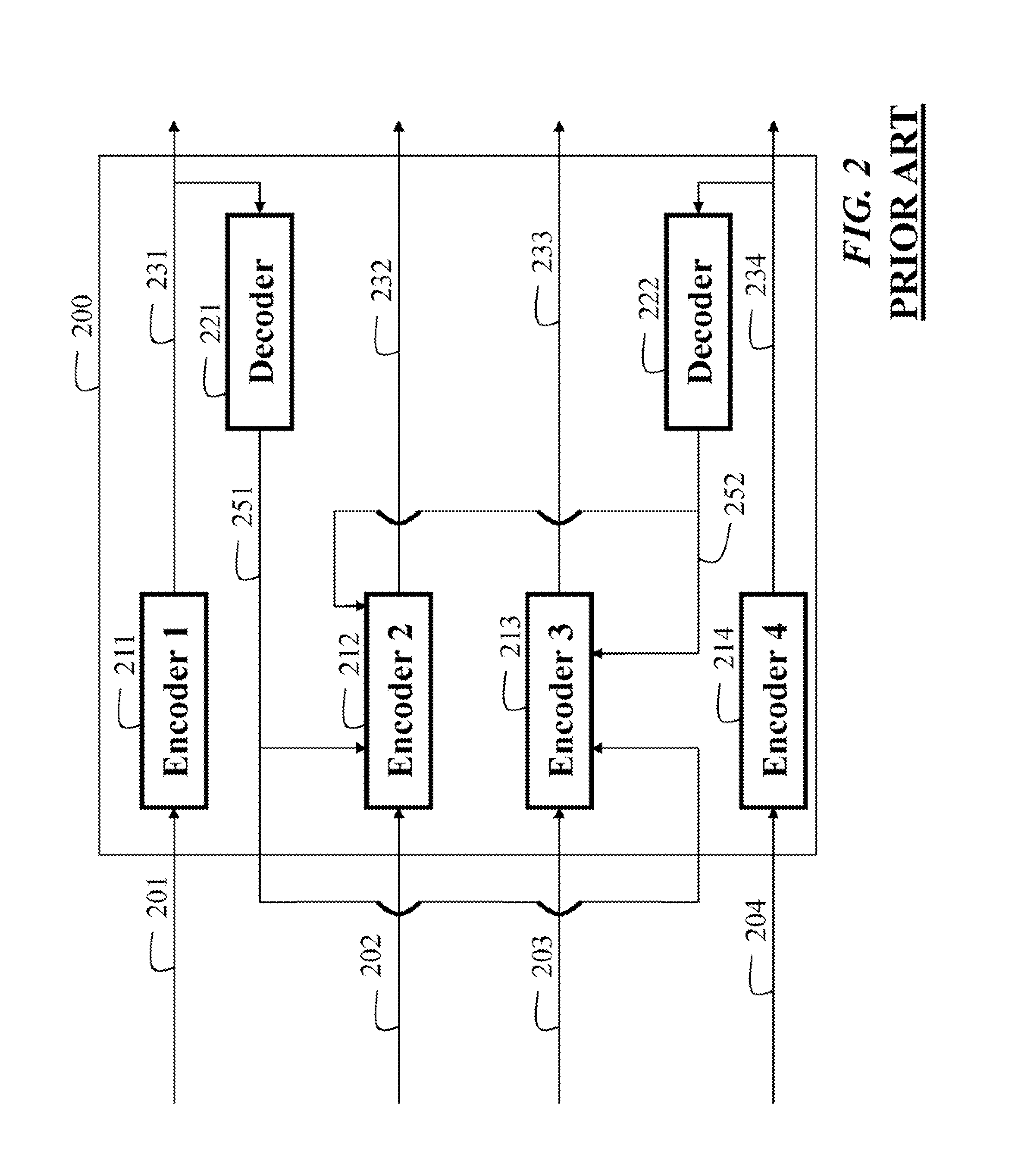

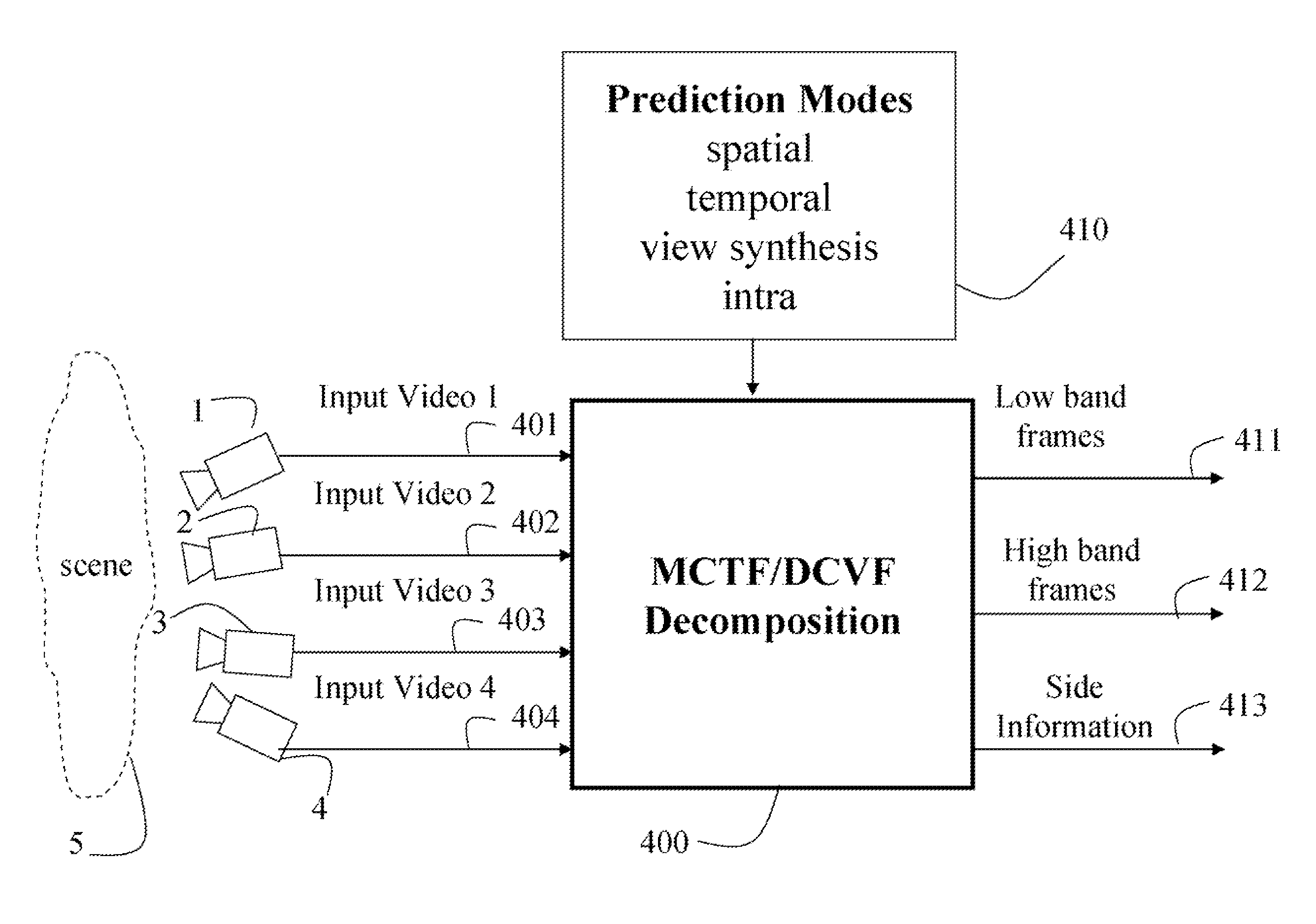

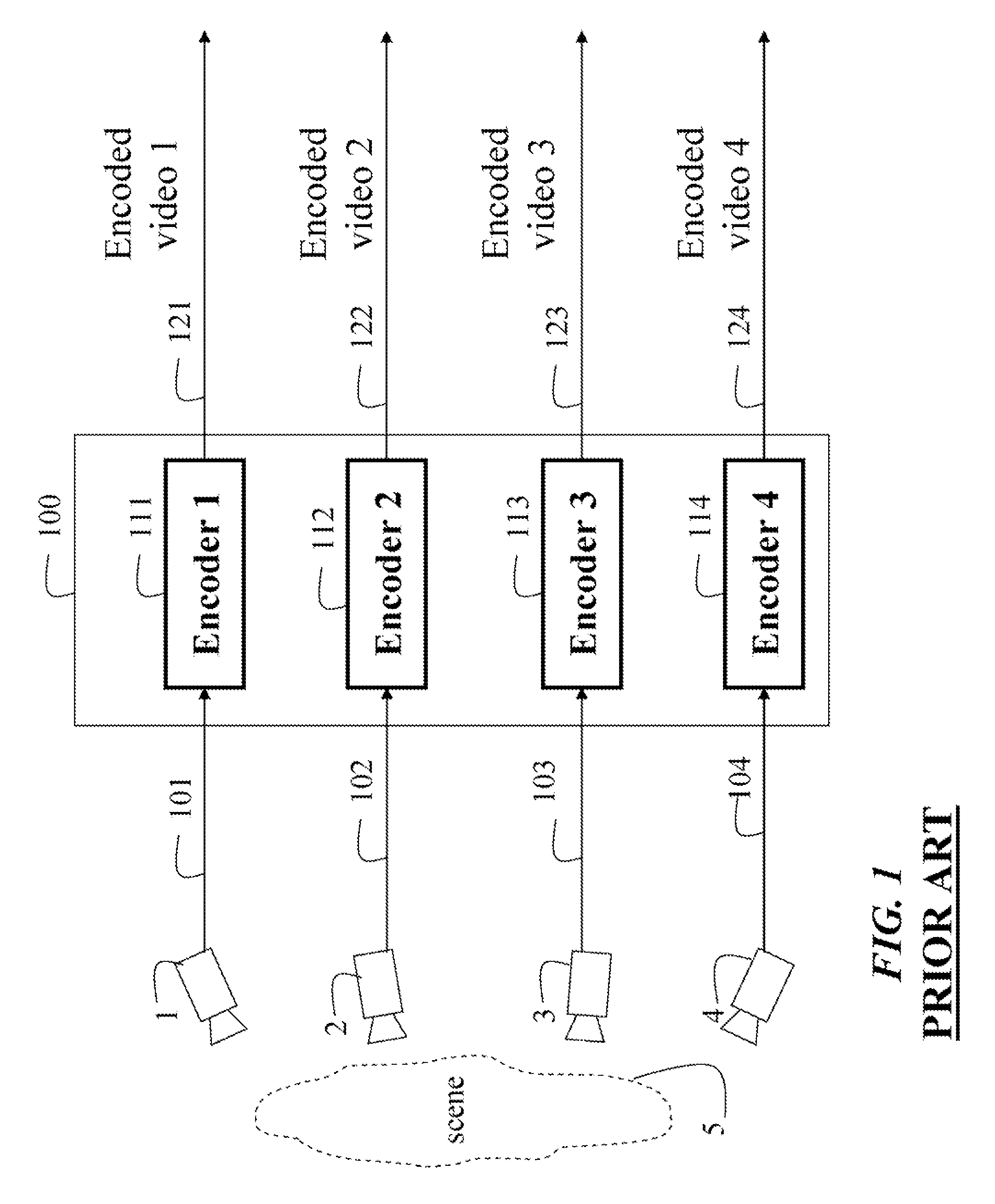

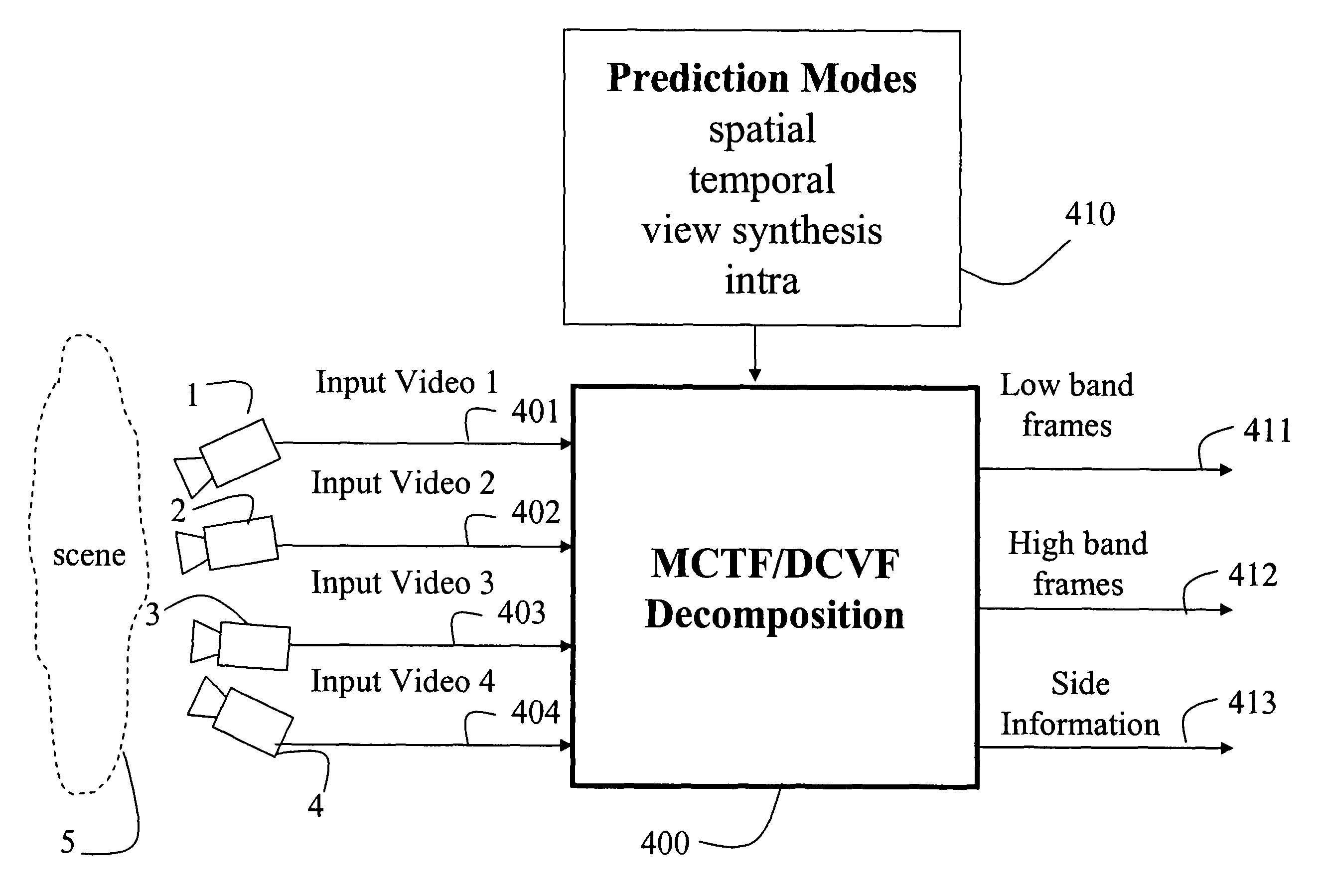

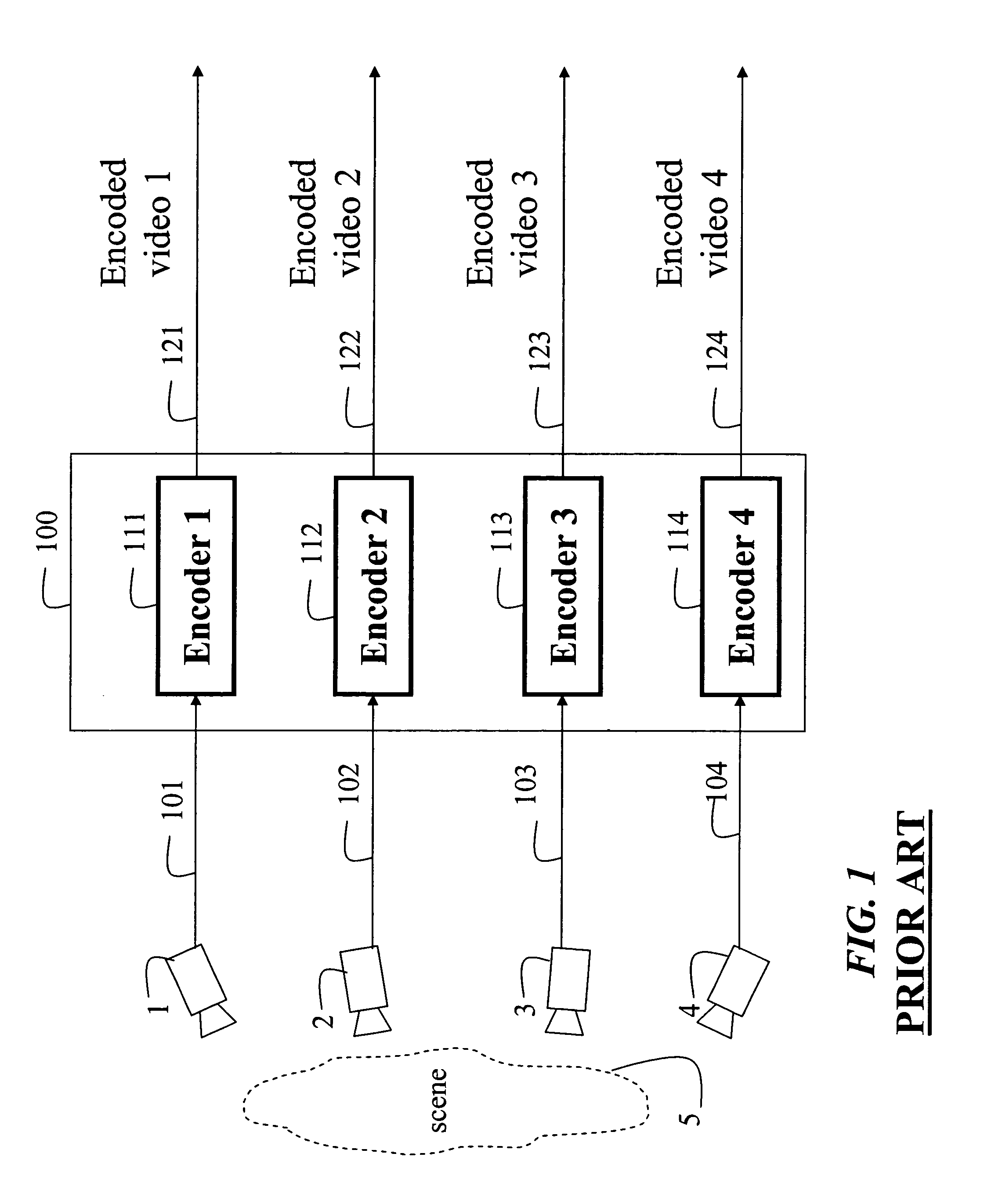

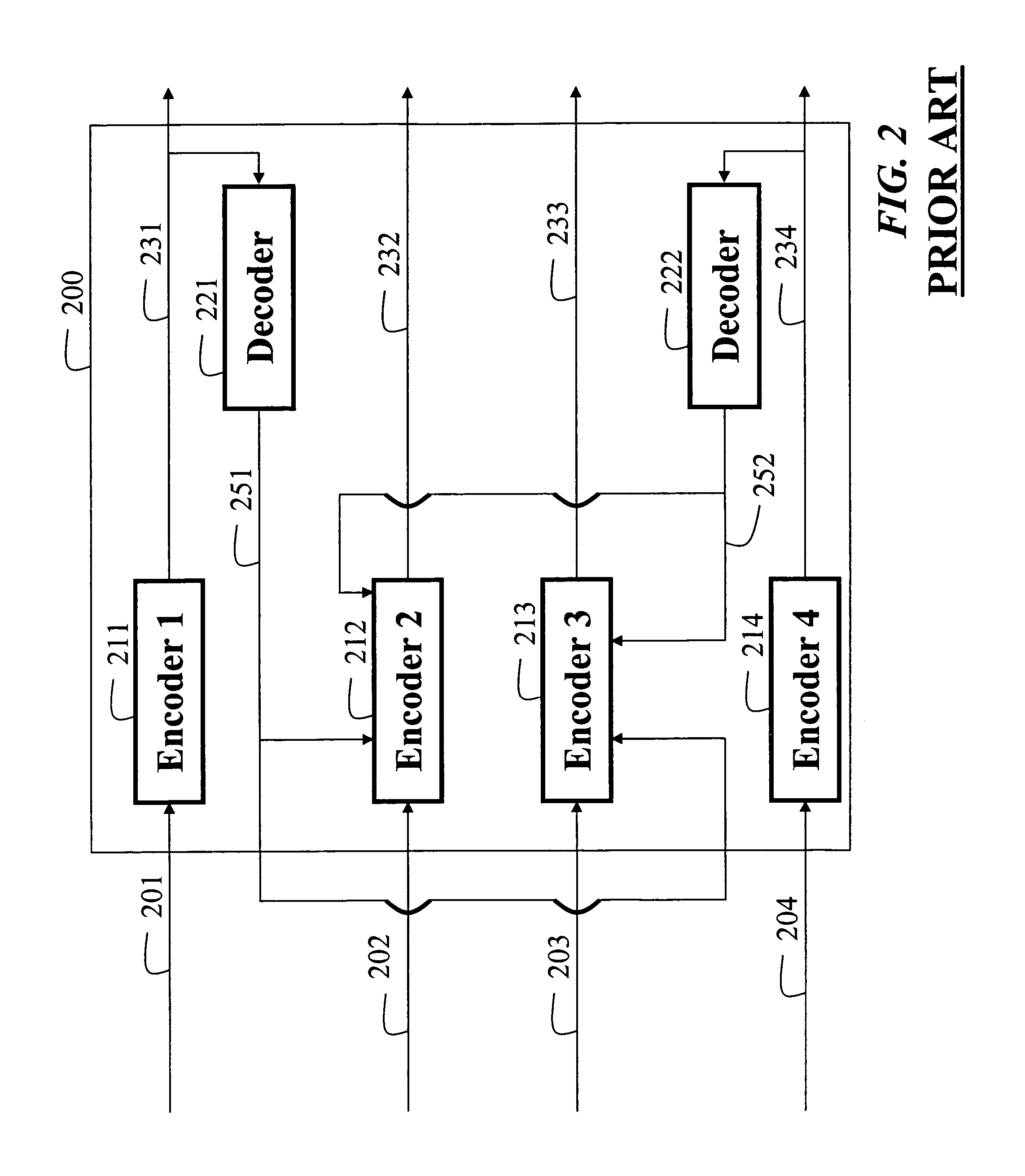

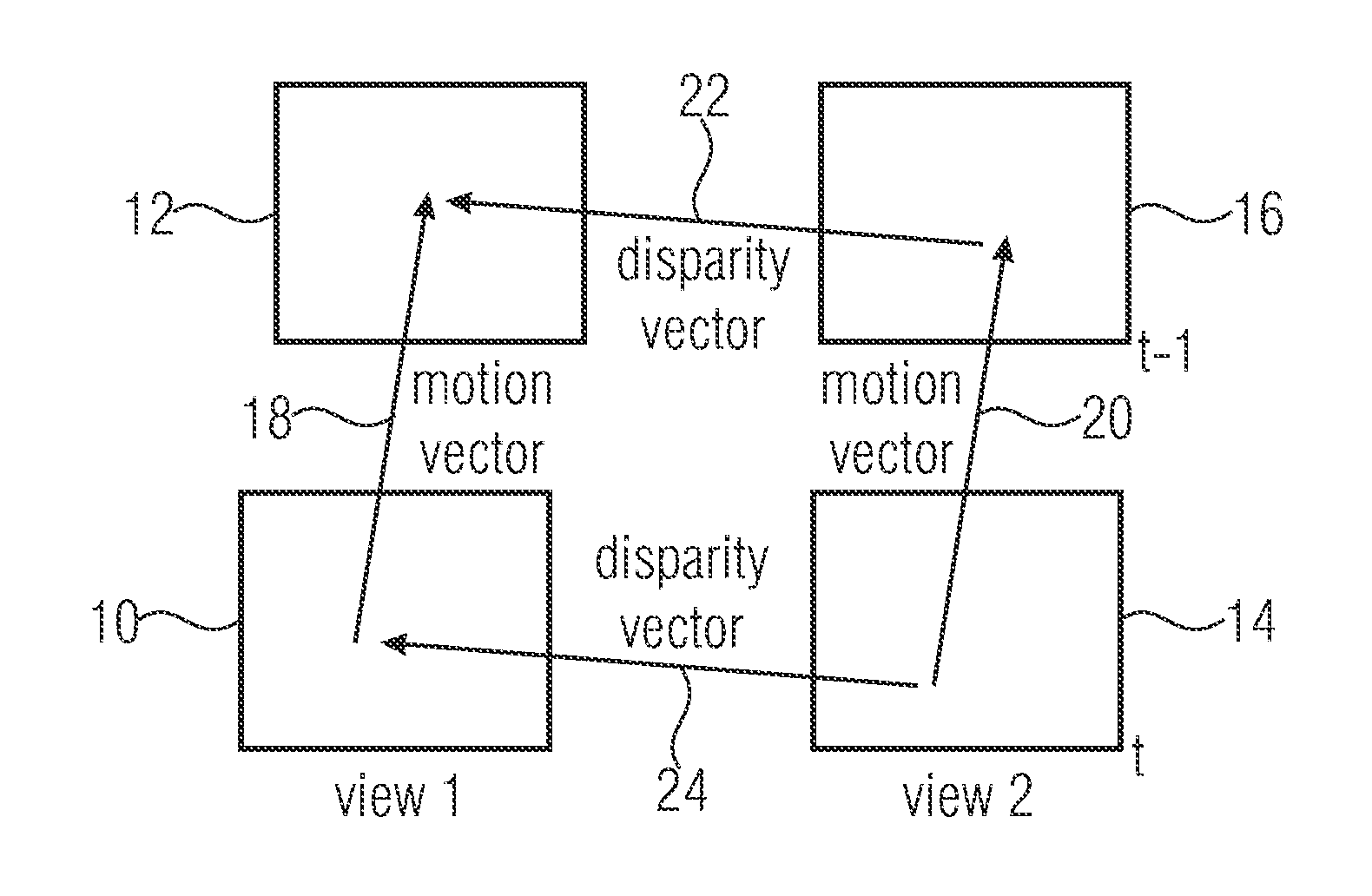

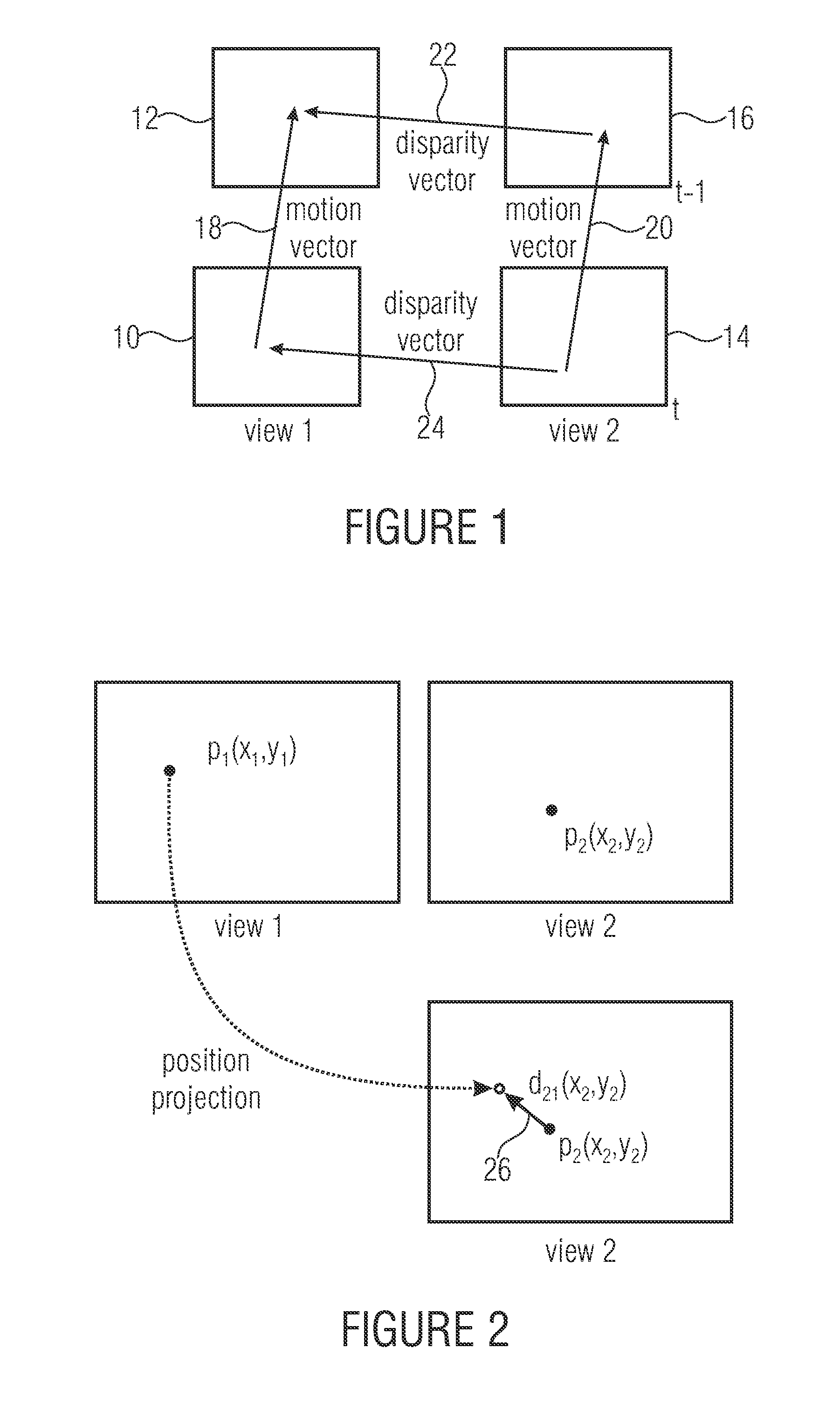

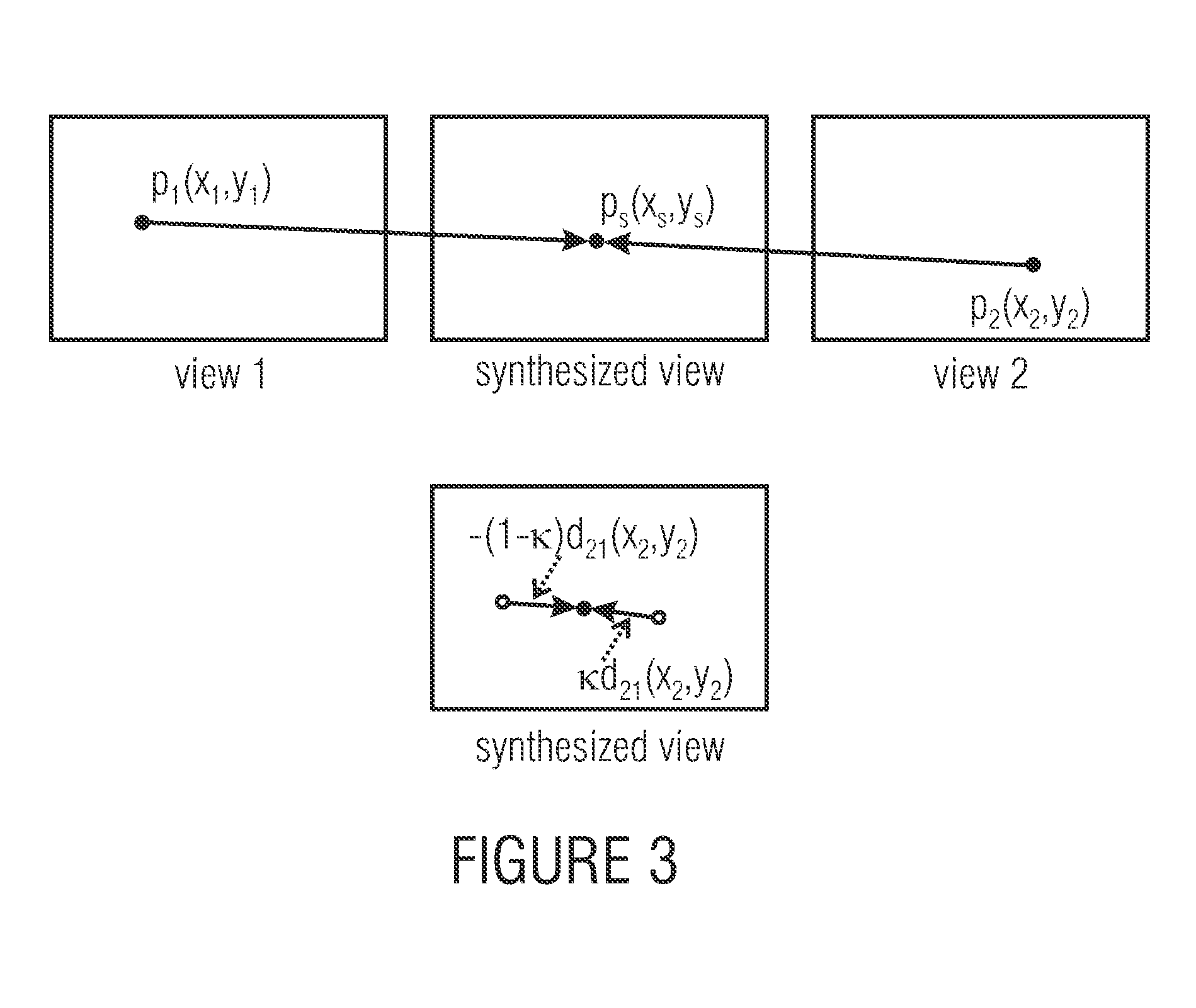

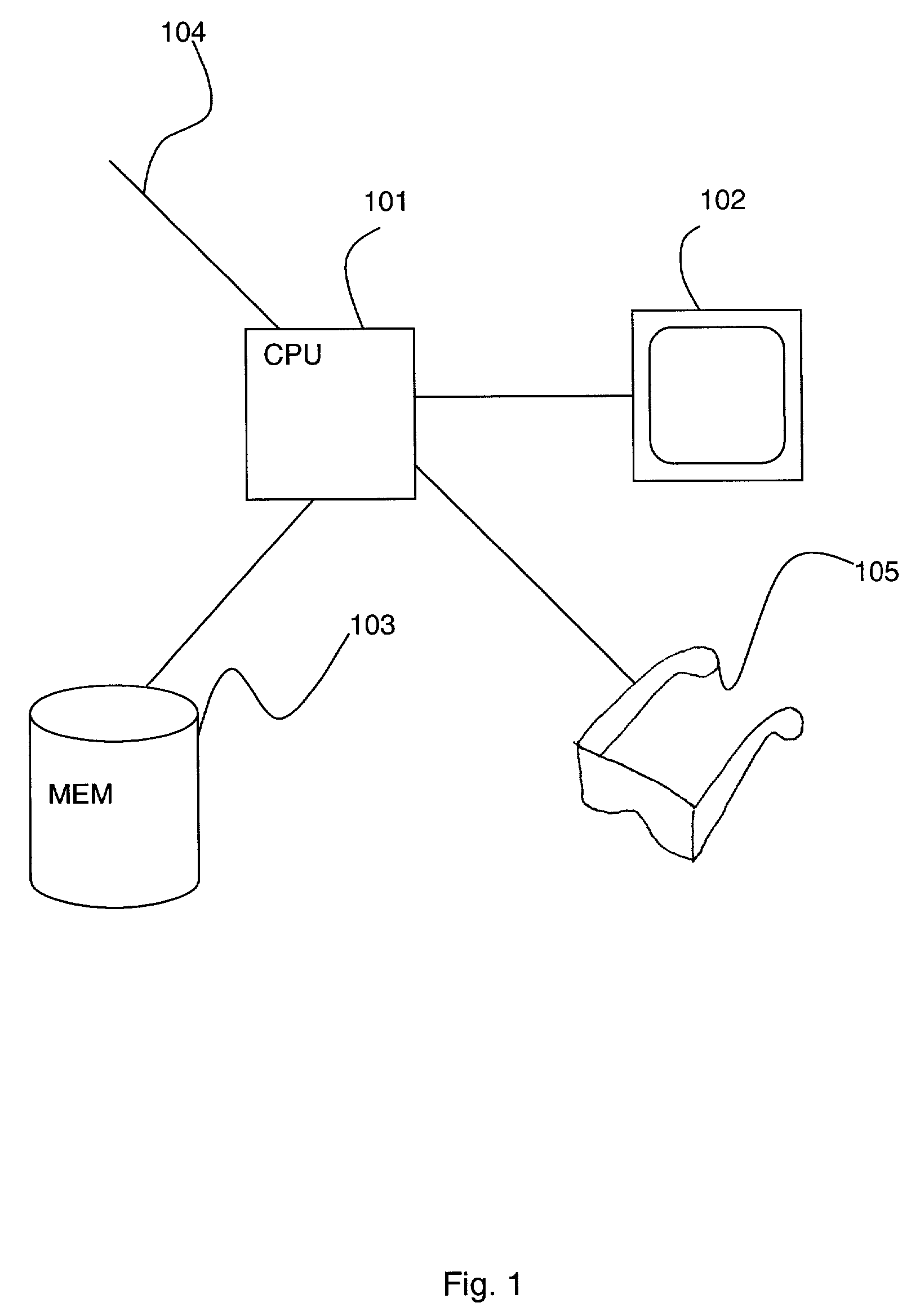

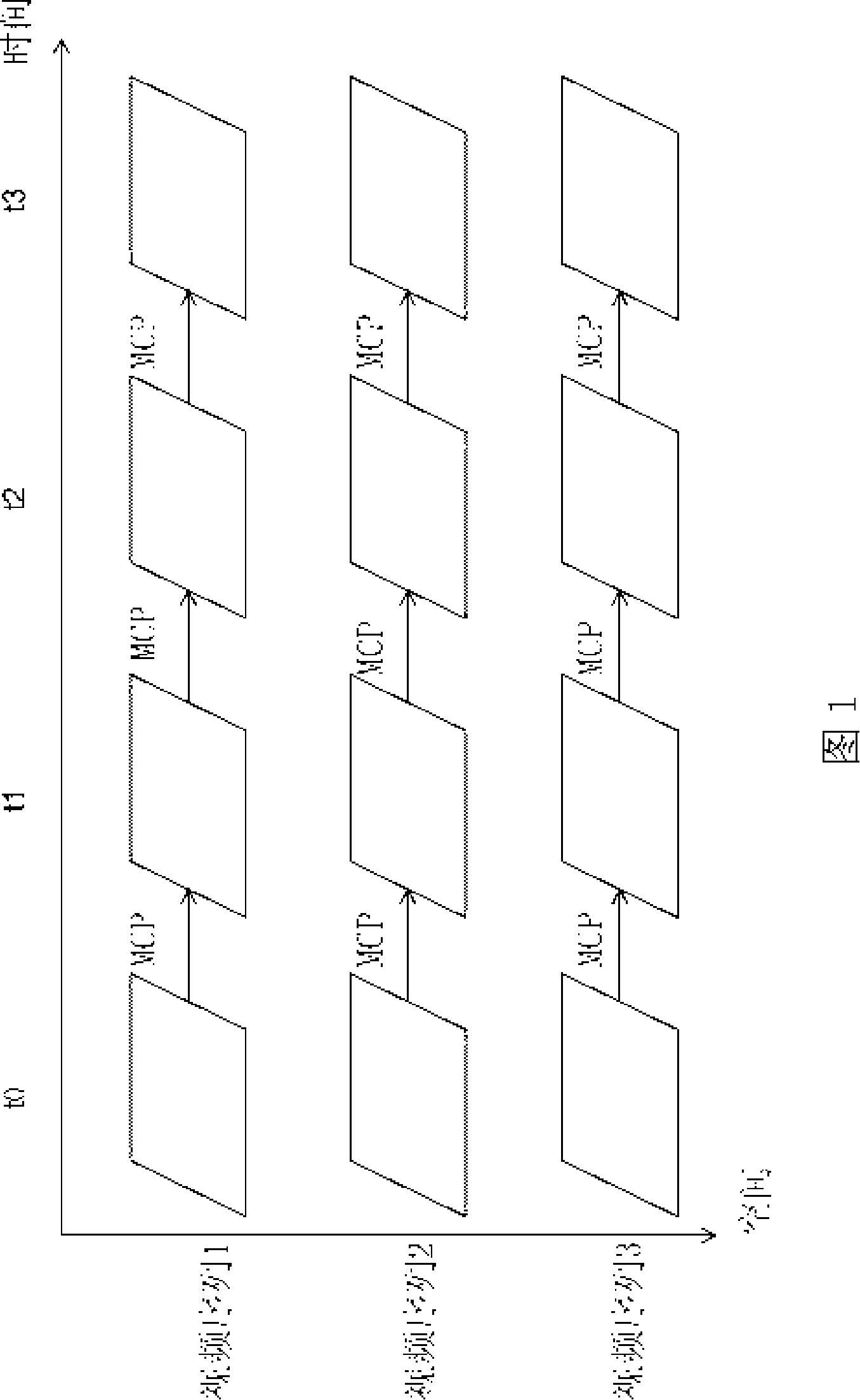

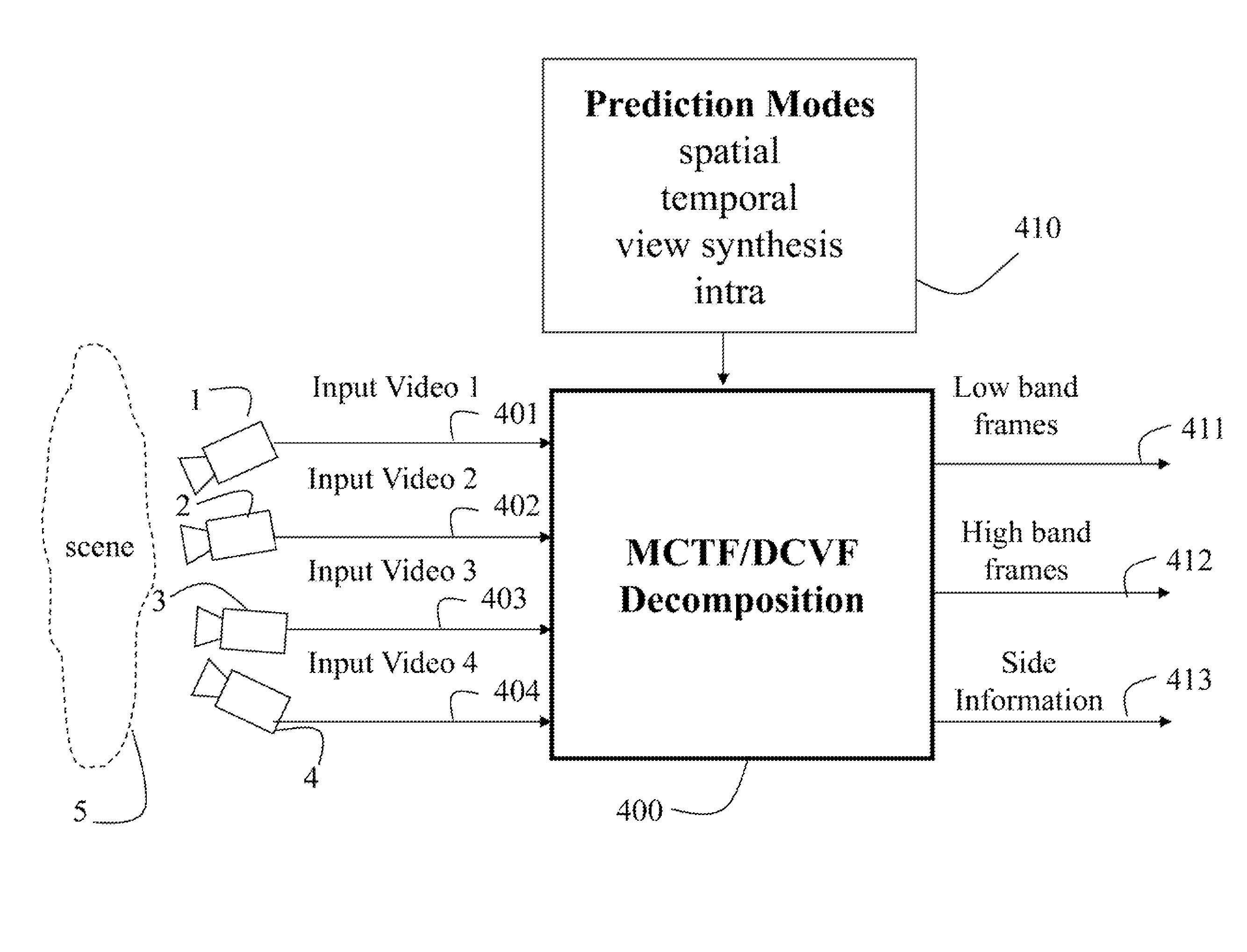

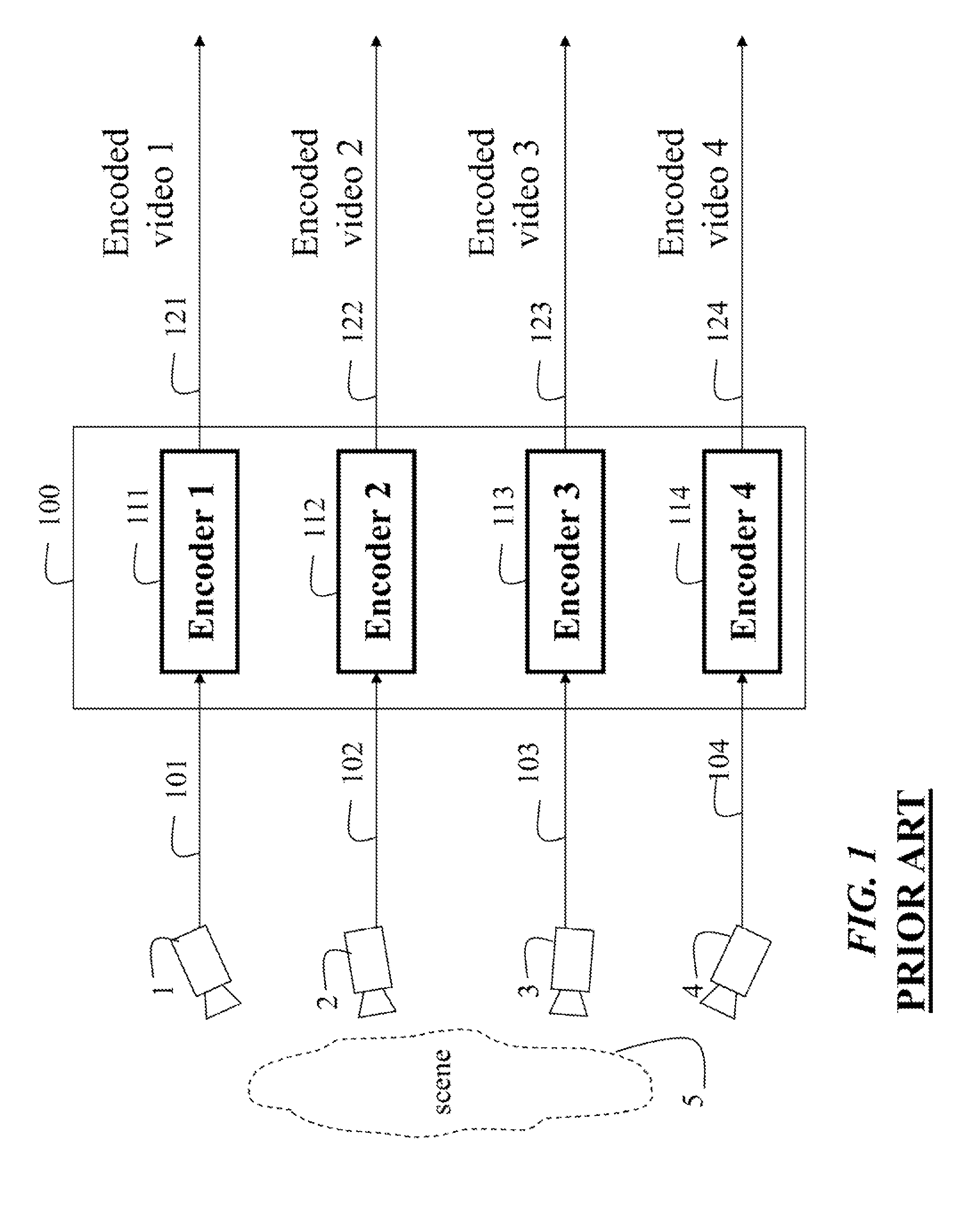

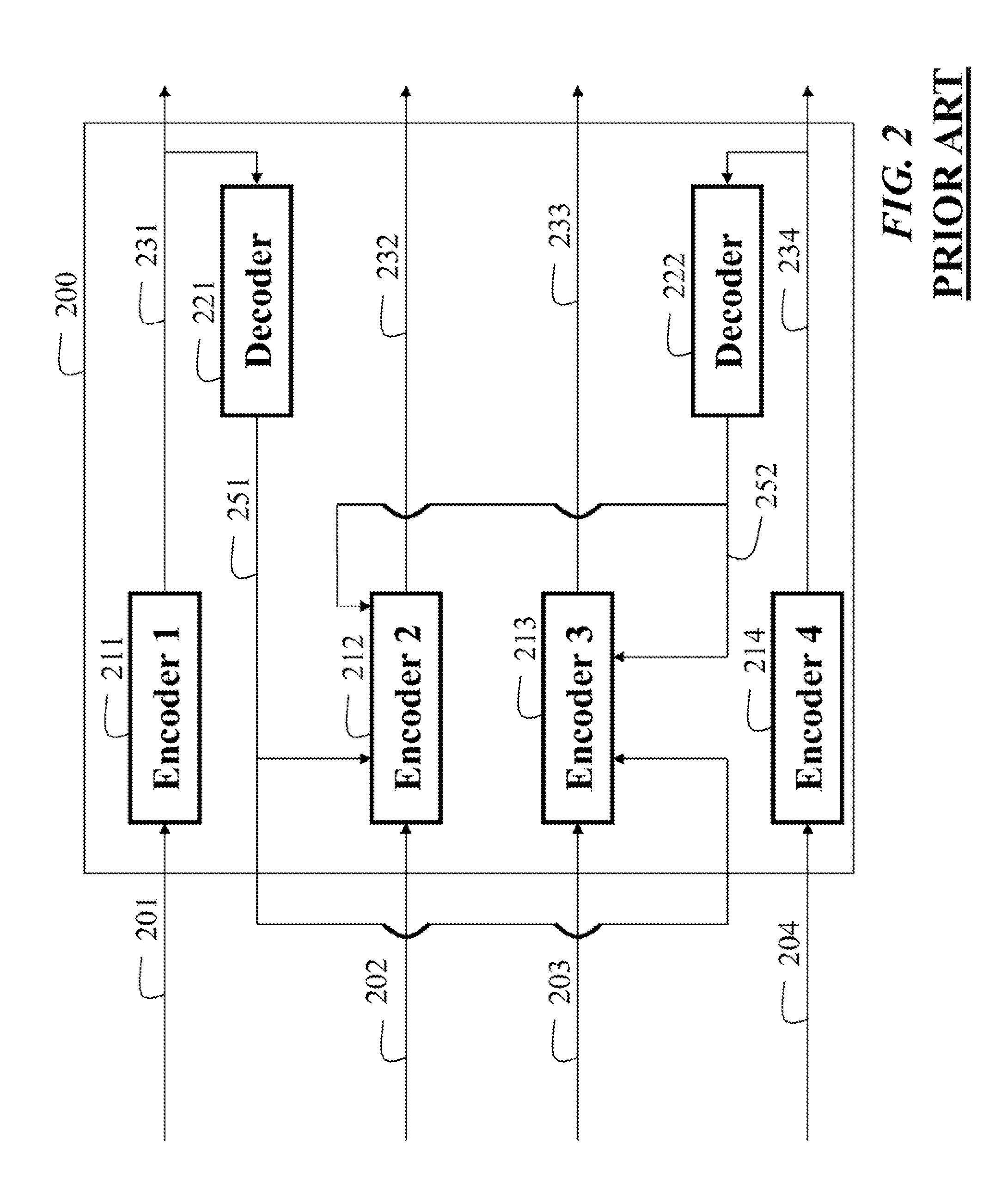

Method and system for processing multiview videos for view synthesis using side information

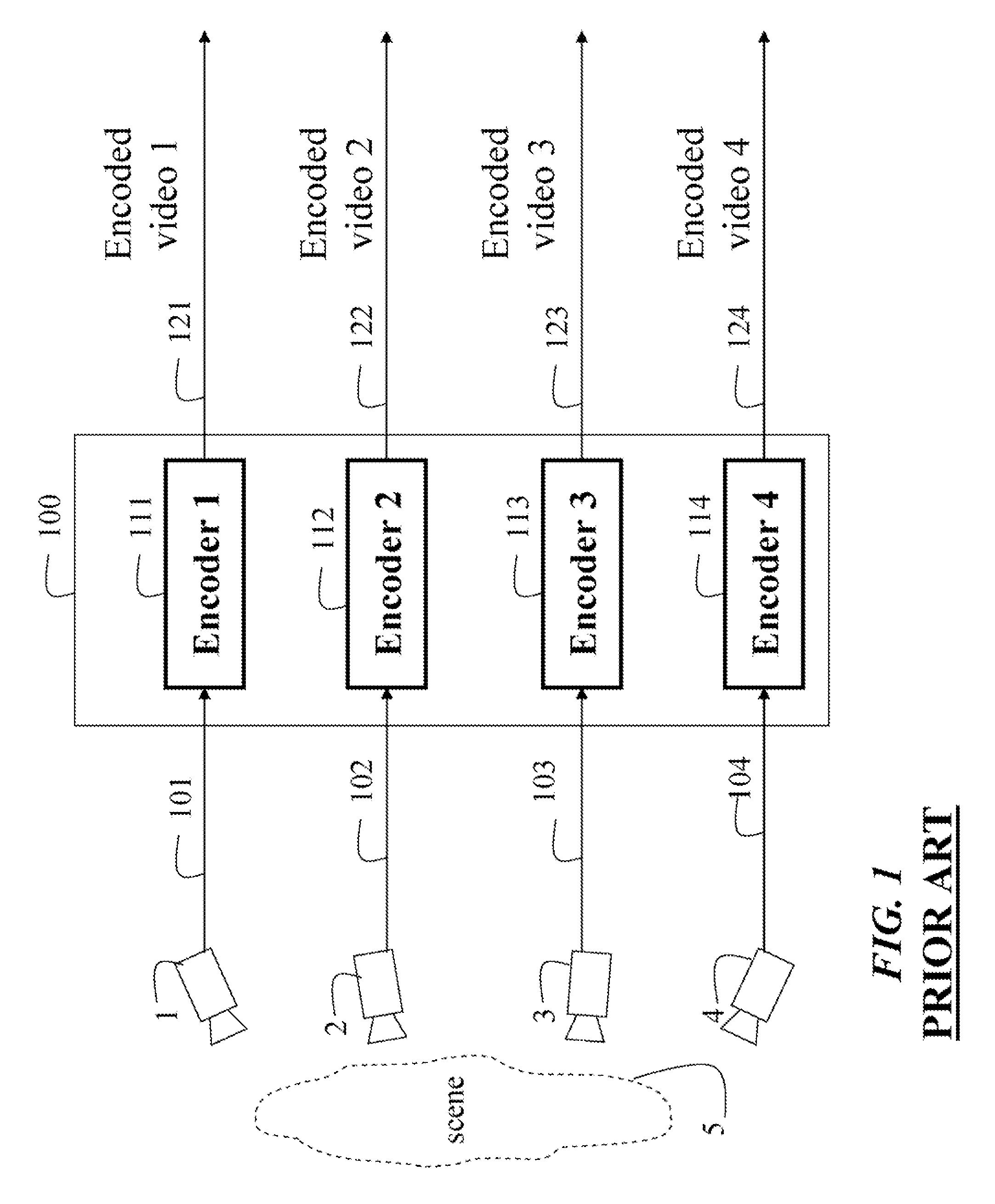

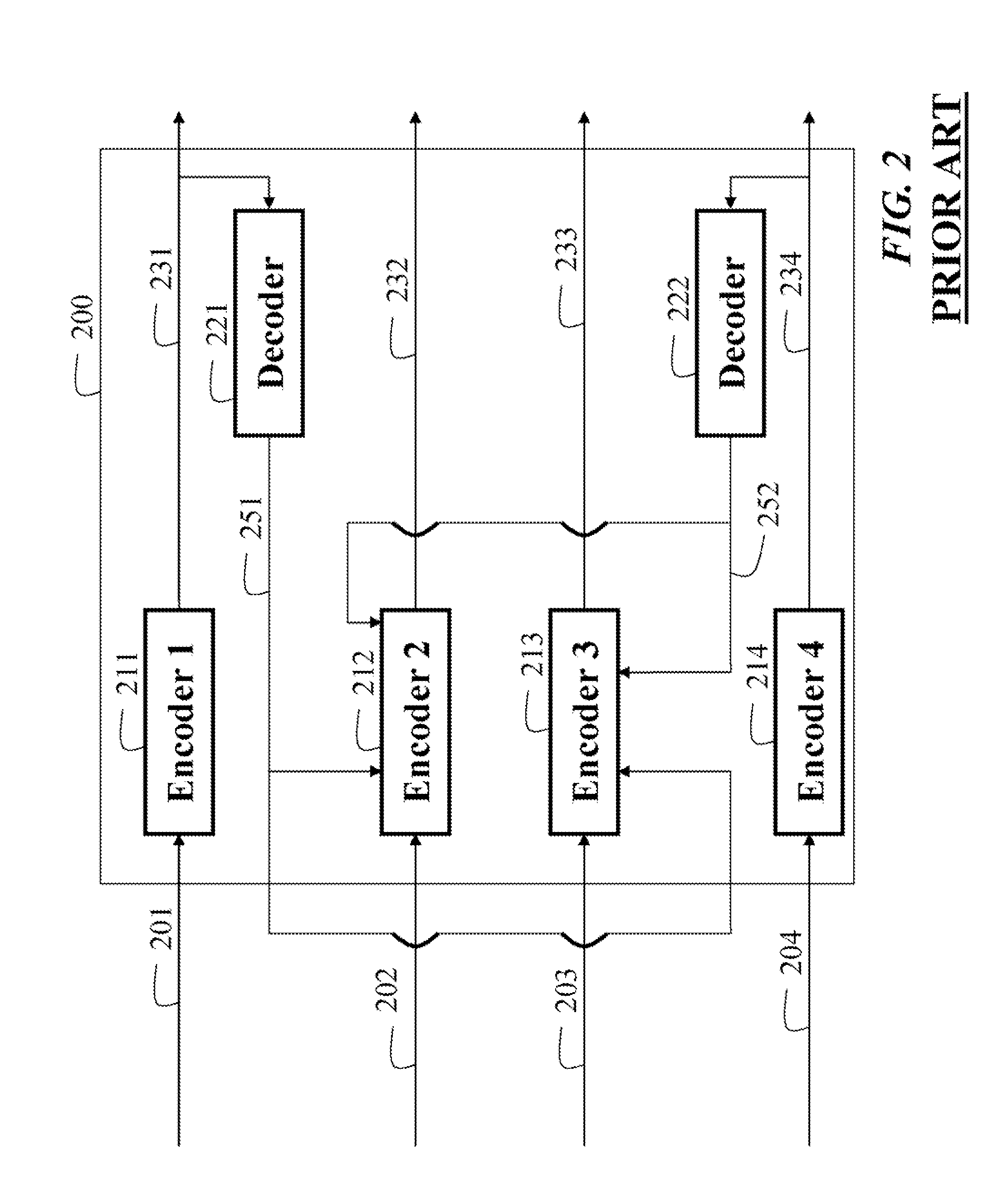

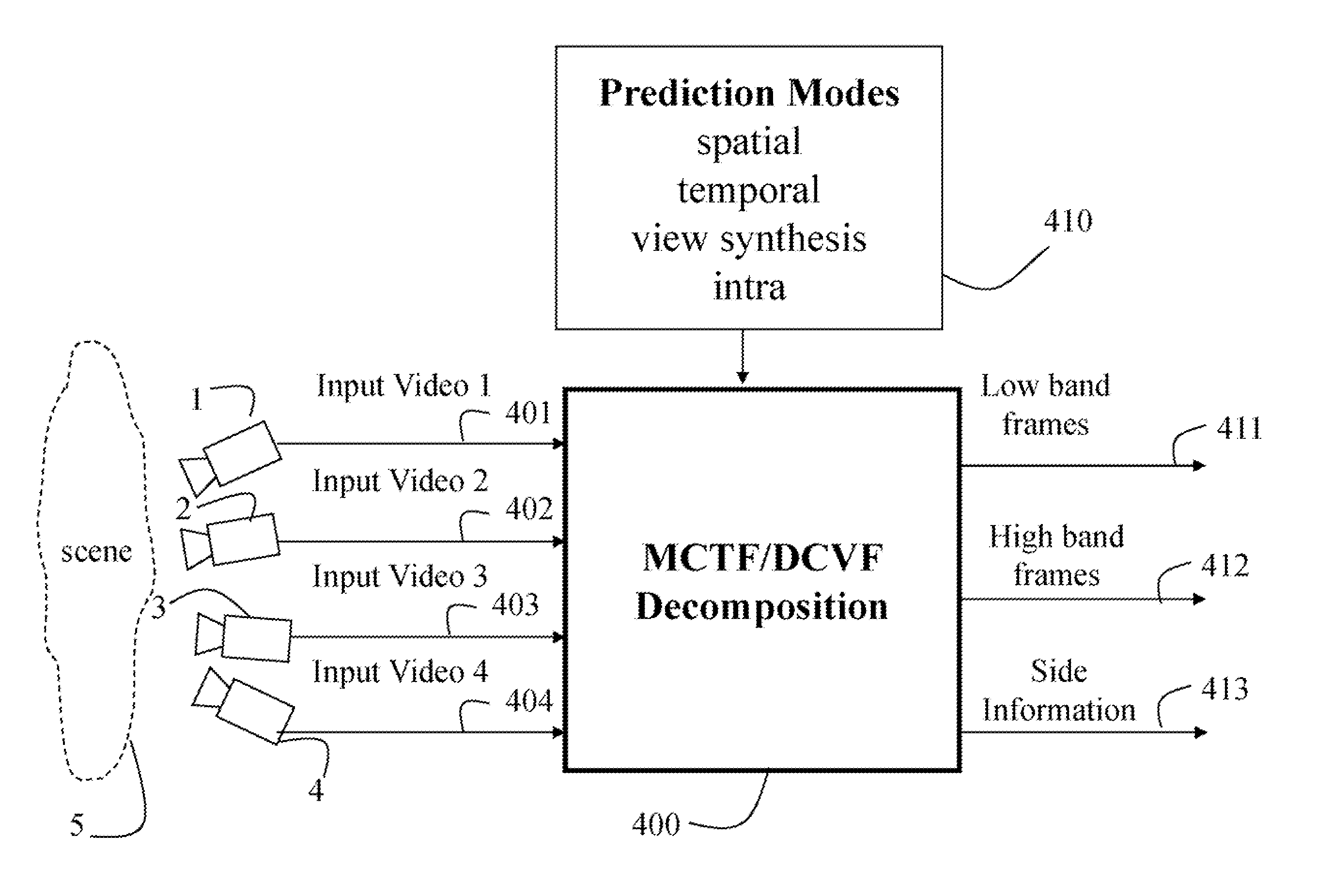

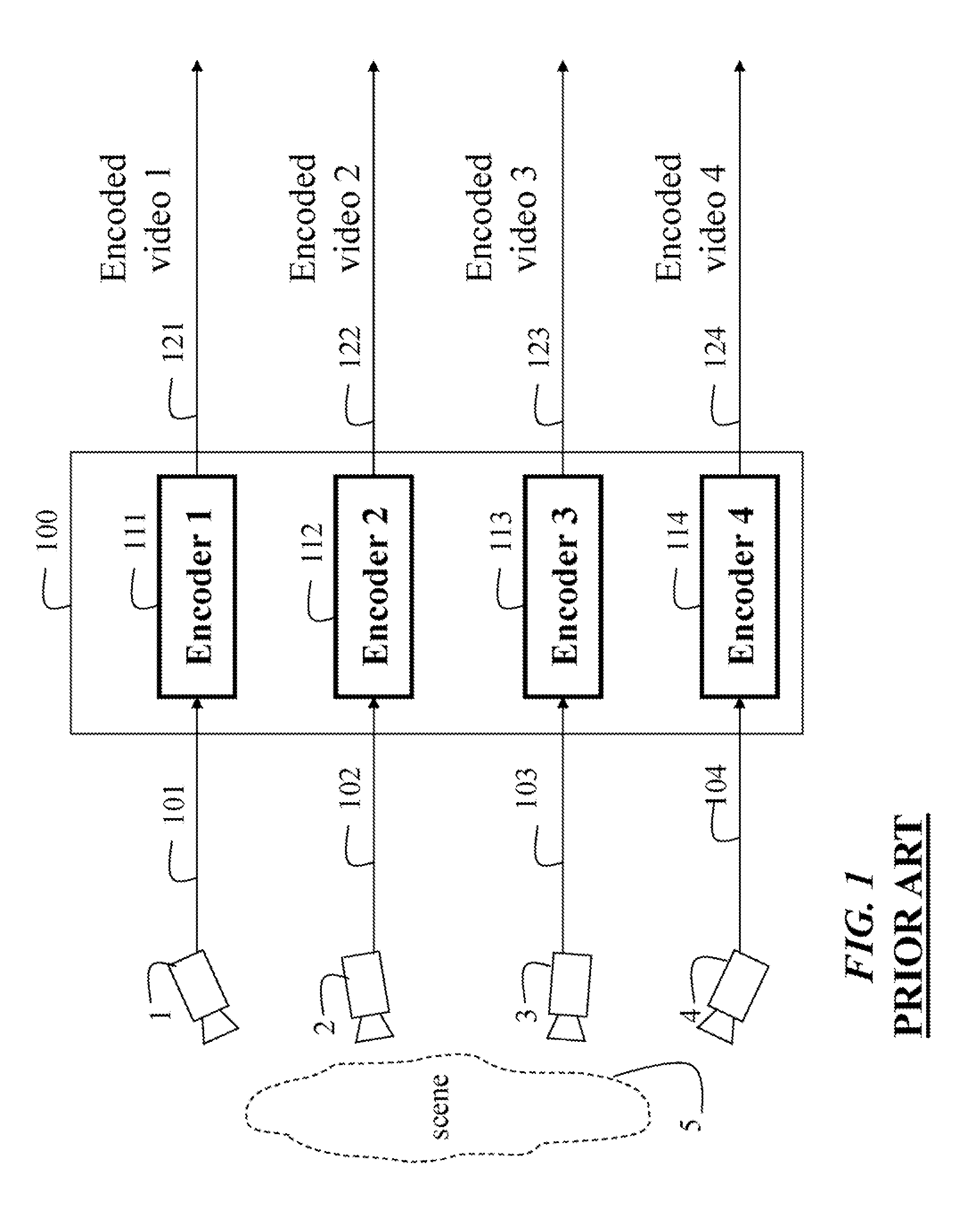

InactiveUS20070030356A1Television system detailsPicture signal generatorsComputer graphics (images)Side information

A method processes a multiview videos of a scene, in which each video is acquired by a corresponding camera arranged at a particular pose, and in which a view of each camera overlaps with the view of at least one other camera. Side information for synthesizing a particular view of the multiview video is obtained in either an encoder or decoder. A synthesized multiview video is synthesized from the of multiview videos and the side information. A reference picture list is maintained for each current frame of each of the multiview videos, the reference picture indexes temporal reference pictures and spatial reference pictures of the acquired multiview videos and the synthesized reference pictures of the synthesized multiview video. Each current frame of the multiview videos is predicted according to reference pictures indexed by the associated reference picture list.

Owner:MITSUBISHI ELECTRIC RES LAB INC

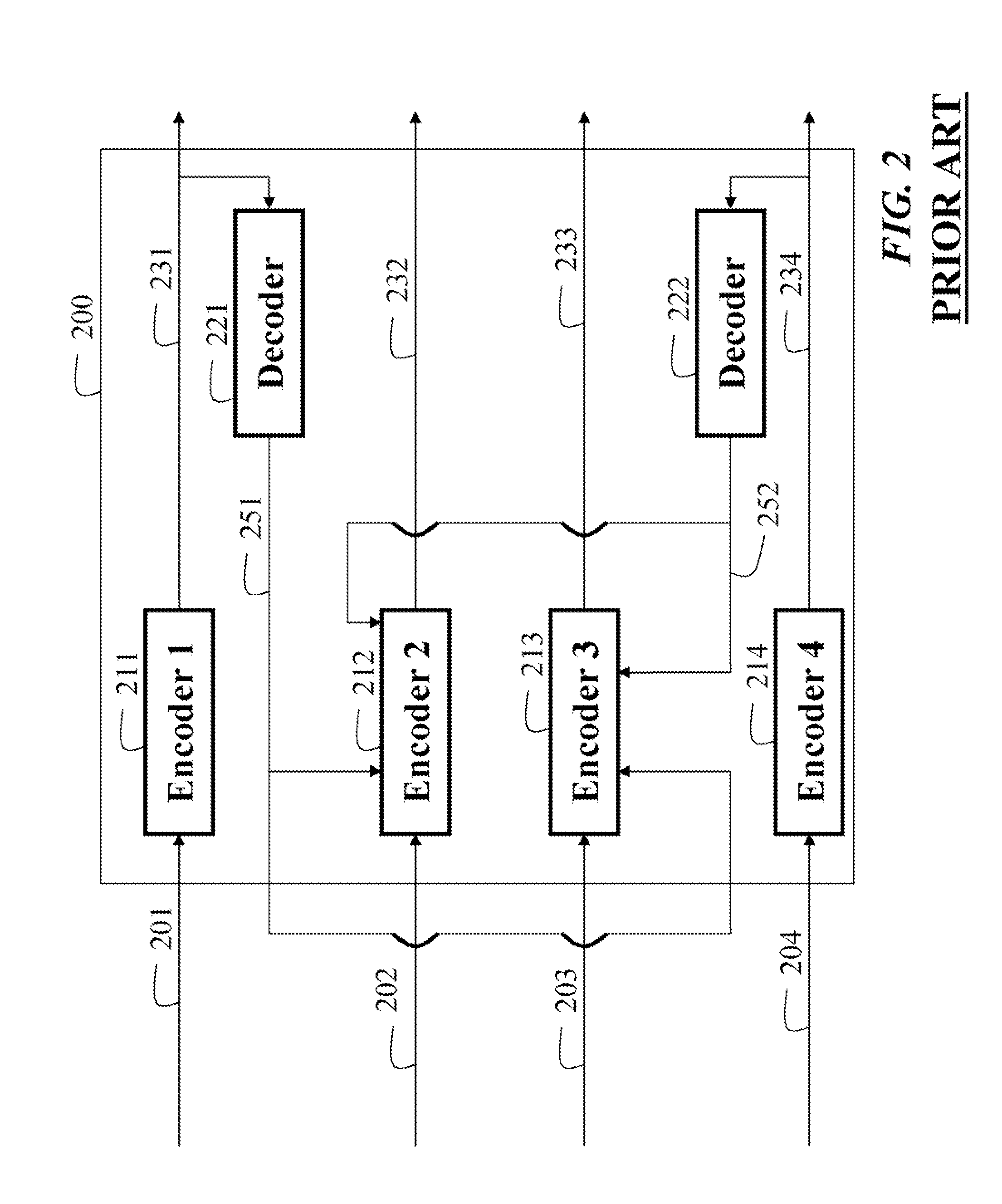

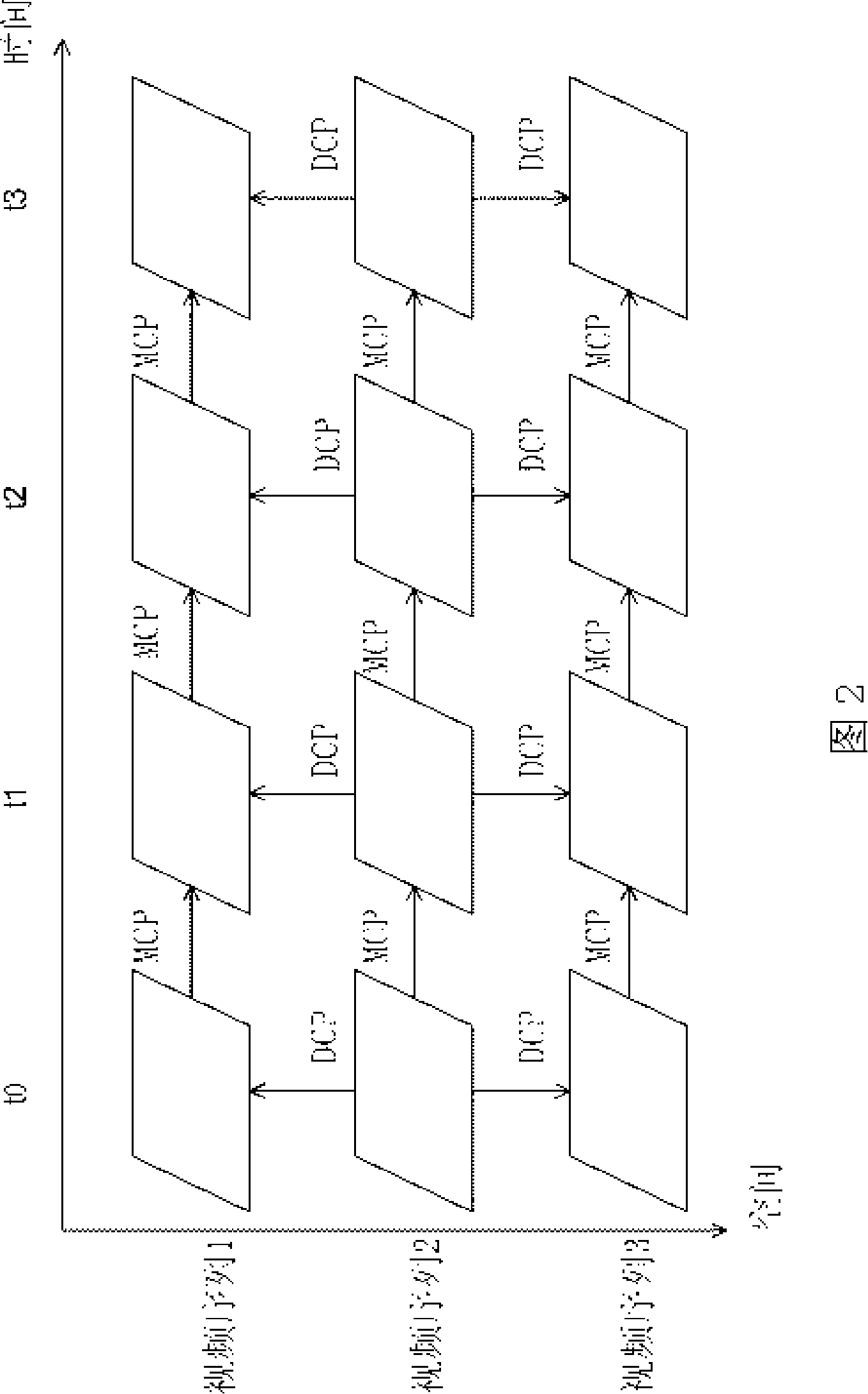

Method and System for Processing Multiview Videos for View Synthesis using Skip and Direct Modes

InactiveUS20070109409A1Television system detailsColor television detailsComputer graphics (images)View synthesis

A method processes a multiview videos of a scene, in which each video is acquired by a corresponding camera arranged at a particular pose, and in which a view of each camera overlaps with the view of at least one other camera. Side information for synthesizing a particular view of the multiview video is obtained in either an encoder or decoder. A synthesized multiview video is synthesized from the multiview videos and the side information. A reference picture list is maintained for each current frame of each of the multiview videos, the reference picture indexes temporal reference pictures and spatial reference pictures of the acquired multiview videos and the synthesized reference pictures of the synthesized multiview video. Each current frame of the multiview videos is predicted according to reference pictures indexed by the associated reference picture list with a skip mode and a direct mode, whereby the side information is inferred from the synthesized reference picture.

Owner:MITSUBISHI ELECTRIC RES LAB INC

Method and System for Processing Multiview Videos for View Synthesis Using Skip and Direct Modes

ActiveUS20120062756A1Television system detailsColor television detailsComputer graphics (images)Side information

Multiview videos are acquired by overlapping cameras. Side information is used to synthesize multiview videos. A reference picture list is maintained for current frames of the multiview videos, the reference picture indexes temporal reference pictures and spatial reference pictures of the acquired multiview videos and the synthesized reference pictures of the synthesized multiview video. Each current frame of the multiview videos is predicted according to reference pictures indexed by the associated reference picture list with a skip mode and a direct mode, whereby the side information is inferred from the synthesized reference picture. Alternatively, the depth images corresponding to the multiview videos of the input data, and this data are encoded as part of the bitstream depending on a SKIP type.

Owner:MITSUBISHI ELECTRIC RES LAB INC

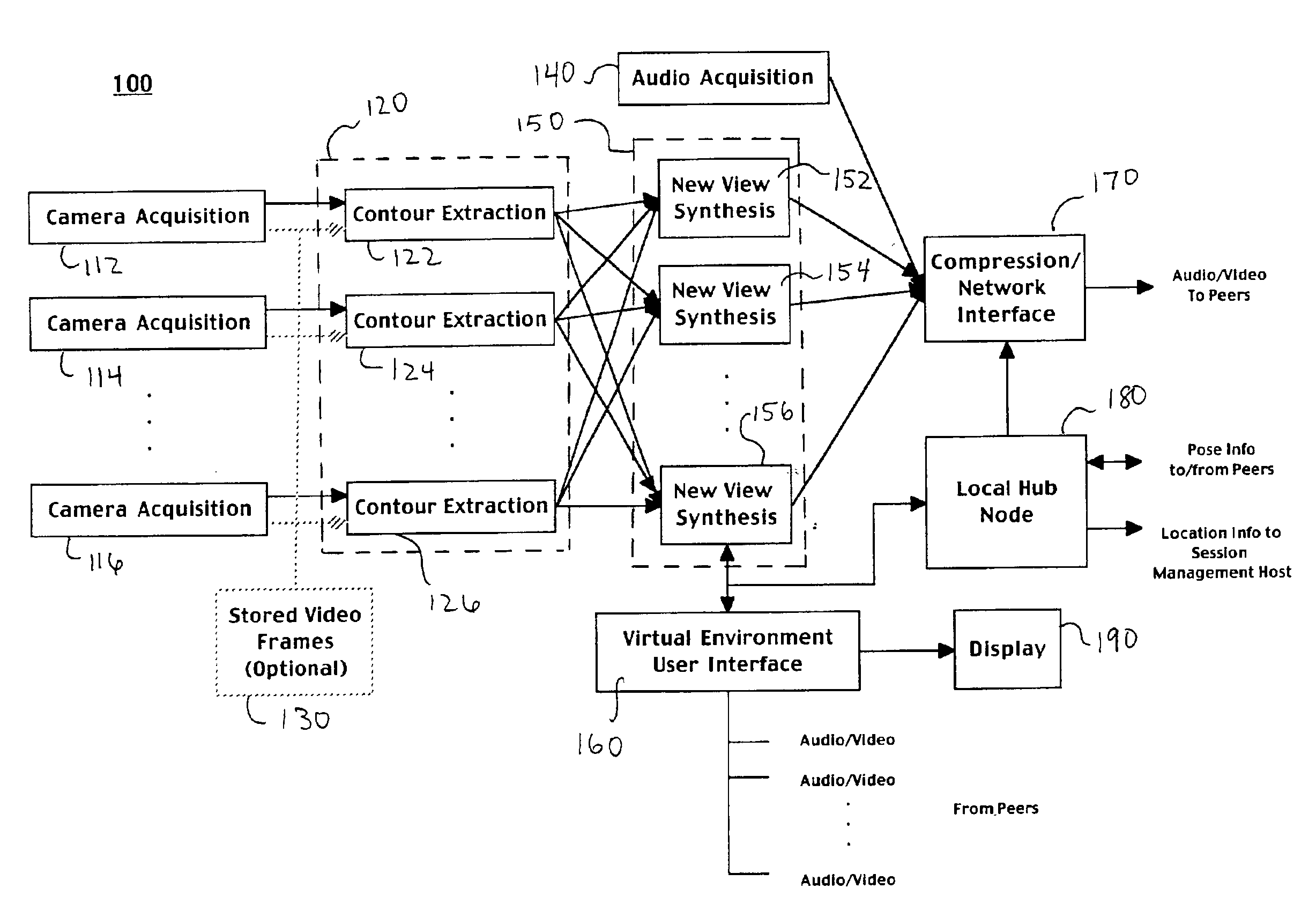

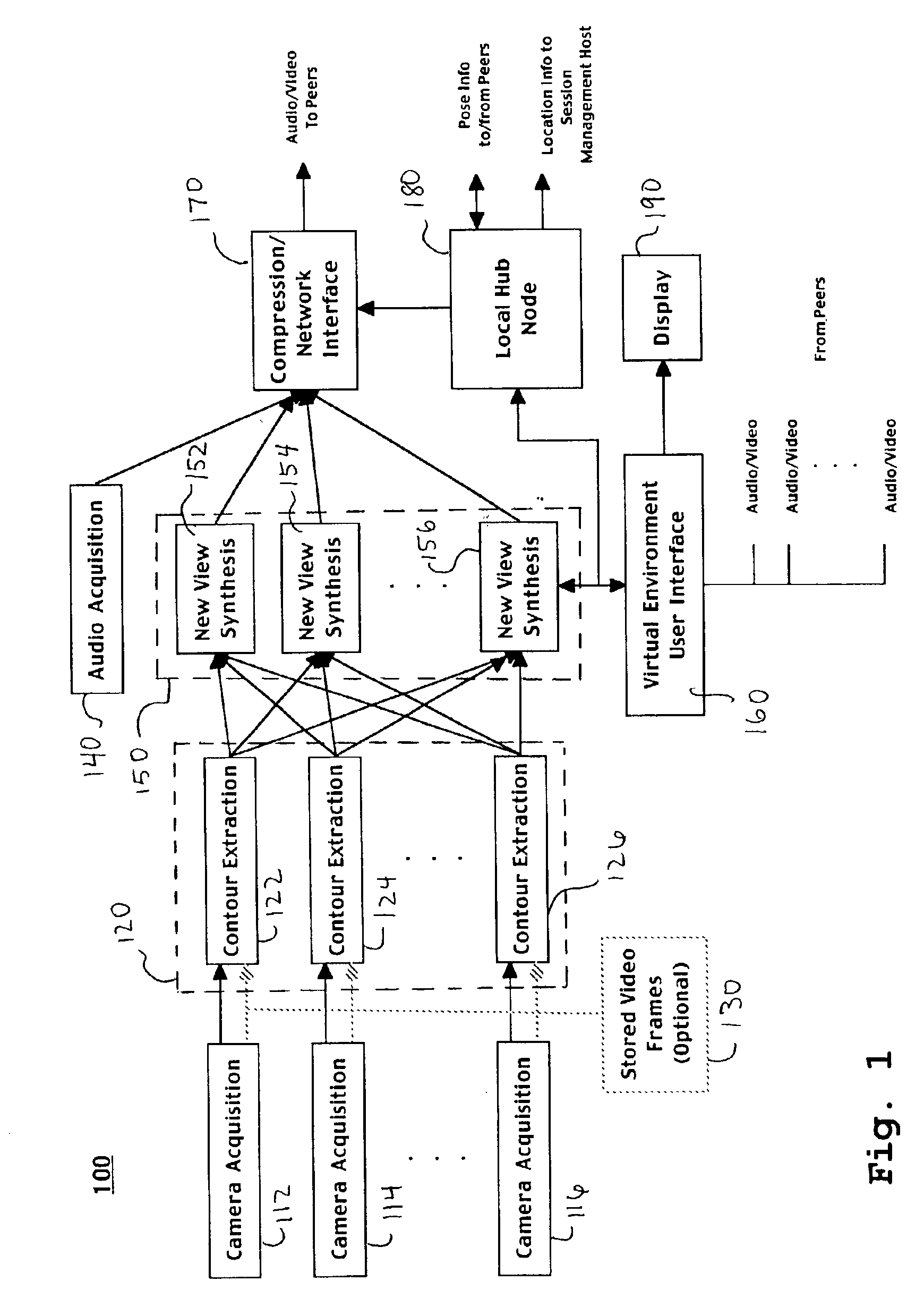

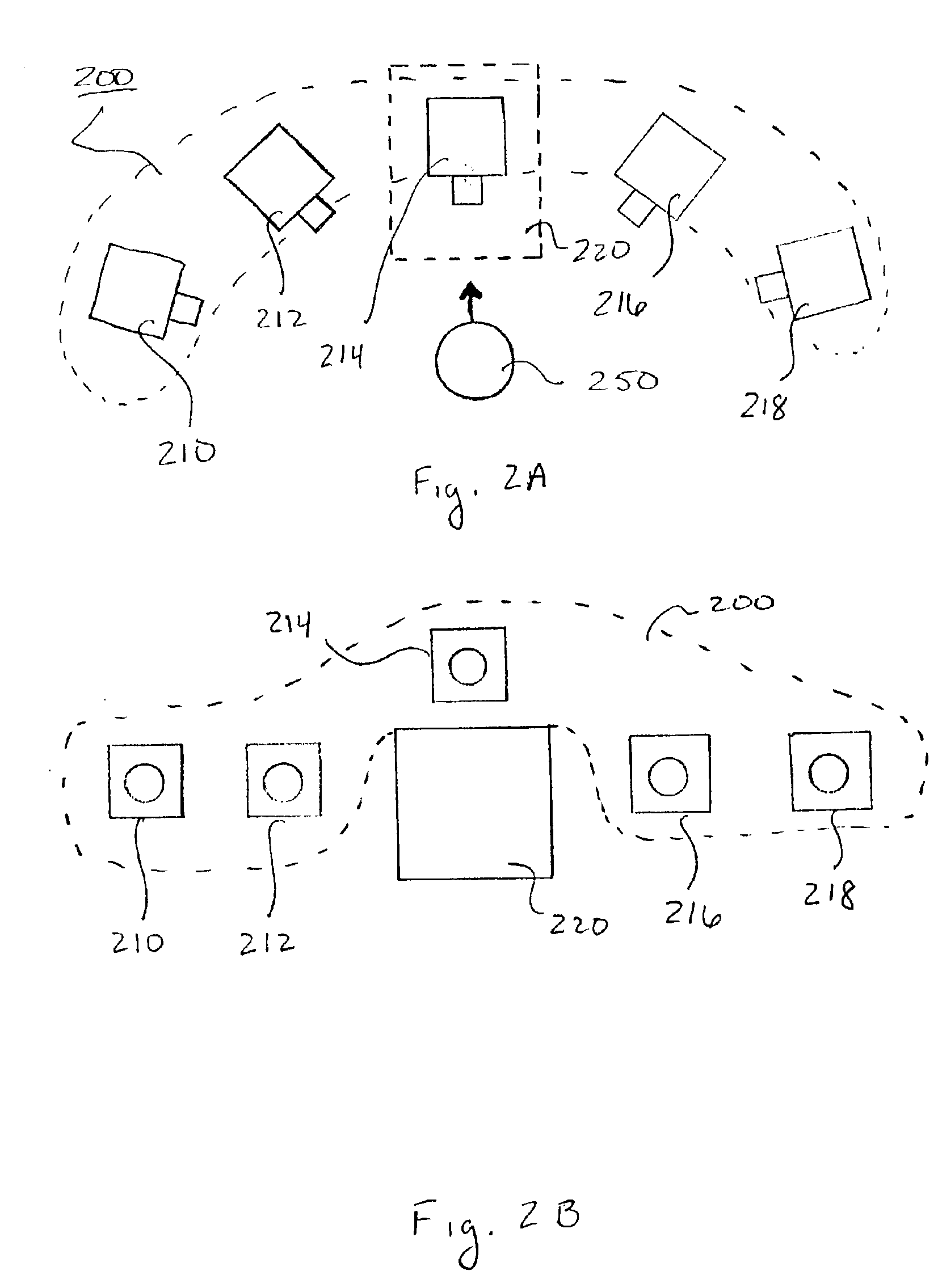

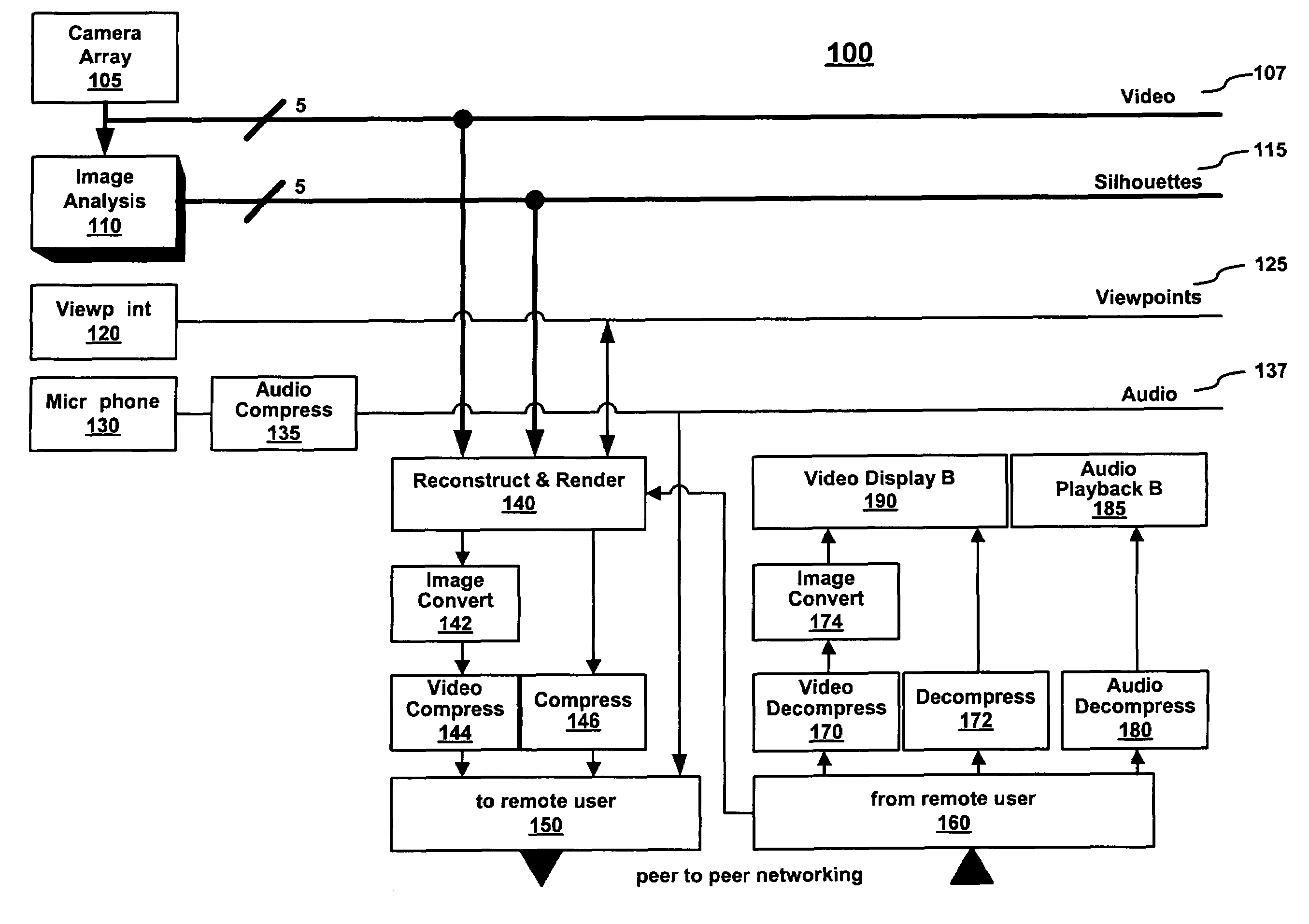

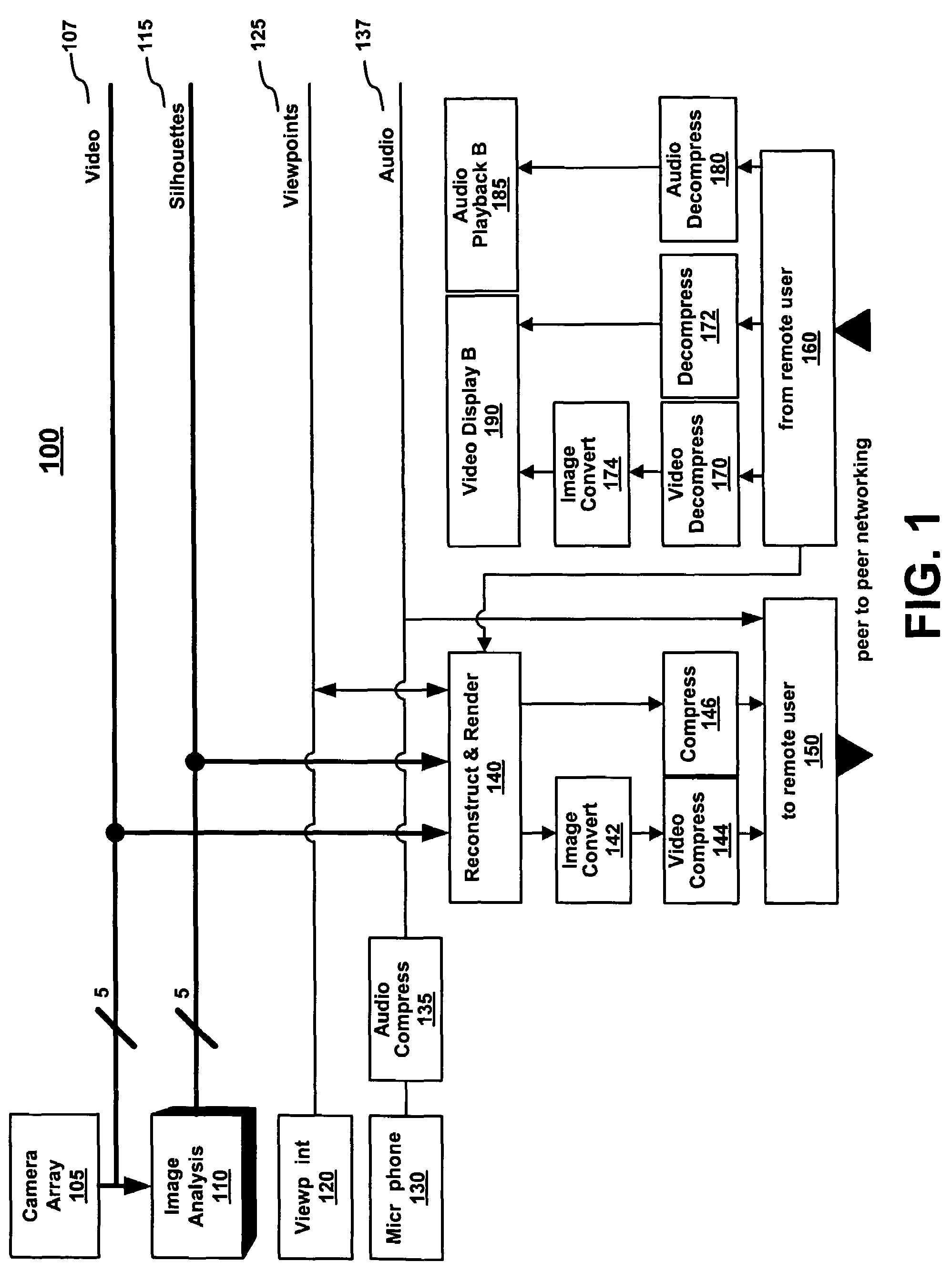

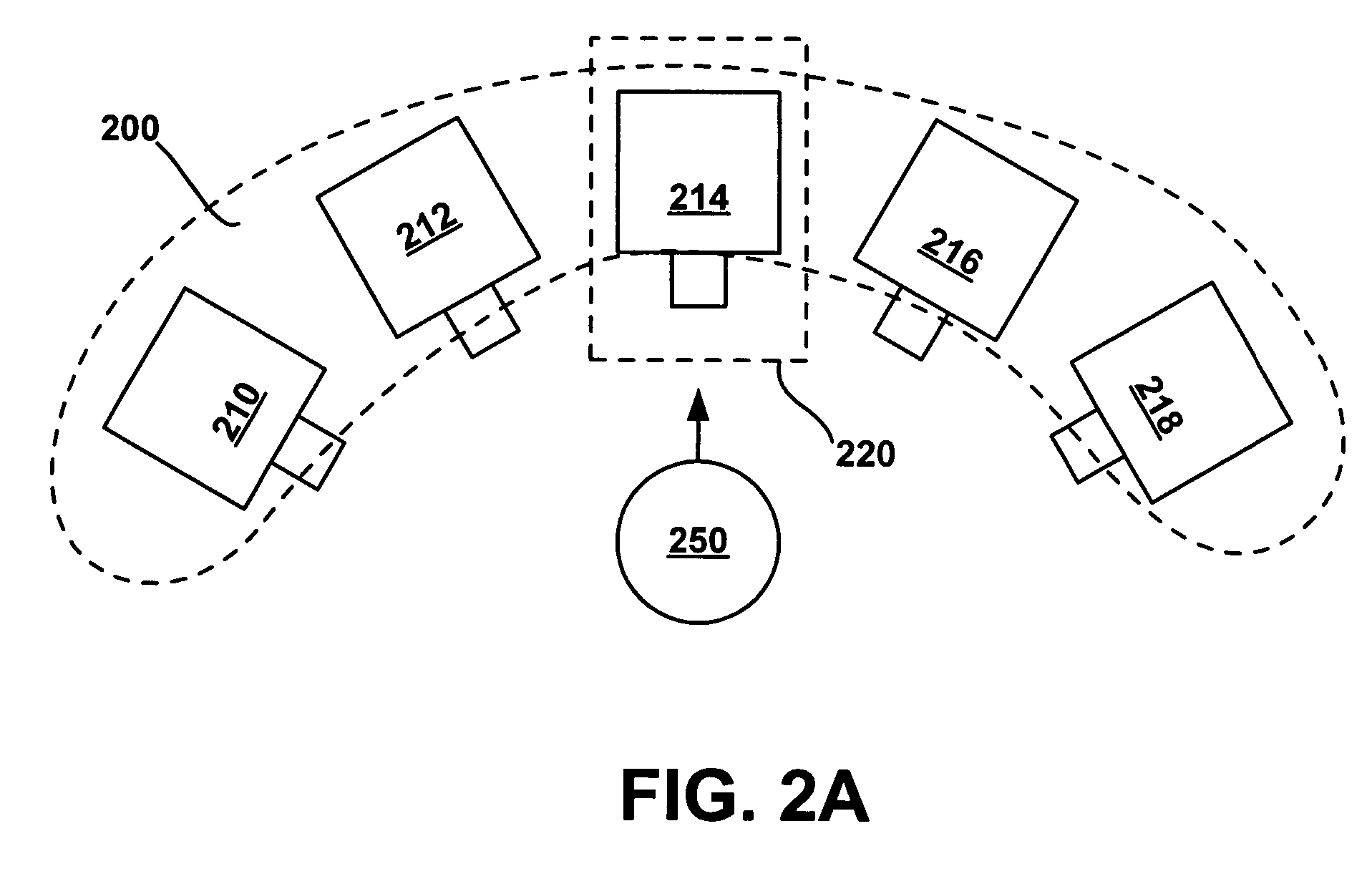

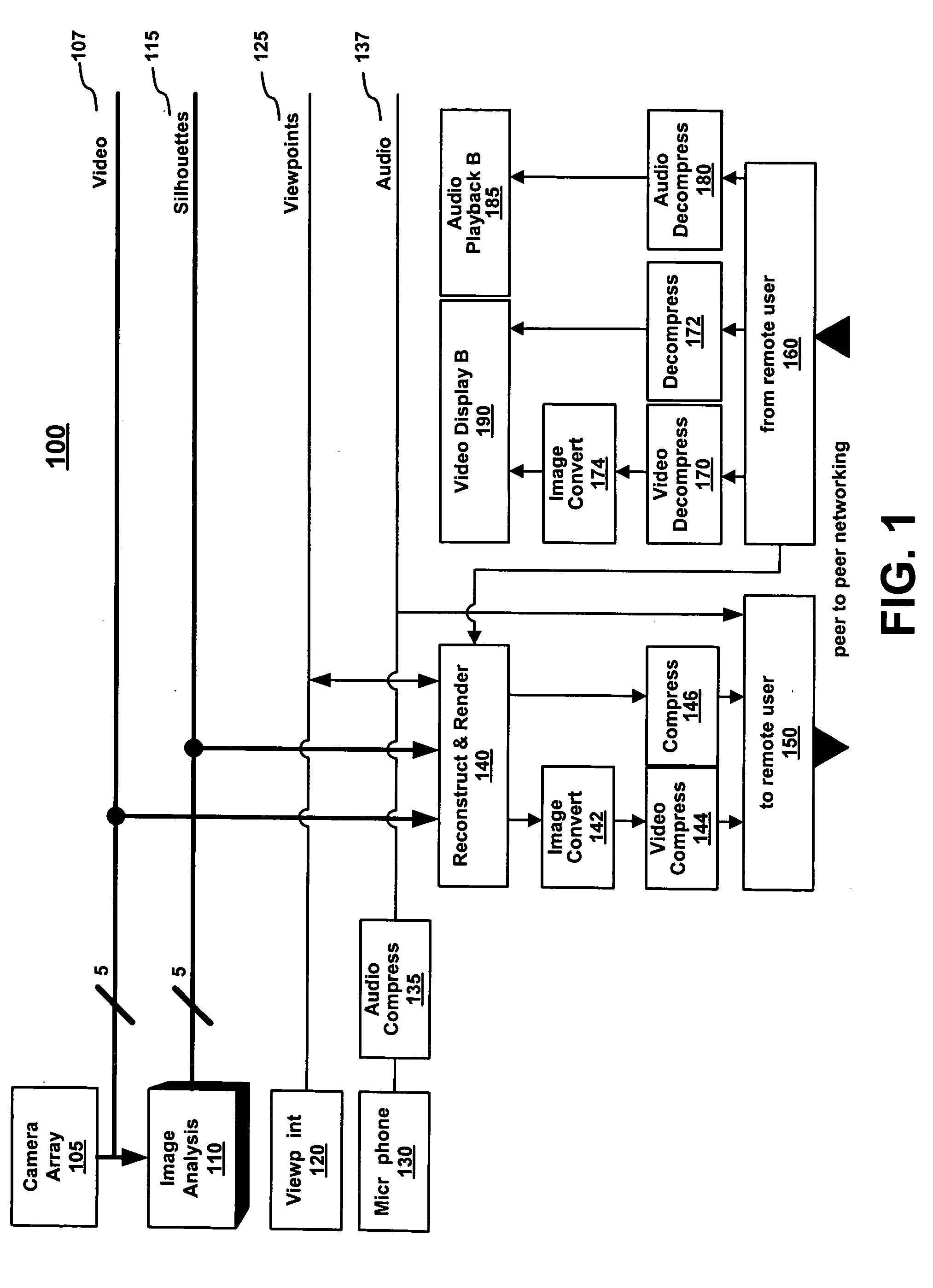

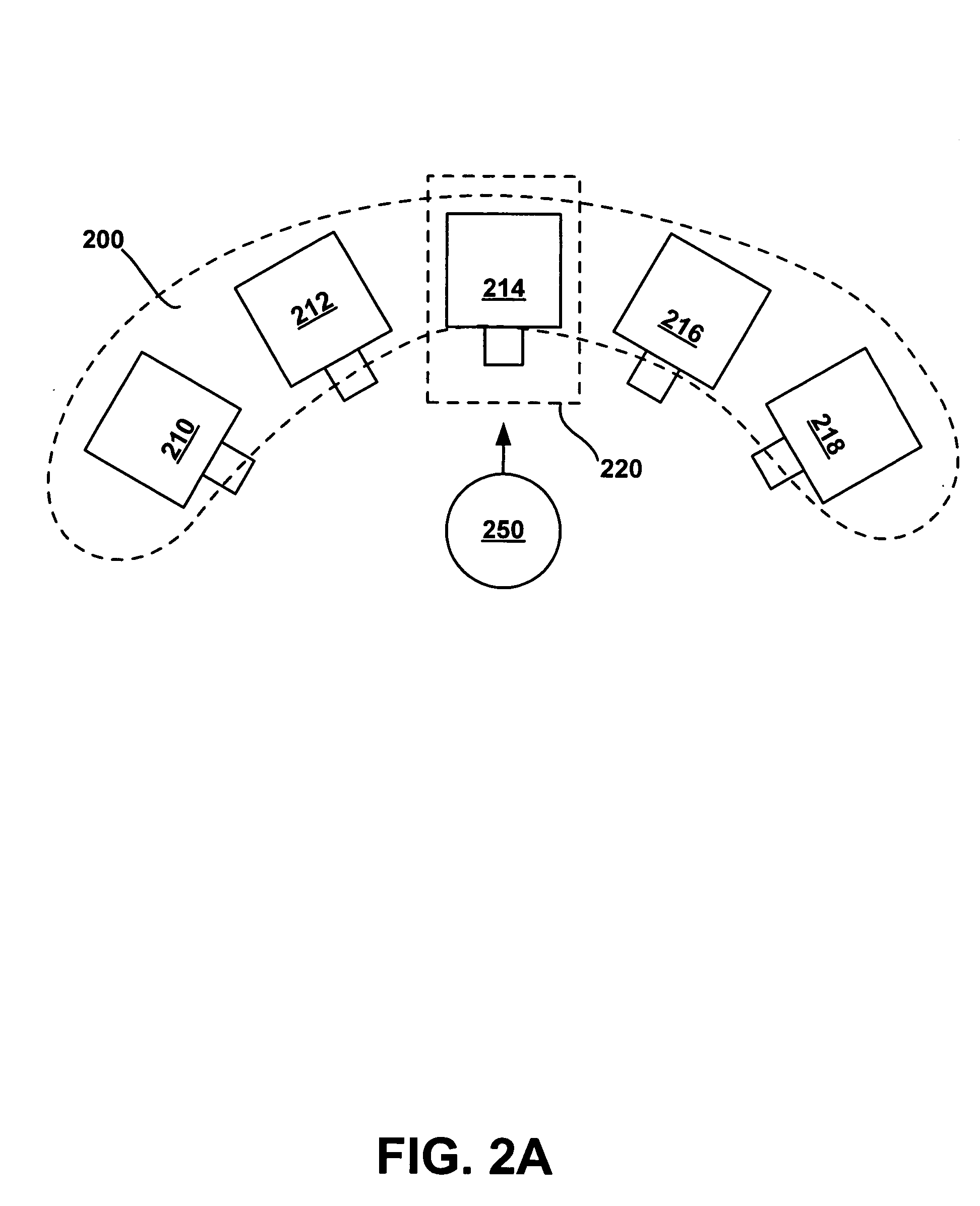

Method and system for real-time video communication within a virtual environment

InactiveUS6853398B2Image analysisPulse modulation television signal transmissionViewpointsView synthesis

A method for real-time video communication. Specifically, one embodiment of the present invention discloses a method of video conferencing that captures a plurality of real-time video streams of a local participant from a plurality of sample viewpoints. From the plurality of video streams, a new view synthesis technique can be applied to generate a video image stream in real-time of the local participant rendered from a second location of a second participant with respect to a first location of the local participant in a coordinate space of a virtual environment. A change in either of the locations leads to the modifying of the video image stream, thereby enabling real-time video communication from the local participant to the second participant.

Owner:HEWLETT PACKARD DEV CO LP

Method and system for real-time rendering within a gaming environment

A method and system for real-time rendering within a gaming environment. Specifically, one embodiment of the present invention discloses a method of rendering a local participant within an interactive gaming environment. The method begins by capturing a plurality of real-time video streams of a local participant from a plurality of camera viewpoints. From the plurality of video streams, a new view synthesis technique is applied to generate a rendering of the local participant. The rendering is generated from a perspective of a remote participant located remotely in the gaming environment. The rendering is then sent to the remote participant for viewing.

Owner:HEWLETT PACKARD DEV CO LP

Method and system for processing multiview videos for view synthesis using skip and direct modes

InactiveUS7671894B2Television system detailsColor television detailsComputer graphics (images)View synthesis

A method processes a multiview videos of a scene, in which each video is acquired by a corresponding camera arranged at a particular pose, and in which a view of each camera overlaps with the view of at least one other camera. Side information for synthesizing a particular view of the multiview video is obtained in either an encoder or decoder. A synthesized multiview video is synthesized from the multiview videos and the side information. A reference picture list is maintained for each current frame of each of the multiview videos, the reference picture indexes temporal reference pictures and spatial reference pictures of the acquired multiview videos and the synthesized reference pictures of the synthesized multiview video. Each current frame of the multiview videos is predicted according to reference pictures indexed by the associated reference picture list with a skip mode and a direct mode, whereby the side information is inferred from the synthesized reference picture.

Owner:MITSUBISHI ELECTRIC RES LAB INC

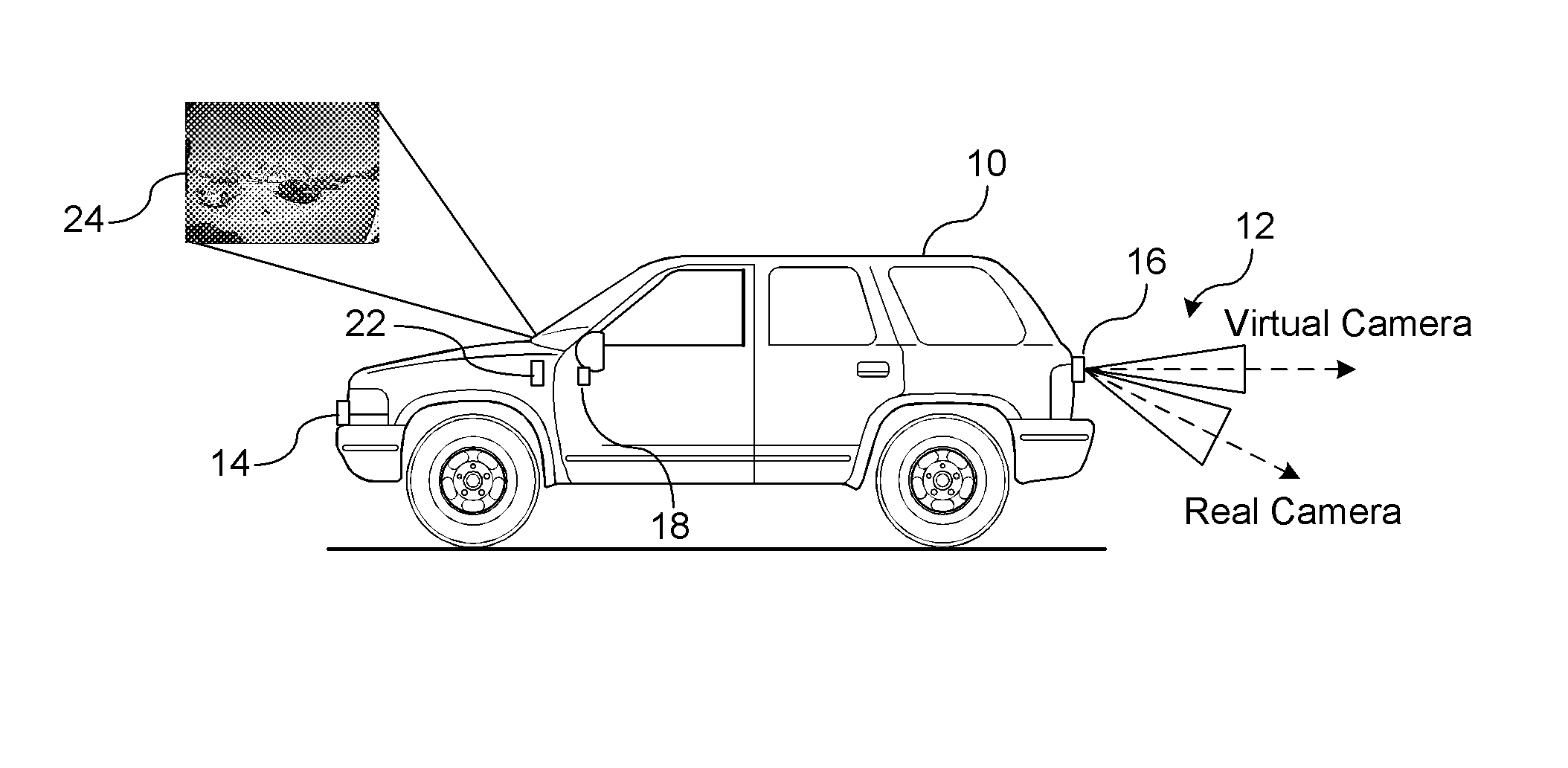

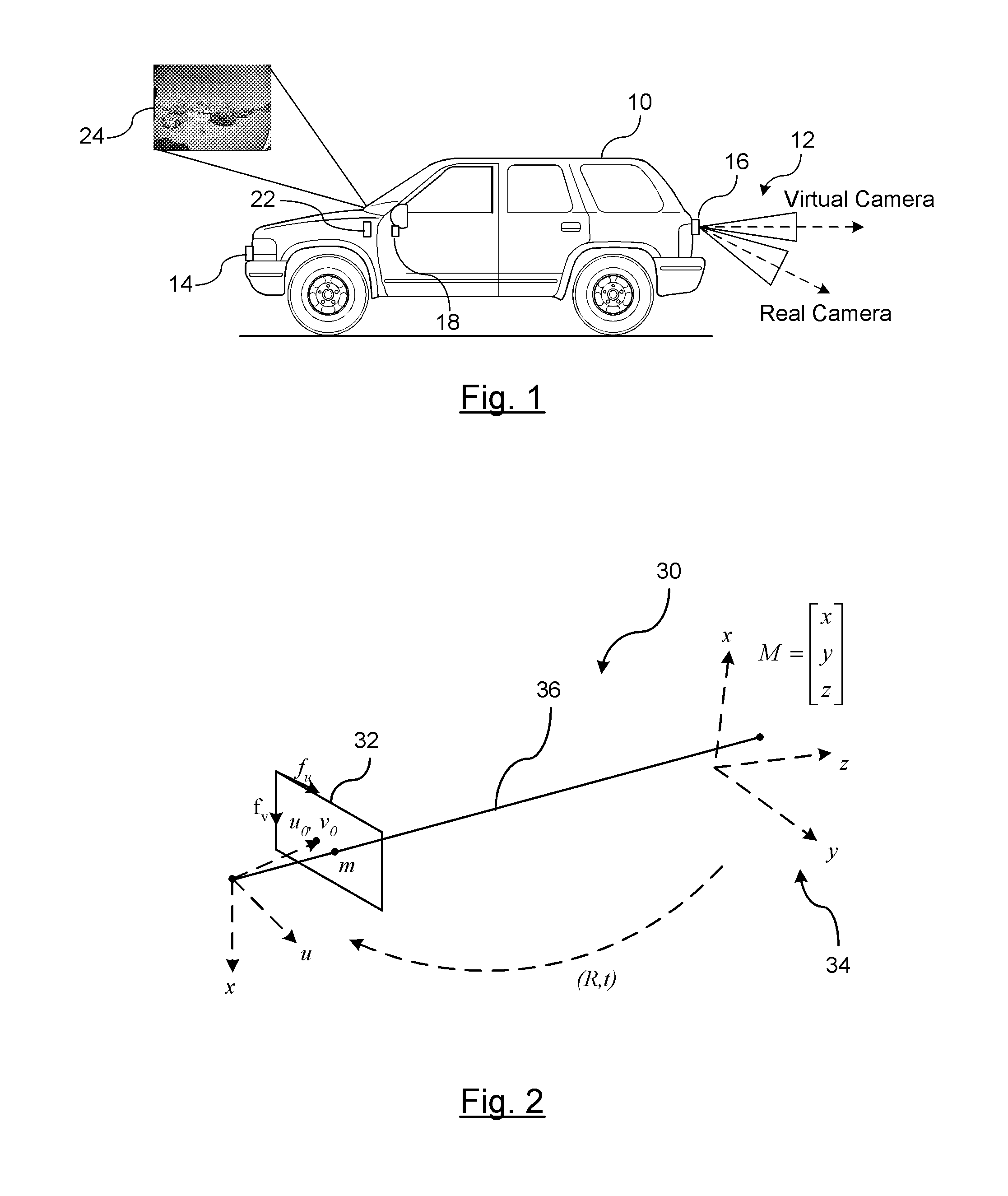

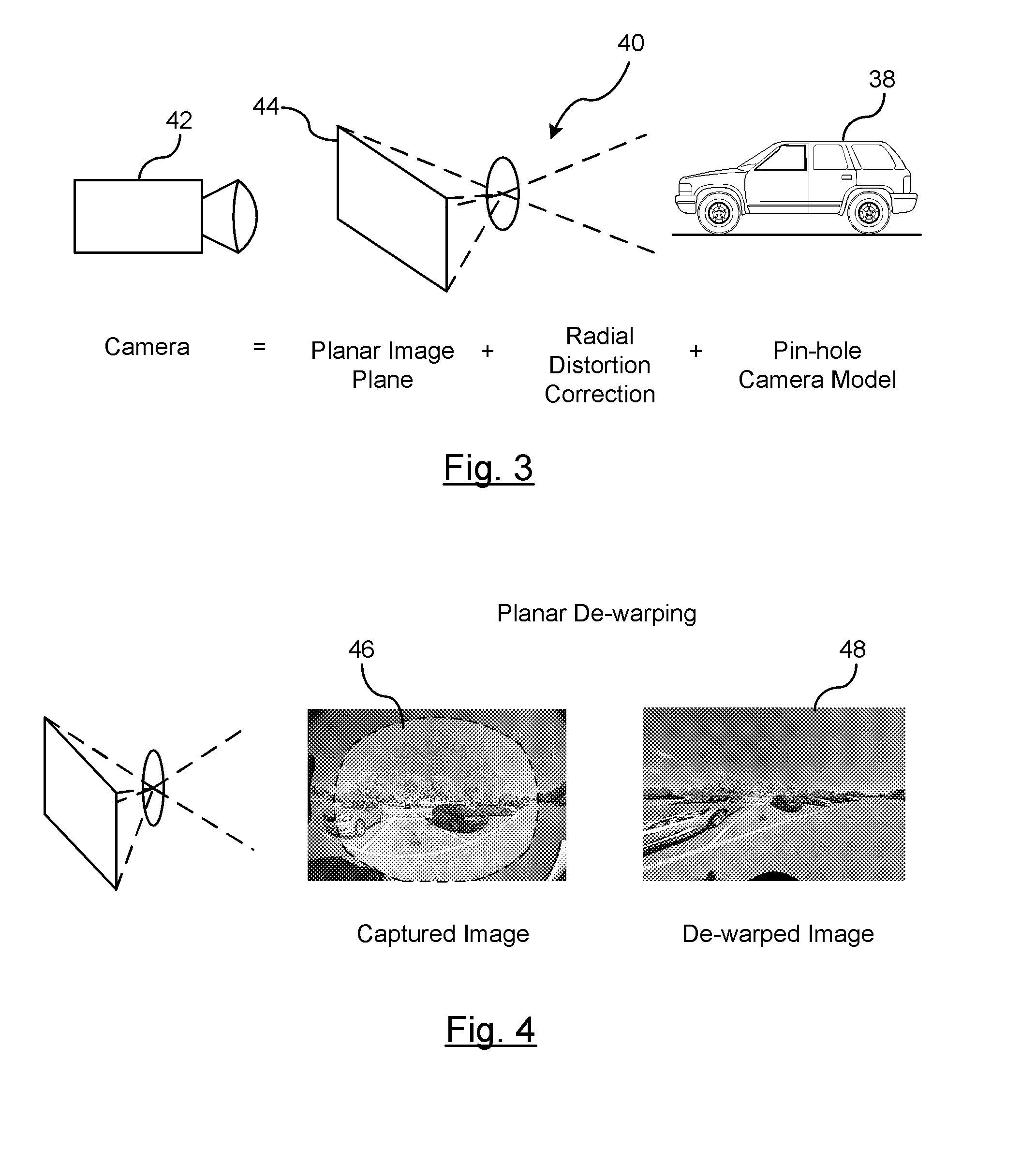

Imaging surface modeling for camera modeling and virtual view synthesis

ActiveUS20140104424A1Enlarge regionEnhances regionImage enhancementImage analysisView synthesisDisplay device

A method for displaying a captured image on a display device. A real image is captured by a vision-based imaging device. A virtual image is generated from the captured real image based on a mapping by a processor. The mapping utilizes a virtual camera model with a non-planar imaging surface. Projecting the virtual image formed on the non-planar image surface of the virtual camera model to the display device.

Owner:GM GLOBAL TECH OPERATIONS LLC

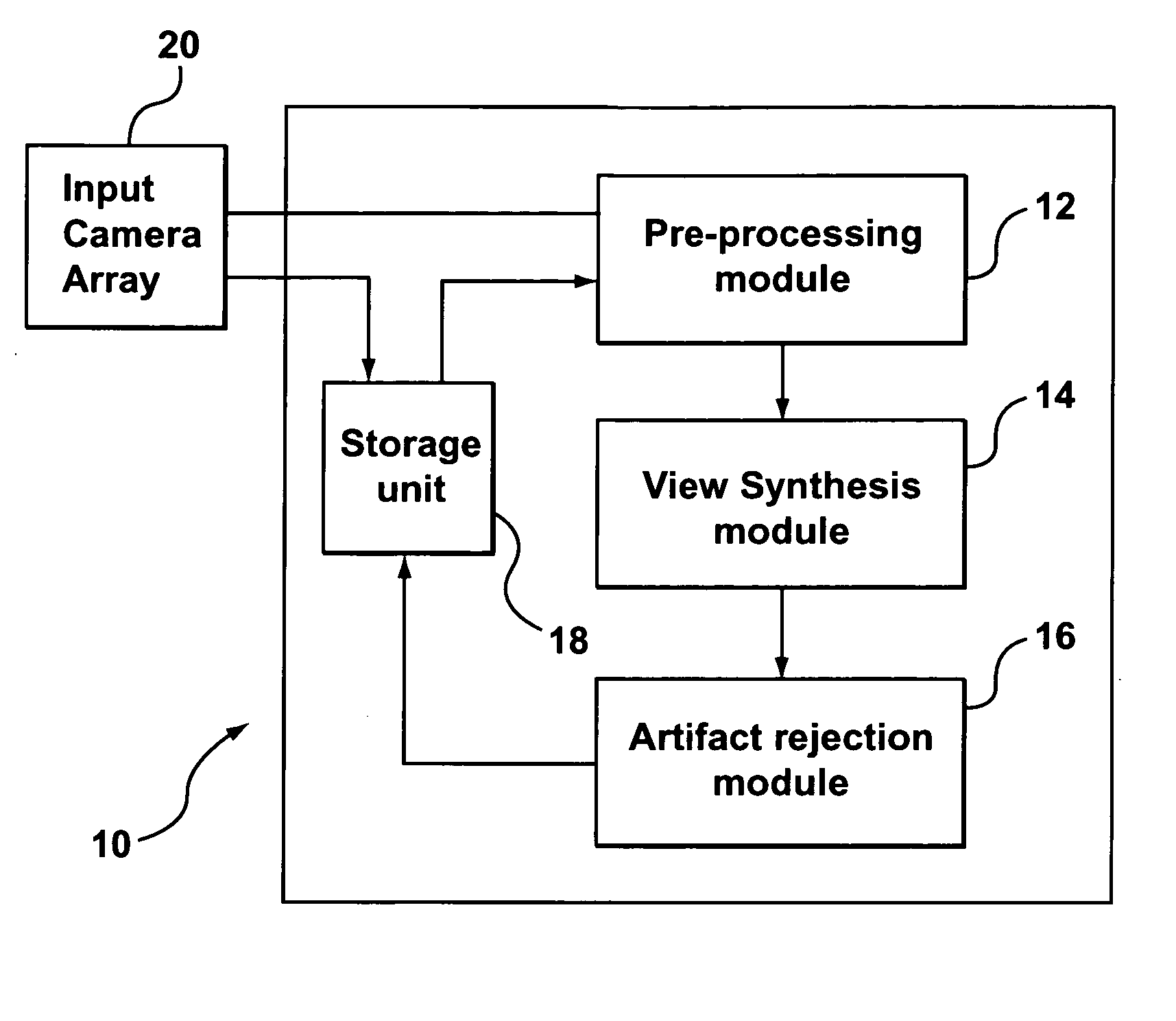

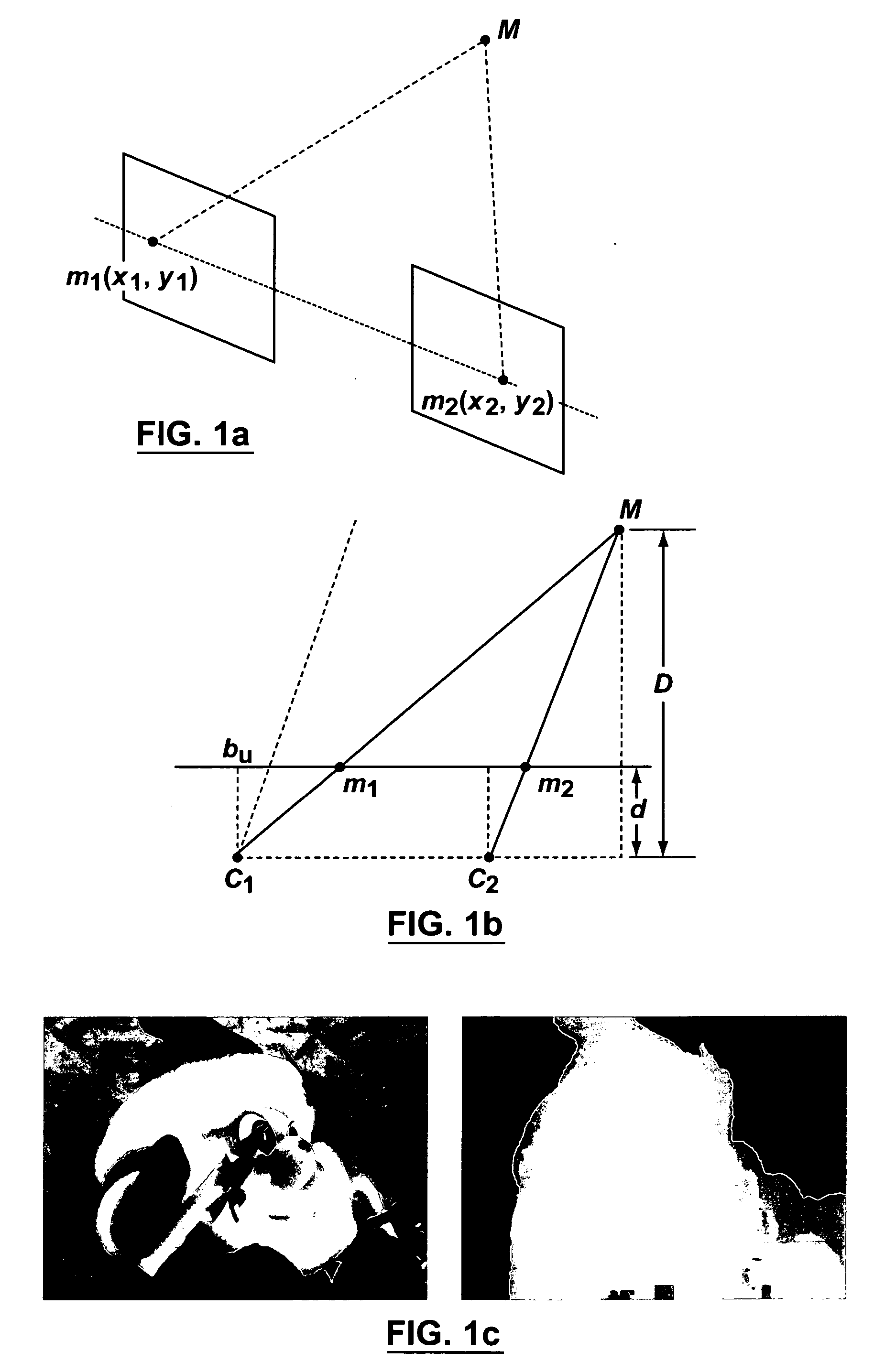

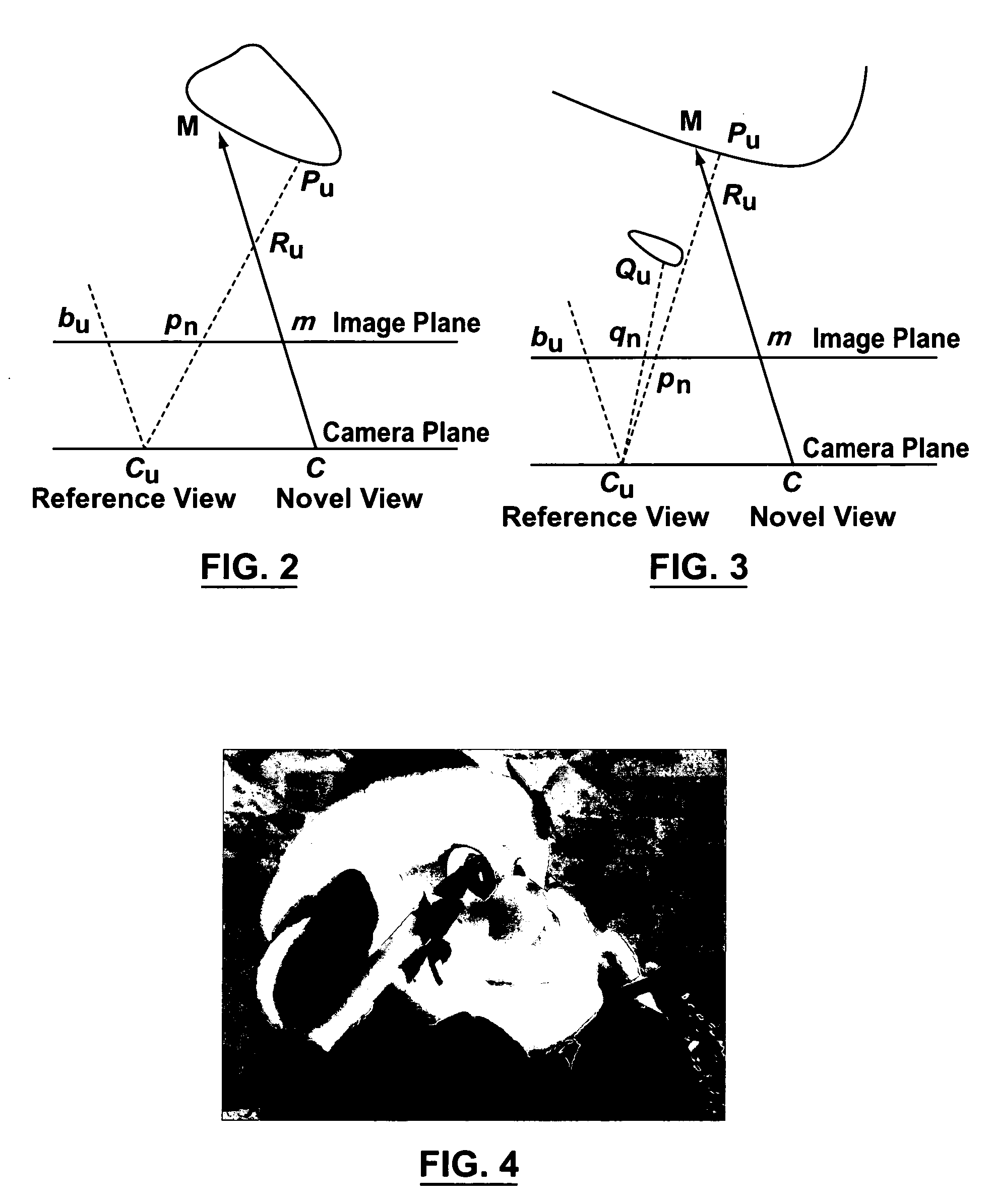

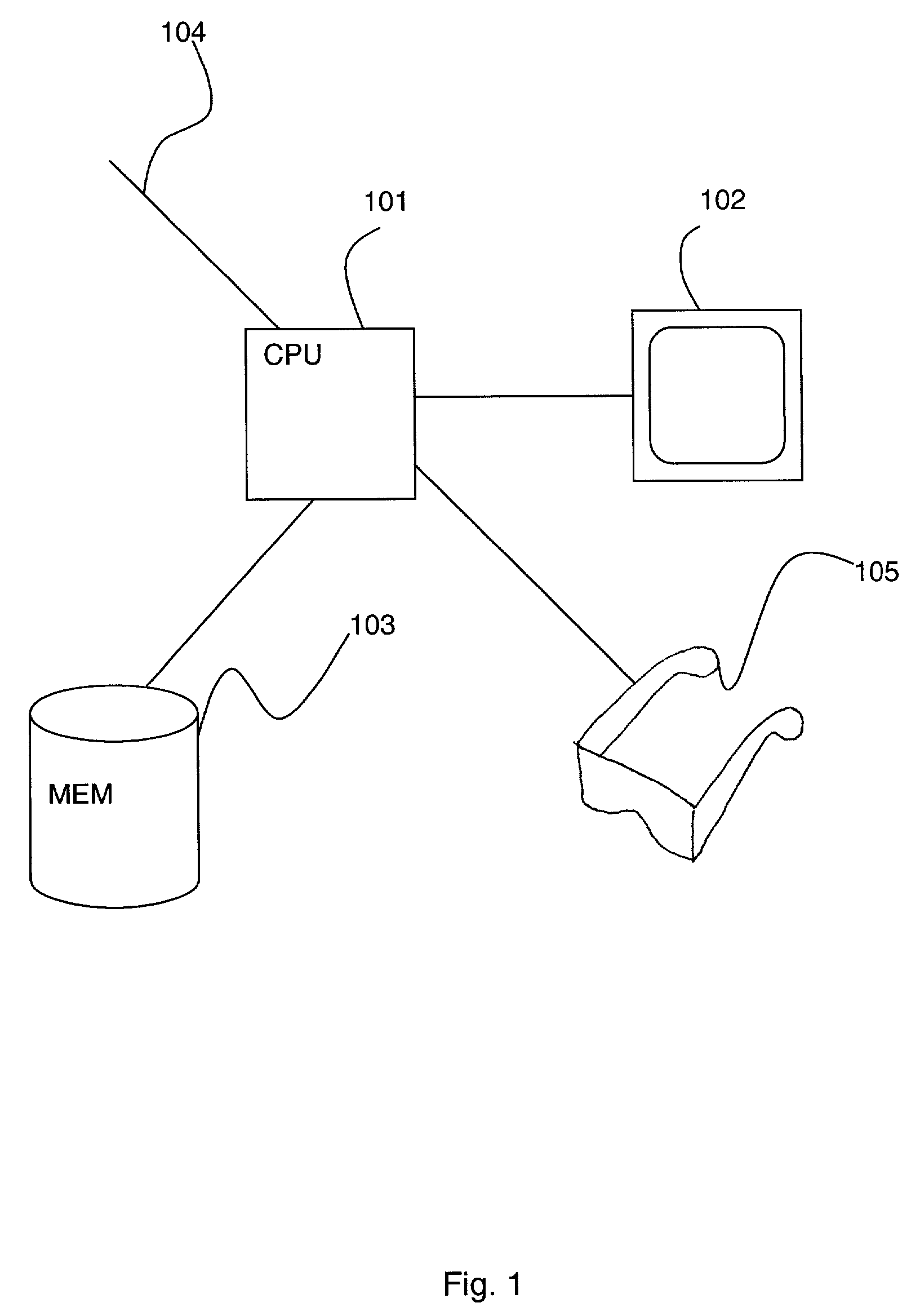

Method and system for real time image rendering

InactiveUS20060066612A1Highly interactive frame rateView accurately3D-image renderingIntermediate imageView synthesis

An image-based rendering system and method for rendering a novel image from several reference images. The system includes a pre-processing module for pre-processing at least two of the reference images and providing pre-processed data; a view synthesis module connected to the pre-processing module for synthesizing an intermediate image from the at least two of the reference images and the pre-processed data; and, an artifact rejection module connected to the view synthesis module for correcting the intermediate image to produce the novel image.

Owner:THE GOVERNORS OF THE UNIV OF ALBERTA

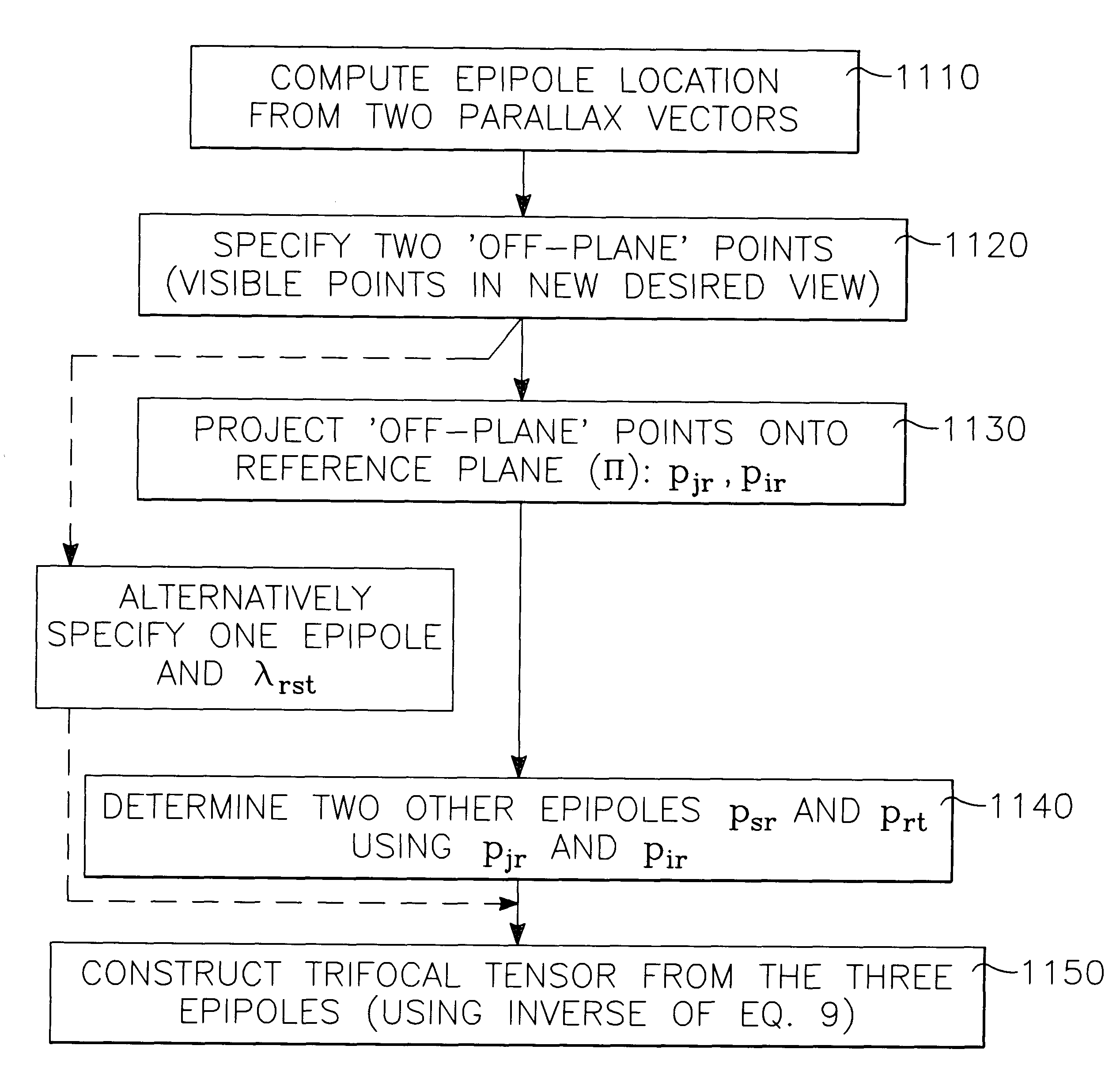

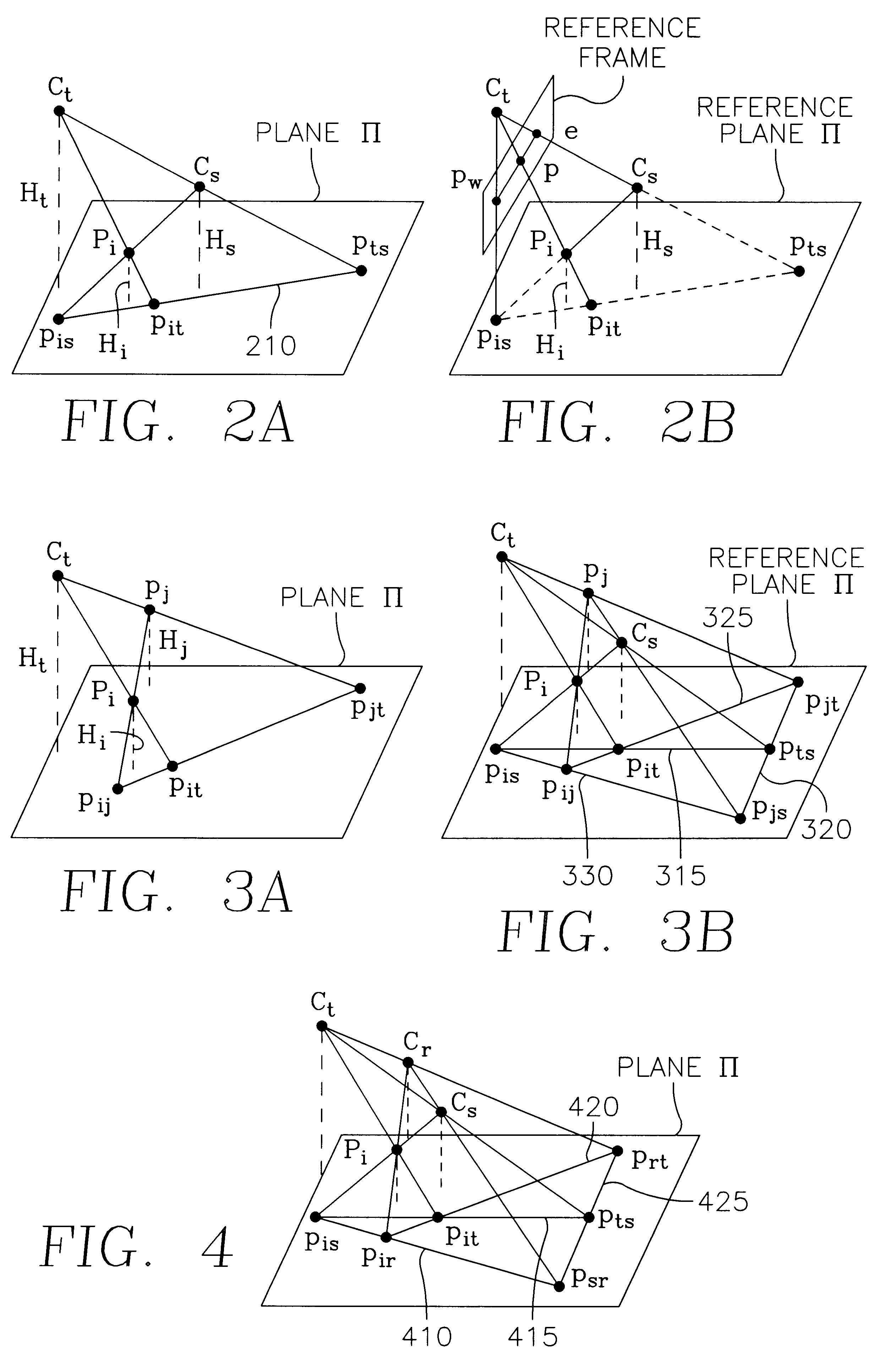

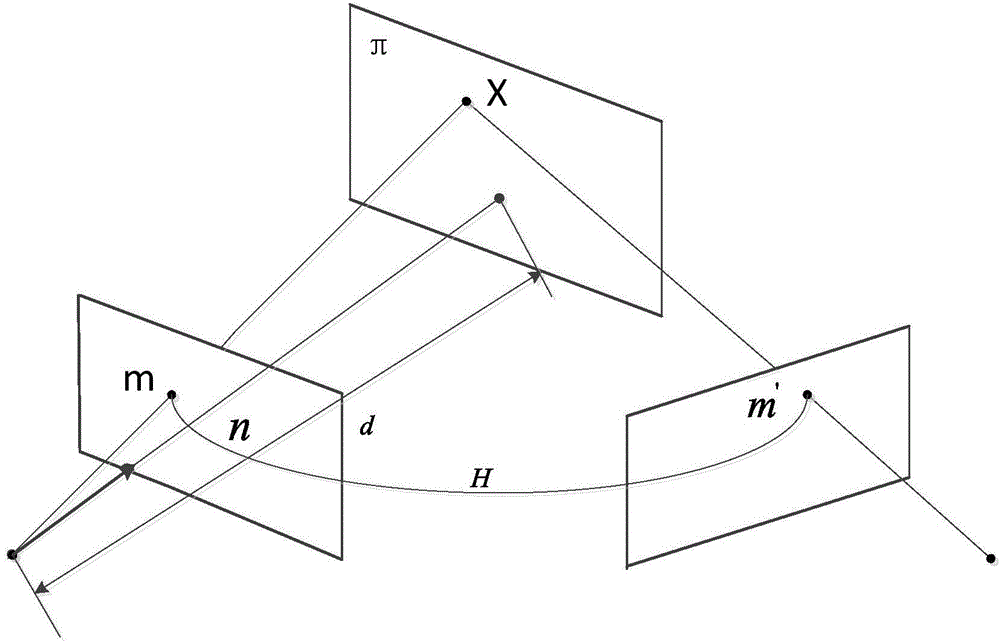

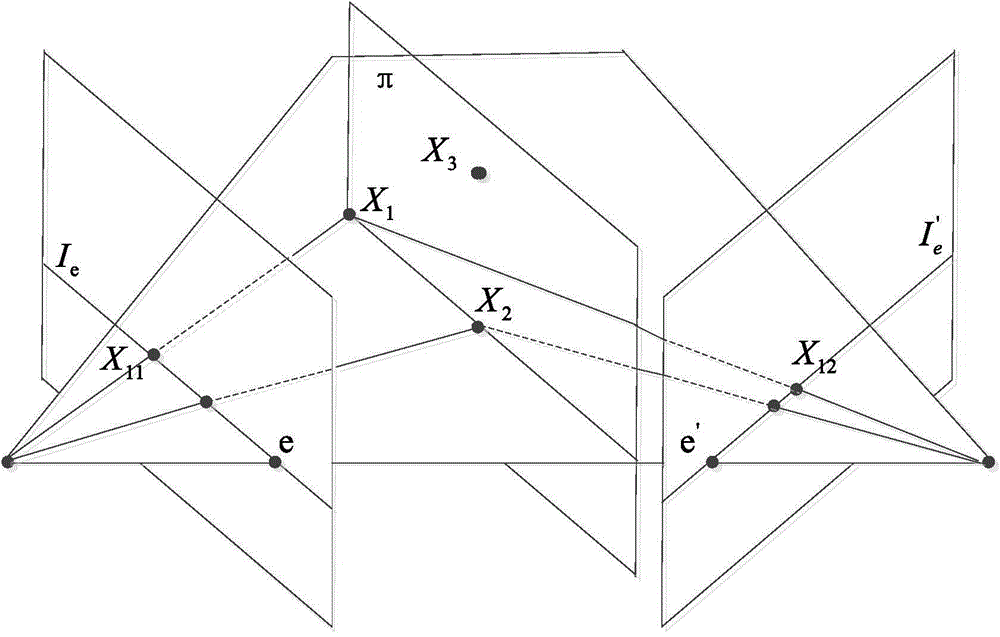

View synthesis from plural images using a trifocal tensor data structure in a multi-view parallax geometry

The invention is embodied in a process for synthesizing a new image representing a new viewpoint of a scene from at least two existing images of the scene taken from different respective viewspoints. The process begins by choosing a planar surface visible in the at least two of the existing images and transforming the at least two existing images relative to one another so as to bring the planar surface into perspective alignment in the at least two existing images, and then choosing a reference frame and computing parallax vectors between the two images of the projection of common scene points on the reference frame.Preferably, the reference frame comprises an image plane of a first one of the existing images. Preferably, the reference frame is co-planar with the planar surface. In this case, the transforming of the existing images is achieved by performing a projective transform on a second one of the existing images to bring its image of the planar surface into perspective alignment with the image of the planar surface in the first existing image.Preferably, the image parameter of the new view comprises information sufficient, together with the parallax vectors, to deduce: (a) a trifocal ratio in the reference frame and (b) one epipole between the new viewpoint and one of the first and second viewpoints.

Owner:YEDA RES & DEV CO LTD +2

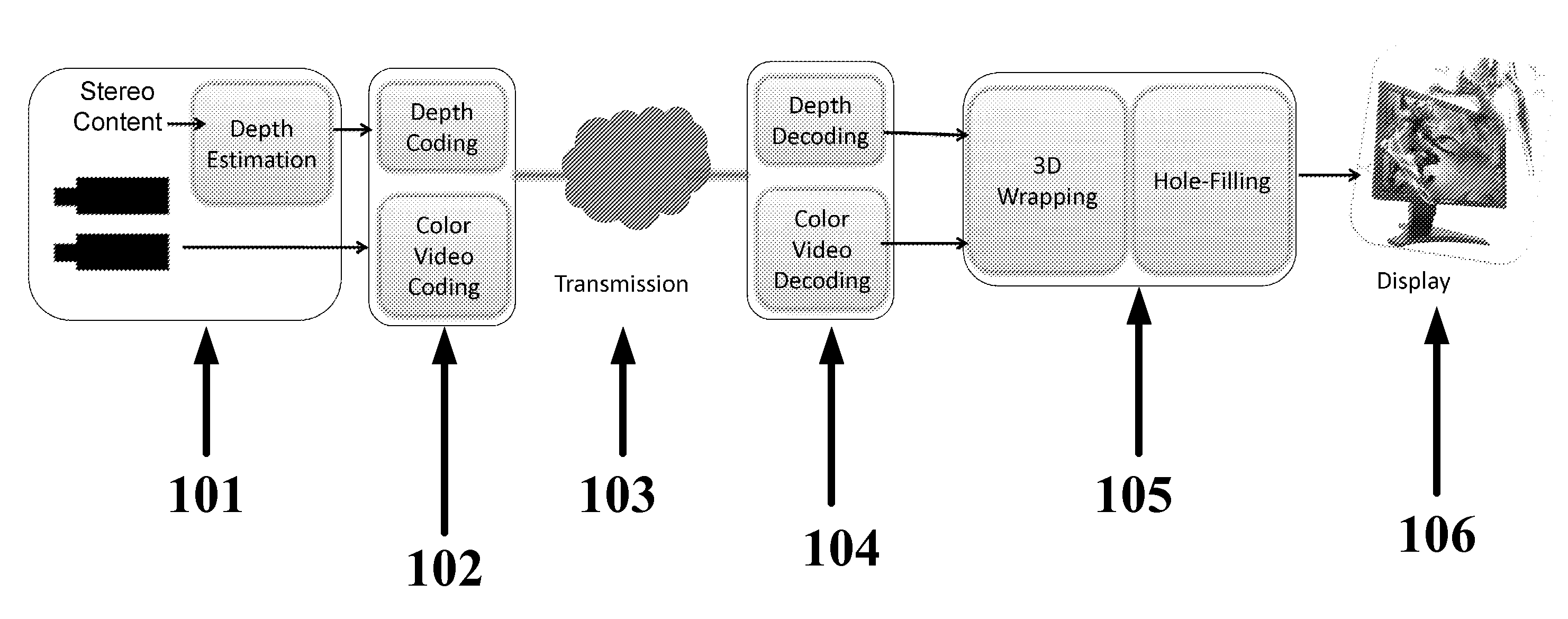

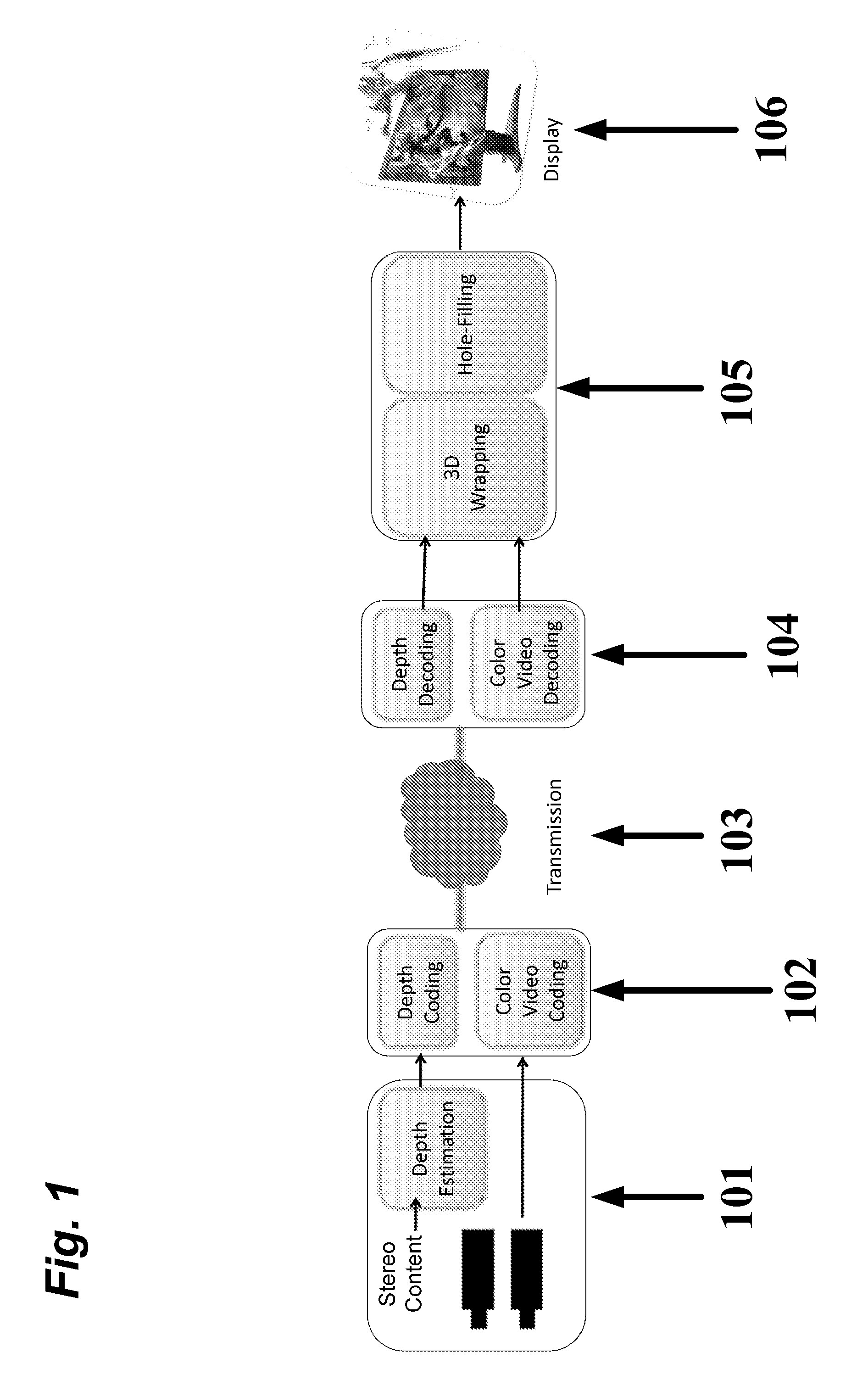

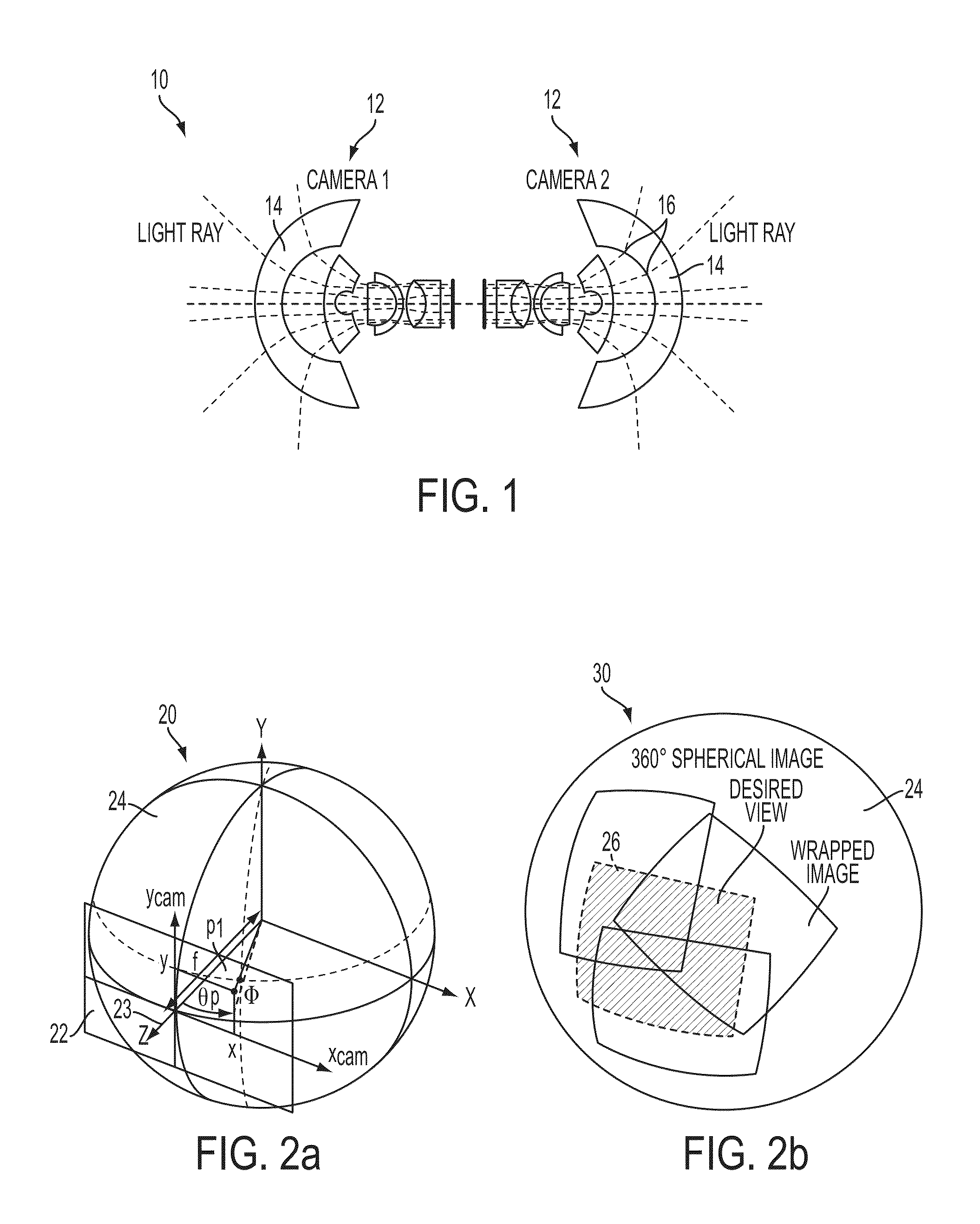

Hierarchical hole-filling for depth-based view synthesis in ftv and 3D video

ActiveUS20120120192A1High resolutionQuality improvementImage enhancementSteroscopic systems3d imageView synthesis

Methods for hierarchical hole-filling and depth adaptive hierarchical hole-filling and error correcting in 2D images, 3D images, and 3D wrapped images are provided. Hierarchical hole-filling can comprise reducing an image that contains holes, expanding the reduced image, and filling the holes in the image with data obtained from the expanded image. Depth adaptive hierarchical hole-filling can comprise preprocessing the depth map of a 3D wrapped image that contains holes, reducing the preprocessed image, expanding the reduced image, and filling the holes in the 3D wrapped image with data obtained from the expanded image. These methods are can efficiently reduce errors in images and produce 3D images from a 2D images and / or depth map information.

Owner:GEORGIA TECH RES CORP

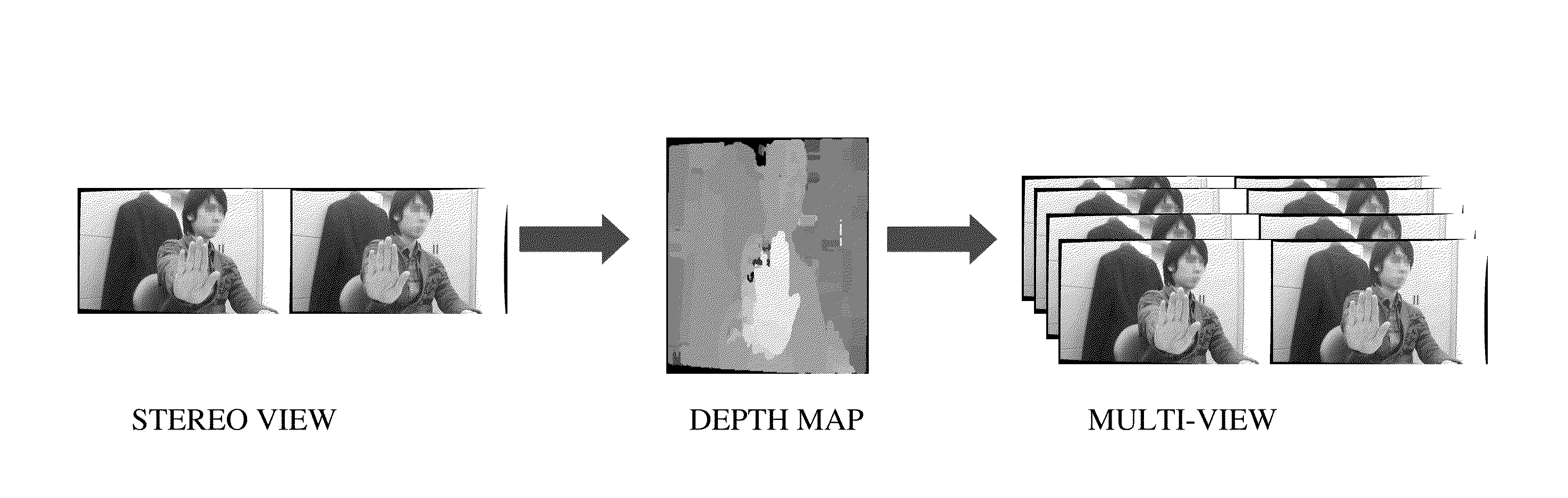

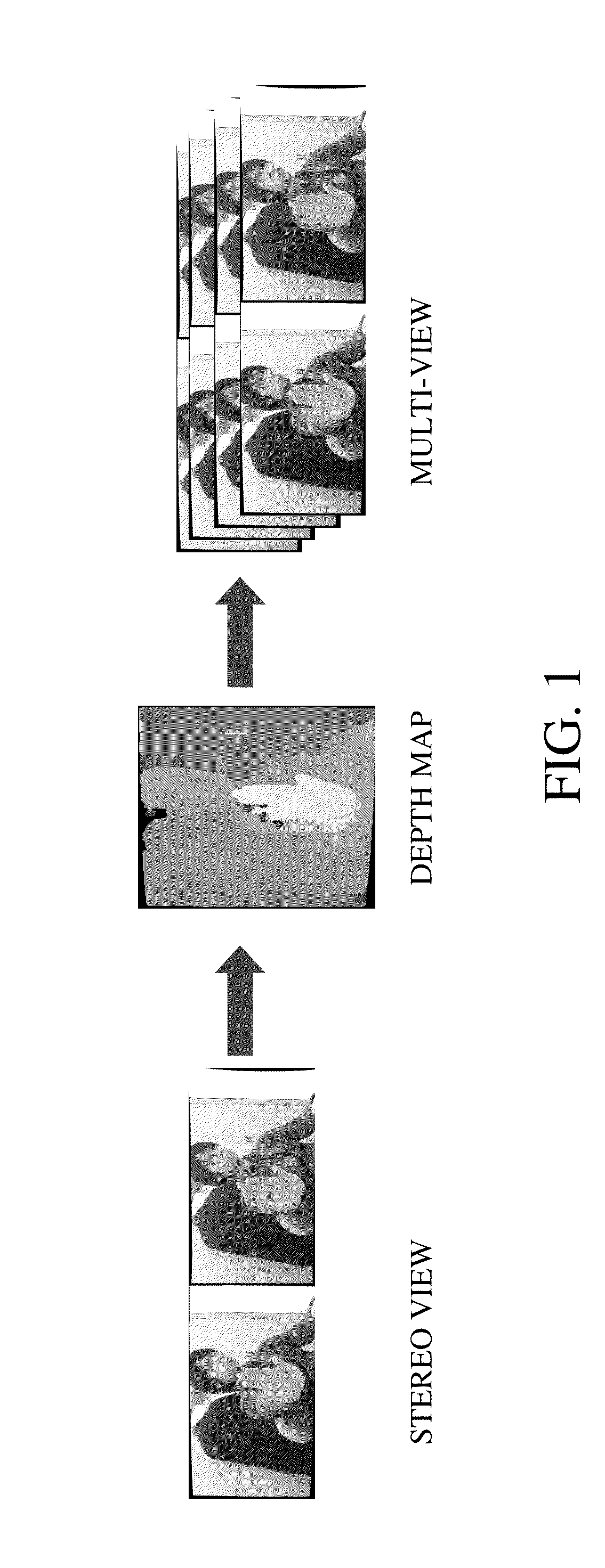

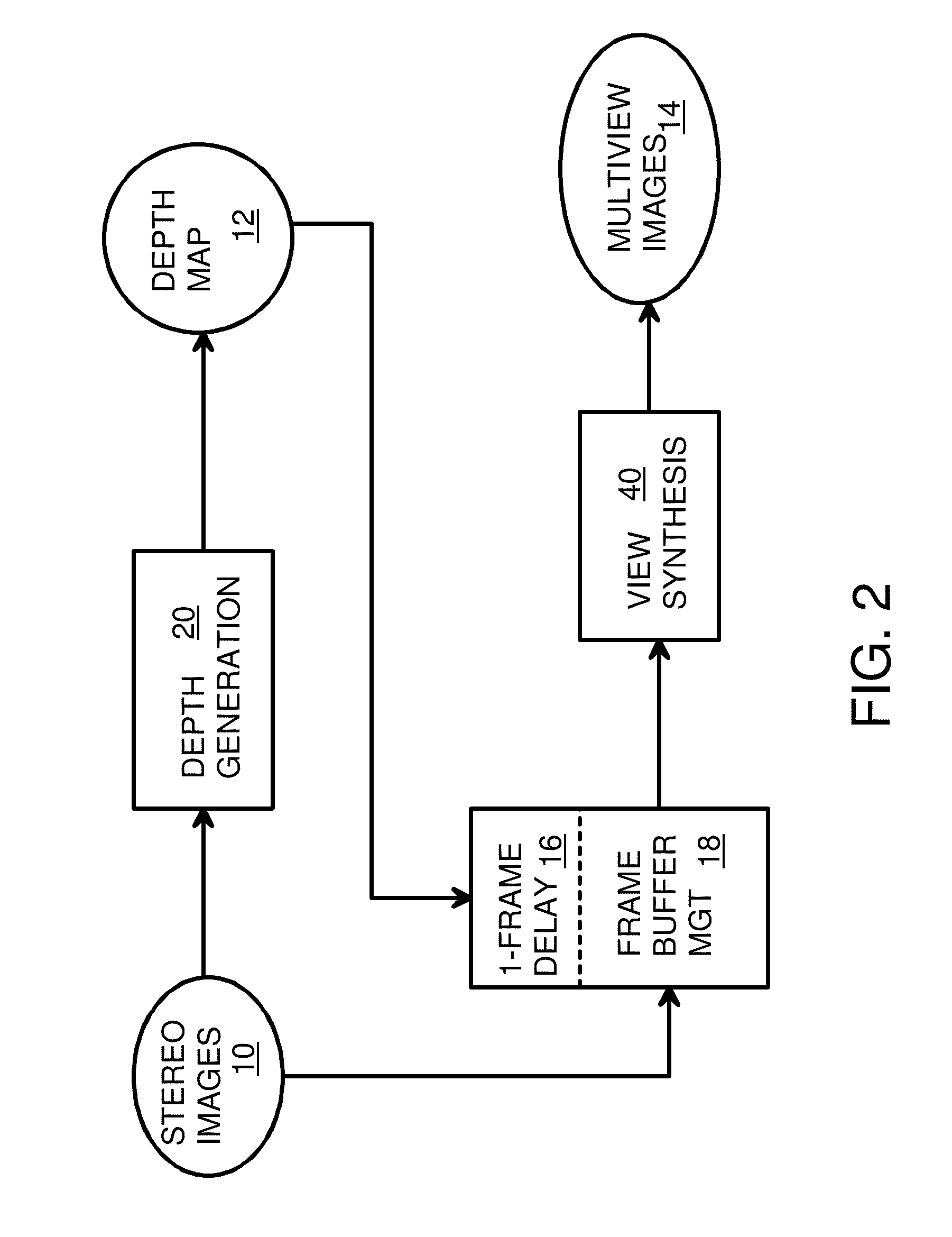

Multi-View Synthesis in Real-Time With Fallback to 2D from 3D to Reduce Flicker in Low or Unstable Stereo-Matching Image Regions

Multi view images are generated with reduced flickering. A first depth map is generated from stereo images by stereo-matching. When stereo-matching is poor or varies too much from frame to frame, disparity fallback selects a second depth map that is generated from a single view without stereo-matching, preventing stereo-matching errors from producing visible artifacts or flickering. Flat or textureless regions can use the second depth map, while regions with good stereo-matching use the first depth map. Depth maps are generated with a one-frame delay and buffered. Low-cost temporal coherence reduces costs used for stereo-matching when the pixel location selected as the lowest-cost disparity is within a distance threshold of the same pixel in a last frame. Hybrid view synthesis uses forward mapping for smaller numbers of views, and backward mapping from the forward-mapping results for larger numbers of views. Rotated masks are generated on-the-fly for backward mapping.

Owner:HONG KONG APPLIED SCI & TECH RES INST

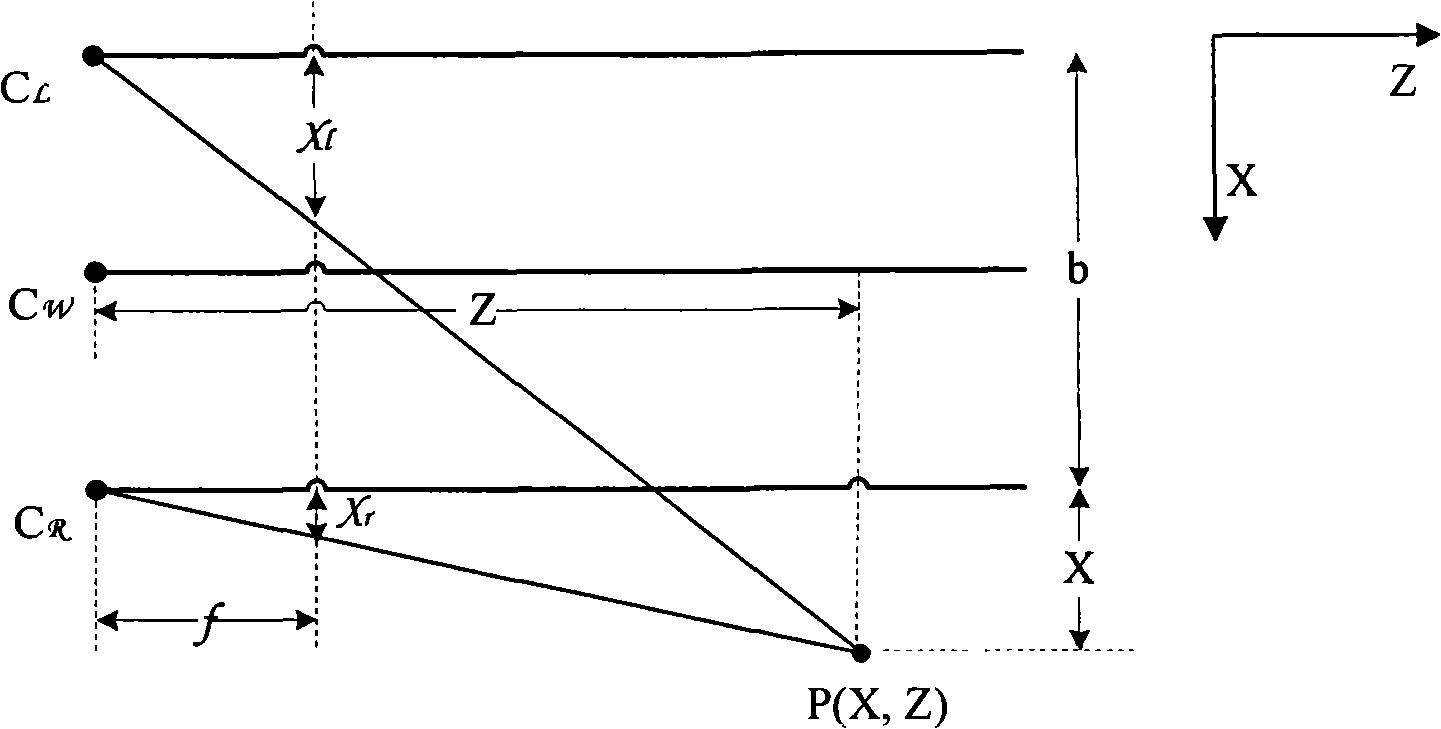

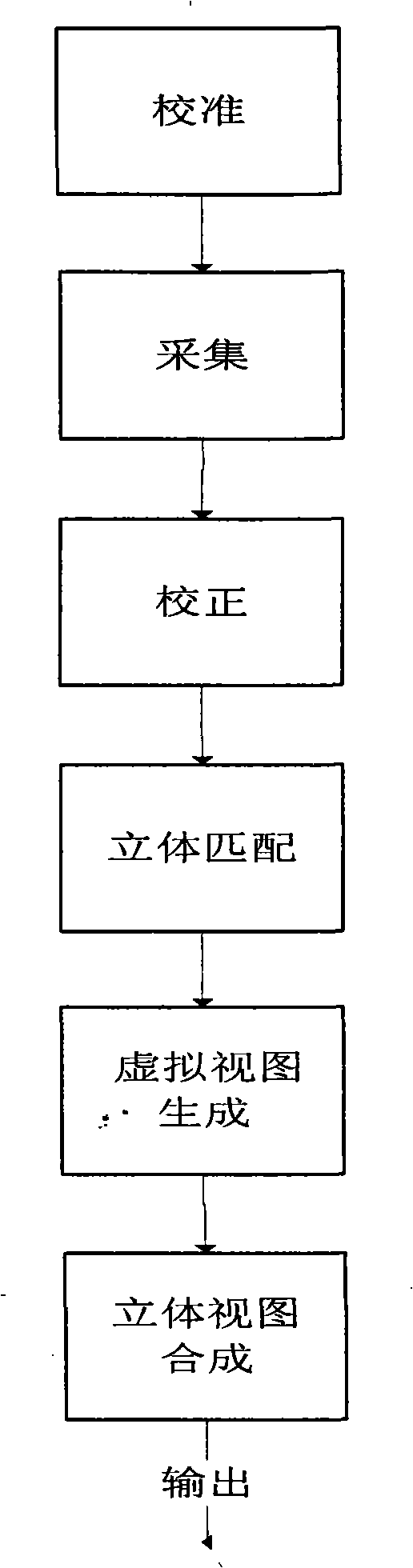

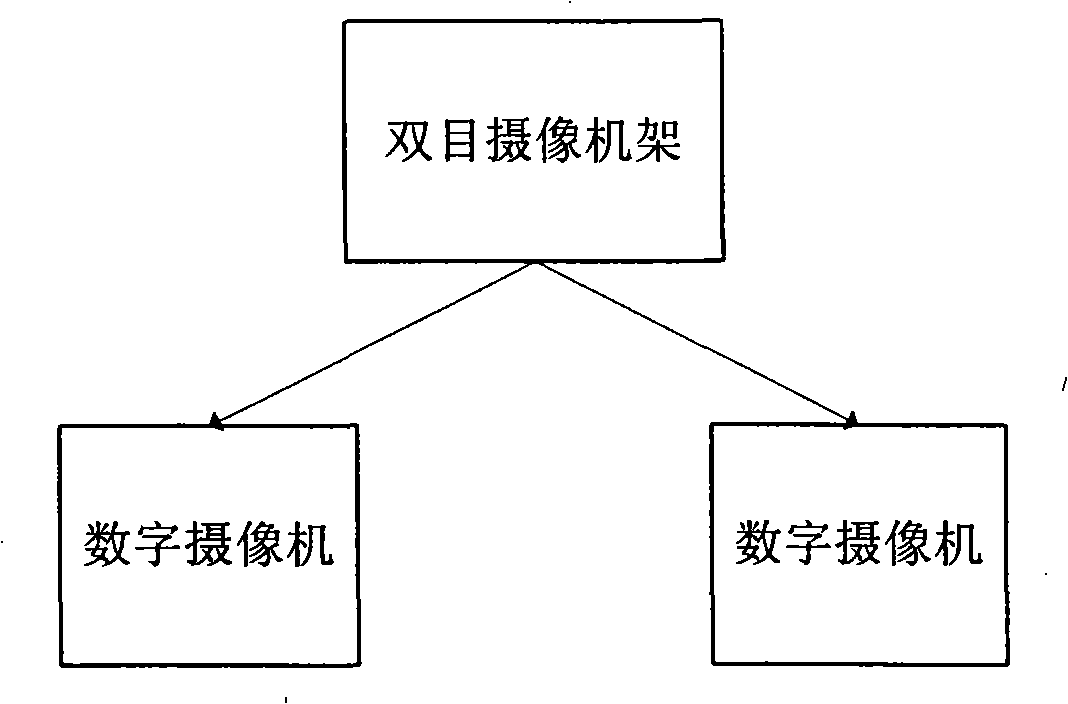

Method for generating real time tridimensional video based on binocular camera

InactiveCN101277454ANo human intervention requiredLow costSteroscopic systemsStereoscopic videoStereo matching

The invention relates to a real-time three-dimensional video generation method that belongs to the acquisition and processing technology field of the three-dimensional video. The method involves the following steps of calibration, collection, regulation, stereo match, virtual view generation and three-dimensional view synthesis. The invention is convenient, simple and rapid, particularly suitable for some situations needing real-time processing.

Owner:TSINGHUA UNIV

Method and system for processing multiview videos for view synthesis using side information

InactiveUS7728878B2Television system detailsPicture signal generatorsComputer graphics (images)Side information

A method processes a multiview videos of a scene, in which each video is acquired by a corresponding camera arranged at a particular pose, and in which a view of each camera overlaps with the view of at least one other camera. Side information for synthesizing a particular view of the multiview video is obtained in either an encoder or decoder. A synthesized multiview video is synthesized from the of multiview videos and the side information. A reference picture list is maintained for each current frame of each of the multiview videos, the reference picture indexes temporal reference pictures and spatial reference pictures of the acquired multiview videos and the synthesized reference pictures of the synthesized multiview video. Each current frame of the multiview videos is predicted according to reference pictures indexed by the associated reference picture list.

Owner:MITSUBISHI ELECTRIC RES LAB INC

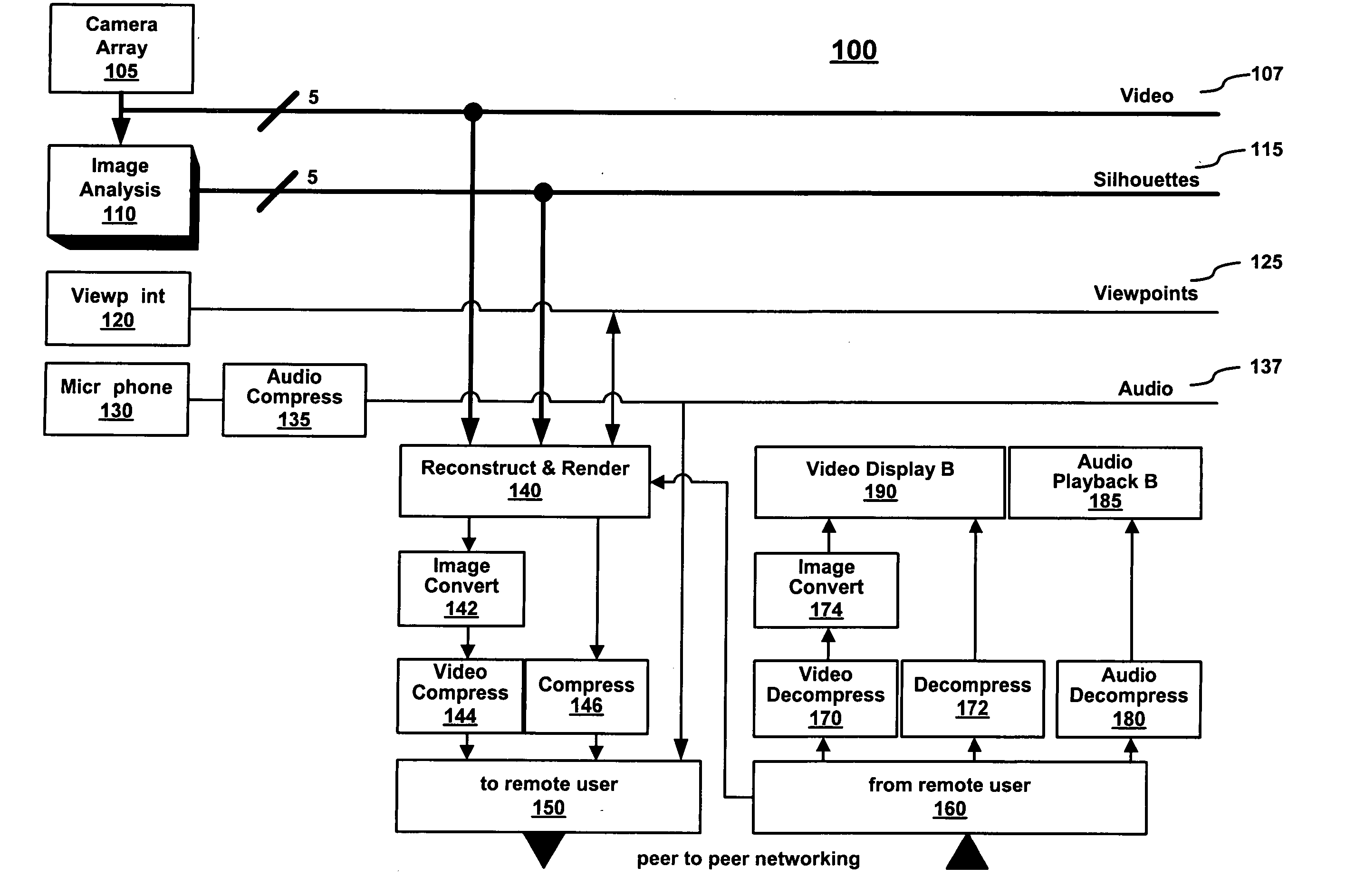

Hybrid video coding supporting intermediate view synthesis

Hybrid video decoder supporting intermediate view synthesis of an intermediate view video from a first- and a second-view video which are predictively coded into a multi-view data signal with frames of the second-view video being spatially subdivided into sub-regions and the multi-view data signal having a prediction mode is provided, having: an extractor configured to respectively extract, from the multi-view data signal, for sub-regions of the frames of the second-view video, a disparity vector and a prediction residual; a predictive reconstructor configured to reconstruct the sub-regions of the frames of the second-view video, by generating a prediction from a reconstructed version of a portion of frames of the first-view video using the disparity vectors and a prediction residual for the respective sub-regions; and an intermediate view synthesizer configured to reconstruct first portions of the intermediate view video.

Owner:GE VIDEO COMPRESSION LLC

Method and system for real-time rendering within a gaming environment

InactiveUS20050085296A1Television conference systemsTwo-way working systemsViewpointsComputer graphics (images)

A method and system for real-time rendering within a gaming environment. Specifically, one embodiment of the present invention discloses a method of rendering a local participant within an interactive gaming environment. The method begins by capturing a plurality of real-time video streams of a local participant from a plurality of camera viewpoints. From the plurality of video streams, a new view synthesis technique is applied to generate a rendering of the local participant. The rendering is generated from a perspective of a remote participant located remotely in the gaming environment. The rendering is then sent to the remote participant for viewing.

Owner:HEWLETT PACKARD DEV CO LP

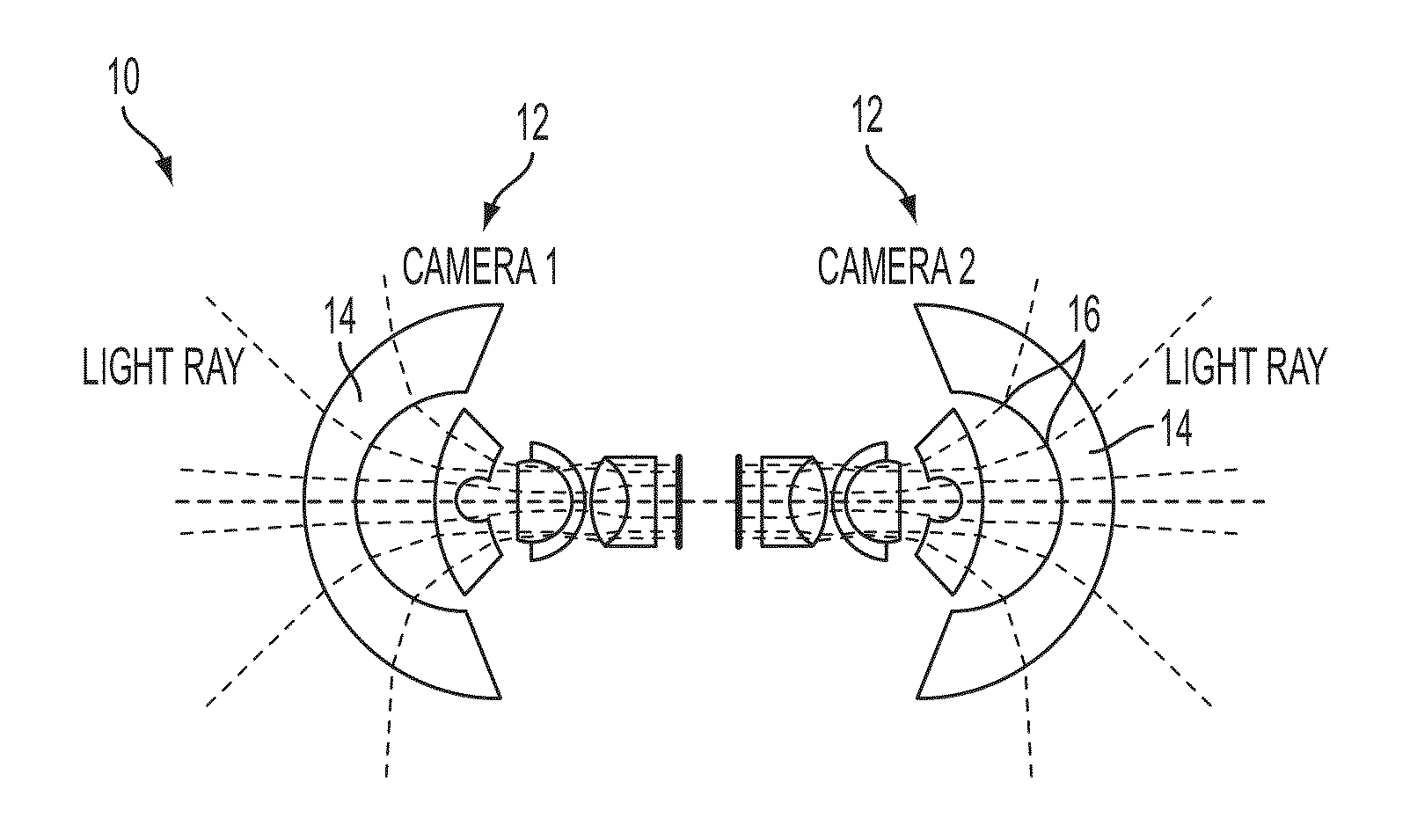

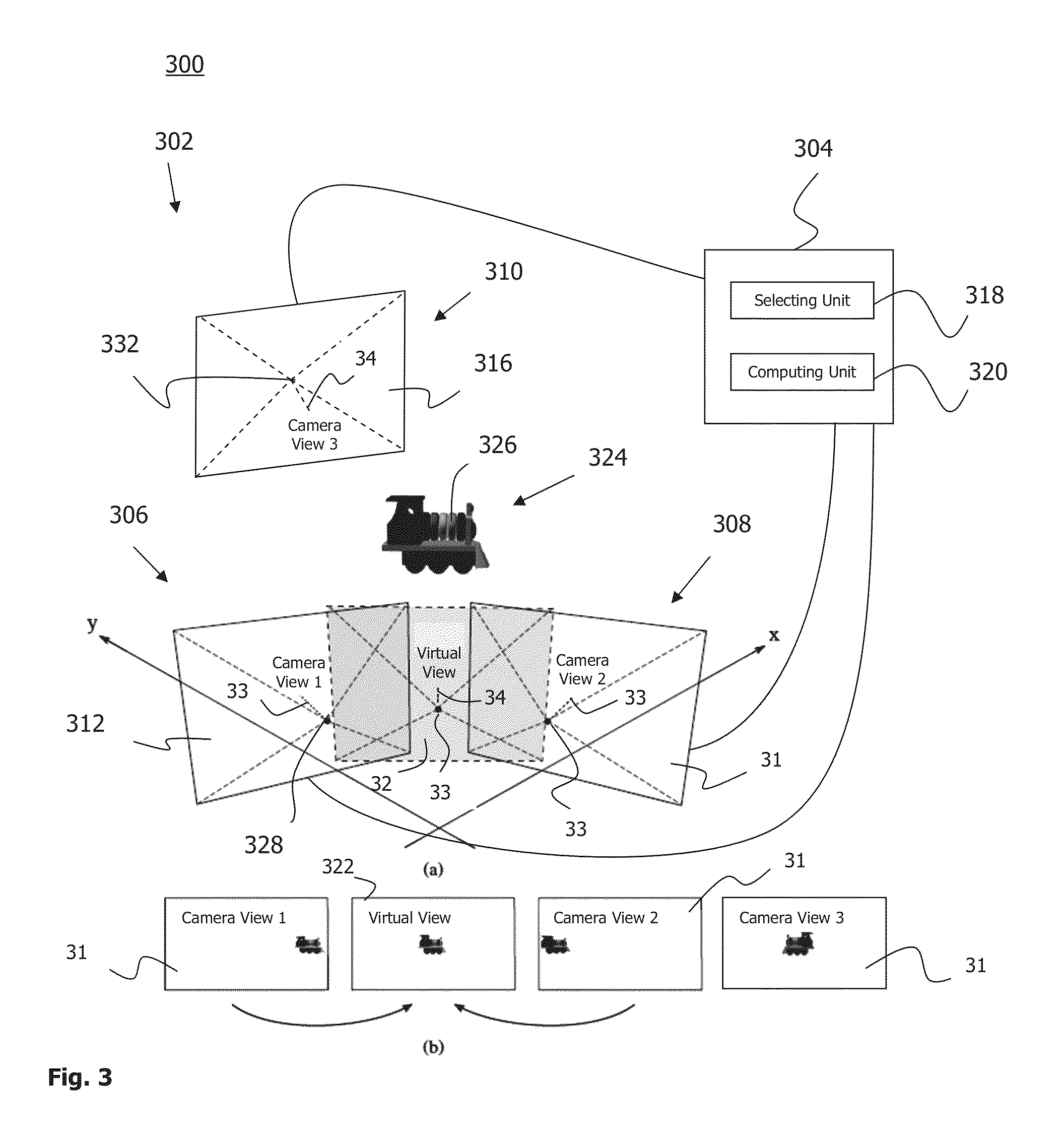

Technique for view synthesis

A technique for computing an image of a virtual view based on a plurality of camera views is presented. One or more cameras provide the plurality of camera views. As to a method aspect of the technique, two or three camera views that at least partially overlap and that at least partially overlap with the virtual view are selected among the plurality of camera views. The image of the virtual view is computed based on objects in the selected camera views using a multilinear relation that relates the selected camera views and the virtual view.

Owner:TELEFON AB LM ERICSSON (PUBL)

Multi view synthesis method and display devices with spatial and inter-view consistency

ActiveUS20140293028A1Easy to useLow costColor television detailsSteroscopic systemsSynthesis methodsComputer graphics (images)

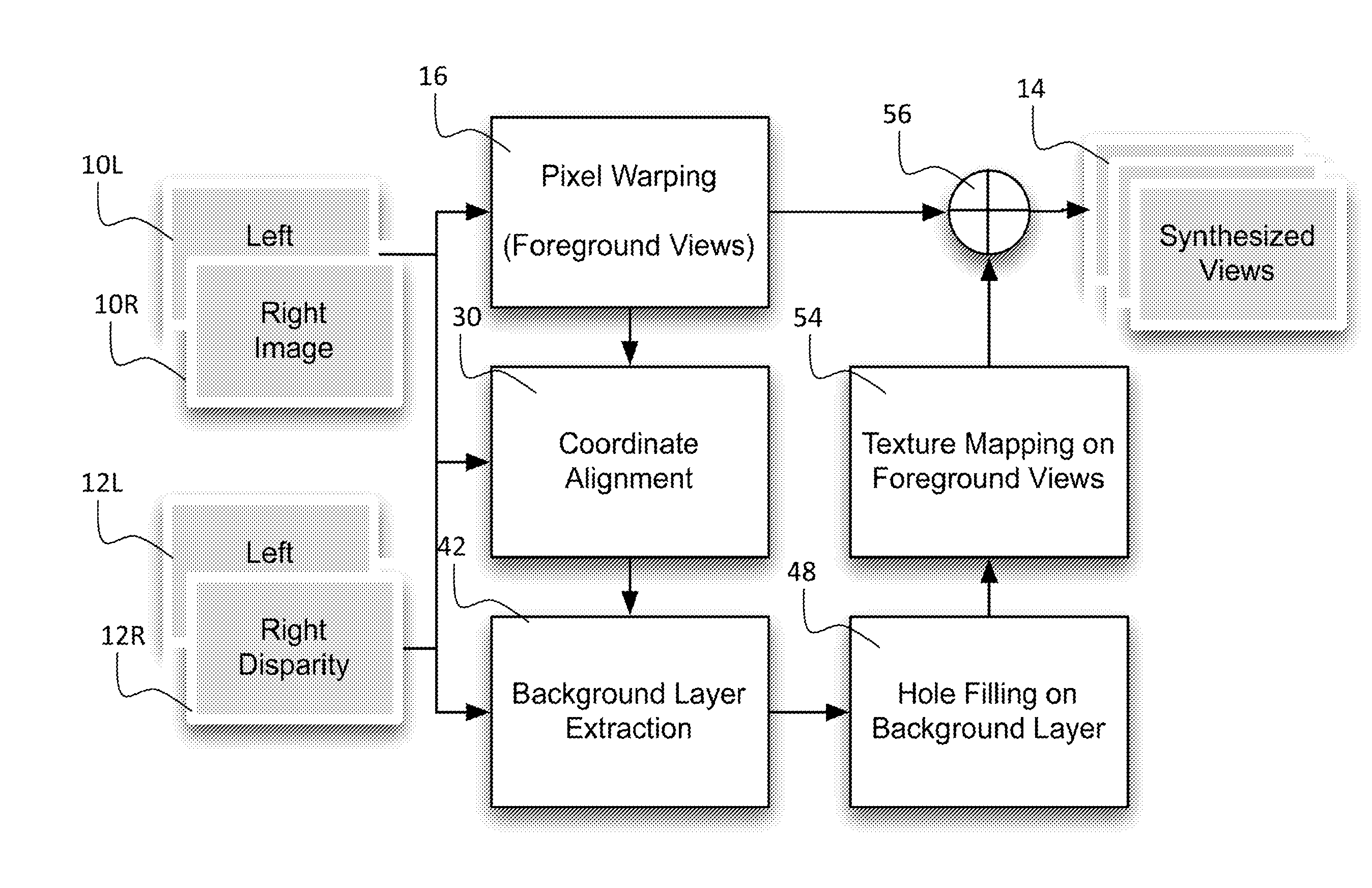

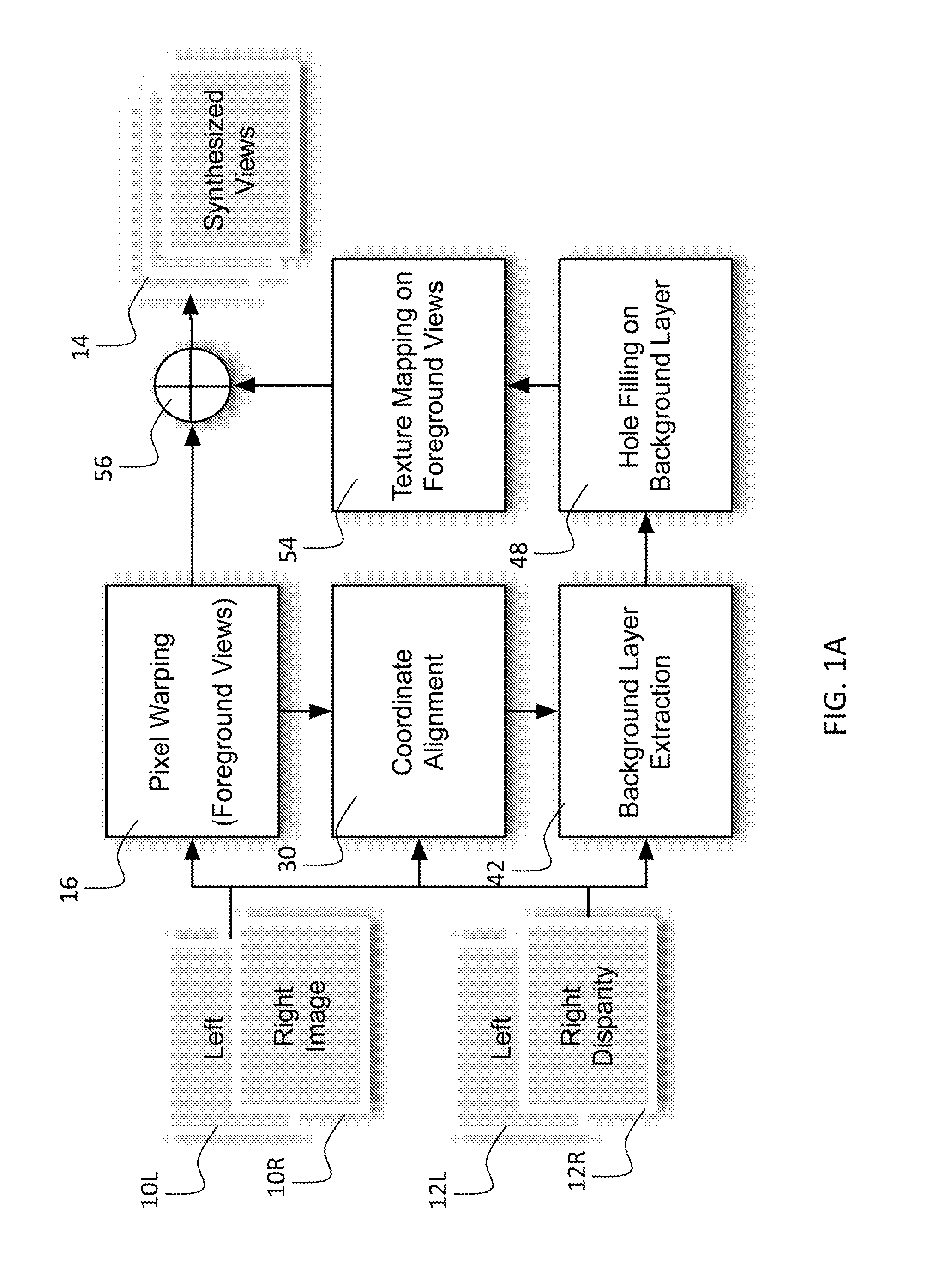

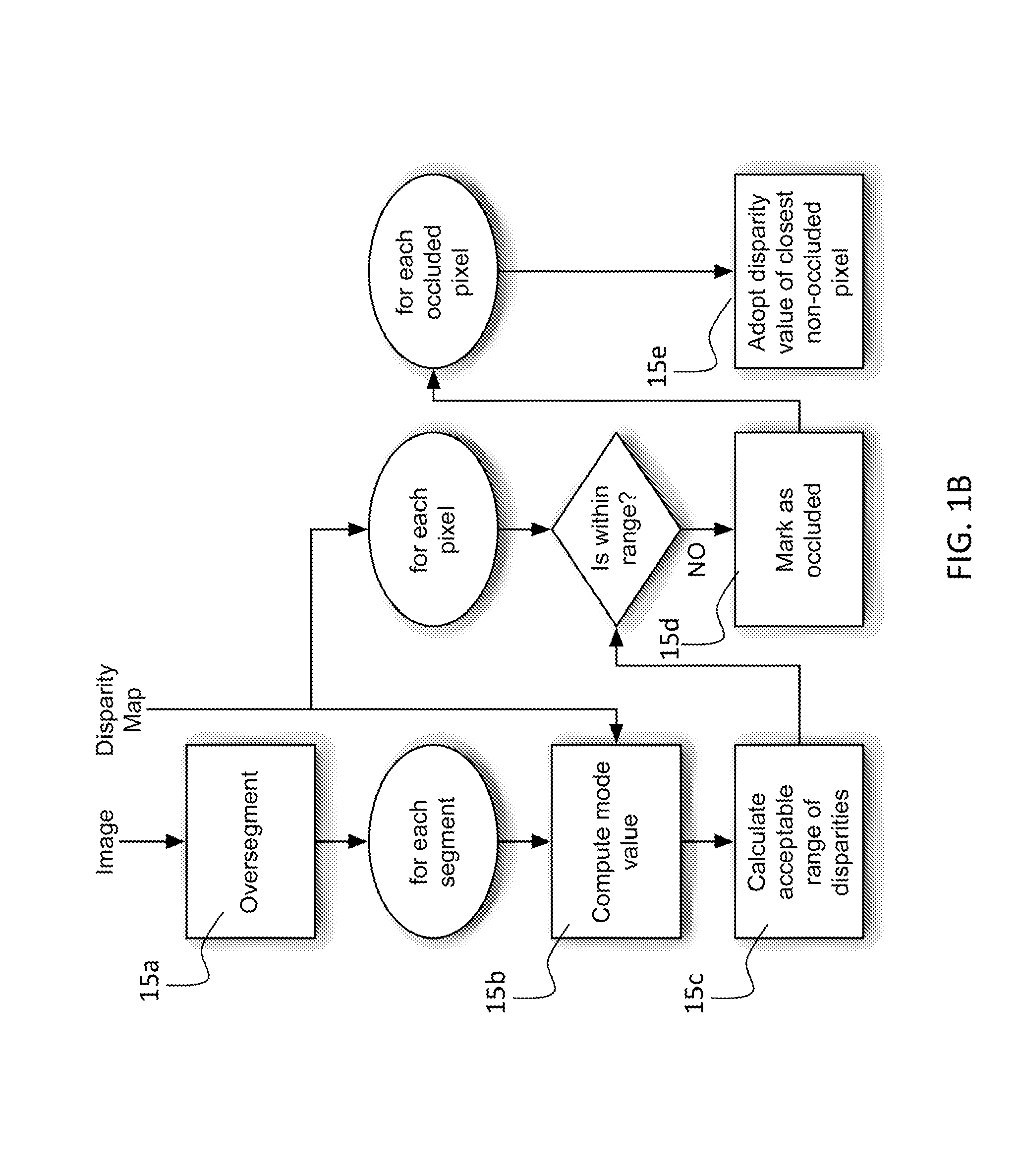

An embodiment of the invention is a method that provides common data and enforces multiple view consistency. In a preferred embodiment, a coordinate alignment matrix is generated after initial warped views are formed. The matrix is used efficiently to repair holes / occlusions when synthesizing final views. In another preferred embodiment, a common background layer is generated based upon initial warped views. Holes are repaired on the common background layer, which can then be used for synthesizing final views. The cost of the latter method is sub-linear, meaning that additional views can be generated with lesser cost. Preferred methods of the invention also use a placement matrix to generate initial warped views, which simplifies warping to a lookup operation.

Owner:RGT UNIV OF CALIFORNIA

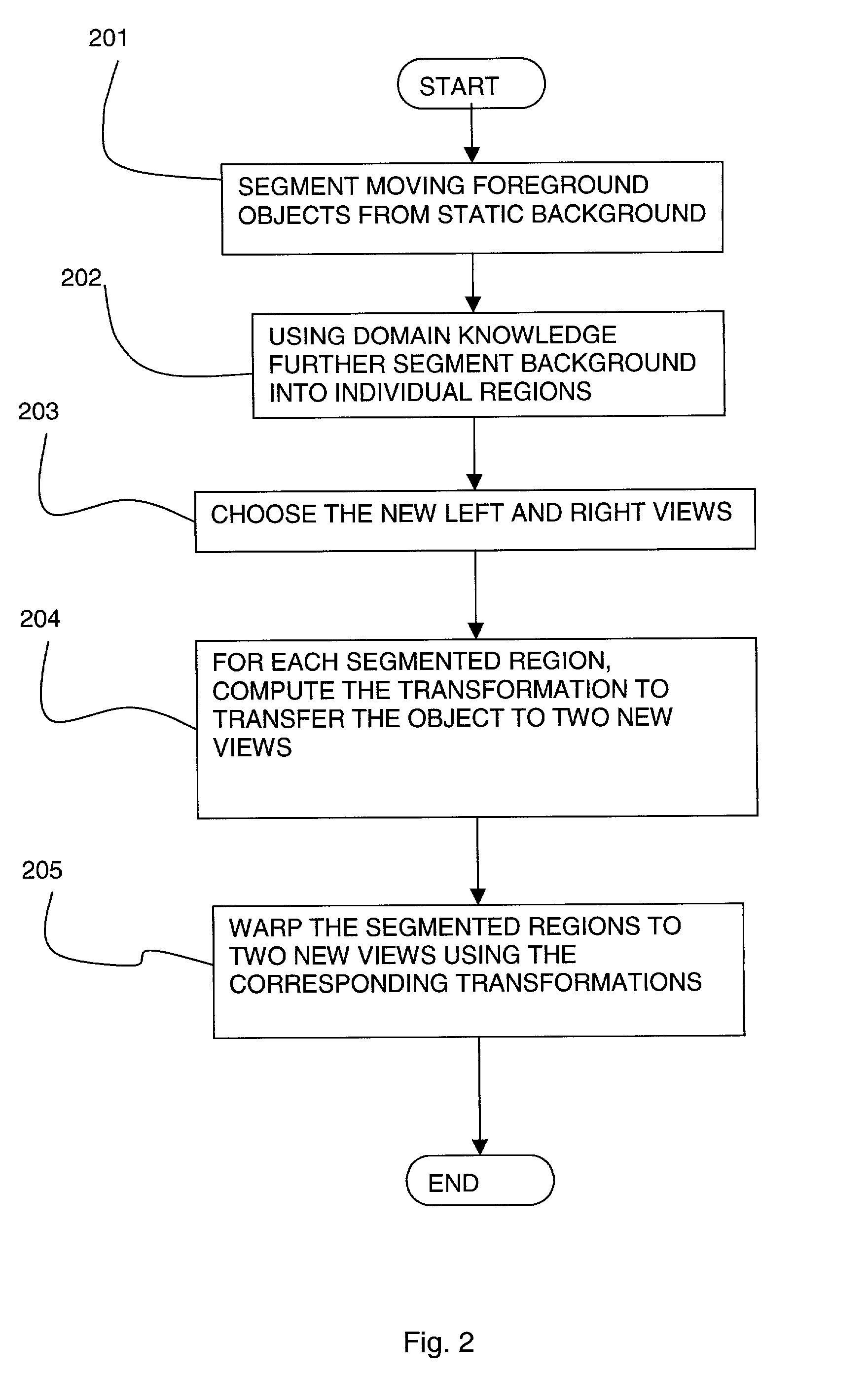

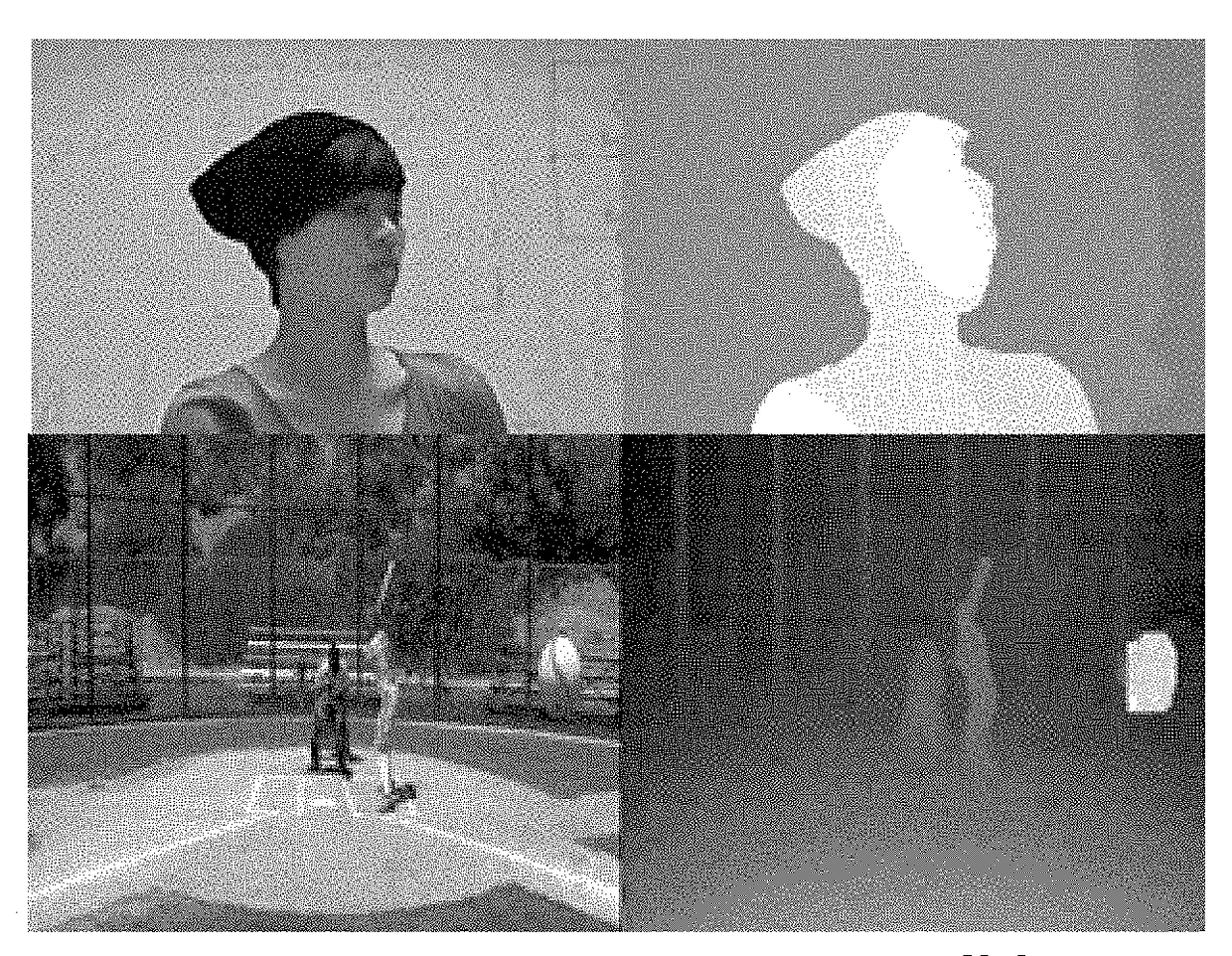

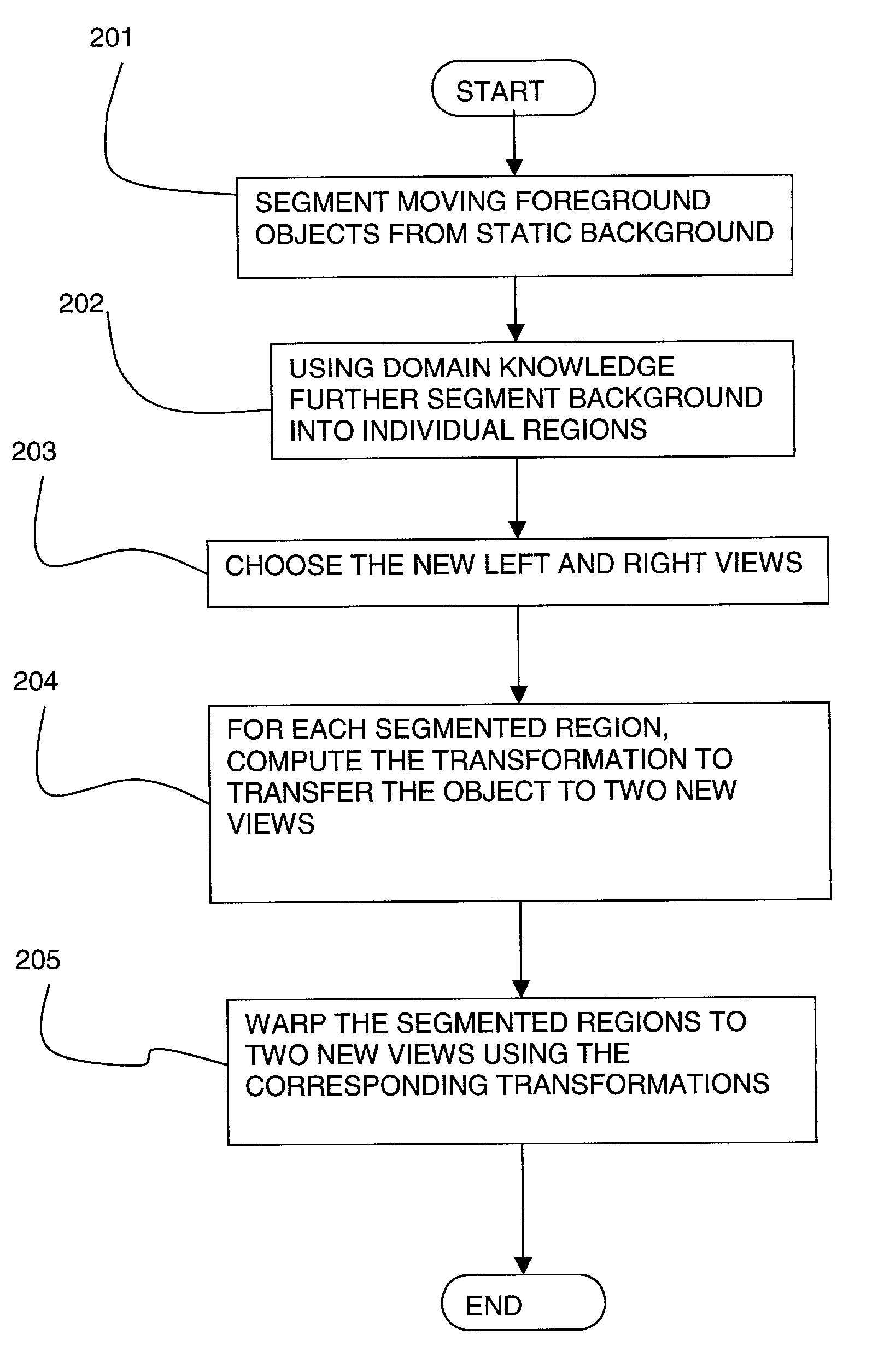

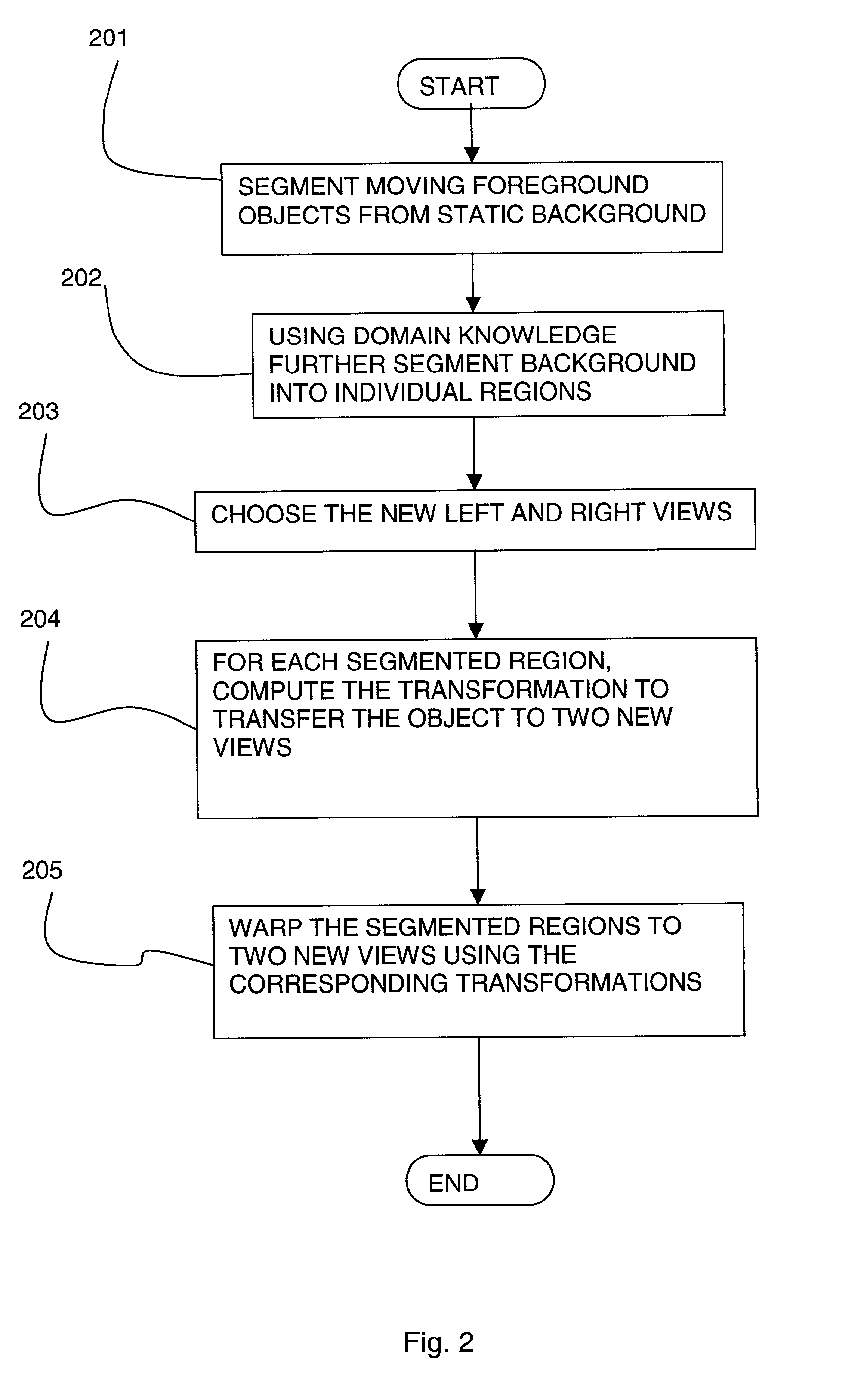

N-view synthesis from monocular video of certain broadcast and stored mass media content

InactiveUS6965379B2Improve approximationSuitable displayImage enhancementImage analysisView synthesisComputer vision

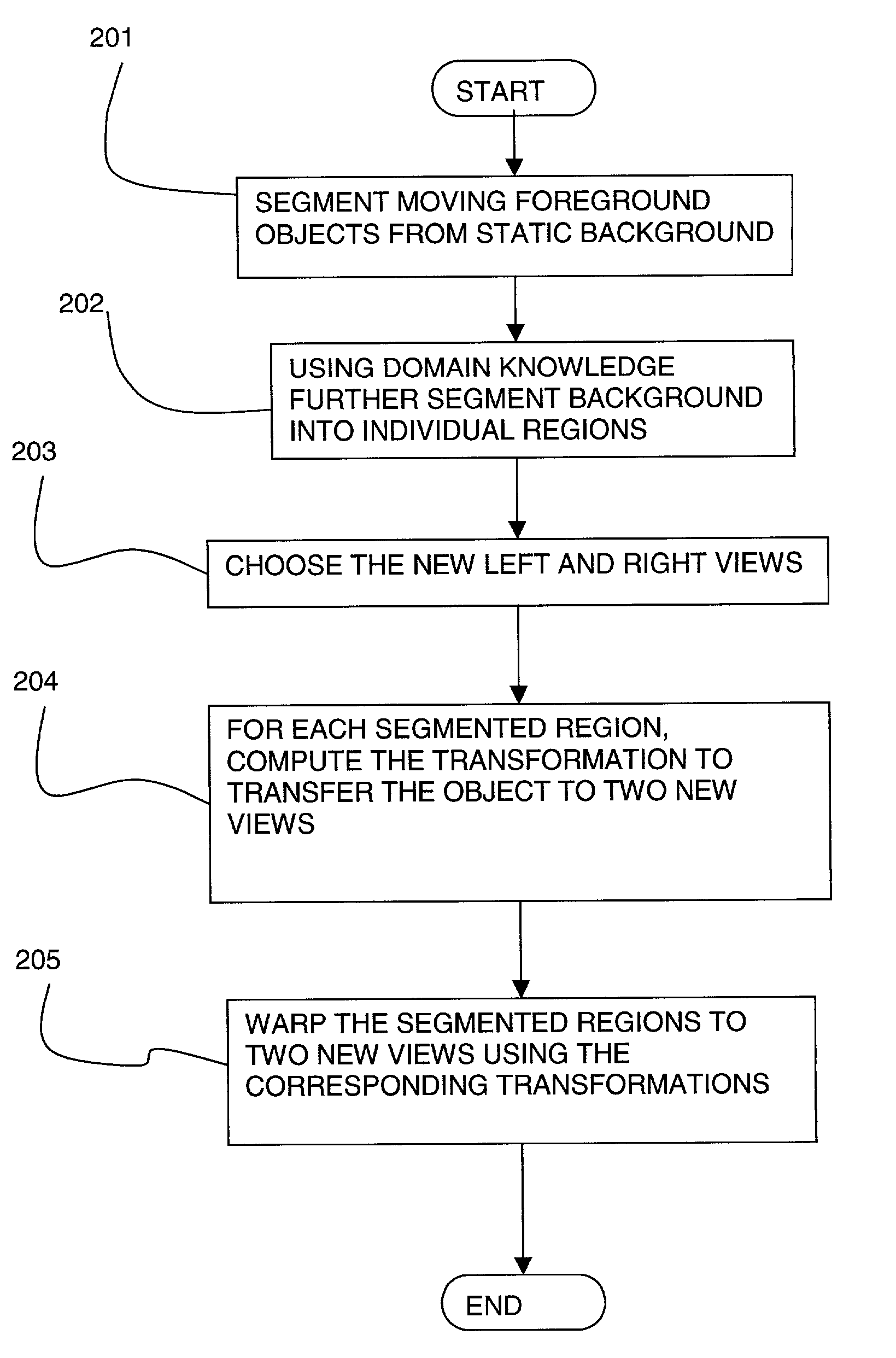

A monocular input image is transformed to give it an enhanced three dimensional appearance by creating at least two output images. Foreground and background objects are segmented in the input image and transformed differently from each other, so that the foreground objects appear to stand out from the background. Given a sequence of input images, the foreground objects will appear to move differently from the background objects in the output images.

Owner:FUNAI ELECTRIC CO LTD

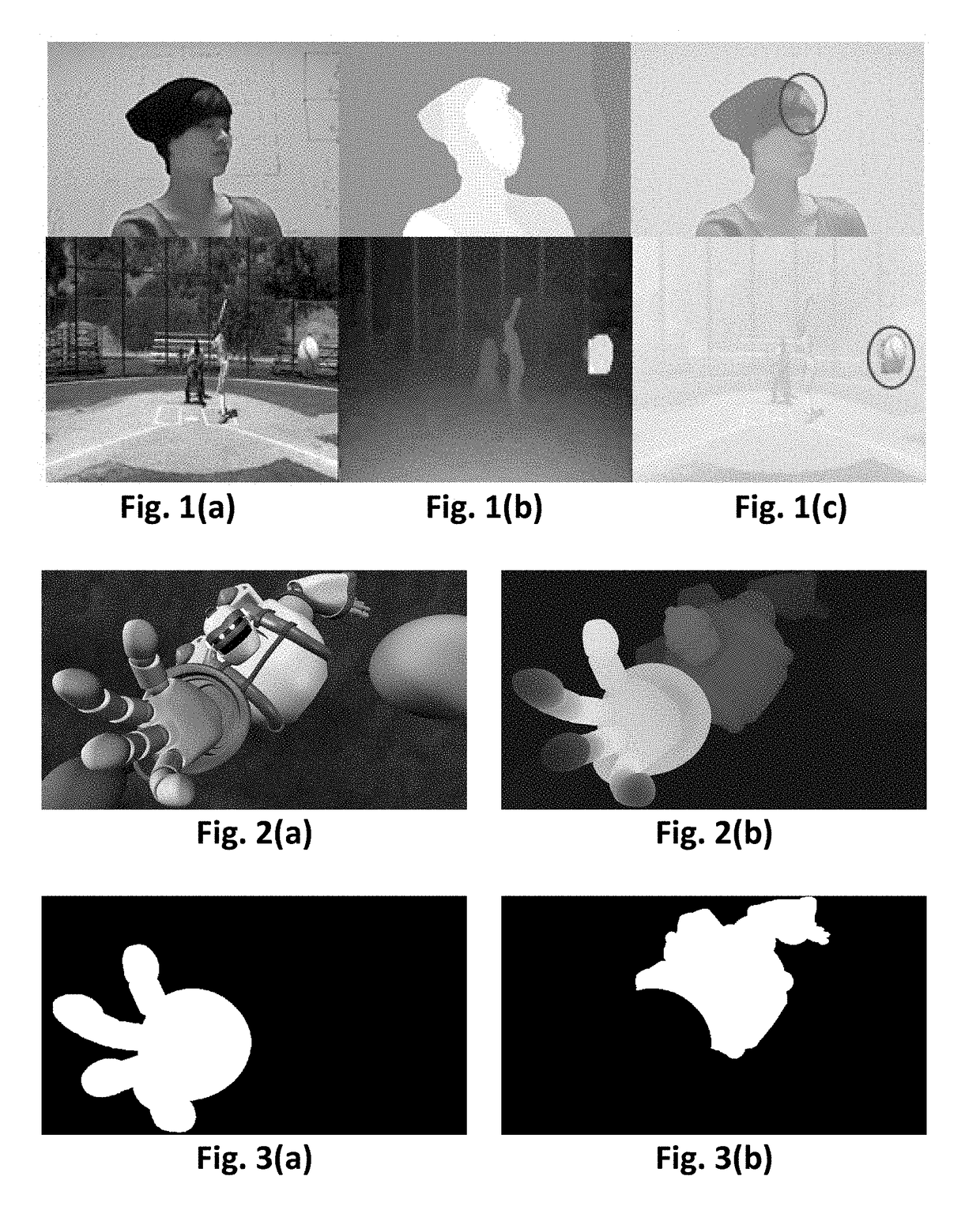

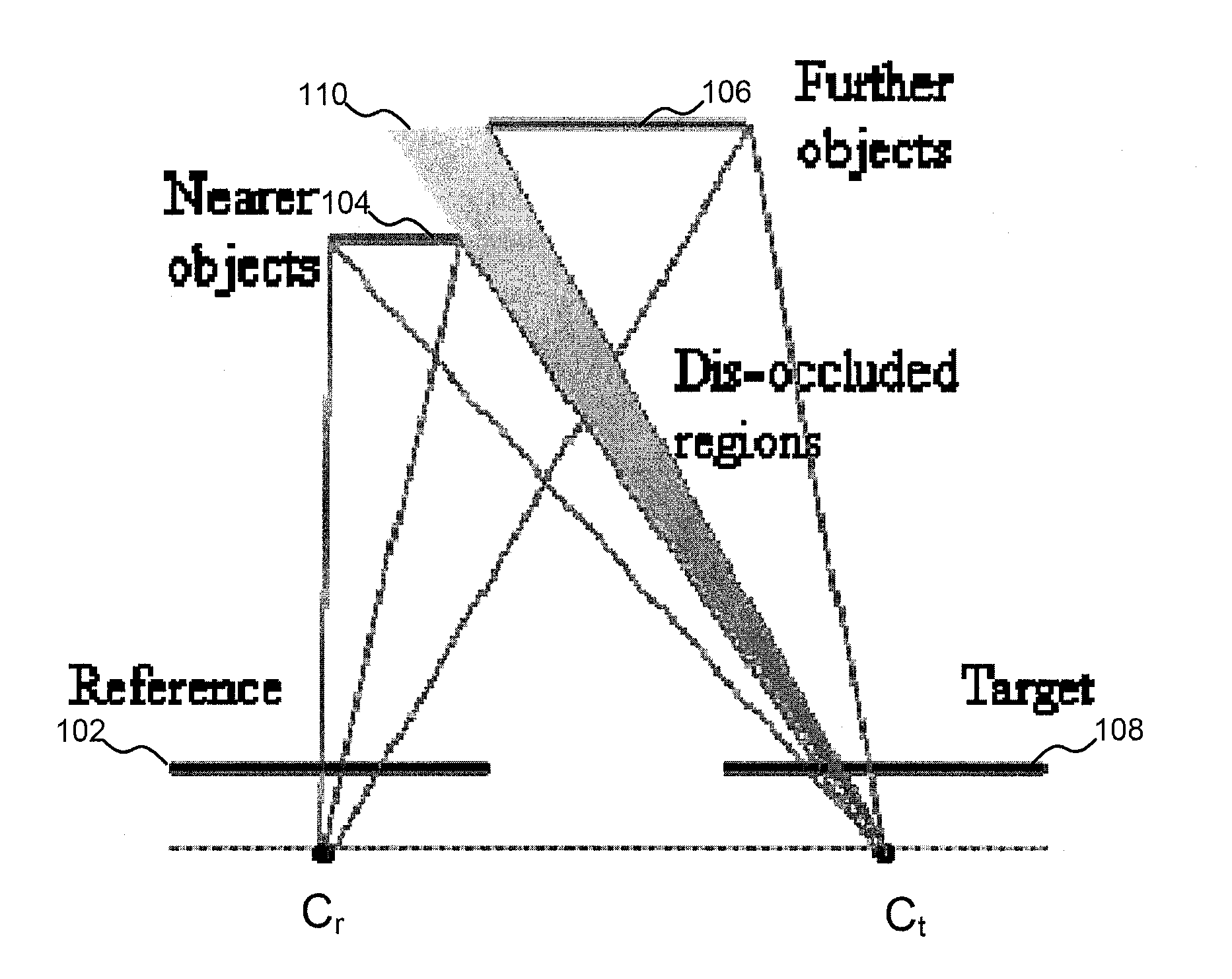

Auxiliary data for artifacts - aware view synthesis

ActiveUS20170188002A1Reduce artifactsLarge discontinuityCharacter and pattern recognitionImage data processingData packMissing data

Original or compressed Auxiliary Data, including possibly major depth discontinuities in the form of shape images, partial occlusion data, associated tuned and control parameters, and depth information of the original video(s), are used to facilitate the interactive display and generation of new views (view synthesis) of conventional 2D, stereo, and multi-view videos in conventional 2D, 3D (stereo) and multi-view or autostereoscopic displays with reduced artifacts. The partial or full occlusion data includes image, depth and opacity data of possibly partially occluded areas to facilitate the reduction of artifacts in the synthesized view. An efficient method is used for extracting objects at partially occluded regions as defined by the auxiliary data from the texture videos to facilitate view synthesis with reduced artifacts. Further, a method for updating the image background and the depth values uses the auxiliary data after extraction of each object to reduce the artifacts due to limited performance of online inpainting of missing data or holes during view synthesis.

Owner:VERSITECH LTD

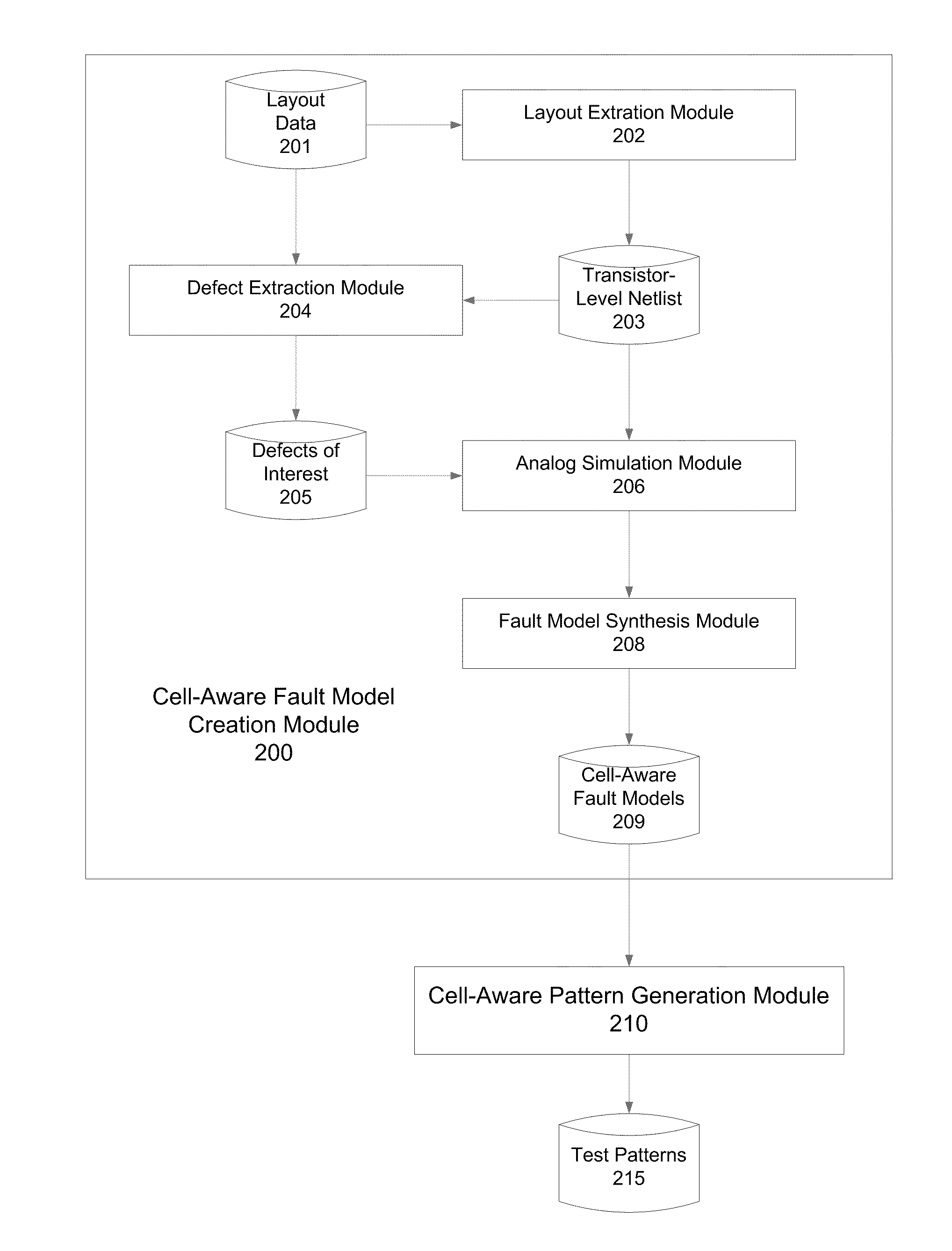

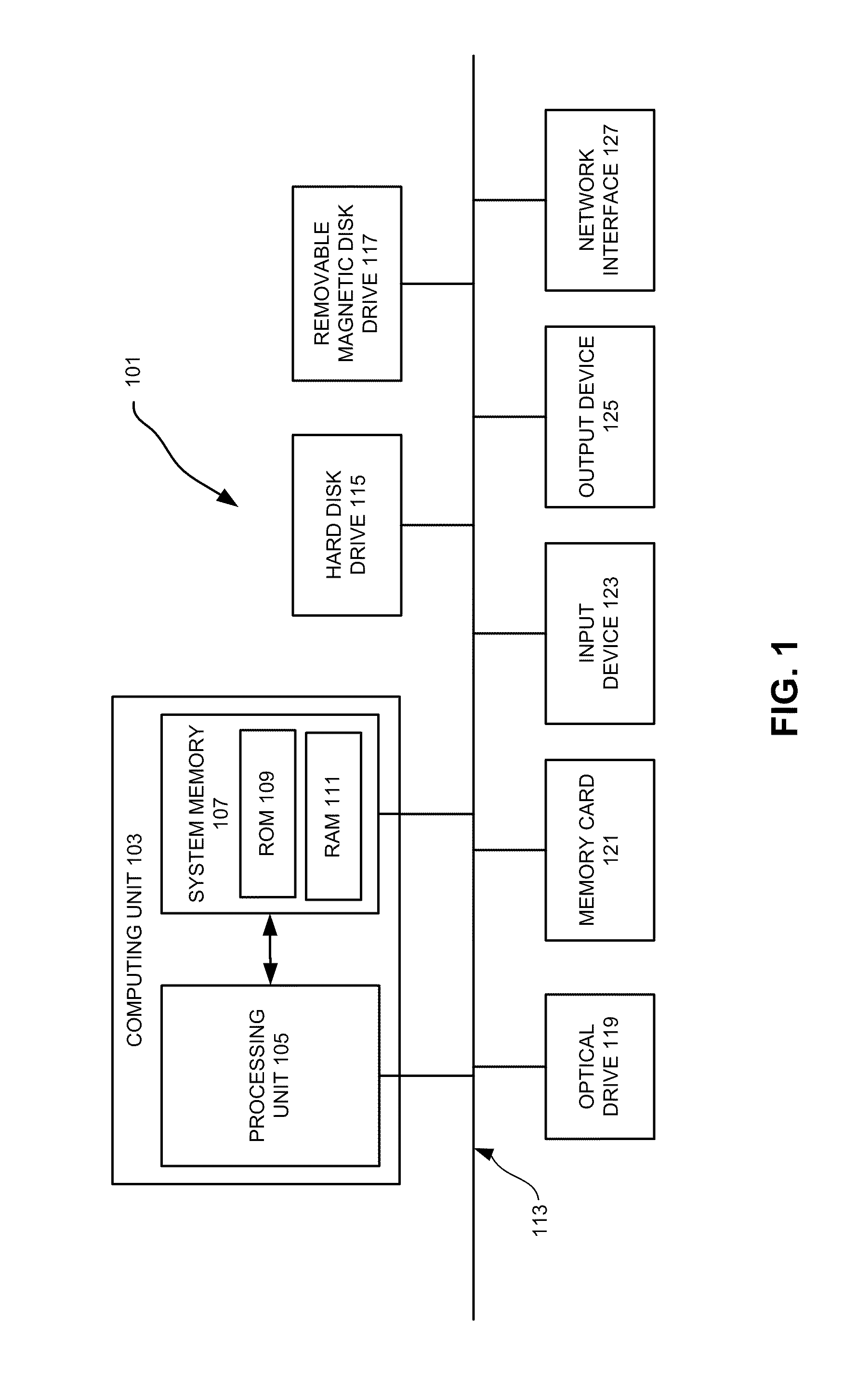

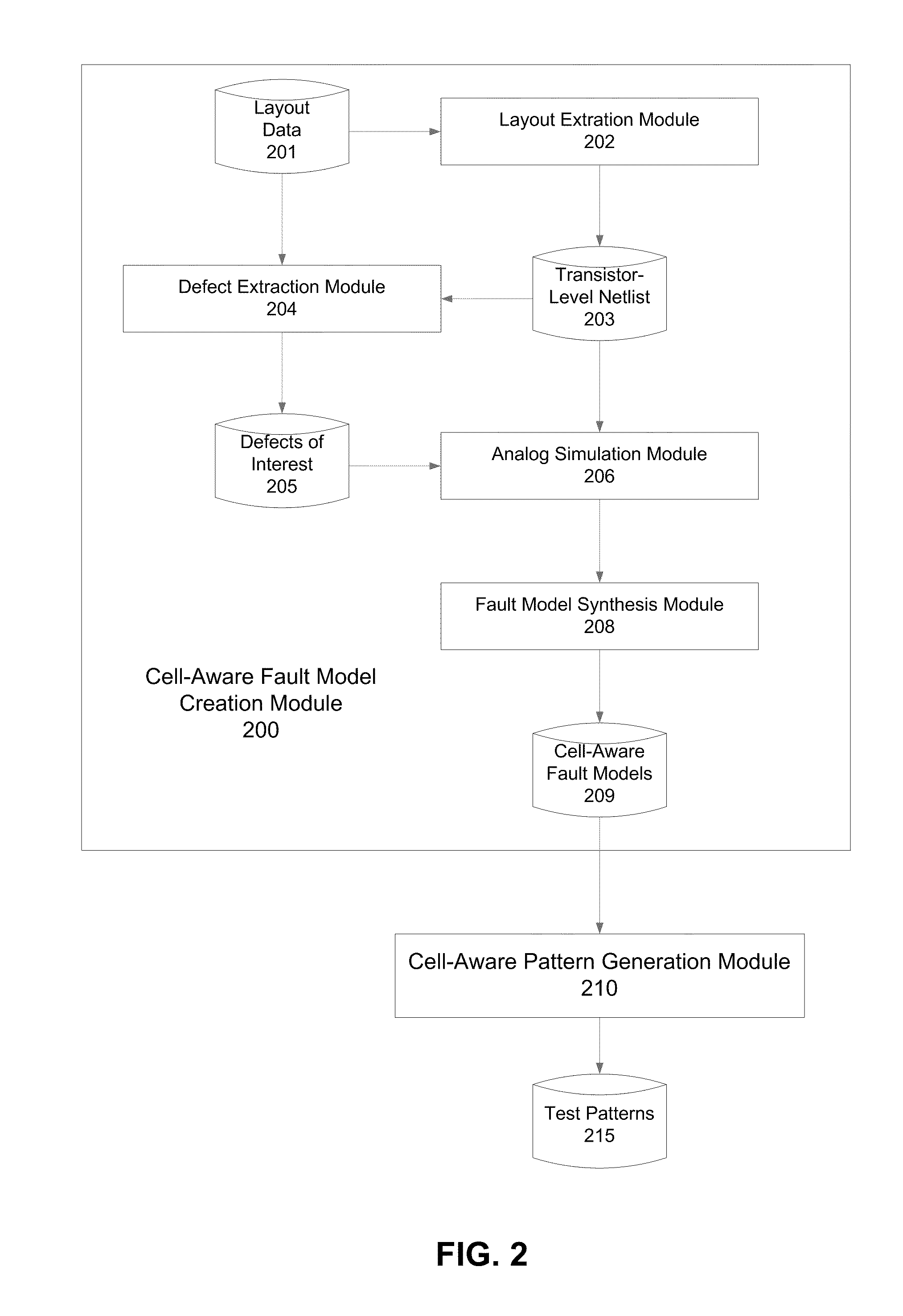

Cell-Aware Fault Model Creation And Pattern Generation

InactiveUS20100229061A1Increase coverageExpand coverageElectronic circuit testingLogical operation testingView synthesisTheoretical computer science

Cell-aware fault models directly address layout-based intra-cell defects. They are created by performing analog simulations on the transistor-level netlist of a library cell and then by library view synthesis. The cell-aware fault models may be used to generate cell-aware test patterns, which usually have higher defect coverage than those generated by conventional ATPG techniques. The cell-aware fault models may also be used to improve defect coverage of a set of test patterns generated by conventional ATPG techniques.

Owner:MENTOR GRAPHICS CORP

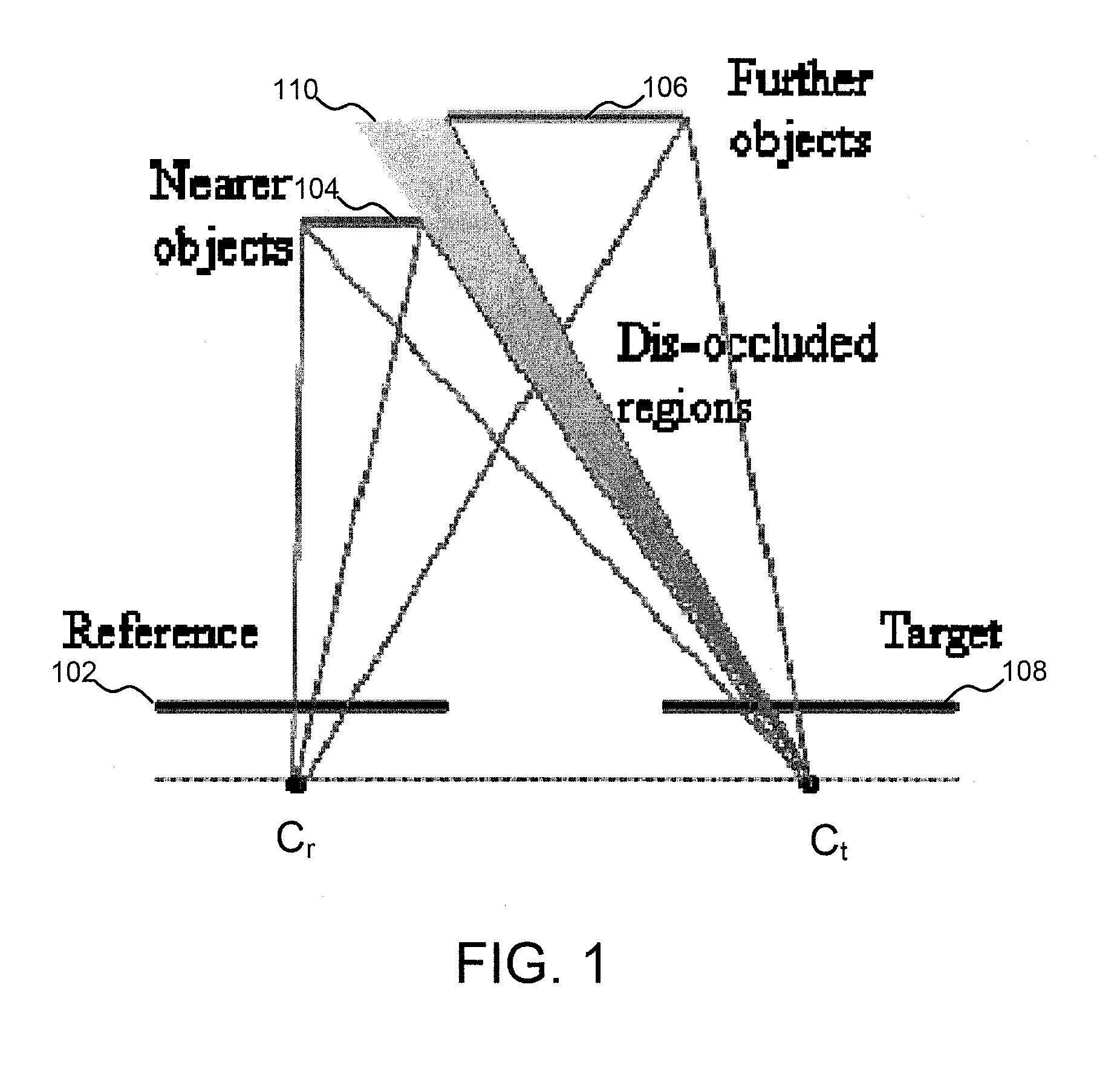

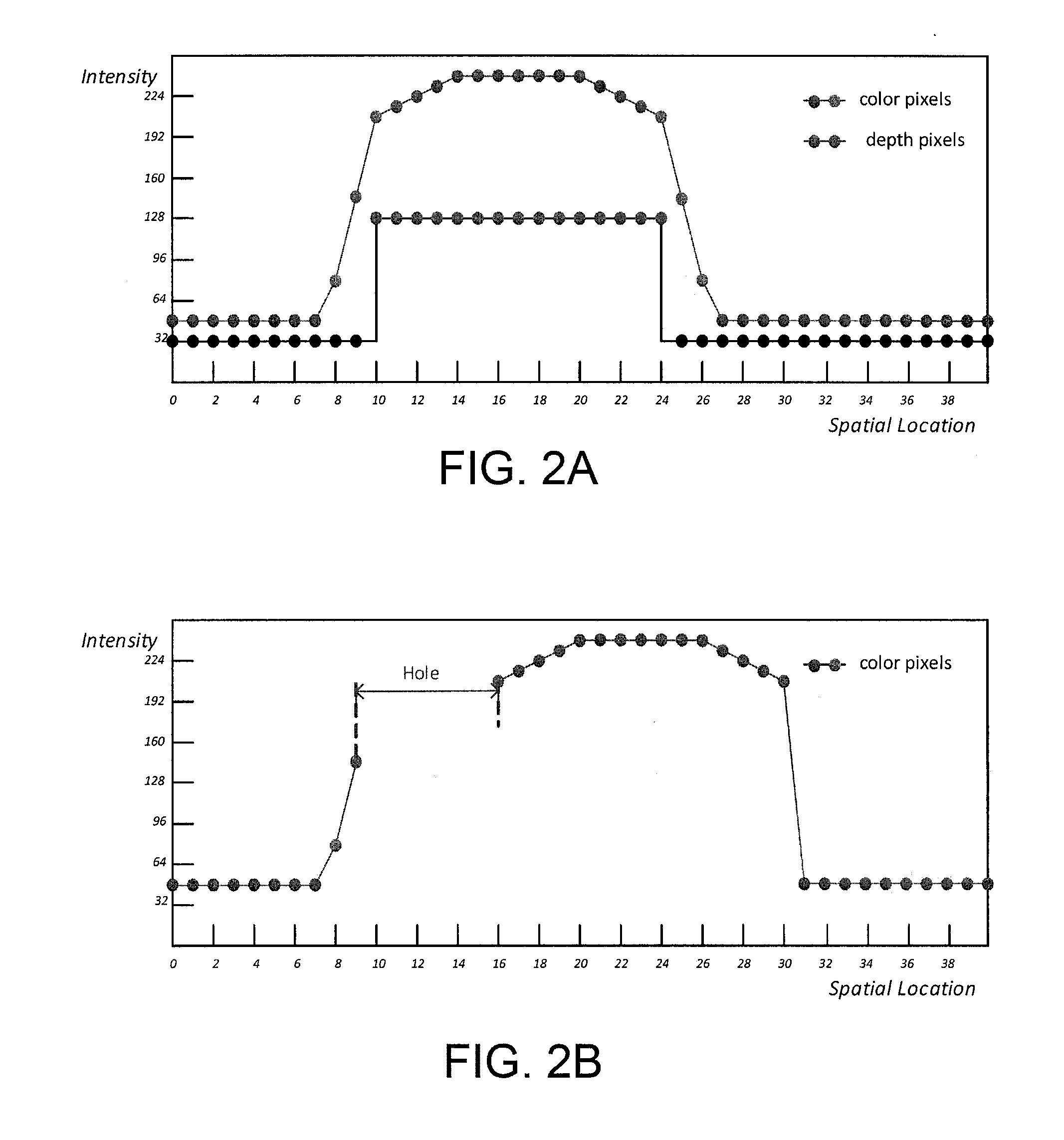

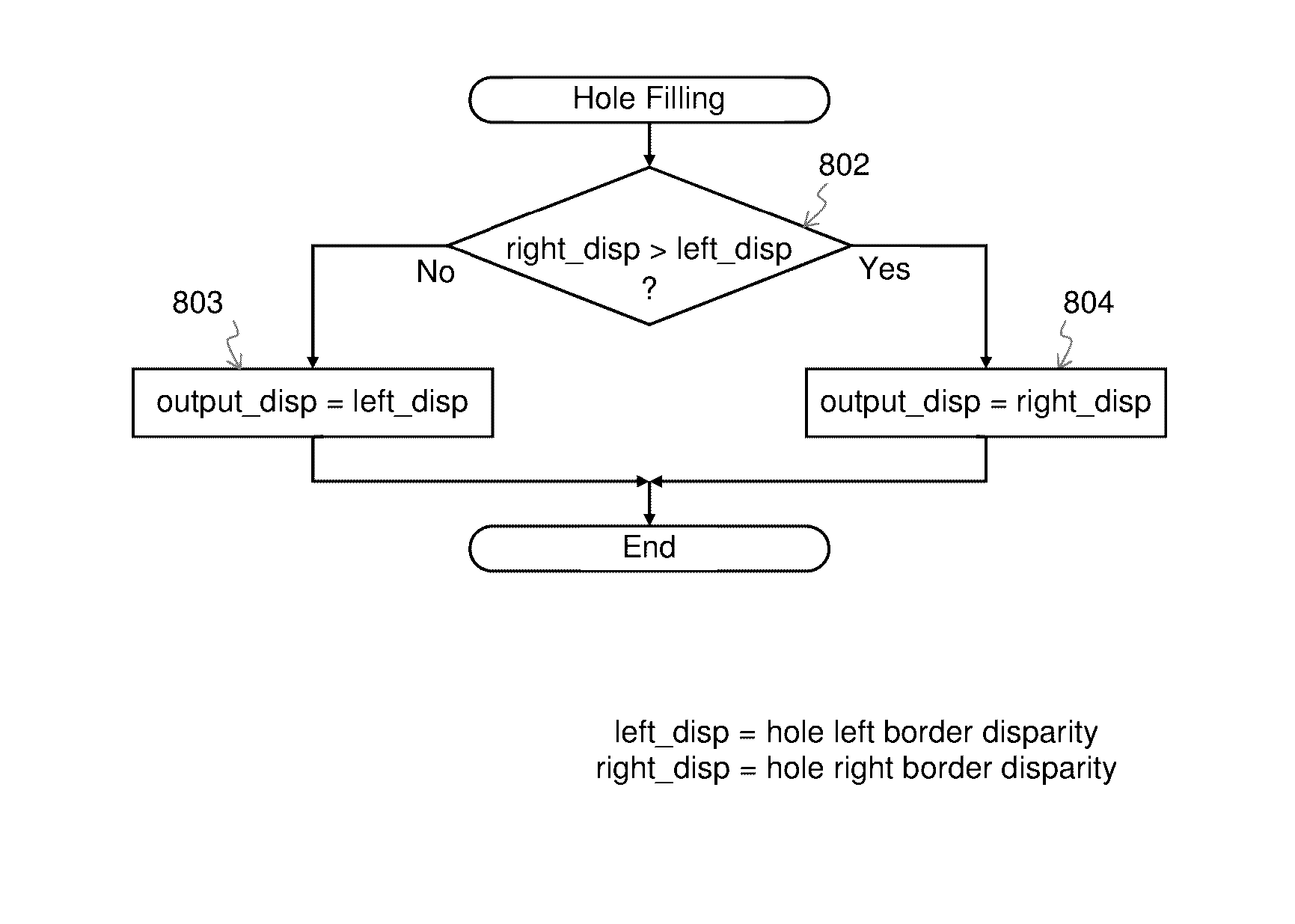

Apparatus, system and method for foreground biased depth map refinement method for dibr view synthesis

InactiveUS20140002595A1Quality improvementEfficient use ofSteroscopic systemsColor imageView synthesis

The present embodiments include methods, systems, and apparatuses for foreground biased depth map refinement in which horizontal gradient of the texture edge in color image is used to guide the shifting of the foreground depth pixels around the large depth discontinuities in order to make the whole texture edge pixels assigned with foreground depth values. In such an embodiment, only background information may be used in hole-filling process. Such embodiments may significantly improve the quality of the synthesized view by avoiding incorrect use of foreground texture information in hole-filling. Additionally, the depth map quality may not be significantly degraded when such methods are used for hole-filling.

Owner:HONG KONG APPLIED SCI & TECH RES INST

Methods for Full Parallax Compressed Light Field Synthesis Utilizing Depth Information

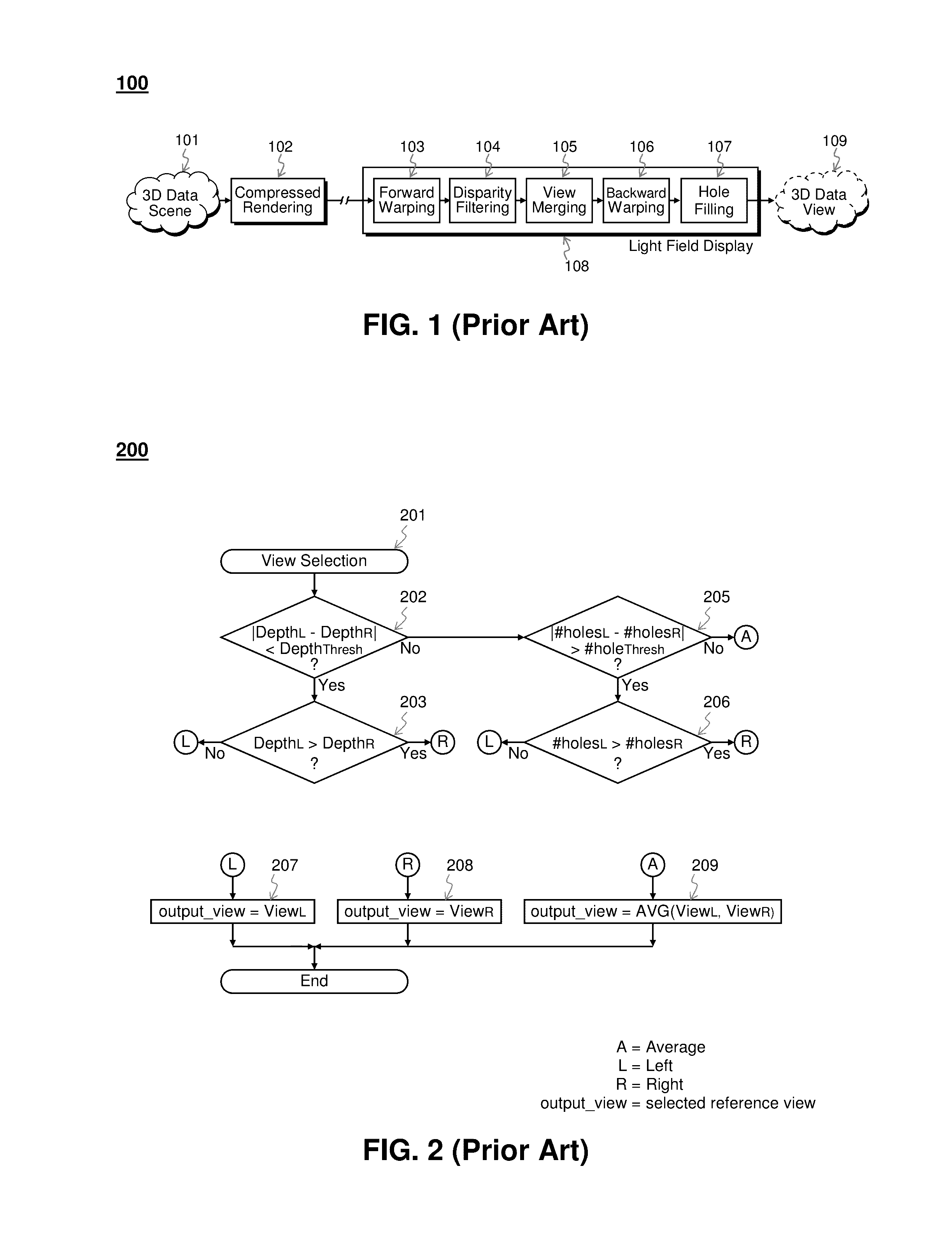

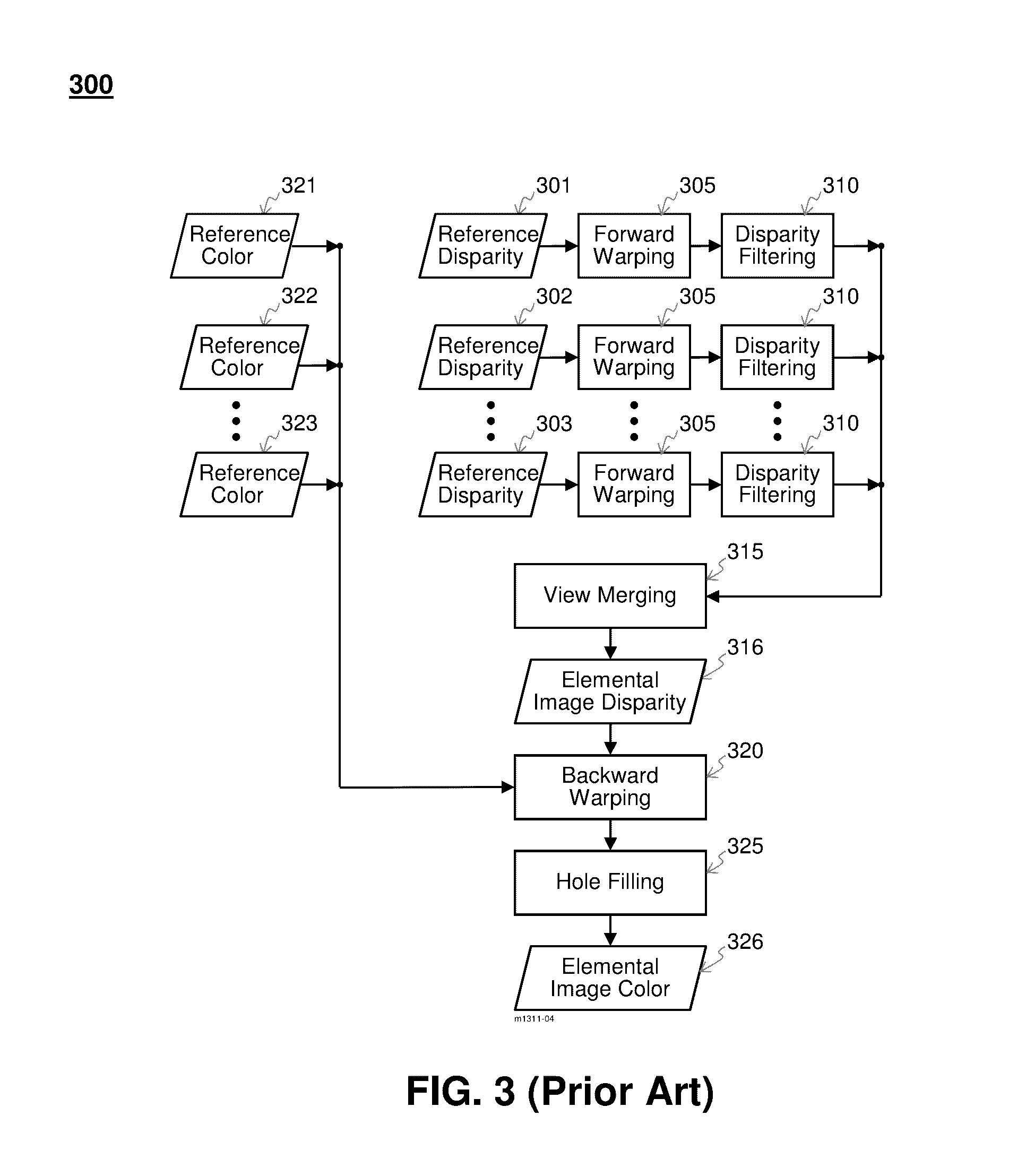

An innovative method for synthesis of compressed light fields is described. Compressed light fields are commonly generated by sub-sampling light field views. The suppressed views must then be synthesized at the display, utilizing information from the compressed light field. The present invention describes a method for view synthesis that utilizes depth information of the scene to reconstruct the absent views. An innovative view merging method coupled with an efficient hole filling procedure compensates for depth misregistrations and inaccuracies to produce realistic synthesized views for full parallax light field displays.

Owner:OSTENDO TECH INC

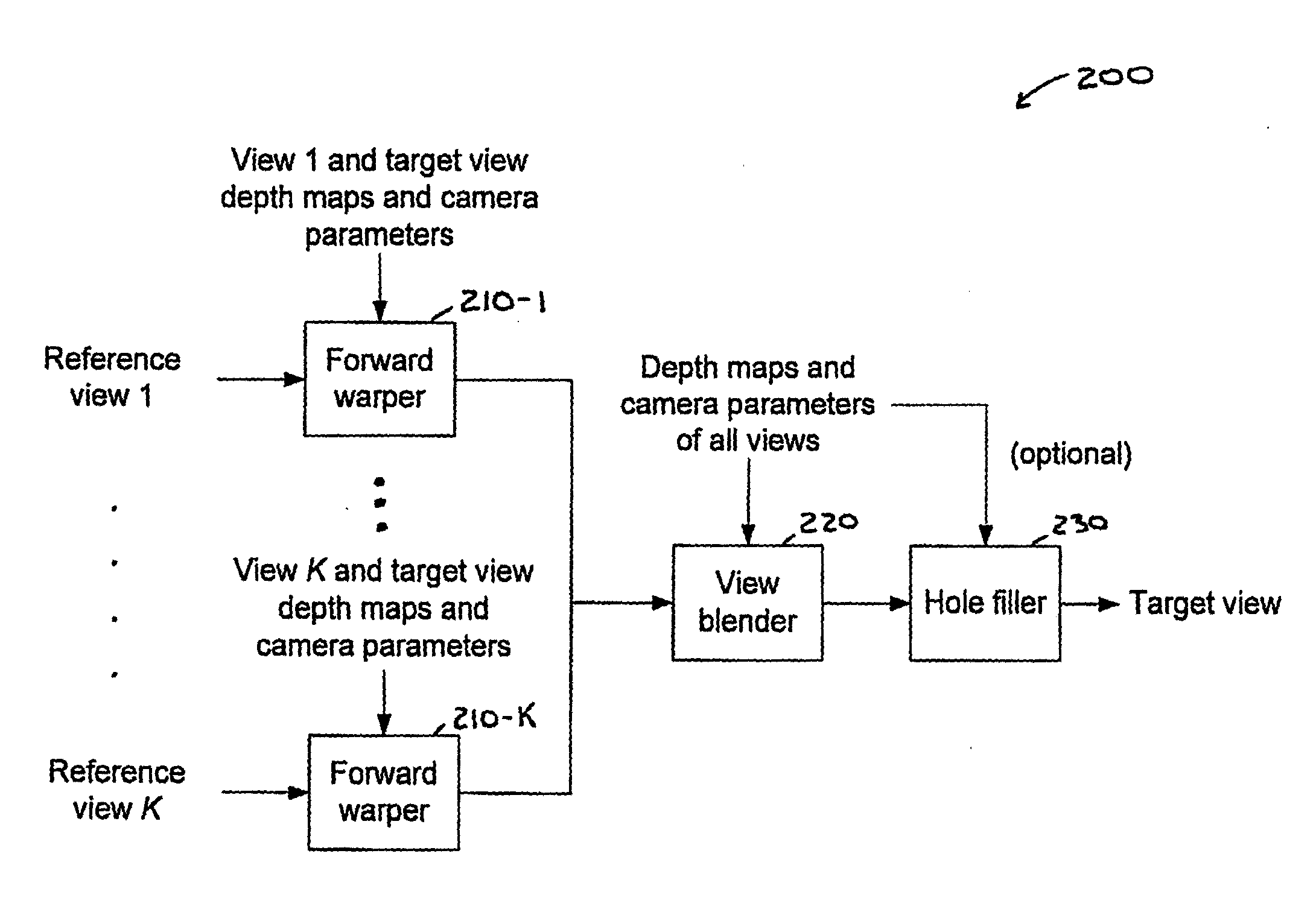

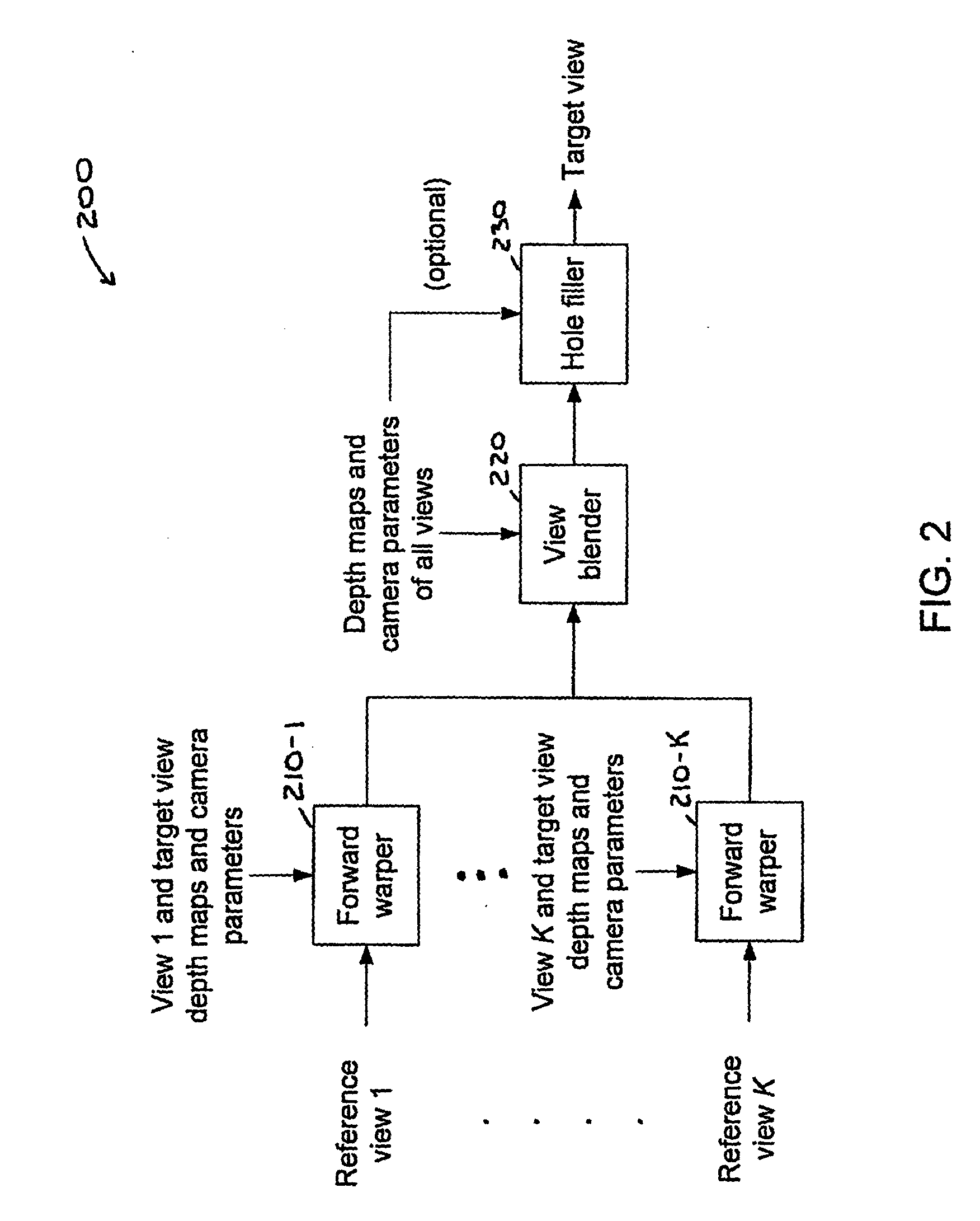

View synthesis with heuristic view blending

InactiveUS20110157229A1Cathode-ray tube indicatorsSteroscopic systemsView synthesisComputer graphics (images)

Various implementations are described. Several implementations relate to view synthesis with heuristic view blending for 3D Video (3DV) applications. According to one aspect, at least one reference picture, or a portion thereof, is warped from at least one reference view location to a virtual view location to produce at least one warped reference. A first candidate pixel and a second candidate pixel are identified in the at least one warped reference. The first candidate pixel and the second candidate pixel are candidates for a target pixel location in a virtual picture from the virtual view location. A value for a pixel at the target pixel location is determined based on values of the first and second candidate pixels.

Owner:THOMSON LICENSING SA

Multi-view video coding method

InactiveCN101170702AGood effectEasy to codeTelevision systemsDigital video signal modificationParallaxComputer graphics (images)

The invention relates to a digital image processing and video coding / decoding technique, specifically a view synthesis predictive coding. The invention aims to solve the technical problems to provide a multi-view video coding method suitable for various camera systems. The multi-view video coding method is characterized in that the method comprises the following steps: a, independently coding video sequences of one or more views by means of motion compensation prediction; b, coding other video sequences by the minimal-cost one prediction mode selected from parallax compensation prediction, view synthesis prediction, and motion compensation prediction. The invention ensures better effect of video coding.

Owner:SICHUAN PANOVASIC TECH

Method and system for processing multiview videos for view synthesis using motion vector predictor list

ActiveUS8823821B2Television system detailsPicture reproducers using cathode ray tubesMotion vectorVideo encoding

Multiview videos are acquired by overlapping cameras. Side information is used to synthesize multiview videos. A reference picture list is maintained for current frames of the multiview videos, the reference picture indexes temporal reference pictures and spatial reference pictures of the acquired multiview videos and the synthesized reference pictures of the synthesized multiview video. Each current frame of the multiview videos is predicted according to reference pictures indexed by the associated reference picture list with a skip mode and a direct mode, whereby the side information is inferred from the synthesized reference picture. In addition, the skip and merge modes for single view video coding are modified to support multiview video coding by generating a motion vector prediction list by also considering neighboring blocks that are associated with synthesized reference pictures.

Owner:MITSUBISHI ELECTRIC RES LAB INC

N-view synthesis from monocular video of certain broadcast and stored mass media content

InactiveUS20020167512A1Improve approximationSuitable displayImage enhancementImage analysisView synthesisComputer vision

A monocular input image is transformed to give it an enhanced three dimensional appearance by creating at least two output images. Foreground and background objects are segmented in the input image and transformed differently from each other, so that the foreground objects appear to stand out from the background. Given a sequence of input images, the foreground objects will appear to move differently from the background objects in the output images.

Owner:FUNAI ELECTRIC CO LTD

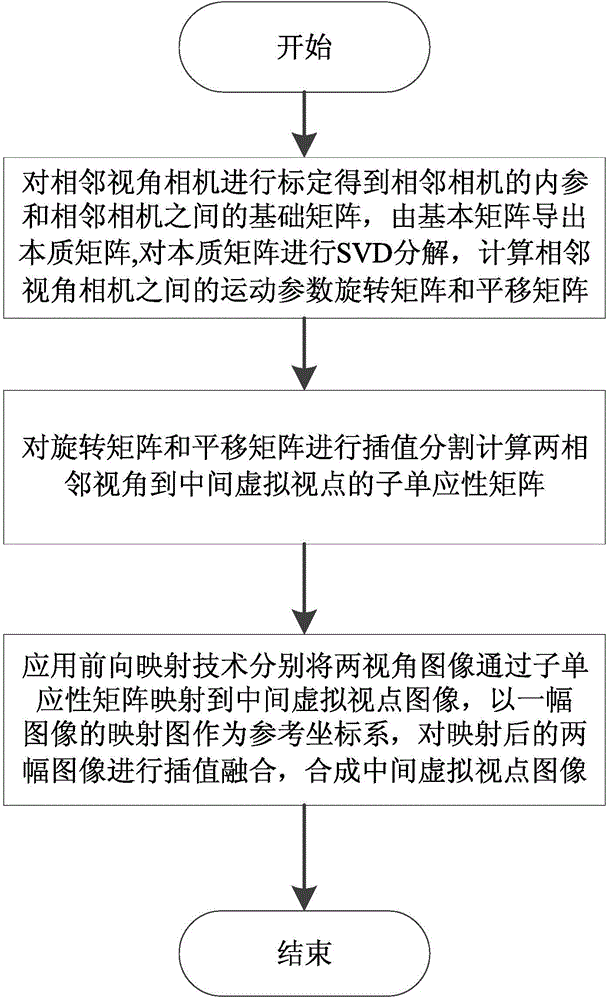

Virtual view synthesis method based on homographic matrix partition

ActiveCN104809719AImprove real-time performanceRebuild fastImage analysisSteroscopic systemsSingular value decompositionEssential matrix

The invention discloses a virtual view synthesis method based on homographic matrix partition. The virtual view synthesis method based on homographic matrix partition comprises the following steps of 1) calibrating left and right neighboring view cameras to obtain the internal reference matrixes of the left and right neighboring view cameras and a basis matrix between the left and right neighboring view cameras, deriving an essential matrix from the basis matrix, performing singular value decomposition on the essential matrix, and computing the motion parameters including a rotation matrix and a translation matrix between the left and right neighboring view cameras; 2) performing interpolation division on the rotation matrix and the translation matrix to obtain sub homographic matrixes from left and right neighboring views to a middle virtual view; 3) applying the forward mapping technology to map two view images to a middle virtual view image respectively through the sub homographic matrixes, taking the mapping graph of one of the images as a reference coordinate system and performing interpolation fusion on the mapped two images to synthesize a middle virtual view image. The virtual view synthesis method based on the homographic matrix partition has the advantages of being high in synthesis speed, simple and effective in process and high in practical engineering value.

Owner:SOUTH CHINA UNIV OF TECH

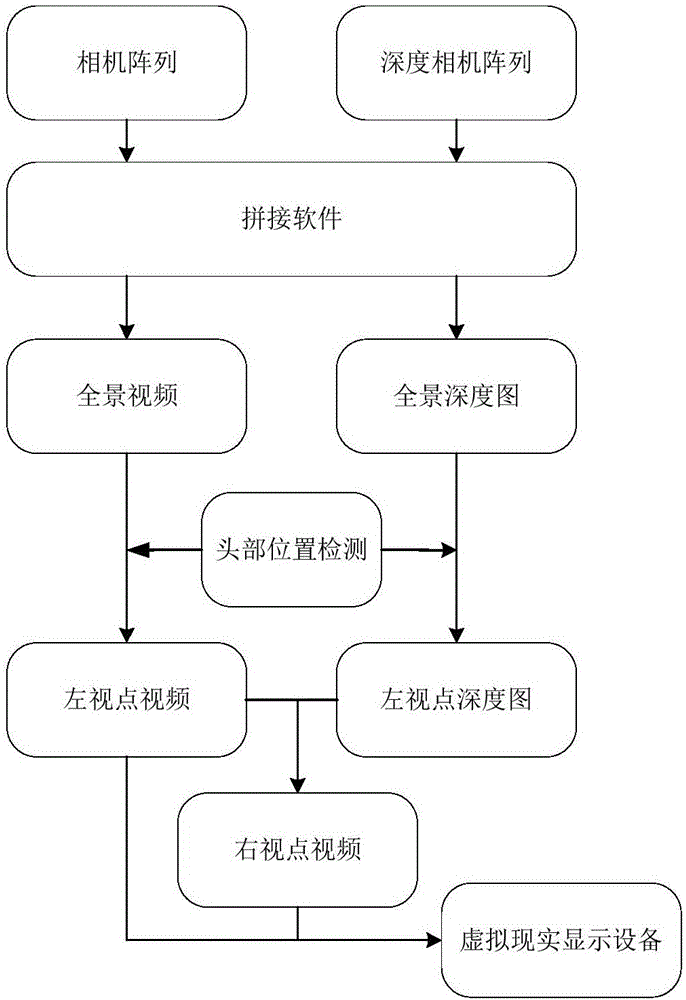

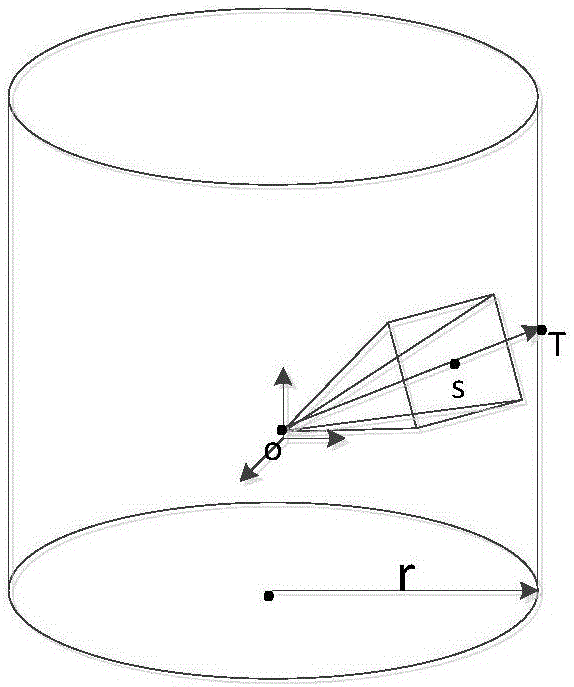

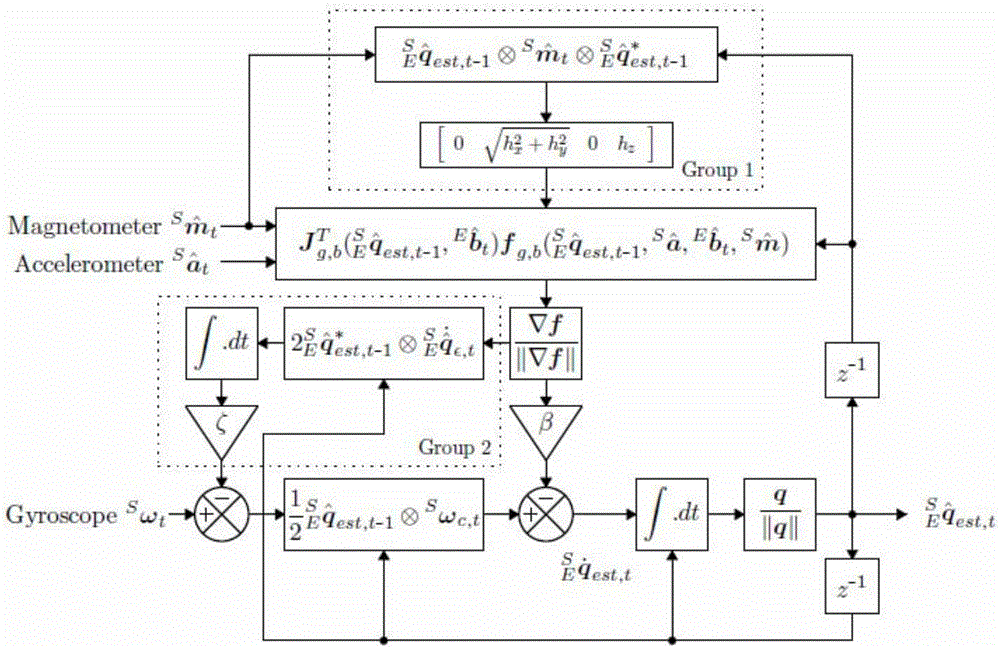

Panoramic 3D video generation method for virtual reality equipment

ActiveCN105959665AImprove experienceImprove realismGeometric image transformationSteroscopic systemsView synthesisDepth map

The invention discloses a panoramic 3D video generation method for virtual reality equipment, comprising the following steps: shooting a scene video using a wide-angle camera array, and generating a panoramic video through a stitching algorithm; shooting a scene depth map using a depth camera array, and generating a panoramic depth map video through the stitching algorithm; by detecting the head position of a person in real time, cutting an image of a corresponding position in the panoramic video frame as a video of the left view; and generating a right view image through extrapolation based on a virtual view synthesis technology according to the left view image and the corresponding depth map, wherein the two images are stitched into a left-right 3D video which is displayed in virtual reality equipment. By adding the view synthesis technology to a panoramic video displayed by virtual reality equipment, viewers can see the 3D effect of the panoramic video, the scene is more lifelike, and the viewer experience is enhanced.

Owner:SHENZHEN GRADUATE SCHOOL TSINGHUA UNIV +1

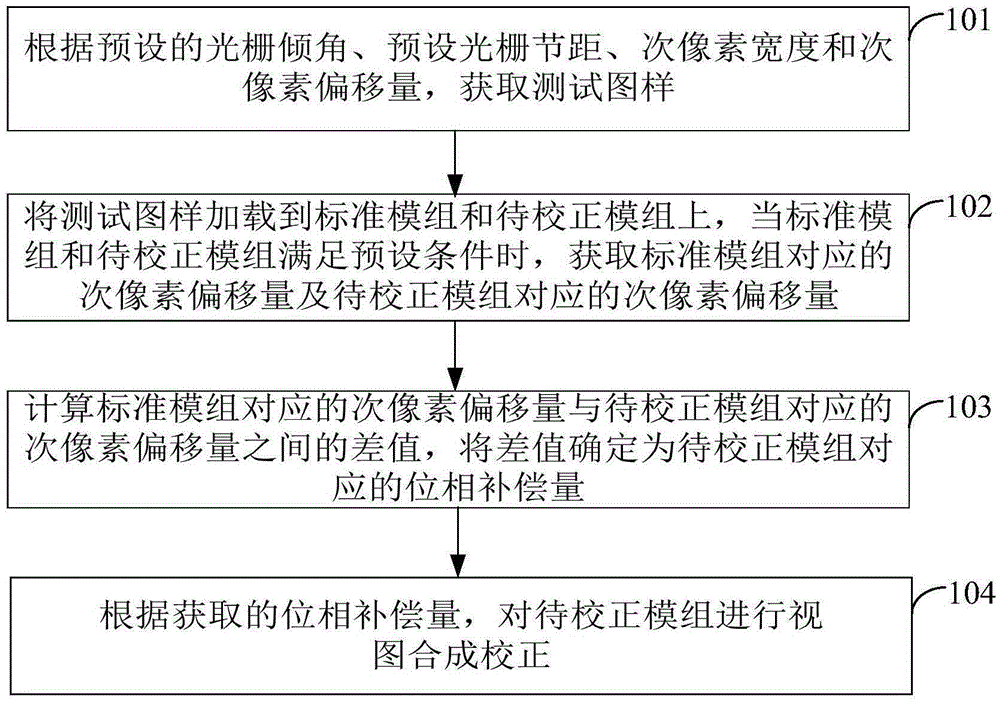

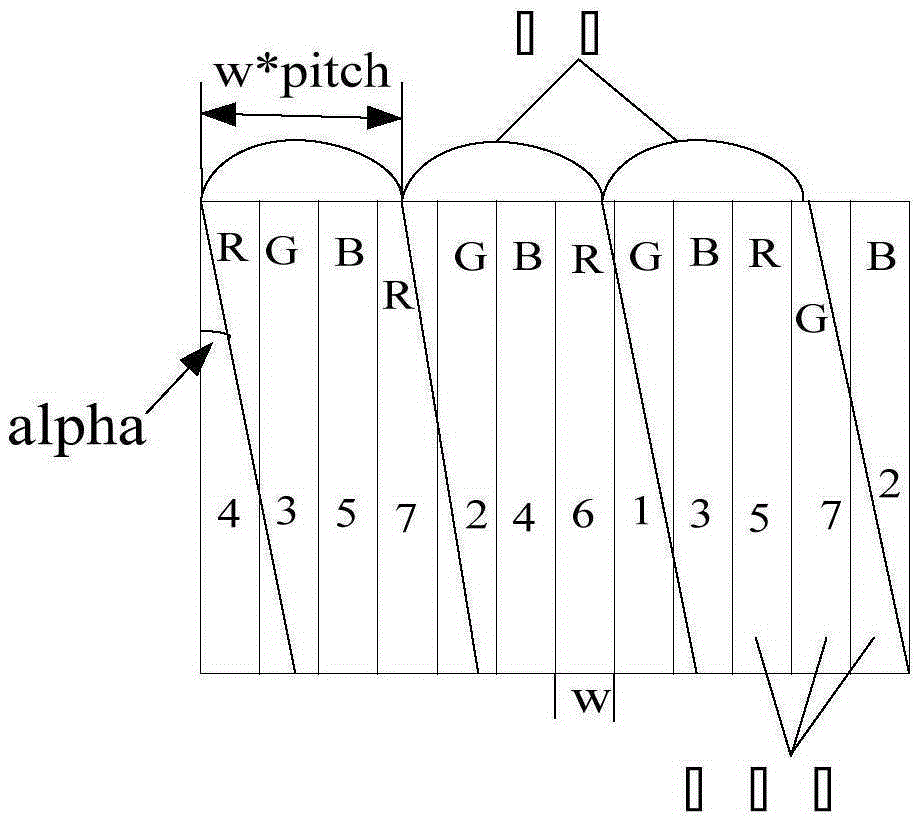

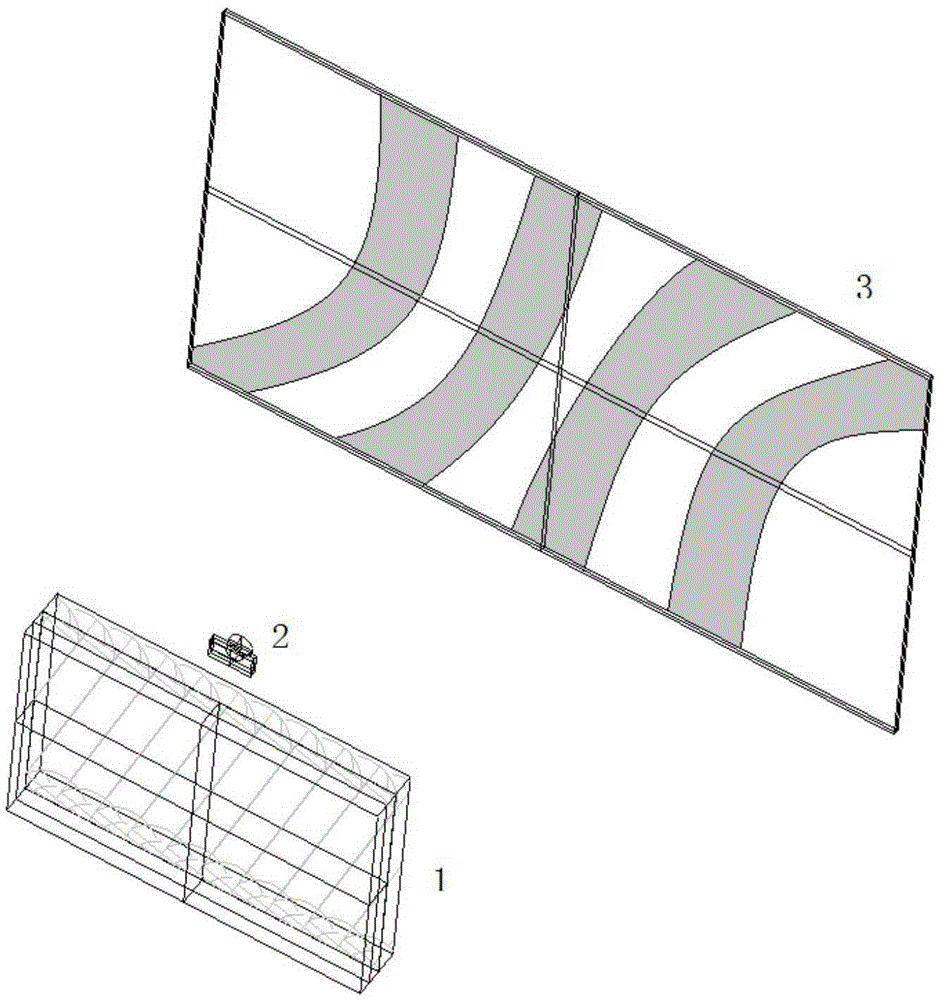

View synthesis correction method and device

InactiveCN105204173AQuality improvementMake up for the errorSteroscopic systemsOptical elementsGratingView synthesis

The invention provides a view synthesis correction method and device. The method includes the following steps that: a test image sample is obtained according to a preset grating inclination angle, a preset grating pitch, sub pixel width and sub pixel offset; the test image sample is loaded onto a standard module set and a module set to be corrected; when the standard module set and the module set to be corrected meet preset conditions, sub pixel offset corresponding to the standard module set and sub pixel offset corresponding to the module set to be corrected are obtained; a difference value between the sub pixel offset corresponding to the standard module set and the sub pixel offset corresponding to the module set to be corrected is calculated, and the difference value is determined as phase compensation quantity corresponding to the module set to be corrected; and view synthesis correction is performed on the module set to be corrected according to the phase compensation quantity. According to the view synthesis correction method and device of the invention, the phase compensation quantity corresponding to the module set to be corrected can be obtained, and view synthesis correction can be carried out according to the phase compensation quantity when the module set to be corrected displays an image in a follow-up stage even if grating attachment errors exist on the module set to be corrected, and therefore, the quality of image display of the module set to be corrected can be improved.

Owner:CHONGQING DROMAX PHOTOELECTRIC

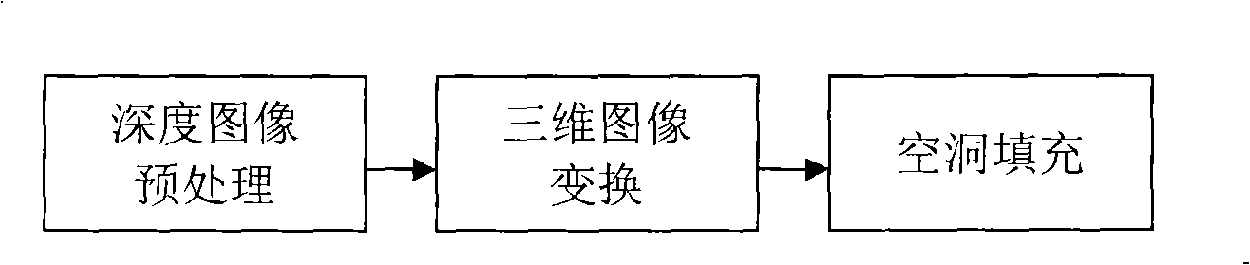

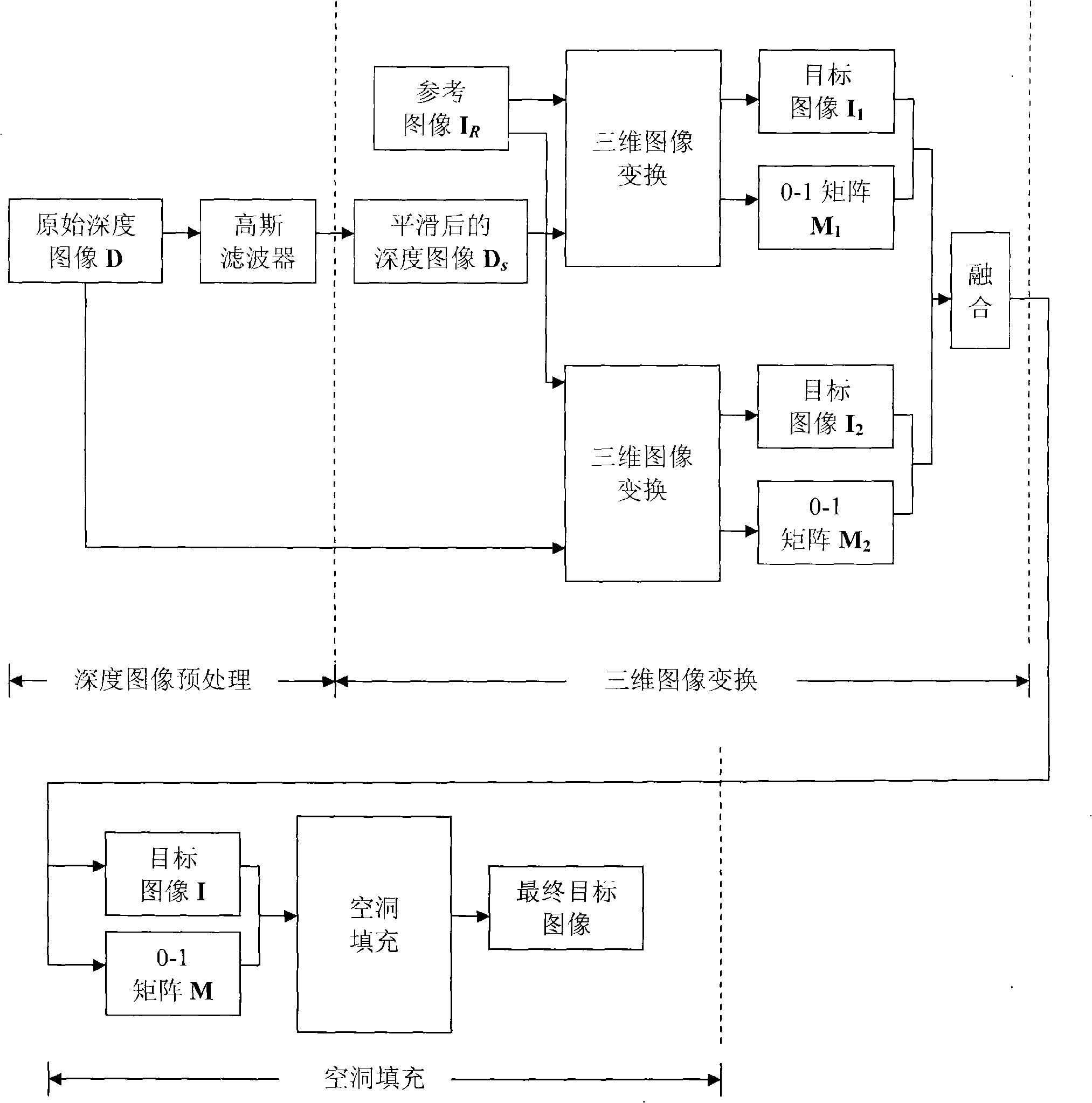

Drafting view synthesizing method based on depth image

InactiveCN101404777AGuarantee authenticitySmall amount of calculationView synthesisImage transformation

The invention discloses a view synthetic method based on the drawing of a depth image. The method comprises that firstly, the depth image is smoothed; and then the three-dimensional image transformation is carried out twice; the first transformation uses a smoothed depth image to obtain an object image comprising a smaller hollow space; the second transformation uses an original depth image to obtain an object image comprising a larger hollow space; then, the two object images are blended based on the object image obtained in the second transformation; hallow space padding is carried out on the object image obtained in blending. The method is characterized in that a zero-one matrix corresponding to the object image is maintained from beginning to end in the process of video synthesis for pointing out whether a certain point in the object image is a hollow point or not; the three-dimensional image transformation is carried out twice in the process of view synthesis, thus guaranteeing that the quality of the image in a non-hollow area is not reduced while the hollow space is eliminated and the authenticity of the image is reserved as far as possible.

Owner:SICHUAN PANOVASIC TECH +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com