Patents

Literature

398 results about "Inpainting" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Inpainting is the process of reconstructing lost or deteriorated parts of images and videos. In the museum world, in the case of a valuable painting, this task would be carried out by a skilled art conservator or art restorer. In the digital world, inpainting (also known as image interpolation or video interpolation) refers to the application of sophisticated algorithms to replace lost or corrupted parts of the image data (mainly small regions or to remove small defects).

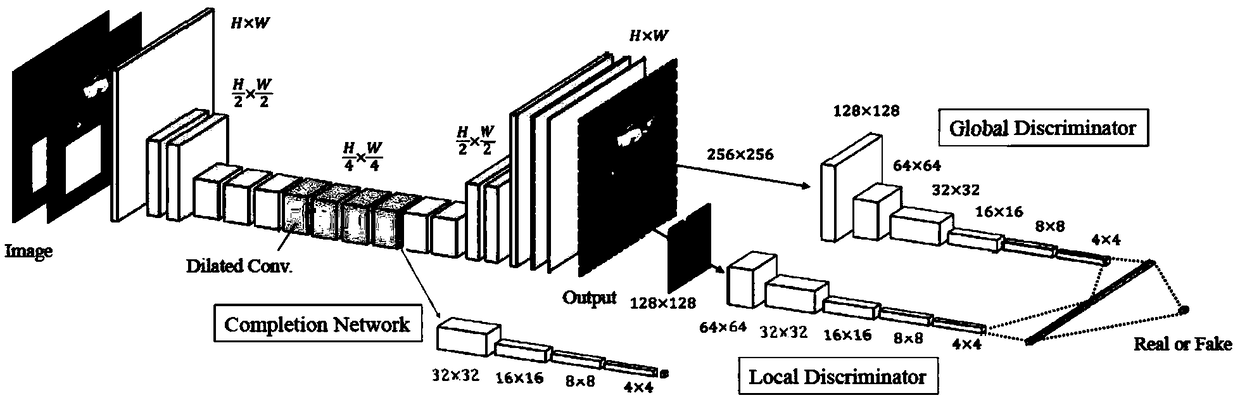

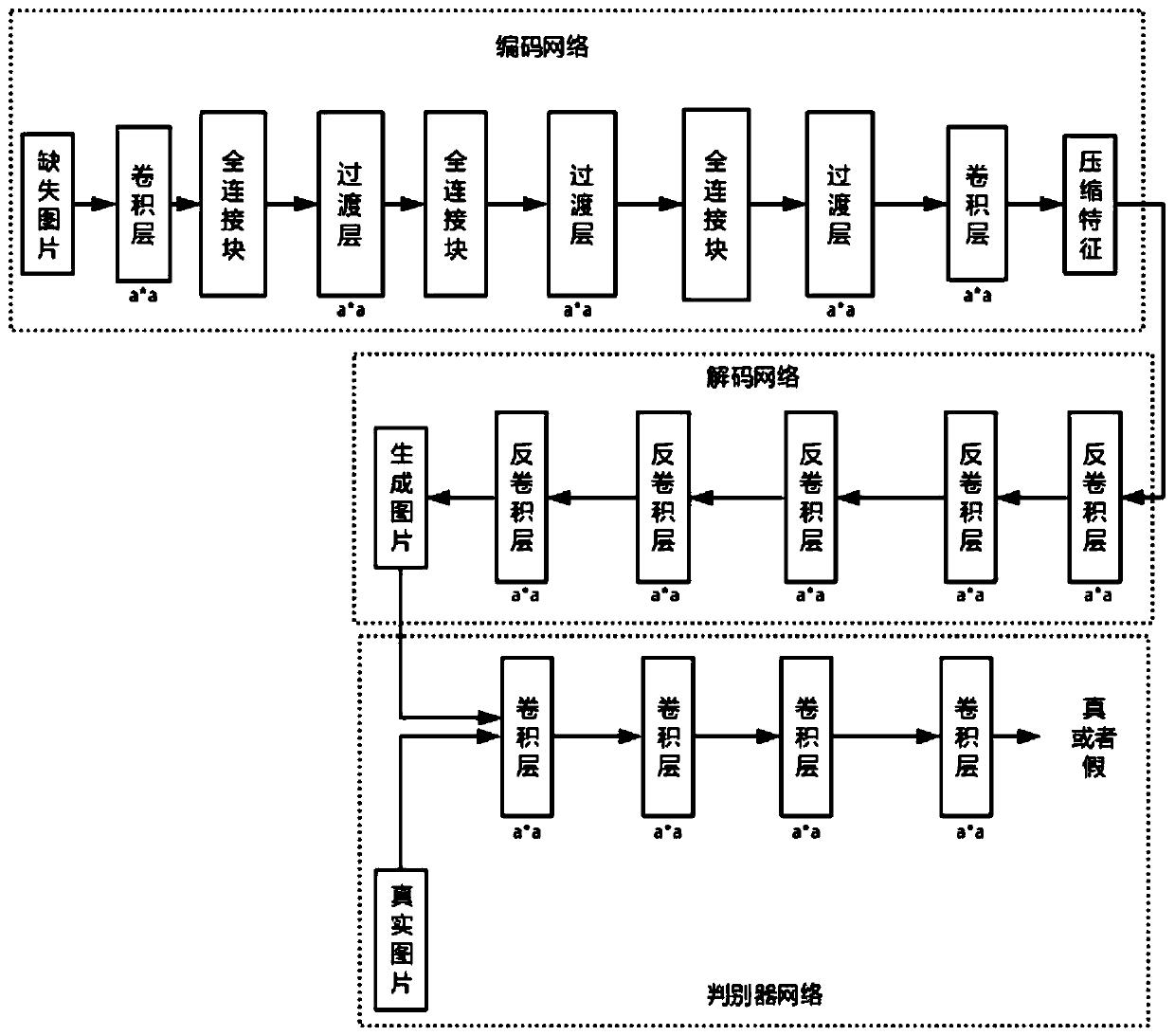

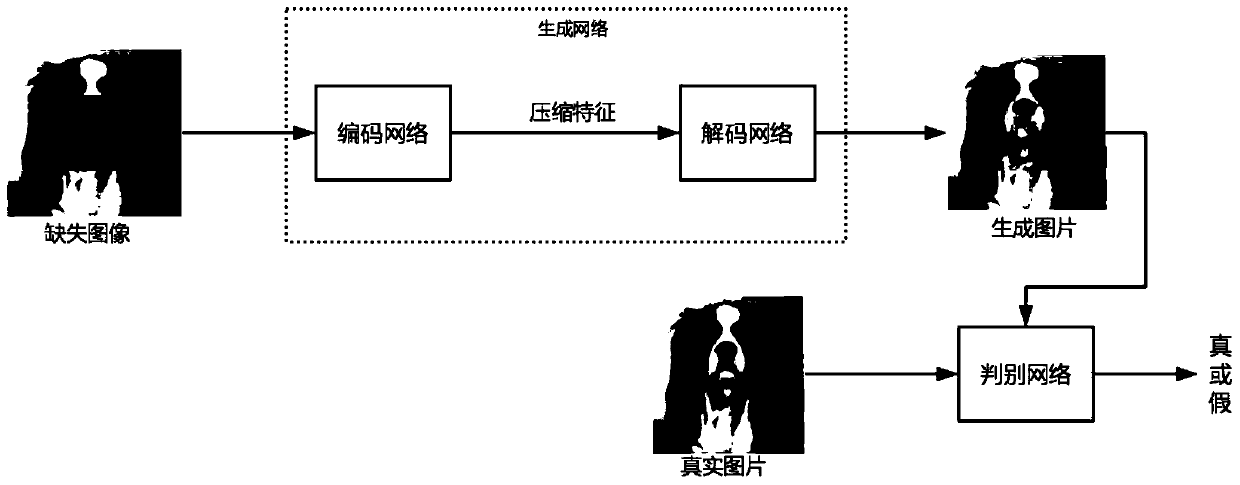

Image inpainting method and system based on antagonistic generation neural network

ActiveCN109191402AEliminate dependenciesImprove robustnessImage enhancementImage analysisAlgorithmNetwork on

The invention provides an image inpainting method and system based on antagonistic generation neural network, which comprises the following steps: firstly, constructing a self-encoder convolutional neural network (including encoder and encoding discriminator), a decoder (generator) convolutional neural network, a discriminator convolutional neural network, a global discriminator, and a local discriminator; Then, different loss functions are constructed for the five networks, and the whole network is trained by step-by-step training. Finally, when the network training is completed, the defect image is put into the network for repairing, and the result graph generated by the decoder (generator) is the final repairing result graph. The invention has the advantages that: the potential constraint of the image is kept and the image is thinned; an end-to-end image restoration network is implemented. The dependence of the repair network on the missing position mask information of the image iseliminated. The robustness in practical application is improved.

Owner:WUHAN UNIV

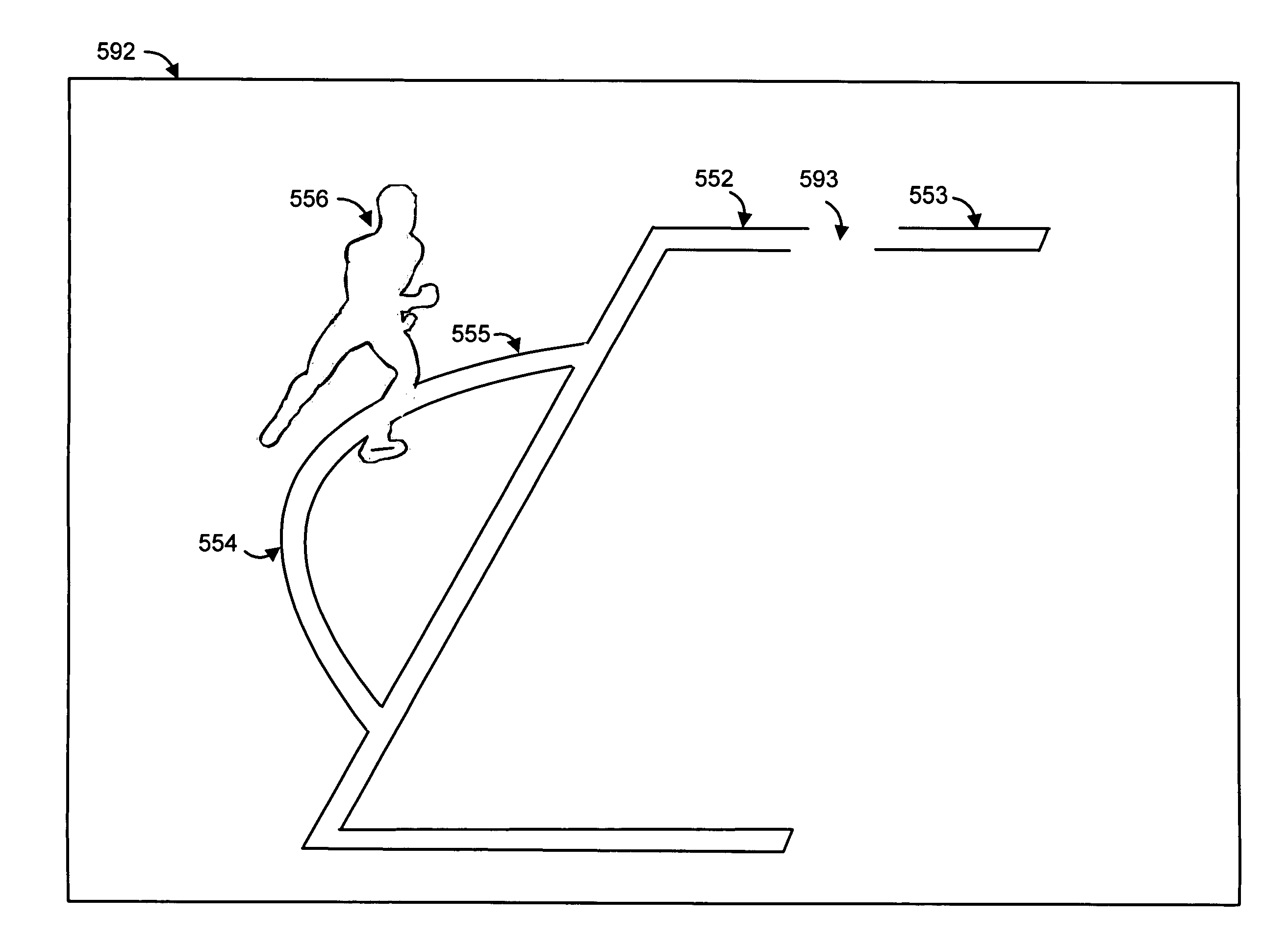

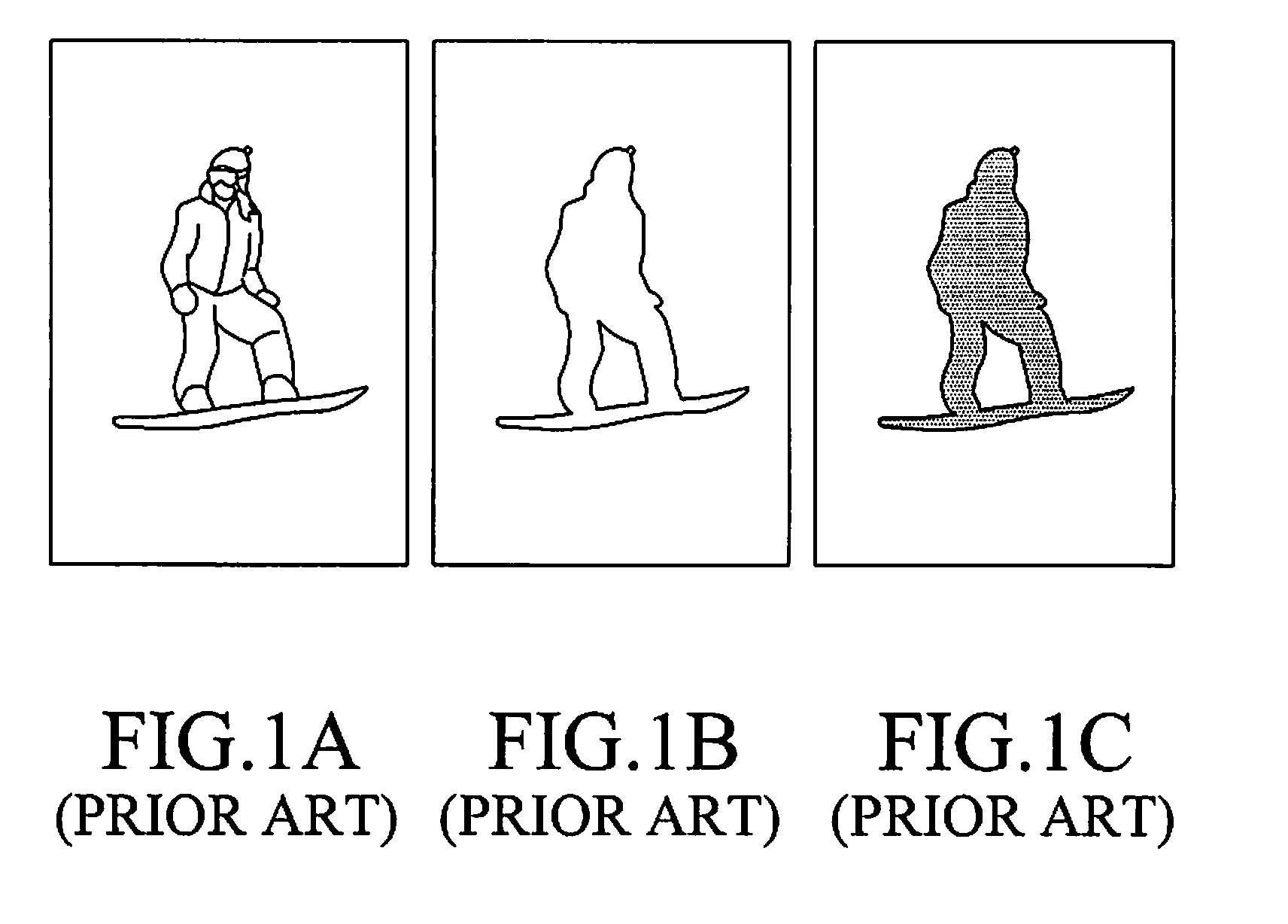

Image repair interface for providing virtual viewpoints

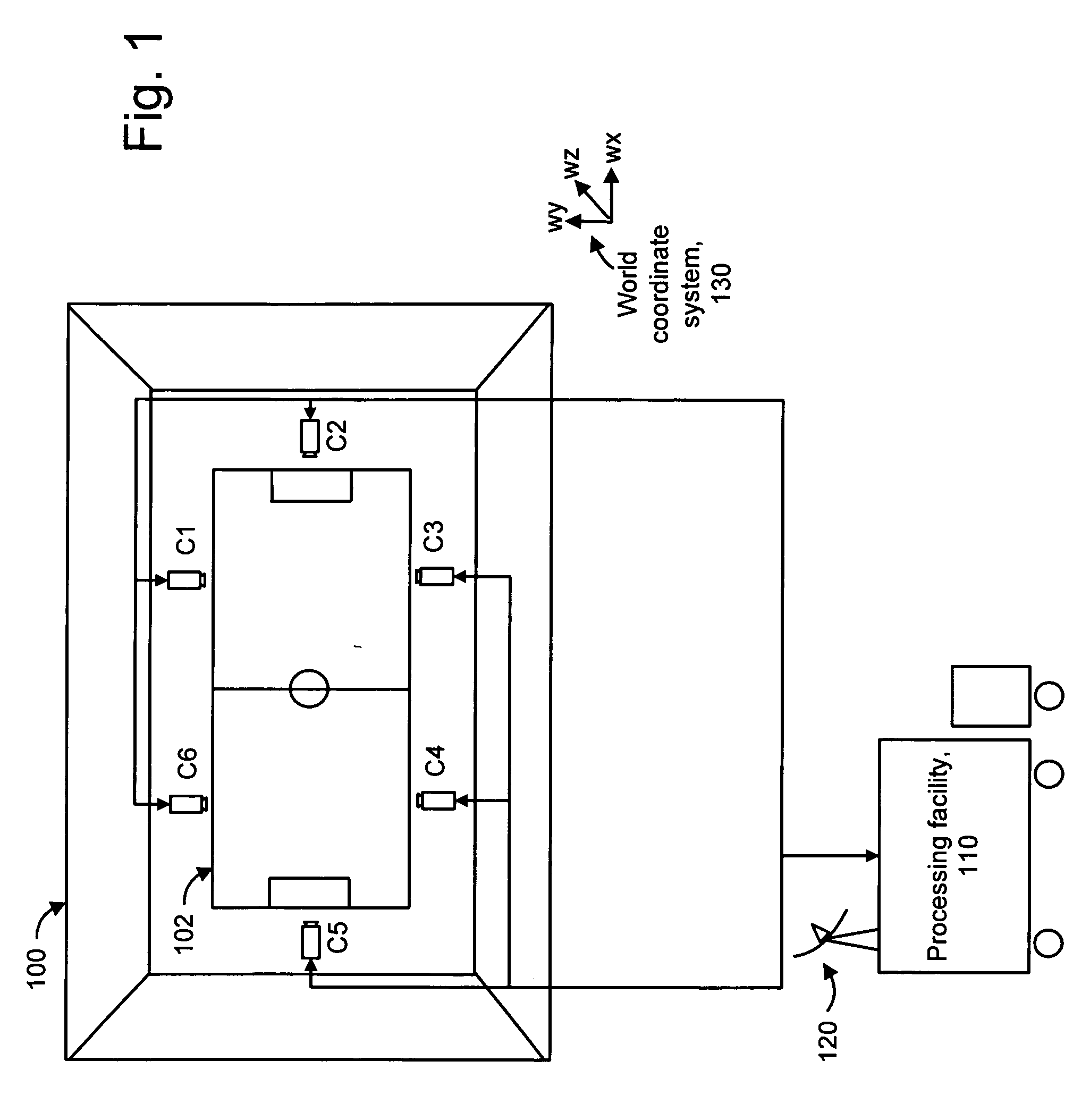

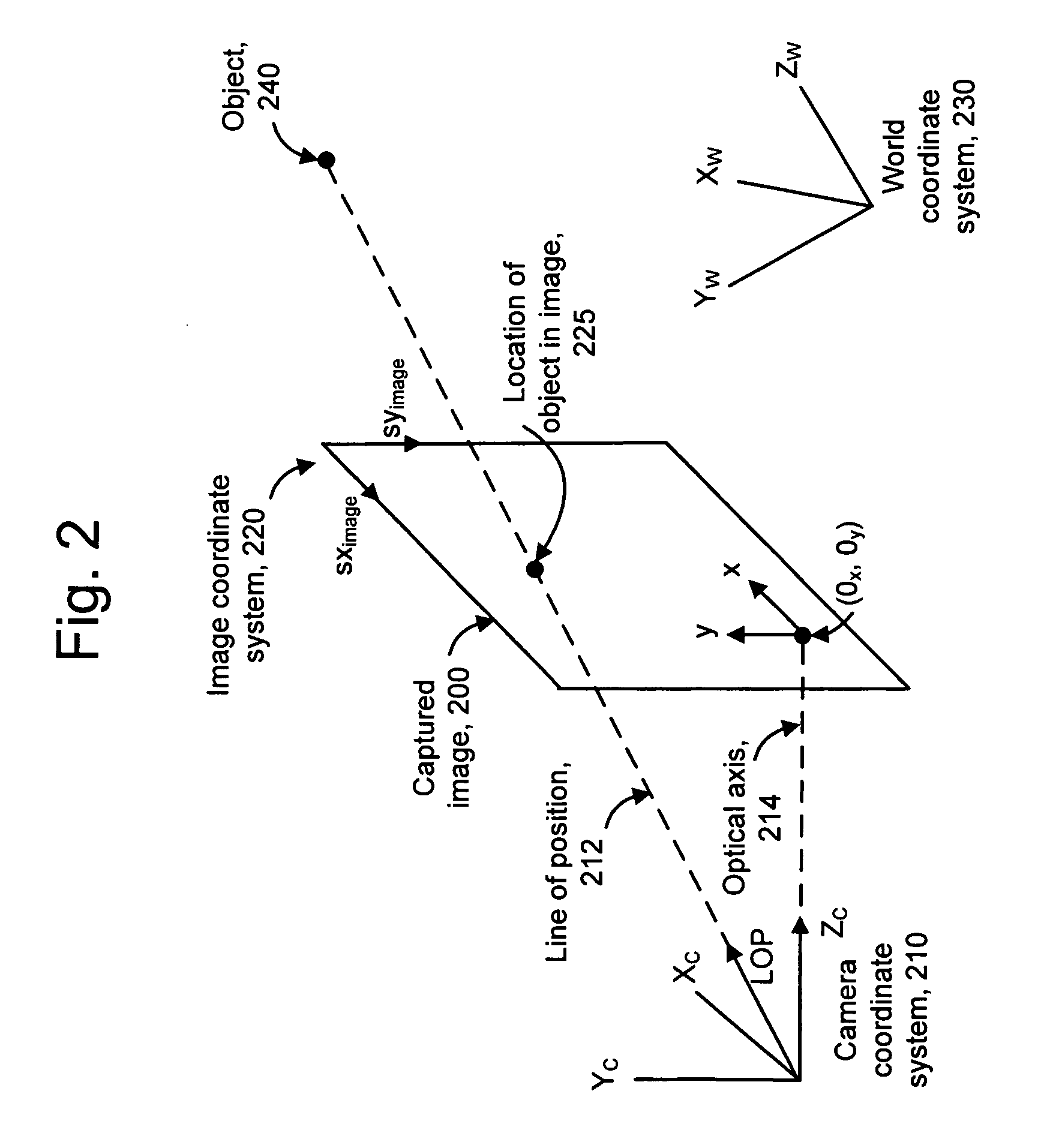

A system and method for repairing an object in image data of an event. An image of the event is obtained from a camera, and an object is detected in the image. For example, the event may be a sporting event in which the object is a participant. Moreover, a portion of the object is occluded in a viewpoint of the camera. For instance, a limb of the participant may be occluded by another participant. The object is repaired by providing a substitute for the occluded portion. A user may perform the repair via a user interface by selecting part of an image from an image library and positioning the selected portion relative to the object. A textured 3d model of the event is combined with data from the repaired object, to depict a realistic virtual viewpoint of the event which differs from a viewpoint of the camera.

Owner:SPORTSMEDIA TECH CORP

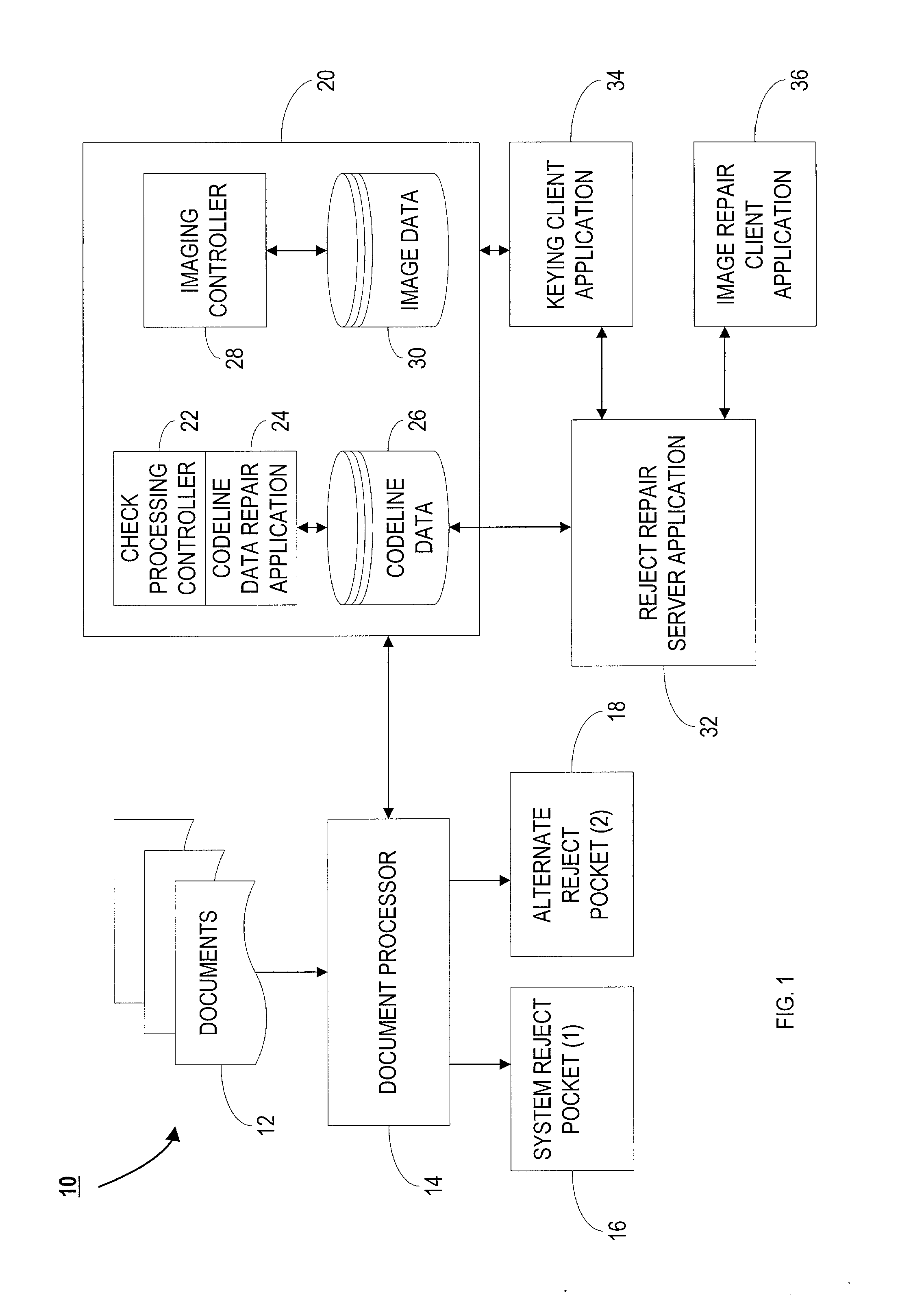

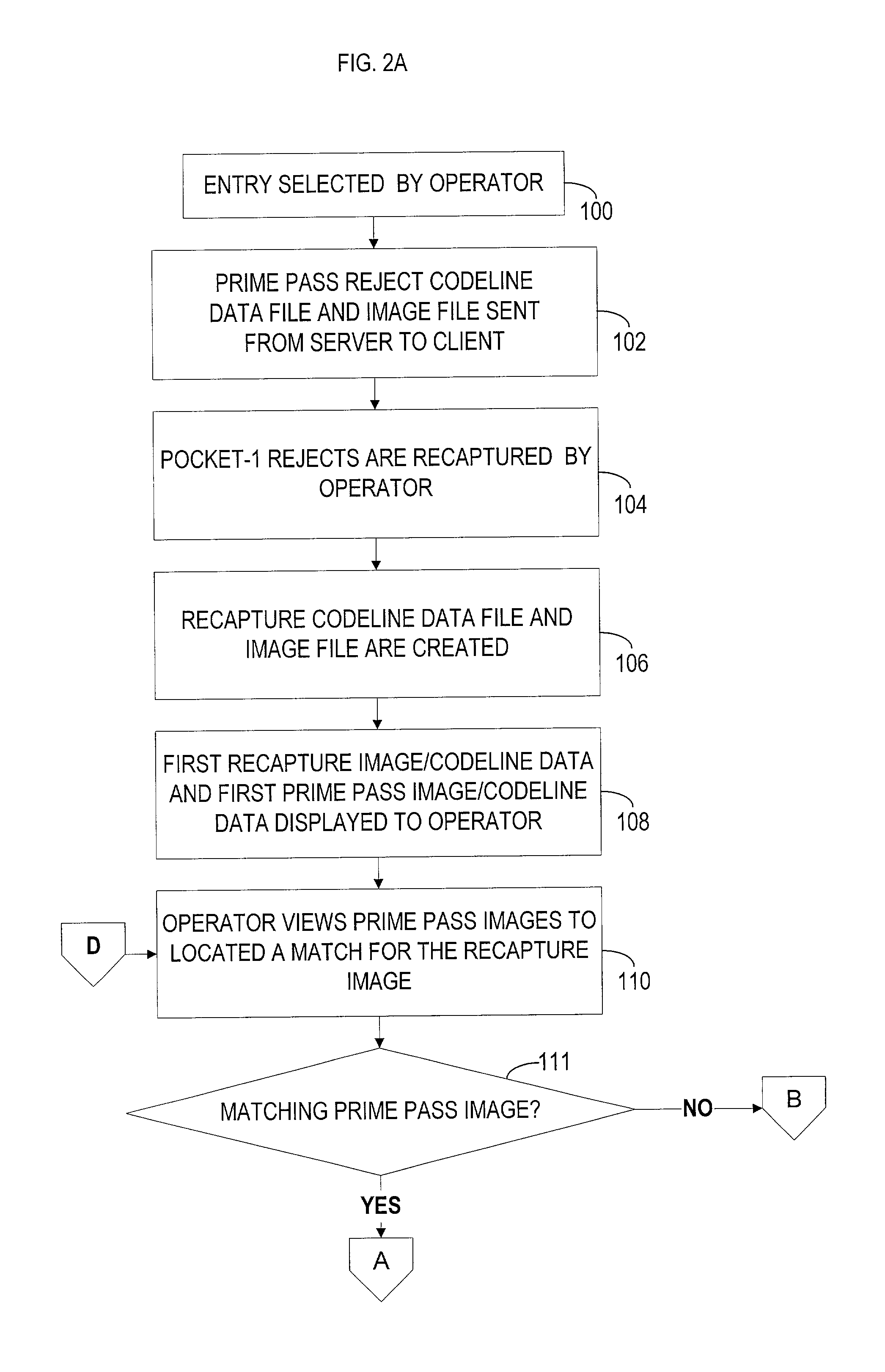

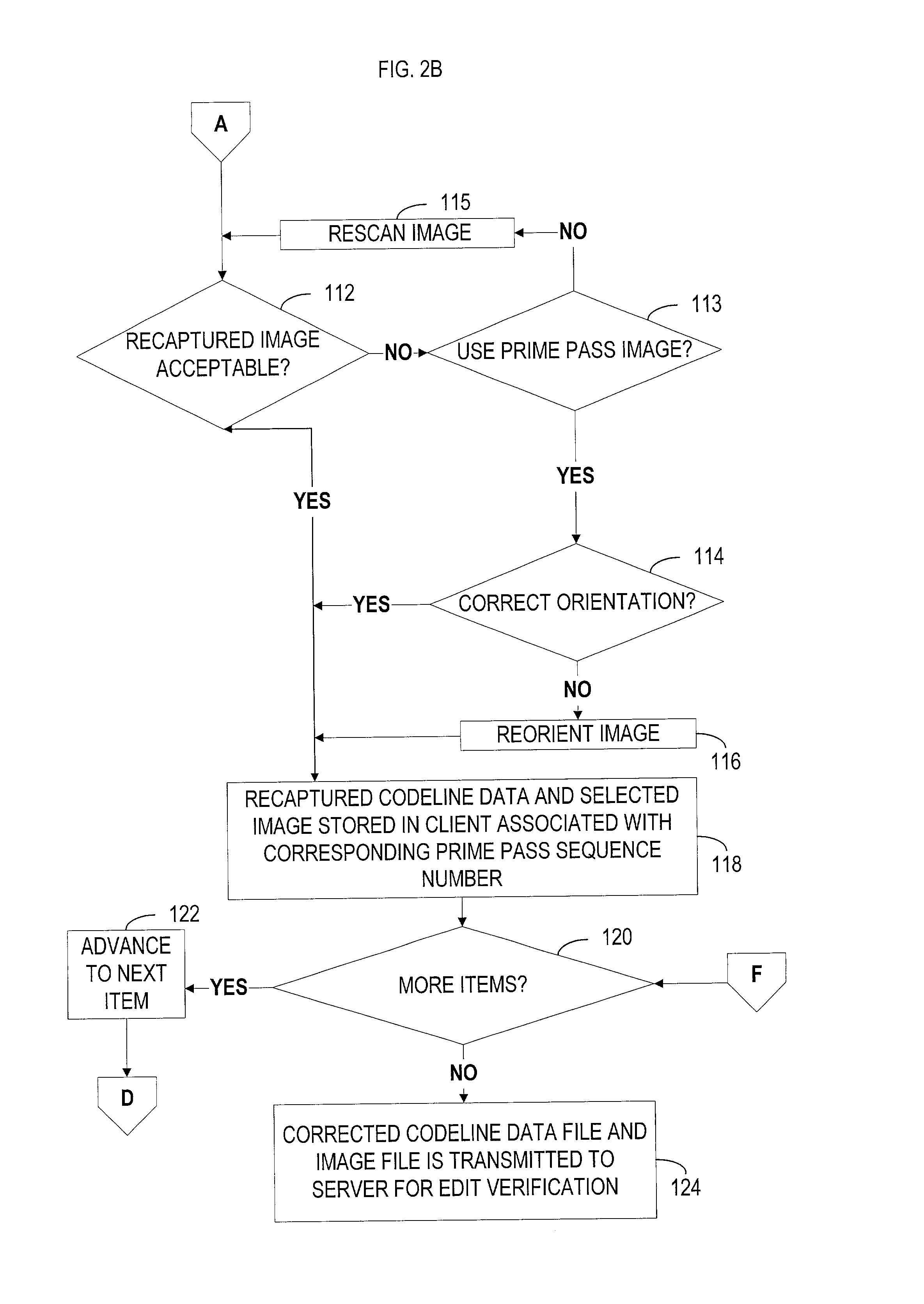

Image enabled reject repair for check processing capture

A method and apparatus for processing a plurality of financial documents, comprising, a document processor, wherein, for each financial document, the document processor captures data encoded on the financial document and an image of the financial document during a prime pass, and assigns a prime pass sequence number to each financial document. The apparatus includes a computer database in which the prime pass data and image is stored in association with the prime pass sequence number for the financial document. The document processor is adapted to determine whether the financial document should be rejected because the data and document image needs to be repaired or the data only needs to be repaired. If the data and image needs to be repaired, the document processor, or a desktop scanner / reader, recaptures the data and image, assigns a recapture sequence number to the financial document, and the recaptured data and image is stored in the computer database in association with the recapture sequence number. An image repair application is adapted to permit an operator to locate a prime pass image that matches the recaptured image, and to repair the document image by visually comparing the recaptured image with the prime pass image. The repaired document image is then stored in the computer database in association with the corresponding prime pass sequence number.

Owner:WELLS FARGO BANK NA

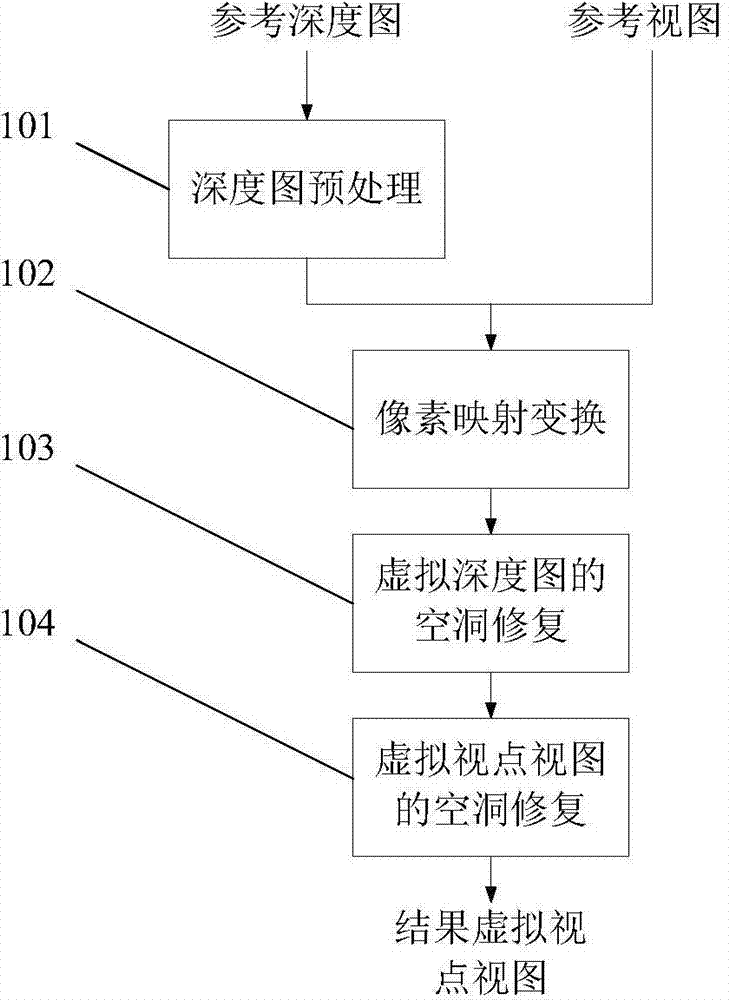

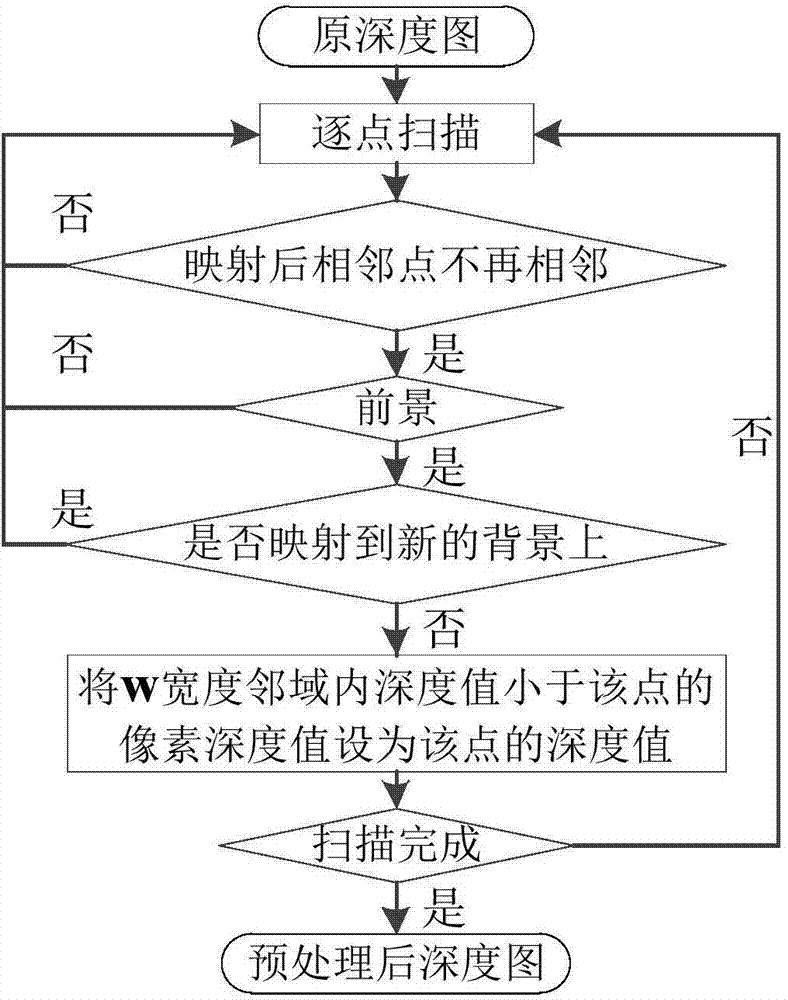

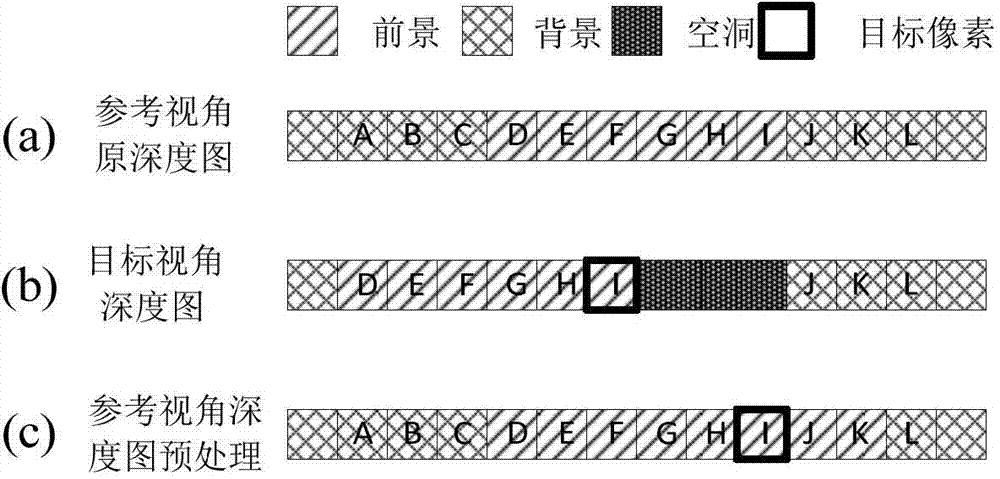

Depth-based cavity repairing method in viewpoint synthesis

InactiveCN104780355AImprove retentionImprove repair effectSteroscopic systemsViewpointsPixel mapping

The invention discloses a depth-based cavity repairing method in viewpoint synthesis. The method comprises steps as follows: (1) performing depth edge one-way expansion preprocessing on an input reference depth map to eliminate inter-infiltration of a foreground pixel and a background pixel in a synthesized virtual viewpoint view; (2) performing pixel mapping transformation by using the preprocessed reference depth map and an original reference view to obtain a virtual viewpoint view with a cavity and a virtual viewpoint depth map; (3) repairing the cavity in the virtual depth map with a pixel-based image repairing method in combination with the relative position relation between the foreground and the background around the cavity in the virtual viewpoint depth map; (4) repairing the cavity in the virtual viewpoint view with an improved sample-based image repairing method in combination with the repaired virtual viewpoint depth map. With the adoption of the method, the virtual viewpoint view with better quality can be obtained, the continuity of image edges can be better kept, and the inter-infiltration of the foreground and background pixels can be eliminated.

Owner:ZHEJIANG UNIV

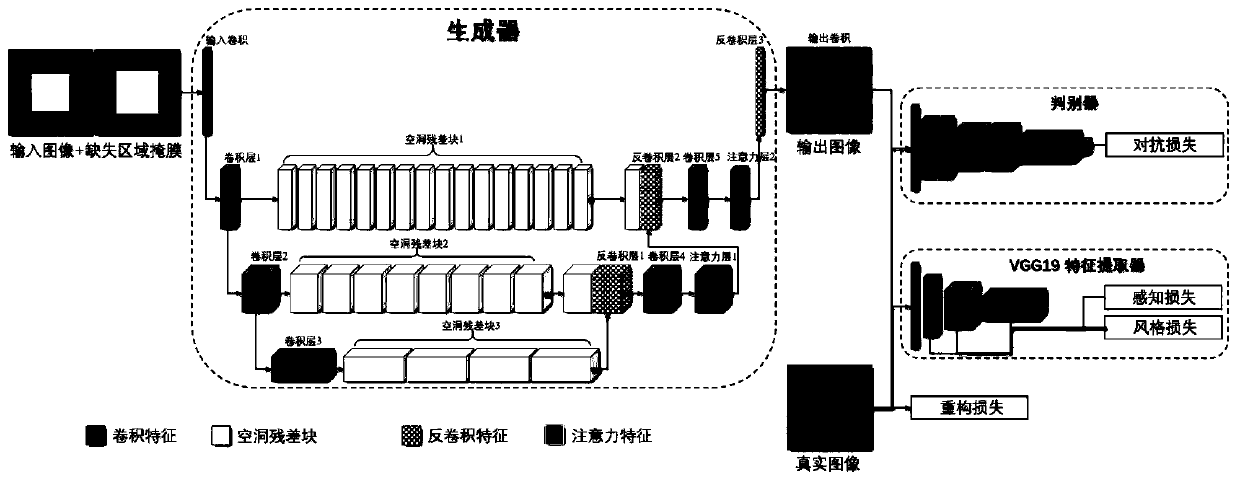

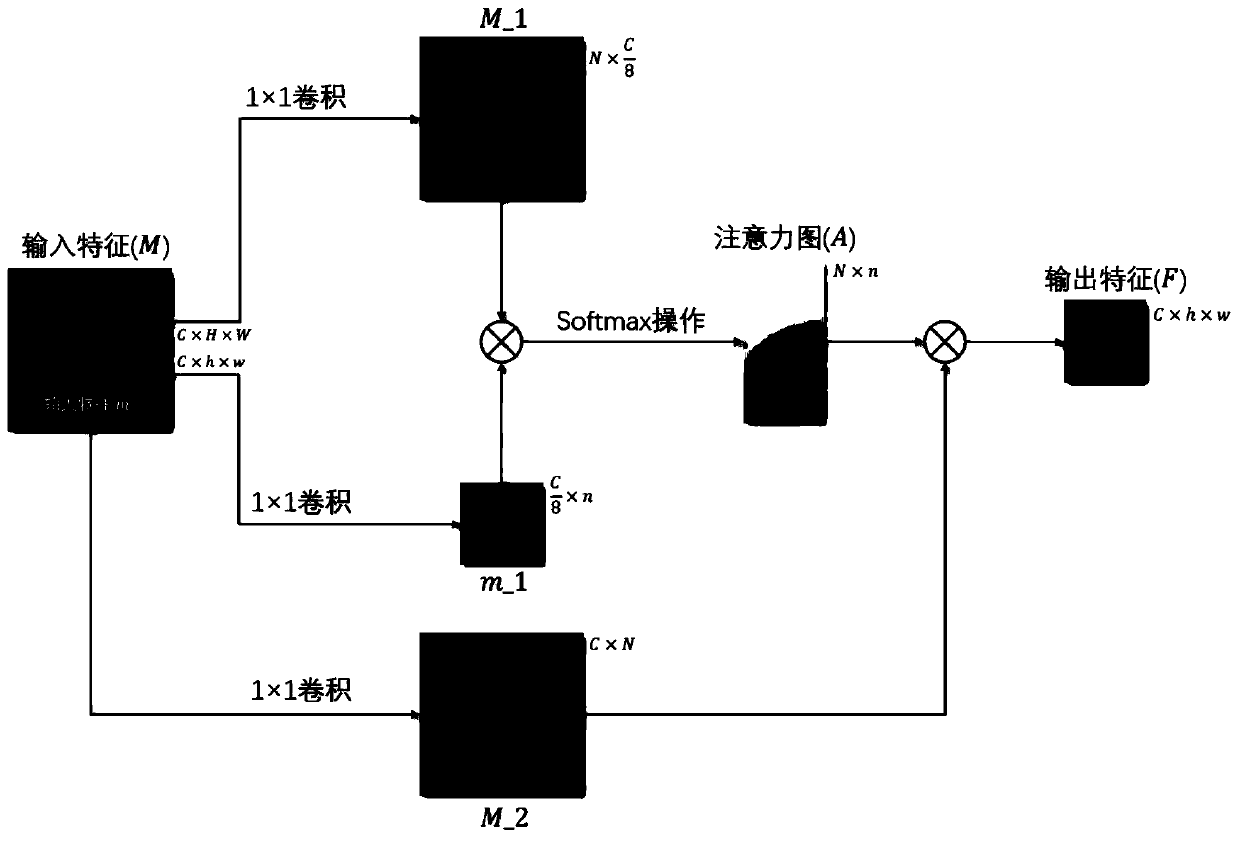

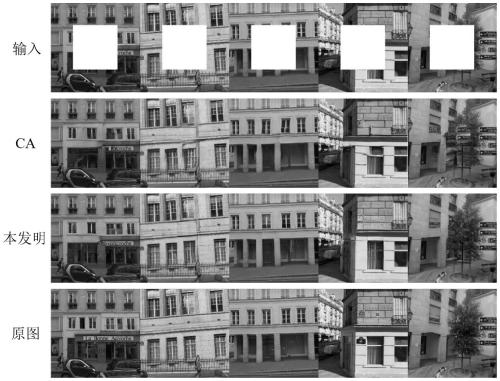

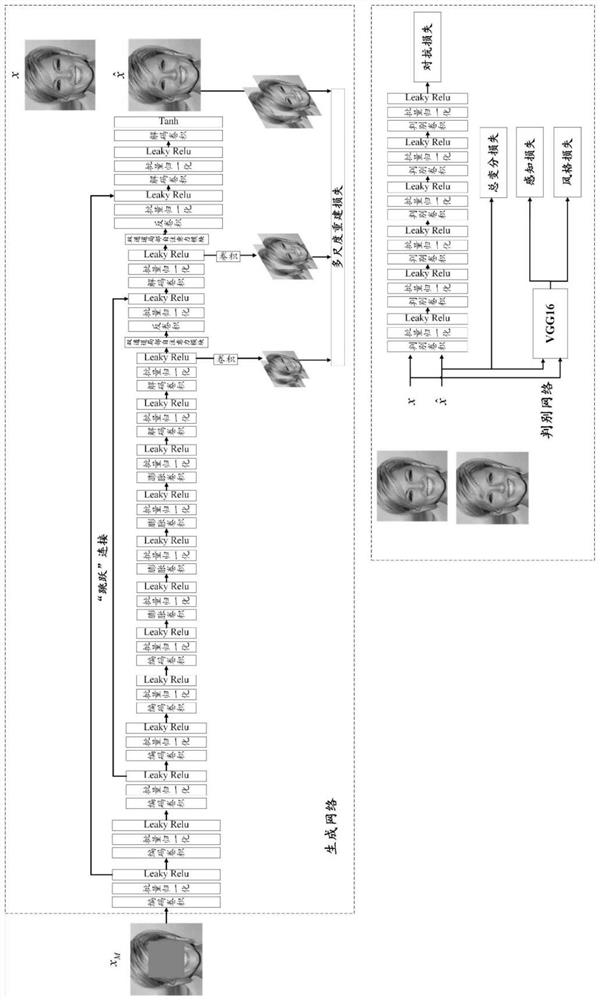

Multi-level image restoration method based on partial-to-overall attention mechanism

PendingCN111127346AFine Restoration ResultsThere will be no problems with incoherent and unreasonable repair resultsImage enhancementImage analysisAlgorithmEngineering

The invention belongs to the technical field of digital image intelligent processing, and particularly relates to a multi-level image restoration method based on a partial-to-overall attention mechanism. Image restoration refers to replacing and generating lost or defective image data by using an algorithm. The method comprises the steps that a multi-level deep convolutional neural network generator structure is provided; and an attention mechanism convolution layer from is partially and wholly integrated into a generator and a discriminator of the network. An image block discriminator and four loss functions of reconstruction loss, perception loss, style loss and adversarial loss are introduced in the training process of the network to assist a generator to learn an image restoration task. Experimental results show that the restored image with vivid details and a reasonable overall structure can be generated, and the image restoration problem is effectively solved.

Owner:FUDAN UNIV

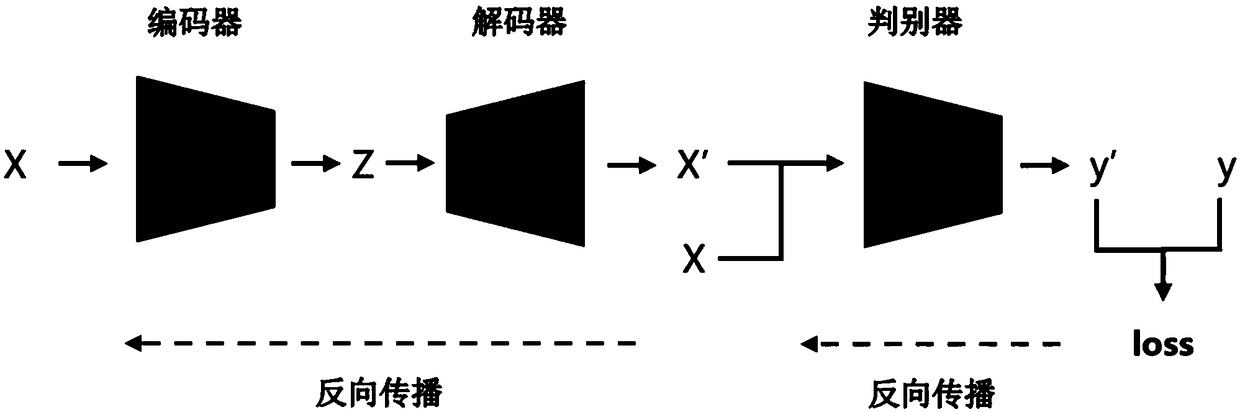

Image restoration method based on a new encoder structure

ActiveCN109801230AAccurate extractionHigh speedImage enhancementFeature learningNetwork architecture

The invention discloses an image restoration method based on a new encoder structureA convolutional neural network composed of an encoder and a decoder is trained to regress a missing pixel value foran image with missing pixels; The encoder captures an image context to obtain a compact feature representation, and the decoder generates missing image content using the representations. Alexnet can improve the operation speed, the network operation scale and the performance; And the Densenet can alleviate the problem of maximum gradient disappearance, enhance the feature utilization and reduce the number of parameters. According to the method, the advantages of the two are considered and combined, and a Densenet framework is added and used. Compared with an Alexnet network architecture used by an original coding and decoding device, the method has the advantages that more compact and real features can be extracted, and meanwhile, the WGAN-is used; GP adversarial loss replaces traditionalGAN adversarial loss, the feature learning speed and precision are improved, and the restoration effect is enhanced.

Owner:HOHAI UNIV

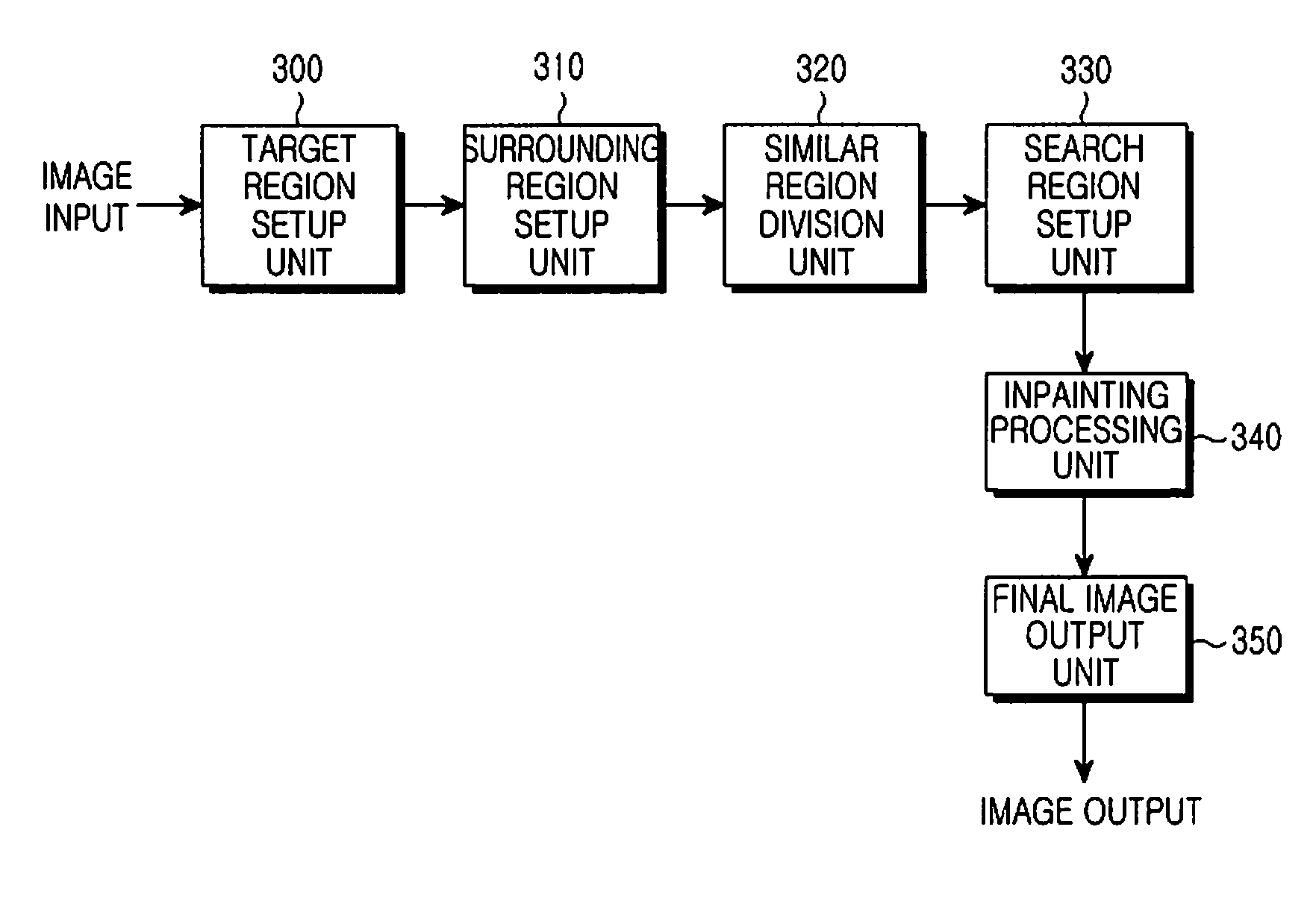

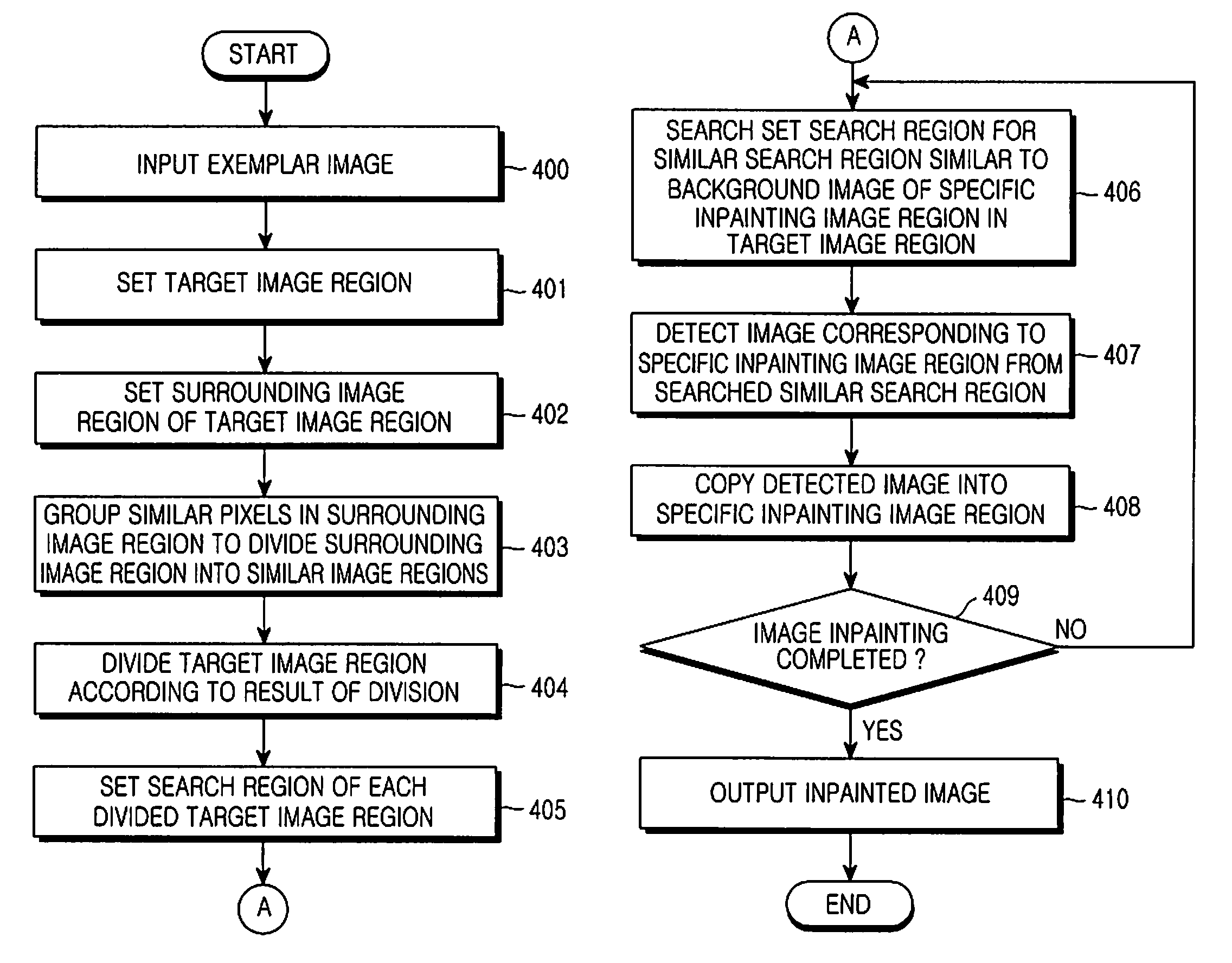

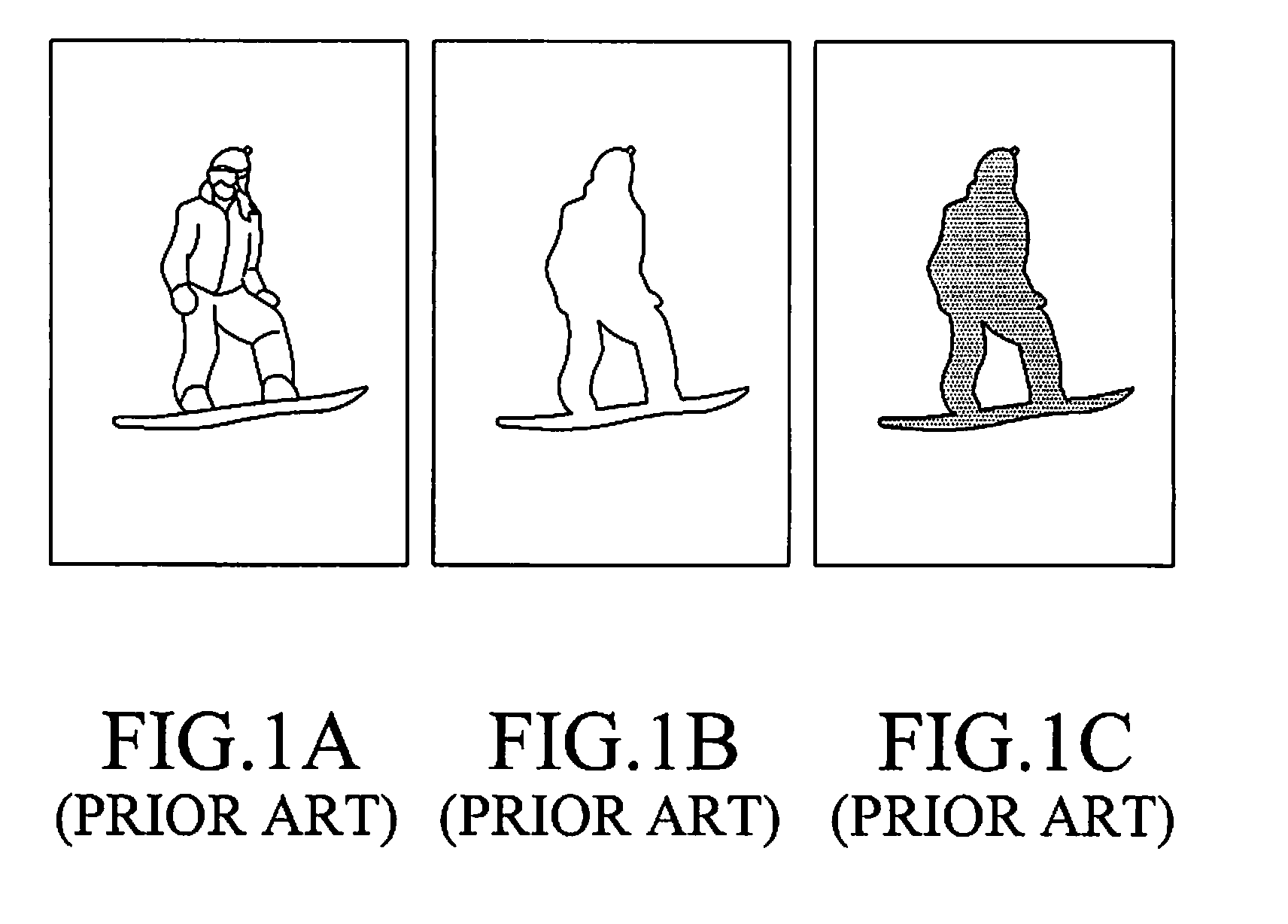

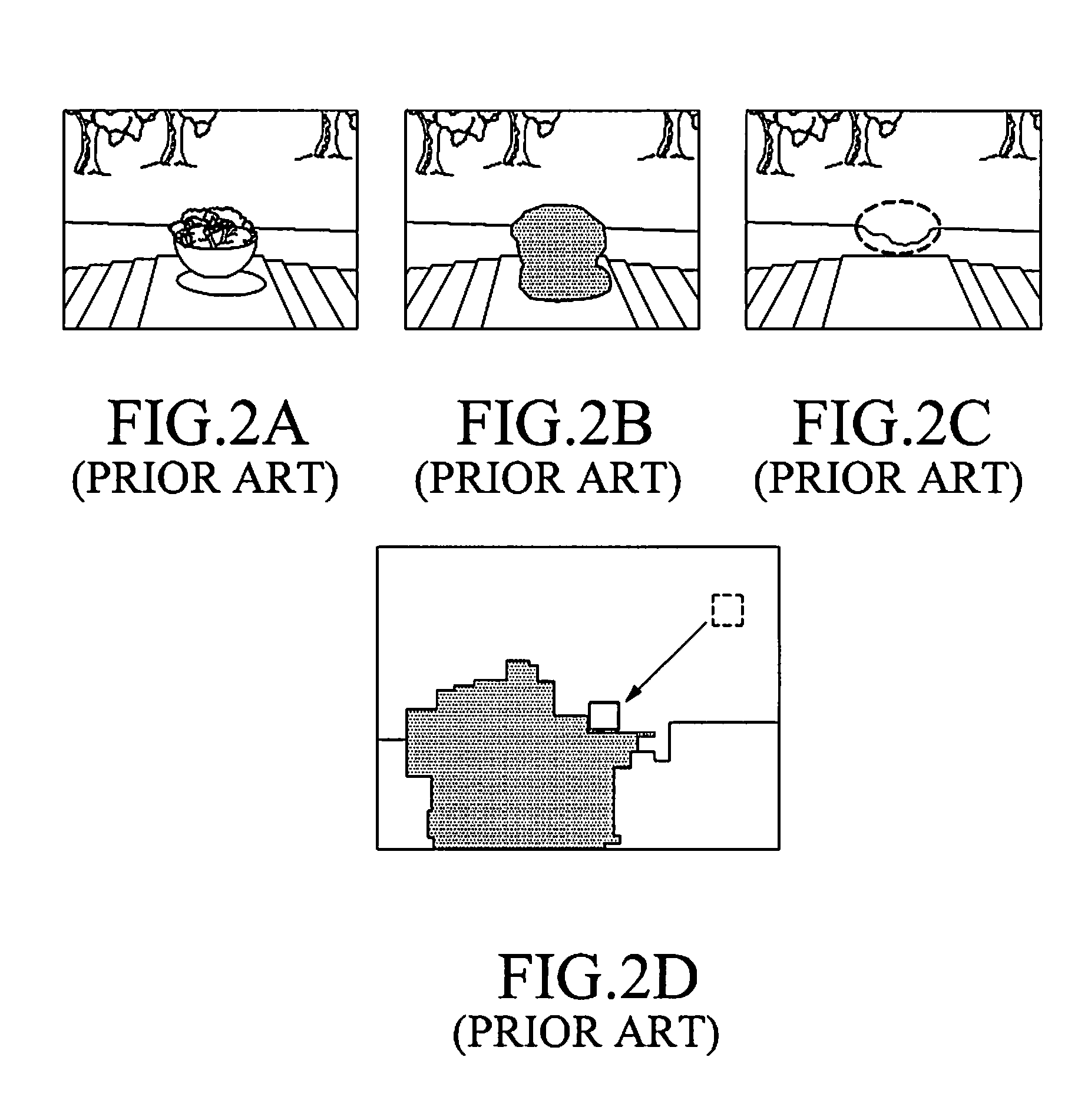

Image inpainting apparatus and method using restricted search region

InactiveUS20110103706A1Accurate repairReduce errorsImage enhancementImage analysisPattern recognitionPerformed Imaging

Disclosed is an image inpainting apparatus and method using a restricted search region. The image inpainting apparatus sets a target image region to be inpainted in response to an image inpainting request, sets a surrounding image region, which has a preset size and includes the set target image region, sets a plurality of similar image regions by grouping similar pixels in the surrounding image region, divides the surrounding image region into the plurality of set similar image regions, divides the target image region according to the similar image regions, sets a search region of each divided target image region, and detects an image of an image region most similar to each divided target image region from the set search region of each divided target image region, thereby performing image inpainting. Accordingly, image inpainting is performed using a search region, which has been determined to include a similar image most similar to an image to inpaint, so that it is possible to increase the probability of searching for an image most similar to the inpainting image region.

Owner:SAMSUNG ELECTRONICS CO LTD

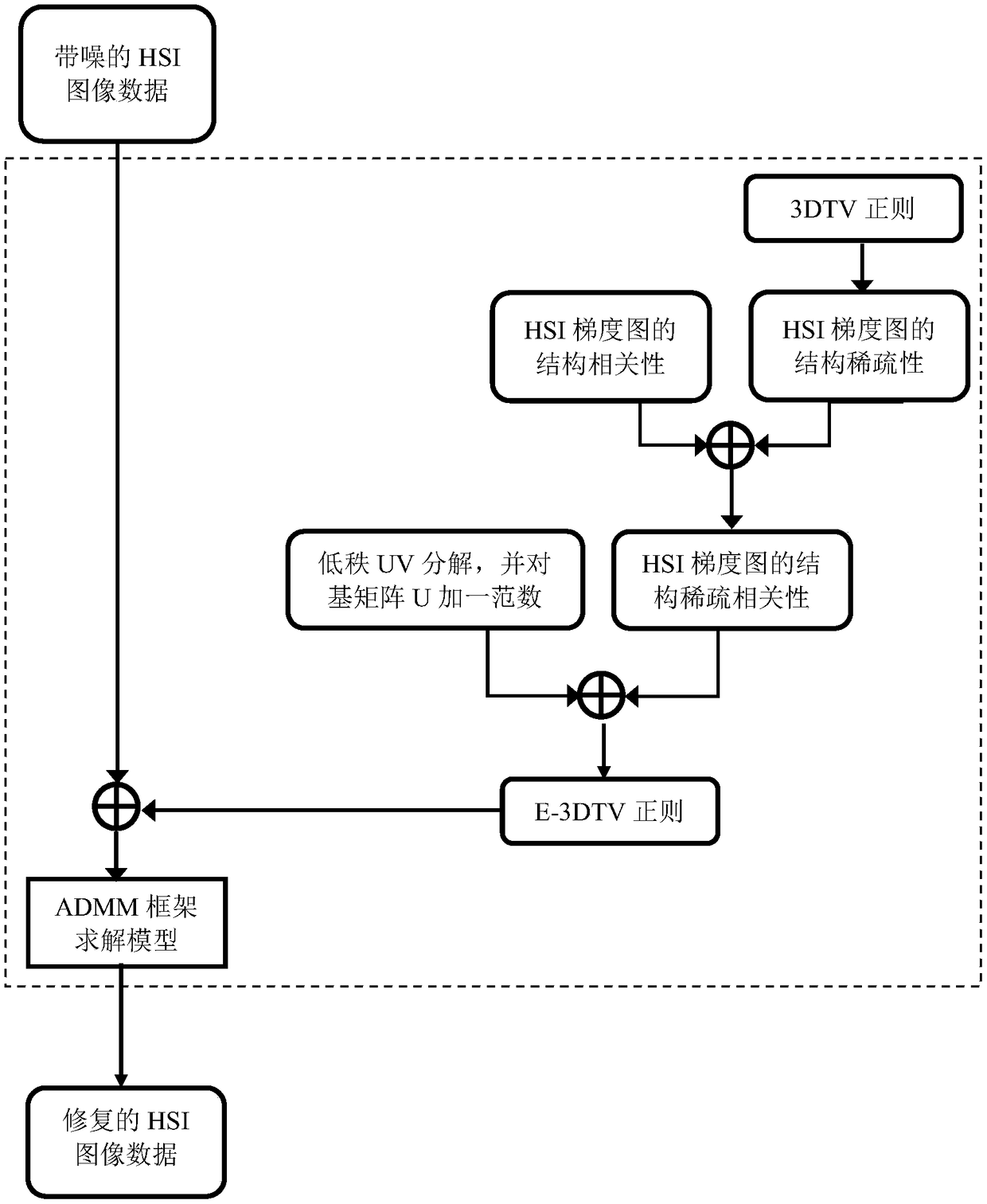

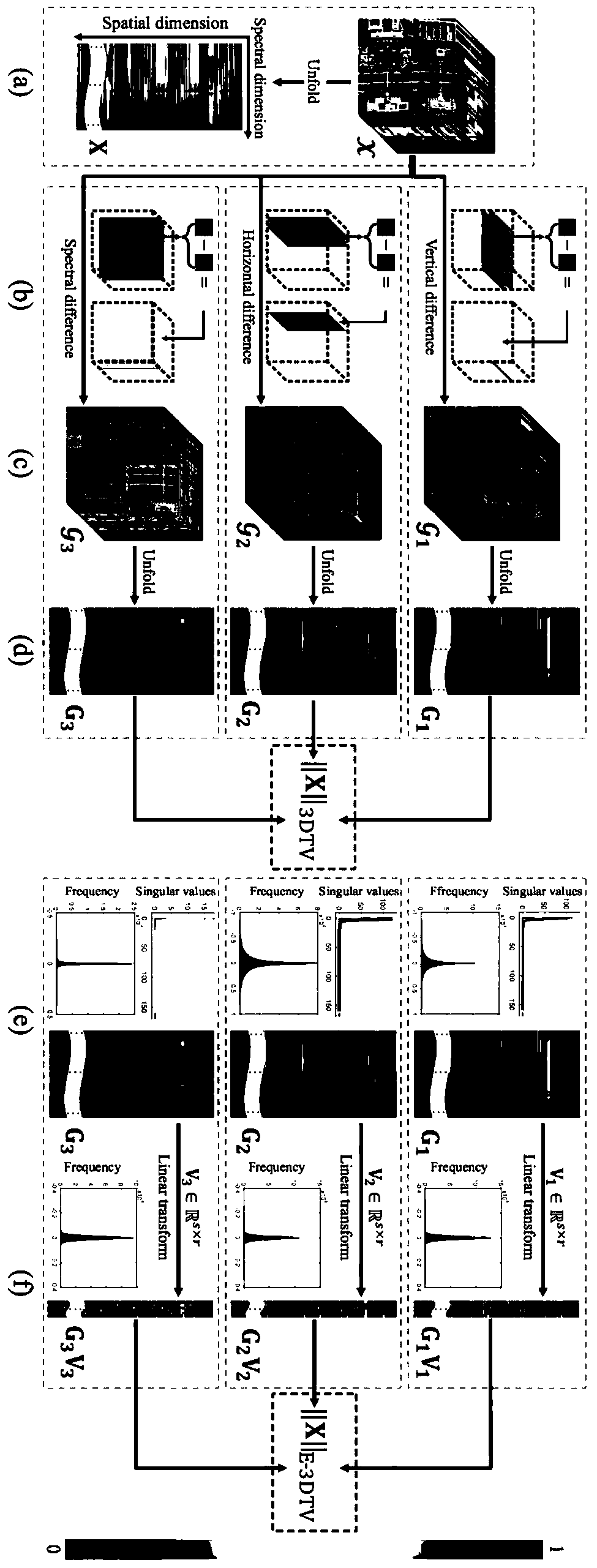

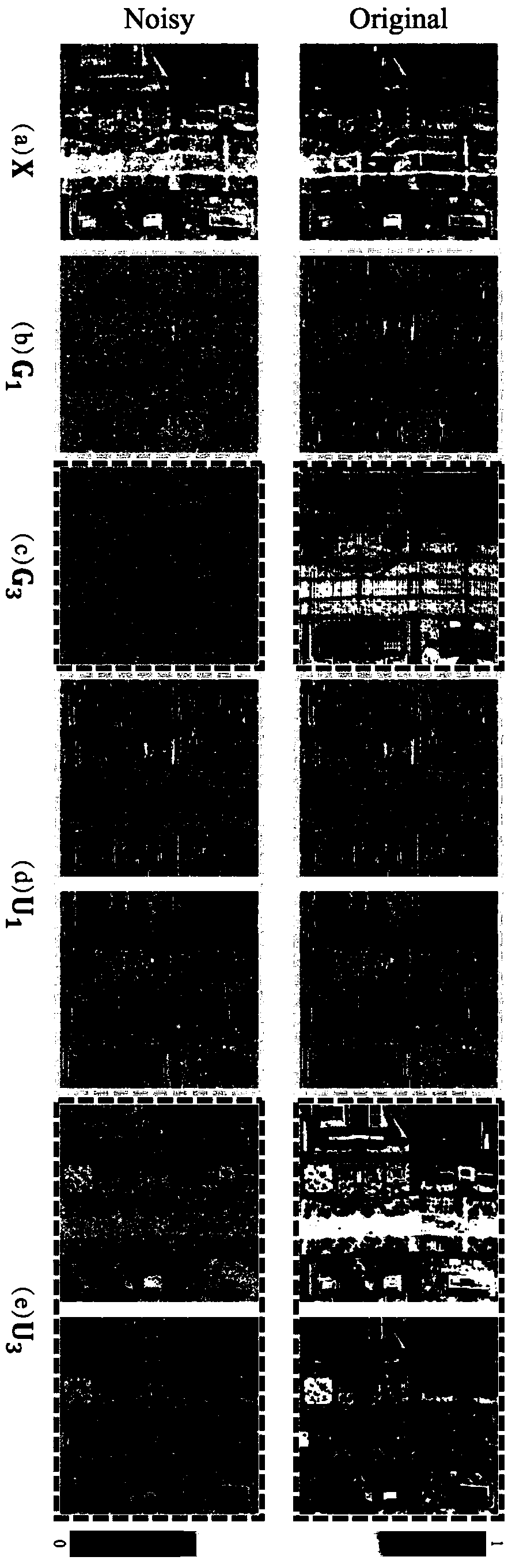

A hyperspectral image inpainting method based on E-3DTV regularity

A hyperspectral image inpainting method based on E-3DTV regularity is provided. The method comprises the steps of expanding the original three-dimensional hyperspectral data with noise into matrix along the spectral dimension, and initializing the matrix representation of the noise term and the hyperspectral data to be repaired, and other model variables and parameters under the ADMM framework; performing differential operation on the hyperspectral data to be repaired along horizontal, vertical and spectral dimensions to obtain three gradient maps in different directions, which are expanded into matrices along the spectral dimensions; decomposing the gradient graph matrices in three directions by low rank UV, and constraining the basis matrices of the gradient graph by sparsity to obtain E-3DTV regularity; adding the E-3DTV regularity to the data to be repaired, writing out the optimization model, using the ADMM framework to solve iteratively; obtaining the restoration image and noisewhen the iteration is stable. The invention performs denoising and compression reconstruction on the hyperspectral image data, and improves the enhancement of the traditional 3DTV so as to give consideration to the structural correlation and sparsity of the gradient image, thereby overcoming the defect that the traditional 3DTV can only depict the sparsity of the gradient image and ignores the correlation.

Owner:XI AN JIAOTONG UNIV

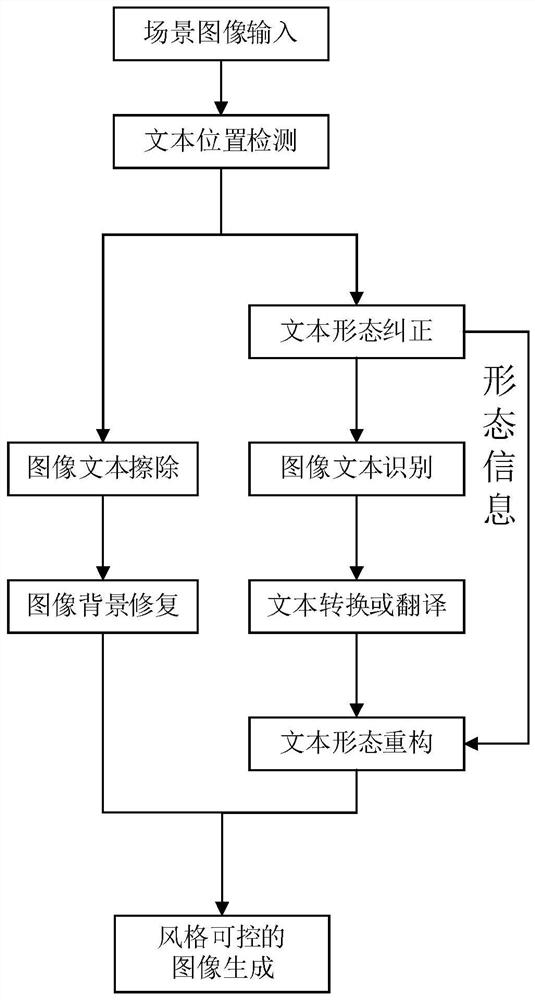

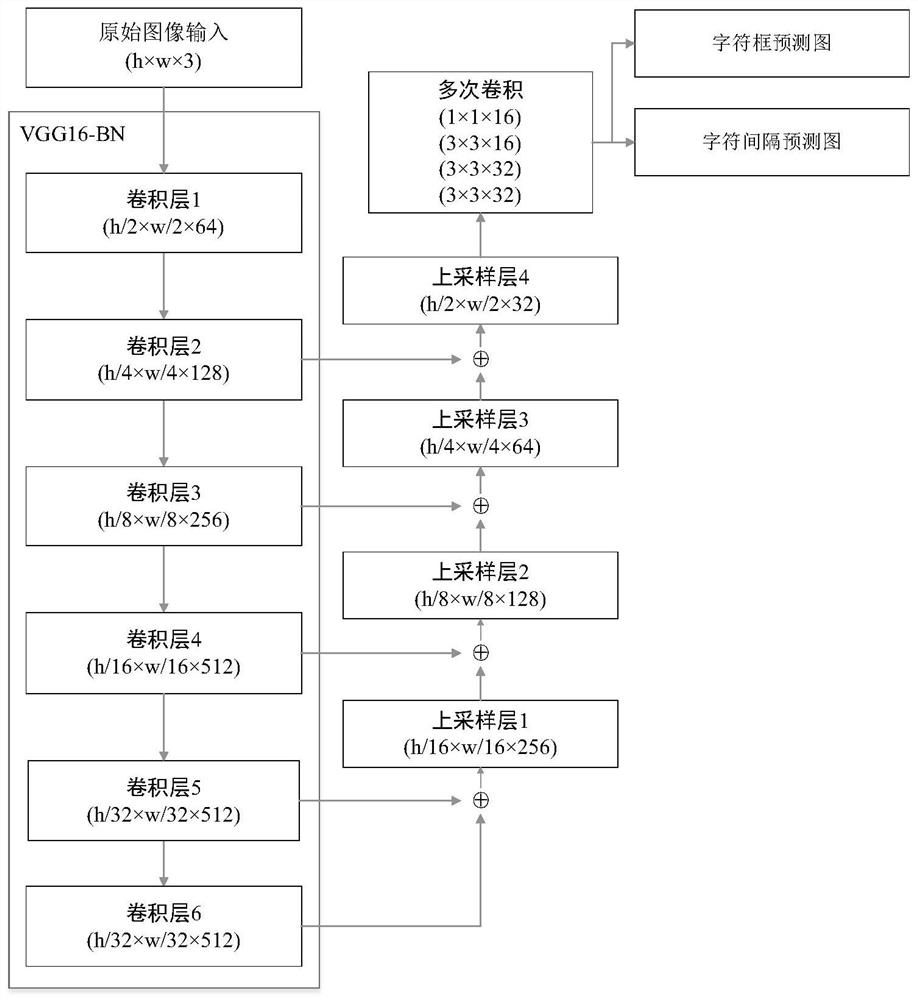

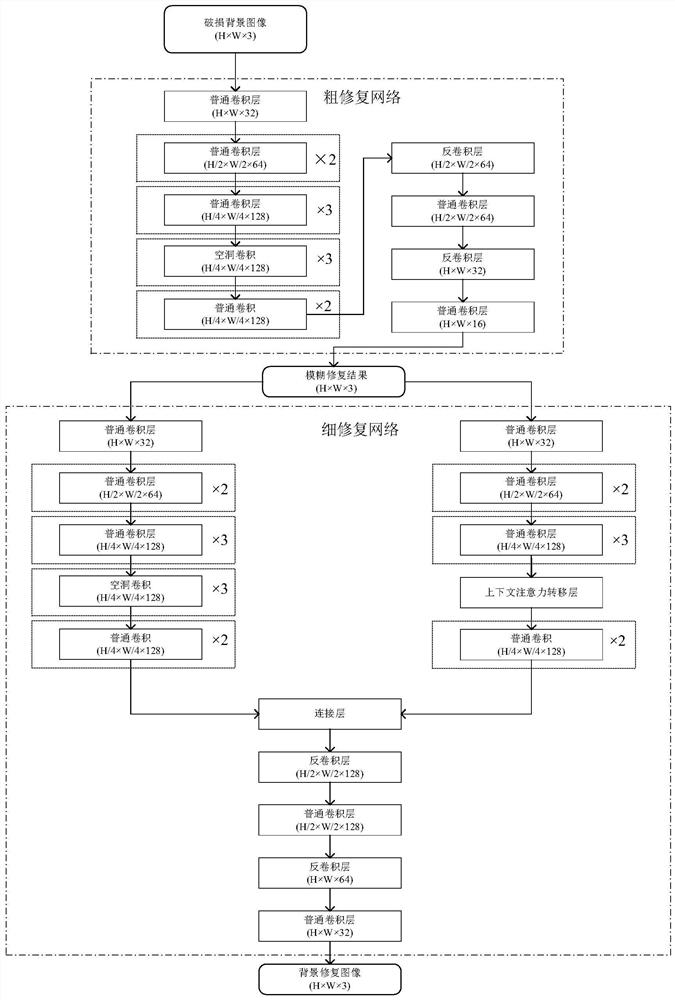

Style-controllable image text real-time translation and conversion method

PendingCN111723585ASolve the problem of uncontrollable text styleRich morphological informationNatural language translationSemantic analysisFeature extractionComputer graphics (images)

The invention discloses a style-controllable image text real-time translation and conversion method. The method comprises the following steps: taking a scene image as input; performing feature extraction by using a multi-layer CNN network, and detecting the position and form information of the image text; and then erasing text pixels based on a text positioning box to obtain a background image anda mask, and carrying out background image restoration by using a thick restoration network and a thin restoration network based on a codec structure; performing form correction and style removal on the image text to obtain a common font image text; and recognizing the image text by using a CRNN model, performing correcting by combining text semantics, and performing translating or converting according to requirements; performing stylization processing on the translated text by learning the artistic style of the original text; and outputting a scene image with a controllable text conversion style. According to the method, more valuable information can be analyzed from the scene image, and the information storage degree during image text translation and conversion is remarkably enhanced.

Owner:CHINA UNIV OF PETROLEUM (EAST CHINA)

Image inpainting apparatus and method using restricted search region

InactiveUS8437567B2Accurate repairReduce errorsImage enhancementImage analysisPattern recognitionPerformed Imaging

Disclosed is an image inpainting apparatus and method using a restricted search region. The image inpainting apparatus sets a target image region to be inpainted in response to an image inpainting request, sets a surrounding image region, which has a preset size and includes the set target image region, sets a plurality of similar image regions by grouping similar pixels in the surrounding image region, divides the surrounding image region into the plurality of set similar image regions, divides the target image region according to the similar image regions, sets a search region of each divided target image region, and detects an image of an image region most similar to each divided target image region from the set search region of each divided target image region, thereby performing image inpainting. Accordingly, image inpainting is performed using a search region, which has been determined to include a similar image most similar to an image to inpaint, so that it is possible to increase the probability of searching for an image most similar to the inpainting image region.

Owner:SAMSUNG ELECTRONICS CO LTD

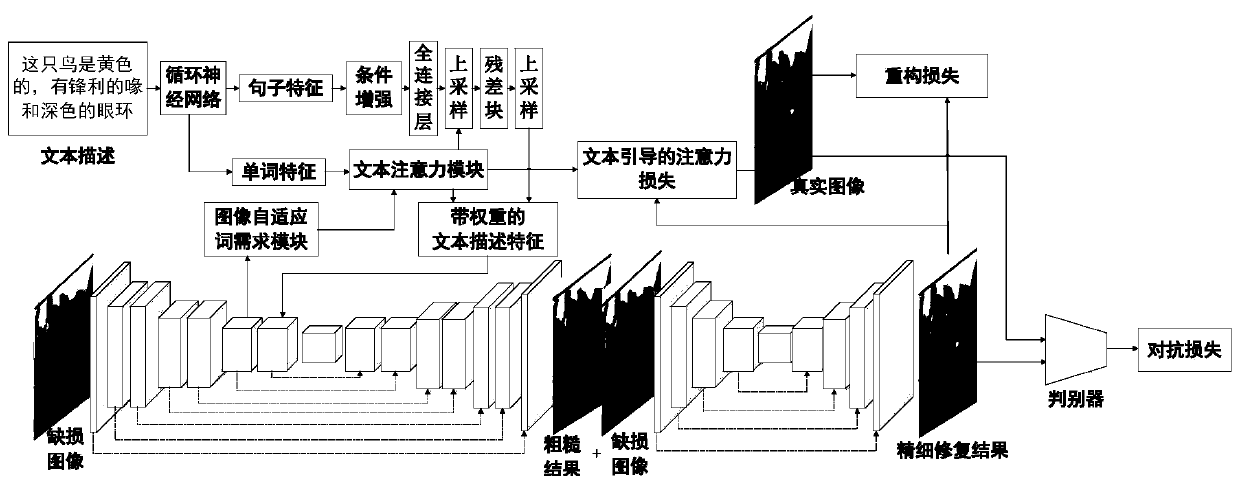

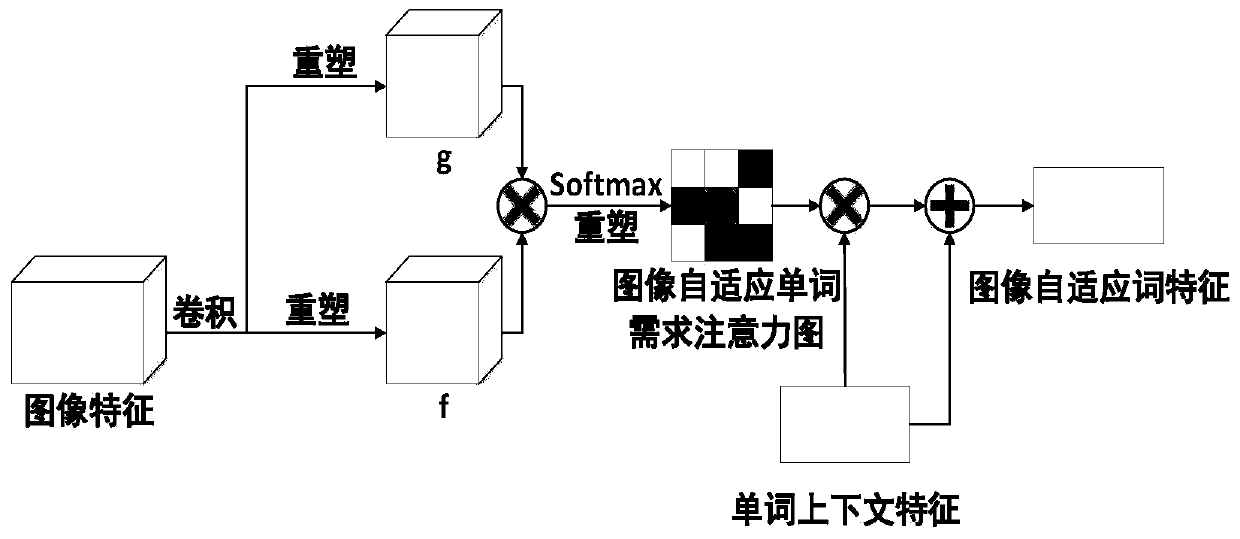

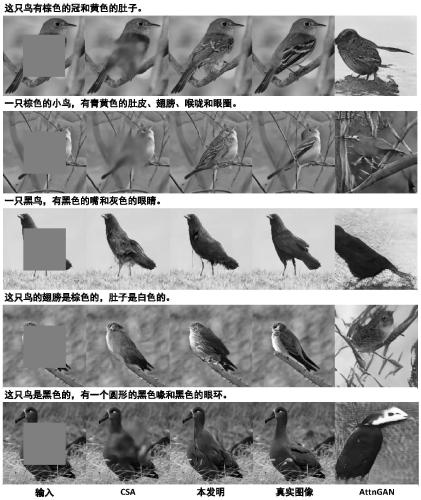

Multi-modal feature fusion text-guided image restoration method

ActiveCN111340122AEliminate bad effectsFine detail textureSemantic analysisCharacter and pattern recognitionImaging FeatureImage restoration

The invention belongs to the technical field of digital image intelligent processing, and particularly relates to a multi-modal feature fusion text-guided image restoration method. The method sequentially comprises the following steps that: a network takes a defect image and corresponding text description as input, and is divided into two stages: a rough repair stage and a fine repair stage; in the rough restoration stage, the network maps the text features and the image features to a unified feature space for fusion, and generates a reasonable rough restoration result by using prior knowledgeof the text features; in the fine repair stage, the network generates more fine-grained textures for the rough repair result; reconstruction loss, adversarial loss and text-guided attention loss areintroduced into network training to assist the network in generating more detailed and natural results. Experimental results show that the semantic information of the object in the missing area can bebetter predicted, the fine-grained texture is generated, and the image restoration effect is effectively improved.

Owner:FUDAN UNIV

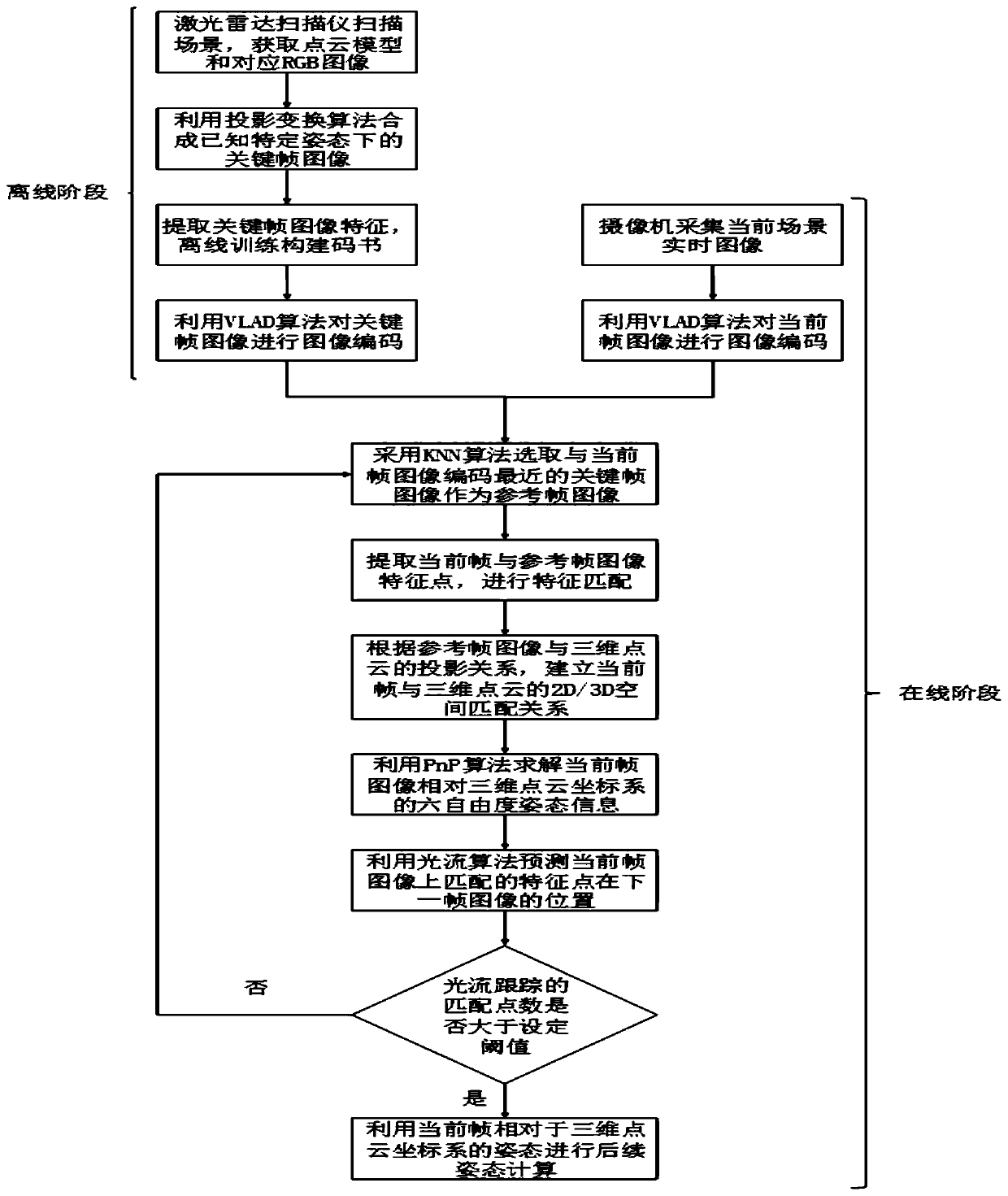

Visual tracking and positioning method based on dense point cloud and composite view

ActiveCN110853075ASolve association problemsRealize initial positioningImage enhancementImage analysisPattern recognitionPoint cloud

The invention provides a visual tracking and positioning method based on dense point cloud and a composite view, and the method comprises the steps: carrying out the three-dimensional scanning of a real scene, obtaining a color key frame image and a corresponding depth image, carrying out the image restoration, and carrying out the image coding of the key frame image; performing image coding on acurrent frame image acquired by the camera in real time, and selecting a composite image closest to the current frame image as a reference frame image of the current image; obtaining stable matching feature point sets on the two images, and performing processing to obtain six-degree-of-freedom pose information of the current frame camera relative to a three-dimensional scanning point cloud coordinate system; and performing judging by using an optical flow algorithm, if the requirement cannot be met, updating the current frame image to be the next frame image acquired by the camera, and performing re-matching. According to the invention, the association problem of the three-dimensional point cloud obtained by the laser radar and the heterogeneous visual image can be solved, and the effect of realizing visual navigation rapid initialization positioning is achieved.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

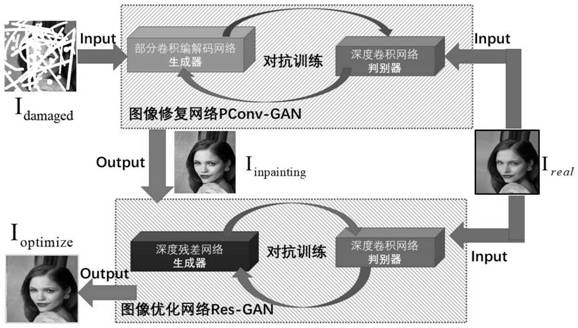

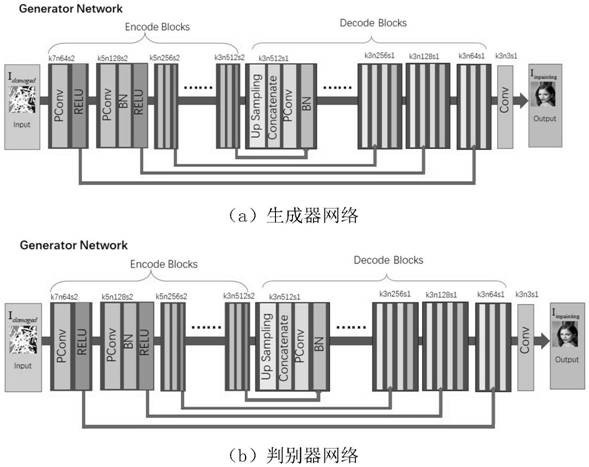

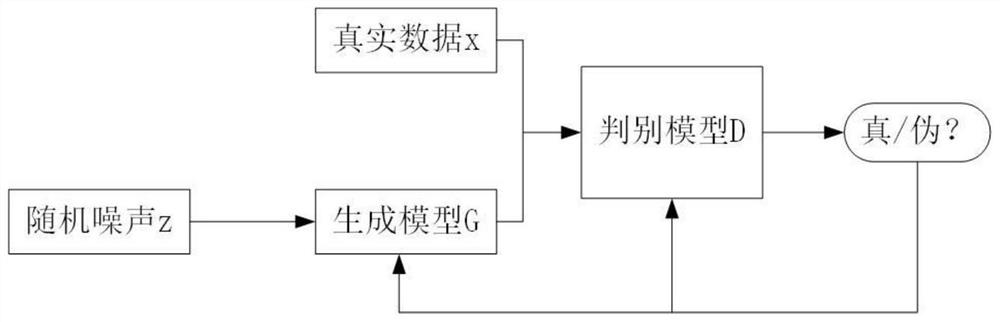

Method of using generative adversarial network in image restoration

ActiveCN111784602AImprove repair effectImprove repair accuracyImage enhancementImage analysisPattern recognitionGenerative adversarial network

The invention discloses an image restoration model PRGAN. The image restoration model PRGAN is composed of two independent generative adversarial network modules. An image restoration network module PConv-GAN is formed by combining part of a convolutional network and an adversarial network and is used for restoring an irregular mask, and meanwhile, making the overall texture structure and color ofan image closer to those of an original image according to feedback of a discriminator. In order to solve the problems of local chromatic aberration and mild boundary artifacts of an image caused bythe deficiency of a restoration network module, the invention designs an image optimization network module. The image optimization network module Res-GAN combines a deep residual network with an adversarial network, and the image optimization network module is trained by combining the adversarial loss, the perception loss and the content loss, so that the information of the non-missing area in theimage is reserved, the consistency of the texture structure of the image in the non-missing area is kept, and the purposes of eliminating the local chromatic aberration phenomenon and solving the pseudo boundary are achieved.

Owner:JIANGXI UNIV OF SCI & TECH

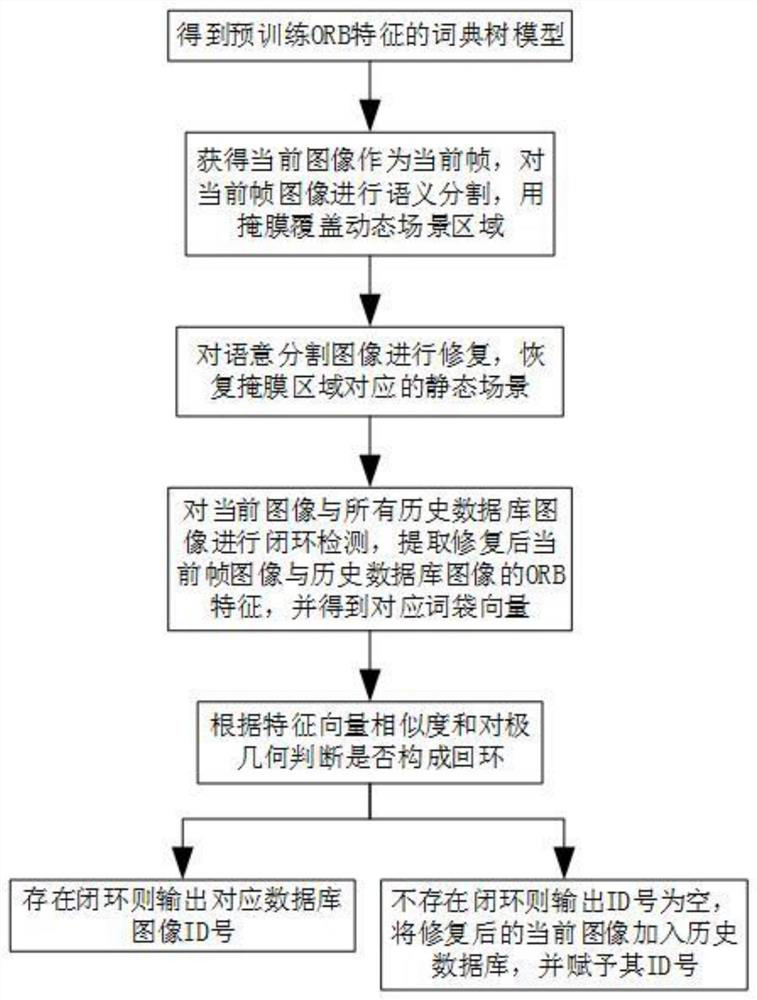

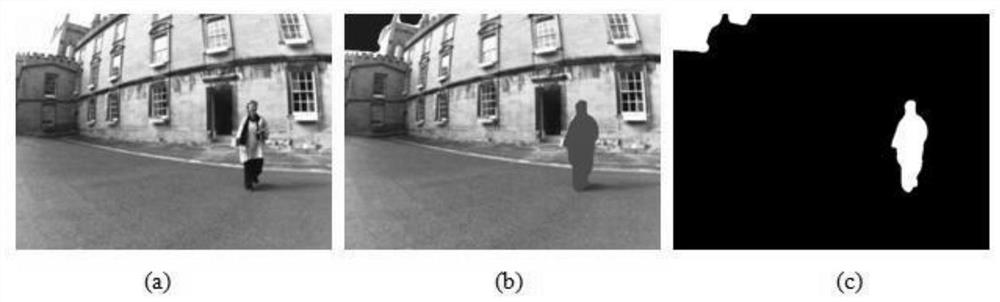

Visual loopback detection method based on semantic segmentation and image restoration in dynamic scene

ActiveCN111696118AImprove reliabilityPrevent extractionImage enhancementImage analysisPattern recognitionRgb image

The invention discloses a visual loopback detection method based on semantic segmentation and image restoration in a dynamic scene. The visual loopback detection method comprises the following steps:1) pre-training an ORB feature offline dictionary in a historical image library; 2) acquiring a current RGB image as a current frame, and segmenting out that the image belongs to a dynamic scene areaby using a DANet semantic segmentation network; 3) carrying out image restoration on the image covered by the mask by utilizing an image restoration network; 4) taking all the historical database images as key frames, and performing loopback detection judgment on the current frame image and all the key frame images one by one; 5) judging whether a loop is formed or not according to the similarityand epipolar geometry of the bag-of-words vectors of the two frames of images; and 6) performing judgement. The visual loopback detection method can be used for loopback detection in visual SLAM in adynamic operation environment, and is used for solving the problems that feature matching errors are caused by existence of dynamic targets such as operators, vehicles and inspection robots in a scene, and loopback cannot be correctly detected due to too few feature points caused by segmentation of a dynamic region.

Owner:SOUTHEAST UNIV

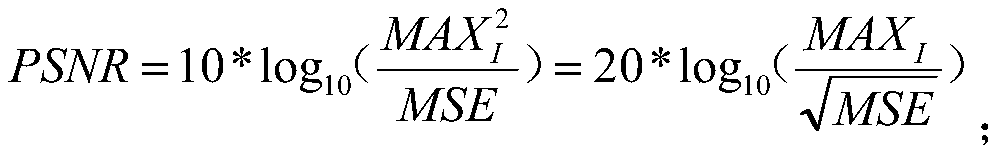

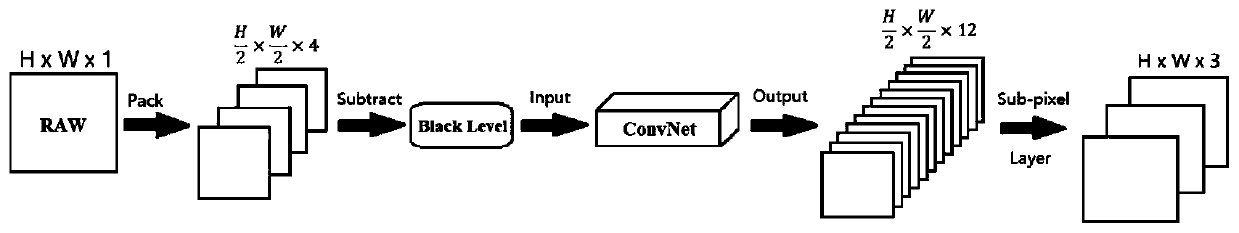

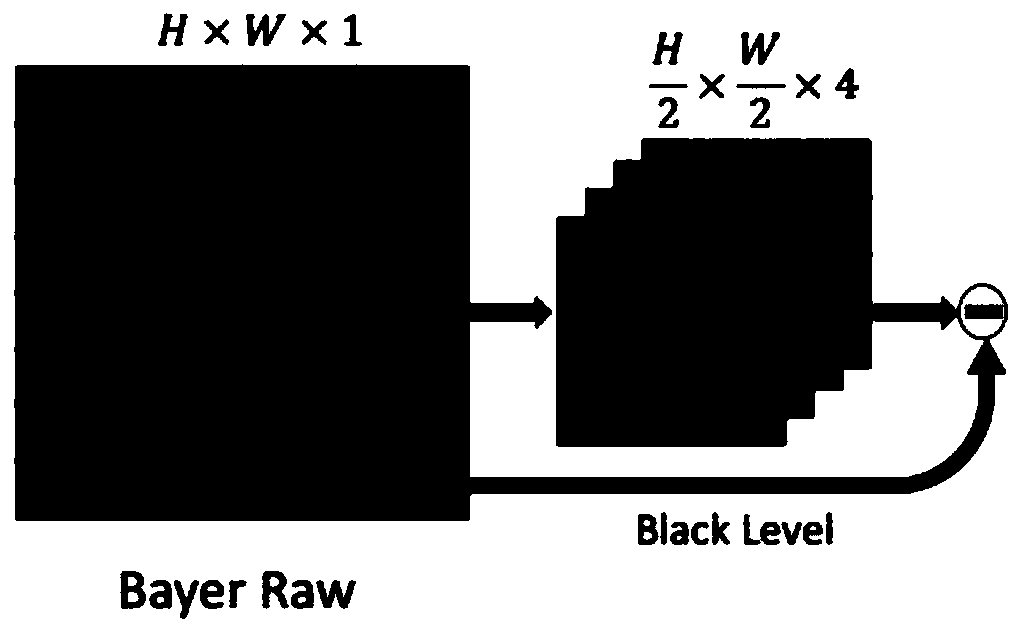

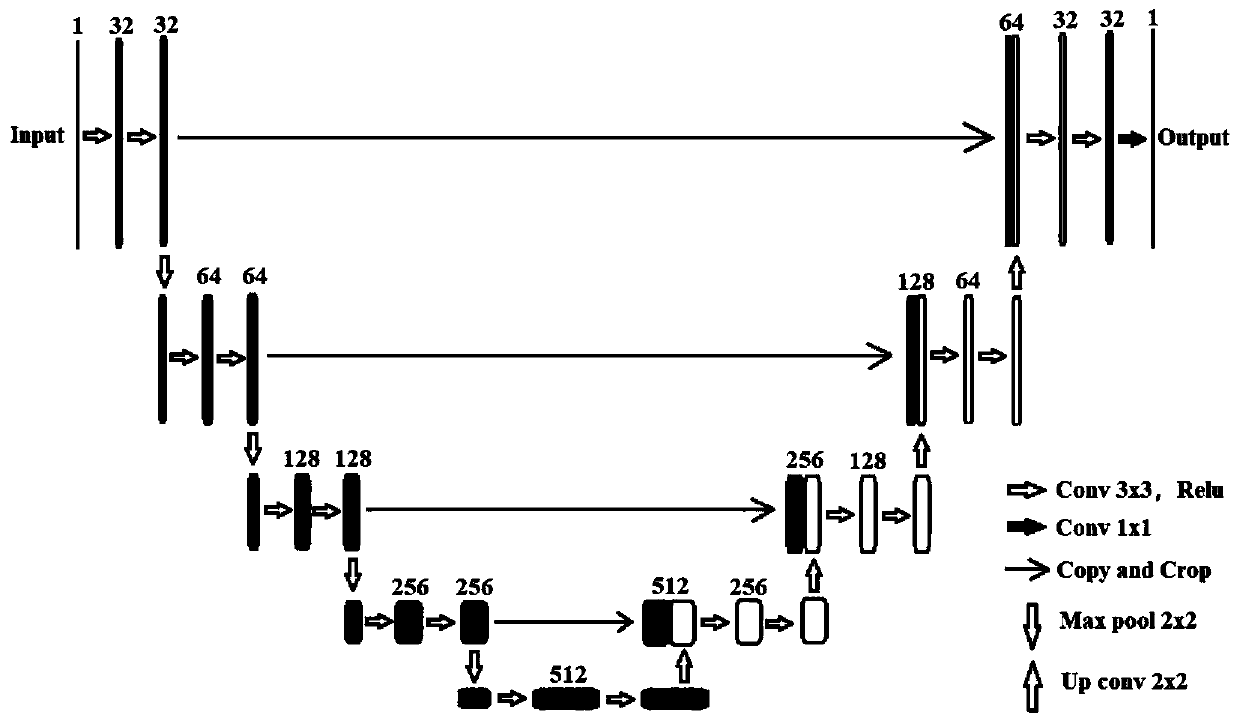

Image restoration method in extreme condition based on convolutional neural network

ActiveCN110378845ASolve the problem of fast imagingImage enhancementNeural architecturesRapid imagingImage denoising

The invention discloses an image restoration method in an extreme condition based on a convolutional neural network. The method comprises the following steps: 1, preprocessing an acquired short-exposure image; 2, inputting the preprocessed image into a U-Net convolutional neural network for training; 3, calculating an error and carrying out iterative training; and 4, evaluating the training model,and taking the peak signal-to-noise ratio and the structural similarity of the image as evaluation criteria of a final result. According to the method, the problem of rapid imaging under low light iseffectively solved, and meanwhile, a new feasible method is provided for image denoising and deblurring.

Owner:HANGZHOU DIANZI UNIV

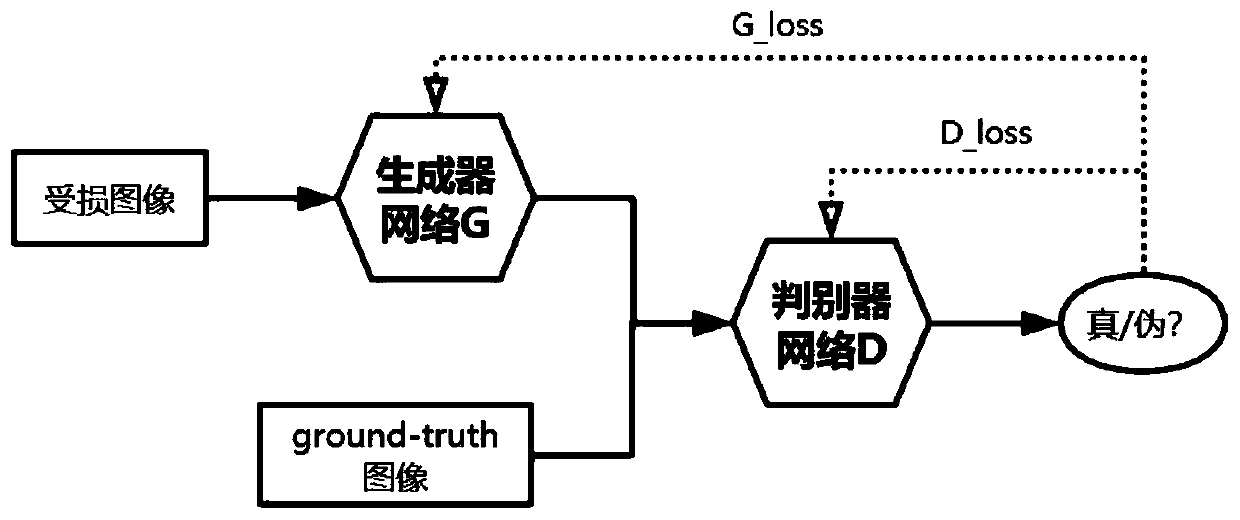

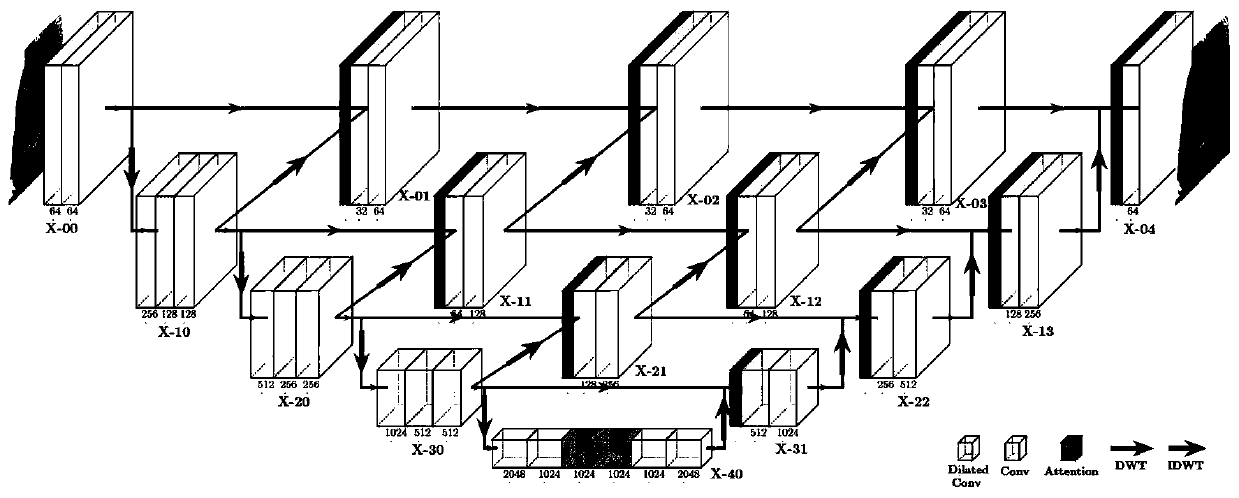

Image restoration method based on wavelet transform attention model

ActiveCN111047541APrecise structureCoherent contentImage enhancementImage analysisPattern recognitionNetwork generation

The invention relates to an image restoration method based on a wavelet transform attention model, and is used for providing a high-quality image restoration method. The method is based on an image restoration network, and the network is based on a GAN and comprises a generator network and a discriminator network. The generator network part firstly utilizes DWT to decompose known region features in a damaged image into multi-frequency sub-bands, then further extracts deep information of a known region through an attention mechanism, and finally generates a restored image through IDWT. A full convolution discriminator network structure of a PatchGAN is adopted in a discriminator network part; the restored image and the ground-true image are jointly used as input, the generator network and the discriminator network are alternately optimized, back propagation is carried out by minimizing a global loss function, generator network parameters are iteratively adjusted, and finally, the optimized generator network can generate a high-quality image restoration result.

Owner:BEIJING UNIV OF TECH

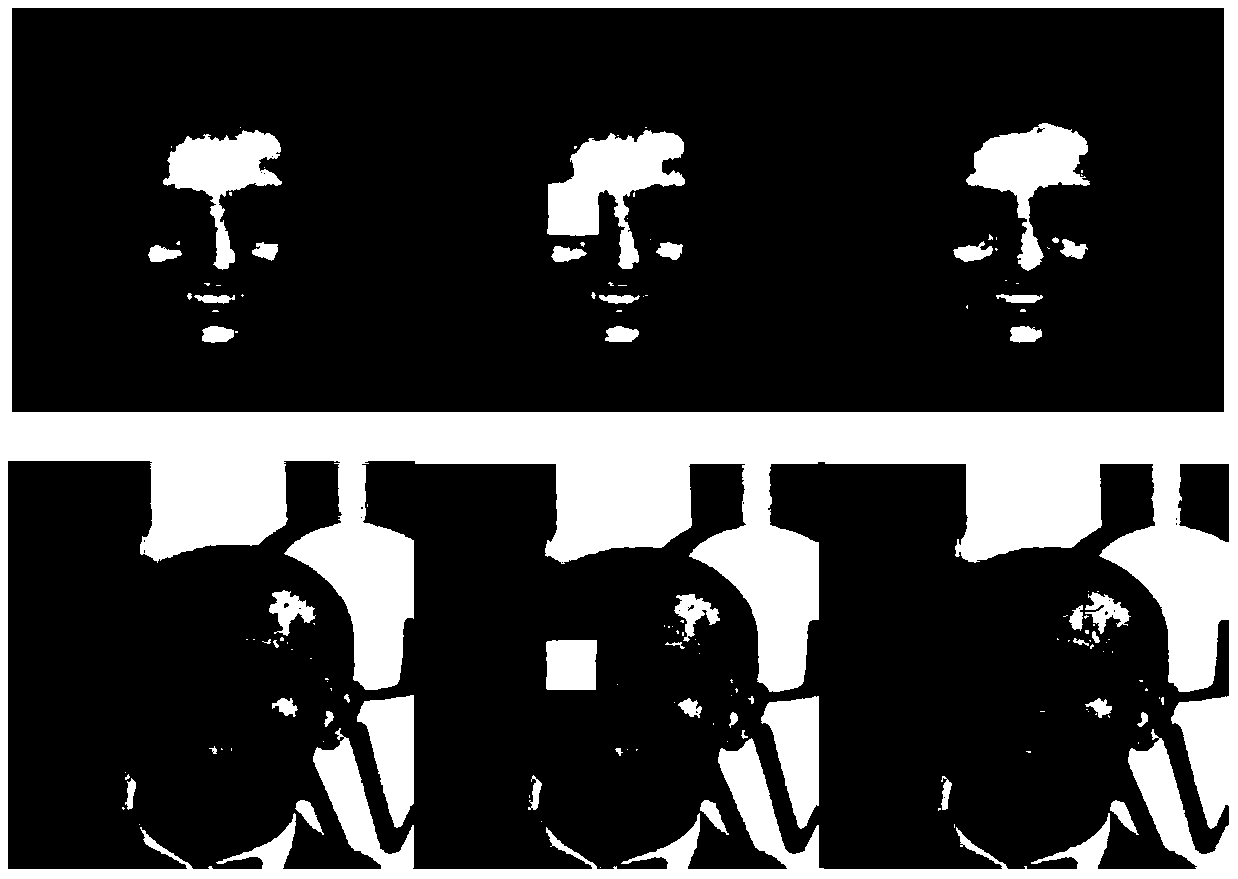

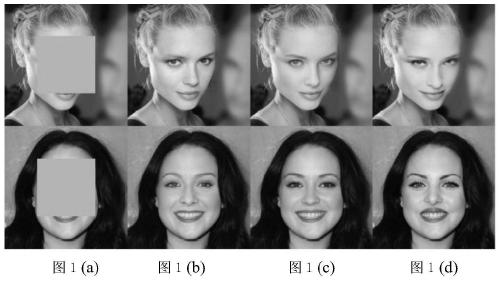

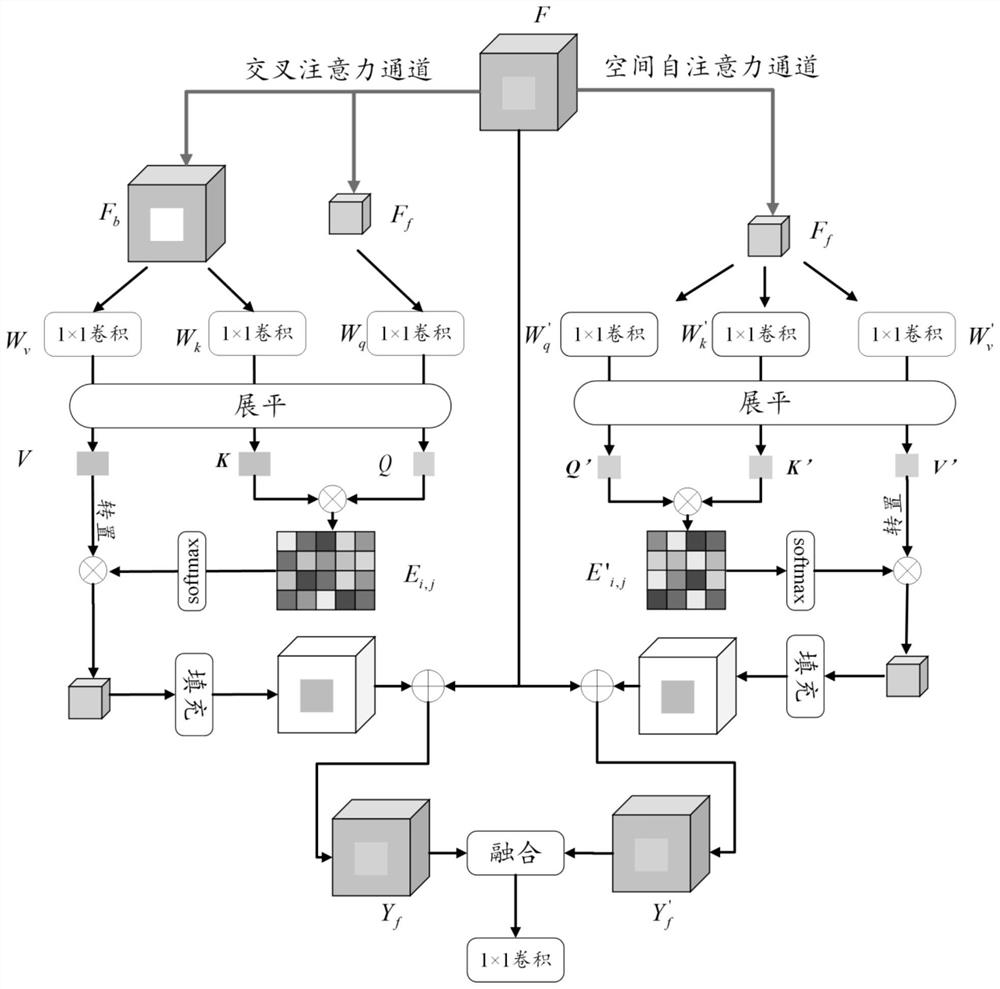

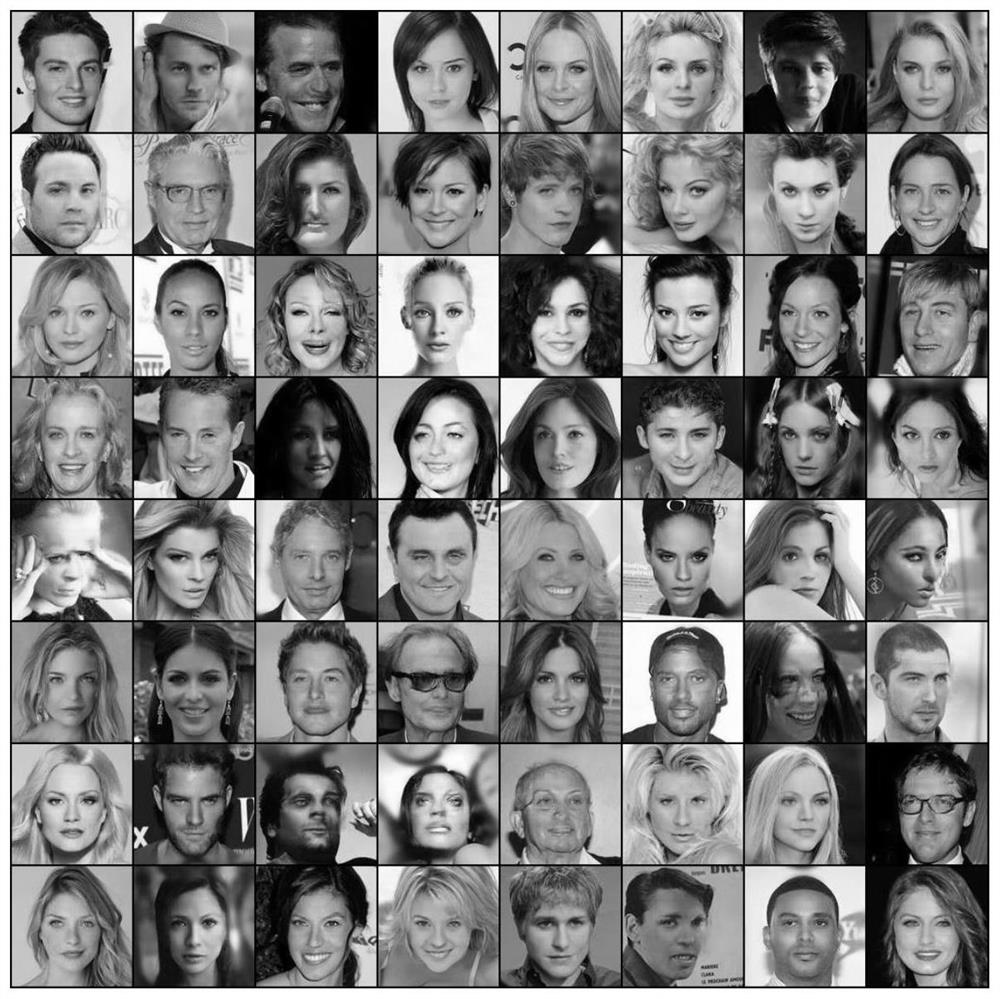

Face image restoration method based on multi-scale local self-attention generative adversarial network

PendingCN113962893AImprove learning efficiencyAchieve fixImage enhancementImage analysisPattern recognitionData set

The invention relates to a face image restoration method based on a multi-scale local self-attention generative adversarial network. The method comprises the following steps: acquiring a face missing image and a corresponding mask, and performing preprocessing; constructing a multi-scale-based local self-attention generative adversarial network, and performing training modeling on the multi-scale-based local self-attention generative adversarial network by using the defective face image data set to obtain a face repair model; and through the multi-scale local self-attention generative adversarial network model, repairing the defect face image to be detected. According to the invention, the multi-scale structure and the dual-channel local self-attention module are added into the generative network, so that the technical problems of unstable training, low repair precision and efficiency, lack of symmetry and mode collapse of the generative adversarial network in the face repair problem are effectively solved, and an efficient, accurate and stable repair method is provided for face repair.

Owner:SHANXI UNIV

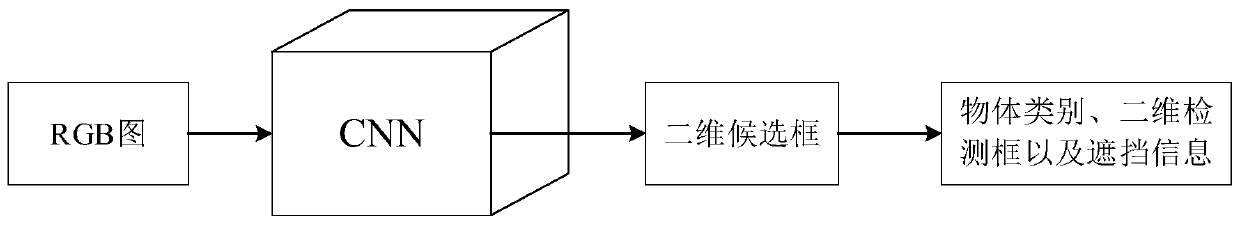

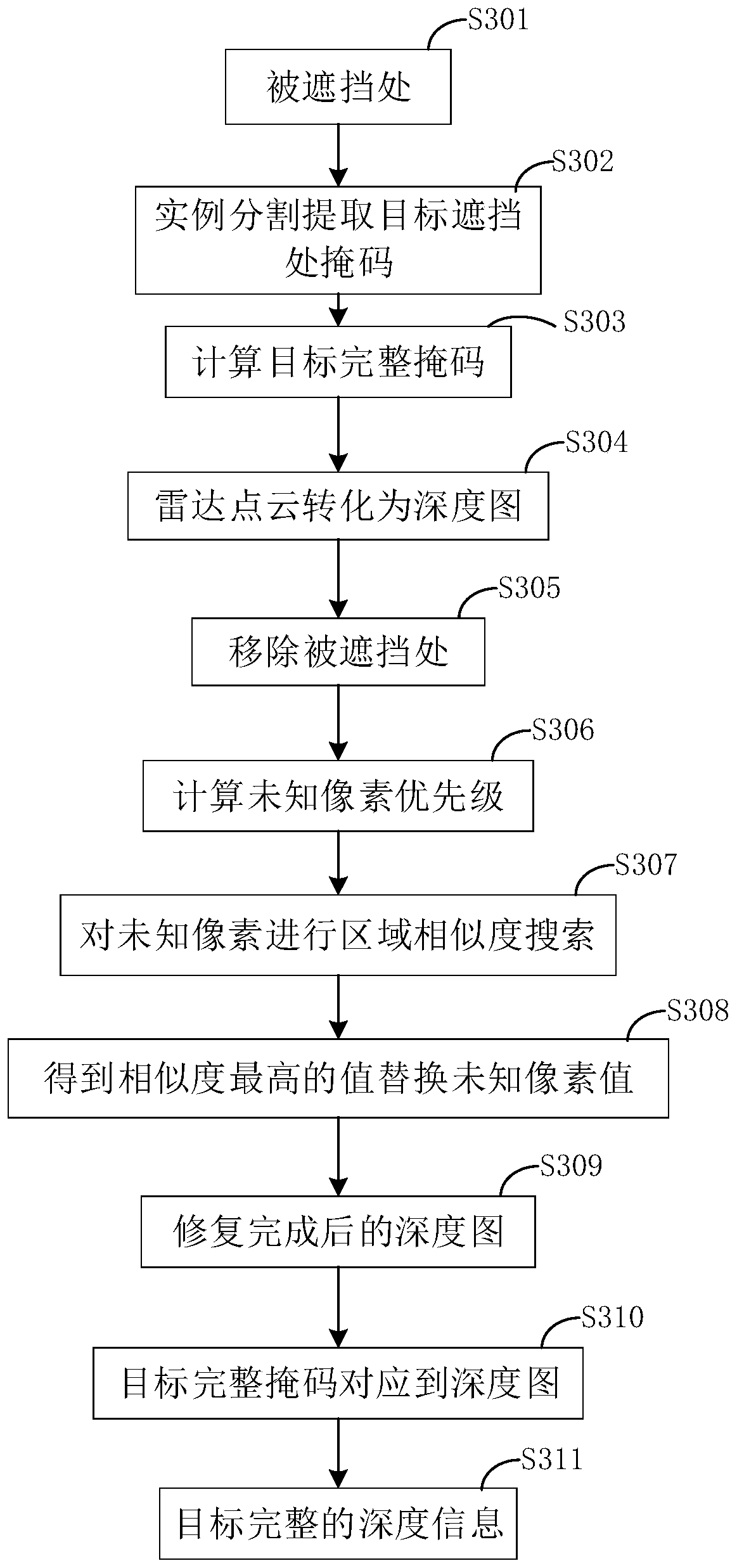

Three-dimensional target detection method and system based on image restoration

PendingCN111079545AEnhanced GeometryIncreased shape integrityImage enhancementImage analysisPattern recognitionPoint cloud

The invention relates to a three-dimensional target detection method and system based on image restoration. The method comprises the following steps: obtaining an RGB image and radar point cloud of athree-dimensional target; generating a two-dimensional target detection box on the RGB image according to a two-dimensional target detection algorithm; for the picture with the shielded target, carrying out instance segmentation by adopting an instance segmentation algorithm to obtain a mask at the shielded position of the target, and then calculating a complete mask of the target according to morphological closed operation; converting the radar point cloud into a depth map through a camera matrix, performing image restoration on the shielded part of the target on the depth map, and extractingdepth information of the target in a depth map form according to a complete mask of the target after the restoration is completed; converting the depth information in the depth map form of the targetinto restored point cloud; and inputting the repaired point cloud into a three-dimensional target detection network for three-dimensional target detection. Compared with the prior art, the three-dimensional target detection method plays a role in reducing offset and improving precision in three-dimensional target detection.

Owner:SHANGHAI UNIV OF ENG SCI

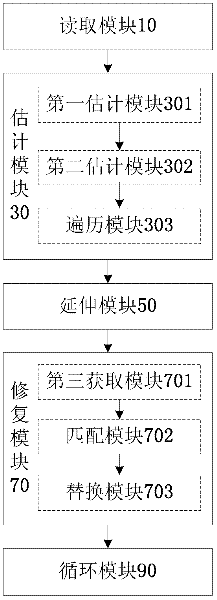

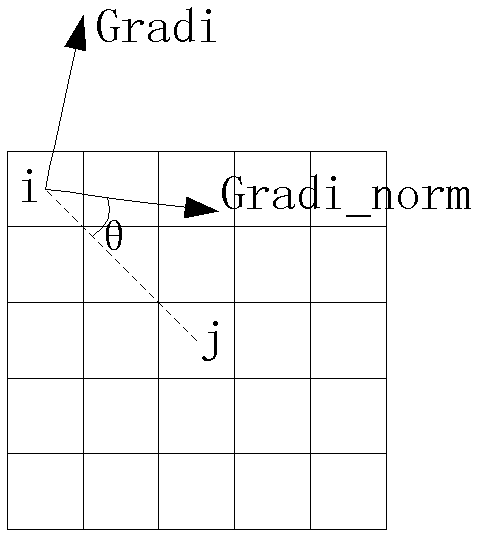

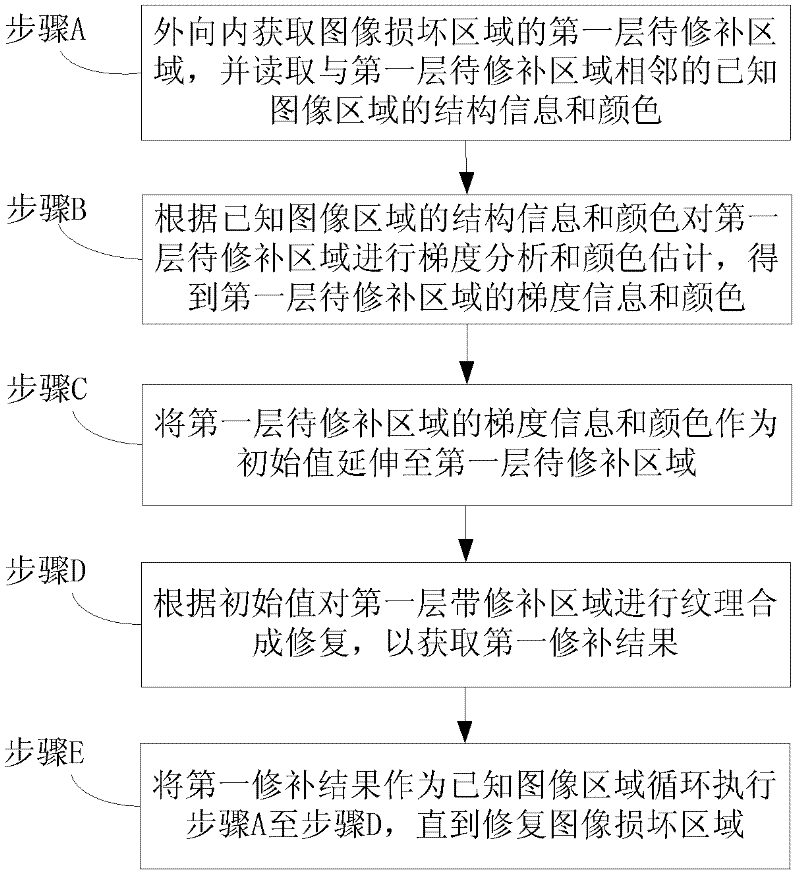

Image restoring method and device

ActiveCN102567970ASolve inaccurateReduce the introductionImage enhancementPattern recognitionComputer graphics (images)

The invention discloses an image restoring method and device. The method comprises the following steps of: step A, obtaining a first layer region to be restored of an image damaged region from inside to outside, and reading structural information and a color of the known image region which is close to the first layer region to be restored; step B, carrying out gradient analysis and color estimation on the first layer region to be restored according to the structural information and the color of the known image region to obtain gradient information and a color of the first layer region to be restored; step C, taking the gradient information and the color of the first layer region to be restored as initial values to be extended to the first layer region to be restored; step D, carrying out texture synthesis restoration on the first layer region to be restored according to the initial values to obtain a first restoring result; and step E, taking the first restoring result as the known image region to circularly execute the steps A to D until the image damaged region is restored. With the adoption of the image restoring method and device, disclosed by the invention, the structural region can be accurately positioned, the block matching accuracy is improved, and the introduction and the reproduction of error information are reduced.

Owner:FOUNDER INTERNATIONAL CO LTD +1

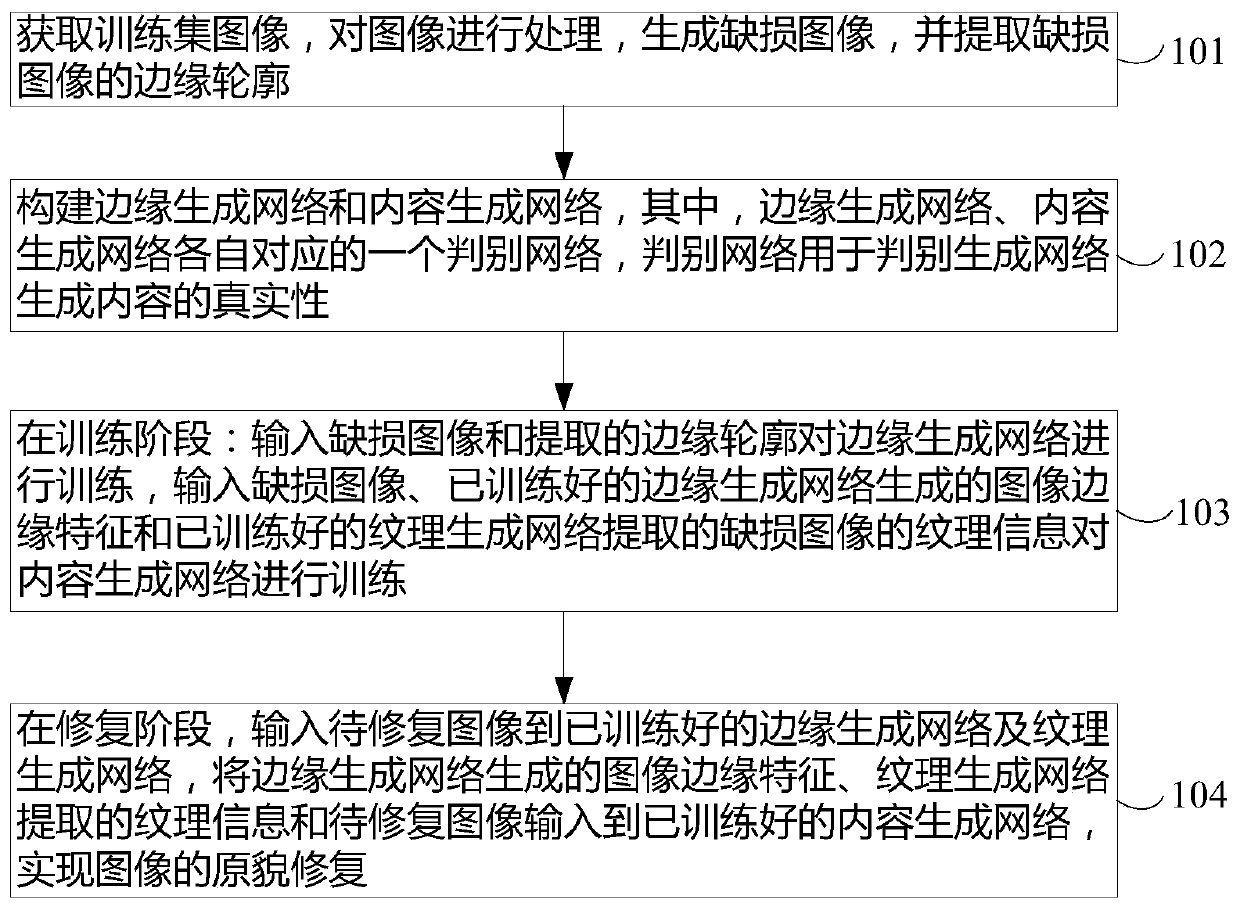

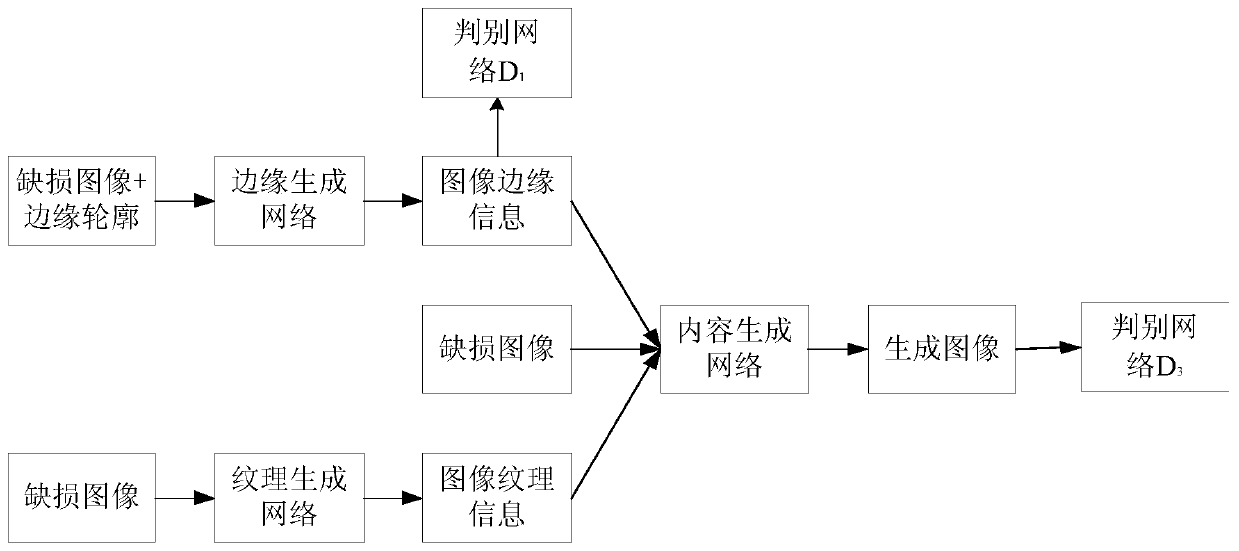

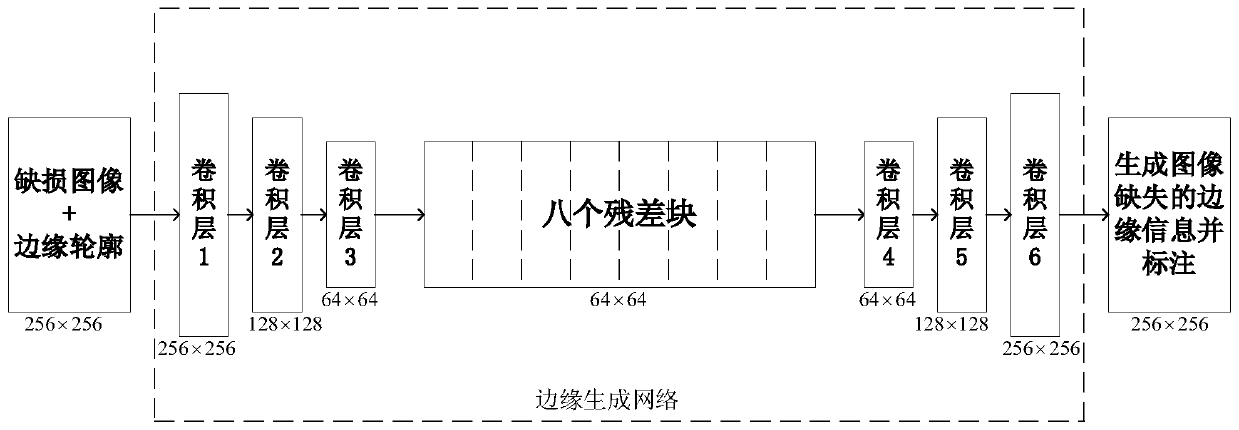

Image restoration method based on edge generation

ActiveCN111047522AAchieve fixResolve blurImage enhancementImage analysisPattern recognitionImage extraction

The invention provides an image restoration method based on edge generation, which can effectively solve the problems of fixed restoration area and blurred generated image in image restoration. The method comprises the steps that a defect image is generated, and the edge contour of the defect image is extracted; an edge generation network and a content generation network are constructed, wherein the content generation network adopts a U-Net structure; in the training stage, a defect image and an extracted edge contour are input to train an edge generation network, and image edge features generated by the trained edge generation network, texture information of the defect image extracted by the trained texture generation network and the defect image are input to train a content generation network; and in the restoration stage, the edge features of the to-be-restored image generated by the edge generation network, the texture information of the to-be-restored image extracted by the texture generation network and the to-be-restored image are input into the trained content generation network, and then original appearance restoration of the image is acheived. The invention relates to thefield of artificial intelligence and image processing.

Owner:UNIV OF SCI & TECH BEIJING +1

An image restoration method based on a Criminisi algorithm

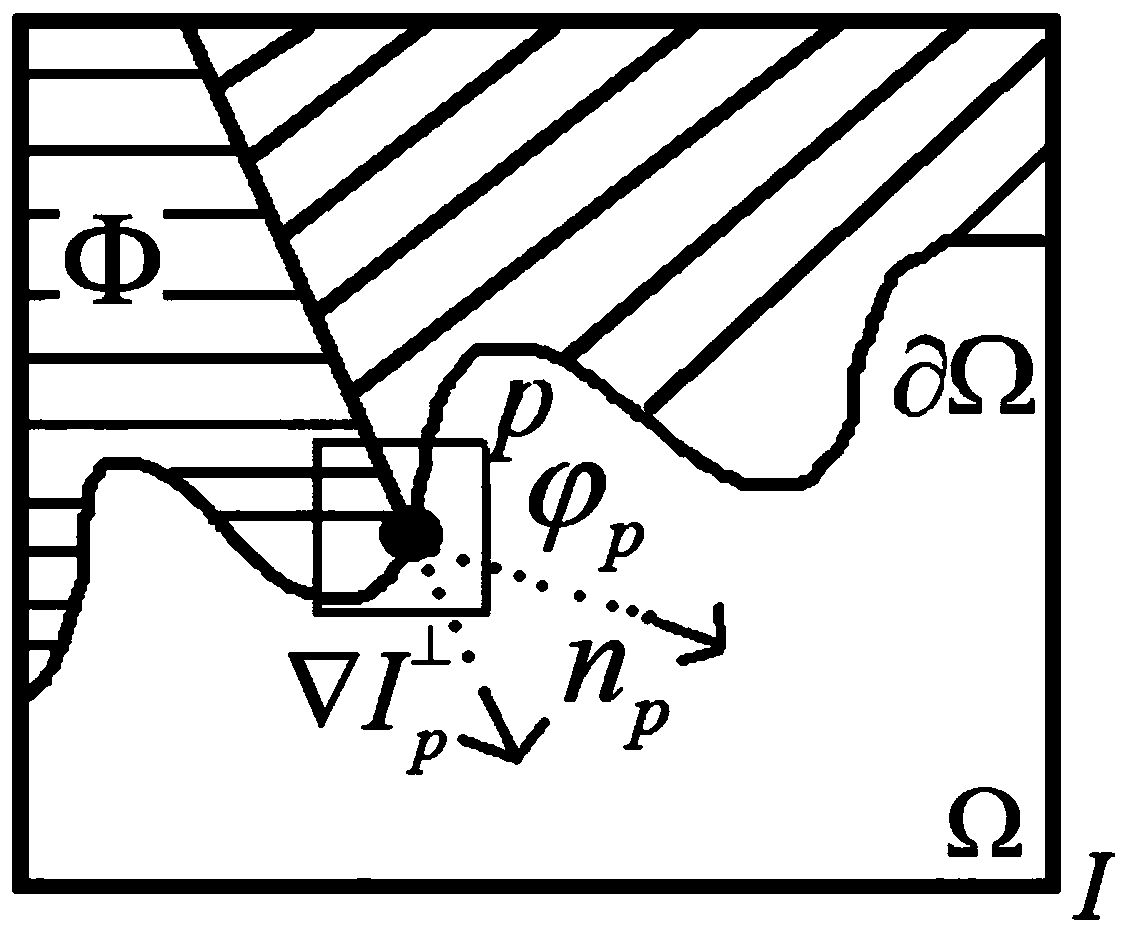

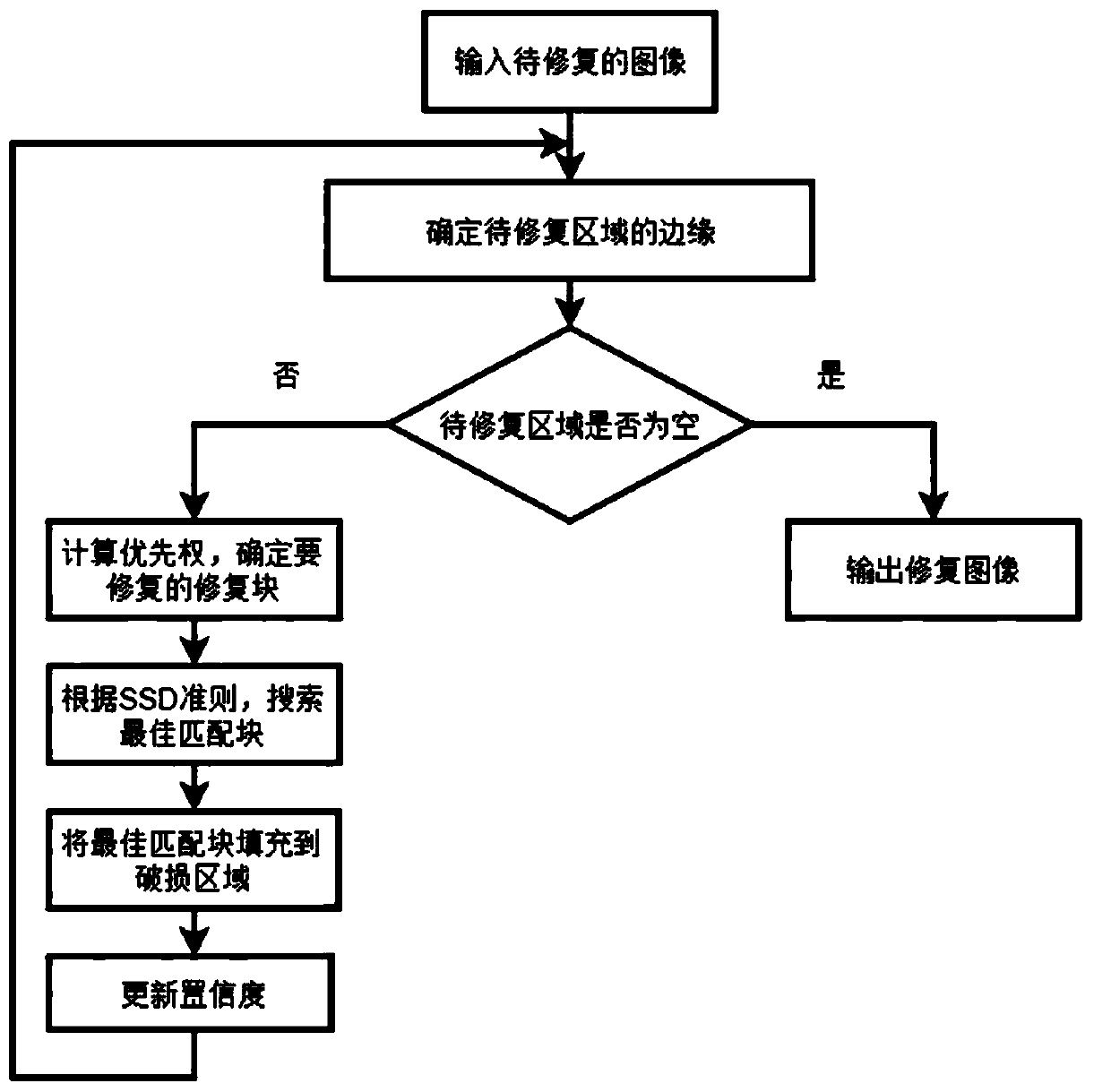

ActiveCN109785250AFix broken imagesStructural solutionImage enhancementImage restorationOptimal matching

The invention discloses an image restoration method based on a Criminisi algorithm. The core idea of the Criminisi algorithm is to calculate the priority of a repair block, determine a repair area according to the priority, find an optimal matching block according to an SSD criterion and fill the optimal matching block, and finally update the confidence of the repair block after repair until the repair of a to-be-repaired area is finished. According to the image restoration method based on the Criminisi algorithm, optimization is carried out on the basis of an existing Criminisi algorithm, andthe phenomena that in the prior art, after image restoration, an image is broken, and the image structure is disordered are solved.

Owner:XI'AN POLYTECHNIC UNIVERSITY

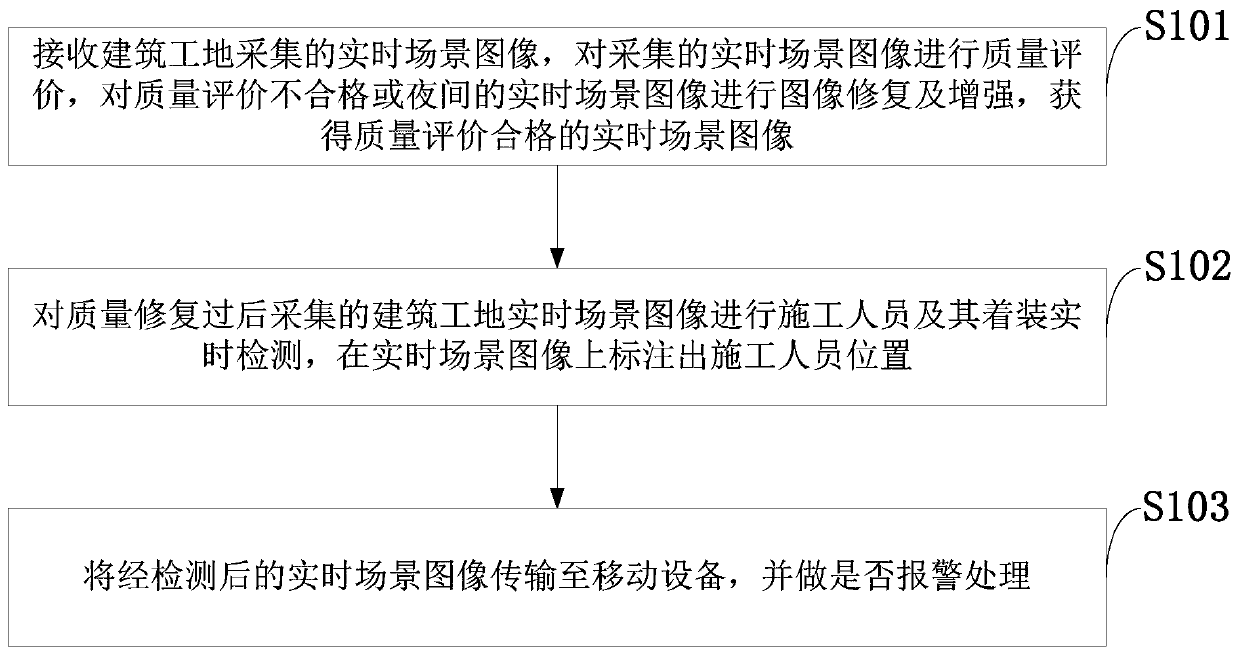

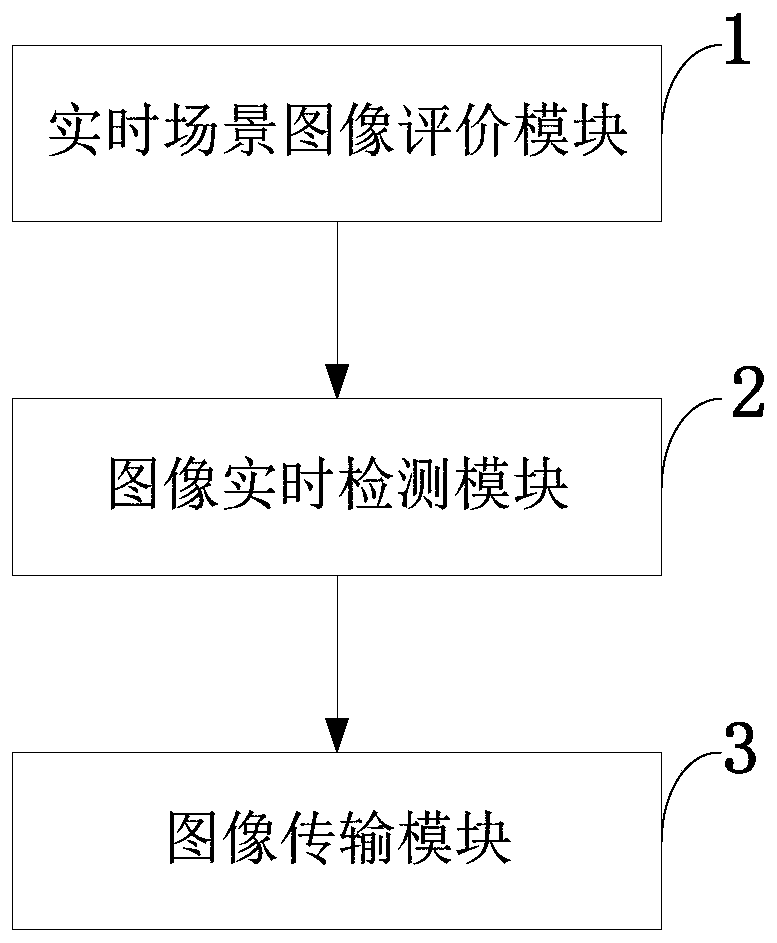

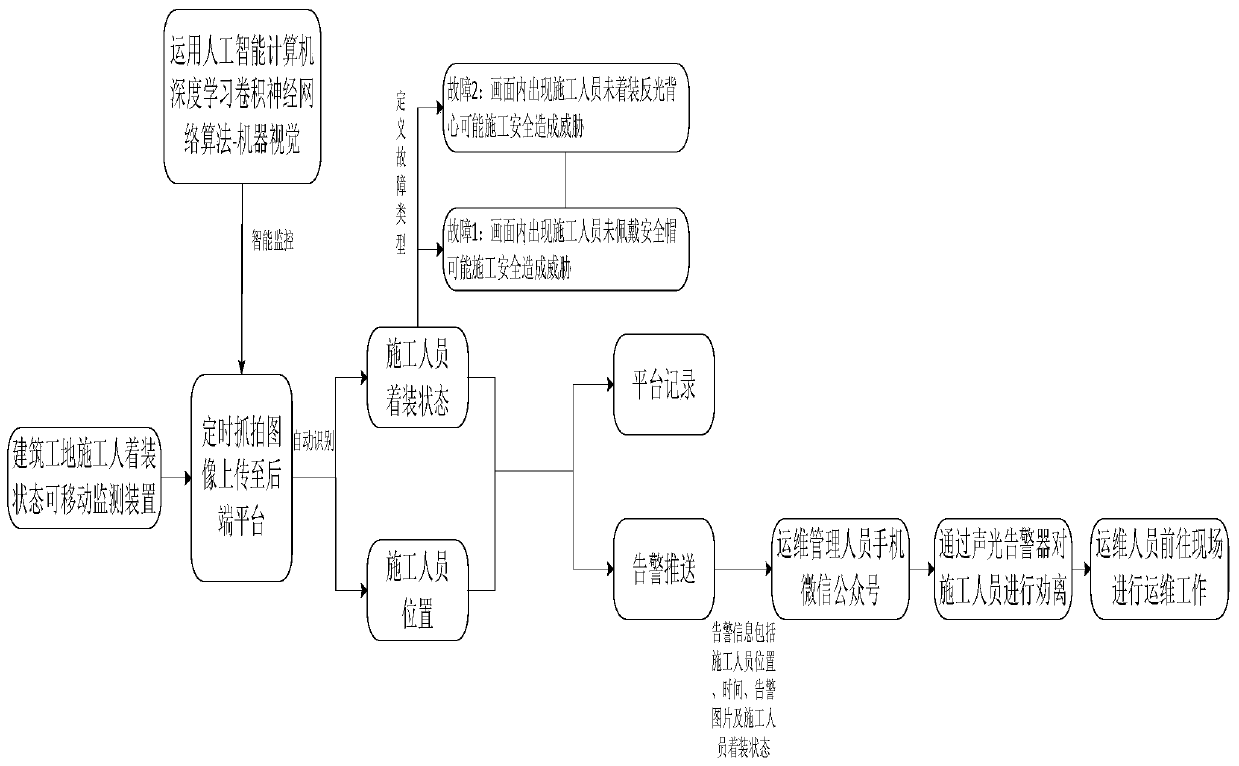

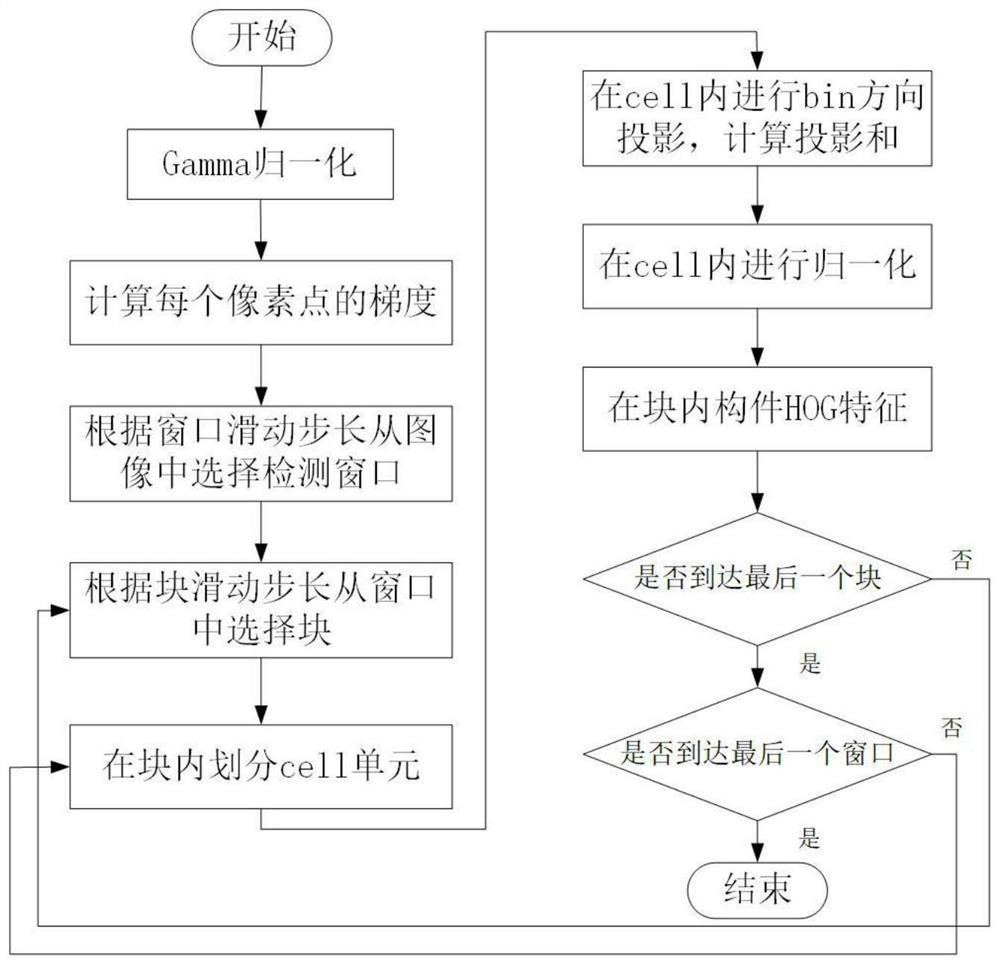

Construction site worker dressing detection method, system and device and storage medium

InactiveCN111383429AKeep abreast of security threatsRaise the level of high security managementImage enhancementImage analysisSite monitoringMobile device

The invention belongs to the technical field of construction site monitoring and protection, and discloses a construction site worker dressing detection method, system and device, and a storage medium. The method comprises the steps: receiving a real-time scene image collected at a construction site, carrying out the quality evaluation of the collected real-time scene image, carrying out the imagerestoration and enhancement of the real-time scene image with unqualified quality evaluation or at night, and obtaining a real-time scene image with qualified quality evaluation; performing real-timedetection of constructors and dresses of the constructors on the collected real-time scene images of the construction site after quality restoration, and marking the positions of the constructors onthe real-time scene images; transmitting the detected real-time scene image to mobile equipment, and carrying out alarm processing. The high safety management level of construction personnel is improved; once a constructor is found, dressing of the constructor is automatically recognized, safety threats faced by the constructor are analyzed, real-time intelligent analysis is carried out, WeChat ispushed to an operation and maintenance manager, and safety accidents can be greatly reduced.

Owner:西安咏圣达电子科技有限公司

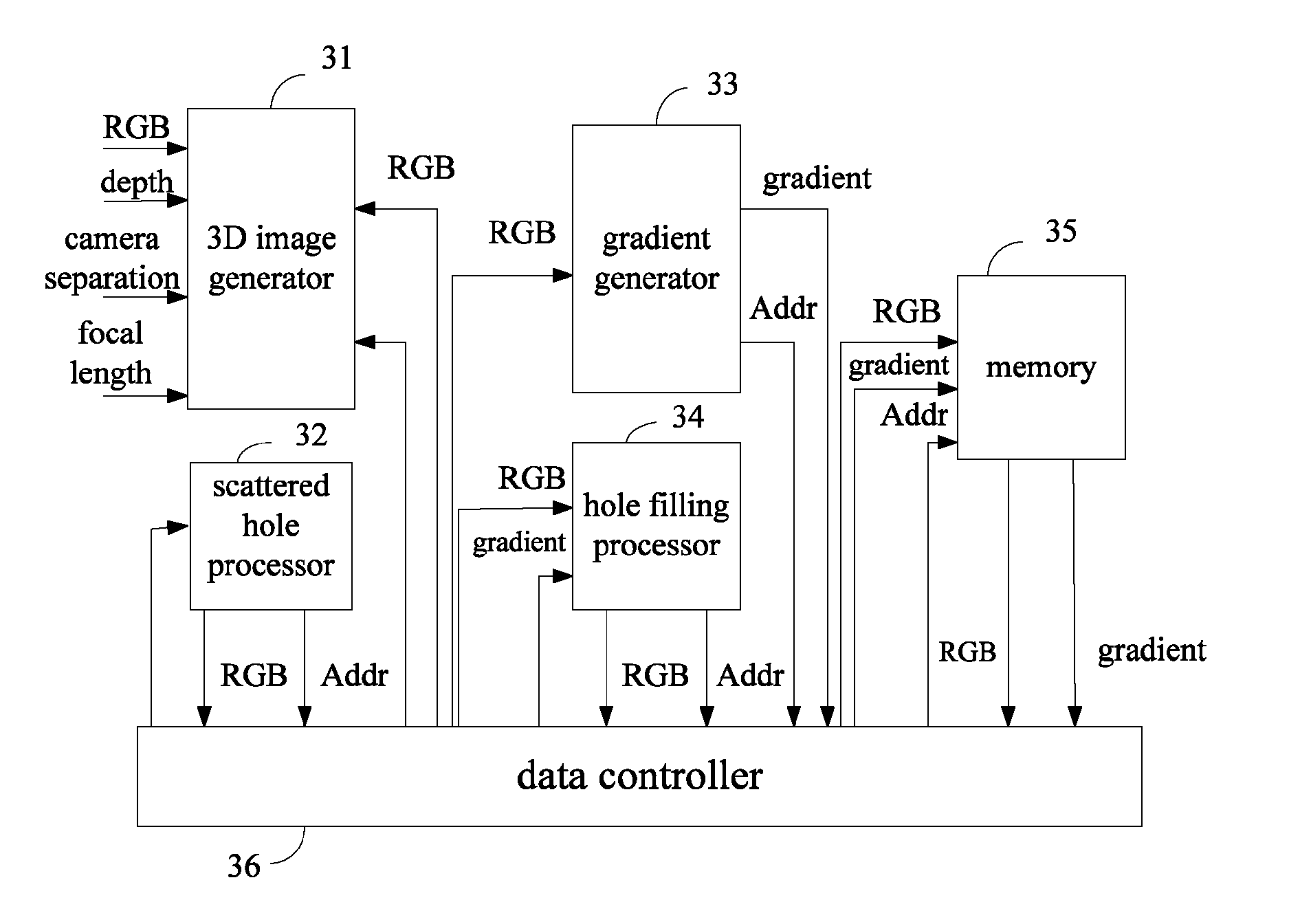

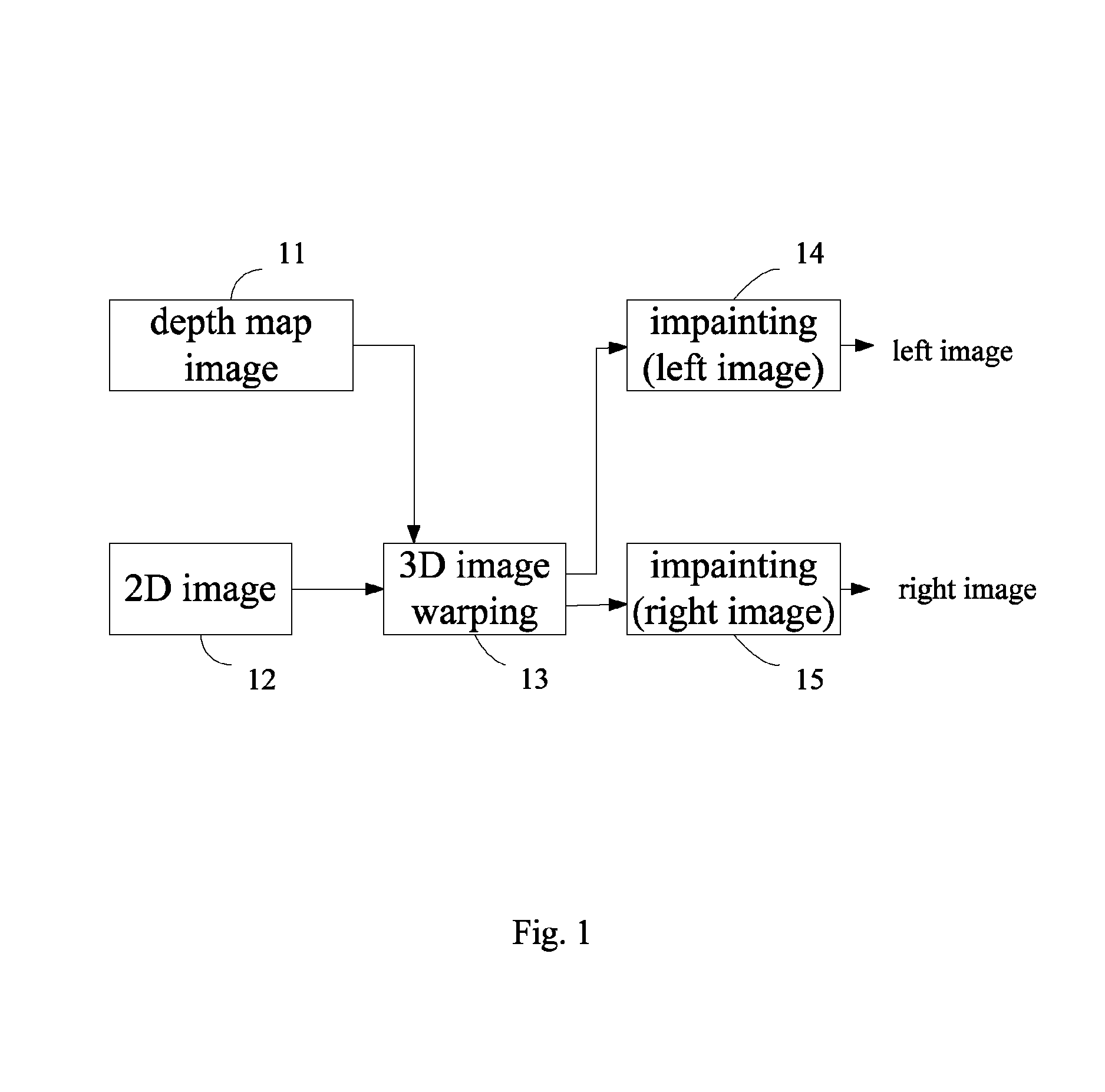

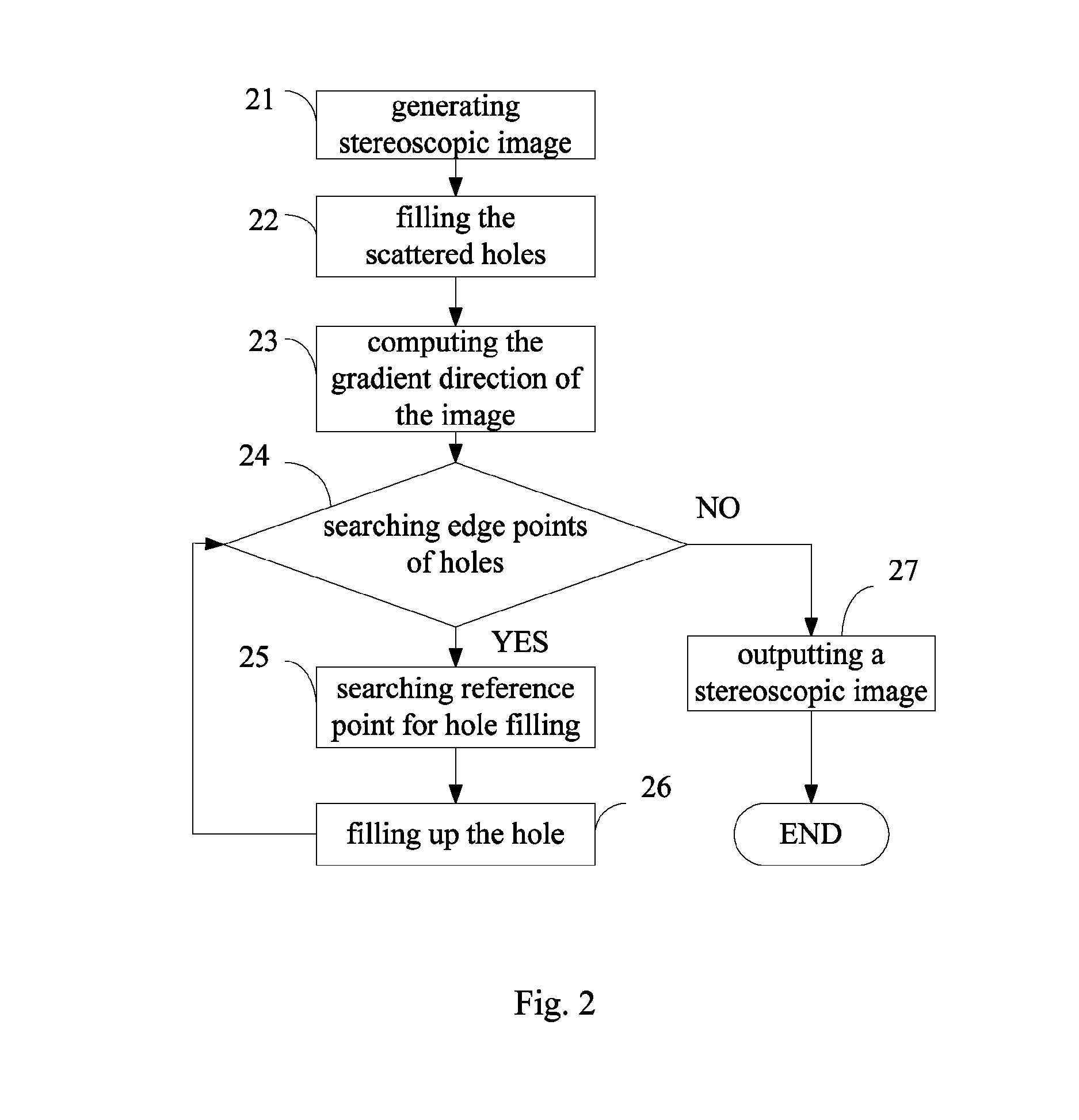

Apparatus for generating real-time stereoscopic image and method thereof

ActiveUS8704875B2Simplify complex methodFast fillCharacter and pattern recognitionSteroscopic systemsParallaxHardware architecture

Disclosed is an apparatus and method for generating a real-time stereoscopic image from depth map. According to the depth information of the image, a depth-image-based rendering (DIBR) algorithm is used to shift (or move) the position of the object in the image to generate the stereoscopic image with parallax. When the object is shifted (or moved) away from its original position, a hole will occur in the original position. Therefore an image inpainting algorithm is developed to fill the hole. In order to achieve the real-time application, a hardware architecture and method have been developed to accomplish the DIBR and image inpainting algorithm.

Owner:CHUNG HUA UNIVERSITY

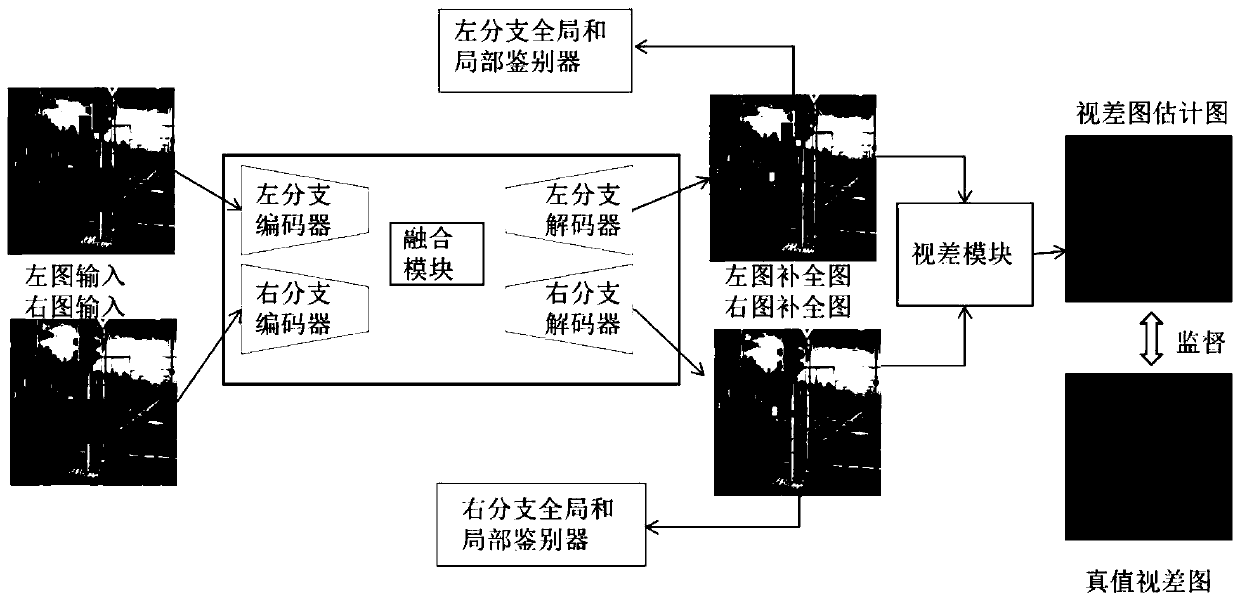

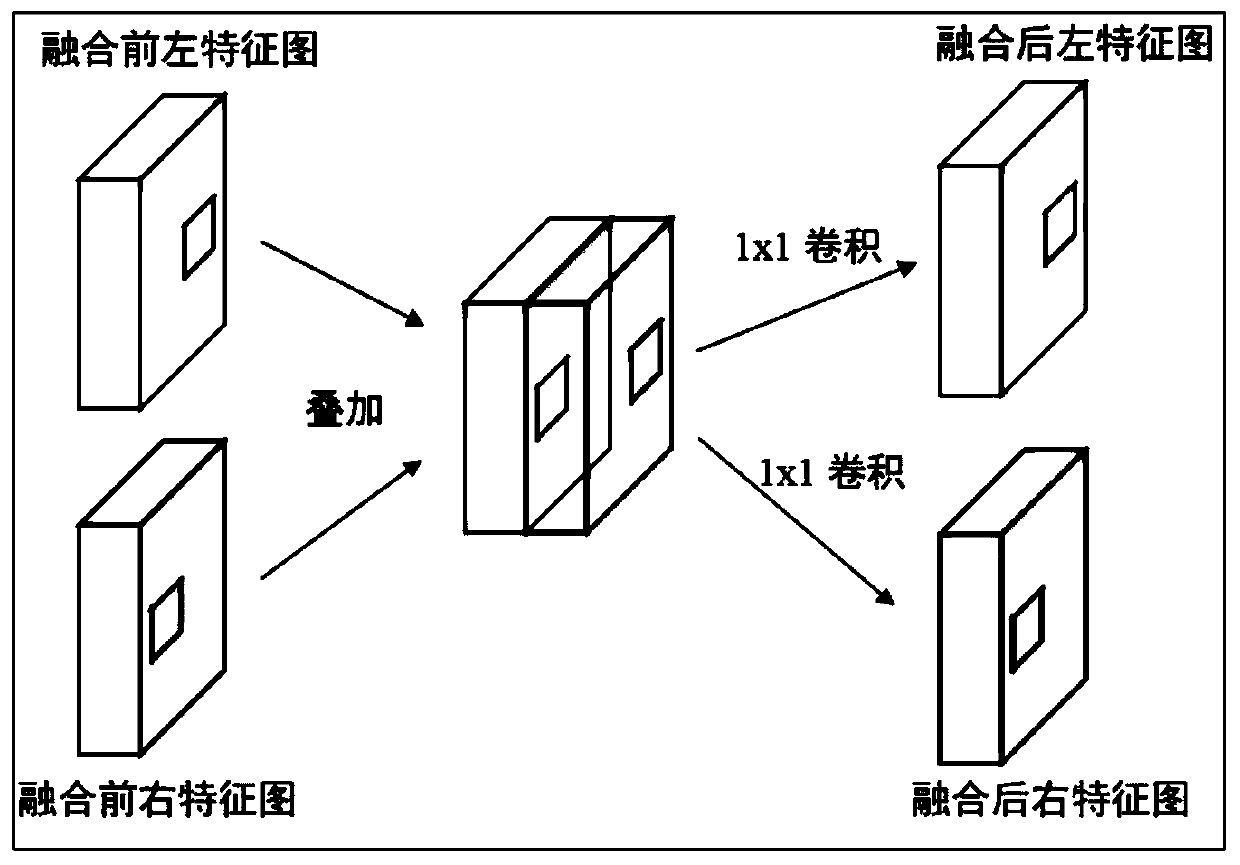

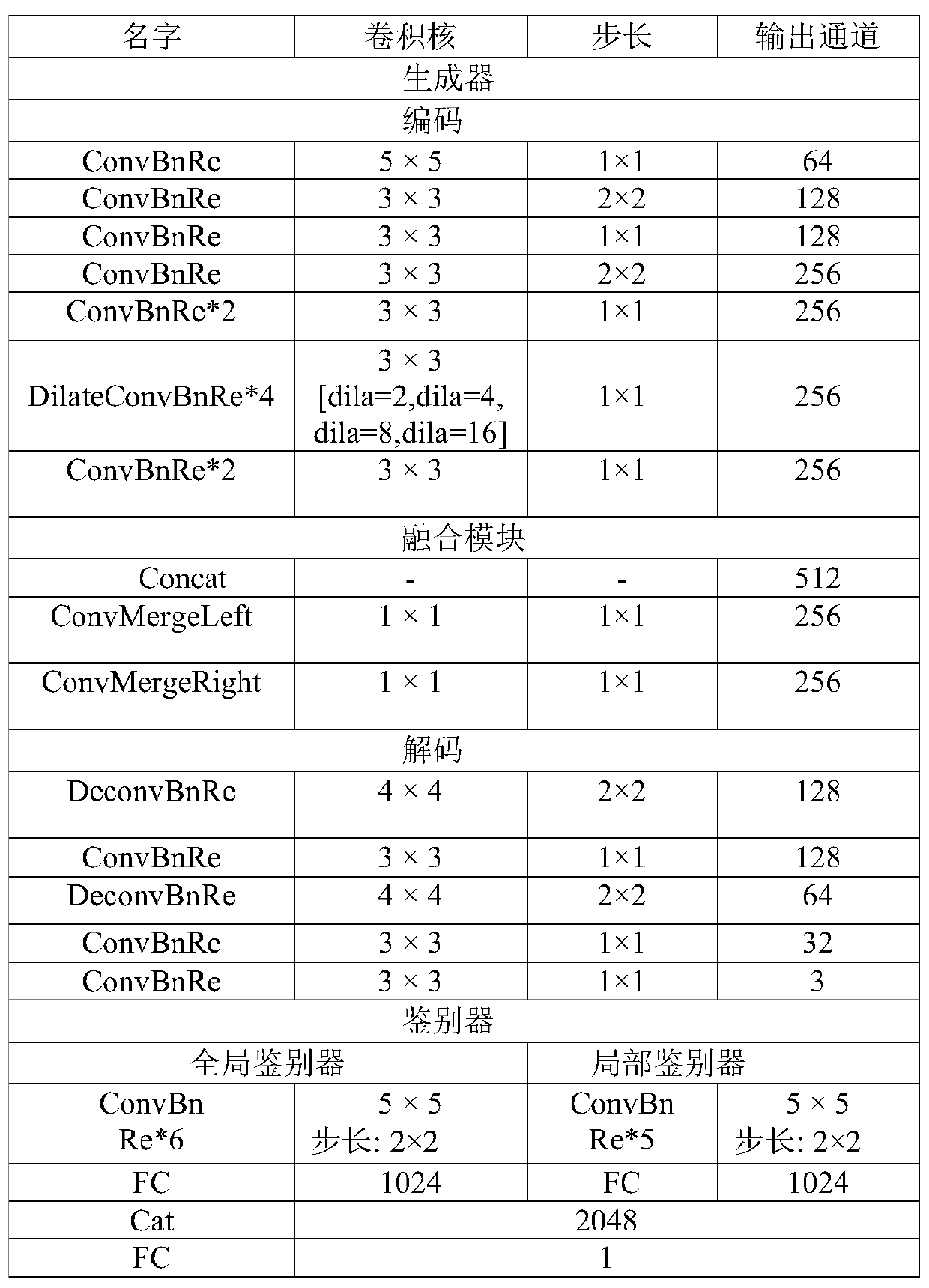

Three-dimensional image restoration method based on deep learning

PendingCN110766623AEnsure Visual ConsistencyAchieve high-precision restorationImage enhancement3D-image renderingPattern recognitionParallax

The invention relates to a three-dimensional image restoration method based on deep learning. The core of the method is a deep neural network model based on local adaptation and visual consistency. The model is composed of an encoder and decoder module, a fusion module, a discriminator module and a parallax module. According to the invention, for left and right views, the area of any size and anyposition can be restored, the application value is effectively improved, and the universality of image restoration is enhanced. Compared with the prior art, the method has the following advantages: 1)parts which can be referenced by each other are fused according to mutual complementation of the feature maps of the two views, so that not only can the features of the residual area of the view be referenced, but also the content of the other view can be referenced; meanwhile, the stereo consistency of the stereo image pair is constrained by using parallax clues, so that dizziness is not caused;and 2) the restored three-dimensional image pair is more vivid in texture and details, and is more in line with the visual perception of human eyes.

Owner:BEIJING UNIV OF TECH

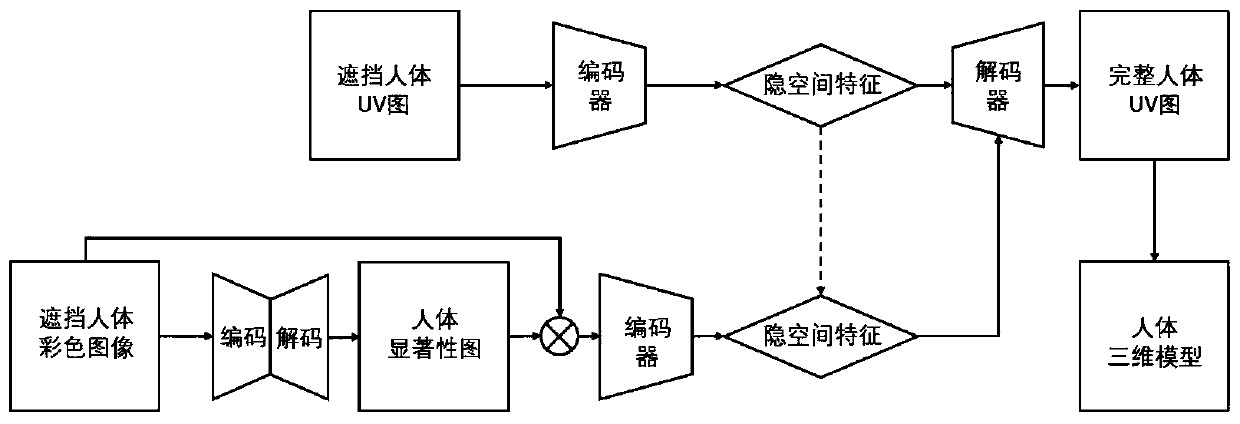

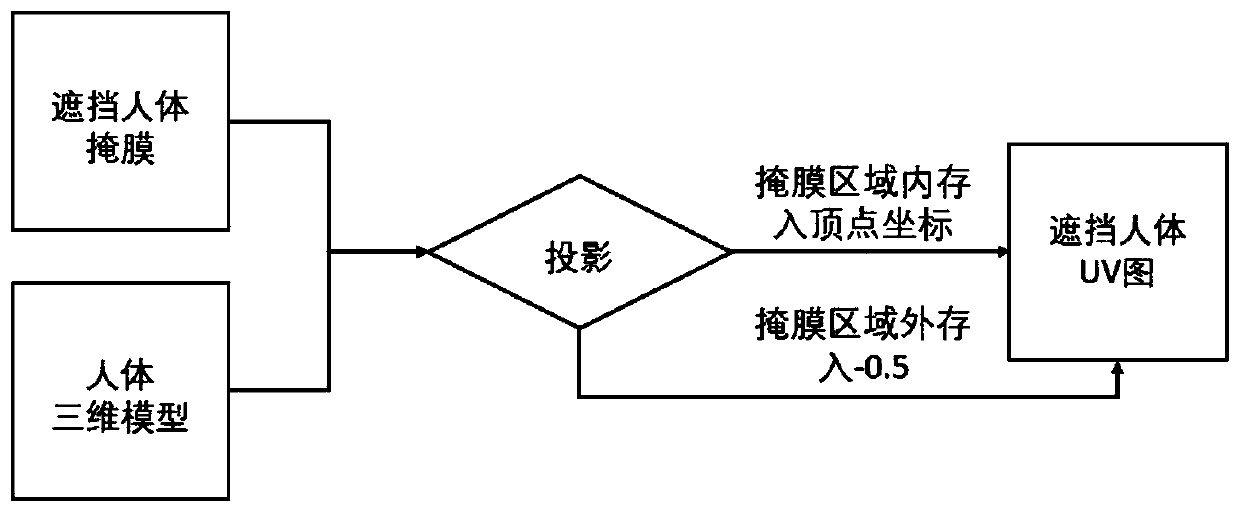

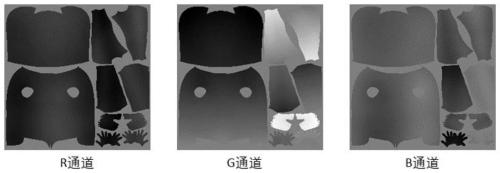

Human body shape and posture estimation method for object occlusion scene

ActiveCN111339870AImprove robustnessImprove smoothnessBiometric pattern recognitionColor imageHuman body

The invention discloses a human body shape and posture estimation method for an object occlusion scene, and the method comprises the steps: converting a weak perspective projection parameter obtainedthrough calculation into a camera coordinate, and obtaining a UV image containing human body shape information under the condition of no occlusion; adding a random object picture to the human body two-dimensional image for shielding, and obtaining a human body mask under the shielding condition; training a UV map restoration network of an encoding-decoding structure by using the obtained virtual occlusion data; inputting a human body color image shielded by a real object, and constructing a saliency detection network of an encoding-decoding structure by taking the mask image as a true value; supervising human body coding network training by using the hidden space features obtained by coding; inputting the shielded human body color image to obtain a complete UV image; and recovering the human body three-dimensional model under the shielding condition by using the vertex corresponding relationship between the UV image and the human body three-dimensional model. According to the method, the shape estimation of the shielded human body is converted into the image restoration problem of the two-dimensional UV chartlet, so that the real-time and dynamic reconstruction of the human body inthe shielded scene is realized.

Owner:SOUTHEAST UNIV

Face image restoration method introducing attention mechanism

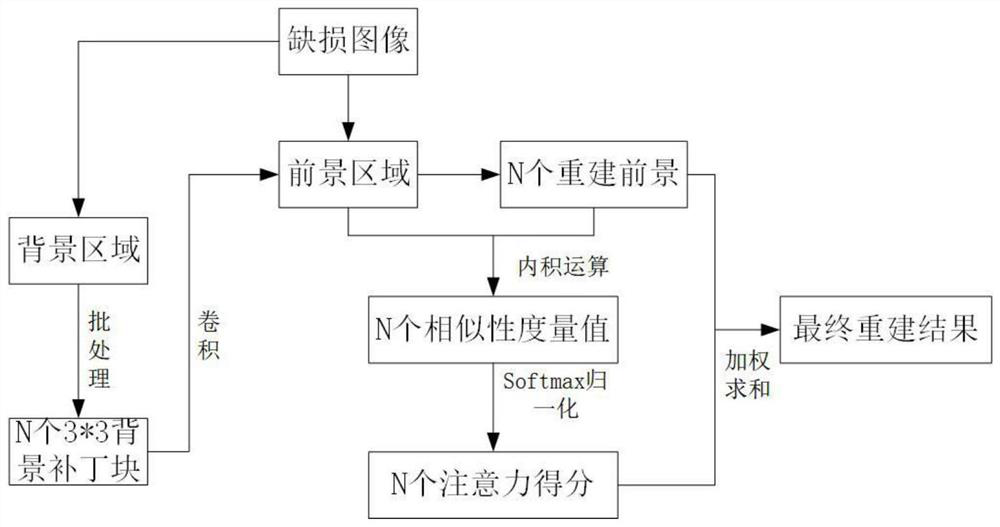

InactiveCN111612718AImprove repair effectSolve the problem caused by the limited receptive field sizeImage enhancementImage analysisData setOriginal data

The invention relates to a face image restoration method introducing an attention mechanism, and the method comprises the steps: (1) obtaining an original data set, carrying out the image preprocessing, obtaining a needed face image data set, and reasonably dividing and arranging the face image data set into a test set and a data set; (2) inputting the training data set into an image restoration model introduced into a context attention layer for training, wherein two parallel encoder networks are introduced into a generator network, one encoder network is used for performing convolution operation to extract advanced feature images, and the other encoder is used for introducing a context attention layer network to realize long-range association between a foreground region and a backgroundregion; and (3) inputting the test data set into the trained face restoration model, and testing the restoration capability of the trained restoration model for the defective face image. According tothe method, after the context attention layer is introduced, the problem that the background region information cannot be fully utilized by the restoration model due to the limited receptive field size of the convolutional neural network is solved, the long-range association of the background information and the foreground region is realized, and the background region information is fully utilizedto fill the foreground region. After the context attention layer is introduced, the restoration model obtains a better restoration effect on some detail textures, and the restoration effect of the face image is also improved on the whole.

Owner:SUN YAT SEN UNIV

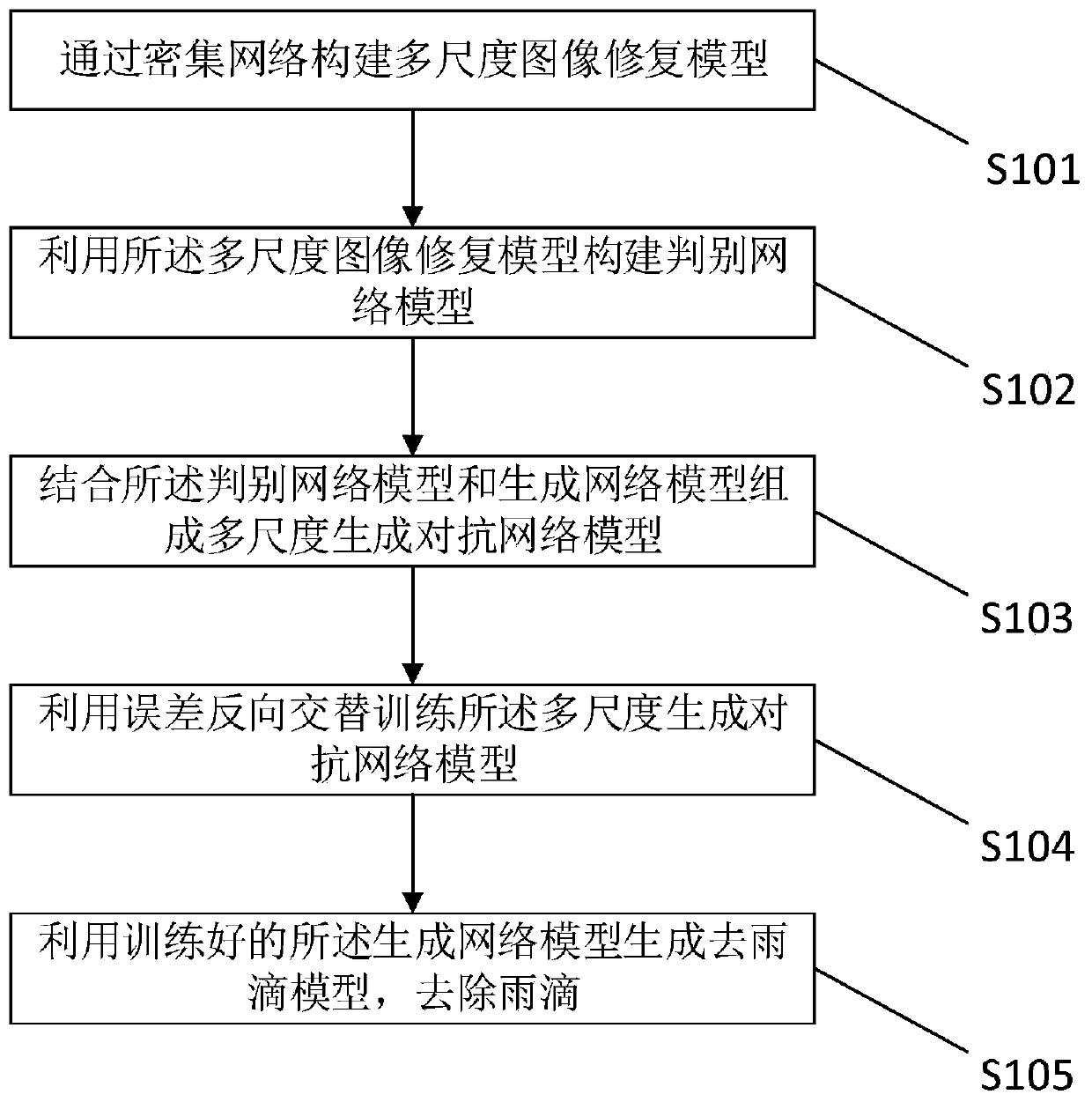

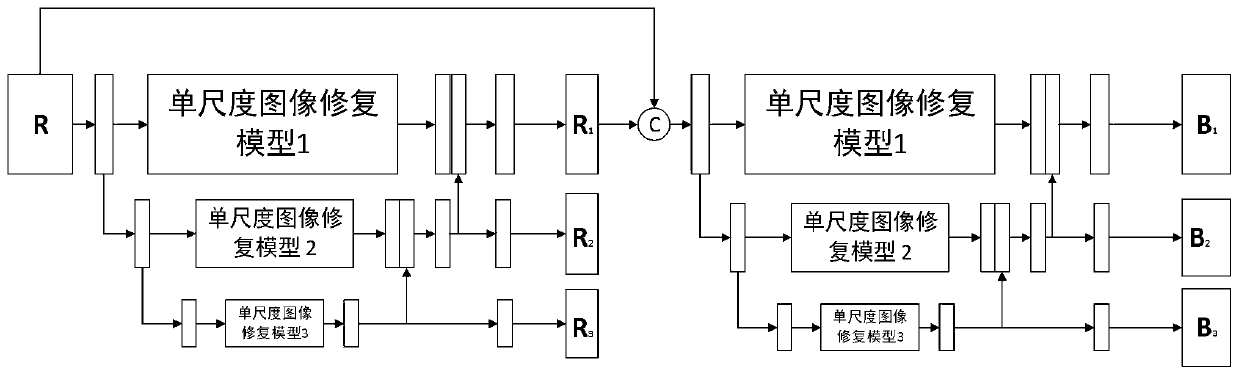

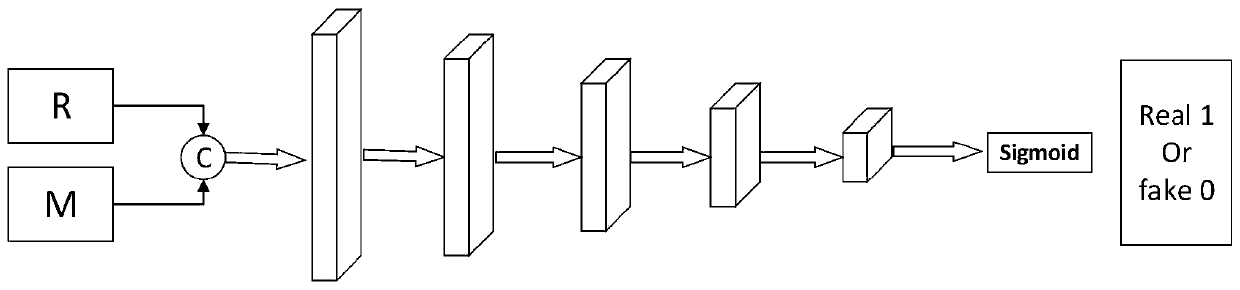

Raindrop removing method for single image based on dense multi-scale generative adversarial network

The invention discloses a raindrop removing method for a single image based on a dense multi-scale generative adversarial network. Constructing a multi-scale image restoration model by using a dense network for feature reuse; constructing a discriminant network model with an attention mechanism by combining and utilizing the multi-scale image restoration model; forming a multi-scale generative adversarial network model; obtaining an original rain image, an original rain-free image and a residual raindrop layer; inputting the original rain-free image and the residual raindrop layer into the discrimination network model; utilizing an error between the discriminant network model and the generative network model; and performing back propagation to alternately train the multi-scale generative adversarial network model, stopping training until errors of the discrimination network model and the generative network model converge to a set range, generating a raindrop removal model by using thetrained generative network model, and removing relatively large and dense raindrops in a single image.

Owner:联友智连科技有限公司 +1

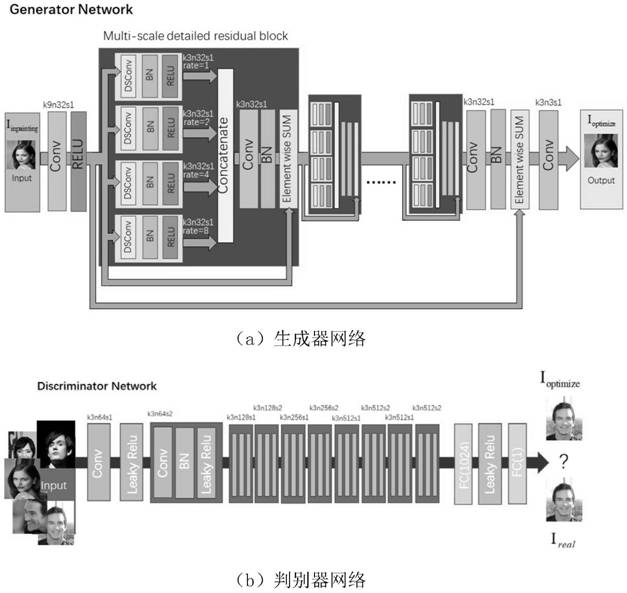

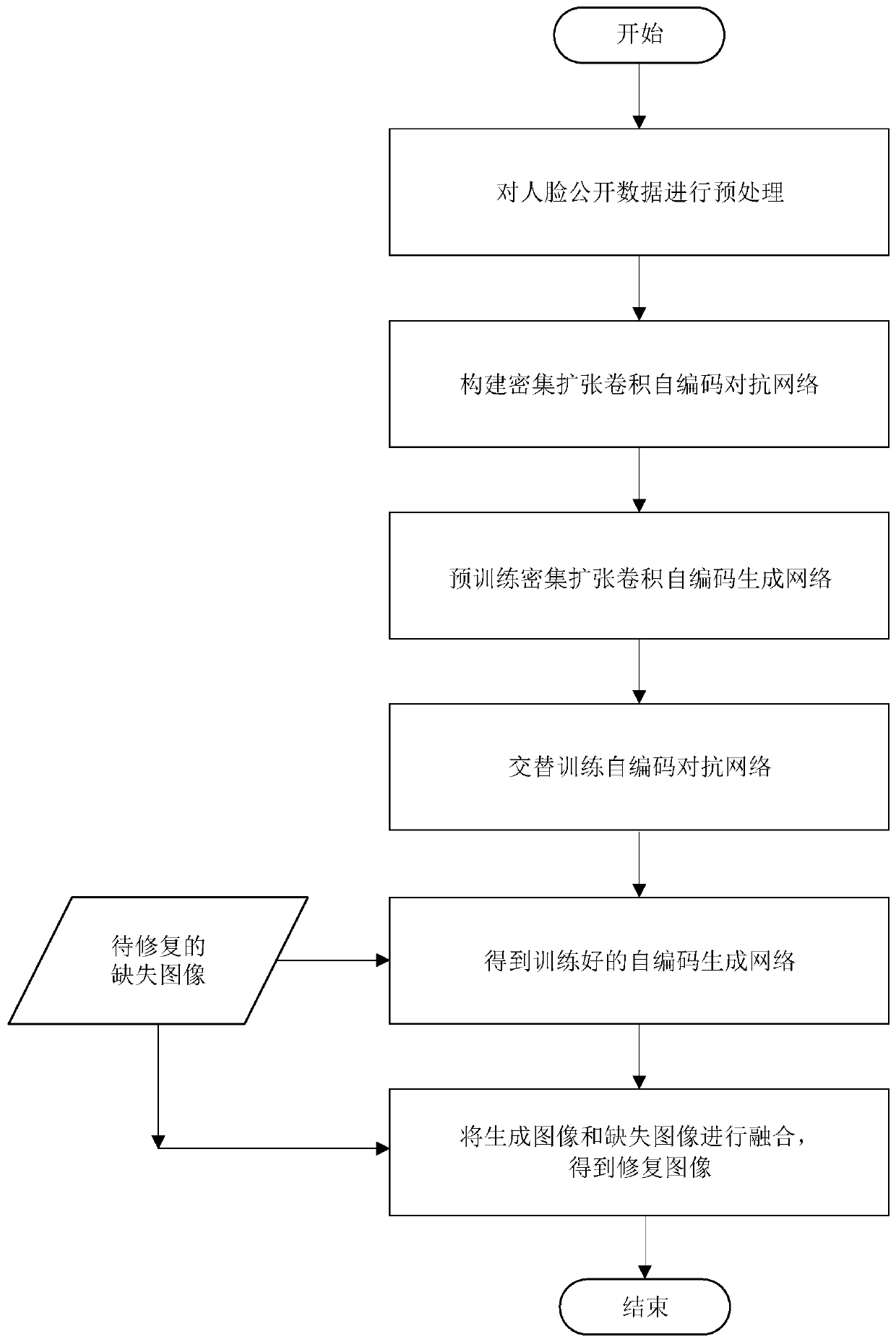

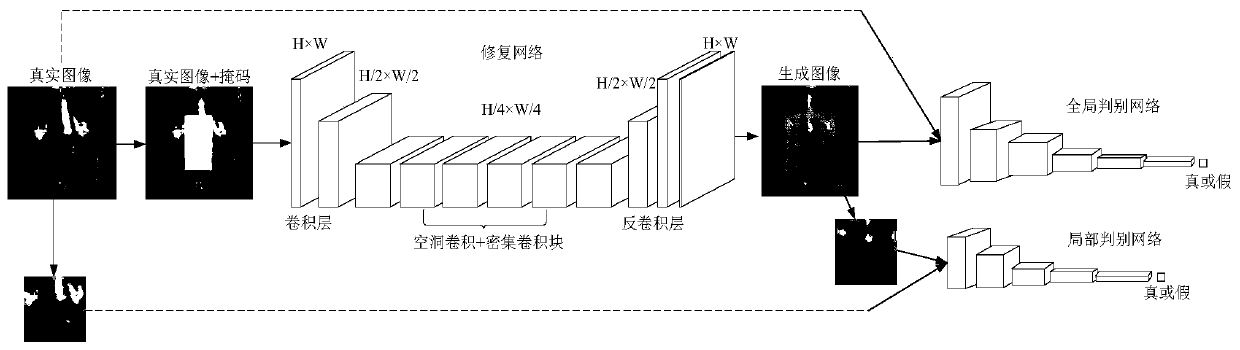

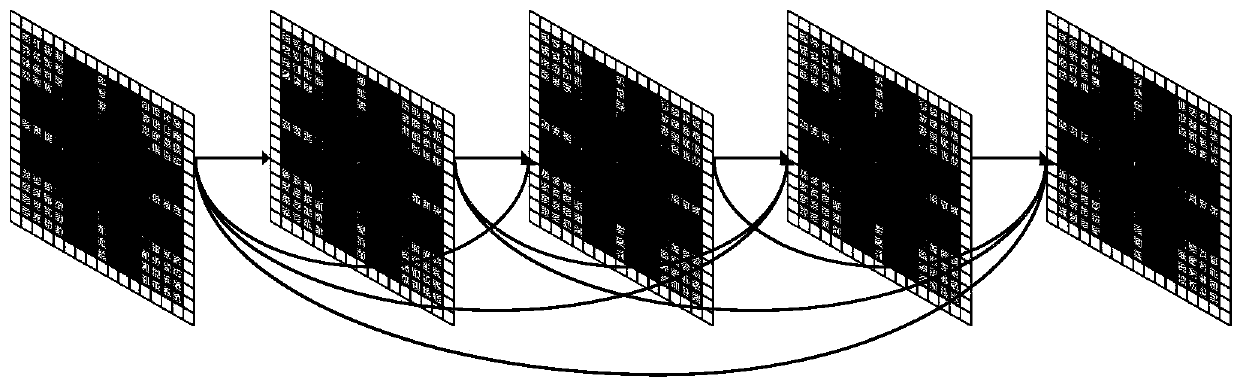

Face image restoration method based on dense expansion convolution self-coding adversarial network

ActiveCN110689499AImprove stabilityEnhance representational powerImage enhancementInternal combustion piston enginesPattern recognitionData set

The invention discloses a face image restoration method based on a dense expansion convolution self-coding adversarial network. The method comprises the following steps: firstly, preprocessing face public data to obtain a face data set; secondly, constructing a dense expansion convolution self-coding adversarial network; then pre-training a dense expansion convolutional self-encoding generation network by utilizing reconstruction loss, and alternately carrying out the following training steps: (1) training a double-discrimination network by utilizing adversarial loss; (2) training a pre-trained generative network by using joint loss; and then obtaining a trained dense expansion convolution self-encoding generation network, finally inputting the image to be restored into the generation network, and fusing the generation image and the defect image to obtain a final restored image. According to the method, the face image restoration problem of serious semantic information loss and large-area random region loss is solved.

Owner:BEIJING UNIV OF TECH

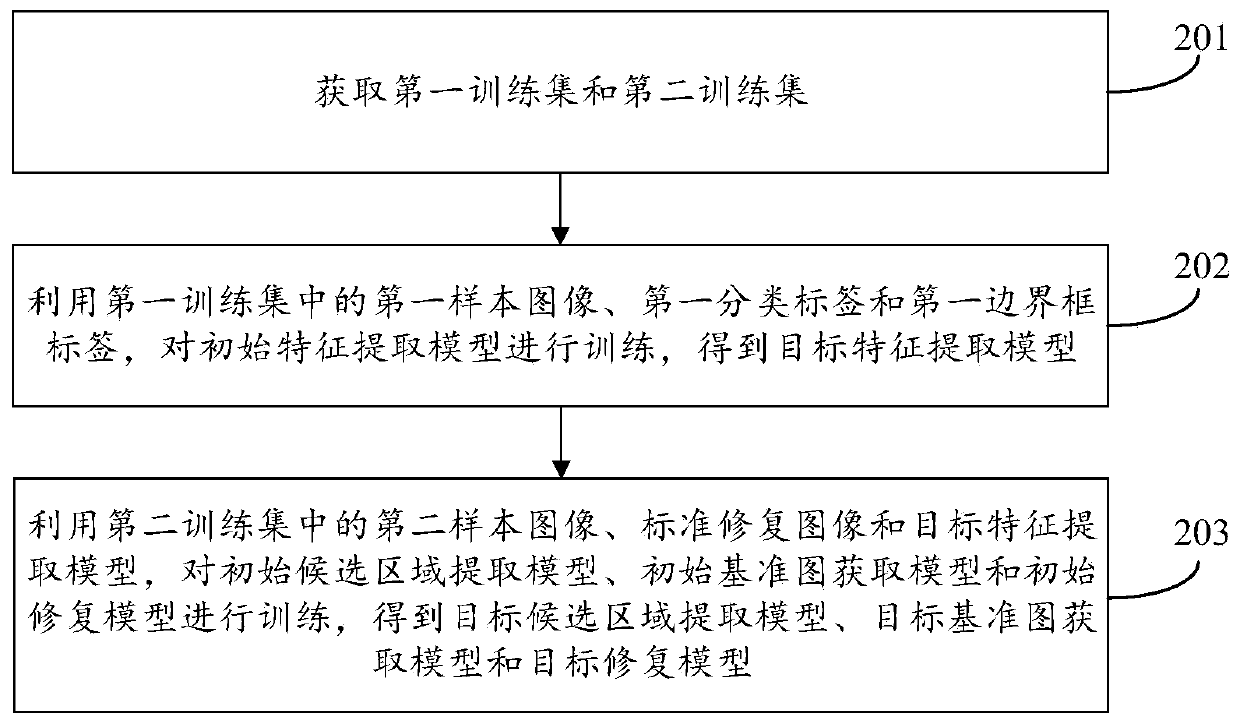

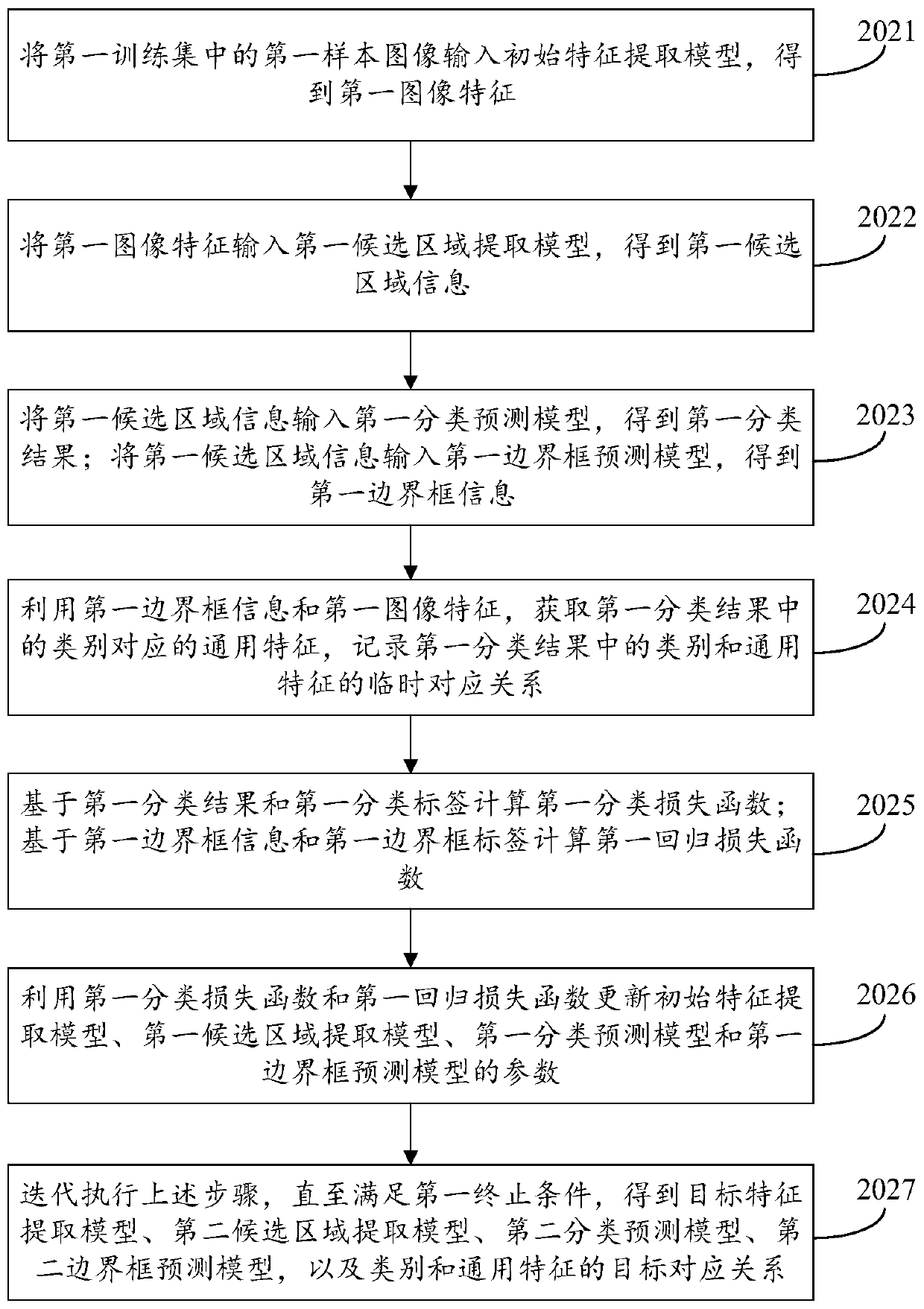

Image restoration method and training method of image restoration model

ActiveCN111325699AImprove repair effectIncrease considerationImage enhancementImage analysisReference imageImaging Feature

The invention discloses an image restoration method and a training method of an image restoration model. The method comprises the steps of obtaining a to-be-restored first target image; extracting target image features of the first target image; based on the target image features, target candidate area information and a target reference image are acquired, and the target reference image carries mode information of the first target image; and repairing the first target image based on the target candidate region information and the target reference image to obtain a target repaired image corresponding to the first target image. In the image restoration process, the consideration of the mode information of the image is added, the consideration is comprehensive, the restoration effect of imagerestoration is improved, and the restored image is more natural.

Owner:TENCENT TECH (SHENZHEN) CO LTD

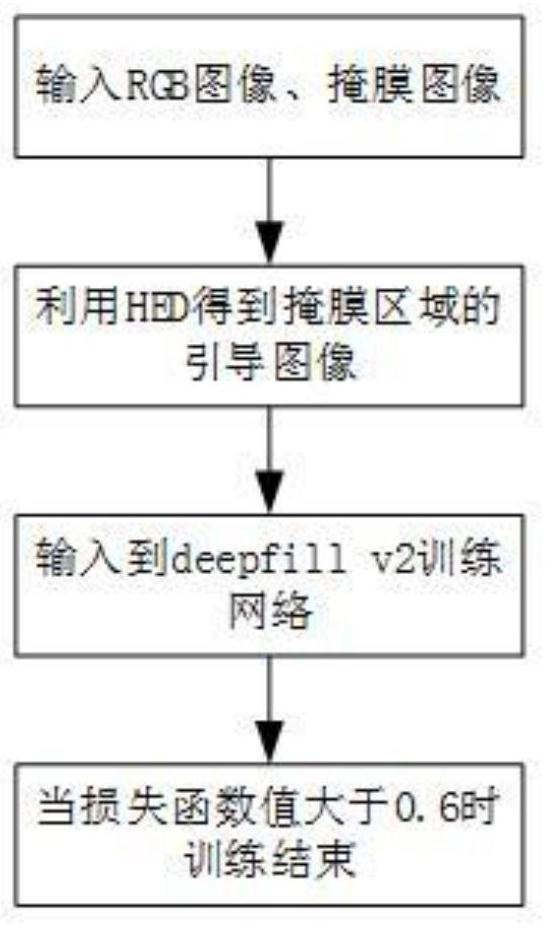

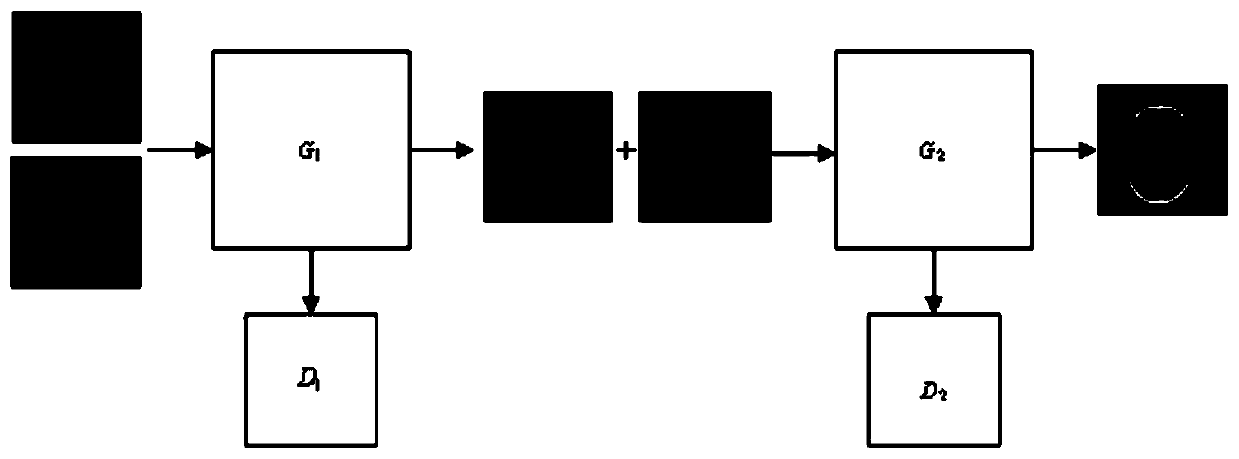

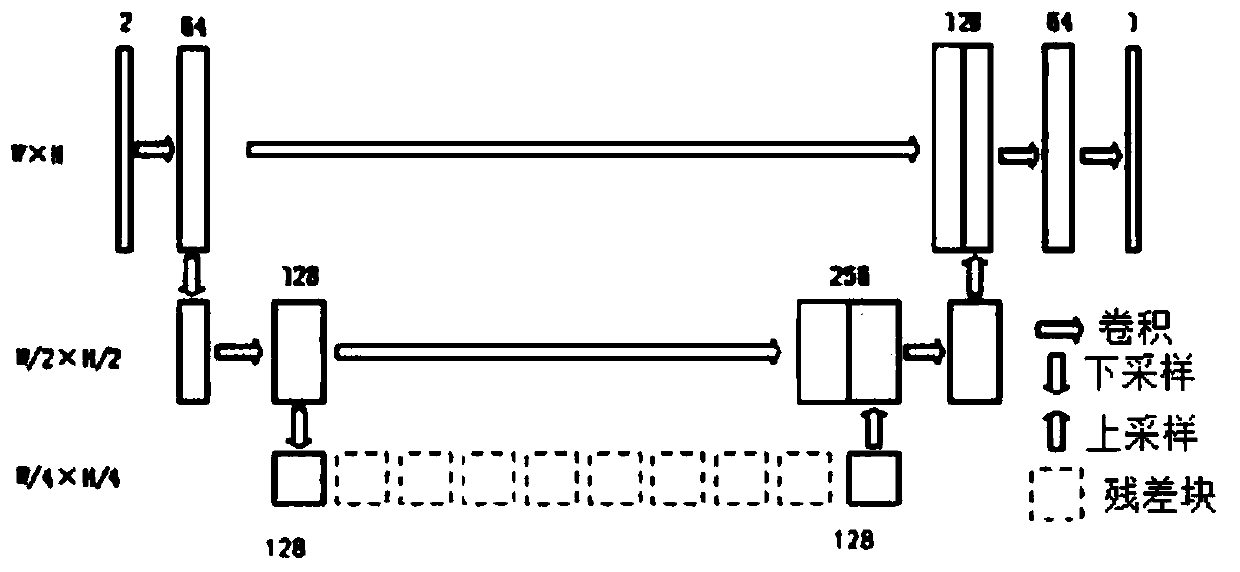

Image restoration method and system based on generative adversarial network and application thereof

ActiveCN111553858AImprove integrityHigh clarityImage enhancementImage analysisPattern recognitionAlgorithm

The invention discloses an image restoration method based on a generative adversarial network and a system and application thereof, wherein the method comprises the steps of obtaining an optimized generated defect image boundary map through a trained boundary generation model in a first generative adversarial network; in a second generative adversarial network, training a restoration model by taking the original complete image, the original defect image and the optimized generated defect image boundary map as input to obtain a trained restoration model; and performing image restoration throughthe trained boundary generation model and restoration model. The restoration method provided by the invention can accurately restore the image defect area, significantly inhibits the image generatedby the defect area from generating blurring or artifacts, and is especially suitable for medical image restoration with high requirements for restoration accuracy and the like.

Owner:四川大学青岛研究院

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com