Patents

Literature

189 results about "Pixel mapping" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

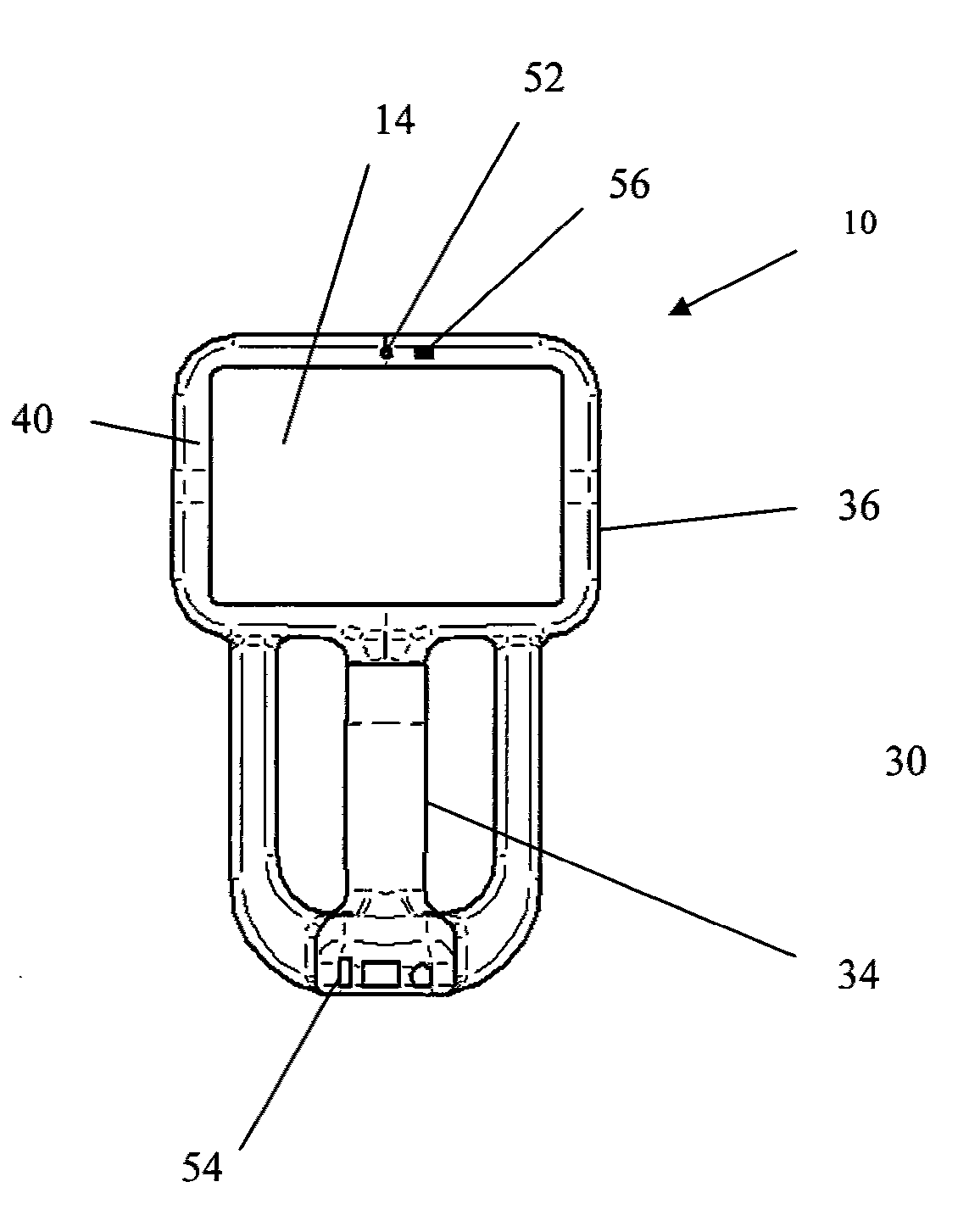

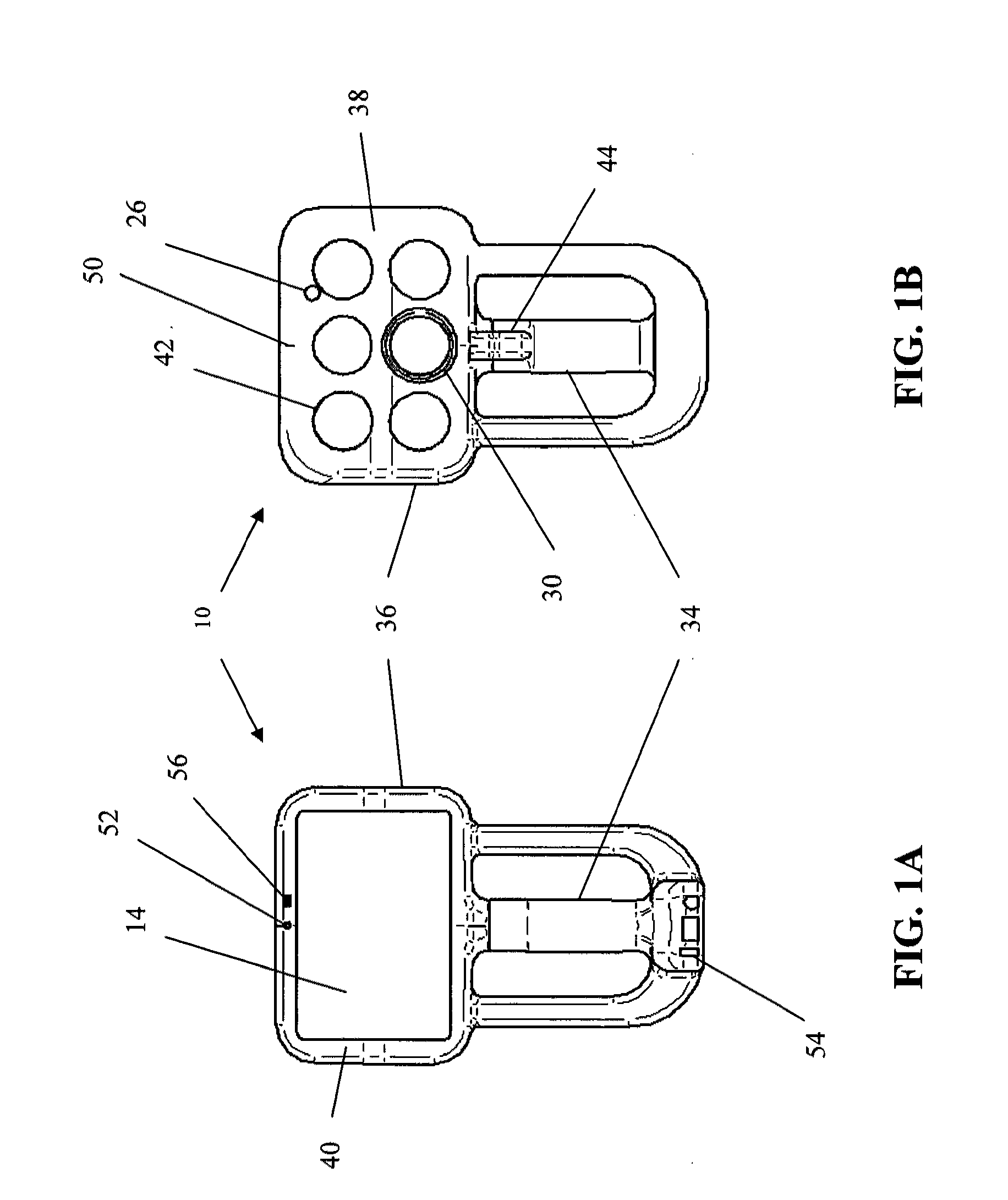

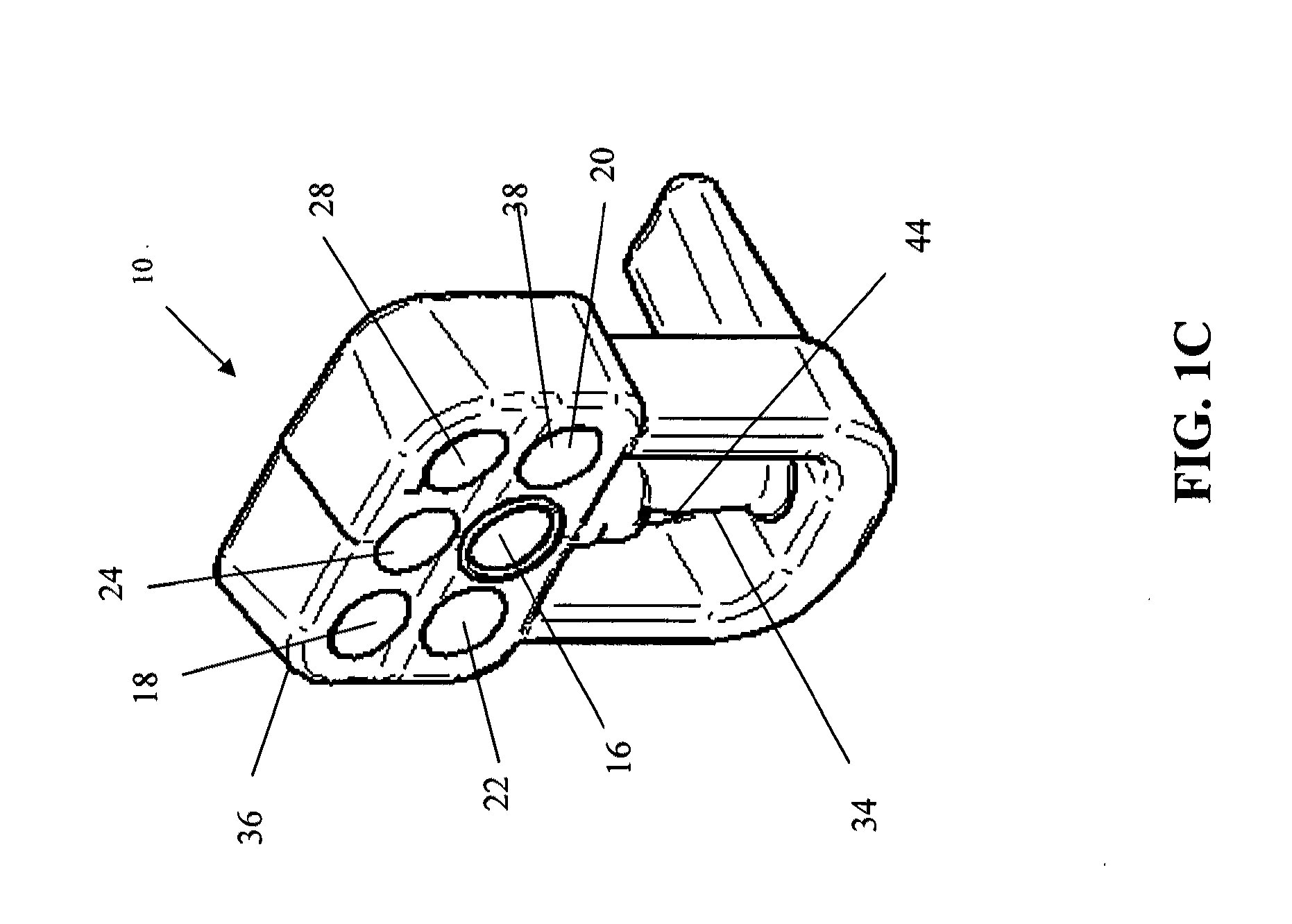

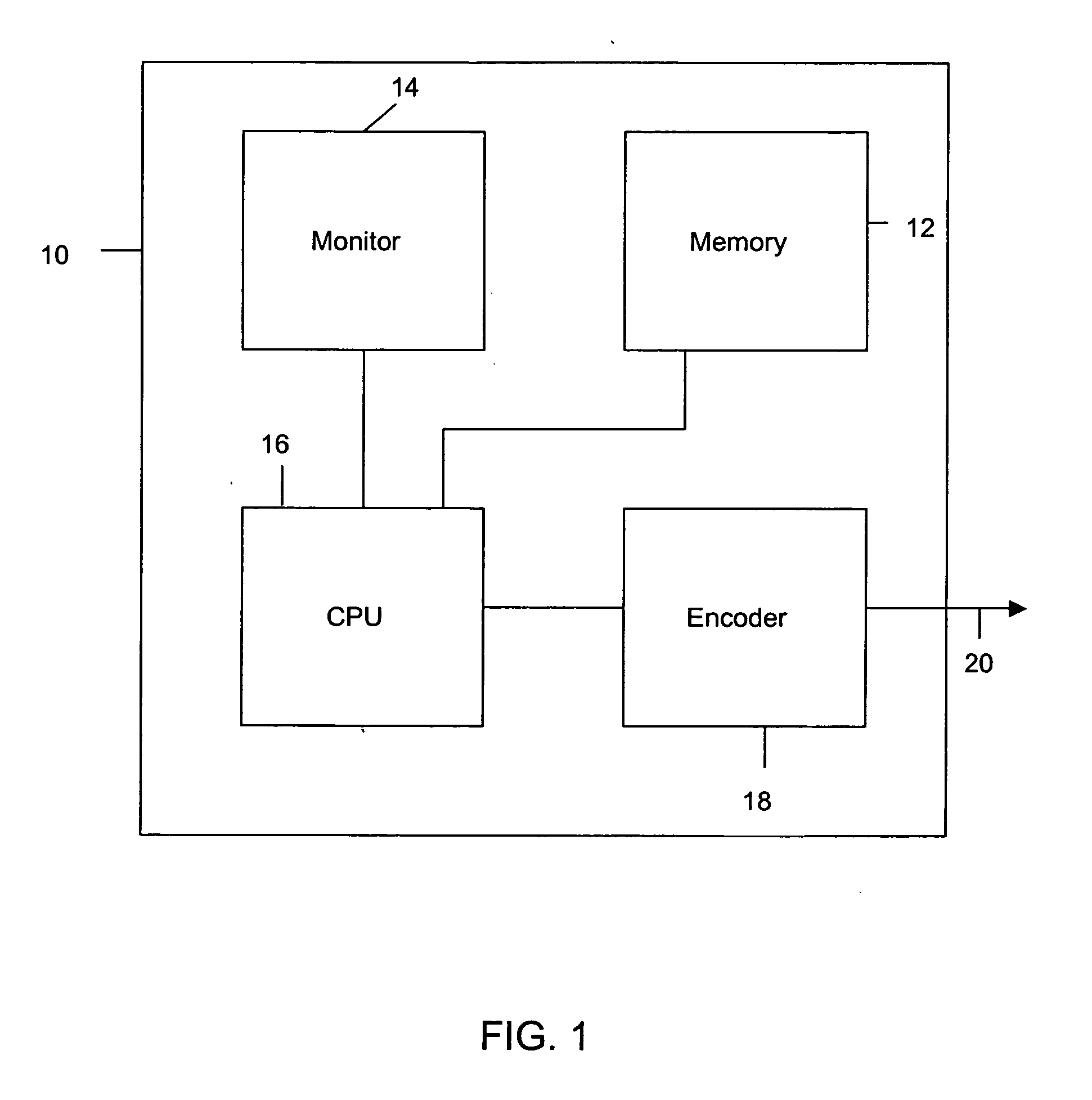

Medical image projection and tracking system

InactiveUS20120078088A1Accurately measure body surface areaReliably identify wound areaDiagnostic recording/measuringSensorsCorrection algorithmPixel mapping

A system comprising a convergent parameter instrument and a laser digital image projector for obtaining a surface map of a target anatomical surface, obtaining images of that surface from a module of the convergent parameter instrument, applying pixel mapping algorithms to impute three dimensional coordinate data from the surface map to a two dimensional image obtained through the convergent parameter instrument, projecting images from the convergent parameter instrument onto the target anatomical surface as a medical reference, and applying a skew correction algorithm to the image.

Owner:POINT OF CONTACT

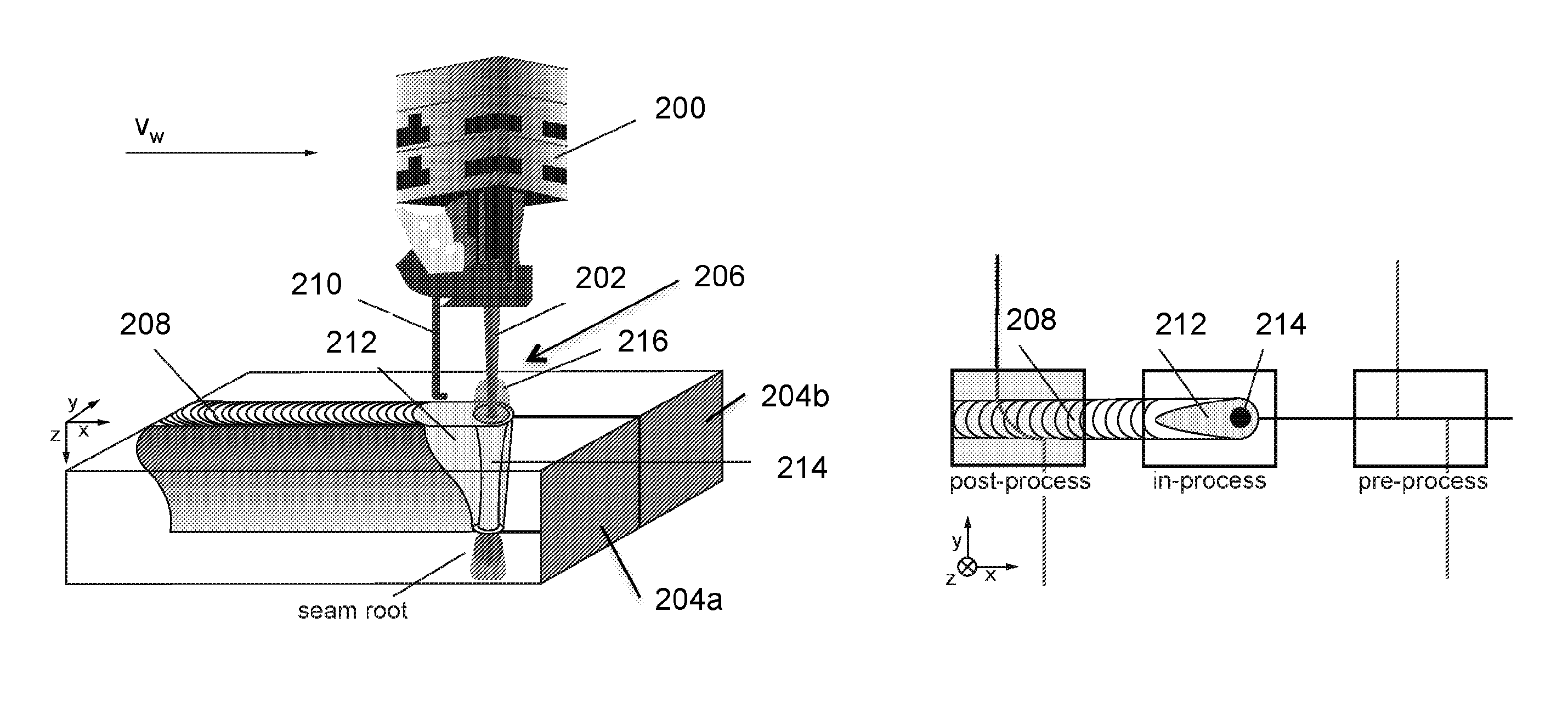

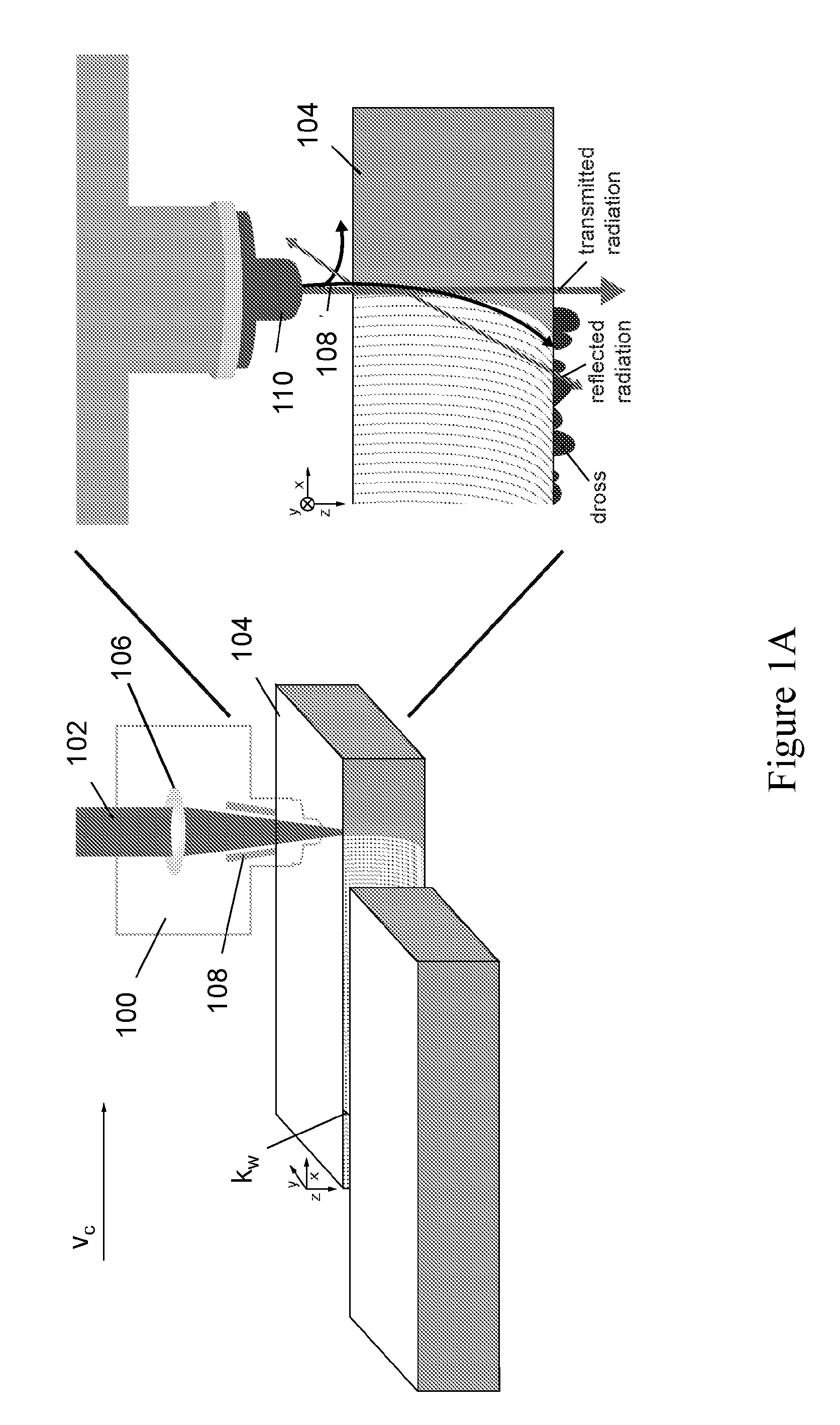

Method for closed-loop controlling a laser processing operation and laser material processing head using the same

ActiveUS20130178952A1Reliable detectionSimilar levelImage analysisSoldering apparatusLaser processingAlgorithm

The present invention relates to a method for closed-loop controlling a processing operation of a workpiece, comprising the steps of: (a) recording a pixel image at an initial time point of an interaction zone by means of a camera, wherein the workpiece is processed using an actuator having an initial actuator value; (b) converting the pixel image into a pixel vector; (c) representing the pixel vector by a sum of predetermined pixel mappings each multiplied by a corresponding feature value; (d) classifying the set of feature values on the basis of learned feature values into at least two classes of a group of classes comprising a first class of a too high actuator value, a second class of a sufficient actuator value and a third class of a too low actuator value at the initial time point; (e) performing a control step for adapting the actuator value by minimizing the error et between a quality indicator ye and a desired value; and (f) repeating the steps (a) to (e) for further time points to perform a closed-loop controlled processing operation.

Owner:PRECITEC GMBH +1

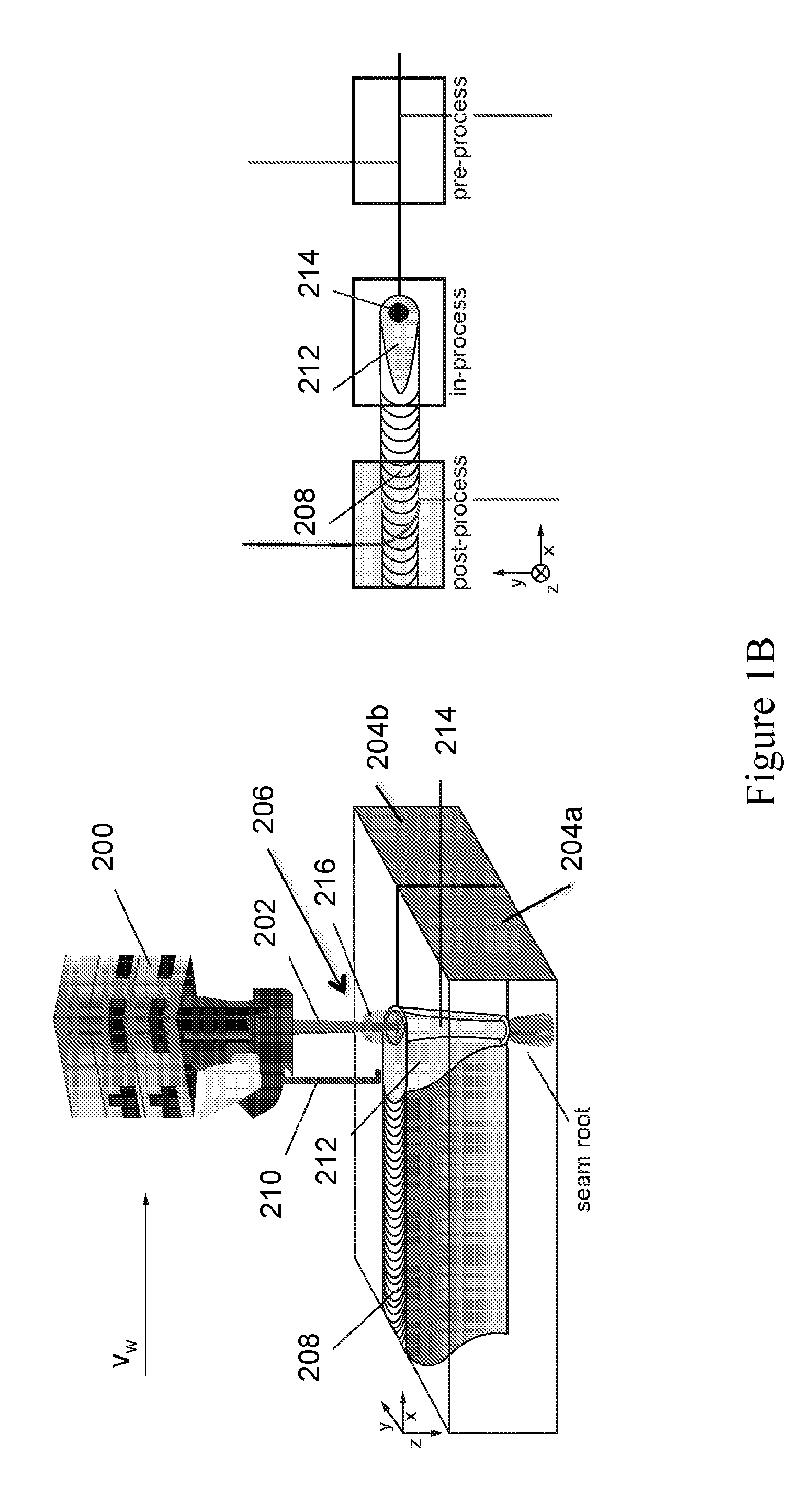

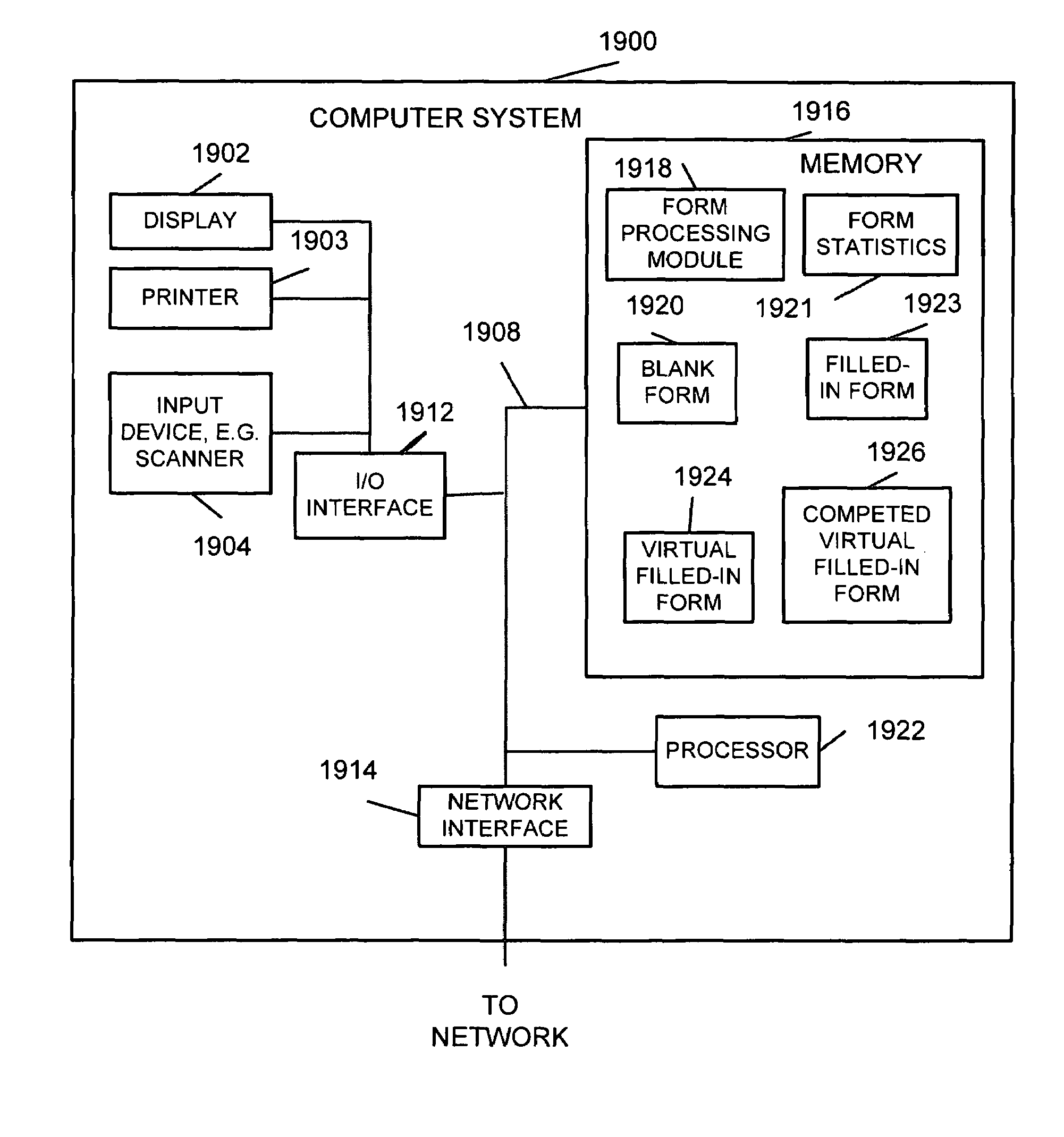

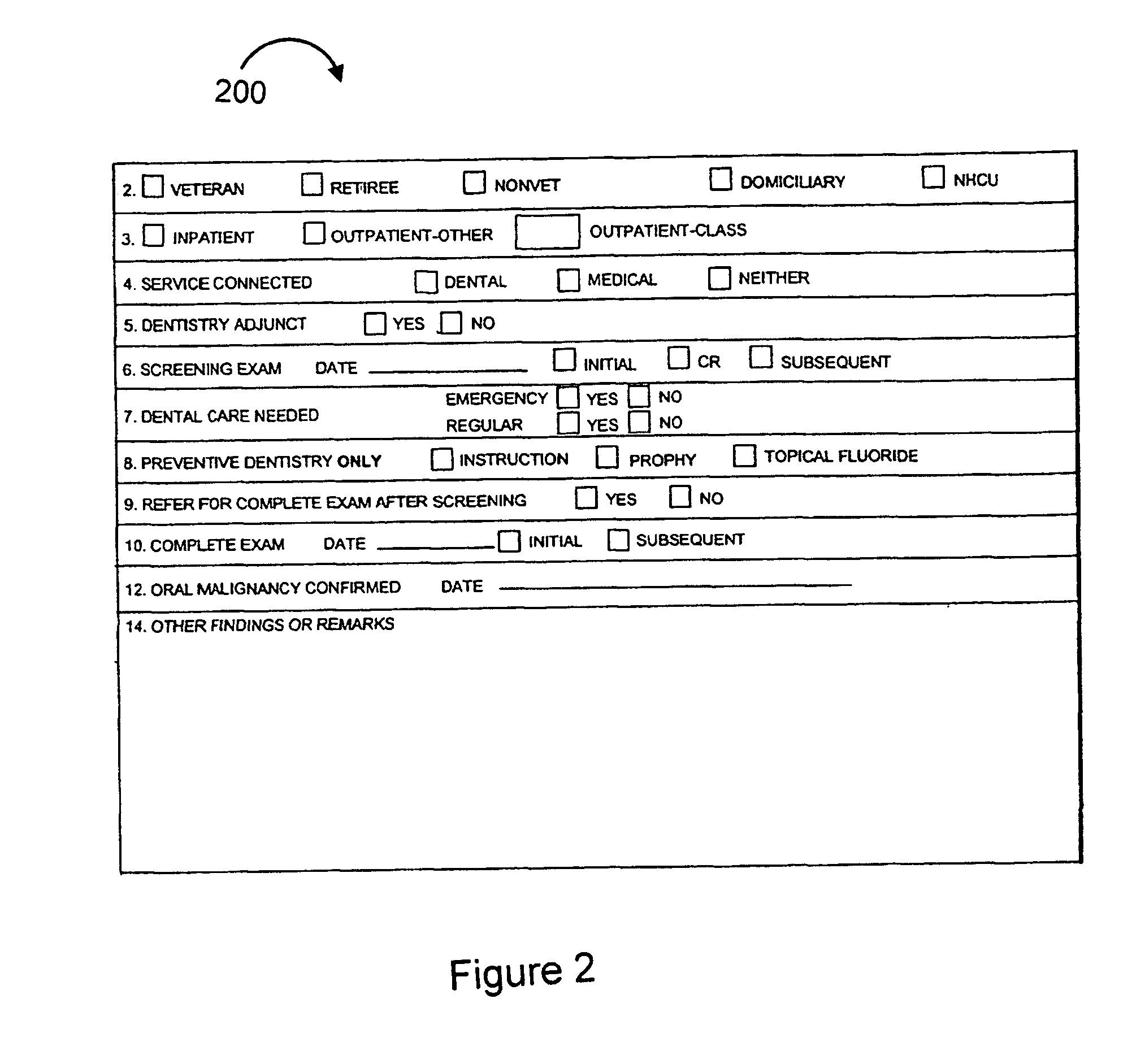

Method and apparatus for recognizing a digitized form, extracting information from a filled-in form, and generating a corrected filled-in form

ActiveUS7487438B1Close in colorCharacter and pattern recognitionSpecial data processing applicationsPattern recognitionComputer graphics (images)

Methods and apparatus for comparing blank forms represented in a digital format to digitized filled-in forms are described. Different errors are attributed different weights when attempting to correlate regions of blank and filled-in forms. Foreground pixels in the blank form which are not found in a corresponding portion of a filled-in form are attributed greater error significance than foreground pixels, e.g., pixels which may correspond to added text, found in the filled-in form which correspond to a background pixel value in the blank form. A virtual filled-in form including content, e.g., pixel values, from the filled-in form is generated from the content of the filled-in form and pixel value location mapping information determined from comparing the blank and filled-in forms. Various analysis is performed on a block basis, but in some embodiments the final pixel mapping to the virtual form is performed on a pixel by pixel rather than a block basis.

Owner:ACCUSOFT CORP

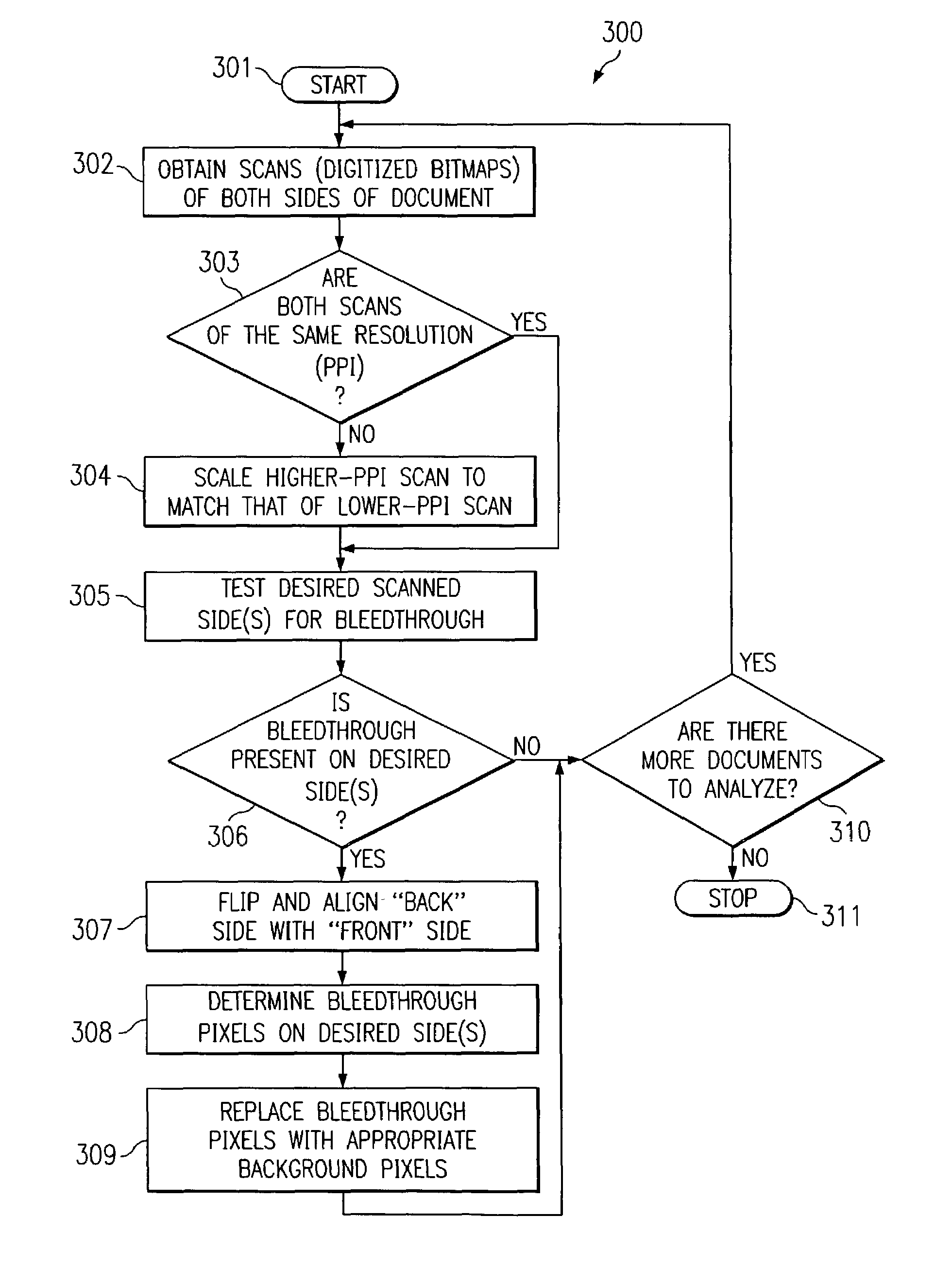

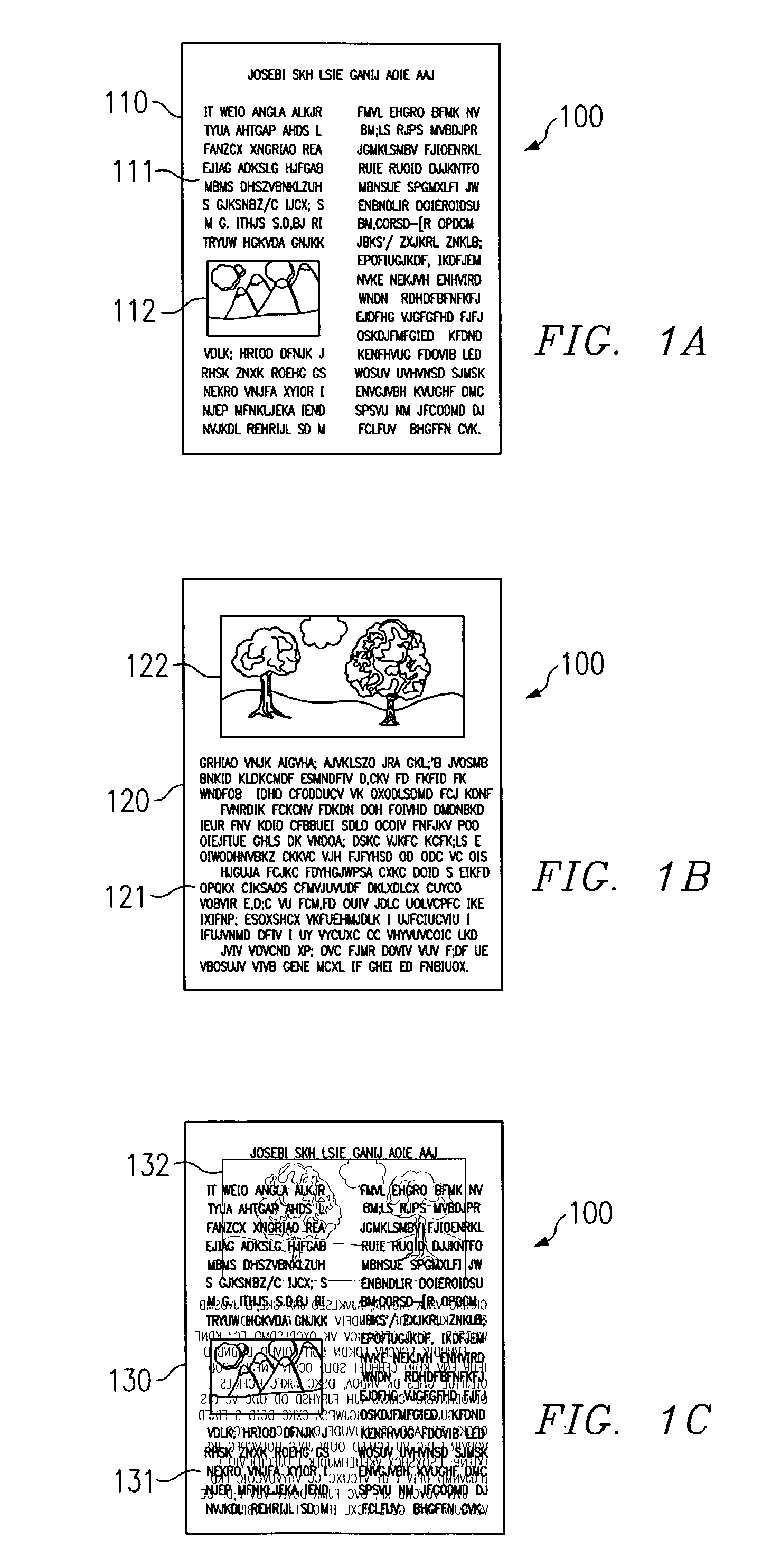

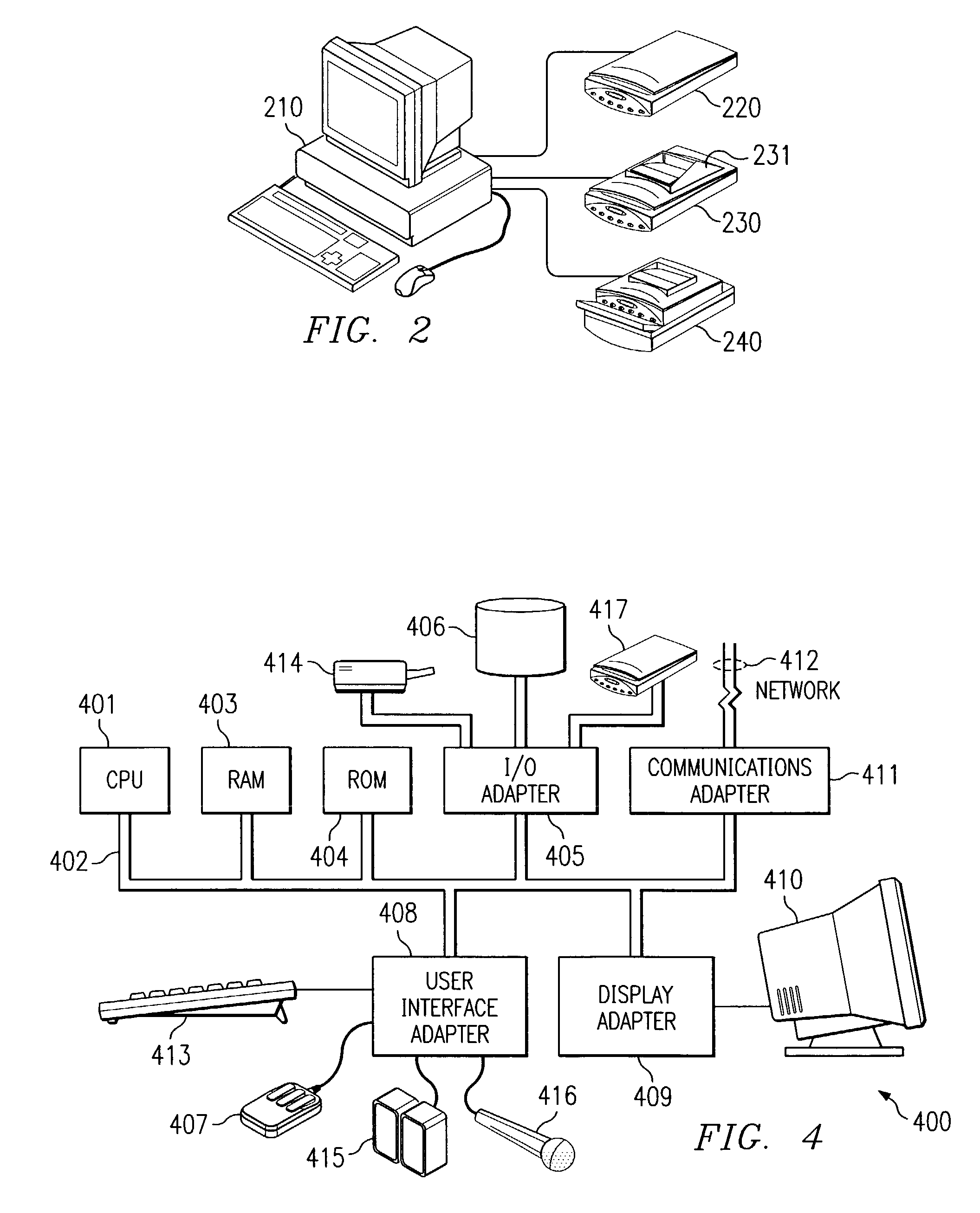

System and method for scanned image bleedthrough processing

ActiveUS7209599B2Character and pattern recognitionPictoral communicationPixel mappingComputer vision

Disclosed are systems and methods for identifying bleedthrough in an image in which a reference tone point of a first image is determined, putative bleedthrough pixels in the first image are identified as a function of the reference tone point, the putative bleedthrough pixels are mapped into a putative bleedthrough representation of said first image, and the putative bleedthrough pixel mapping is analyzed to determine if bleedthrough is present in said first image. Also disclosed are systems and methods for removing bleedthrough from an image in which a document is scanned to provide an electronic first image and an electronic second image, a putative bleedthrough pixel mapping for the first image is generated, the putative bleedthrough pixel mapping is processed using information with respect to said second image, and pixels of the first image corresponding to putative bleedthrough pixels of said mapping are replaced.

Owner:HEWLETT PACKARD DEV CO LP

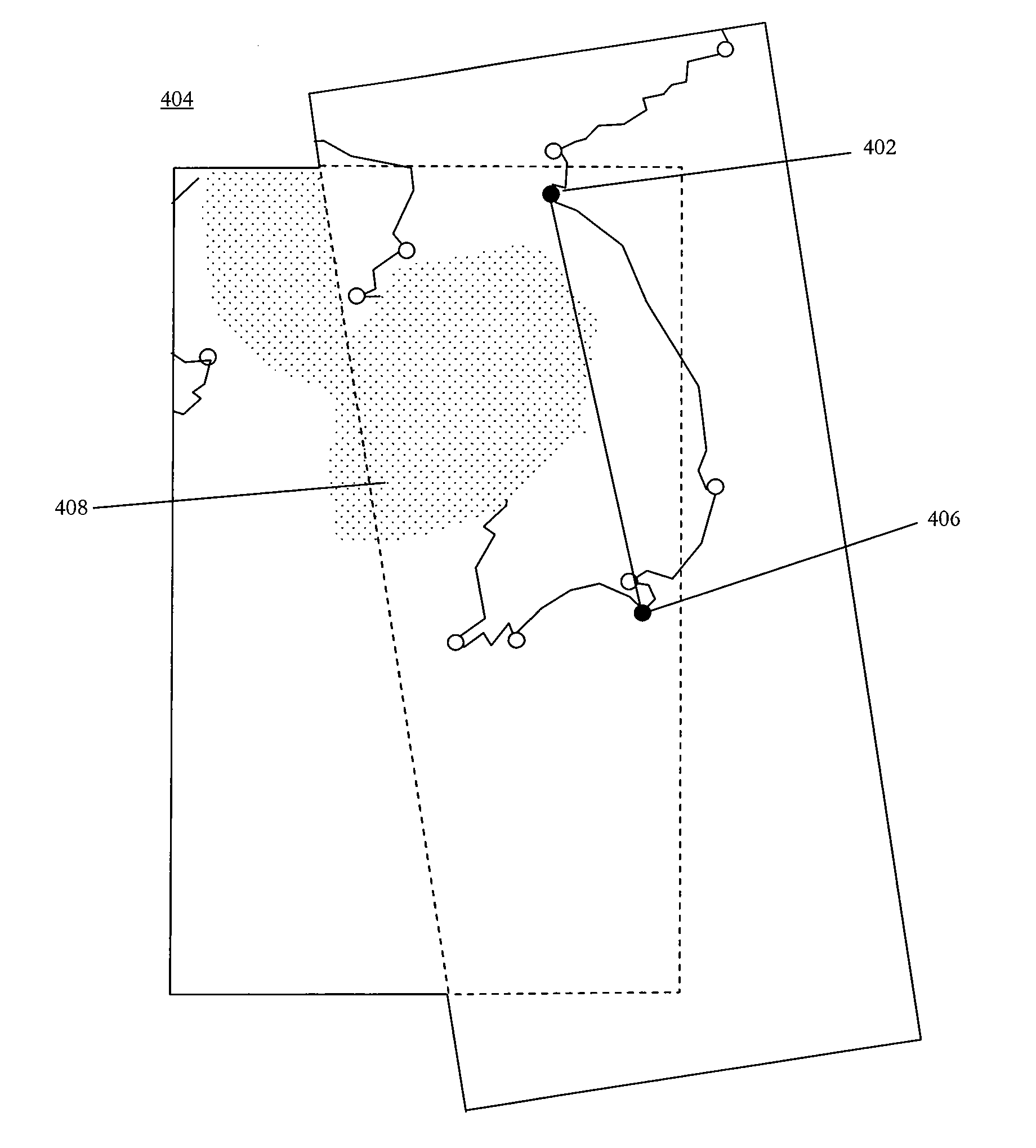

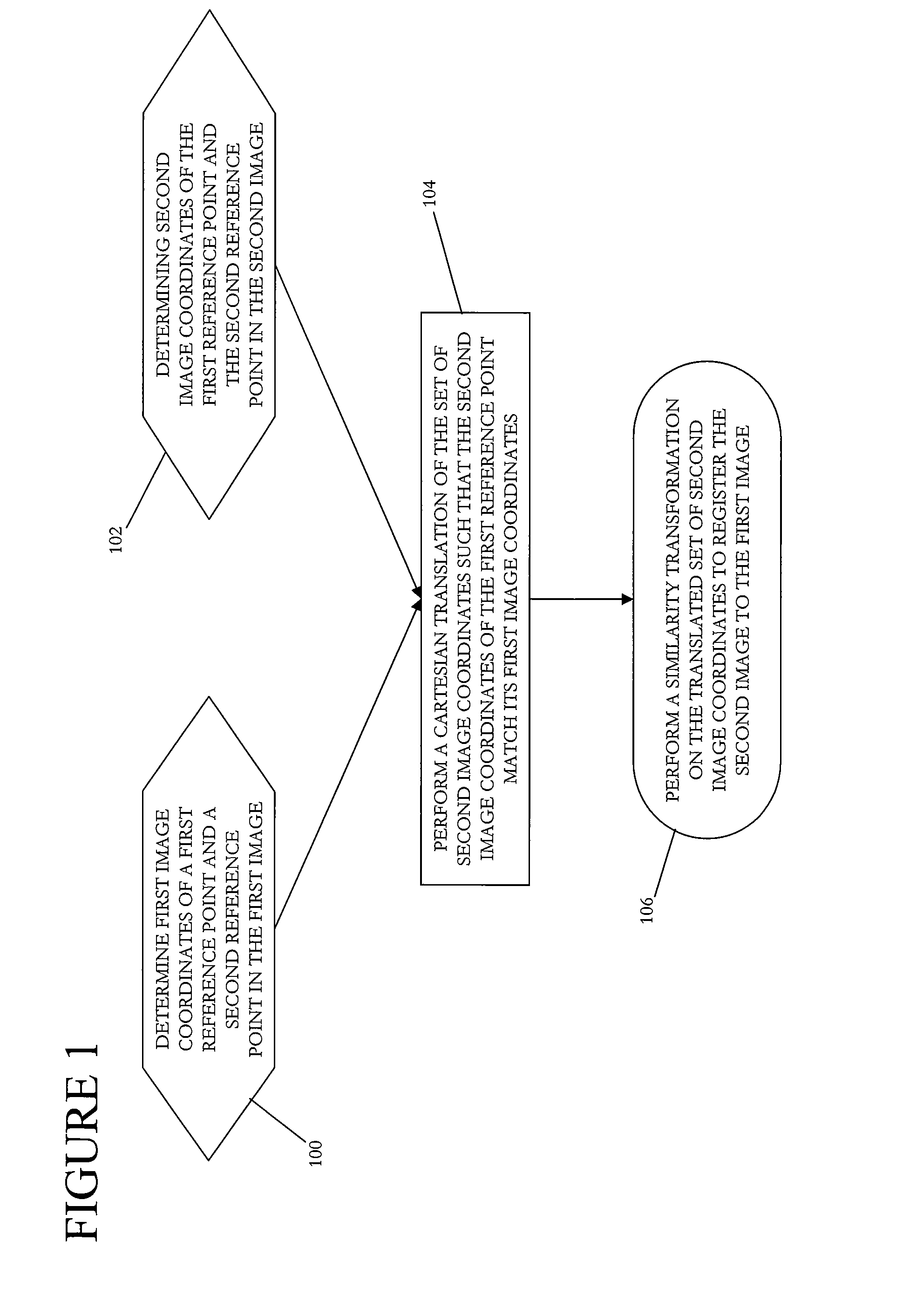

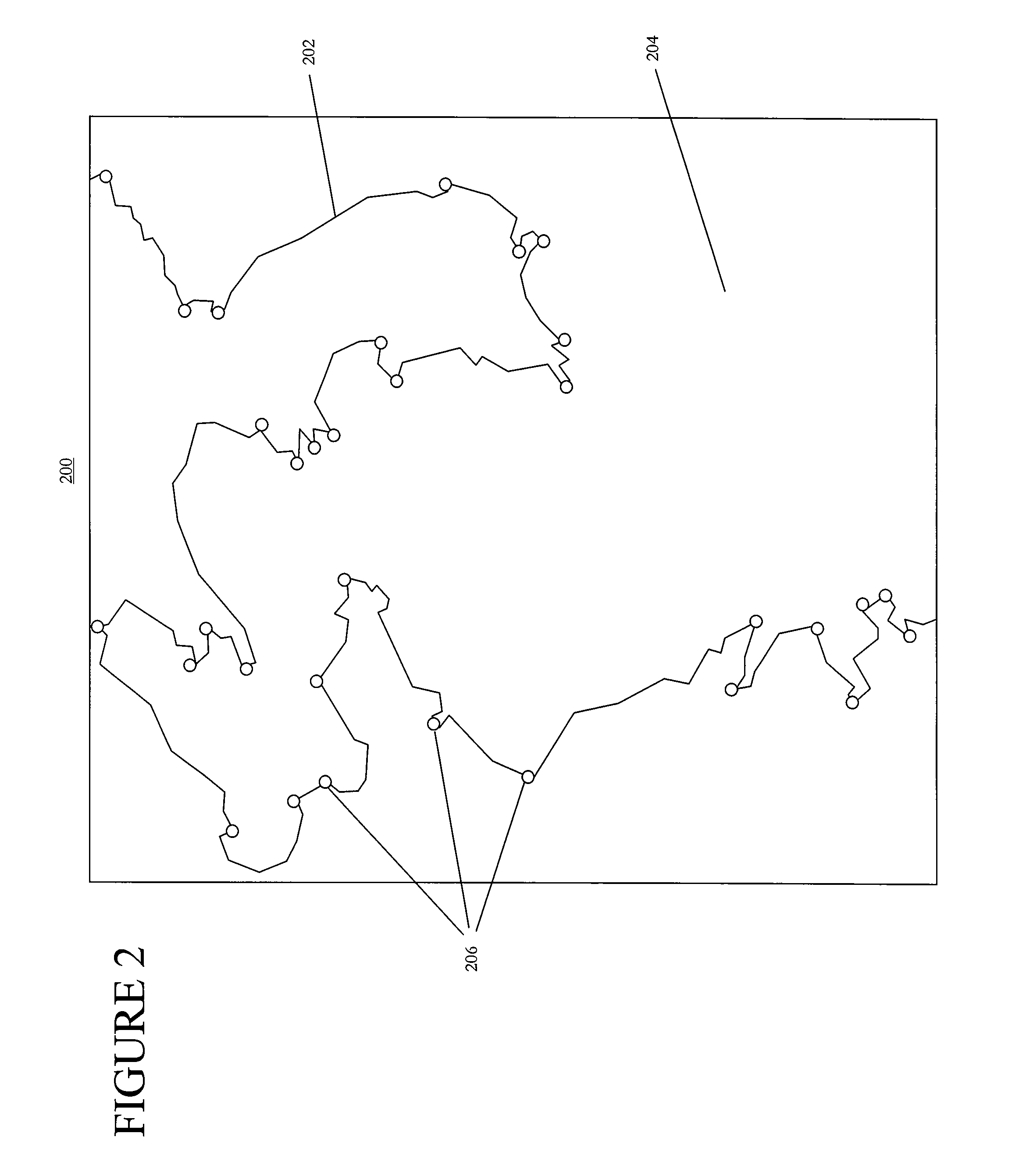

Geometric registration of images by similarity transformation using two reference points

A method for registering a first image to a second image using a similarity transformation. The each image includes a plurality of pixels. The first image pixels are mapped to a set of first image coordinates and the second image pixels are mapped to a set of second image coordinates. The first image coordinates of two reference points in the first image are determined. The second image coordinates of these reference points in the second image are determined. A Cartesian translation of the set of second image coordinates is performed such that the second image coordinates of the first reference point match its first image coordinates. A similarity transformation of the translated set of second image coordinates is performed. This transformation scales and rotates the second image coordinates about the first reference point such that the second image coordinates of the second reference point match its first image coordinates.

Owner:UNIVERSITY OF DELAWARE

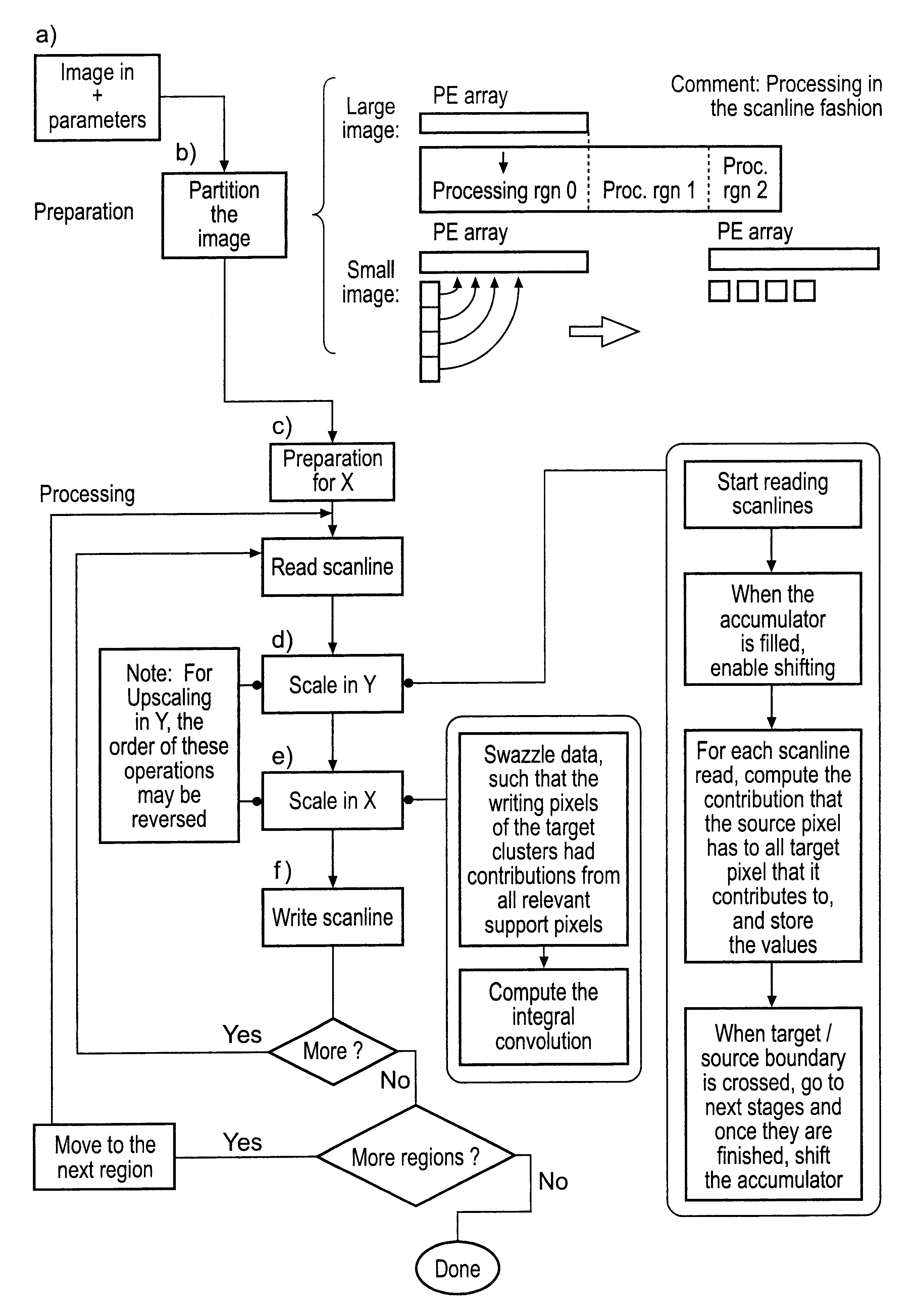

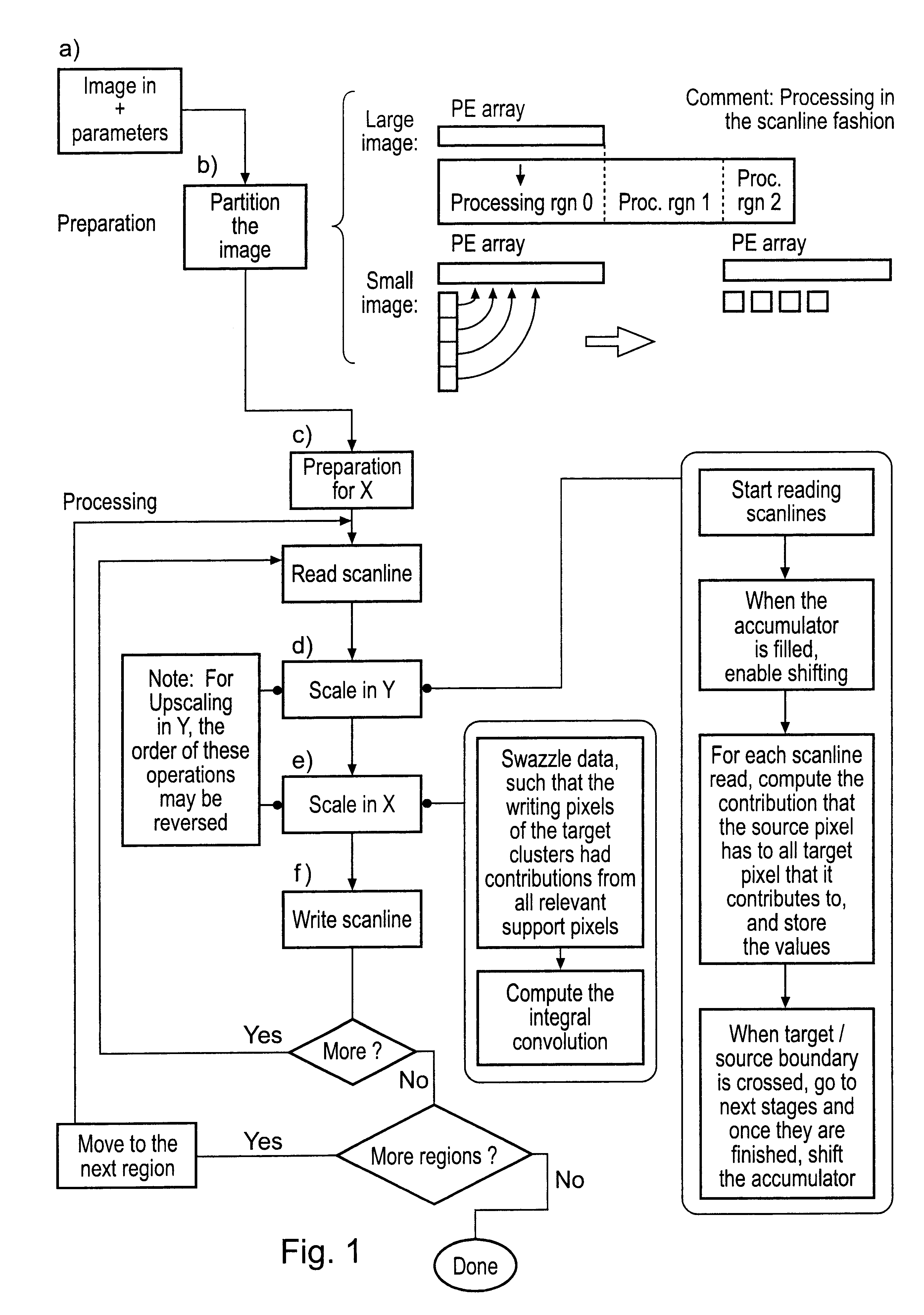

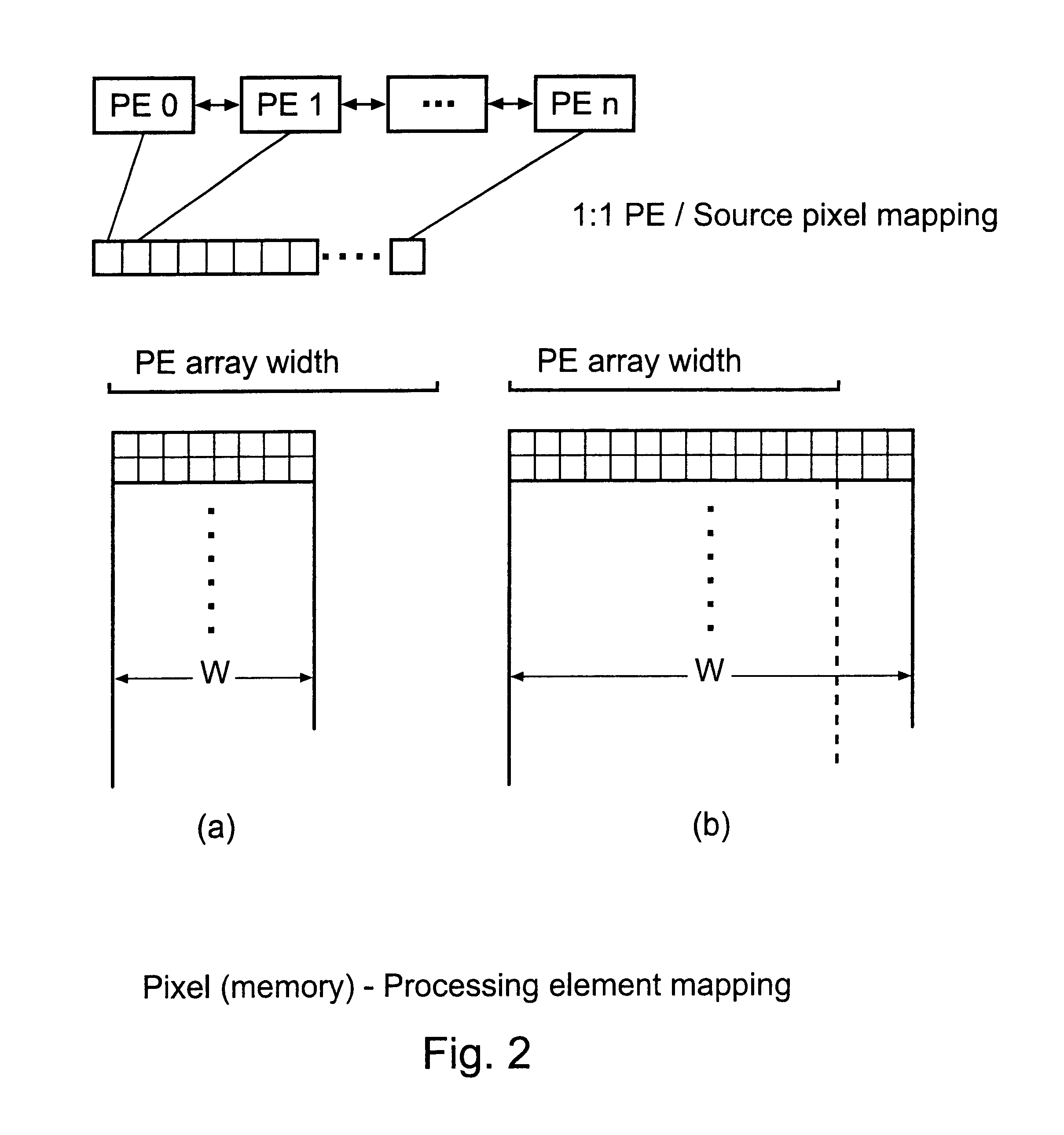

Image scaling

InactiveUS6825857B2Exceeding performanceFast imagingGeometric image transformationPicture reproducers using cathode ray tubesImage resolutionDigital filter

Owner:RAMBUS INC

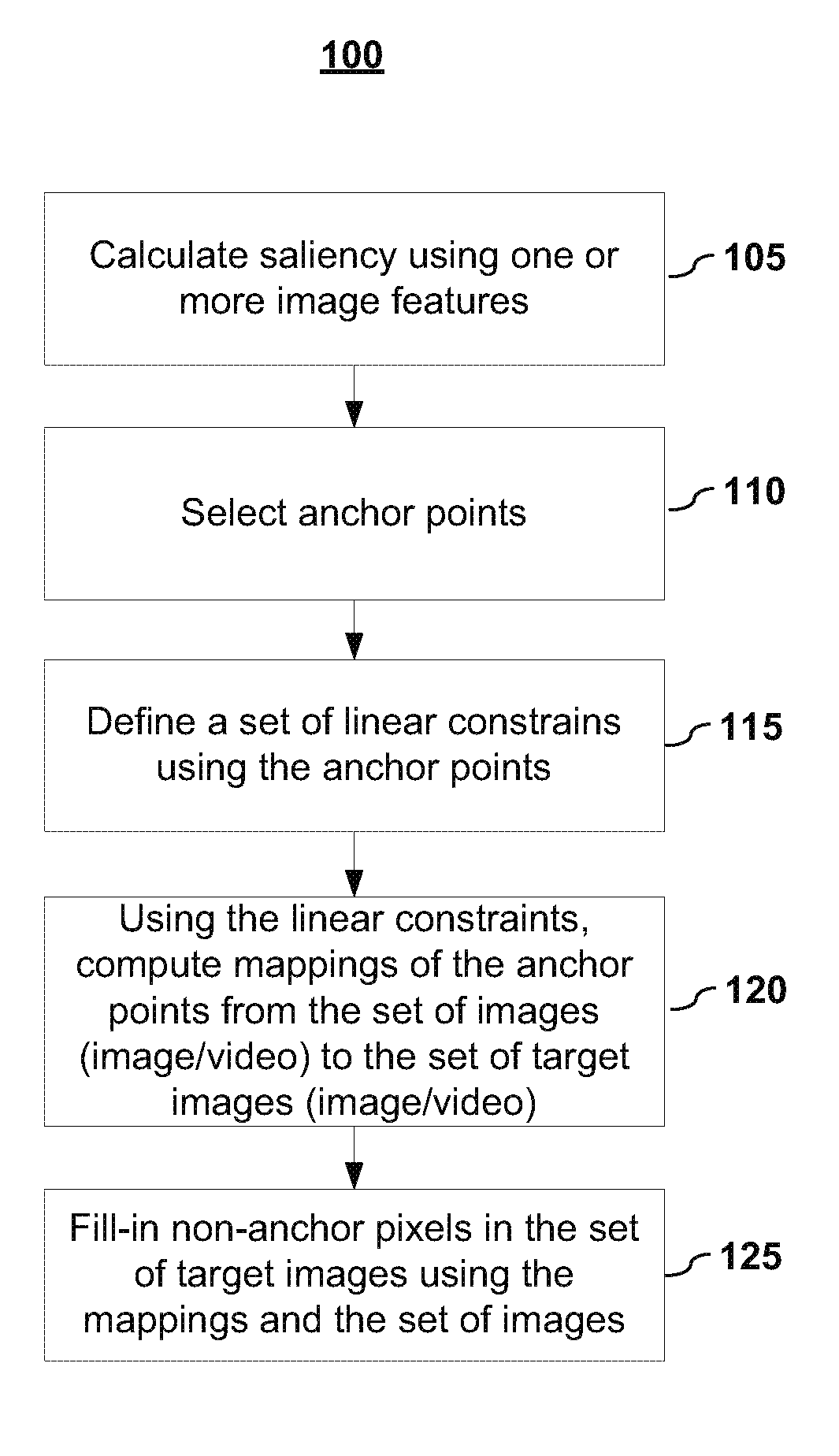

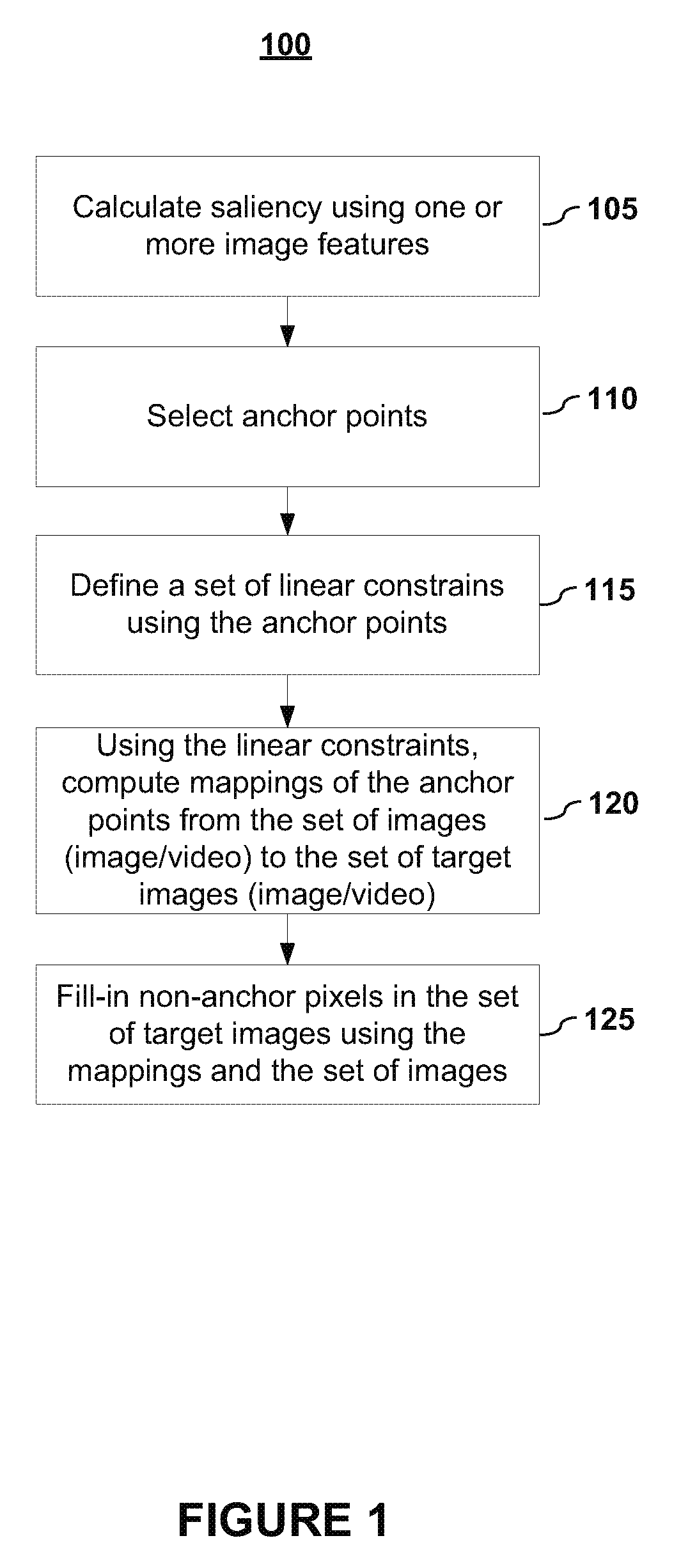

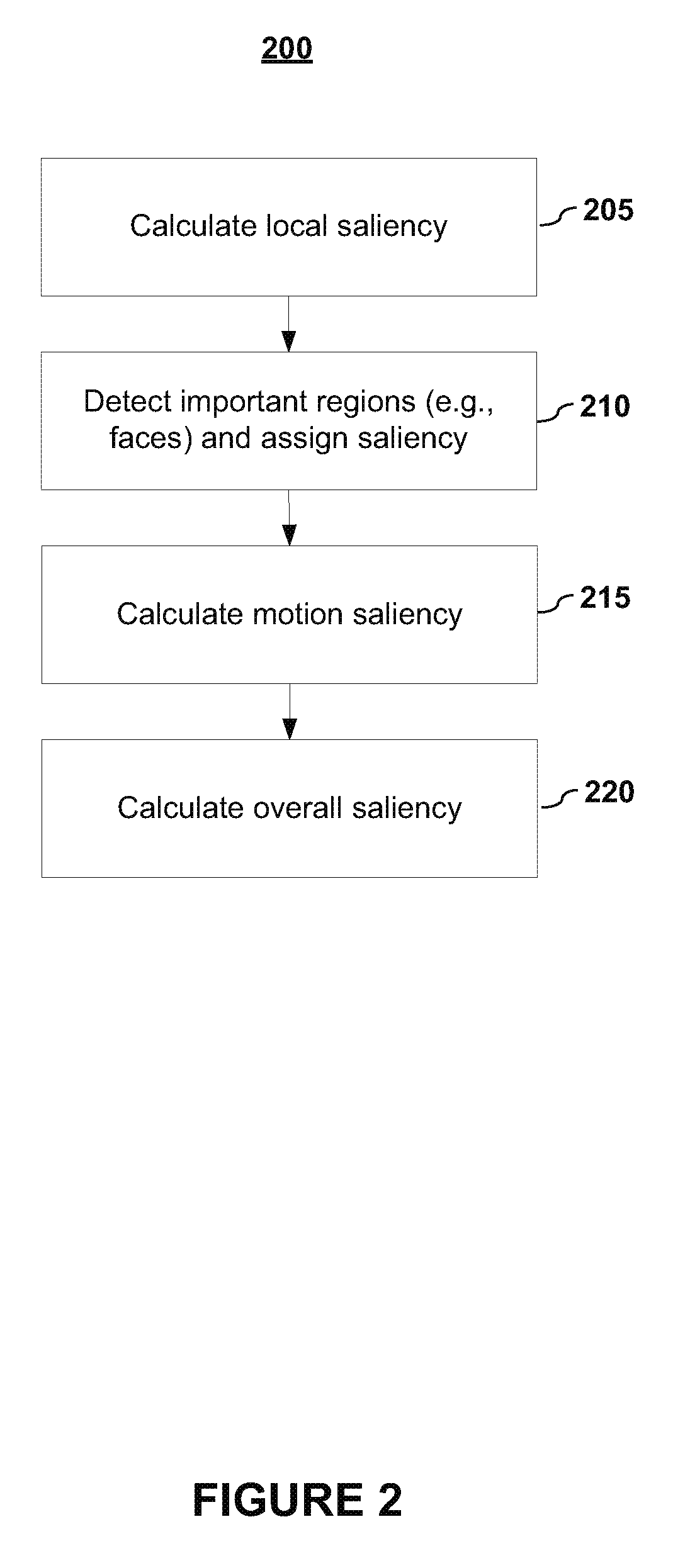

Content-Aware Image and Video Resizing by Anchor Point Sampling and Mapping

ActiveUS20100124371A1Effective preservationAvoid confusionGeometric image transformationCharacter and pattern recognitionPattern recognitionBack projection

Aspects of the present invention include systems and methods for resizing a set of images, which may comprises one or more images, while preserving the important content. In embodiments, the saliency of pixels in the set of images is determined using one or more image features. A small number of pixels, called anchor points, are selected from the set of images by saliency-based sampling. The corresponding positions of these anchor points in the set of target images are obtained using pixel mapping. In embodiments, to prevent mis-ordering of pixel mapping, an iterative approach is used to constrain the mapped pixels to be within the boundaries of the target image / video. In embodiments, based on the mapping of neighboring anchor points, other pixels in the target are inpainted by back-projection and interpolation. The combination of sampling and mapping greatly reduces the computational cost yet leads to a global solution to content-aware image / video resizing.

Owner:138 EAST LCD ADVANCEMENTS LTD

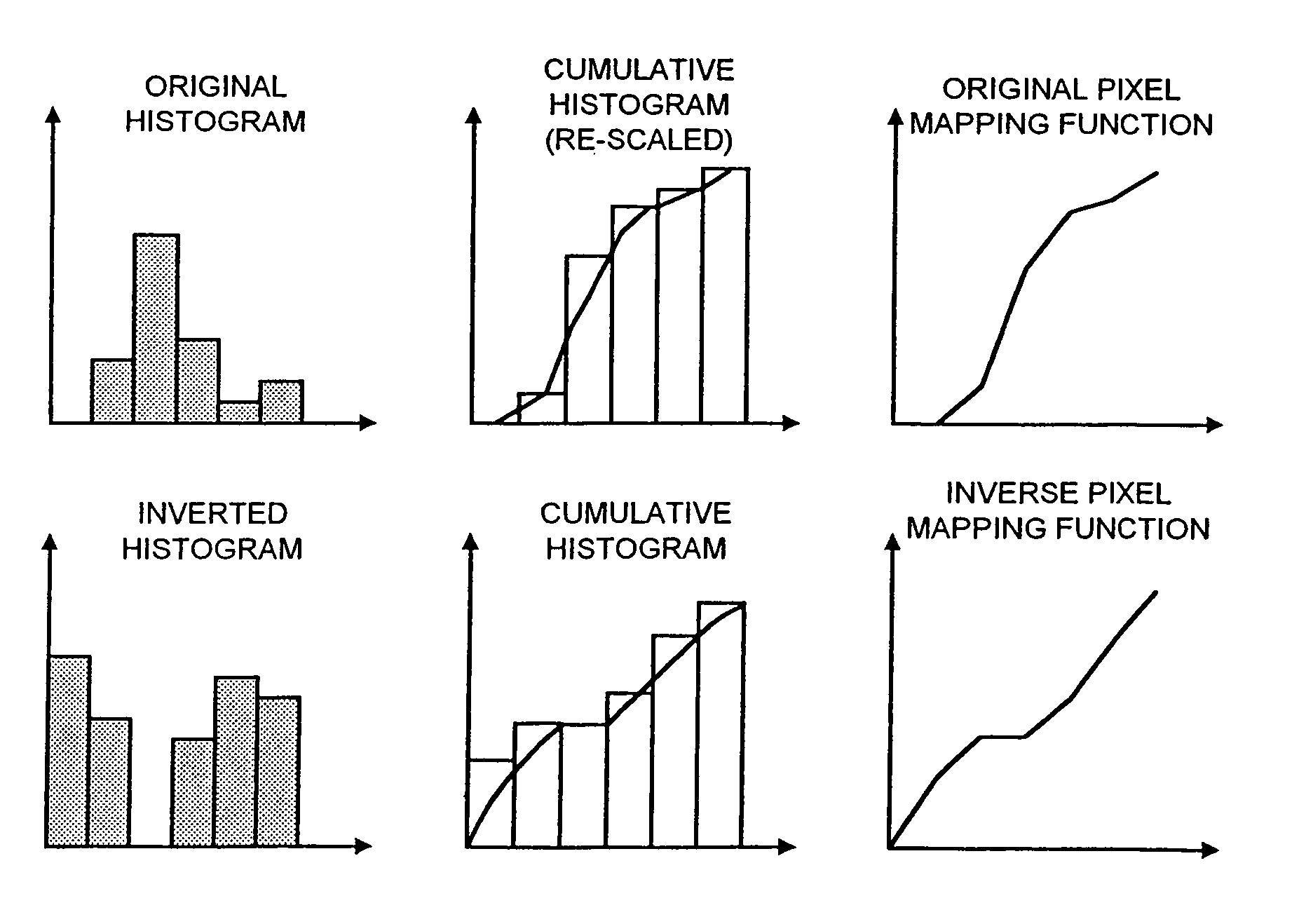

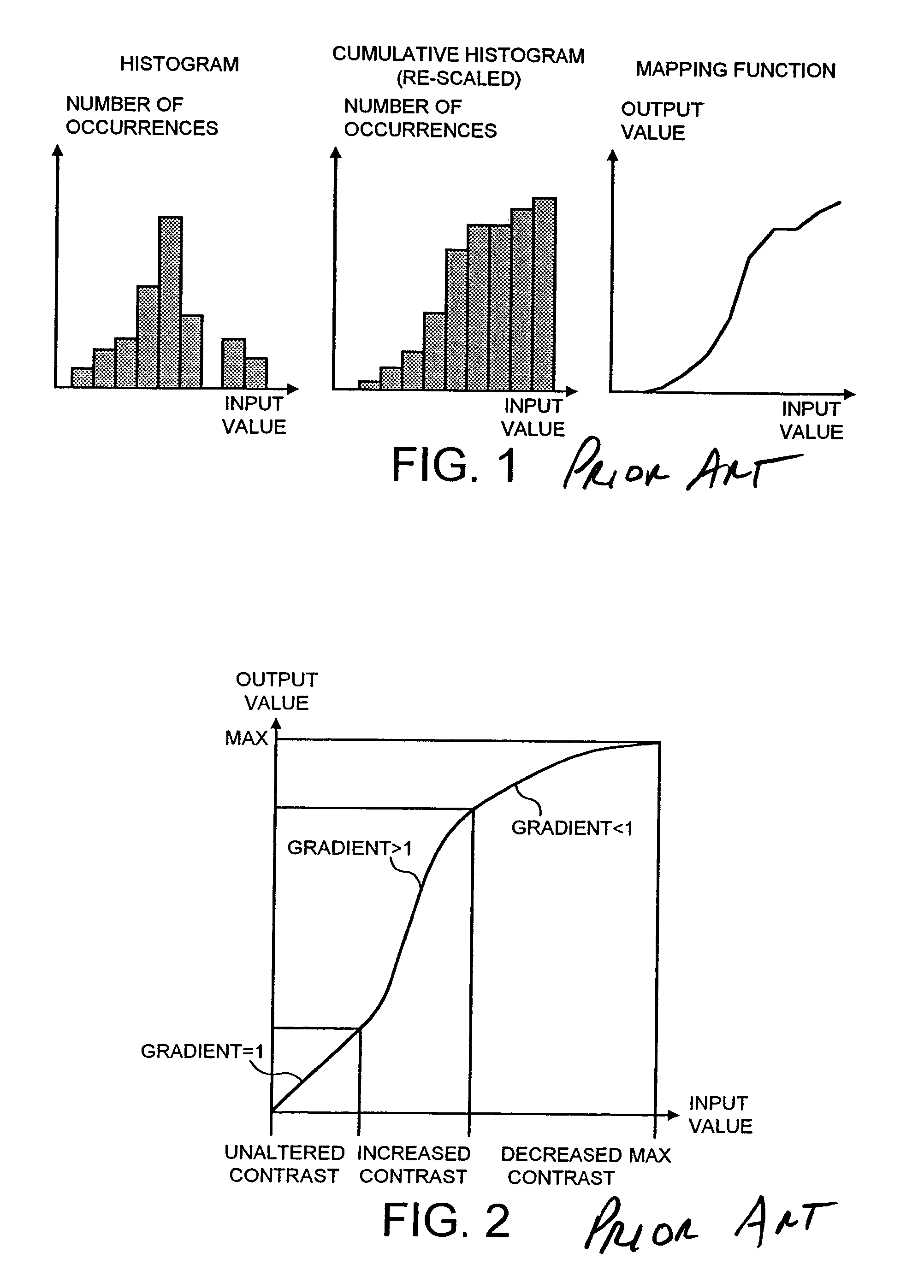

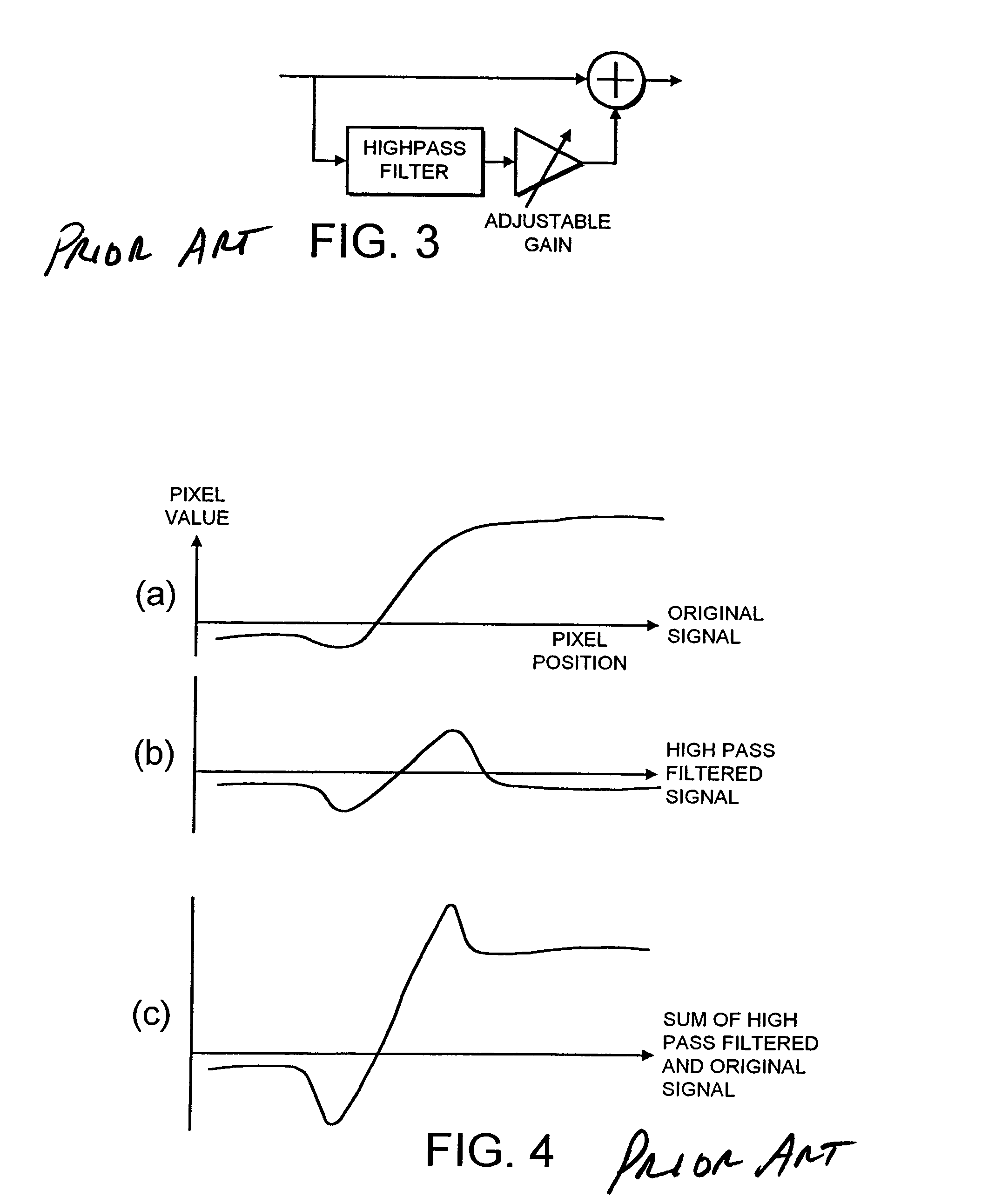

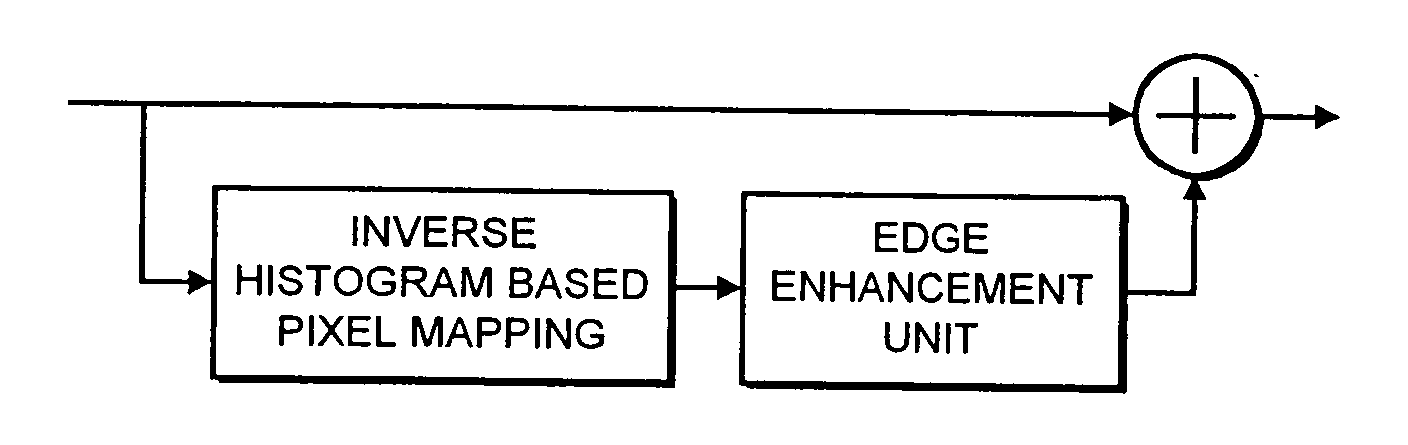

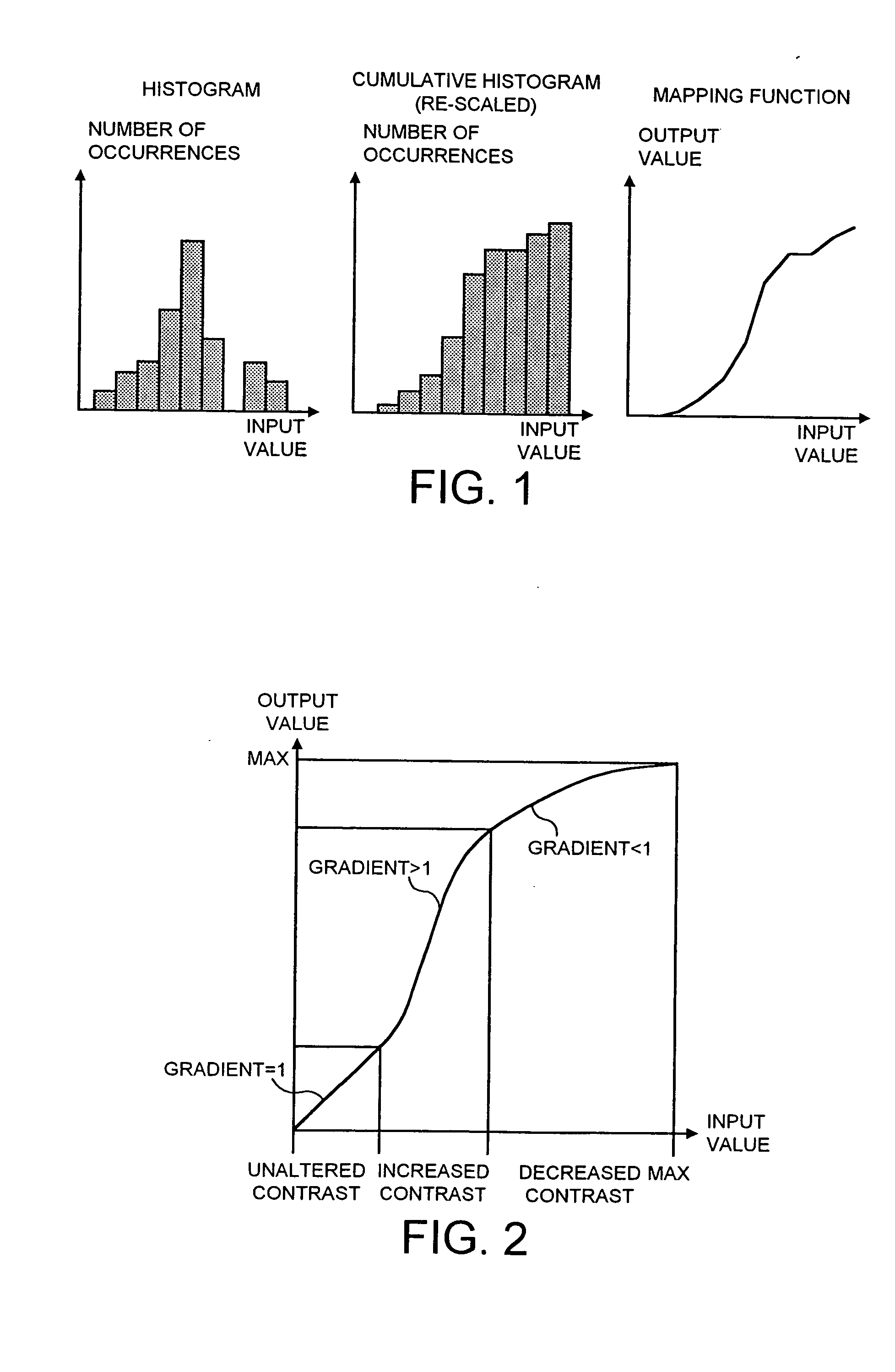

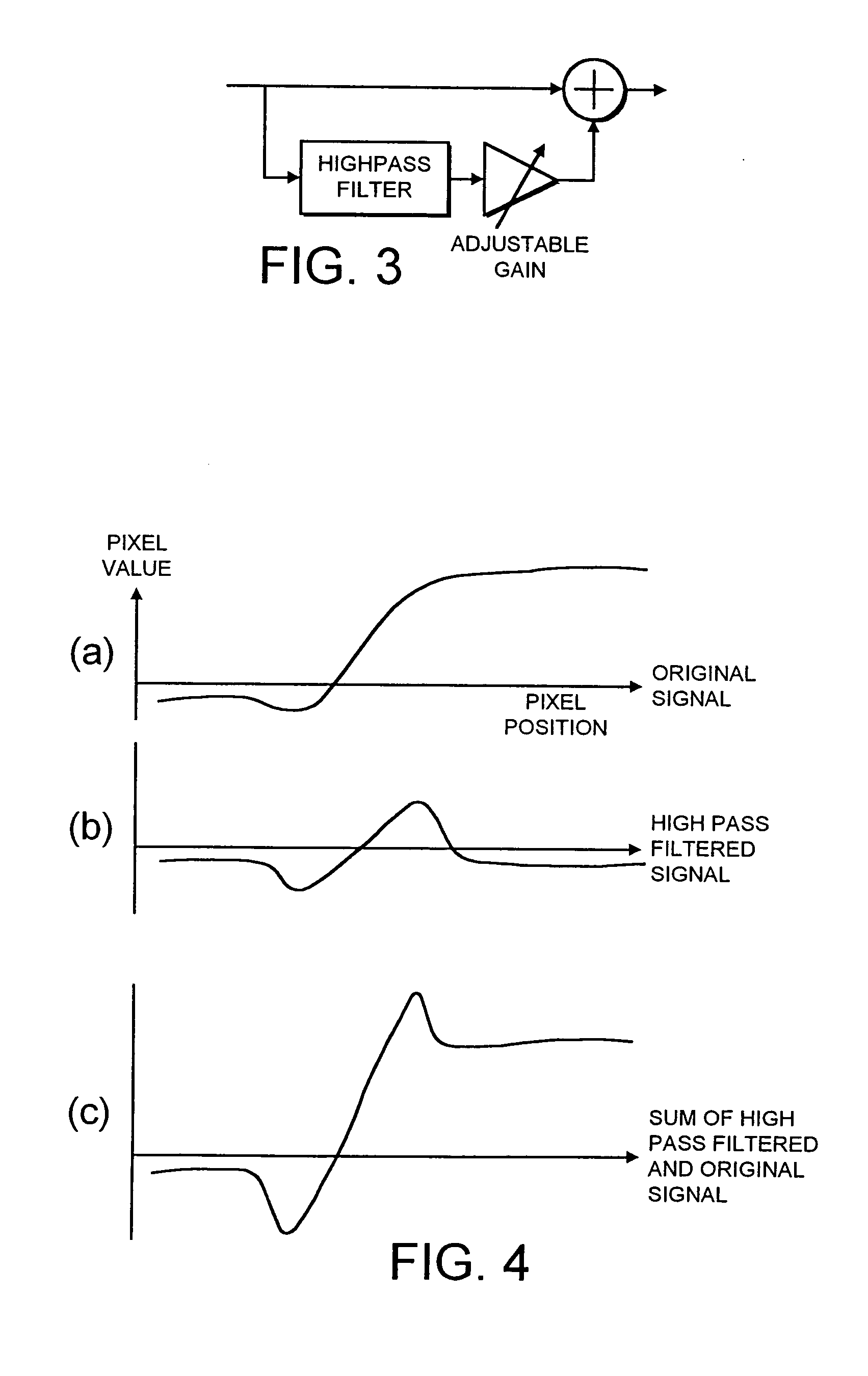

Method and apparatus for enhancing a digital image by applying an inverse histogram-based pixel mapping function to pixels of the digital image

InactiveUS7020332B2Improve performanceGood effectImage enhancementCharacter and pattern recognitionPixel mappingDigital image

A method and associated device wherein an inverse histogram based pixel mapping step is combined with an edge enhancement step such as unsharp masking. In such an arrangement the inverse histogram based pixel mapping step improves the performance of the unsharp masking step, serving to minimize the enhancement of noise components while desired signal components are sharpened.

Owner:WSOU INVESTMENTS LLC

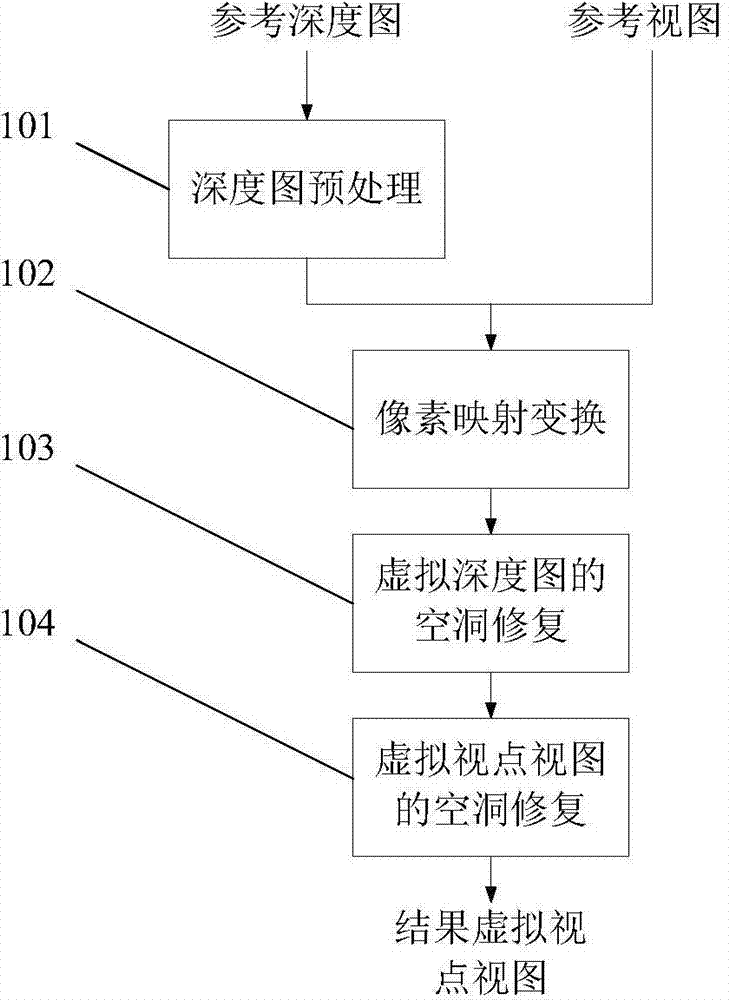

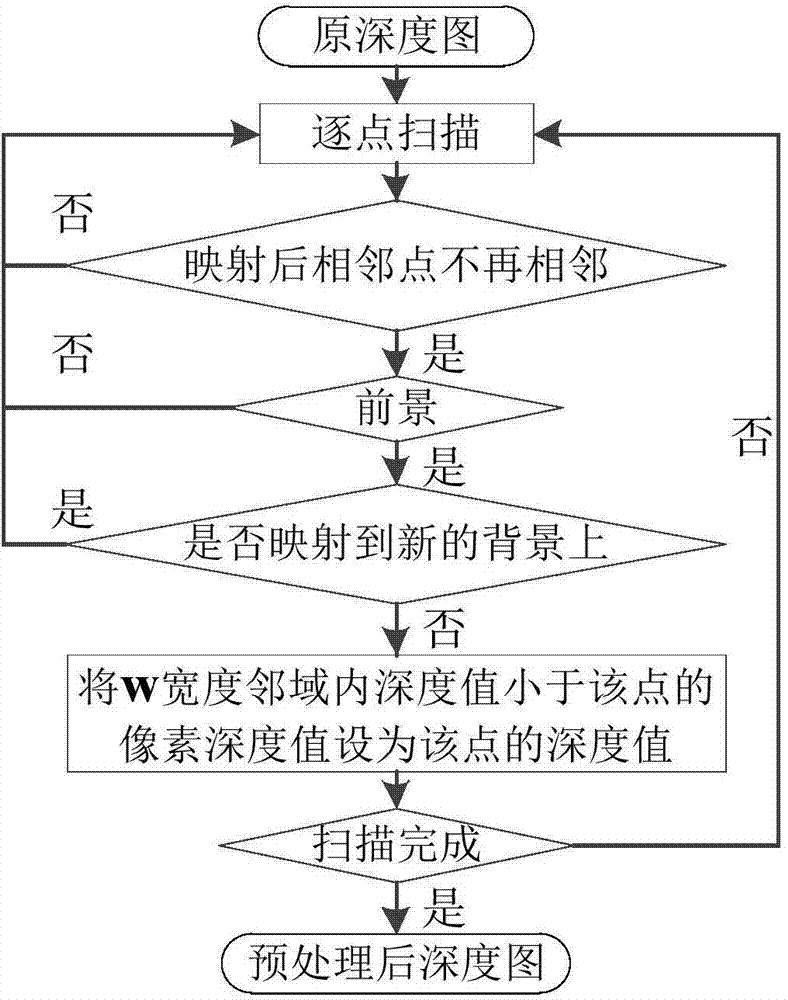

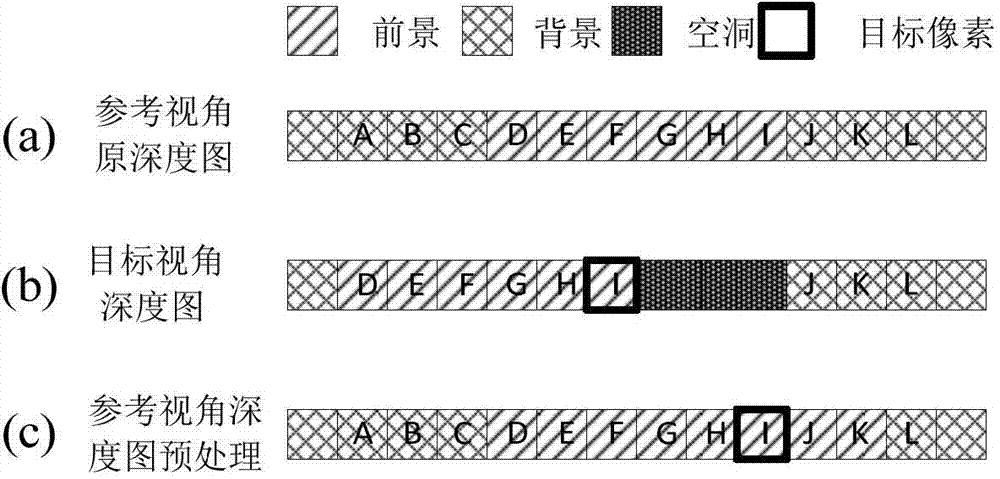

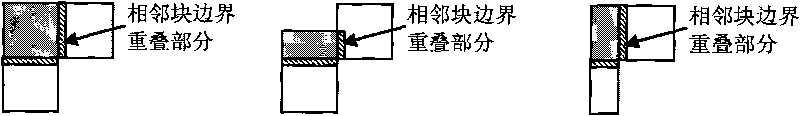

Depth-based cavity repairing method in viewpoint synthesis

InactiveCN104780355AImprove retentionImprove repair effectSteroscopic systemsViewpointsPixel mapping

The invention discloses a depth-based cavity repairing method in viewpoint synthesis. The method comprises steps as follows: (1) performing depth edge one-way expansion preprocessing on an input reference depth map to eliminate inter-infiltration of a foreground pixel and a background pixel in a synthesized virtual viewpoint view; (2) performing pixel mapping transformation by using the preprocessed reference depth map and an original reference view to obtain a virtual viewpoint view with a cavity and a virtual viewpoint depth map; (3) repairing the cavity in the virtual depth map with a pixel-based image repairing method in combination with the relative position relation between the foreground and the background around the cavity in the virtual viewpoint depth map; (4) repairing the cavity in the virtual viewpoint view with an improved sample-based image repairing method in combination with the repaired virtual viewpoint depth map. With the adoption of the method, the virtual viewpoint view with better quality can be obtained, the continuity of image edges can be better kept, and the inter-infiltration of the foreground and background pixels can be eliminated.

Owner:ZHEJIANG UNIV

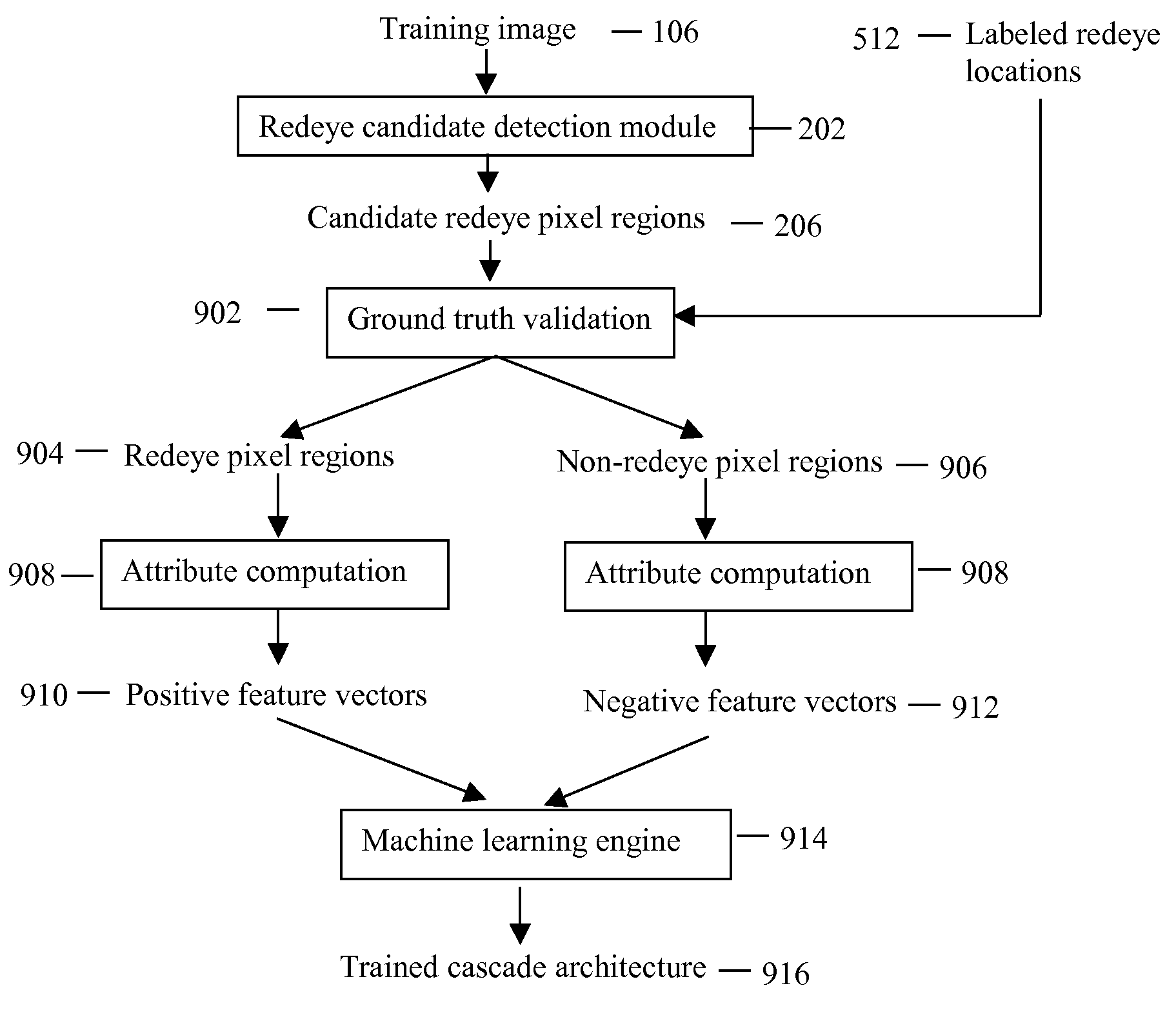

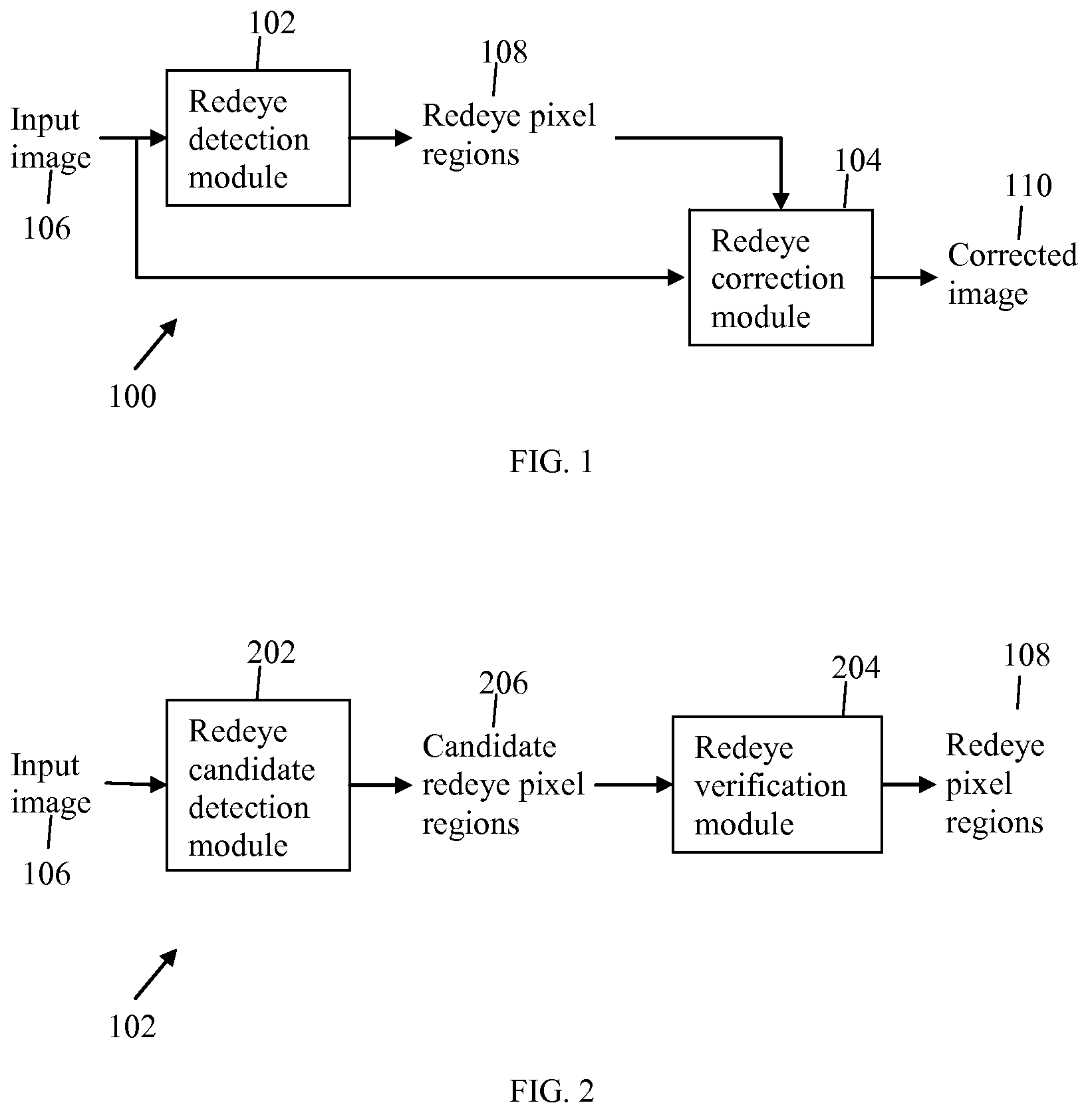

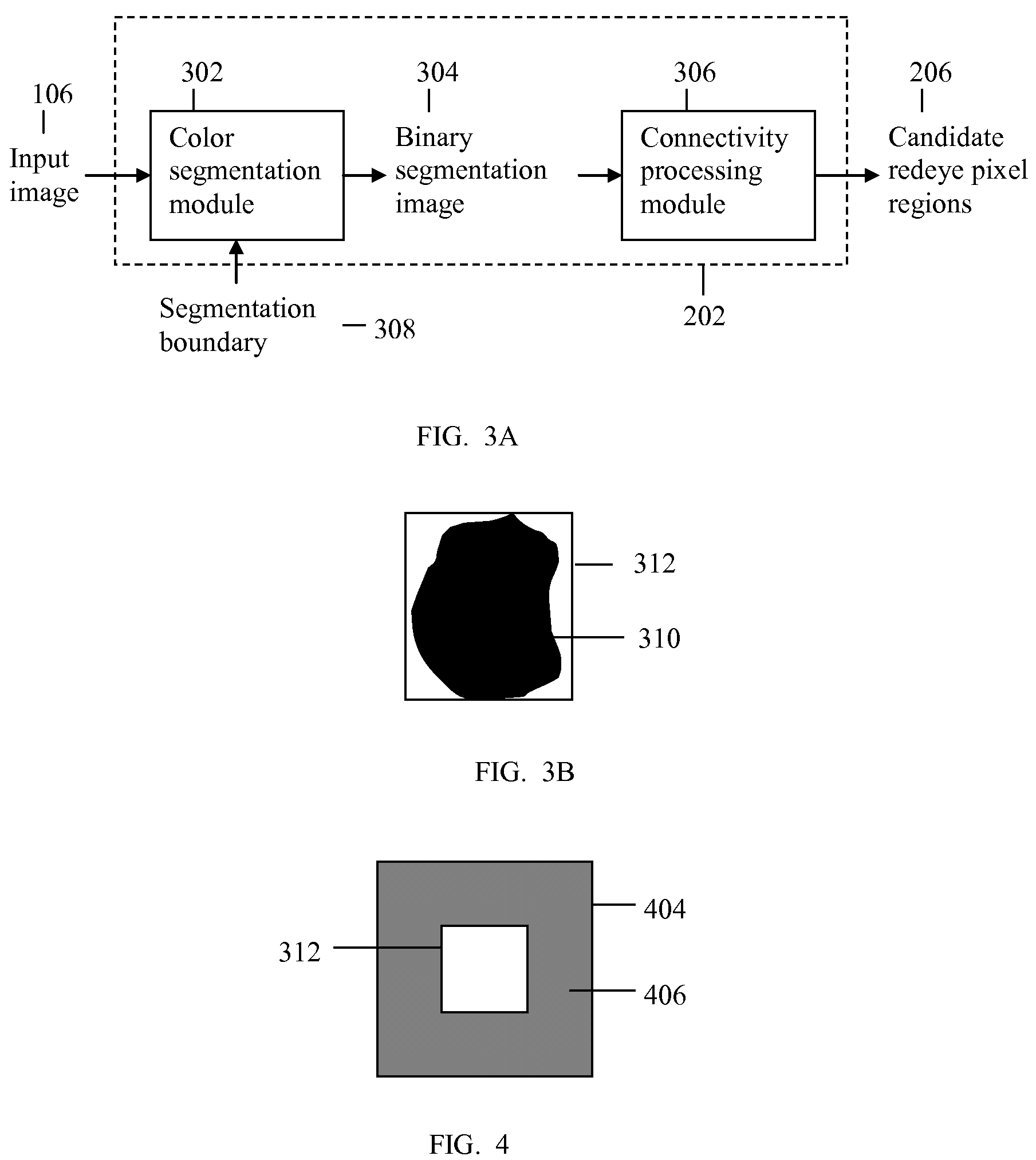

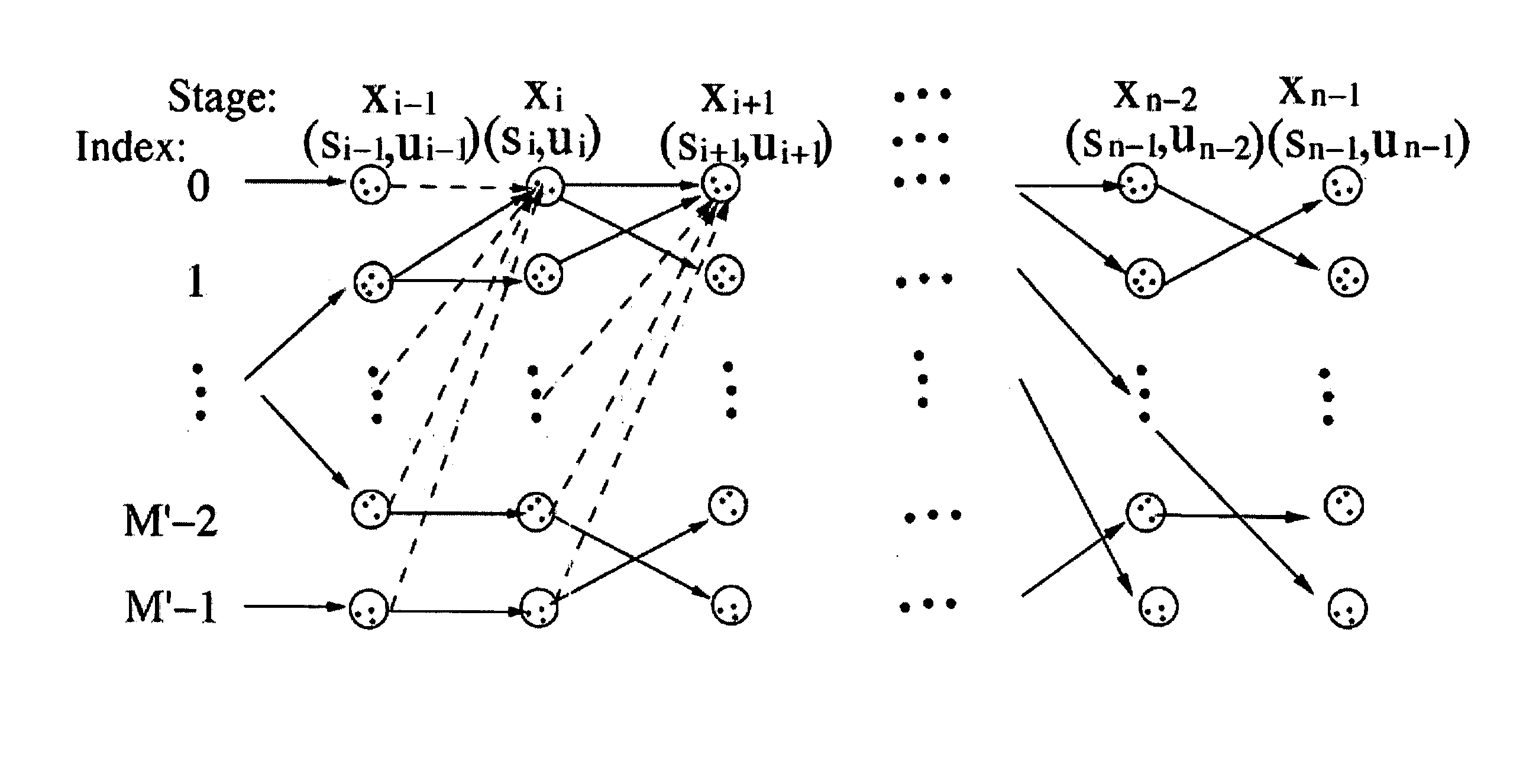

Method and system for detection and removal of redeyes

InactiveUS20080170778A1Effective classificationImage enhancementImage analysisColor imageFeature vector

Systems and methods for detecting and correcting redeye defects in a digital image are described. In one aspect, the invention proposes a color image segmentation method. In accordance with this method, pixels of the input image are segmented by mapping the pixels to a color space and using a number of segmentation surfaces defined in the color space. Based on segmentation results, candidate redeye pixel regions are further identified. In another aspect, the invention features a method to classify candidate redeye pixel regions into redeye pixel regions and non-redeye pixel regions. In accordance with this method, the candidate redeye pixel regions are processed by a cascade of classification stages. In each classification stage, a plural of attributes are computed for the input candidate redeye pixel region to define a feature vector. The feature vector is feed to a pre-trained binary classifier. A candidate redeye pixel region that passes a classification stage is further processed by a next classification stage, while a region that fails is rejected and dropped from further processing. Only the candidate redeye pixel regions that pass all the classification stages are identified as the redeye pixel regions. In another aspect, the invention describes a set of attributes that are effective in driving classification of redeye pixel regions from non-redeye pixel regions. The invention also describes a scheme to generate a plural of attributes and a machine learning scheme to select best attributes for classification design purpose.

Owner:LUO HUITAO

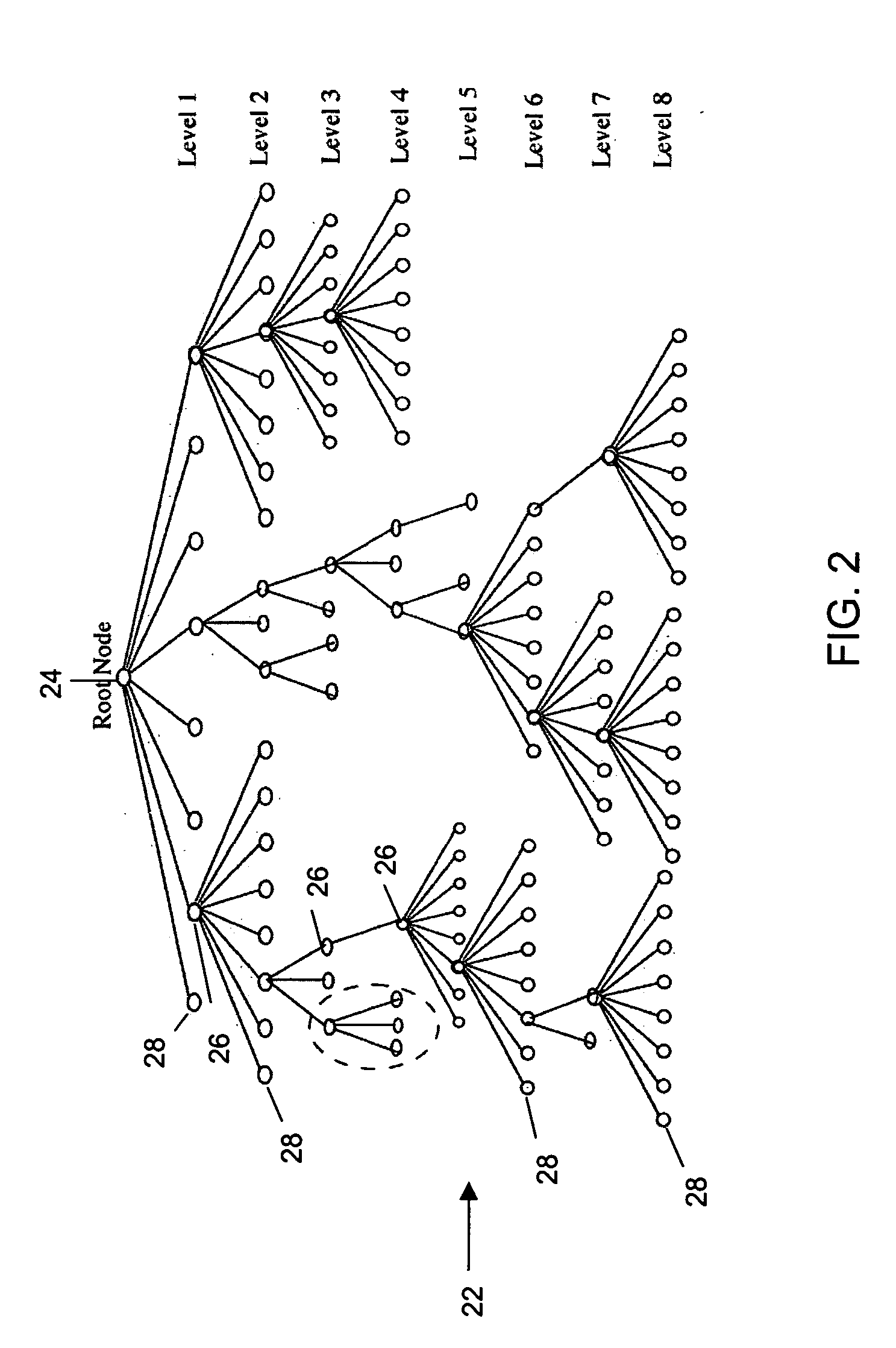

Method, system and software product for color image encoding

ActiveCN101065779AImage codingData switching by path configurationComputation complexityColor image coding

The present invention relates to the compression of color image data. A combination of hard decision pixel mapping and soft decision pixel mapping is used to jointly address both quantization distortion and compression rate while maintaining low computational complexity and compatibility with standard decoders, such as, for example, the GIF / PNG decoder.

Owner:BLACKBERRY LTD

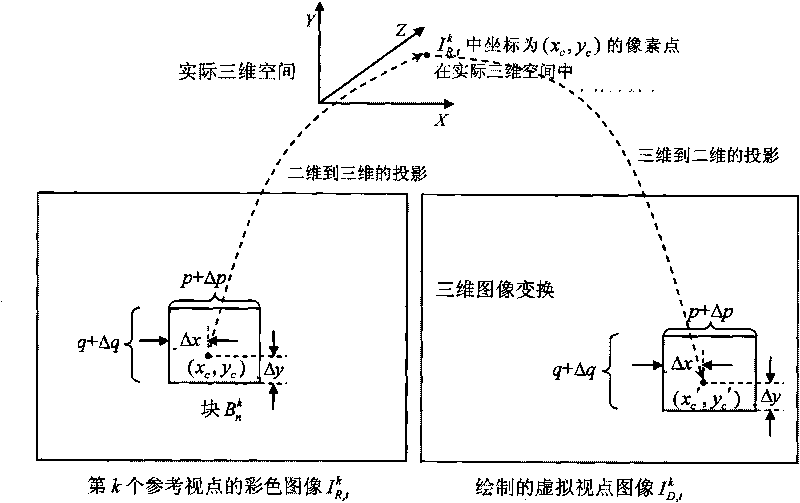

Method for drawing virtual view image

InactiveCN101556700AImprove drawing speedDraw Speed GuaranteedImage analysisSteroscopic systemsColor image3d image

The invention discloses a method for drawing a virtual view image, which has the advantages of dividing the color image of a reference view into a plurality of blocks with same sizes and judging the flatness of each block; for flat blocks, 3D image conversion is only needed to be executed to one pixel point in the block so as to confirm the coordinate mapping relation of projecting the pixel point from the color image of the reference view to the color image of a visual view; and then the whole block is projected into the color image of the visual view by adopting the coordinate mapping relation. As the 3D image conversion is only executed to one pixel point; the drawing speed of the flat blocks can be effectively improved; while for non-flat blocks, the 3D image conversion of per pixel mapping is still adopted to map each pixel point in the non-flat blocks to the color image of the virtual view needing to be drawn, thus being capable of effectively ensuring the drawing precision; the combination of the two greatly improves the drawing speed simultaneously when ensuring the drawing precision of the color image of the virtual view.

Owner:NINGBO UNIV

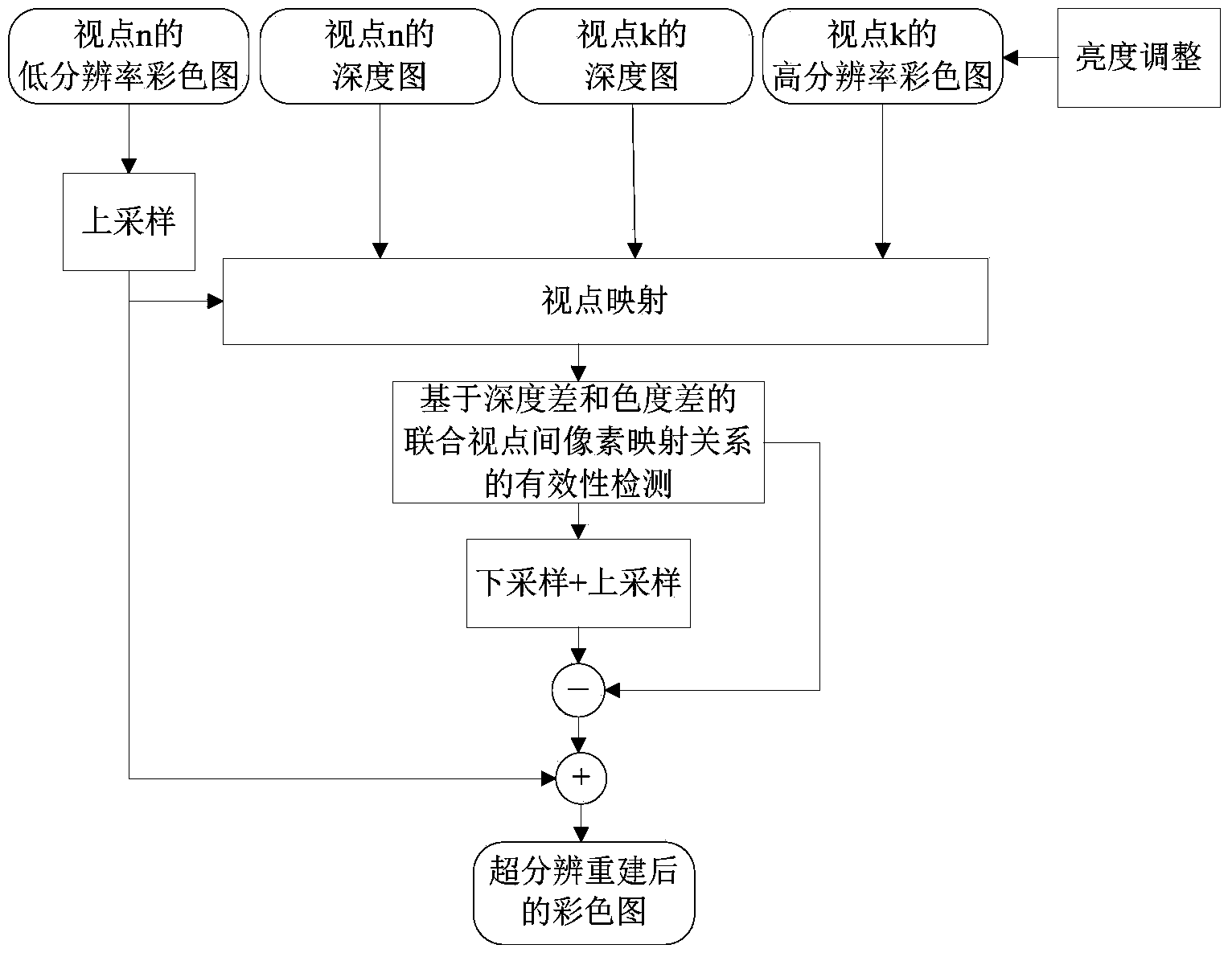

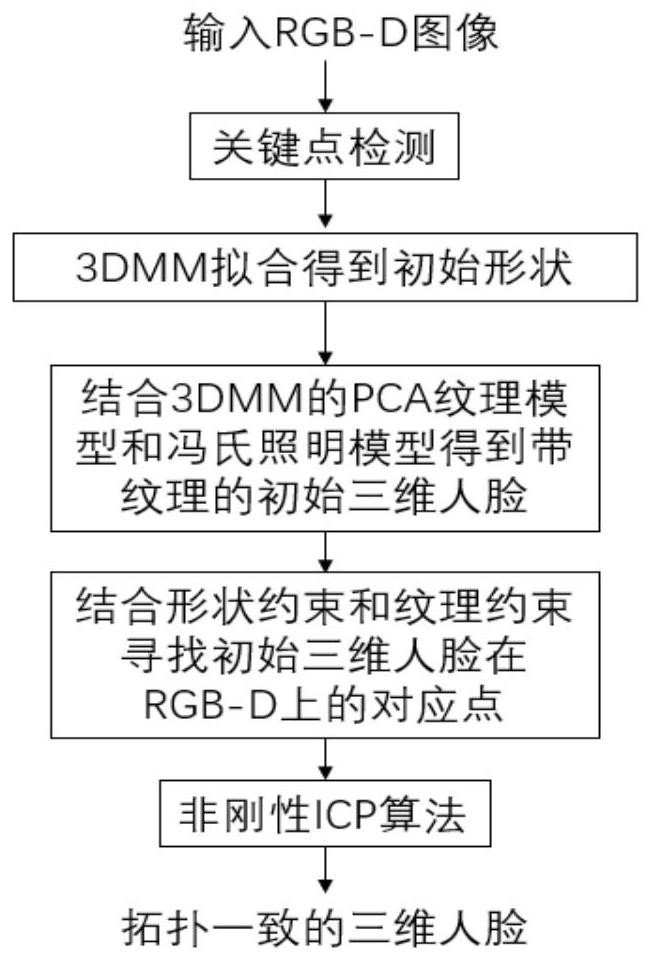

Multi-view-point image super-resolution method based on deep information

InactiveCN104079914AQuality improvementReduce computational complexitySteroscopic systemsColor imagePinhole camera model

The invention provides a multi-view-point image super-resolution method based on deep information. The multi-view-point image super-resolution method mainly solves the problem that in the prior art, the edge artifact phenomenon occurs when super-resolution reconstruction is conducted on a low-resolution view point image. The method includes the steps of mapping a high-resolution colored image of a view point k on the image position of a view point n according to a pinhole camera model through the deep information, related camera parameters and a backward projection method, conducting effectiveness detection based on pixel mapping relations between the joint view points of the depth differences and the color differences on the projected image, only reserving pixel points conforming to effectiveness detection so as to prevent luminance differences between different view points from influencing illumination regulation conducted on the colored image of the view point k in advance, separating out high-frequency information of the projected image, and adding the high-frequency information to the image obtained after the low-resolution colored image of the view point n is sampled so as to obtain a super-resolution reconstructed image of the view point n. By means of the method, the edge artifact phenomenon is effectively relieved when super-resolution reconstruction is conducted on the low-resolution view point, and the quality of the super-resolution reconstructed image is improved.

Owner:SHANDONG UNIV

Method, system and software product for color image encoding

ActiveUS20050237951A1Character and pattern recognitionImage codingComputation complexityColor image coding

The present invention relates to the compression of color image data. A combination of hard decision pixel mapping and soft decision pixel mapping is used to jointly address both quantization distortion and compression rate while maintaining low computational complexity and compatibility with standard decoders, such as, for example, the GIF / PNG decoder.

Owner:MALIKIE INNOVATIONS LTD

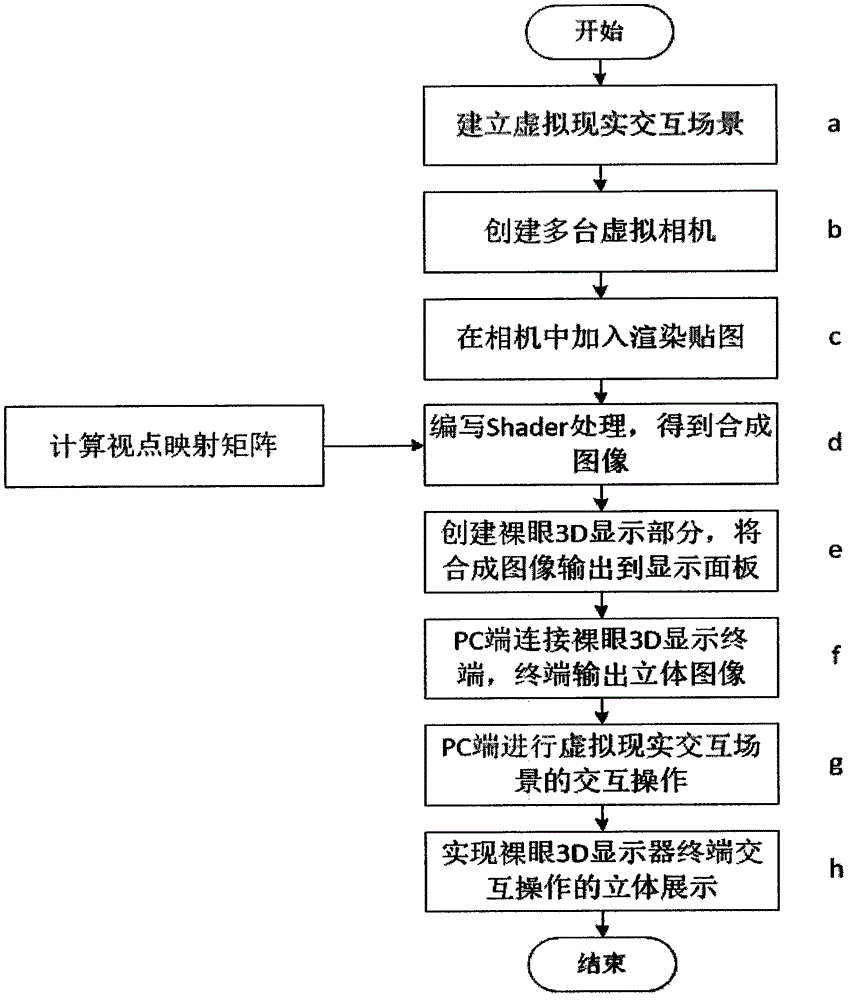

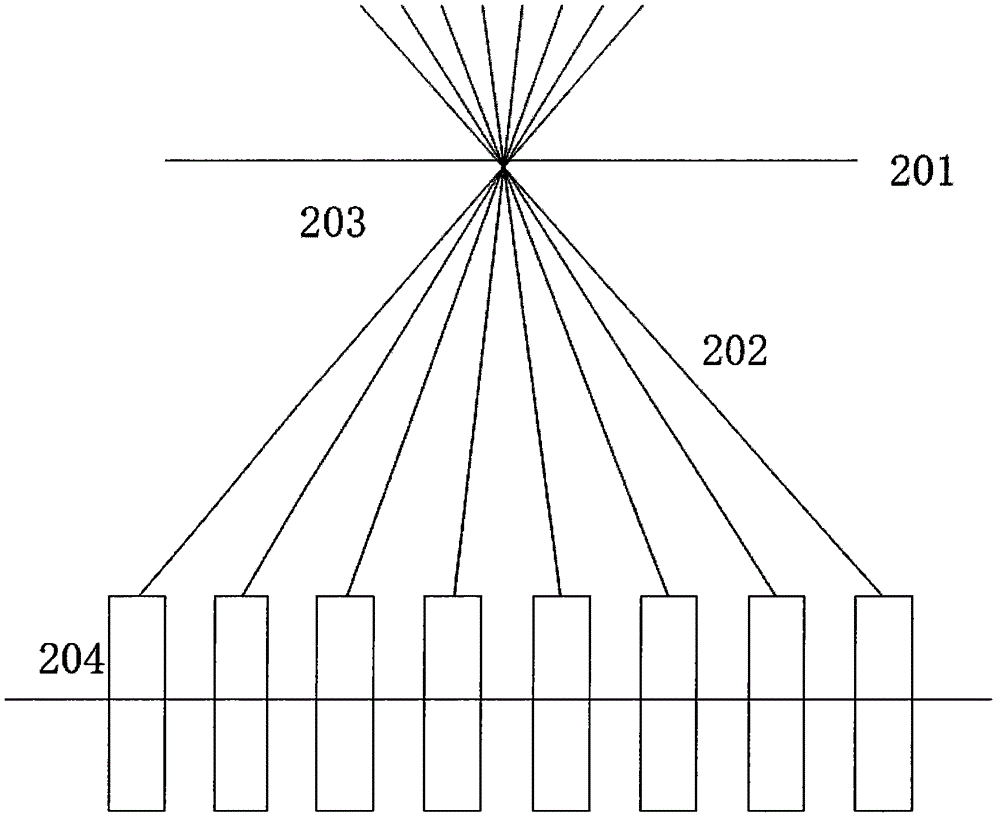

Virtual reality interaction method based on glasses-free 3D display

The invention discloses a virtual reality interaction method based on glasses-free 3D display. The virtual reality interaction method based on the glasses-free 3D display includes that creating a plurality of stereo cameras in a virtual reality interaction scene and arraying; calculating a viewpoint sub-pixel mapping matrix, compiling a shader which runs on a GPU, sampling the render map output by each stereo camera, fusing to obtain a synthesized image, outputting to a new display panel in the scene, connecting the PC terminal with a glasses-free 3D display terminal, and enabling the glasses-free 3D display terminal to display a stereo image; performing the interaction operation of the virtual reality interaction scene at the PC terminal, and finishing the synchronous stereo display of the interaction operation at the glasses-free 3D display terminal. The virtual reality interaction method based on the glasses-free 3D display is capable of effectively solving the problems that the glasses-free 3D video content manufacturing period is long, the manufacturing cost is high, there is no interactivity and the like.

Owner:王子强

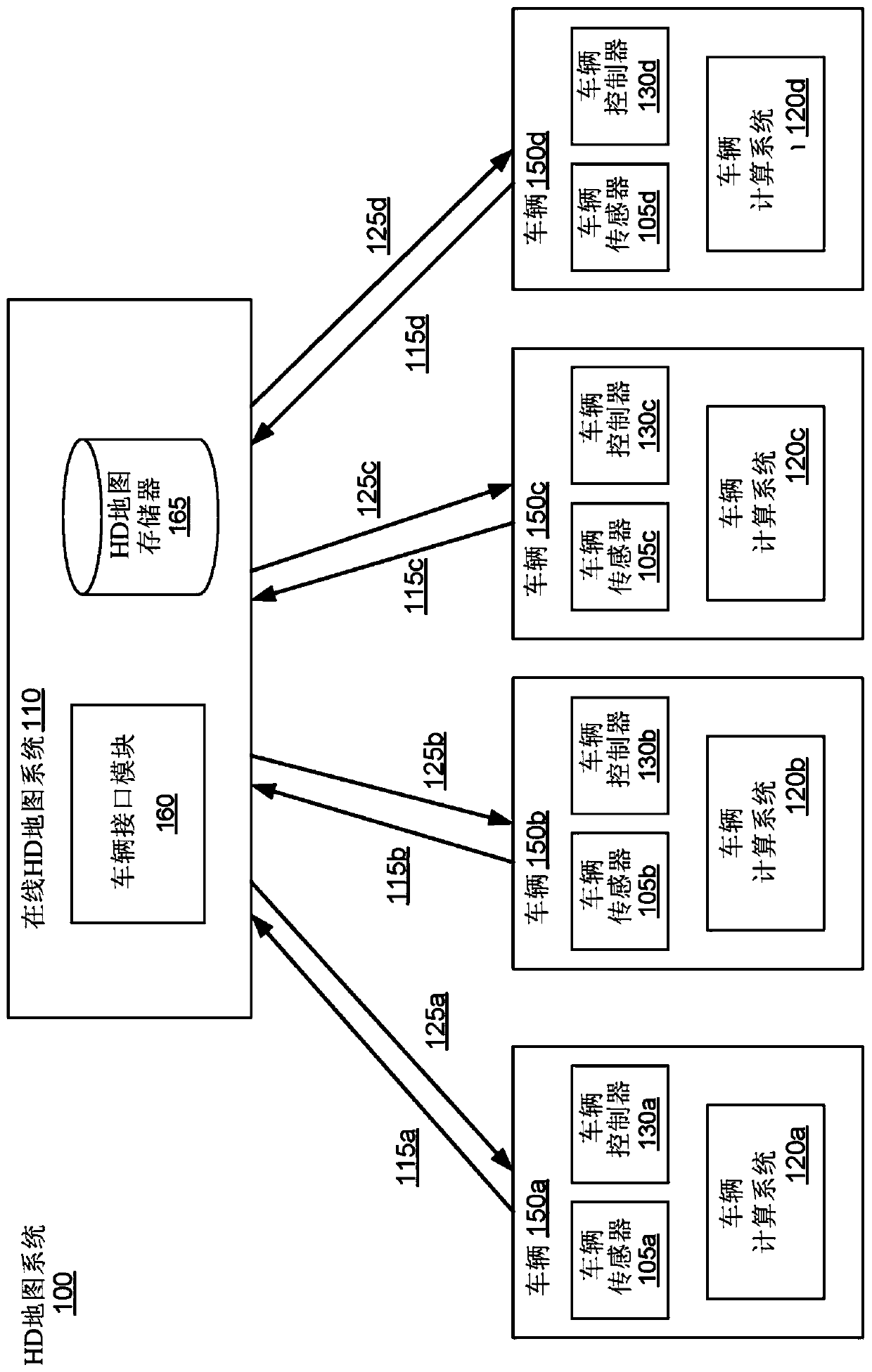

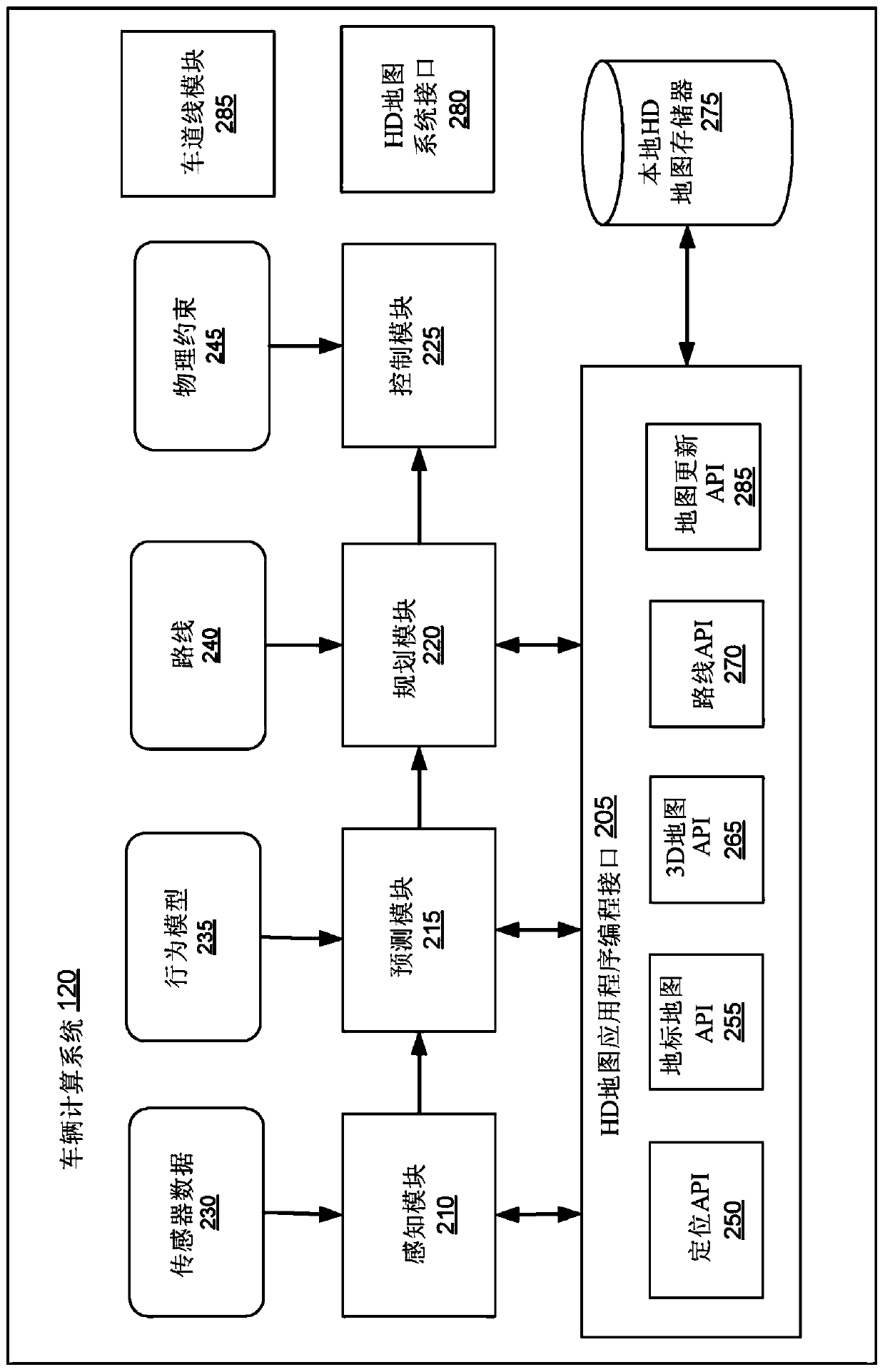

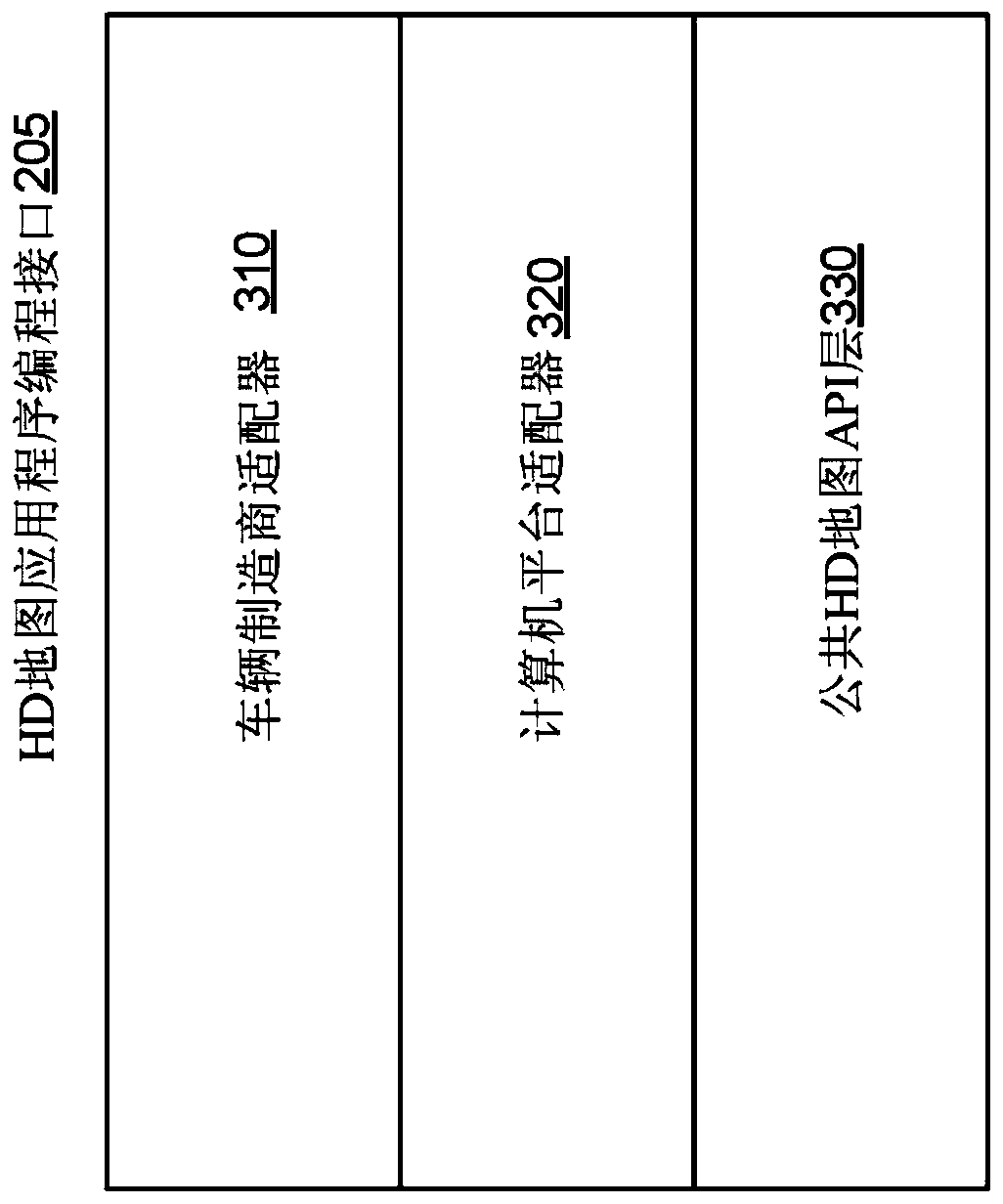

Sign and lane creation for high definition maps used for autonomous vehicles

PendingCN111542860ADrawing from basic elementsAutonomous decision making processCamera imageThree-dimensional space

An HD map system represents landmarks on a high definition map for autonomous vehicle navigation, including describing spatial location of lanes of a road and semantic information about each lane, andalong with traffic signs and landmarks. The system generates lane lines designating lanes of roads based on, for example, mapping of camera image pixels with high probability of being on lane lines into a three-dimensional space, and locating / connecting center lines of the lane lines. The system builds a large connected network of lane elements and their connections as a lane element graph. The system also represents traffic signs based on camera images and detection and ranging sensor depth maps. These landmarks are used in building a high definition map that allows autonomous vehicles to safely navigate through their environments.

Owner:NVIDIA CORP

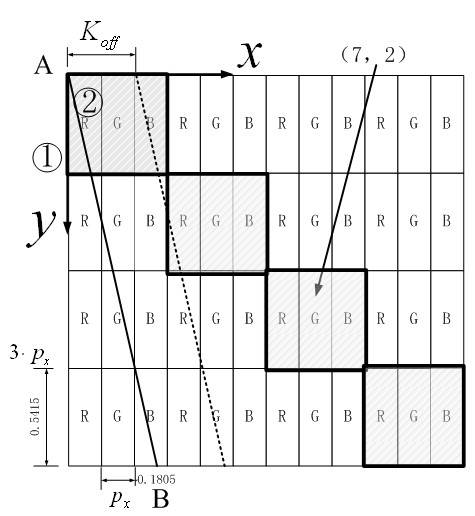

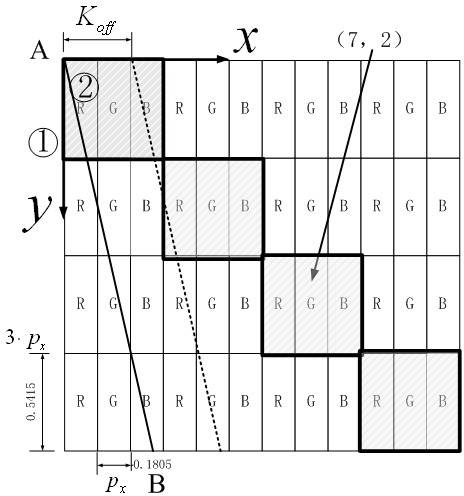

Grating-type multi-viewpoint stereo image synthesis method

The invention discloses a grating-type multi-viewpoint stereo image synthesis method which comprises the following steps: (1) obtaining a lenticular grating pitch parameter p; (2) obtaining a grating inclination angle parameter; (3) obtaining a stereo display sub-pixel and a resolution parameter; (4) according to the size of the lenticular grating pitch, the grating inclination angle and the stereo display sub-pixel, determining the number of viewpoints; (5) obtaining the mapping relationship of the sub-pixel and the viewpoints, namely determining a sub-pixel filling path according to the stereo display grating inclination angle; and (6) after a sub-pixel mapping table is finished, successively filling the pixels of the multi-viewpoint image into a target stereo image according to the filling path. The grating-type multi-viewpoint stereo image synthesis method has the advantages that distortion-free stereo images can be obtained by virtue of the light splitting of a stereo display, and the stereo visual effect is good.

Owner:中航华东光电有限公司

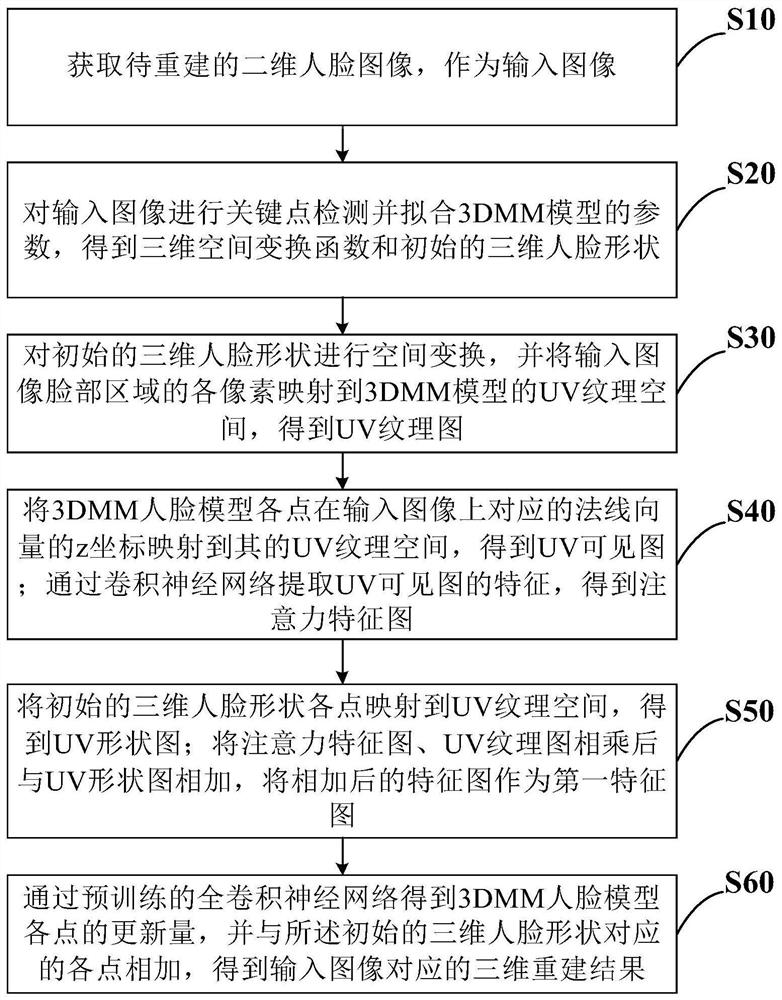

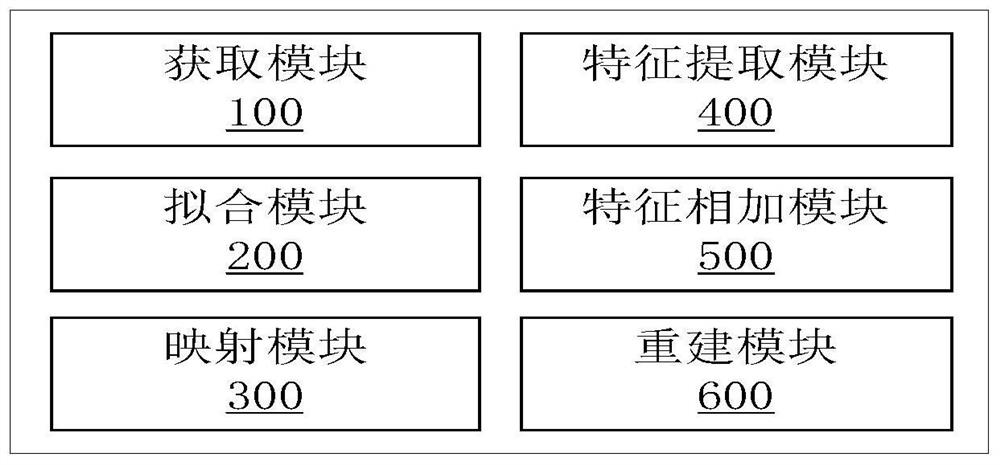

Three-dimensional face reconstruction method, system and device for fine structure

PendingCN112002014AHigh precisionReduce loss of detailCharacter and pattern recognitionNeural architecturesPattern recognitionImaging processing

The invention belongs to the technical field of image processing and pattern recognition, particularly relates to a three-dimensional face reconstruction method, system and device for a fine structure, and aims to solve the problem of poor three-dimensional face reconstruction precision. The method comprises the steps of obtaining a to-be-reconstructed two-dimensional face image; obtaining a three-dimensional space transformation function and an initial three-dimensional face shape; performing spatial transformation on the initial three-dimensional face shape, and mapping each pixel of an image face region to a UV texture space of a 3DMM model to obtain a UV texture map; obtaining a UV visible image and extracting features to obtain an attention feature map; mapping each point of the initial three-dimensional face shape to a UV texture space to obtain a UV shape graph; multiplying the attention feature map and the UV texture map, and adding the multiplied attention feature map and UV texture map to the UV shape map; and obtaining the update amount of each point of the 3DMM face model, and adding the update amount to each point corresponding to the initial three-dimensional face shape to obtain a three-dimensional reconstruction result. According to the invention, the precision of face model reconstruction is improved.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

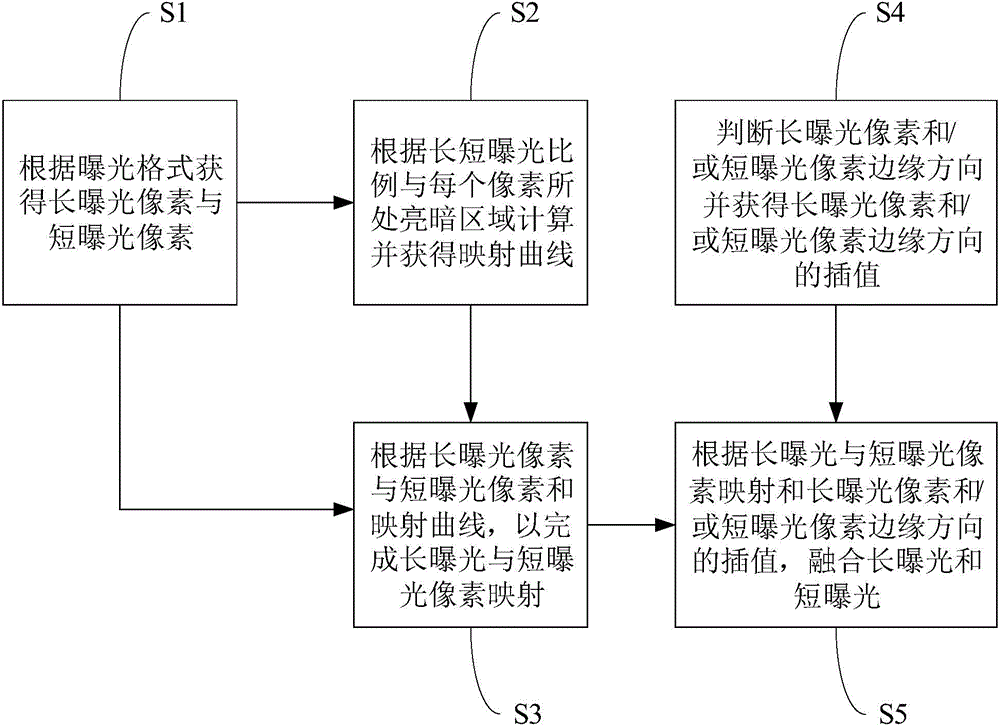

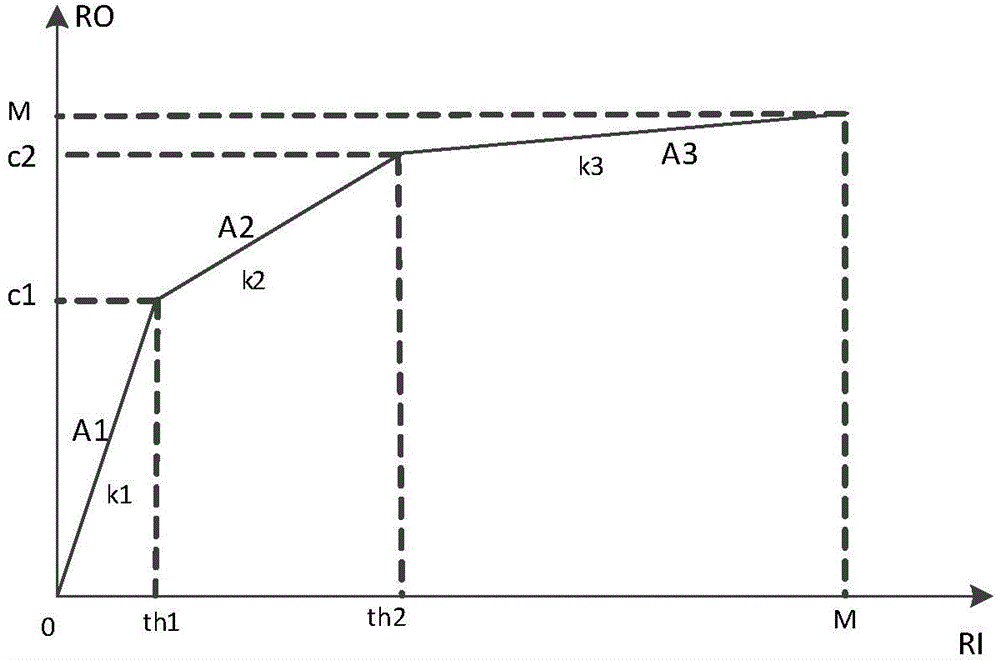

Wide dynamic fusion method based on single-frame double-pulse exposure mode

ActiveCN104639920AImprove protectionLarge dynamic rangeImage enhancementTelevision system detailsPixel mappingDouble pulse

The invention discloses a wide dynamic fusion method based on a single-frame double-pulse exposure mode. The method comprises the following steps of acquiring a long exposure pixel and a short exposure pixel according to an exposure format; calculating and acquiring a mapping curve according to a ratio of the long exposure pixel to the short exposure pixel and bright and dark regions of the pixels; performing long exposure pixel mapping and short exposure pixel mapping according to the long exposure pixel, the short exposure pixel and the mapping curve; judging the edge direction of the long exposure pixel and / or the short exposure pixel and acquiring the interpolation of the edge direction of the long exposure pixel and / or the short exposure pixel; and fusing long exposure and short exposure according to the long exposure pixel mapping, the short exposure pixel mapping and the interpolation of the edge direction of the long exposure pixel and / or the short exposure pixel. By the wide dynamic fusion method based on the single-frame double-pulse exposure mode, a promoted dynamic range is wide, details of an image are protected well, and the color is real.

Owner:SHANGHAI MICROSHARP INTELLIGENT TECH CO LTD

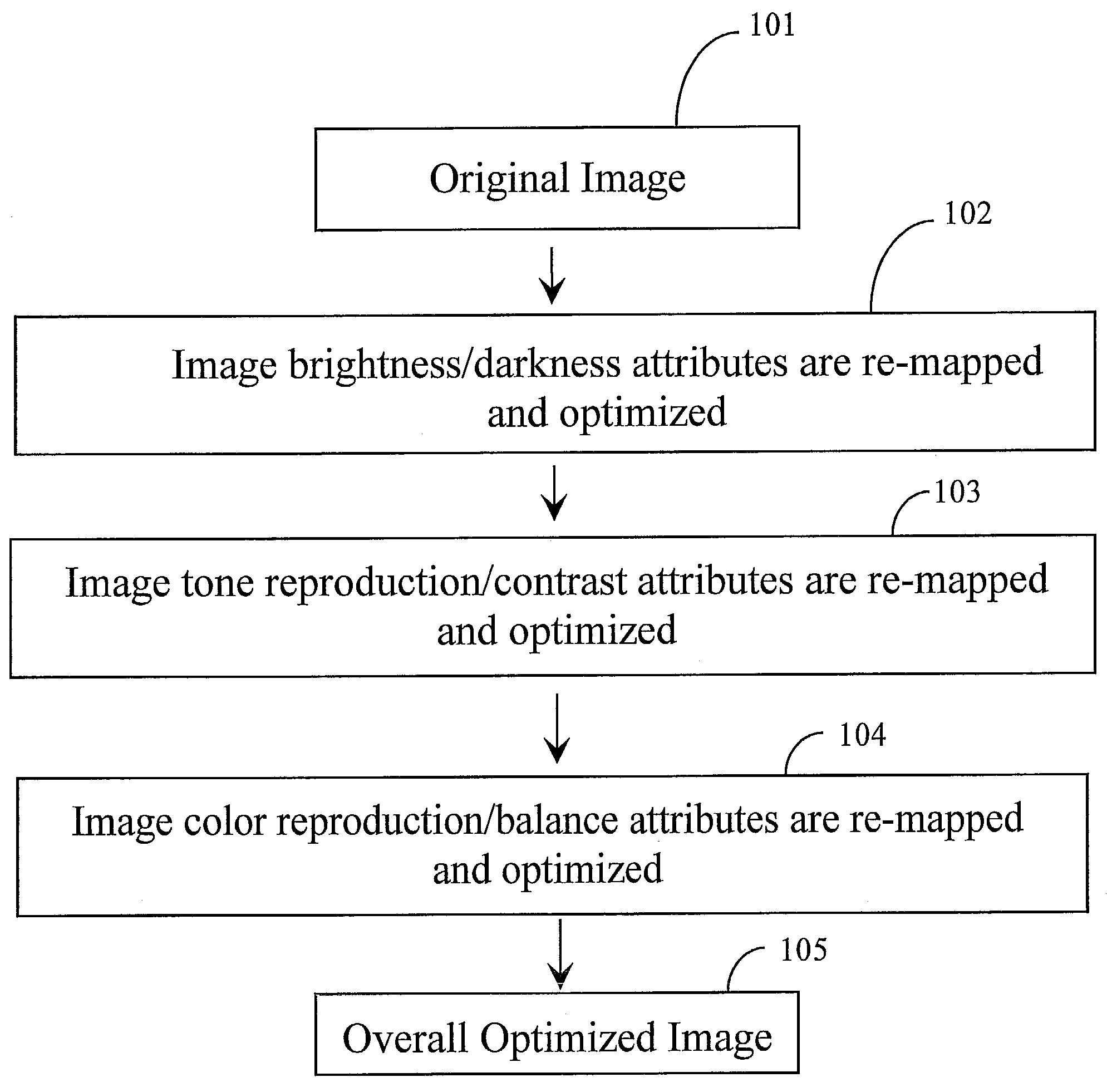

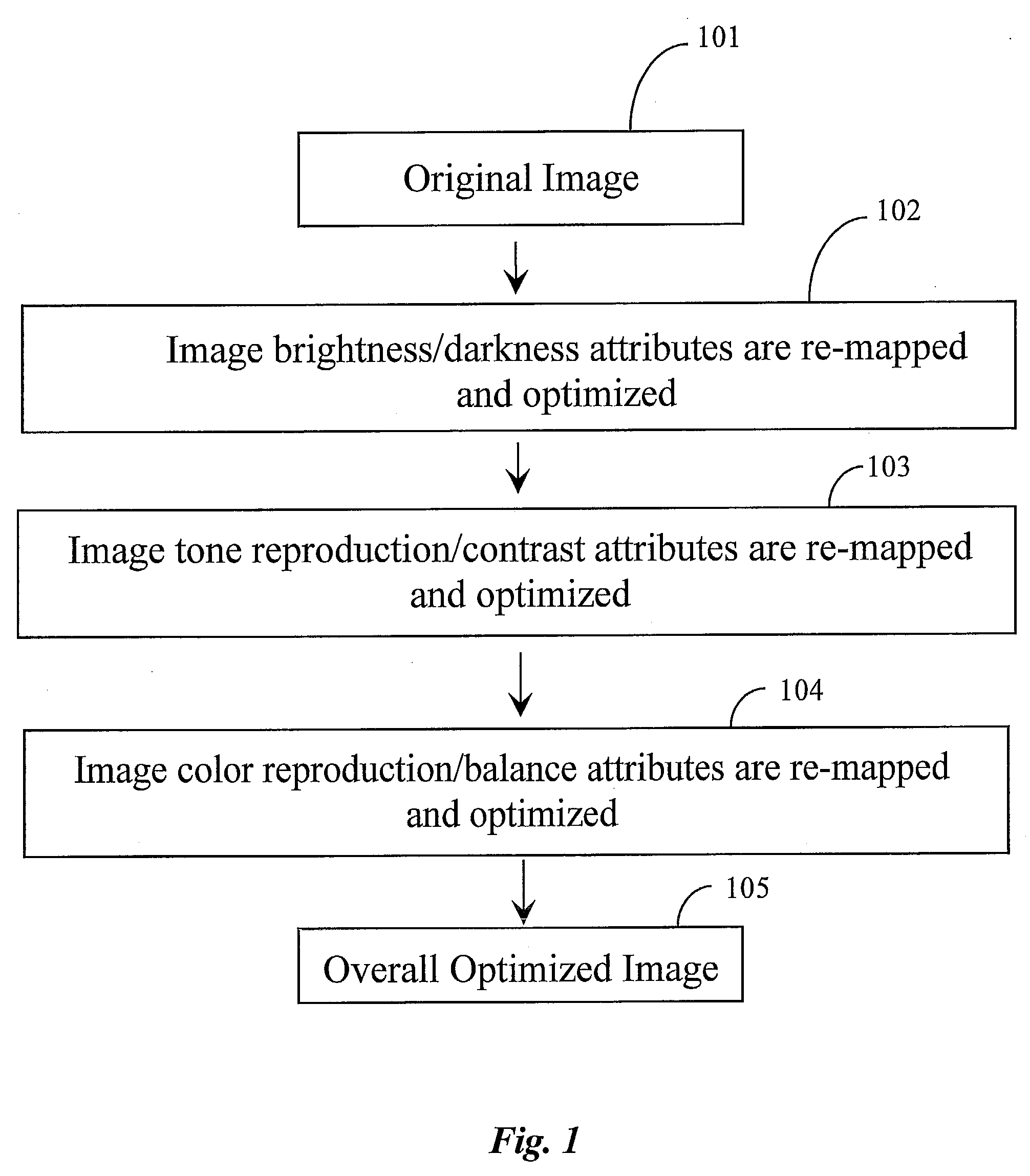

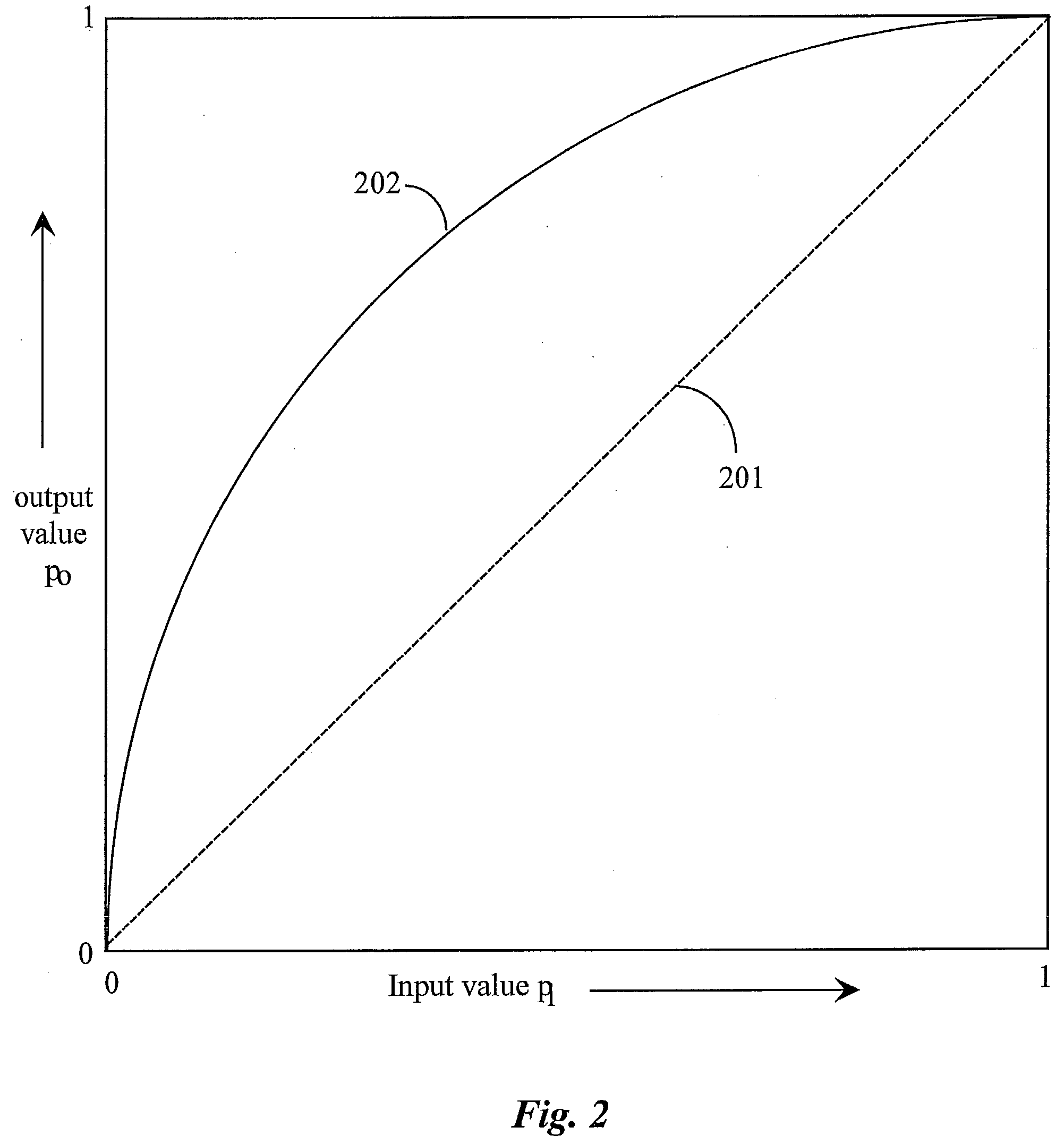

Apparatus and Methods for Enhancing Digital Images

InactiveUS20070104384A1Enhanced digital imageImage enhancementCharacter and pattern recognitionPixel mappingDigital image

A method for enhancing a digital image involves selecting an image as an original image to be enhanced, creating by a pixel mapping procedure at least two images altered from the original image in a first image attribute, each of the altered images differing in the first image attribute, displaying the altered images to a user, and selecting by the user one of the images displayed as preferable to the other images displayed.

Owner:SOUNDSTARTS

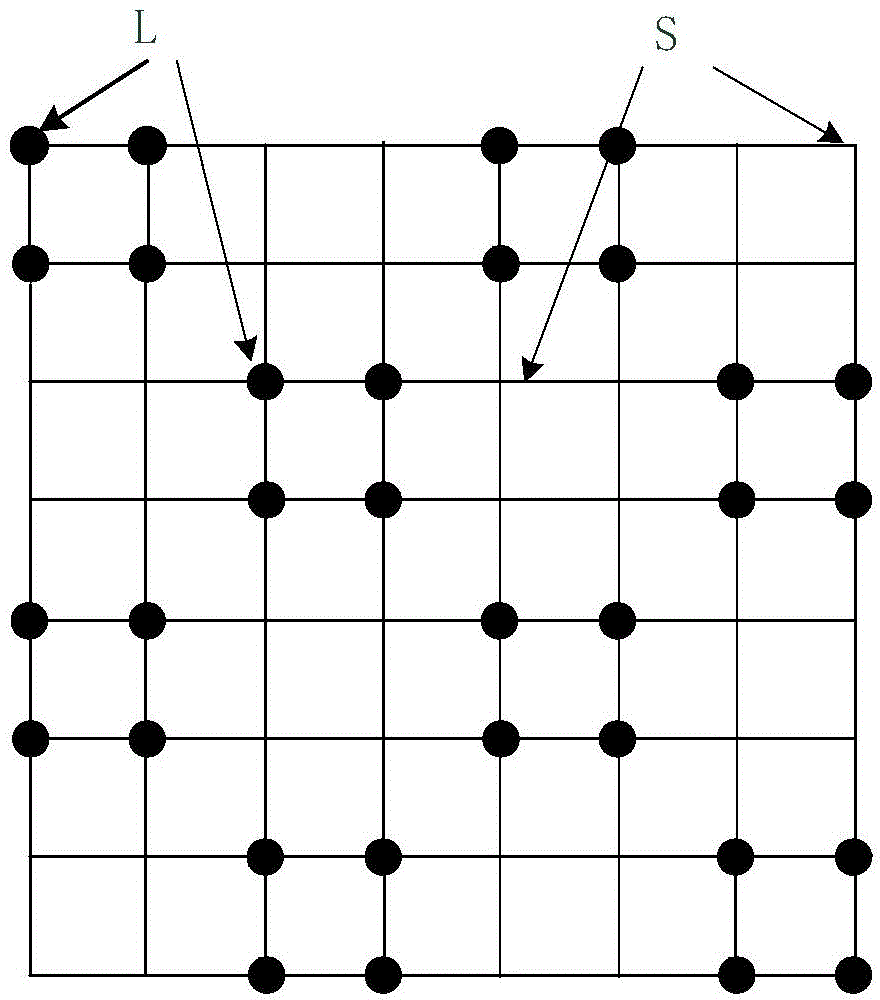

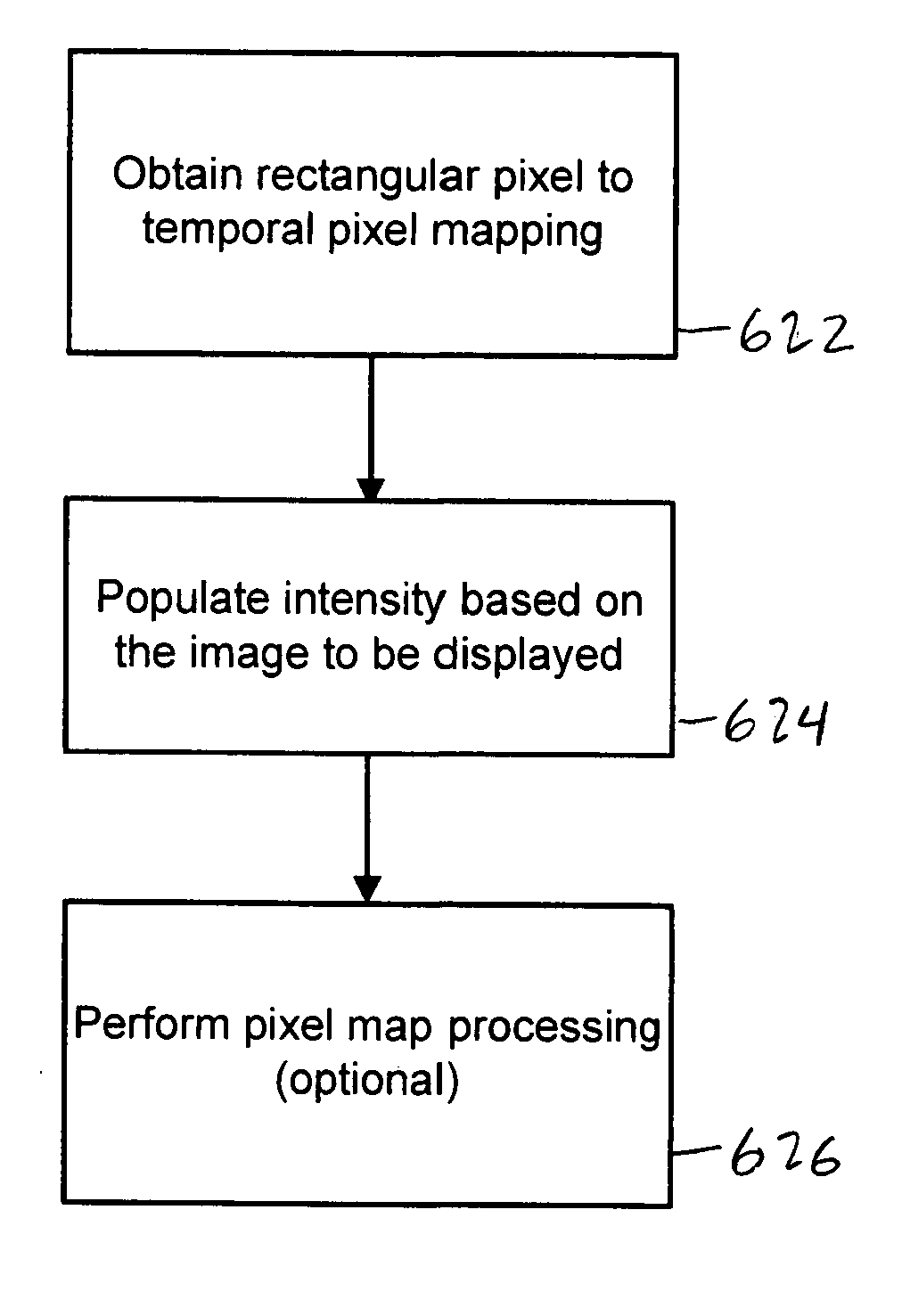

Image to temporal pixel mapping

Image pixel to temporal pixel mapping is disclosed. In some embodiments, a grid of stochastically arranged temporal pixels is employed in a composite display. An image pixel may be mapped to one or more temporal pixels in the grid. One or more of the one or more temporal pixels in the grid to which the image pixel is mapped are employed to render the image pixel in the composite display.

Owner:SNAPTRACK

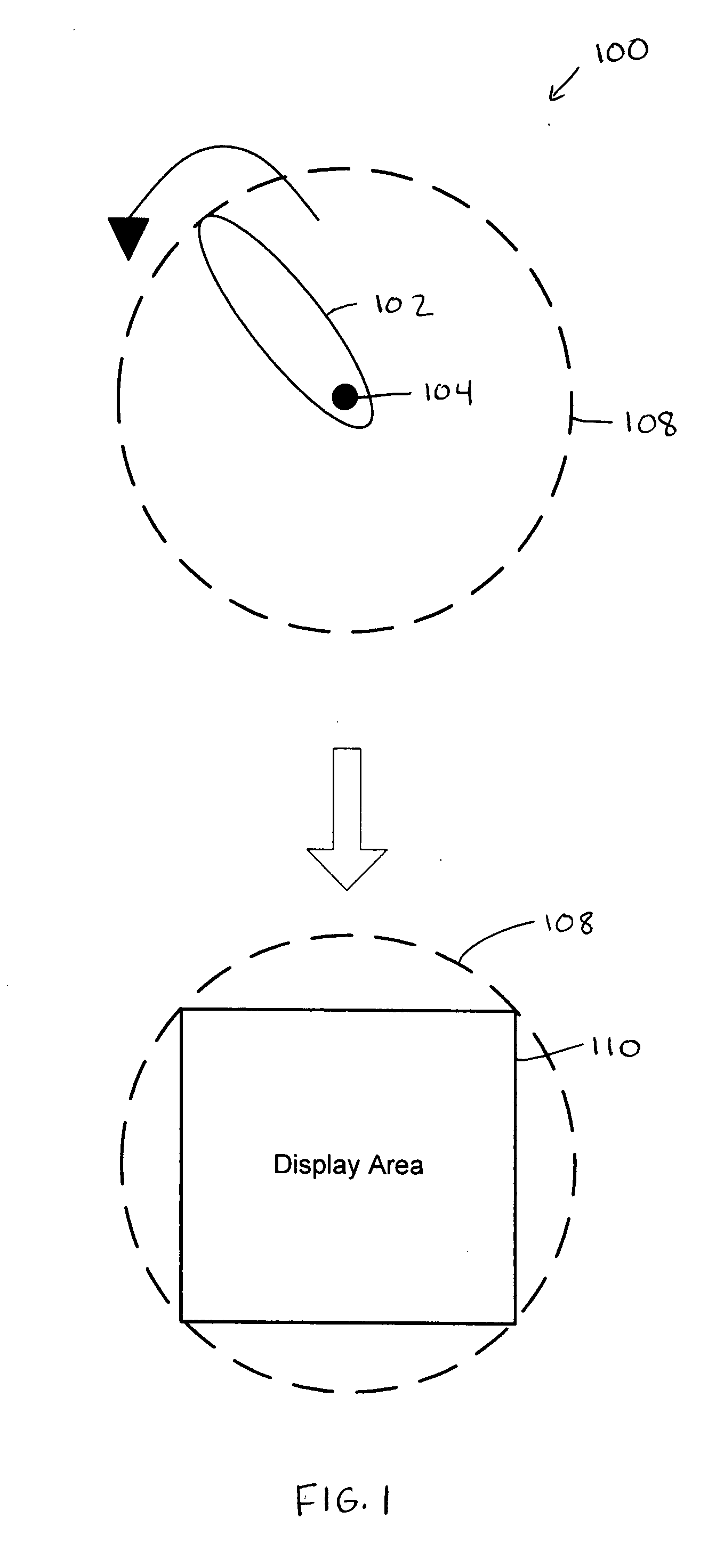

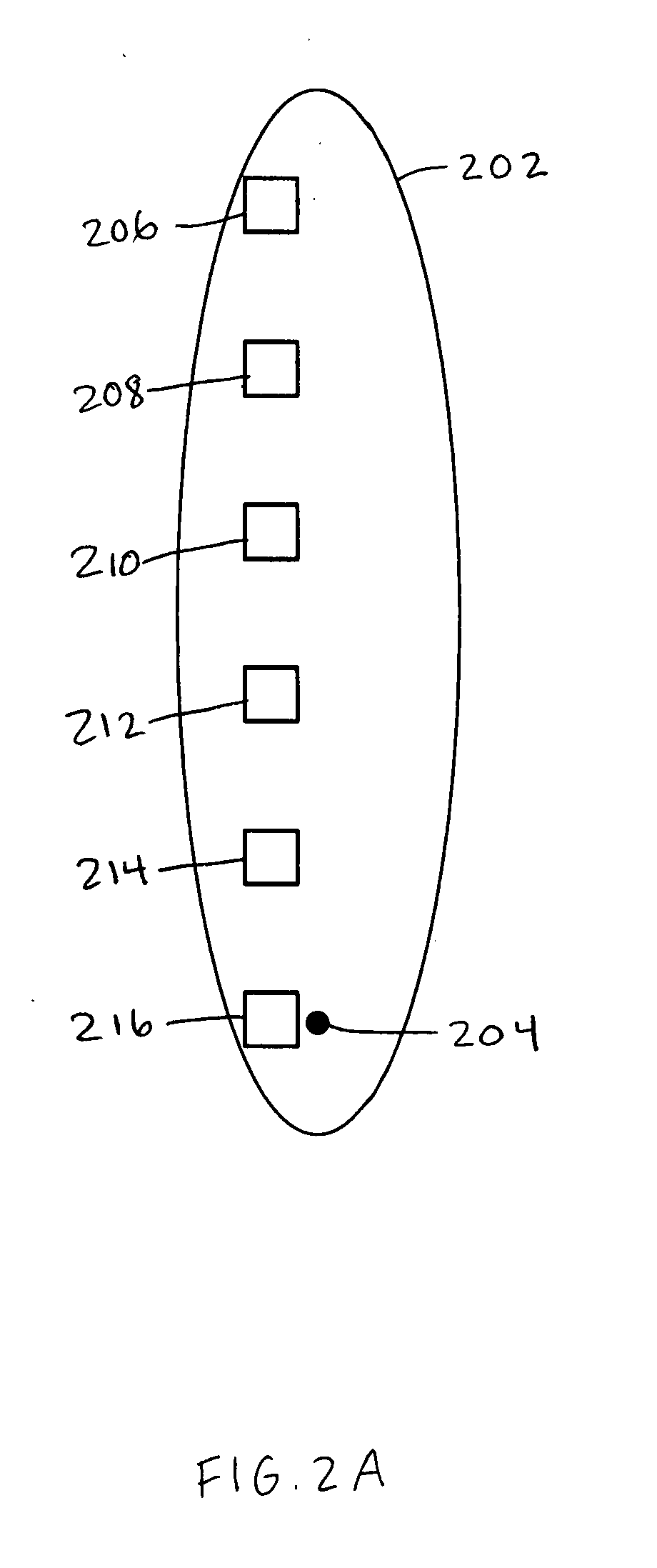

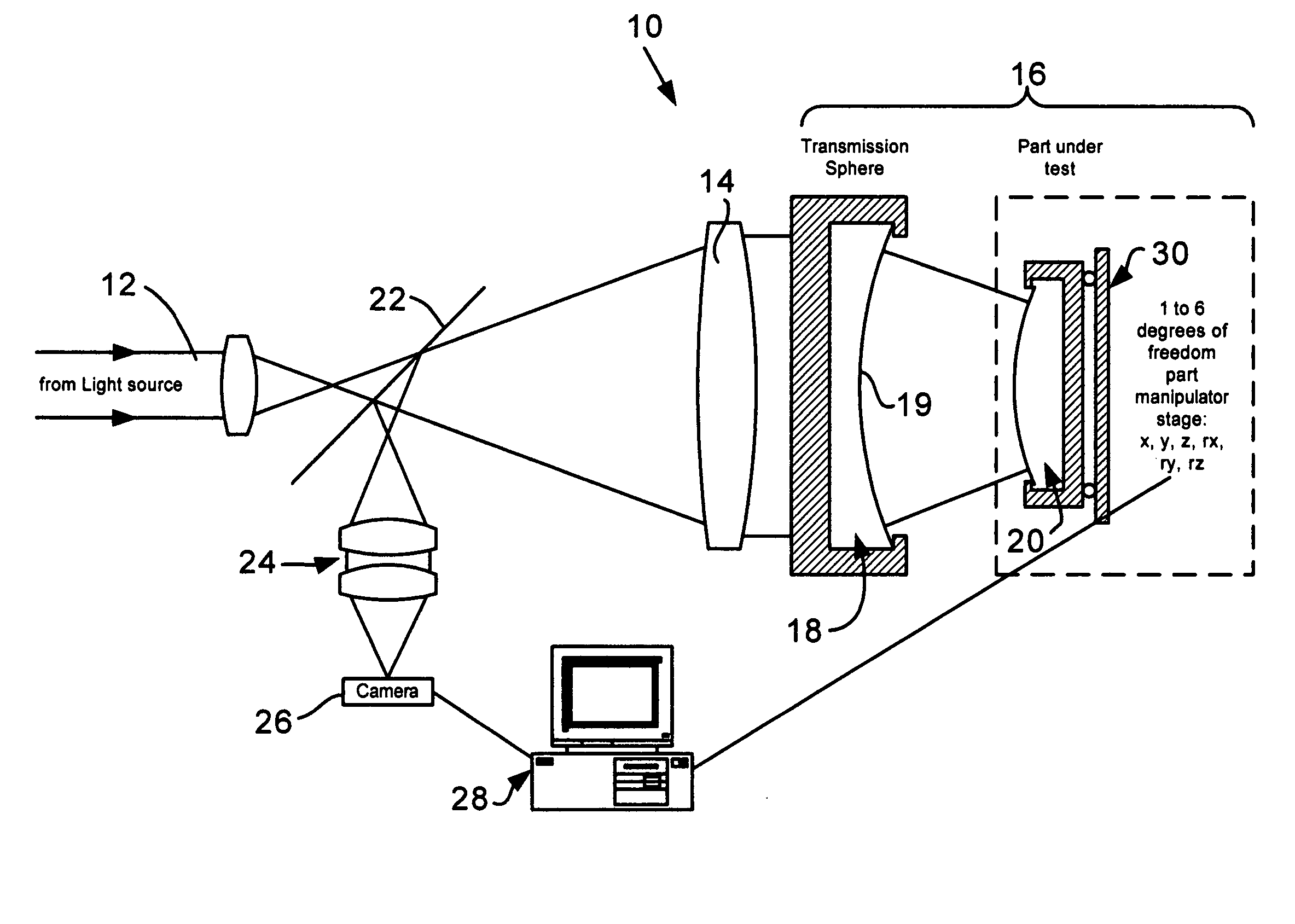

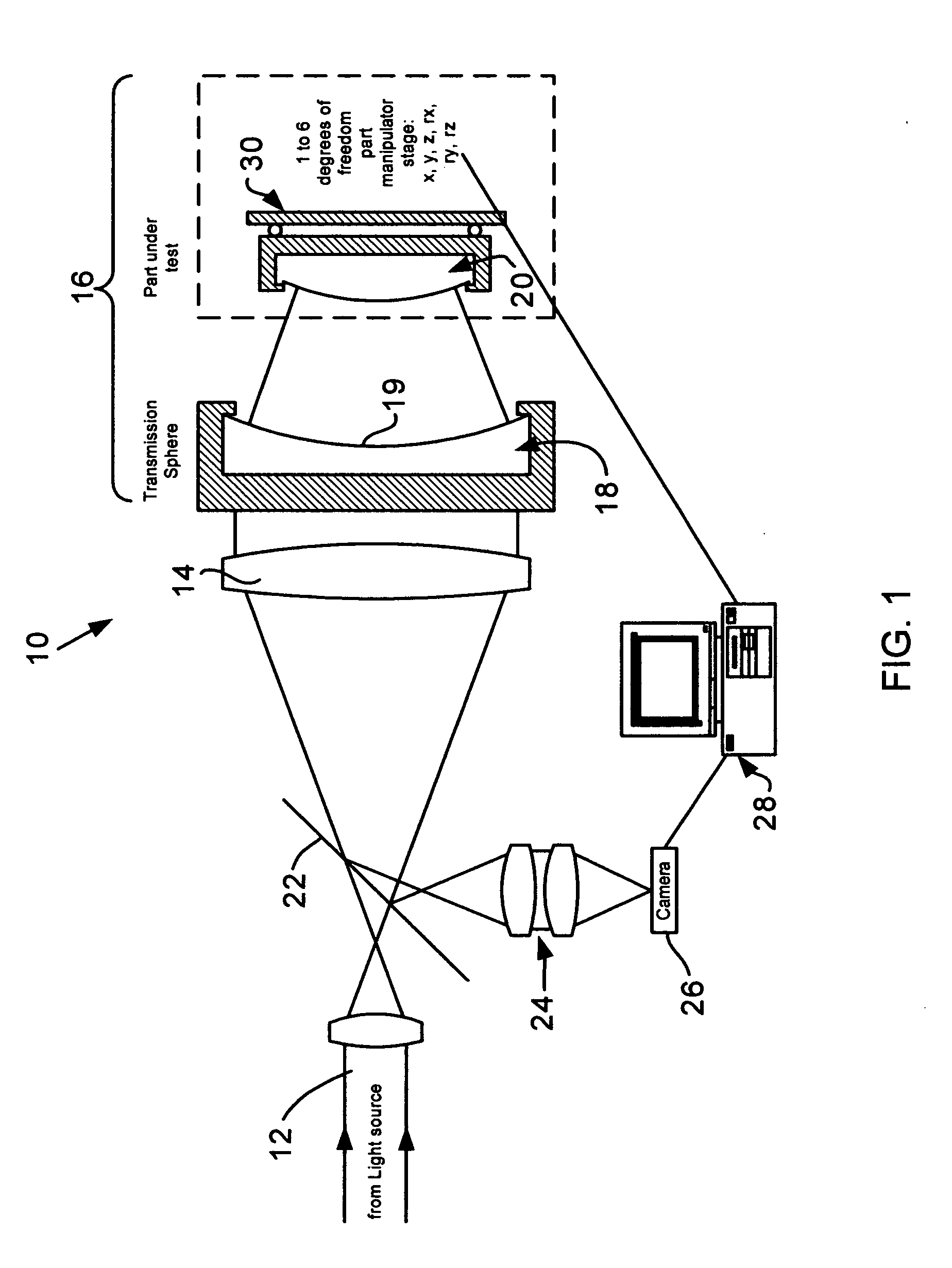

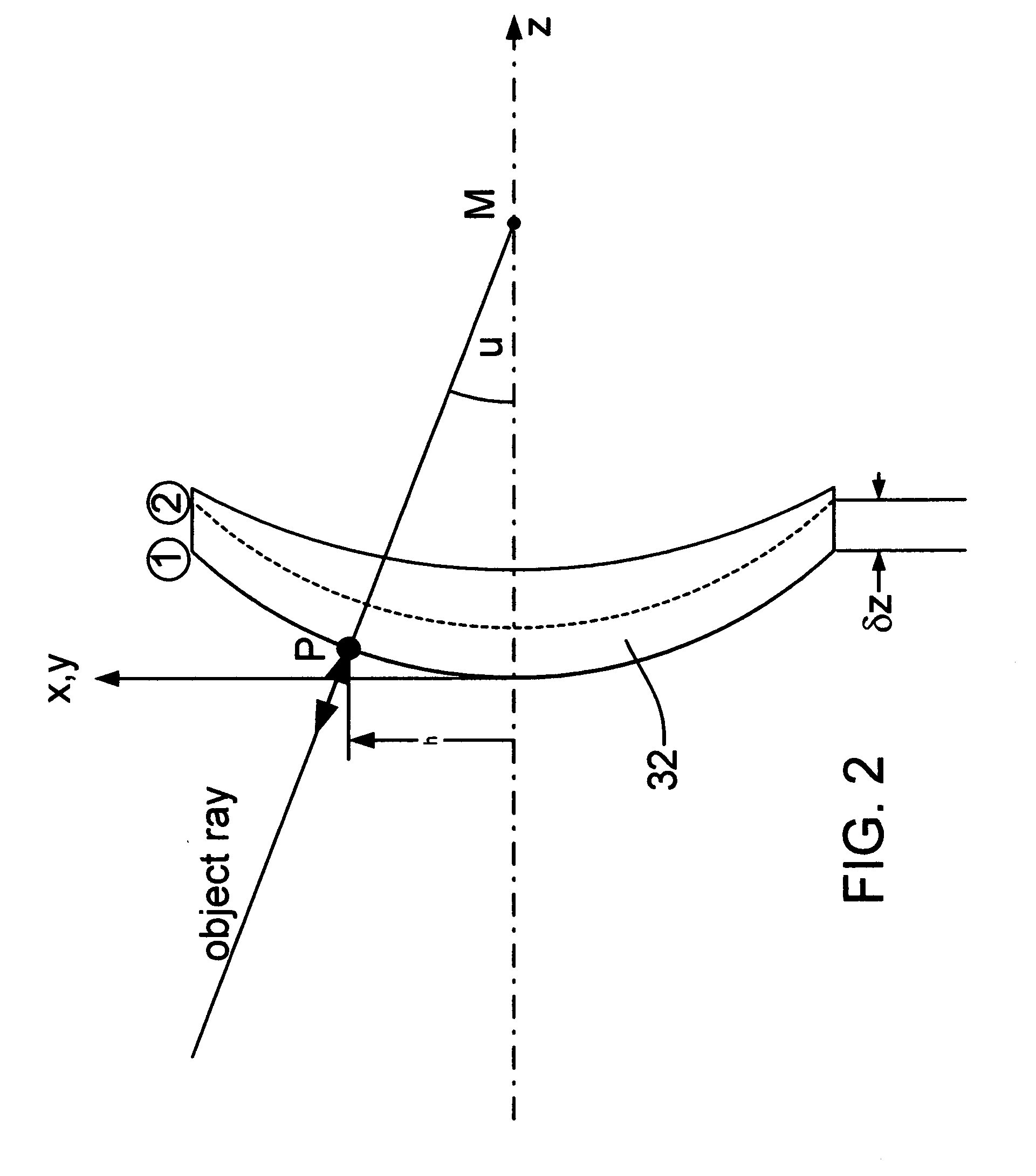

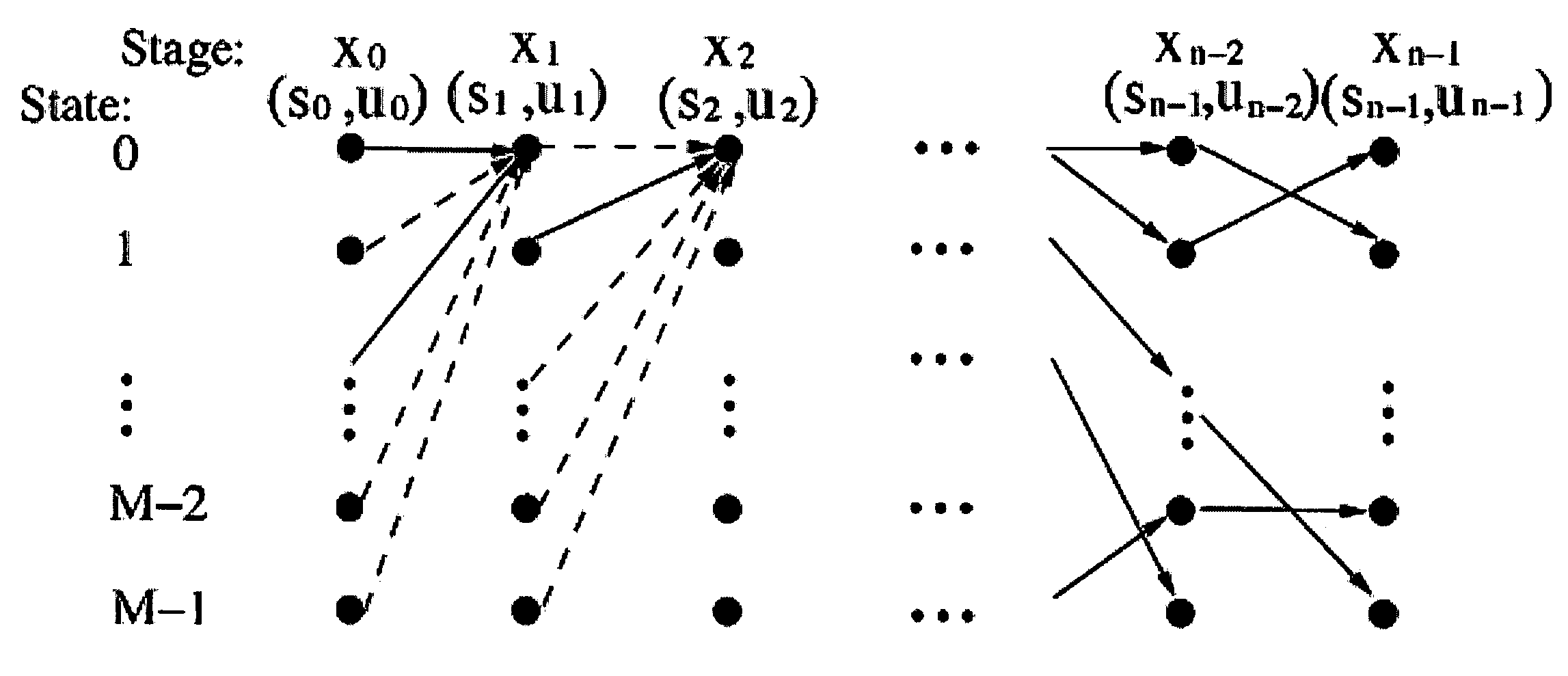

In situ determination of pixel mapping in interferometry

Interferometric methods and apparatus by which the map between pixel positions and corresponding part locations are determined in situ. The part under test, which is assumed to be a rigid body, is precisely moved from a base position to at least one other position in one to six degrees of freedom in three-dimensional space. Then, the actual displacements are obtained. The base position is defined as the position with the smallest fringe density. Measurements for the base and all subsequent positions are stored. After having collected at least one measurement for each degree of freedom under consideration, the part coordinates are calculated using the differences of the various phase maps with respect to the base position. The part coordinates are then correlated with the pixel coordinates and stored.

Owner:ZYGO CORPORATION

Method, system and software product for color image encoding

ActiveUS7525552B2Character and pattern recognitionImage codingComputation complexityColor image coding

The present invention relates to the compression of color image data. A combination of hard decision pixel mapping and soft decision pixel mapping is used to jointly address both quantization distortion and compression rate while maintaining low computational complexity and compatibility with standard decoders, such as, for example, the GIF / PNG decoder.

Owner:MALIKIE INNOVATIONS LTD

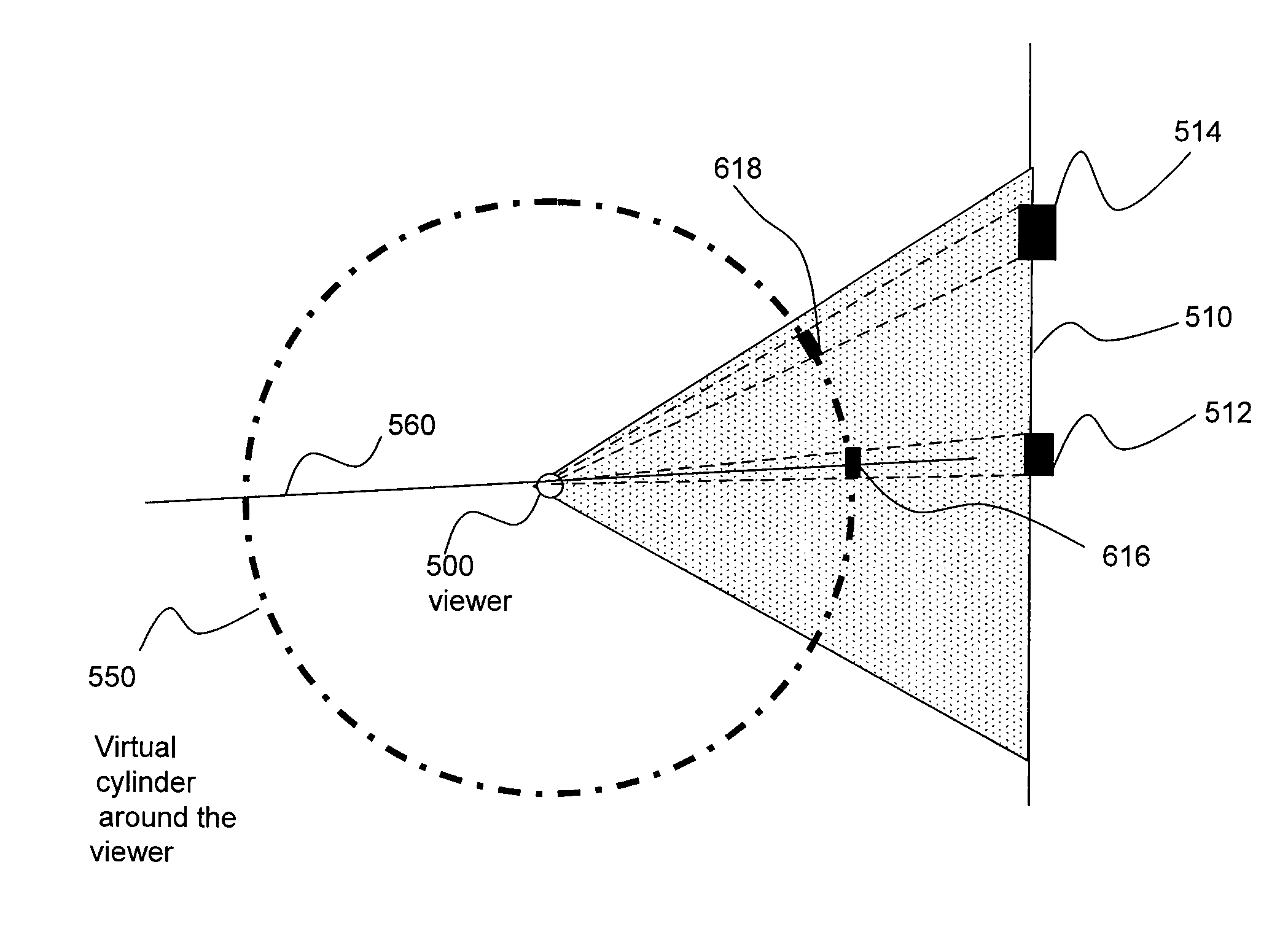

Mapping a two-dimensional image to a cylindrical surface using a tuned distortion curve

InactiveUS8542289B1Television system detailsCharacter and pattern recognitionImage resolutionImage edge

In a typical two-dimensional rectilinear image, all pixel widths are constant across the image. If the two-dimensional rectilinear image is mapped to a cylindrical surface, the pixels at the edge of the image are viewed at an angle, making the effective width of the pixels at the edge smaller than the effective width of the pixels in the interior of the image. This problem may be solved by applying a tuned distortion curve to the two-dimensional rectilinear image, which makes the pixels near the edge of the image wider than pixels in the interior of the image. When pixels that have been distorted in this manner are mapped to a cylindrical surface, the effective pixel width is uniform. A lens with a tuned distortion curve built into its optical profile captures at least a portion of the surrounding imagery such that the resultant image has uniform resolution when projected onto a virtual cylinder centered around a viewer.

Owner:GOOGLE LLC

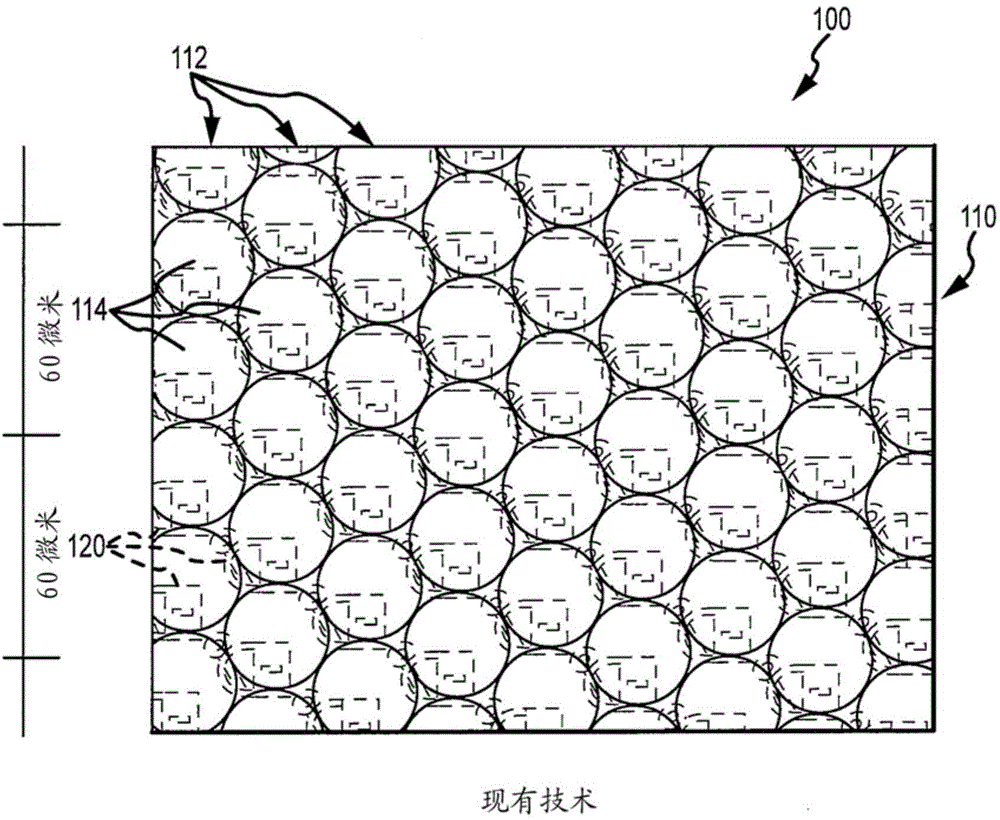

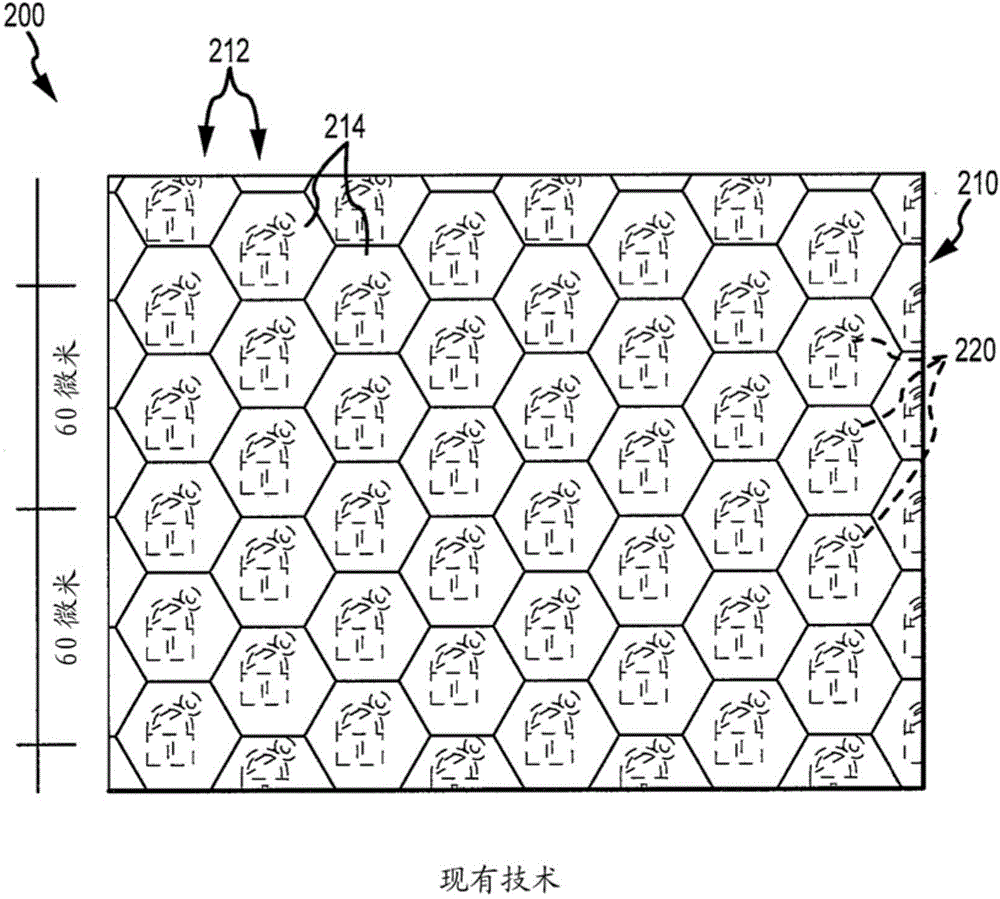

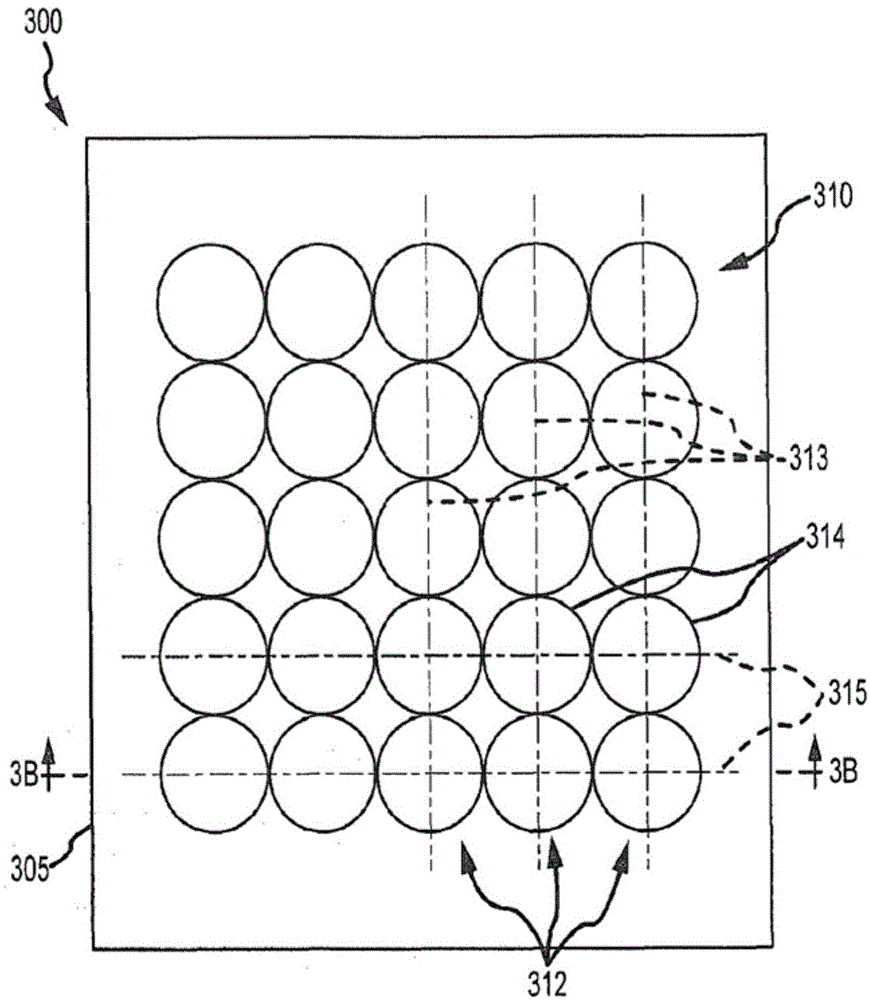

Pixel mapping, arranging, and imaging for round and square-based micro lens arrays to achieve full volume 3D and multi-directional motion

ActiveCN104838304AIncrease darknessIncrease brightnessOther printing matterPattern printingPixel mappingMicro lens array

A visual display assembly adapted for use as an anti-counterfeiting device on paper currency, product labels, and other objects. The assembly includes a film of transparent material including a first surface including an array of lenses and a second surface opposite the first surface. The assembly also includes a printed image proximate to the second surface. The printed image includes pixels of frames of one or more images interlaced relative to two orthogonal axes. The lenses of the array are nested in a plurality of parallel rows, and adjacent ones of the lenses in columns of the array are aligned to be in a single one of the rows with no offset of lenses in adjacent columns / rows. The lenses may be round-based lenses or are square-based lenses, and the lenses may be provided at 200 lenses per inch (LPI) or a higher LPI in both directions.

Owner:LUMENCO

Method and apparatus for enhancing a digital image by applying an inverse histogram-based pixel mapping function to pixels of the digital image

InactiveUS20050058343A1Increase contrastReduce decreaseImage enhancementCharacter and pattern recognitionPixel mappingDigital image

A method and associated device wherein an inverse histogram based pixel mapping step is combined with an edge enhancement step such as unsharp masking. In such an arrangement the inverse histogram based pixel mapping step improves the performance of the unsharp masking step, serving to minimize the enhancement of noise components while desired signal components are sharpened.

Owner:WSOU INVESTMENTS LLC

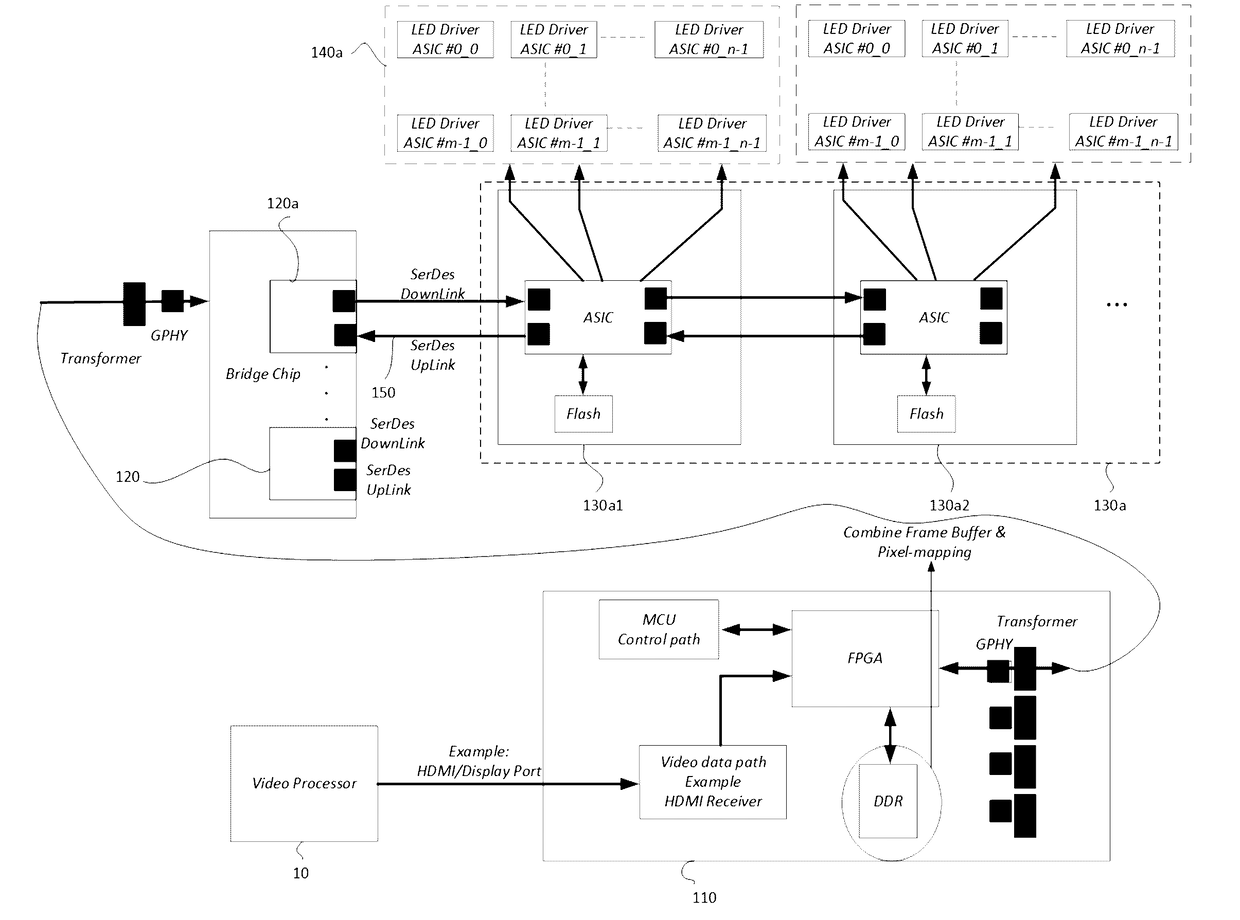

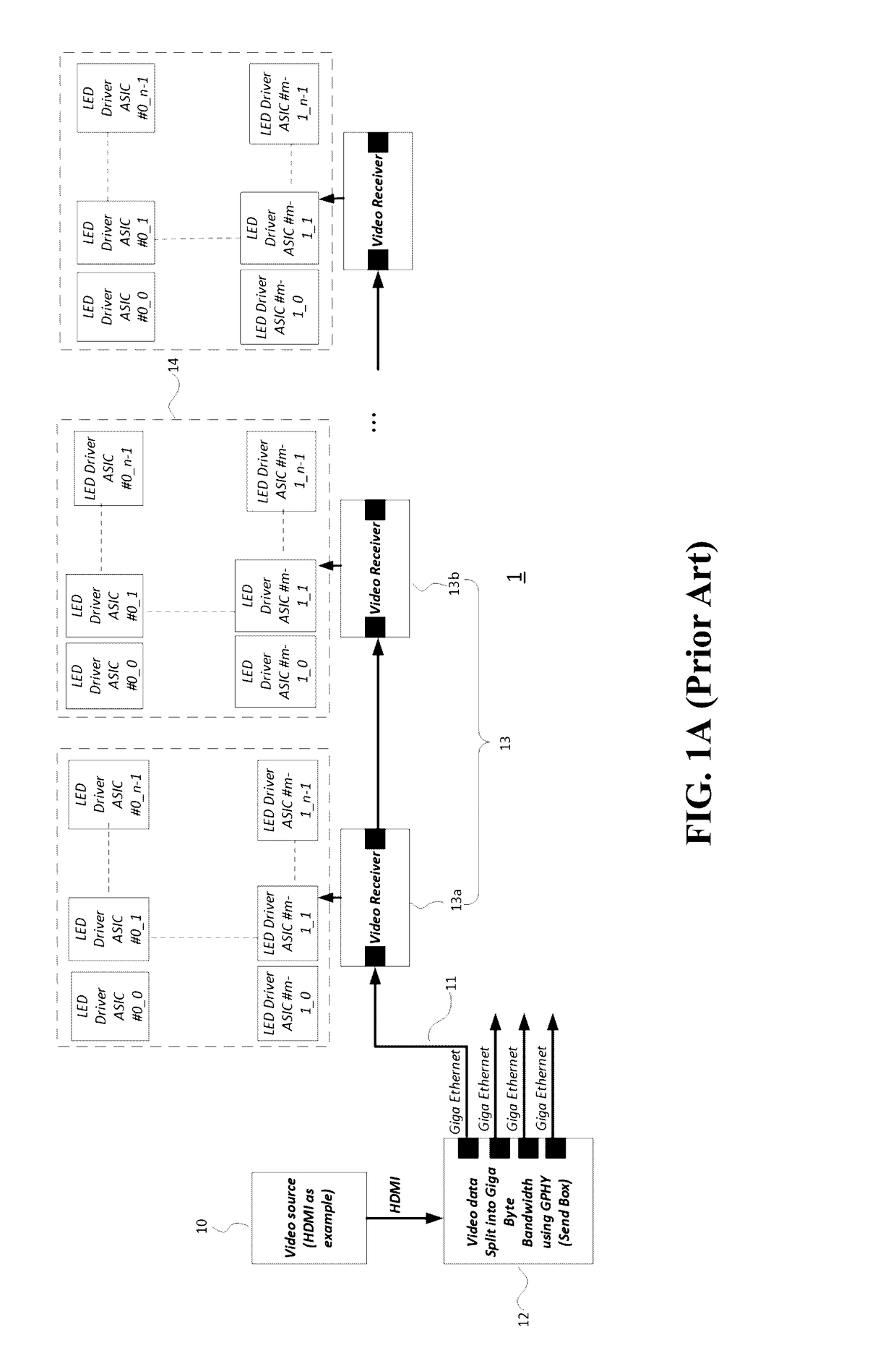

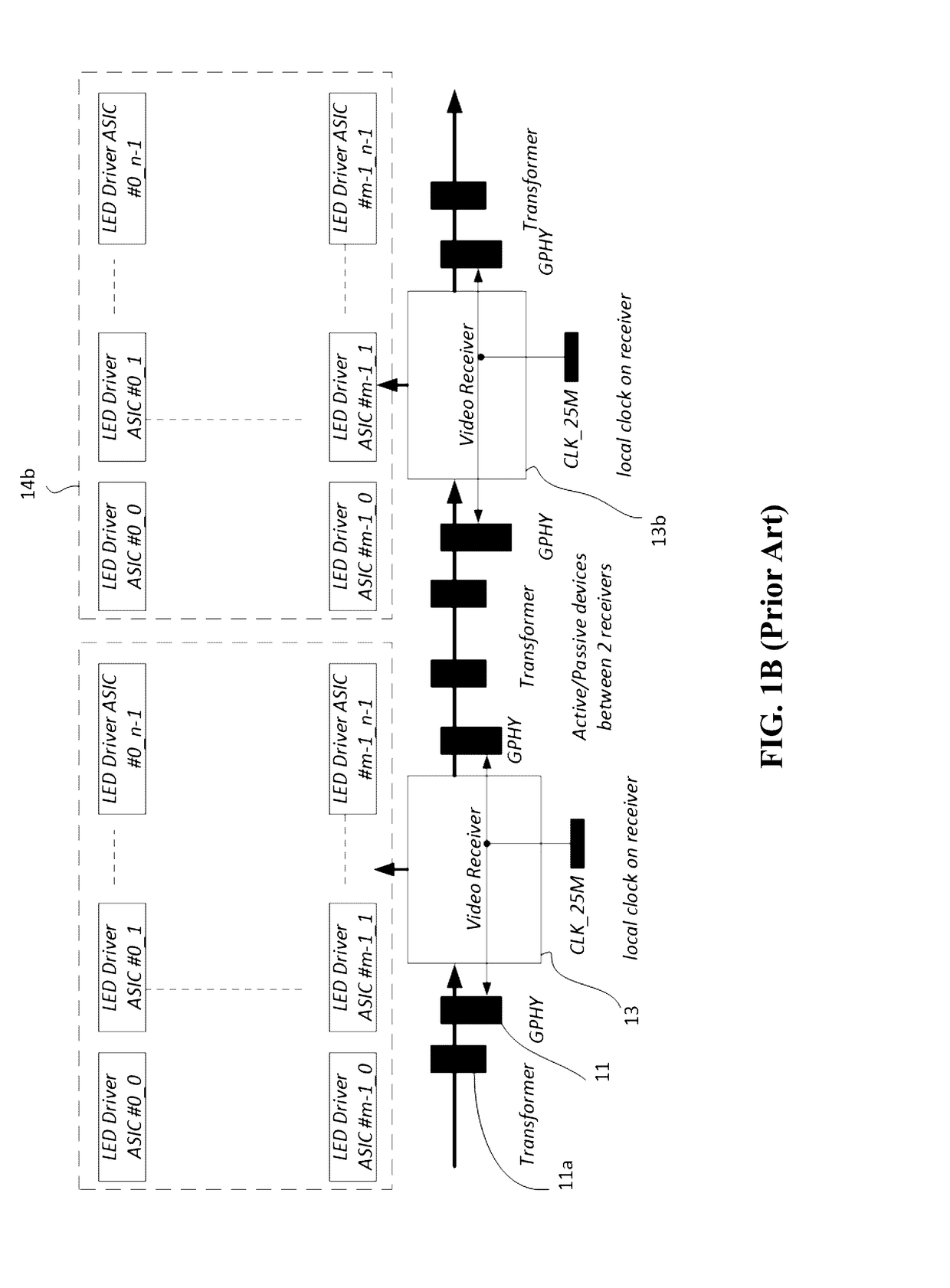

LED display device and method for operating the same

This disclosure describes an LED display device. The LED display device includes a transmitter having a memory and a pixel mapping table, a plurality of first receivers coupled to the transmitter, a plurality of second receiver modules, and a plurality of LED driver groups. A unique address is assigned to a data packet with a use of the pixel mapping table. The data packet has a set of field information and the set of field information includes the unique address. Each of the second receiver modules is coupled to at least one of the first receivers and includes a plurality of second receivers. None of the plurality of second receivers comprises a pixel mapping memory. Each of the LED driver groups is coupled to one of the plurality of second receivers and includes a plurality of LED drivers.

Owner:SCT

Object-based virtual image drawing method of three-dimensional/free viewpoint television

InactiveCN101695140AImprove drawing speedGuaranteed drawing accuracySteroscopic systemsColor imageObject based

The invention discloses an object-based virtual image drawing method of a three-dimensional / free viewpoint television, which comprises the following steps: dividing a color image into an inner region, a background region and a boundary region of an object through an object mask image of the color image, and then, dividing the color image into a plurality of blocks with different sizes according to the three regions; for a whole mapping type block, only needing to carry out three-dimensional image conversion on one pixel point in the block to determine the coordinate mapping relation for projecting the pixel point from the color image into a virtual viewpoint color image, and then, projecting the whole block into the virtual viewpoint color image by using the coordinate mapping relation, wherein because the three-dimensional image conversion is only carried out on one pixel point, the drawing speed of the whole mapping type block can be effectively improved; and for sequential pixel mapping type blocks, because the blocks are mainly positioned in the boundary region, still mapping each pixel point in the blocks into the virtual viewpoint color image by using a sequential pixel mapping type three-dimensional image conversion method , thereby effectively ensuring the drawing precision.

Owner:上海贵知知识产权服务有限公司

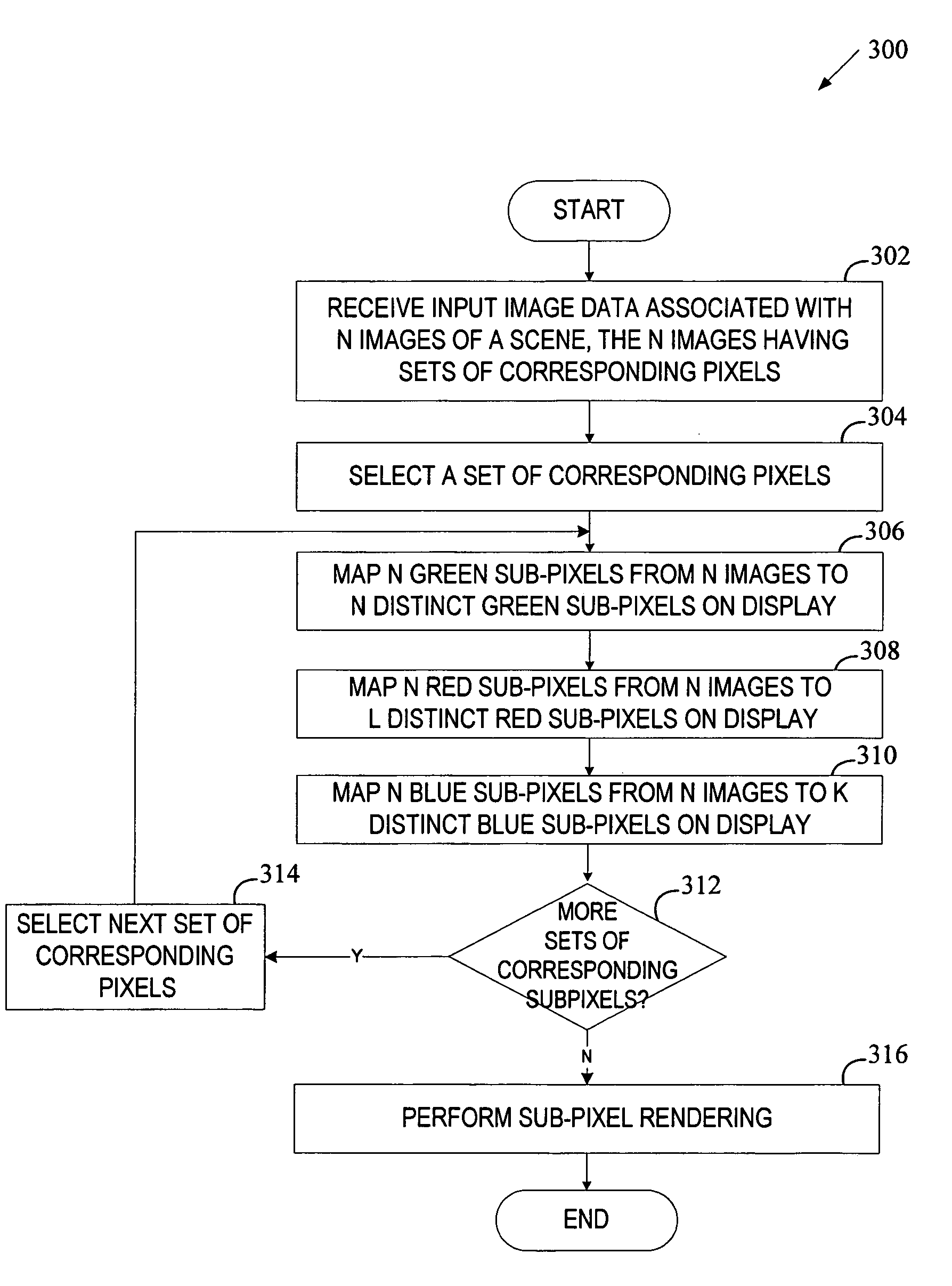

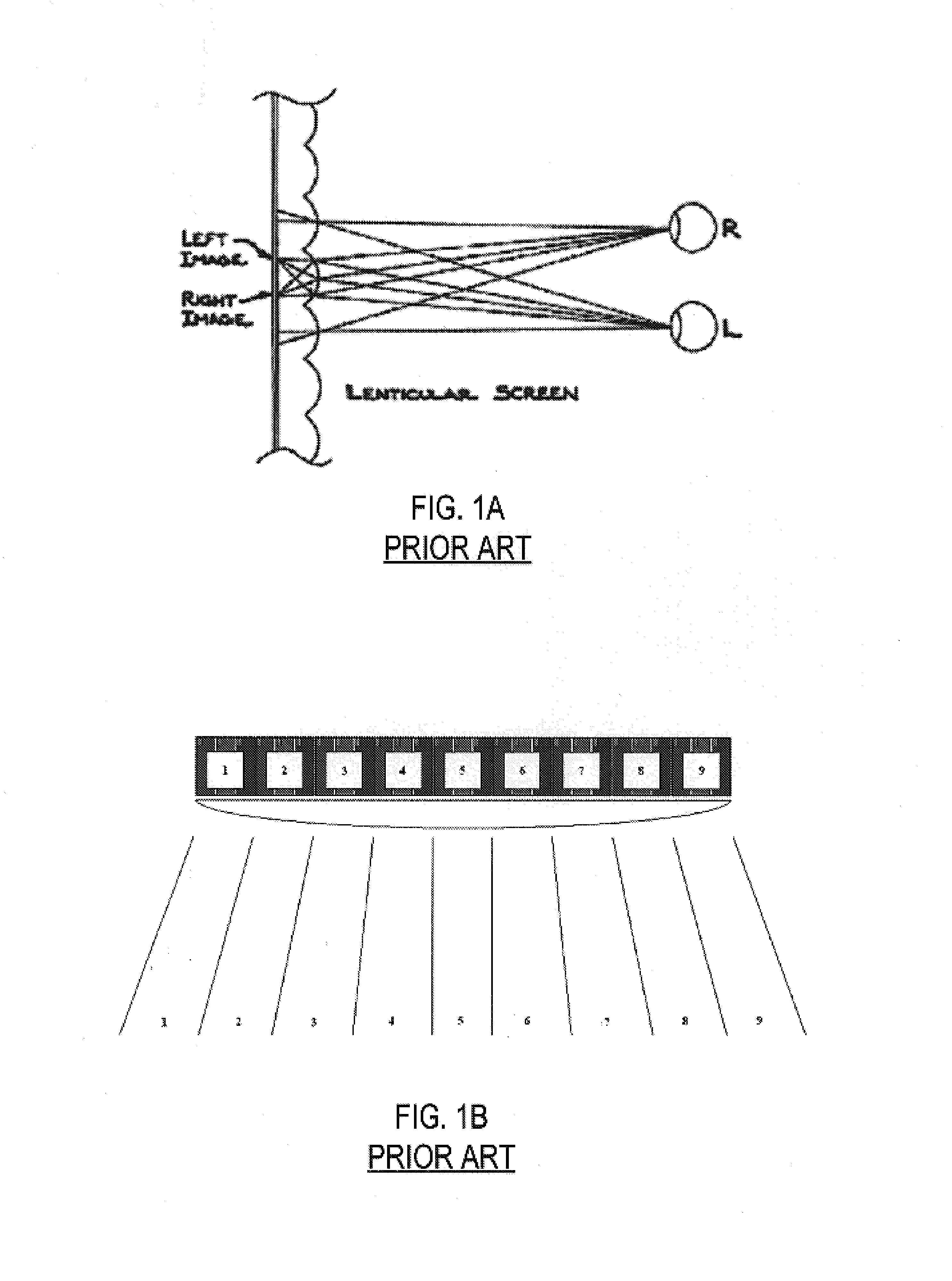

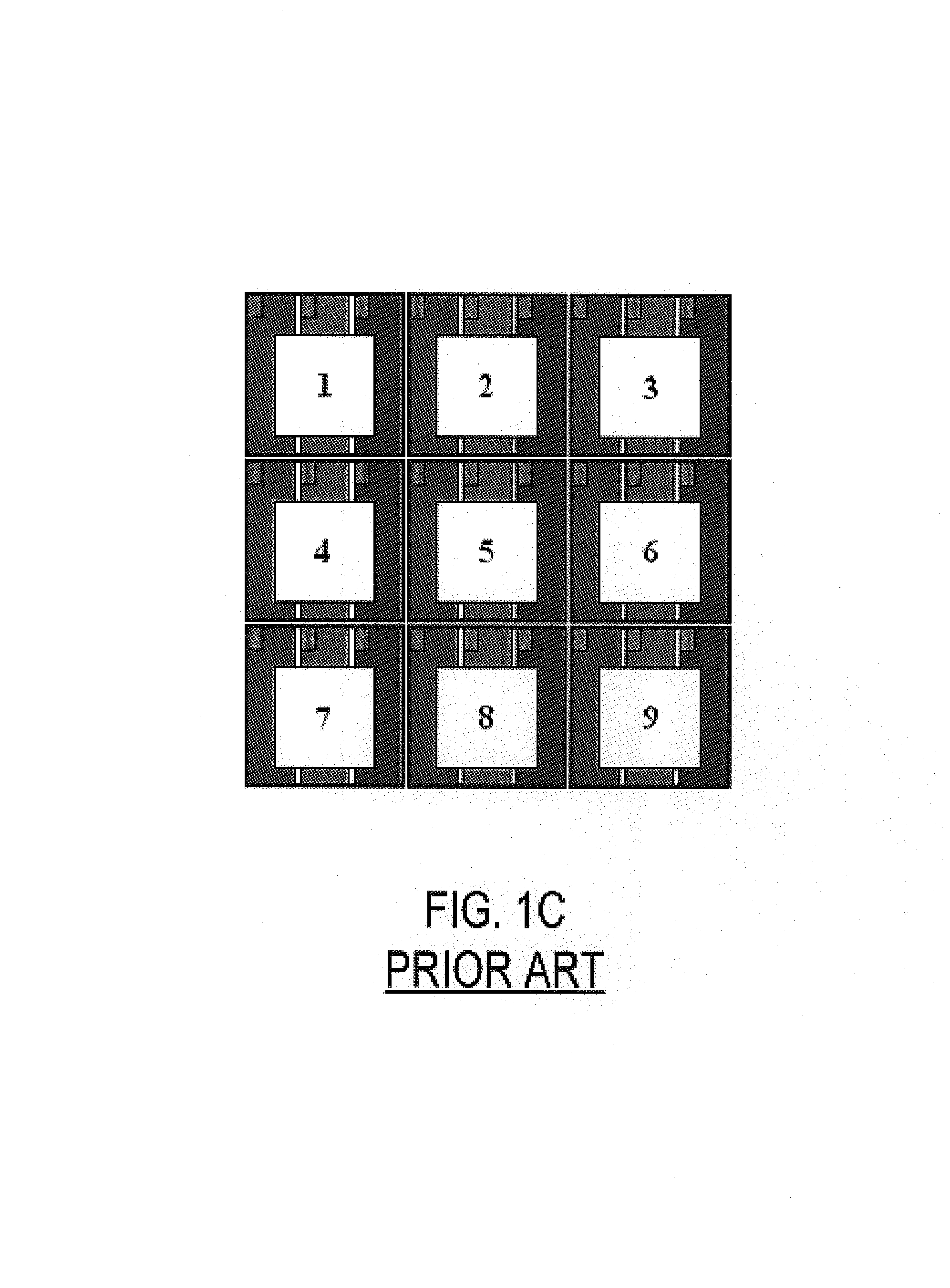

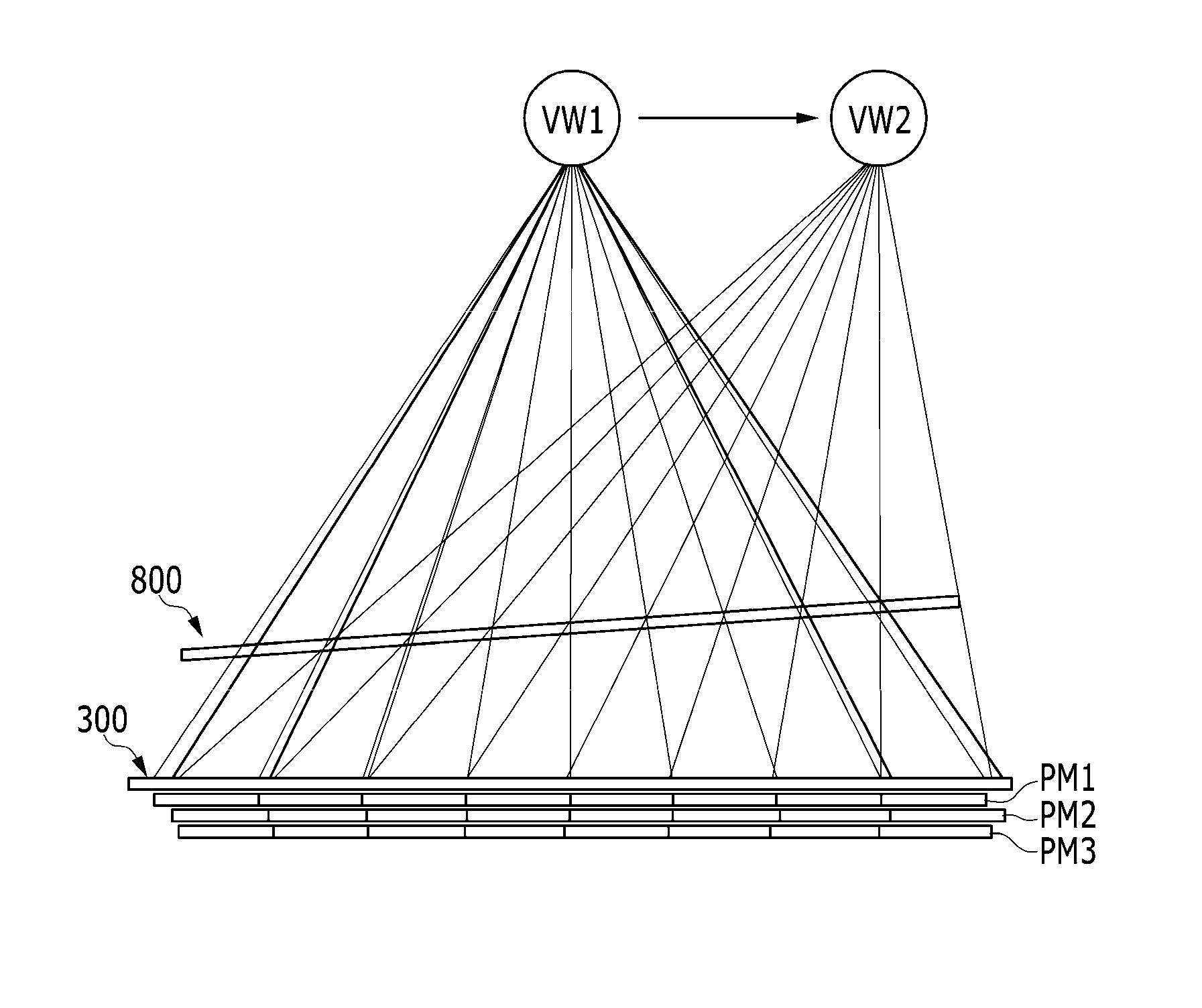

High-resolution micro-lens 3D display with shared sub-pixel color signals

In one embodiment, a sub-pixel rendering method includes receiving 3D image data associated with pixel intensity values of N two-dimensional images having multiple sets of corresponding pixels. Each set of corresponding pixels includes N pixels (one pixel from each of N images) and each pixel has a green sub-pixel, a red sub-pixel and a blue sub-pixel. The method further includes mapping, for each selected set, N green sub-pixels, N red sub-pixels and N blue sub-pixels to M sub-pixels on a display to form a stereogram of the scene. The above mapping includes mapping N green sub-pixels from N images to N green sub-pixels on the display, mapping N red sub-pixels from N images to L red sub-pixels on the display, and mapping N blue sub-pixels from N images to K blue sub-pixels on the display, where L does not exceed N and K is lower than N.

Owner:SONY CORP +1

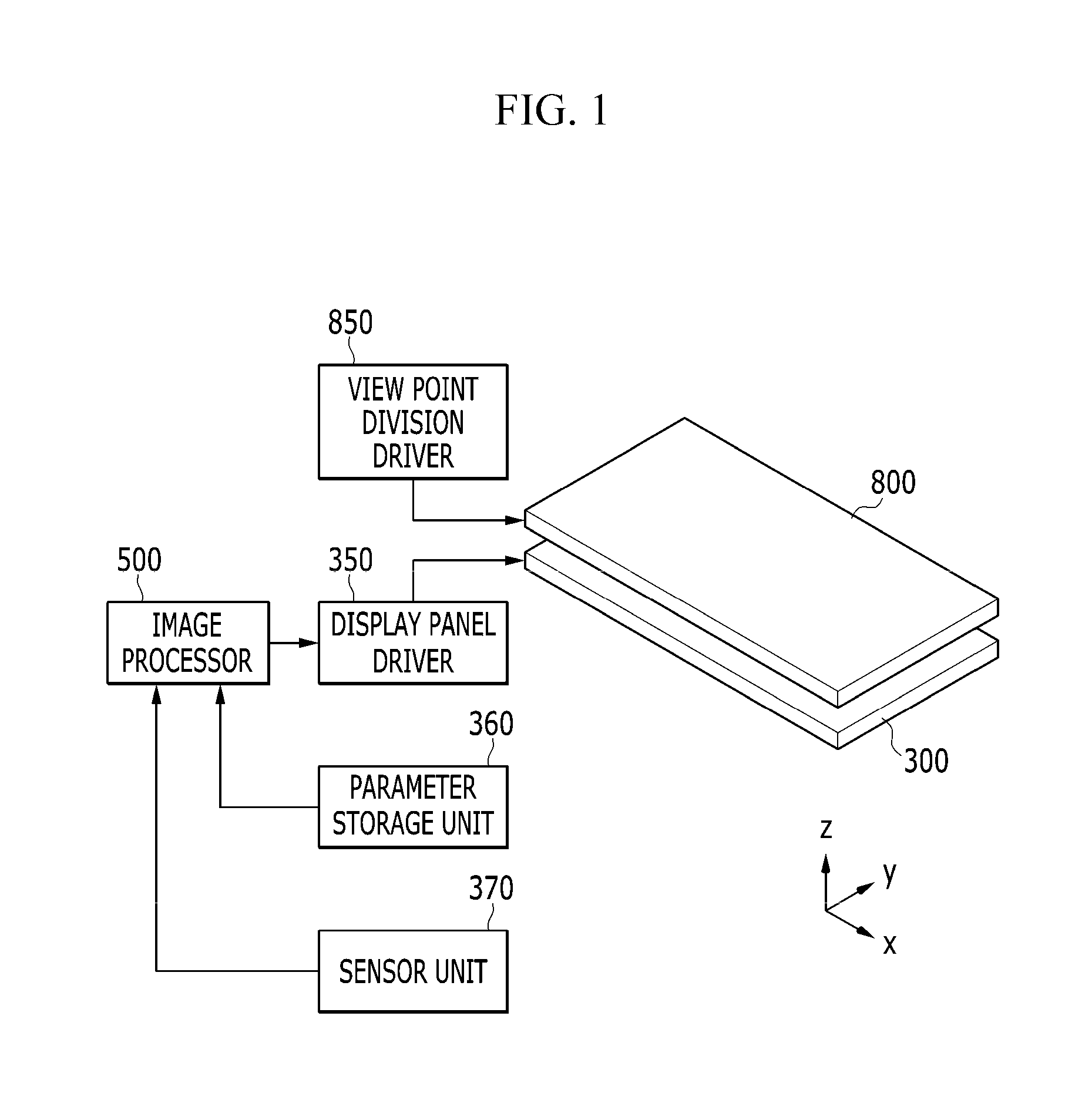

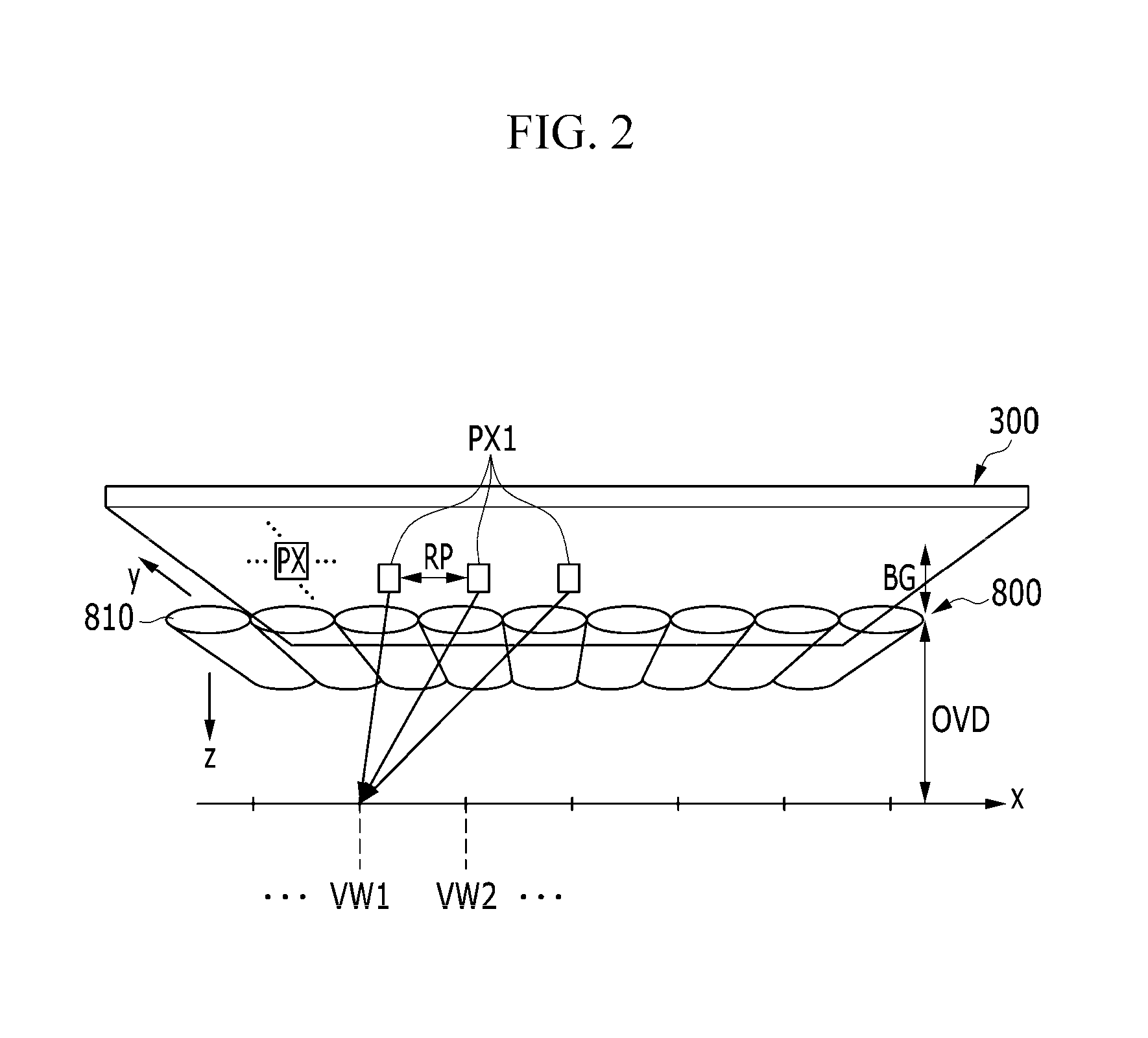

Three-dimensional image display device and driving method thereof

InactiveUS20160323570A1Eliminate crosstalkCompensate for misalignmentSteroscopic systemsViewpointsComputer graphics (images)

There is provided a three-dimensional image display device, including: a display panel including a plurality of signal lines and a plurality of pixels connected to the plurality of signal lines; a viewpoint divider configured to divide an image displayed by the display panel into a plurality of viewpoints; a parameter storage unit configured to store parameters for an alignment between the display panel and the viewpoint divider; an image processor configured to calculate a rendering pitch according to the alignment between the display panel and the viewpoint divider by using the parameters stored in the parameter storage unit and generate an image signal to perform pixel mapping according to the rendering pitch; and a display panel driver configured to receive the image signal to drive the display panel.

Owner:SAMSUNG DISPLAY CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com