Visual tracking and positioning method based on dense point cloud and composite view

A technology of visual tracking and positioning method, which is applied in the information field to achieve the effect of fast initial positioning

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0024] In order to make the purpose, technical solutions and advantages of the embodiments of the present invention more clear, the technical solutions in the embodiments of the present invention will be clearly and completely described below in conjunction with the drawings in the embodiments of the present invention.

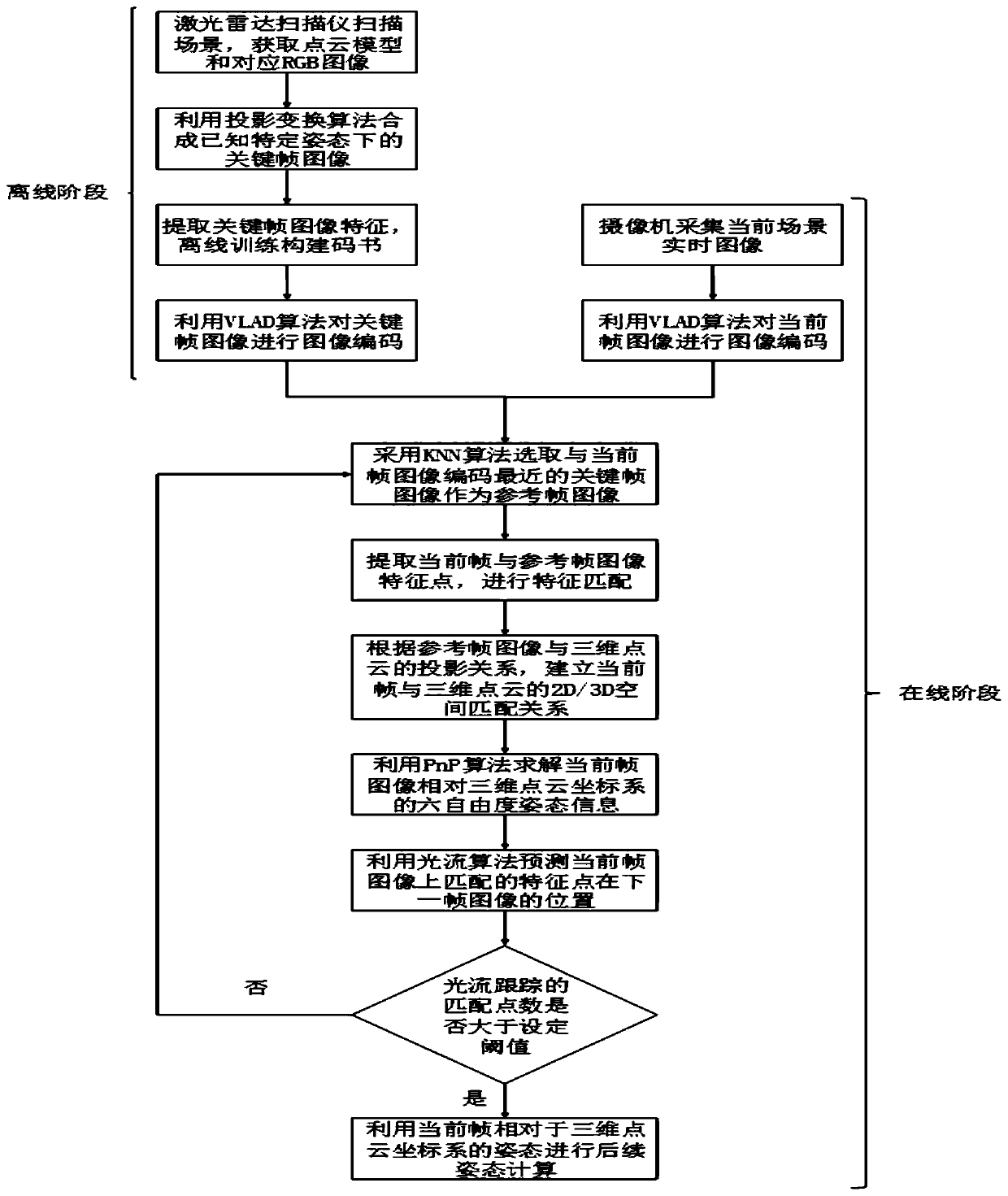

[0025] The design idea of the present invention is: transform the three-dimensional point cloud obtained by the laser radar through space back projection to generate a synthetic image under a known viewpoint, and use the method of matching the synthetic image with the real-time image acquired by the camera to estimate the real-time 6 freedom of the camera. degree pose. This method can be used for tracking navigation or auxiliary positioning in the fields of robots, unmanned vehicles, unmanned aerial vehicles, virtual reality and augmented reality.

[0026] The present invention is based on the visual tracking and positioning method of dense point cloud and s...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com