Multi-modal emotion recognition method based on time domain convolutional network

A convolutional network and emotion recognition technology, applied in the fields of deep learning, pattern recognition, audio and video processing, can solve the problems of less information and relatively low accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0062] Below in conjunction with accompanying drawing and specific embodiment, further illustrate the present invention, should be understood that these examples are only for illustrating the present invention and are not intended to limit the scope of the present invention, after having read the present invention, those skilled in the art will understand various aspects of the present invention All modifications of the valence form fall within the scope defined by the appended claims of the present application.

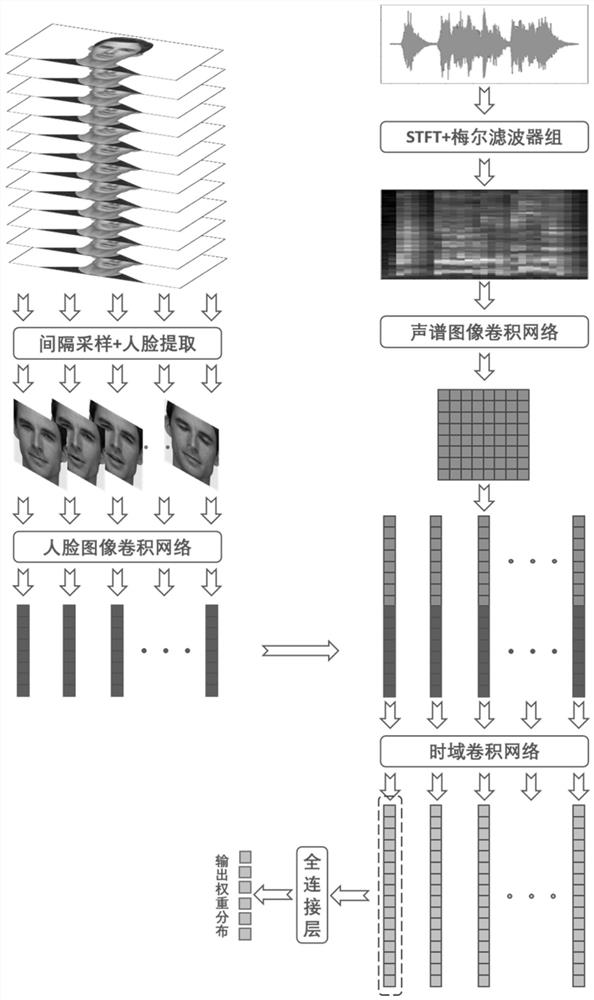

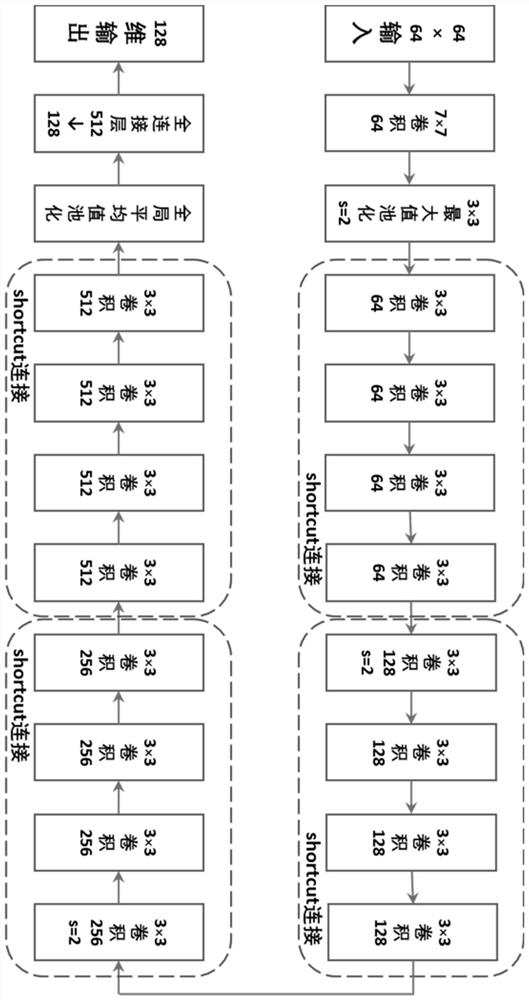

[0063] A multi-modal emotion recognition method based on temporal convolutional network, such as figure 1 As shown, the method includes:

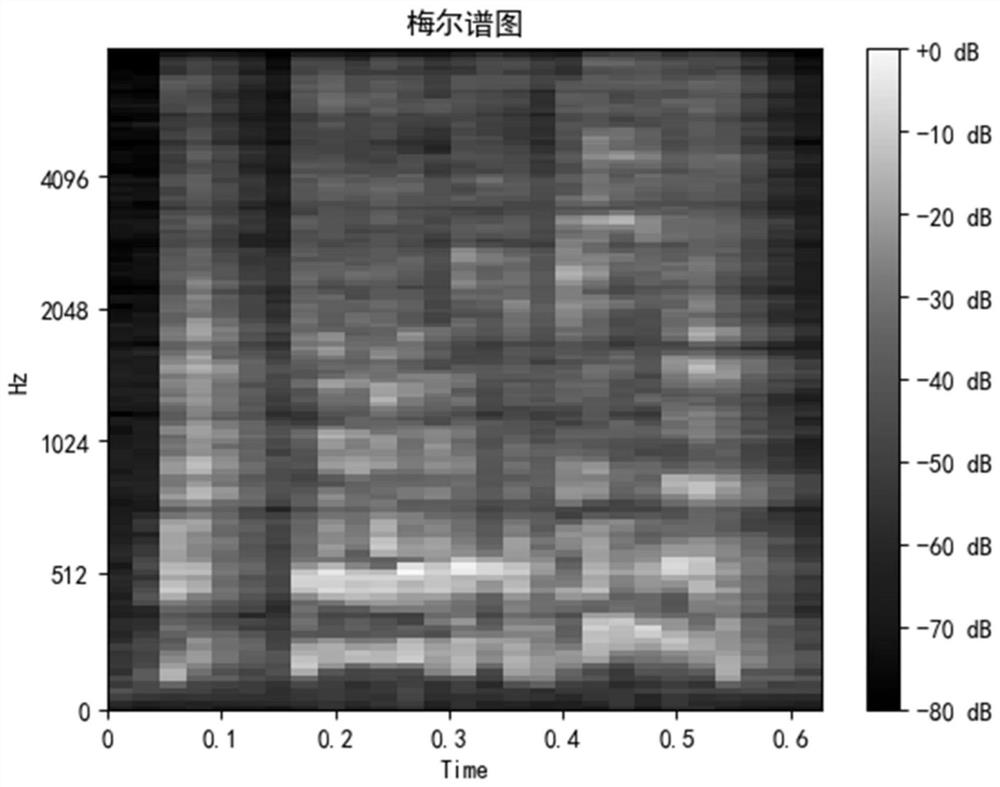

[0064] (1) Obtain a number of audio and video samples containing emotional information, sample the video modality data in the samples at intervals, and perform face detection and key point positioning to obtain grayscale face image sequences.

[0065] This step specifically includes:

[0066] (1-1) Sampling the video modality da...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com