A method of generating a neural network model for video prediction

A neural network model and neural network technology, applied in the field of unsupervised prediction of video frames, can solve problems such as optical flow occlusion, fast movement, sensitivity to changes in illumination or nonlinear structures, fuzzy predictions, and unsatisfactory prediction results. Achieving good long-term predictive effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

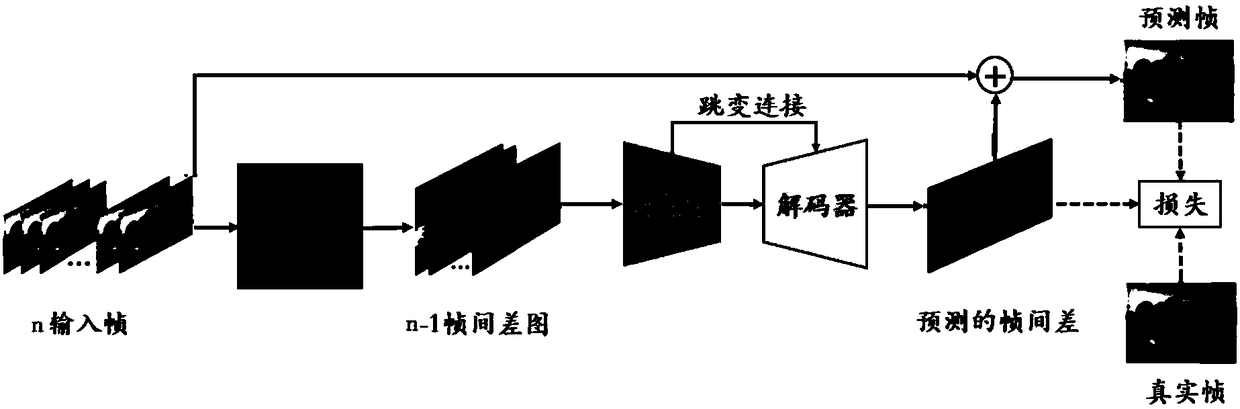

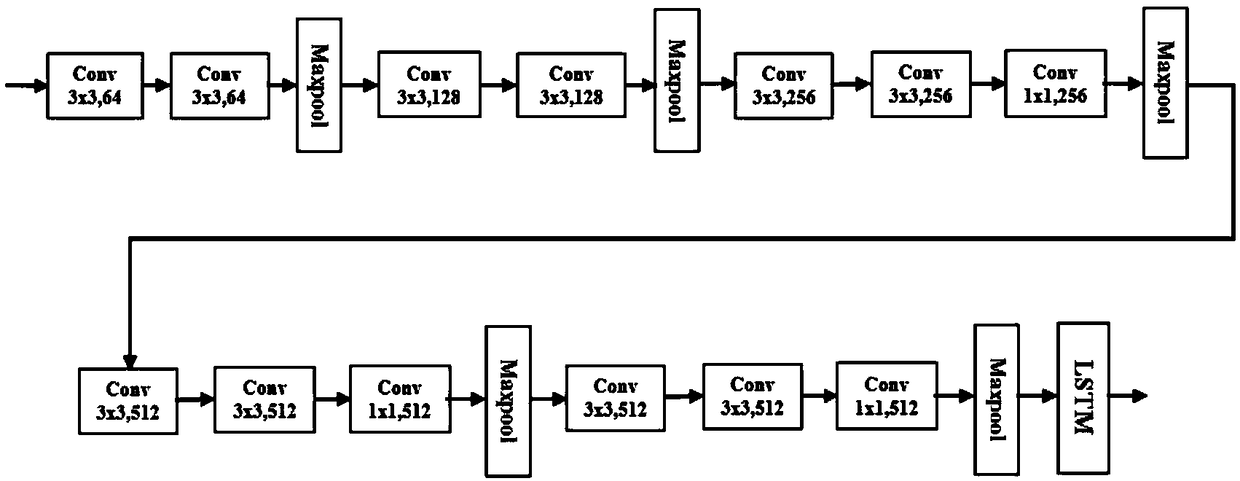

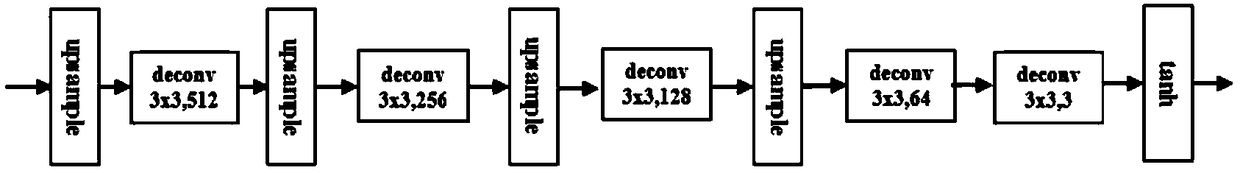

[0040] After studying the existing technology, the inventor found that the current pixel-level video prediction technology adopts a frame-by-frame prediction method. This technology requires a very large amount of calculations whether it is to establish a video prediction model or use the model for prediction. , Especially the use of training neural network to build the above model. In this regard, the inventor proposes that the difference between consecutive multiple frames in a video file can be used to perform video prediction, by extracting the inter-frame difference of video samples, and establishing a generator model G for encoding and decoding. The generator model G includes an encoder and a decoder with a neural network model structure. The encoder takes the inter-frame difference of video samples as input, and uses a jump connection between the encoder and the decoder to generate predicted frames The inter-frame difference ΔX, the sum of the predicted inter-frame diffe...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com