Patents

Literature

166results about How to "Efficient training process" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

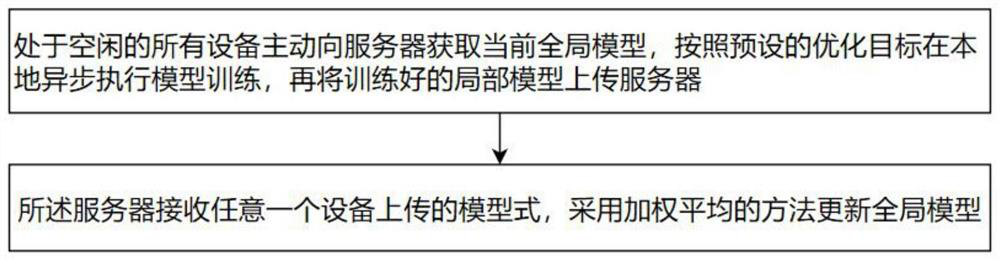

Edge computing-oriented federated learning method

InactiveCN111708640AEfficient training processMake the most of free timeResource allocationMachine learningEdge computingWeighted average method

The invention provides an edge computing-oriented federated learning method, which comprises the following steps that: all idle devices actively acquire a current global model from a server, asynchronously execute model training locally according to a preset optimization target, and upload the trained local model to the server; and the server receives the model formula uploaded by any one device and updates the global model by adopting a weighted average method. According to the method, asynchronous training and federation learning can be combined, in asynchronous federation optimization, allidle devices are used for asynchronous model training, a server updates a global model through weighted average, idle time of all edge devices is fully utilized, and model training is more efficient.

Owner:苏州联电能源发展有限公司

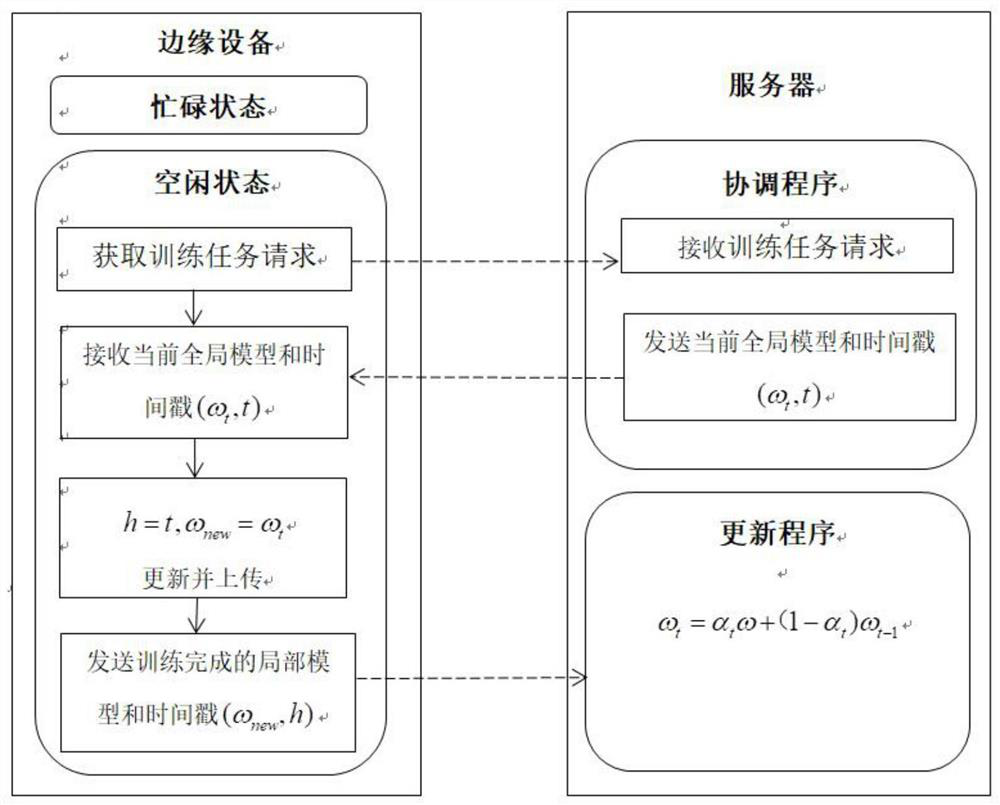

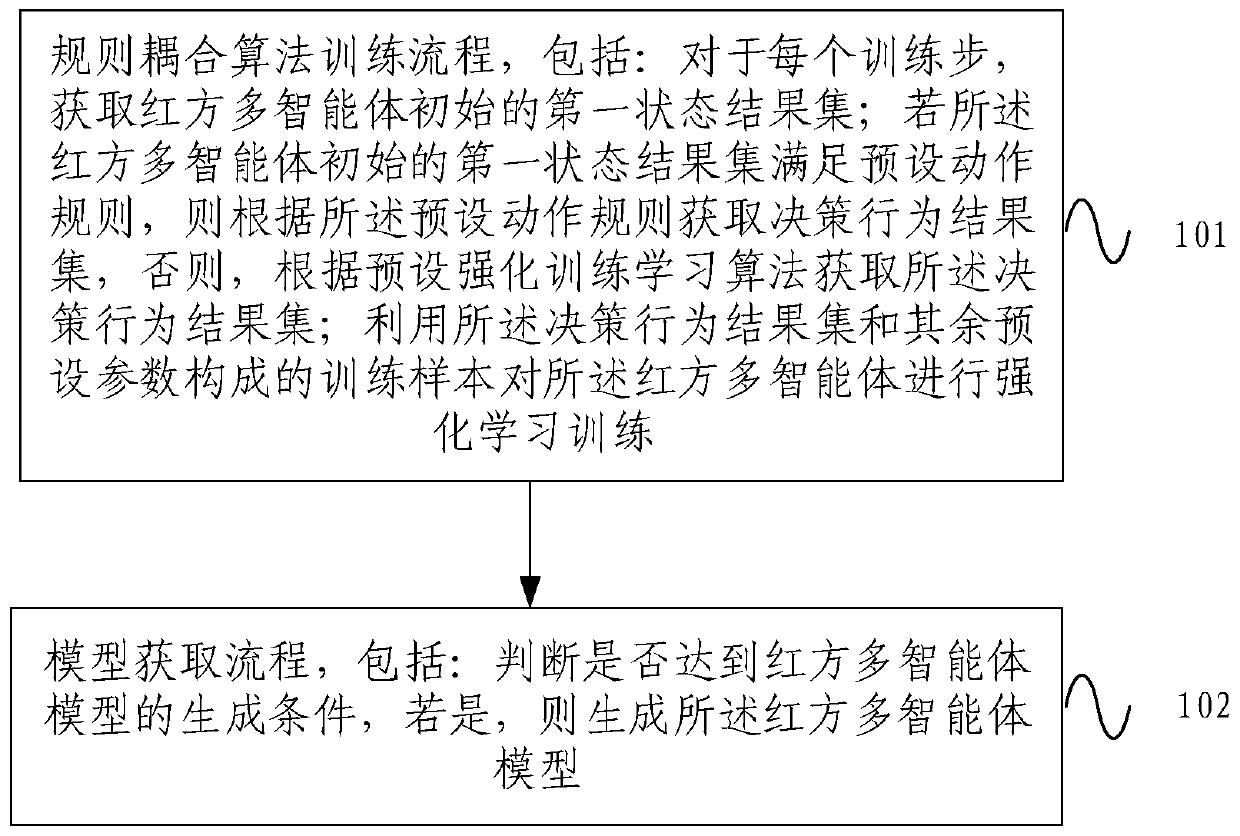

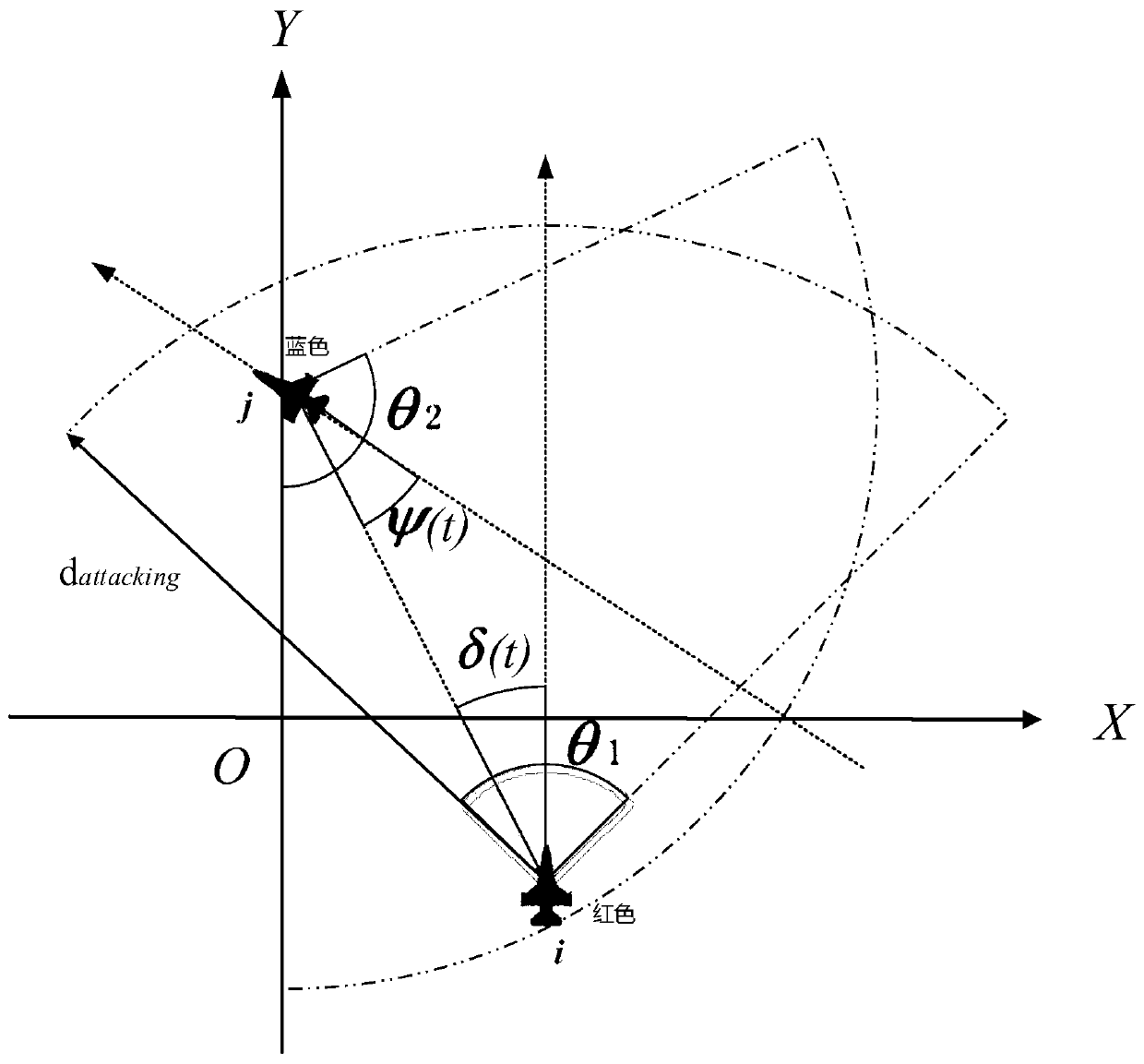

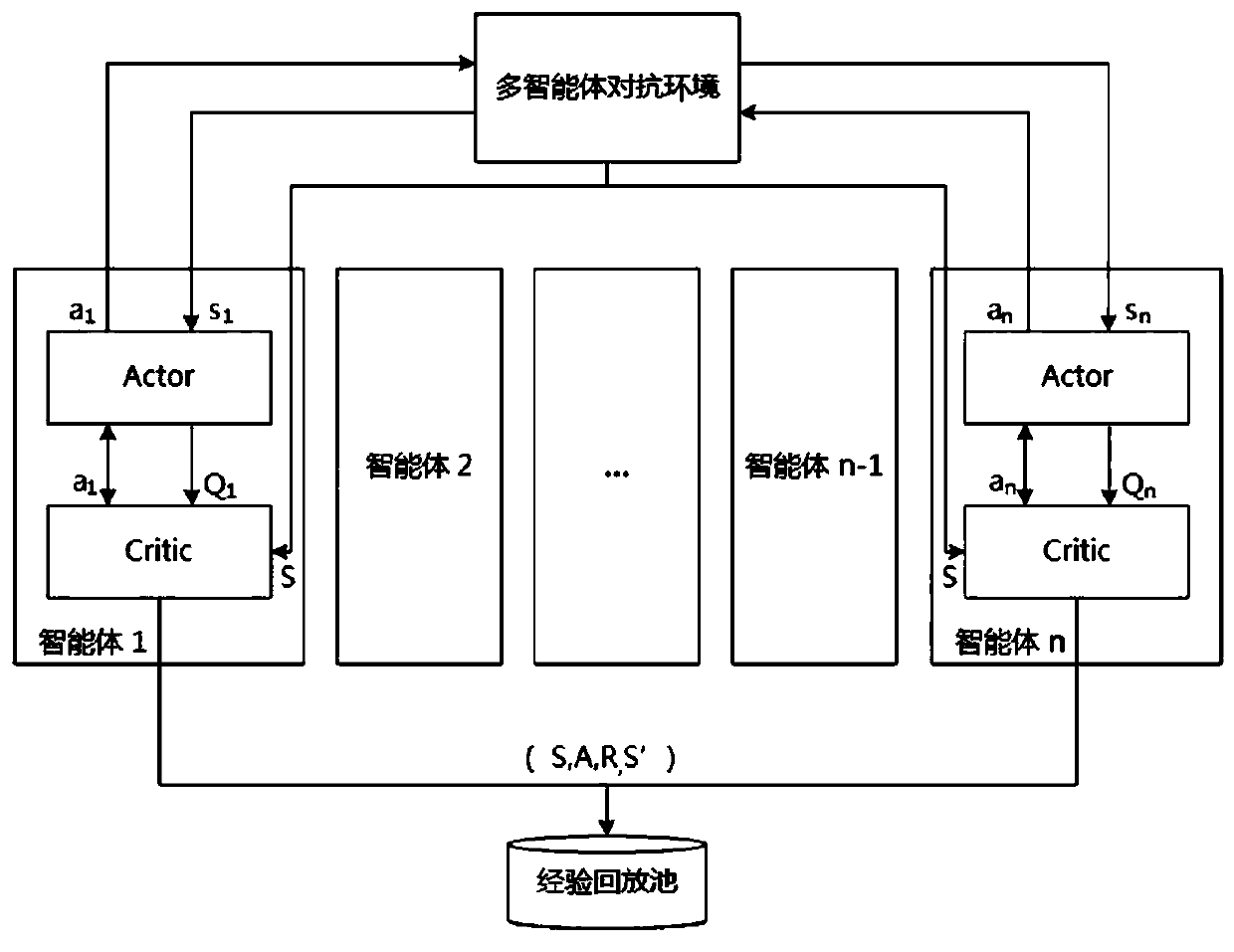

Reinforcement learning training optimization method and device for multi-agent confrontation

ActiveCN110991545AImprove training efficiencyFast trainingCharacter and pattern recognitionArtificial lifeAlgorithmEngineering

The embodiment of the invention provides a reinforcement learning training optimization method and device for multi-agent confrontation. The method comprises the following steps of a rule coupling algorithm training process including the steps of acquiring an initial first state result set of a red party multi-agent for each training step, if the initial first state result set of the red party multi-agent meets a preset action rule, obtaining a decision-making behavior result set according to the preset action rule, and otherwise, acquiring the decision-making behavior result set according toa preset reinforcement training learning algorithm; and performing reinforcement learning training on the red-party multi-agent by utilizing a training sample formed by the decision-making behavior result set and other preset parameters. The embodiment of the invention provides the reinforcement learning training optimization method and device for multi-agent confrontation. In the whole training process, the preset action rule can guide the multiple agents to act, invalid actions are avoided, the problems that in the training process in the prior art, invalid exploration is much, and the training speed is low are solved, and the training efficiency is remarkably improved.

Owner:NAT INNOVATION INST OF DEFENSE TECH PLA ACAD OF MILITARY SCI

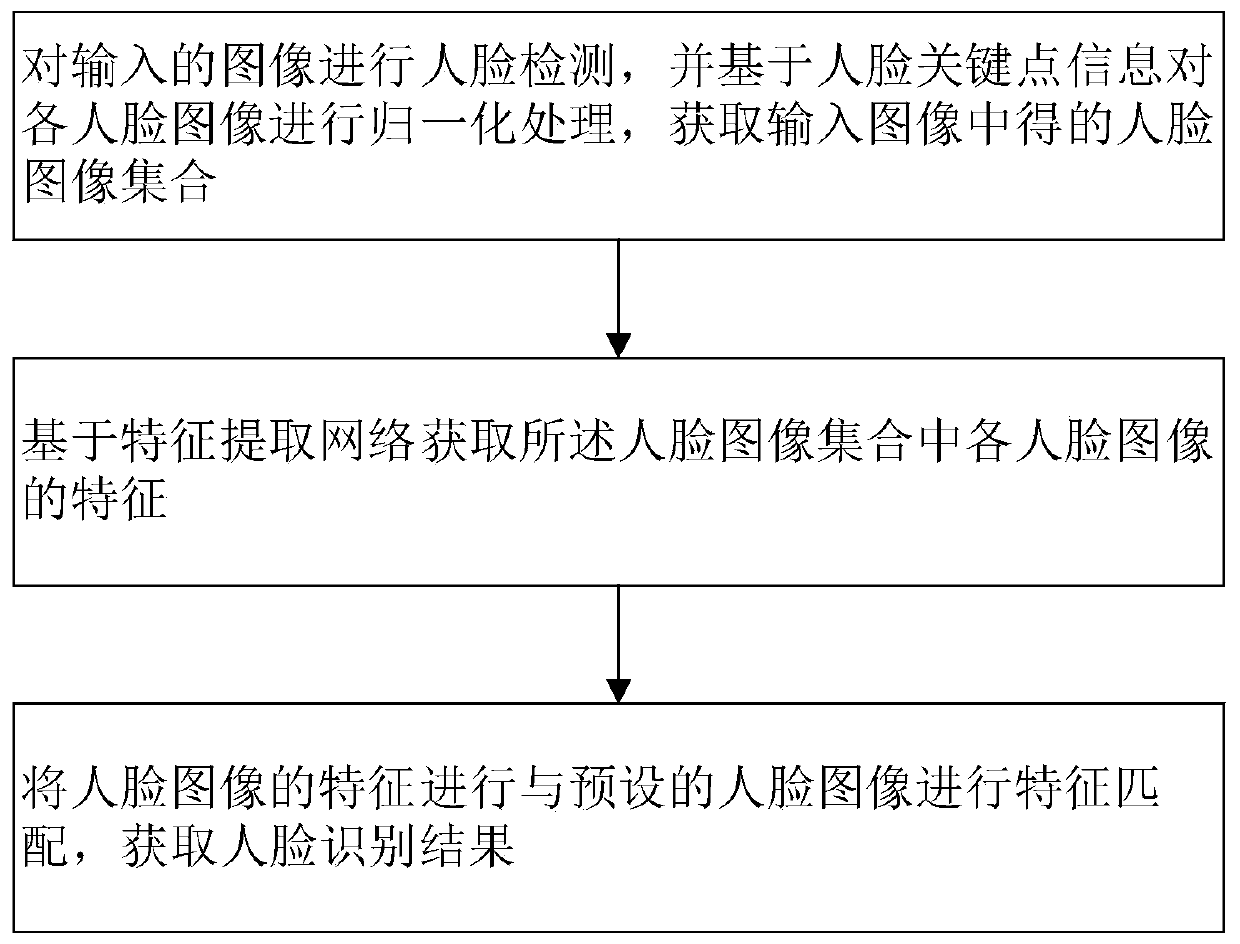

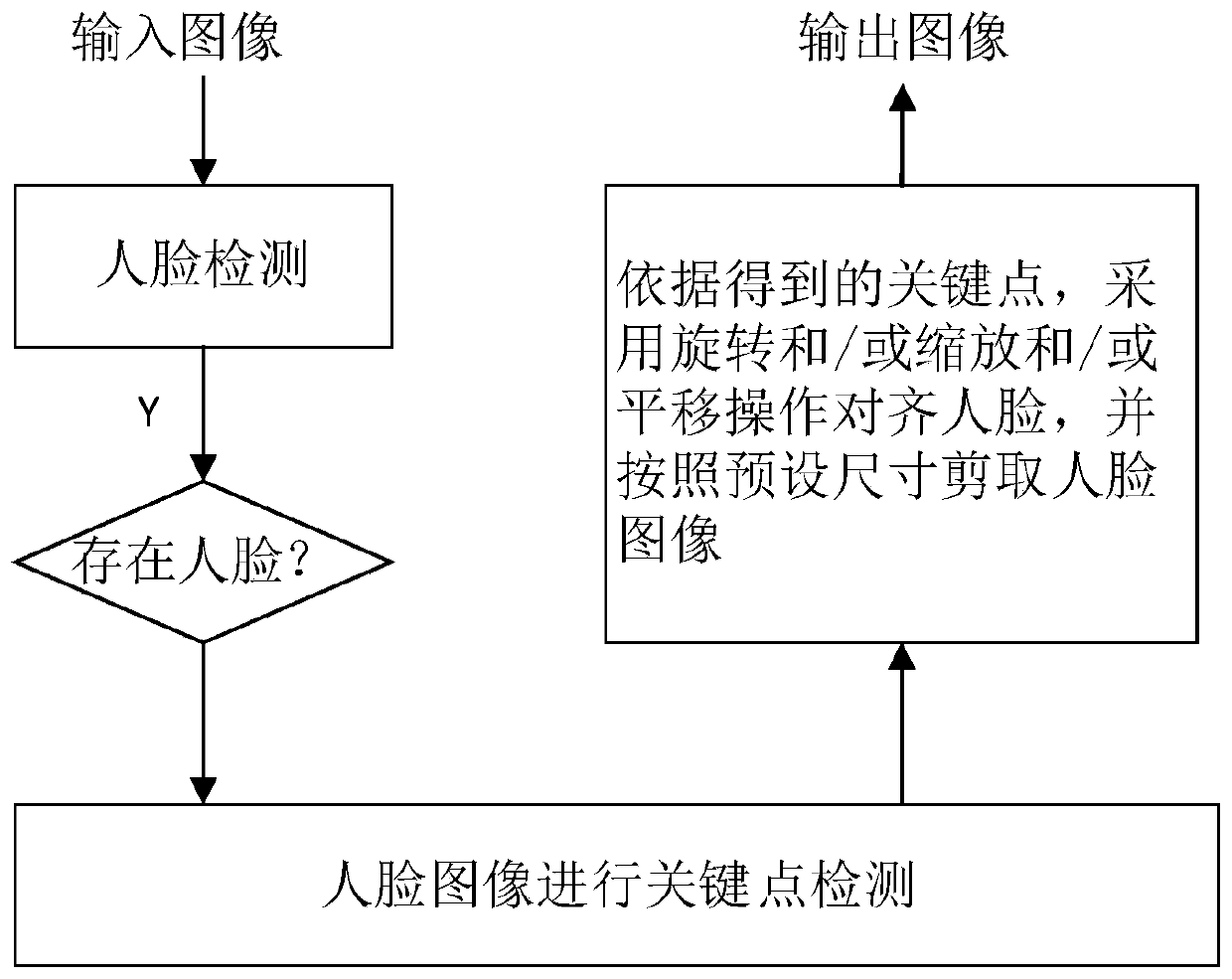

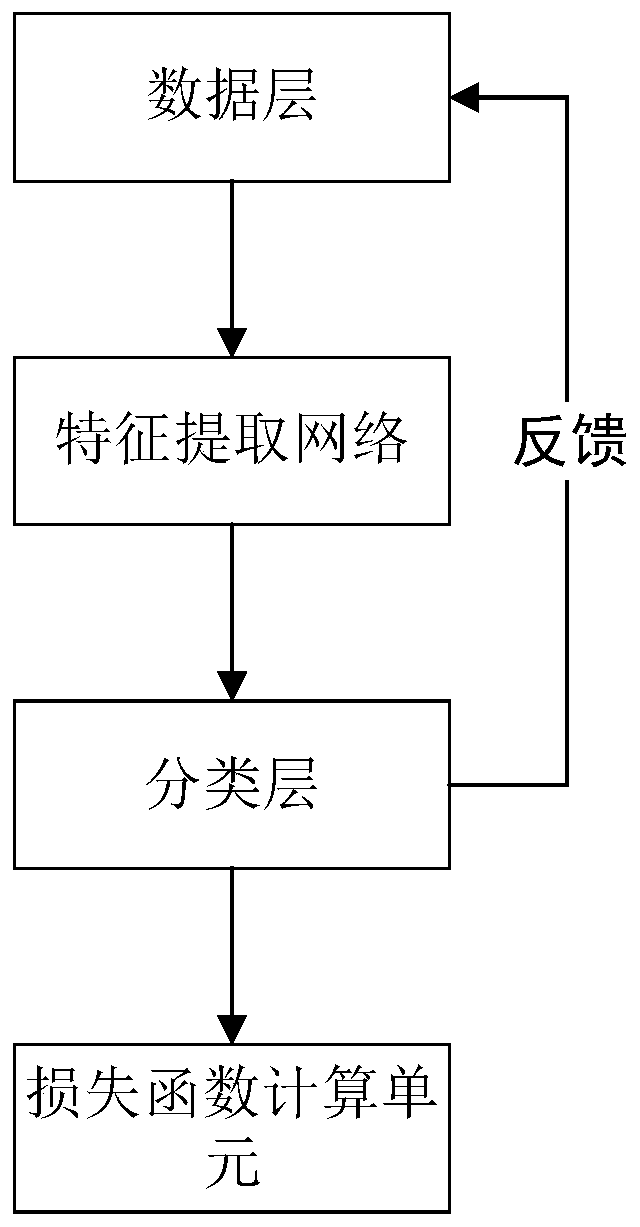

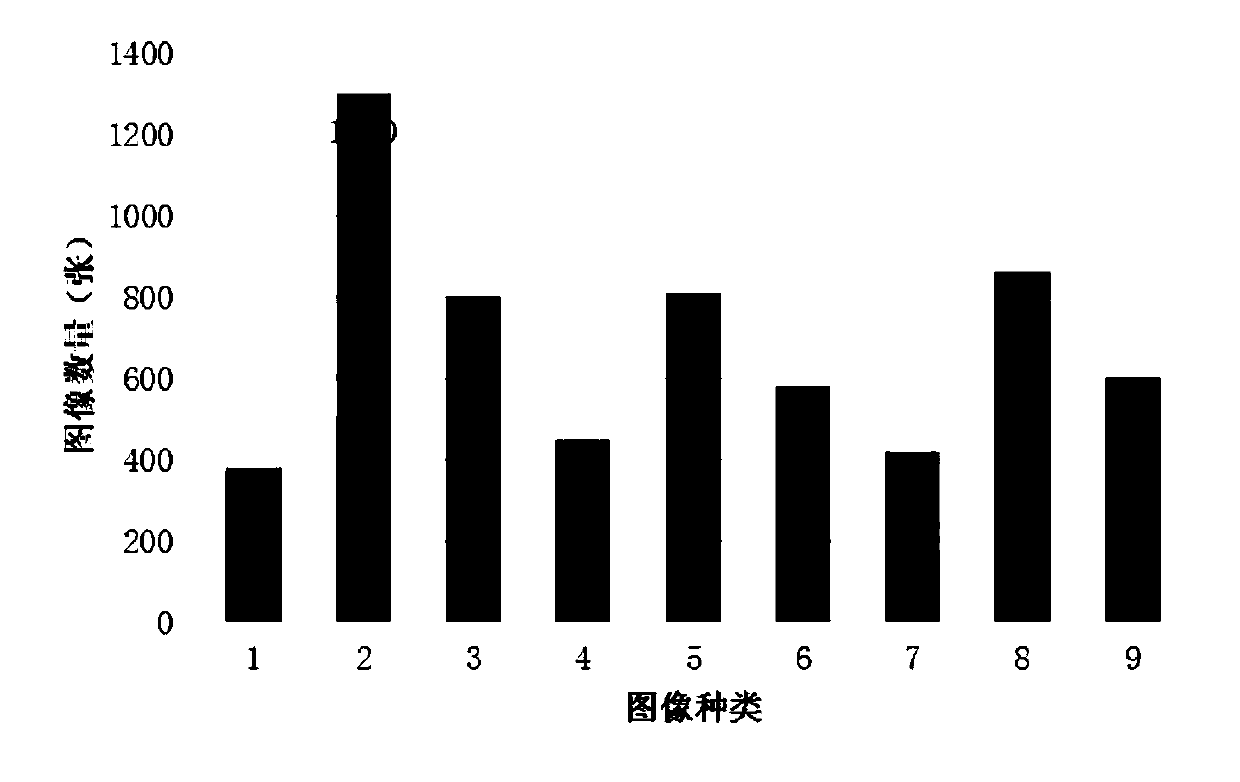

Neural-network-based large-scale unbalanced data face recognition method and system

ActiveCN109948478AImprove performanceEfficient model trainingCharacter and pattern recognitionNeural architecturesSelf adaptiveFace perception

The invention belongs to the field of face recognition, in particular to a neural-network-based large-scale unbalanced data face recognition method and system, and aims to solve the problems of large-scale data optimization and improvement of face recognition efficiency. According to the method, the performance of model face recognition is improved by improving the loss function and the sampling mode, the loss function of the self-adaptive boundary margin is provided in the aspect of the loss function to cope with unbalanced face data, and an improvement scheme is provided in the aspect of sampling for data sampling and classification template sampling. According to the method, model training can be efficiently carried out on large-scale unbalanced face data, and the performance is improved.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

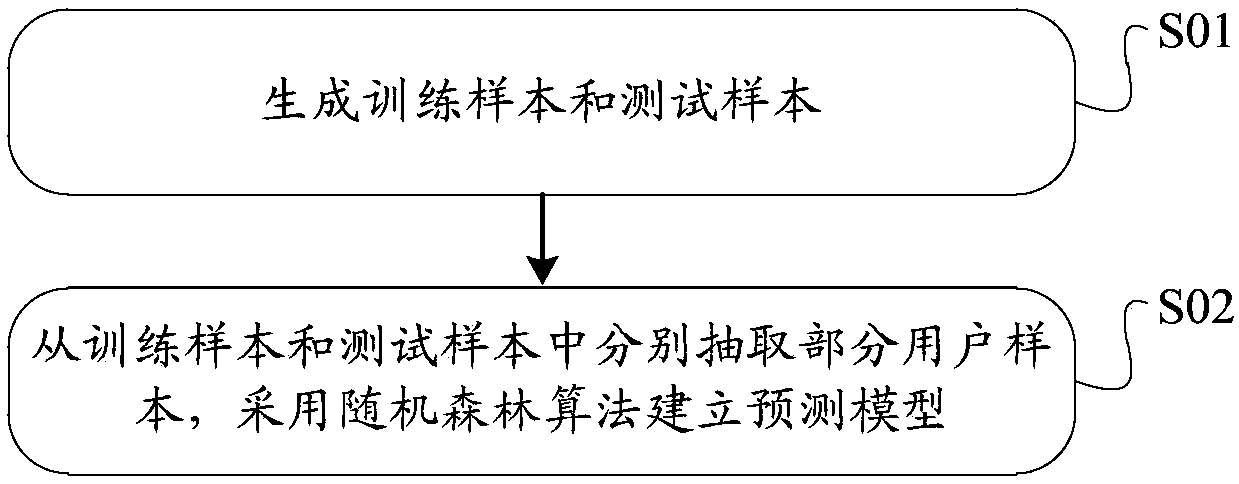

Training method and device for financial risk identification model, computer equipment and medium

PendingCN111724083AImprove generalization abilityEfficient training processFinanceCharacter and pattern recognitionEngineeringRisk identification

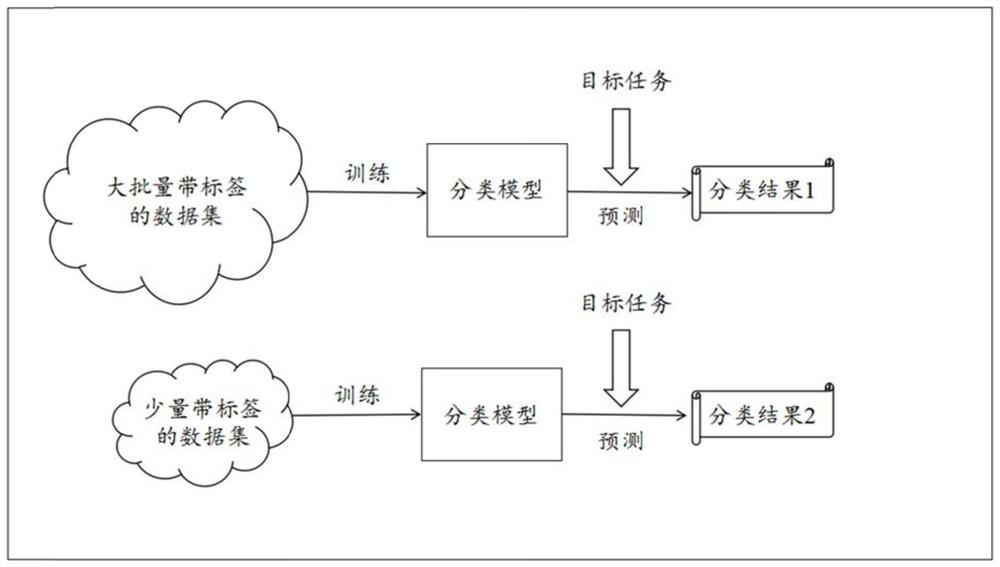

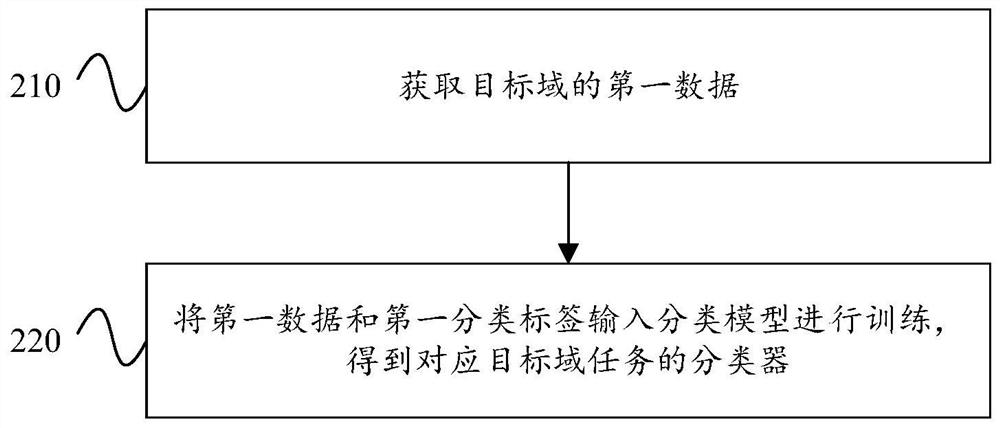

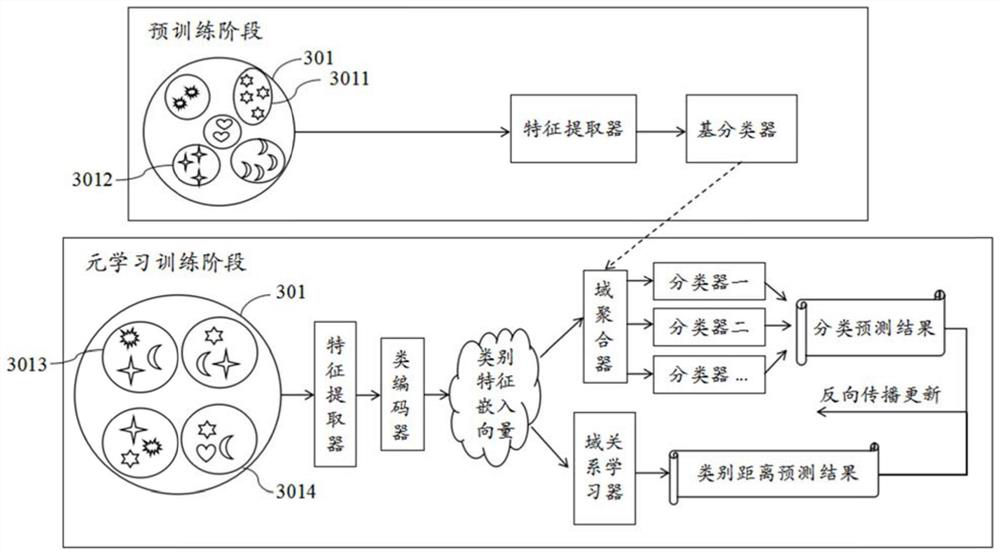

The invention discloses a training method and device for a financial risk identification model, computer equipment and a medium, and the method comprises the steps: obtaining first user data, with a first credit risk label, of a target domain financial project, inputting the first user data into a meta-learning device for training, and obtaining a classifier corresponding to the target domain financial project for risk identification. According to the method, the classifier corresponding to the target domain financial project is trained in a meta-learning mode, priori knowledge in a source domain task can be effectively migrated, so that the data volume of labeled samples required by model training is small, the generalization performance of the recognition model is improved, and the training process of the model is faster and more efficient. Besides, in the training process of the meta-learner, the source domain correlation among the categories of the task sets is learned, so that prior knowledge can be effectively migrated from tasks closer to the current target domain task during migration learning, and the accuracy of model recognition can be improved. The method can be widelyapplied to the technical field of machine learning.

Owner:TENCENT TECH (SHENZHEN) CO LTD

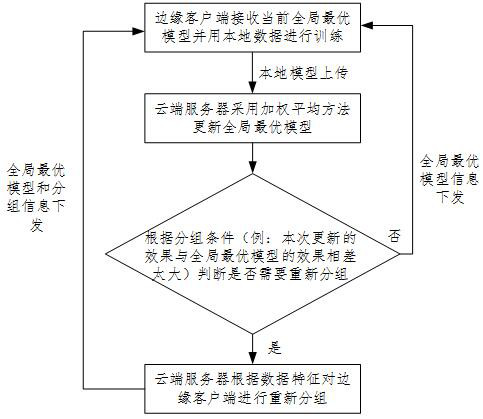

Federated learning model training method based on data feature perception aggregation

PendingCN112488322AMitigate the effects of trainingEfficient training processResource allocationCharacter and pattern recognitionDimensionality reductionEngineering

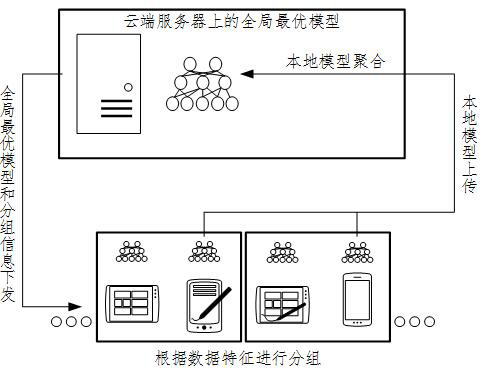

The invention provides a federated learning model training method based on data feature perception aggregation. According to the method, a cloud server calculates a global optimal model according to alocal model uploaded by an edge client, performs dimension reduction on data characteristics according to mined edge client data characteristics, screens out key characteristics, and performs clustering grouping on the edge client based on the key characteristics; the cloud server issues grouping information and a global optimal model to the edge client; and the edge client provides local data according to the received global optimal model and provides local execution model training according to the global optimal model, and one local model is randomly selected from the same edge client groupor the optimal model in the edge client group is selected and uploaded to the cloud server. According to the method, the data characteristics of the edge clients are fully utilized, the edge clientsare grouped, unnecessary communication is avoided, the influence of statistical isomerism on model training is relieved to a great extent, and the training efficiency of the model is improved.

Owner:HANGZHOU DIANZI UNIV

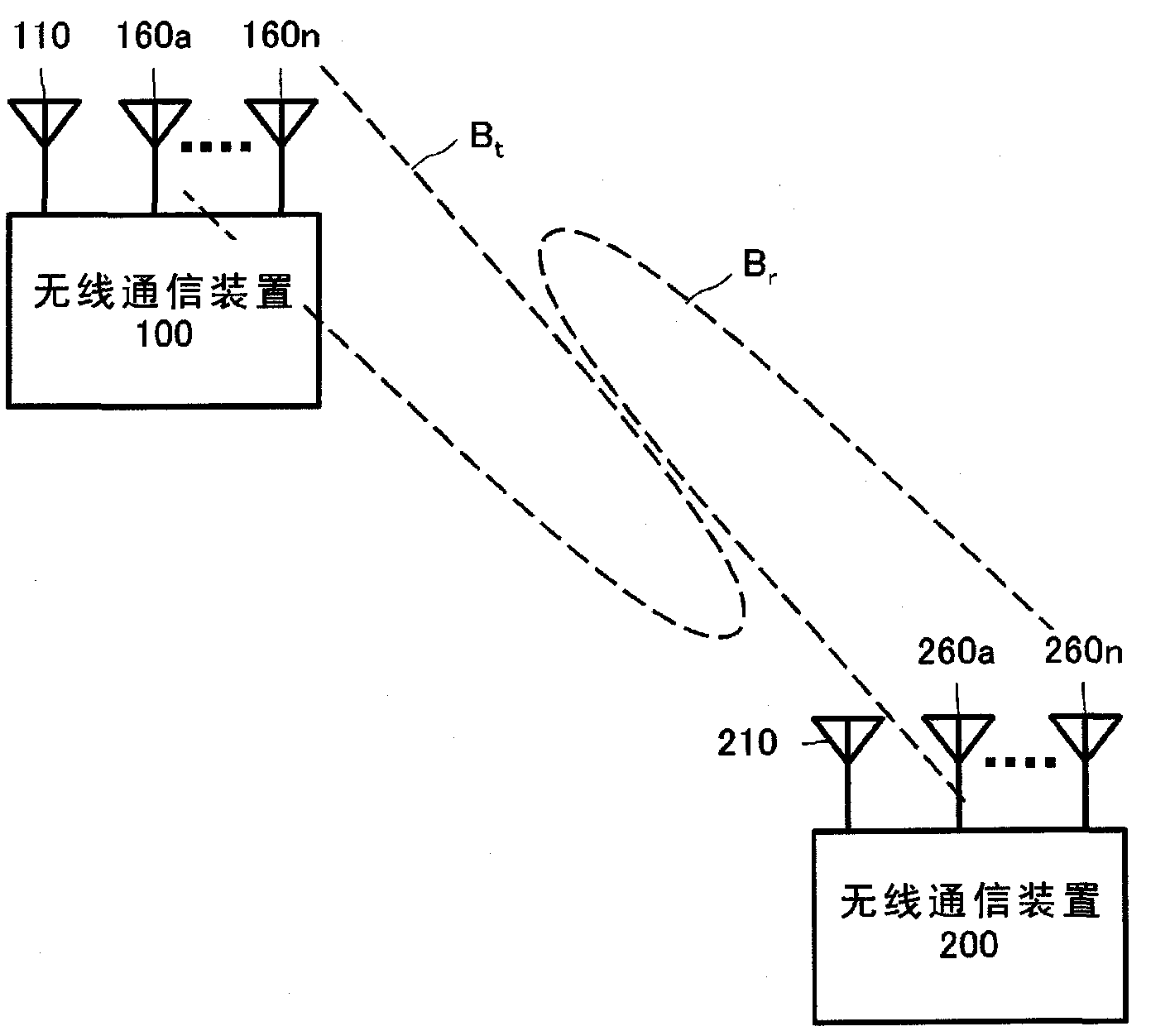

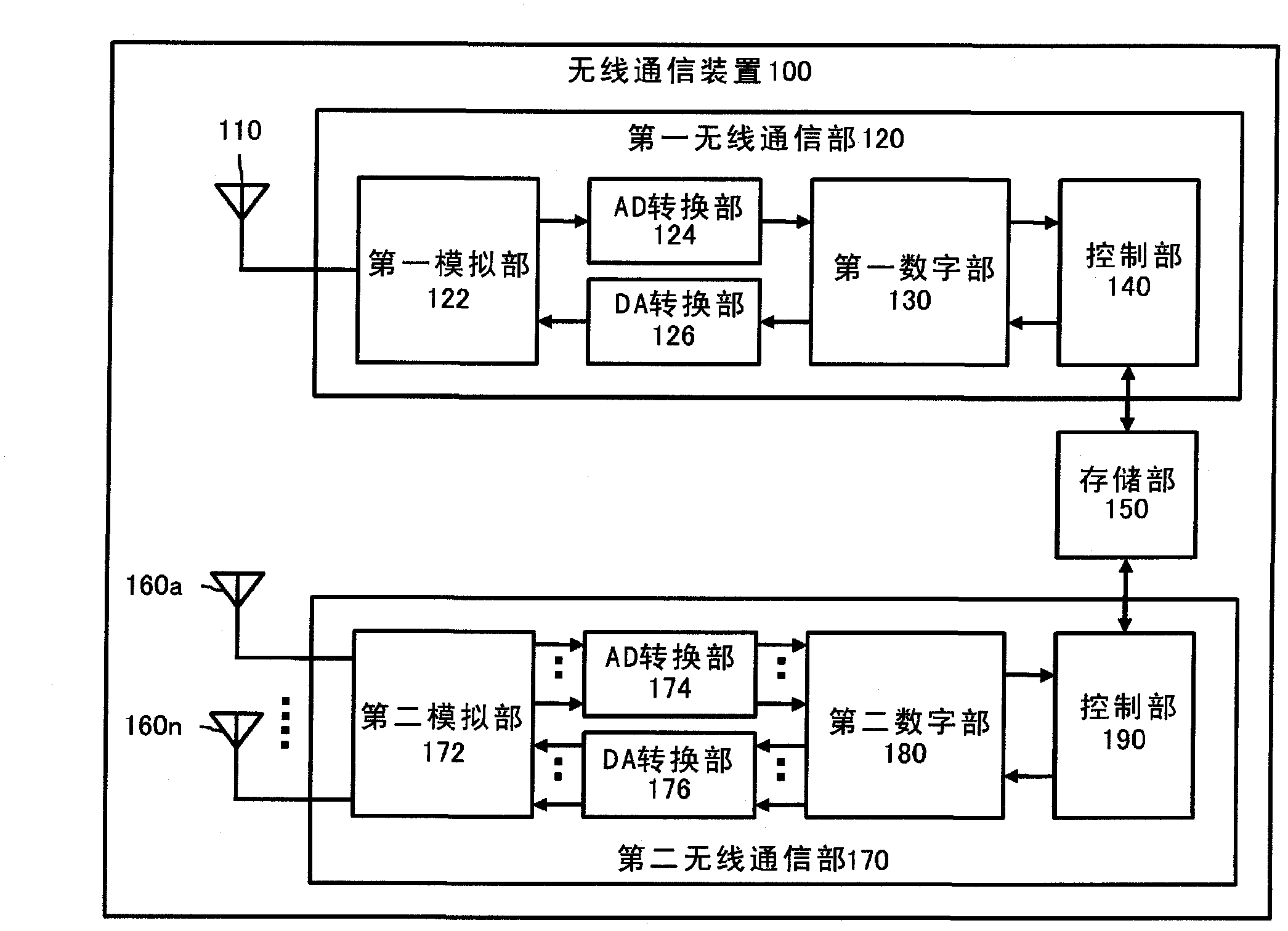

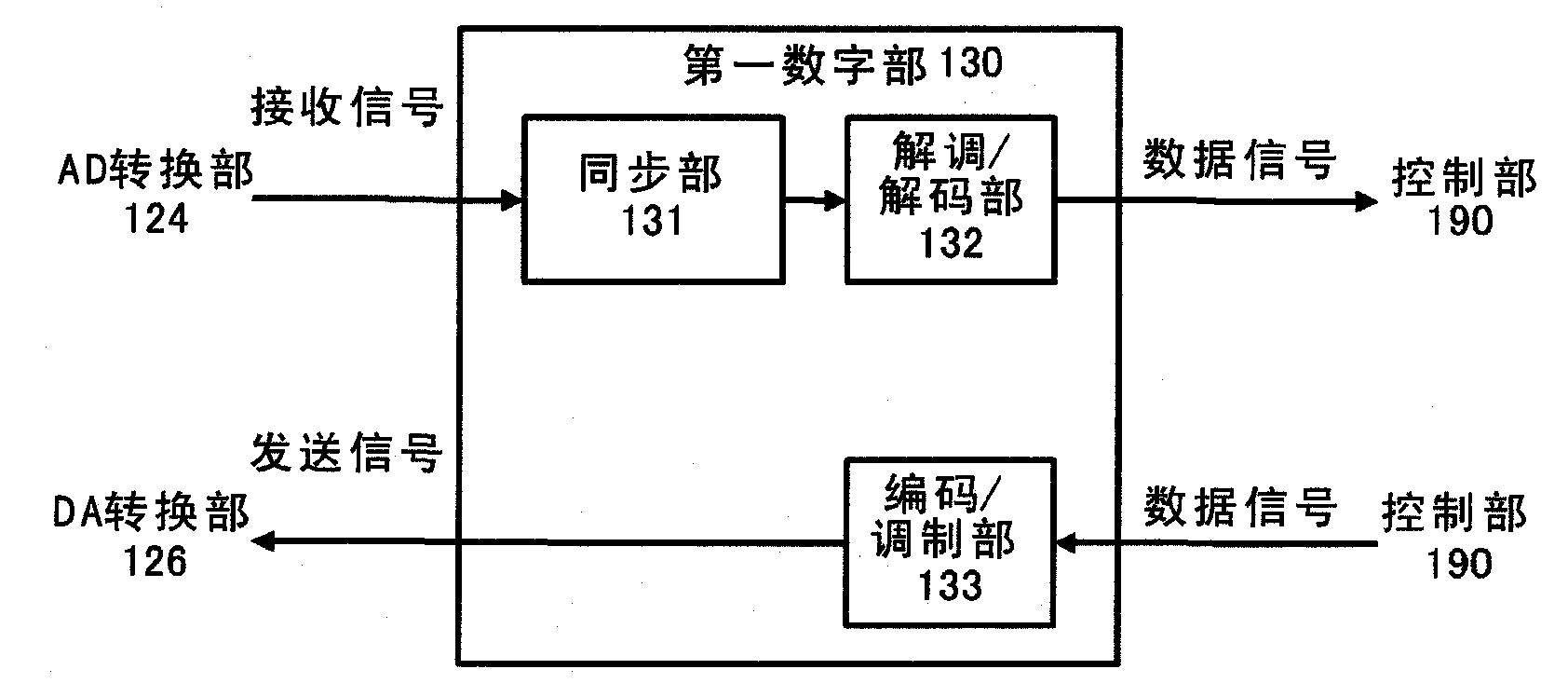

Communication device, communication method, computer program, and communication system

InactiveCN102326339ASimple methodEfficient training processPolarisation/directional diversityNetwork topologiesCommunications systemBeam pattern

In addition to efficiently determining the antenna directivity between a transmitter and a receiver, the disclosed device, communication method and computer program efficiently feed back the determined results from the receiver to the transmitter. When transmission beam pattern order corresponding to various time slots in a signal for beam determination, or names representing the various transmission beam patterns are set communally through presetting or pre-negotiation between transmission and reception, the receiving side is able to predict reception power for each time slot based on shifts in the reception power of the beam determination signal, and also records and feeds back, in a notification signal, the transmission beam pattern name or time slot number that corresponds to the time slot realizing the maximum reception power.

Owner:SONY CORP

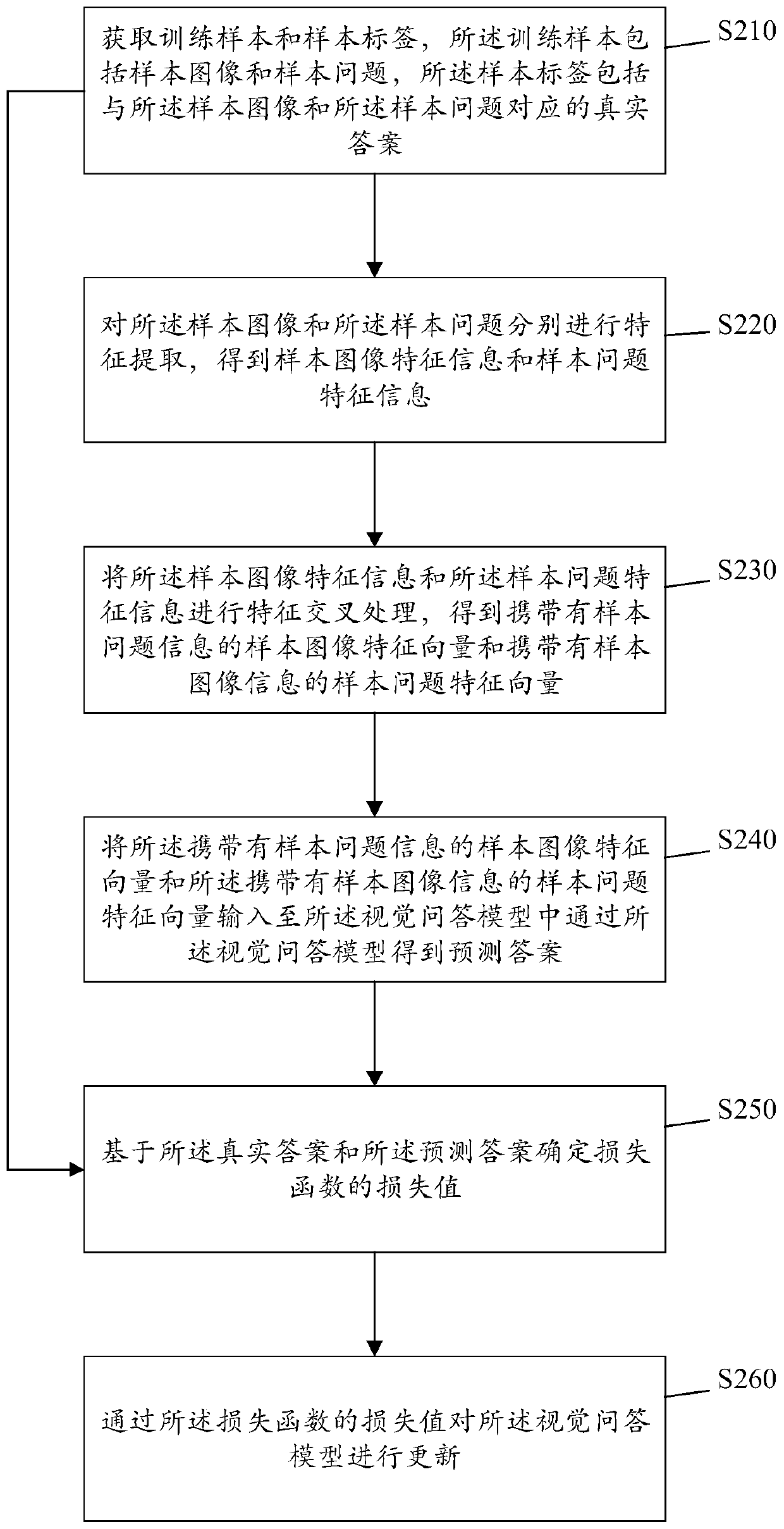

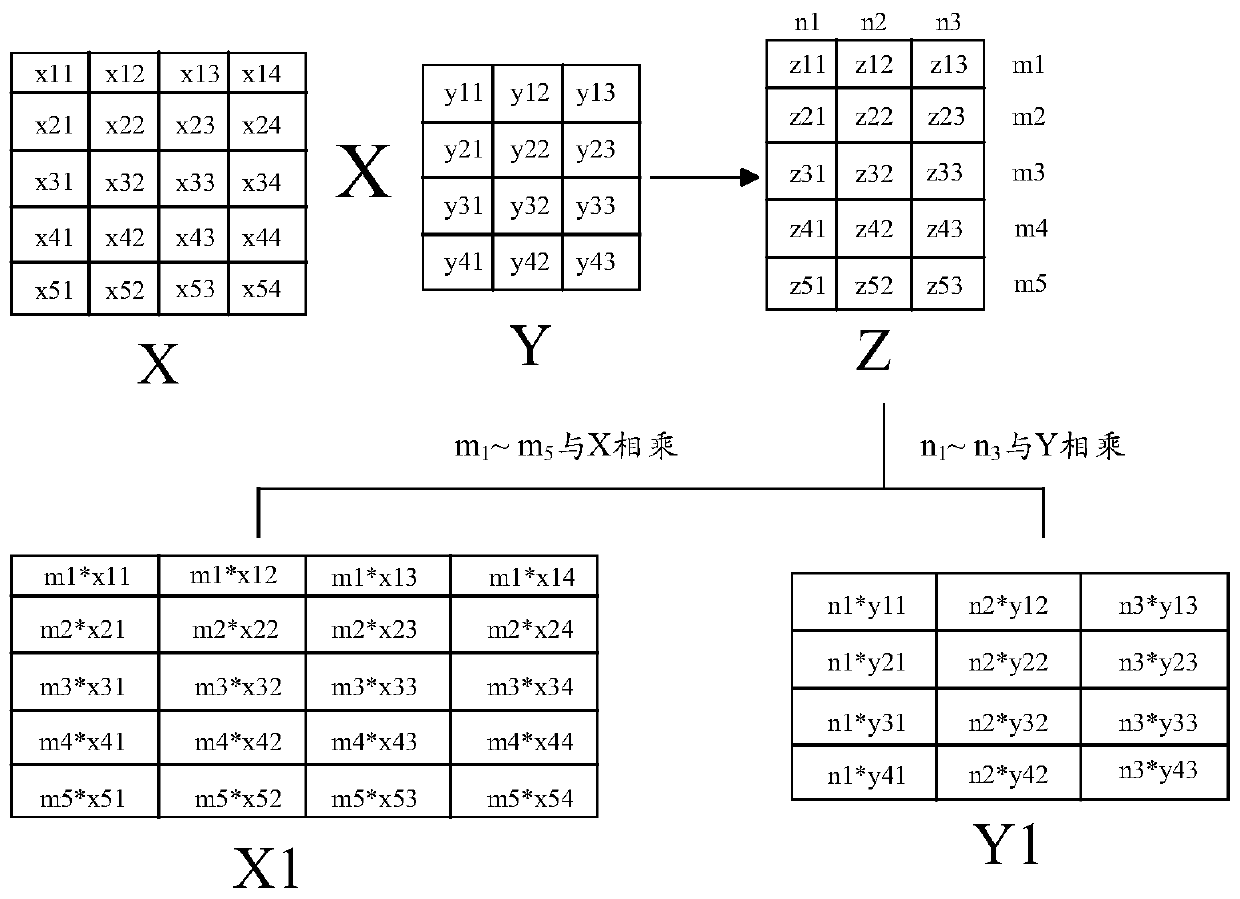

Visual question-answering model training method and device

ActiveCN110348535AEfficient training processIncrease richnessCharacter and pattern recognitionFeature vectorSample image

The invention provides a visual question-answering model training method and device, and relates to the technical field of computers. The visual question-answering model training method comprises thesteps of obtaining a training sample and a sample label; extracting sample image feature information and sample problem feature information; performing feature cross processing on the sample image feature information and the sample problem feature information to obtain a sample image feature vector carrying the sample problem information and a sample problem feature vector carrying the sample image information; inputting the sample image feature vector carrying the sample question information and the sample question feature vector carrying the sample image information into the visual question-answering model to obtain a prediction answer through the visual question-answering model; determining a loss value of a loss function based on the real answer and the predicted answer; and updating the visual question and answer model through the loss value of the loss function.

Owner:BEIJING KINGSOFT DIGITAL ENTERTAINMENT CO LTD +1

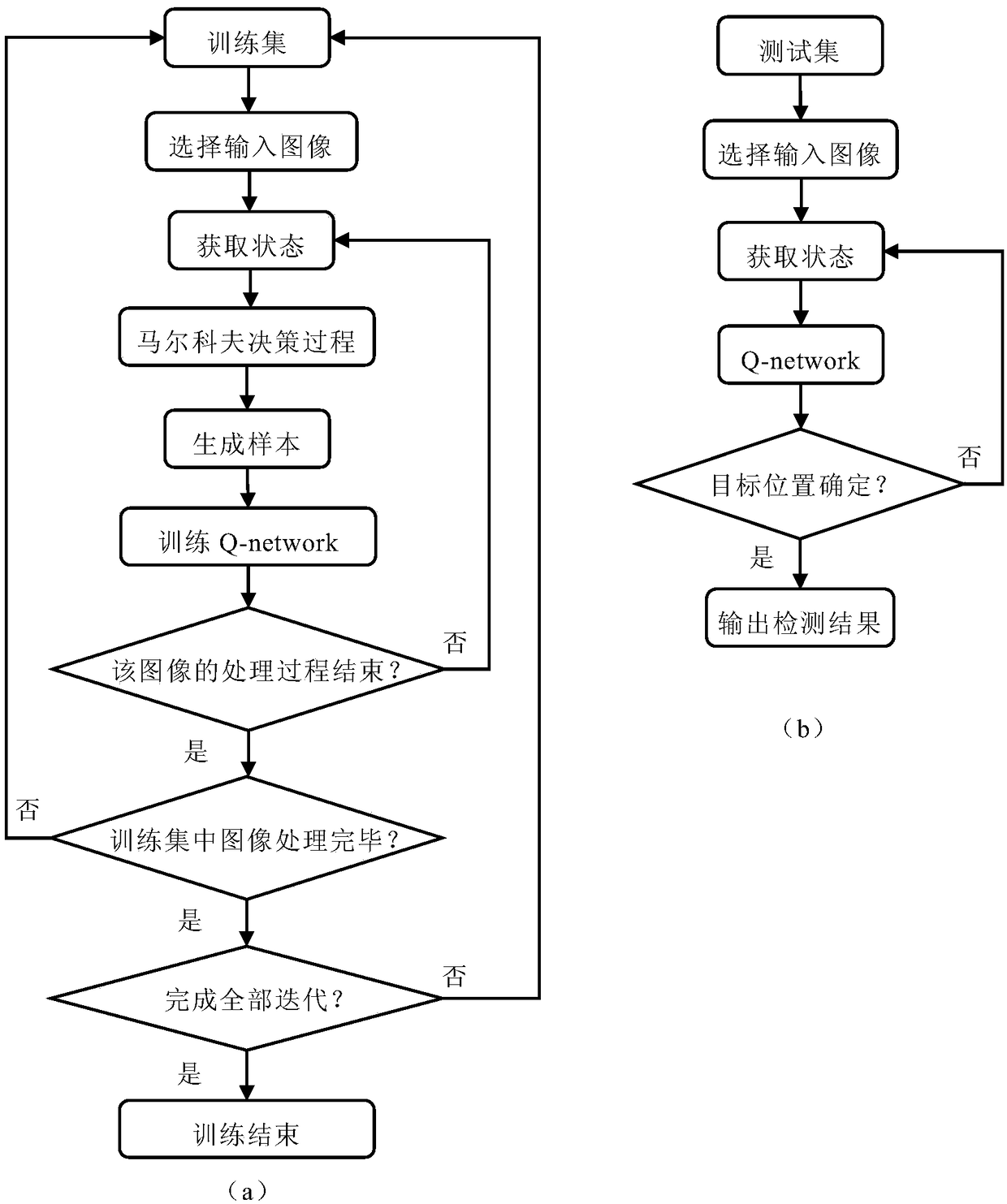

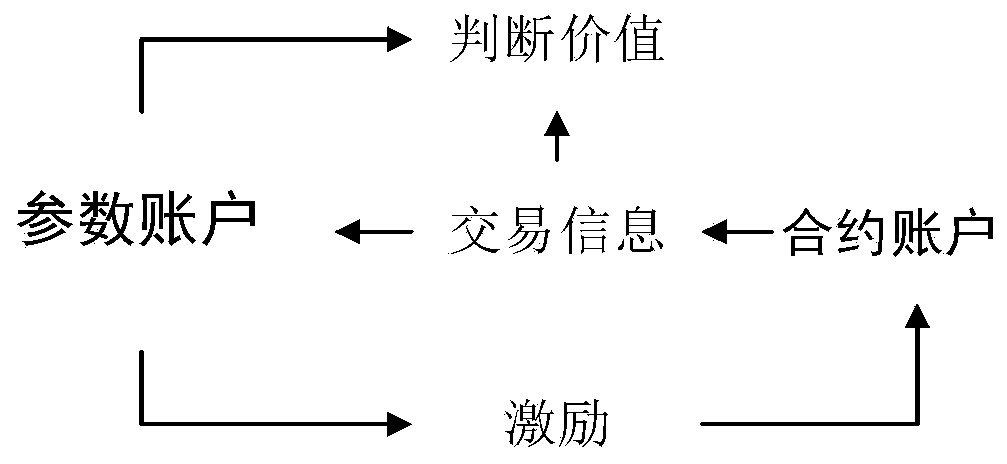

Target detection method for SAR (Synthetic Aperture Radar) image based on deep reinforcement learning

ActiveCN108764006ASave manpower and material resourcesImprove detection accuracyScene recognitionNeural architecturesImaging processingSynthetic aperture radar

The invention relates to a target detection method for an SAR (Synthetic Aperture Radar) image based on deep reinforcement learning, which comprises the steps of S1, setting the number of iterations,and sequentially processing images in a training set in each iteration process; S2, inputting an image from the training set, and generating training samples by using the Markov decision process; S3,randomly selecting a certain number of samples, training the Q-network by adopting a gradient descent method, obtaining the state of a reduced observation area, generating a next sample until a presettermination condition is met, and terminating the image processing process; S4, returning back to the step S2, continuing to input the next image from the training set until all images are completelyprocessed, and terminating the current iteration process; S5, continuing the next iteration process until the set number of iterations is met, and determining network parameters of the Q-network; andS6, performing target detection on an image in a test set through the trained Q-network, and outputting a detection result. The target detection method obtains good detection accuracy in target detection for the SAR image.

Owner:BEIHANG UNIV

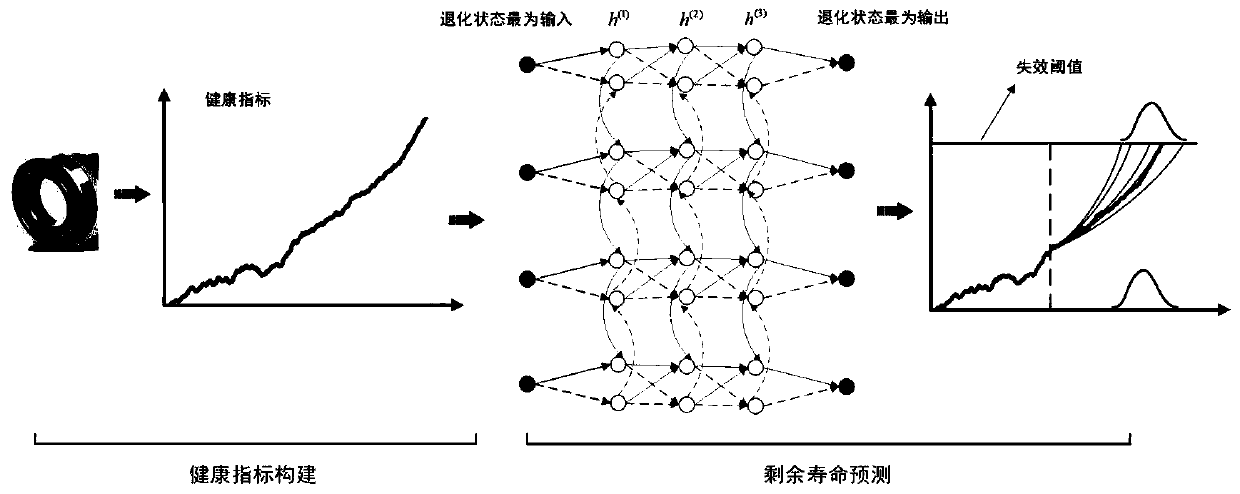

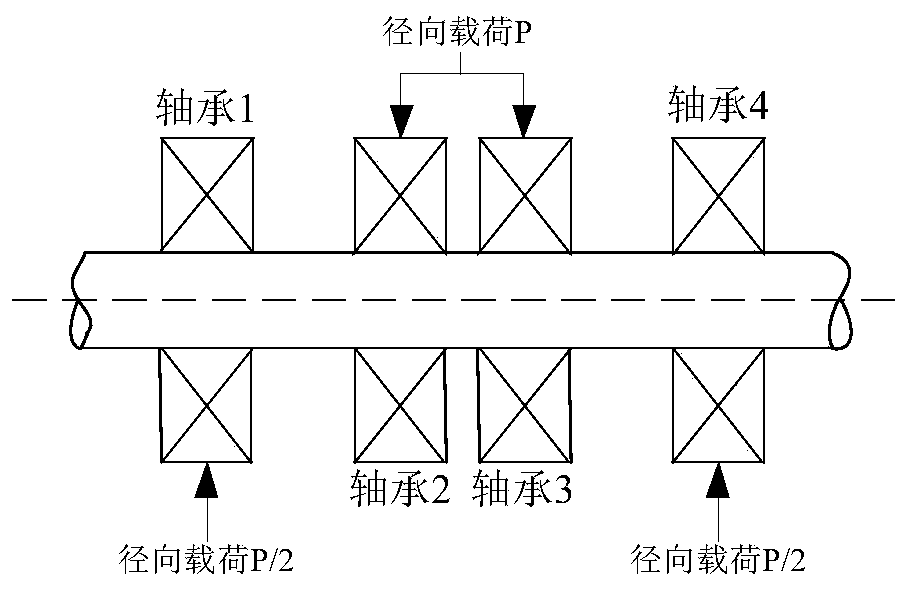

Rotary machine residual life prediction method of multi-layer bidirectional gating cycle unit network

ActiveCN110866314AEfficiently obtain confidence intervalsHigh precisionGeometric CADNeural learning methodsHealth indexFeature extraction

The invention discloses a rotary machine residual life prediction method of a multi-layer bidirectional gating cycle unit network. The rotary machine residual life prediction method comprises the following steps: acquiring a vibration signal; constructing health indexes; constructing a network training set; constructing a multi-layer bidirectional gating circulation unit network; training a multi-layer bidirectional gating cycle unit network; and carrying out network testing, residual life estimation and confidence interval acquisition; and performing residual life prediction evaluation. According to the rotary machine residual life prediction method, the advantages of strong feature extraction capability of deep learning are combined, and regression prediction is carried out by utilizinga bidirectional gated cycle unit neural network, and the confidence interval of the residual life is obtained through a Bootstrap method. Aiming at the problems that the model precision is sensitive to the value of the learning rate in the training process of a recurrent neural network model, the prediction performance of the model is influenced by too high and too low values, and the neural network is efficiently trained by utilizing the natural exponential decay learning efficiency. The rotary machine residual life prediction method can accurately predict the residual life and confidence interval of the rotary machine, can greatly reduce the expensive unplanned maintenance, and avoids the occurrence of a big disaster.

Owner:SOUTHEAST UNIV

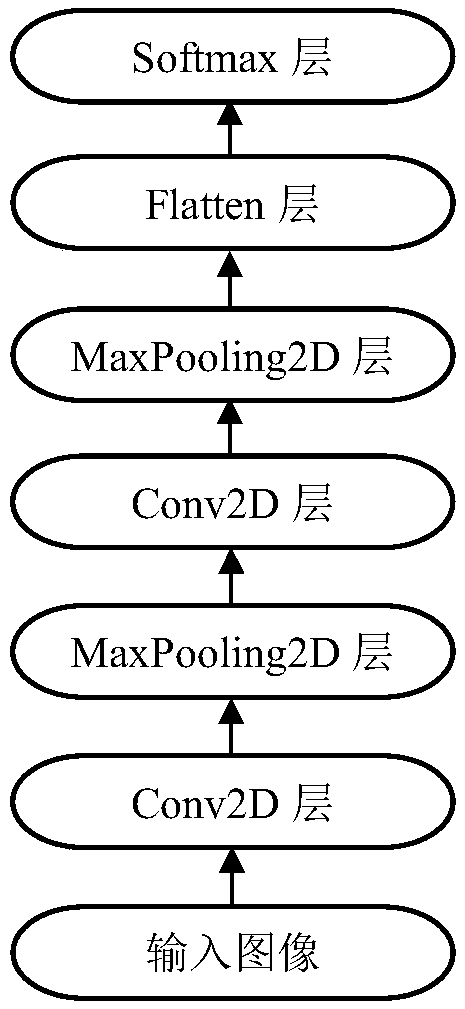

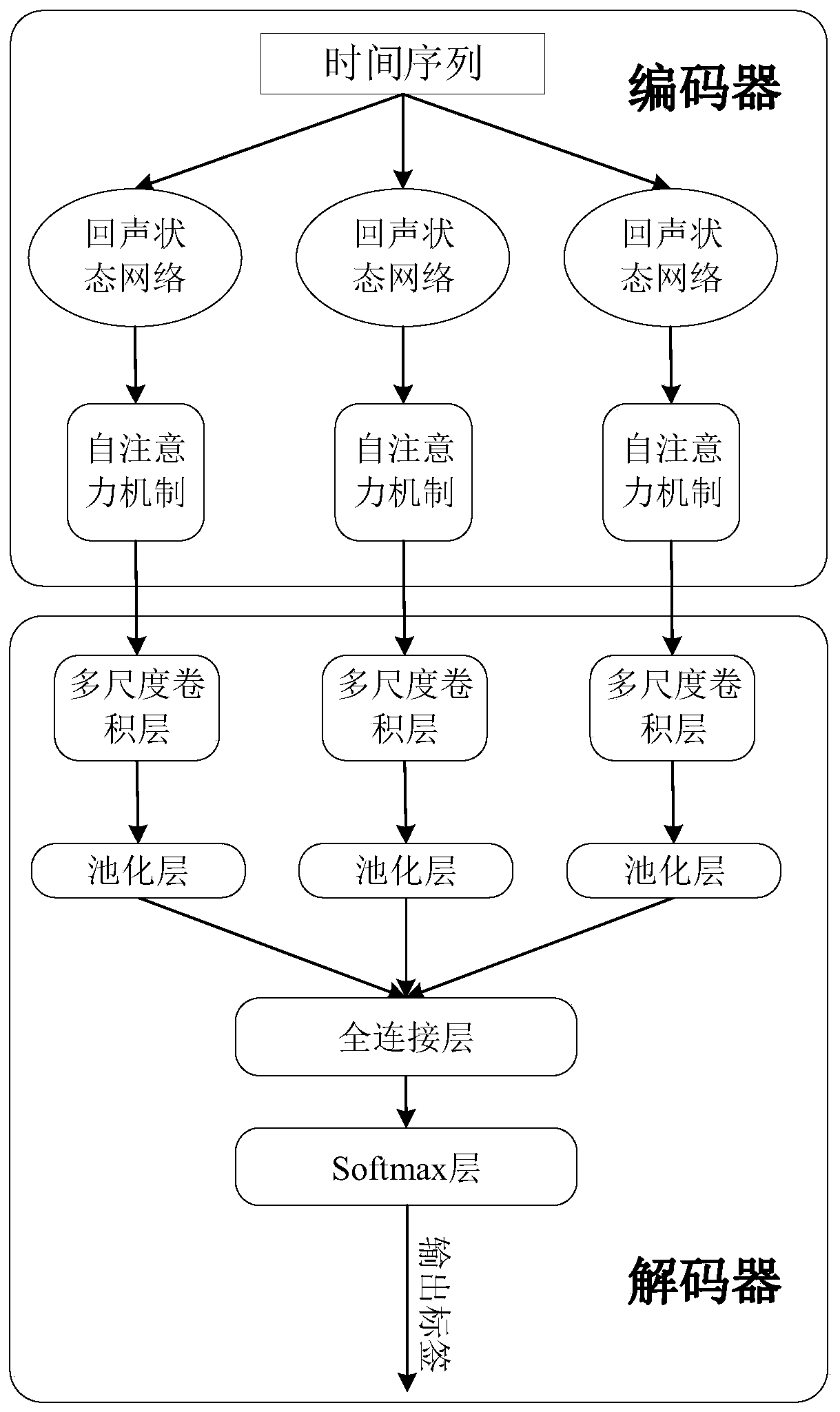

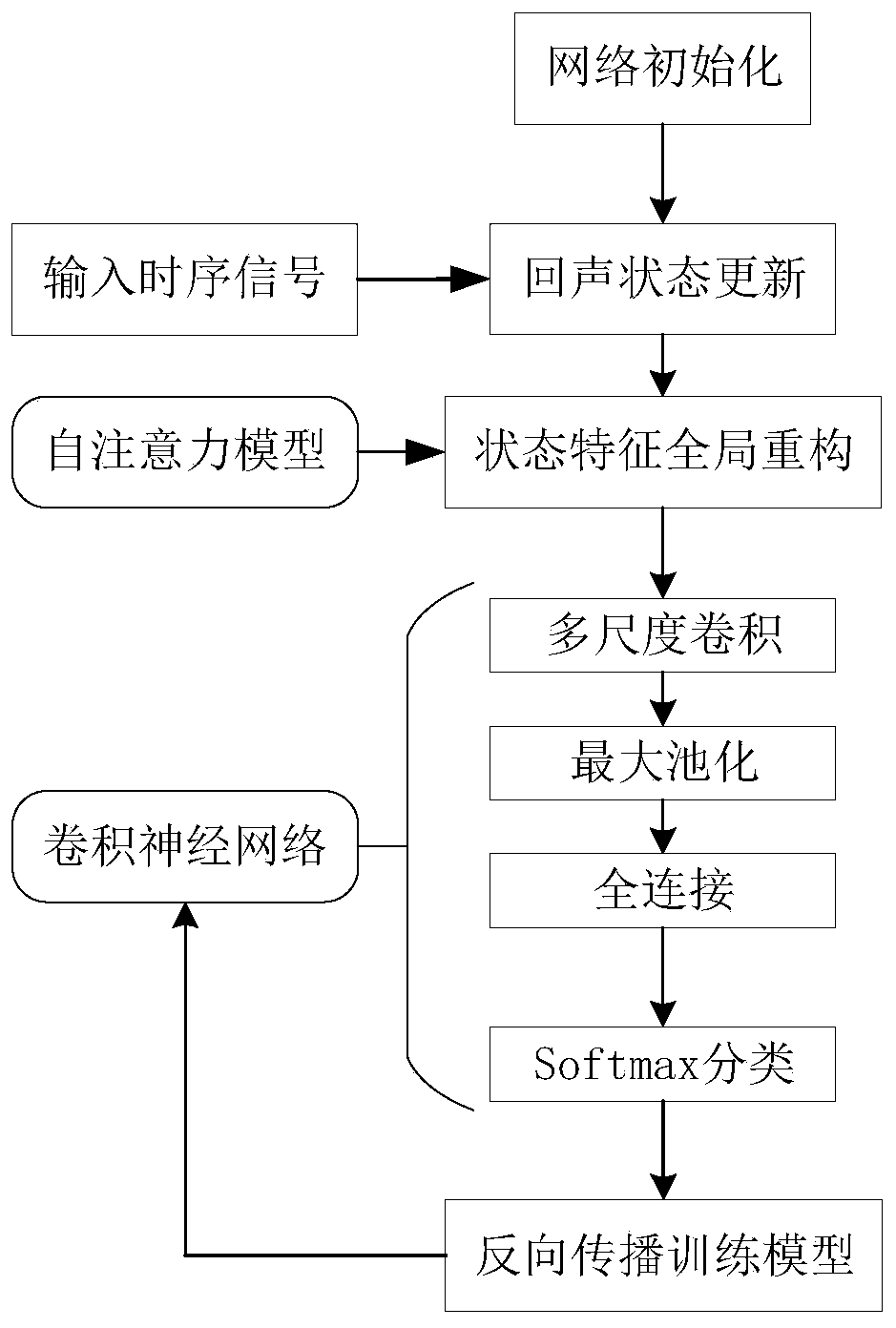

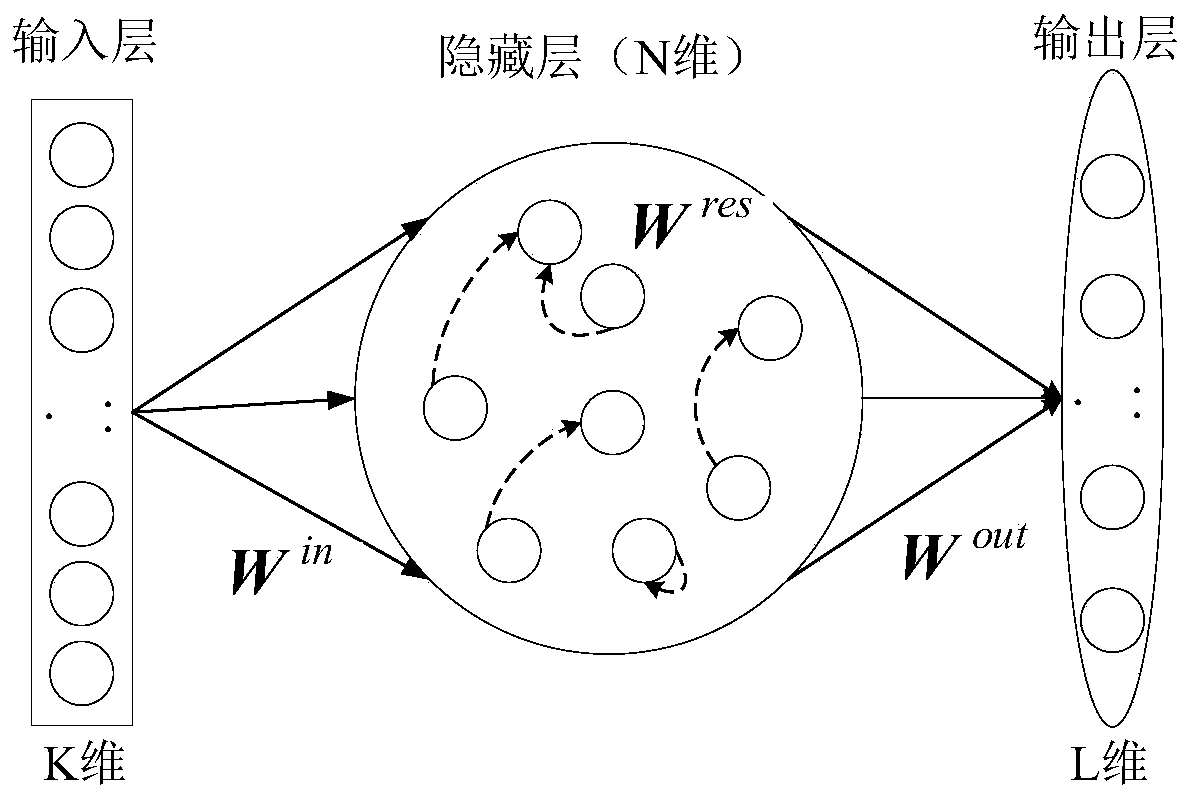

A convolutional echo state network time sequence classification method based on a multi-head self-attention mechanism

InactiveCN109919205AKeep training freeImplement Recode IntegrationCharacter and pattern recognitionNeural architecturesSpacetimeSelf attention

The invention discloses a convolutional echo state network time sequence classification method based on a multi-head self-attention mechanism. The method is realized based on two components of an encoder and a decoder. The encoder is composed of an echo state network of a multi-head self-attention mechanism. An input time sequence is firstly subjected to high-dimensional mapping coding through a plurality of echo state networks to generate original echo state characteristics, and then re-coding of echo state characteristic global space-time information is achieved based on a self-attention mechanism, so that the re-integrated high-dimensional characteristic representation has higher discrimination capability; And finally, taking a shallow convolutional neural network as a decoder to realize high-precision classification. The classification model established by the method inherits the characteristic of high training efficiency of the echo state network, realizes the re-coding of the space-time characteristic information by introducing a self-attention mechanism model without additional parameters, achieves a high-precision time sequence classification effect, and is a simple and efficient model.

Owner:SOUTH CHINA UNIV OF TECH

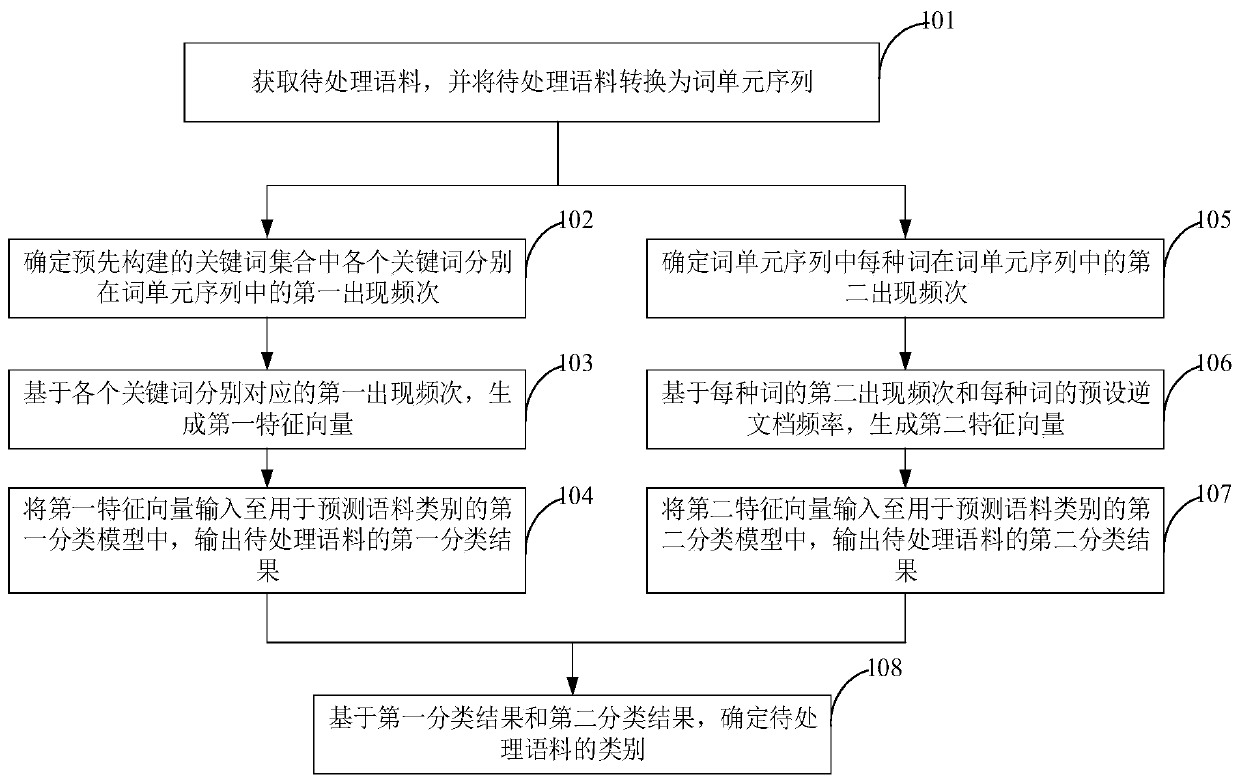

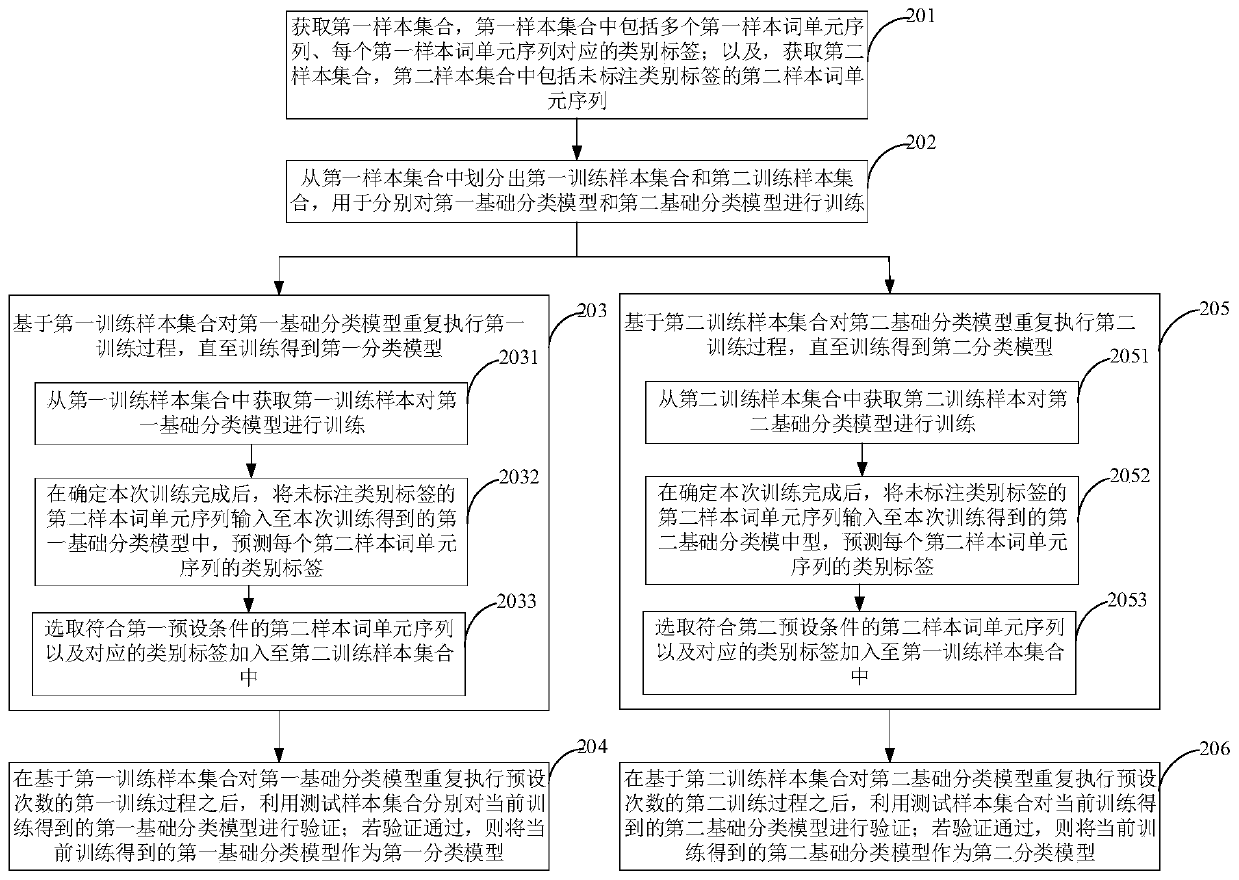

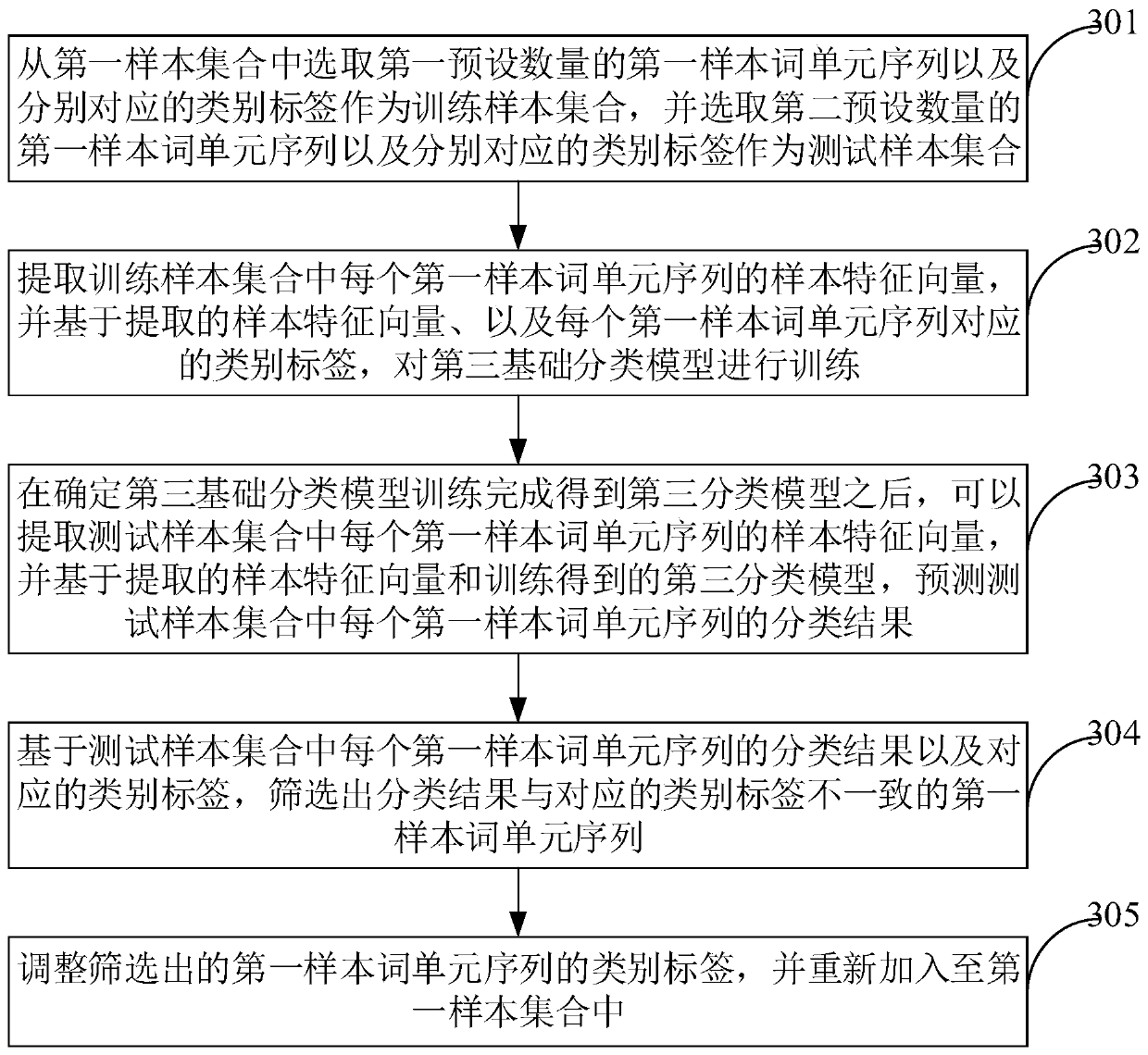

Classification model application and classification model training method and device

InactiveCN110222171AImprove accuracyReduce labor costsNatural language data processingSpecial data processing applicationsFeature vectorAlgorithm

The invention provides a classification model application and a classification model training method and device, and the method comprises the steps: obtaining a to-be-processed corpus, and convertingthe to-be-processed corpus into a word unit sequence; determining a first occurrence frequency of each keyword in a pre-constructed keyword set in the word unit sequence, and generating a first feature vector based on the first occurrence frequency corresponding to each keyword; determining a second occurrence frequency of each word in the word unit sequence in the word unit sequence, and generating a second feature vector based on the second occurrence frequency of each word and a preset inverse document frequency of each word; inputting the first feature vector into a first classification model, and outputting a first classification result of the to-be-processed corpus; and inputting the second feature vector into a second classification model, and outputting a second classification result of the to-be-processed corpus, and determining the category of the to-be-processed corpus based on the first classification result and the second classification result. By means of the method, thecorpus classification accuracy can be improved.

Owner:NEW H3C BIG DATA TECH CO LTD

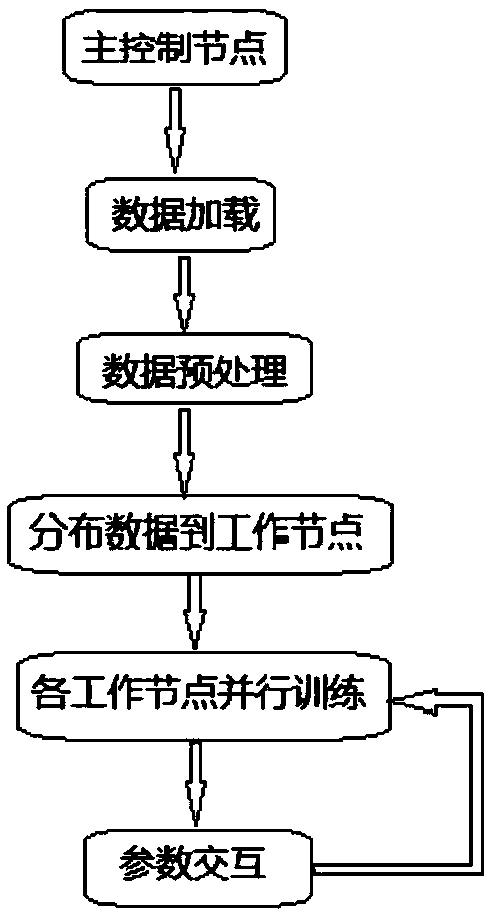

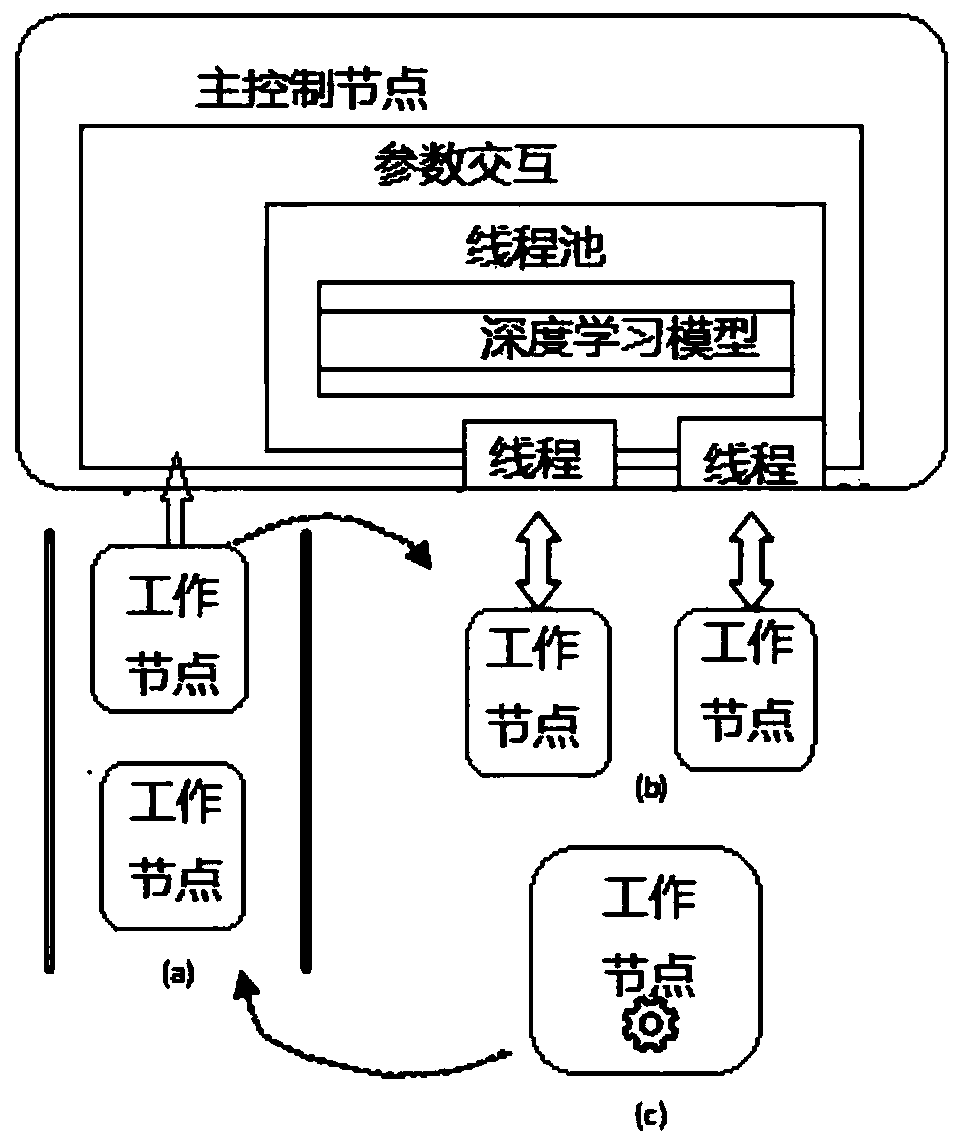

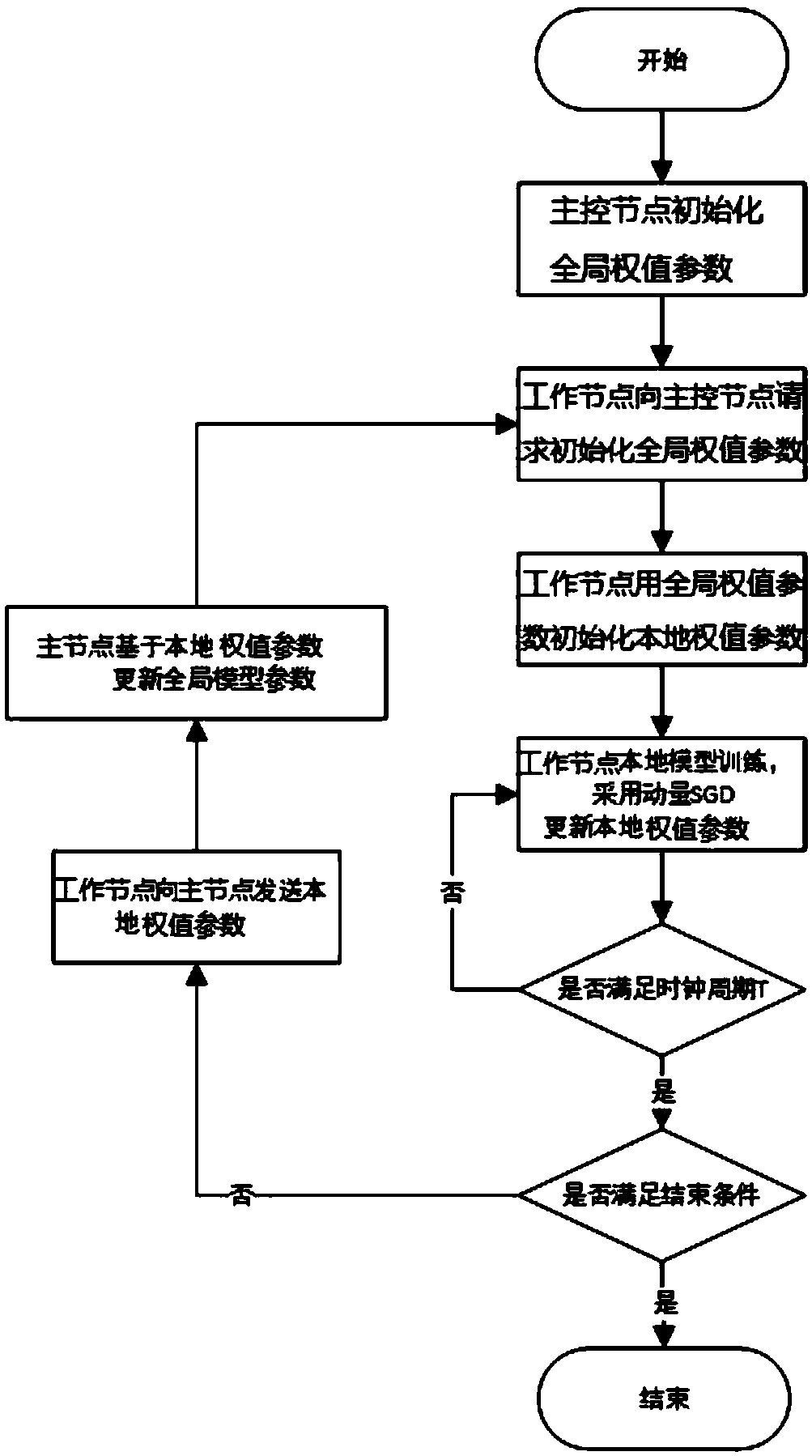

Distributed depth learning system based on momentum and pruning

ActiveCN109299781AEfficient training processQuick classificationNeural architecturesNeural learning methodsMomentumTelecommunications link

The invention provides a distributed depth learning system based on momentum and pruning, which relates to the field of cloud computing and depth learning. A spark distributed cluster is adopted, comprising a main control node and a plurality of working nodes. Any working node is connected with the main control node through a communication link, and the working node stores part of training data. According to the batch of training data stored locally, the work node propagates the training of the depth learning model forward and backward, obtains the update quantity of the weight parameter of the work node, and sends it to the main control node for interactive communication with the global weight parameter of the main control node. The main control node records the node information of the work node, balances the weight parameters of the obtained work node and transmits the obtained weight parameters to each work node. Repeating the interactive communication between the master control node and the working node until the iterative number or convergence condition is reached; The invention solves the problem that the model of the asynchronous algorithm converges slowly under the distributed environment, and at the same time, the operation speed of the asynchronous algorithm is improved.

Owner:ANHUI UNIVERSITY OF TECHNOLOGY +1

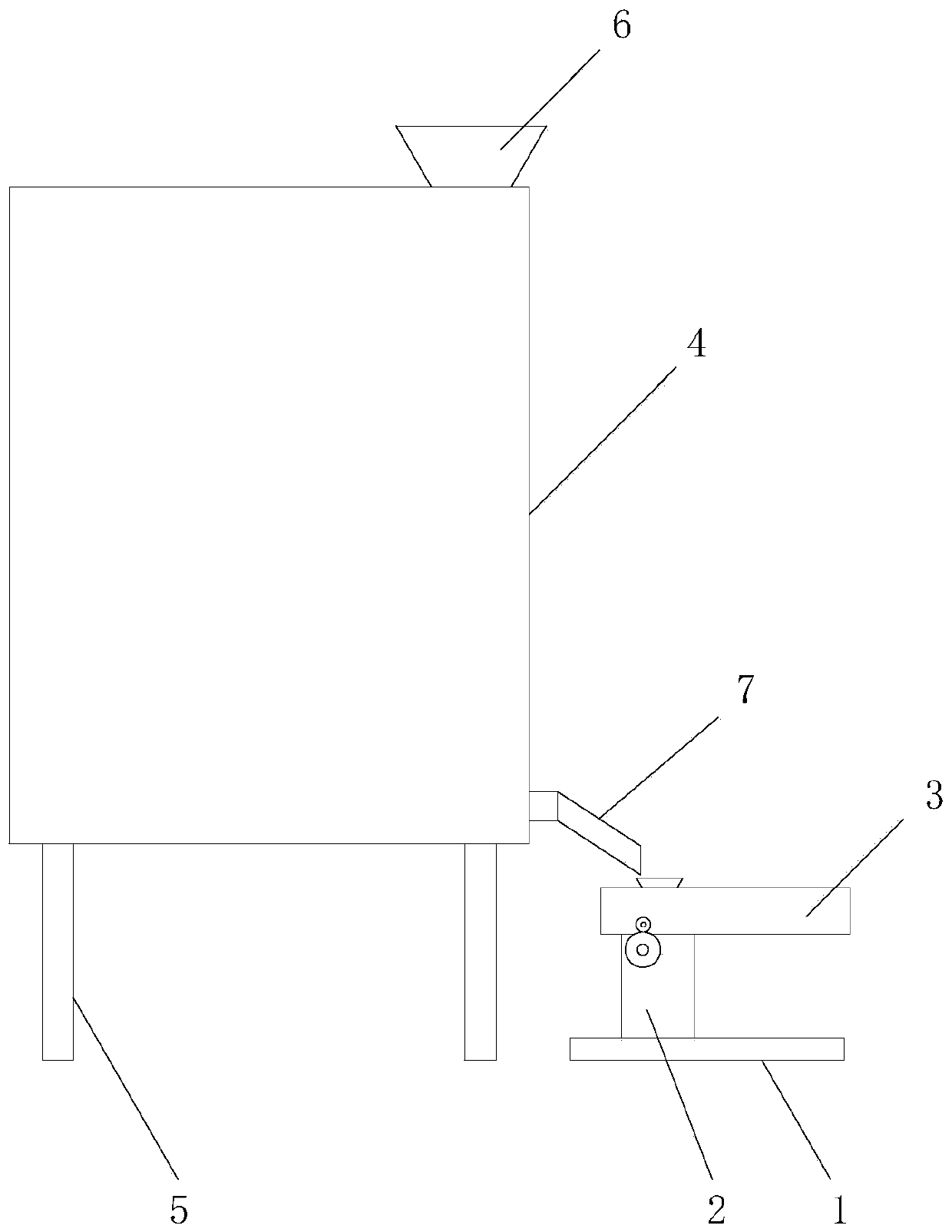

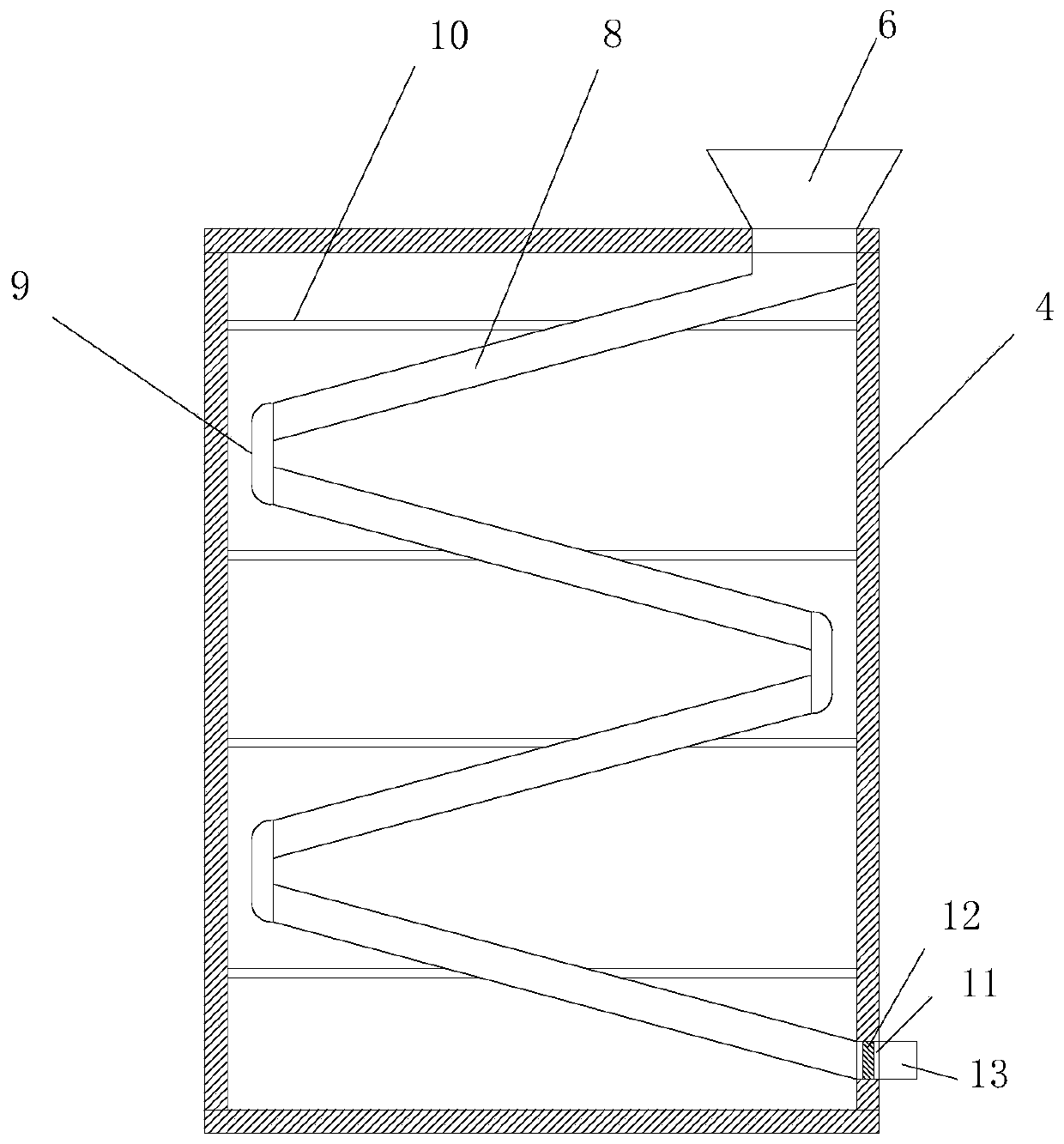

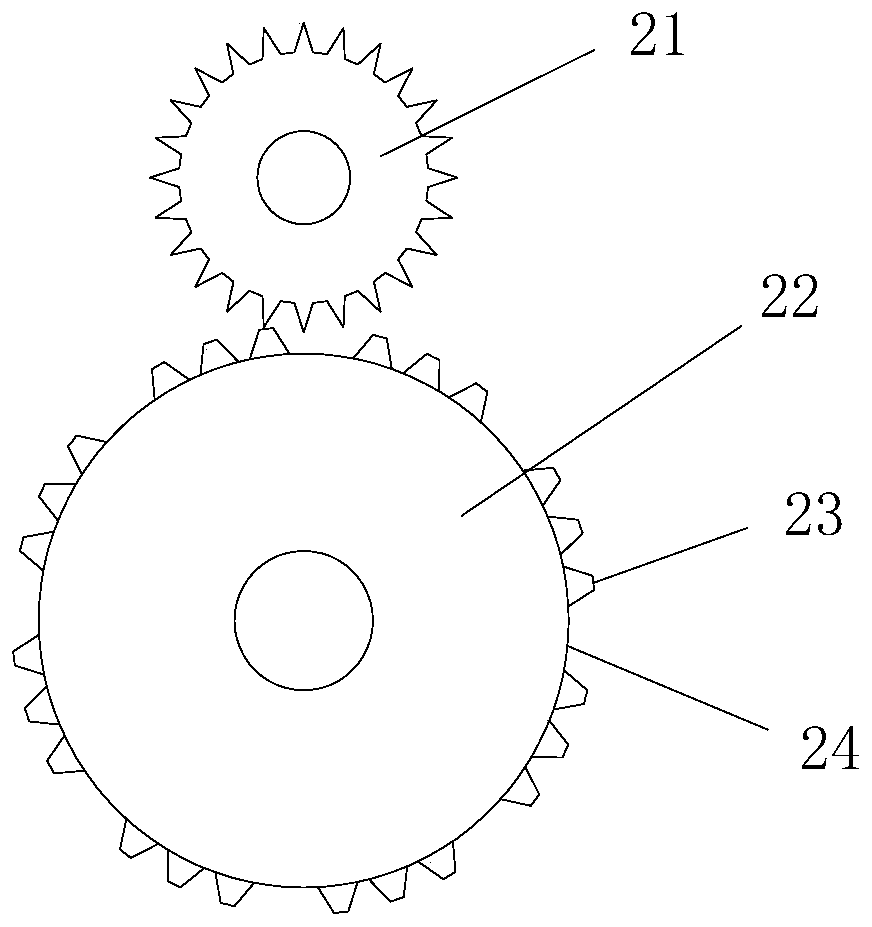

Football catching training device

The invention relates to a football catching training device comprising a base, a mounting base is fixedly mounted on the upper end face of the base, a launching cylinder is arranged on the mounting base, a transmitting device is arranged on the launching cylinder, a ball storage box is arranged at the upper left position of the launching cylinder, a plurality of evenly distributed support rods are fixedly mounted on the lower end face of the ball storage box, and a ball feeding port is fixedly arranged in the upper end face of the ball storage box. According to the football catching trainingdevice in the invention, by arranging a ball storage rack, a driving tooth area and a tooth removing area and the like, automatic ball feeding and circulating ball serving are achieved, the automationdegree is high, the training effect is improved, and the problem that most of the current football catching training devices need continuous manual ball feeding and are low in automation degree, thereby causing that training is not coherent and the training effect is poor is solved.

Owner:XUZHOU NORMAL UNIVERSITY

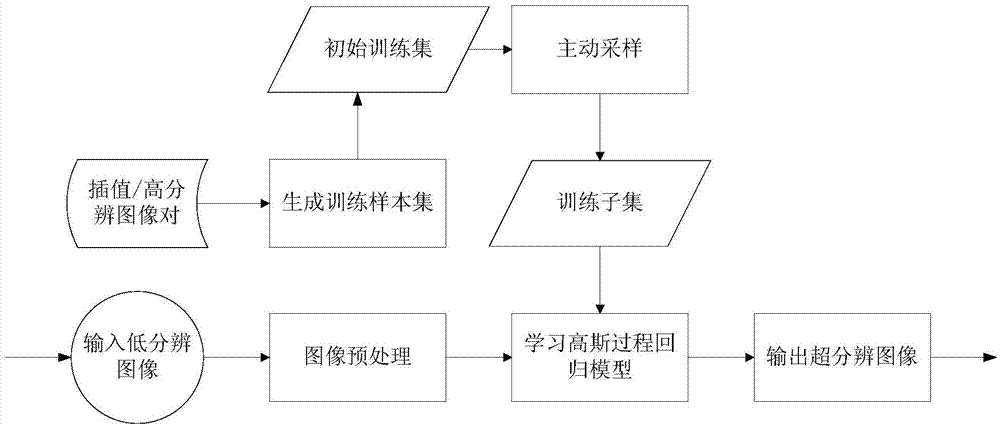

Image super-resolution method based on active sampling and Gaussian process regression

ActiveCN105447840AEfficient training processRich reconstruction detailsImage enhancementImage analysisVideo monitoringTest sample

The present invention discloses an image super-resolution method based on active sampling and Gaussian process regression, which mainly aims to solve the problem of poor super-resolution effects of a texture region in the prior art. According to the method, a training set is constructed by fully using a large number of existing external natural images, and a sample information amount is measured by using characterization and diversity as indexes, so as to extract a simplified training subset, which enables training of a Gaussian process regression model to be more efficient. The implementation steps are as follows: 1. generating an external training sample set, and performing active sampling on the external training sample set to obtain a training subset; 2. learning a Gaussian process regression model based on the training subset; 3. preprocessing a test image and generating a test sample set; and 5. applying the learned Gaussian process regression model on the test sample set, and predicting and outputting a super-resolution image. The method has a relatively strong super-resolution ability and is capable of restoring more detail information in regions such as texture, so that the method can be used for video monitoring, criminal investigation, aerospace, high definition entertainment and video or image compression.

Owner:XIDIAN UNIV

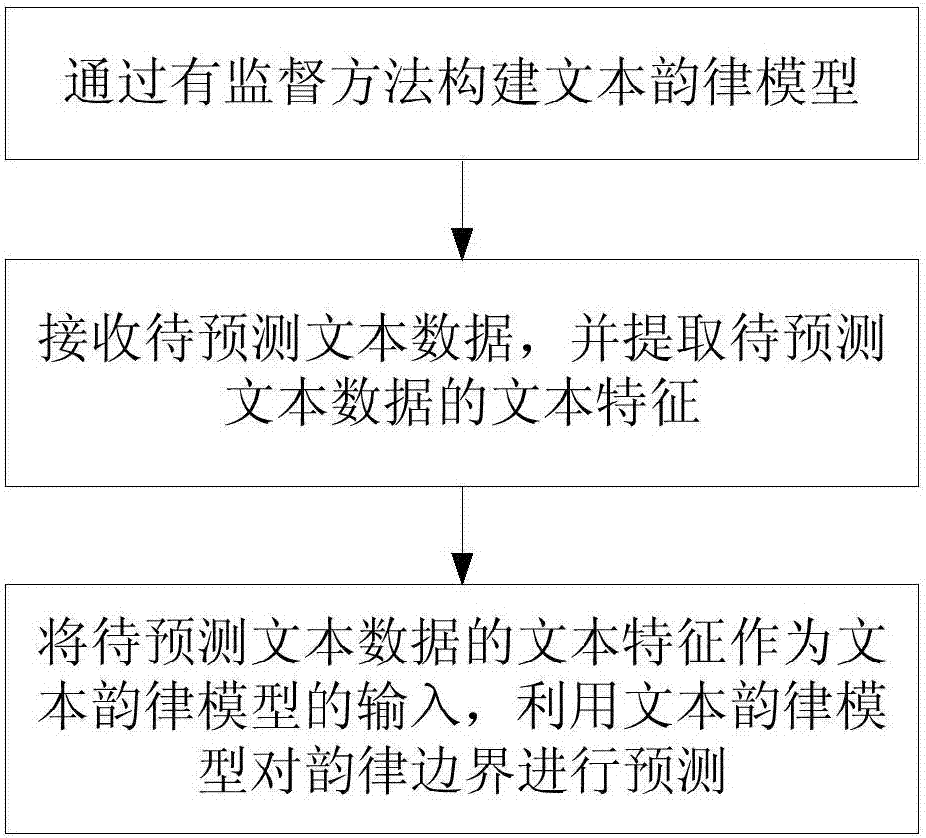

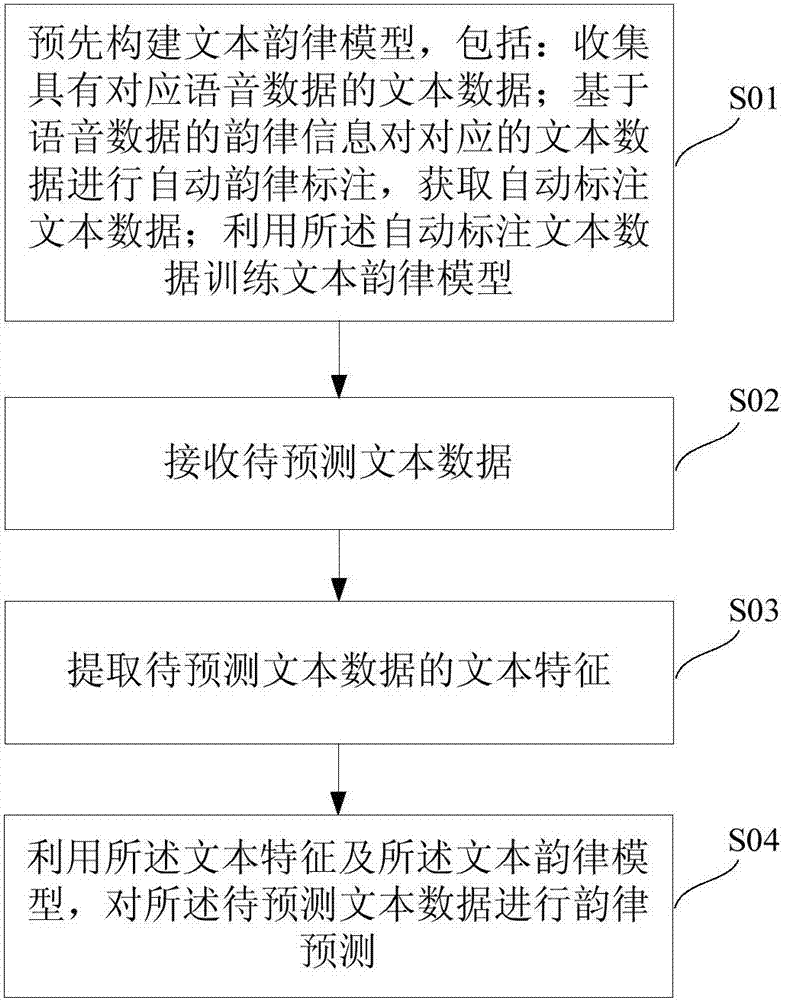

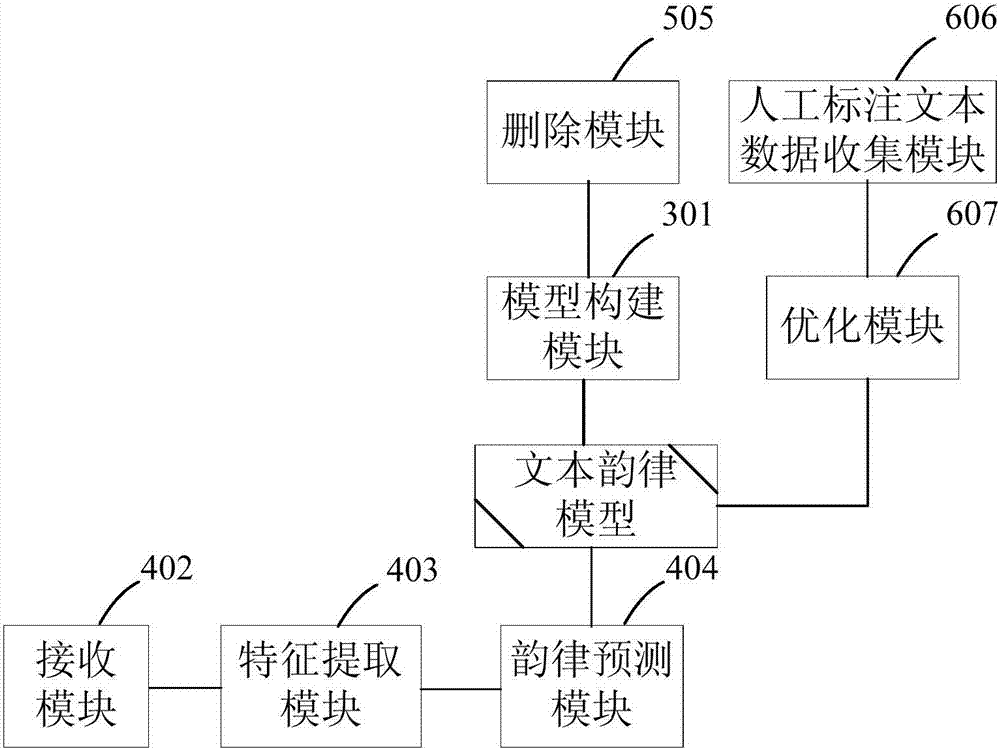

Rhythm predicting method and system

ActiveCN107039034AEfficient acquisitionEfficient training processSpeech synthesisSpeech soundVoice data

The invention discloses a rhythm predicting method and a system wherein the method comprises: creating a text rhythm model in advance; gathering text data with corresponding voice data; based on the rhythm information of the voice data, performing automatic rhythm marking to the corresponding text data; obtaining the automatically marked text data; using the automatically marked text data to train the text rhythm model; receiving the to-be-predicted text data; then, extracting the text characteristics of the to-be-predicted text data; and finally utilizing the text characteristics and the text rhythm model to perform rhythm predictions to the to-be-predicted text data. According to the method and the system, as the gathered text data all correspond to voice data and the voice data contain the rhythm information in reality, it is possible to perform automatic rhythm marking to the text data. Therefore, with the system and the method, the problem in the prior art can be solved that artificial marking is required for the rhythm edges of all trained text data to train the text rhythm model, which leads to the high human cost and the long consumption of time.

Owner:IFLYTEK CO LTD

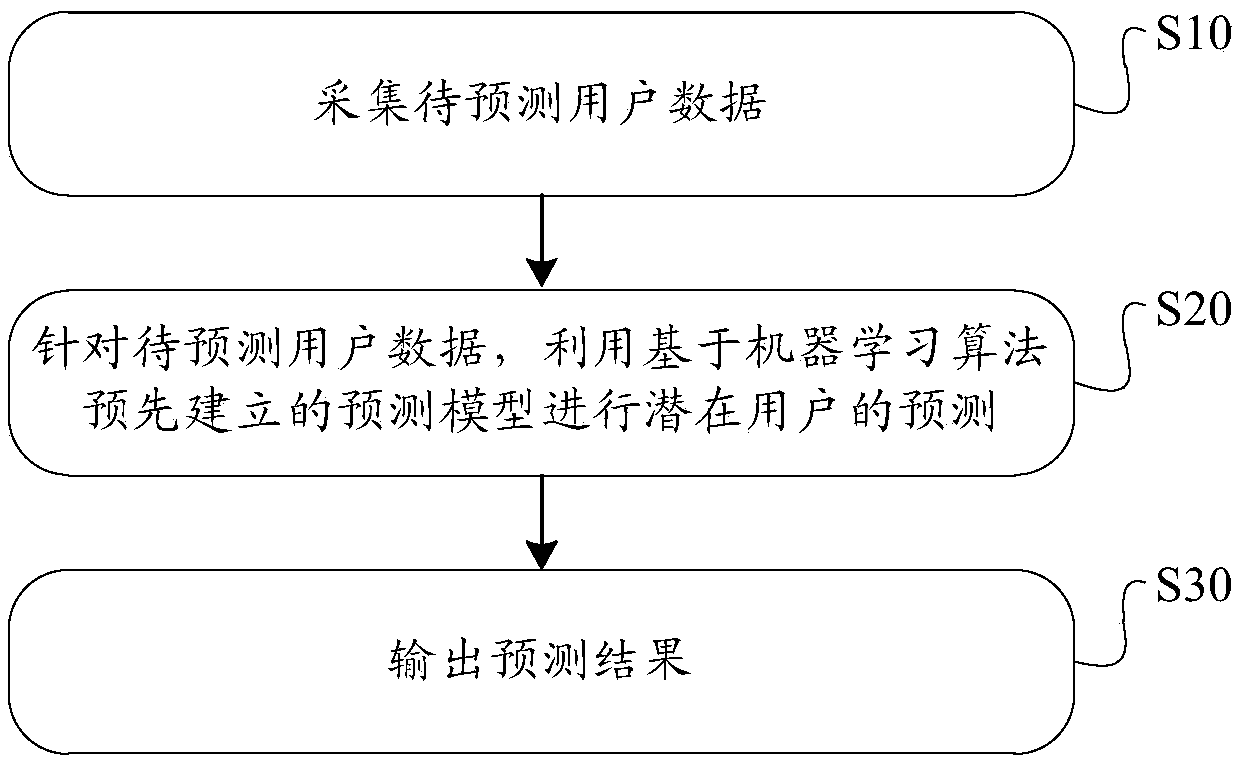

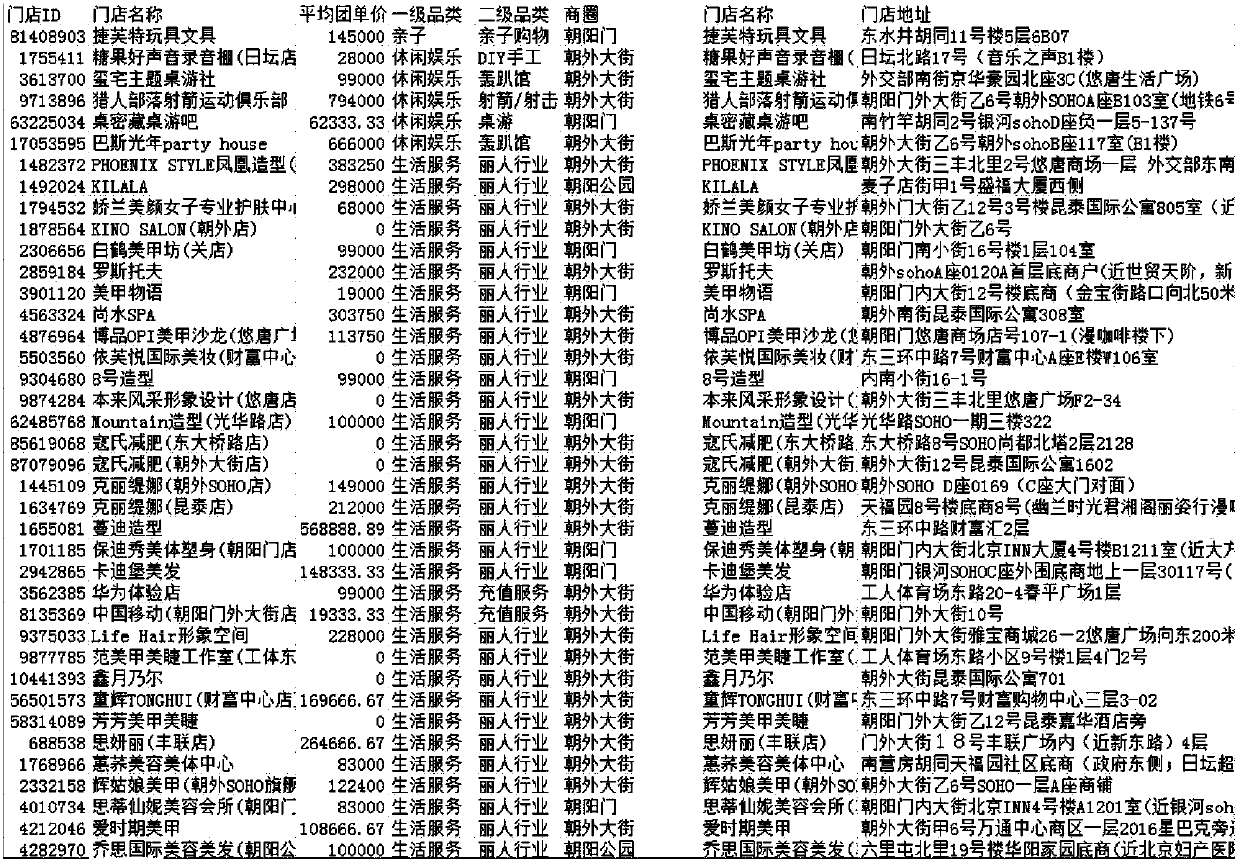

Product potential user mining method and device

ActiveCN107657267AImprove screening efficiencySave time and costCharacter and pattern recognitionMarket data gatheringTime costData mining

The invention discloses a product potential user mining method and device. The product potential user mining method comprises the following steps: collecting user data to be predicated; for the user data to be predicated, carrying out prediction of potential users by utilizing a prediction model pre-established based on a machine learning algorithm; and outputting a prediction result. According tothe technical scheme, product potential users are obtained through the prediction model pre-established based on the machine learning algorithm, so that compared with a manual screening mode, the method greatly improves screening efficiency of the potential users, and effectively saves labor and time cost.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

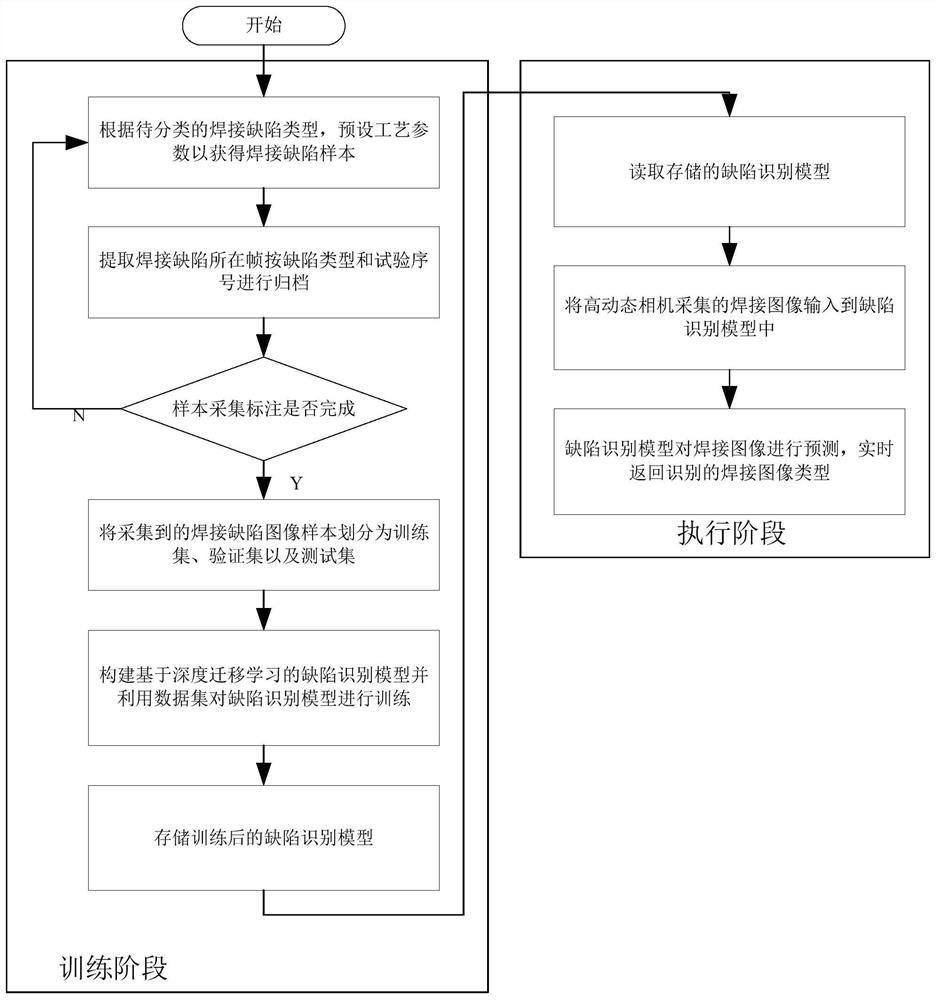

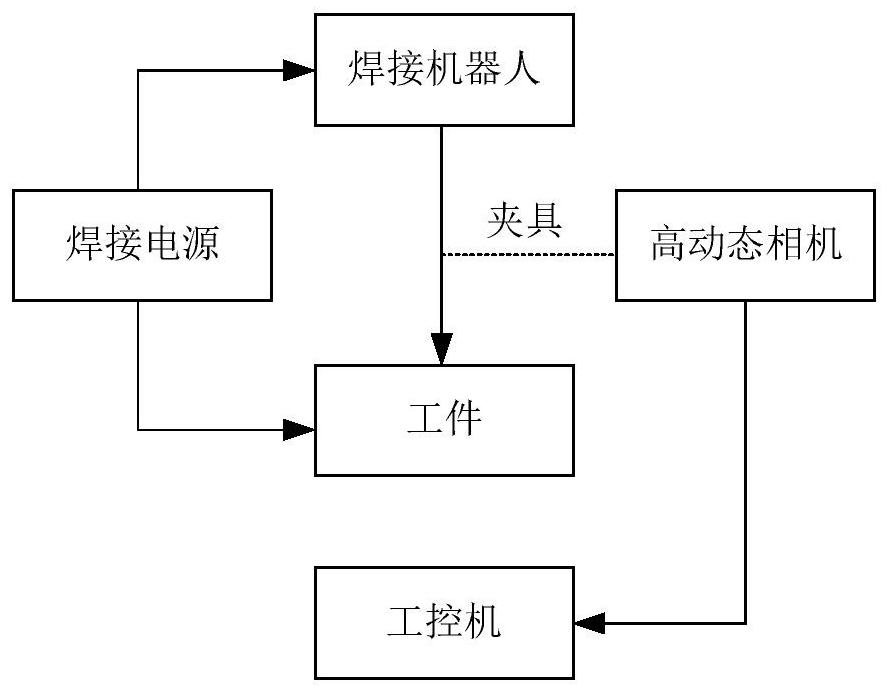

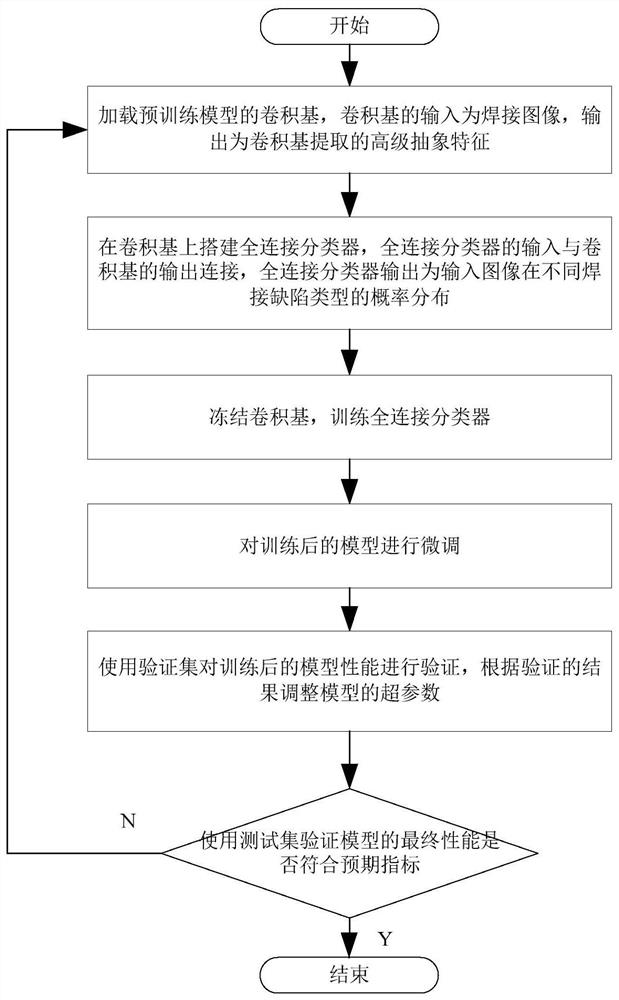

Welding image defect identification method and device, storage medium and equipment

ActiveCN112116560AHigh degree of automationEfficient training processImage enhancementImage analysisPattern recognitionEngineering

The invention provides a welding image defect recognition method and device, a storage medium and equipment. The method comprises the steps of acquiring a to-be-recognized welding image; inputting theto-be-recognized welding image into a defect recognition model, recognizing the welding image through the defect recognition model to obtain a welding image type, and judging whether the welding image has defects or not and the defect type when the defects exist or not; wherein training processing is carried out on the initial defect recognition model as follows: forming a defect recognition model by combining a convolution basis of a pre-training model with a full-connection classifier; carrying out migration training on the defect recognition model; wherein migration training refers to freezing a convolution basis, training a full-connection classifier, and then finely adjusting a defect recognition model. Based on the combination of deep learning and transfer learning technologies, efficient training of the defect recognition model can be realized under the condition of limited sample number, and the accuracy of welding image defect recognition is improved.

Owner:SOUTH CHINA UNIV OF TECH

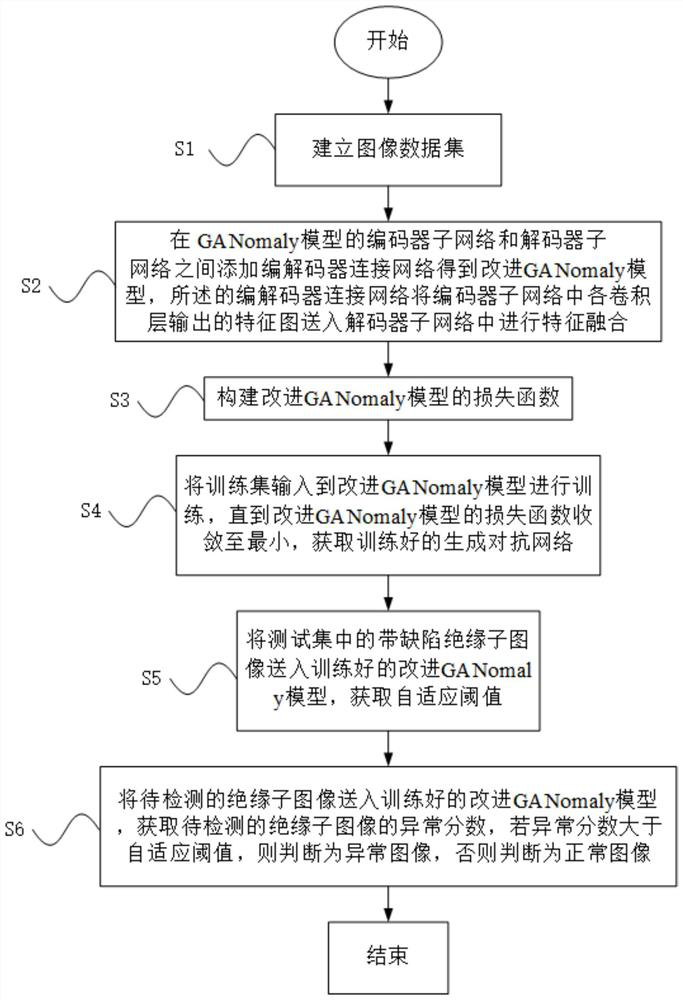

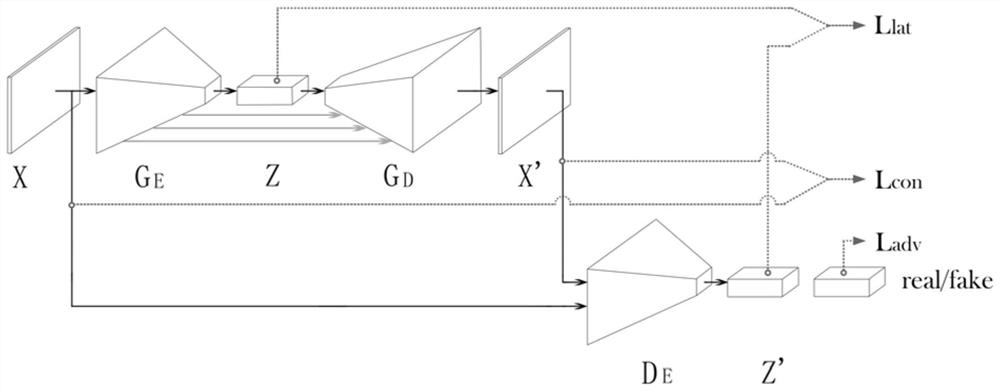

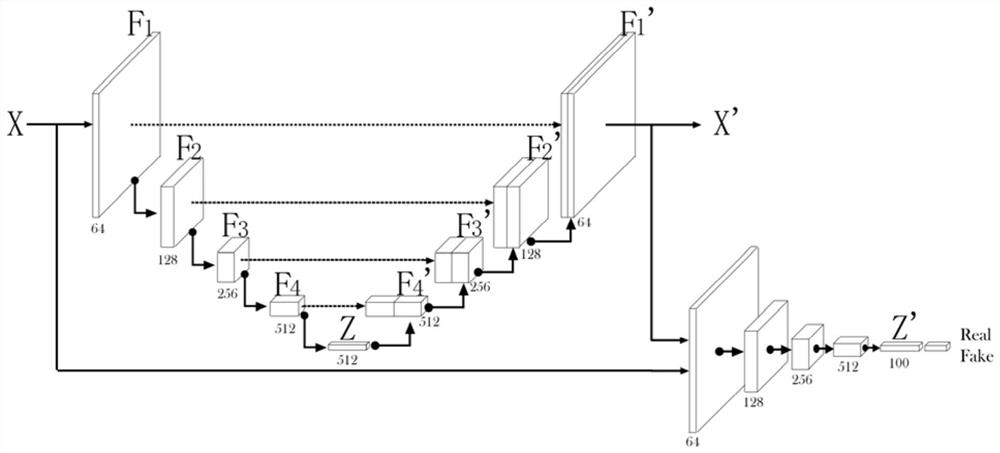

High-voltage line insulator defect detection method based on generative adversarial network

PendingCN112184654ASolve the small number of negative samplesSolve difficult collectionImage enhancementImage analysisData setAlgorithm

The invention relates to a high-voltage line insulator defect detection method based on a generative adversarial network. The method comprises the following steps: establishing an image data set; constructing an improved GANomaly model, wherein the improved GANomaly model sends a feature map output by each convolution layer in the encoder sub-network to the decoder sub-network for feature fusion;constructing a loss function of the improved GANomaly model; training an improved GANomaly model; sending the defective insulator image in the test set into a trained improved GANomaly model to obtainan adaptive threshold; sending a to-be-detected insulator image to the trained improved GANomaly model to judge the image type. Compared with the prior art, the method solves the problems that the number of negative samples in the insulator image is small, the negative samples are difficult to collect, a large amount of energy is needed to label data, and the feasibility and adaptability of the training process are improved.

Owner:SHANGHAI UNIVERSITY OF ELECTRIC POWER

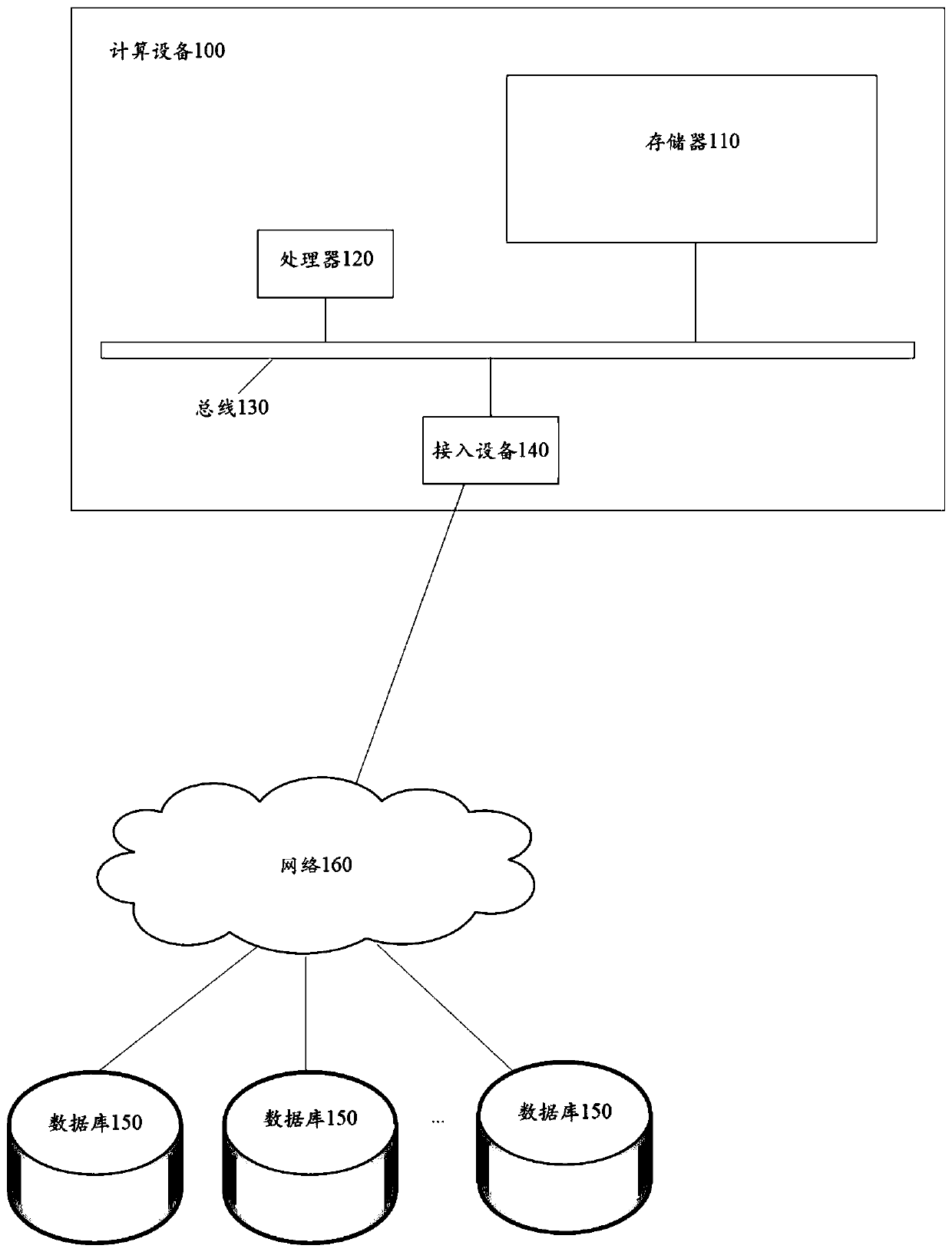

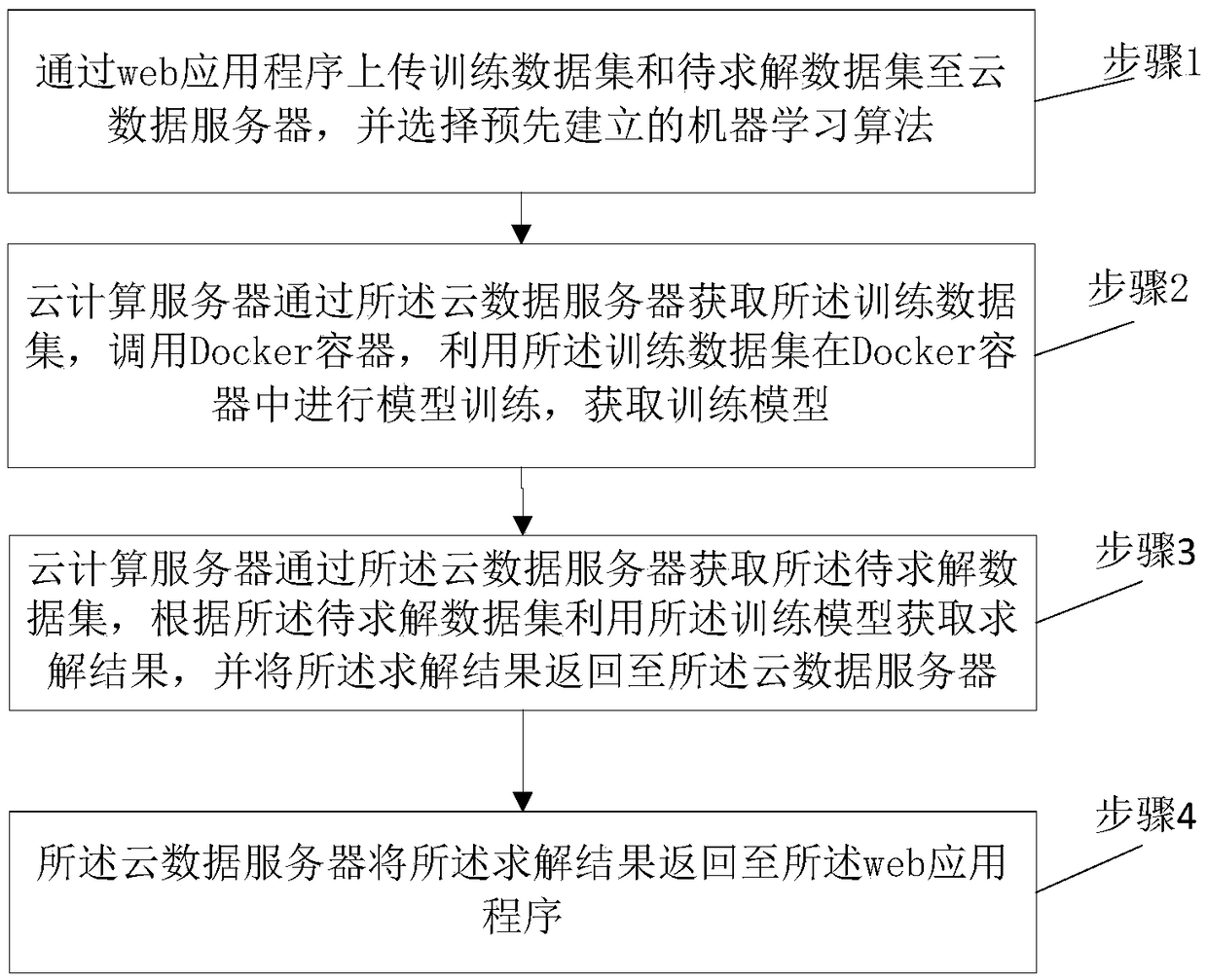

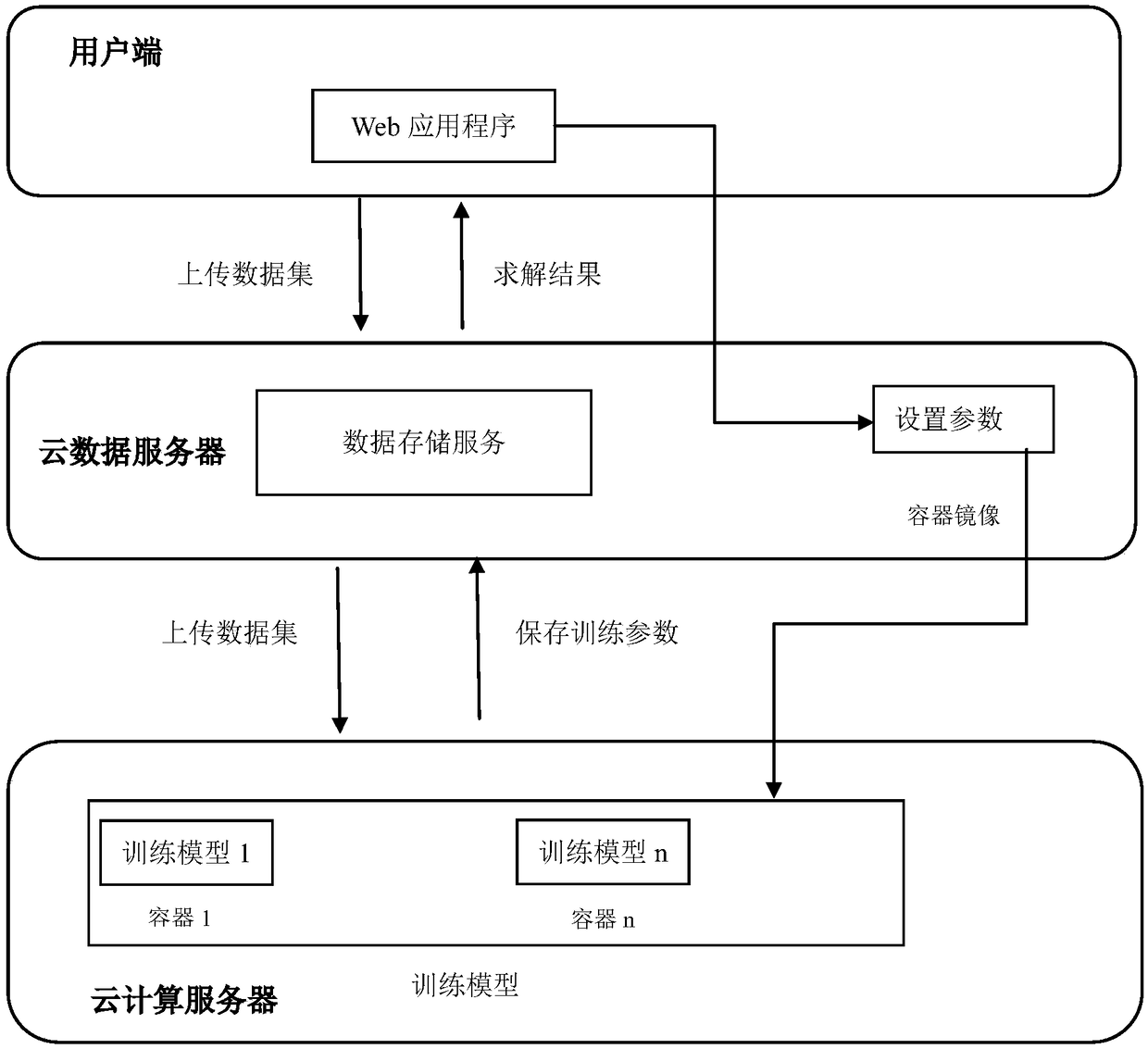

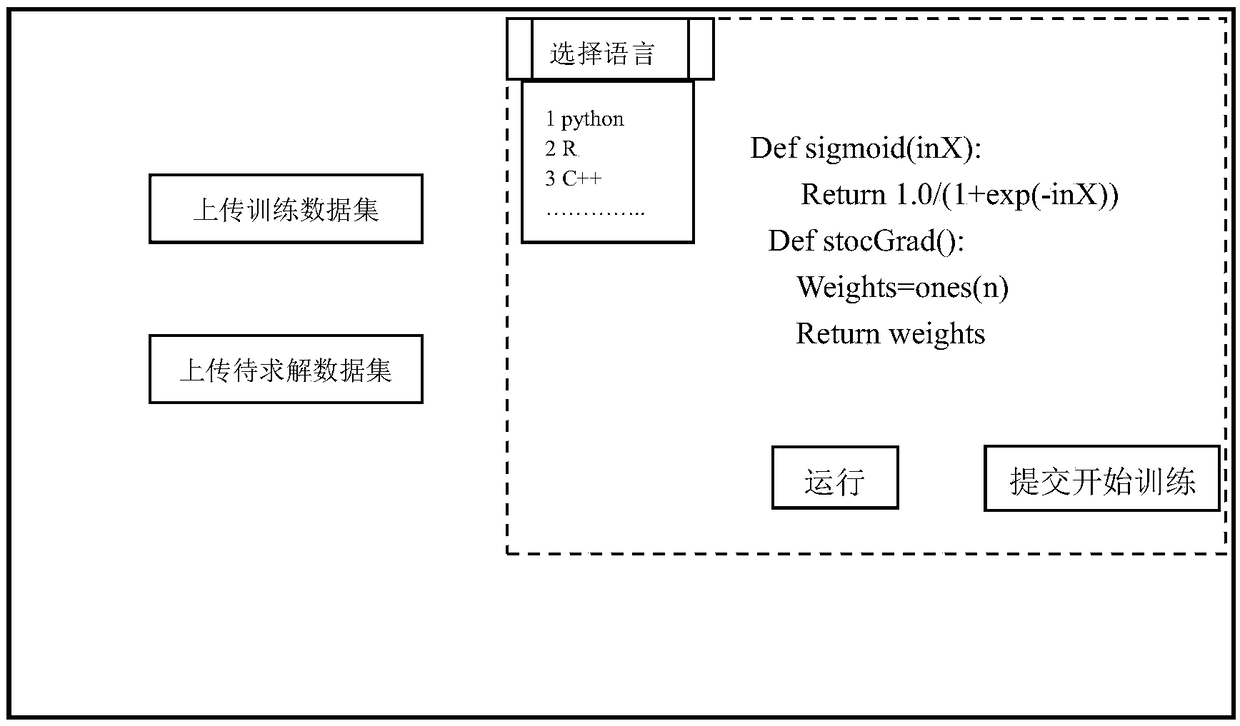

Machine learning algorithm whole-process training method and system based on cloud infrastructure

PendingCN108665072AEfficient training processEfficient constructionComputing modelsCloud computingProcess training

The invention relates to a machine learning algorithm whole-process training method and system based on a cloud infrastructure. The method comprises the steps that a training data set and a to-be-solved problem data set are uploaded to a cloud data server through a web application program, and a preset machine learning algorithm is selected; the training data set is obtained by a cloud computing server through a cloud data server, and model training is conducted by using the training data set to obtain a training model; the to-be-solved problem data set is obtained by the cloud computing server through the cloud data server, solving results are obtained by using the training model according to the to-be-solved problem data set, and the solving results are returned to the cloud data server;the solving results are returned to the web application program by the cloud data server. According to the method, computing environments and computing resources are deployed at cloud ends to help technical researching and developing workers in efficiently and rapidly constructing a machine learning algorithm model and efficiently training the model, and therefore time cost and software and hardware purchasing cost are reduced.

Owner:CHINA ELECTRIC POWER RES INST +2

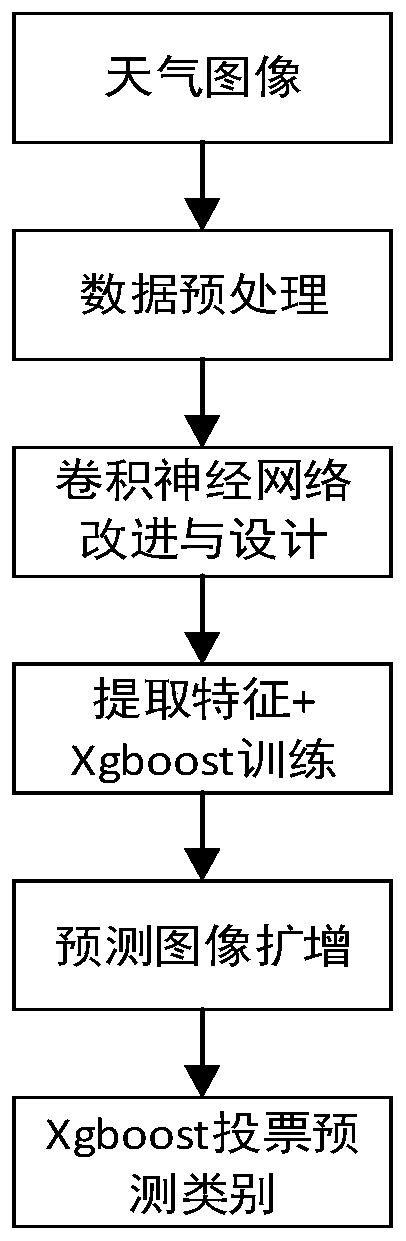

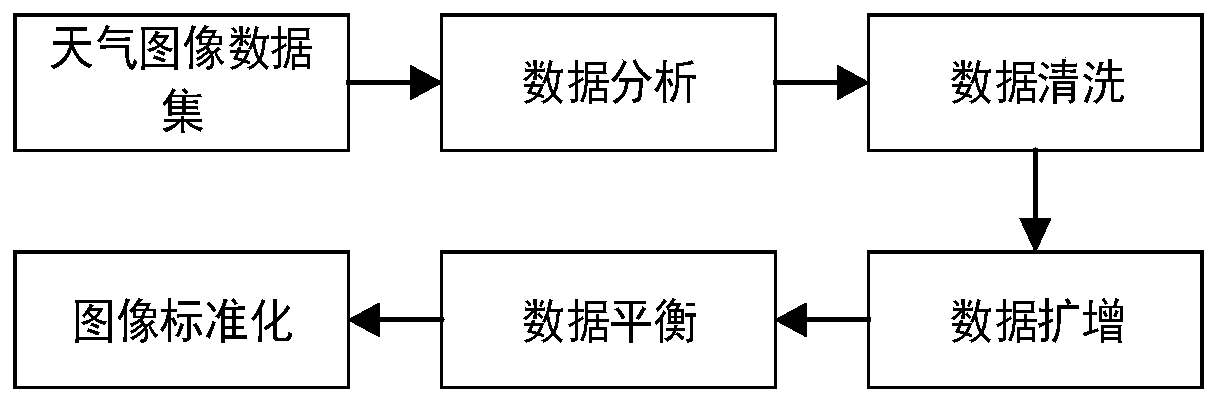

Weather image intelligent identification method and system based on multi-depth convolutional neural network fusion

ActiveCN111476713AImprove performanceImprove accuracyImage enhancementImage analysisFeature extractionEngineering

The invention discloses a weather image intelligent identification method and system based on multi-depth convolutional neural network fusion, and the method comprises the following steps: A, collecting an image, and carrying out the preprocessing of the image; B, establishing four deep convolutional neural network models with different structures, respectively improving full connection layers ofthe deep convolutional neural network models, adding a feature layer, and performing network training based on the high-quality training data obtained in the step A; C, extracting features of newly added feature layers of the four obtained deep learning models, and performing training by adopting an Xgboost ensemble learning model to obtain a fusion model; and D, amplifying a weather image to be identified, identifying the amplified weather image by the obtained fusion model, and performing voting to obtain an identification type with the highest final vote number. According to the method, theproblem of low efficiency of manually extracting the image features in an existing traditional weather recognition method is solved, and the recognition accuracy of the deep learning model is effectively improved.

Owner:CENT SOUTH UNIV

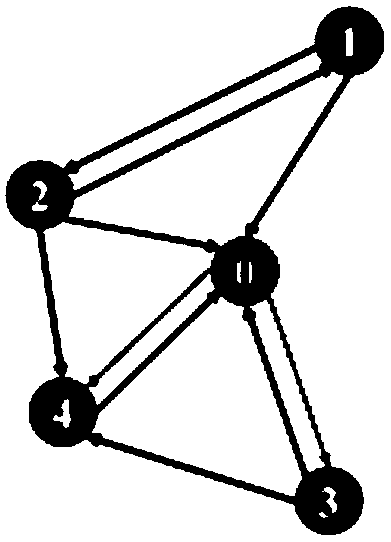

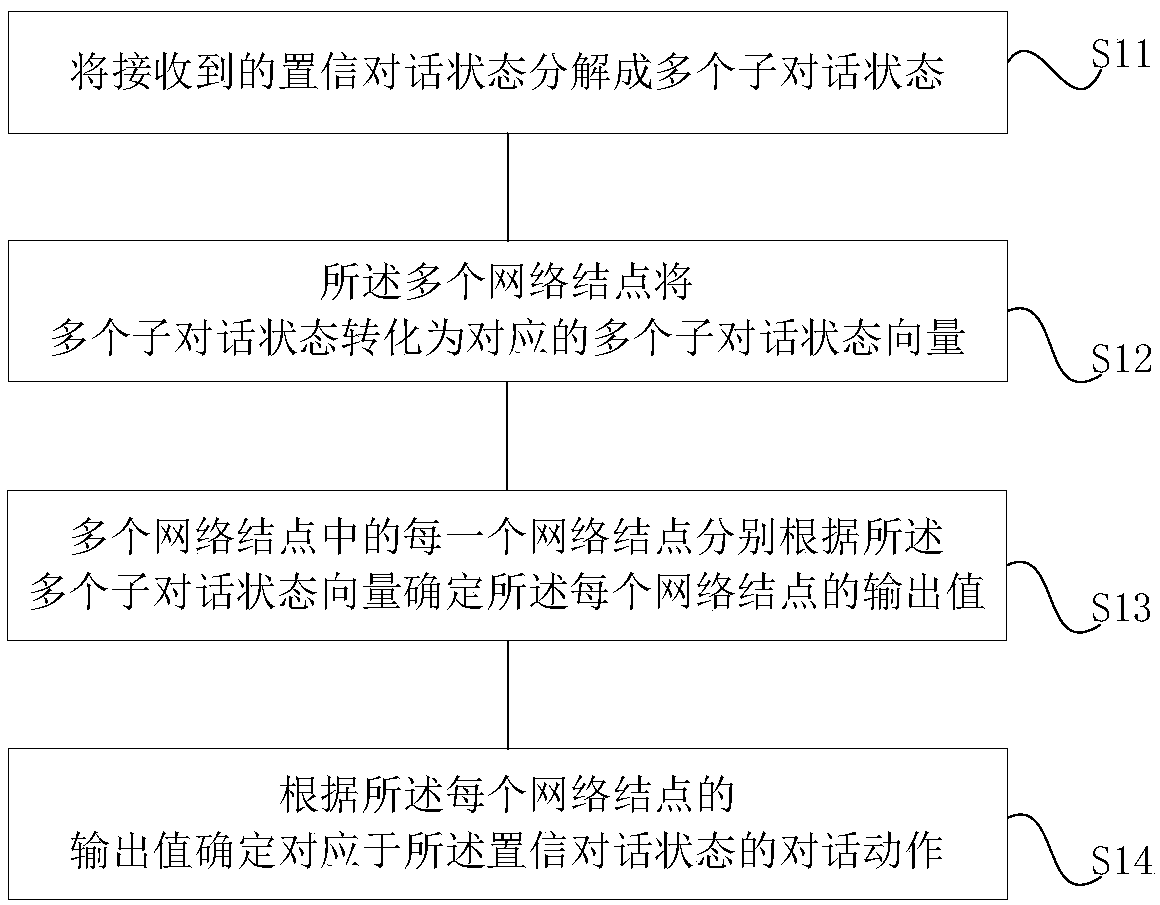

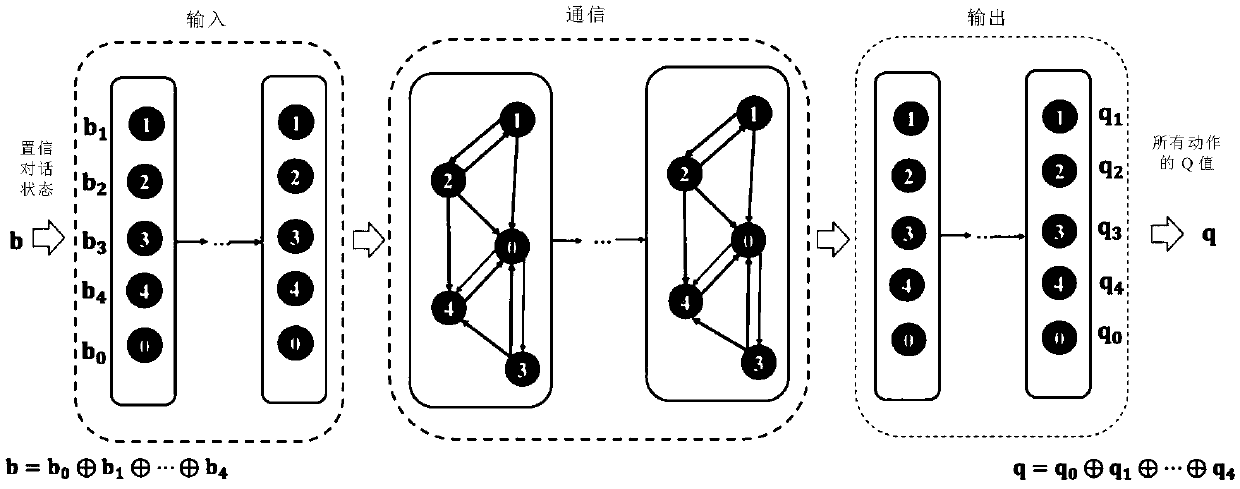

Structured-neural-network-based dialogue method and system, equipment and storage medium

ActiveCN108962238AEfficient training processImprove performanceSemantic analysisSpeech recognitionData miningArtificial intelligence

The invention discloses a structured-neural-network-based dialogue method and system, equipment and a storage medium. The method comprises: decomposing a received confidence dialog state into a plurality of sub-dialog states; transforming the plurality of sub-dialog states into a plurality of corresponding sub-dialog state vectors by a plurality of network nodes; for each of the network nodes, determining output values of all network nodes according to the plurality of sub-dialog state vectors; and according to the output value of each of the network nodes, determining a dialog action corresponding to the confidence dialog state. According to the invention, with the structured neural network, the obtained neural network dialogue strategy can be trained more efficiently; and training is carried out by using a few of dialogue interaction data to obtain a model with the performance reaching the high level.

Owner:AISPEECH CO LTD

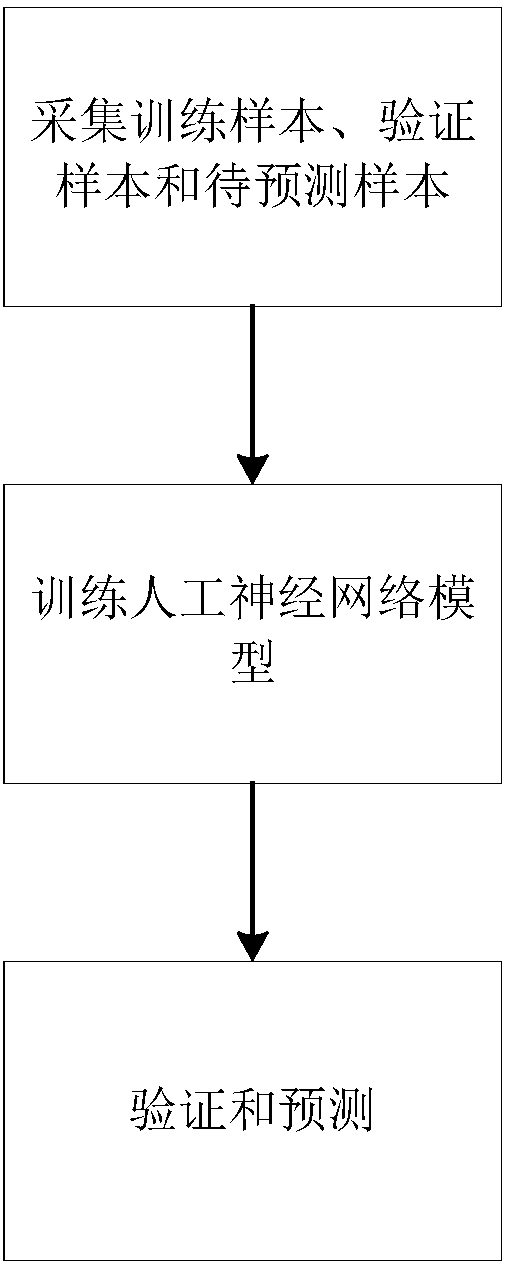

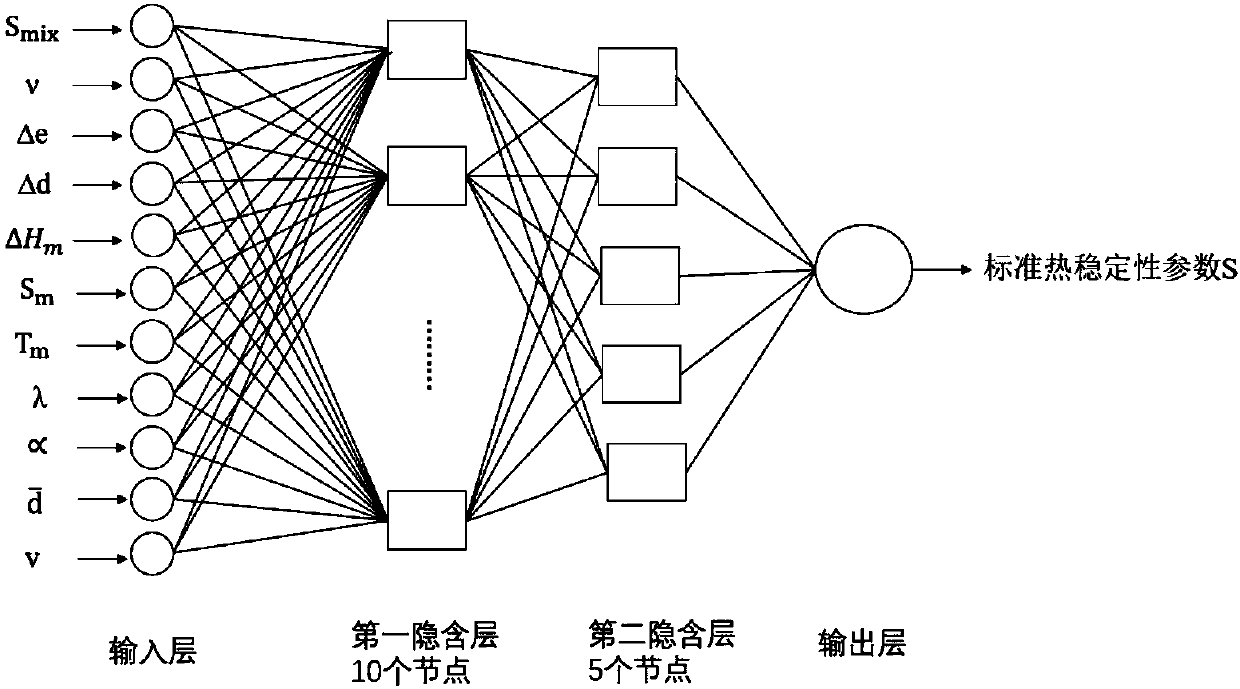

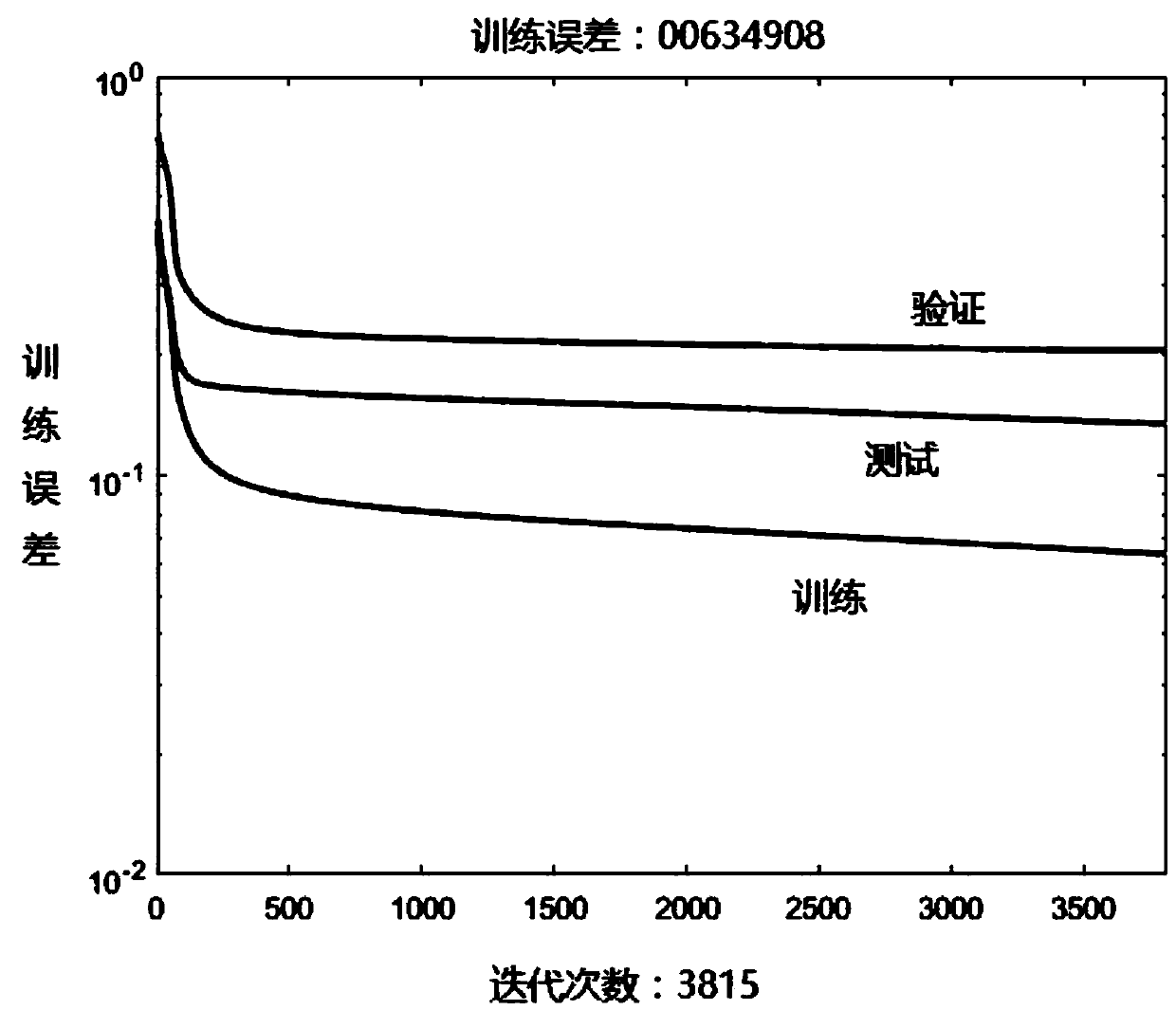

Artificial neural network predicting method of amorphous alloy thermoplasticity forming performance

ActiveCN108256689AImproved thermoplastic formabilityShorten the timeForecastingNeural learning methodsIndex testPredictive methods

The invention belongs to the field of prediction of amorphous alloy thermoplasticity forming performance, and discloses an artificial neural network predicting method of the amorphous alloy thermoplasticity forming performance. The predicting method comprises the following steps of a, selecting multiple performance parameters and collecting data of the performance parameters, dividing the data into a training sample, a verification sample and a to-be-predicted sample, and testing to obtain feature index test values corresponding to the training sample and the verification sample; b, selectingan artificial neural network model as an initial predicting model for the amorphous alloy thermoplasticity forming performance, adopting the training sample to train the artificial neural network model, and determining an improved predicting model; c, adopting the verification sample to verify the improved predicting model, finally obtaining a final predicting model, and adopting the final predicting model for prediction. By means of the artificial neural network predicting method of the amorphous alloy thermoplasticity forming performance, the amorphous alloy thermoplasticity forming performance is effectively predicted without experiments, guidance is provided for development of an amorphous alloy system suitable for thermoplasticity forming, the time for developing the new amorphous alloy system is greatly shortened, and the money cost for developing of the new amorphous alloy system is greatly reduced.

Owner:HUAZHONG UNIV OF SCI & TECH

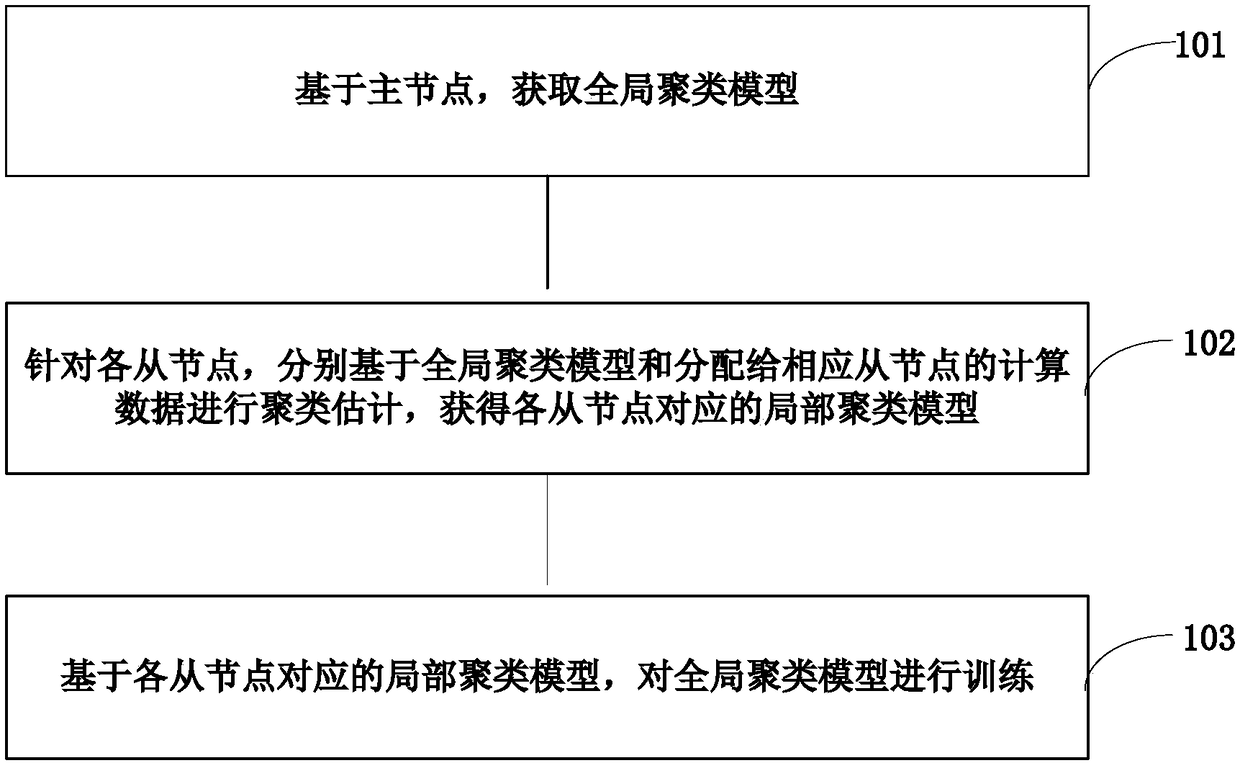

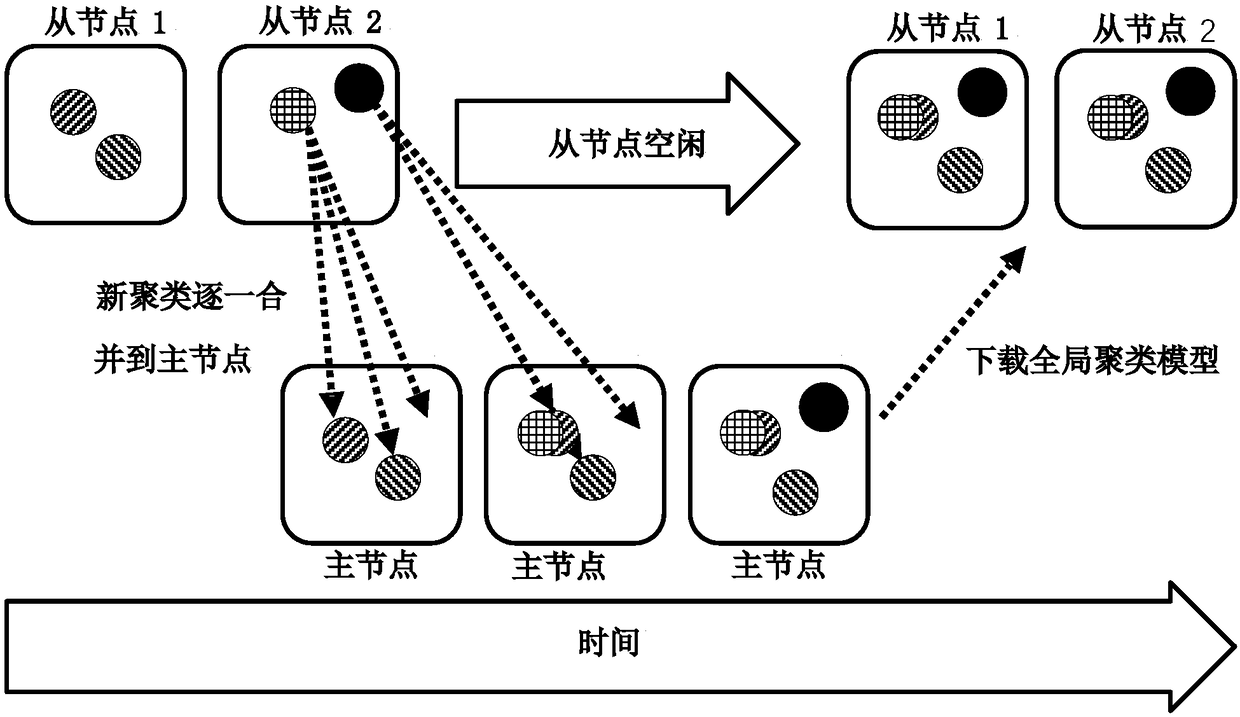

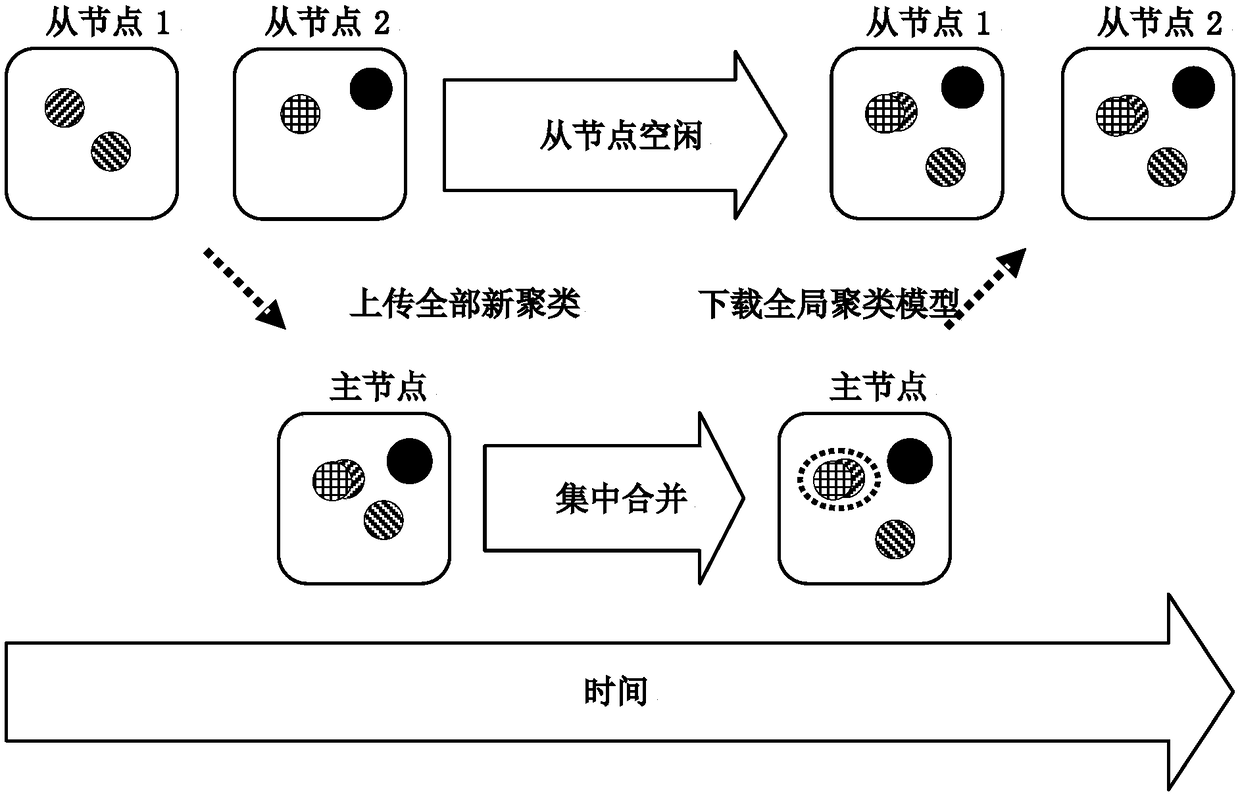

Clustering model training method and device, electronic equipment and computer storage medium

InactiveCN108229528AReduce sync rateEfficient training processCharacter and pattern recognitionElectric equipmentMachine learning

The embodiment of the invention discloses a clustering model training method and device, electronic equipment and a computer storage medium. The method comprises the steps that a global clustering model is acquired based on master nodes; according to any one of at least one slave node in a distributed system, the global clustering model is acquired from the master nodes in the distributed system,clustering estimation is performed based on the global clustering model and calculation data allocated to any corresponding slave node, and a local clustering model corresponding to any slave node isobtained; the distributed system comprises the master nodes and at least one slave node in communicating connection with the master nodes, wherein the master nodes are in communicating connection withall the slave nodes; and the global clustering model is trained based on the obtained local clustering models corresponding to all the slave nodes. Through the clustering model training method in theembodiment, the synchronization rate among the calculation nodes is lowered, and clustering efficiency is improved.

Owner:BEIJING SENSETIME TECH DEV CO LTD

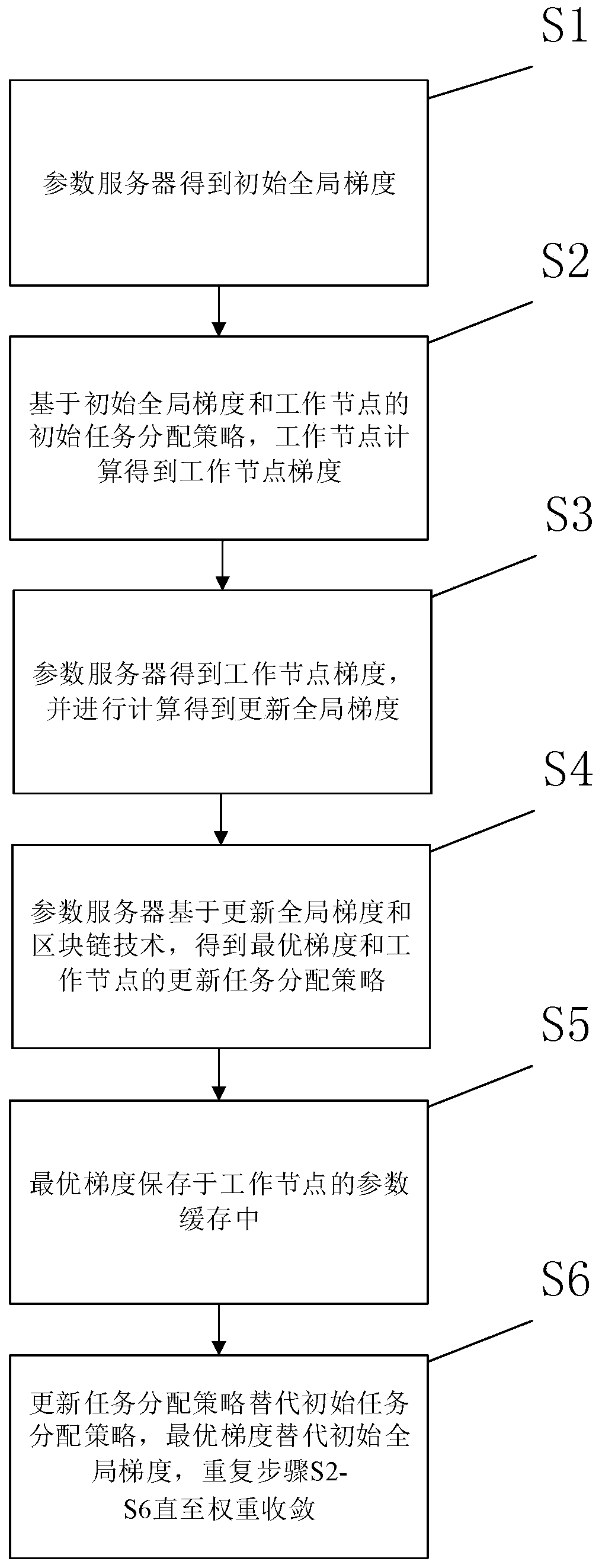

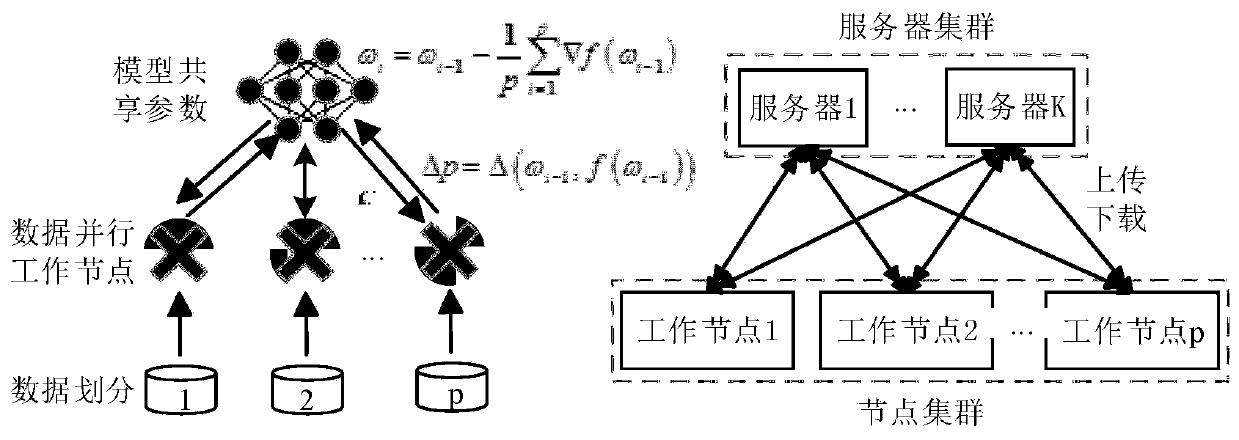

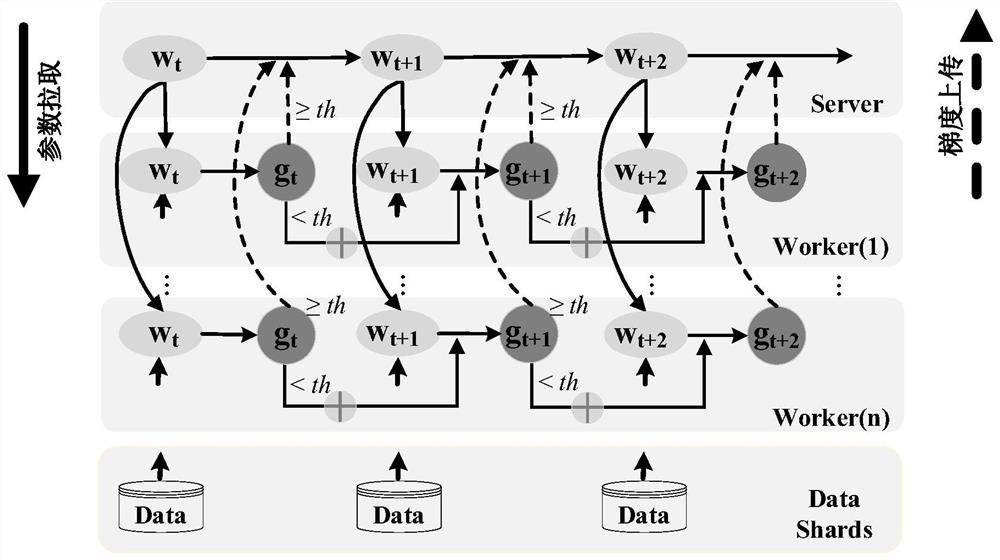

Distributed stochastic gradient descent method

ActiveCN110929878AEfficient training processAvoid collectingMachine learningStochastic gradient descentDistributed computing

The invention relates to a distributed random gradient descent method. The method comprises the following steps that S1, a parameter server obtains an initial global gradient; s2, based on the initialglobal gradient and an initial task allocation strategy of the working node, the working node calculates and obtains a working node gradient; s3, the parameter server obtains a working node gradient,and calculates and obtains an updated global gradient; s4, the parameter server obtains an optimal gradient and an update task allocation strategy of the working node based on the update global gradient and the block chain technology; s5, the optimal gradient is stored in a parameter cache of the working node; s6, the task allocation strategy is updated to replace the initial task allocation strategy, the initial global gradient is replaced with the optimal gradient, and the steps S2-S6 are repeated until the weight converges. Compared with the prior art, poor model parameters are prevented from being collected, the convergence speed of the model is increased, and the whole-process training time is shortened.

Owner:TONGJI UNIV

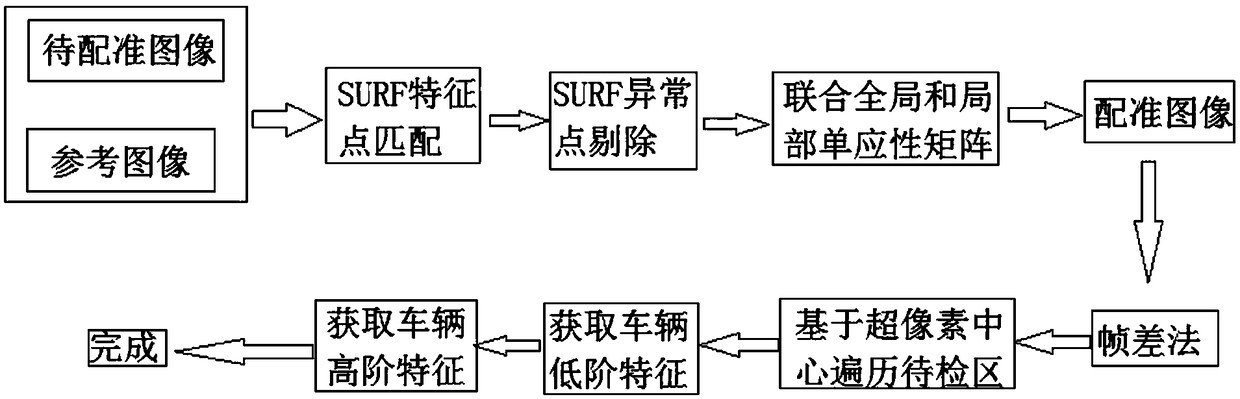

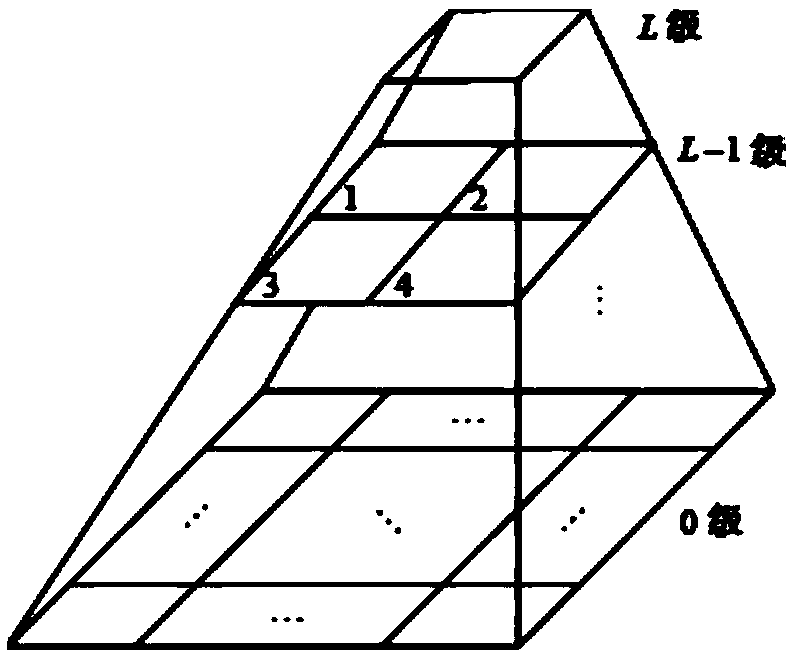

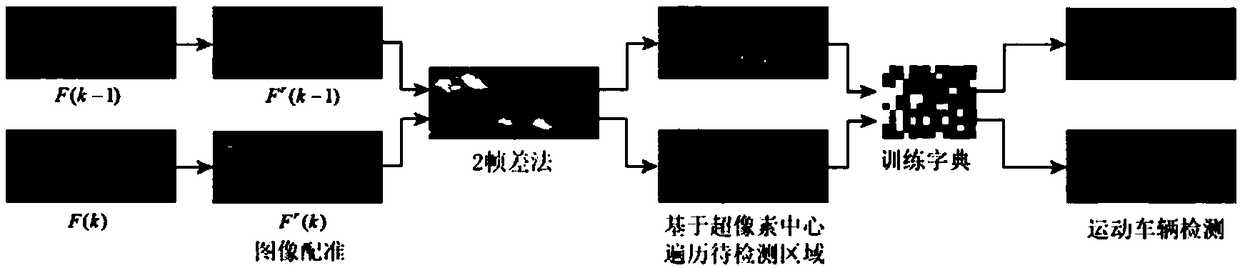

A moving vehicle detection method based on aerial video of unmanned aerial vehicle

ActiveCN109376641AImprove detection efficiencyImprove robustnessCharacter and pattern recognitionFeature point matchingTransformation matrix

The invention discloses a moving vehicle detection method based on aerial video of an unmanned aerial vehicle, at first, the SURF algorithm is used to match the feature points of the image and removethe anomaly point, a UAV image registration algorithm combining global and local homography matrices is used to obtain the transformation matrix, compensating for the adverse effects of airborne camera motion, a 2-frame difference method is used to reduce the area to be detected, and then traverses the region to be detected according to the center of the superpixel, then, the low-order features are extracted by using the multi-channel HOG feature algorithm, and the high-order features are obtained by introducing the context information of the vehicle, and the two features are fused to obtain the multi-order features of the target vehicle. Finally, the multi-order features and dictionary learning algorithm are combined to realize the moving vehicle detection. The method can suppress the influence of the motion of the airborne camera of the unmanned aerial vehicle, process the vehicle deformation and background interference in the image, and improve the robustness and real-time performance of the moving vehicle detection.

Owner:CHECC DATA CO LTD +1

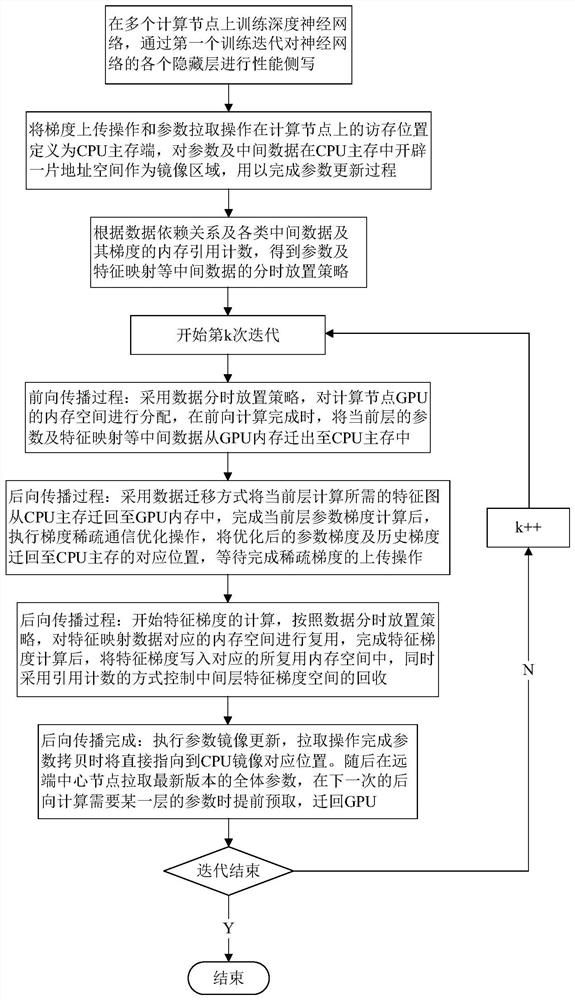

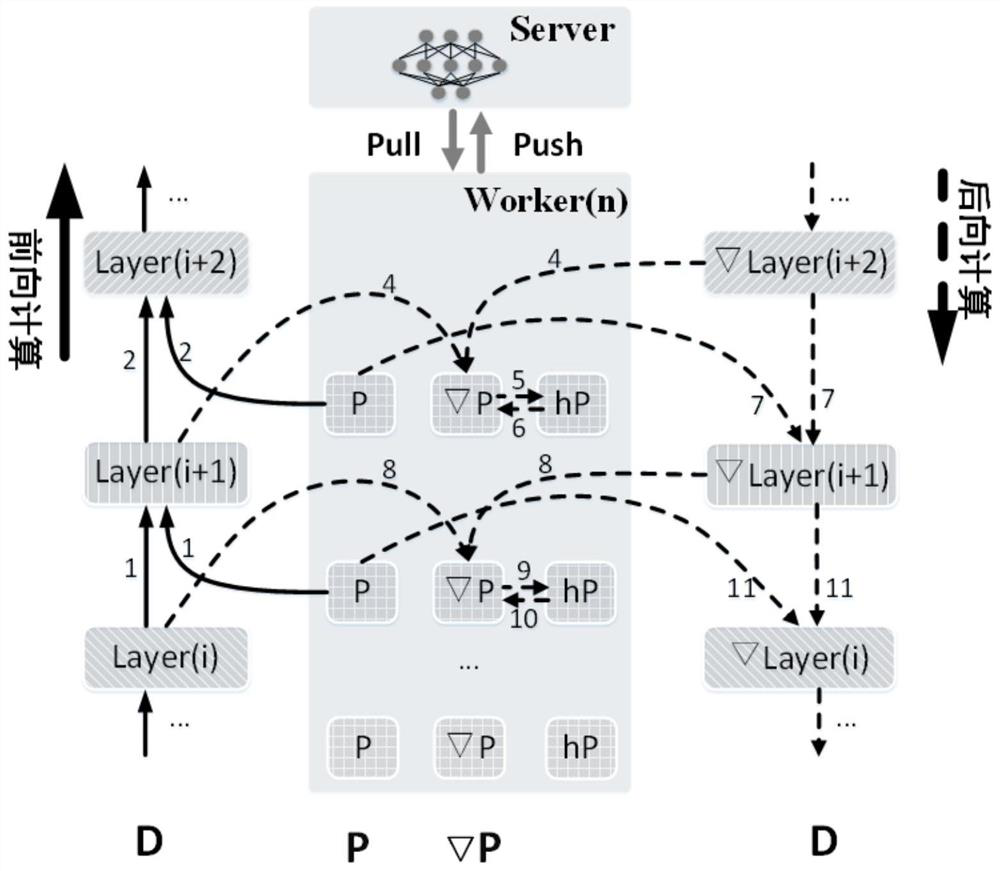

Resource management method and system for large-scale distributed deep learning

PendingCN111858072AReduce memory consumptionAddress the double pressureResource allocationNeural learning methodsParallel computingData access

The invention discloses a resource management method and system for large-scale distributed deep learning, and the method and system achieve the memory resource optimization management of parameters and gradient intermediate data during the training operation of a neural network, and guarantee the reasonable configuration of distributed communication bandwidth resources. Cross-layer memory reuse is realized again, intermediate data required by iterative computation and sparse communication are migrated into a CPU main memory and then migrated back as required, and interlayer memory consumptionis reduced; on the basis of reasonable migration of CPU-GPU data, independence of intra-layer memory reuse, intra-layer calculation mining and memory access operation is achieved, and intra-layer memory consumption is reduced as much as possible. Distributed parameter communication optimization is realized while efficient utilization of memory resources is ensured. Data access in the distributedparameter updating stage is reasonably redirected, a CPU main memory serves as a mirror image access area, the data access to parameters and gradients is completed, and the problems of gradient data missing and parameter writing border crossing are solved.

Owner:HUAZHONG UNIV OF SCI & TECH

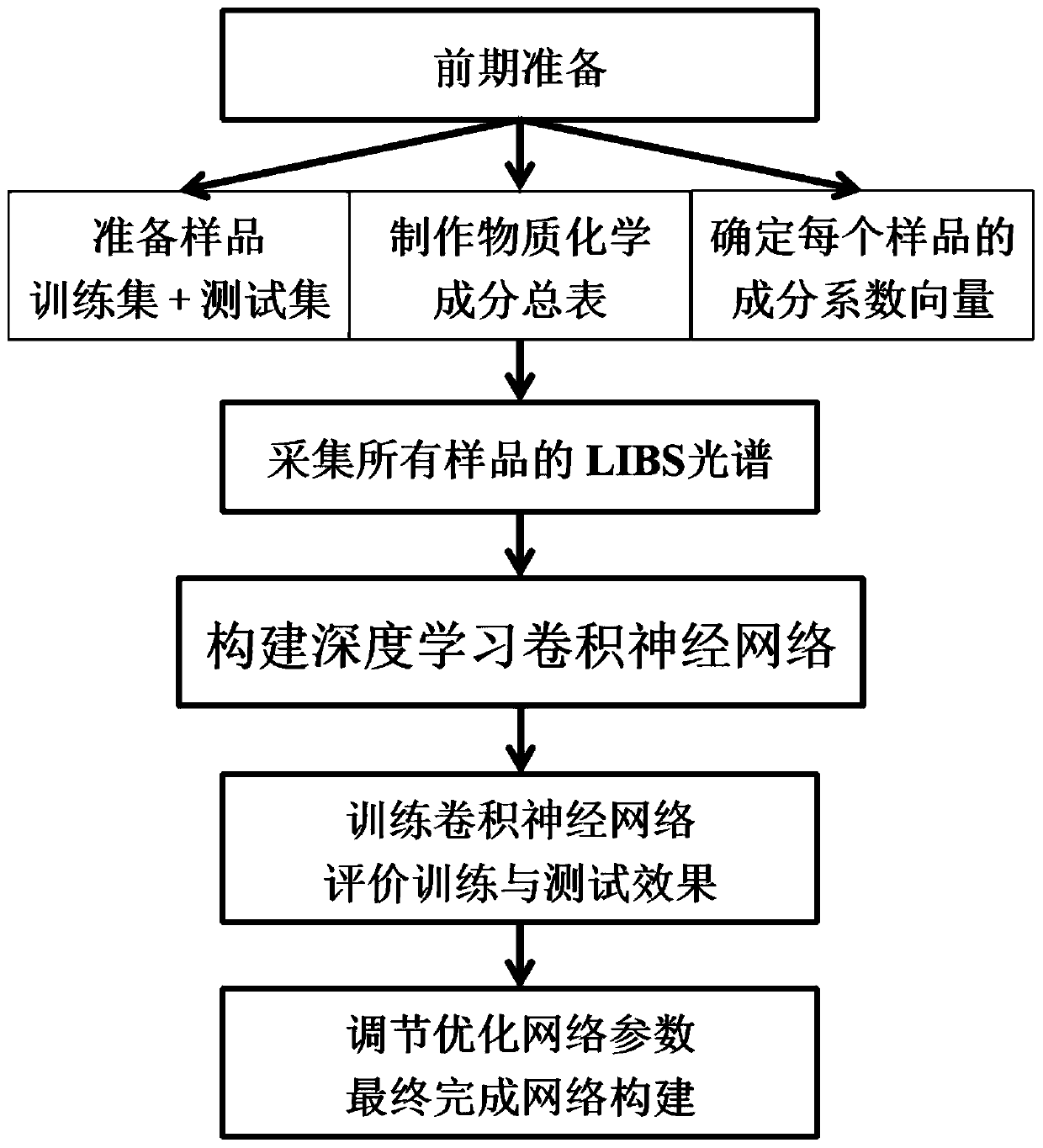

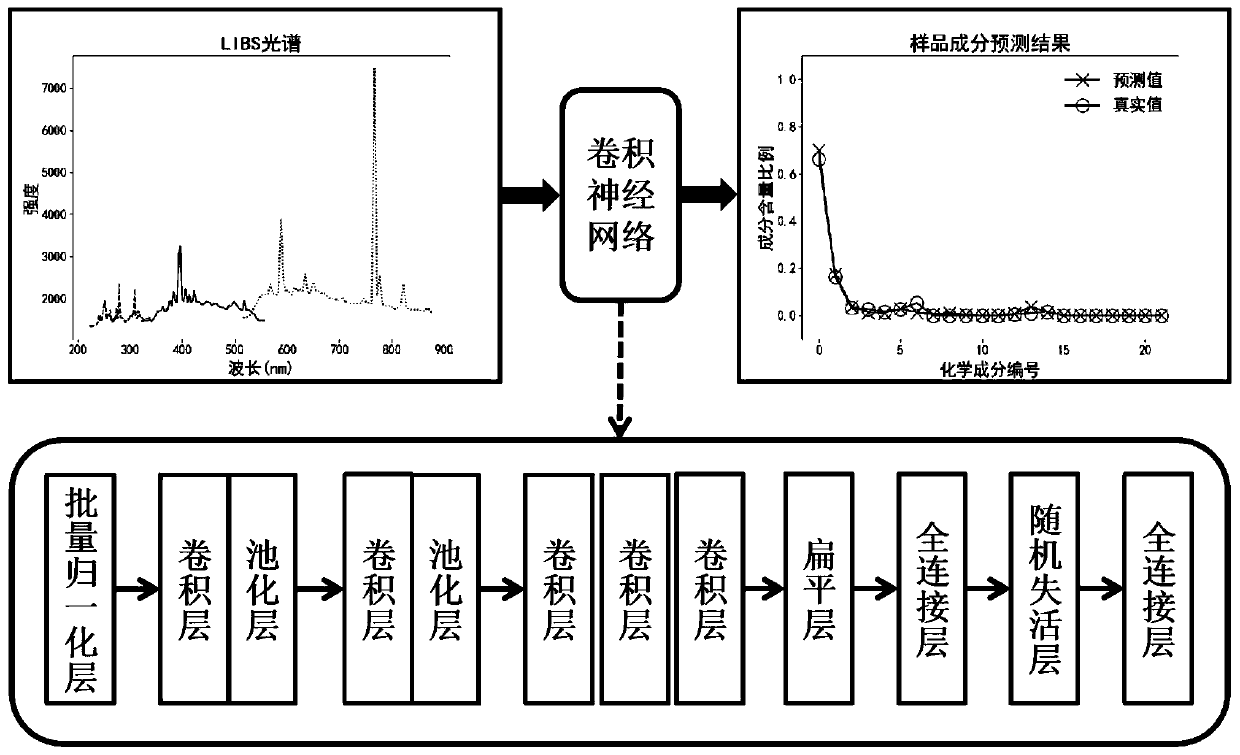

LIBS multi-component quantitative inversion method based on deep learning convolutional neural network

ActiveCN110705372AStrong ability to deal with complex nonlinear problemsHigh Quantitative Analysis AccuracyCharacter and pattern recognitionAnalysis by thermal excitationFeature extractionAlgorithm

The invention discloses an LIBS multi-component quantitative inversion method based on a deep learning convolutional neural network, and is suitable for the field of laser spectrum analysis. Accordingto the method, the unique advantages of the convolutional neural network algorithm in the aspect of image feature recognition are utilized, and the method is applied to LIBS spectrum quantitative inversion. According to the convolutional neural network construction scheme designed by the invention, feature extraction and deep learning can be performed on the LIBS spectral line form of the sample,and after the convolutional neural network is trained by using the LIBS spectrum of the known sample, the network can analyze and predict the contents of various chemical components of the unknown sample at the same time. The method has the advantages of being simple and convenient to operate, efficient in training, high in accuracy and good in robustness, is suitable for quantitatively analyzingthe LIBS spectrum, and is particularly suitable for analyzing the LIBS spectrum with relatively high spectral line form complexity and relatively large interference noise.

Owner:SHANGHAI INST OF TECHNICAL PHYSICS - CHINESE ACAD OF SCI

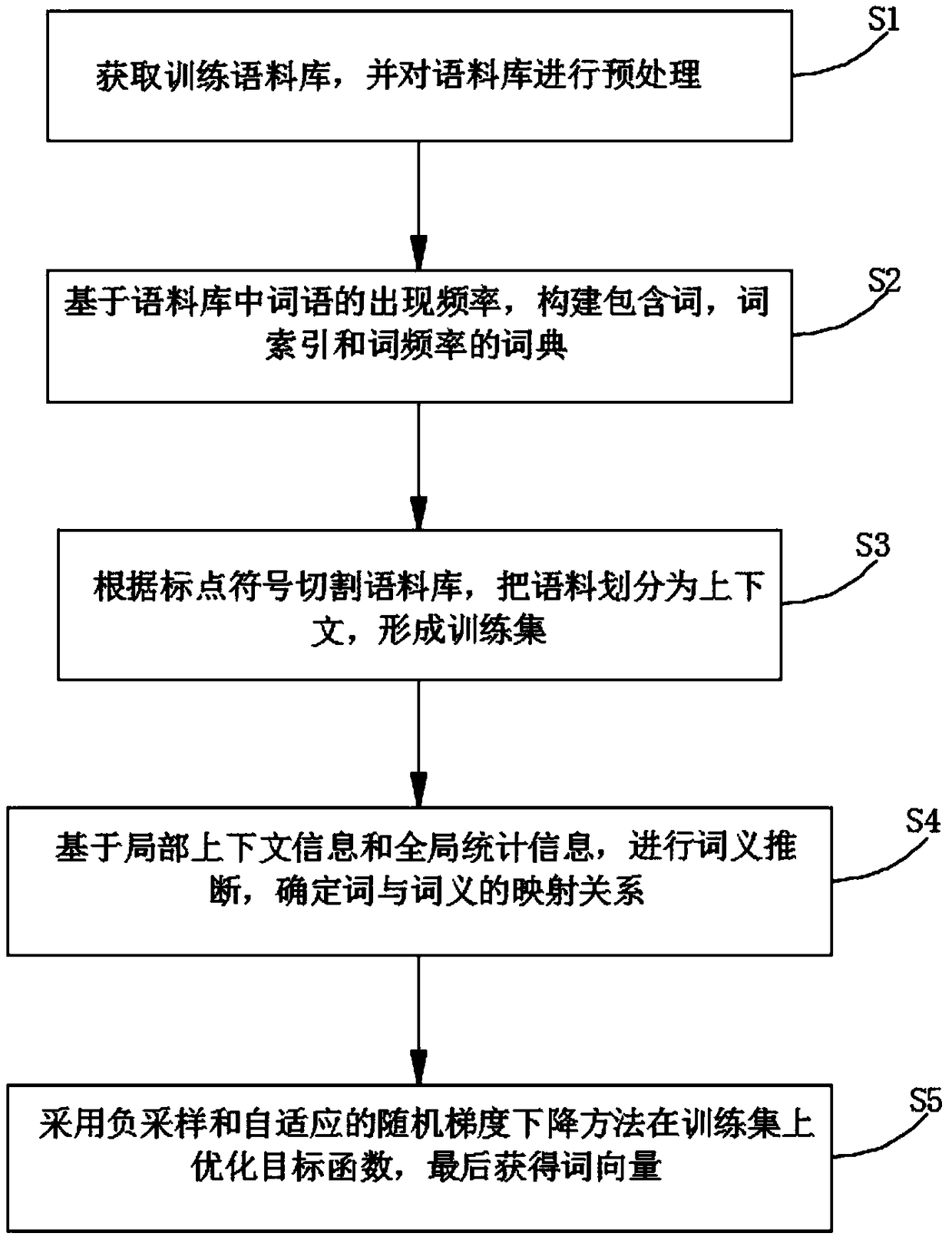

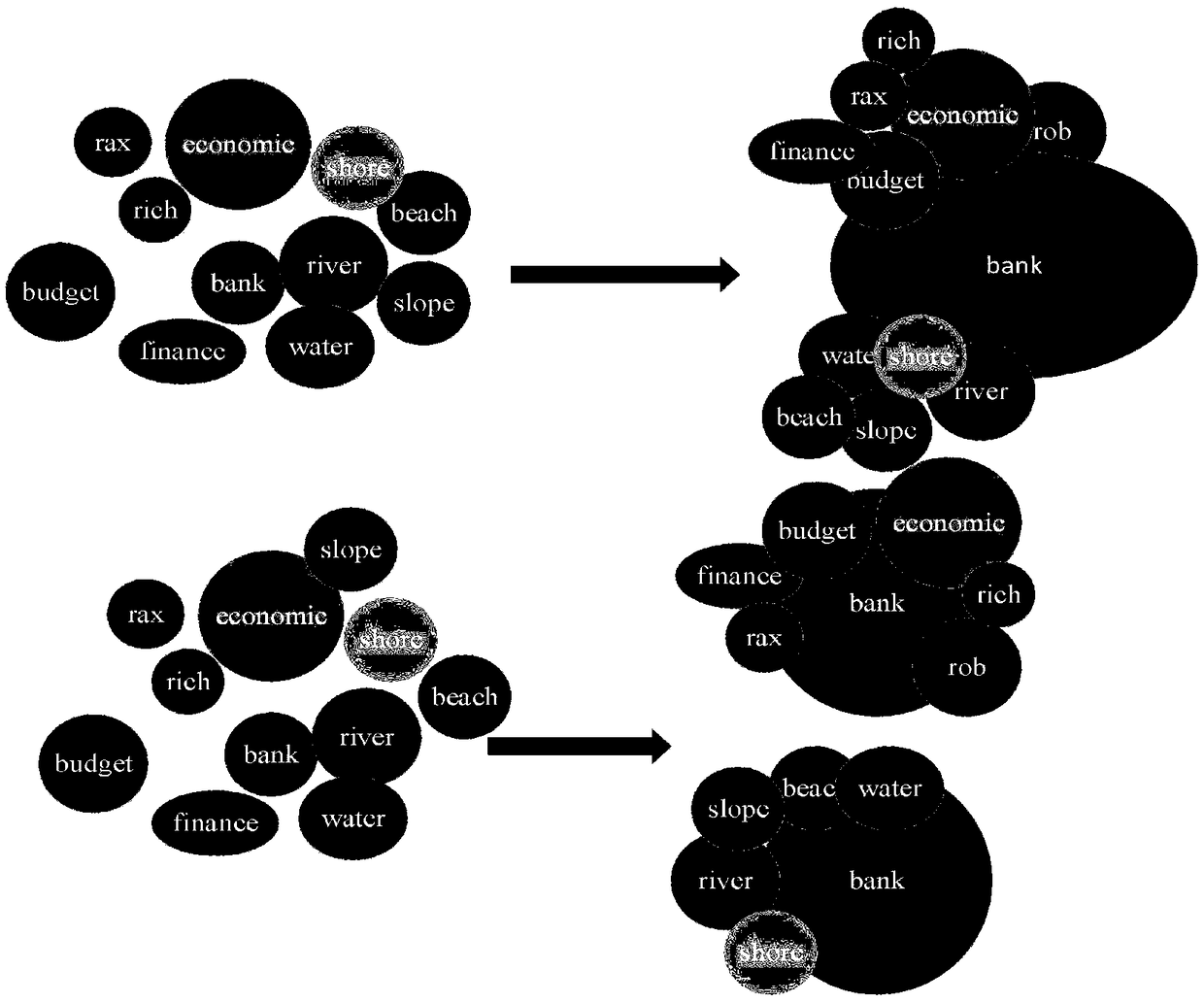

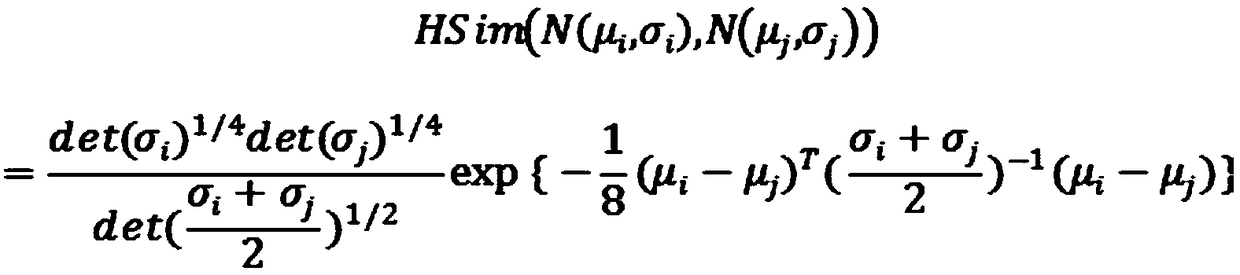

Word vector generation method based on Gaussian distribution

ActiveCN108733647AAvoid Point Estimation FeaturesFix the problem of assuming a fixed number of sensesSemantic analysisCharacter and pattern recognitionInclusion relationAlgorithm

The present invention discloses a word vector generation method based on the Gaussian distribution. The method comprises: firstly, preprocessing the corpus; secondly, using the punctuation to performtext division on the corpus; then combining the local and global information to infer the word meaning, and determining the mapping relationship between the word and the word meaning; and finally, obtaining a word vector by optimizing the objective function. The innovations and beneficial effects of the technical scheme of the present invention are as follows that: 1, words are represented based on the Gaussian distribution, point estimation characteristics of traditional word vectors are avoided, and more abundant information such as probabilistic quality, meaning connotation, an inclusion relationship, and the like can be brought to the word vectors; 2, multiple Gaussian distributions are used to represent the words, so that the linguistic characteristics of a word in the natural language can be coopered with; and 3, the similarity between the Gaussian distributions is defined based on the Hellinger distance, and by combining parameter updating and word meaning discrimination, the number of word meanings can be inferred adaptively, and the problem that the number of hypothetical word meanings of the model in the prior art is fixed is solved.

Owner:SUN YAT SEN UNIV

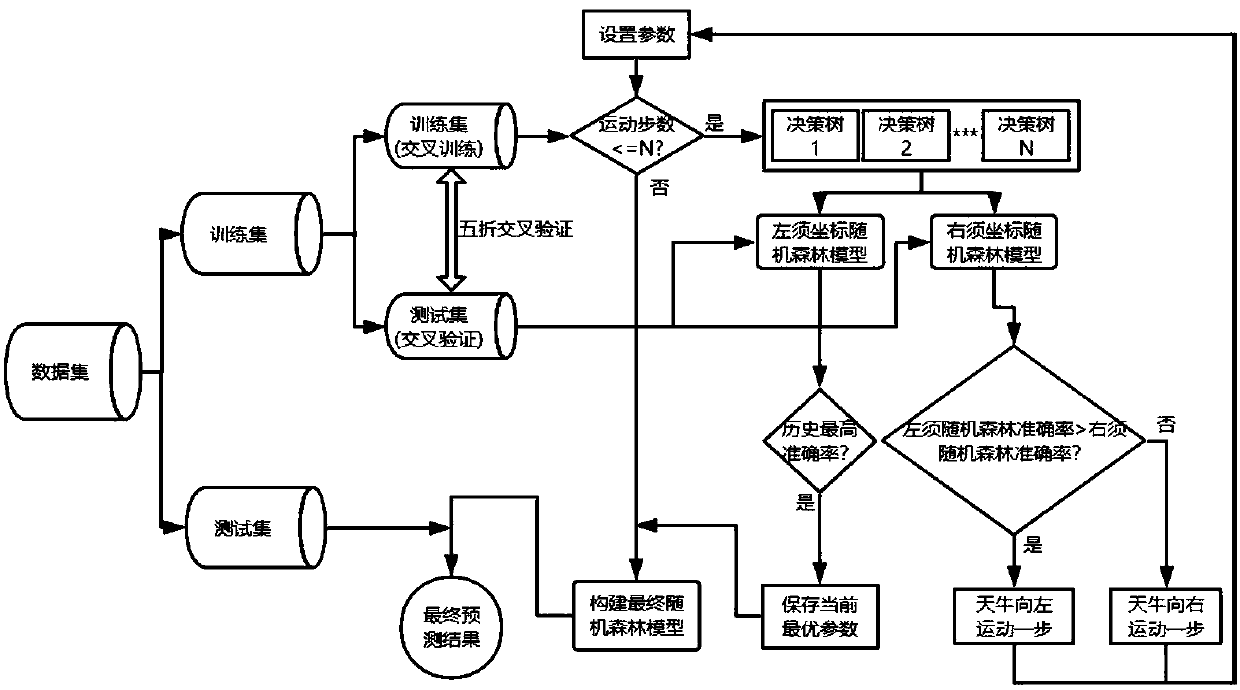

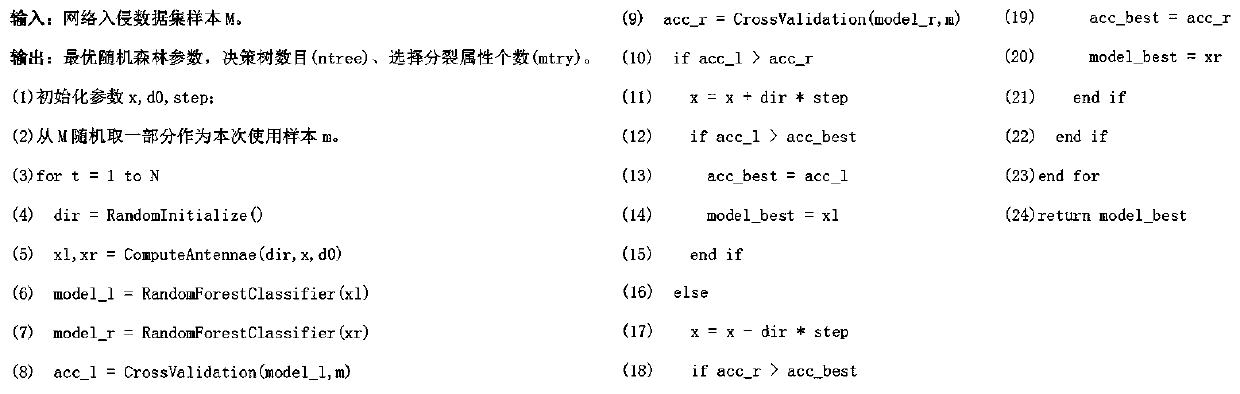

Network intrusion detection method combining longicorn stigma with random forest

InactiveCN110138766AImprove accuracyEfficient training processCharacter and pattern recognitionTransmissionTraffic flow analysisRandom forest

The invention relates to a network intrusion detection method combining longicorn stigma with a random forest, which is used for processing a network intrusion monitoring problem based on machine learning and can be used for training a network intrusion monitoring model with high accuracy by using less time. When abnormal flow passes, the detection system can find a problem through flow analysis and generate a corresponding signal. Compared with a particle swarm optimization random forest algorithm and a fruit fly optimization random forest algorithm, the method can complete model training anddetection more efficiently.

Owner:FUZHOU UNIV

Watermark recognition online training, sample preparation and removal methods, watermark recognition online training device, equipment and medium

PendingCN112419135AEfficient identificationGenerate intelligenceImage enhancementImage analysisPattern recognitionData set

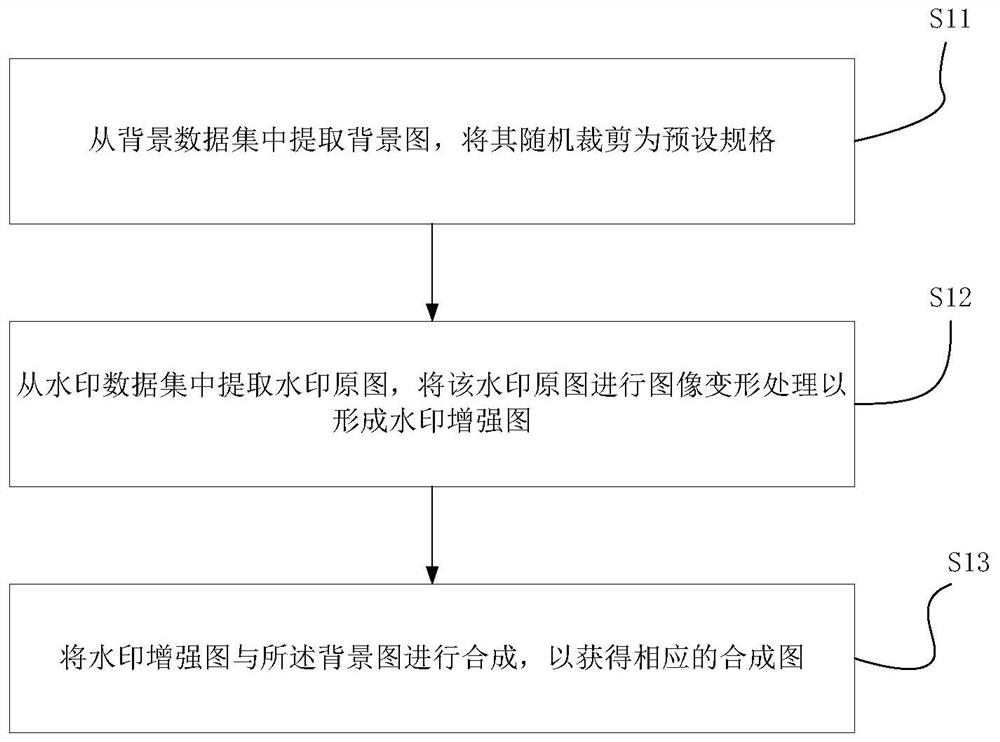

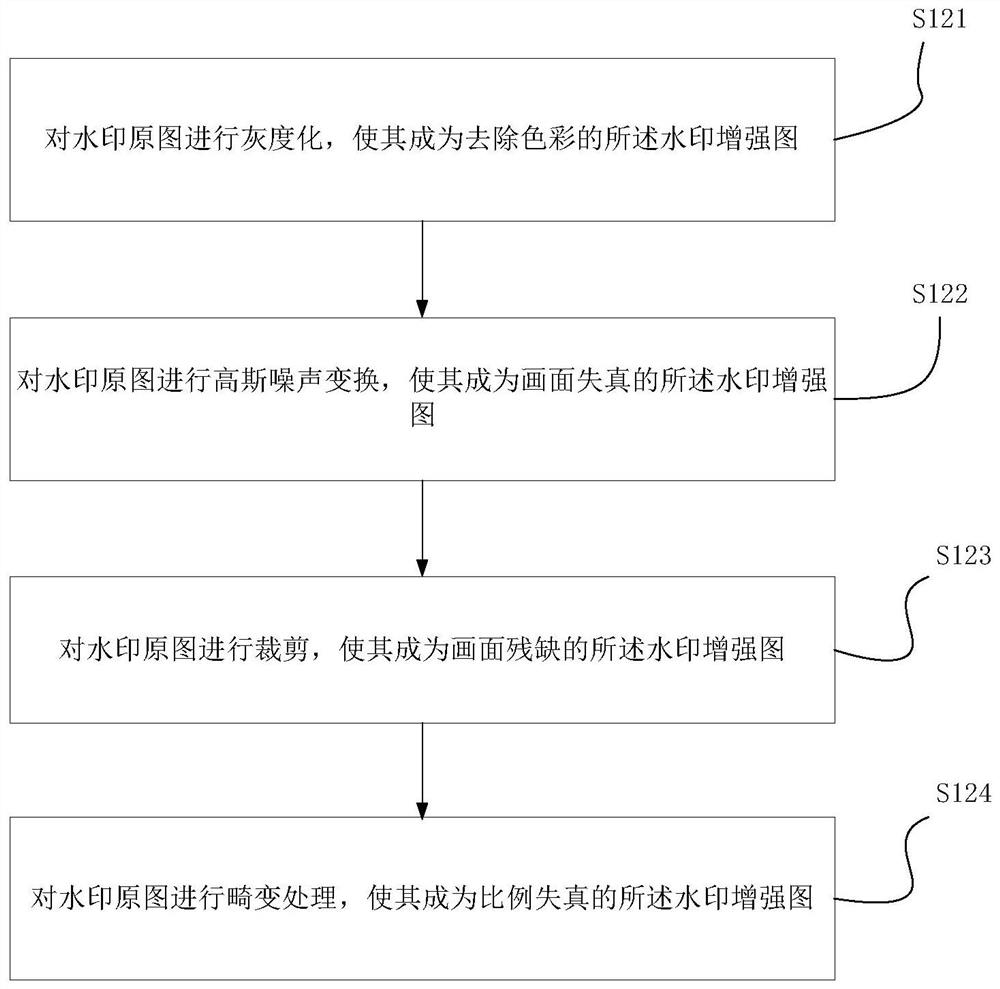

The invention discloses watermark recognition online training, sample preparation and removal methods, a watermark recognition online training device, equipment and a medium. The training method comprises the steps: extracting a background image from a background data set, and randomly cutting the background image into a preset specification; extracting a watermark original image from the watermark data set, and performing image deformation processing on the watermark original image to form a watermark enhanced image; synthesizing the watermark enhancement image and the background image to obtain a corresponding synthesized image; and feeding the composite image as a training sample into a preset image segmentation model which has a coding and decoding structure and is suitable for capturing multi-scale features to implement sample training, so that the image segmentation model is suitable for removing the watermark original image from the to-be-identified image through the training. According to the invention, the watermark-containing training sample can be automatically synthesized on line and immediately used for training the image segmentation model, so that the model has the capability of identifying and removing the watermark in the picture.

Owner:GUANGZHOU HUADUO NETWORK TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com