Image retrieval method, device and system

An image retrieval and image technology, applied in special data processing applications, instruments, electrical digital data processing, etc., can solve the problems of low retrieval efficiency, low retrieval accuracy, and long time, so as to improve retrieval efficiency and eliminate local errors. Feature matching, similarity define precise effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

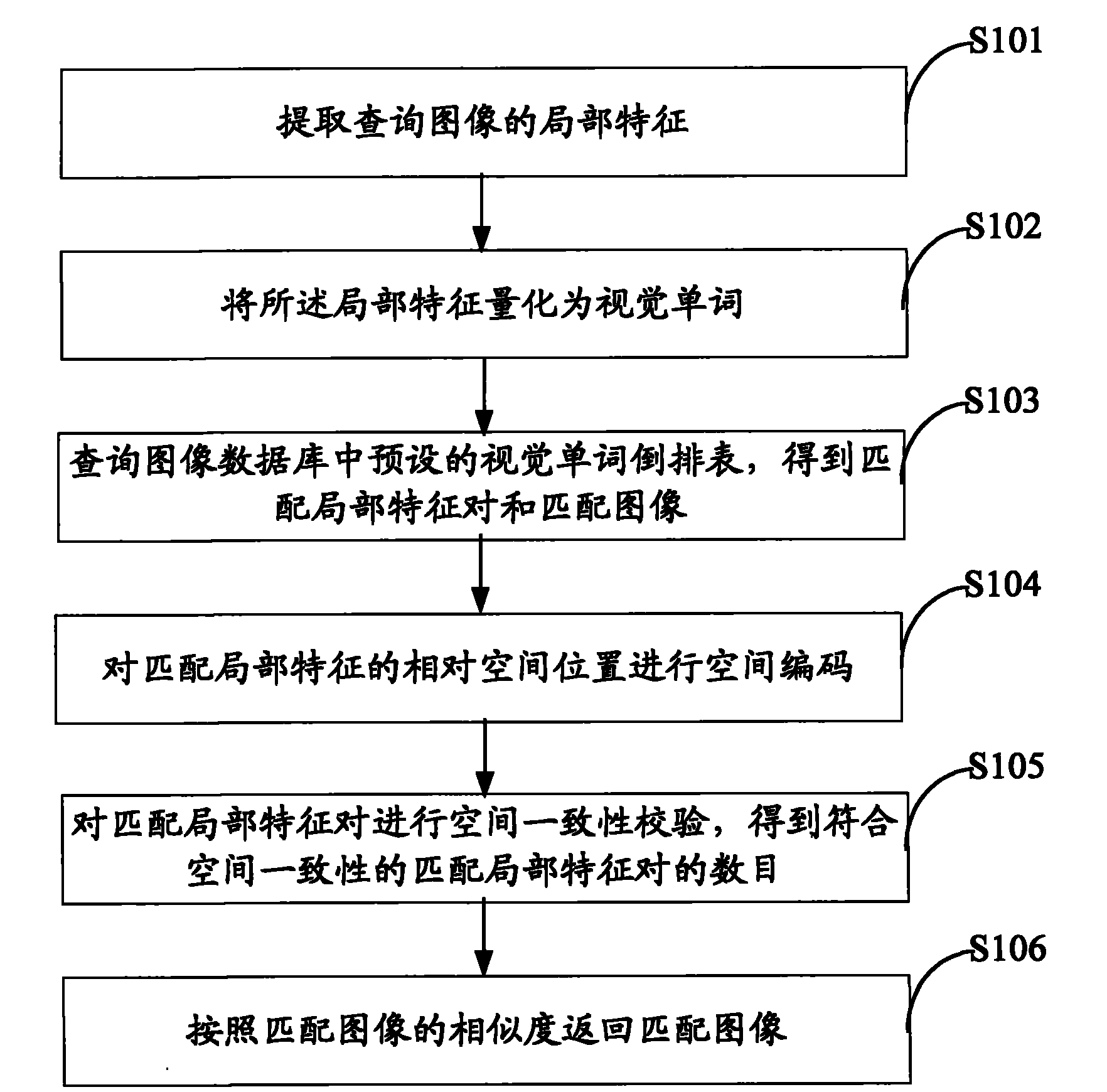

[0039] see figure 1 As shown, a schematic flow chart of the image retrieval method provided in this embodiment, the method includes the following steps:

[0040] Step S101, extracting local features of the query image;

[0041] Step S102, quantifying the local features into visual words;

[0042] Wherein, the visual word may be defined in the following manner: extracting local features from training images, clustering the local features, and determining a visual word as the center of each cluster. Multiple visual words can be obtained through multiple clustering centers, and a visual word codebook containing multiple local features, visual words and their corresponding relationships can be generated. Since visual words do not have explicit semantic information, the size of the visual word codebook, that is, the number of visual words contained in it, can generally be determined empirically with experimental test data. In addition, in this embodiment, a visual word codebook ...

Embodiment 2

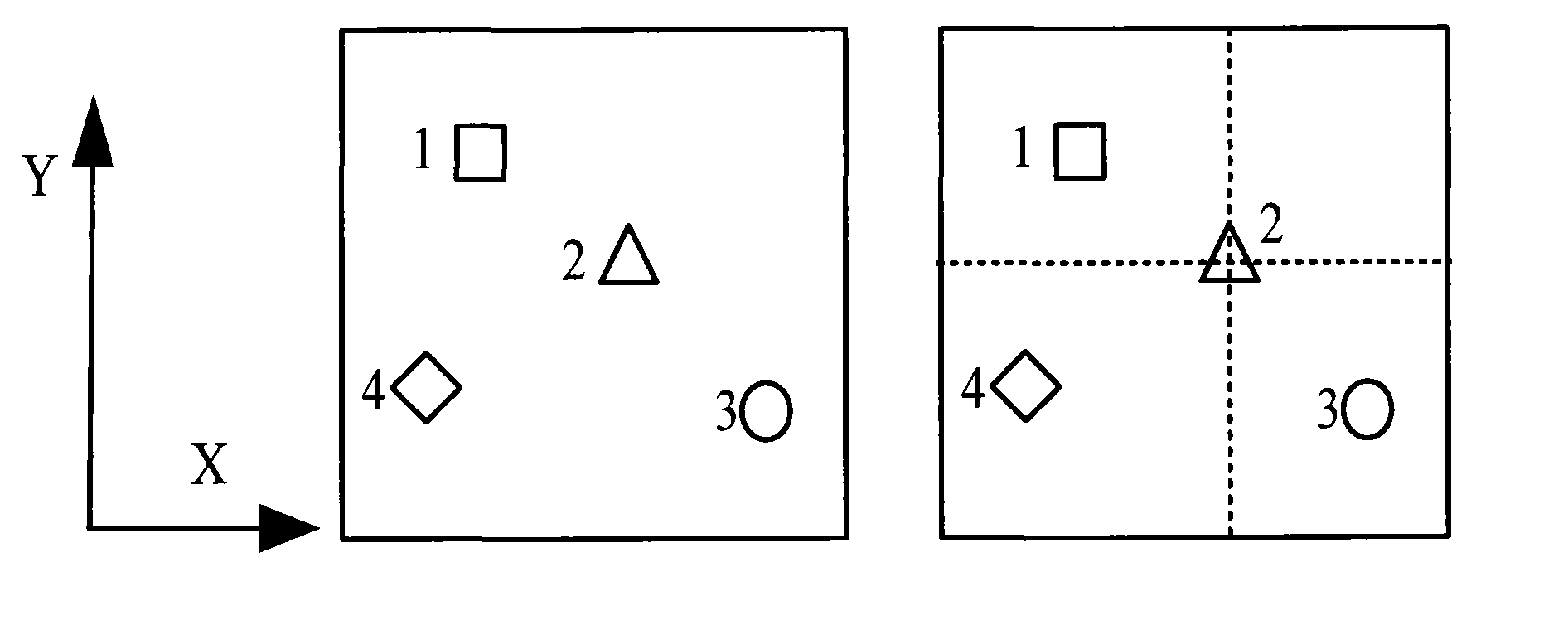

[0062] This embodiment provides an implementation of spatially encoding the relative spatial positional relationship of local features in an image, specifically as follows:

[0063] Describe the relative positional relationship in the horizontal direction of the two matching local features, and obtain the horizontal spatial code map;

[0064] The relative positional relationship in the vertical direction of the two matching local features is described, and the vertical spatial code map is obtained.

[0065] For a query image or matching image, two spatial code maps are generated, denoted as X-map and Y-map respectively. Among them, X-map is used to describe the relative spatial relationship between two matched local features along the horizontal direction (X-axis), and Y-map is used to describe the vertical direction (Y-axis) between two matched local features. -axis) relative spatial position relationship. For example, given a query image I, there are K matching local featu...

Embodiment 3

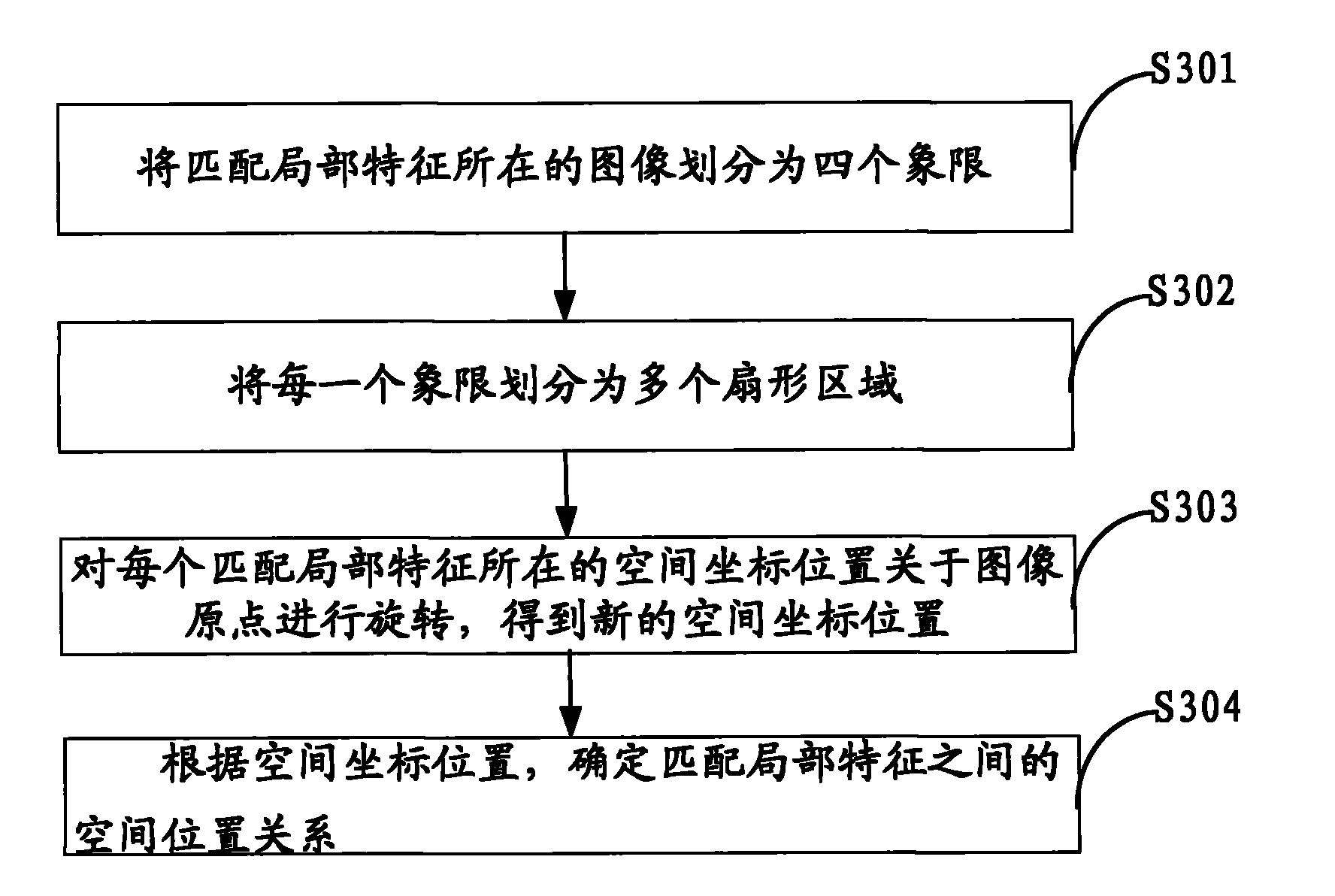

[0086] This embodiment provides an implementation method for performing a spatial consistency check on the spatial coordinate positions of the matching local feature pairs in the query image space code map and the matching image space code map, which may be specifically described as follows:

[0087] According to the quantization of matching local features, if the query image I q and matching image I m If there are N pairs of matching local features, then in step S104 described in Embodiment 1, these matching local features are respectively in I q and I m The relative spatial position in is spatially coded to obtain the query image space code map (GX q , GY q ) and matching image space code map (GX m , GY m ). In order to compare the consistency of the relative spatial positions between the matching local features between the query image and the matching image, the GX q and GX m , GY q and GY m Execute XOR operation, as shown in formula (10) and formula (11):

[008...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com