Robot positioning method with fusion of visual features and IMU information

A robot positioning and visual feature technology, applied in the direction of instruments, computer parts, character and pattern recognition, etc., can solve problems such as insurmountable motion environment, and achieve the effect of improving robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach

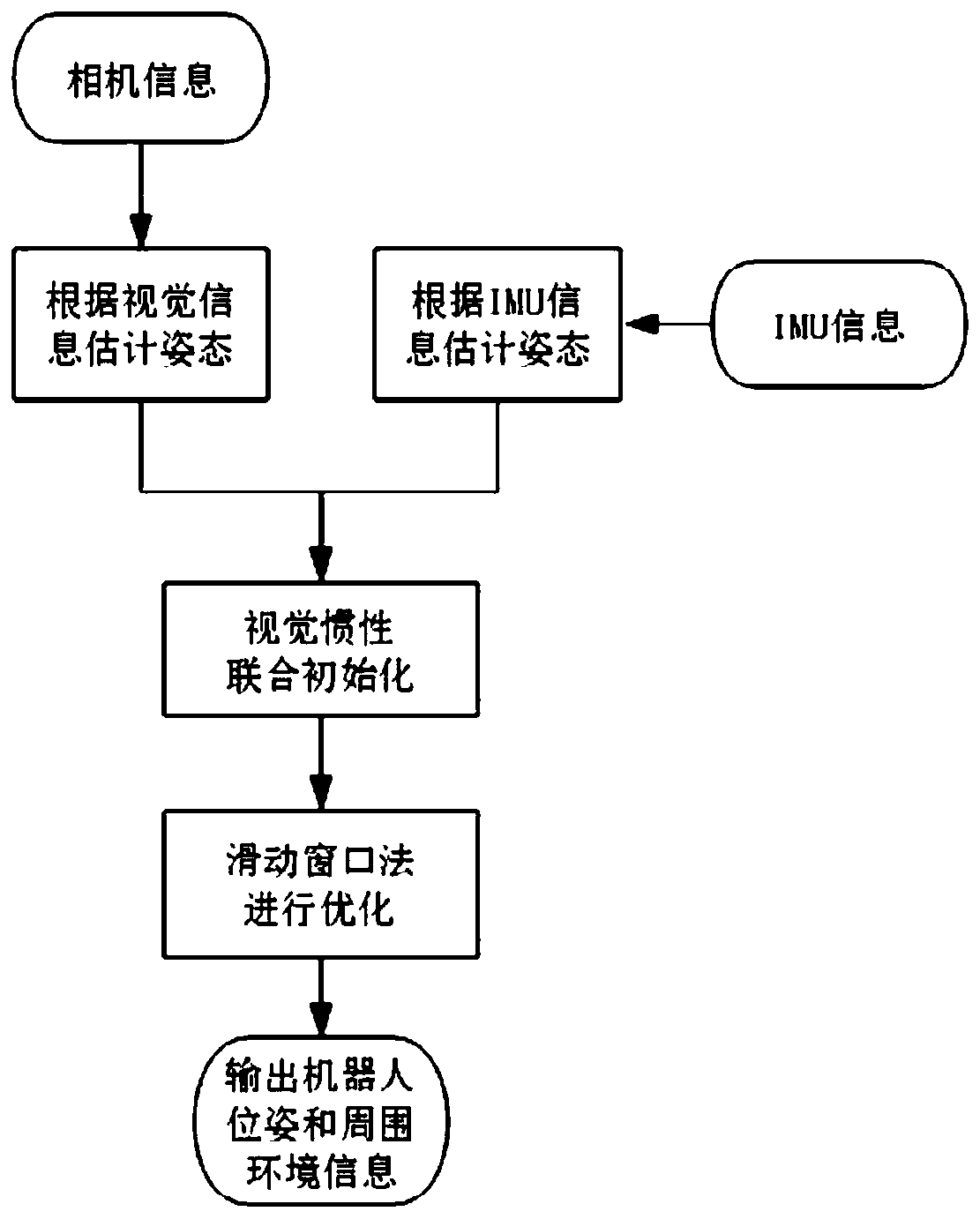

[0061] Knot figure 2 , the specific implementation method of the inventive method is as follows:

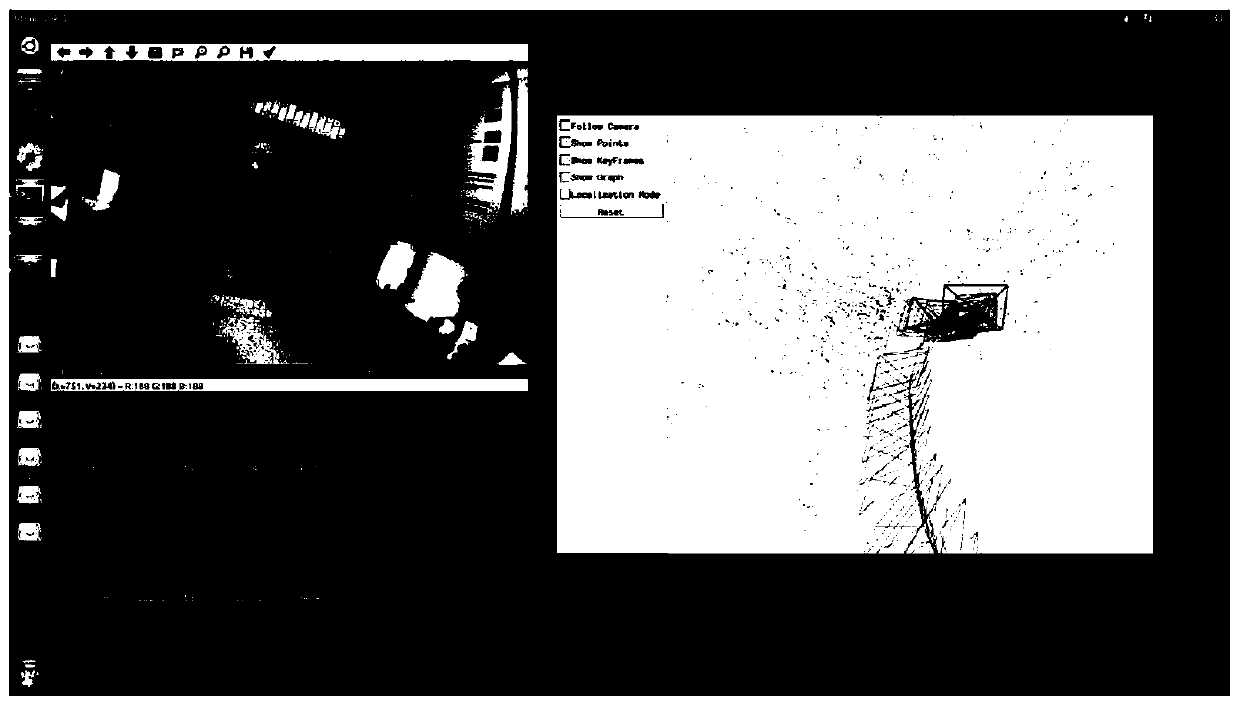

[0062] After connecting the MYNT EYE inertial navigation camera to the EAIBOT mobile car via USB, turn on the Lenovo thinkpad, input the camera operation command, and start the algorithm. In the example, the sampling frequency of the camera is 30HZ, the sampling frequency of the key frame is lower, and the sampling frequency of the IMU is 100HZ.

[0063] Step 1: The present invention first uses the ORB feature extraction algorithm to extract rich ORB feature points from the image frames captured by the MYNT EYE camera, and restores the monocular camera using multi-eye geometric knowledge according to the pixel positions of the feature points between the two frames of images The motion process of the robot is estimated.

[0064] Assume that the coordinates of a point in space are P i =[X i , Y i ,Z i ] T , whose projected pixel coordinates are U i =[u i , v i ] T , the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com