Visual navigation/inertial navigation full combination method

A technology of visual navigation and inertial navigation, which is applied in the field of full combination of visual navigation/inertial navigation, which can solve the problems that the combination effect cannot be optimal, and the prior information of the object space coordinates of image feature points is not considered.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

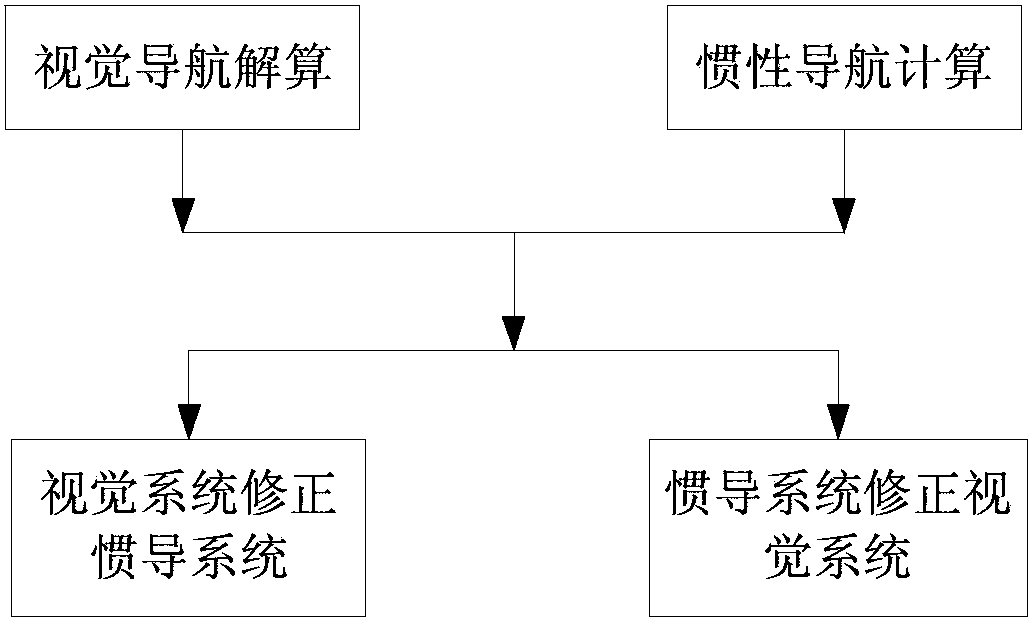

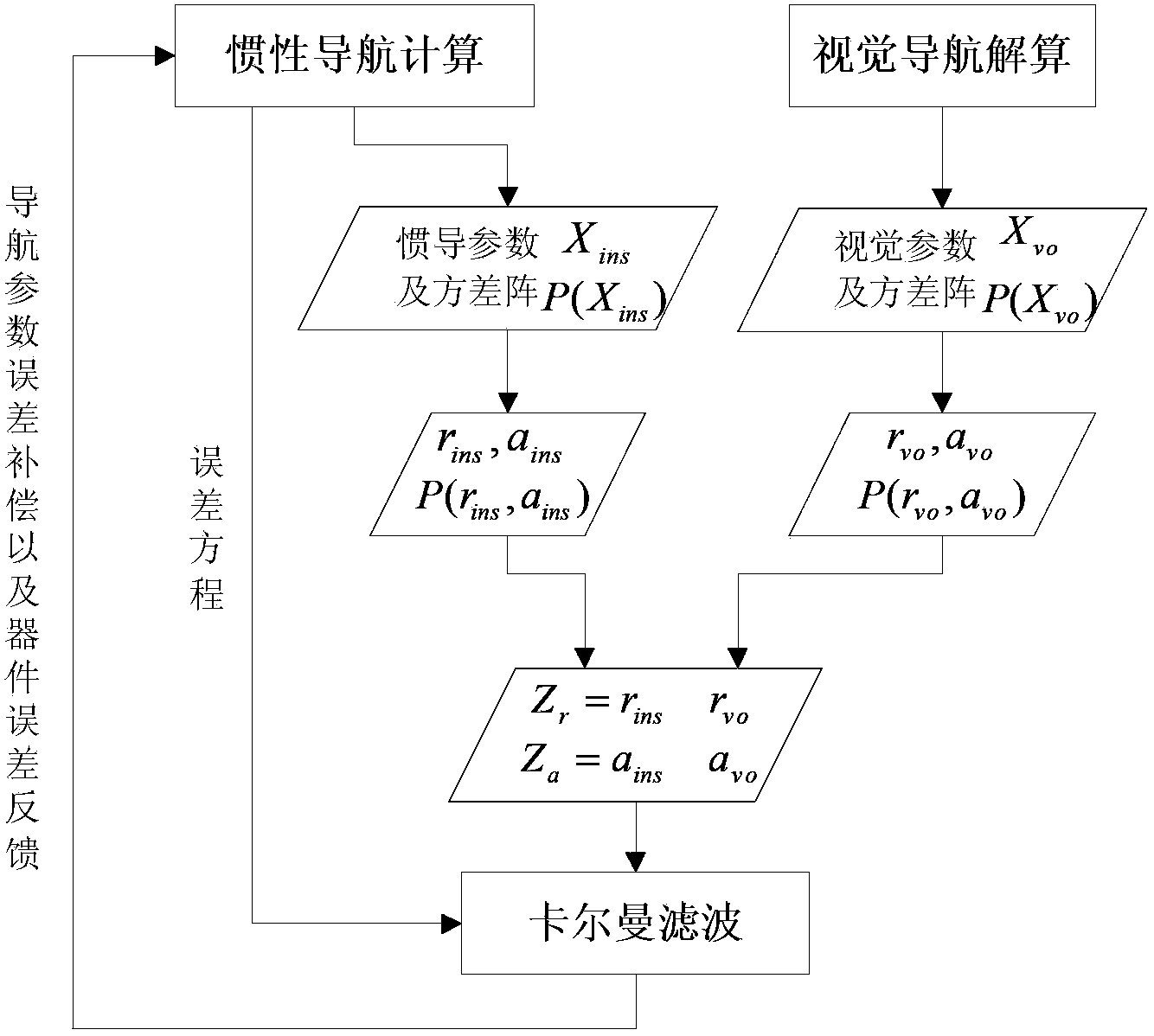

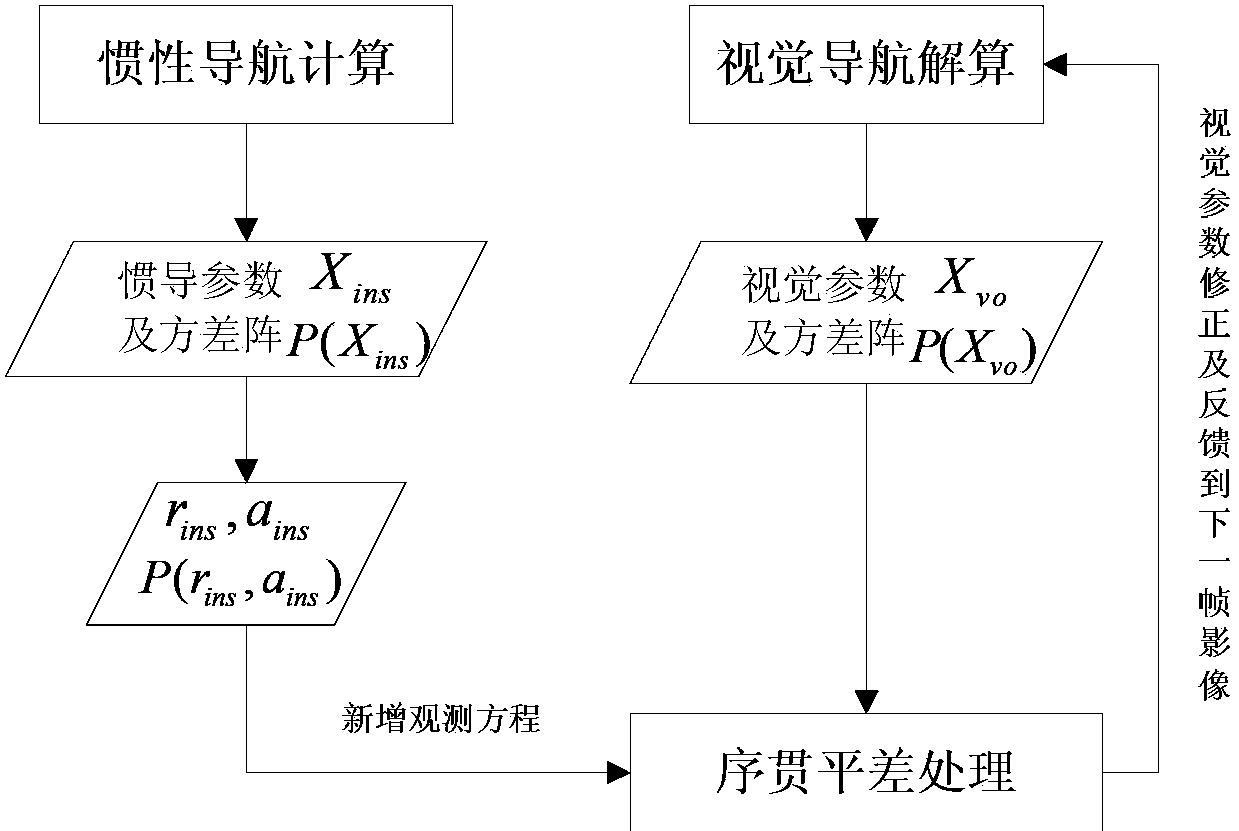

[0085] Such as figure 1 As shown, a fully combined method of visual navigation / inertial navigation, the method includes the following steps:

[0086] 1) Visual navigation solution

[0087] Proceed as follows:

[0088] (1) Initialization of the camera's outer orientation elements. Generally, some control points are arranged around the starting position of the visual navigation, and the camera's outer orientation elements are obtained through resection.

[0089] (2) The carrier is constantly moving, and the stereo camera is used to obtain the image at the current moment for feature point extraction and matching. The feature point matching includes two aspects: one is to match the feature points of the left and right images at the current moment, and the other is to match the current The left image at the moment is matched with the left image at the previous moment.

[0090] (3) Based on the collinear equation, the observation equation is established for all the feature points...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com