Deep learning based video retrieval method

A deep learning and video technology, applied in the field of computer vision, can solve problems such as inaccurate description of high-dimensional feature differences, and achieve the effects of improving matching accuracy, precise retrieval, and avoiding false detection and missed detection

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] The specific implementation of the present invention will be further described below in conjunction with the accompanying drawings, but the implementation and protection of the present invention are not limited thereto. realized or understood.

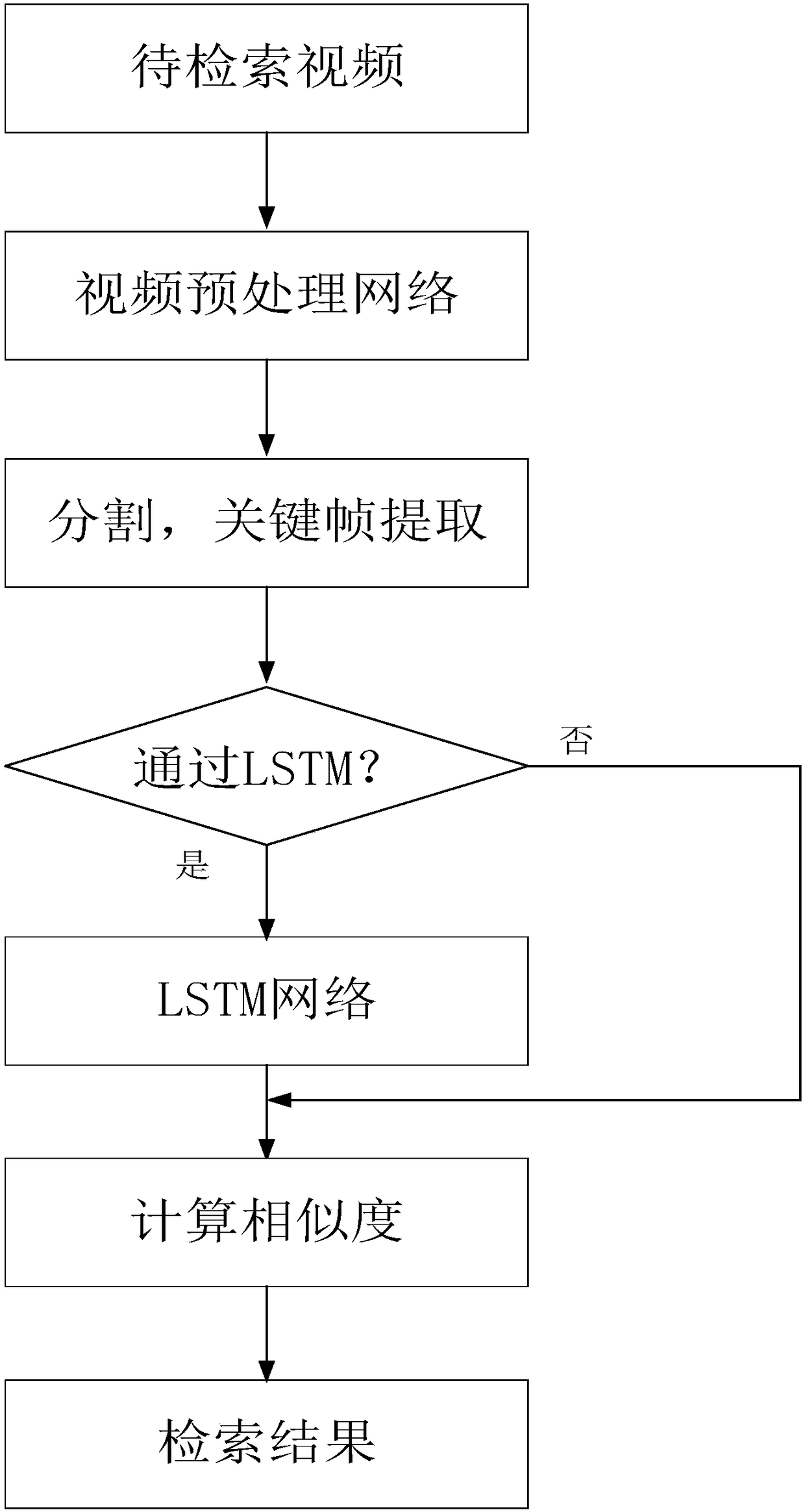

[0030] The embodiment of the present invention provides a video retrieval method based on deep learning, the steps are as follows: figure 1 Shown; The concrete implementation steps of described method are as follows:

[0031] Network training part:

[0032] Step 1) Construct a video preprocessing network, using the network structure of Inception Net V3. Inception Net is a 22-layer deep convolutional neural network. The last layer of the network has the best classification effect, so the output of the last layer is selected as the input feature vector.

[0033] Step 2) Train the video preprocessing network. Use YouTuBe-8M as the training data set, which has 8 million videos and a total of 4800 label categories. In order to e...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com