A multimodal video scene segmentation method based on sound and vision

A technology for video scene and scene segmentation, applied in speech analysis, character and pattern recognition, instruments, etc., to achieve the effect of improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0016] Various details involved in the technical solution will be described in detail below in conjunction with the accompanying drawings. It should be noted that the described embodiments are intended to facilitate the understanding of the present invention, but not to limit it in any way.

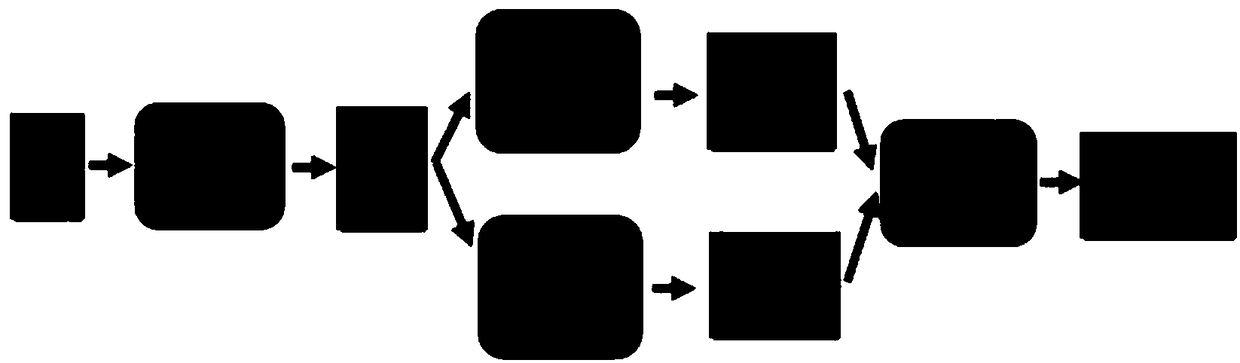

[0017] The implementation process of the present invention is as figure 1 Shown:

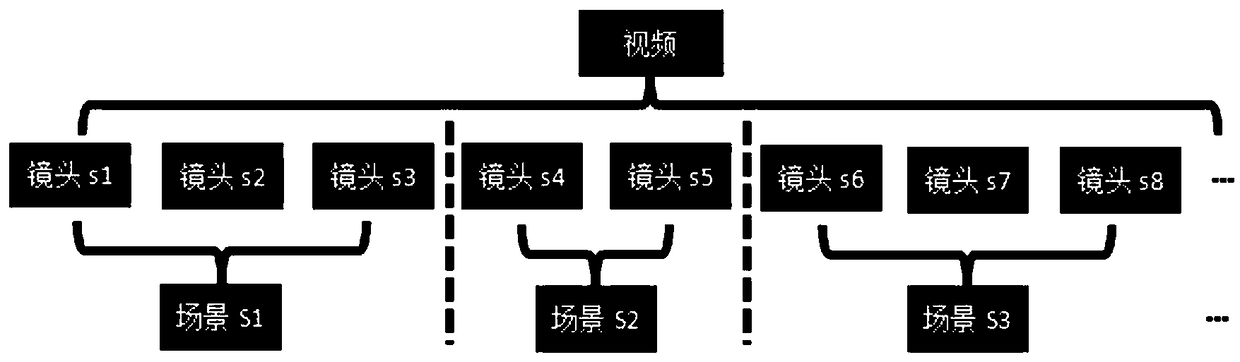

[0018] In the embodiment of the present invention, firstly, the temporal boundary of the shot is determined by using the comprehensive feature of the tracking flow and the continuity of the global image color distribution, and the video is divided into segments composed of shots. Tracking flow continuity refers to the continuous movement of objects or regions appearing in a single shot in a video, but a sudden change occurs at the shot boundary.

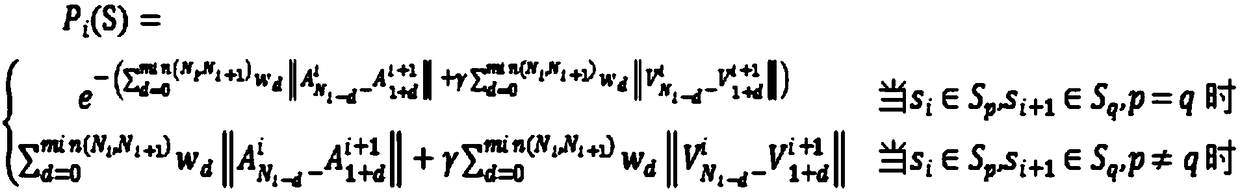

[0019] In the embodiment of the present invention, the optical flow field between adjacent frames in the video is calculated to obtain the motion amount between...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com