Video semantic scene segmentation and labeling method

A semantic scene and video technology, applied in the field of computer video processing, can solve the problem of video segmentation and labeling without multiple semantic scenes, and achieve the effect of improving experience and fun

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

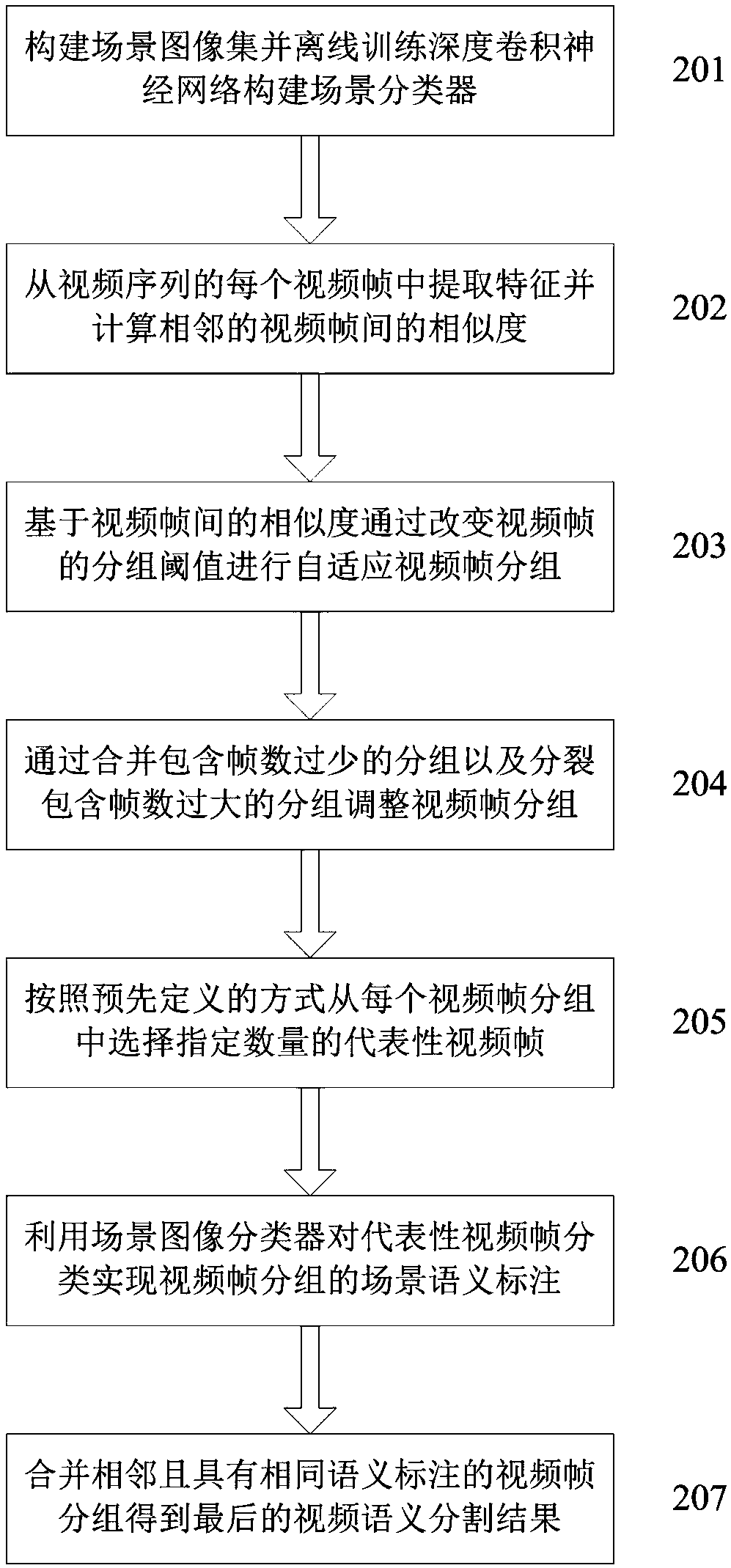

[0051] This embodiment provides a method for semantic scene segmentation and labeling of video sequences, combining figure 1 The method is described in detail as figure 1 Shown:

[0052] Step 201: using a set of labeled scene images to train a deep convolutional neural network to construct a scene classifier, the scene classifier can predict the probability that an input image belongs to each scene category;

[0053] In this embodiment, the set of marked scene images can utilize existing image sets such as Places and SUN397, and can also collect images of scenes of interest to construct a set of scene images, which is used to train the set of marked scene images of the scene classifier. The scene category is the scene category that can be used for scene semantic annotation of the video;

[0054] The structure of the deep convolutional neural network can use classic network results such as VGG-Net or ResNet, etc. The output of the last layer of the network structure is the dist...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com