Height information-based unmanned vehicle lane scene segmentation method

A technology of scene segmentation and height information, applied to instruments, character and pattern recognition, computer components, etc., can solve problems such as excessive noise, unclear boundaries between non-road areas and road areas, and achieve the effect of reducing noise

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

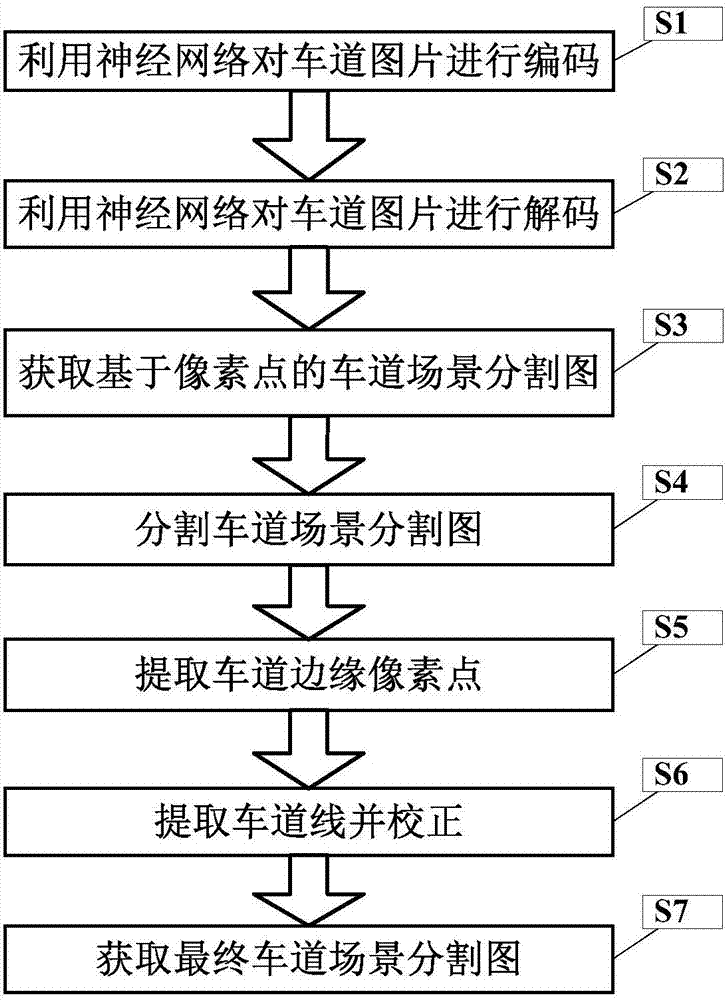

[0025] figure 1 It is a flowchart of the scene segmentation method for unmanned vehicle lanes based on height information in the present invention.

[0026] In this example, if figure 1 Shown, the present invention a kind of unmanned vehicle lane scene segmentation method based on height information, comprises the following steps:

[0027] S1. Use the neural network to encode the lane picture

[0028] In this embodiment, the vehicle-mounted camera is used to collect lane pictures, and then the collected lane pictures are input into the neural network, and the convolution operation and pooling operation of the coding part of the neural network are used to perform feature extraction on the input lane images to obtain a sparse feature map.

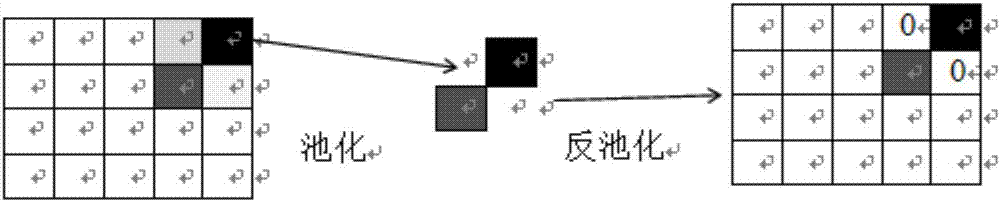

[0029] In this embodiment, the specific operations of each convolutional layer are: 1), use the template matrix to perform matrix shift and multiplication operations on the picture pixel matrix, that is, the corresponding positions of the m...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com