Patents

Literature

2394 results about "Binocular single vision" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In biology, binocular vision is a type of vision in which an animal having two eyes is able to perceive a single three-dimensional image of its surroundings.

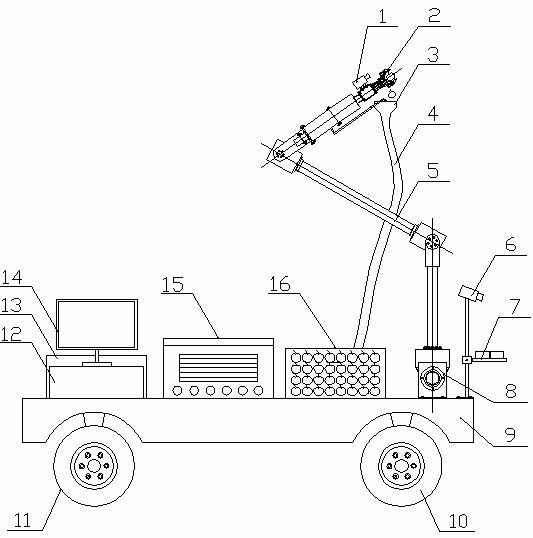

Wheel type mobile fruit picking robot and fruit picking method

InactiveCN102124866AReduce energy consumptionShorten speedProgramme-controlled manipulatorPicking devicesUltrasonic sensorData acquisition

Owner:NANJING AGRICULTURAL UNIVERSITY

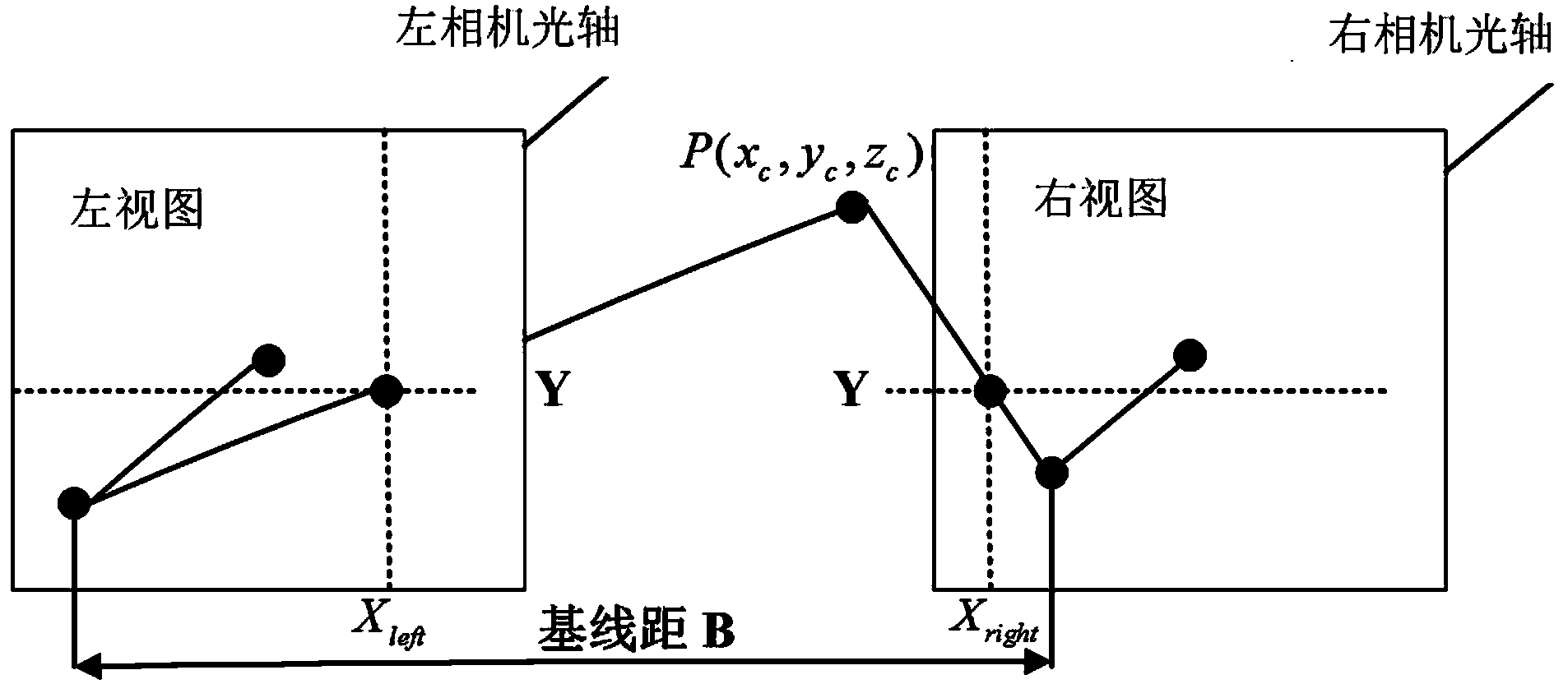

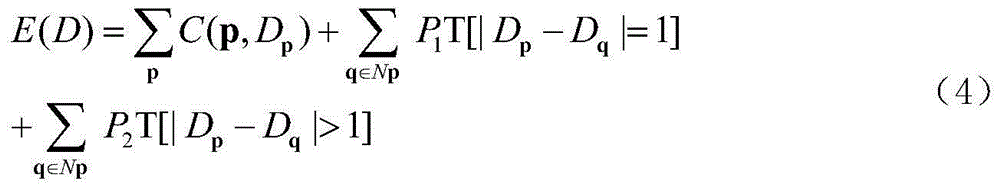

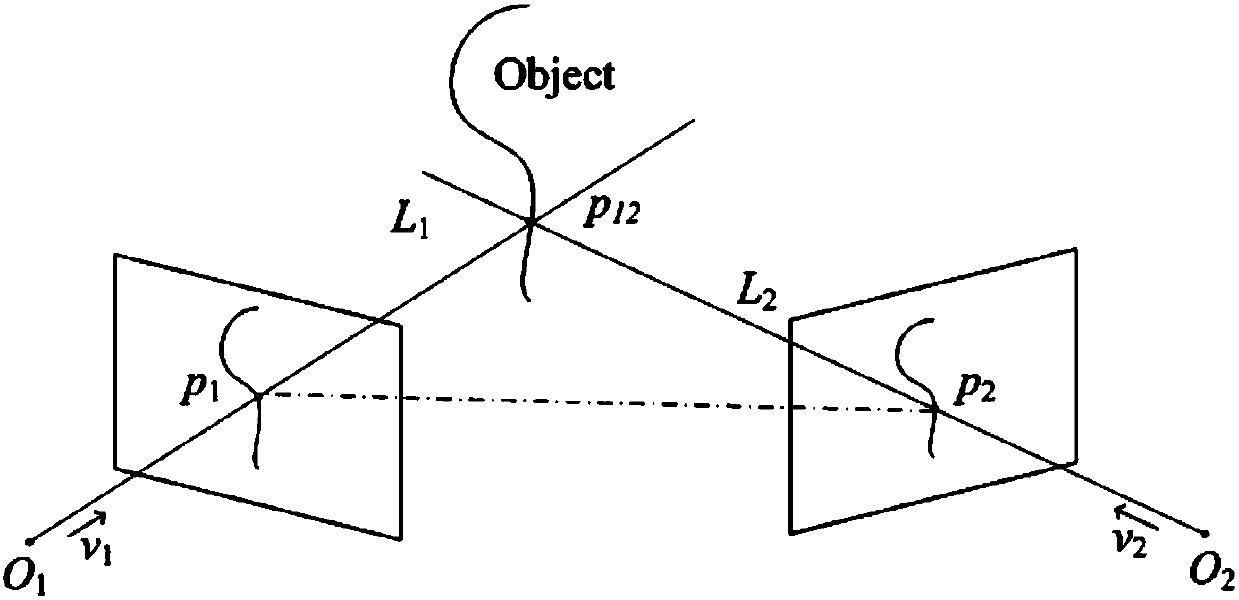

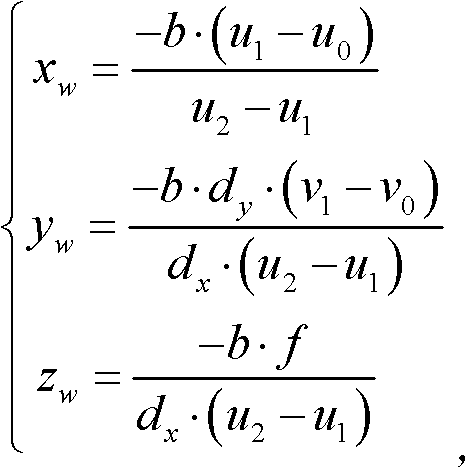

Parallax optimization algorithm-based binocular stereo vision automatic measurement method

InactiveCN103868460AAccurate and automatic acquisitionComplete 3D point cloud informationImage analysisUsing optical meansBinocular stereoNon targeted

The invention discloses a parallax optimization algorithm-based binocular stereo vision automatic measurement method. The method comprises the steps of 1, obtaining a corrected binocular view; 2, matching by using a stereo matching algorithm and taking a left view as a base map to obtain a preliminary disparity map; 3, for the corrected left view, enabling a target object area to be a colorized master map and other non-target areas to be wholly black; 4, acquiring a complete disparity map of the target object area according to the target object area; 5, for the complete disparity map, obtaining a three-dimensional point cloud according to a projection model; 6, performing coordinate reprojection on the three-dimensional point cloud to compound a coordinate related pixel map; 7, using a morphology method to automatically measure the length and width of a target object. By adopting the method, a binocular measuring operation process is simplified, the influence of specular reflection, foreshortening, perspective distortion, low textures and repeated textures on a smooth surface is reduced, automatic and intelligent measuring is realized, the application range of binocular measuring is widened, and technical support is provided for subsequent robot binocular vision.

Owner:GUILIN UNIV OF ELECTRONIC TECH

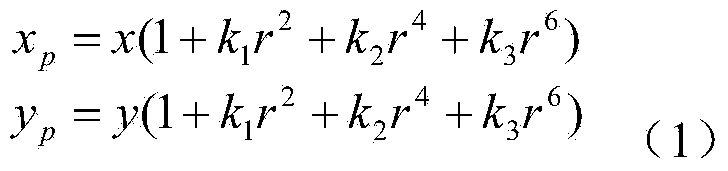

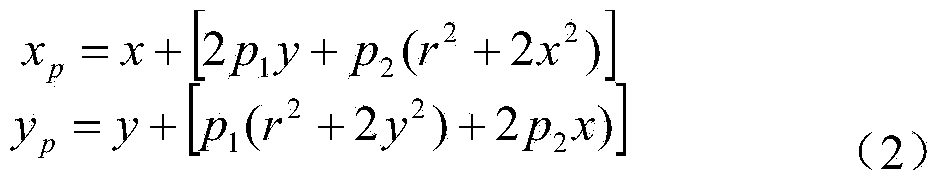

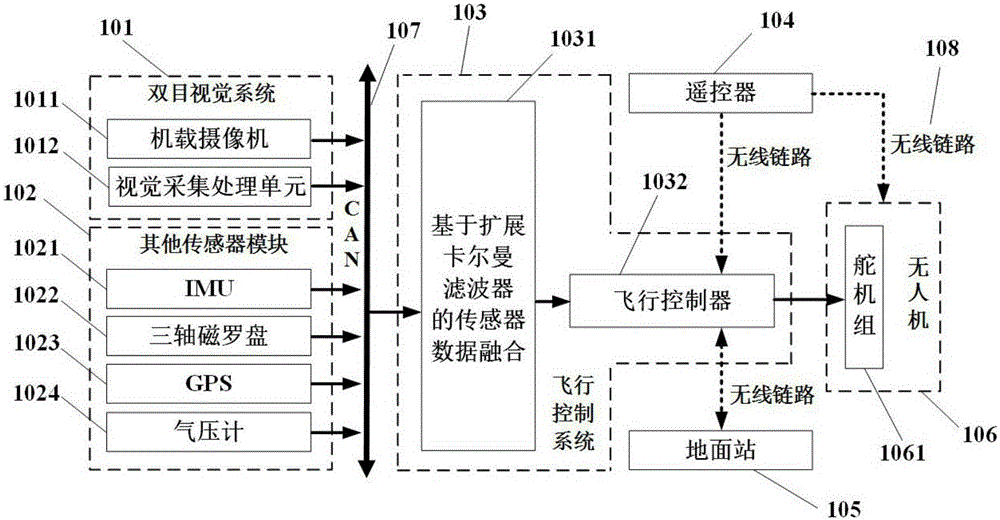

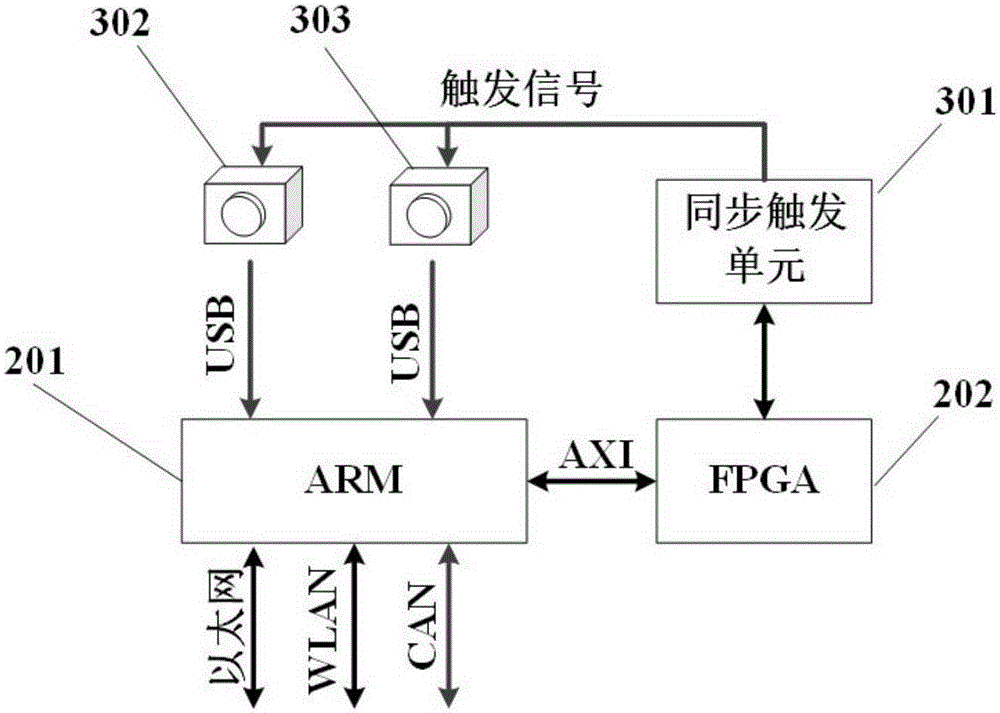

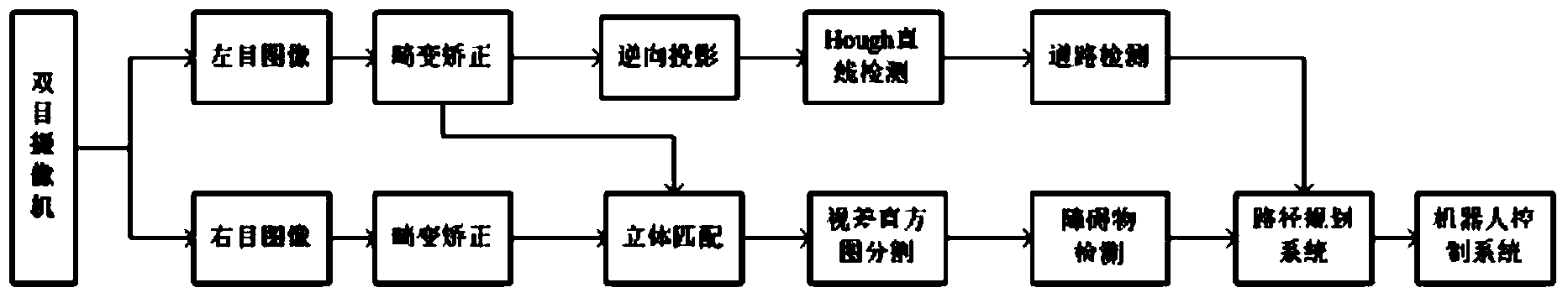

Unmanned aerial vehicle autonomous obstacle detection system and method based on binocular vision

InactiveCN105222760ARealize the function of effective obstacle avoidanceRealize the function of obstacle avoidanceTransmission systemsPicture taking arrangementsUncrewed vehicleObstacle avoidance

The invention relates to an unmanned aerial vehicle autonomous obstacle detection system and method based on binocular vision. The unmanned aerial vehicle autonomous obstacle detection system and method based on the binocular vision are characterized in that the system comprises a binocular visual system, other sensor modules and a flight control system which are mounted on an unmanned aerial vehicle; the method comprises the steps that the binocular visual system acquires visual information of the flight environment of the unmanned aerial vehicle, and obstacle information is obtained through processing; other sensor units acquire state information of the unmanned aerial vehicle; the flight control system receives the obstacle information and the state information of the unmanned aerial vehicle, establishes a flight path and generates a flight control instruction to send to the unmanned aerial vehicle; the unmanned aerial vehicle flies by avoiding obstacles according to the flight control instruction. According to the unmanned aerial vehicle autonomous obstacle detection system and method based on the binocular vision, the vision information is fused with other sensor information, the flight environment information is perceived, flight path control and path planning are conducted to avoid the obstacles, the problem of vision obstacle avoidance of the unmanned aerial vehicle is effectively solved, and the capacity of completing vision obstacle avoidance by means of a vehicle-mounted camera is achieved.

Owner:一飞智控(天津)科技有限公司

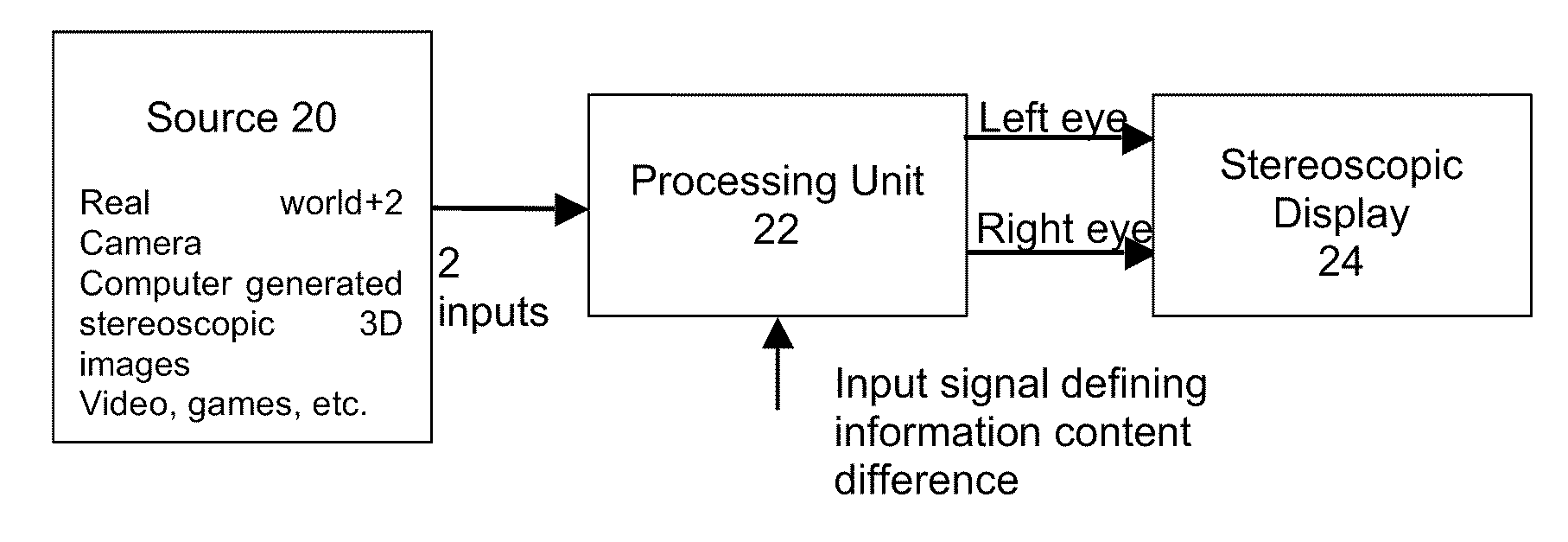

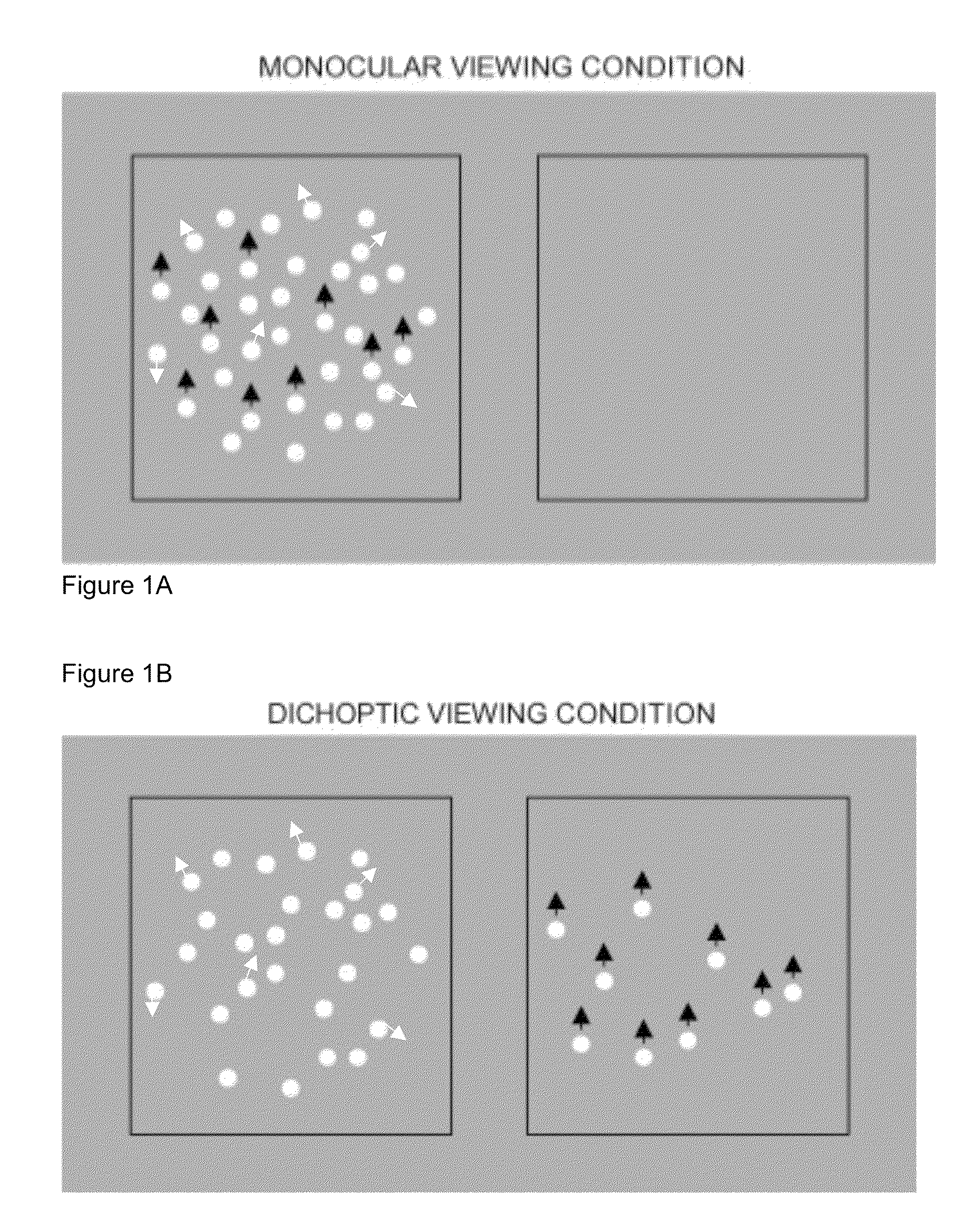

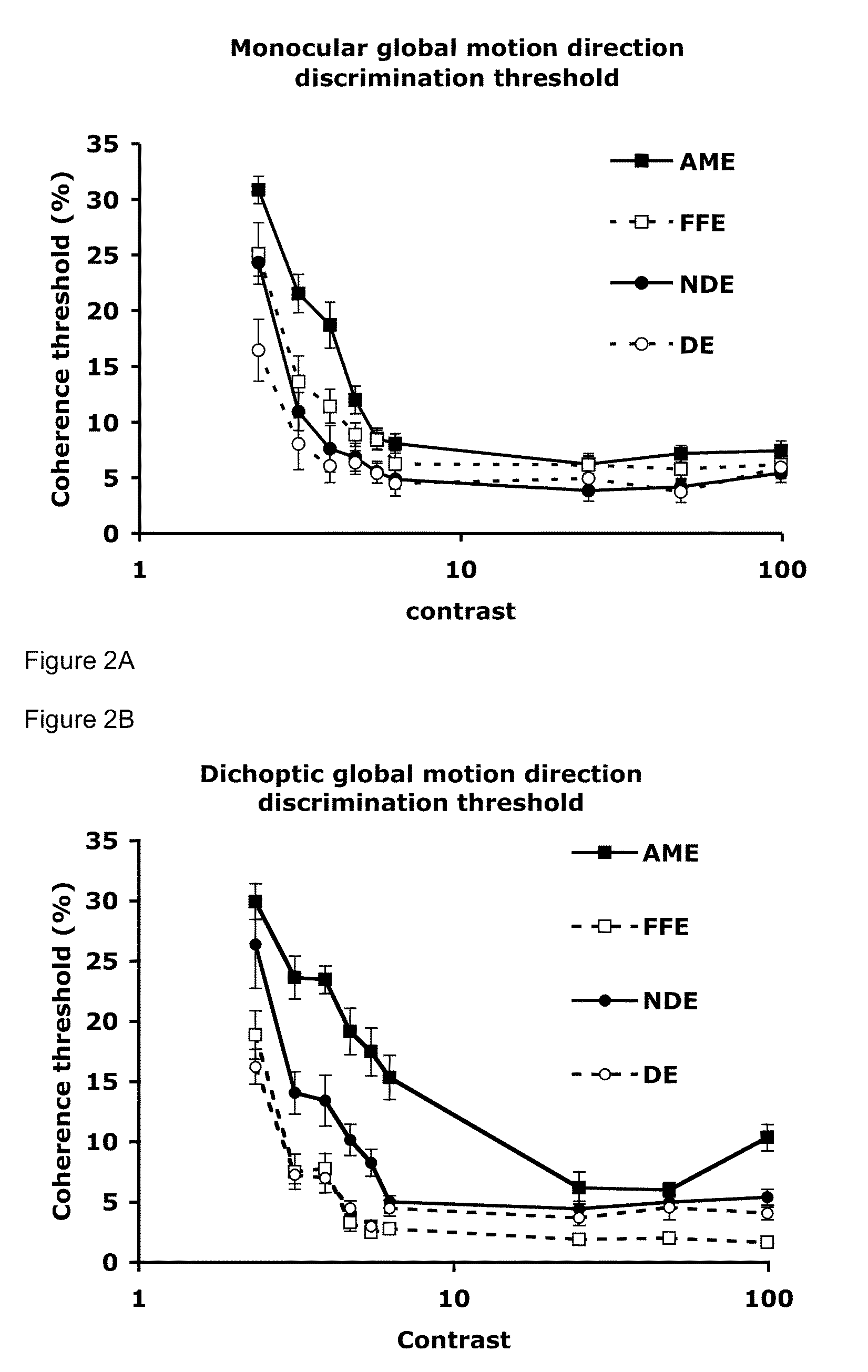

Binocular vision assessment and/or therapy

ActiveUS20100283969A1Big contrastIncrease contrastEye exercisersEye diagnosticsTherapeutic effectBilateral eyes

This invention is a game platform for the assessment and / or treatment of disorders of binocular vision, such as amblyopia. The game content is devised to maximize the possible therapeutic effects by leveraging advanced research in opthalmology, as well as advanced display technology to render images independently to each eye. In particular, the game content engages both eyes at different levels of difficulty, forcing an amblyopic eye to work harder to regain its performance in the visual system. The invention herein described provides a mobile device, capable of interaction with an eye care specialist, for the assessment / treatment of binocular vision using innovative mechanisms for ensuring proper use thereof.

Owner:MCGILL UNIV

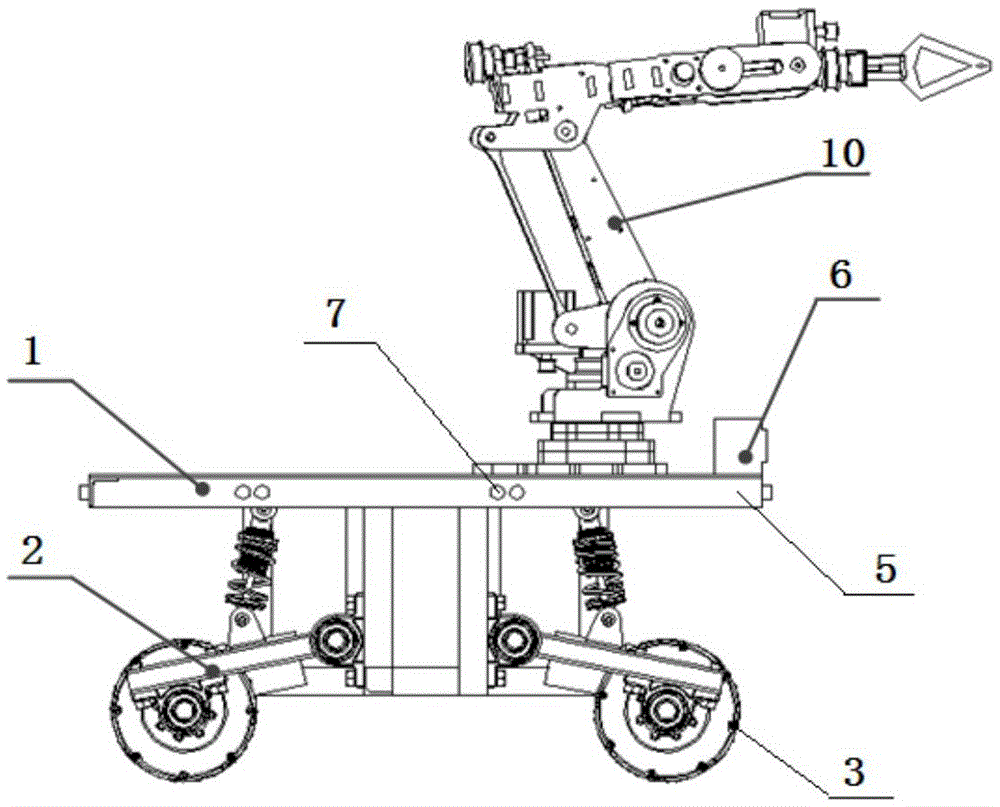

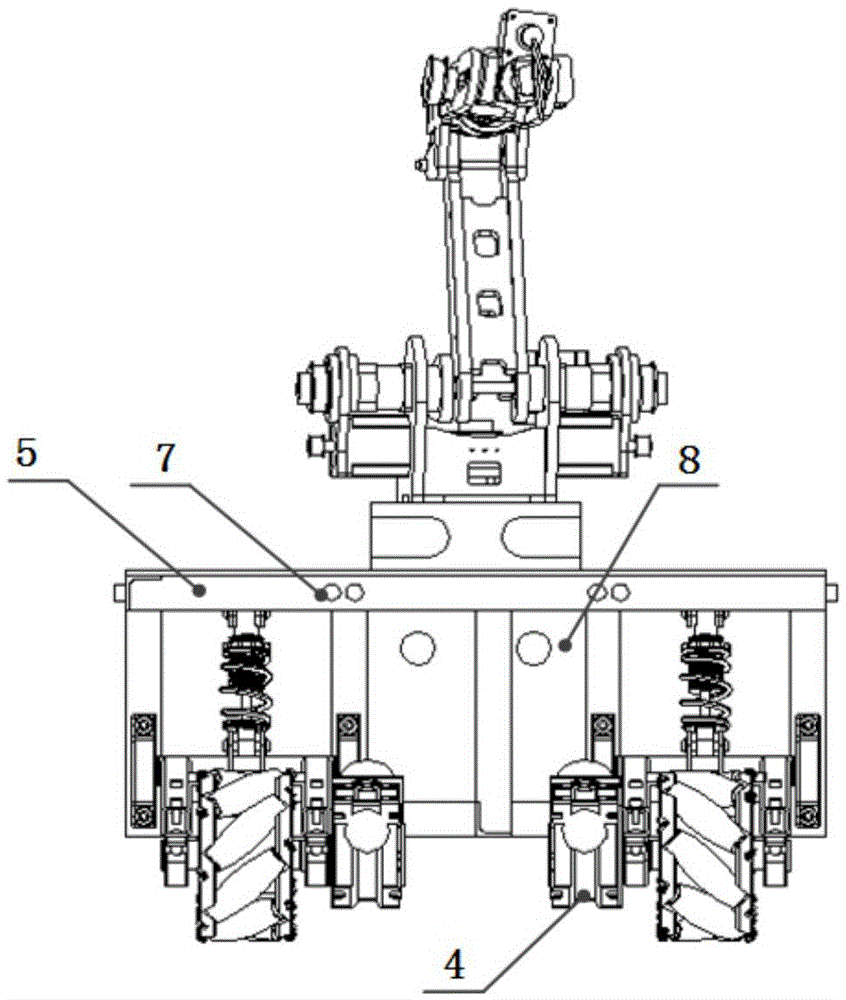

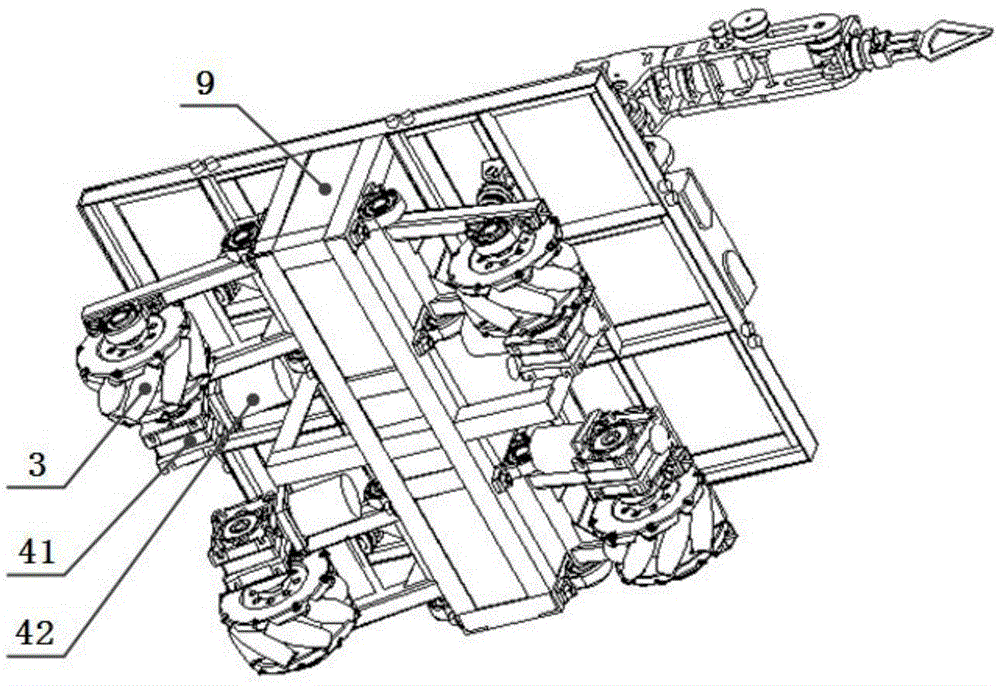

Omnidirectional moving transfer robot with Mecanum wheels

InactiveCN105479433AImprove motor flexibilityHigh degree of intelligenceManipulatorVehicle frameDrive wheel

The invention discloses an omnidirectional moving transfer robot with Mecanum wheels. The omnidirectional moving transfer robot comprises a mechanical arm with six degrees of freedom, an omnidirectional moving base plate, a binocular vision device, a master control box and a remote monitoring device. The omnidirectional moving base plate comprises a frame and the multiple Mecanum wheels arranged below the frame. Each Mecanum wheel is a drive wheel. A plurality of distance measuring sensors are arranged on the periphery of the frame. Each distance measuring sensor is fixedly connected with the frame through an independent suspending module. By means of the structure, the rotating speeds and the steering direction of all the wheels are matched so that a moving platform can move in any direction in the plane, and the moving flexibility of the whole transfer device is remarkably improved; in addition, it can also be ensured that the Mecanum wheels are in full contact with the ground, and operation stability and control precision are improved; and furthermore, the binocular vision device is used for guiding the robot to move and conduct the object carrying and conveying task, the whole process is completed by the robot independently, and the intelligence degree is higher.

Owner:JIANGSU UNIV OF SCI & TECH

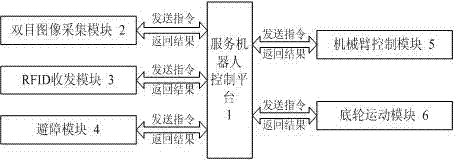

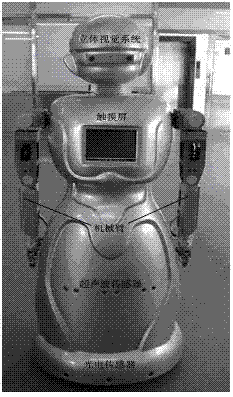

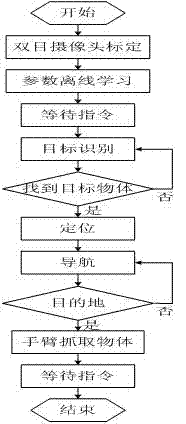

Binocular vision-based robot target identifying and gripping system and method

InactiveCN102902271AIntelligent service modeHumanized serviceManipulatorPosition/course control in two dimensionsEngineeringObstacle avoidance

The invention discloses a binocular vision-based robot target identifying and gripping system and method. The binocular vision-based robot target identifying and gripping system comprises a binocular image acquisition module, an RFID (Radio Frequency Identification) transceiving module, a chassis movement module, an obstacle avoidance module and a mechanical arm control module. The binocular vision-based robot target identifying and gripping method comprises the following steps of: (1) calibration of a binocular camera; (2) erection and conversion of a coordinate system; (3) target identification and location; (4) autonomous navigation and obstacle avoidance; and (5) control over mechanical arms for gripping a target object. According to the invention, a robot can be intelligently interactive with the environment, and the intelligent gripping capability of the robot is enhanced. According to the binocular vision-based robot target identifying and gripping system and method, except for identification and location to the target object, a navigation database is also established, and the robot can automatically reach an area where the object is located after the target object is positioned; and the binocular vision-based robot target identifying and gripping system and method ensures that a service mode of the robot is more intelligentized and humanized by controlling the human-simulated mechanical arms and adopting a specific personified path gripping manner.

Owner:SHANGHAI UNIV

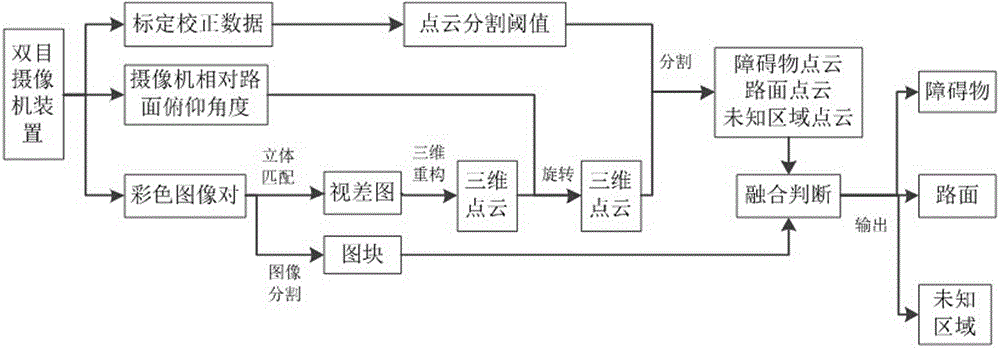

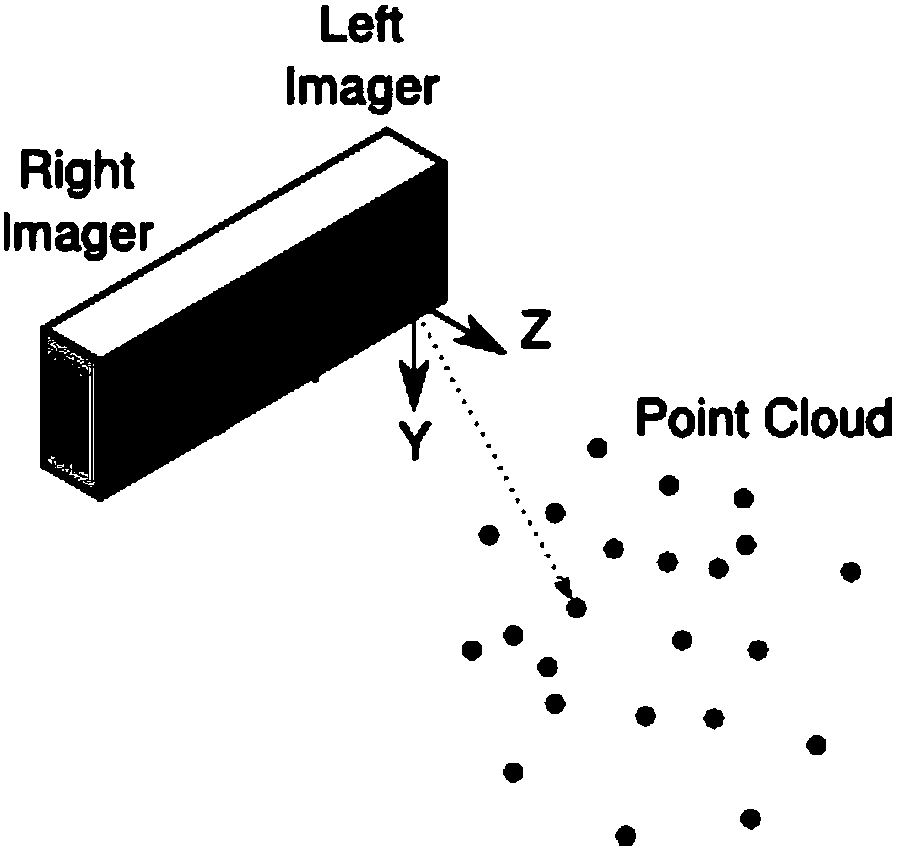

Binocular vision obstacle detection method based on three-dimensional point cloud segmentation

InactiveCN103955920AImprove reliabilityImprove practicalityImage analysis3D modellingReference mapCamera image

The invention provides a binocular vision obstacle detection method based on three-dimensional point cloud segmentation. The method comprises the steps of synchronously collecting two camera images of the same specification, conducting calibration and correction on a binocular camera, and calculating a three-dimensional point cloud segmentation threshold value; using a three-dimensional matching algorithm and three-dimensional reconstruction calculation for obtaining a three-dimensional point cloud, and conducting image segmentation on a reference map to obtain image blocks; automatically detecting the height of a road surface of the three-dimensional point cloud, and utilizing the three-dimensional point cloud segmentation threshold value for conducting segmentation to obtain a road surface point cloud, obstacle point clouds at different positions and unknown region point clouds; utilizing the point clouds obtained through segmentation for being combined with the segmented image blocks, determining the correctness of obstacles and the road surface, and determining position ranges of the obstacles, the road surface and unknown regions. According to the binocular vision obstacle detection method, the camera and the height of the road surface can be still detected under the complex environment, the three-dimensional segmentation threshold value is automatically estimated, the obstacle point clouds, the road surface point cloud and the unknown region point clouds can be obtained through segmentation, the color image segmentation technology is ended, color information is integrated, correctness of the obstacles and the road surface is determined, the position ranges of the obstacles, the road surface and the unknown regions are determined, the high-robustness obstacle detection is achieved, and the binocular vision obstacle detection method has higher reliability and practicability.

Owner:GUILIN UNIV OF ELECTRONIC TECH +1

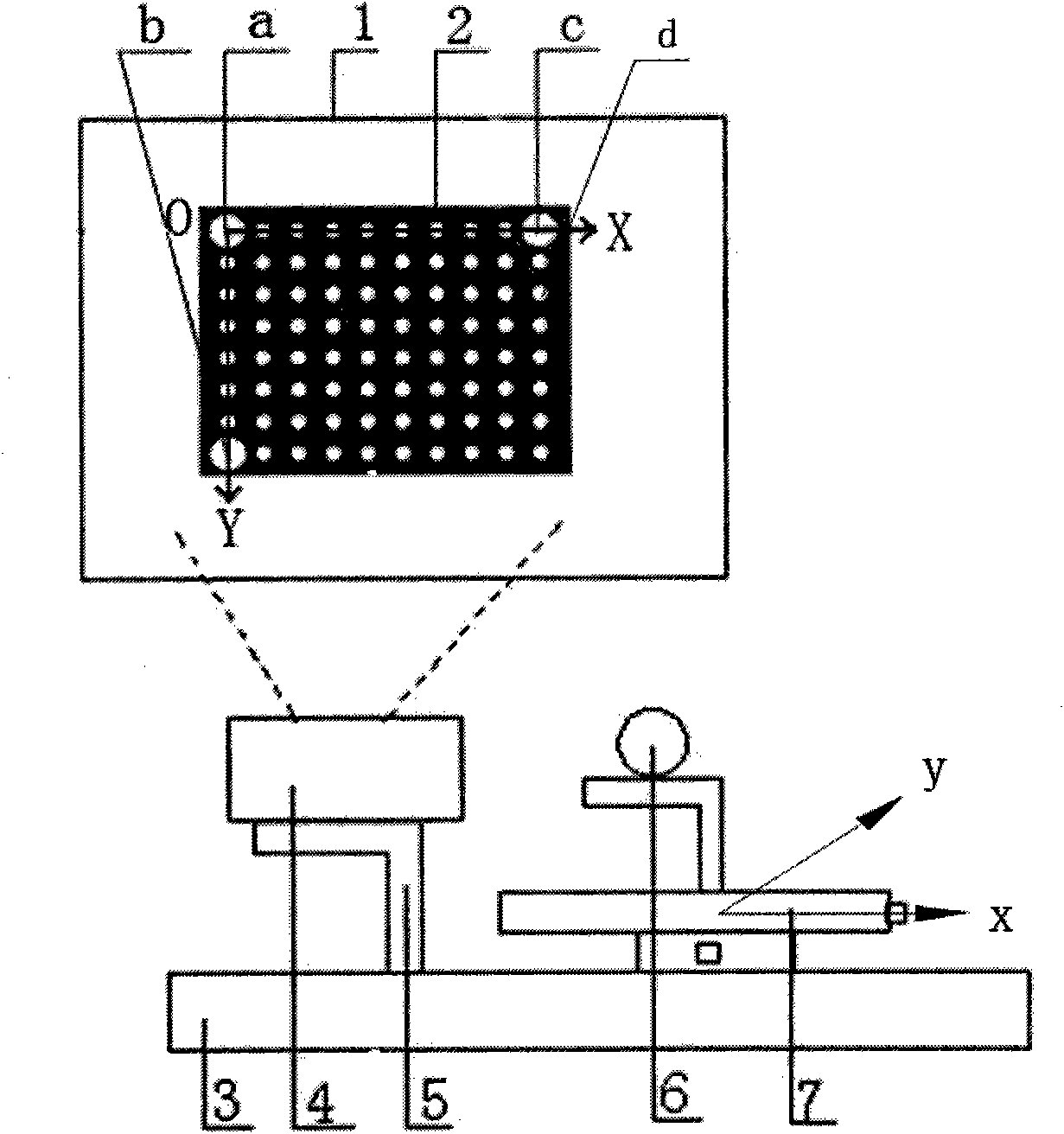

Camera on-field calibration method in measuring system

ActiveCN101876532AEasy extractionOvercome the adverse effects of opaque imagingImage analysisUsing optical meansTheodoliteSize measurement

The invention discloses a camera on-field calibration method in a measuring system, belonging to the field of computer vision detection, in particular to an on-field calibration method for solving inside and outside parameters of cameras in a large forgeable piece size measuring system. Two cameras and one projector are provided in the measuring system. The calibration method comprises the following steps of: manufacturing inside and outside parameter calibration targets of the cameras; projecting inside parameter targets and shooting images; extracting image characteristic points of the images through an image processing algorithm in Matlab; writing out an equation to solve the inside parameters of the cameras; processing the images shot simultaneously by the left camera and the right camera; and measuring the actual distance of the circle center of the target by using a left theodolite and a right theodolite, solving a scale factor and further solving the actual outside parameters. The invention has stronger on-field adaptability, overcomes the influence of impermeable and illegible images caused by the condition that a filter plate filters infrared light in a large forgeable piece binocular vision measuring system by adopting the projector to project the targets, and is suitable for occasions with large scene and complex background.

Owner:DALIAN UNIV OF TECH

Autonomous obstacle avoidance and navigation method for unmanned aerial vehicle-based field search and rescue

InactiveCN107656545AGuaranteed accuracySatellite radio beaconingTarget-seeking controlUltrasonic sensorDetect and avoid

An autonomous obstacle avoidance and navigation method for unmanned aerial vehicle (UAV)-based field search and rescue provided by the invention includes global navigation based on GPS signals and anautonomous obstacle avoidance and navigation algorithm based on binocular vision and ultrasound. First, a UAV carries out search under the navigation of GPS signals according to a planned path. Aftera target is found, the autonomous obstacle avoidance and navigation algorithm based on binocular vision and ultrasound is adopted. A binocular camera acquires a disparity map containing depth information. The disparity map is re-projected to a 3D space to get a point cloud. A cost map is established according to the point cloud. The path is re-planned using the cost map to avoid obstacles. When there is no GPS signal, a visual inertial odometry (VIO) is used to ensure that the UAV flies according to the planned path, and an ultrasonic sensor is used to detect and avoid obstacles below the UAVto approach the rescued target. UAV autonomous obstacle avoidance and navigation in a field search and rescue scene is realized.

Owner:WUHAN UNIV

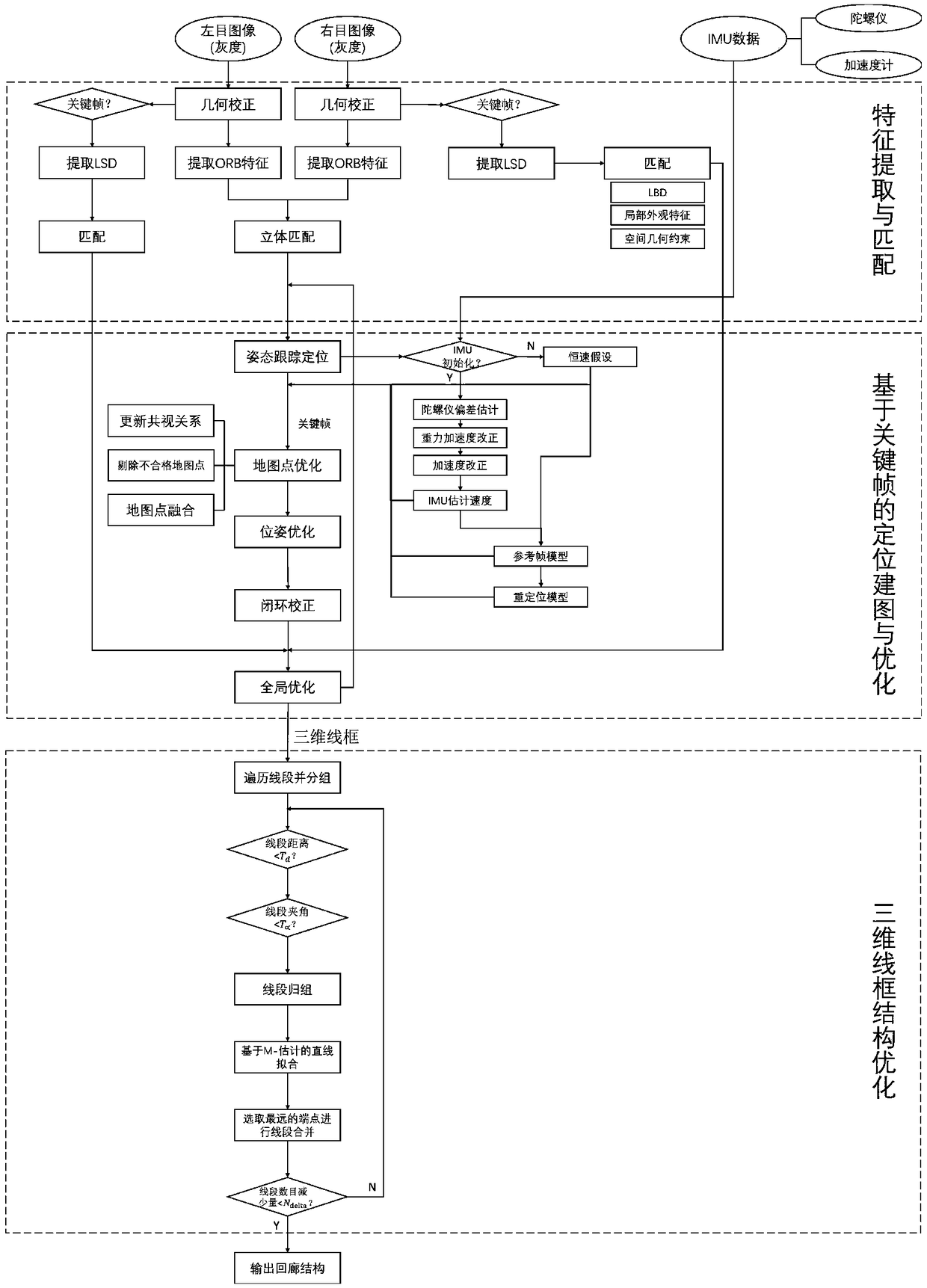

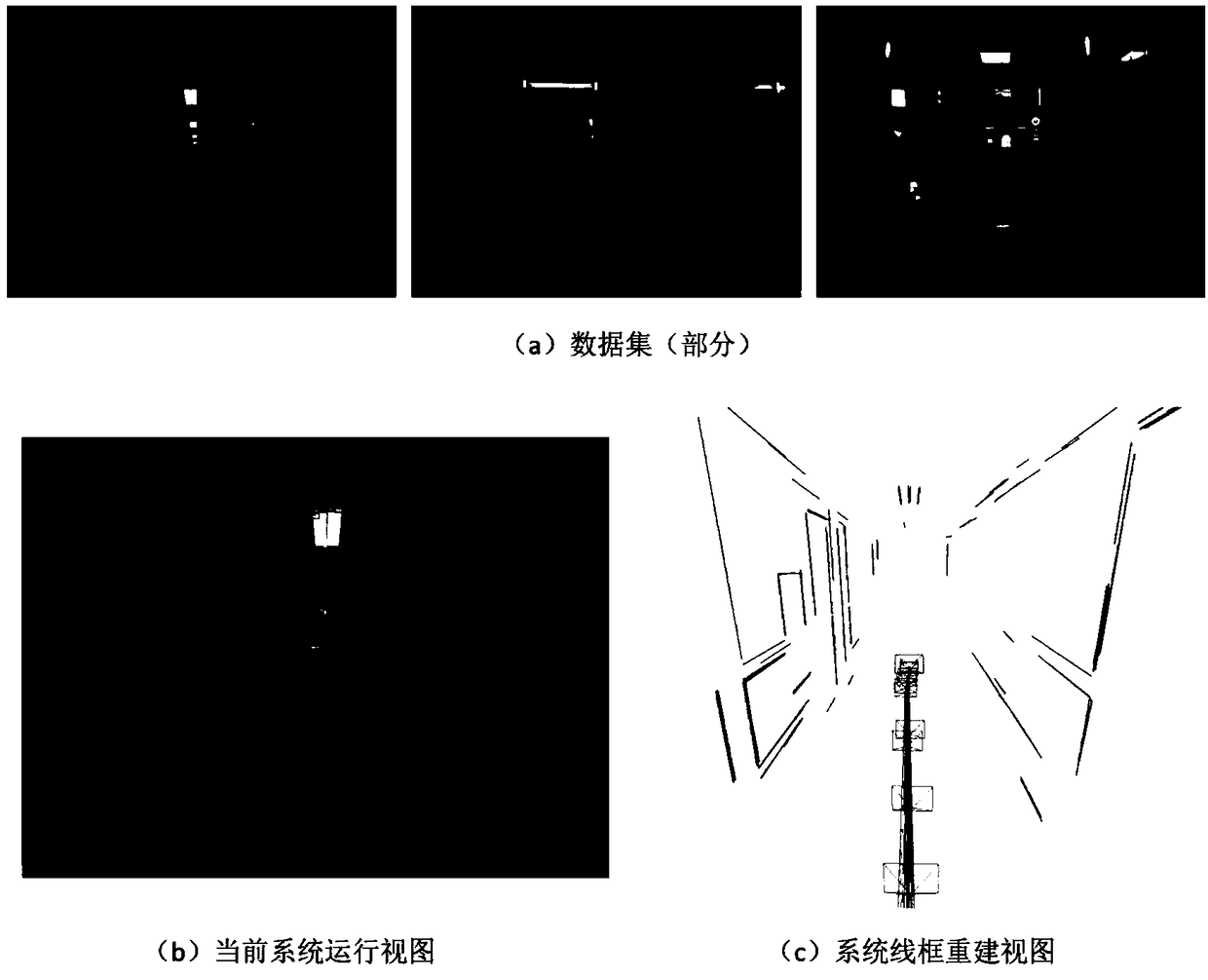

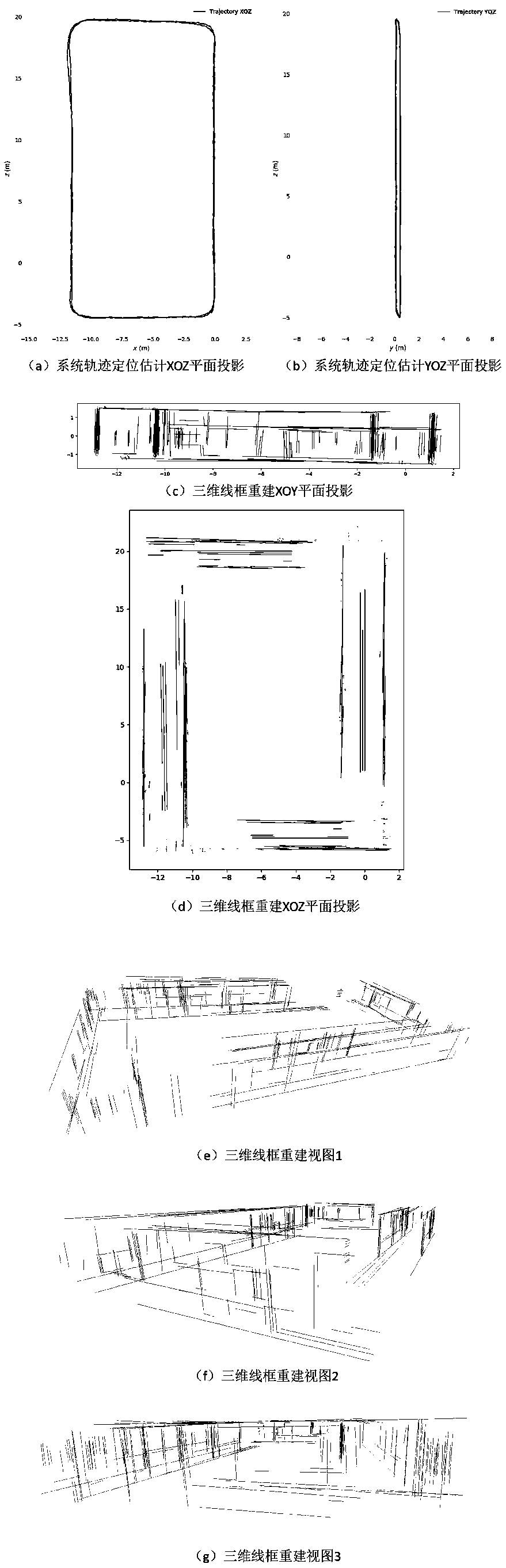

A three-dimensional wire frame structure method and system fusing a binocular camera and IMU positioning

ActiveCN109166149AGuaranteed uptimeImprove stabilityImage enhancementImage analysisThree-dimensional spaceDistance constraints

The invention relates to a three-dimensional wire frame structure method and system fusing a binocular camera and IMU positioning. On the basis of binocular vision, the invention initializes and fusesinertial measurement information by using a divide-and-conquer strategy, implements tracking, positioning and drawing, and can robustly run in indoor and outdoor environments and complex motion conditions. On the basis of accurate positioning, 3D wireframe reconstruction and iterative optimization are carried out based on the posture of the key frame. Linear segments are matched by local featuresand spatial geometric constraints and back-projected into three-dimensional space. Through the angle and distance constraints, the straight line segments are divided into different sets. Based on thegrouping results, the fitting region is determined and the straight line segments are merged. Finally, a 3-D wireframe structure is output. The invention fuses multi-source information to improve thestability and robustness of the system on the traditional vision-based positioning and mapping method. At the same time, line information is added to the key frame to sparsely express the structuralcharacteristics of the three-dimensional environment, which improves the computational efficiency.

Owner:WUHAN UNIV

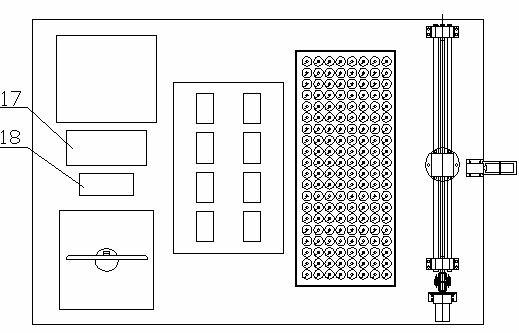

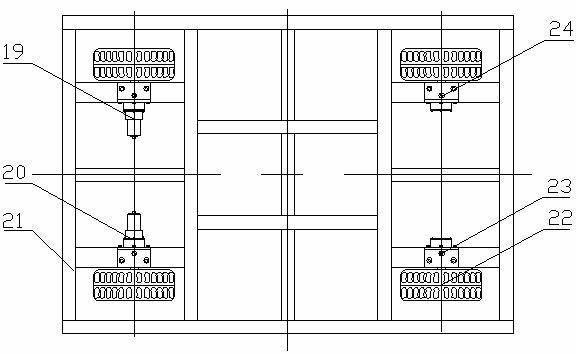

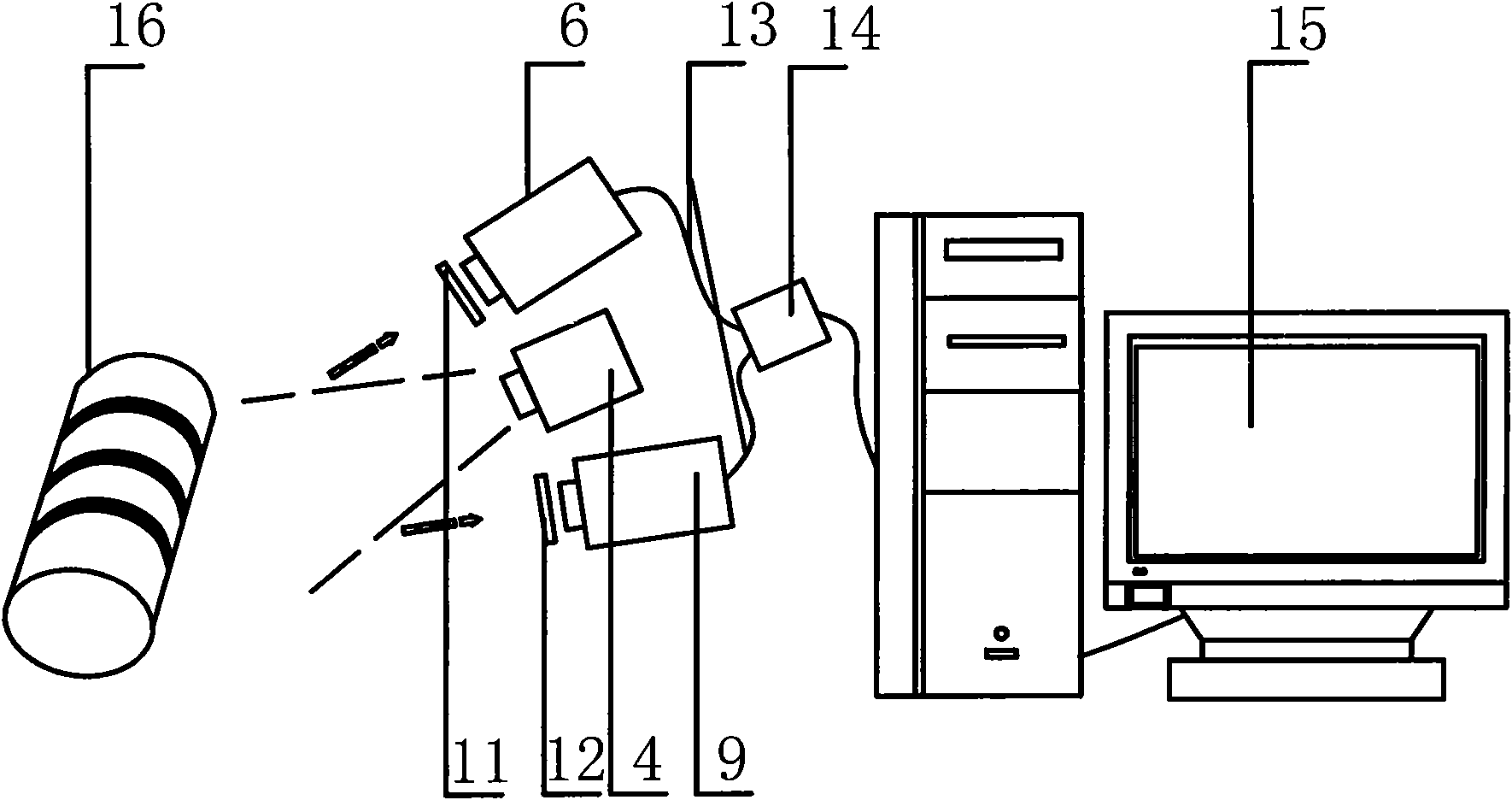

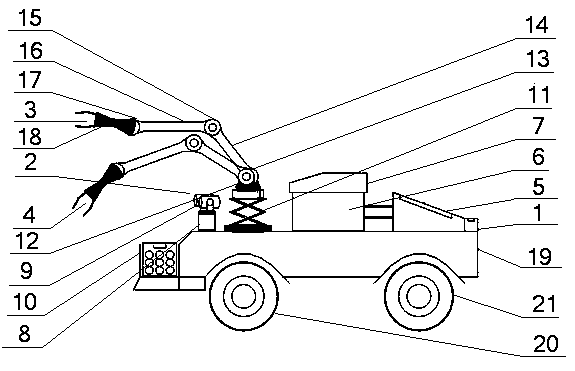

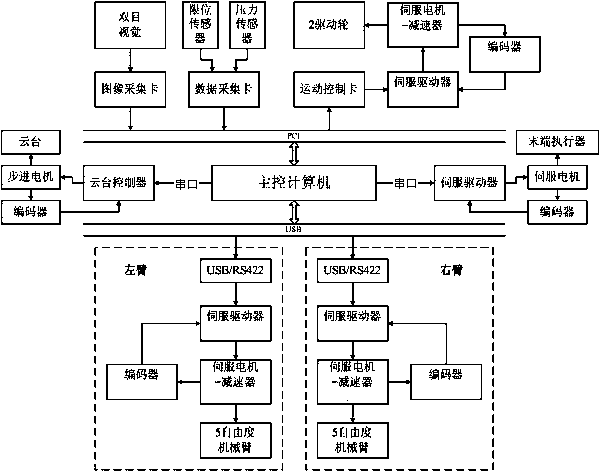

Double-manipulator fruit and vegetable harvesting robot system and fruit and vegetable harvesting method thereof

ActiveCN103503639ARealize automatic harvestingImprove work efficiencyProgramme-controlled manipulatorPicking devicesSimulationActuator

The invention discloses a double-manipulator fruit and vegetable harvesting robot system and a fruit and vegetable harvesting robot method of the double-manipulator fruit and vegetable harvesting robot system. According to the system, a binocular stereoscopic vision system is used for visual navigation of walking motion of a robot and obtaining of position information of harvested targets and barriers; a manipulator device is used for grasping and separating according to the positions of the harvested targets and the barriers; a robot movement platform is used for autonomous moving under the operation environment; a main control computer is a control center, integrates a control interface and all software modules, and controls the whole system. The binocular stereoscopic vision system comprises two color vidicons, an image collection card and an intelligent control cloud deck; the manipulator device comprises two five degree-of-freedom manipulator bodies, a joint servo driver, an actuator motor and the like; the robot movement platform comprises a wheel-type body, a power source and power control device and a fruit and vegetable harvesting device. Binocular vision and the double-manipulator bionic personification are used for building the fruit and vegetable harvesting robot, and autonomous navigation walking and automatic harvesting of the fruit and vegetable targets are achieved.

Owner:溧阳常大技术转移中心有限公司

Binocular vision navigation system and method based on inspection robot in transformer substation

ActiveCN103400392ADriving path adjustmentImplement automatic detectionImage analysisPosition/course control in two dimensionsTransformerSimulation

The invention discloses a binocular vision navigation system based on an inspection robot in a transformer substation. The binocular vision navigation system comprises a robot body, an image collecting system, a network transmission system, a vision analysis system, a route planning system and a robot control system, wherein the image collecting system is arranged in front of the robot body and used for collecting environmental information images of a forwarding road and then uploads the environmental information images to the vision analysis system on the basis of the network transmission system; the vision analysis system detects a barrier in the road area of the transformer substation according to binocular image information collected by the image collecting system and inside and outside parameters of a camera; the route planning system plans a route according to environment information detected by the vision system and timely adjusts the walking routes of the robot, so that the robot can be prevented from being collided with the barrier; and the robot control system controls moving of the robot body according to the route planned by the route planning system. The invention simultaneously discloses a vision navigation method. By means of the system and the method, the self-adaptation ability of the robot to the environment is improved, the autonomous navigation function of the electric robot is really realized in the complicated outdoor environment, and the flexibility and the safety of the robot are improved.

Owner:STATE GRID INTELLIGENCE TECH CO LTD

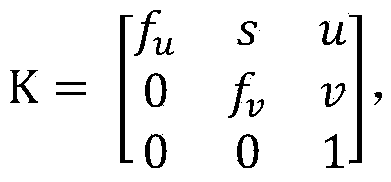

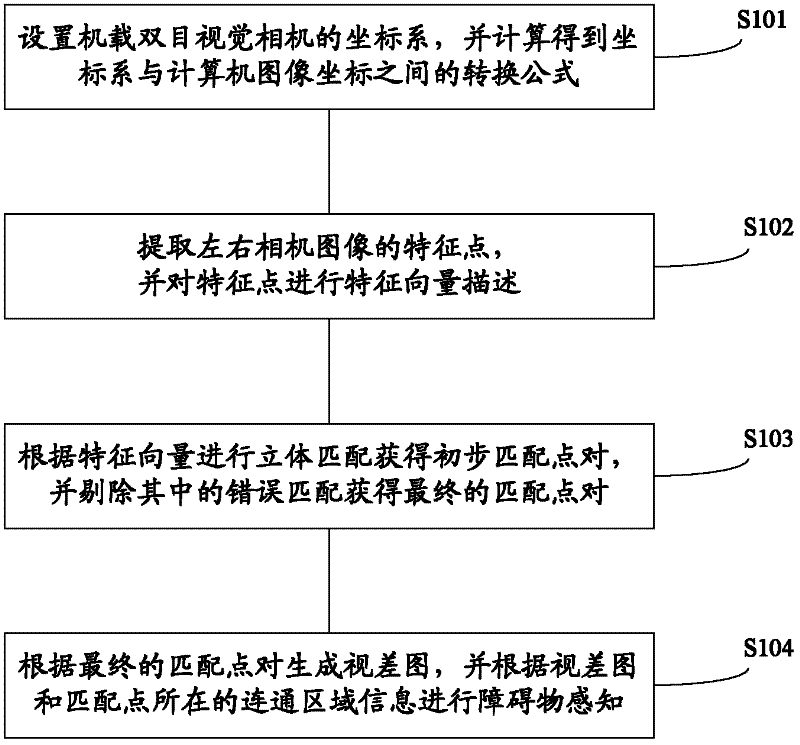

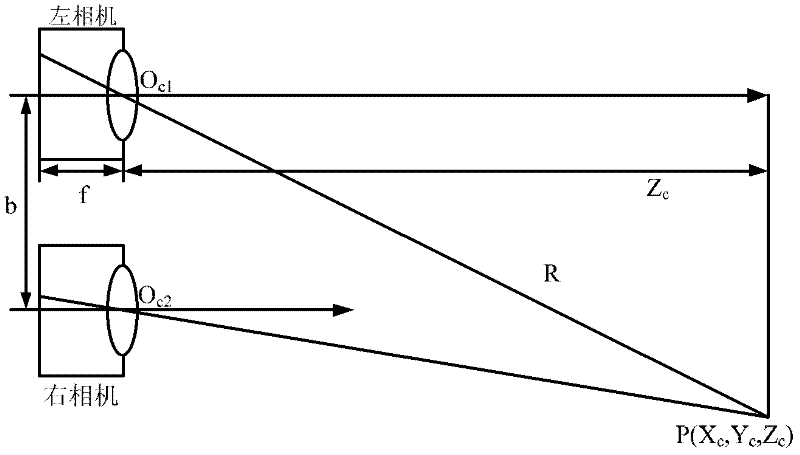

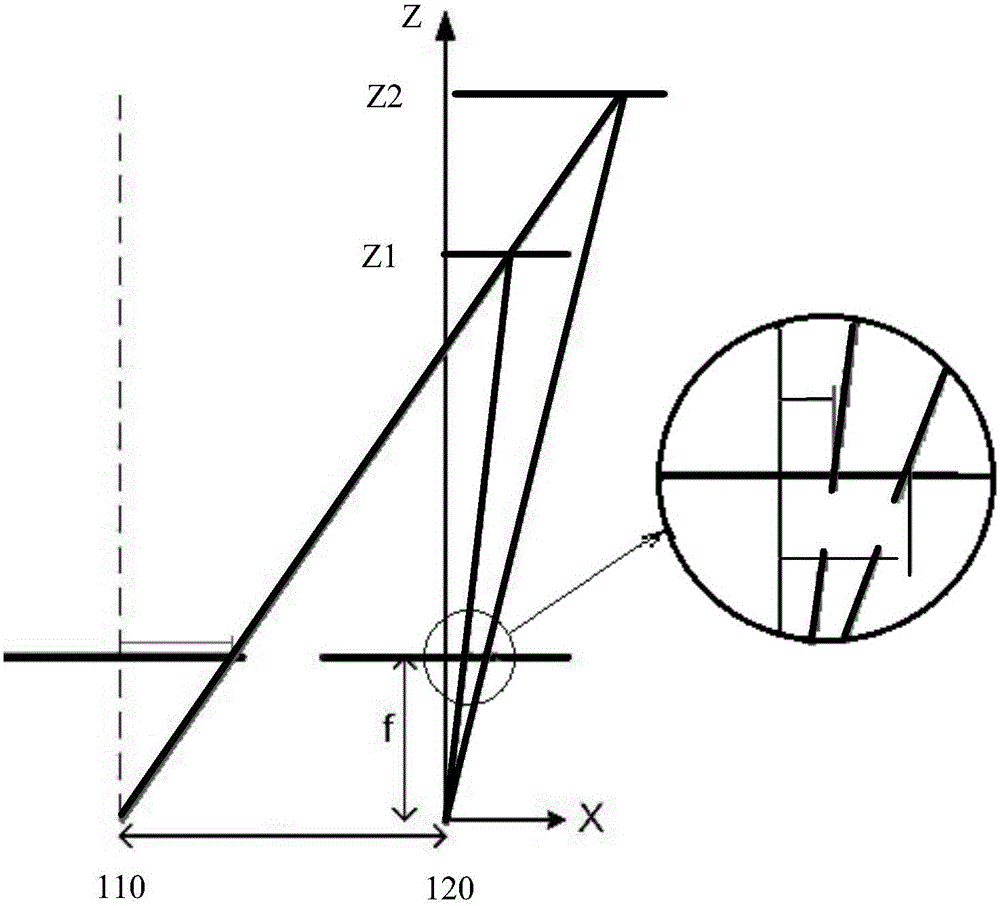

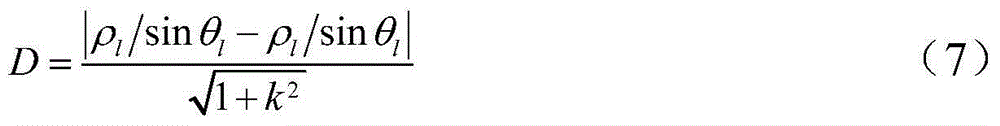

Method for barrier perception based on airborne binocular vision

The invention provides a method for barrier perception based on airborne binocular vision. The method comprises the following steps of setting a coordinate system of an airborne binocular vision camera, calculating a formula for conversion between the coordinate system and computer image coordinates of an image obtained by the airborne binocular vision camera according to the coordinate system, wherein the airborne binocular vision camera comprises a left camera and a right camera, extracting characteristic points of an image obtained by the airborne binocular vision camera, carrying out a characteristic vector description process on the characteristic points, carrying out stereo matching of left and right images according to characteristic vectors of the characteristic points to obtain preliminary matching point pairs, eliminating error matching in the preliminary matching point pairs to obtain final matching point pairs, creating a disparity map according to the final matching point pairs, and carrying out barrier perception according to the disparity map. The method for barrier perception based on airborne binocular vision has the advantages of strong adaptability, good instantaneity and good concealment performance.

Owner:SHENZHEN AUTEL INTELLIGENT AVIATION TECH CO LTD

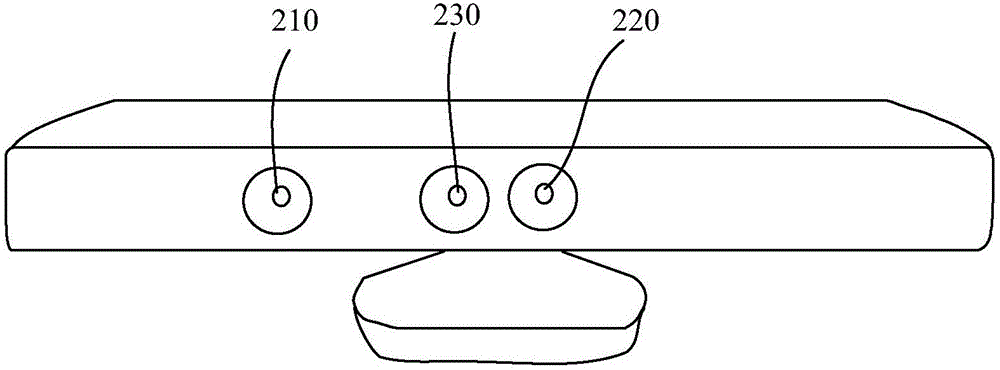

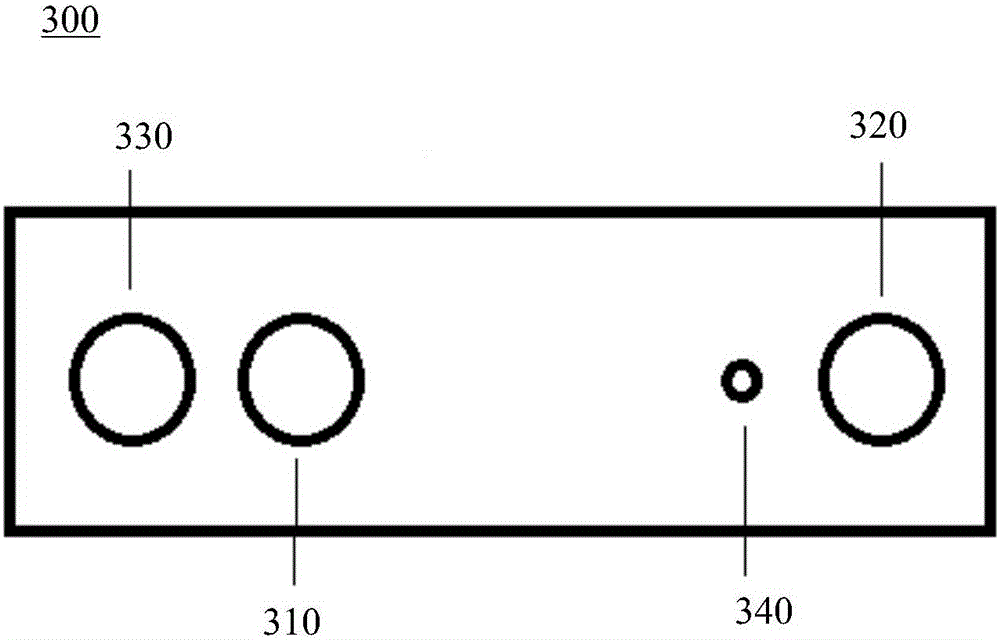

Device for obtaining depth image of three-dimensional scene

ActiveCN106550228ATelevision system detailsColor television detailsVisual perceptionStructured light

The present invention relates to a device for obtaining the depth image of a three-dimensional scene. The device comprises an infrared projector, a first camera, a second camera and an infrared light compensating light source; the first camera is configured to receive infrared light; the second camera is configured to receive color visible light; a distance in accordance with binocular vision is defined between the first camera and the second camera; the infrared projector is configured to project structured light under a first mode and not project structural light under a second mode; and the infrared light compensating light source is configured to selectively project a complementary light source under the second mode.

Owner:SHANGHAI TIMENG INFORMATION TECH CO LTD

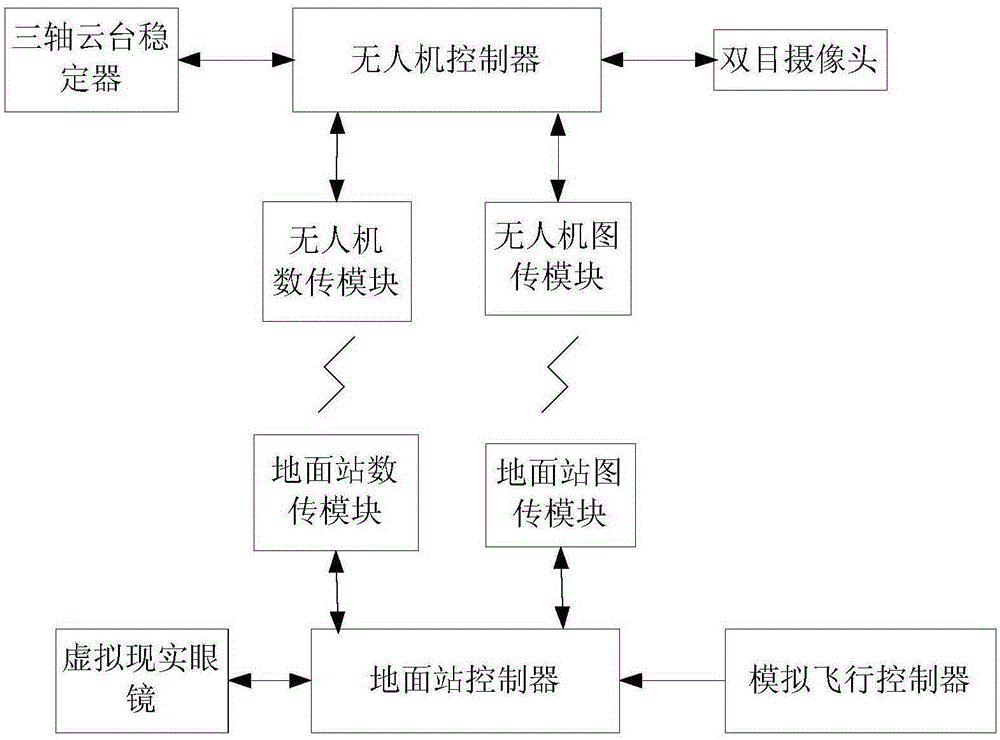

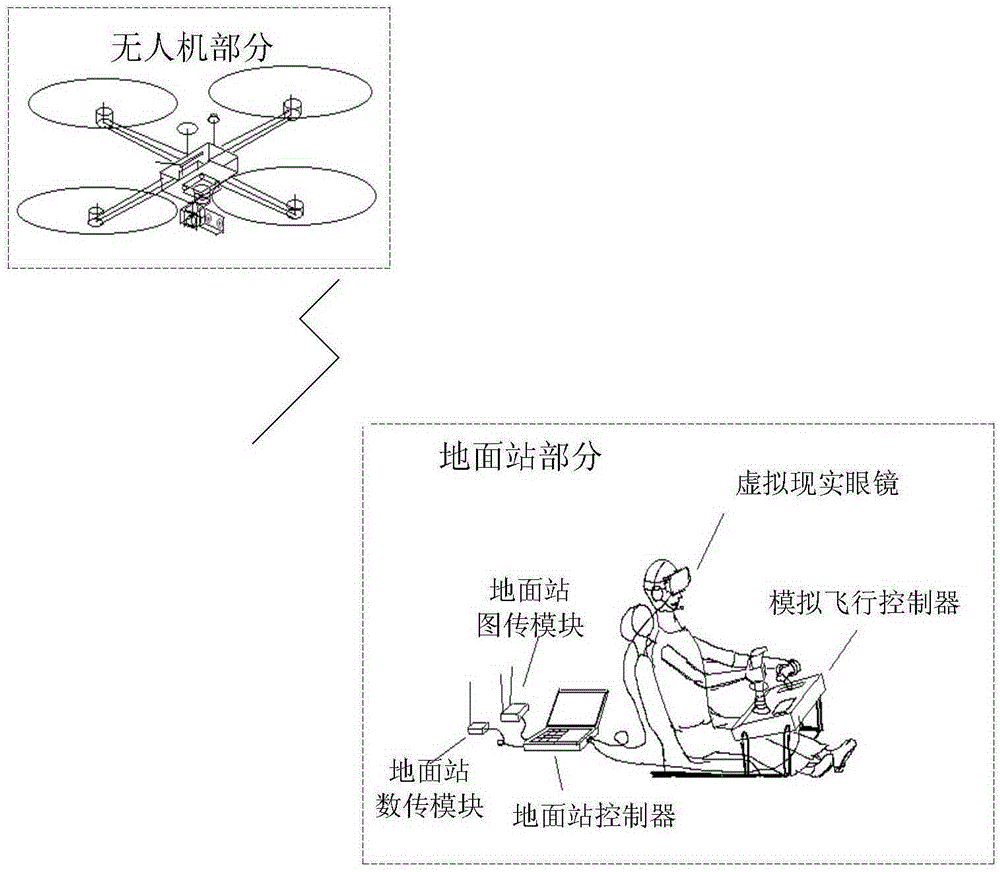

First-person immersive unmanned aerial vehicle driving system realized by virtue of virtual reality and binocular vision technology and driving method

InactiveCN105222761ARealize Virtual MigrationTrack syncSimulator controlPicture taking arrangementsUncrewed vehicleEyewear

A first-person immersive unmanned aerial vehicle driving system realized by virtue of virtual reality and a binocular vision technology and a driving method belong to the technical field of unmanned aerial vehicles and aim at solving the problems that a real-time flying state cannot be mastered through an existing unmanned aerial vehicle driving system, and errors are liable to occur. The driving system and the driving method are characterized in that an unmanned aerial vehicle body carries a binocular camera through an existing tri-axial cradle head stabilizer, an unmanned aerial vehicle image transmission module is equipped for image back transmission, and the tri-axial cradle head stabilizer and the actions of a driver on the ground are synchronized by virtue of a data transmission module and a pair of virtual reality goggles; the driver of the unmanned aerial vehicle on the ground wears the virtual reality goggles and sits in a simulating cabin approximate to a real helicopter driving cabin to feel pictures took by the binocular camera and aircraft flying information, and the flying of the unmanned aerial vehicle is controlled by adopting the data transmission module. The driving system and the driving method are suitable for manipulation of the unmanned aerial vehicle.

Owner:HARBIN INST OF TECH

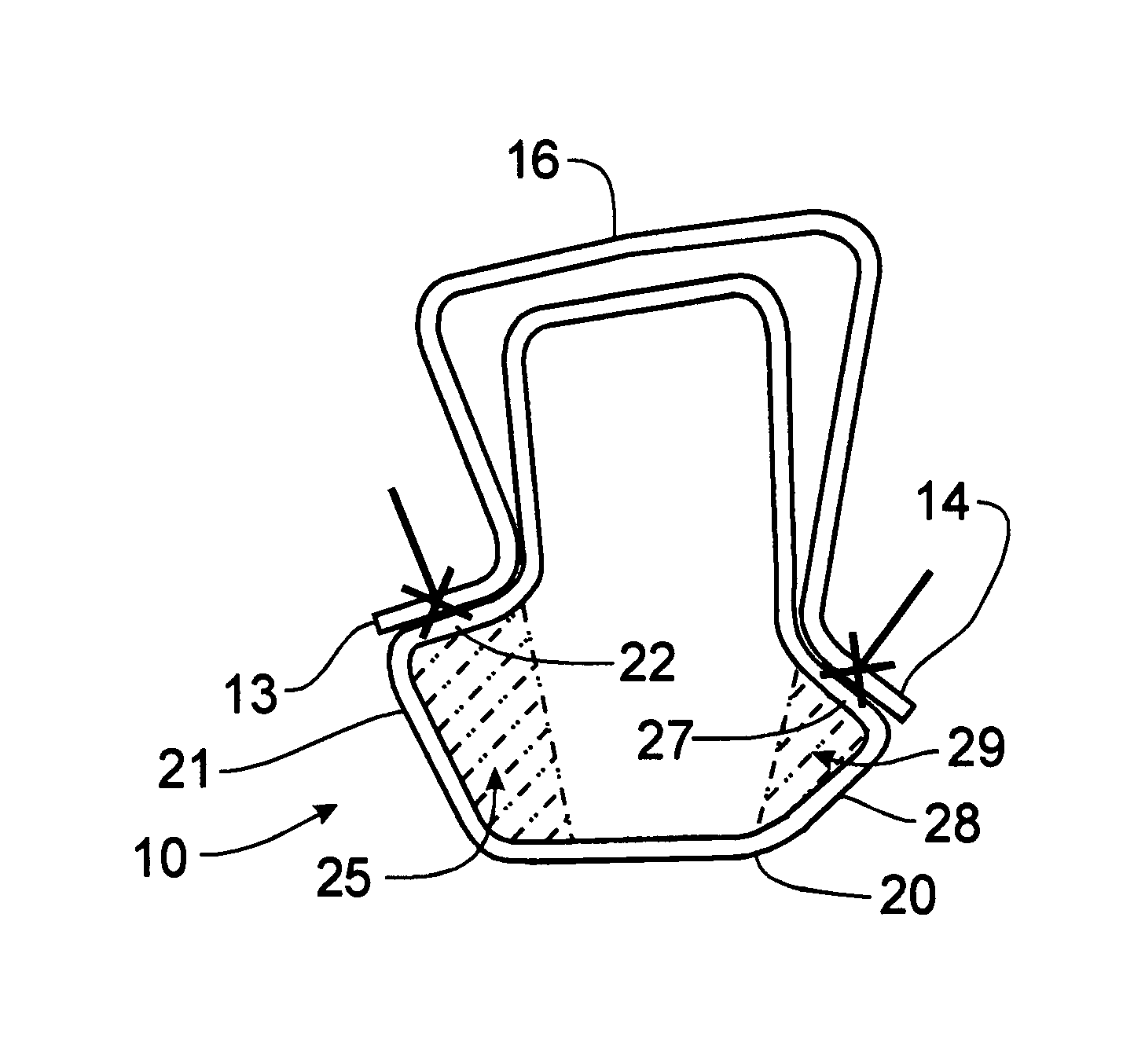

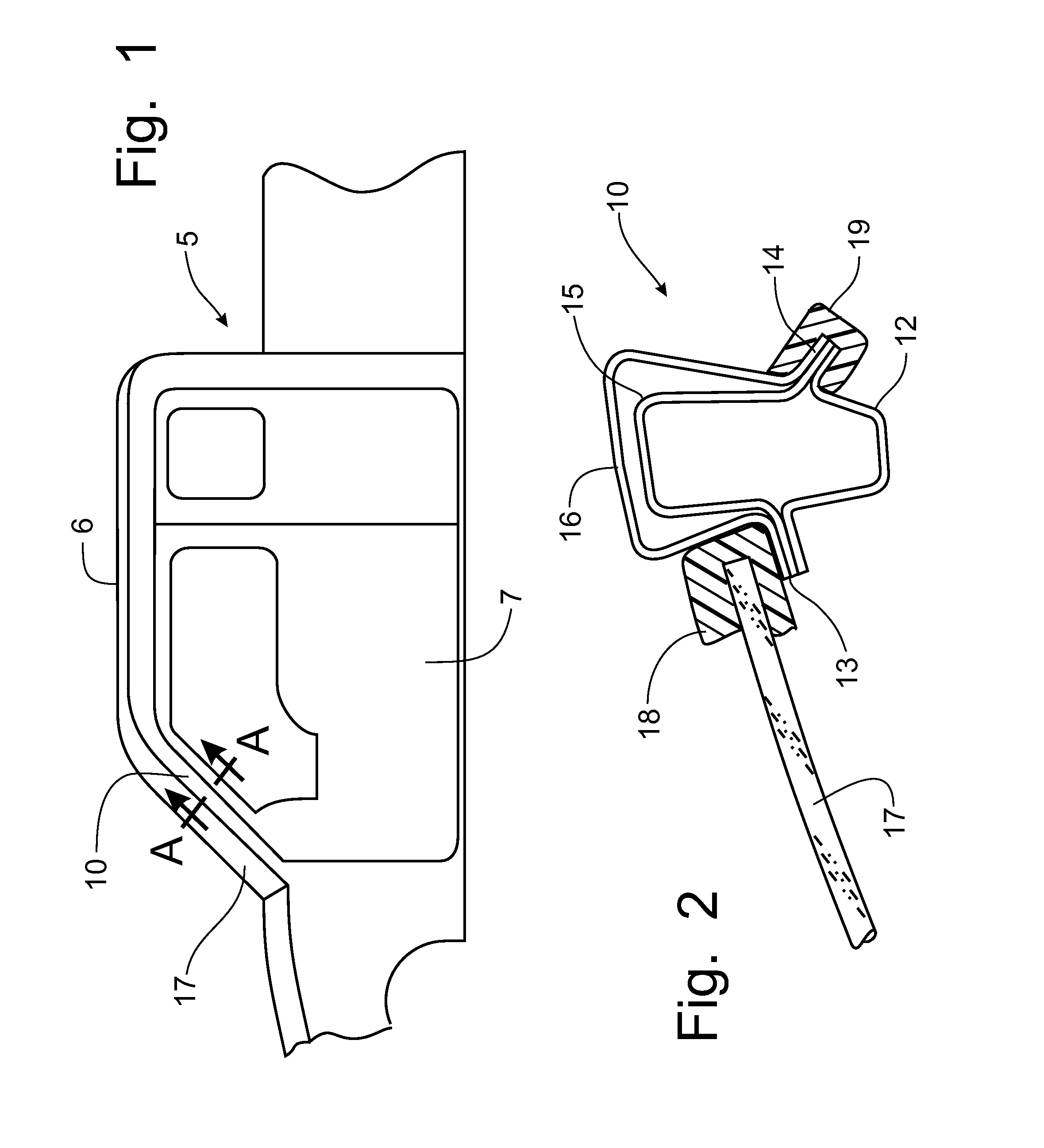

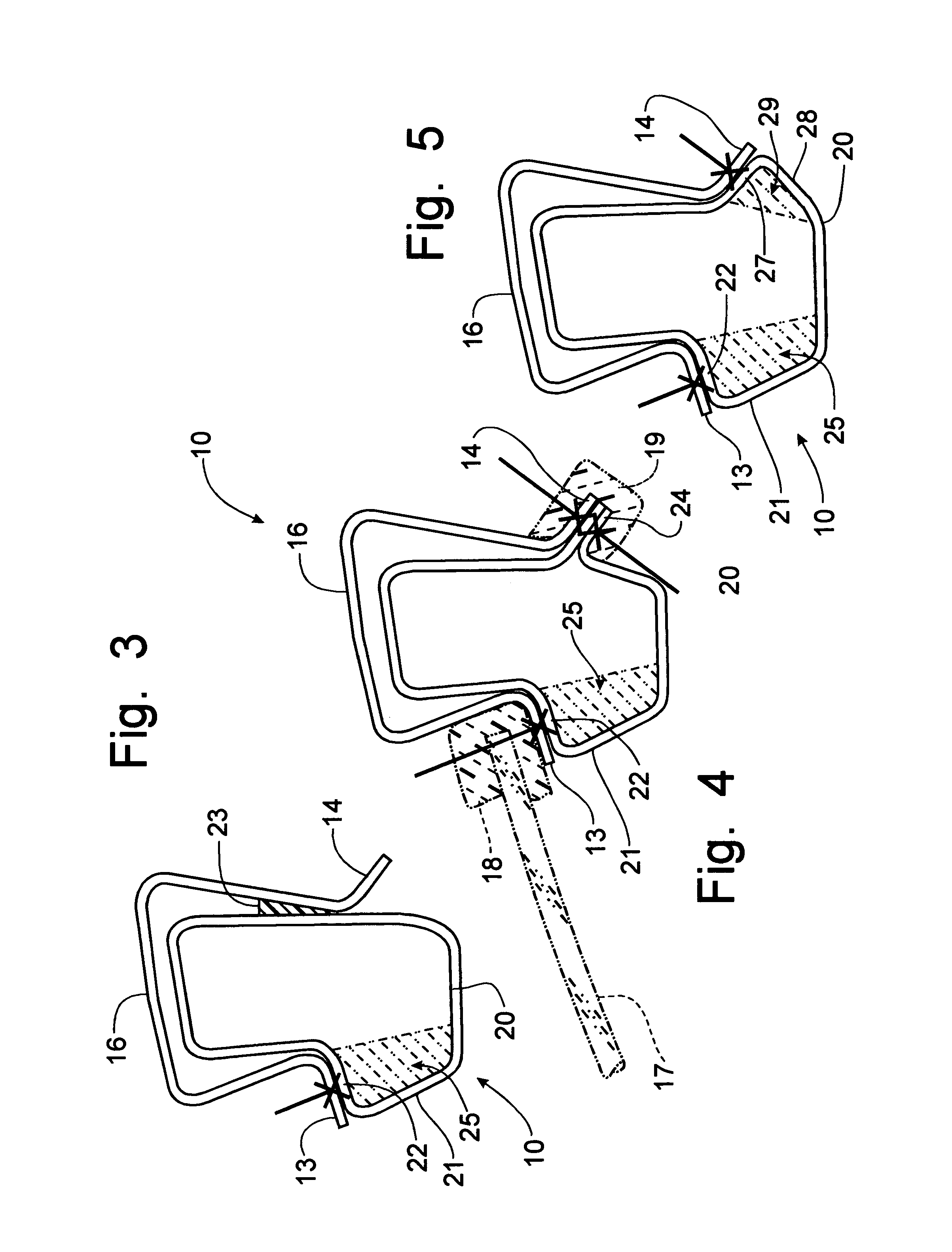

A-pillar structure for an automotive vehicle

InactiveUS7344186B1High strengthLittle and no effect on vision requirementVehicle seatsSuperstructure subunitsMobile vehicleEngineering

An A-pillar structure on an automotive vehicle is manufactured from a formed tubular member that replaces the conventional inner and inner reinforcement members. The door opening panel is formed with conventional flanges that are welded or adhered to the tubular member to provide a conventional uniform surface against which the windshield and front door seals can be supported. The door opening panel flanges can be welded by one-sided welding techniques, such as laser stitch welding, to the tubular member. As an option, the movement of the front door seal from the ab flange to the front door can provide an opportunity to form the tubular member with a still larger cross-section for enhanced strength. The distance dimension spanning the opposing flanges to define the standard arc for maintaining binocular vision is retained while increasing the strength of the A-pillar due to the enlarged cross-sectional configuration provided by the tubular member.

Owner:FORD GLOBAL TECH LLC

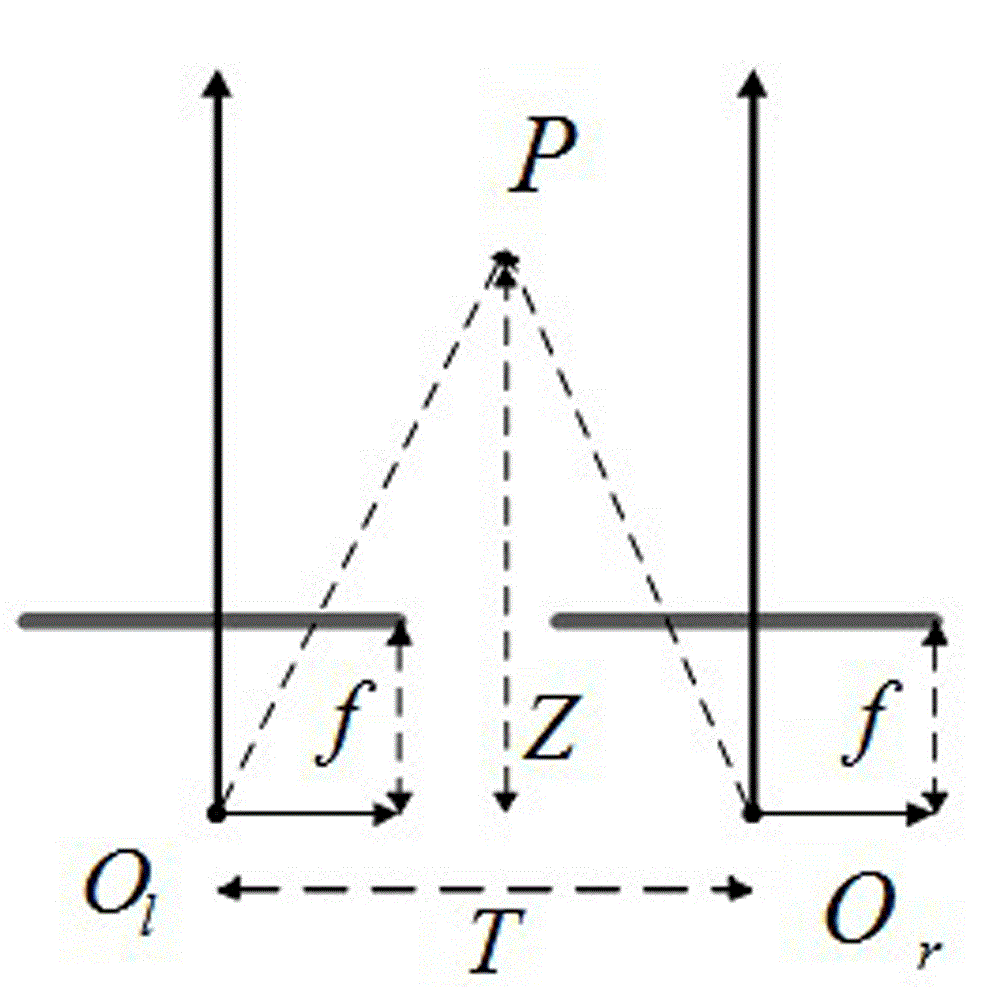

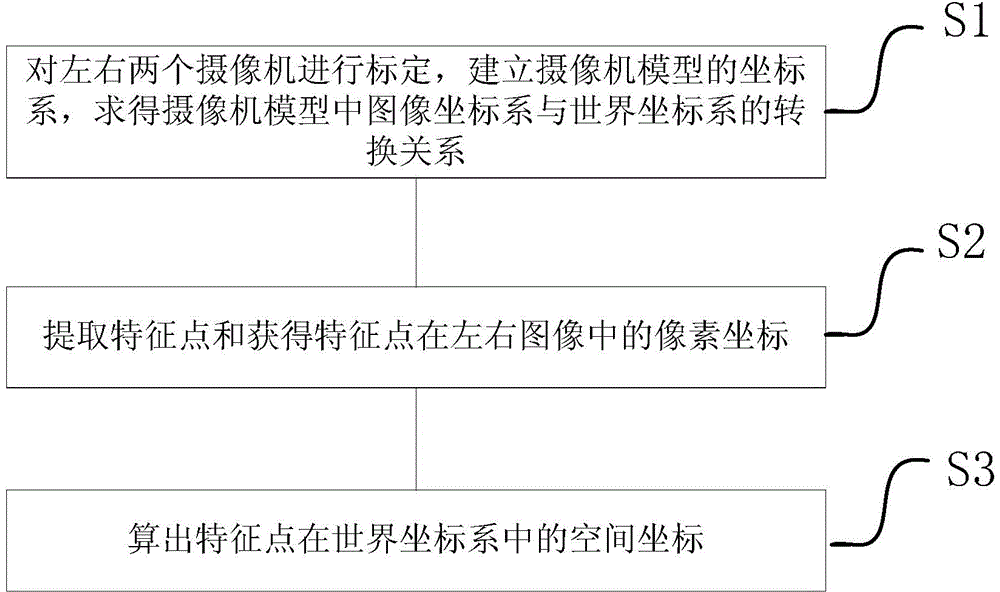

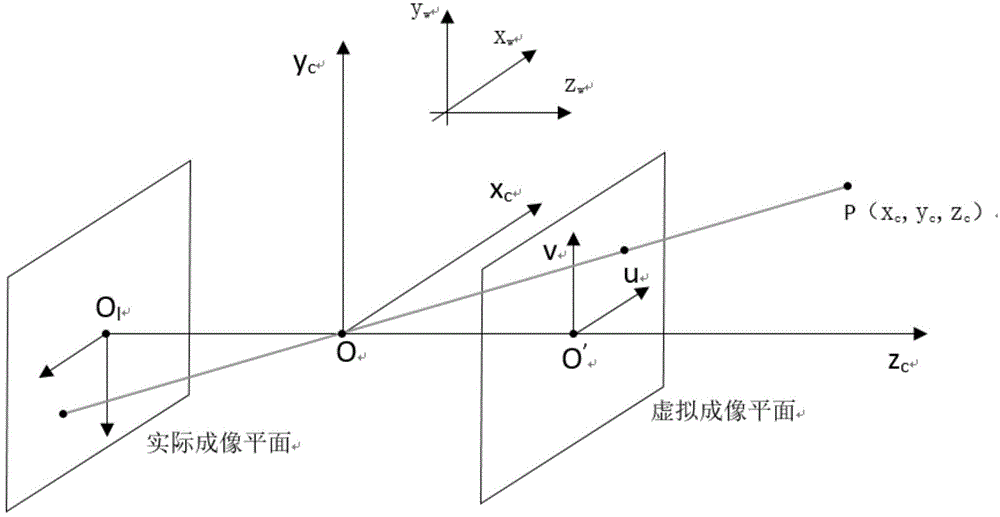

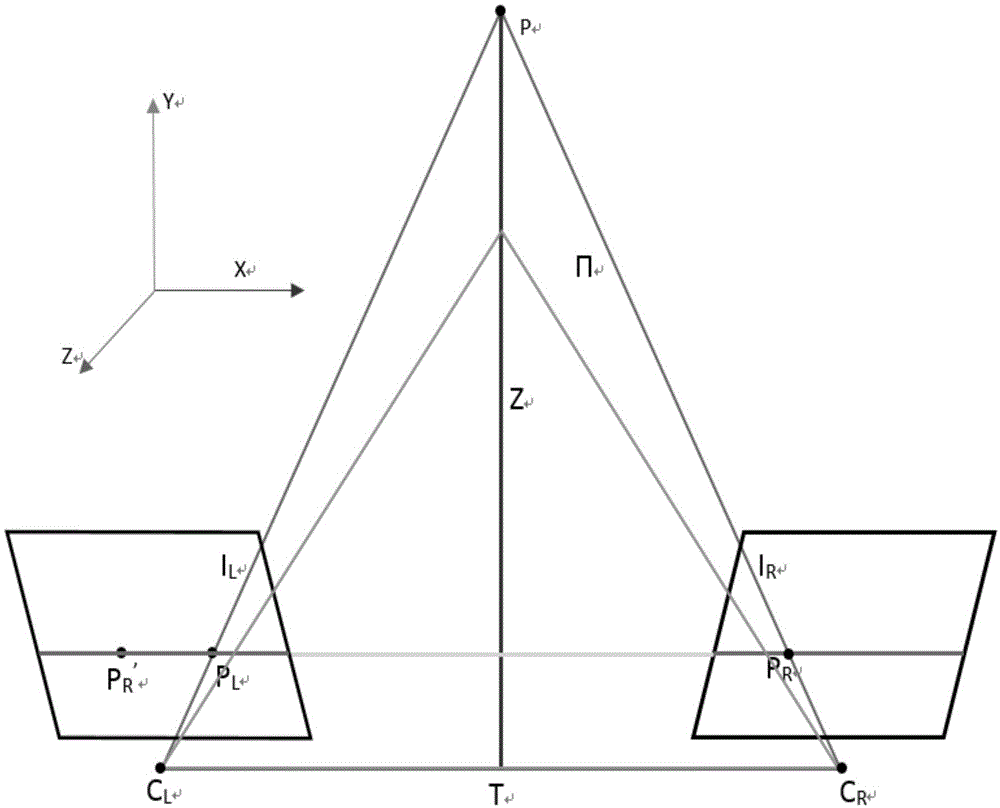

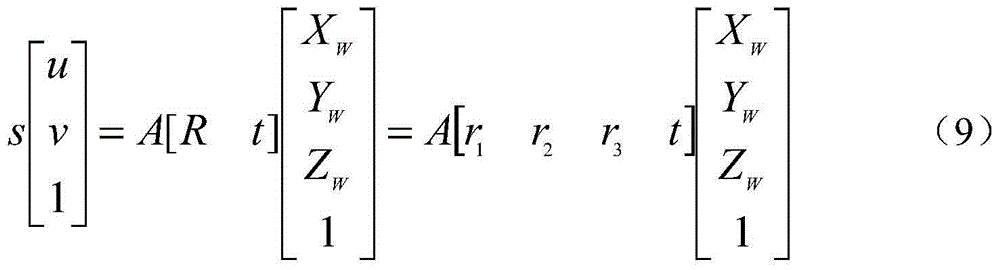

Physical coordinate positioning method based on binocular vision

ActiveCN104933718AHigh measurement accuracyImprove measurement efficiencyImage enhancementImage analysisVisual perceptionBinocular distance

The invention discloses a physical coordinate positioning method based on binocular vision. The physical coordinate positioning method comprises the following steps: S1) setting a left camera and a right camera, establishing a coordinate system of a camera model, and obtaining a conversion relationship between the coordinate system of the camera model and a world coordinate system; S2) extracting a feature point, and obtaining a pixel coordinate of the feature point in a left image and a right image; and S3) calculating a space coordinate of the feature point in the world coordinate system. The left camera and the right camera are used for simulating two eyes, the coordinate system conversion model is established, then, each image shot by the two cameras is subjected to feature point extraction and pixel coordinate calculation, the pixel coordinate is converted into a theoretical coordinate of the camera model, and finally, the space coordinate of a target point is calculated. Measurement accuracy and efficiency is improved, and binocular coordinate positioning can have a better application prospect in the fields including an eye-in-hand system of an industrial robot, industrial cutting, logistics transportation business, packaging business, optical detection and processing and the like.

Owner:INST OF INTELLIGENT MFG GUANGDONG ACAD OF SCI

Stereoscopic vision based emergency treatment device and method for running vehicles

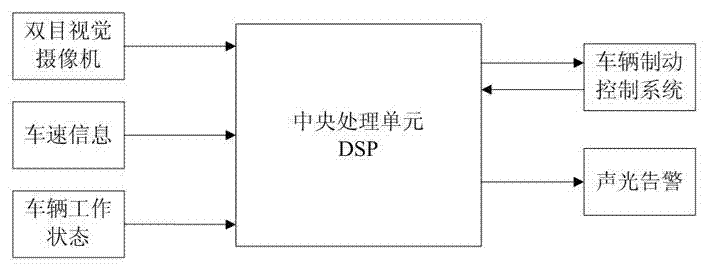

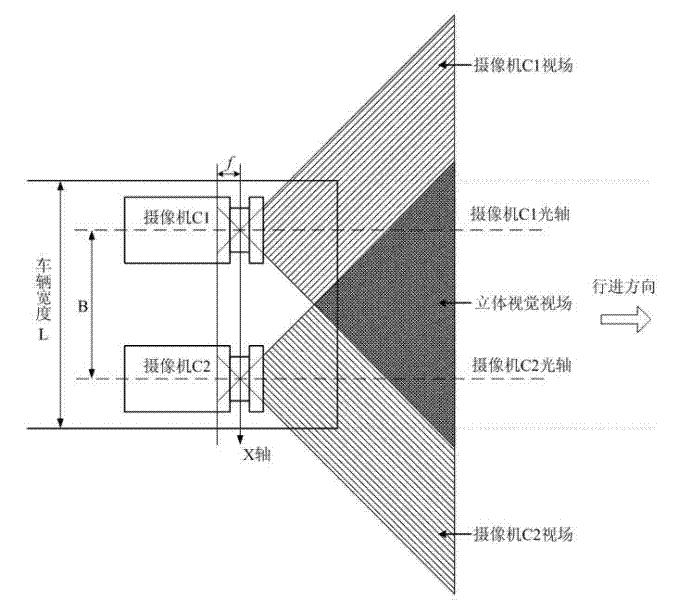

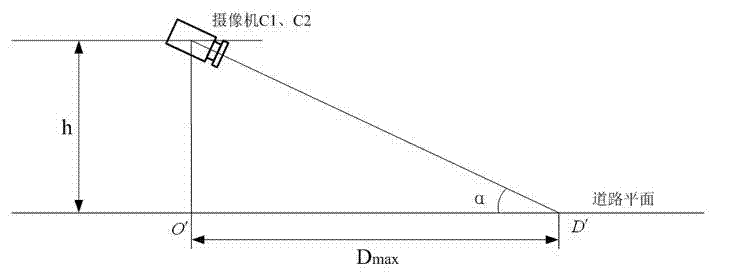

ActiveCN102390370AReduce speedAcoustic signal devicesAutomatic initiationsDriver/operatorVision based

The invention relates to a stereoscopic vision based emergency treatment device and method for running vehicles, wherein the device comprises a binocular-vision image pick-up unit, an on-board bus interface, a central processing unit, a vehicle braking control system and an acousto-optic alarm circuit. The binocular-vision image pick-up unit is used for capturing an image of a road in front of a vehicle; the DSP (digital signal processor) based central processing unit is adopted for carrying out real-time quick calculation on a visual image so as to obtain a three-dimensional road scene, and compares the obtained three-dimensional road scene with a safe driving road model set up by a system so as to judge whether obstacles or dangers exist in the traveling direction of the vehicle; when adanger is found, the vehicle braking control system is started so as to reduce the speed of the vehicle and send an acousto-optic alarm to a driver; meanwhile, the central processing unit is connected with a vehicle sensor by an inter-vehicle bus so as to detect the state of the vehicle, and when the vehicle has mechanical or circuit faults, a braking system is started so as to reduce the speed of the vehicle and send an acousto-optic alarm. The device disclosed by the invention can be arranged on ordinary motor vehicles so as to avoid the occurrence of accidents or reduce the accident loss, thereby improving the driving safety performance.

Owner:HOHAI UNIV

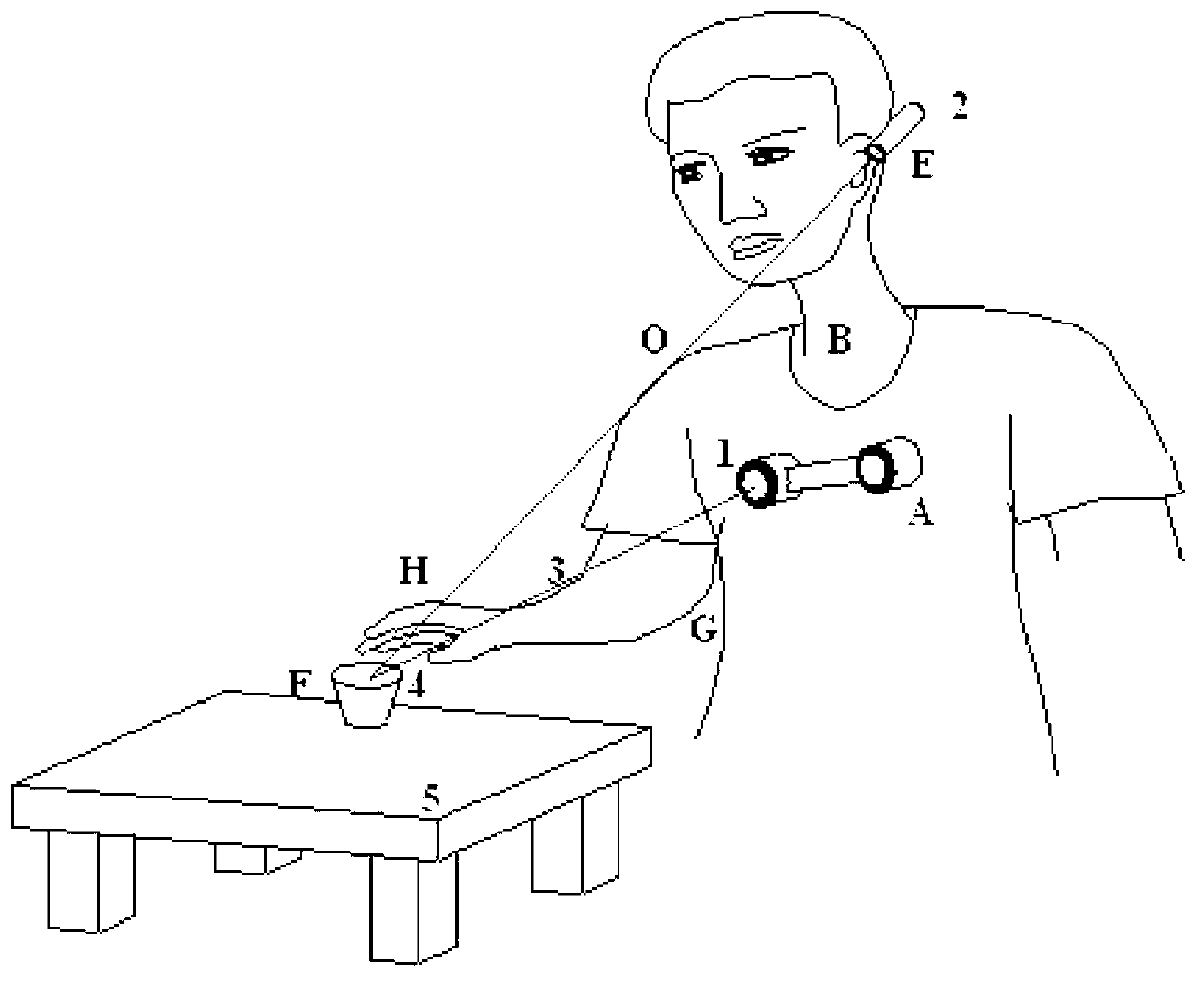

Man-machine interactive manipulator control system and method based on binocular vision

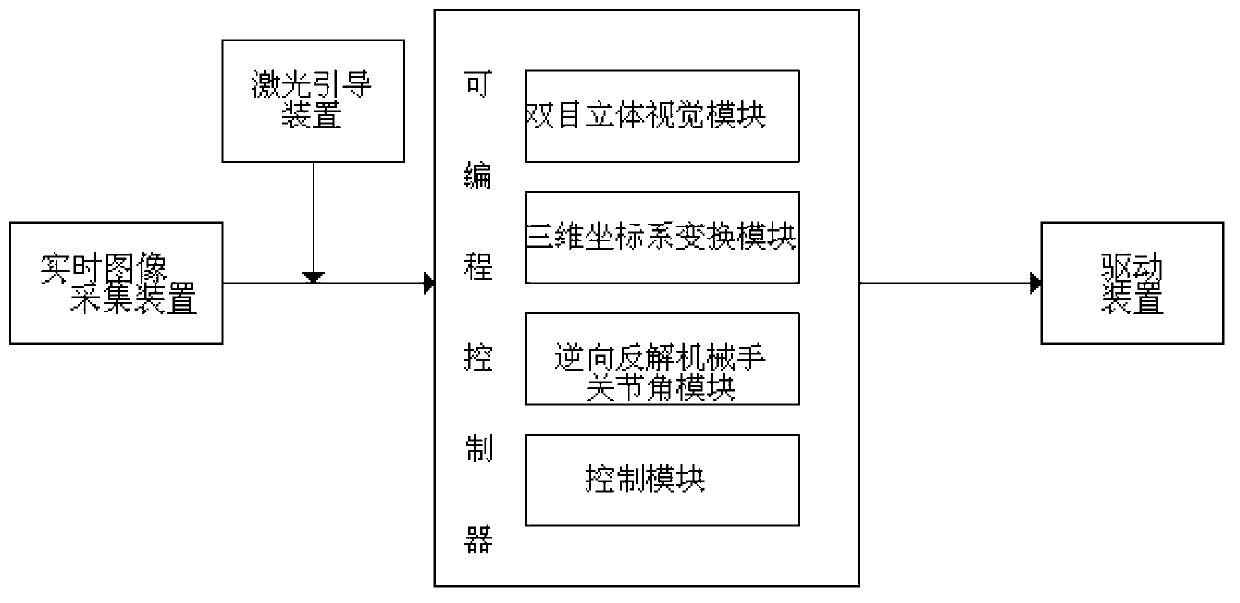

InactiveCN103271784AControl tension reductionImprove participationProgramme-controlled manipulatorProsthesisSystem transformationVisual perception

The invention discloses a man-machine interactive manipulator control system and method based on binocular vision. The man-machine interactive manipulator control system is composed of a real-time image collecting device, a laser guiding device, a programmable controller and a driving device. The programmable controller is composed of a binocular three-dimensional vision module, a three-dimensional coordinate system transformation module, an inverse manipulator joint angle module and a control module. Color characteristics in a binocular image are extracted through the real-time image collecting device to be used as a signal source for controlling a manipulator, and three-dimensional information of red characteristic laser points in a view real-time image is obtained through transformation and calculation of the binocular three-dimensional vision system and a three-dimensional coordinate system and used for controlling the manipulator to conduct man-machine interactive object tracking operation. The control system and method can effectively conduct real-time tracking and extracting of a moving target object and is wide in application fields such as intelligent artificial limb installing, explosive-handling robots, manipulators helping the old and the disabled and the like.

Owner:SHANDONG UNIV OF SCI & TECH

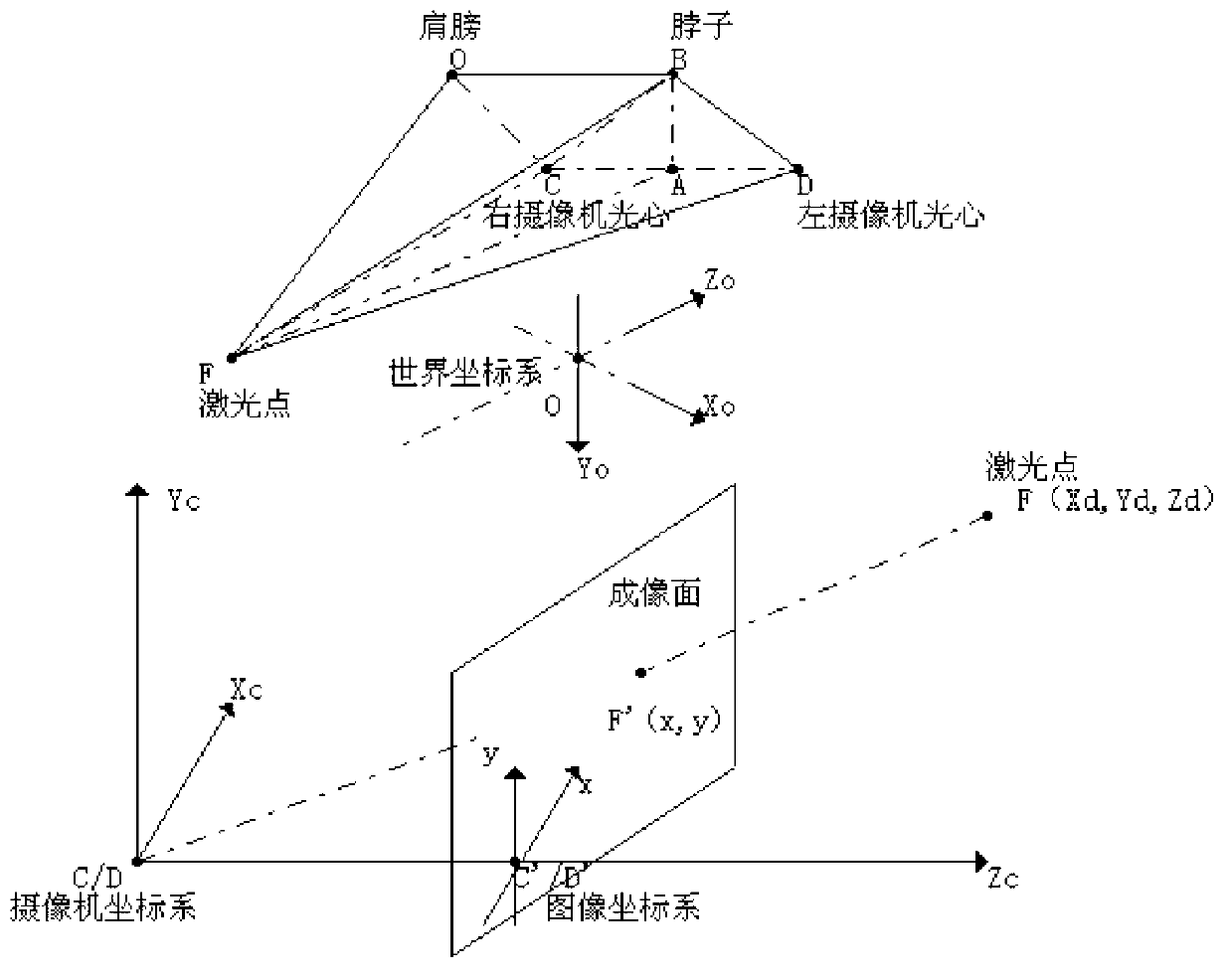

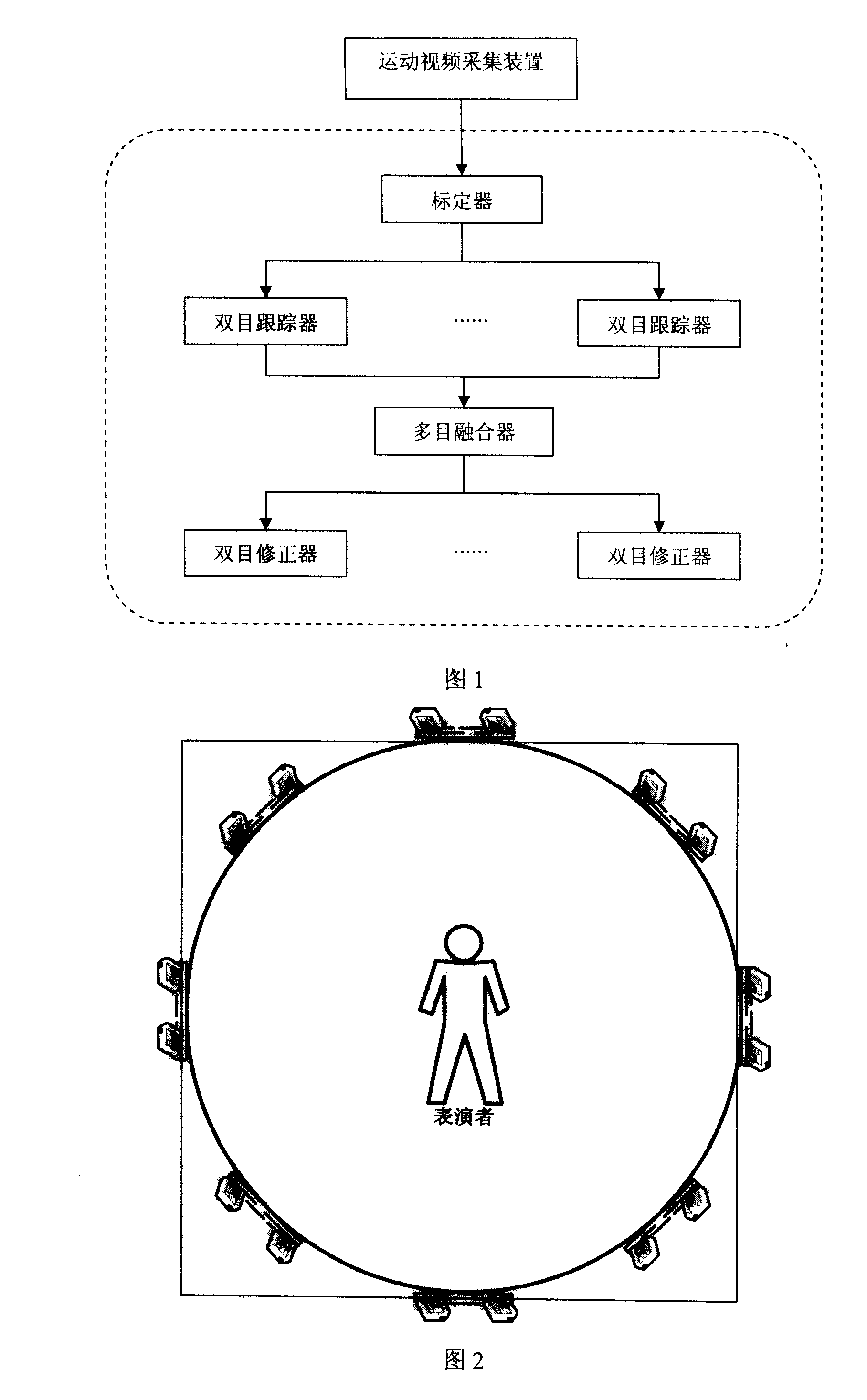

Method for capturing movement based on multiple binocular stereovision

The invention discloses a movement capturing method based on multiple binocular stereo vision. A movement video collecting device is constructed, and human movement video sequences from different orientations are collected by the movement video collecting device. Multiocular movement video sequences shot by a plurality of cameras are calibrated. Marked points matching and tracking of each binocular tracker is finished. Data fusion of three-dimensional tracking result of multiple binocular trackers is completed. The three-dimensional movement information of the marked points acquired by a multiocular fusion device is fed back to the binocular tracker to consummate binocular tracking. On the basis of binocular three-dimensional tracking realized by binocular vision, the invention fuses multiple groups of binocular three-dimensional movement data, resolves parameter acquiring problem of three-dimensional position, tracking, track fusion and the like for a plurality of marked points, increases number of traceable market points and enables the tracking effect to be comparable with three-dimensional movement acquiring device employing multi-infrared cameras for collecting.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

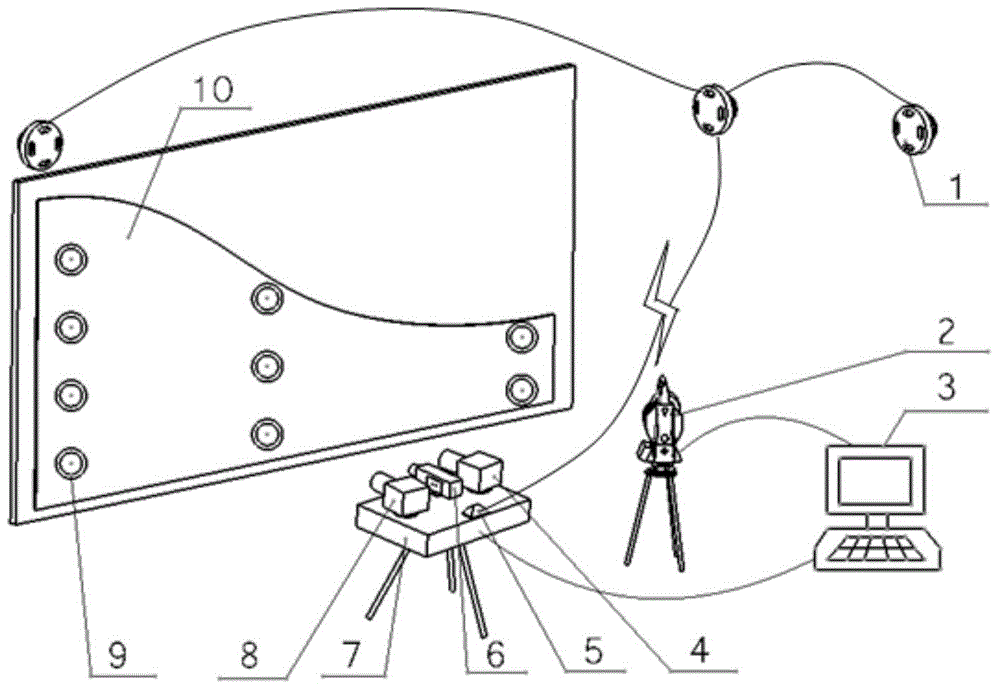

Geometric parameter visual measurement method for large composite board

ActiveCN104457569AAccurate measurementRealize visual calibration workImage analysisUsing optical meansLaser trackerPosition control

The invention relates to a geometric parameter visual measurement method for a large composite board, and belongs to the field of visual measurement. By means of the method, on-site quick three-dimensional reconstruction and geometric parameter measurement of the large composite board can be achieved. According to the method, a binocular vision measurement system, a laser tracker system and an indoor Bluetooth locating system are used for measurement, feature points are distributed on the measured composite board, and reflection targets are pasted to the feature points; the binocular vision measurement system is set up and is composed of a left camera, a right camera, a continuous line laser and an automatic position control platform; the laser tracker system is arranged, and the indoor Bluetooth locating system is mounted, so that measurement is completed. By means of the method, visual calibration of the large composite board can be quickly and precisely achieved, splicing precision of adjacent images is improved, data measurement precision is improved on the whole, and the defects that a traditional measurement method is low in efficiency, precision and stability are overcome.

Owner:DALIAN UNIV OF TECH

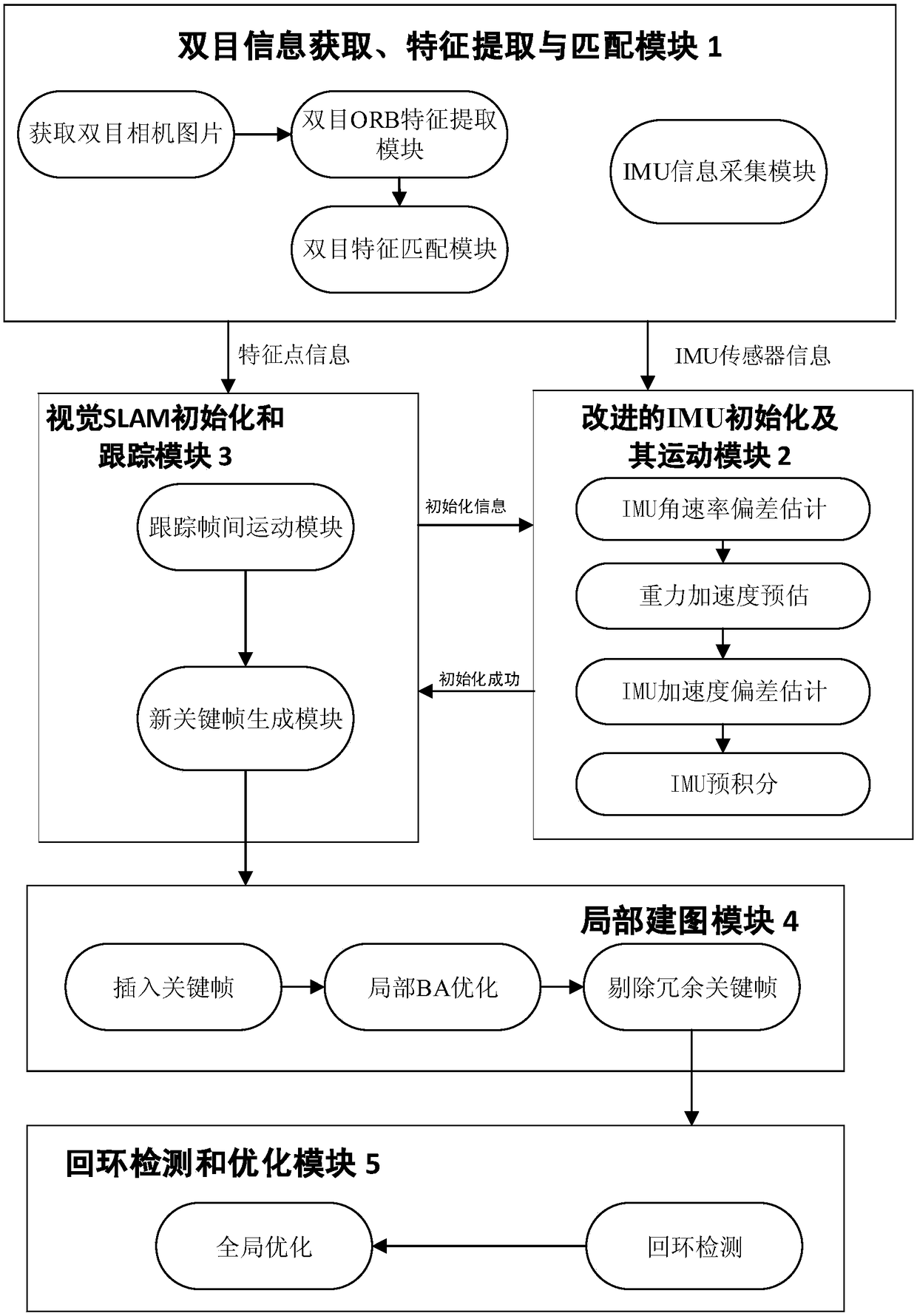

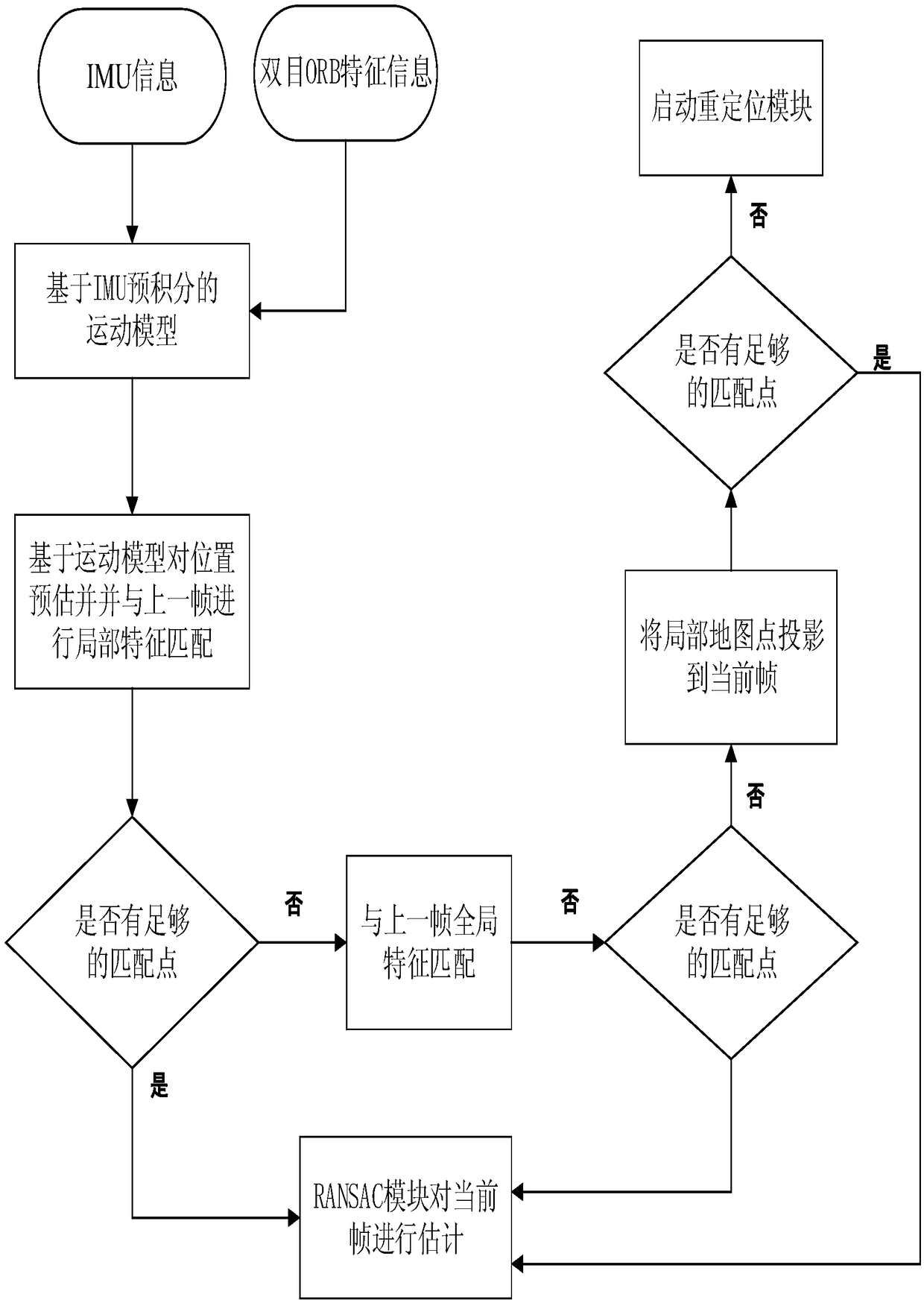

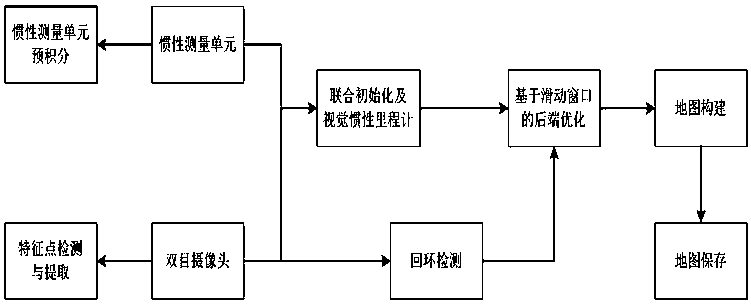

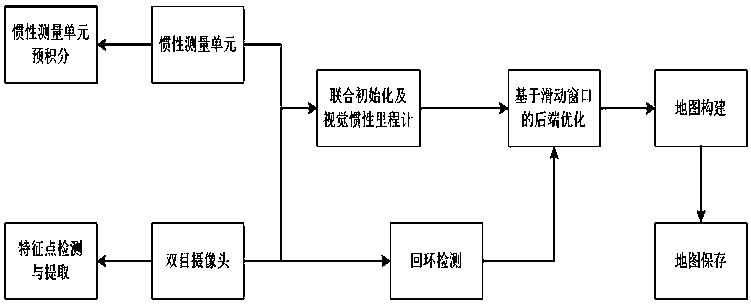

Robot positioning and map construction system based on binocular vision features and IMU information

PendingCN108665540AImprove robustnessImprove accuracyImage enhancementImage analysisGlobal optimizationInter frame

Disclosed is a robot positioning and map construction system based on binocular vision features and IMU information, comprising a binocular information collection, feature extraction and matching module, an improved IMU initialization and motion module, a visual SLAM algorithm initialization and tracking module, a local mapping module and a loop detection and optimization module. The binocular information collection, feature extraction and matching module comprises a binocular ORB feature extraction sub-module, a binocular feature matching sub-module and an IMU information collection sub-module. The improved IMU initialization and motion module includes an IMU angular rate deviation estimation sub-module, a gravity acceleration prediction sub-module, an IMU acceleration deviation estimation sub-module and an IMU pre-integration sub-module. The visual SLAM algorithm initialization and tracking module includes a tracking inter-frame motion sub-module and a key frame generation sub-module. The local mapping module includes a new key frame insertion sub-module, a local BA optimization sub-module and a redundant key frame elimination sub-module. The loop detection and optimization module includes a loop detection sub-module and a global optimization sub-module. The invention provides a robot positioning and map construction system based on binocular vision features and IMU information, which has good robustness, high accuracy and strong adaptability.

Owner:ZHEJIANG UNIV OF TECH

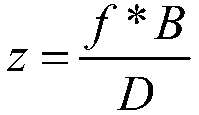

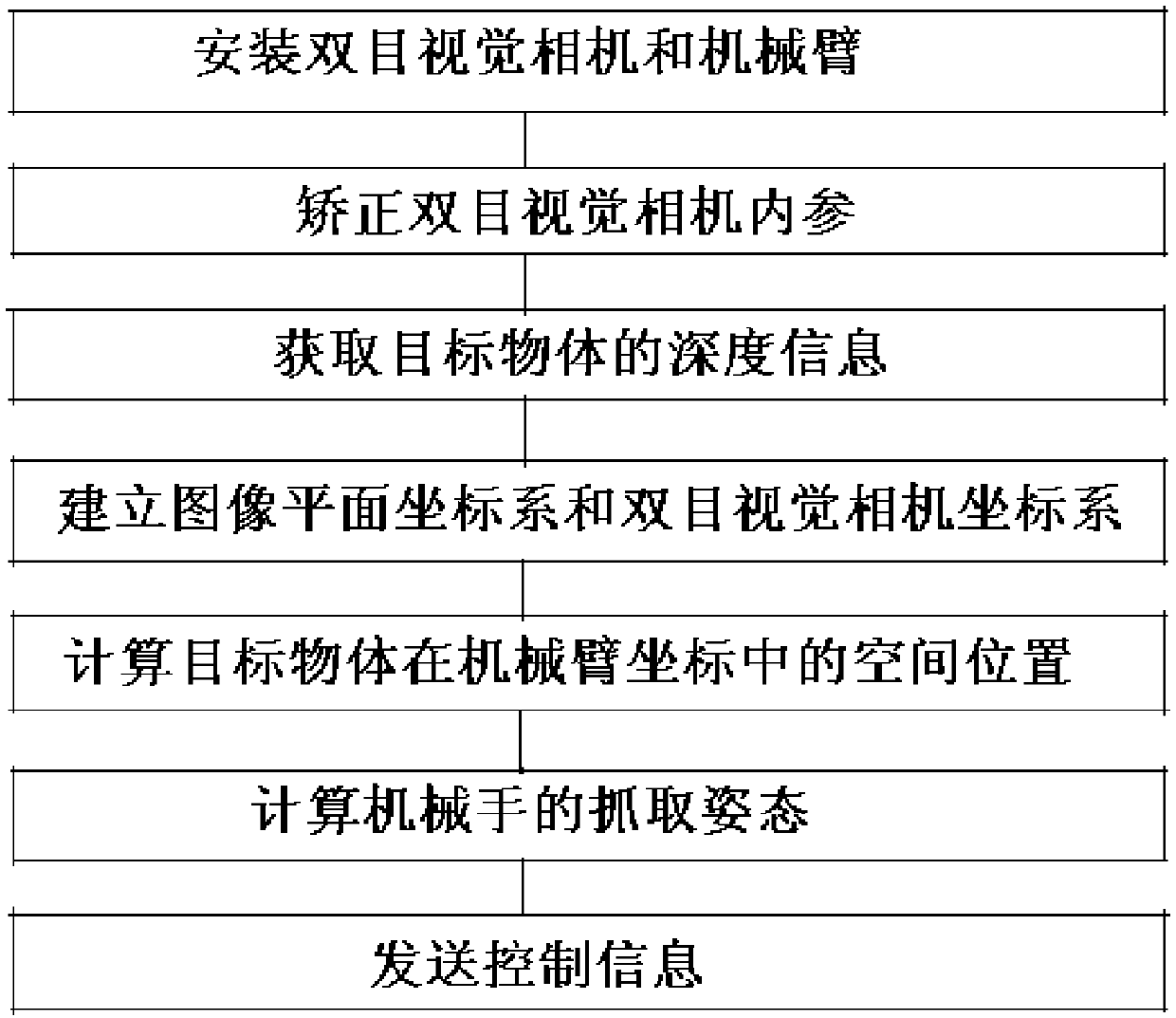

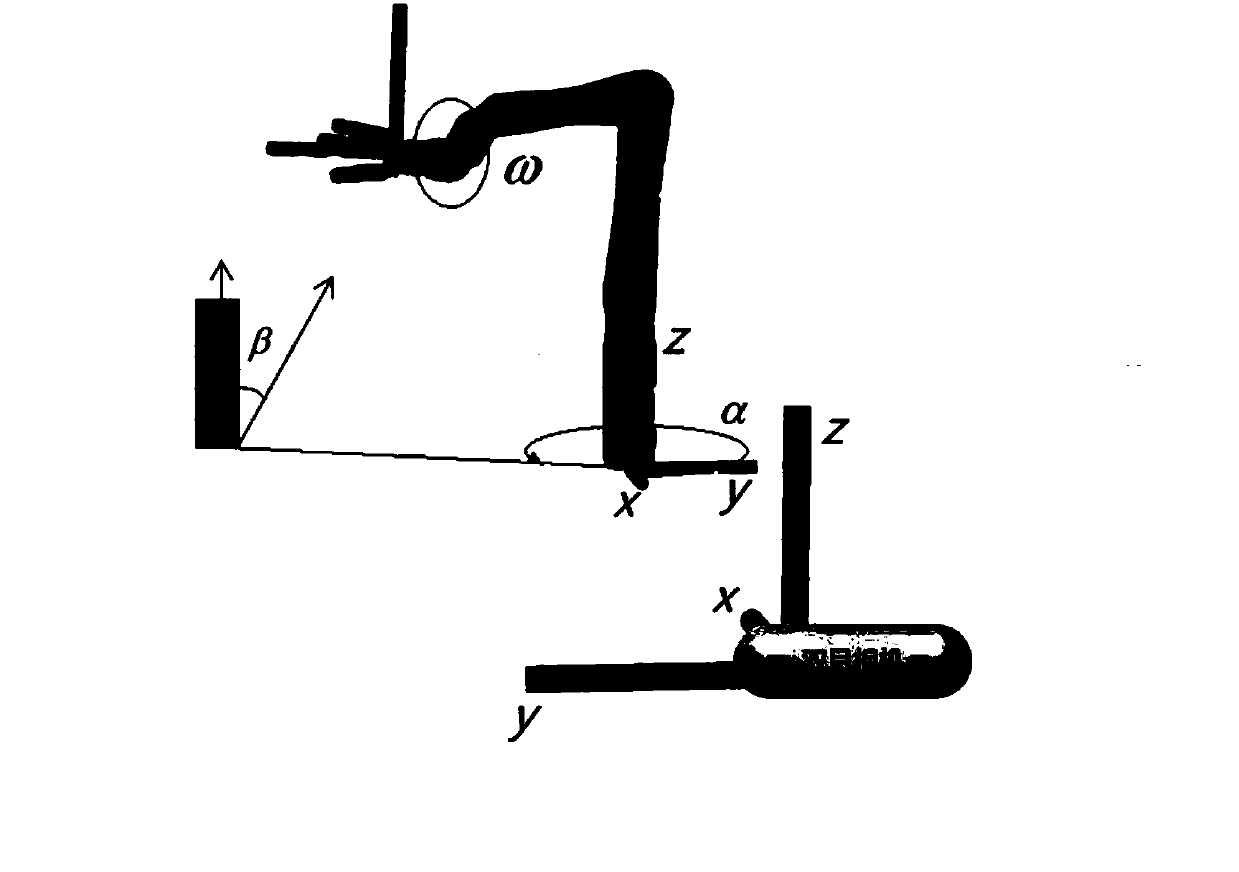

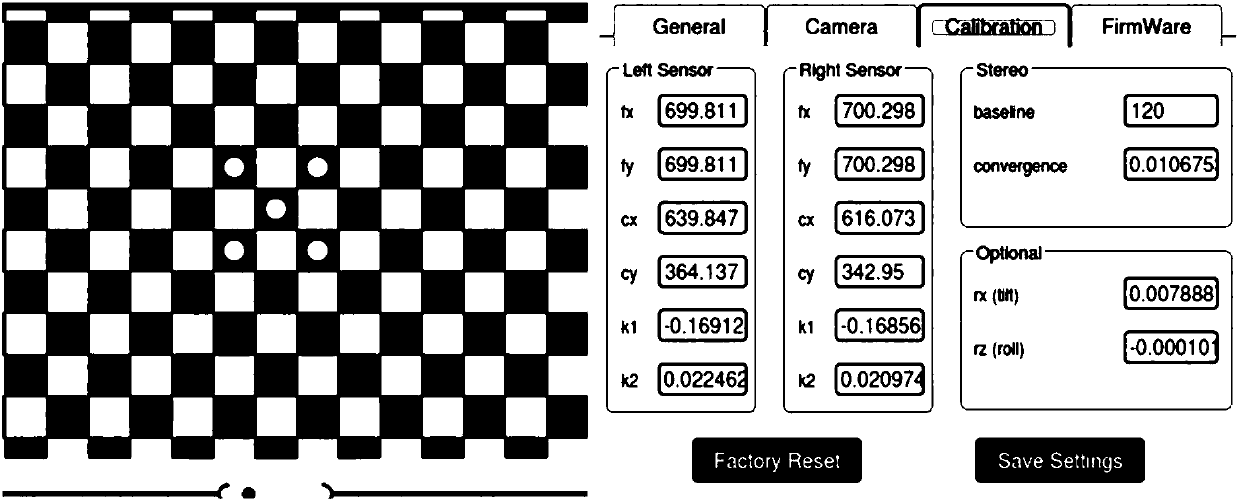

Binocular vision based object location grabbing method for mechanical arm

ActiveCN107767423ASimple methodSmall amount of calculationImage analysisVisual technologyManipulator

The invention relates to the field of mechanical arms and computer vision technology. The binocular vision based object location grabbing method for a mechanical arm includes steps of 1, mounting a binocular vision camera and a mechanical arm; 2, correcting inner parameters of the binocular vision camera; 3, obtaining depth information of a target object; 4, establishing an image planar coordinatesystem and a binocular vision camera coordinate system; 5, calculating the spatial position of the target object in the mechanical arm coordinate system; 6, calculating the grabbing gesture of the mechanical arm; 7, sending control information. Compared with the prior art, the method has advantages that 1, time requirements for real time grabbing can be met since an object recognition method is simple and the calculation load is comparatively low; 2, a problem of operation failure, which occurs when fine difference exists between the gesture of the target object and an ideal gesture, of a mechanism system adopting a traditional demonstration system is avoided.

Owner:DALIAN UNIV OF TECH

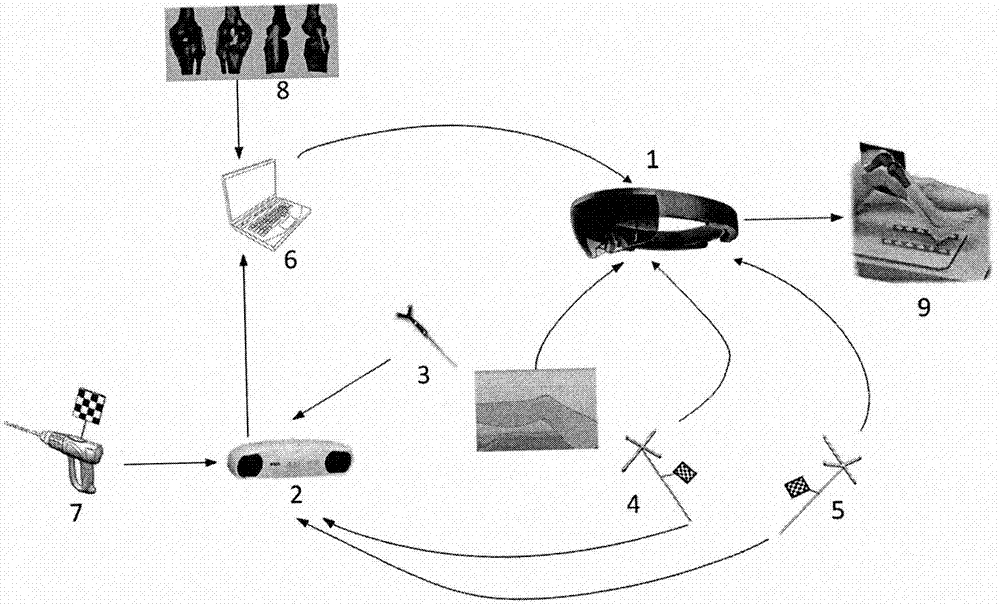

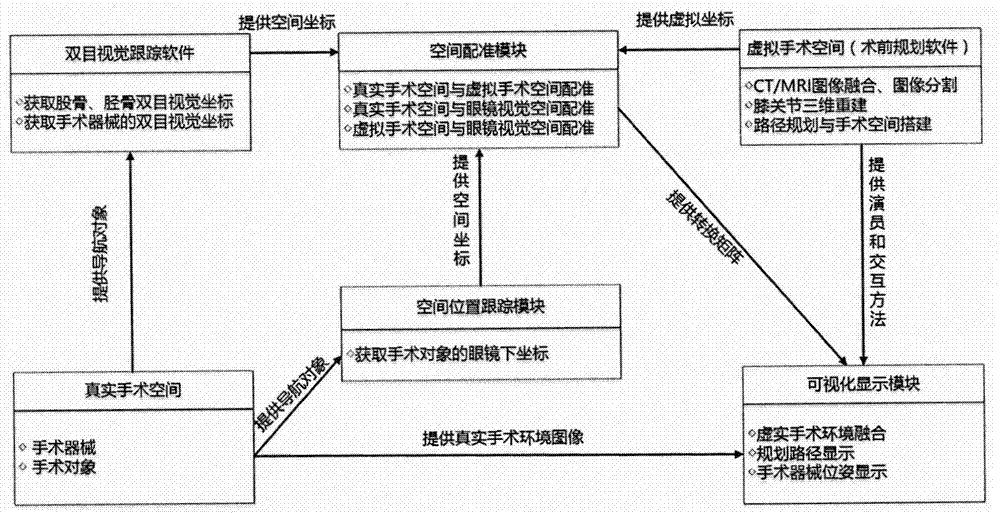

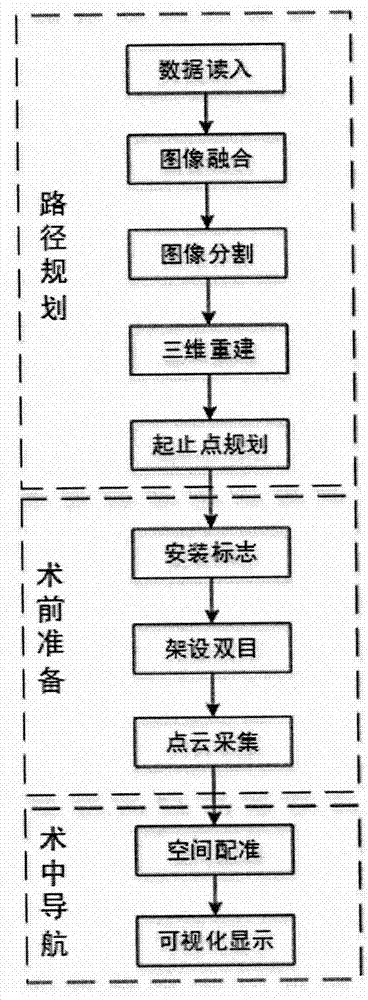

Augmented reality operation navigation system for reconstructing anterior cruciate ligament

InactiveCN107536643AHas a regular quadrilateral borderHas an asymmetrical imageSurgical navigation systemsTibiaPosterior cruciate ligament

The invention relates to the field of medicine, in particular to an augmented reality operation navigation system for reconstructing anterior cruciate ligament. The system is constituted by augmentedreality glasses, operation navigation software for reconstructing the anterior cruciate ligament, binocular vision, labeling needles, femur joint labels, tibia joint labels, a computer and vision tracking software, wherein the operation navigation software, the binocular vision, labeling needles, femur joint labels, tibia joint labels and the computer are installed on the augmented reality glasses, and the vision tracking software is installed on the computer. Before an operation, diagnosis image CT data and MRI data are subjected to image fusing and division processing, three-dimensional reconstruction is conducted, and a reconstructed three-dimensional model is subjected to pre-operation planning; in the operation, the labeling needles are adopted to collect knee joint dissection joints,the collected knee joint dissection joints and the pre-operation knee joint model are rectified, and according to labeling of the tibia and femur, the knee joint three-dimensional model and a planning path are displayed in real time in the augmented reality glasses. According to the augmented reality operation navigation system for reconstructing the anterior cruciate ligament, precise locating of the starting point and the ending point of the anterior cruciate ligament can be achieved, the problem that an existing endoscope technique operation is narrow in vision is solved, and the operationrisks are effectively lowered.

Owner:BEIHANG UNIV

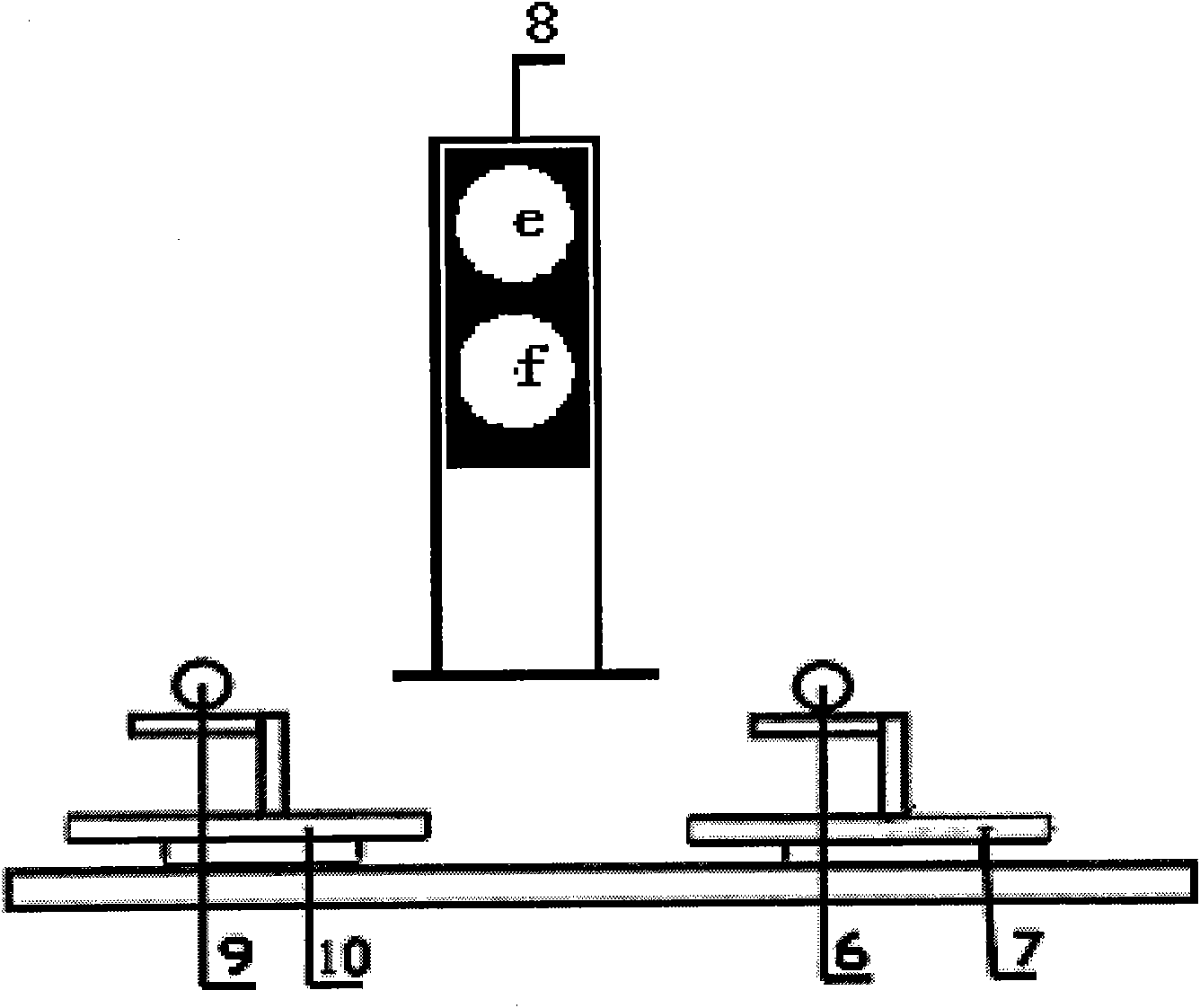

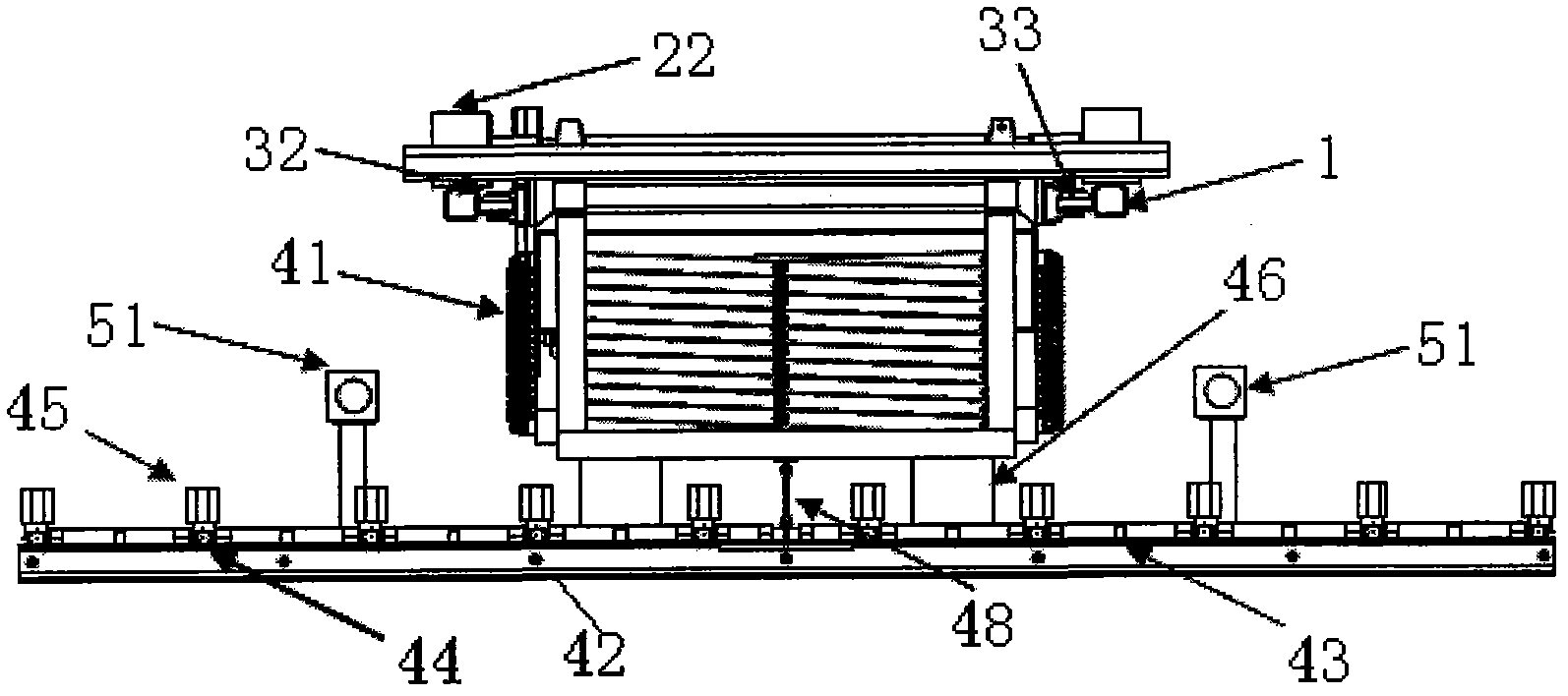

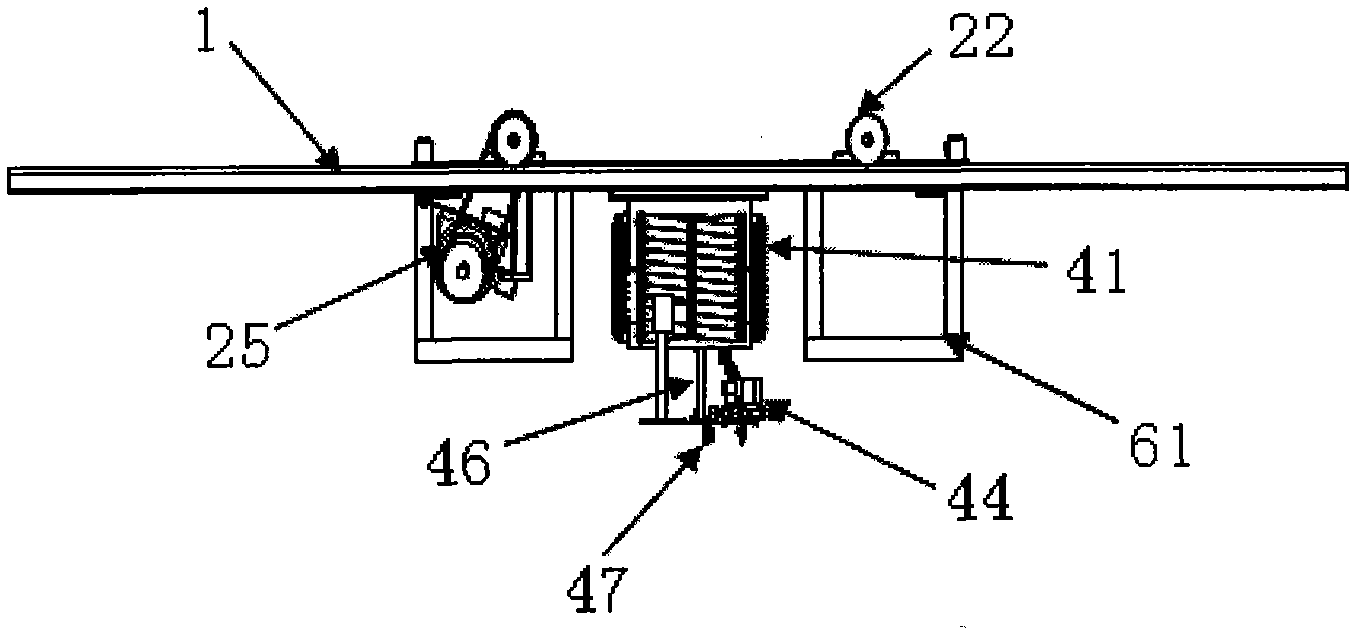

Greenhouse suspended self-propelled target-oriented sprayer system and operation method thereof

InactiveCN102017938AAvoid bodily harmImprove labor efficiencySpraying apparatusInsect catchers and killersSprayerEngineering

The invention relates to a greenhouse suspended self-propelled target-oriented sprayer system and an operation method thereof. The greenhouse suspended self-propelled target-oriented sprayer system is characterized by comprising a guide way, a self-propelled mobile platform, a hall sensor, a spraying manipulator device, a binocular vision system and a PLC (Programmable Logic Controller), wherein a plurality of magnetic positioning marks are preinstalled on the guide way; the self-propelled mobile platform is arranged on the guide way through a walking mechanism and provided with the hall sensor; the spraying manipulator device comprises a Z direction cross-shear hanger which is arranged on a rack; a spray rod is horizontally arranged below the hanger through a rotary positioning mechanism; a spray pipe is fixedly arranged on the spray rod; a plurality of spray heads are arranged on the spray pipe at intervals and independently controlled by electromagnetic valves; the hanger has the functions of extension and retraction; the binocular vision system comprises two CCD (Charge-Coupled Device) video cameras; the two CCD video cameras are fixedly installed on both ends of the spray rod respectively and connected with an upper computer in which control software is prearranged; and the PLC is electrically connected with the upper computer, the hall sensor, a frequency converter, a drive controller, the electromagnetic valves and the CCD video cameras respectively.

Owner:CHINA AGRI UNIV

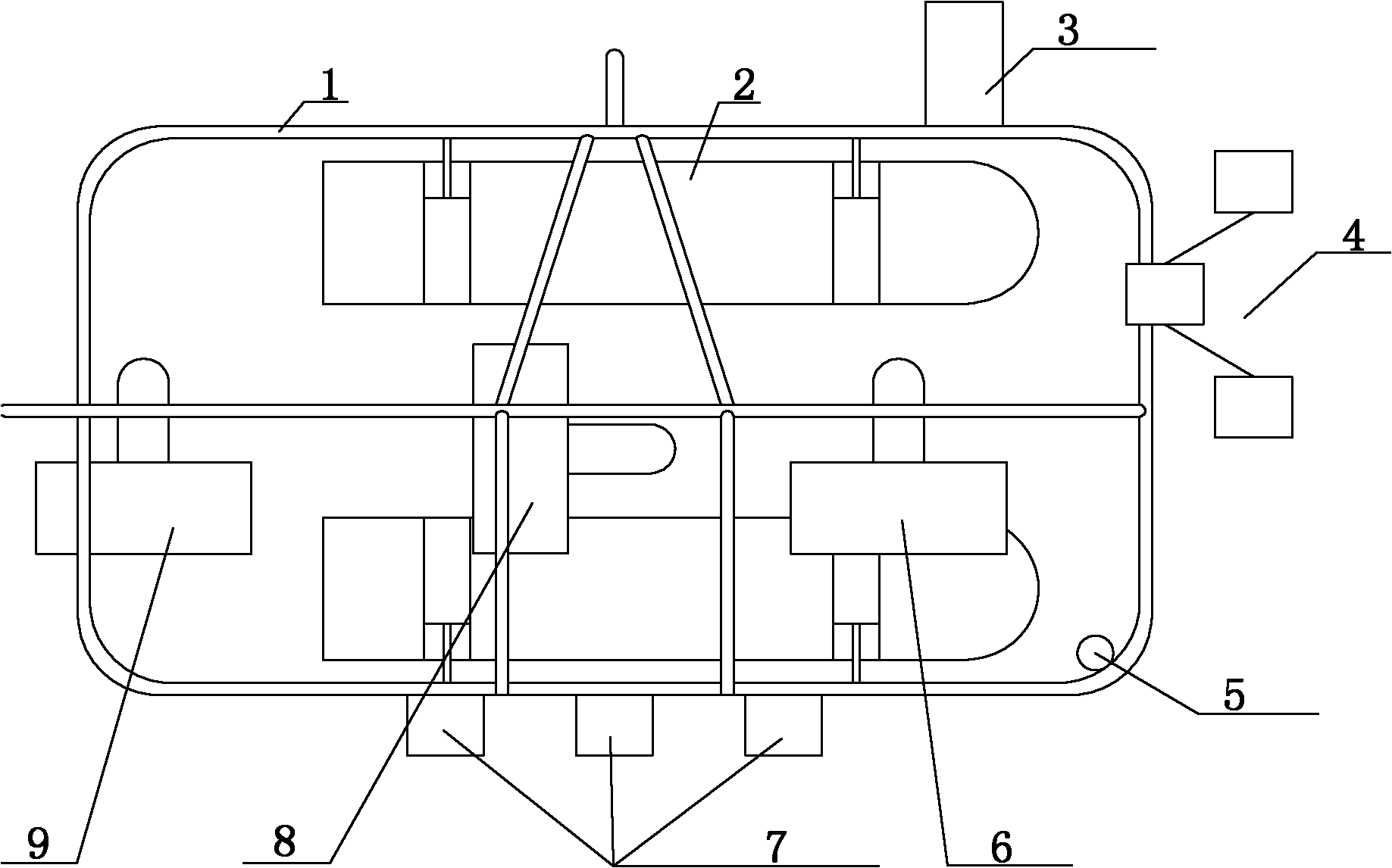

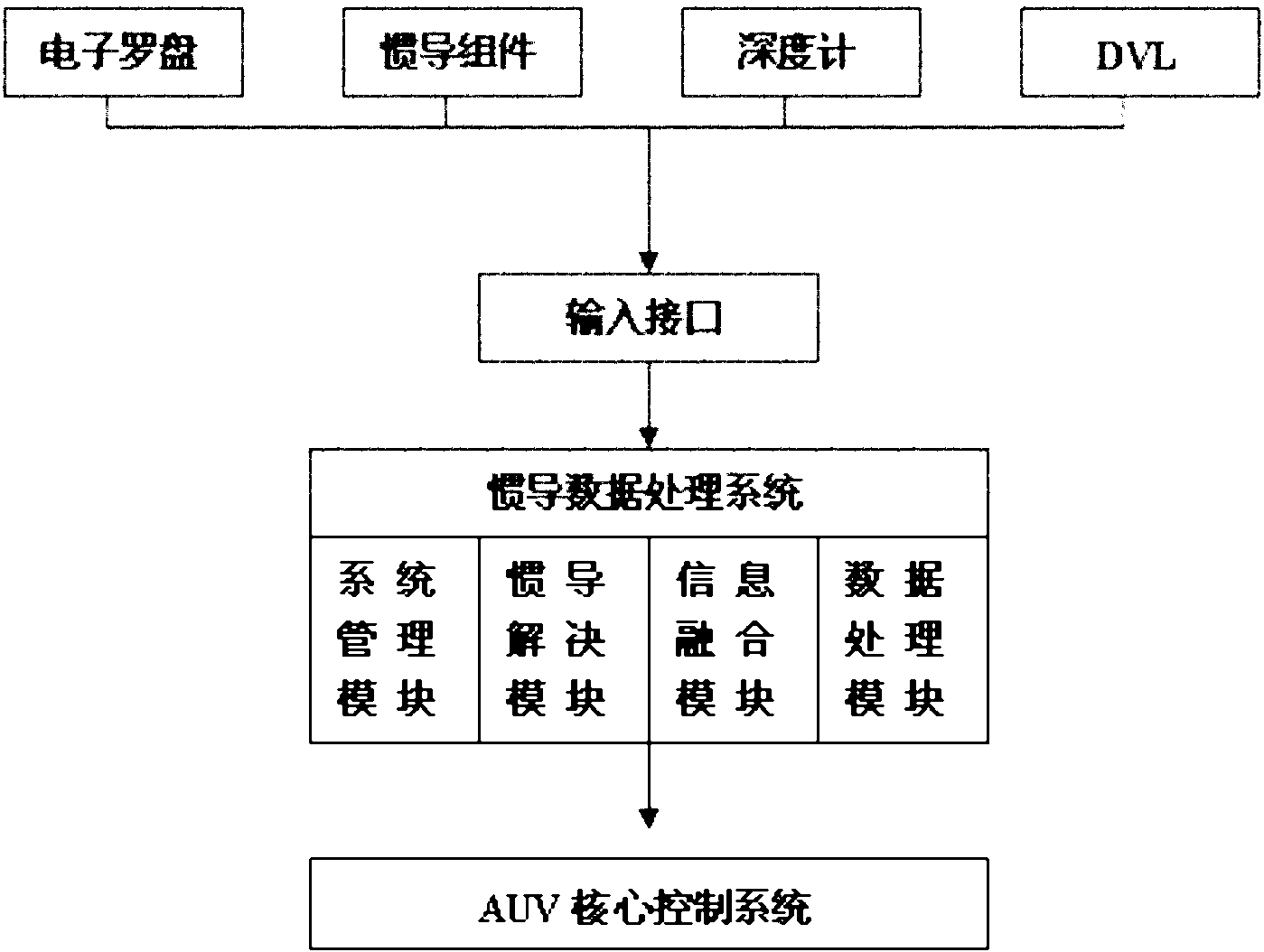

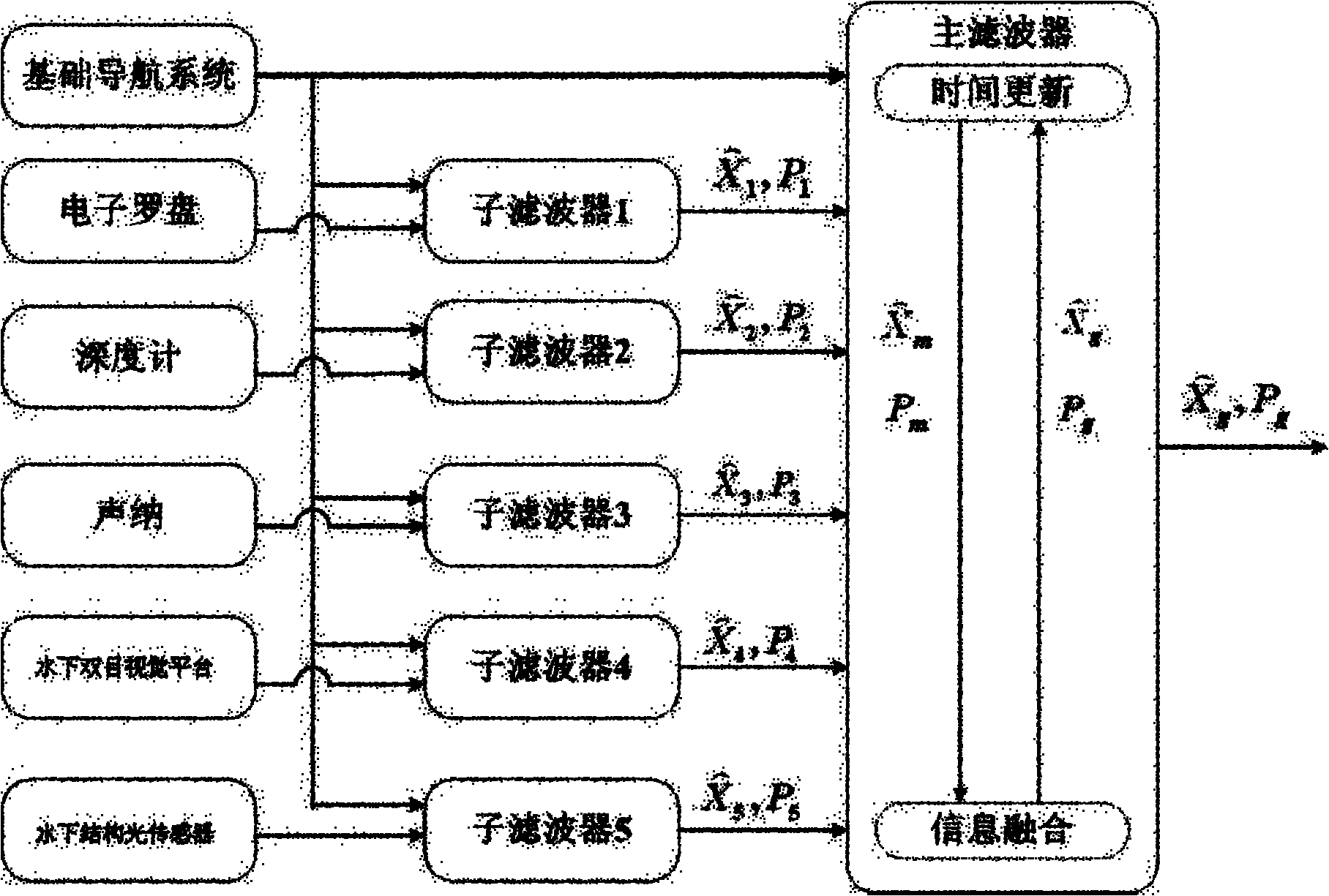

Autonomous underwater vehicle combined navigation system

ActiveCN102042835ARealize autonomous navigationPrecise NavigationPhotogrammetry/videogrammetryNavigation by speed/acceleration measurementsNavigation systemVisual perception

The invention relates to an underwater vehicle navigation system, in particular to an autonomous underwater vehicle combined navigation system. The system comprises an inertia basis navigation device and an external sensor navigation device, wherein the inertia basis navigation device comprises a Doppler velocimeter, an optical fiber gyro, a pressure sensor, an electronic compass and a depthometer; and the external sensor navigation device comprises a sonar. The combined navigation system also comprises an underwater structure optical sensor and an underwater binocular vision platform, wherein the underwater structural optical sensor comprises a forward-vision structure optical sensor positioned on the front of an outer frame of an autonomous underwater vehicle, and a downward-vision structure optical sensor positioned at the bottom of the outer frame; the underwater binocular vision platform comprises a forward-vision binocular vision platform positioned on the front of the outer frame, and a downward-vision binocular vision platform positioned at the bottom of the outer frame; the forward-vision structure optical sensor and the forward-vision binocular vision platform form a forward-vision structure optical and visual system module positioned on the front of the outer frame; and the downward-vision structure optical sensor and the downward-vision binocular vision platform form a downward-vision structure optical and visual system module positioned at the bottom of the outer frame.

Owner:OCEAN UNIV OF CHINA

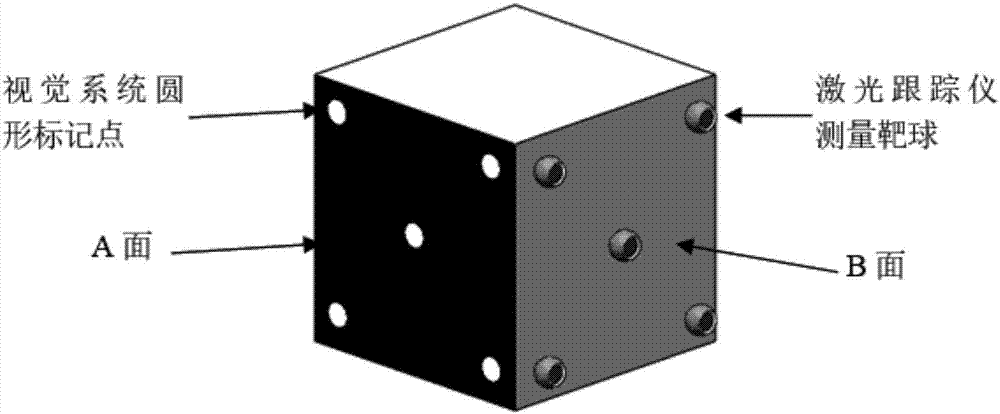

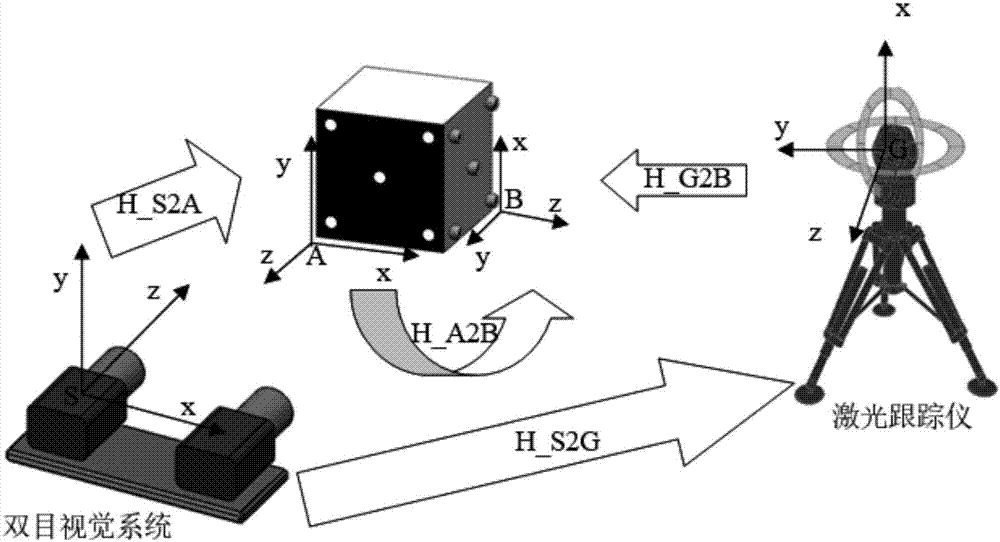

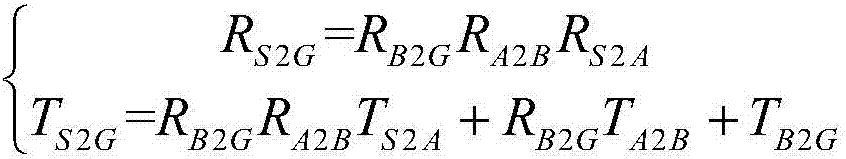

Global calibration method based on binocular visual system and laser tracker measurement system

ActiveCN107883870AReduce difficultyAvoid measurement errorsUsing optical meansObservational errorCalibration result

The invention discloses a global calibration method based on a binocular visual system and a laser tracker measurement system. Firstly a rigid stereoscopic target of which the A side is provided withbinocular visual system circular mark points and the B side is provided with laser tracker measurement target balls is manufactured; then the A side coordinate system and the B side coordinate systemof the stereoscopic target are established; the transformation relation between the A side coordinate system of the stereoscopic target and the left camera coordinate system of the binocular visual system and the transformation relation between the B side coordinate system of the stereoscopic target and the laser tracker world coordinate system are solved; the transformation relation between the Aside coordinate system and the B side coordinate system is solved according to the transformation relation solved in the last step; and finally the transformation relation between the left camera coordinate system of the global calibration binocular visual system and the laser tracker world coordinate system is solved. The difficulty of directly processing and maintaining the high-precision stereoscopic target can be reduced so that the measurement error caused by direct measurement can be solved and the more accurate calibration result can be acquired.

Owner:四川雷得兴业信息科技有限公司

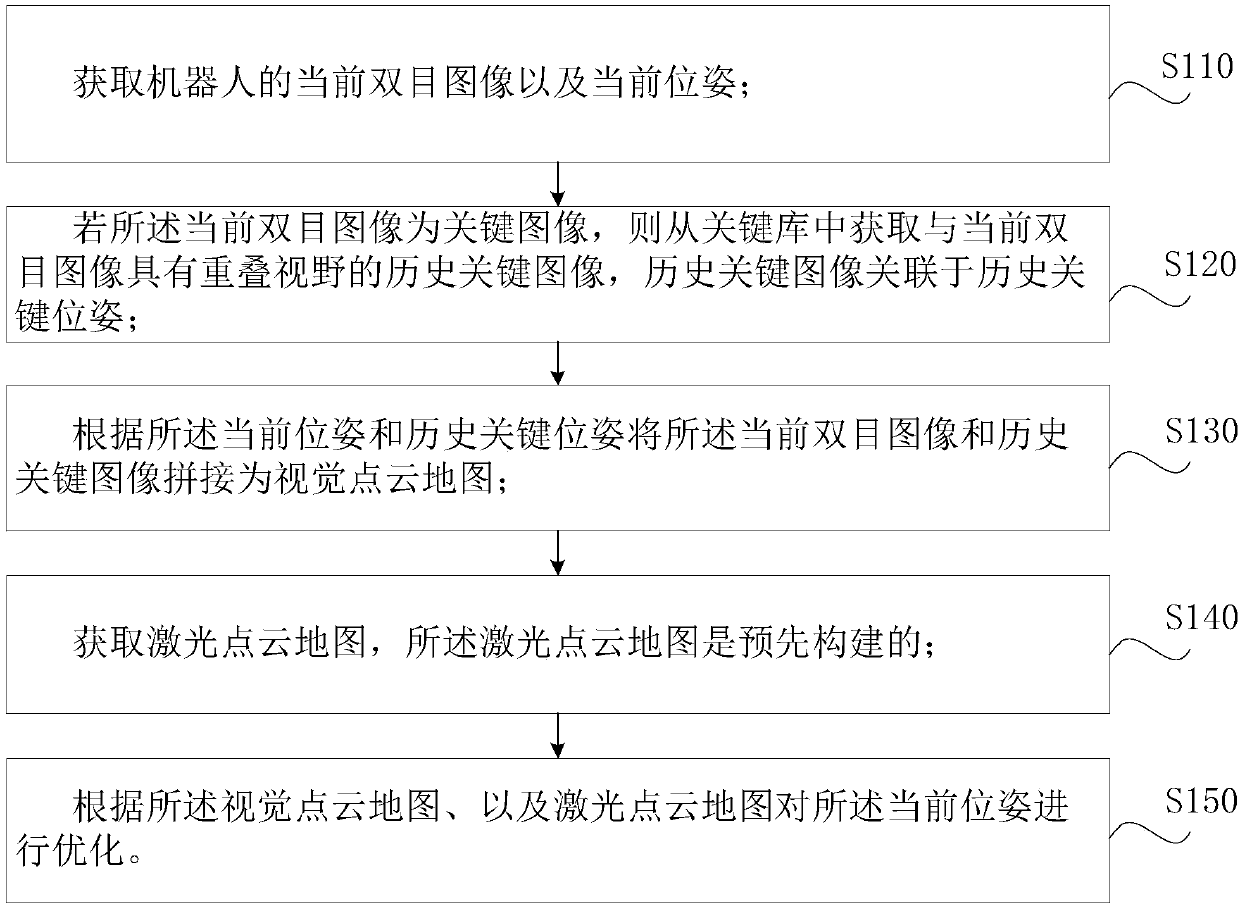

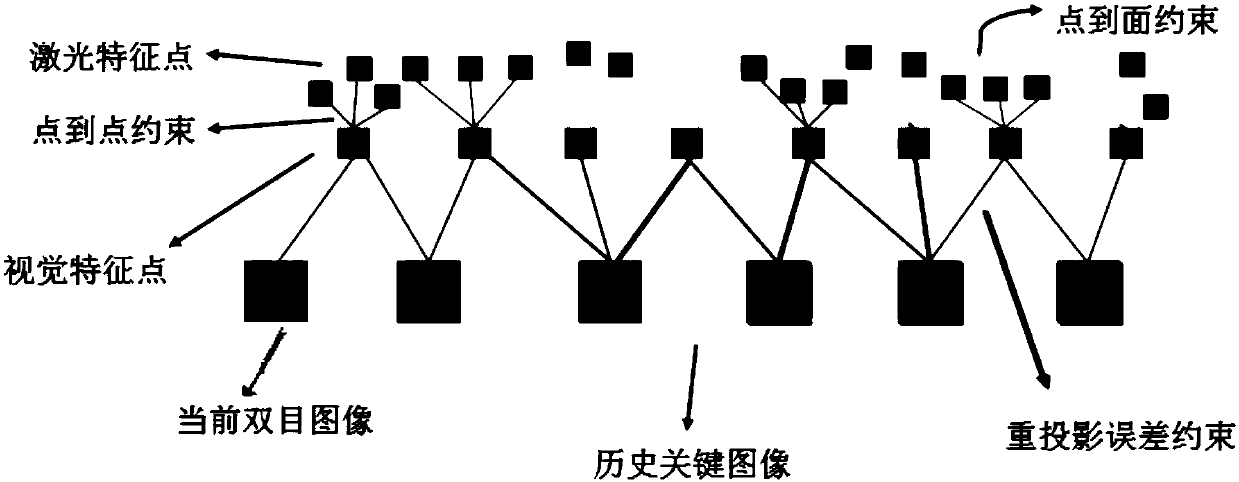

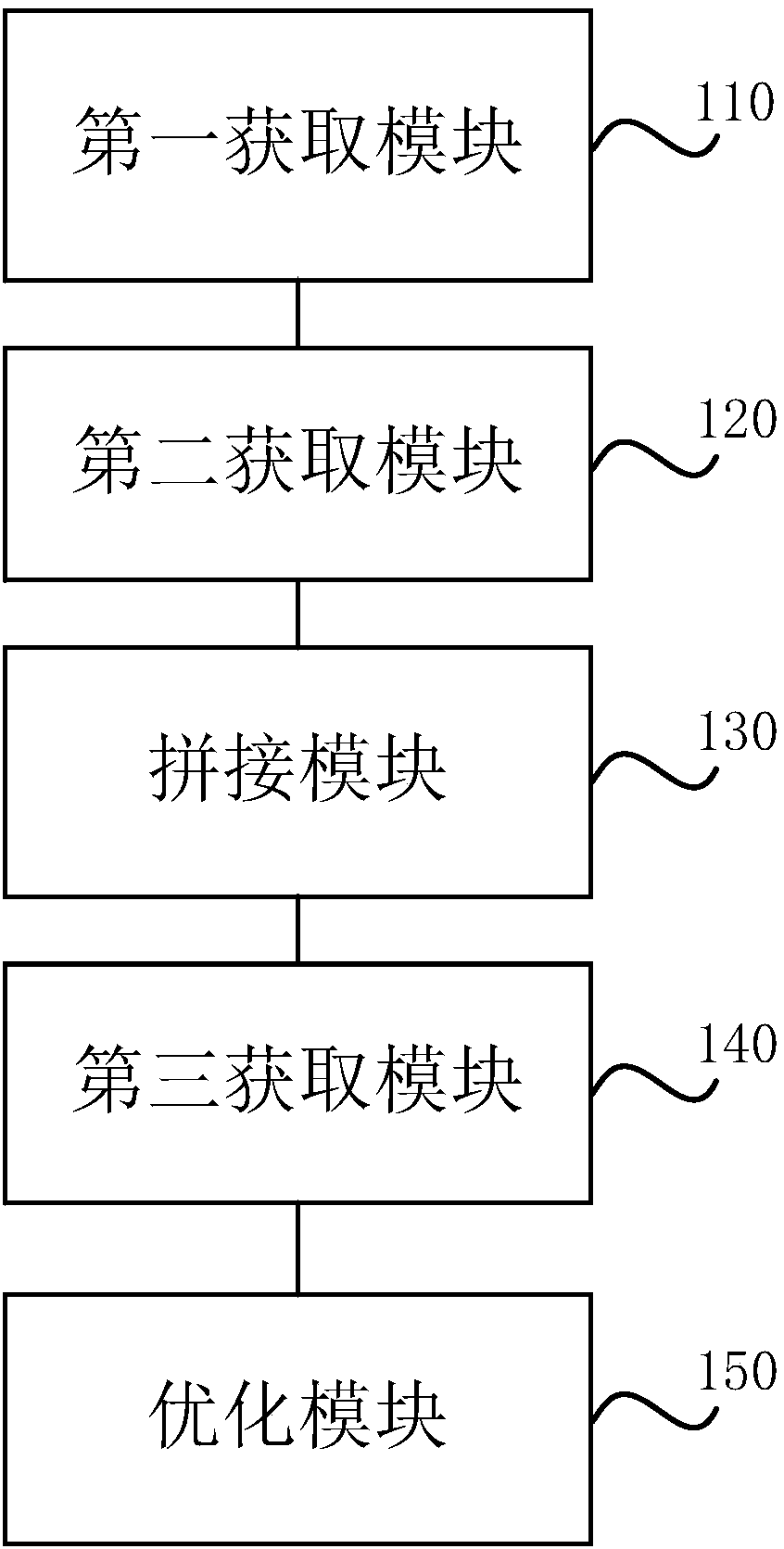

Binocular vision positioning method and binocular vision positioning device for robots, and storage medium

ActiveCN107796397ALower requirementHigh precisionNavigational calculation instrumentsPicture taking arrangementsVisual field lossPoint cloud

The invention discloses a binocular vision positioning method and a binocular vision positioning device for robots, and a storage medium. The binocular vision positioning method includes acquiring current binocular images and current positions and posture of the robots; acquiring historical key images from key libraries if the current binocular images are key images; splicing the current binocularimages and the historical key images according to the current positions and posture and historical key positions and posture to obtain vision point cloud maps; acquiring preliminarily built laser point cloud maps; optimizing the current positions and posture according to the vision point cloud maps and the laser point cloud maps. The historical key images acquired from the key libraries and the current binocular images have overlapped visual fields, and the historical key images are related to the historical key positions and posture. The binocular vision positioning method, the binocular vision positioning device and the storage medium have the advantages that position and posture estimated values are optimized by the aid of the preliminarily built laser point cloud maps at moments corresponding to key frames, and accordingly position and posture estimation cumulative errors can be continuously corrected in long-term robot operation procedures; information of the accurate laser pointcloud maps is imported in optimization procedures, and accordingly the binocular vision positioning method and the binocular vision positioning device are high in positioning accuracy.

Owner:HANGZHOU JIAZHI TECH CO LTD

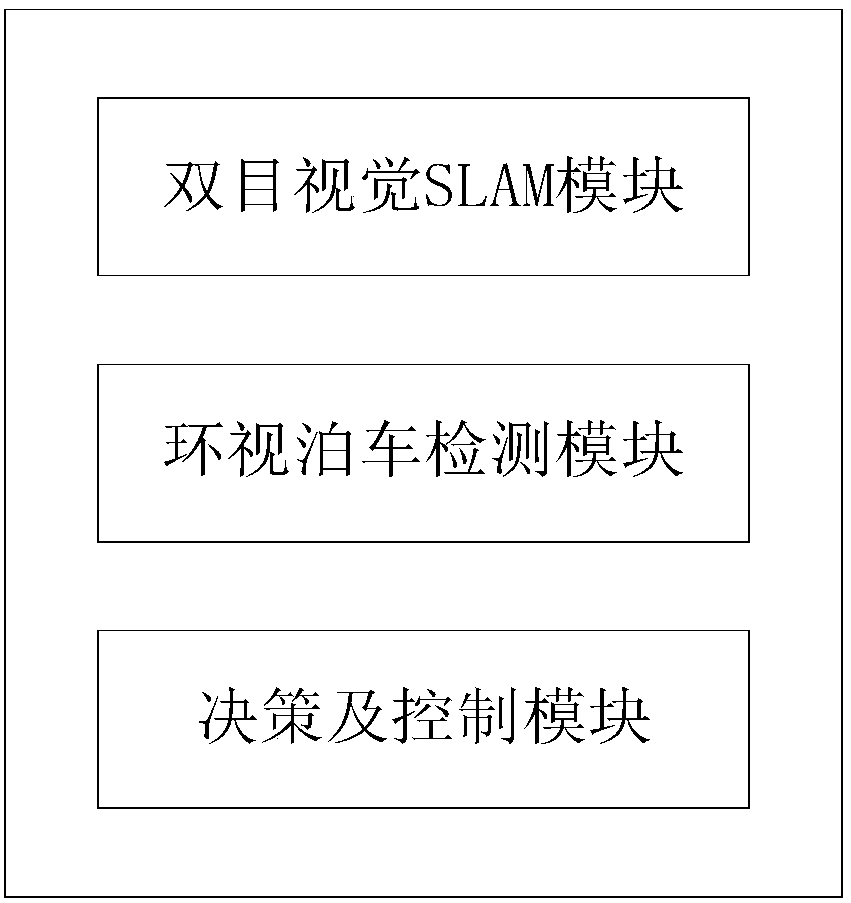

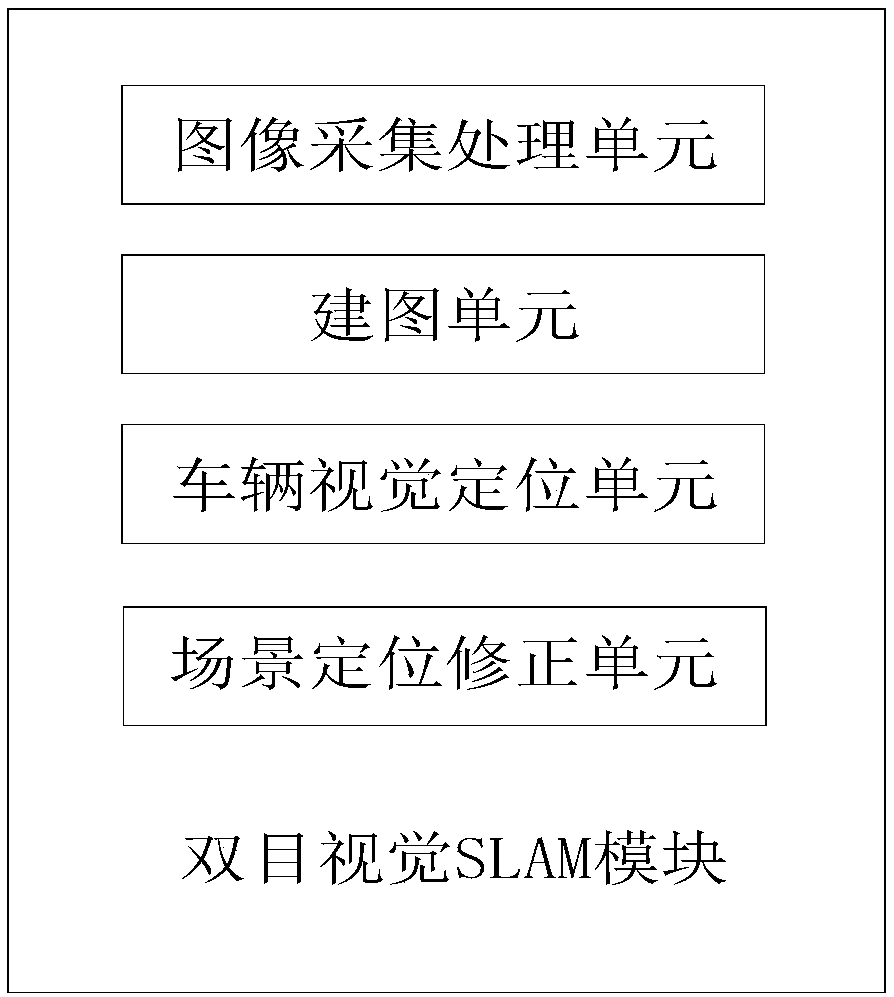

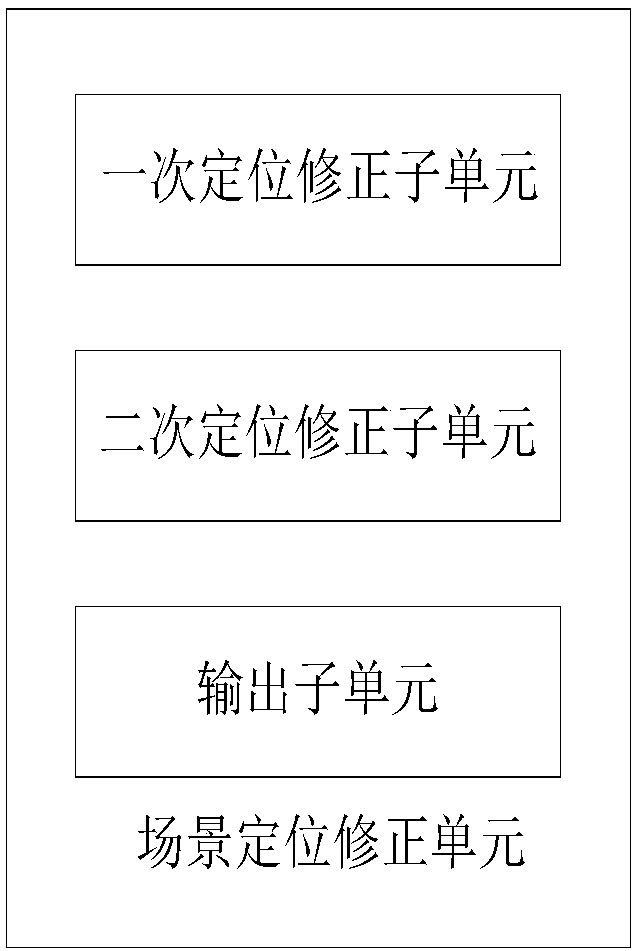

Autonomous parking system and method based on multi-vision inertial navigation integration

ActiveCN107600067AAccurate real-time location informationStable real-time location informationGeolocationEngineering

The invention discloses an autonomous parking system based on multi-vision inertial navigation integration. The autonomous parking system comprises a binocular vision SLAM module, a round looking parking detection module and a decision and control module, wherein the binocular vision SLAM module is used for generating a parking lot simulating map by acquired parking lot images through the multi-vision inertial navigation integration, obtaining real-time positioning information of a vehicle under a coordinate system of the parking lot simulating map, and transmitting the real-time positioning information to the decision and control module; the round looking parking detection module is used for acquiring current position data of the vehicle for current environmental distinction detection, and transmitting the testing result to the decision and control module; and the decision and control module is used for making a vehicle autonomous parking strategy according to the received geographicpositioning information of the vehicle in the parking lot simulating map and the current environmental distinction parameters. The invention also discloses an autonomous parking method based on the multi-vision inertial navigation integration. The autonomous parking method is used for realizing the autonomous parking system.

Owner:SUN YAT SEN UNIV

Binocular vision indoor positioning and mapping method and device

ActiveCN110044354APose optimizationHigh precisionInternal combustion piston enginesNavigational calculation instrumentsSlide windowAngular velocity

The invention discloses a binocular vision indoor positioning and mapping method and device. The method comprises the following steps of collecting left and right images in real time, and calculatingthe initial pose of the camera; collecting angular velocity information and acceleration information in real time, and pre-integrating to obtain the state of an inertial measurement unit; constructinga sliding window containing several image frames, and nonlinearly optimizing the initial pose of the camera by taking the visual error term between the image frames and the error term of the measurement value of the inertial measurement unit as constraints to obtain the optimized pose of the camera and measurement value of the inertial measurement unit; constructing word bag models for loop detection, and correcting the optimized pose of the camera; extracting and converting features of the left and right image into words for matching with the word bags of the offline map, optimizing and solving to obtain the optimized pose of the camera if the match is successful, and re-collecting the left and right images and matching the word bags if the match is unsuccessful. The binocular vision indoor positioning and mapping method and device provided by the invention can realize positioning and mapping in an unknown environment and the positioning function in the already constructed scene, andhas good precision and robustness.

Owner:SOUTHEAST UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com