Binocular vision positioning method and binocular vision positioning device for robots, and storage medium

A binocular vision positioning and robot technology, applied in the field of robot navigation, can solve problems such as closed-loop optimization failure, large pose drift, and inaccurate robot pose estimation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

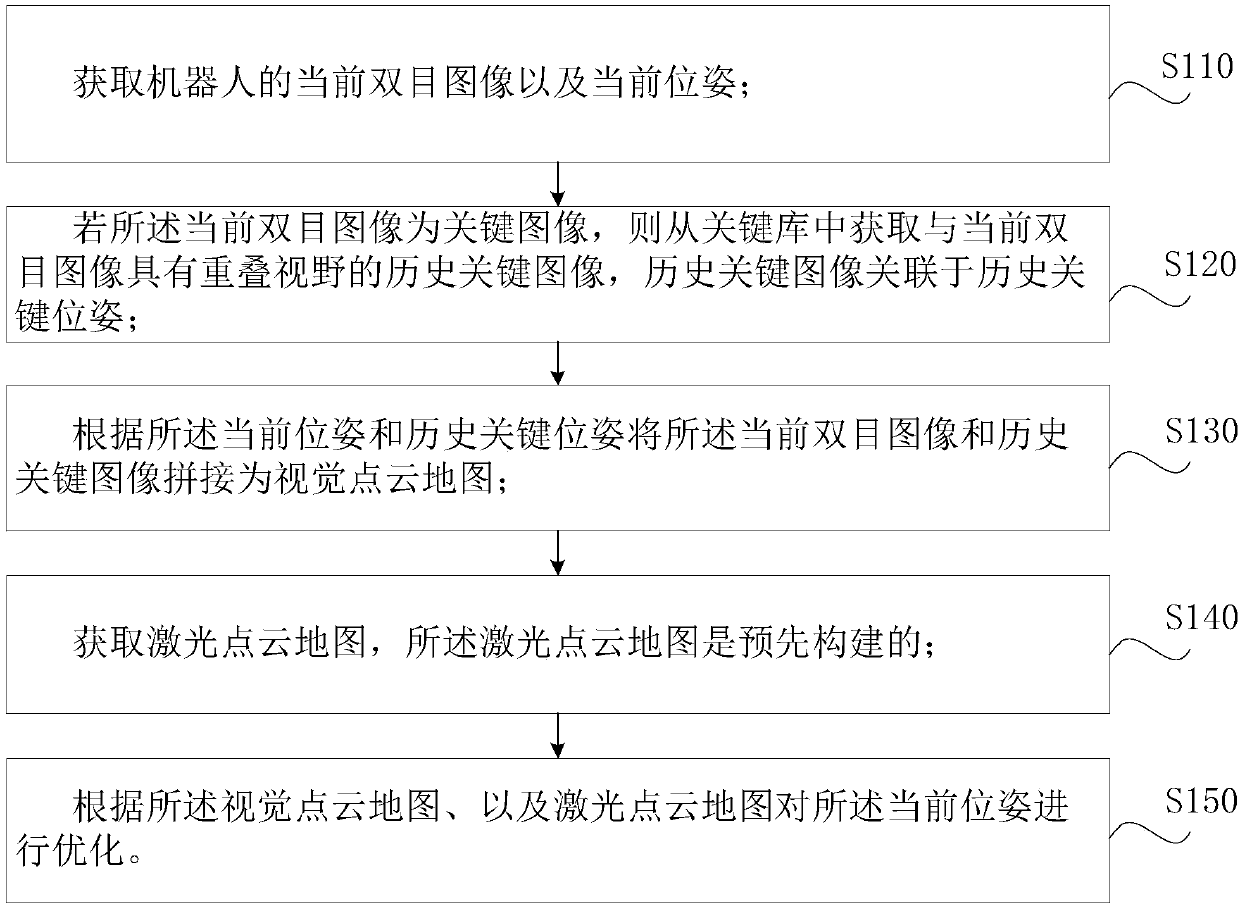

[0061] Such as figure 1 A binocular vision positioning method for a robot, comprising the following steps:

[0062] Step S110, acquiring the current binocular image and the current pose of the robot.

[0063] As a preferred implementation, step S110 acquires the current binocular image and the current pose of the robot, which specifically includes the following sub-steps:

[0064] Step S111, acquiring the current binocular image of the robot, as well as the previous binocular image and previous pose of the robot;

[0065] Step S112, calculating the amount of pose change according to the current binocular image and the previous binocular image;

[0066] Step S113, calculating the current pose of the robot according to the amount of pose change and the previous pose.

[0067] The use of binocular vision to realize the motion estimation of the robot itself has a long research history. Most of the implementation methods are to use the image information of adjacent frames to est...

Embodiment 2

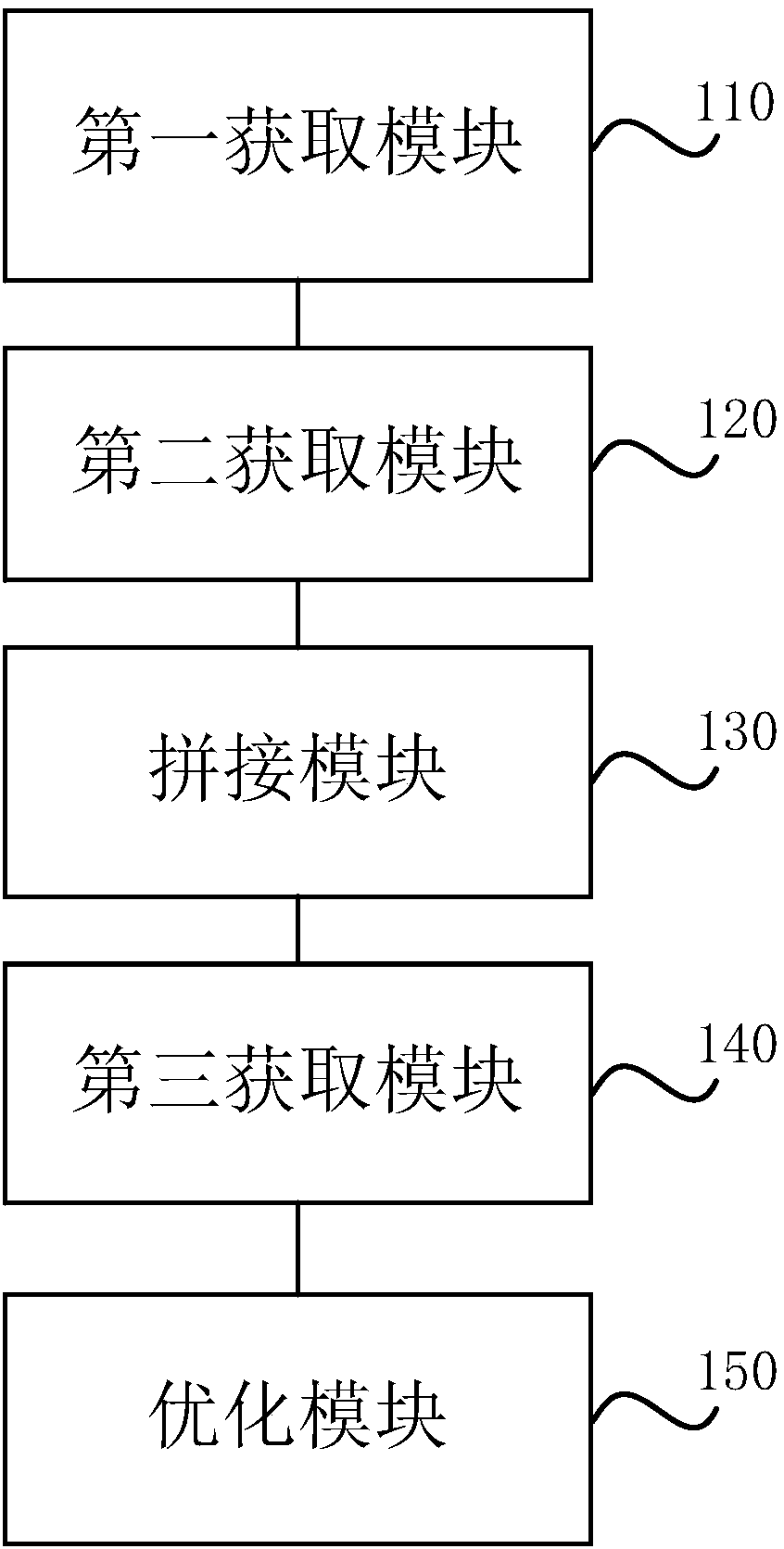

[0115] Such as image 3 The robot binocular vision positioning device shown includes:

[0116] The first acquiring module 110 is used to acquire the current binocular image and the current pose of the robot;

[0117] The second acquisition module 120 is used to obtain a historical key image with an overlapping field of view with the current binocular image from the key library if the current binocular image is a key image, and the historical key image is associated with the historical key pose;

[0118] The splicing module 130 is used for splicing the current binocular image and the historical key image into a visual point cloud map according to the current pose and the historical key pose;

[0119] The third obtaining module 140 is used to obtain the laser point cloud map, and the laser point cloud map is pre-built;

[0120] The optimization module 150 is configured to optimize the current pose according to the visual point cloud map and the laser point cloud map.

[0121]...

Embodiment 3

[0134] Such as Figure 4 An electronic device shown includes a memory 200, a processor 300, and a program stored in the memory 200. The program is configured to be executed by the processor 300. When the processor 300 executes the program, the robot binocular vision positioning described above is realized. method steps.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com