3D point cloud semantic segmentation method under bird's-eye view coding view angle

A semantic segmentation, bird's-eye view technology, applied in the field of computer vision, can solve the problem of high sparsity of point cloud scene understanding data, difficult to process large-scale point clouds in real time, and insufficient local feature robustness, etc. Effects of perceptual field, increased perceptual field, strong feature extraction and recognition ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

[0066] The present invention provides a 3D point cloud semantic segmentation method under the bird's-eye view encoding perspective, including the training of the network model and the operation steps of the model.

[0067] 1. Training network model

[0068] To train the 3D point cloud semantic segmentation network model under the coding perspective of the bird's-eye view, sufficient point cloud data is first required. Each frame of point cloud scene samples should contain XYZ, reflectivity, and semantic category information to which each point belongs. Take the SemanticKITTI outdoor lidar point cloud dataset as an example. A total of 15,000 frame scene point clouds are used as the training set, and 3,000 real point clouds are used as the verification set.

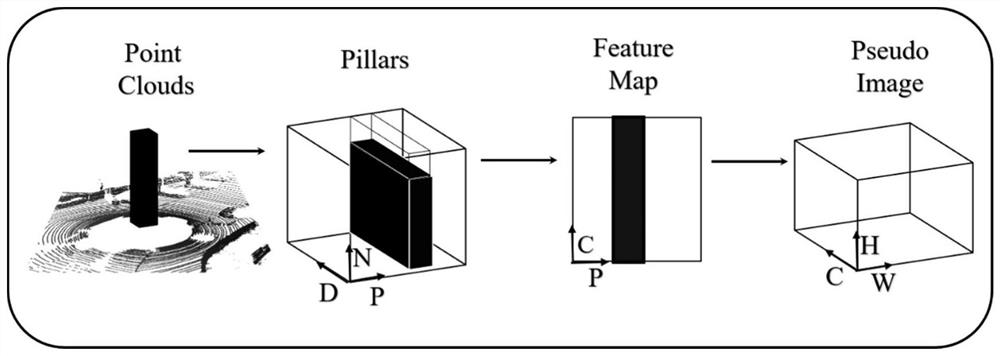

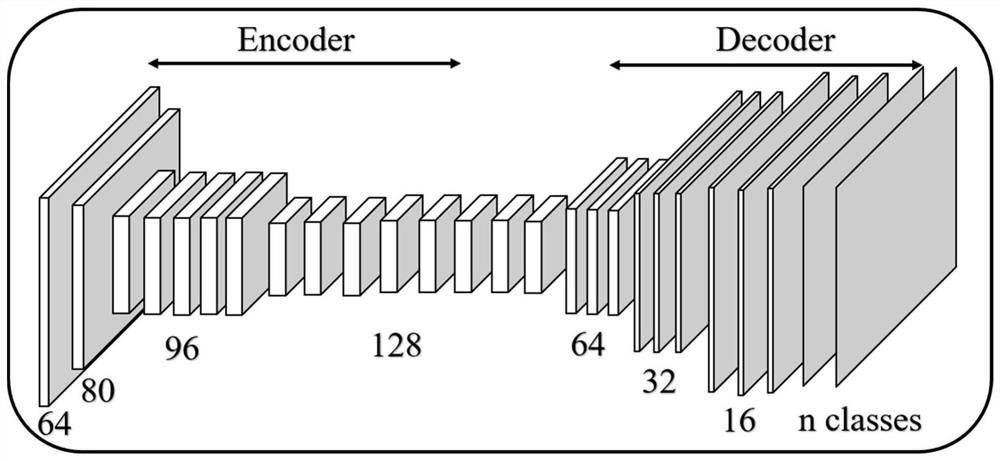

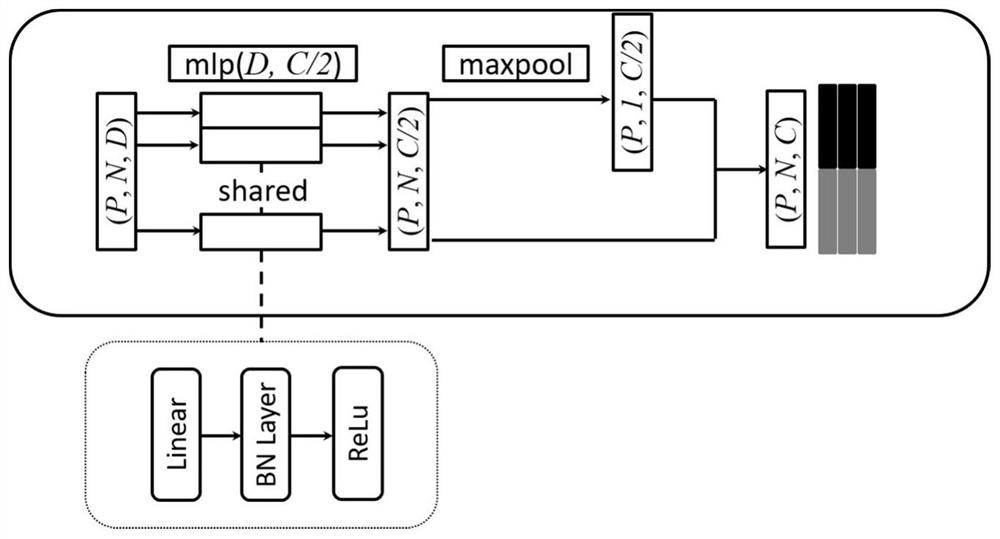

[0069] After obtaining enough point cloud data sets, firstly, each frame of point cloud needs to be coded from the perspective of the bird’s-eye view to become grid voxels under the bird’s-eye view, and then use the simpl...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com