Robot grasp pose estimation method based on object recognition depth learning model

A deep learning and pose estimation technology, applied in the field of computer vision, can solve the problems of complex algorithms and time-consuming three-dimensional information, and achieve the effect of improving computing efficiency, improving success rate, and reducing the range of point cloud segmentation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0032] Hereinafter, a number of preferred embodiments of the present invention will be introduced with reference to the accompanying drawings in the specification to make the technical content clearer and easier to understand. The present invention can be embodied by many different forms of embodiments, and the protection scope of the present invention is not limited to the embodiments mentioned in the text.

[0033] In the drawings, components with the same structure are represented by the same numerals, and components with similar structures or functions are represented by similar numerals. The size and thickness of each component shown in the drawings are arbitrarily shown, and the present invention does not limit the size and thickness of each component. In order to make the illustration clearer, the thickness of the components is appropriately exaggerated in some places in the drawings.

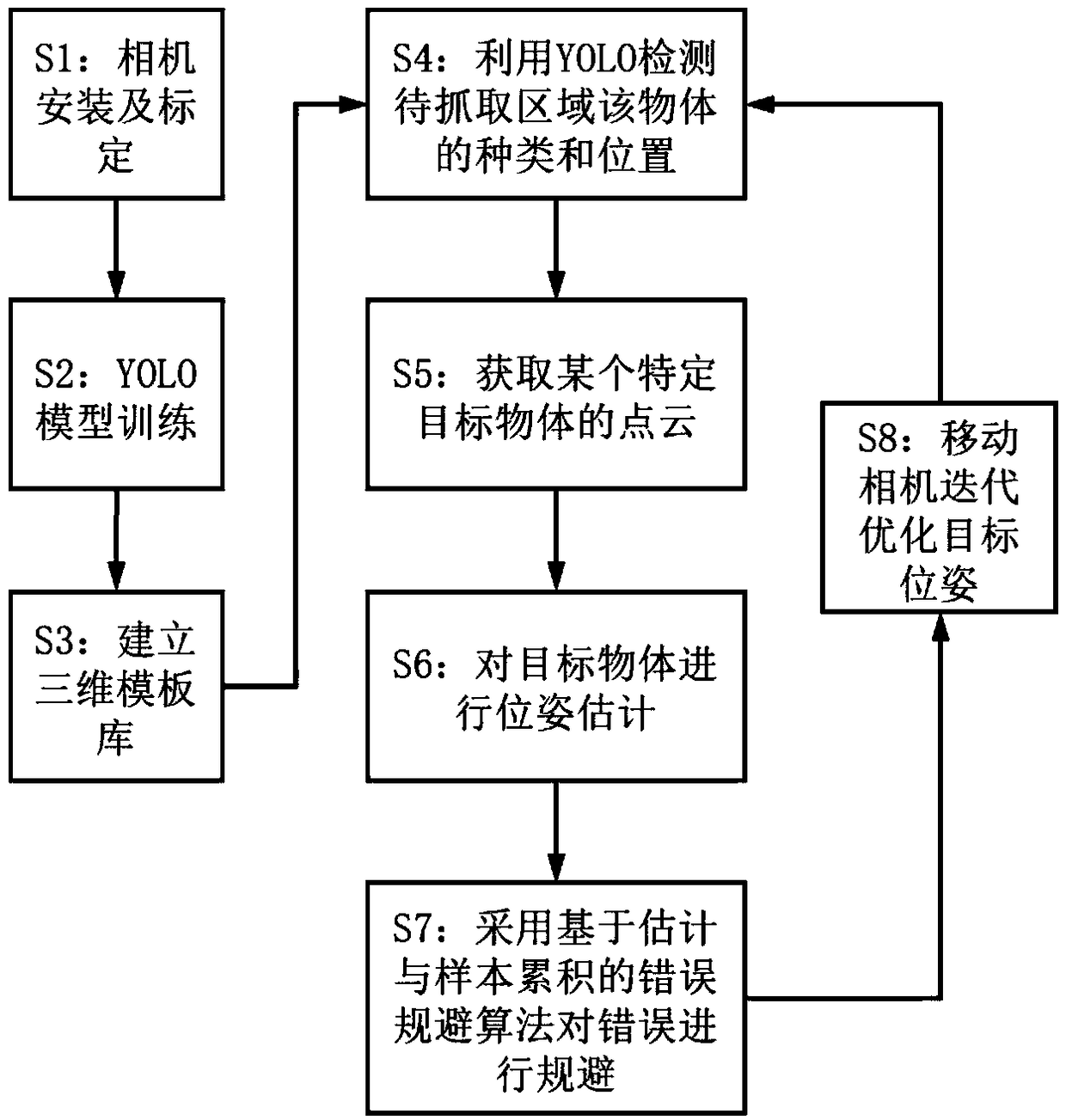

[0034] Such as figure 1 As shown, the robot used in the embodiment of the present invent...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com