Sparse point cloud segmentation method and device

A sparse point and point cloud technology, applied in the field of image processing, can solve the problems of expensive hardware, segmentation accuracy and low efficiency, and achieve the effect of improving accuracy and efficiency, reducing equipment cost, and good practical application value

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

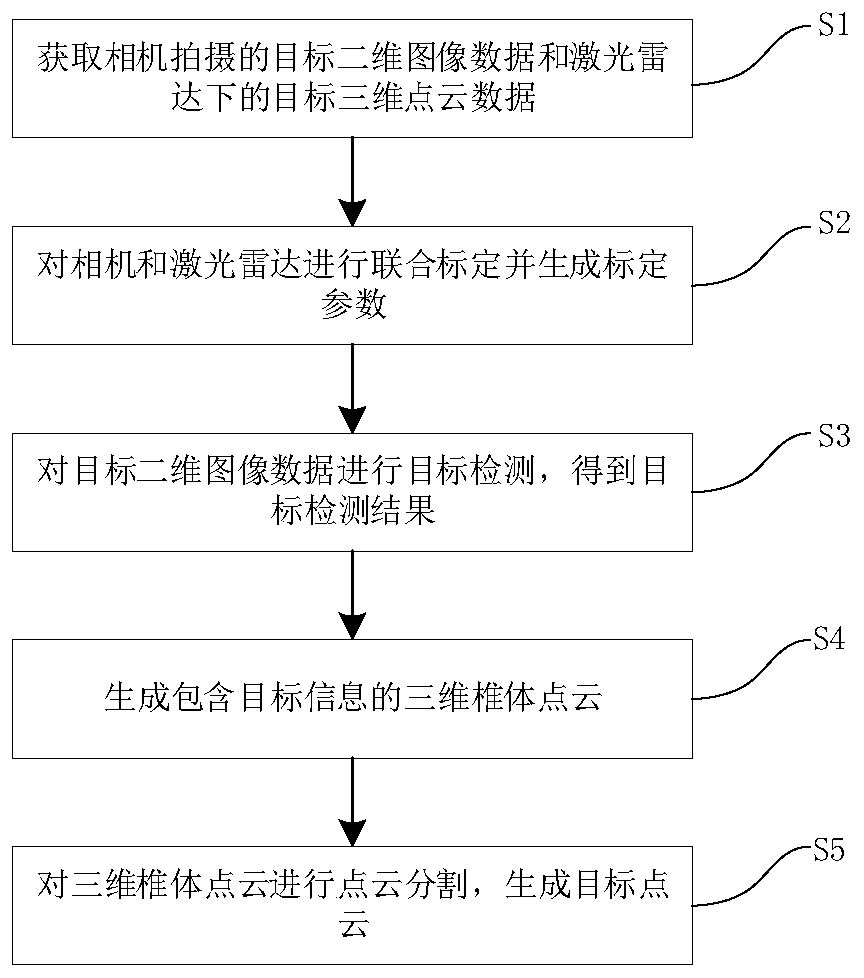

Embodiment 1

[0050] This embodiment is different from traditional point cloud segmentation methods and existing point cloud segmentation methods that directly apply deep learning. Traditional point cloud segmentation methods use purely mathematical models and geometric inference techniques, such as region growing or model fitting, combined with robust estimators to fit linear and nonlinear models to point cloud data. This method of point cloud segmentation is faster and can achieve good segmentation results in simple scenes, but the limitation of this method is that it is difficult to choose the size of the model when fitting objects, and it is sensitive to noise and in complex scenes doesn't work well.

[0051] Existing methods that directly apply deep learning for point cloud segmentation use feature descriptors to extract 3D features from point cloud data, and use machine learning techniques to learn different categories of object types, and then use the resulting model to classify the ...

Embodiment 2

[0099] Such as Figure 4 As shown, it is a structural block diagram of a sparse point cloud segmentation device of this embodiment, including:

[0100] Obtain image data module 10, be used for obtaining the target two-dimensional image data of camera shooting and target three-dimensional point cloud data under laser radar;

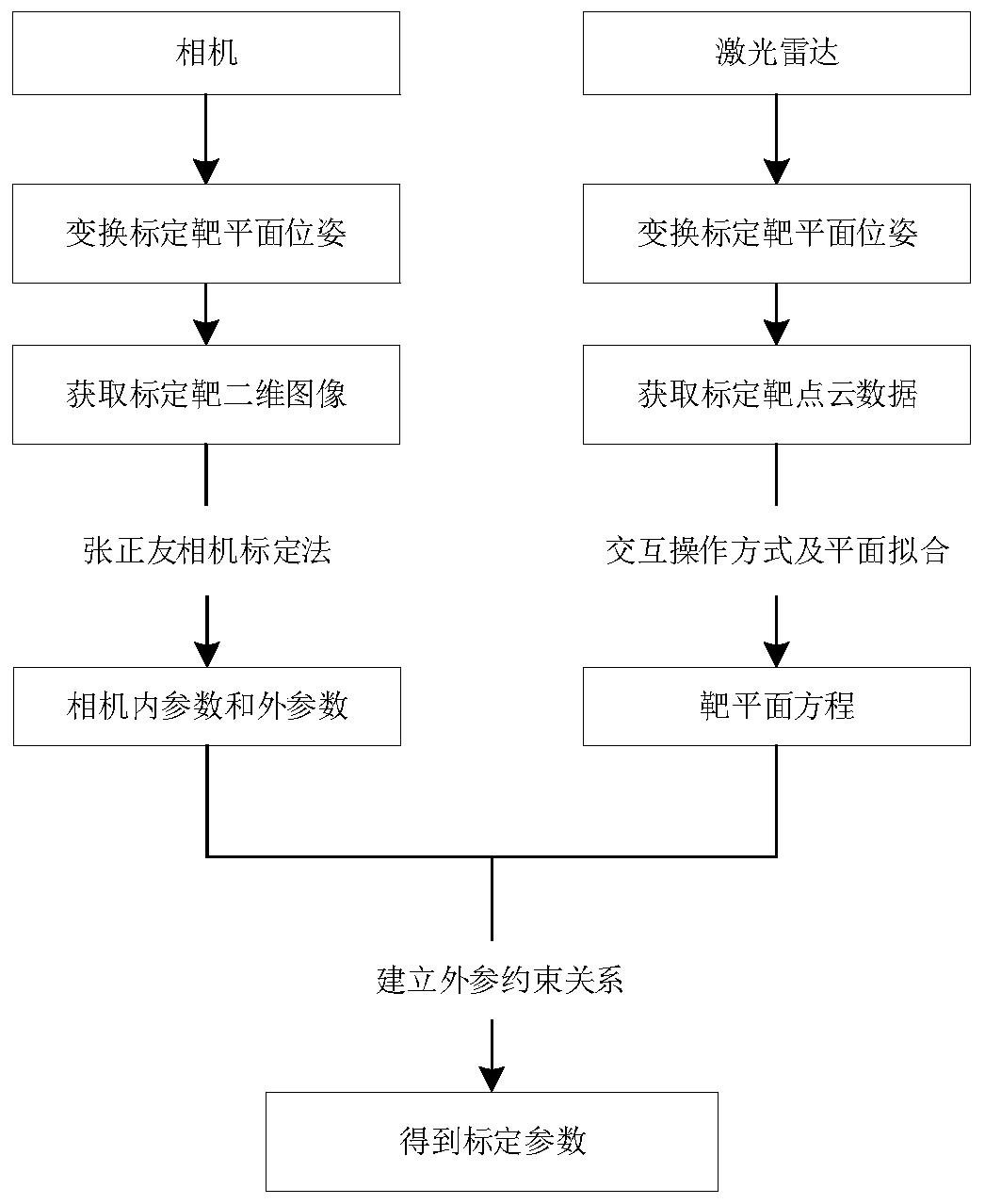

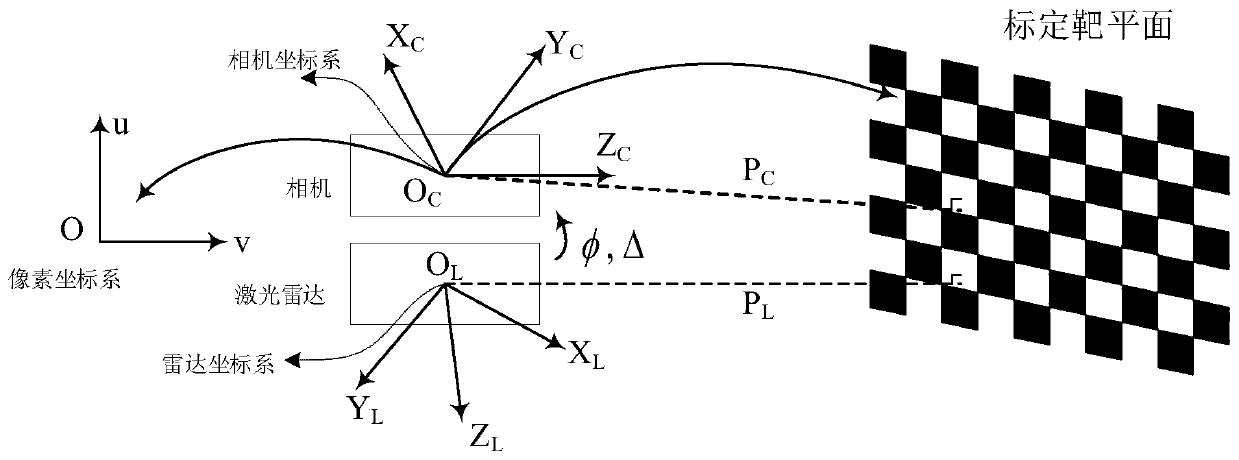

[0101] A joint calibration module 20, configured to jointly calibrate the camera and the laser radar and generate calibration parameters;

[0102] The target detection module 30 is used to perform target detection on the target two-dimensional image data to obtain a target detection result, the target detection result including: target category and two-dimensional bounding box position coordinate information;

[0103] The three-dimensional cone point cloud generation module 40 is used to extract three-dimensional points that can be converted to the target two-dimensional bounding box according to the two-dimensional bounding box position coordinate inform...

Embodiment 3

[0106] This embodiment also provides a sparse point cloud segmentation device, including:

[0107] at least one processor, and a memory communicatively coupled to the at least one processor;

[0108] Wherein, the processor is used to execute the method described in Embodiment 1 by invoking the computer program stored in the memory.

[0109] In addition, the present invention also provides a computer-readable storage medium, where computer-executable instructions are stored in the computer-readable storage medium, where the computer-executable instructions are used to make a computer execute the method as described in Embodiment 1.

[0110] In the embodiment of the present invention, by acquiring the two-dimensional image data of the target captured by the camera and the three-dimensional point cloud data of the target under the laser radar, the camera and the laser radar are jointly calibrated to generate calibration parameters, and then the target two-dimensional image data i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com