Patents

Literature

38results about How to "Reduce latency overhead" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

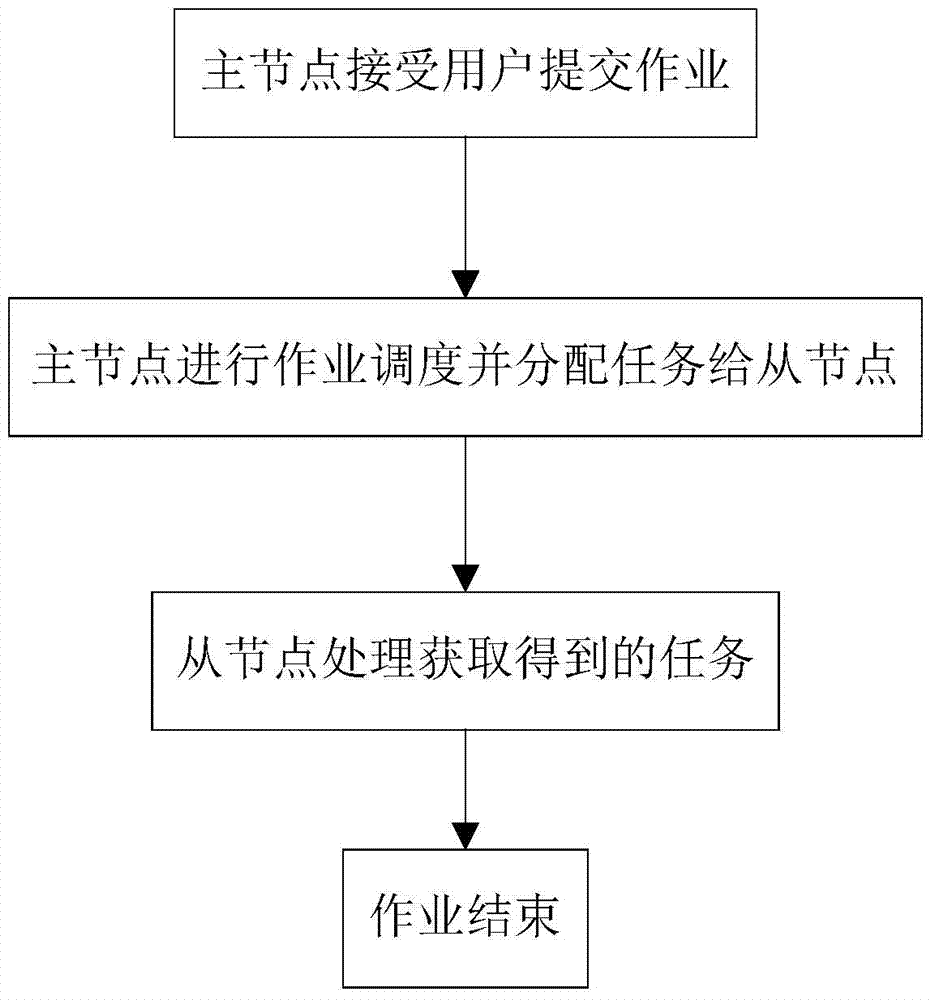

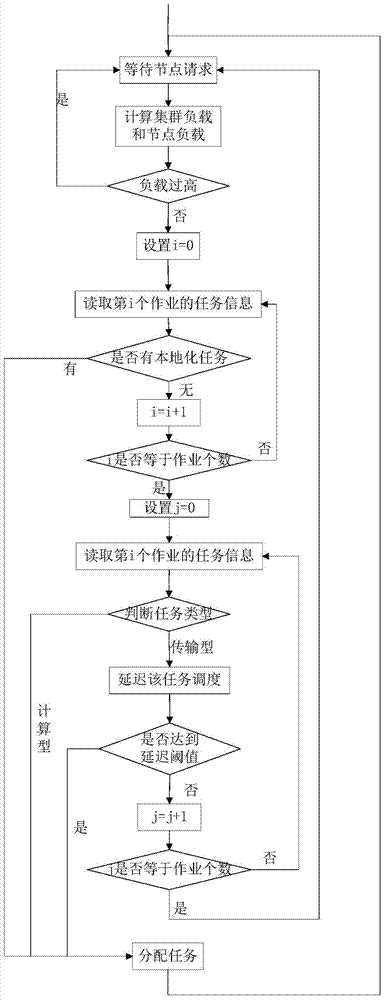

MapReduce optimizing method suitable for iterative computations

ActiveCN103617087AIncrease the localization ratioReduce latency overheadResource allocationTrunkingDynamic data

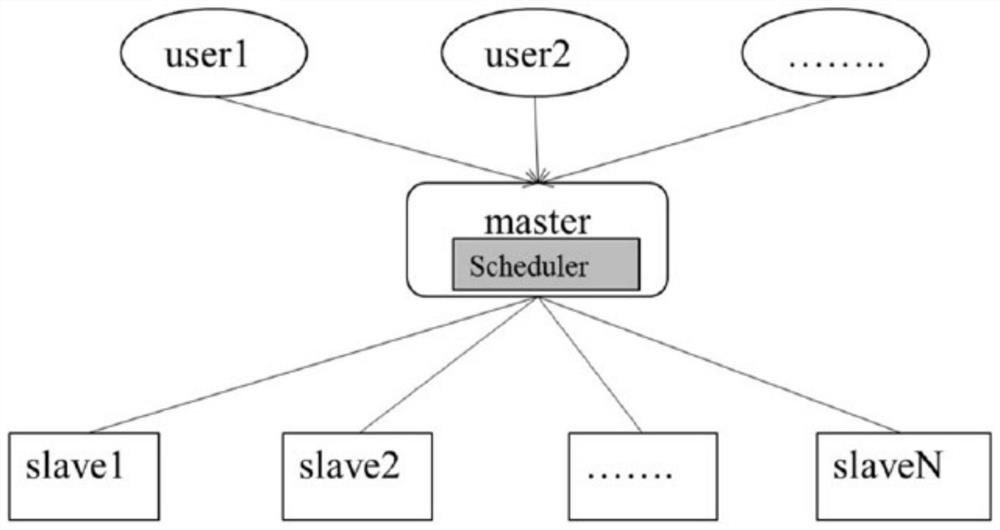

The invention discloses a MapReduce optimizing method suitable for iterative computations. The MapReduce optimizing method is applied to a Hadoop trunking system. The trunking system comprises a major node and a plurality of secondary nodes. The MapReduce optimizing method comprises the following steps that a plurality of Hadoop jobs submitted by a user are received by the major node; the jobs are placed in a job queue by a job service process of the major node and wait for being scheduled by a job scheduler of the major node; the major node waits for a task request transmitted from the secondary nodes; after the major node receives the task request, localized tasks are scheduled preferentially by the job scheduler of the major node; and if the secondary nodes which transmit the task request do not have localized tasks, prediction scheduling is performed according to task types of the Hadoop jobs. The MapReduce optimizing method can support the traditional data-intensive application, and can also support iterative computations transparently and efficiently; dynamic data and static data can be respectively researched; and data transmission quantity can be reduced.

Owner:HUAZHONG UNIV OF SCI & TECH

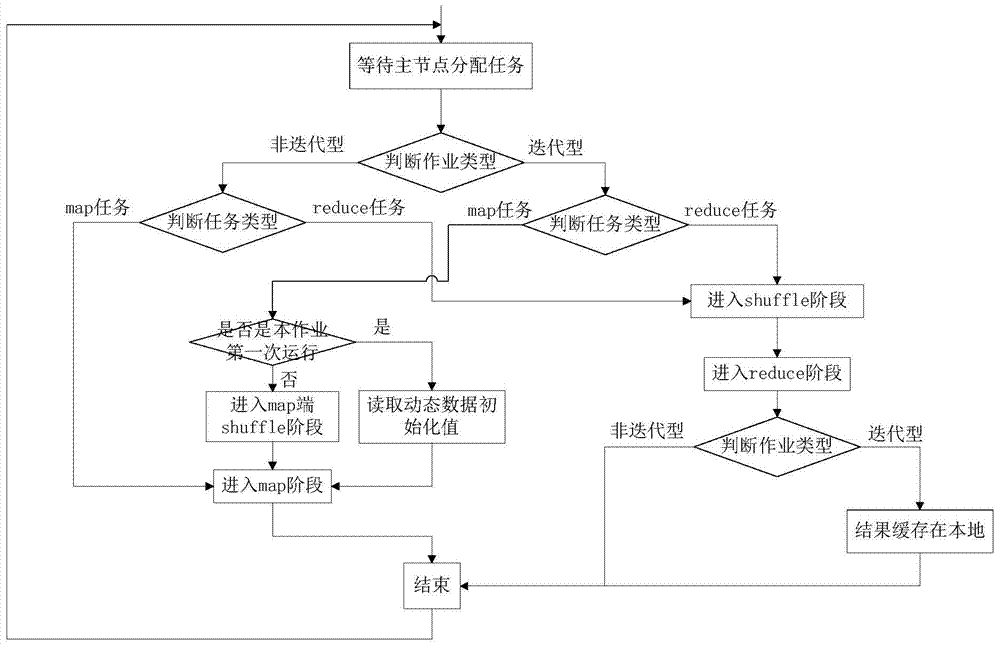

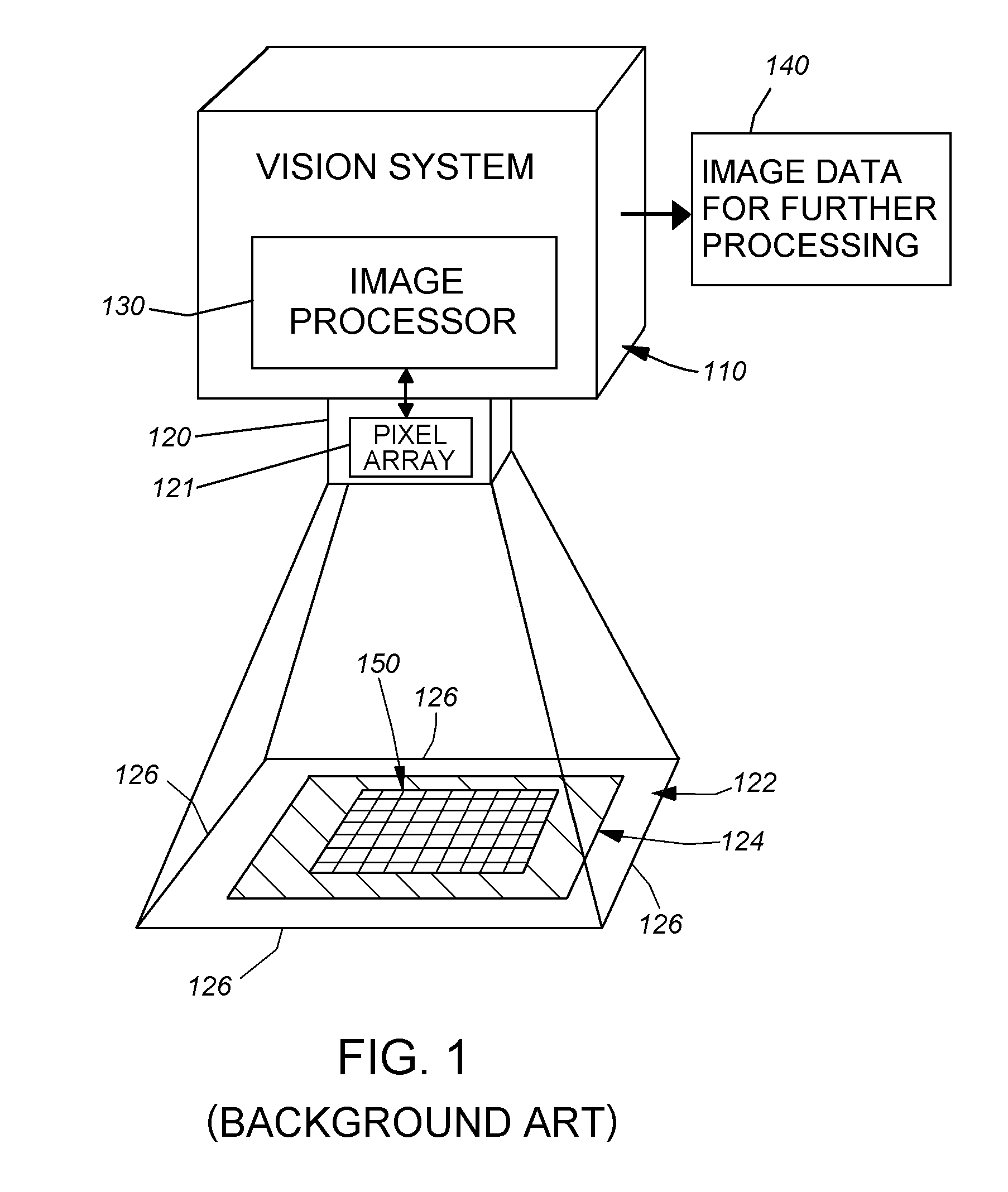

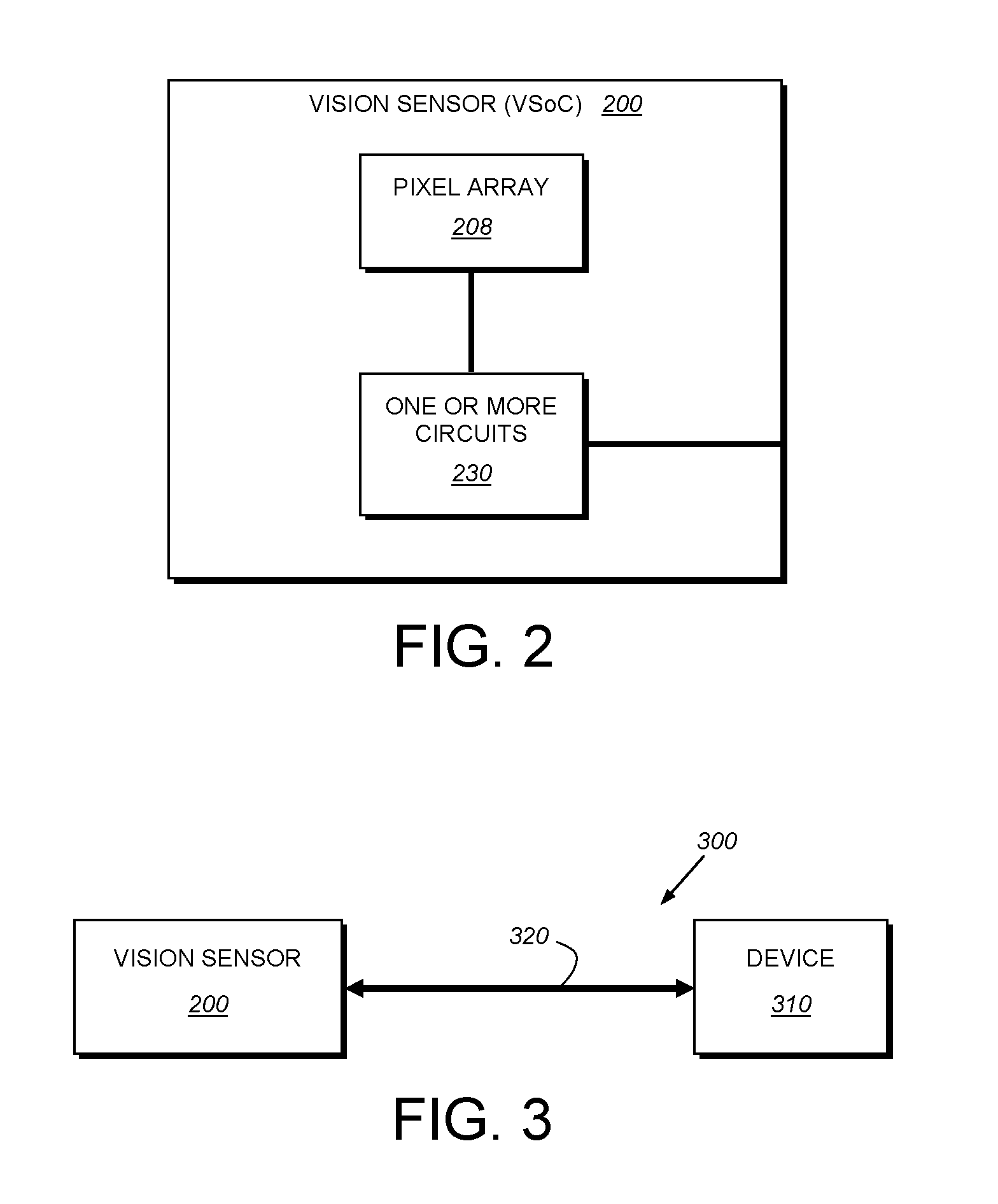

System and method for processing image data relative to a focus of attention within the overall image

InactiveUS20110211726A1Process time savingSave data storage overheadTelevision system detailsProgram control using wired connectionsSensor arrayDigital data

This invention provides a system and method for processing discrete image data within an overall set of acquired image data based upon a focus of attention within that image. The result of such processing is to operate upon a more limited subset of the overall image data to generate output values required by the vision system process. Such output value can be a decoded ID or other alphanumeric data. The system and method is performed in a vision system having two processor groups, along with a data memory that is smaller in capacity than the amount of image data to be read out from the sensor array. The first processor group is a plurality of SIMD processors and at least one general purpose processor, co-located on the same die with the data memory. A data reduction function operates within the same clock cycle as data-readout from the sensor to generate a reduced data set that is stored in the on-die data memory. At least a portion of the overall, unreduced image data is concurrently (in the same clock cycle) transferred to the second processor while the first processor transmits at least one region indicator with respect to the reduced data set to the second processor. The region indicator represents at least one focus of attention for the second processor to operate upon.

Owner:COGNEX CORP

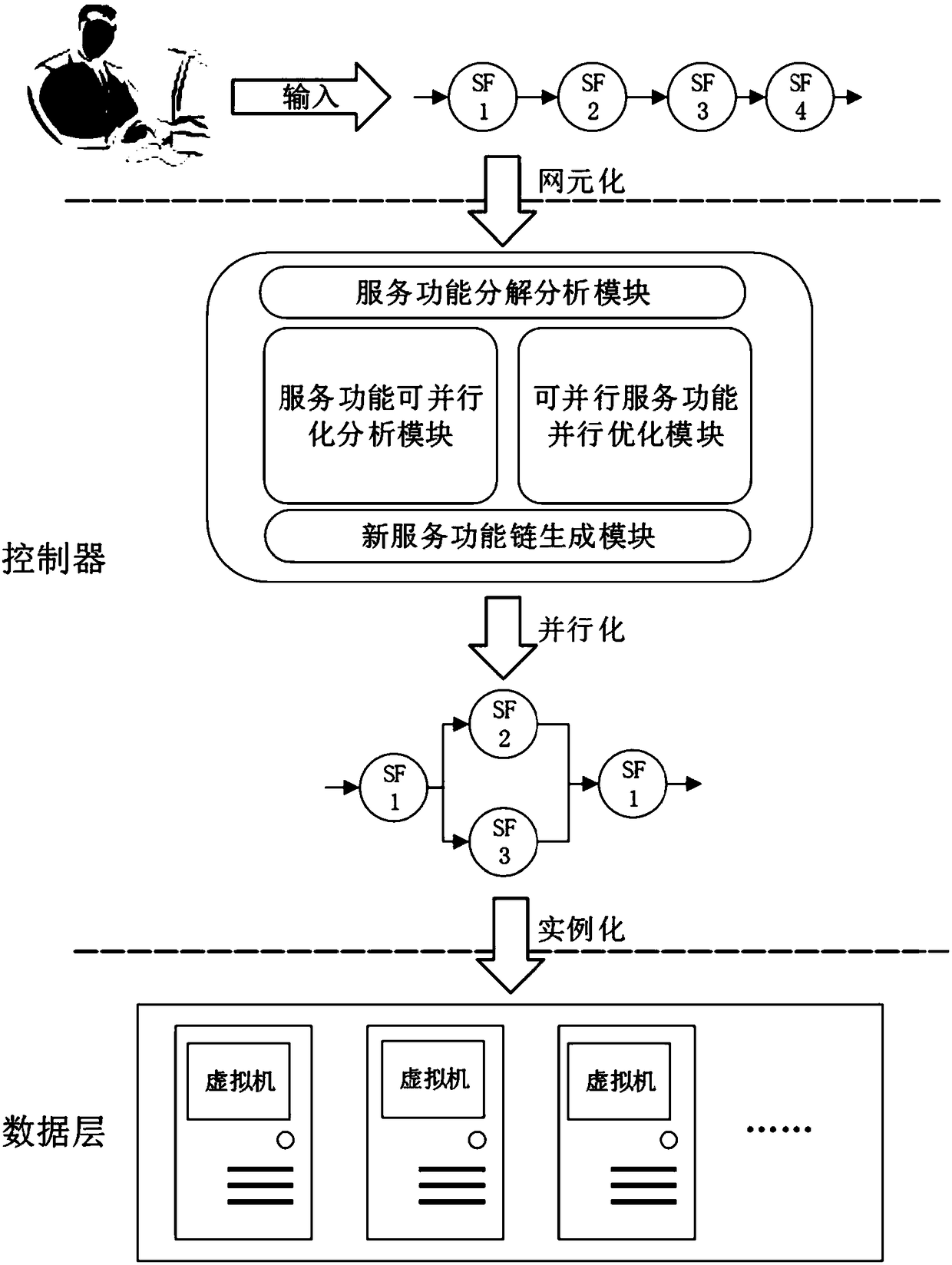

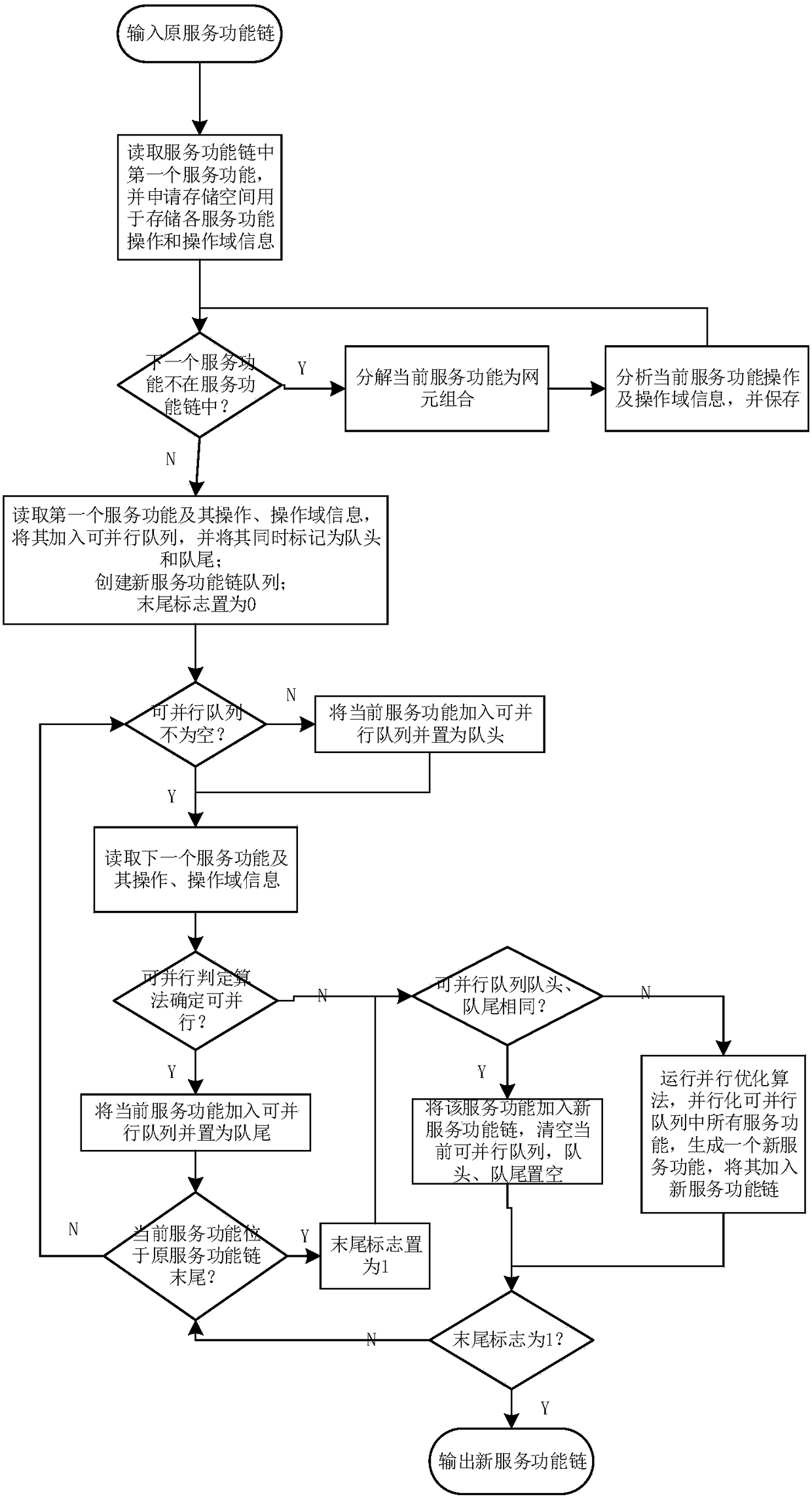

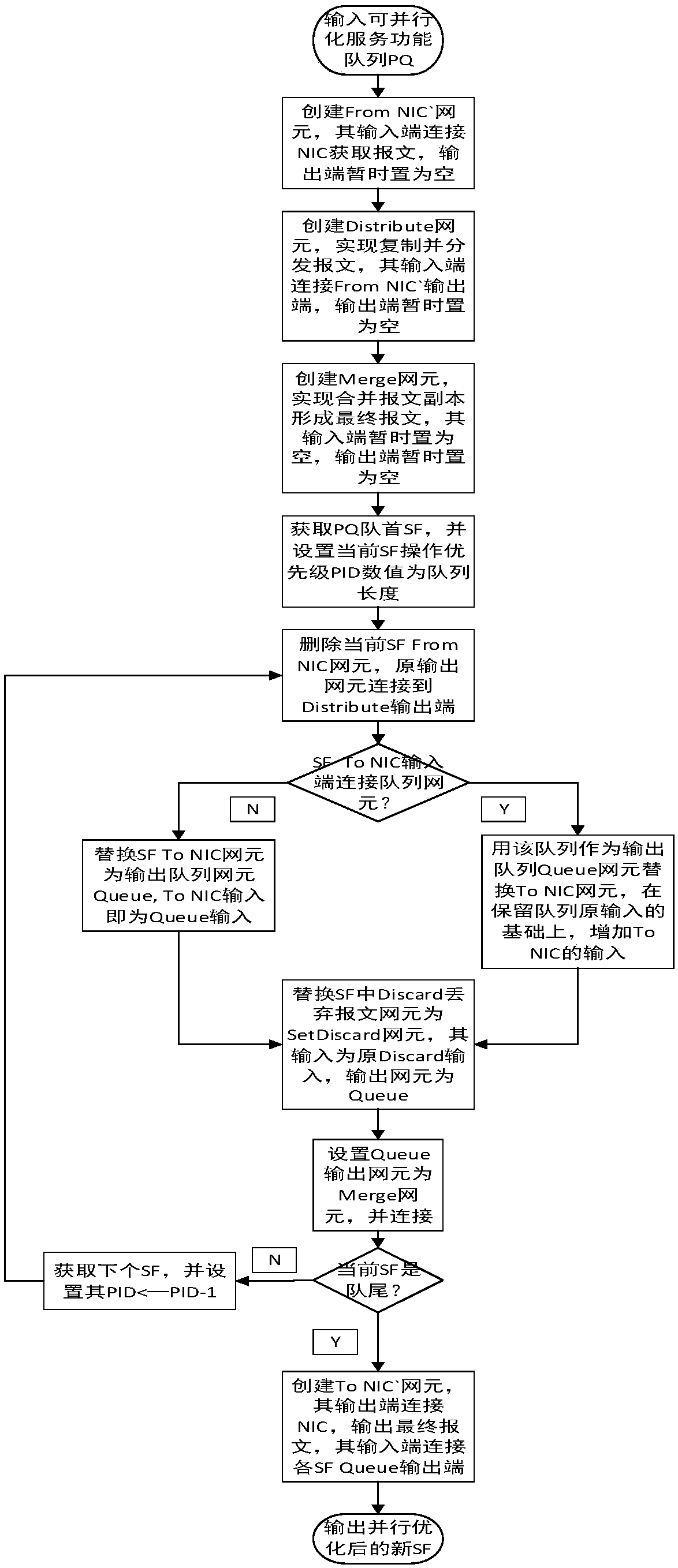

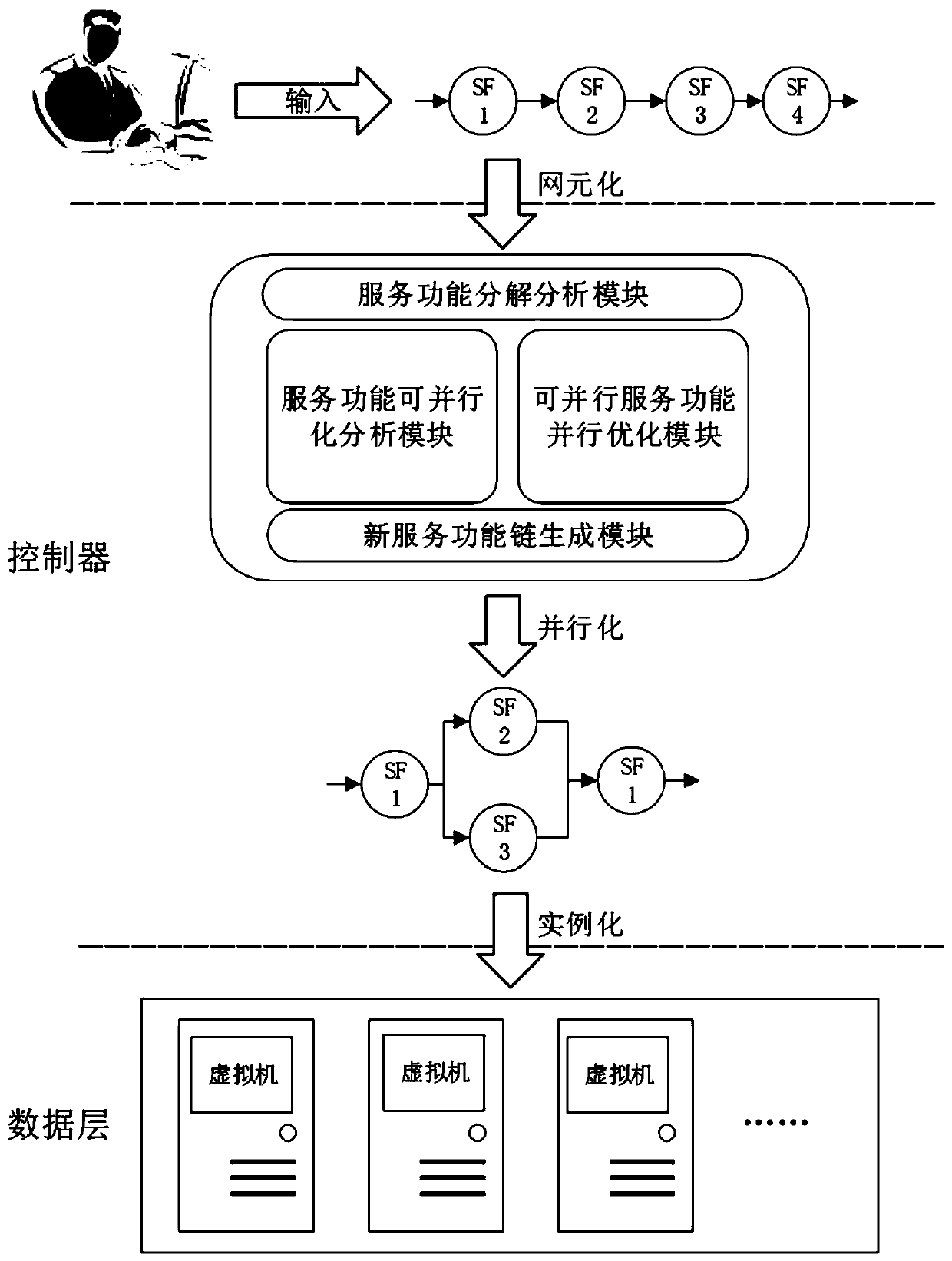

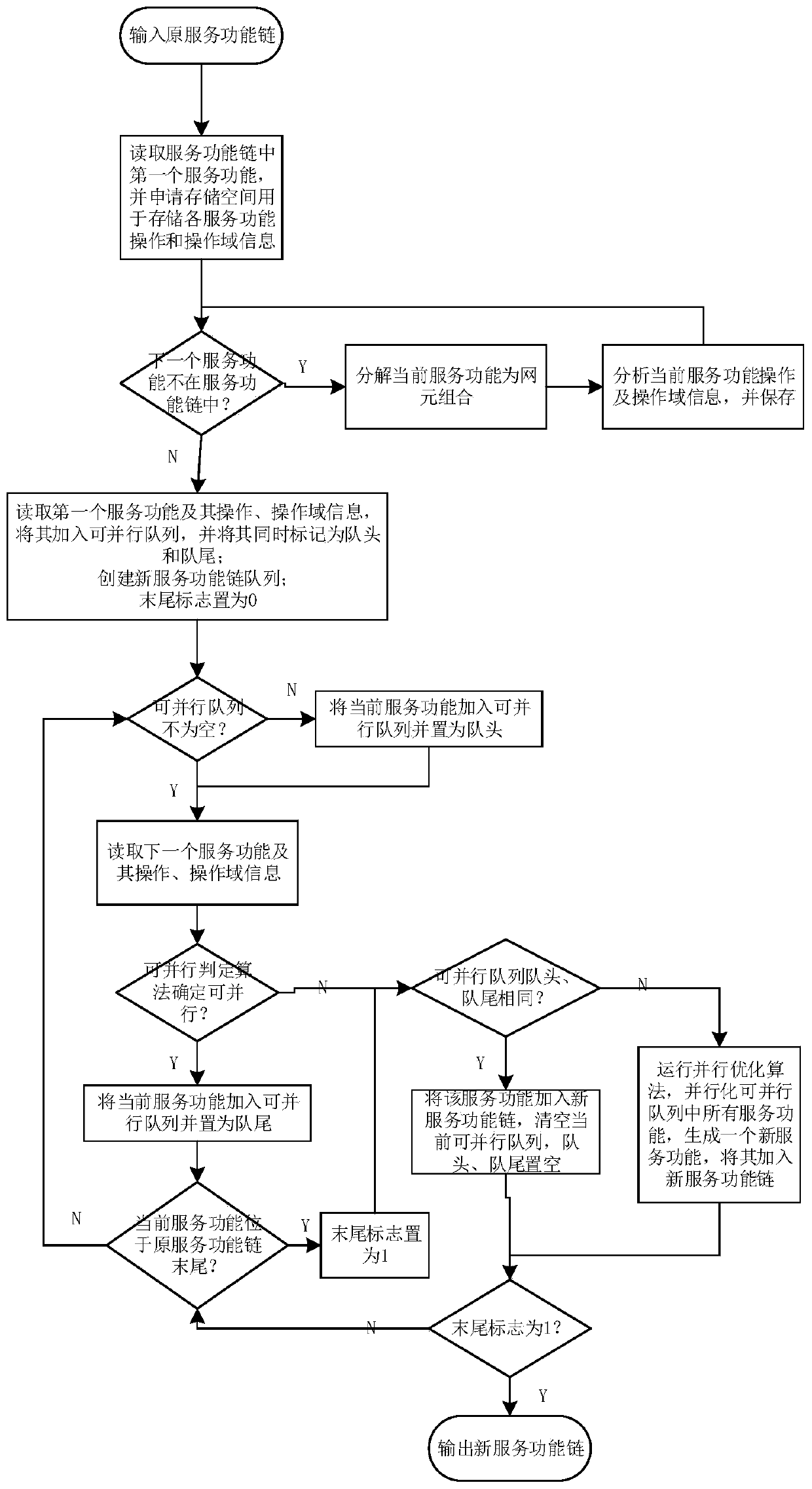

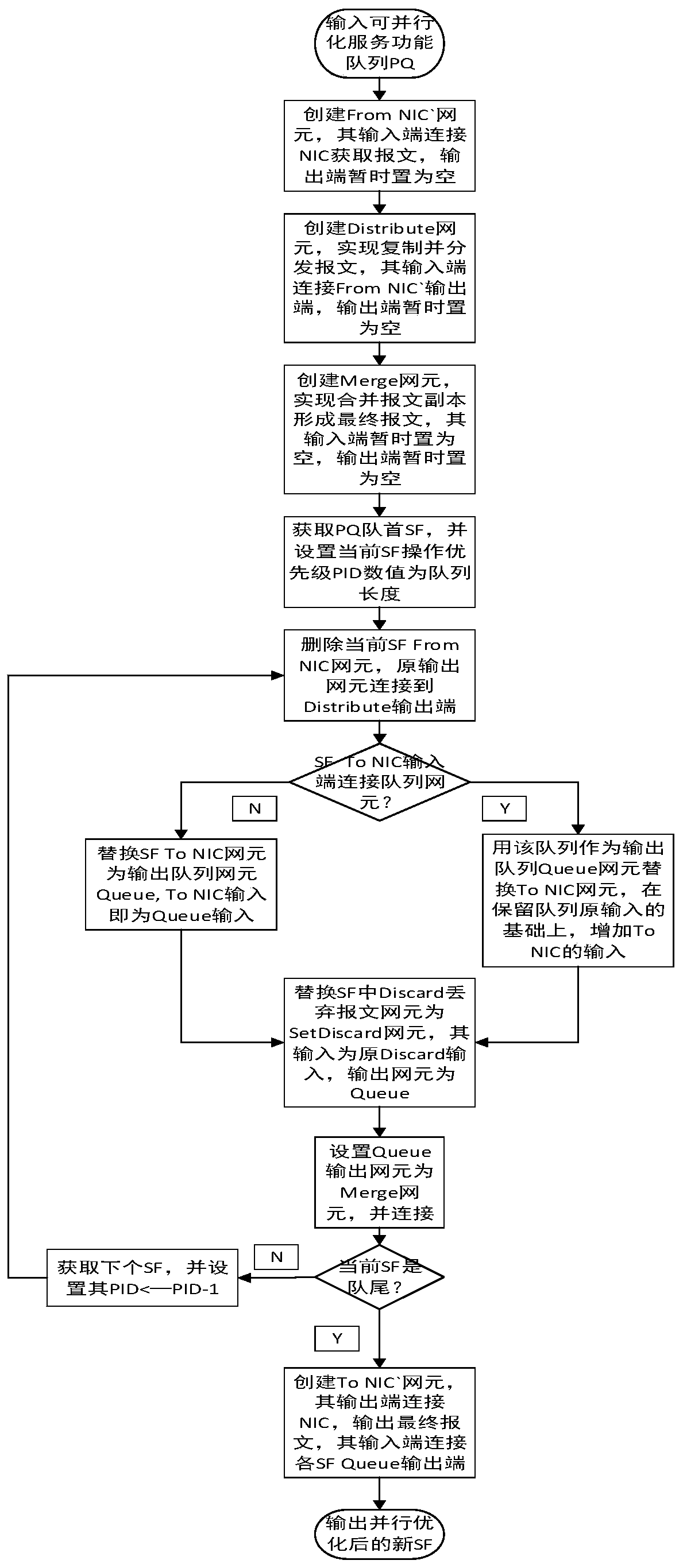

Method for realizing network element level parallel service function in network function virtualization environment

ActiveCN108092803AImprove parallelismShorten the lengthData switching networksSoftware simulation/interpretation/emulationVirtualizationNetwork Functions Virtualization

The invention discloses a method for realizing network element level parallel service functions in a network function virtualization environment. The method comprises the steps of traversing a servicefunction chain, operating a service function decomposition analysis algorithm and decomposing service functions into basic message processing units, called as network elements, and determining and storing operation and operation domain information of the service functions for messages; operating a parallel judgment algorithm through utilization of the obtained operation and operation domain information of the service functions, and determining a service function combination on which parallel optimization can be carried out in the service function chain; performing a parallel optimization algorithm, and combining the parallel service functions; and through combination of non-parallel service functions and the parallelly optimized service functions, organizing and combining the service functions according to an original service function sequence, and establishing a new service function chain. According to the new service function chain, the length of the original service function chainis effectively reduced, the parallelism among the service functions is improved, and the delay cost when the messages pass through the service function chain is clearly reduced.

Owner:CHINA INFOMRAITON CONSULTING & DESIGNING INST CO LTD +1

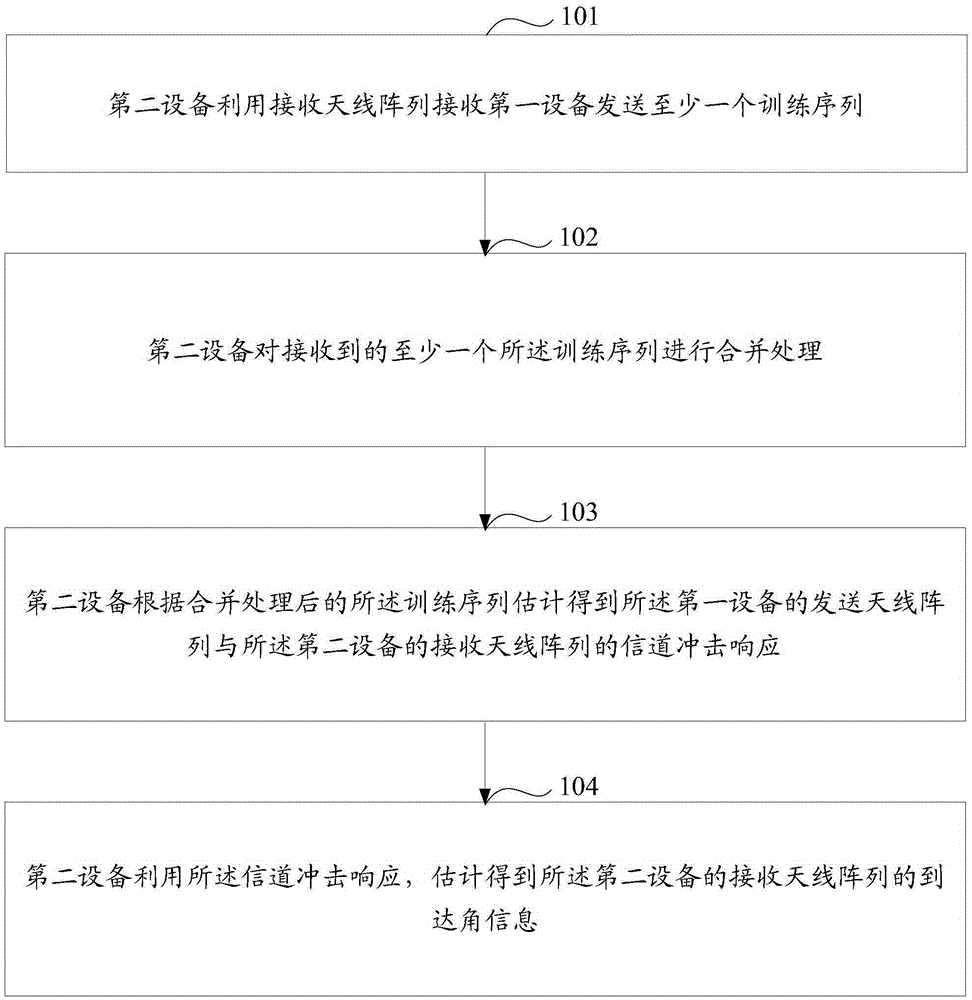

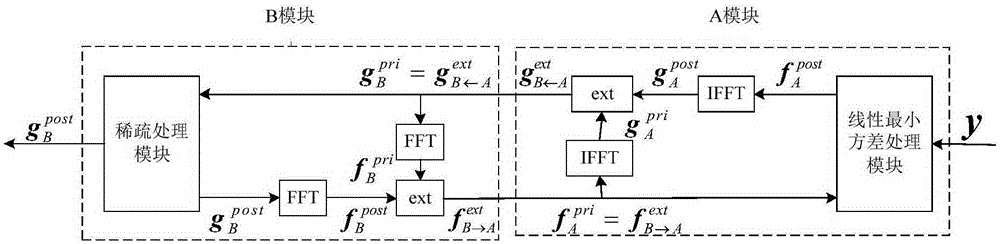

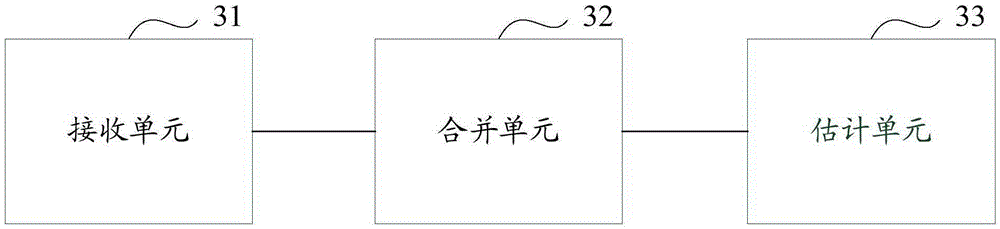

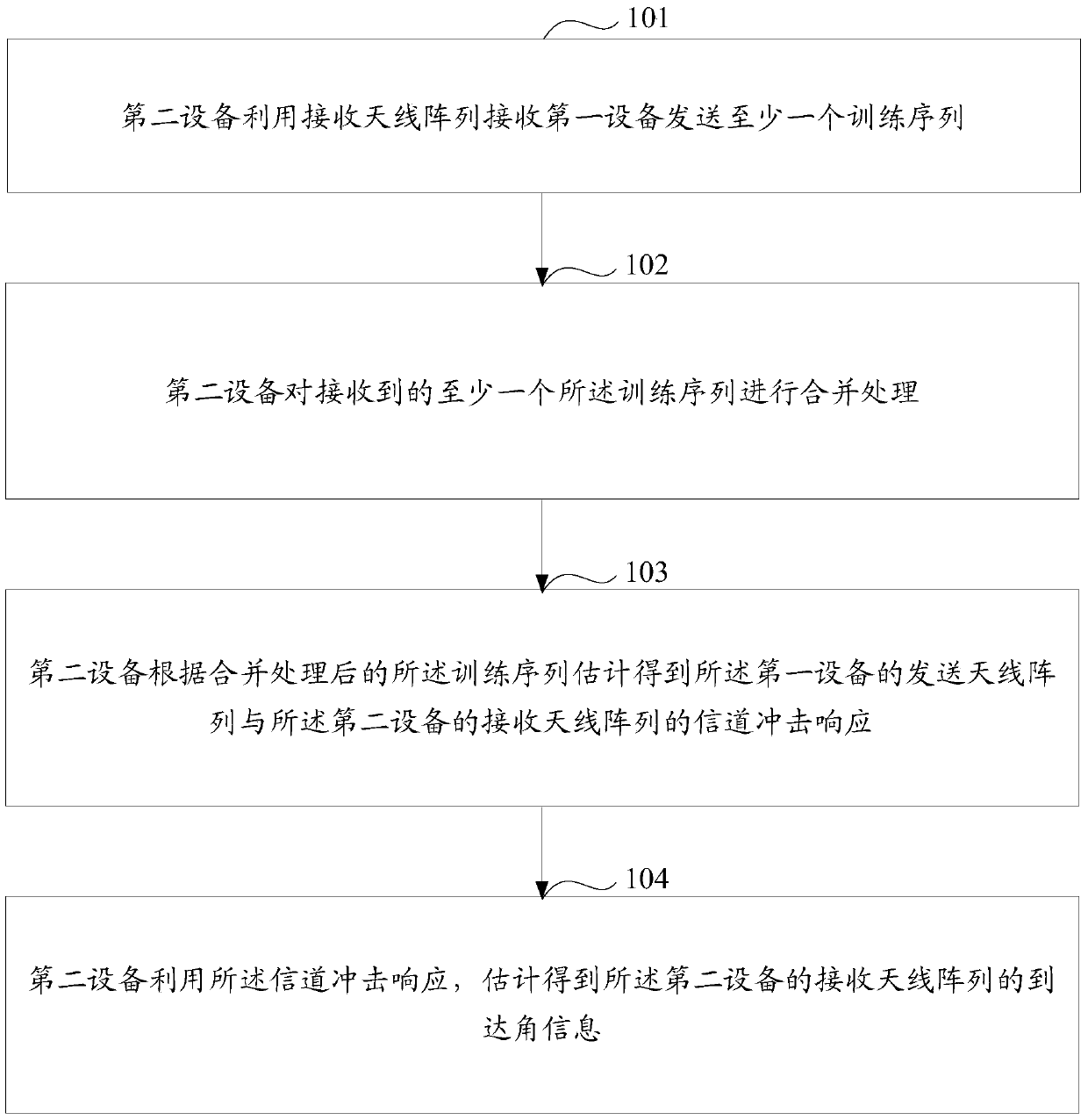

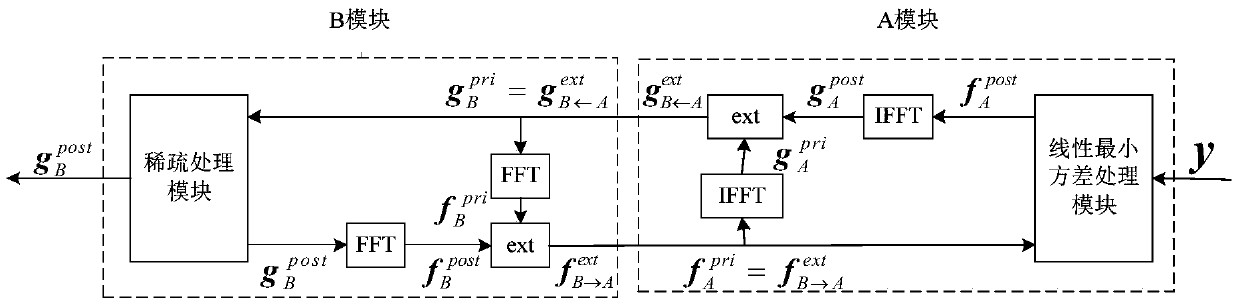

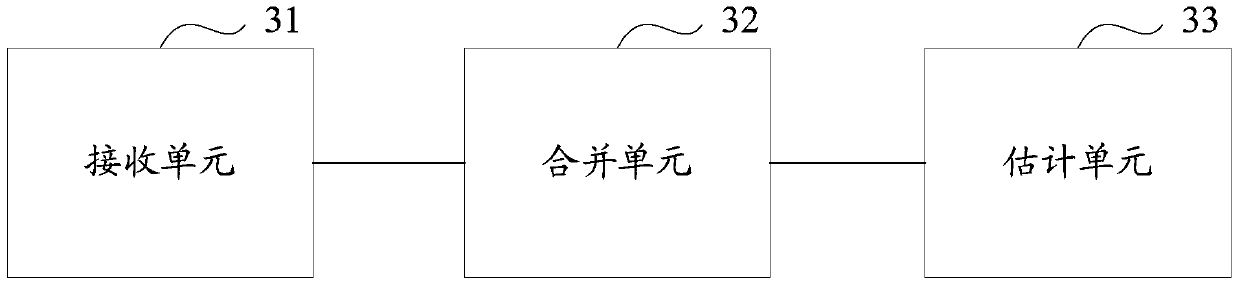

Method and equipment for determining antenna angles

ActiveCN105246086AReduce latency overheadImprove signal transmission efficiencyNetwork planningChannel impulse responseTerminal equipment

The invention discloses a method and equipment for determining arrival angles of antenna arrays. The method comprises the following steps: receiving at least one training sequence transmitted by base station equipment through a receiving antenna array by mobile terminal equipment, wherein one training sequence is transmitted in each time slot, and the training sequences are transmitted after being subjected to beam forming processing by the base station equipment; combining the received at least one training sequence; estimating channel impulse responses of a transmitting antenna array of the base station equipment and the receiving antenna array of the mobile terminal equipment according to the combined training sequences; and estimating arrival angle information of the receiving antenna array of the mobile terminal equipment with the channel impulse responses. Respective arrival angles are estimated through the training sequences transmitted between the base station equipment and the mobile terminal equipment, so that the time delay overhead of angle value estimation is reduced; the method and the equipment can be suitable for a millimeter-wave system under a large scale of antenna arrays; signal transmission with a millimeter-wave band is realized; and the signal transmission efficiency is increased effectively.

Owner:BEIJING UNIV OF POSTS & TELECOMM

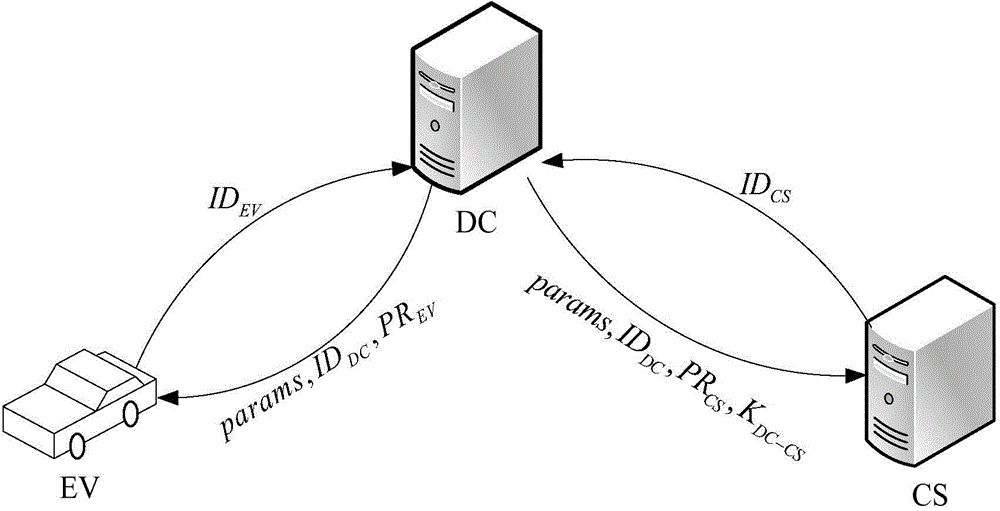

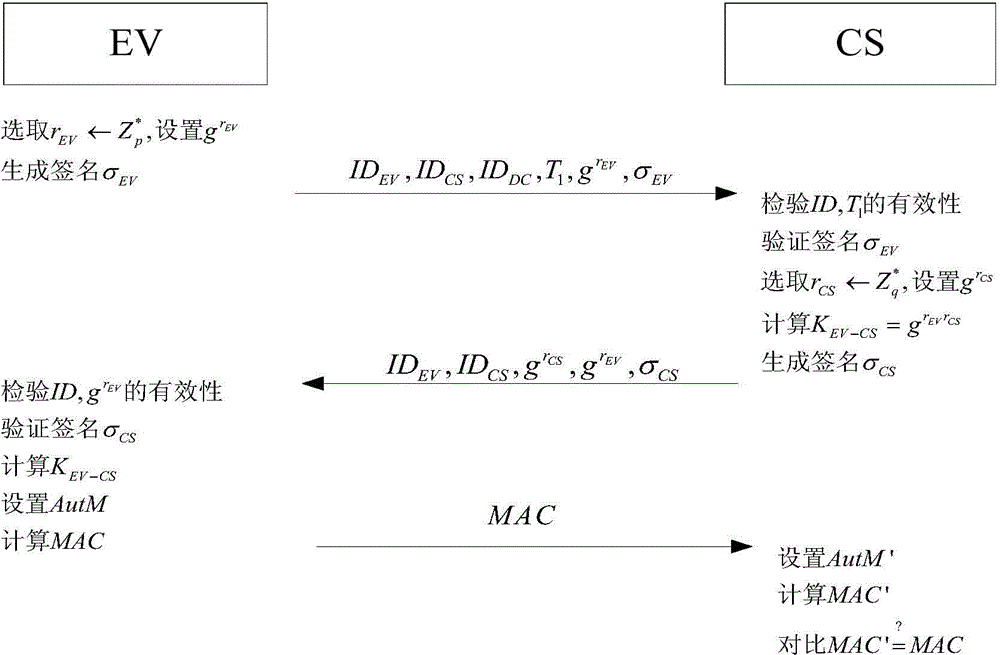

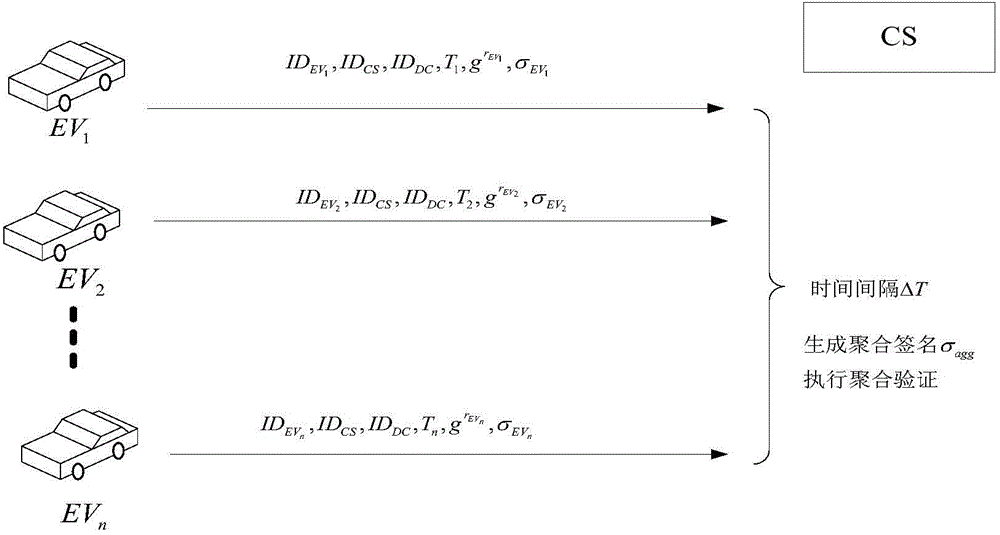

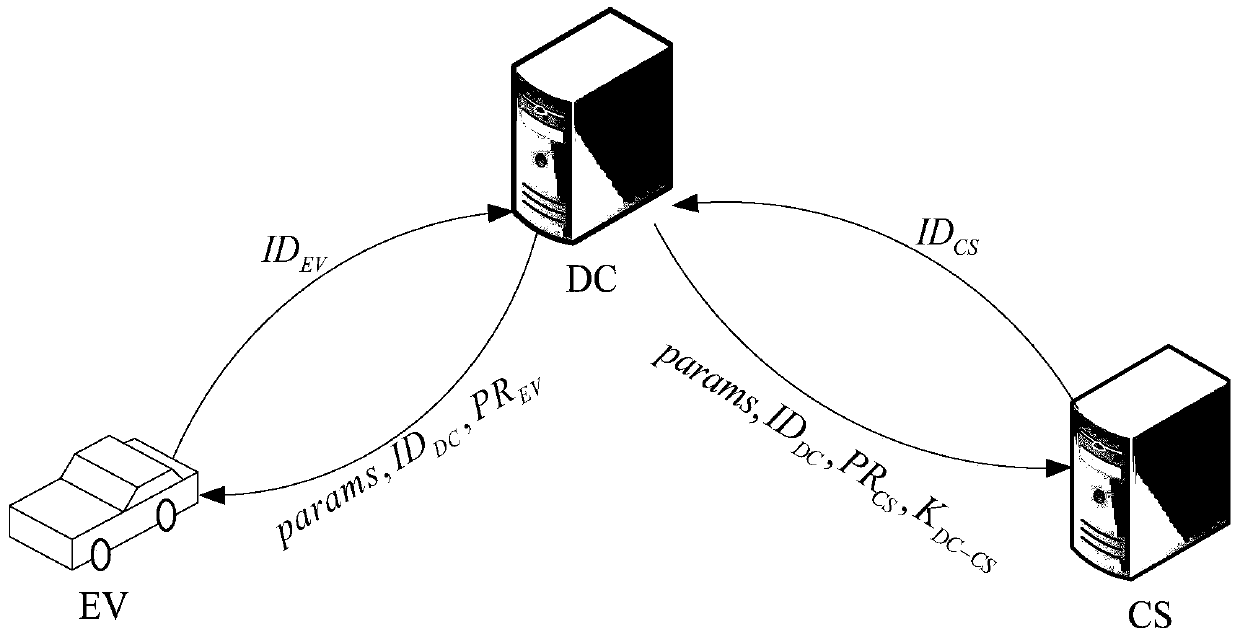

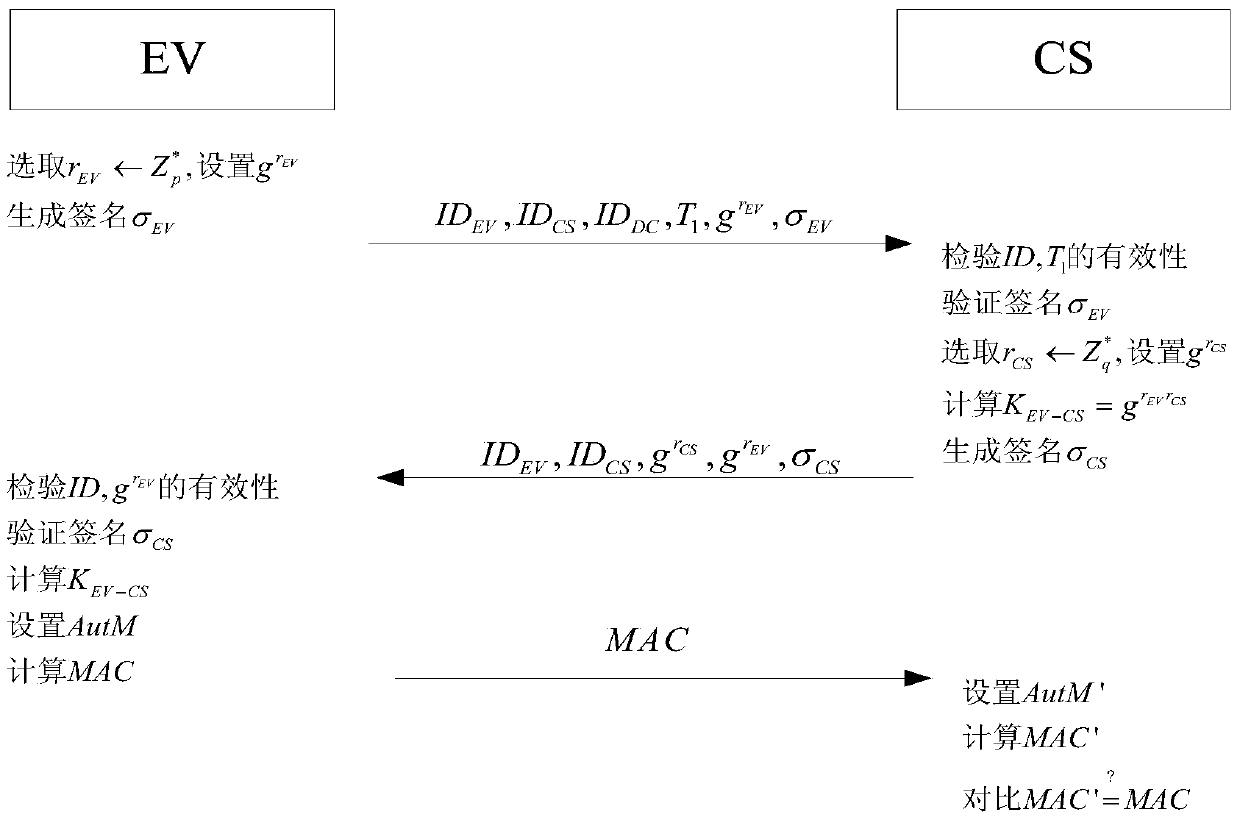

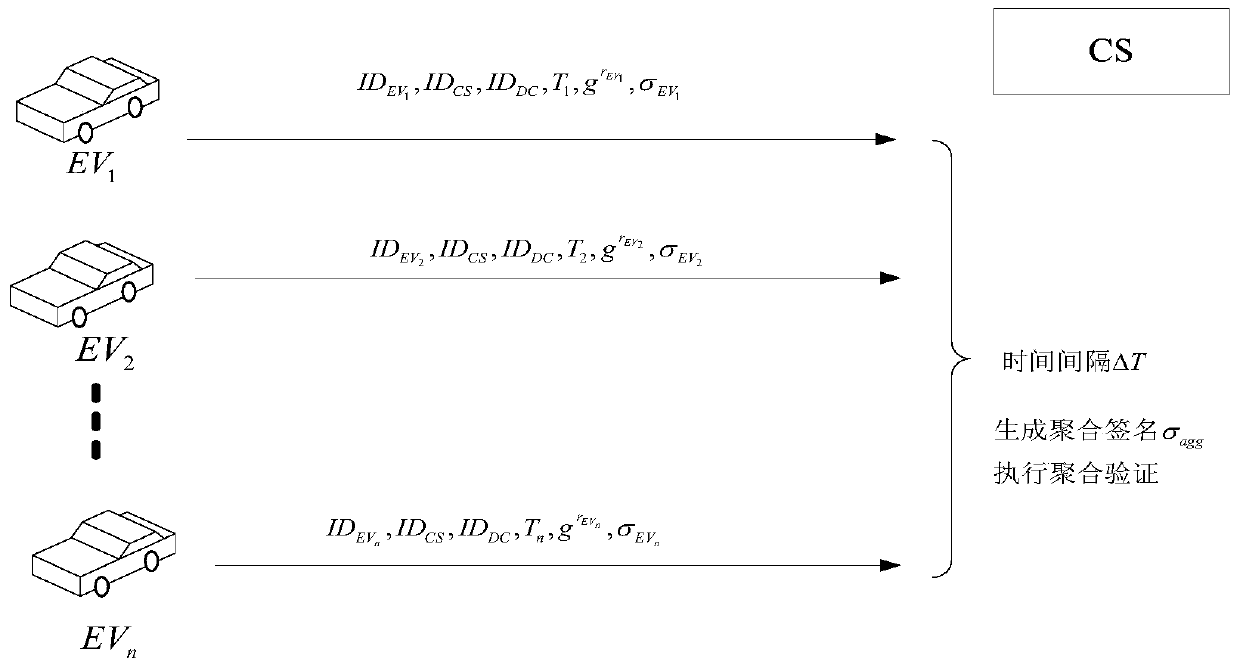

Access authentication method of electric automobile

The invention relates to an access authentication method of electric automobiles. The method comprises the following steps that: access authentication systems of the electric automobiles are established; access authentication between the electric automobiles and a charging station is carried out; and the charging station carries out batch signature authentication on the electric automobiles. According to the invention, identity aggregate signatures are introduced into identity validity inspection of the electric automobiles, and bidirectional authentication and session key negotiation are designed between the electric automobiles and the access authentication systems, so that the reasonable usage of the information resources in the station is ensured, and the safety of the whole network system is fundamentally ensured. The batch signature authentication mechanism enables the charging station to verify a plurality of electric automobile terminal signatures in the aggregate manner, the calculation load is lightened, and the performance is improved. The typical identity aggregate signature system is composed of five algorithms: system initialization, private key generation, signing, aggregation and aggregate verification, user identity information can be used to replace a public key thereof, the problem of high certificate cost is solved, and by compressing the plurality signatures into one, the high-efficiency verification is carried out.

Owner:STATE GRID CORP OF CHINA +2

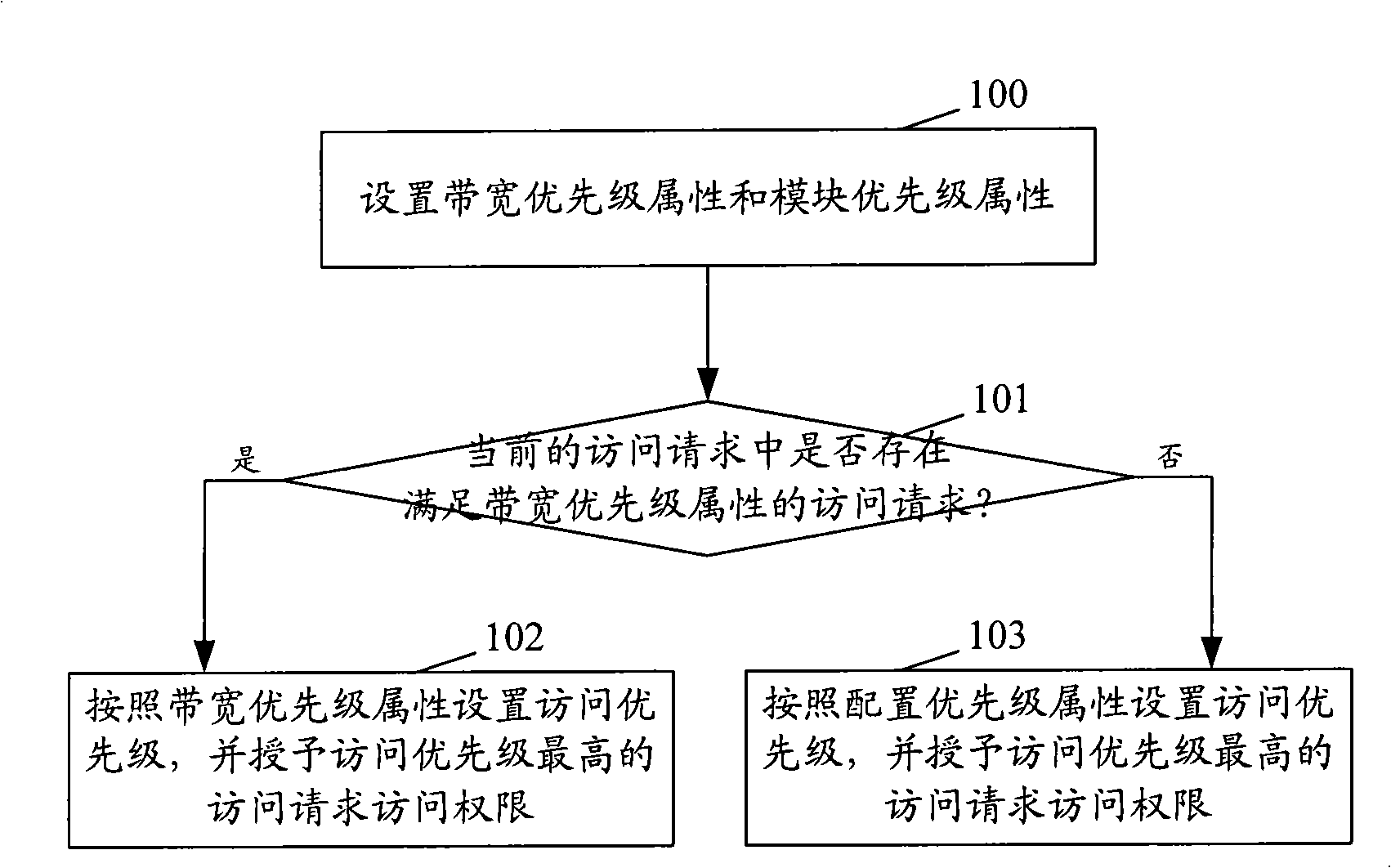

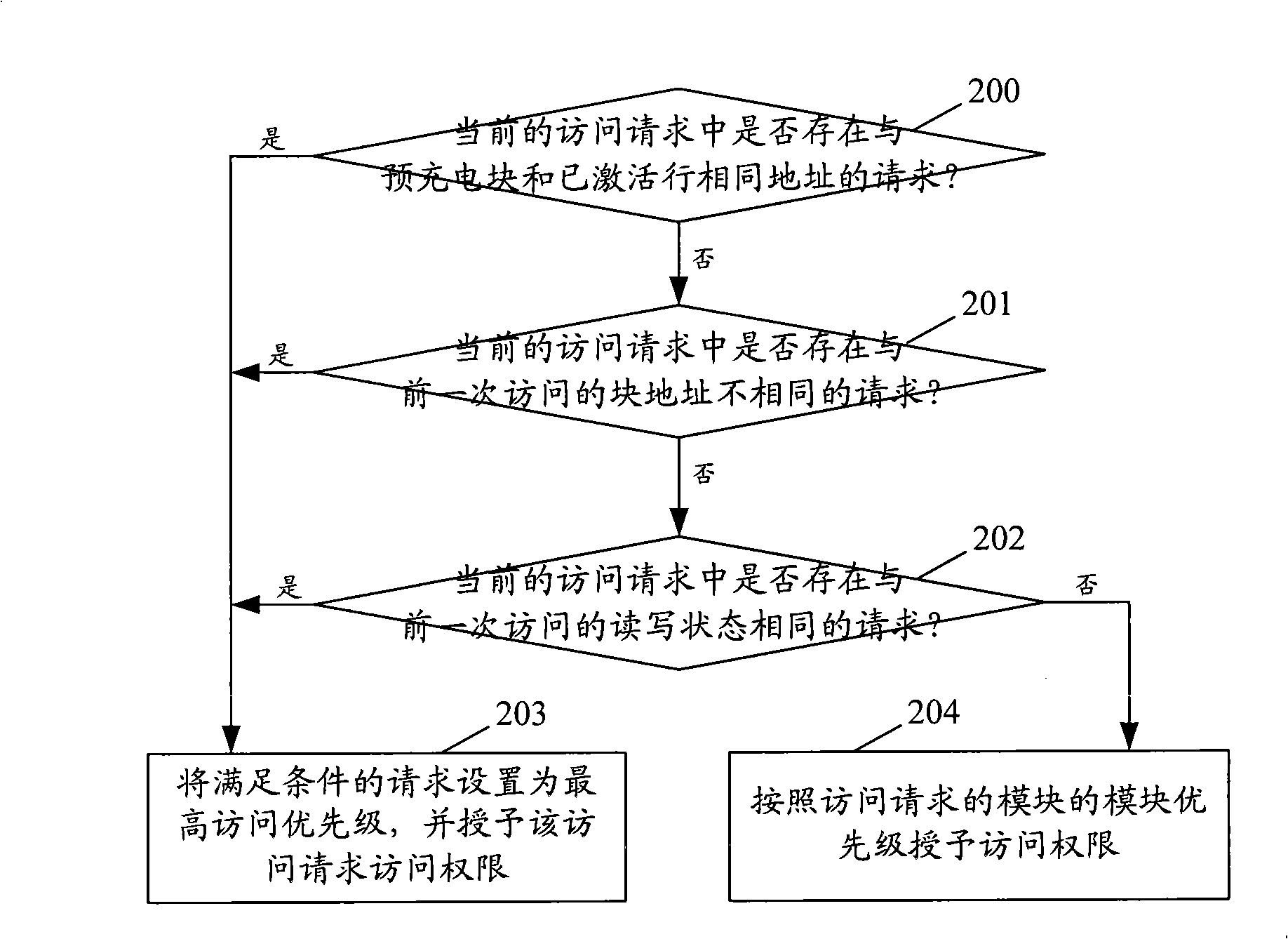

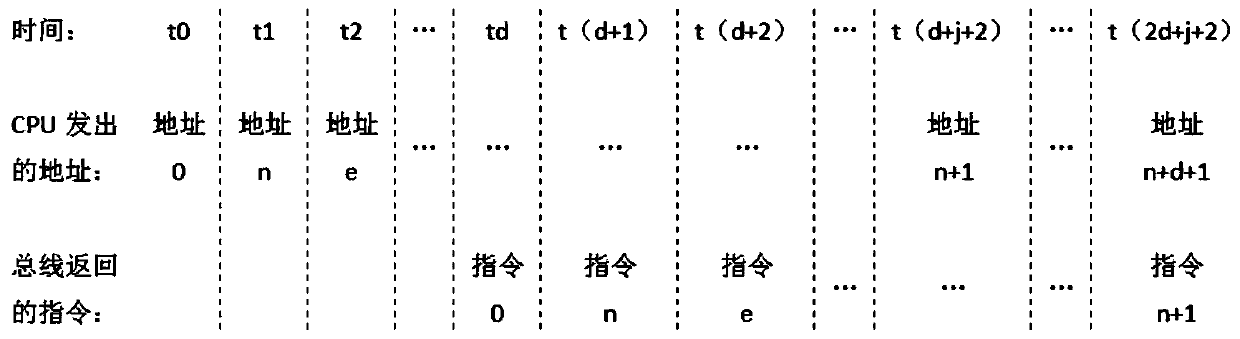

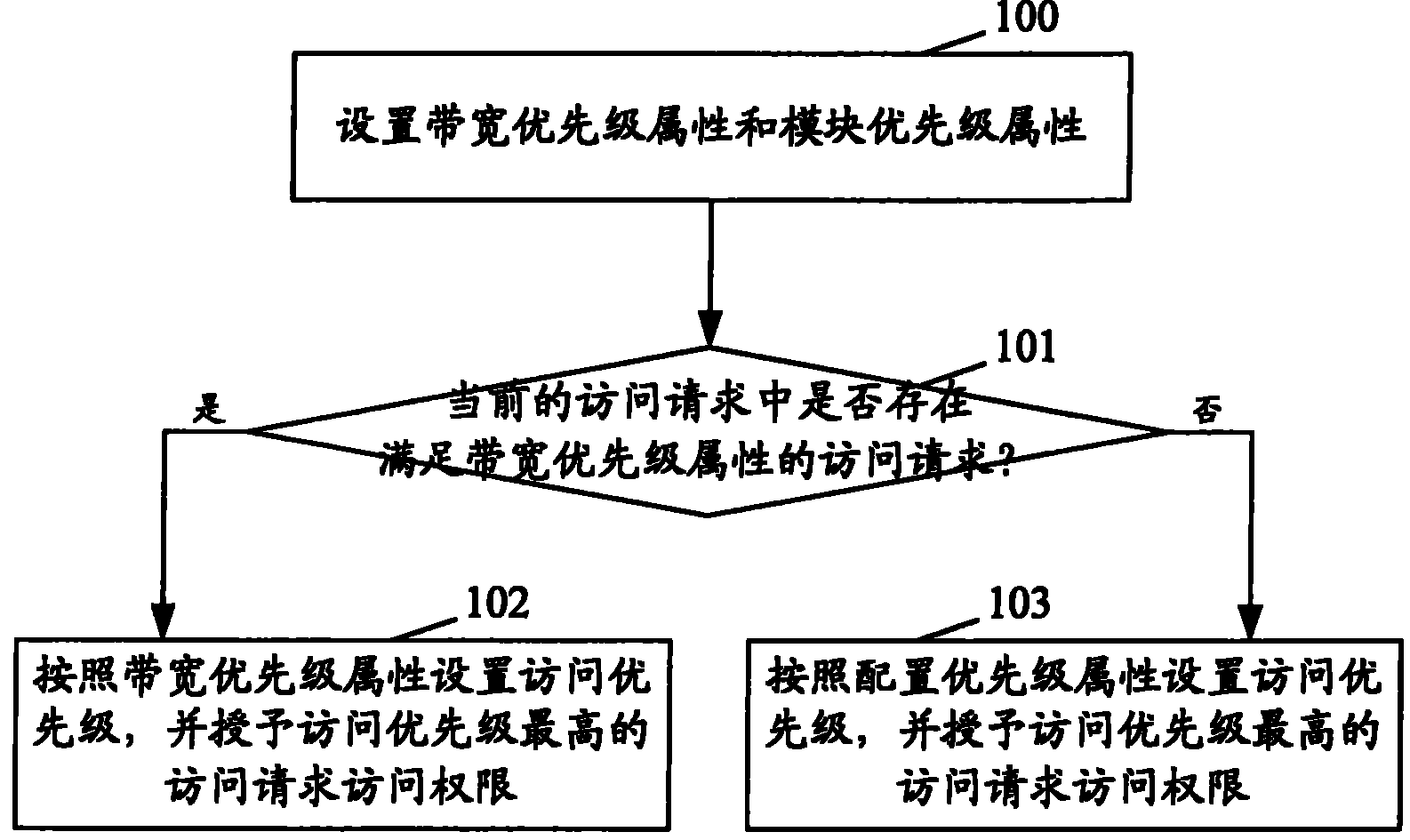

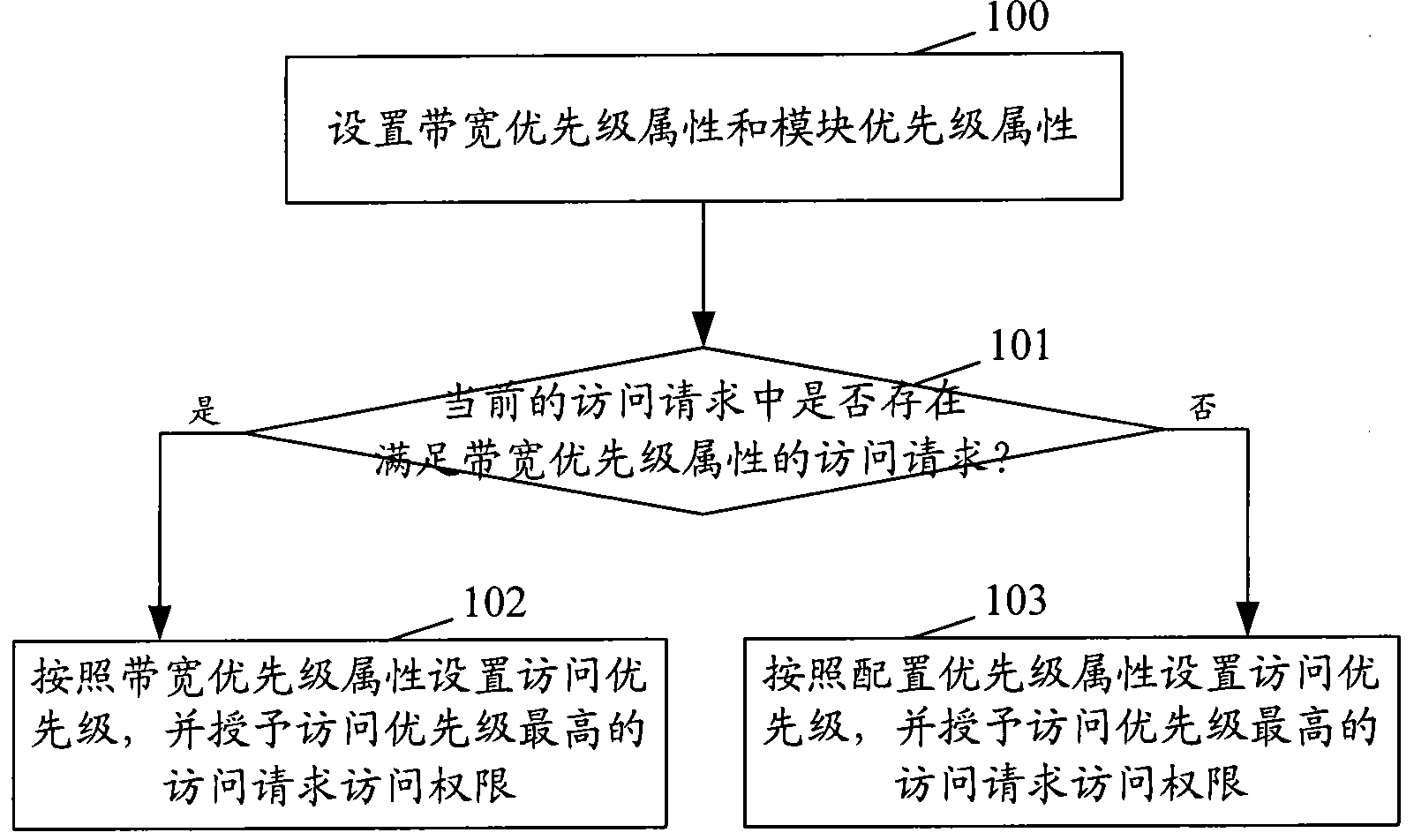

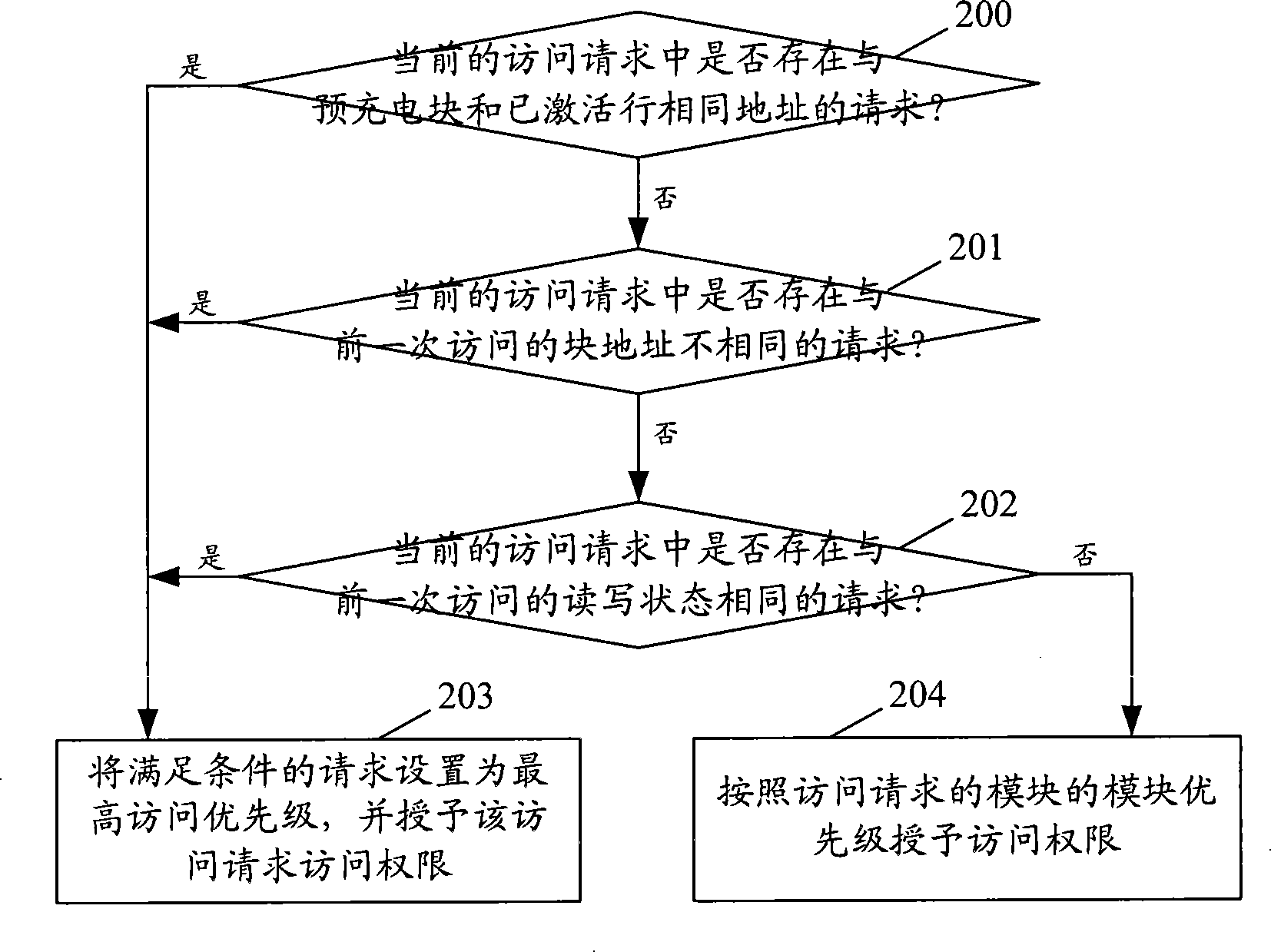

Method for access to external memory

ActiveCN101271435AImprove efficiencyReduce latency overheadElectric digital data processingExternal storageData access

The present invention discloses a method for visiting an external storage device. The present invention displays the bandwidth priority attribute of the physical features of the external storage device by setting up and determines whether the visit authority of the current visit request is entitled or not according to the judgment of the bandwidth priority attribute of the physical features of the external storage device, thus increasing the efficiency of the device to visit the external storage device. The continuous data visit tries to be operated on the same line of the same block to the largest extent, thus greatly increasing the efficiency of continuous visit of the external storage device. When implementing the data transmission by the former request, the command and the address of the current visit request are parallelly sent out, thus reducing the time consumption of the transmission command delay; the time consumption of the bus switch is reduced by ensuring that the continuous data visit is writing or reading.

Owner:JIANGSU DAHAI INTELLIGENT SYST

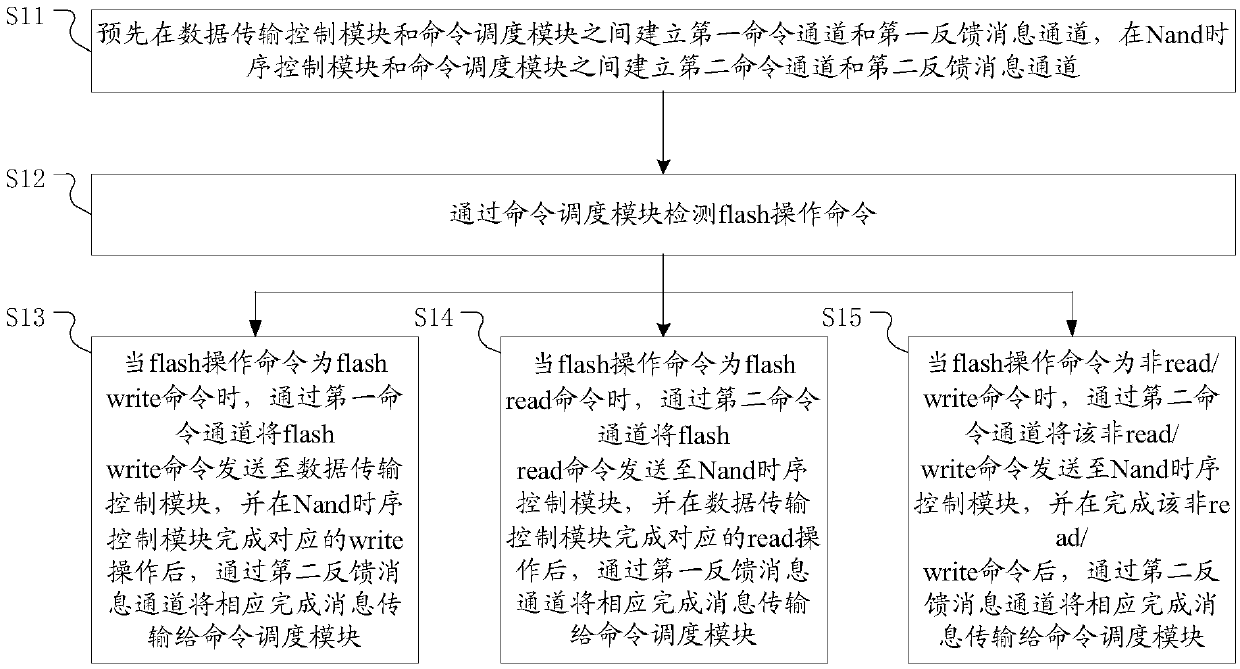

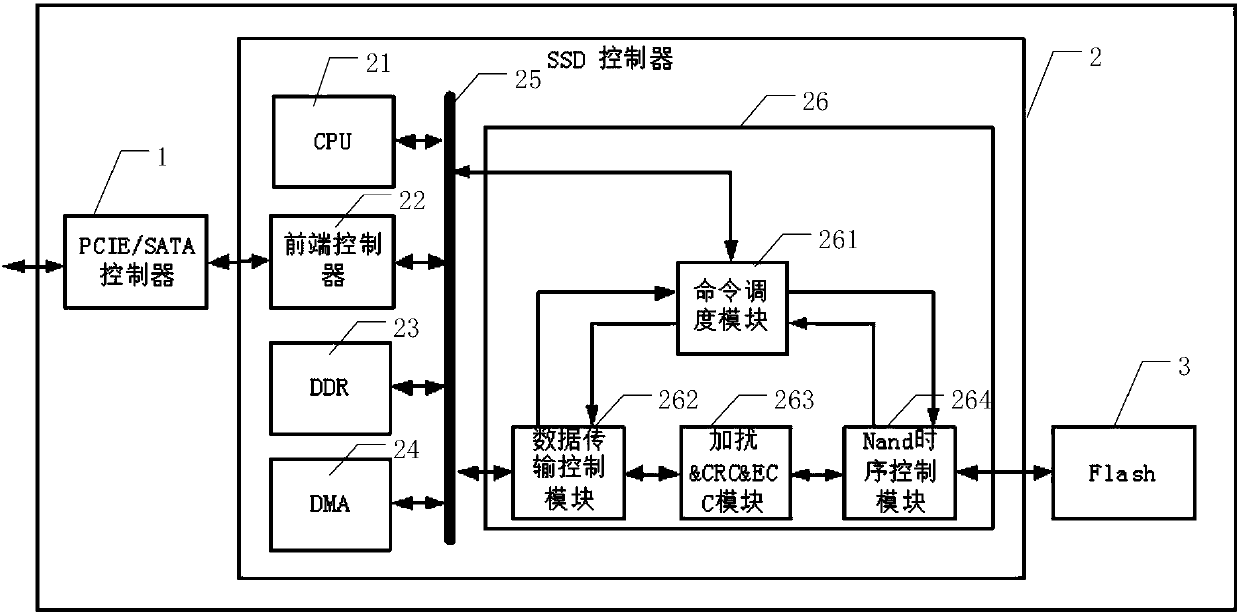

Data flow control method for SSD backend

ActiveCN107632793AImprove the efficiency of command responseReduce latency overheadInput/output to record carriersInterprogram communicationData streamComputer module

The invention discloses a data flow control method for an SSD backend. The SSD backend includes a command scheduling module, a data transmission control module, an interference and CRC and ECC module,and a Nand time sequence control module. In the method, a first command channel and a first information feedback channel are established beforehand between a data transmission control module and a command scheduling module, and a second command channel and a second information feedback channel are established between the Nand time sequence control module and the command scheduling module. By separating operation process areas of read / write commands from other operation commands and adjusting different command receiving channels and completing the information feedback channel, the cost of delaying waiting data at a Nand interface is reduced, and the efficiency of command response at the Nand interface is improved.

Owner:INSPUR SUZHOU INTELLIGENT TECH CO LTD

Resource allocation method and resource borrowing method

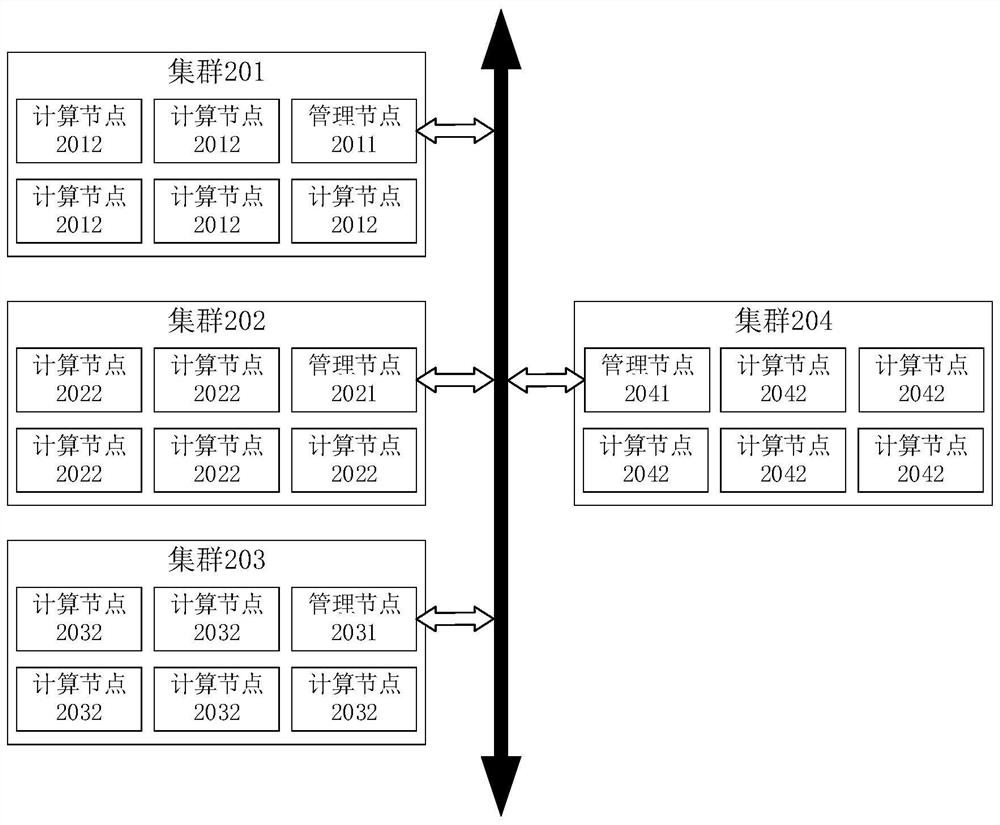

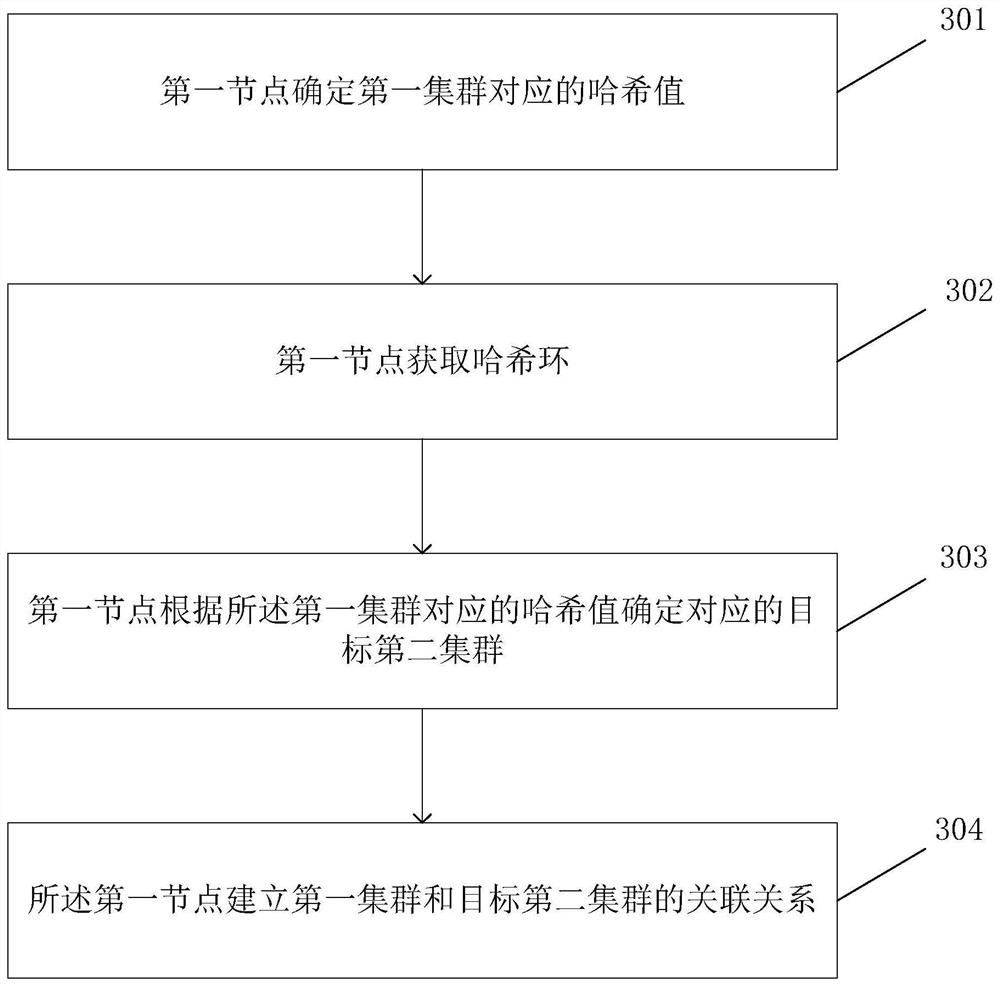

PendingCN112306651AReduce latency overheadProgram initiation/switchingResource allocationResource assignmentDistributed computing

The embodiment of the invention provides a resource allocation method. A borrowing conflict caused by the fact that a plurality of busy clusters concurrently borrow resources to the same idle clusterdoes not occur. The resource allocation method comprises the steps that a first node determines a hash value corresponding to a first cluster; the first node acquires a hash ring; and the first node establishes an association relationship between the first cluster and a target second cluster, and the target second cluster establishing the association relationship with the first cluster is allowedto apply for resources from the first cluster.

Owner:HUAWEI TECH CO LTD

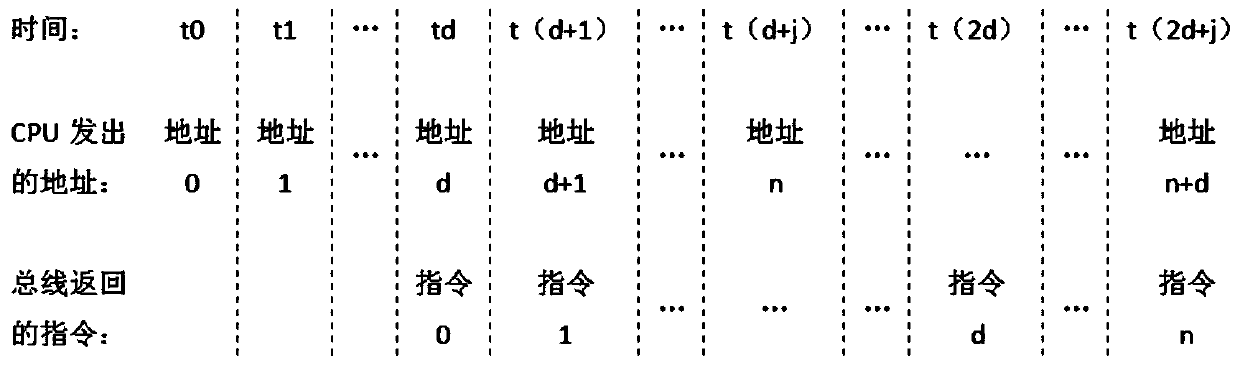

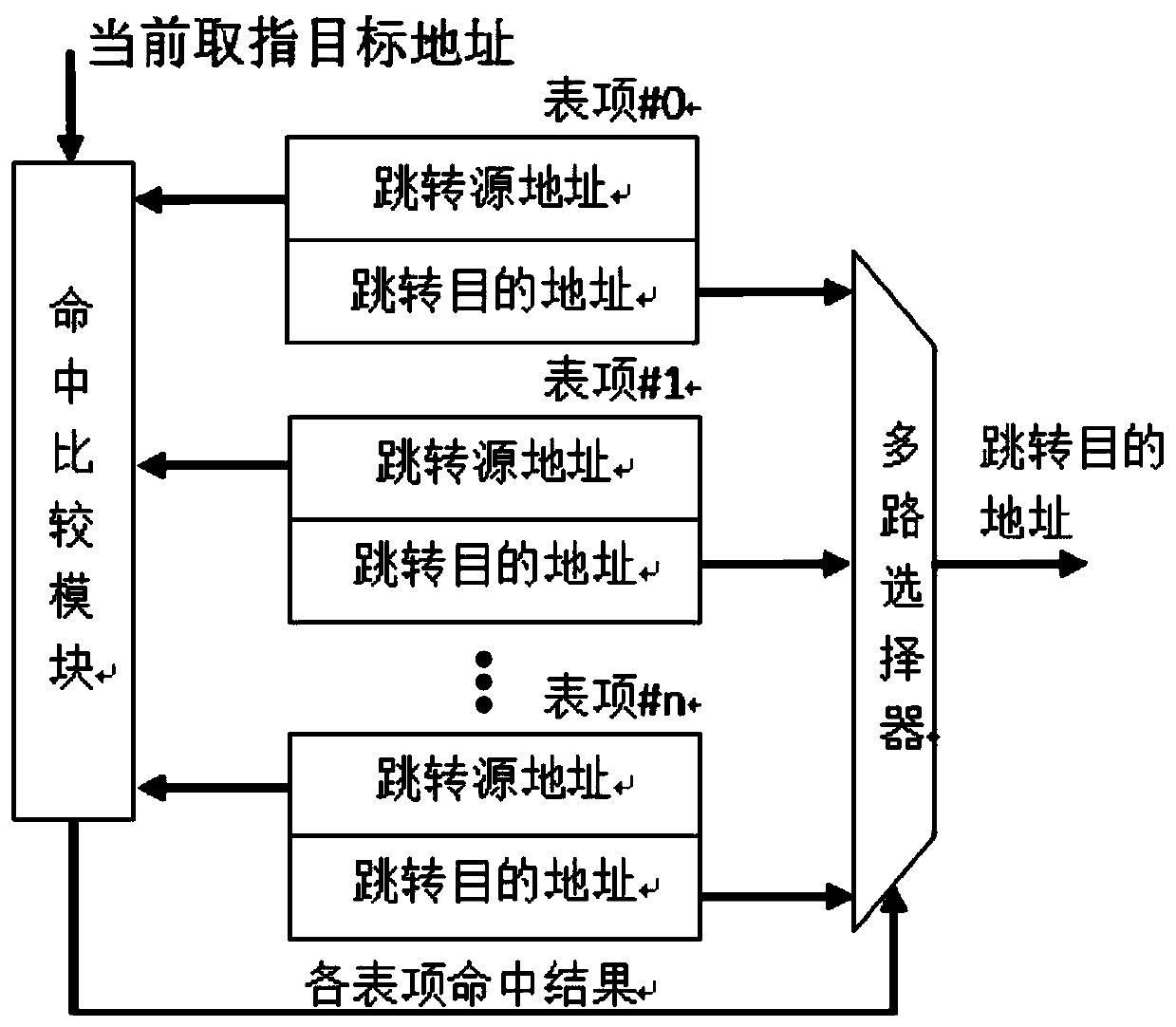

Method and circuit for reducing program skip overhead in CPU

InactiveCN111124493AReduce latency overheadMachine execution arrangementsParallel computingLookup table

The invention provides a method used in an embedded CPU (Central Processing Unit) and used for reducing program skip overhead and circuit implementation thereof. According to the method, historical score values are added into the skip address lookup table entries, score comparison is carried out on the table entries, and therefore the most frequently-occurring skip destination address is reservedin the lookup table entries, and the skip delay overhead is effectively reduced. According to the method, in terms of hardware implementation, a CPU classic cache structure is adopted, and the score value is used as a table item replacement basis.

Owner:天津国芯科技有限公司

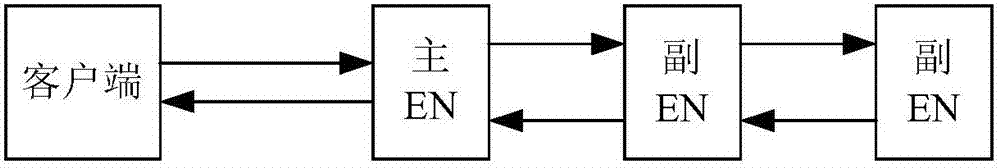

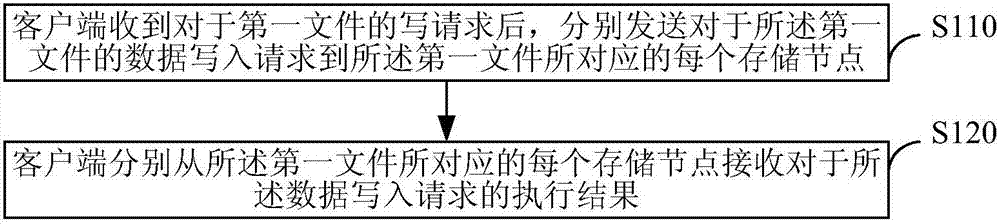

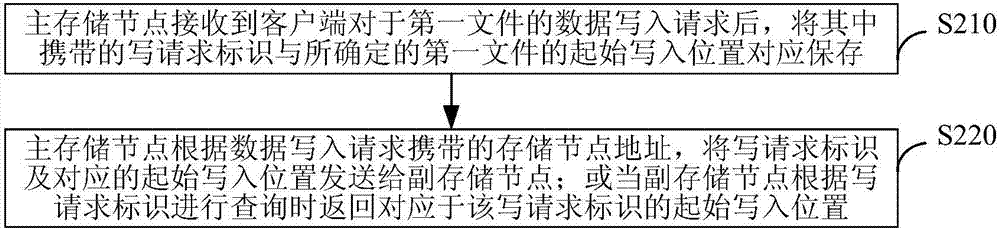

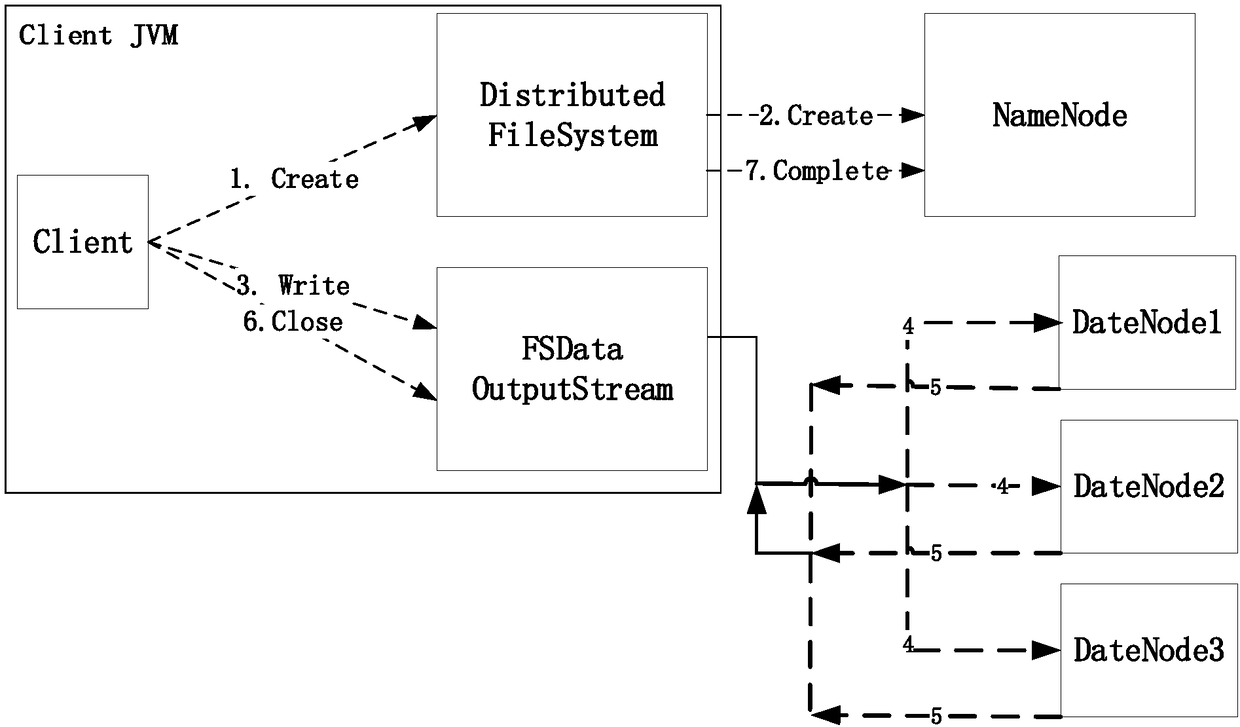

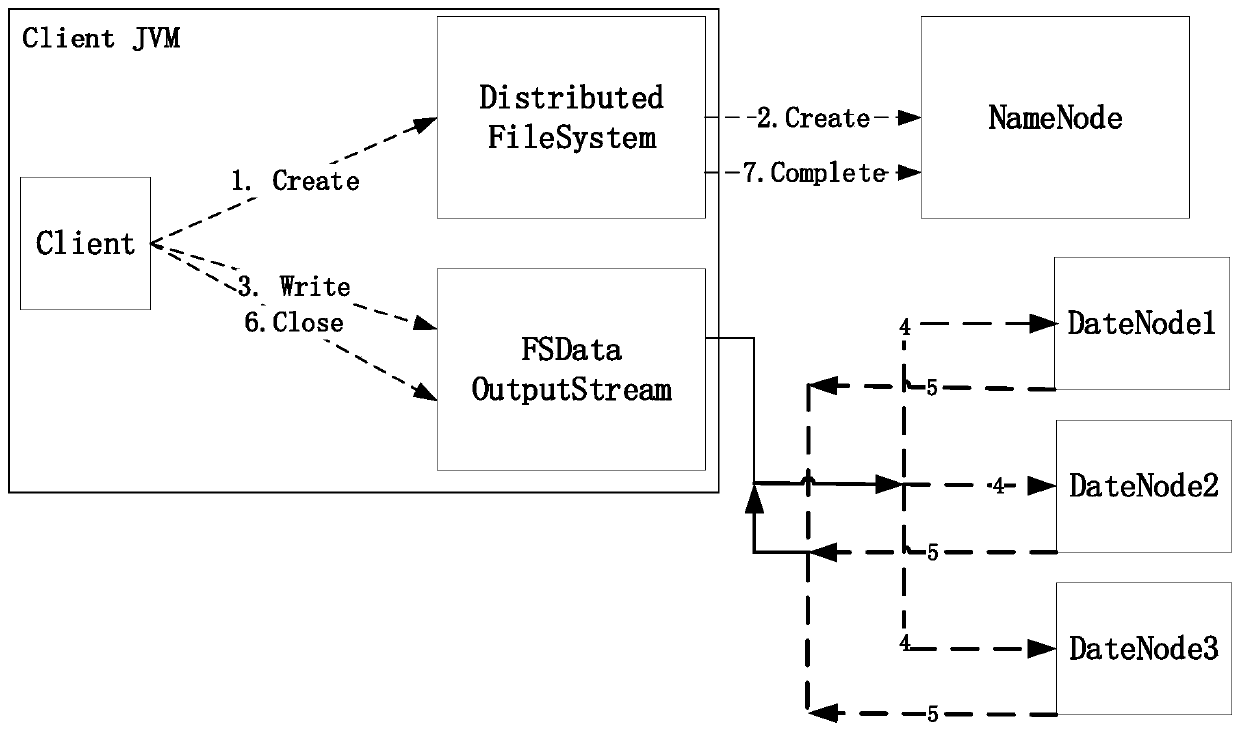

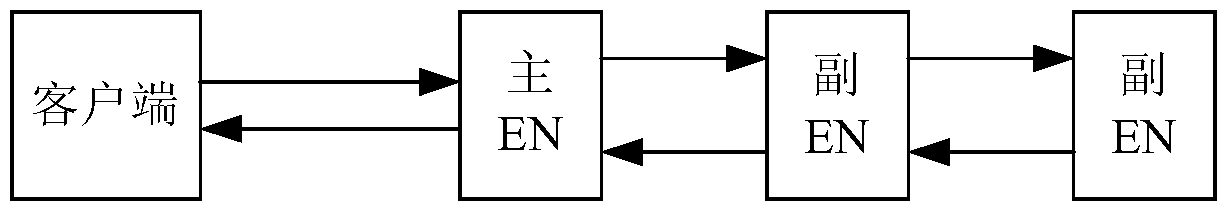

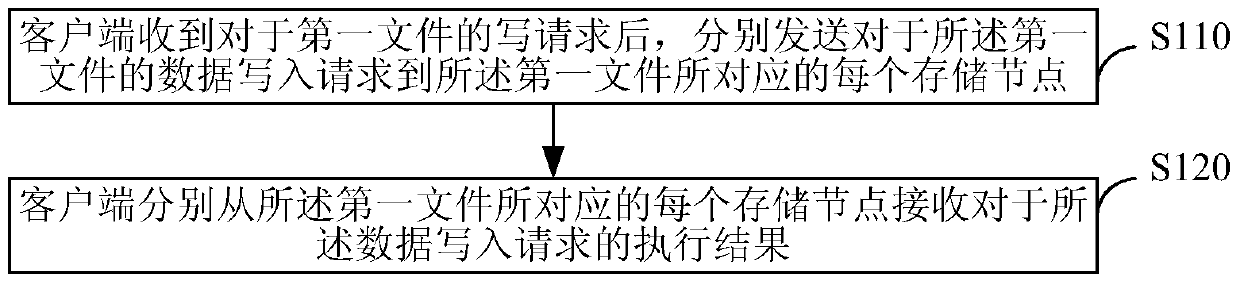

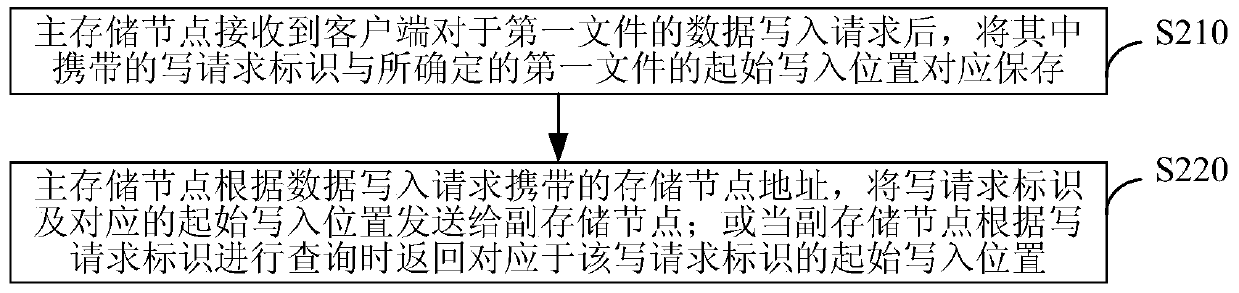

File write-in method in distributed system and apparatus thereof

ActiveCN107493309AEnsure consistencyReduce consistencyTransmissionSpecial data processing applicationsTime delaysClient-side

A file write-in method in a distributed system and an apparatus thereof are disclosed. The file write-in method comprises the following steps that after receiving a write request for a first file, a client sends a data write-in request of the first file to each storage node corresponding to the first file respectively, wherein the data write-in request at least includes a write request identification corresponding to the first file data write-in request and a storage node address; the storage node address includes an address of a main storage node and / or an address of a secondary storage node in each storage node corresponding to the first file; the storage node address is used for interaction between the main storage node and the secondary storage node; and the client receives an execution result of the data write-in request from each storage node corresponding to the first file respectively. In the invention, under the condition that multiple copy byte orders of the data can be guaranteed to be consistent, data sending hop counts are reduced so that write-in time delay is decreased.

Owner:ALIBABA GRP HLDG LTD

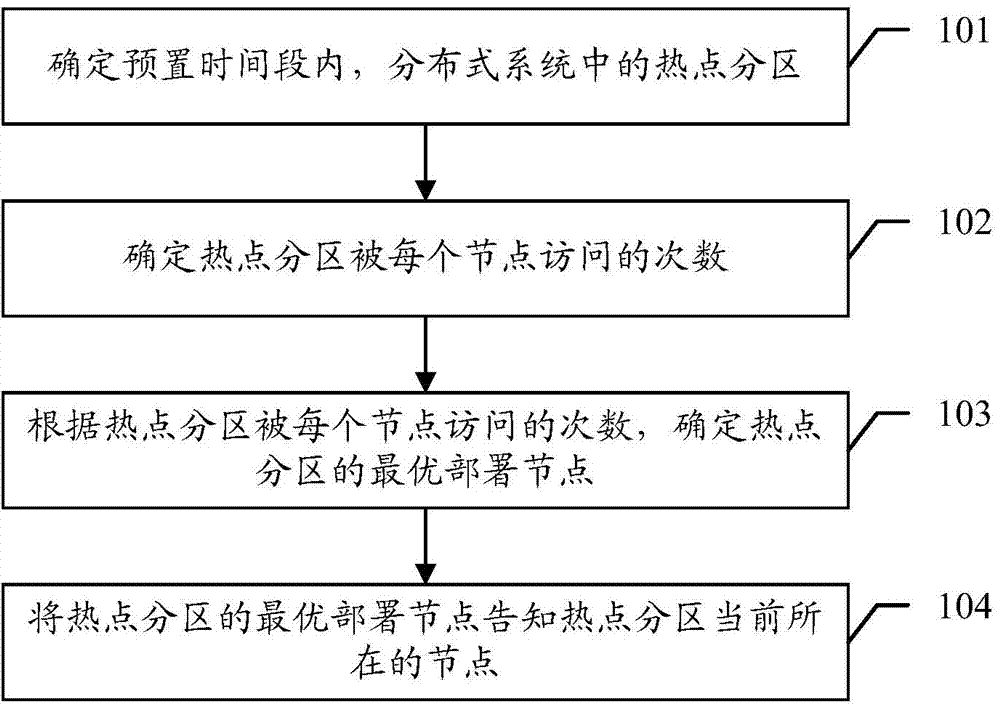

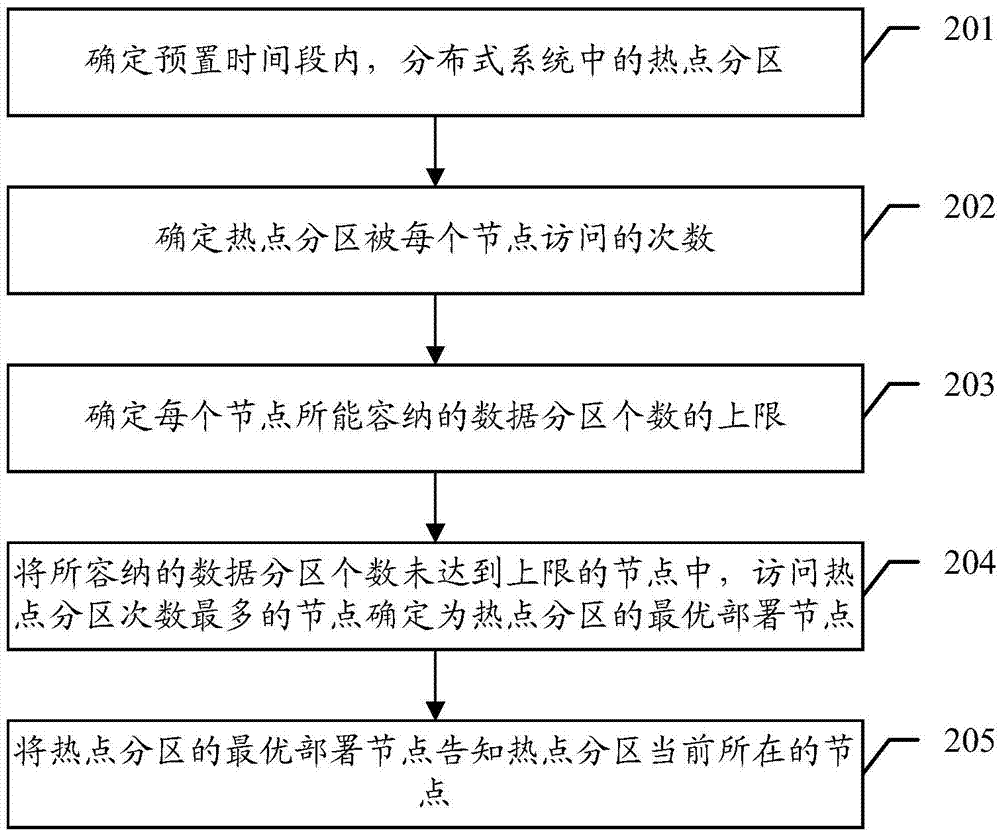

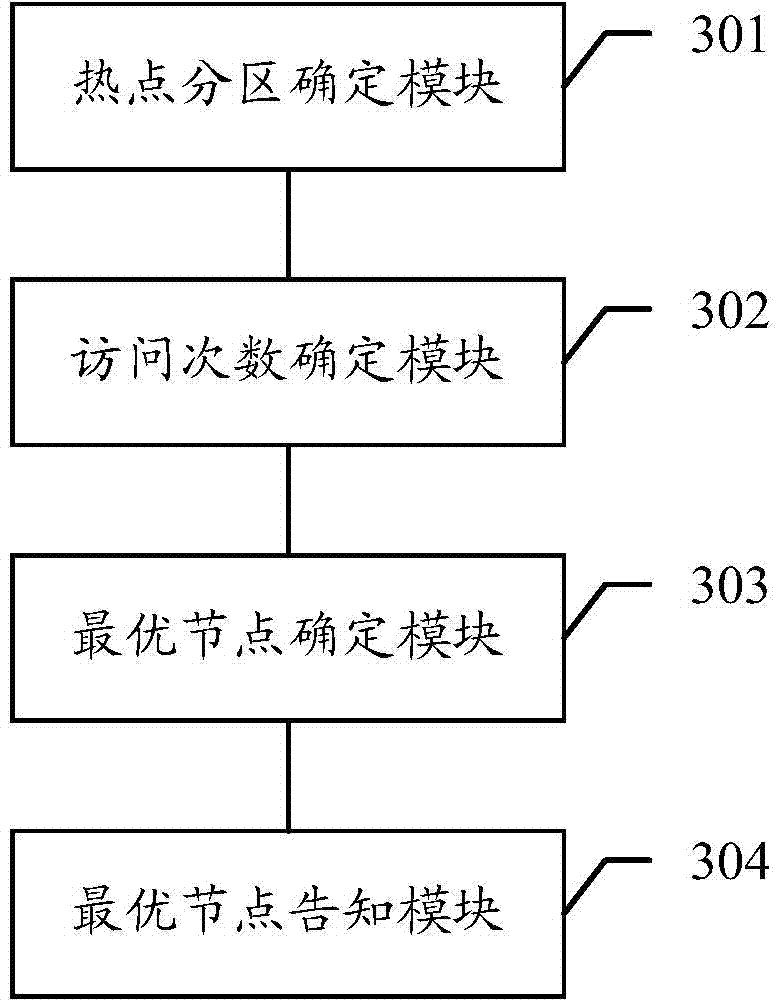

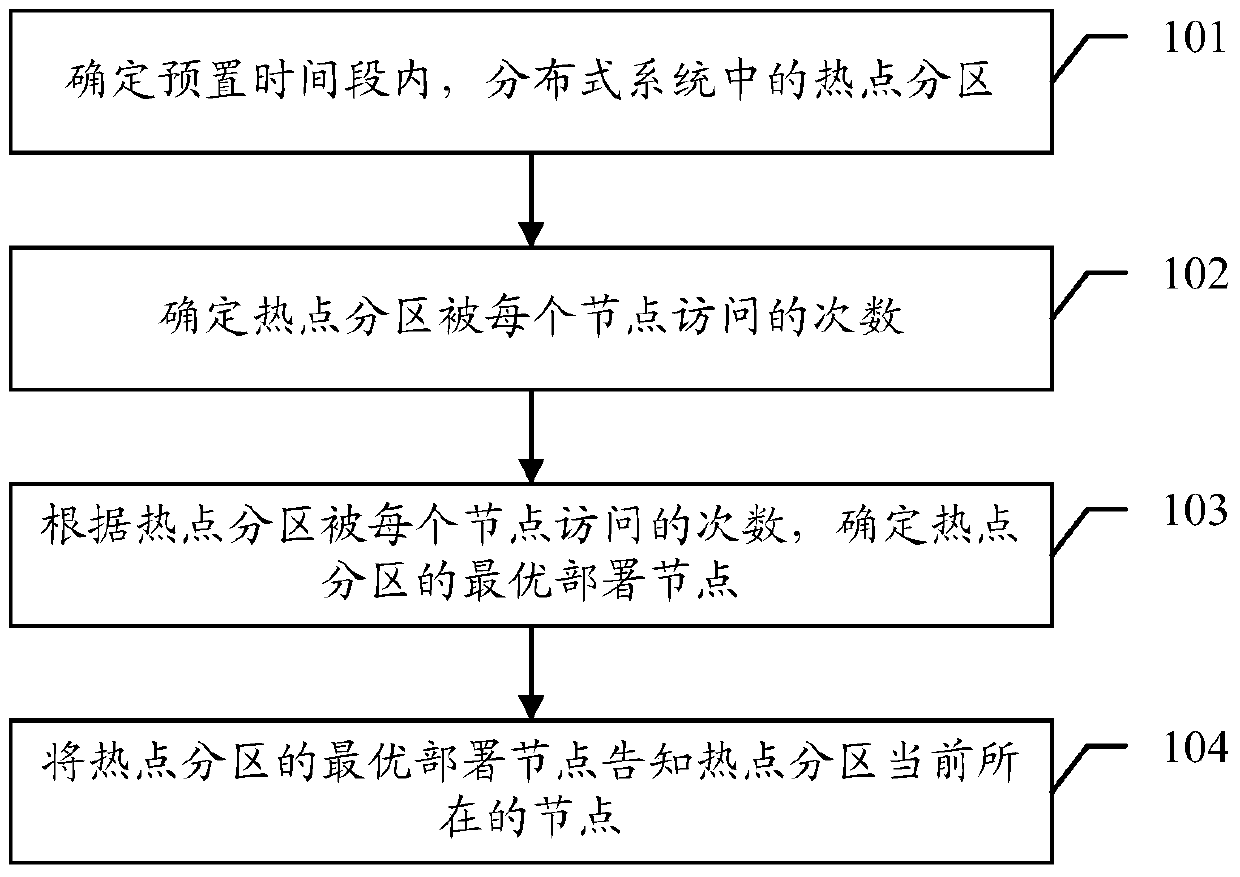

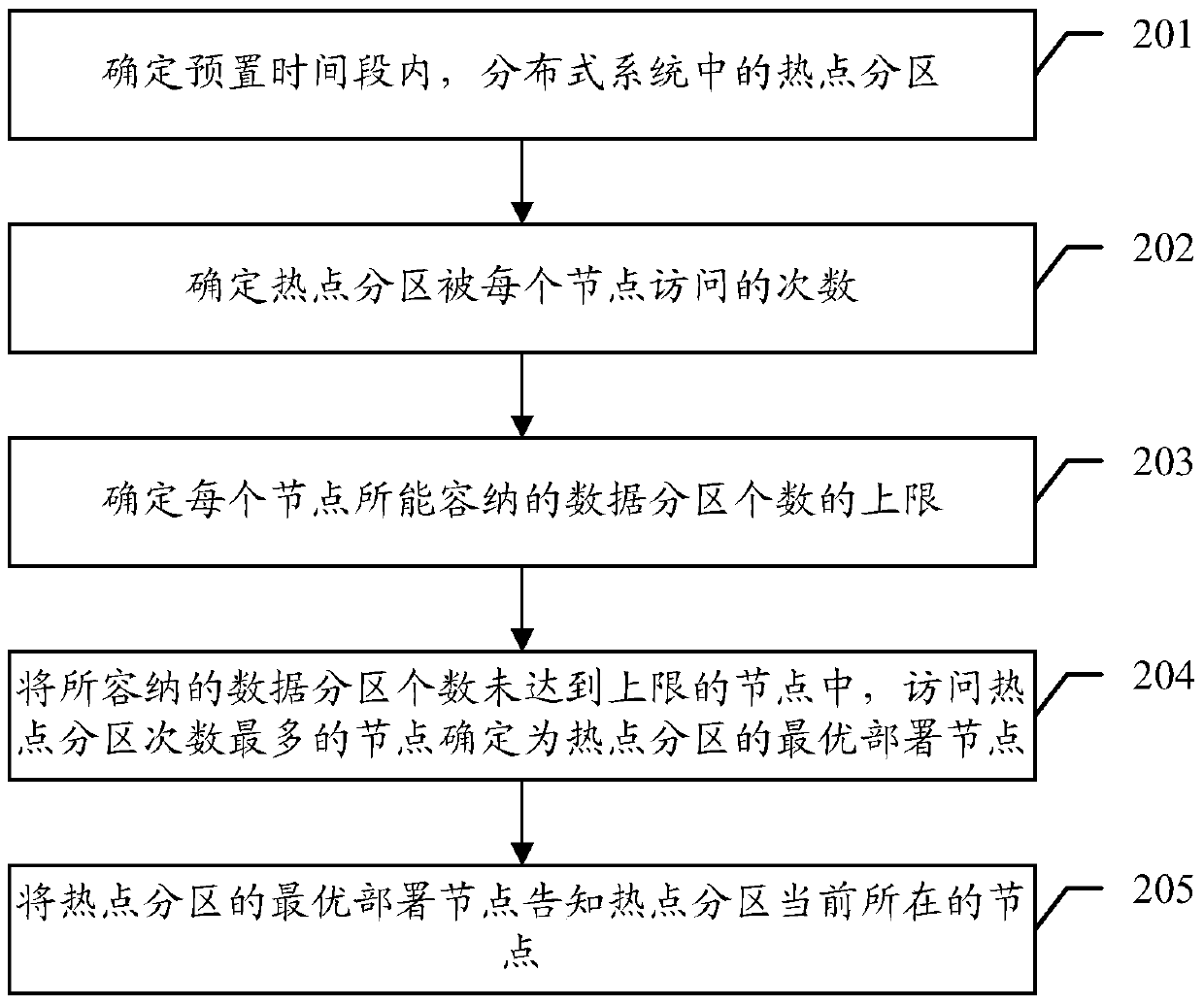

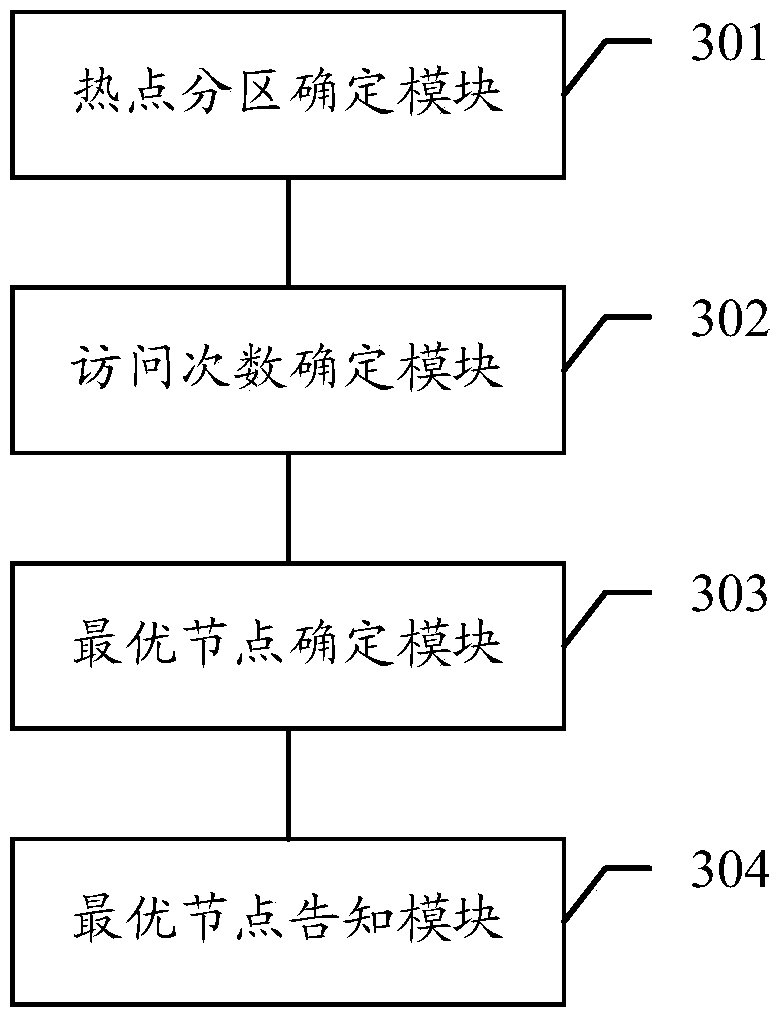

Memory partition deployment method and device

ActiveCN104516952AReduce the number of timesReduce latency overheadSpecial data processing applicationsTime delaysOptimal deployment

The invention discloses a memory partition deployment method. The memory partition deployment method is used for rationally deploying the memory partition of a distributed system, and reducing the time delay overhead of the distributed system. The memory partition deployment method comprises the steps: determining the hot spot partition in the distributed system within the preset time slot, wherein the distributed system comprises multiple nodes, the memory partition is deployed on the nodes, and the hot spot partition is the memory partition in the distributed system of which the frequency of the visits is arranged in the front rank; determining the frequency of the hot spot partition which is visited by each node; according to the frequency of the hot spot partition which is visited by each node, determining the optimal deployment node of the hot spot partition; informing the optimal deployment node of the hot spot partition to the node currently located by the hot spot partition. The invention further provides a relevant memory partition deployment device.

Owner:HUAWEI CLOUD COMPUTING TECH CO LTD

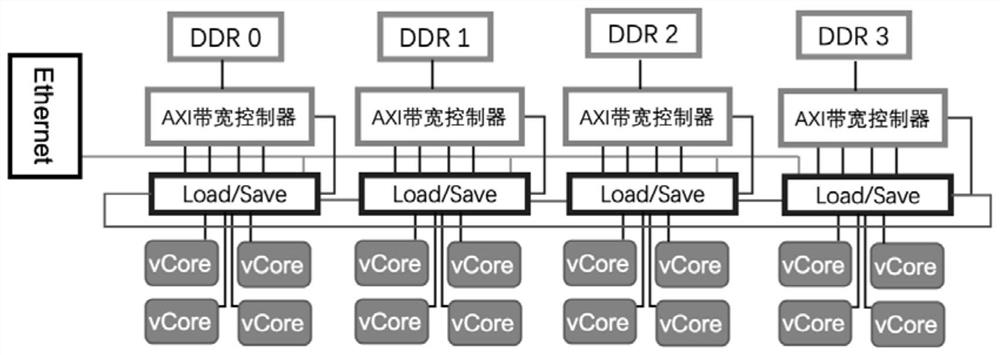

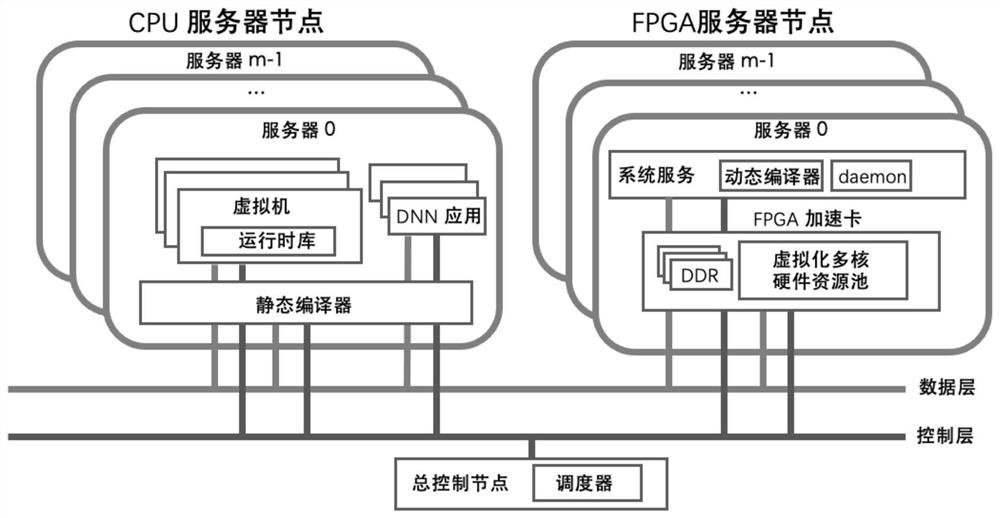

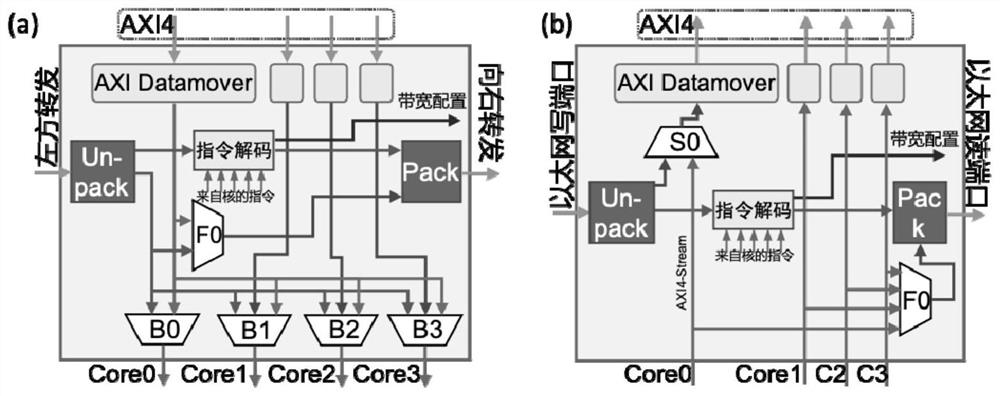

FPGA (Field Programmable Gate Array) virtualization hardware system stack design for cloud deep learning reasoning

ActiveCN113420517AReduce latency overheadReduce callsDesign optimisation/simulationCAD circuit designResource poolComputer architecture

The invention discloses an FPGA (Field Programmable Gate Array) virtualization hardware system stack design for cloud deep learning reasoning, and relates to the technical field of artificial intelligence, the design comprises a distributed FPGA hardware auxiliary virtualization hardware architecture, a CPU (Central Processing Unit) server node for running a virtual machine container, a static compiler and a deep neural network DNN, wherein the deep neural network DNN is used for acquiring a user instruction, and the user instruction is compiled into an instruction packet through the static compiler; the FPGA server computing node is used for operating virtualization system service and an FPGA acceleration card, and the FPGA acceleration card comprises a virtualization multi-core hardware resource pool and four double-rate synchronous dynamic random access memories (DDRs); and the master control node is used for managing each node in the CPU server node and the FPGA server computing node through a control layer. According to the scheme, the technical problem that in the prior art, an FPGA virtualization scheme oriented to deep learning reasoning application cannot be expanded to a distributed multi-node computing cluster is solved.

Owner:TSINGHUA UNIV

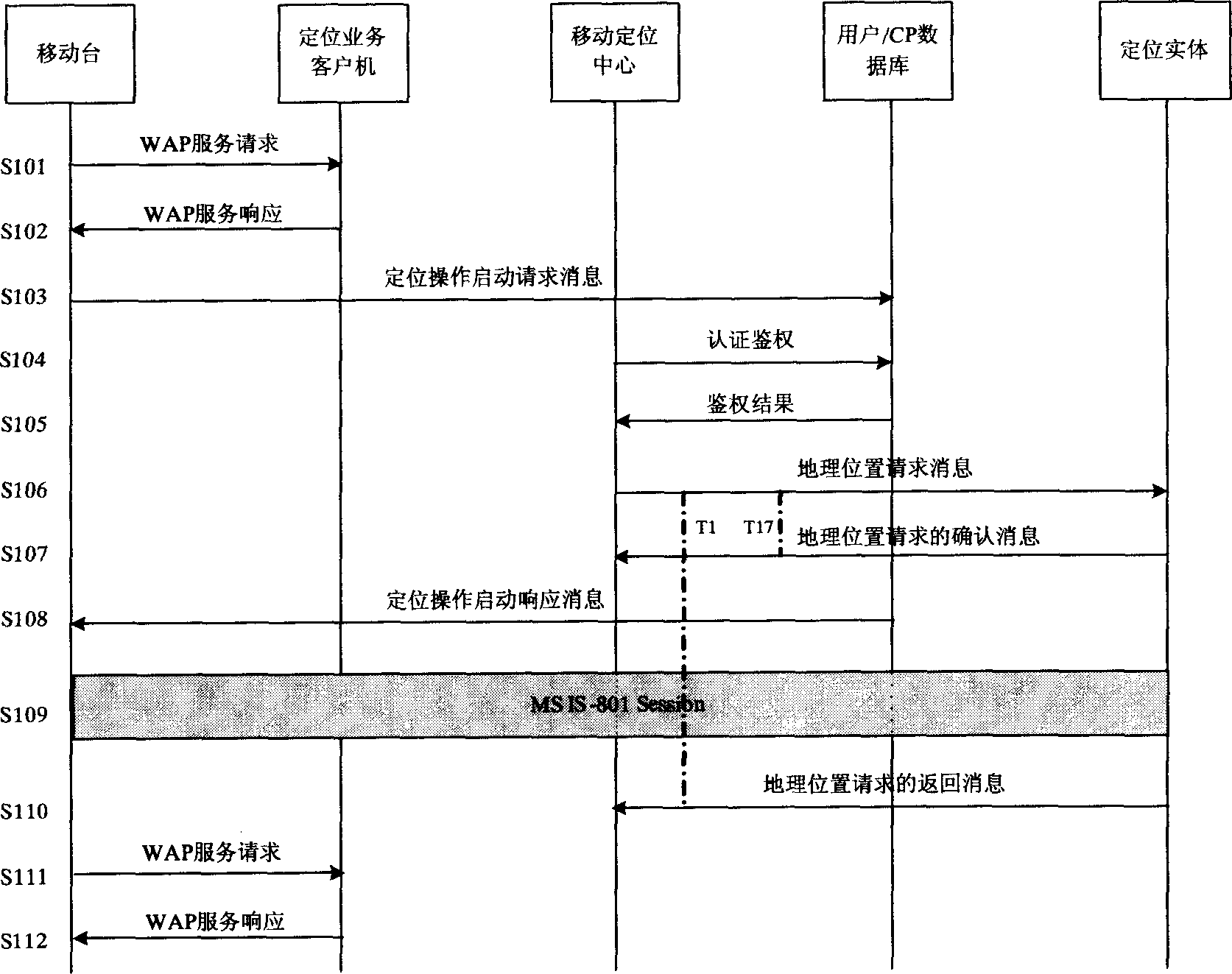

Method for implementing positioning based on mobile station

ActiveCN1719938ASimple processReduce latency overheadRadio/inductive link selection arrangementsLocation information based serviceTelecommunicationsGeolocation

This invention discloses a method for realizing positioning based on a mobile station including the following steps: a mobile station starts a position request based on WAP, a positioning service customer processor receives the request and asks for position information to the terminal the mobile station starts the beginning of the positioning process request information, the mobile station and the mobile positioning center interact to finish authorization authentication and certification, the mobile station, positioning entity and mobile positioning center in interact to finish computation and acquirement of position information, the mobile station sends its position information to the position service customer processor, which provide service based on the position information to the mobile station.

Owner:CHINA TELECOM CORP LTD

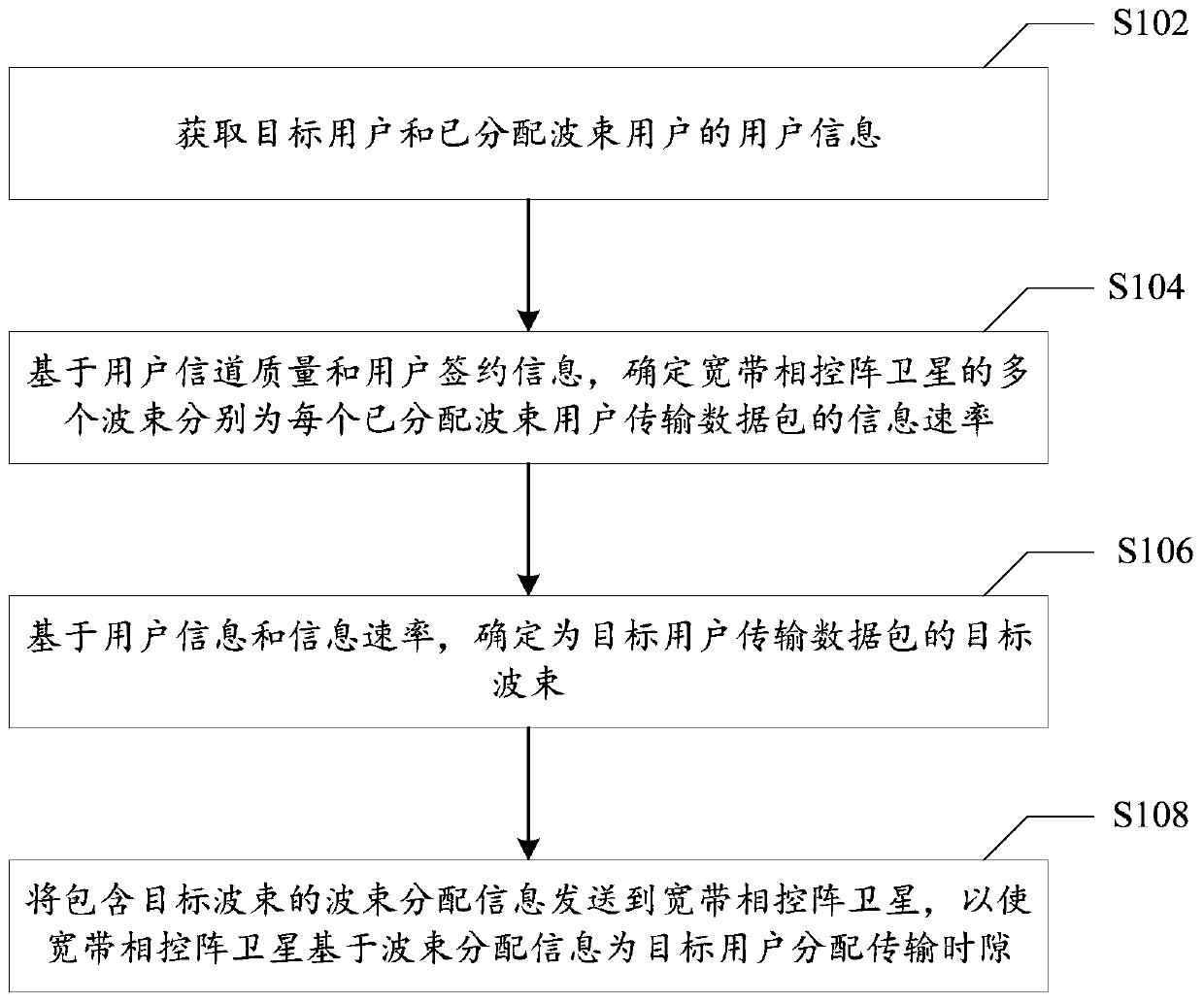

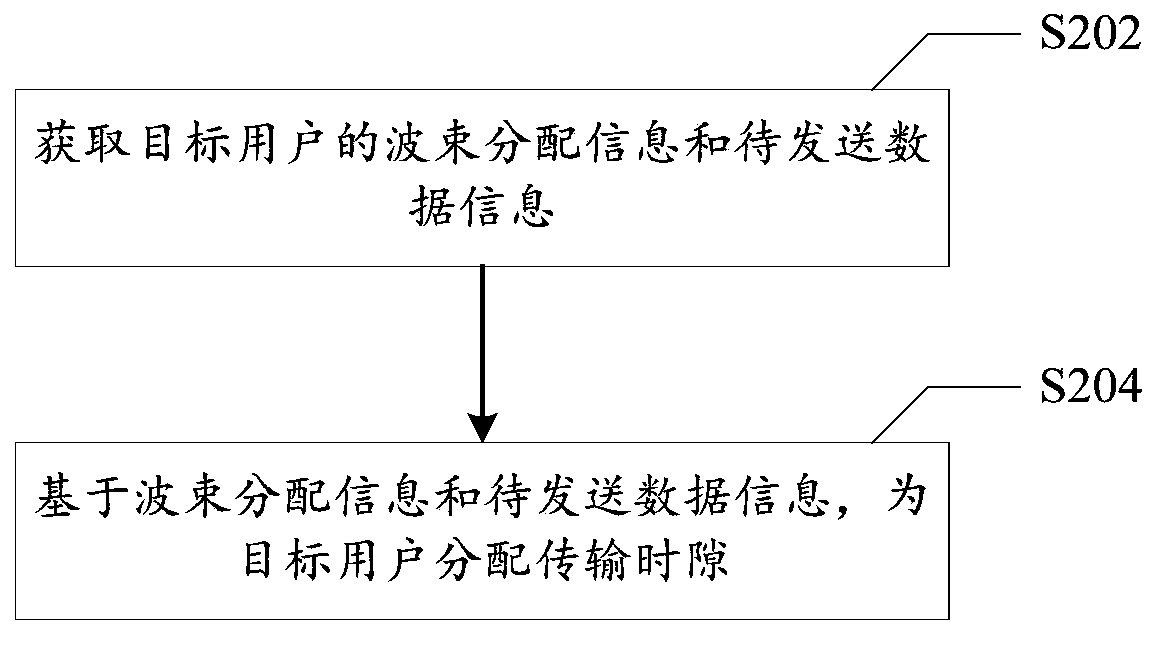

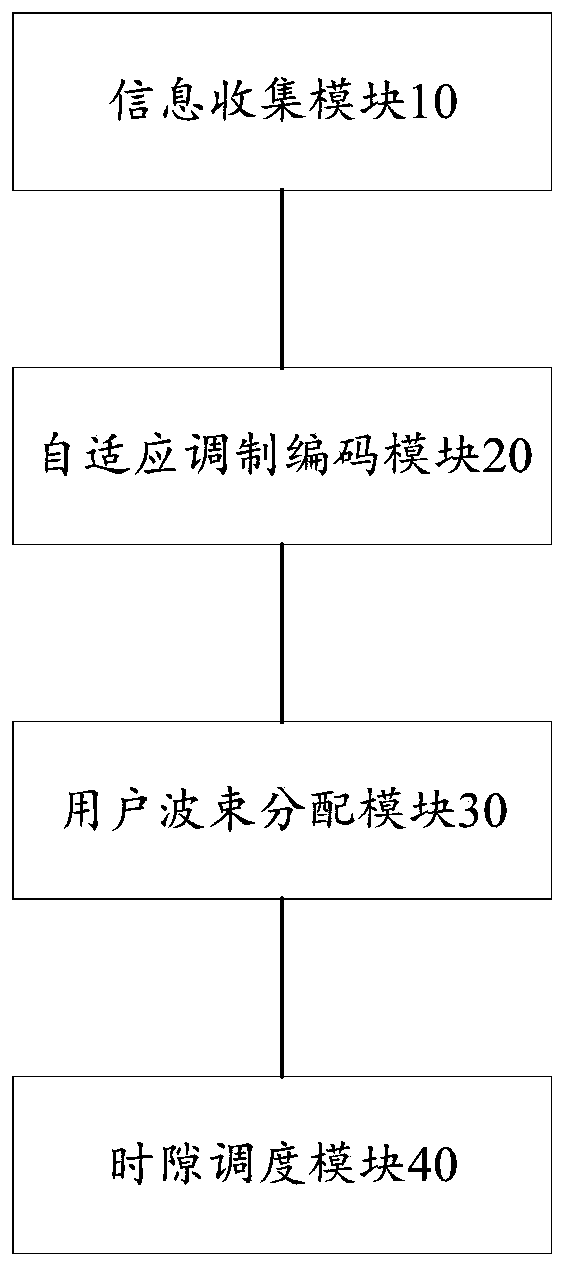

Double-layer resource allocation method and system for broadband phased array satellite

ActiveCN111555799AReduce complexityReduce latency overheadRadio transmissionBroadbandingGround station

The invention provides a double-layer resource allocation method and system for a broadband phased array satellite. The method is are applied to a ground operation and control center of the broadbandphased array satellite and comprise the following steps: acquiring user information of a target user and an allocated beam user; based on the user channel quality and the user subscription information, determining an information rate at which a plurality of beams of the broadband phased array satellite transmit data packets to each allocated beam user; determining a target beam for transmitting adata packet to the target user based on the user information and the information rate; and sending the beam allocation information containing the target beam to the broadband phased array satellite, so that the broadband phased array satellite allocates a transmission time slot to the target user based on the beam allocation information. According to the invention, the technical problems in the prior art that the processing complexity is too high if the distribution process is carried out in satellites, and the transmission delay is too long if the distribution process is carried out in a ground station are solved.

Owner:TSINGHUA UNIV +1

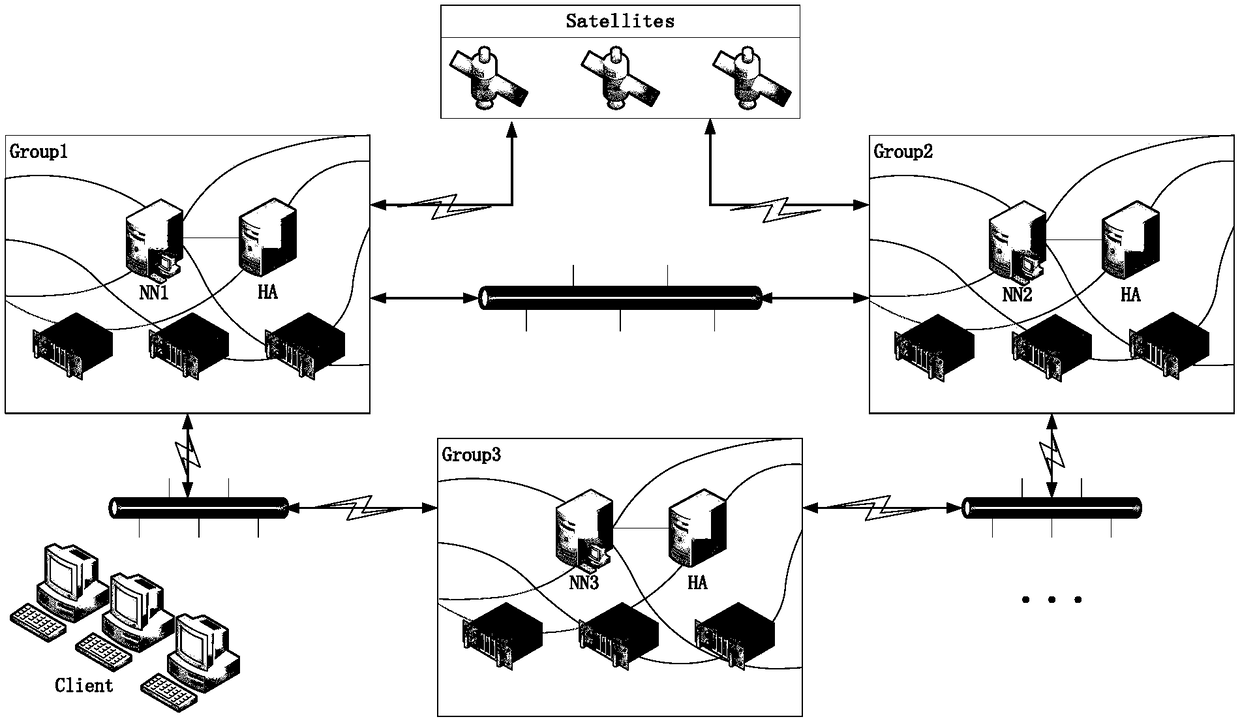

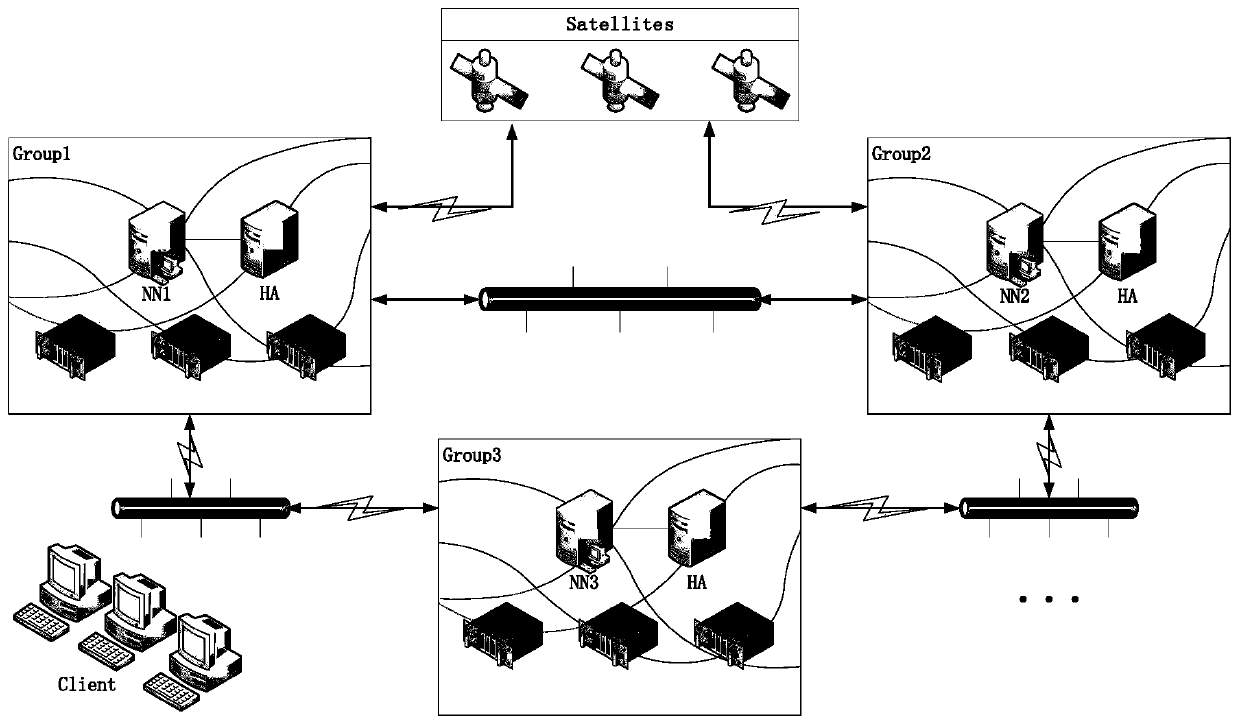

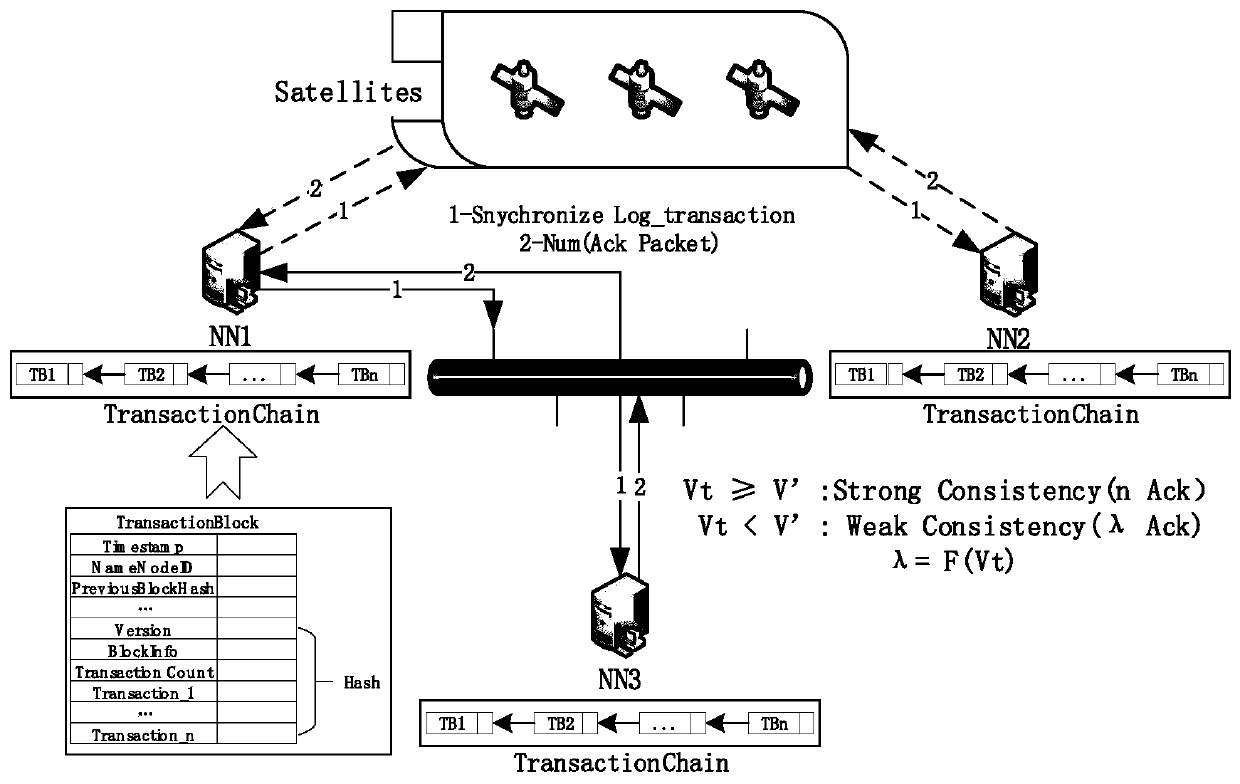

Data consistency system based on satellite network environment

ActiveCN108111338AAchieving Dynamic Consistency GuaranteesFast read and write responseData switching networksData synchronizationGranularity

The invention discloses a data consistency system based on a satellite network environment. The system comprises n gateway stations, wherein each gateway station comprises a meta-data server and is used for realizing meta-data synchronization by means of a chain-based high-consistency strategy through a transaction chain when the average speed of the chain is larger than or equal with a threshold,and otherwise, realizing meta-data synchronization by means of a chain-based low-consistency strategy through the transaction chain; a hot-standby meta-data server which is used for replacing the meta-data server for service when shutdown of the meta-data server occurs; and a data server which is used for supplying the chain-based high-consistency strategy for the writing operation of the data block in the data server when the average speed of the chain is larger than or equal with the threshold, and otherwise, supplying the chain-based low-consistency strategy. The data consistency system has advantages of ensuring high granularity consistency, realizing quick read-and-write response and reducing synchronous expenditure of repeated data.

Owner:HUAZHONG UNIV OF SCI & TECH +1

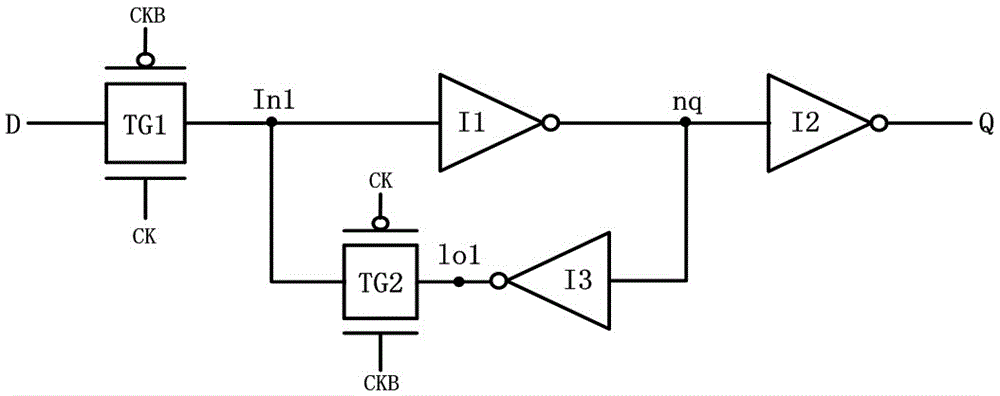

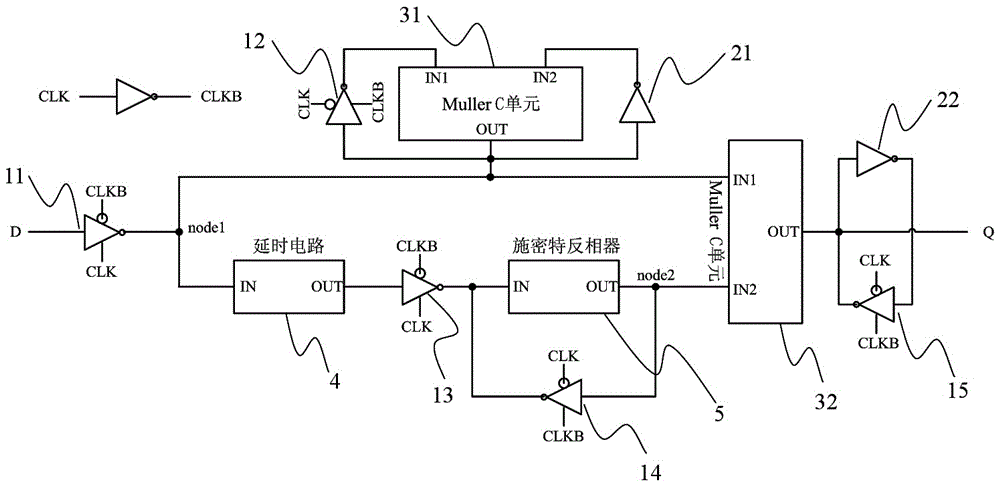

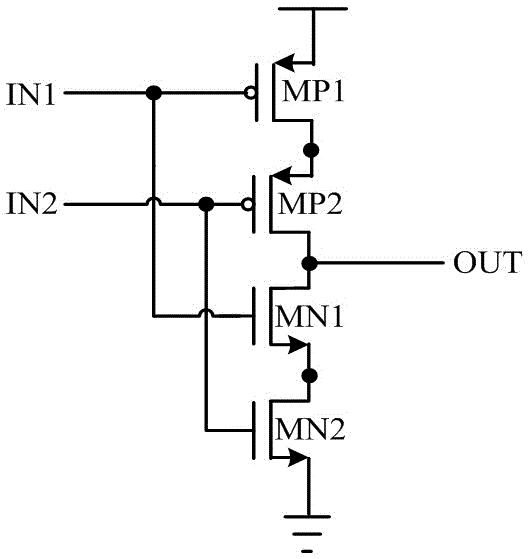

Single Event Upset and Single Event Transient Immunity Latch

ActiveCN104270141BImprove reliabilityReduce Design ComplexityLogic circuitsSingle event upsetComputer science

The invention aims at the problems of poor SEU (Single Event Upset) resistance, poor SET (Single Event Transient) resistance, complicated structure and high cost of the existing integrated circuit, and provides a latch capable of resisting single event upset and single event transient pulse for implementing shielding of the SET from combinational logic and the SEU of data in the latch. The latch comprises five clock-controlled inverters, two regular inverters, two MullerC unit circuits, a Schmidt inverter and a delay circuit. The SEU and the SET are filtered by utilizing the MullerC unit circuits. The Schmidt inverter is used for increasing key charge of a sensitive node. The delay circuit is used for generating a signal in a delayed form. According to the latch capable of resisting single event upset and single event transient pulse, the influence of radiation on the circuit can be effectively eliminated, and the latch has the advantages of better anti-radiation performance, simple circuit structure and small area expenditure.

Owner:HEFEI UNIV OF TECH

A memory partition deployment method and device

ActiveCN104516952BReduce the number of timesReduce latency overheadSpecial data processing applicationsTime delaysOptimal deployment

The invention discloses a memory partition deployment method. The memory partition deployment method is used for rationally deploying the memory partition of a distributed system, and reducing the time delay overhead of the distributed system. The memory partition deployment method comprises the steps: determining the hot spot partition in the distributed system within the preset time slot, wherein the distributed system comprises multiple nodes, the memory partition is deployed on the nodes, and the hot spot partition is the memory partition in the distributed system of which the frequency of the visits is arranged in the front rank; determining the frequency of the hot spot partition which is visited by each node; according to the frequency of the hot spot partition which is visited by each node, determining the optimal deployment node of the hot spot partition; informing the optimal deployment node of the hot spot partition to the node currently located by the hot spot partition. The invention further provides a relevant memory partition deployment device.

Owner:HUAWEI CLOUD COMPUTING TECH CO LTD

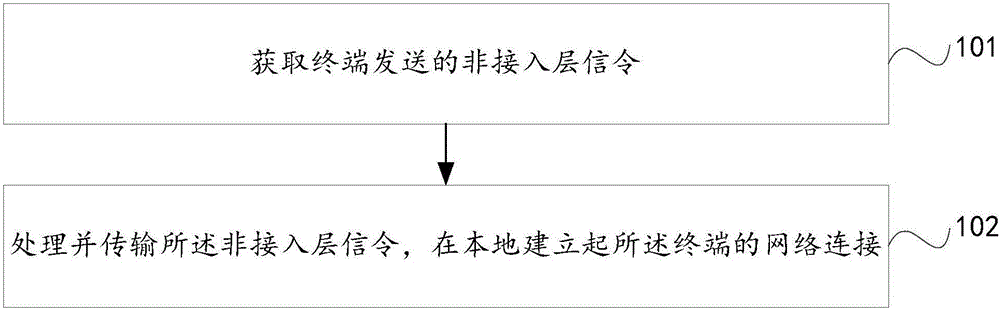

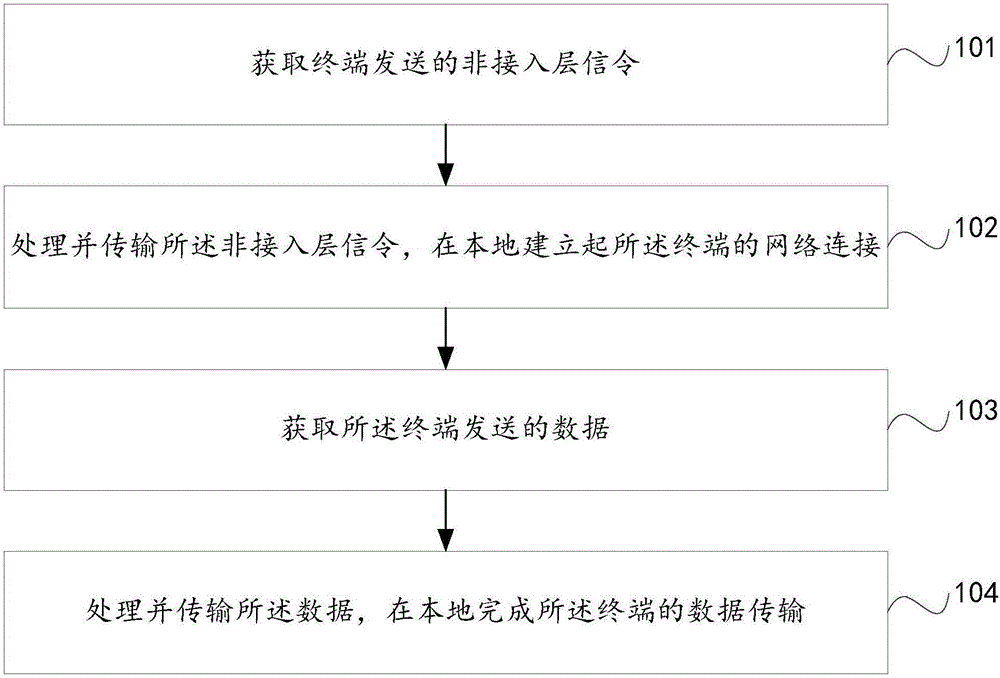

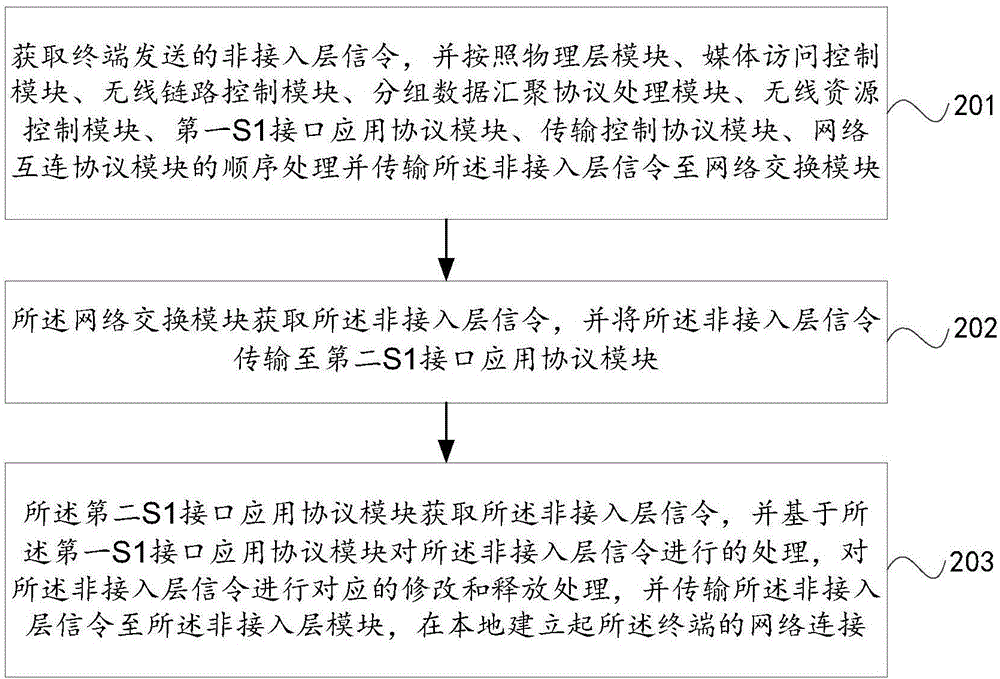

Communication method and base station

InactiveCN106686700AImprove experienceReduce latency overheadAssess restrictionNetwork connectionNon-access stratum

The invention provides a communication method and a base station, and relates to the technical field of communication, and is capable of completing terminal network access at the side of the base station. The method comprises: non-access layer signals sent by the terminal are obtained; the non-access layer signals are processed and transmitted, and the network connection of the terminal is locally established. The technical scheme is applied to the process of terminal communication.

Owner:NANJING BAILIAN INFORMATION TECH CO LTD

A method and device for determining antenna angle

ActiveCN105246086BReduce latency overheadImprove signal transmission efficiencyNetwork planningChannel impulse responseTime delays

Owner:BEIJING UNIV OF POSTS & TELECOMM

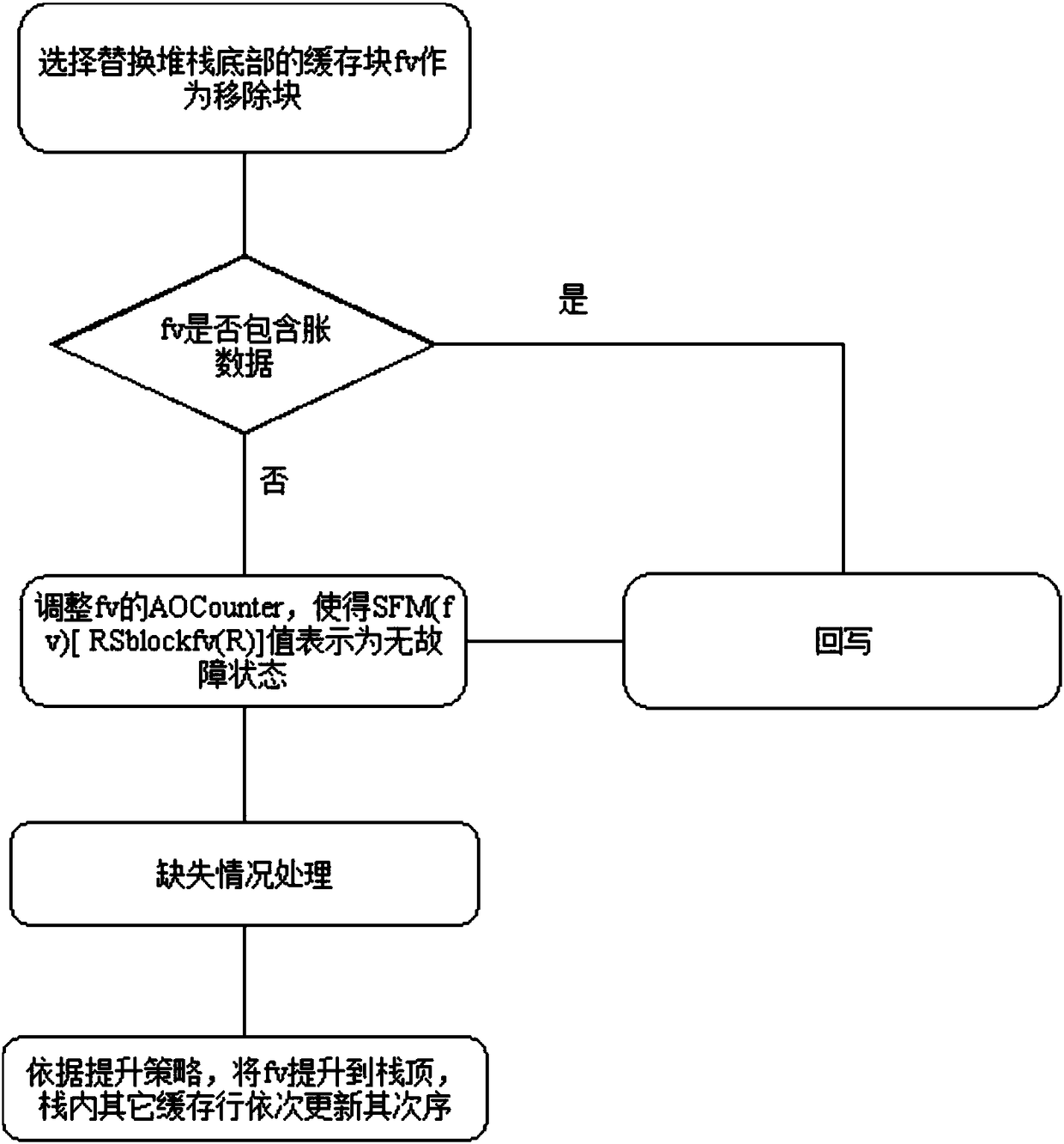

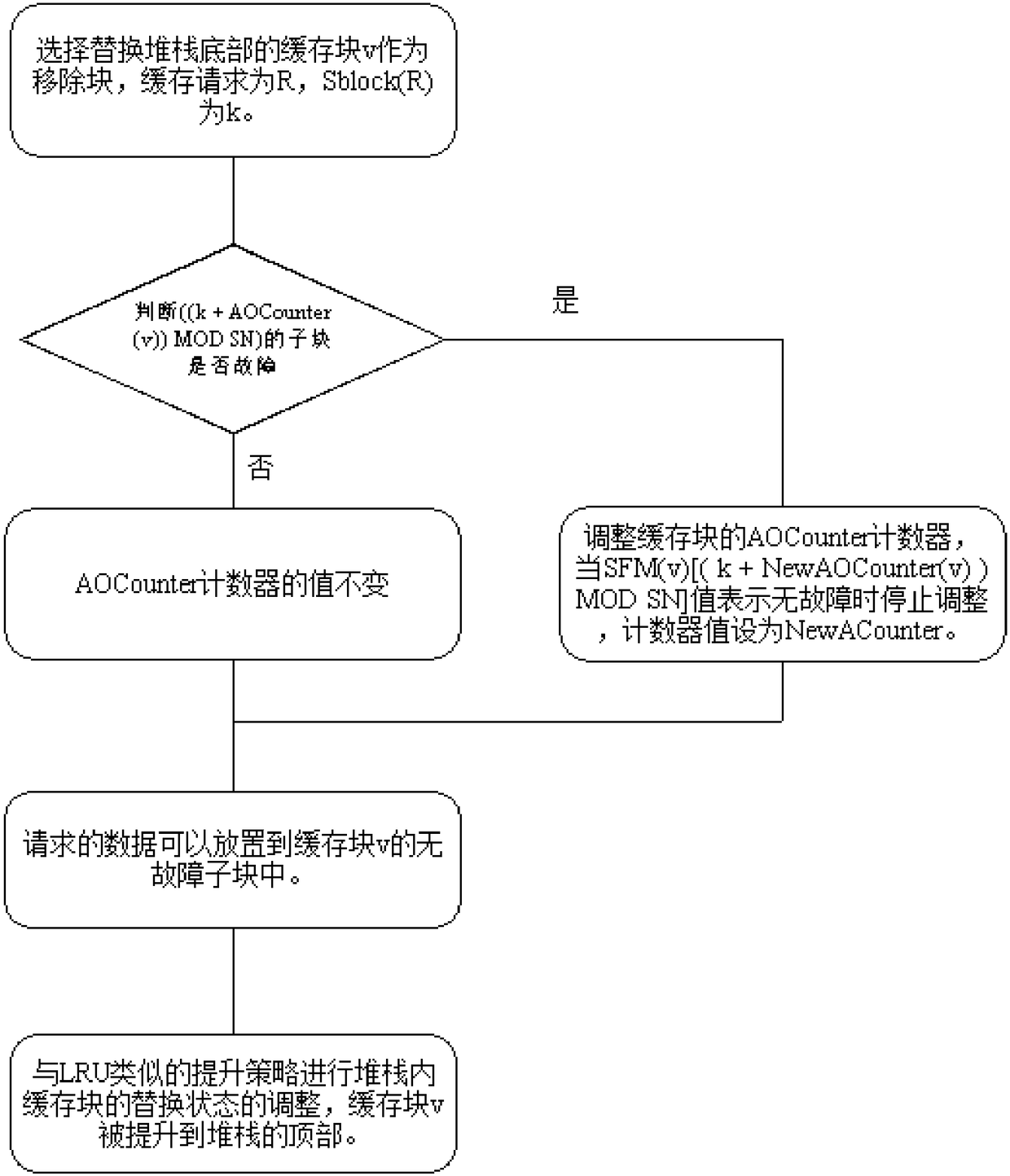

A High-Bandwidth Multi-Scale Fault Bitmap Cache Structure

ActiveCN107329906BReduce latency overheadEfficient recordingMemory systemsFault toleranceHigh bandwidth

The invention provides a mechanism and storage structure capable of checking cache logic structure faults of all levels in a quick and high-bandwidth way. Buffer sets, buffer lines and fault conditions of buffer sub-blocks can be marked in a multiscale way; and a high-performance fault tolerance buffer structure can be supported. Two different bitmap spatial configuration ways including static distribution and dynamic distribution can be provided; and low storage expense can be achieved.

Owner:BEIJING UNIV OF POSTS & TELECOMM

Method for access to external memory

ActiveCN101271435BImprove efficiencyReduce latency overheadElectric digital data processingExternal storageData access

The present invention discloses a method for visiting an external storage device. The present invention displays the bandwidth priority attribute of the physical features of the external storage device by setting up and determines whether the visit authority of the current visit request is entitled or not according to the judgment of the bandwidth priority attribute of the physical features of the external storage device, thus increasing the efficiency of the device to visit the external storage device. The continuous data visit tries to be operated on the same line of the same block to the largest extent, thus greatly increasing the efficiency of continuous visit of the external storage device. When implementing the data transmission by the former request, the command and the address of the current visit request are parallelly sent out, thus reducing the time consumption of the transmission command delay; the time consumption of the bus switch is reduced by ensuring that the continuous data visit is writing or reading.

Owner:JIANGSU DAHAI INTELLIGENT SYST

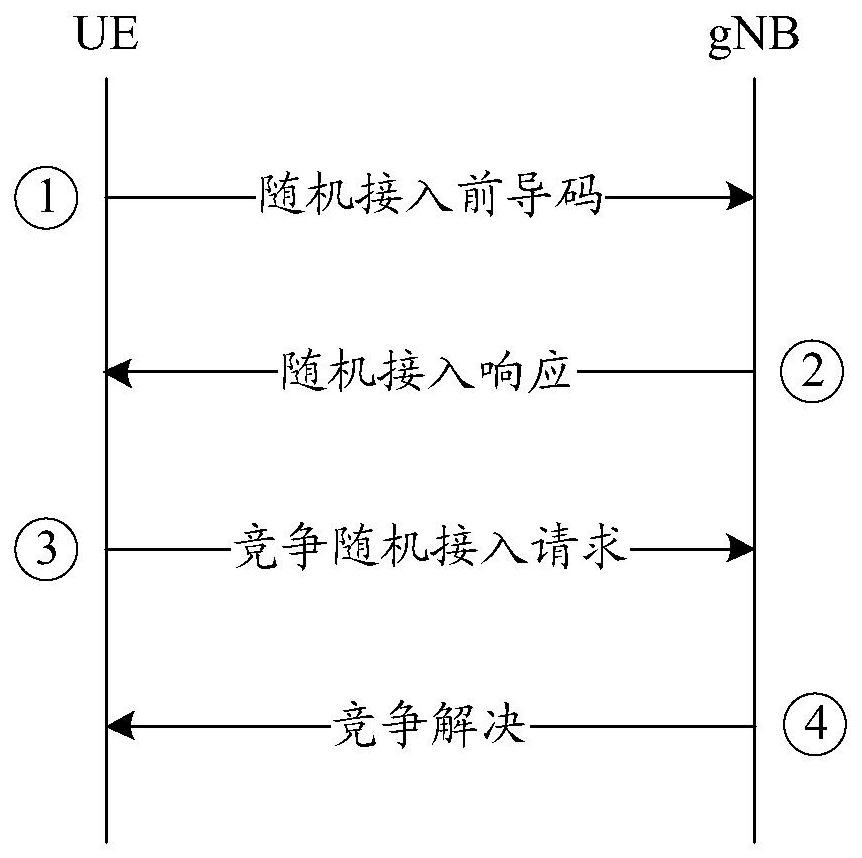

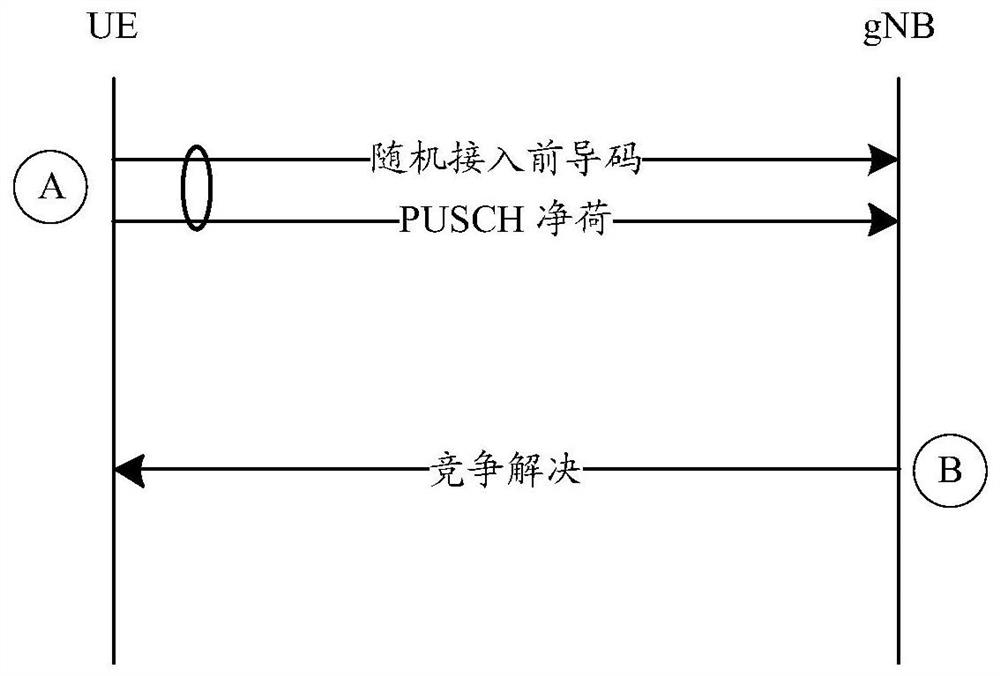

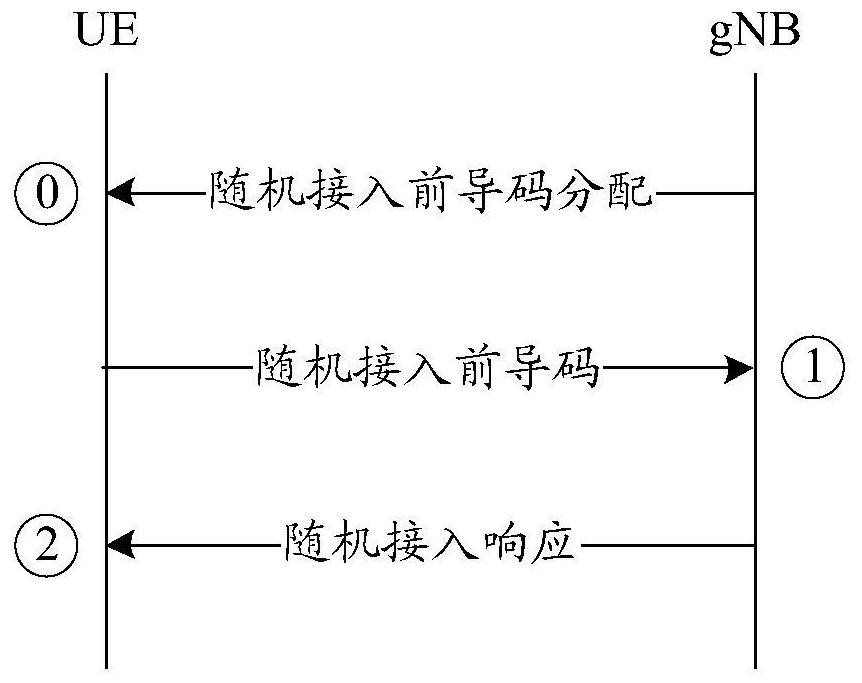

Random access method and device

PendingCN113873671AReduce random access delayReduce latency overheadAssess restrictionNetwork topologiesReal-time computingSatellite Telecommunications

The invention discloses a random access method and device, and the method comprises the steps that: in a random access process, a base station to which a satellite cell belongs sends a random access related message through a ground cell accessed by UE, and indicates a cell type corresponding to the random access related message, and the UE receives the random access related message sent by the ground cell; and a cell type corresponding to the random access related message is determined, wherein the cell type is a ground cell or a satellite cell. The invention provides the method for assisting the satellite cell to perform random access by the ground cell, the random access time delay of the satellite communication system can be shortened through the method, and the time delay overhead is reduced.

Owner:DATANG MOBILE COMM EQUIP CO LTD

A method for access authentication of electric vehicles

The invention relates to an access authentication method of electric automobiles. The method comprises the following steps that: access authentication systems of the electric automobiles are established; access authentication between the electric automobiles and a charging station is carried out; and the charging station carries out batch signature authentication on the electric automobiles. According to the invention, identity aggregate signatures are introduced into identity validity inspection of the electric automobiles, and bidirectional authentication and session key negotiation are designed between the electric automobiles and the access authentication systems, so that the reasonable usage of the information resources in the station is ensured, and the safety of the whole network system is fundamentally ensured. The batch signature authentication mechanism enables the charging station to verify a plurality of electric automobile terminal signatures in the aggregate manner, the calculation load is lightened, and the performance is improved. The typical identity aggregate signature system is composed of five algorithms: system initialization, private key generation, signing, aggregation and aggregate verification, user identity information can be used to replace a public key thereof, the problem of high certificate cost is solved, and by compressing the plurality signatures into one, the high-efficiency verification is carried out.

Owner:STATE GRID CORP OF CHINA +2

A Data Consistency System Based on Satellite Network Environment

ActiveCN108111338BAchieving Dynamic Consistency GuaranteesFast read and write responseData switching networksData synchronizationGranularity

The invention discloses a data consistency system based on a satellite network environment. The system comprises n gateway stations, wherein each gateway station comprises a meta-data server and is used for realizing meta-data synchronization by means of a chain-based high-consistency strategy through a transaction chain when the average speed of the chain is larger than or equal with a threshold,and otherwise, realizing meta-data synchronization by means of a chain-based low-consistency strategy through the transaction chain; a hot-standby meta-data server which is used for replacing the meta-data server for service when shutdown of the meta-data server occurs; and a data server which is used for supplying the chain-based high-consistency strategy for the writing operation of the data block in the data server when the average speed of the chain is larger than or equal with the threshold, and otherwise, supplying the chain-based low-consistency strategy. The data consistency system has advantages of ensuring high granularity consistency, realizing quick read-and-write response and reducing synchronous expenditure of repeated data.

Owner:HUAZHONG UNIV OF SCI & TECH +1

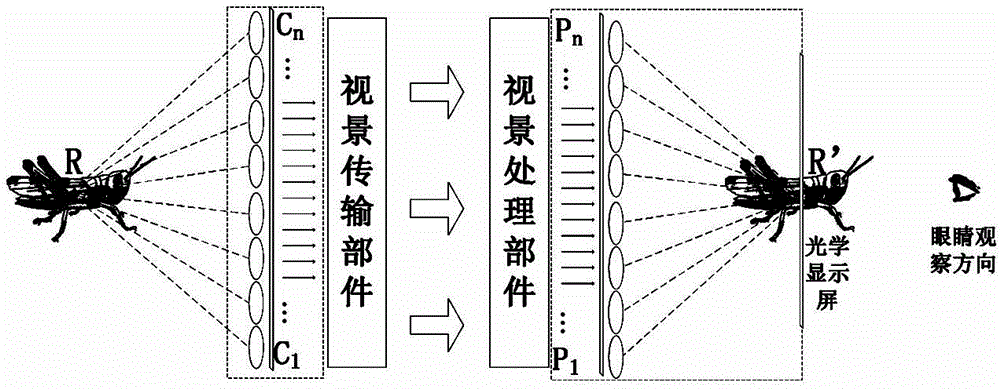

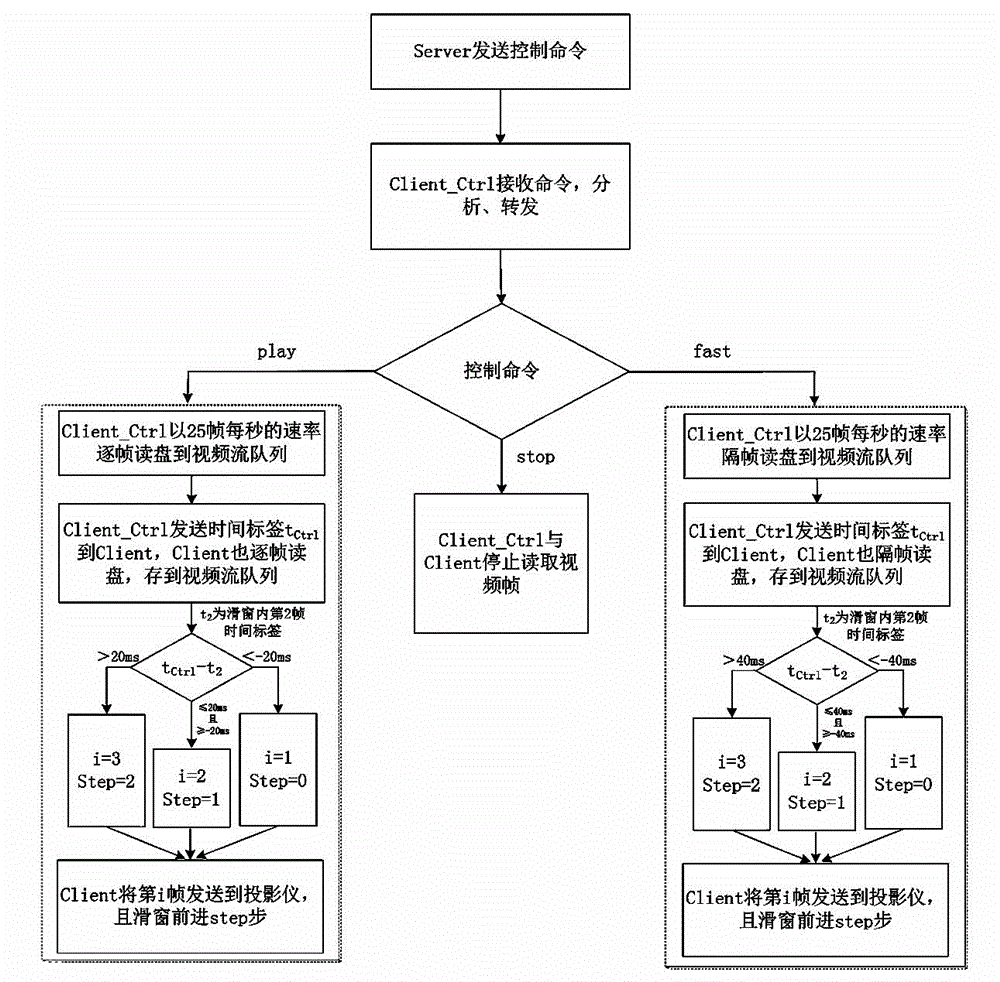

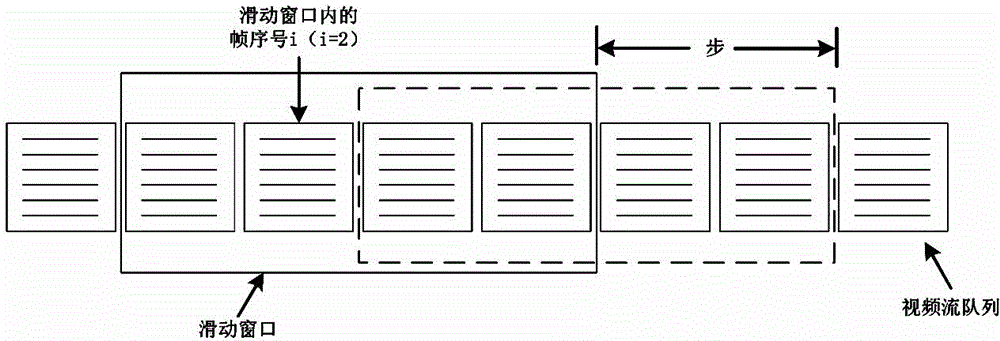

Video synchronous playback method for multi-view true 3D display system based on sliding window

ActiveCN103873846BImprove scalabilityReduce control errorSteroscopic systemsViewpointsSynchronous control

The invention discloses a video synchronization playing method for a stakeholder viewpoint real three-dimensional display system based on a sliding window. The method includes the following implementing steps that a synchronization control terminal and video playing client sides are specified in advance, all the video playing client sides are respectively connected with the synchronization control terminal, and the synchronization control terminal is connected with a service control terminal; the synchronization control terminal judges the type of a playing control instruction, and if the control instruction is a stopping instruction, the synchronization control terminal and the video playing client sides stop outputting; if the control instruction is a playing or fast forward instruction, the synchronization control terminal and the video playing client sides respectively read a video frame into a video flowing queue, a time label of a next video frame to be played is broadcast to all the video client sides to keep the video playing client sides and the synchronization control terminal synchronous when the synchronization control terminal outputs the video frame. The video synchronization playing method has the advantages of being good in expandability, small in time delay discreteness, small in synchronization control error, good in synchronization performance, good in playing-progress-adjusting synchronization performance and high in playing-progress-adjusting synchronization speed.

Owner:NAT UNIV OF DEFENSE TECH

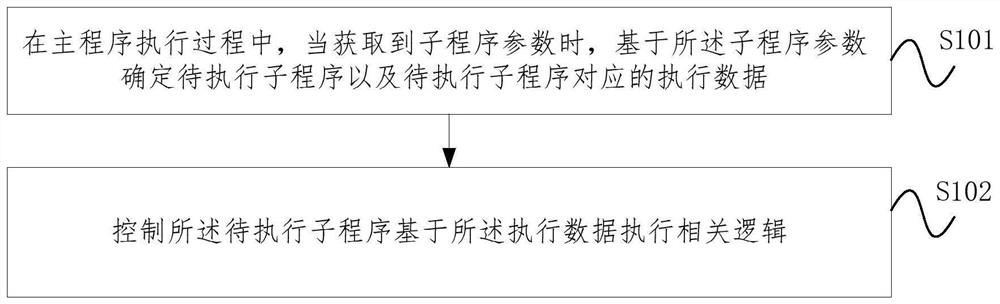

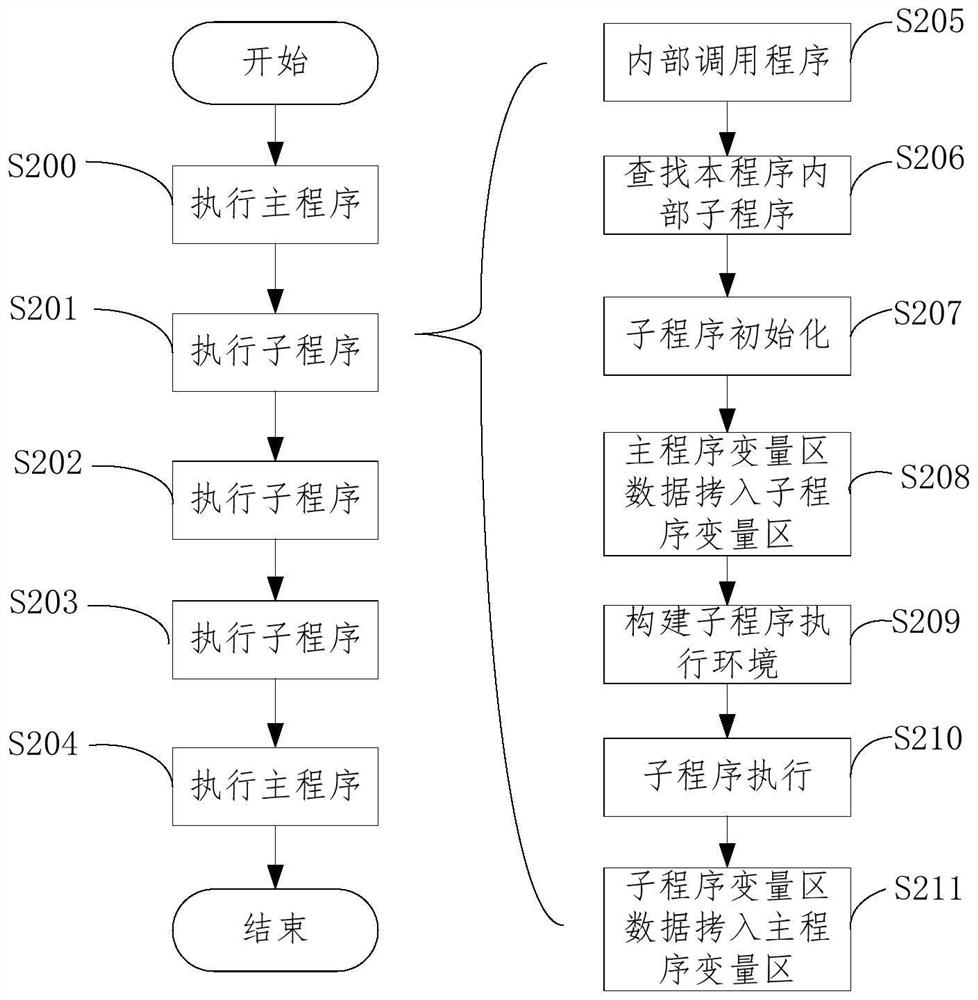

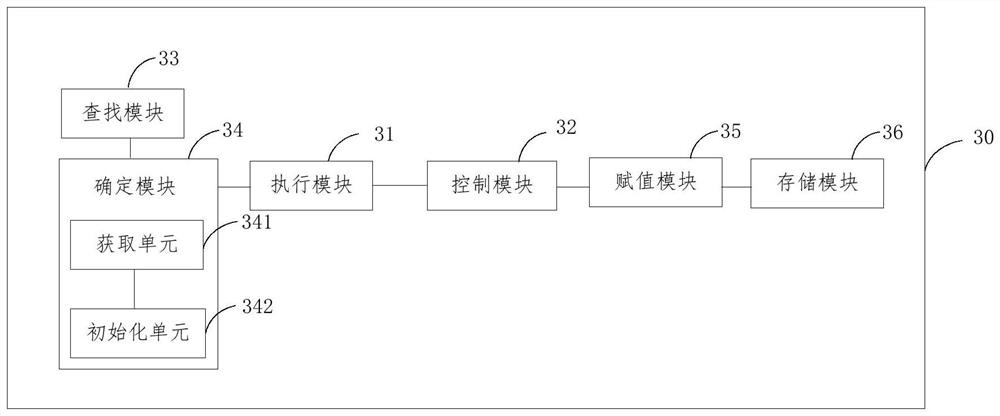

Program execution method and device, equipment and storage medium

PendingCN114237769AReduce the number of interactionsReduce latency overheadExecution paradigmsSubroutineClient

The invention relates to a program execution method and device, equipment and a storage medium, and the method comprises the steps: in a main program execution process, when a subprogram parameter is obtained, determining a to-be-executed subprogram and execution data corresponding to the to-be-executed subprogram based on the subprogram parameter; and controlling the to-be-executed subprogram to execute related logic based on the execution data. According to the method, the to-be-executed subprogram and the execution data corresponding to the to-be-executed subprogram are determined according to the subprogram parameters, the number of times of querying the subprogram is reduced, the to-be-executed subprogram is controlled to execute related logic according to the execution data, execution of multiple programs is changed into execution of one program, the execution efficiency is improved, and the execution time is shortened. And the number of times of network message interaction between the client and the server is reduced, so that the network delay overhead is reduced, and the processor overhead of the database is also reduced.

Owner:北京人大金仓信息技术股份有限公司

Method for Realizing Network Element Level Parallel Service Functions in Network Functions Virtualization Environment

ActiveCN108092803BImprove parallelismShorten the lengthData switching networksSoftware simulation/interpretation/emulationVirtualizationTheoretical computer science

The invention discloses a method for realizing network element level parallel service functions in a network function virtualization environment. The method comprises the steps of traversing a servicefunction chain, operating a service function decomposition analysis algorithm and decomposing service functions into basic message processing units, called as network elements, and determining and storing operation and operation domain information of the service functions for messages; operating a parallel judgment algorithm through utilization of the obtained operation and operation domain information of the service functions, and determining a service function combination on which parallel optimization can be carried out in the service function chain; performing a parallel optimization algorithm, and combining the parallel service functions; and through combination of non-parallel service functions and the parallelly optimized service functions, organizing and combining the service functions according to an original service function sequence, and establishing a new service function chain. According to the new service function chain, the length of the original service function chainis effectively reduced, the parallelism among the service functions is improved, and the delay cost when the messages pass through the service function chain is clearly reduced.

Owner:CHINA INFOMRAITON CONSULTING & DESIGNING INST CO LTD +1

File writing method and device in a distributed system

ActiveCN107493309BEnsure consistencyReduce consistencyDigital data information retrievalTransmissionComputer hardwareComputer network

A file write-in method in a distributed system and an apparatus thereof are disclosed. The file write-in method comprises the following steps that after receiving a write request for a first file, a client sends a data write-in request of the first file to each storage node corresponding to the first file respectively, wherein the data write-in request at least includes a write request identification corresponding to the first file data write-in request and a storage node address; the storage node address includes an address of a main storage node and / or an address of a secondary storage node in each storage node corresponding to the first file; the storage node address is used for interaction between the main storage node and the secondary storage node; and the client receives an execution result of the data write-in request from each storage node corresponding to the first file respectively. In the invention, under the condition that multiple copy byte orders of the data can be guaranteed to be consistent, data sending hop counts are reduced so that write-in time delay is decreased.

Owner:ALIBABA GRP HLDG LTD

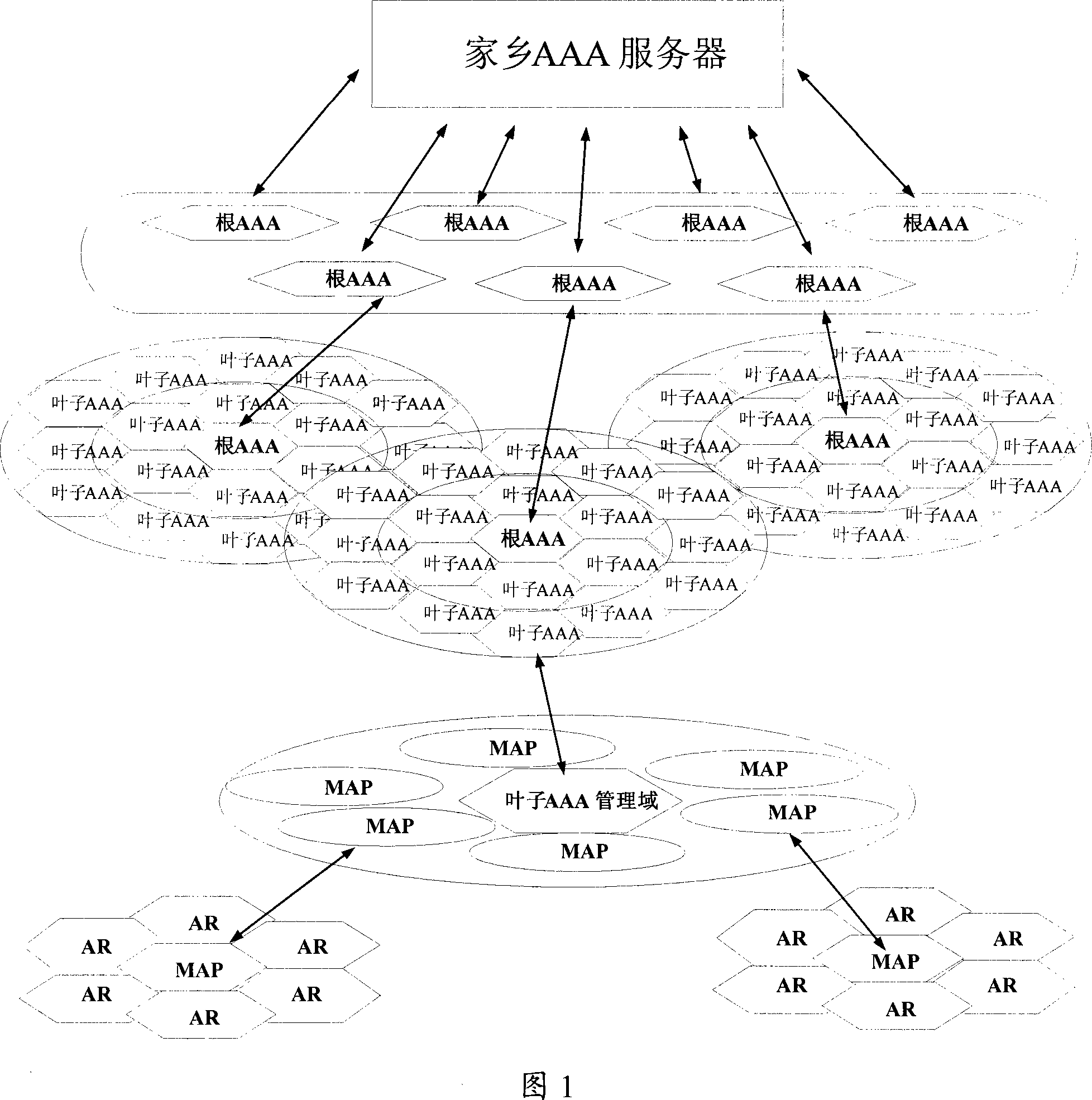

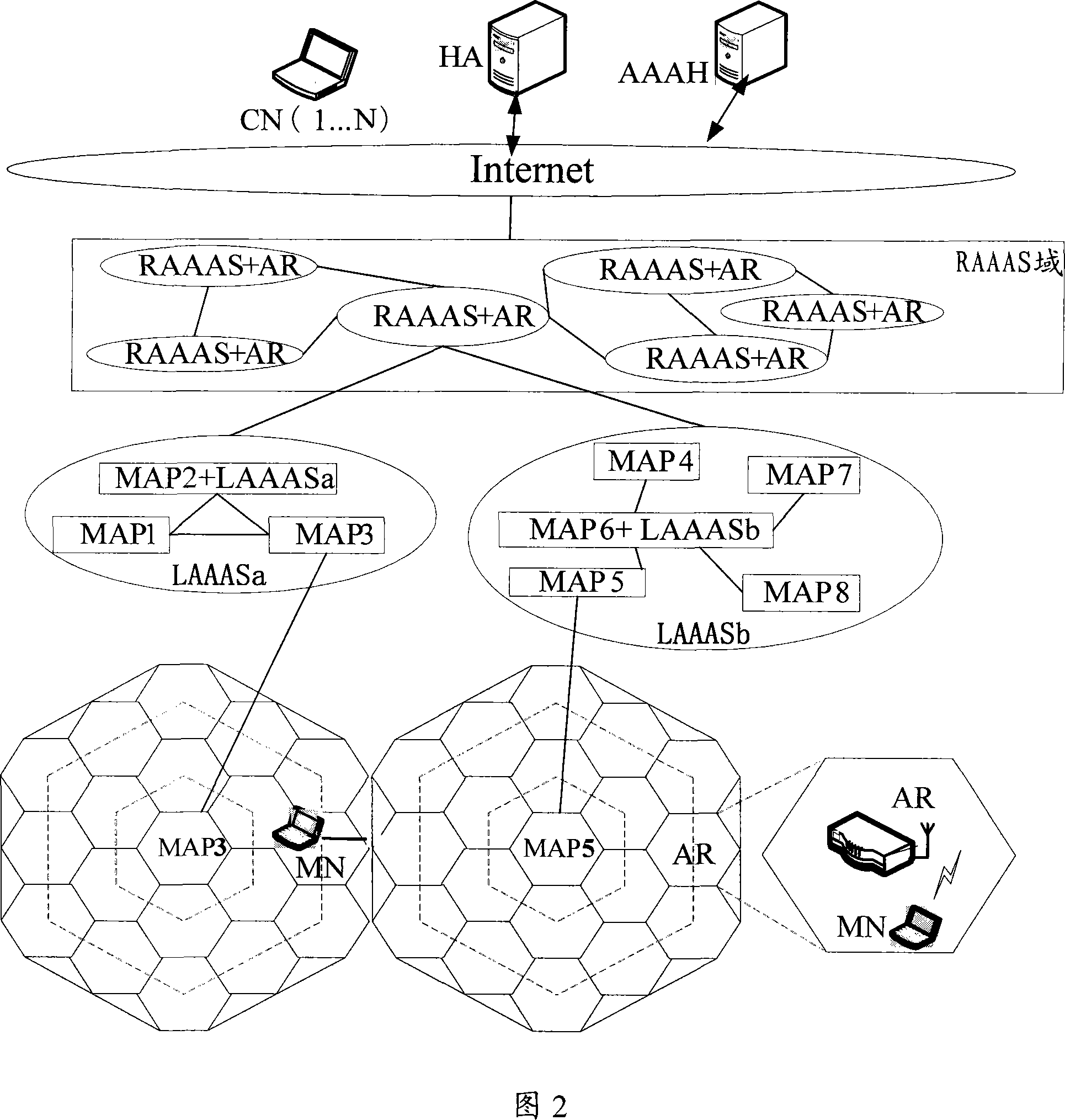

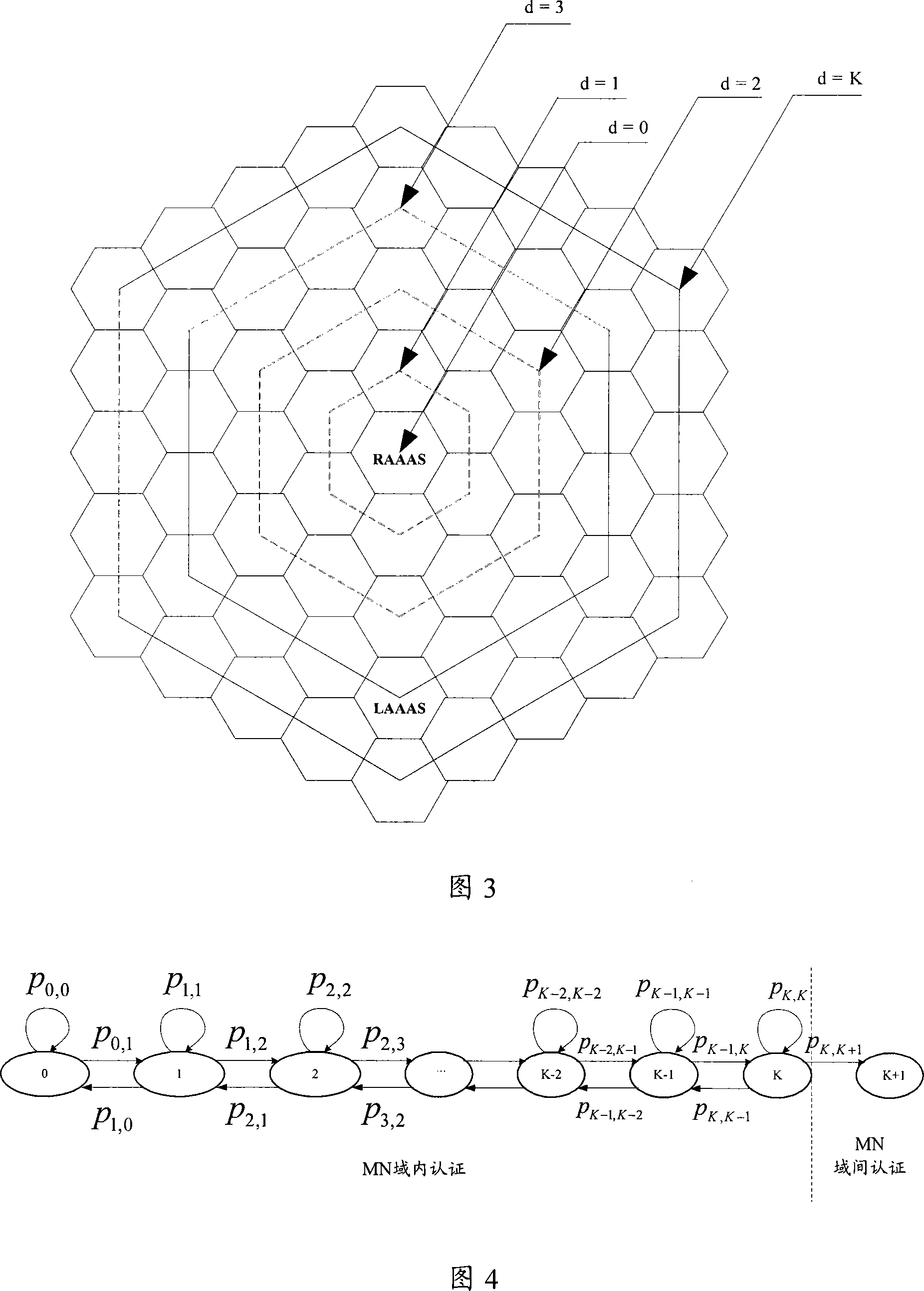

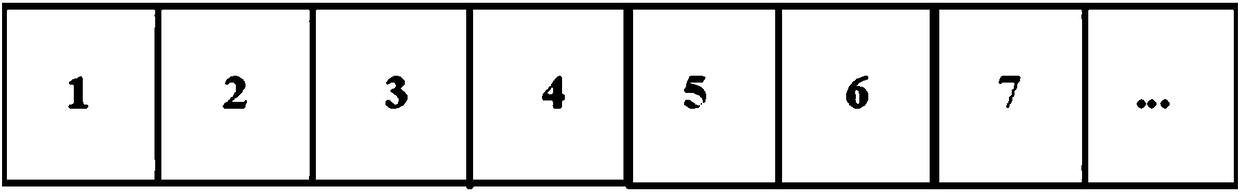

Mobility managing system and method for layered AAA in mobile internet

InactiveCN101222494BReduce latency overheadAvoid inefficiencyData switching networksNetwork data managementEngineeringMobility management

The present invention discloses a mobility management system and a method for AAA layering in the mobile Internet, relating to the communication technical field. The system comprises AAAH, HA, RAAAS, LAAAS, MAP and AR, wherein, the AAAH is responsible for processing the request authentication, authorization and accounting of mobile devices in all RAAAS regions in a home management region; all RAAAS are deployed on the same logic plane of which the underside the LAAAS is subordinate to, forming a tree structure; the AAAH is connected with at least two RAAAS and receives the inter-domain authentication requests which are forwarded by the RAAAS and come from a mobile device MN; the RAAAS is connected with at least two LAAAS and receives the authentication requests which are forwarded by the LAAAS and come from a mobile device domain; the LAAAS is connected with at least two MAP, receives the inter-domain authentication requests which are forwarded by the MAP and come from the MN domain, and transmits the inter-domain authentication requests to the RAAAS. The present invention also discloses a management method based on the system.

Owner:BEIJING UNIV OF POSTS & TELECOMM

Dynamically patched high-performance on-chip cache fault-tolerant architecture

ActiveCN107122256BGuaranteed to workEfficient repairNon-redundant fault processingFault tolerant architectureParallel computing

The invention puts forward a dynamic repairing high-performance on-chip cache fault-tolerant framework, and can timely and efficiently process intermittent bit failure and permanent faults by low expenditure. Through a fault-sensitive replacement mechanism, new faults can be tolerated at any time, the cache is guaranteed to normally work, then, on the basis of a situation that a cache block is used and accessed, and a fault subblock is dynamically repaired to lighten an influence on the performance of the cache by faults.

Owner:BEIJING UNIV OF POSTS & TELECOMM

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com