Patents

Literature

121 results about "Fpga acceleration" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

The Intel FPGA Acceleration Stack. The Acceleration Stack for Intel Xeon CPU with FPGAs is a robust collection of software, firmware, and tools designed and distributed by Intel to make it easier to develop and deploy Intel FPGAs for workload optimization in the data center.

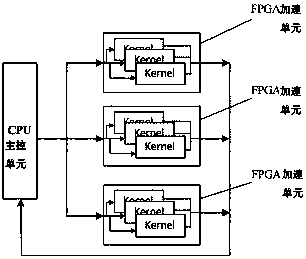

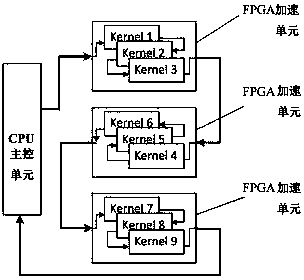

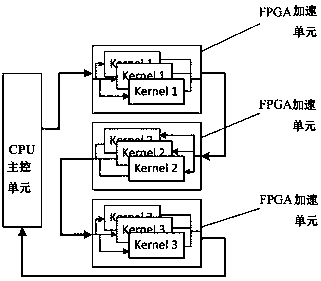

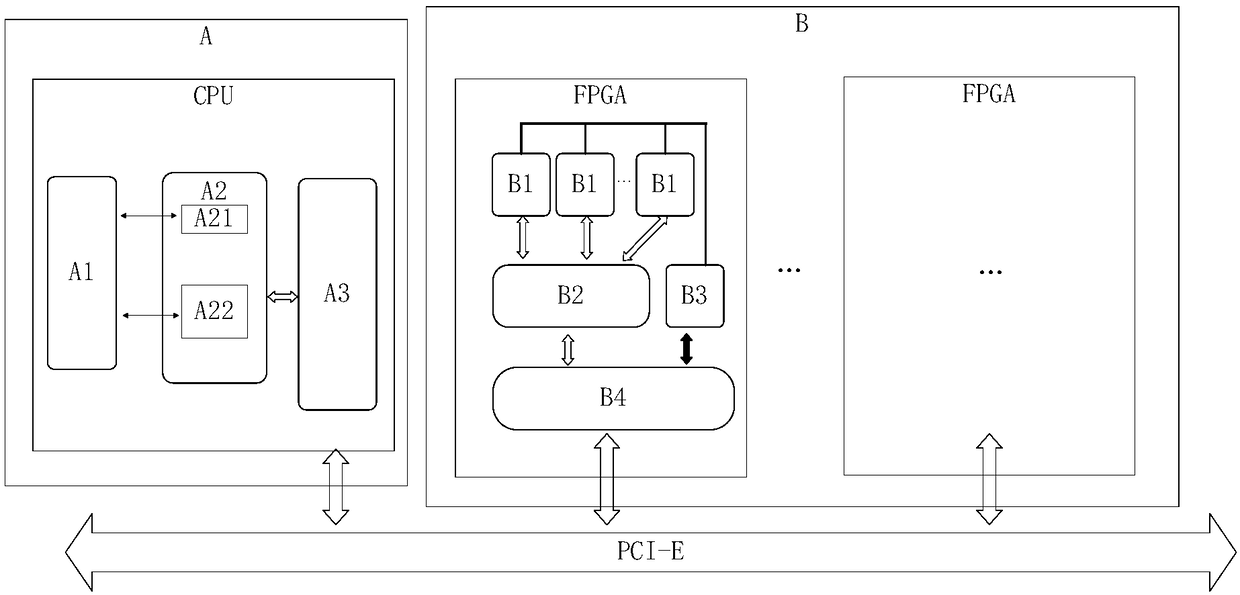

CPU+FPGA-based heterogeneous computing system and acceleration method thereof

InactiveCN108776649AImprove throughputImprove computing powerArchitecture with single central processing unitElectric digital data processingAssembly lineComputing systems

The invention discloses a CPU+FPGA-based heterogeneous computing system, and relates to the technical field of heterogeneous computing. According to the system, multiple FPGA acceleration units cooperate with a CPU main control unit to complete a same computing task; the CPU main control unit is responsible for logic judgement and management control, and distributing computing tasks to the FPGA acceleration units; the FPGA acceleration units are responsible for accelerating the computing tasks; each FPGA acceleration unit is internally divided into a static area and a dynamic reconfigurable area; the static areas are responsible for realizing PCIe-DMA communication, SRIO communication and DDR control; and the dynamic reconfigurable areas are responsible for executing kernel functions distributed by the CPU main control unit and accelerating the computing tasks. The system is capable of carrying out parallel acceleration and assembly line acceleration on computing tasks in different task types, so as to greatly enhance the task processing throughout, shorten the task execution time and greatly improve the computing performance of computers.

Owner:SHANDONG CHAOYUE DATA CONTROL ELECTRONICS CO LTD

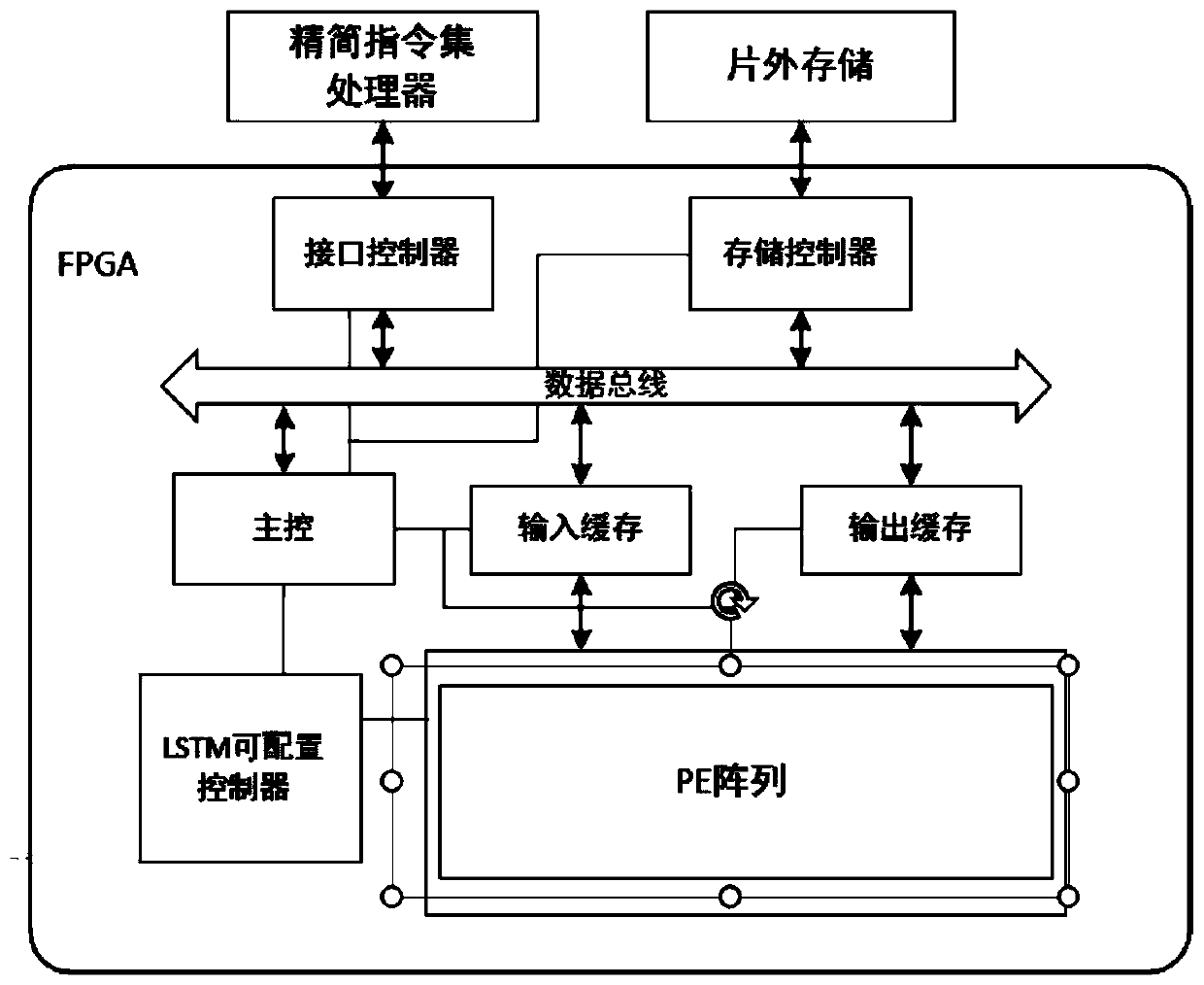

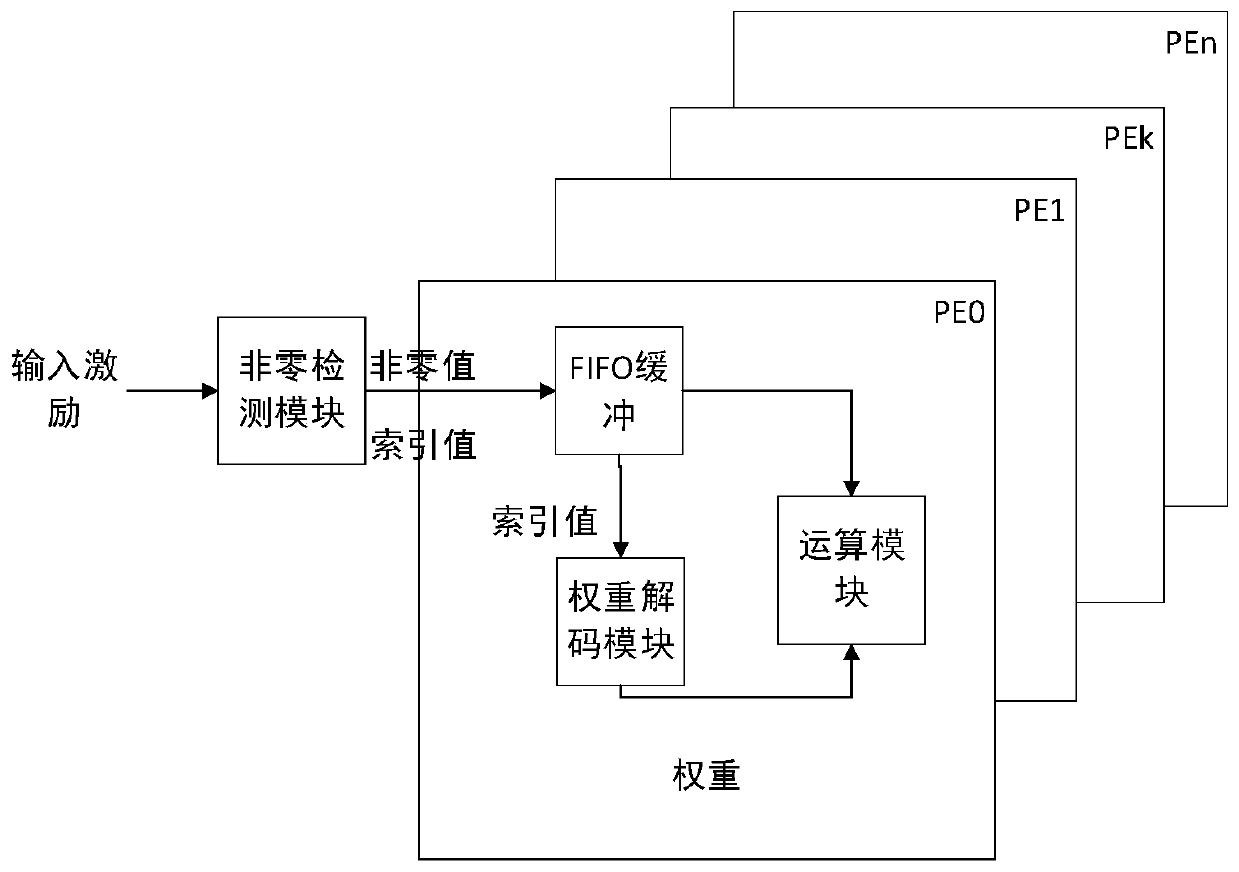

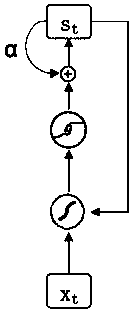

FPGA accelerator of LSTM neural network and acceleration method of FPGA accelerator

ActiveCN110110851AImprove computing powerWill not cause idleNeural architecturesPhysical realisationActivation functionControl signal

The invention provides an FPGA accelerator of an LSTM neural network and an acceleration method of the FPGA accelerator. The accelerator comprises a data distribution unit, an operation unit, a control unit and a storage unit; the operation unit comprises a sparse matrix vector multiplication module, a nonlinear activation function module and an element-by-element multiplication and addition calculation module; the control unit sends a control signal to the data distribution unit, and the data distribution unit reads an input excitation value and a neural network weight parameter from the storage unit and inputs the input excitation value and the neural network weight parameter to the operation unit for operation. The operation resources are uniformly distributed to each operation unit according to the number of the non-zero weight values, so that idling of operation resources is avoided, and the operation performance of the whole network is improved. Meanwhile, the pruned neural network is stored in the form of the sparse network, the weight value of each column is stored in the same address space, the neural network is coded according to the row index, and the operation performance and the data throughput rate are improved under the condition that the precision is guaranteed.

Owner:NANJING UNIV

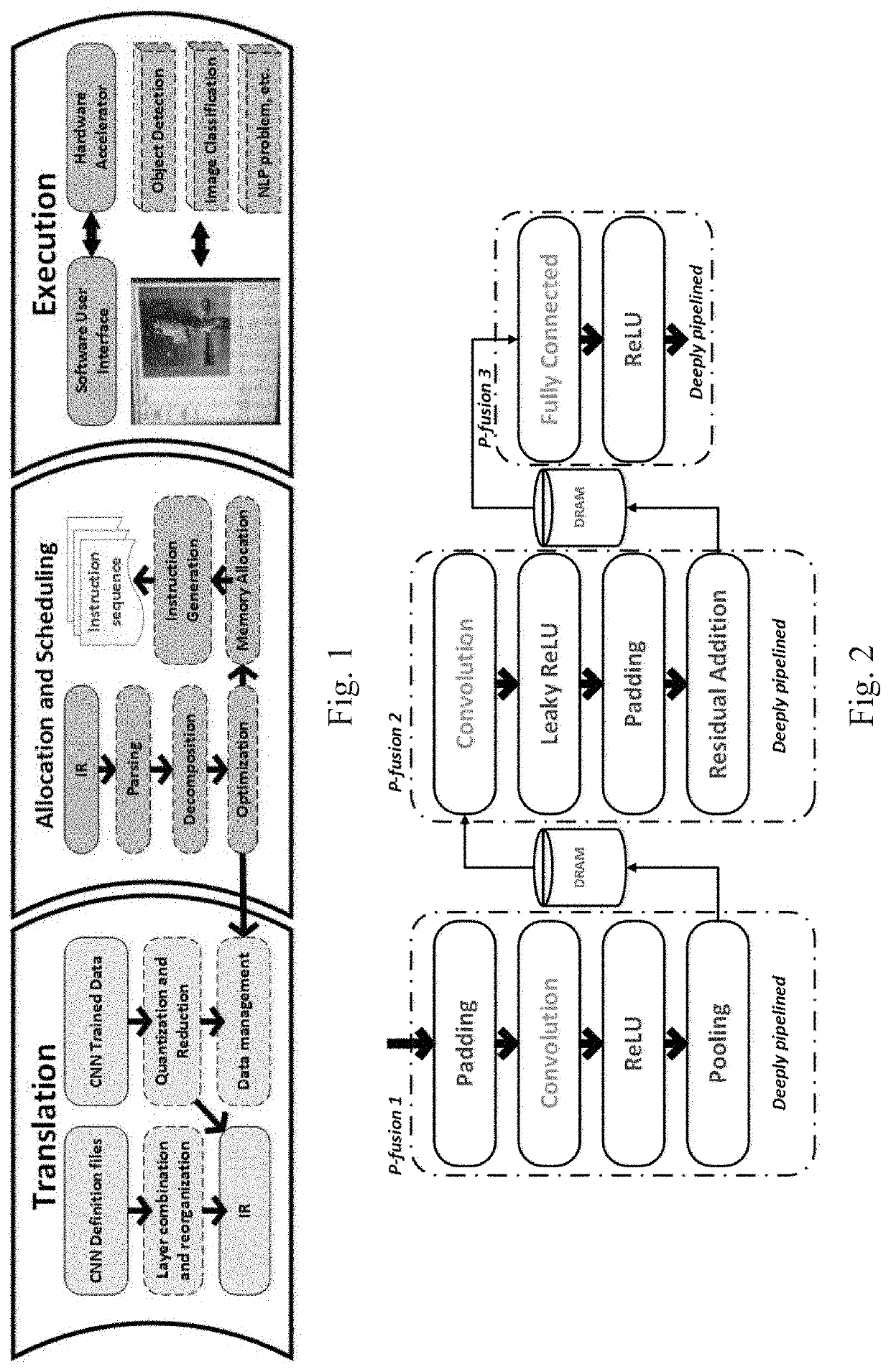

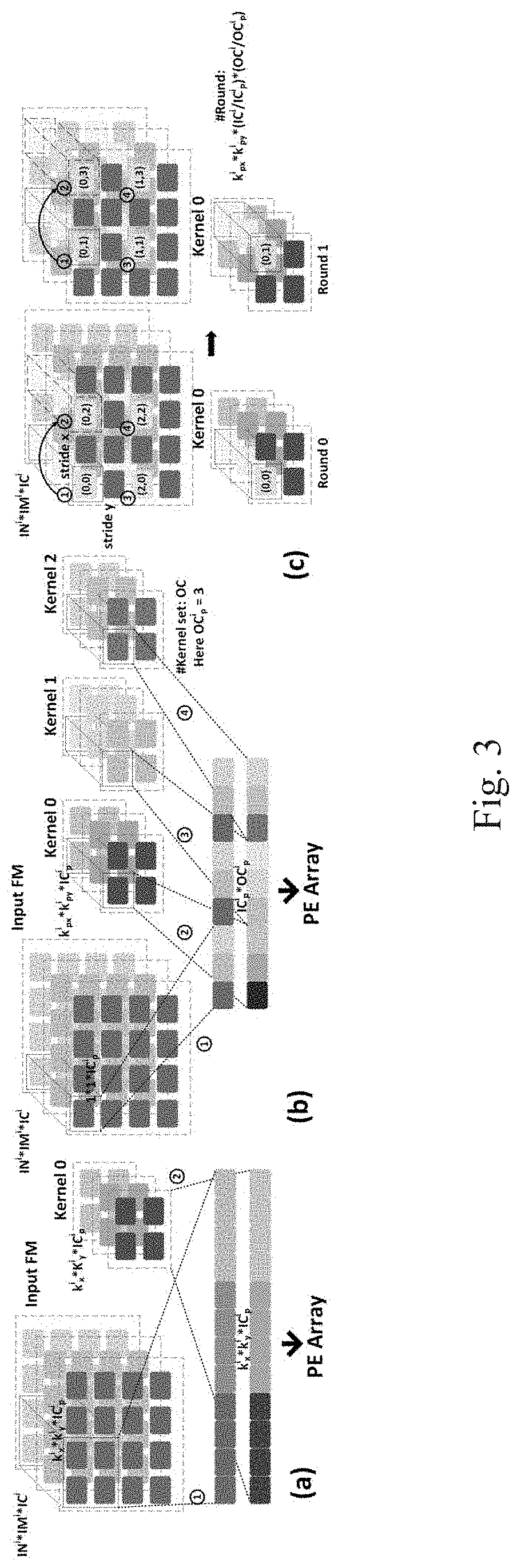

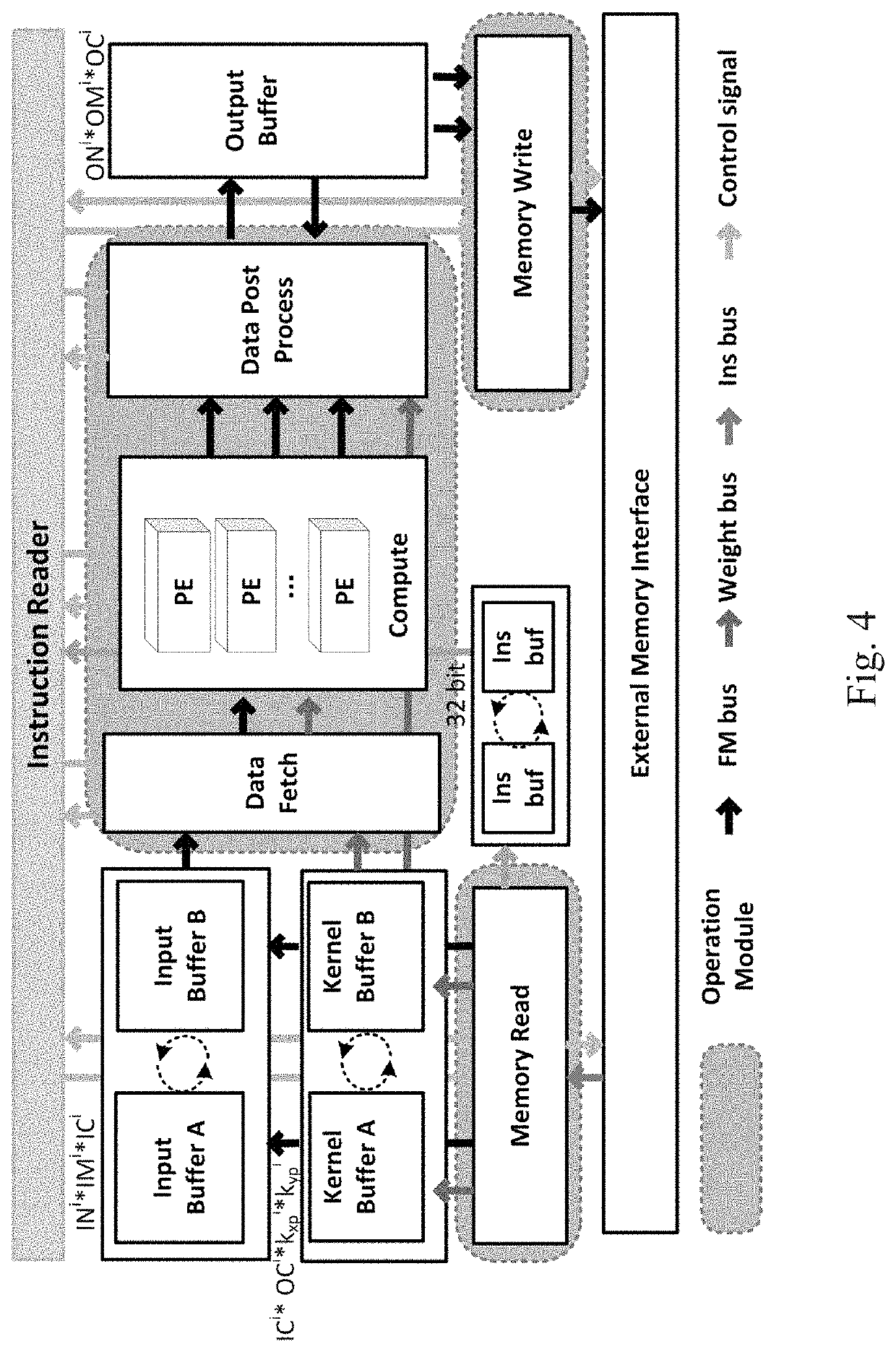

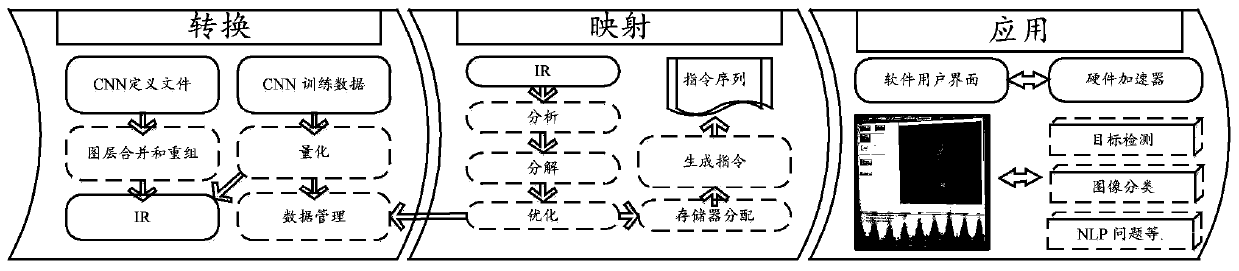

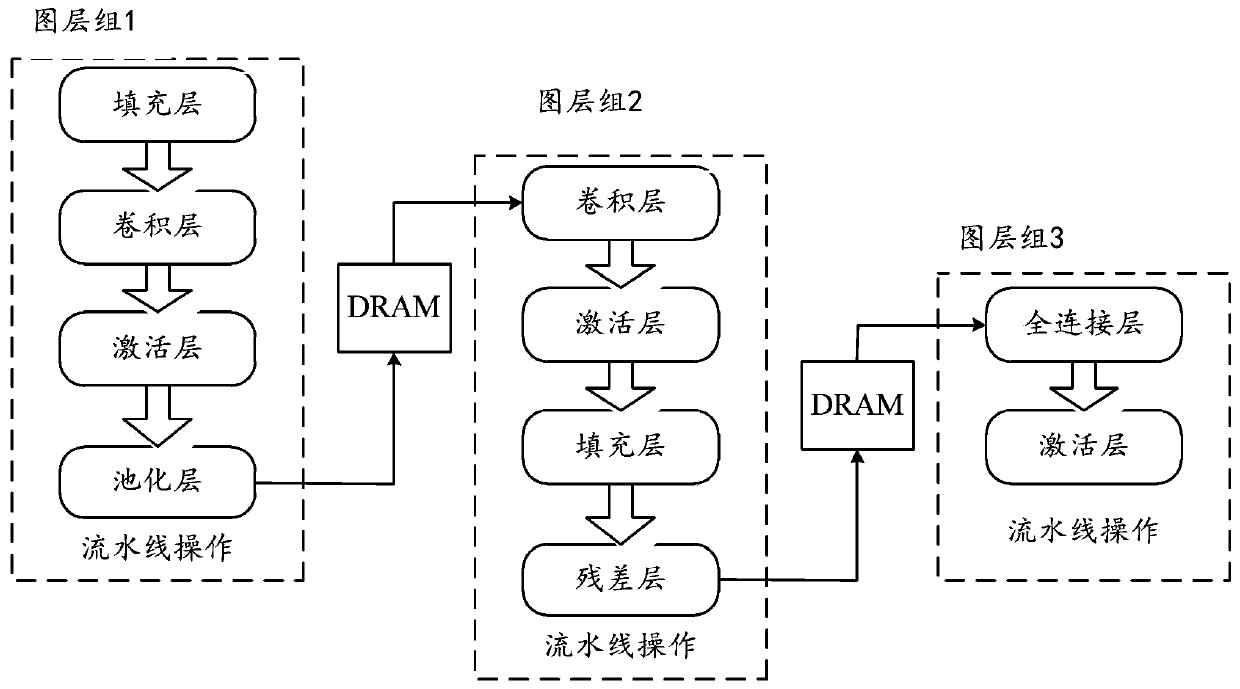

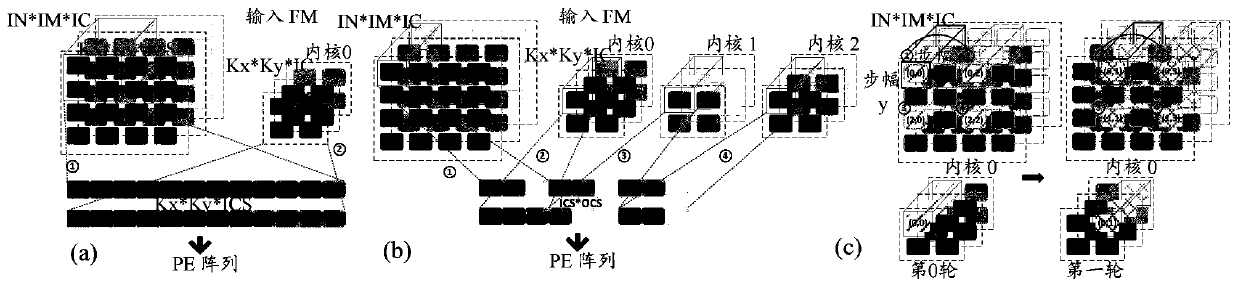

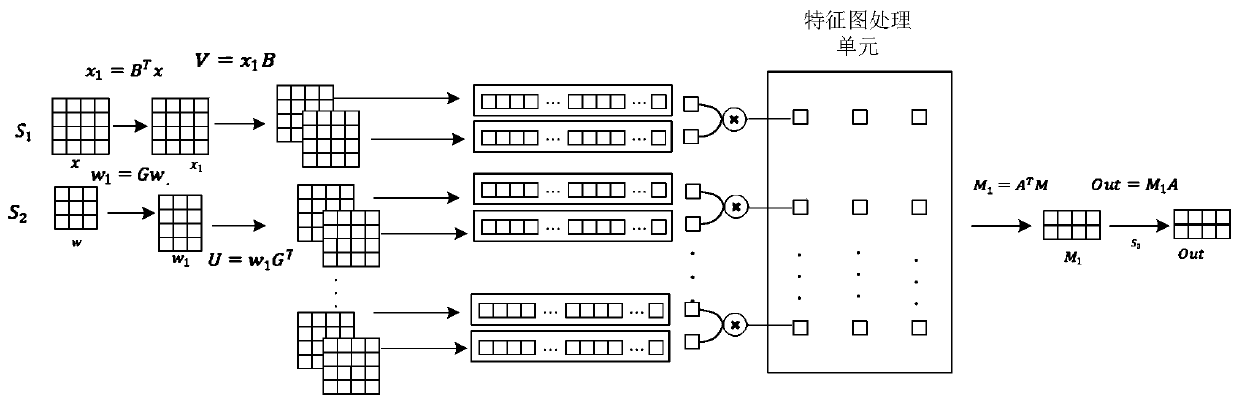

OPU-based CNN acceleration method and system

InactiveUS20200151019A1High complexityReduced versatilityResource allocationKernel methodsJazelleConcurrent computation

An OPU-based CNN acceleration method and system are disclosed. The method includes (1) defining an OPU instruction set; (2) performing conversion on deep learning framework generated CNN configuration files of different target networks through a complier, selecting an optimal mapping strategy according to the OPU instruction set, configuring mapping, generating instructions of the different target networks, and completing the mapping; and (3) reading the instructions into the OPU, and then running the instruction according to a parallel computing mode defined by the OPU instruction set, and completing an acceleration of the different target networks. The present invention solves the problem that the existing FPGA acceleration aims at generating specific individual accelerators for different CNNs through defining the instruction type and setting the instruction granularity, performing network reorganization optimization, searching the solution space to obtain the mapping mode ensuring the maximum throughput, and the hardware adopting the parallel computing mode.

Owner:REDNOVA INNOVATIONS INC

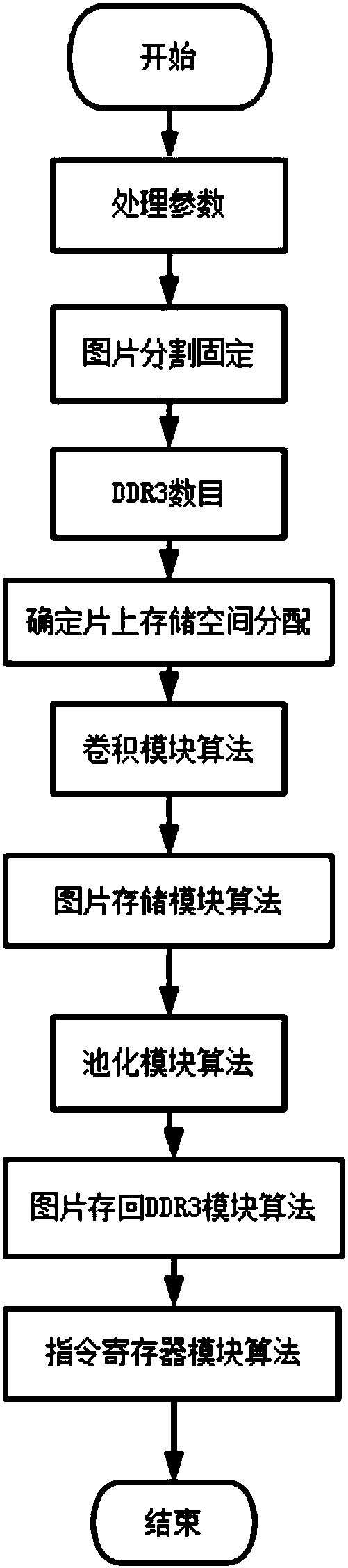

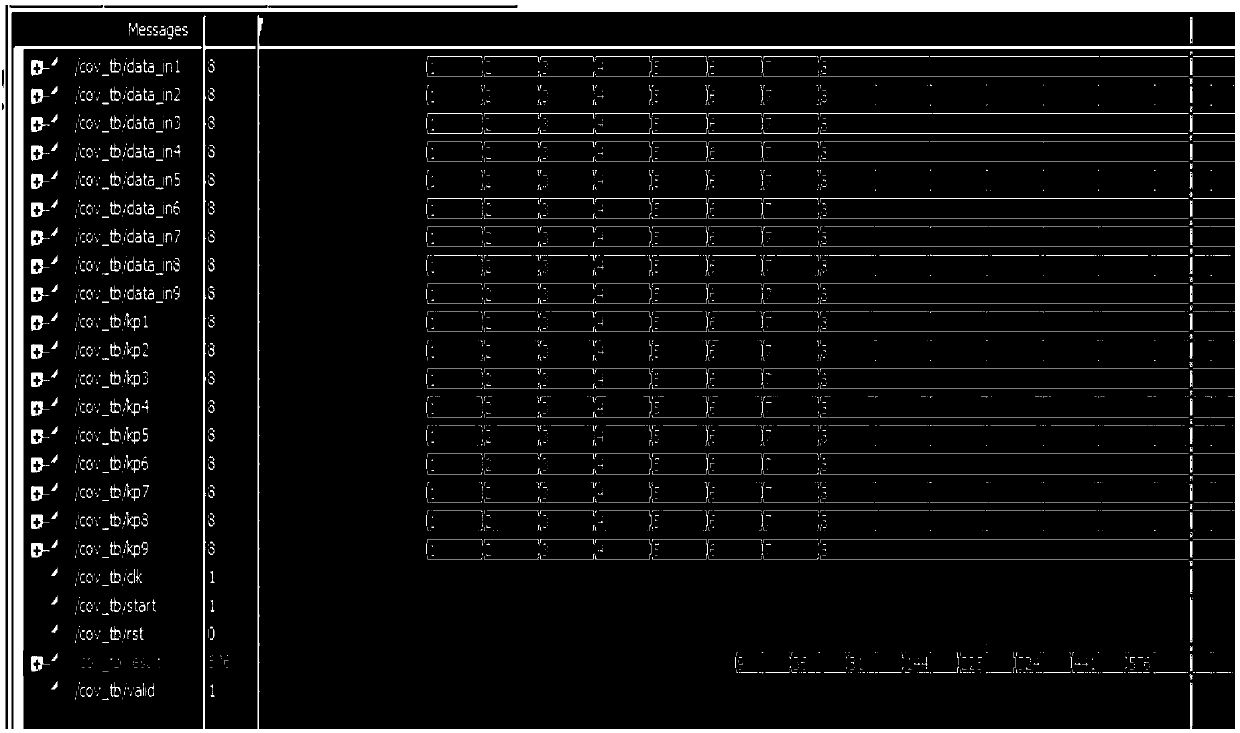

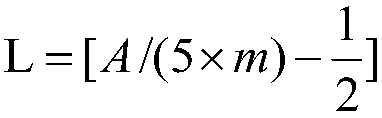

Image processing method based on FPGA accelerated convolution neural network framework

ActiveCN108154229AAccelerate the effectConvolution calculation is uninterruptedNeural architecturesPhysical realisationResource utilizationInstruction register

The invention discloses a image processing method based on an FPGA accelerated convolution neural network framework, which mainly solves the problems of low resource utilization rate and slow speed ofthe prior art. The plan comprises the following steps of:1) calculating a picture division fixed value according to the designed picture parameter and the FPGA resource parameter; 2) determining theDDR3 number according to the picture fixed value and distributing the block ram resource; 3) constructing a convolution neural network framework according to 1) and 2), wherein the framework comprisesa picture storage module, a picture data distribution module, a convolution module, a pool module, a picture storage back DDR3 module and an instruction register group; 4) obtaining a control instruction from the instruction register set through a handshake signal and interacts with each other, and processing the picture data according to the control instruction. The invention improves the resource utilization and acceleration effect through the FPGA-accelerated convolutional neural network framework, and can be used for image classification, target recognition, speech recognition and naturallanguage processing.

Owner:XIDIAN UNIV

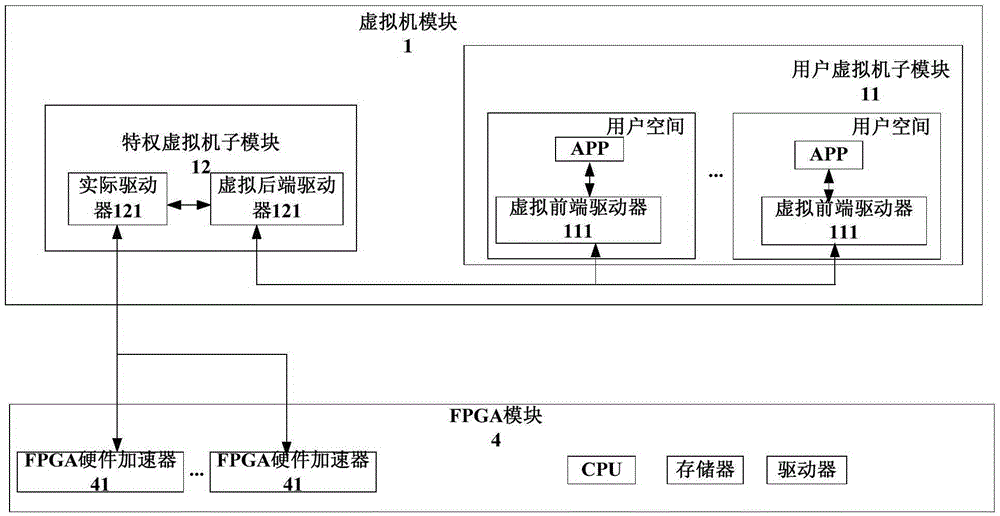

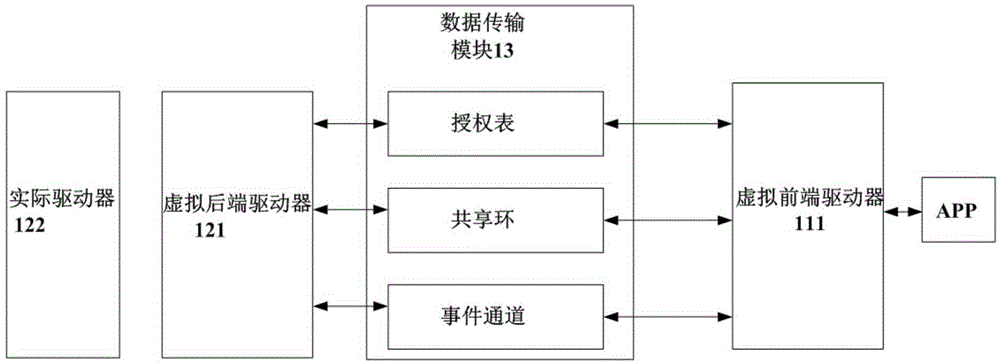

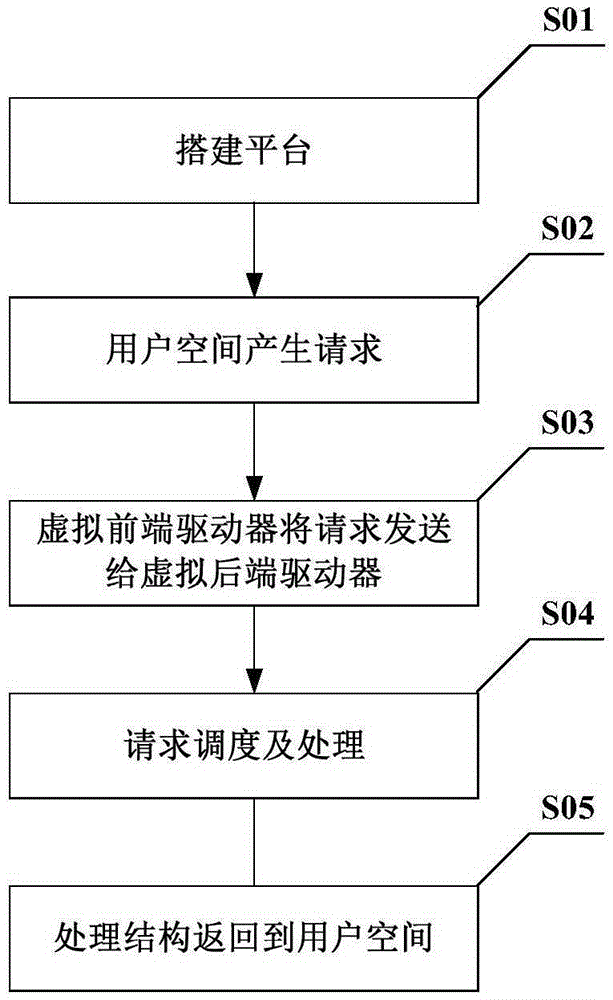

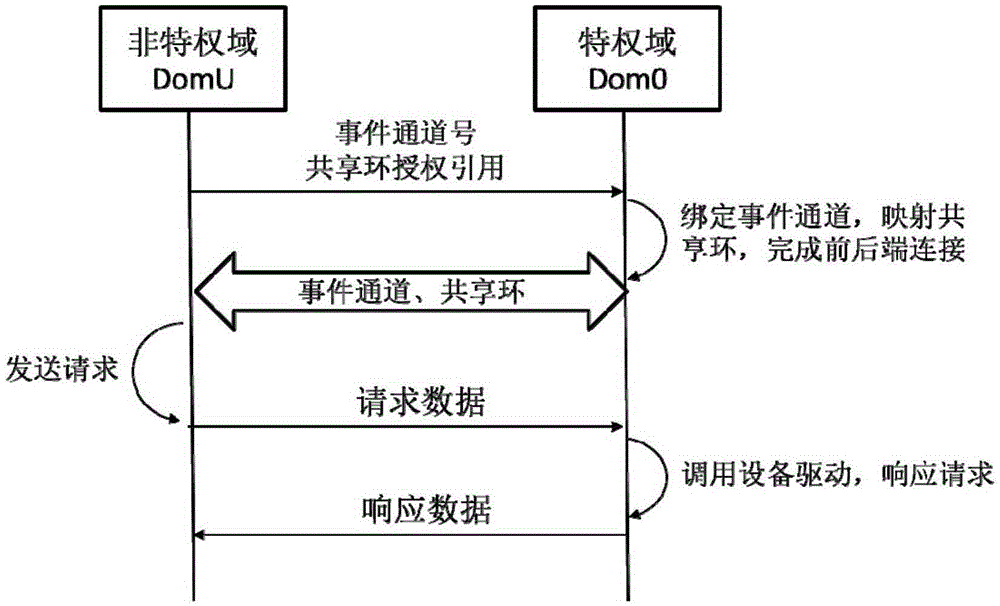

Xen-based FPGA accelerator virtualization platform and application

ActiveCN105389199ARealize front-end and back-end communicationRealize data transmissionSoftware simulation/interpretation/emulationVirtualizationData transmission

The present invention relates to a Xen-based FPGA accelerator virtualization platform and application. The Xen-based FPGA accelerator virtualization platform is characterized by comprising a virtual machine module (1) and an FPGA module (4) connected with each other. The virtual machine module (1) comprises a user virtual machine submodule (11), a privileged virtual machine submodule (12) and a data transmission module (13); the user virtual machine submodule (11) is provided with a virtual front-end driver (111); the privileged virtual machine submodule (12) is provided with a virtual back-end driver (121) and an actual driver (122); the virtual front-end driver (111) is connected to the virtual back-end driver (121) through the data transmission module (13); the actual driver (122) is connected to the FPGA module (4); and both the virtual front-end driver (111) and the actual driver (122) realize character device virtualization through a Xen. Compared with the prior art, the Xen-based FPGA accelerator virtualization platform disclosed by the present invention enables multiple users to simultaneously use the accelerator, thereby improving access speed and computational efficiency.

Owner:TONGJI UNIV

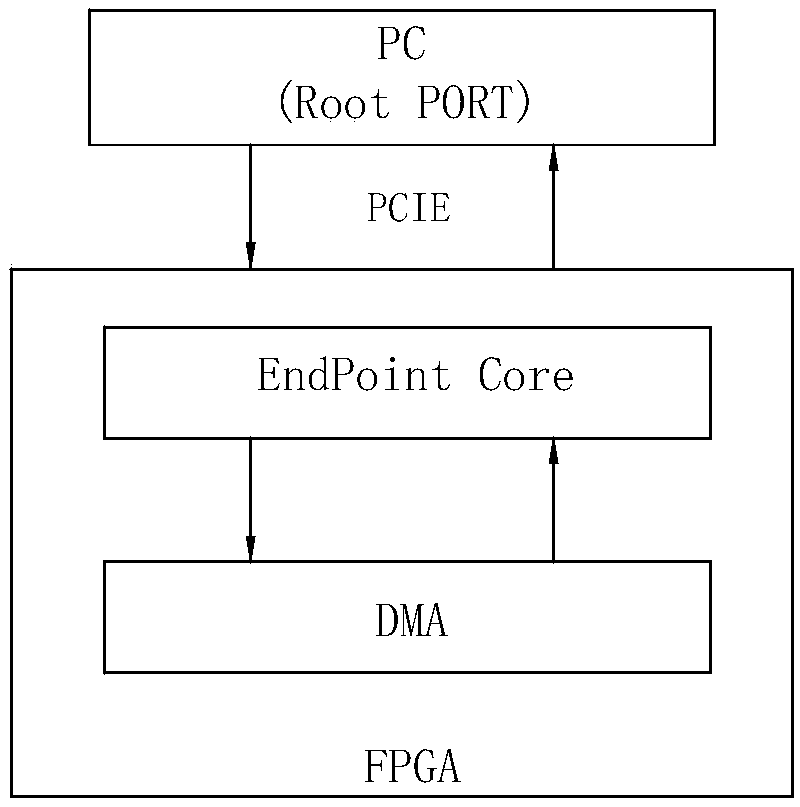

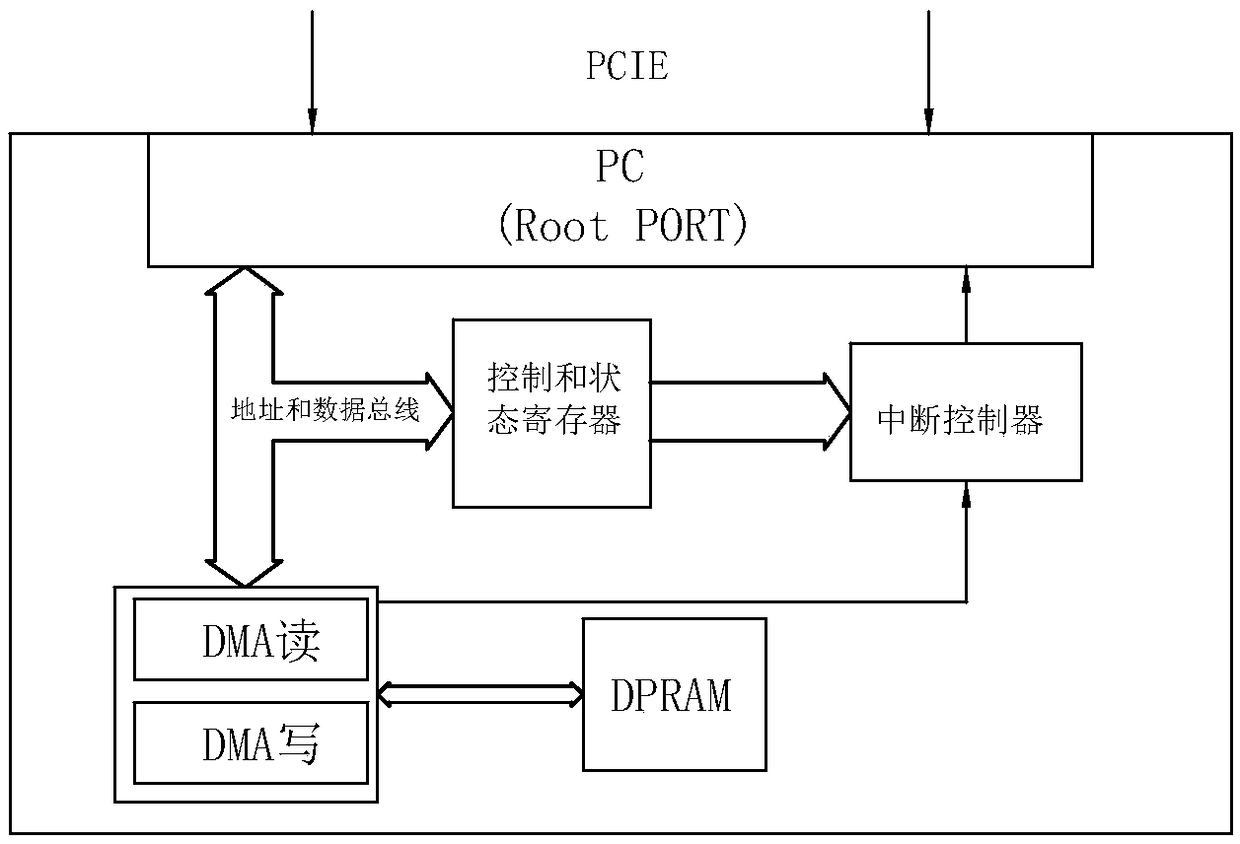

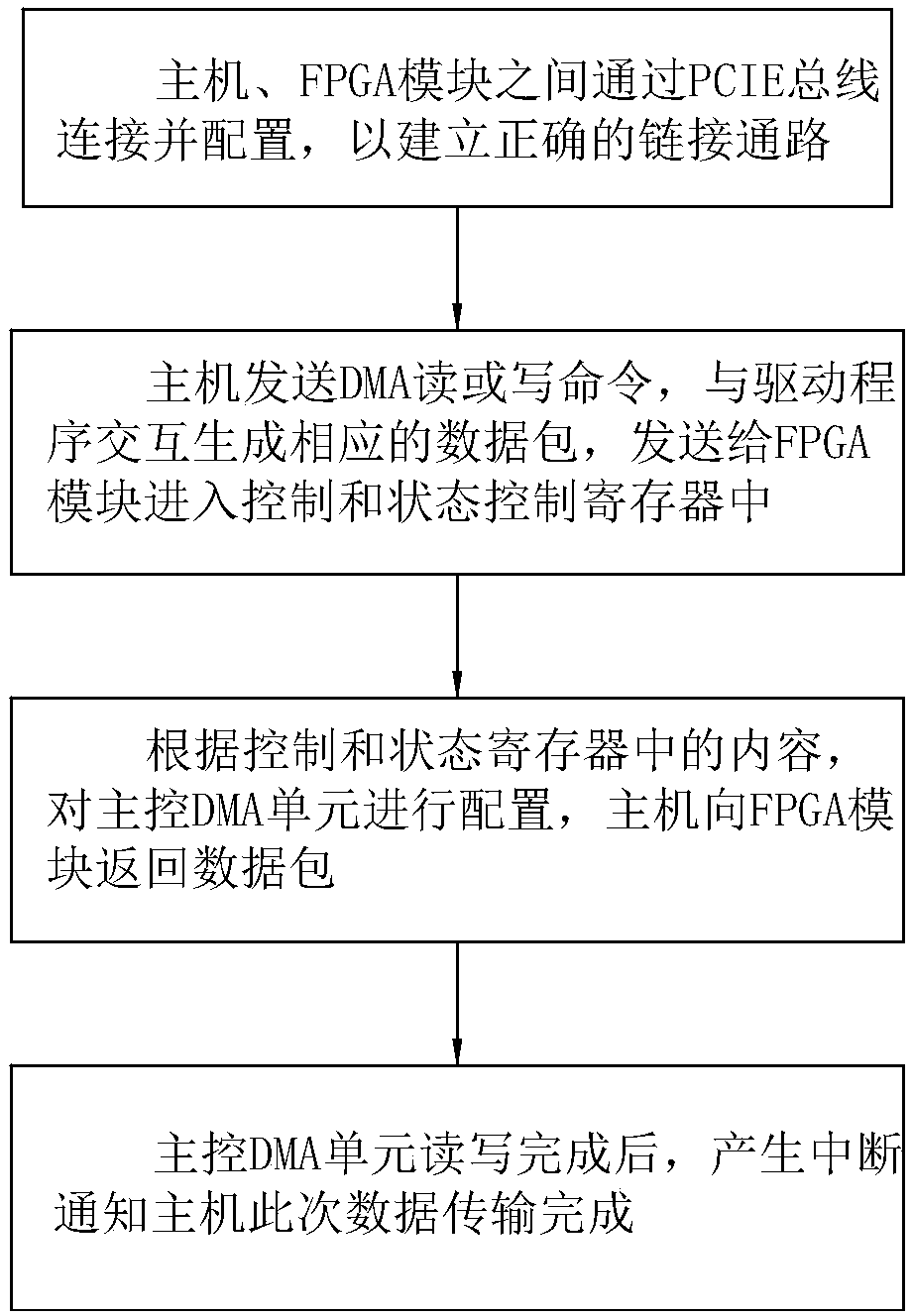

High-speed data transmission system and method based on PCIE

The invention discloses a high-speed data transmission system and method based on PCIE. The high-speed data transmission system comprises a host, an FPGA module and a PCIE bus, the PCIE bus is used for connecting fast communication between the host and the FPGA module, the host and the FPGA module are both provided with a PCIE interface in insertion connection with the PCIE bus, the host is a mainend of the PCIE bus, the FPGA module is a secondary end of the PCIE bus and used for completing read-write operation on DMA. The high-speed data transmission system and method based on the PCIE havethe advantages that the DMA, address mapping, interruption and the like are mainly controlled between the host and the FPGA module, the speed of the data transmission is improved, the reliability of the data transmission is ensured, the high-speed data transmission system and method can be applied to some equipment for high-speed data interaction with the host, such as data acquisition cards, FPGAacceleration cards and the like.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

FPGA-based network function acceleration method and system

ActiveCN108319563ASolve the problem of low resource utilizationEasy to handleDigital computer detailsProgram controlFpga accelerationNetwork packet

The invention relates to an FPGA-based network function acceleration method and system. The method comprises the steps of establishing the network function acceleration system. The system comprises aphysical machine and an acceleration card. The physical machine is connected with the acceleration card through a PCIe channel. The physical machine comprises a processor. The acceleration card comprises an FPGA and is used for providing network function acceleration for the processor. The processor is configured to query whether a required acceleration module exists in the FPGA or not when the acceleration card is needed to provide the network function acceleration, if yes, obtain an acceleration function ID corresponding to the required acceleration module, if not, select at least one partial reconfiguration region in the FPGA, configure the region as the required acceleration module and generate the corresponding acceleration function ID, and / or send an acceleration request to the FPGA,wherein the acceleration request comprises a to-be-processed data package and the acceleration function ID. The FPGA is configured to send the acceleration request to the required acceleration modulefor performing acceleration processing according to the acceleration function ID.

Owner:HUAZHONG UNIV OF SCI & TECH

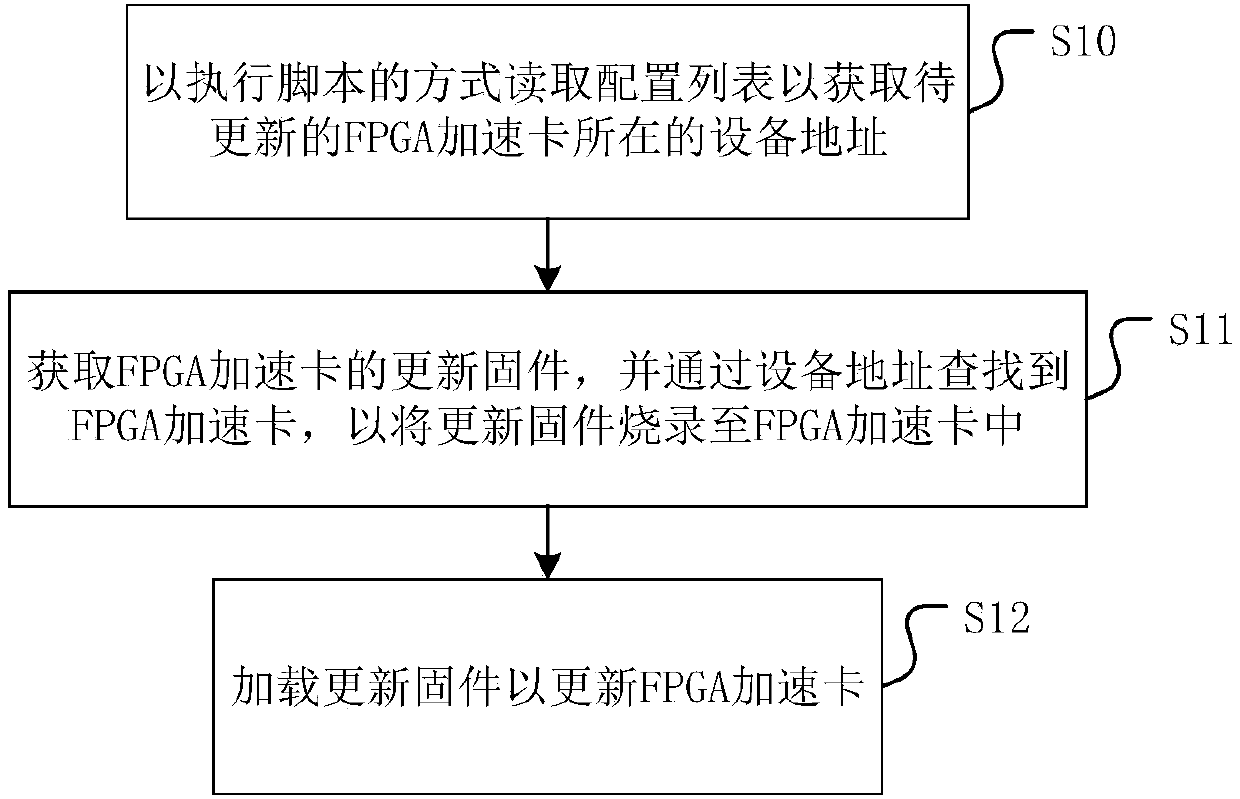

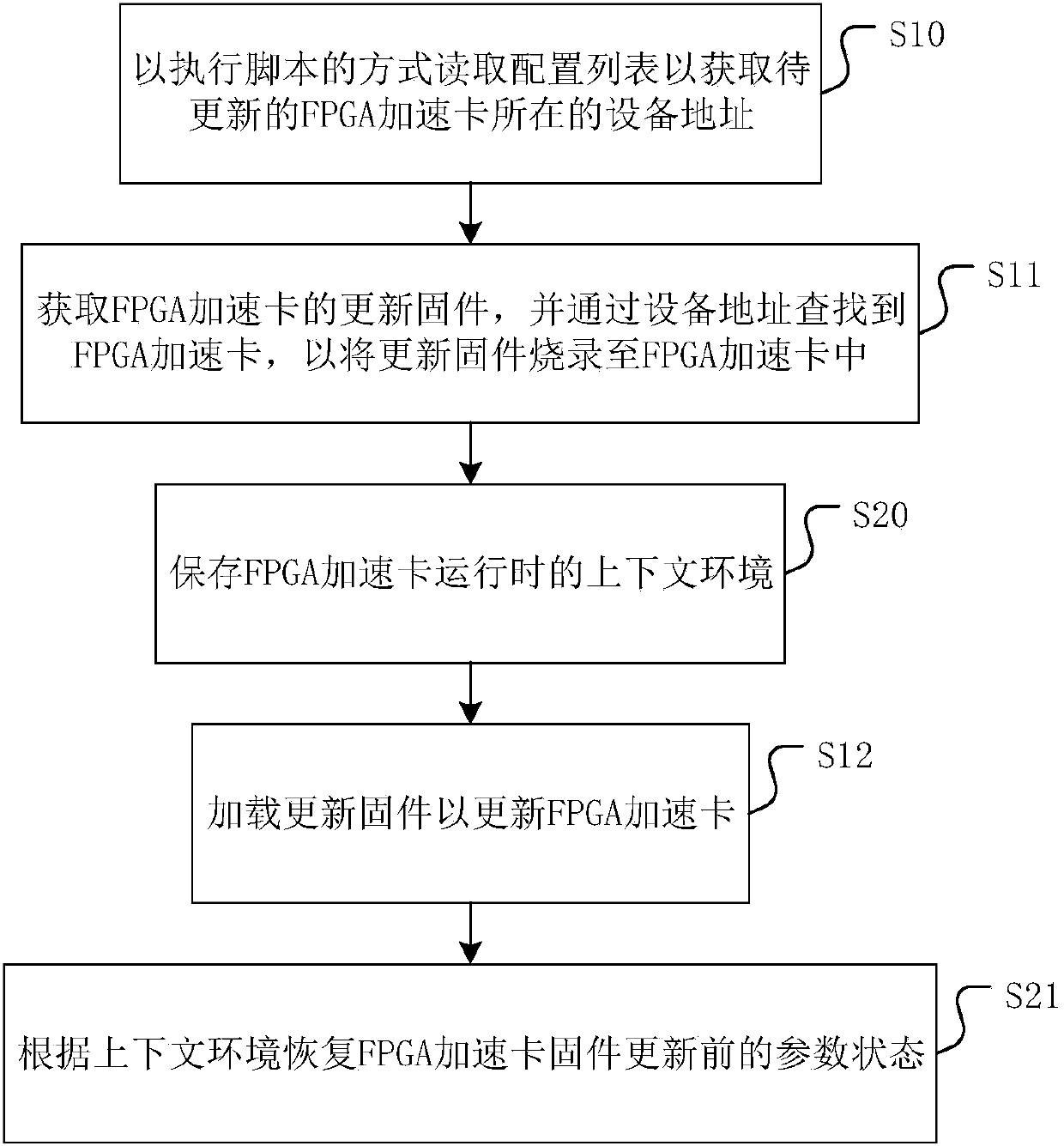

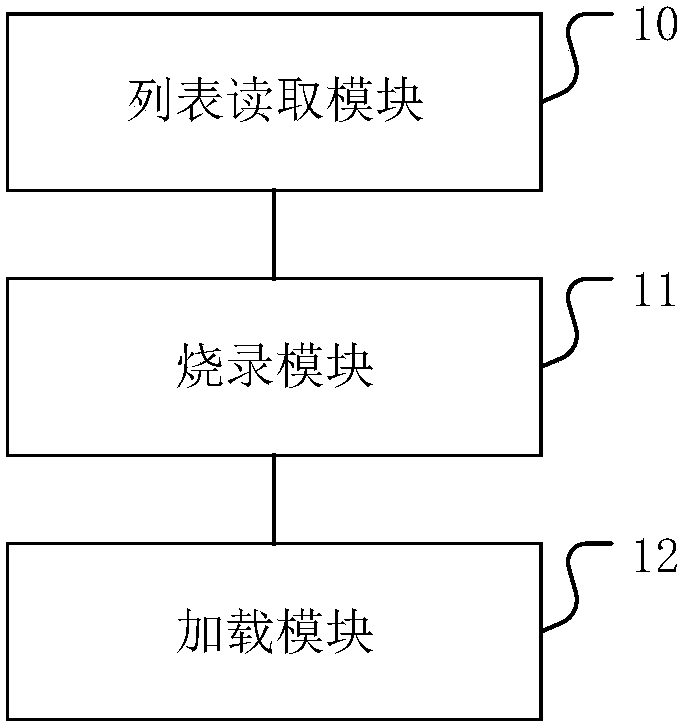

Firmware updating method, device and medium of FPGA accelerator card

InactiveCN107656776AAvoid the problem of low manual update efficiencyImprove usabilityProgram loading/initiatingHigh availabilityComplex network

The invention discloses a firmware updating method, device and medium of an FPGA accelerator card. The method comprises the steps that a configuration list is read in a script execution mode so that adevice address where the FPGA accelerator card to be updated is located can be acquired; updating firmware of the FPGA accelerator card is acquired, and the FPGA accelerator card is found through thedevice address so that the updating firmware can be burnt into the FPGA accelerator card; the updating firmware is loaded so that the FPGA accelerator card can be updated. Compared with an artificialmode that the updating firmware is burnt to the FPDG accelerator card one by one, the firmware updating method achieves automatic corresponding updating of the FPGA accelerator card by a system through script running, so that the problem of relatively low artificial updating efficiency due to a large quantity of FPGA accelerator cards in a complex network device environment is solved, the error probability due to difference among updating firmware of numerous FPGA accelerator cards in artificial updating is lowered, and high availability of the FPGA accelerator cards is guaranteed. Furthermore, the invention further provides the firmware updating device and medium of the FPGA accelerator card. The firmware updating device and medium of the FPGA accelerator card also have the advantages.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

CNN acceleration method and system based on OPU

ActiveCN110058883AOvercoming universality issuesOvercome the inability to accurately predict the order of instructionsKernel methodsNeural architecturesGranularityInstruction set

The invention discloses a CNN acceleration method and system based on OPU, and relates to the field of CNN acceleration methods based on FPGA. The method includes that an OPU instruction set is defined; the compiler converts the CNN definition files of different target networks, selects an optimal accelerator to configure mapping according to the defined OPU instruction set, and generates instructions of different target networks to complete mapping; the OPU reads the compiled instruction, operates the instruction according to a parallel computing mode defined by the OPU instruction set, and completes acceleration of different target networks. According to the method, the instruction type is defined, the instruction granularity is set, network recombination optimization is carried out, a mapping mode for guaranteeing the maximum throughput is obtained by searching a solution space, and hardware adopts a parallel computing mode; the problem that an existing FPGA acceleration work aims at generating specific independent accelerators for different CNNs is solved, and the effects that the FPGA accelerator is not reconstructed, and acceleration of different network configurations is rapidly achieved through instructions are achieved.

Owner:梁磊

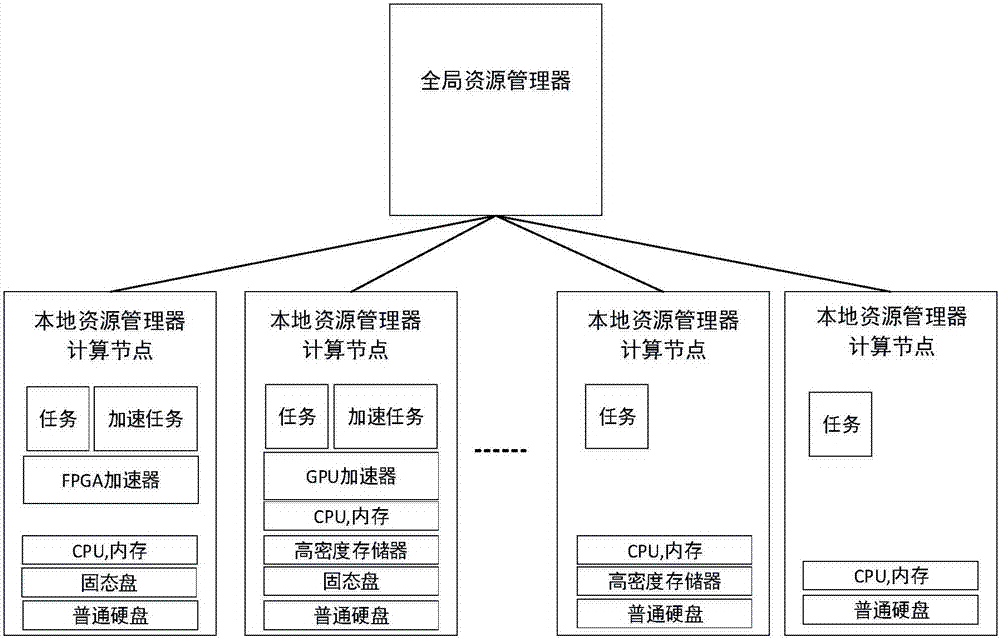

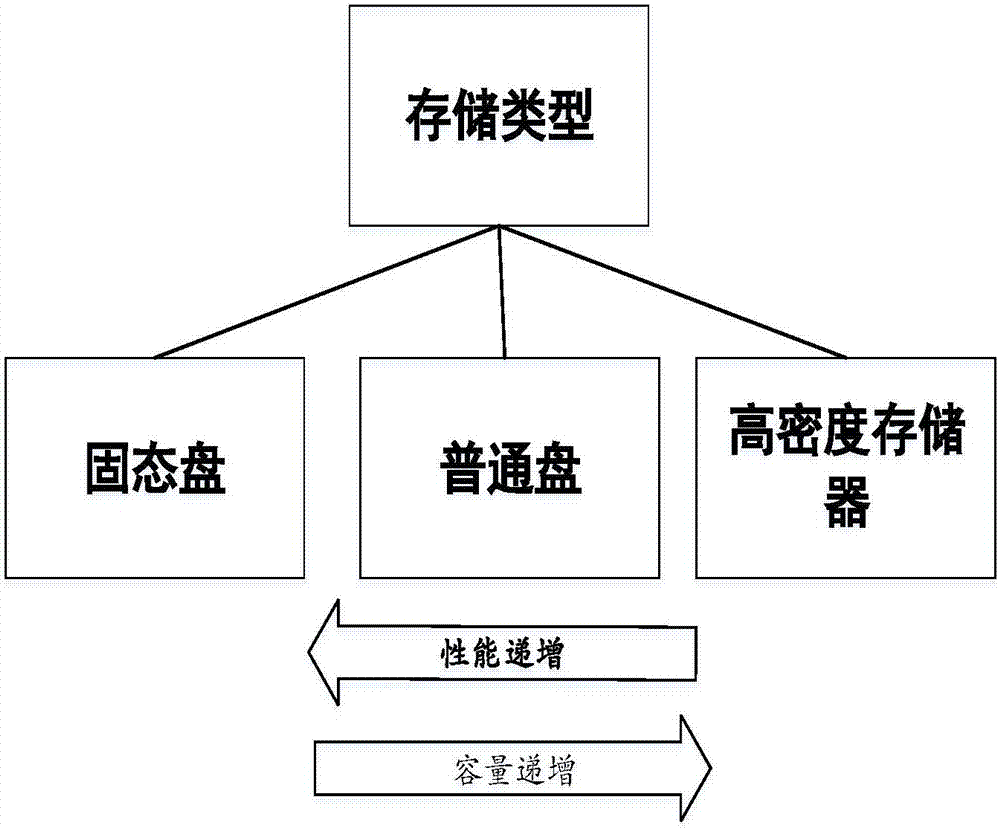

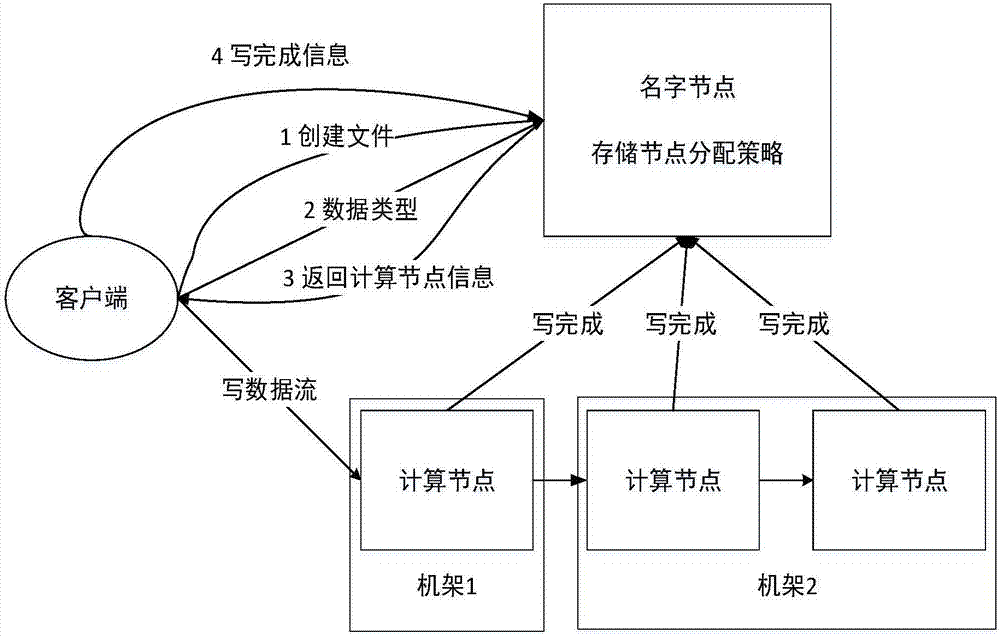

Hadoop heterogeneity method and system based on storage and acceleration optimization

ActiveCN107102824AImprove read and write performanceImprove application execution performanceInput/output to record carriersSolid-state storageResource utilization

The invention discloses a Hadoop heterogeneity method and system based on storage and acceleration optimization, and belongs to the field of distributed calculation. According to the technical scheme, aiming at data processing requirements, a storage medium is divided into three types, including a solid storage medium, a common storage medium and a high-density storage medium, and the most appropriate storage mode is found for data of different types; meanwhile, an acceleration application of which calculation performance needs to be improved is positioned to an FPGA accelerator with a specific algorithm or a GPU accelerator to complete calculation so as to improve processing performance of the application, and algorithm functions and layouts of the FPGA and GPU accelerator can be statically switched. The invention further discloses the Hadoop heterogeneity system based on the storage and acceleration optimization. According to the Hadoop heterogeneity method and system based on the storage and acceleration optimization, reading and writing performance of a whole cluster and execution performance of an application task and the resource utilization rate of an acceleration device are improved.

Owner:HUAZHONG UNIV OF SCI & TECH

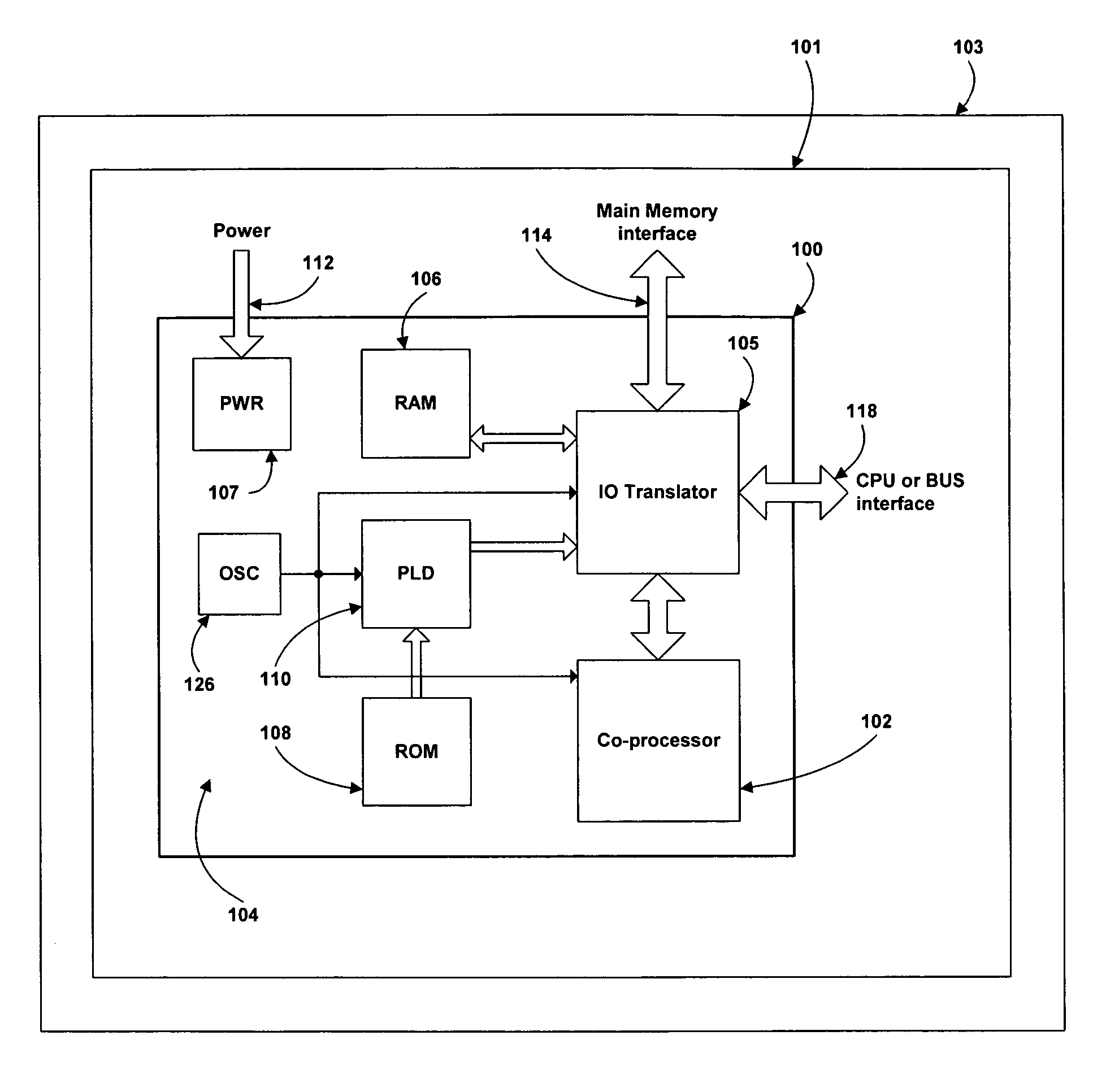

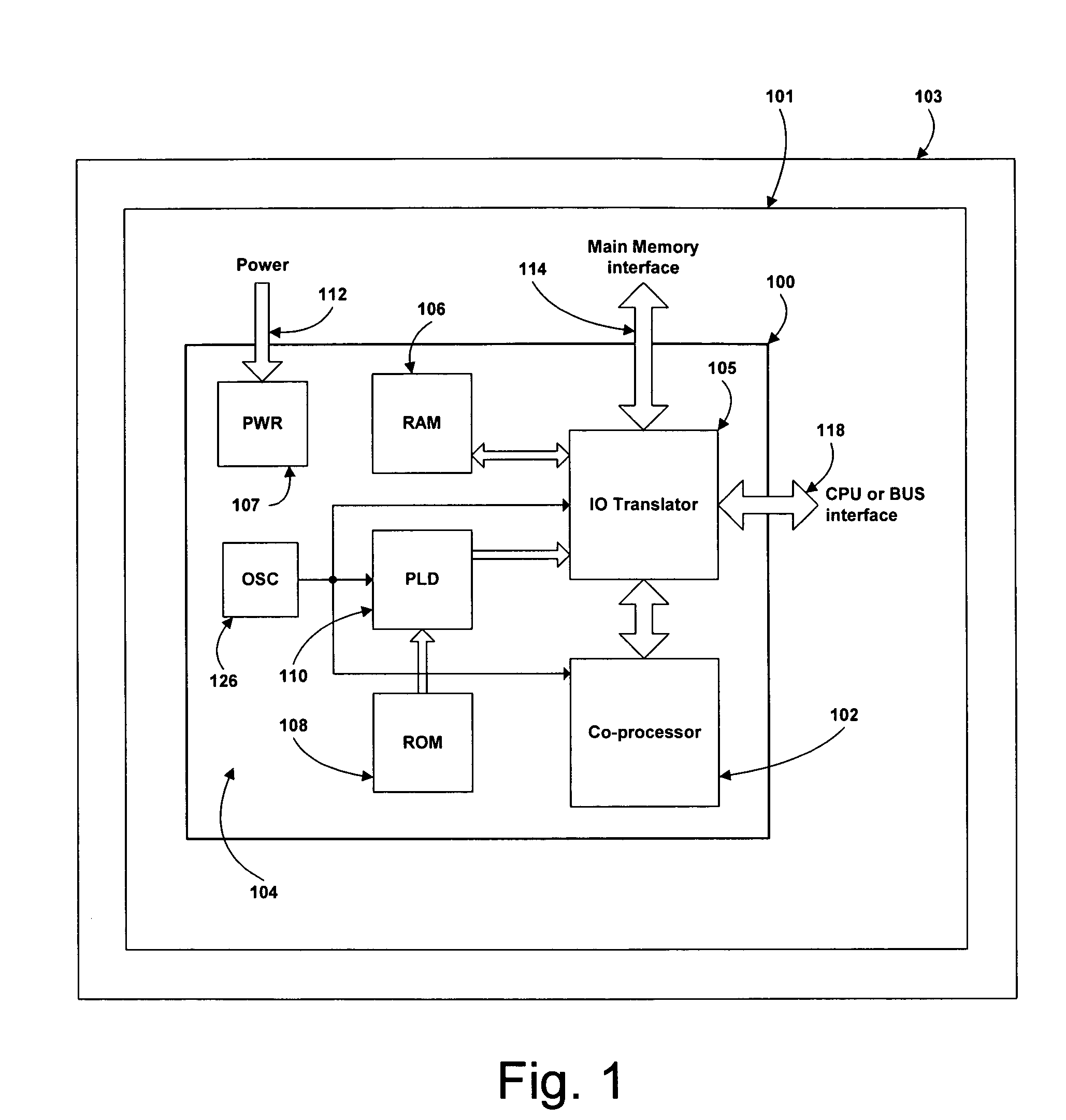

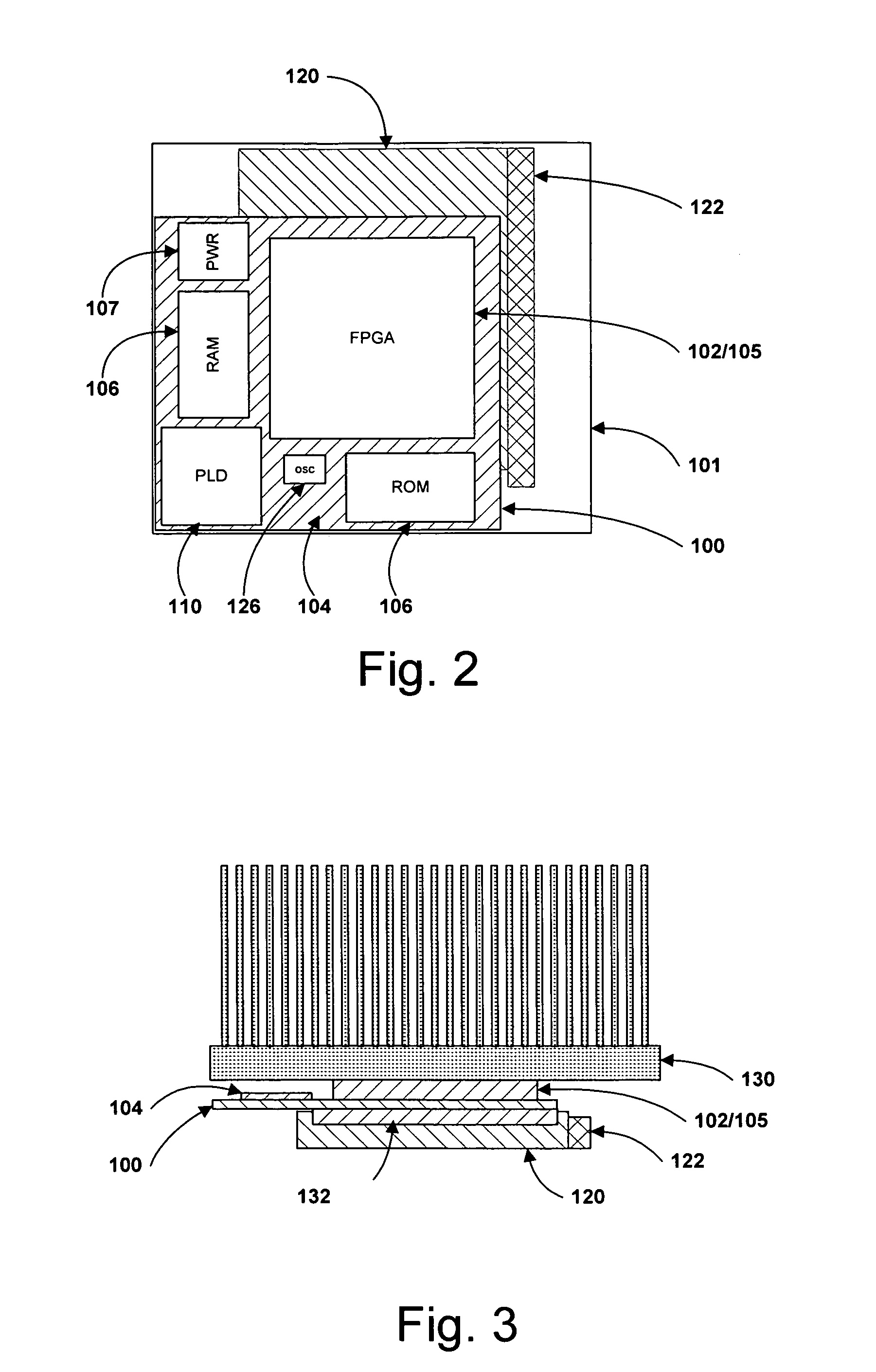

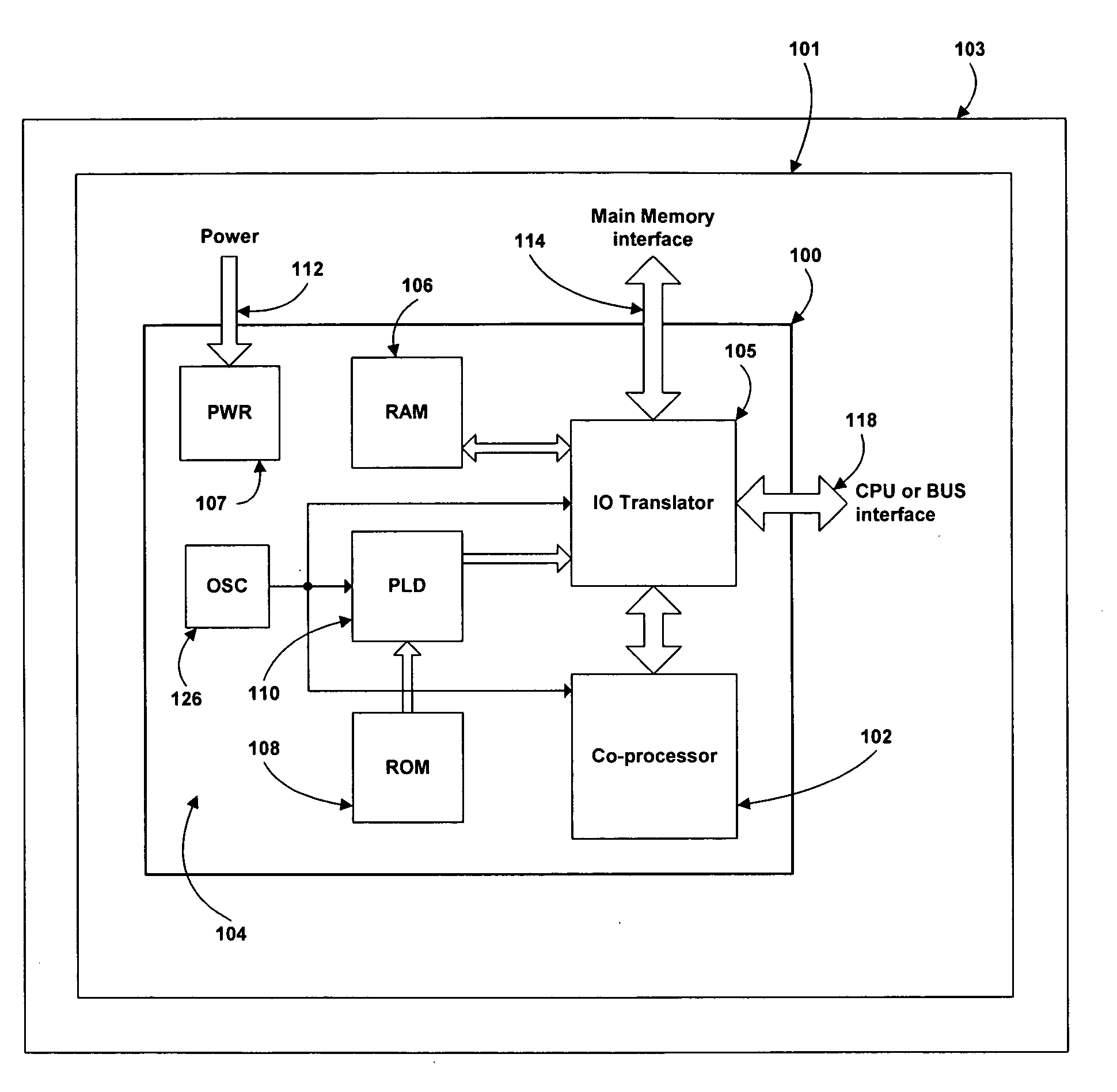

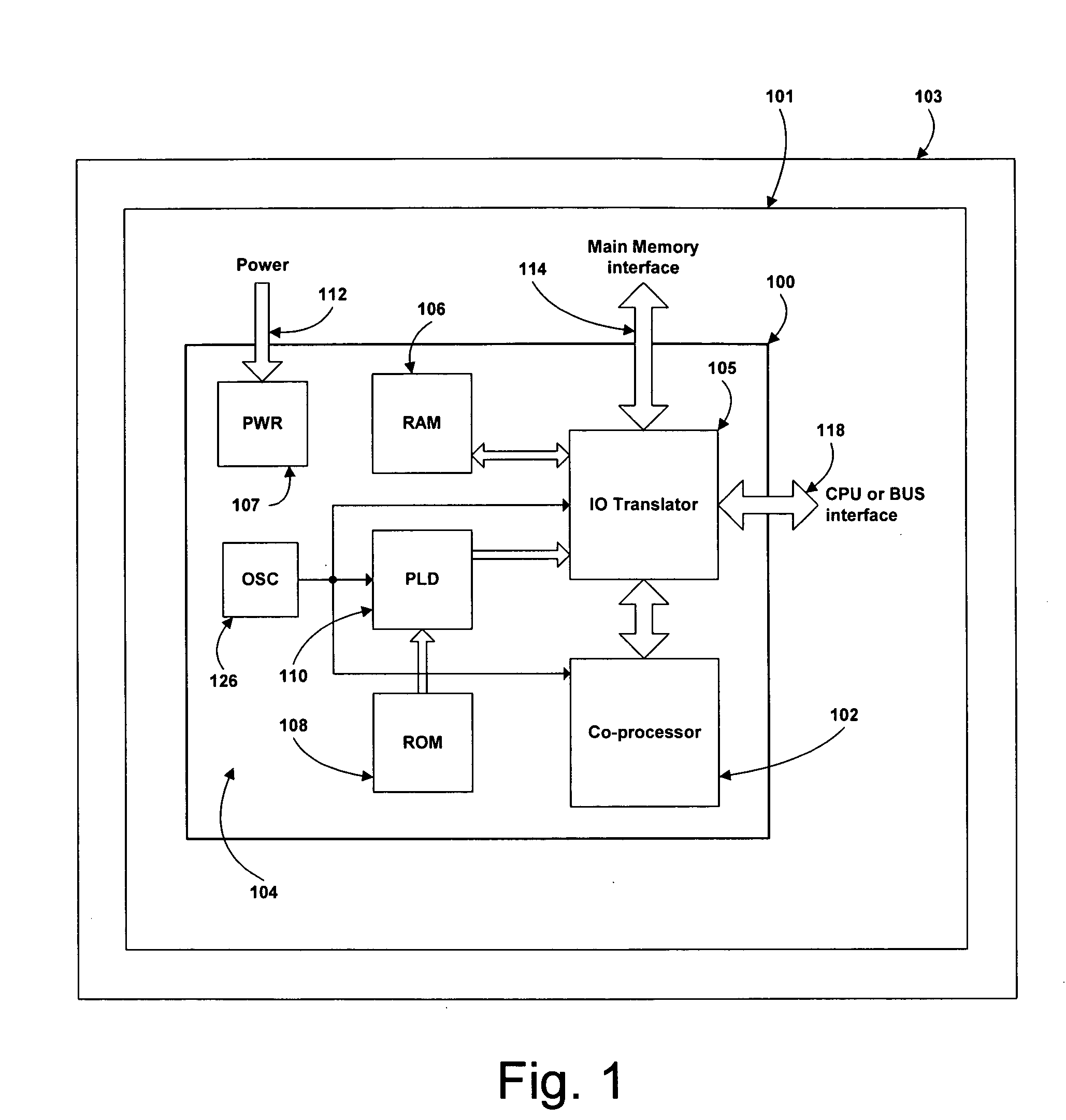

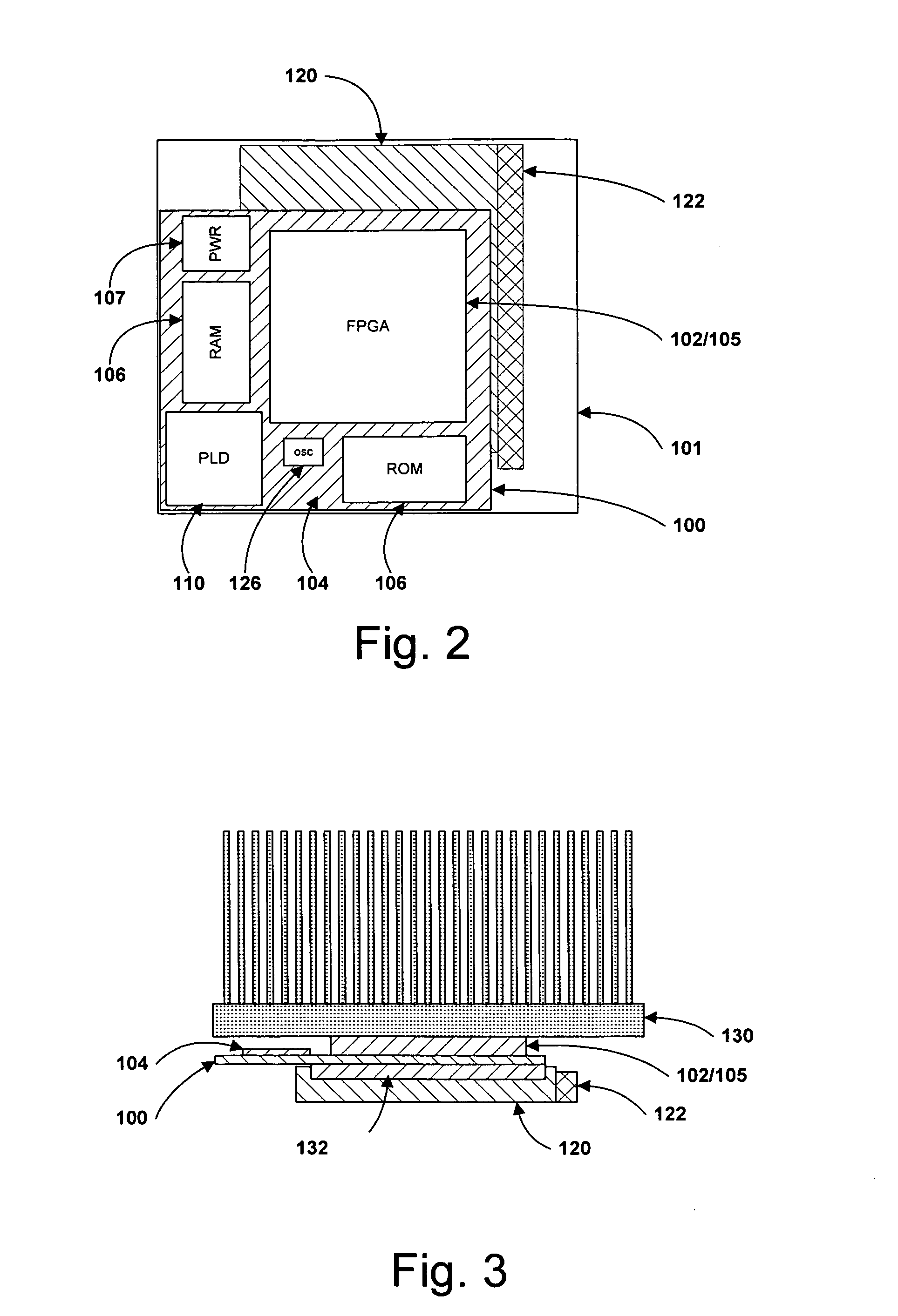

Systems and methods for providing co-processors to computing systems

ActiveUS7454550B2Simple designLow costDigital data processing detailsPrinted circuit aspectsComputer hardwareComputerized system

Computing systems with conventional CPUs coupled to co-processors or accelerators implemented in FPGAs (Field Programmable Gate Arrays). One embodiment of the systems and methods according to the invention includes a FPGA accelerator implemented in a computer system by providing an adapter board configured to be used in a standard CPU socket.

Owner:XTREMEDATA INC

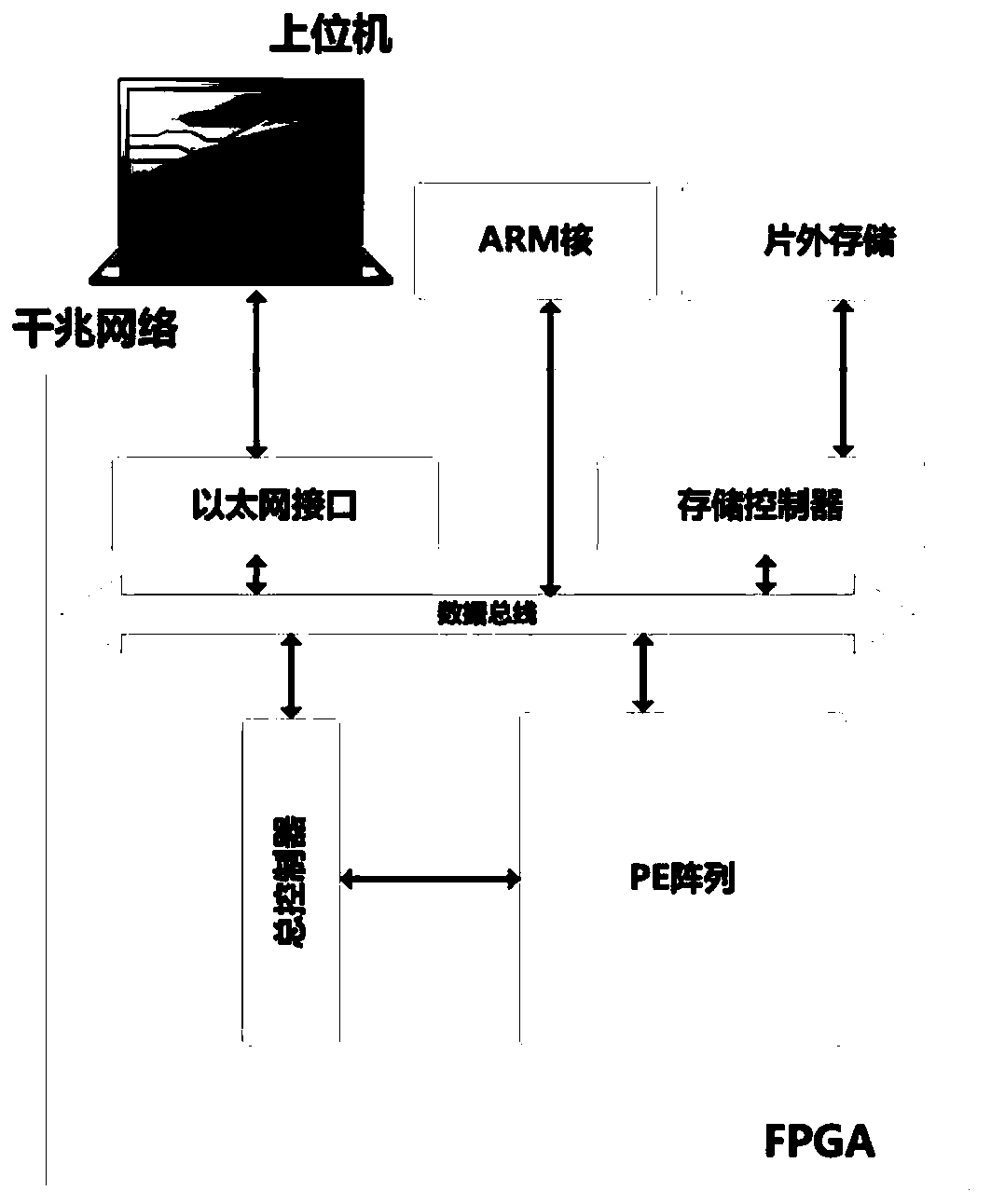

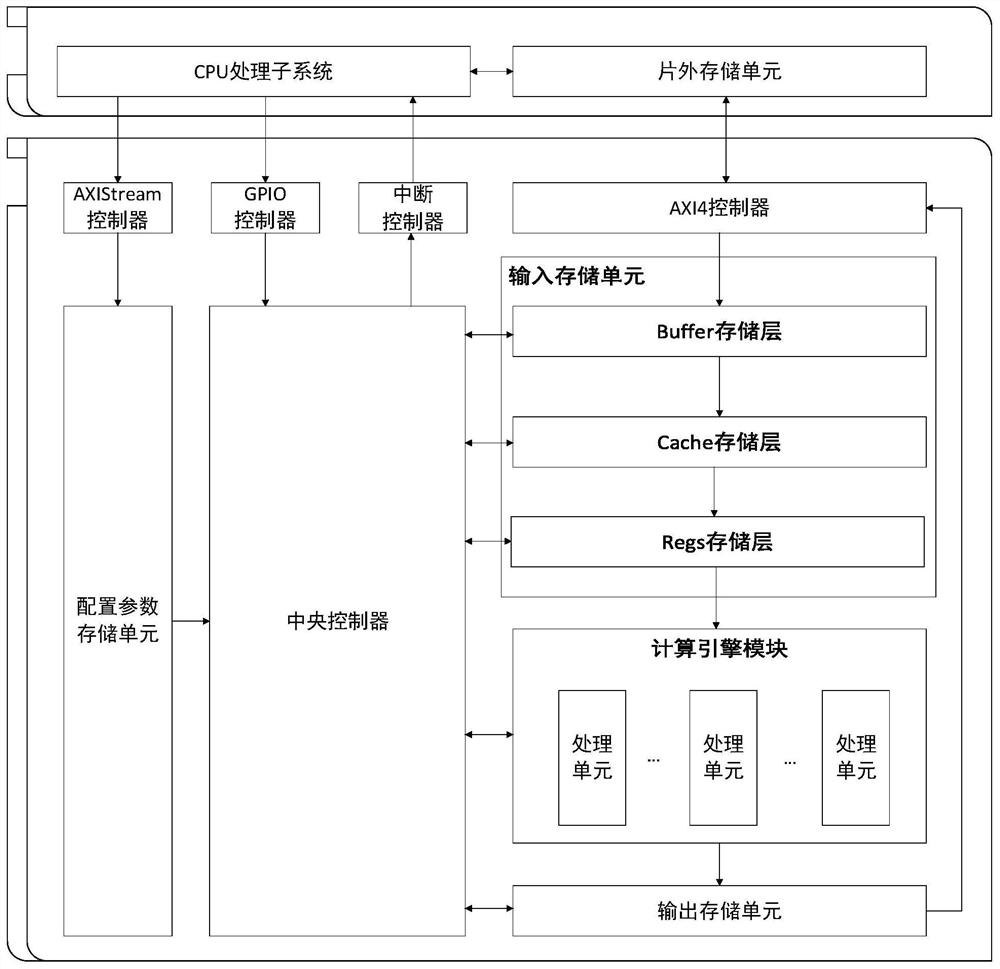

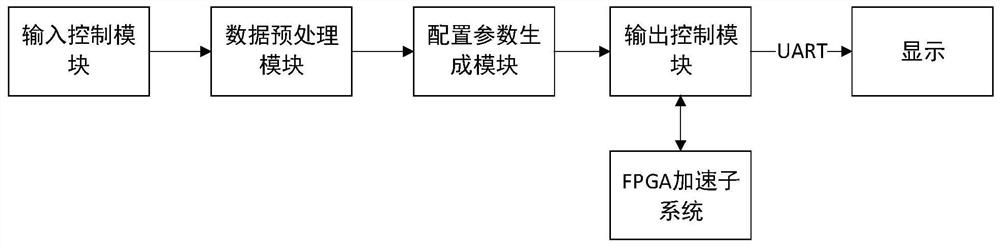

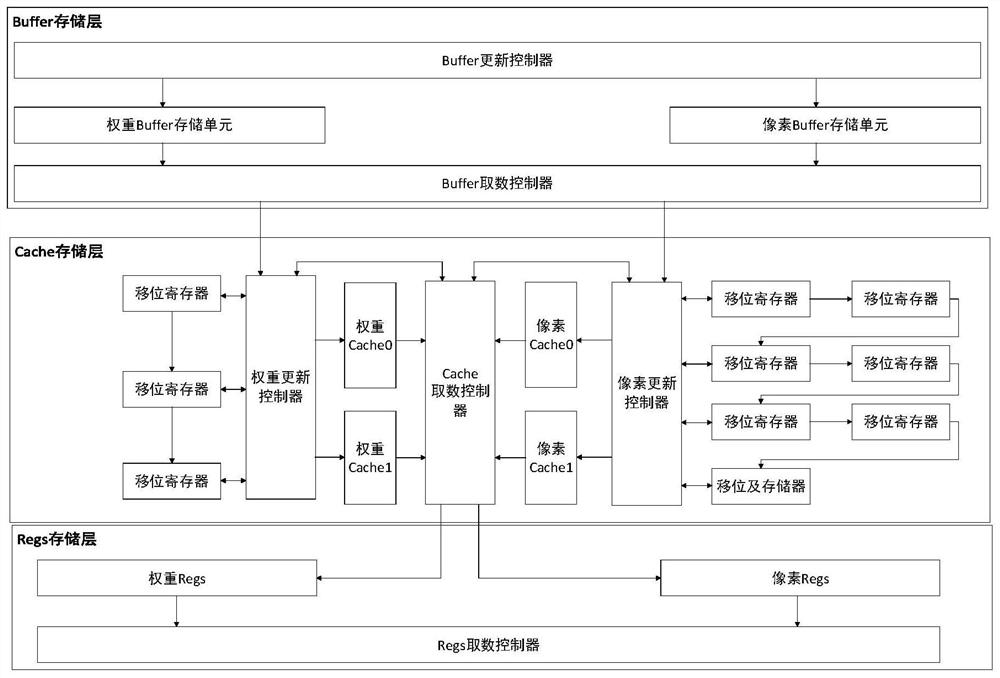

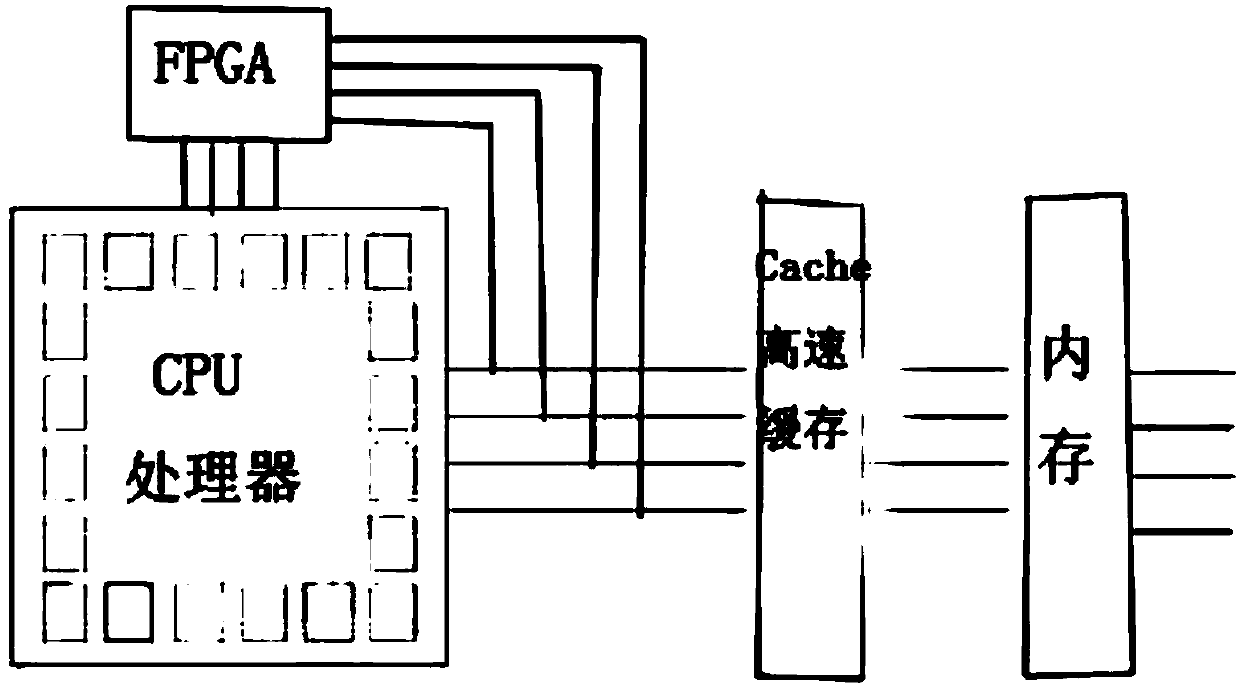

Convolutional neural network accelerator based on CPU-FPGA memory sharing

ActiveCN111626403AReduce power consumptionImprove parallelismInterprogram communicationNeural architecturesInput controlTerm memory

The invention discloses a convolutional neural network accelerator based on CPU-FPGA memory sharing. A CPU processing subsystem comprises an input control module, a configuration parameter generationmodule and an output control module; the input control module receives and caches the pixel data and the weight data; the configuration parameter generation module controls configuration parameters; the output control module controls data transmission; the FPGA acceleration subsystem comprises an on-chip storage module, a calculation engine module and a control module; the on-chip storage module is used for buffering, reading and writing access of data; the calculation engine module accelerates calculation; and the control module controls the on-chip storage module to perform read-write operation on the data, and performs data exchange and calculation control with the calculation engine module. According to the method, the characteristics of high parallelism, high throughput rate and low power consumption of the FPGA can be brought into full play, and meanwhile, the flexible and efficient data processing characteristics of the CPU processor can be fully utilized, so that the whole system can efficiently and quickly realize the reasoning process of the convolutional neural network with relatively low power consumption.

Owner:BEIHANG UNIV

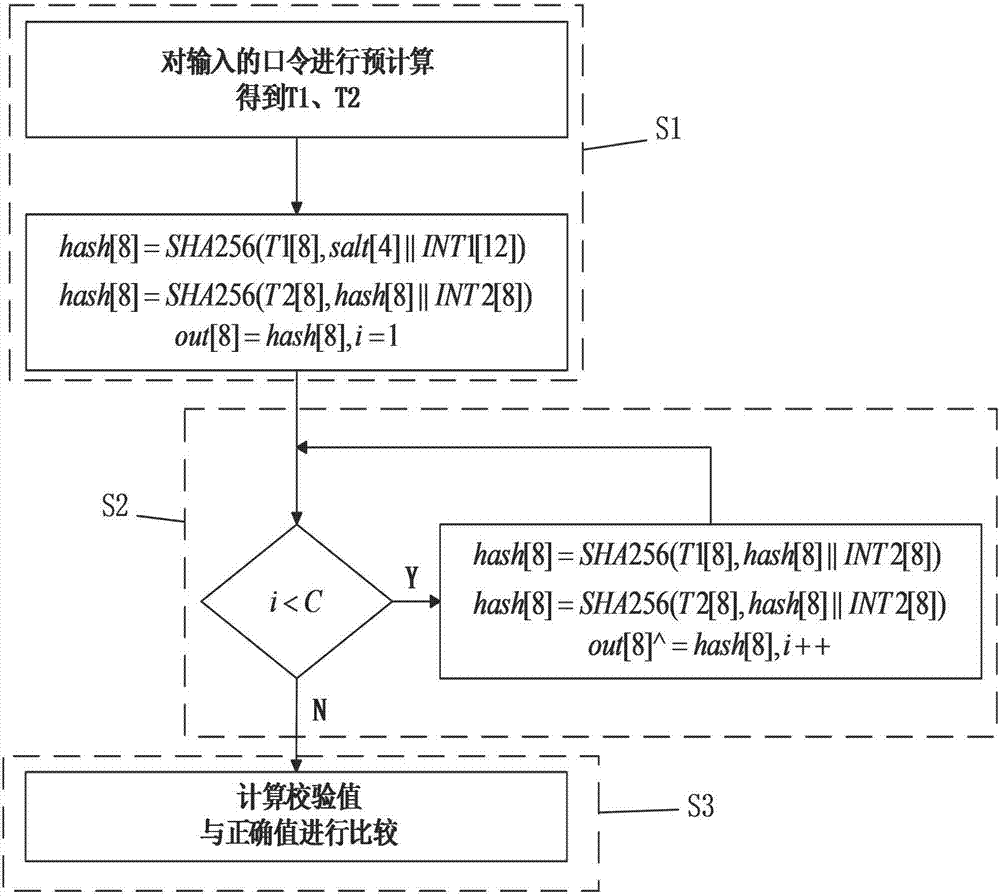

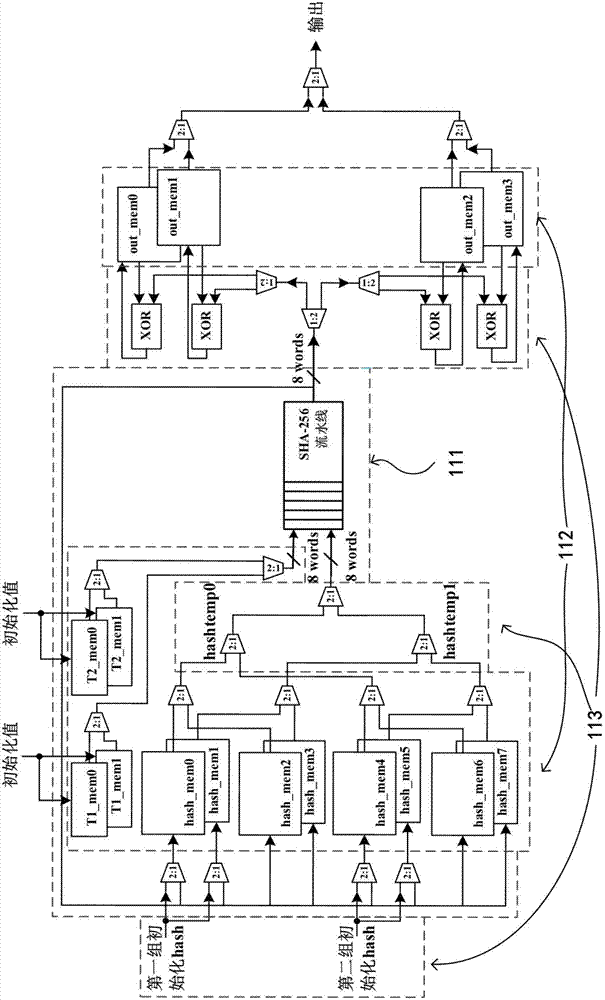

PBKDF2 cryptographic algorithm accelerating method and used device

ActiveCN107135078AImprove abstract abilityImprove reusabilityKey distribution for secure communicationUser identity/authority verificationResource utilizationBus interface

The invention discloses a PBKDF2 cryptographic algorithm accelerating device. The device comprises a CPU+FPGA (Field-Programmable Gate Array) heterogeneous system composed of an FPGA and a universal CPU. The invention further provides a PBKDF2 cryptographic algorithm accelerating method. The method comprises the following steps: 1) initial: computing a pre-computing part and a part before a loop body of a PBKDF2 algorithm is executed in a CPU, and transmitting a computed result to the FPGA via a bus interface; 2) loop: placing a computing-intensive loop body part in the PBKDF2 algorithm onto the FPGA, improving an acceleration effect and a resource utilization efficiency on the FPGA using an optimization means, and transmitting the computed result to the CPU via the bus interface; and 3) check: reading result data obtained after FPGA accelerated computing, and performing computed result summarization as well as check value computation and judgment.

Owner:北京骏戎嘉速科技有限公司

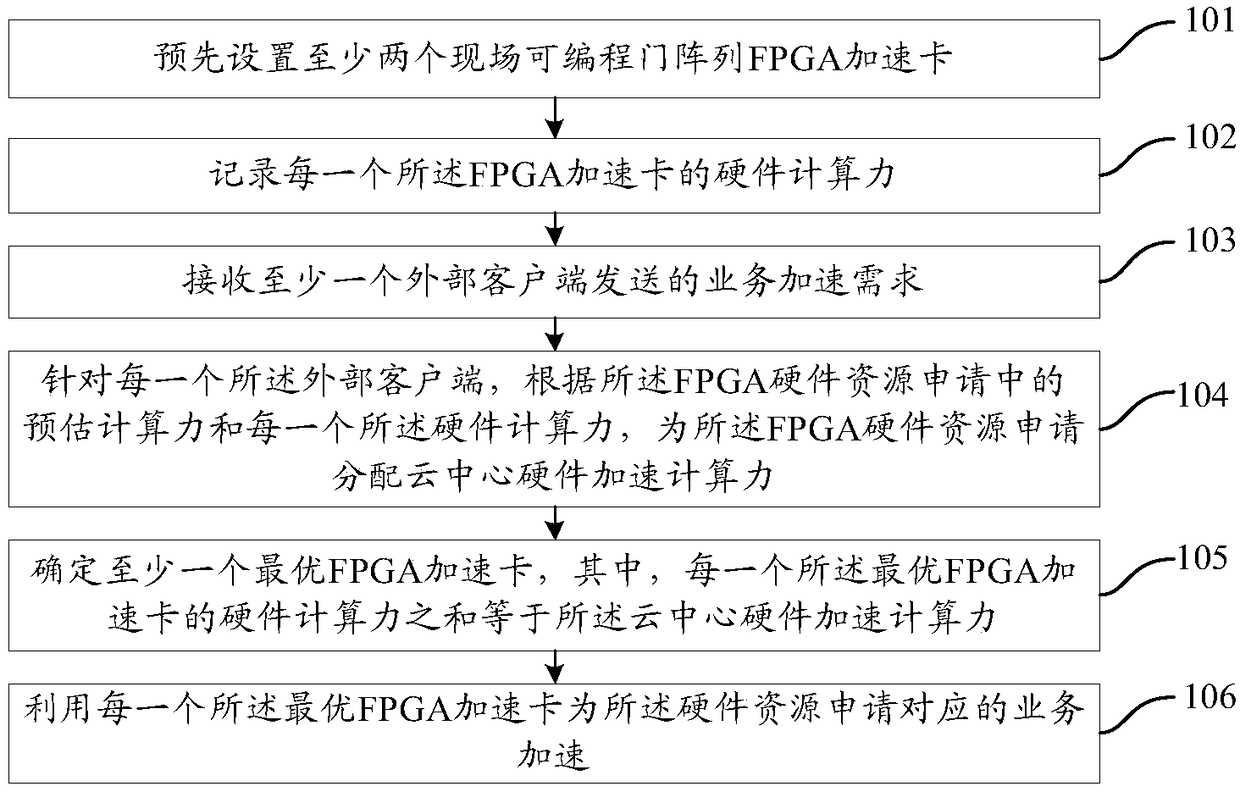

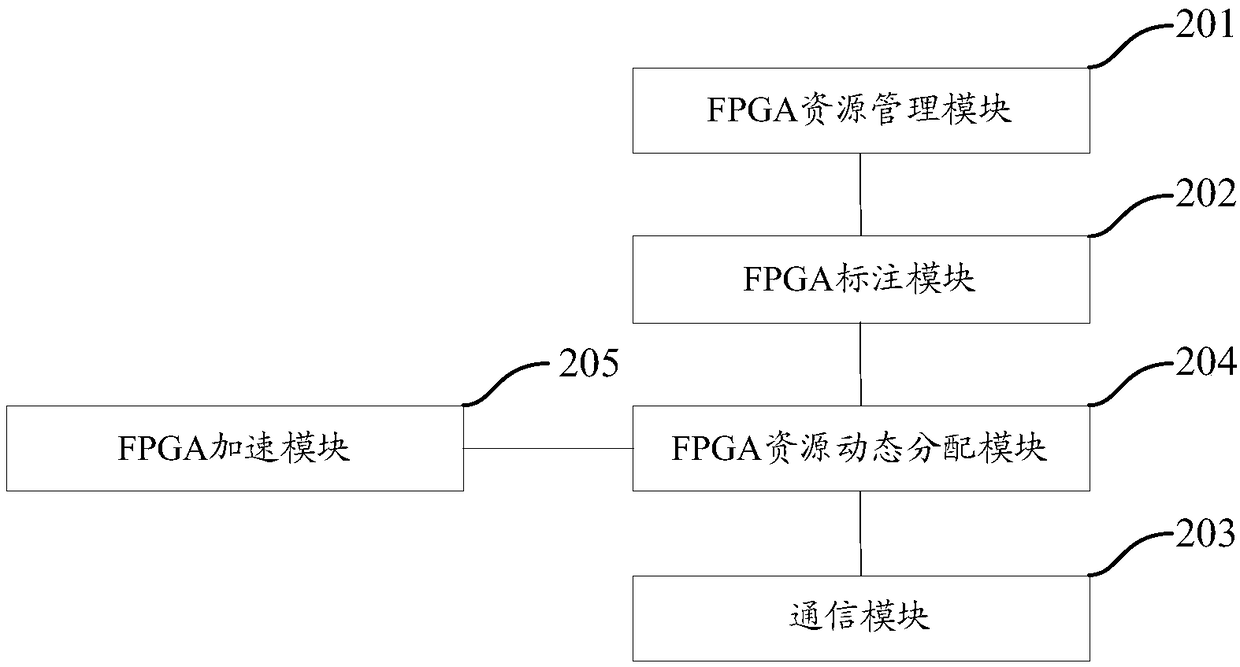

Hardware acceleration calculation power distribution method and system for cloud center, and cloud center

ActiveCN108829512AImprove accelerationImprove computing powerResource allocationEnergy efficient computingDistribution methodClient-side

The invention provides a hardware acceleration calculation power distribution method and system for a cloud center, and the cloud center. The method comprises the steps of presetting at least two FPGAacceleration cards; recording hardware calculation power of each FPGA acceleration card; receiving an FPGA hardware resource application sent by at least one external client; for each external client, according to estimated calculation power in the FPGA hardware resource application and each piece of the hardware calculation power, distributing the hardware acceleration calculation power of the cloud center to the FPGA hardware resource application; determining at least one optimal FPGA acceleration card, wherein the sum of the hardware calculation power of the optimal FPGA acceleration cardsis equal to the hardware acceleration calculation power of the cloud center; and accelerating a service corresponding to the hardware resource application by utilizing each optimal FPGA accelerationcard. According to the scheme, the service acceleration capability of a server can be improved.

Owner:SHANDONG INSPUR SCI RES INST CO LTD

Data processing method, system and terminal equipment

PendingCN110399221AAddressing the lack of ability to work independentlyProgram initiation/switchingResource allocationData processing systemTerminal equipment

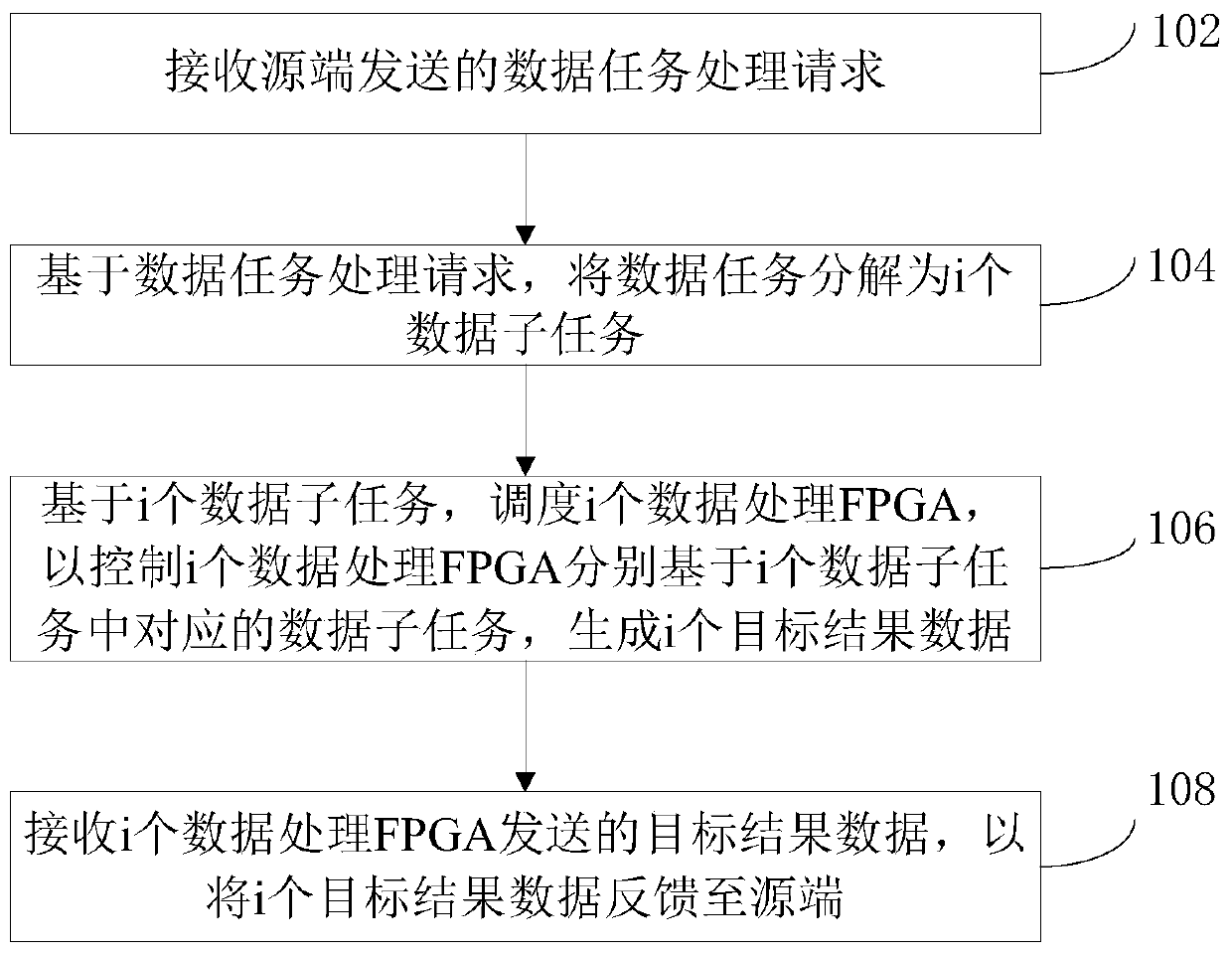

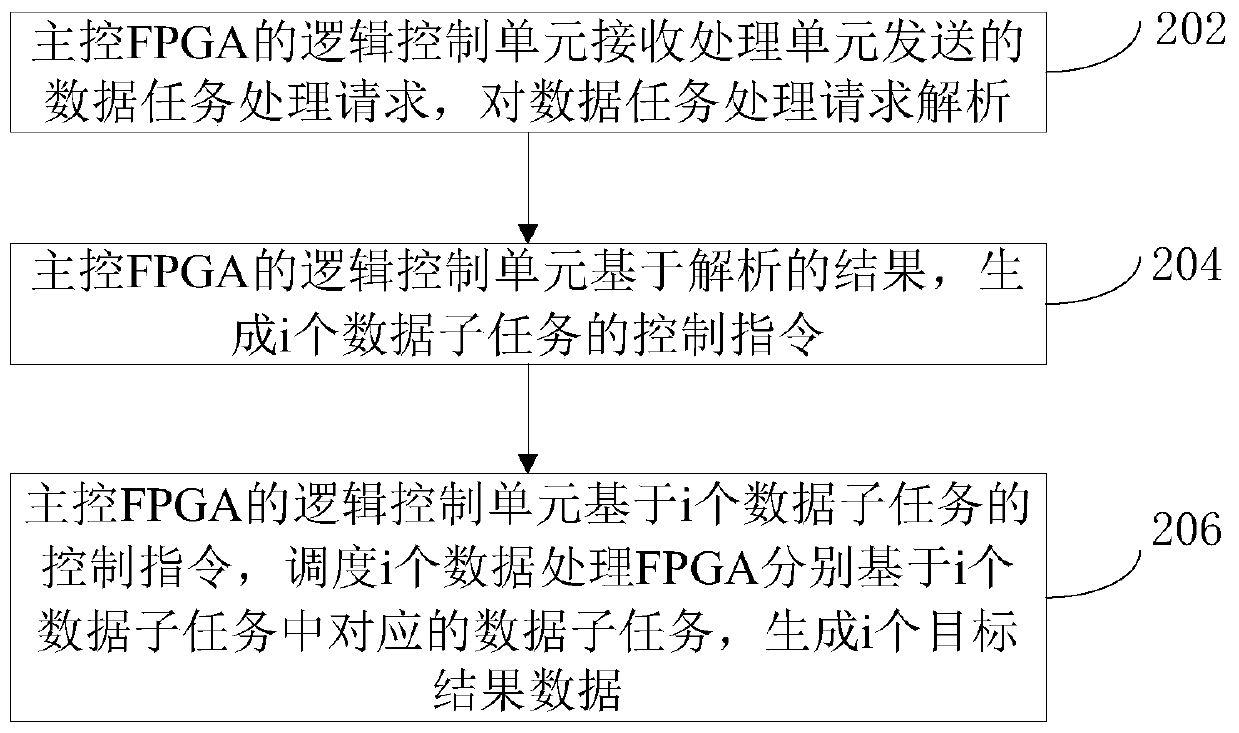

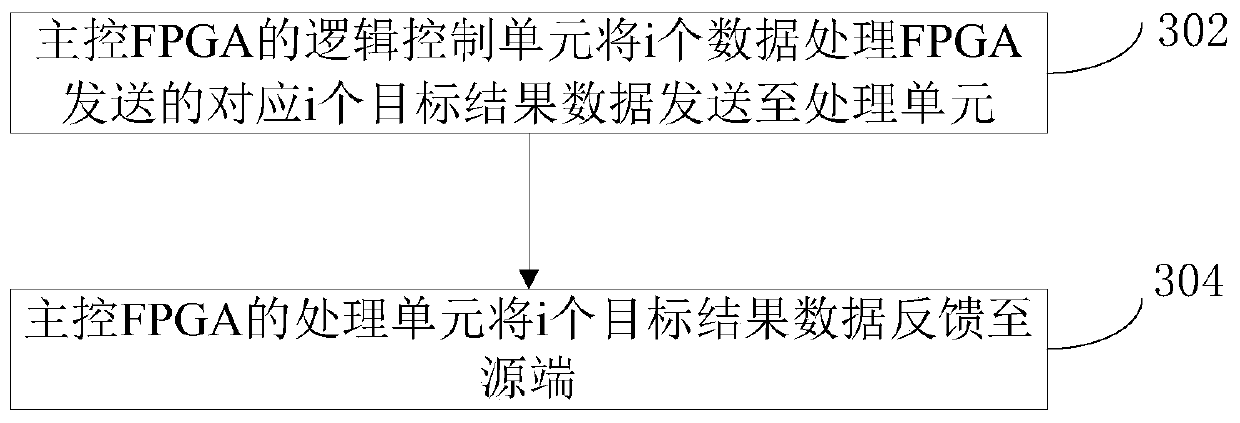

The invention provides a data processing method, a data processing system and terminal equipment. The method comprises the steps: receiving a data task processing request transmitted by a source end,and enabling a main control FPGA to be at least logically independent from the source end; decomposing the data task into i data subtasks based on the data task processing request; scheduling i data processing FPGAs based on the i data sub-tasks to control the i data processing FPGAs to generate i target result data based on the corresponding data sub-tasks in the i data sub-tasks; and receiving the target result data sent by the i data processing FPGAs so as to feed back the i target result data to the source end. The FPGA acceleration card solves the problem that the FPGA acceleration card in the prior art needs to be inserted into hosts such as a PC (Personal Computer) and the like to work, so that the FPGA acceleration card is lack of independent working capacity.

Owner:江苏鼎速网络科技有限公司

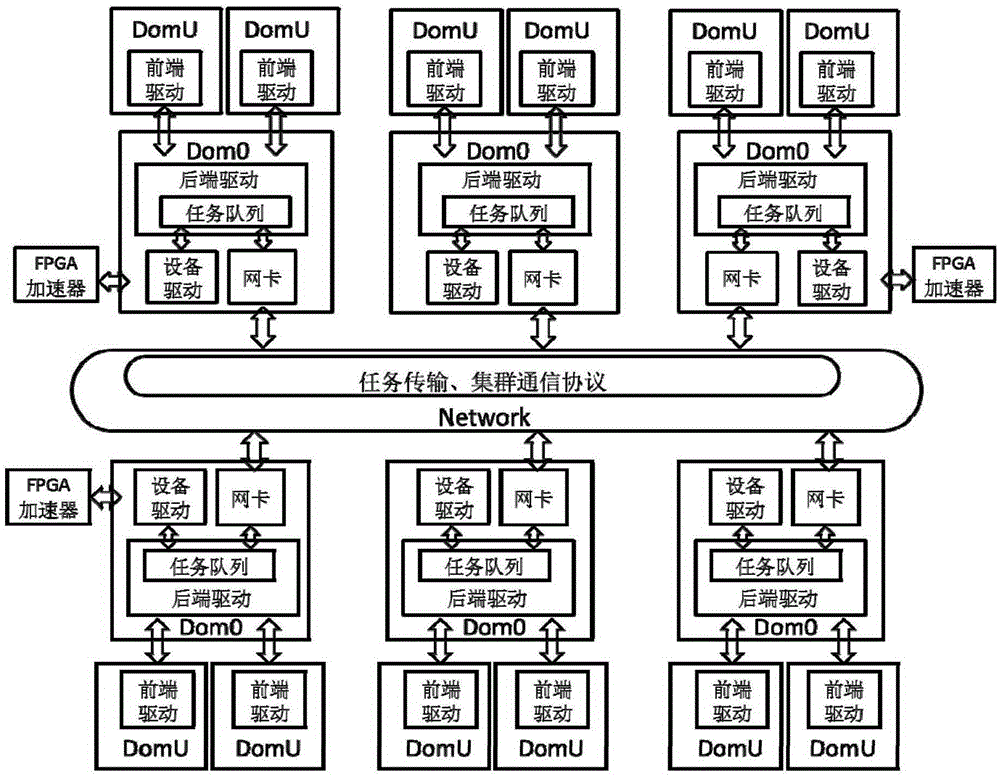

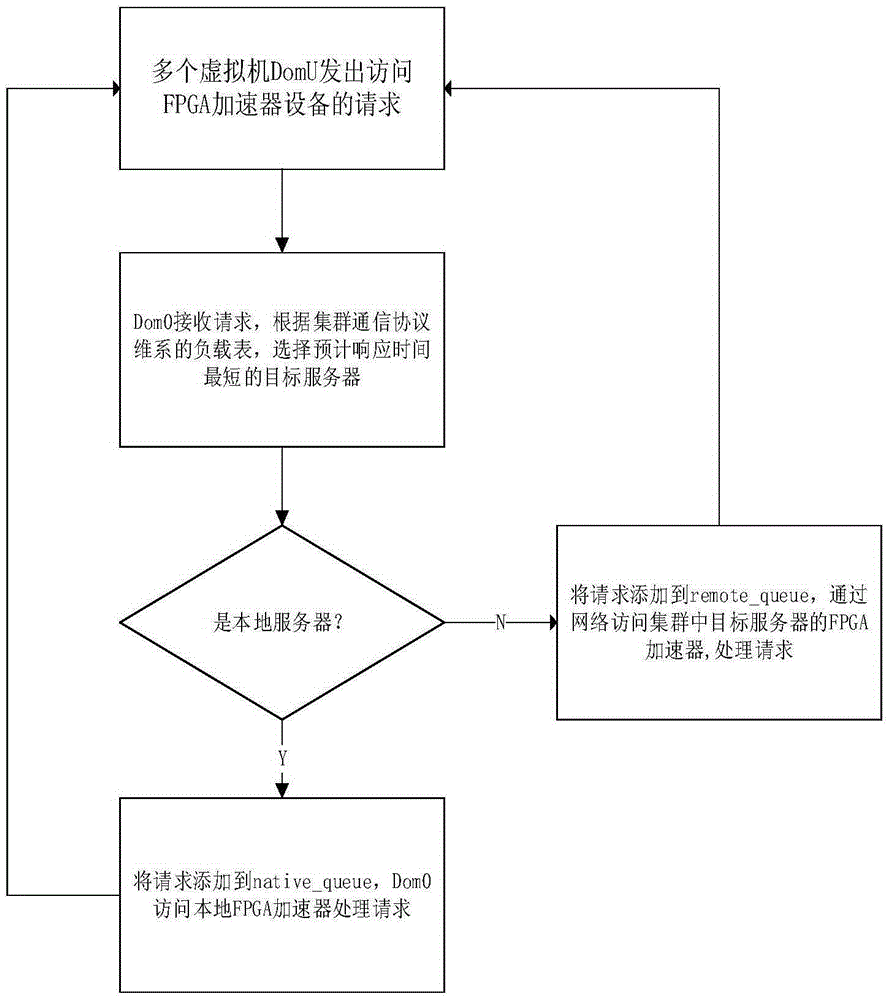

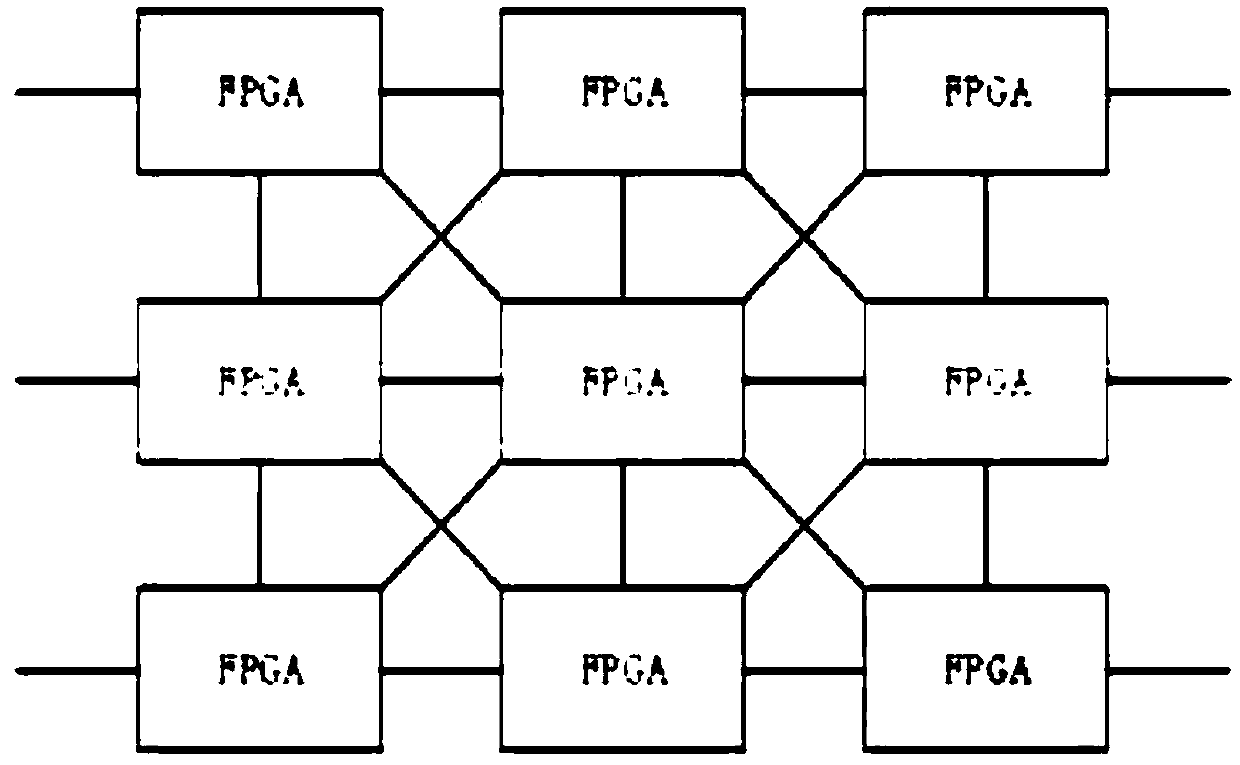

System and method for dispatching FPGA (Field Programmable Gate Array) accelerator based on Xen virtualized cluster

ActiveCN105335211AShort response timeImprove computing efficiencySoftware simulation/interpretation/emulationVirtualizationNetwork interface controller

The invention relates to a system and a method for dispatching an FPGA (Field Programmable Gate Array) accelerator based on a Xen virtualized cluster. The system comprises multiple servers, wherein the servers are mutually connected through an exchanger to form a cluster; each server comprises a privileged domain virtual machine, multiple non-privileged domain virtual machines and an FPGA; the privileged domain virtual machine is respectively in communication with the multiple non-privileged domain virtual machines and the FPGA; the multiple non-privileged domain virtual machines share the FPGA through the privileged domain virtual machine; the privileged domain virtual machine in each server is in communication with the privileged domain virtual machines in other servers through a network card. Compared with the prior art, the system and the method, disclosed by the invention, have the advantages that the equipment utilization rate is increased, the equipment cost is reduced, and the like.

Owner:TONGJI UNIV

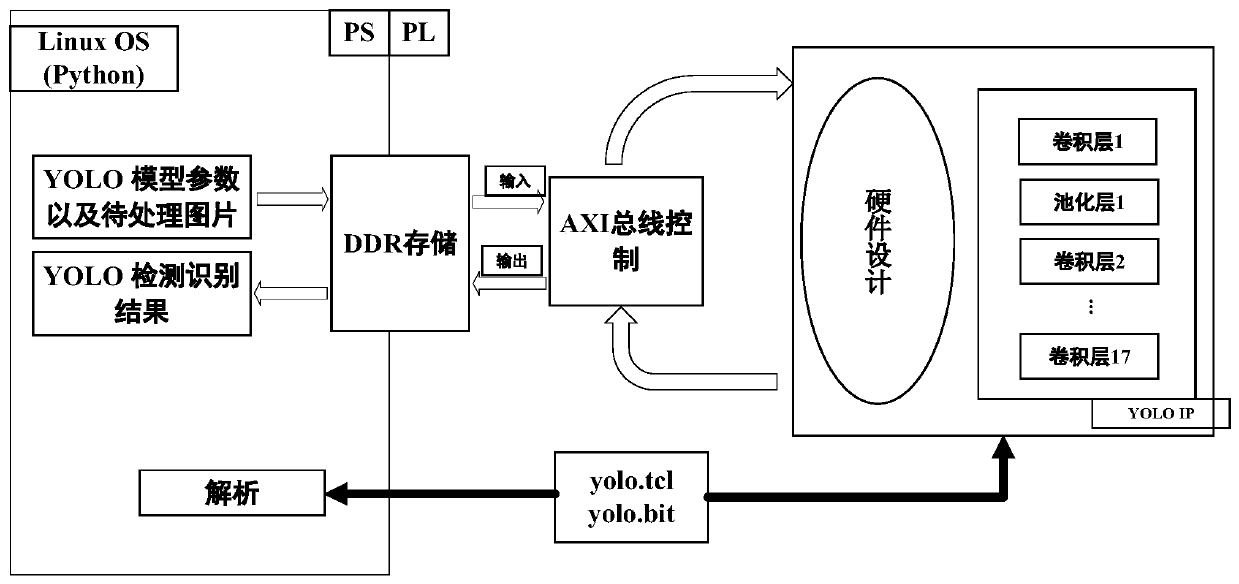

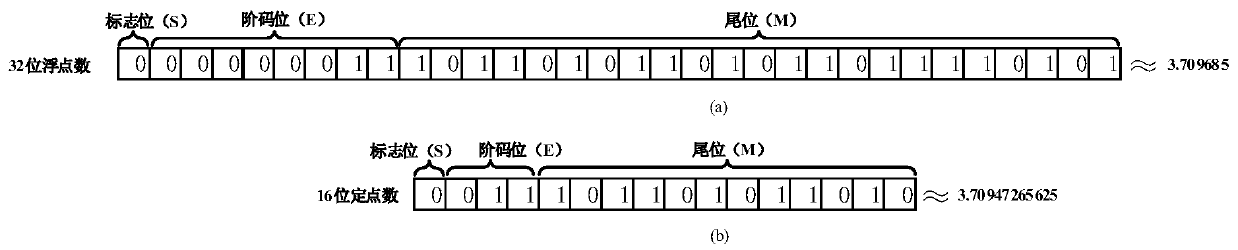

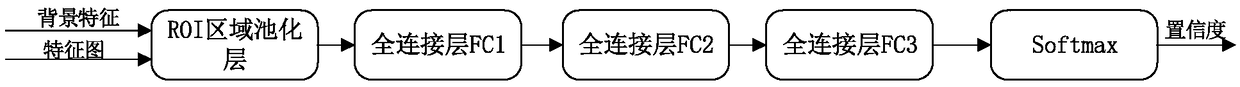

Winograd YOLOv2 target detection model method based on FPGA acceleration

ActiveCN111459877ASave resourcesReduce usageNeural architecturesArchitecture with single central processing unitComputation complexityData path

The invention discloses a Winograd YOLOv2 target detection model method based on FPGA acceleration. The method comprises the steps: employing a PYNQ board card, and enabling a main control chip of thePYNQ board card to comprise a processing system end PS and a programmable logic end PL; wherein the PS end caches a YOLO model and feature map data of a to-be-detected image; the PL end caches the parameters of the YOLO model and the to-be-detected image into an on-chip RAM, deploys a YOLO accelerator with a Winograd algorithm, completes acceleration operation on the model, forms a data path of ahardware accelerator, and realizes target detection of the to-be-detected image; and an operation result of the acceleration circuit can be read out, and image preprocessing and display are carried out. By adopting the technical scheme of the invention, the calculation complexity of the YOLO algorithm can be reduced, the FPGA accelerator storage optimization algorithm reduces the calculation timeof the FPGA when accelerating the YOLO algorithm, accelerates the target detection, and effectively improves the performance of the target detection.

Owner:BEIJING TECHNOLOGY AND BUSINESS UNIVERSITY +1

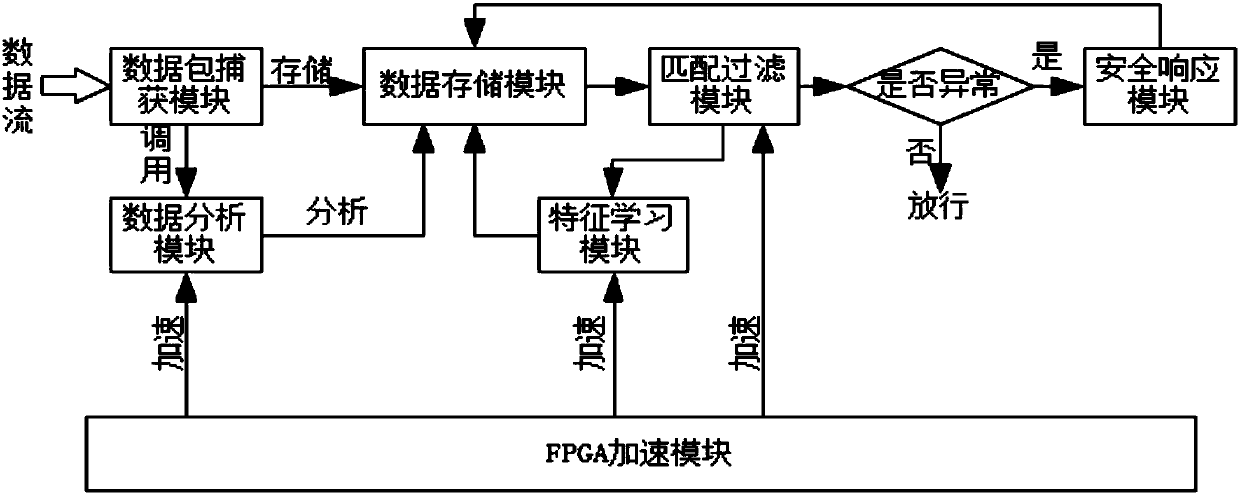

Intrusion prevention system and method

InactiveCN107612948AImprove detection matching speedStrong computing powerPlatform integrity maintainanceTransmissionFeature learningFilter algorithm

The invention discloses an intrusion prevention system and method. The intrusion prevention system comprises a data packet capture module, a data pack analysis module, a matching filter module, an FPGA (field programmable gate array) acceleration platform and a feature learning module; the data packet capture module is responsible for capturing and storing data packets entering a host; the data packet analysis module is used for analyzing and reorganizing the data packets captured by the data packet capture module; the matching filter module is used for matching and filtering the data packetscaptured in a matching filter through a matching filter algorithm; the FPGA acceleration platform is used for accelerating data by the aid of an FPGA calculation system and execution speed in algorithm of a packet classification module, the matching filter module and a neural training module; the feature learning module is used for performing neutral training on the data subjected to matching filter by the aid of a neutral network algorithm embedded in the FPGA acceleration platform. By the above principle, high calculation capability is achieved, intrusion behaviors can be detected in time before occurrence of the intrusion behaviors, misinformation and false report cannot happen, prevention effect is good, and the intrusion prevention system and method is quite suitable for intrusion prevention of big data.

Owner:INFORMATION & TELECOMM COMPANY SICHUAN ELECTRIC POWER

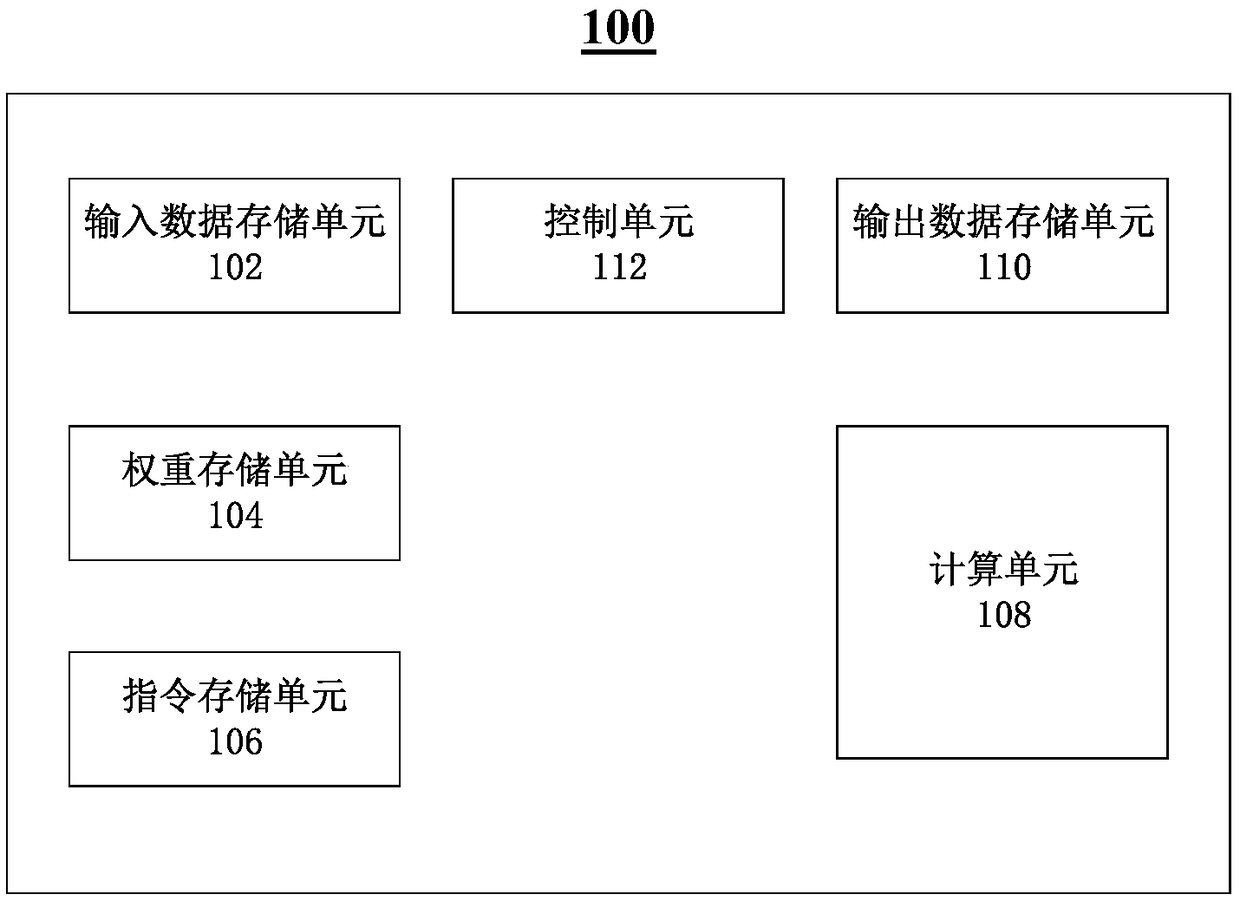

FPGA acceleration device, method and system for realizing neural network

InactiveCN109492761AImprove performanceReduce power consumptionNeural architecturesPhysical realisationGraphicsFpga implementations

The invention discloses an FPGA acceleration device, method and system for realizing a neural network, and the device includes at least one storage unit for storing operation instructions, operation data, and weight data of n sub-networks constituting the neural network,, and n is an integer greater than 1; multiple computing units used for executing vector multiplication and addition operation inneural network calculation according to the operation instruction, the operation data, the weight data and the execution sequence j of the n sub-networks, wherein the initial value of j is 1, and thefinal calculation result of the sub-networks with the execution sequence j serves as input of the sub-networks with the execution sequence j + 1; and a control unit connected with the at least one storage unit and the plurality of computing units and is used for obtaining the operation instruction through the at least one storage unit and analyzing the operation instruction to control the plurality of computing units. The FPGA is used for accelerating the operation process of the neural network, and compared with a general processor and a graphic processor, the method has the advantages of being high in performance and low in power consumption.

Owner:SHENZHEN LINTSENSE TECH CO LTD

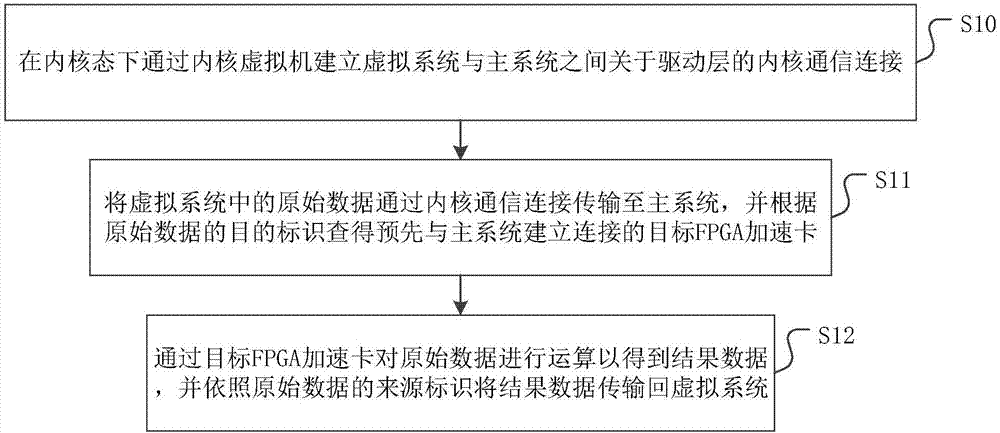

Access method, device and medium on FPGA accelerator card, and medium

InactiveCN107977256AAchieve accessRealize the use effectSoftware simulation/interpretation/emulationComputer hardwareAccess method

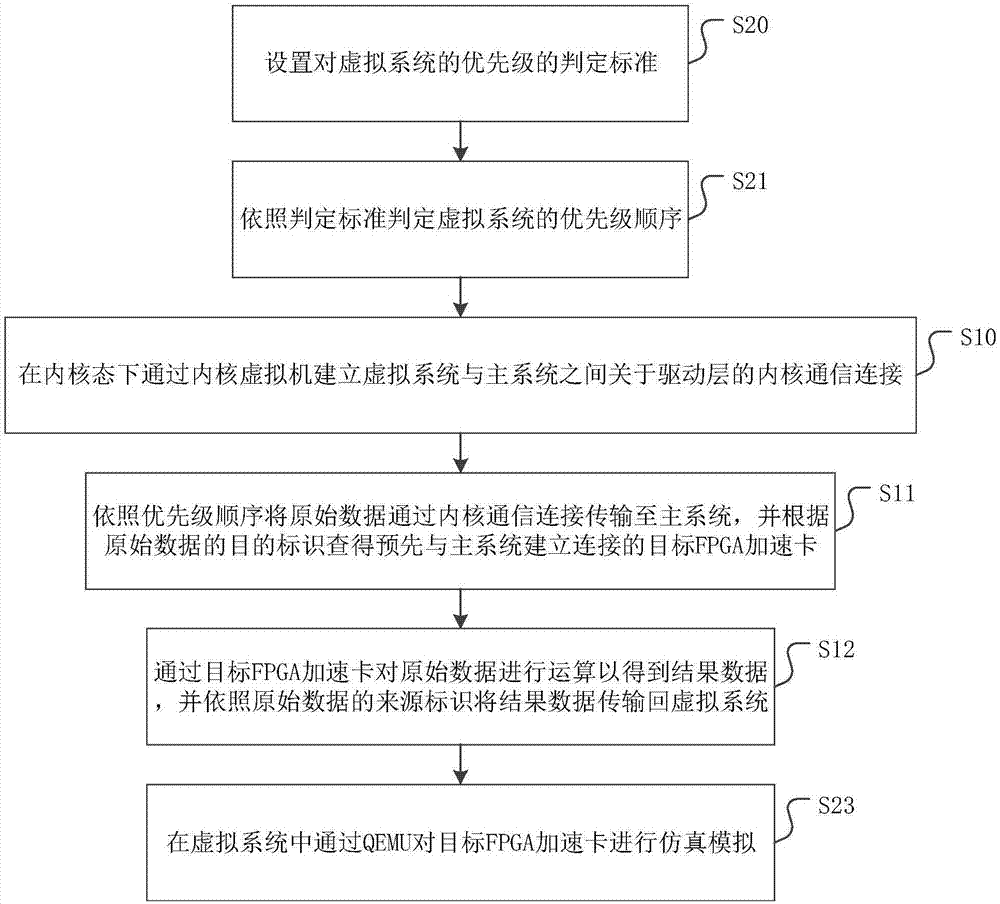

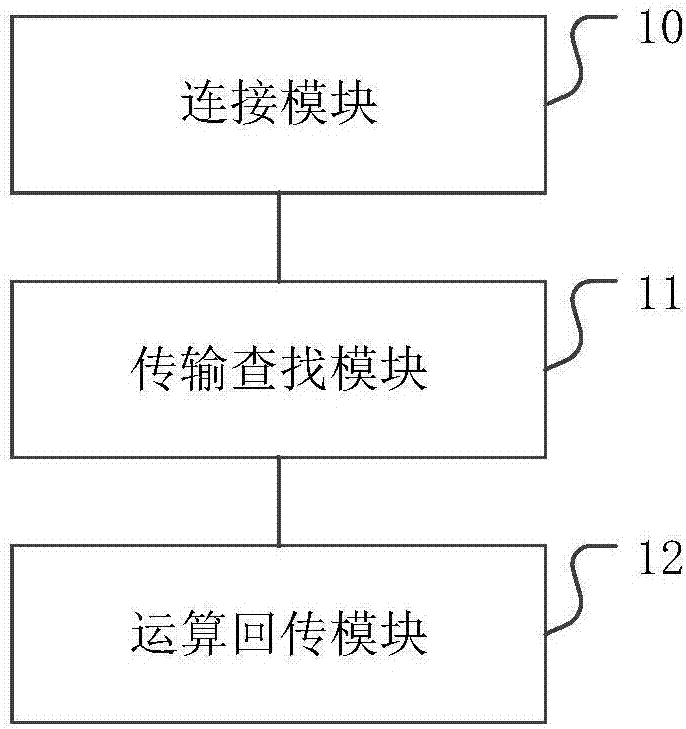

The invention discloses an access method, device and medium on a FPGA accelerator card, and a medium. The method comprises the following steps: establishing kernel communication connection about a driver layer between a virtual system and a main system through a kernel virtual machine under a kernel state; transmitting the original data in the virtual system to the main system through the kernel communication connection, and searching a target FPGA accelerator card establishing the connection with the main system in advance according to a destination identifier of the original data; operatingthe original data through the target FPGA accelerator card to obtain result data, and transmitting the result data back to the virtual system according to a source identifier of the original data. Through the access method disclosed by the invention, the access and use on the FPGA accelerator card by the virtual system are realized, the accuracy of data transmission between various virtual systemsand the FPGA accelerator cards and the high availability of the whole mechanism are guaranteed. Furthermore, the invention further provides an access device and medium on the FPGA accelerator card with the above advantages.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

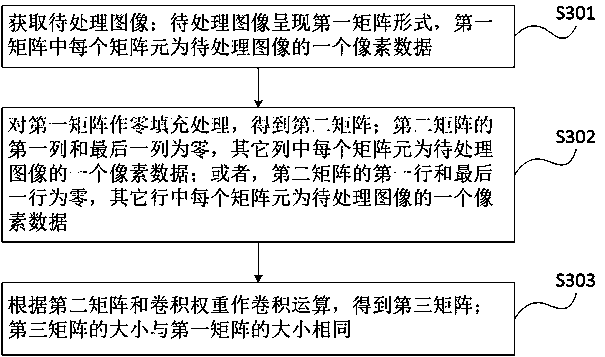

Image filling method and device in FPGA acceleration of convolution neural network

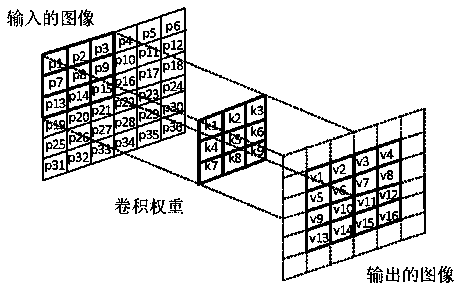

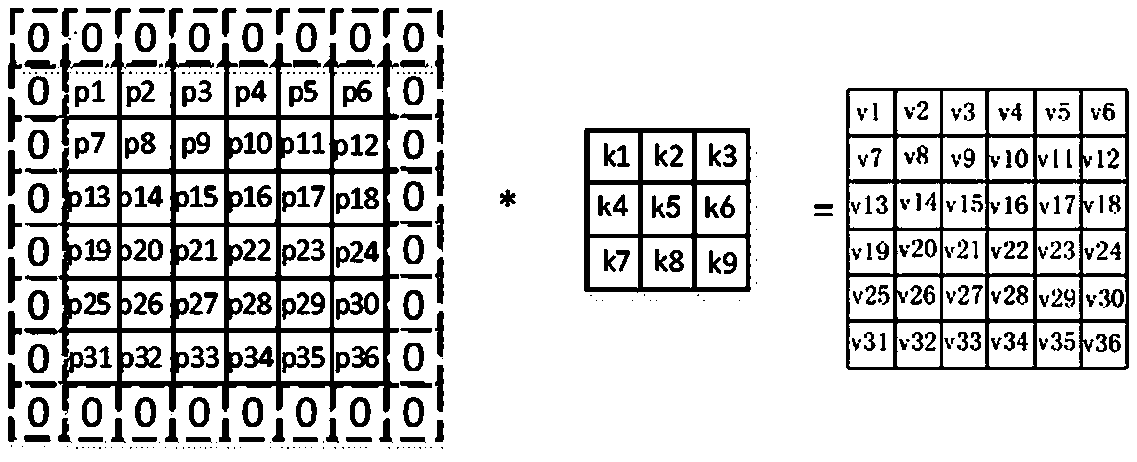

ActiveCN109461119ASame sizeGeometric image transformationNeural architecturesPattern recognitionConvolution

The invention discloses an image filling method and a device in FPGA acceleration of a convolution neural network. The method comprises steps that an image to be processed is acquired; The image to beprocessed is displayed in the form of a first matrix, and each matrix element in the first matrix is one pixel data of the image to be processed. Performing a zero filling process on the first matrixto obtain a second matrix; The first column and the last column of the second matrix are zeros, and each matrix element in the other columns is one pixel data of the image to be processed; Alternatively, the first row and the last row of the second matrix are zero, and each matrix element in the other row is one pixel data of the image to be processed; Performing convolution calculation accordingto the second matrix and convolution weights to obtain a third matrix; The size of the third matrix is the same as the size of the first matrix. In this way, the calculated image can have the same size as the original image.

Owner:深兰人工智能芯片研究院(江苏)有限公司

Systems and methods for providing co-processors to computing systems

ActiveUS20060149883A1Simple designLow costDigital data processing detailsPrinted circuit aspectsComputer hardwareComputerized system

Computing systems with conventional CPUs coupled to co-processors or accelerators implemented in FPGAs (Field Programmable Gate Arrays). One embodiment of the systems and methods according to the invention includes a FPGA accelerator implemented in a computer system by providing an adapter board configured to be used in a standard CPU socket.

Owner:XTREMEDATA INC

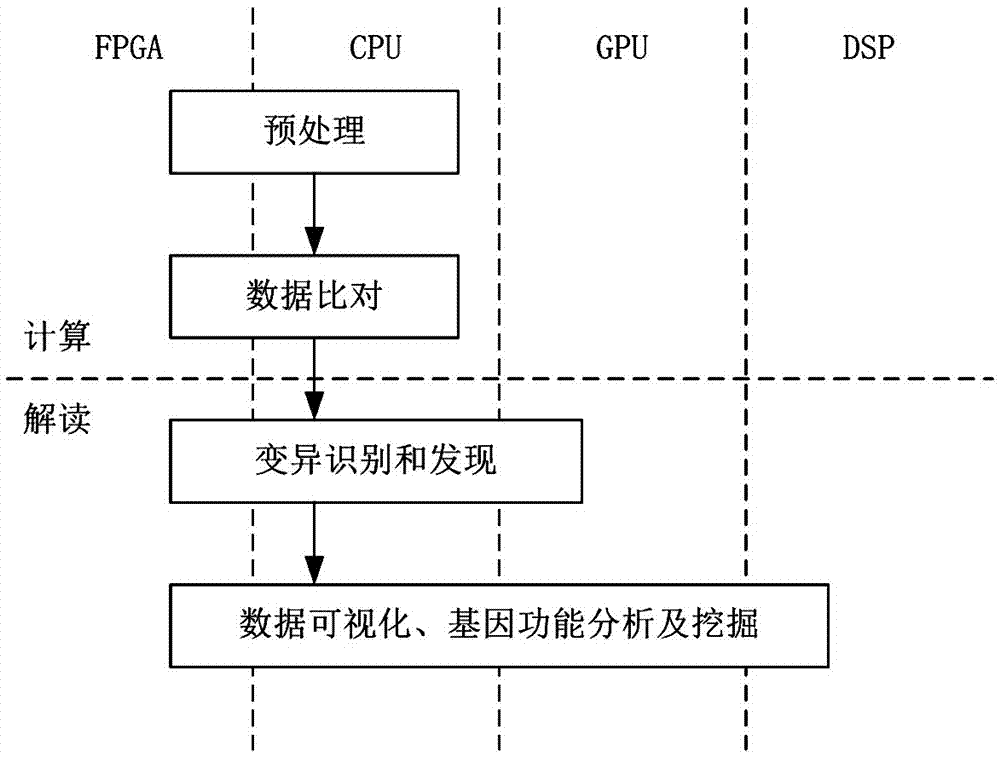

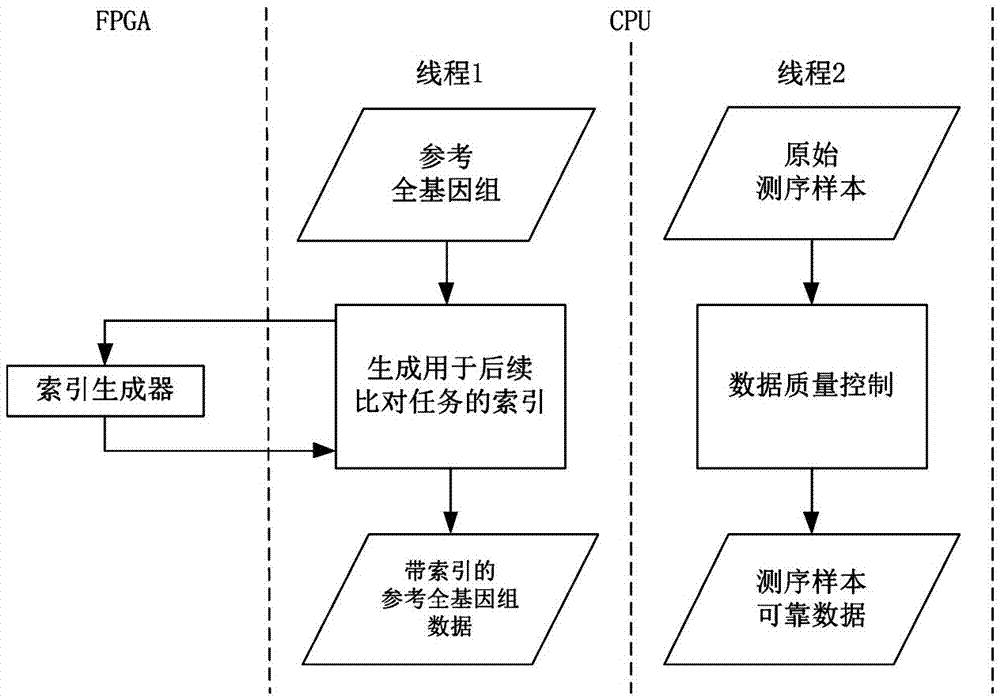

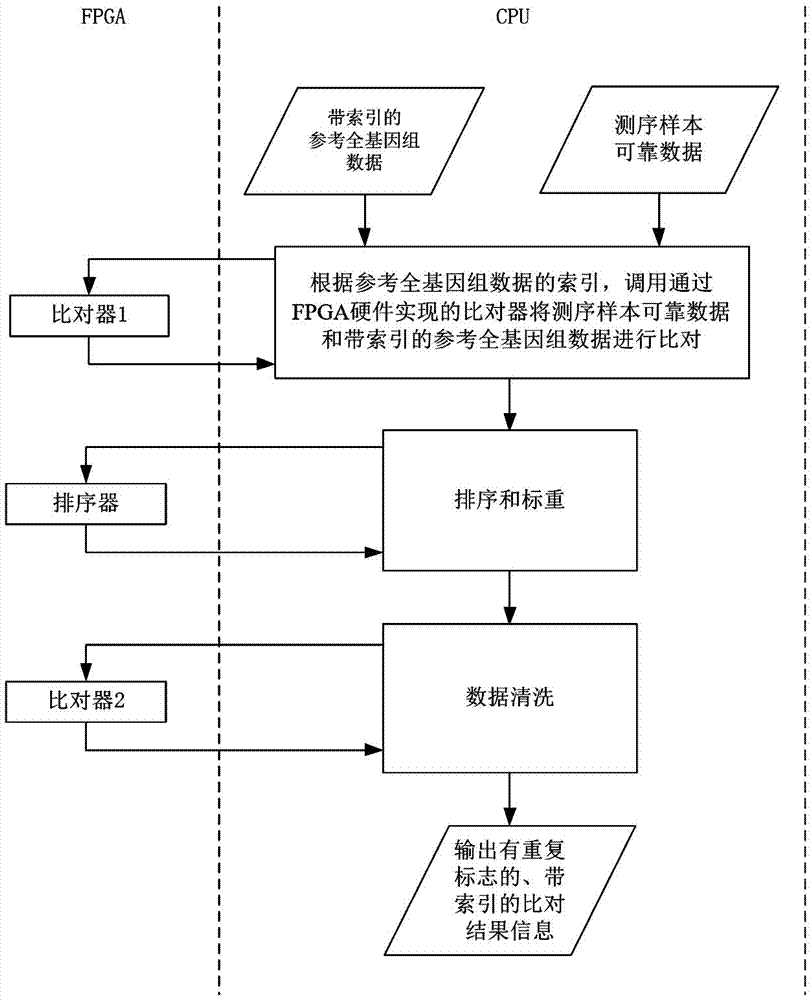

Whole genome sequencing data calculation interpretation method

InactiveCN107194204AImprove real-time performanceAdd depthBiostatisticsSequence analysisWhole genome sequencingGenomic data

The invention discloses a whole genome sequencing data calculation interpretation method, which comprises the following implementation steps that: inputting reference whole genome data used for whole genome sequencing and organic sequencing sample data, and carrying out preprocessing; calling an FPGA (Field Programmable Gate Array) by a CPU (Central Processing Unit) to be accelerated to compare sequencing sample reliable data with the reference whole genome data with an index to obtain a comparison result with a repeated sign and the index; calling the FPGA and a GPU (Graphics Processing Unit) by the CPU to be accelerated to carry out genome reassembling on the sequencing sample reliable data, and carrying out variant identification recognition on the comparison result with the repeated label and the index; and calling the GPU and a DSP (Digital Signal Processor) by the CPU to be accelerated to carry out visual processing, and calling a deep learning model realized by hardware on the FPGA by the CPU to carry out the analysis and the mining of the whole genome and a variant function on the basis of a visual processing result. By use of the method, the GPU, DSP and FPGA processors can be comprehensively utilized for acceleration, and the method has the advantages of being quick, real-time, accurate in penetration, popular and easy in understanding and varied in forms.

Owner:GENETALKS BIO TECH CHANGSHA CO LTD

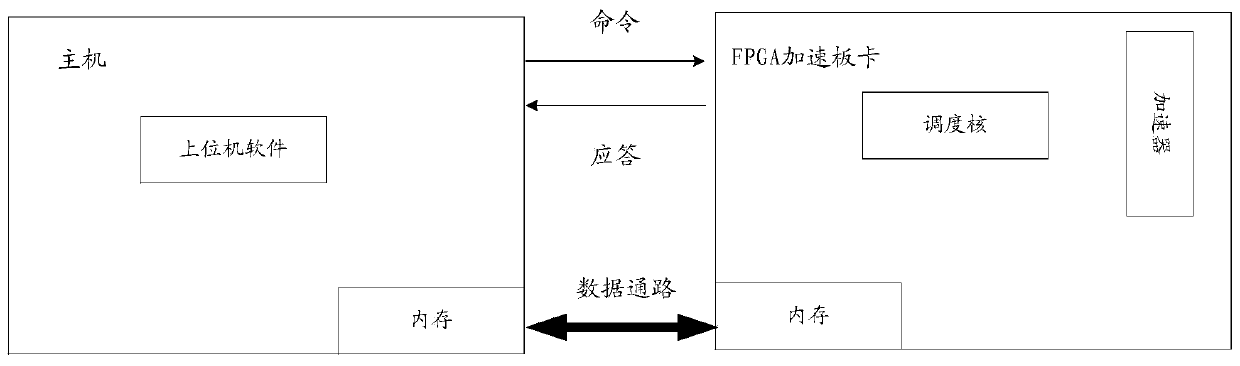

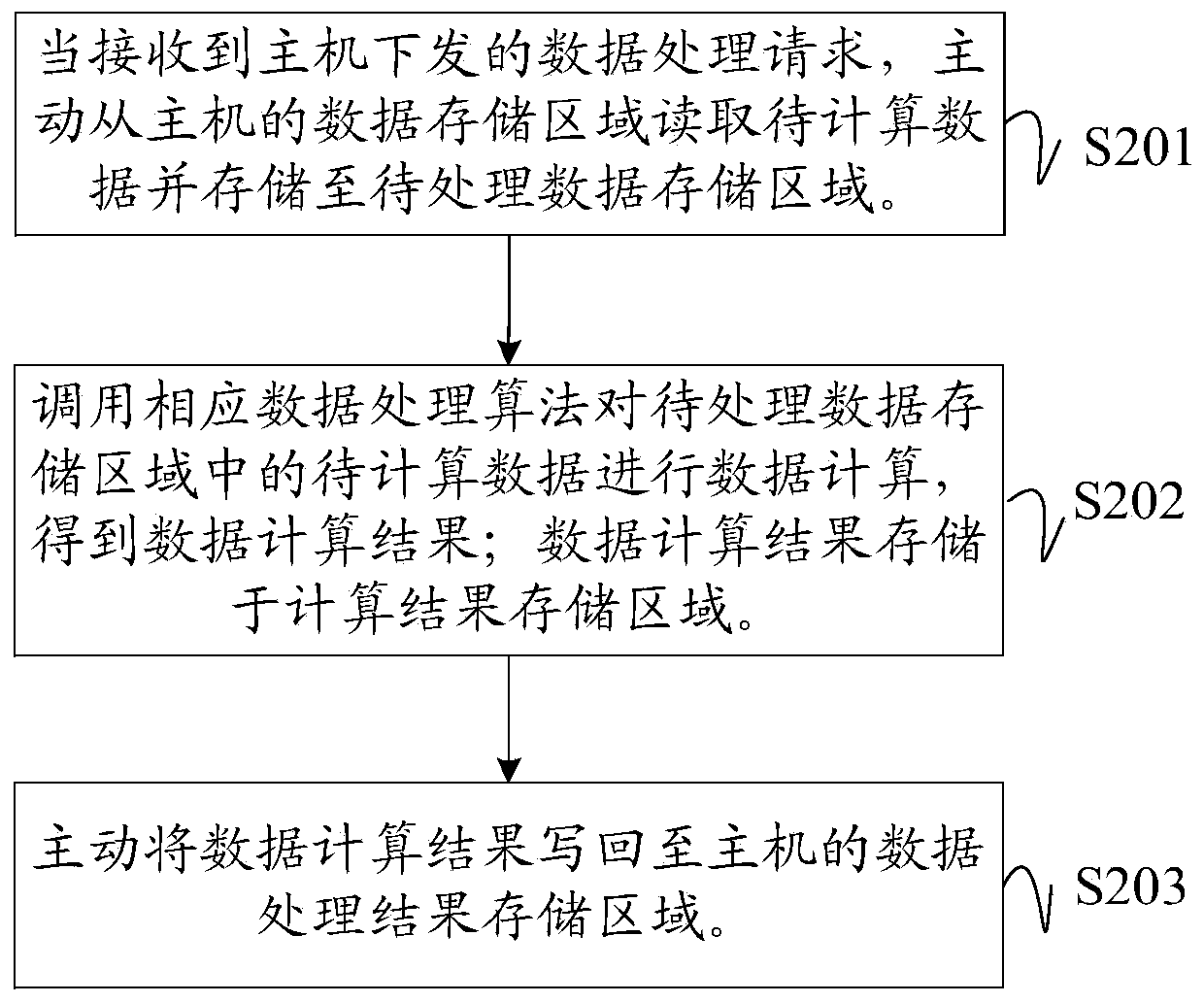

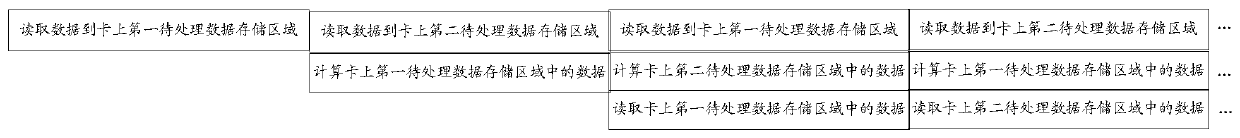

Data processing method and device of heterogeneous computing platform and readable storage medium

InactiveCN111143272AIncrease autonomyReduce the latency of data transfer operationsDigital computer detailsElectric digital data processingHost memoryData transmission

The invention discloses a data processing method and device of a heterogeneous computing platform and a computer readable storage medium. The method comprises the steps that a data storage area and adata processing result storage area are opened up in a host memory space in advance, and a to-be-processed data storage area and a calculation result storage area are opened up in an FPGA accelerationboard card memory space; after the host stores the to-be-calculated data in the data storage area, a data processing request is issued to the FPGA acceleration board card, and the FPGA acceleration board card actively reads the to-be-calculated data from the data storage area and stores the to-be-calculated data in the to-be-processed data storage area of the FPGA acceleration board card; a corresponding data processing algorithm is called to perform data calculation on the to-be-calculated data in the to-be-processed data storage area to obtain a data calculation result, and the data calculation result is stored in a calculation result storage area; and finally, the data calculation result back is actively writted to the data processing result storage area of the host. The data transmission efficiency of the heterogeneous computing platform is improved, and the computing performance of the FPGA acceleration board card is improved.

Owner:INSPUR BEIJING ELECTRONICS INFORMATION IND

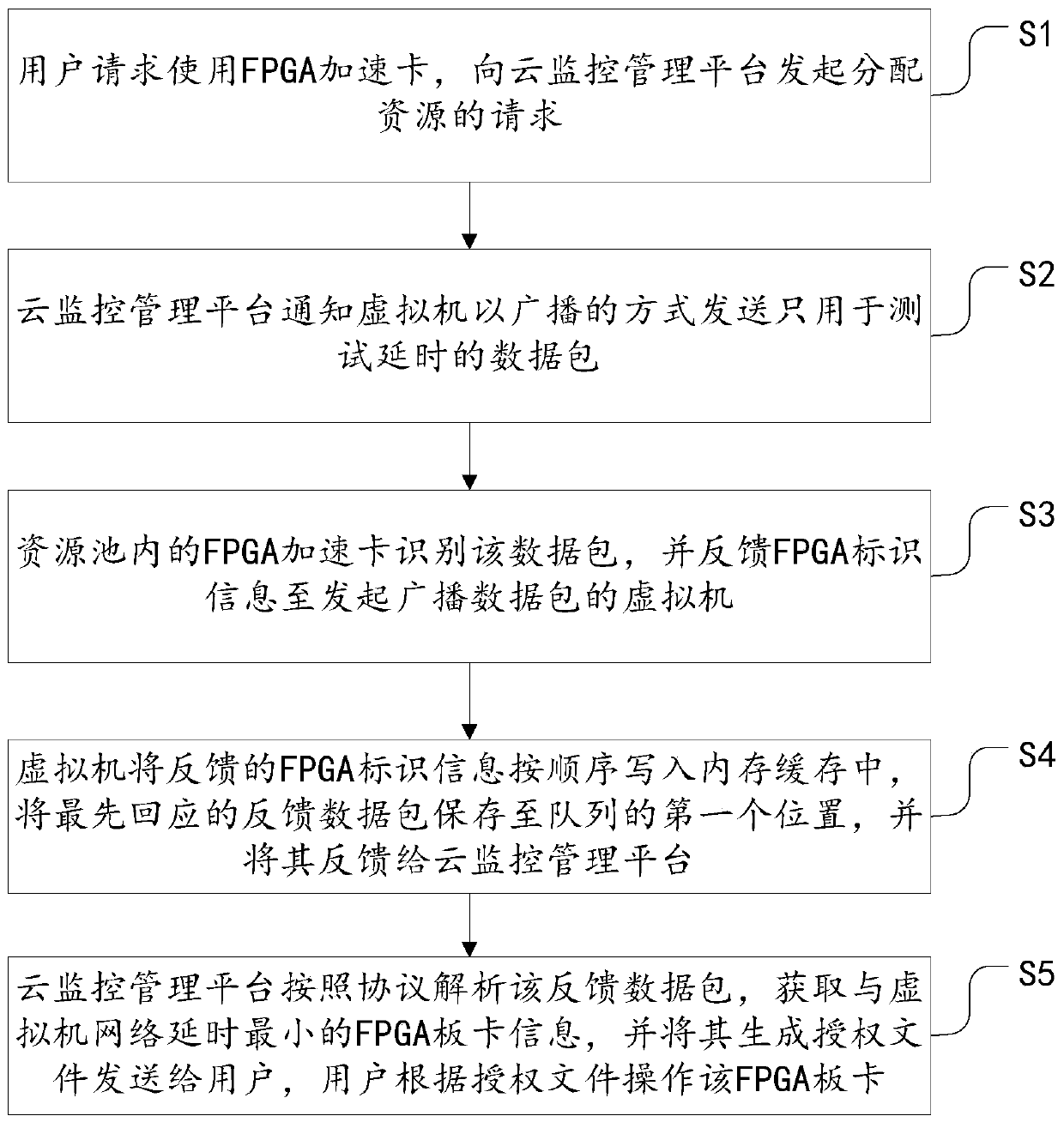

FPGA cloud platform acceleration resource allocation method and system

ActiveCN110618871AImprove experienceProtect effective rights and interestsResource allocationResource poolDistribution method

The invention provides an FPGA cloud platform acceleration resource allocation method and system. According to the invention, accelerator card resources are allocated and coordinated according to thetime delay between a user host and a FPGA accelerator card deployed in each network segment; when the user applies for using the FPGA, the FPGA acceleration card with the minimum delay with the host in the FPGA resource pool is allocated to the user, thereby realizing allocation of acceleration resources of the FPGA cloud platform; a cloud monitoring management platform can obtain the transmissiondelay with a virtual machine network according to different geographic positions of the FPGA board cards in the FPGA resource pool, , and can allocate the board card with the minimum delay to each user for use; in addition, unauthorized users can be effectively prevented from randomly accessing the acceleration resources in the resource pool, and the effective rights and interests of a resource pool owner are protected. According to the method and the device, the FPGA acceleration card which is not authorized to be used by the user is effectively protected, the network delay of the board cardallocated to the user can be ensured to be minimum, the optimal acceleration effect is achieved, and the user experience is improved.

Owner:INSPUR SUZHOU INTELLIGENT TECH CO LTD

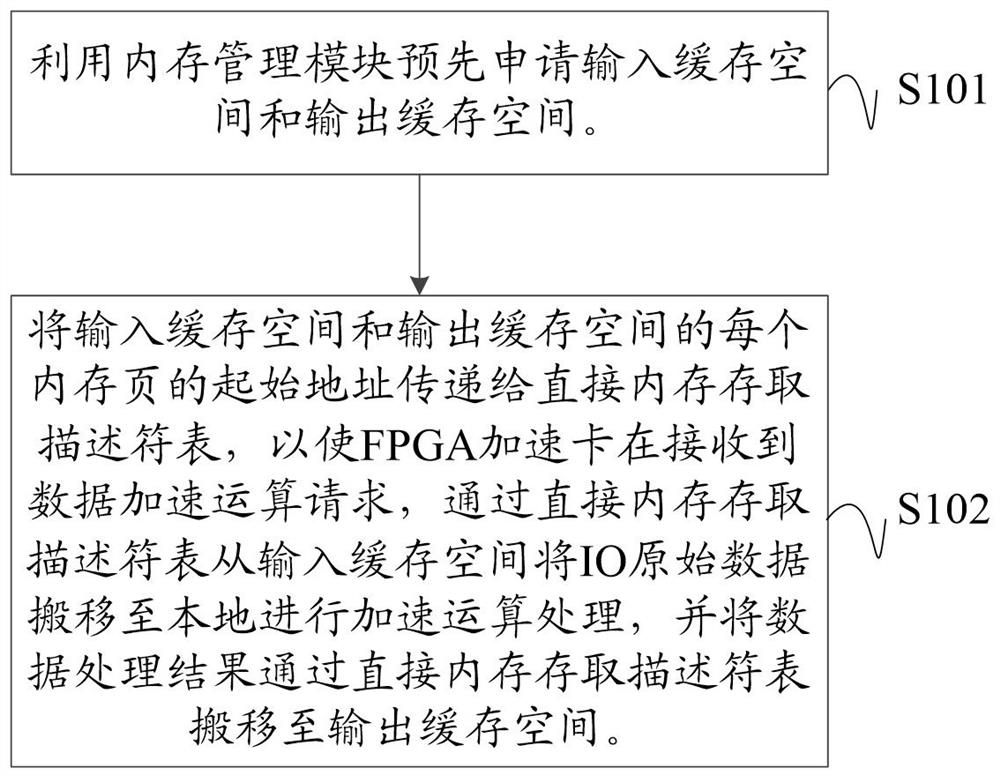

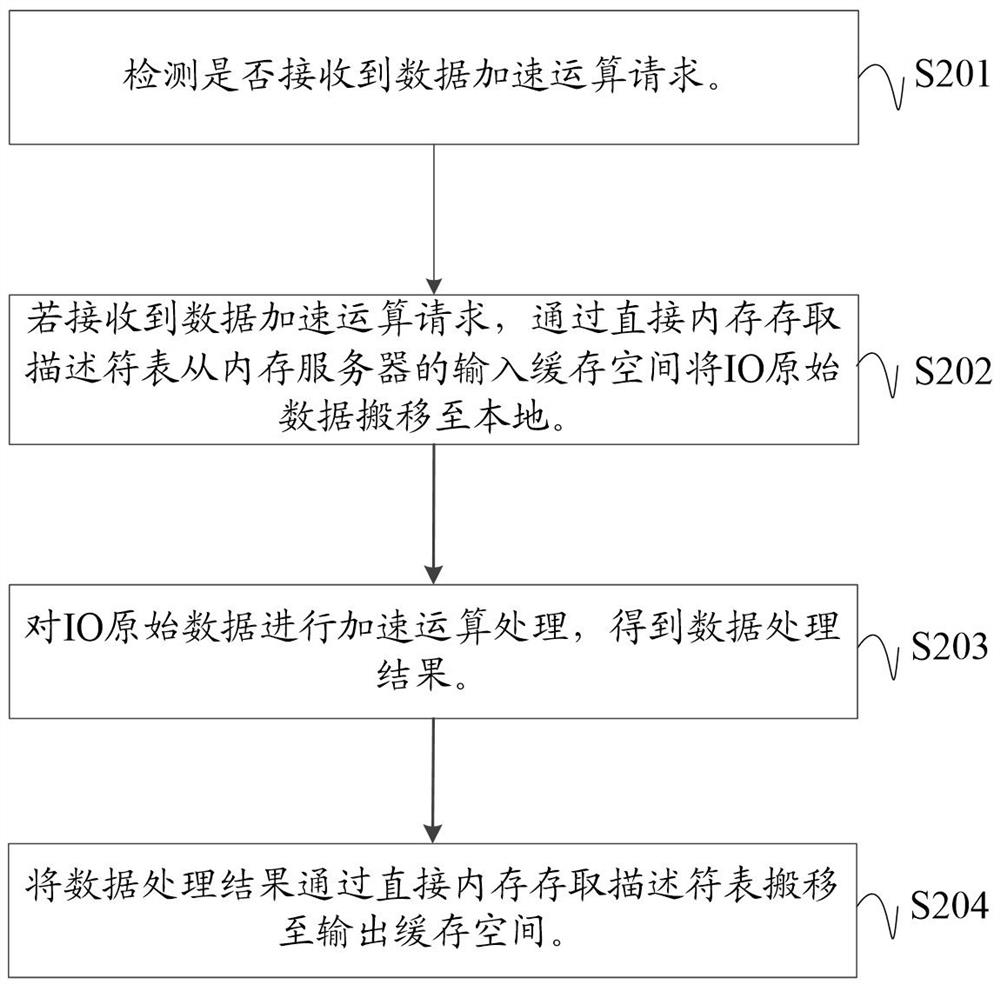

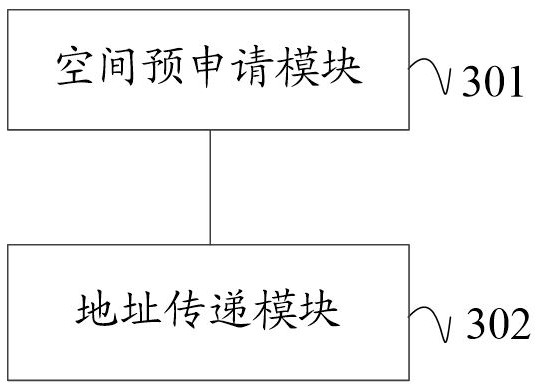

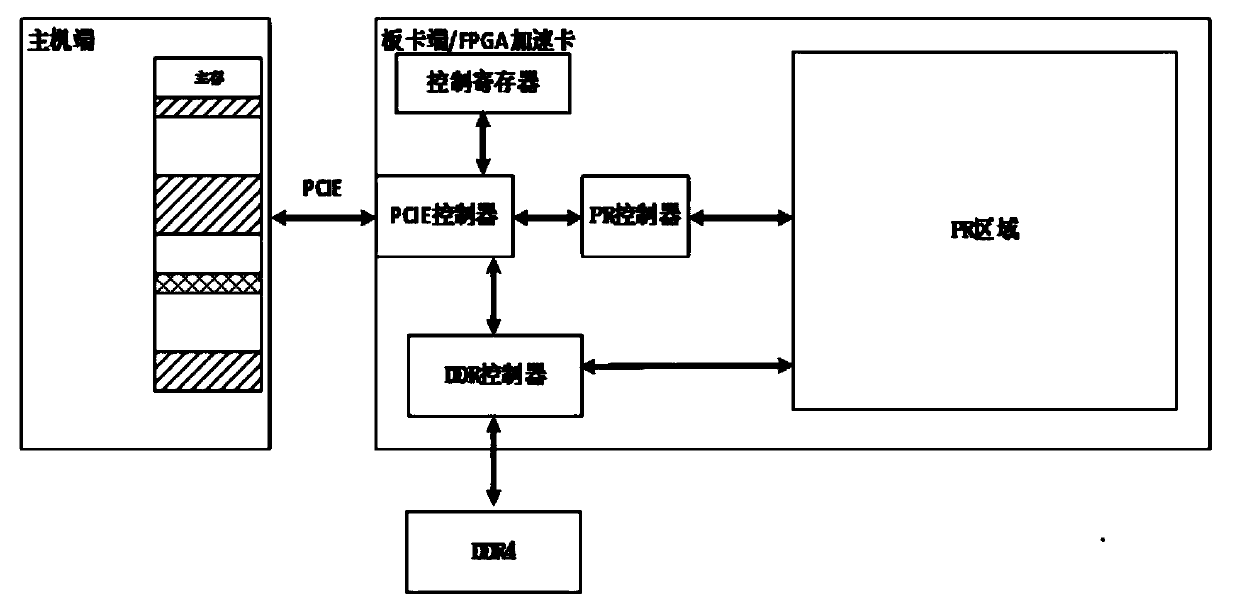

Data acceleration operation processing method and device and computer readable storage medium

The invention discloses a data acceleration operation processing method and device and a computer readable storage medium. The method comprises the following steps that: a storage server applies for an input cache space and an output cache space in advance by utilizing a memory management module, and meanwhile, transmits the initial address of each memory page of the input cache space and the output cache space to a direct memory access descriptor table, wherein the input cache space stores IO original data corresponding to a user data request , and the output cache space stores a data processing result obtained after the IO original data is subjected to accelerated operation; an FPGA acceleration card receives a data acceleration operation request and transfers the IO original data to thelocal from the input cache space through the direct memory access descriptor table to subject the IO original data to accelerated operation processing, and transfers a data processing result to the output cache space through the direct memory access descriptor table; and therefore, memory copying can be avoided while the FPGA acceleration card is used for carrying out data processing on the storage server, and the performance loss of the storage server in the acceleration process is effectively reduced.

Owner:INSPUR SUZHOU INTELLIGENT TECH CO LTD

Data processing method, device and system and FPGA acceleration card

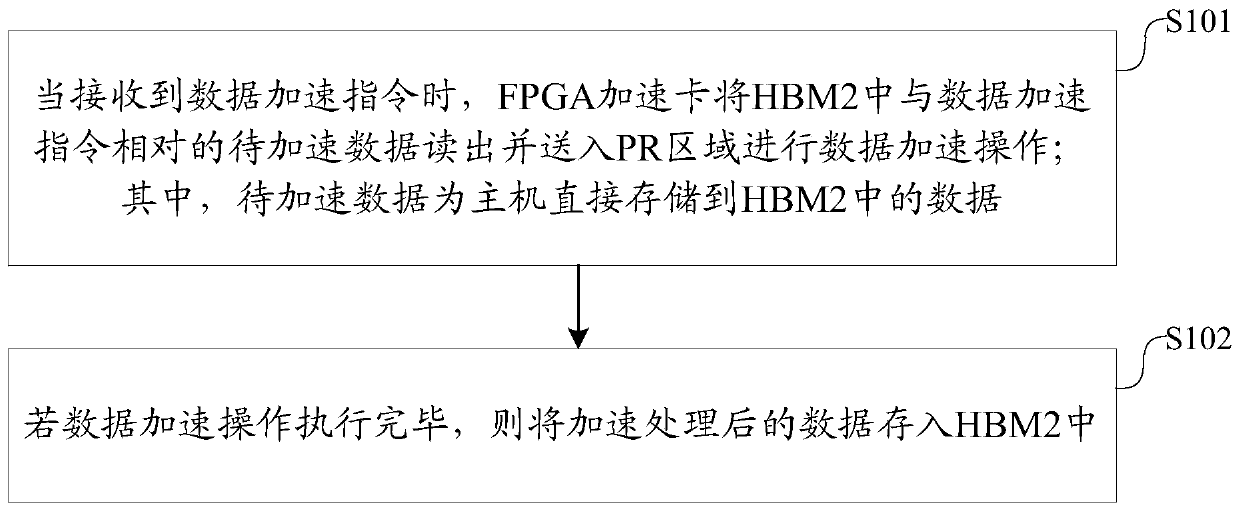

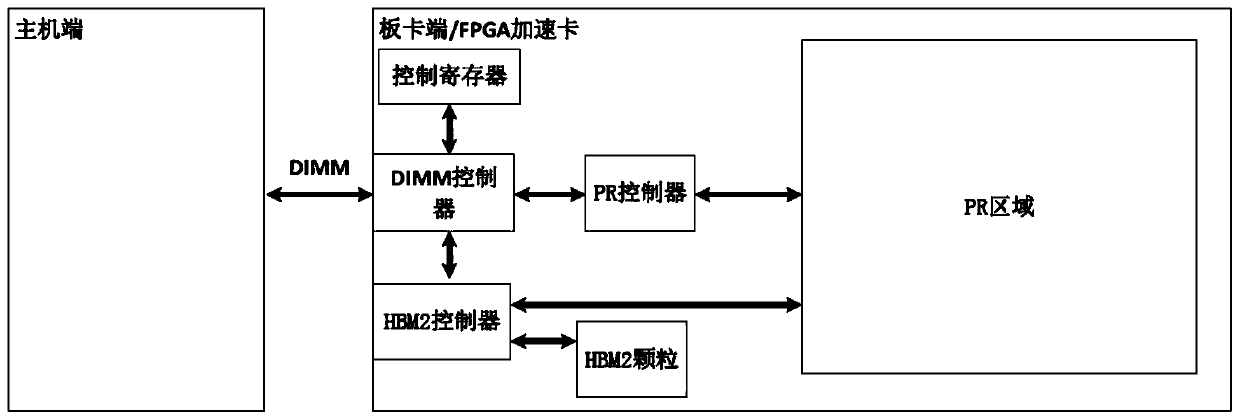

InactiveCN109739784AReduce data transfer timeEasy to deployElectric digital data processingData processing systemData transmission time

The invention discloses a data processing method, which comprises the following steps that: when a data acceleration instruction is received, an FPGA acceleration card reads to-be-accelerated data opposite to the data acceleration instruction in an HBM2 and sends the to-be-accelerated data into a PR region for data acceleration operation; wherein the to-be-accelerated data is the data directly stored in the HBM2 by the host; if the execution of the data acceleration operation is completed, storing the data subjected to acceleration processing into an HBM2; according to the method, a host directly stores data into HBM2 particles in an FPGA acceleration card; the FPGA acceleration card can directly read out data in the HBM2 and send the data into a PR area for data acceleration, and after acceleration is completed, the data is fed back to HBM2 particles; therefore, the data transmission time and the data migration frequency between the host and the FPGA acceleration card are greatly reduced, and the deployment is more convenient; the invention also discloses a data processing device, a data processing system and an FPGA accelerator card, which have the above beneficial effects.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

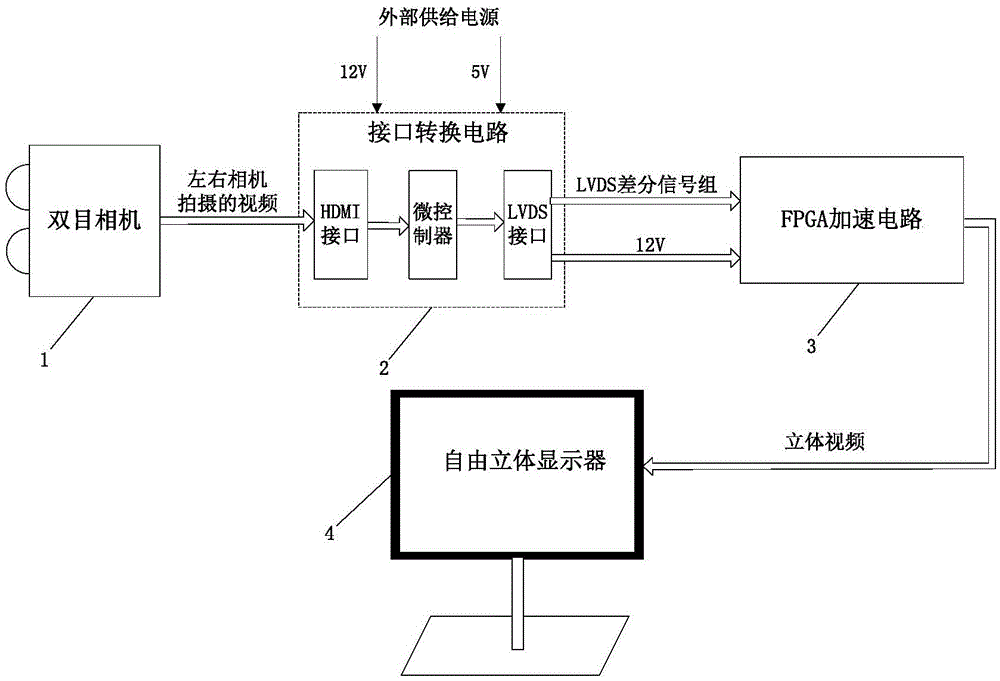

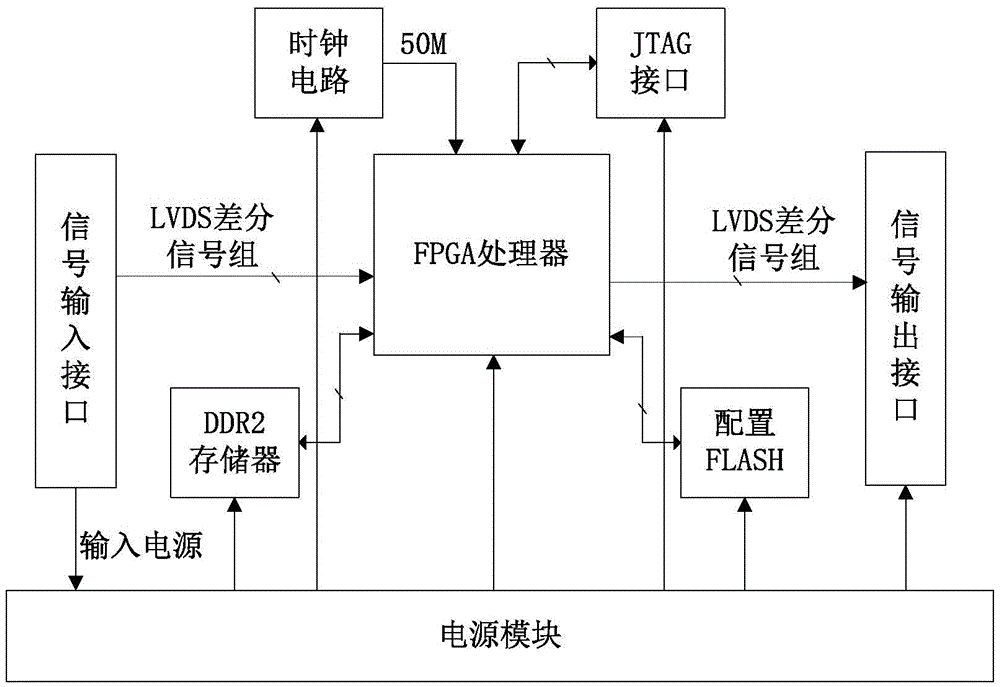

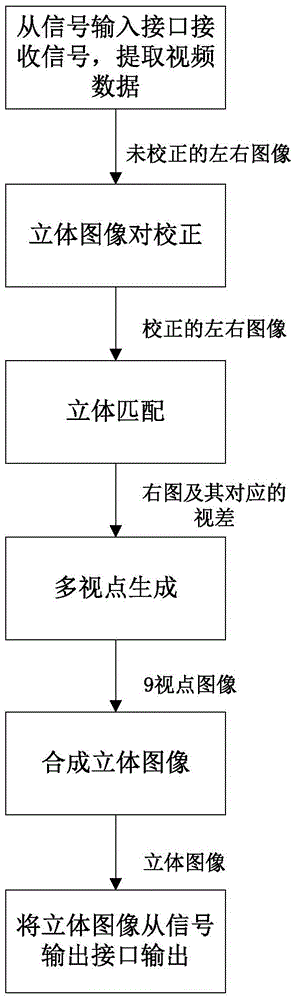

Binocular vision auto-stereoscopic display system

InactiveCN105611270ASave transmission bandwidthReduce storage spaceSteroscopic systemsStereoscopic videoStereo matching

The present invention discloses a binocular vision auto-stereoscopic display system. The system comprises a binocular camera, an interface conversion circuit, an FPGA acceleration circuit and an auto-stereoscopic display, wherein the binocular camera is used for collecting real scenes, and transmitting collected videos to the interface conversion circuit through an HDMI interface; the interface conversion circuit is used for transmitting the received videos to the FPGA acceleration circuit through an LVDS interface; the FPGA acceleration circuit is used for receiving the videos, processing the videos frame by conducting frame through stereo matching, multi-view generation and stereoscopic image synthesis in sequence, and outputting the finally synthesized stereoscopic image to the auto-stereoscopic display. A watcher can perceive a stereoscopic effect when standing in corresponding position in front of the stereoscopic display to watch. The auto-stereoscopic display system uses an FPGA to support parallel and pipeline processing characteristics, thereby realizing real-time auto-stereoscopic display. An experimental result shows that the frame rate of displaying a stereoscopic video with the resolution of 1920*1080 by the present invention is about 30 frames / second.

Owner:HUAZHONG UNIV OF SCI & TECH

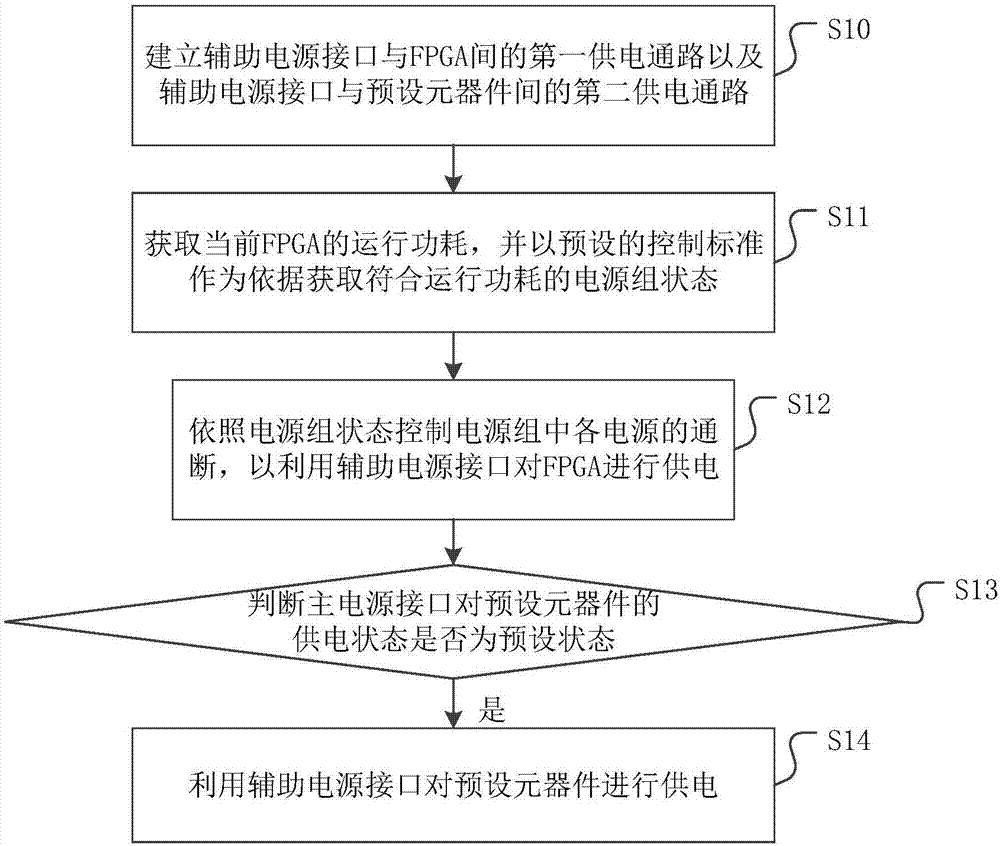

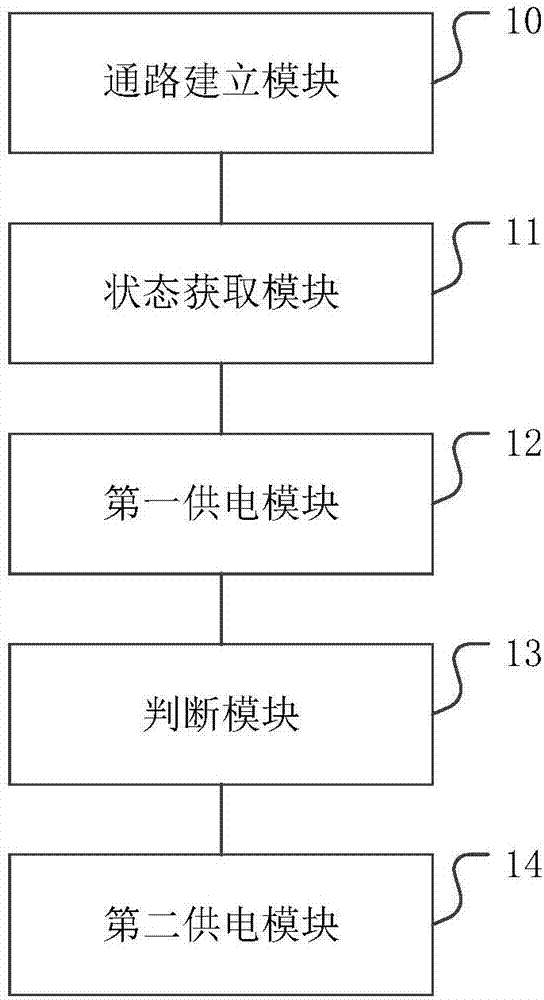

Method and device for power supply control of FPGA accelerator card auxiliary power supply, and medium

InactiveCN108008801AIncrease flexibilityImprove efficiencyVolume/mass flow measurementPower supply for data processingState controlFpga acceleration

The invention discloses a method and a device for power supply control of a FPGA accelerator card auxiliary power supply, and a medium. The steps of the method comprises: establishing a first power supply access between an auxiliary power interface and a FPGA, and a second power supply access between an auxiliary power interface and a preset component; obtaining current operation power consumptionof the FPGA, and using a preset control standard as basis, obtaining a power supply group state which meets the operation power consumption; according to the power supply group state, controlling on-off of each power supply in the power supply group, to supply power for the FPGA using the auxiliary power interface; determining whether the state of the main power interface supplying power to the preset component is a preset state, if yes, using the auxiliary power interface to supply power for the preset component. An auxiliary power supply is relatively reasonably and flexibly used, and safety operation of each component and integrated working efficiency are guaranteed. In addition, the invention also provides a device for power supply control of a FPGA accelerator card auxiliary power supply, and a medium, and beneficial effects are as described above.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

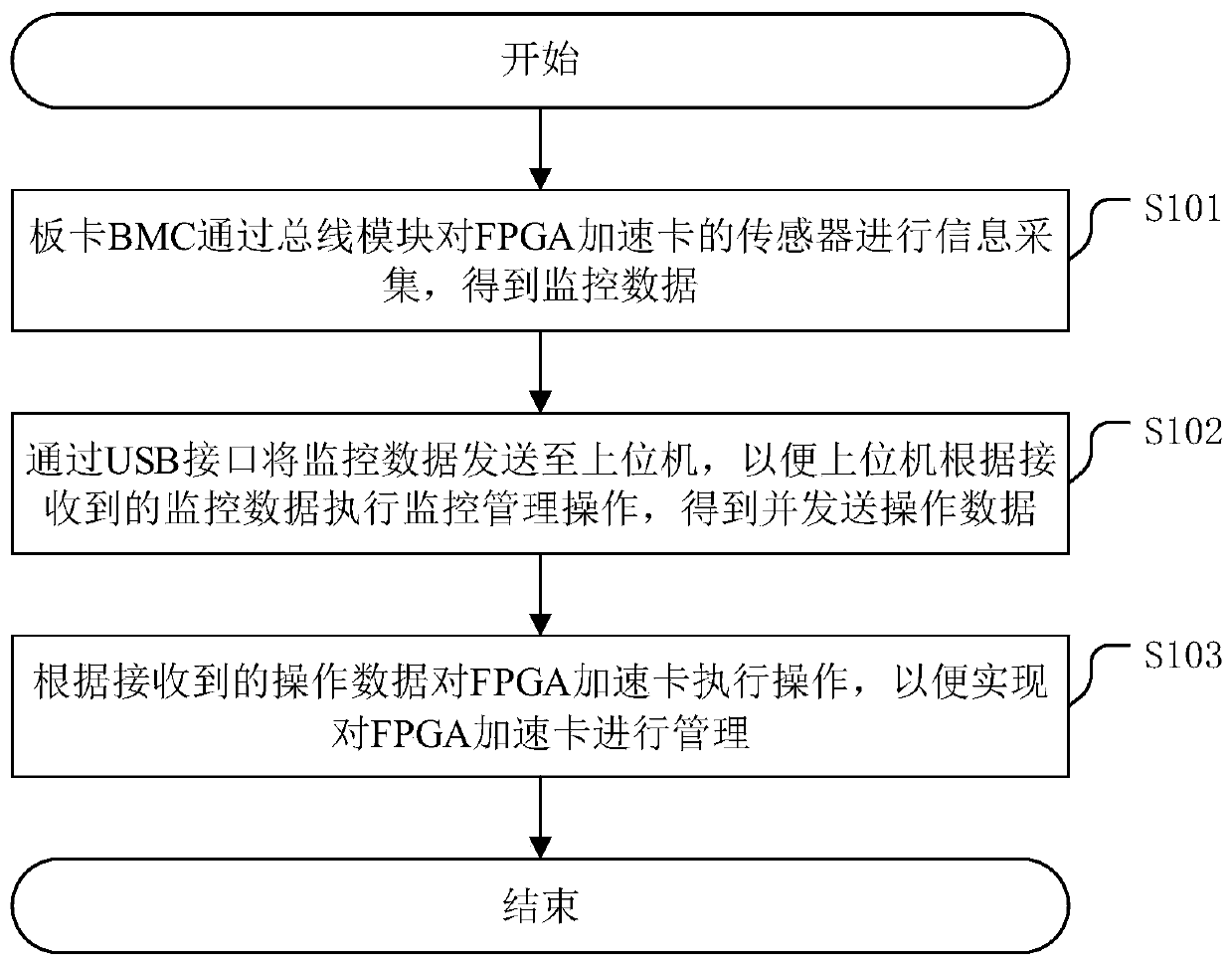

FPGA accelerator card management method and related device

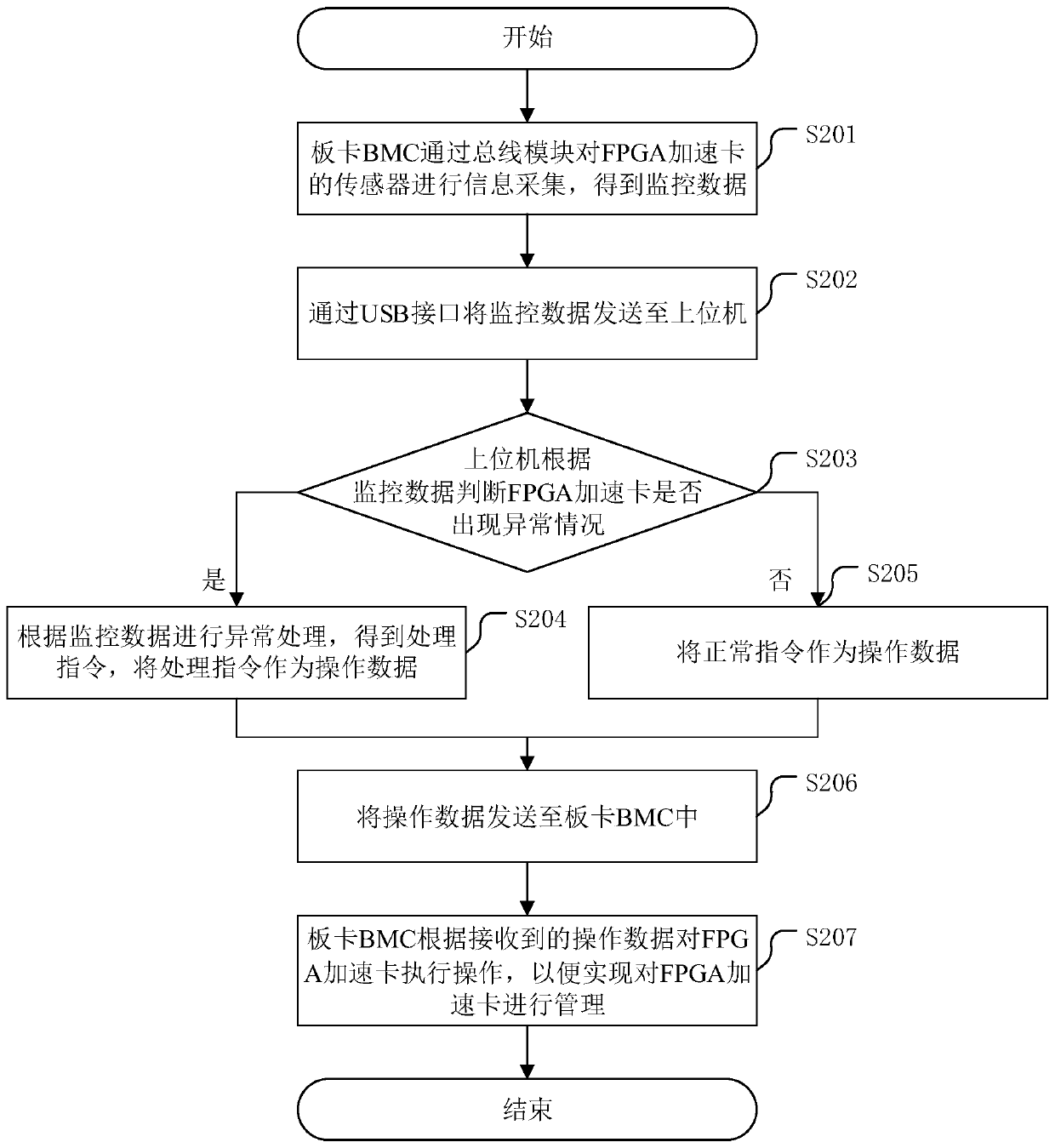

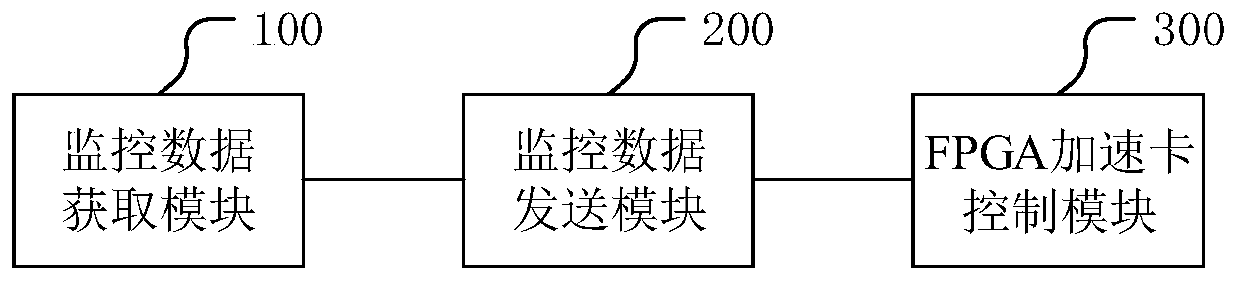

InactiveCN110399220AImprove versatilityIncrease flexibilityResource allocationHardware monitoringComputer moduleData transmission

The invention discloses an FPGA accelerator card management method, which comprises the steps of performing information acquisition on a sensor of an FPGA accelerator card by a board card BMC througha bus module and obtaining monitoring data; sending the monitoring data to an upper computer through a USB interface, executing monitoring management operation by an upper computer according to the received monitoring data, obtaining and sending out operation data; executing an operation on the FPGA accelerator card according to the received operation data so as to manage the FPGA accelerator card. The monitoring data is firstly collected through the board card BMC, and then the data is sent to the upper computer through the USB interface instead of a special interface for data transmission. Therefore, the monitoring management difficulty and the implementation cost are reduced. The invention further discloses a management system of the FPGA accelerator card, a case management device of the server and a computer readable storage medium, which have the above beneficial effects.

Owner:SUZHOU LANGCHAO INTELLIGENT TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com