Patents

Literature

37 results about "Jazelle" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Jazelle DBX (Direct Bytecode eXecution) is an extension that allows some ARM processors to execute Java bytecode in hardware as a third execution state alongside the existing ARM and Thumb modes. Jazelle functionality was specified in the ARMv5TEJ architecture and the first processor with Jazelle technology was the ARM926EJ-S. Jazelle is denoted by a "J" appended to the CPU name, except for post-v5 cores where it is required (albeit only in trivial form) for architecture conformance.

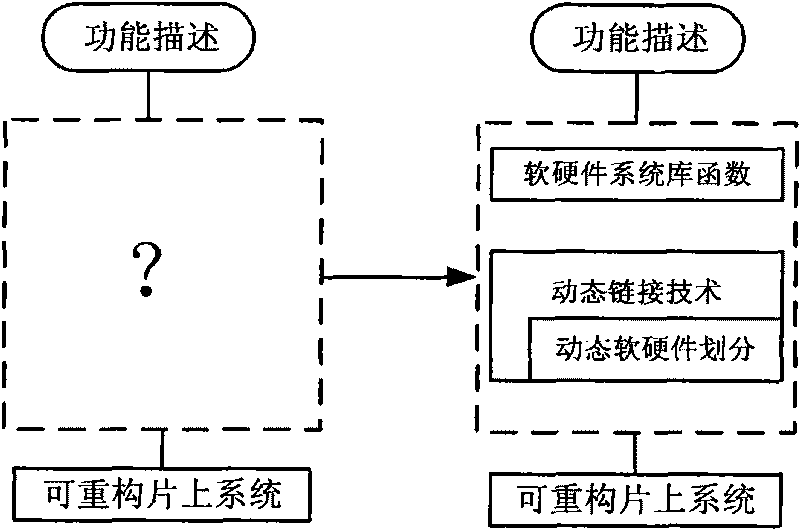

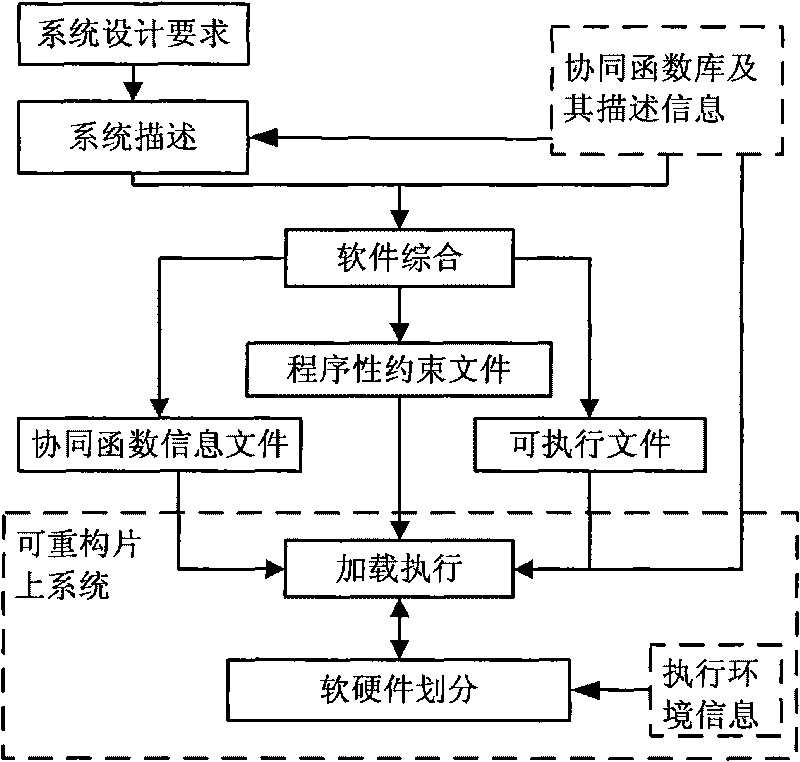

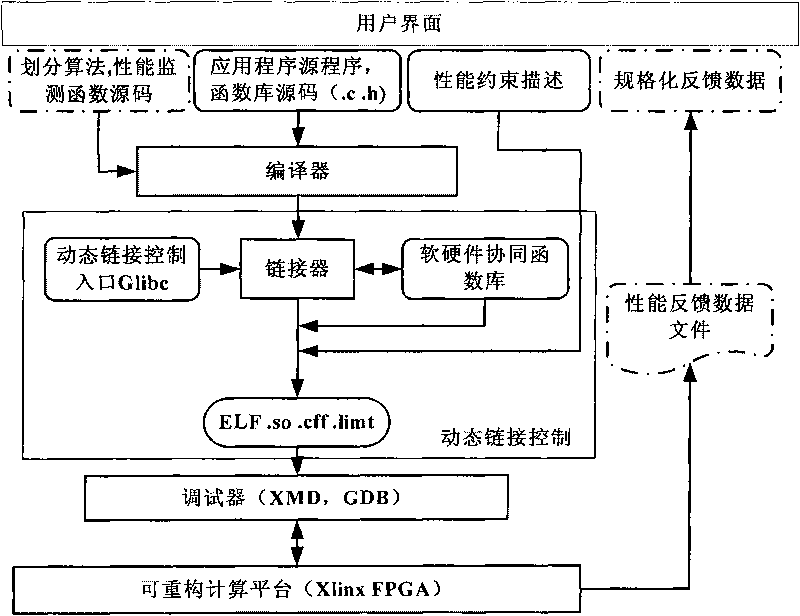

Procedure level software and hardware collaborative design automatized development method

InactiveCN101763265AIncrease profitEasy to divideSpecific program execution arrangementsHigh-level programming languageHardware implementations

The invention provides a procedure level software and hardware collaborative design automatized development method, which is characterized in that the method comprises the following steps: step 1, using high level languages to complete the system function description which comprises the transfer of the software and hardware collaborative functions; step 2, dynamically dividing the software and hardware functions; step 3, linking and executing the step; and step 4, judging and ending the step (judging whether the execution of all functions is completed, ending the step if the execution of all functions is completed, and otherwise, returning parameters used for dividing to the second step to enter a next circulation). The invention uses the procedure level software and hardware uniform programming model for shielding the difference realized by bottom layer hardware to realize the goal of transparent effect of reconstruction devices on program users. The programming model encapsulates the hardware accelerator into C Language functions for bringing convenience for the programming by users, and in addition, the dynamic software and hardware division during the operation is supported, so the division is transparent to programmers, and the utilization rate of reconstruction resources is improved.

Owner:HUNAN UNIV

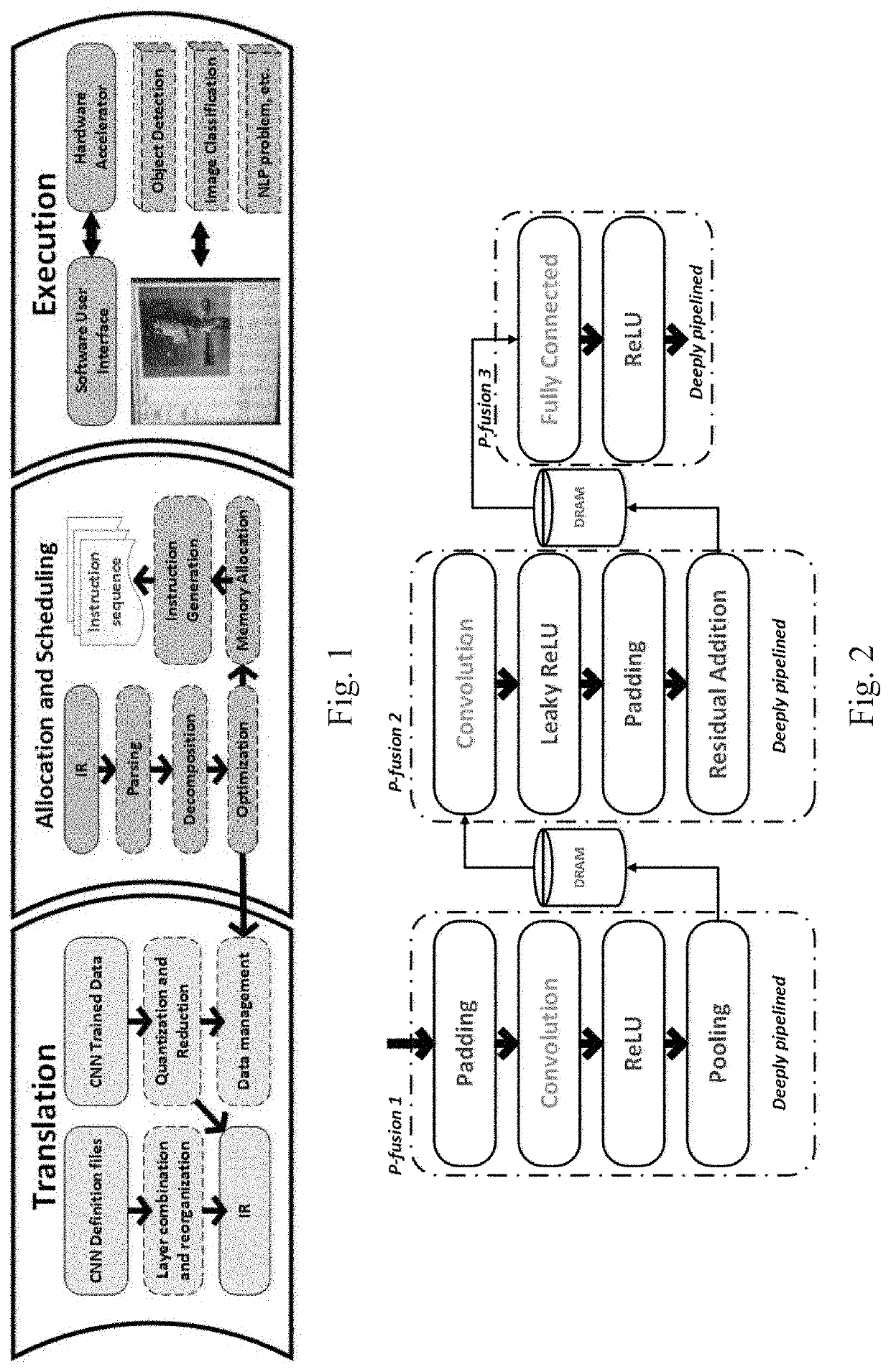

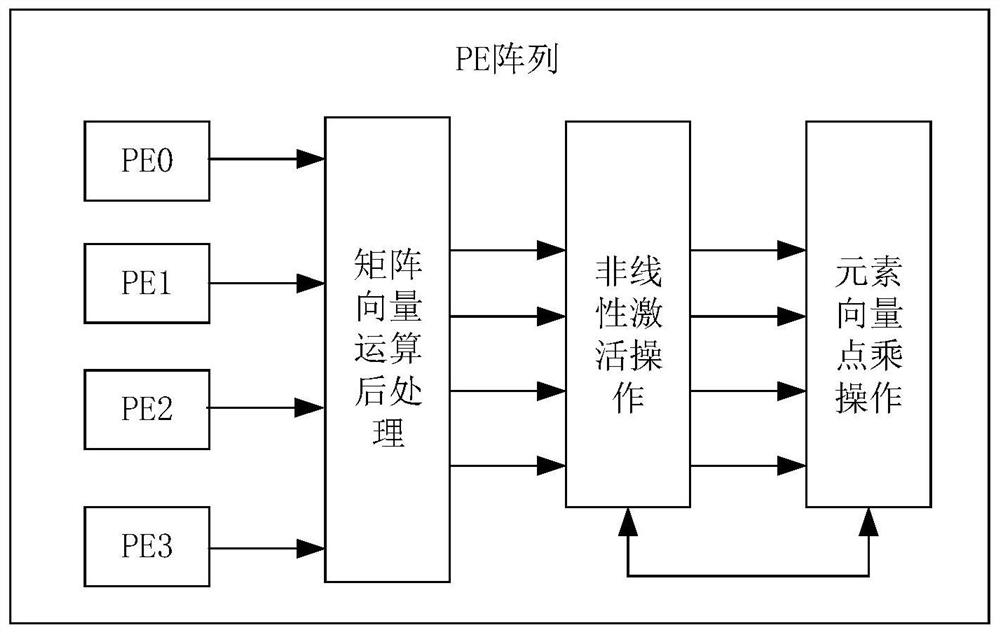

OPU-based CNN acceleration method and system

InactiveUS20200151019A1High complexityReduced versatilityResource allocationKernel methodsJazelleConcurrent computation

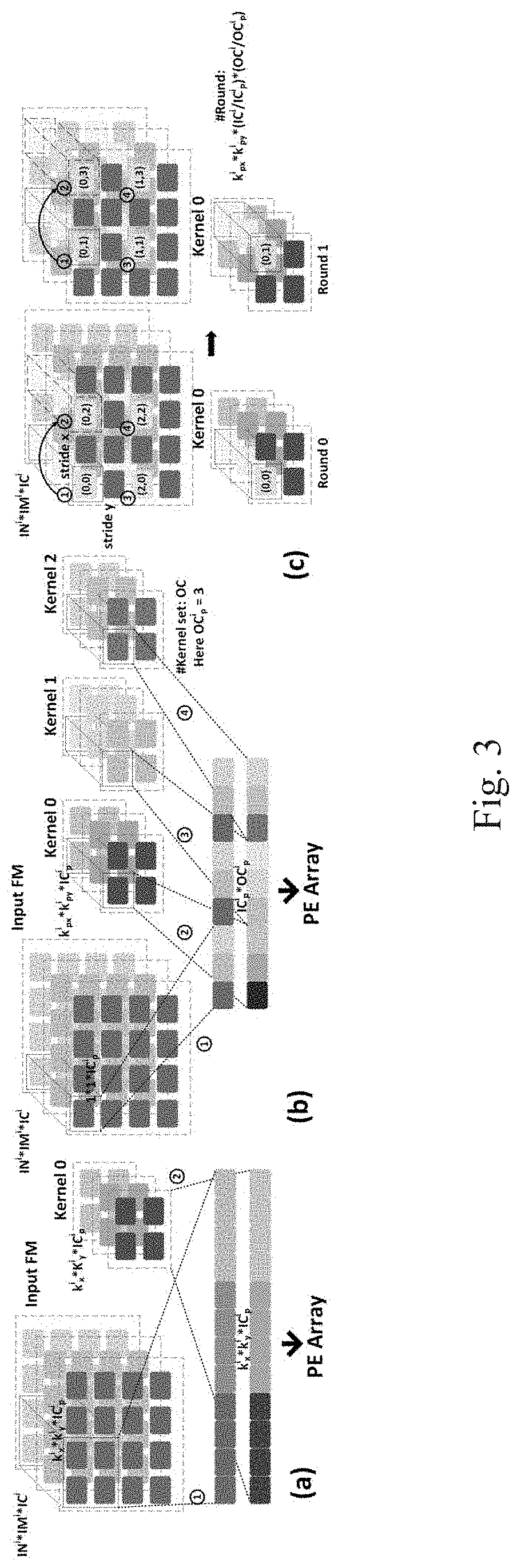

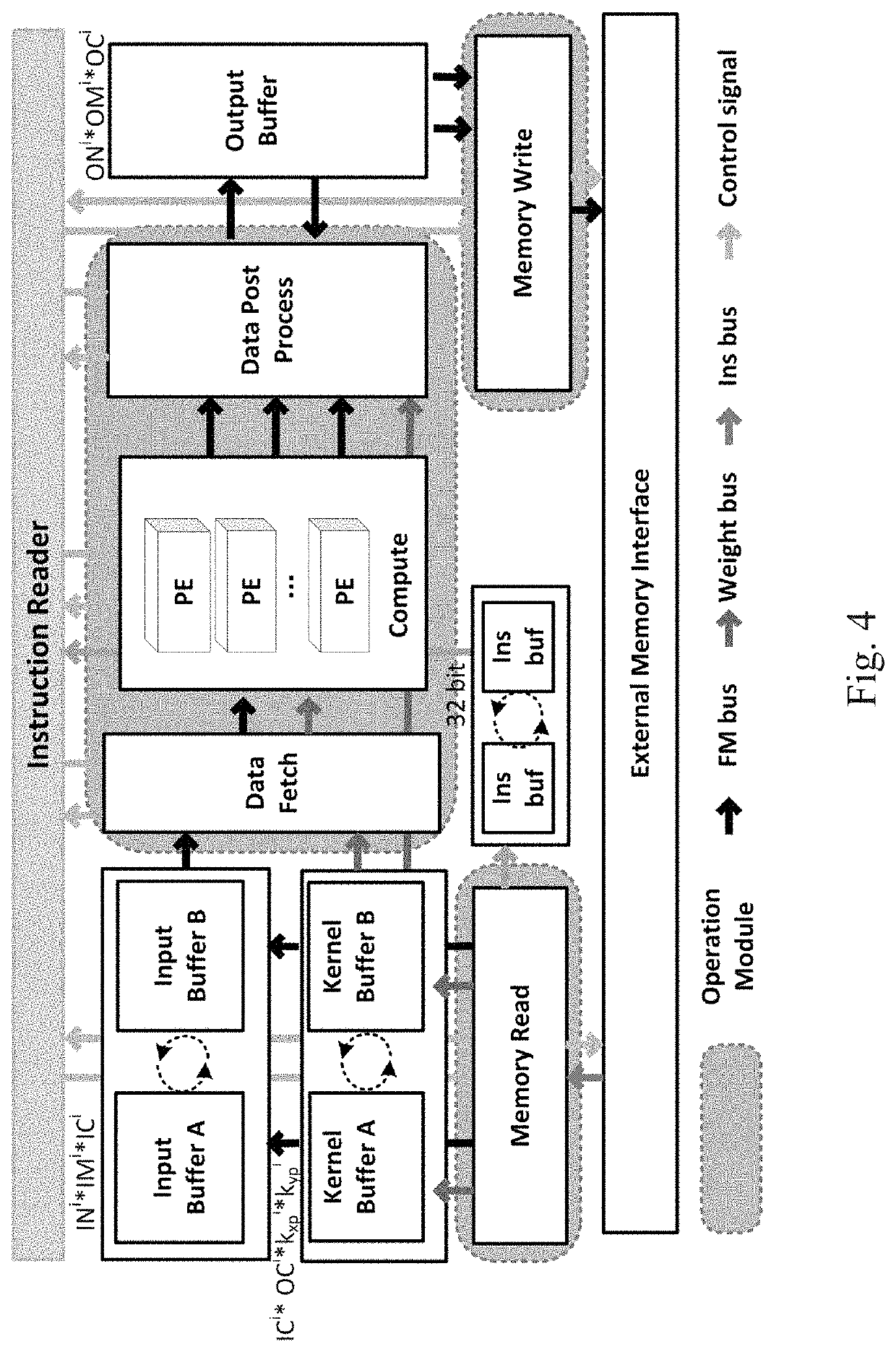

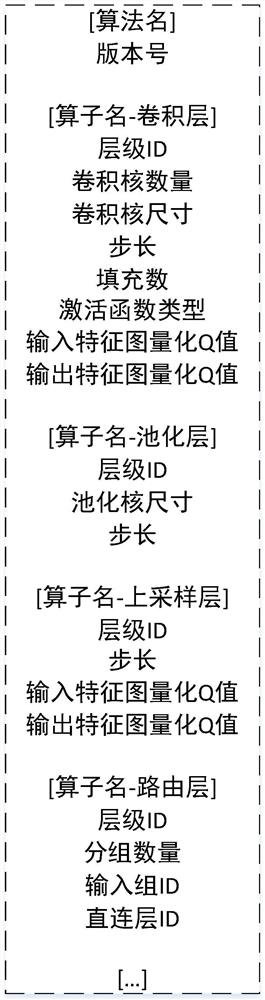

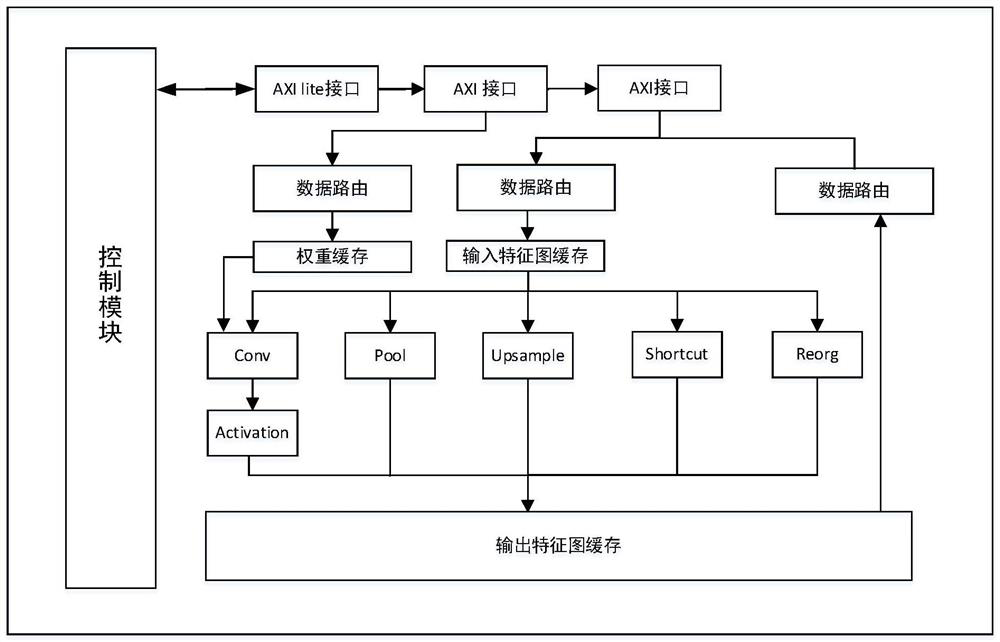

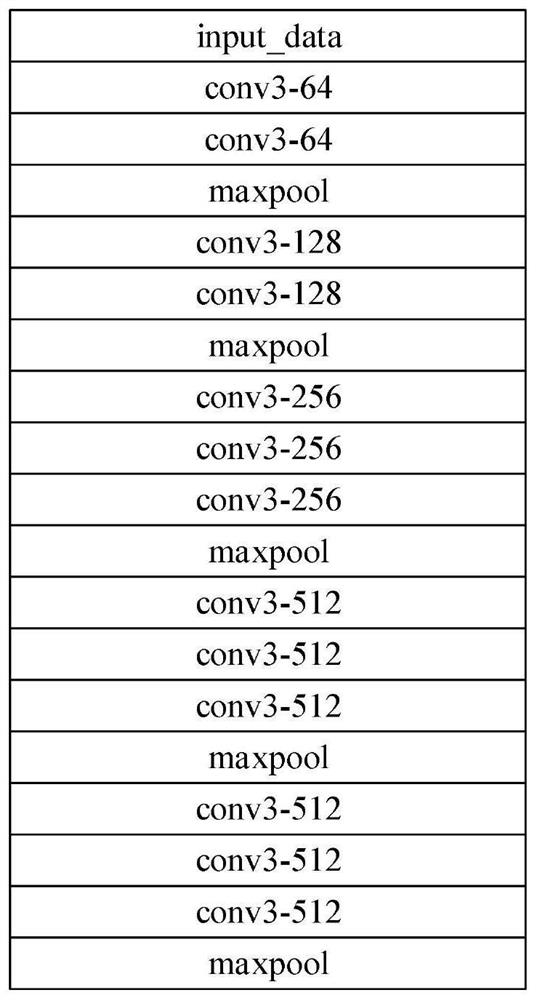

An OPU-based CNN acceleration method and system are disclosed. The method includes (1) defining an OPU instruction set; (2) performing conversion on deep learning framework generated CNN configuration files of different target networks through a complier, selecting an optimal mapping strategy according to the OPU instruction set, configuring mapping, generating instructions of the different target networks, and completing the mapping; and (3) reading the instructions into the OPU, and then running the instruction according to a parallel computing mode defined by the OPU instruction set, and completing an acceleration of the different target networks. The present invention solves the problem that the existing FPGA acceleration aims at generating specific individual accelerators for different CNNs through defining the instruction type and setting the instruction granularity, performing network reorganization optimization, searching the solution space to obtain the mapping mode ensuring the maximum throughput, and the hardware adopting the parallel computing mode.

Owner:REDNOVA INNOVATIONS INC

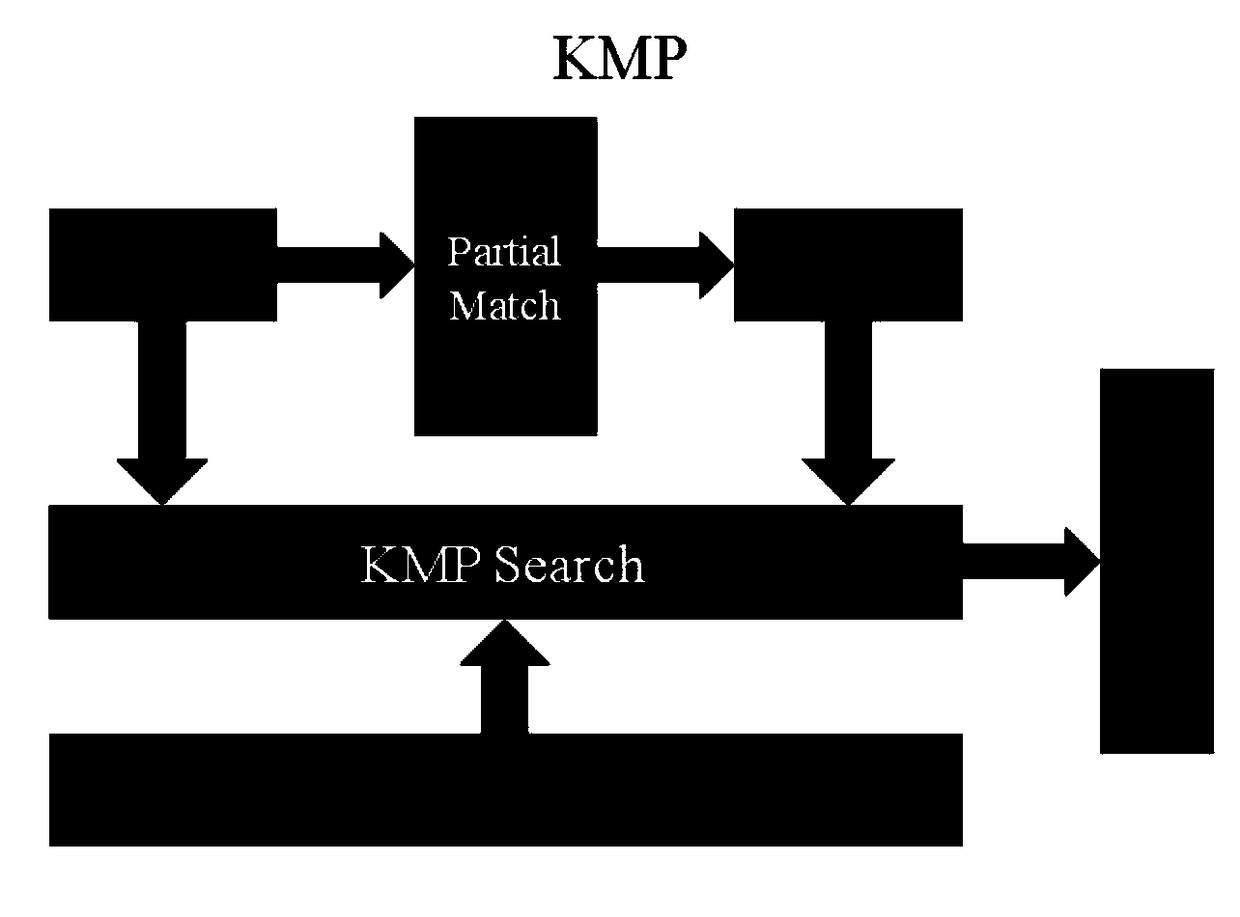

FPGA-based acceleration platform and design method for gene sequencing string matching algorithm

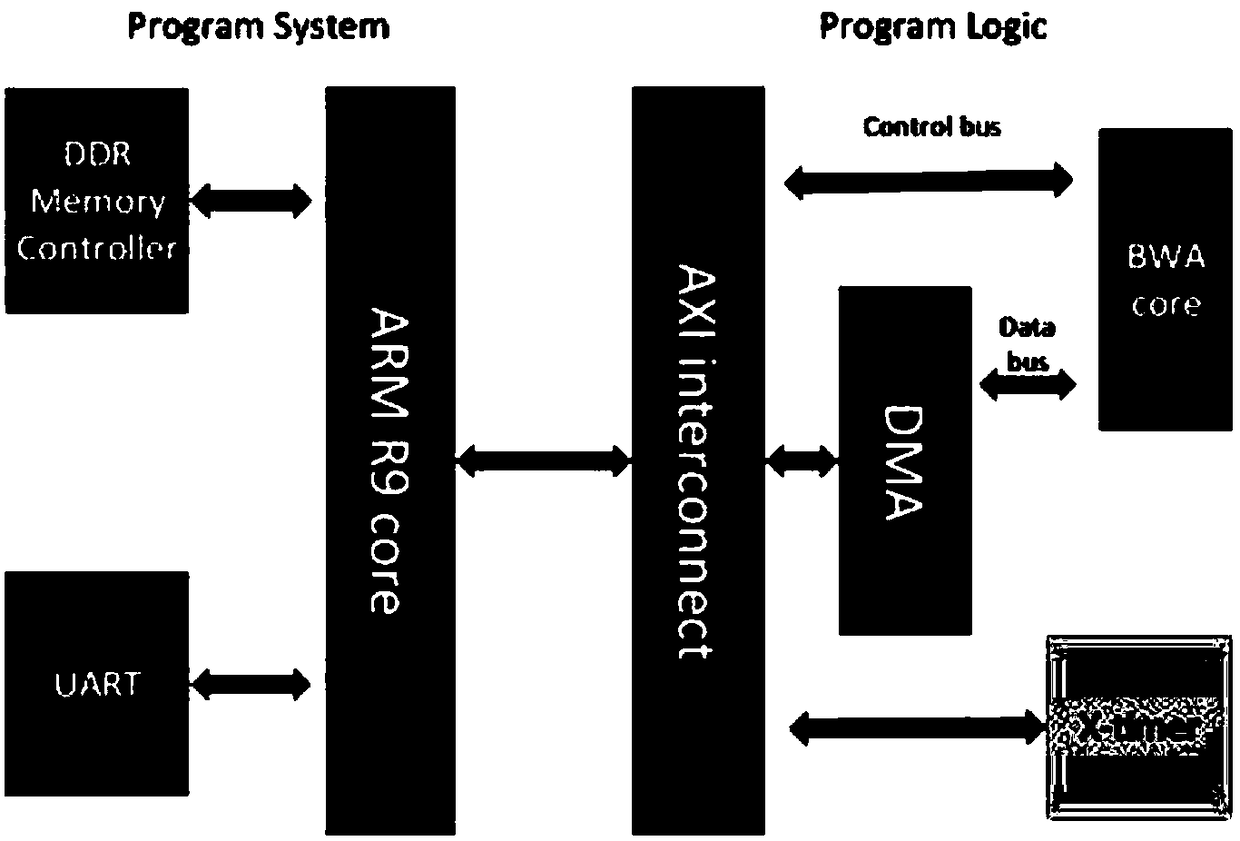

PendingCN108595917AImprove performanceHybridisationSpecial data processing applicationsGeneral purposeProgram logic

The invention discloses an FPGA-based acceleration platform and a design method for a gene sequencing string matching algorithm. The acceleration platform includes a PS (Program System) end and a PL (Program Logic) end, and the PS end includes a general-purpose processor and a DRAM to complete the software-side code operation and control of a hardware part, the PL end contains a plurality of IP cores that can be solidified according to requirements to achieve corresponding tasks. First, the general-purpose processor writes string data into the DRAM, and then the FPGA reads the string data fromthe DRAM and starts calculation, and the calculation result is written into the DRAM, and finally the general-purpose processor reads the matching result from the DRAM. The accelerator of the invention deploys multiple independent IP cores for calculation on the FPGA and the IP cores run in a pipeline mode, so that programmers without hardware knowledge can easily obtain good performance by usingexisting FPGA resources.

Owner:SUZHOU INST FOR ADVANCED STUDY USTC

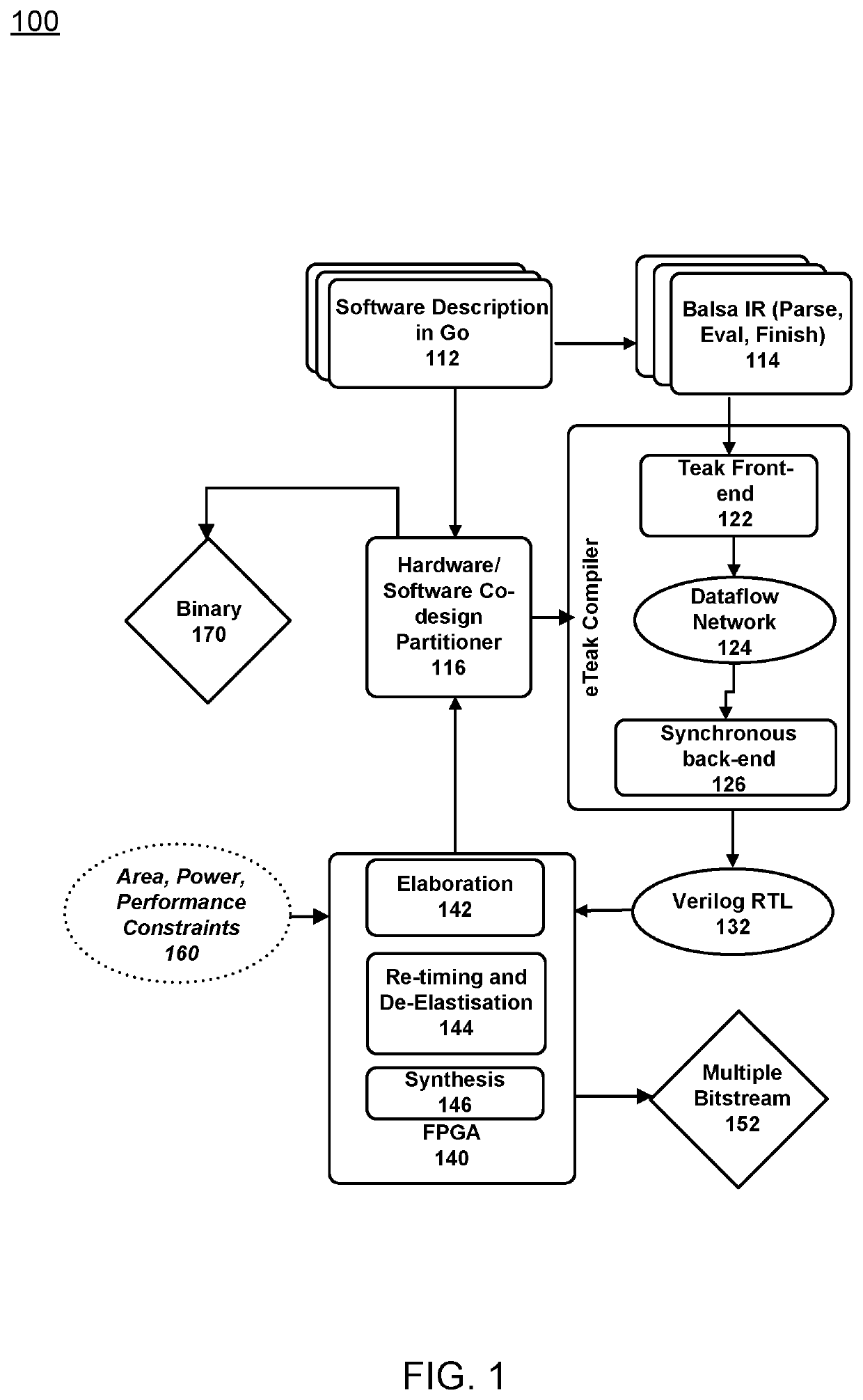

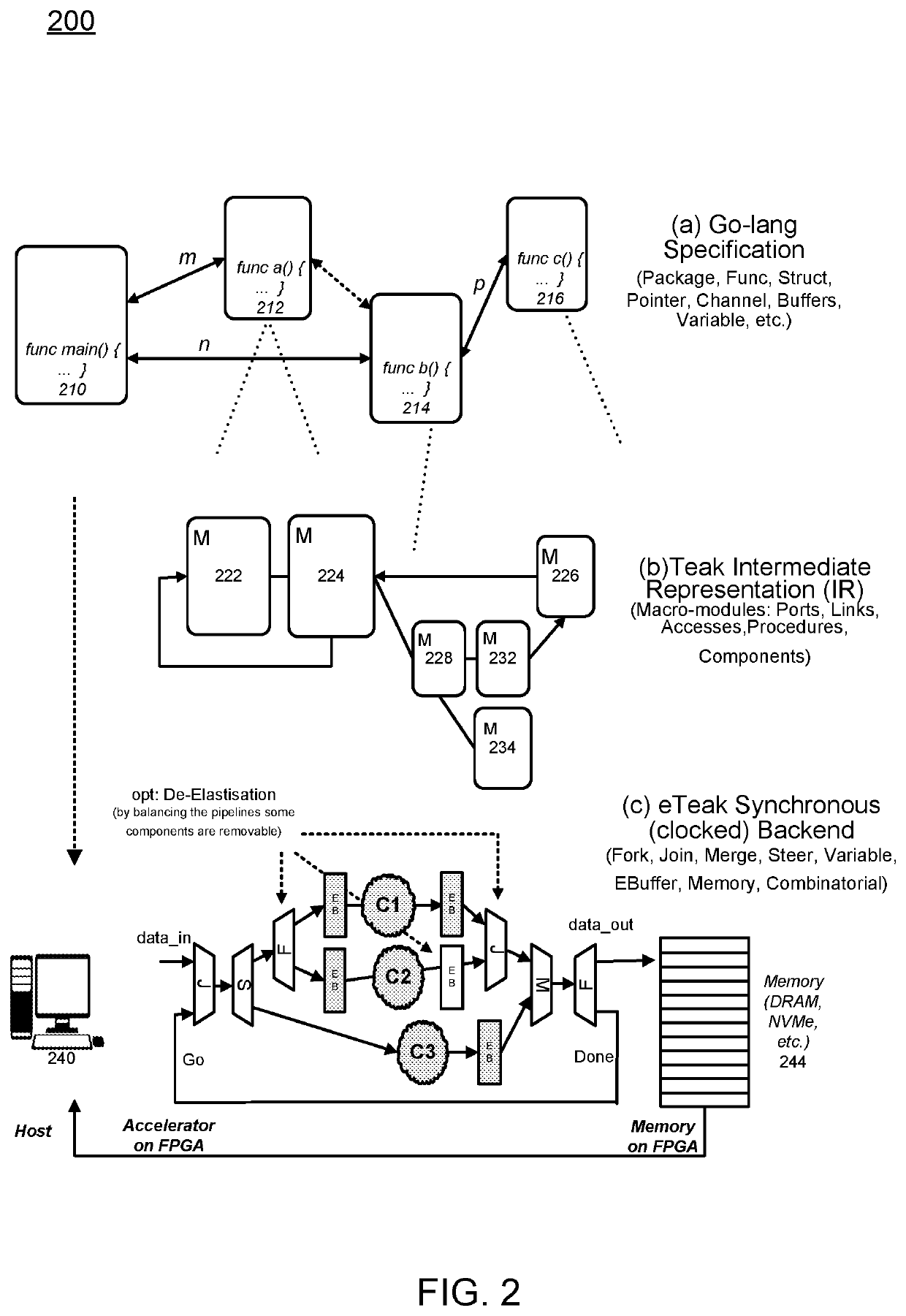

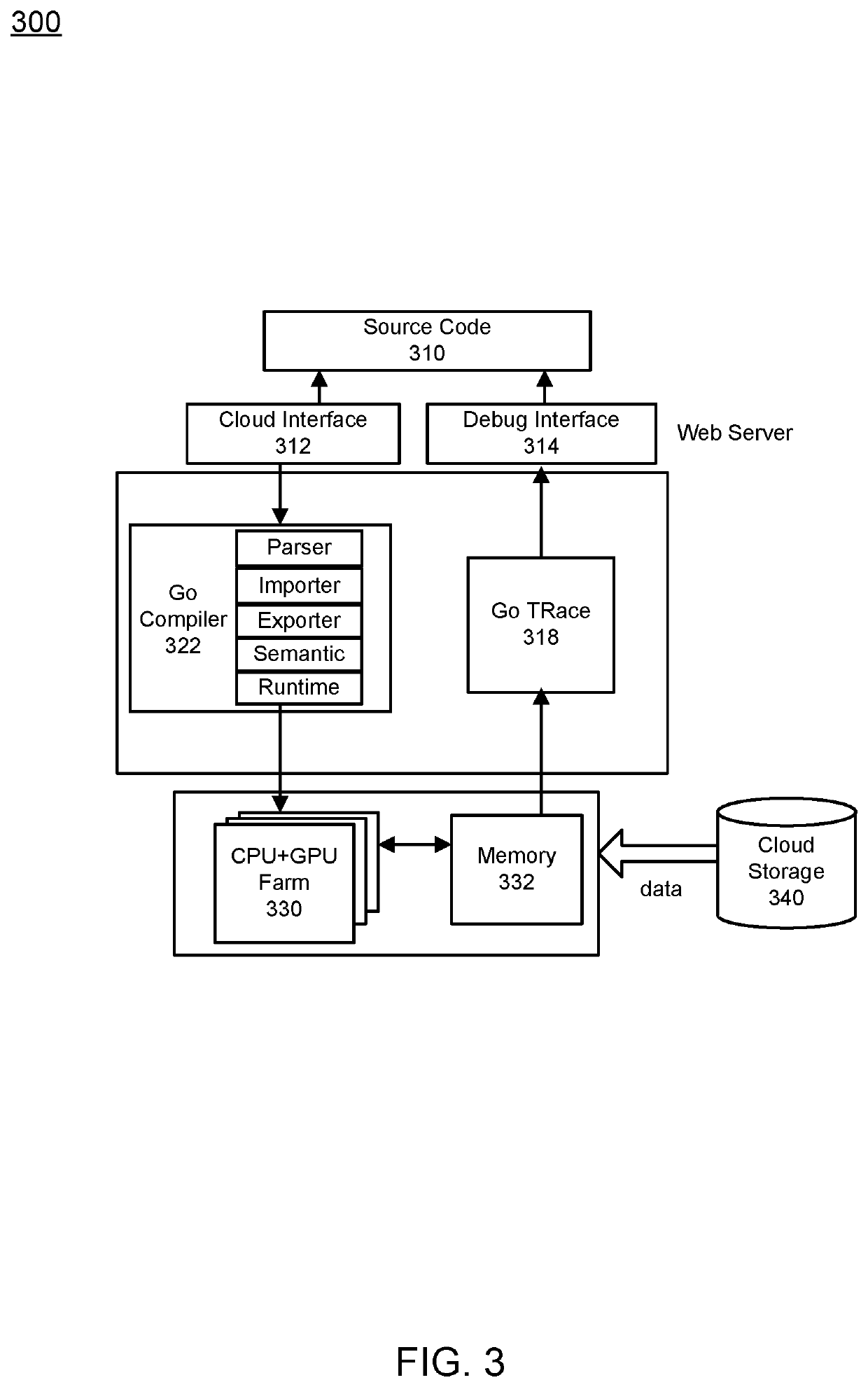

Synthesis Path For Transforming Concurrent Programs Into Hardware Deployable on FPGA-Based Cloud Infrastructures

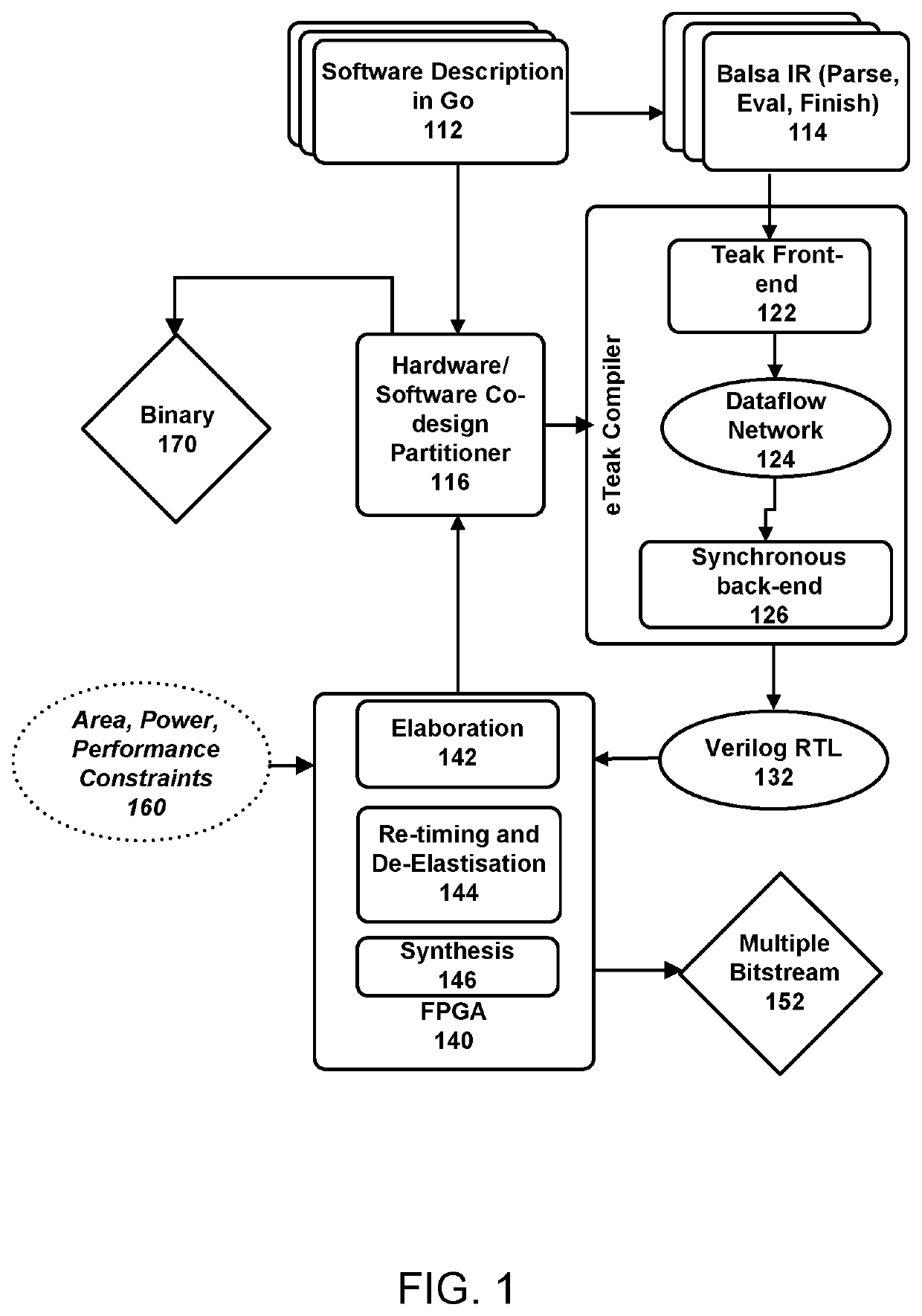

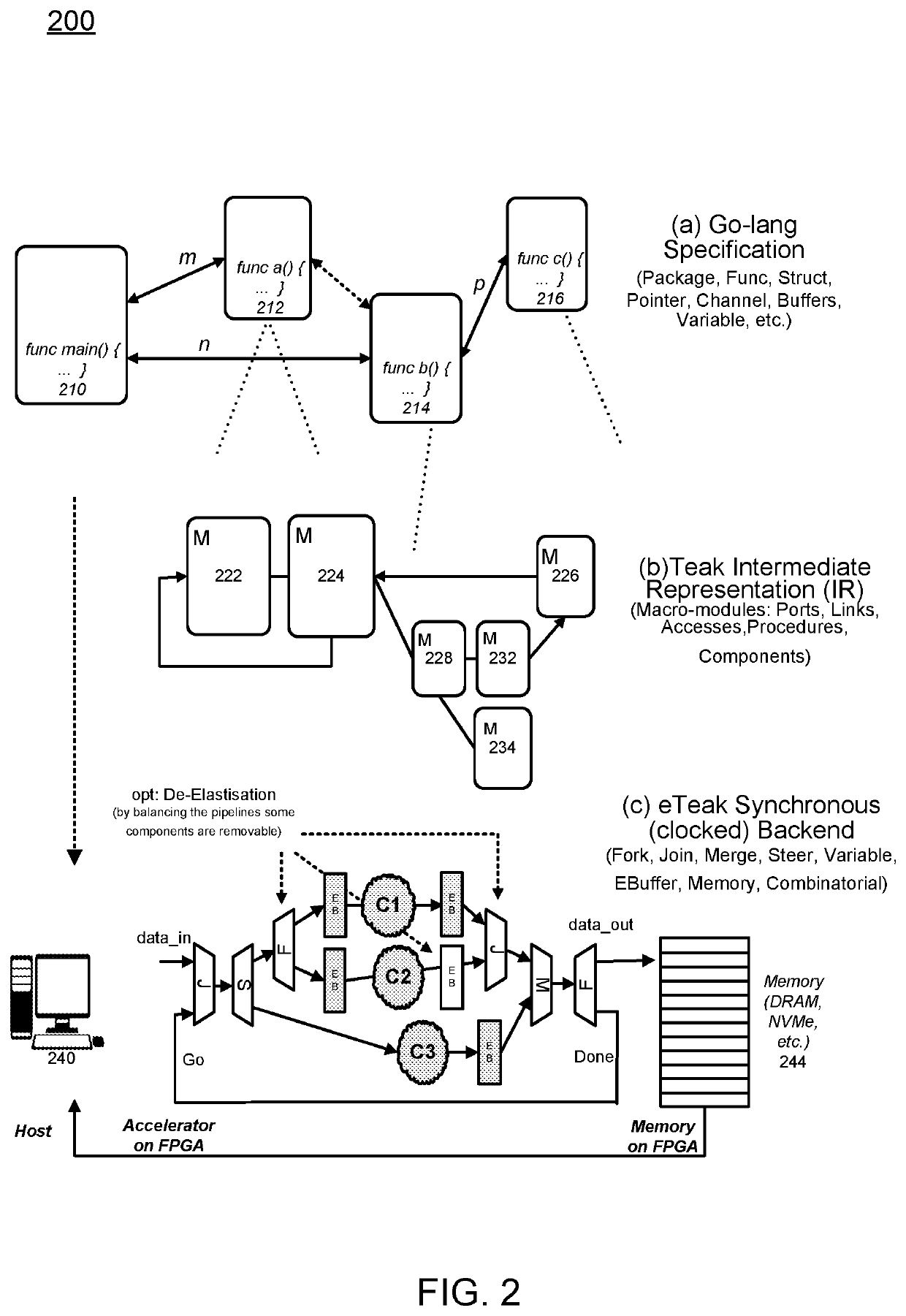

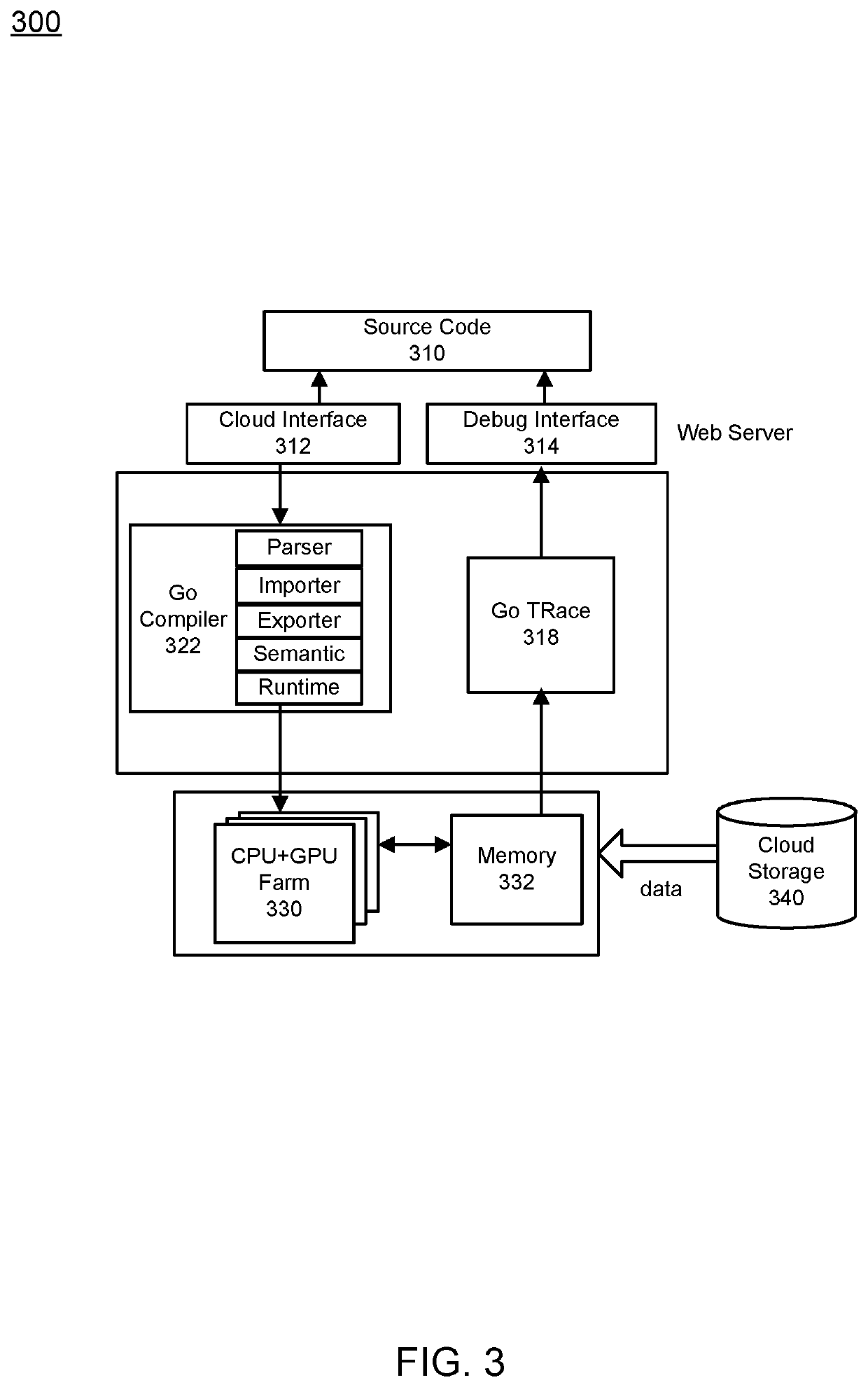

Exploiting FPGAs for acceleration may be performed by transforming concurrent programs. One example mode of operation may provide one or more of creating synchronous hardware accelerators from concurrent asynchronous programs at software level, by obtaining input as software instructions describing concurrent behavior via a model of communicating sequential processes (CSP) of message exchange between concurrent processes performed via channels, mapping, on a computing device, each of the concurrent processes to synchronous dataflow primitives, comprising at least one of join, fork, merge, steer, variable, and arbiter, producing a clocked digital logic description for upload to one or more field programmable gate array (FPGA) devices, performing primitive remapping of the output design for throughput, clock rate and resource usage via retiming, and creating an annotated graph of the input software description for debugging of concurrent code for the field FPGA devices.

Owner:RECONFIGURE IO LTD

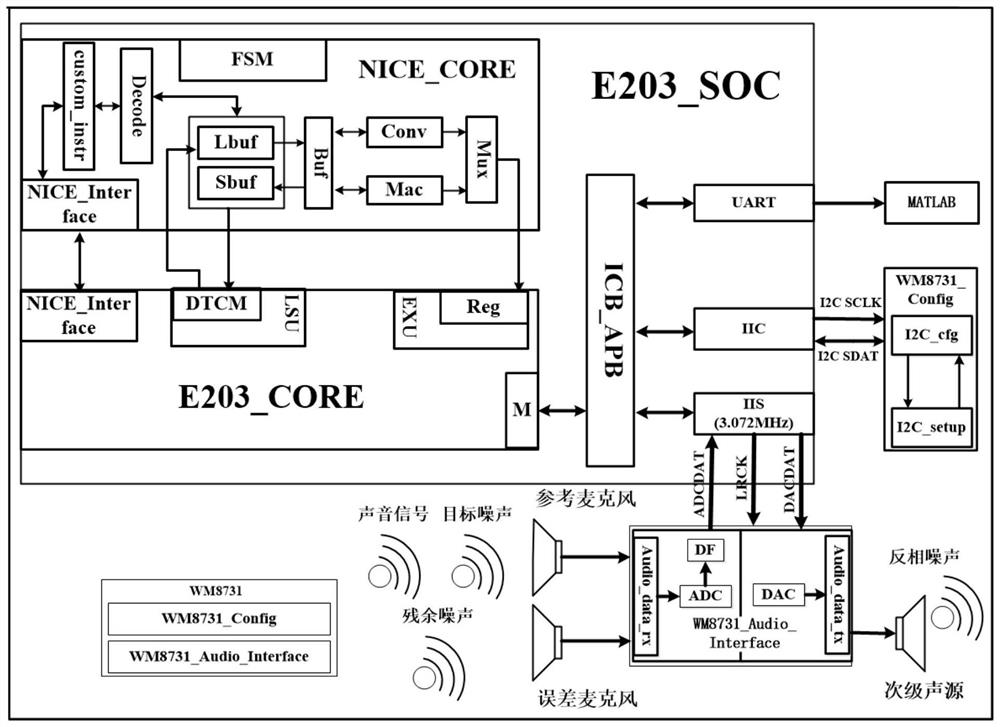

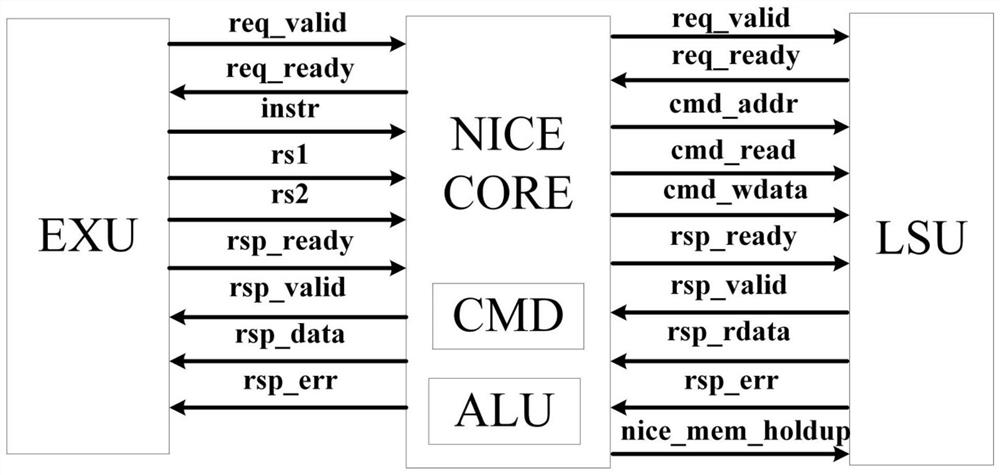

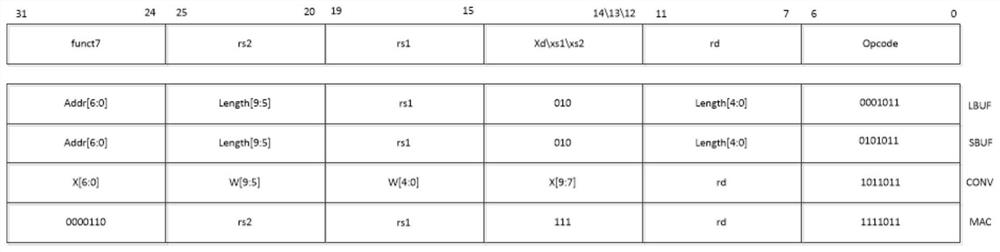

Audio noise reduction accelerator system and method based on RISC v custom instruction set expansion

PendingCN113851103AReduce wasteStrong configurabilitySound producing devicesComputer hardwareComputer architecture

The invention discloses an audio noise reduction accelerator system and method based on RISC v custom instruction set expansion, and belongs to the technical field of integrated circuits. The system mainly comprises an E203_CORE, an NICE_CORE, a NICE_Interface, an E203_SOC, an audio coding and decoding WM8731 module, and an audio noise reduction FxLMS algorithm, wherein the E203_CORE is connected with the NICE_CORE through the NICE_Interface, the E203_CORE, the NICE_CORE and a related peripheral port form the E203_SOC together, the E203_SOC is connected with the audio coding and decoding WM8731 module, and the audio noise reduction FxLMS algorithm is downloaded into a core of the RISC v processor through software programming to run. Compared with a processor of an ARM instruction set architecture, the processor adopting an RISC v custom instruction set can accelerate a specific operation part in the audio noise reduction FxLMS algorithm; and the problems of area, power consumption, granularity and the like can be better optimized, and the flexibility and feasibility of the algorithm are improved.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

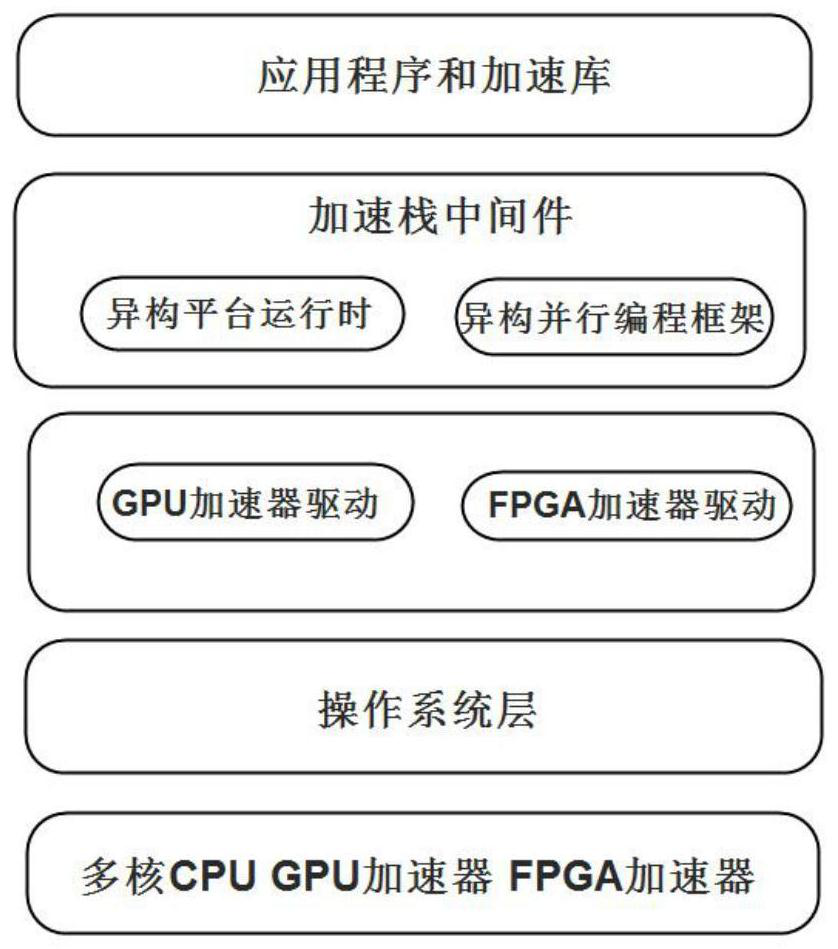

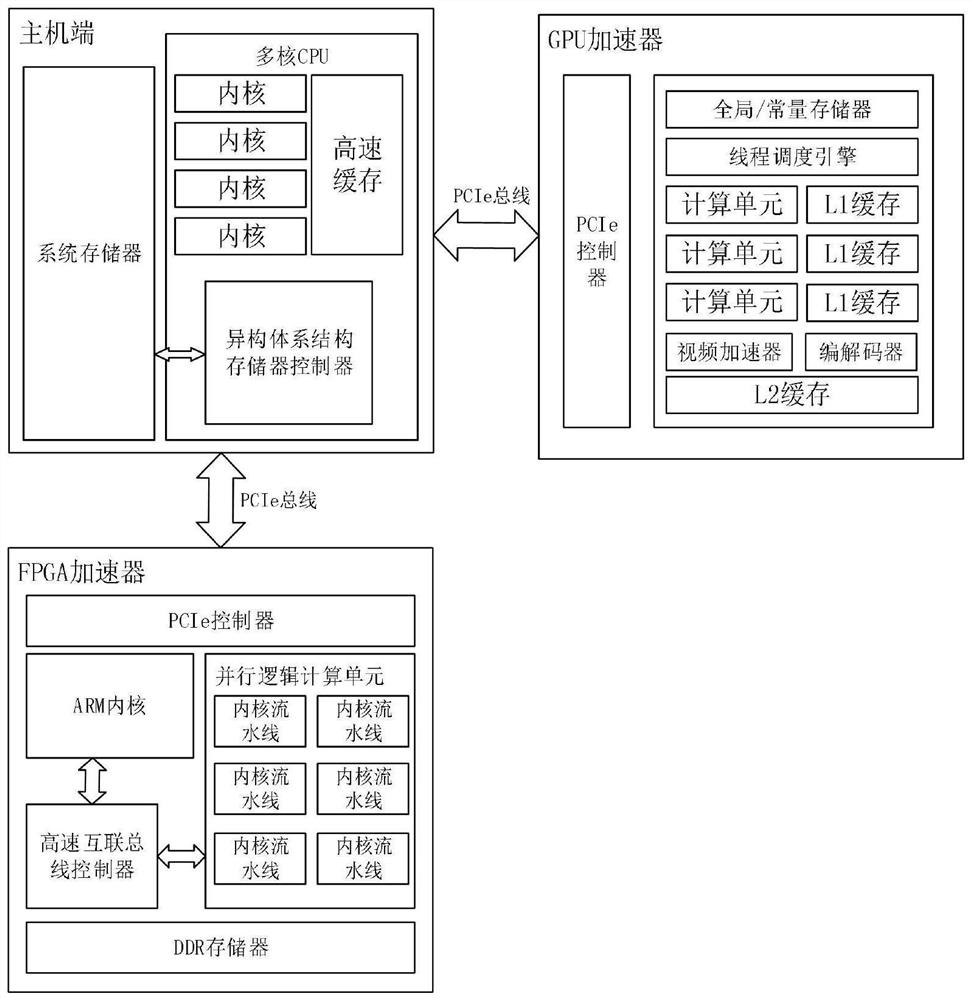

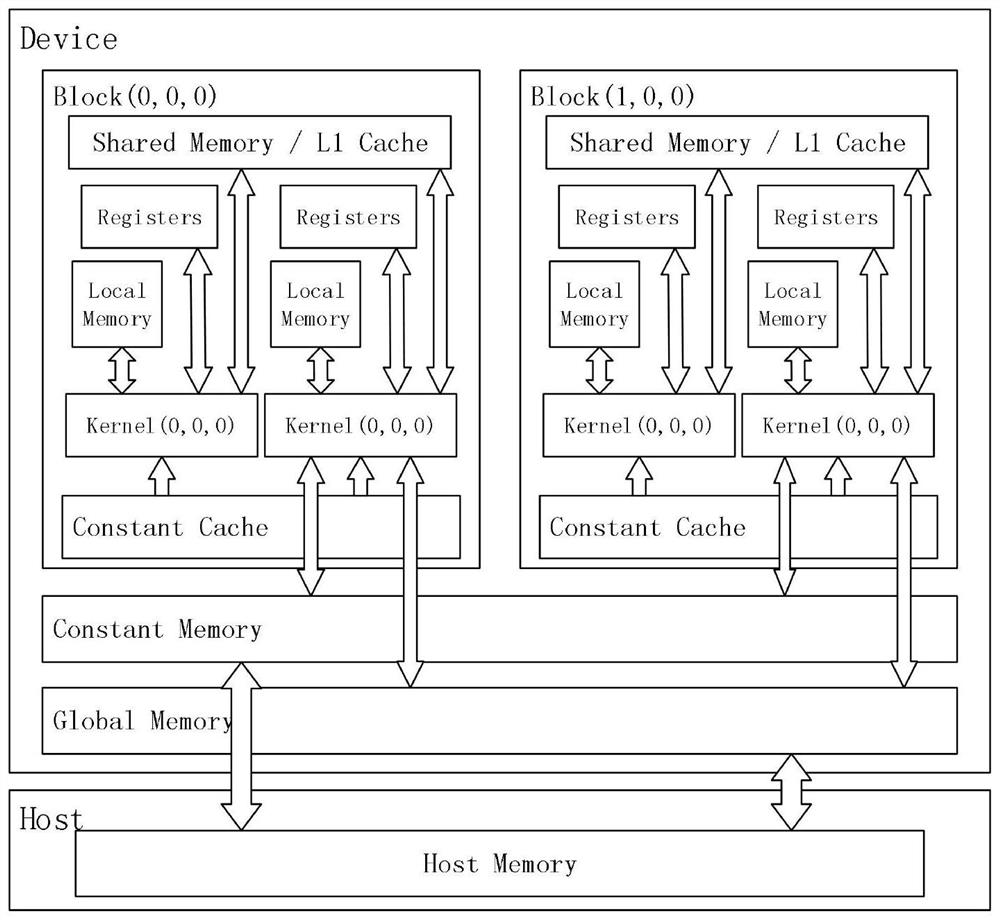

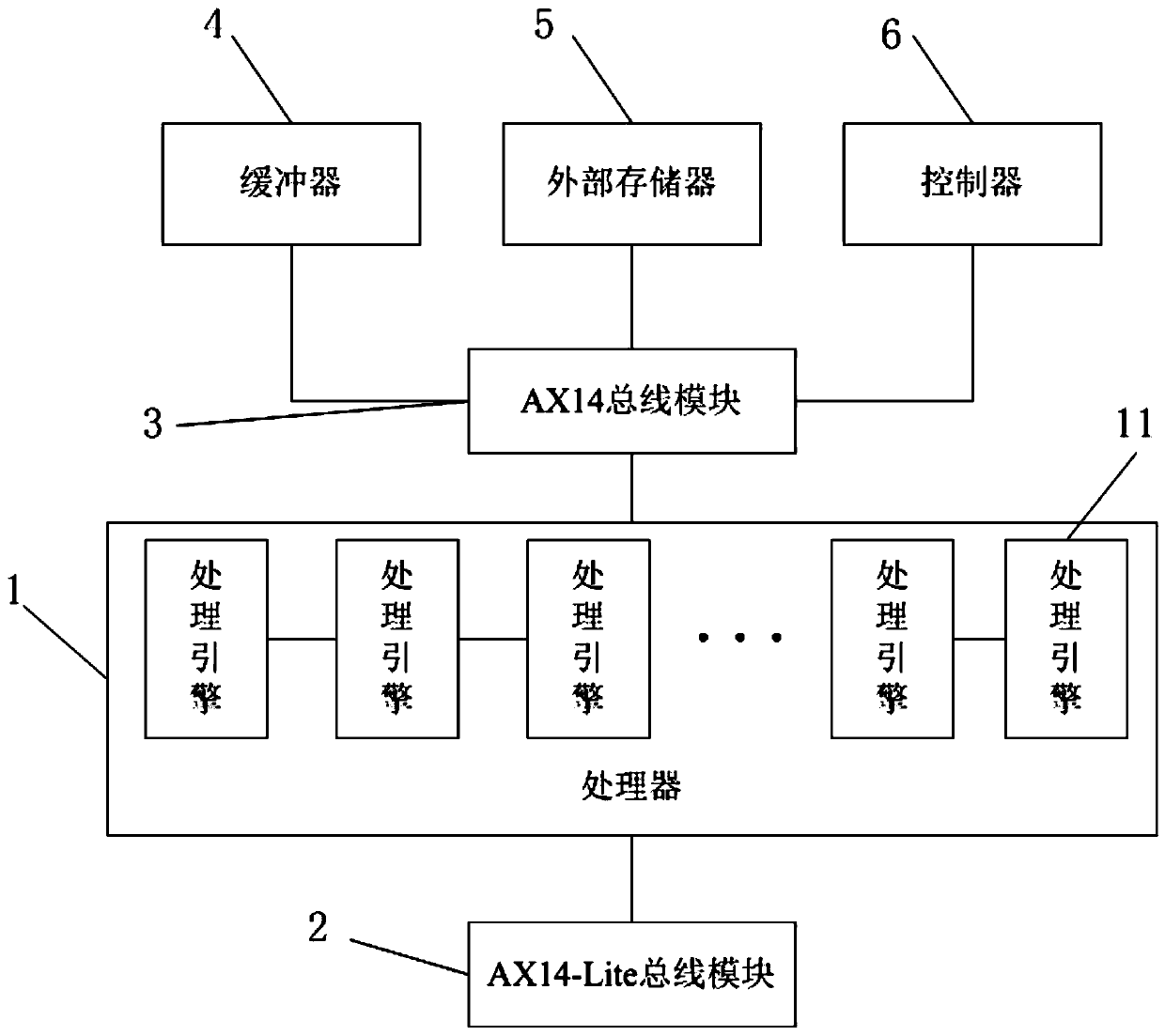

Domestication heterogeneous computing acceleration platform

PendingCN111611198AImprove computing powerRealize localization substitutionArchitecture with single central processing unitElectric digital data processingComputer architectureConcurrent computation

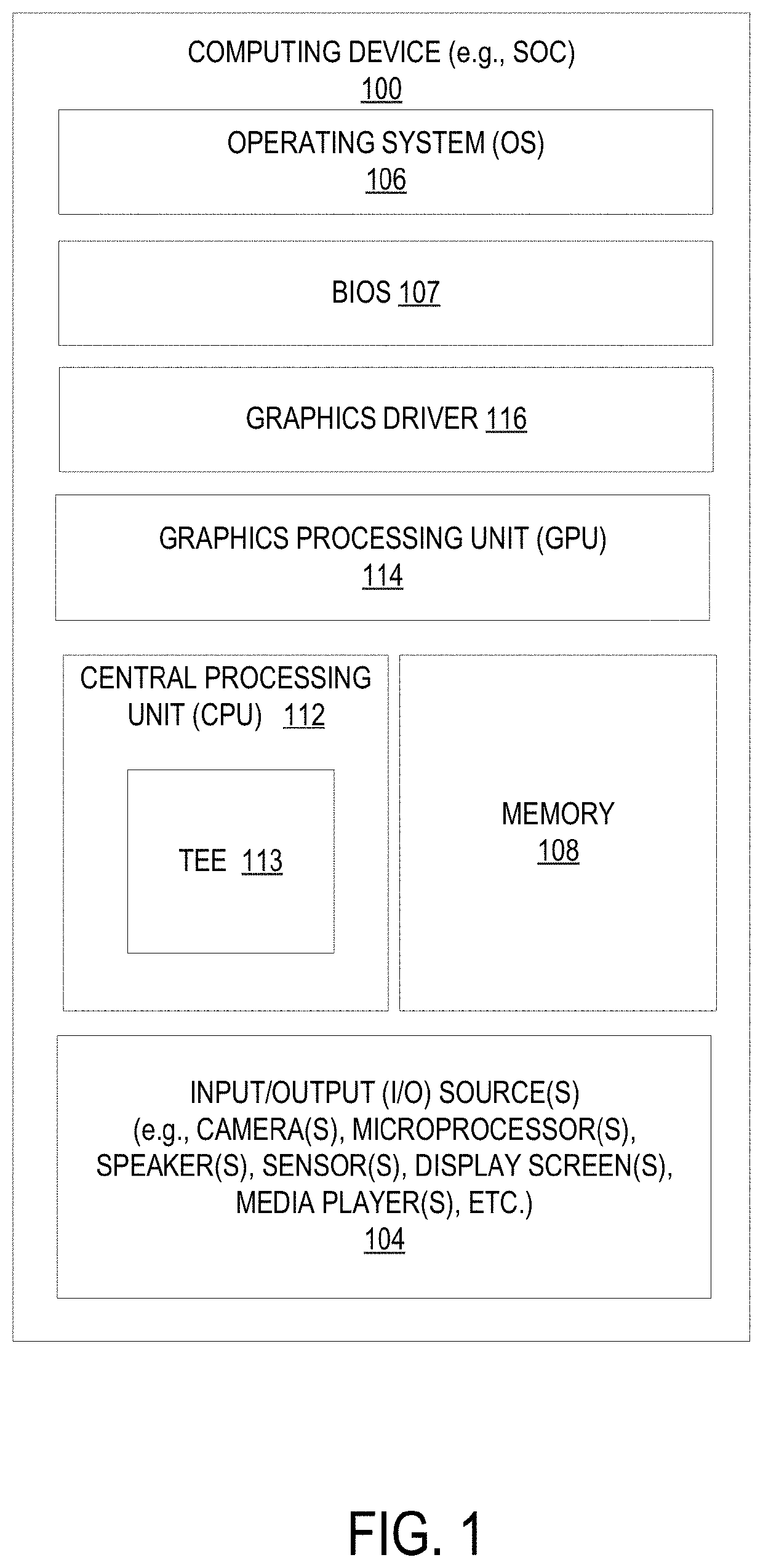

The invention relates to a domestication heterogeneous computing acceleration platform which is technically characterized by comprising an accelerator hardware platform, an operating system layer, a GPU accelerator driving layer, an FPGA accelerator driving layer, heterogeneous acceleration stack middleware, an application program and an acceleration library. The accelerator hardware platform is responsible for computing storage resource allocation and scheduling; the GPU accelerator driving layer and the FPGA accelerator driving layer provide bottom hardware internal resource management interfaces for heterogeneous platform middleware to call; the acceleration stack middleware maps heterogeneous system computing and storage resources to an operating system user space, and provides a standardized calling interface for a top application program; the acceleration library provides basic operation parallelization and bottom layer optimization, when an application program is executed, the host submits a calculation kernel and an execution instruction, and calculation is executed in a calculation unit on the equipment. The heterogeneous many-core acceleration stack and the heterogeneousparallel computing framework are constructed, the difference between heterogeneous system platforms is hidden, and domestication of heterogeneous acceleration software and hardware platforms is achieved.

Owner:TIANJIN QISUO PRECISION ELECTROMECHANICAL TECH

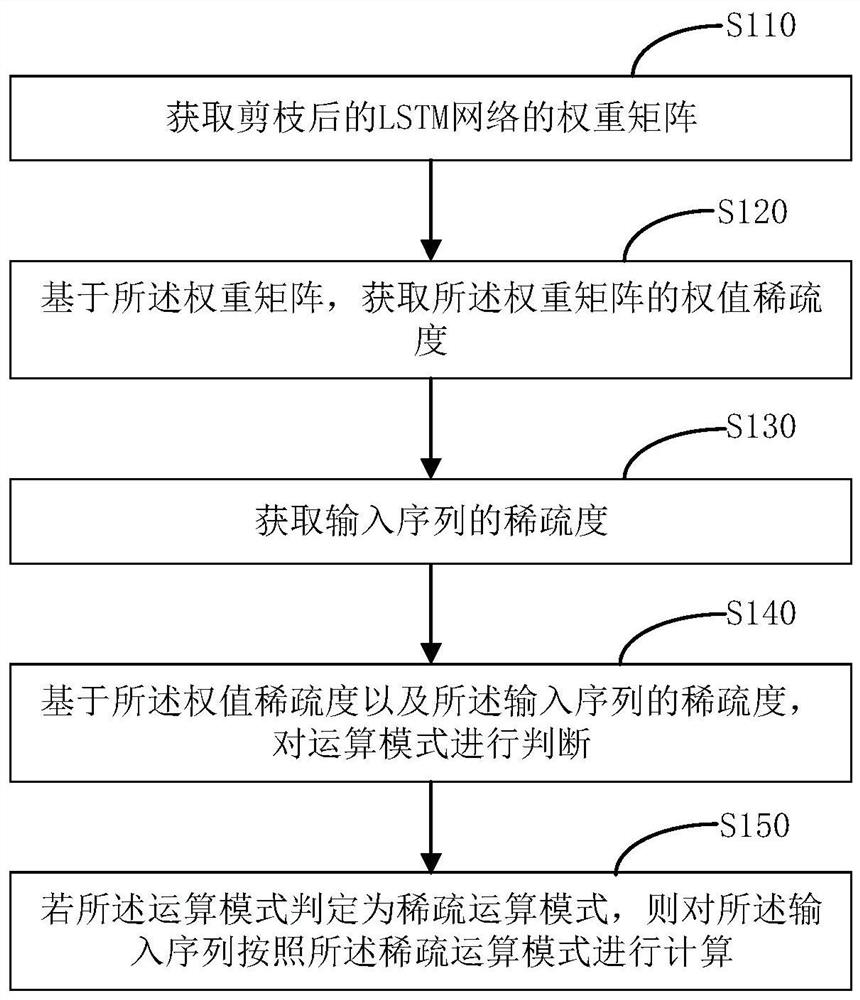

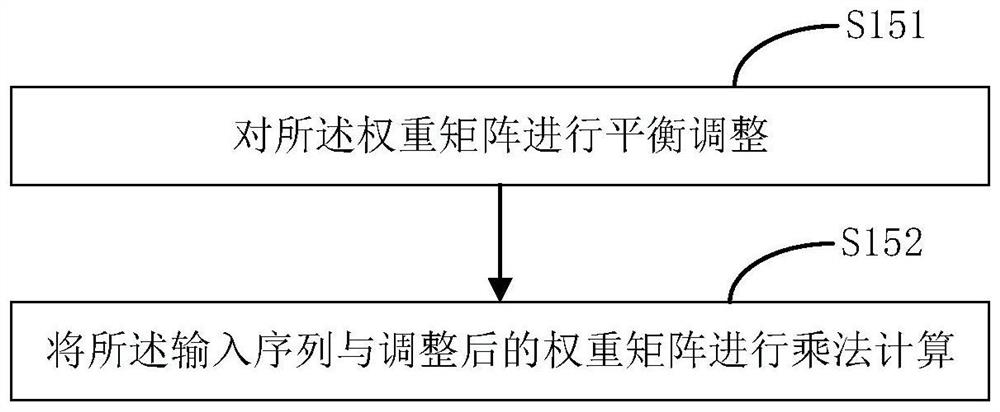

LSTM model optimization method, accelerator, device and medium

PendingCN113537465AGet weight sparsityGet sparsityNeural architecturesPhysical realisationJazelleProcess engineering

The invention discloses an LSTM model optimization method, an accelerator, a device and a medium. The method comprises the following steps: acquiring a weight matrix of a pruned LSTM network; based on the weight matrix, obtaining the weight sparseness of the weight matrix; obtaining the sparseness of an input sequence; based on the weight sparseness and the sparseness of the input sequence, judging an operation mode; and if the operation mode is judged to be a sparse operation mode, calculating the input sequence according to the sparse operation mode. The invention aims to improve the energy efficiency ratio of the LSTM hardware accelerator, and is simple to implement and easy to deploy.

Owner:SHENZHEN ECHIEV AUTONOMOUS DRIVING TECH CO LTD

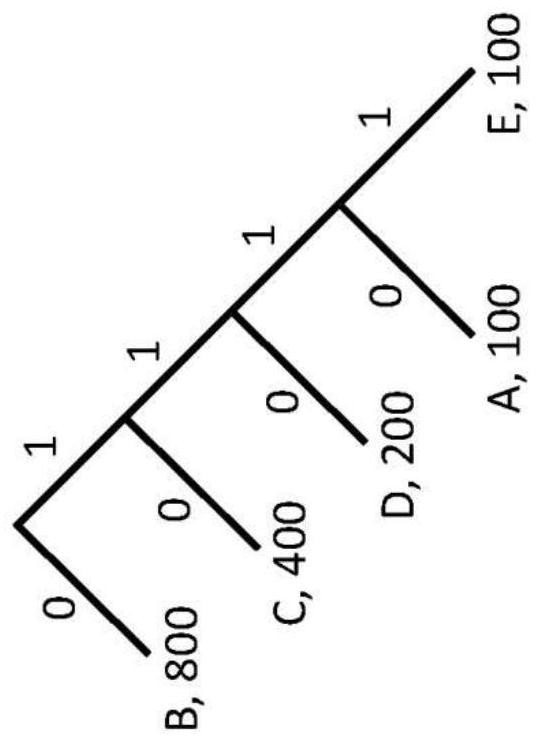

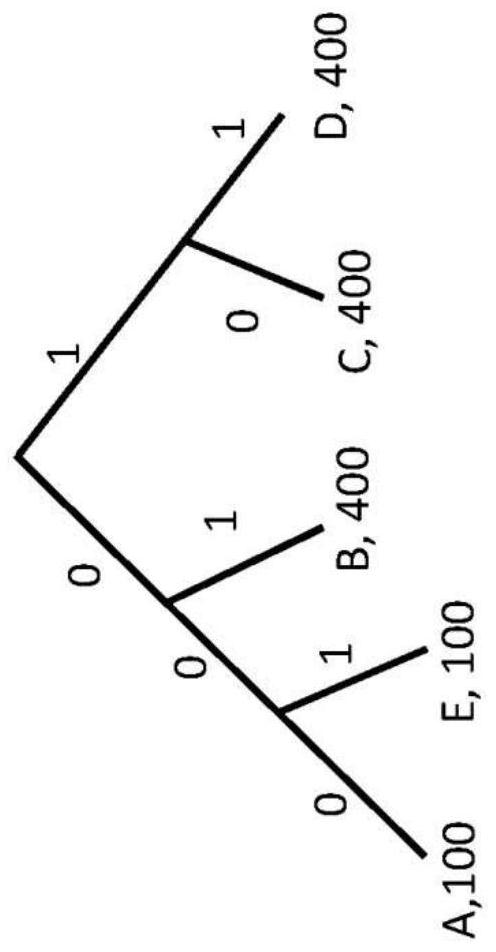

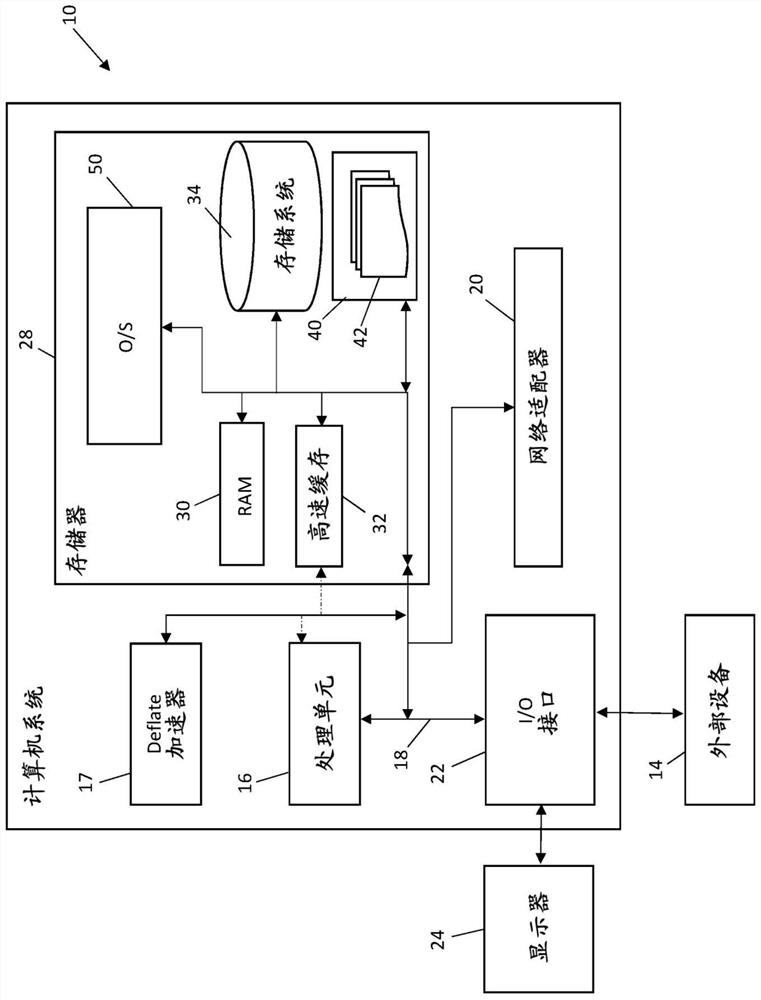

Reducing latch count to save hardware area for dynamic huffman table generation

Embodiments of the invention are directed to a DEFLATE compression accelerator and to a method for reducing a latch count required for symbol sorting when generating a dynamic Huffman table. The accelerator includes an input buffer and a Lempel-Ziv 77 (LZ77) compressor communicatively coupled to an output of the input buffer. The accelerator further includes a Huffman encoder communicatively coupled to the LZ77 compressor. The Huffman encoder includes a bit translator. The accelerator further includes an output buffer communicatively coupled to the Huffman encoder.

Owner:IBM CORP

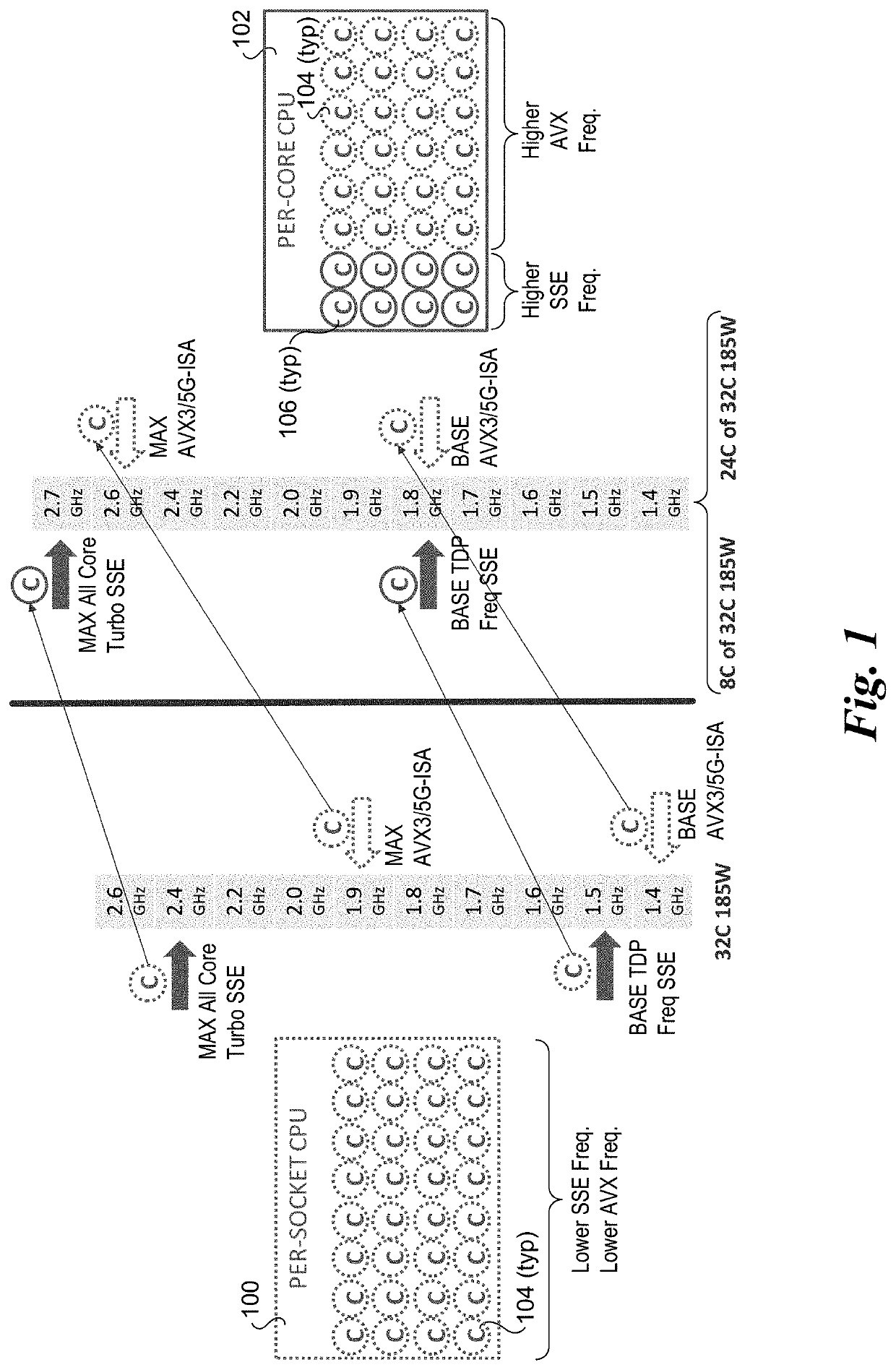

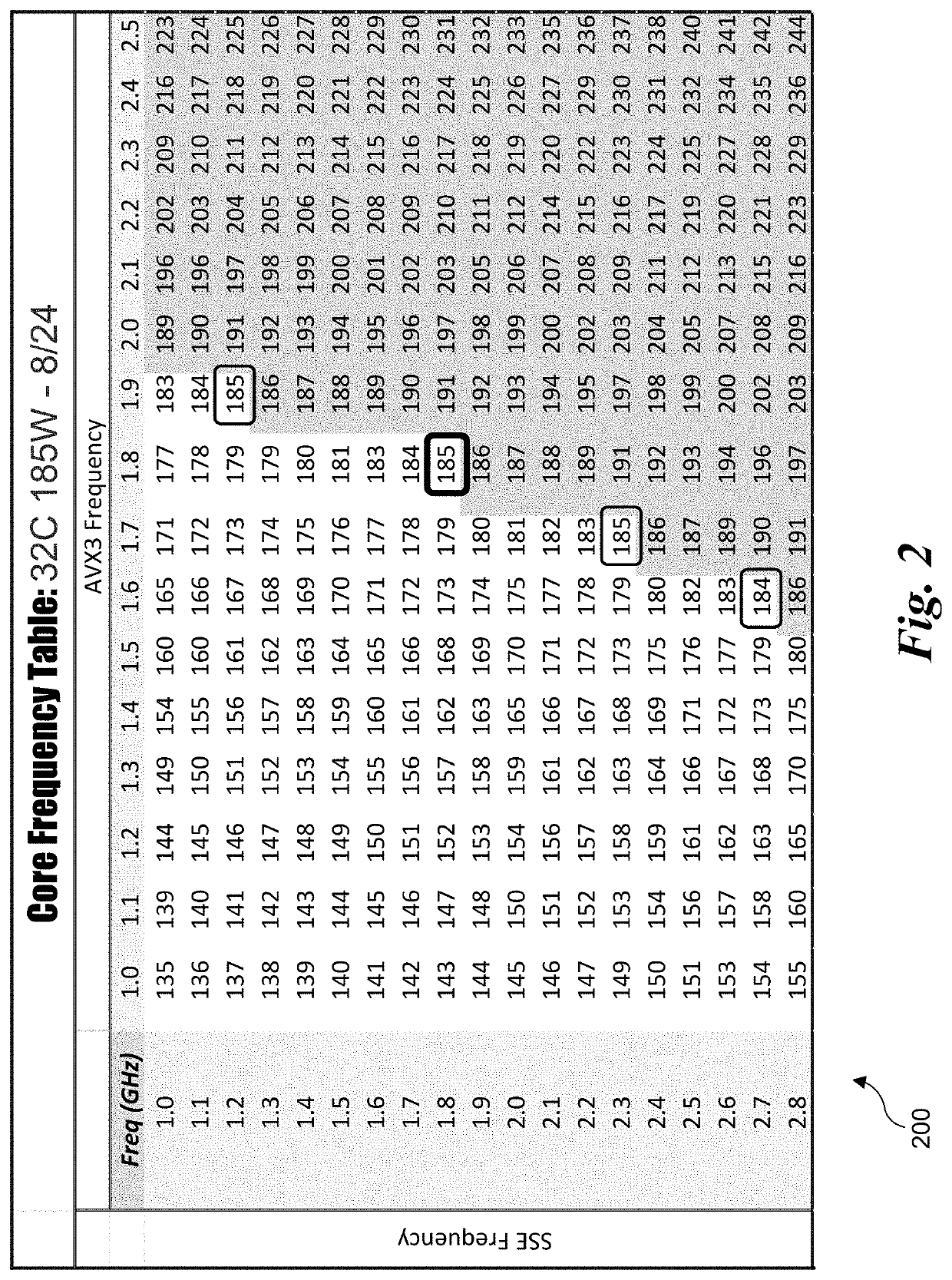

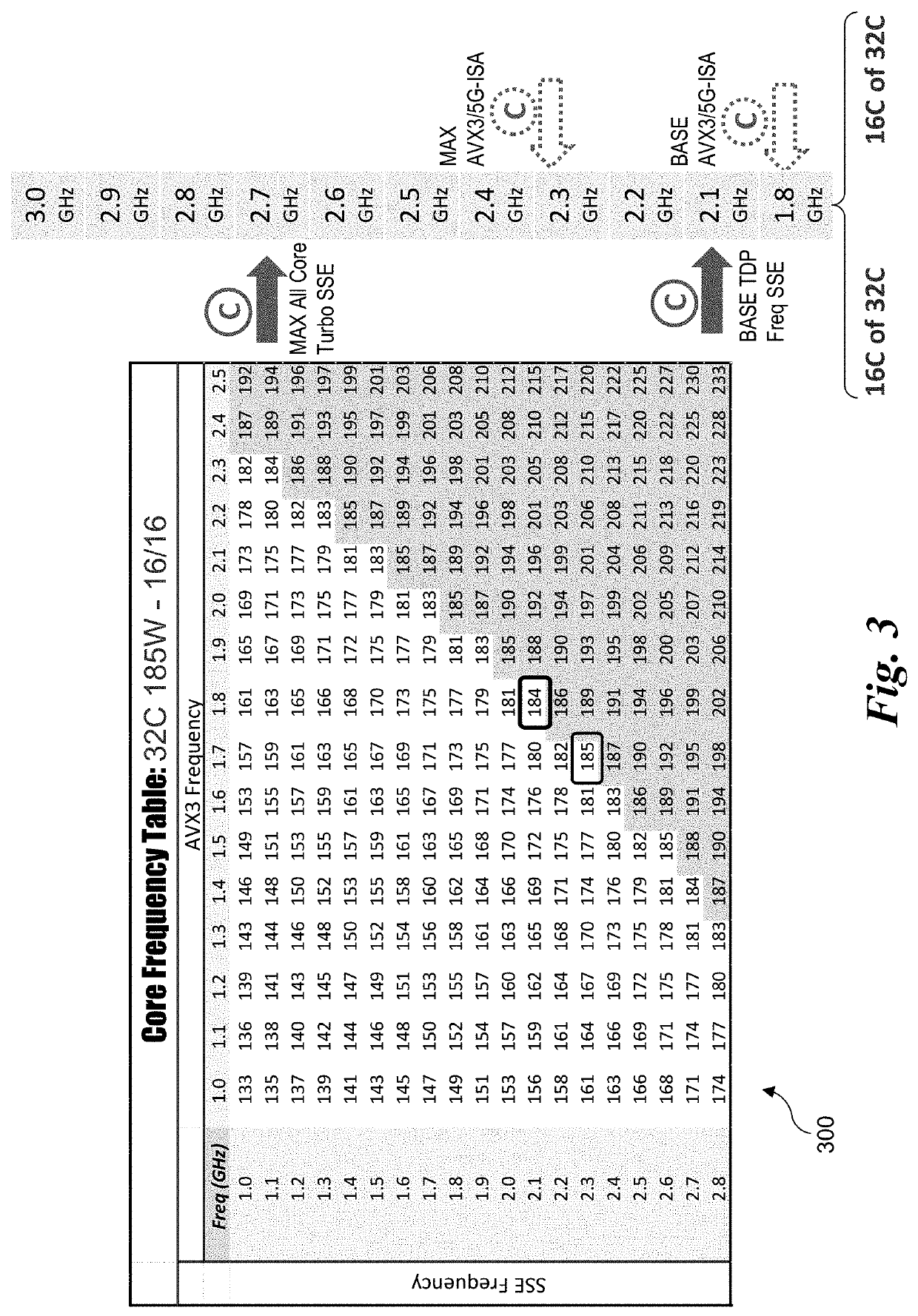

Frequency scaling for per-core accelerator assignments

PendingUS20210334101A1Concurrent instruction executionEnergy efficient computingJazelleComputer architecture

Methods for frequency scaling for per-core accelerator assignments and associated apparatus. A processor includes a CPU (central processing unit) having multiple cores that can be selectively configured to support frequency scaling and instruction extensions. Under this approach, some cores can be configured to support a selective set of AVX instructions (such as AVX3 / 5G-ISA instructions) and / or AMX instructions, while other cores are configured to not support these AVX / AMX instructions. In one aspect, the selective AVX / AMX instructions are implemented in one or more ISA extension units that are separate from the main processor core (or otherwise comprises a separate block of circuitry in a processor core) that can be selectively enabled or disabled. This enables cores having the separate unit(s) disabled to consume less power and / or operate at higher frequencies, while supporting the selective AVX / AMX instructions using other cores. These capabilities enhance performance and provides flexibility to handle a variety of applications requiring use of advanced AVX / AMX instructions to support accelerated workloads.

Owner:INTEL CORP

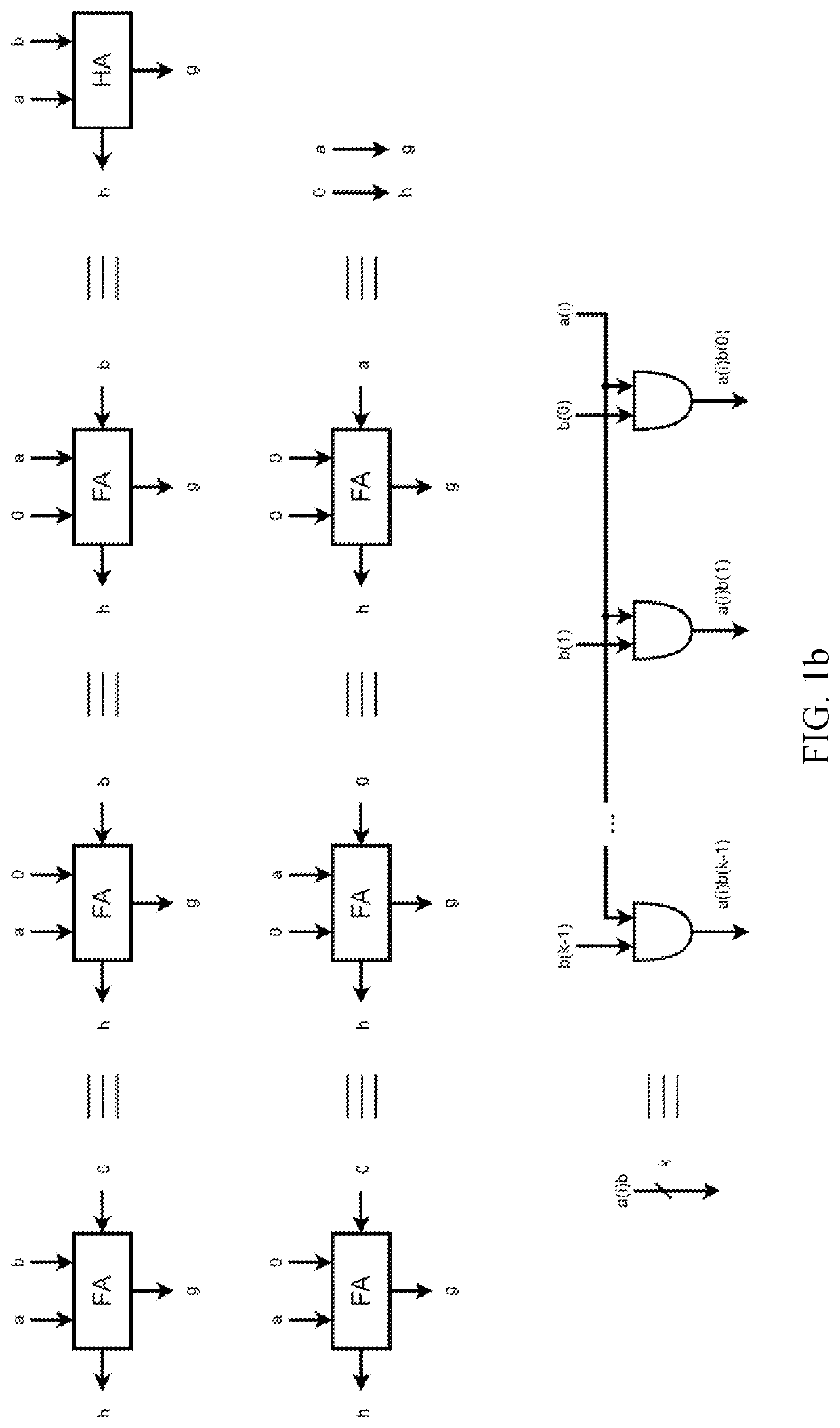

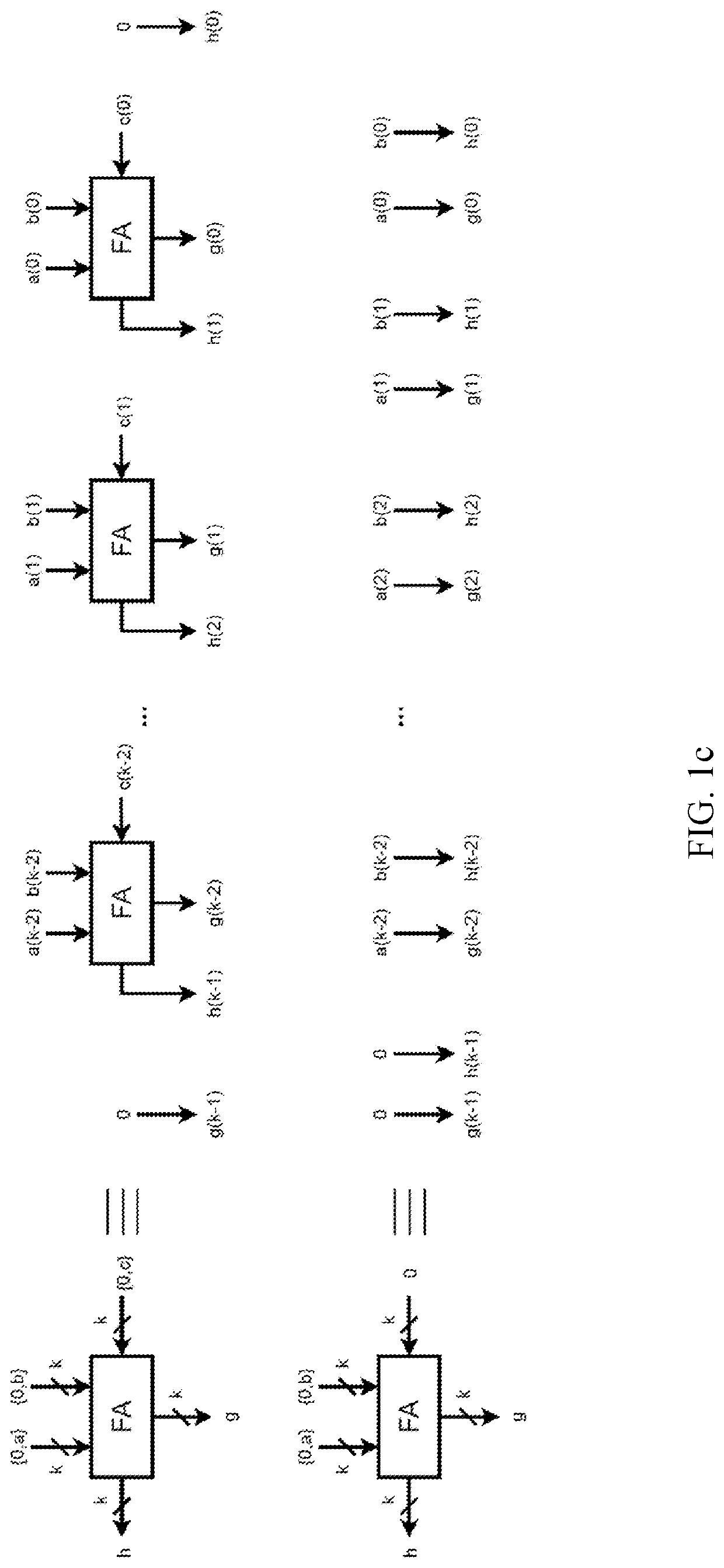

Architecture for small and efficient modular multiplication using carry-save adders

ActiveUS11210067B1Reduce complexityLower areaComputations using residue arithmeticJazelleComputer architecture

A computer processing system having at least one accelerator operably configured to compute modular multiplication with a modulus of special form and having a systolic carry-save architecture configured to implement Montgomery multiplication and reduction and having multiple processing element types composed of Full Adders and AND gates.

Owner:PQSECURE TECH LLC

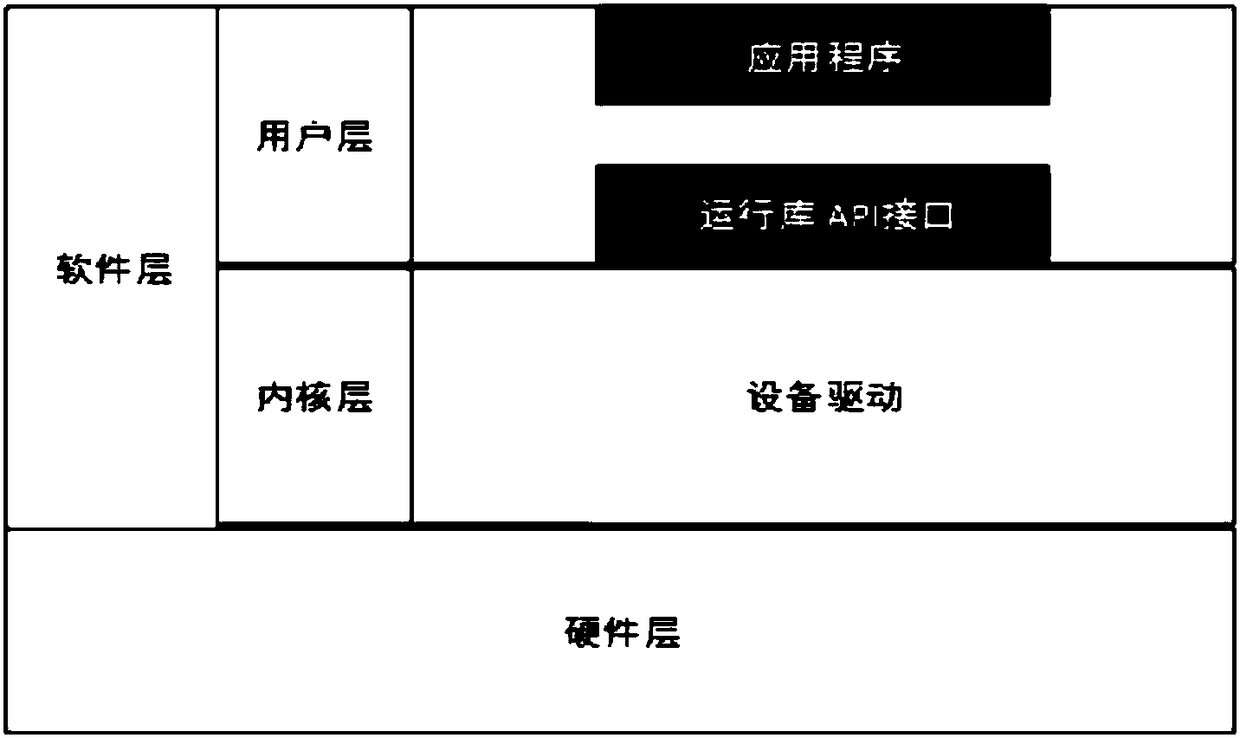

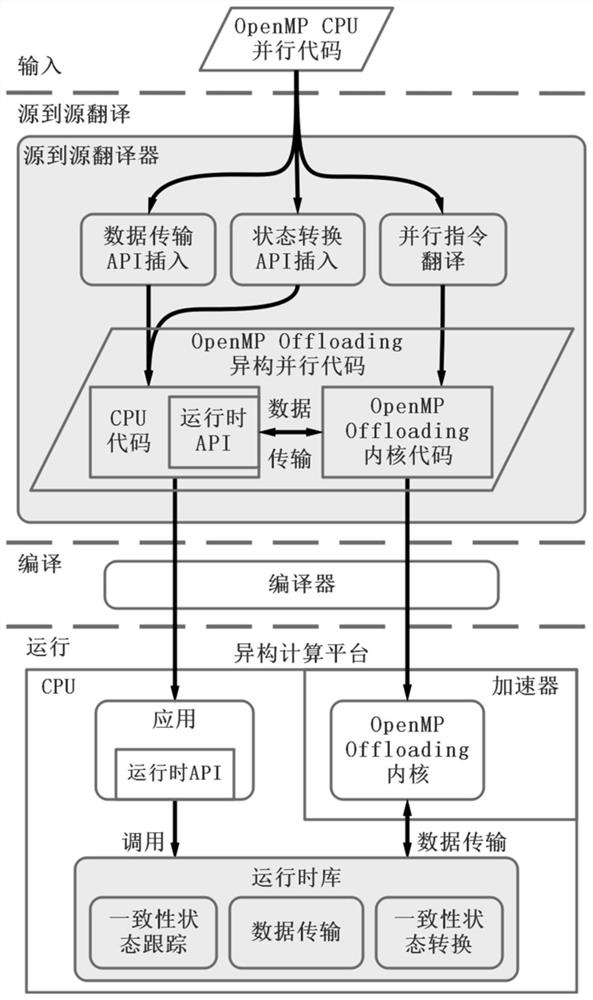

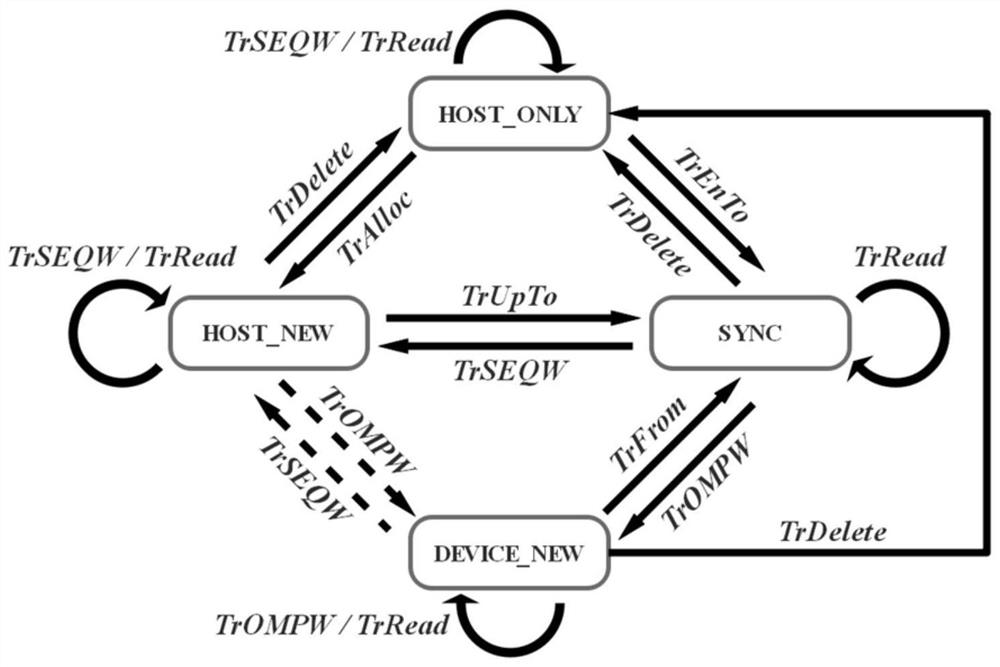

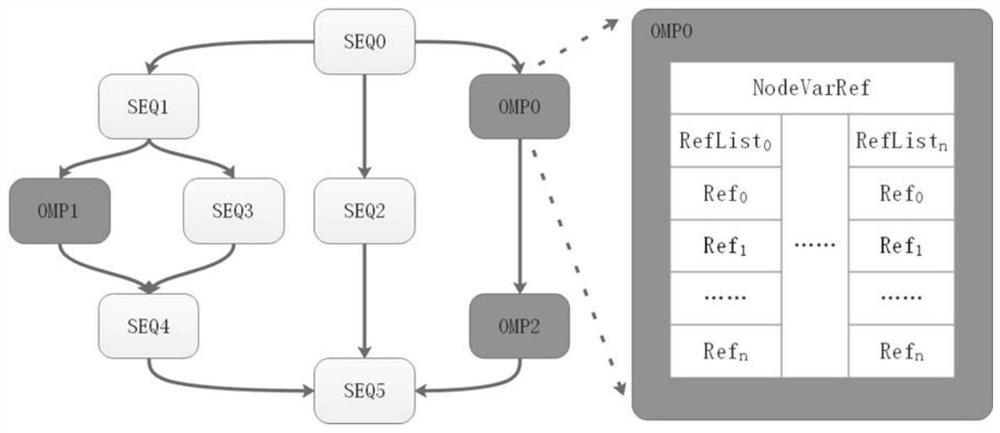

Automatic transplanting and optimizing method for heterogeneous parallel program

PendingCN111966397AReduce workloadEnsure consistencyProgram code adaptionCode compilationJazelleComputer architecture

The invention discloses an automatic transplanting and optimizing method for a heterogeneous parallel program, and belongs to a heterogeneous parallel program development technology. The invention aims to realize automatic transplantation of CPU parallel programs and improve the program performance while reducing the workload of developers, thereby solving the problems of parallel instruction conversion, data transmission management and optimization. The method is characterized in that a framework of a heterogeneous parallel program automatic transplanting system is constructed, and the heterogeneous parallel program automatic transplanting system is used for automatically translating an OpenMP CPU parallel program into an OpenMP Offloading heterogeneous parallel program; consistency stateconversion is formalized, on the premise that data consistency is guaranteed, transmission operation is optimized, and redundant data transmission is reduced; a runtime library is designed, wherein the runtime library is used for providing an automatic data transmission management and optimization function and maintaining the consistency state of each variable memory area; and a source-to-sourcetranslator is designed, wherein the translator is used for automatically converting a parallel instruction and automatically inserting a runtime API. The method can automatically identify the CPU parallel instruction and convert the CPU parallel instruction into the accelerator parallel instruction, so that the program performance is improved.

Owner:HARBIN INST OF TECH

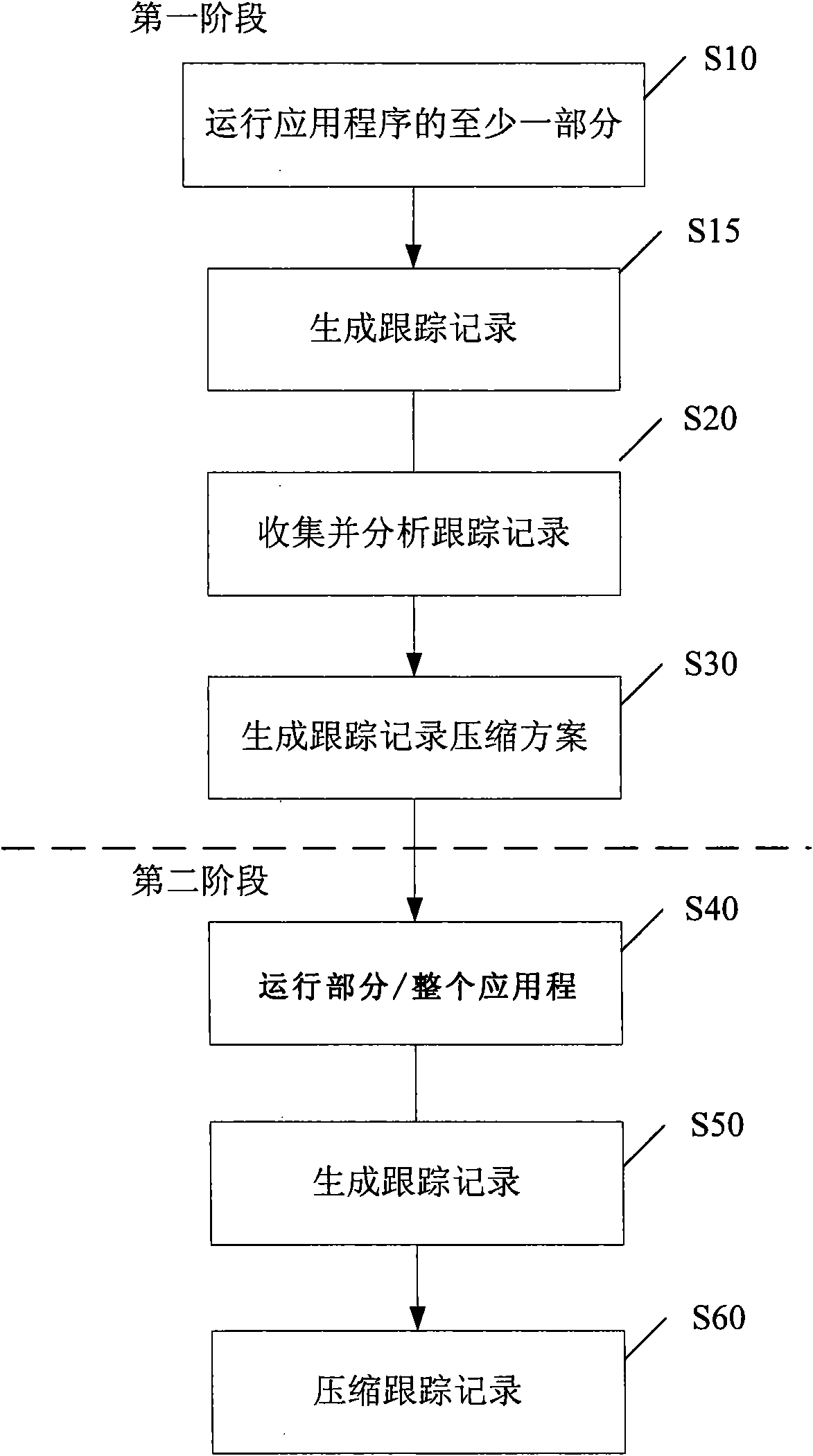

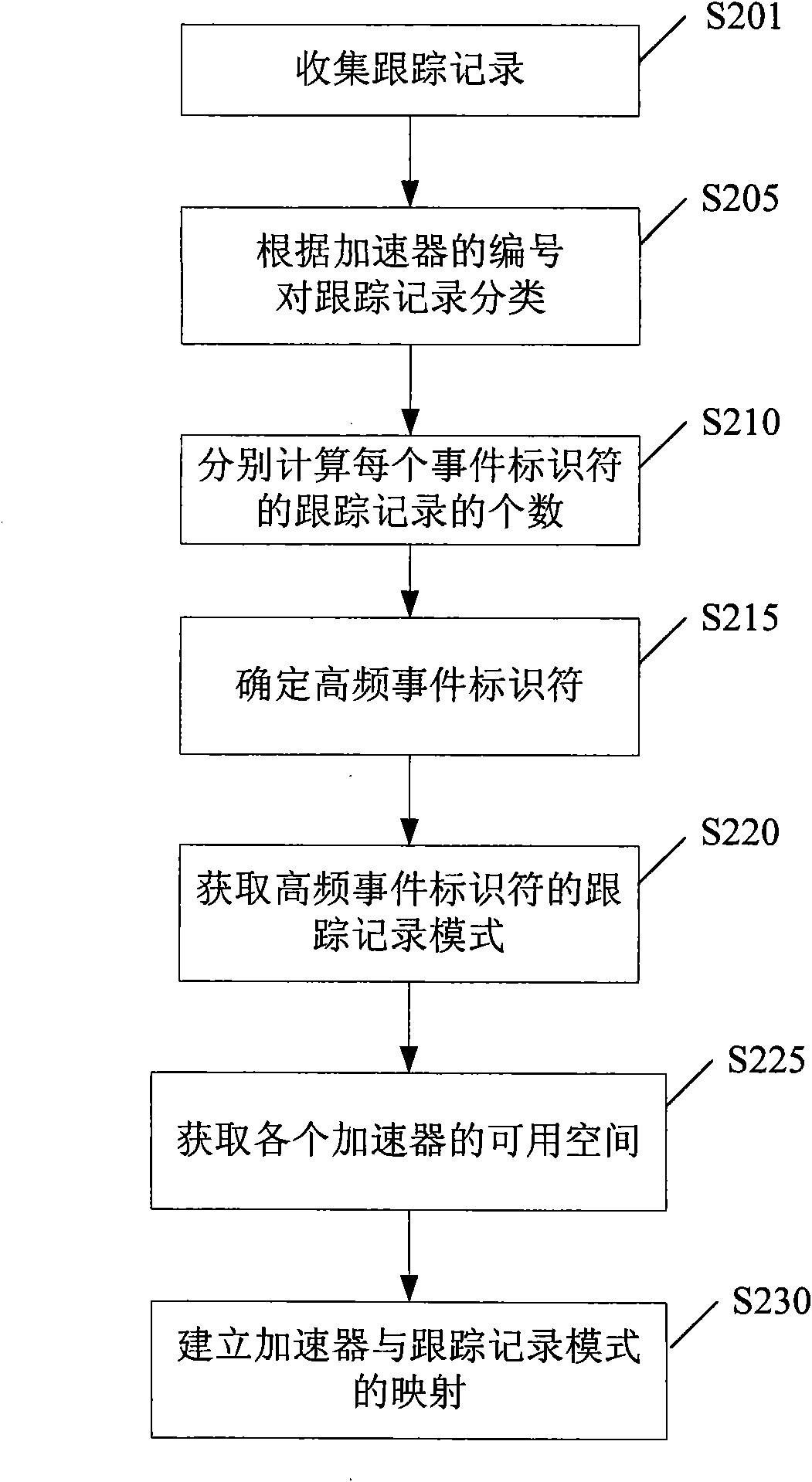

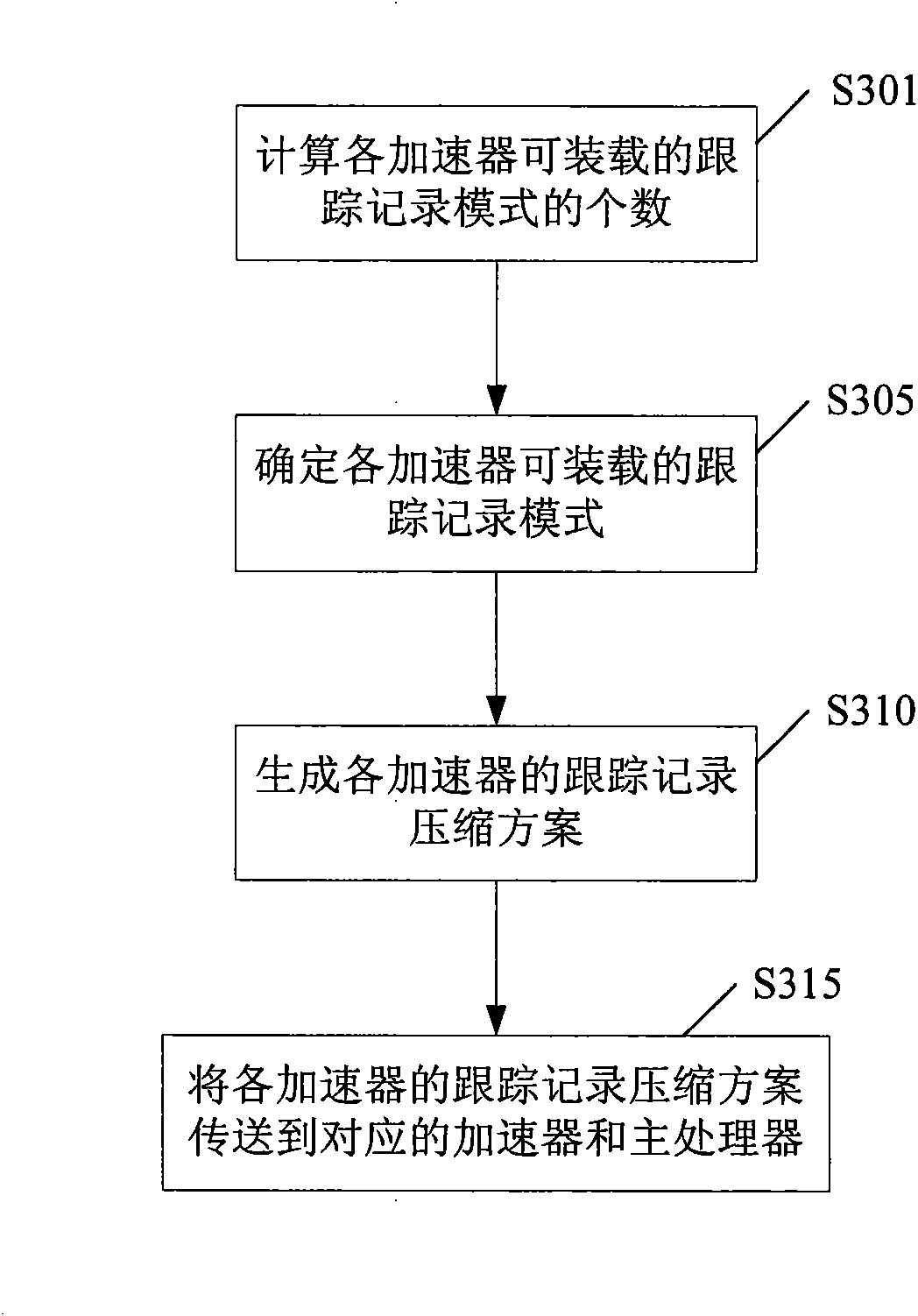

Method and device for generating track record compression scheme of application program

InactiveCN101615157AReduce transfer timeSoftware testing/debuggingApplication softwareMaster processor

The invention relates to a method for generating a track record compression scheme of an application program, wherein the application program is operated on a heterogeneous multi-core system structure which comprises a main processor and a plurality of accelerators. The method comprises the following steps: operating at least a part of the application program on the heterogeneous multi-core system structure; collecting and analyzing track records which are generated by a plurality of the accelerators and comprise event identifiers and track data; and generating the track record compression scheme of the application program according to an analyzing result. The method can be applied to the heterogeneous multi-core system structure, and not only can compress the totally same track records, but also can compress the partially same track records by using a track record mode similar to the track records. Time for transmitting the track records can be shortened by compressing the track records of high-frequency events so as to reduce the influence on the behavior of the application program. The invention also discloses a device for generating the track record compression scheme of the application program, a method and a device for compressing the track records of the application program, and the heterogeneous multi-core system structure.

Owner:IBM CORP

Reconfigurable secret key splitting side channel attack resistant rsa-4k accelerator

InactiveUS20220085993A1Key distribution for secure communicationPublic key for secure communicationComputer hardwareJazelle

Owner:INTEL CORP

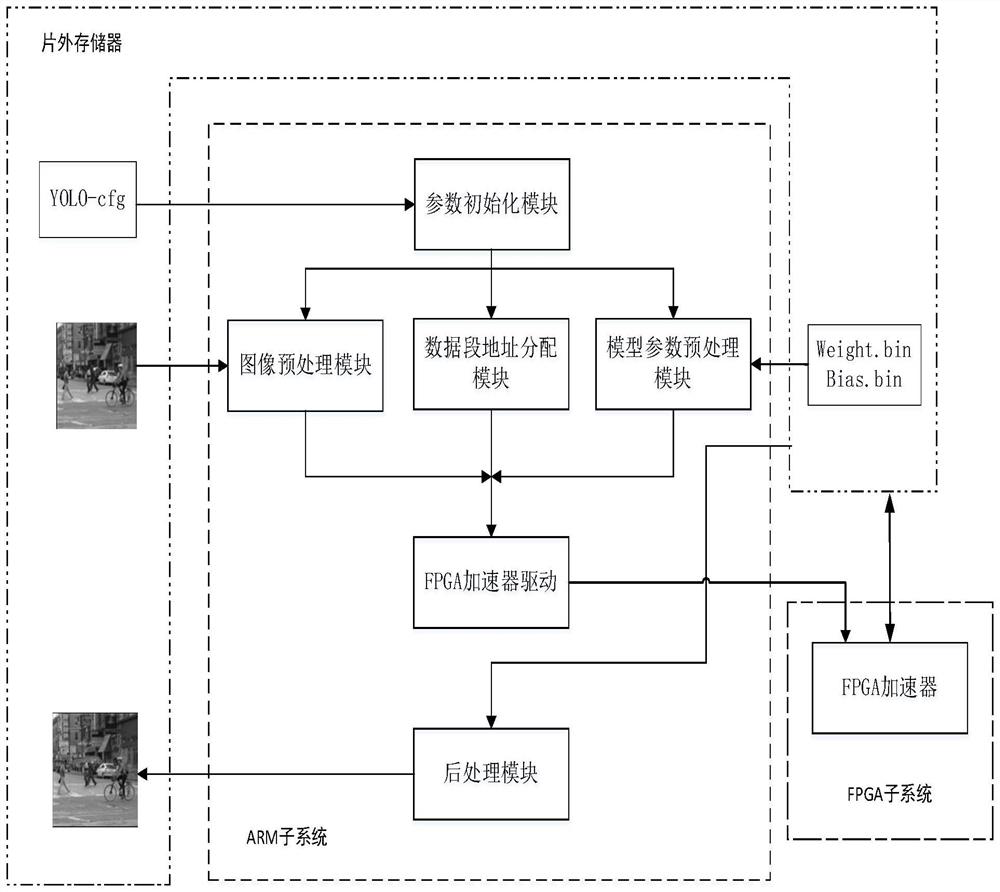

General hardware accelerator system platform oriented to YOLO algorithm and capable of being rapidly deployed

PendingCN114662681AImprove performanceReduce power consumptionNeural architecturesPhysical realisationJazelleHigh density

A universal hardware accelerator system platform oriented to a YOLO algorithm and capable of being rapidly deployed belongs to the technical field of computers, considers the deployment requirements of the YOLO target detection algorithm on rapidness, high performance and low power consumption, and has a wide application scene. The platform is composed of an ARM subsystem, an FPGA subsystem and an off-chip memory, the ARM subsystem is responsible for parameter initialization, image preprocessing, model parameter preprocessing, data field address allocation, FPGA accelerator driving and image post-processing, and the FPGA subsystem is responsible for high-density calculation of a YOLO algorithm. After the platform is started, a YOLO algorithm configuration file is read, accelerator driving parameters are initialized, a to-be-detected image is preprocessed, then model weight and bias data are read, quantization, fusion and reordering are executed, an FPGA subsystem is driven to execute model calculation, and a calculation result is post-processed to obtain a target detection image. According to the method, the YOLO algorithm can be quickly deployed on the premise of high performance and low power consumption.

Owner:BEIJING UNIV OF TECH

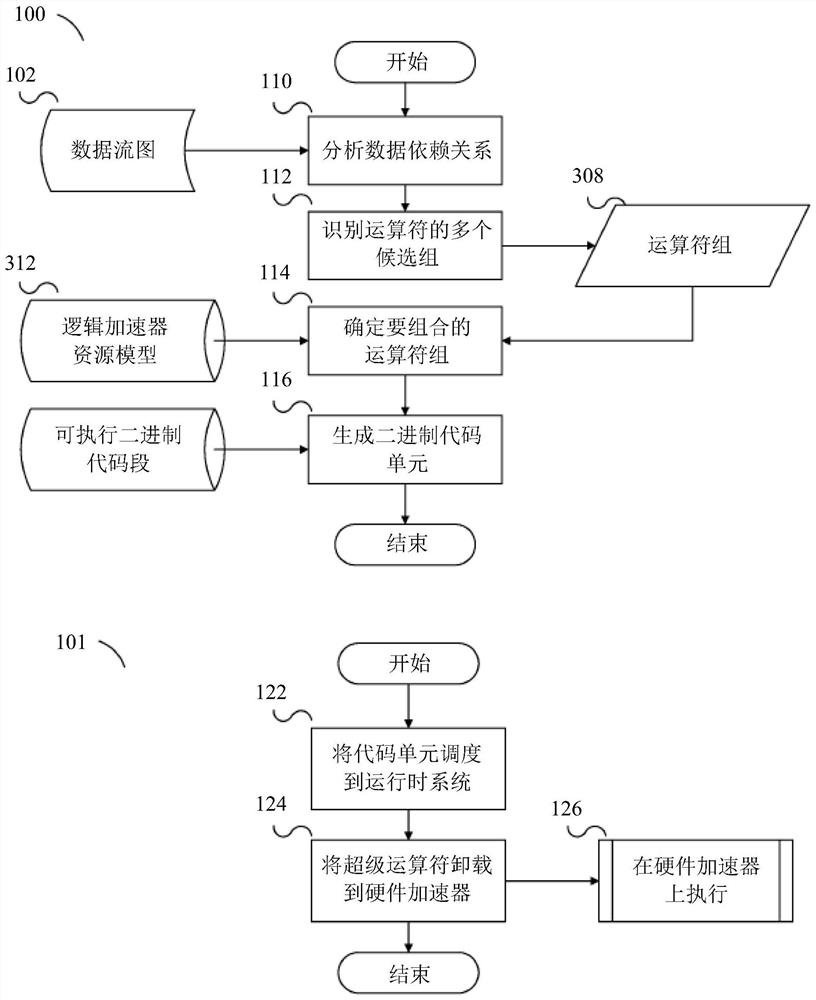

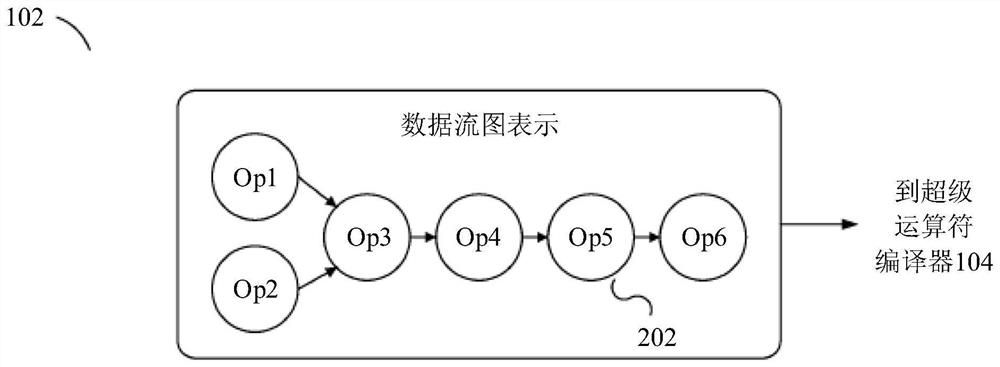

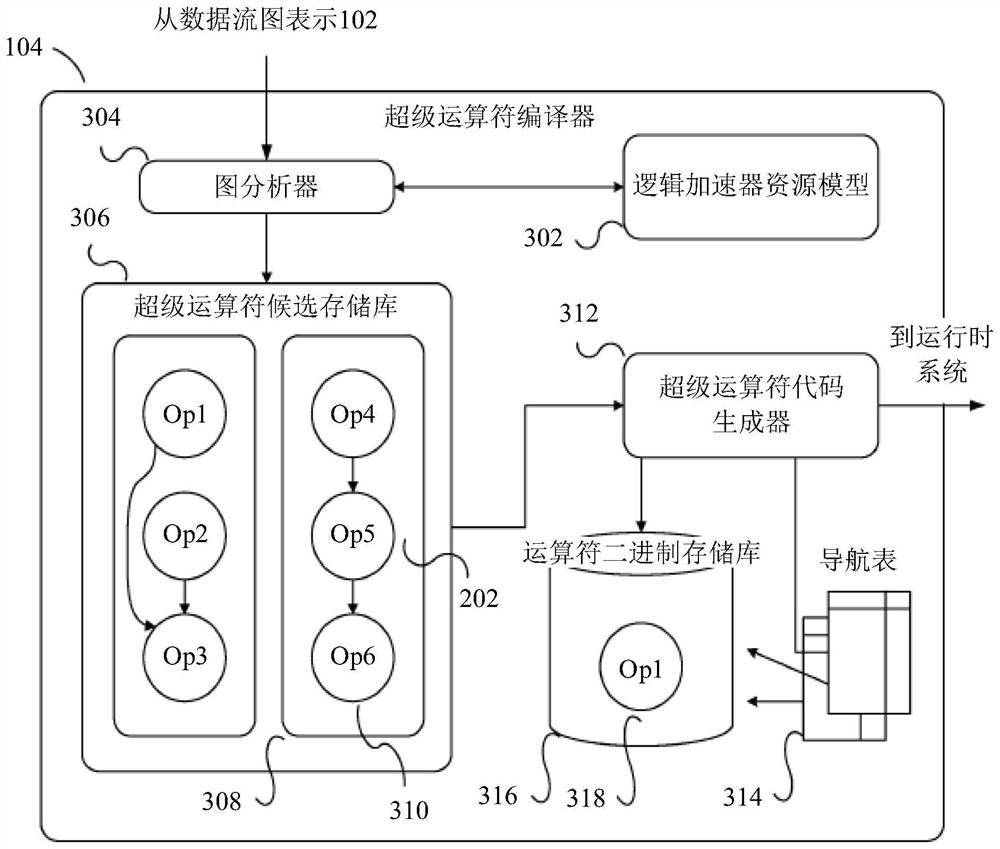

Method and apparatus for enabling autonomous acceleration of data stream AI applications

A method includes analyzing a dataflow graph representing data dependencies between operators of a dataflow application to identify a plurality of candidate groups of the operators. Depending on characteristics of a given hardware accelerator and operators of a given candidate group of the plurality of candidate groups, it is determined whether the operators of the given candidate group are to be combined. Upon determining that the operators of the given candidate group are to be combined, retrieving executable binary code segments corresponding to the operators of the given candidate group; generating a binary code unit including the executable binary code segment and metadata representing execution of a control flow among the executable binary code segment; and scheduling the code unit to the given hardware accelerator to execute the code unit.

Owner:HUAWEI TECH CO LTD

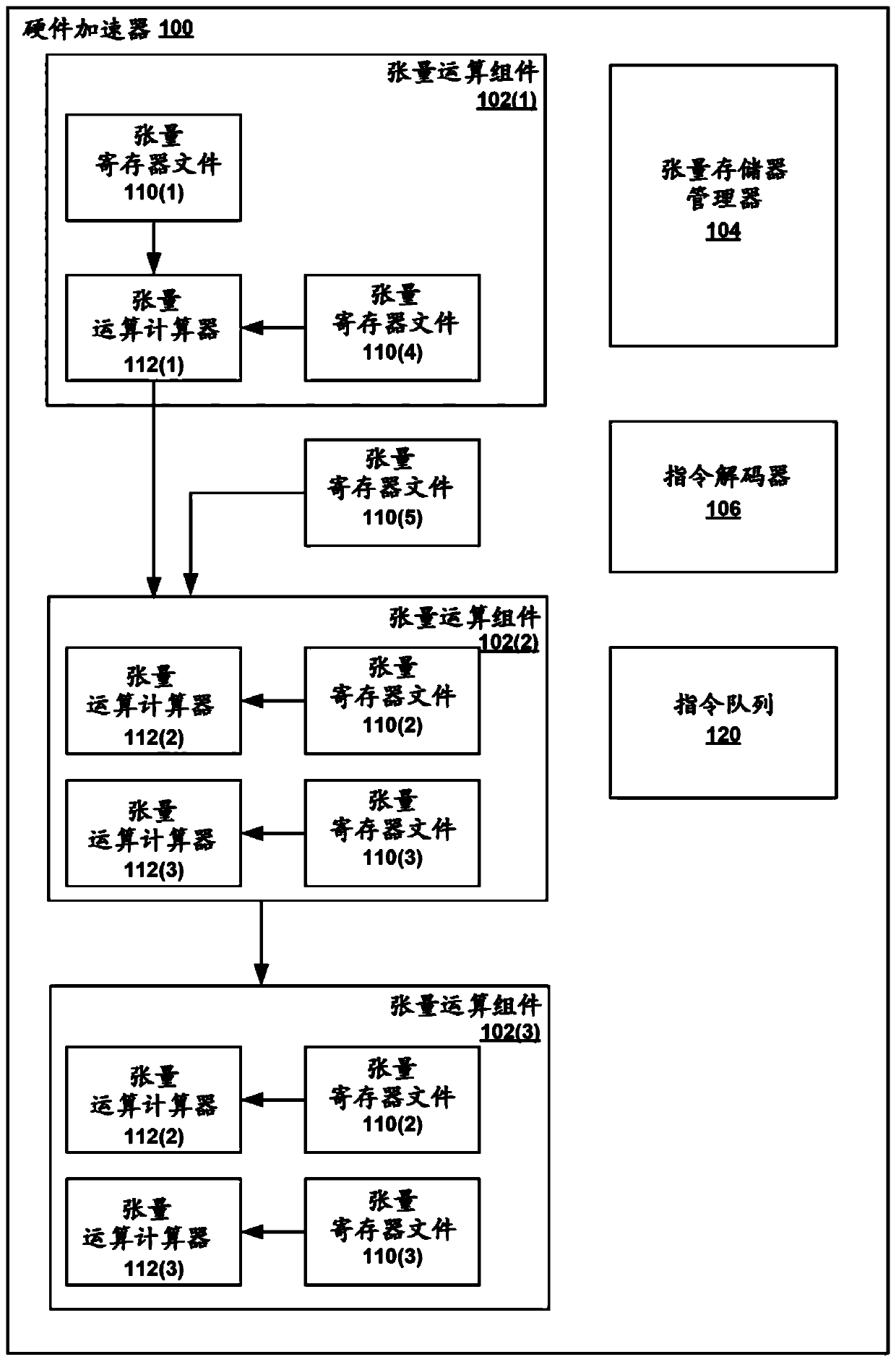

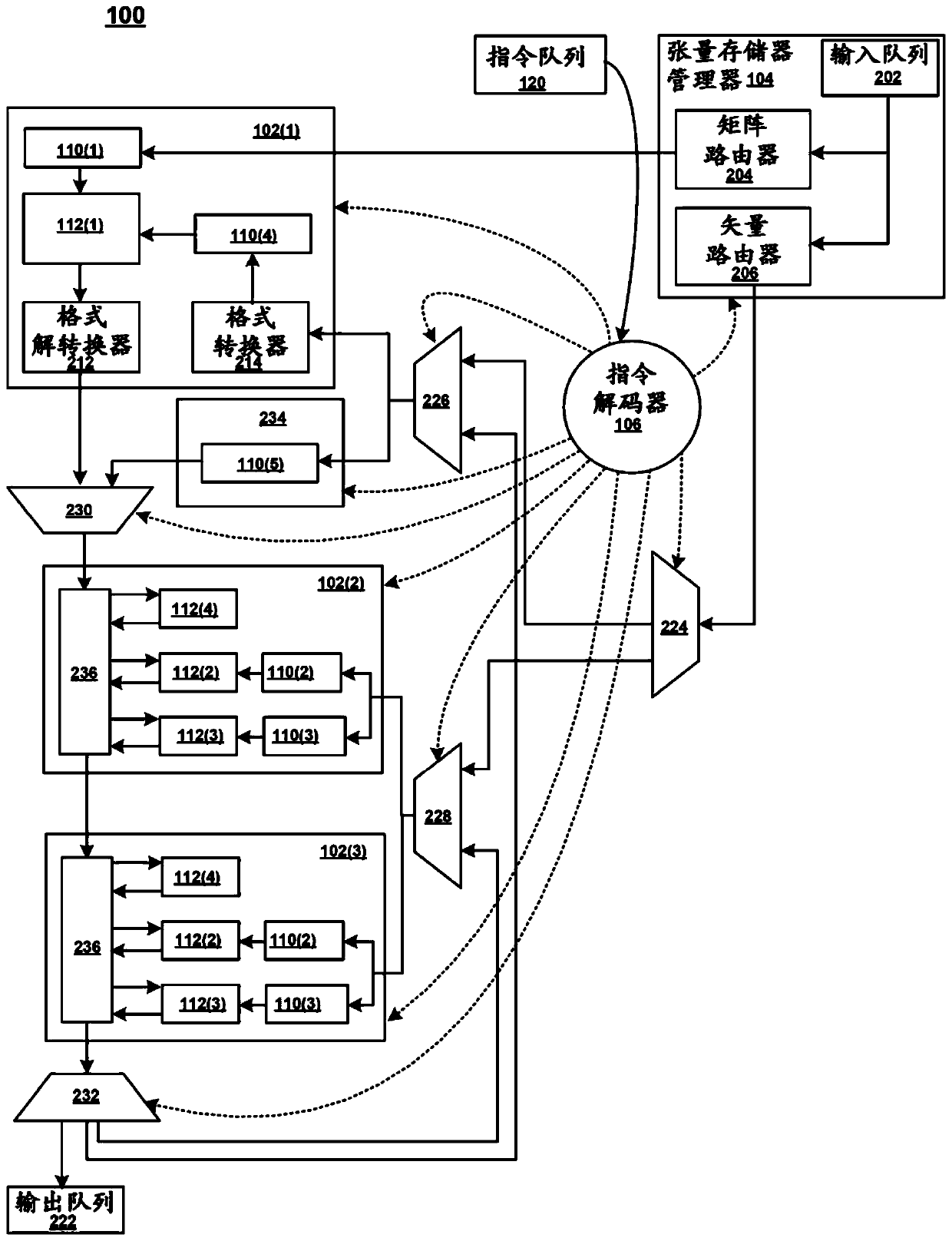

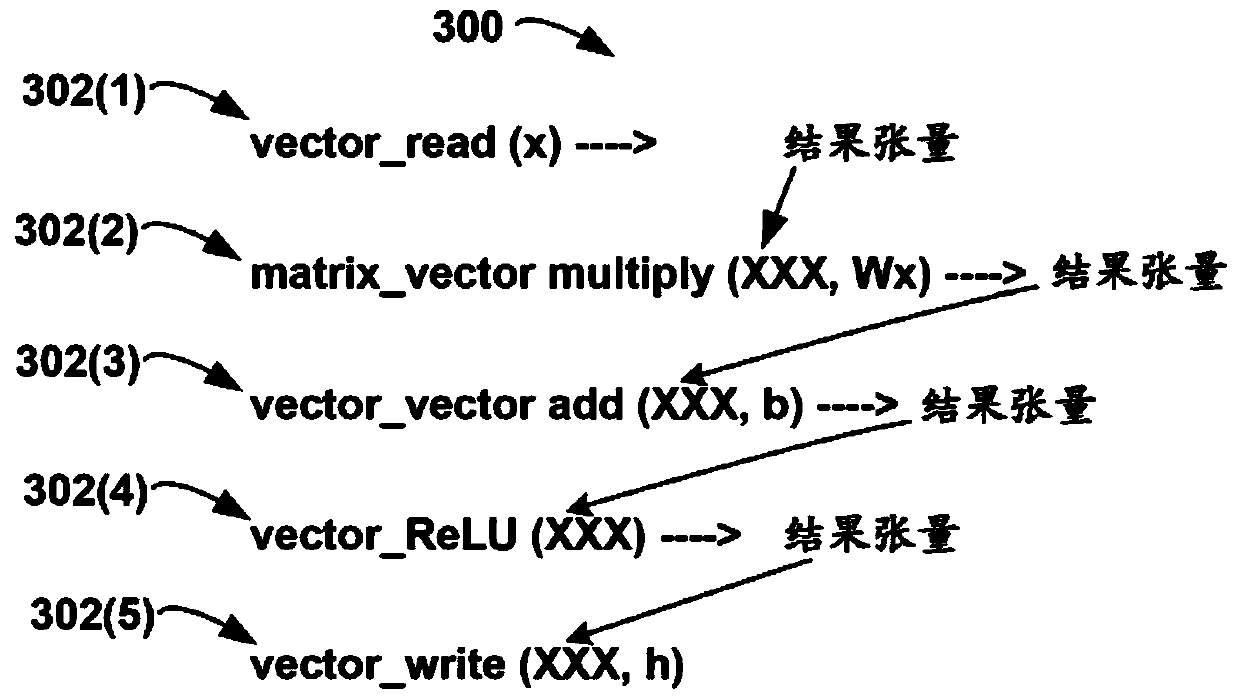

Tensor processor instruction set architecture

A hardware accelerator having an efficient instruction set is disclosed. An apparatus may comprise logic configured to access a first and a second machine instruction. The second machine instruction may be missing a tensor operand needed to execute the second machine instruction. The logic may be further configured to execute the first machine instruction, resulting in a tensor. The logic may be further configured to execute the second machine instruction using the resultant tensor as the missing tensor operand.

Owner:MICROSOFT TECH LICENSING LLC

Cross-platform data analysis method

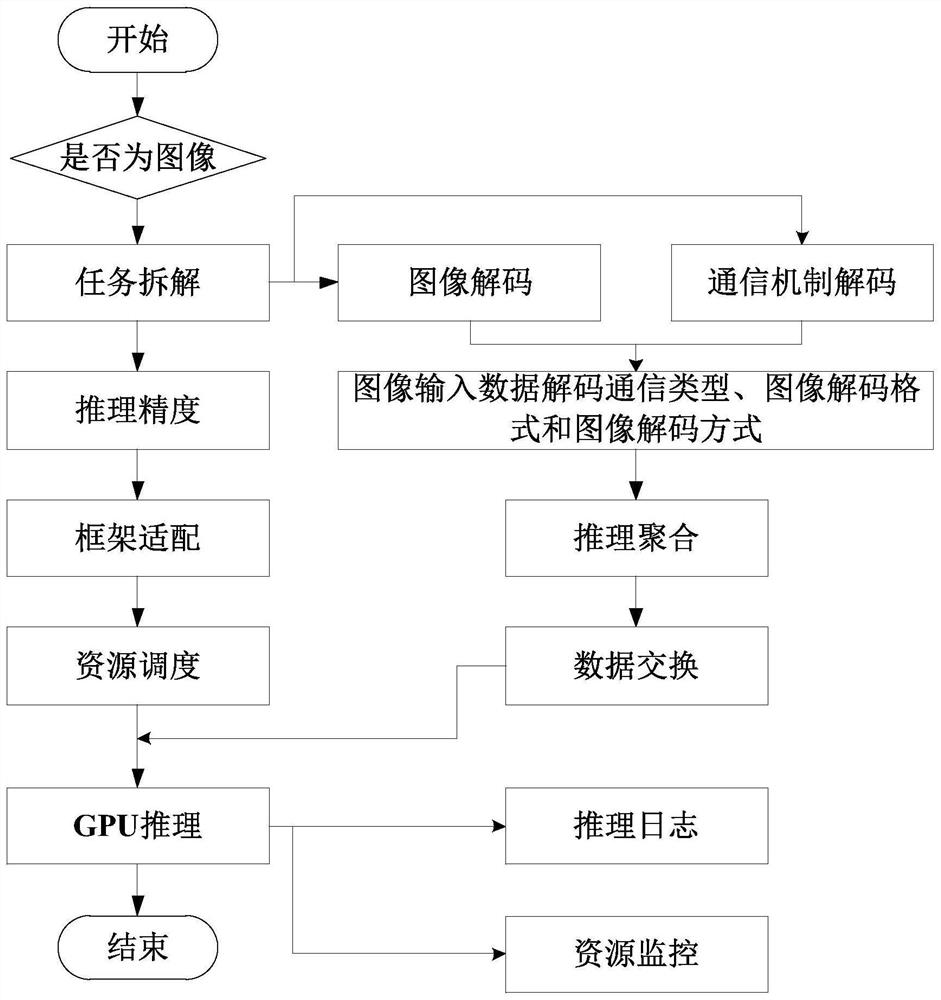

PendingCN114115992AMany solutionsSolve the costProgram code adaptionInference methodsJazelleHardware architecture

The invention relates to a cross-platform data analysis method, which solves the problems of many edge hardware architectures and high model acceleration deployment cost by additionally arranging a user reasoning interface on an accelerator structure layer. Compared with a format conversion process in a model edge deployment process of a traditional method, the development efficiency is obviously improved; and the later AI reasoning code maintenance cost of the edge equipment is effectively reduced.

Owner:中科计算技术西部研究院

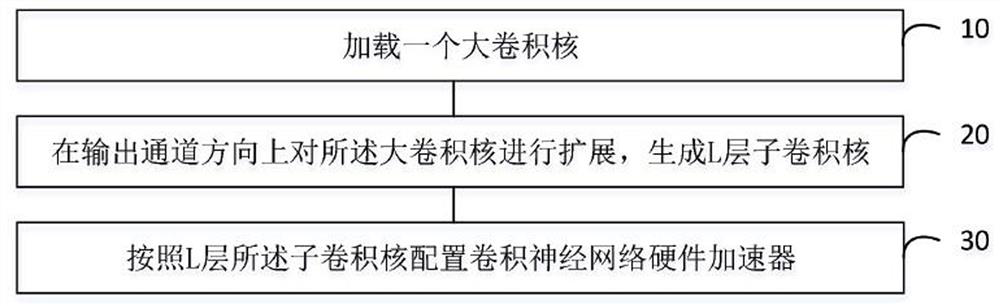

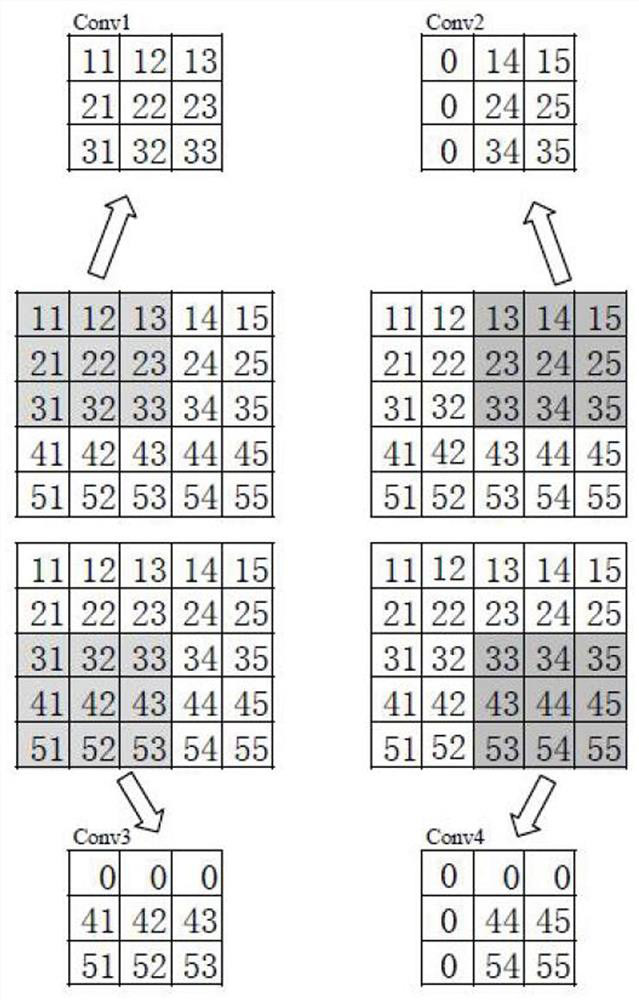

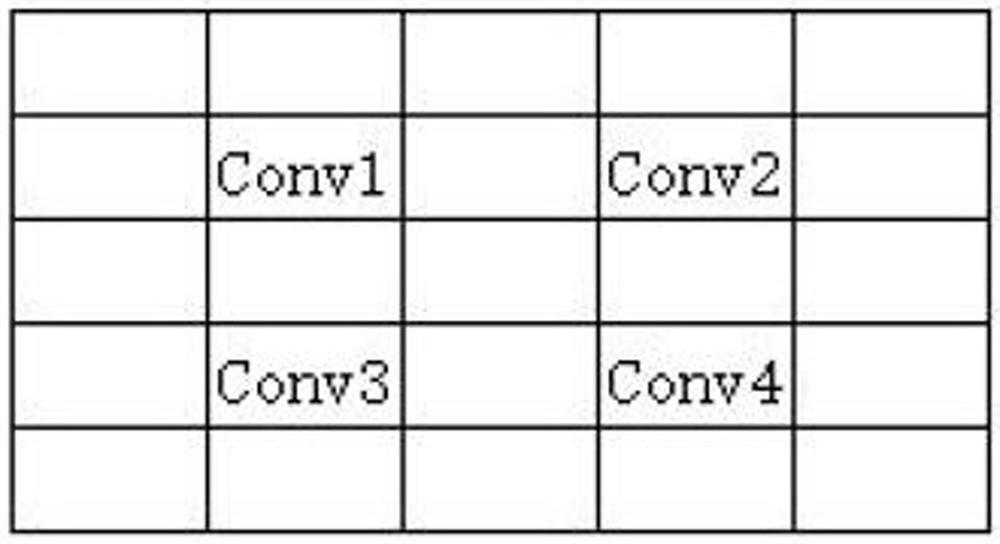

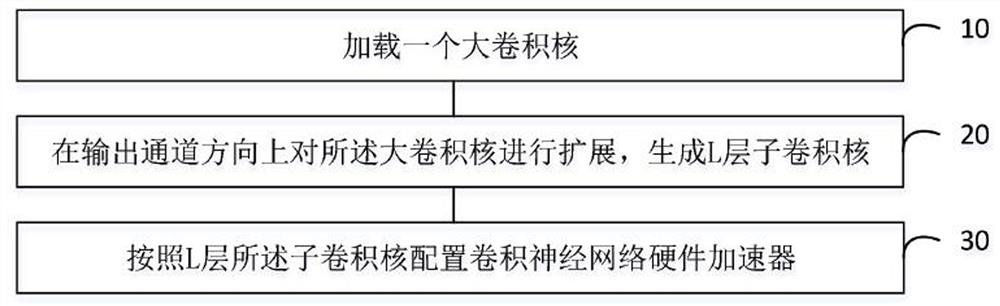

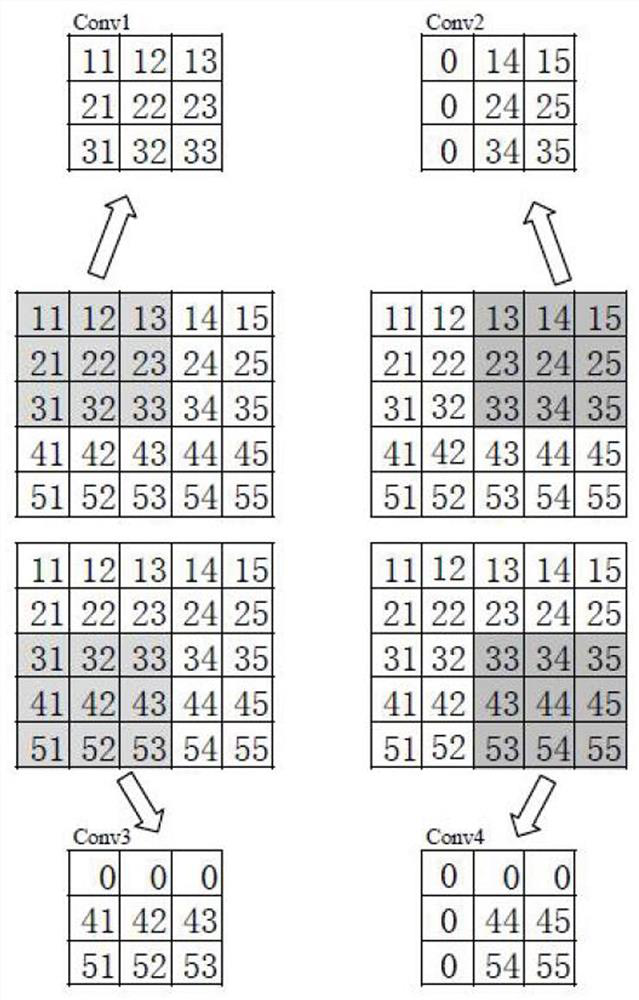

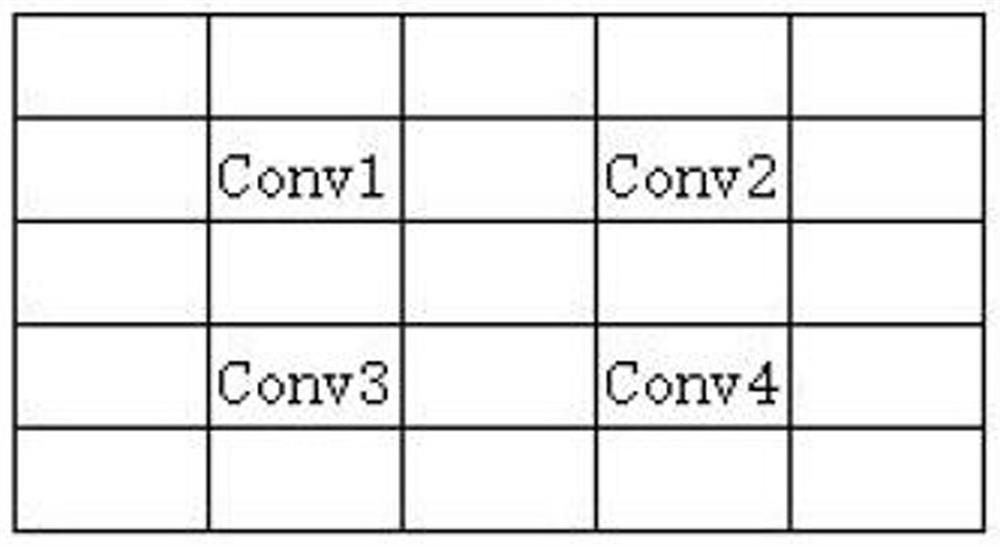

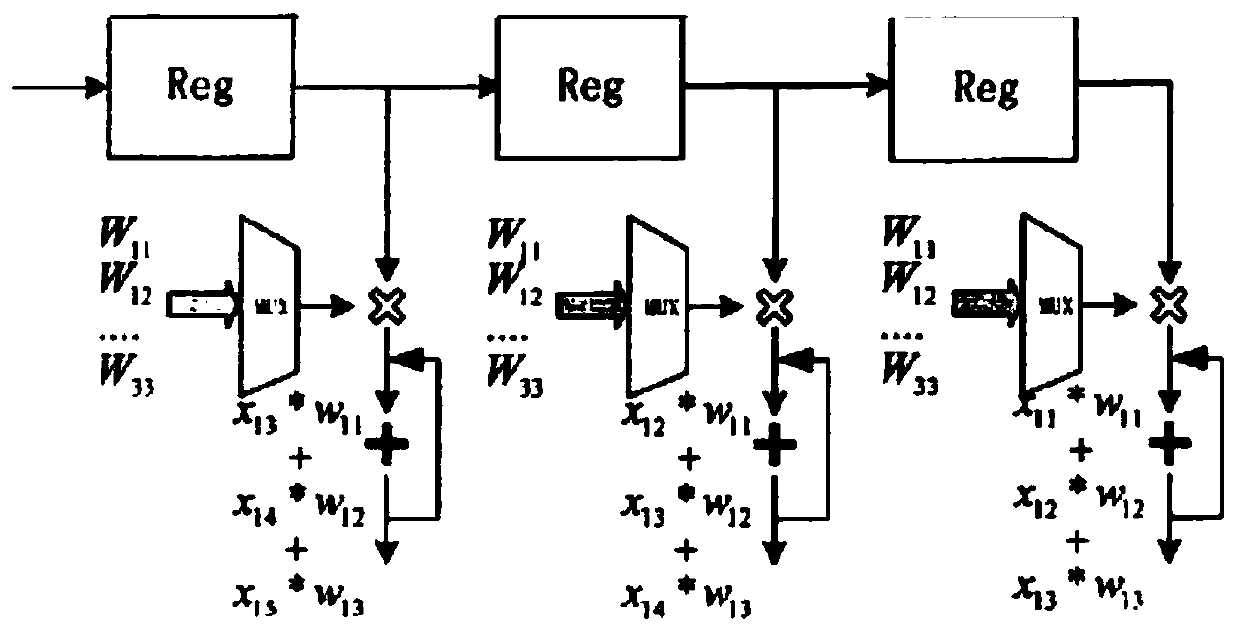

Large convolution kernel hardware implementation method, computer equipment and storage medium

ActiveCN113780544BReduce complexityEasy to handleNeural architecturesPhysical realisationComputer hardwareJazelle

The large convolution kernel hardware implementation method, computer equipment, and computer-readable storage medium provided by the application include loading a large convolution kernel; expanding the large convolution kernel in the direction of the output channel to generate a layer of 3× 3 sub-convolution kernels; configure the convolutional neural network hardware accelerator according to the layer 3×3 sub-convolution kernels. The large convolution kernel hardware implementation method provided by this application can split the large convolution kernel to generate several 3×3 sub-convolution kernels, wherein there are overlapping parts between the 3×3 sub-convolution kernels; and the complex The large convolution kernel operation is directly deployed on the existing simple convolution hardware in the NPU, which reduces the complexity of the NPU hardware and improves the processing performance of the NPU.

Owner:南京风兴科技有限公司

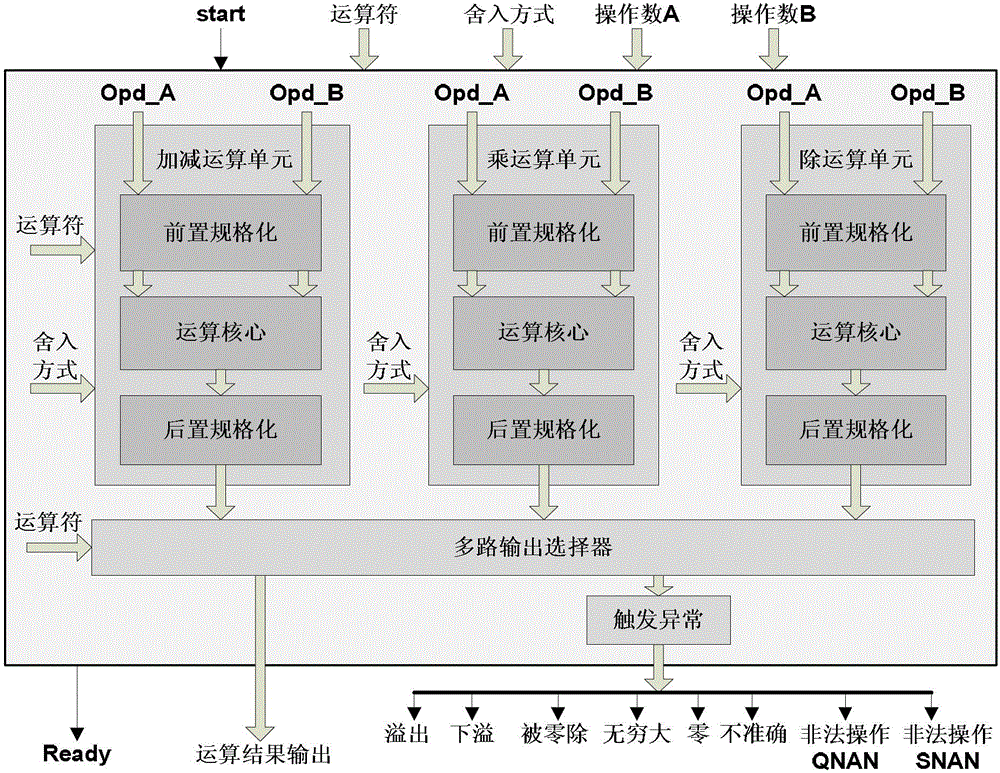

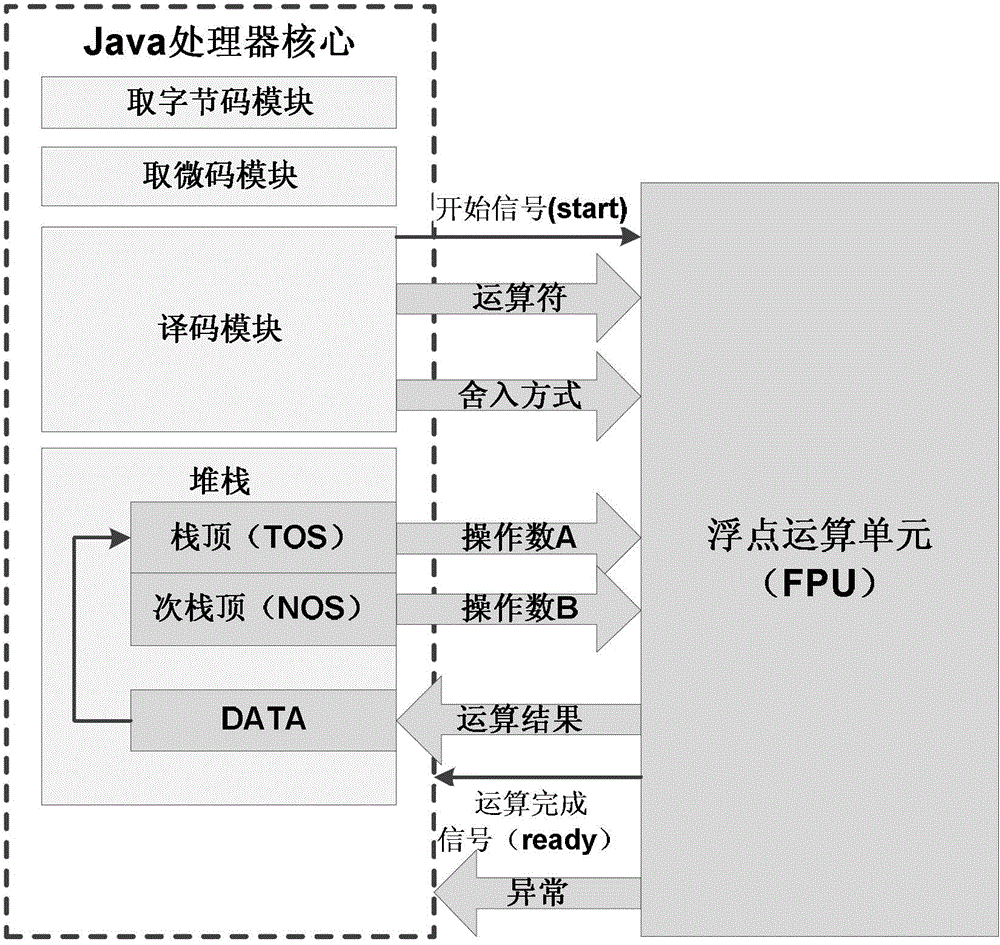

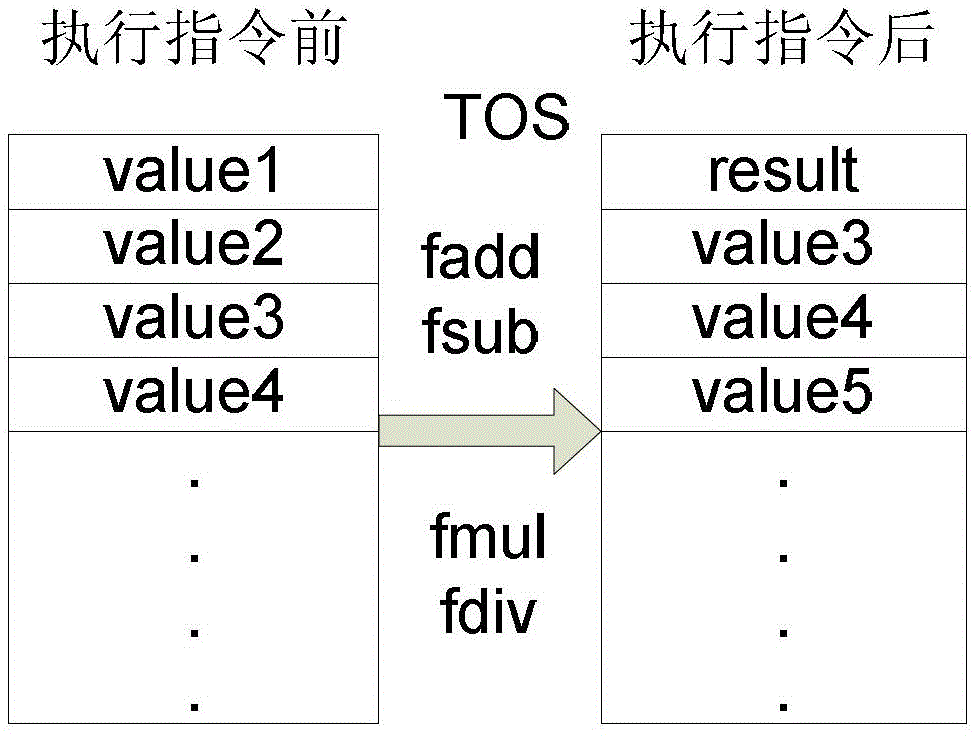

Floating-point unit of Java processor and control method thereof

InactiveCN102722353ARun directlyGuaranteed uptimeDigital data processing detailsProcessor registerFloating-point unit

The invention discloses a floating-point unit of a Java processor and a control method thereof, which meet the requirements of an embedded system on a high-accuracy operation, wherein the floating-point unit is used as a hardware accelerator and directly connected to the core of the Java processor, and an input port of the FPU (floating-point unit) is used for receiving a clock input, a starting signal, an operand A, an operand B, an operator and a rounding mode which are from the core of the Java processor. The FPU comprises operation units and a demultiplexer; the FPU selects the corresponding operation units according to the input operator, and the operation units realize various basic operations; the operation units are divided into an addition / subtraction operation unit, a multiplication operation unit and a division operation unit; and the demultiplexer selects a register of an operation result of the corresponding operation unit according to the input operator, and checks whether the operation and a to-be-output operation result trigger the abnormity of a floating point with a standard definition, if so, the demultiplexer outputs a corresponding abnormity signal; otherwise, an output port of the FPU sends a completion signal and the operation result in the register to the core of the Java processor.

Owner:SYSU HUADU IND SCI & TECH INST

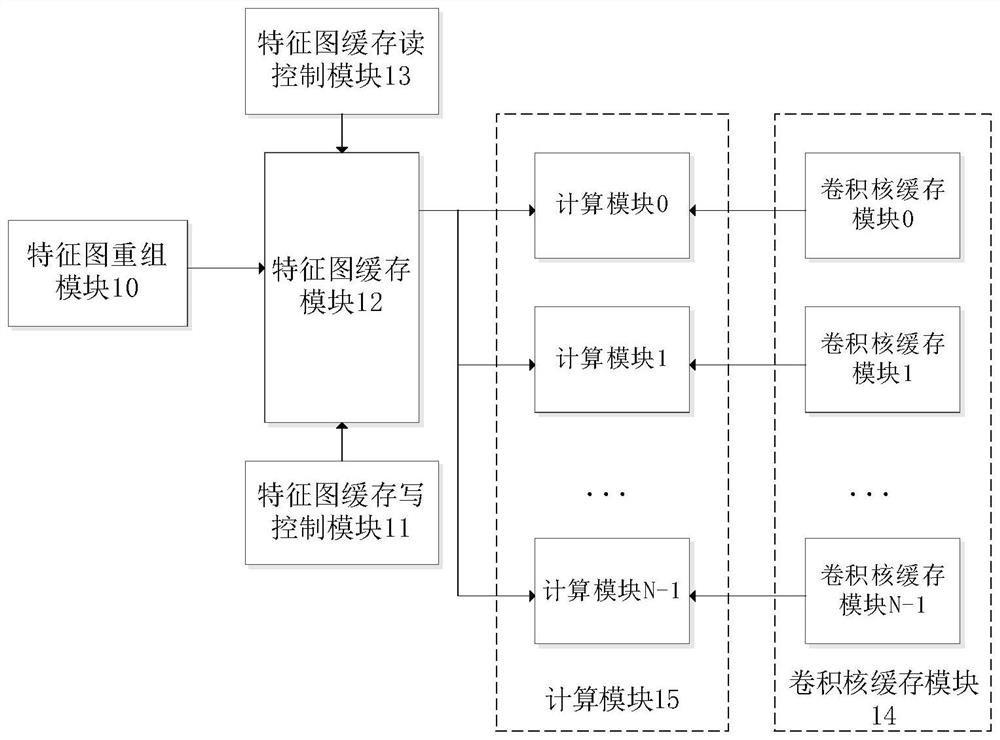

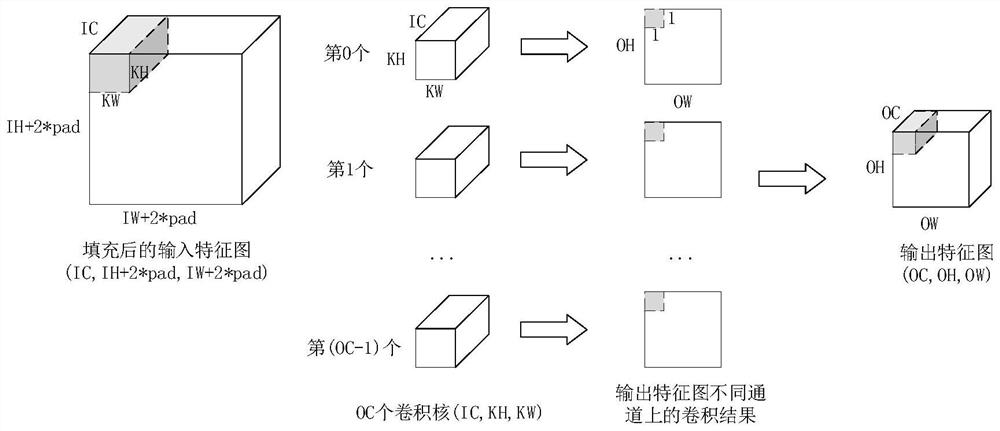

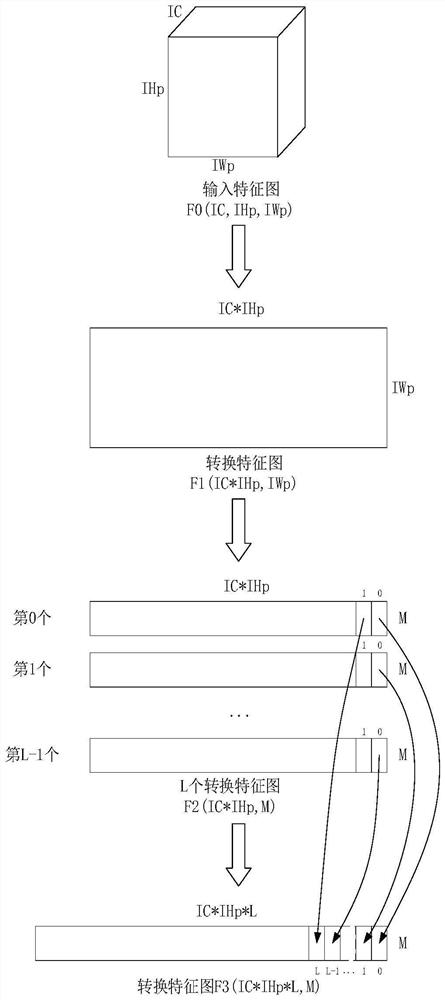

Convolution operation hardware accelerator and data processing method

PendingCN114330656AEfficient inputImprove computing efficiencyProgram controlNeural architecturesComputer hardwareJazelle

The invention discloses a convolution operation hardware accelerator which is characterized in that a feature graph recombination module segments each row of input feature data of an input feature graph, and each segment of data is M at most; the feature map cache write control module generates a feature map cache unit sequence number and a cache write address to be written into each piece of input feature data, the sequence number and the cache write address are written into the feature map cache module, and at most M pieces of input feature data are written into M feature map cache units in parallel in the same clock period; the feature map cache read control module reads at most M pieces of input feature data in parallel in a single clock period in one convolution operation; the convolution kernel cache module writes a convolution kernel of a single output channel into M convolution kernel cache units according to the fact that M pieces of convolution kernel data are taken as a group; and the calculation module performs corresponding convolution operation on the convolution kernel data and the input feature data. According to the invention, the hardware accelerator can support the convolution operation of different size parameters, and the calculation efficiency of the convolution operation is improved.

Owner:HANGZHOU FEISHU TECH CO LTD

Large convolution kernel hardware implementation method, computer device and storable medium

ActiveCN113780544AReduce complexityEasy to handleNeural architecturesPhysical realisationComputer hardwareJazelle

The invention provides a large convolution kernel hardware implementation method, a computer device and a computer readable storage medium. The method comprises the steps of loading a large convolution kernel; expanding the large convolution kernel in an output channel direction to generate a layer 3*3 sub convolution kernel; and configuring a convolutional neural network hardware accelerator according to the layer 3*3 sub-convolution kernel. According to the large convolution kernel hardware implementation method provided by the invention, the large convolution kernel can be split into a plurality of 3*3 sub-convolution kernels, and the 3*3 sub-convolution kernels have overlapped parts; and the complex large convolution kernel operation is directly deployed on the existing simple convolution hardware in an NPU, so that the complexity of the NPU hardware is reduced, and the processing performance of the NPU is improved.

Owner:南京风兴科技有限公司

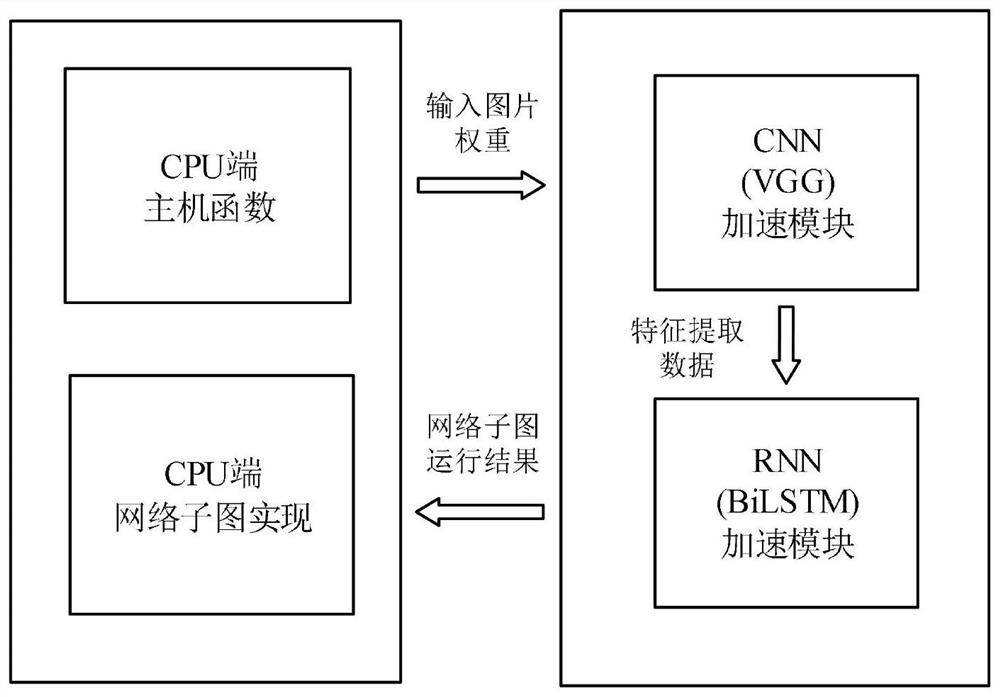

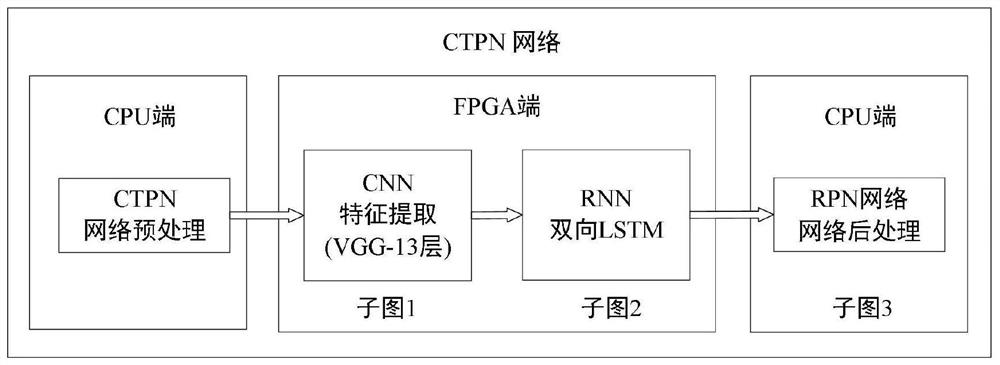

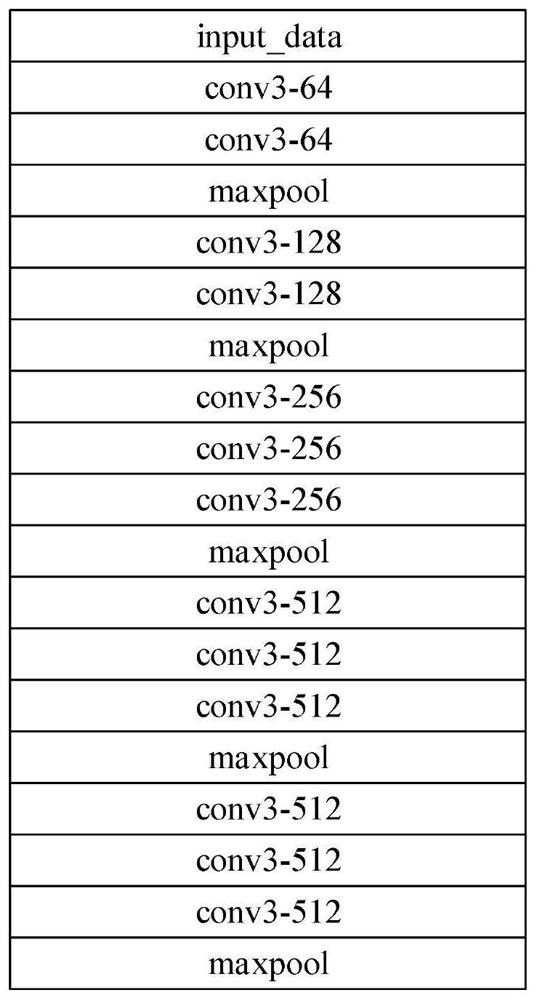

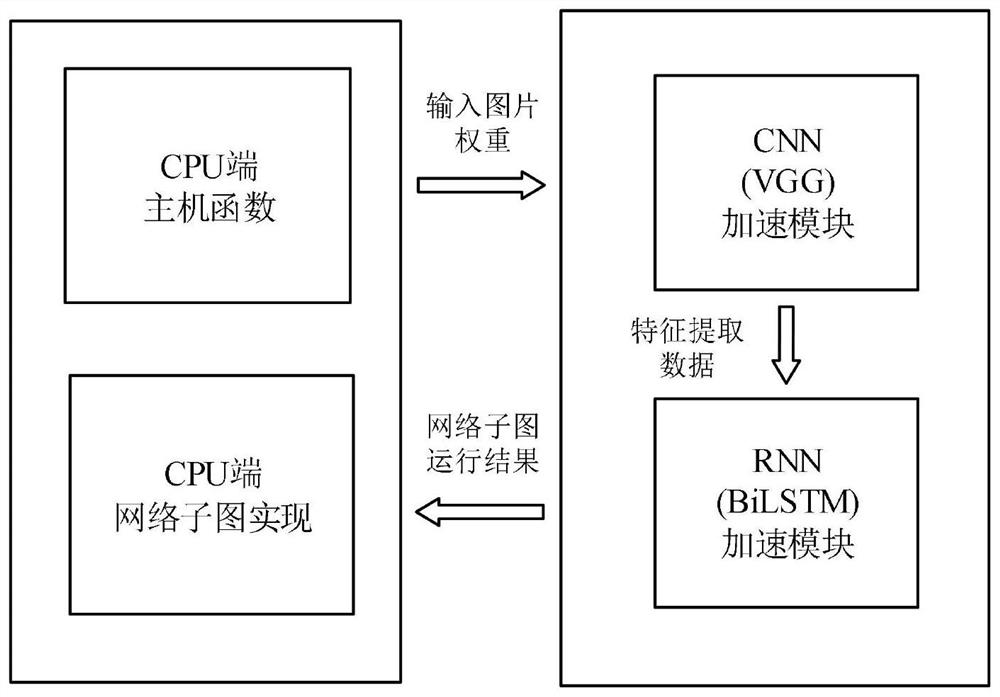

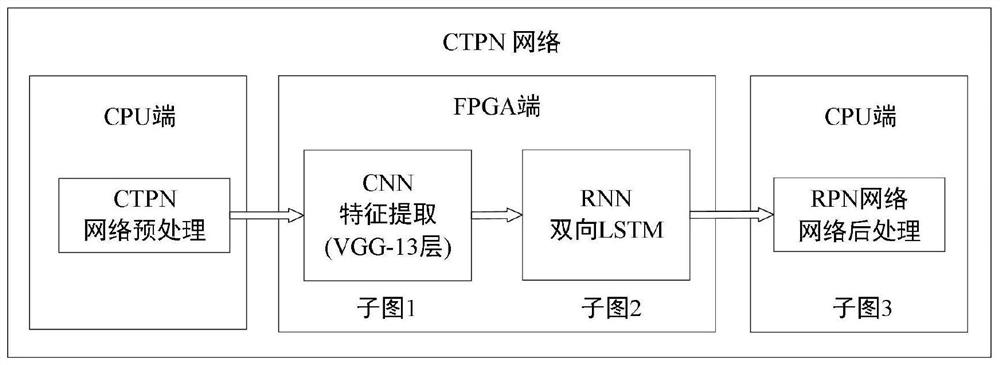

Heterogeneous acceleration system and method based on CTPN network

ActiveCN112732638AReduce Design ComplexityImprove parallelismNeural architecturesArchitecture with single central processing unitCharacter recognitionJazelle

The invention provides a heterogeneous acceleration system and method based on a CTPN network. The heterogeneous acceleration system comprises a CPU end and an FPGA end. the FPGA end comprises a first sub-graph and a second sub-graph, and the CPU end comprises a third sub-graph; the first sub-graph comprises a CTPN network CNN part, the second sub-graph comprises an RNN part, and the third sub-graph comprises a CTPN network remaining part; the first sub-graph and the second sub-graph are executed at an FPGA end, and the third sub-graph is executed at a CPU end; the output of the FPGA end is used as the input of the third sub-graph; and the CPU end finally realizes network inference and obtains a final result. According to the system and method, the deduction speed of the CTPN network can be greatly improved under the condition that the precision is slightly reduced, so that the accelerator can better realize a real-time scene character recognition function.

Owner:SHANGHAI JIAO TONG UNIV +1

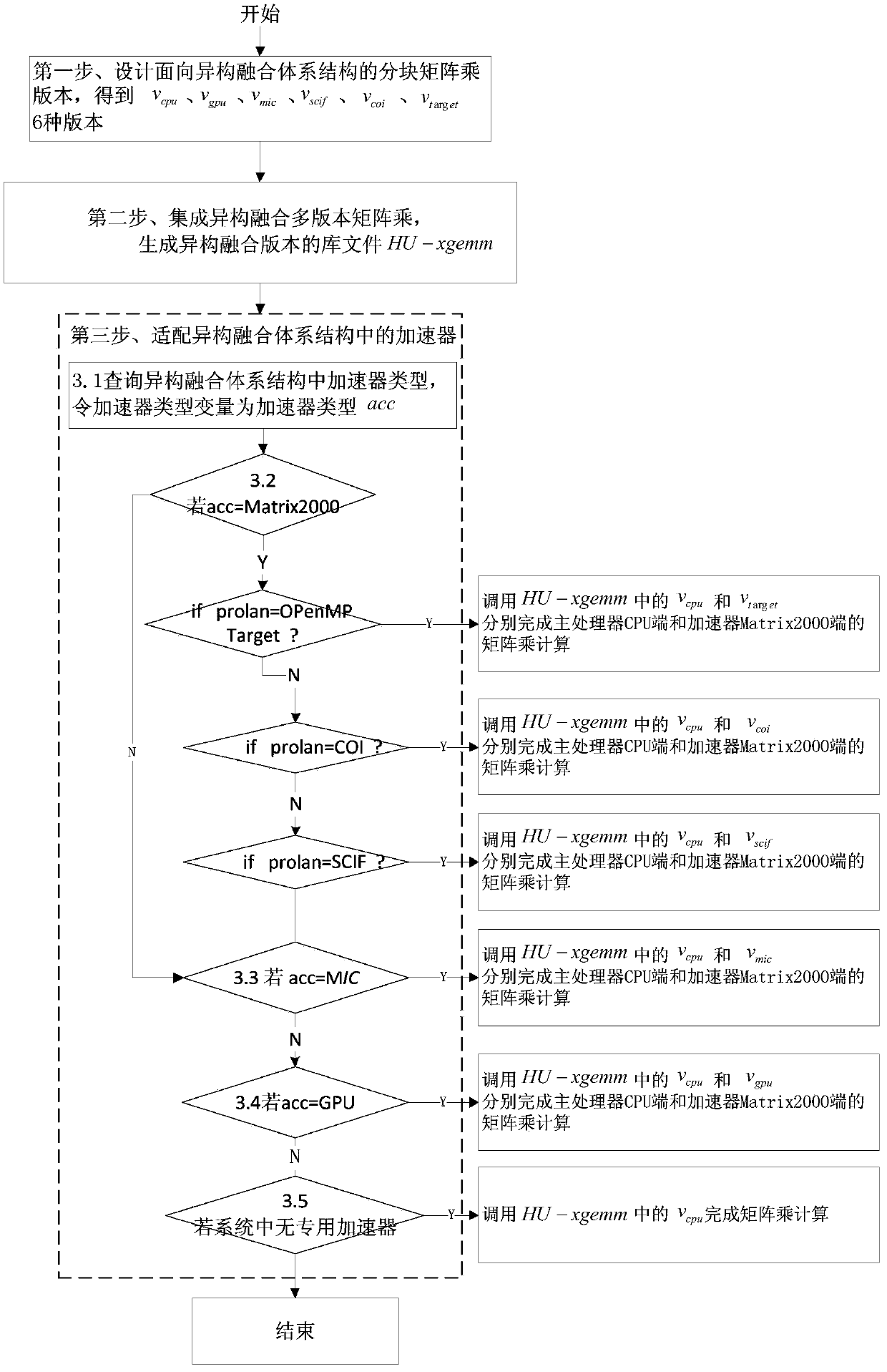

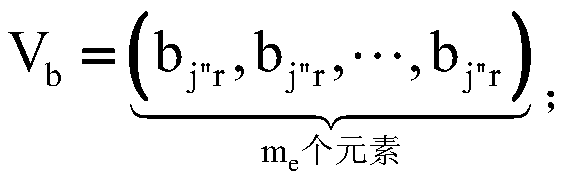

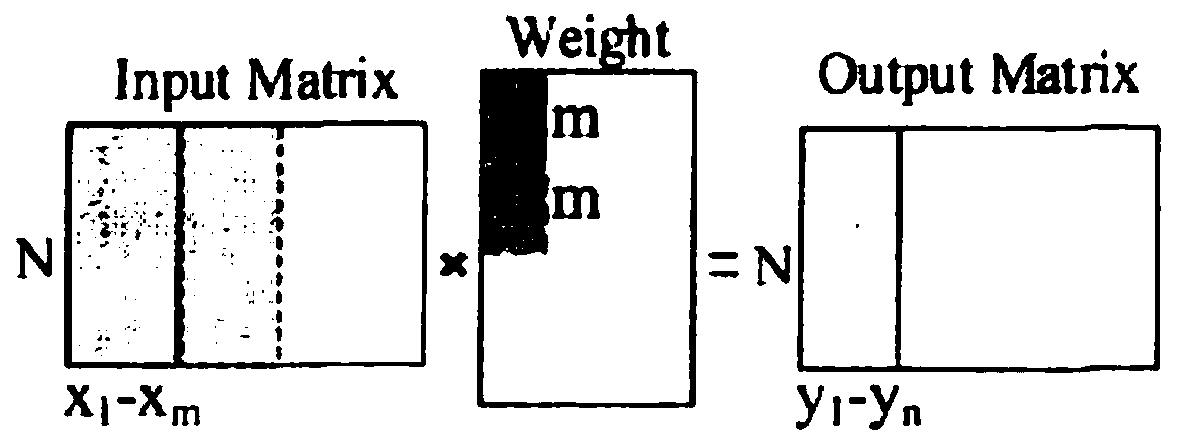

Matrix Multiplication Acceleration Method for Heterogeneous Fusion Architecture

ActiveCN109871512BReduce development difficultyReduce workloadDigital data processing detailsComplex mathematical operationsComputational scienceJazelle

The invention discloses a matrix multiplication acceleration method oriented to a heterogeneous fusion system structure, and aims to design a universal matrix multiplication acceleration method oriented to the heterogeneous fusion system structure for different many-core accelerator target system structures and improve the use efficiency of a heterogeneous system. According to the technical scheme, firstly, block matrix multiplied versions facing a heterogeneous fusion system structure are designed, the block matrix multiplied versions comprise vcpu, vgpu, vmic, vscif, vcoi and vtarget, and then the heterogeneous fusion multi-version matrix multiplied versions are integrated and packaged to generate a library file HU-xgemm of the heterogeneous fusion version; finally, an accelerator in anHU-xgemm adaptive heterogeneous fusion system structure is adopted. According to the invention, different target accelerators and processors can be self-adapted; matrix multiplication can be adaptively carried out according to different heterogeneous fusion system structures, matrix multiplication is carried out according to topological structures of CPUs or accelerators in the different heterogeneous fusion system structures, FMA parallel computing is carried out, the matrix multiplication speed is increased, and the use efficiency of a heterogeneous system is improved.

Owner:NAT UNIV OF DEFENSE TECH

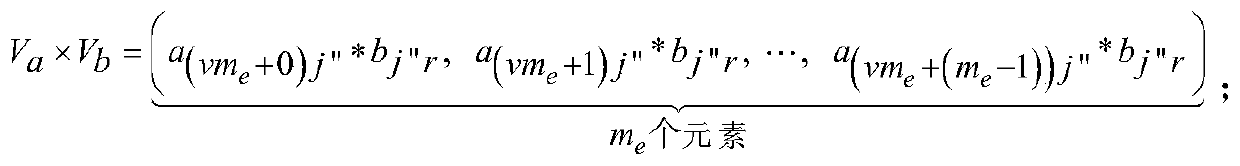

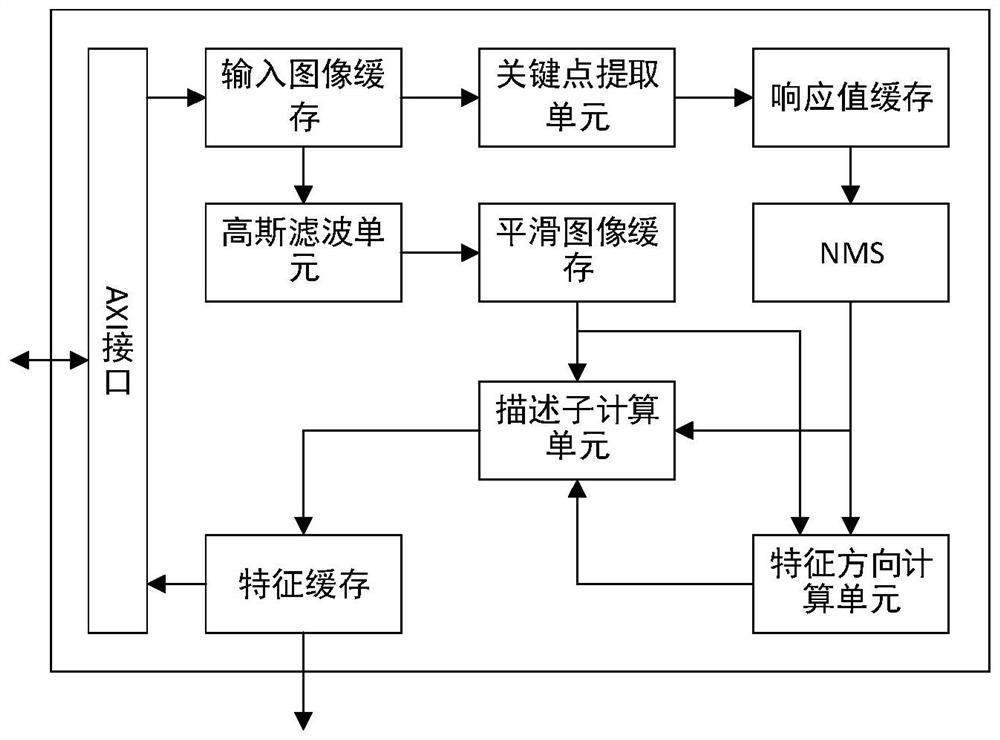

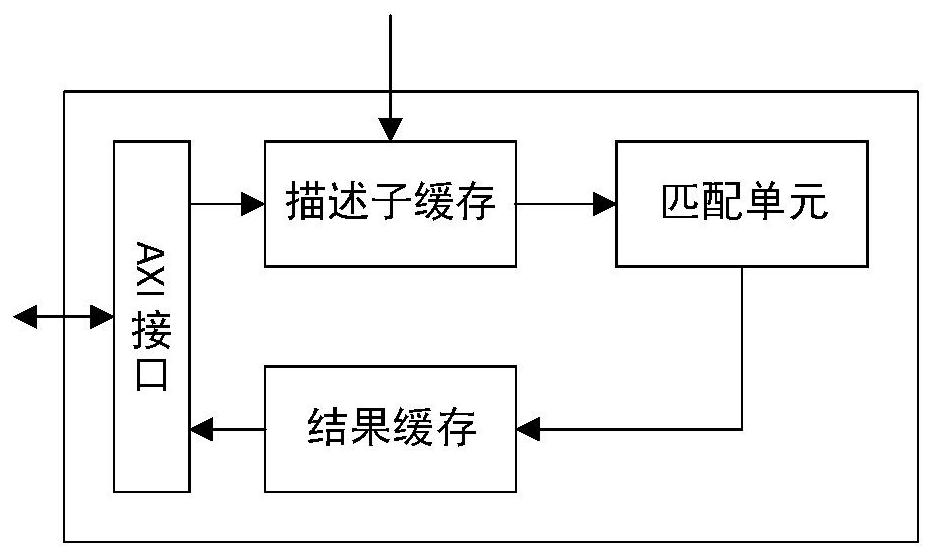

An orb-slam hardware accelerator

ActiveCN109919825BReduce power consumptionIncrease frame rateImage enhancementImage analysisComputer hardwareJazelle

The invention discloses an ORB-SLAM hardware accelerator, which comprises: an FPGA hardware acceleration module and which is used for accelerating feature extraction and feature matching; a sensor module which is used for capturing images; And a processor system which is used as a host for controlling the FPGA hardware acceleration module and the sensor module, and is responsible for operating pose estimation, pose optimization and map updating. The invention utilizes the FPGA hardware acceleration module to accelerate the process with the largest calculation and the most time consuming in theORB-SLAM process, can effectively improve the running speed of the ORB-SLAM and reduce the power consumption, greatly improve the energy consumption ratio, and reduce the difficulty of ORB-SLAM deployment on power-constrained platforms.

Owner:BEIHANG UNIV

Heterogeneous acceleration system and method based on ctpn network

ActiveCN112732638BReduce Design ComplexityImprove parallelismNeural architecturesArchitecture with single central processing unitJazelleText recognition

The present invention provides a heterogeneous acceleration system and method based on a CTPN network, including a CPU end and an FPGA end; the FPGA end includes a first sub-graph and a second sub-graph, and the CPU end includes a third sub-graph; The first sub-graph includes the CTPN network CNN part, the second sub-graph includes the RNN part, and the third sub-graph includes the rest of the CTPN network; the first sub-graph and the second sub-graph are executed on the FPGA side, and the second sub-graph is executed on the FPGA side. The three subgraphs are executed on the CPU side; the output of the FPGA side is used as the input of the third subgraph; the CPU side finally implements network inference and obtains the final result. The present invention can greatly increase the inference speed of the CTPN network with little decrease in precision, and enables the accelerator to better realize the function of real-time scene character recognition.

Owner:SHANGHAI JIAOTONG UNIV +1

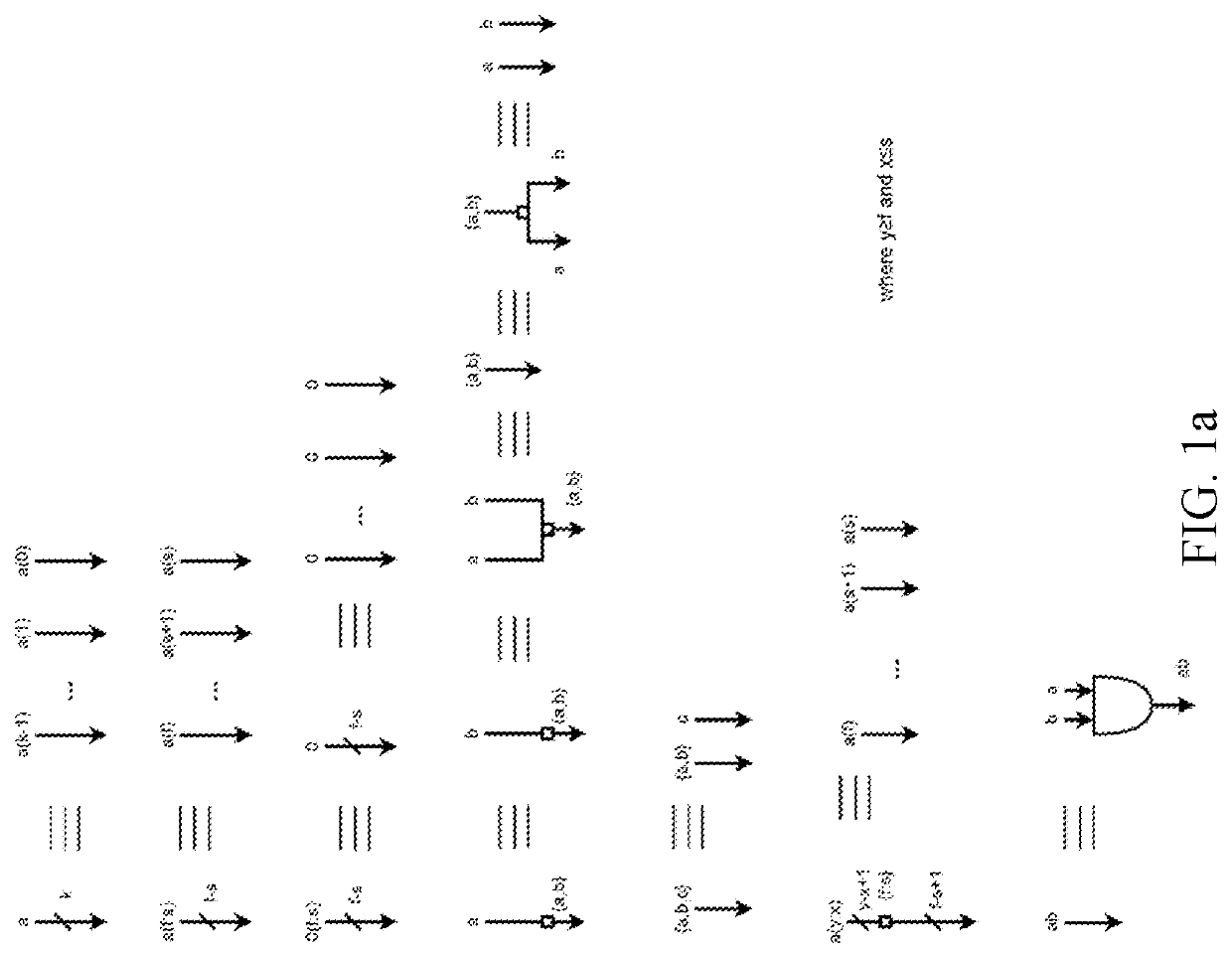

Synthesis path for transforming concurrent programs into hardware deployable on FPGA-based cloud infrastructures

Exploiting FPGAs for acceleration may be performed by transforming concurrent programs. One example mode of operation may provide one or more of creating synchronous hardware accelerators from concurrent asynchronous programs at software level, by obtaining input as software instructions describing concurrent behavior via a model of communicating sequential processes (CSP) of message exchange between concurrent processes performed via channels, mapping, on a computing device, each of the concurrent processes to synchronous dataflow primitives, comprising at least one of join, fork, merge, steer, variable, and arbiter, producing a clocked digital logic description for upload to one or more field programmable gate array (FPGA) devices, performing primitive remapping of the output design for throughput, clock rate and resource usage via retiming, and creating an annotated graph of the input software description for debugging of concurrent code for the field FPGA devices.

Owner:RECONFIGURE IO LTD

Execution unit accelerator

ActiveUS10929134B2Instruction analysisProcessor architectures/configurationJazelleComputer architecture

A processor to facilitate acceleration of instruction execution is disclosed. The processor includes a plurality of execution units (EUs), each including an instruction decode unit to decode an instruction into one or more operands and opcode defining an operation to be performed at an accelerator, a register file having a plurality of registers to store the one or more operands and an accelerator having programmable hardware to retrieve the one or more operands from the register file and perform the operation on the one or more operands.

Owner:INTEL CORP

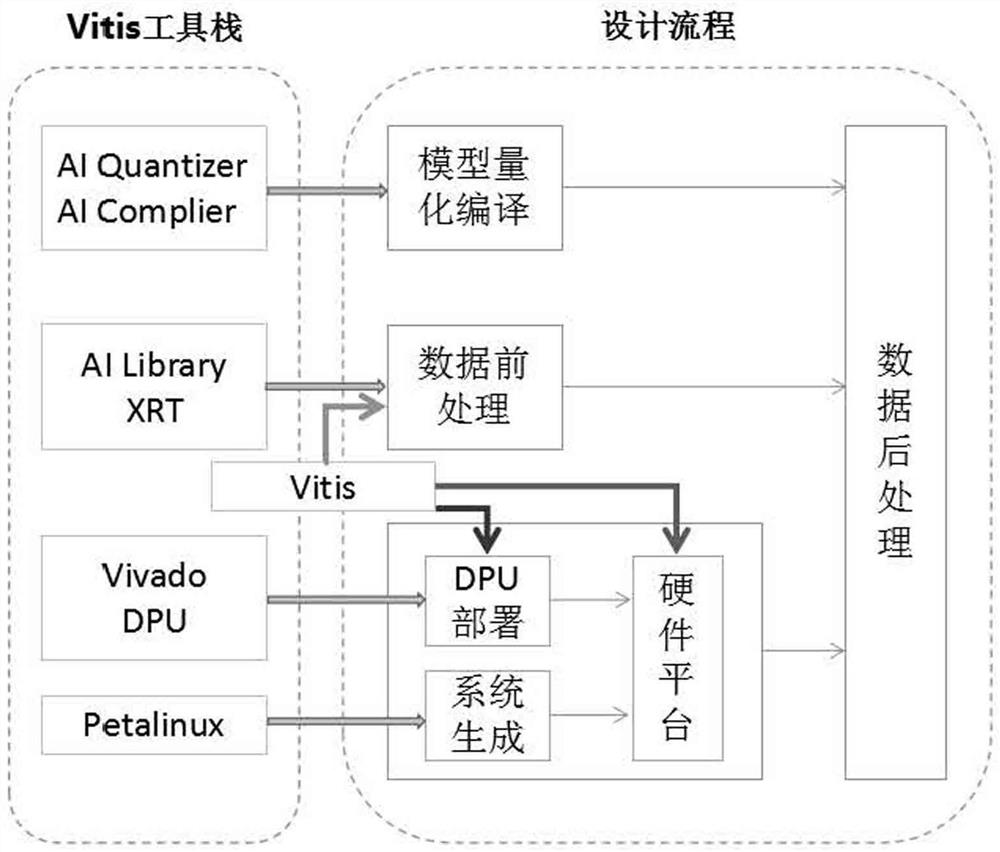

Target detection full-process acceleration method and system based on Vitis stack

PendingCN114298298AImprove reusabilityStrong expandabilityPhysical realisationData transformationAlgorithm

The invention discloses a target detection full-process acceleration method and system based on a Vitis stack, and the method comprises the steps: firstly carrying out the fine adjustment of a network, then carrying out the quantification processing of a model through a vitis quantizer, and carrying out the compiling of the model through a vitis compiler, achieving the conversion of an original network model, and reducing the data size; data transformation storage and data type fixed-point processing are carried out on the host program data, so that the data parallelism degree is improved; then, task type parallel and data optimization instructions are performed on the whole process, and data operation can be accelerated; next, a hardware accelerator and a system file are respectively created through vivado and petalinux, a hardware platform is formed through vitis integration, and interactive transmission is carried out on a ps end and a pl end through an AXI bus; and finally, the optimization design of the previous steps is integrated to form full-process acceleration. According to the design, the throughput rate of deploying a neural network algorithm in an embedded field edge device can be increased, and a certain acceleration effect is achieved in the field of target detection.

Owner:SUN YAT SEN UNIV +2

Alexnet forward network accelerator based on FPGA (Field Programmable Gate Array)

PendingCN111340206AReduce redundant dataReduce consumptionNeural architecturesPhysical realisationJazelleExternal storage

Owner:YUNNAN UNIV

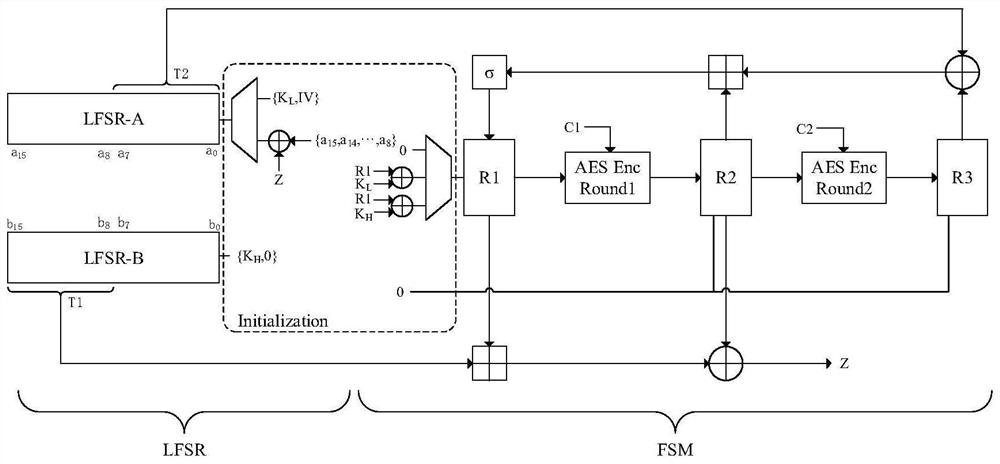

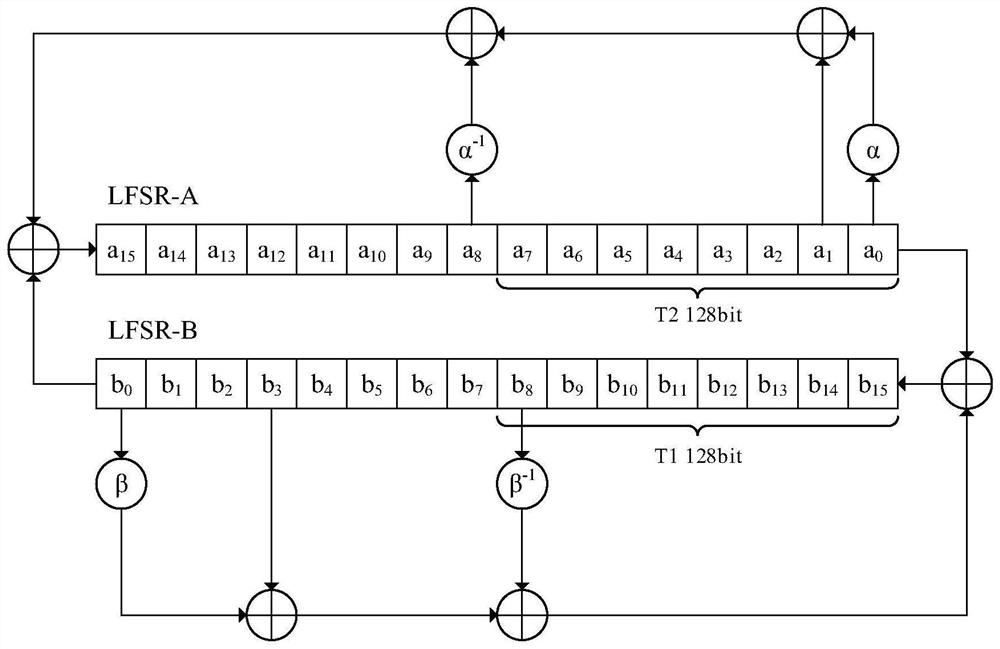

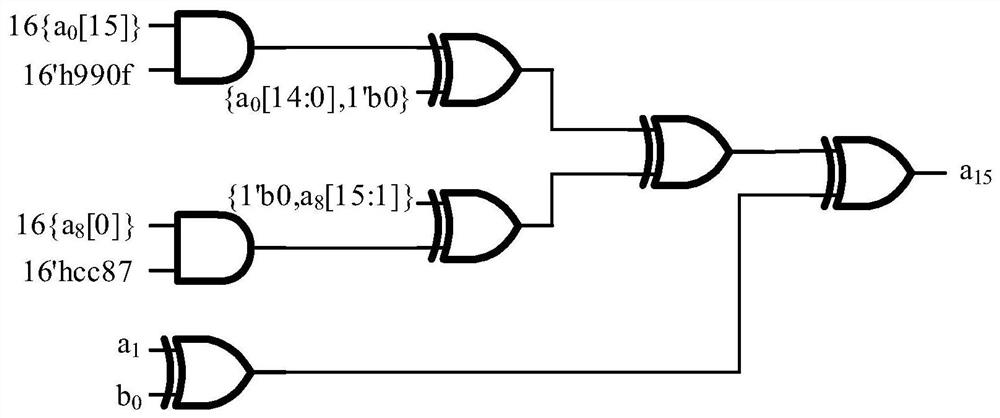

SNOW-V algorithm accelerator applied to 5G system and acceleration method thereof

PendingCN114039719AMeet the needs of ultra-high-speed encryptionEasy to implementEncryption apparatus with shift registers/memoriesPlaintextShift register

Owner:NANJING UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com