Patents

Literature

131 results about "Runtime library" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computer programming, a runtime library (RTL) is a set of low-level routines used by a compiler to invoke some of the behaviors of a runtime environment, by inserting calls to the runtime library into compiled executable binary. The runtime environment implements the execution model, built-in functions, and other fundamental behaviors of a programming language. During execution (run time) of that computer program, execution of those calls to the runtime library cause communication between the executable binary and the runtime environment. A runtime library often includes built-in functions for memory management or exception handling. Therefore, a runtime library is always specific to the platform and compiler.

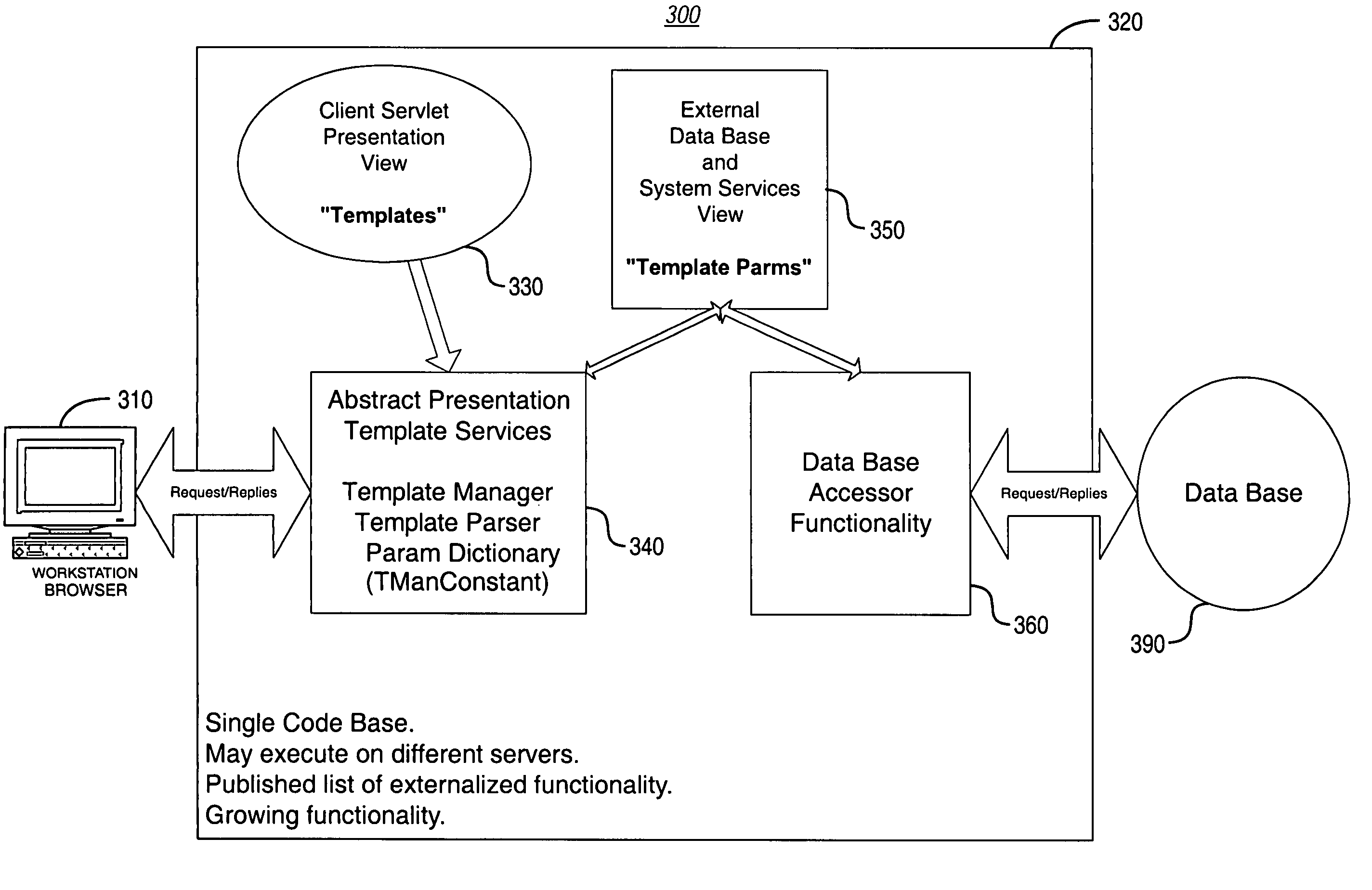

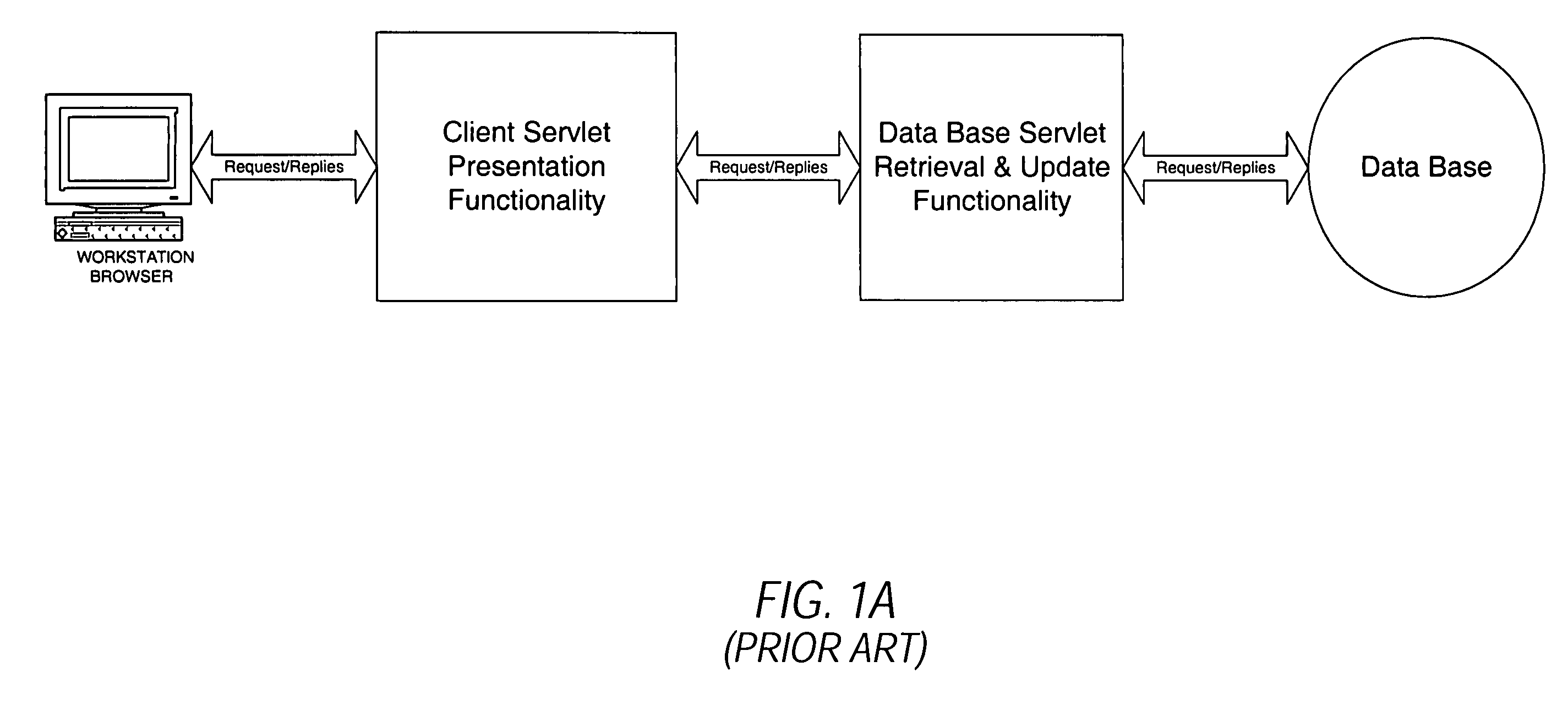

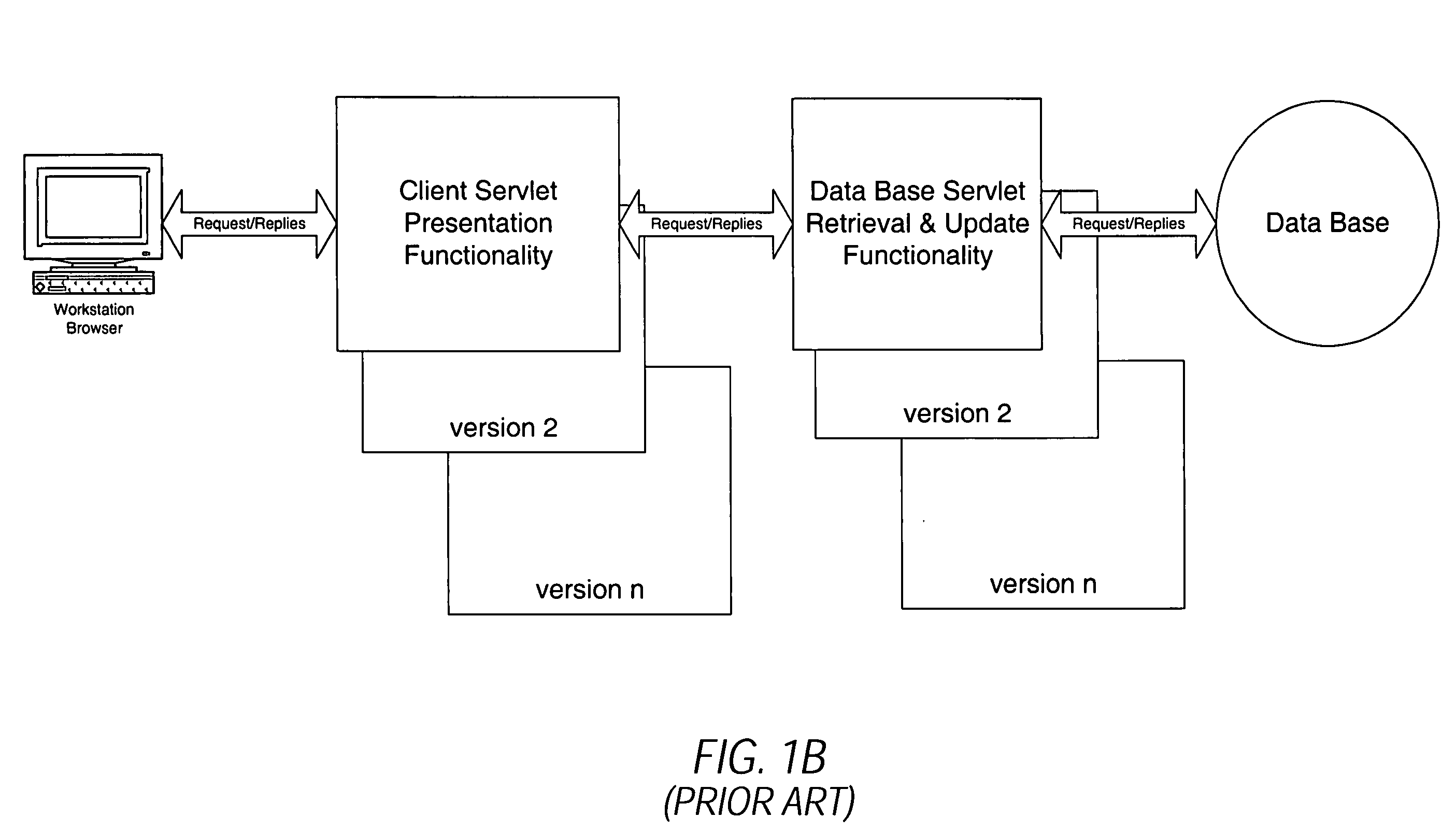

System and methodology for dynamic application environment employing runtime execution templates

InactiveUS7111231B1Digital data information retrievalSpecial data processing applicationsThe InternetApplication software

A dynamic application environment or system is described that includes a client, a run-time system, and a back-end database. The client comprises a workstation or desktop PC running browser software, such as Microsoft Internet Explorer or Netscape Navigator. The back-end database comprises a back-end (e.g., server-based) database system, such as an SQL-based database system. The run-time system includes a collection or repository module for storing “presentation templates,” a Template Services Module or engine, a Template Parameters Module, and a Database Accessor Module. The presentation templates are employed for presentation of the application to the user interface (at the client). At application run-time, the templates are provided to the Template Services Module, which includes a Template Manager and a Template Parser. These provide generic processing of the templates, which may be assembled to complete a finished product (i.e., run-time application). For instance, the Template Services Module knows how to load and parse a template and then look up its parameters, regardless of the application-specific details (e.g., user interface implementation) embodied by the template. In use, the system is deployed with presentation templates that represent the various client views of the target application, for the various platforms that the application is deployed to. In this manner, when new functionality needs to be added to the system, it may simply be added by expanding the run-time library portion of the system.

Owner:INTELLISYNC CORP

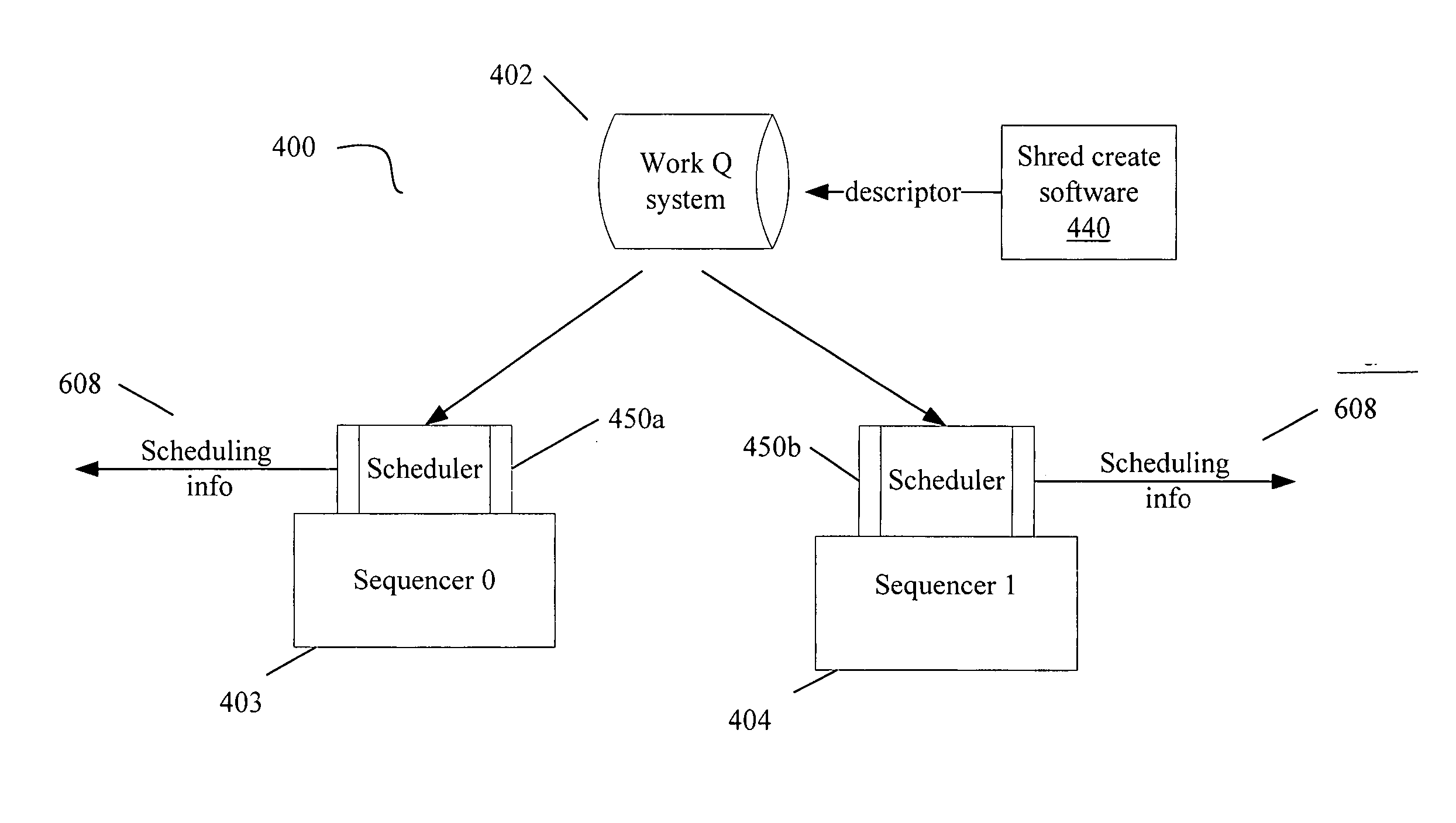

Scheduling optimizations for user-level threads

InactiveUS20070074217A1Multiprogramming arrangementsMemory systemsOperational systemThread scheduling

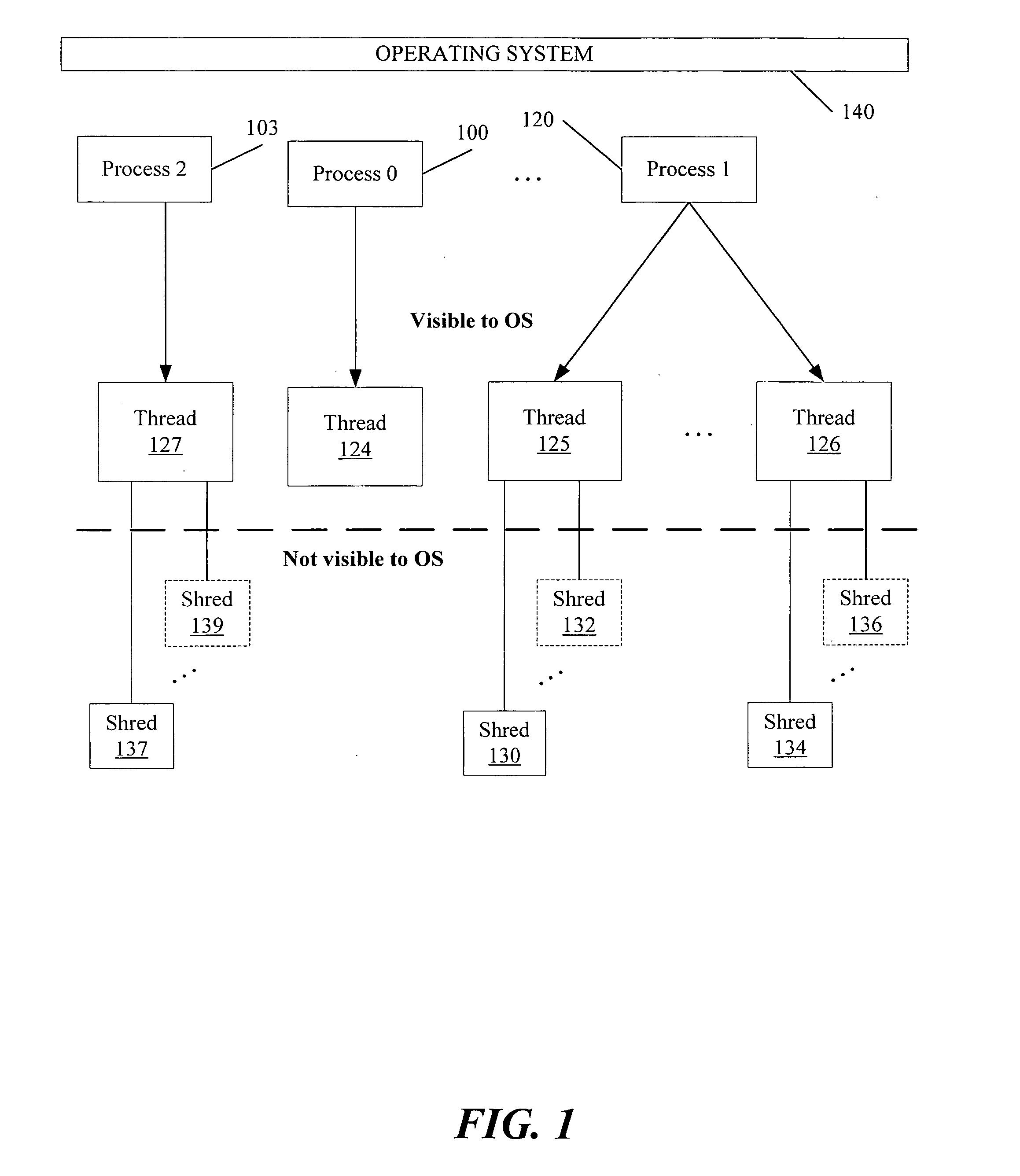

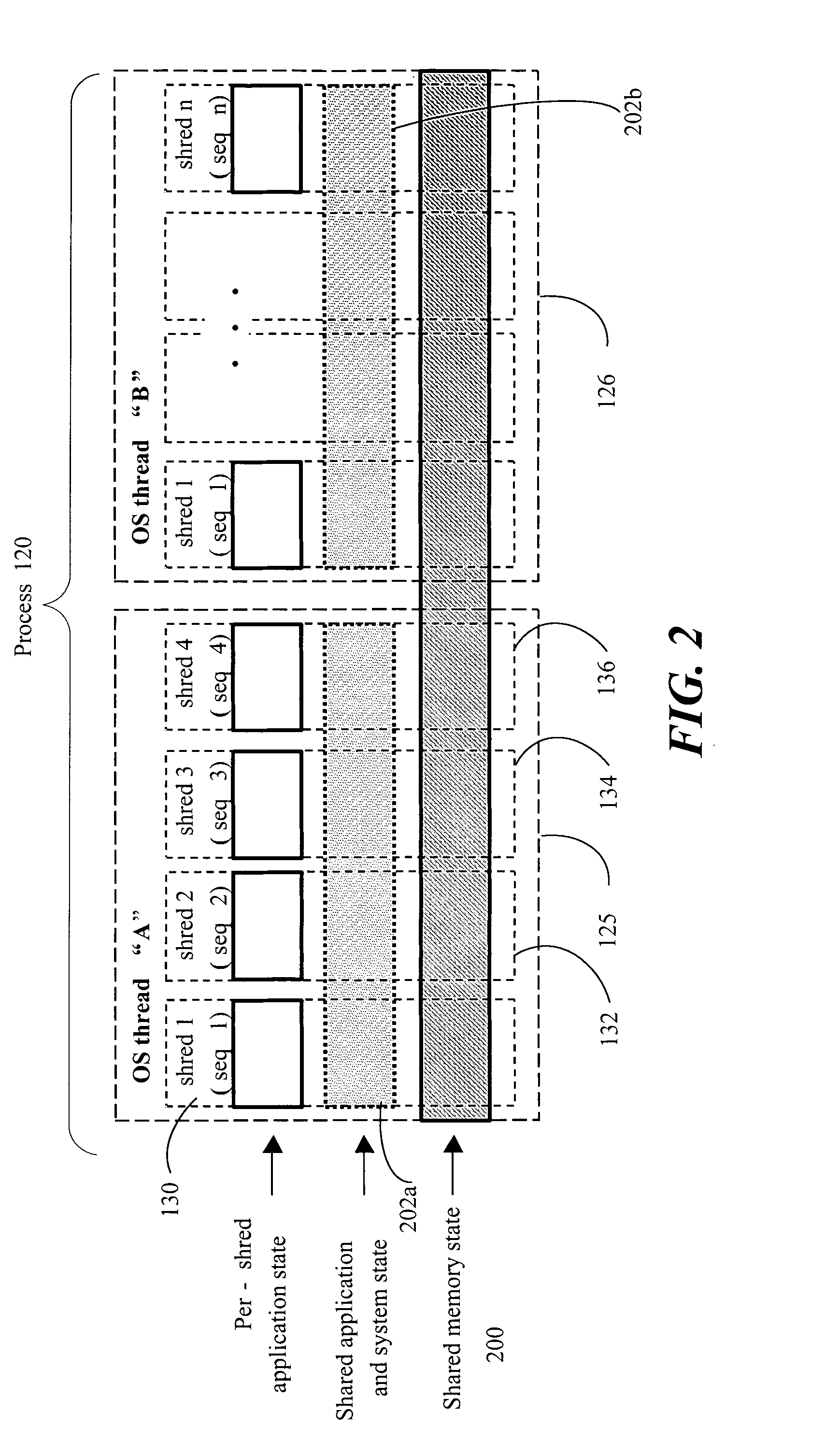

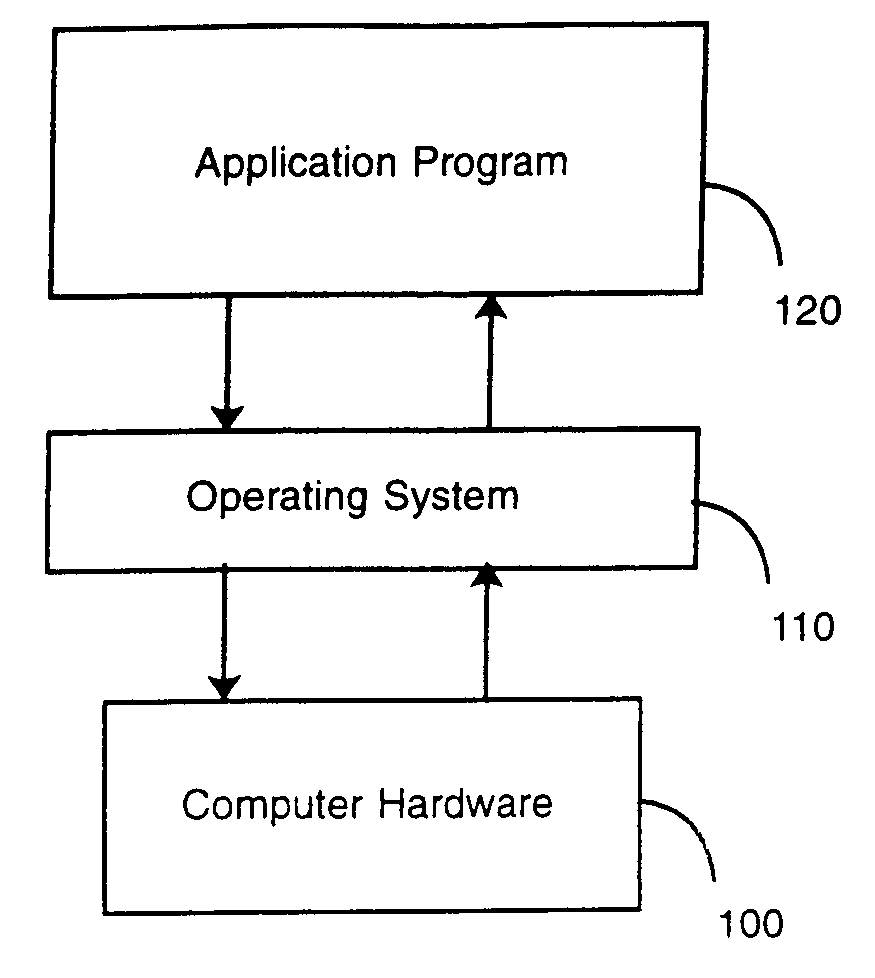

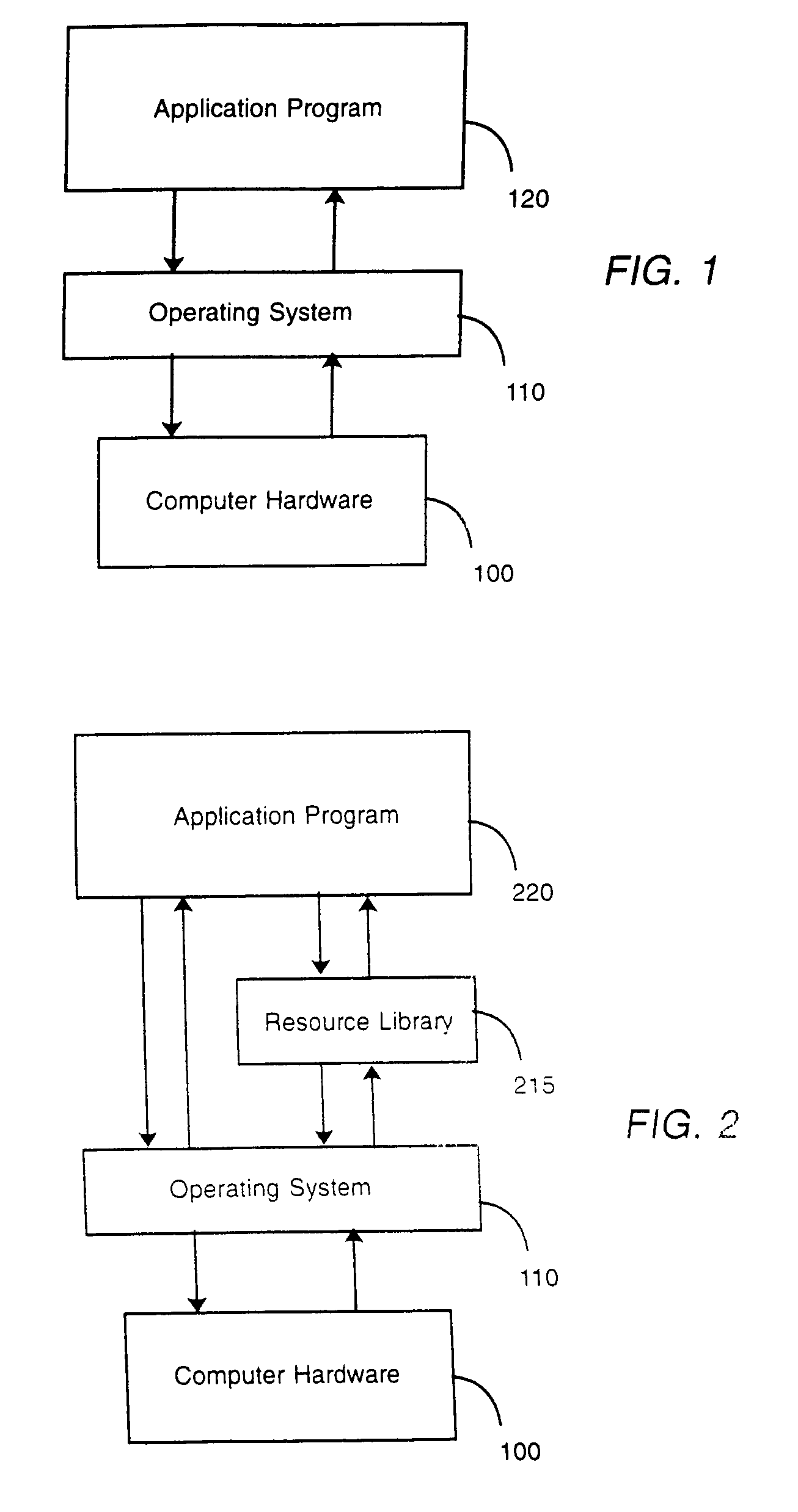

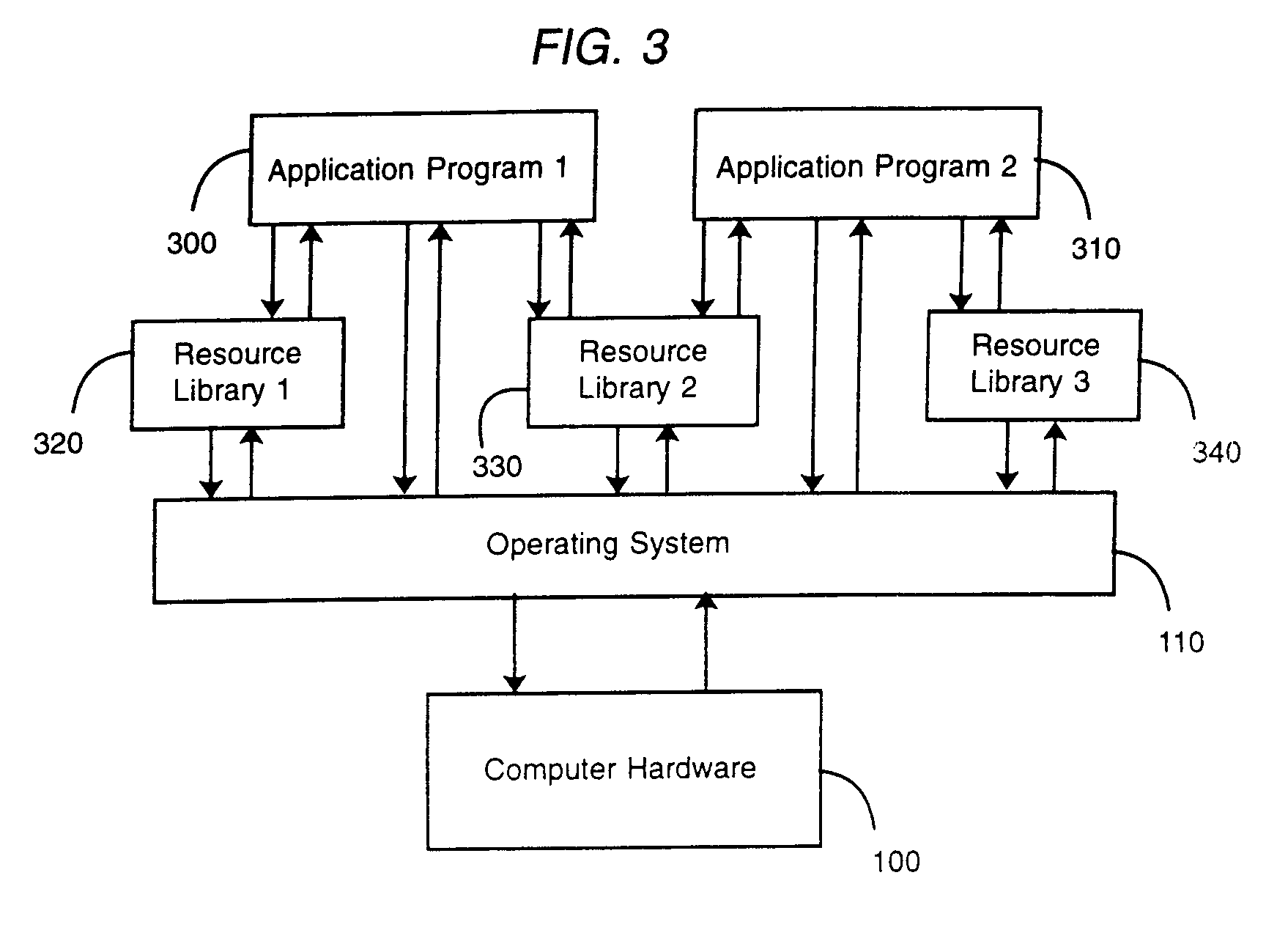

Method, apparatus and system embodiments to schedule user-level OS-independent “shreds” without intervention of an operating system. For at least one embodiment, the shred is scheduled for execution by a scheduler routine rather than the operating system. The scheduler routine resides in user space and may be part of a runtime library. The library may also include monitoring logic that monitors execution of a shredded program and provides scheduling hints, based on shred dependence information, to the scheduler. In addition, the scheduler may further optimize shred scheduling by taking into account information about a system's configuration of thread execution hardware. Other embodiments are also described and claimed.

Owner:INTEL CORP

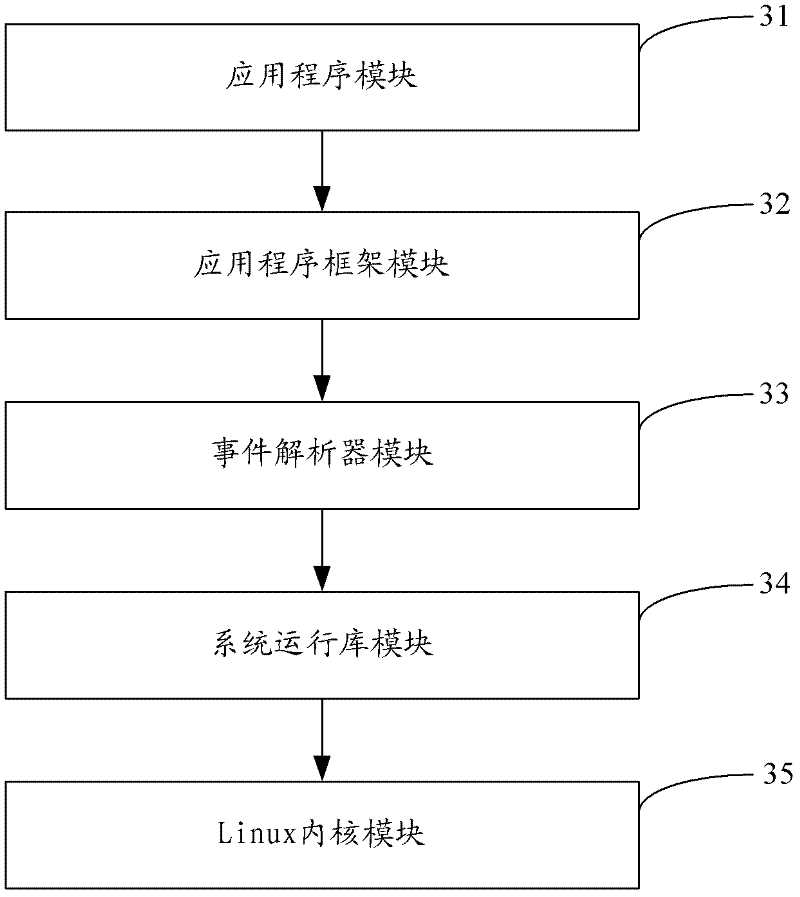

Android system development framework and development device

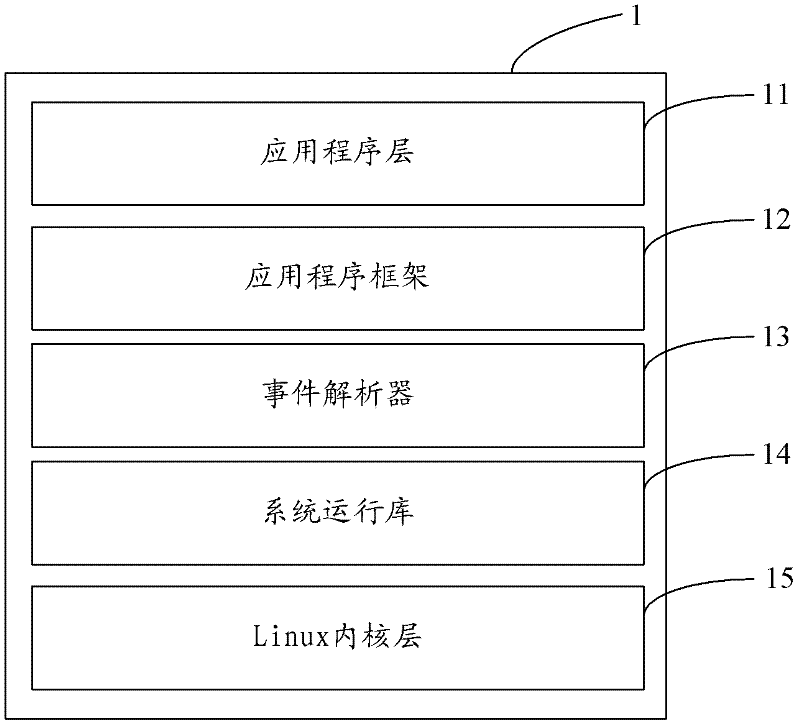

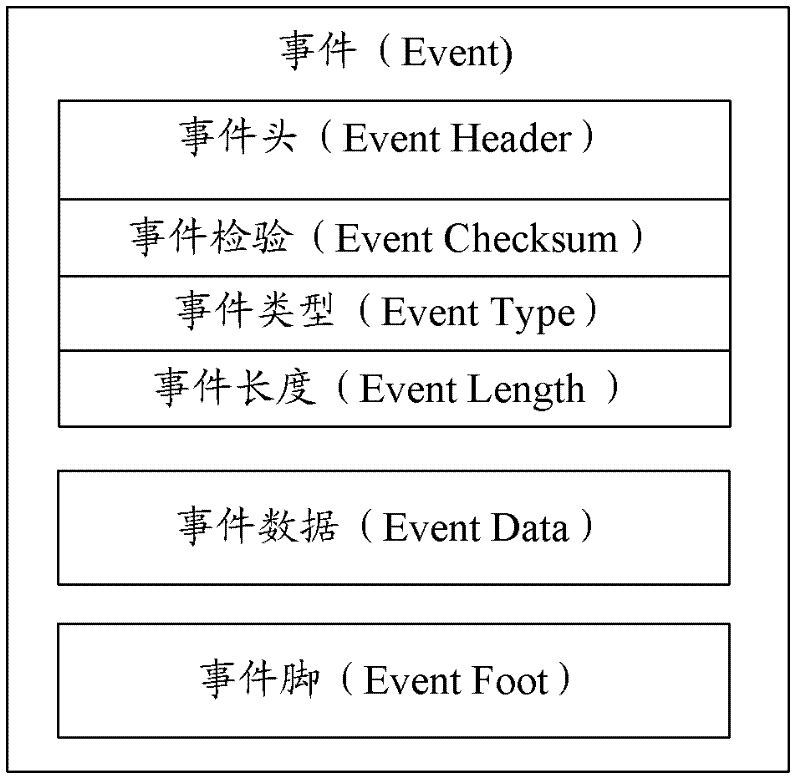

ActiveCN102314348AReduce couplingSolve the problems of low development efficiency and poor portabilitySpecific program execution arrangementsCouplingInteraction interface

The invention is applicable to the technical field of computers, and provides an Android system development framework and a development device; the Android system development framework comprises an application program level, an application program framework, a system runtime library and a Linux inner core level, and is characterized in that: the Android system development framework also comprises an event analyzer; and the event analyzer is used for standardizing interaction information which is received from an interaction interface. By adding the event analyzer for standardizing the interaction information which is received from the interaction interface in the existing Android system development framework, the embodiment of the invention solves the problems that the existing Android system cannot provide an effective development framework so that the application program development efficiency is low and the transplantation performance is poor, improves the development efficiency and the transplantation performance of application programs, and simultaneously reduces the coupling degree of the Android system.

Owner:TCL CORPORATION

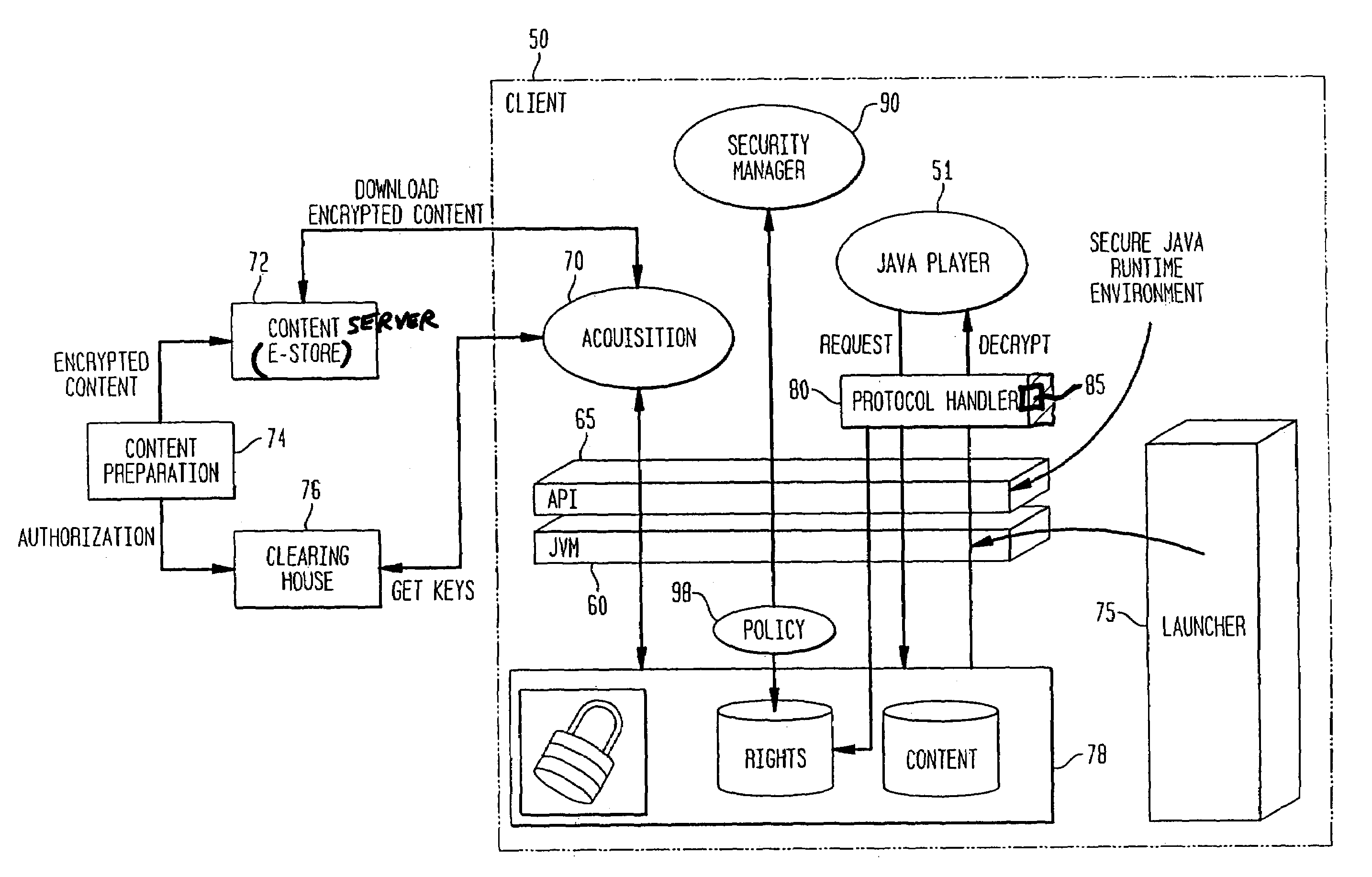

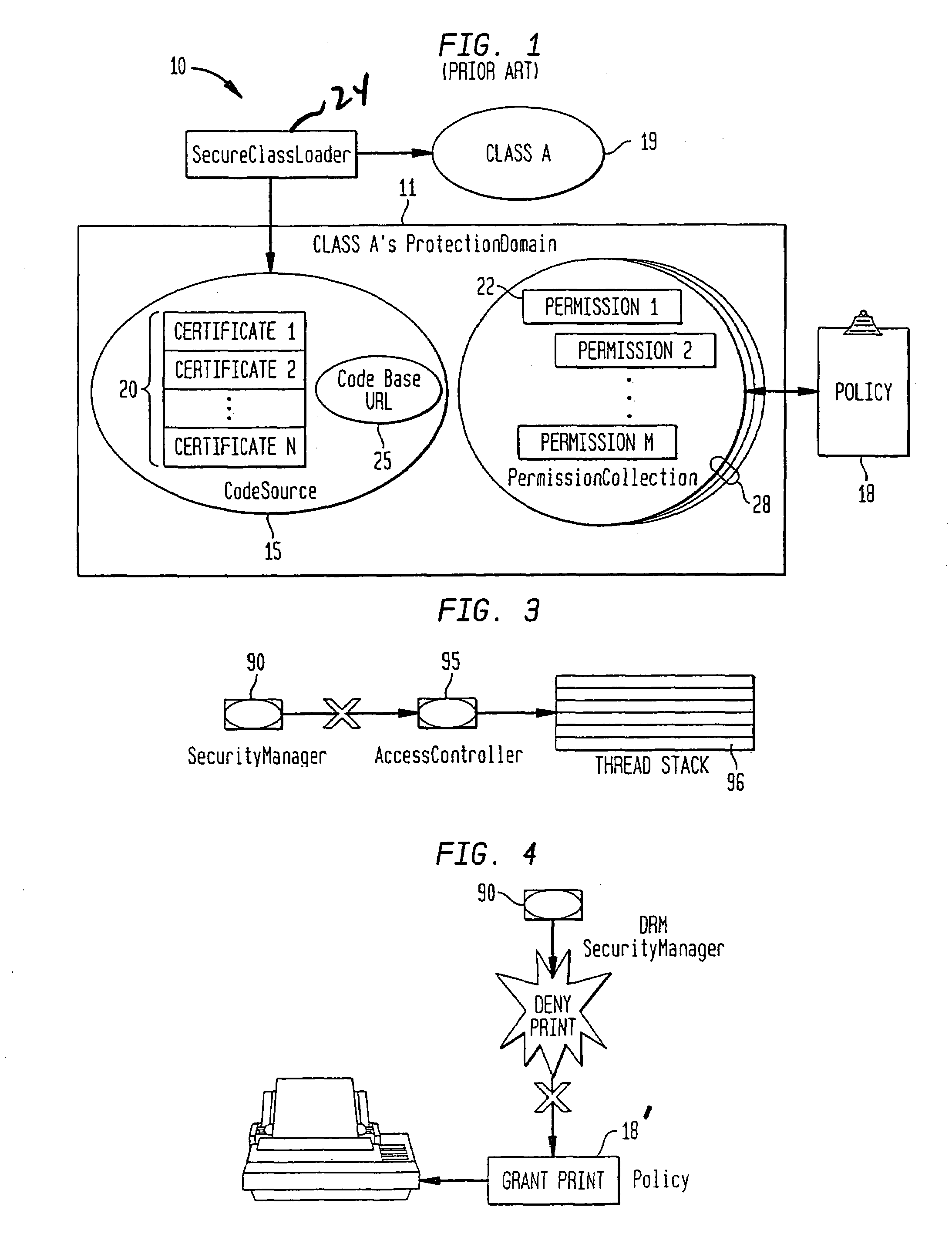

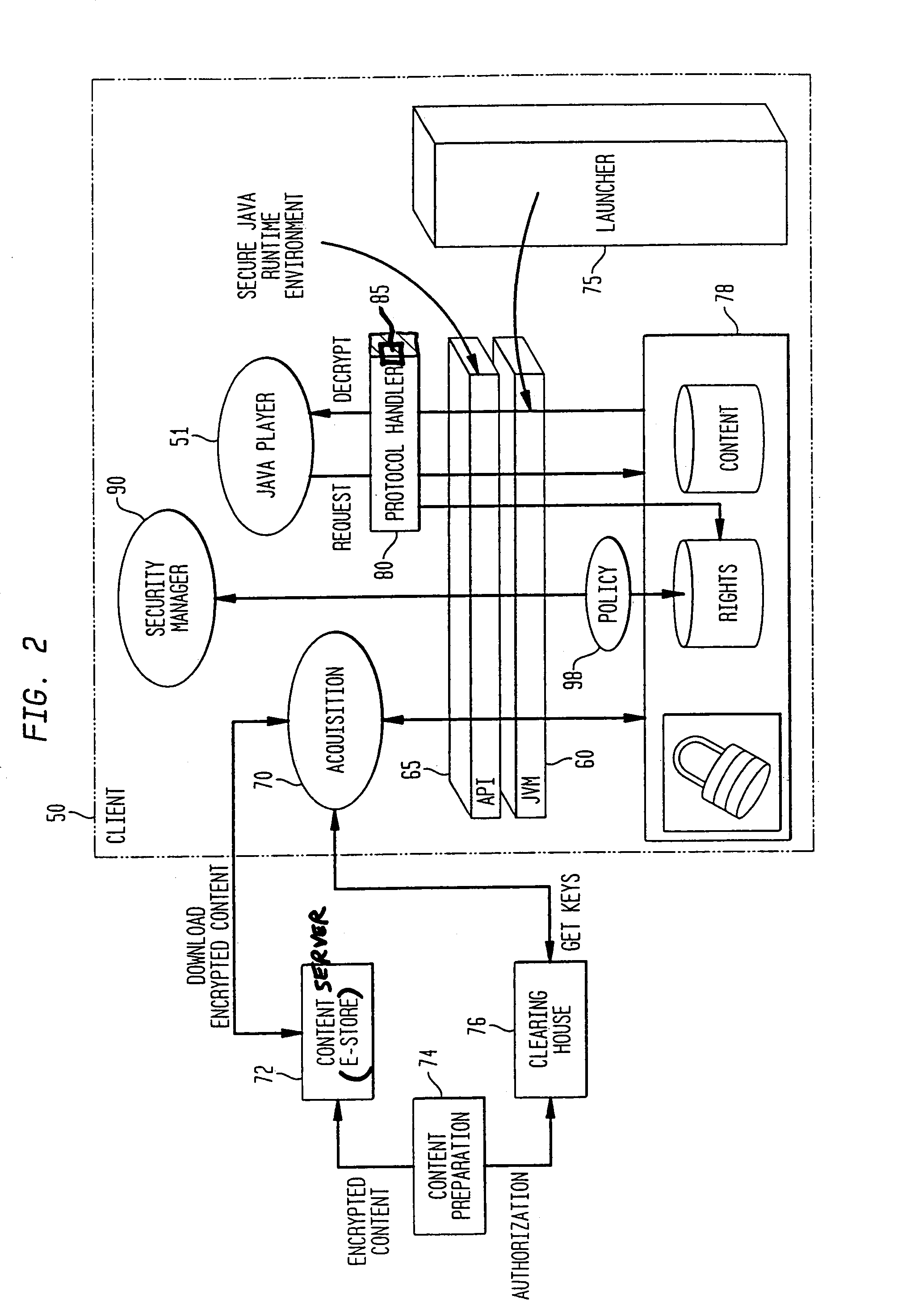

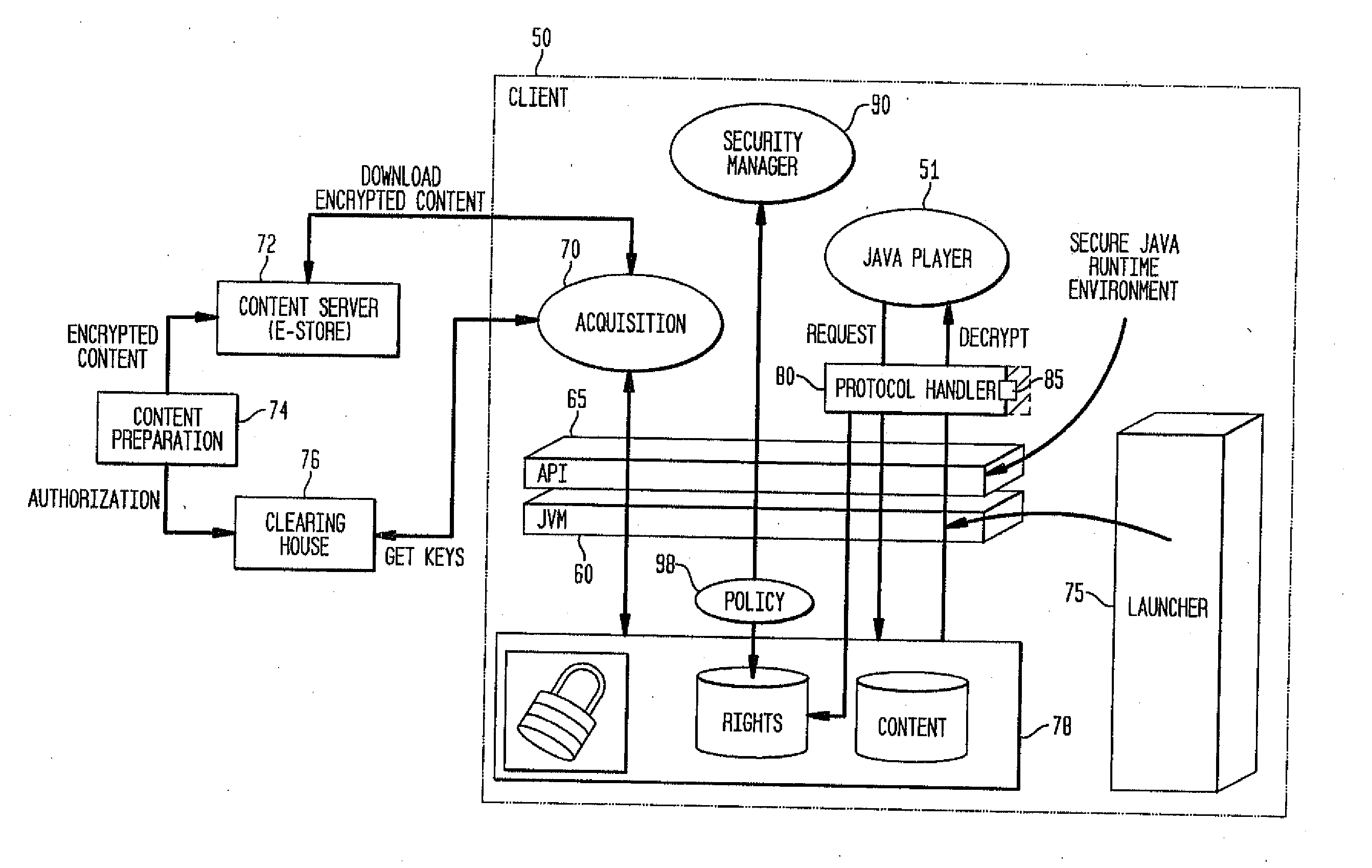

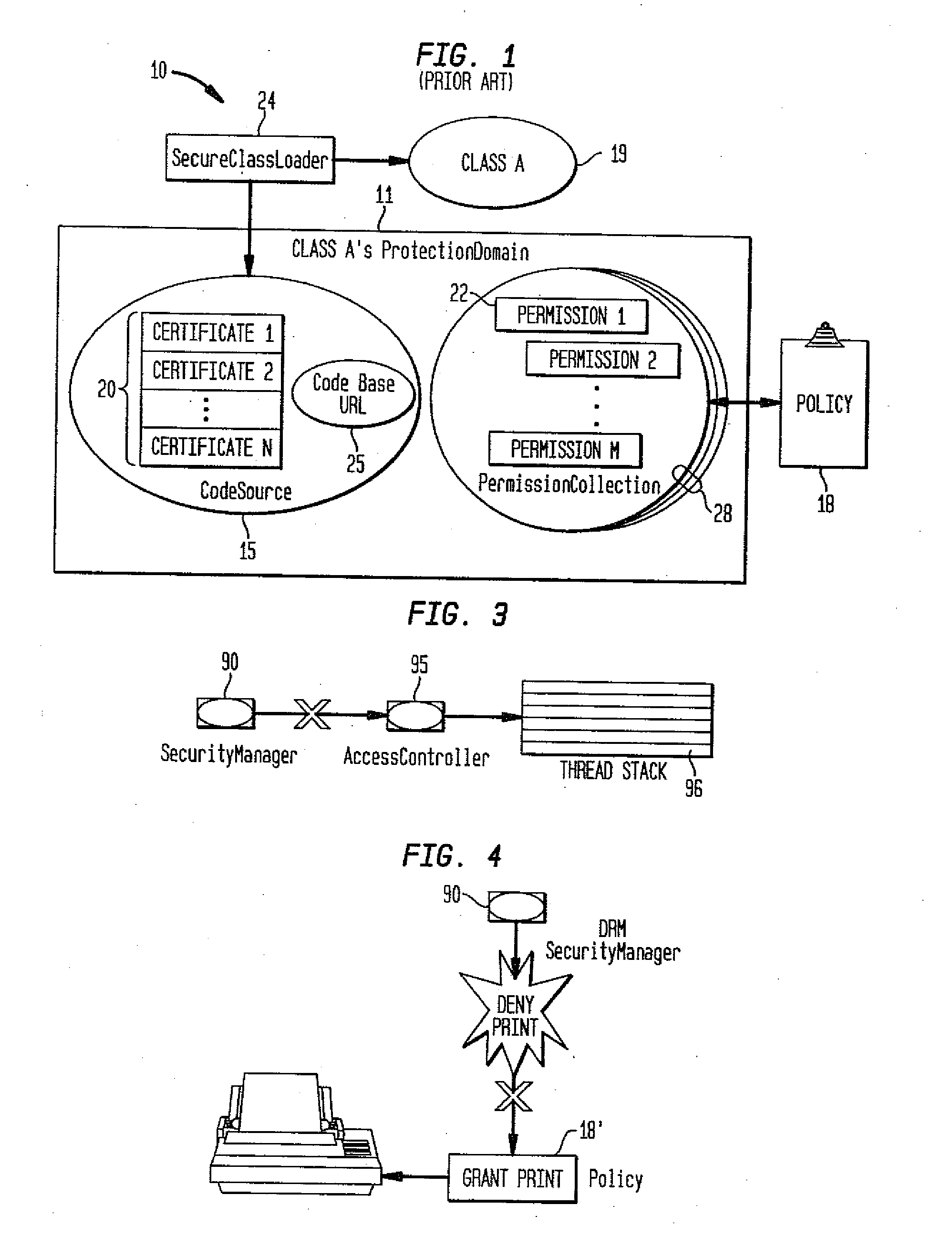

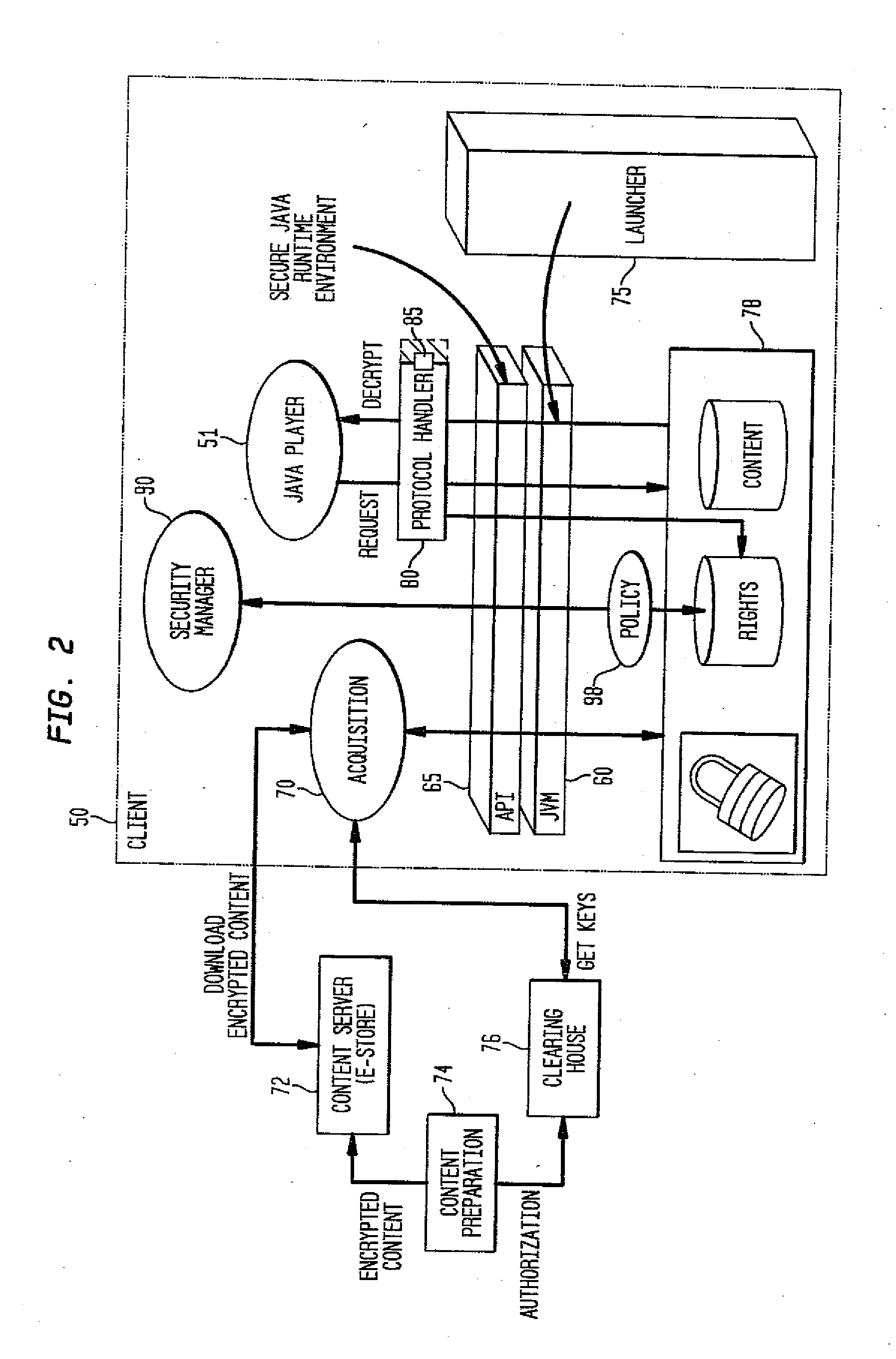

System and method for supporting digital rights management in an enhanced Java™ 2 runtime environment

InactiveUS7308717B2Provide protectionDigital data processing detailsAnalogue secracy/subscription systemsDigital rights management systemSoftware engineering

A digital rights management (DRM) system and methodology for a Java client implementing a Java Runtime Environment (JRE). The JRE comprises a Java Virtual Machine (JVM) and Java runtime libraries components and is capable of executing a player application for presenting content that can be presented through a Java program (e.g., a Java application, applet, servlet, bean, etc.) and downloaded from a content server to the client. The DRM system includes an acquisition component for receiving downloaded protected contents; and a dynamic rights management layer located between the JRE and player application for receiving requests to view or play downloaded protected contents from the player, and, in response to each request, determining the rights associated with protected content and enabling viewing or playing of the protected contents via the player application if permitted according to the rights. By providing a Ad DRM-enabled Java runtime, which does not affect the way non-DRM-related programs work, DRM content providers will not require the installation of customized players. By securing the runtime, every Java™ player automatically and transparently becomes a DRM-enabled player.

Owner:IBM CORP

Flexible and extensible java bytecode instrumentation system

ActiveUS20030149960A1Error detection/correctionDigital computer detailsInjection pointCode injection

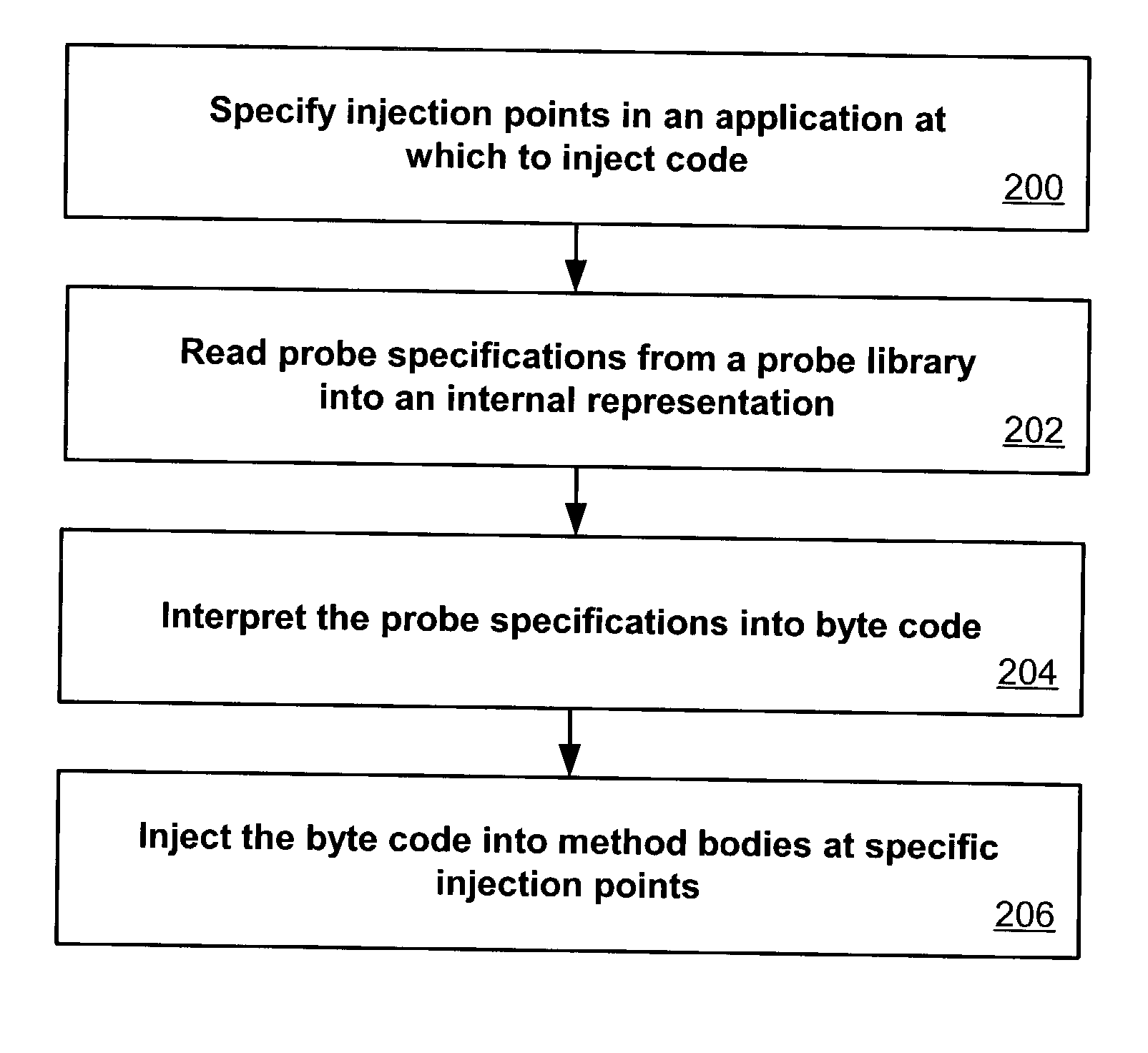

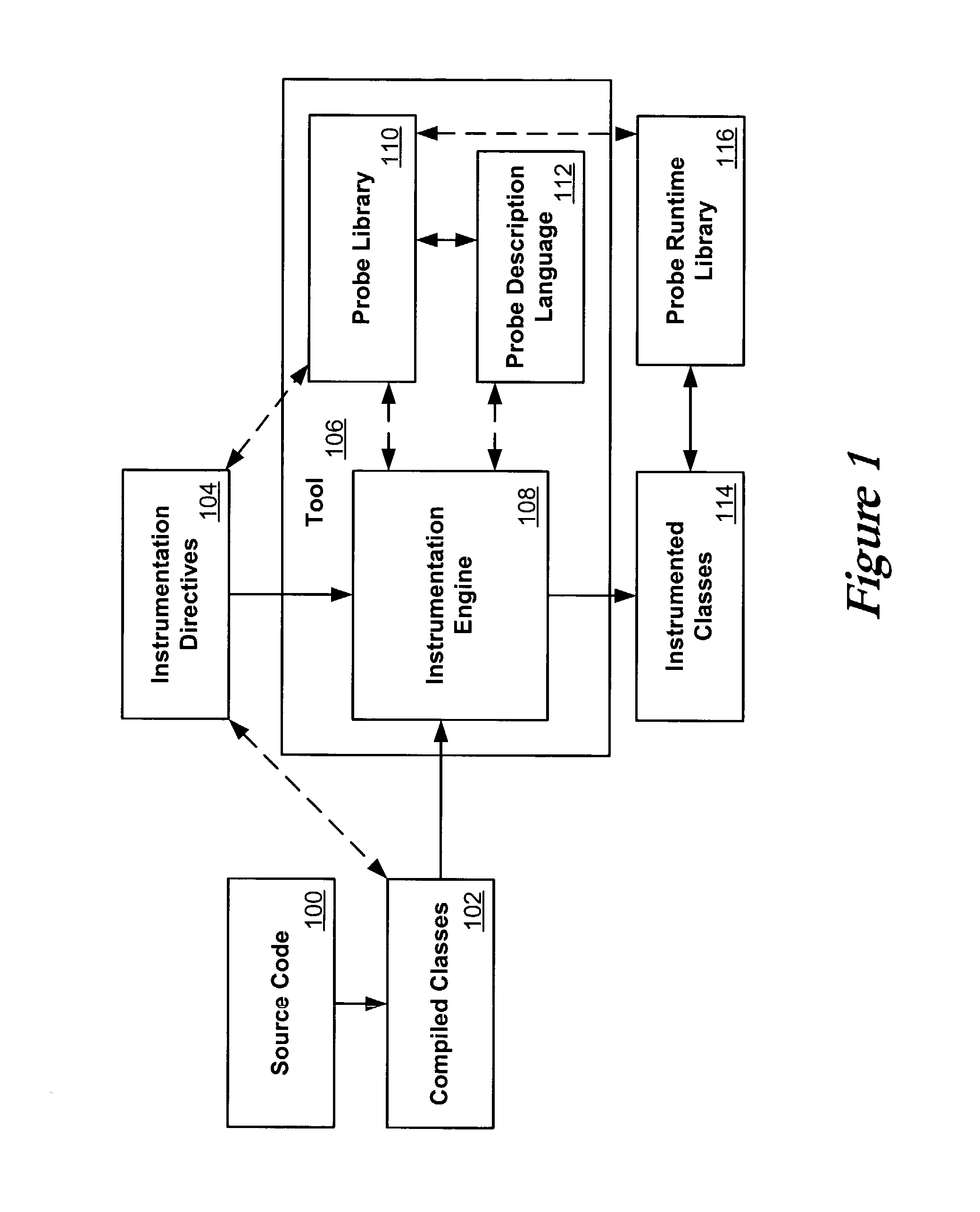

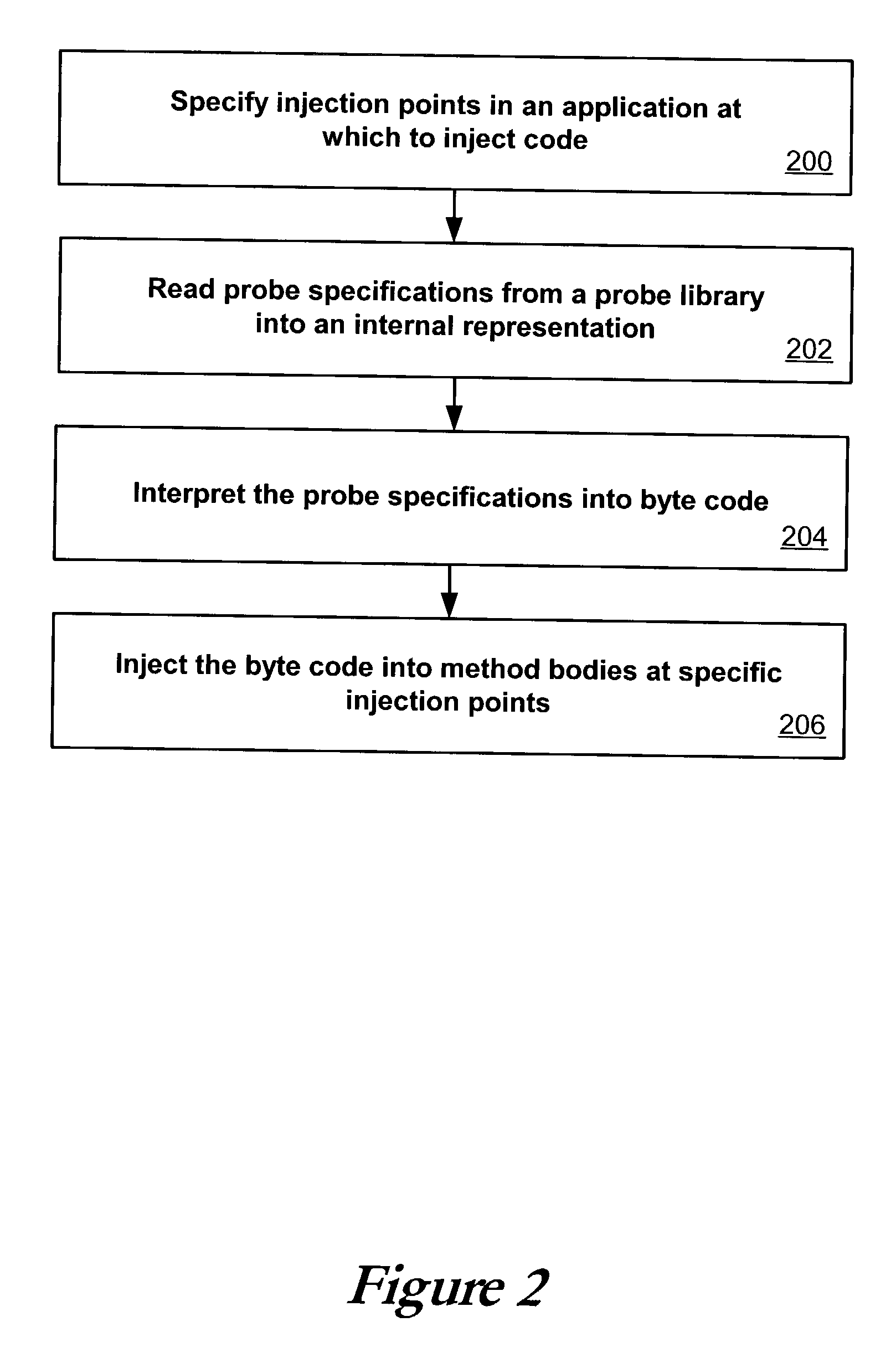

Code can be injected into a compiled application through the use of probes comprised of instrumentation code. Probes can be implemented in a custom high level language that hides low level instruction details. A directive file contains instructions on injecting a probe into a compiled application. An instrumentation engine reads these instructions and injects the probe into the compiled application at the appropriate injection points. Multiple probes can be used, and can be stored in a probe library. Each probe can inject code into the application at, for example, a package, class, method, or line of the compiled application. Calls can also be made to external runtime libraries. This description is not intended to be a complete description of, or limit the scope of, the invention. Other features, aspects, and objects of the invention can be obtained from a review of the specification, the figures, and the claims.

Owner:ORACLE INT CORP

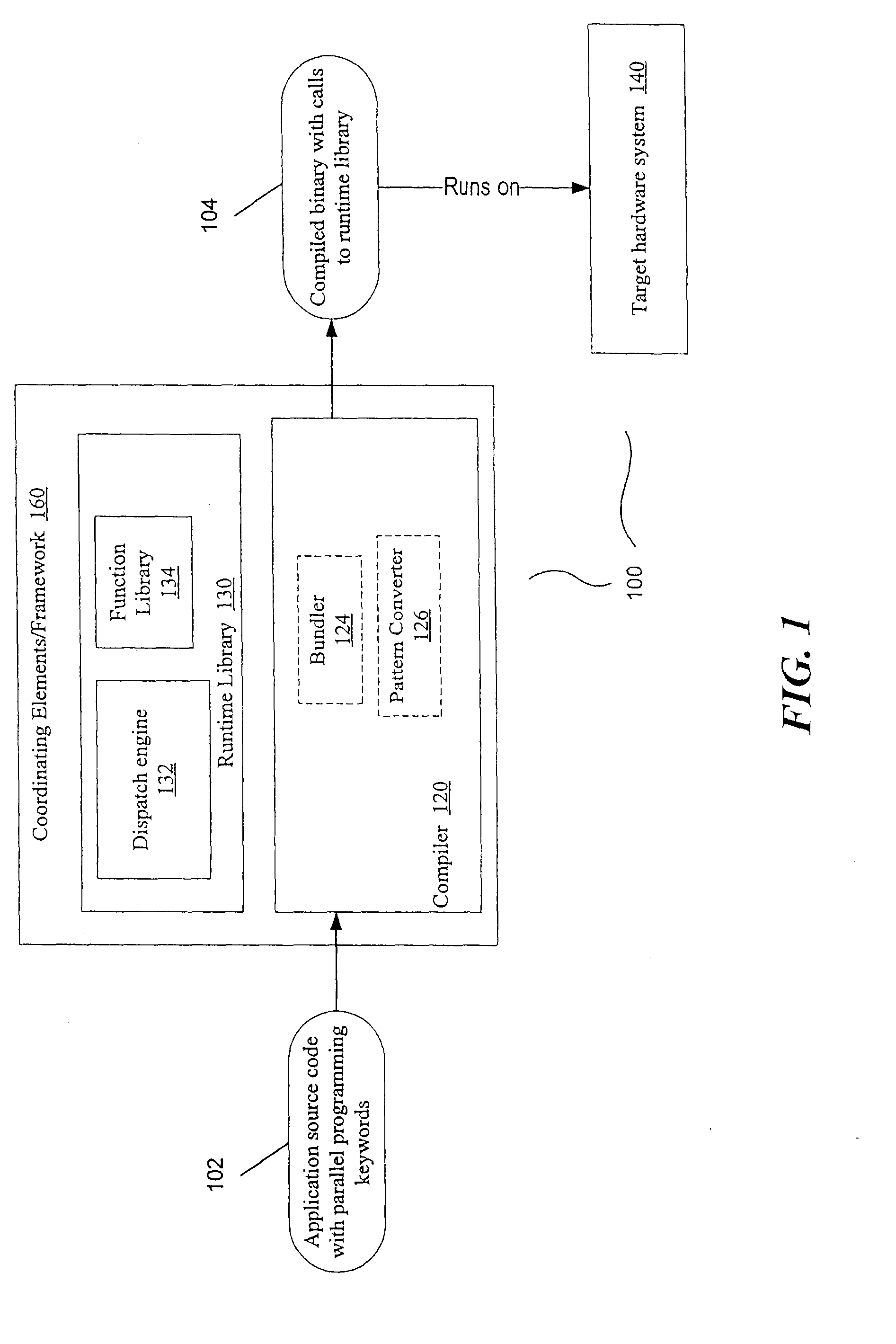

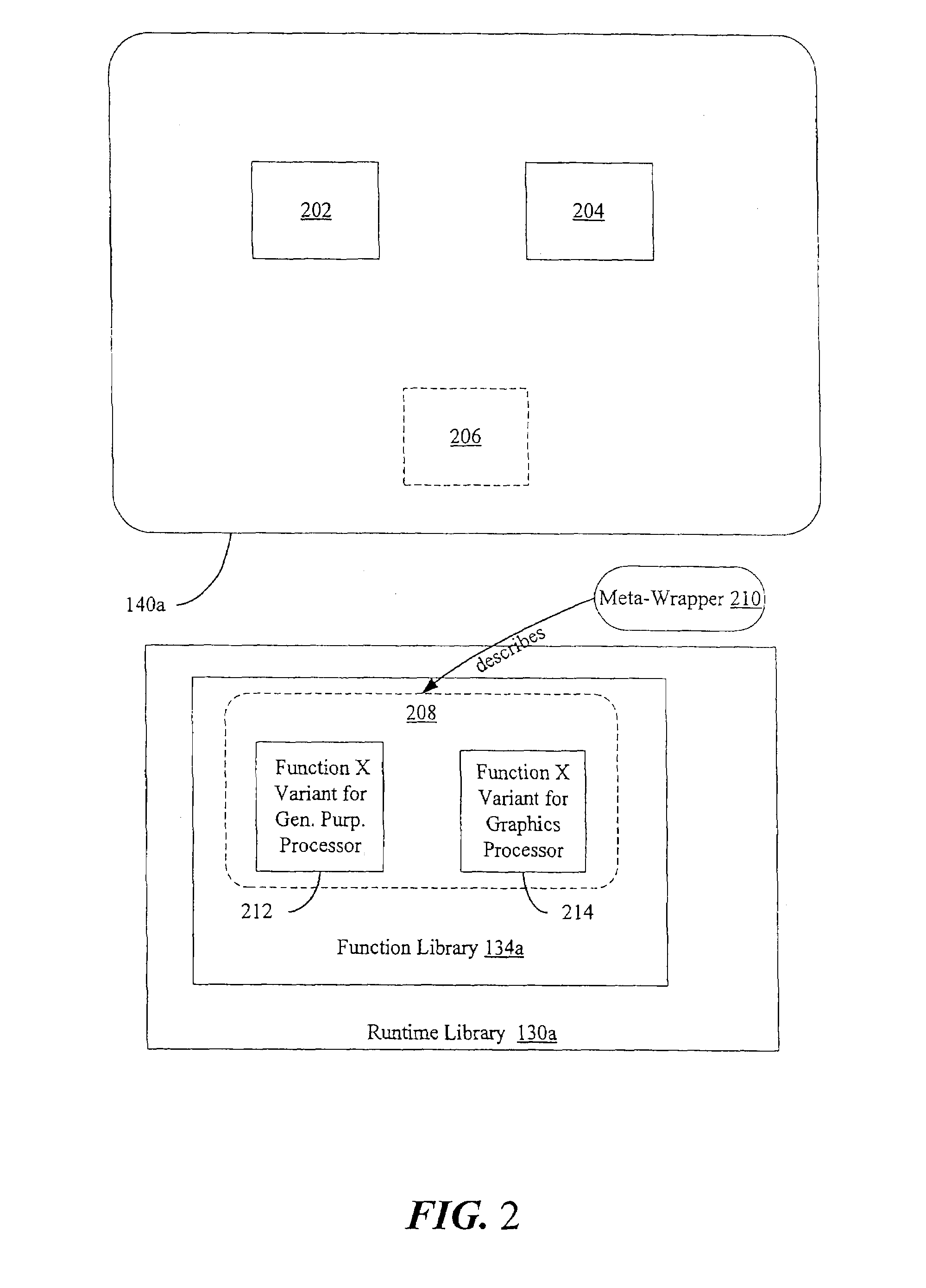

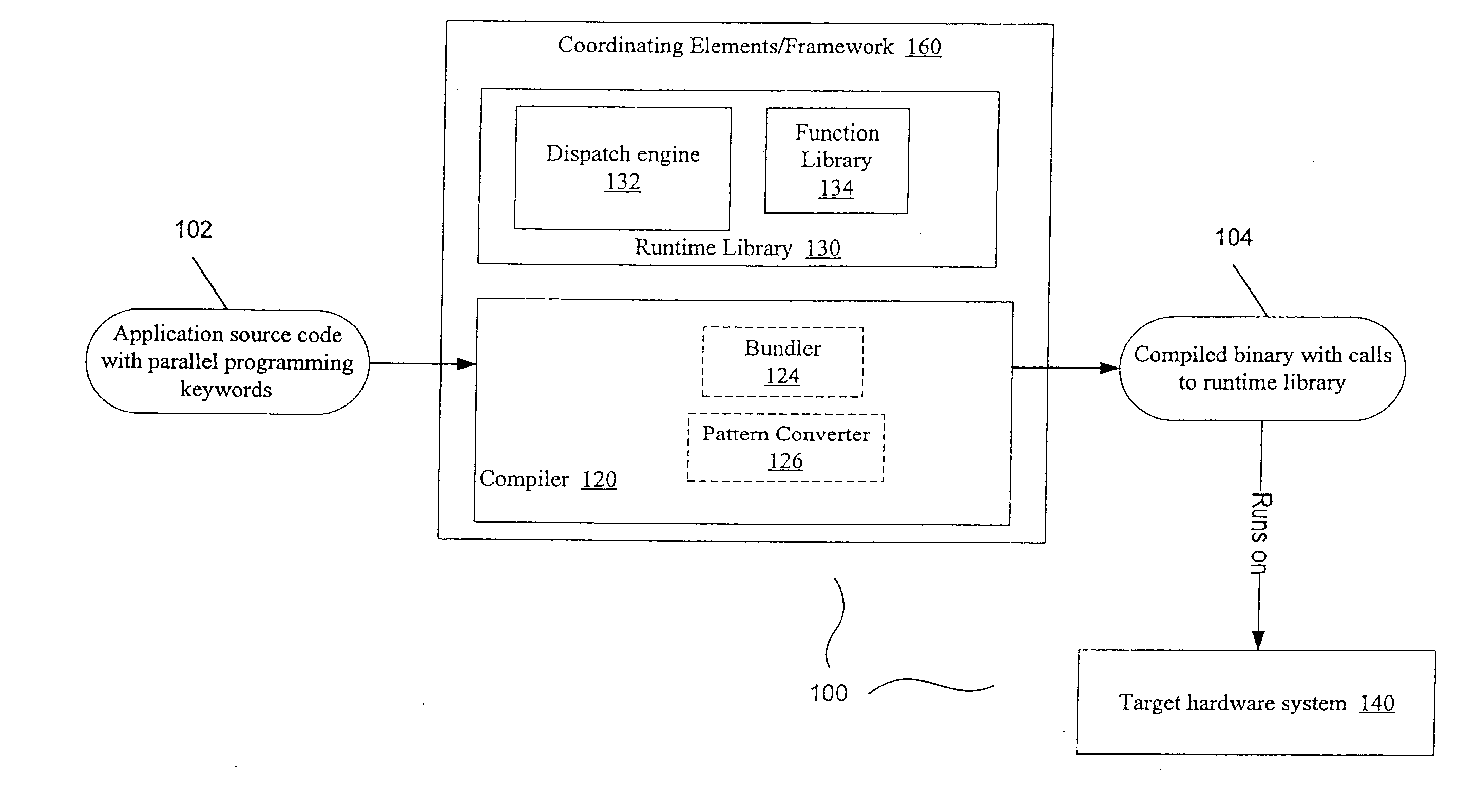

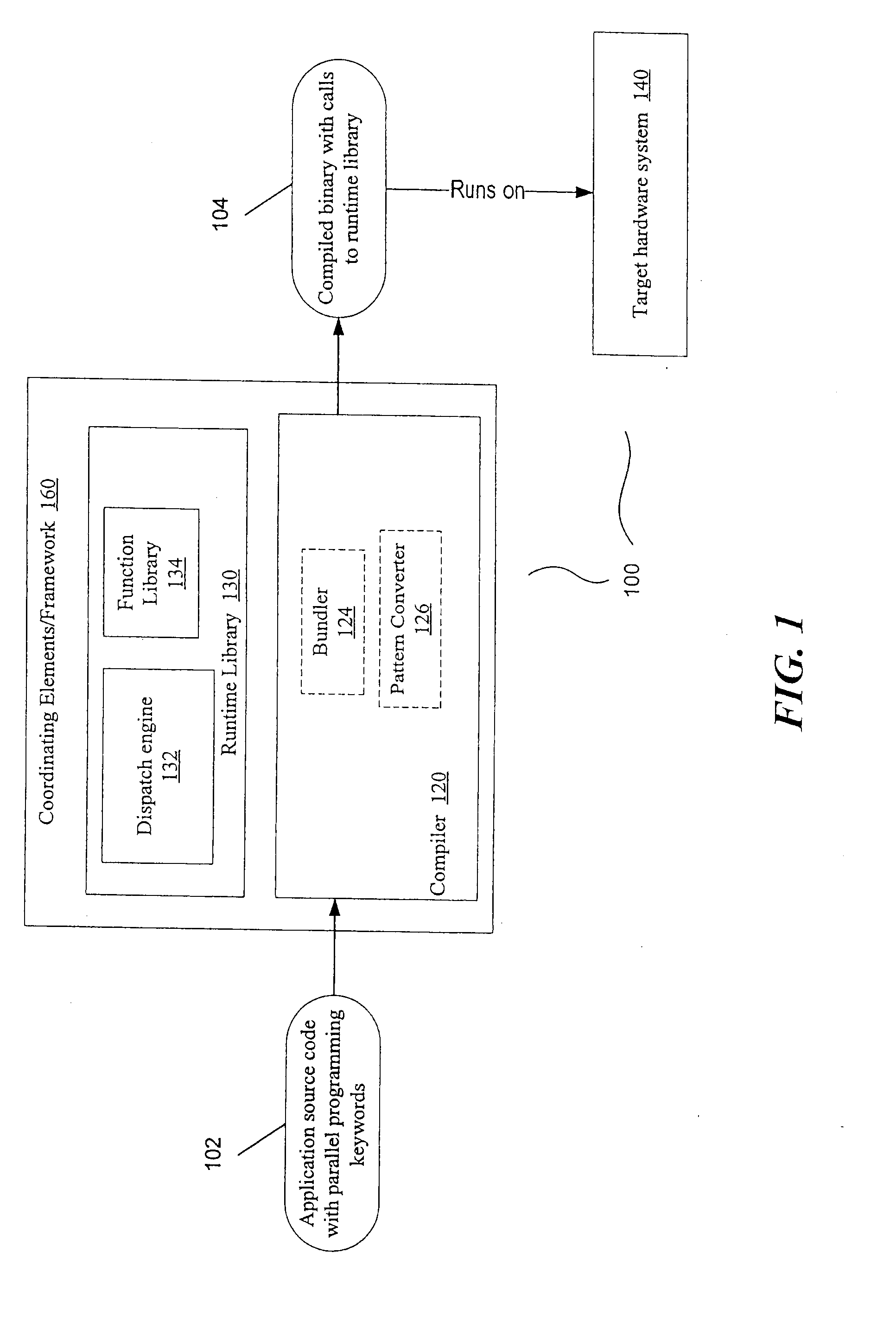

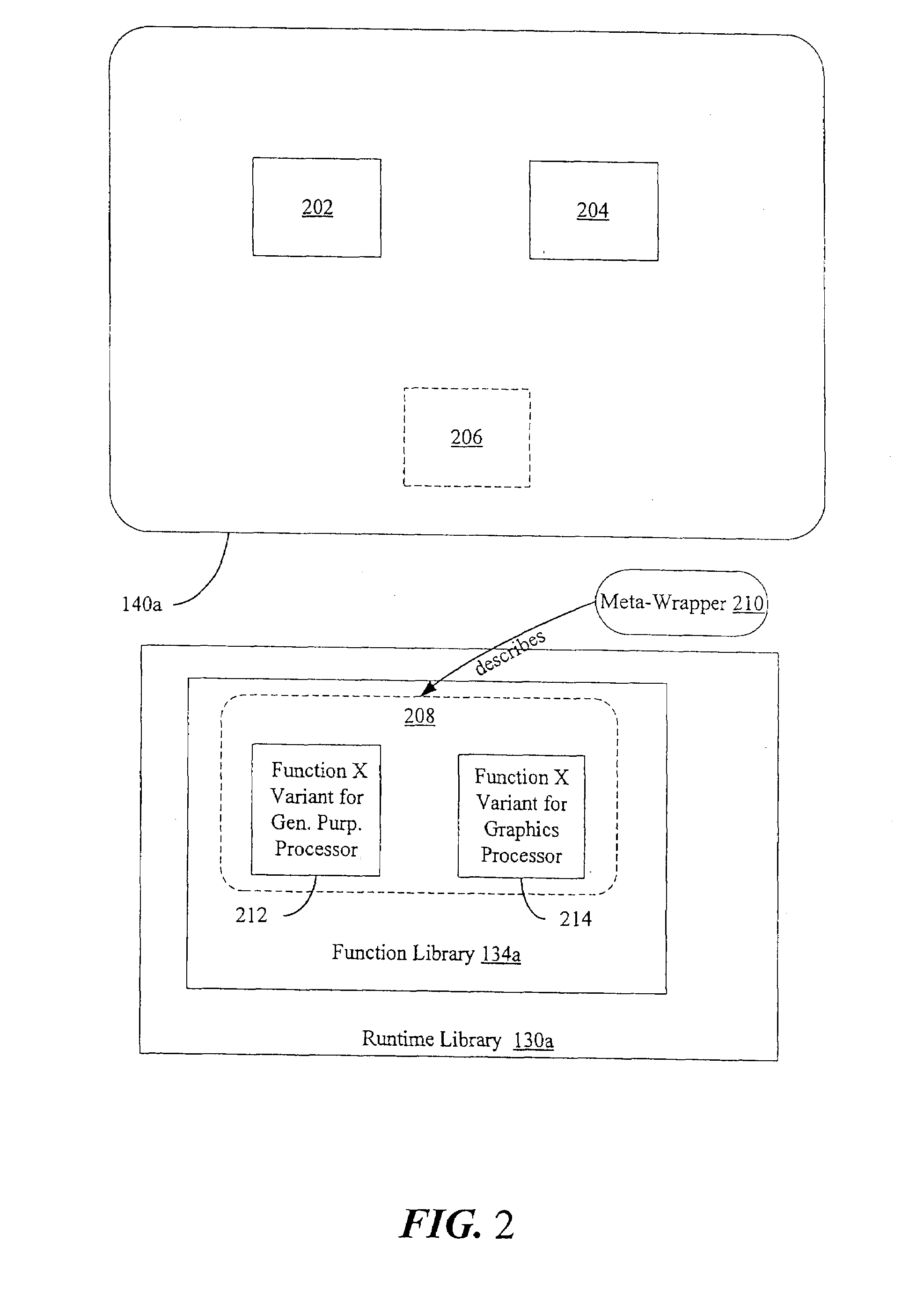

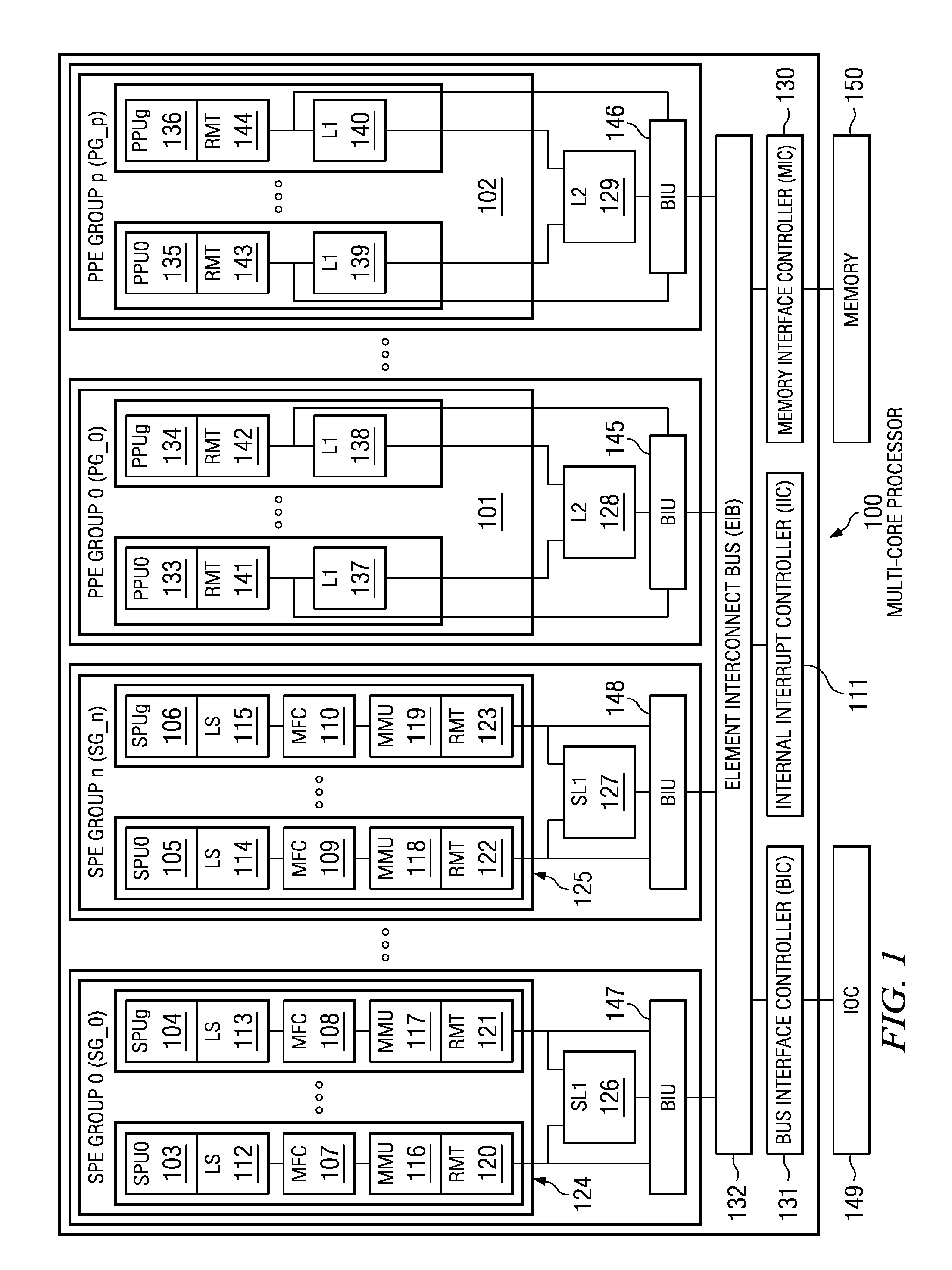

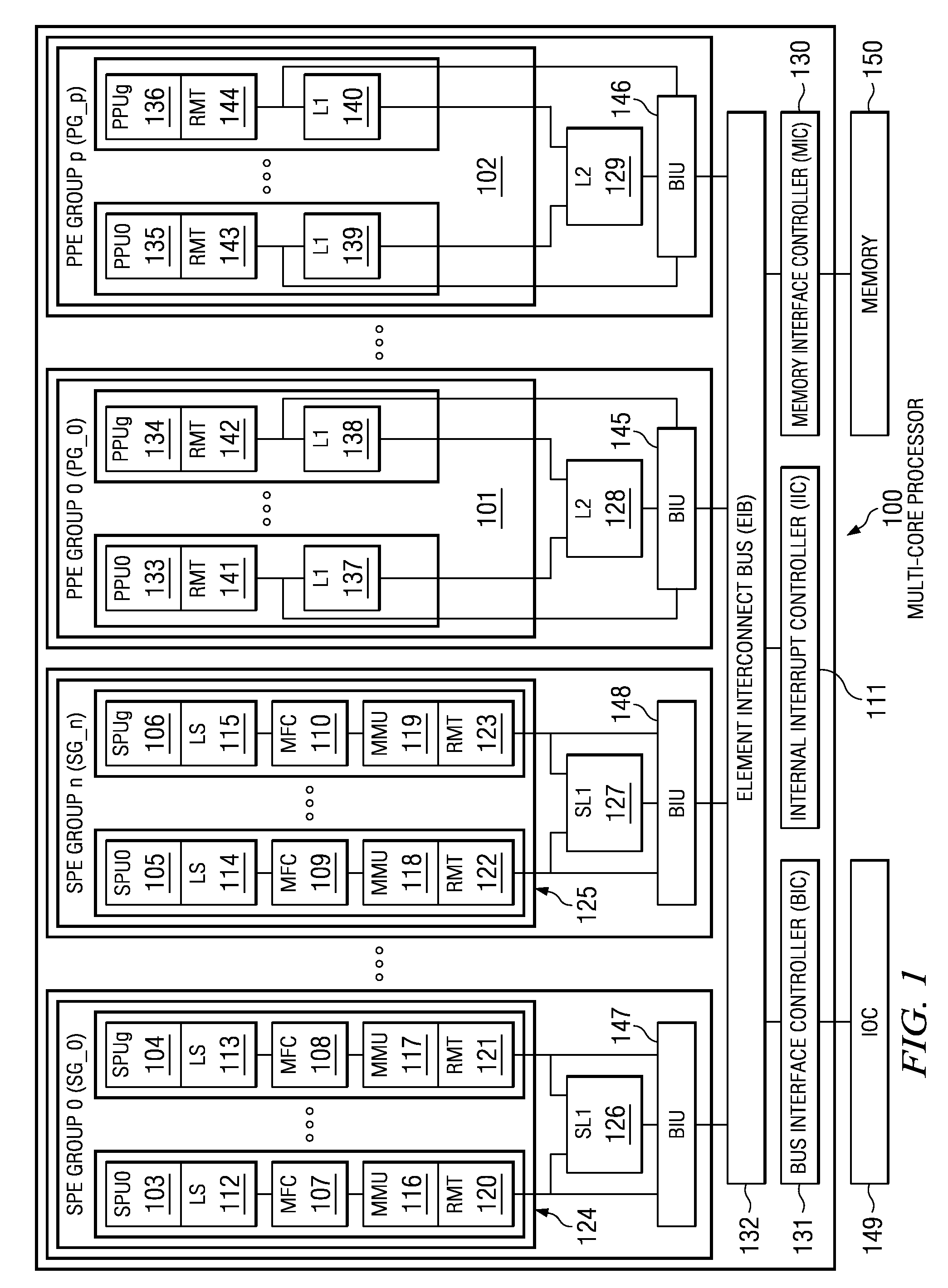

Compiler and runtime for heterogeneous multiprocessor systems

ActiveUS8296743B2Specific program execution arrangementsMemory systemsTheoretical computer scienceGeneric function

Owner:INTEL CORP

Compiler and Runtime for Heterogeneous Multiprocessor Systems

ActiveUS20090158248A1Specific program execution arrangementsMemory systemsTheoretical computer scienceGeneric function

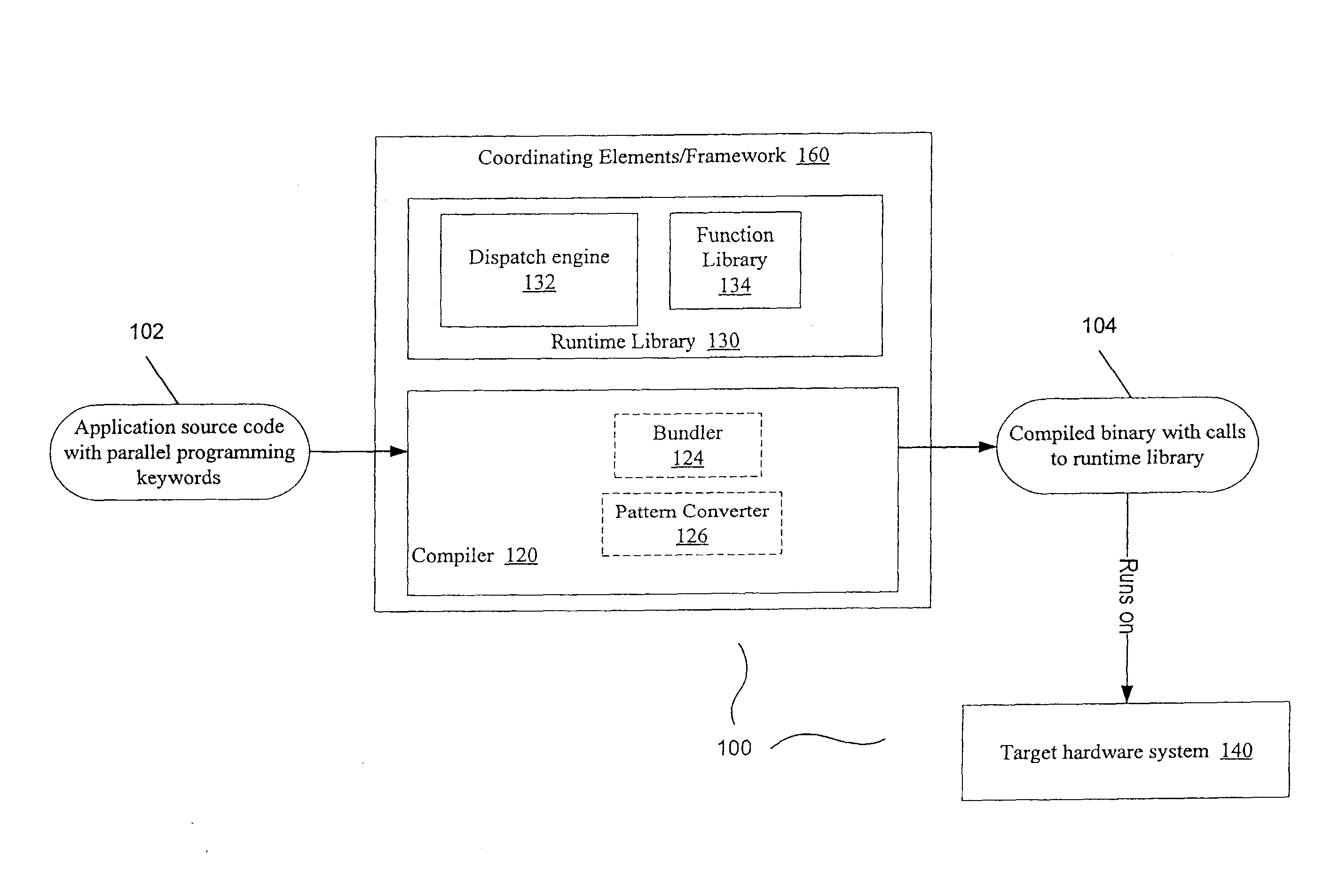

Presented are embodiments of methods and systems for library-based compilation and dispatch to automatically spread computations of a program across heterogeneous cores in a processing system. The source program contains a parallel-programming keyword, such as mapreduce, from a high-level, library-oriented parallel programming language. The compiler inserts one or more calls for a generic function, associated with the parallel-programming keyword, into the compiled code. A runtime library provides a predicate-based library system that includes multiple hardware specific implementations (“variants”) of the generic function. A runtime dispatch engine dynamically selects the best-available (e.g., most specific) variant, from a bundle of hardware-specific variants, for a given input and machine configuration. That is, the dispatch engine may take into account run-time availability of processing elements, choose one of them, and then select for dispatch an appropriate variant to be executed on the selected processing element. Other embodiments are also described and claimed.

Owner:INTEL CORP

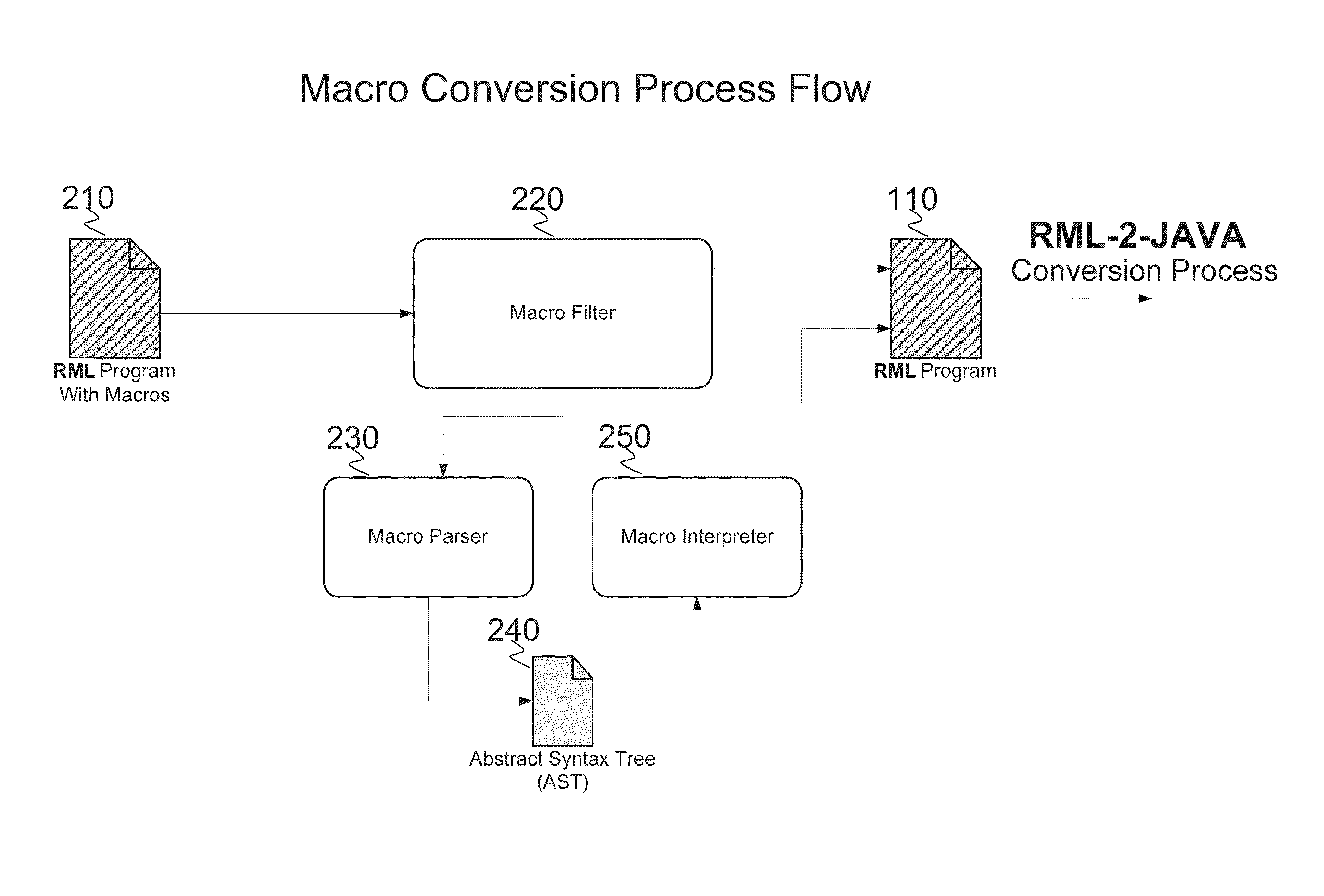

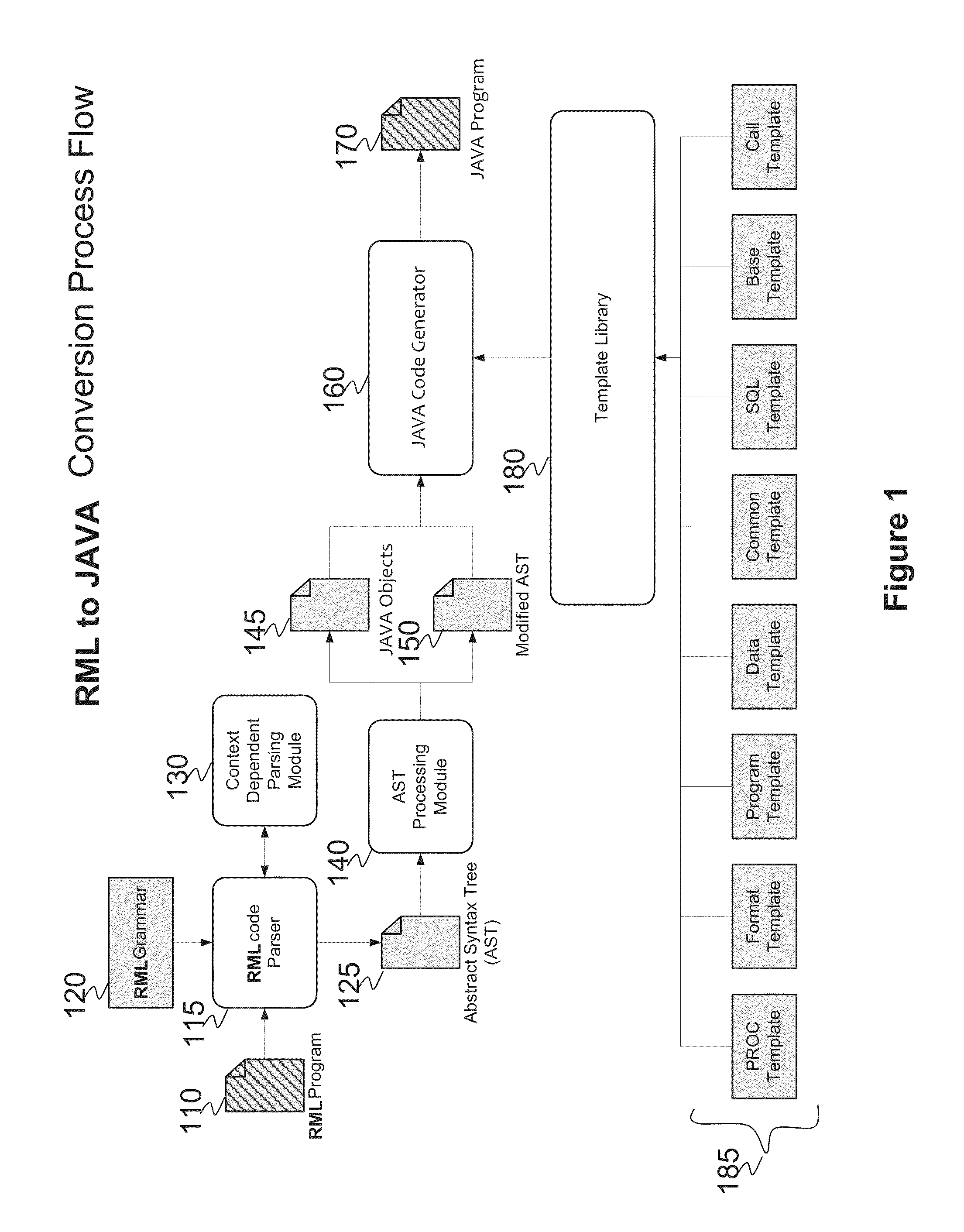

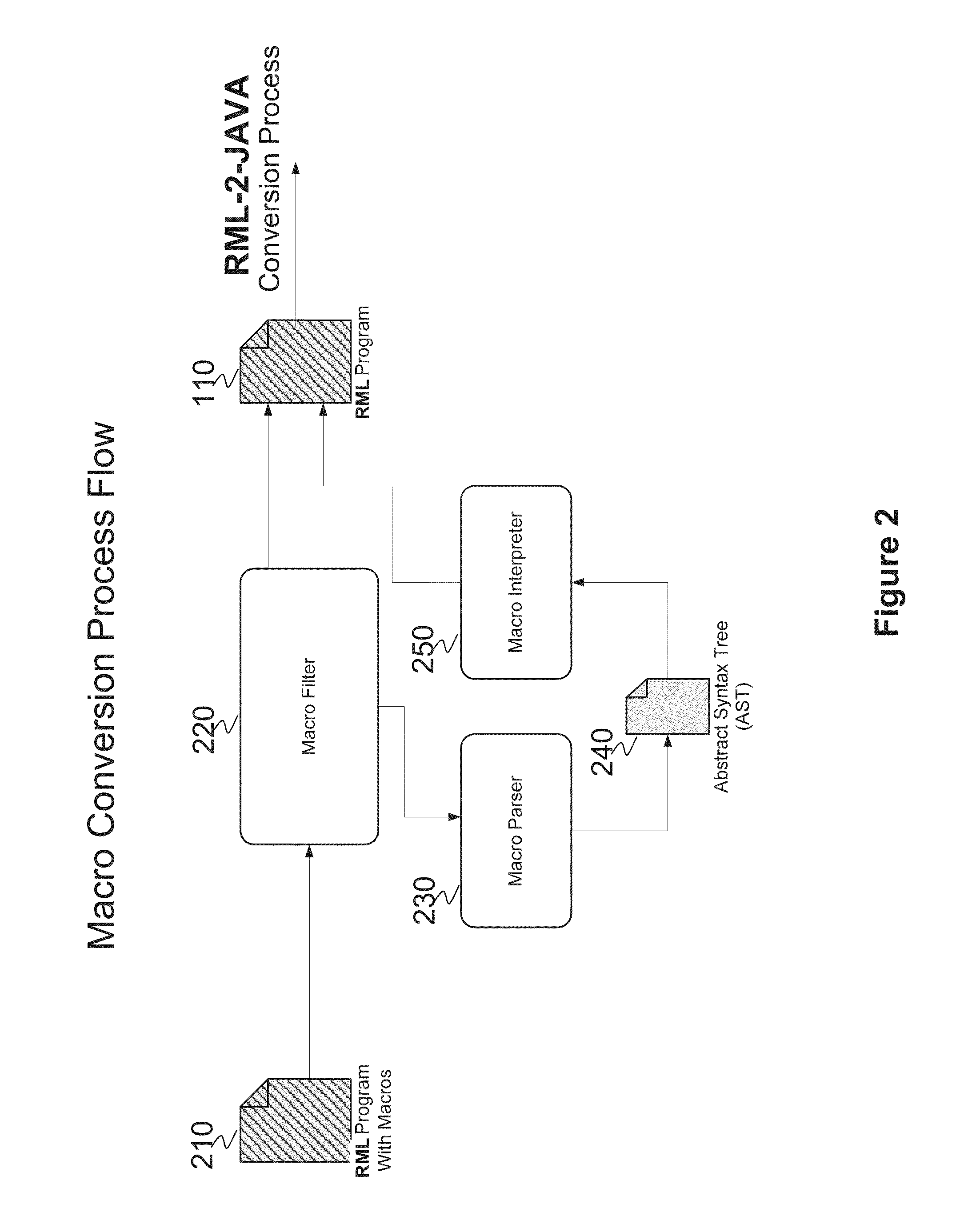

System and method for converting base SAS runtime macro language scripts to JAVA target language

ActiveUS8453126B1Overcome incidenceOvercome the impactProgram controlMemory systemsControl flowLexical analysis

A system and method for automated conversion of a SAS runtime macro language (RML) program to a target language program such as JAVA. RML macros are identified and converted for inclusion in the RML program. A lexer is applied to generate a stream of tokens, including a token type for ambiguous tokens. A context dependent parsing module, including a token filter to resolve ambiguous tokens, assists the parser in generating an abstract syntax tree (AST), which is modified to express RML specific control flow constructs with target language program elements. The elements of the modified AST are replaced with target language templates from a library, with template parameters filled from the corresponding AST element. A run time library is provided for execution of the target language program.

Owner:DULLES RES

Systems and methods for efficient scheduling of concurrent applications in multithreaded processors

ActiveUS20130332711A1Efficient executionEasy to addInstruction analysisDigital computer detailsInstruction set designConcurrency control

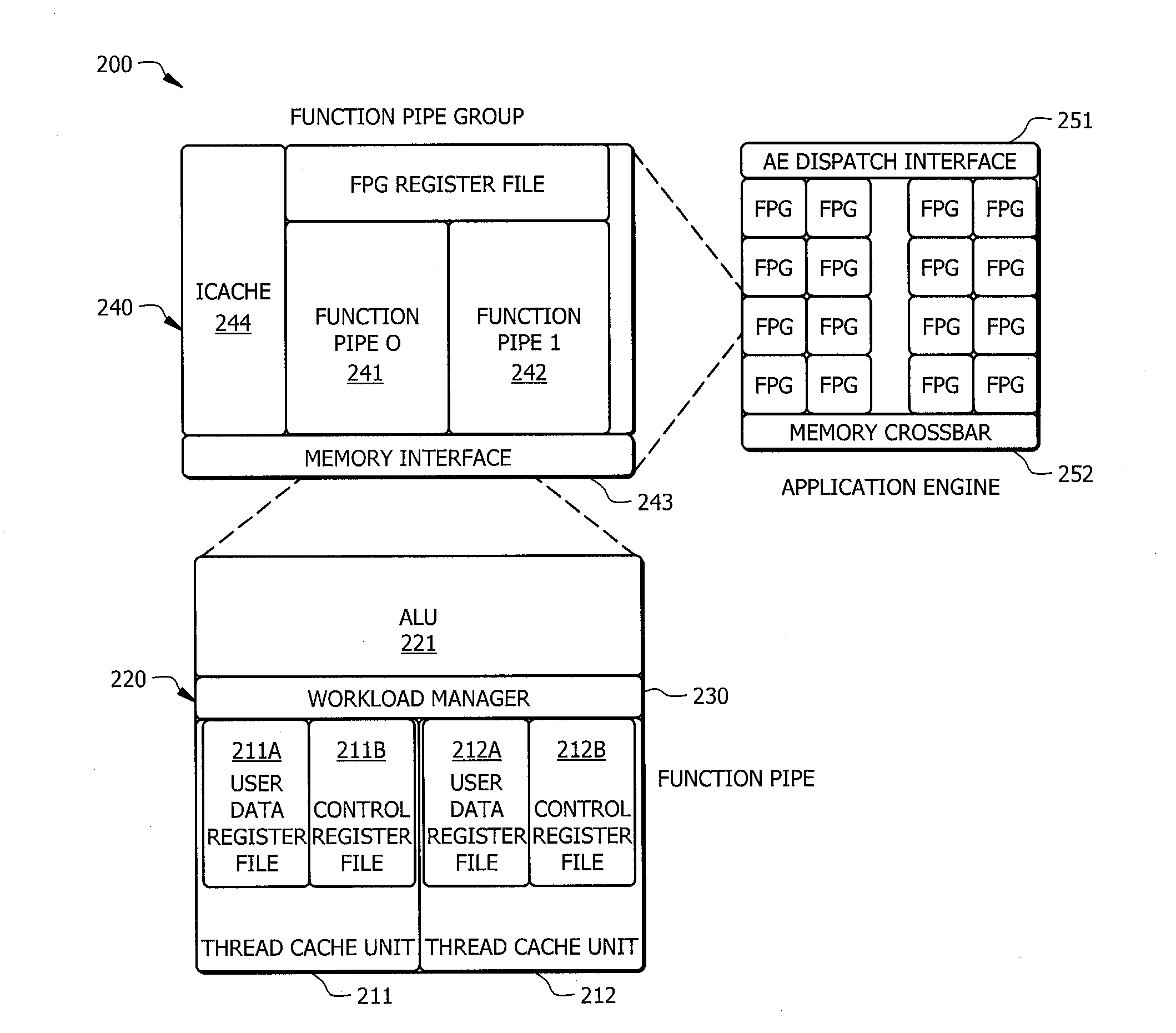

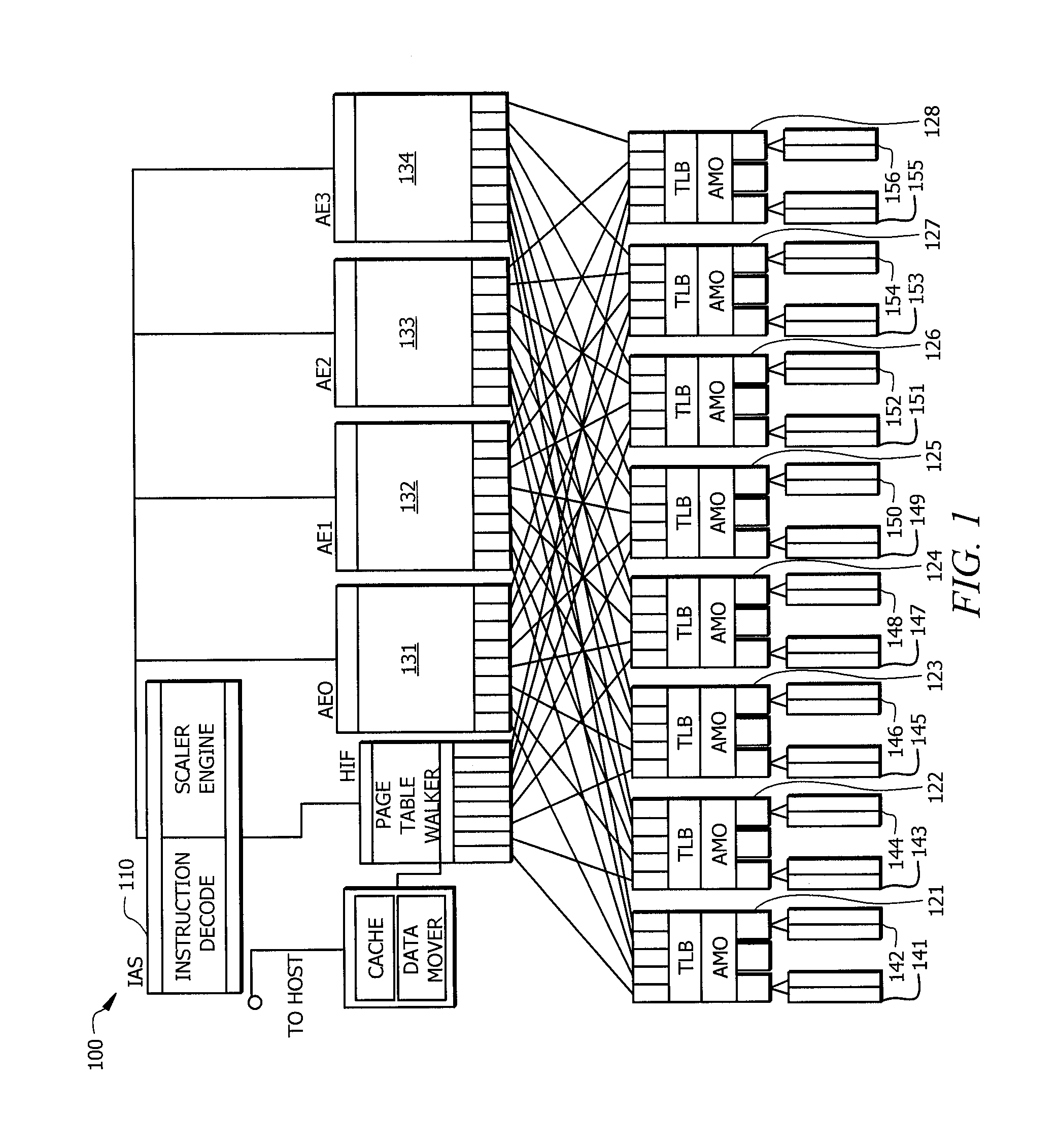

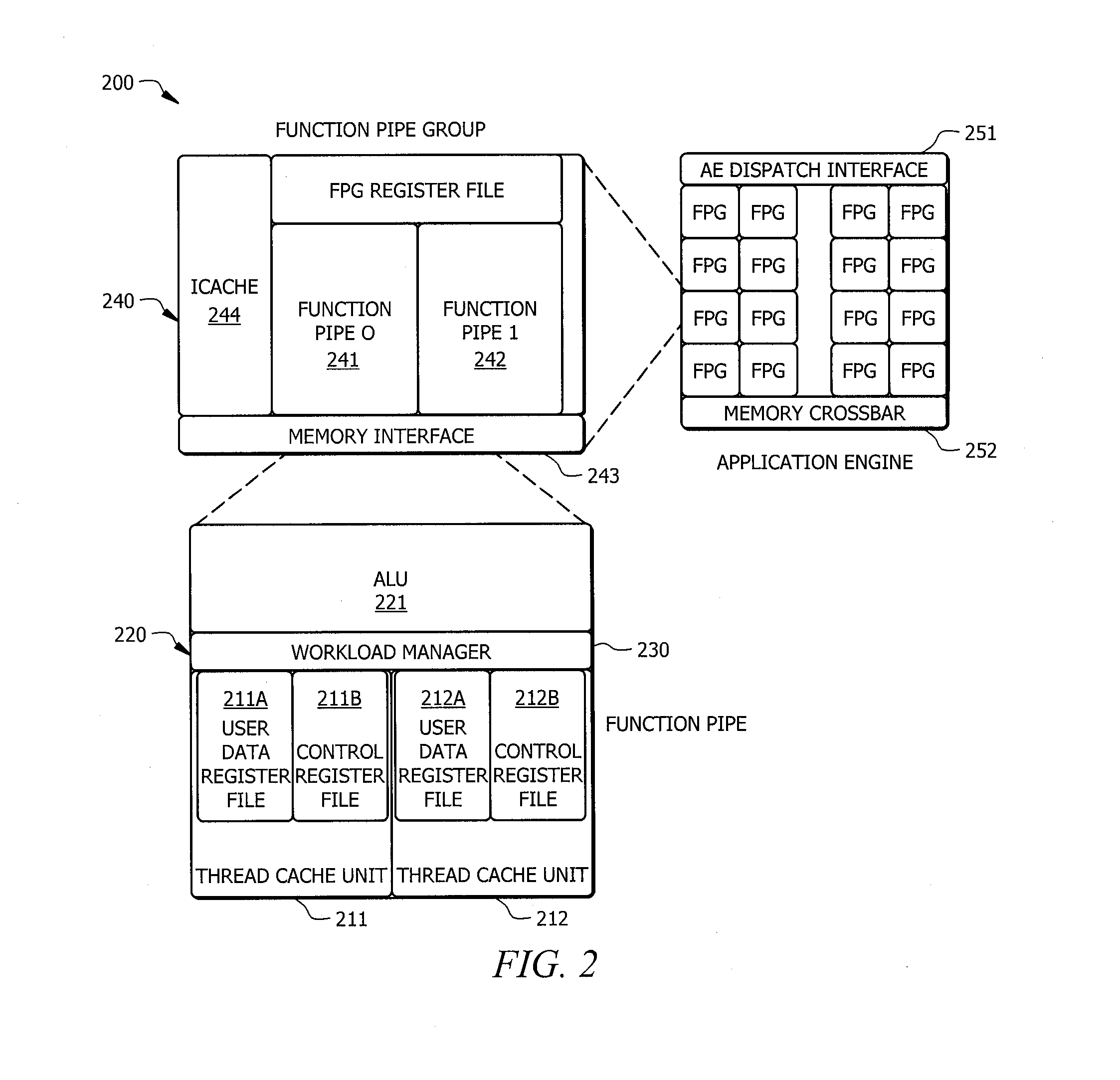

Systems and methods which provide a modular processor framework and instruction set architecture designed to efficiently execute applications whose memory access patterns are irregular or non-unit stride as disclosed. A hybrid multithreading framework (HMTF) of embodiments provides a framework for constructing tightly coupled, chip-multithreading (CMT) processors that contain specific features well-suited to hiding latency to main memory and executing highly concurrent applications. The HMTF of embodiments includes an instruction set designed specifically to exploit the high degree of parallelism and concurrency control mechanisms present in the HMTF hardware modules. The instruction format implemented by a HMTF of embodiments is designed to give the architecture, the runtime libraries, and / or the application ultimate control over how and when concurrency between thread cache units is initiated. For example, one or more bit of the instruction payload may be designated as a context switch bit (CTX) for expressly controlling context switching.

Owner:MICRON TECH INC

Method and apparatus for multi-version updates of application services

ActiveUS7140012B2Easy to updateData processing applicationsVersion controlApplication softwareRuntime library

Successor versions of an application service provision runtime library of an application service provision apparatus are provided with corresponding update services to facilitate upgrade of applications to selected ones of the successor versions on request. In various embodiments, a dispatcher of the application service provision apparatus is provided with complementary functions to coordinate the servicing of the upgrade requests. In some embodiments, each of the update services is equipped to upgrade the application from an immediate predecessor version of the runtime library. In other embodiments, each of the update services is equipped to upgrade the application from any predecessor version of the runtime library.

Owner:ORACLE INT CORP

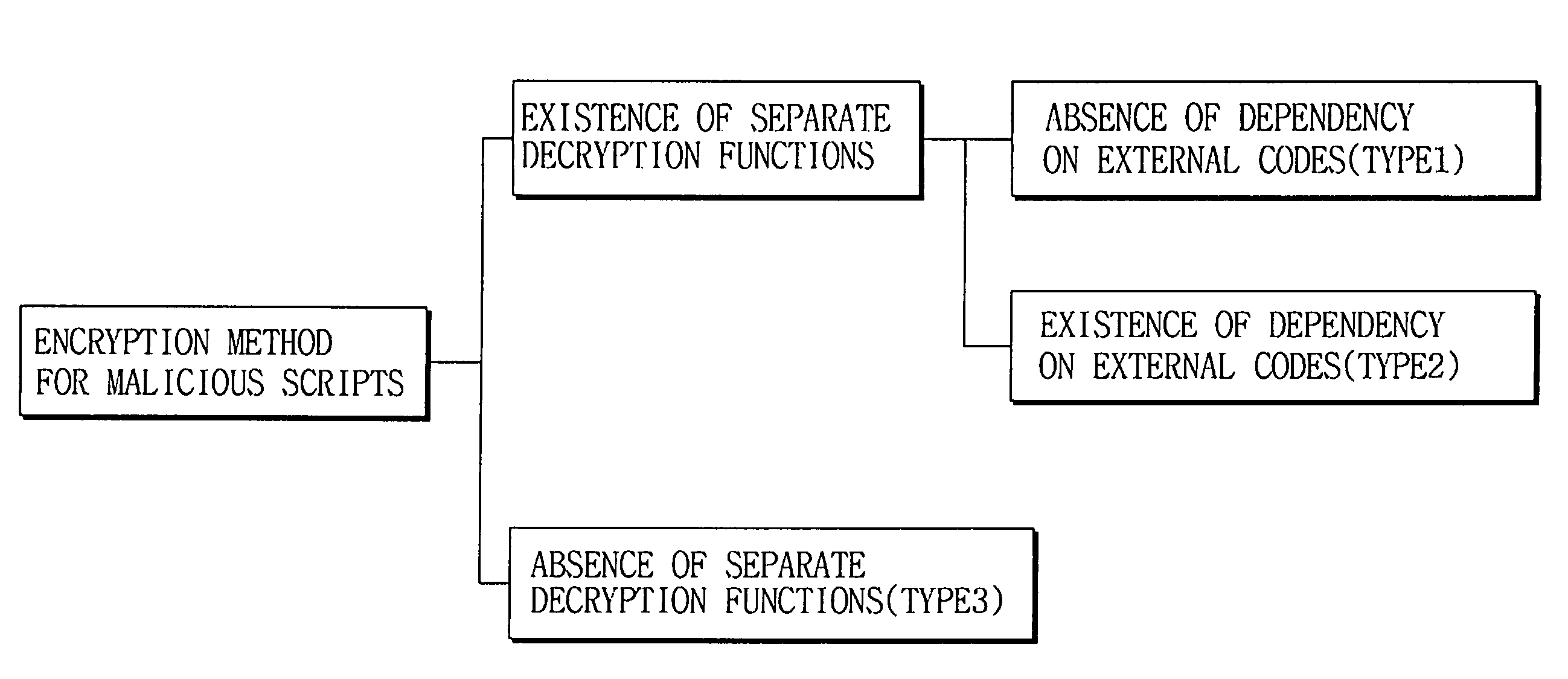

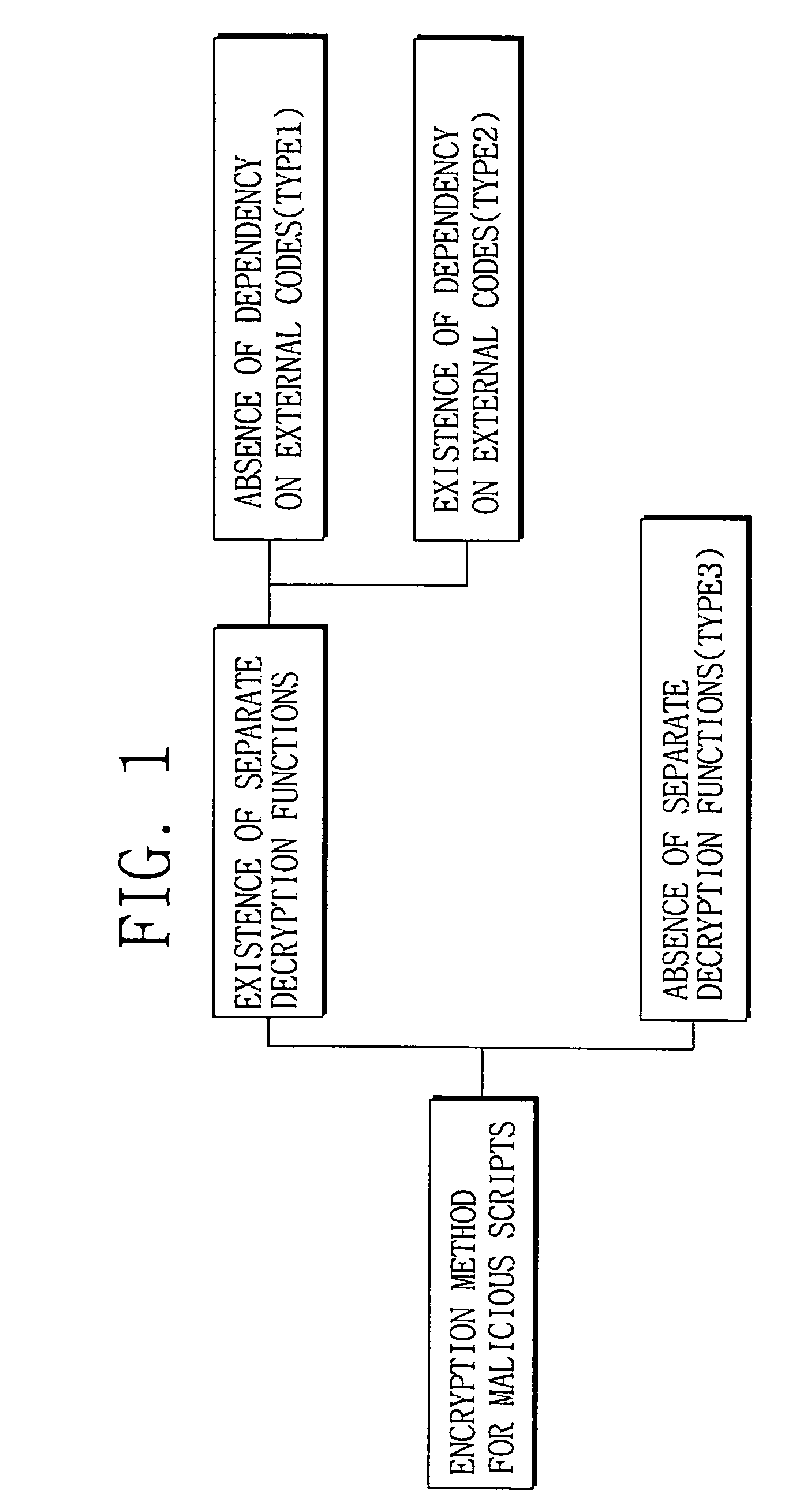

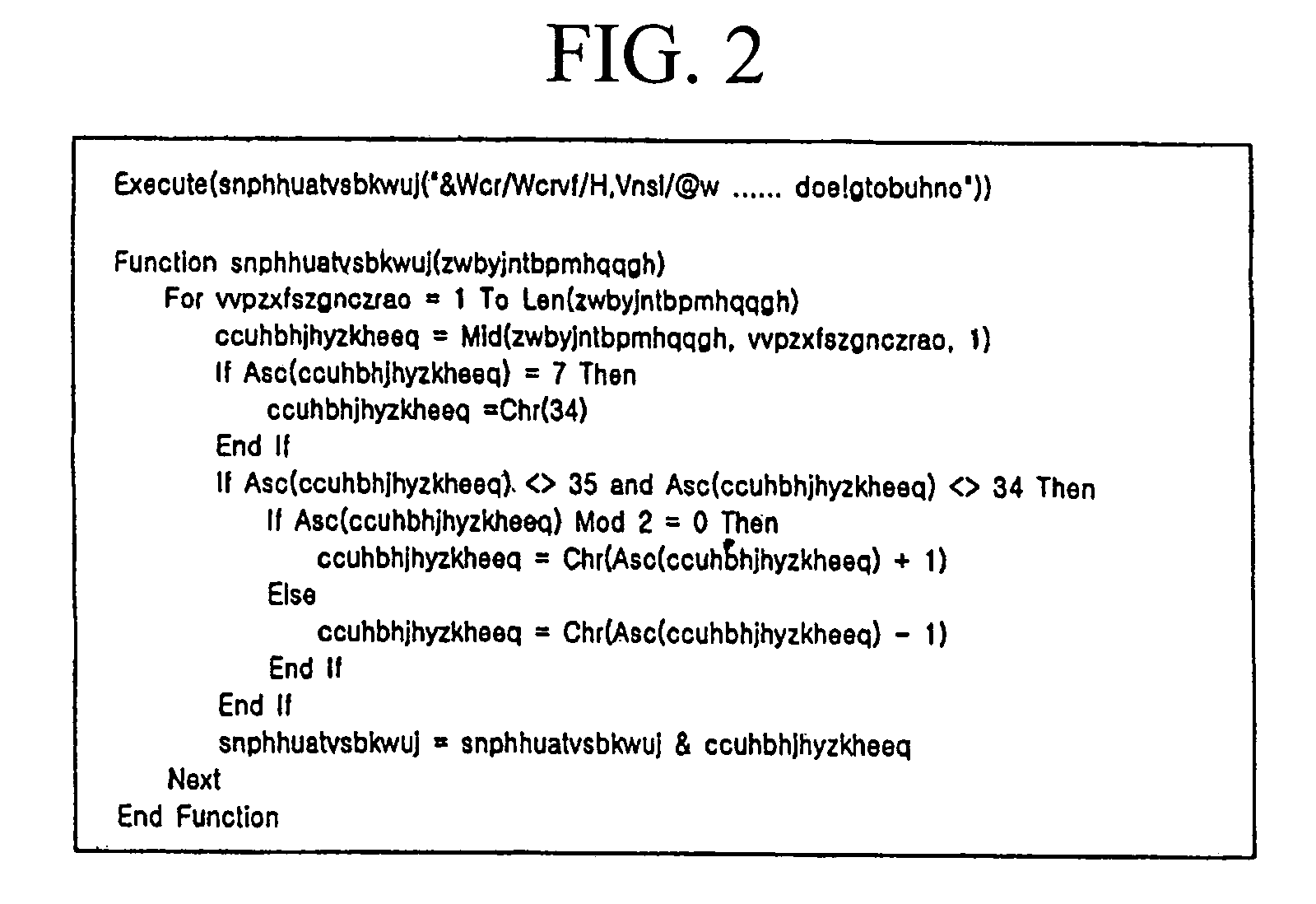

Method of decrypting and analyzing encrypted malicious scripts

ActiveUS7409718B1Flexible responseMemory loss protectionError detection/correctionProgramming languageFunction definition

Disclosed herein is a method of analyzing and decrypting encrypted malicious scripts. The method of the present invention comprises the steps of classifying a malicious script encryption method into a case where a decryption function exists in malicious scripts and is an independent function that is not dependent on external codes such as run time library, a case where a decryption function exists and is a dependent function that is dependent on external codes, and a case where a decryption function does not exist; and if the decryption function exists in malicious scripts and is the independent function that is not dependent on the external codes, extracting a call expression and a function definition for the independent function, executing or emulating the extracted call expression and function definition for the independent function, and obtaining a decrypted script by putting a result value based on the execution or emulation into an original script at which an original call expression is located. According to the present invention, unknown malicious codes can be promptly and easily decrypted through only a single decryption algorithm without any additional data. In addition to the decryption of encrypted codes, complexity of later code analysis can also be reduced by substituting constants for all values that can be set as constants in a relevant script.

Owner:AJOU UNIV IND ACADEMIC COOP FOUND

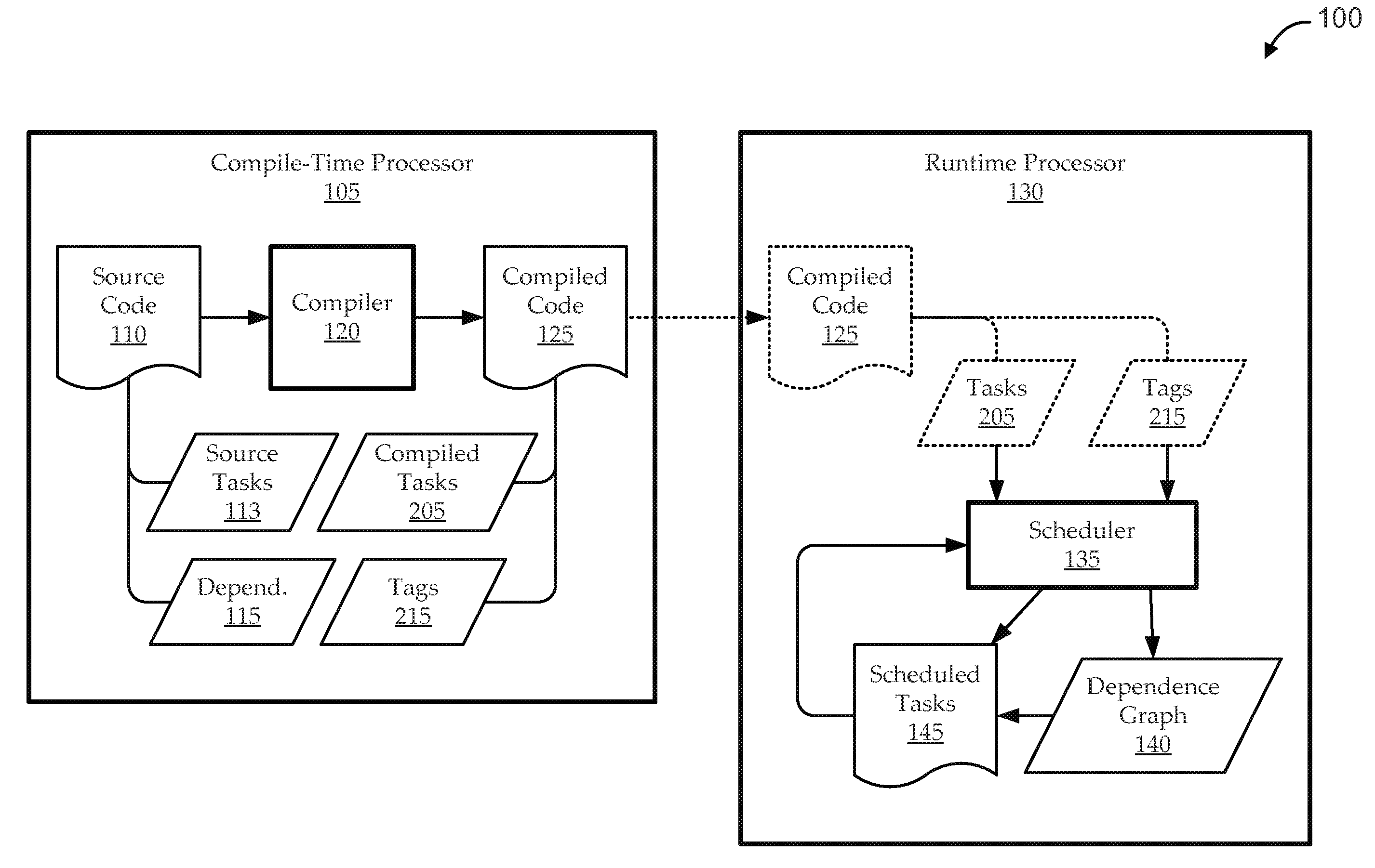

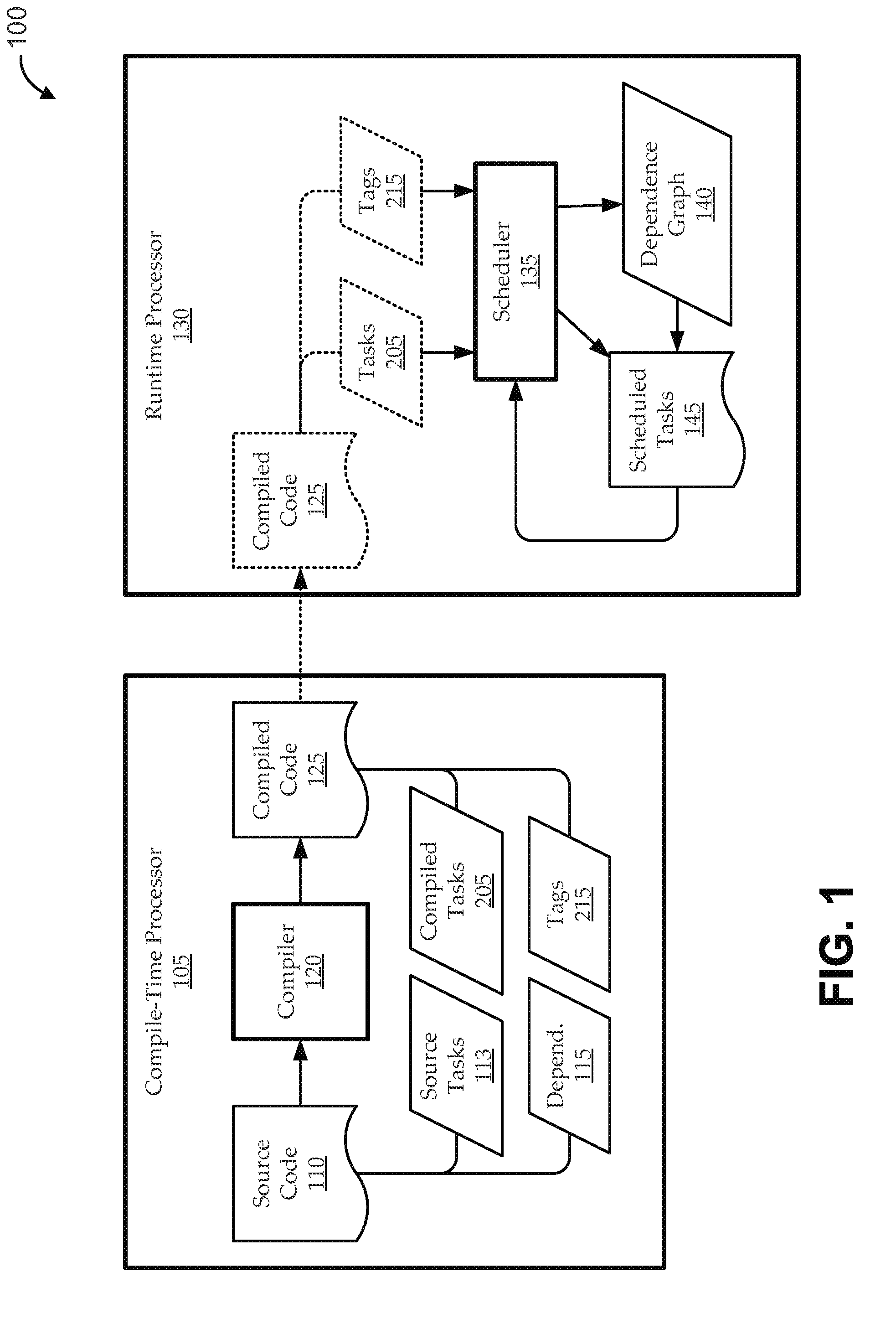

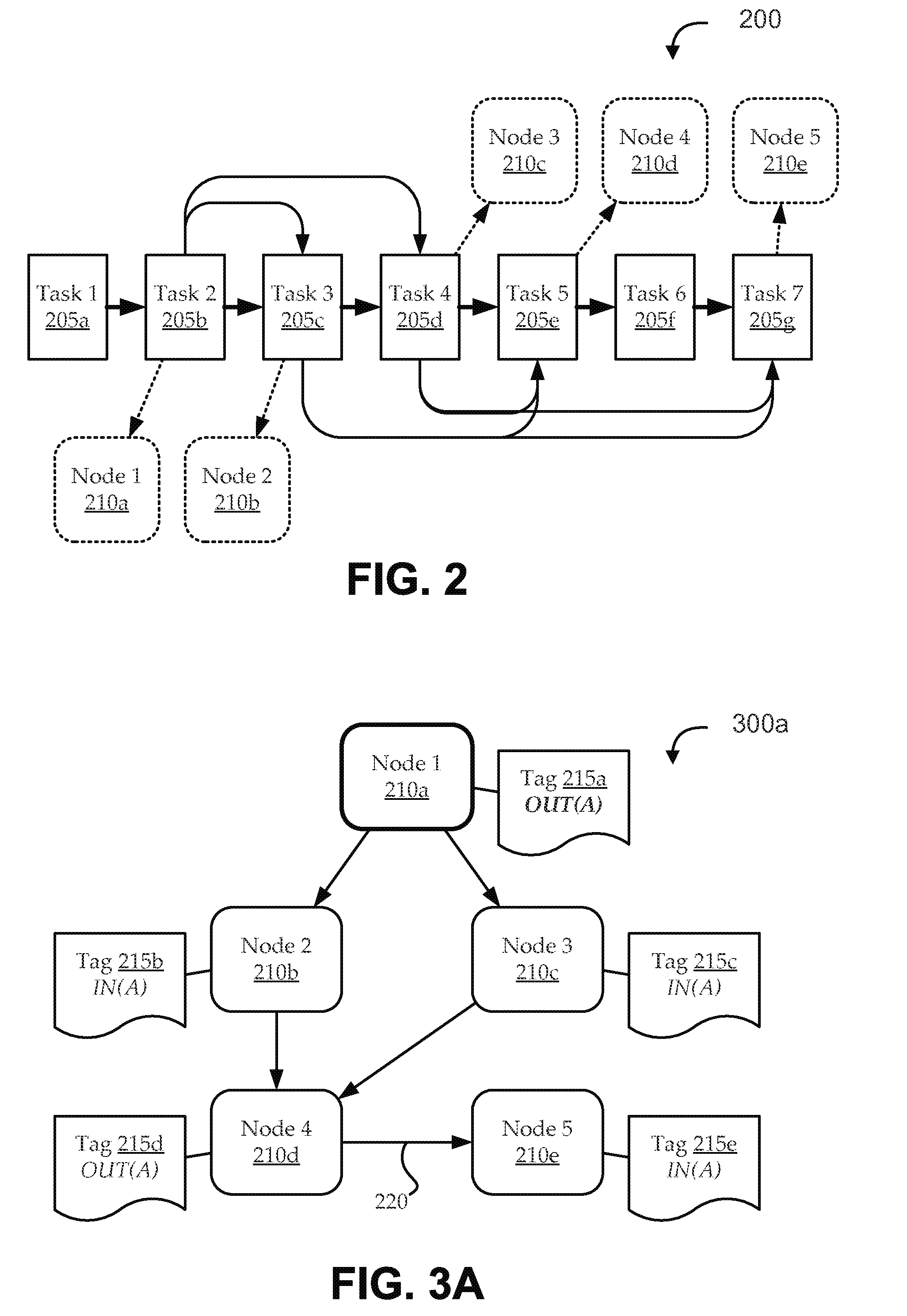

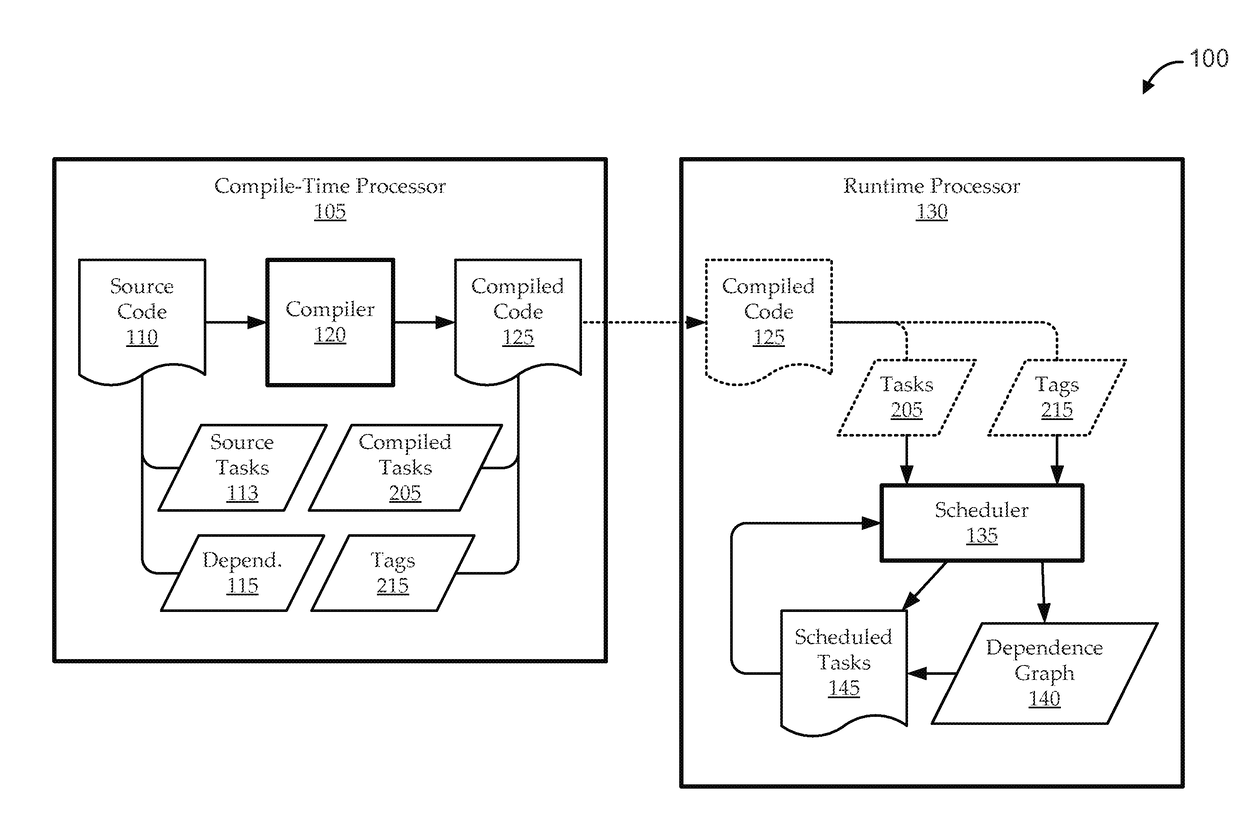

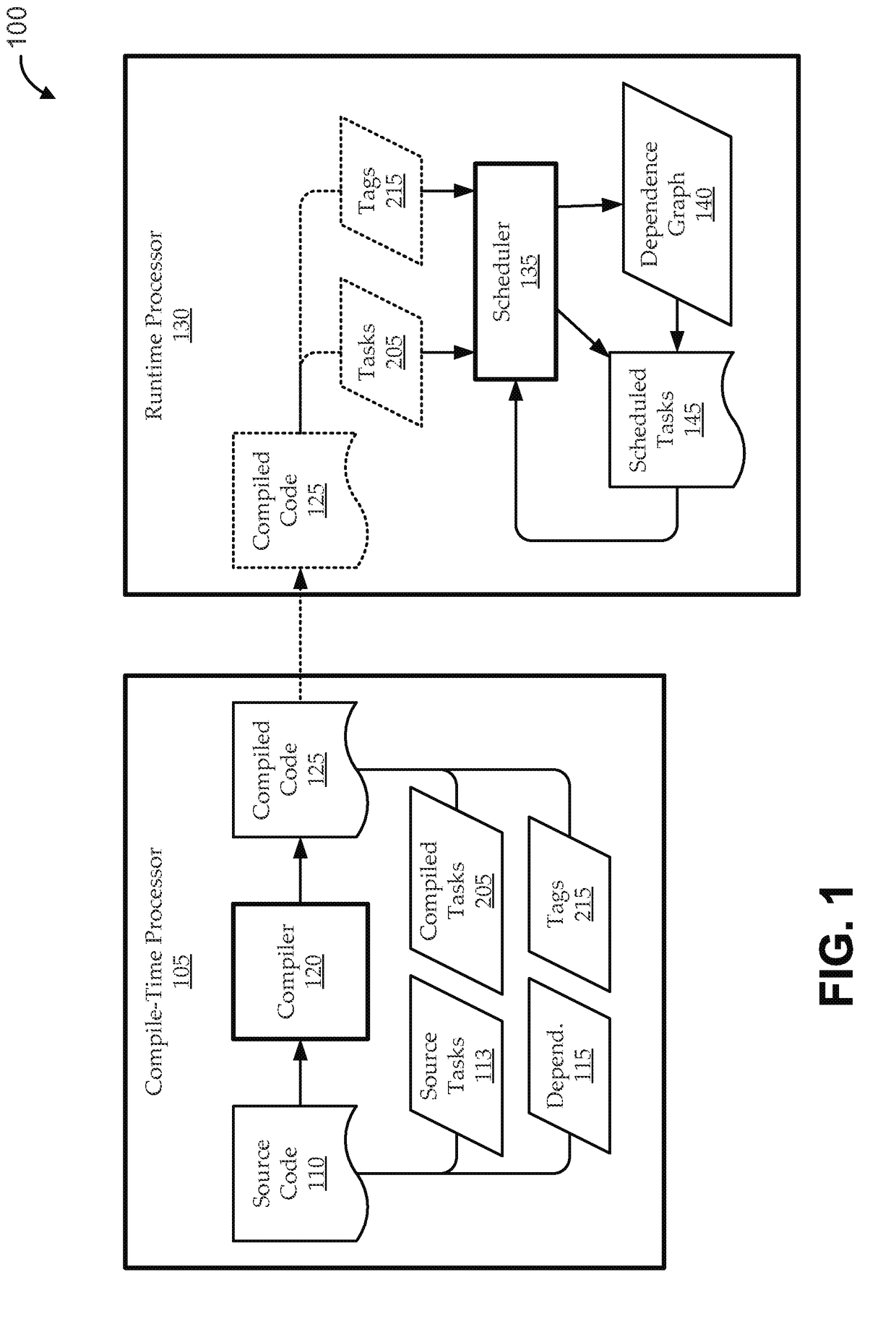

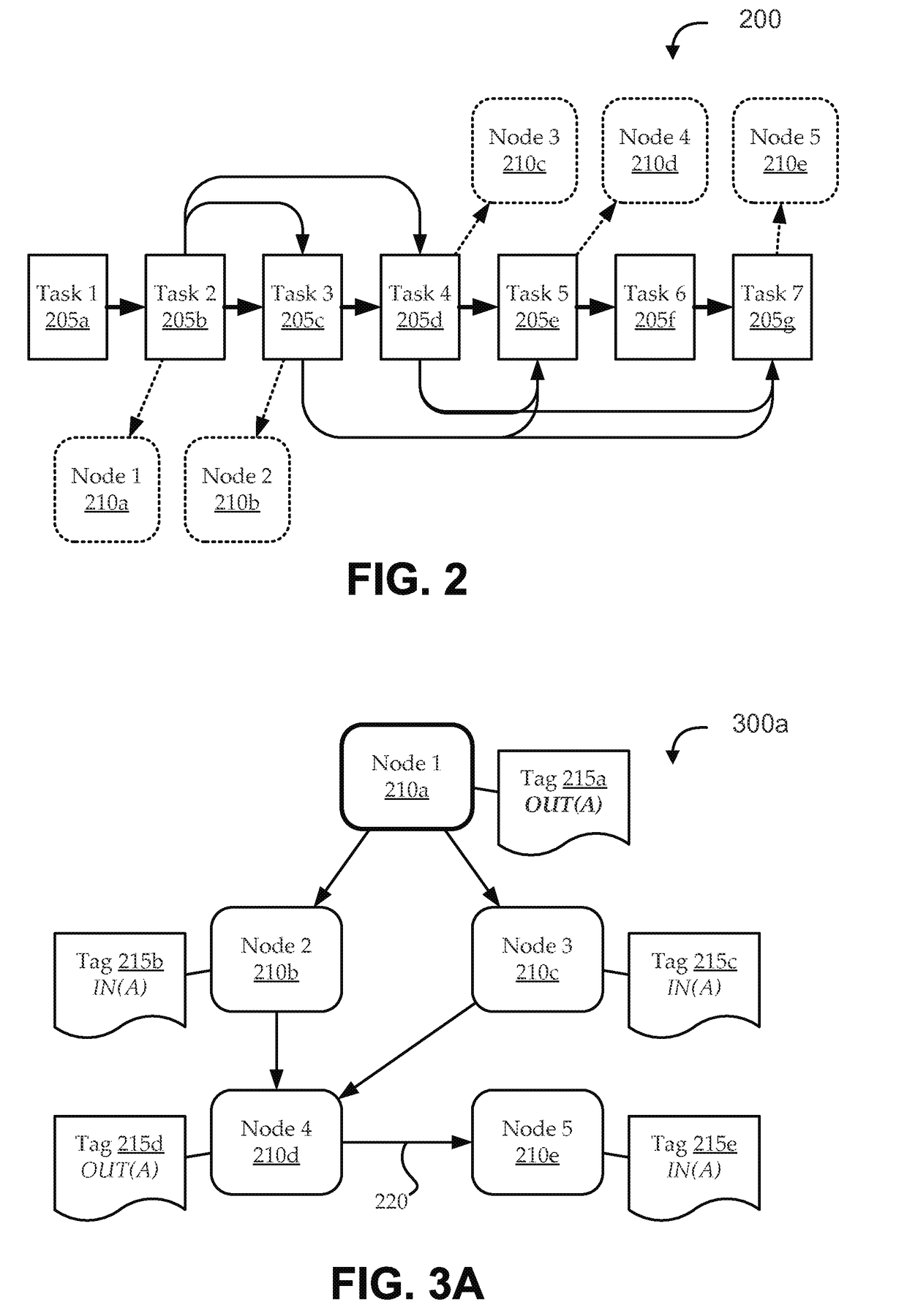

Runtime handling of task dependencies using dependence graphs

ActiveUS20150268992A1Preserves task dependenciesProgram initiation/switchingMemory systemsTask dependencyRuntime library

Embodiments include systems and methods for handling task dependencies in a runtime environment using dependence graphs. For example, a computer-implemented runtime engine includes runtime libraries configured to handle tasks and task dependencies. The task dependencies can be converted into data dependencies. At runtime, as the runtime engine encounters tasks and associated data dependencies, it can add those identified tasks as nodes of a dependence graph, and can add edges between the nodes that correspond to the data dependencies without deadlock. The runtime engine can schedule the tasks for execution according to a topological traversal of the dependence graph in a manner that preserves task dependencies substantially as defined by the source code.

Owner:ORACLE INT CORP

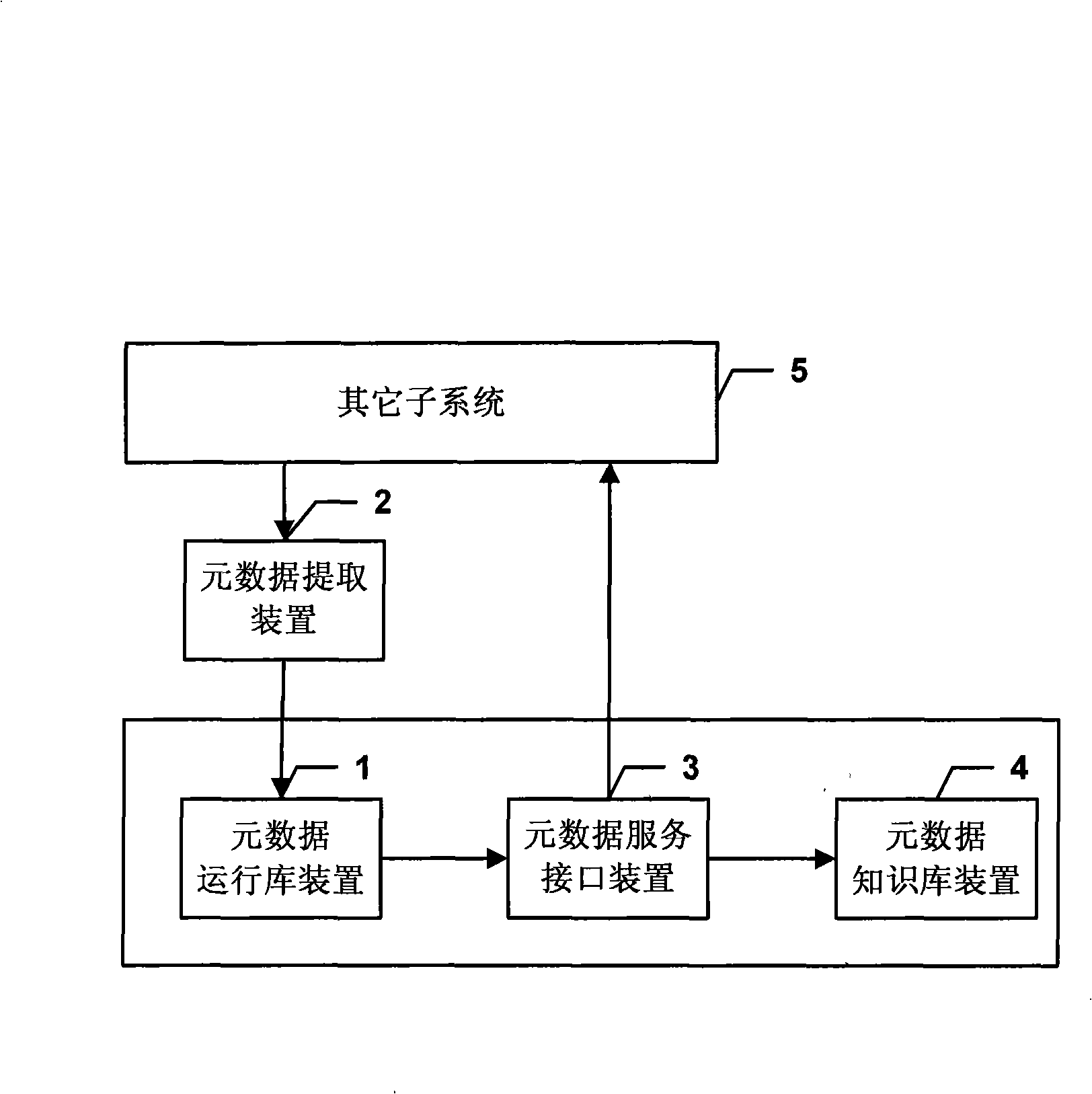

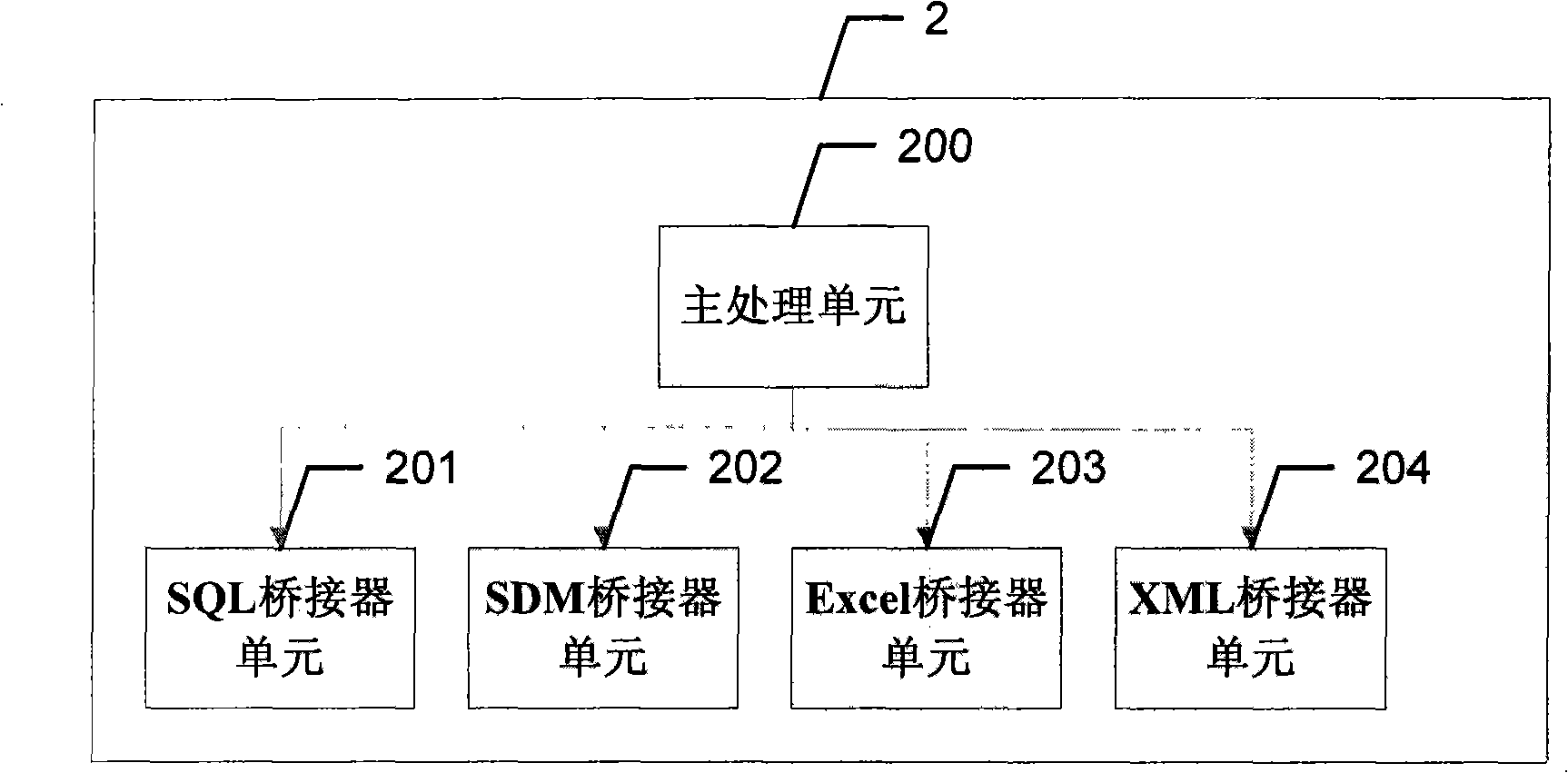

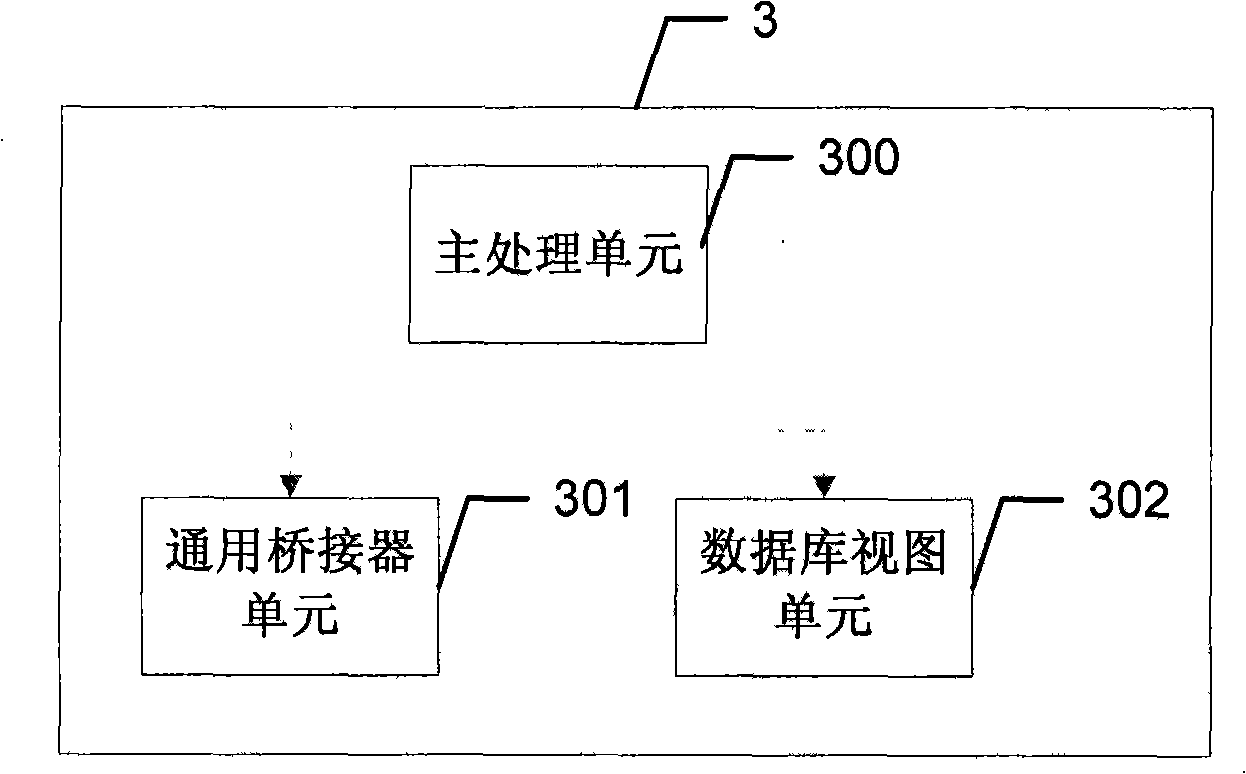

Metadata management system with bidirectional interactive characteristics and implementation method thereof

ActiveCN101515290AReduce management difficultyGuaranteed accuracySpecial data processing applicationsForm analysisMetadata management

The invention discloses a metadata management system with bidirectional interactive characteristics and an implementation method thereof. A metadata data extraction device extracts the metadata of all subsystems of an EDW to a metadata runtime library device, and a universal relational metadata model stores the metadata; a conventional bridge extracts the metadata of the relational metadata model to a metadata knowledge base device of an object metadata model to form analysis results for inquiry by a user; a database view supplies the needed metadata information to other subsystems. The invention uses a bidirectional metadata management system framework, can support the metadata extracted from other subsystems of the EDW to be stored into the metadata management system, and meanwhile, supports providing the needed metadata information to other subsystems of the EDW, solves the running management problem of the metadata and the EDW, reduces the management difficulty of the EDW, guarantees the accuracy of the metadata and causes the subsystems to efficiently communicate and stably run.

Owner:INDUSTRIAL AND COMMERCIAL BANK OF CHINA

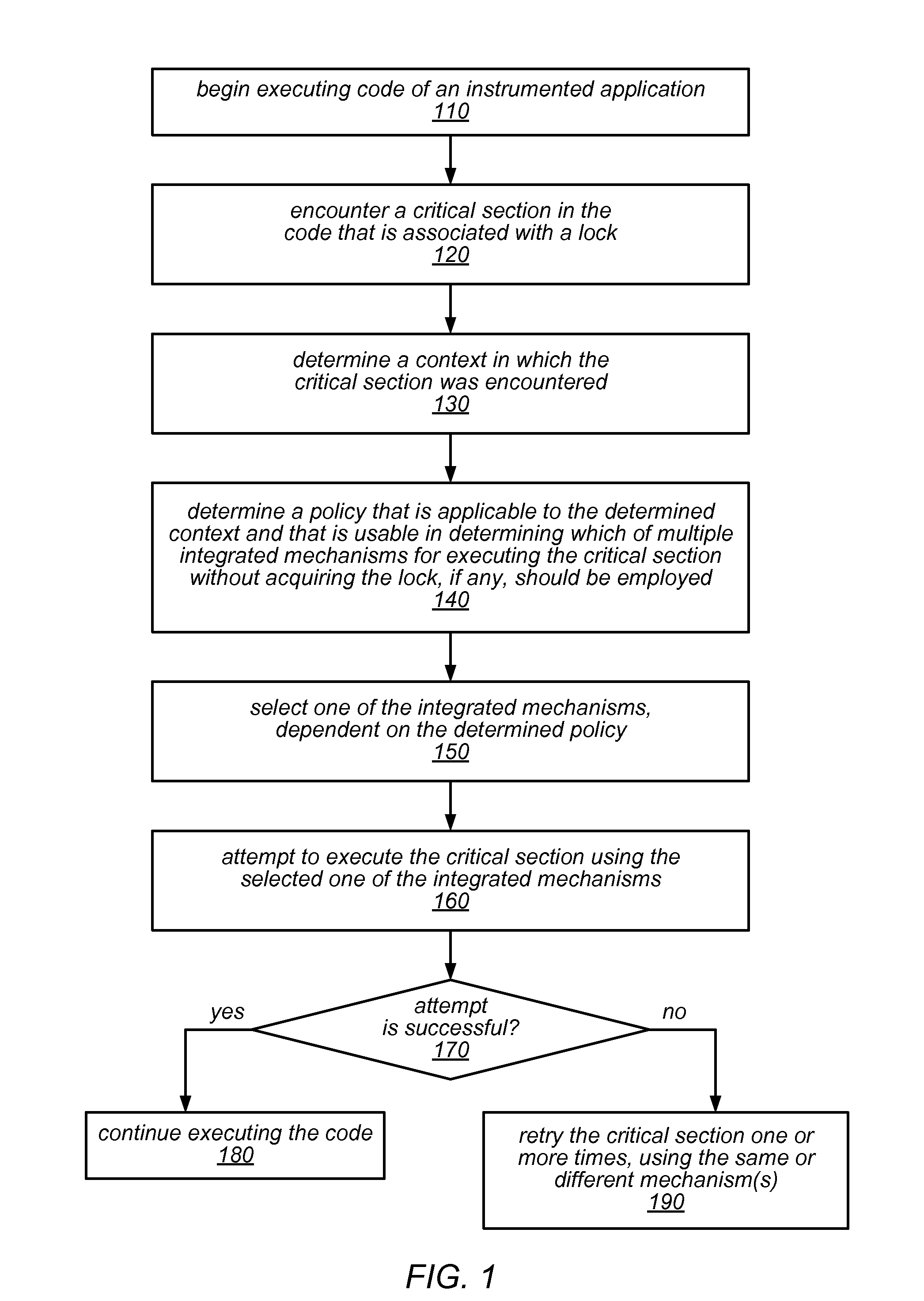

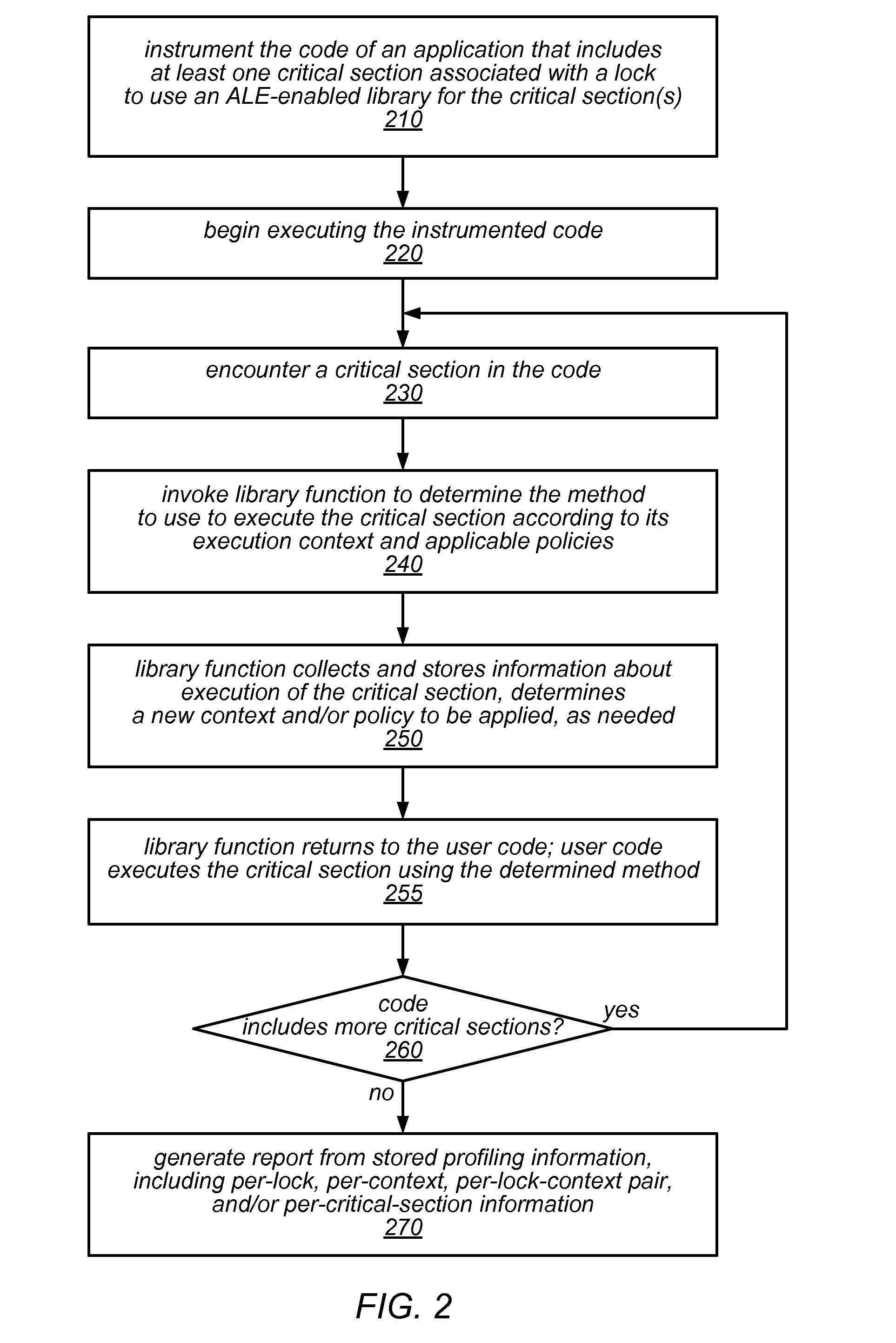

Systems and Methods for Adaptive Integration of Hardware and Software Lock Elision Techniques

ActiveUS20150026688A1Improve scalabilityImprove performanceSpecific access rightsProgram initiation/switchingSoftware lockoutParallel computing

Particular techniques for improving the scalability of concurrent programs (e.g., lock-based applications) may be effective in some environments and for some workloads, but not others. The systems described herein may automatically choose appropriate ones of these techniques to apply when executing lock-based applications at runtime, based on observations of the application in the current environment and with the current workload. In one example, two techniques for improving lock scalability (e.g., transactional lock elision using hardware transactional memory, and optimistic software techniques) may be integrated together. A lightweight runtime library built for this purpose may adapt its approach to managing concurrency by dynamically selecting one or more of these techniques (at different times) during execution of a given application. In this Adaptive Lock Elision approach, the techniques may be selected (based on pluggable policies) at runtime to achieve good performance on different platforms and for different workloads.

Owner:ORACLE INT CORP

System and method for supporting digital rights management in an enhanced javatm 2 runtime environment

InactiveUS20080060083A1Provide protectionDigital data processing detailsAnalogue secracy/subscription systemsDigital rights management systemRights management

A digital rights management (DRM) system and methodology for a Java client implementing a Java Runtime Environment (JRE). The JRE comprises a Java Virtual Machine (JVM) and Java runtime libraries components and is capable of executing a player application for presenting content that can be presented through a Java program (e.g., a Java application, applet, servlet, bean, etc.) and downloaded from a content server to the client. The DRM system includes an acquisition component for receiving downloaded protected contents; and a dynamic rights management layer located between the JRE and player application for receiving requests to view or play downloaded protected contents from the player, and, in response to each request, determining the rights associated with protected content and enabling viewing or playing of the protected contents via the player application if permitted according to the rights. By providing a DRM-enabled Java runtime, which does not affect the way non-DRM-related programs work, DRM content providers will not require the installation of customized players. By securing the runtime, every Java™ player automatically and transparently becomes a DRM-enabled player.

Owner:INT BUSINESS MASCH CORP

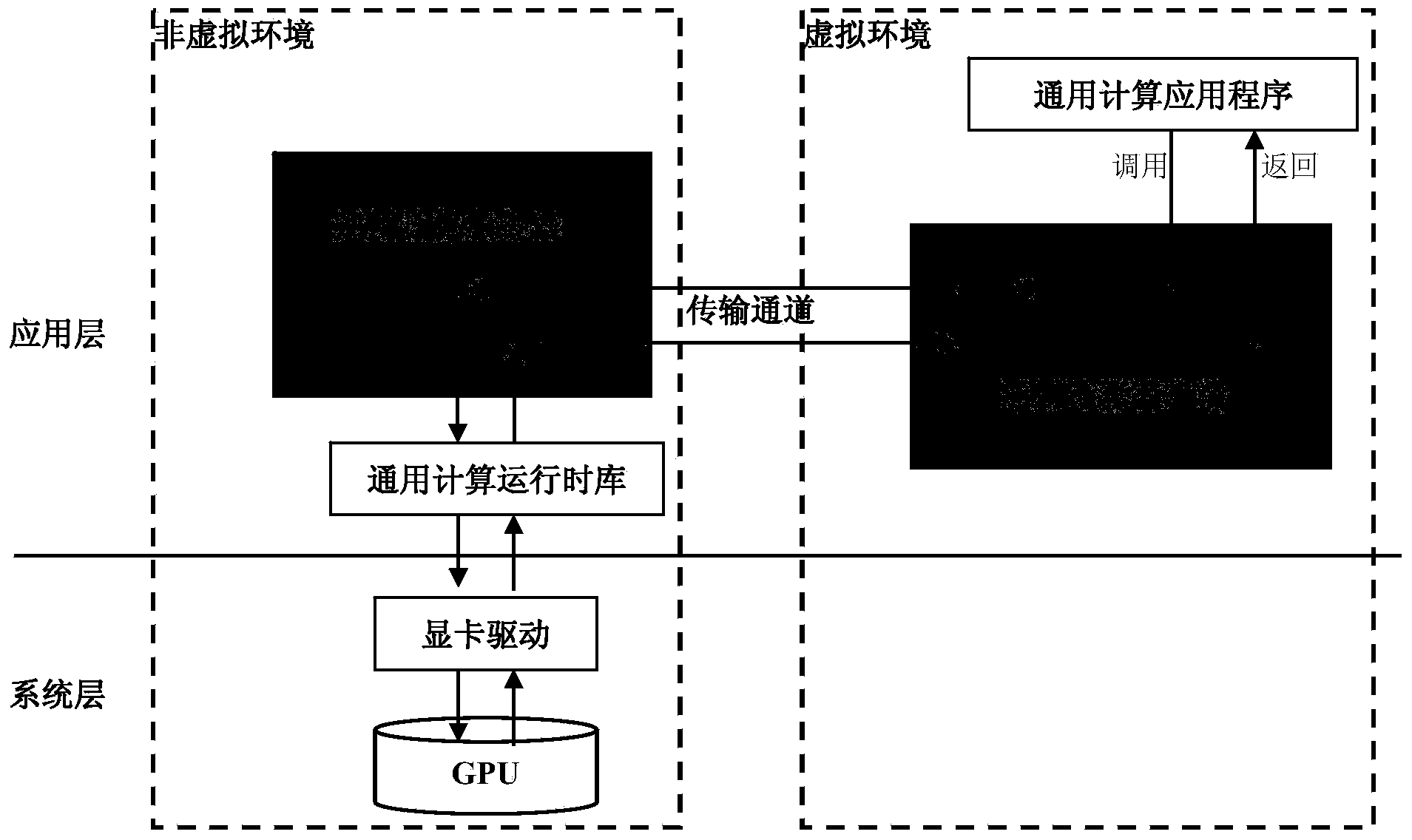

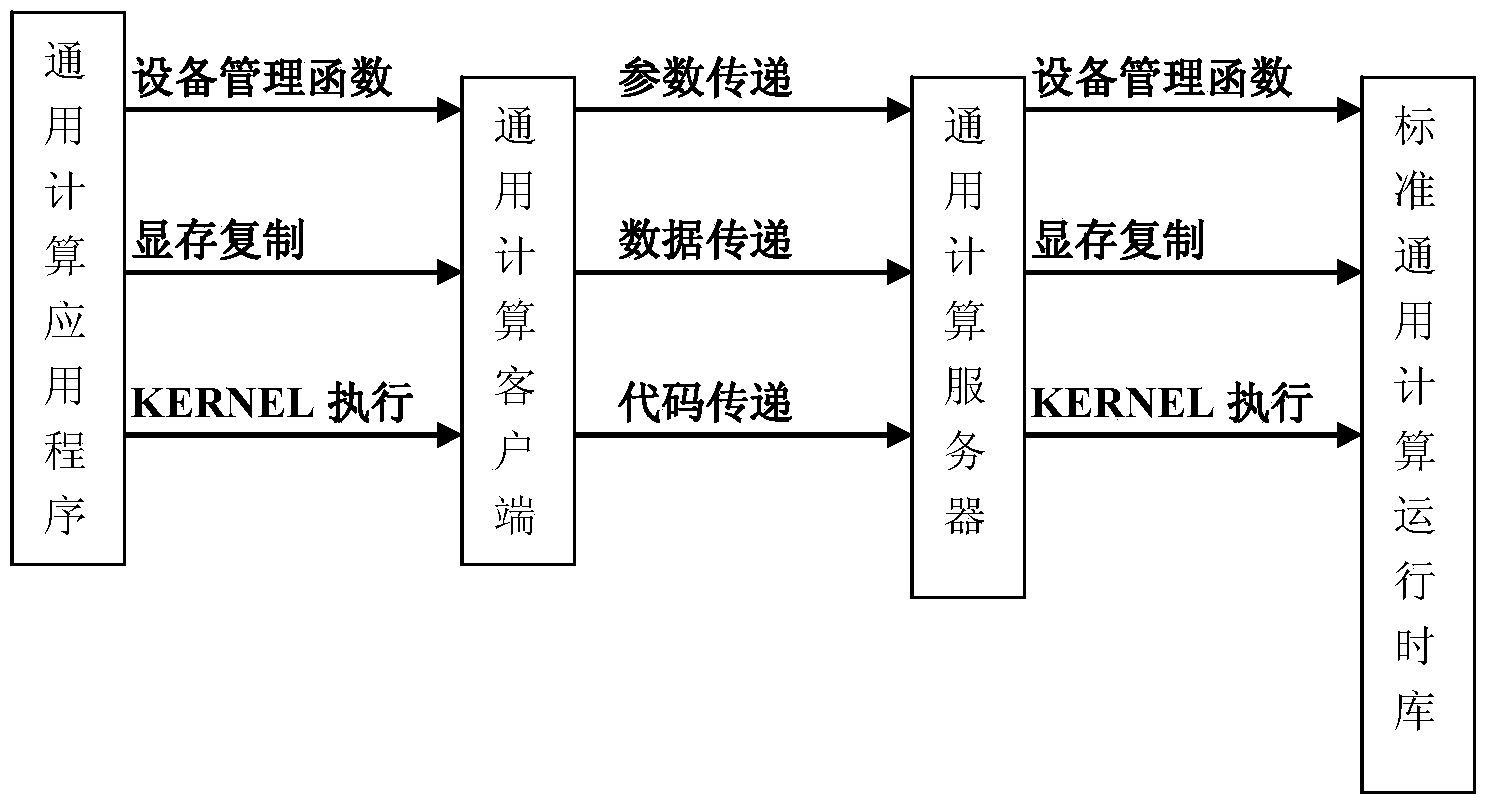

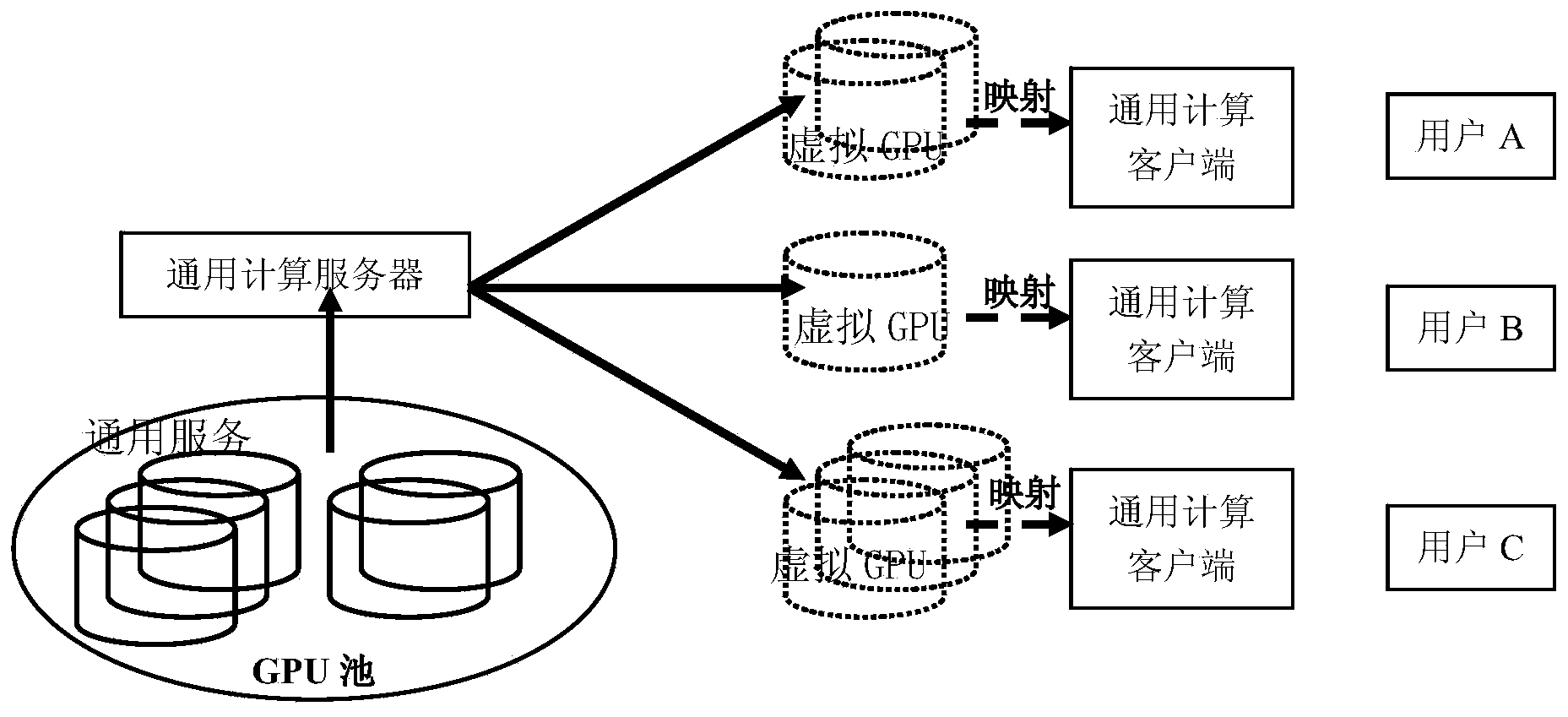

General purpose computation virtualization implementation method based on dynamic library interception

ActiveCN103761139AImprove efficiencyLow costResource allocationSoftware simulation/interpretation/emulationVirtualizationTime-division multiplexing

The invention discloses a general purpose computation virtualization implementation method based on dynamic library interception. For overcoming the defects of an existing virtual machine technology in supporting general purpose computation, all functions relevant to the general purpose computation in a virtual environment are called and redirected to a non-virtual environment by intercepting and redirecting a general purpose computation runtime library in real time. Because the non-virtual environment has the general purpose computation capacity, the actual general purpose computation tasks will be completed and the results will be returned to the virtual environment. It is totally transparent for a user in the virtual environment due to the method; on the basis of virtualization of the dynamic library, one physical GPU is virtualized into multiple GPUs logically through the space division multiplexing and time division multiplexing technologies for a GPU pool with GPU resources as allocation objects, and the method supports that one GPU is multiplexed by multiple users.

Owner:HUNAN UNIV

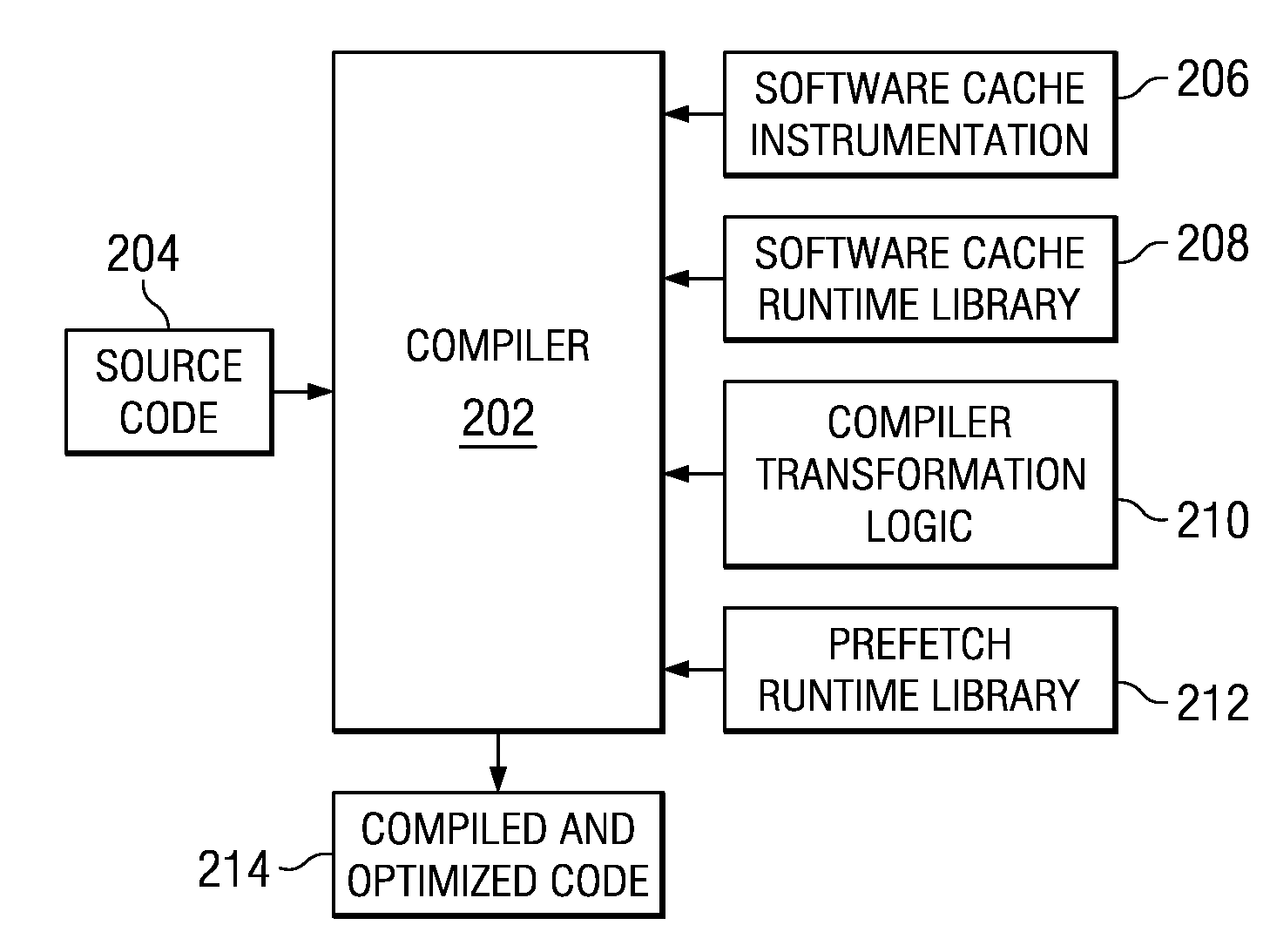

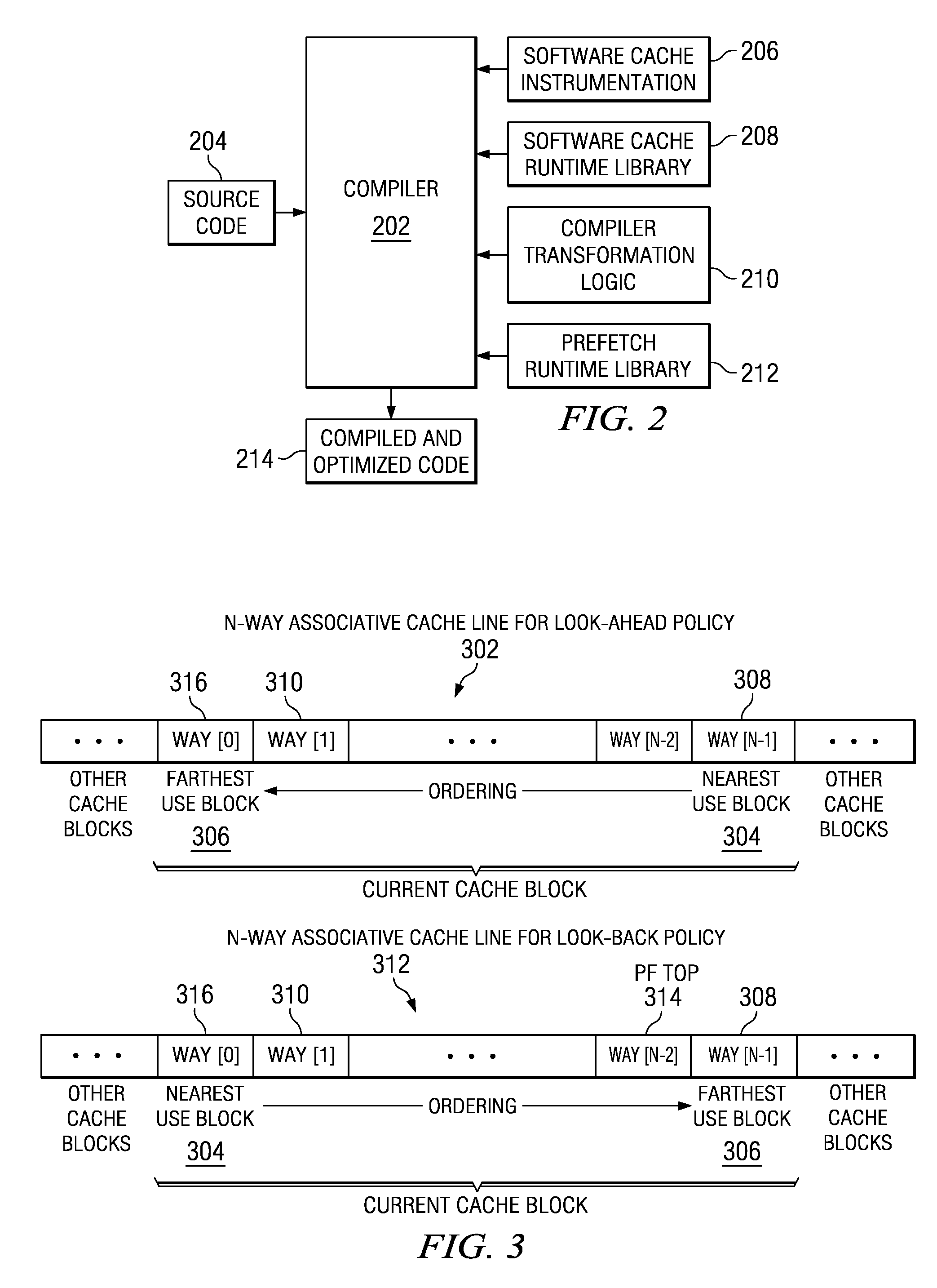

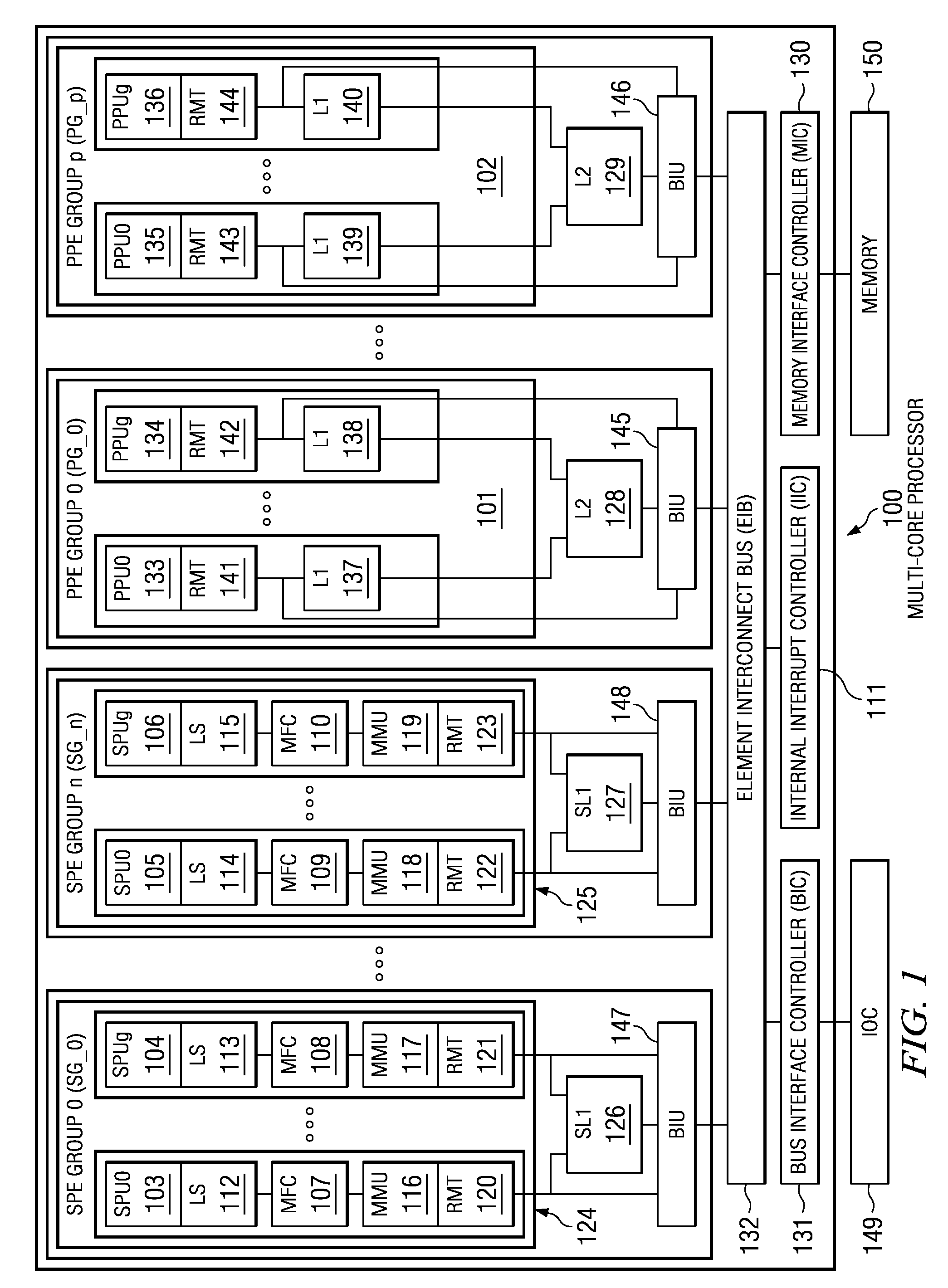

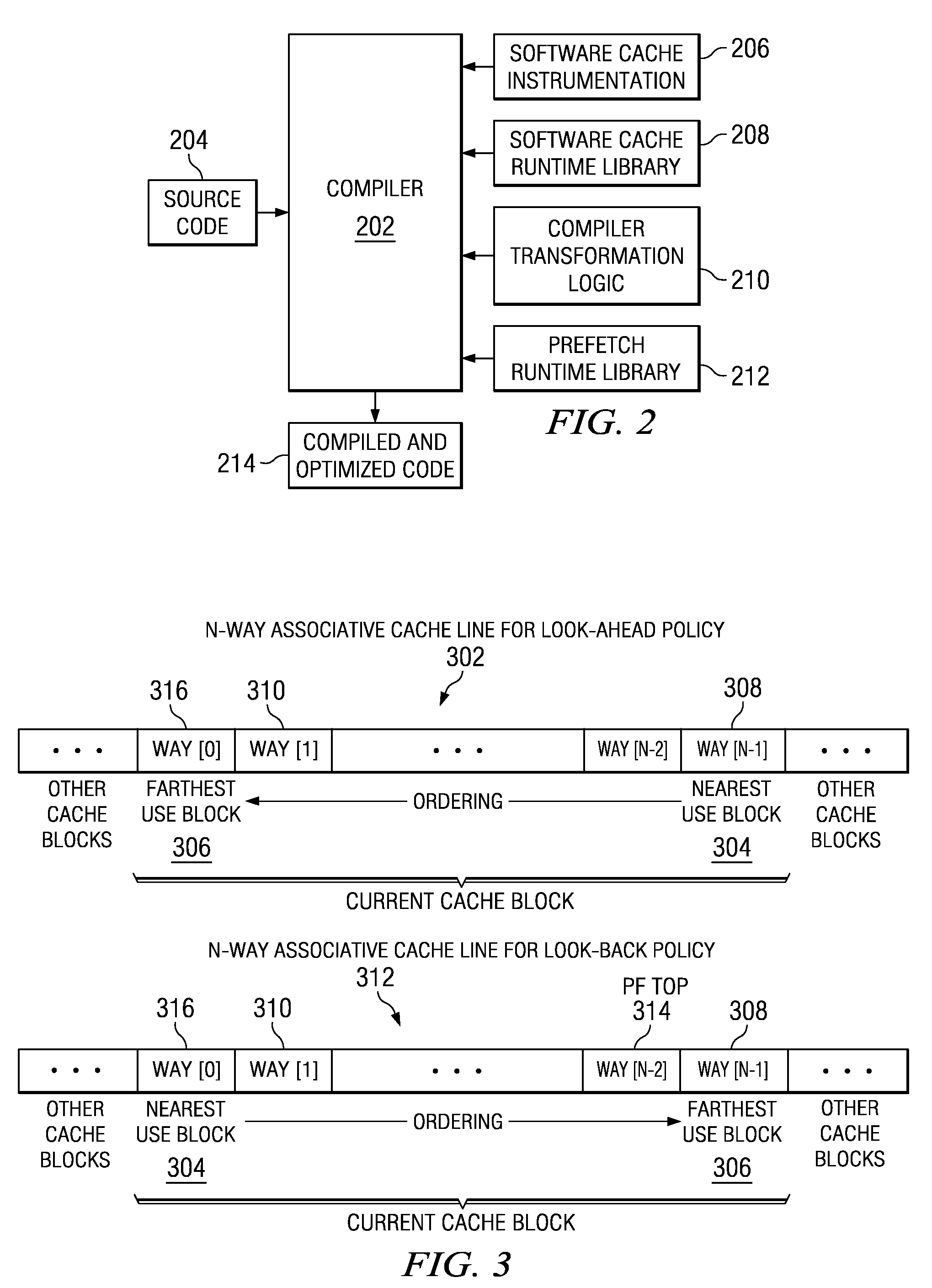

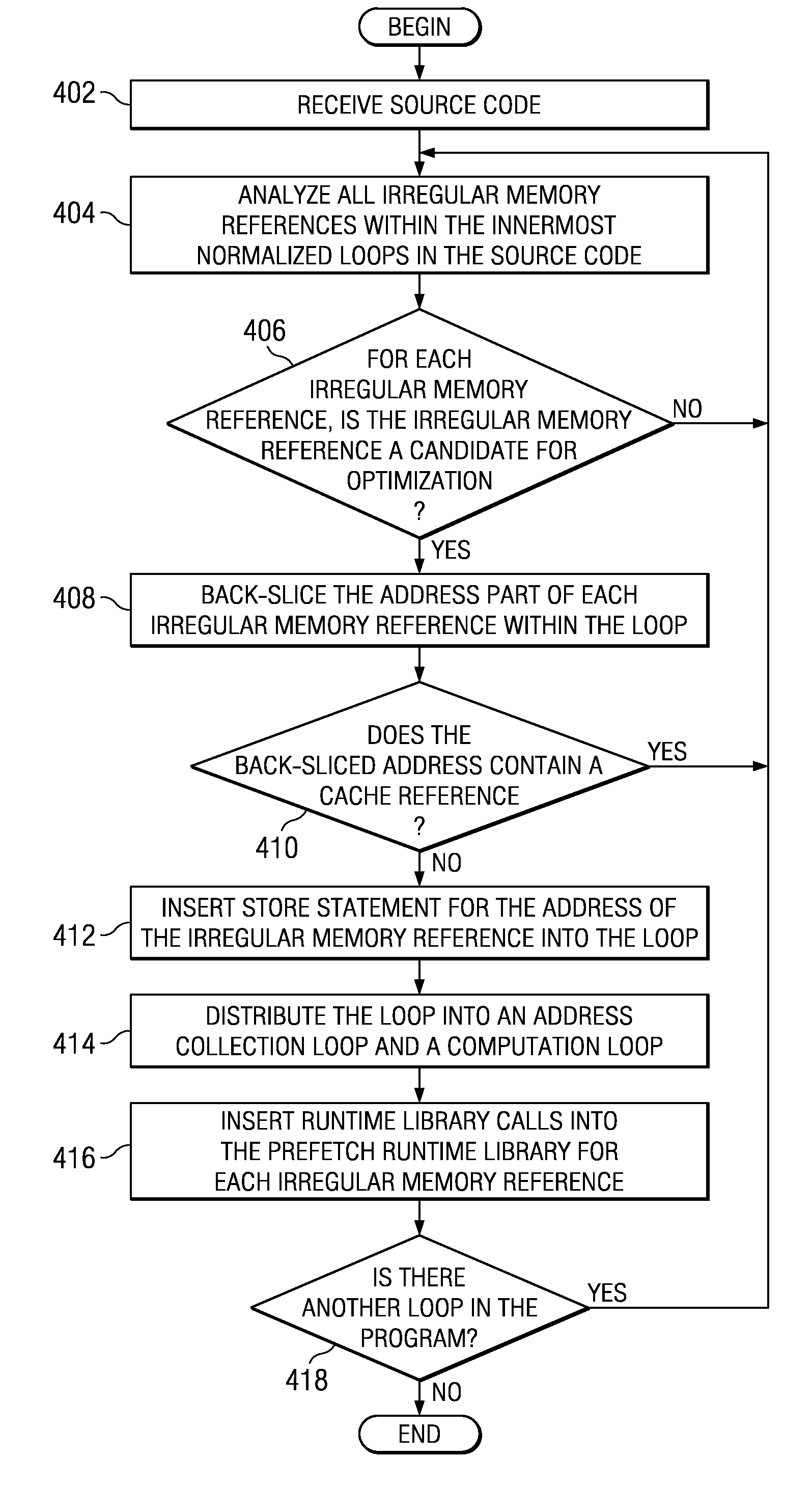

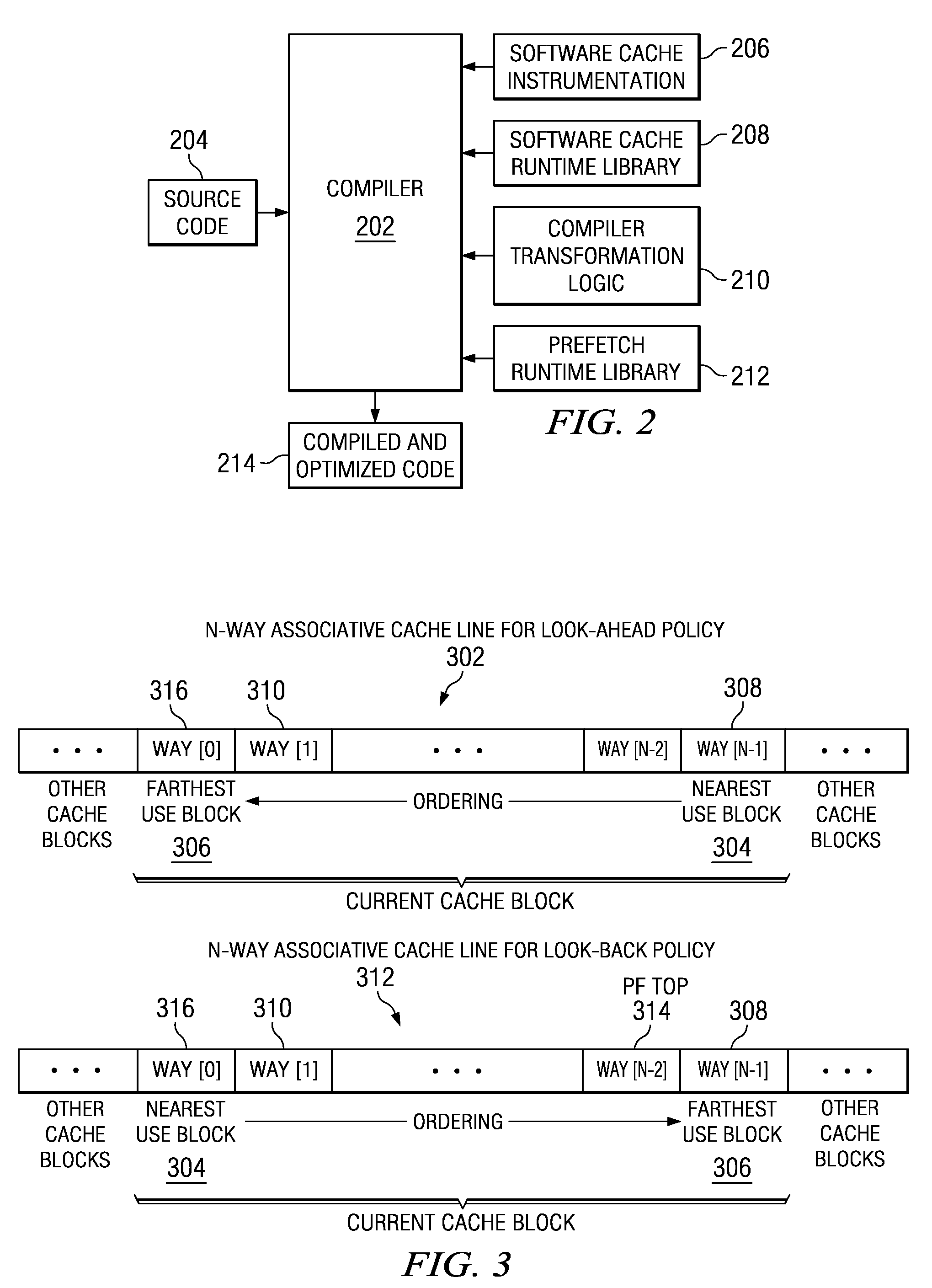

Prefetching Irregular Data References for Software Controlled Caches

Prefetching irregular memory references into a software controlled cache is provided. A compiler analyzes source code to identify at least one of a plurality of loops that contain an irregular memory reference. The compiler determines if the irregular memory reference within the at least one loop is a candidate for optimization. Responsive to an indication that the irregular memory reference may be optimized, the compiler determines if the irregular memory reference is valid for prefetching. Responsive to an indication that the irregular memory reference is valid for prefetching, a store statement for an address of the irregular memory reference is inserted into the at least one loop. A runtime library call is inserted into a prefetch runtime library for the irregular memory reference. Data associated with the irregular memory reference is prefetched into the software controlled cache when the runtime library call is invoked.

Owner:IBM CORP

Runtime handling of task dependencies using dependence graphs

ActiveUS9652286B2Preserves task dependenciesProgram initiation/switchingTask dependencyRuntime library

Embodiments include systems and methods for handling task dependencies in a runtime environment using dependence graphs. For example, a computer-implemented runtime engine includes runtime libraries configured to handle tasks and task dependencies. The task dependencies can be converted into data dependencies. At runtime, as the runtime engine encounters tasks and associated data dependencies, it can add those identified tasks as nodes of a dependence graph, and can add edges between the nodes that correspond to the data dependencies without deadlock. The runtime engine can schedule the tasks for execution according to a topological traversal of the dependence graph in a manner that preserves task dependencies substantially as defined by the source code.

Owner:ORACLE INT CORP

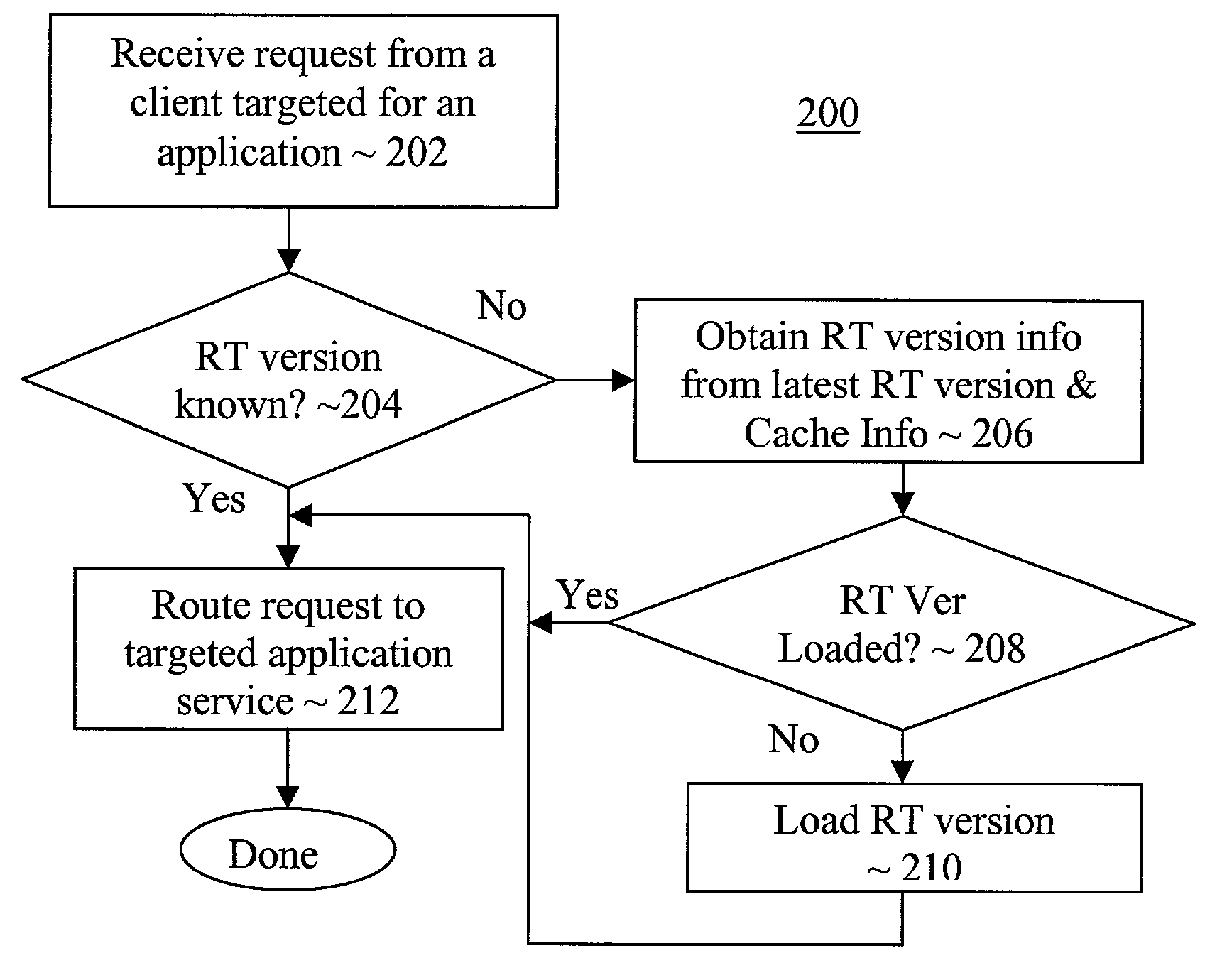

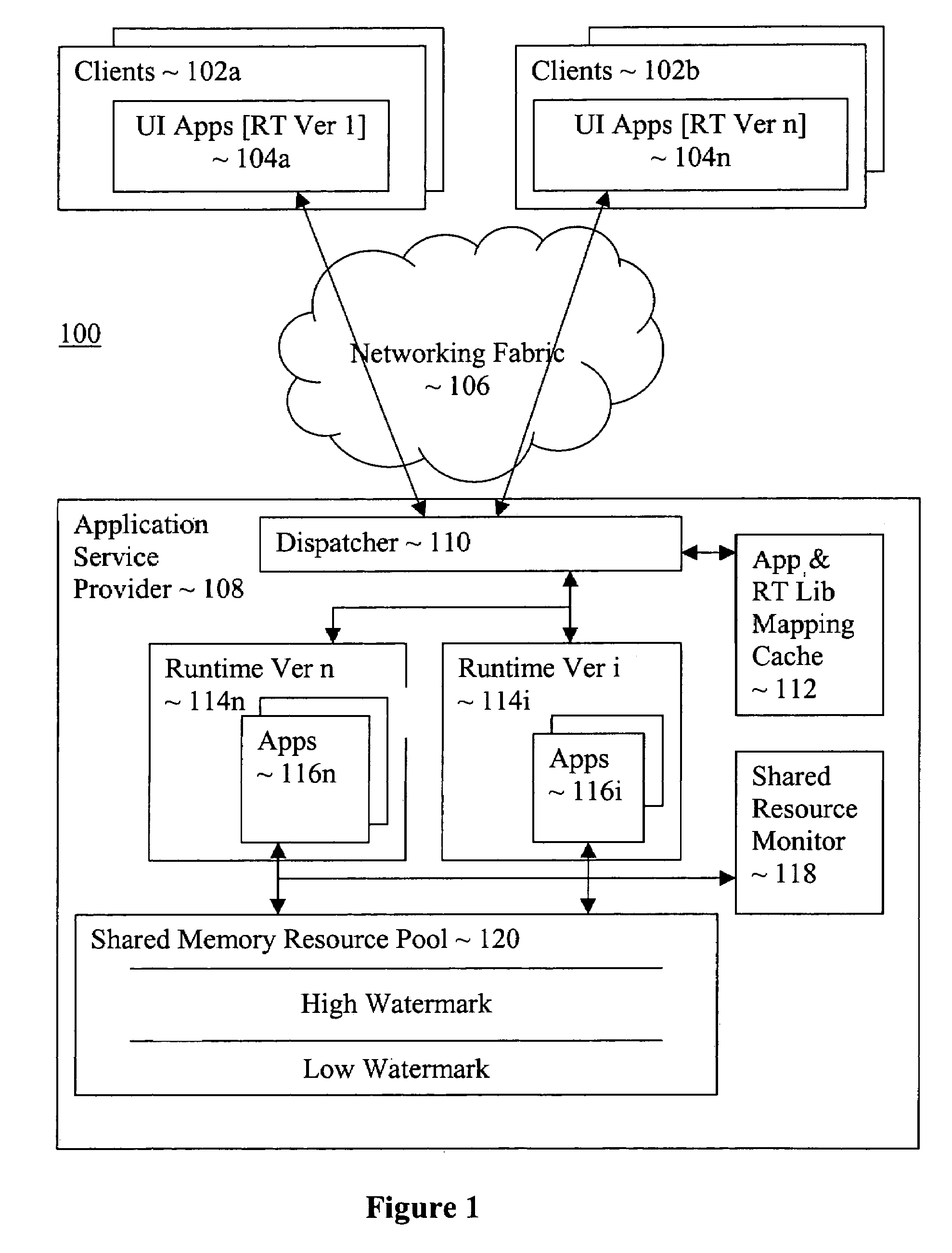

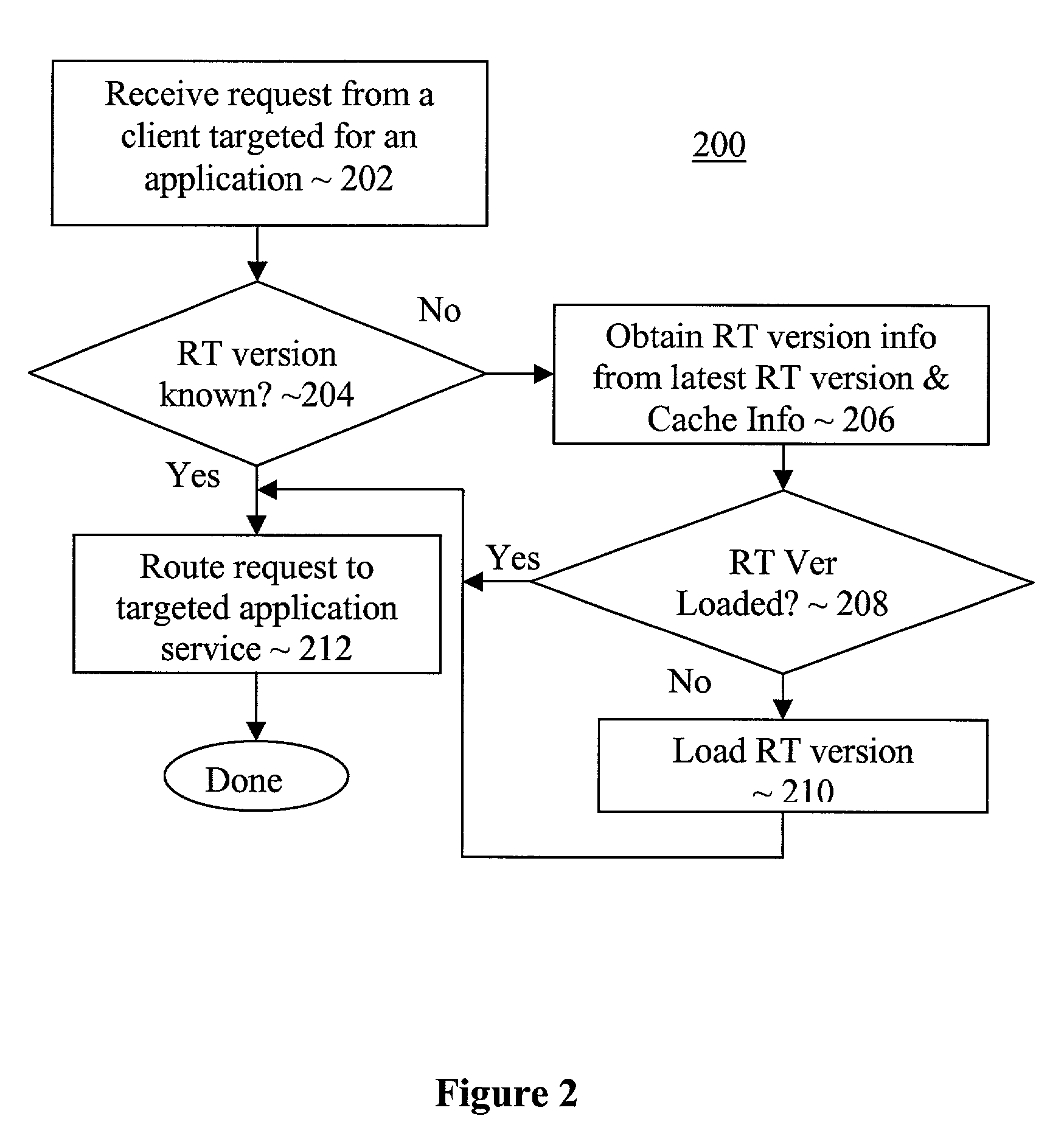

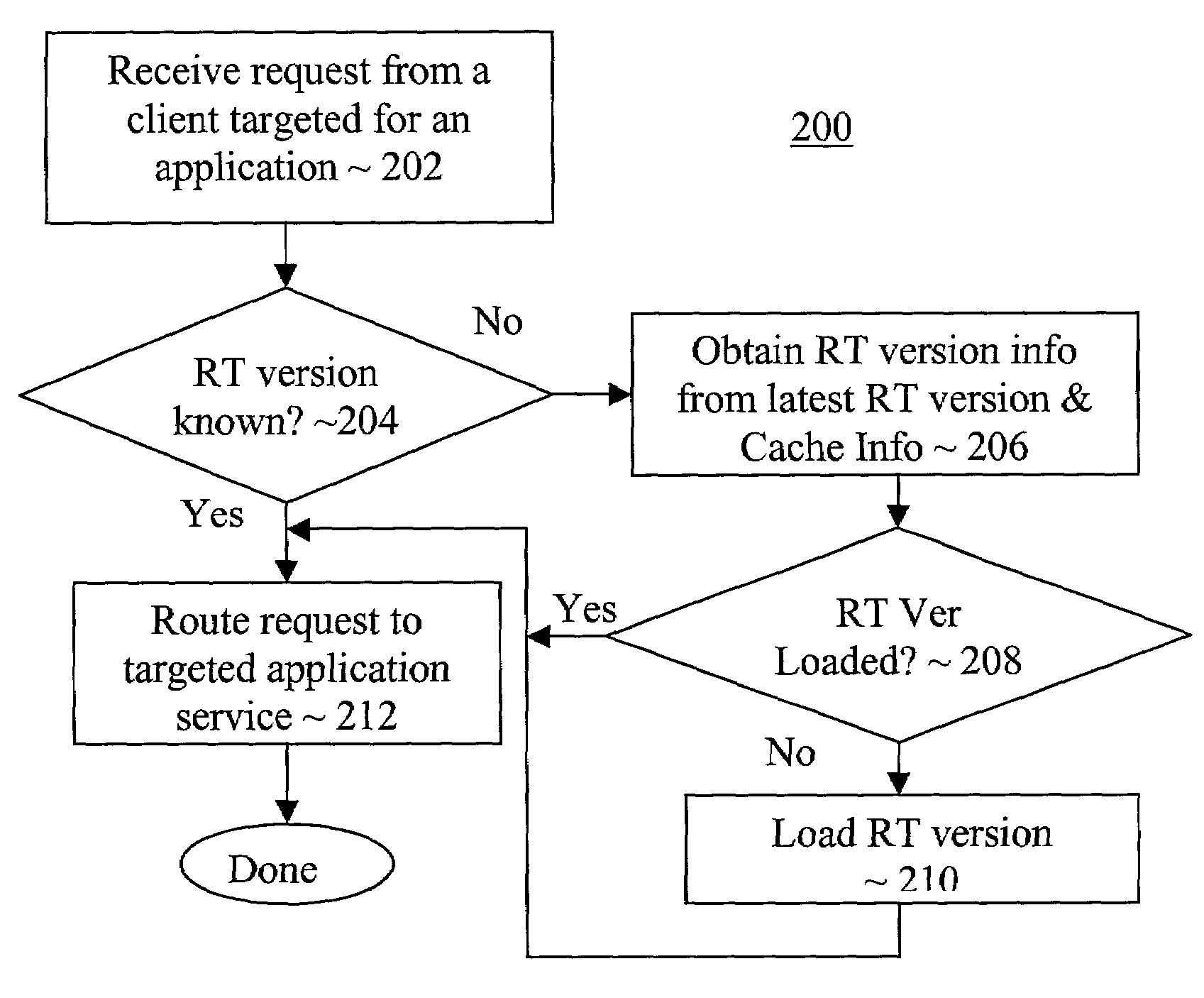

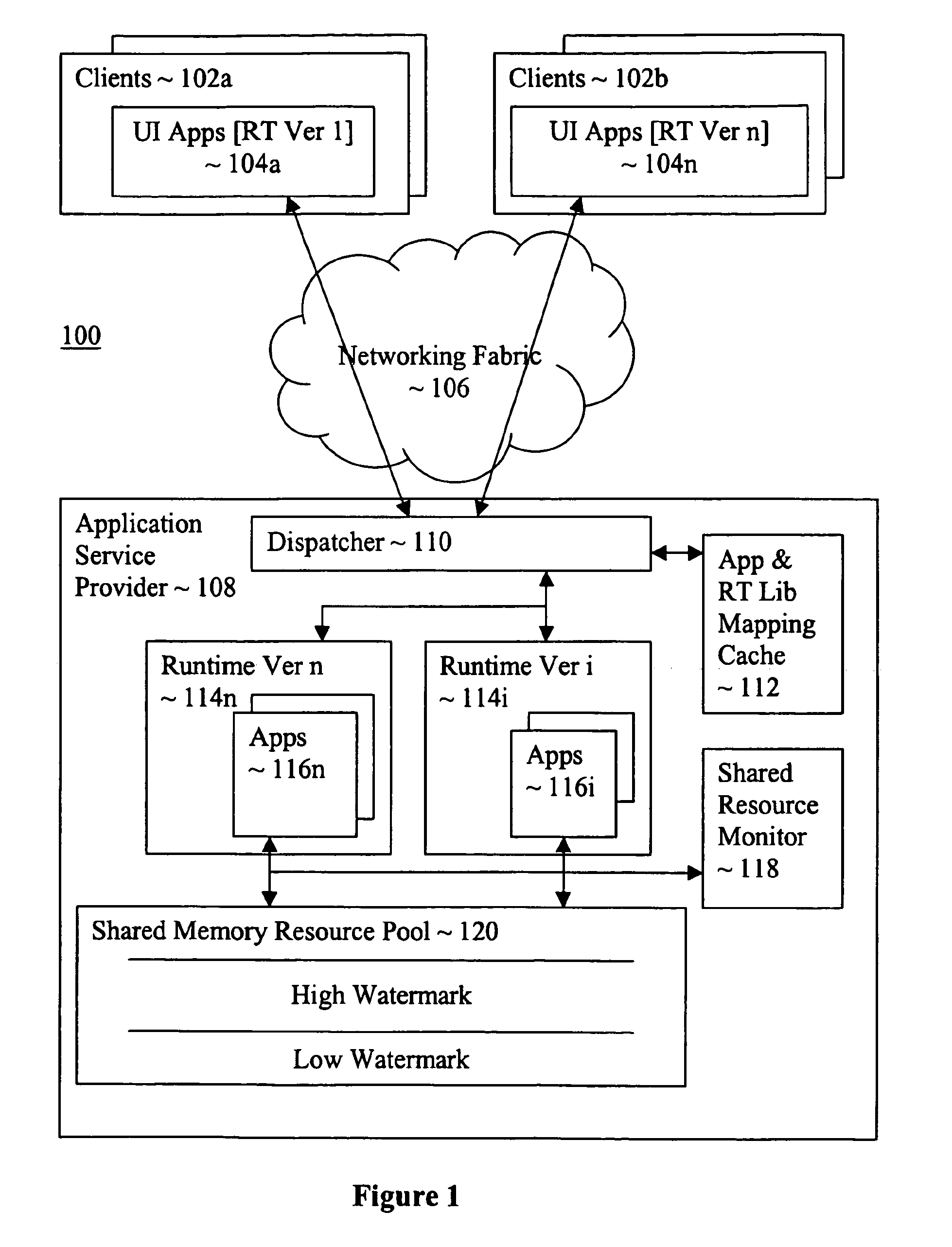

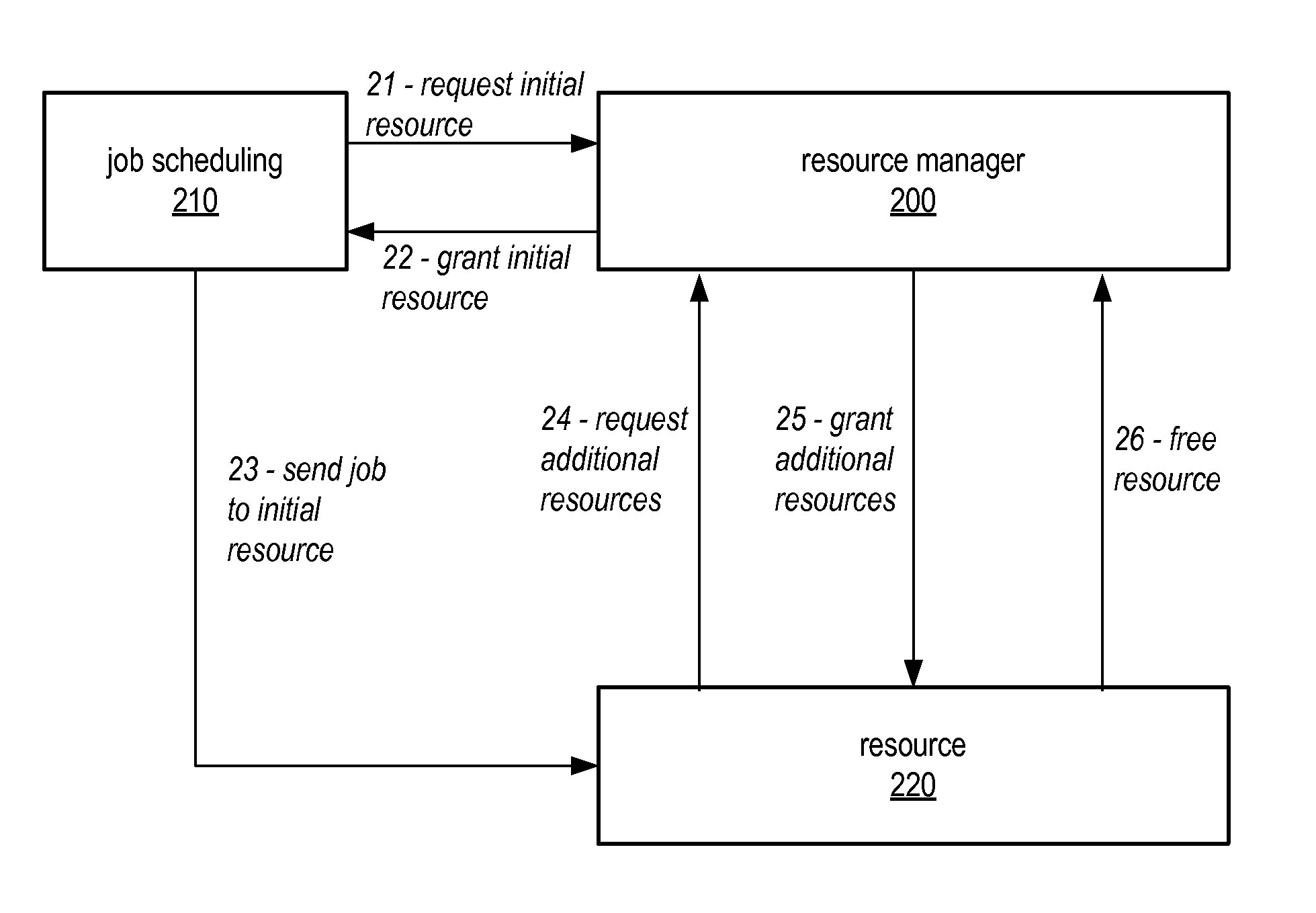

Multi-version hosting of application services

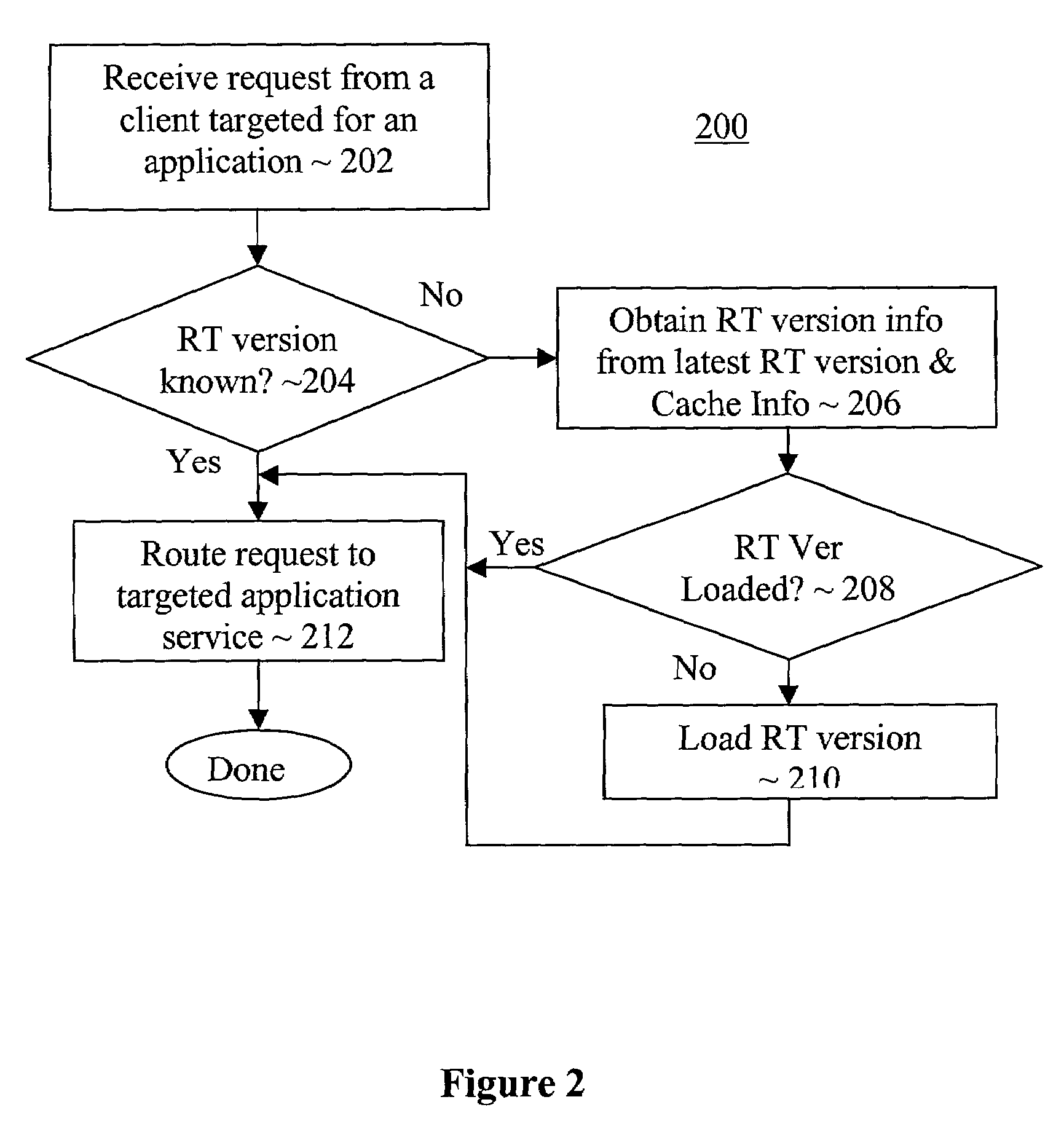

An application service provision apparatus is provided with one or more components to perform a dispatching and a shared resource monitoring function to allow applications be hosted with multiple versions of a hosting service runtime library in a more efficient manner. The dispatching function, upon receipt of a request for service for an application, determines if the version of the runtime library required is known. If not, the dispatching function turns to the latest version of the runtime library to determine the version required. In one embodiment, the required earlier versions are loaded only on an as needed basis. The shared resource monitoring function, upon detecting aggregated allocation of a shared resource reaching a pre-determined threshold, requests consumers of the shared resource to provide tracked last used times of their allocations. In response, the monitoring function selects a number of the allocations for release, and instructed the shared resource consumers accordingly.

Owner:ORACLE INT CORP

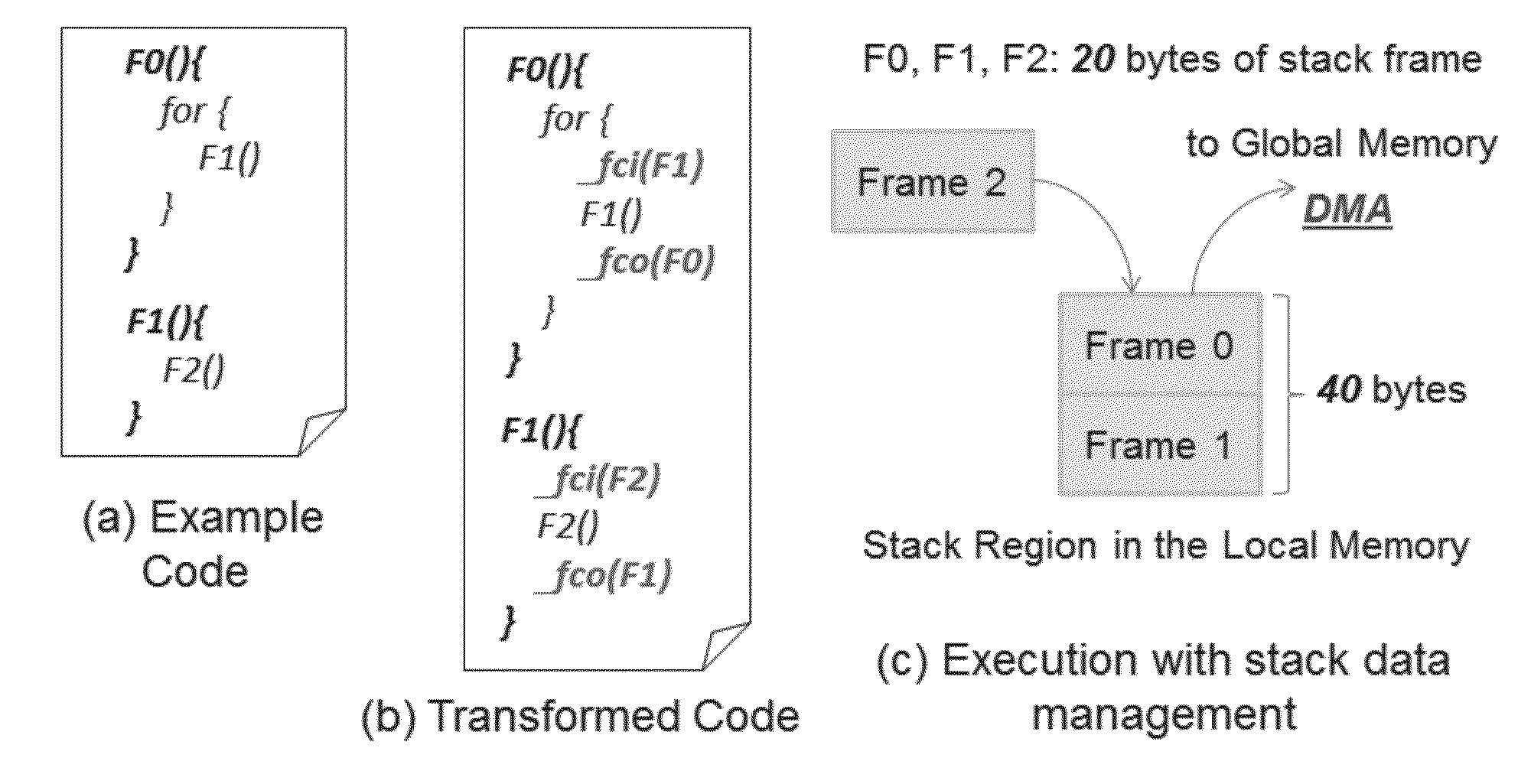

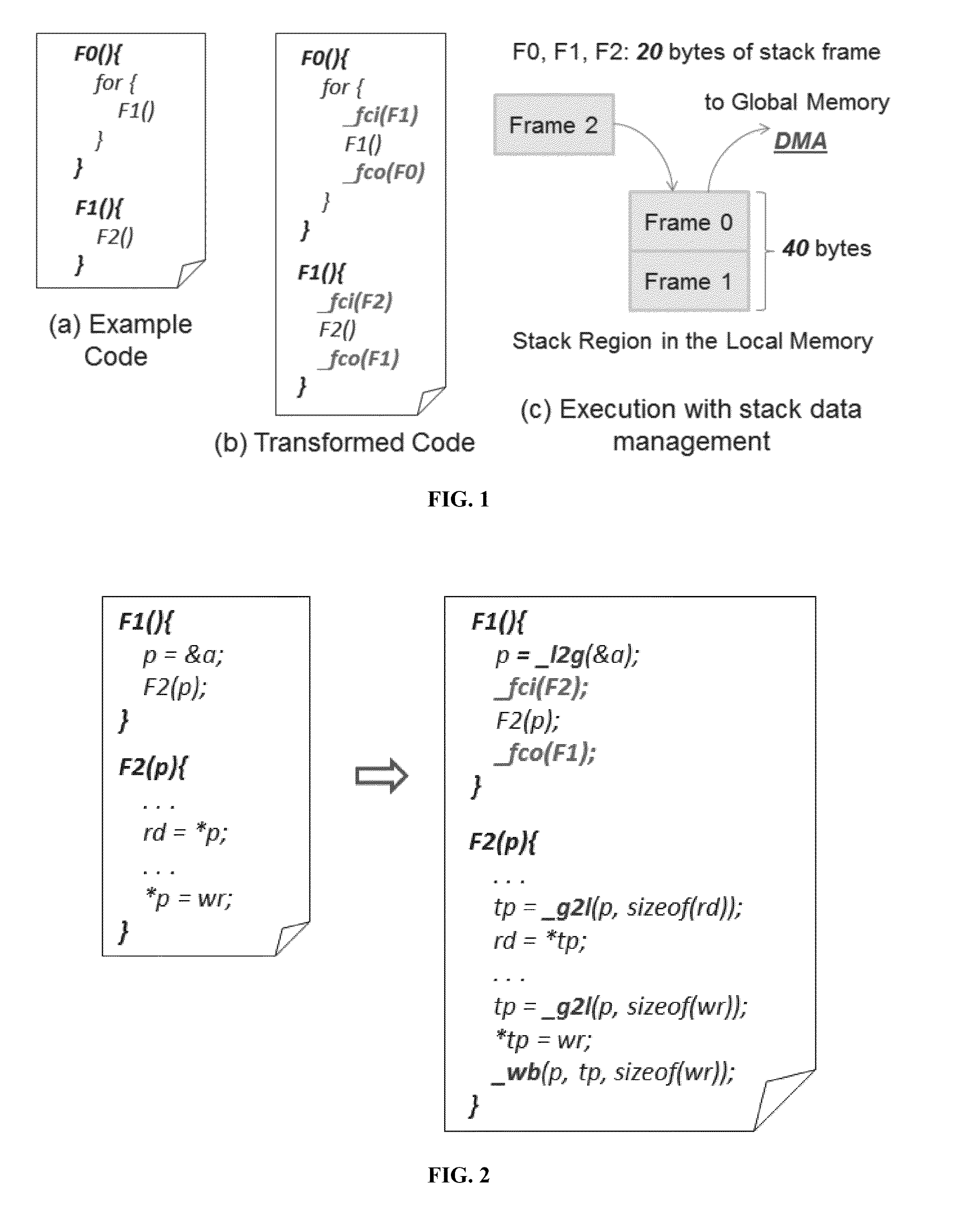

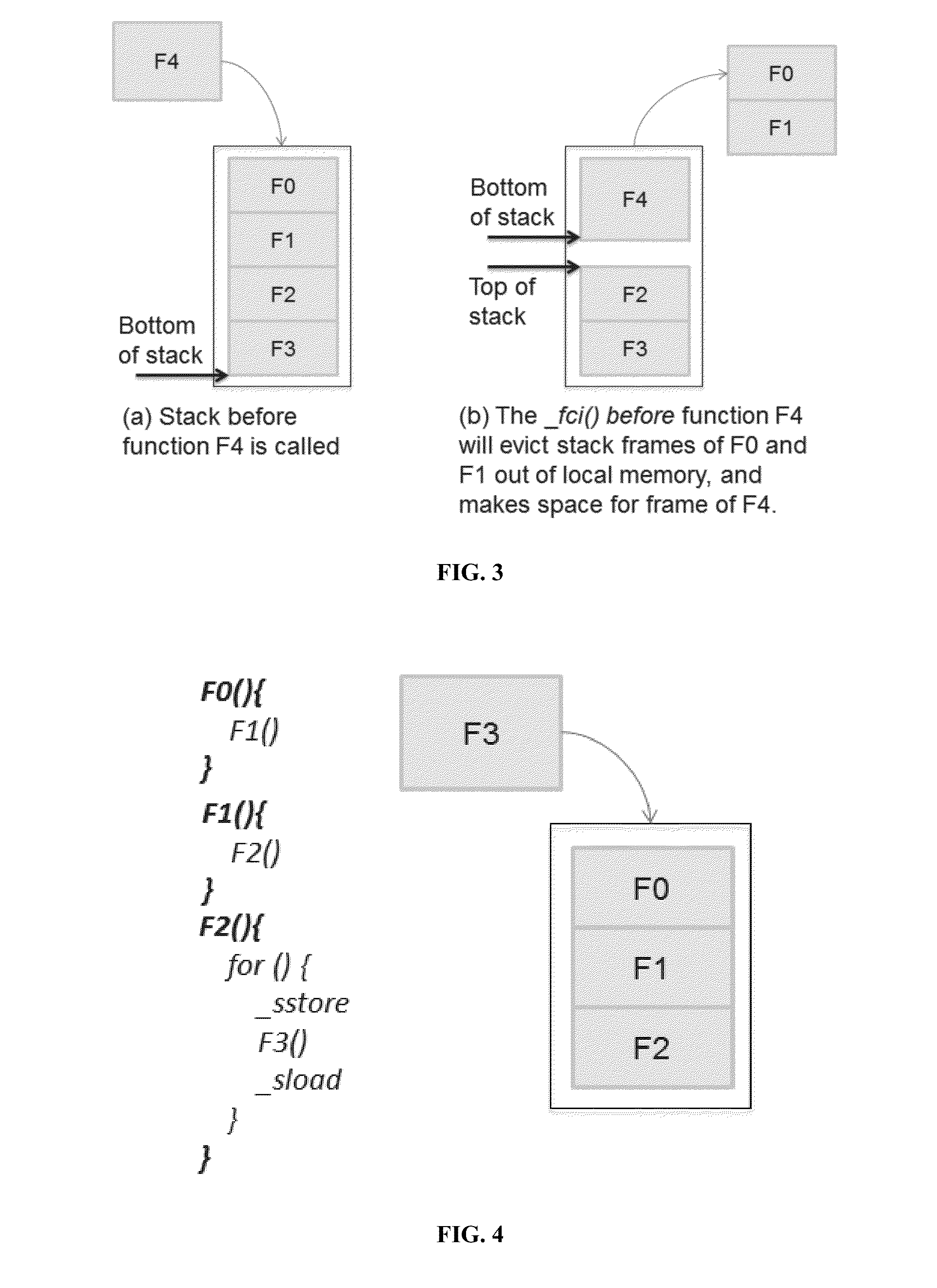

Stack Data Management for Software Managed Multi-Core Processors

InactiveUS20140282454A1Minimal costEasy to cutResource allocationSoftware engineeringInteger linear programming formulationData management

Methods and apparatus for managing stack data in multi-core processors having scratchpad memory or limited local memory. In one embodiment, stack data management calls are inserted into software in accordance with an integer linear programming formulation and a smart stack data management heuristic. In another embodiment, stack management and pointer management functions are inserted before and after function calls and pointer references, respectively. The calls may be inserted in an automated fashion by a compiler utilizing an optimized stack data management runtime library.

Owner:ARIZONA STATE UNIVERSITY

Dynamically Controlling a Prefetching Range of a Software Controlled Cache

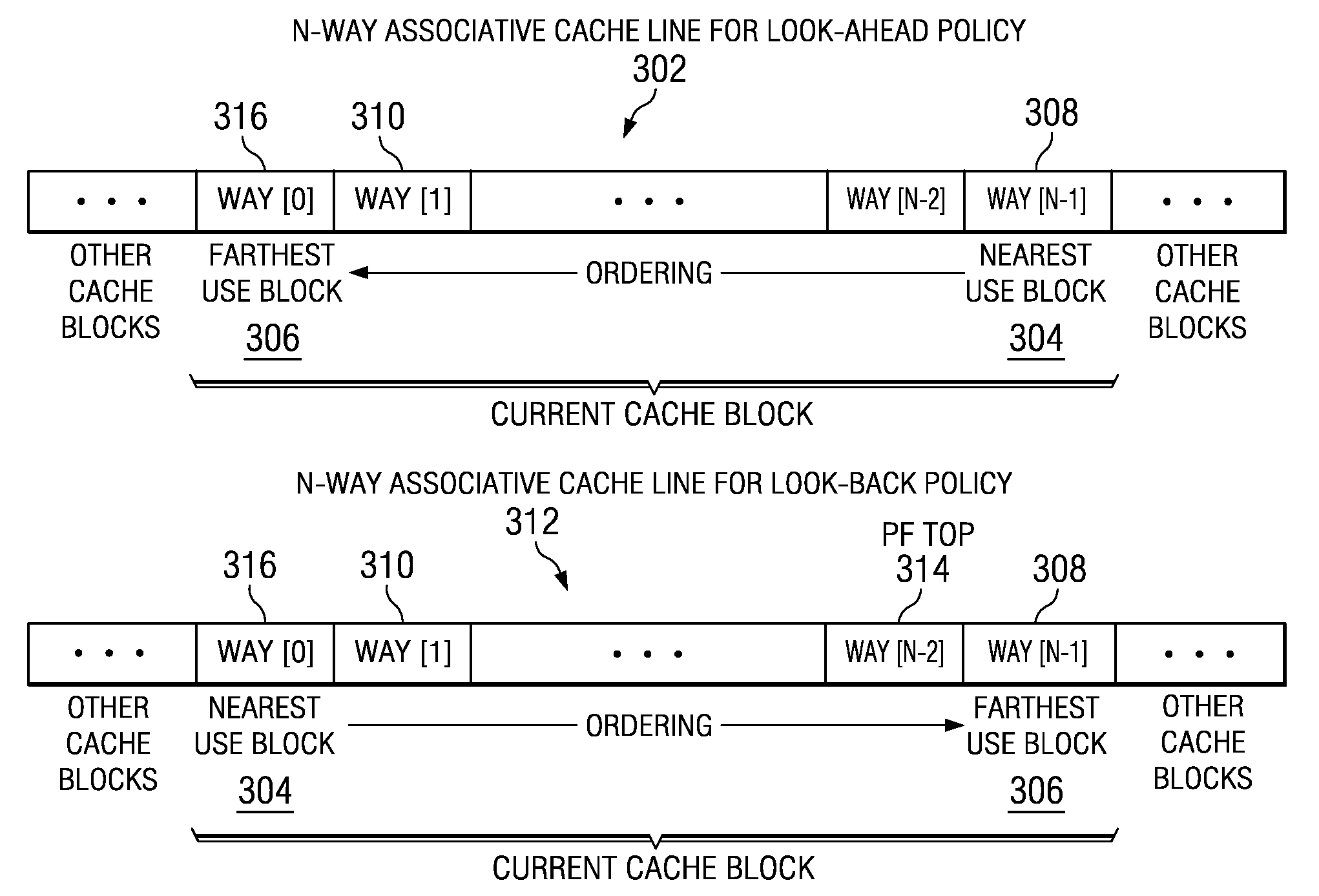

InactiveUS20090254733A1Memory adressing/allocation/relocationMicro-instruction address formationParallel computingSource code

Dynamically controlling a prefetching range of a software controlled cache is provided. A compiler analyzes source code to identify at least one of a plurality of loops that contain irregular memory references. For each irregular memory reference in the source code, the compiler determines whether the irregular memory reference is a candidate for optimization. Responsive to identifying an irregular memory reference that may be optimized, the complier determines whether the irregular memory reference is valid for prefetching. If the irregular memory reference is valid for prefetching, a store statement for an address of the irregular memory reference is inserted into the at least one loop. A runtime library call is inserted into a prefetch runtime library to dynamically prefetch the irregular memory references. Data associated with the irregular memory references are dynamically prefetched into the software controlled cache when the runtime library call is invoked.

Owner:IBM CORP

Dynamically controlling a prefetching range of a software controlled cache

InactiveUS8146064B2Memory systemsMicro-instruction address formationParallel computingRuntime library

Dynamically controlling a prefetching range of a software controlled cache is provided. A compiler analyzes source code to identify at least one of a plurality of loops that contain irregular memory references. For each irregular memory reference in the source code, the compiler determines whether the irregular memory reference is a candidate for optimization. Responsive to identifying an irregular memory reference that may be optimized, the complier determines whether the irregular memory reference is valid for prefetching. If the irregular memory reference is valid for prefetching, a store statement for an address of the irregular memory reference is inserted into the at least one loop. A runtime library call is inserted into a prefetch runtime library to dynamically prefetch the irregular memory references. Data associated with the irregular memory references are dynamically prefetched into the software controlled cache when the runtime library call is invoked.

Owner:IBM CORP

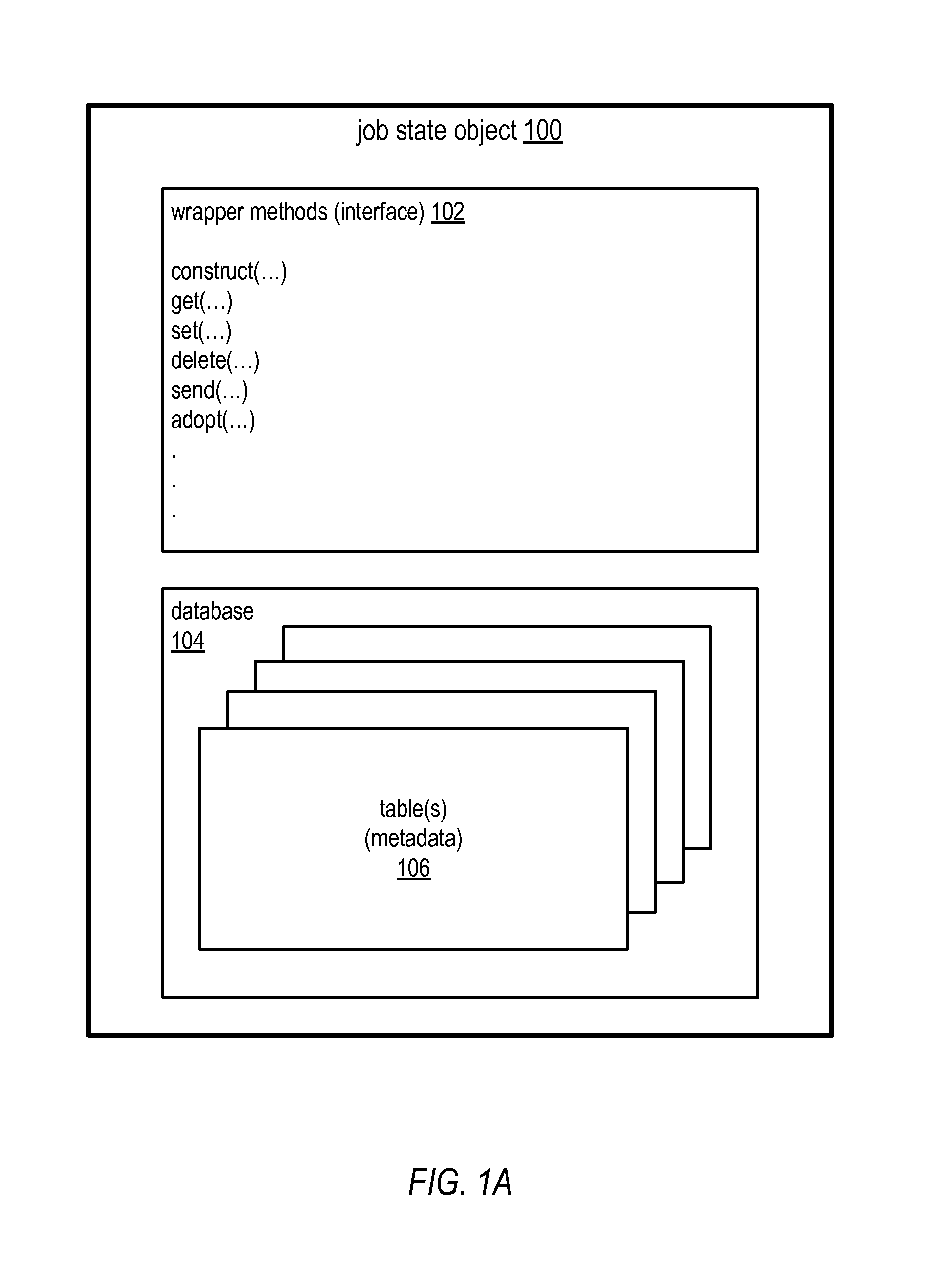

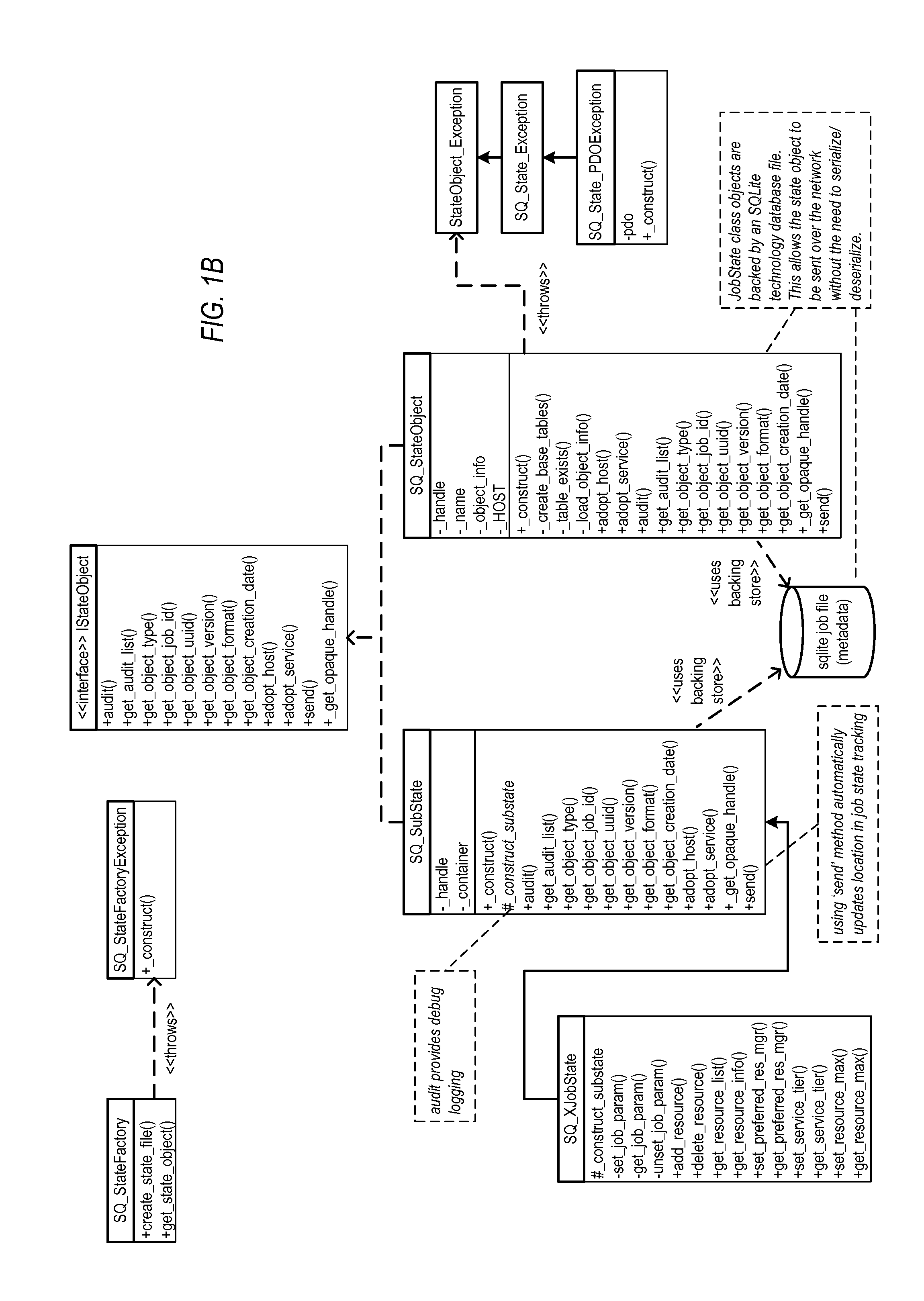

Methods and Apparatus for State Objects in Cluster Computing

ActiveUS20130219397A1Flexible applicationLess application overheadEnergy efficient ICTDigital data processing detailsRelational databaseDatabase engine

Embodiments of a mobile state object for storing and transporting job metadata on a cluster computing system may use a database as an envelope for the metadata. A state object may include a database that stores the job metadata and wrapper methods. A small database engine may be employed. Since the entire database exists within a single file, complex, extensible applications may be created on the same base state object, and the state object can be sent across the network with the state intact, along with history of the object. An SQLite technology database engine, or alternatively other single file relational database engine technologies, may be used as the database engine. To support the database engine, compute nodes on the cluster may be configured with a runtime library for the database engine via which applications or other entities may access the state file database.

Owner:ADOBE SYST INC

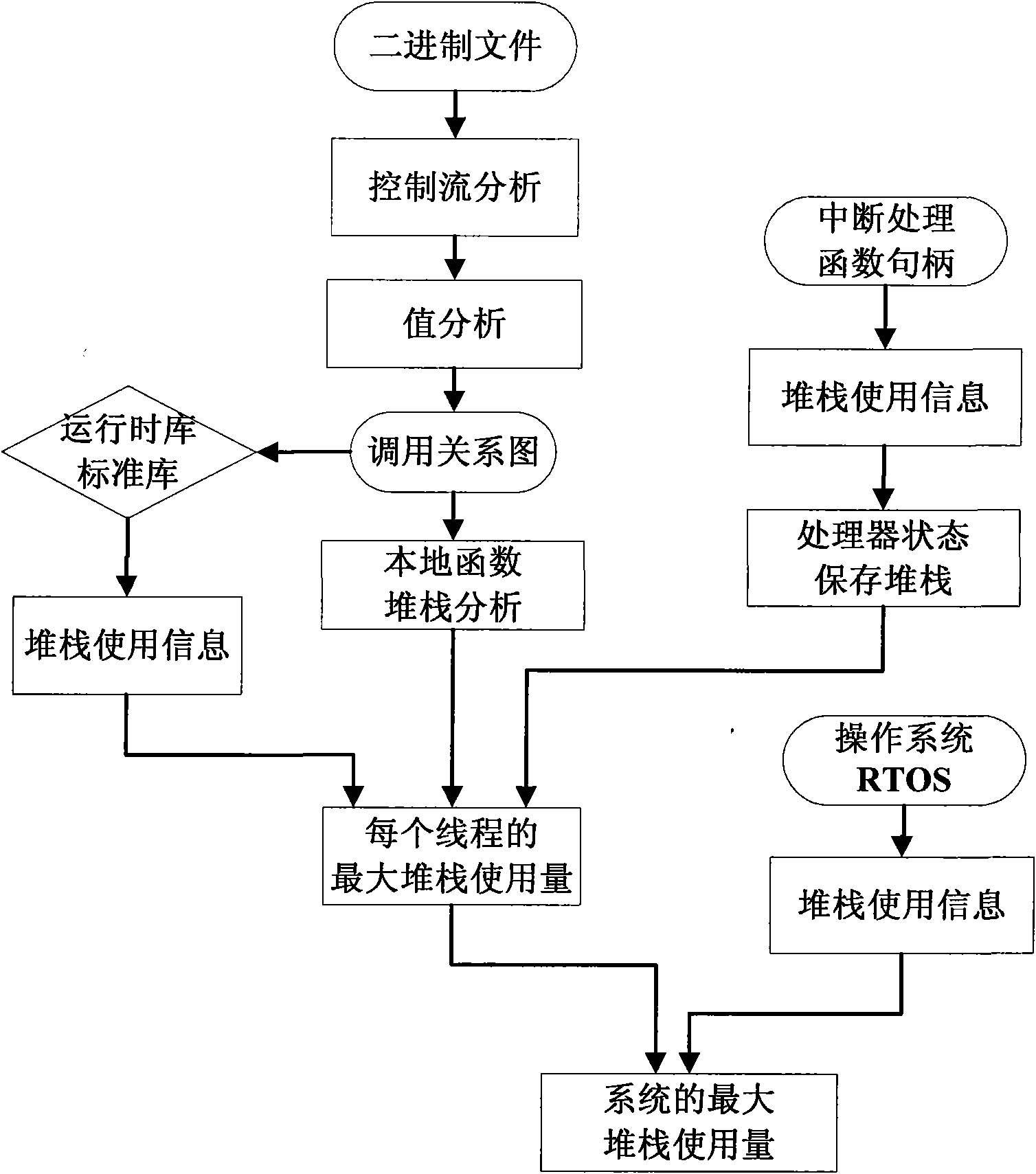

Method for accurately estimating stack demand in embedded system

InactiveCN101876923AGuarantee safety and reliabilityThe calculation result is accurateResource allocationMemory systemsOperational systemRelational graph

The invention provides a method for accurately estimating stack demand in an embedded system, which comprises the following steps of: performing stack demand analysis on the stack demand of a runtime library and a standard library of a local function and each call tree by using a complete function call relational graph, and summing; determining the maximum stack demand of each interrupt program in each priority level, summing, and adding the additional stack demand increased by saving the processor state; and summing the results to obtain the maximum stack demand of a single thread, wherein the complete function call relational graph is obtained by the following steps of: performing control flow analysis on a complete function relational graph to obtain a primary function relational graph, further analyzing by using a value analysis method of an abstract interpretation algorithm aiming at an indirect call function and a recursive call function, and noting function calls which cannot be analyzed by users. Aiming at multi-thread, a single thread step is repeated, a sum is calculated, and finally the sum plus the stack demand of the operation system is the total stack demand of the system.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI

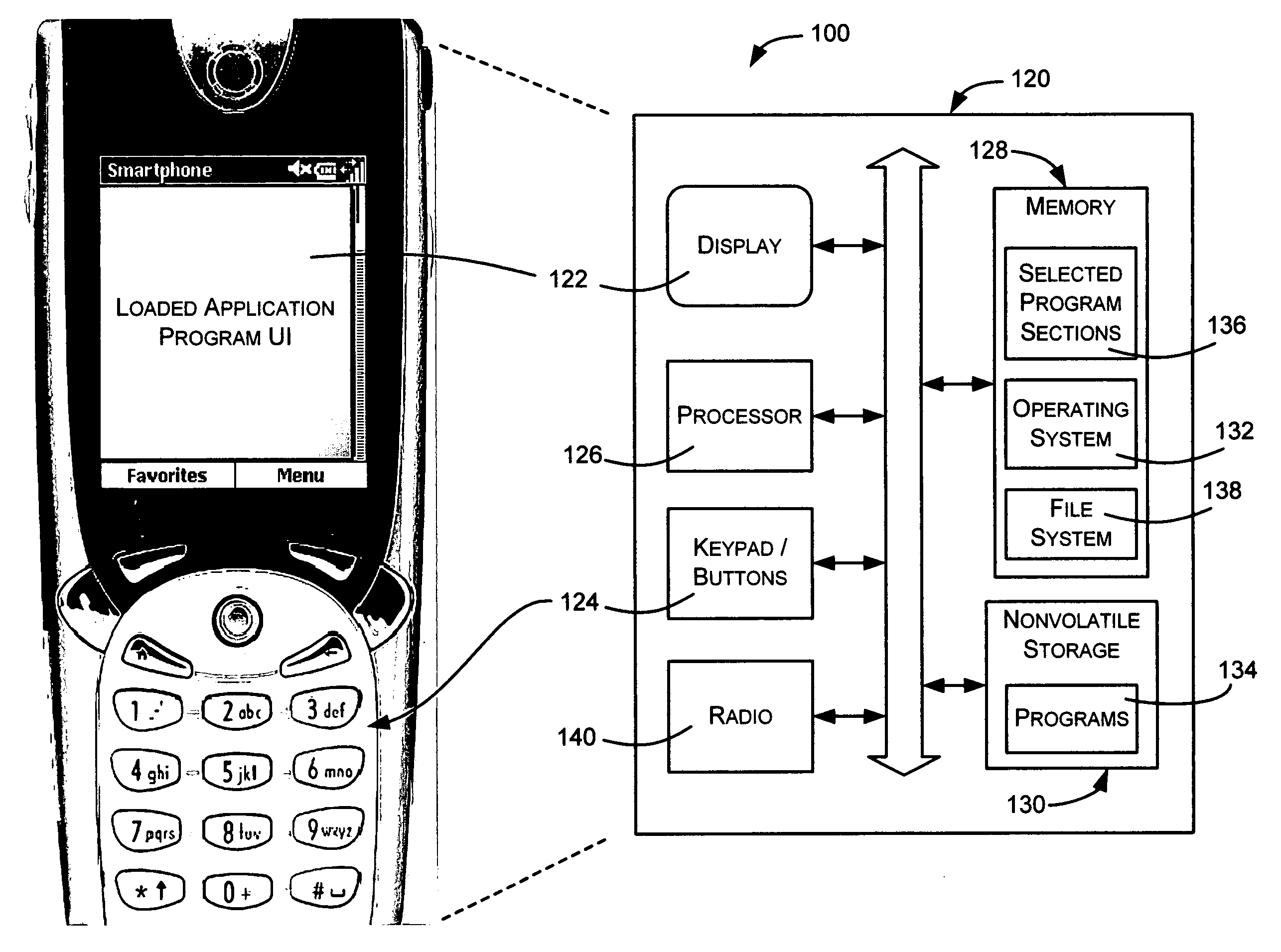

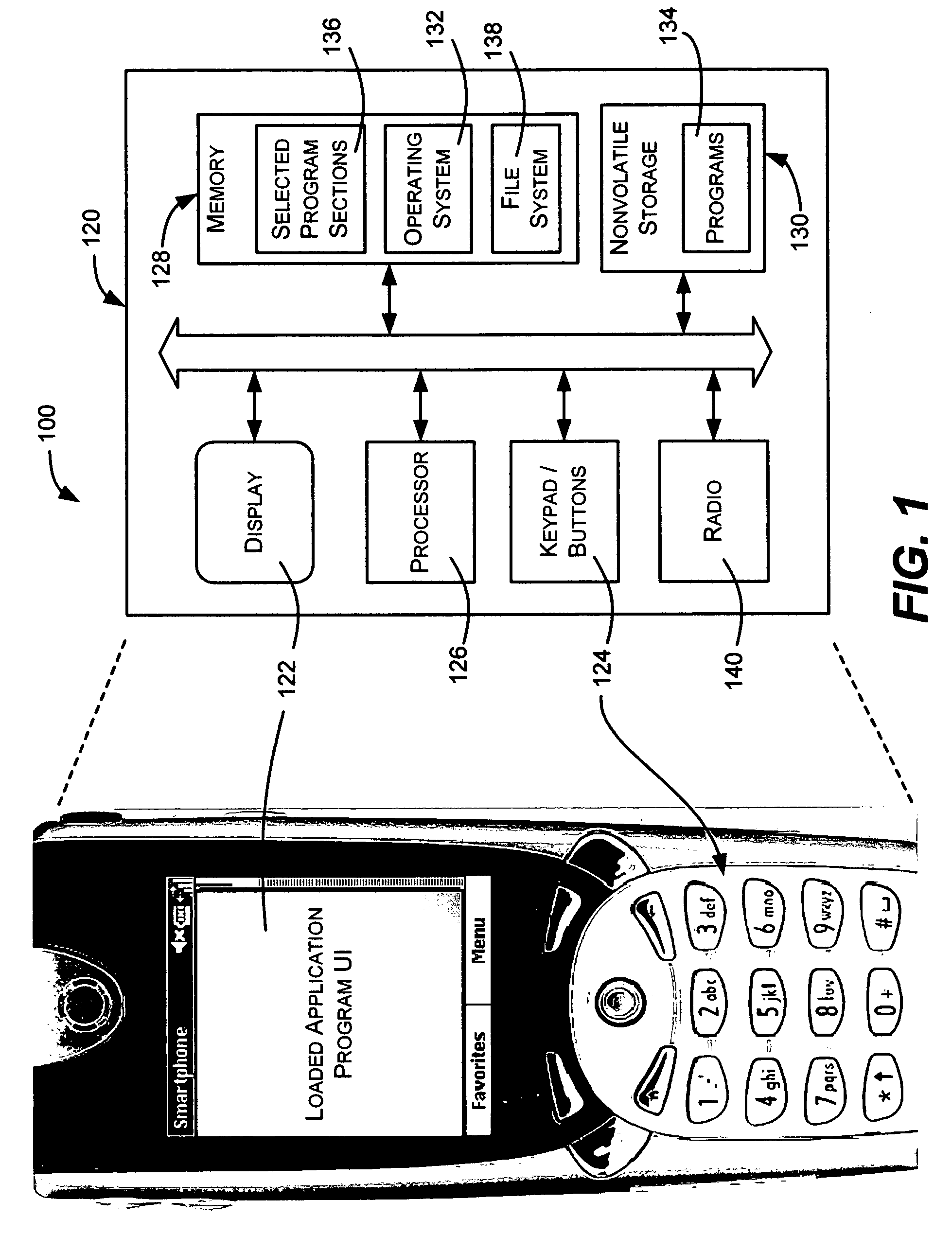

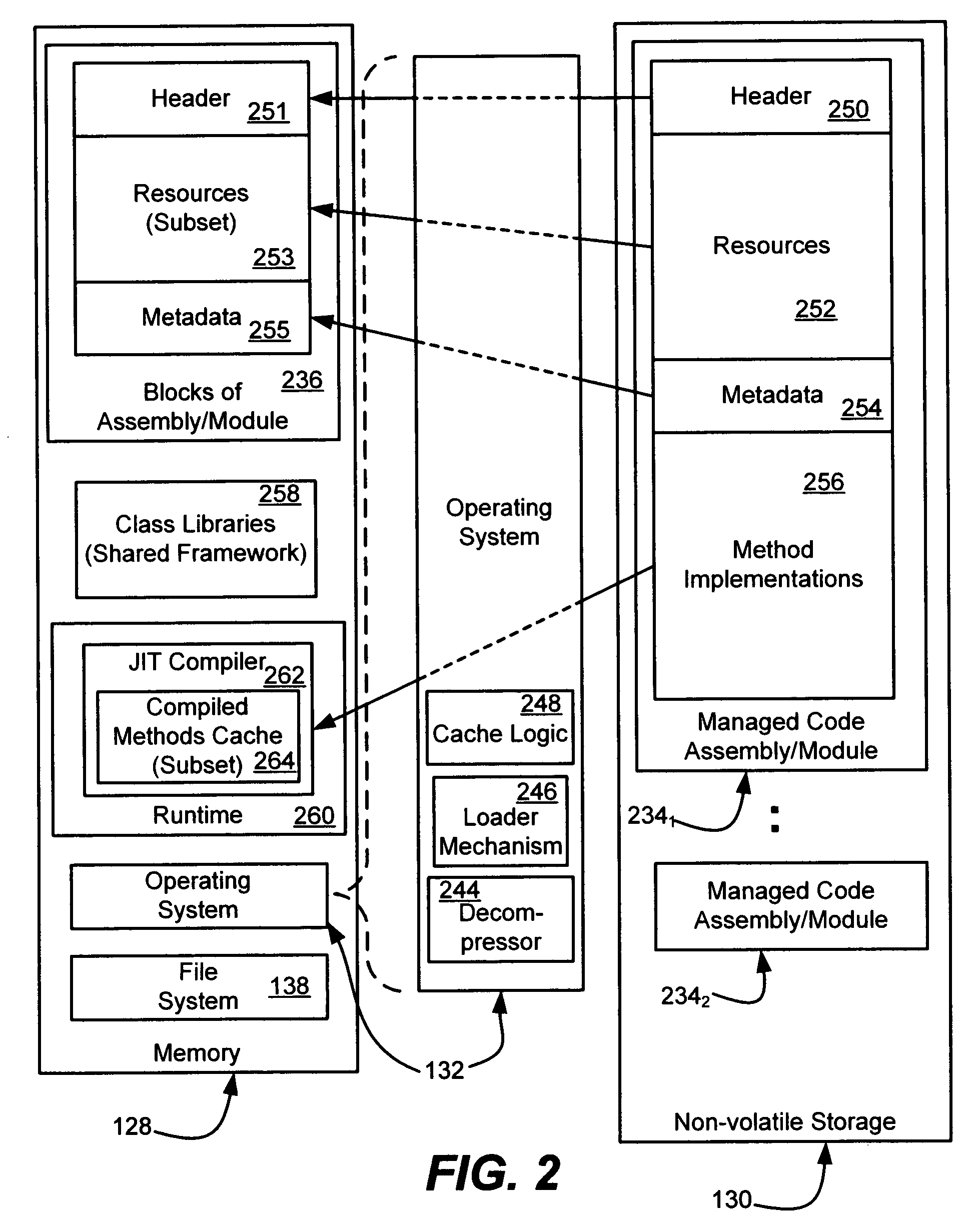

Method and system for caching managed code for efficient execution on resource-constrained devices

InactiveUS20060190932A1Reduce memory neededEfficient managementSoftware engineeringSpecific program execution arrangementsManaged codeTerm memory

Owner:MICROSOFT TECH LICENSING LLC

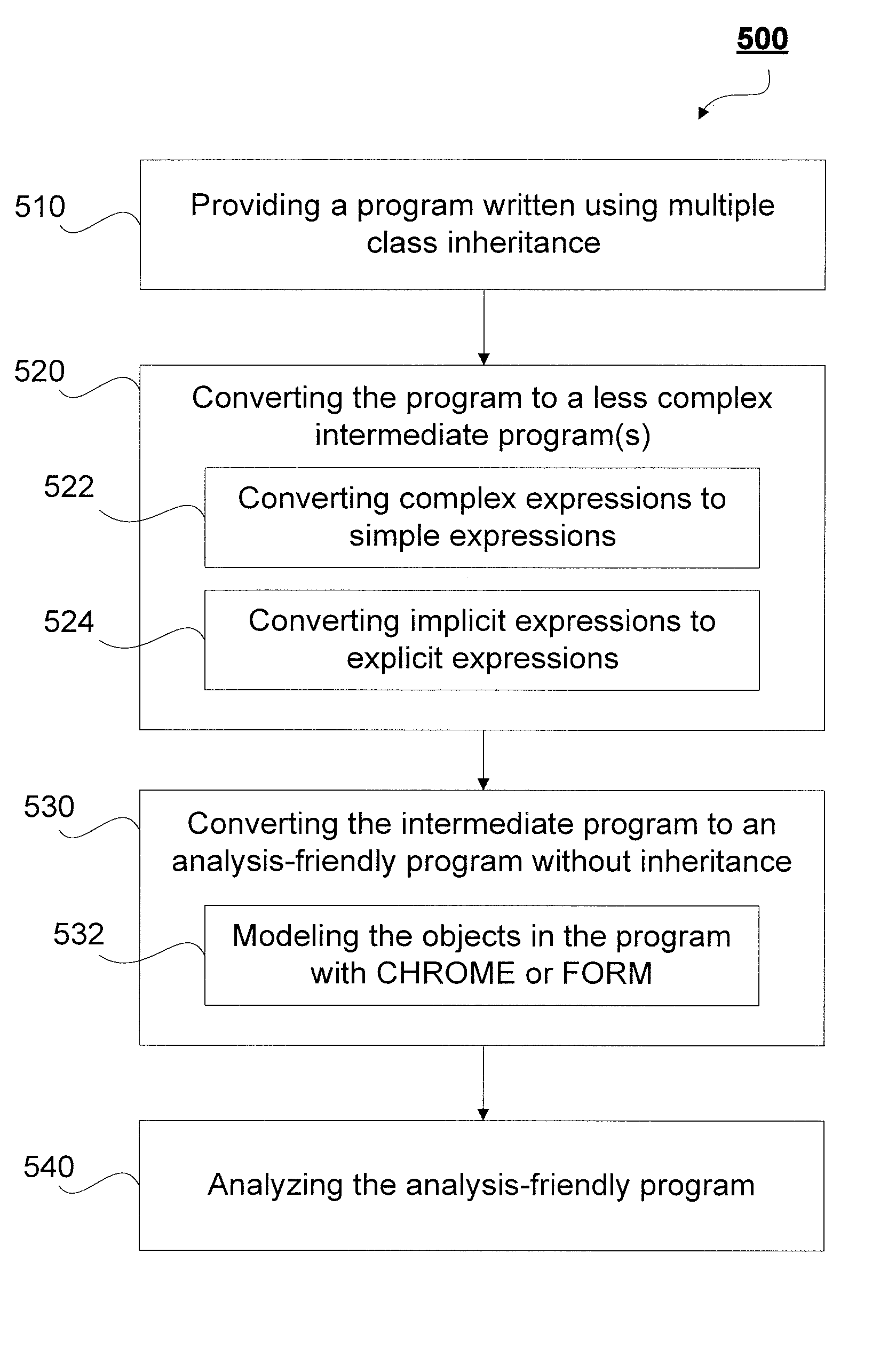

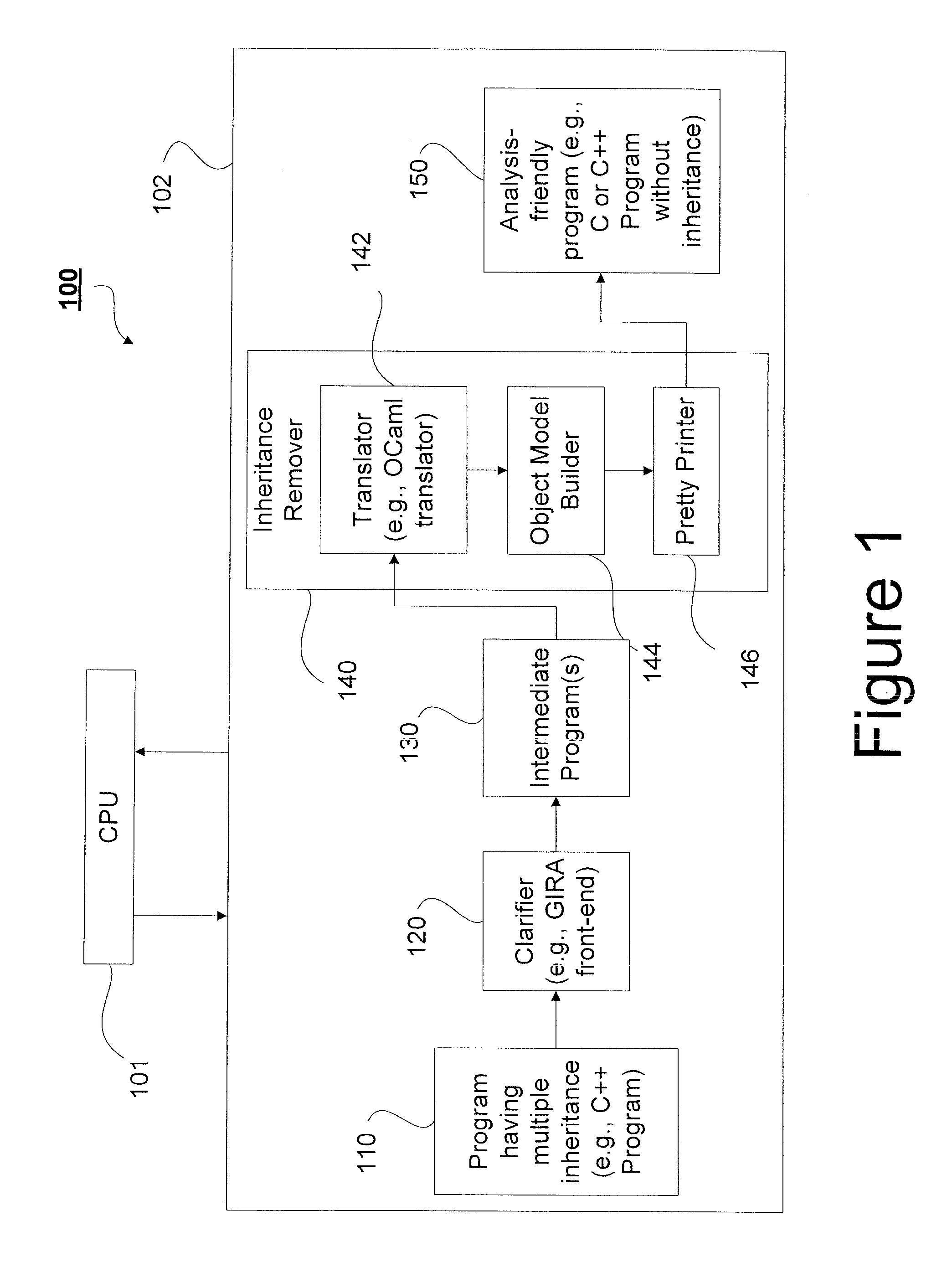

Embedding class hierarchy into object models for multiple class inheritance

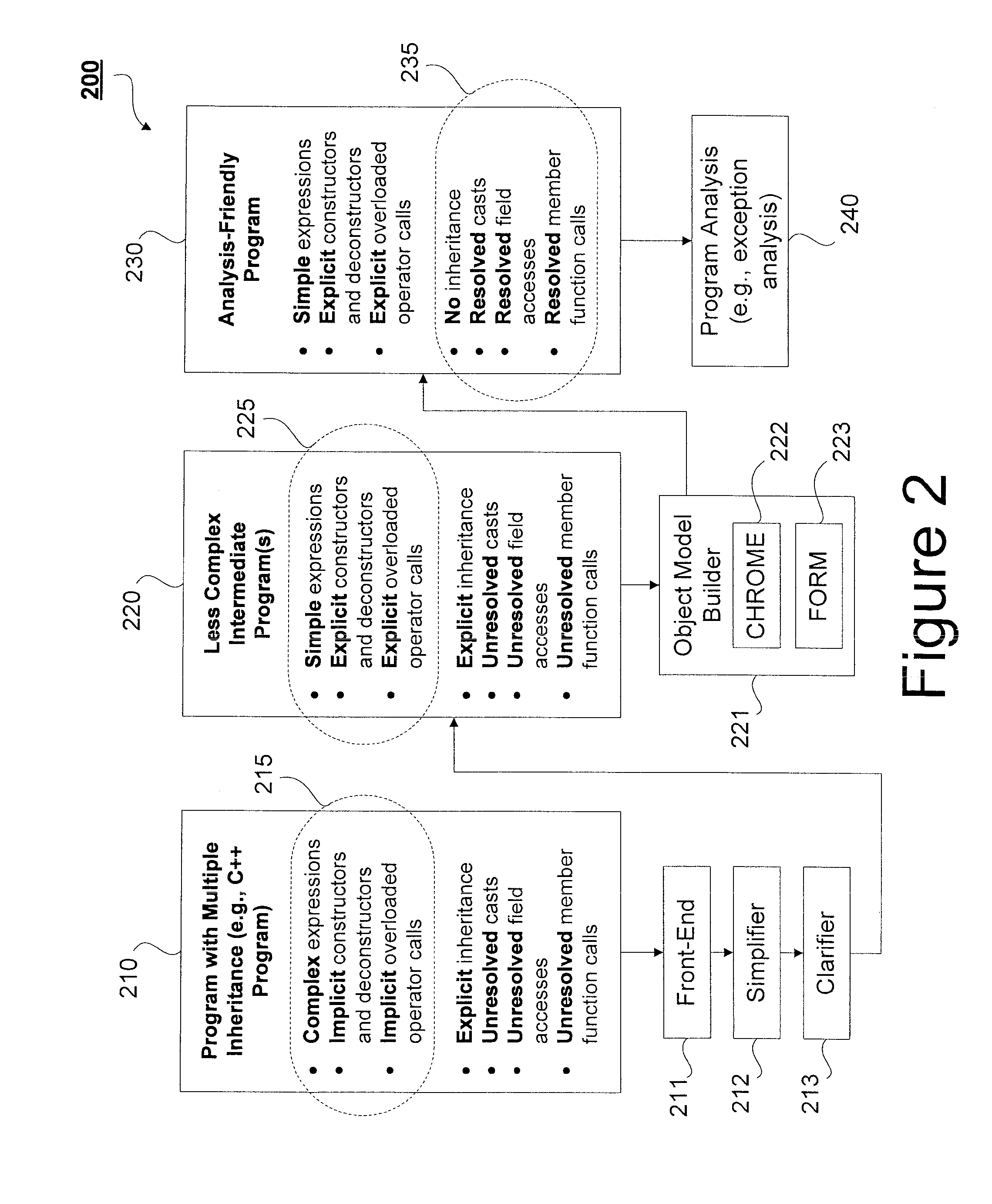

A model is provided for transforming a program with a priori given class hierarchy that is induced by inheritance. An inheritance remover is configured to remove inheritance from a given program to produce an analysis-friendly program which does not include virtual-function pointer tables and runtime libraries associated with inheritance-related operations. The analysis-friendly program preserves the semantics of the given program with respect to a given class hierarchy. A clarifier is configured to identify implicit expressions and function calls and transform the given program into at least one intermediate program having explicit expressions and function calls.

Owner:NEC CORP

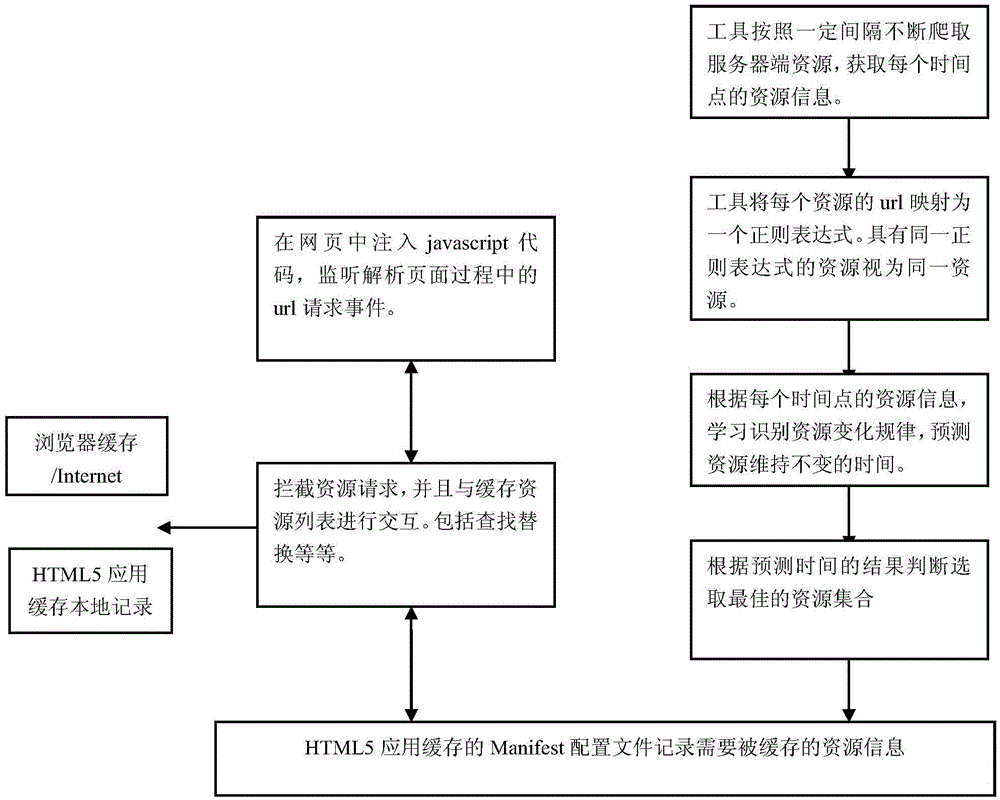

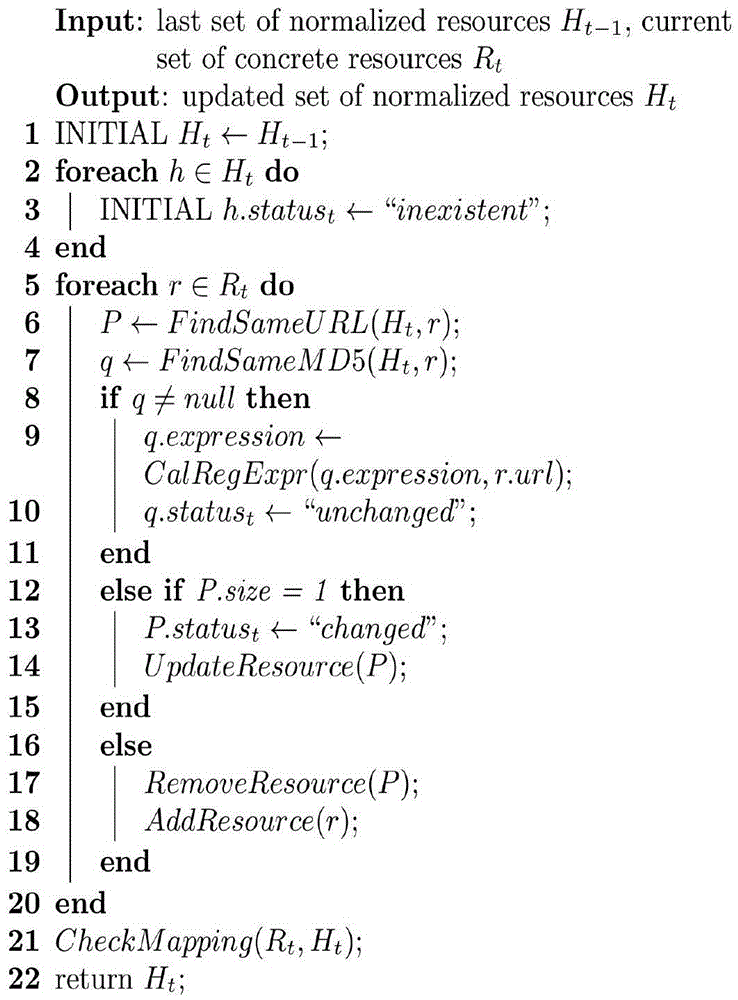

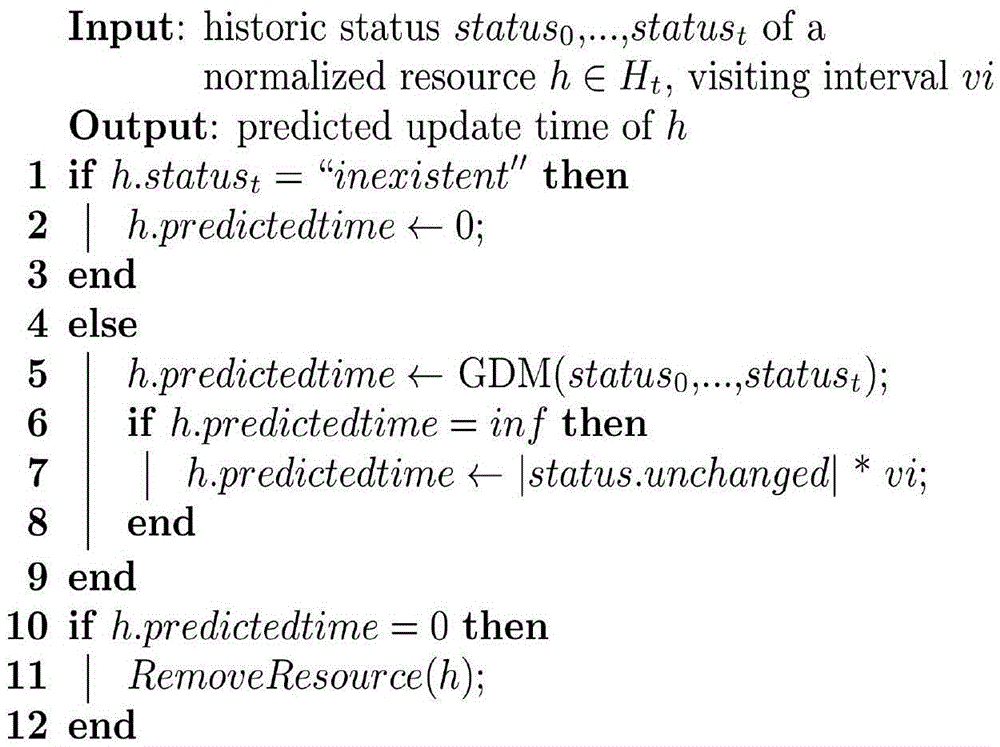

HTML5 application cache based mobile Web cache optimization method

ActiveCN105550338AEasy accessImprove cache hit ratioWeb data indexingInformation formatHTML5Cache optimization

Owner:PEKING UNIV

System method and apparatus for authorizing access

InactiveUS8027925B1Specific access rightsDigital data processing detailsSoftware licenseApplication software

The present invention comprises a method and apparatus for enforcing software licenses for resource libraries such as an application program interface (API), a toolkit, a framework, a runtime library, a dynamic link library (DLL), an applet (e.g. a Java or ActiveX applet), or any other reusable resource. The present invention allows the resource library to be selectively used only by authorized end user software programs. The present invention can be used to enforce a “per-program” licensing scheme for a resource library whereby the resource library is licensed only for use with particular software programs. In one embodiment, a license text string and a corresponding license key are embedded in a program that has been licensed to use a resource library. The license text string and the license key are supplied, for example, by a resource library vendor to a program developer who wants to use the resource library with an end user program being developed. The license text string includes information about the terms of the license under which the end user program is allowed to use the resource library. The license key is used to authenticate the license text string. The resource library in turn is provided with means for reading the license text string and the license key, and for determining, using the license key, whether the license text string is authentic and whether the license text string has been altered. Resource library functions are made available only to a program having an authentic and unaltered license text string.

Owner:APPLE INC

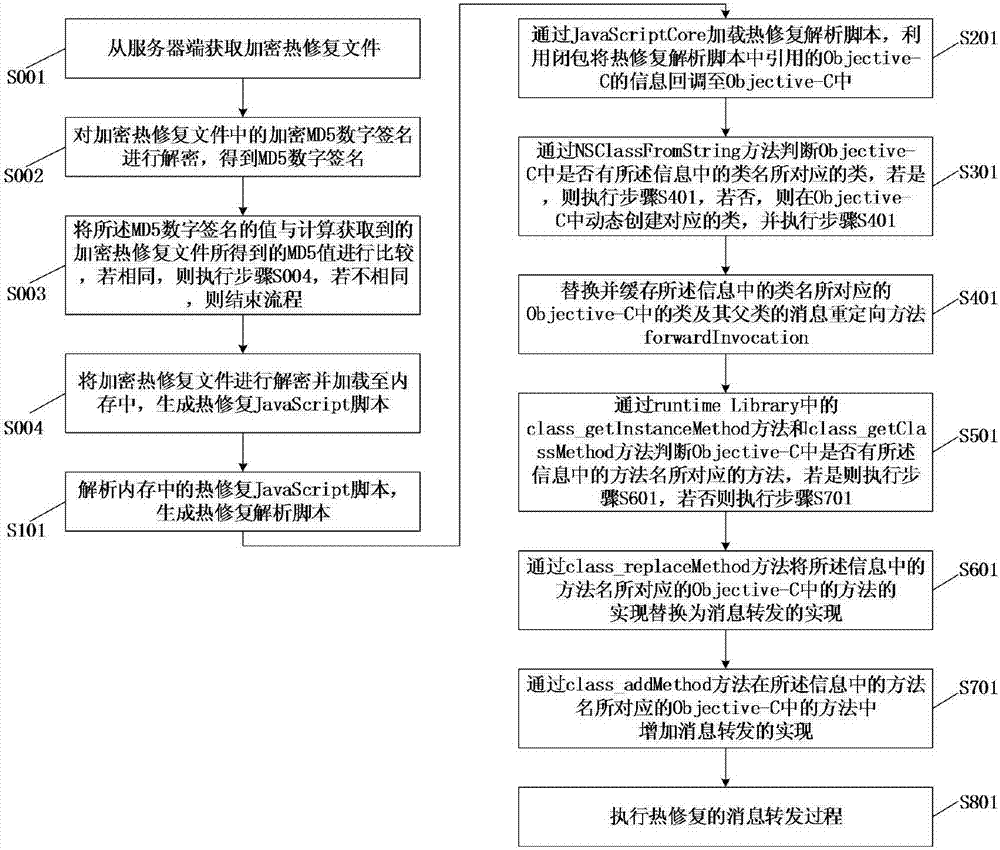

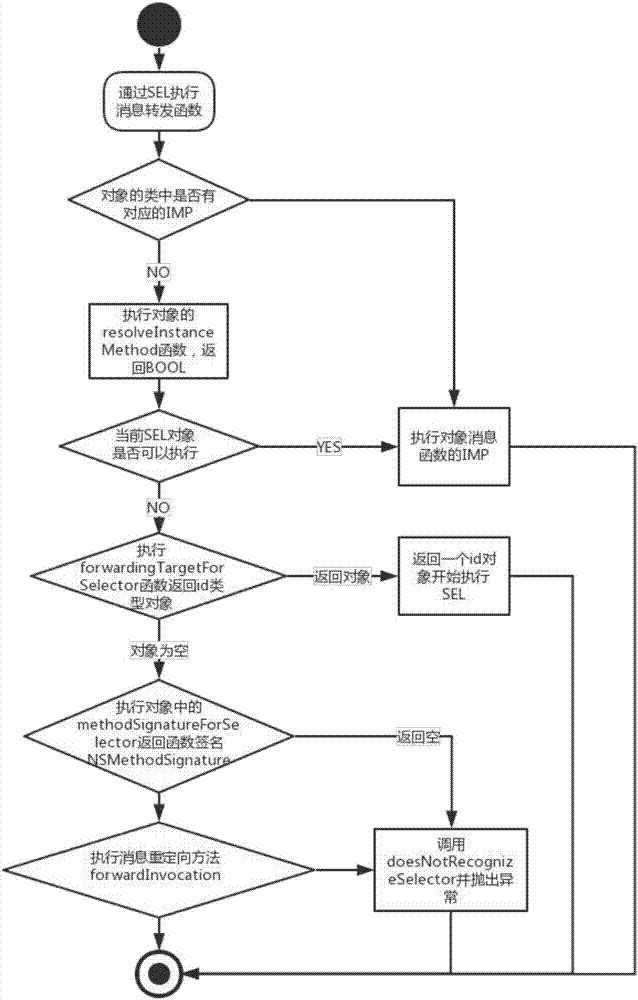

Method of solving iOS hot-fix problem of and user terminal

ActiveCN107391171AAddressing the Attack SituationResolve Review Denied IssuesSoftware engineeringUser identity/authority verificationFixed-functionAlgorithm

The invention discloses a method of solving an iOS hot-fix problem and a user terminal. According to the method, on the one hand, a method of querying whether an Objective-C class method exists is also realized through avoiding a respondsToSelector method and a performSelector method and using class_getInstanceMethod and class_getClassMethod methods in a runtime Library, and NSInvocation and forwardInvocation are applied to realize redirection of messages, and also to realize forwarding and invoking of the messages. Through relieving a sensitivity function problem existing in current open source technology, the method can enable a product with a hot-fix function to successfully pass strict examination and verification of Apple Inc..

Owner:GUANGDONG YOUMAI INFORMATION COMM TECH

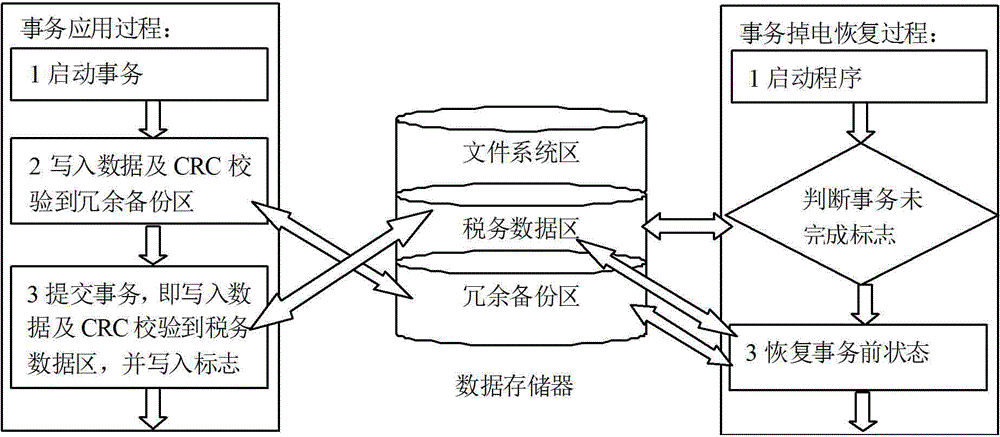

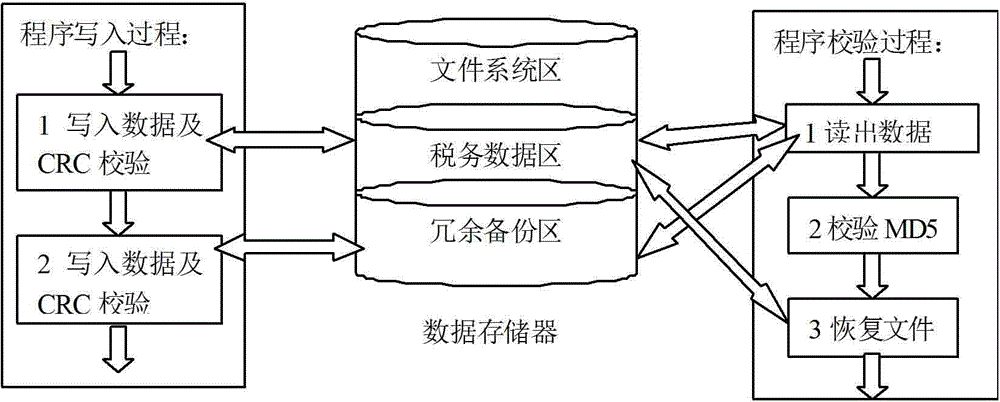

Abnormal power failure data recovery method

InactiveCN104133740AGuaranteed synchronous storageEnable secure storageRedundant operation error correctionElectricityRecovery method

The invention discloses an abnormal power failure data recovery method, which comprises the concrete realization processes that: three regional spaces including a file system region, a tax data region and a redundant backup region are set in a data memory of an embedded network billing machine; a redundant backup program runtime library and an operation function library are encapsulated in the redundant backup region, and the operation function library comprises a file creating function, a reading function and a writing function; when tax data relevant affairs are stored in the tax data region, the affairs are firstly written into the redundant backup region, and after the submission of the affairs in the redundant backup region is completed, original files are written into the tax data region; and when abnormal power failure occurs, an affair power failure recovery program is started, and the data recovery is completed through the redundant backup region. Compared with the prior art, the abnormal power failure data recovery method has the advantages that important data such as invoice volume data and invoice details of network billing can be enabled to realize synchronous storage; the service execution integrity is ensured; and the safe storage requirement of the machine is improved.

Owner:INSPUR QILU SOFTWARE IND

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com