Large convolution kernel hardware implementation method, computer equipment and storage medium

A hardware implementation and storage medium technology, applied in the field of convolutional neural network, can solve the problems of reducing the actual processing performance of NPU, increasing the waiting time of NPU, etc., and achieve the effect of improving processing performance and reducing complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

example 1

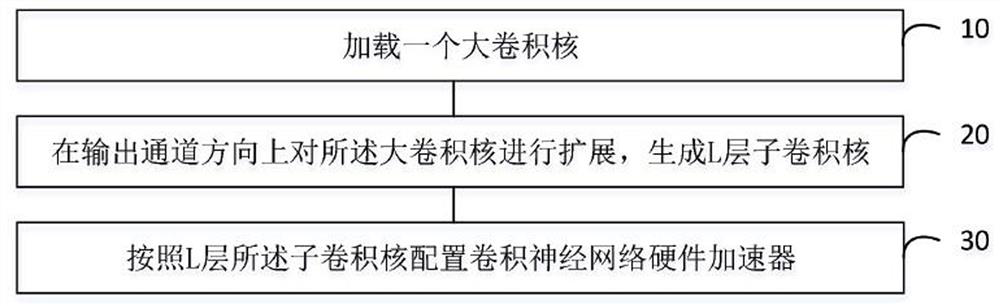

[0055] Example 1, a large convolution kernel hardware implementation method disclosed in this embodiment is applied to the implementation process of a 5×5 convolution kernel.

[0056] For a (out_ch, in_ch, 5, 5) large convolution kernel, expand in the direction of the output channel to generate two layers of 3×3 sub-convolution kernels, and configure them according to the generated two-layer 3×3 sub-convolution kernels Convolutional Neural Network Hardware Accelerator.

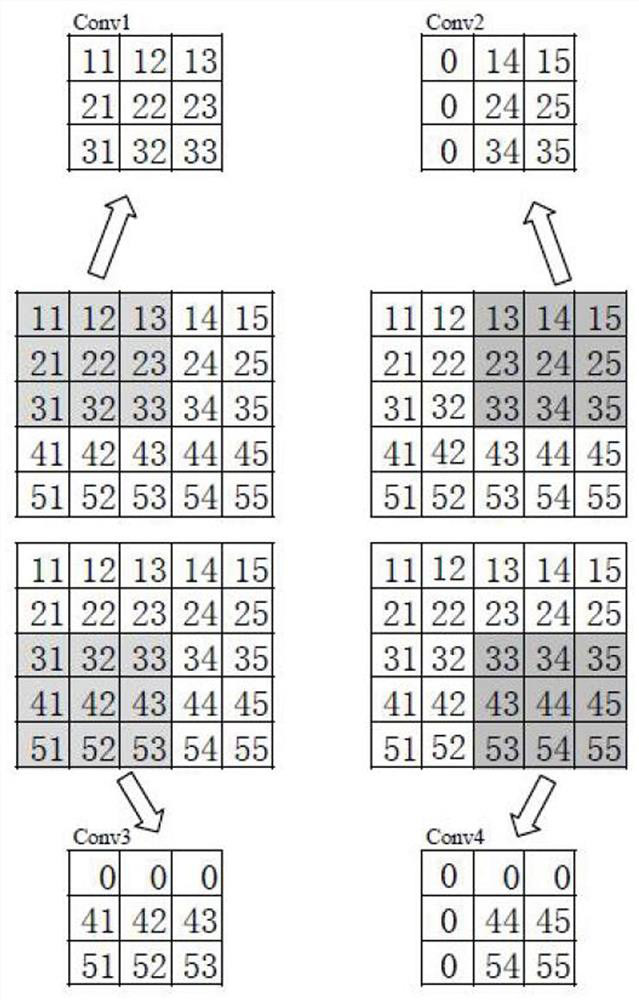

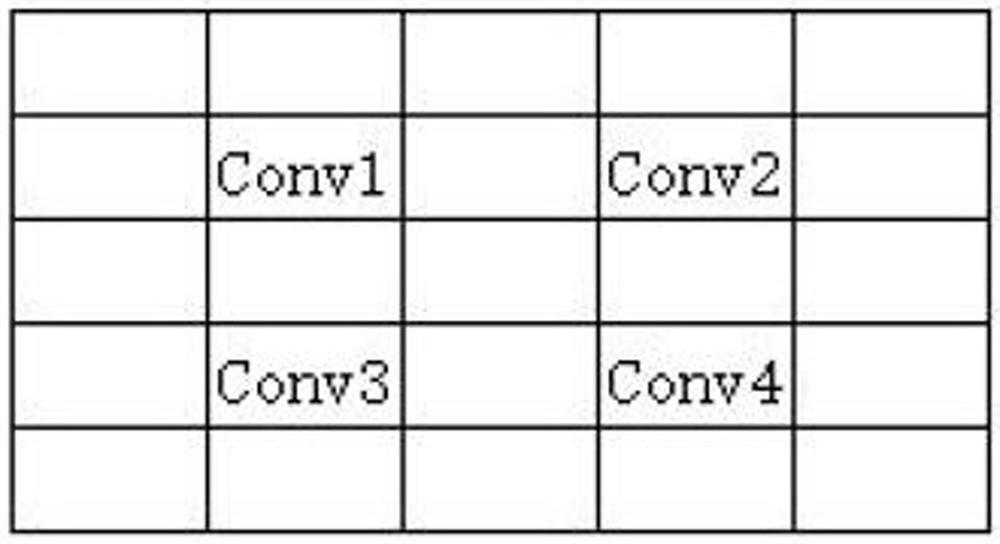

[0057] see figure 2 , to generate the first layer of sub-convolution kernels. First, in the direction of the output channel, four 3x3 sub-convolution kernels Conv1, Conv2, Conv3, and Conv4 are selected from the 5×5 convolution kernel expansion according to the step size equal to 2, and the first layer of sub-convolution kernels (out_ch×4, in_ch, 3, 3). The position where any newly generated 3x3 sub-convolution kernel overlaps with any generated 3x3 sub-convolution kernel is filled with data 0; that is, the...

example 2

[0066] Example 2, a large convolution kernel hardware implementation method disclosed in this embodiment is applied to the implementation process of a 7×7 convolution kernel.

[0067] For a (out_ch, in_ch, 7, 7) large convolution kernel, expand in the direction of the output channel to generate three layers of 3×3 sub-convolution kernels, and configure them according to the generated two-layer 3×3 sub-convolution kernels Convolutional Neural Network Hardware Accelerator. For the filling scheme of each sub-convolution kernel, see Figure 5 .

[0068] Generate the first layer of sub-convolution kernels. First, in the direction of the output channel, from the 7×7 convolution kernel expansion, select 9 3x3 sub-convolution kernels Conv1, Conv2, ..., Conv9 according to the step size equal to 2, and generate the first layer of sub-convolution kernels (out_ch×9, in_ch , 3, 3). The position where any newly generated 3x3 sub-convolution kernel overlaps with any generated 3x3 sub-con...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com