LSTM model optimization method, accelerator, device and medium

An optimization method and model technology, applied in neural learning methods, biological neural network models, neural architectures, etc., can solve problems such as difficulty in deploying models, high power consumption of computing platforms, and inability to carry LSTMs to enhance overall performance and applicability range, improved computing efficiency and speed, and the effect of facilitating hardware deployment

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 example

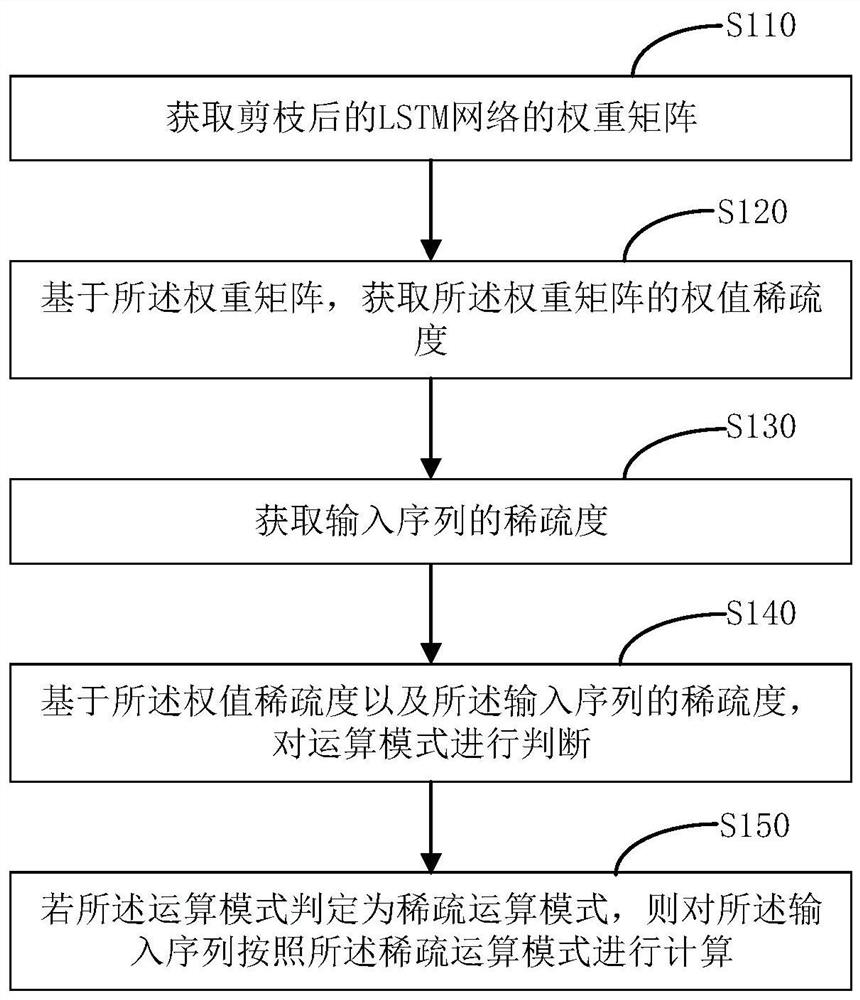

[0051] refer to figure 1 , figure 1 For the first embodiment of the LSTM model optimization method of the present application, the method includes:

[0052] Step S110: Obtain the weight matrix of the pruned LSTM network.

[0053]Specifically, the Recurrent Neural Network (RNN) based on Long Short-Term Memory (LSTM) is a neural network model for processing sequence data, which effectively solves the problem of gradient disappearance and explosion, and It is widely used in the field of intelligent cognition, such as speech recognition, behavior recognition and natural language processing.

[0054] Specifically, the pruning operation is to compress the LSTM network to reduce the storage and computing costs of the LSTM network. The methods for compressing LSTM networks mainly include but are not limited to parameter pruning and sharing, low-rank factorization, transferred / compact convolutional filters, and Knowledge distillation.

[0055] Specifically, the weight matrix is ...

no. 2 example

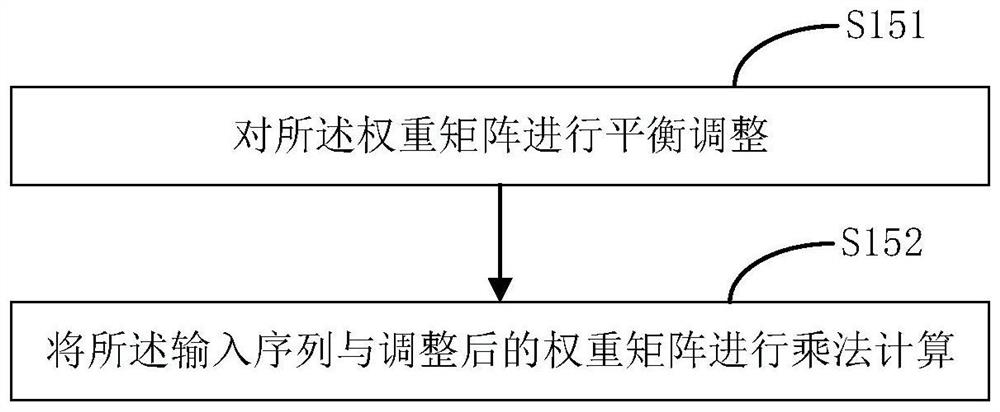

[0090] refer to Image 6 , Image 6 For the second embodiment of the LSTM model optimization method of the present application, the method also includes:

[0091] Step S210: Obtain the weight matrix of the pruned LSTM network.

[0092] Step S220: Obtain the weight sparsity of the weight matrix based on the weight matrix.

[0093] Step S230: Obtain the sparsity of the input sequence.

[0094] Step S240: If the weight sparsity is greater than or equal to the weight sparsity threshold and / or the input sequence sparsity is greater than or equal to the input sequence sparsity threshold, determine the sparse operation mode.

[0095] Step S250: Calculate the input sequence according to the sparse operation mode.

[0096] Step S260: If the weight sparsity is less than the weight sparsity threshold and / or the input sequence sparsity is less than the input sequence sparsity threshold, determine that it is an intensive computing mode.

[0097]Step S270: Calculate the input sequence ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com