Patents

Literature

33 results about "Sparse matrix-vector multiplication" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

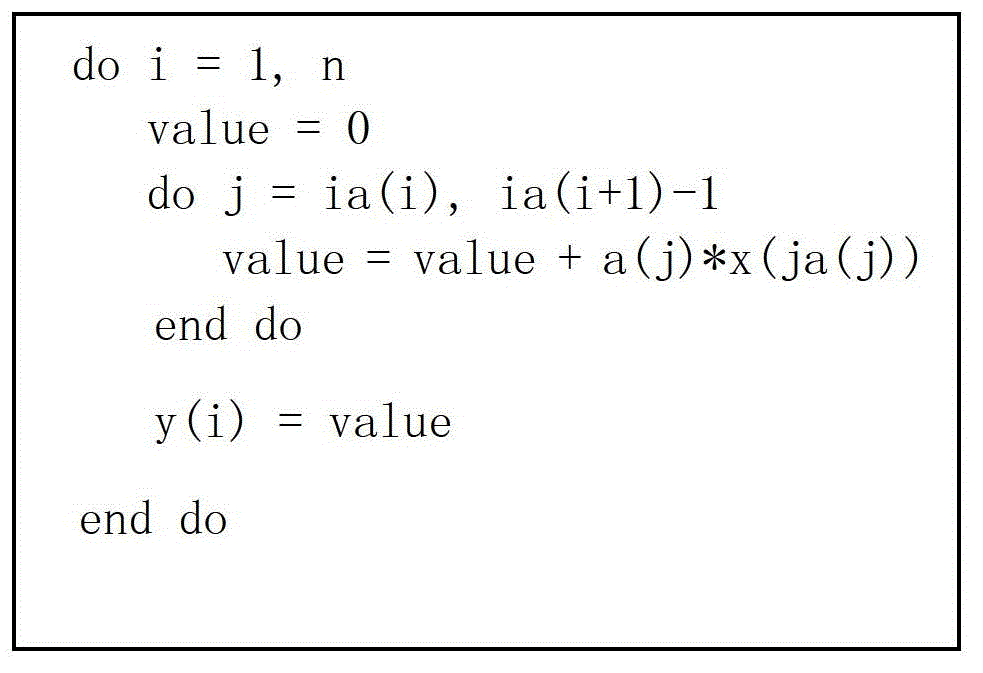

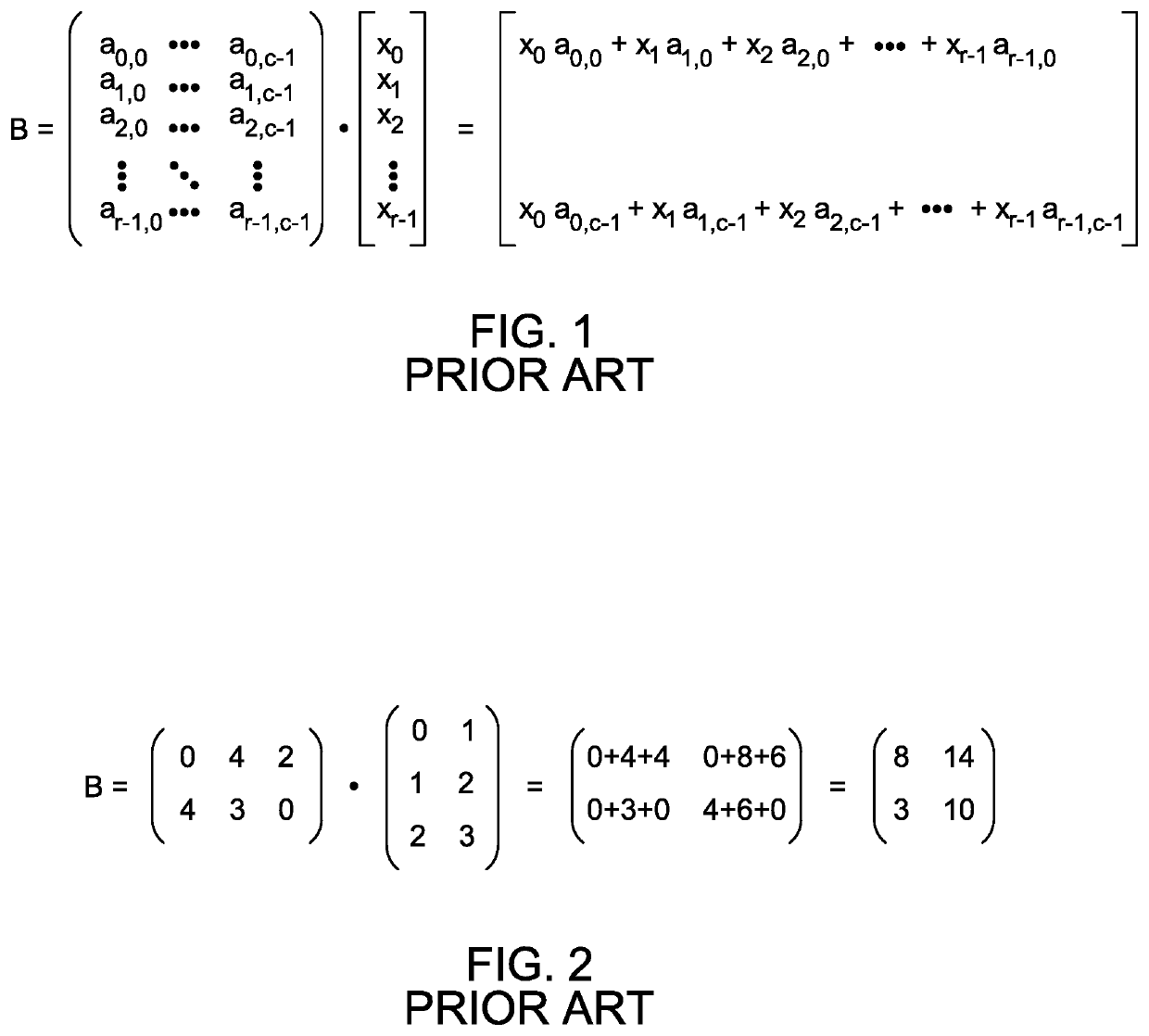

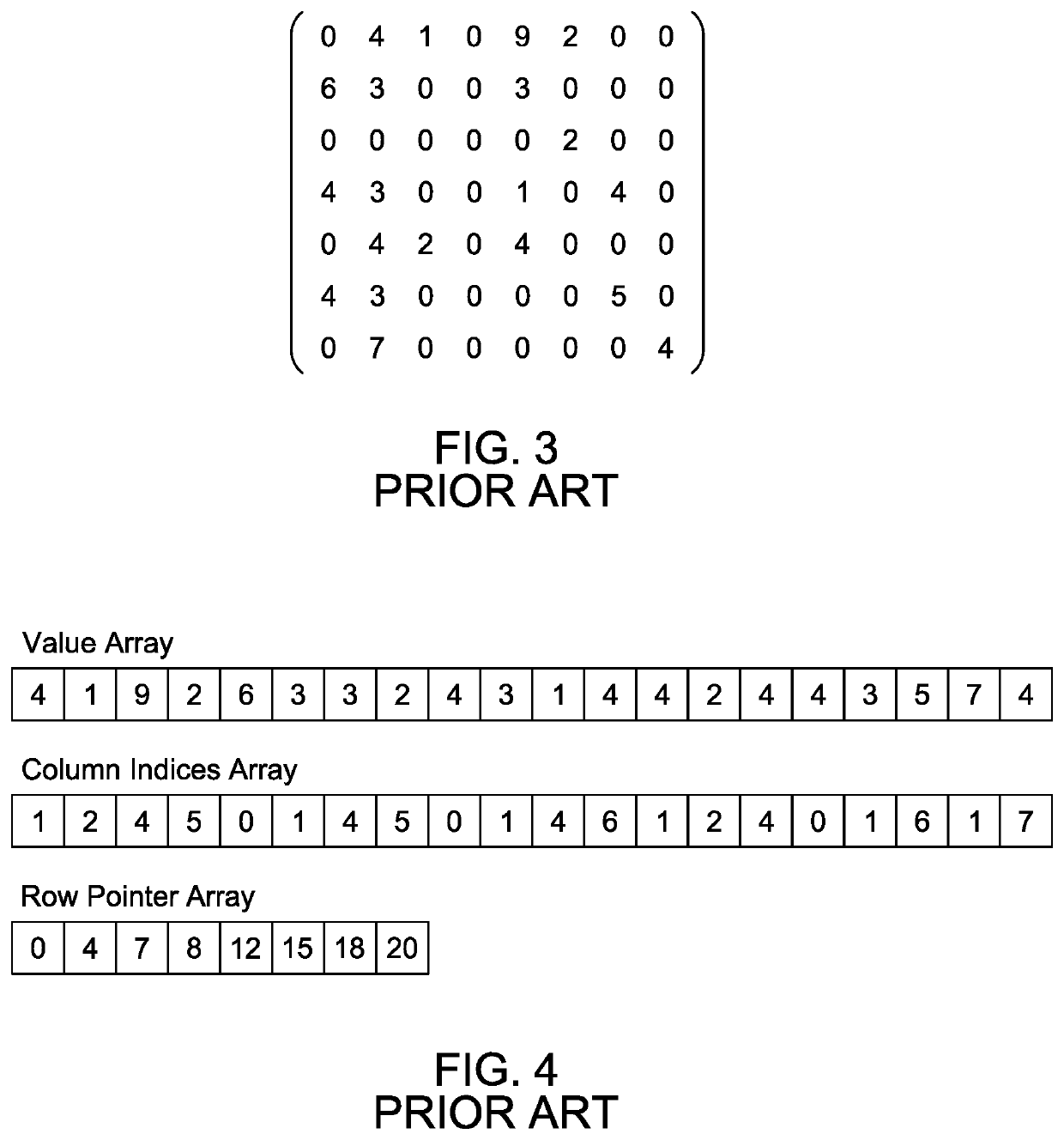

Sparse matrix-vector multiplication (SpMV) of the form is a widely used computational kernel existing in many scientific applications. The input matrix is sparse. The input vector and the output vector are dense. In case of repeated operation involving the same input matrix but possibly changing numerical values of its elements, can be preprocessed to reduce both the parallel and sequential run time of the SpMV kernel.

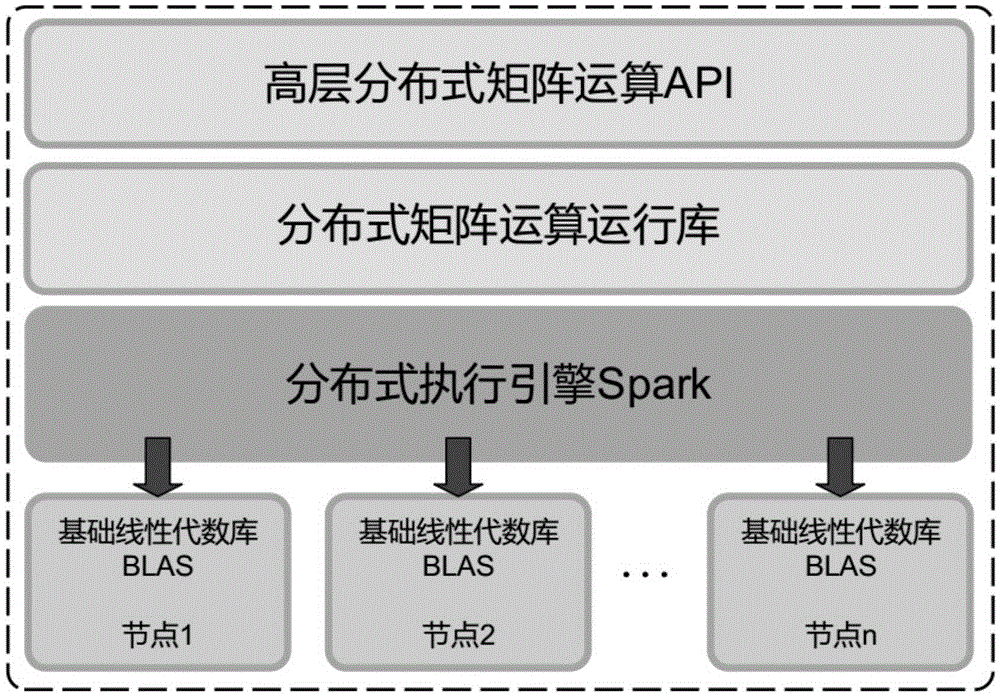

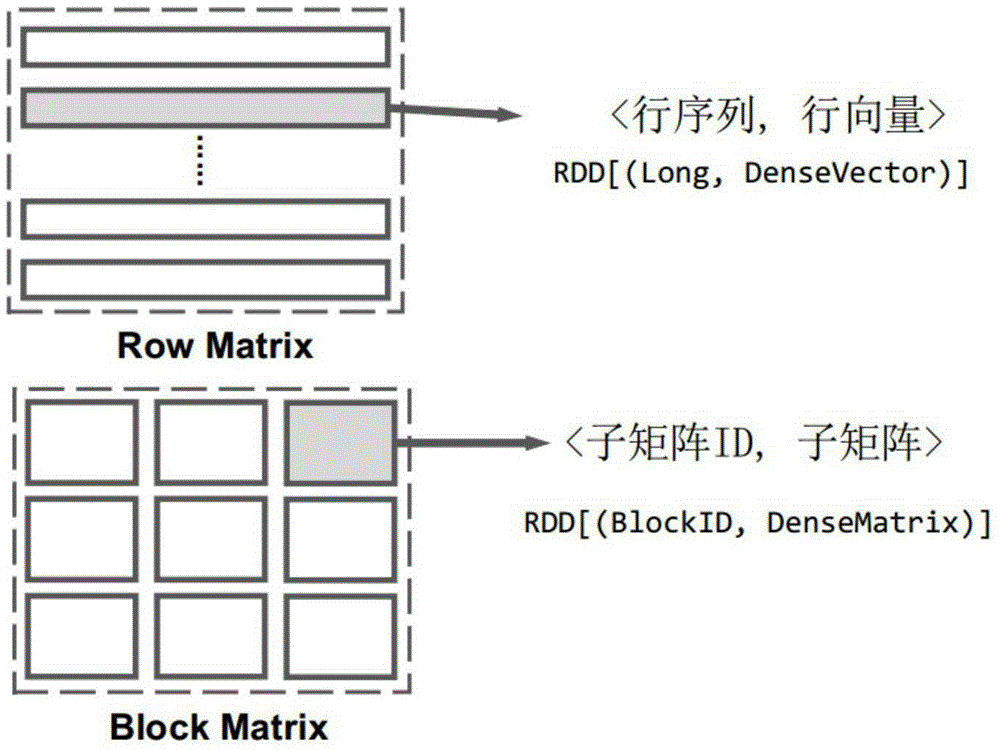

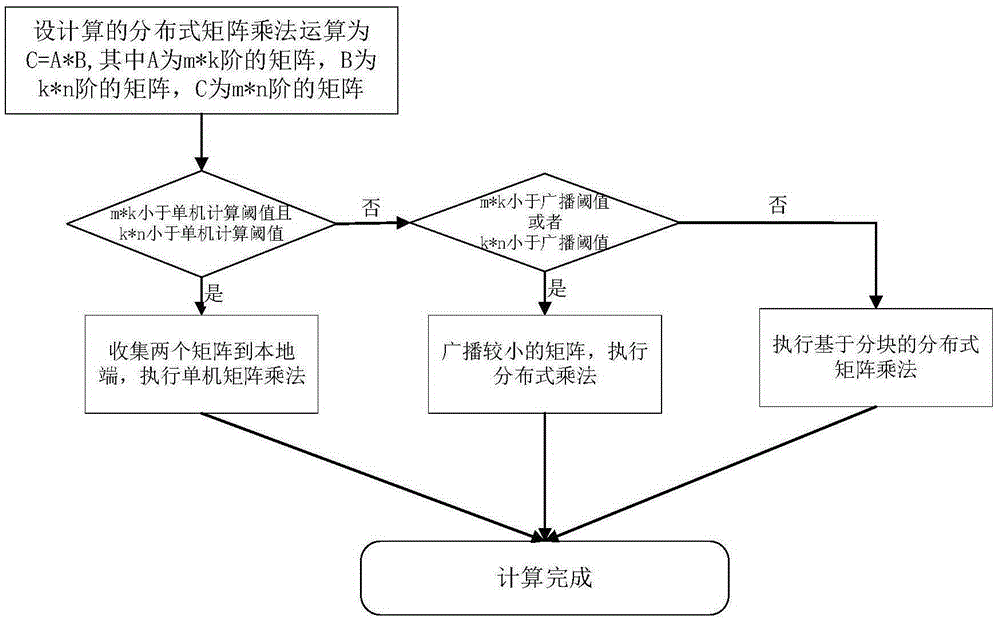

Matrix calculation method of distributed large-scale matrix multiplication based on Spark

InactiveCN105426344ATroubleshoot fault tolerance issuesReduce learning costsComplex mathematical operationsDistributed File SystemDistributed Computing Environment

The invention discloses a matrix calculation method of distributed large-scale matrix multiplication based on Spark. The method comprises the following steps of adopting a system framework based on a distributed computation execution engine Spark and single-machine BLAS (Basic Linear Algebra Subprograms); defining an operation interface related to a packaging matrix in a distributed system, reading a matrix file from a distributed file system, and selecting a proper scheme to execute the distributed multiplication for the quantity of resource of a distributed computing environment and the scale of a to-be-processed matrix; if the scales of the two matrixes are small, gathering to the locality to carry out single-machine multiplication; if the scale of one matrix is smaller, broadcasting the matrix execution multiplication; and if the scales of the two matrixes are large, adopting block-based distributed matrix multiplication. As for the latter two conditions, the invention respectively provides two efficient solutions, so that the problems of low performance and the poor expansibility of the existing big data processing platform about the distributed matrix operation are solved.

Owner:NANJING UNIV

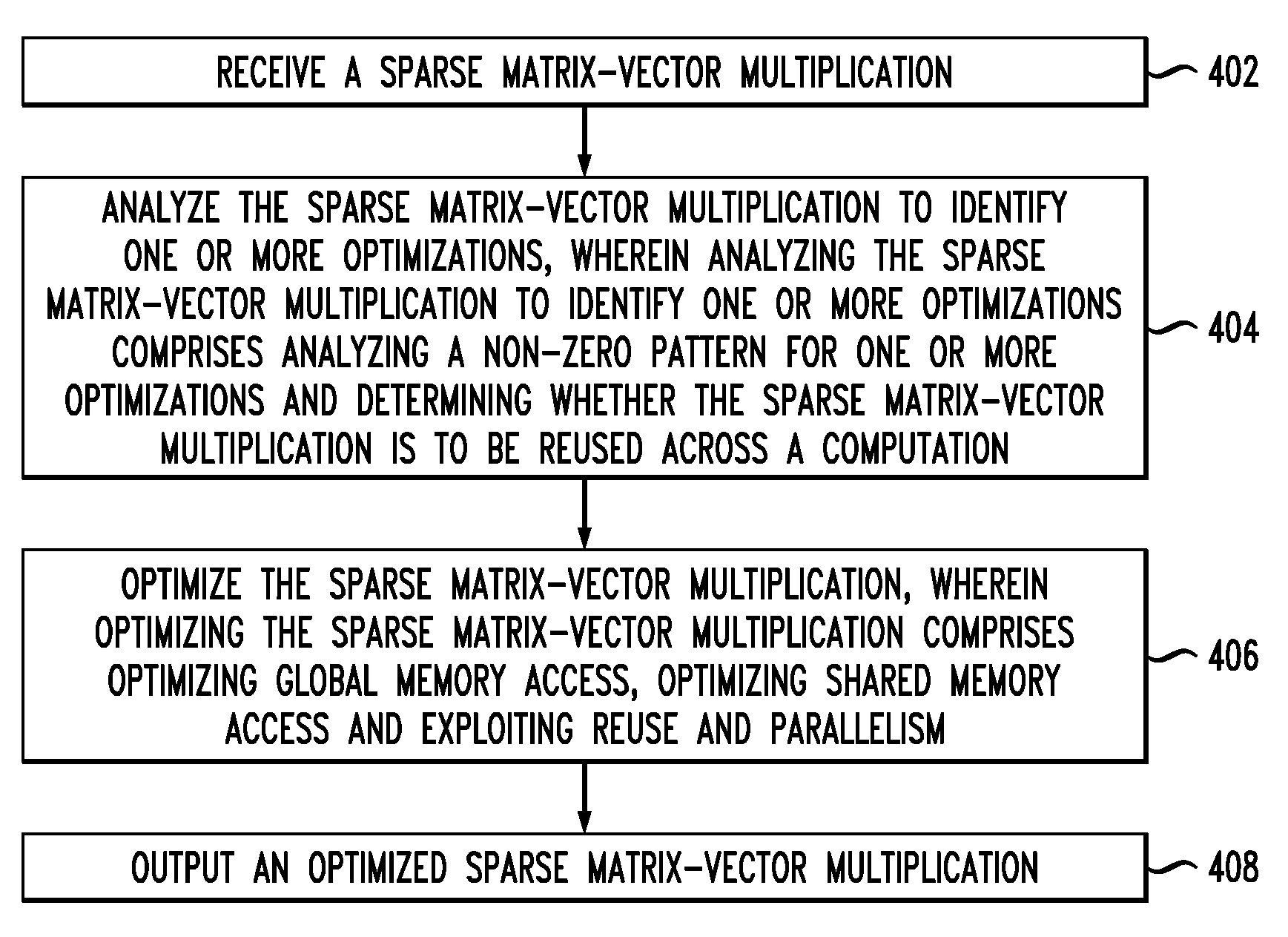

Sparse Matrix-Vector Multiplication on Graphics Processor Units

InactiveUS20110078226A1Computation using non-contact making devicesDigital computer detailsGraphicsMatrix multiplication

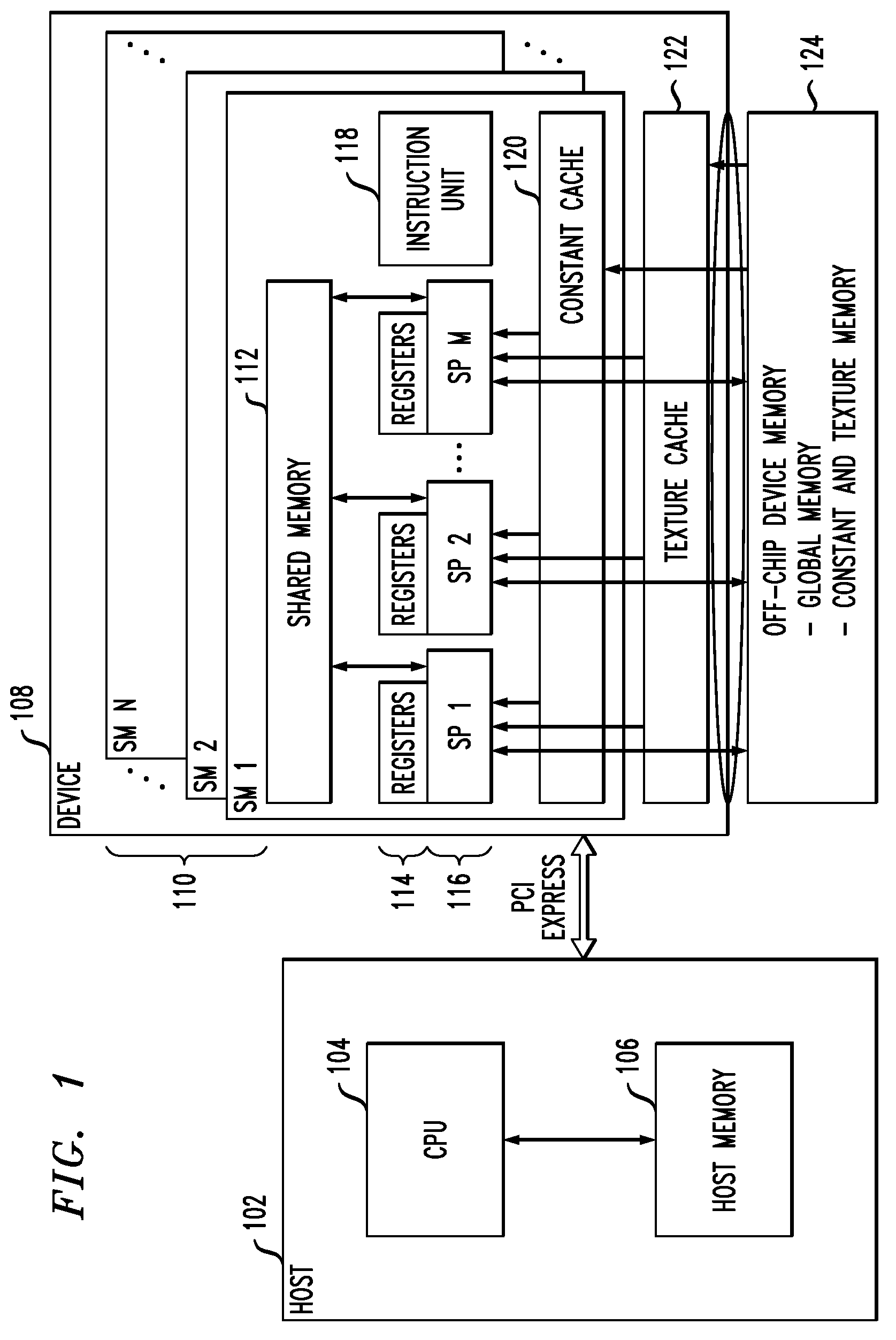

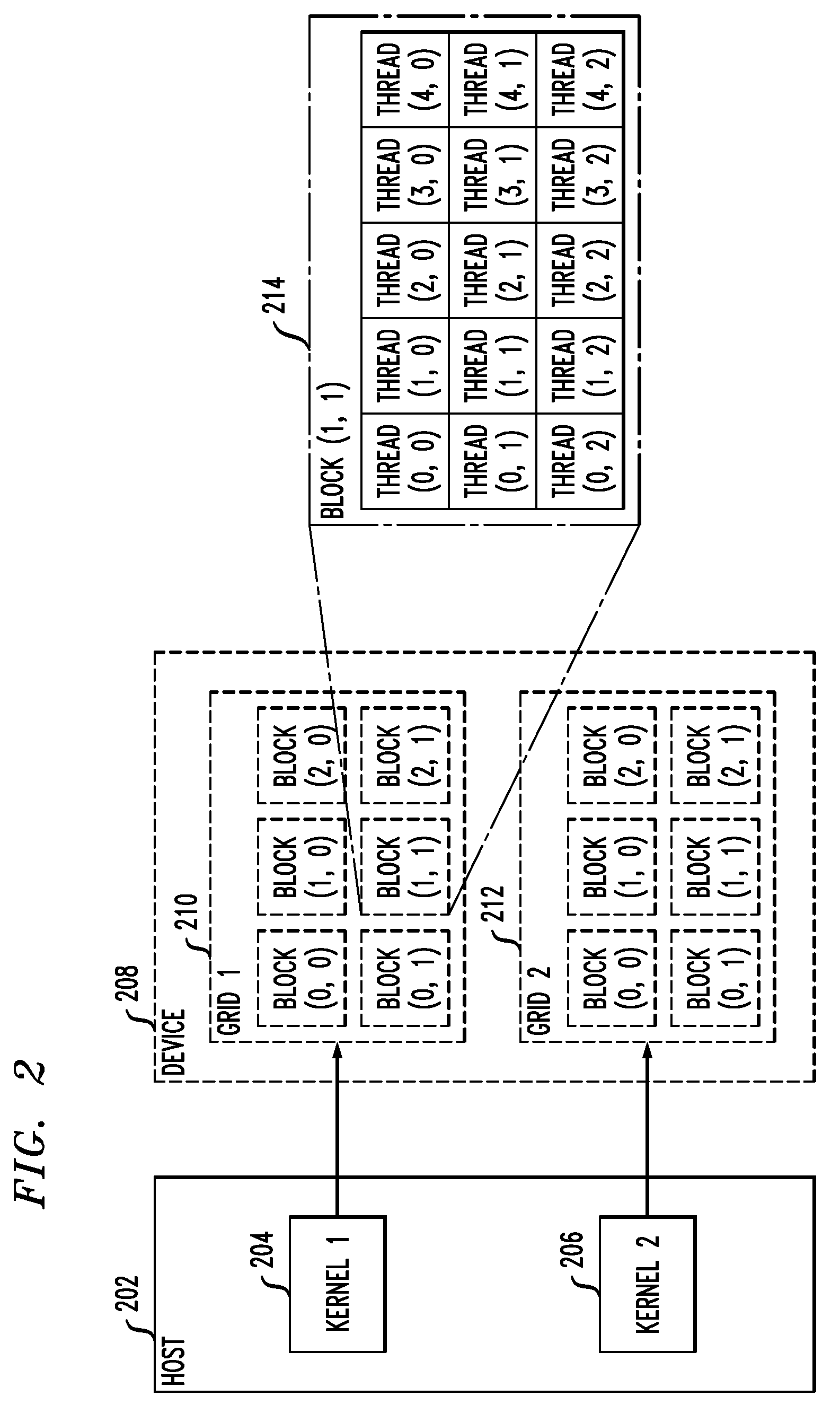

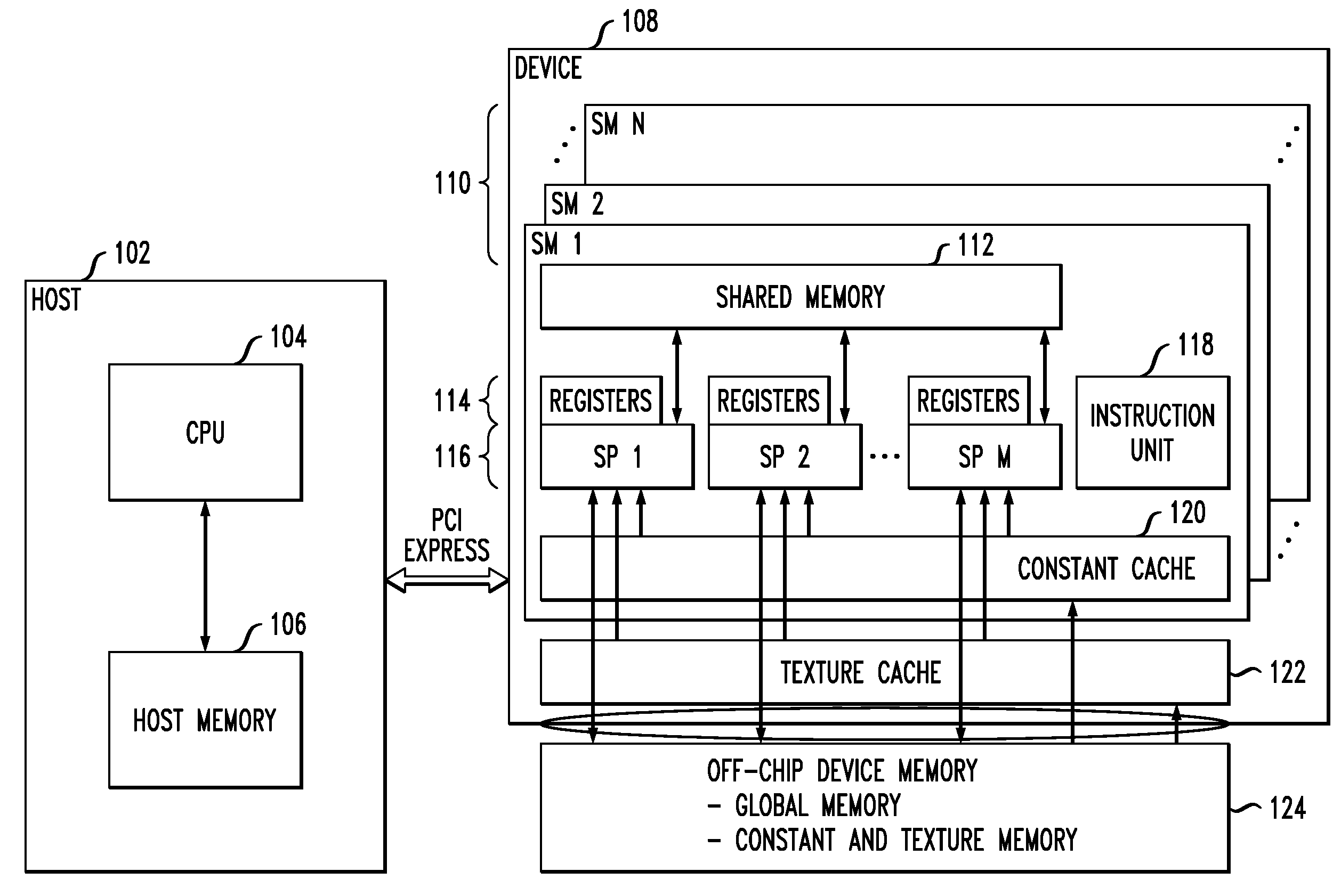

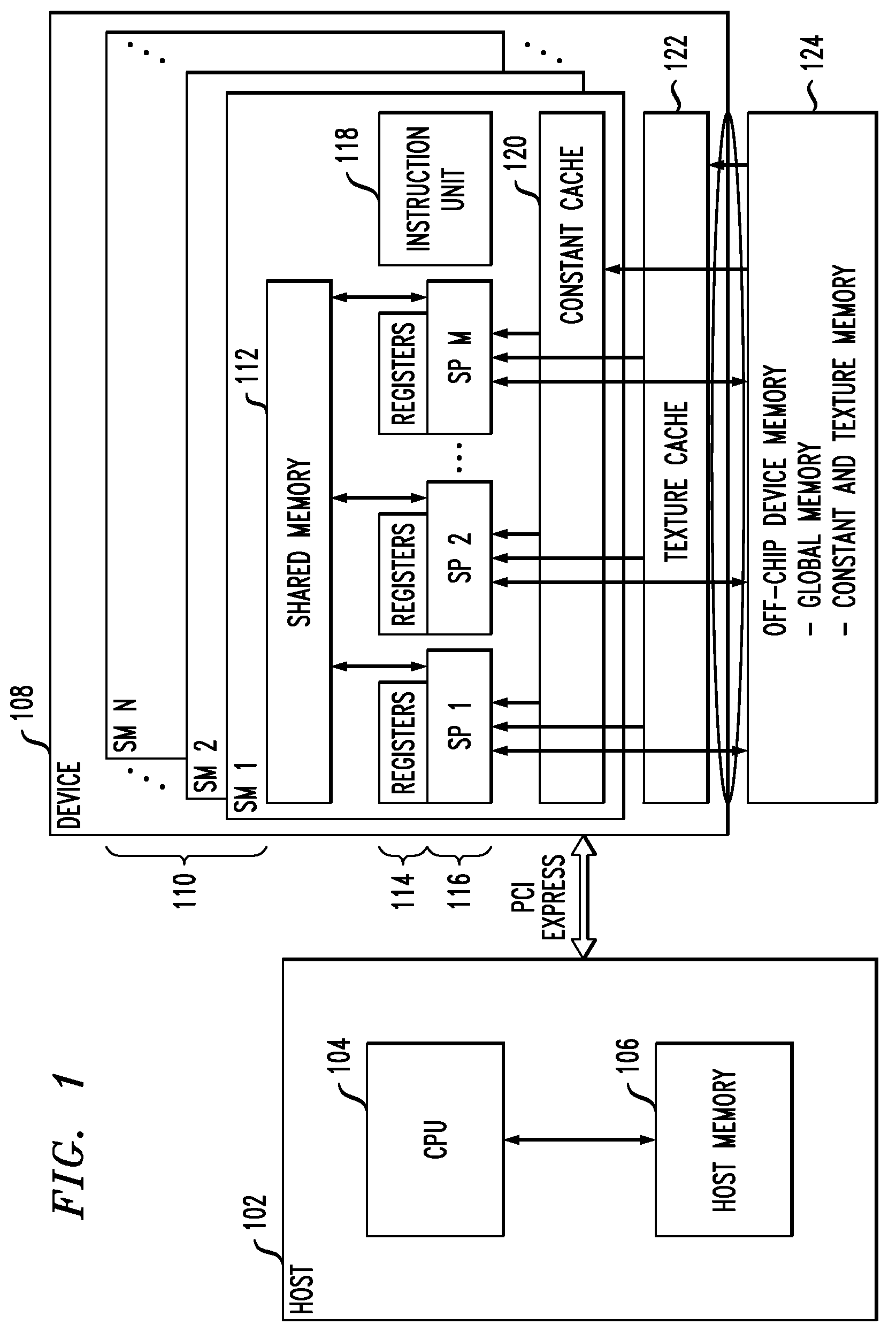

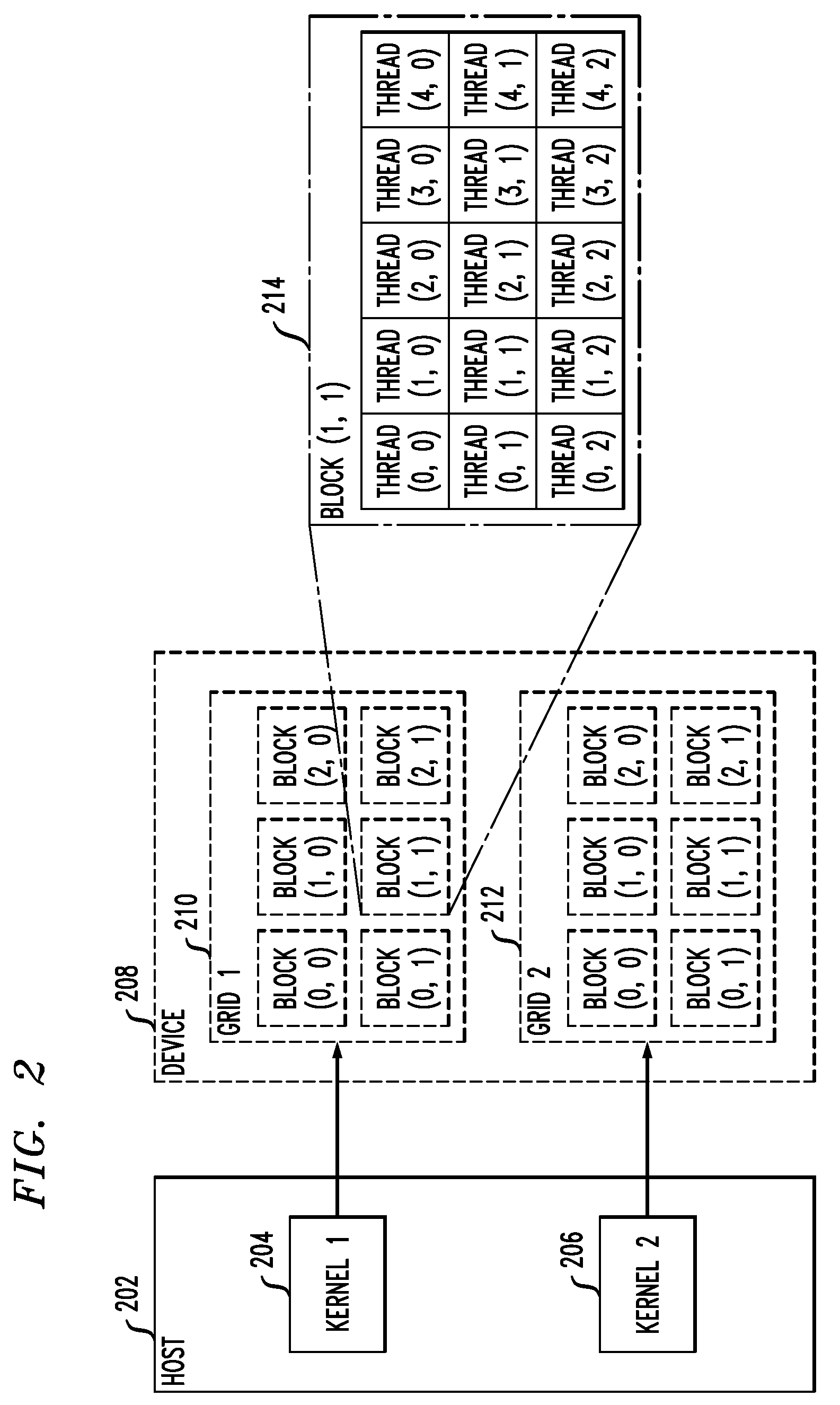

Techniques for optimizing sparse matrix-vector multiplication (SpMV) on a graphics processing unit (GPU) are provided. The techniques include receiving a sparse matrix-vector multiplication, analyzing the sparse matrix-vector multiplication to identify one or more optimizations, wherein analyzing the sparse matrix-vector multiplication to identify one or more optimizations comprises analyzing a non-zero pattern for one or more optimizations and determining whether the sparse matrix-vector multiplication is to be reused across computation, optimizing the sparse matrix-vector multiplication, wherein optimizing the sparse matrix-vector multiplication comprises optimizing global memory access, optimizing shared memory access and exploiting reuse and parallelism, and outputting an optimized sparse matrix-vector multiplication.

Owner:IBM CORP

Sparse matrix storage method CSRL (Compressed Sparse Row with Local Information) and SpMV (Sparse Matrix Vector Multiplication) realization method based on same

ActiveCN103336758AReduce accessIncrease profitComplex mathematical operationsSparse matrix vectorArray data structure

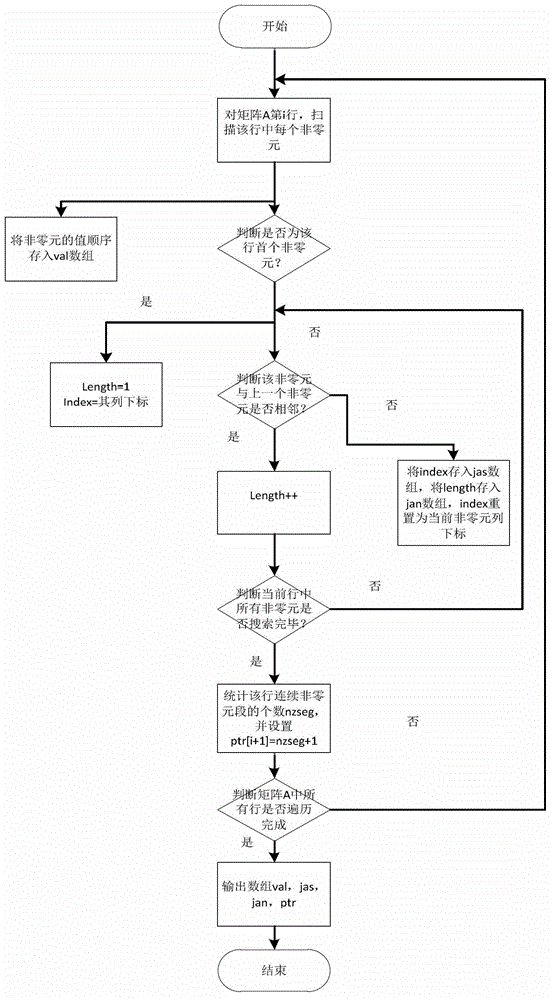

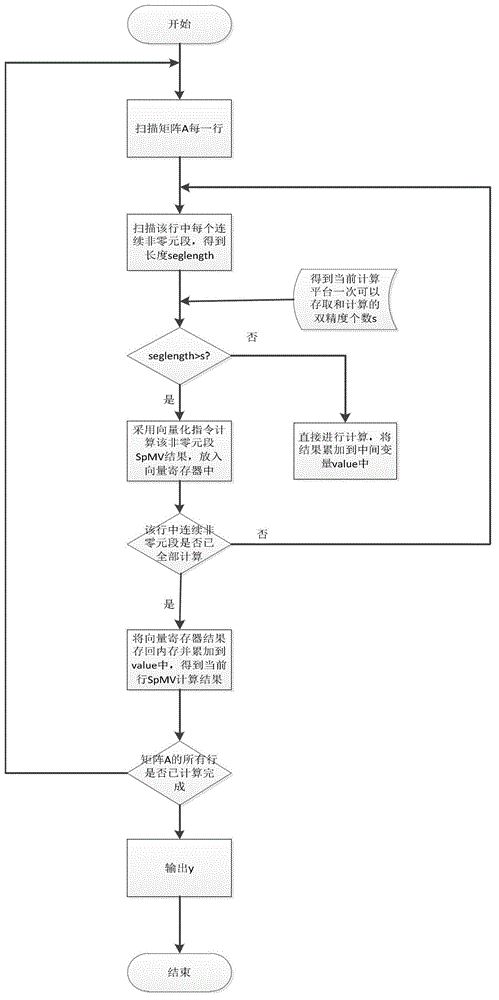

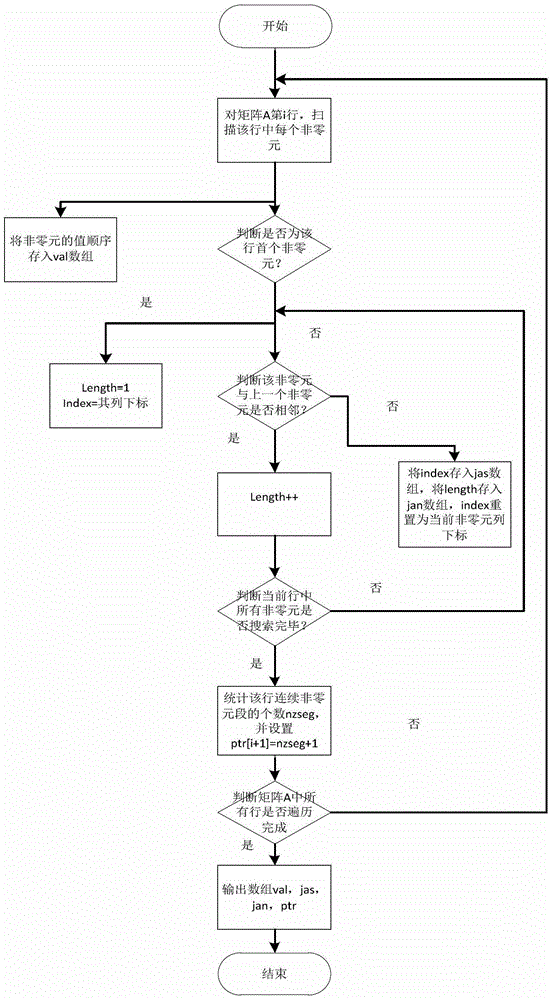

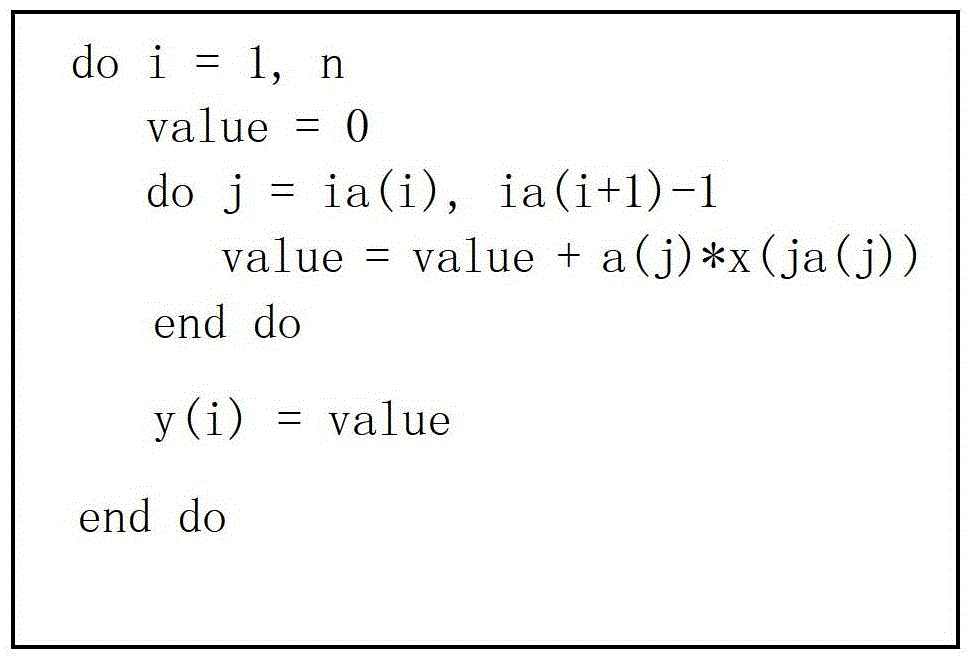

The invention discloses a sparse matrix storage method CSRL (Compressed Sparse Row with Local Information) and an SpMV (Sparse Matrix Vector Multiplication) realization method based on the same. The storage method comprises the following steps of scanning a sparse matrix A in rows and storing each non-zero element value information in an array val sequence; defining a plurality of non-zero elements with continuous row subscripts as a continuous non-zero element section, recording a row subscript of a first element in each continuous non-zero element section by use of an array jas, and recording the number of non-zero elements in each continuous non-zero element section by use of an array jan; and recording an initial index of a first continuous non-zero element section in each row of the sparse matrix A by use of an array ptr. According to the data storage method, row indexes of the non-zero elements are combined and stored, so that the storage space requirement is reduced, the data locality of the sparse matrix is fully excavated, access and calculation can be performed by use of an SIMD (Single Instruction Multiple Data) instruction, the access frequency of an internal storage can be reduced, and the SpMV performance is improved.

Owner:INST OF SOFTWARE - CHINESE ACAD OF SCI

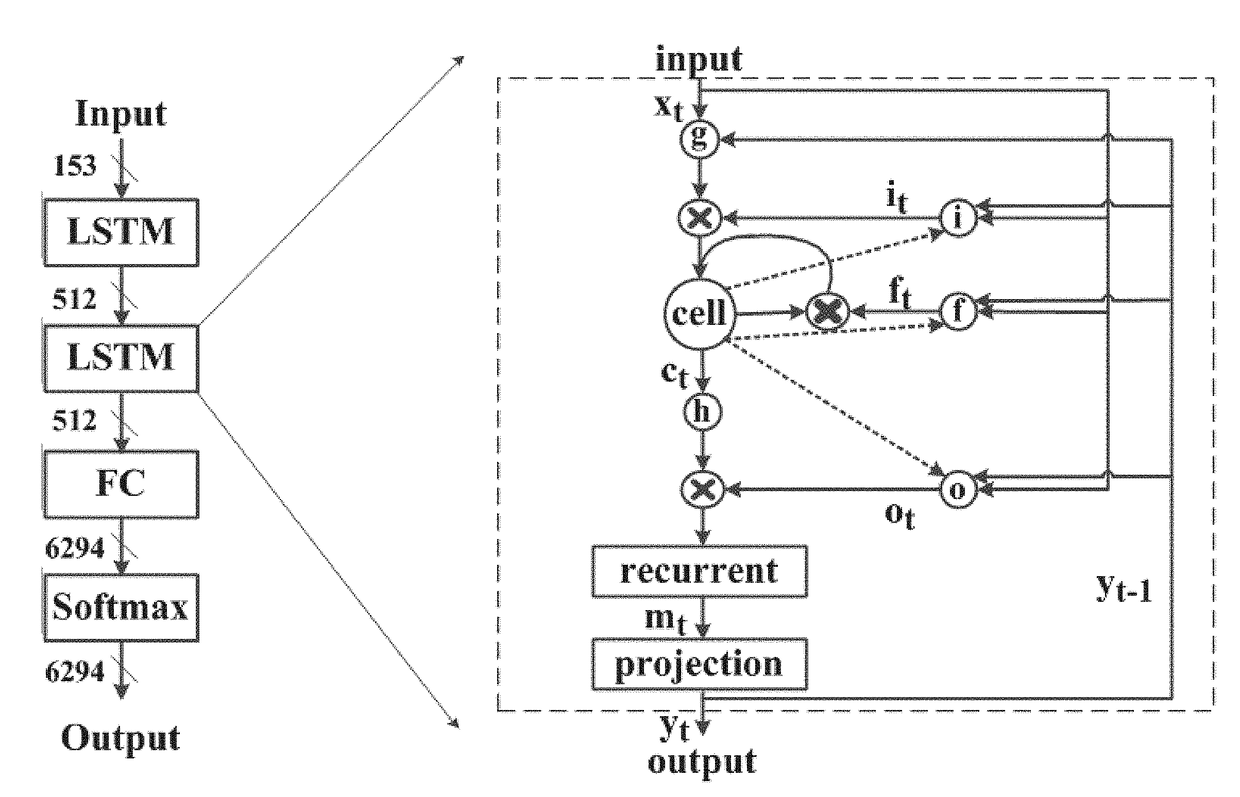

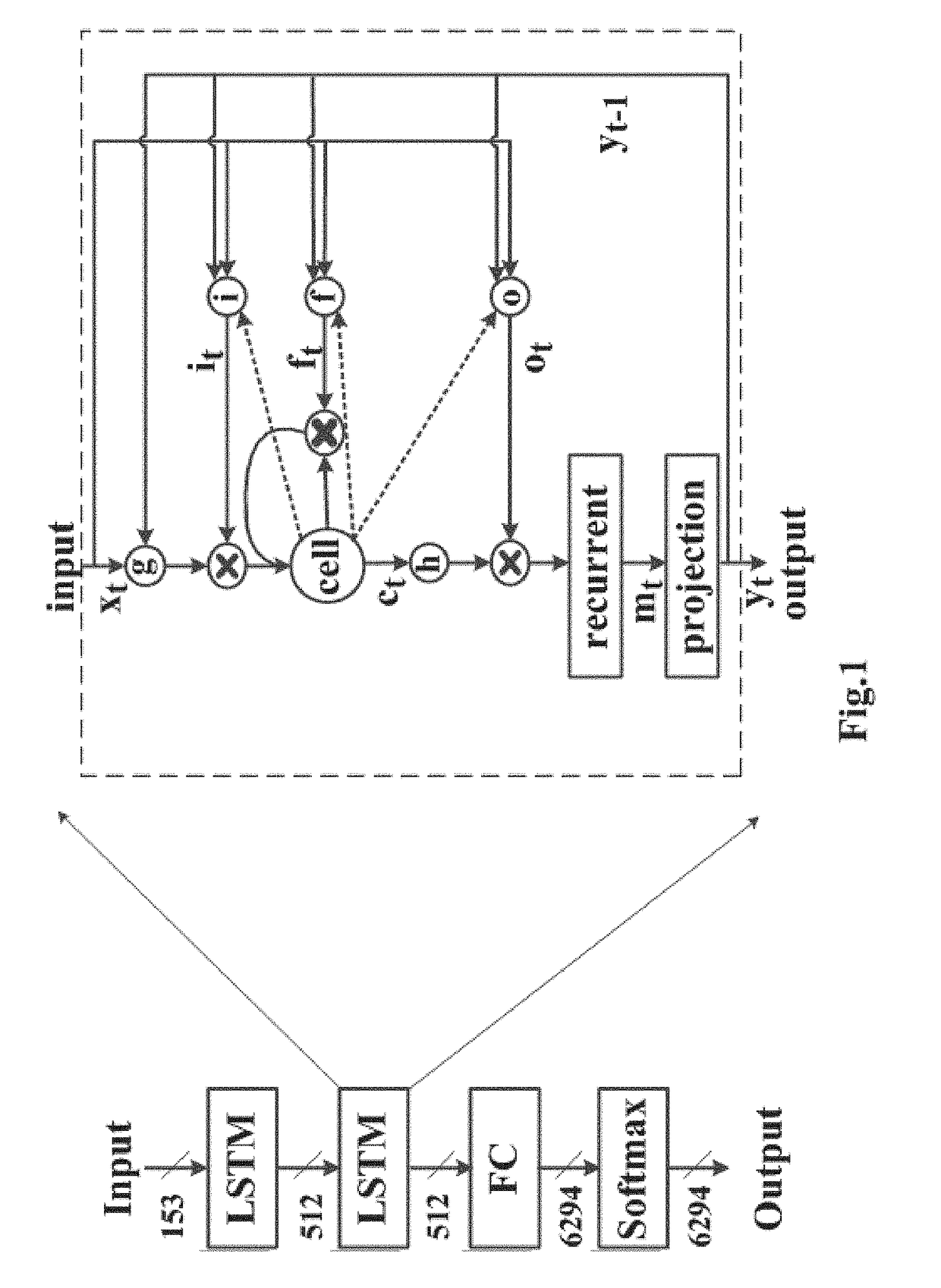

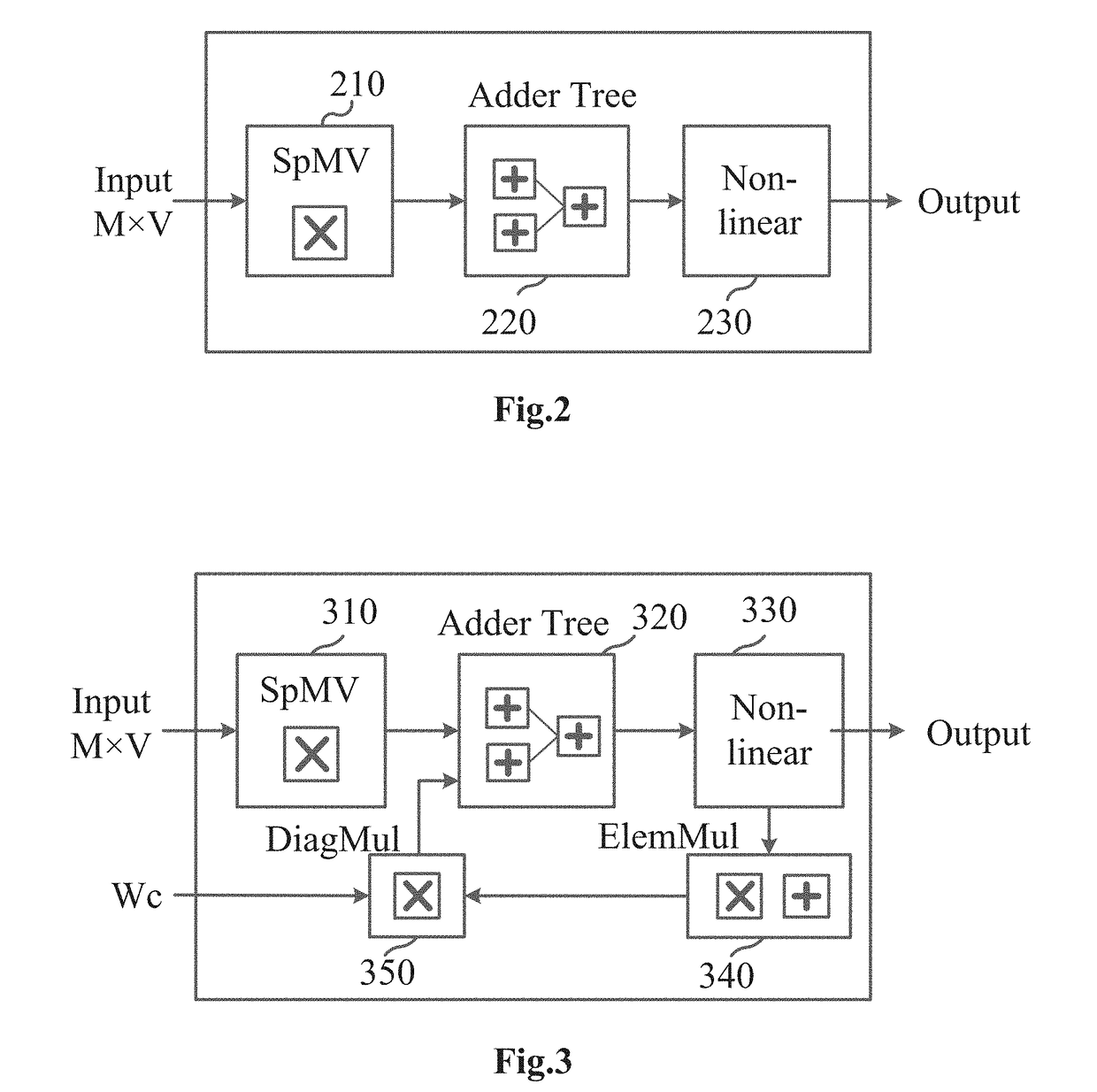

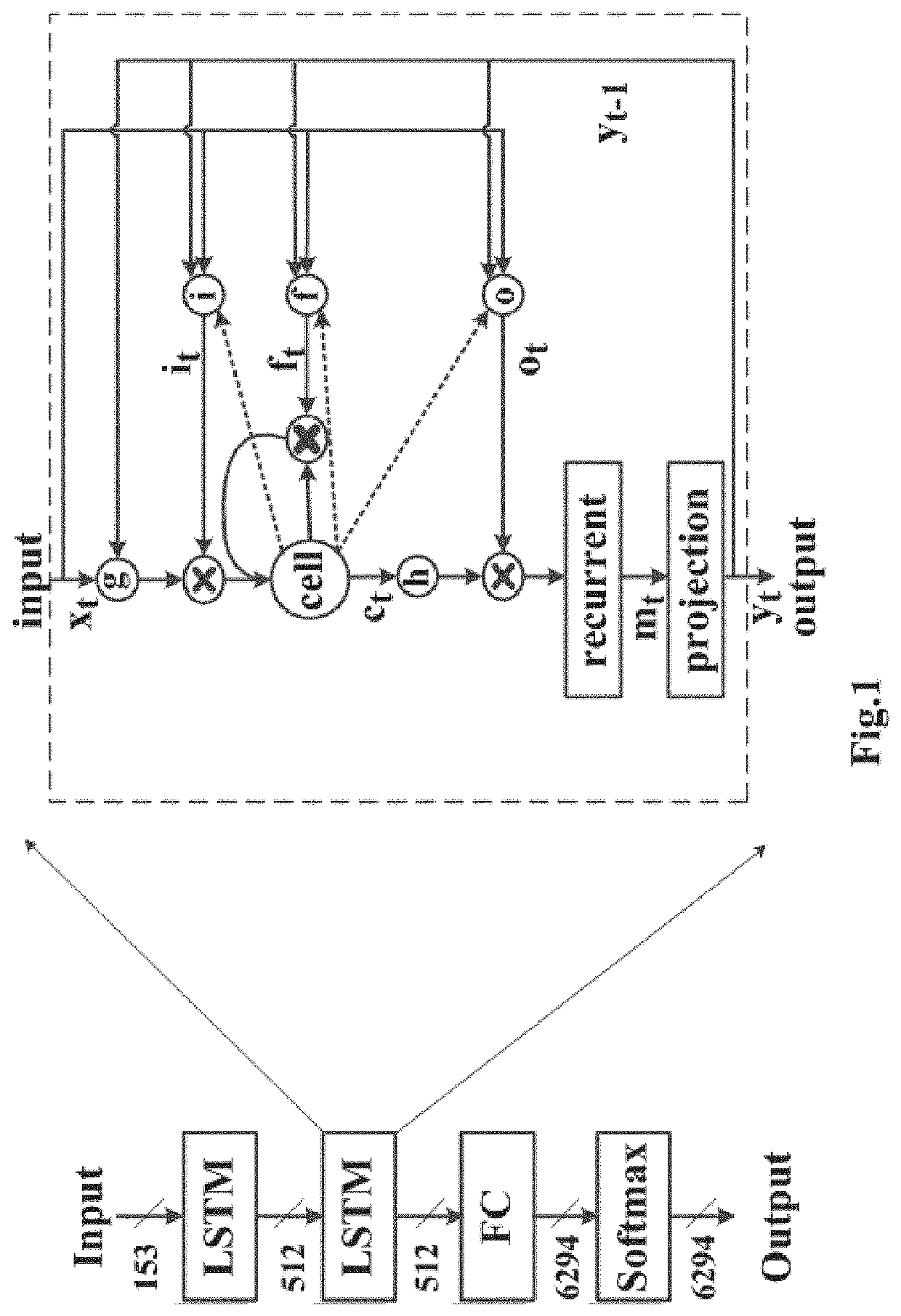

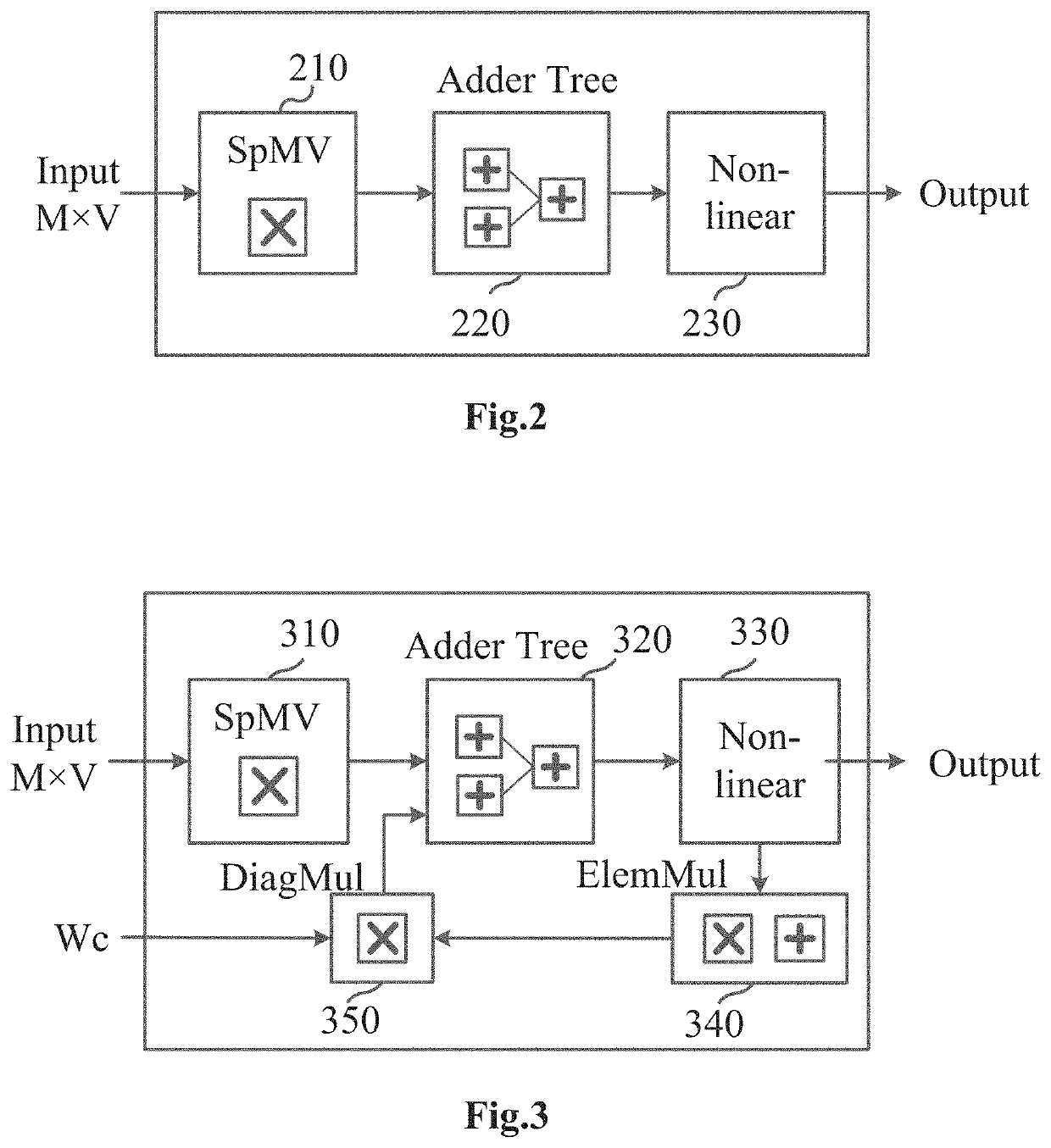

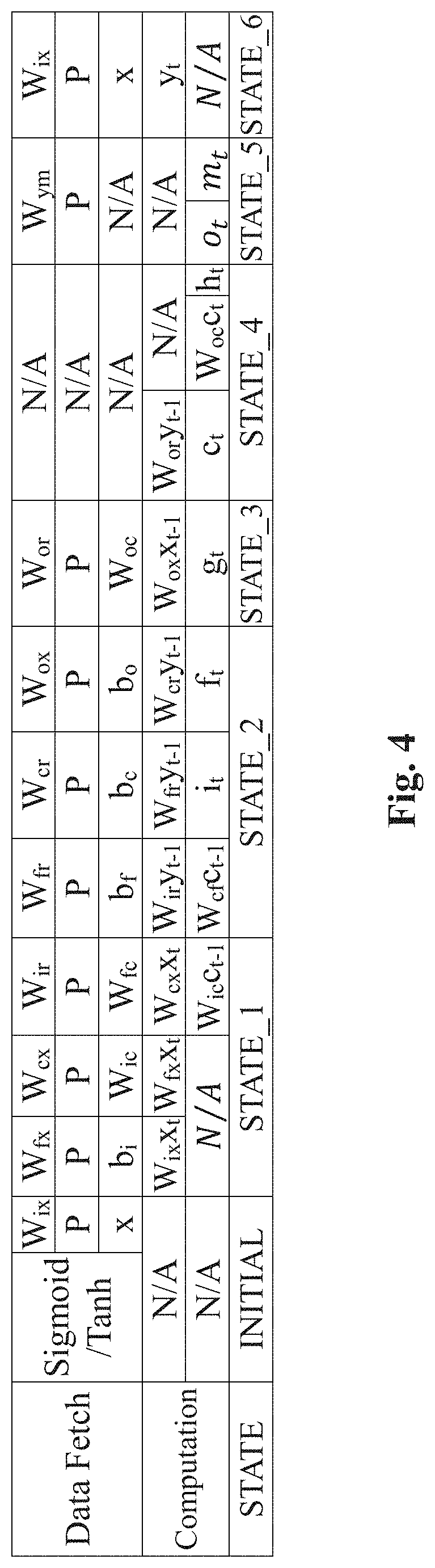

Hardware Accelerator for Compressed LSTM

ActiveUS20180174036A1Easy to operateImprove forecastNeural architecturesPhysical realisationActivation functionComputer module

Hardware accelerator for compressed Long Short Term Memory (LSTM) is disclosed. The accelerator comprise a sparse matrix-vector multiplication module for performing multiplication operation between all sparse matrices in the LSTM and vectors to sequentially obtain a plurality of sparse matrix-vector multiplication results. A addition tree module are also included for accumulating a plurality of said sparse matrix multiplication results to obtain an accumulated result. And a non-linear operation module passes the accumulated results through an activation function to generate non-linear operation result. That is, the present accelerator adopts pipeline design to overlap the time of data transfer and computation for compressed LSTM.

Owner:XILINX TECH BEIJING LTD

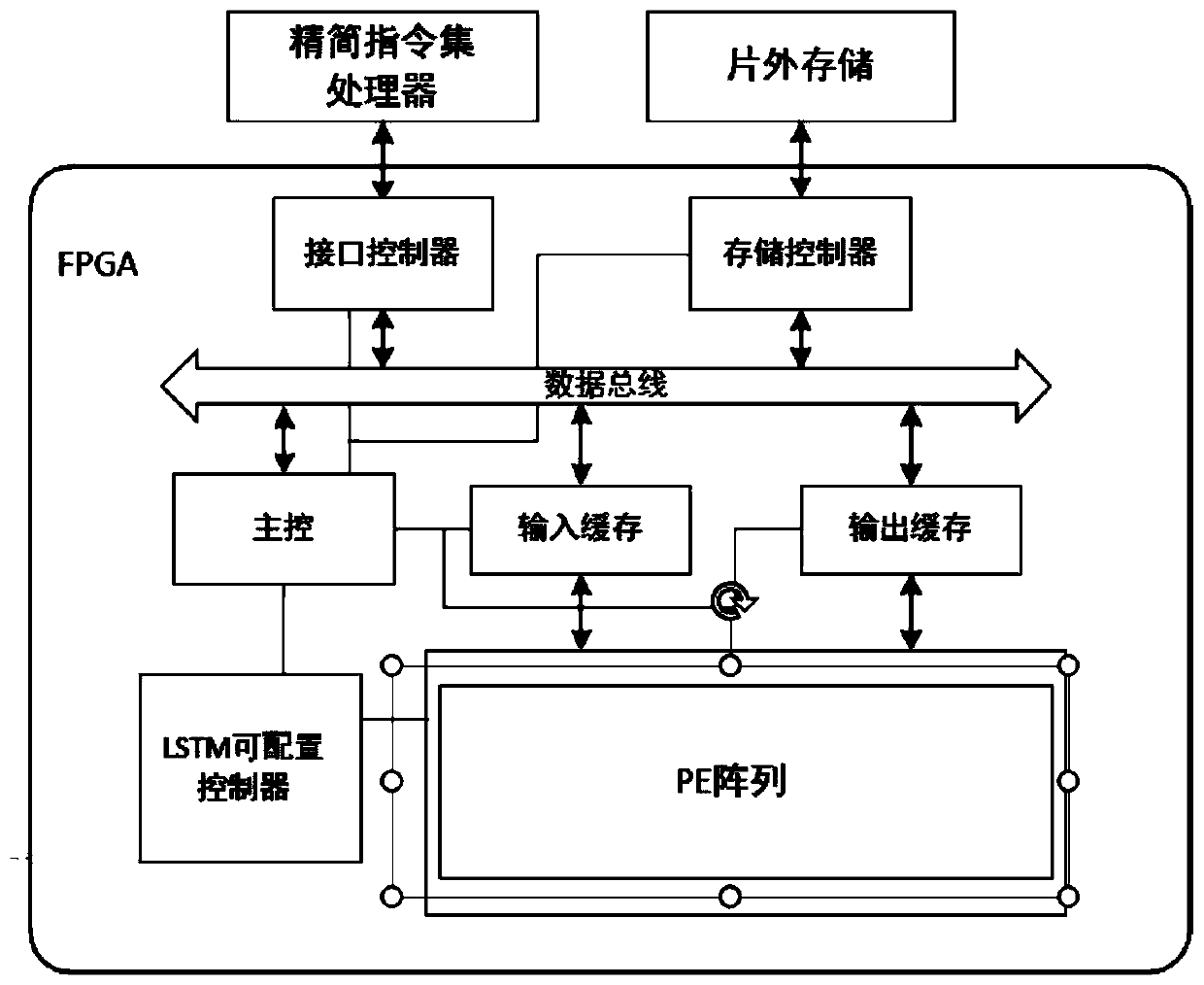

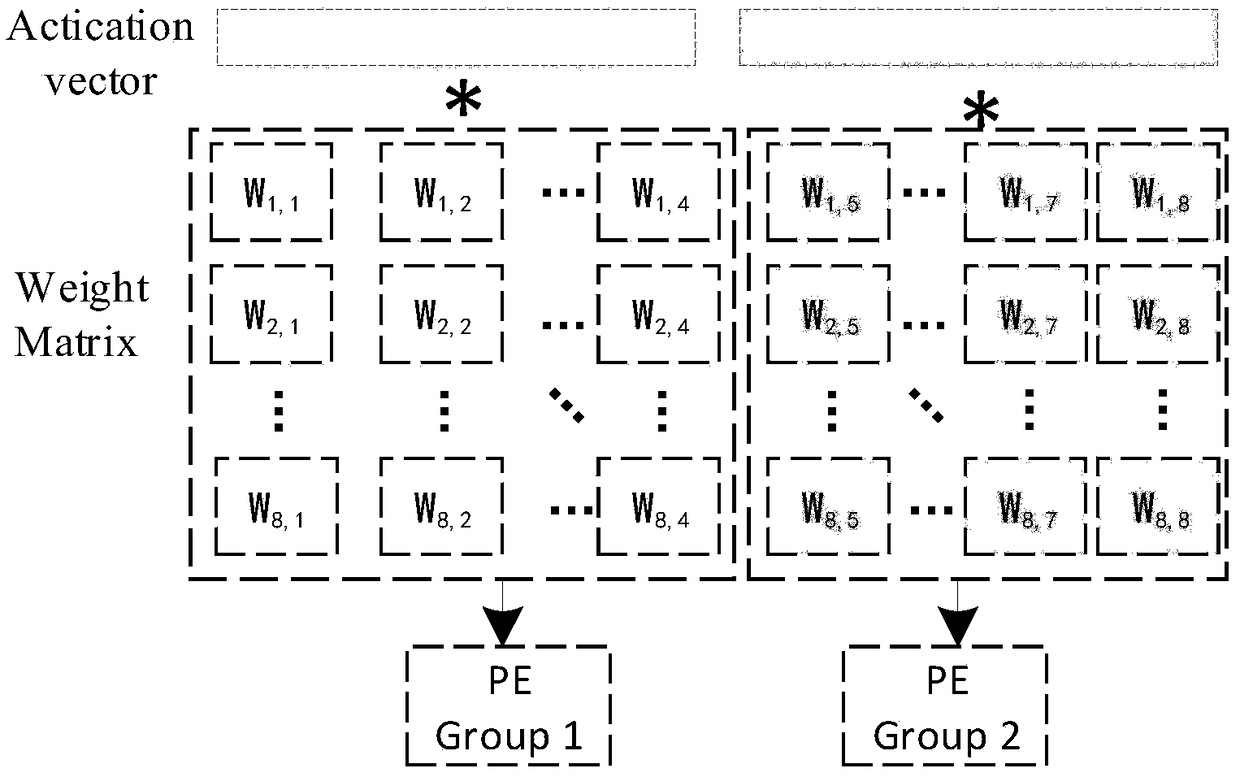

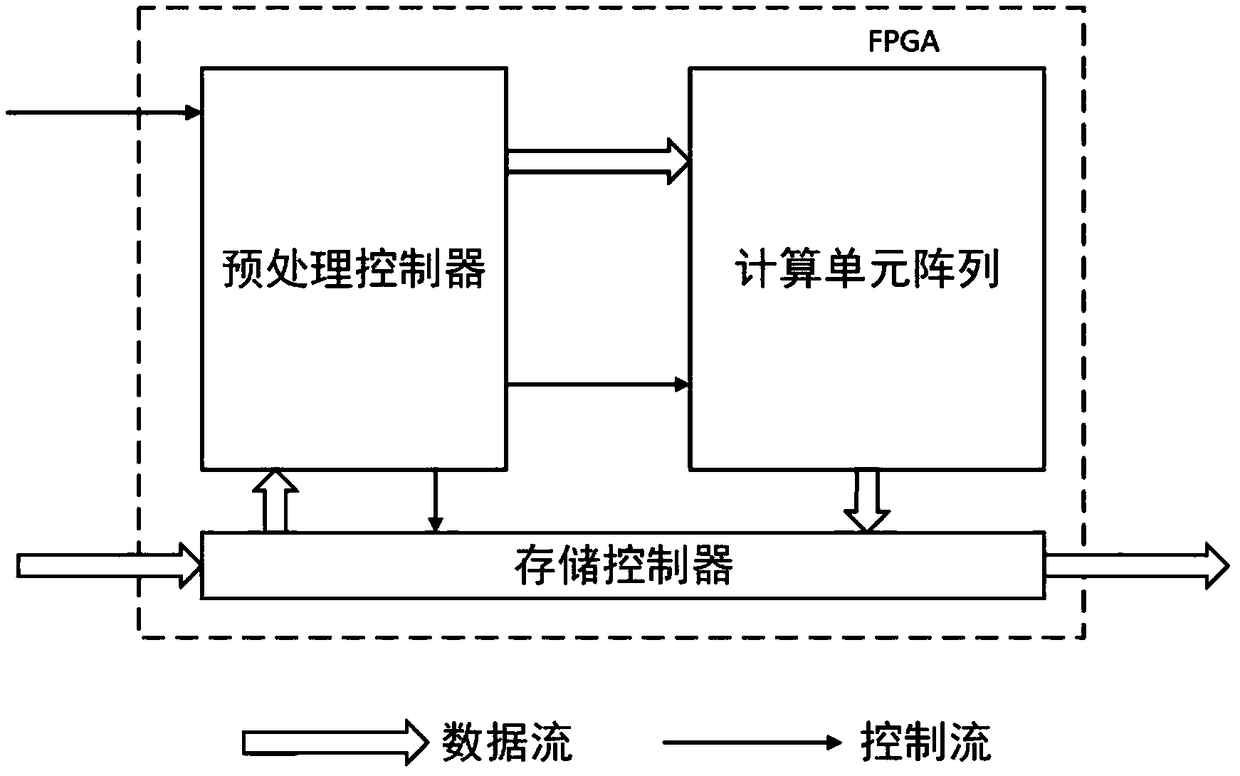

FPGA accelerator of LSTM neural network and acceleration method of FPGA accelerator

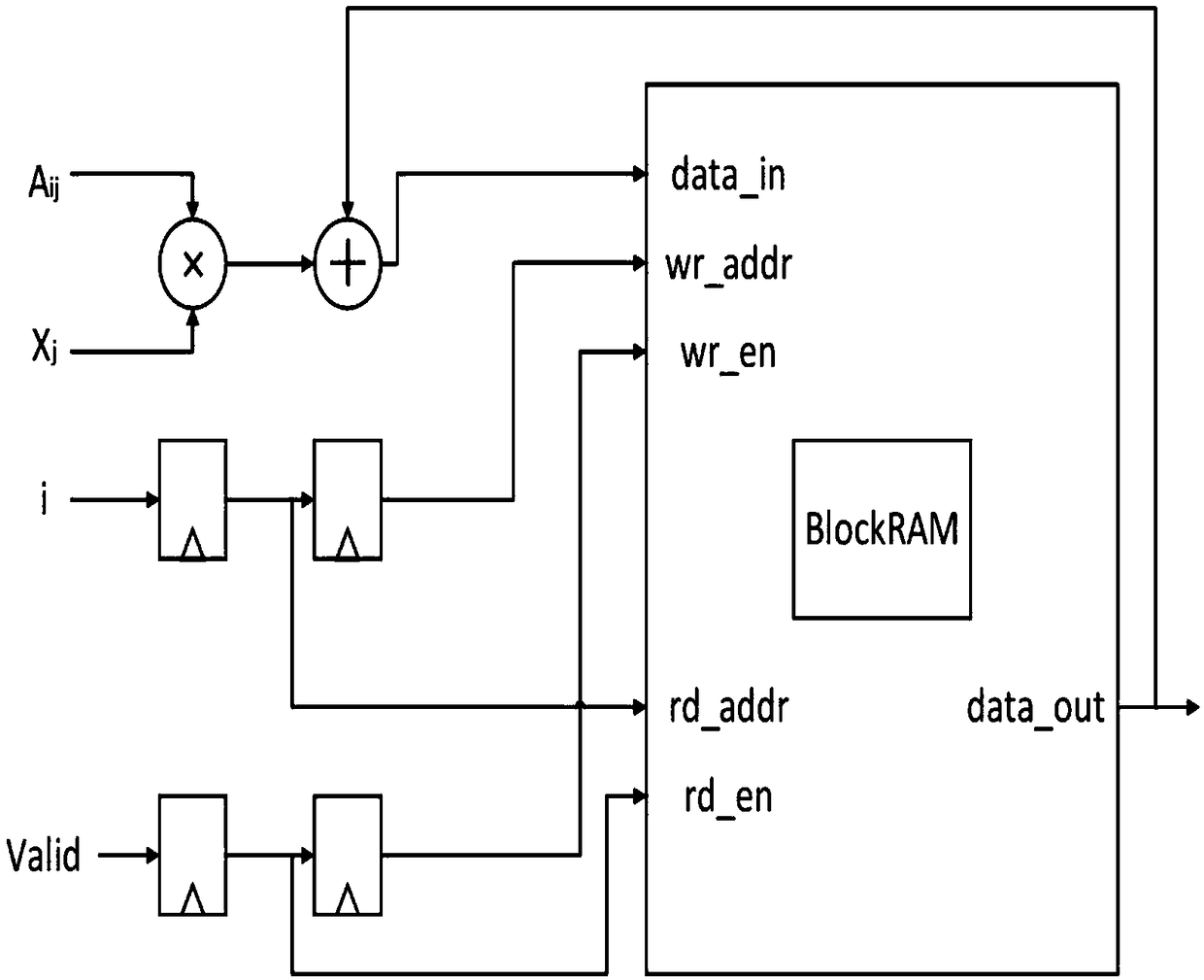

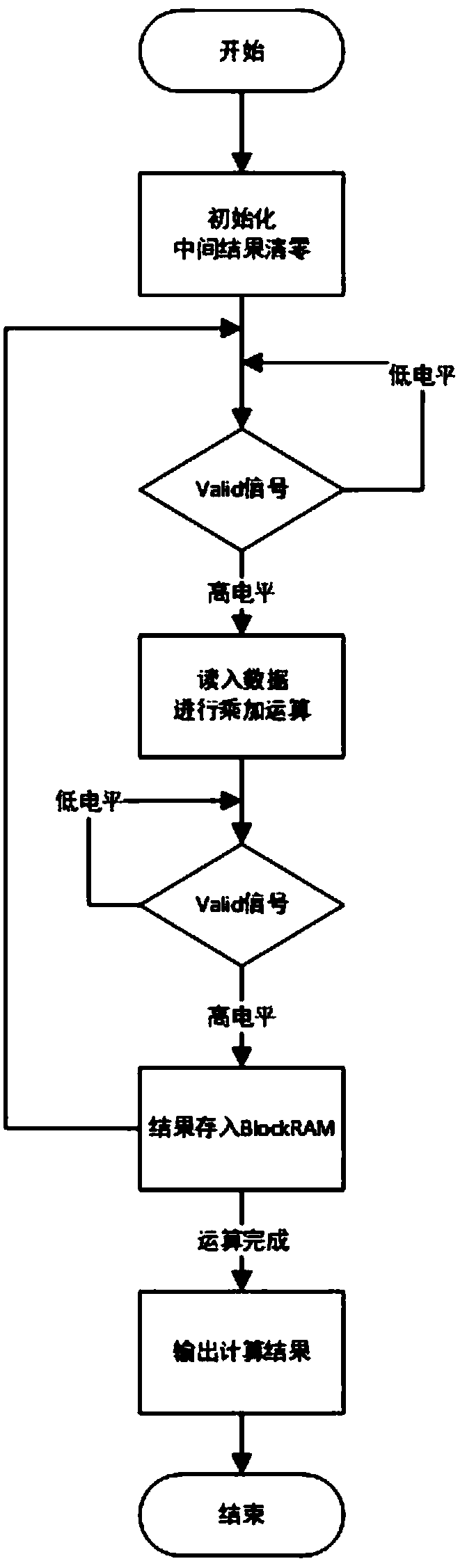

ActiveCN110110851AImprove computing powerWill not cause idleNeural architecturesPhysical realisationActivation functionControl signal

The invention provides an FPGA accelerator of an LSTM neural network and an acceleration method of the FPGA accelerator. The accelerator comprises a data distribution unit, an operation unit, a control unit and a storage unit; the operation unit comprises a sparse matrix vector multiplication module, a nonlinear activation function module and an element-by-element multiplication and addition calculation module; the control unit sends a control signal to the data distribution unit, and the data distribution unit reads an input excitation value and a neural network weight parameter from the storage unit and inputs the input excitation value and the neural network weight parameter to the operation unit for operation. The operation resources are uniformly distributed to each operation unit according to the number of the non-zero weight values, so that idling of operation resources is avoided, and the operation performance of the whole network is improved. Meanwhile, the pruned neural network is stored in the form of the sparse network, the weight value of each column is stored in the same address space, the neural network is coded according to the row index, and the operation performance and the data throughput rate are improved under the condition that the precision is guaranteed.

Owner:NANJING UNIV

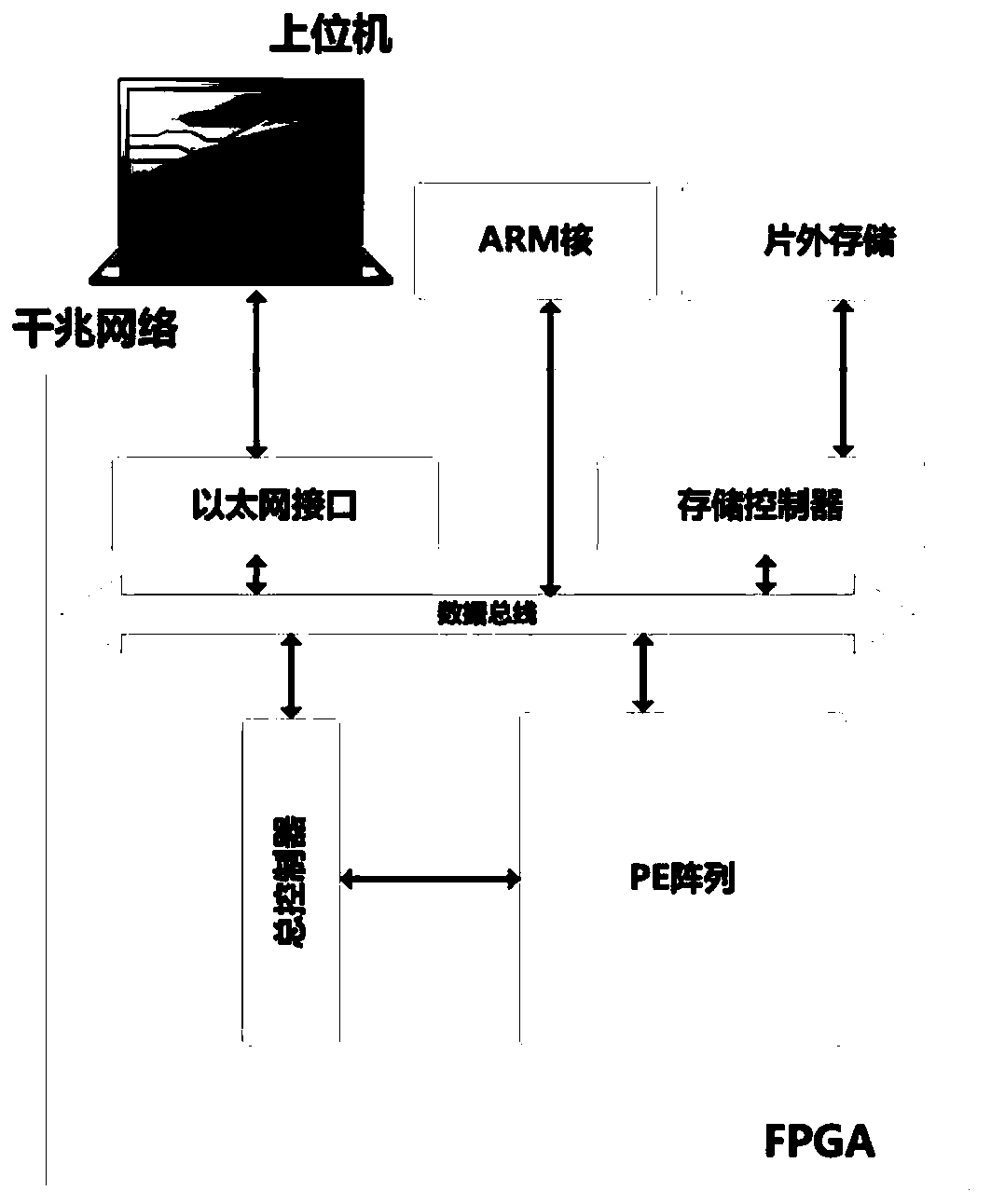

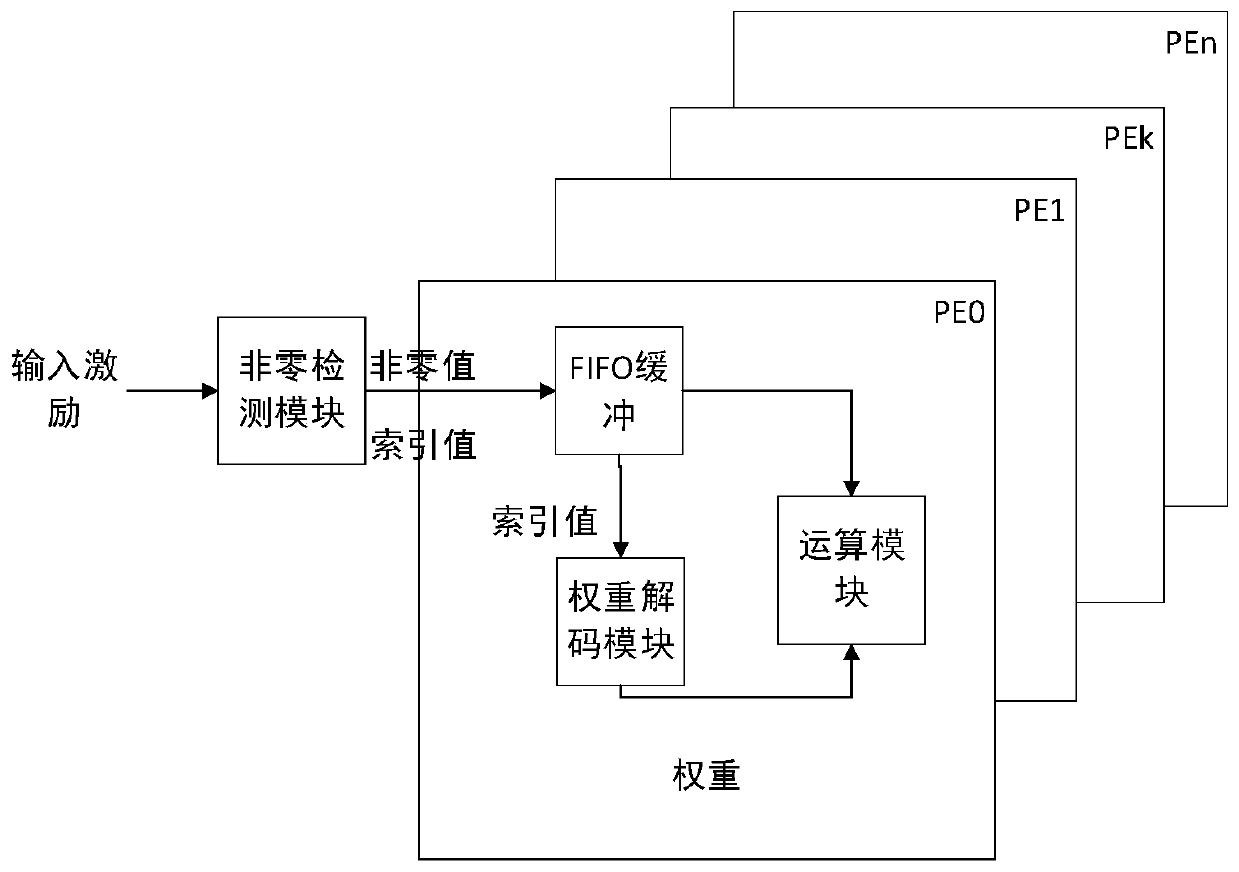

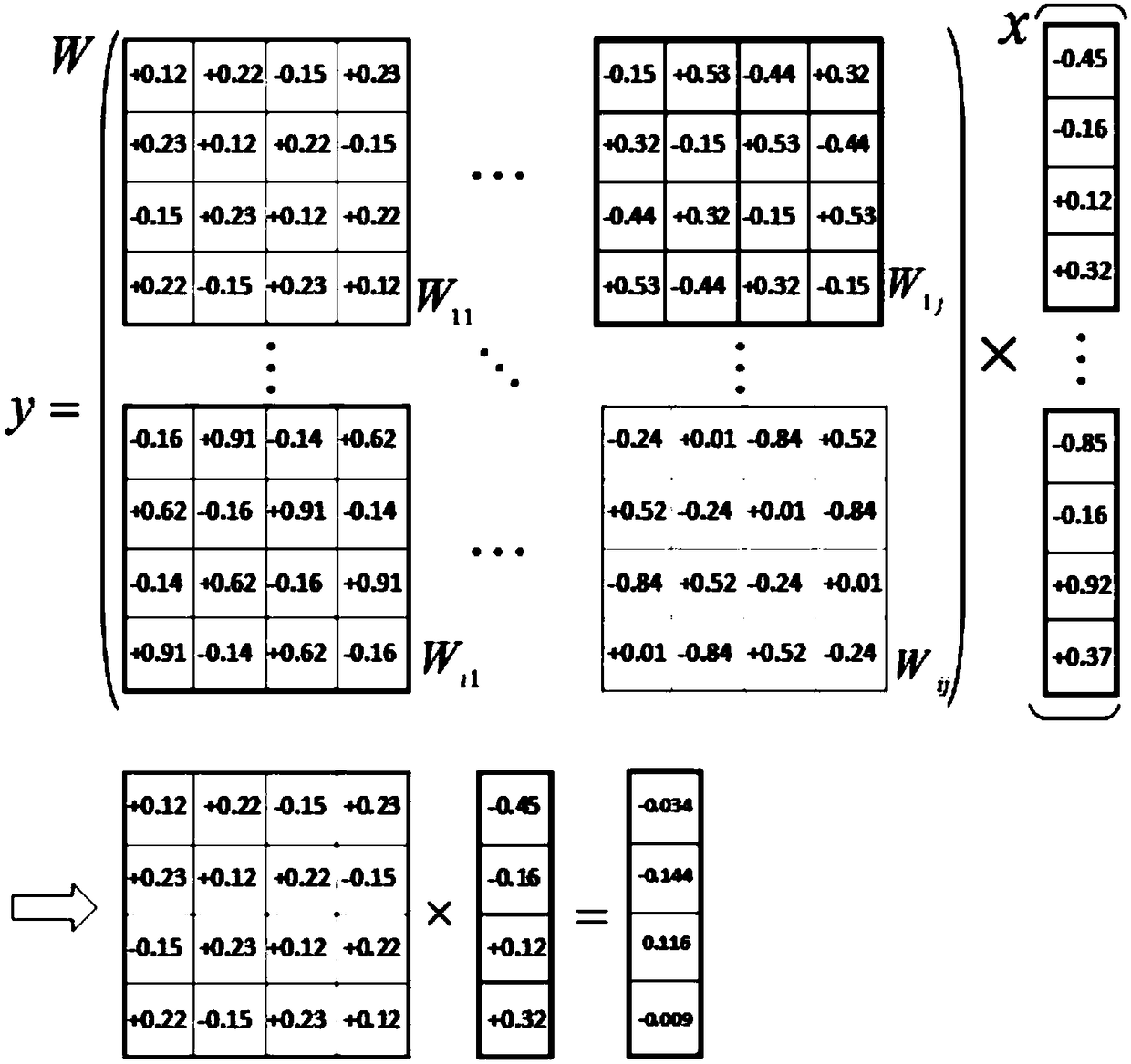

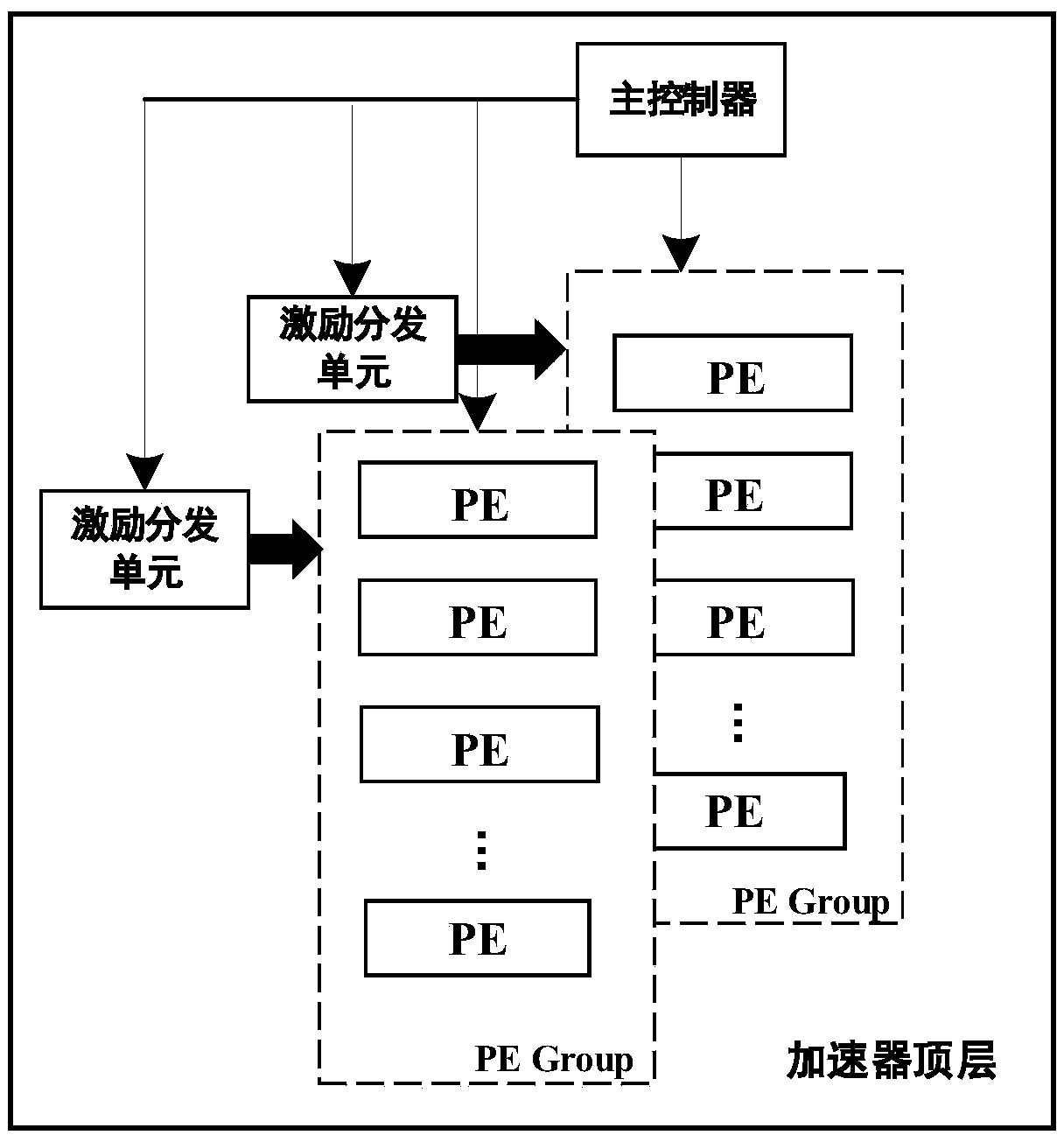

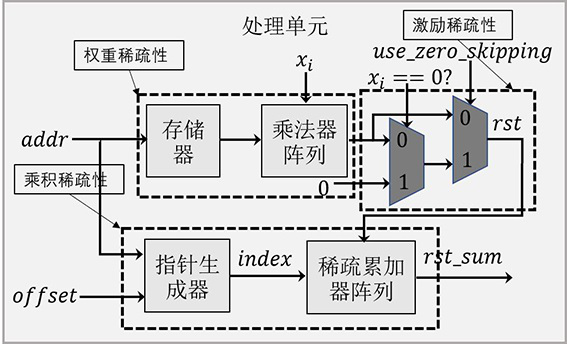

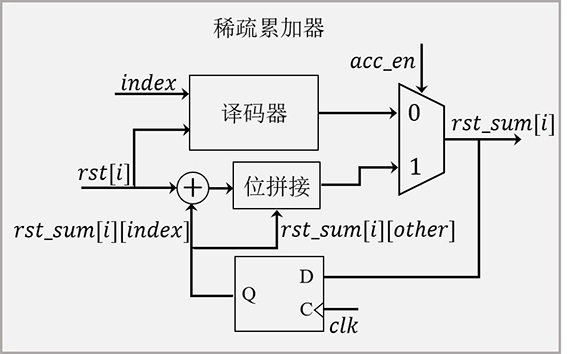

Aneural network acceleration system based on a block cyclic sparse matrix

ActiveCN109472350AReduce excessive storage accessReduce energy consumptionNeural architecturesPhysical realisationSparse matrix vectorNerve network

The invention relates to a neural network acceleration system based on a block cyclic sparse matrix, and the system comprises an extensible processing unit array which stores a part of weights of a neural network, and carries out the decoding and operation of a compressed network; The main controller is mainly responsible for controlling the operation process; And the excitation distribution unitis used for distributing non-zero operation data to the extensible processing unit array under the control of the main controller. The method has the beneficial effects that the characteristics of a block cyclic sparse matrix are effectively utilized, the problem of unbalanced load of sparse matrix vector multiplication operation is solved, and the utilization rate of operation units is improved;By utilizing the sparsity of excitation and weight, the use of on-chip storage is reduced, and redundant operation is skipped, so that the throughput of a hardware accelerator is improved, and the real-time requirement for processing a deep neural network is met.

Owner:NANJING UNIV

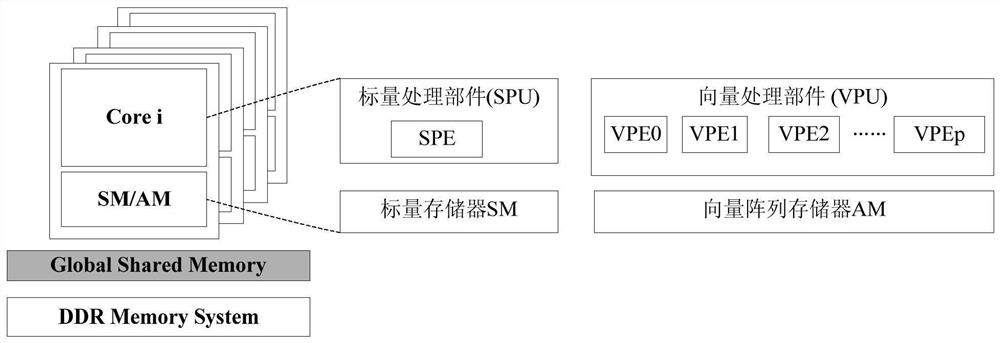

Method for realizing heterogeneous many-core of sparse matrix-vector multiplication based on domestic SW26010 processors

ActiveCN106775594AImprove performanceReduce the total memory accessMachine execution arrangementsSparse matrix vectorEuclidean vector

Owner:INST OF SOFTWARE - CHINESE ACAD OF SCI

Sparse matrix-vector multiplication on graphics processor units

InactiveUS8364739B2Computation using non-contact making devicesDigital computer detailsGraphicsMatrix multiplication

Owner:INT BUSINESS MASCH CORP

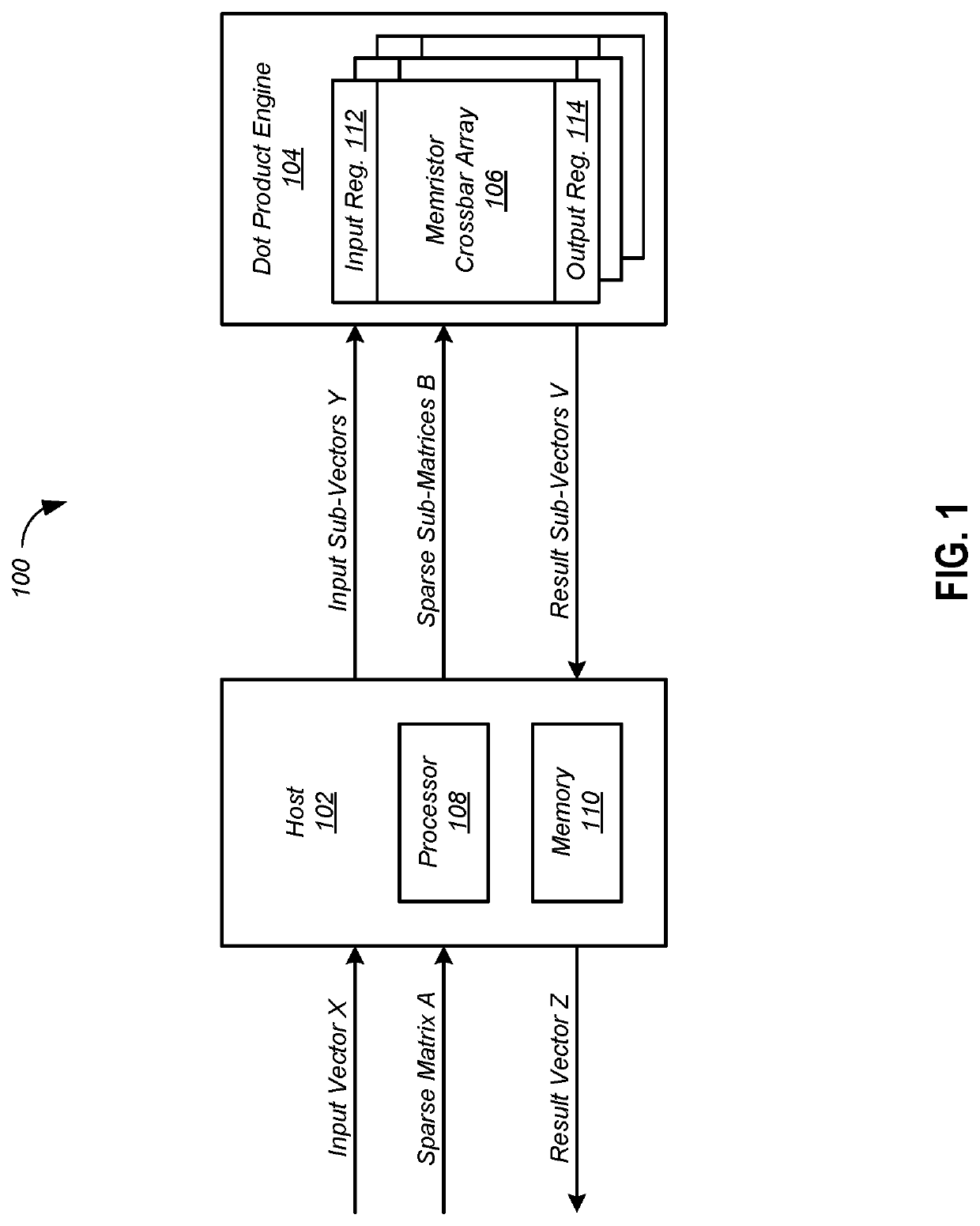

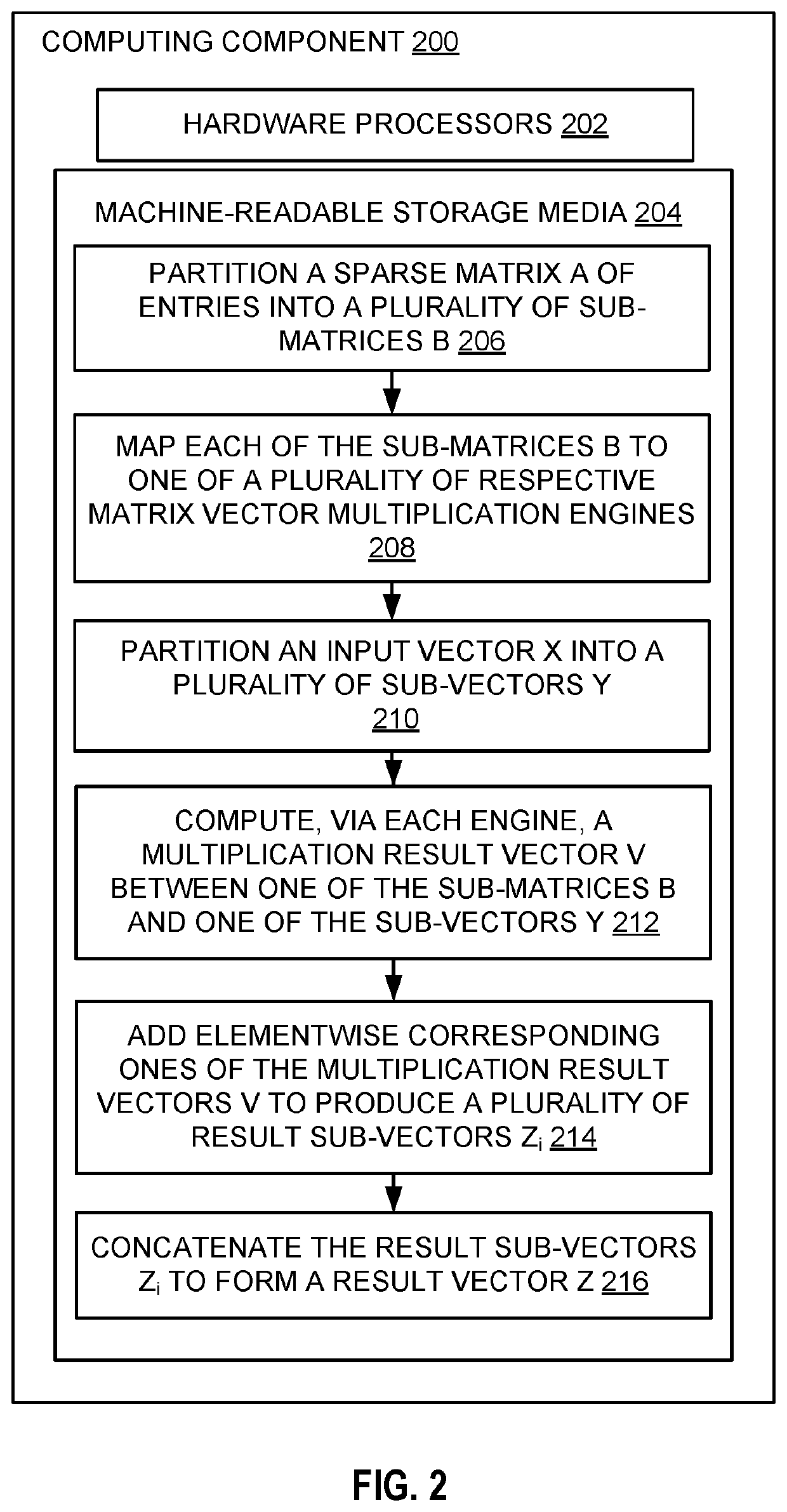

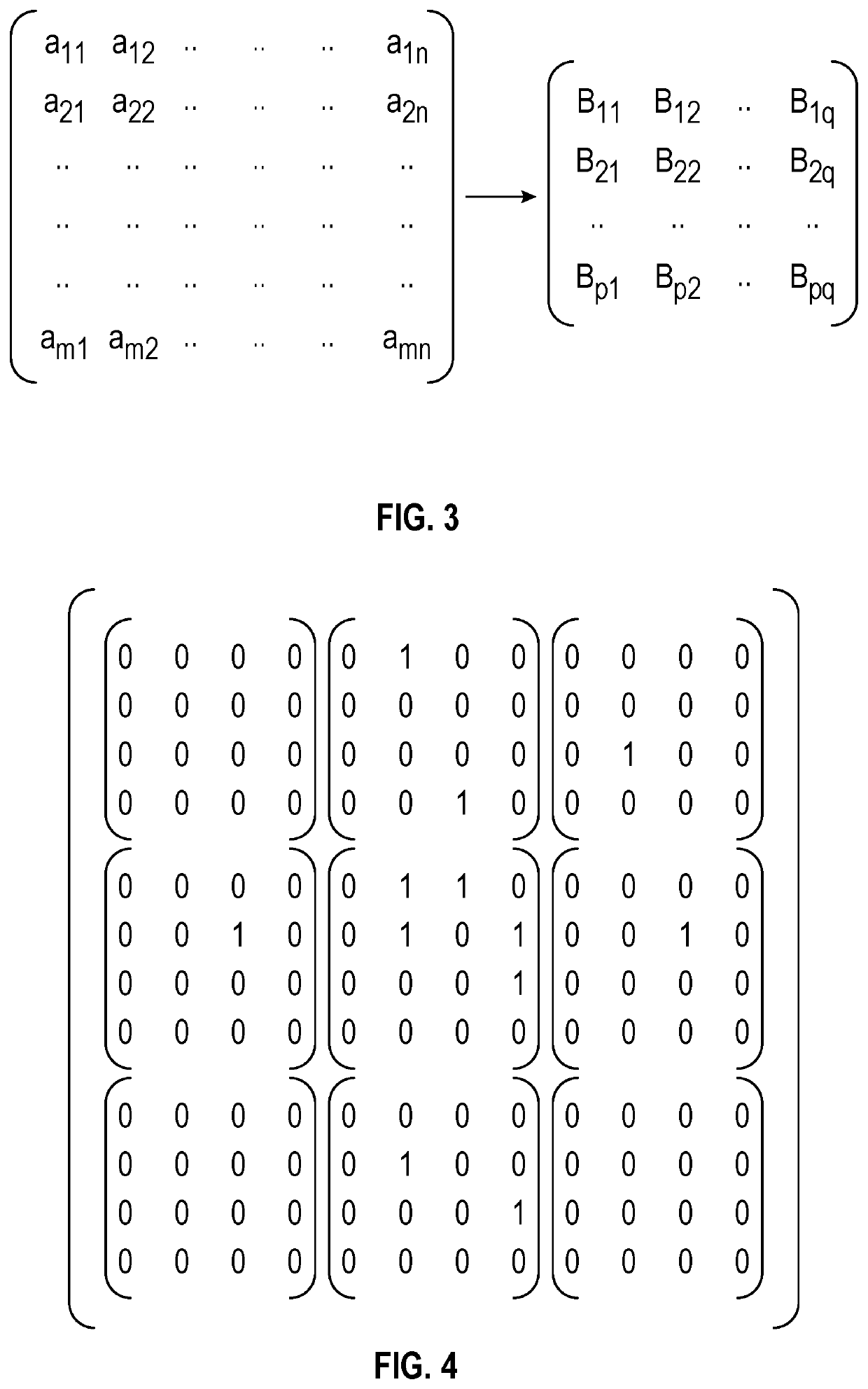

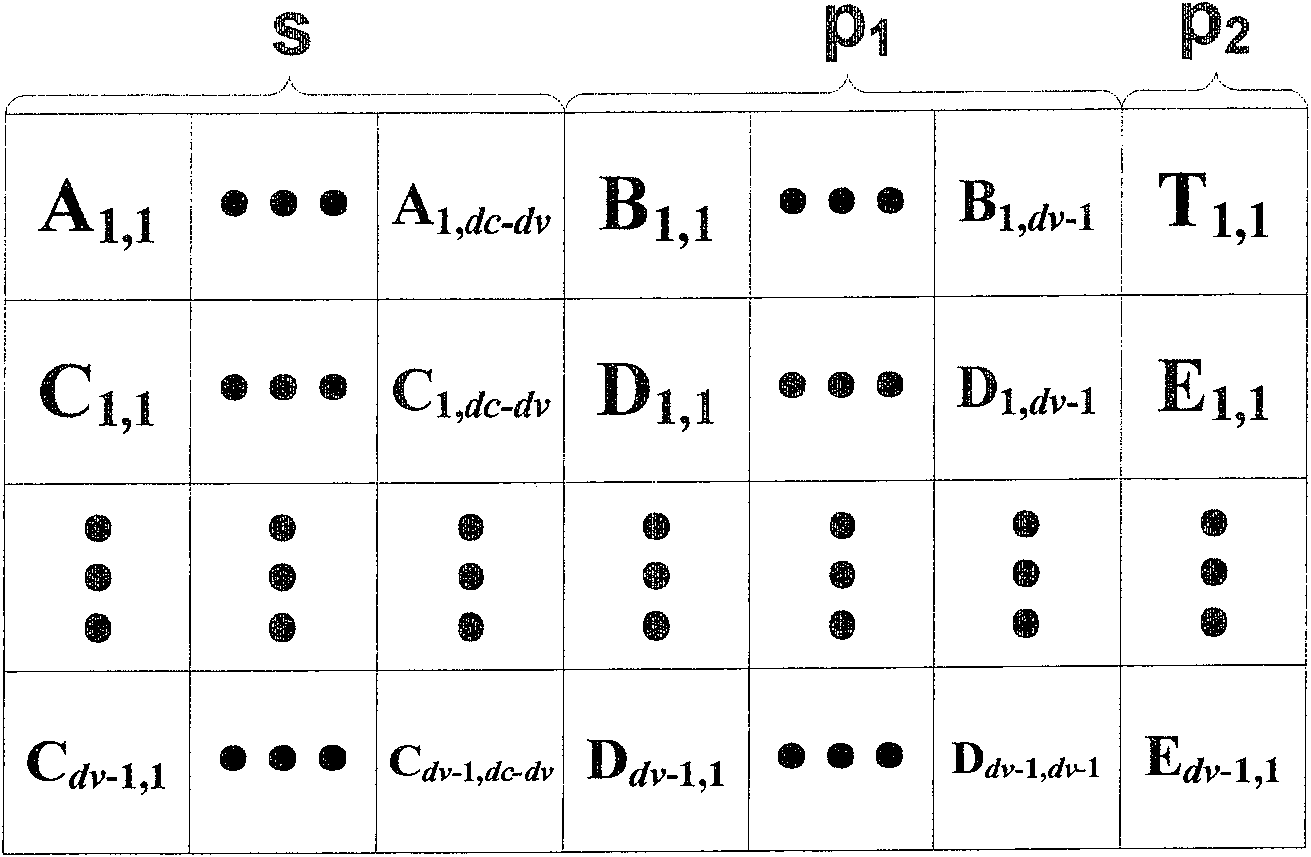

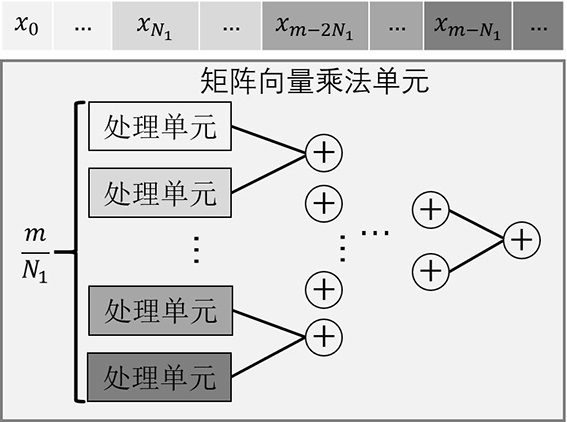

Sparse matrix vector multiplication with a matrix vector multiplication unit

ActiveUS20200117700A1Neural architecturesPhysical realisationStructural engineeringMechanical engineering

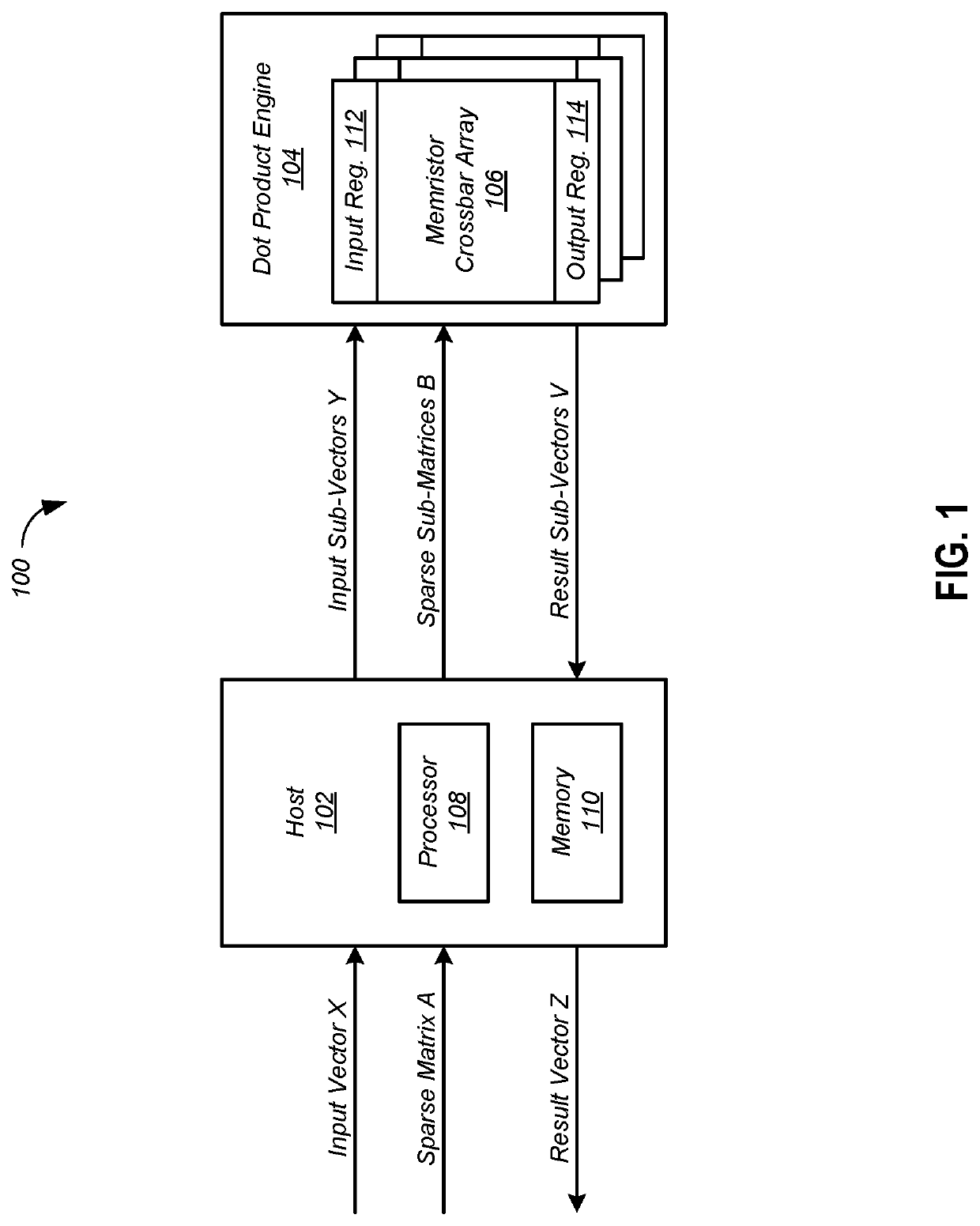

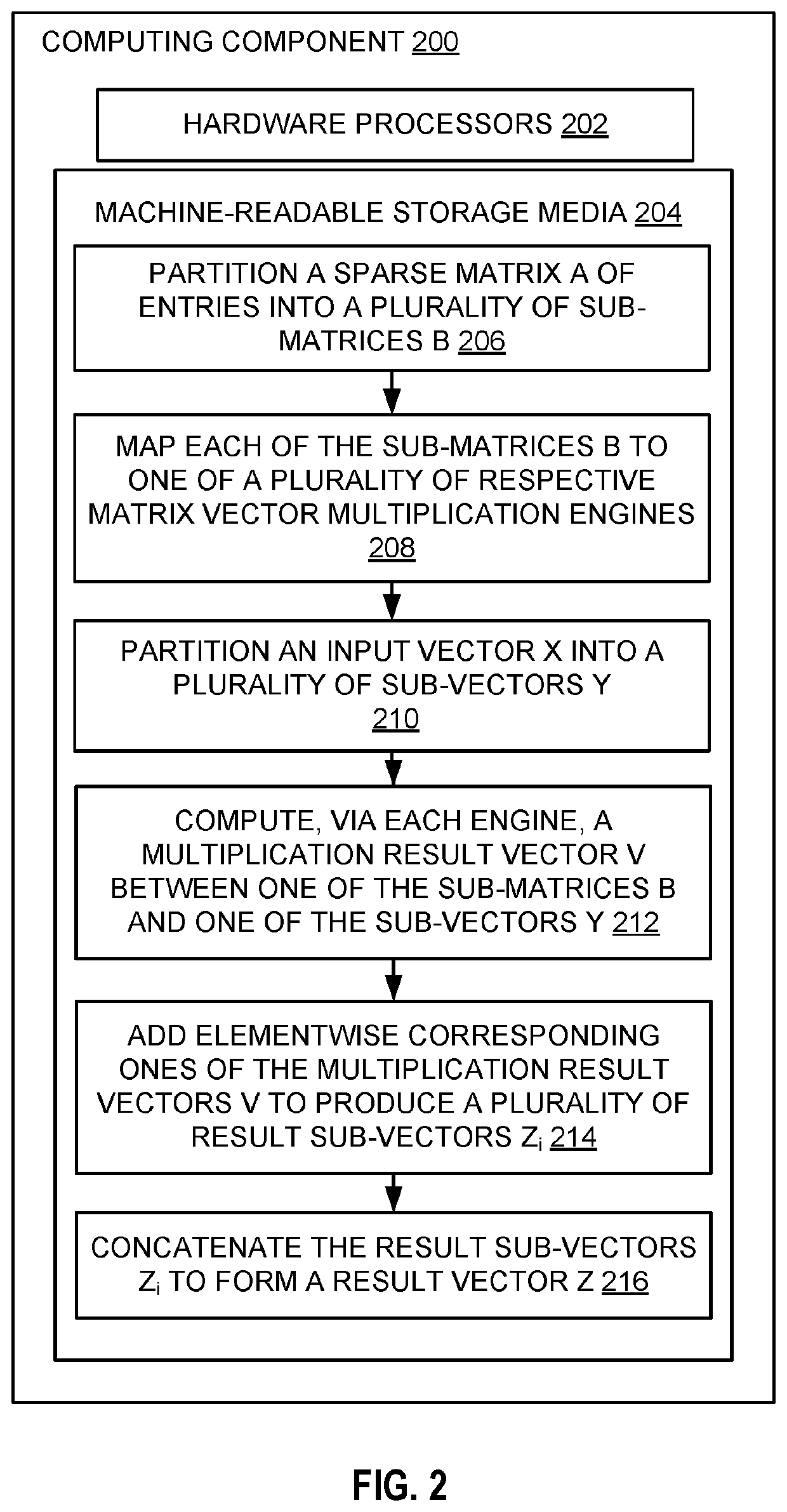

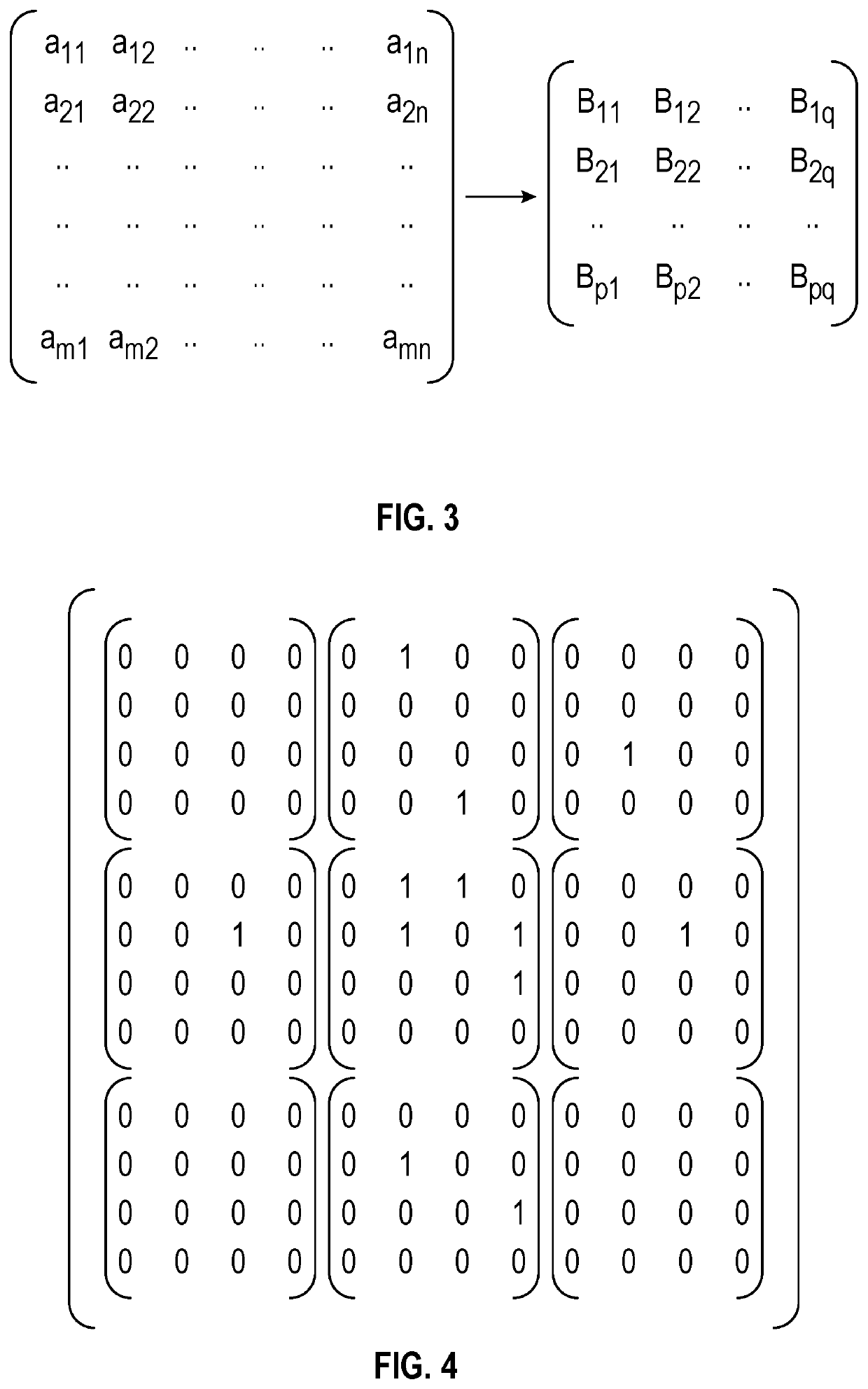

Systems and methods are provided for sparse matrix vector multiplication with a matrix vector multiplication unit. The method includes partitioning a sparse matrix of entries into a plurality of sub-matrices; mapping each of the sub-matrices to one of a plurality of respective matrix vector multiplication engines; partitioning an input vector into a plurality of sub-vectors; computing, via each matrix vector multiplication engine, a plurality of intermediate result vectors each resulting from a multiplication of one of the sub-matrices and one of the sub-vectors; for each set of rows of the sparse matrix, adding elementwise the intermediate result vectors to produce a plurality of result sub-vectors; and concatenating the result sub-vectors to form a result vector.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

FPGA-based expandable sparse matrix vector multiplication processor

PendingCN108710505AEasy to operateImprove data throughputMachine execution arrangementsSparse matrix vectorControl store

Owner:NANJING UNIV

Parallel computing method for sparse matrix vector multiplication of Shenwei architecture

ActiveCN108446253AIncrease spaceExtension of timeComplex mathematical operationsSparse matrix vectorHardware architecture

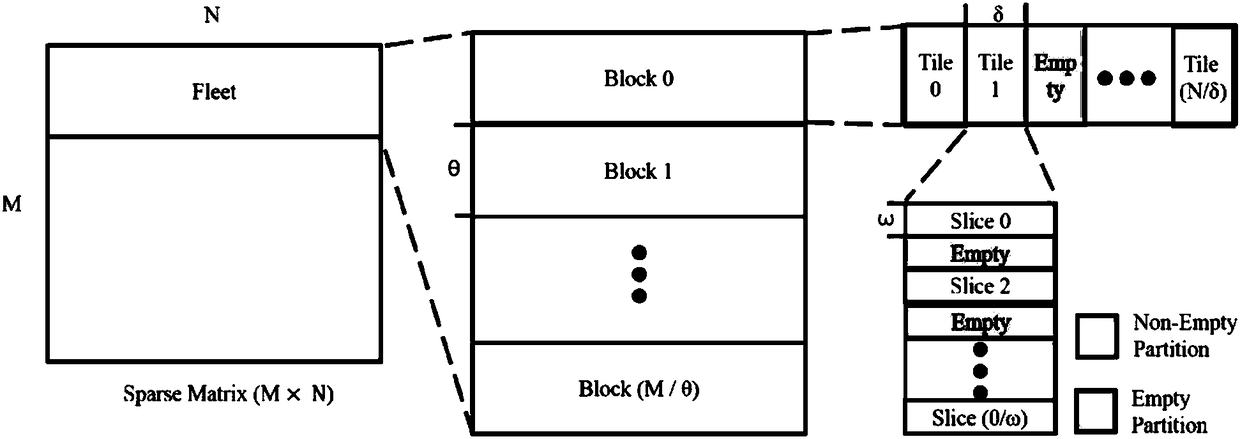

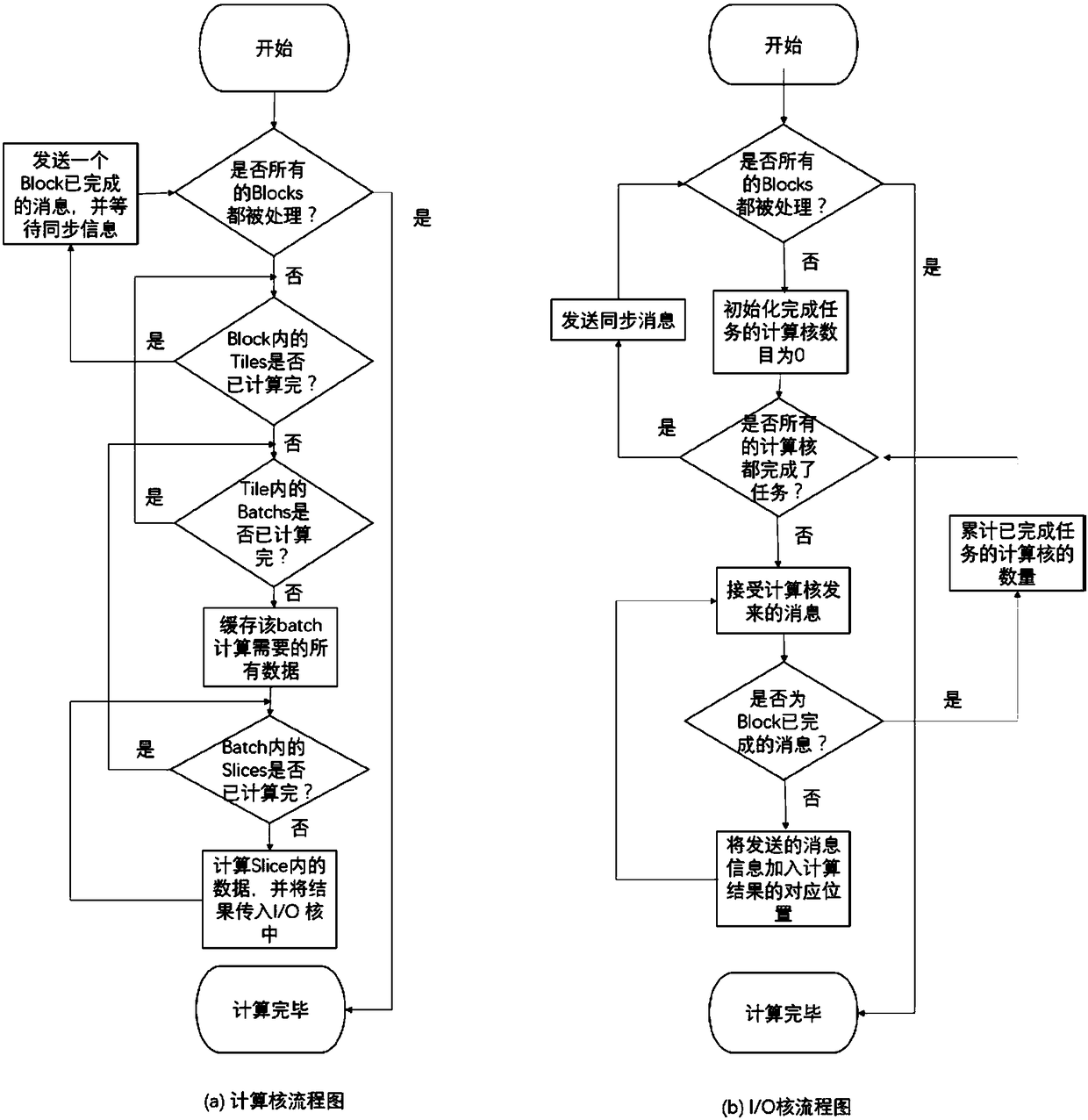

The present invention relates to a parallel computing method for sparse matrix vector multiplication of a Shenwei architecture. The method comprises: dividing a sparse matrix from an original single-level data structure into a multi-level data structure, wherein the multi-level data structure comprises four levels of fleet, block, tile, and slice; and corresponding the multi-level data structure to a Shenwei hardware architecture and the calculation hierarchy. According to the method disclosed by the present invention, the spatial and temporal locality of the data is improved, and the number of times of interactions between the core group and the memory is reduced.

Owner:BEIHANG UNIV

Data classification method and system based on convolutional neural network, medium and equipment

ActiveCN110909801AReduce training timeReduce memory consumptionCharacter and pattern recognitionNeural architecturesData setConcurrent computation

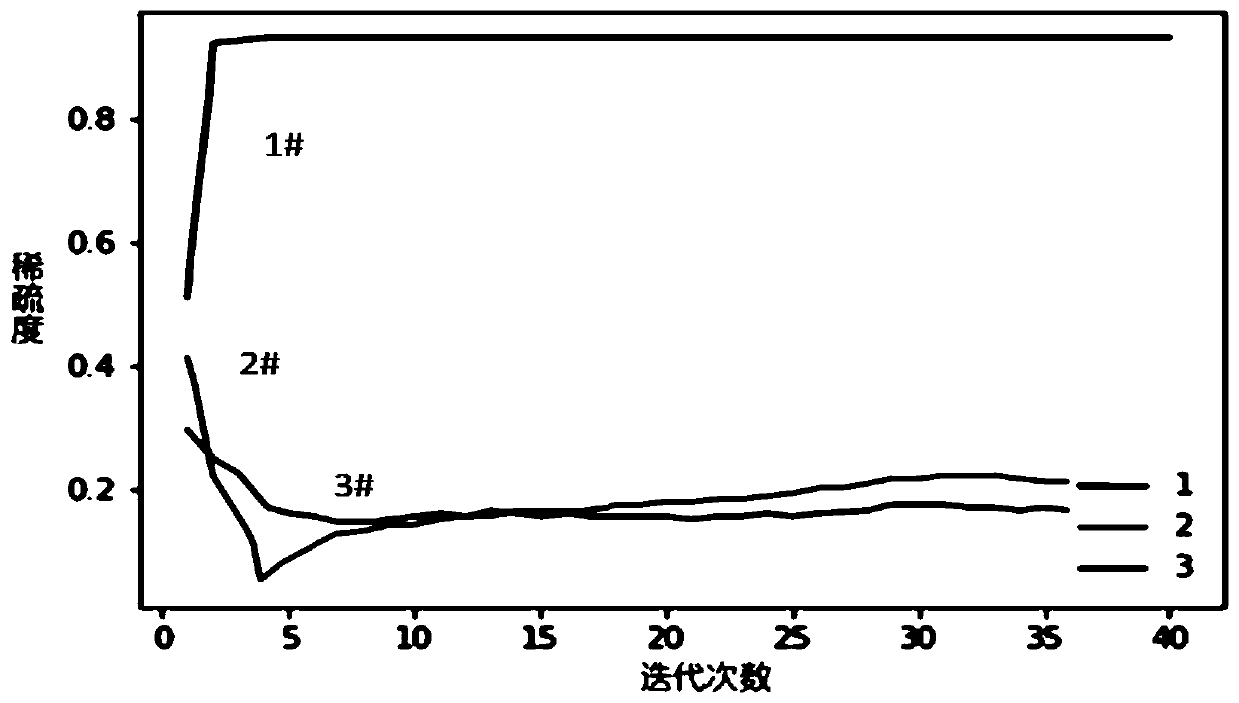

The invention provides a data classification method and system based on a convolutional neural network, a medium and equipment. The data classification method comprises the steps: carrying out the preprocessing of obtained classification data, and constructing a data set; constructing a convolutional neural network, wherein the convolutional neural network at least comprises a convolutional layerused for extracting local features, and the convolutional layer compresses a feature matrix, and sparse matrix vector multiplication operation is performed on the generated sparse matrix on a graphicprocessing unit, and data in a data set is utilized to train the convolutional neural network; and preprocessing the to-be-classified data, inputting the preprocessed to-be-classified data into the trained convolutional neural network model, and outputting a data classification result. According to the data classification method, the feature matrix of the convolutional layer is compressed, and parallel computation is performed on the GPU, so that the memory consumption and zero value computation in the computation process are reduced, and the training time and memory consumption of the neuralnetwork are reduced.

Owner:SHANDONG NORMAL UNIV

Heterogeneous parallel computing method for sparse matrix-vector multiplication

InactiveCN105068787AExcellent computing characteristicsThe generation method is simpleConcurrent instruction executionMemory systemsParallel computingData storing

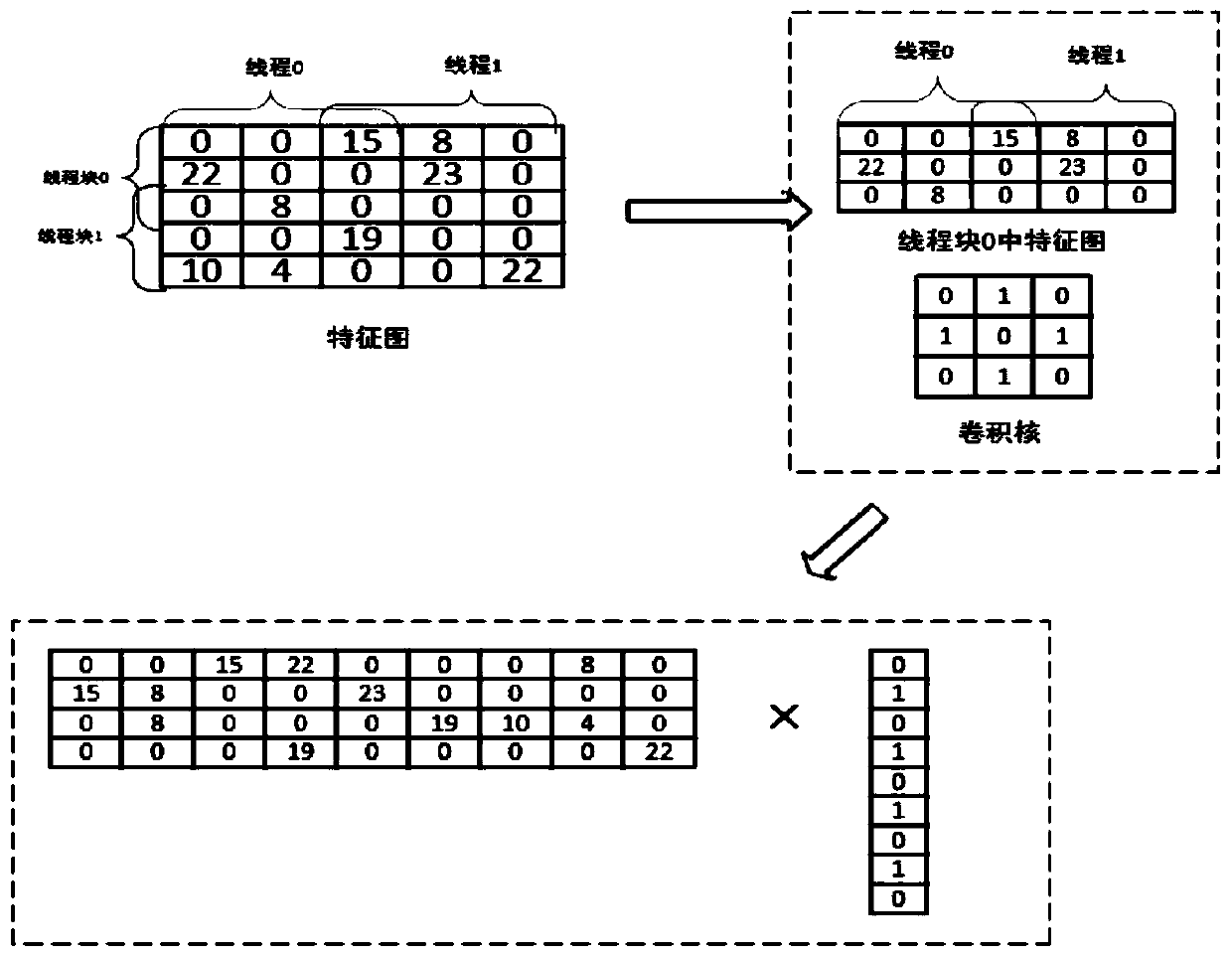

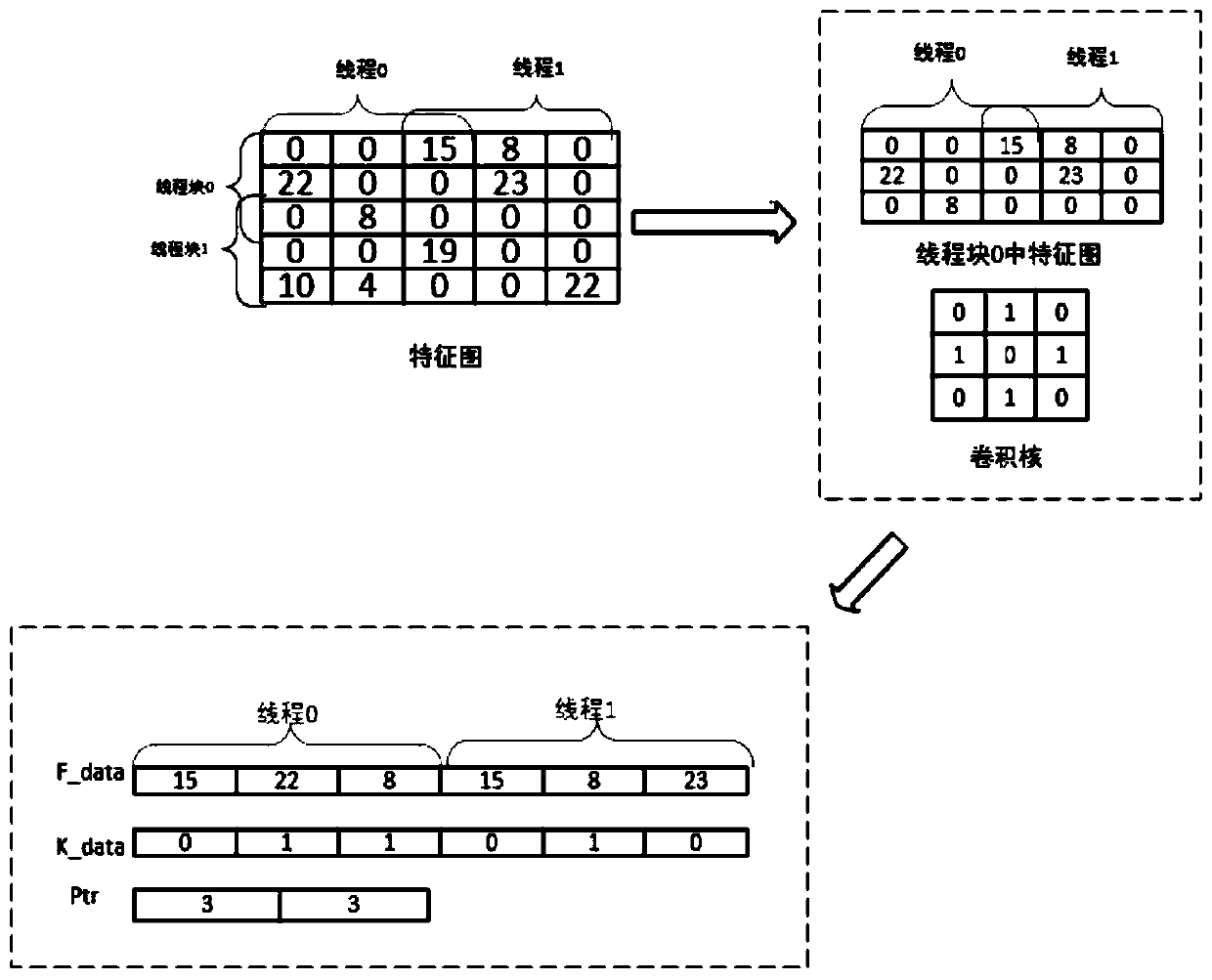

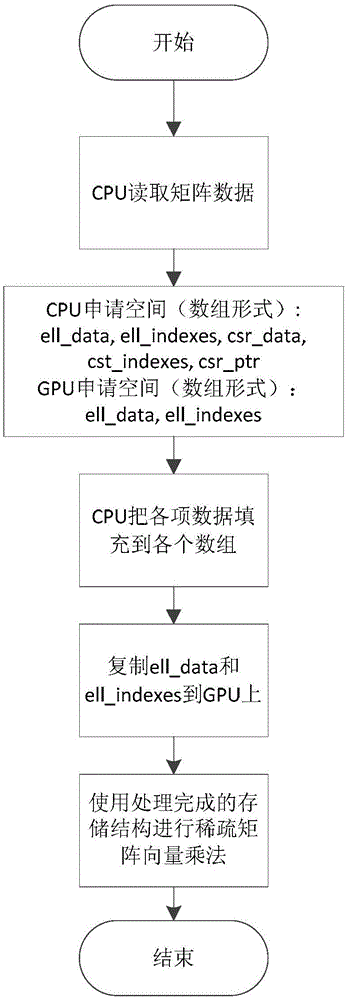

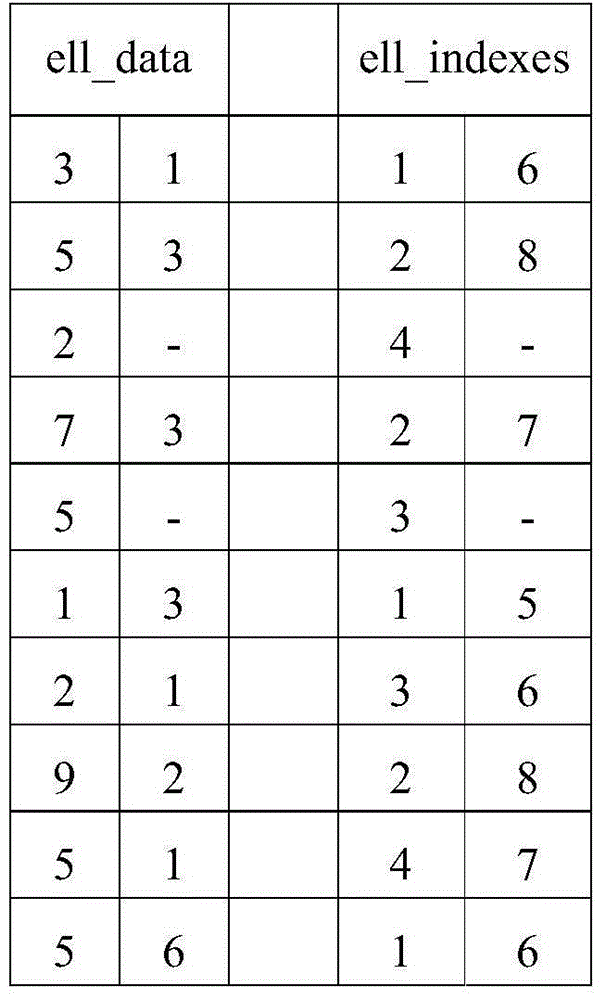

The invention discloses a heterogeneous parallel computing method for sparse matrix-vector multiplication. The method comprises the following steps of: reading a sparse matrix stored in a hard disk by a CPU, determining an adjustable parameter K of the spare matrix, and according to the adjustable parameter K of the spare matrix, applying memory storage space including storage space required for an ELL (ELLPACK) storage structure and a CSR (Compressed Sparse Row) storage structure; at the same time, applying GPU storage space required for the ELL storage structure; filling memory storage space applied by the CPU with matrix data to generate a mixed storage structure; copying data stored in the ELL storage structure in a memory to the GPU storage space for storage; and finally, performing sparse matrix-vector multiplication by using the storage structure after completion of processing. According to the computing method, the computing capabilities of the CPU and a GPU are utilized at the same time when a sparse matrix-vector multiplication computation task is executed by a computer, so that the best computing characteristics of the CPU and the GPU can be exerted separately.

Owner:SOUTH CHINA UNIV OF TECH

Data transmission method for sparse matrix vector multiplication and DMA transmission device

PendingCN111061997ARealize irregular accessEfficiently provideComplex mathematical operationsComputer hardwareParallel computing

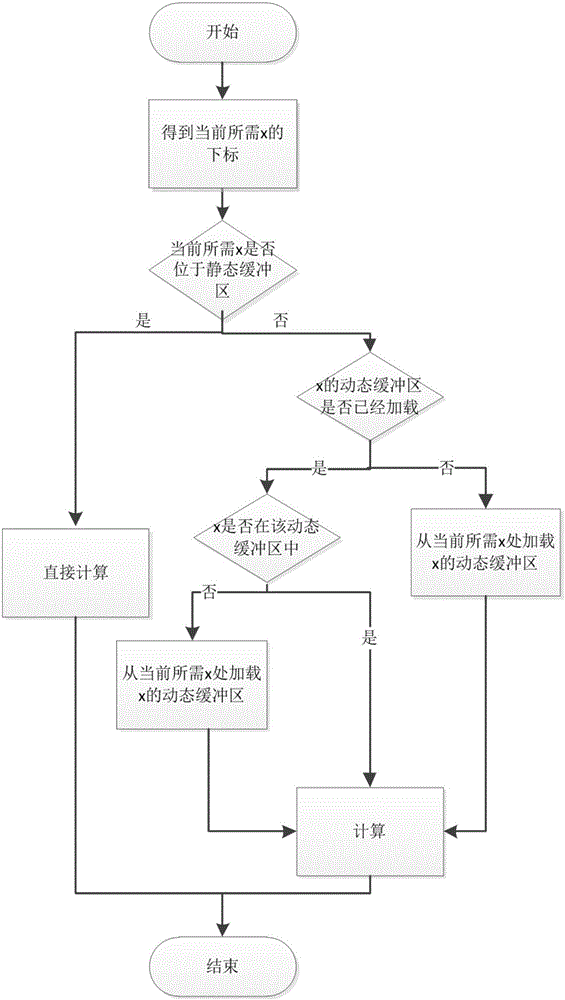

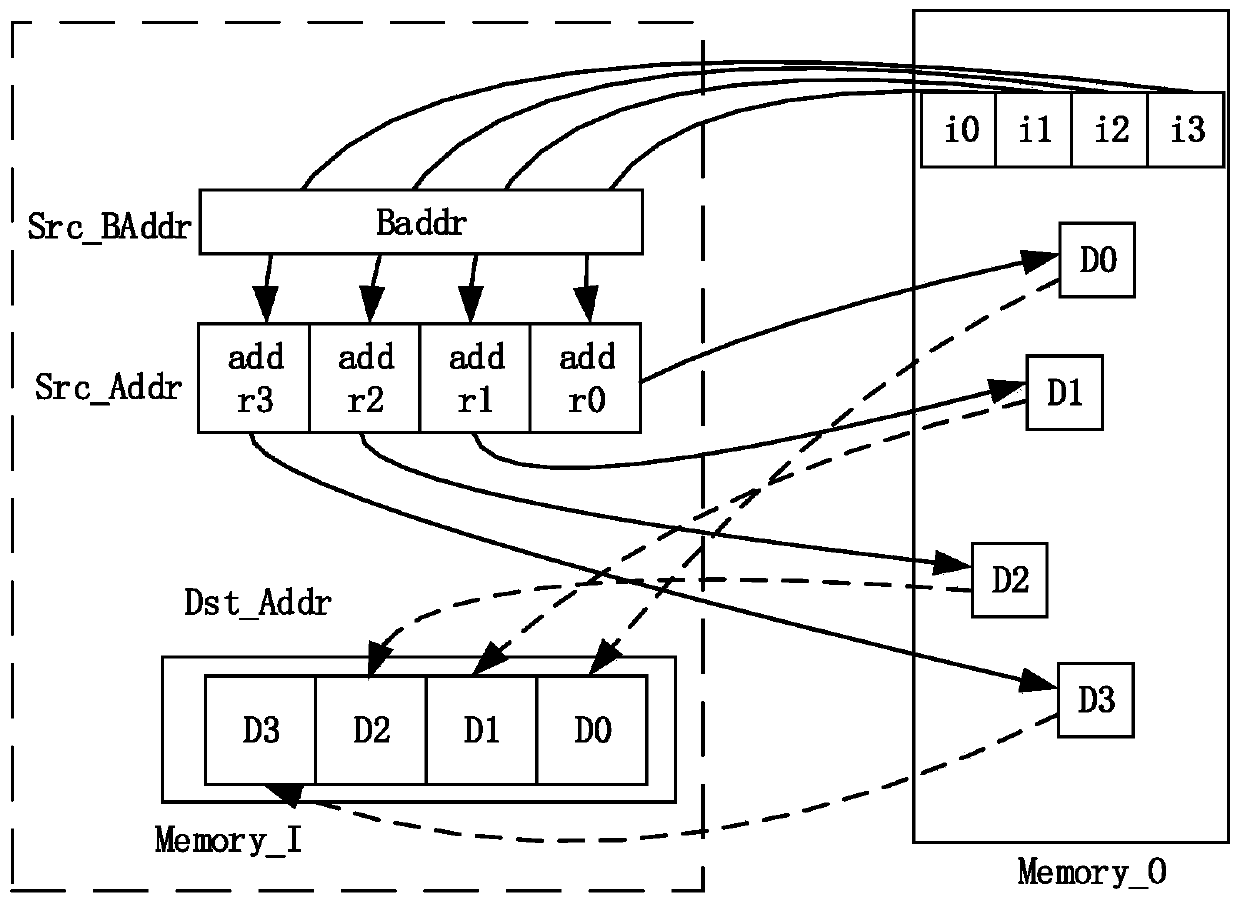

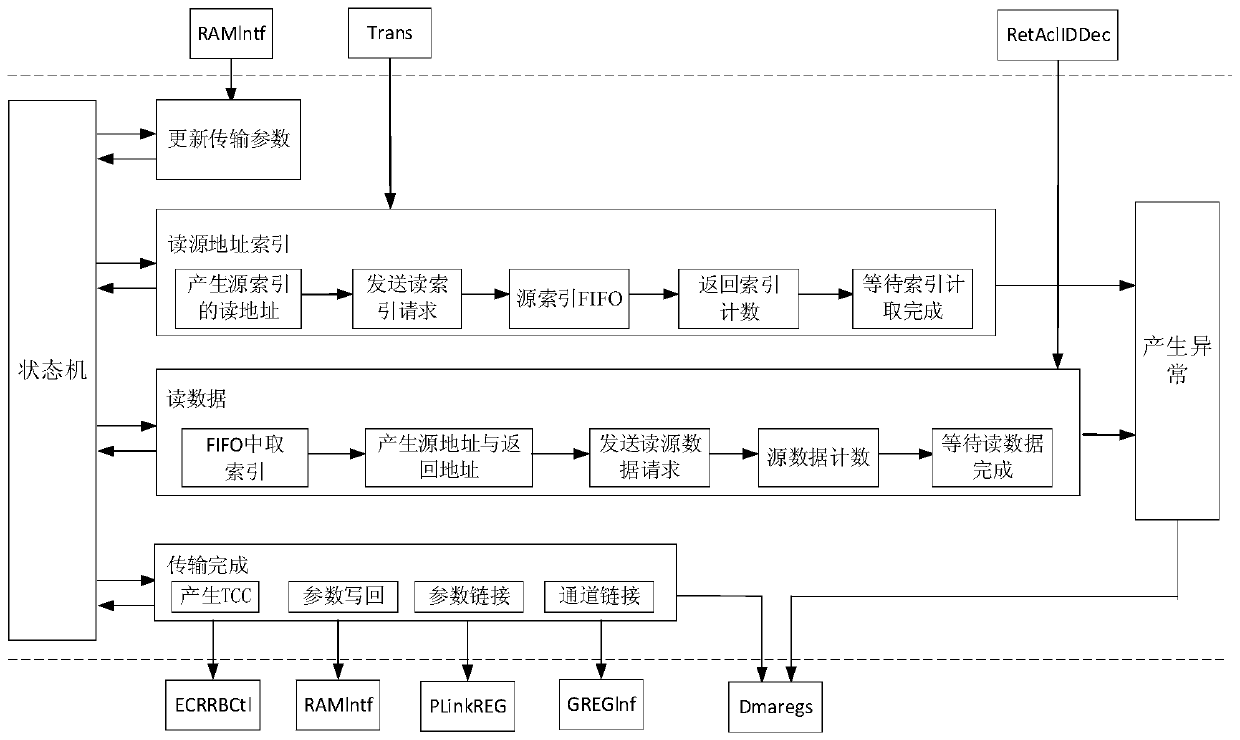

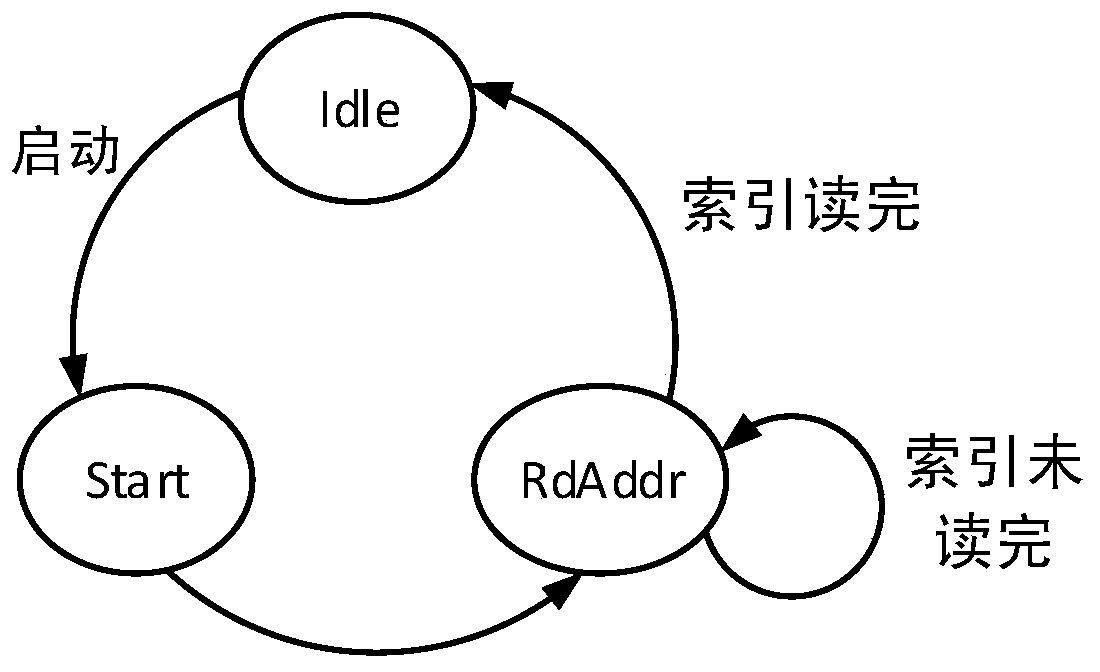

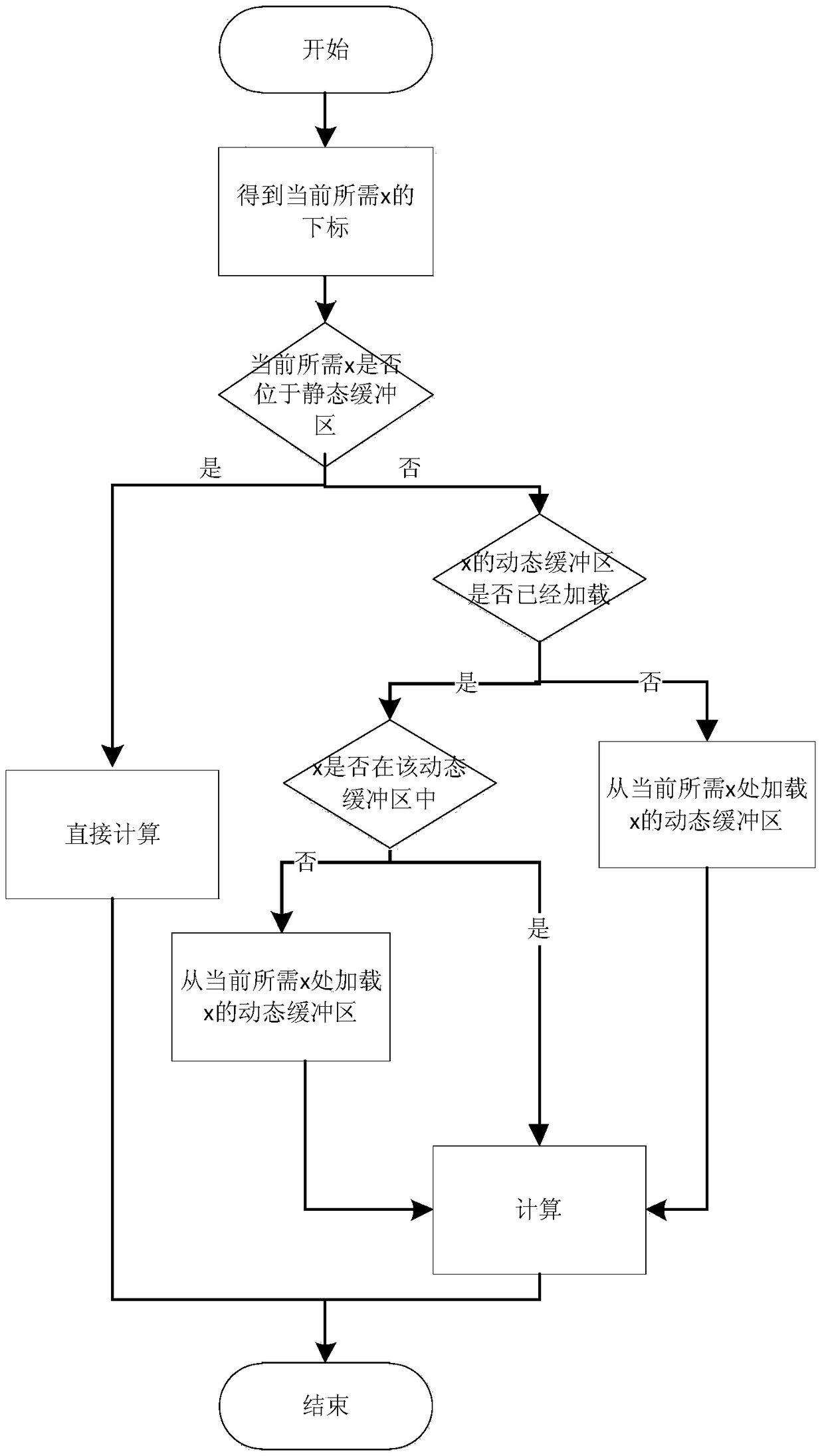

The invention discloses a data transmission method for sparse matrix vector multiplication and a DMA transmission device. The method comprises the following steps: when x vector data needs to be accessed in the sparse matrix vector multiplication solving process, configuring a DMA (Direct Memory Access) data channel APip so as to carry out irregular memory access on the x vector data according toa SuperGather data transmission mode; during memory access, the DMA data channel APip firstly reading the index of the source address from the outside of the core; and generating an actual read address of the source data according to the base address and the index of the read source address, sending a read data request with a read return address according to the generated actual read address of the source data, and moving x vector data required by sparse matrix vector multiplication calculation to the in-core memory bank from the outside of the core. The method has the advantages of being simple in implementation method, high in access speed, high in accuracy, less in occupied resource, flexible in application and the like.

Owner:NAT UNIV OF DEFENSE TECH

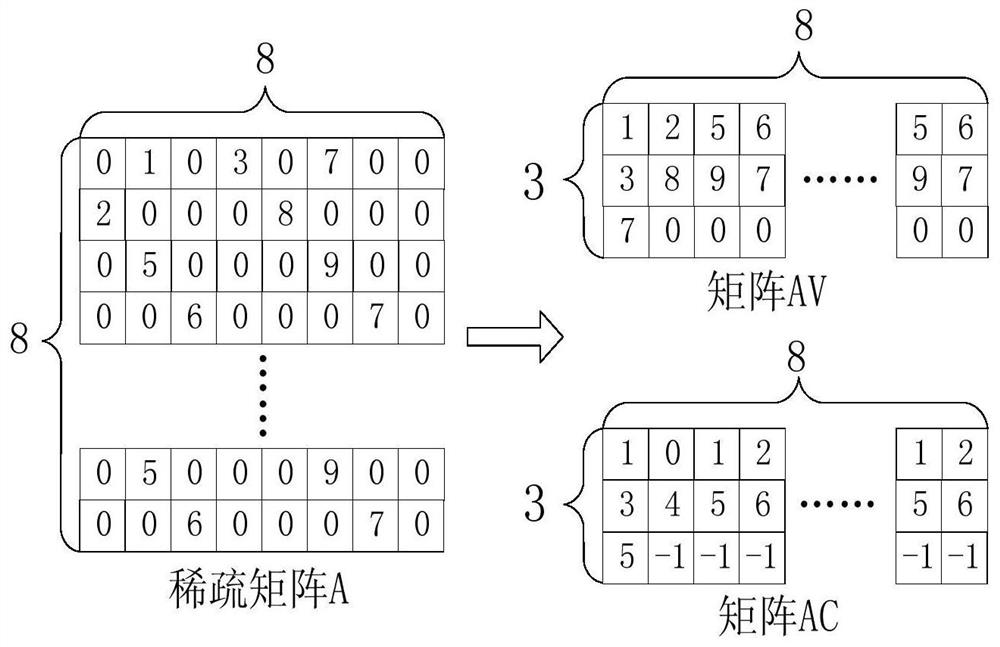

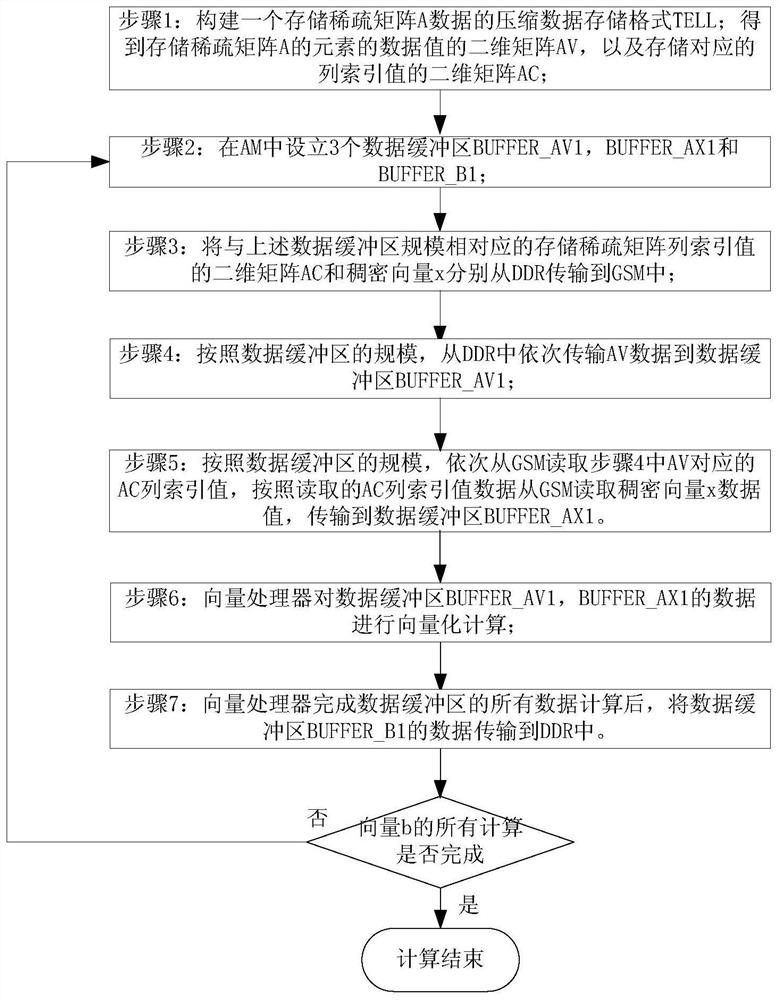

Sparse matrix vector multiplication vectorization implementation method

ActiveCN111859277AImprove resource utilizationImplement vectorizationComplex mathematical operationsDouble data rateAlgorithm

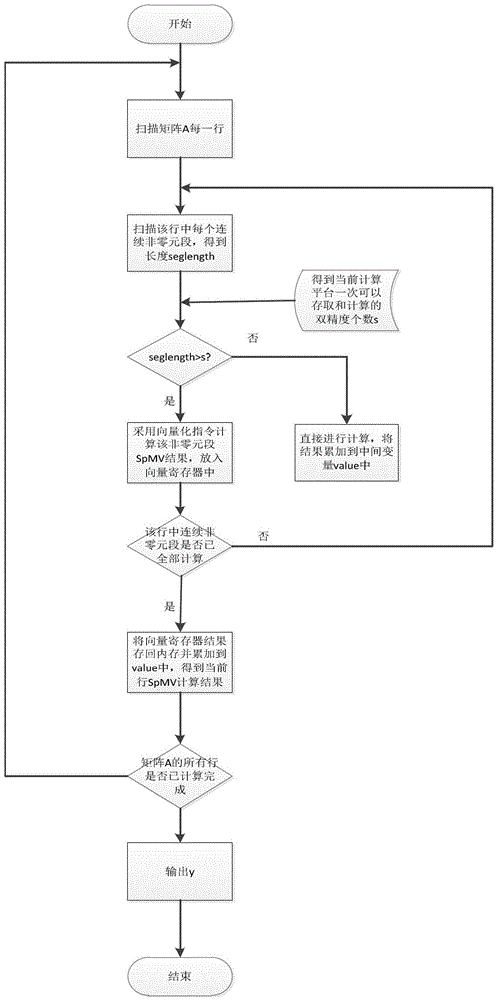

The invention discloses a sparse matrix vector multiplication vectorization implementation method, which comprises the following steps of: 1, respectively constructing a first matrix and a second matrix in a DDR (Double Data Rate), reading non-zero element data values in a sparse matrix to be calculated according to rows and storing the non-zero element data values in the first matrix according tocolumns, and storing corresponding column index values in the second matrix according to columns; 2, configuring a data buffer area in AM; 3, respectively transmitting the dense vector to be calculated and the data in the second matrix from the DDR to the GSM; 4, sequentially transmitting the data in the first matrix from the DDR to a data buffer area; 5, reading a column index value in the second matrix, reading a data value of the to-be-calculated dense vector according to the read column index value, and transmitting the data value to a data buffer area; and step 6, performing vectorization calculation on the data in the data buffer area. The method has the advantages of simple implementation operation, high resource utilization rate, high calculation efficiency, low hardware overheadand the like.

Owner:NAT UNIV OF DEFENSE TECH

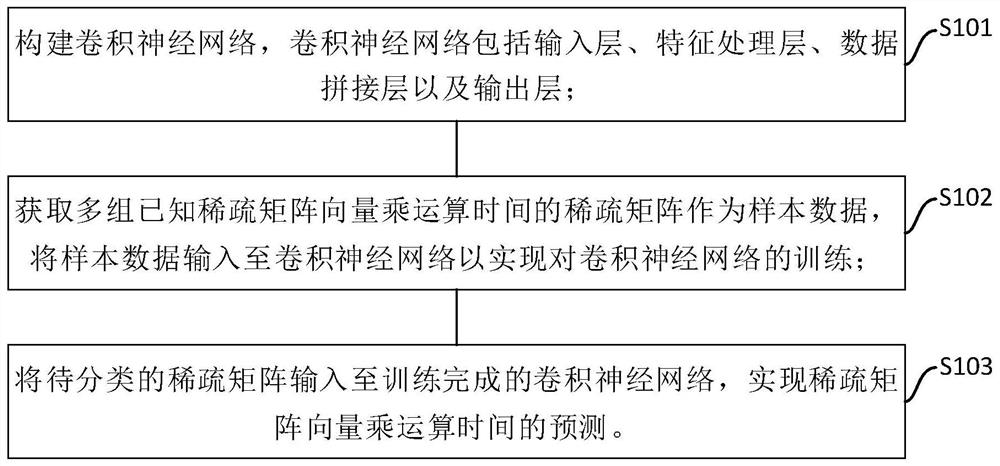

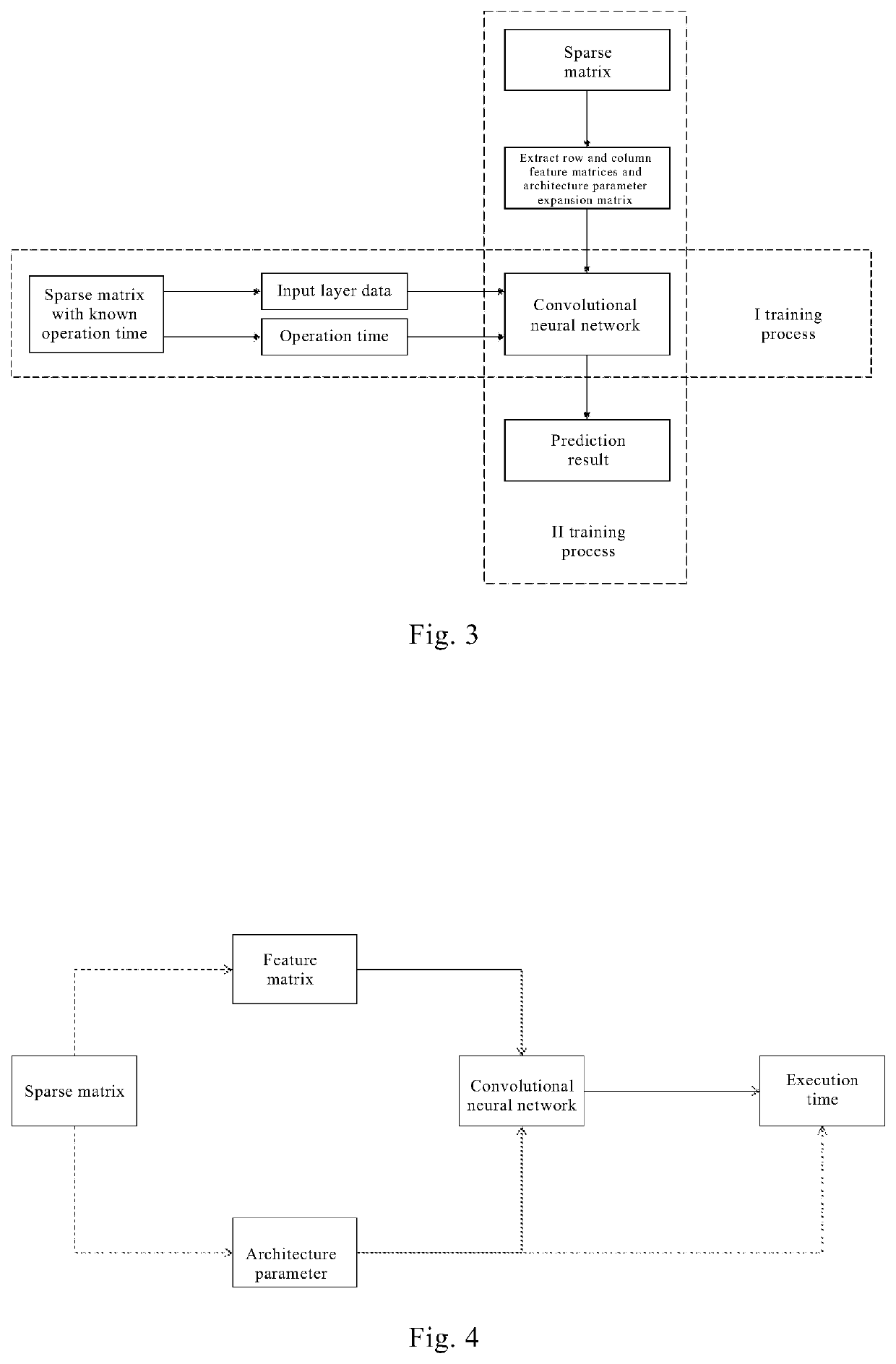

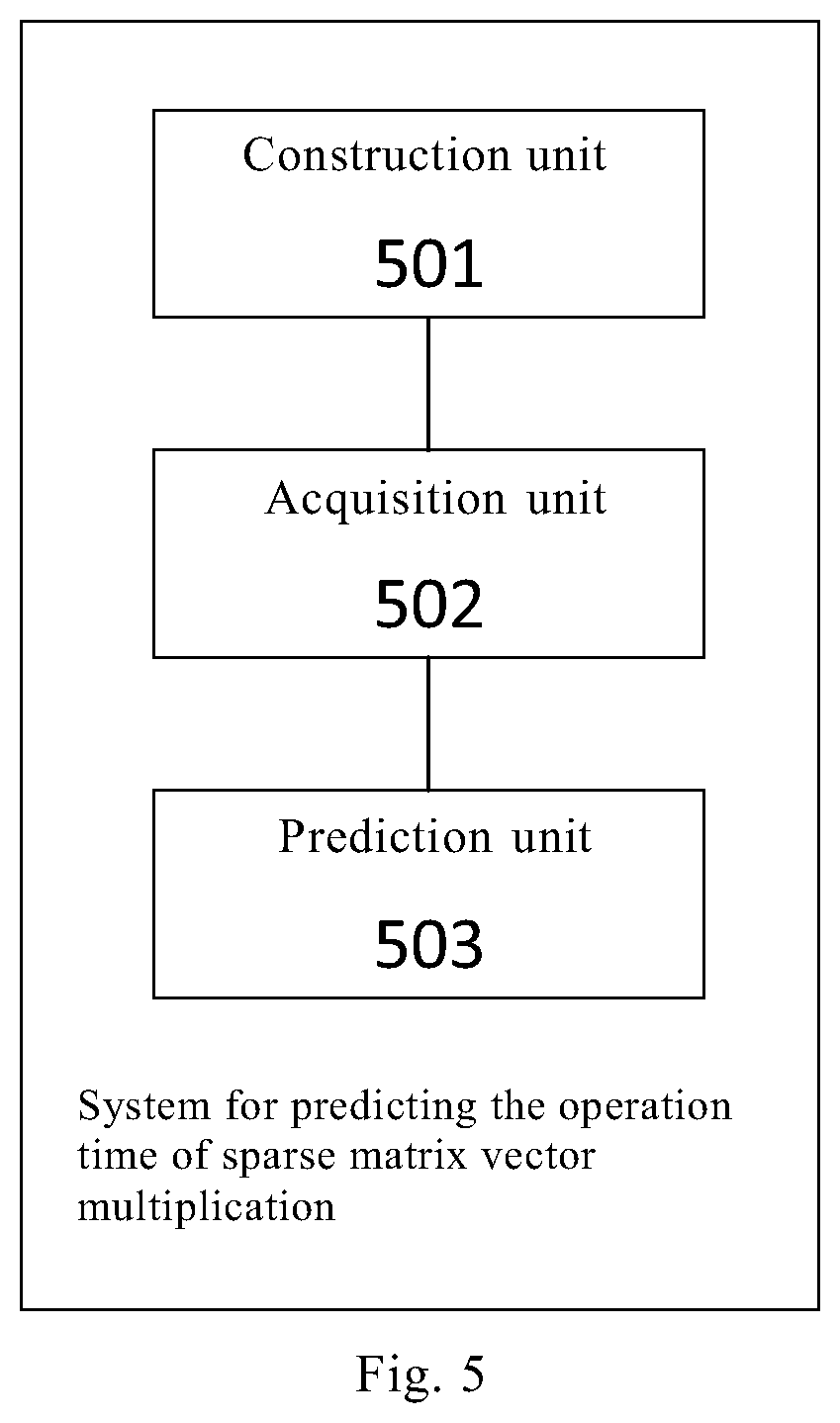

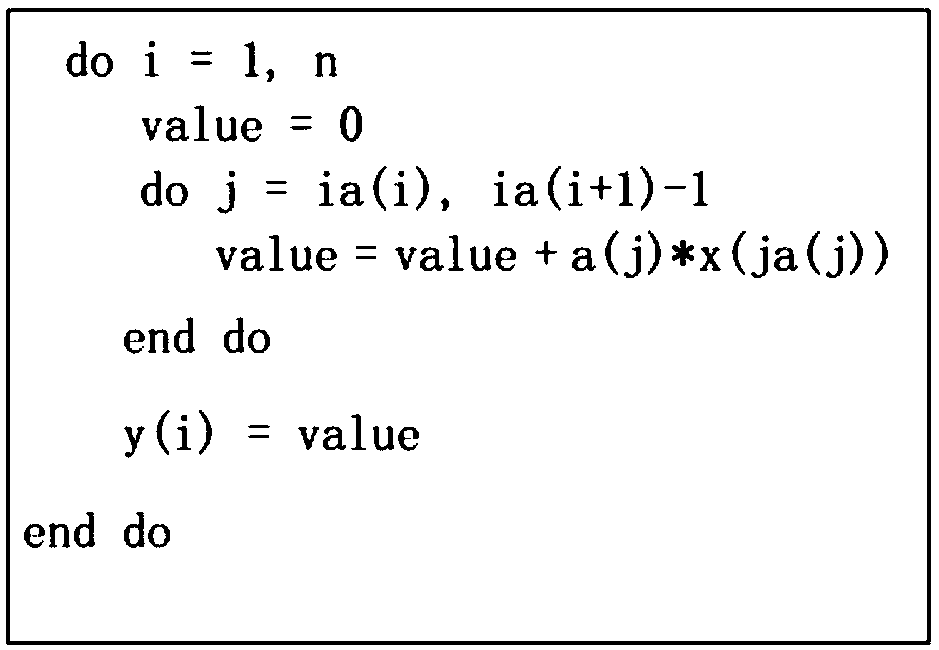

Prediction method and system for vector multiplication operation time of sparse matrix

ActiveCN111914213AReduce complexityDigital data processing detailsNeural architecturesSparse matrix vectorAlgorithm

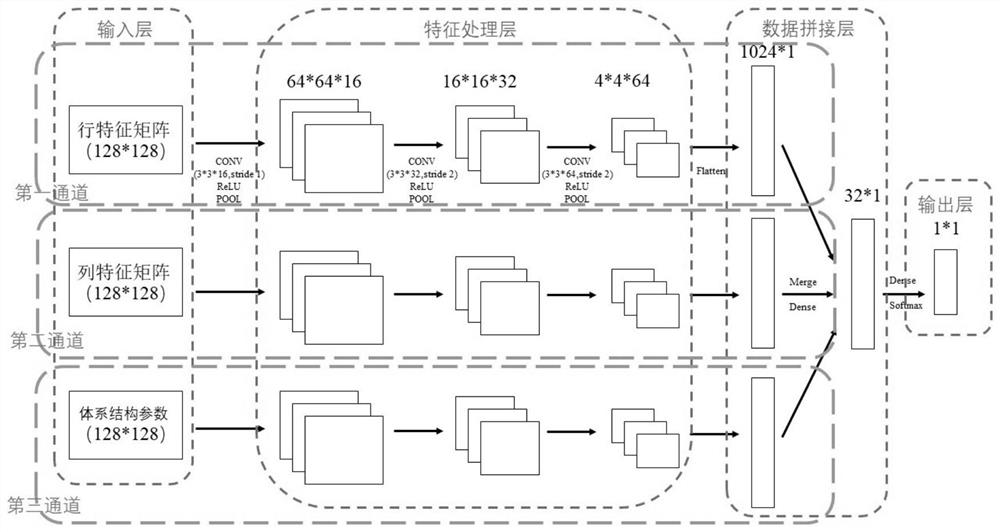

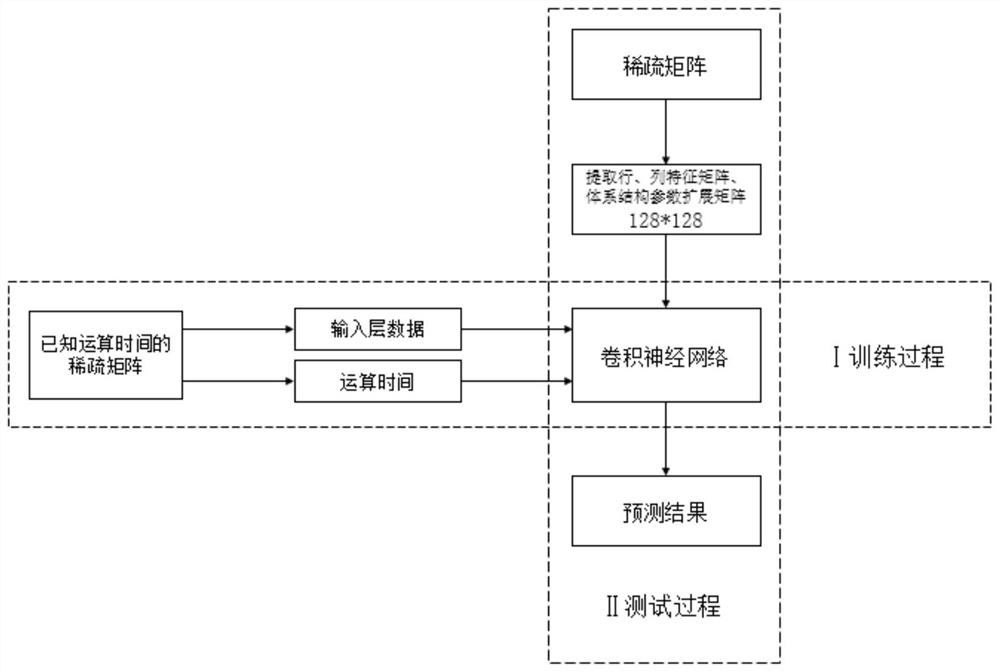

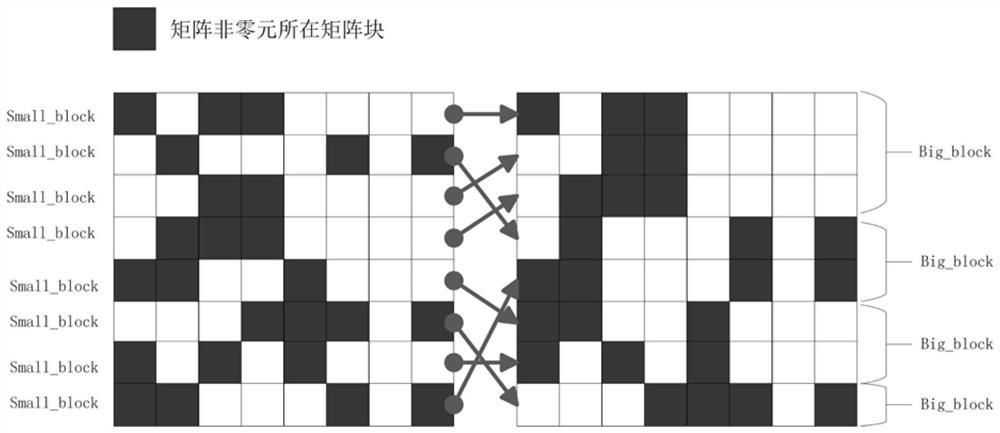

The invention relates to a prediction method and system for vector multiplication operation time of a sparse matrix, and the method comprises the following steps: constructing a convolutional neural network which comprises an input layer, a feature processing layer, a data splicing layer and an output layer, wherein the input layer is used for inputting the characteristics of a row characteristicmatrix, the characteristics of a column characteristic matrix and the characteristics of an architecture parameter extension matrix in a sparse matrix; the feature processing layer is used for extracting features in the previous layer; the data splicing layer is used for splicing the extracted features of the row feature matrix, the extracted features of the column feature matrix and the extractedfeatures of the architecture parameter extension matrix; the output layer is used for outputting a prediction result and obtaining a plurality of groups of sparse matrixes with known sparse matrix vector multiplication operation time as sample data, and inputting the sample data into the convolutional neural network to realize training of the convolutional neural network; and inputting a sparse matrix to be classified into the trained convolutional neural network to realize prediction of sparse matrix vector multiplication operation time.

Owner:CHINA INSTITUTE OF ATOMIC ENERGY +1

Many-core architecture-oriented sparse matrix vector multiplication many-core optimization method

InactiveCN112560356AImprove acceleration performanceThe optimization effect is obviousDesign optimisation/simulationComputational scienceSparse matrix vector

The invention discloses a many-core architecture-oriented sparse matrix vector multiplication many-core optimization method. The method comprises the following steps of S1, knowing a sparse matrix A of which the row number is m and the column number is n and a vector x of which the length is n; solving a vector y with the length of m, wherein y = Ax is the dot product of the sparse matrix A and the vector x; the method comprises the following steps: S1, defining the size blkxsize of an x vector block, and partitioning the x vector element according to an x vector element subscript to partitionan x vector; and S2, counting the number of the x vector block corresponding to the column number of each row of non-zero elements in the original sparse matrix, namely the sparse matrix A. Accordingto the blocking information of the x vector, namely the number information of the x vector block where the x vector element obtained by solving in the step S1 is located, thereby counting the numberinformation of the x vector block required by each row of the sparse matrix when the sparse matrix vector is multiplied. According to the method, the overall many-core acceleration performance is improved, the locality of data access is improved, and the optimization effect on unstructured grid CFD application is obvious.

Owner:JIANGNAN INST OF COMPUTING TECH

Sparse matrix vector multiplication with a matrix vector multiplication unit

ActiveUS10726096B2Neural architecturesPhysical realisationStructural engineeringMechanical engineering

Systems and methods are provided for sparse matrix vector multiplication with a matrix vector multiplication unit. The method includes partitioning a sparse matrix of entries into a plurality of sub-matrices; mapping each of the sub-matrices to one of a plurality of respective matrix vector multiplication engines; partitioning an input vector into a plurality of sub-vectors; computing, via each matrix vector multiplication engine, a plurality of intermediate result vectors each resulting from a multiplication of one of the sub-matrices and one of the sub-vectors; for each set of rows of the sparse matrix, adding elementwise the intermediate result vectors to produce a plurality of result sub-vectors; and concatenating the result sub-vectors to form a result vector.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

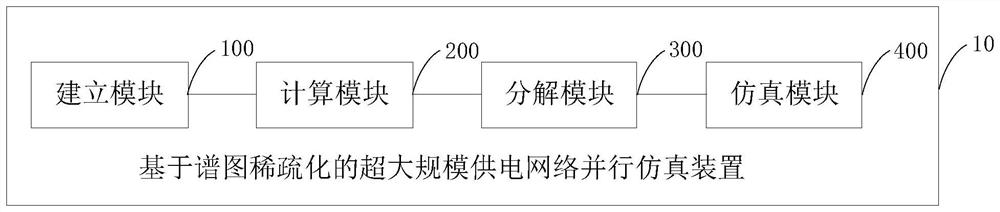

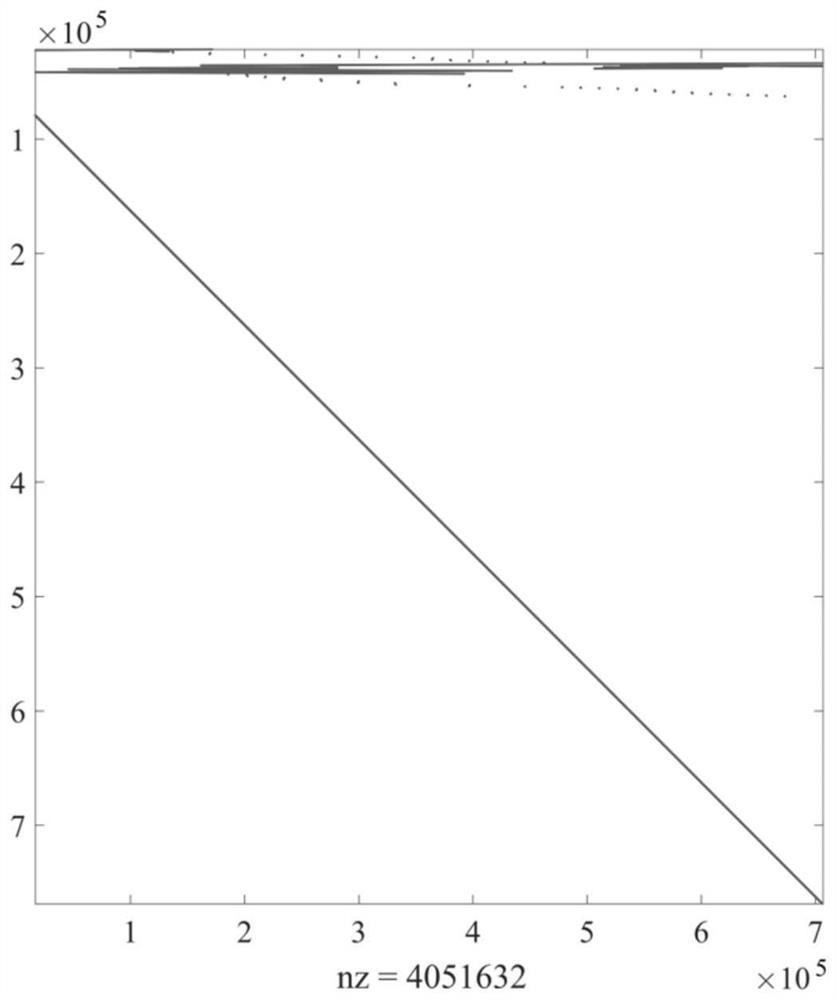

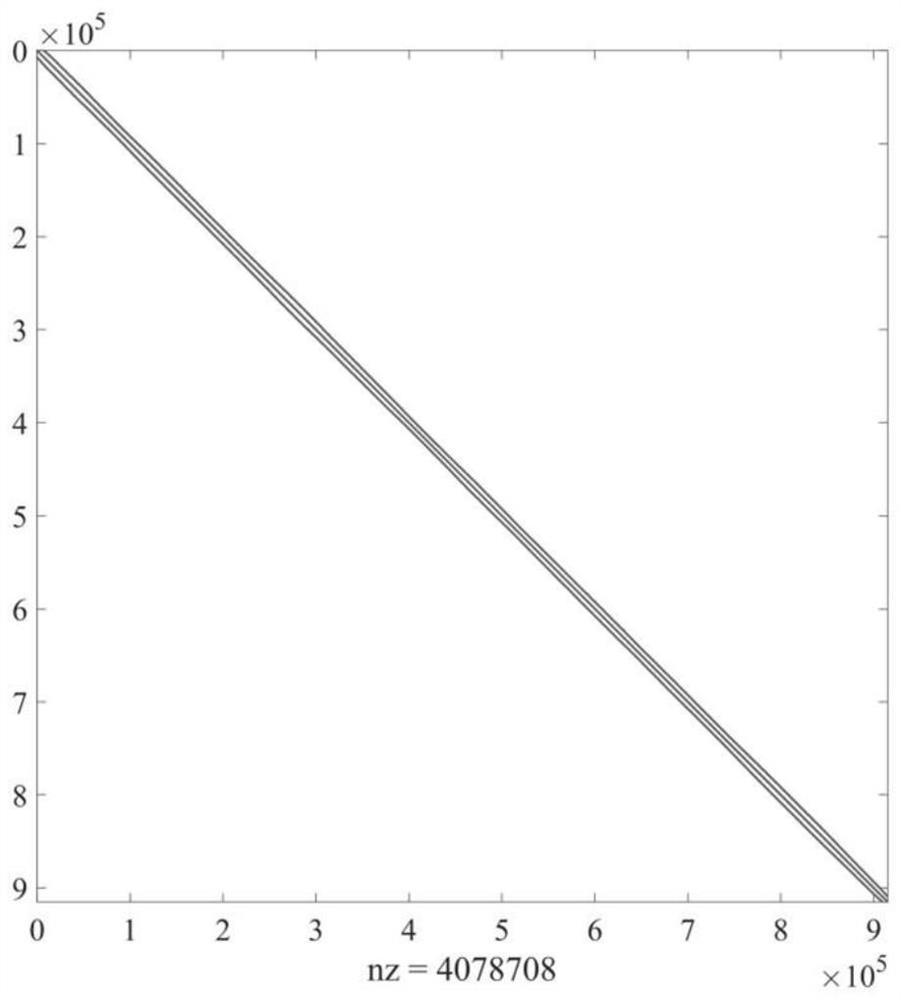

On-chip super-large-scale power supply network parallel simulation method based on spectrogram rarefaction

PendingCN114004186ALow memory usageFast convergenceOther databases indexingComputer aided designIrregular matrixTheoretical computer science

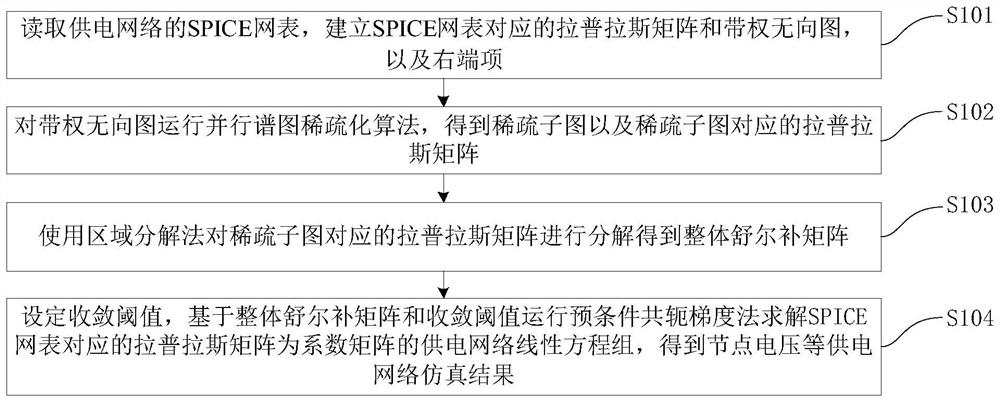

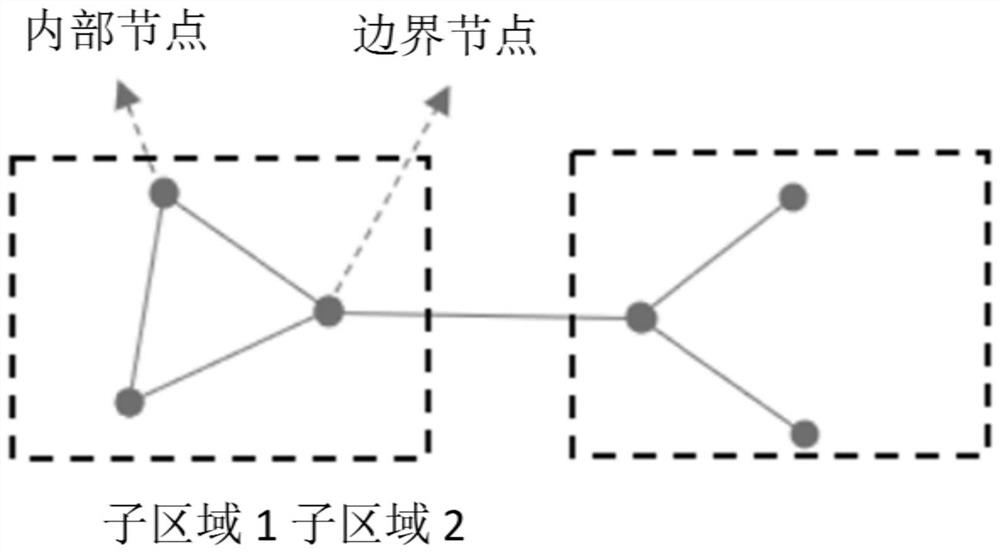

The invention discloses a super-large-scale power supply network parallel simulation method and device based on spectrogram rarefaction, and the method comprises the steps: reading an SPICE netlist of a power supply network, and building a Laplacian matrix and a weighted undirected graph corresponding to the SPICE netlist, and a right-end item; running a parallel spectrogram sparsification algorithm on the weighted undirected graph to obtain a sparse sub-graph and a Laplacian matrix corresponding to the sparse sub-graph; decomposing the Laplacian matrix corresponding to the sparse sub-graph by using a region decomposition method to obtain an integral Schel complement matrix; and setting a convergence threshold, solving a power supply network linear equation set which takes a Laplacian matrix corresponding to the SPICE netlist as a coefficient matrix based on an integral Schel complement matrix and a convergence threshold operation precondition conjugate gradient method, and obtaining power supply network simulation results of node voltage and the like. Therefore, the problems that an existing spectrogram rarefaction algorithm is achieved in a serial mode, and even though sparse matrix vector multiplication and vector addition in the PCG iteration process are prone to parallel, the parallel efficiency of the Cholesky decomposition process, the previous substitution process and the back substitution process of a sparse and irregular matrix is low are solved.

Owner:TSINGHUA UNIV

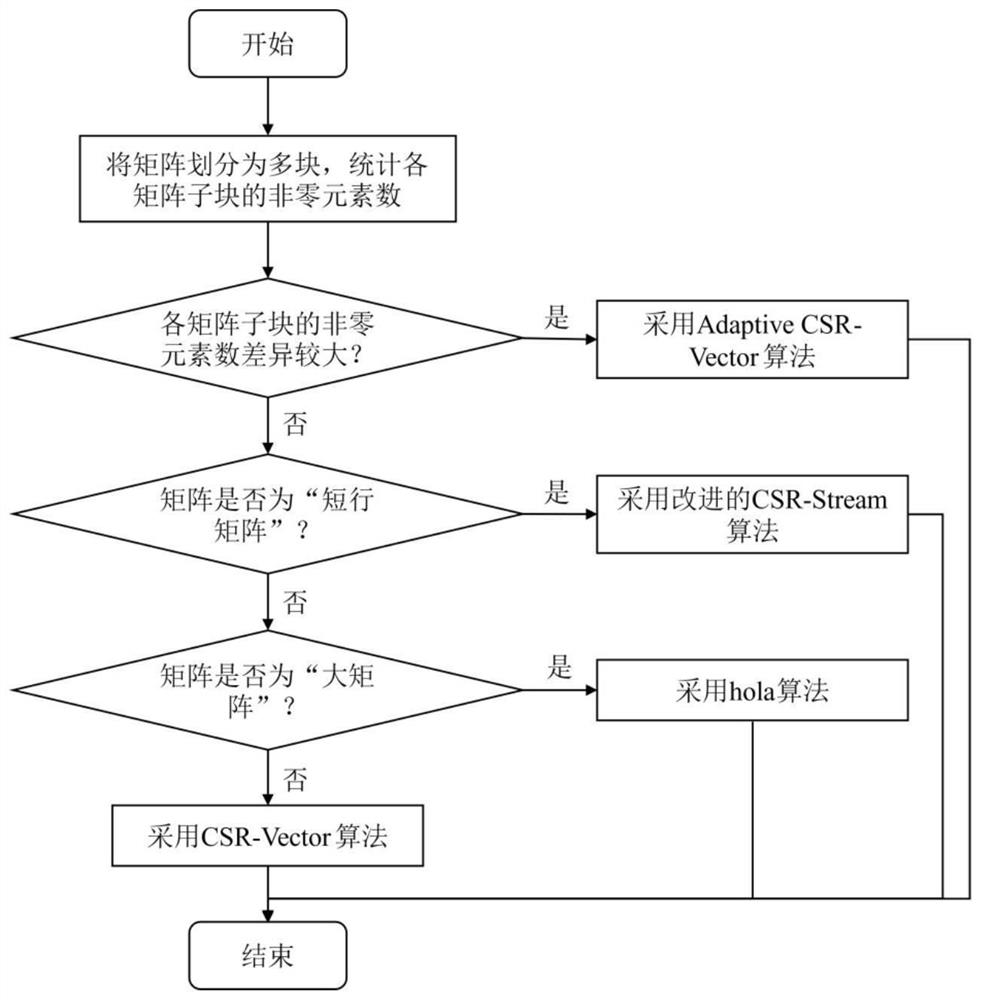

Adaptive sparse matrix vector multiplication strategy selection and optimization method

PendingCN114491401AEasy to useCategory detailsComplex mathematical operationsTheoretical computer scienceStreaming algorithm

The invention discloses a self-adaptive sparse matrix vector multiplication strategy selection and optimization method, which is suitable for a GPU (Graphics Processing Unit) architecture, and comprises the following steps: partitioning a to-be-processed matrix according to rows, counting the number of non-zero elements of each matrix sub-block, and if the difference multiple of the number of non-zero elements of each matrix sub-block is higher than a first preset threshold value, determining that the matrix is not processed; if yes, processing is carried out by adopting a self-adaptive CSR-Vector algorithm; counting the row average non-zero element number of the to-be-processed matrix, and if the row average non-zero element number of the matrix is lower than a second preset threshold value, adopting an improved CSR-Stream algorithm for solving; counting the number of non-zero elements of the to-be-processed matrix, and if the number of the non-zero elements of the to-be-processed matrix is larger than a third preset threshold value, adopting a hola algorithm for solving; and if all the conditions are not met, a CSR-Vector algorithm is adopted for solving. According to the method, adaptive efficient SpMV solving for different application problems is realized.

Owner:UNIV OF SCI & TECH BEIJING

Hardware accelerator for compressed LSTM

ActiveUS10691996B2Easy to operateImprove forecastNeural architecturesPhysical realisationComputer hardwareComputational science

Hardware accelerator for compressed Long Short Term Memory (LSTM) is disclosed. The accelerator comprise a sparse matrix-vector multiplication module for performing multiplication operation between all sparse matrices in the LSTM and vectors to sequentially obtain a plurality of sparse matrix-vector multiplication results. A addition tree module are also included for accumulating a plurality of said sparse matrix multiplication results to obtain an accumulated result. And a non-linear operation module passes the accumulated results through an activation function to generate non-linear operation result. That is, the present accelerator adopts pipeline design to overlap the time of data transfer and computation for compressed LSTM.

Owner:XILINX TECH BEIJING LTD

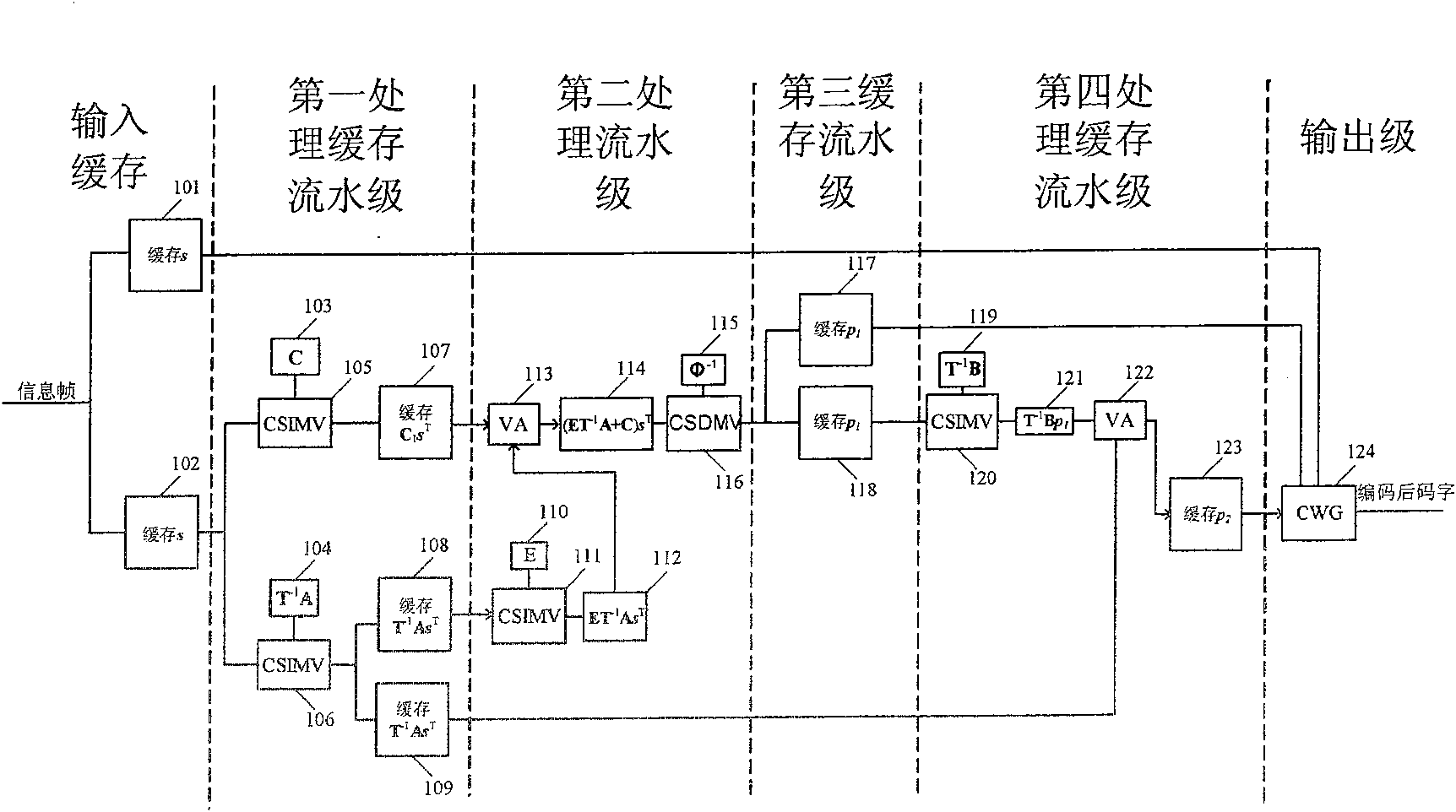

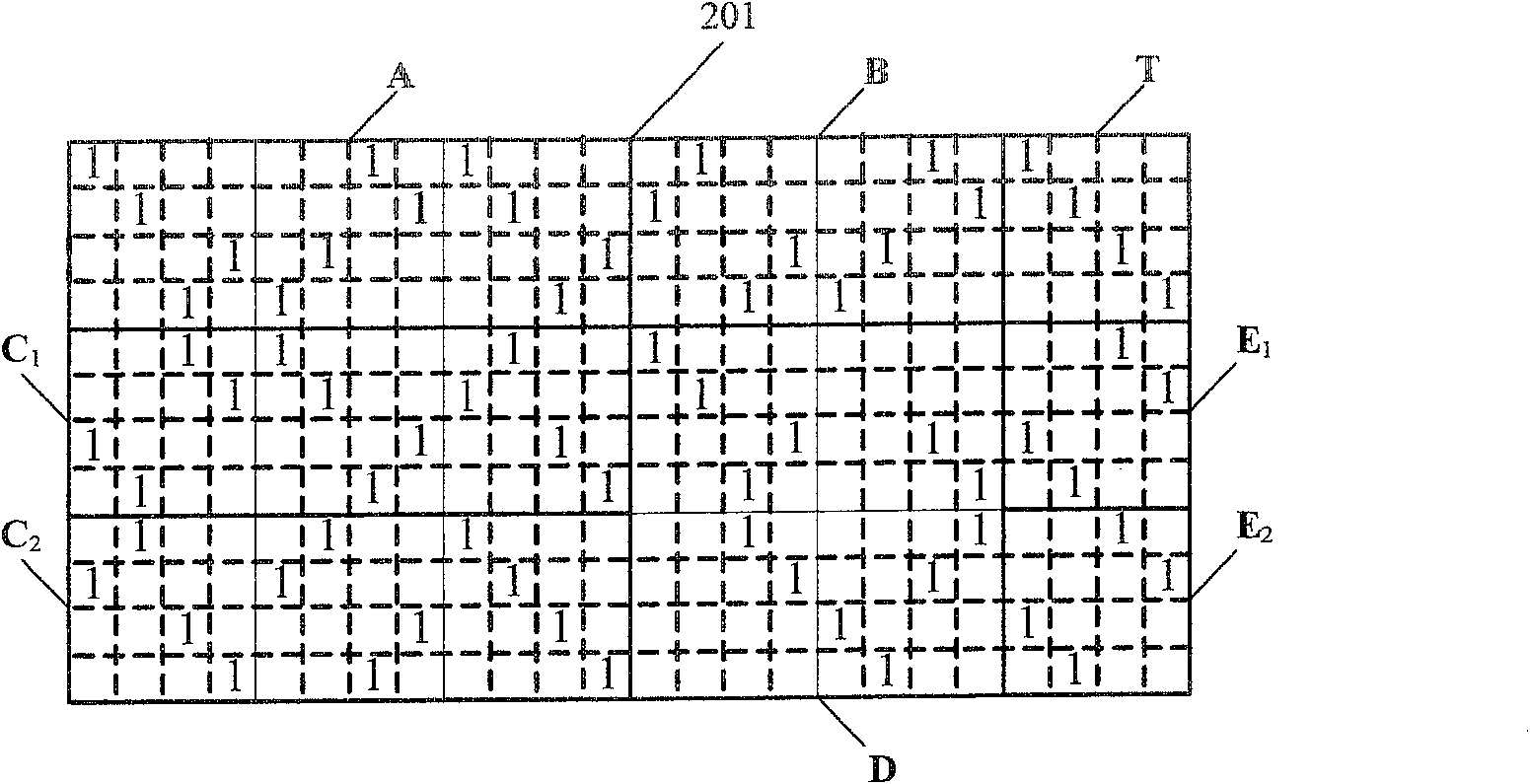

Encoder of LDPC code of layered quasi-circulation extended structure

InactiveCN100566183CSave resourcesImprove throughputError correction/detection using multiple parity bitsVariable-length codeParallel computing

Owner:SHANGHAI JIAOTONG UNIV

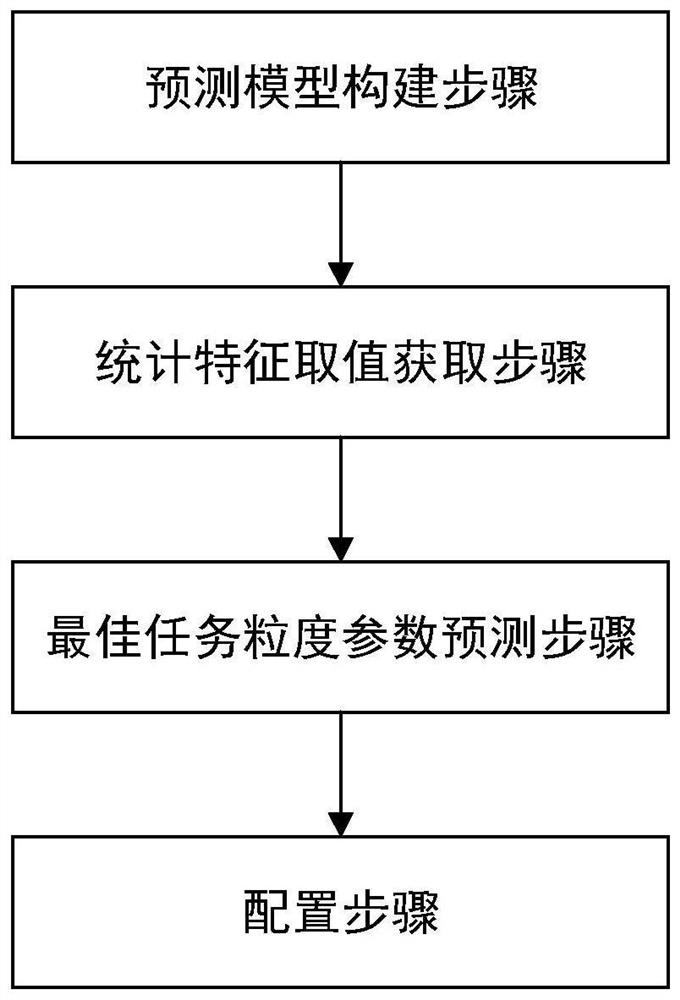

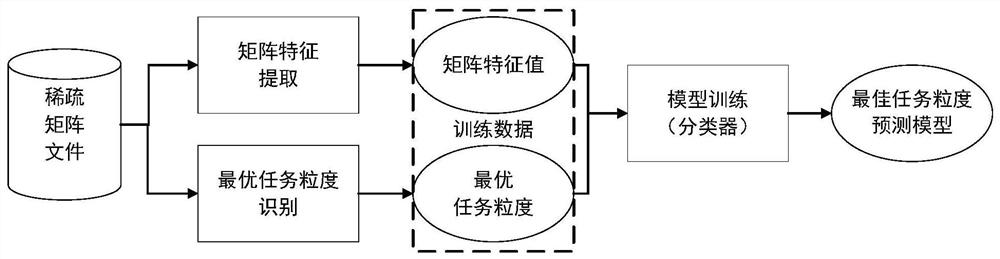

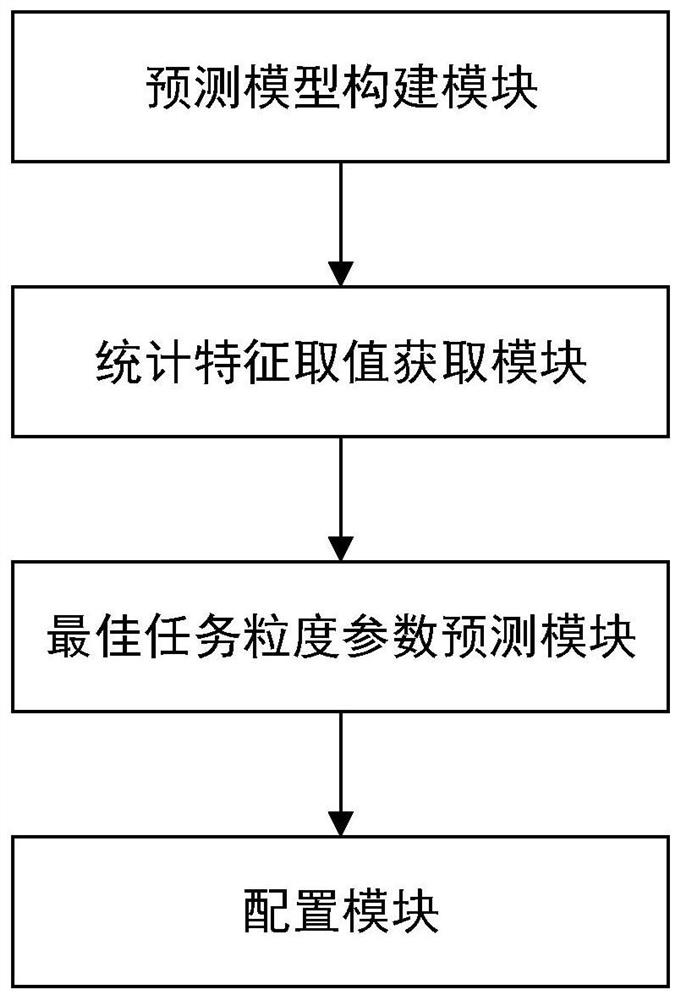

Sparse matrix vector multiplication parallel task granularity parameter automatic tuning method and device

ActiveCN111984418AImprove load balancingGuaranteed uptimeProgram initiation/switchingResource allocationComputational scienceSparse matrix vector

The invention belongs to the field of parallel computing and discloses an automatic tuning method and device for sparse matrix vector multiplication parallel task granularity parameters. The method comprises a prediction model construction step, constructing a prediction model through a machine learning method; a statistical characteristic value obtaining step, analyzing the matrix original data file to obtain a statistical characteristic value of the matrix; an optimal task granularity parameter prediction step, inputting the obtained statistical characteristic value into a prediction model,and predicting an optimal parallel task granularity parameter value of the SpMV program when the matrix characteristic value is used as input; and a configuration step of adjusting the system task granularity during parallel running according to the prediction result. The device comprises a prediction model construction module, a statistical characteristic value acquisition module, an optimal taskgranularity parameter prediction module and a configuration module. According to the method, the parallel task granularity of the SpMV in different input matrixes is adaptively selected, so a purposeof improving the load balance and the overall computing performance of the parallel program is achieved.

Owner:NAT UNIV OF DEFENSE TECH

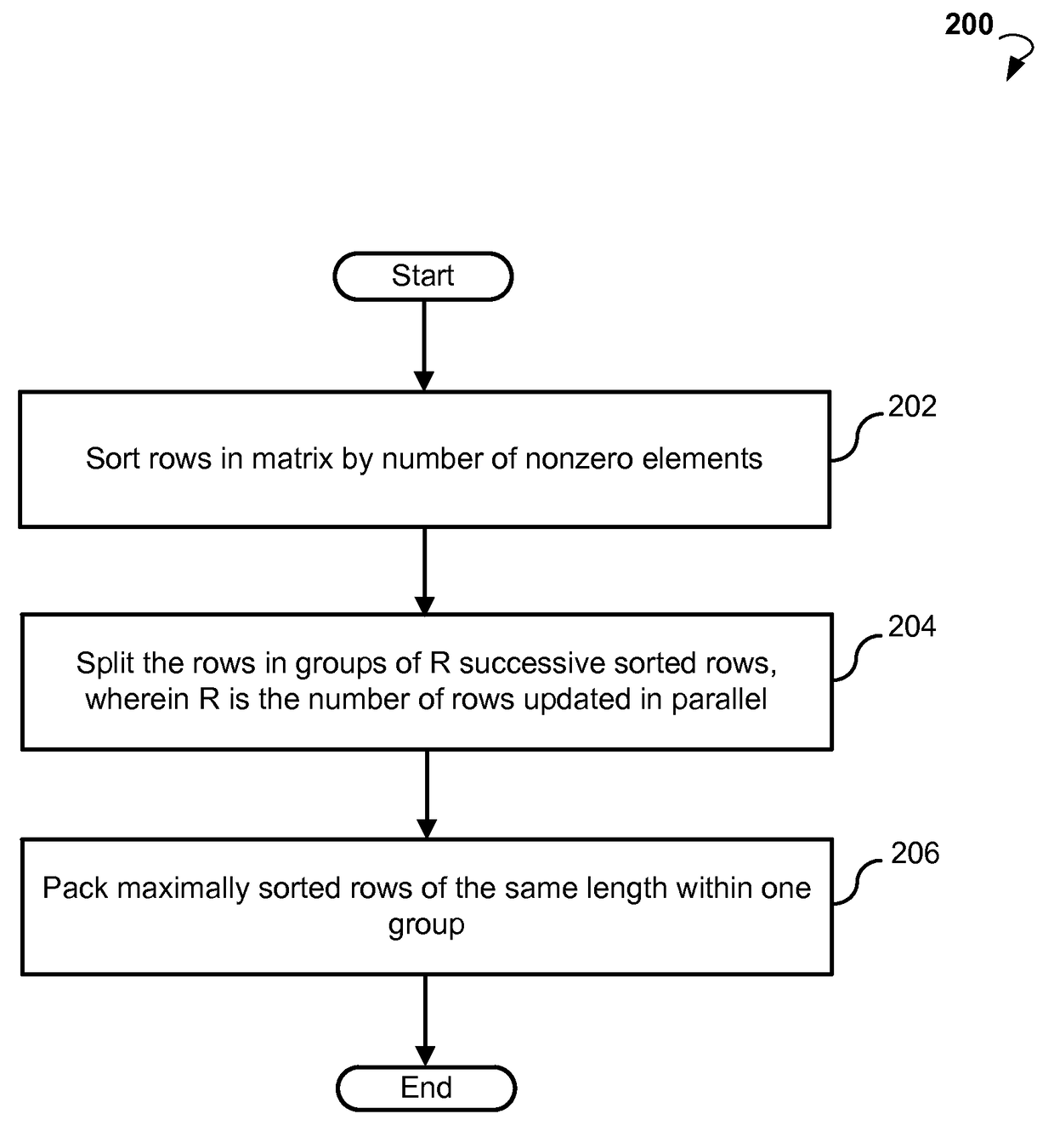

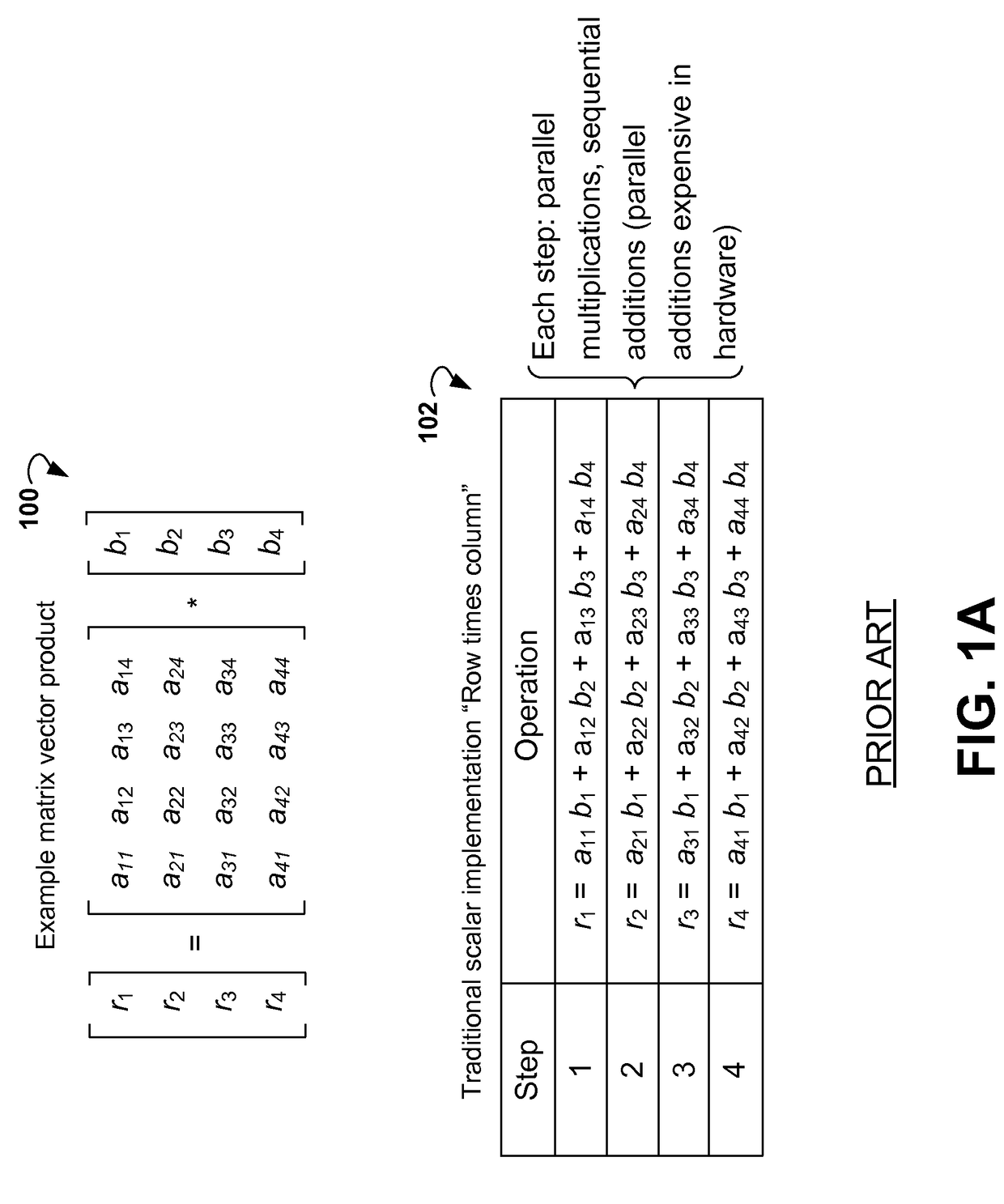

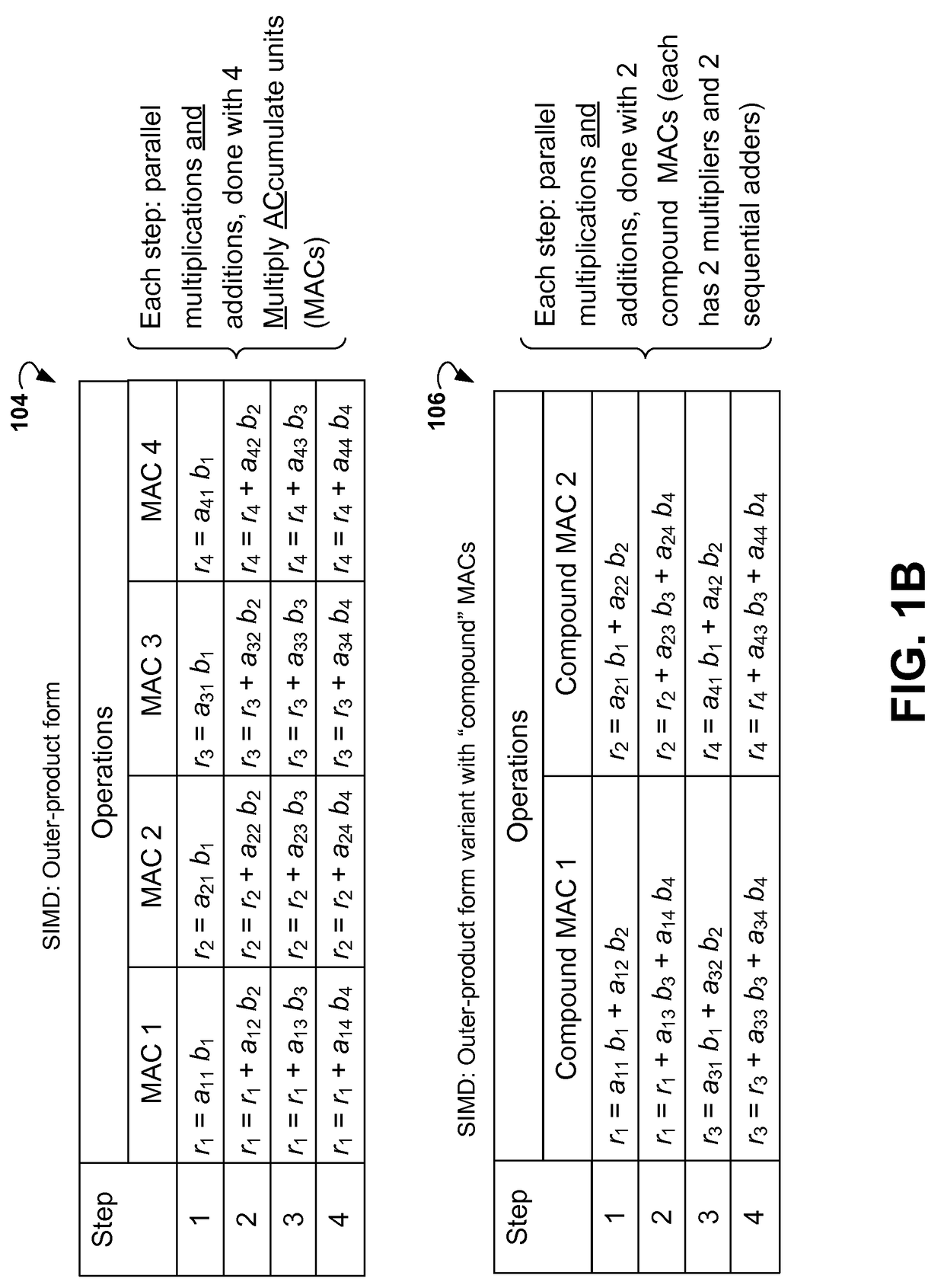

Sparse matrix vector multiplication

InactiveUS20180067899A1Well formedComplex mathematical operationsC-elementSparse matrix-vector multiplication

Systems and methods for multiplying a sparse matrix by a vector using a single instruction multiple data (SIMD) architecture are provided. An example method includes sorting rows of the sparse matrix by a number of non-zero elements in the rows to generate sorted rows. The sorted rows are split to generate groups of the sorted rows. The number of rows in each group of the sorted rows is equal to the number of rows updated in parallel. The method allows for packing the sorted rows in each of the groups to generate packed rows. Each of the packed rows within the same group has the same length. Per clock cycle, C elements of the packed rows and data for selecting elements of the vector are provided to computational units in the SIMD architecture, where C is the number of computational units.

Owner:KNOWLES ELECTRONICS INC

Sparse matrix calculations untilizing ightly tightly coupled memory and gather/scatter engine

ActiveUS11481214B2Register arrangementsMemory adressing/allocation/relocationComputational scienceEngineering

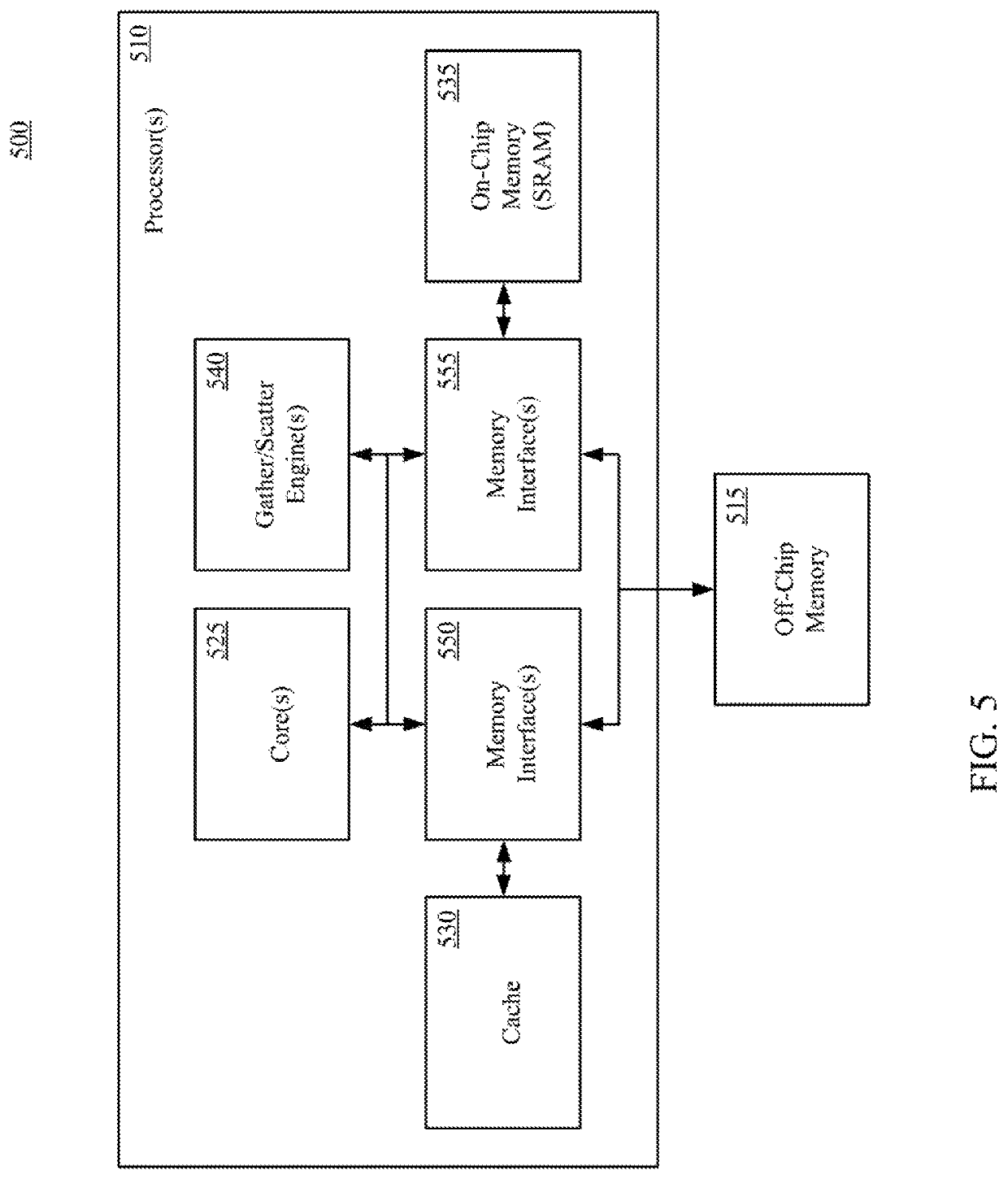

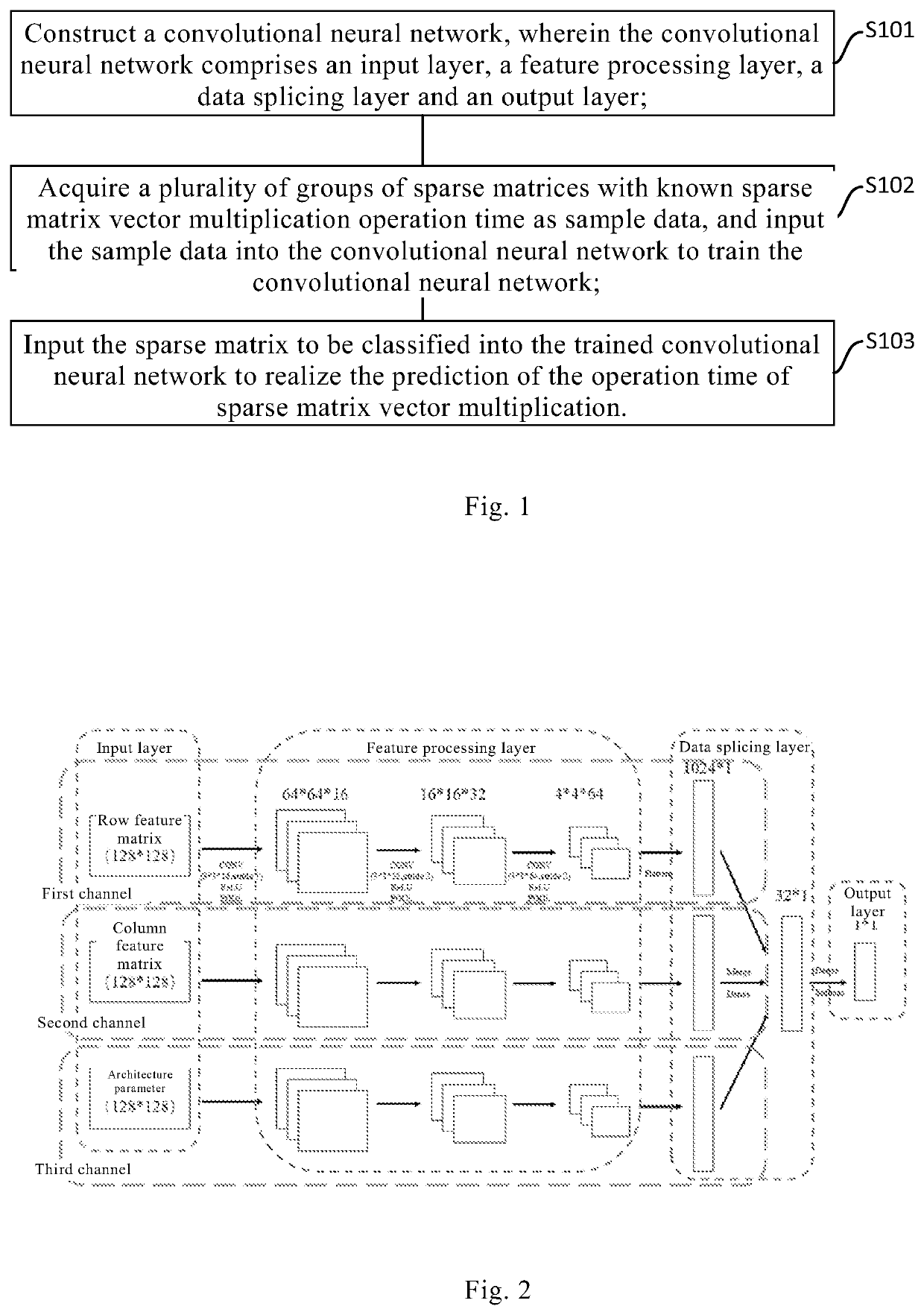

A processor for sparse matrix calculation can include an on-chip memory, a cache, a gather / scatter engine and a core. The on-chip memory can be configured to store a first matrix or vector, and the cache can be configured to store a compressed sparse second matrix data structure. The compressed sparse second matrix data structure can include: a value array including non-zero element values of the sparse second matrix, where each entry includes a given number of element values; and a column index array where each entry includes the given number of offsets matching the value array. The gather / scatter engine can be configured to gather element values of the first matrix or vector using the column index array of the sparse second matrix. In a horizontal implementation, the gather / scatter engine can be configured to gather sets of element values from different sub-banks within a same row based on the column index array of the sparse matrix. In a vertical implementation, the gather / scatter engine can be configured to gather sets of element values from different rows based on the column index array of the sparse matrix. In a hybrid horizontal / vertical implementation, the gather / scatter engine can be configured to gather sets of element values from sets of rows and from different sub-banks within the same rows based on the column index array of the sparse matrix. The core can be configured to perform sparse matrix-matrix multiplication or sparse-matrix-vector multiplication using the gathered elements of the first matrix or vector and the value array of the compressed sparse second matrix.

Owner:ALIBABA GRP HLDG LTD

Method and System for Predicting Operation Time of Sparse Matrix Vector Multiplication

PendingUS20220164413A1Reduce complexityDigital data processing detailsNeural learning methodsAlgorithmSparse matrix-vector multiplication

The disclosure relates to a method and a system for predicting the operation time of sparse matrix vector multiplication. The method comprises constructing a convolutional neural network comprising an input layer, a feature processing layer, a data splicing layer and an output layer for outputting prediction results. The method further comprises acquiring a plurality of groups of sparse matrices with known sparse matrix vector multiplication operation time as sample data, inputting the sample data into the convolutional neural network to train the convolutional neural network, and inputting the sparse matrix to be classified into the trained convolutional neural network to realize the prediction of the operation time of sparse matrix vector multiplication.

Owner:CHINA INSTITUTE OF ATOMIC ENERGY +1

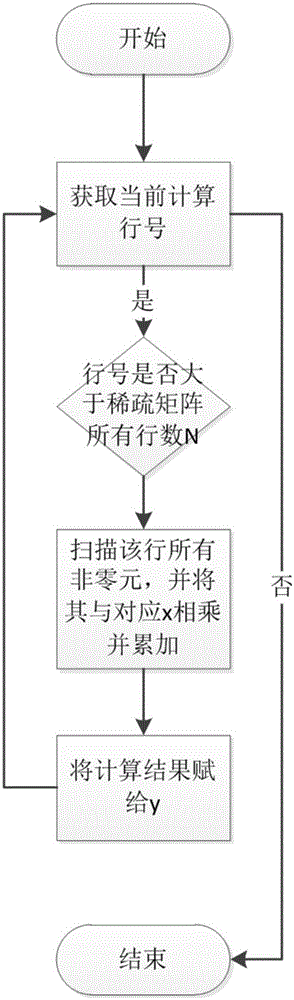

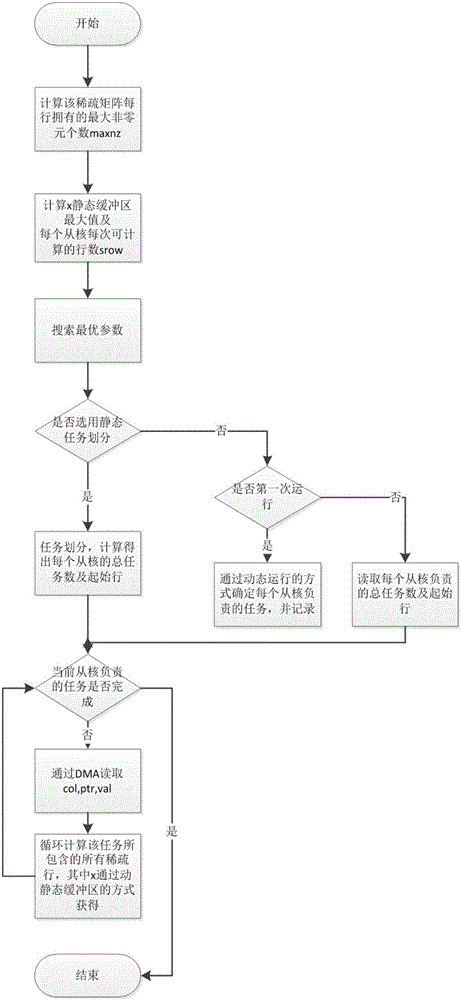

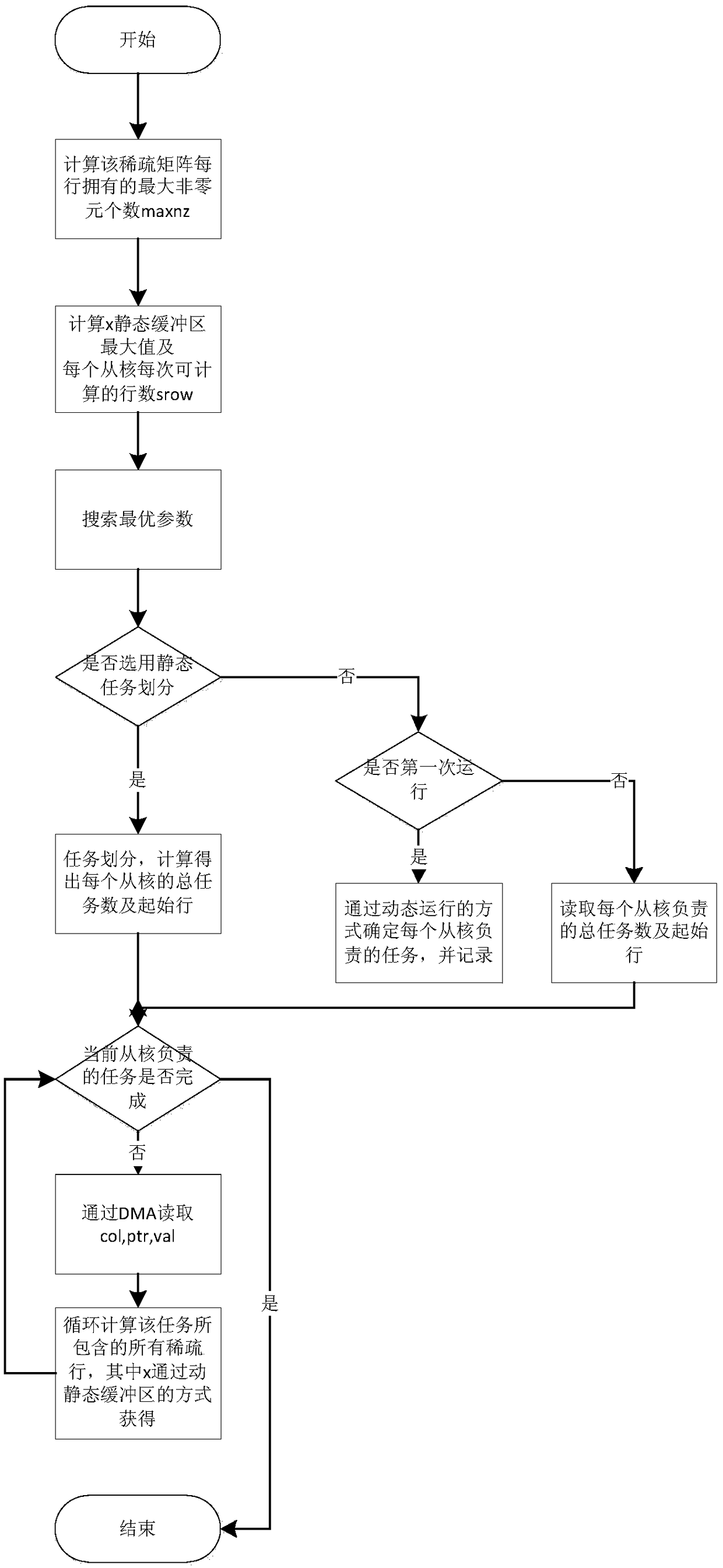

An implementation method of heterogeneous many-core based on sparse matrix-vector multiplication based on Shenwei 26010 processor

ActiveCN106775594BImprove performanceReduce the total memory accessMachine execution arrangementsSparse matrix vectorEuclidean vector

The invention discloses a method for realizing heterogeneous many-core of sparse matrix-vector multiplication based on domestic SW26010 processors. As the non-zero elements of a sparse matrix are distributed very irregularly, two different static and dynamic task partitioning methods are designed in the method, so as to adapt to different sparse matrices; a set of dynamic and static cache mechanism is provided, so as to promote the memory access hit rate of a vector x; a set of self-adapting optimization method is provided, specific to the sparse matrix, the optimal execution parameters can be dynamically selected, so as to promote the running performance. According to the method disclosed by the invention, 16 sparse matrices in a Matrix Market matrix set are adopted to conduct test, the running edition SpMV has about 10 times of acceleration to the utmost extent compared with the single main core of a domestic SW processor, and the average speed-up ratio is 6.51.

Owner:INST OF SOFTWARE - CHINESE ACAD OF SCI

A sparse matrix storage method using compressed sparse rows with local information and an implementation method of spmv based on the method

ActiveCN103336758BReduce accessIncrease profitComplex mathematical operationsSparse matrix vectorArray data structure

The invention discloses a sparse matrix storage method CSRL (Compressed Sparse Row with Local Information) and an SpMV (Sparse Matrix Vector Multiplication) realization method based on the same. The storage method comprises the following steps of scanning a sparse matrix A in rows and storing each non-zero element value information in an array val sequence; defining a plurality of non-zero elements with continuous row subscripts as a continuous non-zero element section, recording a row subscript of a first element in each continuous non-zero element section by use of an array jas, and recording the number of non-zero elements in each continuous non-zero element section by use of an array jan; and recording an initial index of a first continuous non-zero element section in each row of the sparse matrix A by use of an array ptr. According to the data storage method, row indexes of the non-zero elements are combined and stored, so that the storage space requirement is reduced, the data locality of the sparse matrix is fully excavated, access and calculation can be performed by use of an SIMD (Single Instruction Multiple Data) instruction, the access frequency of an internal storage can be reduced, and the SpMV performance is improved.

Owner:INST OF SOFTWARE - CHINESE ACAD OF SCI

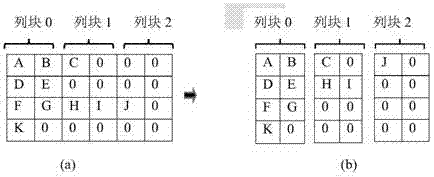

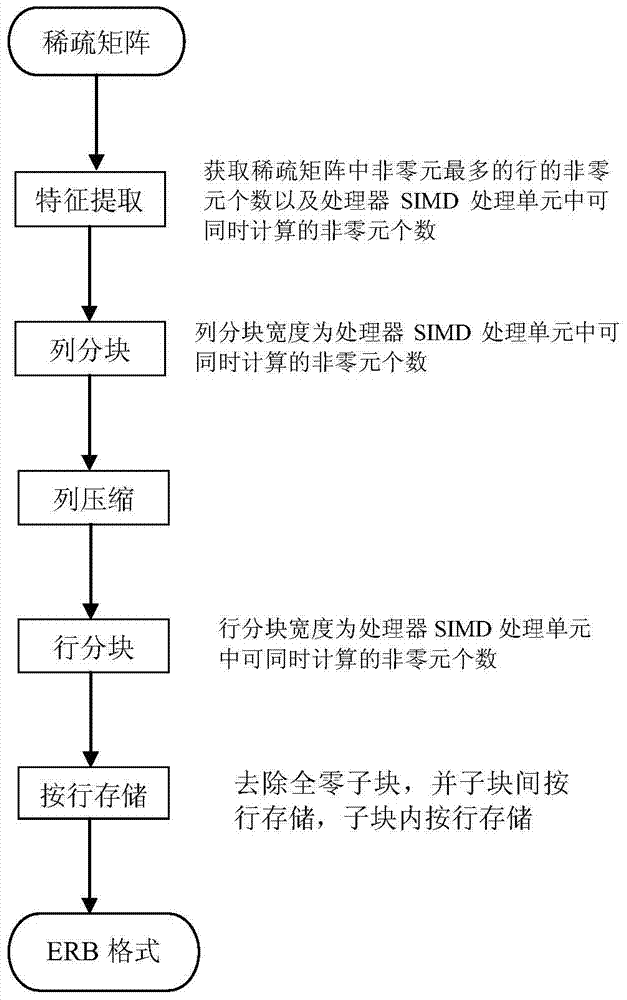

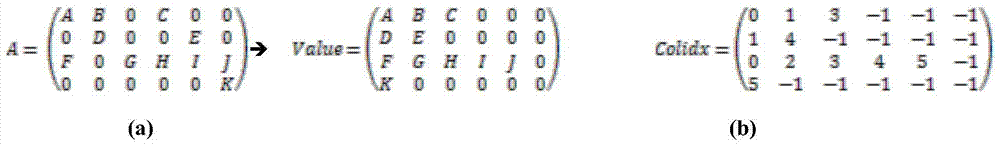

A sparse matrix storage method on simd many-core processor with multi-level cache

ActiveCN104636273BHigh densityIncrease profitConcurrent instruction executionMemory systemsSparse matrix vectorArray data structure

The invention discloses a storage method of a sparse matrix on an SIMD multi-core processor with a multi-level cache. The method includes the steps of firstly, the maximum value a of the number of row nonzero elements in a matrix A and the number b of the nonzero elements which can be calculated at the same time by a processor SIMD unit are acquired, and a minimum value which is larger than a and is the multiple of b is calculated to serve as a temperature row width; secondly, for the matrix A, array Value and Colidx respectively sequentially stores the nonzero element value of each row and line coordinates, and 0 and -1 are respectively supplemented to the tail of each row whose number of the nonzero elements does not reach the temporary row width; thirdly, partitioning according to b lines is performed on Colidx and Value; fourthly, each line block is compressed according to rows, and the rows, with the nonzero elements, in the line block is allowed to centralize on the upper portion of the line block; sixthly, partitioning is performed on the line blocks according to b rows to obtain sub-blocks; all-zero sub-blocks are removed, and the sub-blocks are stored according to rows. The method has the advantages that the sparse matrix is divided into dense sub-blocks, the utilization of the processor SIMD processing unit and a register are increased while the locality of the nonzero elements is kept, and sparse matrix vector multiplication performance is increased.

Owner:UNIV OF SCI & TECH OF CHINA

Sparse matrix-vector multiplication computational unit for permuted block-diagonal weight matrices

ActiveCN110889259BAvoid multiplicationAvoid cumulative operationsCAD circuit designAlgorithmComputational physics

Owner:扬州伊达实业有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com