CNN acceleration method and system based on OPU

An accelerator and mapping technology, applied in the OPU-based CNN acceleration method and system field, can solve the problems of high hardware upgrade complexity and poor versatility

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0091] A kind of CNN acceleration method based on OPU, comprises the steps:

[0092] Define the OPU instruction set;

[0093] The compiler converts the CNN definition files of different target networks, and selects the optimal accelerator configuration mapping according to the defined OPU instruction set, and generates instructions for different target networks to complete the mapping;

[0094] The OPU reads the above-mentioned compiled instructions, runs the instructions according to the parallel computing mode defined by the OPU instruction set, and completes the acceleration of different target networks;

[0095] Among them, the OPU instruction set includes unconditional instructions that are directly executed and provide configuration parameters for conditional instructions, and conditional instructions that are executed after trigger conditions are met. The OPU instruction set is defined to optimize the instruction granularity according to the CNN network research results a...

Embodiment 2

[0124] Based on embodiment 1, refine the definition OPU instruction set of this application, details are as follows:

[0125]The instruction set defined in this application needs to overcome the problem of the universality of the processor corresponding to the instruction execution instruction set. Specifically, the instruction execution time in the existing CNN acceleration system is highly uncertain, so that the instruction sequence cannot be accurately predicted and the instruction set Corresponding to the universality of the processor, the technical means adopted are: define conditional instructions, define unconditional instructions and set instruction granularity, conditional instructions define its composition, set conditional instruction registers and execution methods, the execution method is to satisfy Execute after the trigger condition written by the hardware, the register includes the parameter register and the trigger condition register; set the parameter configur...

Embodiment 3

[0141] Based on Example 1, refine the compilation steps, the details are as follows:

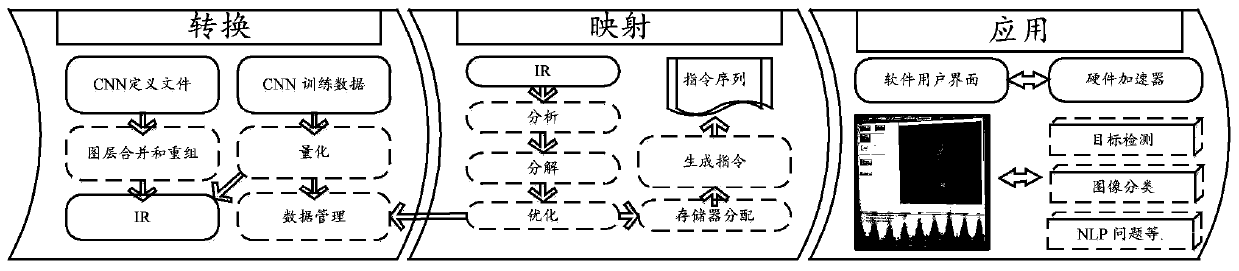

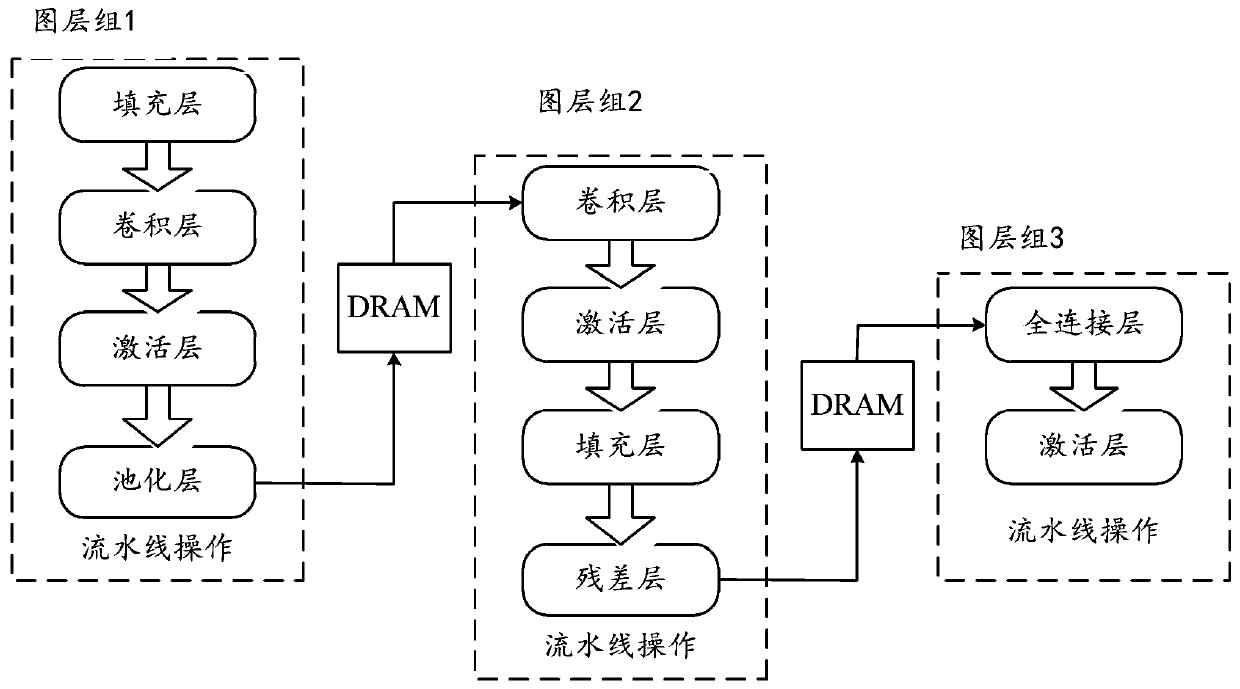

[0142] Convert the CNN definition files of different target networks, and select the optimal accelerator configuration mapping according to the defined OPU instruction set, and generate instructions for different target networks to complete the mapping;

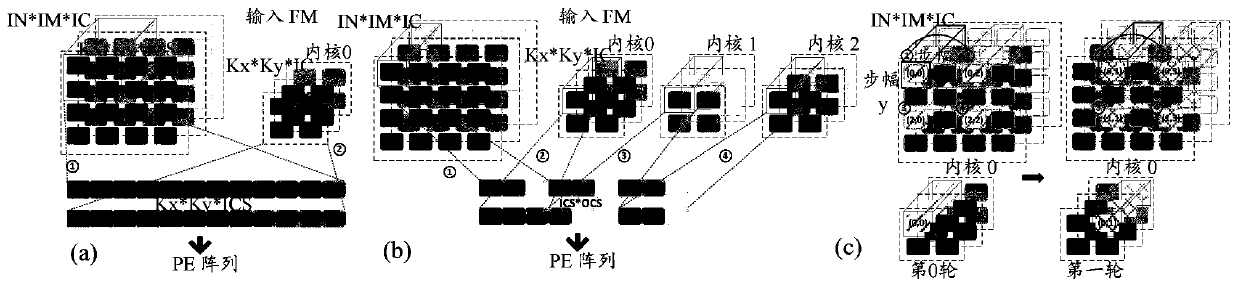

[0143] Wherein, the conversion includes file conversion, network layer reorganization and generation of a unified intermediate representation IR;

[0144] The mapping includes parsing the IR, searching the solution space according to the parsing information to obtain a mapping method that guarantees the maximum throughput, and expressing the above mapping solution as an instruction sequence according to the defined OPU instruction set to generate instructions for different target networks.

[0145] The corresponding compiler includes a conversion unit, which is used to perform file conversion, network layer reorganization, and generate IR a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com