Patents

Literature

70 results about "Block ram" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

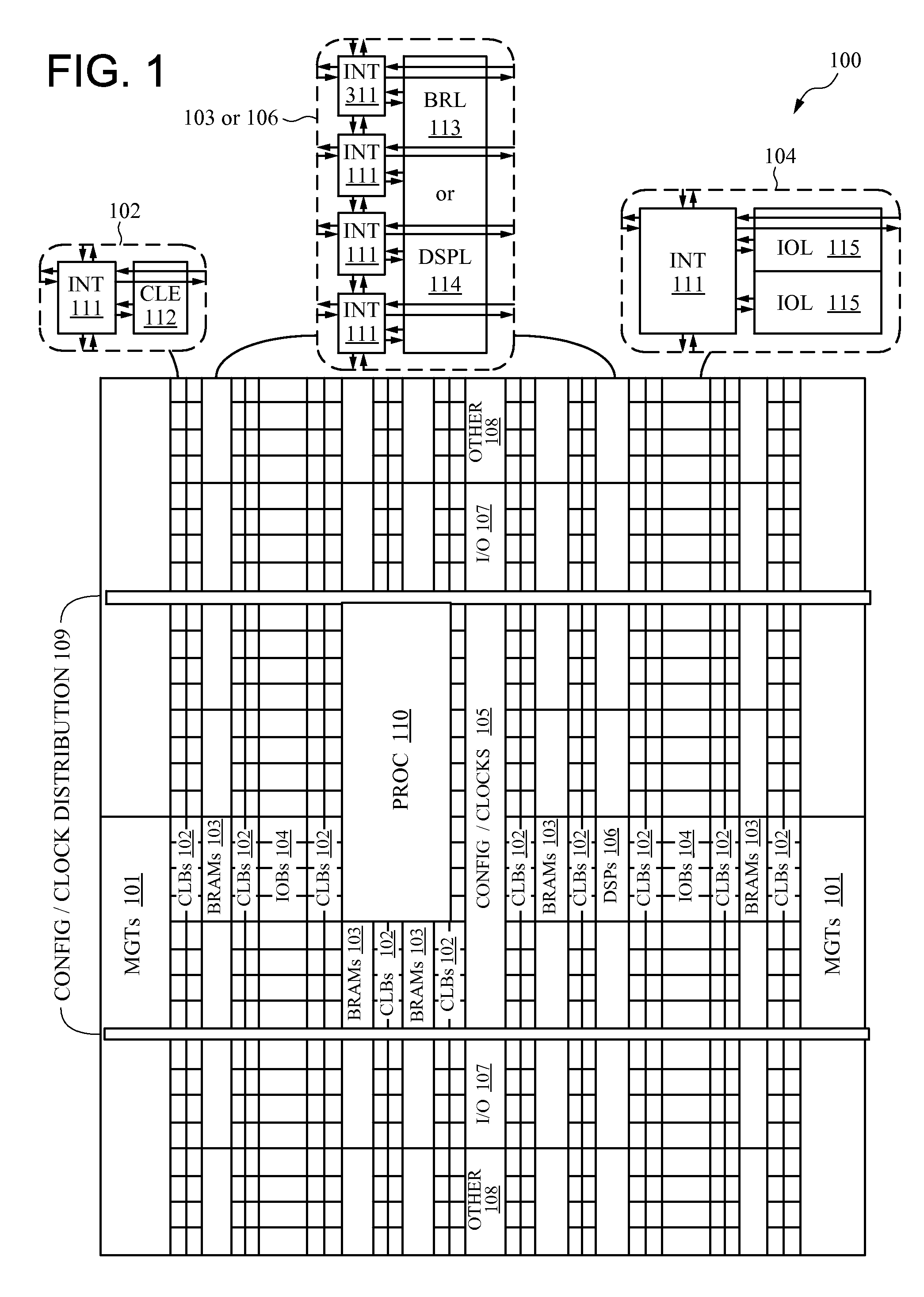

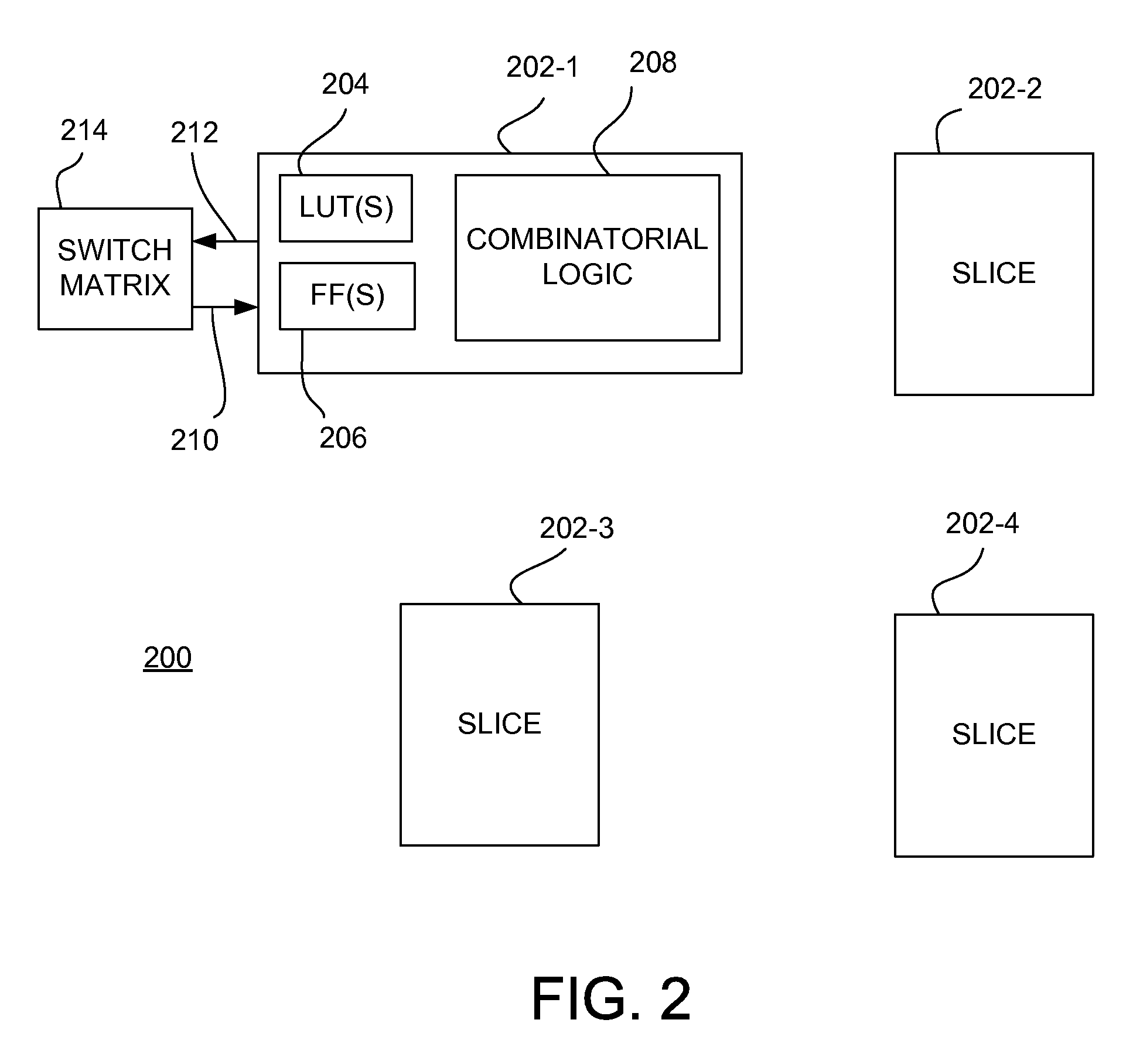

Block RAMs (or BRAM) stands for Block Random Access Memory. Block RAMs are used for storing large amounts of data inside of your FPGA. They one of four commonly identified components on an FPGA datasheet.

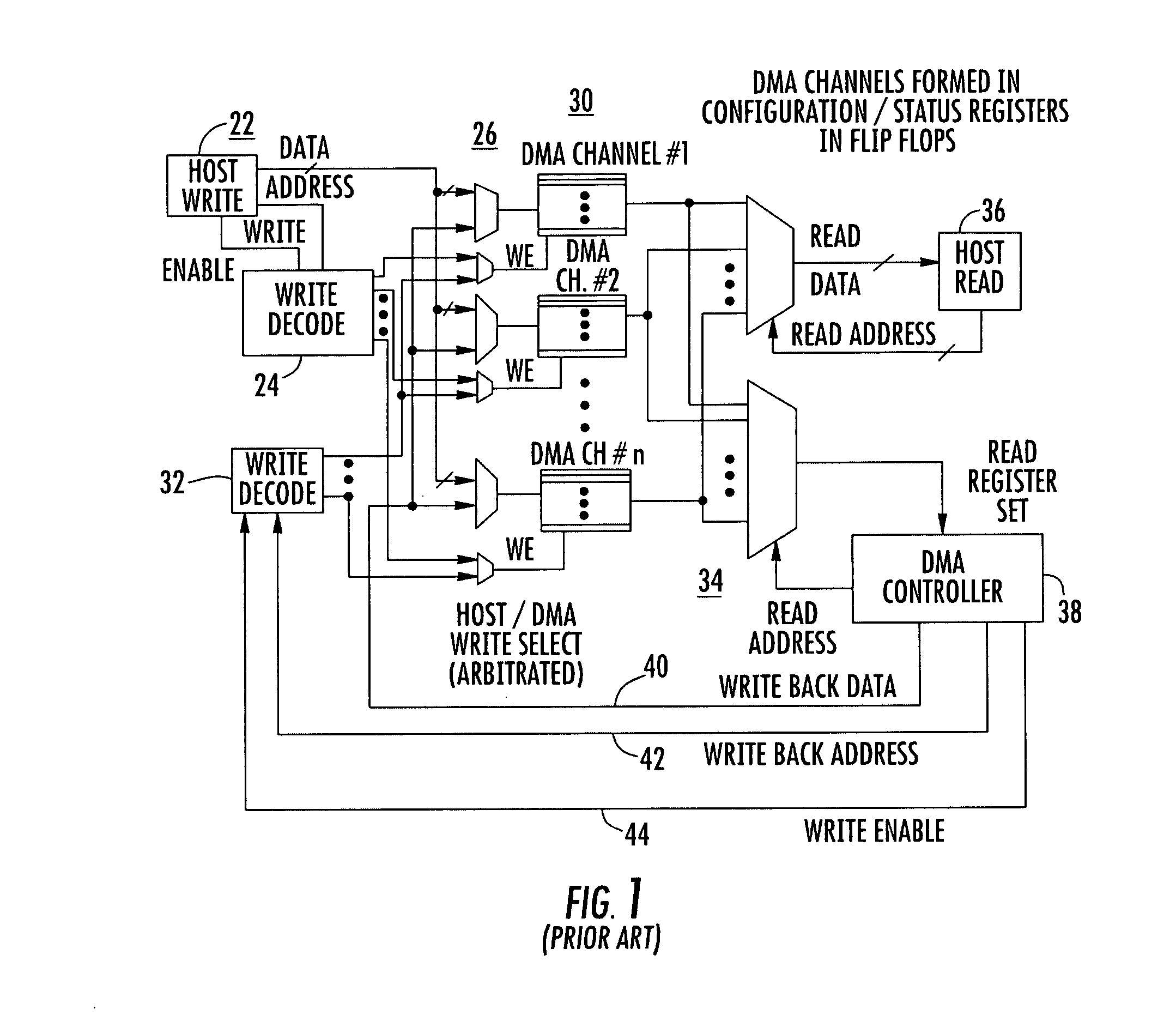

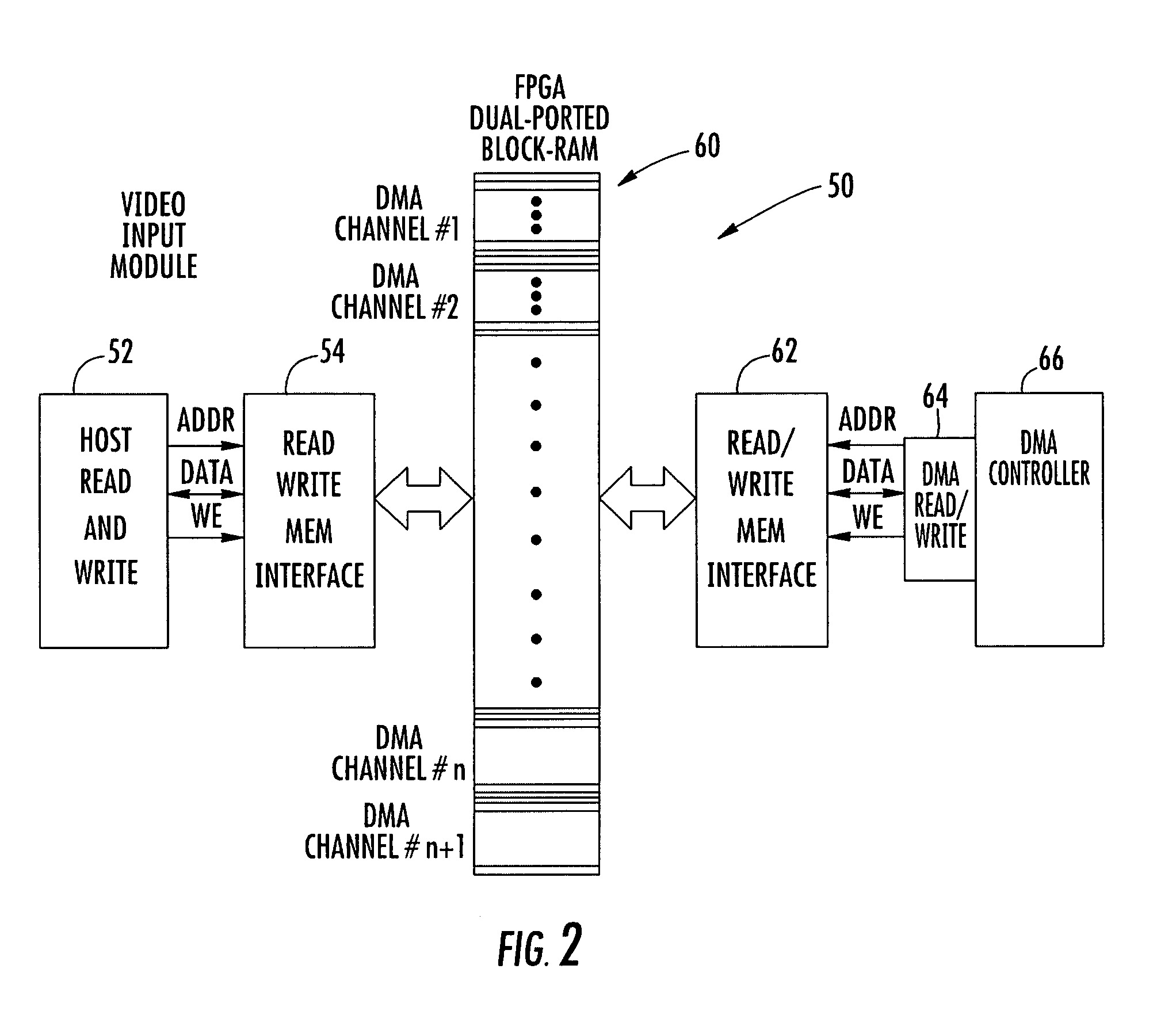

Video multiviewer system using direct memory access (DMA) registers and block ram

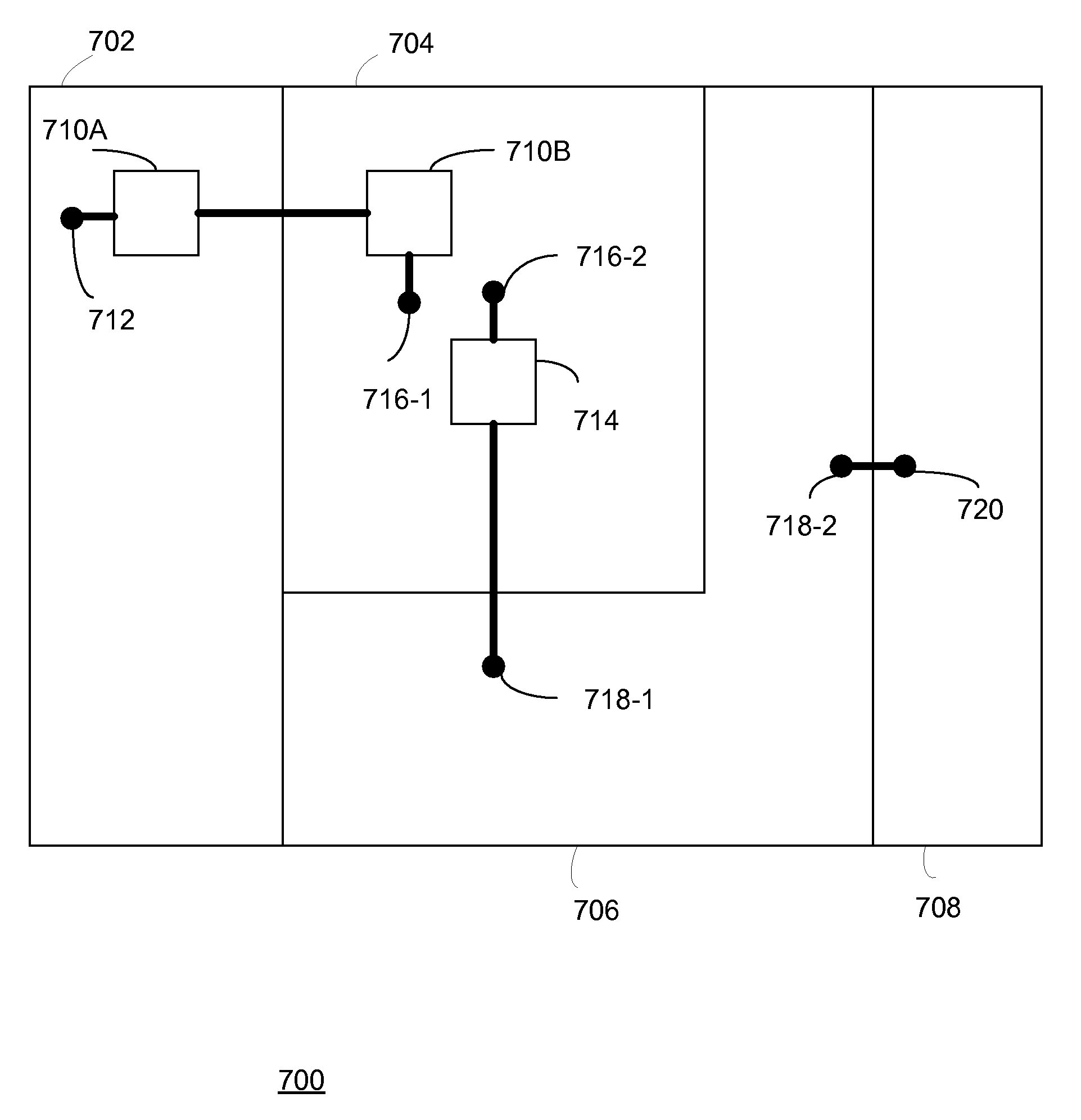

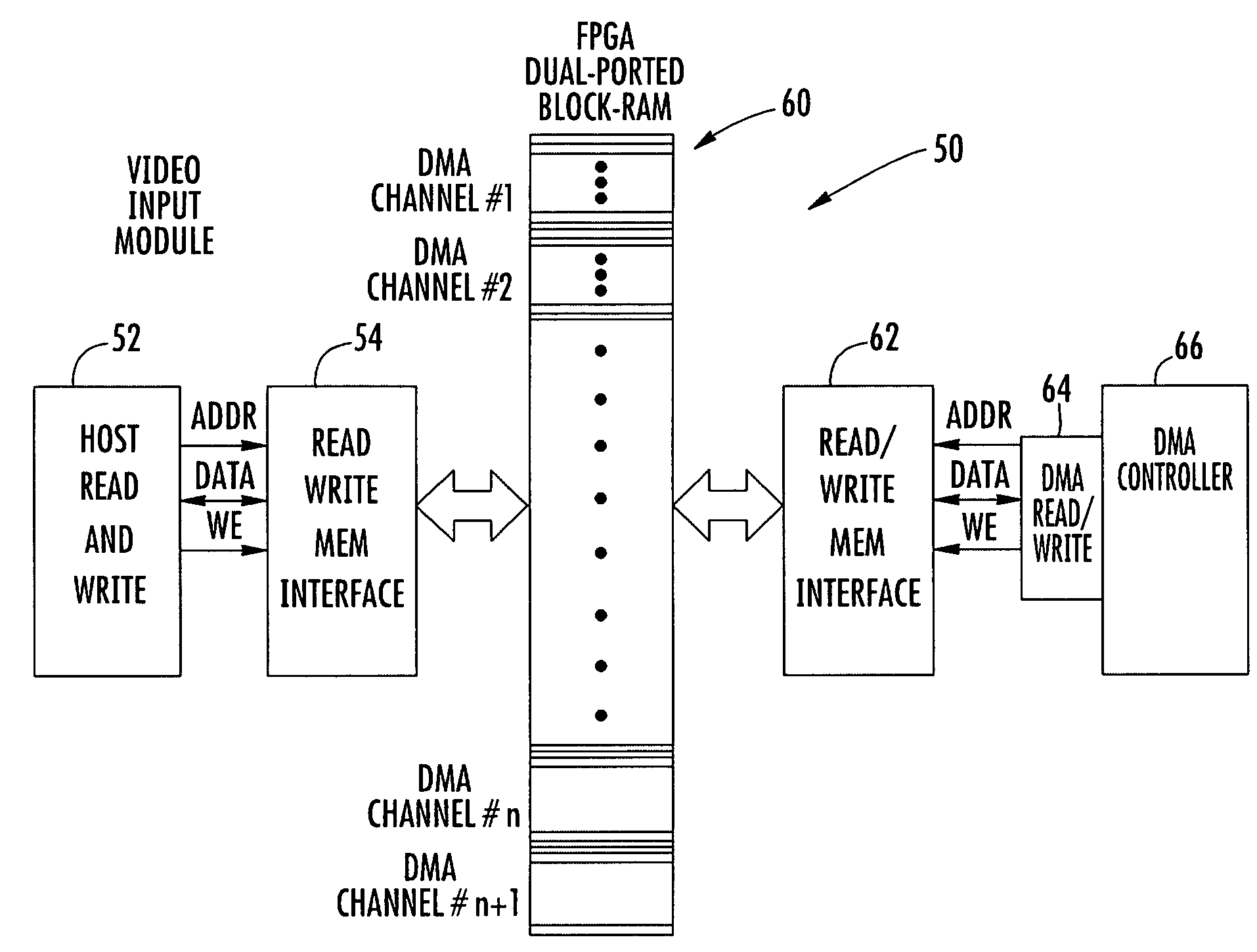

A video multiviewer system includes a Graphics Processing Unit (GPU) that includes a GPU memory. A video input module is operative with the GPU for receiving video data and transferring the video data to the GPU memory via a Direct Memory Access (DMA). A programmable circuit such as a Field Programmable Gate Array (FPGA) includes a multi-ported and in one aspect a dual ported block Random Access Memory (RAM) configured for a plurality of DMA channels for receiving video data and allowing uninterrupted operation of consecutive DMA transfers of video data to the GPU memory. A display displays the multiple video windows based upon video data received within the GPU memory.

Owner:IMAGINE COMM

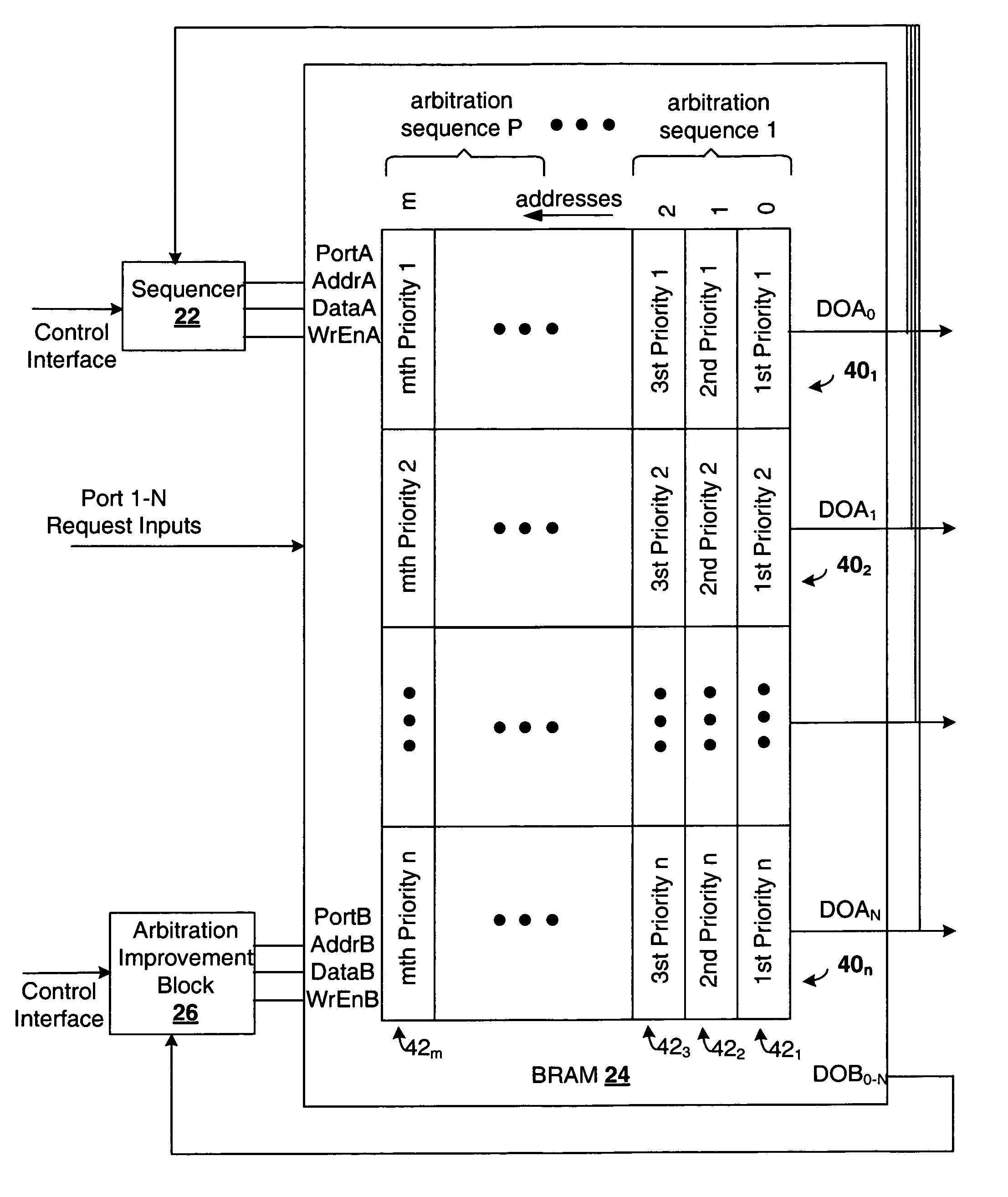

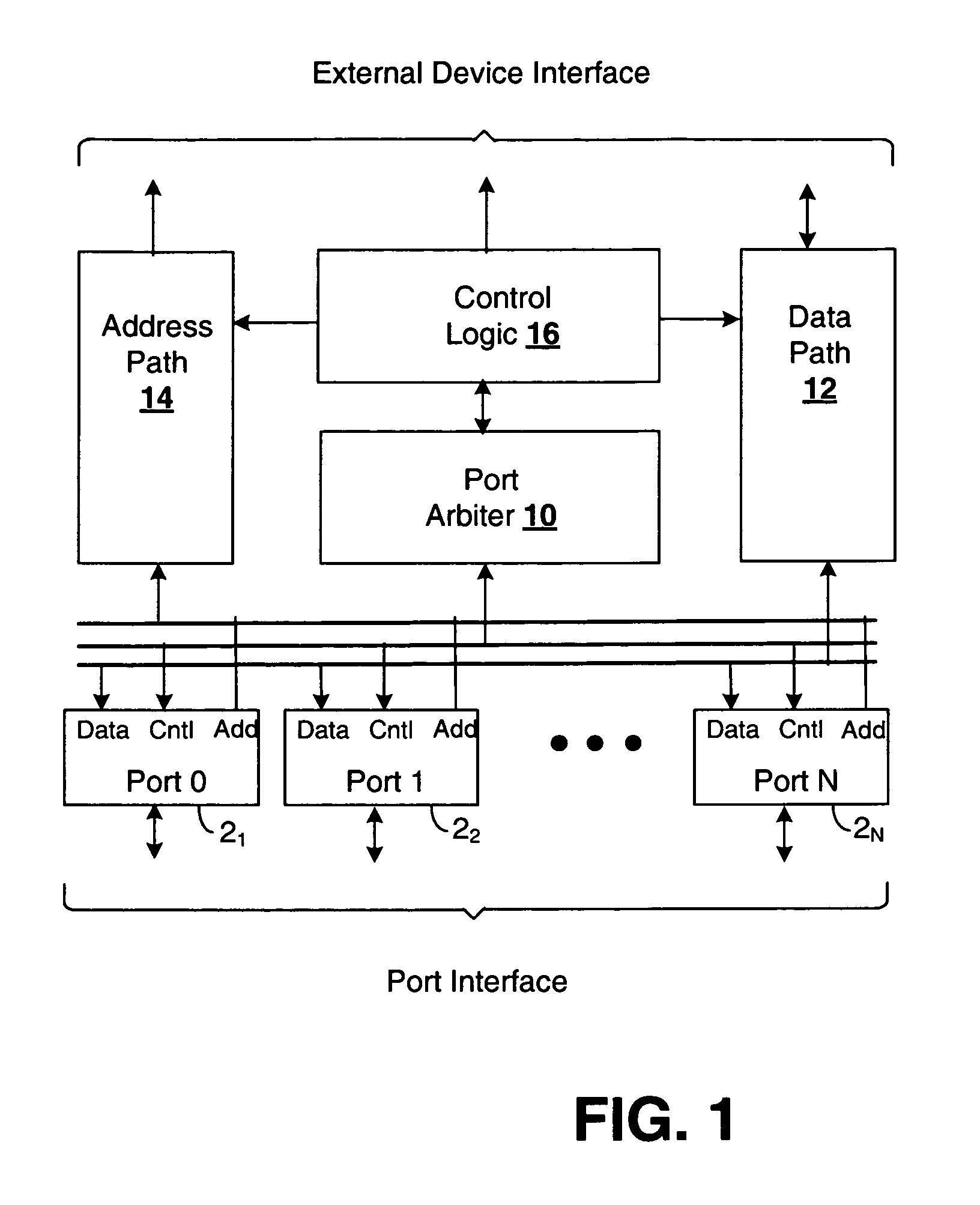

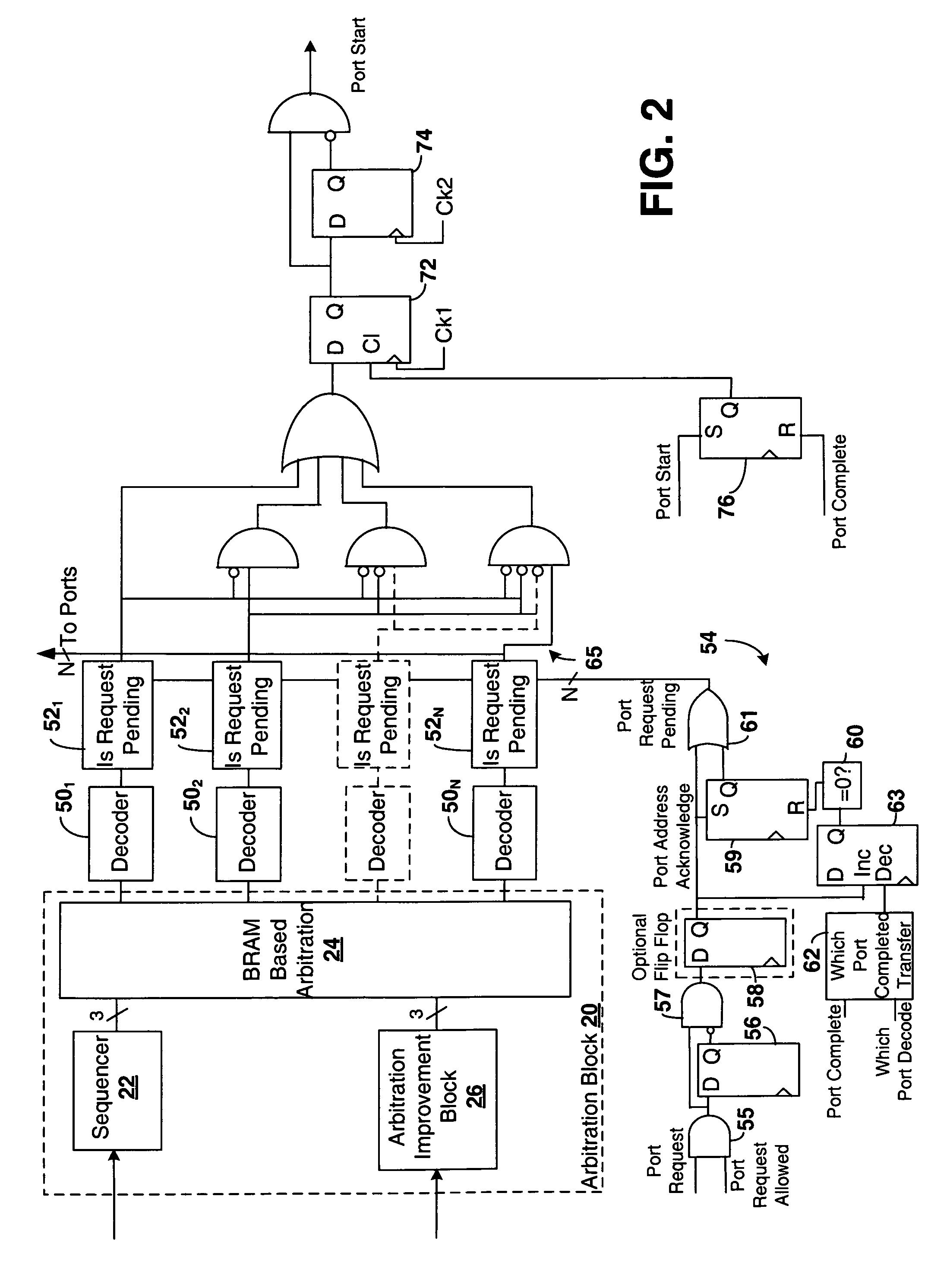

Architecture for dynamically reprogrammable arbitration using memory

An architecture for a Block RAM (BRAM) based arbiter is provided to enable a programmable logic device (PLD) to efficiently form a memory controller, or other device requiring arbitration. The PLD arbiter provides low latency with a high clock frequency, even when implementing complex arbitration, by using BRAM to minimize PLD resources required. The architecture allows multiple complex arbitration algorithms to be used by allowing the multiple algorithms to be stored in BRAM. With multiple algorithms, dynamic configurability of the arbitration can be provided without halting the arbiter by simply changing an algorithm stored in BRAM. Additionally, algorithms can by dynamically modified by writing to the BRAM. With BRAM memory used for arbitration, PLD resources that would otherwise be wasted are frees up to be used by other components of the system.

Owner:XILINX INC

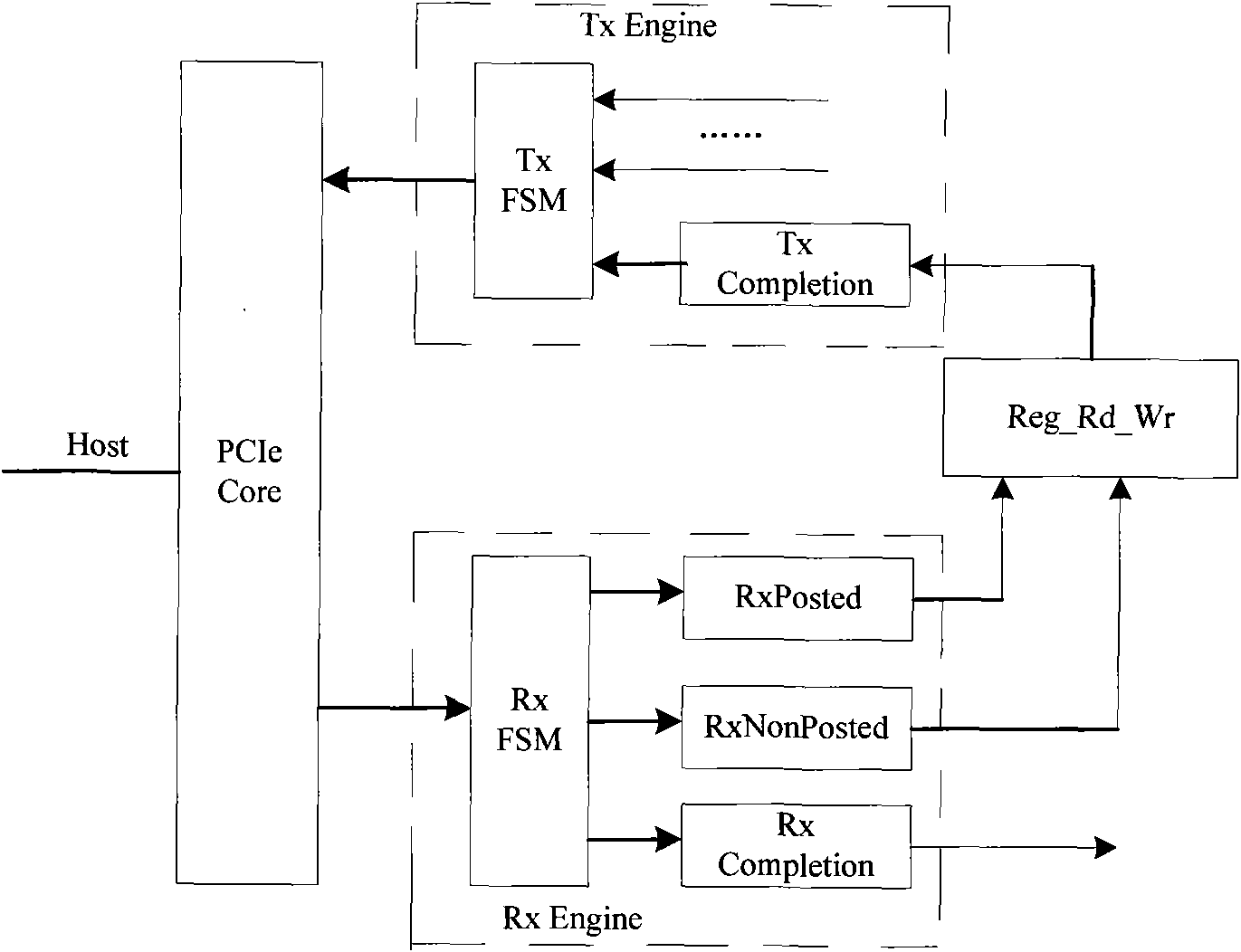

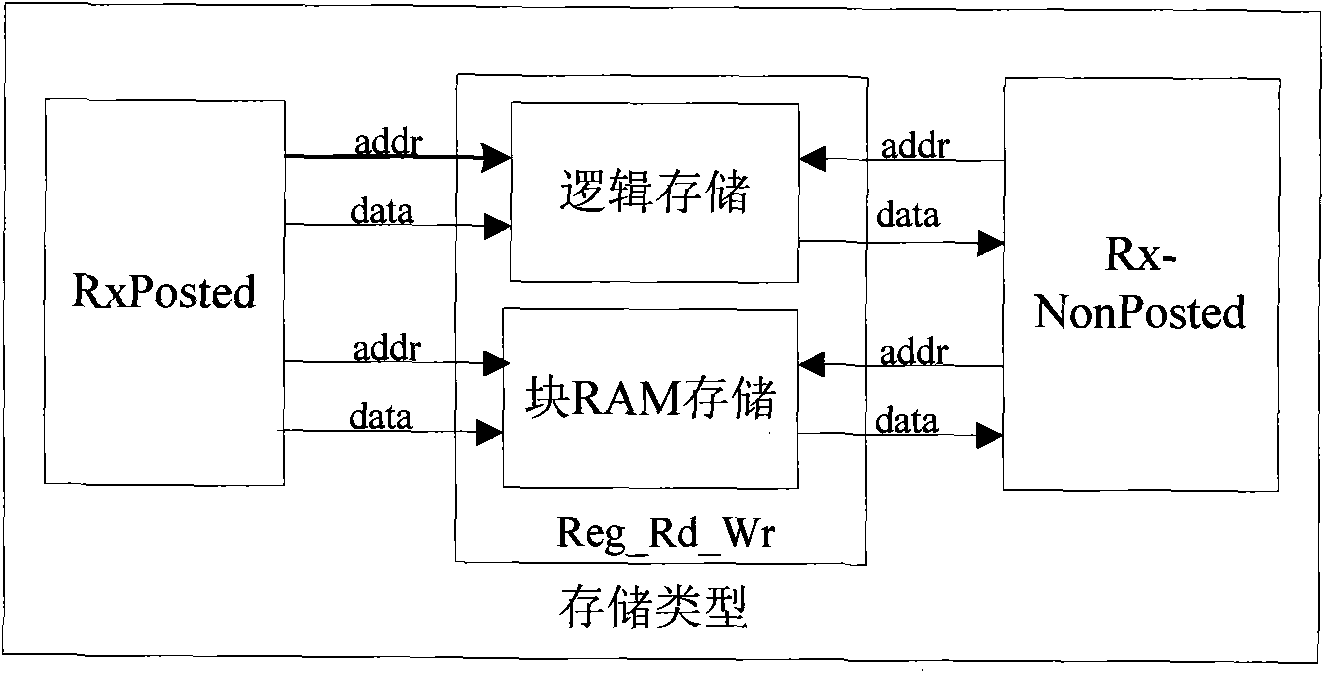

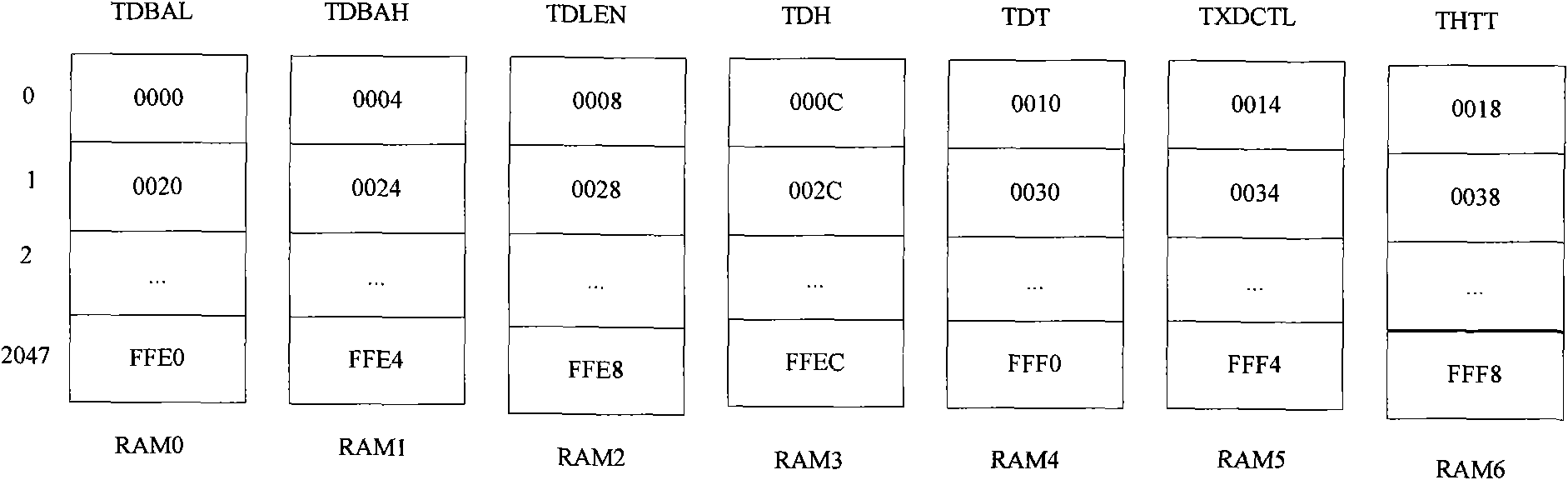

Method for storing multiple virtual queues data based on FPGA

ActiveCN101599294AFlexible and efficient handlingFlexible and efficient data controlRead-only memoriesDigital storageExternal storageComputer architecture

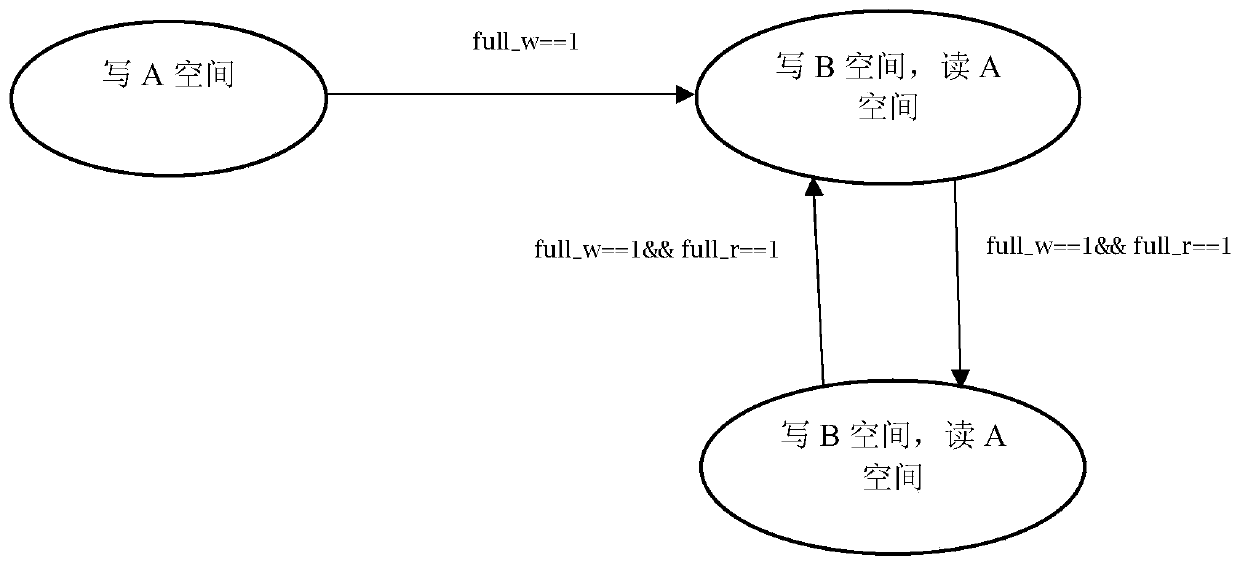

The invention is a method for storing multiple virtual queues data based on FPGA. The invention is designed based on the technology realizing the network card data read-write in FPGA, in particular to a method of storing multiple virtual queues data, adopting a plurality of register blocks which contain a receiving engine module, a read-write module and a block-shaped memory; wherein, each register block belongs to one of the queues, the block-shape memory is provided with a read-write mode state switching module, both the lock-shaped memory and the read-write mode state switching module are in support of three operational modes and uses the block RAM in the FPGA to store and process all registers in a large number of queues (like 2048 queues), thus realizing simultaneous reading and writing on the related registers in the same queue. The method provided in the invention solves the problems of insufficient logical resources, slow storage speed, long storage cycle and the like in the logical storage and external storage in the prior art.

Owner:南京城市云计算中心有限公司

Integrated logic analysis module based on PCIe (peripheral component interconnection express) for FPGA (field programmable gate array)

ActiveCN102495920ASolve the situation where the margin is not enoughMeet the transmission protocol requirementsSpecial data processing applicationsInternal memoryComputer architecture

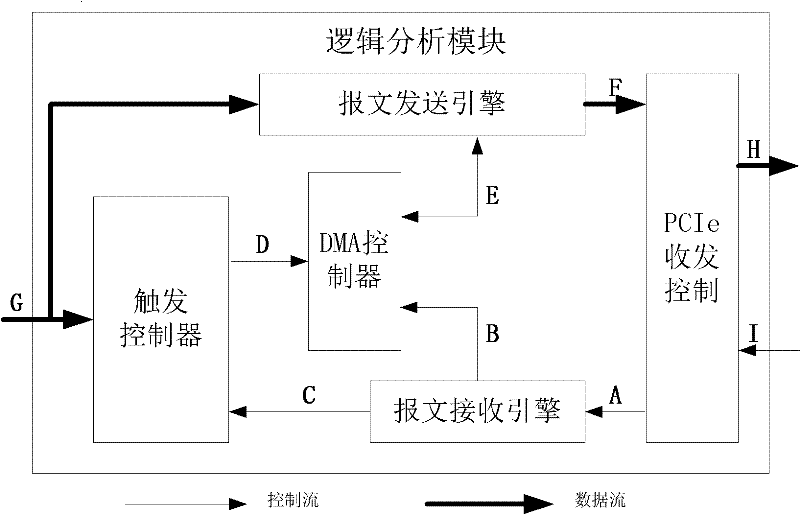

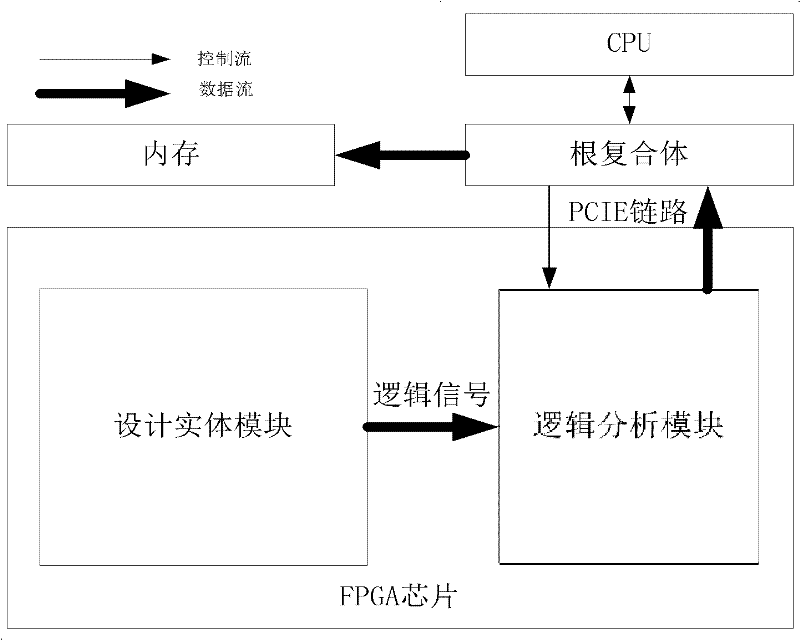

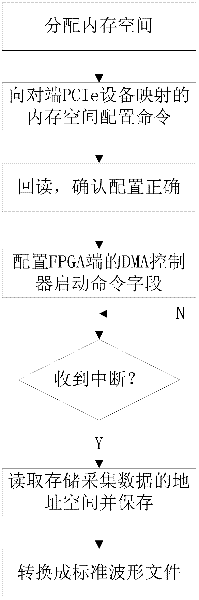

An integrated logic analysis module based on PCIe (peripheral component interconnection express) for an FPGA (field programmable gate array), which comprises a trigger controller, a DMA (direct memory access) controller, a message transmitting engine, a message receiving engine and a PCIe receiving and transmitting controller. The integrated logic analysis module not only can realize all functions of SignalTap or Chipscope, but also can solve the problem of insufficient allowance of a Block RAM (random access memory) in a large-size design, and at the moment, as data are exported and stored in an internal memory of a CPU (central processing unit) side instead of being stored in a chip, sufficient data can be collected under permission of the internal memory. In addition, a trigger module is a register-level code, accordingly, more complicated triggering setting can be realized only by means of modifying the code, and the integrated logic analysis module is far more flexible than the SignalTap or the Chipscope. Besides, in the large-sized design, a CPU and an FPGA are commonly arranged in the same system, a PCIe link is a common channel of multiple high-speed systems, and accordingly the integrated logic analysis module is wide in application.

Owner:南京中新赛克科技有限责任公司

Cache control method and system, storage medium, computer equipment and application

ActiveCN111782578AReduce problems with large areasReduce the problem of large chip areaArchitecture with single central processing unitEnergy efficient computingTime delaysProcessing element

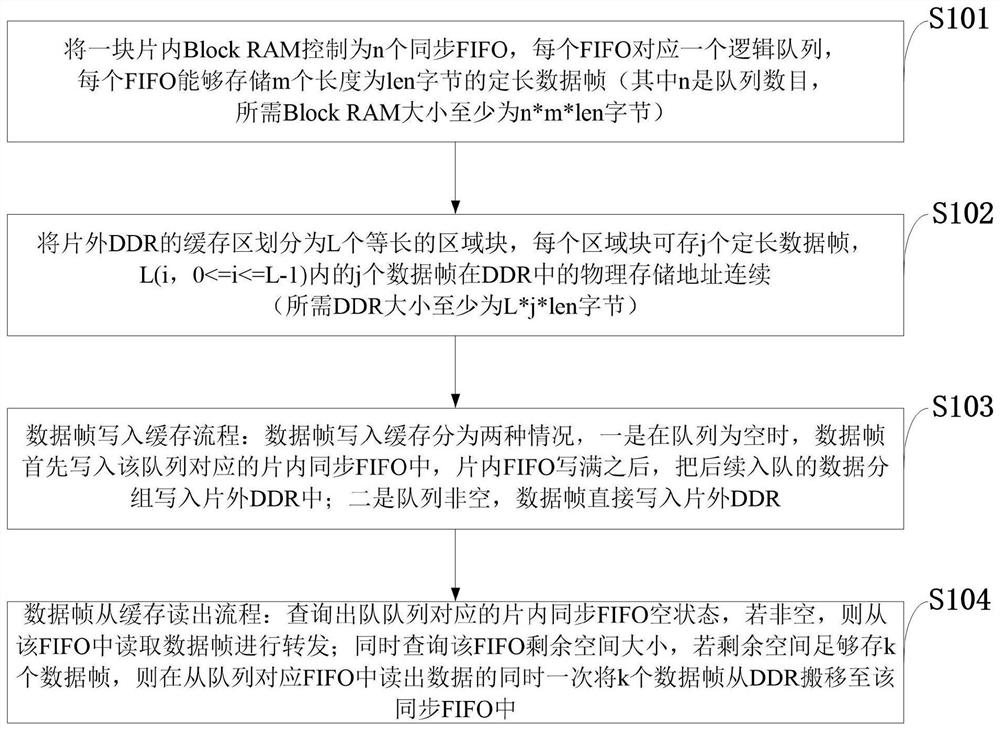

The invention belongs to the technical field of data exchange and discloses a cache control method and system, a storage medium, computer equipment and an application. The method comprises the steps:enabling one Block RAM to be controlled into a plurality of three-channel synchronous FIFOs for use, writing data frames into corresponding on-chip FIFOs when the data frames enter a queue, and writing the data into an off-chip DDR after the on-chip FIFOs are fully written; dividing the DDR storage space into L area blocks with continuous addresses; when data dequeues, moving k continuous data frames to the on-chip FIFO from the DDR while the data is read from the corresponding on-chip FIFO. According to the invention, the processing speed and the traffic burst resistance of the packet processing unit are improved, the DDR bandwidth utilization rate is increased, and the time delay of DDR data reading is reduced. According to the invention, the processing speed of the switching unit is accelerated, a relatively high DDR bandwidth utilization rate is realized, and the influence of absolute time delay brought by a DDR device on the processing time of the switching unit is reduced.

Owner:XIDIAN UNIV

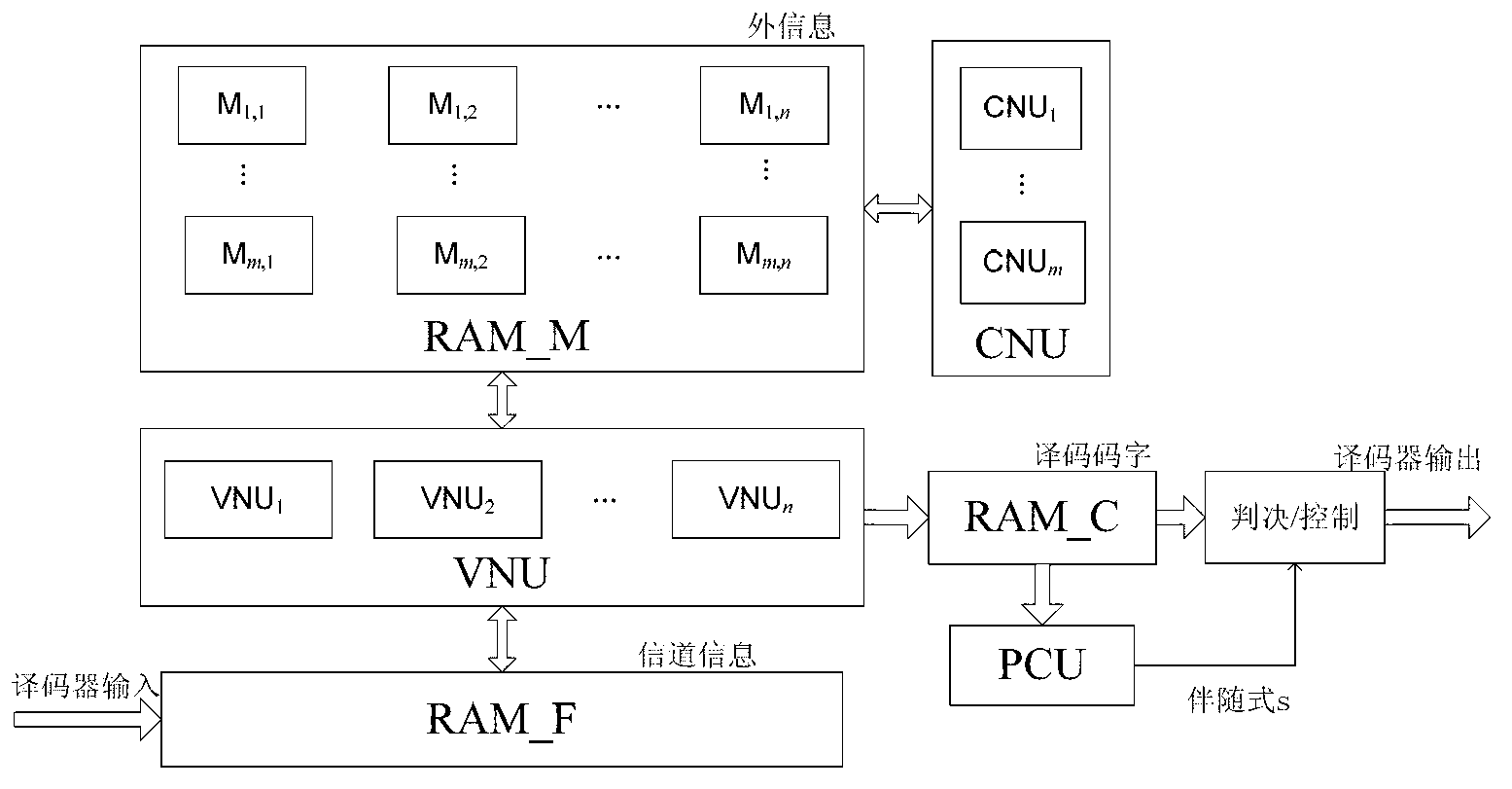

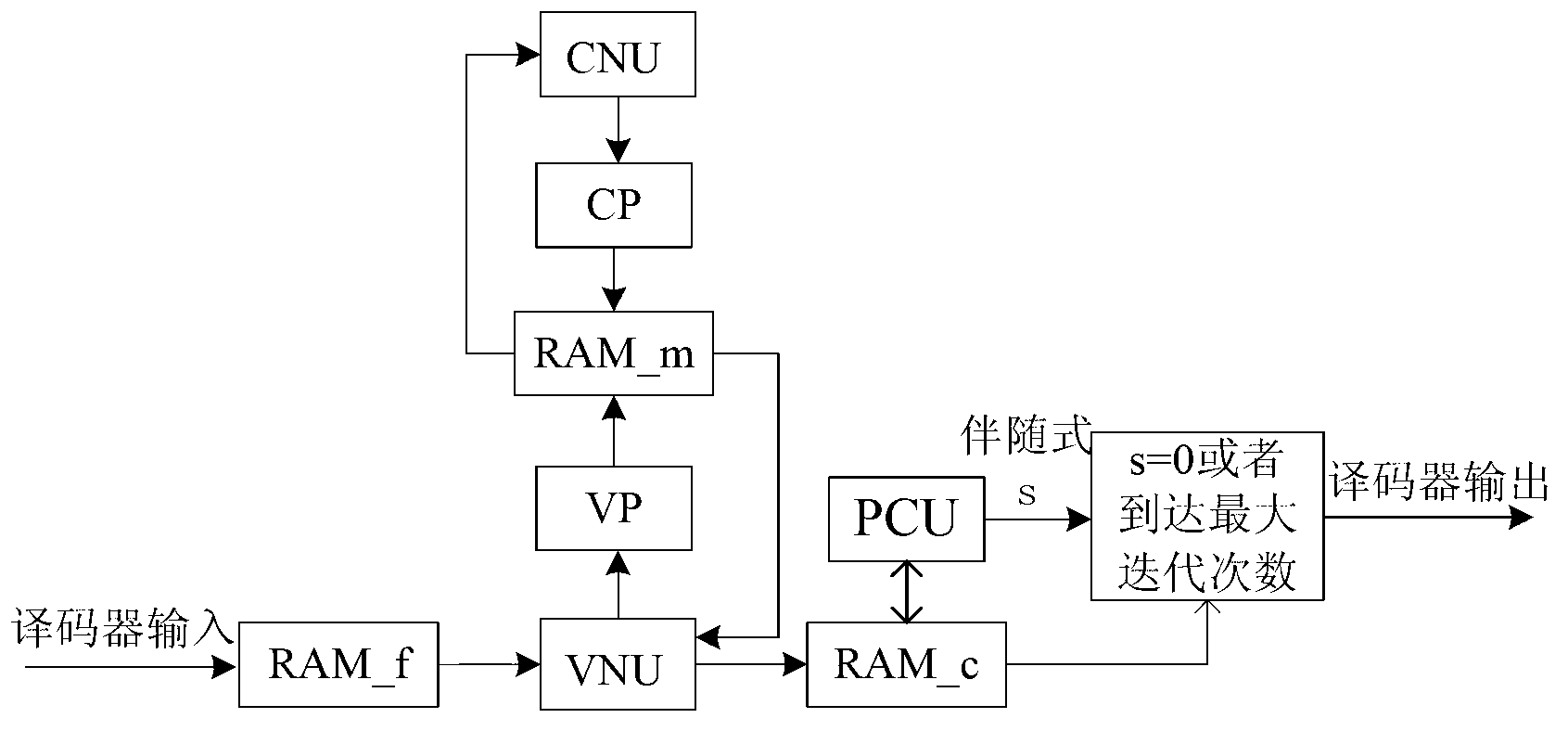

Realization method for QC-LDPC (Quasi-Cyclic Low-Density Parity-Check) decoder for improving node processing parallelism

ActiveCN103220003AOvercoming the bottleneck of low throughput limiting system rateImprove throughputError correction/detection using multiple parity bitsRandom access memoryBlock ram

The invention relates to a realization method for a QC-LDPC (Quasi-Cyclic Low-Density Parity-Check) decoder for improving the node processing parallelism. The decoder comprises a variable node information updating unit (VNU), a variable node information packing unit (VP), a check node information updating unit (CNU), a check node information packing unit (CP), a check equation computing unit (PCU), storage blocks RAM (Random Access Memory)_f and RAM_m with storage bit widths of (Qh)bits respectively and a storage block RAM_c with the storage bit width of hbits. According to the realization method disclosed by the invention, due to the adoption of the node information packing unit, simultaneous reading and writing of batch data of a memory can be effectively realized and the problem of access conflict of the memory is solved; and the number of data stored in each address unit of the memory is added, so that the parallelism of a processing unit of the LDPC decoder can be improved. The realization method for the QC-LDPC decoder has the characteristics of high throughput capacity, fewer hardware resources, low design complicity and the like.

Owner:XIAN INSTITUE OF SPACE RADIO TECH

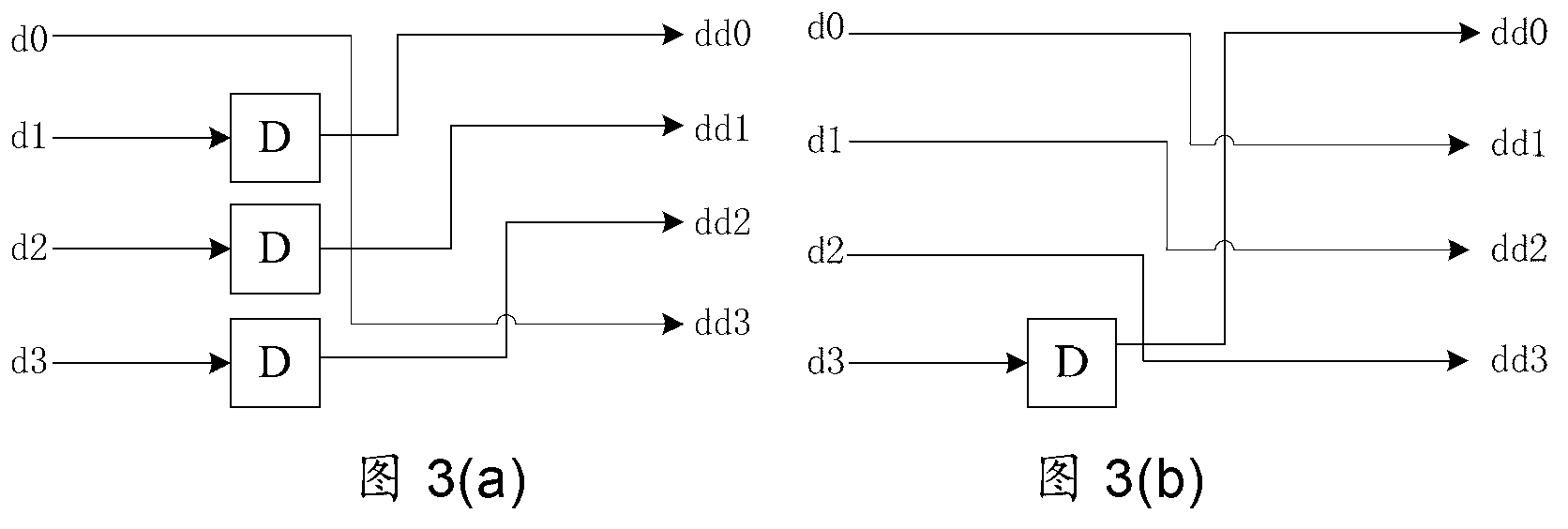

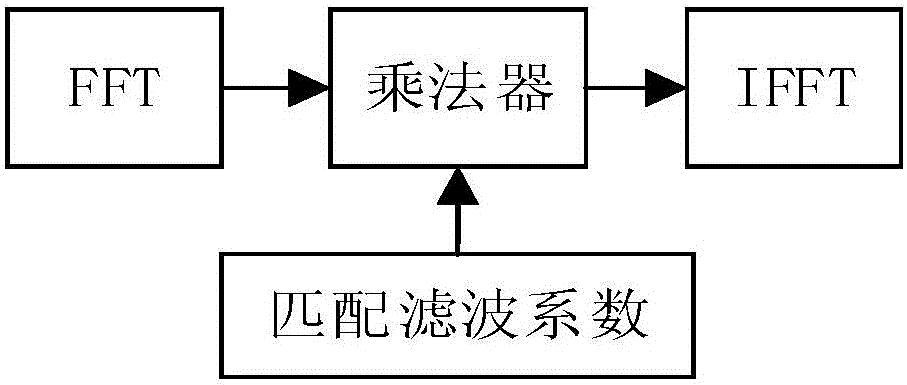

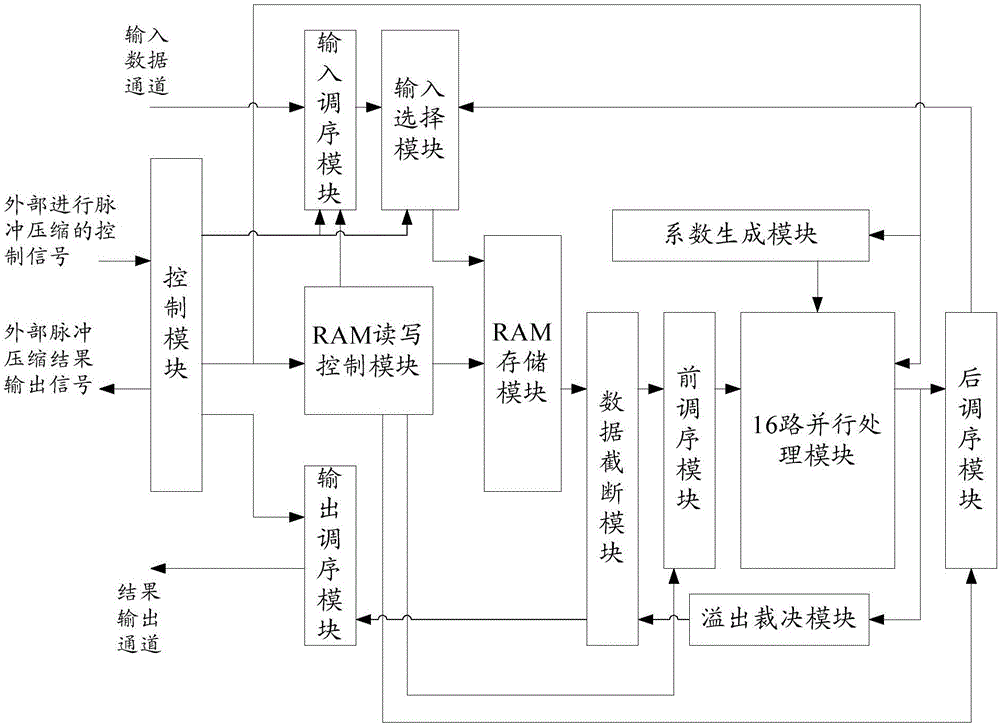

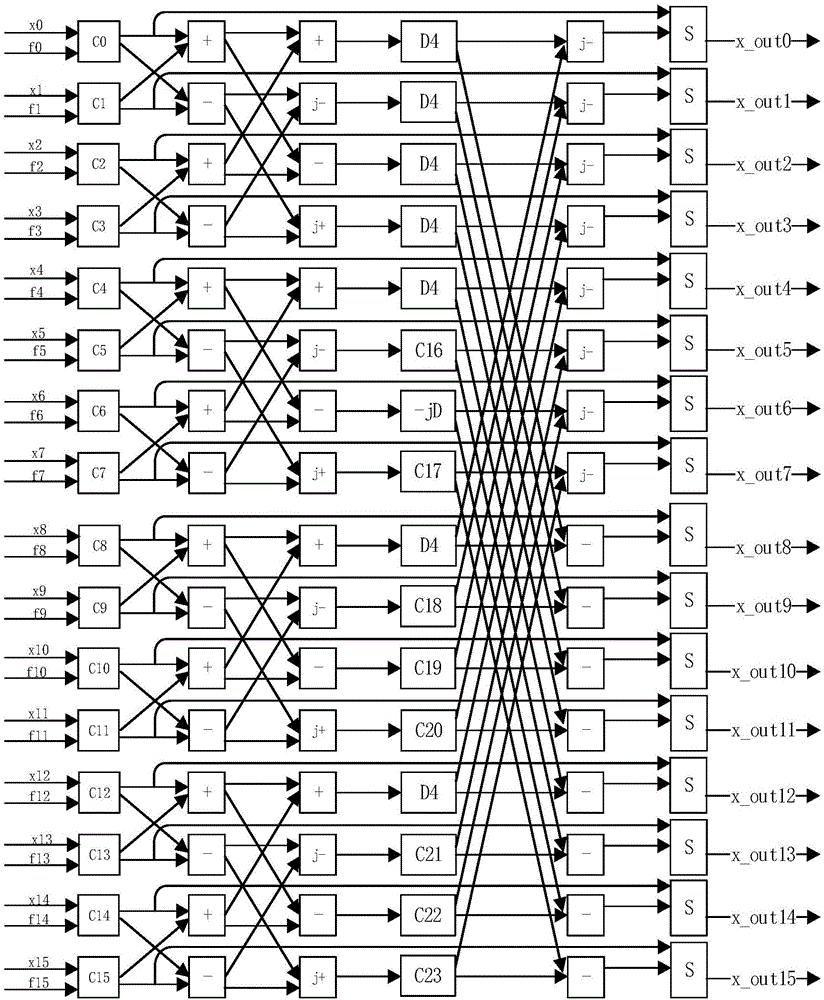

Device and method for realizing pulse compression of 65536 points on basis of FPGA (Field Programmable Gate Array)

ActiveCN106484658AImprove parallelismProcessing speedComplex mathematical operationsTriangular functionRotation factor

The invention belongs to the technical field of radar signal processing and discloses a device and method for realizing pulse compression of 65536 points on the basis of an FPGA (Field Programmable Gate Array). The implementation process comprises the following steps of: firstly receiving data from 16 paths in parallel, and storing the data into 16 block RAMs by adopting the principle of parallel reading and no conflict; then reading 16 numbers once by a production line mode, carrying out 4 stages of radix-16 butterfly computation and in-situ storage and finishing FFT (Fast Fourier Transform) processing; carrying out parallel and matched filtering processing on 16 paths and in-situ storage; then realizing IFFT processing by 4 stages of butterfly computation; and finally outputting for 16 paths in parallel, wherein rotation factors and matched filtering coefficients are generated in real time by using sectional linear triangular function tables and a mode of checking table differences. The device and method disclosed by the invention have the advantages that the use of RAM resources in the FPGA is greatly saved, the processing speed is obviously increased, and the data throughput is increased.

Owner:XIDIAN UNIV

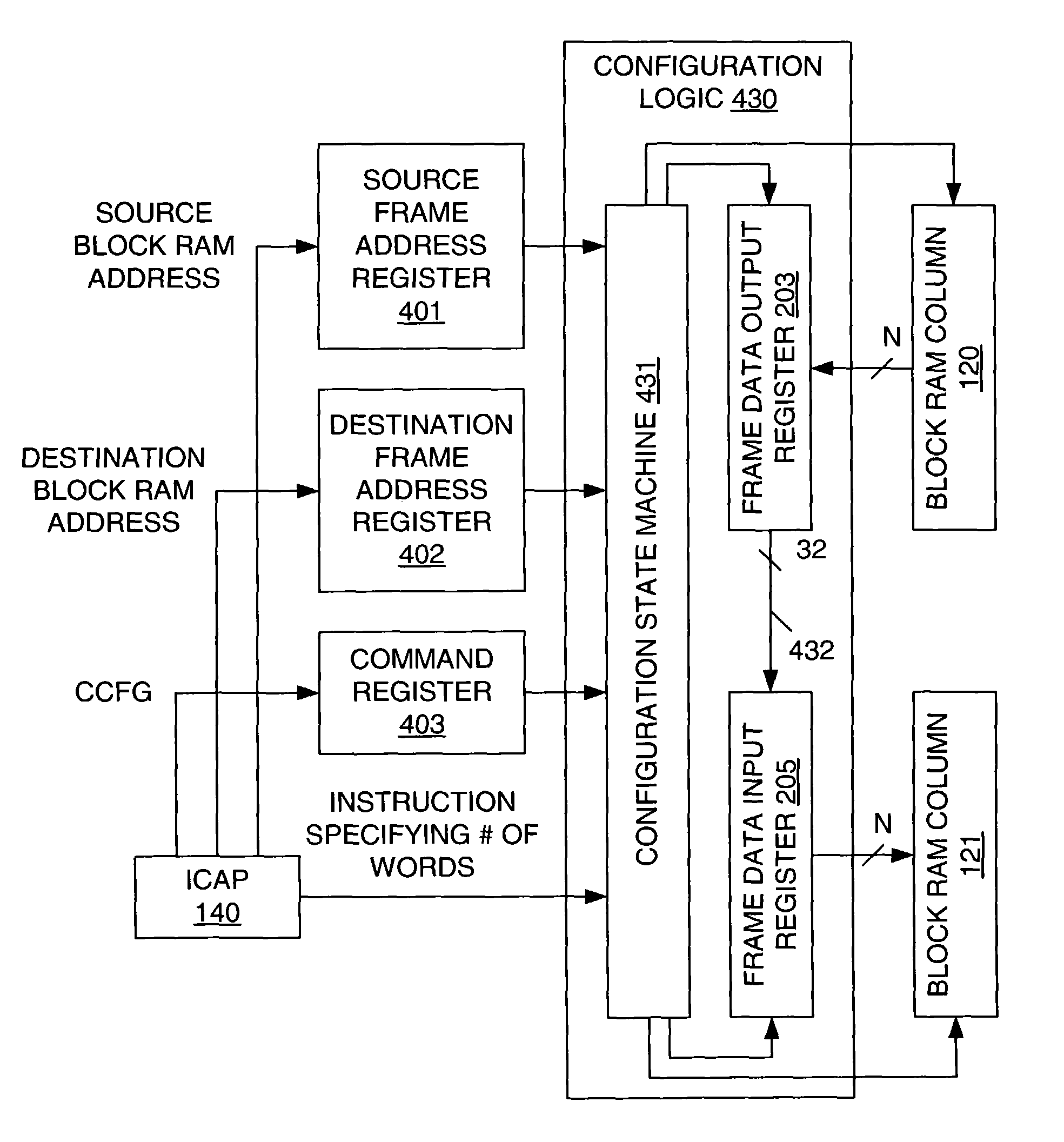

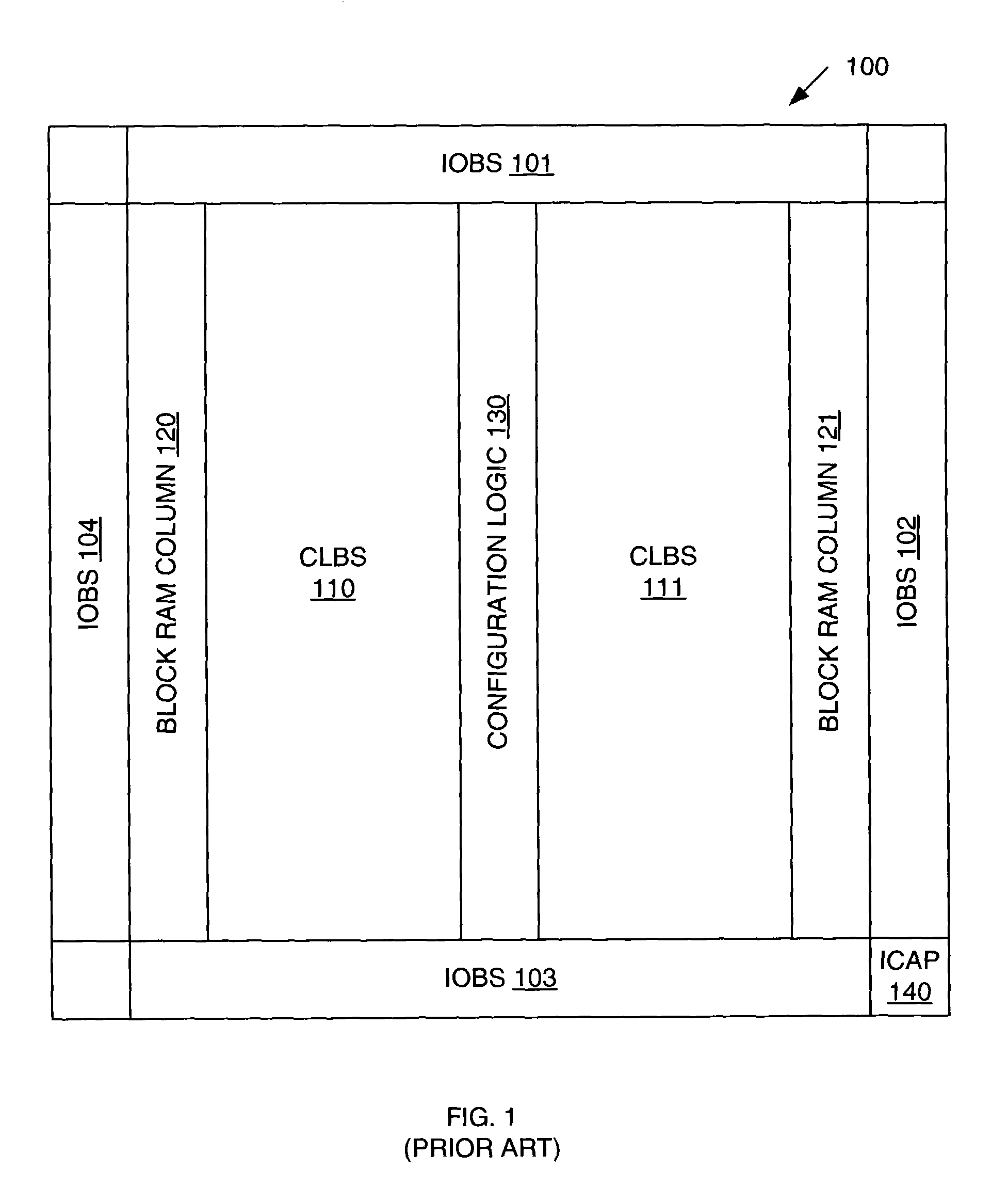

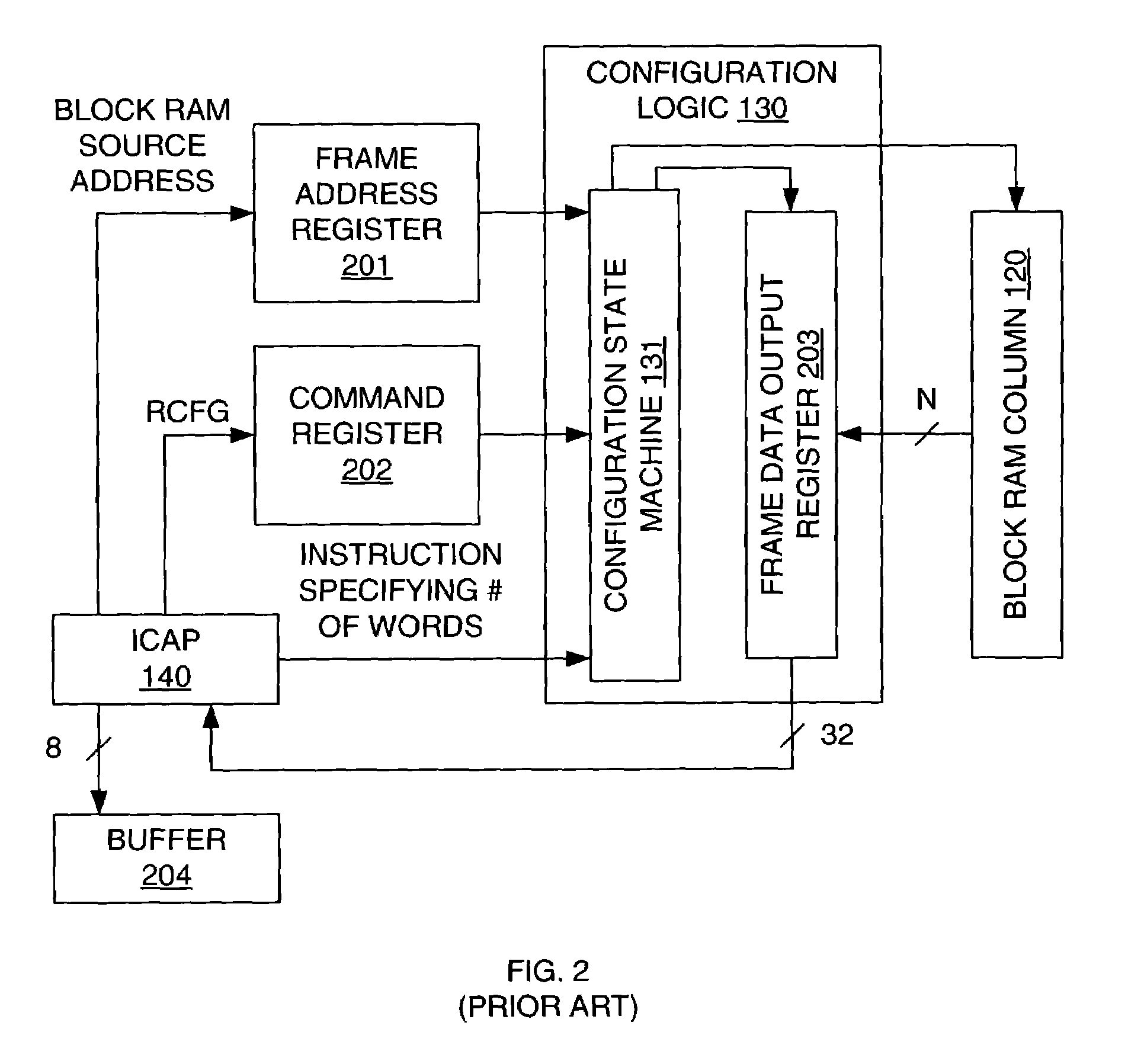

Field programmable gate array (FPGA) configuration data path for module communication

ActiveUS7080226B1Solid-state devicesLogic circuits using elementary logic circuit componentsProcessor registerBlock ram

Data is transferred on a field programmable gate array (FPGA) by (1) retrieving a first set of data from a first block RAM column of a configuration memory of the FPGA, (2) storing the first set of data retrieved from the first block RAM column in a frame data output register, (3) transferring the first set of data from the frame data output register directly to a frame data input register through a configuration bus of the FPGA, and (4) transferring the first set of data from the frame data input register to a second block RAM column of the configuration memory. The configuration bus is wide (e.g., 32-bits), thereby resulting in a high data transfer bandwidth.

Owner:XILINX INC

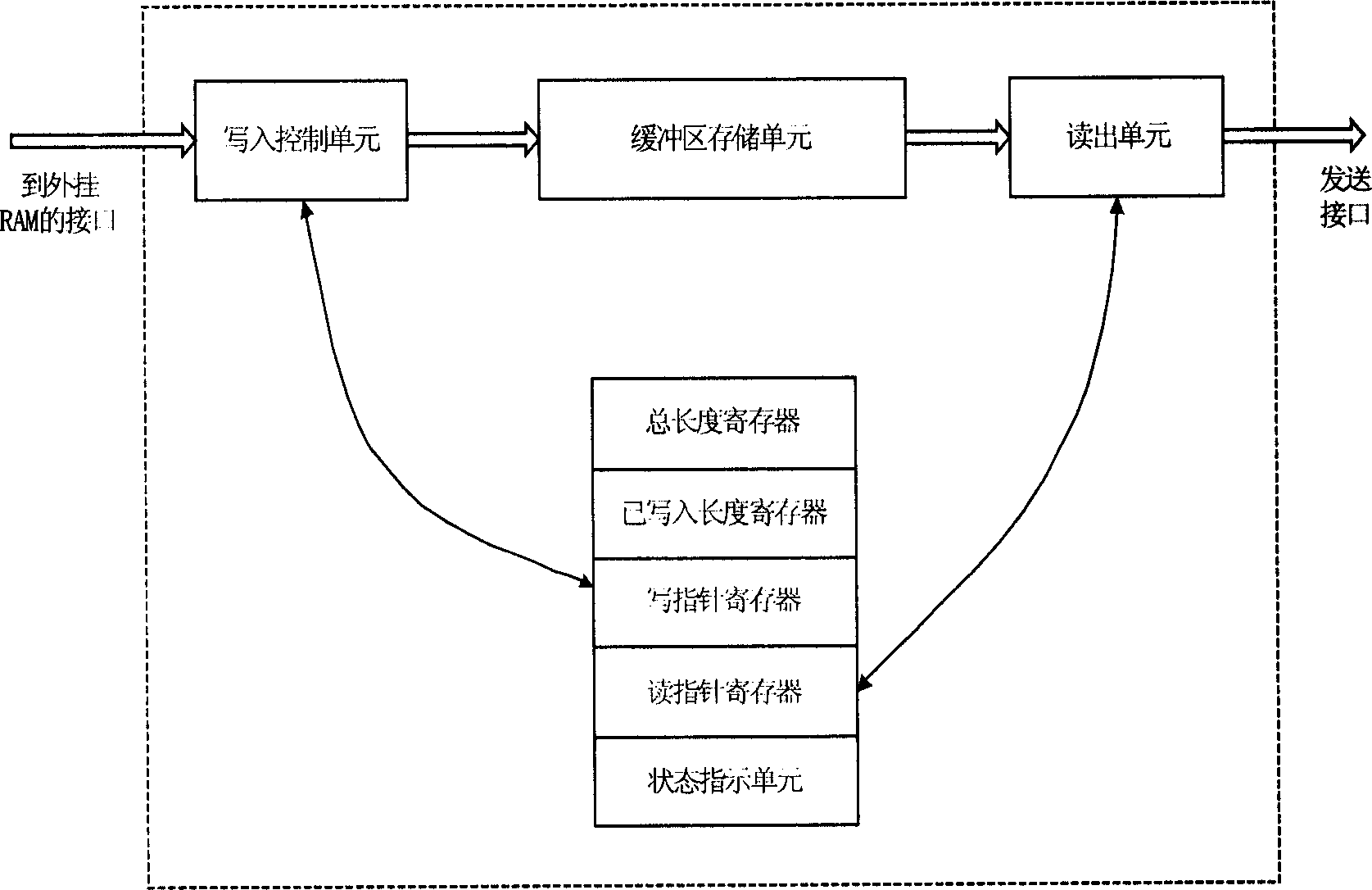

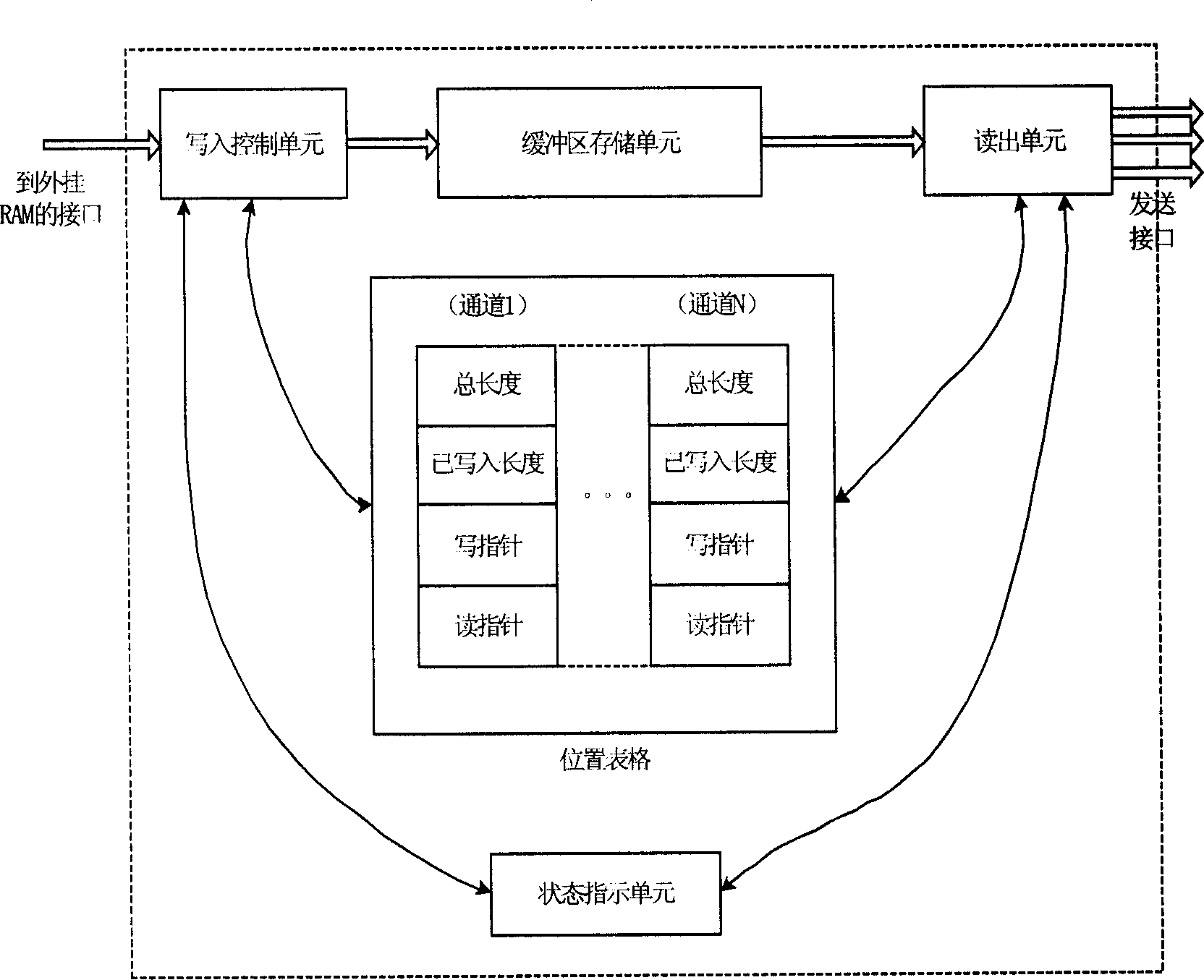

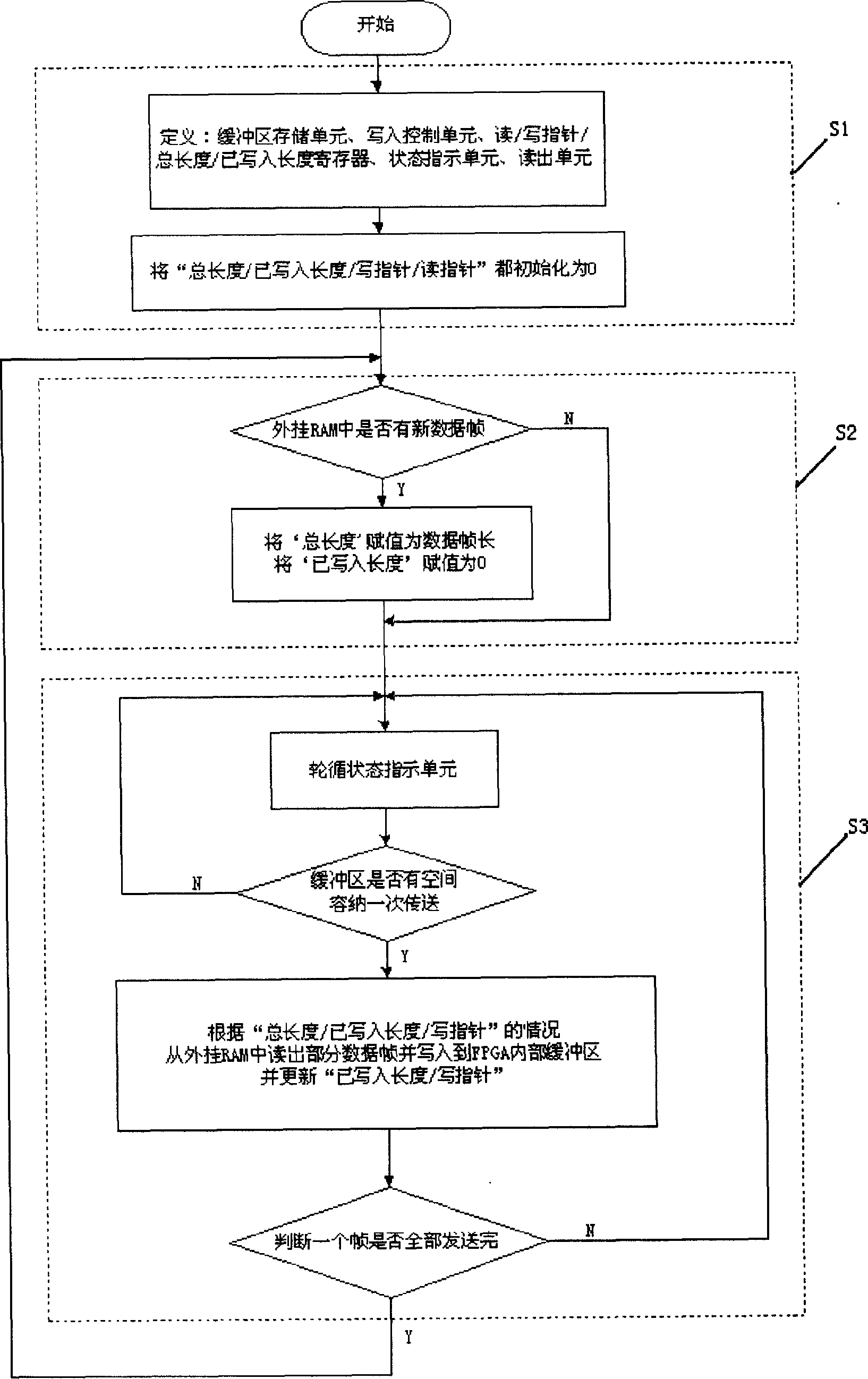

Method and device for managing transmitting buffer area in field programmable gate array

The present invention relates to method and device for managing the transmission buffer area in FPGA. Inside the FPGA, there are defined buffer area memory unit, write-in control unit, write / read pointer register, total length / written length register, state indication unit and read out unit. The data frames to be transmitted is first read out from the plugged RAM in several times with the write-in control unit, then written to the buffer area memory unit in FPGA, and finally read out from the buffer area memory unit and sent out with the read out unit. The present invention has very small FPGA block RAM resource occupied by the buffer area even if in case of long data frame.

Owner:ZTE CORP

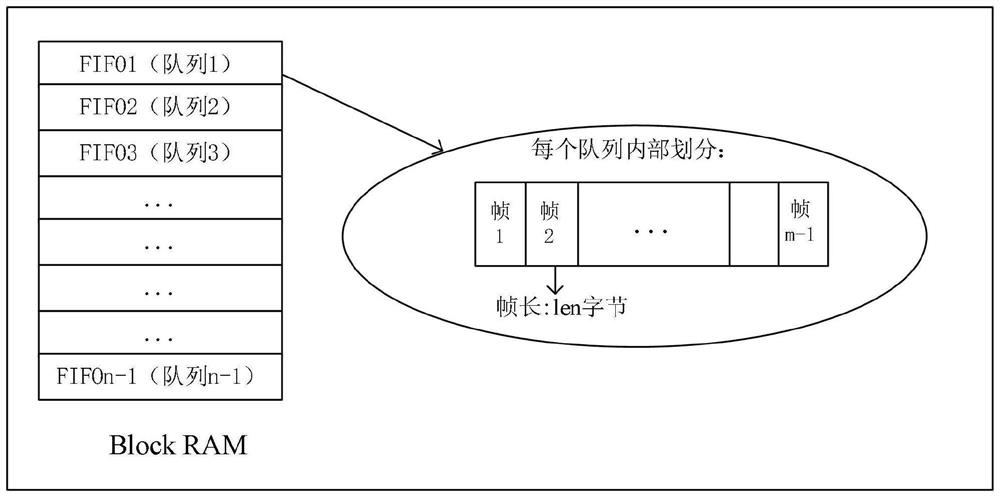

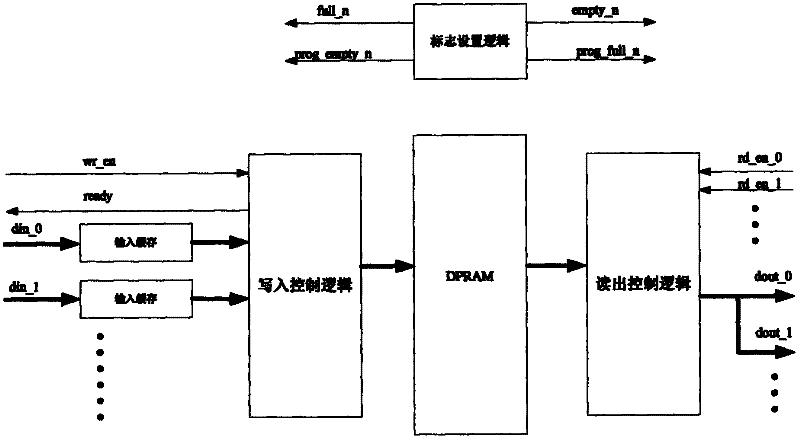

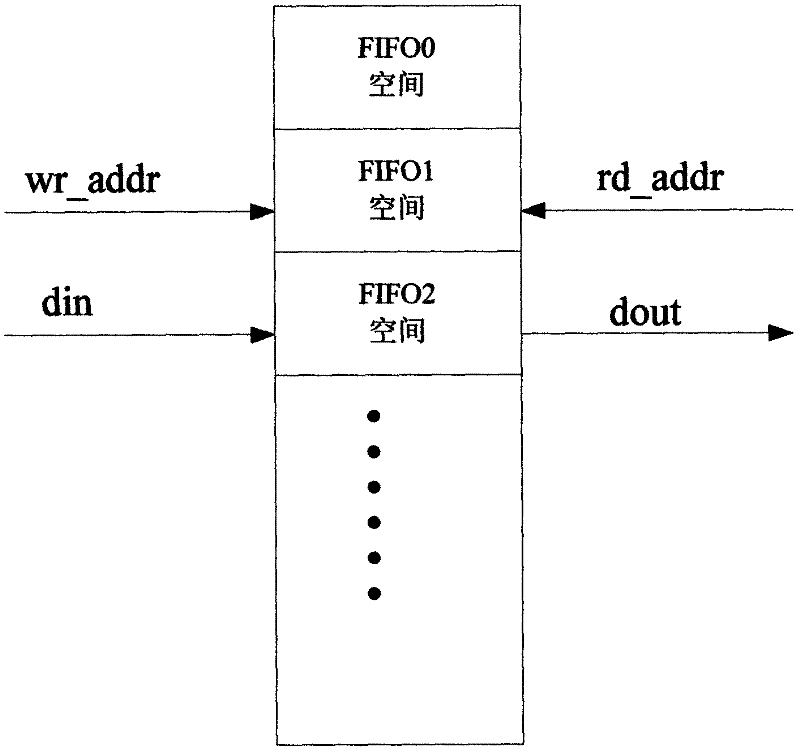

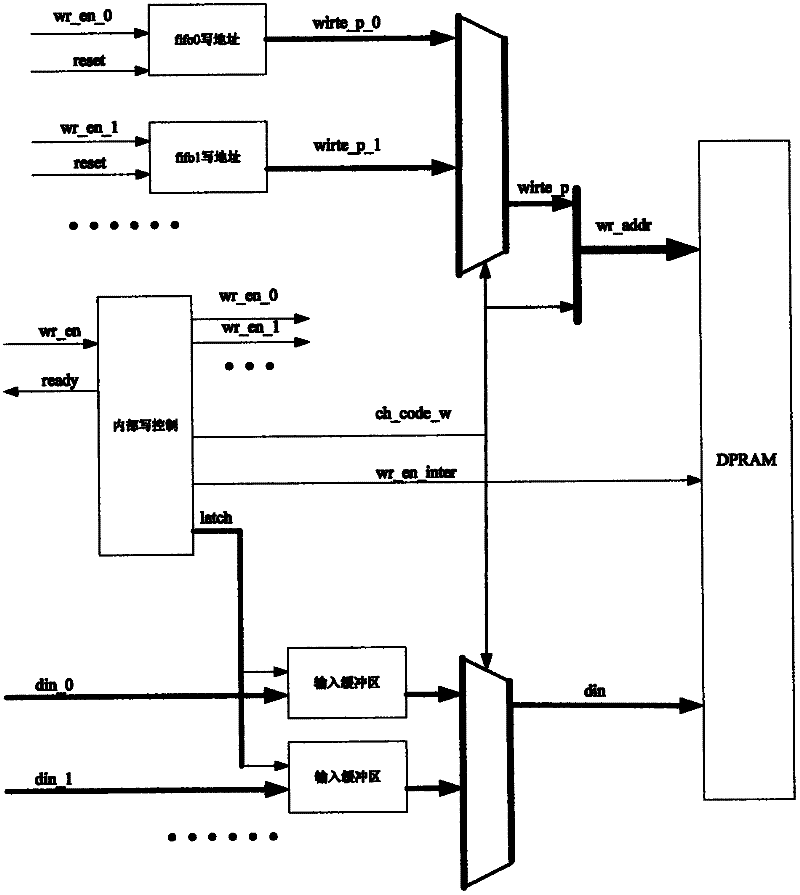

Parallel write-in multi-FIFO (first in,first out) implementation method based on single chip block RAM (random access memory)

InactiveCN102520902ATake advantage ofIncrease profitData conversionStatic random-access memoryRandom access memory

The invention discloses a parallel write-in multi-FIFO (first in,first out) implementation method based on a single chip block RAM (random access memory). The implementation method comprises the following steps of: instantiating a block RAM into a DPRAM (dual port random access memory) to be used for storing data of each channel FIFO, wherein each channel FIFO has a corresponding memory space in the DPRAM; receiving each channel FIFO parallel data write-in requirement by an input buffer area and a write-in control logic, and writing data to the corresponding input buffer area of each channel FIFO; generating an inner writing order by the input buffer area and the write-in control logic after the input buffer areas receive the data, taking out each channel FIFO data from the input buffer areas; and sequentially writing in the memory space of each channel FIFO; when receiving external any channel FIFO reading requirement, reading the control logic according to the requirement, reading data from the memory space of the channel FIFO, and sending FIFO data to an output port; and setting the marks for marking the empty, full, programmable empty and programmable full states of each channel FIFO for logic. The implementation method provided by the invention can implement a plurality of FIFOs requiring parallel write-in and readout in a random sequence.

Owner:FUSHUN OPTOELECTRONICS TECH

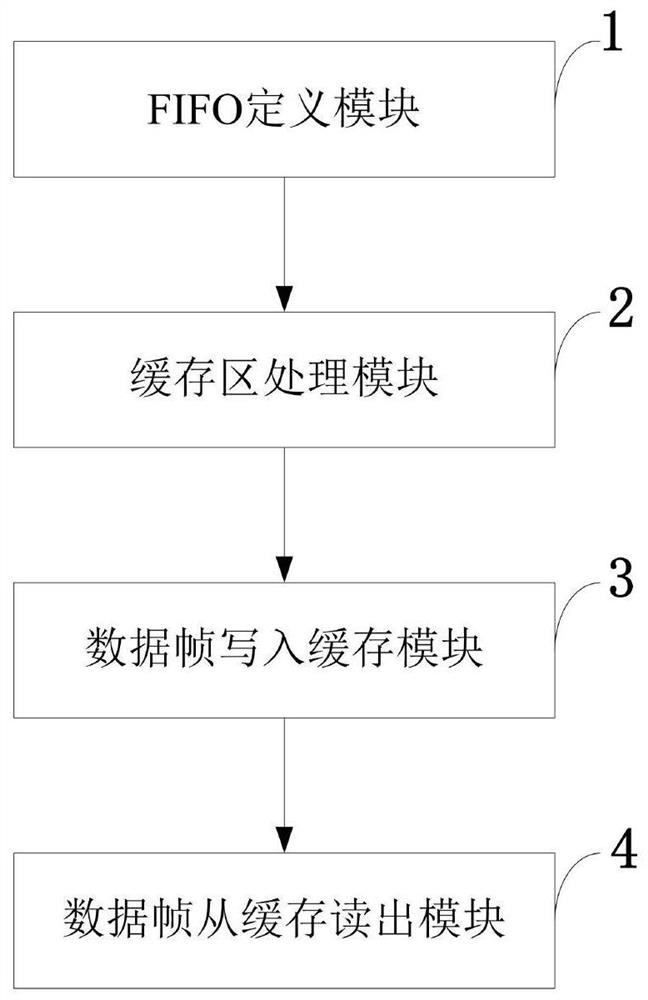

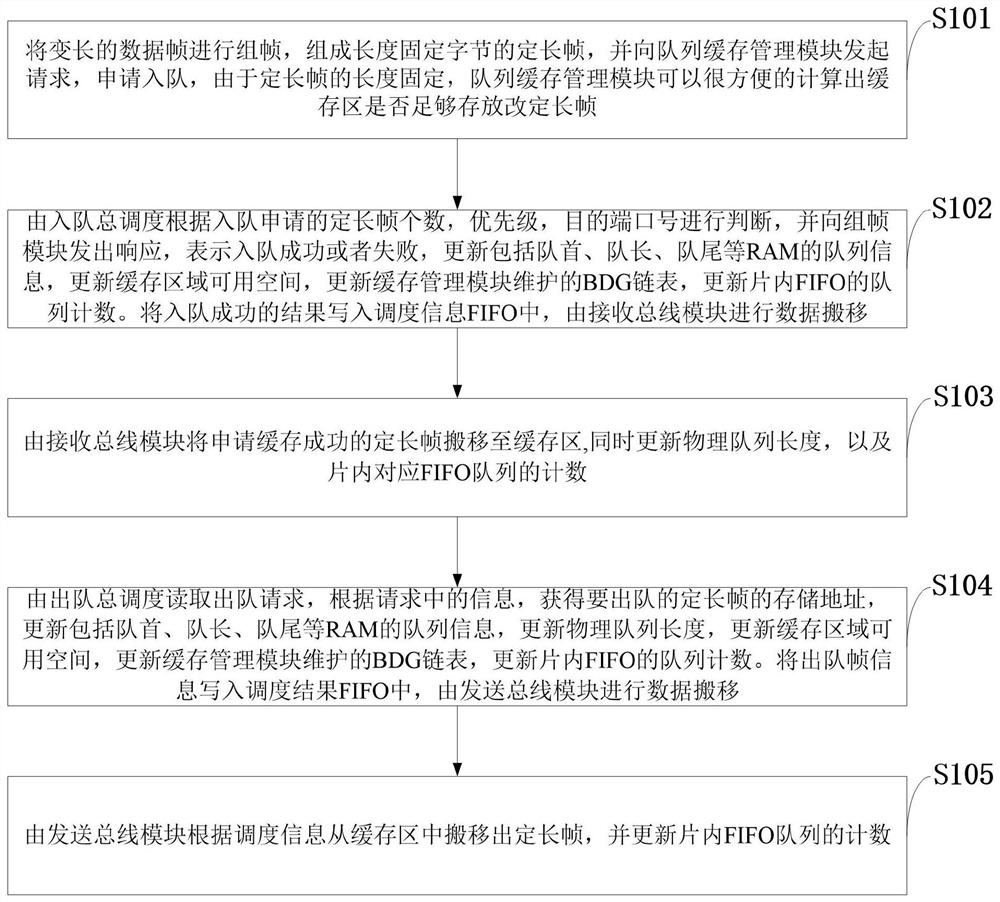

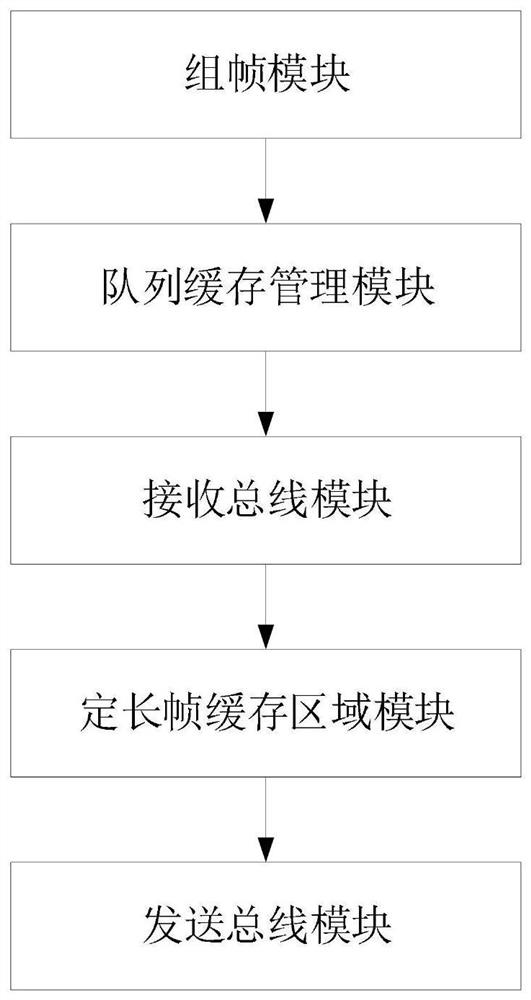

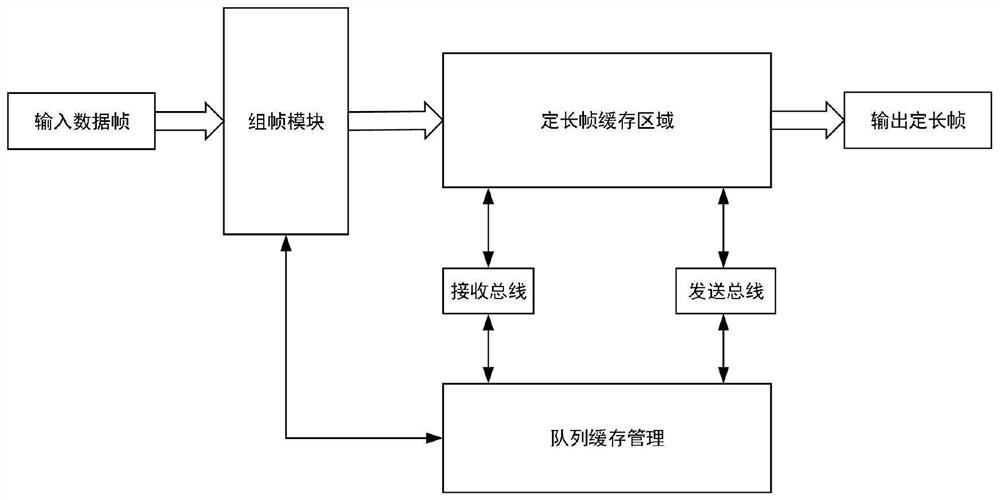

Queue cache management method and system, storage medium, computer equipment and application

ActiveCN112084136ASimplified managementIncrease exchange rateOther databases indexingArchitecture with single central processing unitShardParallel computing

The invention belongs to the technical field of data exchange, and discloses a queue cache management method and system, a storage medium, computer equipment and an application. The method includes framing on data frames subjected to flow classification and grouping processing before the data frames enter a queue cache management module, and framing the lengthened Ethernet data frames into fixed-length frames with fixed bytes; using peripheral control to an on-chip Block RAM, so that a configurable multi-channel FIFO queue is formed and used for storing fixed-length frames. According to the invention, the fixed-length frames of different queues are stored in the whole Block RAM, the storage area is selected in a configurable mode to be presented outwards to form the whole RAM or a plurality of FIFO queues, a proper storage scheme is selected according to the storage area, the utilization rate of storage resources is increased, and the processing and forwarding efficiency of data framesis improved. According to the invention, internal fragments can be avoided as much as possible, the overall rate of the system is improved, and the utilization rate of storage resources is greatly improved.

Owner:XIDIAN UNIV

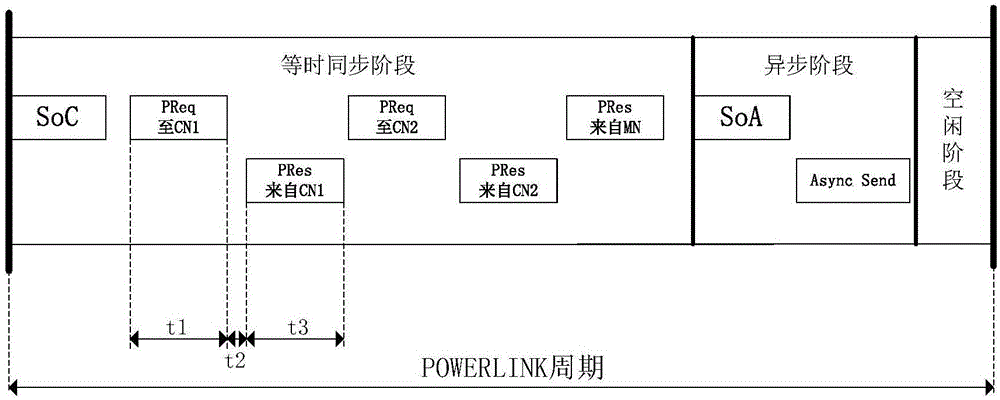

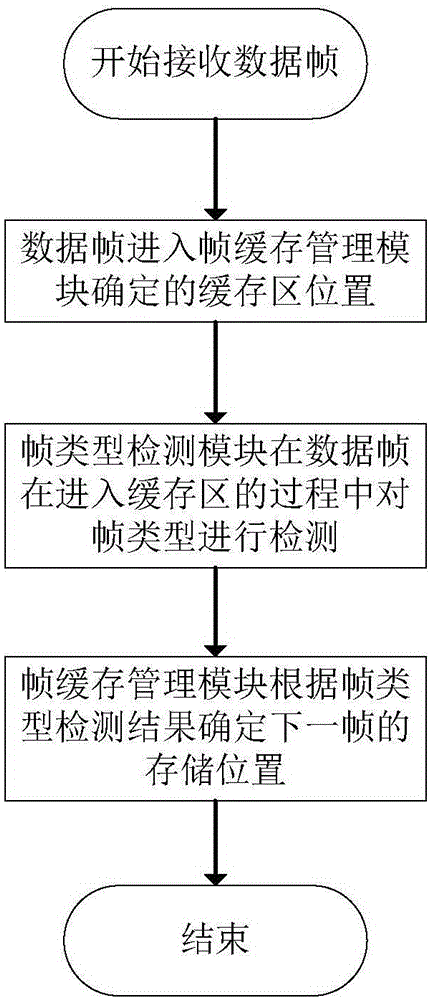

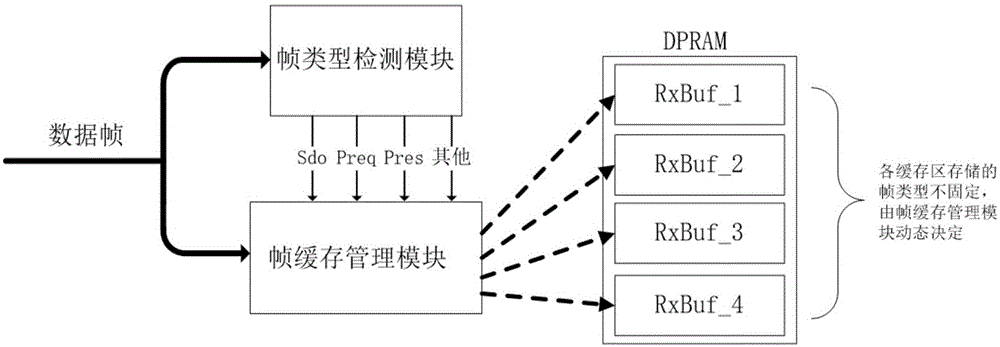

POWERLINK slave station frame buffer management system based on FPGA (Field Programmable Gate Array)

ActiveCN105162786ASolve the problem of being coveredAvoid overwritingData switching networksRandom access memoryComputer module

The invention discloses a POWERLINK slave station frame buffer management system based on an FPGA (Field Programmable Gate Array). A data frame type detection module and a data frame buffer management module are realized in the FPGA, a block RAM (Random Access Memory) in the FPGA is taken as a frame buffer region, the frame type is recognized in a data frame receiving process by the data frame type detection module, a result is transmitted to the buffer management module, and the buffer management module dynamically arranges a storage structure, so that different types of frames are stored in different regions of the RAM without interference on each other. Through dynamic management of the storage structure, a problem of data loss of the conventional linear storage structure due to frame coverage is avoided.

Owner:HUAZHONG UNIV OF SCI & TECH

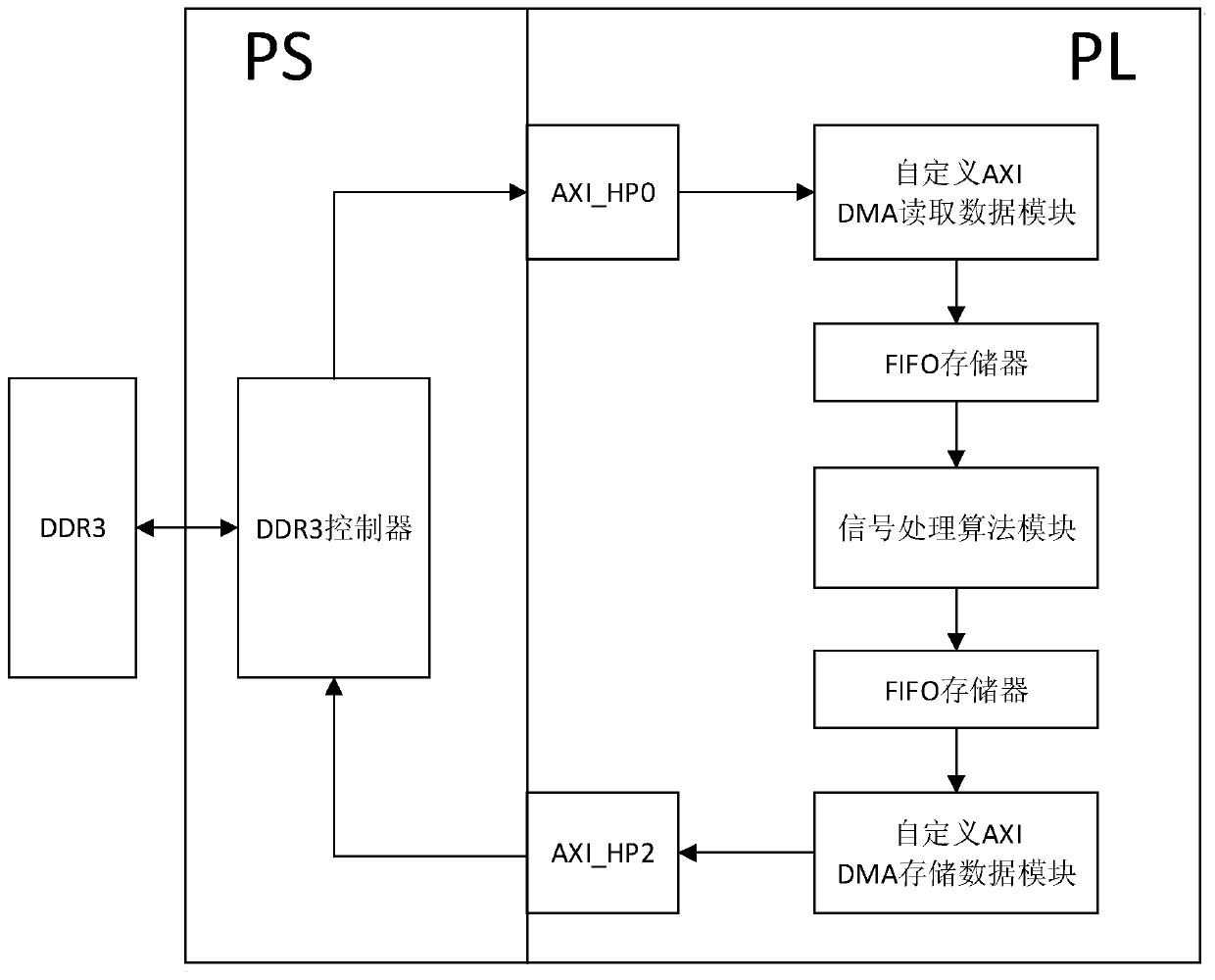

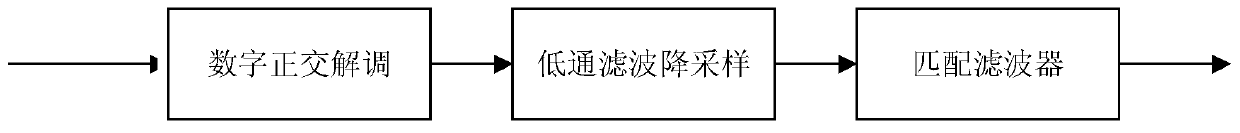

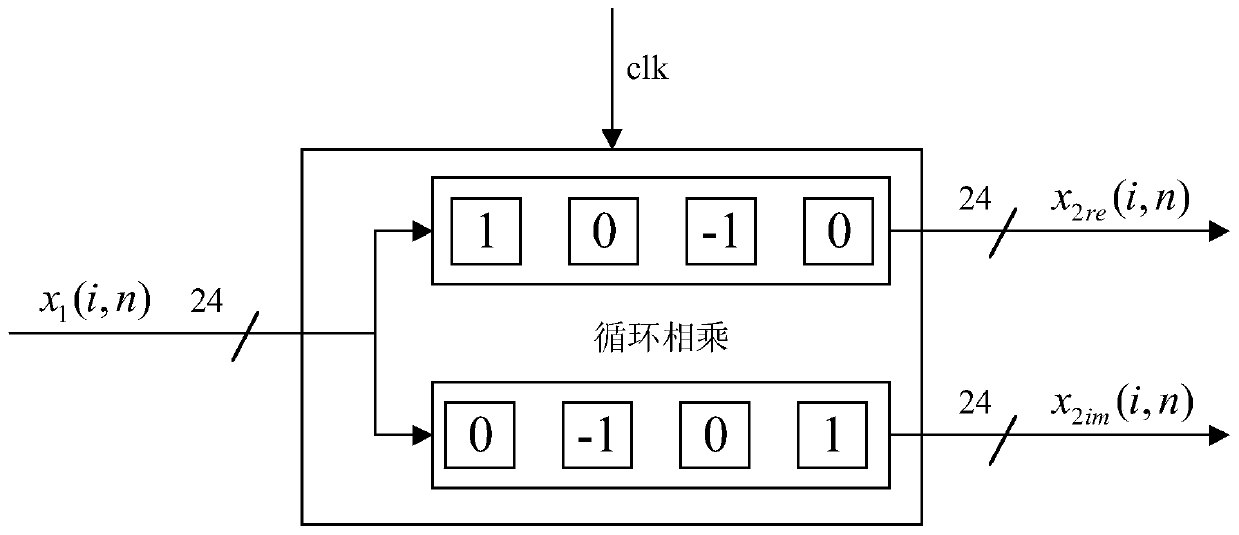

Side-scan sonar signal processing method based on ZYNQ

ActiveCN110244304AFast operationAcoustic wave reradiationQuadrature demodulationSonar signal processing

The invention discloses a side-scan sonar signal processing method based on ZYNQ. The method comprises the following steps of 1) reading AD original acquisition data in a DDR3 shared memory outside a PS end at a PL end by utilizing a FIFO memory, a self-defined DMA reading data module, EMIO and a logic unit of an AXI-HP interface; 2) carrying out digital quadrature demodulation, low-pass filtering downsampling and matched filtering processing on the AD original acquisition data in the step 1) by utilizing an FIR IP core, a Block RAM IP core, a multiplier IP core and a CORDIC IP core; and 3) storing a processing result in the step 2) into the DDR3 shared memory outside the PS end at the PL end by utilizing the FIFO memory, the self-defined DMA reading data module, a PL-PS interrupt and the logic unit of the AXI-HP interface. According to the method, the speed and precision of the side-scanning sonar signal processing can be effectively improved.

Owner:ZHEJIANG UNIV OF TECH

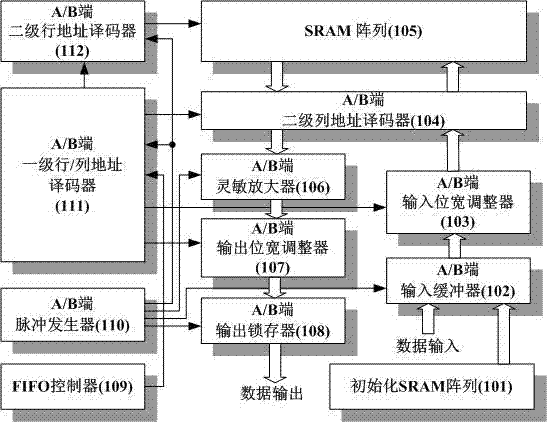

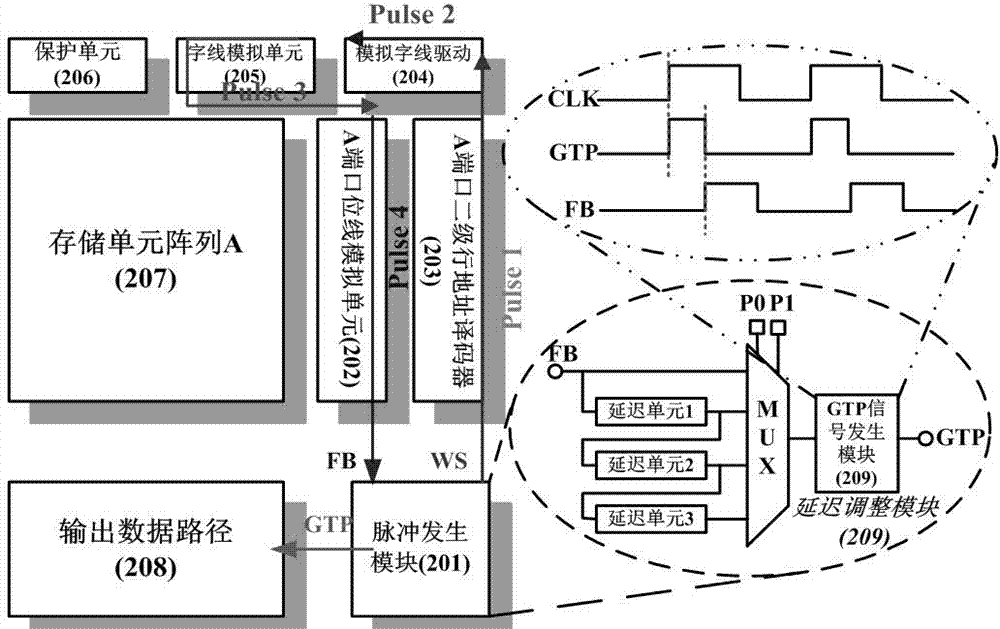

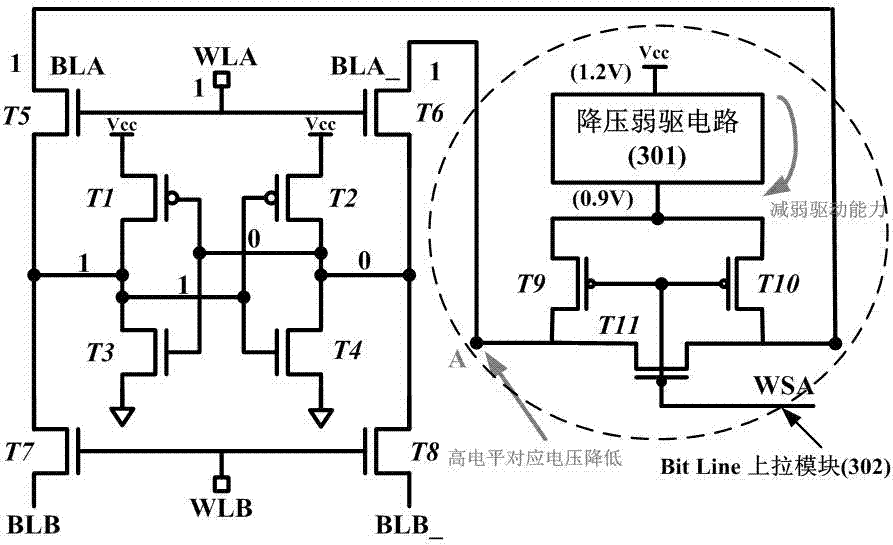

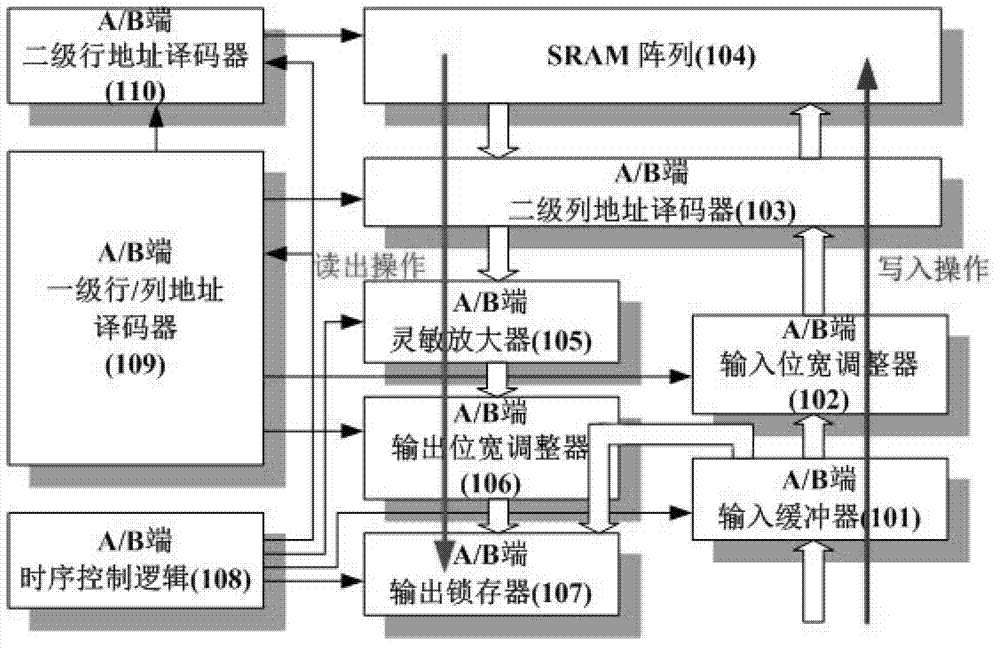

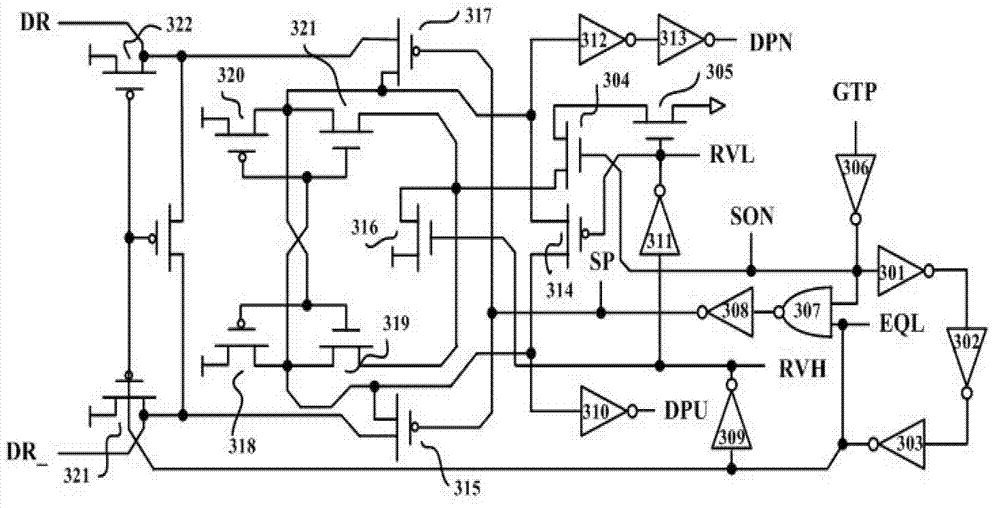

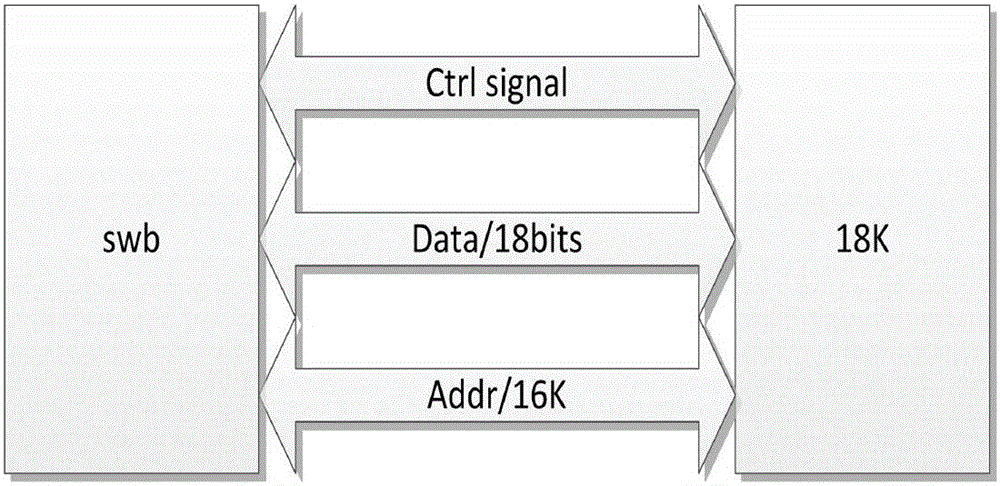

Embedded independent dual-port BRAM (block RAM) IP hardcore of FPGA (field programmable gate array)

ActiveCN103500584AObvious power optimization purposeImprove adaptabilityDigital storageBit lineComputer architecture

The invention belongs to the technical field of an FPGA (field programmable gate array), and particularly relates to an embedded independent dual-port BRAM (block RAM) IP hardcore of an FPGA. According to the embedded independent dual-port BRAM IP hardcore, a circuit simulation delay control module is introduced into a module, and the transmission delay of circuit signals under different process angles, working temperatures and voltages is obtained through dynamic simulation and fed back to a pulse generation module for control so as to improve the asynchronous time sequence control reliability of overall design. Moreover, the static leakage power consumption is reduced by use of a high-threshold tube SRAM; by optimizing the size of a transmission gate of the SRAM, the drive capability of a bit-line pull-up module is reduced, and the dynamic power consumption caused by mutual pull of drive sources is lowered, so that the power consumption of the BRAM IP hardcore is remarkably reduced. By adopting the BRAM IP hardcore provided by the invention, the programmable storage resource supported by the Block RAM can be applied more widely.

Owner:FUDAN UNIV

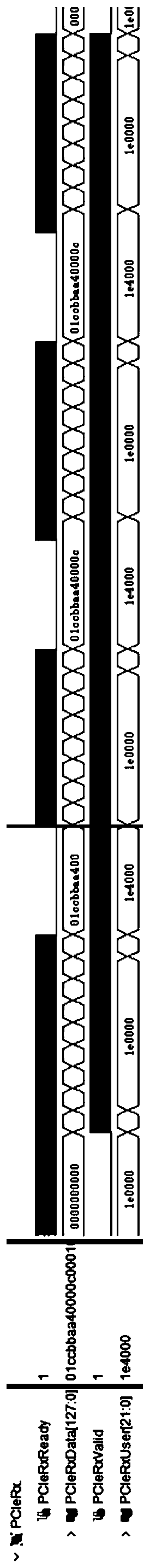

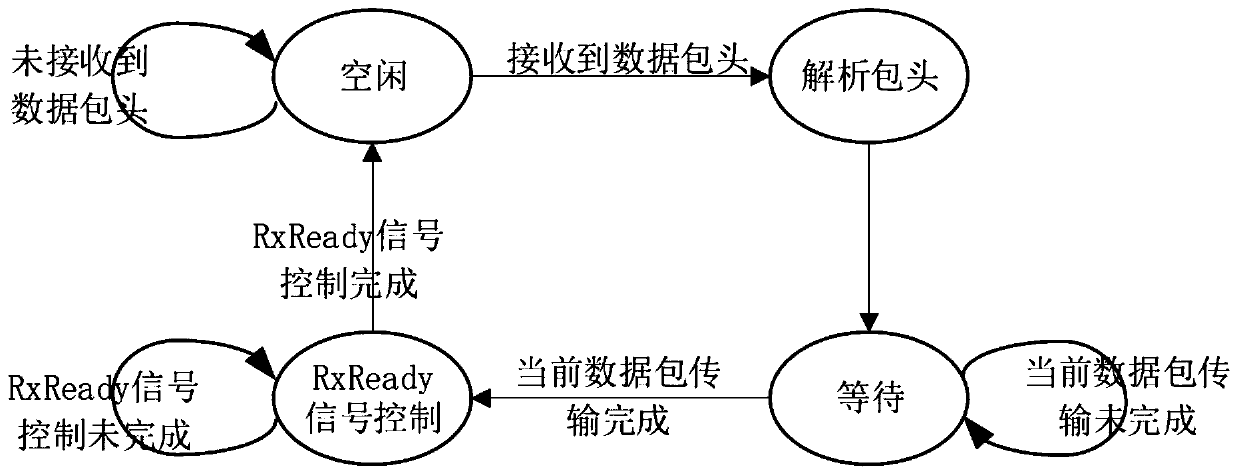

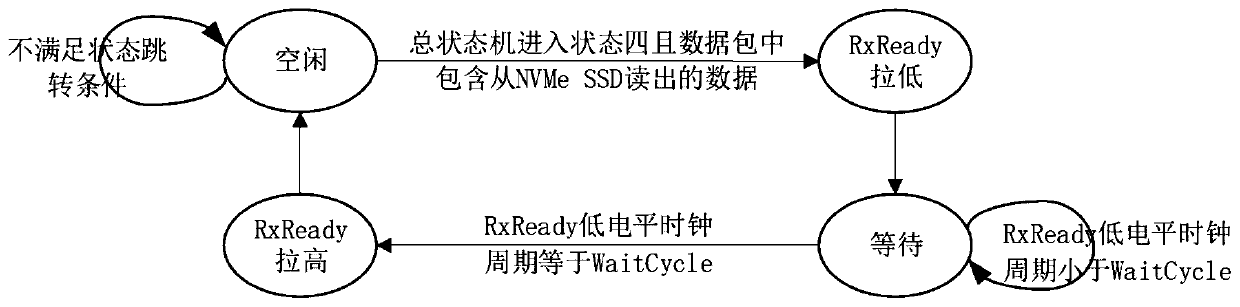

NVMe SSD reading speed and optical fiber interface speed adaptive matching method

ActiveCN110209613ASufficient timing marginImprove developmentElectric digital data processingStreaming dataAdaptive matching

The invention discloses an NVMe SSD reading speed and optical fiber interface speed adaptive matching method in the technical field of data storage. In order to solve the problem that an NVMe SSD reading speed control method occupies more data cache resources in an FPGA in the prior art, the method comprises the following steps that firstly, the FPGA receives a data packet of reading data returnedfrom an NVMe SSD, and then an RxRead signal is pulled down by five clock periods. The invention controls the sending speed of a data packet when the NVMe SSD reads the data by using a RxReady signalof an AXI-Stream data receiving interface on a PCIe hard core, so that the NVMe SSD data reading speed matches the optical data interface speed. A complete reading command does not need to be split into a plurality of sub-commands. Enough time sequence allowance is reserved for the data packet receiving and analyzing process and development is easy. In addition, the method can reduce the requirement for cache resources in the data reading process, 50% of Block Ram cache resources and 92% of Block Ram cache resources are saved when the size of a logic block of the NVMe SSD is 512 Byte and 4 KByte respectively, and the method can be widely applied to the technical field of data storage.

Owner:HARBIN INST OF TECH

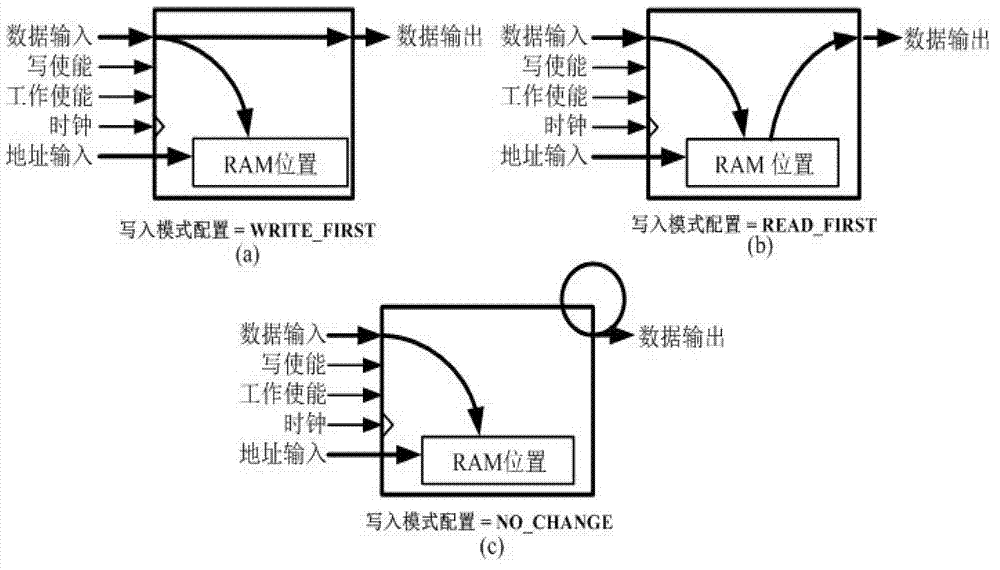

Block RAM (random-access memory) with multiple Write-Modes in FPGA (field programmable gate array)

ActiveCN103198854AGuaranteed functional correctnessConsider high speedDigital storageComputer architectureHemt circuits

The invention belongs to the technical field of electronics, and in particular relates to a sequential control circuit design of write-in operation of IP (Internet protocol) hard core Block RAM (random-access memory) embedded in an FPGA (field programmable gate array). The standard shows sequential control situation requirements under corresponding Write-Modes, and meanwhile, discloses that a delay situation in a current working environment is obtained through a dynamic redundant circuit simulation technique and is fed back to a Write_Mode control module, thereby implementing a configurable circuit design of write operation in different working environments with the different Write-Modes. According to the invention, the required three different Write-Mode configuration conditions in the Block RAM design can be realized, and the high-speed property and reliability of a circuit under various working states are ensured fully.

Owner:FUDAN UNIV

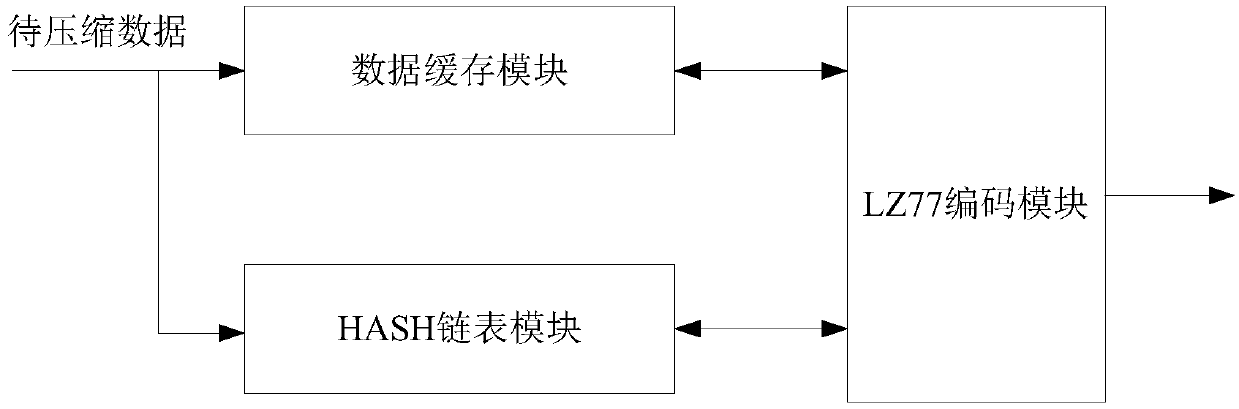

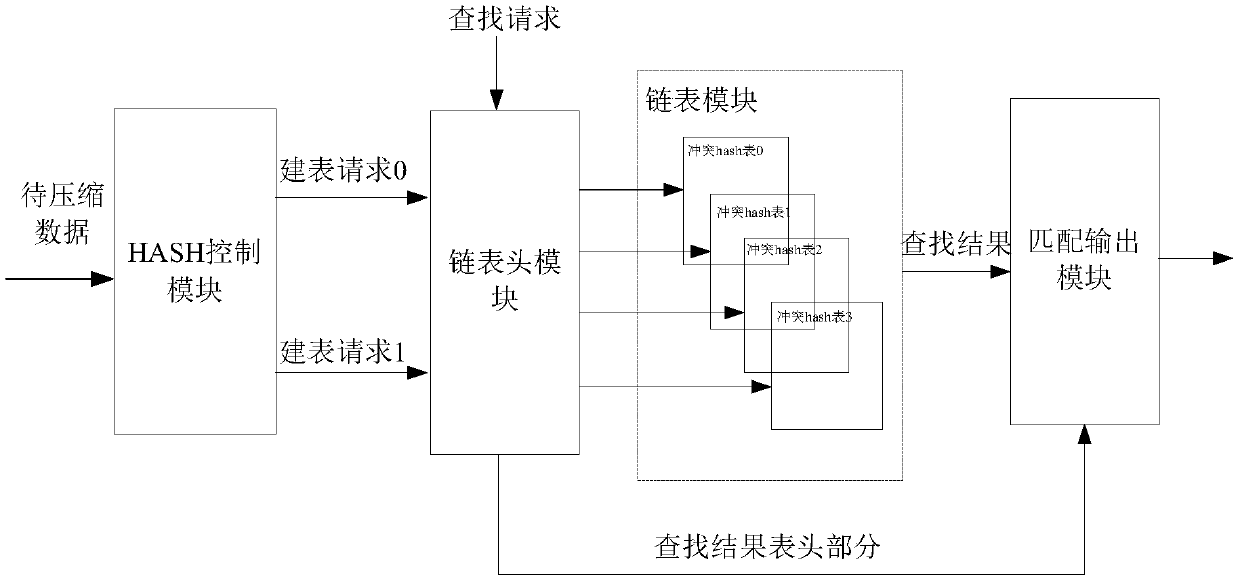

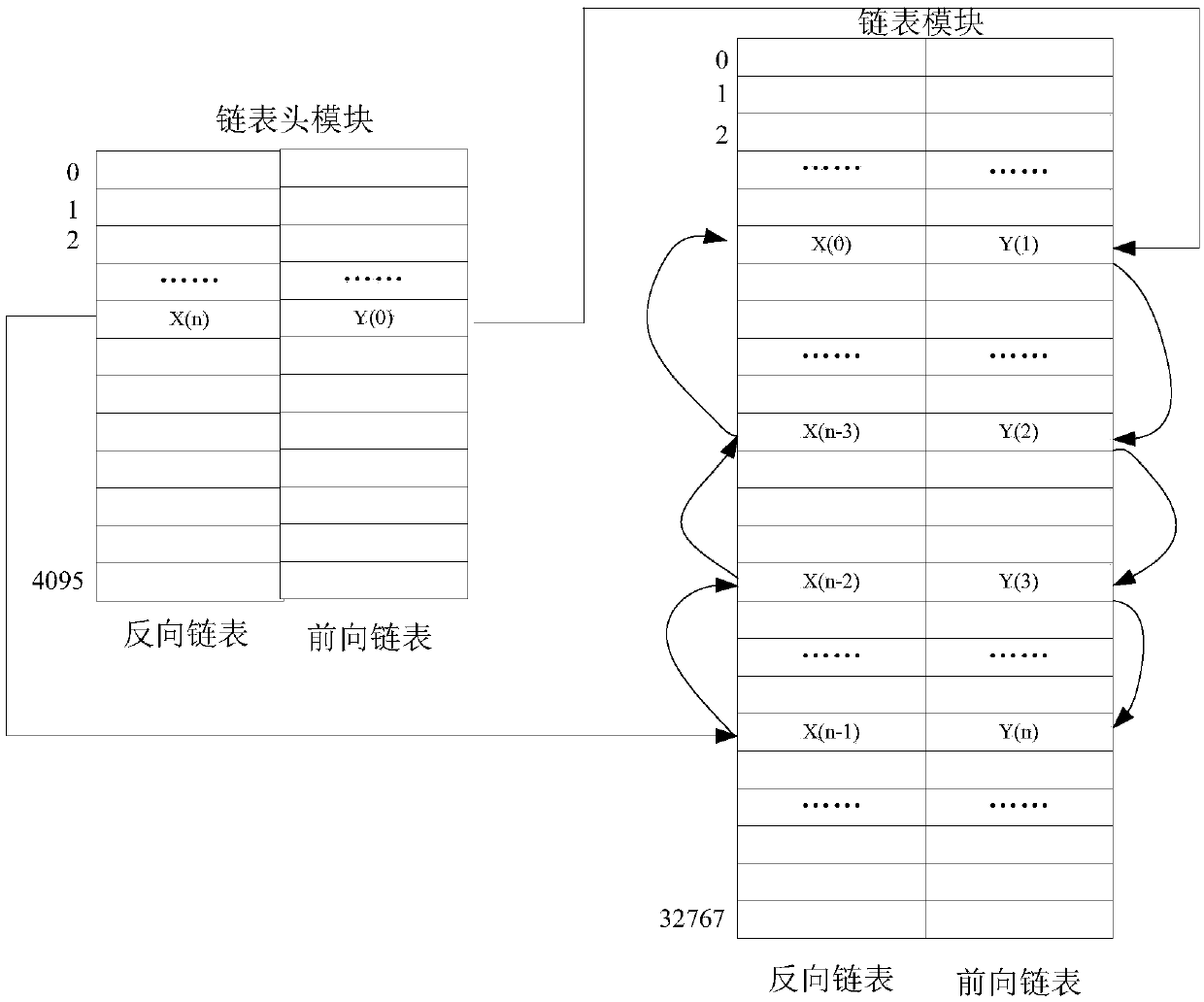

Device and method for quickly implementing LZ77 compression based on FPGA

ActiveCN109672449AEliminate redundancyAchieve compressionCode conversionData compressionOriginal data

The invention relates to a device and a method for quickly implementing LZ77 compression based on an FPGA. A data cache module in a compression device stores original data in a compression sliding window and implements the function by using a block RAM resource in the FPGA; a HASH linked list module realizes the construction and storage of a HASH dictionary and implements the function by using a block RAM + logic resource in the FPGA; an LZ77 encoding mode implements the search and encoding of the same character string. In a compression process, a loop cache equal to the size of a compressionwindow is constructed to store a HASH conflict linked list, and the conflict linked list is stored according to the sequence of data to be compressed, and a window removal operation is replaced by a loop coverage mode; when the same character string is searched by using the HASH linked list, the matched character string is simultaneously searched from two directions in the process of searching forthe same character string through the HASH linked list by using the parallel processing advantages of the FPGA, and meanwhile a matching value the same as a HASH value but having different charactersis removed in advance in a preprocessing manner during compressed encoding, thereby achieving the purpose of quickly eliminating data redundancy to achieve LZ77 data compression.

Owner:WUHAN ZHONGYUAN HUADIAN SCI & TECH

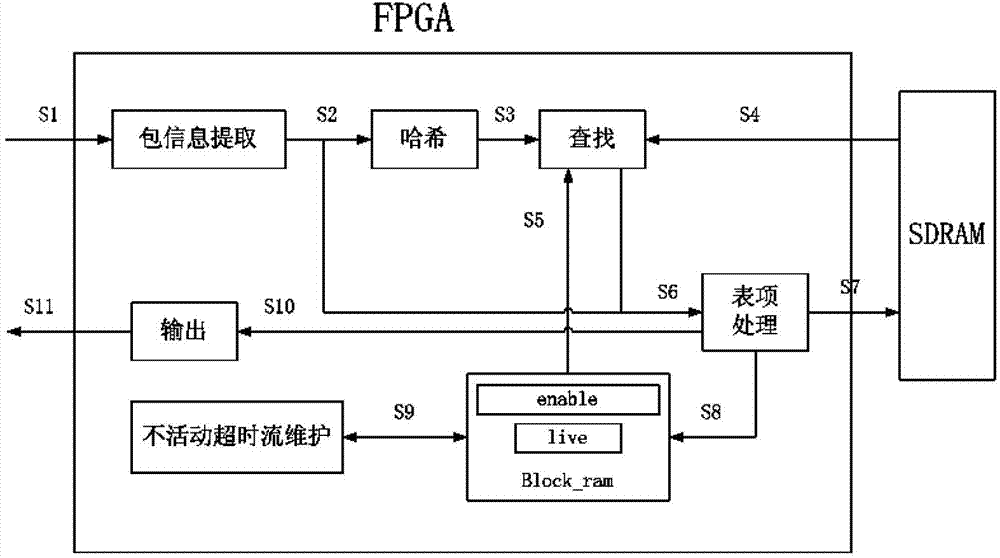

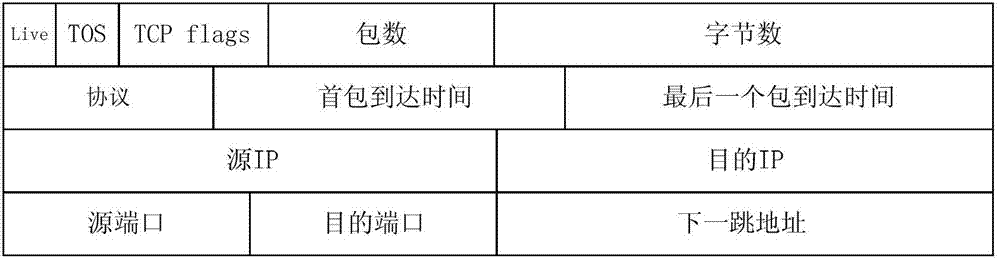

Network data management method based on one-way parallel multiple chain lists and system thereof

InactiveCN103095595AImprove efficiencyEffective controlData switching networksPacket arrivalStream management

The invention discloses a network data management method based on one-way parallel multiple chain lists. The method includes the following steps: S100, receiving link flow copied by a light split device, analyzing the link flow packet by packet, and obtaining a quintuple unit of each packet; recording arrival time of each packet; S200, hashing each quintuple unit to a flow identification (ID); judging whether the current packets corresponding to the quintuple units are synthesizer (SYN) packets; S300, reading out tablebody data of the current packets from SD Ram and Block Ram; comparing the tablebody data with the quintuple units corresponding to the packets to confirm whether the packets corresponding to the quintuple units are matched with the tablebody. The network data management method based on the one-way parallel multiple chain lists and the system of the network data management method based on the one-way parallel multiple chain lists achieve packet-by-packet treatment in the flow management through a simple algorithm, effectively control inactivity timeout flow, and is high in algorithm efficiency, short in time consumption, low to cost due to the fact that field programmable gata array (FPGA) is adopted to process the core, and convenient to popularize and use.

Owner:大连环宇移动科技有限公司 +1

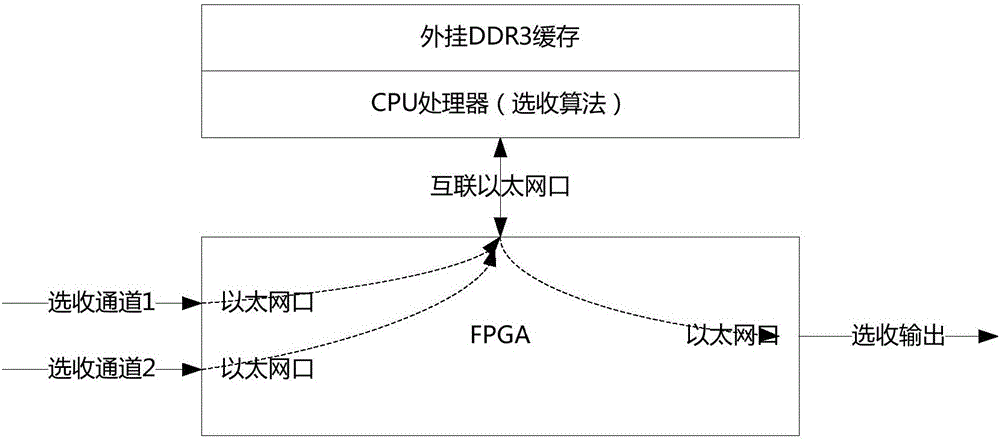

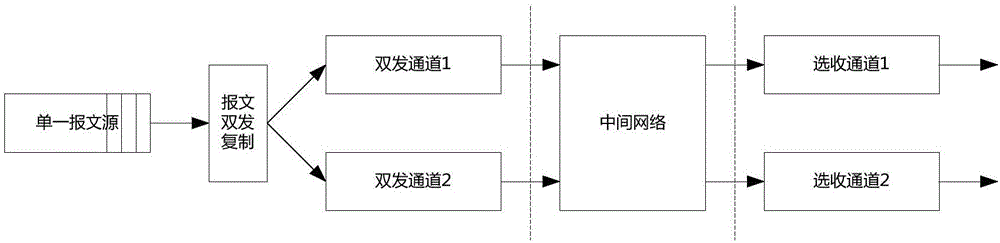

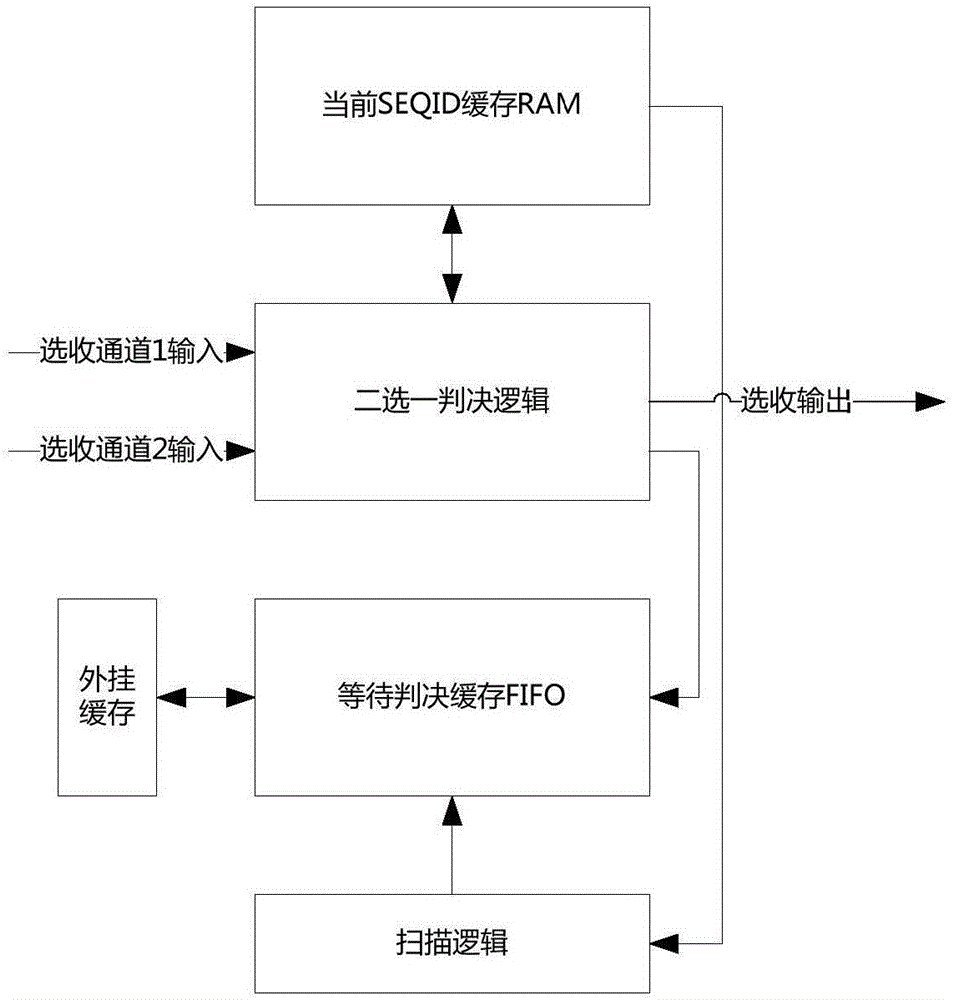

Circuit structure for implementing alternative of messages

ActiveCN106656852AEasy to implementSave control resourcesData switching networksMultiplexingComputer module

The invention discloses a circuit structure for implementing alternative of messages. The circuit structure comprises an alternative judgment logic module, a judgment waiting buffer module, a current SEQID (SEQuence IDentifier) buffer module, and a scanning logic module, wherein the judgment waiting buffer module and the current SEQID buffer module are connected with the alternative judgment logic module; the scanning logic module is connected with the judgment waiting buffer module; an inlet of the alternative judgment logic module is connected with two selecting and receiving channels; and messages of two selecting and receiving channels are from dual-transmitter messages of the same source equipment. The circuit structure for implementing alternative of the messages, which is disclosed by the invention, adopts the independent alternative judgment logic module and judgment waiting buffer module, so that functions of the respective modules are relatively single, and the circuit structure is convenient to implement a logic circuit; and moreover, the judgment waiting buffer module is managed in an RAM (SDP BLOCK RAM) multiplexing mode, so that a great quantity of judgment waiting buffer module control resources are saved, and a message group number of an alternative circuit can support a range to 1K (1024) and even more.

Owner:STATE GRID ZHEJIANG ELECTRIC POWER CO LTD SHAOXING POWER SUPPLY CO +3

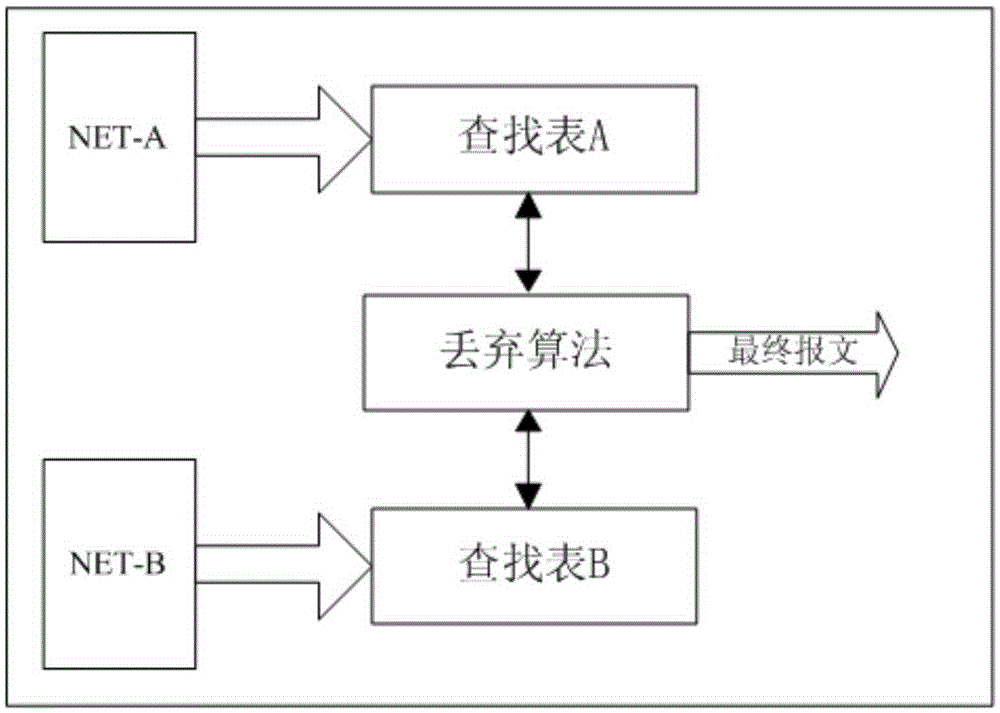

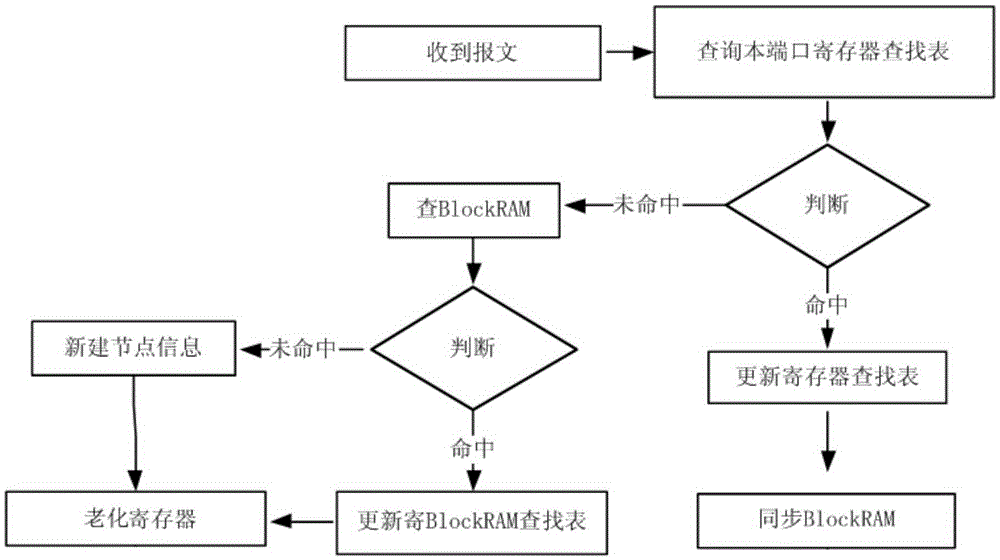

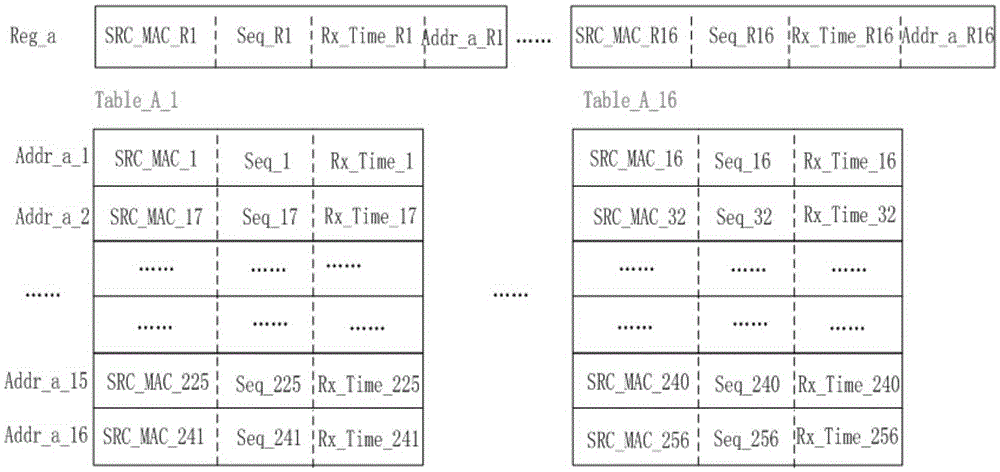

Lookup table optimization method for parallel redundancy protocol (PRP)/high reliable seamless redundancy (HSR) message dropping algorithm

InactiveCN105357126AQuick responseReduce table lookup delayData switching networksProcessor registerParallel computing

The invention discloses a lookup table optimization method for a parallel redundancy protocol (PRP) / high reliable seamless redundancy (HSR) message dropping algorithm. A field programmable gate array (FPGA) internal register and a Block random access memory (RAM) are used for storing message information tables in a hierarchical way; and according to a hierarchical inquiring method, the register is used as a first grade of lookup table, the Block RAM is used as a second grade of lookup table, the register is a Cache of the Block RAM, the content of the Cache of the register is updated and optimized in real time by use of a pipeline aging mechanism, the Block RAM is updated synchronously, and the mapping relation and data consistency of the Block RAM and the content of the Cache are maintained. According to the lookup table optimization method, the FPGA internal register and the Block RAM are used for storing the message information tables in the hierarchical way, the hierarchical inquiring method and the register aging mechanism are adopted, the register speed and Block RAM capacity are balanced, and the lookup table time delay is reduced, so that the network response speed is improved.

Owner:NARI TECH CO LTD +4

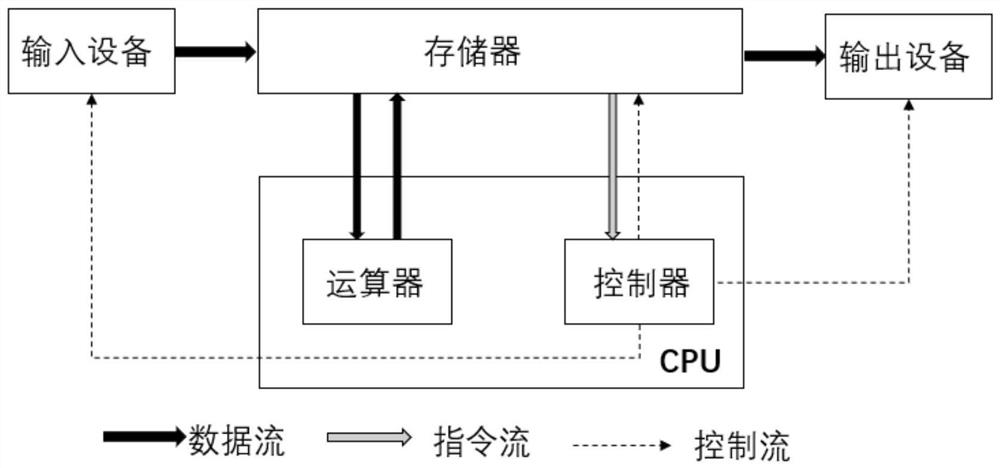

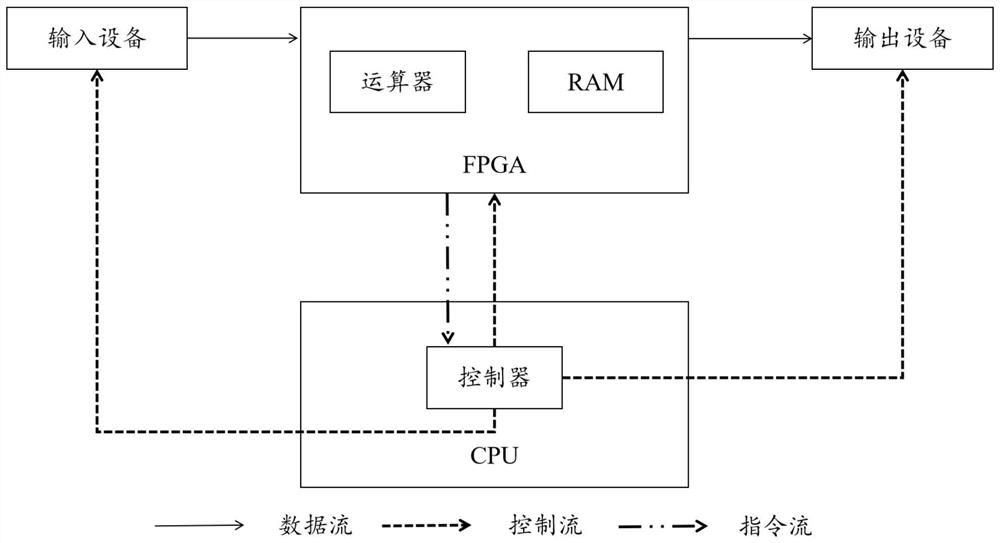

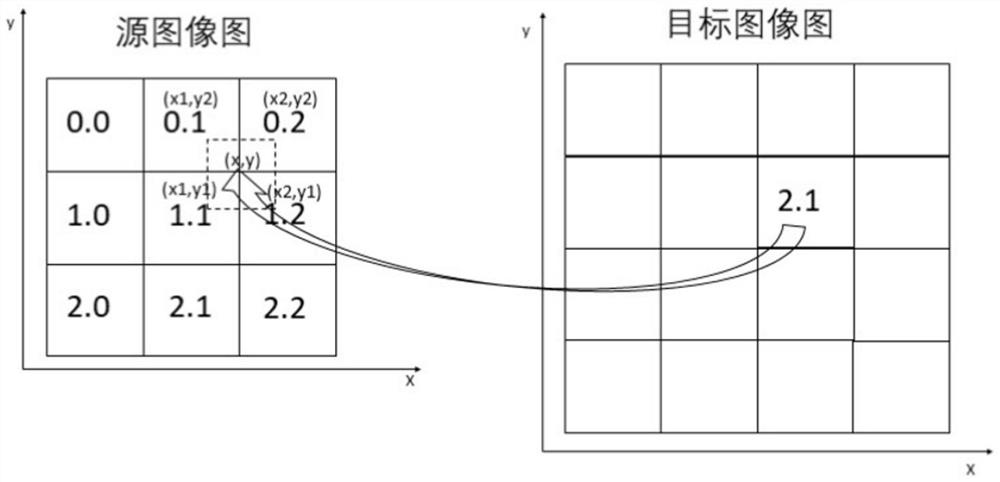

Image processing chip, method and equipment

PendingCN114463178AImprove processing speedImprove scalabilityImage enhancementImage analysisImaging processingRandom access memory

The invention provides an image processing chip, method and equipment, a block RAM (Random Access Memory) in an FPGA (Field Programmable Gate Array) is used for replacing a memory, a CPU (Central Processing Unit) arithmetic unit processing part is transplanted into the FPGA, primary linear interpolation calculation is carried out on an image in the horizontal direction and the vertical direction respectively, a color channel numerical value of a target point is obtained, and a floating point coordinate is further obtained; and calculating according to the floating point coordinates to obtain an incidence relation between the original image coordinates and the target image coordinates, and zooming the image according to the incidence relation. The technical problem of image edge blurring is solved, the image processing speed and the zooming effect are improved, the real-time performance is achieved, and the cost is reduced.

Owner:浙江派大芯集成电路有限公司

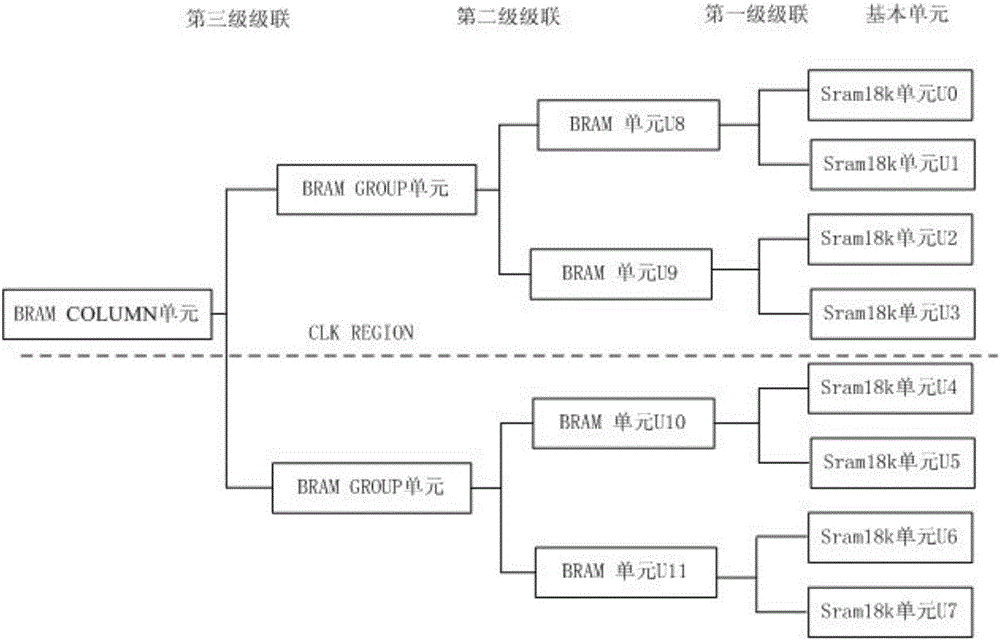

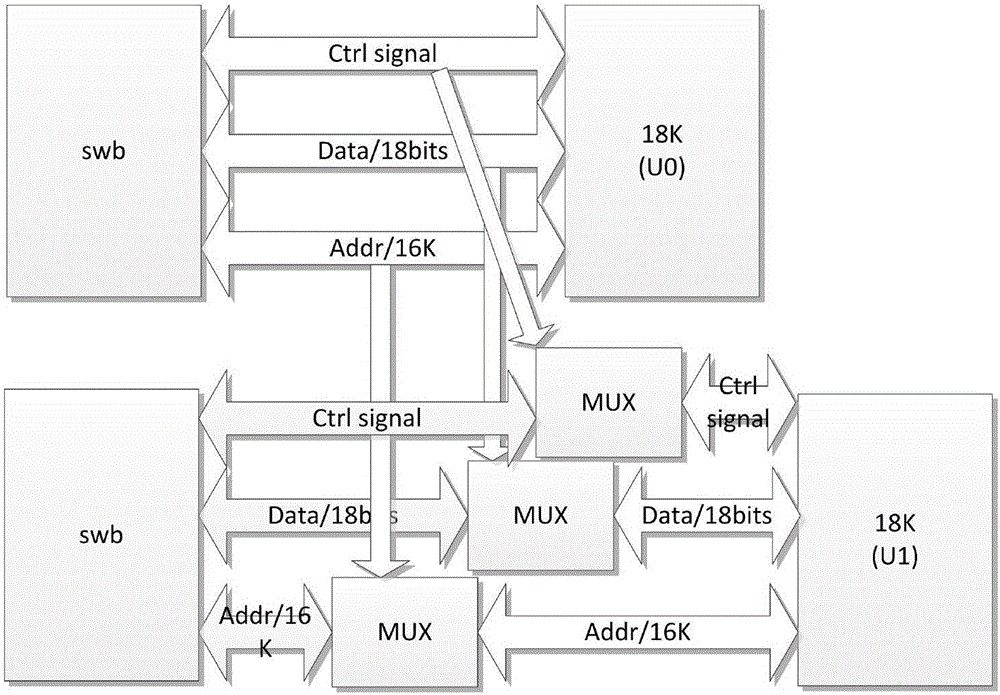

BLOCK RAM (Random Access Memory) cascade structure of field programmable gate array FPGA

InactiveCN105808474AMeet storage needsSave peripheral wiring resourcesElectric digital data processingStatic random-access memoryComputer architecture

The invention relates to a BLOCK RAM (Random Access Memory) cascade structure of a field programmable gate array FPGA. The BLOCK RAM cascade structure comprises a column of SRAM18K (Static Random Access Memory) units, wherein two adjacent SRAM18K units in one column of the SRAM18K units are in address cascade or data parallel connection through a first stage ASIC (Application Specific Integrated Circuit) cascade logic routing architecture, and one BLOCK RAM unit is formed, and the like, taking wiring of a CLK REGION in the FPGA as the limit, two adjacent BLOCK RAM units are cascaded and one BRAM GROUP unit is formed; and two adjacent BRAM GROUP units are cascaded to form one BRAM COLUMN unit, and a BLOCK RAM cascade structure with the required address depth and data width can be formed. The BLOCK RAM cascade structure of the field programmable gate array FPGA provided by the invention can realize a memory cell RAM with the required address depth and data width, simplify a software algorithm and save peripheral wiring resources.

Owner:58TH RES INST OF CETC

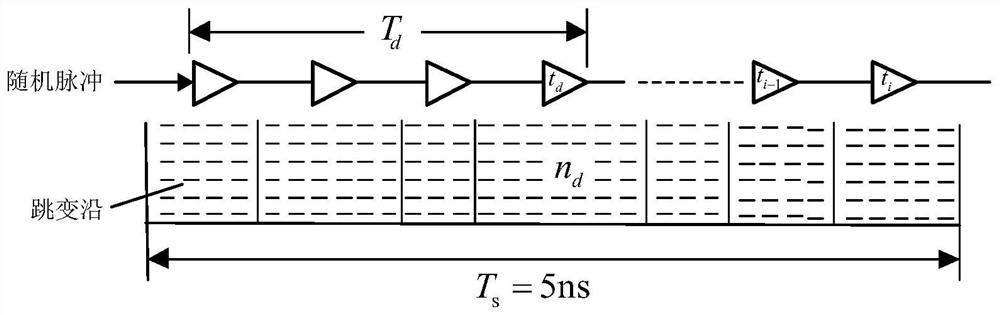

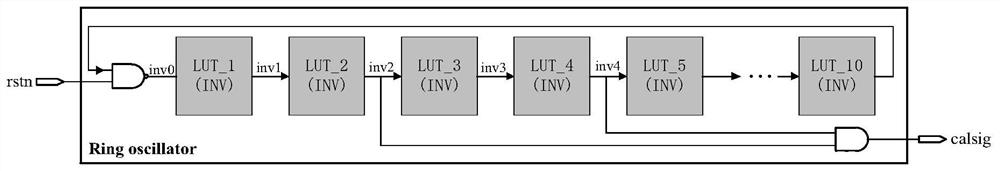

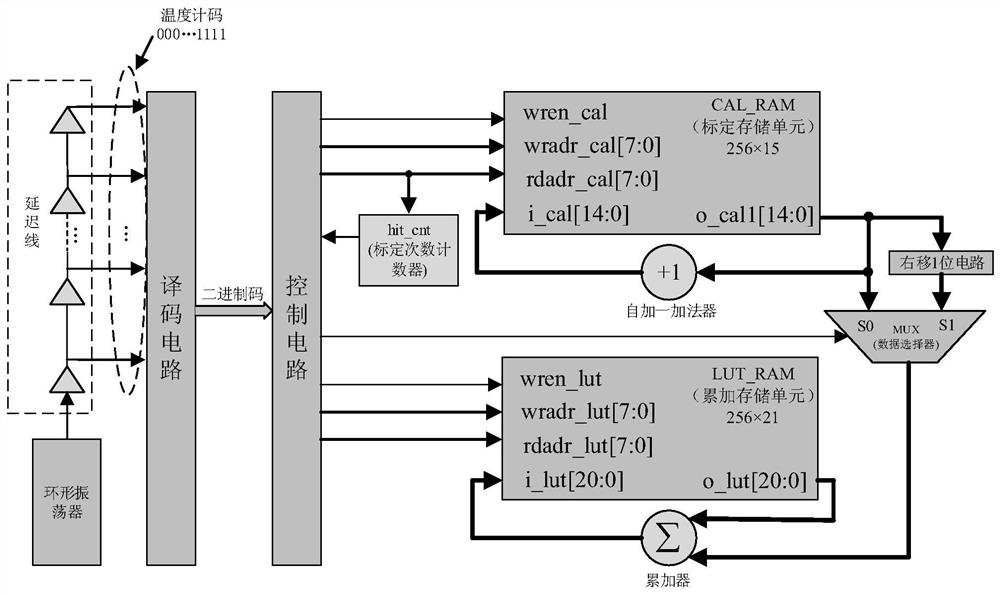

Delay line calibration circuit for FPGA type time-to-digital converter

InactiveCN112578661AAvoid occupyingGood precisionTime-to-digital convertersConvertersFinite-state machine

The invention discloses a delay line calibration circuit for an FPGA type time-to-digital converter. The delay line calibration circuit is composed of a ring oscillator circuit, a control circuit anda CAL_RAM and LUT_RAM storage unit; the ring oscillator circuit is controlled by a reset signal to start oscillation and is used for generating a random pulse signal for calibration; the control circuit controls reading and writing of a CAL_RAM and a LUT_RAM through a finite-state machine, and completes the calibration of a delay line and accumulation of count values; a CAL_RAM and LUT_RAM circuitis realized by calling a Block RAM IP core; in a calibration state, the CAL_RAM is used for storing the frequency of hopping at each delay unit in the delay line; and in the accumulation state, the LUT_RAM is used for storing the accumulated value of the hopping times. The calibration circuit provided by the invention is good in transportability, can quickly perform online calibration on the delay line, reduces the influence of temperature / voltage on delay of the delay line, and improves the measurement precision of a TDC.

Owner:TIANJIN UNIV

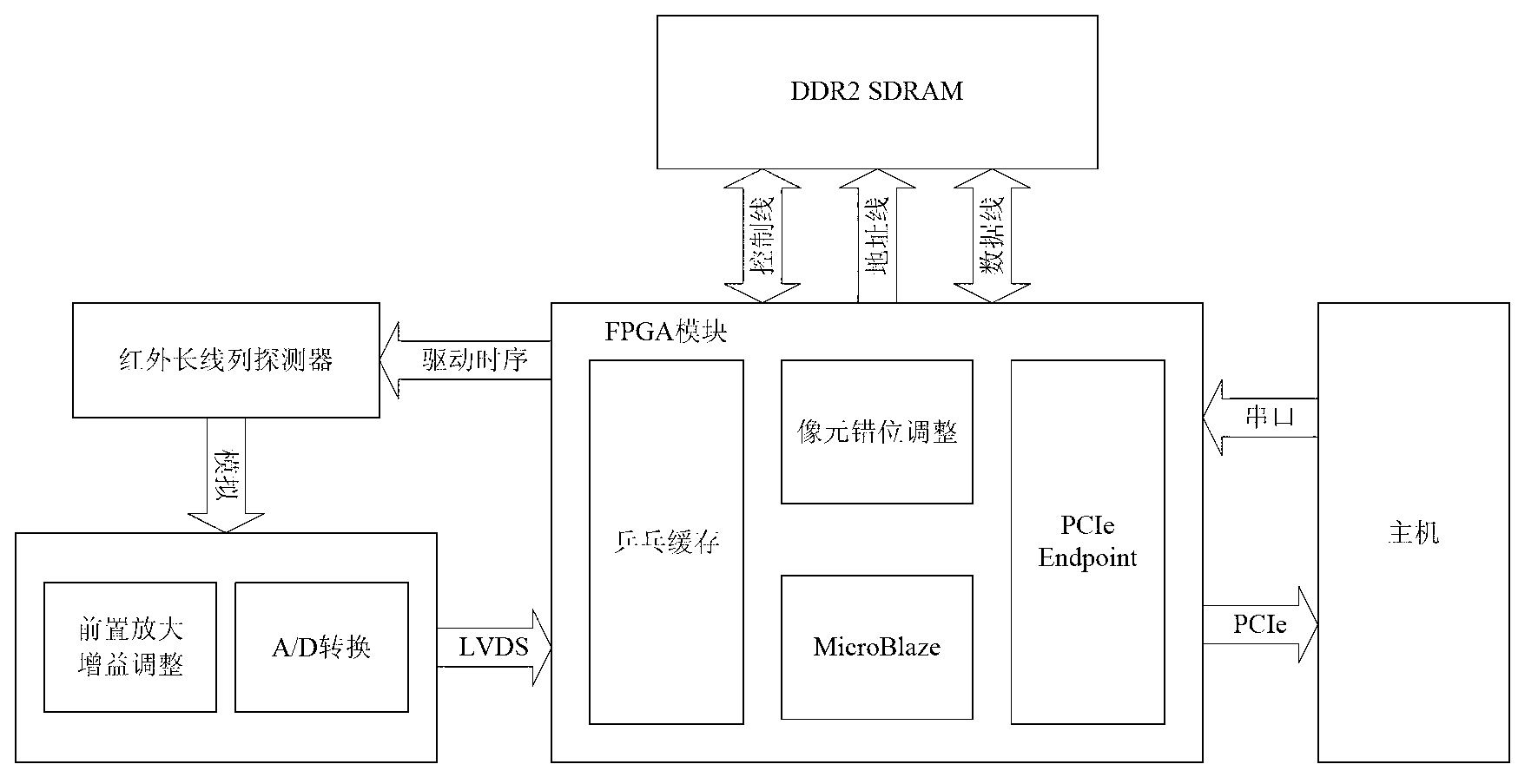

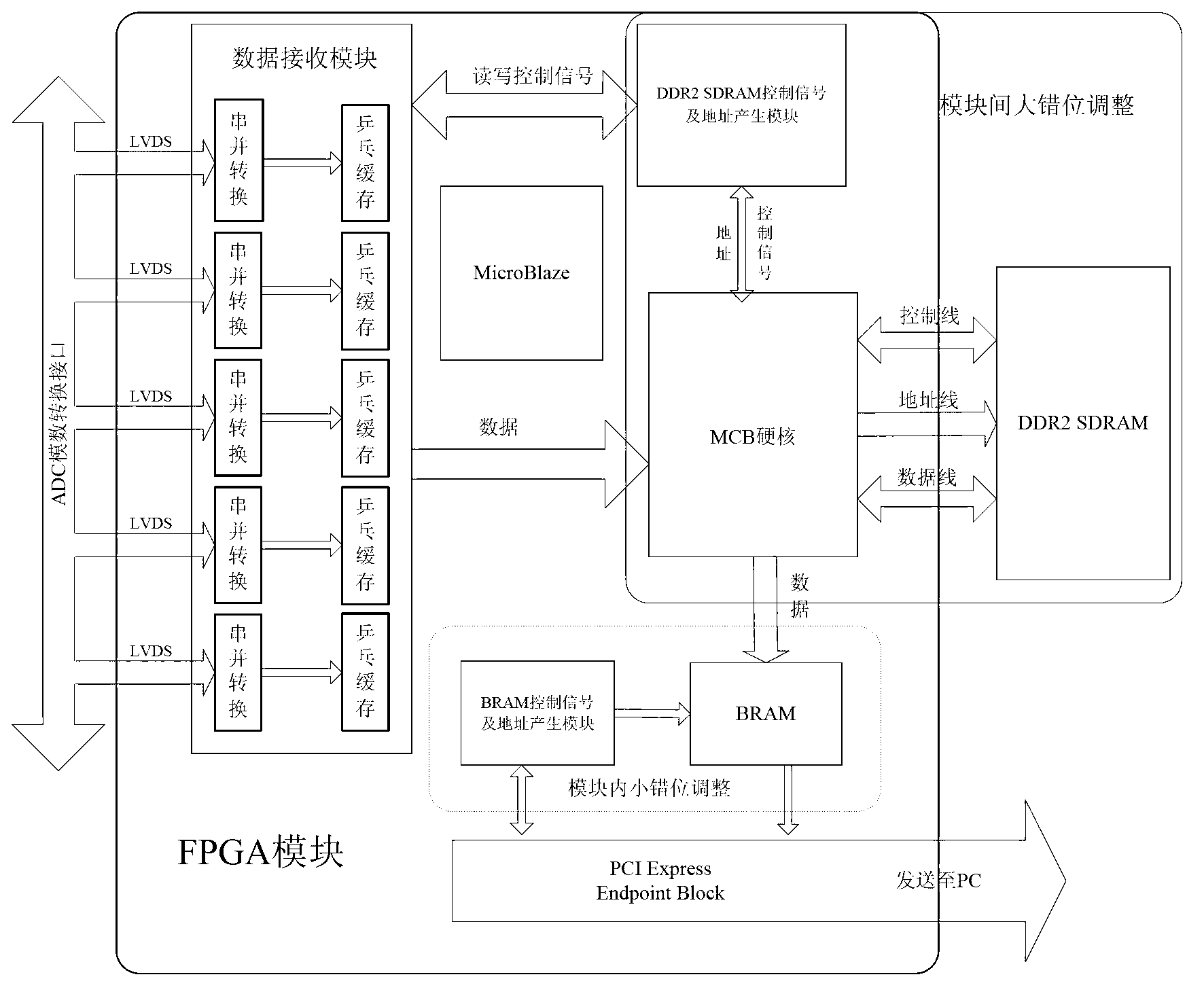

Pixel misplacement adjustment method of infrared long-line detector

InactiveCN103258311ARealize reading and writingEasy programmingImage data processing detailsReusabilityModularity

The invention discloses a pixel misplacement adjustment method of a multi-piece-spliced infrared long-line detector. The method is mainly used for a scanning imaging system of the infrared long-line detector. According to the technical scheme, the method mainly includes the following steps: (1) misplacement parameters are adjusted in real time by means of a micro processor (Micro Blaze) in an FPGA; (2) DDR2SDRAM is read by means of a memory controller (Memory Control Block, MCB) so that large misplacement among modules of the long-line detector is adjusted; (3) adjustment of small misplacement inside the modules is achieved through a block memory (Block RAM) built in the FPGA; (4) data are sent to a main unit for display by means of a PCI Express protocol. The method has the advantages that by means of ping-pong buffer, the DDR2SDRAM and the PCI Express protocol, data transmission with a high data rate can be achieved, the misplacement parameters can be adjusted in real time through the embedded micro processor, the long-line detectors with different module quantities can be compatible, the modularization degree is high, and reusability is good.

Owner:SHANGHAI INST OF TECHNICAL PHYSICS - CHINESE ACAD OF SCI

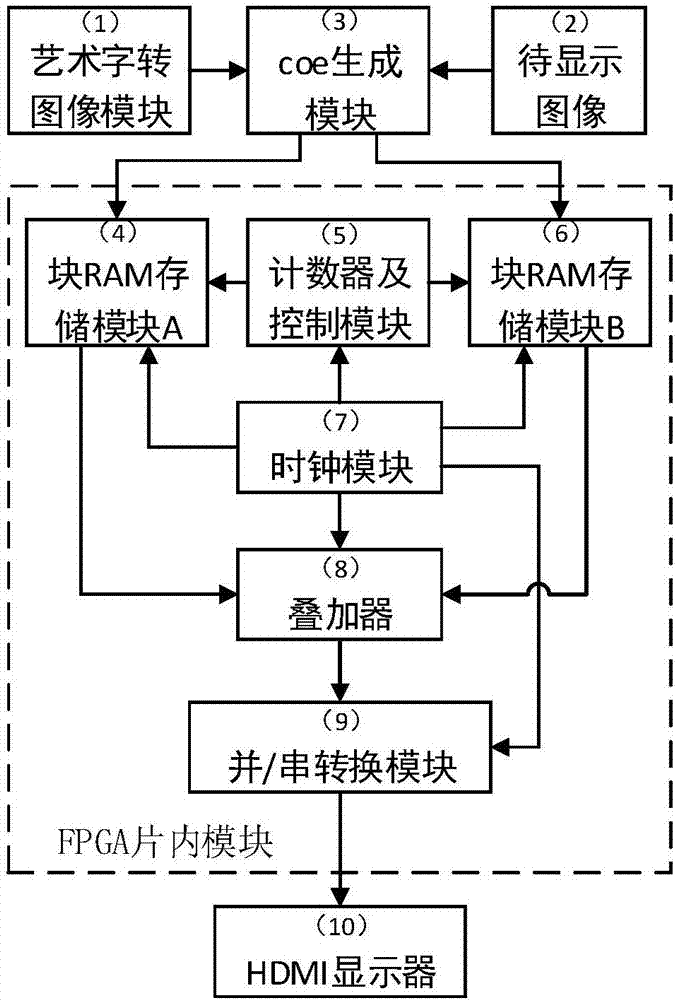

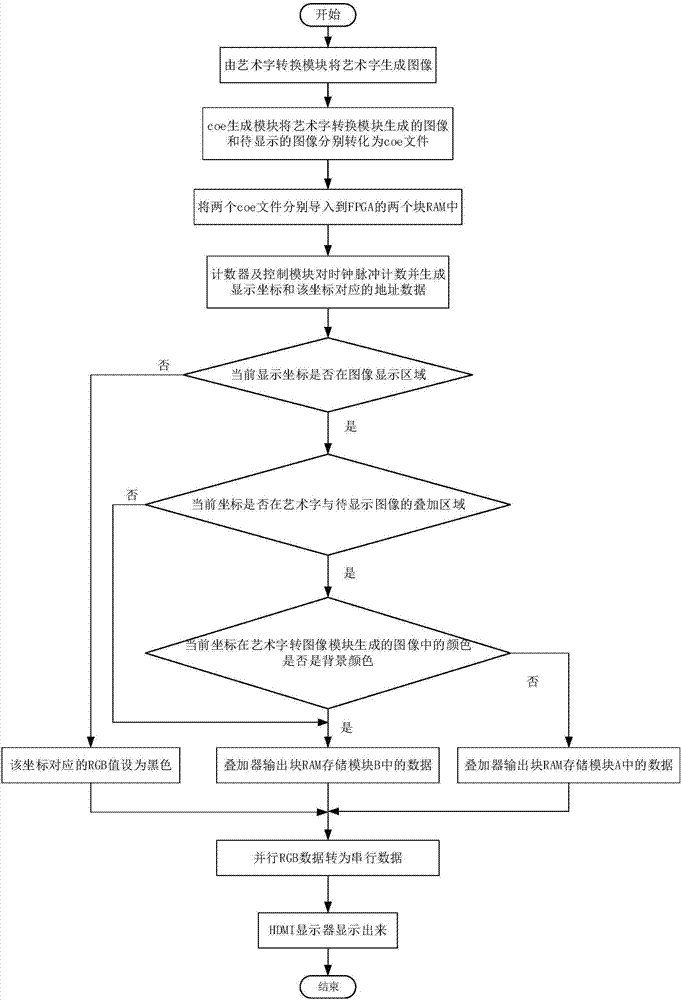

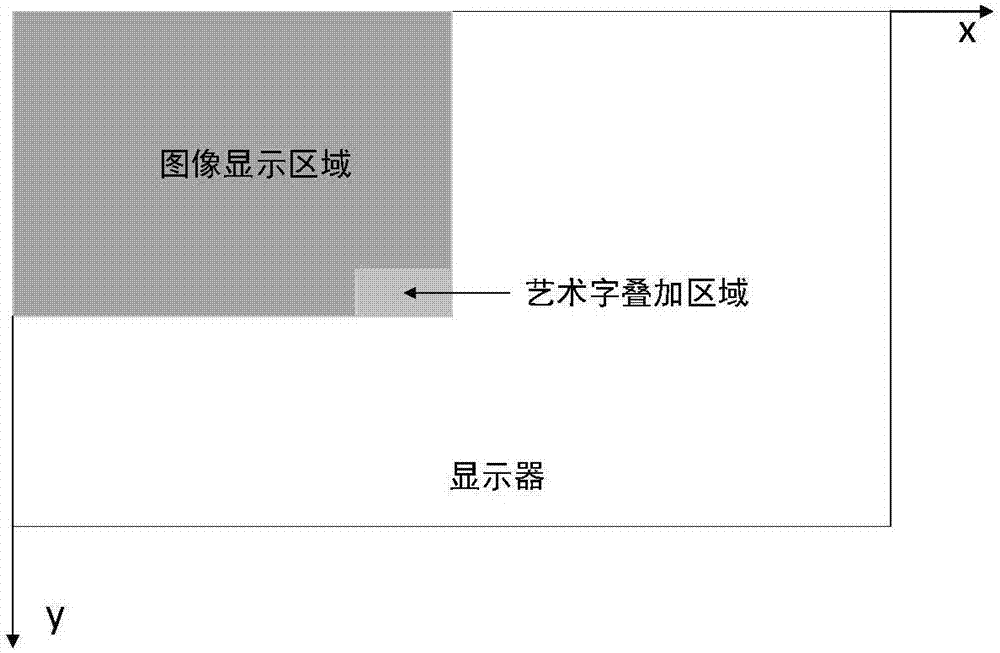

Device and method for superimposing art fonts on image based on FPGA

InactiveCN107888848AMeet needsImprove experienceTelevision system detailsColor television detailsDigital videoComputer module

The invention provides a device and method for superimposing art fonts on an image based on an FPGA. The device comprises an art font-to-image module (1), a coe generation module (3), a block RAM module A (4), a counter and control module (5), a block RAM module B (6), a clock module (7), a superimposer (8), a parallel / serial conversion module (9) and an HDMI display (10). According to the deviceand the method, the technical problem that a display effect of superimposing characters is stiff when the image is displayed on the HDMI display by taking the FPGA as a master control chip in the prior art is solved. Through adoption of the device and the method, different art fonts can be superimposed when the image is displayed, increasingly developing demands in the field of digital video display are satisfied, and the user experience is improved.

Owner:HARBIN ENG UNIV

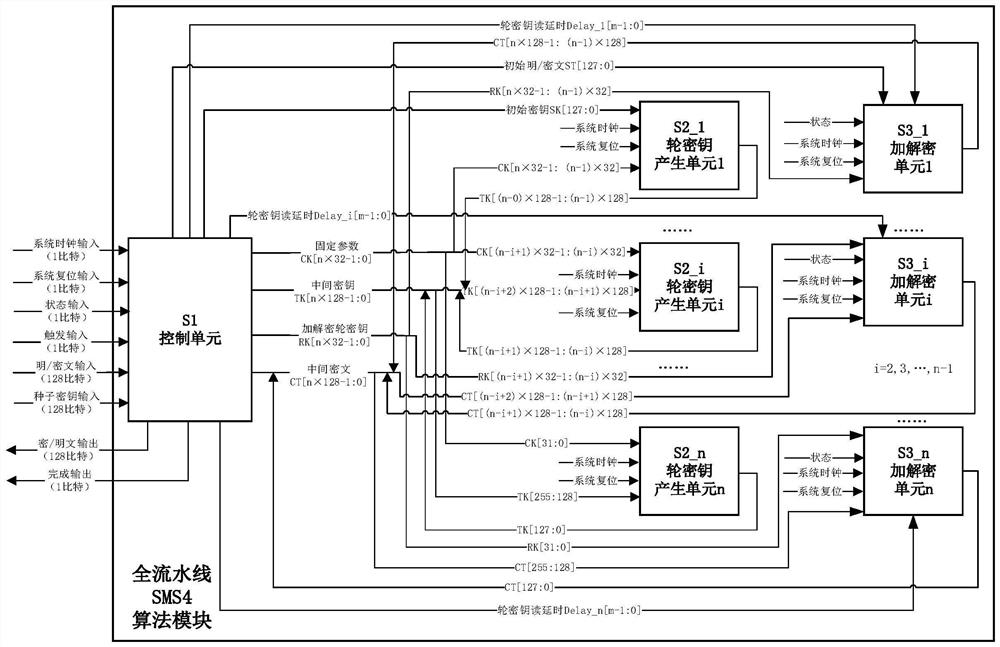

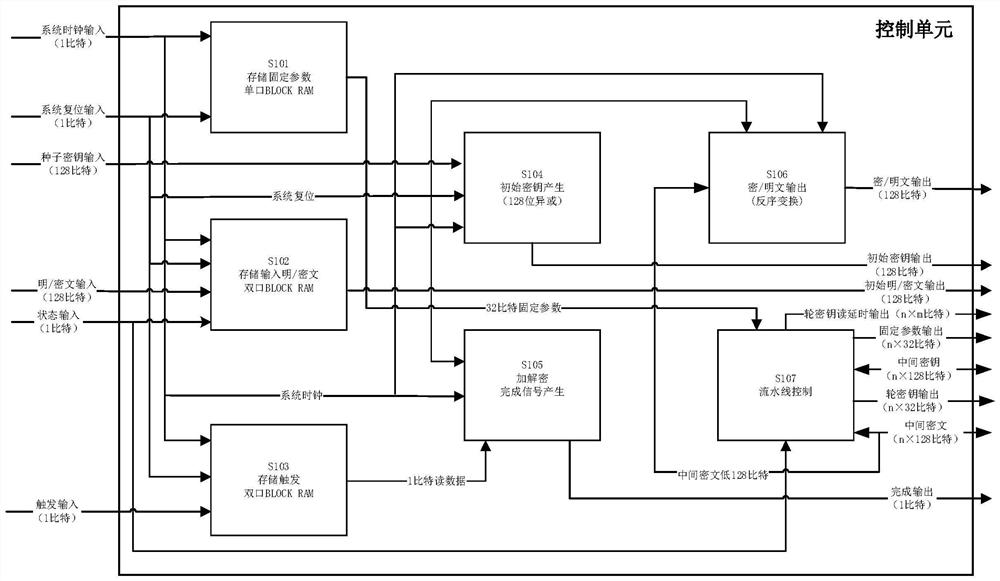

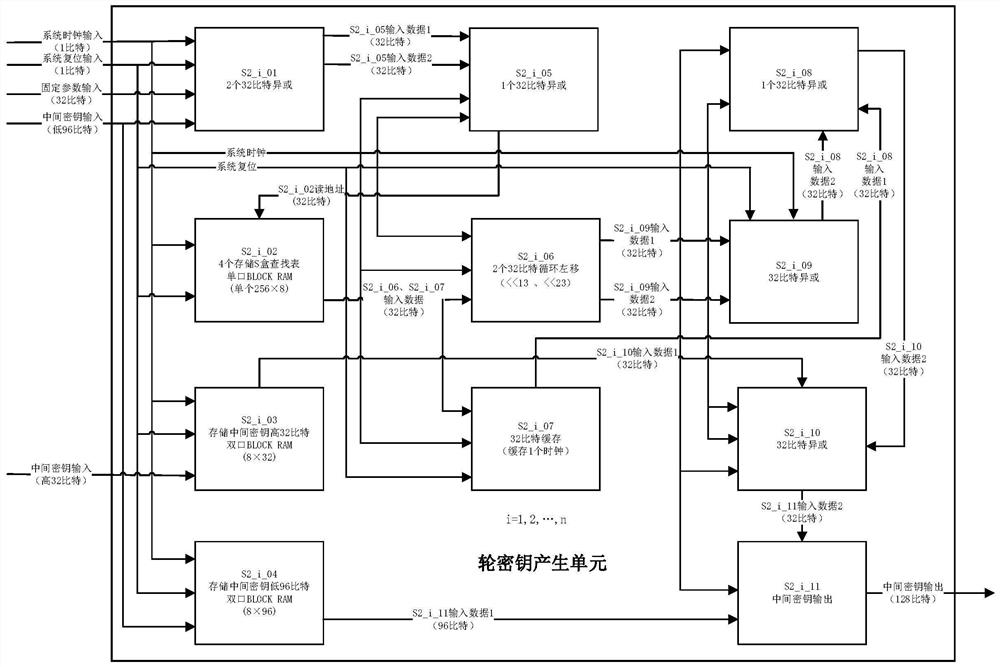

Full-pipeline SMS4 encryption and decryption method and system

ActiveCN112350819AImprove throughputReduce resource consumptionEncryption apparatus with shift registers/memoriesPlaintextComputer architecture

The invention discloses a full-pipeline SMS4 encryption and decryption method and system. The method is characterized by using full-pipeline processing architecture based on a Block RAM; connecting all operational circuits for carrying out the n key generation in a one-time encryption and decryption conversion process, and n encryption and decryption completely in series; caching multi-clock-period intermediate operation data through the Block RAM, and storing an S box lookup table and a fixed parameter lookup table, wherein the intermediate operation data comprises a ciphertext, an intermediate key, a round key and an encryption and decryption trigger; realizing plaintext and ciphertext continuous input processing through a full-pipeline processing architecture; and realizing the multi-clock period delay output of the intermediate operation data through the read-write time difference of the dual-port Block RAM. An SMS4 algorithm is realized by using the pipeline architecture, FPGA embedded Block RAM resources are fully utilized to realize multi-clock-period caching of a large amount of intermediate operation data and S-box lookup table storage in a plurality of round key generation units and encryption and decryption units, the resource consumption of configurable logic blocks is reduced to the maximum extent, and the information throughput rate is improved to the maximum extent.

Owner:SICHUAN JIUZHOU ELECTRIC GROUP

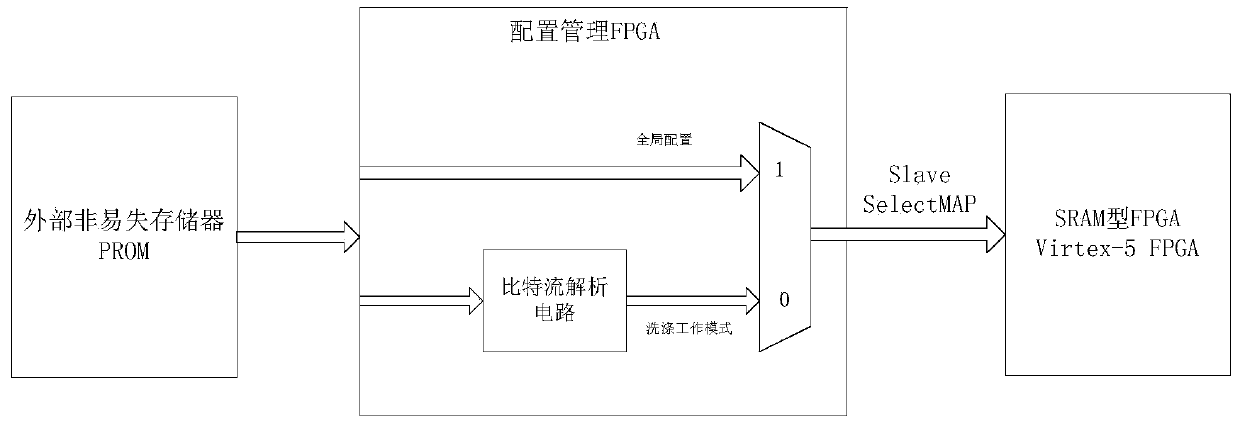

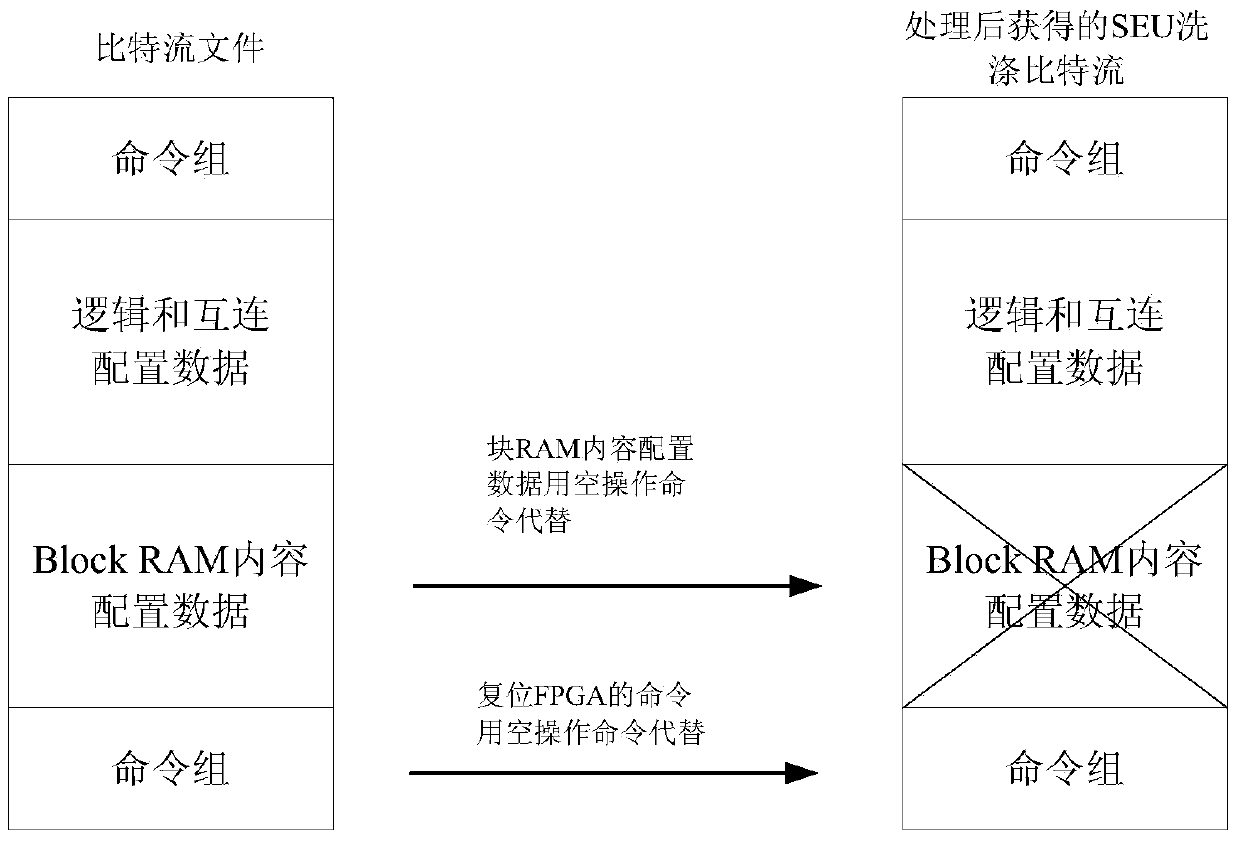

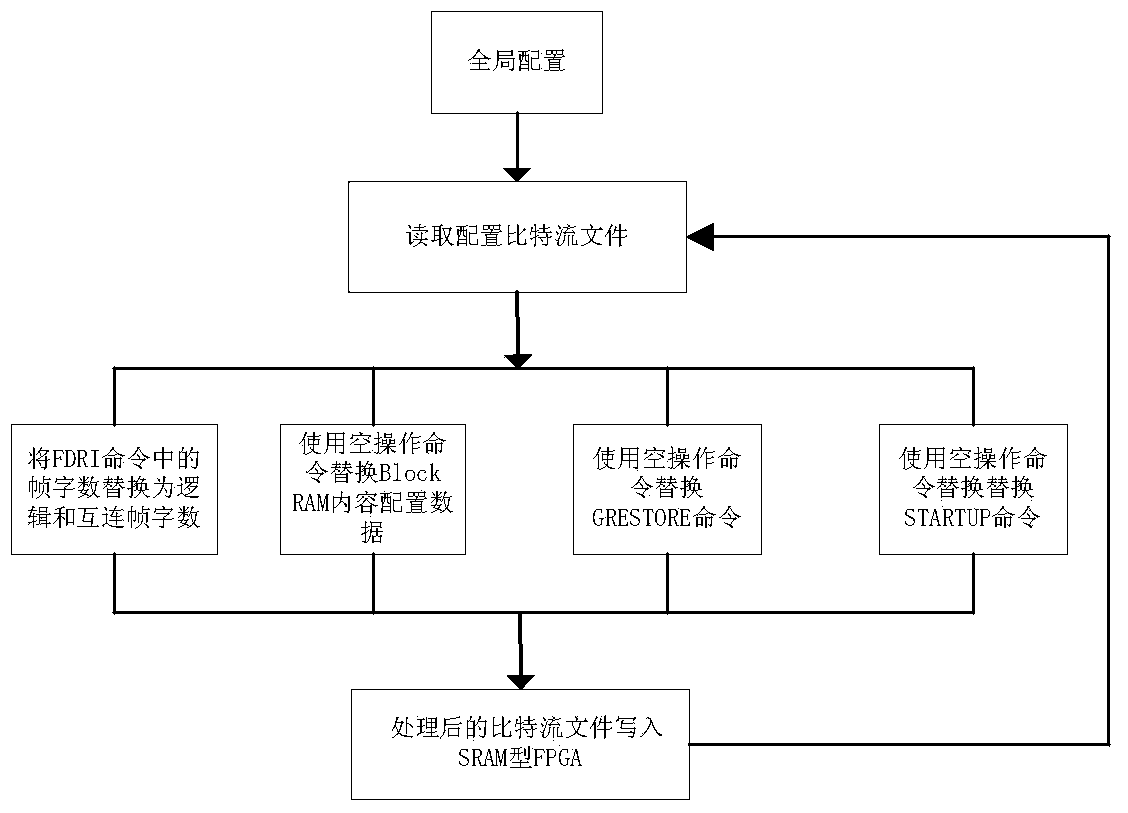

SRAM (static random access memory) type FPGA SEU (field programmable gate array single event upset) operation fixing method

The invention discloses an SRAM (static random access memory) type FPGA SEU (field programmable gate array single event upset) operation fixing method, belonging to the technical field of configuration of SRAM type FPGAs. The method comprises the following steps: 1) after an SRAM type FPGA is electrified, a configuration management FPGA is used for carrying out whole configuration on the SRAM type FPGA; 2) the configuration management FPGA reads a configuration bit stream file, detects the bit stream file and carrying out the follow steps: when a frame write-in command FDRI in the bit stream file is detected, an incidental frame word number in the FDRI is replaced with a logic and interconnection frame word number; when content configuration dada Block RAM is detected, the Block RAM is replaced with a no-operation command; when a register resetting command GRESTORE is detected, the GRESTORE is replaced with a no-operation command; when I / O start command STARTUP is detected, the STARTUP is replaced with a no-operation command; 3) the bit stream file which is subjected to the process of the step 2) is written into the SRAM type FPGA; and 4) the step 2) and the step 3) are repeated until the SRAM type FPGA stops working. The method is suitable for SRAM type FPGA SEU operation fixation.

Owner:NO 513 INST THE FIFTH INST OF CHINA AEROSPACE SCI & TECH

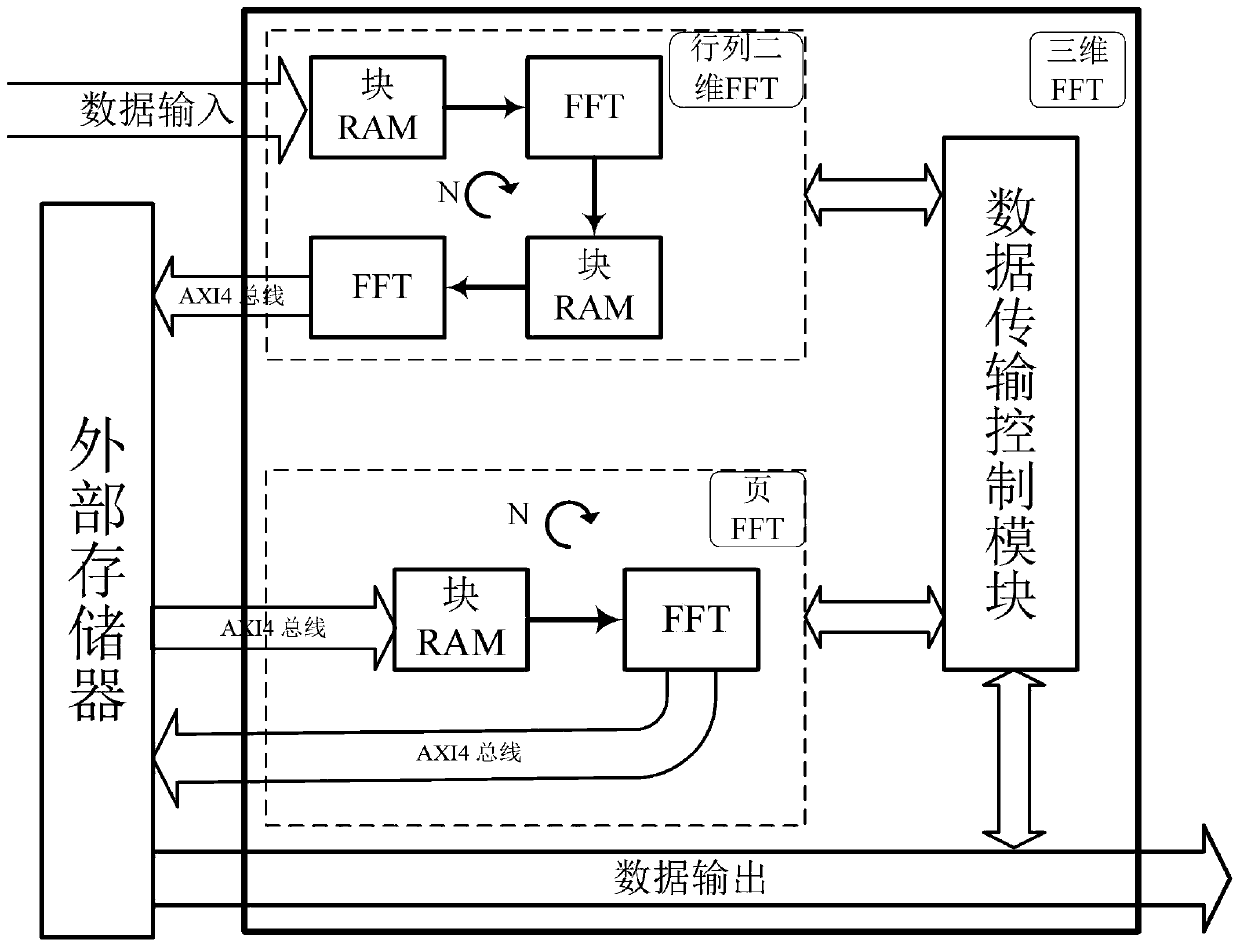

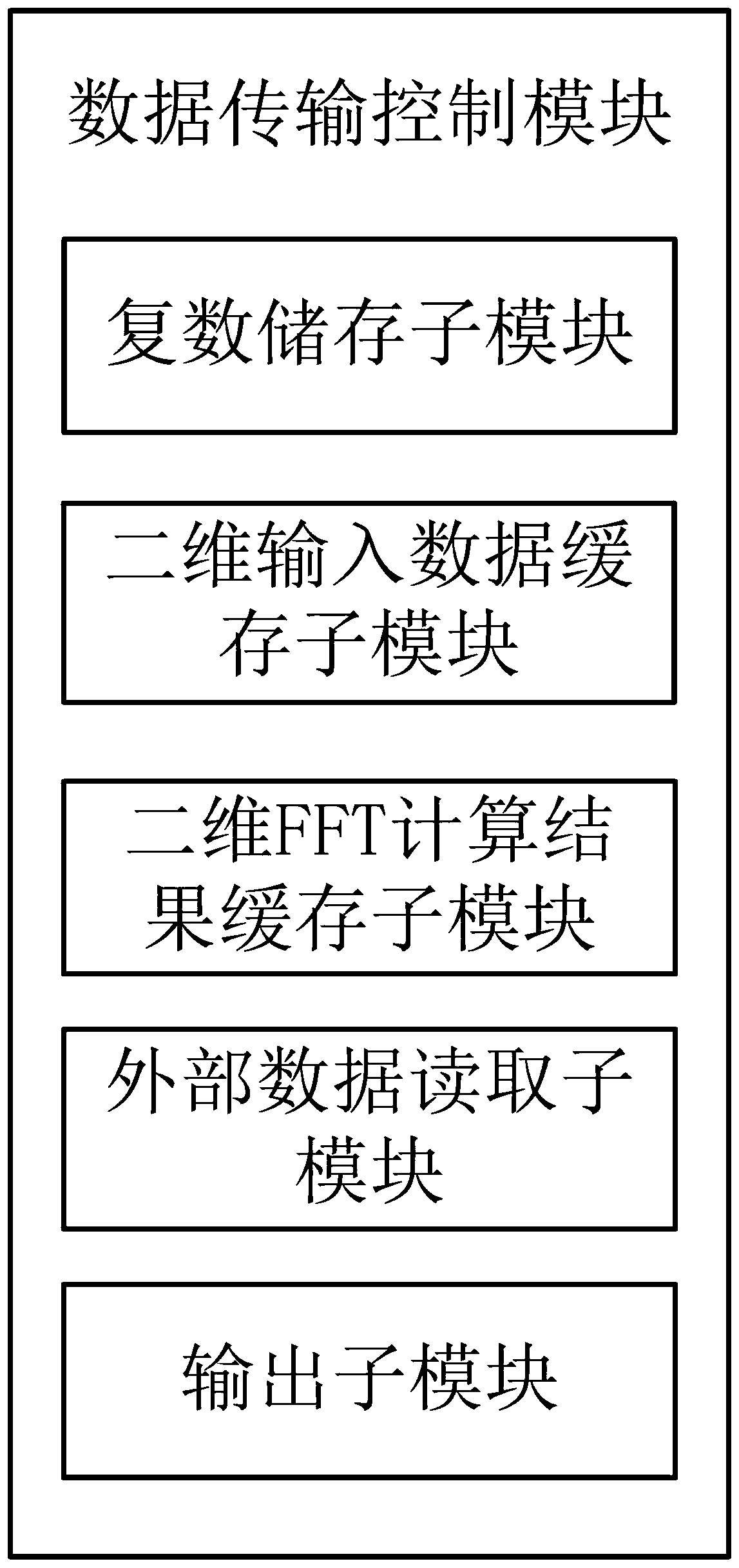

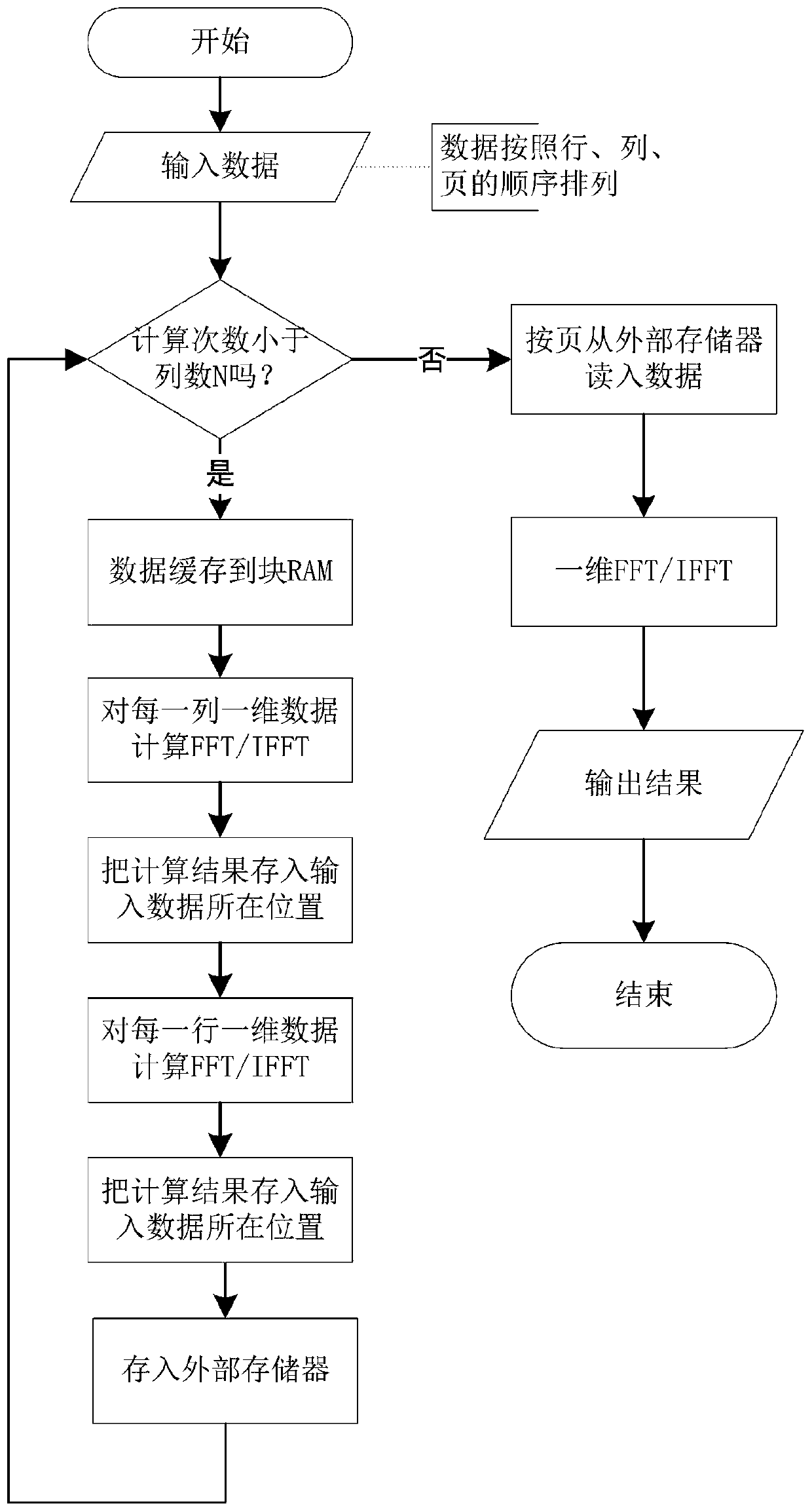

Three-dimensional FFT calculation device based on FPGA

ActiveCN110647719ASolve the situation where large-scale 3D FFT cannot be calculatedMeet actual needsComplex mathematical operationsICT adaptationExternal storageParallel computing

The invention discloses a three-dimensional FFT calculation device based on an FPGA. The three-dimensional FFT calculation device mainly solves the problem that in the prior art, FFT calculation cannot be conducted on large-size three-dimensional data, wherein the FPGA comprises a one-dimensional FFT calculator, a two-dimensional FFT calculator, a data buffer area and an external memory, the databuffer area is formed by using an internal block RAM of the FPGA, and the external memory caches a result calculated by the two-dimensional FFT calculator; the three-dimensional FFT calculator calculates the FFT of the third dimension data according to the calculation result of the two-dimensional FFT cached by the external memory to obtain a three-dimensional FFT calculation result; and the datatransmission control module generates address information to control an intermediate result obtained by calculating the two-dimensional FFT to be cached to an external memory through an AXI4 bus, andcontrols the FPGA to read data from the external memory according to a page sequence. The method is high in calculation precision, the calculated three-dimensional FFT result meets the actual requirement, and the method can be used for simulating dynamic sea surface electromagnetic scattering.

Owner:XIDIAN UNIV +1

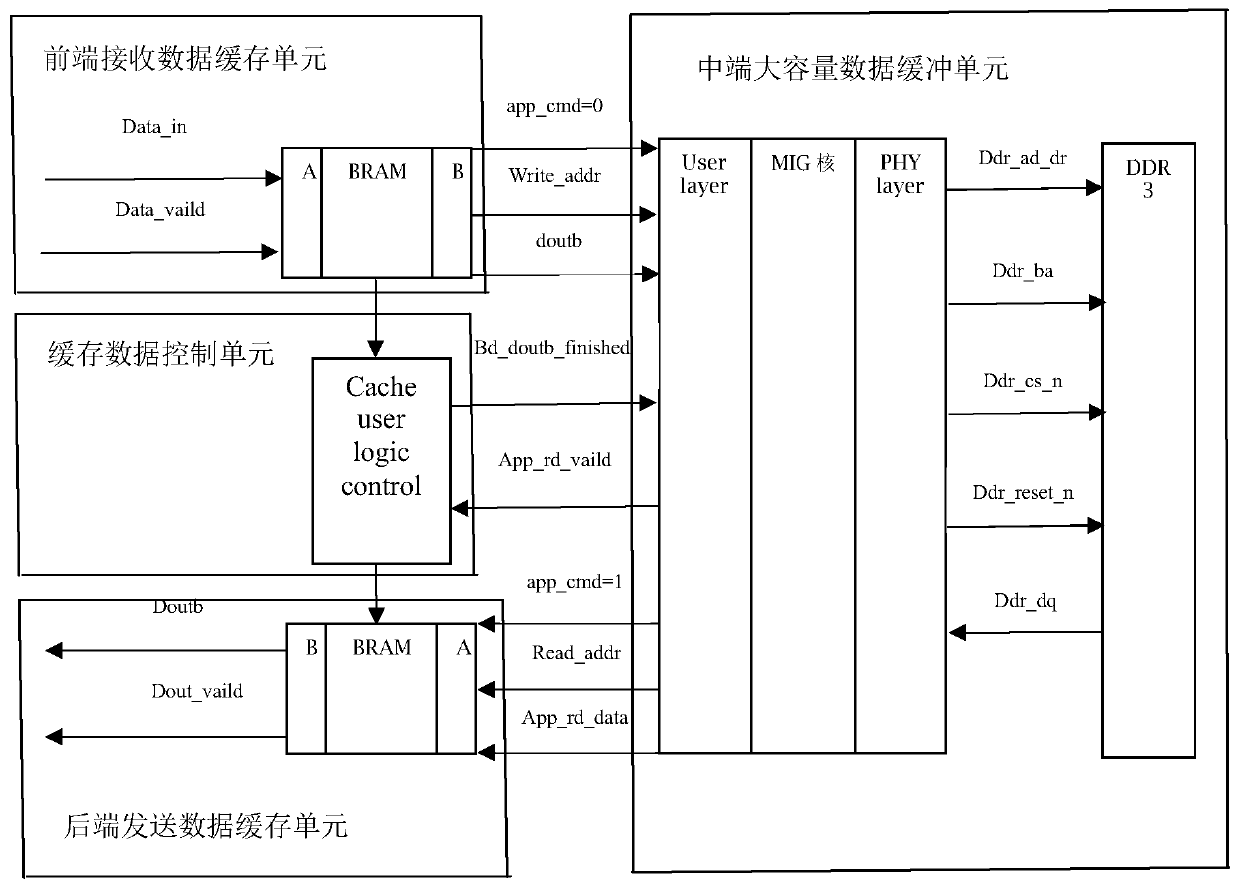

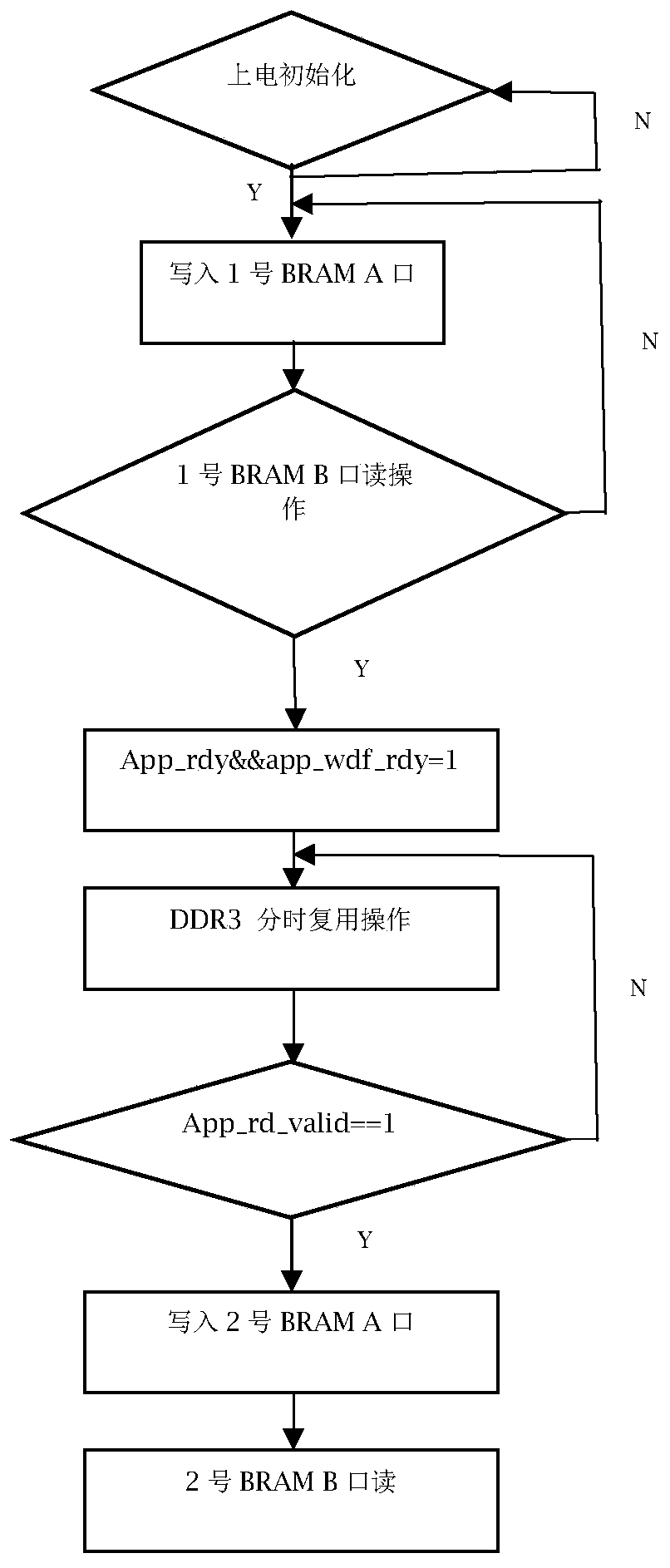

High-speed data caching structure and method

PendingCN111338983ALarge capacityControls are simple and straightforwardMemory systemsData controlControl cell

The invention discloses a high-speed data caching structure and method. The high-speed data caching structure comprises a front-end receiving data caching unit, a middle-end large-capacity data caching unit, a rear-end sending data caching unit and a caching data control unit, wherein the front-end receiving data caching unit comprises a double-port Block RAM, an A port of the BRAM of the front-end receiving data caching unit is used for writing data to be cached, and a B port of the BRAM of the front-end receiving data caching unit is used for reading the data to be cached to the middle-end large-capacity data caching unit; the middle-end large-capacity data cache unit comprises a high-speed cache chip DDR3, time-sharing ping-pong operation is adopted for executing write-in and read-out,and it is guaranteed that the write priority is higher than that of read in the process; the rear-end sending data cache unit comprises a dual-port Block RAM, an A port of the BRAM of the rear-end sending data cache unit is used for writing data read by DDR, and a B port of the BRAM of the rear-end sending data cache unit is used for reading data to a next operation end; and the cache control unitis used for controlling the execution processes of the three units. According to the method, the program execution efficiency can be improved and the bit error rate can be reduced in a system with high capacity and relatively high real-time requirement.

Owner:SOUTHEAST UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com