Patents

Literature

30 results about "Block random access memory" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

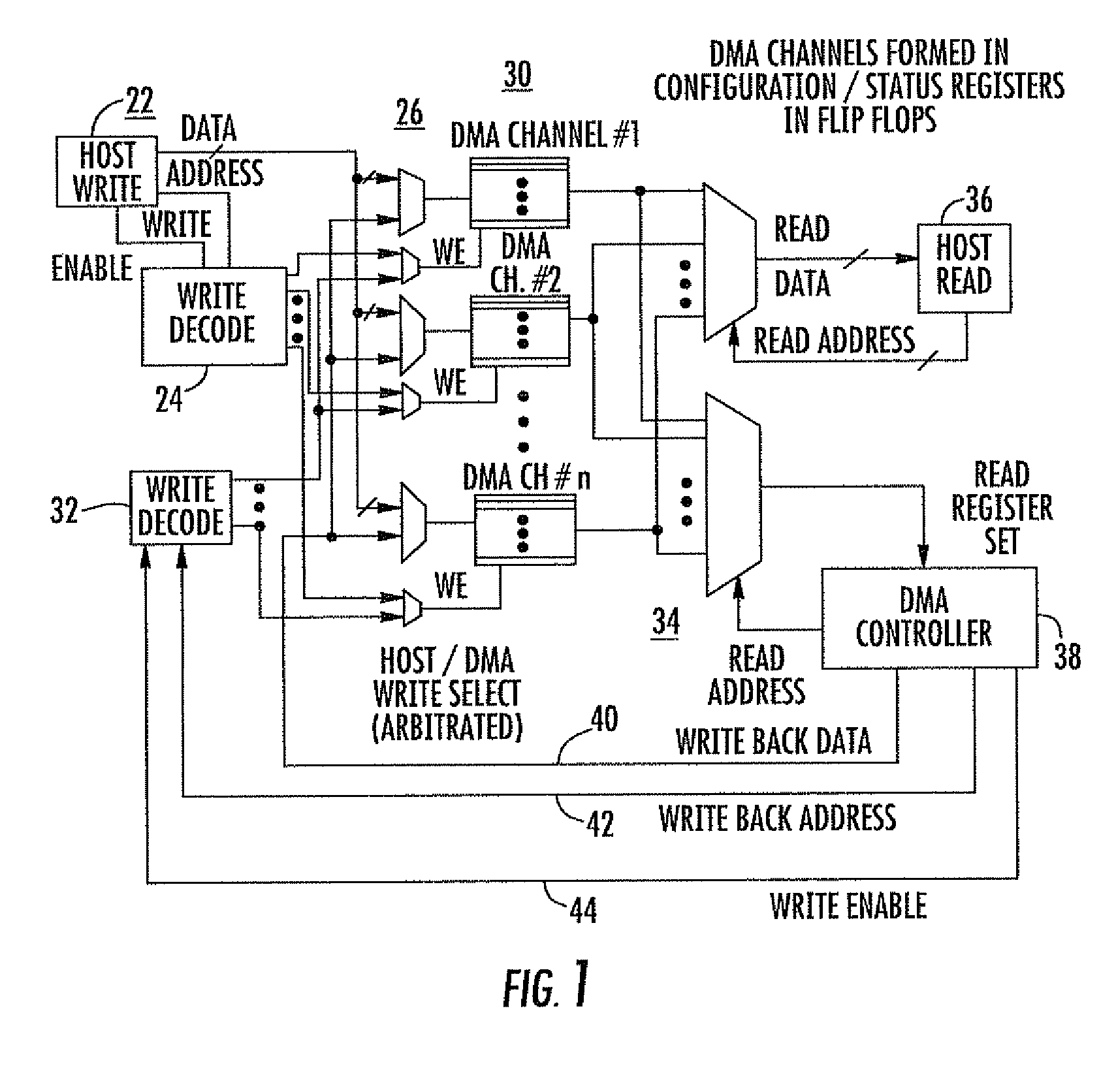

Video multiviewer system using direct memory access (DMA) registers and block ram

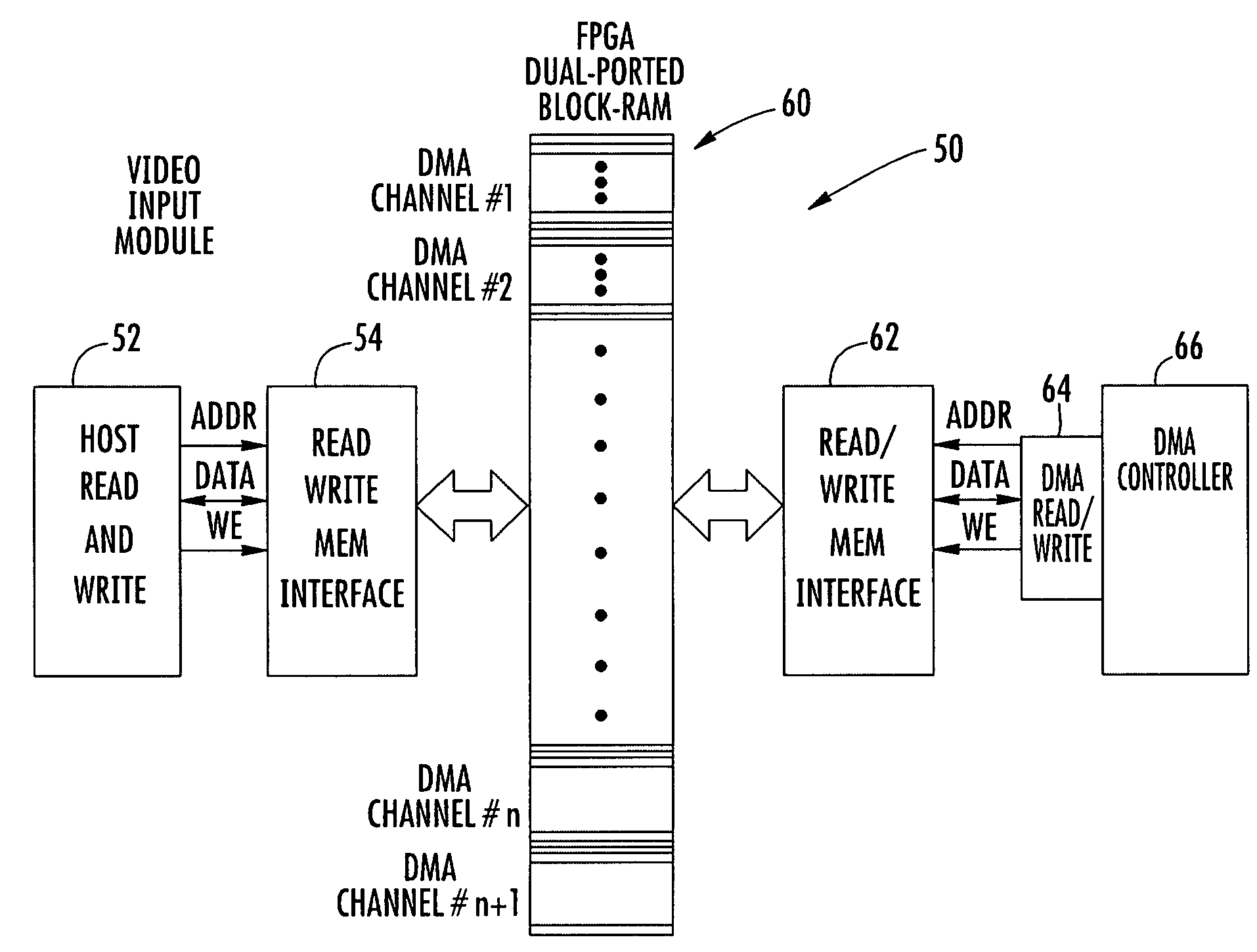

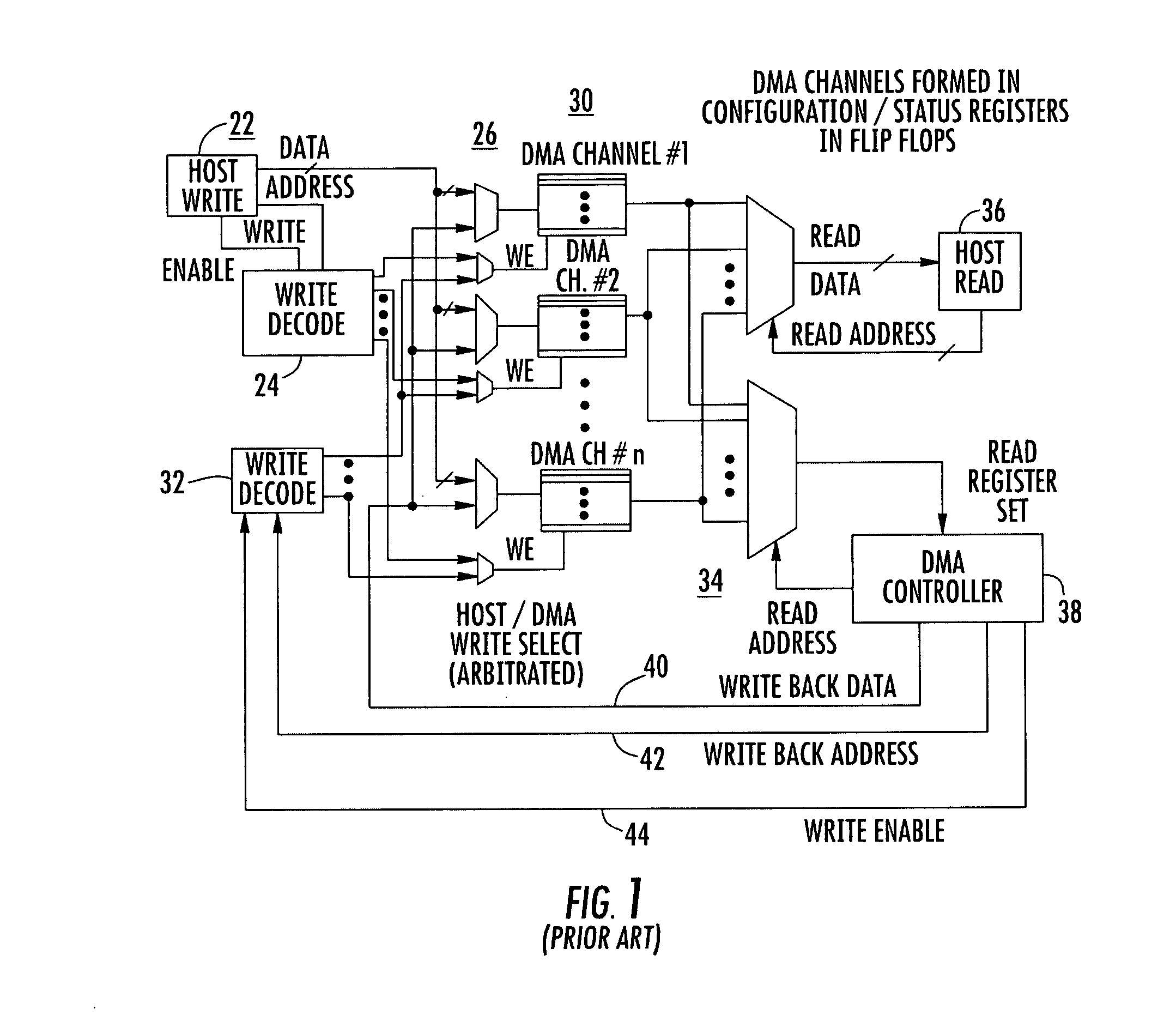

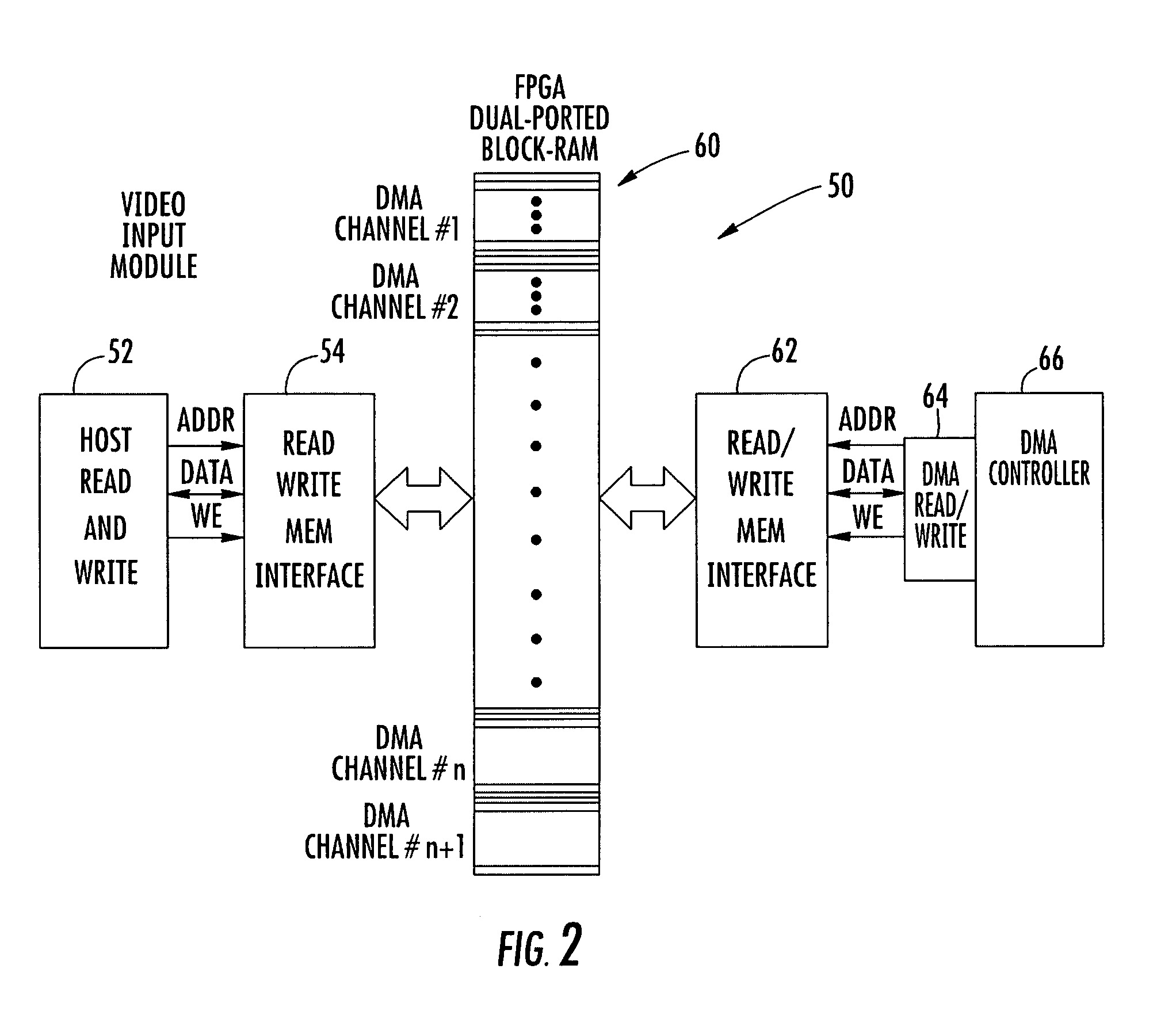

A video multiviewer system includes a Graphics Processing Unit (GPU) that includes a GPU memory. A video input module is operative with the GPU for receiving video data and transferring the video data to the GPU memory via a Direct Memory Access (DMA). A programmable circuit such as a Field Programmable Gate Array (FPGA) includes a multi-ported and in one aspect a dual ported block Random Access Memory (RAM) configured for a plurality of DMA channels for receiving video data and allowing uninterrupted operation of consecutive DMA transfers of video data to the GPU memory. A display displays the multiple video windows based upon video data received within the GPU memory.

Owner:IMAGINE COMM

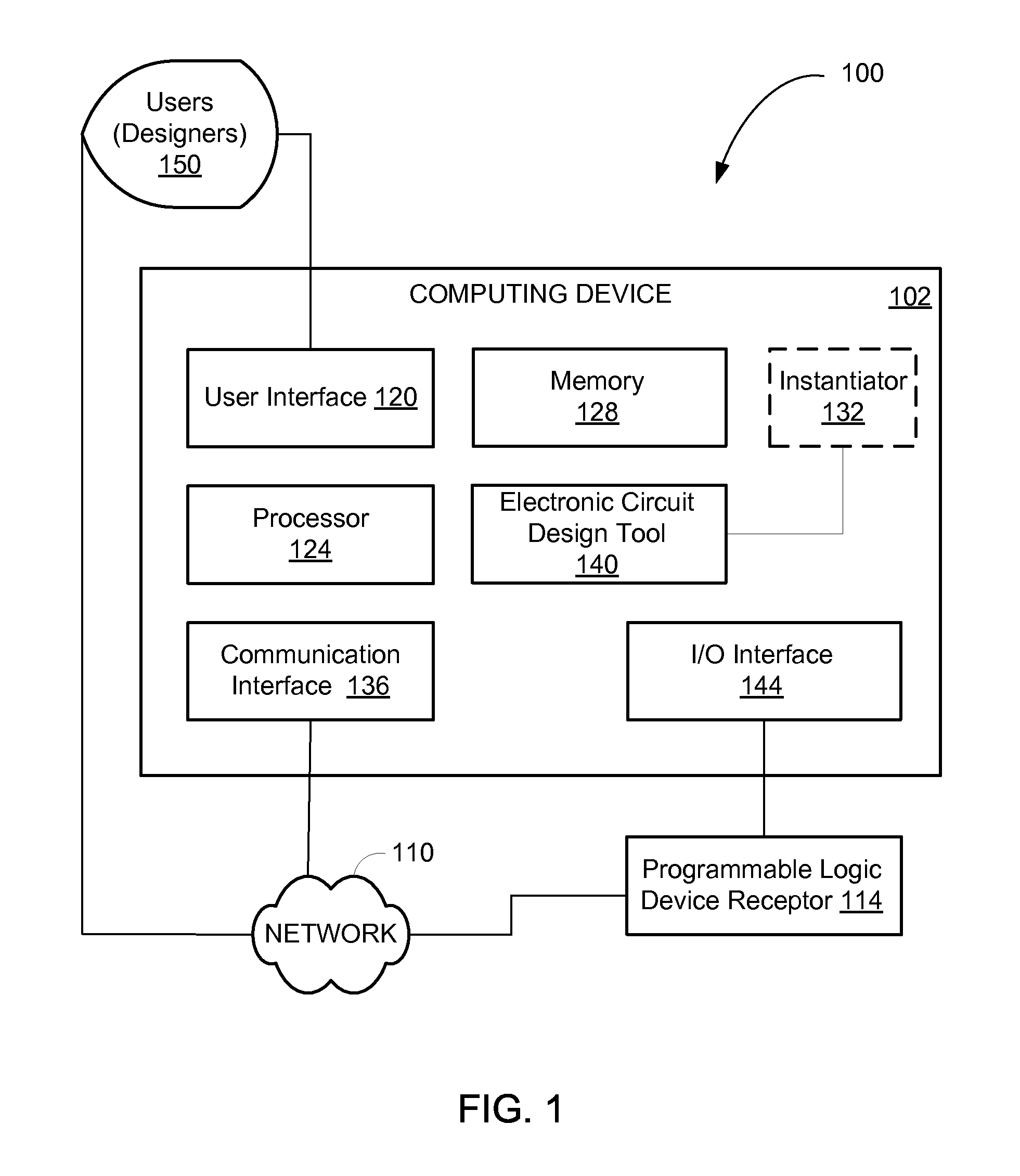

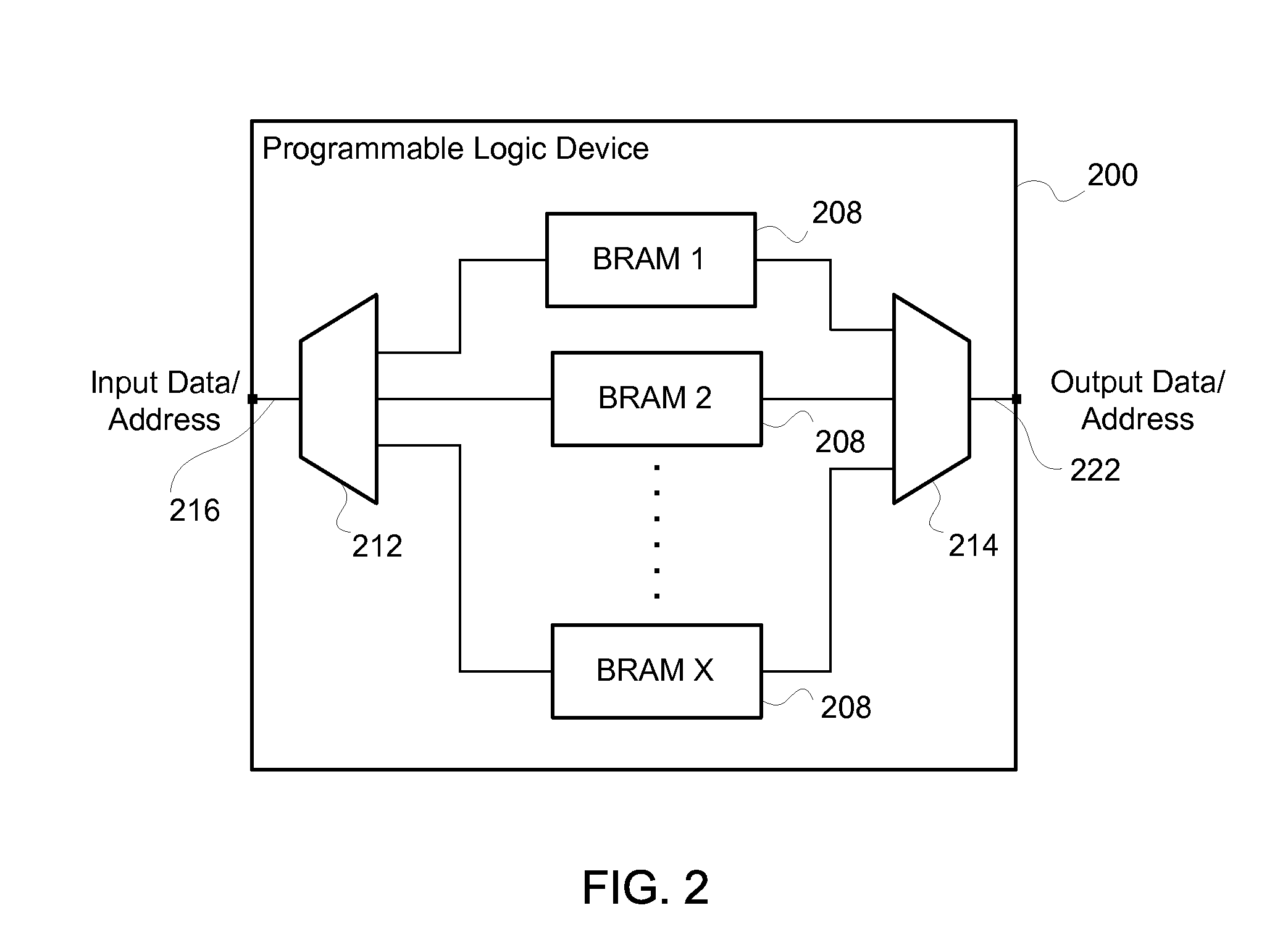

System for memory instantiation and management

InactiveUS20110231628A1Memory adressing/allocation/relocationComputer aided designRandom access memoryProgrammable logic device

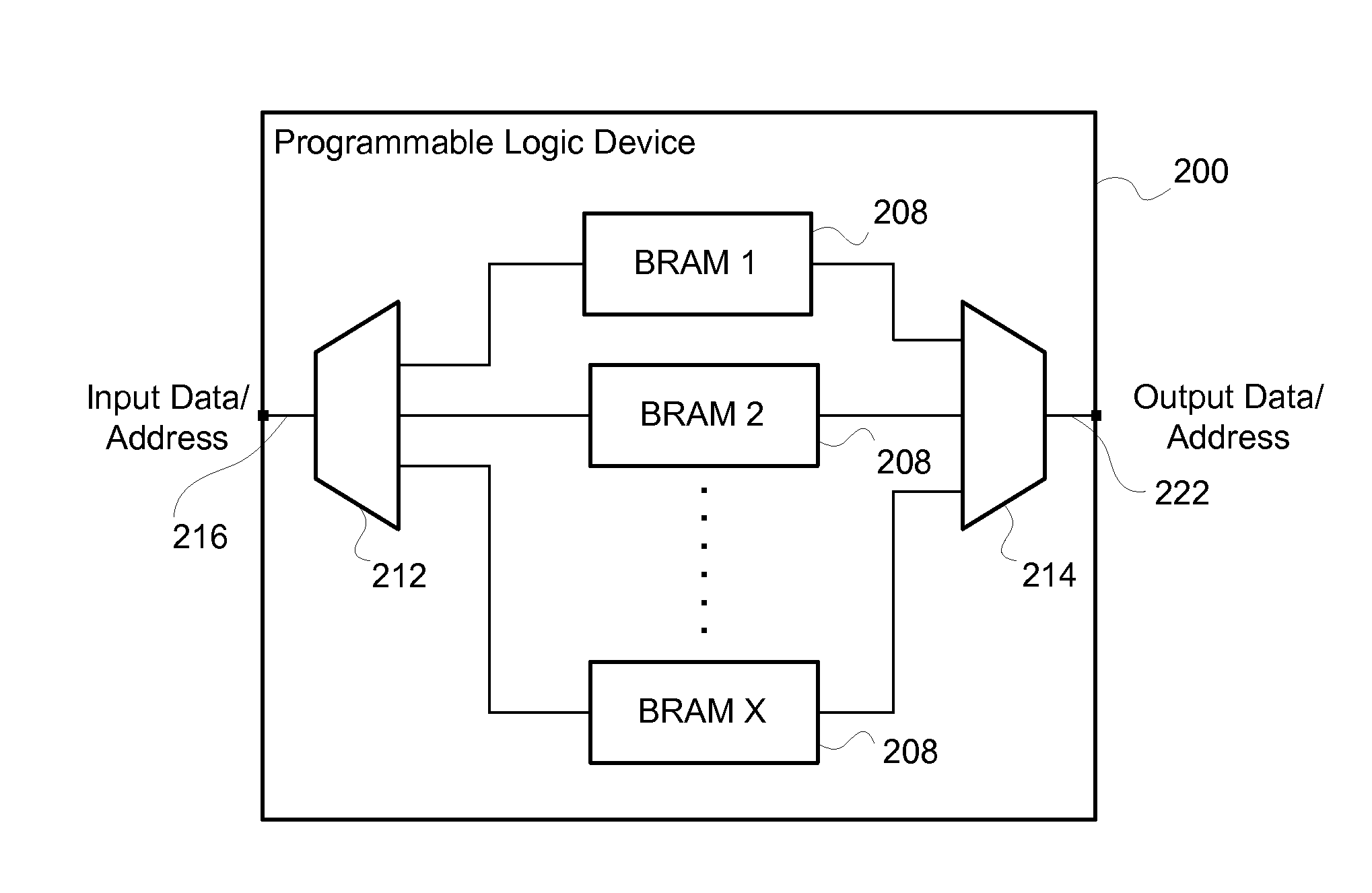

A system for memory instantiation in a programmable logic device (PLD) includes a computing device having a processor and memory coupled with the PLD. The processor is configured to receive memory parameters including at least a data width and a data depth. The processor is also configured to determine a number and sizes of block random access memory (BRAM) primitives required for data storage based on the memory parameters and based on one or more sizes of BRAM primitives available on the programmable logic device. In one example, the processor minimizes a size of the total number of BRAMs required for instantiation on the PLD. The processor is also configured to instantiate the determined number and corresponding sizes of the BRAM primitives in logic for configuration of the programmable logic device to include a device memory within the available BRAM primitives thereof corresponding to the determined number and sizes of the BRAM primitives.

Owner:HARMAN INT IND INC

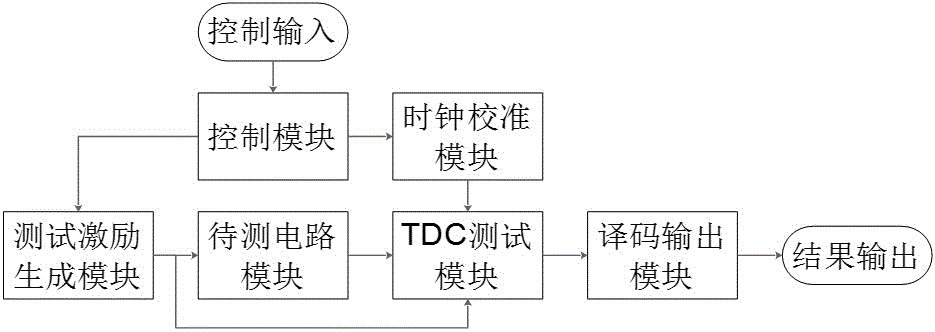

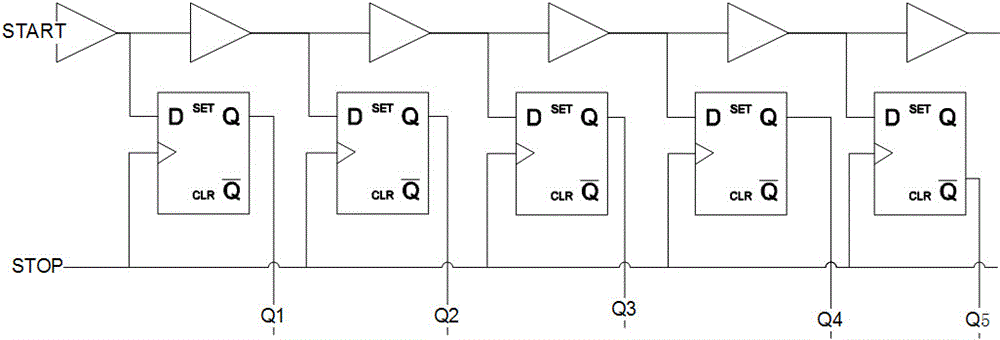

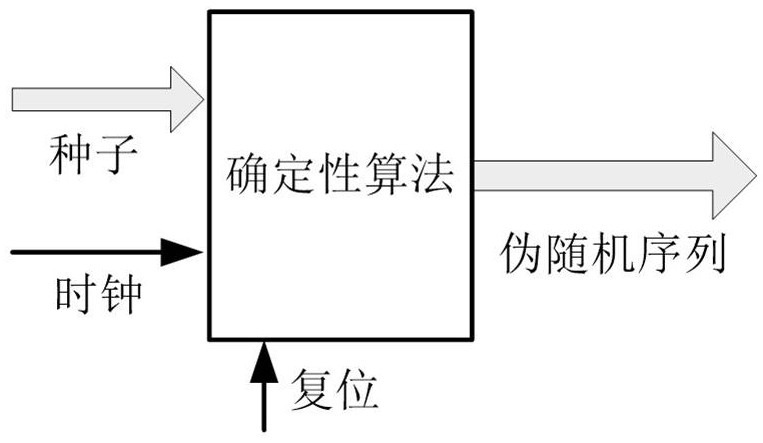

FPGA circuit transmission delay rest system and method based on TDC

InactiveCN105842610ADelay combinatorial logic delayReduce demandElectronic circuit testingDigital converterBuilt-in self-test

The invention belongs to the integrated circuit technical filed, and concretely discloses an FPGA (Field Programmable Gate Array) circuit transmission delay test system and method based on a TDC (time-digital converter) method. The test system comprises: a circuit module to be tested, a test excitation generation module, a TDC module, a decoding output module, a time calibration module and a control module. The system can utilize a TDC method to count delay chains, test transmission delay of an FPGA internal circuit more conveniently, and utilize internal resources in an FPGA chip to construct BIST (Build-in Self Test) for test, has the characteristics of low test cost, sound anti-interference performance, great transportability, independence of test tools, etc., and has great application values on test various transmission delay parameters in an FPGA including switch parameters, interconnection delay, combinational logic delay and CLK-Q delay of a configurable logic block (CLB), a programmable input output box (IOB), a block random access memory (BRAM), a digital signal processor (DSP) and a programmable interconnection FPGA combination module circuit.

Owner:FUDAN UNIV

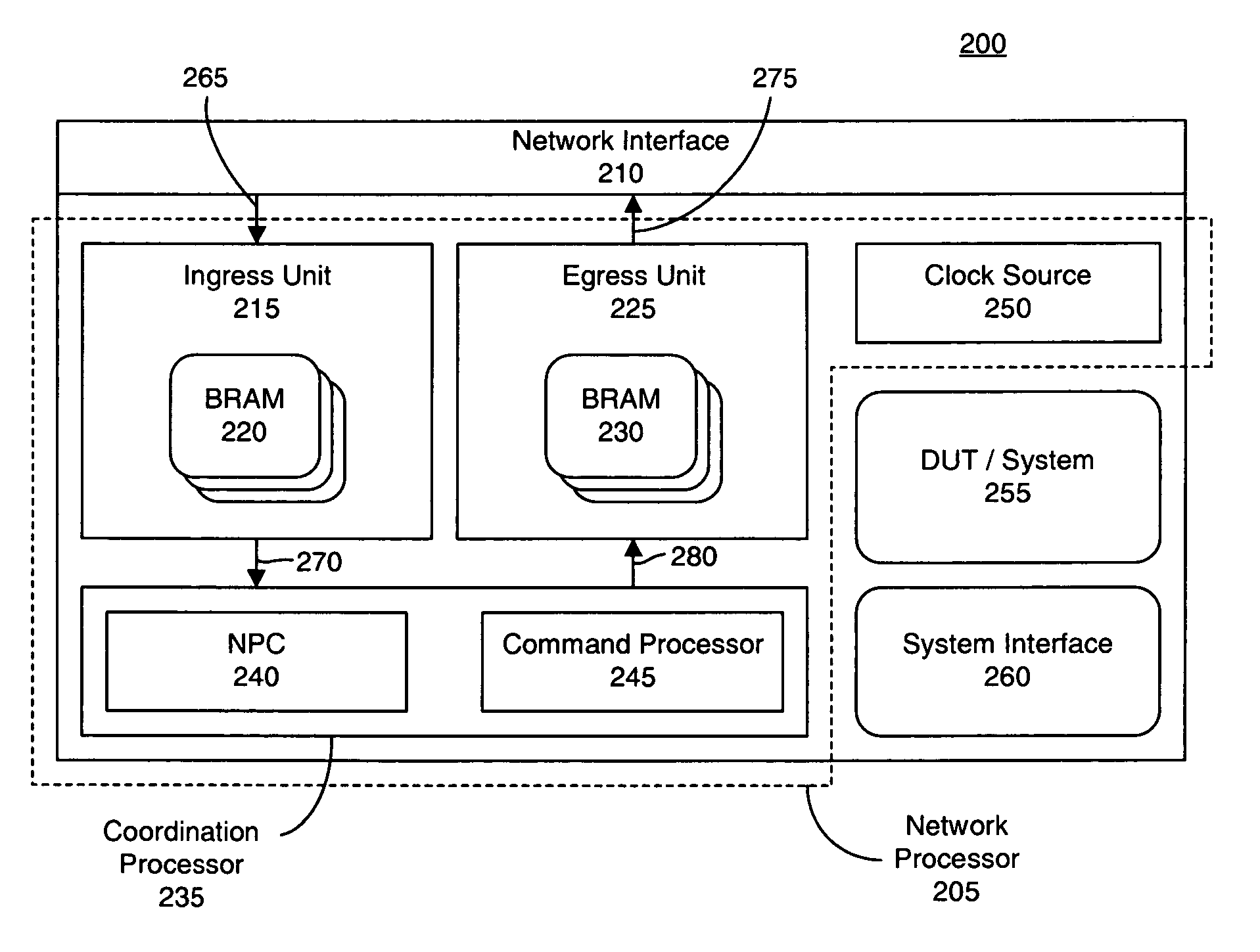

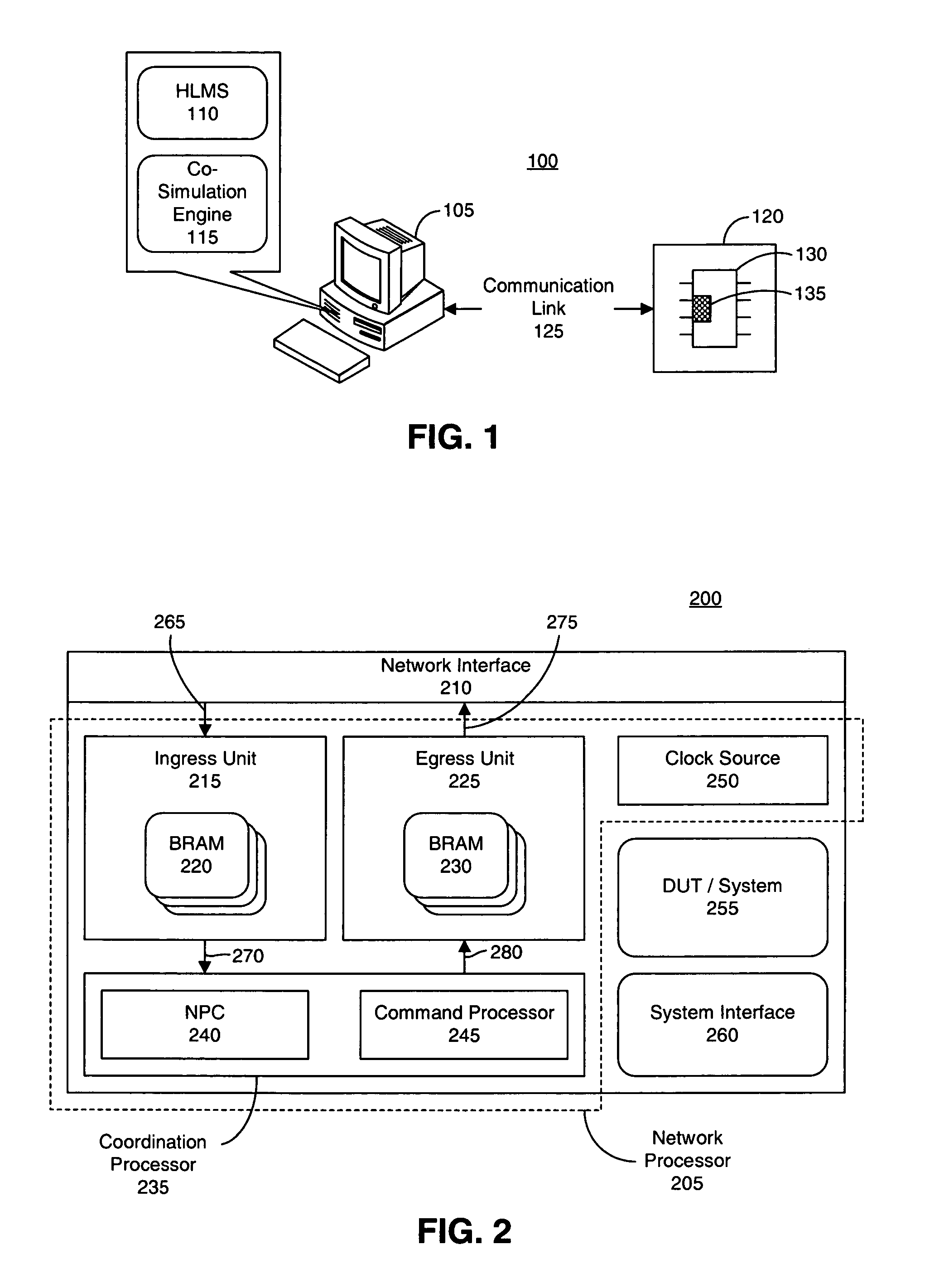

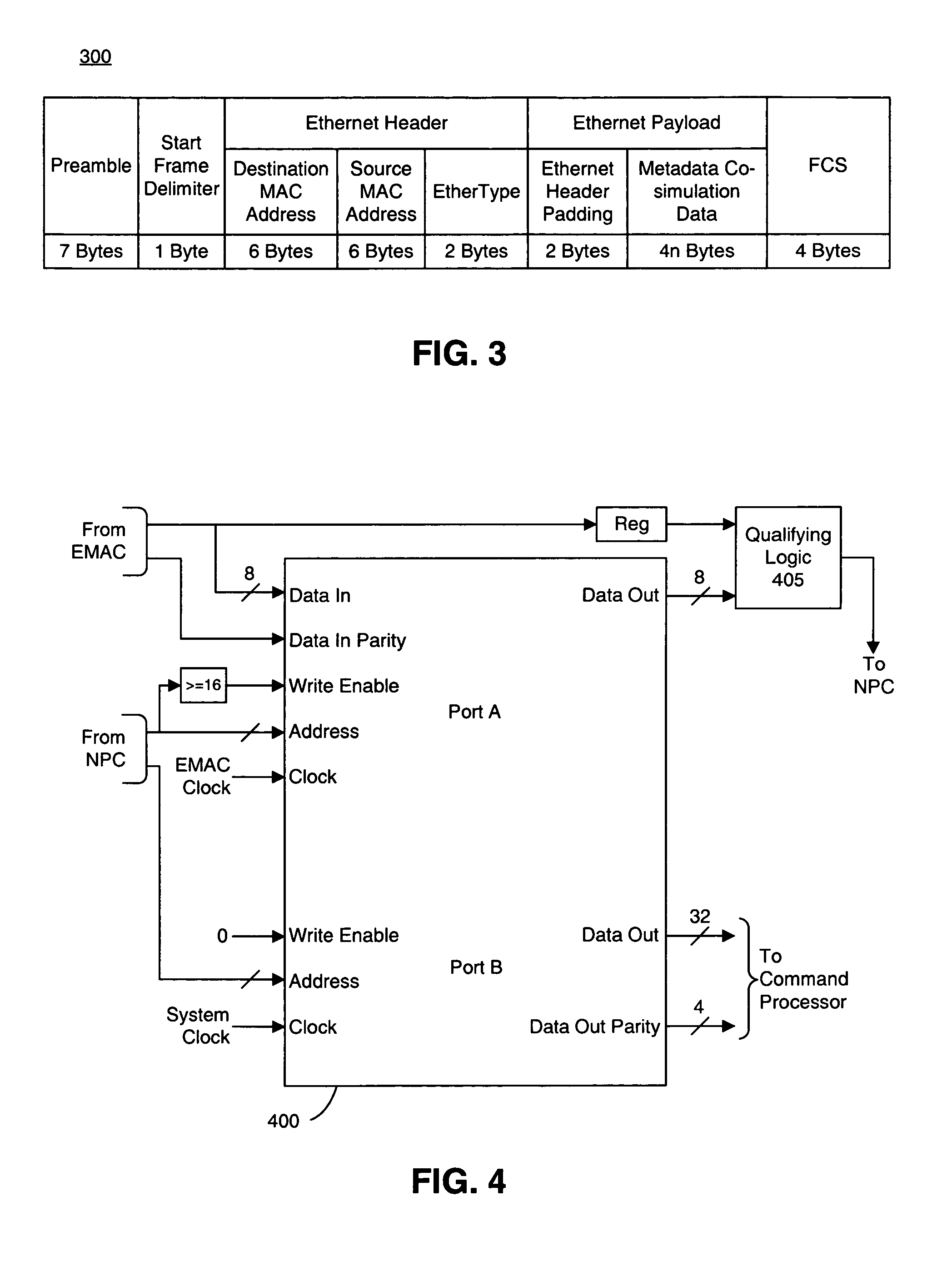

Parameterizable compact network processor for low-level communication with an integrated circuit

ActiveUS7590137B1Analogue computers for electric apparatusTime-division multiplexRandom access memoryNetwork processor

A network processor, disposed on an integrated circuit can include an ingress unit having a dual port block random access memory and an egress unit having a dual port block random access memory. The network processor further can include a network interface configured to write packetized data to the ingress unit and read packetized data from the egress unit as well as a coordination processor configured to coordinate movement of data between the network interface, the ingress unit, and the egress unit.

Owner:XILINX INC

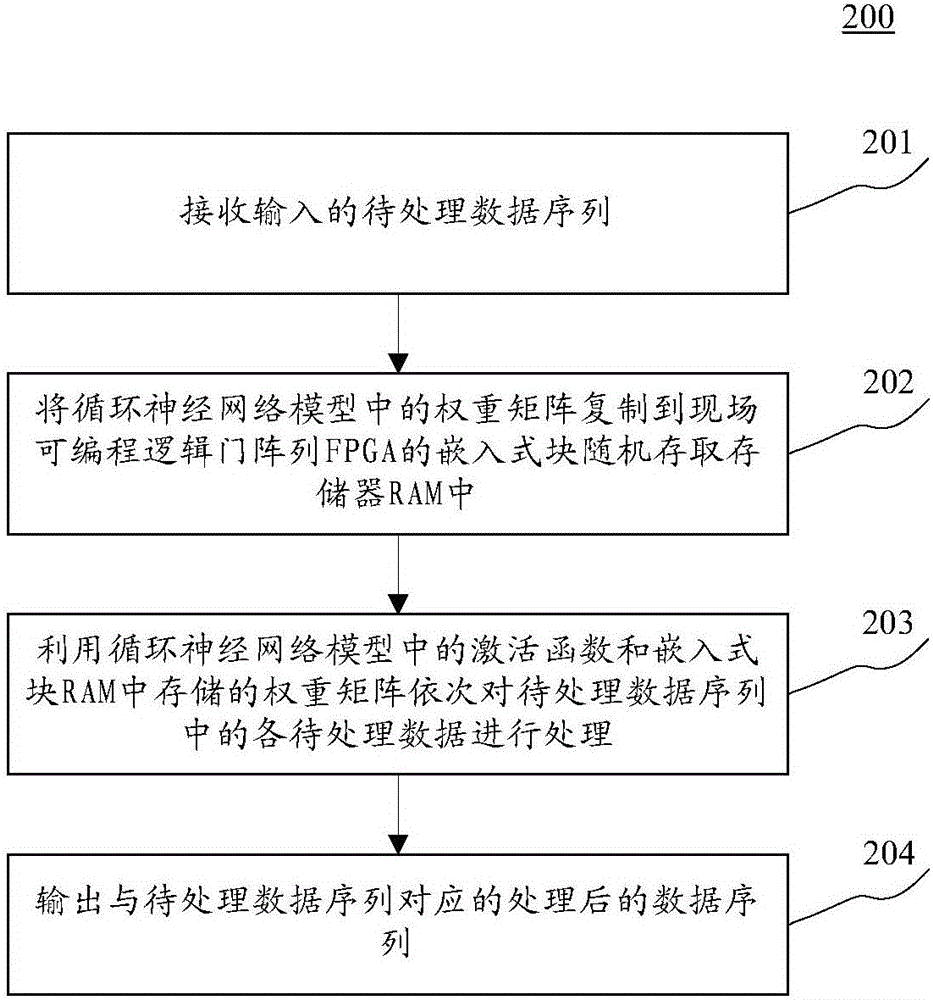

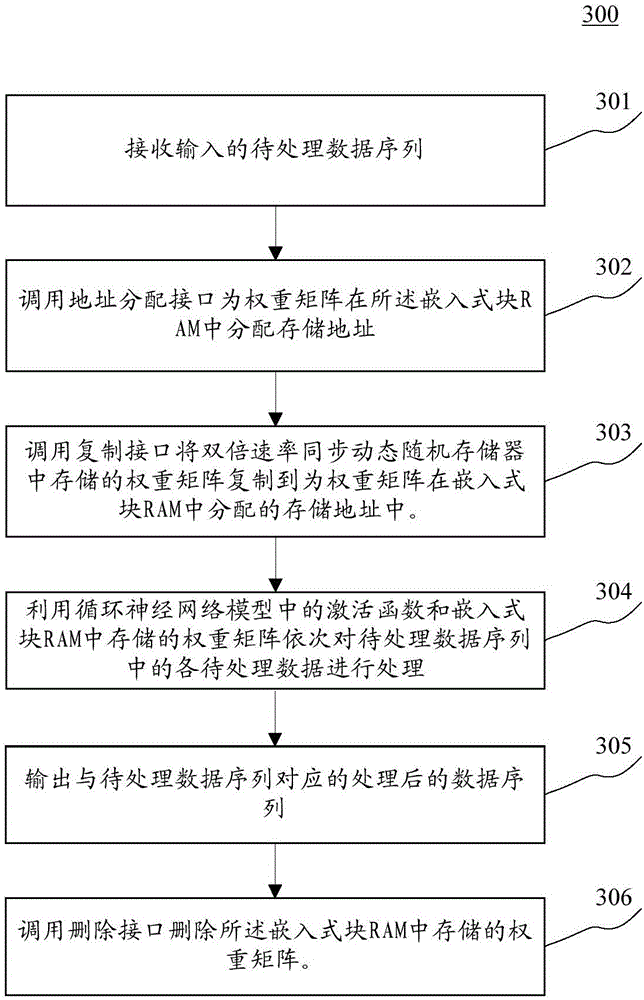

Method and apparatus for processing data sequences

InactiveCN106529669AImprove the efficiency of processing data sequencesPhysical realisationActivation functionAlgorithm

The application discloses a method and apparatus for processing data sequences. The method comprises: an inputted to-be-processed data sequence is received; a weight matrix of a recurrent neural network model is copied to an embedded block random access memory (RAM) of a field programmable gate array (FPGA); all to-be-processed data are processed in the to-be-processed data sequence by using an activation function in the recurrent neural network model and the weight matrix stored into the embedded block random access memory; and a processed data sequence corresponding to the to-be-processed data sequence is outputted. Therefore, the efficiency of processing the data sequence by the recurrent neural network model is improved.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

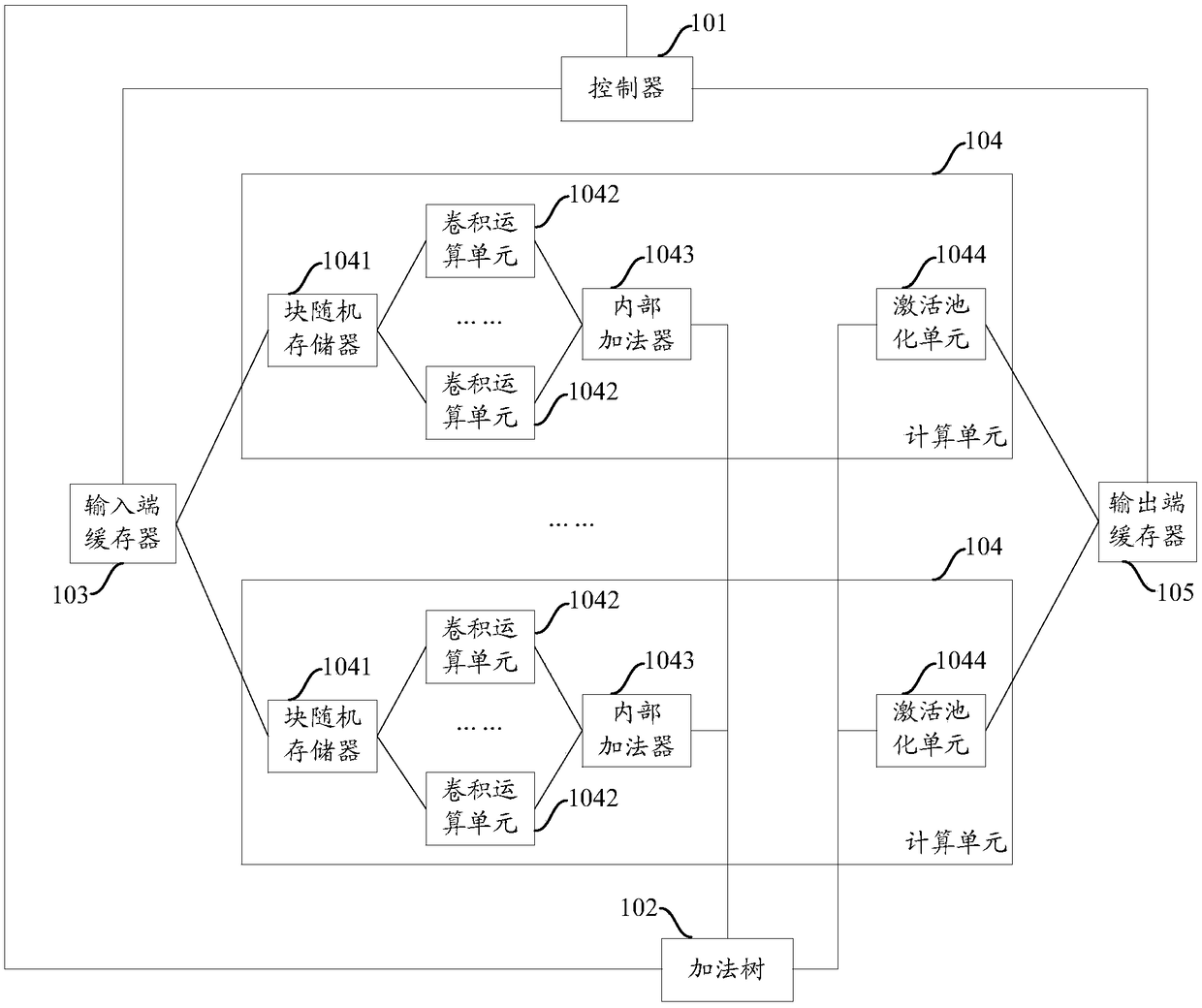

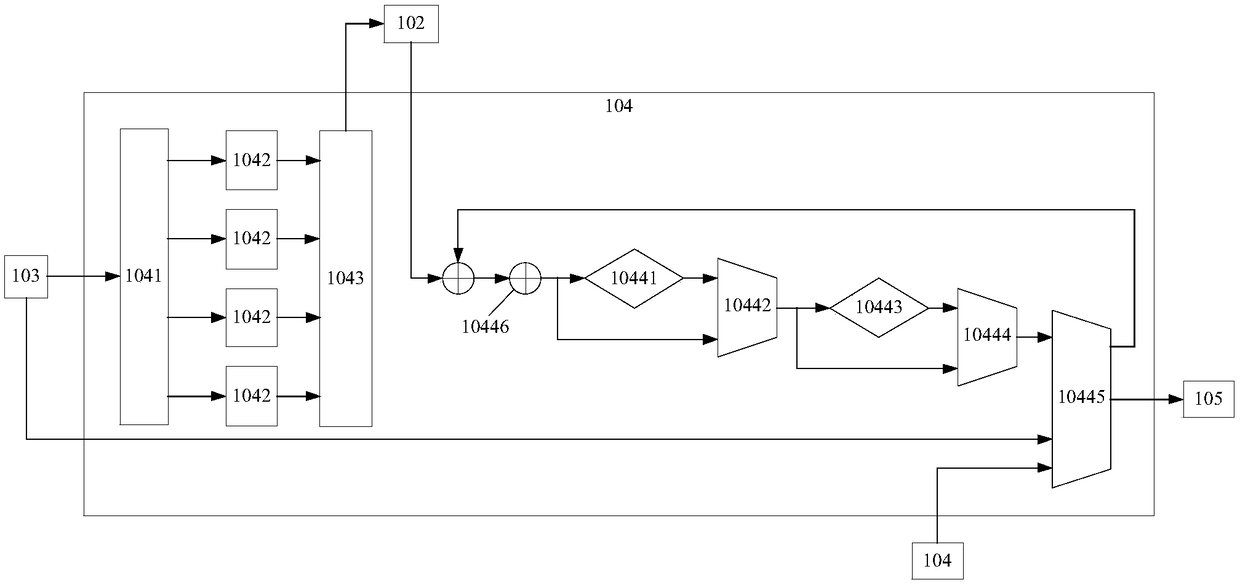

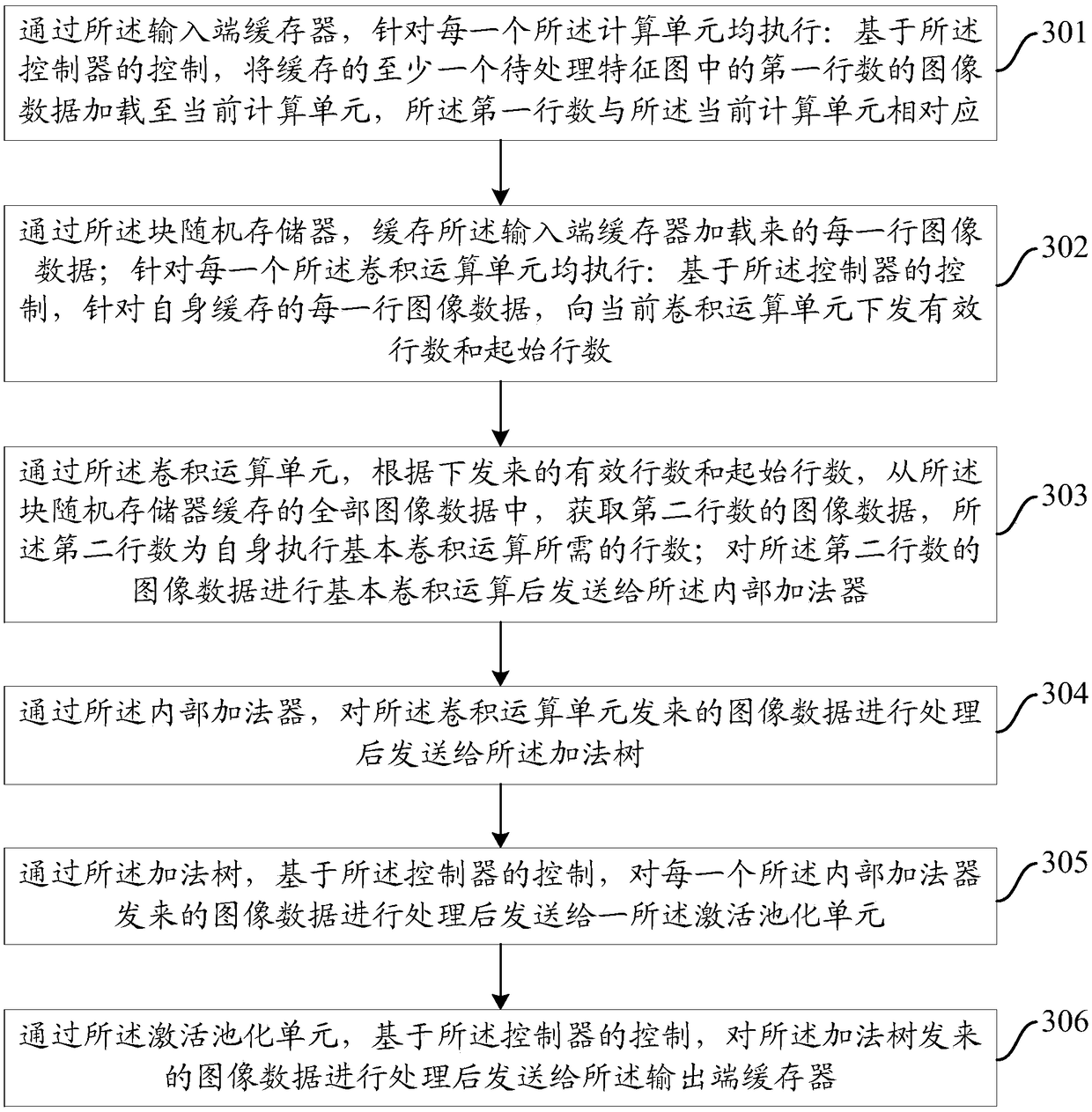

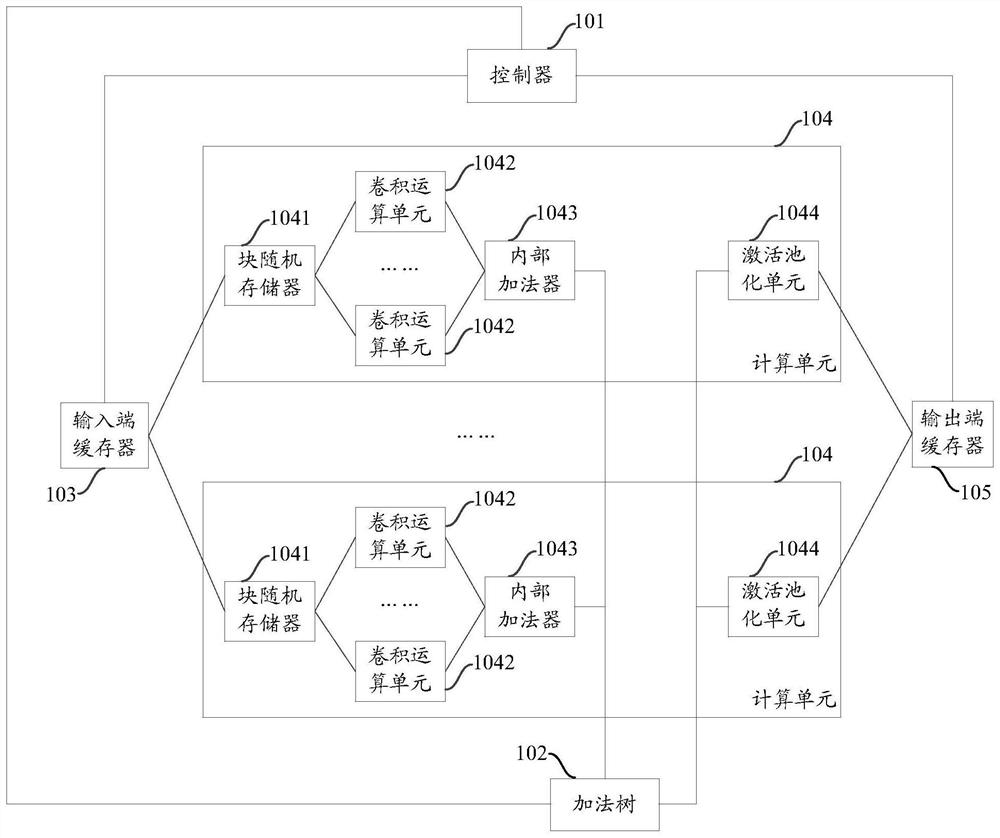

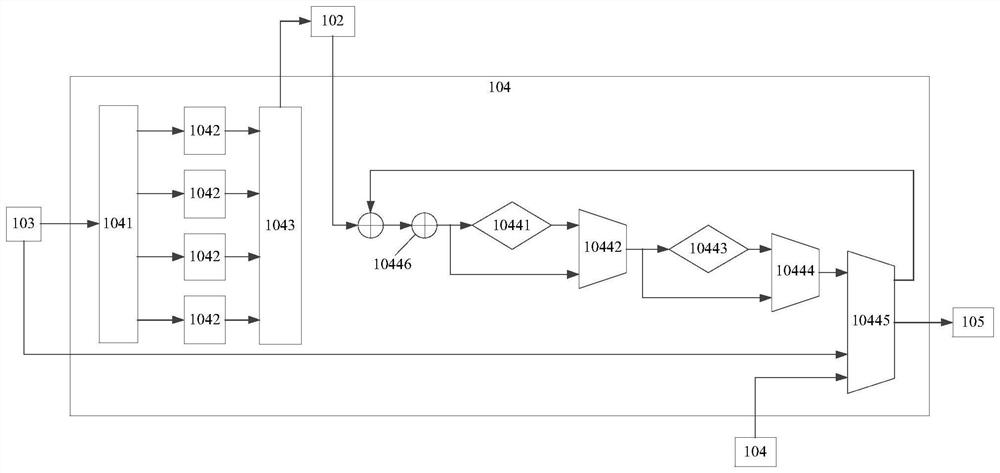

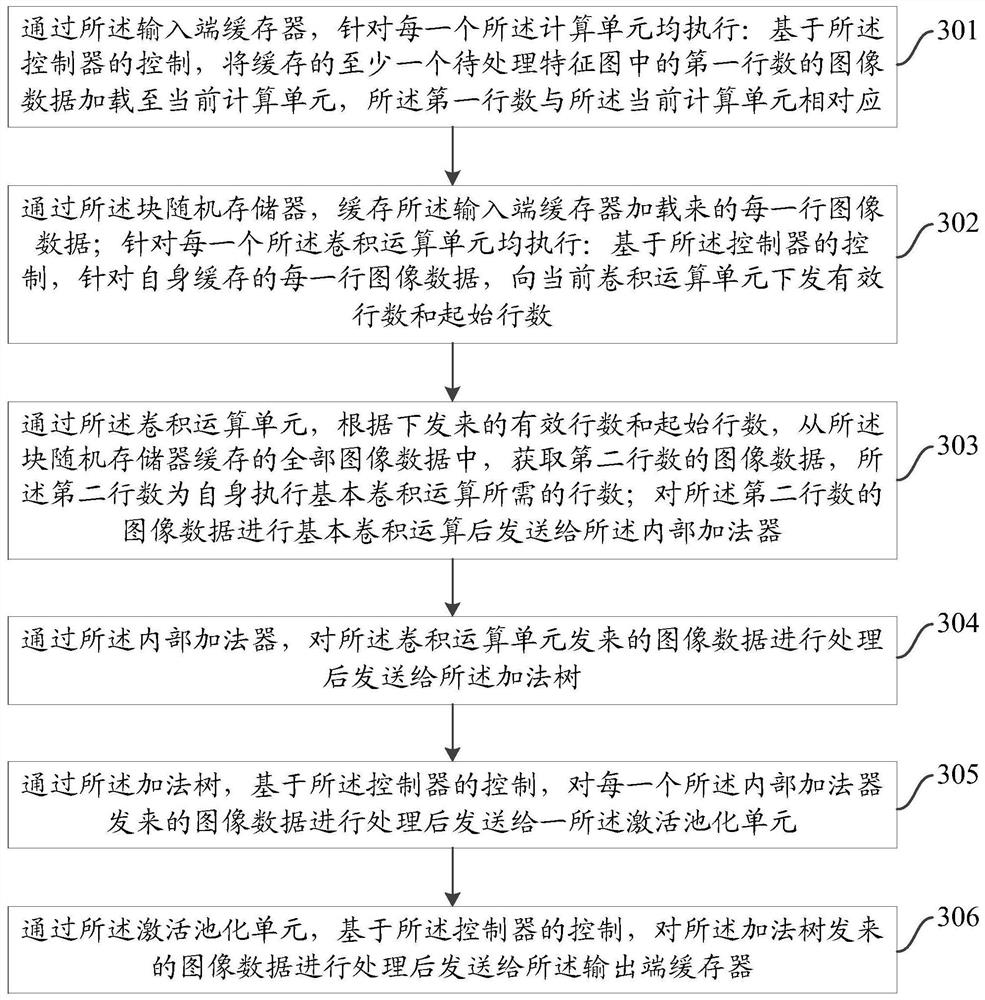

A basic calculation unit and a calculation method of a convolutional neural network

ActiveCN109165728ACompletion time controllableNeural architecturesPhysical realisationCompletion timeParallel computing

The invention provides a basic calculation unit and a calculation method of a convolutional neural network. The basic calculation unit comprises a controller, an addition tree, an input buffer, a plurality of calculation units and an output buffer. The computing unit includes a block random access memory, a plurality of convolutional operation units, an internal adder, and an activation pooling unit. Based on the control of the controller, the input buffer loads the corresponding line number image data to each calculation unit, and the block random access memory sends the effective line numberand the starting line number to each convolutional operation unit so as to obtain the corresponding line number image data. The convolutional arithmetic unit processes the image data and sends the image data to the addition tree through the internal adder. The addition tree processes the image data sent from each internal adder and sends it to an activation pooling unit. The activation pooling unit processes the image data and then sends the data to the output buffer. The algorithm can be implemented based on hardware, so that the algorithm completion time is controllable.

Owner:INSPUR GROUP CO LTD

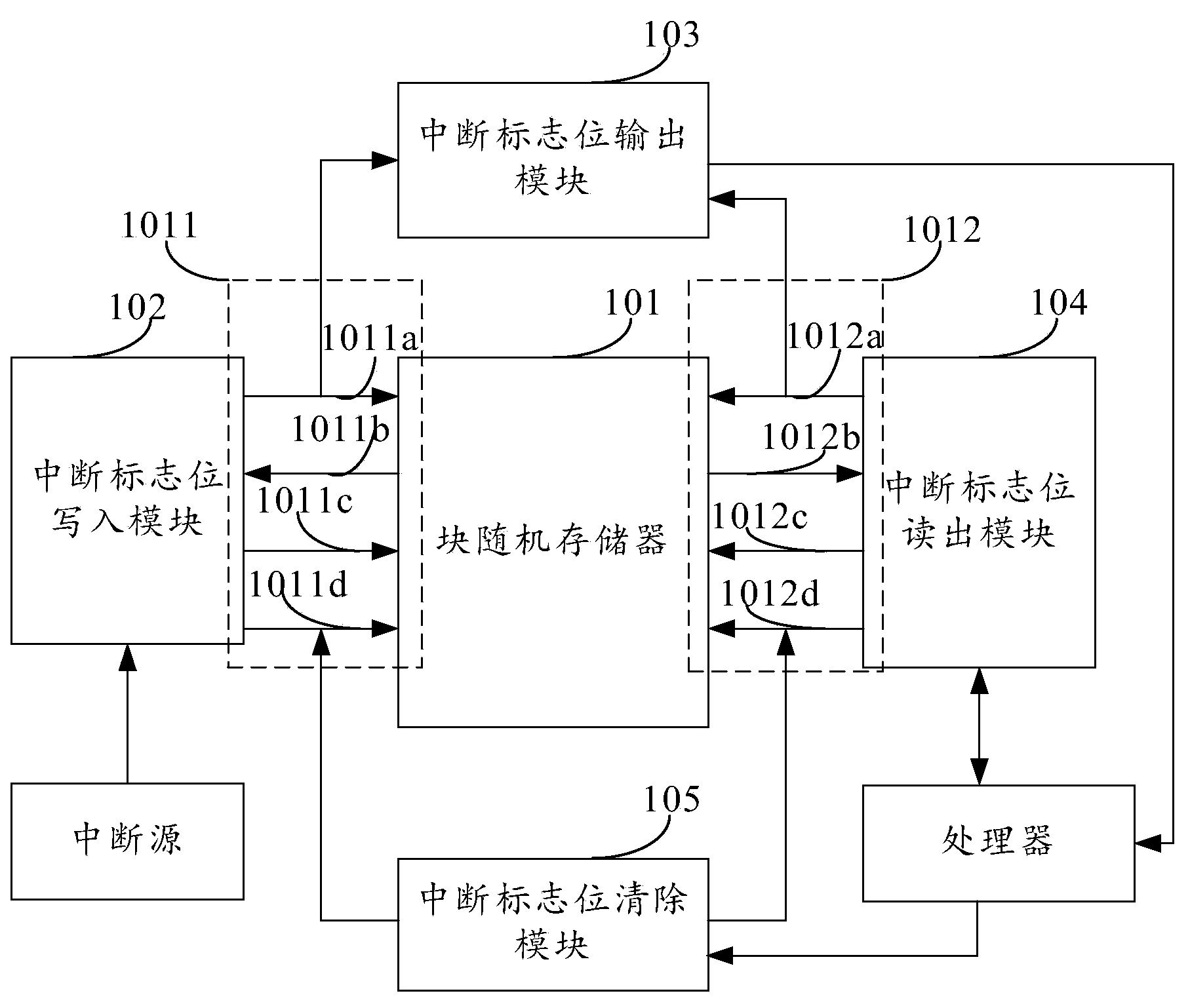

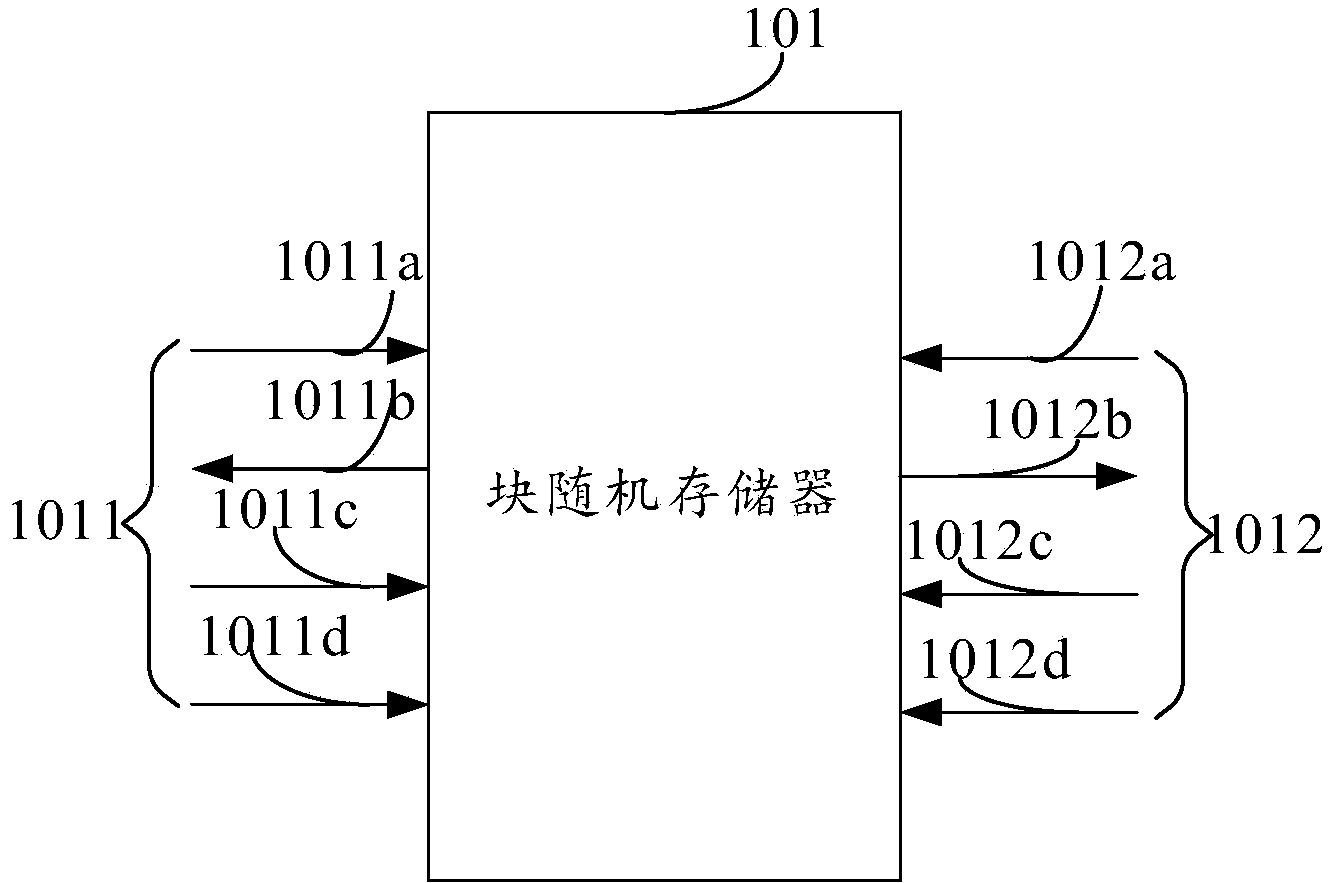

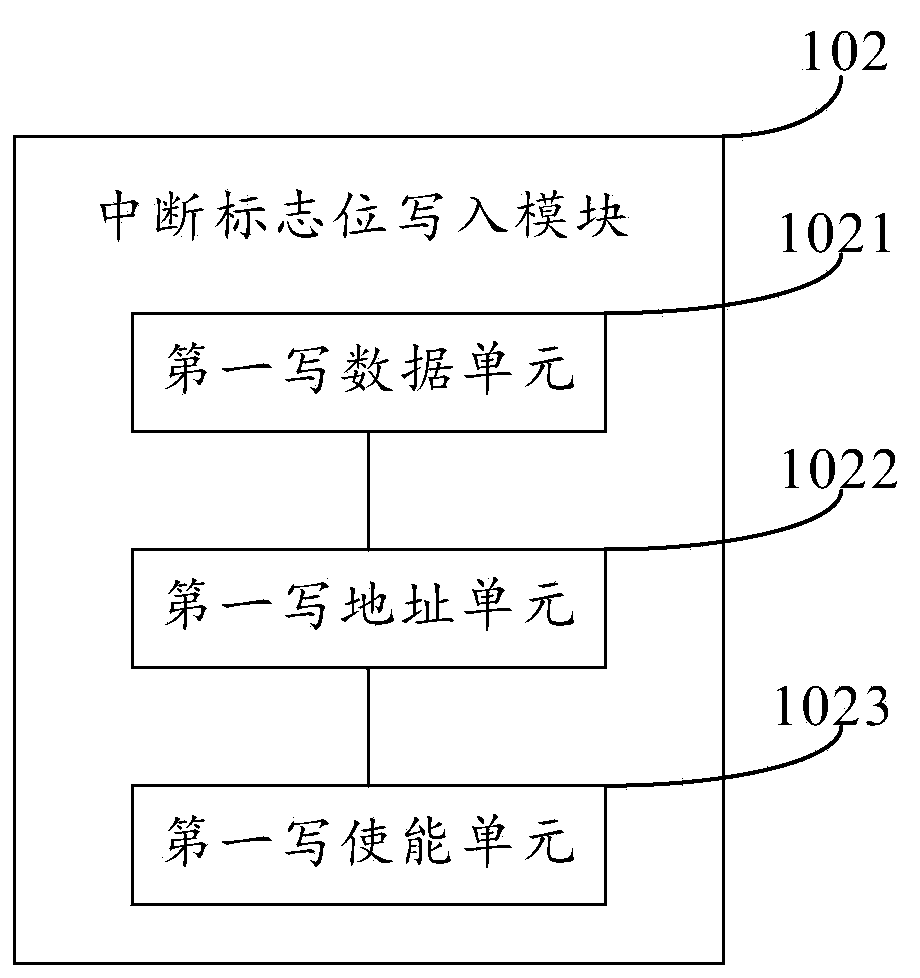

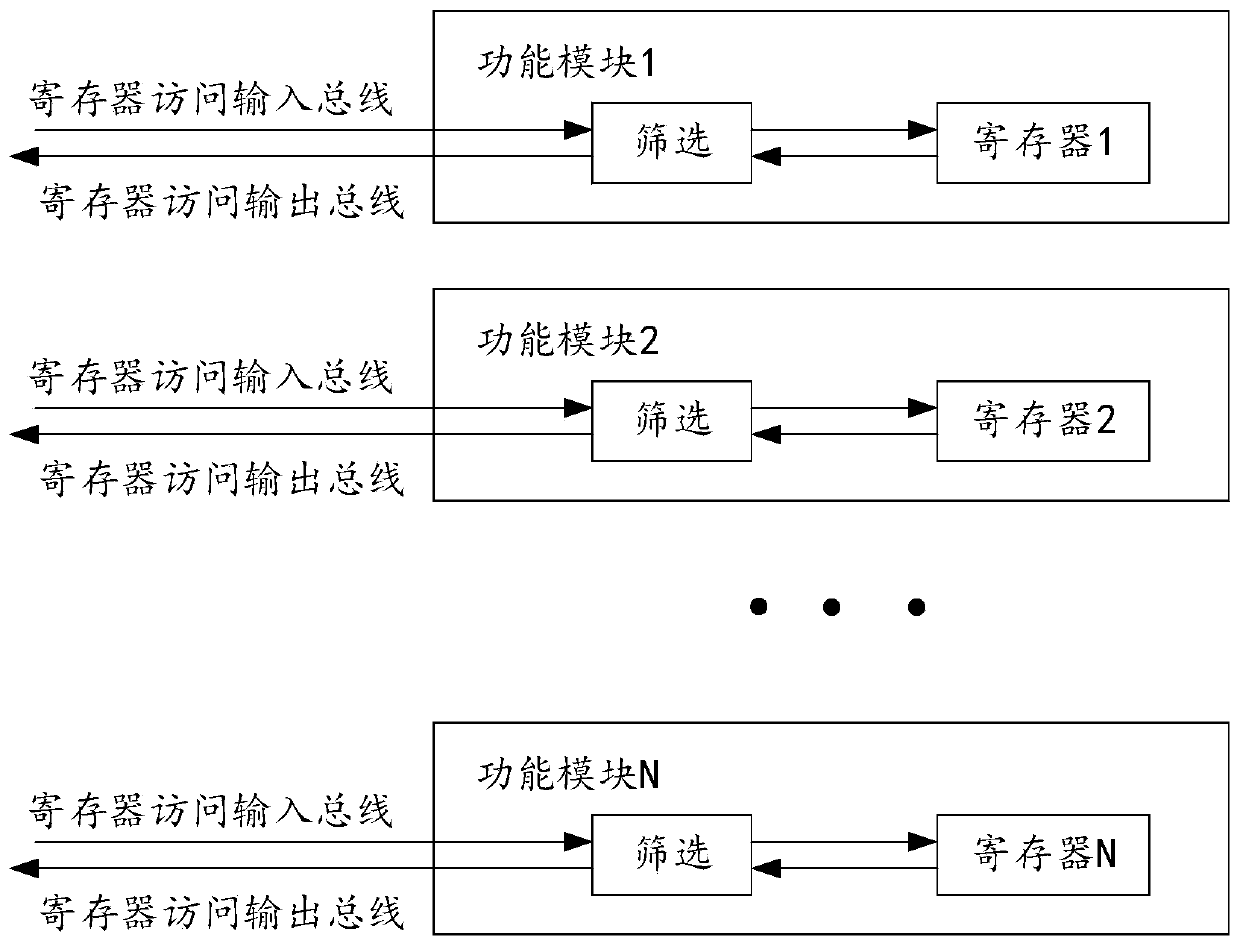

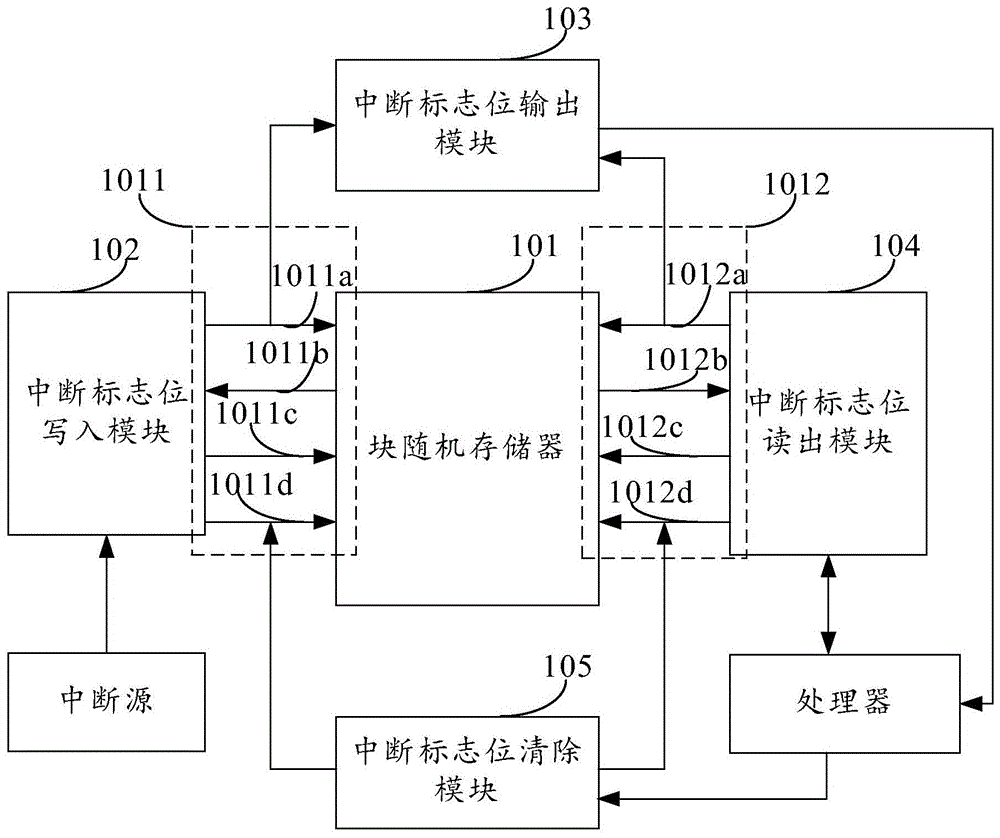

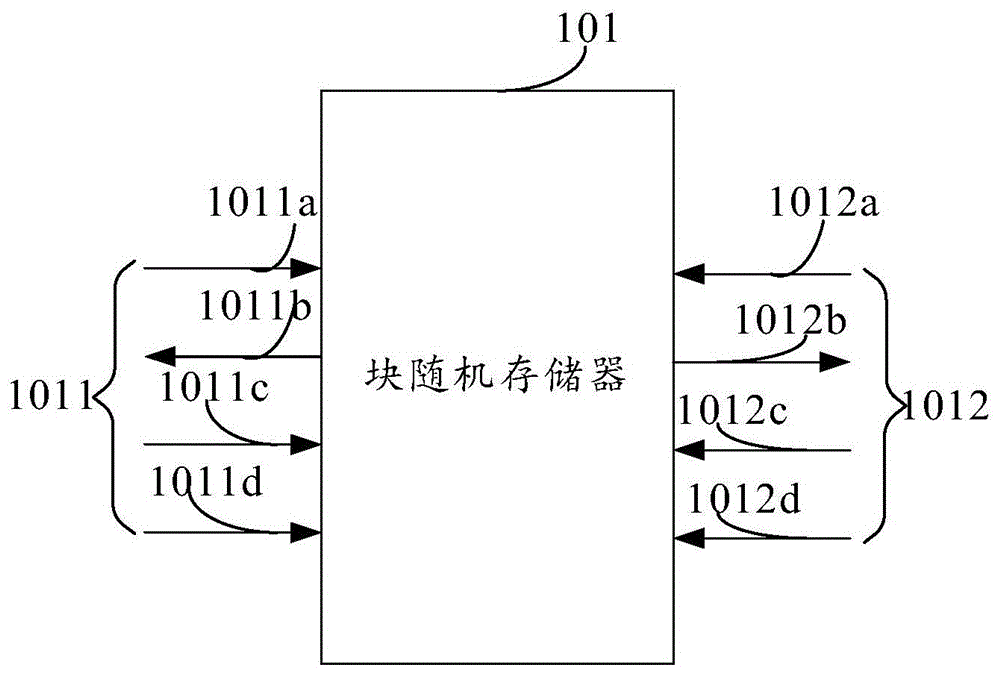

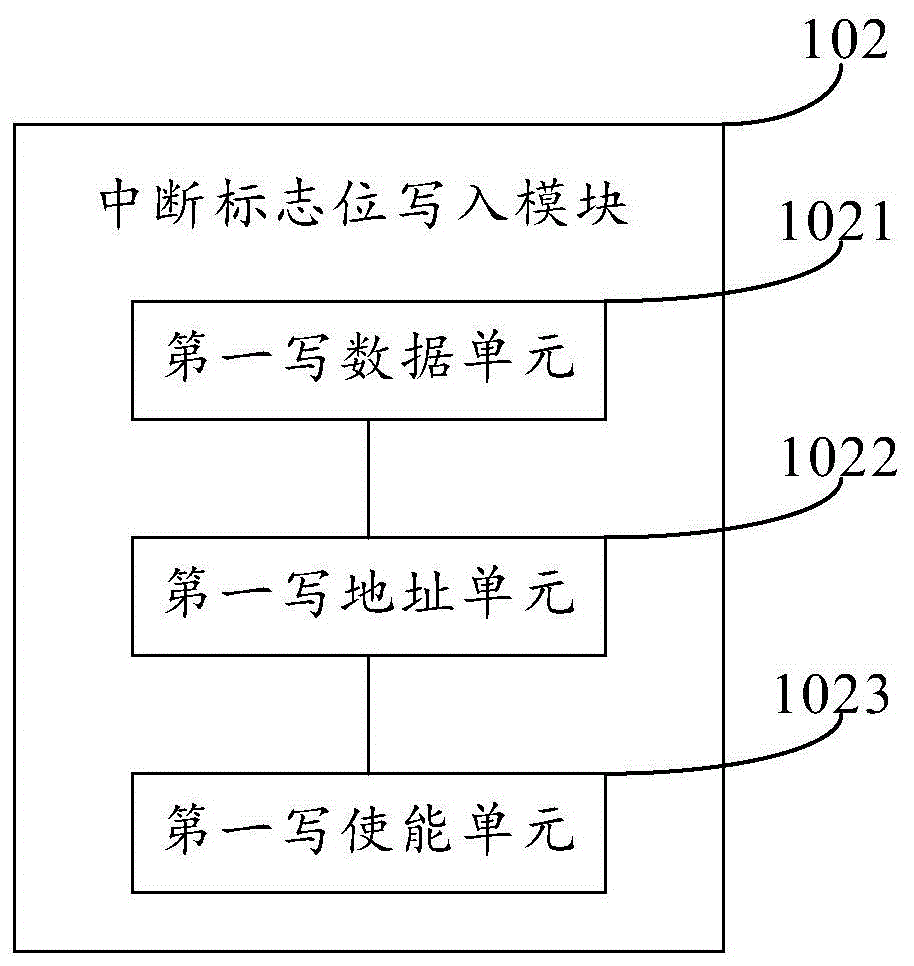

Interrupt processing device and method

The embodiment of the invention provides an interrupt processing device and method. The interrupt processing device and method are applied to the technical field of interrupt control, can save hardware resources, and can reduce hardware cost. The interrupt processing device comprises a block random access memory, an interrupt flag bit write-in module, an interrupt flag bit output module, an interrupt flag bit read-out module and an interrupt flag bit removal module, wherein the block random access memory at least comprises m memory cell groups; the interrupt flag bit write-in module is used for writing interrupt flag bit information of any function module in the memory cell group corresponding to the function module; the interrupt flag bit output module is used for generating an interrupt event processing request and sending the interrupt event processing request to a processor; the interrupt flag bit read-out module is used for obtaining the interrupt flag bit information of the memory cell group corresponding to the function module and sending the interrupt flag bit information to the processor; the interrupt flag bit removal module is used for removing effective information corresponding to an interrupt source. The interrupt processing device is used for processing an interrupt event.

Owner:RUIJIE NETWORKS CO LTD

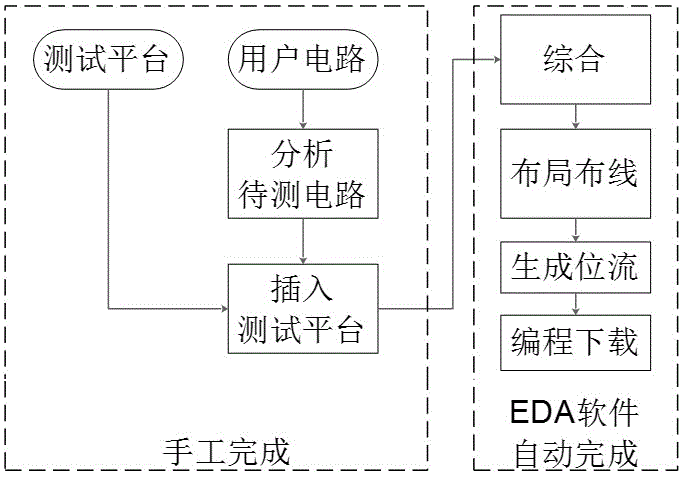

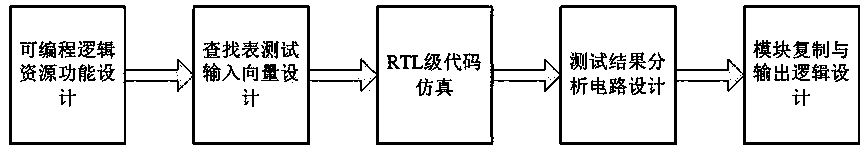

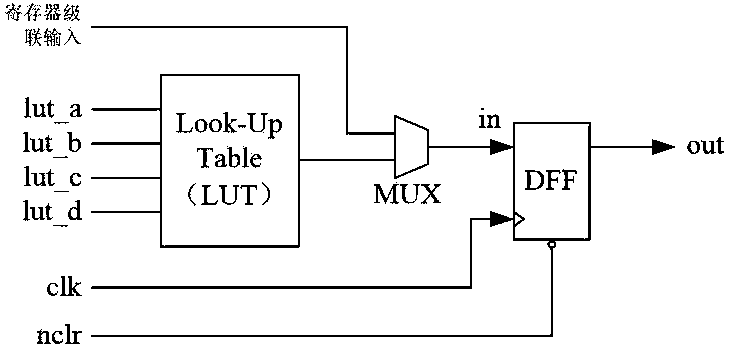

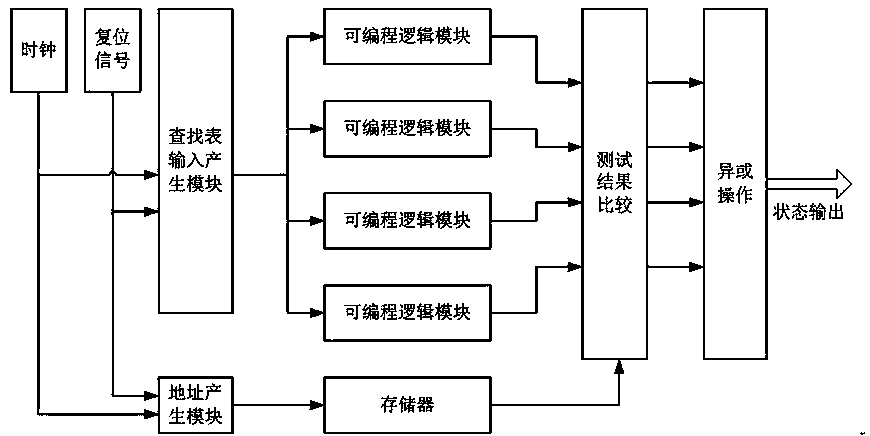

Screening and testing method of FPGA programmable logic resources

ActiveCN109445366ASettlement feeSolve difficultyProgramme controlComputer controlTest efficiencyComputer module

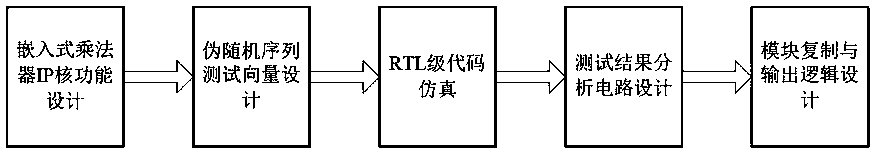

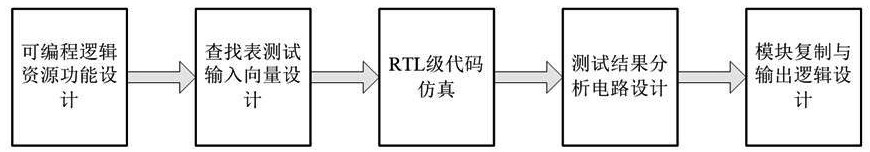

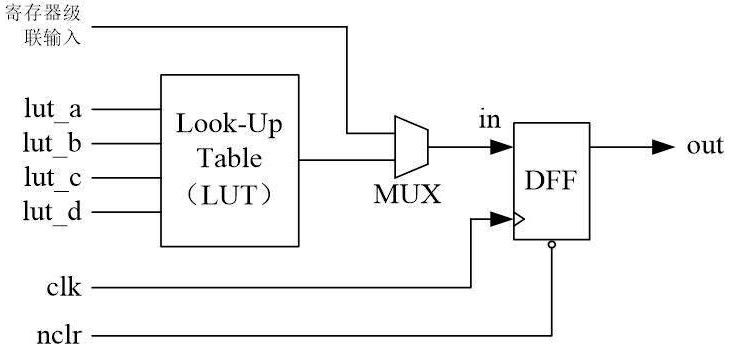

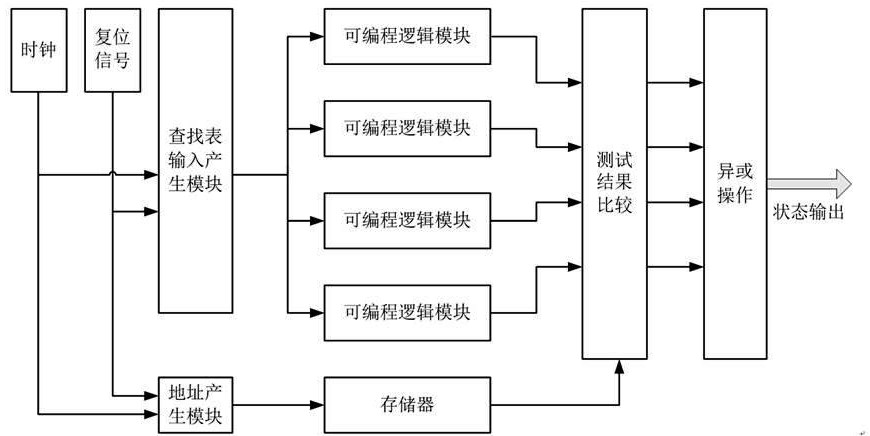

The invention relates to a screening and testing method of FPGA programmable logic resources. The method comprises the following steps of 1 programmable logic resource function design, 2 lookup tabletesting input vector design, 3 RTL-level code emulation, 4 testing result analysis circuit design and 5 module copying and output logic design. According to the screening and testing method of the FPGA programmable logic resources, a testing method based on a BIST is adopted, and the defects that the ATE testing expense is high, and the testing technical difficulty is large are overcome. All LUT modules and DFF modules can be covered only through two sets of matching codes, and the testing efficiency is improved. According to the method, the programmable characteristic of an FPGA chip and richchannel resources and a block random access memory (BRAM) in the chip are fully utilized. The method is simple in implementation step and high in transportability and has certain engineering application value.

Owner:济南国科芯微电子科技有限公司

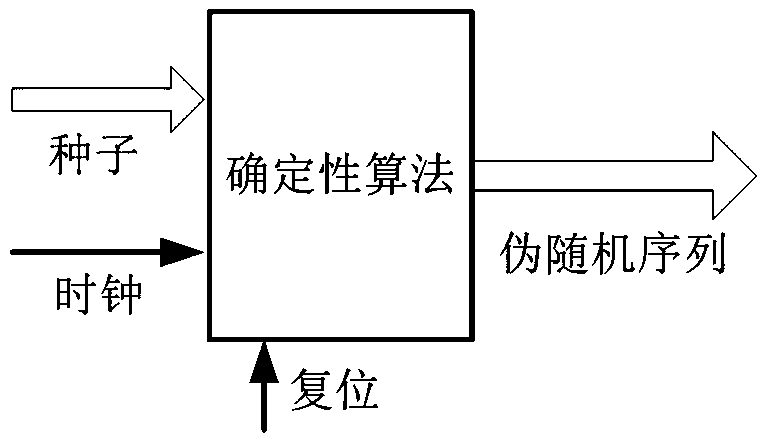

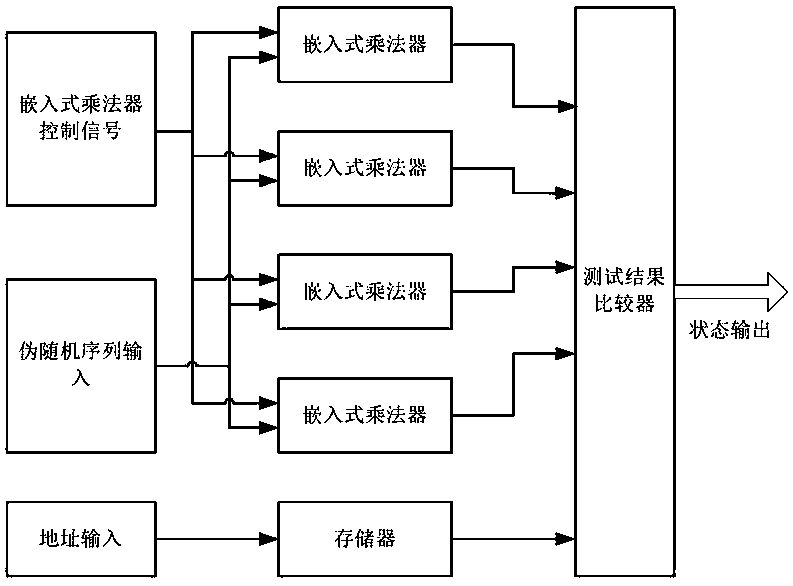

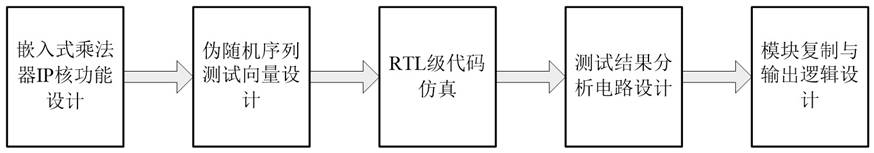

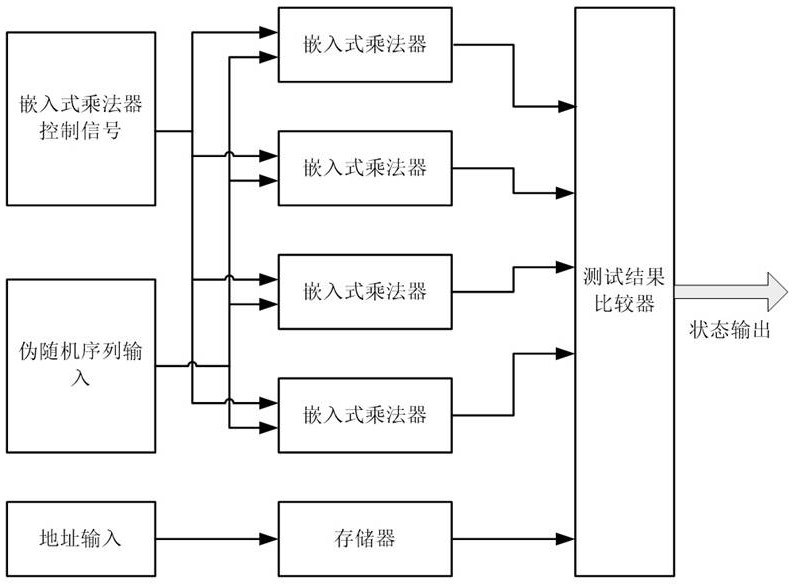

Screening and testing method of FPGA embedded multiplier

ActiveCN109445365ASettlement feeSolve difficultyProgramme controlComputer controlTest efficiencyCore function

The invention relates to a method for testing the performance of an FPGA embedded multiplier. The method comprises the following steps of 1 embedded multiplier IP core function deign, 2 pseudorandom sequence test vector design, 3 RTL-level code emulation, 4, testing result analysis circuit design and 5 module copying and output logic design. According to the Screening and testing method of the FPGA embedded multiplier, a testing method based on a BIST is adopted, and the defects that the ATE testing expense is high, and the testing technical difficulty is large are overcome. Meanwhile, a pseudorandom sequence is adopted as excitory input, the testing time is shortened, and the testing efficiency is improved. According to the method, the programmable characteristic of an FPGA chip and richchannel resources and a block random access memory (BRAM) in the chip are fully utilized. The method is simple in implementation step and high in transportability and has certain engineering application value.

Owner:青岛中科青芯电子科技有限公司

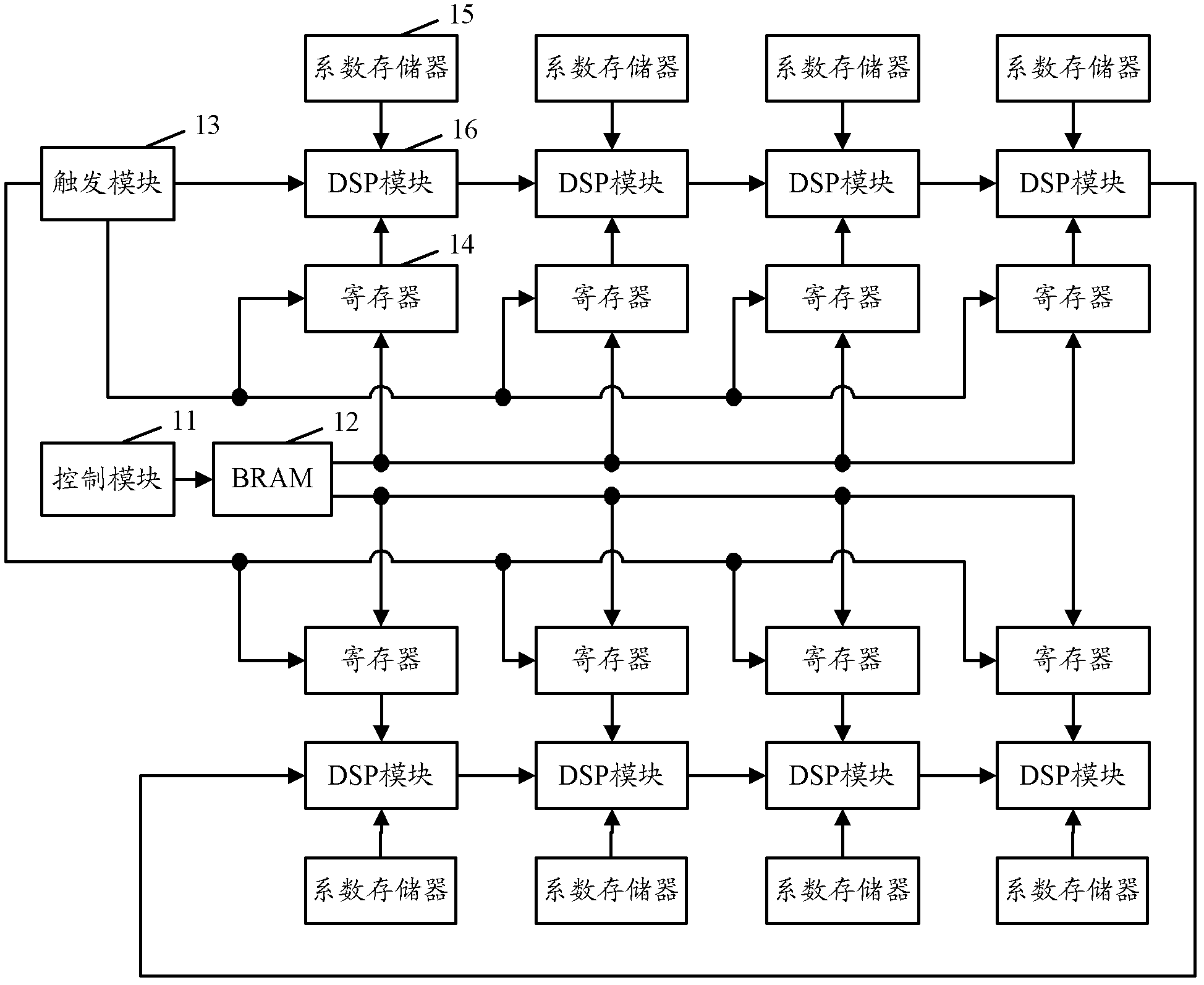

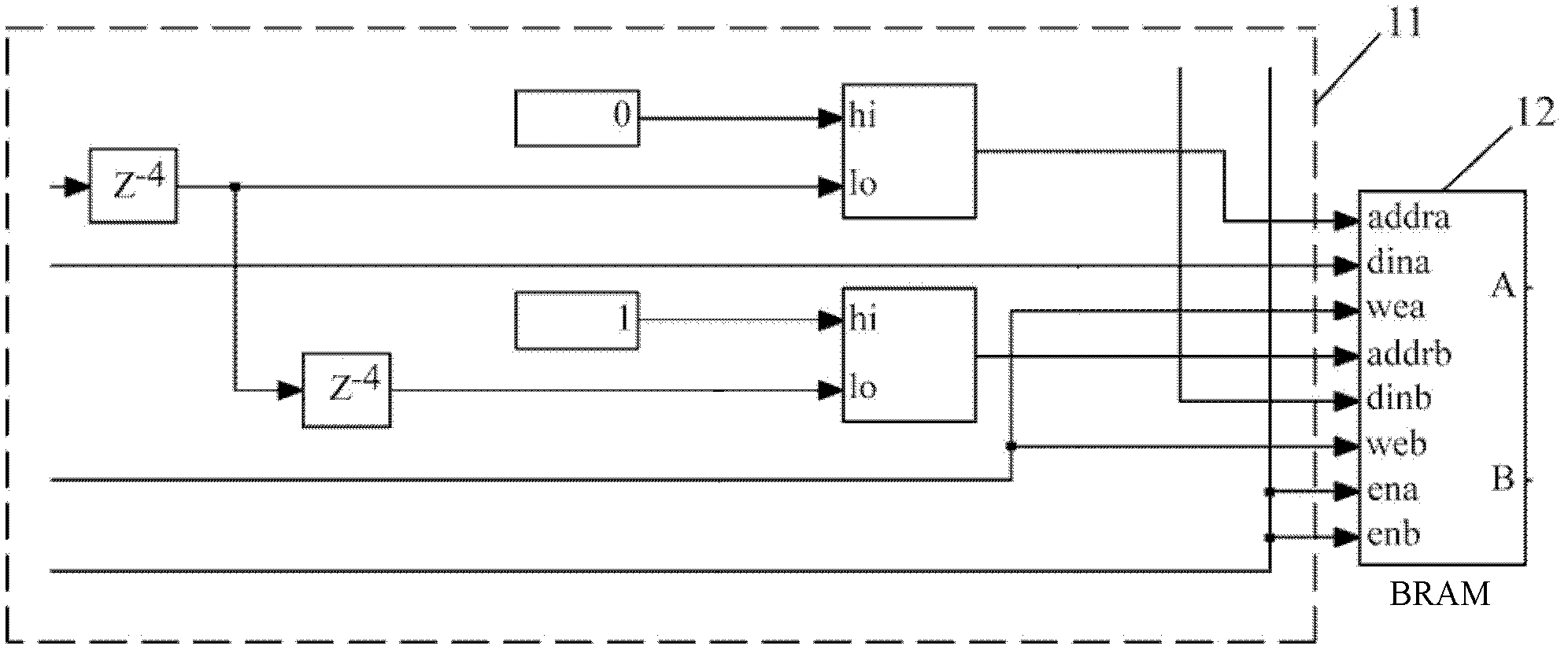

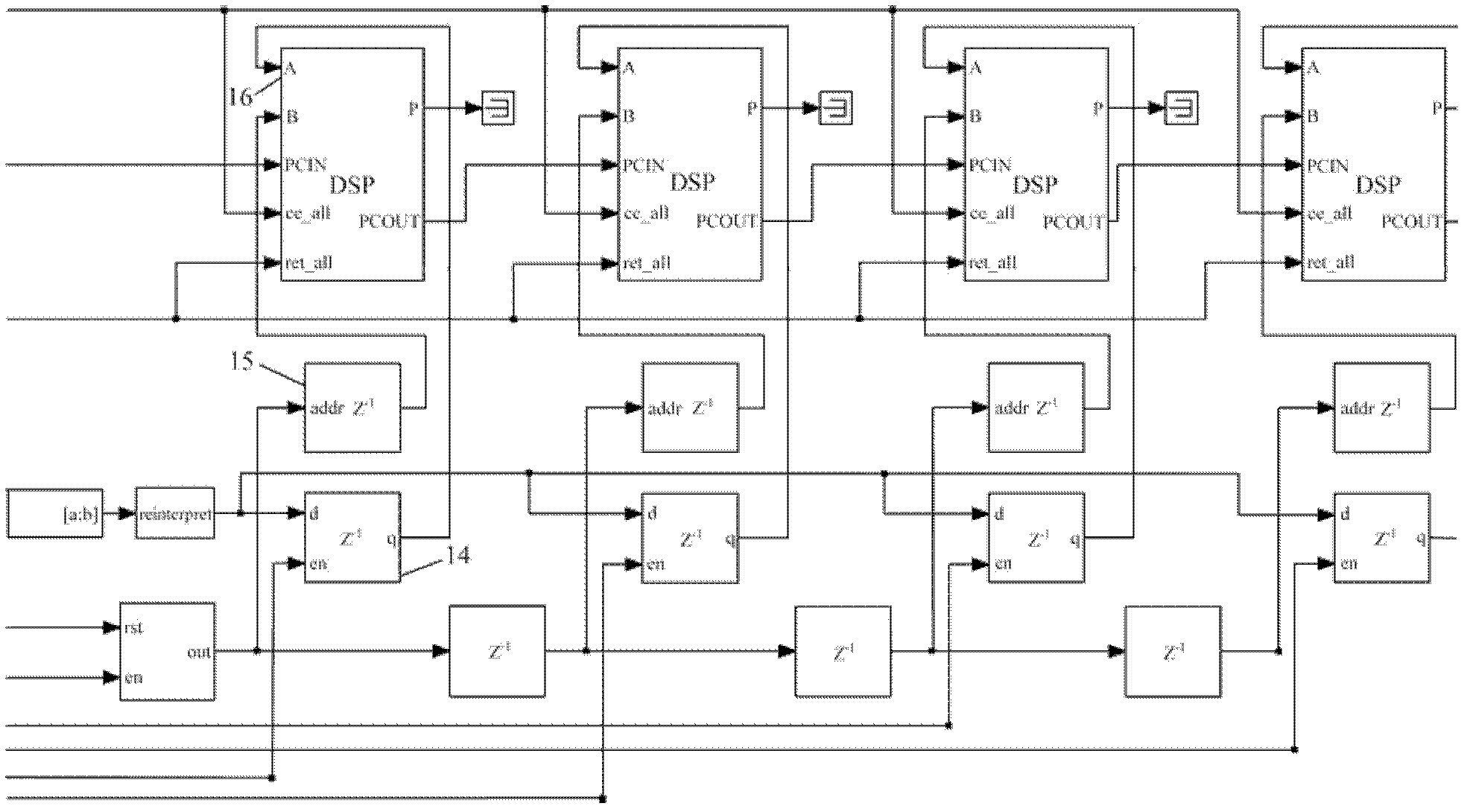

Cache handling device in multi-channel multi-phase filter

InactiveCN102545831ASave resourcesTake advantage ofDigital technique networkProcessor registerParallel computing

The invention discloses a cache handling device in a multi-channel multi-phase filter. The cache handling device comprises a control module, a BRAM (Block Random Access Memory), a triggering module, a plurality of registers, a plurality of coefficient memories and a plurality of cascaded DSP (Digital Signal Processor) modules, wherein the quantities of the registers, the coefficient memories and the DSP modules are the same, and each stage of DSP module corresponds to different registers and coefficient memories. According to the invention, two output ports of the BRAM are used for outputting data to the DSP modules, coefficients are stored in independent coefficient memories, and only logical resources are consumed during realization; the plurality of additionally arranged registers are used for storing data output by the output ports of the BRAM, so as to achieve multi-phase processing, at the moment, each output port of the BRAM can provide cache service for the plurality of DSP modules; and therefore, the cache handling device provided by the invention can be used to make the best of the BRAM, thereby reducing the use amount of the BRAM and saving the BRAM resource.

Owner:DATANG MOBILE COMM EQUIP CO LTD

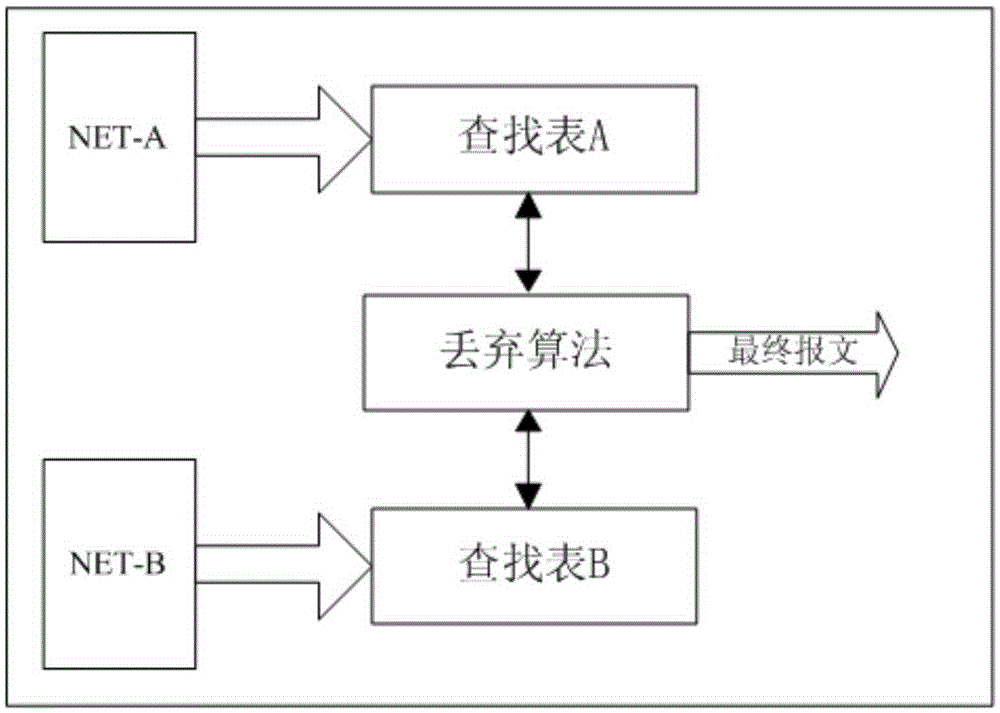

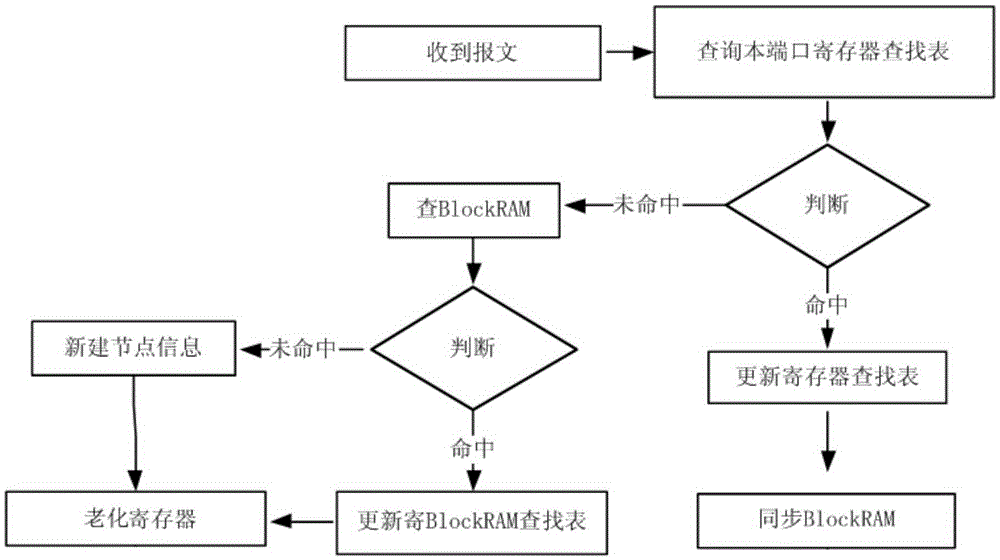

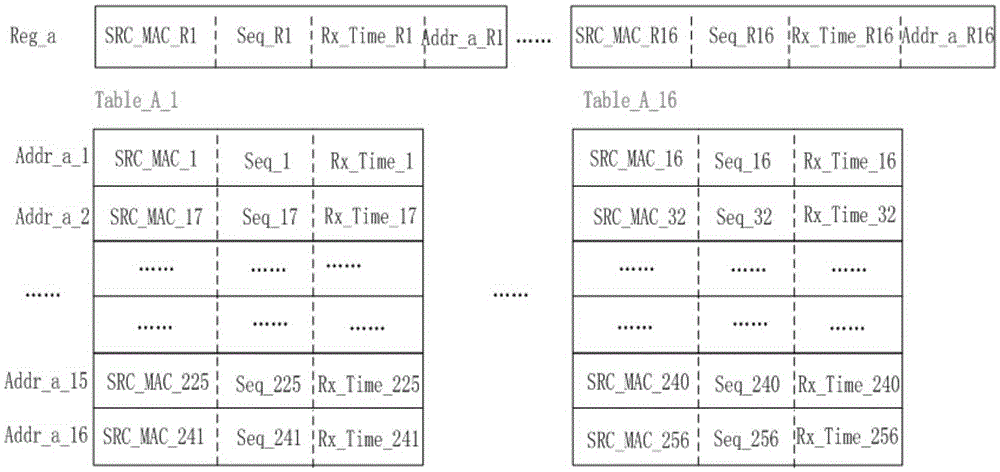

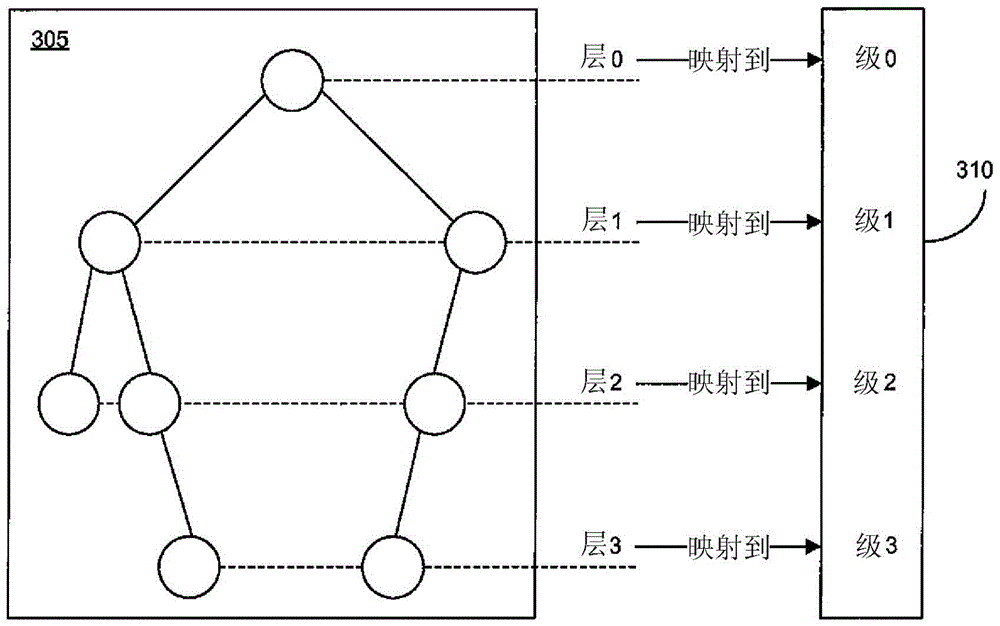

Lookup table optimization method for parallel redundancy protocol (PRP)/high reliable seamless redundancy (HSR) message dropping algorithm

InactiveCN105357126AQuick responseReduce table lookup delayData switching networksProcessor registerParallel computing

The invention discloses a lookup table optimization method for a parallel redundancy protocol (PRP) / high reliable seamless redundancy (HSR) message dropping algorithm. A field programmable gate array (FPGA) internal register and a Block random access memory (RAM) are used for storing message information tables in a hierarchical way; and according to a hierarchical inquiring method, the register is used as a first grade of lookup table, the Block RAM is used as a second grade of lookup table, the register is a Cache of the Block RAM, the content of the Cache of the register is updated and optimized in real time by use of a pipeline aging mechanism, the Block RAM is updated synchronously, and the mapping relation and data consistency of the Block RAM and the content of the Cache are maintained. According to the lookup table optimization method, the FPGA internal register and the Block RAM are used for storing the message information tables in the hierarchical way, the hierarchical inquiring method and the register aging mechanism are adopted, the register speed and Block RAM capacity are balanced, and the lookup table time delay is reduced, so that the network response speed is improved.

Owner:NARI TECH CO LTD +4

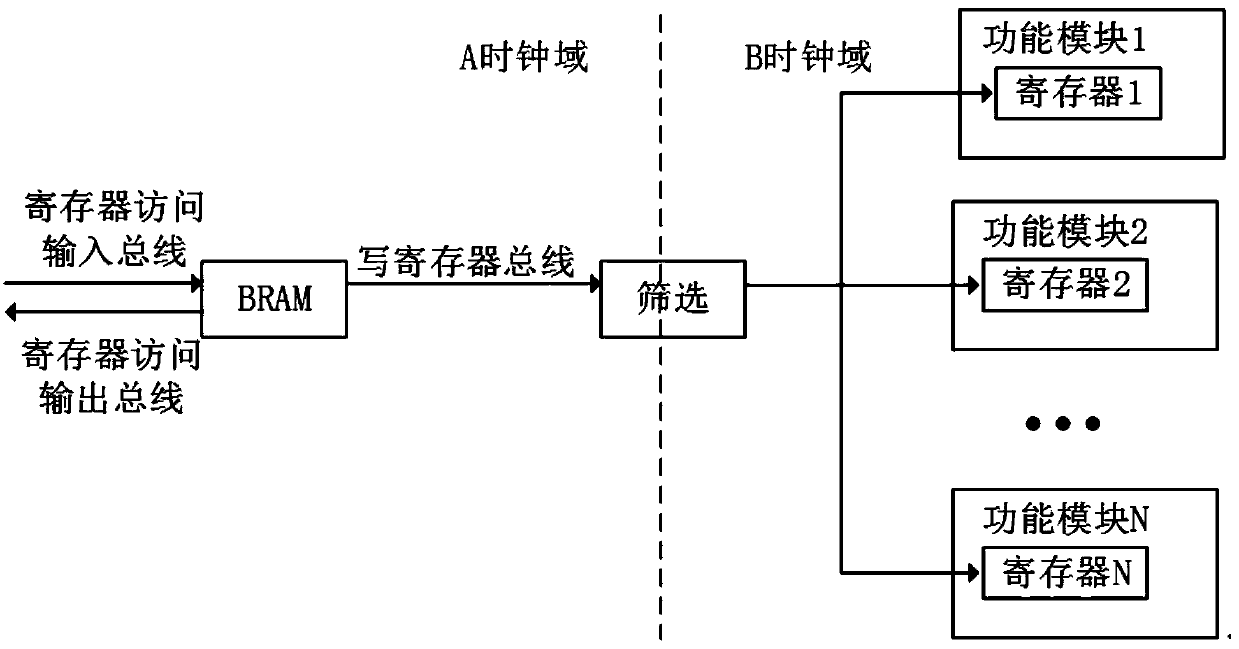

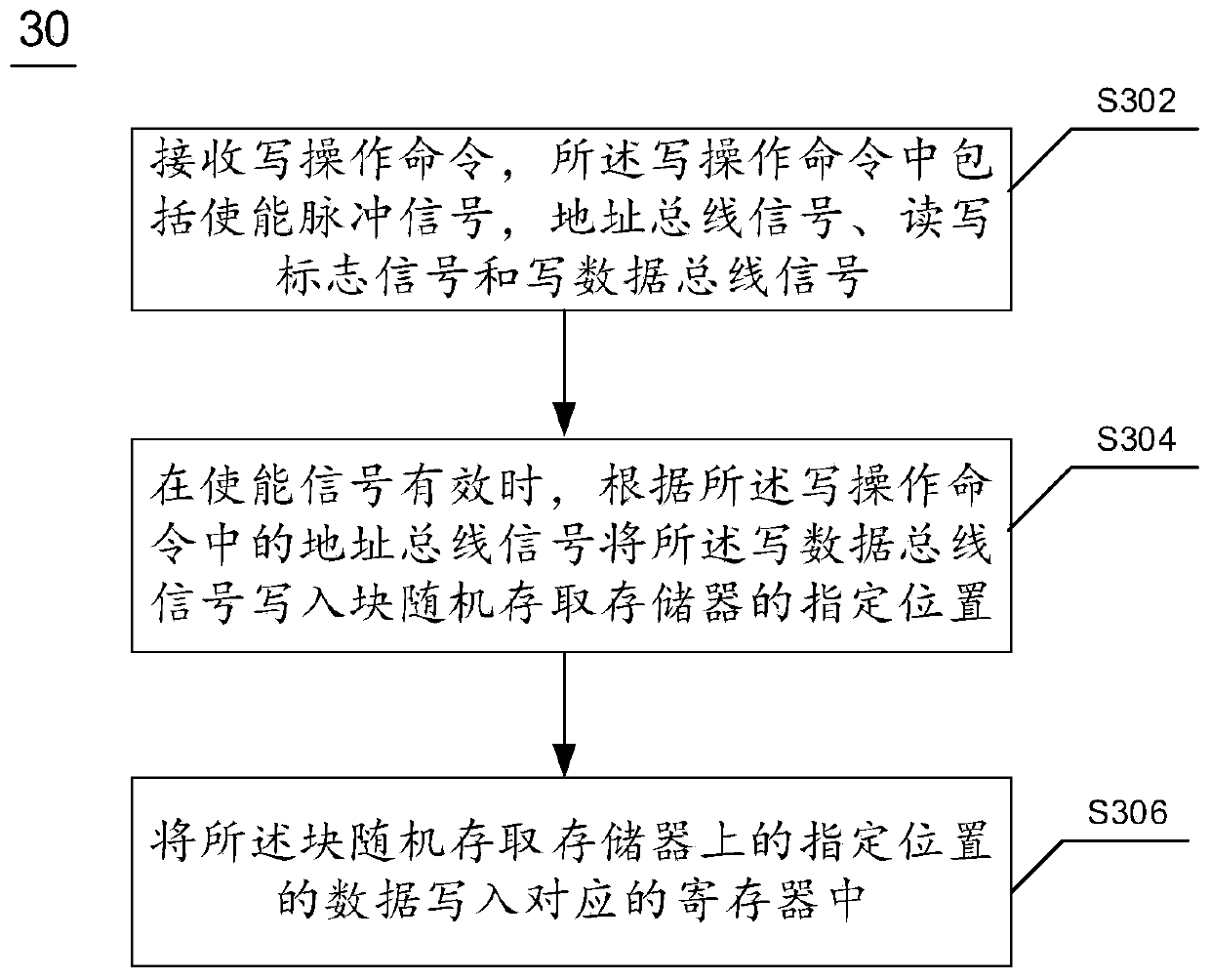

Method and device for accessing register, electronic equipment and readable medium

ActiveCN111488297ASave logic resourcesRegister arrangementsEnergy efficient computingRandom access memoryEngineering

The invention relates to a method and device for accessing a register, electronic equipment and a computer readable medium. The method comprises the following steps: receiving a write operation command, wherein the write operation command comprises an enable pulse signal, an address bus signal, a read-write mark signal and a write data bus signal; when the enable signal is valid, writing the writedata bus signal into a specified position of a block random access memory according to an address bus signal in the write operation command; and writing the data of the specified position on the block random access memory into a corresponding register. According to the method and device for accessing the register, the electronic equipment and the computer readable medium, screened signals of theregister can be kept stable within a certain period of time, time sequence relaxation constraint is facilitated, logic resources are saved, and cross-clock-domain read-write operation can be carried out on the register.

Owner:HANGZHOU DPTECH TECH

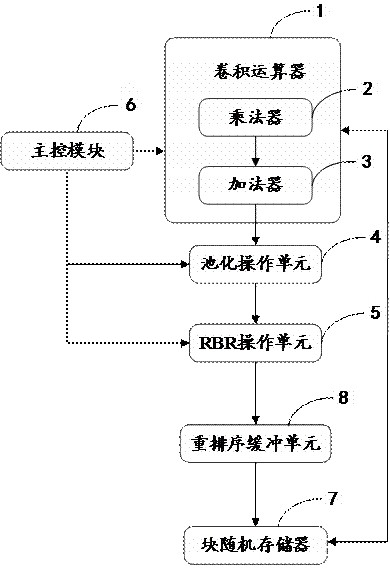

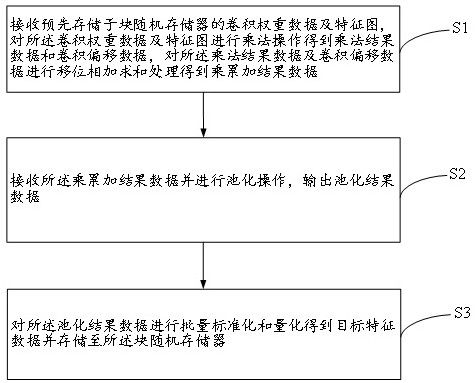

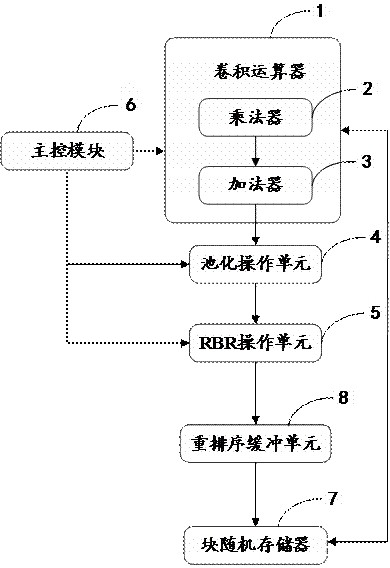

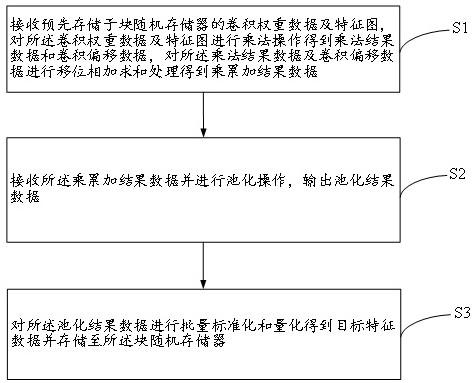

Object Detection Hardware Accelerator and Acceleration Method

ActiveCN112230884BShorten the timeReduce power consumptionComputation using non-contact making devicesPhysical realisationComputer hardwareBinary multiplier

The present invention relates to the field of data processing, and provides a target detection hardware accelerator and an acceleration method. The accelerator includes a convolution operator integrated with a multiplier and an adder, and the convolution operator receives volumes stored in block random access memory in advance. Product weight data and feature maps, the multiplier performs multiplication operations on the convolution weight data and feature maps to obtain multiplication result data and convolution offset data, and the adder performs multiplication result data and convolution offset data The data is shifted, added and summed to obtain the multiplication and accumulation result data; the pooling operation unit is used to receive the multiplication and accumulation result data and perform a pooling operation, and output the pooling result data; the RBR operation unit is used to perform the multiplication and accumulation result data; The pooling result data is subjected to batch normalization and quantization to obtain target feature data and stored in the block random access memory. The invention can reduce the time and power consumption required by the accelerator for data transfer, and improve the working efficiency of the accelerator.

Owner:JIHUA LAB

An interrupt processing device and interrupt processing method

The embodiment of the invention provides an interrupt processing device and method. The interrupt processing device and method are applied to the technical field of interrupt control, can save hardware resources, and can reduce hardware cost. The interrupt processing device comprises a block random access memory, an interrupt flag bit write-in module, an interrupt flag bit output module, an interrupt flag bit read-out module and an interrupt flag bit removal module, wherein the block random access memory at least comprises m memory cell groups; the interrupt flag bit write-in module is used for writing interrupt flag bit information of any function module in the memory cell group corresponding to the function module; the interrupt flag bit output module is used for generating an interrupt event processing request and sending the interrupt event processing request to a processor; the interrupt flag bit read-out module is used for obtaining the interrupt flag bit information of the memory cell group corresponding to the function module and sending the interrupt flag bit information to the processor; the interrupt flag bit removal module is used for removing effective information corresponding to an interrupt source. The interrupt processing device is used for processing an interrupt event.

Owner:RUIJIE NETWORKS CO LTD

A basic computing unit and computing method of a convolutional neural network

ActiveCN109165728BCompletion time controllableNeural architecturesPhysical realisationTheoretical computer scienceController (computing)

The invention provides a basic computing unit and a computing method of a convolutional neural network. The basic computing unit includes a controller, an addition tree, an input buffer, several computing units, and an output buffer; the computing unit includes a block random access memory, Several convolution operation units, internal adders, activation pooling units. Based on the control of the controller, the input buffer loads the image data of the corresponding number of lines to each calculation unit, and the block random access memory sends the effective number of lines and the initial number of lines to each convolution operation unit so that it can obtain the image data of the corresponding number of lines; The convolution operation unit processes the image data and sends it to the addition tree through the internal adder; the addition tree processes the image data sent by each internal adder and sends it to an activation pooling unit; the activation pooling unit processes the image data and sends it to the output terminal cache. The solution can realize the algorithm based on hardware, so that the completion time of the algorithm can be controlled.

Owner:INSPUR GROUP CO LTD

Data processing method and device

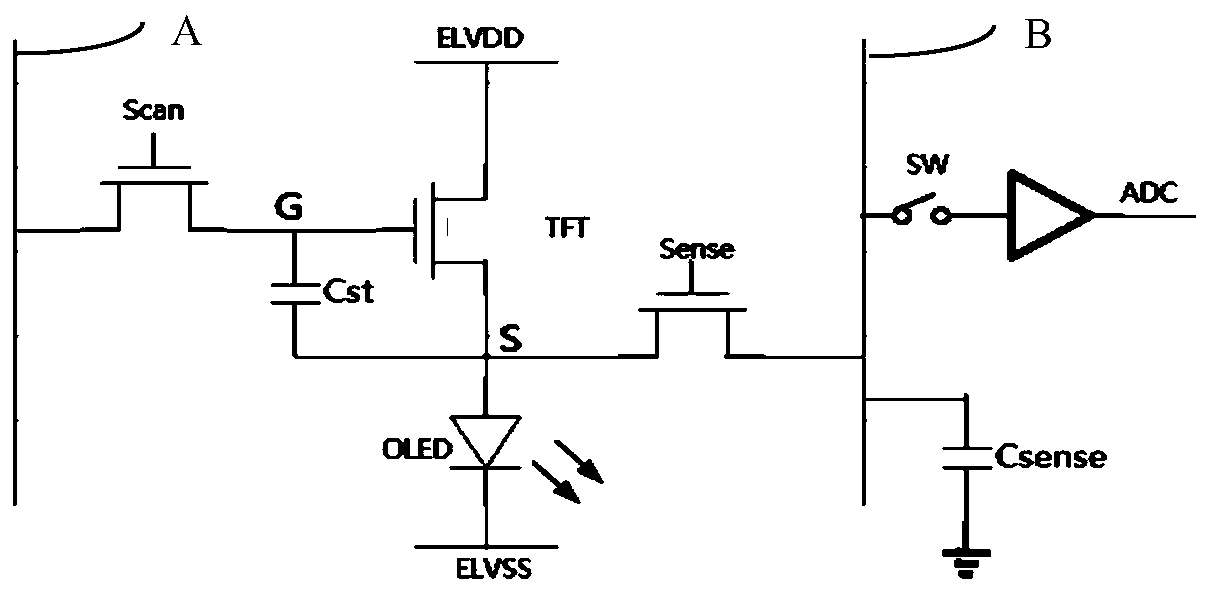

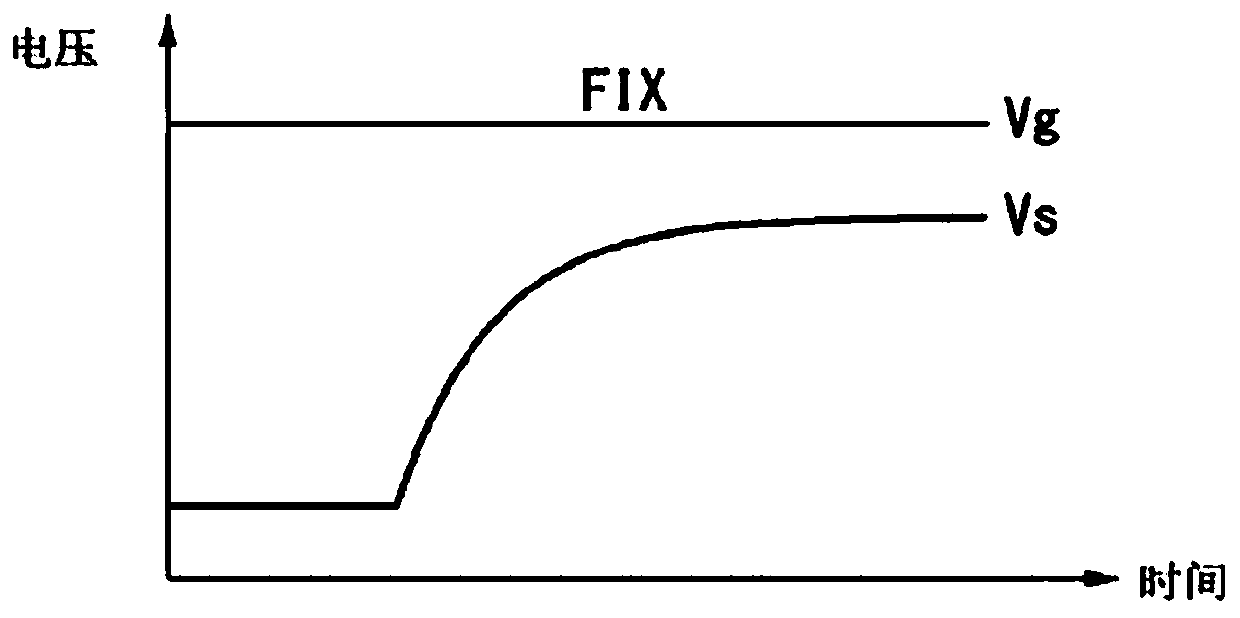

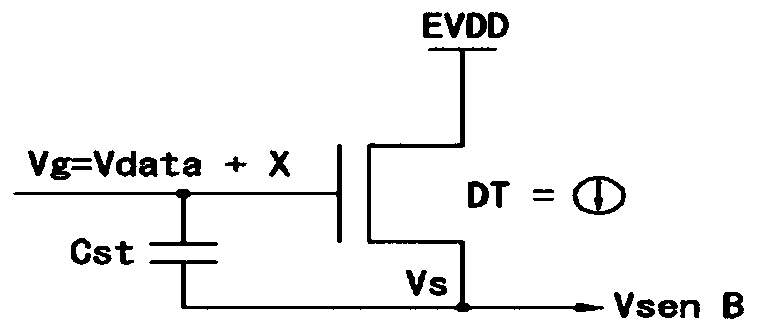

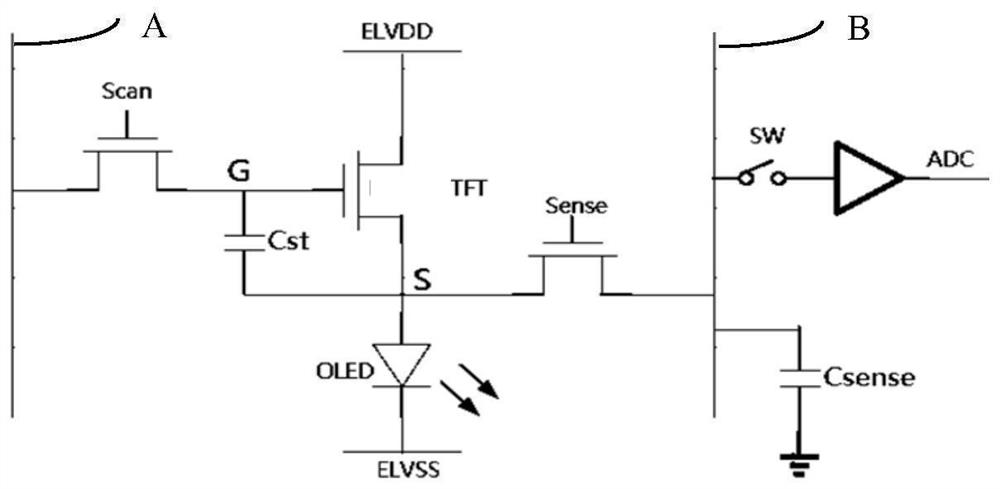

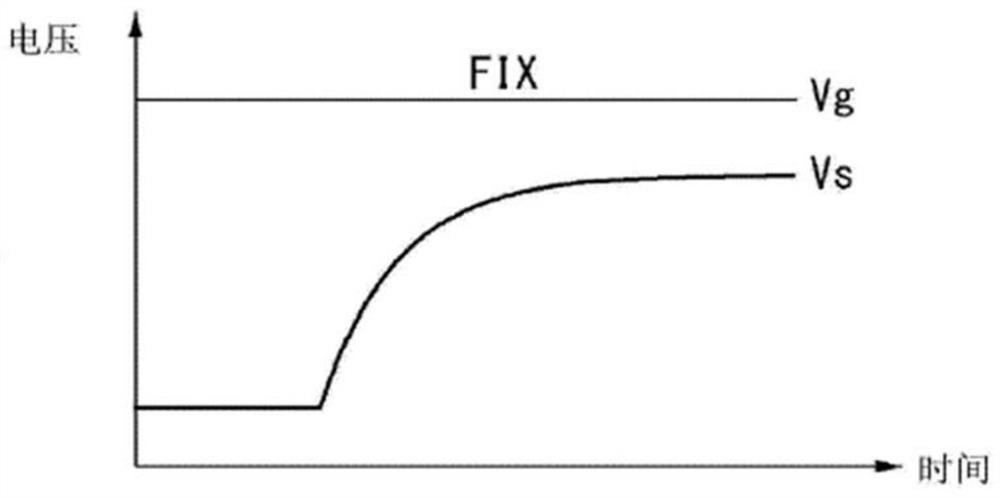

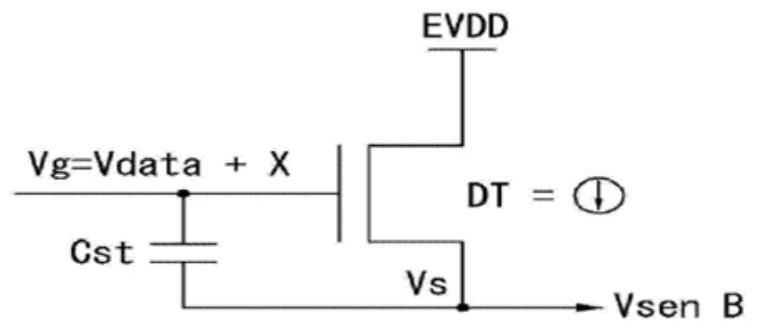

ActiveCN110610679AReduce usageLow costStatic indicating devicesInput/output processes for data processingComputer hardwareData information

The embodiment of the invention provides a data processing method and a device, and the method comprises the steps: obtaining the data information of pixels in a display device, and the data information comprises the compensation data of the pixels and the position of the pixels; according to a preset mapping relationship, determining a storage position of compensation data of the pixels in a block random access memory, wherein the block random access memory comprises a plurality of groups of data units which are stored in sequence, the sequences in which the data units with adjacent storage positions are taken out are adjacent, the data units comprise a plurality of compensation data which are taken out in the same clock period, and the plurality of compensation data are stored in the block random access memory in sequence; and storing the compensation data in a storage space corresponding to the storage position. Therefore, the compensation data can be preferentially stored in one block random access memory, the storage capacity of the block random access memory is fully utilized, and the use number of the block random access memory is reduced, so that the cost of the FPGA is reduced.

Owner:BOE TECH GRP CO LTD

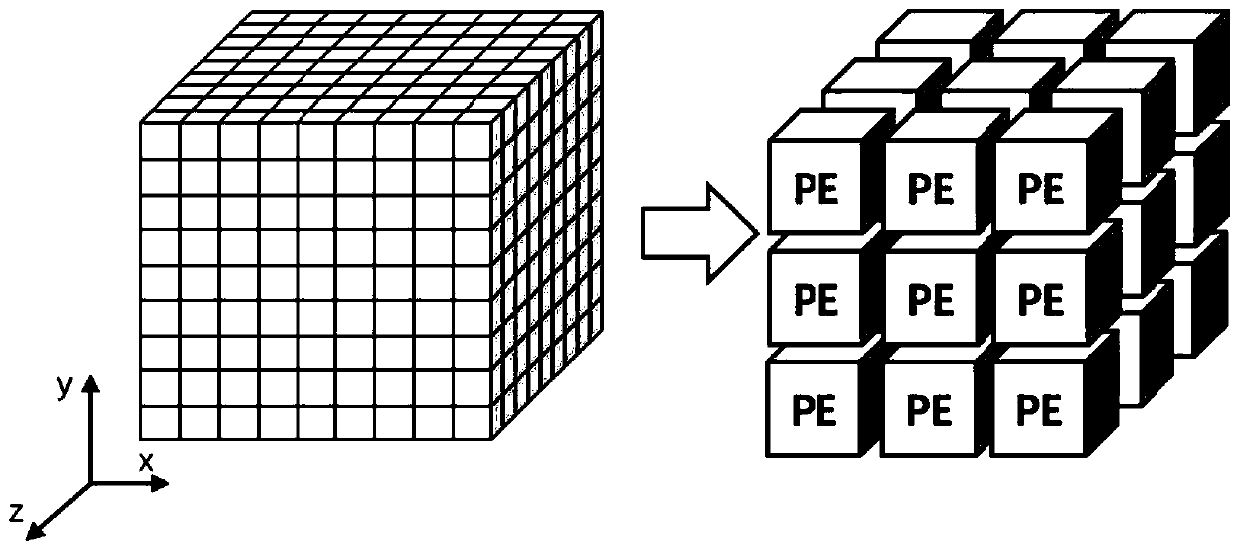

Time domain finite difference method hardware accelerator architecture and implementation method thereof

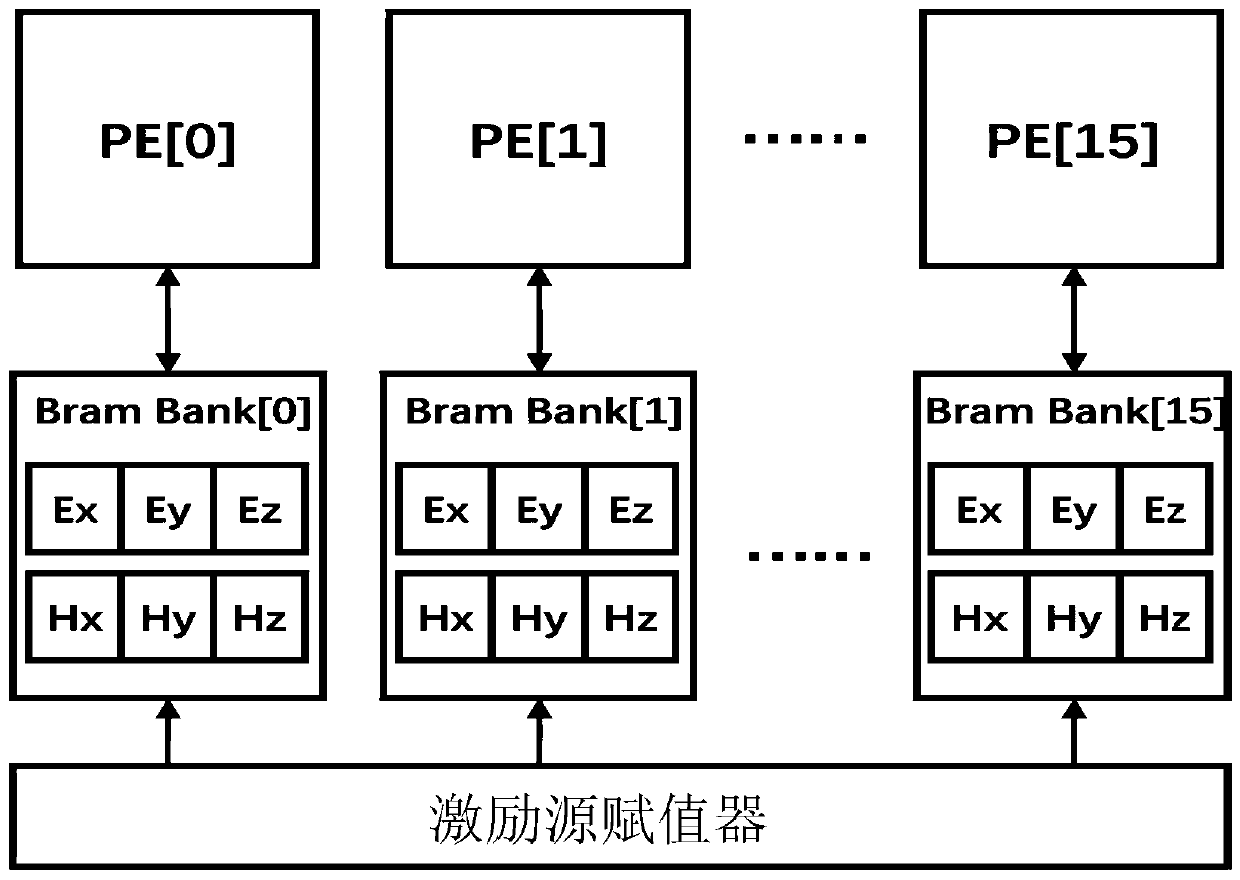

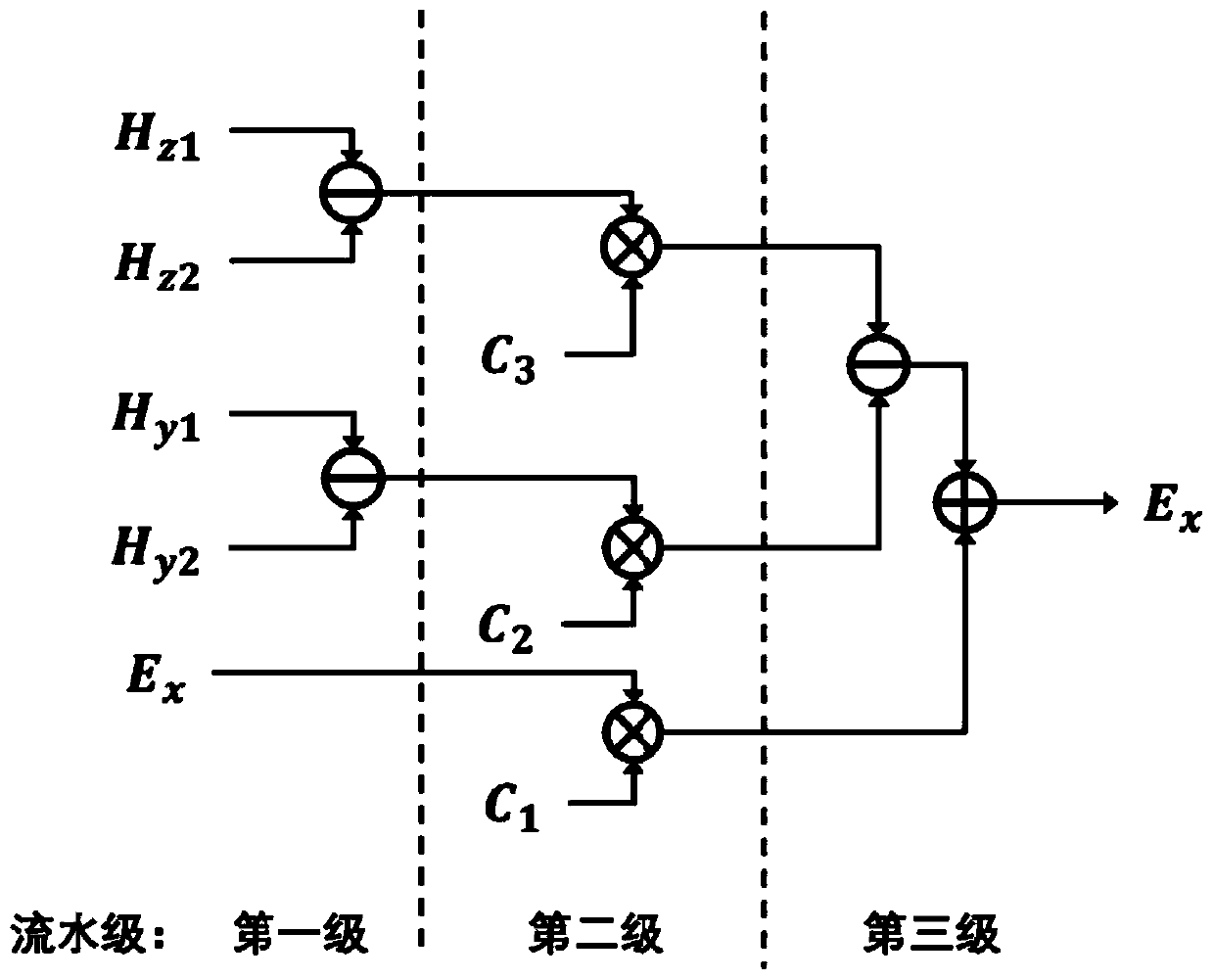

ActiveCN111209249AIncrease profitSmooth connectionArchitecture with single central processing unitElectric digital data processingParallel computingHardware acceleration

The invention discloses a time domain finite difference method hardware accelerator architecture. The architecture comprises a chain type calculation processing unit arrangement structure, multiple groups of storage blocks and an excitation source assignment device, the arrangement structure of the chained calculation processing unit is a combination of a plurality of PE arranged in the one-dimensional direction and is used for calculating the electric field value and / or the magnetic field value of the three-dimensional grid points simulated by the electromagnetic field; the storage block is composed of a plurality of block random access memories and is used for storing the electric field values and / or the magnetic field values of the grid points calculated by the PE; and the excitation source valuator is used for valuating the electric field value and / or the magnetic field value stored in the storage block. According to the invention, the utilization rate of the PE can be improved, the wiring difficulty and time delay comprehensively realized on the FPGA are reduced, the off-chip storage bandwidth capability is increased, and the expansibility and grid adaptability of the PE arrayare improved. The method can be applied to the field of hardware accelerators based on FPGA platforms.

Owner:SUN YAT SEN UNIV

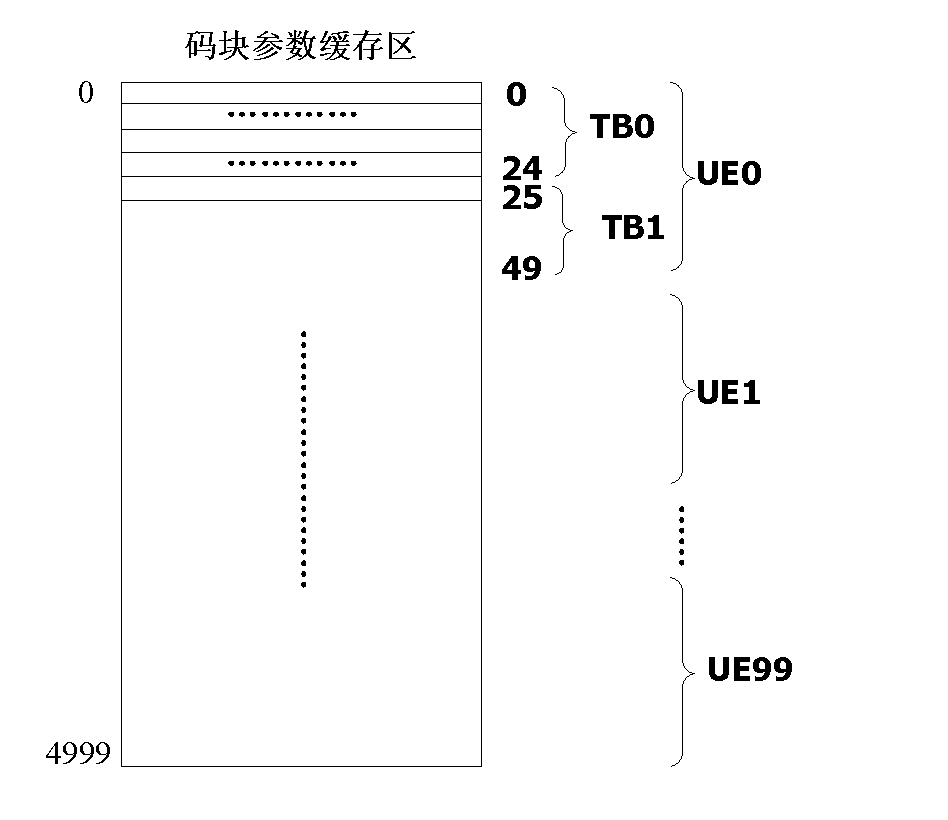

Method and system for dynamic caching of user information

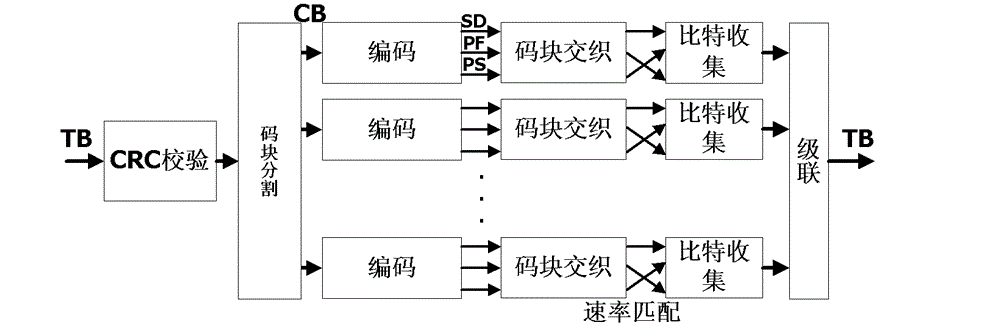

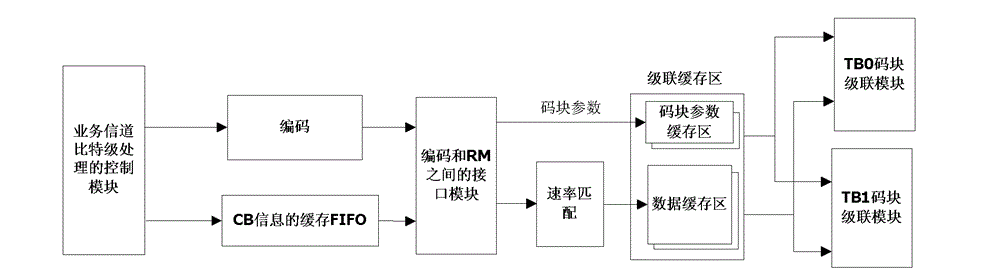

ActiveCN102685810BReduce wasteNetwork traffic/resource managementTransmissionCoding blockUser equipment

The present invention provides a method and system for dynamically buffering user information, which are used for solving the problem of excessive resources for buffering user information. In the present invention, by storing a starting address of a Transport Block (TB) located in a code block parameter buffer area into a TB buffer area, and storing the starting address of each code block located in the data buffer area into the code block parameter buffer area according to the starting address of the TB located in the code block parameter buffer area, wherein the code block is segmented from the TB, a concatenation module, when a concatenation is performed, finds out the starting address of the TB located in the code block parameter buffer area from the TB buffer area according to a User Equipment (UE) index, and finds out the starting address of the code block located in the data buffer area according to a current code block index maintained by the concatenation module. Thus the waste of resources for buffering user information is decreased and more Block Random Access Memories (BRAMs) are saved.

Owner:NANJING ZHONGXING XIN SOFTWARE CO LTD

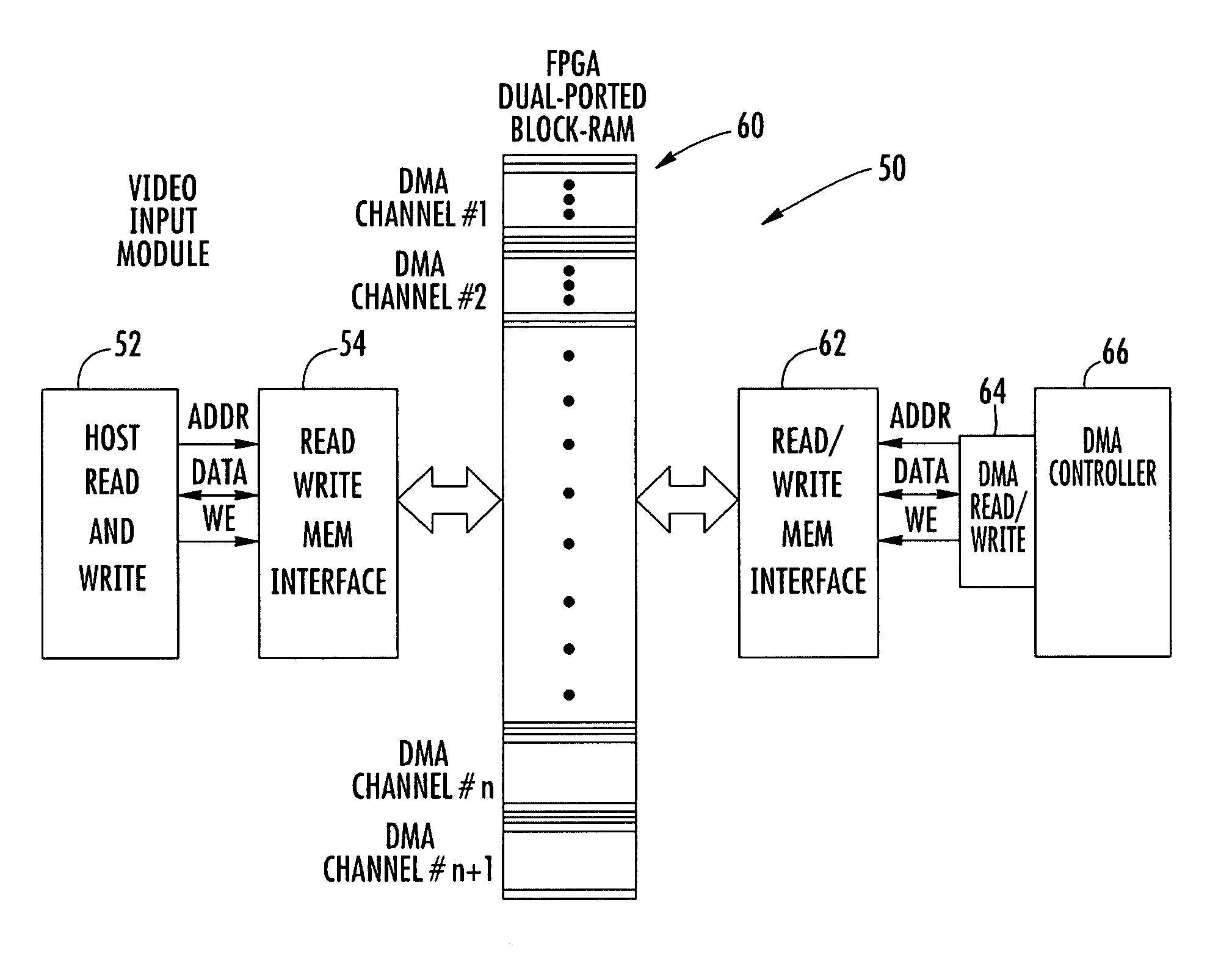

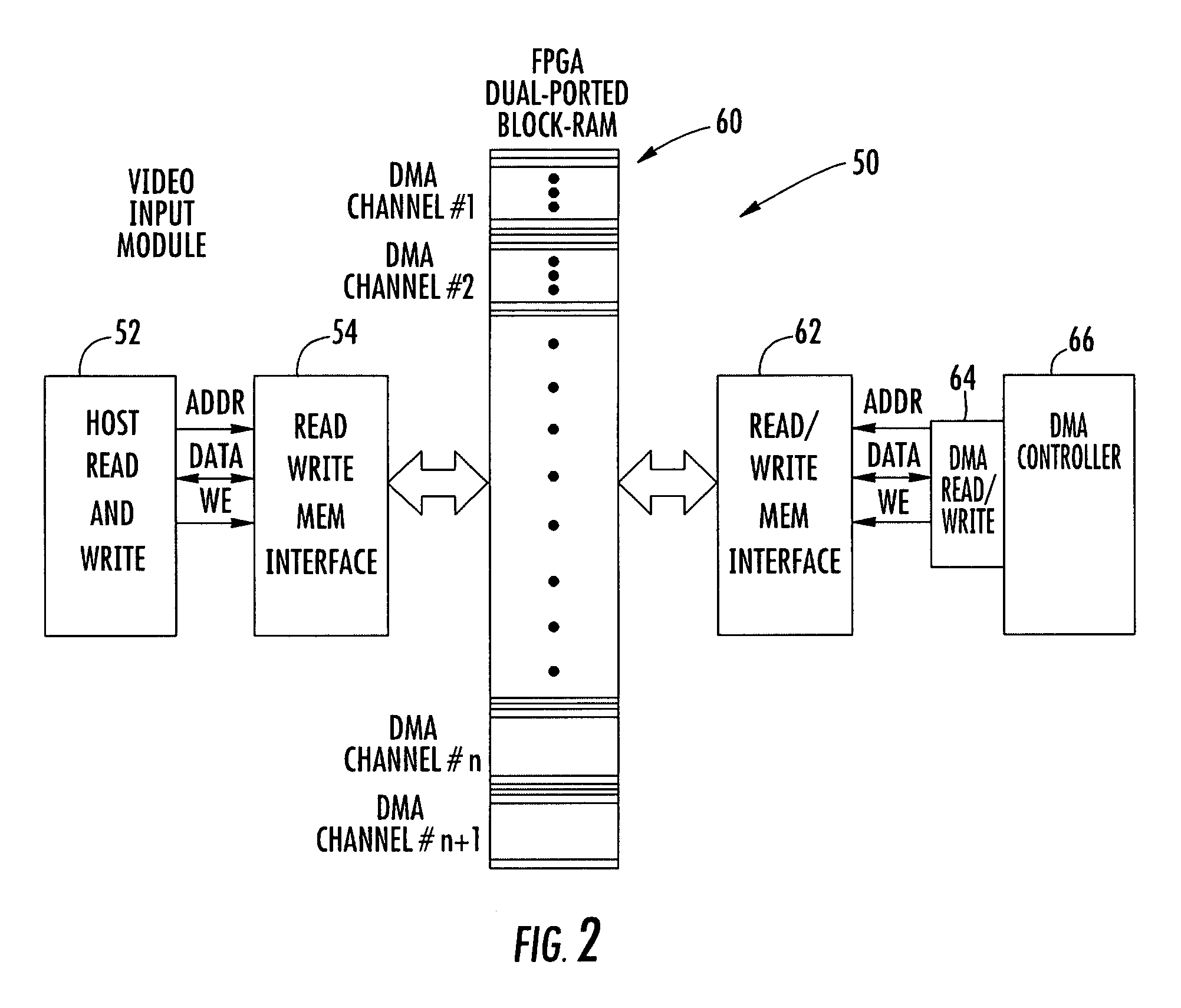

Video multiviewer system using direct memory access (DMA) registers and block RAM

A video multiviewer system includes a Graphics Processing Unit (GPU) that includes a GPU memory. A video input module is operative with the GPU for receiving video data and transferring the video data to the GPU memory via a Direct Memory Access (DMA). A programmable circuit such as a Field Programmable Gate Array (FPGA) includes a multi-ported and in one aspect a dual ported block Random Access Memory (RAM) configured for a plurality of DMA channels for receiving video data and allowing uninterrupted operation of consecutive DMA transfers of video data to the GPU memory. A display displays the multiple video windows based upon video data received within the GPU memory.

Owner:IMAGINE COMM

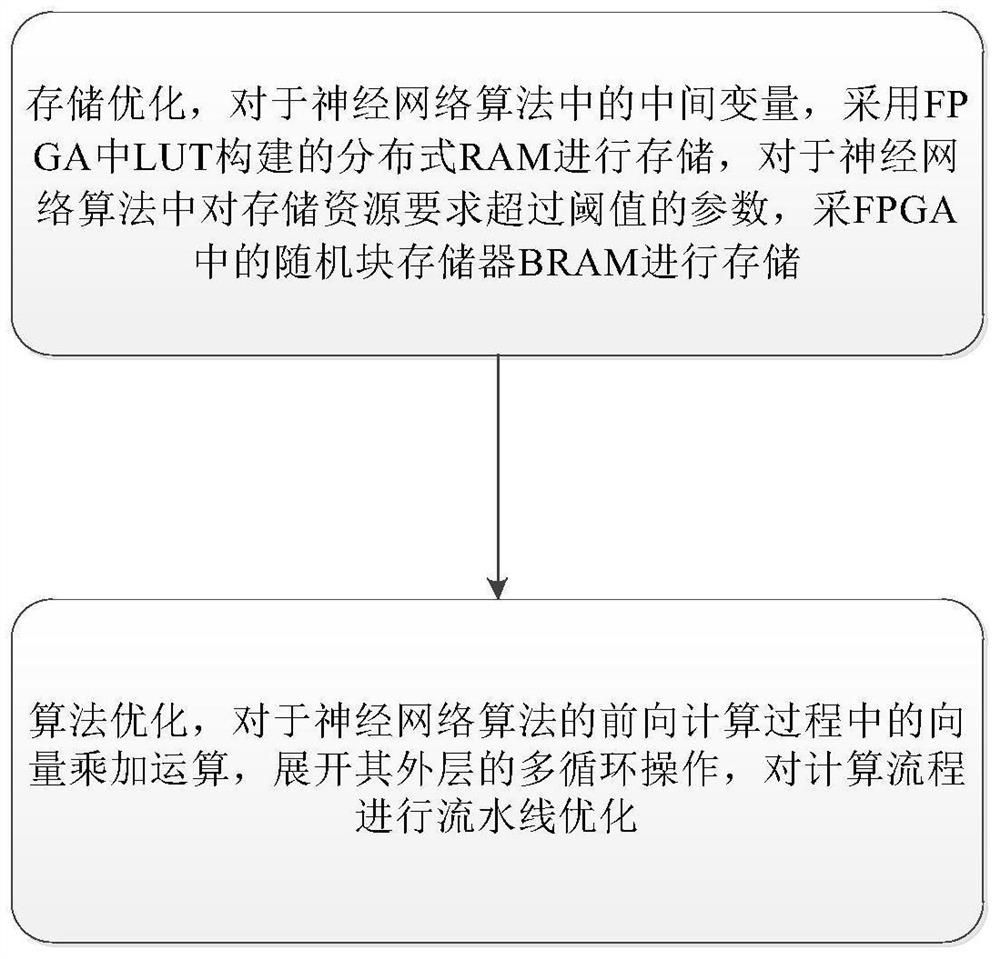

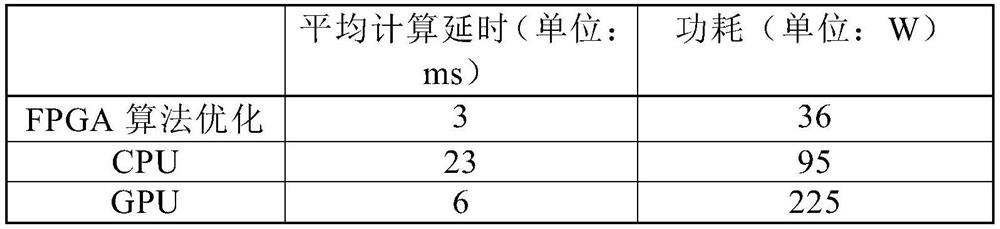

FPGA (Field Programmable Gate Array) platform-oriented recurrent neural network algorithm optimization method

PendingCN114841332AImprove computing efficiencyImprove resource usageDesign optimisation/simulationCAD circuit designRandom access memoryTheoretical computer science

The invention belongs to the technical field of data processing, and particularly relates to an FPGA platform-oriented recurrent neural network algorithm optimization method. The method comprises the following steps of: storage optimization: storing intermediate variables in a neural network algorithm by adopting a distributed RAM (Random Access Memory) constructed by an LUT (Loop Under Test) in an FPGA (Field Programmable Gate Array), and storing parameters with storage resource requirements exceeding a threshold value in the neural network algorithm by adopting a BRAM (Block Random Access Memory) in the FPGA; and algorithm optimization: for vector multiplication and addition operation in the forward calculation process of the neural network algorithm, carrying out multi-loop operation on the outer layer of the vector multiplication and addition operation, and carrying out pipeline optimization on the calculation process. According to the invention, the calculation efficiency and the resource utilization rate of the recurrent neural network are improved, and the calculation time delay and power consumption are reduced.

Owner:SHENYANG AIRCRAFT DESIGN INST AVIATION IND CORP OF CHINA

Target detection hardware accelerator and acceleration method

ActiveCN112230884AShorten the timeReduce power consumptionComputation using non-contact making devicesPhysical realisationComputer hardwareBinary multiplier

The invention relates to the field of data processing, and provides a target detection hardware accelerator and an acceleration method, and the accelerator comprises: a convolution arithmetic unit integrated with a multiplier and an adder, wherein the convolution arithmetic unit receives convolution weight data and a feature map which are stored in a block random access memory in advance, the multiplier performs multiplication operation on the convolution weight data and the feature map to obtain multiplication result data and convolution offset data, and the adder performs shift addition summation processing on the multiplication result data and the convolution offset data to obtain multiply-accumulate result data; a pooling operation unit which is used for receiving the multiply-accumulate result data, performing pooling operation and outputting pooling result data; and a RBR operation unit which is used for performing batch standardization and quantification on the pooling result data to obtain target feature data and storing the target feature data in the block random access memory. The time and power consumption required by the accelerator for data carrying can be reduced, andthe working efficiency of the accelerator is improved.

Owner:JIHUA LAB

A data processing method and device

ActiveCN110610679BReduce usageLow costStatic indicating devicesInput/output processes for data processingComputer hardwareData information

An embodiment of the present invention provides a data processing method and device, which acquires data information of pixels in a display device, and the data information includes compensation data and pixel positions of the pixels; and determines the compensation data of the pixels according to a preset mapping relationship In the storage location of the block random access memory, wherein the block random access memory includes multiple groups of data units stored sequentially, the order in which the data units adjacent to the storage positions are taken out is adjacent, and the data units include the data units taken out in the same clock cycle A plurality of compensation data, the plurality of compensation data are sequentially stored in the block random access memory; and the compensation data is stored in a storage space corresponding to the storage location. In this way, the compensation data can be preferentially stored in a block random access memory, the storage capacity of the block random access memory can be fully utilized, and the number of used block random access memories can be reduced, thereby reducing the cost of the FPGA.

Owner:BOE TECH GRP CO LTD

A screening test method for fpga embedded multiplier

ActiveCN109445365BShorten test timeImprove test efficiencyProgramme controlComputer controlComputer architectureLogisim

The invention relates to a performance testing method of an FPGA embedded multiplier, comprising the following steps: (1) IP core function design of the embedded multiplier; (2) pseudo-random sequence test vector design; (3) RTL-level code simulation; ( 4) Test result analysis circuit design; (5) Module replication and output logic design. The screening test method of the FPGA embedded multiplier provided by the present invention adopts the test method based on BIST, which solves the shortcomings of high ATE test cost and difficult test technology; at the same time, the pseudo-random sequence is used as the stimulus input, which reduces the test time. Improved testing efficiency. This method makes full use of the programmable characteristics of the FPGA chip, as well as the abundant programmable logic units and embedded memory units (Block Random Access Memory, BRAM) inside the chip. The invention has simple implementation steps, strong portability and certain engineering application value.

Owner:青岛中科青芯电子科技有限公司

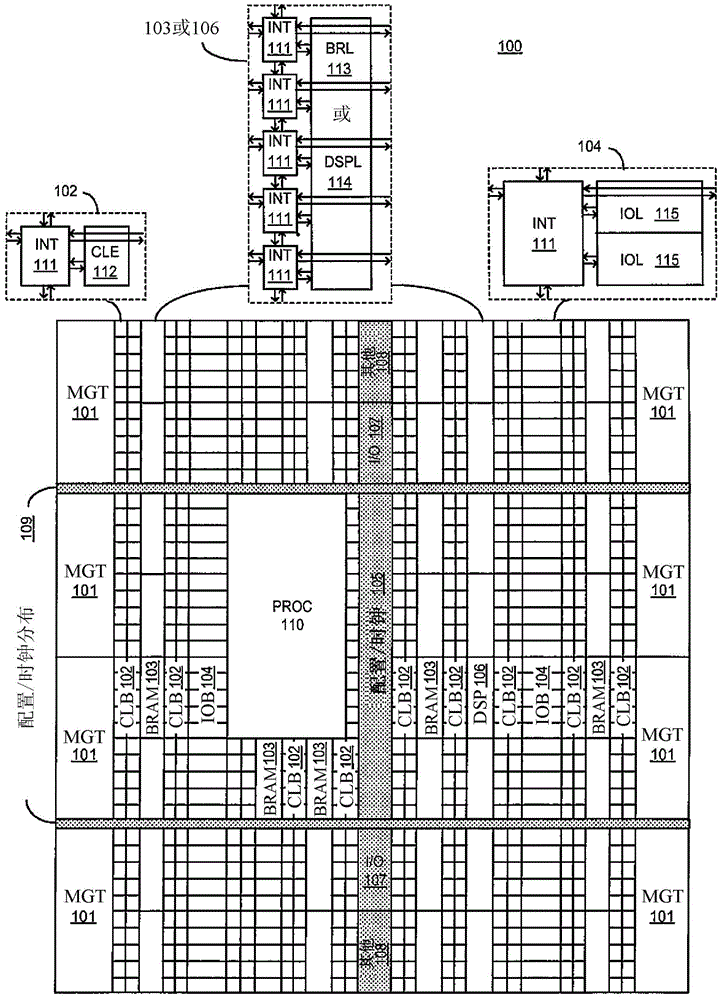

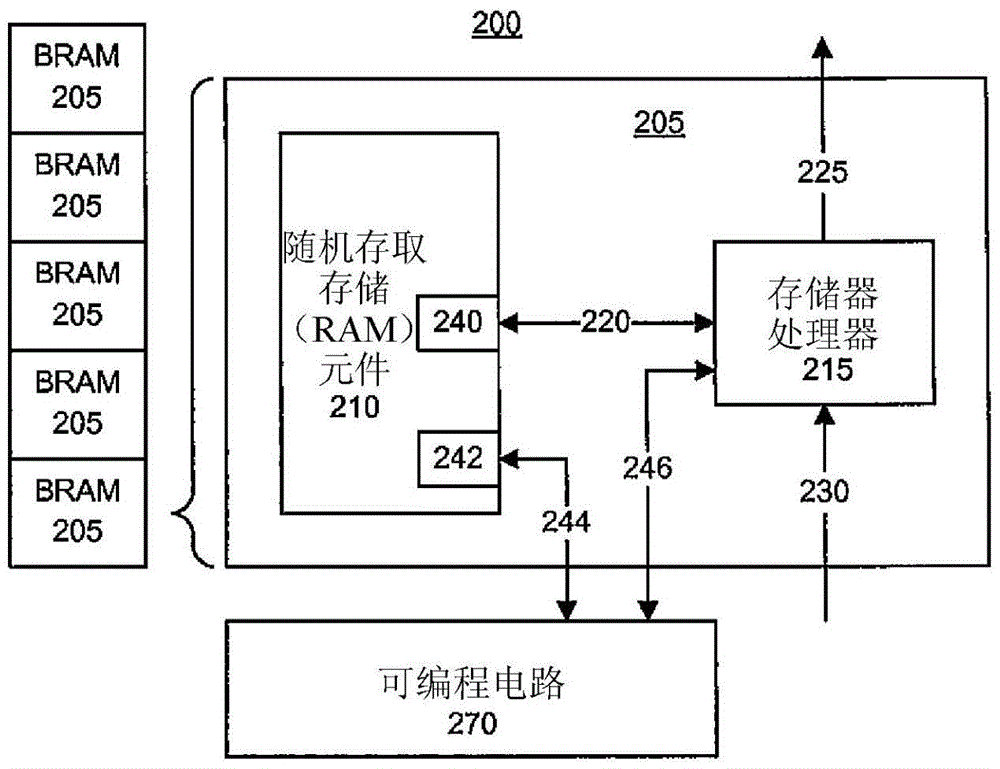

Embedded memory and special purpose processor structures within integrated circuits

The integrated circuit may include a programmable circuit (270) operable according to a first clock frequency, and a memory block (205). The memory block may include a storage element (210) having at least one data port and a memory processor (215) coupled to the data port of the storage element and to the programmable circuit. The memory processor may be operable according to a second clock frequency higher than the first clock frequency. Furthermore, the memory processor may be hardwired and dedicated to performing operations in the storage elements of the memory block.

Owner:XILINX INC

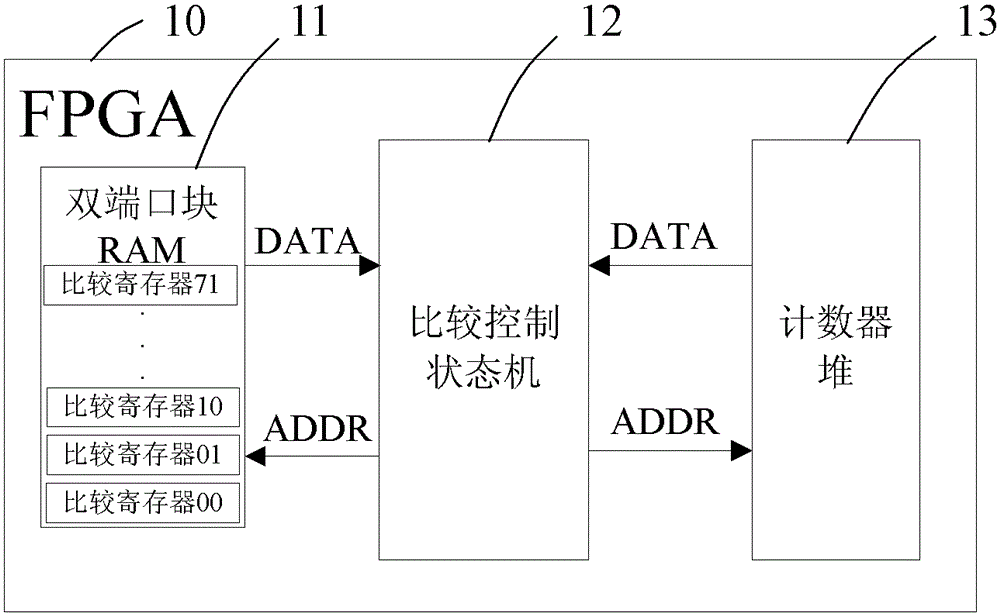

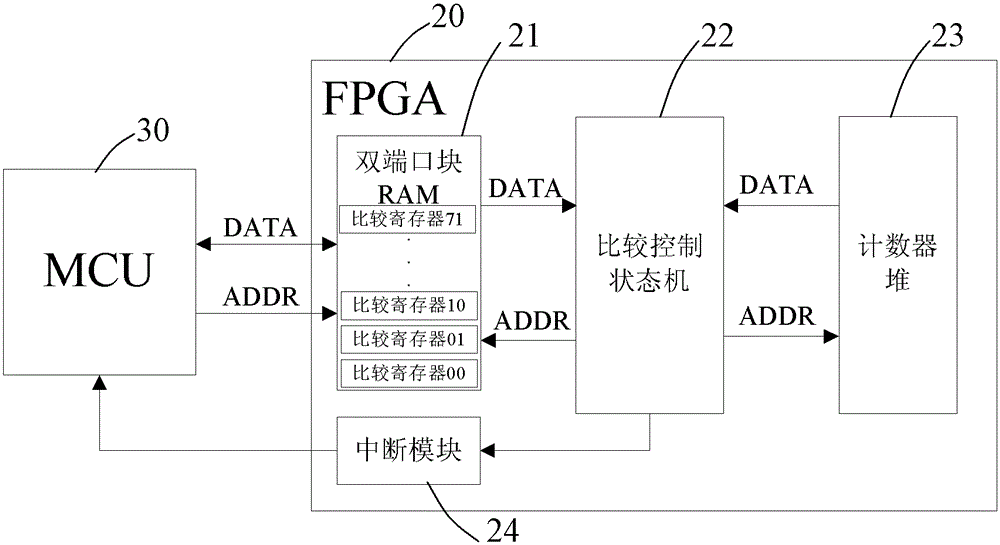

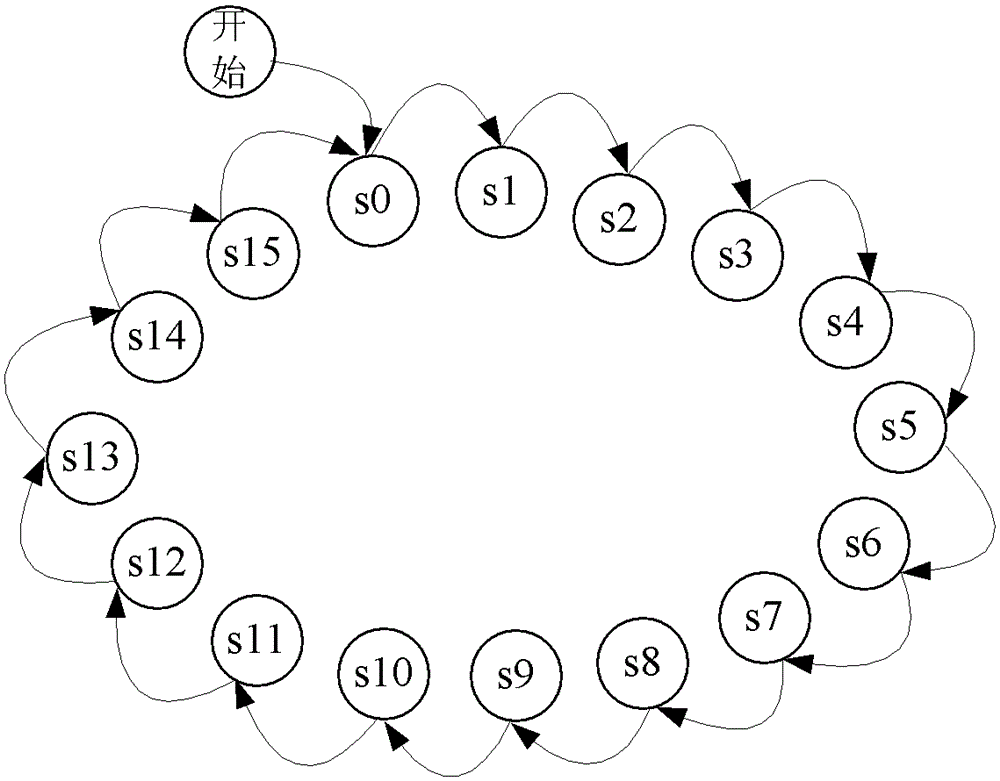

System and method for realizing plc high-speed pulse counting based on fpga

ActiveCN103187967BProgramme control in sequence/logic controllersPulse techniqueProcessor registerProgrammable logic controller

The invention provides a programmable logic controller (PLC) high speed pulse counting implementation system based on a field programmable gate array (FPGA). The PLC high speed pulse counting implementation system based on the FPGA comprises the FPGA. The FPGA comprises a dual-port block random access memory (RAM), a comparison control state machine and a counter pile, wherein the dual-port block RAM comprises multiple groups of comparison registers, the counter pile comprises multiple counters which are used for counting input pulses, each counter in the counter pile corresponds to one group of comparison registers in the dual-port block RAM, and the comparison control state machine is used for reading the value of each comparison register from the dual-port block RAM in a cyclic mode and comparing the value of each comparison register with the value of one corresponding counter in the counter pile. The invention further provides a method corresponding to the PLC high speed pulse counting implementation system based on the FPGA. According to the PLC high speed pulse counting implementation system based on the FPGA and the corresponding method, comparative data are stored in the dual-port block RAM inside the FPGA, the comparison control state machine is used for comparing the comparative data in the dual-port block RAM with the values of the counters in a cyclic mode, and therefore counting and comparison of the high speed pulses through a PLC are realized.

Owner:SHENZHEN INOVANCE TECH

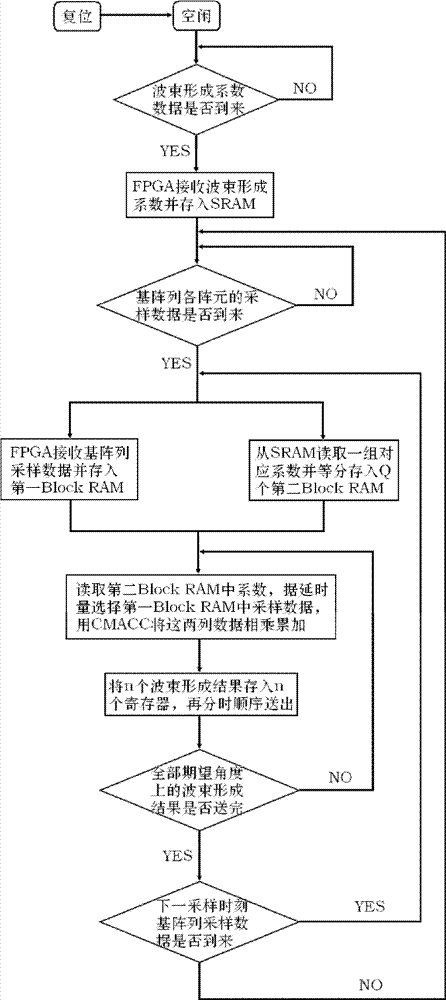

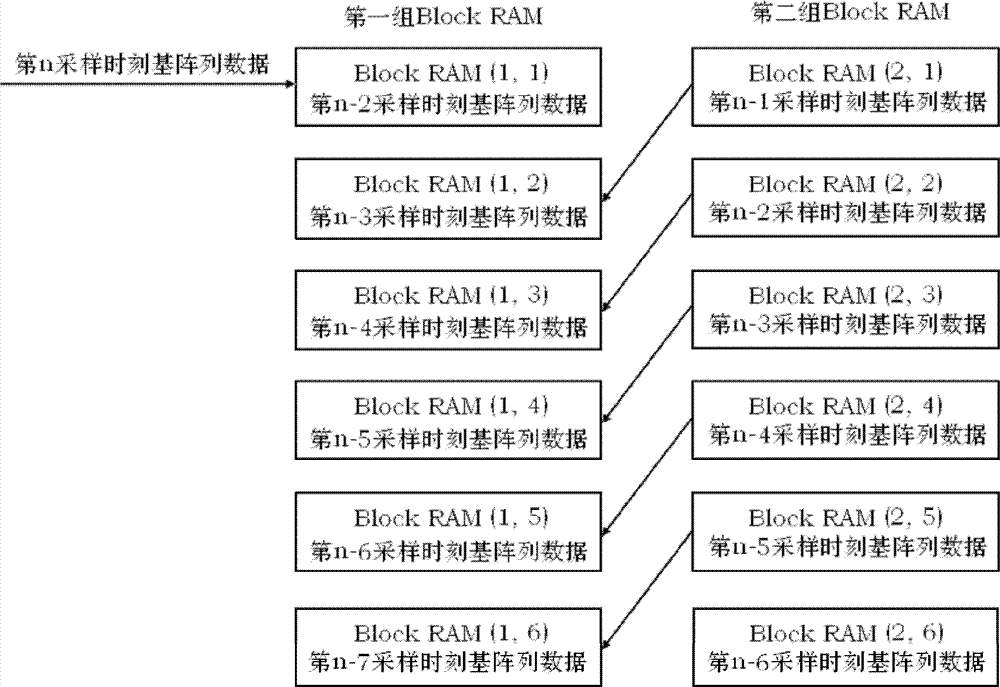

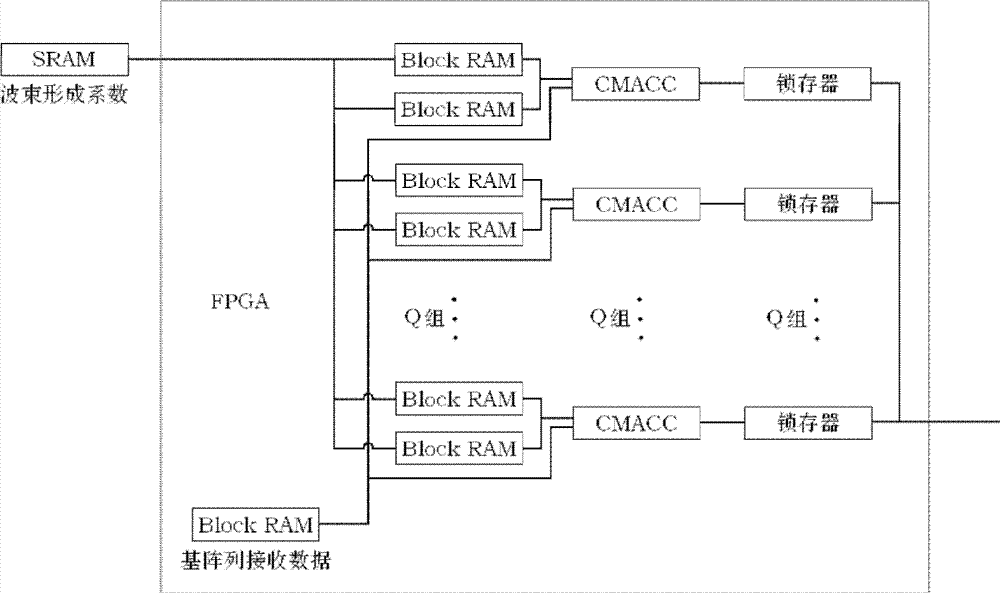

Real-time parallel time-delay and phase-shift beam forming method based on field programmable gate array (FPGA)

InactiveCN102565775BSave storage spaceAccurate Beamforming ResultsSpatial transmit diversityWave based measurement systemsStatic random-access memoryPhase shifted

The invention discloses a real-time paralle time-delay and phase-shift beam forming method based on a field programmable gate array (FPGA), which includes: (1) the FPGA receives half of beam forming coefficient data at an expected angle and stores the beam forming coefficient data into a connected static random access memory (SRAM); (2) the FPGA receives sample data from receipt signals of each of array elements of arrays and stores the sample data in a first Block random access memory (RAM), and also performs an alternant read-write in two groups of the first Blocks RAM; a group of corresponding beam forming coefficient data from SRAM are read and stored in a group of Q second Block RAMs of FPGA; (3) simultaneously, beam forming coefficient data of Q second Block RAMs are read, one sample datum of the first Block RAM is selected according to the time-delay amount; the two arrays of data are performed multiplication and accumulation to obtain Q beam forming results which are stored inQ latches; the results are sequentially sent out in time sharing until all beam forming results are sent out; and (4) before the sample data of next sampling moment of receipt signals of each of array elements of arrays arrive, the step (2) is repeatedly executed until the process of the beam forming of whole received sample data from receipt signals of each of array elements of arrays is finished.

Owner:ZHEJIANG UNIV

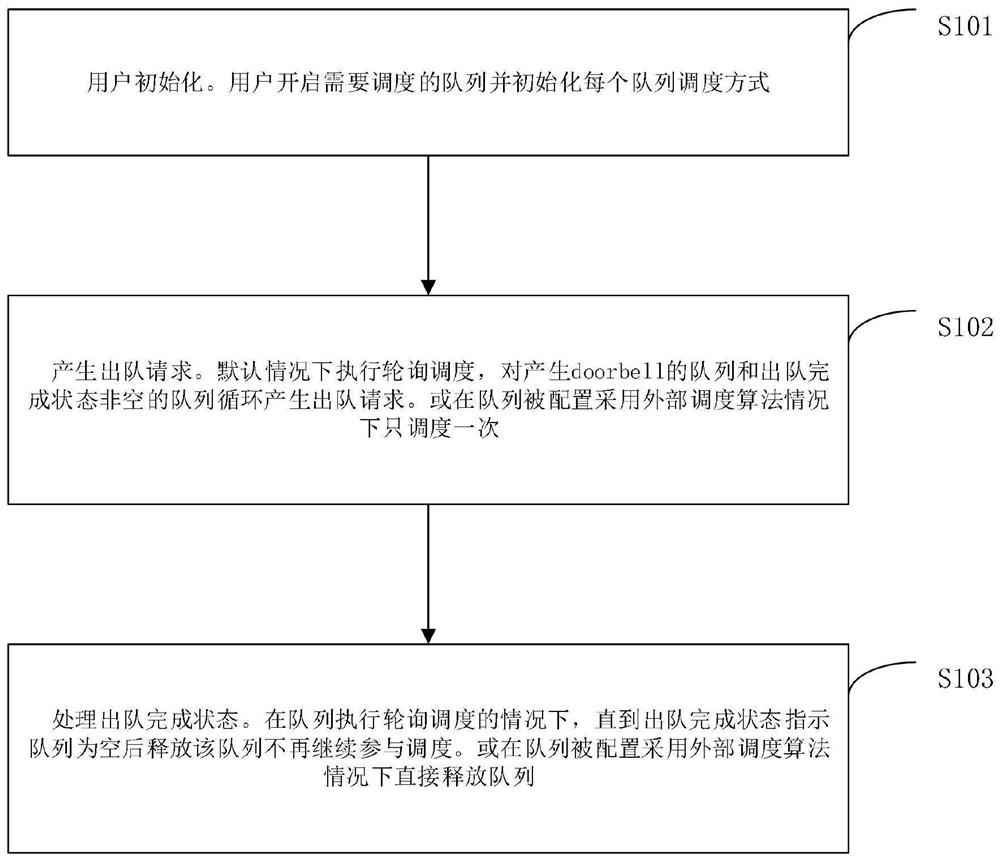

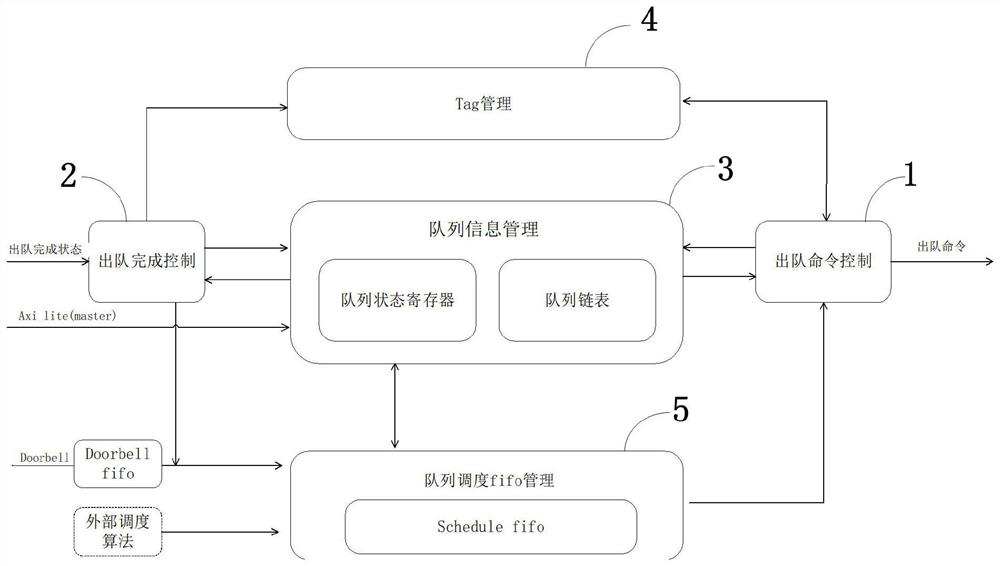

Dynamic configurable high-performance queue scheduling method, system, processor and protocol

PendingCN114827048AOvercome limitationsImprove compatibilityTransmissionInformation supportRandom access memory

The invention belongs to the technical field of multi-queue scheduling of network equipment, and discloses a dynamic configurable high-performance queue scheduling method, system, processor and protocol, and the method comprises the following steps: storing queue state information by using BRAM (Block Random Access Memory) or URAM (Universal Random Access Memory) resources of an FPGA (Field Programmable Gate Array); an external user is supported to dynamically configure queue state information through an AXI lite interface and randomly disable or start a specified queue so as to perform accurate transmission control on multiple queues; the default scheduling method is high-performance polling scheduling, and any scheduling algorithm can be expanded or replaced. The scheduling algorithm adopts a pipeline structure to support two different operations, namely generating a dequeue request and receiving a dequeue completion state. One fifo is used for storing a scheduled queue and executing polling scheduling, distributing a unique Tag number for each dequeue request, and maintaining a linked list for storing the Tag number for each queue so as to maintain and follow up a plurality of dequeue states of parallel processing; and the flexibility and the expandability are greatly improved.

Owner:XIDIAN UNIV

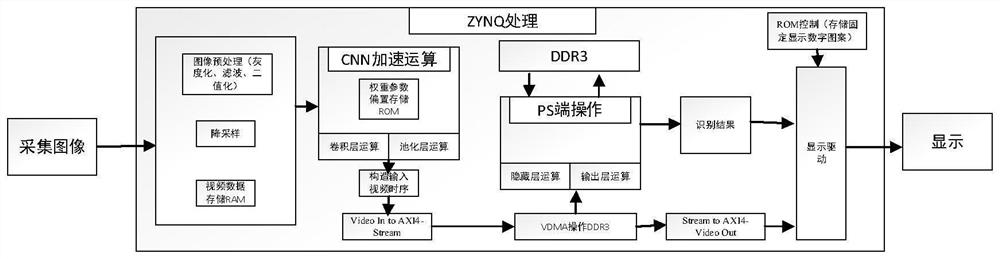

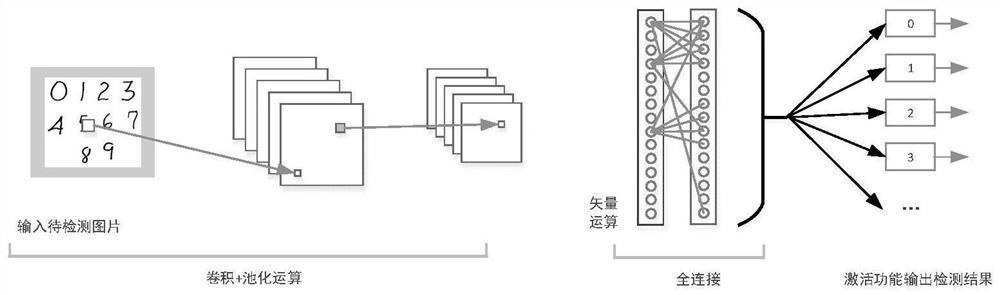

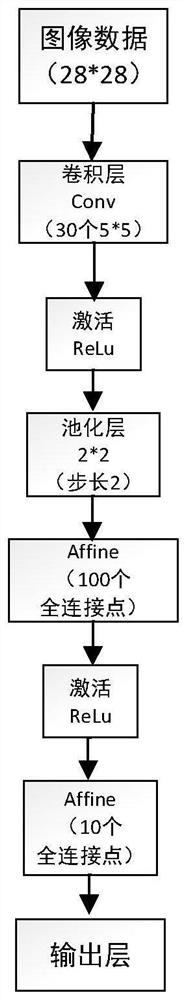

Handwritten numeral recognition implementation method

PendingCN114299514ASimple structureFast recognitionNeural architecturesDigital ink recognitionActivation functionDouble data rate

The invention discloses a handwritten numeral recognition implementation method, and belongs to the field of image recognition. According to the method, the convolutional neural network is mainly deployed on a ZYNQ embedded hardware platform, and handwritten numeral recognition is realized through collaborative acceleration of software and hardware. The method comprises the following steps of: firstly, performing graying and binarization processing on an input image, performing identification frame matching on the input image and a data set picture in size, and then storing an identification frame image into a BRAM (Block Random Access Memory) storage unit; then, convolution operation, function activation and pooling operation acceleration are carried out on identification frame image data at a PL end; constructing a camera time sequence by using the pooled image data, and transmitting the camera time sequence to a DDR (Double Data Rate) of a PS end; and finally, hidden layer and output layer operation is completed at the PS end, and an identification result is transmitted to the PL end to be displayed. According to the method, reasoning operation of a part of neural networks can be accelerated, and handwritten digits in the picture can be quickly recognized.

Owner:NANJING UNIV OF SCI & TECH

A screening test method for FPGA programmable logic resources

ActiveCN109445366BImprove test efficiencySimple implementation stepsProgramme controlComputer controlTest efficiencyComputer architecture

The invention relates to a screening and testing method of FPGA programmable logic resources. The method comprises the following steps of 1 programmable logic resource function design, 2 lookup tabletesting input vector design, 3 RTL-level code emulation, 4 testing result analysis circuit design and 5 module copying and output logic design. According to the screening and testing method of the FPGA programmable logic resources, a testing method based on a BIST is adopted, and the defects that the ATE testing expense is high, and the testing technical difficulty is large are overcome. All LUT modules and DFF modules can be covered only through two sets of matching codes, and the testing efficiency is improved. According to the method, the programmable characteristic of an FPGA chip and richchannel resources and a block random access memory (BRAM) in the chip are fully utilized. The method is simple in implementation step and high in transportability and has certain engineering application value.

Owner:济南国科芯微电子科技有限公司

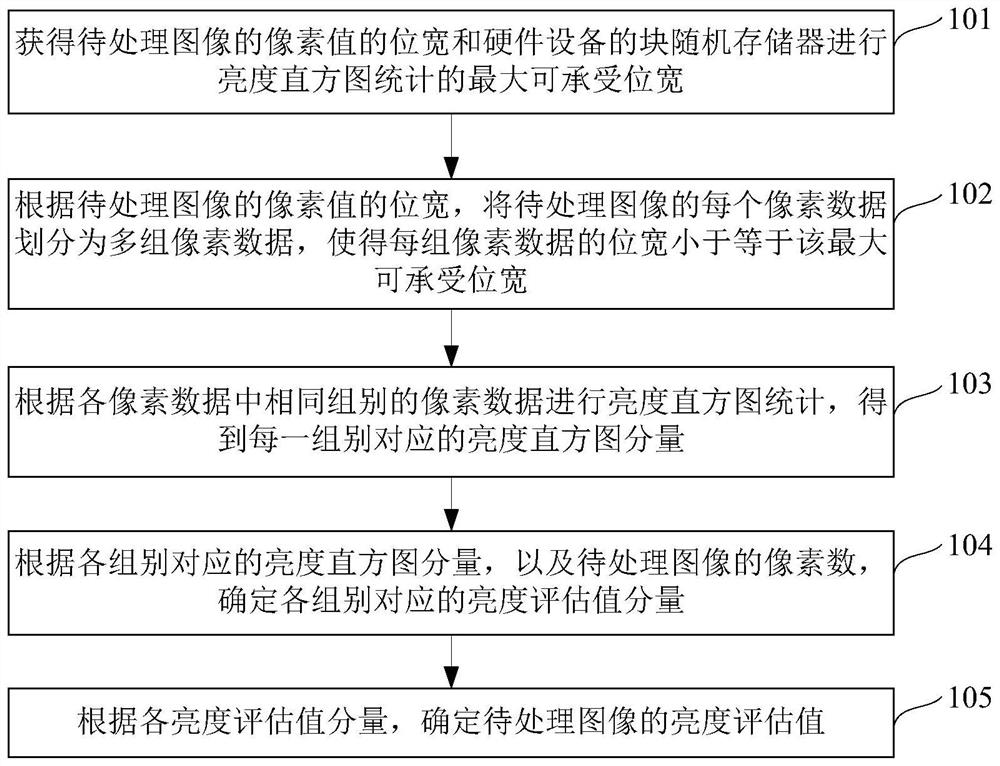

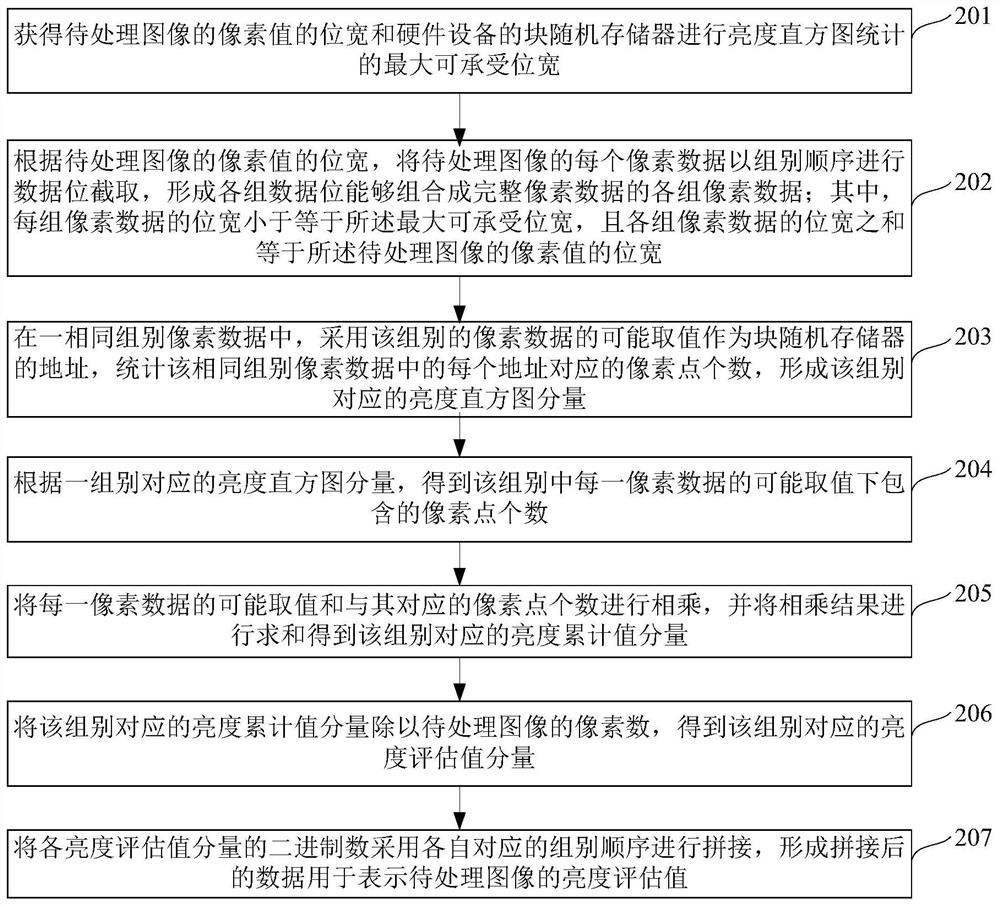

An image brightness statistical method and imaging equipment

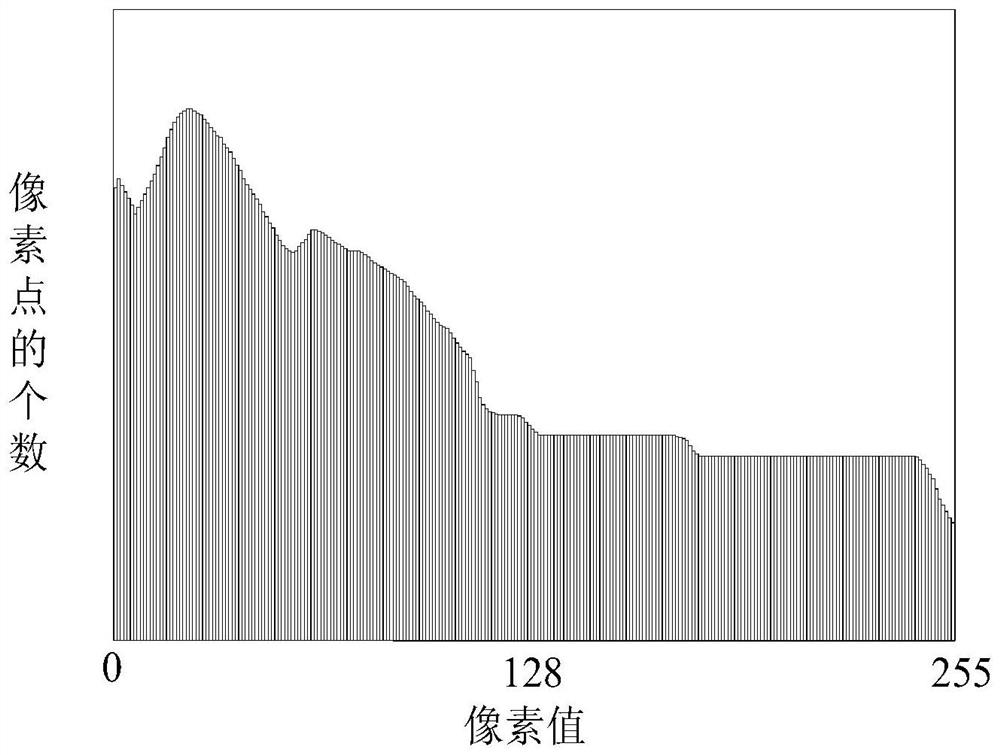

ActiveCN111107274BConvenient brightness statisticsProcessing power limitTelevision system detailsImage enhancementImaging processingRadiology

The invention provides an image brightness statistical method and an imaging device, which relate to the technical field of image processing. The method includes: obtaining the bit width of the pixel value of the image to be processed and the maximum bearable bit width of the block random access memory of the hardware device for performing brightness histogram statistics; according to the bit width of the pixel value of the image to be processed, each The pixel data is divided into multiple groups of pixel data, so that the bit width of each group of pixel data is less than or equal to the maximum acceptable bit width; according to the brightness histogram statistics of the same group of pixel data in each pixel data, the brightness corresponding to each group is obtained Histogram component: Determine the brightness evaluation value component corresponding to each group according to the brightness histogram component corresponding to each group and the pixel number of the image to be processed; determine the brightness evaluation value of the image to be processed according to each brightness evaluation value component. The present invention can conveniently perform brightness statistics for images with a high bit width to obtain brightness evaluation values.

Owner:BEIJING TUSEN ZHITU TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com