Patents

Literature

623 results about "Controller (computing)" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computing and especially in computer hardware, a controller is a chip, an expansion card, or a stand-alone device that interfaces with a more peripheral device. This may be a link between two parts of a computer (for example a memory controller that manages access to memory for the computer) or a controller on an external device that manages the operation of (and connection with) that device.

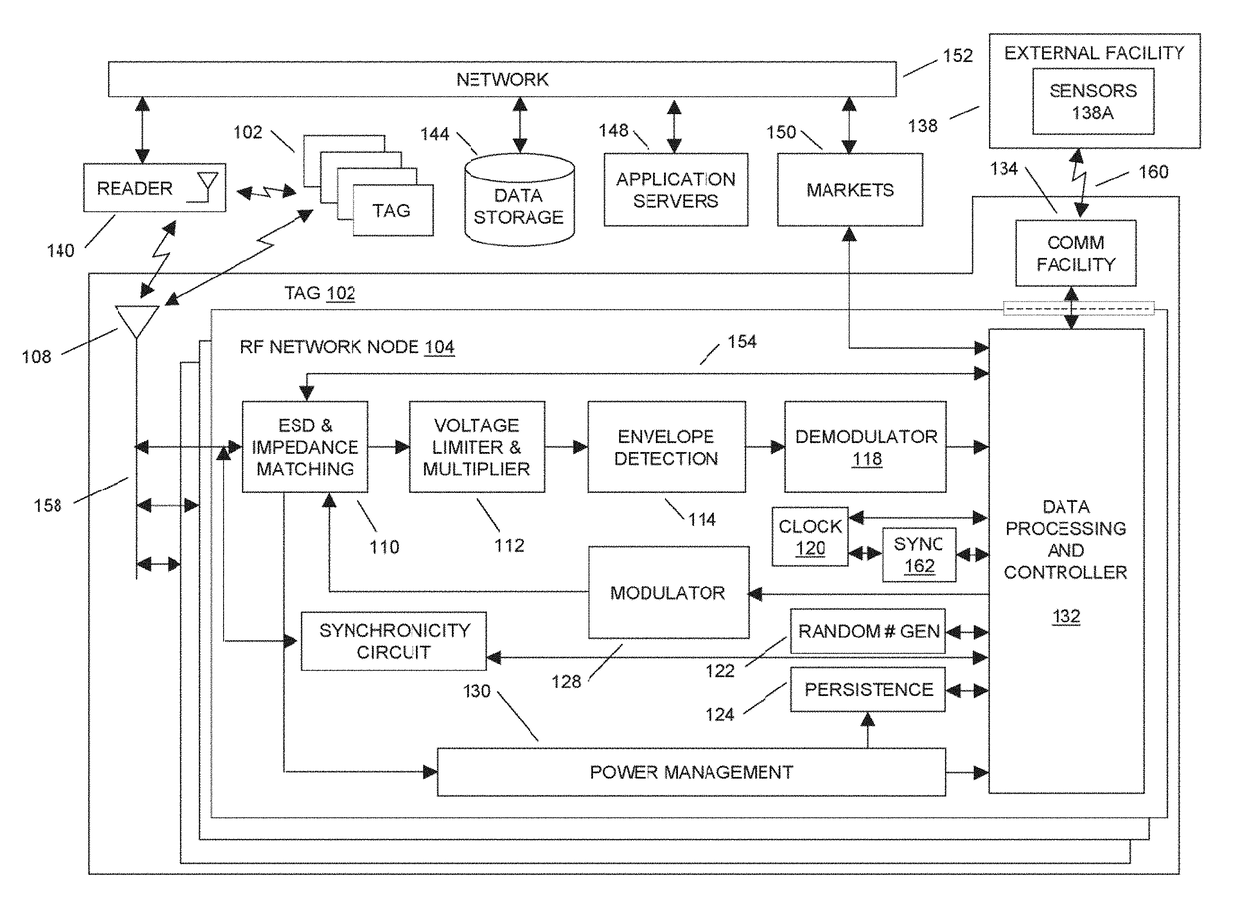

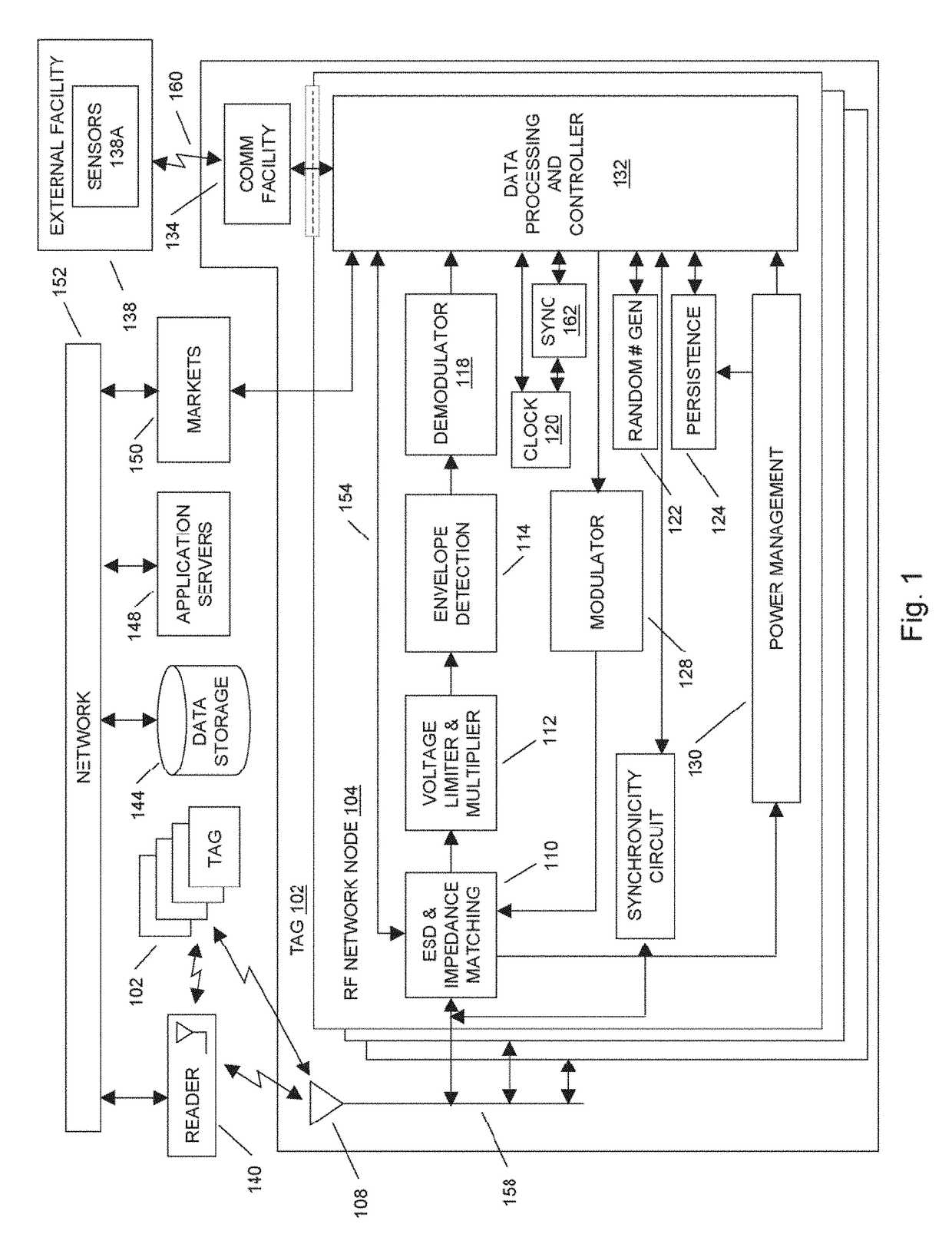

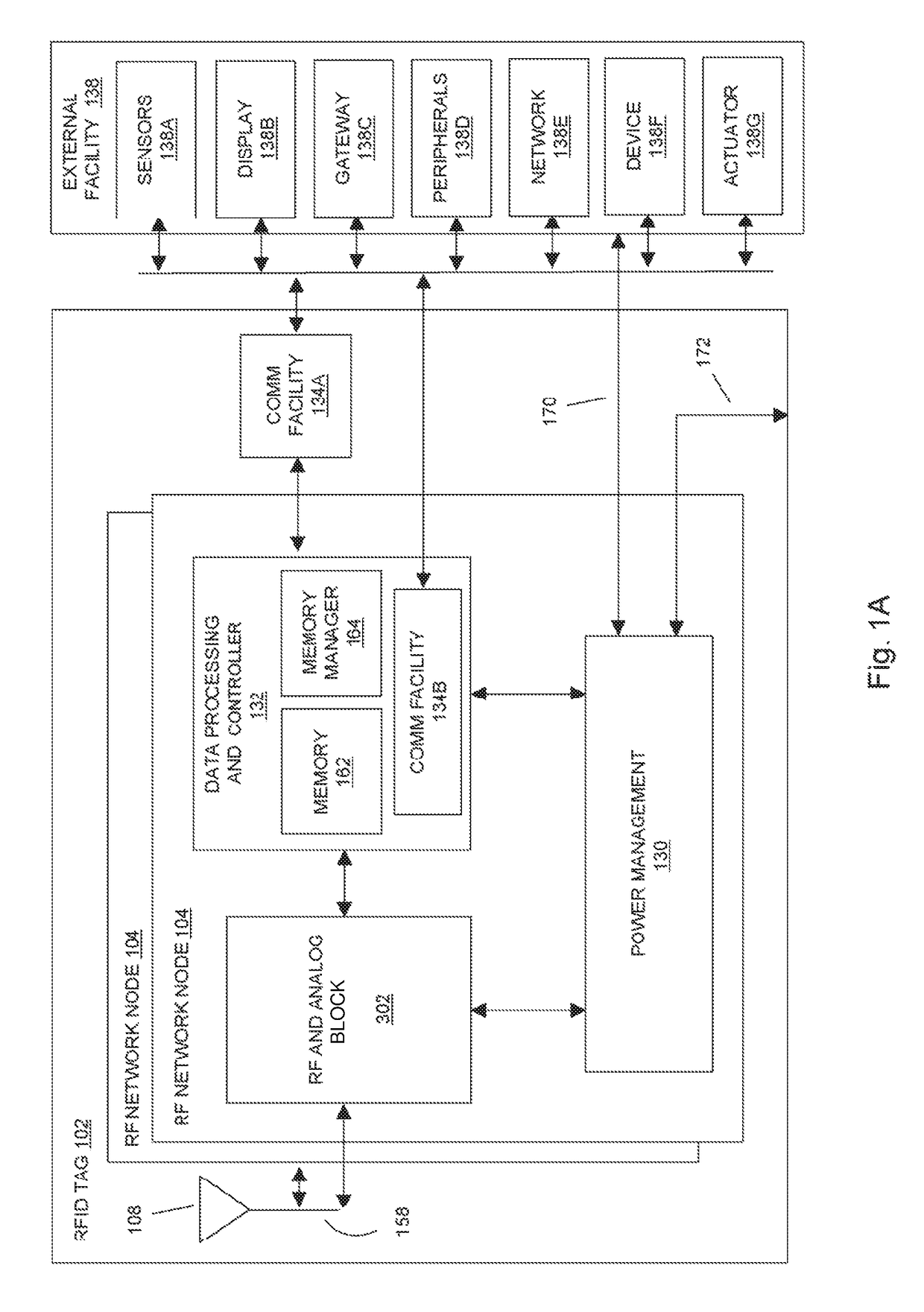

Operating systems for an RFID tag

ActiveUS9953193B2Enhanced radiationMemory record carrier reading problemsCo-operative working arrangementsComputer hardwareOperational system

Owner:TEGO INC

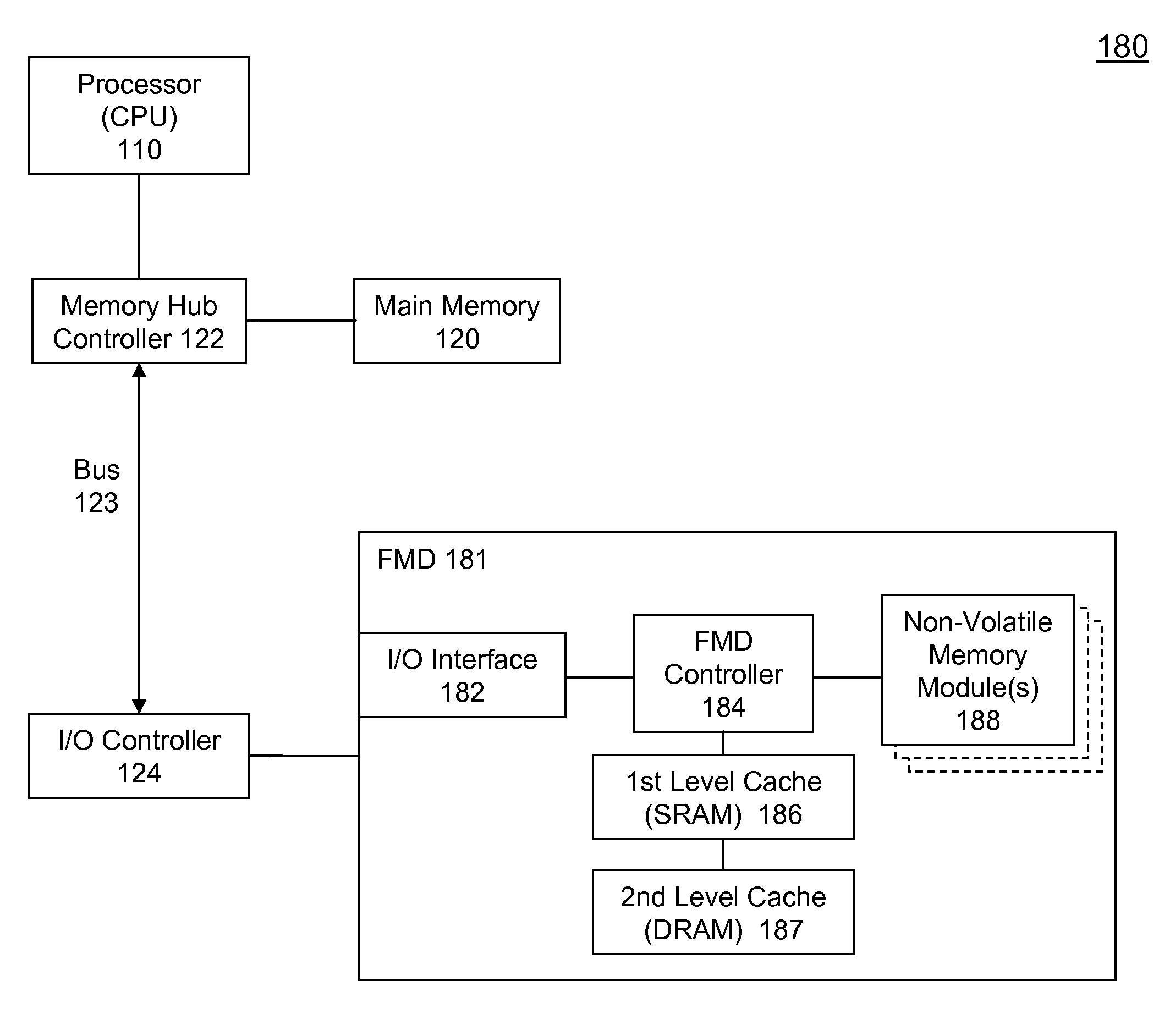

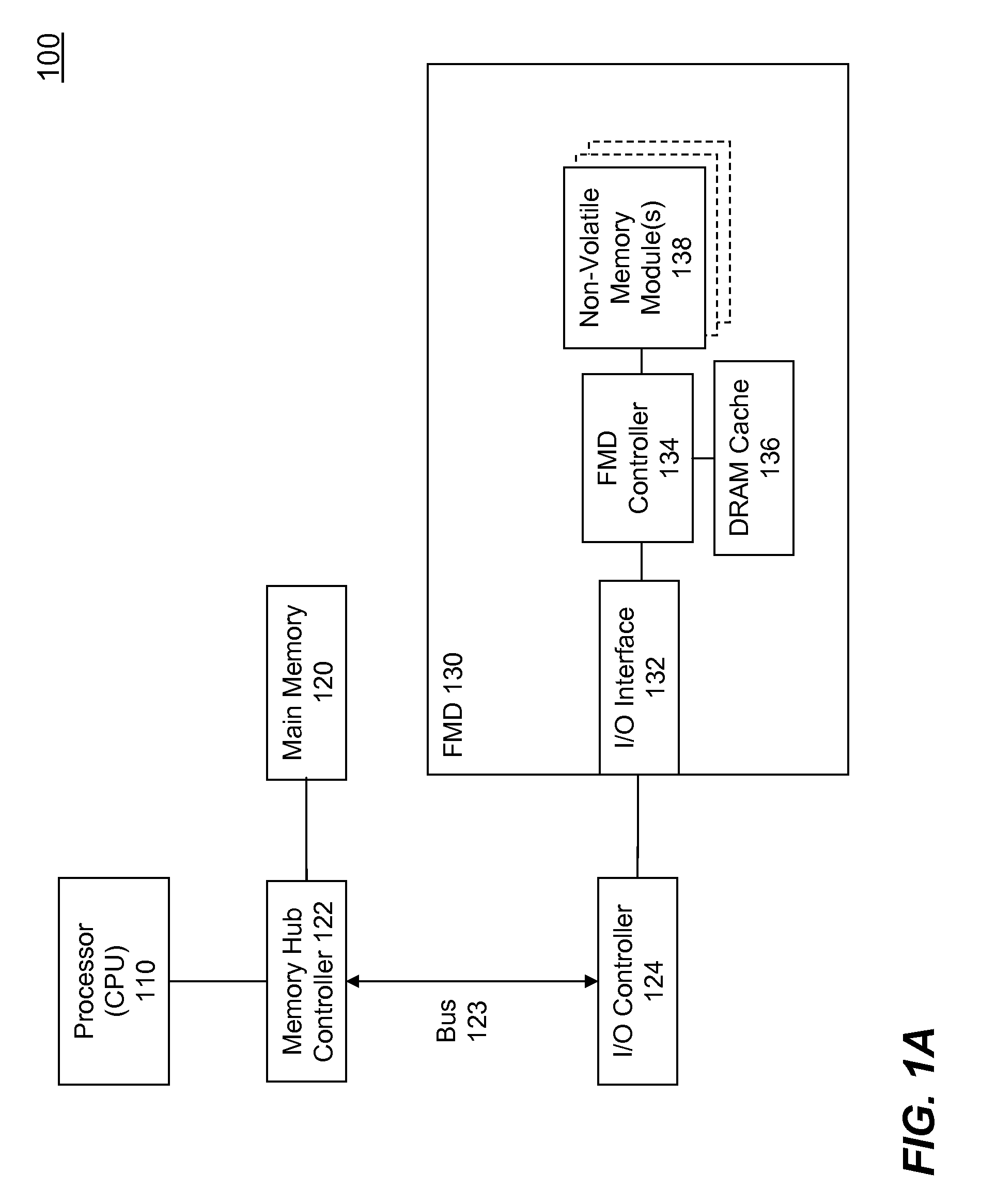

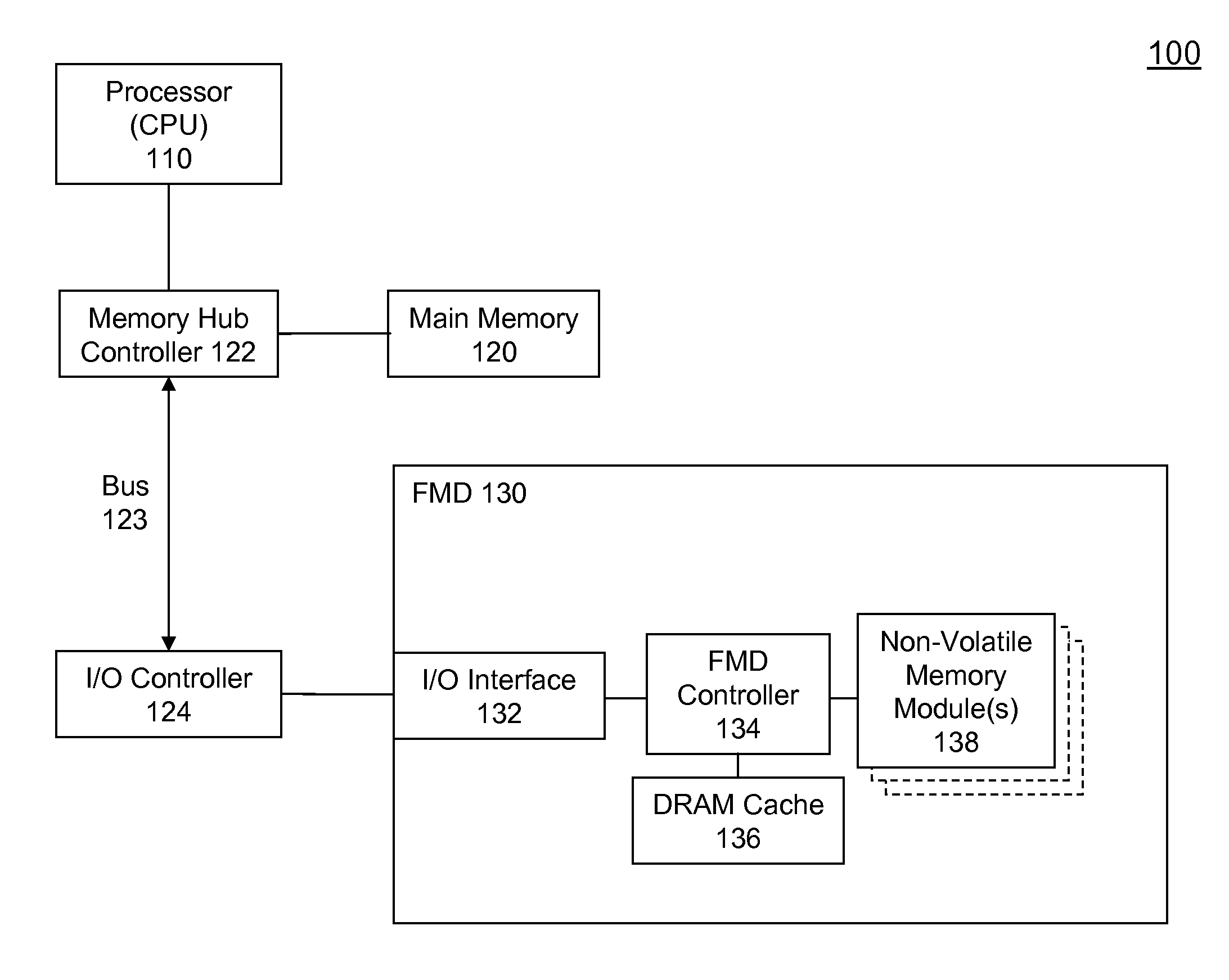

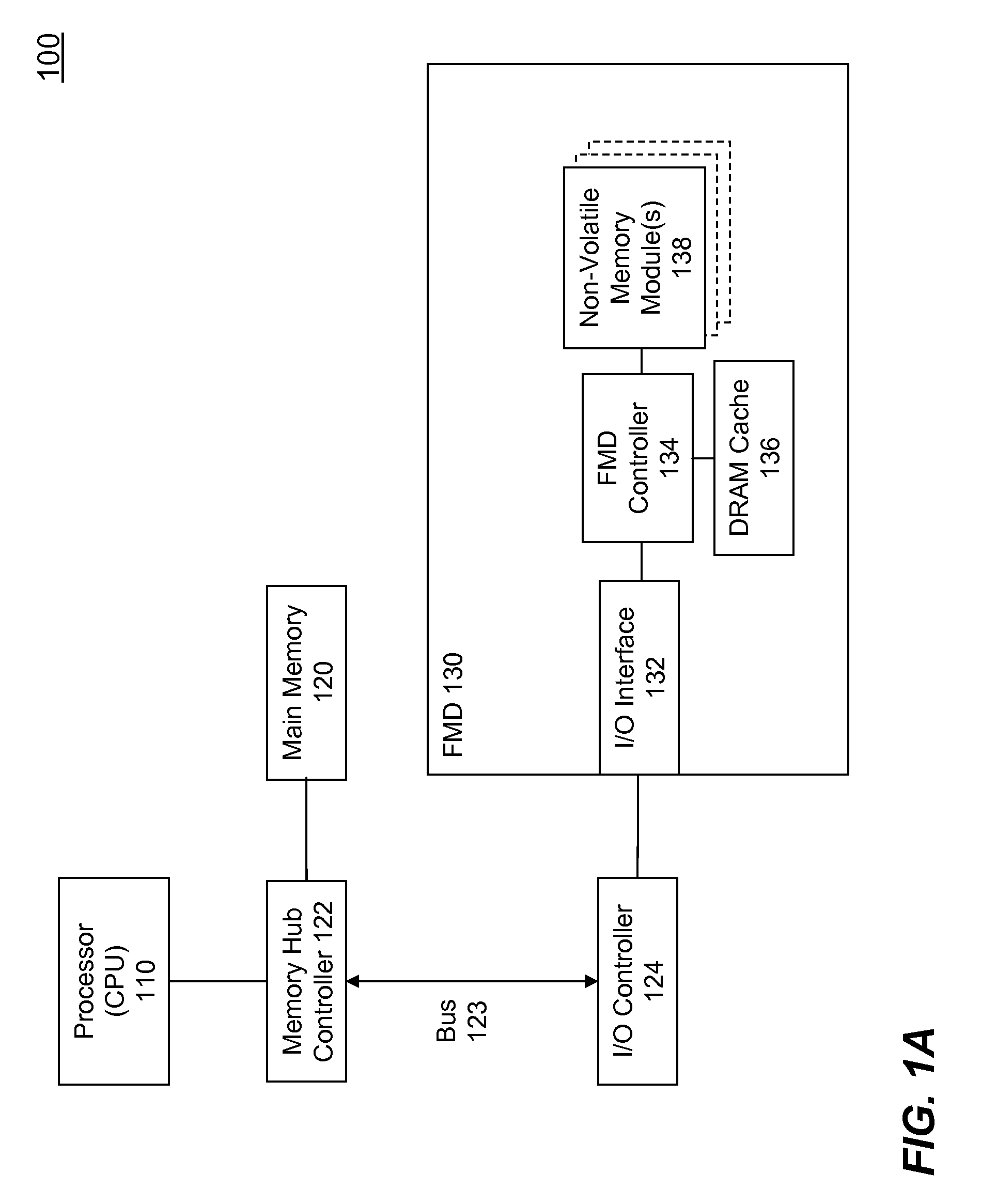

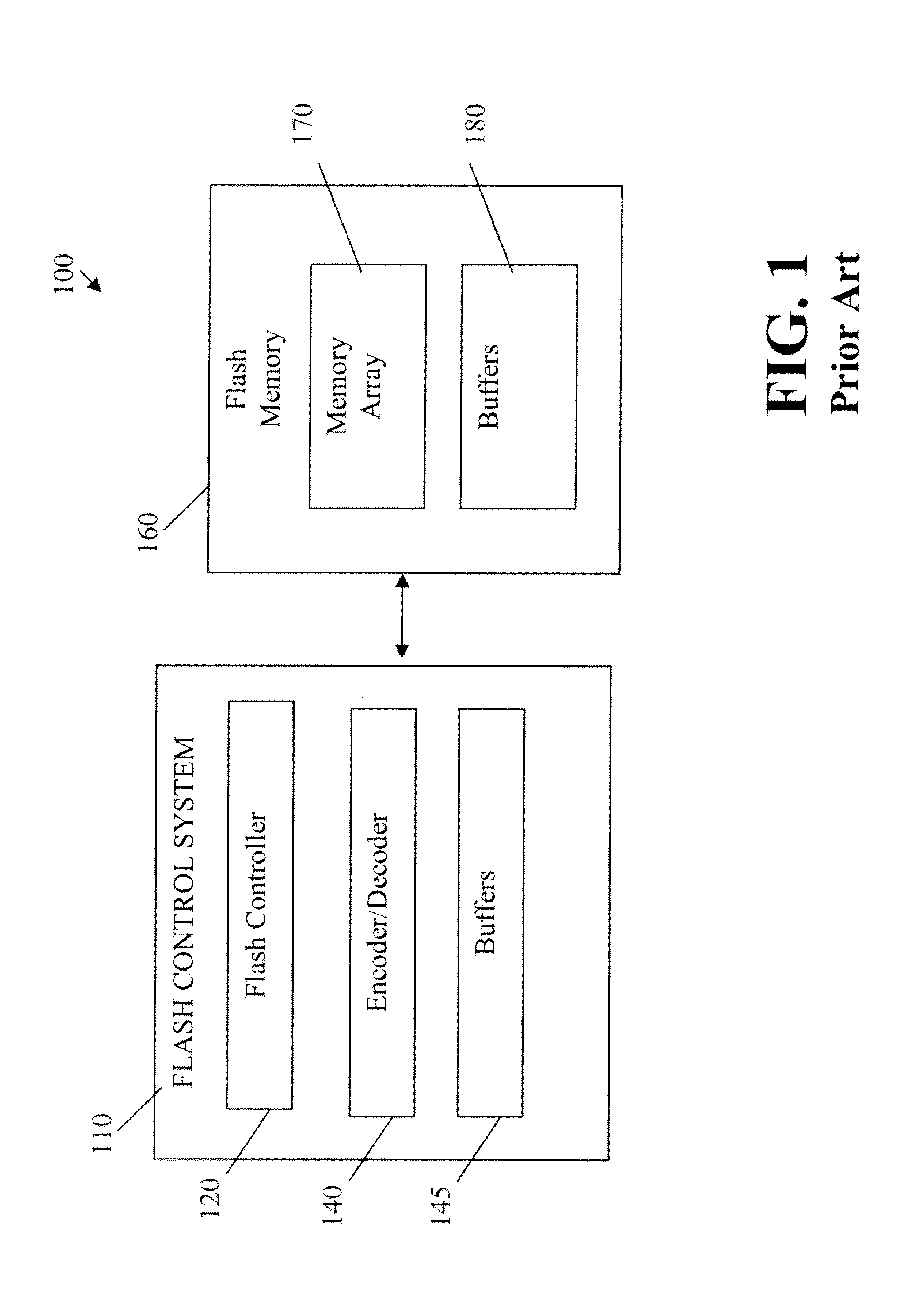

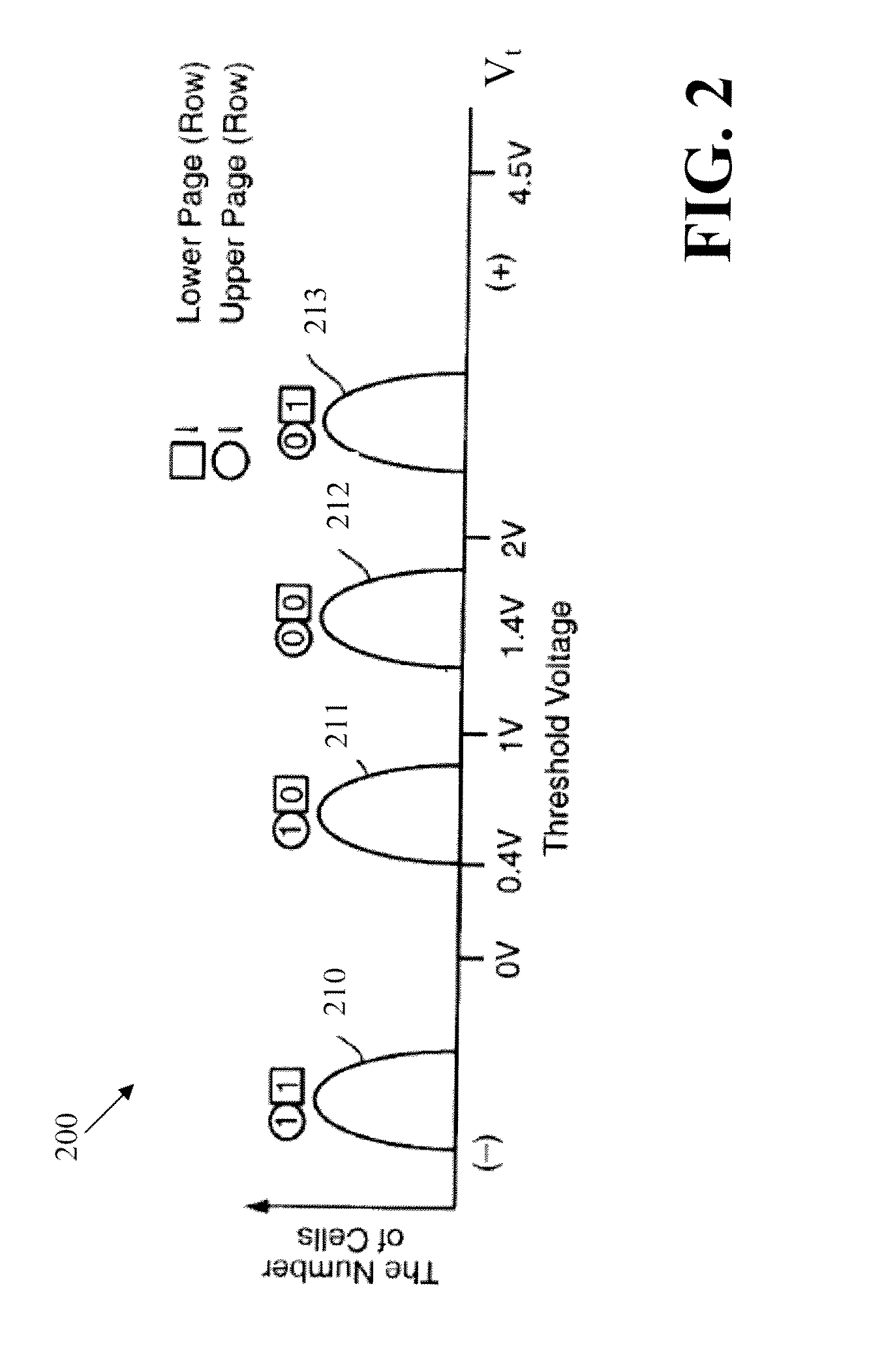

High Performance Flash Memory Devices (FMD)

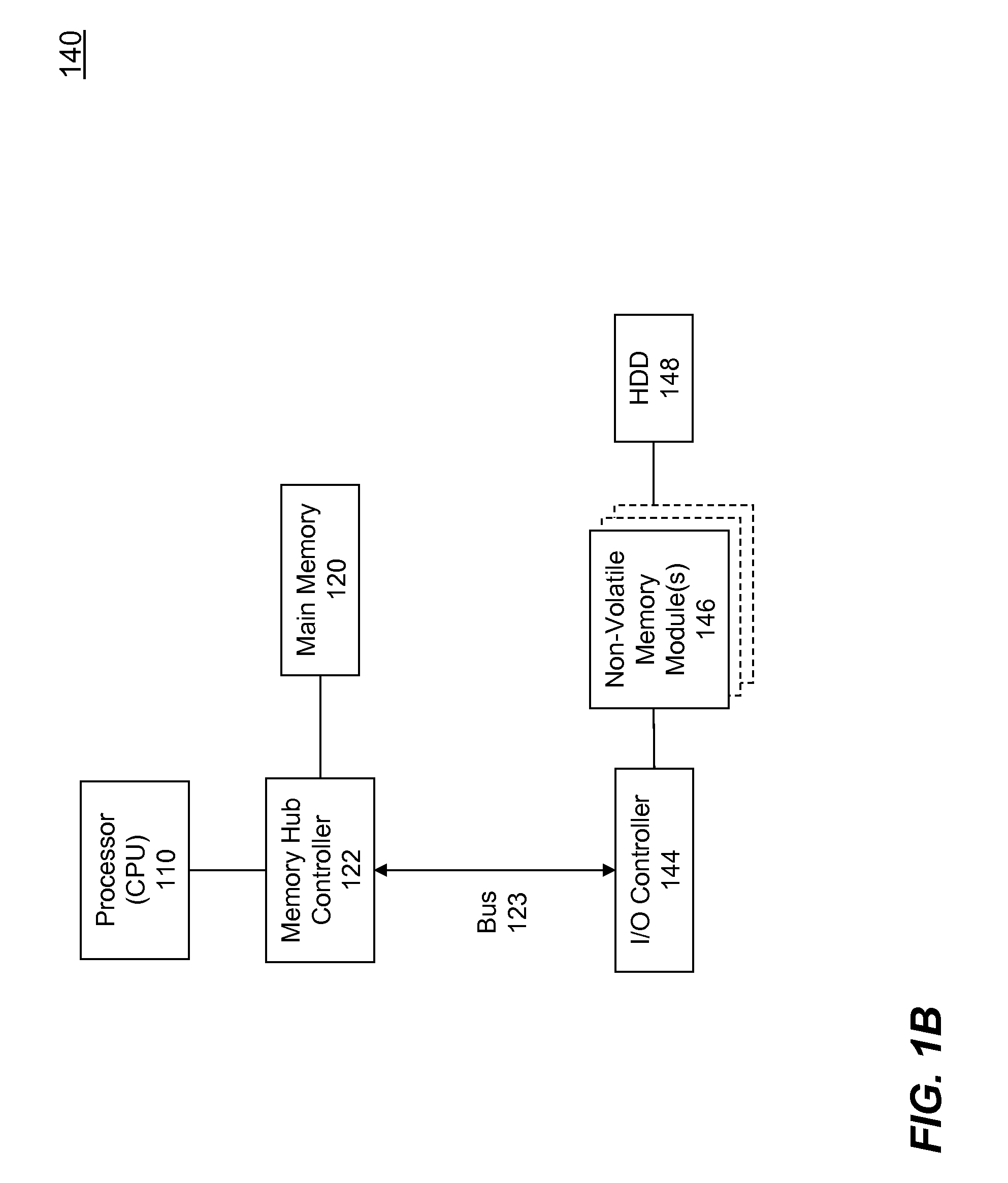

InactiveUS20080147968A1Improve performanceImprove efficiencyError detection/correctionMemory adressing/allocation/relocationControl dataAuxiliary memory

High performance flash memory devices (FMD) are described. According to one exemplary embodiment of the invention, a high performance FMD includes an I / O interface, a FMD controller, and at least one non-volatile memory module along with corresponding at least one channel controller. The I / O interface is configured to connect the high performance FMD to a host computing device The FMD contoller is configured to control data transfer (e.g., data reading, data writing / programming, and data erasing) operations between the host computing device and the non-volatile memory module. The at least one non-volatile memory module, comprising one or more non-volatile memory chips, is configured as a secondary storage for the host computing device. The at least one channel controller is configured to ensure proper and efficient data transfer between a set of data buffers located in the FMD controller and the at least one non-volatile memory module.

Owner:SUPER TALENT TECH CORP

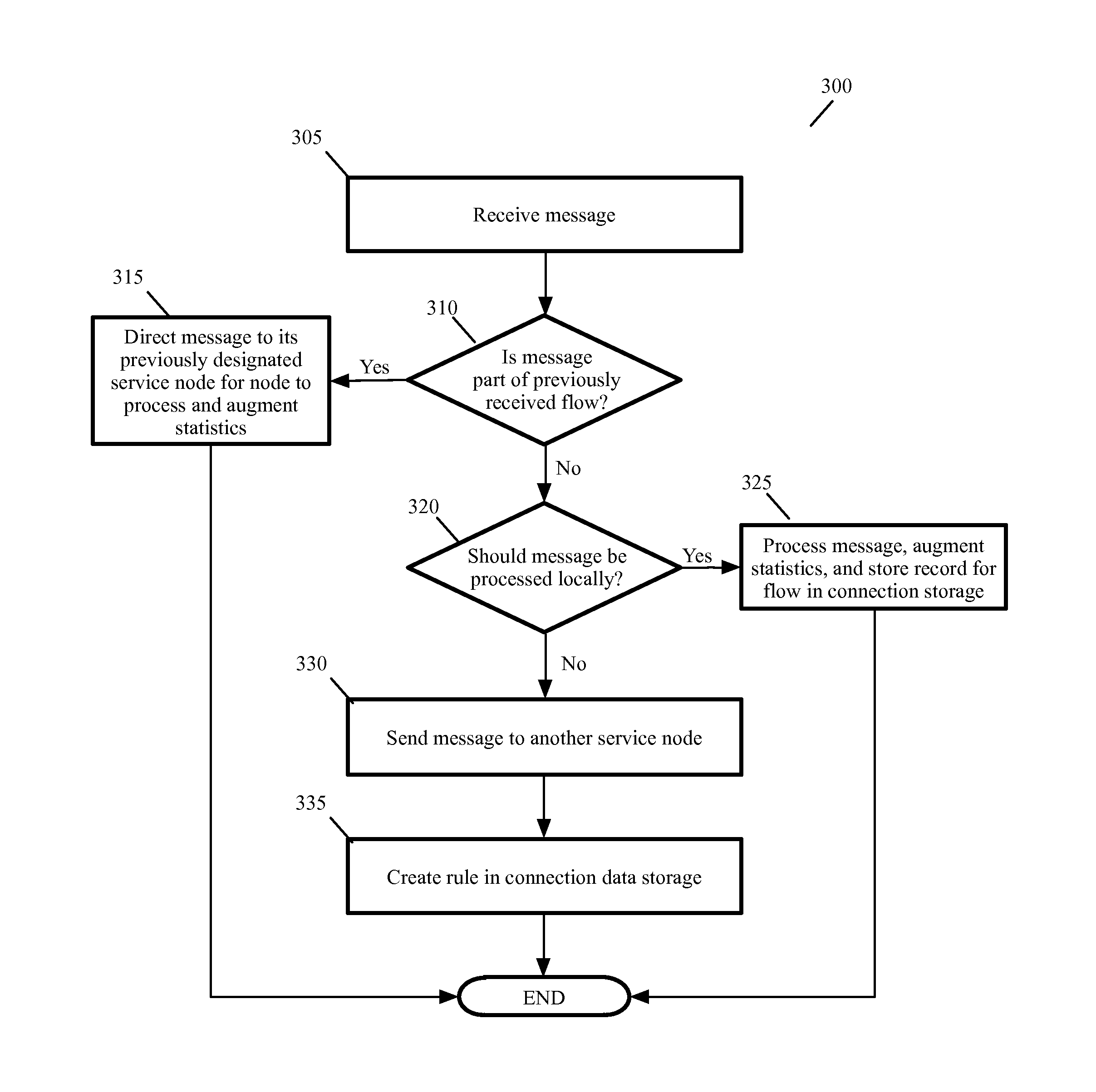

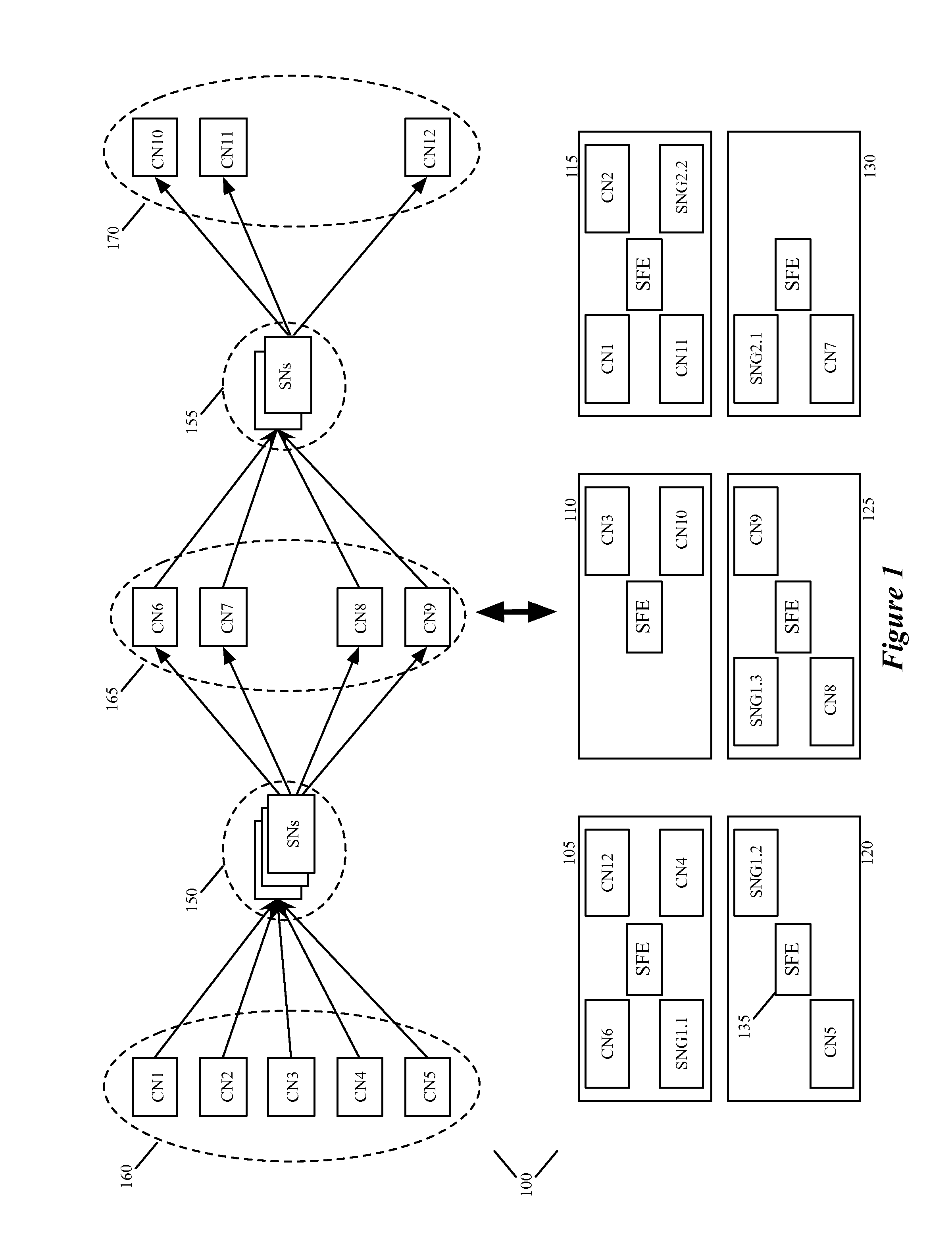

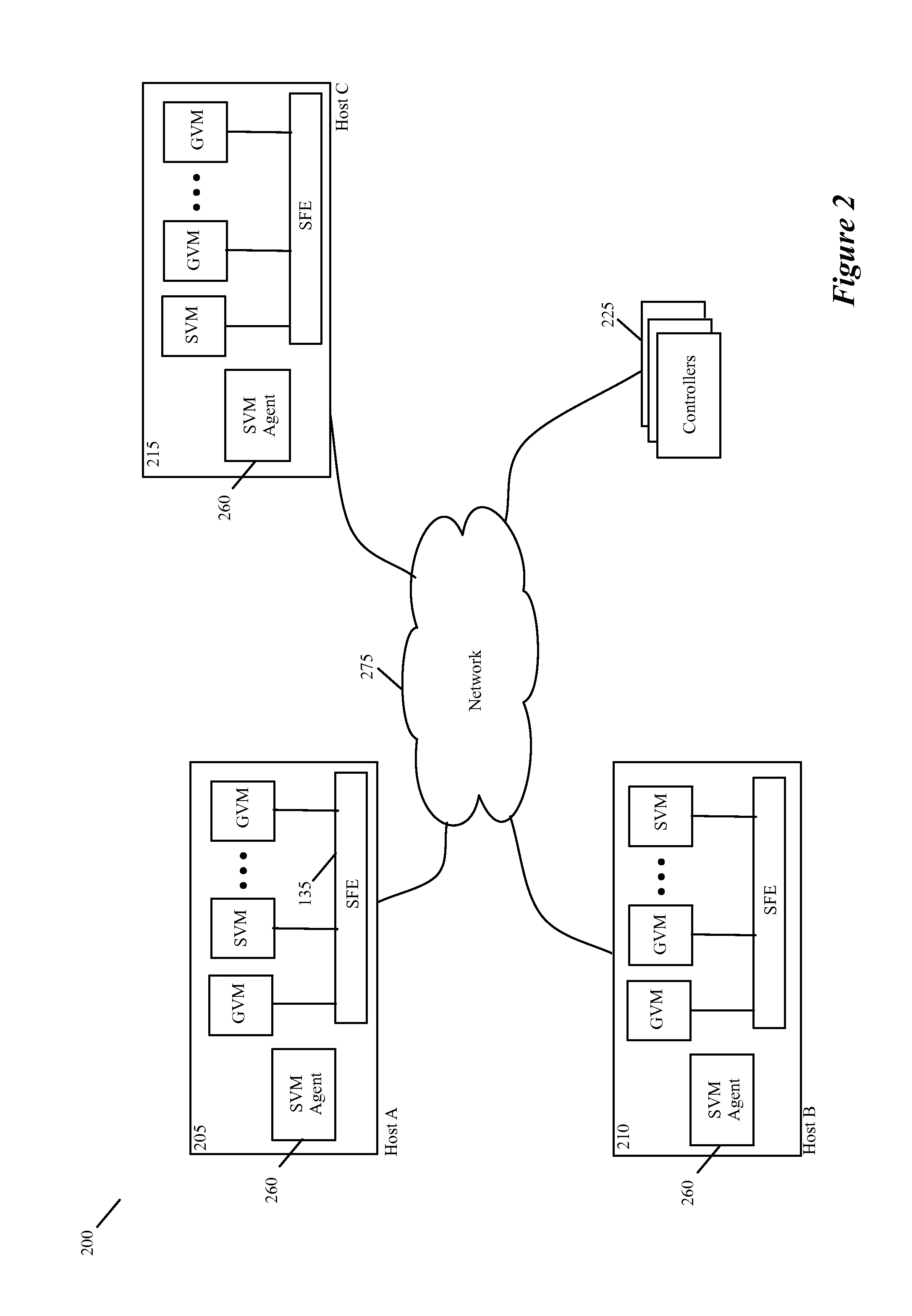

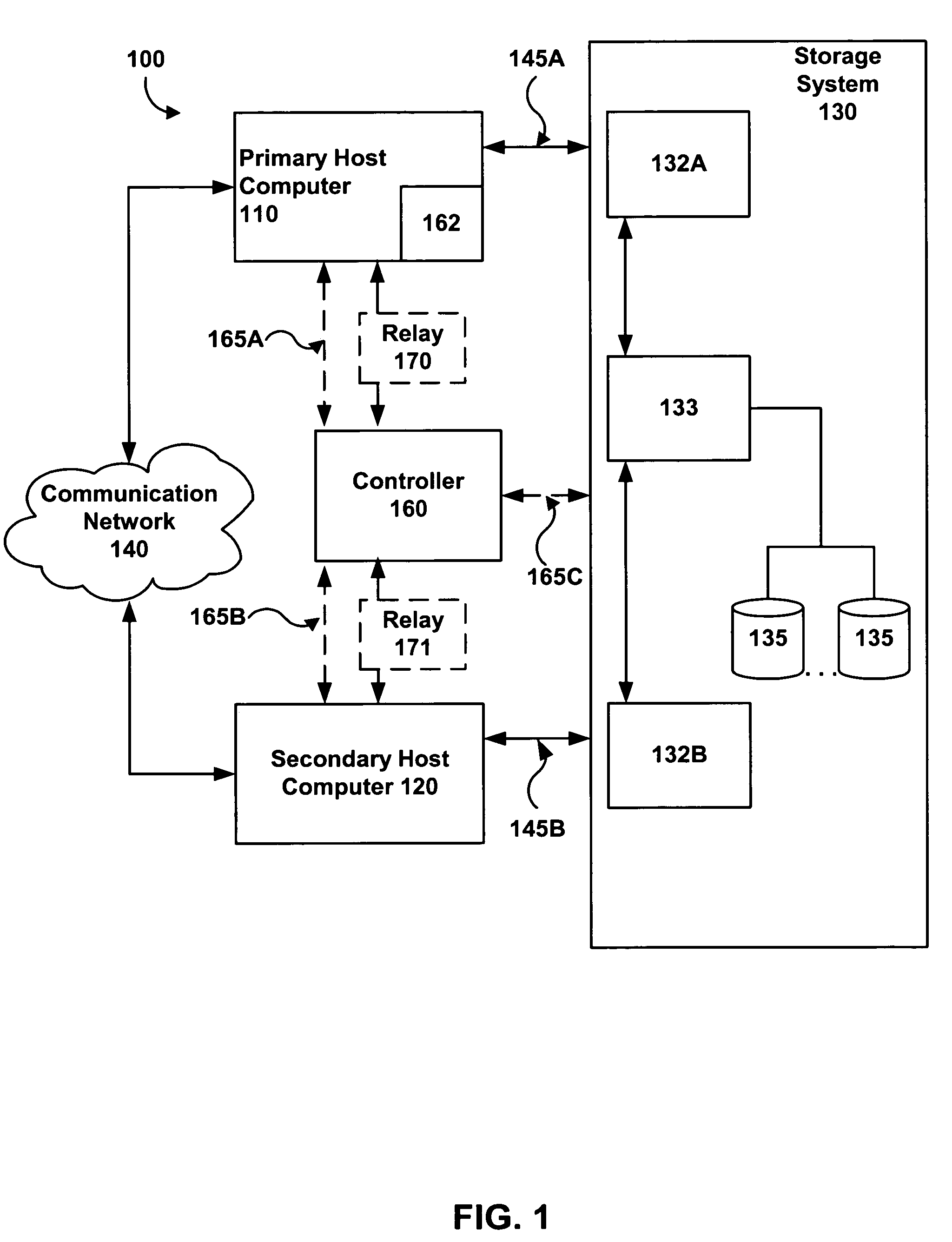

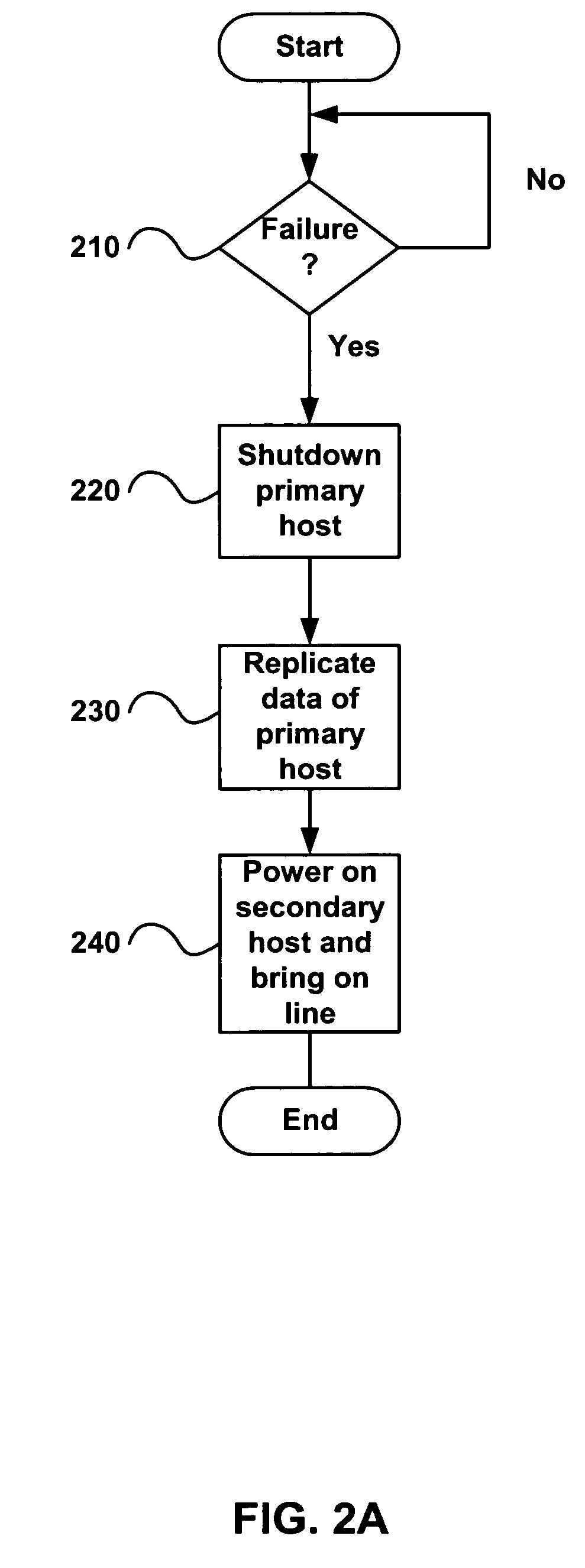

Method and apparatus for providing a service with a plurality of service nodes

Some embodiments provide an elastic architecture for providing a service in a computing system. To perform a service on the data messages, the service architecture uses a service node (SN) group that includes one primary service node (PSN) and zero or more secondary service nodes (SSNs). The service can be performed on a data message by either the PSN or one of the SSN. However, in addition to performing the service, the PSN also performs a load balancing operation that assesses the load on each service node (i.e., on the PSN or each SSN), and based on this assessment, has the data messages distributed to the service node(s) in its SN group. Based on the assessed load, the PSN in some embodiments also has one or more SSNs added to or removed from its SN group. To add or remove an SSN to or from the service node group, the PSN in some embodiments directs a set of controllers to add (e.g., instantiate or allocate) or remove the SSN to or from the SN group. Also, to assess the load on the service nodes, the PSN in some embodiments receives message load data from the controller set, which collects such data from each service node. In other embodiments, the PSN receives such load data directly from the SSNs.

Owner:NICIRA

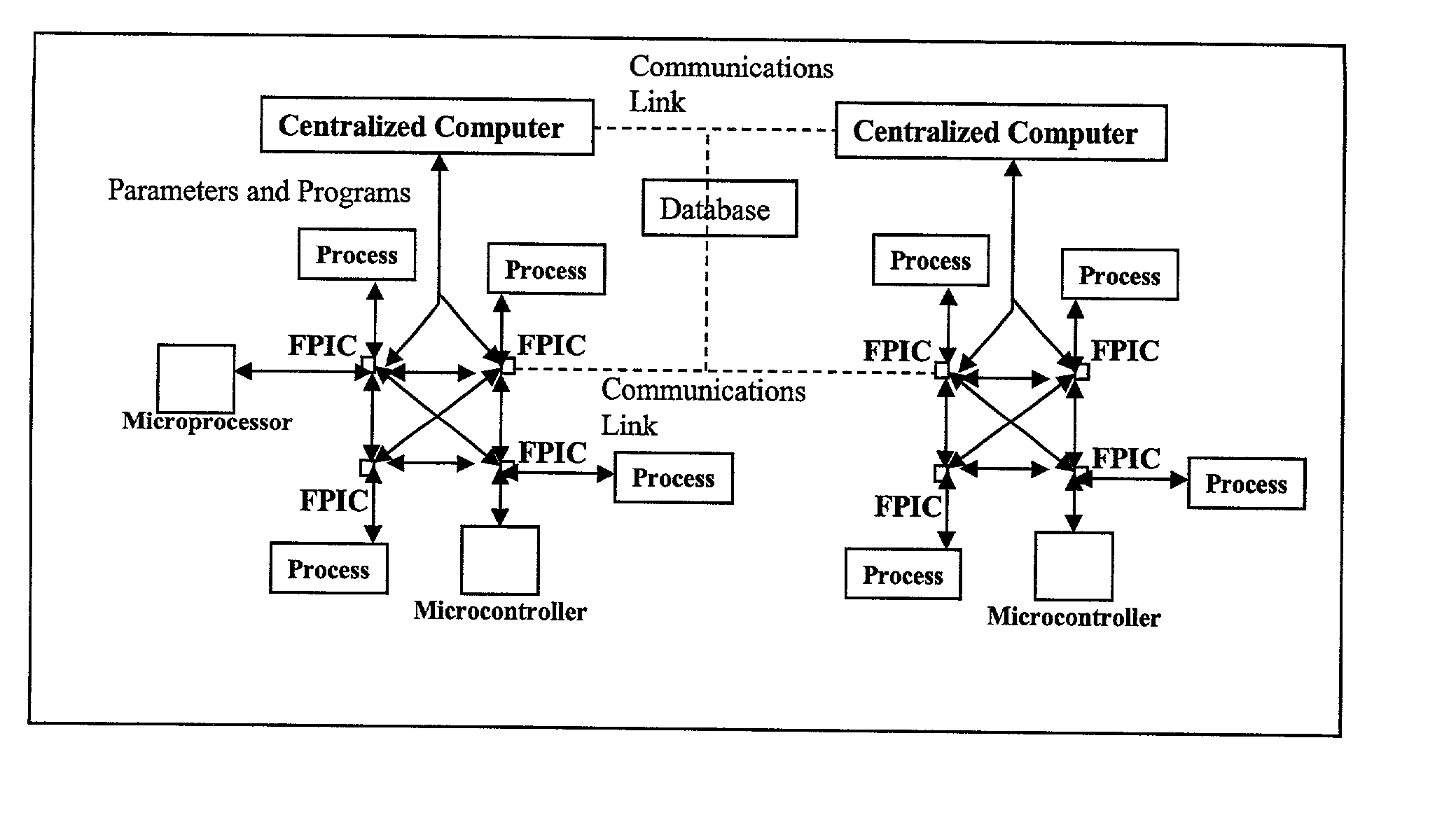

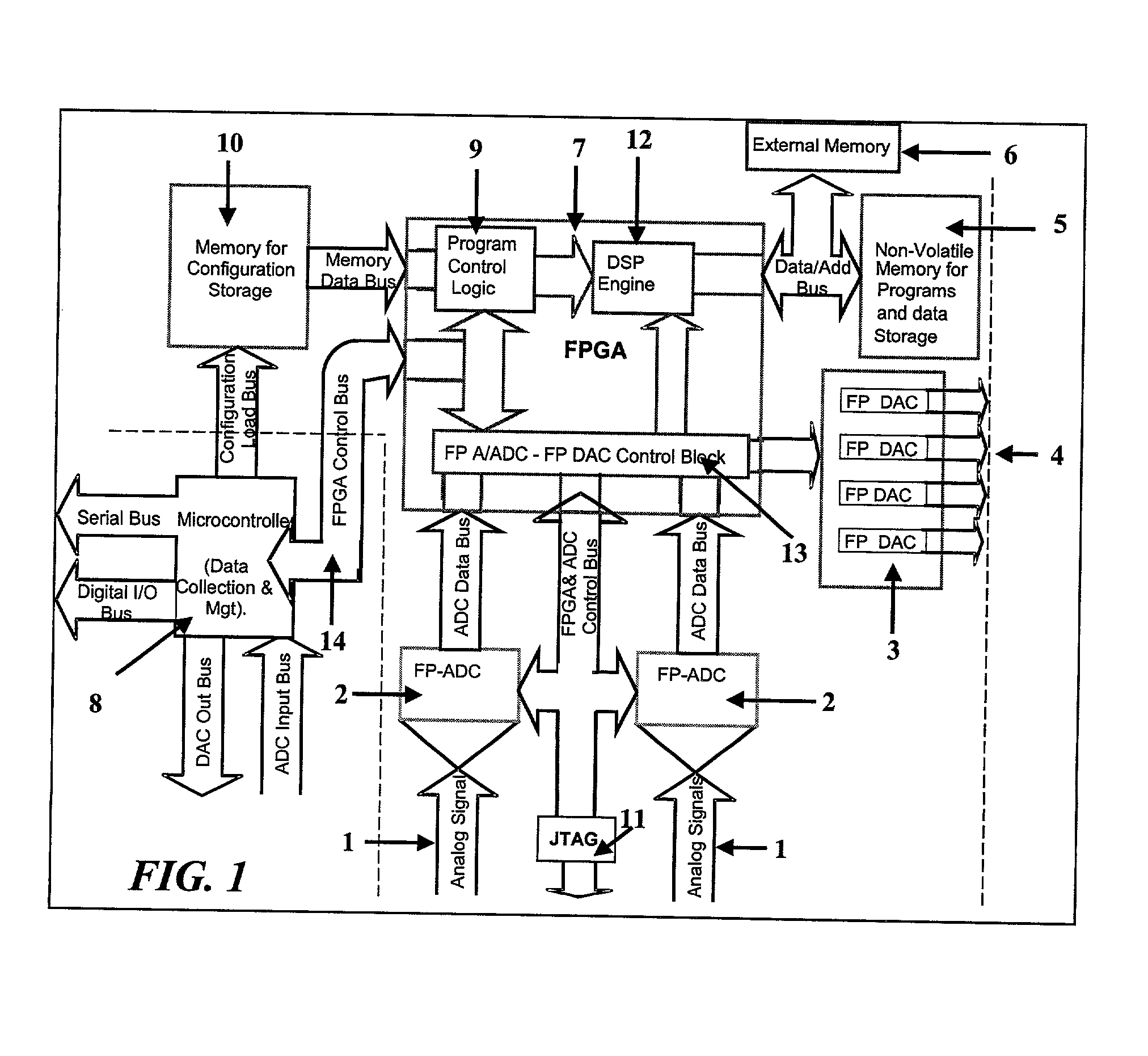

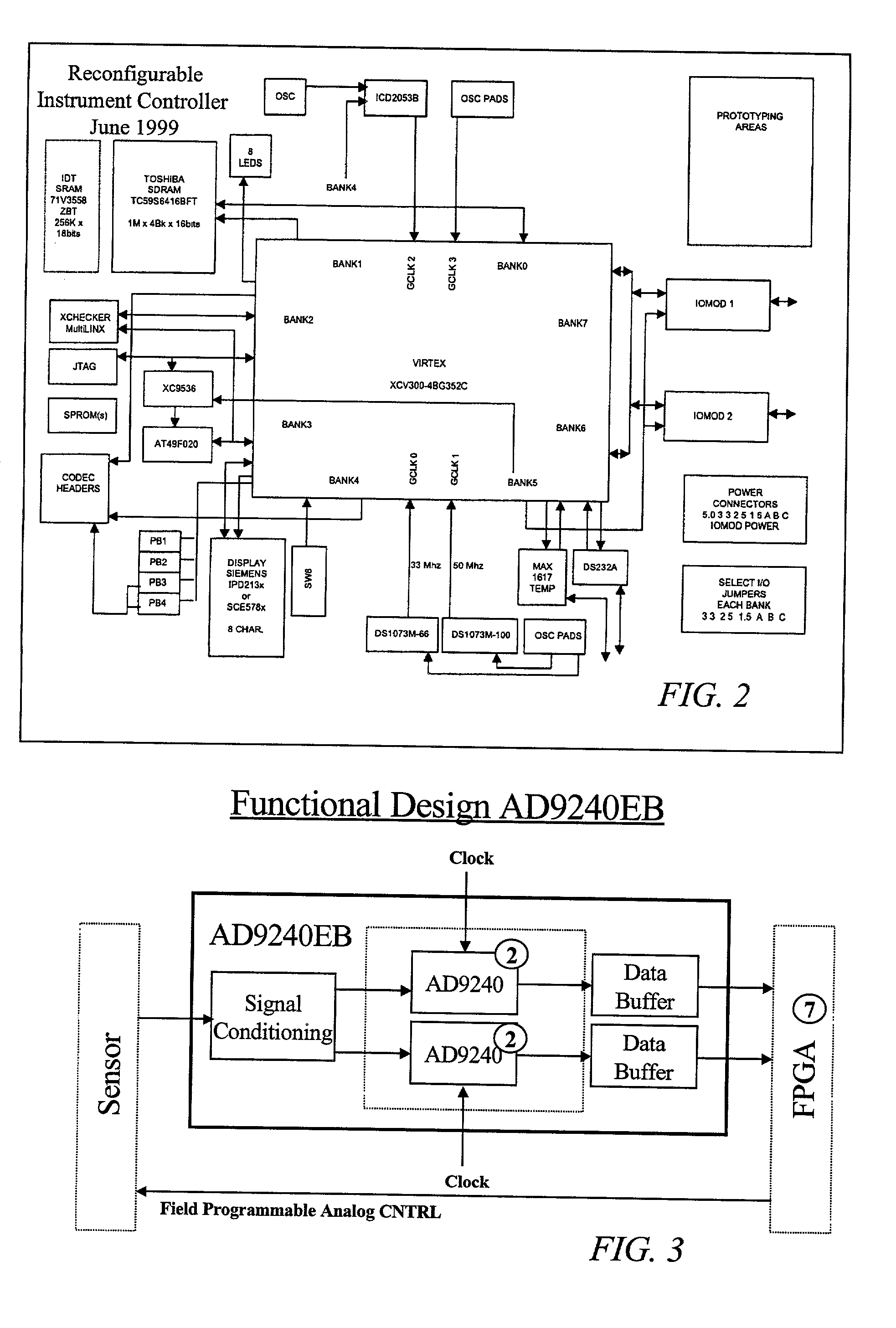

High performance hybrid micro-computer

The Field Programmable Instrument Controller (FPIC) is a stand-alone low to high performance, clocked or unclocked multi-processor that operates as a microcontroller with versatile interface and operating options. The FPIC can also be used as a concurrent processor for a microcontroller or other processor. A tightly coupled Multiple Chip Module design incorporates non-volatile memories, a large field programmable gate array (FPGA), field programmable high precision analog to digital converters, field programmable digital to analog signal generators, and multiple ports of external mass data storage and control processors. The FPIC has an inherently open architecture with in-situ reprogrammability and state preservation capability for discontinuous operations. It is designed to operate in multiple roles, including but not limited to, a high speed parallel digital signal processing; co-processor for precision control feedback during analog or hybrid computing; high speed monitoring for condition based maintenance; and distributed real time process control. The FPIC is characterized by low power with small size and weight.

Owner:BLEMEL KENNETH G

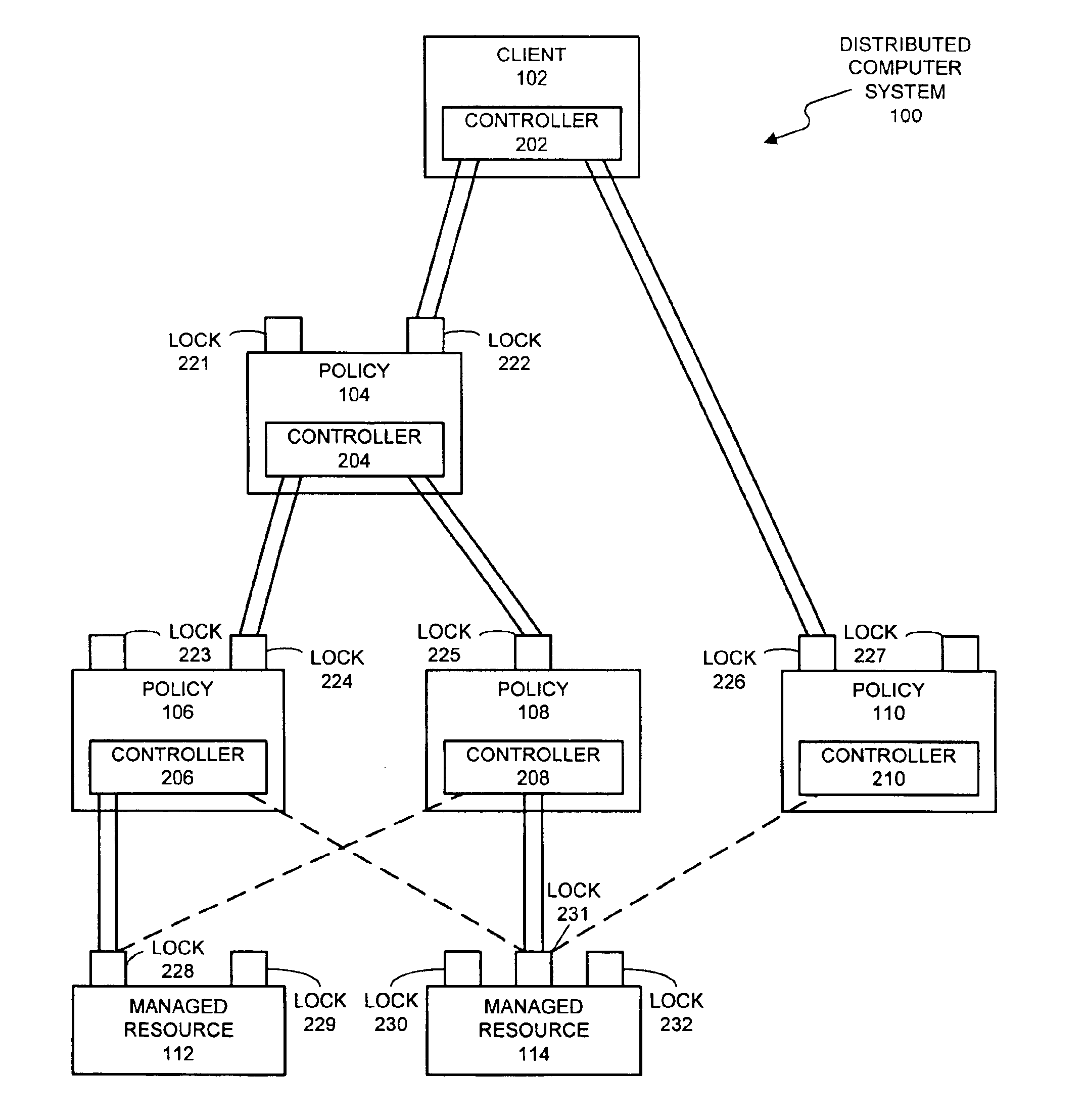

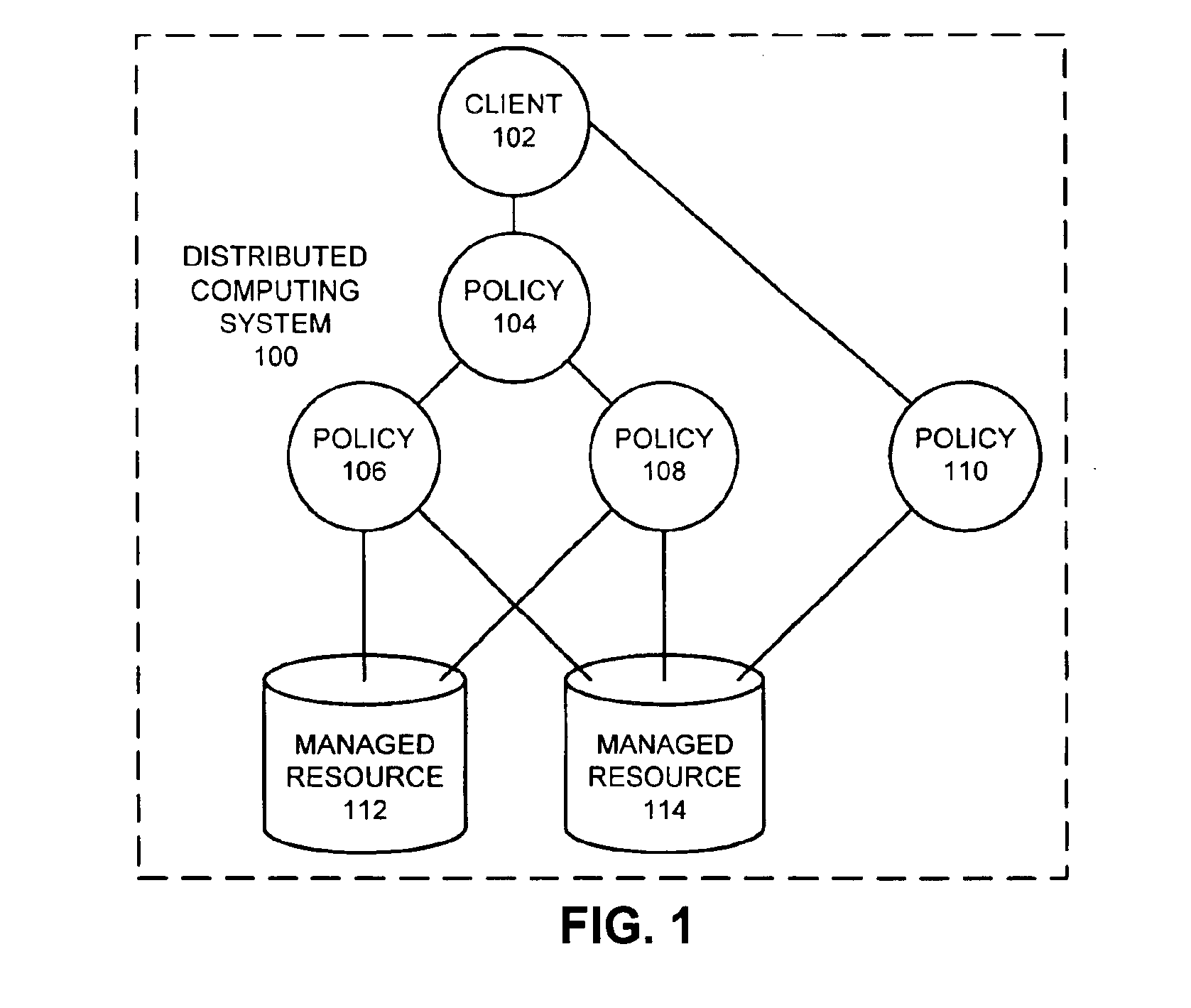

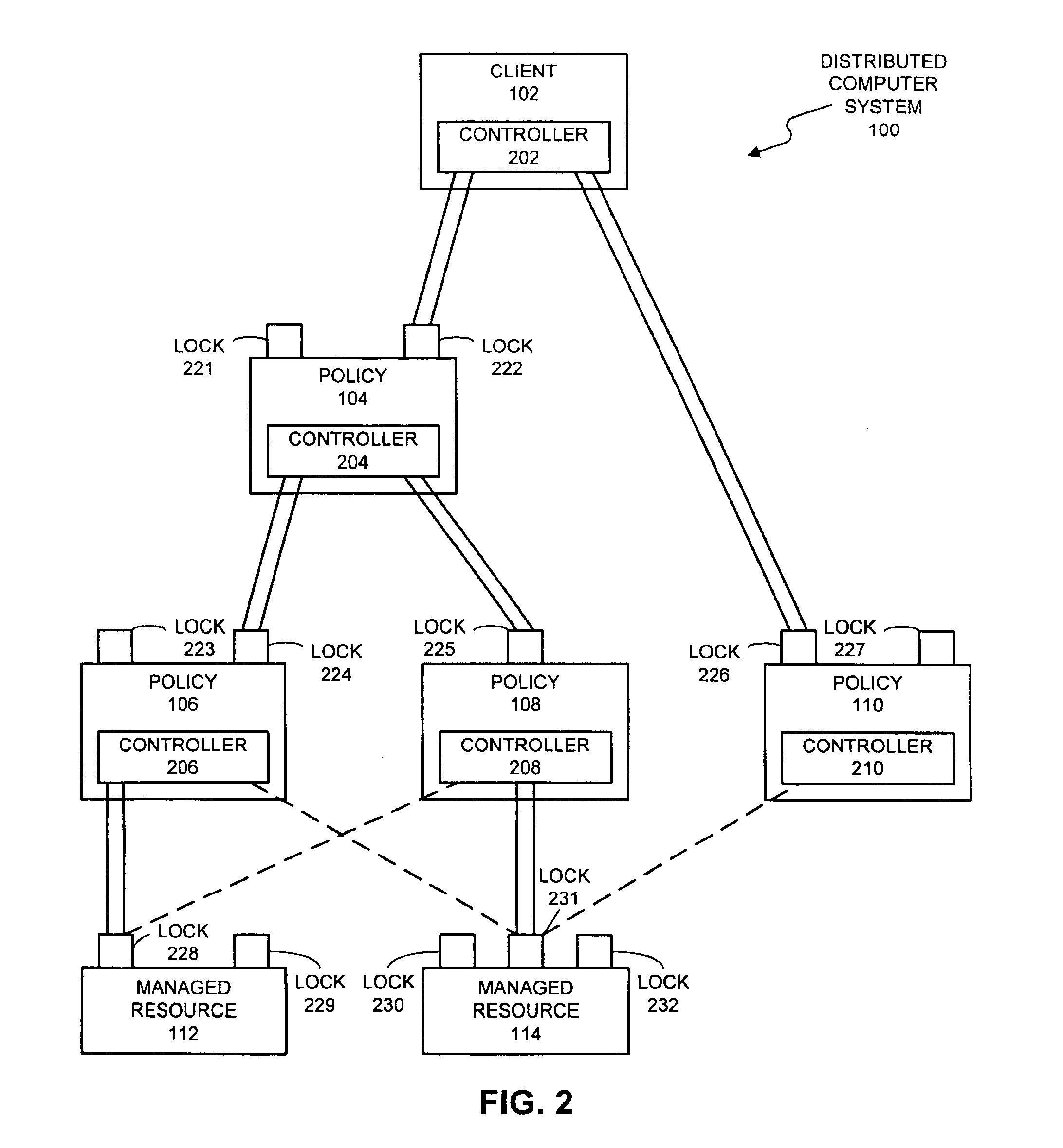

Method and apparatus for concurrency control in a policy-based management system

InactiveUS6865549B1Facilitates concurrency controlData processing applicationsProgram synchronisationConcurrency controlPolicy-based management

A system that facilitates concurrency control for a policy-based management system that controls resources in a distributed computing system. The system operates by receiving a request to perform an operation on a lockable resource from a controller in the distributed computing system. This controller sends the request in order to enforce a first policy for controlling resources in the distributed computing system. In response the request, the system determines whether the controller holds a lock on the lockable resource. If so, the system allows the controller to execute the operation on the lockable resource. If not, the system allows the controller an opportunity to acquire the lock. If the controller is able to acquire the lock, the system allows the controller to execute the operation on the lockable resource.

Owner:ORACLE INT CORP

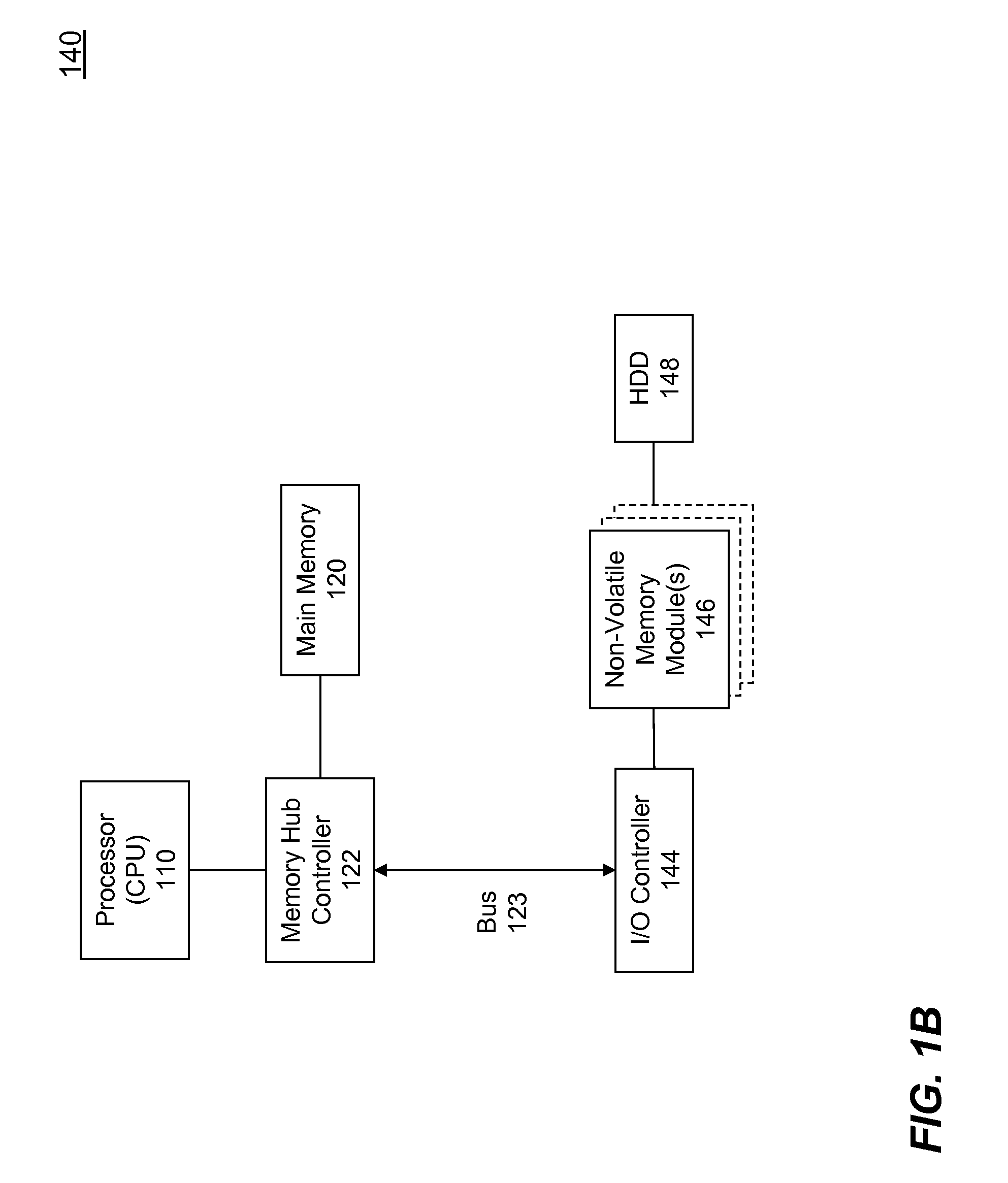

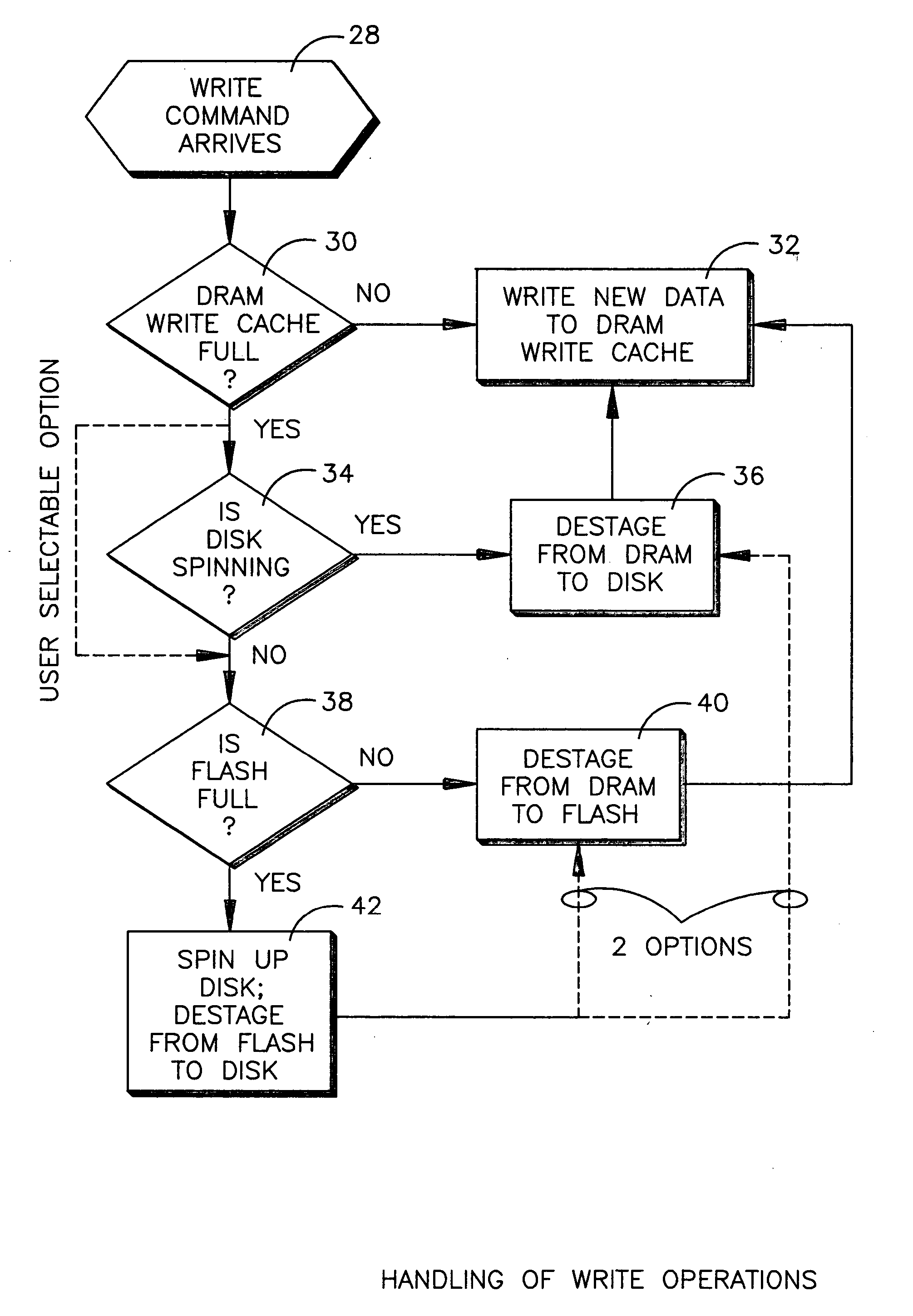

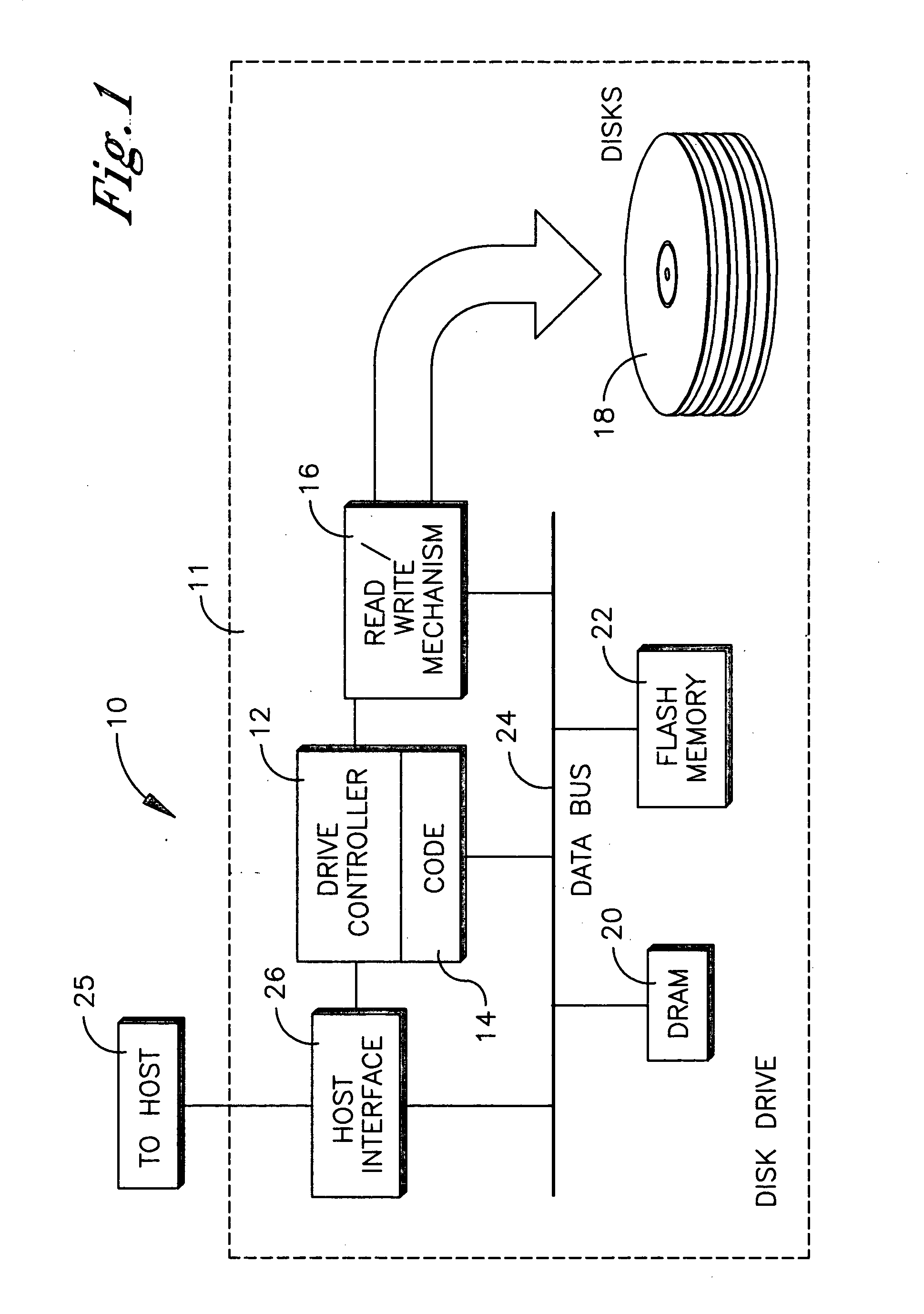

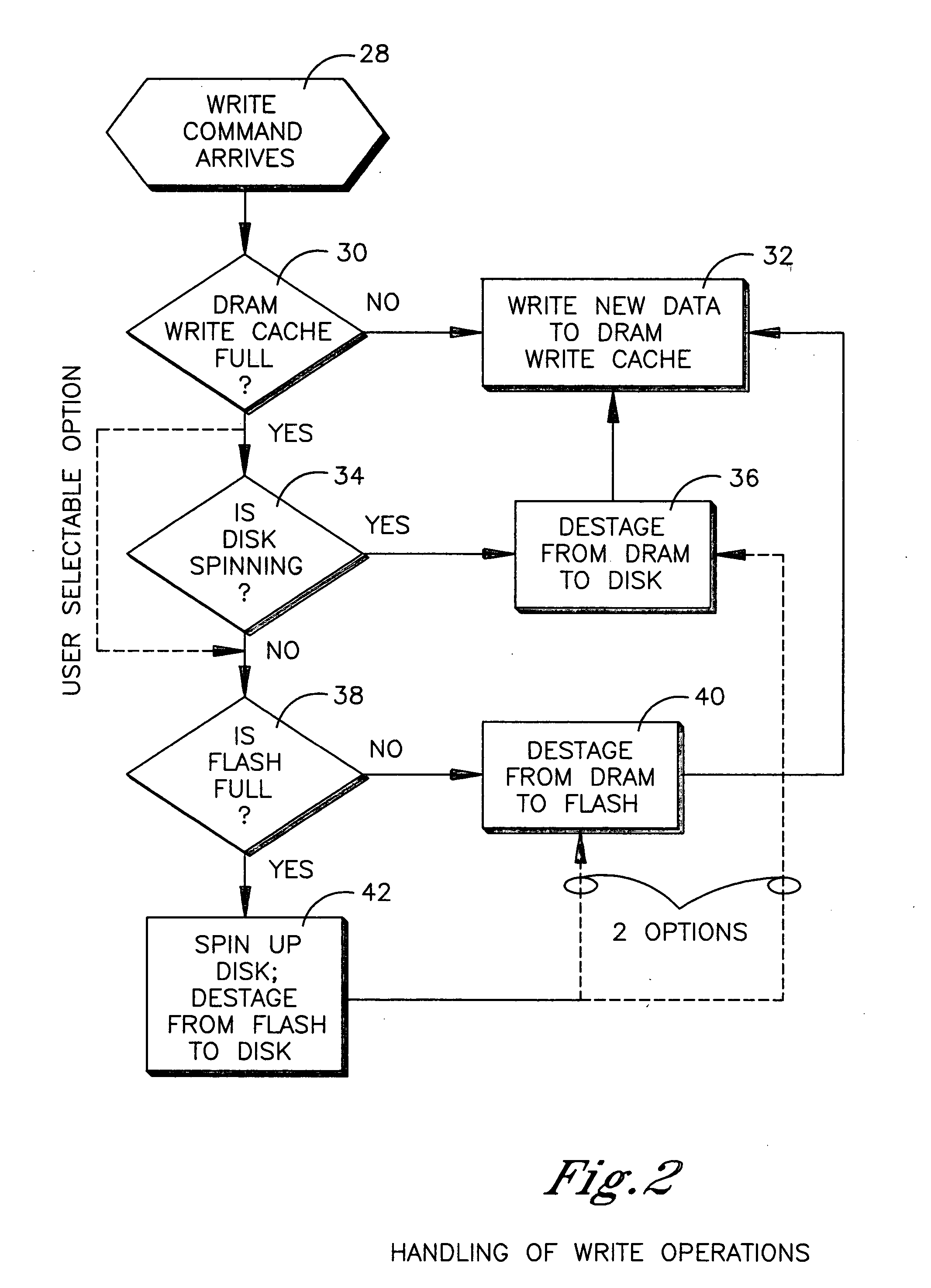

HDD having both dram and flash memory

InactiveUS20060080501A1Energy efficient ICTDigital data processing detailsHard disc driveMobile computing

A mobile computing hard disk drive has both a flash memory device and a DRAM device, with the HDD controller managing data storage between disk, DRAM, and flash both when write requests arrive and when the HDD is idle to optimize flash memory device life and system performance.

Owner:HITACHI GLOBAL STORAGE TECH NETHERLANDS BV

High performance flash memory devices (FMD)

InactiveUS7827348B2Improve performanceImprove efficiencyError detection/correctionStatic storageControl dataAuxiliary memory

High performance flash memory devices (FMD) are described. According to one exemplary embodiment of the invention, a high performance FMD includes an I / O interface, a FMD controller, and at least one non-volatile memory module along with corresponding at least one channel controller. The I / O interface is configured to connect the high performance FMD to a host computing device The FMD contoller is configured to control data transfer (e.g., data reading, data writing / programming, and data erasing) operations between the host computing device and the non-volatile memory module. The at least one non-volatile memory module, comprising one or more non-volatile memory chips, is configured as a secondary storage for the host computing device. The at least one channel controller is configured to ensure proper and efficient data transfer between a set of data buffers located in the FMD controller and the at least one non-volatile memory module.

Owner:SUPER TALENT TECH CORP

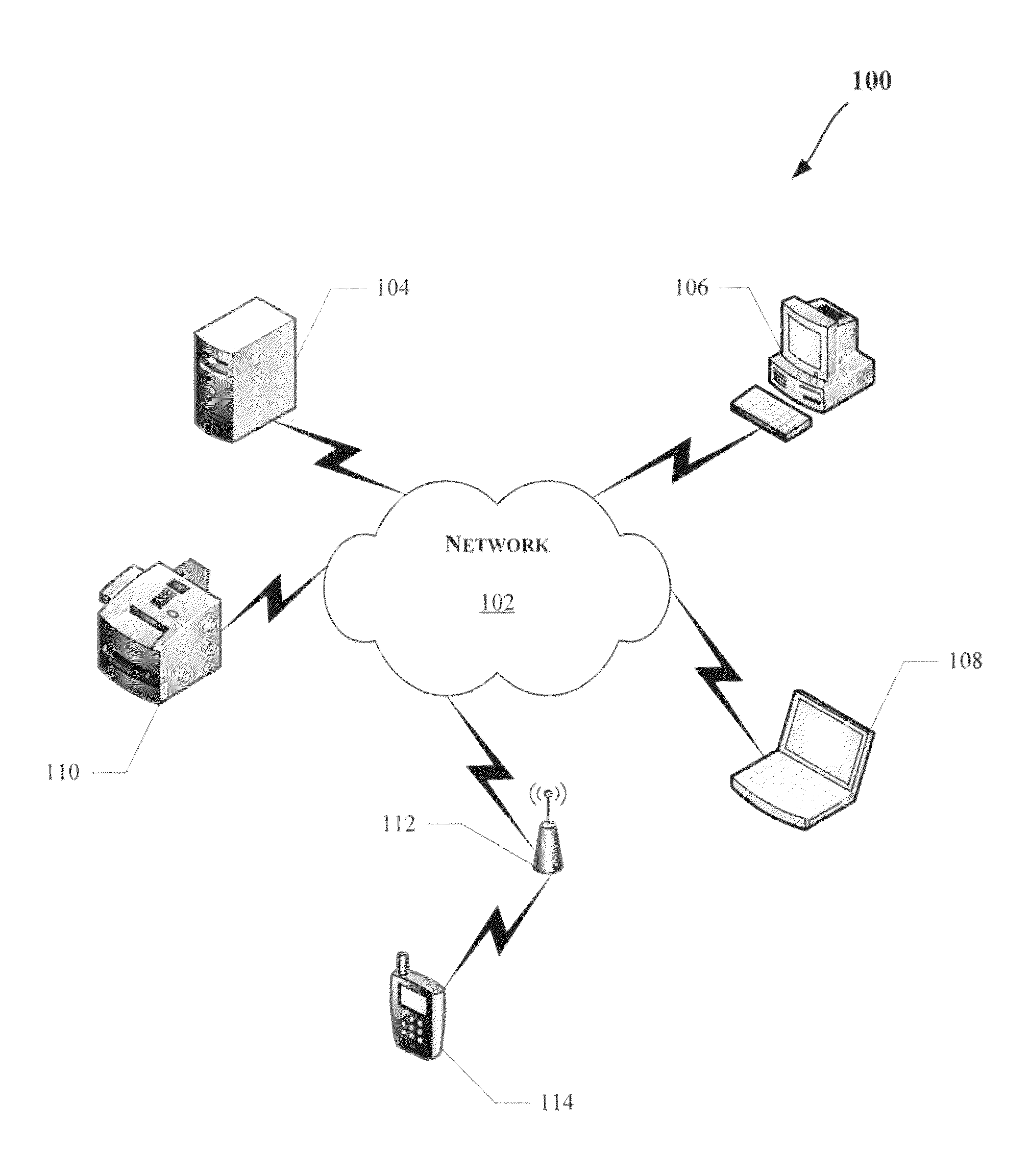

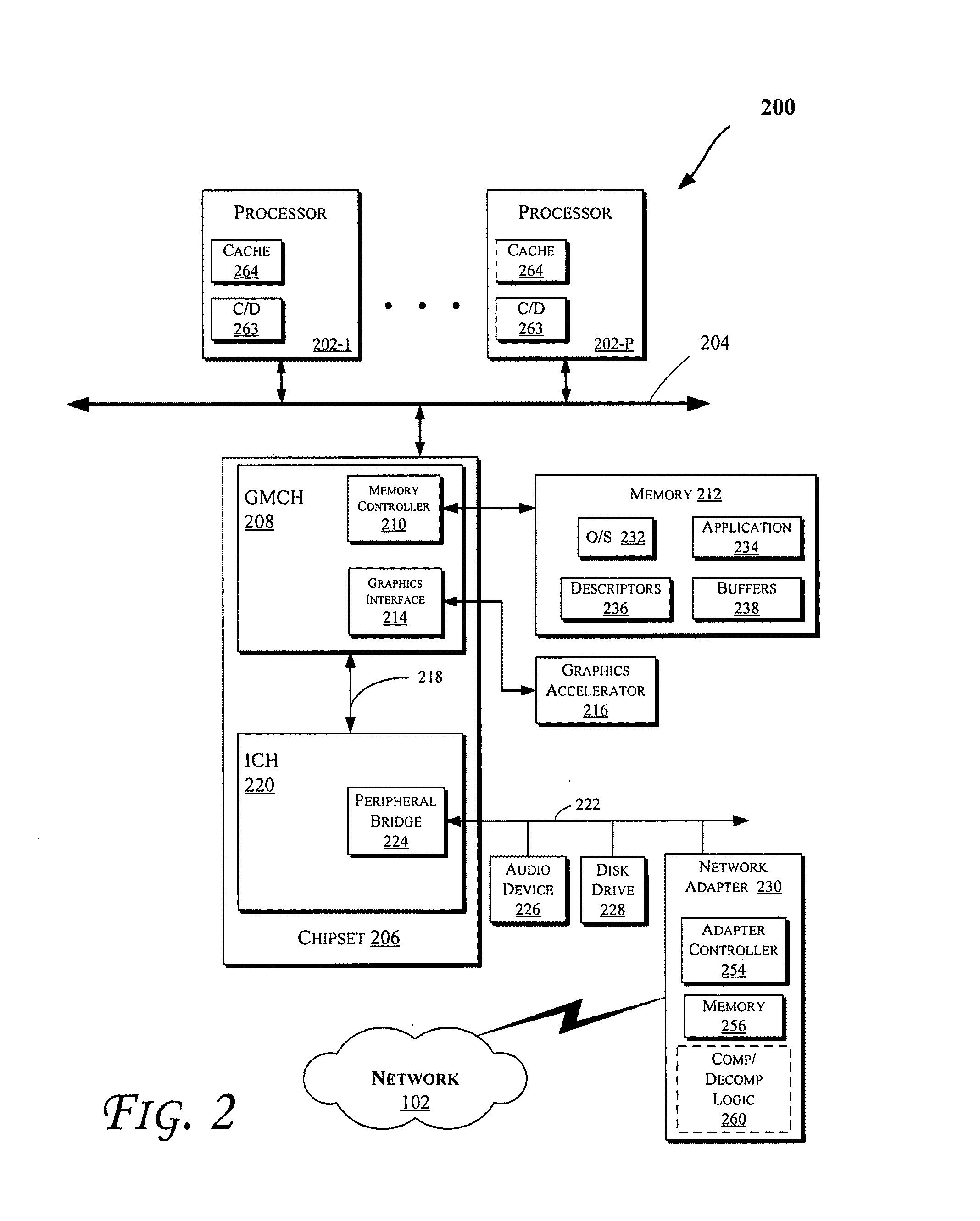

Network packet payload compression

ActiveUS8001278B2Energy efficient ICTMultiple digital computer combinationsPayload (computing)Term memory

Methods and apparatus relating to network packet payload compression / decompression are described. In an embodiment, an uncompressed packet payload may be compressed before being transferred between various components of a computing system. For example, a packet payload may be compressed prior to transfer between network interface cards or controllers (NICs) and storage devices (e.g., including a main system memory and / or cache(s)), as well as between processors (or processor cores) and storage devices (e.g., including main system memory and / or caches). Other embodiments are also disclosed.

Owner:INTEL CORP

Computational method, system, and apparatus

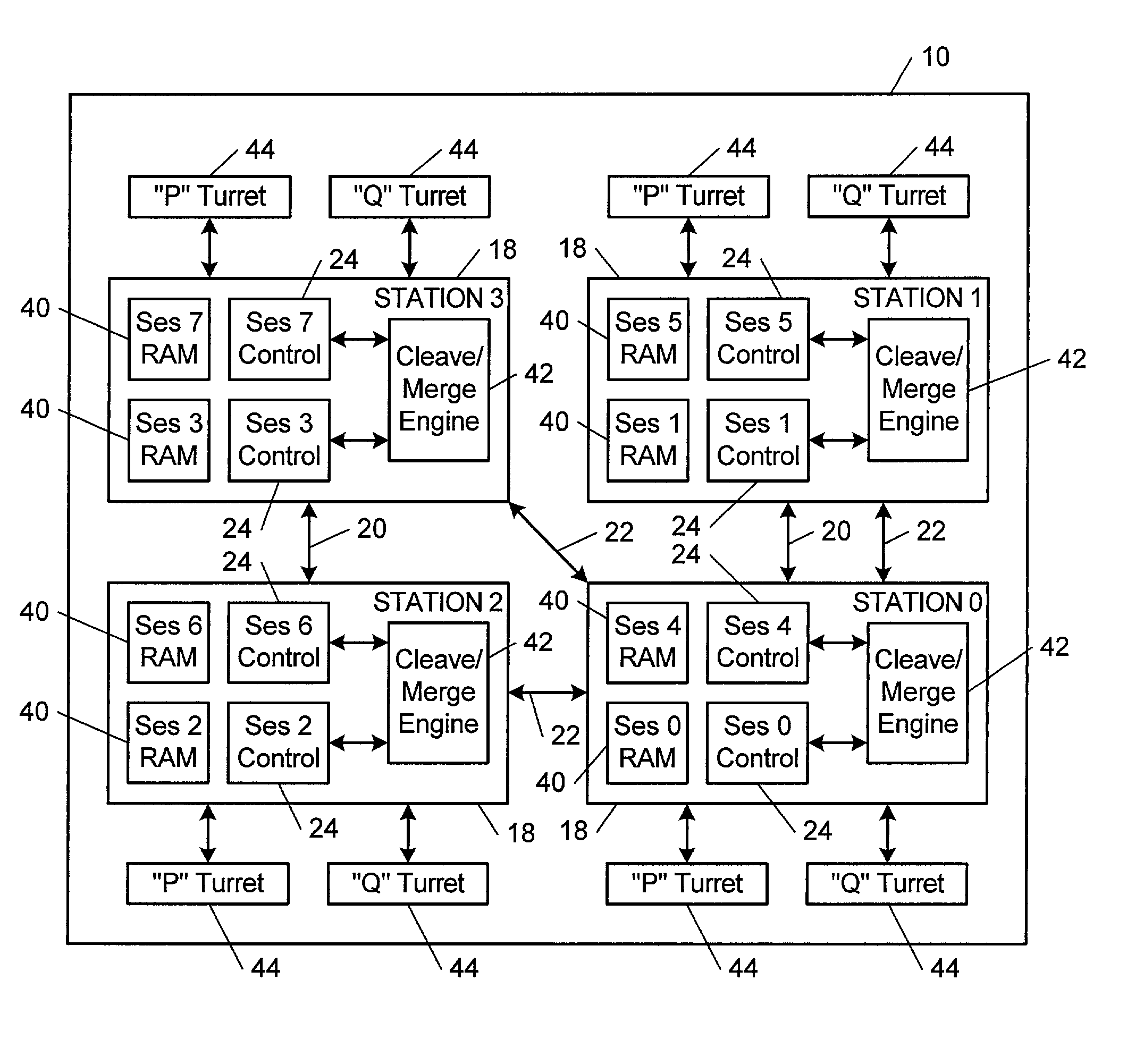

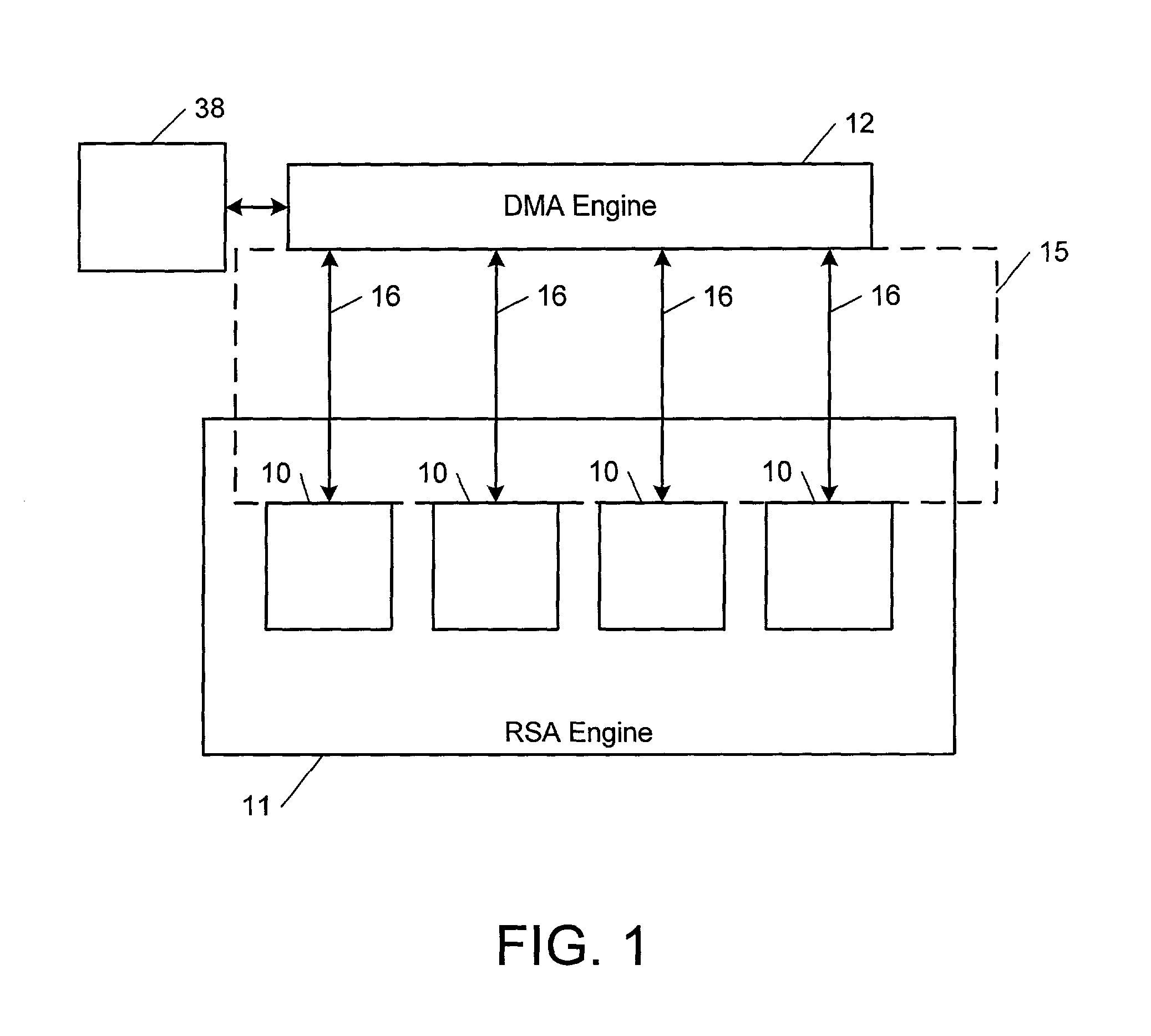

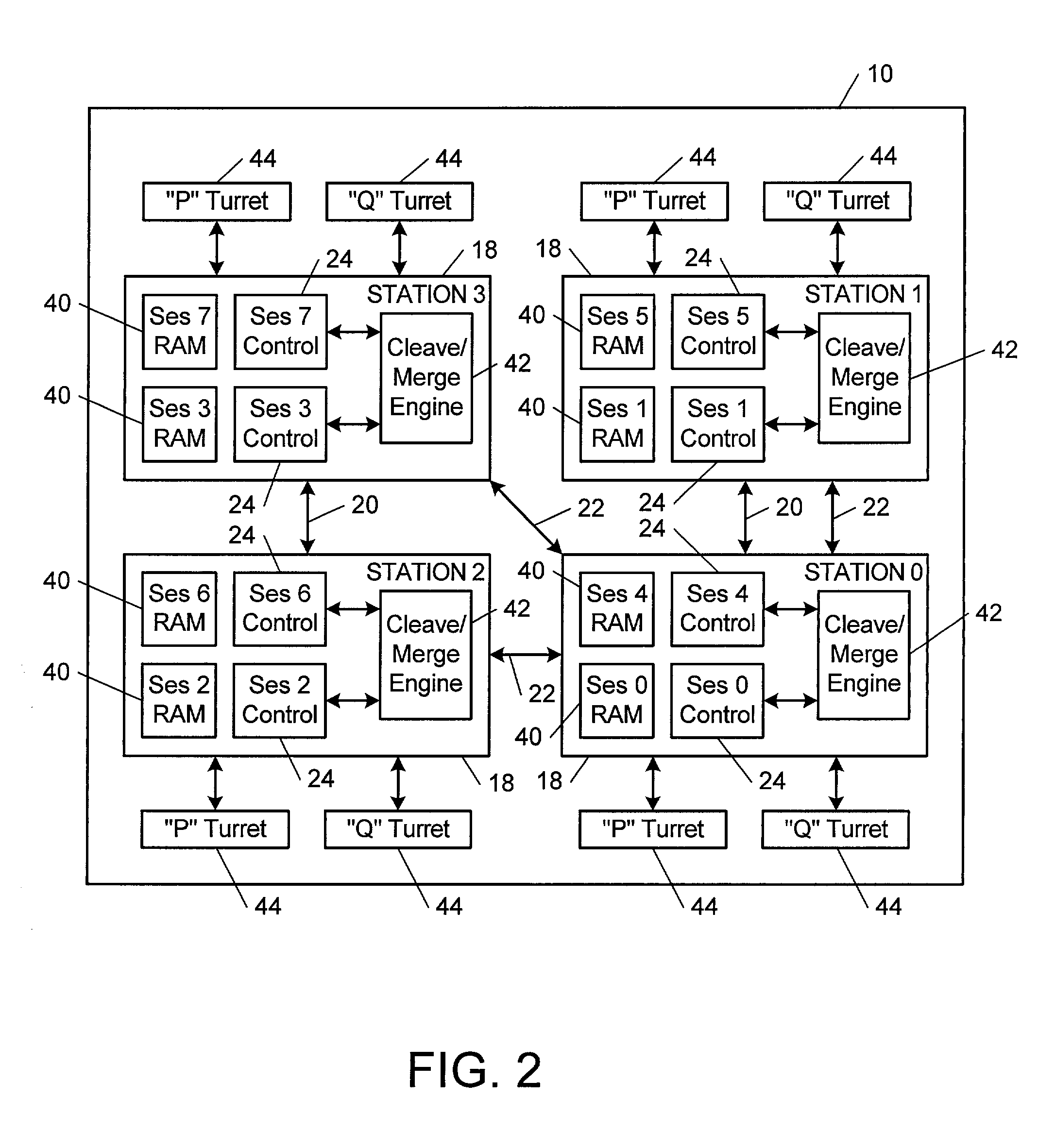

A method, system, and apparatus for performing computations.In a method, arguments X and K are loaded into session memory, and X mod P and X mod Q are computed to give, respectively, XP and XQ. XP and XQ are exponentiated to compute, respectively, CP and CQ. CP and CQ are merged to compute C, which is then retrieved from the session memory.A system includes a computing device and at least one computational apparatus, wherein the computing device is configured to use the computational apparatus to perform accelerated computations.An apparatus includes a chaining controller and a plurality of computational devices. A first chaining subset of the plurality of computational devices includes at least two of the plurality of computational devices, and the chaining controller is configured to instruct the first chaining subset to operate as a first computational chain.

Owner:NCIPHER LIMITED ACTING BY & THROUGH ITS WHOLLY OWNED SUBSIDIARY NCIPHER +1

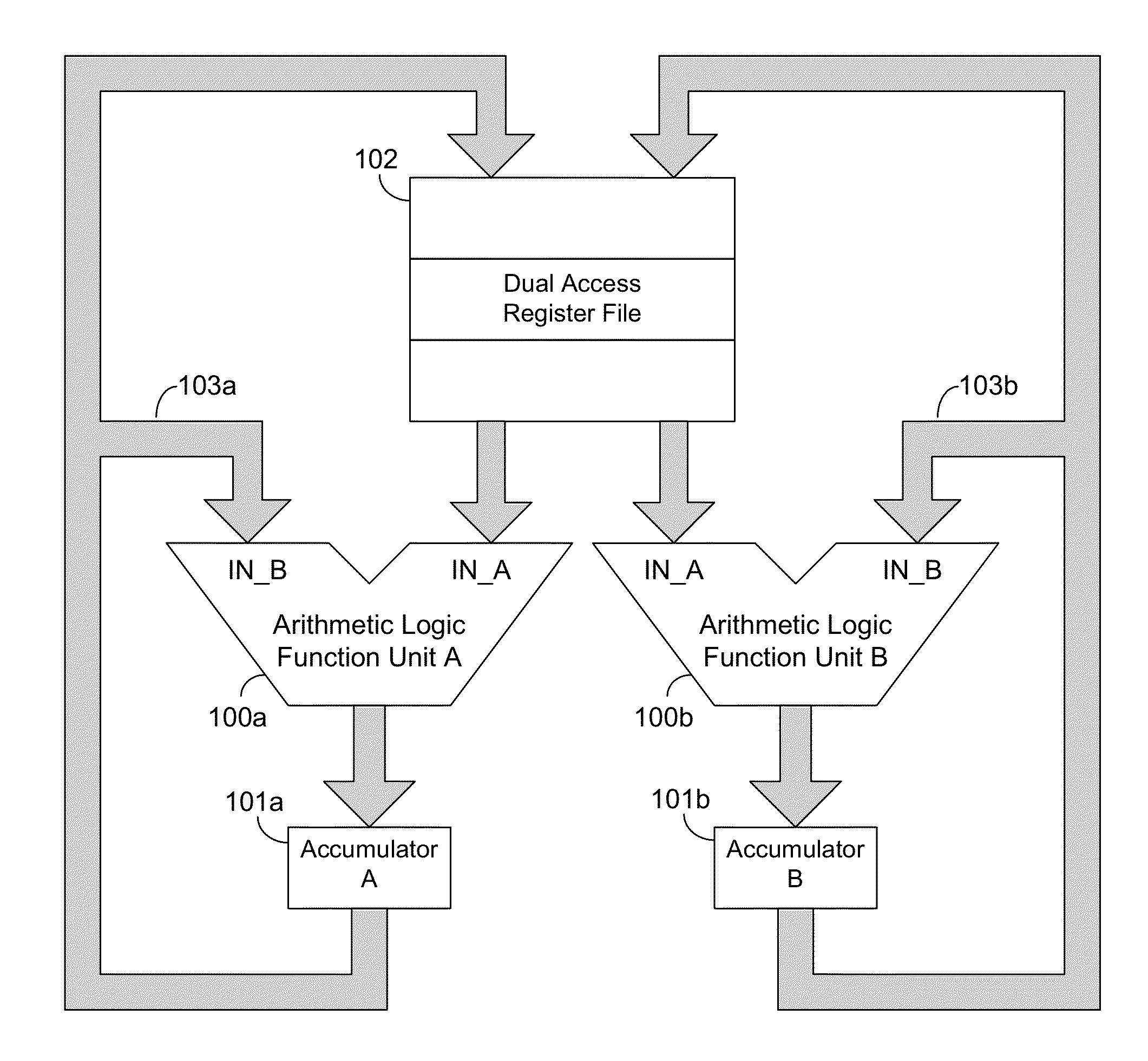

Residue number arithmetic logic unit

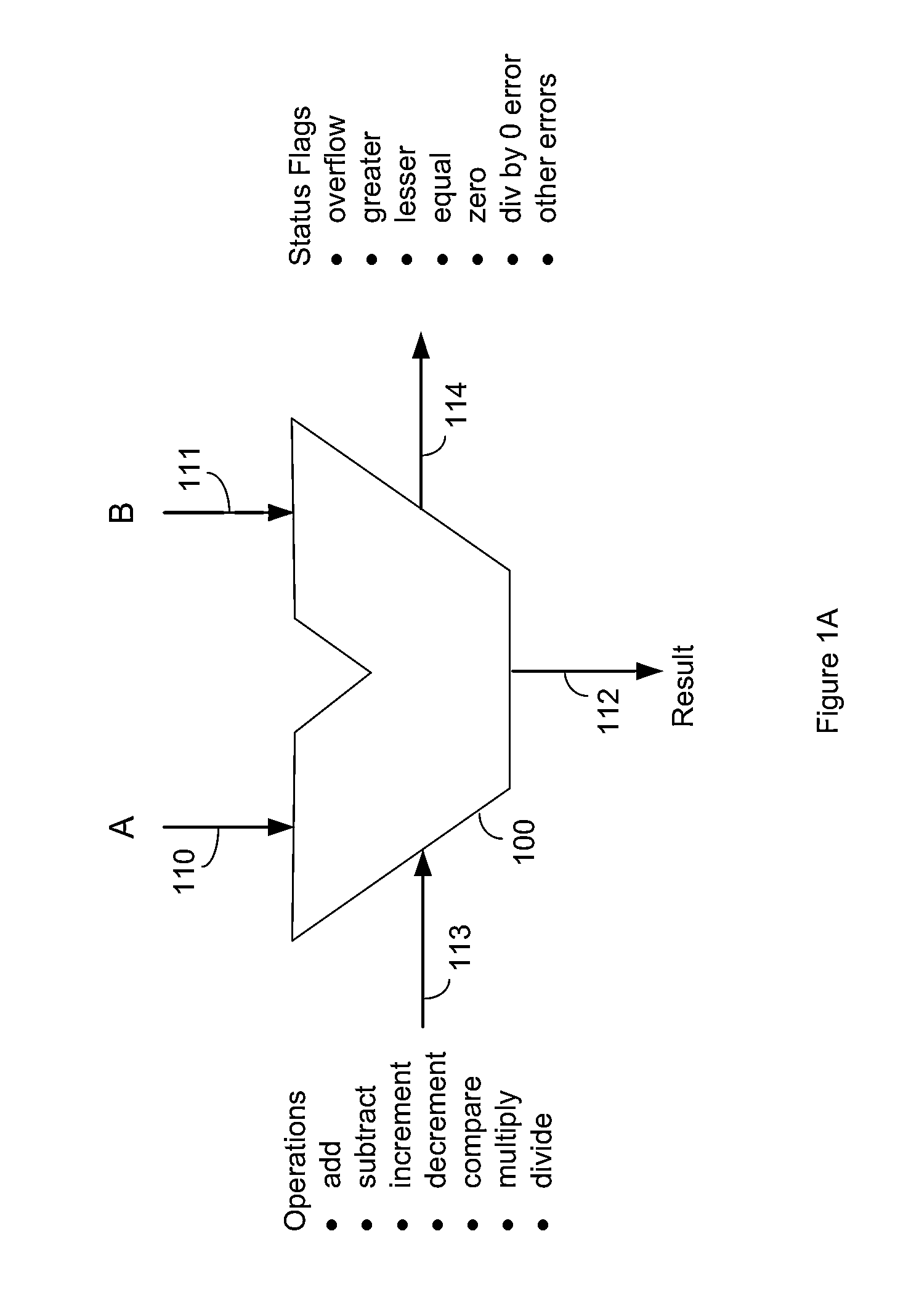

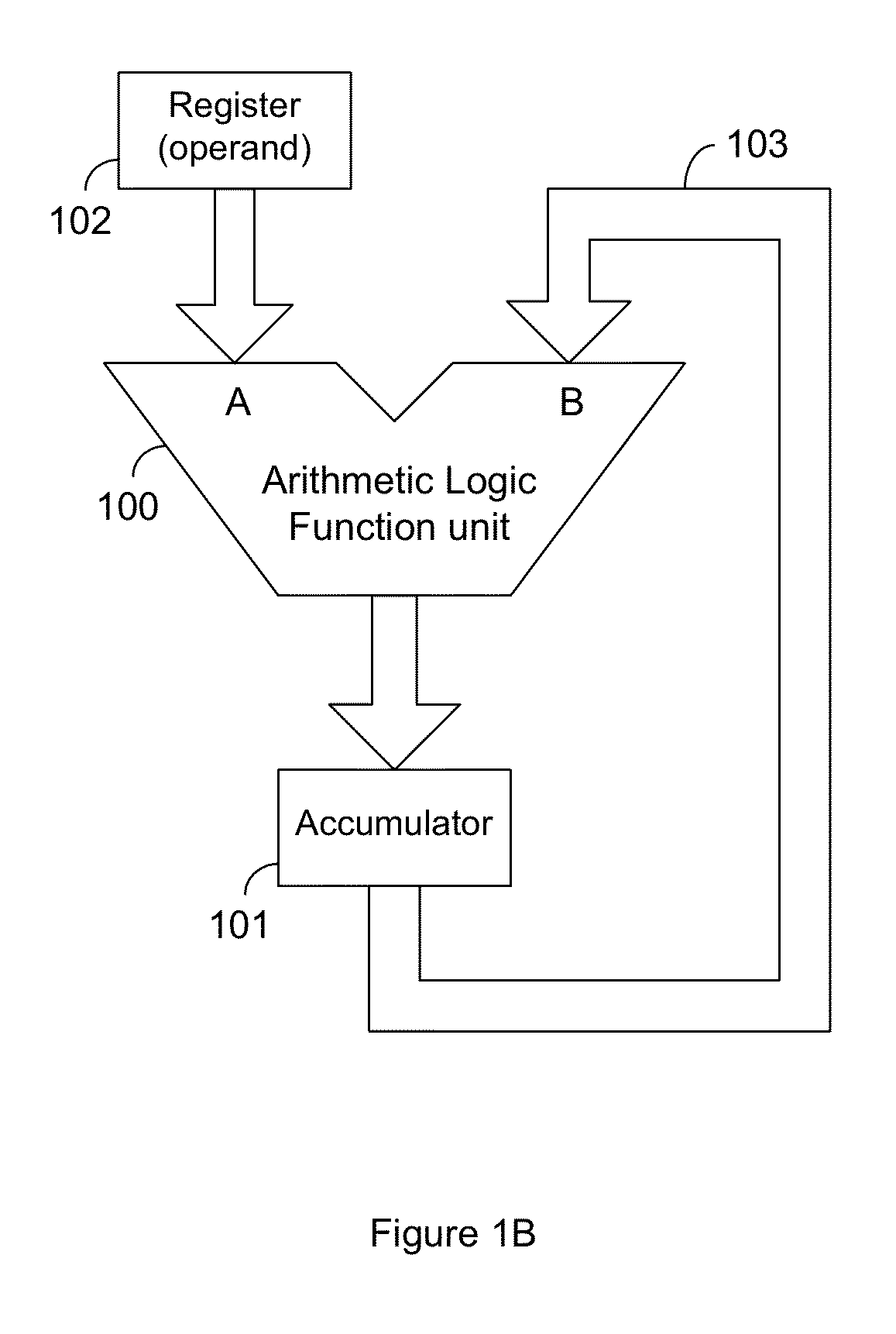

ActiveUS20130311532A1Improve performanceConservingProgram controlComputation using denominational number representationArithmetic logic unitNumbering system

Methods and systems for residue number system based ALUs, processors, and other hardware provide the full range of arithmetic operations while taking advantage of the benefits of the residue numbers in certain operations. In one or more embodiments, an RNS ALU or processor comprises a plurality of digit slices configured to perform modular arithmetic functions. Operation of the digit slices may be controlled by a controller. Residue numbers may be converted to and from fixed or mixed radix number systems for internal use and for use in various computing systems.

Owner:OLSEN IP RESERVE

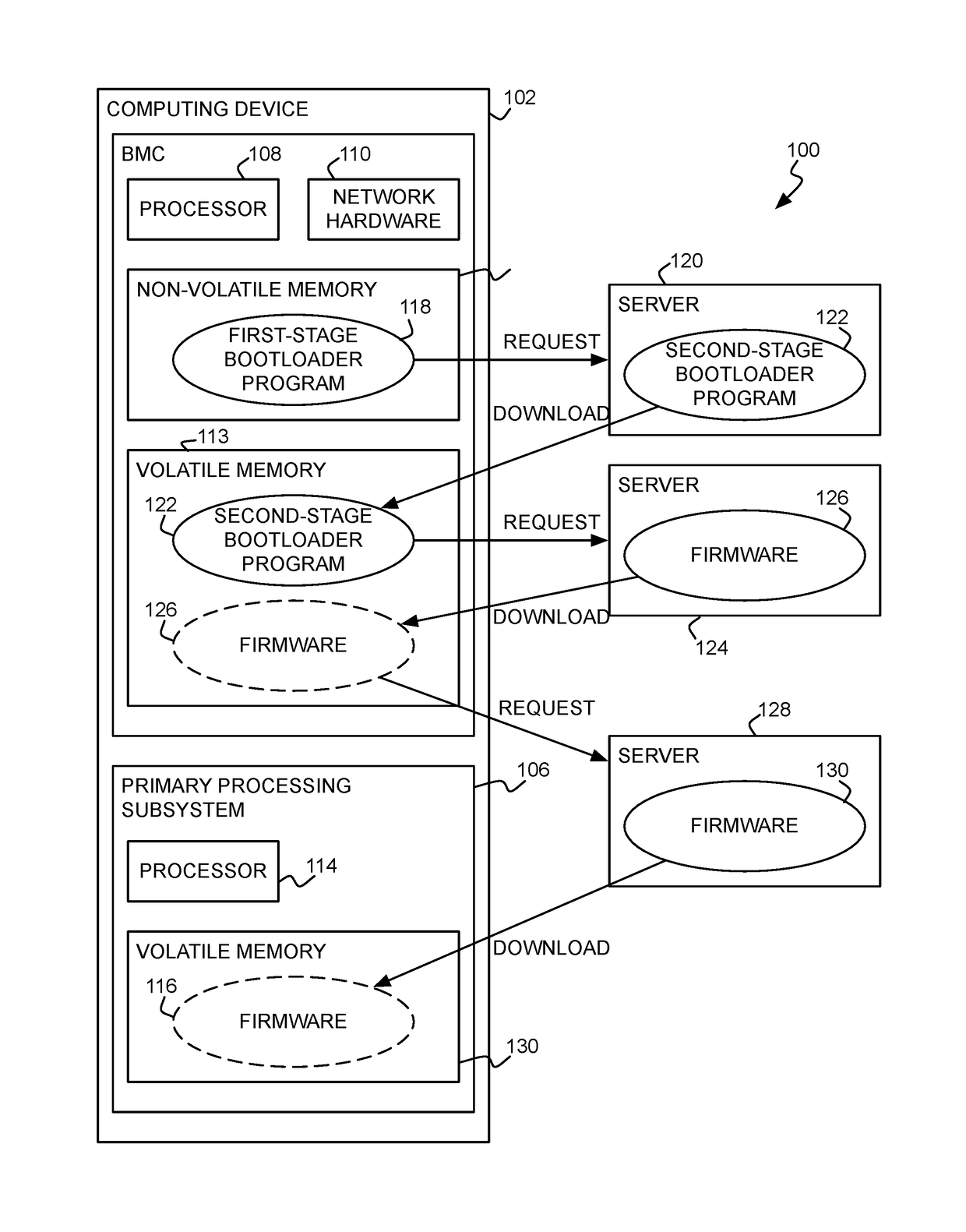

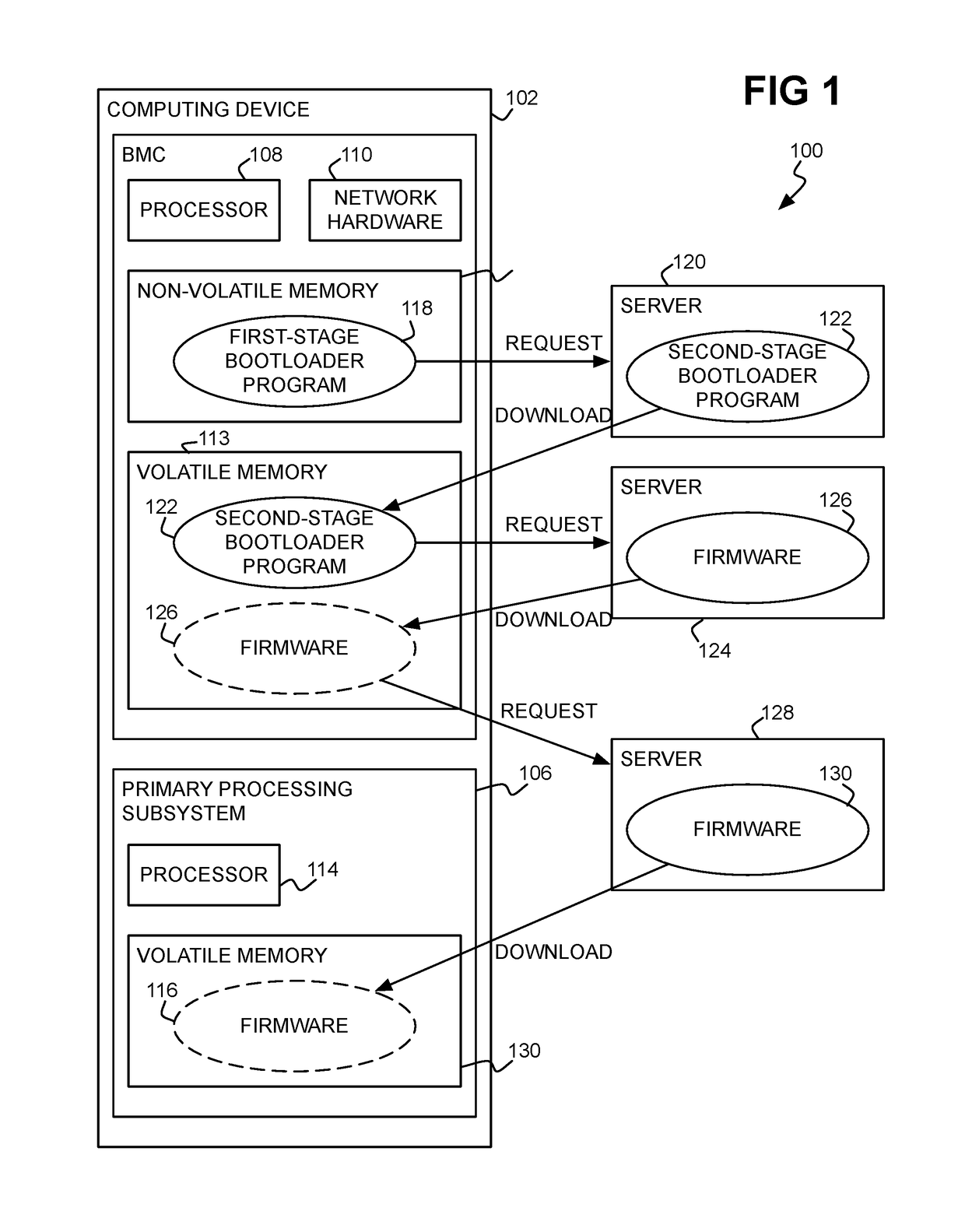

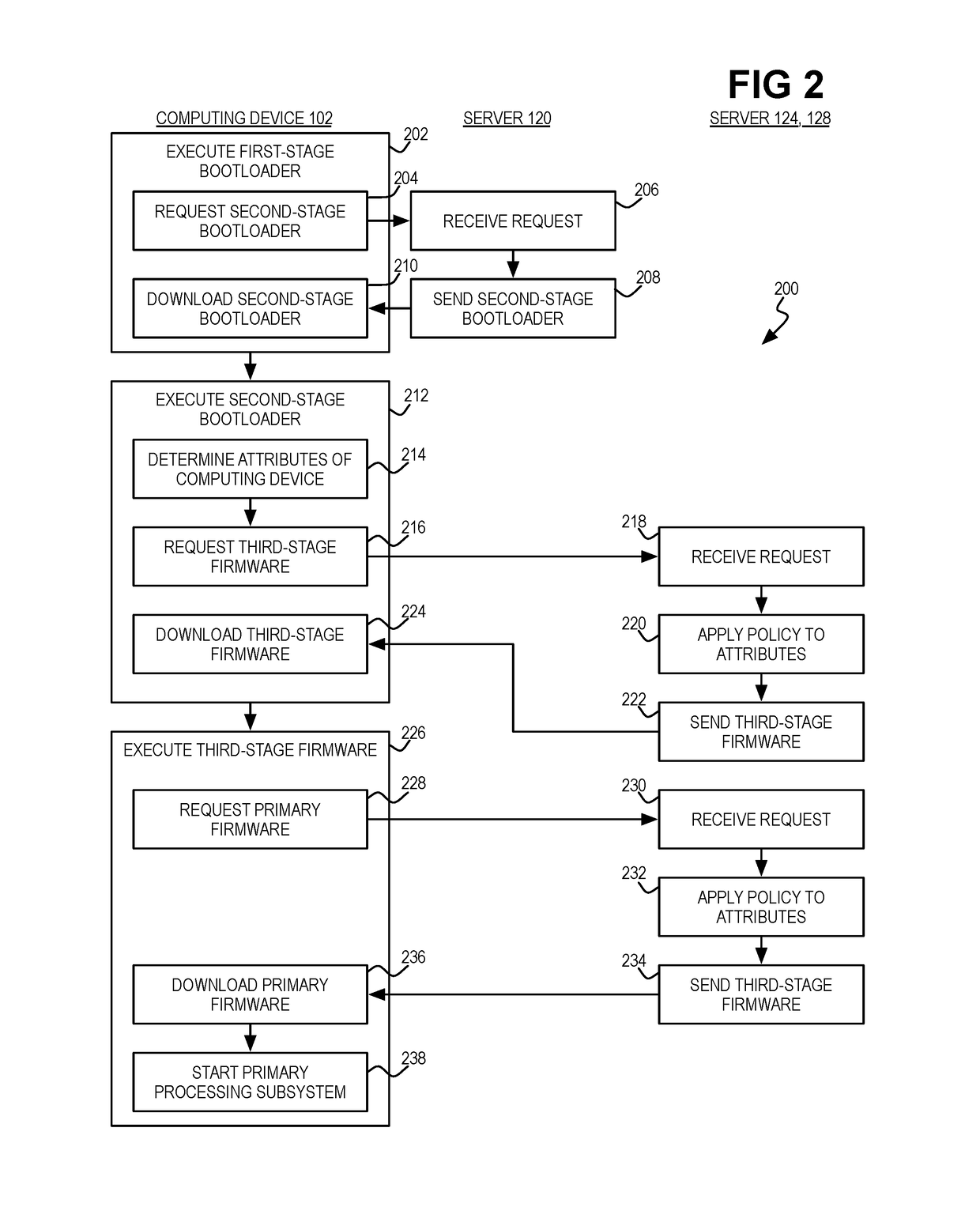

Multiple-stage bootloader and firmware for baseboard manager controller and primary processing subsystem of computing device

At power on of a computing device, a baseboard management controller (BMC) of the computing device executes, a first-stage bootloader program to download a second-stage bootloader program from a first server. The BMC executes the second-stage bootloader program to download third-stage firmware of the BMC from a second server. The BMC executes the third-stage firmware to download firmware of a primary processing subsystem of the computing device from a third server, and to start the primary processing subsystem by causing the primary processing subsystem to execute the firmware of the primary processing subsystem.

Owner:LENOVO GLOBAL TECH INT LTD

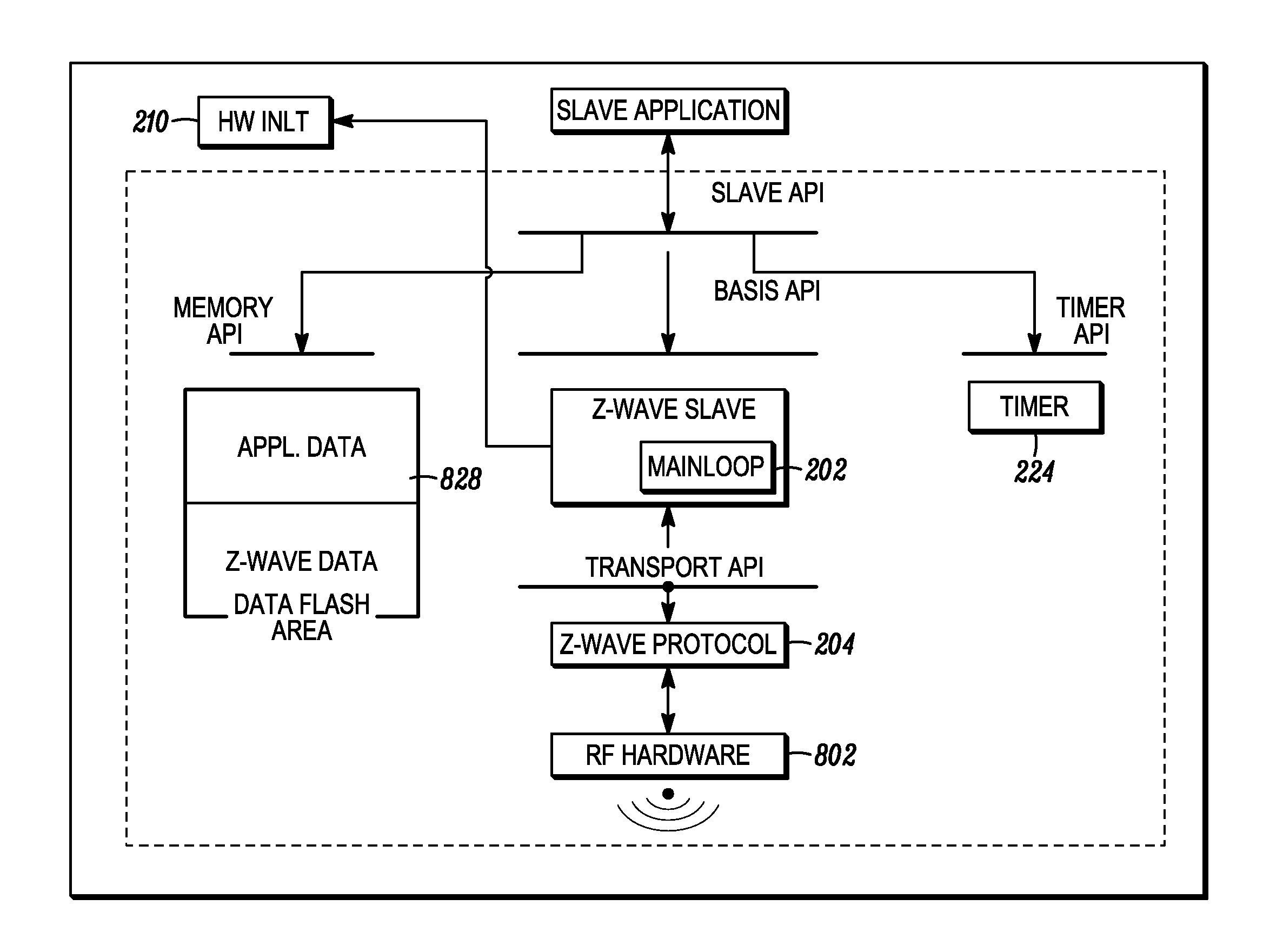

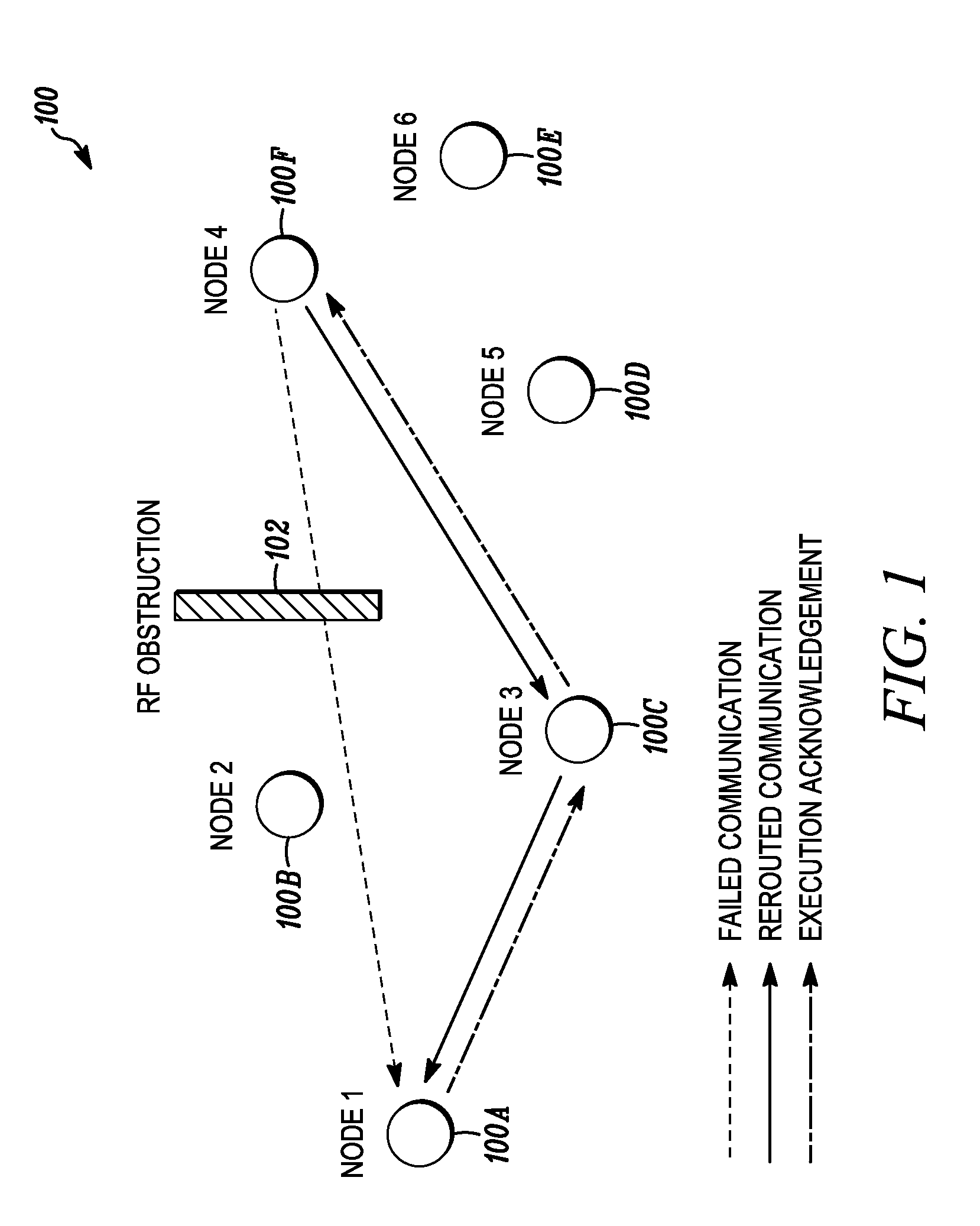

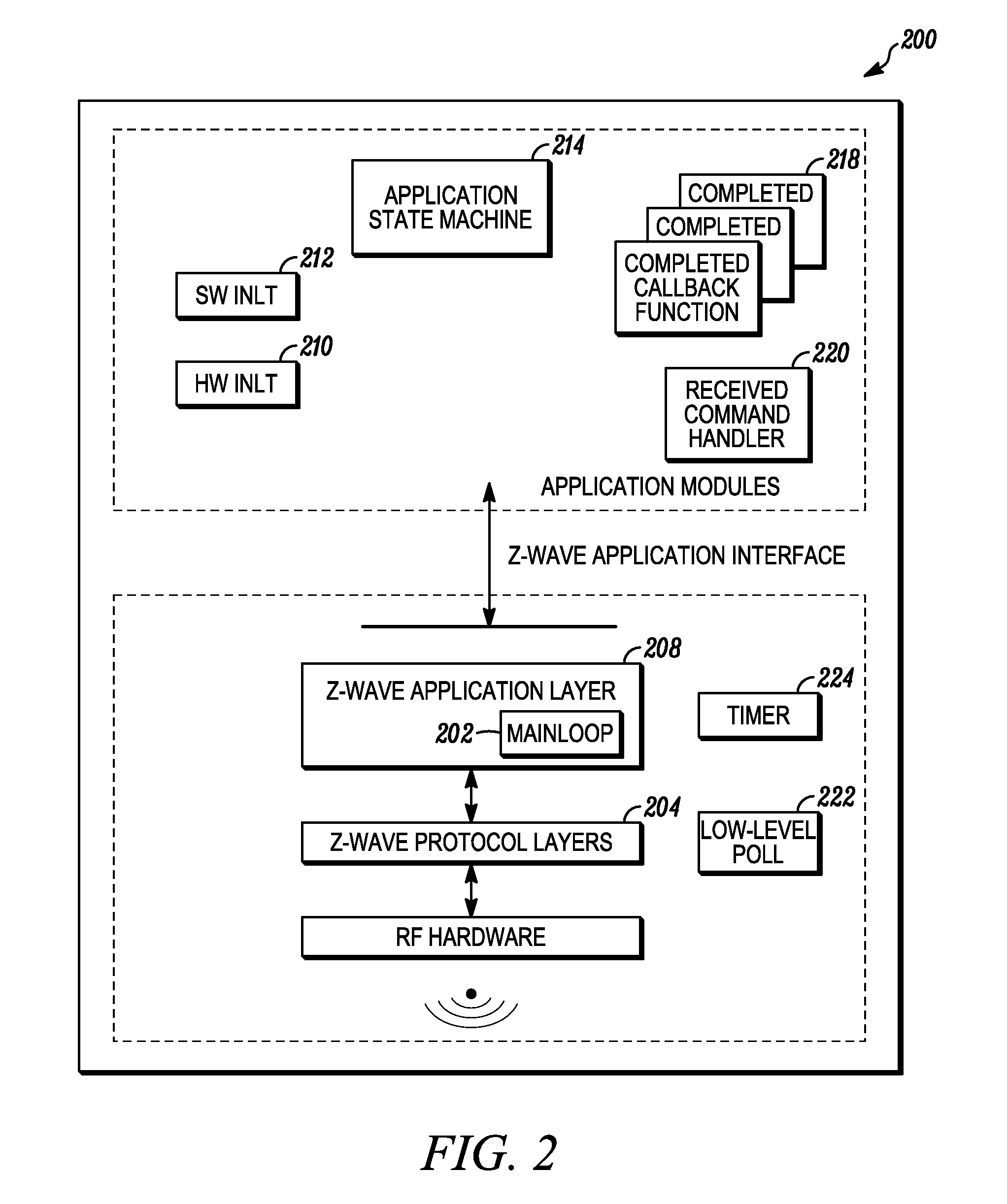

Method for uniquely addressing a group of network units in a sub-network

InactiveUS20130219482A1Maintaining protection against unwanted intrusionLower latencyNetwork traffic/resource managementDigital data processing detailsTime delaysBroadcasting

Owner:SILICON LAB INC

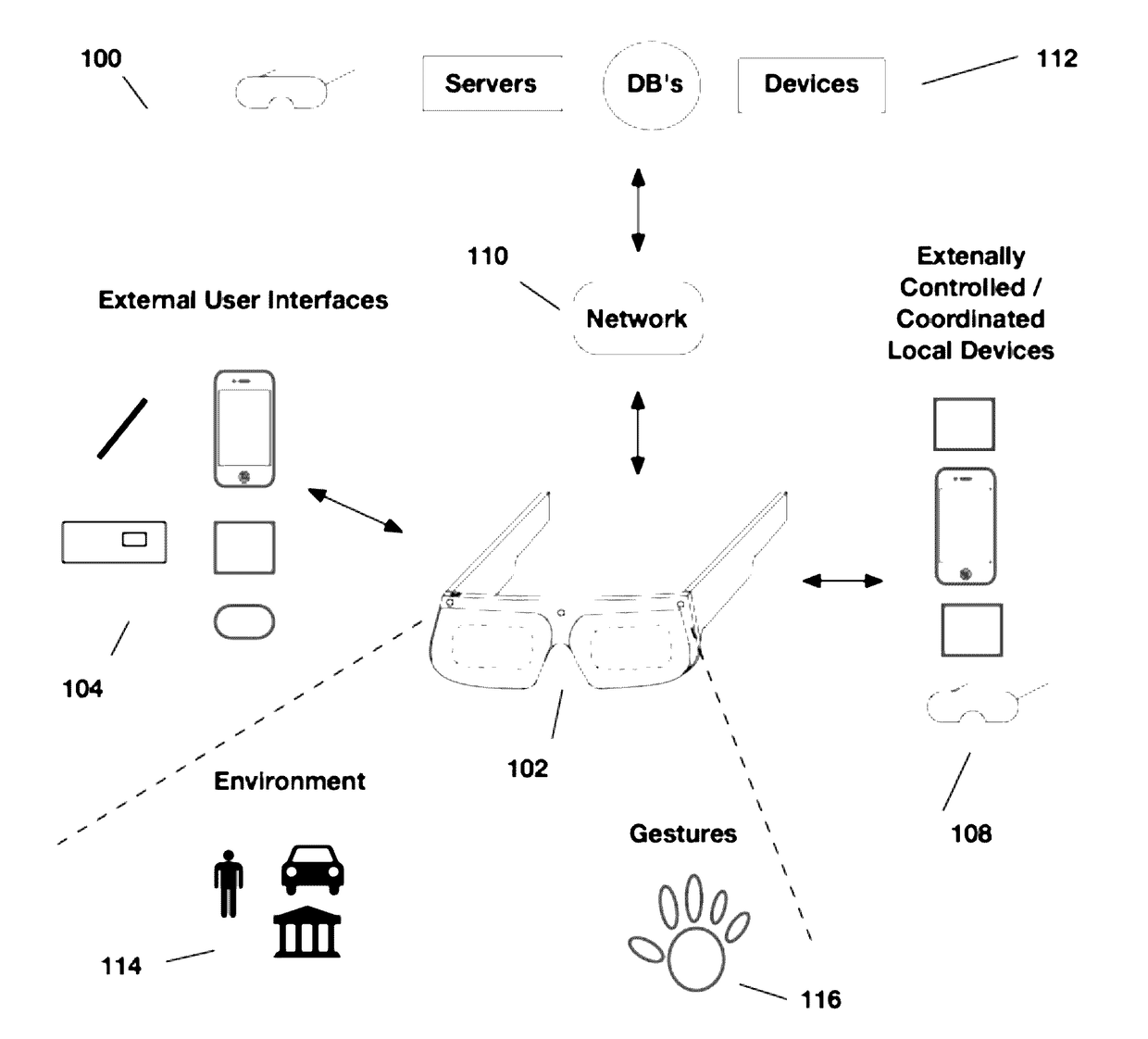

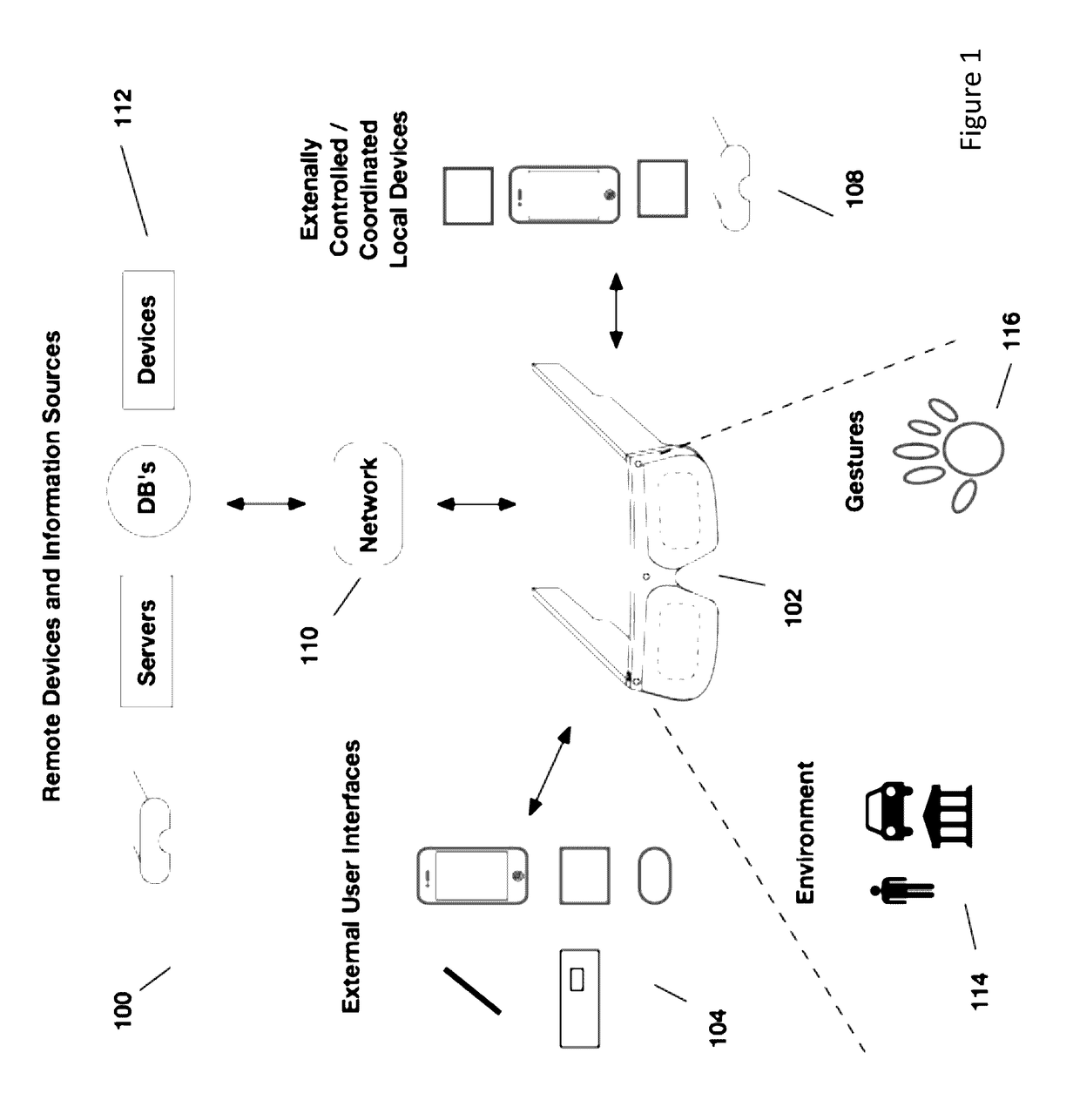

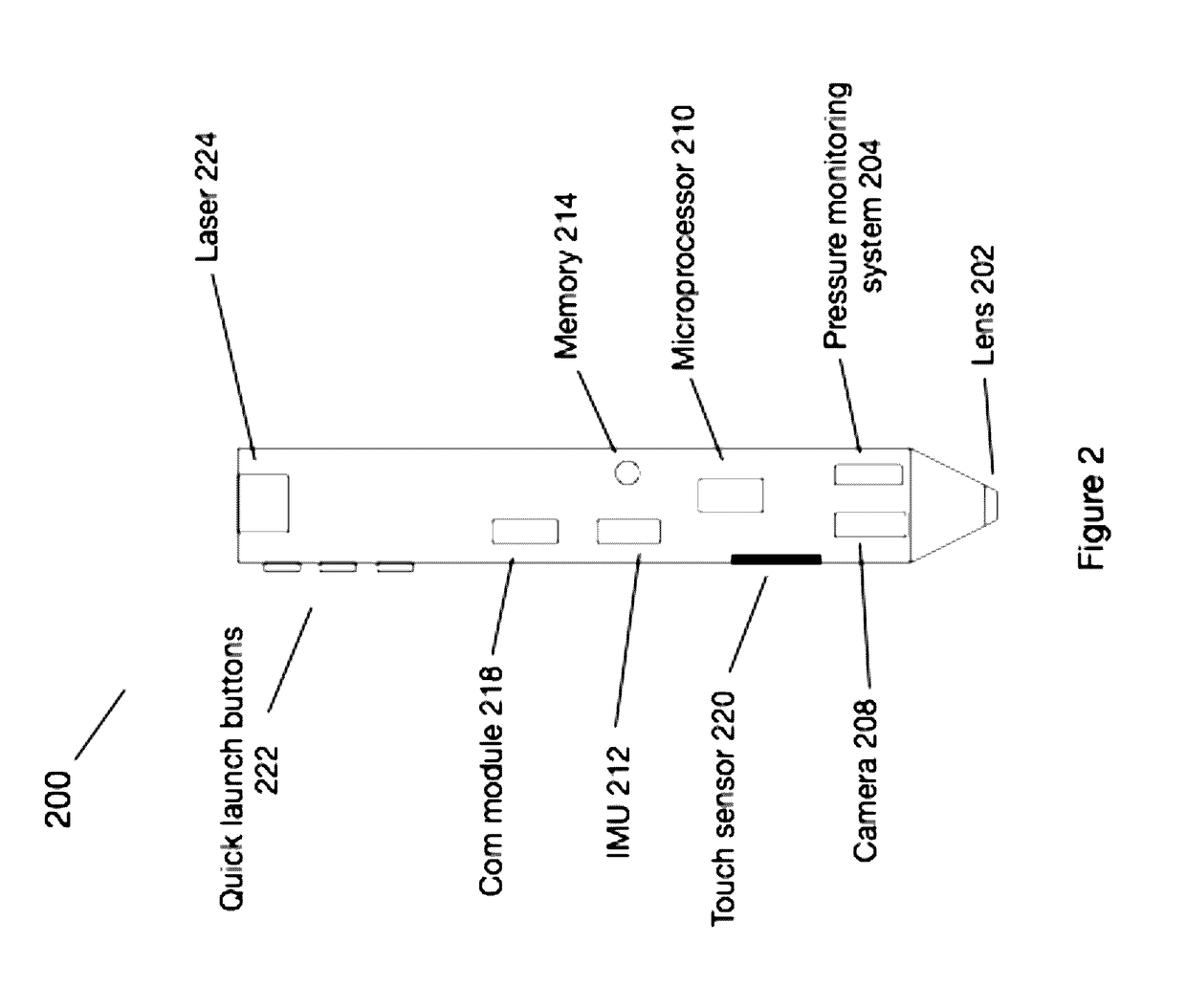

External user interface for head worn computing

InactiveUS20170100664A1Input/output for user-computer interactionDetails for portable computersControl systemHand held

Aspects of the present invention relate to a multi-sided hand-held controller for a head-worn computing system. The hand-held computer controller includes a first user control interface on a first side of the hand-held controller, a second user control interface on a second side of the hand-held controller, and a control system adapted to detect which of the first side and second side is an active control side of the controller, positioned properly for user interaction. Following the detection, the control system can activate the active control side to accept user interactions for control of a computer.

Owner:OSTERHOUT GROUP INC

Method and apparatus for providing additional resources for a host computer

InactiveUS7281031B1Lower performance requirementsDigital computer detailsData switching networksAuto-configurationE-commerce

Owner:EMC IP HLDG CO LLC

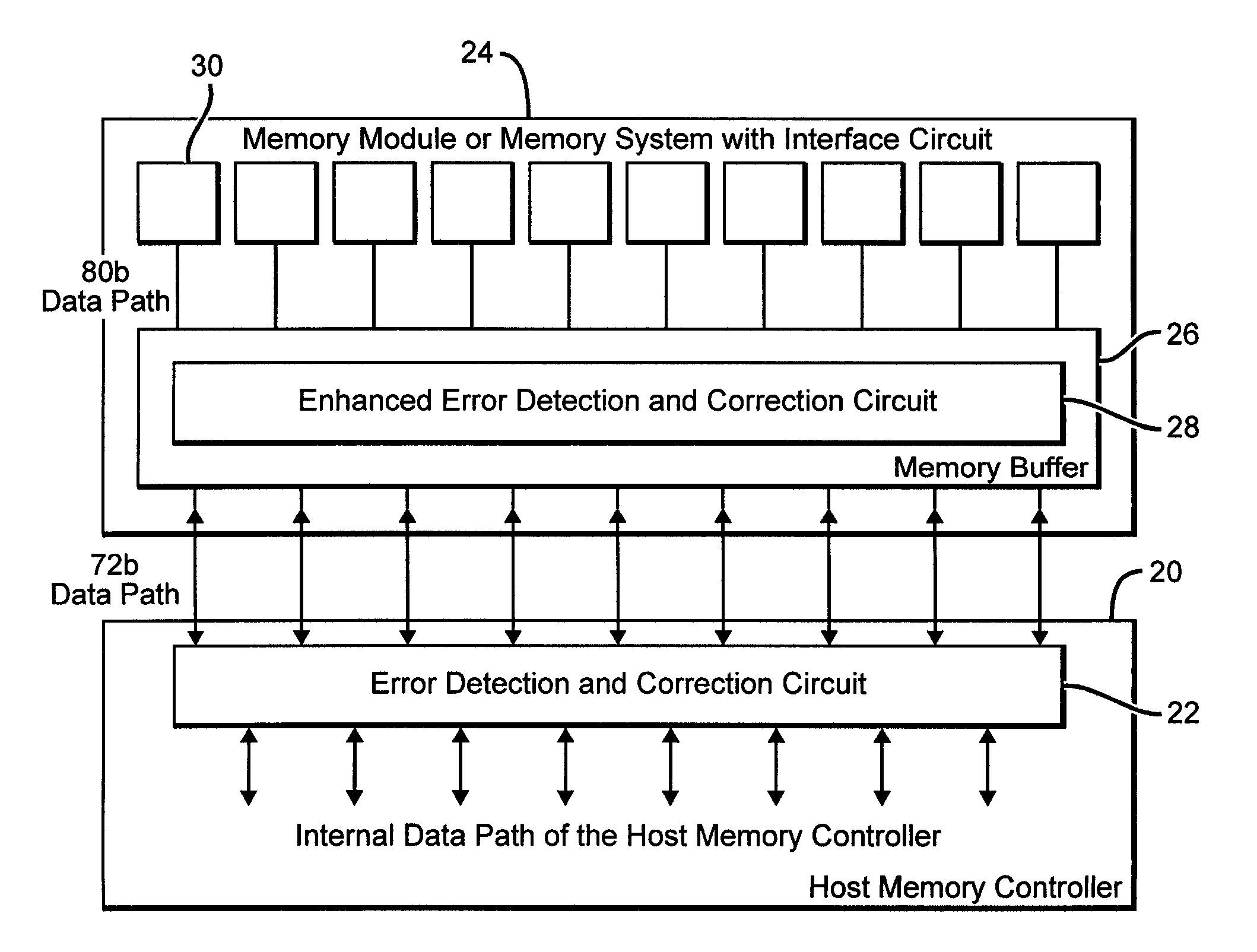

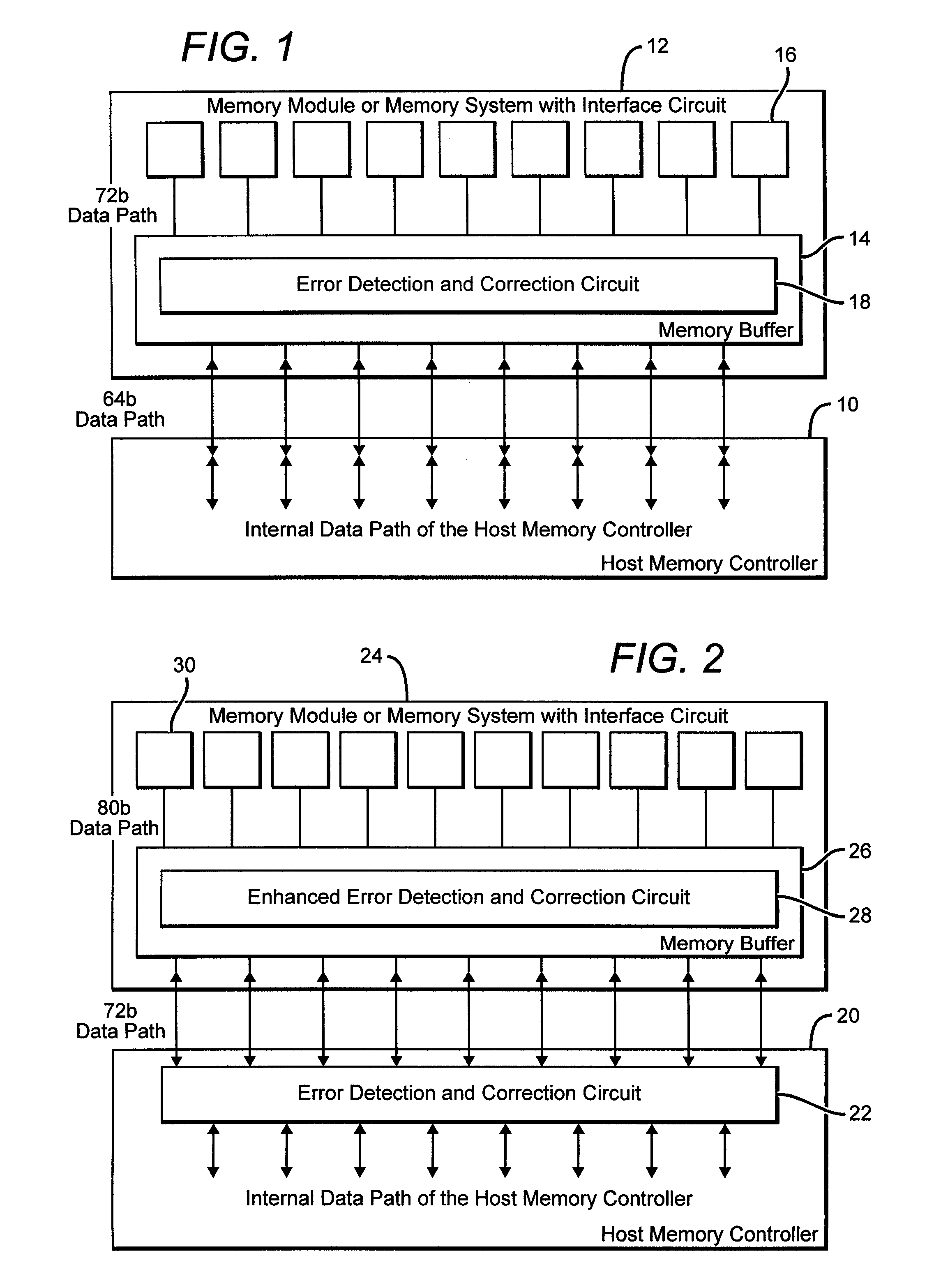

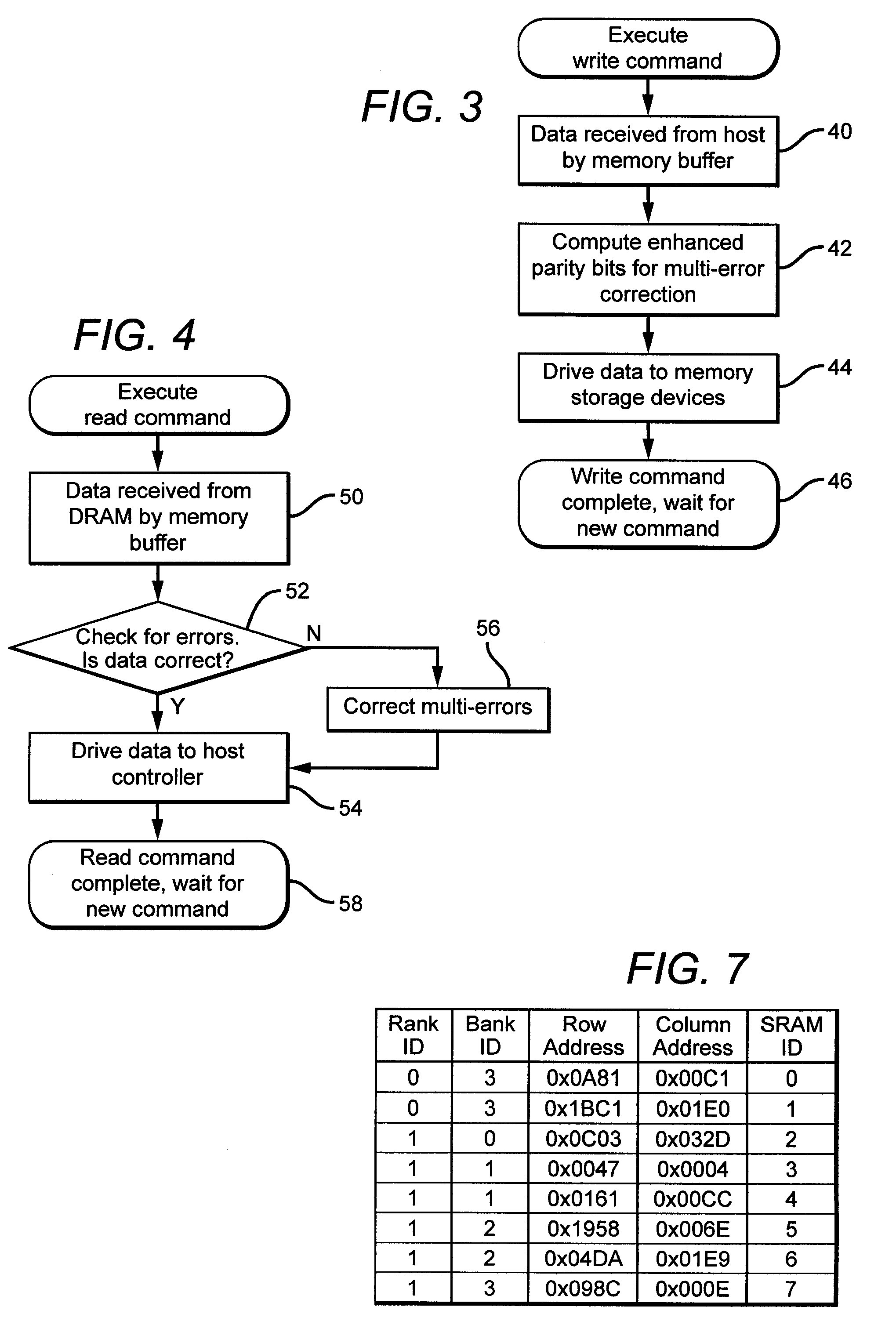

Systems and methods for error detection and correction in a memory module which includes a memory buffer

ActiveUS20120266041A1Without compromising integrity requirementError preventionTransmission systemsMultiplexingMultiplexer

The present systems include a memory module containing a plurality of RAM chips, typically DRAM, and a memory buffer arranged to buffer data between the DRAM and a host controller. The memory buffer includes an error detection and correction circuit arranged to ensure the integrity of the stored data words. One way in which this may be accomplished is by computing parity bits for each data word and storing them in parallel with each data word. The error detection and correction circuit can be arranged to detect and correct single errors, or multi-errors if the host controller includes its own error detection and correction circuit. Alternatively, the locations of faulty storage cells can be determined and stored in an address match table, which is then used to control multiplexers that direct data around the faulty cells, to redundant DRAM chips in one embodiment or to embedded SRAM in another.

Owner:RAMBUS INC

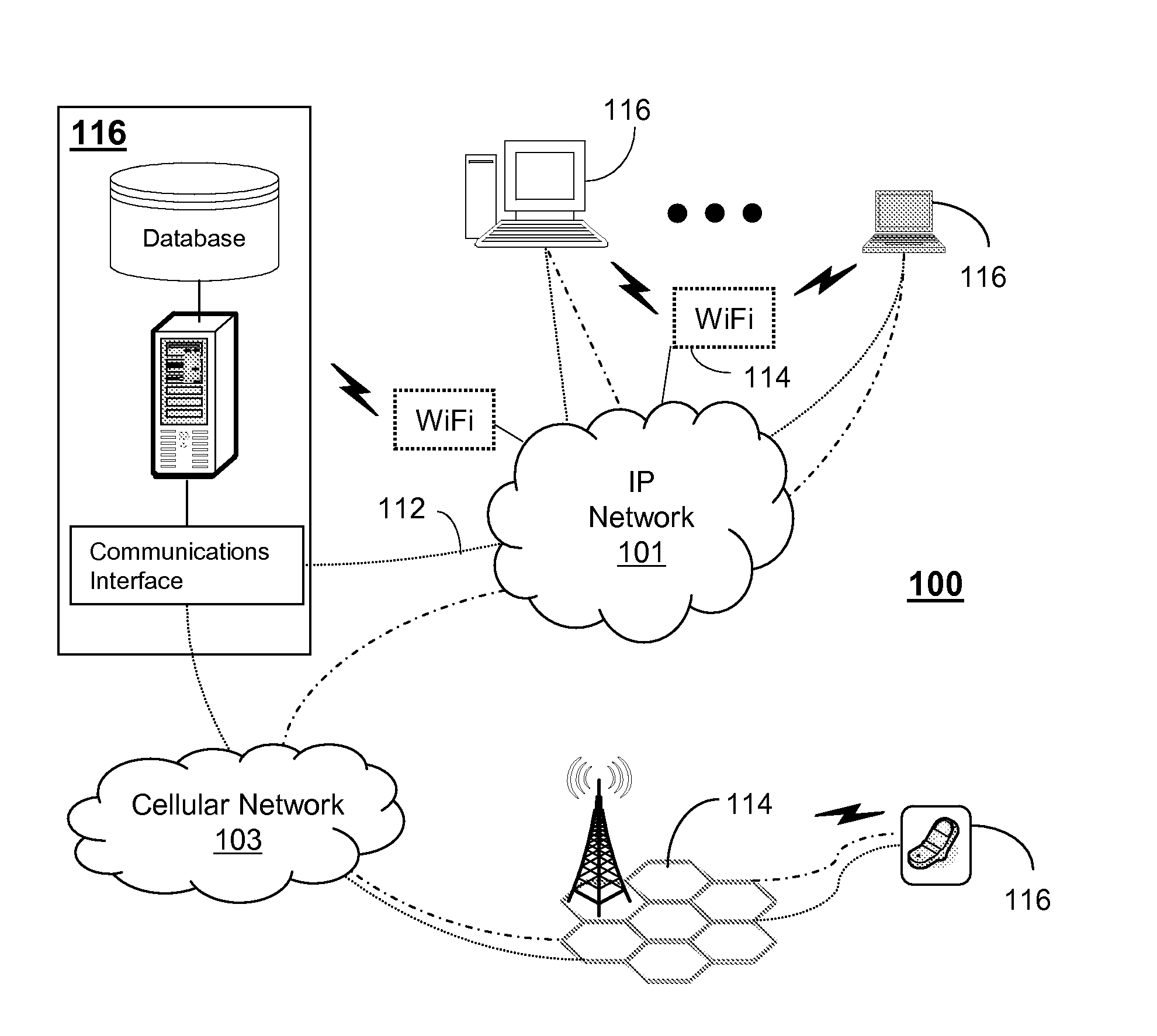

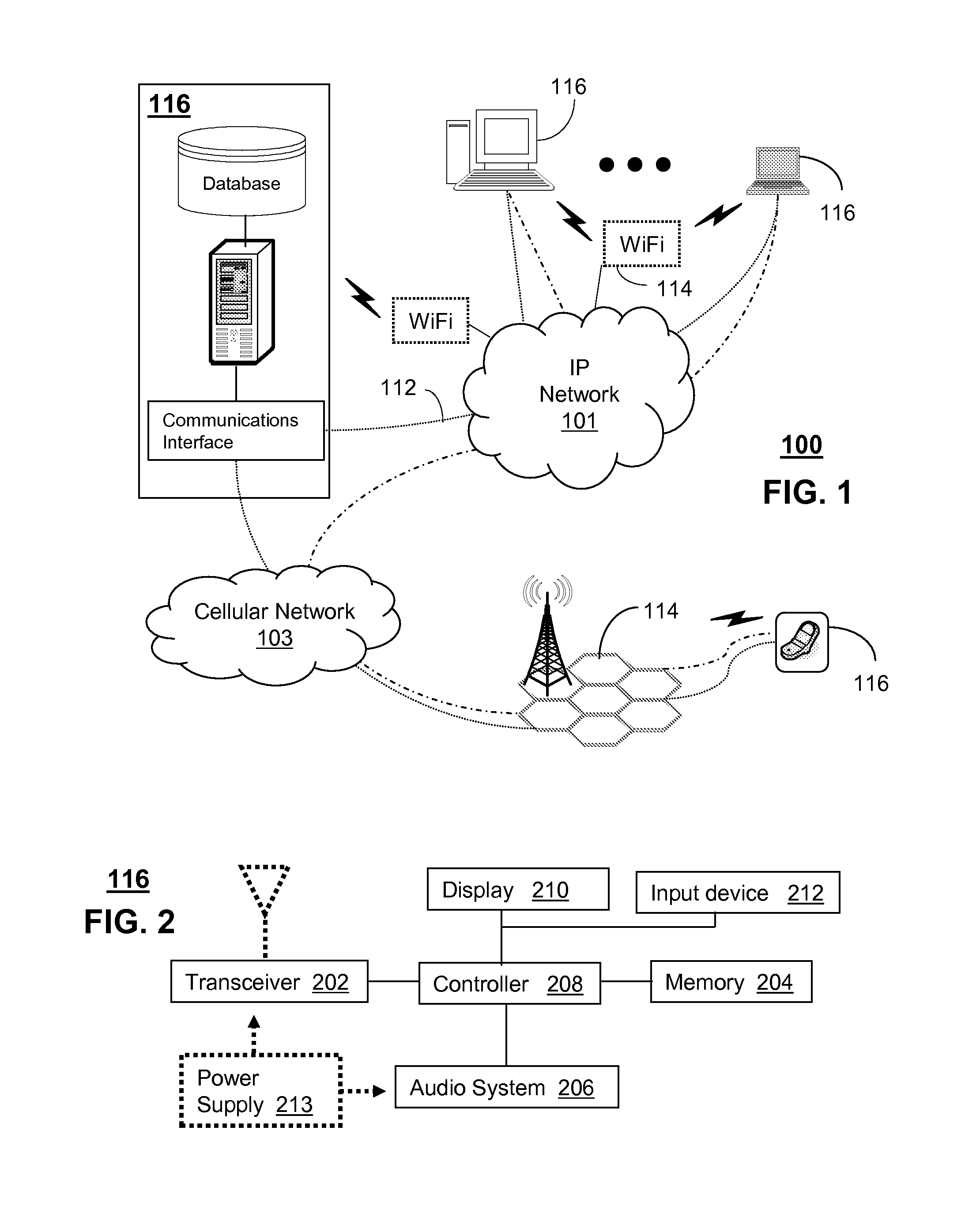

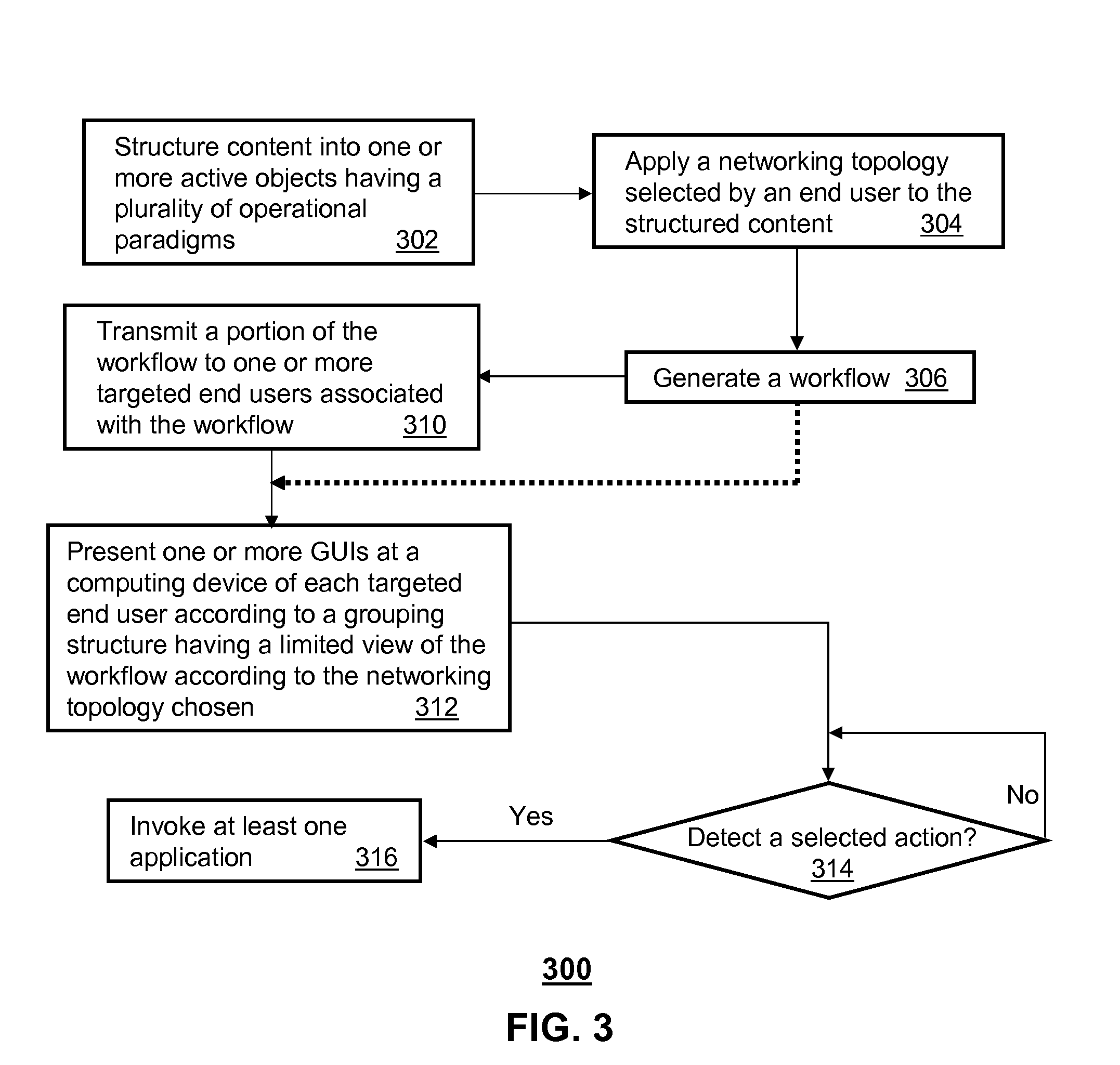

Method and apparatus for configuring a workflow

InactiveUS20070250613A1Digital computer detailsOffice automationSoftware engineeringController (computing)

An apparatus and method are disclosed for configuring a workflow. An apparatus that incorporates teachings of the present disclosure may include, for example, a computing device having a controller programmed to identify one or more active objects from a content source, and structure the one or more active objects into a workflow having a networking topology and a plurality of operational paradigms. Additional embodiments are disclosed.

Owner:SBC KNOWLEDGE VENTURES LP

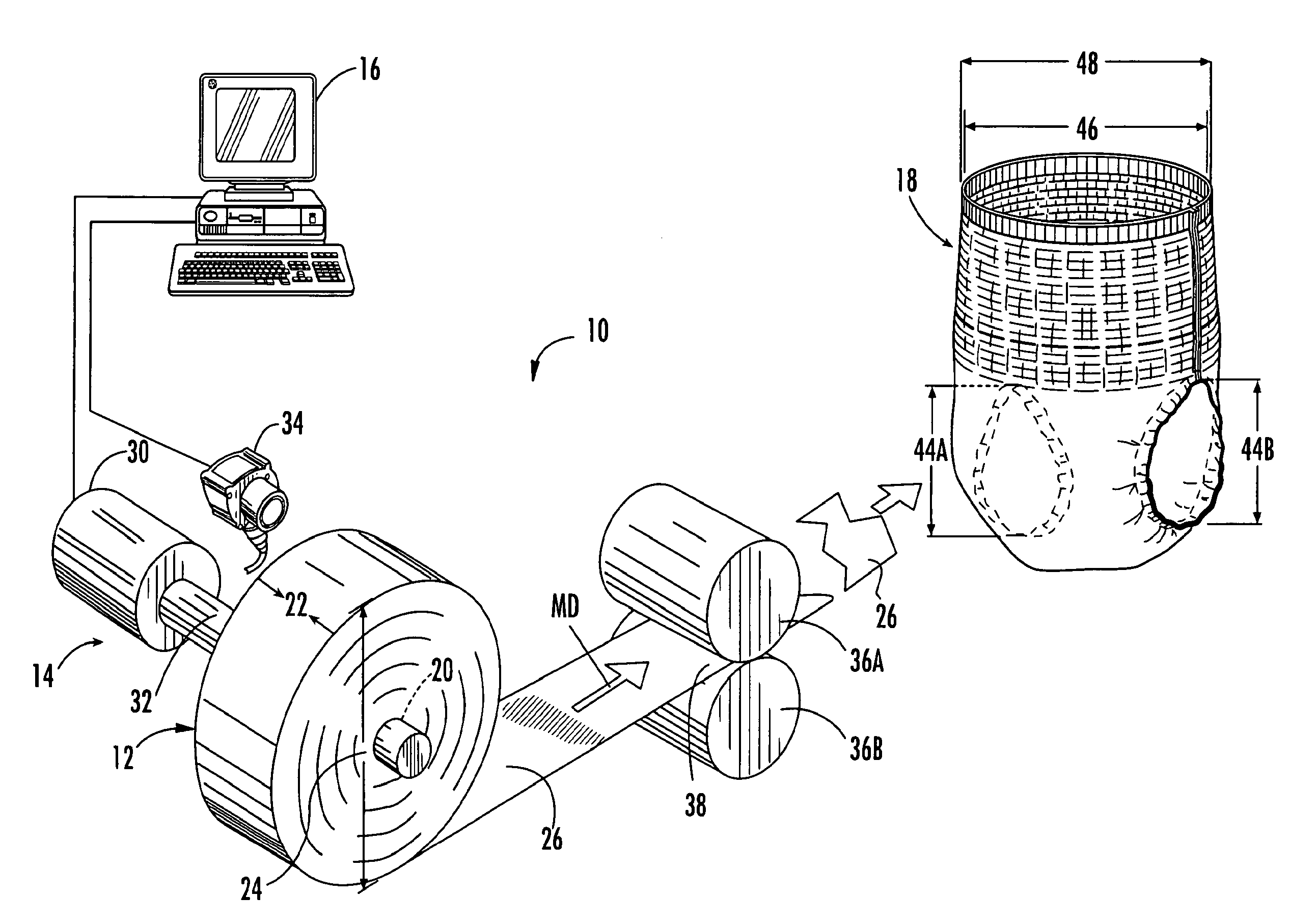

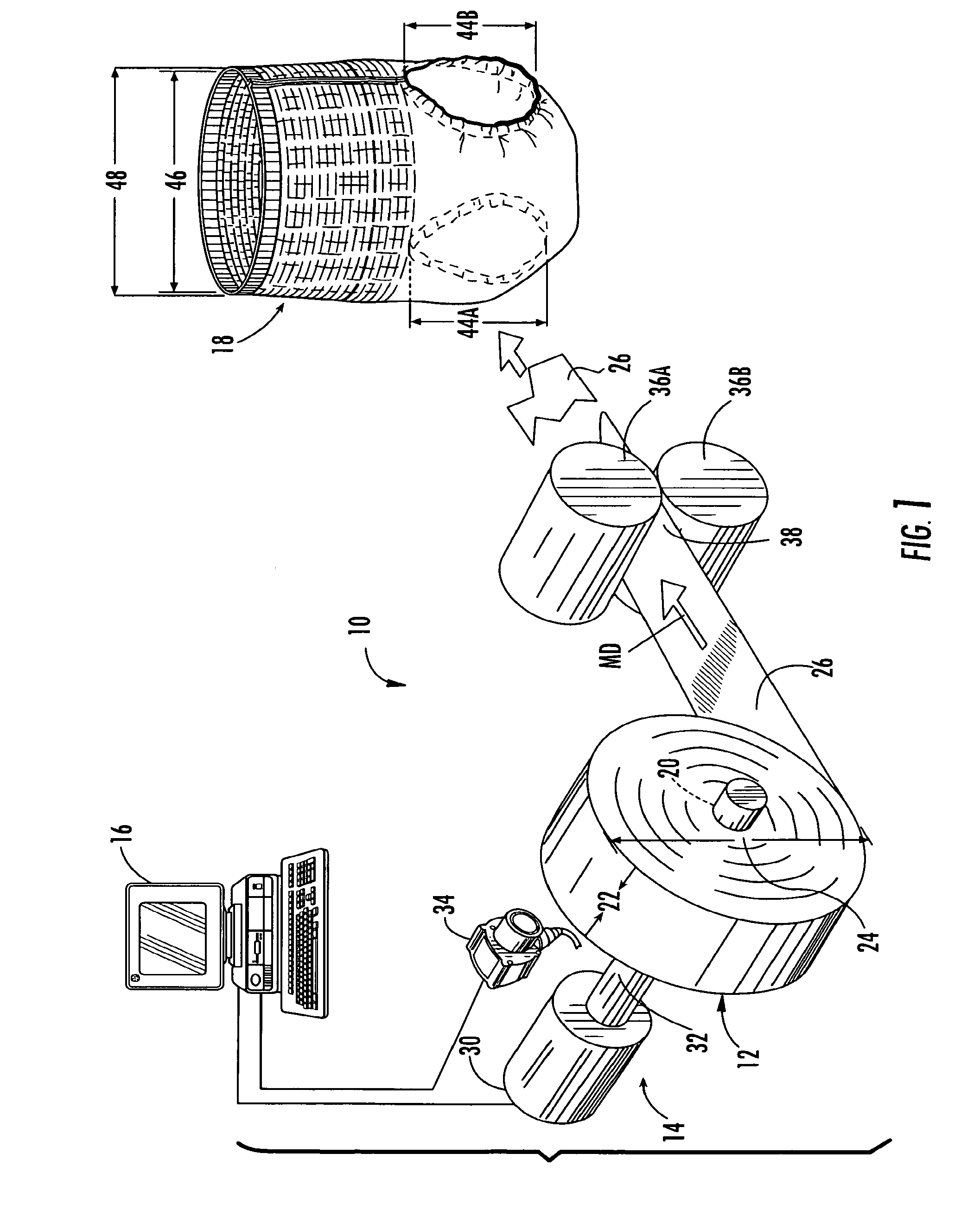

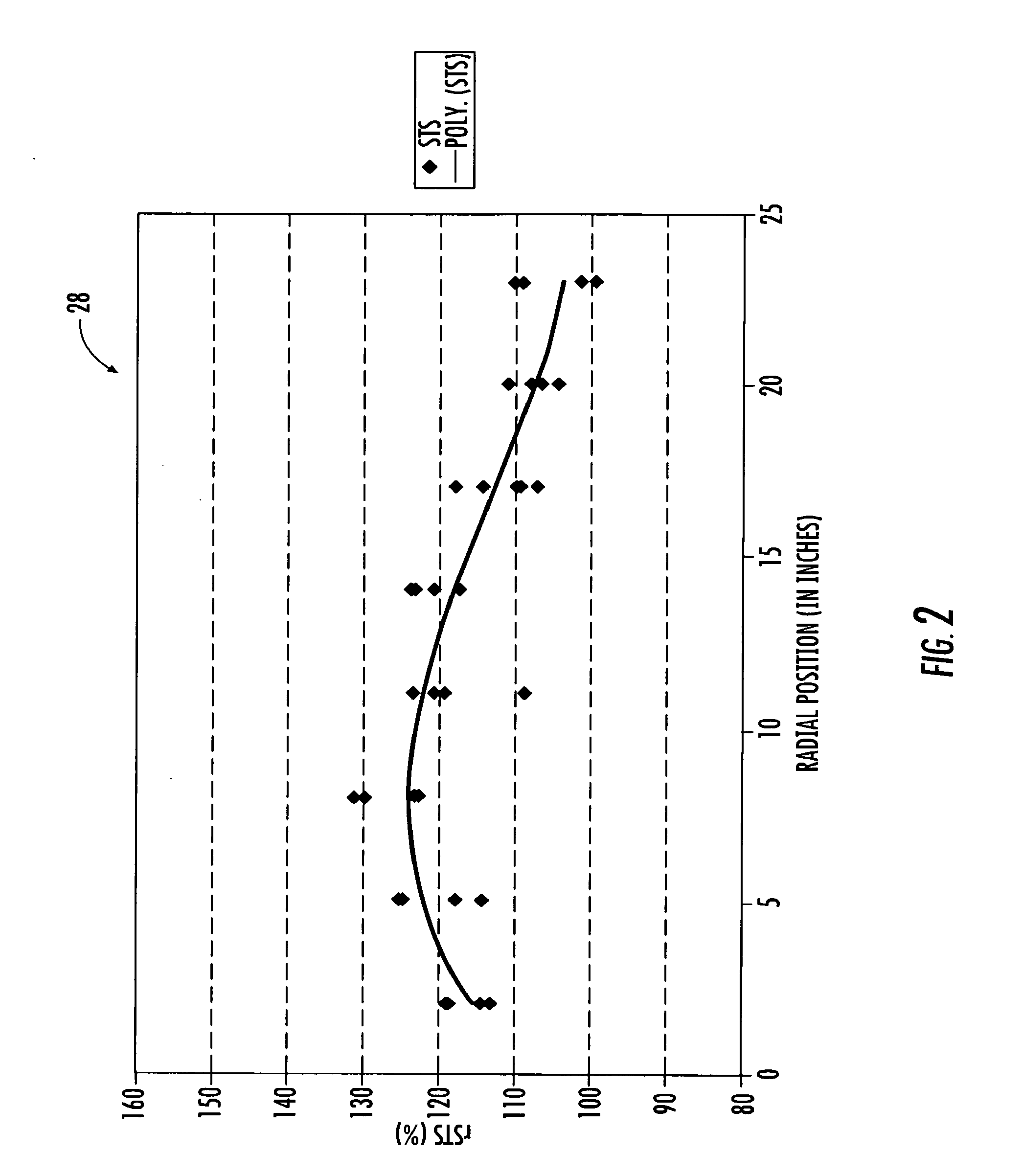

Through-roll profile unwind control system and method

ActiveUS20070131817A1Reduce material consumptionSame sizeFilament handlingAbsorbent padsProcess systemsControl system

A method of unwinding material in a process system for producing a product includes the steps of loading a roll of material in an unwind system, programming a controller with an unwind equation, the controller in communication with the unwind system, computing a material feed rate based on a predetermined product circumference and an average stretch-to-stop roll profile, and putting a diameter of the roll of material and the material feed rate in the unwind equation of the controller, and unwinding a varying amount of material from the roll of material at a varying unwind speed, the controller being responsive to the unwind equation to vary the unwind speed for forming a plurality of products, each product defining a respective circumference substantially equal to the predetermined product circumference.

Owner:KIMBERLY-CLARK WORLDWIDE INC

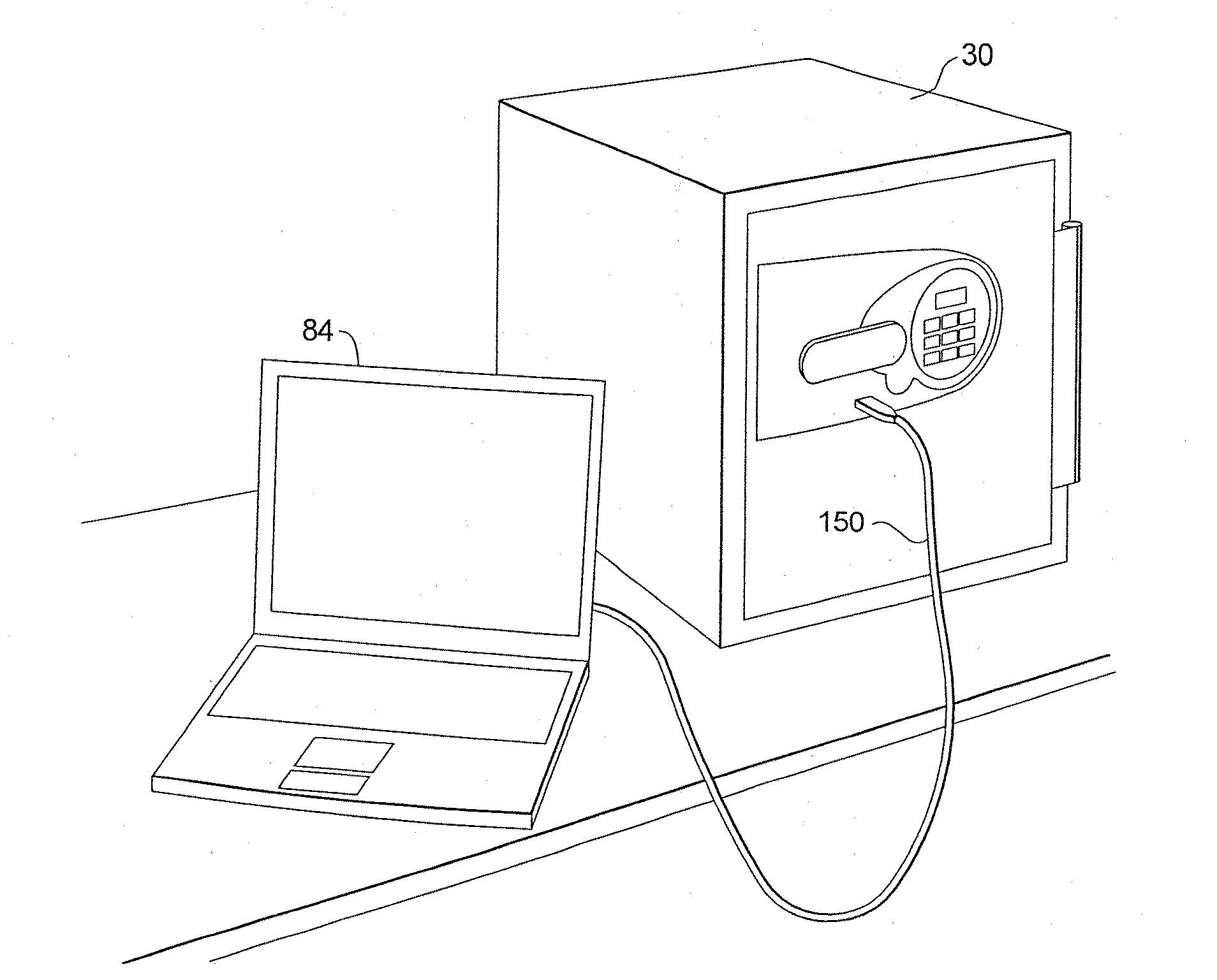

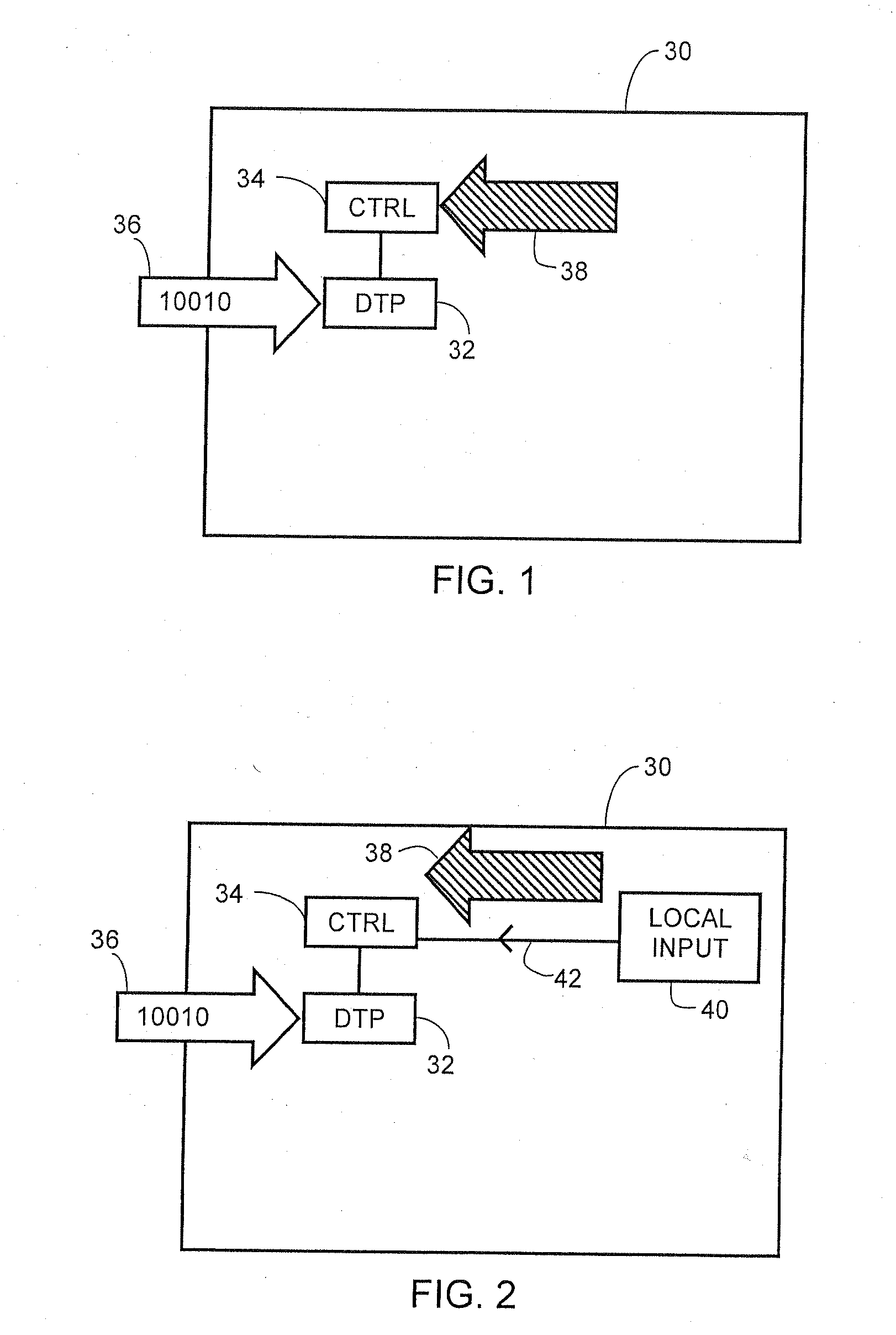

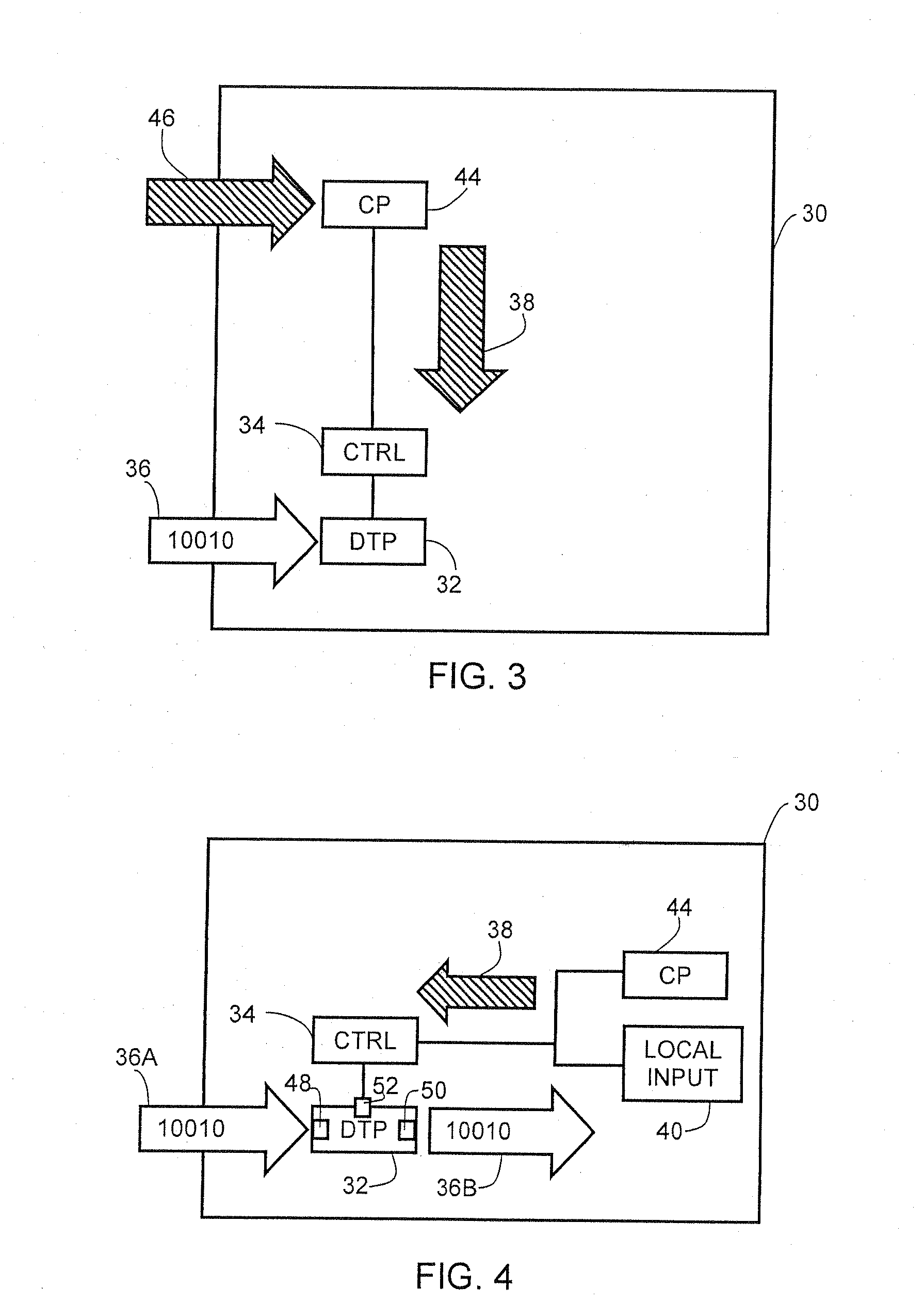

Safe with controllable data transfer capability

ActiveUS20090165682A1Volume/mass flow measurementInternal/peripheral component protectionData transmissionEmbedded system

A safe including a safe controller coupled to a data transfer port is provided. The safe controller is configured to selectively enable device data to pass through the data transfer port when a valid code is received by the safe controller. A system for controlling data communications with an internal device in a safe is also provided. The system includes an external computing device configured to execute a series of instructions, and a safe. The safe includes a data transfer port coupled to the external computing device and the internal device. The safe also includes a safe controller coupled to the data transfer port, wherein the safe controller is configured to selectively enable communication between the external computing device and the internal device when a valid code is received by the safe controller.

Owner:SENTRY SAFE

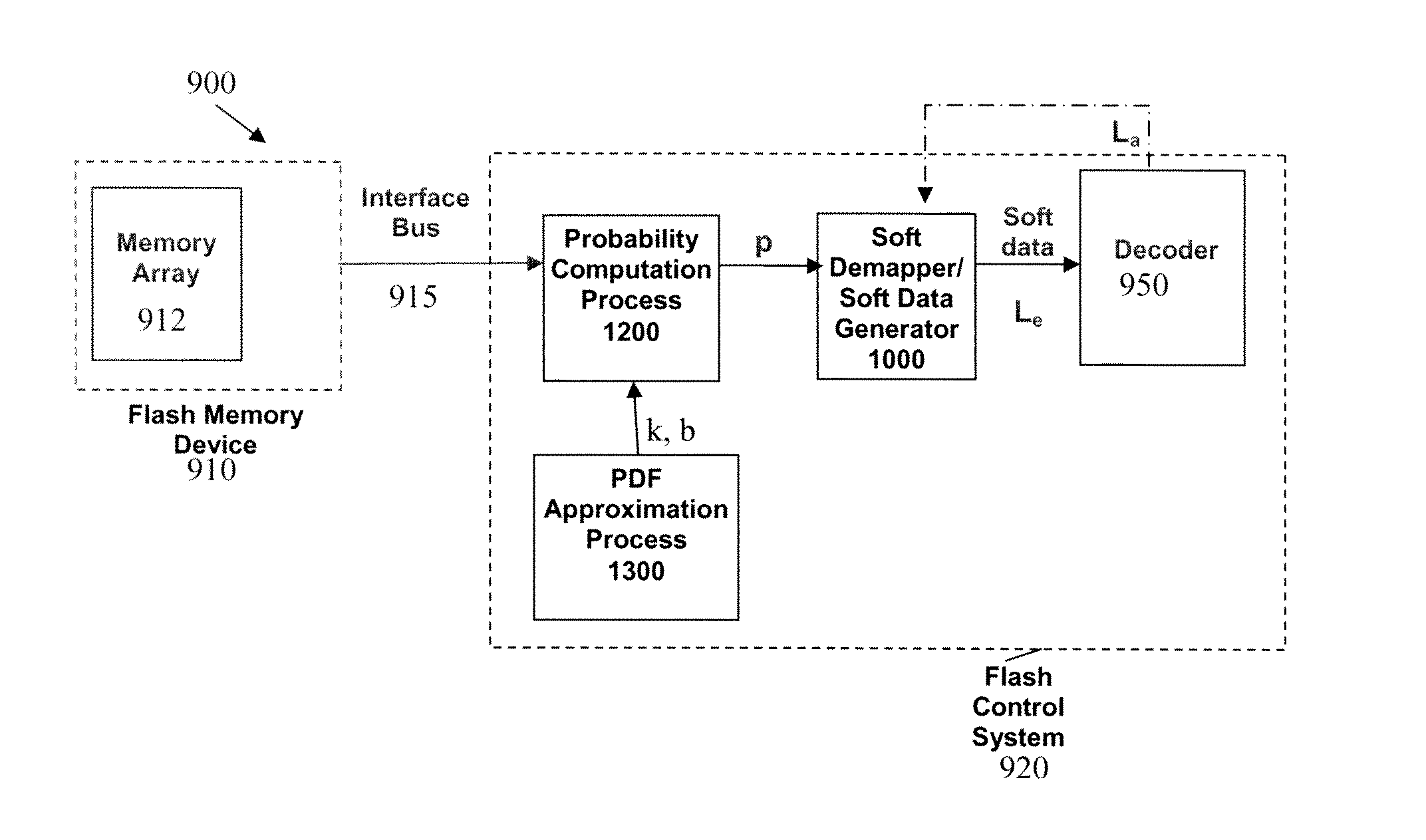

Methods and apparatus for computing a probability value of a received value in communication or storage systems

ActiveUS20110246136A1Other decoding techniquesDigital computer detailsCommunications systemAlgorithm

Methods and apparatus are provided for computing a probability value of a received value in communication or storage systems. A probability value for a received value in a communication system or a memory device is computed by obtaining at least one received value; identifying a segment of a function corresponding to the received value, wherein the function is defined over a plurality of segments, wherein each of the segments has an associated set of parameters; and calculating the probability value using the set of parameters associated with the identified segment. A probability value for a received value in a communication system or a memory device can also be computed by calculating the probability value for the received value using a first distribution, wherein the first distribution is predefined and wherein a mapped version of the first distribution approximates a distribution of the received values and wherein the calculating step is implemented by a processor, a controller, a read channel, a signal processing unit or a decoder.

Owner:AVAGO TECH INT SALES PTE LTD

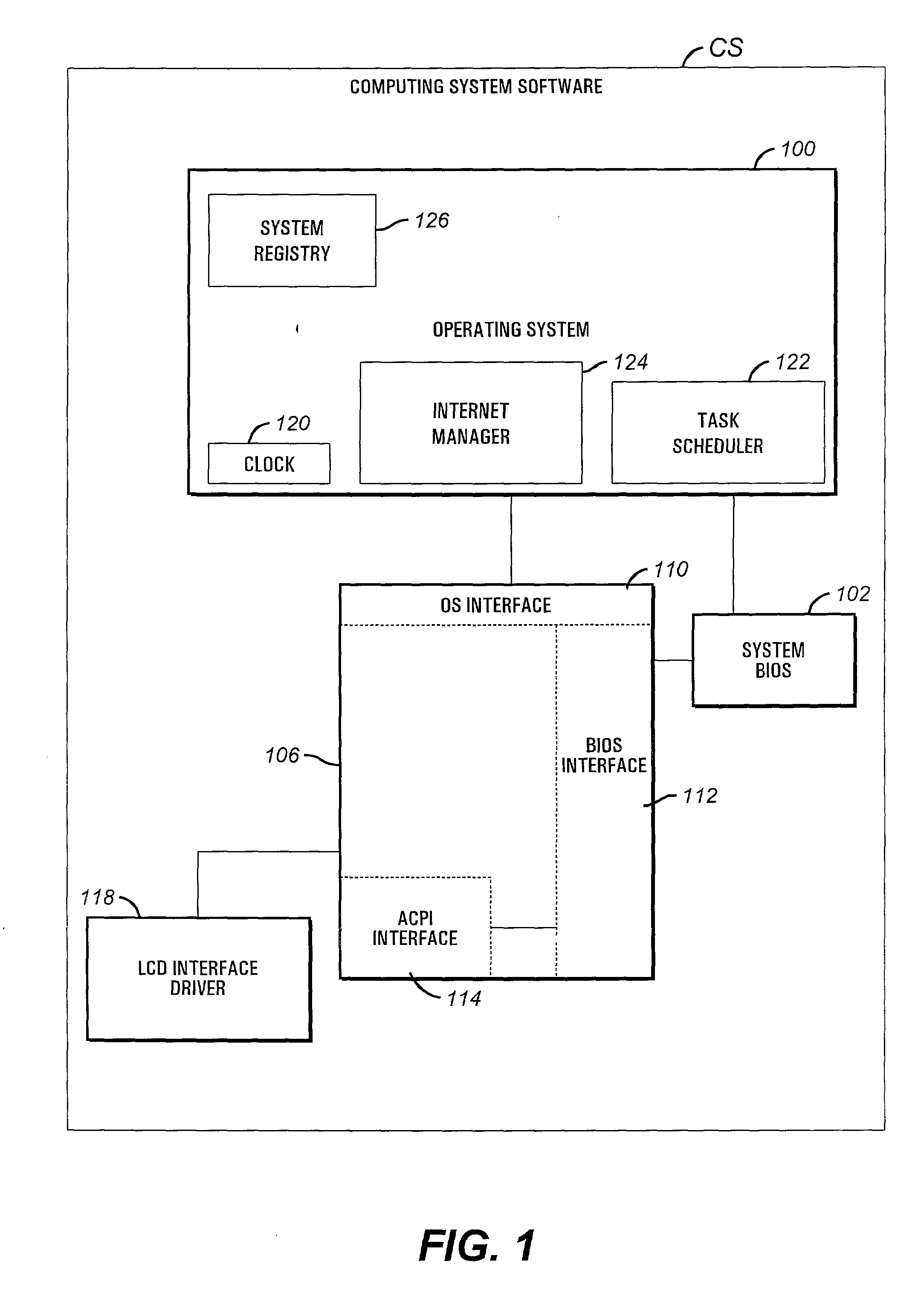

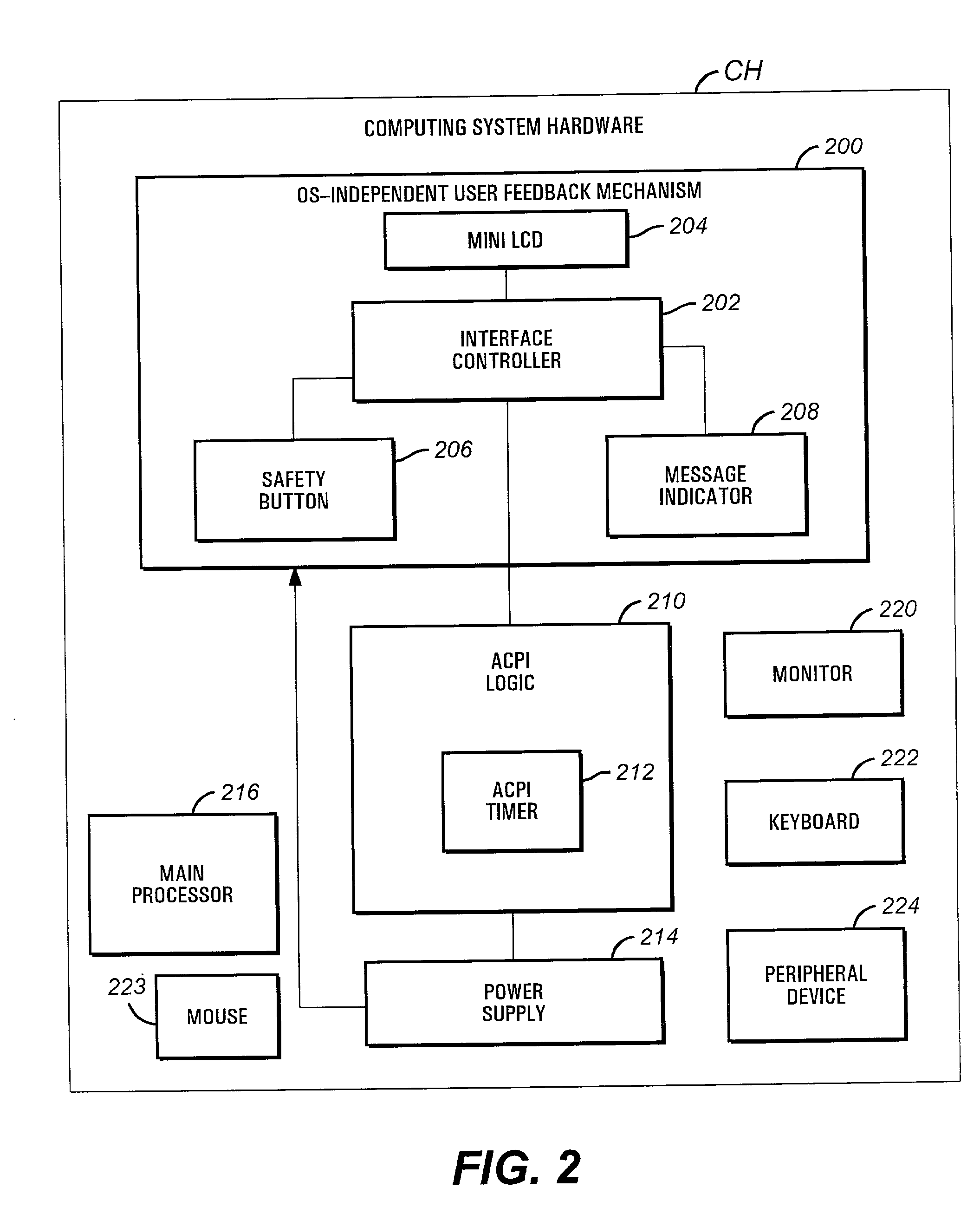

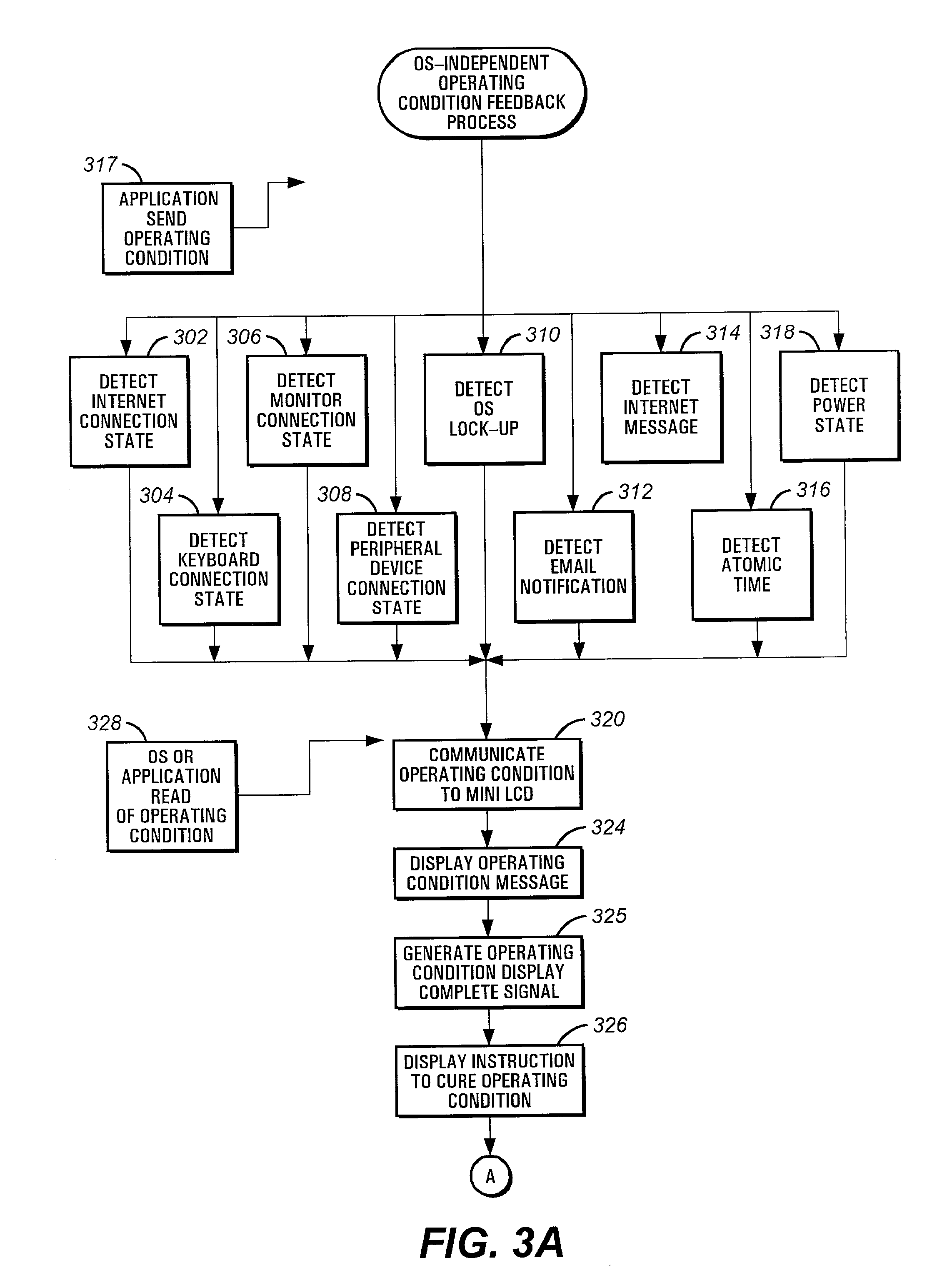

Operating system-independent computing system user feedback mechanism

InactiveUS20010007140A1Digital data processing detailsHardware monitoringOperational systemThe Internet

A computing system employs a user feedback mechanism to monitor operating conditions of the computing system and to alert a user to the operating conditions independently of an operating system of the computing system. The user feedback mechanism includes a display panel to display operating condition messages to the user and includes a controller to monitor operating condition signals. Some examples of operating conditions include a connection state of the computing system to the Internet, a connection state of a peripheral device to the computing system, a new e-mail notification message, a new Internet message and atomic time from a network server coupled to the computing system. The user feedback mechanism may include a safety button to signal a power supply to power off the computing system independently of the operating system.

Owner:HEWLETT PACKARD DEV CO LP

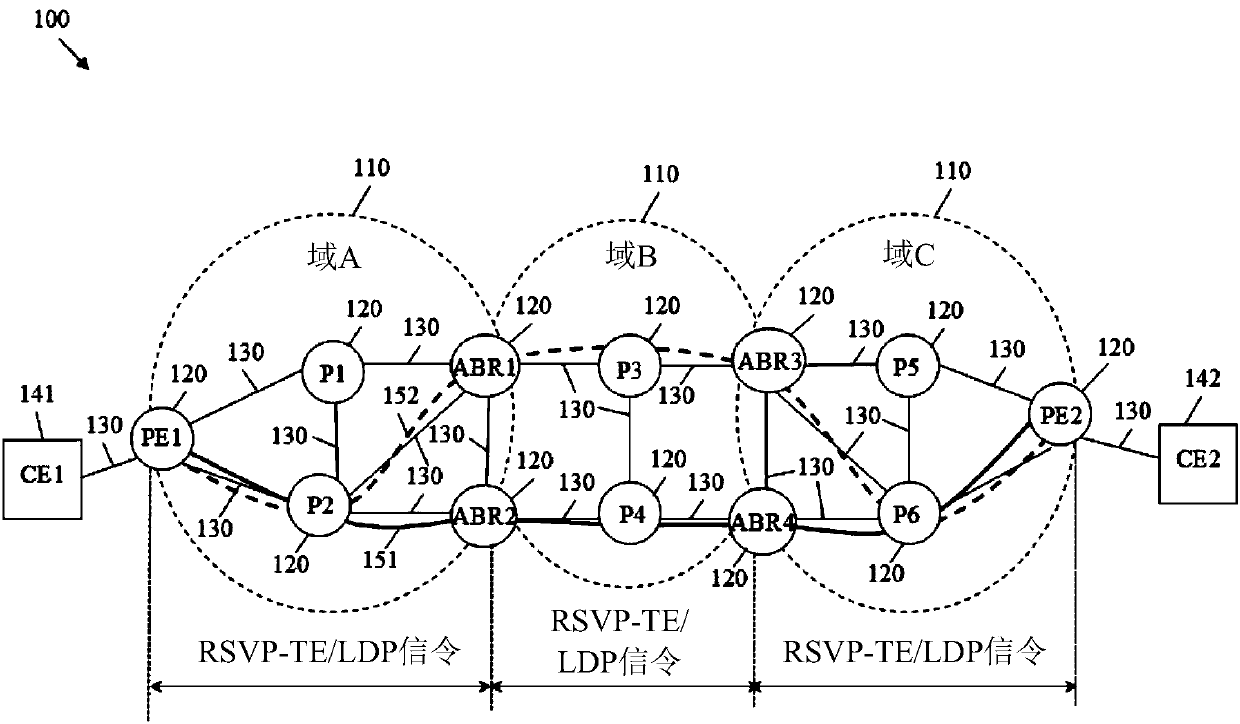

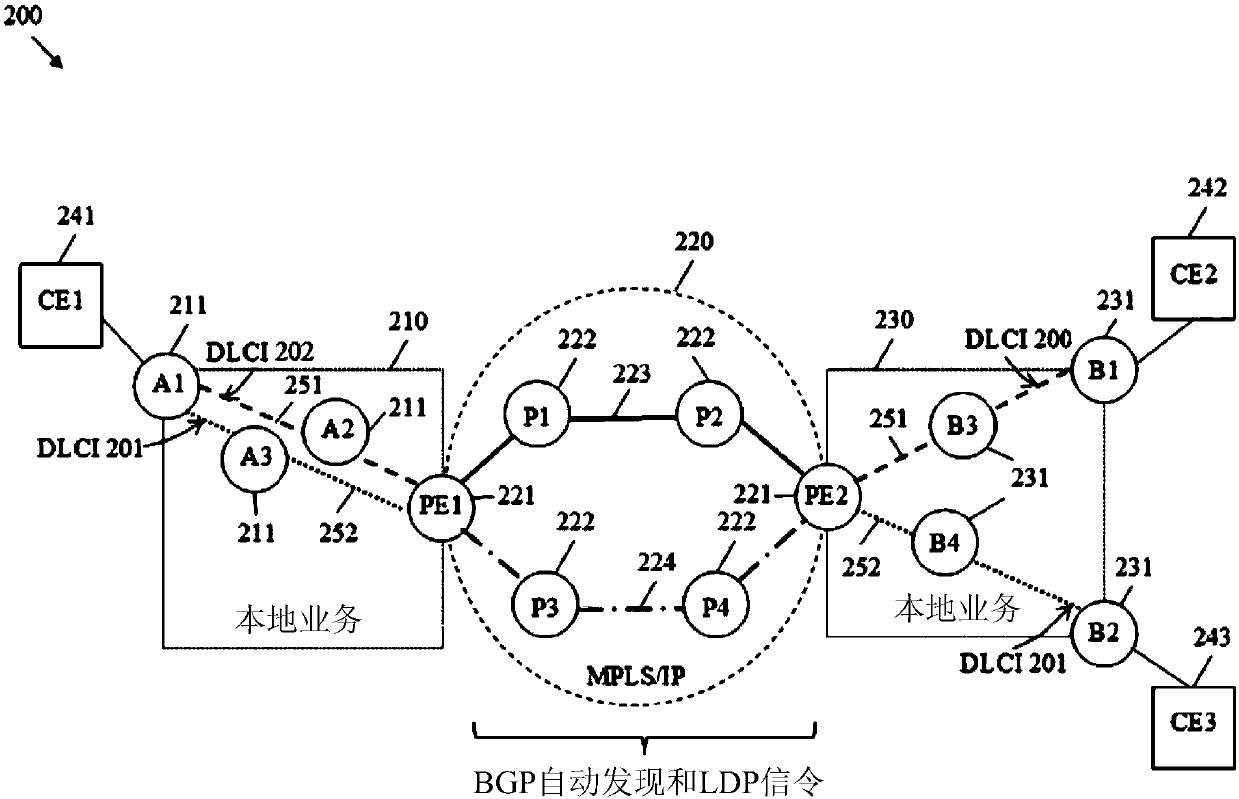

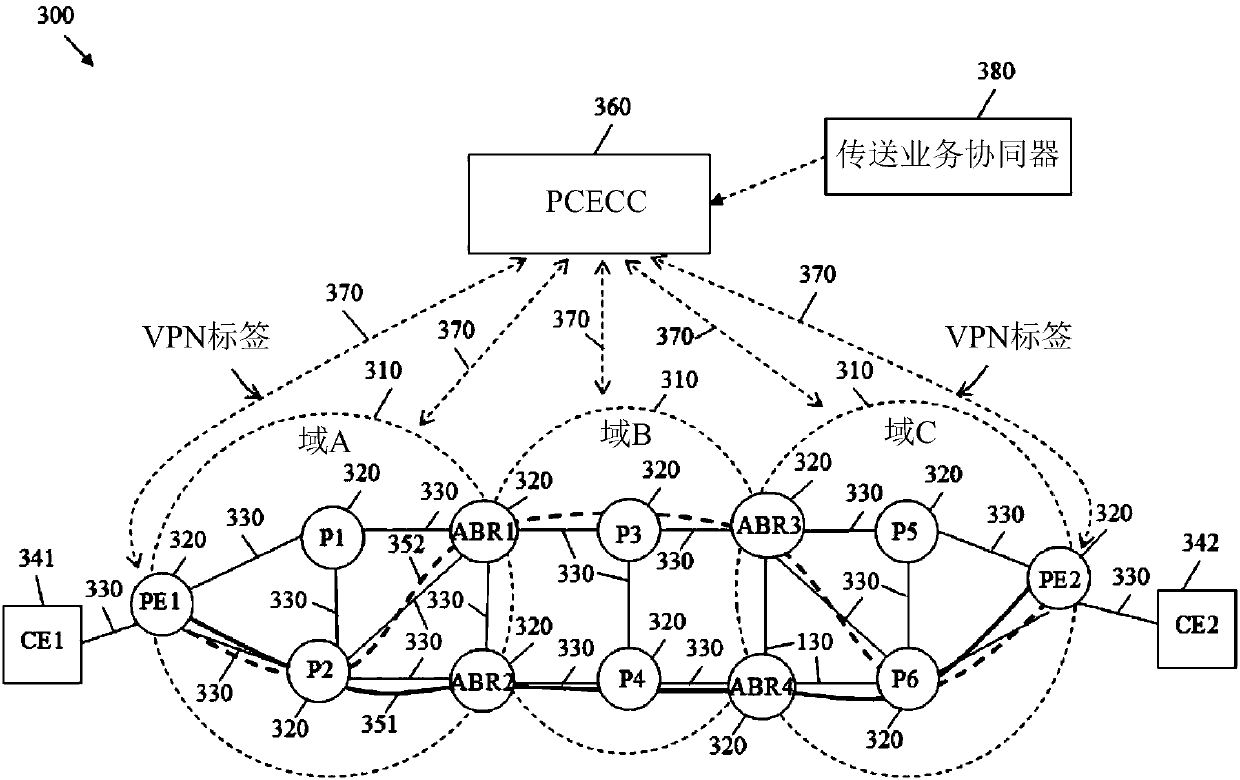

Path computation element central controllers (pceccs) for network services

The invention discloses path computation element central controllers (PCECCS) for network services. According to the invention, a method implemented by a path computation element centralized controller (PCECC), the method comprises: receiving a service request to provision for a service from a first edge node and a second edge node in a network; computing a path for a label switched path (LSP) from the first edge node to the second edge node in response to the service request; reserving label information for forwarding traffic of the service on the LSP; and sending a label update message to athird node on the path to facilitate forwarding of the traffic of the service on the path, wherein the label update message comprises the label information.

Owner:HUAWEI TECH CO LTD

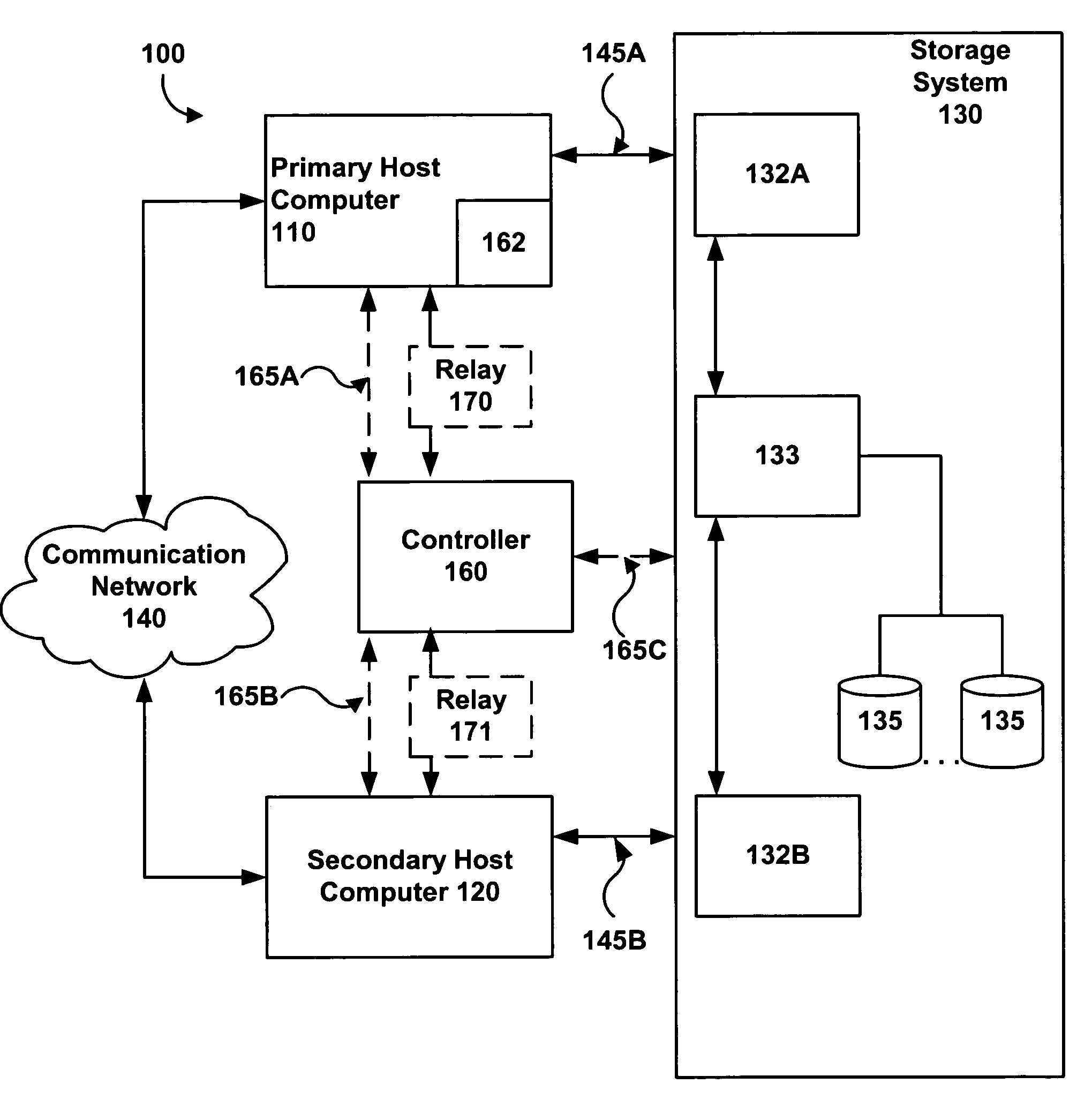

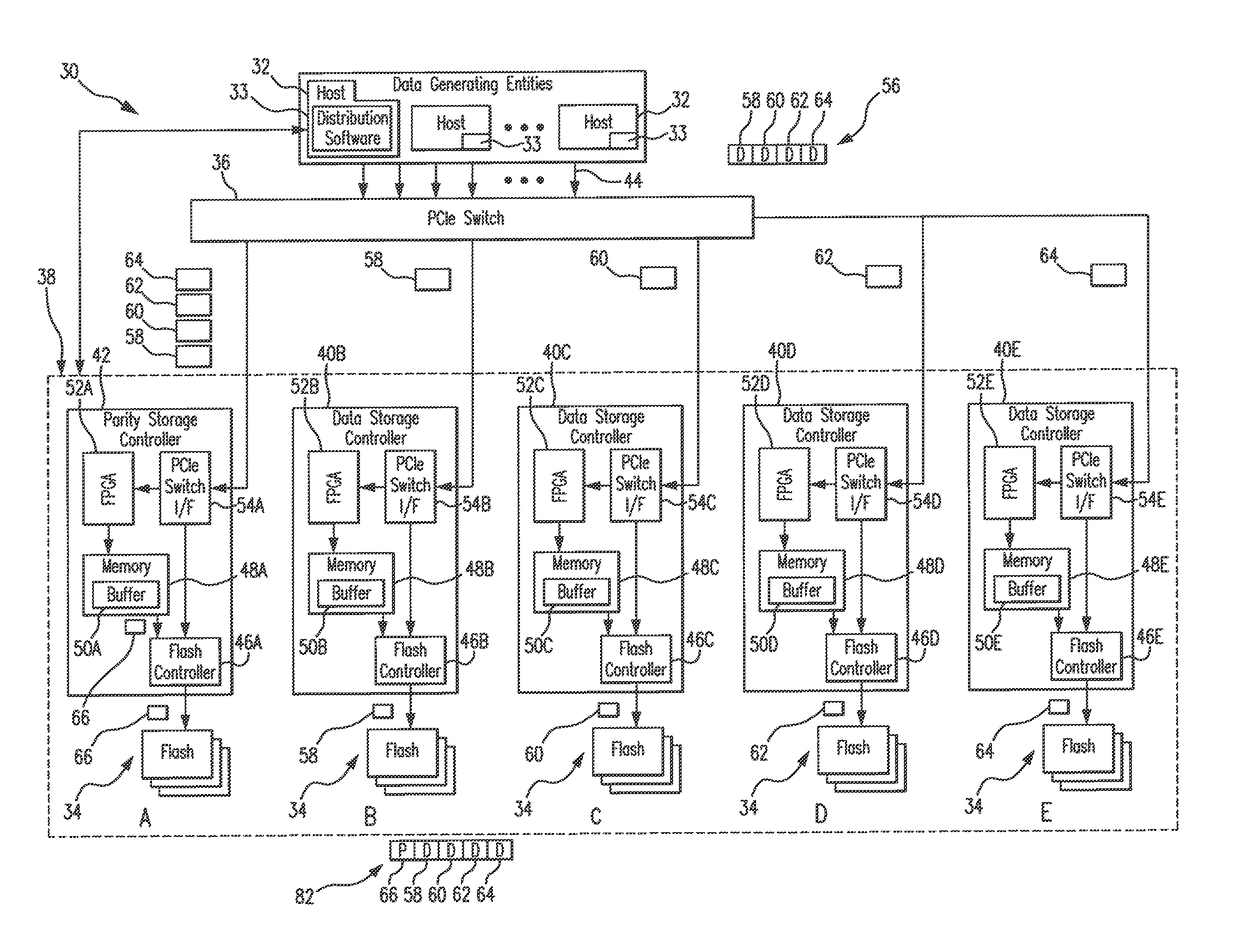

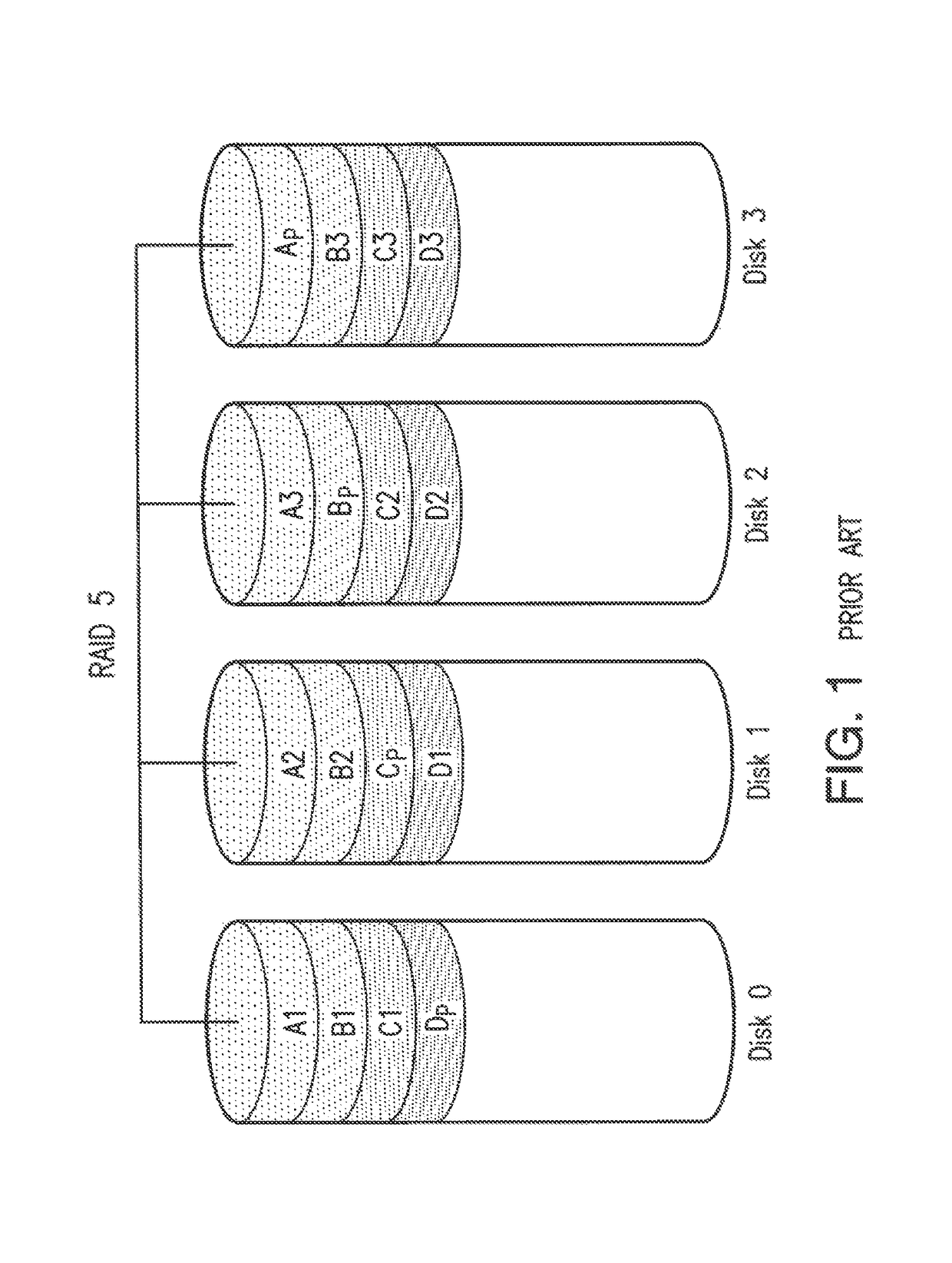

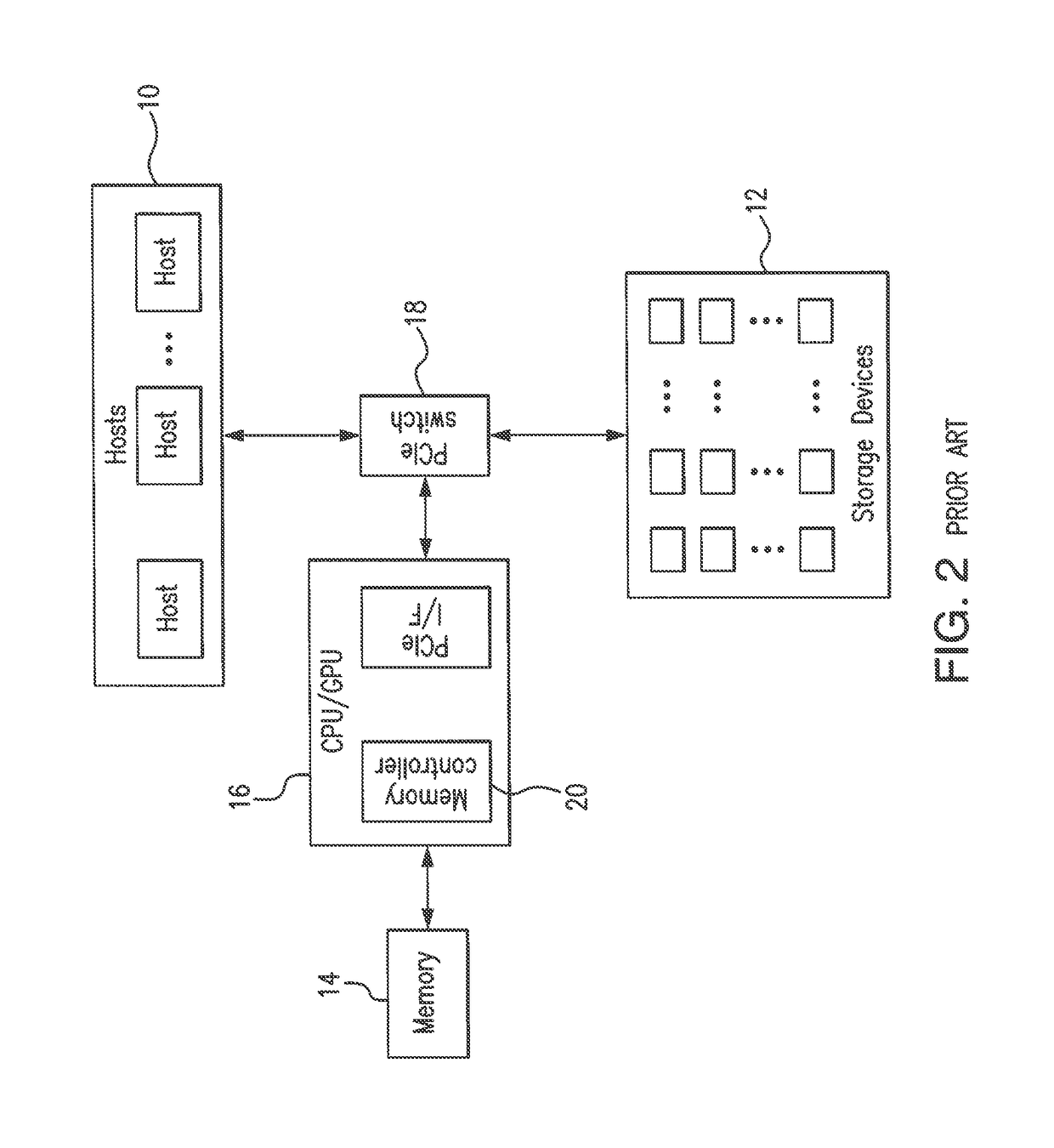

Data storage system and method for data migration between high-performance computing architectures and data storage devices using storage controller with distributed XOR capability

ActiveUS9639457B1Efficient and inexpensive wayEfficient and inexpensiveMemory architecture accessing/allocationError detection/correctionRAIDControl store

In the data storage system the storage area network performs XOR operations on incoming data for parity generation without buffering data through a centralized RAID engine or processor. The hardware for calculating the XOR data is distributed to incrementally calculate data parity in parallel across each data channel and may be implemented as a set of FPGAs with low bandwidths to efficiently scale as the amount of storage memory increases. A host adaptively appoints data storage controllers in the storage area network to perform XOR parity operations on data passing therethrough. The system provides data migration and parity generation in a simple and effective matter and attains a reduction in cost and power consumption.

Owner:DATADIRECT NETWORKS

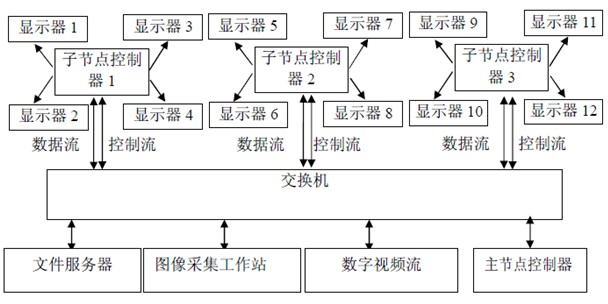

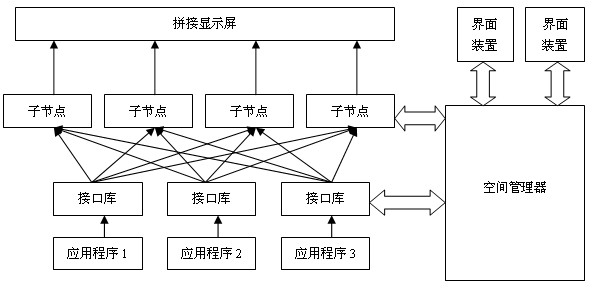

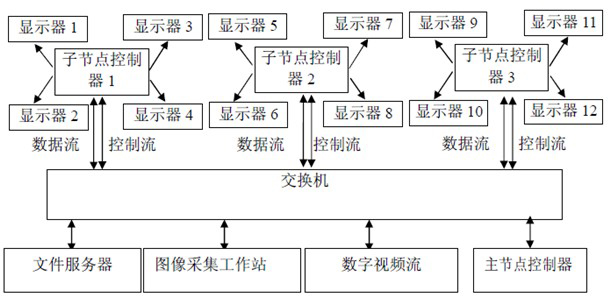

Multi-screen parallel massive information display system

InactiveCN102541501AStrong information processing abilityBreakthrough signal processing speed constraintsConcurrent instruction executionDigital output to display deviceVideo memoryData pack

The invention provides a multi-screen parallel massive information display system. An integral splicing wall is divided by the system with four screens as a unit, each four screens are controlled by an accessory node controller, and the accessory node controllers are connected with a master node controller through a high-speed internet. Under unified allocation and organization of the master node controller, external massive data (including analog video signals, digital signals of a computer and the like) are transmitted to the high-speed internet after quantization and digital processing and finally transmitted to the accessory node controllers, and images are parallelly rendered to a video memory by the accessory node controllers and displayed on a high-definition splicing display screen. The system comprehensively applies the CPU (central processing unit)+CPU parallel processing technology, the cloud computing technology and the high-speed network technology, so that the massive data which cannot be processed and displayed by a traditional system are quickly displayed in real time.

Owner:GUANGZHOU HANYANG INFORMATION TECH

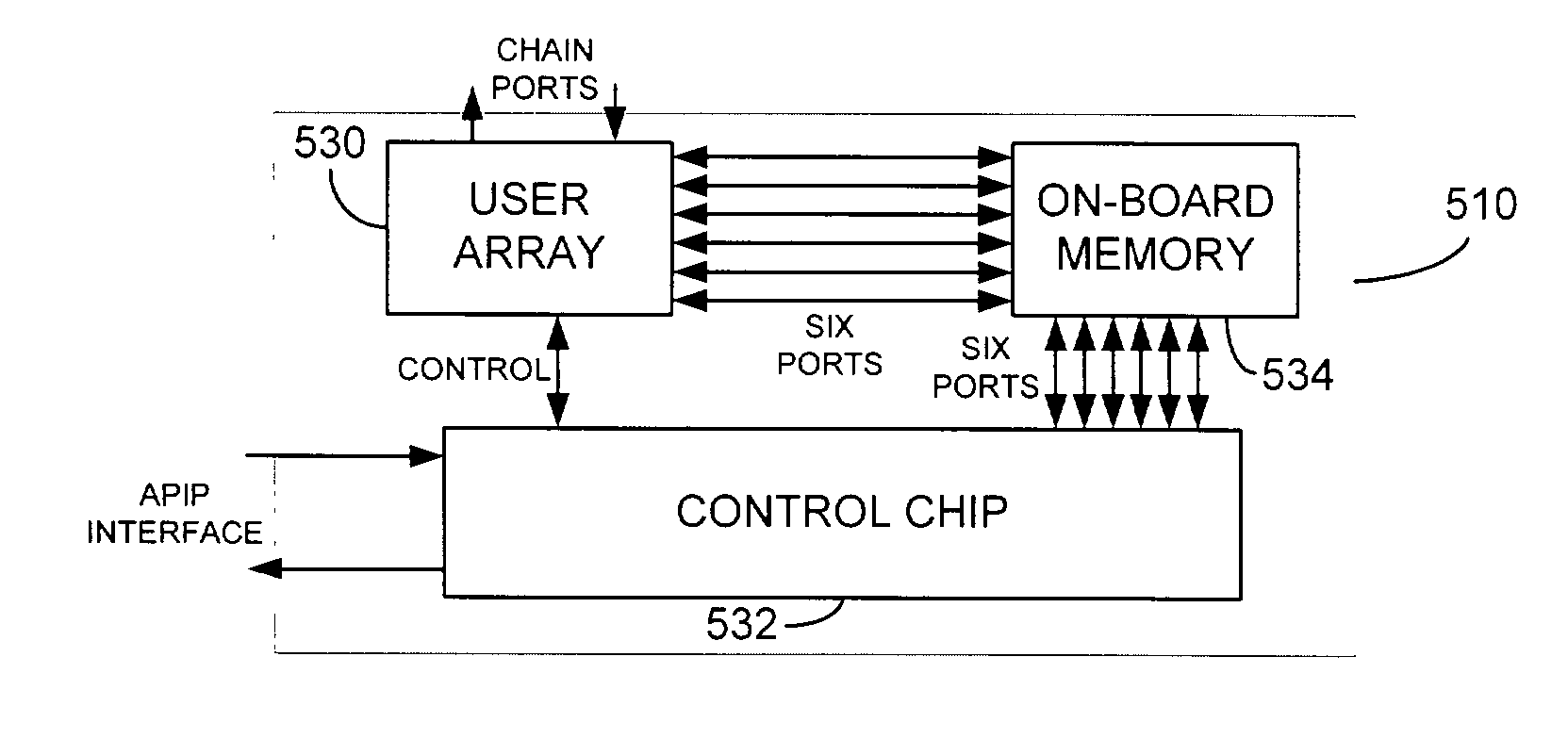

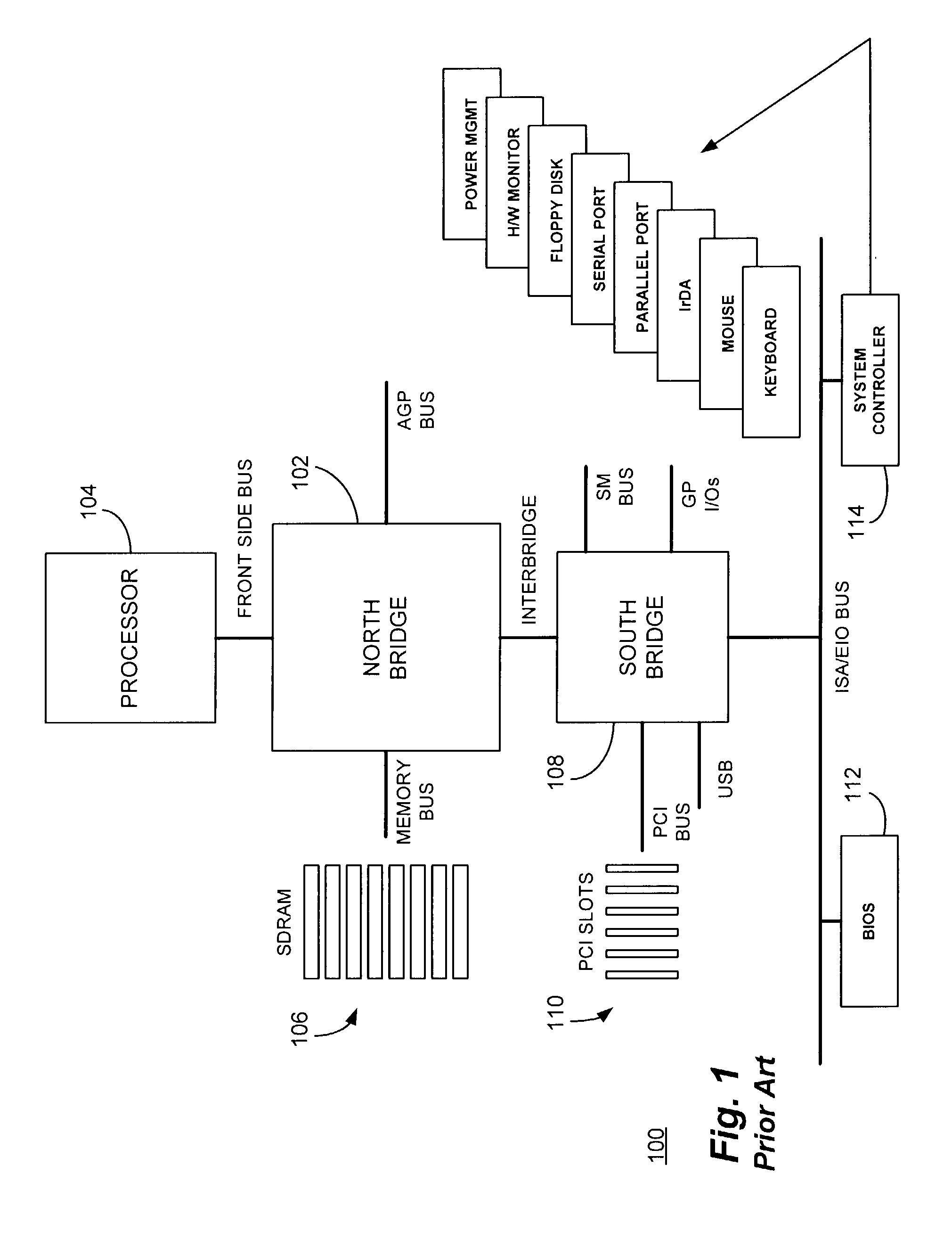

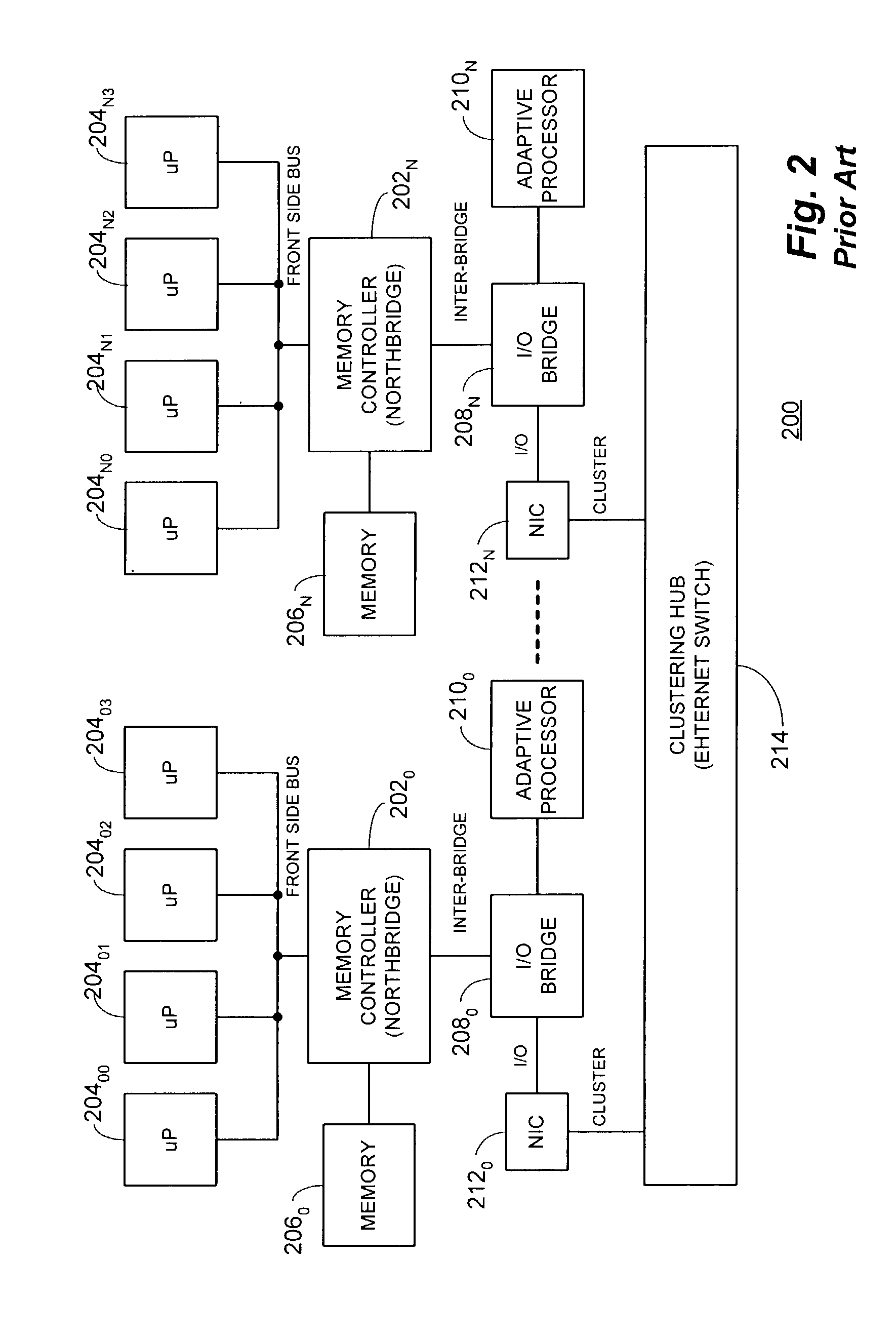

Computer system architecture and memory controller for close-coupling within a hybrid processing system utilizing an adaptive processor interface port

InactiveUS7003593B2Equal latencyEqual memory bandwidthComputer controlSimulator controlClose couplingComputer architecture

A computer system architecture and memory controller for close-coupling within a hybrid computing system using an adaptive processor interface port (“APIP”) added to, or in conjunction with, the memory and I / O controller chip of the core logic. Memory accesses to and from this port, as well as the main microprocessor bus, are then arbitrated by the memory control circuitry forming a portion of the controller chip. In this fashion, both the microprocessors and the adaptive processors of the hybrid computing system exhibit equal memory bandwidth and latency. In addition, because it is a separate electrical port from the microprocessor bus, the APIP is not required to comply with, and participate in, all FSB protocol. This results in reduced protocol overhead which results higher yielded payload on the interface.

Owner:SRC COMP

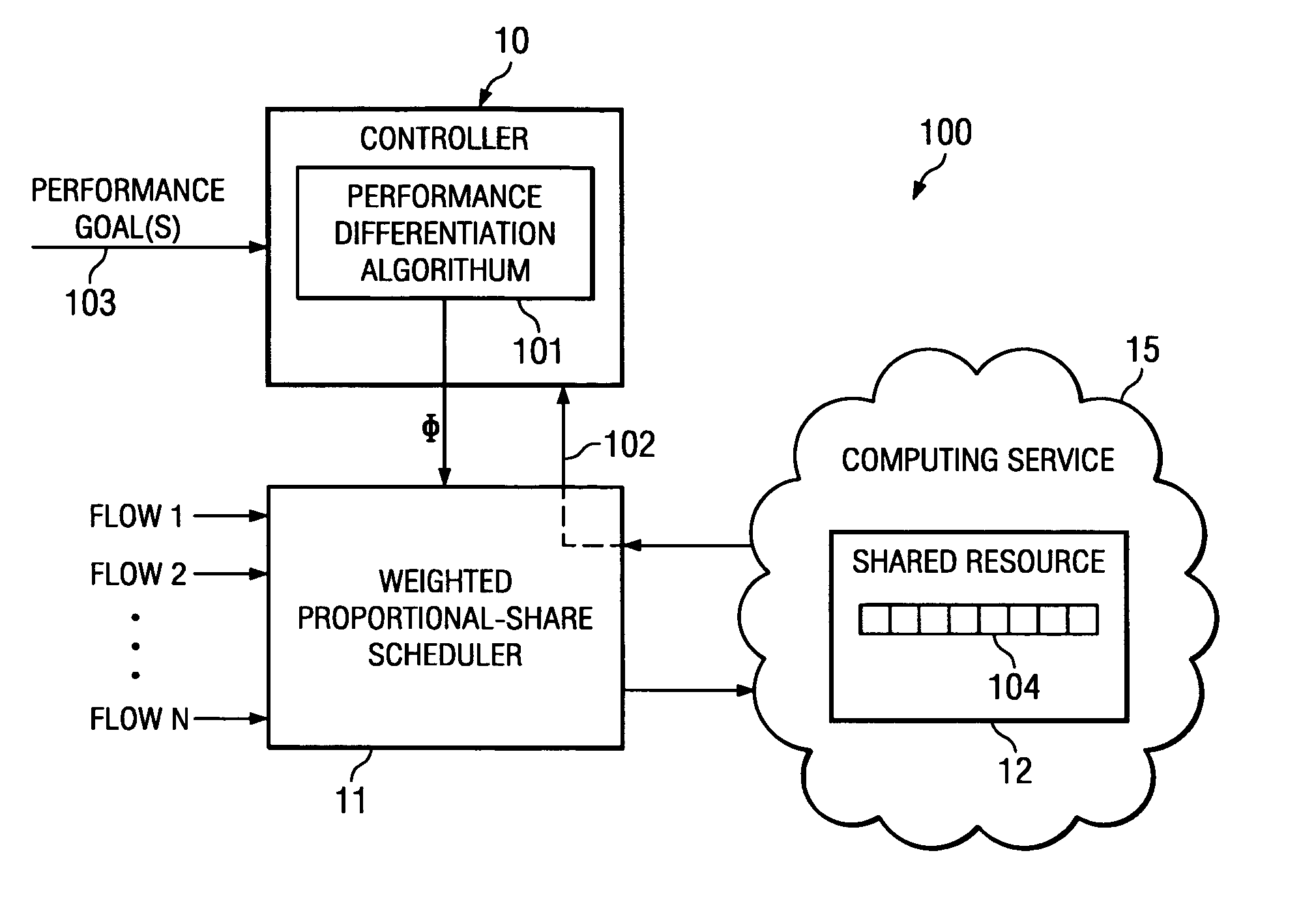

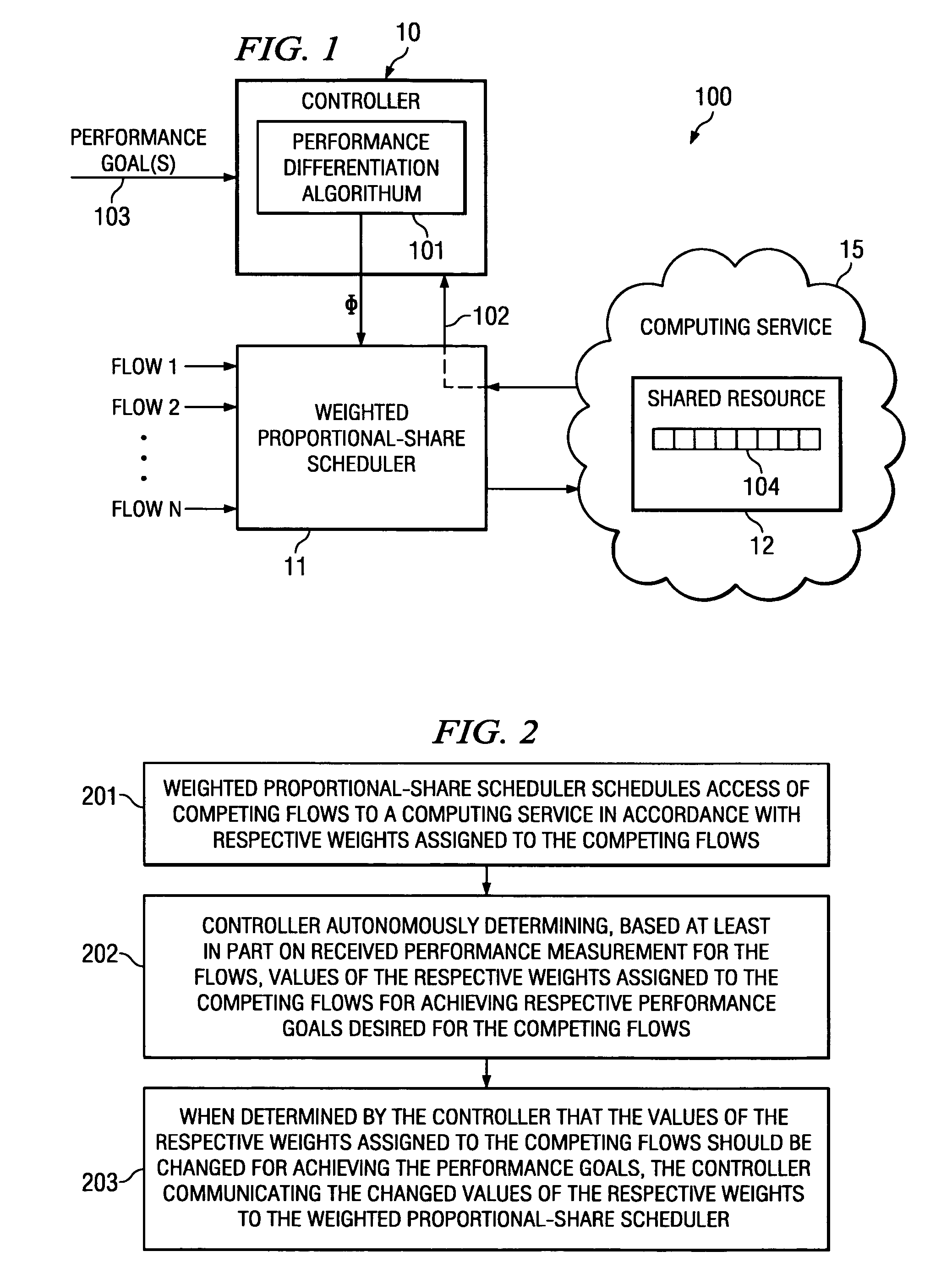

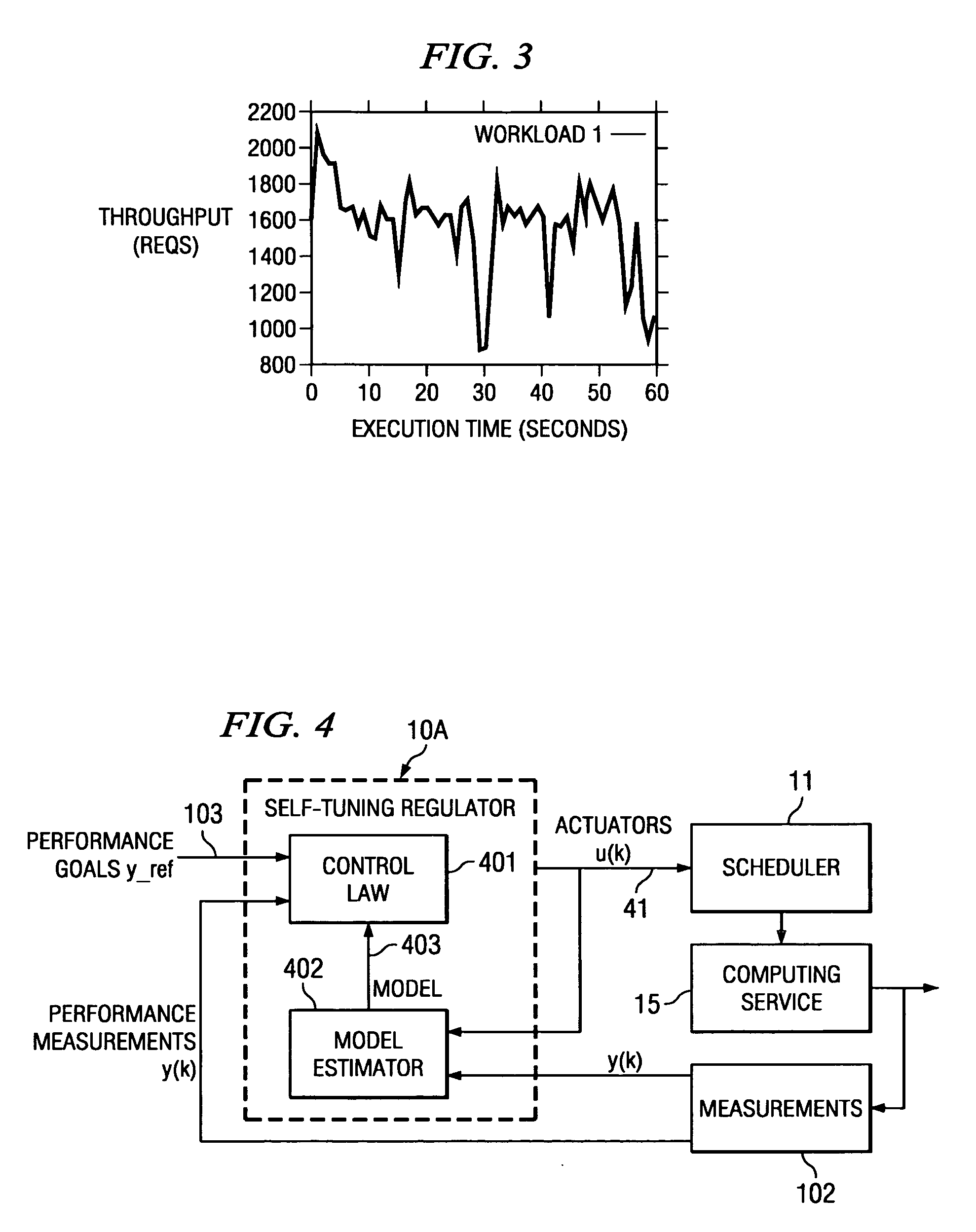

System and method for dynamically controlling weights assigned to consumers competing for a shared resource

InactiveUS20060294044A1Error preventionFrequency-division multiplex detailsProportional shareShared resource

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

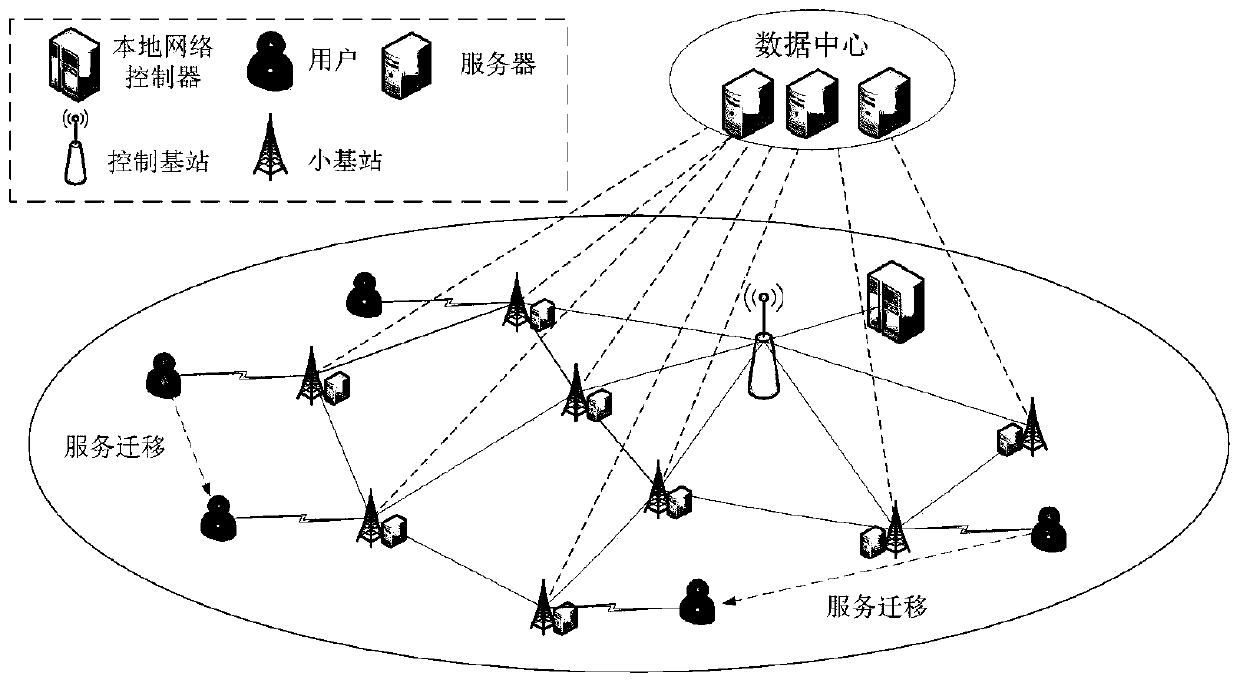

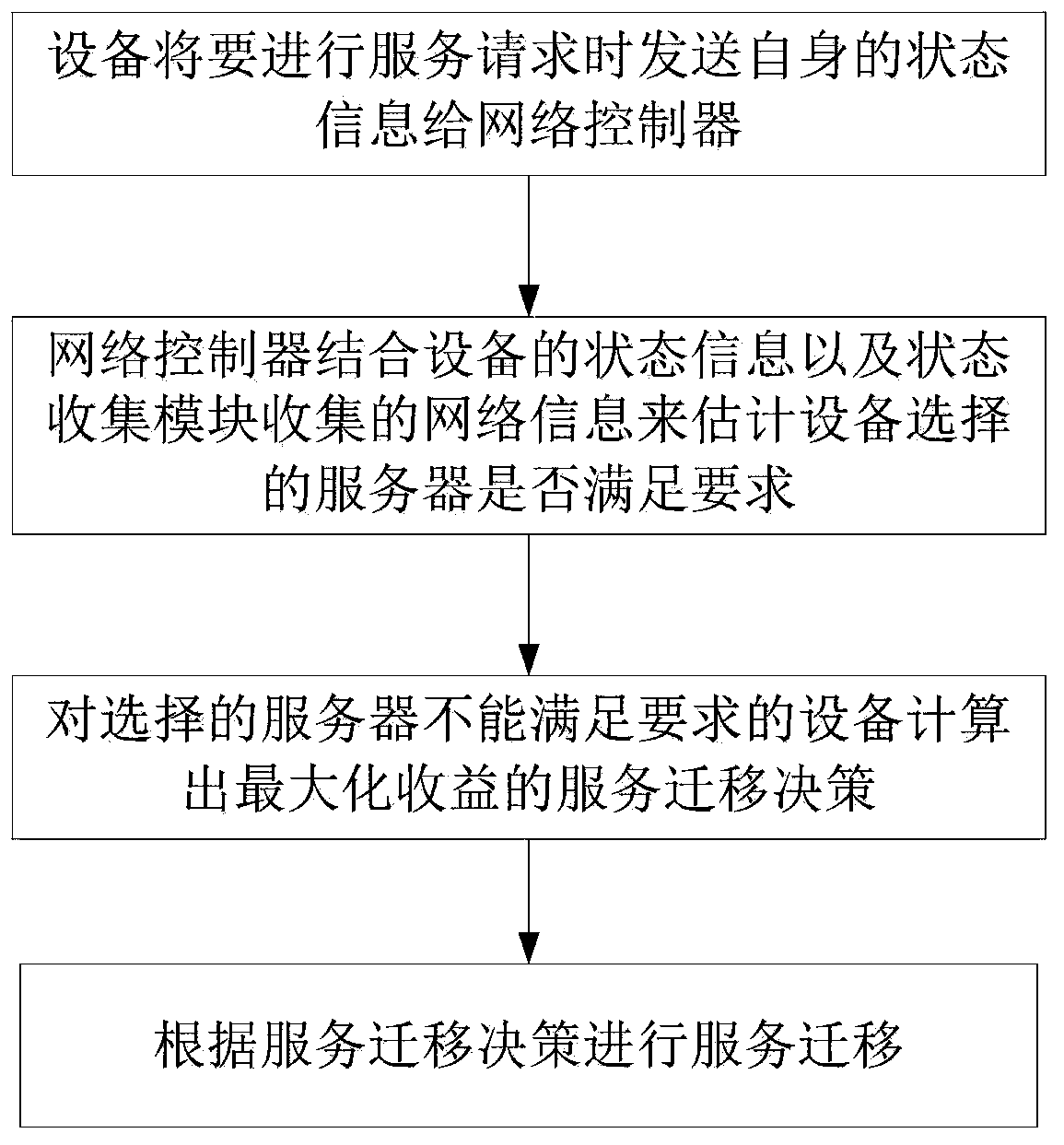

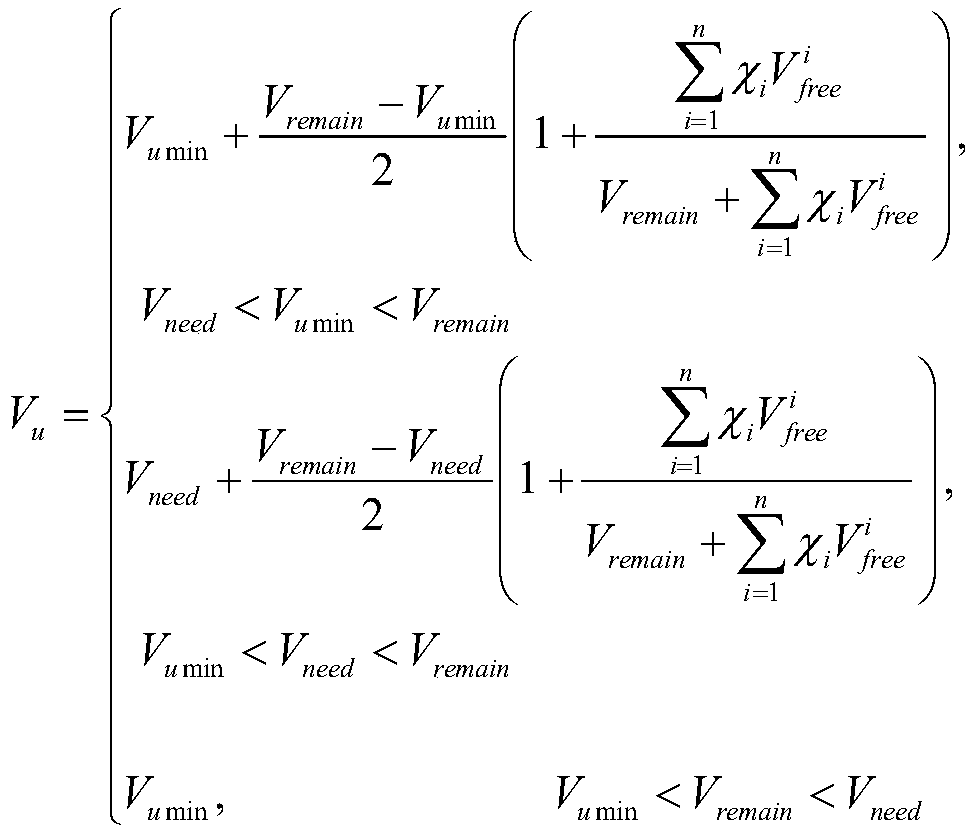

Particle swarm-based service migration method in edge computing environment

The invention relates to a service migration method based on a particle swarm in an edge computing environment, and belongs to the technical field of mobile communication. The method comprises the following steps: S1, modeling a server; S2, reporting a service request: equipment sends own state information to a local network controller; S3, finding out the equipment of which the delay does not meet the requirement: the local network controller estimates the delay of the equipment according to the received equipment state information and the network information collected by the state collectionmodule, and judges whether the delay requirement of the corresponding type of task is met or not; and S4, formulating a migration decision: selecting the equipment which cannot meet the requirementsfor the server, comprehensively considering energy consumption and time delay in combination with factors such as task size, task type, link stability, server state and the like, and formulating the migration decision with the maximum income. According to the invention, service migration can be triggered in real time when the equipment performance does not reach the standard, and the income of service migration is maximized while the task requirements of each piece of equipment are met.

Owner:CHONGQING UNIV

System and method for a hierarchical system management architecture of a highly scalable computing system

InactiveUS7219156B1Hardware monitoringMultiple digital computer combinationsScalable computingSystems management

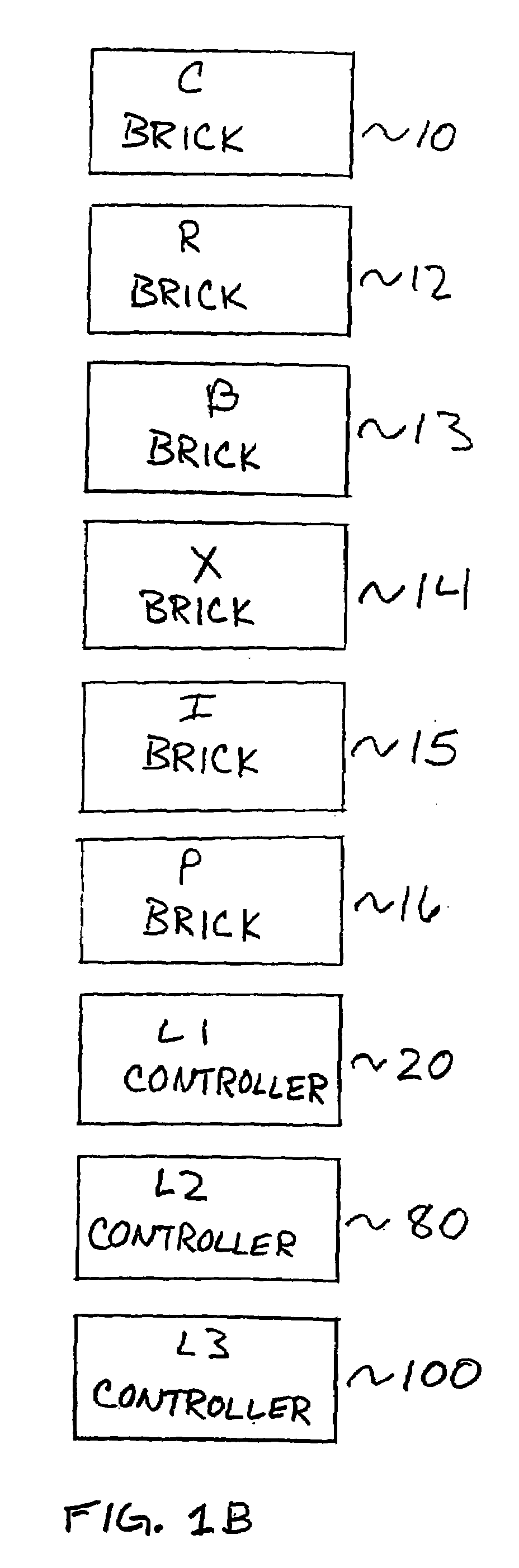

A modular computer system includes at least two processing functional modules each including a processing unit adapted to process data and adapted to input / output data to other functional modules through at least two ports with each port including a plurality of data lines. At least one routing functional module is adapted to route data and adapted to input / output data to other functional modules through at least two ports with each port including a plurality of data lines. At least one input or output functional module is adapted to input or output data and adapted to input / output data to other functional modules through at least one port including a plurality of data lines. Each processing, routing and input or output functional module includes a local controller adapted to control the local operation of the associated functional module, wherein the local controller is adapted to input and output control information over control lines connected to the respective ports of its functional module. At least one system controller functional module is adapted to communicate with one or more local controllers and provide control at a level above the local controllers. Each of the functional modules adapted to be cabled together with a single cable that includes a plurality of data lines and control lines such that control lines in each module are connected together and data lines in each unit are connected together. Each of the local controllers adapted to detect other local controllers to which it is connected and to thereby collectively determine the overall configuration of a system.

Owner:MORGAN STANLEY +1

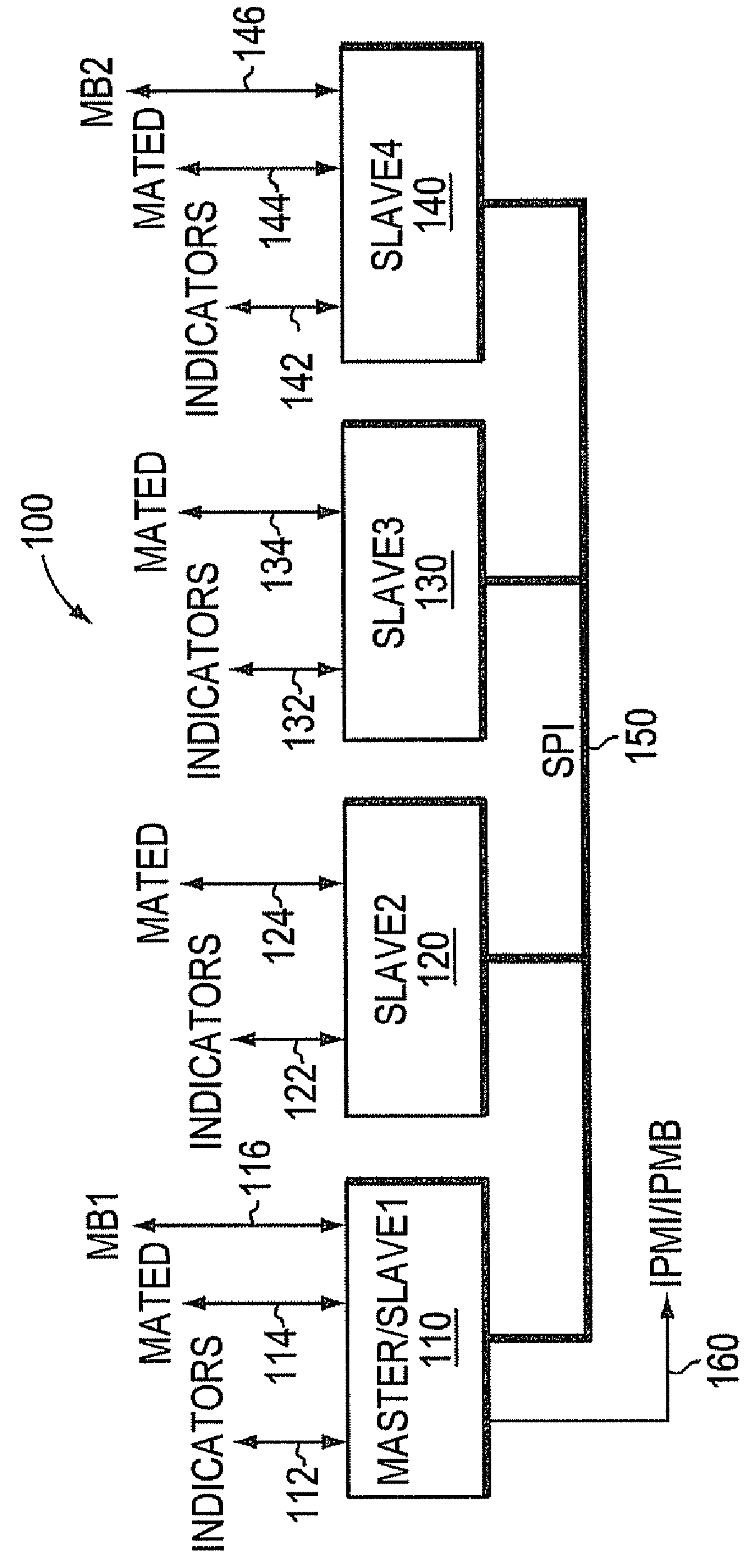

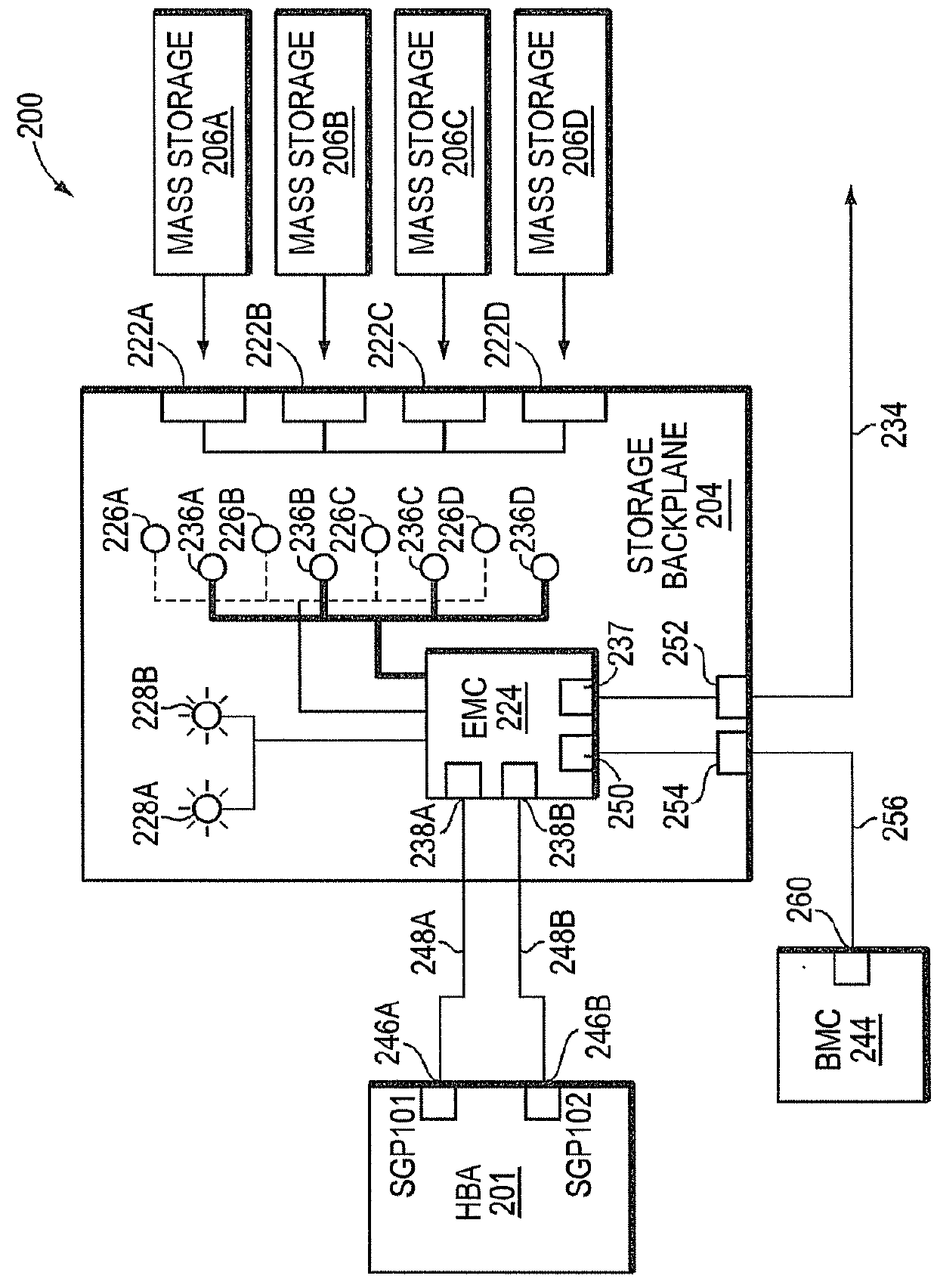

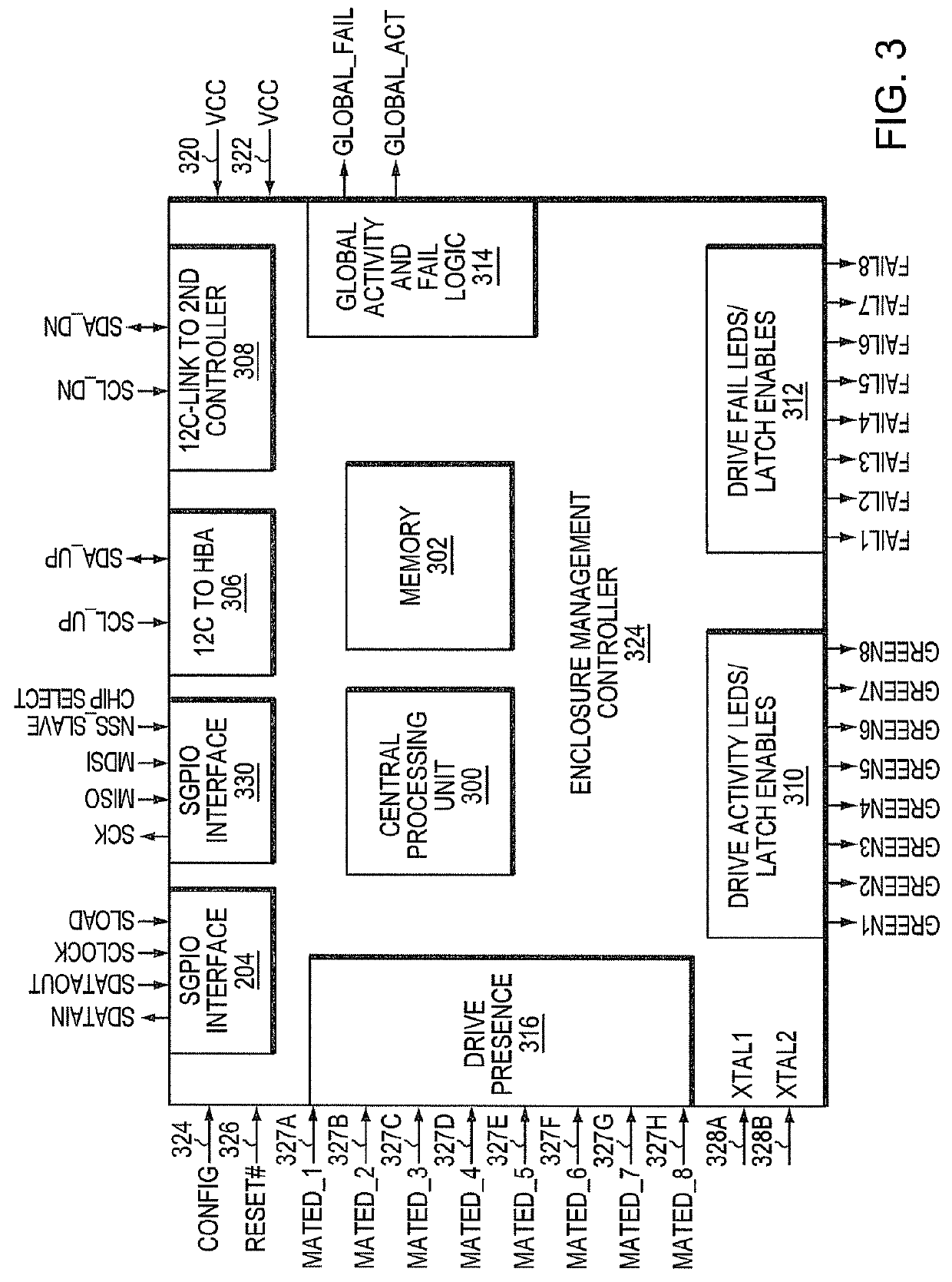

Drive mapping using a plurality of connected enclosure management controllers

According to one aspect, a computing system having a plurality of enclosure management controllers (EMCs) is disclosed. In one embodiment, the EMCs are communicatively coupled to each other and each EMC is operatively connected to a corresponding plurality of drive slots and at least one of a plurality of drive slot status indicators. Each EMC is operative to receive enclosure management data, detect an operational status of the drive slots, and generate drive slot status data. One of the EMCs is configured to function at least partly as a master EMC to receive drive slot status data and, based on received enclosure management data and received drive slot status data, generate mapped data for each one of the EMCs for selectively activating at least one of the drive slot status indicators to indicate corresponding operational status.

Owner:AMERICAN MEGATRENDS

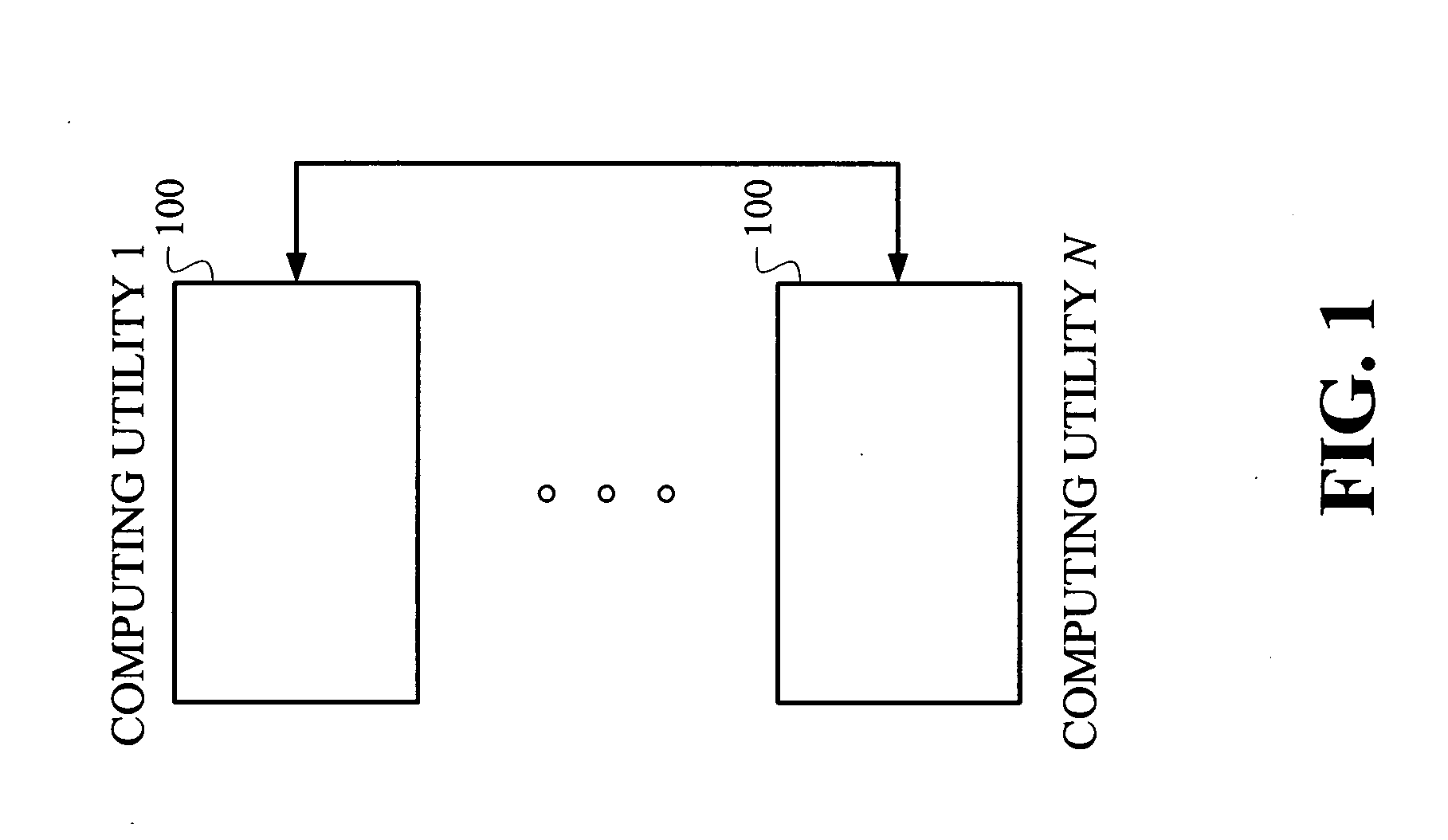

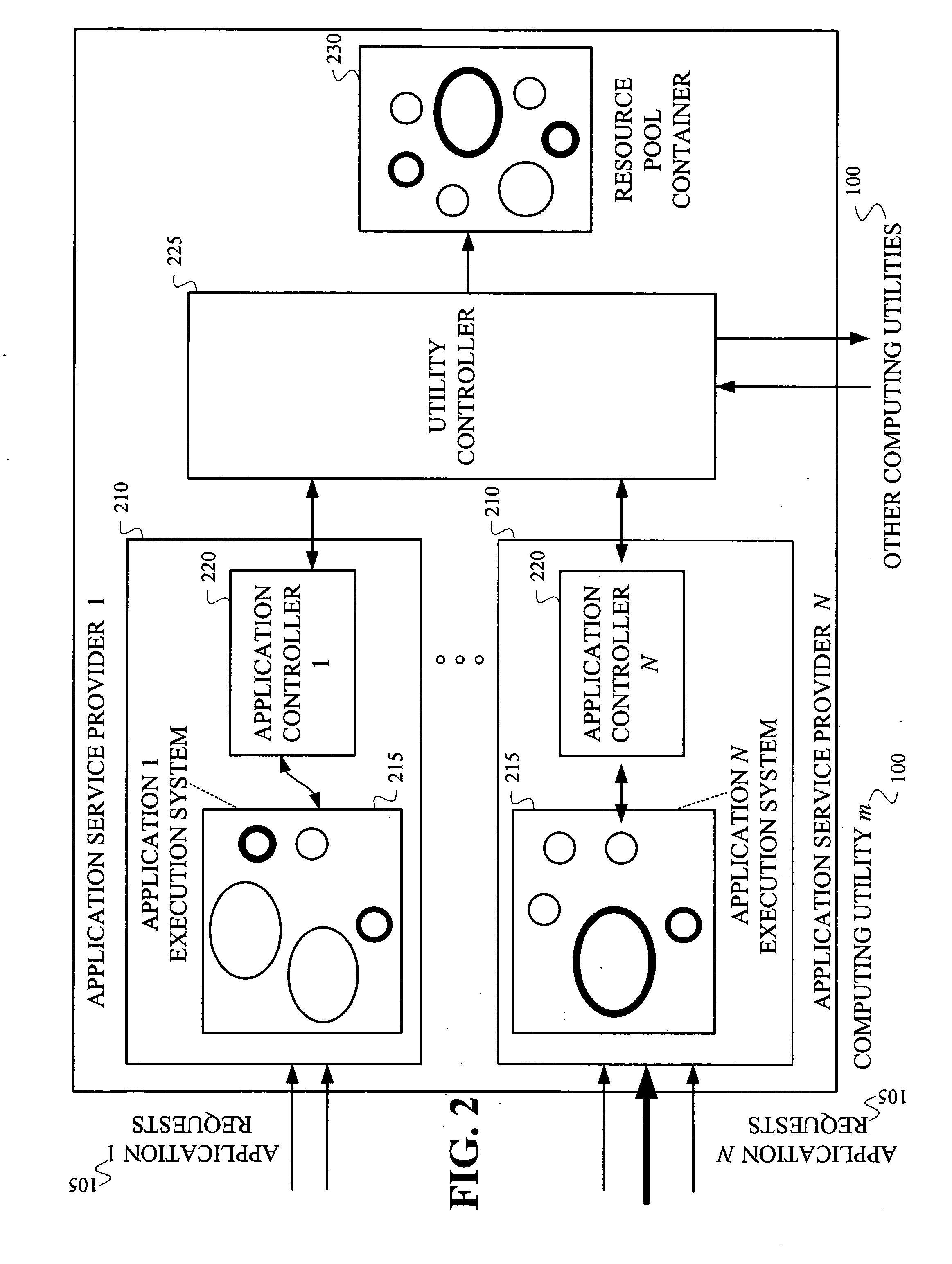

Systems and methods for business-level resource optimizations in computing utilities

InactiveUS20060112075A1ResourcesSpecial data processing applicationsComputer networkController (computing)

Techniques are provided for use in accordance with relates to computing utilities. For example, in one aspect of the invention, a method for use in a computing utility, wherein the computing utility comprises a plurality of application service provider systems and a utility controller, and each application service provider system comprising an application controller, comprises the following steps. An application request to one of the plurality of application service provider systems is obtained. Then, in response to the application request, at least one of: (i) the application controller of the application service provider system to which the application request is directed computes a value of a business metric associated with a resource action; and (ii) the utility controller computes a value of a business metric associated with a resource action.

Owner:IBM CORP

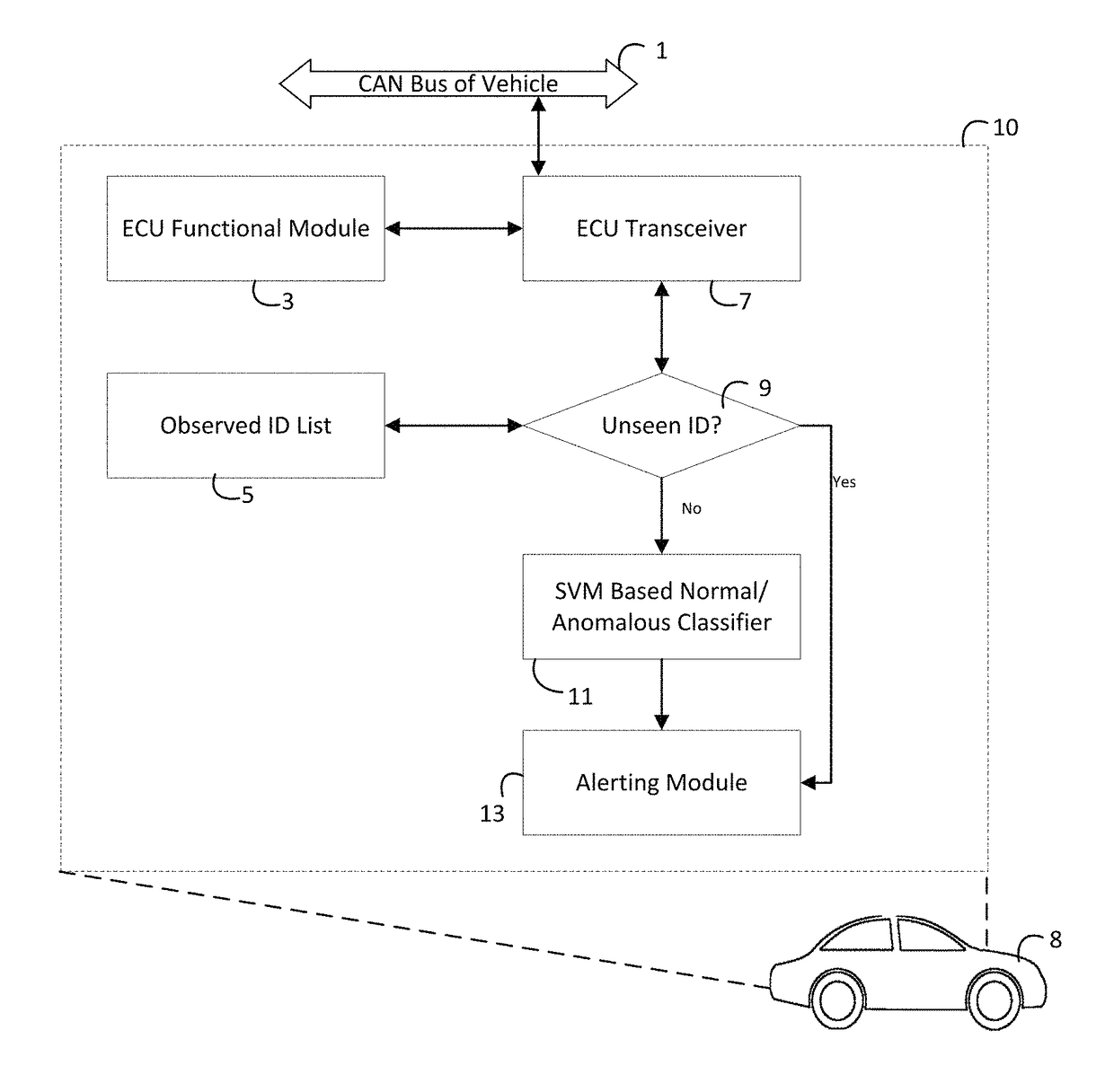

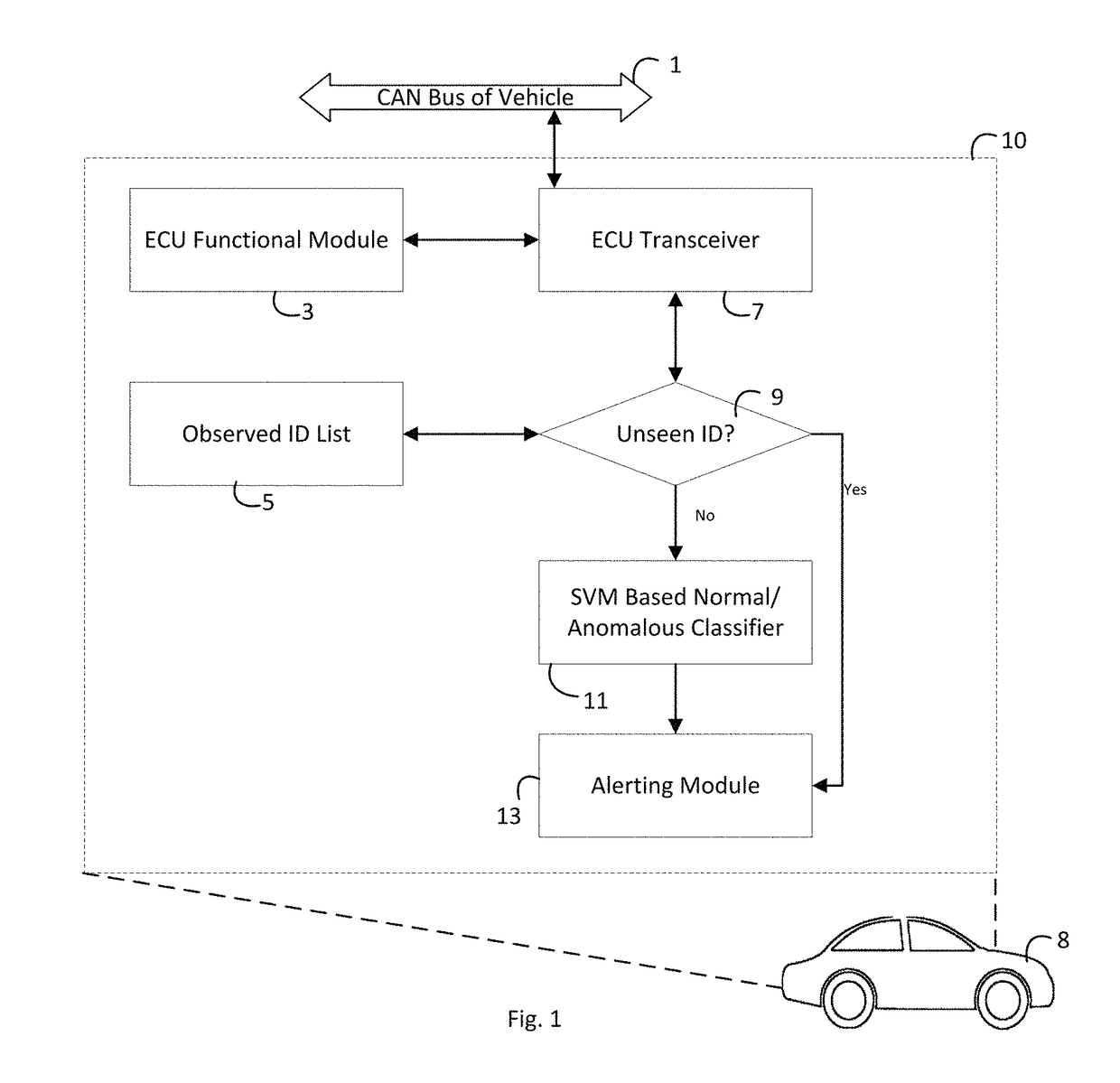

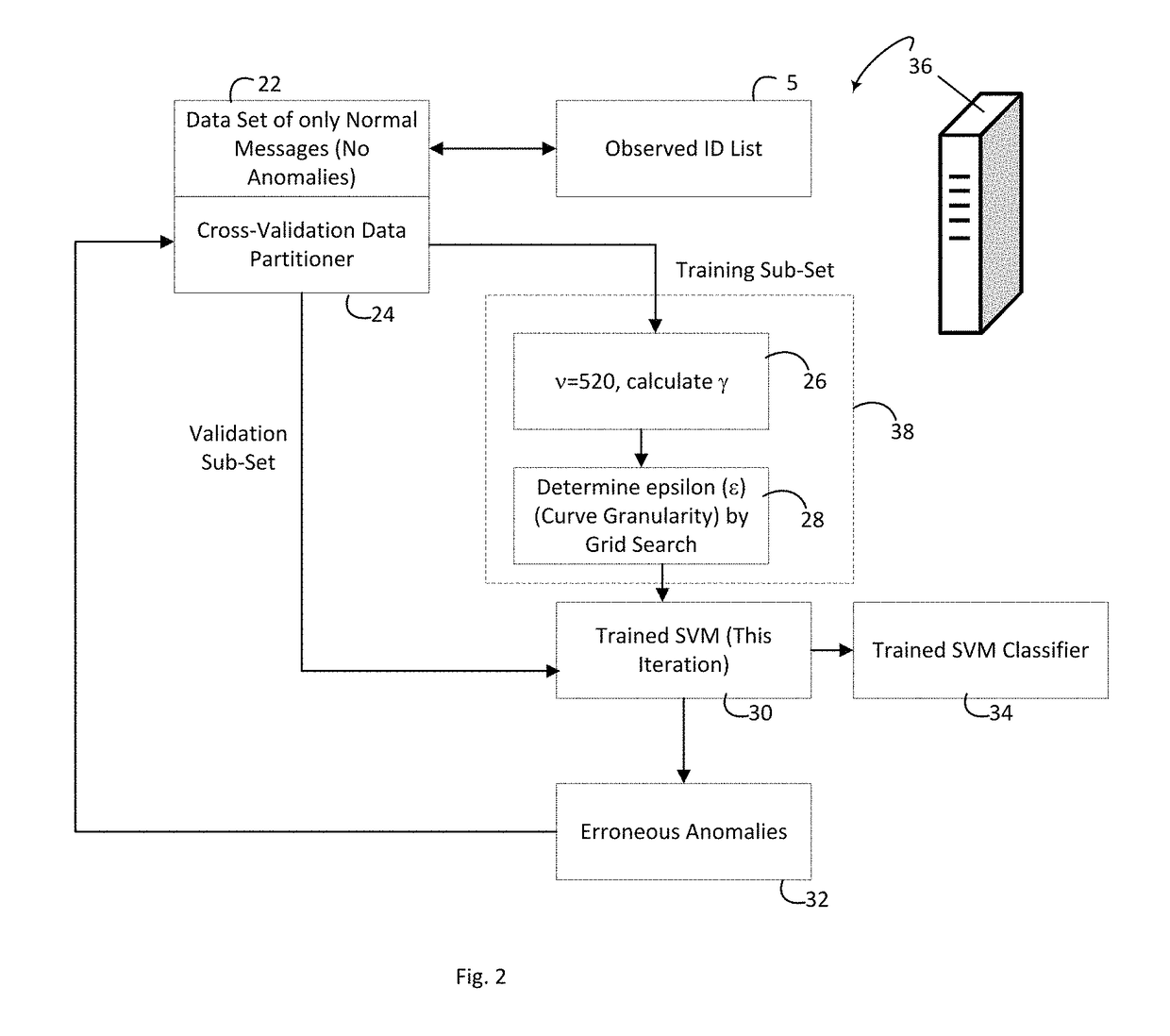

Anomaly detection for vehicular networks for intrusion and malfunction detection

A security monitoring system for a Controller Area Network (CAN) comprises an Electronic Control Unit (ECU) operatively connected to the CAN bus. The ECU is programmed to classify a message read from the CAN bus as either normal or anomalous using an SVM-based classifier with a Radial Basis Function (RBF) kernel. The classifying includes computing a hyperplane curvature parameter γ of the RBF kernel as γ=ƒ(D) where ƒ( ) denotes a function and D denotes CAN bus message density as a function of time. In some such embodiments γ=ƒ(Var(D)) where Var(D) denotes the variance of the CAN bus message density as a function of time. The security monitoring system may be installed in a vehicle (e.g. automobile, truck, watercraft, aircraft) including a vehicle CAN bus, with the ECU operatively connected to the vehicle CAN bus to read messages communicated on the CAN bus. By not relying on any proprietary knowledge of arbitration IDs from manufacturers through their dbc files, this anomaly detector truly functions as a zero knowledge detector.

Owner:BATTELLE MEMORIAL INST

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com